User login

SAN DIEGO – An automated outbreak detection system was popular among infection preventionists and detected pathogenic clusters about 5 days sooner than did hospitals themselves, researchers said at an annual scientific meeting on infectious diseases.

“The vast majority of hospitals were very pleased to expand surveillance beyond multidrug-resistant organisms, and most thought this system would improve their ability to detect outbreaks and streamline their work,” said Dr. Meghan Baker of Harvard Medical School and Harvard Pilgrim Health Care Institute, Boston.

Patients in acute-care hospitals acquired almost 782,000 healthcare-associated infections (HAIs) in 2011, according to the Centers for Disease Control and Prevention.

Traditionally, infection preventionists at these hospitals look for HAIs by identifying temporal or spatial clusters of “a limited number of prespecified pathogens,” Dr. Baker said. But some real clusters do not meet these empirical rules, leading to more cases and potentially severe consequences for patients. Automated HAI outbreak detection systems can help, but not if they constantly trigger false alarms or, conversely, are so specific that they miss outbreaks.

Using the Premier SafetySurveillor infection control tool and free statistical software, Dr. Baker and her associates analyzed 83 years of historical microbiology data from 44 hospitals. The system signaled for any cluster involving at least three cases, even if cases occurred by chance less than once a year, she said. The researchers compared the results with outbreak data submitted by a convenience sample of hospitals that used their usual surveillance methods.

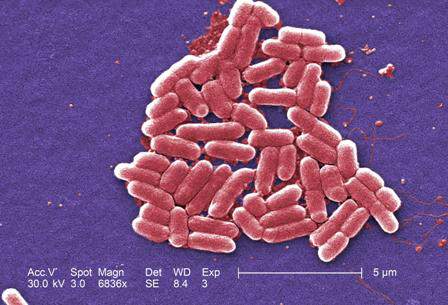

The automated approach identified 230 clusters. Most were detected based on antimicrobial resistance, but others shared the same ward or specialty service and some produced more than one signal. Clusters most often involved Staphylococcus aureus, Enterococcus, Pseudomonas, Escherichia coli, and Klebsiella, but most organisms were not under routine surveillance. As a result, 89% of clusters detected by the automated tool were not detected by hospitals using their usual methods, she emphasized. “Some were not real breaks, as determined later by genetic typing,” she added. “Most real clusters were [methicillin-resistant S. aureus], Clostridium difficile, and some were resistant gram-negative rods.”

When surveyed, 76% of infection preventionists said the automated tool moderately or greatly improved their ability to detect outbreaks, Dr. Baker reported. They would have wanted notification about 81% of the clusters, and considered 47% to be moderately or highly concerning. Notably, 51% of clusters expanded after detection.

“If these had been detected in real time, it would have been possible to intervene and possibly curtail the outbreak,” and 237 (42%) of 559 infections might have been avoided if these interventions were successful, she said.

Dr. Baker and her associates reported their findings at the combined annual meetings of the Infectious Diseases Society of America, the Society for Healthcare Epidemiology of America, the HIV Medicine Association, and the Pediatric Infectious Diseases Society.

Dr. Baker reported no competing interests. Two of her associates reported financial and relationships with Premier, Sage, Molnycke, and 3M.

SAN DIEGO – An automated outbreak detection system was popular among infection preventionists and detected pathogenic clusters about 5 days sooner than did hospitals themselves, researchers said at an annual scientific meeting on infectious diseases.

“The vast majority of hospitals were very pleased to expand surveillance beyond multidrug-resistant organisms, and most thought this system would improve their ability to detect outbreaks and streamline their work,” said Dr. Meghan Baker of Harvard Medical School and Harvard Pilgrim Health Care Institute, Boston.

Patients in acute-care hospitals acquired almost 782,000 healthcare-associated infections (HAIs) in 2011, according to the Centers for Disease Control and Prevention.

Traditionally, infection preventionists at these hospitals look for HAIs by identifying temporal or spatial clusters of “a limited number of prespecified pathogens,” Dr. Baker said. But some real clusters do not meet these empirical rules, leading to more cases and potentially severe consequences for patients. Automated HAI outbreak detection systems can help, but not if they constantly trigger false alarms or, conversely, are so specific that they miss outbreaks.

Using the Premier SafetySurveillor infection control tool and free statistical software, Dr. Baker and her associates analyzed 83 years of historical microbiology data from 44 hospitals. The system signaled for any cluster involving at least three cases, even if cases occurred by chance less than once a year, she said. The researchers compared the results with outbreak data submitted by a convenience sample of hospitals that used their usual surveillance methods.

The automated approach identified 230 clusters. Most were detected based on antimicrobial resistance, but others shared the same ward or specialty service and some produced more than one signal. Clusters most often involved Staphylococcus aureus, Enterococcus, Pseudomonas, Escherichia coli, and Klebsiella, but most organisms were not under routine surveillance. As a result, 89% of clusters detected by the automated tool were not detected by hospitals using their usual methods, she emphasized. “Some were not real breaks, as determined later by genetic typing,” she added. “Most real clusters were [methicillin-resistant S. aureus], Clostridium difficile, and some were resistant gram-negative rods.”

When surveyed, 76% of infection preventionists said the automated tool moderately or greatly improved their ability to detect outbreaks, Dr. Baker reported. They would have wanted notification about 81% of the clusters, and considered 47% to be moderately or highly concerning. Notably, 51% of clusters expanded after detection.

“If these had been detected in real time, it would have been possible to intervene and possibly curtail the outbreak,” and 237 (42%) of 559 infections might have been avoided if these interventions were successful, she said.

Dr. Baker and her associates reported their findings at the combined annual meetings of the Infectious Diseases Society of America, the Society for Healthcare Epidemiology of America, the HIV Medicine Association, and the Pediatric Infectious Diseases Society.

Dr. Baker reported no competing interests. Two of her associates reported financial and relationships with Premier, Sage, Molnycke, and 3M.

SAN DIEGO – An automated outbreak detection system was popular among infection preventionists and detected pathogenic clusters about 5 days sooner than did hospitals themselves, researchers said at an annual scientific meeting on infectious diseases.

“The vast majority of hospitals were very pleased to expand surveillance beyond multidrug-resistant organisms, and most thought this system would improve their ability to detect outbreaks and streamline their work,” said Dr. Meghan Baker of Harvard Medical School and Harvard Pilgrim Health Care Institute, Boston.

Patients in acute-care hospitals acquired almost 782,000 healthcare-associated infections (HAIs) in 2011, according to the Centers for Disease Control and Prevention.

Traditionally, infection preventionists at these hospitals look for HAIs by identifying temporal or spatial clusters of “a limited number of prespecified pathogens,” Dr. Baker said. But some real clusters do not meet these empirical rules, leading to more cases and potentially severe consequences for patients. Automated HAI outbreak detection systems can help, but not if they constantly trigger false alarms or, conversely, are so specific that they miss outbreaks.

Using the Premier SafetySurveillor infection control tool and free statistical software, Dr. Baker and her associates analyzed 83 years of historical microbiology data from 44 hospitals. The system signaled for any cluster involving at least three cases, even if cases occurred by chance less than once a year, she said. The researchers compared the results with outbreak data submitted by a convenience sample of hospitals that used their usual surveillance methods.

The automated approach identified 230 clusters. Most were detected based on antimicrobial resistance, but others shared the same ward or specialty service and some produced more than one signal. Clusters most often involved Staphylococcus aureus, Enterococcus, Pseudomonas, Escherichia coli, and Klebsiella, but most organisms were not under routine surveillance. As a result, 89% of clusters detected by the automated tool were not detected by hospitals using their usual methods, she emphasized. “Some were not real breaks, as determined later by genetic typing,” she added. “Most real clusters were [methicillin-resistant S. aureus], Clostridium difficile, and some were resistant gram-negative rods.”

When surveyed, 76% of infection preventionists said the automated tool moderately or greatly improved their ability to detect outbreaks, Dr. Baker reported. They would have wanted notification about 81% of the clusters, and considered 47% to be moderately or highly concerning. Notably, 51% of clusters expanded after detection.

“If these had been detected in real time, it would have been possible to intervene and possibly curtail the outbreak,” and 237 (42%) of 559 infections might have been avoided if these interventions were successful, she said.

Dr. Baker and her associates reported their findings at the combined annual meetings of the Infectious Diseases Society of America, the Society for Healthcare Epidemiology of America, the HIV Medicine Association, and the Pediatric Infectious Diseases Society.

Dr. Baker reported no competing interests. Two of her associates reported financial and relationships with Premier, Sage, Molnycke, and 3M.

AT IDWEEK 2015

Key clinical point: An automated outbreak detection system can augment traditional methods for detecting pathogenic clusters.

Major finding: The software tool identified clusters about 5 days sooner than did hospitals themselves.

Data source: Comparison of an automated outbreak detection tool with usual hospital surveillance methods, and surveys of infection preventionists.

Disclosures: Dr. Baker reported no competing interests. Two of her associates reported financial and relationships with Premier, Sage, Molnycke, and 3M.