User login

This transcript has been edited for clarity.

If a picture is worth a thousand words, then a CT scan of the chest might as well be Atlas Shrugged. When you think of the sheer information content in one of those scans, it becomes immediately clear that our usual method of CT scan interpretation must be leaving a lot on the table. After all, we can go through all that information and come out with simply “normal” and call it a day.

Of course, radiologists can glean a lot from a CT scan, but they are trained to look for abnormalities. They can find pneumonia, emboli, fractures, and pneumothoraces, but the presence or absence of life-threatening abnormalities is still just a fraction of the data contained within a CT scan.

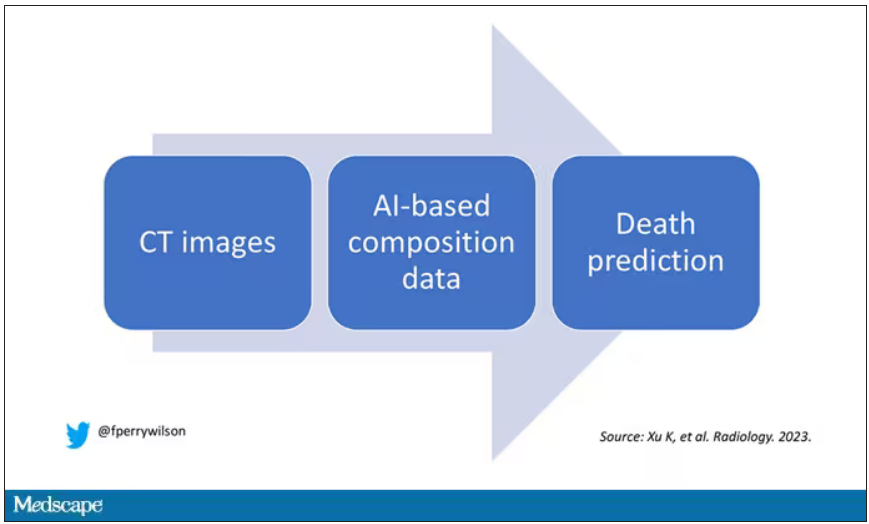

Pulling out more data from those images – data that may not indicate disease per se, but nevertheless tell us something important about patients and their risks – might just fall to those entities that are primed to take a bunch of data and interpret it in new ways: artificial intelligence (AI).

I’m thinking about AI and CT scans this week thanks to this study, appearing in the journal Radiology, from Kaiwen Xu and colleagues at Vanderbilt.

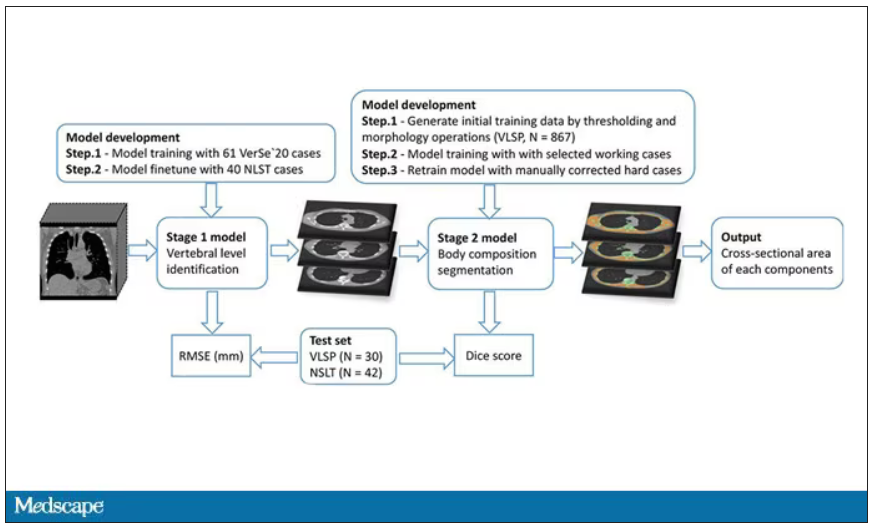

In a previous study, the team had developed an AI algorithm to take chest CT images and convert that data into information about body composition: skeletal muscle mass, fat mass, muscle lipid content – that sort of thing.

While the radiologists are busy looking for cancer or pneumonia, the AI can create a body composition report – two results from one data stream.

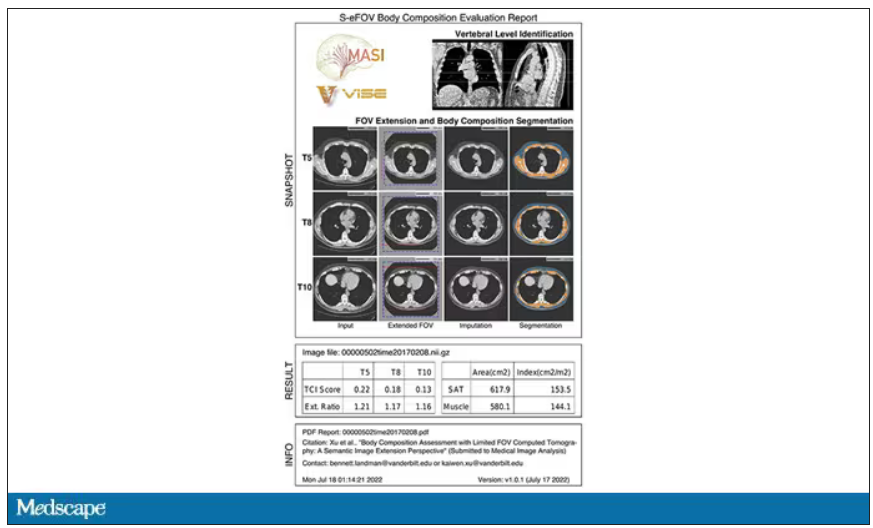

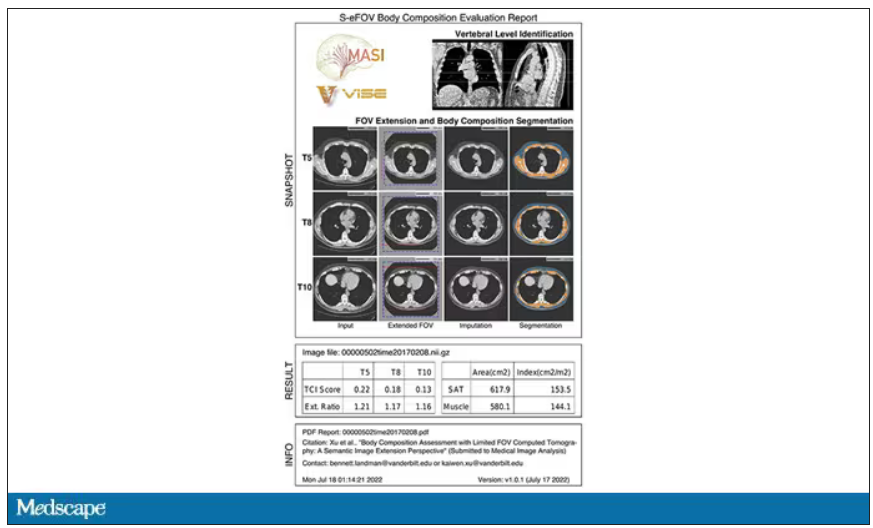

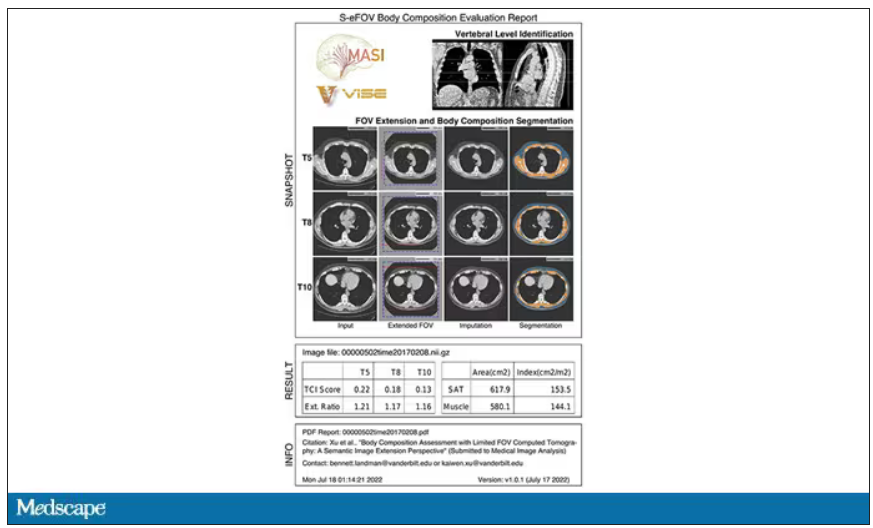

Here’s an example of a report generated from a CT scan from the authors’ GitHub page.

The cool thing here is that this is a clinically collected CT scan of the chest, not a special protocol designed to assess body composition. In fact, this comes from the low-dose lung cancer screening trial dataset.

As you may know, the U.S. Preventive Services Task Force recommends low-dose CT screening of the chest every year for those aged 50-80 with at least a 20 pack-year smoking history. These CT scans form an incredible dataset, actually, as they are all collected with nearly the same parameters. Obviously, the important thing to look for in these CT scans is whether there is early lung cancer. But the new paper asks, as long as we can get information about body composition from these scans, why don’t we? Can it help to risk-stratify these patients?

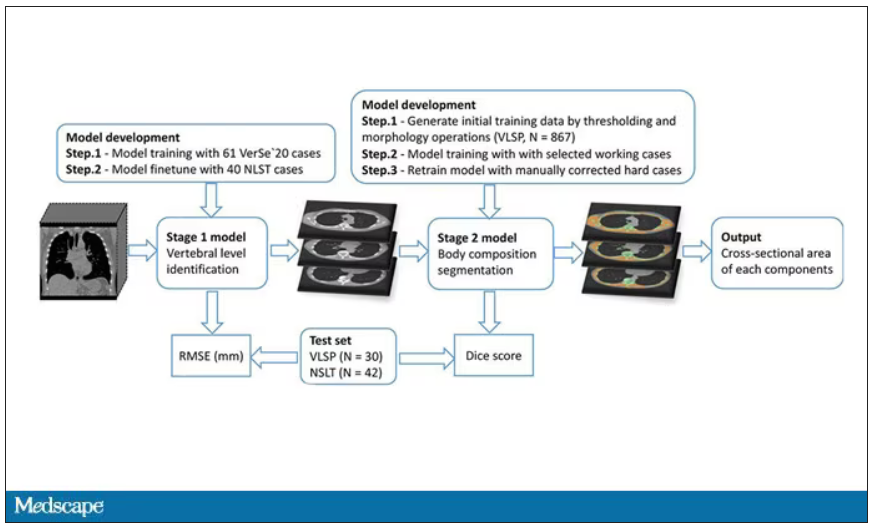

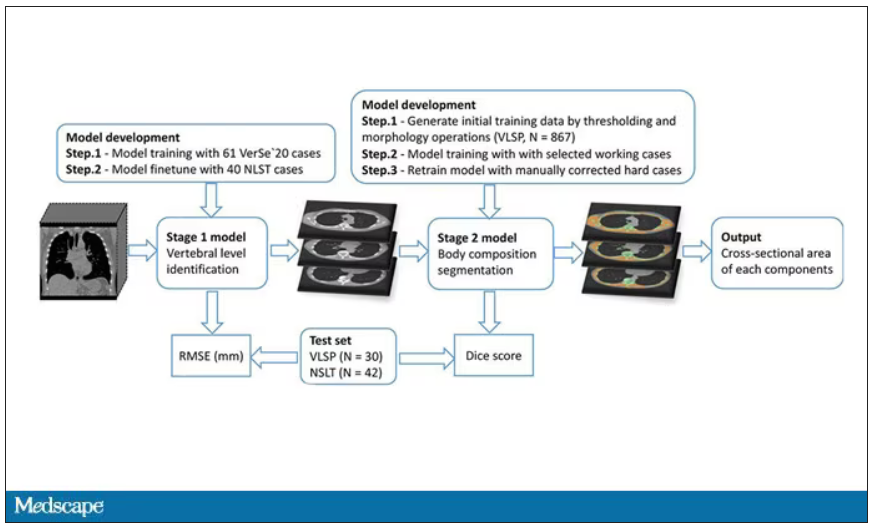

They took 20,768 individuals with CT scans done as part of the low-dose lung cancer screening trial and passed their scans through their automated data pipeline.

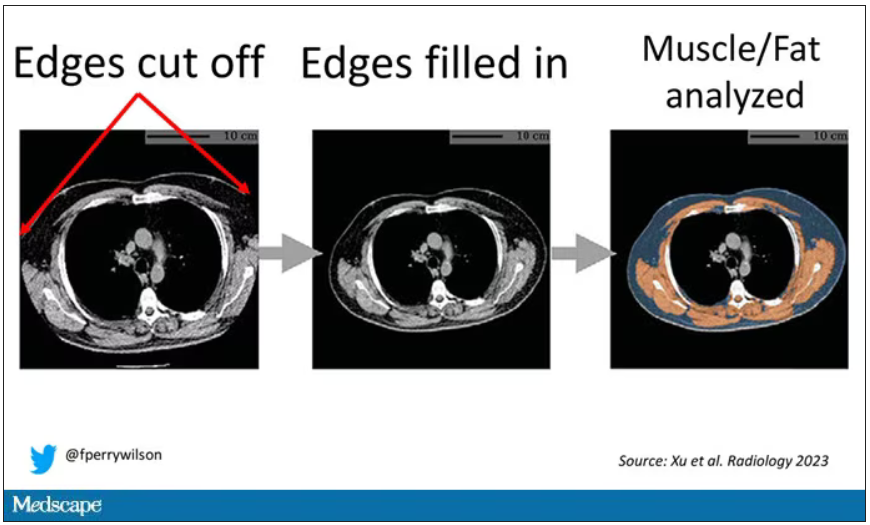

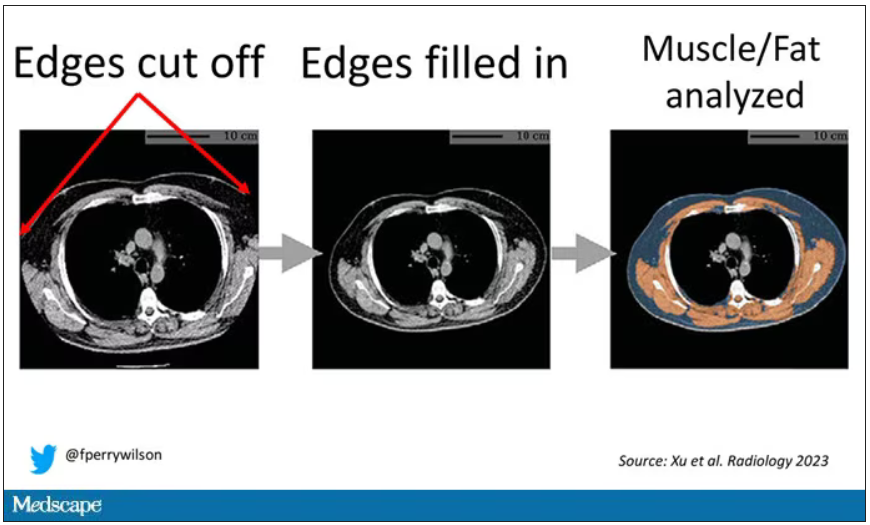

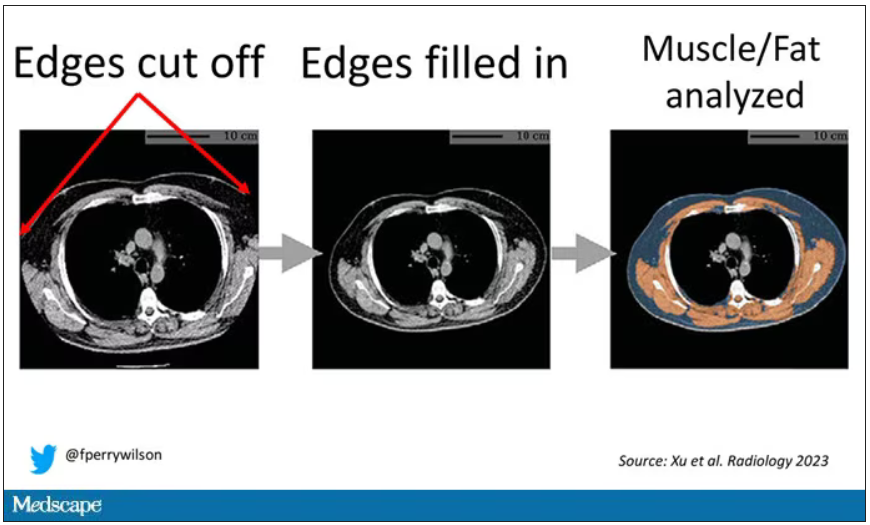

One cool feature here: Depending on body size, sometimes the edges of people in CT scans are not visible. That’s not a big deal for lung-cancer screening as long as you can see both lungs. But it does matter for assessment of muscle and body fat because that stuff lives on the edges of the thoracic cavity. The authors’ data pipeline actually accounts for this, extrapolating what the missing pieces look like from what is able to be seen. It’s quite clever.

On to some results. Would knowledge about the patient’s body composition help predict their ultimate outcome?

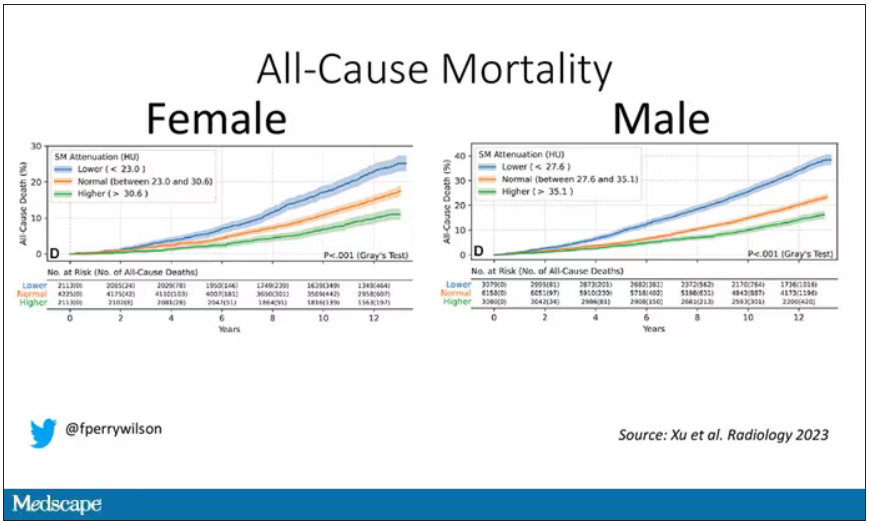

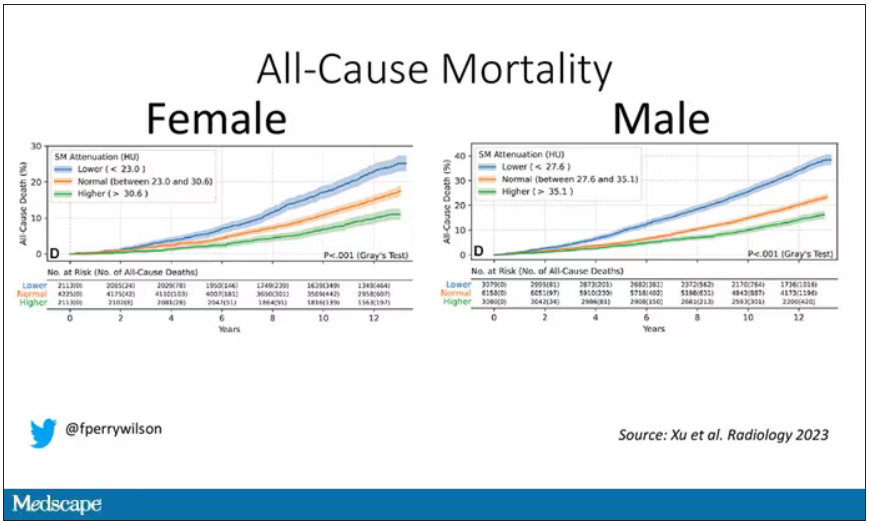

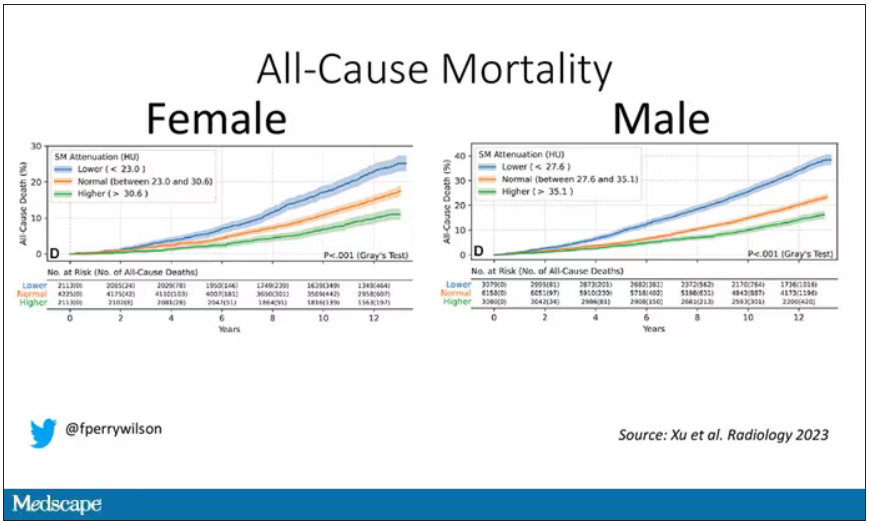

It would. And the best single predictor found was skeletal muscle attenuation – lower levels of skeletal muscle attenuation mean more fat infiltrating the muscle – so lower is worse here. You can see from these all-cause mortality curves that lower levels were associated with substantially worse life expectancy.

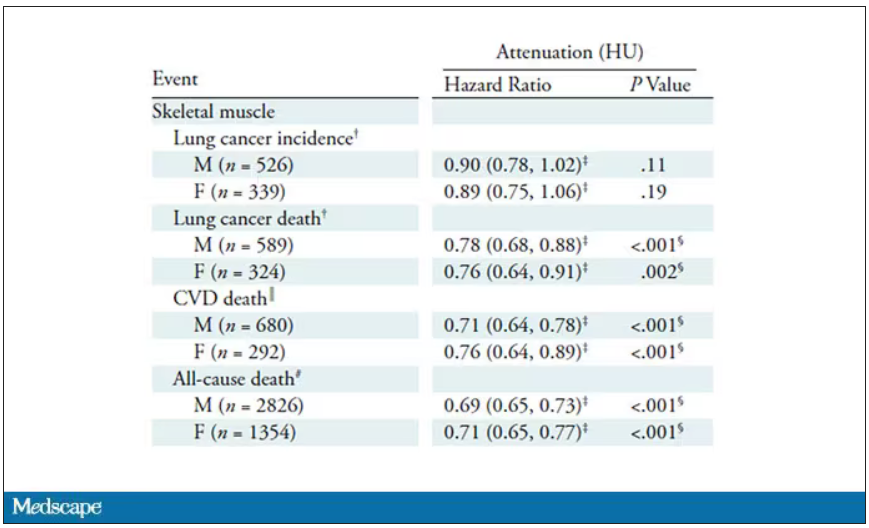

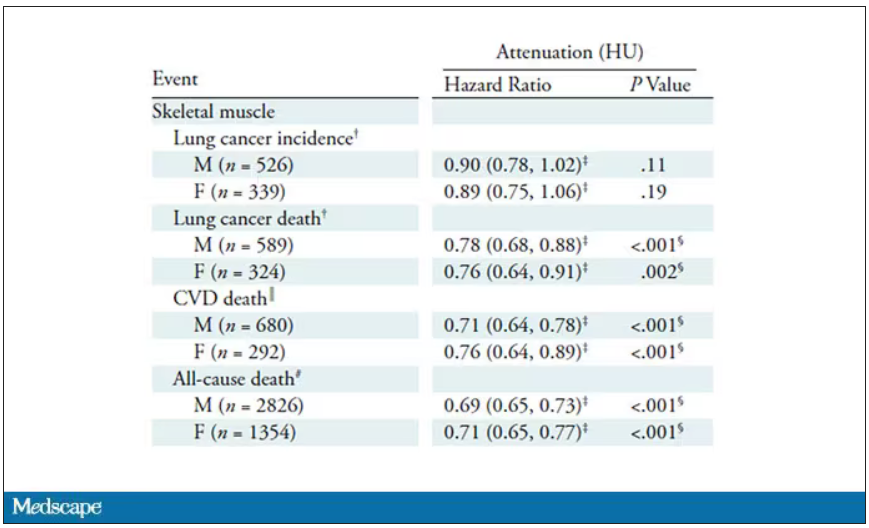

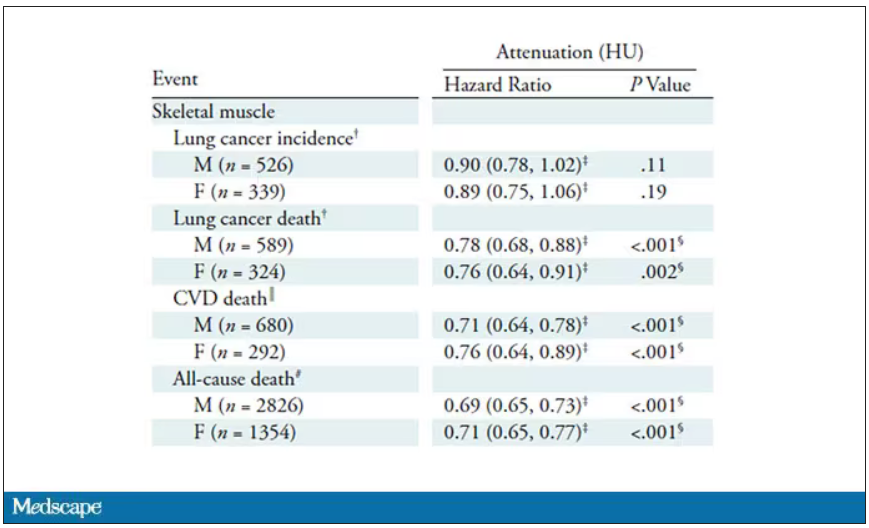

It’s worth noting that these are unadjusted curves. While AI prediction from CT images is very cool, we might be able to make similar predictions knowing, for example, the age of the patient. To account for this, the authors adjusted the findings for age, diabetes, heart disease, stroke, and coronary calcium score (also calculated from those same CT scans). Even after adjustment, skeletal muscle attenuation was significantly associated with all-cause mortality, cardiovascular mortality, and lung-cancer mortality – but not lung cancer incidence.

Those results tell us that there is likely a physiologic significance to skeletal muscle attenuation, and they provide a great proof-of-concept that automated data extraction techniques can be applied broadly to routinely collected radiology images.

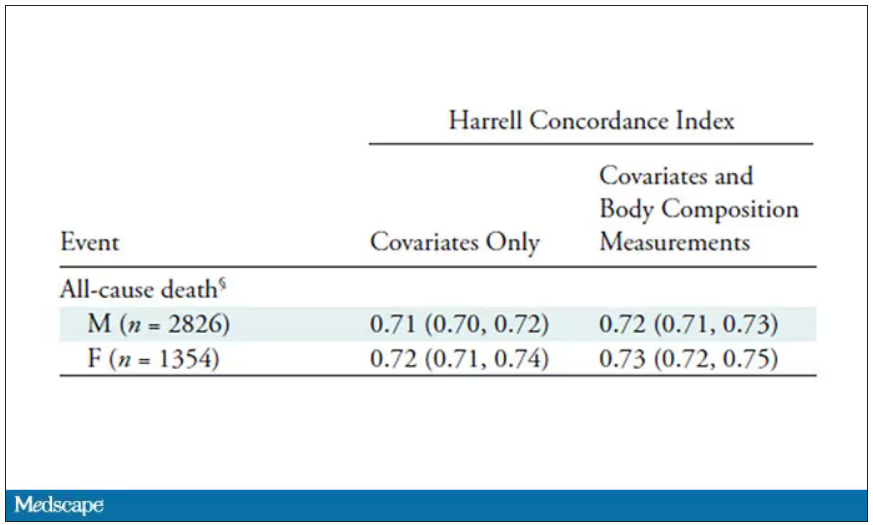

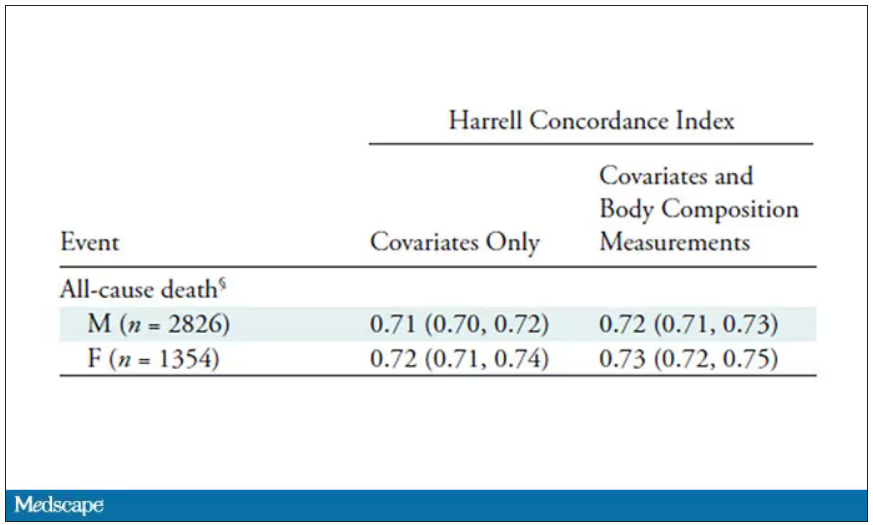

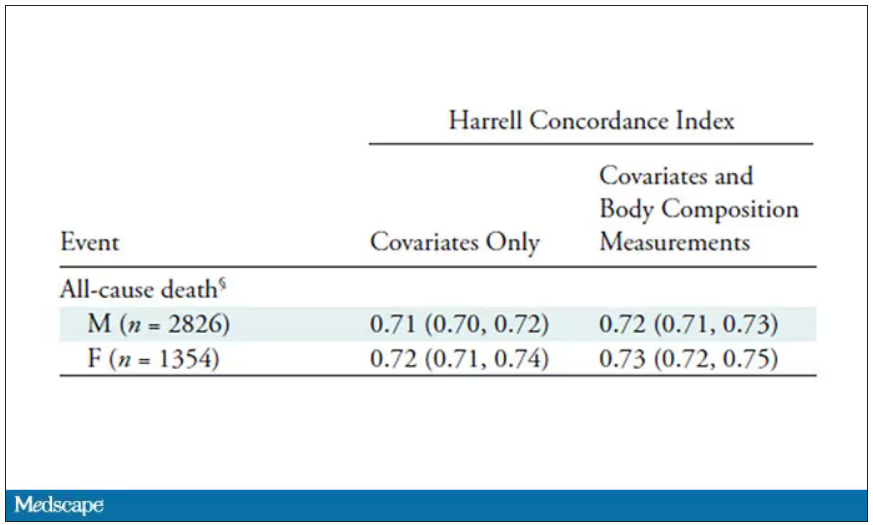

That said, it’s one thing to show that something is physiologically relevant. In terms of actually predicting outcomes, adding this information to a model that contains just those clinical factors like age and diabetes doesn’t actually improve things very much. We measure this with something called the concordance index. This tells us the probability, given two individuals, of how often we can identify the person who has the outcome of interest sooner – if at all. (You can probably guess that the worst possible score is thus 0.5 and the best is 1.) A model without the AI data gives a concordance index for all-cause mortality of 0.71 or 0.72, depending on sex. Adding in the body composition data bumps that up only by a percent or so.

This honestly feels a bit like a missed opportunity to me. The authors pass the imaging data through an AI to get body composition data and then see how that predicts death.

Why not skip the middleman? Train a model using the imaging data to predict death directly, using whatever signal the AI chooses: body composition, lung size, rib thickness – whatever.

I’d be very curious to see how that model might improve our ability to predict these outcomes. In the end, this is a space where AI can make some massive gains – not by trying to do radiologists’ jobs better than radiologists, but by extracting information that radiologists aren’t looking for in the first place.

F. Perry Wilson, MD, MSCE, is associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Conn. He reported no conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

If a picture is worth a thousand words, then a CT scan of the chest might as well be Atlas Shrugged. When you think of the sheer information content in one of those scans, it becomes immediately clear that our usual method of CT scan interpretation must be leaving a lot on the table. After all, we can go through all that information and come out with simply “normal” and call it a day.

Of course, radiologists can glean a lot from a CT scan, but they are trained to look for abnormalities. They can find pneumonia, emboli, fractures, and pneumothoraces, but the presence or absence of life-threatening abnormalities is still just a fraction of the data contained within a CT scan.

Pulling out more data from those images – data that may not indicate disease per se, but nevertheless tell us something important about patients and their risks – might just fall to those entities that are primed to take a bunch of data and interpret it in new ways: artificial intelligence (AI).

I’m thinking about AI and CT scans this week thanks to this study, appearing in the journal Radiology, from Kaiwen Xu and colleagues at Vanderbilt.

In a previous study, the team had developed an AI algorithm to take chest CT images and convert that data into information about body composition: skeletal muscle mass, fat mass, muscle lipid content – that sort of thing.

While the radiologists are busy looking for cancer or pneumonia, the AI can create a body composition report – two results from one data stream.

Here’s an example of a report generated from a CT scan from the authors’ GitHub page.

The cool thing here is that this is a clinically collected CT scan of the chest, not a special protocol designed to assess body composition. In fact, this comes from the low-dose lung cancer screening trial dataset.

As you may know, the U.S. Preventive Services Task Force recommends low-dose CT screening of the chest every year for those aged 50-80 with at least a 20 pack-year smoking history. These CT scans form an incredible dataset, actually, as they are all collected with nearly the same parameters. Obviously, the important thing to look for in these CT scans is whether there is early lung cancer. But the new paper asks, as long as we can get information about body composition from these scans, why don’t we? Can it help to risk-stratify these patients?

They took 20,768 individuals with CT scans done as part of the low-dose lung cancer screening trial and passed their scans through their automated data pipeline.

One cool feature here: Depending on body size, sometimes the edges of people in CT scans are not visible. That’s not a big deal for lung-cancer screening as long as you can see both lungs. But it does matter for assessment of muscle and body fat because that stuff lives on the edges of the thoracic cavity. The authors’ data pipeline actually accounts for this, extrapolating what the missing pieces look like from what is able to be seen. It’s quite clever.

On to some results. Would knowledge about the patient’s body composition help predict their ultimate outcome?

It would. And the best single predictor found was skeletal muscle attenuation – lower levels of skeletal muscle attenuation mean more fat infiltrating the muscle – so lower is worse here. You can see from these all-cause mortality curves that lower levels were associated with substantially worse life expectancy.

It’s worth noting that these are unadjusted curves. While AI prediction from CT images is very cool, we might be able to make similar predictions knowing, for example, the age of the patient. To account for this, the authors adjusted the findings for age, diabetes, heart disease, stroke, and coronary calcium score (also calculated from those same CT scans). Even after adjustment, skeletal muscle attenuation was significantly associated with all-cause mortality, cardiovascular mortality, and lung-cancer mortality – but not lung cancer incidence.

Those results tell us that there is likely a physiologic significance to skeletal muscle attenuation, and they provide a great proof-of-concept that automated data extraction techniques can be applied broadly to routinely collected radiology images.

That said, it’s one thing to show that something is physiologically relevant. In terms of actually predicting outcomes, adding this information to a model that contains just those clinical factors like age and diabetes doesn’t actually improve things very much. We measure this with something called the concordance index. This tells us the probability, given two individuals, of how often we can identify the person who has the outcome of interest sooner – if at all. (You can probably guess that the worst possible score is thus 0.5 and the best is 1.) A model without the AI data gives a concordance index for all-cause mortality of 0.71 or 0.72, depending on sex. Adding in the body composition data bumps that up only by a percent or so.

This honestly feels a bit like a missed opportunity to me. The authors pass the imaging data through an AI to get body composition data and then see how that predicts death.

Why not skip the middleman? Train a model using the imaging data to predict death directly, using whatever signal the AI chooses: body composition, lung size, rib thickness – whatever.

I’d be very curious to see how that model might improve our ability to predict these outcomes. In the end, this is a space where AI can make some massive gains – not by trying to do radiologists’ jobs better than radiologists, but by extracting information that radiologists aren’t looking for in the first place.

F. Perry Wilson, MD, MSCE, is associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Conn. He reported no conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

If a picture is worth a thousand words, then a CT scan of the chest might as well be Atlas Shrugged. When you think of the sheer information content in one of those scans, it becomes immediately clear that our usual method of CT scan interpretation must be leaving a lot on the table. After all, we can go through all that information and come out with simply “normal” and call it a day.

Of course, radiologists can glean a lot from a CT scan, but they are trained to look for abnormalities. They can find pneumonia, emboli, fractures, and pneumothoraces, but the presence or absence of life-threatening abnormalities is still just a fraction of the data contained within a CT scan.

Pulling out more data from those images – data that may not indicate disease per se, but nevertheless tell us something important about patients and their risks – might just fall to those entities that are primed to take a bunch of data and interpret it in new ways: artificial intelligence (AI).

I’m thinking about AI and CT scans this week thanks to this study, appearing in the journal Radiology, from Kaiwen Xu and colleagues at Vanderbilt.

In a previous study, the team had developed an AI algorithm to take chest CT images and convert that data into information about body composition: skeletal muscle mass, fat mass, muscle lipid content – that sort of thing.

While the radiologists are busy looking for cancer or pneumonia, the AI can create a body composition report – two results from one data stream.

Here’s an example of a report generated from a CT scan from the authors’ GitHub page.

The cool thing here is that this is a clinically collected CT scan of the chest, not a special protocol designed to assess body composition. In fact, this comes from the low-dose lung cancer screening trial dataset.

As you may know, the U.S. Preventive Services Task Force recommends low-dose CT screening of the chest every year for those aged 50-80 with at least a 20 pack-year smoking history. These CT scans form an incredible dataset, actually, as they are all collected with nearly the same parameters. Obviously, the important thing to look for in these CT scans is whether there is early lung cancer. But the new paper asks, as long as we can get information about body composition from these scans, why don’t we? Can it help to risk-stratify these patients?

They took 20,768 individuals with CT scans done as part of the low-dose lung cancer screening trial and passed their scans through their automated data pipeline.

One cool feature here: Depending on body size, sometimes the edges of people in CT scans are not visible. That’s not a big deal for lung-cancer screening as long as you can see both lungs. But it does matter for assessment of muscle and body fat because that stuff lives on the edges of the thoracic cavity. The authors’ data pipeline actually accounts for this, extrapolating what the missing pieces look like from what is able to be seen. It’s quite clever.

On to some results. Would knowledge about the patient’s body composition help predict their ultimate outcome?

It would. And the best single predictor found was skeletal muscle attenuation – lower levels of skeletal muscle attenuation mean more fat infiltrating the muscle – so lower is worse here. You can see from these all-cause mortality curves that lower levels were associated with substantially worse life expectancy.

It’s worth noting that these are unadjusted curves. While AI prediction from CT images is very cool, we might be able to make similar predictions knowing, for example, the age of the patient. To account for this, the authors adjusted the findings for age, diabetes, heart disease, stroke, and coronary calcium score (also calculated from those same CT scans). Even after adjustment, skeletal muscle attenuation was significantly associated with all-cause mortality, cardiovascular mortality, and lung-cancer mortality – but not lung cancer incidence.

Those results tell us that there is likely a physiologic significance to skeletal muscle attenuation, and they provide a great proof-of-concept that automated data extraction techniques can be applied broadly to routinely collected radiology images.

That said, it’s one thing to show that something is physiologically relevant. In terms of actually predicting outcomes, adding this information to a model that contains just those clinical factors like age and diabetes doesn’t actually improve things very much. We measure this with something called the concordance index. This tells us the probability, given two individuals, of how often we can identify the person who has the outcome of interest sooner – if at all. (You can probably guess that the worst possible score is thus 0.5 and the best is 1.) A model without the AI data gives a concordance index for all-cause mortality of 0.71 or 0.72, depending on sex. Adding in the body composition data bumps that up only by a percent or so.

This honestly feels a bit like a missed opportunity to me. The authors pass the imaging data through an AI to get body composition data and then see how that predicts death.

Why not skip the middleman? Train a model using the imaging data to predict death directly, using whatever signal the AI chooses: body composition, lung size, rib thickness – whatever.

I’d be very curious to see how that model might improve our ability to predict these outcomes. In the end, this is a space where AI can make some massive gains – not by trying to do radiologists’ jobs better than radiologists, but by extracting information that radiologists aren’t looking for in the first place.

F. Perry Wilson, MD, MSCE, is associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Conn. He reported no conflicts of interest.

A version of this article first appeared on Medscape.com.