User login

SAN FRANCISCO – Tumor response assessments differ widely, in ways that may affect treatment decisions, depending on whether Response Evaluation Criteria in Solid Tumors (RECIST) are used, suggests a study reported at the ASCO Gastrointestinal Cancers Symposium.

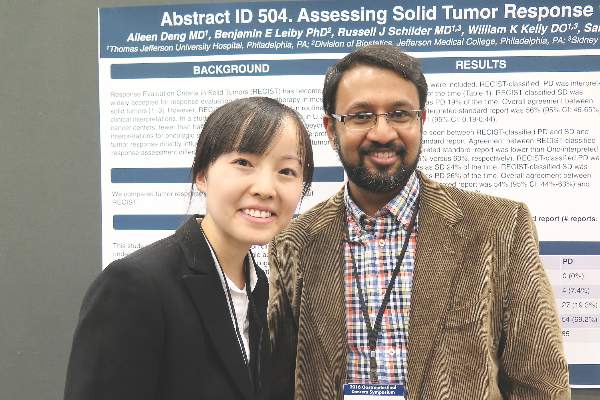

Researchers at Jefferson Medical College, Philadelphia undertook a study using 292 scans performed in patients with solid tumors who were treated in clinical trials during 2013-2014.

Results presented in a poster session showed that the RECIST report of tumor status agreed with the oncologist’s interpretation of the standard radiology report, generated without these criteria, only about half the time.

“This study basically came about when I saw all of these [standard] reports calling it progression, and I would get the RECIST report back saying it was stable. And we are actually making treatment decisions” based on that, senior author Dr. Ashwin R. Sama of Jefferson Medical College, Philadelphia, said in an interview. “This has never been studied, although everybody knew that there probably is a difference between regular reads and RECIST reads.”

“The correlation was about 50% – that’s like the flip of a coin. And that really has a big impact on treatment,” he added. “You don’t want to take patients off treatment too soon if they have stable disease; and vice versa, if they have progression, you don’t want to keep them on chemo that is not working.”

In the study, a single radiologist read the scans using RECIST criteria and generated a report. Multiple radiologists then read the same scans without using these criteria and generated a standard report. An oncologist and a resident separately interpreted the standard reports to classify patients as having a complete response, a partial response, stable disease, or progressive disease.

Overall agreement between the RECIST report and the oncologist-interpreted standard report was just 56%. In 29% of cases of RECIST-classified progressive disease, the oncologist interpreted the standard report as showing stable disease. On the other hand, in 19% of cases of RECIST-classified stable disease, the oncologist interpreted the standard report as showing progressive disease.

Findings were similar when the resident interpreted the standard report. Overall agreement with the RECIST report was just 54%. In 24% of cases of RECIST-classified progressive disease, the resident interpreted the standard report as showing stable disease. In 26% of cases of RECIST-classified stable disease, the resident interpreted the standard report as showing progressive disease.

“Clinical trials commonly use RECIST as a way to interpret tumor response. However, outside of clinical trial settings, RECIST criteria are less commonly used, especially if you are in a nonacademic institution,” commented first author Dr. Aileen Deng of the Thomas Jefferson University Hospital, Philadelphia.

Variability in standard reports likely contributes to considerable variability in practice, she speculated. “There need to be better ways to standardize how we interpret serial images that are done for our patients with solid tumors,” she concluded.

Dr. Sama and Dr. Deng disclosed that they had no relevant conflicts of interest.

SAN FRANCISCO – Tumor response assessments differ widely, in ways that may affect treatment decisions, depending on whether Response Evaluation Criteria in Solid Tumors (RECIST) are used, suggests a study reported at the ASCO Gastrointestinal Cancers Symposium.

Researchers at Jefferson Medical College, Philadelphia undertook a study using 292 scans performed in patients with solid tumors who were treated in clinical trials during 2013-2014.

Results presented in a poster session showed that the RECIST report of tumor status agreed with the oncologist’s interpretation of the standard radiology report, generated without these criteria, only about half the time.

“This study basically came about when I saw all of these [standard] reports calling it progression, and I would get the RECIST report back saying it was stable. And we are actually making treatment decisions” based on that, senior author Dr. Ashwin R. Sama of Jefferson Medical College, Philadelphia, said in an interview. “This has never been studied, although everybody knew that there probably is a difference between regular reads and RECIST reads.”

“The correlation was about 50% – that’s like the flip of a coin. And that really has a big impact on treatment,” he added. “You don’t want to take patients off treatment too soon if they have stable disease; and vice versa, if they have progression, you don’t want to keep them on chemo that is not working.”

In the study, a single radiologist read the scans using RECIST criteria and generated a report. Multiple radiologists then read the same scans without using these criteria and generated a standard report. An oncologist and a resident separately interpreted the standard reports to classify patients as having a complete response, a partial response, stable disease, or progressive disease.

Overall agreement between the RECIST report and the oncologist-interpreted standard report was just 56%. In 29% of cases of RECIST-classified progressive disease, the oncologist interpreted the standard report as showing stable disease. On the other hand, in 19% of cases of RECIST-classified stable disease, the oncologist interpreted the standard report as showing progressive disease.

Findings were similar when the resident interpreted the standard report. Overall agreement with the RECIST report was just 54%. In 24% of cases of RECIST-classified progressive disease, the resident interpreted the standard report as showing stable disease. In 26% of cases of RECIST-classified stable disease, the resident interpreted the standard report as showing progressive disease.

“Clinical trials commonly use RECIST as a way to interpret tumor response. However, outside of clinical trial settings, RECIST criteria are less commonly used, especially if you are in a nonacademic institution,” commented first author Dr. Aileen Deng of the Thomas Jefferson University Hospital, Philadelphia.

Variability in standard reports likely contributes to considerable variability in practice, she speculated. “There need to be better ways to standardize how we interpret serial images that are done for our patients with solid tumors,” she concluded.

Dr. Sama and Dr. Deng disclosed that they had no relevant conflicts of interest.

SAN FRANCISCO – Tumor response assessments differ widely, in ways that may affect treatment decisions, depending on whether Response Evaluation Criteria in Solid Tumors (RECIST) are used, suggests a study reported at the ASCO Gastrointestinal Cancers Symposium.

Researchers at Jefferson Medical College, Philadelphia undertook a study using 292 scans performed in patients with solid tumors who were treated in clinical trials during 2013-2014.

Results presented in a poster session showed that the RECIST report of tumor status agreed with the oncologist’s interpretation of the standard radiology report, generated without these criteria, only about half the time.

“This study basically came about when I saw all of these [standard] reports calling it progression, and I would get the RECIST report back saying it was stable. And we are actually making treatment decisions” based on that, senior author Dr. Ashwin R. Sama of Jefferson Medical College, Philadelphia, said in an interview. “This has never been studied, although everybody knew that there probably is a difference between regular reads and RECIST reads.”

“The correlation was about 50% – that’s like the flip of a coin. And that really has a big impact on treatment,” he added. “You don’t want to take patients off treatment too soon if they have stable disease; and vice versa, if they have progression, you don’t want to keep them on chemo that is not working.”

In the study, a single radiologist read the scans using RECIST criteria and generated a report. Multiple radiologists then read the same scans without using these criteria and generated a standard report. An oncologist and a resident separately interpreted the standard reports to classify patients as having a complete response, a partial response, stable disease, or progressive disease.

Overall agreement between the RECIST report and the oncologist-interpreted standard report was just 56%. In 29% of cases of RECIST-classified progressive disease, the oncologist interpreted the standard report as showing stable disease. On the other hand, in 19% of cases of RECIST-classified stable disease, the oncologist interpreted the standard report as showing progressive disease.

Findings were similar when the resident interpreted the standard report. Overall agreement with the RECIST report was just 54%. In 24% of cases of RECIST-classified progressive disease, the resident interpreted the standard report as showing stable disease. In 26% of cases of RECIST-classified stable disease, the resident interpreted the standard report as showing progressive disease.

“Clinical trials commonly use RECIST as a way to interpret tumor response. However, outside of clinical trial settings, RECIST criteria are less commonly used, especially if you are in a nonacademic institution,” commented first author Dr. Aileen Deng of the Thomas Jefferson University Hospital, Philadelphia.

Variability in standard reports likely contributes to considerable variability in practice, she speculated. “There need to be better ways to standardize how we interpret serial images that are done for our patients with solid tumors,” she concluded.

Dr. Sama and Dr. Deng disclosed that they had no relevant conflicts of interest.

AT THE ASCO GASTROINTESTINAL CANCERS SYMPOSIUM

Key clinical point: Failure to use RECIST criteria may result in inappropriate stopping or continuation of treatment.

Major finding: Agreement between the RECIST report and the oncologist’s interpretation of the standard radiology report was only 56%.

Data source: A single-center study of 292 scans performed in patients with solid tumors who were being treated in clinical trials.

Disclosures: Dr. Sama disclosed that he had no relevant conflicts of interest. Dr. Deng disclosed that she had no relevant conflicts of interest.