User login

AGA issues position statements on reducing CRC burden

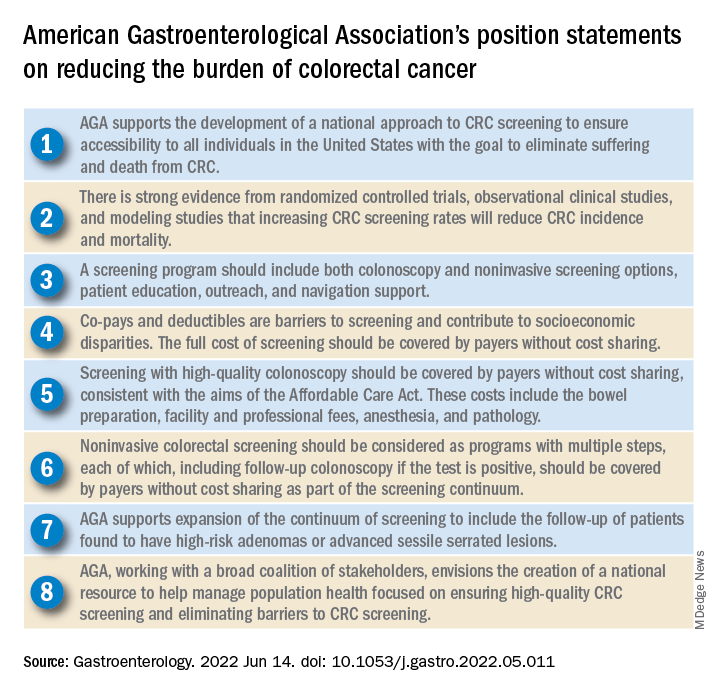

The American Gastroenterological Association has published eight position statements aimed at reducing the burden of colorectal cancer (CRC).

The evidence-based statements, published in Gastroenterology, call for a national approach to CRC screening, outline the elements of a high-quality screening program, and make clear that payers should cover all costs, from bowel prep through pathology, plus follow-up for high-risk patients.

“There is strong evidence that CRC screening is effective [at reducing CRC incidence and mortality] ... but less than 70% of eligible individuals have been screened,” wrote authors led by David Lieberman, MD, who is on the AGA Executive Committee on the Screening Continuum and affiliated with Oregon Health and Science University, Portland, noting the recent expansion of eligibility to include individuals in the 45- to 49-year age group.

“CRC screening saves lives, but only if people get screened,” Dr. Lieberman said in a press release from the AGA. “Cost sharing is an important barrier to screening, which contributes to racial, ethnic and socioeconomic inequities in colorectal cancer outcomes. The full cost of screening – including noninvasive tests and follow-up colonoscopies – should be covered without cost to patients.”

He added: “AGA wishes to collaborate with stakeholders to eliminate obstacles to screening, which disproportionately impact those with low income and lack of insurance.”

Eliminating disparities in screening

Among the position statements, Dr. Lieberman and colleagues first called for “development of a national approach to CRC screening” to patch gaps in access across the United States.

“Systematic outreach occurs infrequently,” they noted. “CRC screening prevalence is much lower among individuals who do not have access to health care due to lack of insurance, do not have a primary care provider, or are part of a medically underserved community.”

According to Dr. Lieberman and colleagues, the AGA is also “working with a broad coalition of stakeholders,” such as the American Cancer Society, payers, patient advocacy groups, and others, to create a “national resource ... focused on ensuring high-quality CRC screening and eliminating barriers to CRC screening.”

Specifically, the coalition will work to collectively tackle “disparities created by social determinants of health, which includes lack of access to screening, transportation, and even work hours and child care.

“The AGA recognizes that moving the needle to achieve a CRC screening participation goal of 80% will take a village,” they wrote.

Elements of high-quality CRC screening

The investigators went on to describe the key features of a high-quality CRC screening program, including “colonoscopy and noninvasive screening options, patient education, outreach, and navigation support.”

Dr. Lieberman and colleagues pointed out that offering more than one type of screening test “acknowledges patient preferences and improves participation.”

Certain noninvasive methods, such as fecal immunochemical testing (FIT), eliminate “important barriers” to screening, they noted, such as the need for special preparation, time off work, and transportation to a medical facility.

For individuals who have high-risk adenomas (HRAs) or advanced sessile serrated lesions (SSLs), screening should be expanded to include follow-up, the investigators added.

“Evidence from a systematic review demonstrates that individuals with HRAs at baseline have a 3- to 4-fold higher risk of incident CRC during follow-up compared with individuals with no adenoma or low-risk adenomas,” they wrote. “There is also evidence that individuals with advanced SSLs have a three= to fourfold higher risk of CRC, compared with individuals with nonadvanced SSLs.”

Payers should cover costs

To further improve access to care, payers should cover the full costs of CRC screening because “copays and deductibles are barriers to screening and contribute to socioeconomic disparities,” that “disproportionately impact those with low income and lack of insurance,” according to Dr. Lieberman and colleagues.

They noted that the Affordable Care Act “eliminated copayments for preventive services,” yet a recent study showed that almost half of patients with commercial insurance and more than three-quarters of patients with Medicare still share some cost of CRC screening.

The investigators made clear that payers need to cover costs from start to finish, including “bowel preparation, facility and professional fees, anesthesia, and pathology,” as well as follow-up screening for high-risk patients identified by noninvasive methods.

“Noninvasive colorectal screening should be considered as programs with multiple steps, each of which, including follow-up colonoscopy if the test is positive, should be covered by payers without cost sharing as part of the screening continuum,” Dr. Lieberman and colleagues wrote.

Changes underway

According to Steven Itzkowitz, MD, professor of medicine and oncological sciences and director of the gastroenterology fellowship training program at the Icahn School of Medicine at Mount Sinai, New York, the AGA publication is important because it “consolidates many of the critical issues related to decreasing the burden of colorectal cancer in the United States.”

Dr. Itzkowitz noted that changes are already underway to eliminate cost as a barrier to screening.

“The good news is that, in the past year, the Departments of Health & Human Services, Labor, and Treasury declared that cost sharing should not be imposed, and plans are required to cover screening colonoscopy with polyp removal and colonoscopy that is performed to follow-up after an abnormal noninvasive CRC screening test,” Dr. Itzkowitz said in an interview. “Many plans are following suit, but it will take time for this coverage to take effect across all plans.”

For individual gastroenterologists who would like to do their part in reducing screening inequity, Dr. Itzkowitz suggested leveraging noninvasive testing, as the AGA recommends.

“This publication is the latest to call for using noninvasive, stool-based testing in addition to colonoscopy,” Dr. Itzkowitz said. “FIT and multitarget stool DNA tests all have proven efficacy in this regard, so gastroenterologists should have those conversations with their patients. GIs can also make it easier for patients to complete colonoscopy by developing patient navigation programs, direct access referrals, and systems for communicating with primary care providers for easier referrals and communicating colonoscopy results.”

Many practices are already instituting such improvements in response to the restrictions imposed by the COVID-19 pandemic, according to Dr. Itzkowitz.“These changes, plus better coverage by payers, will make a huge impact on health equity when it comes to colorectal cancer screening.”

The publication was supported by the AGA. The investigators disclosed relationships with Geneoscopy, ColoWrap, UniversalDx, and others. Dr. Itzkowitz disclosed no relevant conflicts of interest.

Groups interested in collaborating with AGA should contact Kathleen Teixeira, AGA Vice President, Public Policy and Advocacy, at [email protected].

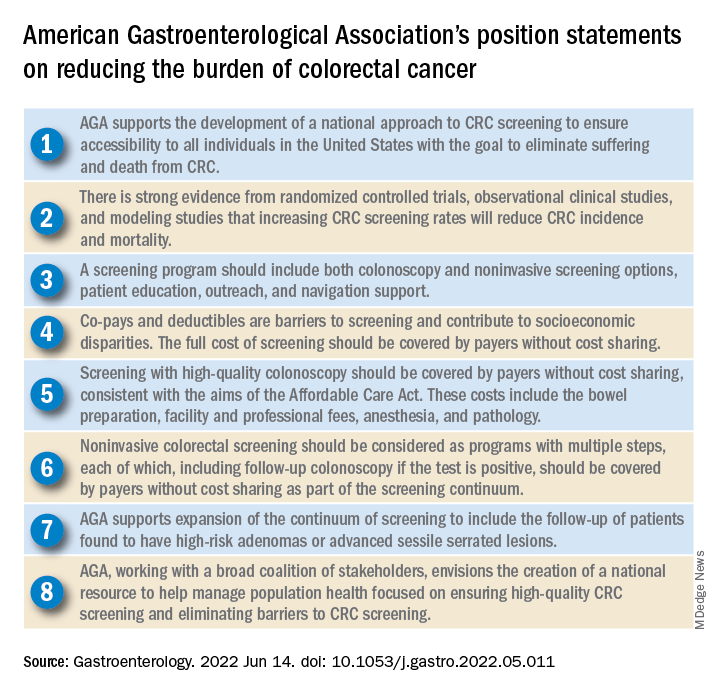

The American Gastroenterological Association has published eight position statements aimed at reducing the burden of colorectal cancer (CRC).

The evidence-based statements, published in Gastroenterology, call for a national approach to CRC screening, outline the elements of a high-quality screening program, and make clear that payers should cover all costs, from bowel prep through pathology, plus follow-up for high-risk patients.

“There is strong evidence that CRC screening is effective [at reducing CRC incidence and mortality] ... but less than 70% of eligible individuals have been screened,” wrote authors led by David Lieberman, MD, who is on the AGA Executive Committee on the Screening Continuum and affiliated with Oregon Health and Science University, Portland, noting the recent expansion of eligibility to include individuals in the 45- to 49-year age group.

“CRC screening saves lives, but only if people get screened,” Dr. Lieberman said in a press release from the AGA. “Cost sharing is an important barrier to screening, which contributes to racial, ethnic and socioeconomic inequities in colorectal cancer outcomes. The full cost of screening – including noninvasive tests and follow-up colonoscopies – should be covered without cost to patients.”

He added: “AGA wishes to collaborate with stakeholders to eliminate obstacles to screening, which disproportionately impact those with low income and lack of insurance.”

Eliminating disparities in screening

Among the position statements, Dr. Lieberman and colleagues first called for “development of a national approach to CRC screening” to patch gaps in access across the United States.

“Systematic outreach occurs infrequently,” they noted. “CRC screening prevalence is much lower among individuals who do not have access to health care due to lack of insurance, do not have a primary care provider, or are part of a medically underserved community.”

According to Dr. Lieberman and colleagues, the AGA is also “working with a broad coalition of stakeholders,” such as the American Cancer Society, payers, patient advocacy groups, and others, to create a “national resource ... focused on ensuring high-quality CRC screening and eliminating barriers to CRC screening.”

Specifically, the coalition will work to collectively tackle “disparities created by social determinants of health, which includes lack of access to screening, transportation, and even work hours and child care.

“The AGA recognizes that moving the needle to achieve a CRC screening participation goal of 80% will take a village,” they wrote.

Elements of high-quality CRC screening

The investigators went on to describe the key features of a high-quality CRC screening program, including “colonoscopy and noninvasive screening options, patient education, outreach, and navigation support.”

Dr. Lieberman and colleagues pointed out that offering more than one type of screening test “acknowledges patient preferences and improves participation.”

Certain noninvasive methods, such as fecal immunochemical testing (FIT), eliminate “important barriers” to screening, they noted, such as the need for special preparation, time off work, and transportation to a medical facility.

For individuals who have high-risk adenomas (HRAs) or advanced sessile serrated lesions (SSLs), screening should be expanded to include follow-up, the investigators added.

“Evidence from a systematic review demonstrates that individuals with HRAs at baseline have a 3- to 4-fold higher risk of incident CRC during follow-up compared with individuals with no adenoma or low-risk adenomas,” they wrote. “There is also evidence that individuals with advanced SSLs have a three= to fourfold higher risk of CRC, compared with individuals with nonadvanced SSLs.”

Payers should cover costs

To further improve access to care, payers should cover the full costs of CRC screening because “copays and deductibles are barriers to screening and contribute to socioeconomic disparities,” that “disproportionately impact those with low income and lack of insurance,” according to Dr. Lieberman and colleagues.

They noted that the Affordable Care Act “eliminated copayments for preventive services,” yet a recent study showed that almost half of patients with commercial insurance and more than three-quarters of patients with Medicare still share some cost of CRC screening.

The investigators made clear that payers need to cover costs from start to finish, including “bowel preparation, facility and professional fees, anesthesia, and pathology,” as well as follow-up screening for high-risk patients identified by noninvasive methods.

“Noninvasive colorectal screening should be considered as programs with multiple steps, each of which, including follow-up colonoscopy if the test is positive, should be covered by payers without cost sharing as part of the screening continuum,” Dr. Lieberman and colleagues wrote.

Changes underway

According to Steven Itzkowitz, MD, professor of medicine and oncological sciences and director of the gastroenterology fellowship training program at the Icahn School of Medicine at Mount Sinai, New York, the AGA publication is important because it “consolidates many of the critical issues related to decreasing the burden of colorectal cancer in the United States.”

Dr. Itzkowitz noted that changes are already underway to eliminate cost as a barrier to screening.

“The good news is that, in the past year, the Departments of Health & Human Services, Labor, and Treasury declared that cost sharing should not be imposed, and plans are required to cover screening colonoscopy with polyp removal and colonoscopy that is performed to follow-up after an abnormal noninvasive CRC screening test,” Dr. Itzkowitz said in an interview. “Many plans are following suit, but it will take time for this coverage to take effect across all plans.”

For individual gastroenterologists who would like to do their part in reducing screening inequity, Dr. Itzkowitz suggested leveraging noninvasive testing, as the AGA recommends.

“This publication is the latest to call for using noninvasive, stool-based testing in addition to colonoscopy,” Dr. Itzkowitz said. “FIT and multitarget stool DNA tests all have proven efficacy in this regard, so gastroenterologists should have those conversations with their patients. GIs can also make it easier for patients to complete colonoscopy by developing patient navigation programs, direct access referrals, and systems for communicating with primary care providers for easier referrals and communicating colonoscopy results.”

Many practices are already instituting such improvements in response to the restrictions imposed by the COVID-19 pandemic, according to Dr. Itzkowitz.“These changes, plus better coverage by payers, will make a huge impact on health equity when it comes to colorectal cancer screening.”

The publication was supported by the AGA. The investigators disclosed relationships with Geneoscopy, ColoWrap, UniversalDx, and others. Dr. Itzkowitz disclosed no relevant conflicts of interest.

Groups interested in collaborating with AGA should contact Kathleen Teixeira, AGA Vice President, Public Policy and Advocacy, at [email protected].

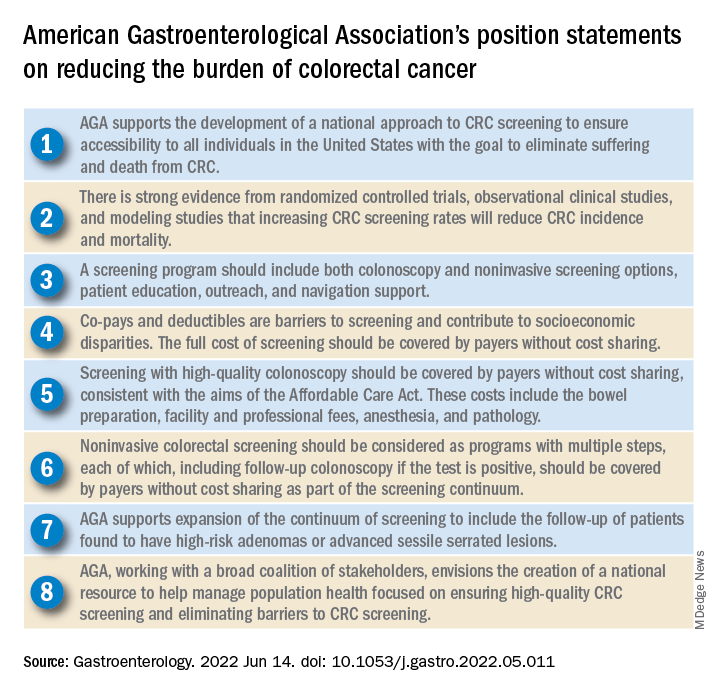

The American Gastroenterological Association has published eight position statements aimed at reducing the burden of colorectal cancer (CRC).

The evidence-based statements, published in Gastroenterology, call for a national approach to CRC screening, outline the elements of a high-quality screening program, and make clear that payers should cover all costs, from bowel prep through pathology, plus follow-up for high-risk patients.

“There is strong evidence that CRC screening is effective [at reducing CRC incidence and mortality] ... but less than 70% of eligible individuals have been screened,” wrote authors led by David Lieberman, MD, who is on the AGA Executive Committee on the Screening Continuum and affiliated with Oregon Health and Science University, Portland, noting the recent expansion of eligibility to include individuals in the 45- to 49-year age group.

“CRC screening saves lives, but only if people get screened,” Dr. Lieberman said in a press release from the AGA. “Cost sharing is an important barrier to screening, which contributes to racial, ethnic and socioeconomic inequities in colorectal cancer outcomes. The full cost of screening – including noninvasive tests and follow-up colonoscopies – should be covered without cost to patients.”

He added: “AGA wishes to collaborate with stakeholders to eliminate obstacles to screening, which disproportionately impact those with low income and lack of insurance.”

Eliminating disparities in screening

Among the position statements, Dr. Lieberman and colleagues first called for “development of a national approach to CRC screening” to patch gaps in access across the United States.

“Systematic outreach occurs infrequently,” they noted. “CRC screening prevalence is much lower among individuals who do not have access to health care due to lack of insurance, do not have a primary care provider, or are part of a medically underserved community.”

According to Dr. Lieberman and colleagues, the AGA is also “working with a broad coalition of stakeholders,” such as the American Cancer Society, payers, patient advocacy groups, and others, to create a “national resource ... focused on ensuring high-quality CRC screening and eliminating barriers to CRC screening.”

Specifically, the coalition will work to collectively tackle “disparities created by social determinants of health, which includes lack of access to screening, transportation, and even work hours and child care.

“The AGA recognizes that moving the needle to achieve a CRC screening participation goal of 80% will take a village,” they wrote.

Elements of high-quality CRC screening

The investigators went on to describe the key features of a high-quality CRC screening program, including “colonoscopy and noninvasive screening options, patient education, outreach, and navigation support.”

Dr. Lieberman and colleagues pointed out that offering more than one type of screening test “acknowledges patient preferences and improves participation.”

Certain noninvasive methods, such as fecal immunochemical testing (FIT), eliminate “important barriers” to screening, they noted, such as the need for special preparation, time off work, and transportation to a medical facility.

For individuals who have high-risk adenomas (HRAs) or advanced sessile serrated lesions (SSLs), screening should be expanded to include follow-up, the investigators added.

“Evidence from a systematic review demonstrates that individuals with HRAs at baseline have a 3- to 4-fold higher risk of incident CRC during follow-up compared with individuals with no adenoma or low-risk adenomas,” they wrote. “There is also evidence that individuals with advanced SSLs have a three= to fourfold higher risk of CRC, compared with individuals with nonadvanced SSLs.”

Payers should cover costs

To further improve access to care, payers should cover the full costs of CRC screening because “copays and deductibles are barriers to screening and contribute to socioeconomic disparities,” that “disproportionately impact those with low income and lack of insurance,” according to Dr. Lieberman and colleagues.

They noted that the Affordable Care Act “eliminated copayments for preventive services,” yet a recent study showed that almost half of patients with commercial insurance and more than three-quarters of patients with Medicare still share some cost of CRC screening.

The investigators made clear that payers need to cover costs from start to finish, including “bowel preparation, facility and professional fees, anesthesia, and pathology,” as well as follow-up screening for high-risk patients identified by noninvasive methods.

“Noninvasive colorectal screening should be considered as programs with multiple steps, each of which, including follow-up colonoscopy if the test is positive, should be covered by payers without cost sharing as part of the screening continuum,” Dr. Lieberman and colleagues wrote.

Changes underway

According to Steven Itzkowitz, MD, professor of medicine and oncological sciences and director of the gastroenterology fellowship training program at the Icahn School of Medicine at Mount Sinai, New York, the AGA publication is important because it “consolidates many of the critical issues related to decreasing the burden of colorectal cancer in the United States.”

Dr. Itzkowitz noted that changes are already underway to eliminate cost as a barrier to screening.

“The good news is that, in the past year, the Departments of Health & Human Services, Labor, and Treasury declared that cost sharing should not be imposed, and plans are required to cover screening colonoscopy with polyp removal and colonoscopy that is performed to follow-up after an abnormal noninvasive CRC screening test,” Dr. Itzkowitz said in an interview. “Many plans are following suit, but it will take time for this coverage to take effect across all plans.”

For individual gastroenterologists who would like to do their part in reducing screening inequity, Dr. Itzkowitz suggested leveraging noninvasive testing, as the AGA recommends.

“This publication is the latest to call for using noninvasive, stool-based testing in addition to colonoscopy,” Dr. Itzkowitz said. “FIT and multitarget stool DNA tests all have proven efficacy in this regard, so gastroenterologists should have those conversations with their patients. GIs can also make it easier for patients to complete colonoscopy by developing patient navigation programs, direct access referrals, and systems for communicating with primary care providers for easier referrals and communicating colonoscopy results.”

Many practices are already instituting such improvements in response to the restrictions imposed by the COVID-19 pandemic, according to Dr. Itzkowitz.“These changes, plus better coverage by payers, will make a huge impact on health equity when it comes to colorectal cancer screening.”

The publication was supported by the AGA. The investigators disclosed relationships with Geneoscopy, ColoWrap, UniversalDx, and others. Dr. Itzkowitz disclosed no relevant conflicts of interest.

Groups interested in collaborating with AGA should contact Kathleen Teixeira, AGA Vice President, Public Policy and Advocacy, at [email protected].

FROM GASTROENTEROLOGY

AI-based CADe outperforms high-definition white light in colonoscopy

An artificial intelligence (AI)–based computer-aided polyp detection (CADe) system missed fewer adenomas, polyps, and sessile serrated lesions and identified more adenomas per colonoscopy than a high-definition white light (HDWL) colonoscopy, according to findings from a randomized study.

While adenoma detection by colonoscopy is associated with a reduced risk of interval colon cancer, detection rates of adenomas vary among physicians. AI approaches, such as machine learning and deep learning, may improve adenoma detection rates during colonoscopy and thus potentially improve outcomes for patients, suggested study authors led by Jeremy R. Glissen Brown, MD, of the Beth Israel Deaconess Medical Center and Harvard Medical School, Boston, who reported their trial findings in Clinical Gastroenterology and Hepatology.

The investigators explained that, although AI approaches may offer benefits in adenoma detection, there have been no prospective data for U.S. populations on the efficacy of an AI-based CADe system for improving adenoma detection rates (ADRs) and reducing adenoma miss rates (AMRs). To overcome this research gap, the investigators performed a prospective, multicenter, single-blind randomized tandem colonoscopy study which assessed a deep learning–based CADe system in 232 patients.

Individuals who presented to the four included U.S. medical centers for either colorectal cancer screening or surveillance were randomly assigned to the CADe system colonoscopy first (n = 116) or HDWL colonoscopy first (n = 116). This was immediately followed by the other procedure, in tandem fashion, performed by the same endoscopist. AMR was the primary outcome of interest, while secondary outcomes were adenomas per colonoscopy (APC) and the miss rate of sessile serrated lesions (SSL).

The researchers excluded 9 patients, which resulted in a total patient population of 223 patients. Approximately 45.3% of the cohort was female, 67.7% were White, and 21% were Black. Most patients (60%) were indicated for primary colorectal cancer screening.

Compared with the HDWL-first group, the AMR was significantly lower in the CADe-first group (31.25% vs. 20.12%, respectively; P = .0247). The researchers commented that, although the CADe system resulted in a statistically significantly lower AMR, the rate still reflects missed adenomas.

Additionally, the CADe-first group had a lower SSL miss rate, compared with the HDWL-first group (7.14% vs. 42.11%, respectively; P = .0482). The researchers noted that their study is one of the first research studies to show that a computer-assisted polyp detection system can reduce the SSL miss rate. The first-pass APC was also significantly higher in the CADe-first group (1.19 vs. 0.90; P = .0323). No statistically significant difference was observed between the groups in regard to the first-pass ADR (50.44% for the CADe-first group vs. 43.64 % for the HDWL-first group; P = .3091).

A multivariate logistic regression analysis identified three significant factors predictive of missed polyps: use of HDWL first vs. the computer-assisted detection system first (odds ratio, 1.8830; P = .0214), age 65 years or younger (OR, 1.7390; P = .0451), and right colon vs. other location (OR, 1.7865; P = .0436).

According to the researchers, the study was not powered to identify differences in ADR, thereby limiting the interpretation of this analysis. In addition, the investigators noted that the tandem colonoscopy study design is limited in its generalizability to real-world clinical settings. Also, given that endoscopists were not blinded to group assignments while performing each withdrawal, the researchers commented that “it is possible that endoscopist performance was influenced by being observed or that endoscopists who participated for the length of the study became over-reliant on” the CADe system during withdrawal, resulting in an underestimate or overestimation of the system’s performance.

The authors concluded that their findings suggest that an AI-based CADe system with colonoscopy “has the potential to decrease interprovider variability in colonoscopy quality by reducing AMR, even in experienced providers.”

This was an investigator-initiated study, with research software and study funding provided by Wision AI. The investigators reported relationships with Wision AI, as well as Olympus, Fujifilm, and Medtronic.

Several randomized trials testing artificial intelligence (AI)–assisted colonoscopy showed improvement in adenoma detection. This study adds to the growing body of evidence that computer-aided detection (CADe) systems for adenoma augment adenoma detection rates, even among highly skilled endoscopists whose baseline ADRs are much higher than the currently recommended threshold for quality colonoscopy (25%).

This study also highlights the usefulness of CADe in aiding detection of sessile serrated lesions (SSL). Recognition of SSL appears to be challenging for trainees and the most likely type of missed large adenomas overall.

AI-based systems will enhance but will not replace the highly skilled operator. As this study pointed out, despite the superior ADR, adenomas were still missed by CADe. The main reason for this was that the missed polyps were not brought into the visual field by the operator. A combination of a CADe program and a distal attachment mucosa exposure device in the hands of an experienced endoscopists might bring the best results.

Monika Fischer, MD, is an associate professor of medicine at Indiana University, Indianapolis. She reported no relevant conflicts of interest.

Several randomized trials testing artificial intelligence (AI)–assisted colonoscopy showed improvement in adenoma detection. This study adds to the growing body of evidence that computer-aided detection (CADe) systems for adenoma augment adenoma detection rates, even among highly skilled endoscopists whose baseline ADRs are much higher than the currently recommended threshold for quality colonoscopy (25%).

This study also highlights the usefulness of CADe in aiding detection of sessile serrated lesions (SSL). Recognition of SSL appears to be challenging for trainees and the most likely type of missed large adenomas overall.

AI-based systems will enhance but will not replace the highly skilled operator. As this study pointed out, despite the superior ADR, adenomas were still missed by CADe. The main reason for this was that the missed polyps were not brought into the visual field by the operator. A combination of a CADe program and a distal attachment mucosa exposure device in the hands of an experienced endoscopists might bring the best results.

Monika Fischer, MD, is an associate professor of medicine at Indiana University, Indianapolis. She reported no relevant conflicts of interest.

Several randomized trials testing artificial intelligence (AI)–assisted colonoscopy showed improvement in adenoma detection. This study adds to the growing body of evidence that computer-aided detection (CADe) systems for adenoma augment adenoma detection rates, even among highly skilled endoscopists whose baseline ADRs are much higher than the currently recommended threshold for quality colonoscopy (25%).

This study also highlights the usefulness of CADe in aiding detection of sessile serrated lesions (SSL). Recognition of SSL appears to be challenging for trainees and the most likely type of missed large adenomas overall.

AI-based systems will enhance but will not replace the highly skilled operator. As this study pointed out, despite the superior ADR, adenomas were still missed by CADe. The main reason for this was that the missed polyps were not brought into the visual field by the operator. A combination of a CADe program and a distal attachment mucosa exposure device in the hands of an experienced endoscopists might bring the best results.

Monika Fischer, MD, is an associate professor of medicine at Indiana University, Indianapolis. She reported no relevant conflicts of interest.

An artificial intelligence (AI)–based computer-aided polyp detection (CADe) system missed fewer adenomas, polyps, and sessile serrated lesions and identified more adenomas per colonoscopy than a high-definition white light (HDWL) colonoscopy, according to findings from a randomized study.

While adenoma detection by colonoscopy is associated with a reduced risk of interval colon cancer, detection rates of adenomas vary among physicians. AI approaches, such as machine learning and deep learning, may improve adenoma detection rates during colonoscopy and thus potentially improve outcomes for patients, suggested study authors led by Jeremy R. Glissen Brown, MD, of the Beth Israel Deaconess Medical Center and Harvard Medical School, Boston, who reported their trial findings in Clinical Gastroenterology and Hepatology.

The investigators explained that, although AI approaches may offer benefits in adenoma detection, there have been no prospective data for U.S. populations on the efficacy of an AI-based CADe system for improving adenoma detection rates (ADRs) and reducing adenoma miss rates (AMRs). To overcome this research gap, the investigators performed a prospective, multicenter, single-blind randomized tandem colonoscopy study which assessed a deep learning–based CADe system in 232 patients.

Individuals who presented to the four included U.S. medical centers for either colorectal cancer screening or surveillance were randomly assigned to the CADe system colonoscopy first (n = 116) or HDWL colonoscopy first (n = 116). This was immediately followed by the other procedure, in tandem fashion, performed by the same endoscopist. AMR was the primary outcome of interest, while secondary outcomes were adenomas per colonoscopy (APC) and the miss rate of sessile serrated lesions (SSL).

The researchers excluded 9 patients, which resulted in a total patient population of 223 patients. Approximately 45.3% of the cohort was female, 67.7% were White, and 21% were Black. Most patients (60%) were indicated for primary colorectal cancer screening.

Compared with the HDWL-first group, the AMR was significantly lower in the CADe-first group (31.25% vs. 20.12%, respectively; P = .0247). The researchers commented that, although the CADe system resulted in a statistically significantly lower AMR, the rate still reflects missed adenomas.

Additionally, the CADe-first group had a lower SSL miss rate, compared with the HDWL-first group (7.14% vs. 42.11%, respectively; P = .0482). The researchers noted that their study is one of the first research studies to show that a computer-assisted polyp detection system can reduce the SSL miss rate. The first-pass APC was also significantly higher in the CADe-first group (1.19 vs. 0.90; P = .0323). No statistically significant difference was observed between the groups in regard to the first-pass ADR (50.44% for the CADe-first group vs. 43.64 % for the HDWL-first group; P = .3091).

A multivariate logistic regression analysis identified three significant factors predictive of missed polyps: use of HDWL first vs. the computer-assisted detection system first (odds ratio, 1.8830; P = .0214), age 65 years or younger (OR, 1.7390; P = .0451), and right colon vs. other location (OR, 1.7865; P = .0436).

According to the researchers, the study was not powered to identify differences in ADR, thereby limiting the interpretation of this analysis. In addition, the investigators noted that the tandem colonoscopy study design is limited in its generalizability to real-world clinical settings. Also, given that endoscopists were not blinded to group assignments while performing each withdrawal, the researchers commented that “it is possible that endoscopist performance was influenced by being observed or that endoscopists who participated for the length of the study became over-reliant on” the CADe system during withdrawal, resulting in an underestimate or overestimation of the system’s performance.

The authors concluded that their findings suggest that an AI-based CADe system with colonoscopy “has the potential to decrease interprovider variability in colonoscopy quality by reducing AMR, even in experienced providers.”

This was an investigator-initiated study, with research software and study funding provided by Wision AI. The investigators reported relationships with Wision AI, as well as Olympus, Fujifilm, and Medtronic.

An artificial intelligence (AI)–based computer-aided polyp detection (CADe) system missed fewer adenomas, polyps, and sessile serrated lesions and identified more adenomas per colonoscopy than a high-definition white light (HDWL) colonoscopy, according to findings from a randomized study.

While adenoma detection by colonoscopy is associated with a reduced risk of interval colon cancer, detection rates of adenomas vary among physicians. AI approaches, such as machine learning and deep learning, may improve adenoma detection rates during colonoscopy and thus potentially improve outcomes for patients, suggested study authors led by Jeremy R. Glissen Brown, MD, of the Beth Israel Deaconess Medical Center and Harvard Medical School, Boston, who reported their trial findings in Clinical Gastroenterology and Hepatology.

The investigators explained that, although AI approaches may offer benefits in adenoma detection, there have been no prospective data for U.S. populations on the efficacy of an AI-based CADe system for improving adenoma detection rates (ADRs) and reducing adenoma miss rates (AMRs). To overcome this research gap, the investigators performed a prospective, multicenter, single-blind randomized tandem colonoscopy study which assessed a deep learning–based CADe system in 232 patients.

Individuals who presented to the four included U.S. medical centers for either colorectal cancer screening or surveillance were randomly assigned to the CADe system colonoscopy first (n = 116) or HDWL colonoscopy first (n = 116). This was immediately followed by the other procedure, in tandem fashion, performed by the same endoscopist. AMR was the primary outcome of interest, while secondary outcomes were adenomas per colonoscopy (APC) and the miss rate of sessile serrated lesions (SSL).

The researchers excluded 9 patients, which resulted in a total patient population of 223 patients. Approximately 45.3% of the cohort was female, 67.7% were White, and 21% were Black. Most patients (60%) were indicated for primary colorectal cancer screening.

Compared with the HDWL-first group, the AMR was significantly lower in the CADe-first group (31.25% vs. 20.12%, respectively; P = .0247). The researchers commented that, although the CADe system resulted in a statistically significantly lower AMR, the rate still reflects missed adenomas.

Additionally, the CADe-first group had a lower SSL miss rate, compared with the HDWL-first group (7.14% vs. 42.11%, respectively; P = .0482). The researchers noted that their study is one of the first research studies to show that a computer-assisted polyp detection system can reduce the SSL miss rate. The first-pass APC was also significantly higher in the CADe-first group (1.19 vs. 0.90; P = .0323). No statistically significant difference was observed between the groups in regard to the first-pass ADR (50.44% for the CADe-first group vs. 43.64 % for the HDWL-first group; P = .3091).

A multivariate logistic regression analysis identified three significant factors predictive of missed polyps: use of HDWL first vs. the computer-assisted detection system first (odds ratio, 1.8830; P = .0214), age 65 years or younger (OR, 1.7390; P = .0451), and right colon vs. other location (OR, 1.7865; P = .0436).

According to the researchers, the study was not powered to identify differences in ADR, thereby limiting the interpretation of this analysis. In addition, the investigators noted that the tandem colonoscopy study design is limited in its generalizability to real-world clinical settings. Also, given that endoscopists were not blinded to group assignments while performing each withdrawal, the researchers commented that “it is possible that endoscopist performance was influenced by being observed or that endoscopists who participated for the length of the study became over-reliant on” the CADe system during withdrawal, resulting in an underestimate or overestimation of the system’s performance.

The authors concluded that their findings suggest that an AI-based CADe system with colonoscopy “has the potential to decrease interprovider variability in colonoscopy quality by reducing AMR, even in experienced providers.”

This was an investigator-initiated study, with research software and study funding provided by Wision AI. The investigators reported relationships with Wision AI, as well as Olympus, Fujifilm, and Medtronic.

FROM CLINICAL GASTROENTEROLOGY AND HEPATOLOGY

Esophageal cancer screening isn’t for everyone: Study

Endoscopic screening for esophageal adenocarcinoma (EAC), may not be a cost-effective strategy for all populations, possibly even leading to net harm in some, according to a comparative cost-effectiveness analysis.

Several U.S. guidelines suggest the use of endoscopic screening for EAC, yet recommendations within these guidelines vary in terms of which population should receive screening, according study authors led by Joel H. Rubenstein, MD, of the Lieutenant Charles S. Kettles Veterans Affairs Medical Center, Ann Arbor, Mich. Their findings were published in Gastroenterology. In addition, there have been no randomized trials to date that have evaluated endoscopic screening outcomes among different populations. Population screening recommendations in the current guidelines have been informed mostly by observational data and expert opinion.

Existing cost-effectiveness analyses of EAC screening have mostly focused on screening older men with gastroesophageal reflux disease (GERD) at certain ages, and many of these analyses have limited data regarding diverse patient populations.

In their study, Dr. Rubenstein and colleagues performed a comparative cost-effectiveness analysis of endoscopic screening for EAC that was restricted to individuals with GERD symptoms in the general population. The analysis was stratified by race and sex. The primary objective of the analysis was to identify and establish the optimal age at which to offer endoscopic screening in the specific populations evaluated in the study.

The investigators conducted their comparative cost-effectiveness analyses using three independent simulation models. The independently developed models – which focused on EAC natural history, screening, surveillance, and treatment – are part of the National Cancer Institute’s Cancer Intervention and Surveillance Modeling Network. For each model, there were four cohorts, defined by race as either White or Black and sex, which were independently calibrated to targets to reproduce the EAC incidence in the United States. The three models were based on somewhat different structures and assumptions; for example, two of the models assumed stable prevalence of GERD symptoms of approximately 20% across ages, while the third assumed a near-linear increase across adulthood. All three assumed EAC develops only in individuals with Barrett’s esophagus.

In each base case, the researchers simulated cohorts of people in the United States who were born in 1950, and then stratified these individuals by race and sex and followed each individual from 40 years of age until 100 years of age. The researchers considered 42 strategies, such as no screening, a single endoscopic screening at six specified ages (between 40 and 65 years of age), and a single screening in individuals with GERD symptoms at the six specified ages.

Primary results were the averaged results across all three models. The optimal screening strategy, defined by the investigators, was the strategy with the highest effectiveness that had an incremental cost-effectiveness ratio of less than $100,000 per quality-adjusted life-year gained.

The most effective – yet the most costly – screening strategies for White men were those that screened all of them once between 40 and 55 years of age. The optimal screening strategy, however, was one that screened individuals with GERD twice, once at age 45 years and again at 60 years. The researchers determined that screening Black men with GERD once at 55 years of age was optimal.

By contrast, the optimal strategy for women, whether White or Black, was no screening at all. “In particular, among Black women, screening is, at best, very expensive with little benefit, and some strategies cause net harm,” the authors wrote.

The investigators wrote that there is a need for empiric, long-term studies “to confirm whether repeated screening has a substantial yield of incident” Barrett’s esophagus. The researchers also noted that their study was limited by the lack of inclusion of additional risk factors, such as smoking, obesity, and family history, which may have led to different conclusions on specific screening strategies.

“We certainly acknowledge the history of health care inequities, and that race is a social construct that, in the vast majority of medical contexts, has no biological basis. We are circumspect regarding making recommendations based on race or sex if environmental exposures or genetic factors on which to make to those recommendations were available,” they wrote.

The study was supported by National Institutes of Health/National Cancer Institute grants. Some authors disclosed relationships with Lucid Diagnostics, Value Analytics Labs, and Cernostics.

Over the past decades we have seen an alarming rise in the incidence of esophageal adenocarcinoma, mostly diagnosed at an advanced stage when curative treatment is no longer an option. Esophageal adenocarcinoma develops from Barrett’s esophagus that, if known to be present, can be surveilled to detect dysplasia and cancer at an early and curable stage.

Whereas currently screening for Barrett’s esophagus focused on White males with gastroesophageal reflux, little was known about screening in non-White and non-male populations. Identifying who and how to screen poses a challenge, and in real life such studies looking at varied populations would require many patients, years of follow-up, much effort and substantial costs. Rubenstein and colleagues used three independent simulation models to simulate many different screening scenarios, while taking gender and race into account. The outcomes of this study, which demonstrate that one size does not fit all, will be very relevant in guiding future strategies regarding screening for Barrett’s esophagus and early esophageal adenocarcinoma. Although the study is based around endoscopic screening, the insights gained from this study will also be relevant when considering the use of nonendoscopic screening tools.

R.E. Pouw, MD, PhD, is with Amsterdam University Medical Centers. She disclosed having been a consultant for MicroTech and Medtronic and having received speaker fees from Pentax.

Over the past decades we have seen an alarming rise in the incidence of esophageal adenocarcinoma, mostly diagnosed at an advanced stage when curative treatment is no longer an option. Esophageal adenocarcinoma develops from Barrett’s esophagus that, if known to be present, can be surveilled to detect dysplasia and cancer at an early and curable stage.

Whereas currently screening for Barrett’s esophagus focused on White males with gastroesophageal reflux, little was known about screening in non-White and non-male populations. Identifying who and how to screen poses a challenge, and in real life such studies looking at varied populations would require many patients, years of follow-up, much effort and substantial costs. Rubenstein and colleagues used three independent simulation models to simulate many different screening scenarios, while taking gender and race into account. The outcomes of this study, which demonstrate that one size does not fit all, will be very relevant in guiding future strategies regarding screening for Barrett’s esophagus and early esophageal adenocarcinoma. Although the study is based around endoscopic screening, the insights gained from this study will also be relevant when considering the use of nonendoscopic screening tools.

R.E. Pouw, MD, PhD, is with Amsterdam University Medical Centers. She disclosed having been a consultant for MicroTech and Medtronic and having received speaker fees from Pentax.

Over the past decades we have seen an alarming rise in the incidence of esophageal adenocarcinoma, mostly diagnosed at an advanced stage when curative treatment is no longer an option. Esophageal adenocarcinoma develops from Barrett’s esophagus that, if known to be present, can be surveilled to detect dysplasia and cancer at an early and curable stage.

Whereas currently screening for Barrett’s esophagus focused on White males with gastroesophageal reflux, little was known about screening in non-White and non-male populations. Identifying who and how to screen poses a challenge, and in real life such studies looking at varied populations would require many patients, years of follow-up, much effort and substantial costs. Rubenstein and colleagues used three independent simulation models to simulate many different screening scenarios, while taking gender and race into account. The outcomes of this study, which demonstrate that one size does not fit all, will be very relevant in guiding future strategies regarding screening for Barrett’s esophagus and early esophageal adenocarcinoma. Although the study is based around endoscopic screening, the insights gained from this study will also be relevant when considering the use of nonendoscopic screening tools.

R.E. Pouw, MD, PhD, is with Amsterdam University Medical Centers. She disclosed having been a consultant for MicroTech and Medtronic and having received speaker fees from Pentax.

Endoscopic screening for esophageal adenocarcinoma (EAC), may not be a cost-effective strategy for all populations, possibly even leading to net harm in some, according to a comparative cost-effectiveness analysis.

Several U.S. guidelines suggest the use of endoscopic screening for EAC, yet recommendations within these guidelines vary in terms of which population should receive screening, according study authors led by Joel H. Rubenstein, MD, of the Lieutenant Charles S. Kettles Veterans Affairs Medical Center, Ann Arbor, Mich. Their findings were published in Gastroenterology. In addition, there have been no randomized trials to date that have evaluated endoscopic screening outcomes among different populations. Population screening recommendations in the current guidelines have been informed mostly by observational data and expert opinion.

Existing cost-effectiveness analyses of EAC screening have mostly focused on screening older men with gastroesophageal reflux disease (GERD) at certain ages, and many of these analyses have limited data regarding diverse patient populations.

In their study, Dr. Rubenstein and colleagues performed a comparative cost-effectiveness analysis of endoscopic screening for EAC that was restricted to individuals with GERD symptoms in the general population. The analysis was stratified by race and sex. The primary objective of the analysis was to identify and establish the optimal age at which to offer endoscopic screening in the specific populations evaluated in the study.

The investigators conducted their comparative cost-effectiveness analyses using three independent simulation models. The independently developed models – which focused on EAC natural history, screening, surveillance, and treatment – are part of the National Cancer Institute’s Cancer Intervention and Surveillance Modeling Network. For each model, there were four cohorts, defined by race as either White or Black and sex, which were independently calibrated to targets to reproduce the EAC incidence in the United States. The three models were based on somewhat different structures and assumptions; for example, two of the models assumed stable prevalence of GERD symptoms of approximately 20% across ages, while the third assumed a near-linear increase across adulthood. All three assumed EAC develops only in individuals with Barrett’s esophagus.

In each base case, the researchers simulated cohorts of people in the United States who were born in 1950, and then stratified these individuals by race and sex and followed each individual from 40 years of age until 100 years of age. The researchers considered 42 strategies, such as no screening, a single endoscopic screening at six specified ages (between 40 and 65 years of age), and a single screening in individuals with GERD symptoms at the six specified ages.

Primary results were the averaged results across all three models. The optimal screening strategy, defined by the investigators, was the strategy with the highest effectiveness that had an incremental cost-effectiveness ratio of less than $100,000 per quality-adjusted life-year gained.

The most effective – yet the most costly – screening strategies for White men were those that screened all of them once between 40 and 55 years of age. The optimal screening strategy, however, was one that screened individuals with GERD twice, once at age 45 years and again at 60 years. The researchers determined that screening Black men with GERD once at 55 years of age was optimal.

By contrast, the optimal strategy for women, whether White or Black, was no screening at all. “In particular, among Black women, screening is, at best, very expensive with little benefit, and some strategies cause net harm,” the authors wrote.

The investigators wrote that there is a need for empiric, long-term studies “to confirm whether repeated screening has a substantial yield of incident” Barrett’s esophagus. The researchers also noted that their study was limited by the lack of inclusion of additional risk factors, such as smoking, obesity, and family history, which may have led to different conclusions on specific screening strategies.

“We certainly acknowledge the history of health care inequities, and that race is a social construct that, in the vast majority of medical contexts, has no biological basis. We are circumspect regarding making recommendations based on race or sex if environmental exposures or genetic factors on which to make to those recommendations were available,” they wrote.

The study was supported by National Institutes of Health/National Cancer Institute grants. Some authors disclosed relationships with Lucid Diagnostics, Value Analytics Labs, and Cernostics.

Endoscopic screening for esophageal adenocarcinoma (EAC), may not be a cost-effective strategy for all populations, possibly even leading to net harm in some, according to a comparative cost-effectiveness analysis.

Several U.S. guidelines suggest the use of endoscopic screening for EAC, yet recommendations within these guidelines vary in terms of which population should receive screening, according study authors led by Joel H. Rubenstein, MD, of the Lieutenant Charles S. Kettles Veterans Affairs Medical Center, Ann Arbor, Mich. Their findings were published in Gastroenterology. In addition, there have been no randomized trials to date that have evaluated endoscopic screening outcomes among different populations. Population screening recommendations in the current guidelines have been informed mostly by observational data and expert opinion.

Existing cost-effectiveness analyses of EAC screening have mostly focused on screening older men with gastroesophageal reflux disease (GERD) at certain ages, and many of these analyses have limited data regarding diverse patient populations.

In their study, Dr. Rubenstein and colleagues performed a comparative cost-effectiveness analysis of endoscopic screening for EAC that was restricted to individuals with GERD symptoms in the general population. The analysis was stratified by race and sex. The primary objective of the analysis was to identify and establish the optimal age at which to offer endoscopic screening in the specific populations evaluated in the study.

The investigators conducted their comparative cost-effectiveness analyses using three independent simulation models. The independently developed models – which focused on EAC natural history, screening, surveillance, and treatment – are part of the National Cancer Institute’s Cancer Intervention and Surveillance Modeling Network. For each model, there were four cohorts, defined by race as either White or Black and sex, which were independently calibrated to targets to reproduce the EAC incidence in the United States. The three models were based on somewhat different structures and assumptions; for example, two of the models assumed stable prevalence of GERD symptoms of approximately 20% across ages, while the third assumed a near-linear increase across adulthood. All three assumed EAC develops only in individuals with Barrett’s esophagus.

In each base case, the researchers simulated cohorts of people in the United States who were born in 1950, and then stratified these individuals by race and sex and followed each individual from 40 years of age until 100 years of age. The researchers considered 42 strategies, such as no screening, a single endoscopic screening at six specified ages (between 40 and 65 years of age), and a single screening in individuals with GERD symptoms at the six specified ages.

Primary results were the averaged results across all three models. The optimal screening strategy, defined by the investigators, was the strategy with the highest effectiveness that had an incremental cost-effectiveness ratio of less than $100,000 per quality-adjusted life-year gained.

The most effective – yet the most costly – screening strategies for White men were those that screened all of them once between 40 and 55 years of age. The optimal screening strategy, however, was one that screened individuals with GERD twice, once at age 45 years and again at 60 years. The researchers determined that screening Black men with GERD once at 55 years of age was optimal.

By contrast, the optimal strategy for women, whether White or Black, was no screening at all. “In particular, among Black women, screening is, at best, very expensive with little benefit, and some strategies cause net harm,” the authors wrote.

The investigators wrote that there is a need for empiric, long-term studies “to confirm whether repeated screening has a substantial yield of incident” Barrett’s esophagus. The researchers also noted that their study was limited by the lack of inclusion of additional risk factors, such as smoking, obesity, and family history, which may have led to different conclusions on specific screening strategies.

“We certainly acknowledge the history of health care inequities, and that race is a social construct that, in the vast majority of medical contexts, has no biological basis. We are circumspect regarding making recommendations based on race or sex if environmental exposures or genetic factors on which to make to those recommendations were available,” they wrote.

The study was supported by National Institutes of Health/National Cancer Institute grants. Some authors disclosed relationships with Lucid Diagnostics, Value Analytics Labs, and Cernostics.

FROM GASTROENTEROLOGY

Confronting endoscopic infection control

The reprocessing of endoscopes following gastrointestinal endoscopy is highly effective for mitigating the risk of exogenous infections, yet challenges in duodenoscope reprocessing continue to persist. While several enhanced reprocessing measures have been developed to reduce duodenoscope-related infection risks, the effectiveness of these enhanced measures is largely unclear.

Rahul A. Shimpi, MD, and Joshua P. Spaete, MD, from Duke University, Durham, N.C., wrote in a paper in Techniques and Innovations in Gastrointestinal Endoscopy that novel disposable duodenoscope technologies offer promise for reducing infection risk and overcoming current reprocessing challenges. The paper notes that, despite this promise, there is a need to better define the usability, costs, and environmental impact of these disposable technologies.

Current challenges in endoscope reprocessing

According to the authors, the reprocessing of gastrointestinal endoscopes involves several sequential steps that require a “meticulous” attention to detail “to ensure the adequacy of reprocessing.” Human factors/errors are a major contributor to suboptimal reprocessing quality, and these errors are often related to varying adherence to current reprocessing protocols among centers and reprocessing staff members.

Despite these challenges, infectious complications associated with gastrointestinal endoscopy are rare, particularly in relation to end-viewing endoscopes. Many high-profile infectious outbreaks associated with duodenoscopes have been reported in recent years, however, which has heightened the awareness and corresponding concern with endoscope reprocessing. Many of these infectious outbreaks, the authors said, have involved multidrug-resistant organisms.

The complex elevator mechanism, which the authors noted “is relatively inaccessible during the precleaning and manual cleaning steps in reprocessing,” represents a paramount challenge in the reprocessing of duodenoscopes. The challenge related to this mechanism potentially contributes to greater biofilm formation and contamination. Other factors implicated in the transmission of duodenoscope-associated infections from patient to patient include other design issues, human errors in reprocessing, endoscope damage and channel defects, and storage and environmental factors.

“Given the reprocessing challenges posed by duodenoscopes, in 2015 the Food and Drug Administration issued a recommendation that one or more supplemental measures be implemented by facilities as a means to decrease the infectious risk posed by duodenoscopes,” the authors noted, including ethylene oxide (EtO) sterilization, liquid chemical sterilization, and repeat high-level disinfection (HLD). They added, however, that a recent U.S. multisociety reprocessing guideline “does not recommend repeat high-level disinfection over single high-level disinfection, and recommends use of EtO sterilization only for duodenoscopes in infectious outbreak settings.”

New sterilization technologies

Liquid chemical sterilization may be a promising alternative to EtO sterilization because it features a shorter disinfection cycle time and less endoscope wear or damage. However, clinical data for the effectiveness of LCS in endoscope reprocessing remains very limited.

The high costs and toxicities associated with EtO sterilization may be overcome by the plasma-activated gas, another novel low-temperature sterilization technology. This newer sterilization technique also features a shorter reprocessing time, thereby making it an attractive option for duodenoscope reprocessing. The authors noted that, although it showed promise in a proof-of-concept study, “plasma-activated gas has not been assessed in working endoscopes or compared directly to existing HLD and EtO sterilization technologies.”

Quality indicators in reprocessing

Recently, several quality indicators have been developed to assess the quality of endoscope reprocessing. The indicators, the authors noted, may theoretically allow “for point-of-care assessment of reprocessing quality.” To date, the data to support these indicators are limited.

Adenosine triphosphate testing has been the most widely studied indicator because this can be used to examine the presence of biofilms during endoscope reprocessing via previously established ATP benchmark levels, the authors wrote. Studies that have assessed the efficacy of ATP testing, however, are limited by their use of heterogeneous assays, analytical techniques, and cutoffs for identifying contamination.

Hemoglobin, protein, and carbohydrate are other point-of-care indicators that have previously demonstrated potential capability of assessing the achievement of adequate manual endoscope cleaning before high-level disinfection or sterilization.

Novel disposable duodenoscope technologies

Given that consistent research studies have shown the existence of residual duodenoscope contamination after standard and enhanced reprocessing, there has been increased attention placed on novel disposable duodenoscope technologies. In 2019, the FDA recommended a move toward duodenoscopes with disposable components because it could make reprocessing easier, more effective, or altogether unnecessary. According to the authors, there are currently six duodenoscopes with disposable components that are cleared by the FDA for use. These include three that use a disposable endcap, one that uses a disposable elevator and endcap, and two that are fully disposable. The authors stated that, while “improved access to the elevator facilitated by a disposable endcap may allow for improved cleaning” and reduce contamination and formation of biofilm, there are no data to confirm these proposed advantages.

There are several unanswered questions regarding new disposable duodenoscope technologies, including questions related to the usability, costs, and environmental impact of these technologies. The authors summarized several studies discussing these issues; however, a clear definition or consensus regarding how to approach these challenges has yet to be established. In addition to these unanswered questions, the authors also noted that identifying the acceptable rate of infectious risk associated with disposable duodenoscopes is another “important task” that needs to be accomplished in the near future.

Environmental impact

The authors stated that the health care system in the United States is directly responsible for up to 10% of total U.S. greenhouse emissions. Additionally, the substantial use of chemicals and water in endoscope reprocessing represents a “substantial” concern for the environment. One estimate suggested that a mean of 40 total endoscopies per day generates around 15.78 tons of CO2 per year.

Given the unclear impact disposable endoscopes may have on the environment, the authors suggested that there is a clear need to discover interventions that reduce their potential negative impact. Strategies that reduce the number of endoscopies performed, increased recycling and use of recyclable materials, and use of renewable energy sources in endoscopy units have been proposed.

“The massive environmental impact of gastrointestinal endoscopy as a whole has become increasingly recognized,” the authors wrote, “and further study and interventions directed at improving the environmental footprint of endoscopy will be of foremost importance.”

The authors disclosed no conflicts of interest.

The future remains to be seen

Solutions surrounding proper endoscope reprocessing and infection prevention have become a major focus of investigation and innovation in endoscope design, particularly related to duodenoscopes. As multiple infectious outbreaks associated with duodenoscopes have been reported, the complex mechanism of the duodenoscope elevator has emerged as the target for modification because it is somewhat inaccessible and difficult to adequately clean.

One of the major considerations related to disposable duodenoscopes is the cost. Currently, the savings from removing the need for reprocessing equipment, supplies, and personnel does not balance the cost of the disposable duodenoscope. Studies on the environmental impact of disposable duodenoscopes suggest a major increase in endoscopy-related waste.

In summary, enhanced reprocessing techniques and modified scope design elements may not achieve adequate thresholds for infection prevention. Furthermore, while fully disposable duodenoscopes offer promise, questions remain about overall functionality, cost, and the potentially profound environmental impact. Further research is warranted on feasible solutions for infection prevention, and the issues of cost and environmental impact must be addressed before the widespread adoption of disposable duodenoscopes.

Jennifer Maranki, MD, MSc, is professor of medicine and director of endoscopy at Penn State Hershey (Pennsylvania) Medical Center. She reports being a consultant for Boston Scientific.

The future remains to be seen

Solutions surrounding proper endoscope reprocessing and infection prevention have become a major focus of investigation and innovation in endoscope design, particularly related to duodenoscopes. As multiple infectious outbreaks associated with duodenoscopes have been reported, the complex mechanism of the duodenoscope elevator has emerged as the target for modification because it is somewhat inaccessible and difficult to adequately clean.

One of the major considerations related to disposable duodenoscopes is the cost. Currently, the savings from removing the need for reprocessing equipment, supplies, and personnel does not balance the cost of the disposable duodenoscope. Studies on the environmental impact of disposable duodenoscopes suggest a major increase in endoscopy-related waste.

In summary, enhanced reprocessing techniques and modified scope design elements may not achieve adequate thresholds for infection prevention. Furthermore, while fully disposable duodenoscopes offer promise, questions remain about overall functionality, cost, and the potentially profound environmental impact. Further research is warranted on feasible solutions for infection prevention, and the issues of cost and environmental impact must be addressed before the widespread adoption of disposable duodenoscopes.

Jennifer Maranki, MD, MSc, is professor of medicine and director of endoscopy at Penn State Hershey (Pennsylvania) Medical Center. She reports being a consultant for Boston Scientific.

The future remains to be seen

Solutions surrounding proper endoscope reprocessing and infection prevention have become a major focus of investigation and innovation in endoscope design, particularly related to duodenoscopes. As multiple infectious outbreaks associated with duodenoscopes have been reported, the complex mechanism of the duodenoscope elevator has emerged as the target for modification because it is somewhat inaccessible and difficult to adequately clean.

One of the major considerations related to disposable duodenoscopes is the cost. Currently, the savings from removing the need for reprocessing equipment, supplies, and personnel does not balance the cost of the disposable duodenoscope. Studies on the environmental impact of disposable duodenoscopes suggest a major increase in endoscopy-related waste.

In summary, enhanced reprocessing techniques and modified scope design elements may not achieve adequate thresholds for infection prevention. Furthermore, while fully disposable duodenoscopes offer promise, questions remain about overall functionality, cost, and the potentially profound environmental impact. Further research is warranted on feasible solutions for infection prevention, and the issues of cost and environmental impact must be addressed before the widespread adoption of disposable duodenoscopes.

Jennifer Maranki, MD, MSc, is professor of medicine and director of endoscopy at Penn State Hershey (Pennsylvania) Medical Center. She reports being a consultant for Boston Scientific.

The reprocessing of endoscopes following gastrointestinal endoscopy is highly effective for mitigating the risk of exogenous infections, yet challenges in duodenoscope reprocessing continue to persist. While several enhanced reprocessing measures have been developed to reduce duodenoscope-related infection risks, the effectiveness of these enhanced measures is largely unclear.

Rahul A. Shimpi, MD, and Joshua P. Spaete, MD, from Duke University, Durham, N.C., wrote in a paper in Techniques and Innovations in Gastrointestinal Endoscopy that novel disposable duodenoscope technologies offer promise for reducing infection risk and overcoming current reprocessing challenges. The paper notes that, despite this promise, there is a need to better define the usability, costs, and environmental impact of these disposable technologies.

Current challenges in endoscope reprocessing

According to the authors, the reprocessing of gastrointestinal endoscopes involves several sequential steps that require a “meticulous” attention to detail “to ensure the adequacy of reprocessing.” Human factors/errors are a major contributor to suboptimal reprocessing quality, and these errors are often related to varying adherence to current reprocessing protocols among centers and reprocessing staff members.

Despite these challenges, infectious complications associated with gastrointestinal endoscopy are rare, particularly in relation to end-viewing endoscopes. Many high-profile infectious outbreaks associated with duodenoscopes have been reported in recent years, however, which has heightened the awareness and corresponding concern with endoscope reprocessing. Many of these infectious outbreaks, the authors said, have involved multidrug-resistant organisms.

The complex elevator mechanism, which the authors noted “is relatively inaccessible during the precleaning and manual cleaning steps in reprocessing,” represents a paramount challenge in the reprocessing of duodenoscopes. The challenge related to this mechanism potentially contributes to greater biofilm formation and contamination. Other factors implicated in the transmission of duodenoscope-associated infections from patient to patient include other design issues, human errors in reprocessing, endoscope damage and channel defects, and storage and environmental factors.

“Given the reprocessing challenges posed by duodenoscopes, in 2015 the Food and Drug Administration issued a recommendation that one or more supplemental measures be implemented by facilities as a means to decrease the infectious risk posed by duodenoscopes,” the authors noted, including ethylene oxide (EtO) sterilization, liquid chemical sterilization, and repeat high-level disinfection (HLD). They added, however, that a recent U.S. multisociety reprocessing guideline “does not recommend repeat high-level disinfection over single high-level disinfection, and recommends use of EtO sterilization only for duodenoscopes in infectious outbreak settings.”

New sterilization technologies

Liquid chemical sterilization may be a promising alternative to EtO sterilization because it features a shorter disinfection cycle time and less endoscope wear or damage. However, clinical data for the effectiveness of LCS in endoscope reprocessing remains very limited.

The high costs and toxicities associated with EtO sterilization may be overcome by the plasma-activated gas, another novel low-temperature sterilization technology. This newer sterilization technique also features a shorter reprocessing time, thereby making it an attractive option for duodenoscope reprocessing. The authors noted that, although it showed promise in a proof-of-concept study, “plasma-activated gas has not been assessed in working endoscopes or compared directly to existing HLD and EtO sterilization technologies.”

Quality indicators in reprocessing

Recently, several quality indicators have been developed to assess the quality of endoscope reprocessing. The indicators, the authors noted, may theoretically allow “for point-of-care assessment of reprocessing quality.” To date, the data to support these indicators are limited.

Adenosine triphosphate testing has been the most widely studied indicator because this can be used to examine the presence of biofilms during endoscope reprocessing via previously established ATP benchmark levels, the authors wrote. Studies that have assessed the efficacy of ATP testing, however, are limited by their use of heterogeneous assays, analytical techniques, and cutoffs for identifying contamination.

Hemoglobin, protein, and carbohydrate are other point-of-care indicators that have previously demonstrated potential capability of assessing the achievement of adequate manual endoscope cleaning before high-level disinfection or sterilization.

Novel disposable duodenoscope technologies

Given that consistent research studies have shown the existence of residual duodenoscope contamination after standard and enhanced reprocessing, there has been increased attention placed on novel disposable duodenoscope technologies. In 2019, the FDA recommended a move toward duodenoscopes with disposable components because it could make reprocessing easier, more effective, or altogether unnecessary. According to the authors, there are currently six duodenoscopes with disposable components that are cleared by the FDA for use. These include three that use a disposable endcap, one that uses a disposable elevator and endcap, and two that are fully disposable. The authors stated that, while “improved access to the elevator facilitated by a disposable endcap may allow for improved cleaning” and reduce contamination and formation of biofilm, there are no data to confirm these proposed advantages.

There are several unanswered questions regarding new disposable duodenoscope technologies, including questions related to the usability, costs, and environmental impact of these technologies. The authors summarized several studies discussing these issues; however, a clear definition or consensus regarding how to approach these challenges has yet to be established. In addition to these unanswered questions, the authors also noted that identifying the acceptable rate of infectious risk associated with disposable duodenoscopes is another “important task” that needs to be accomplished in the near future.

Environmental impact

The authors stated that the health care system in the United States is directly responsible for up to 10% of total U.S. greenhouse emissions. Additionally, the substantial use of chemicals and water in endoscope reprocessing represents a “substantial” concern for the environment. One estimate suggested that a mean of 40 total endoscopies per day generates around 15.78 tons of CO2 per year.

Given the unclear impact disposable endoscopes may have on the environment, the authors suggested that there is a clear need to discover interventions that reduce their potential negative impact. Strategies that reduce the number of endoscopies performed, increased recycling and use of recyclable materials, and use of renewable energy sources in endoscopy units have been proposed.

“The massive environmental impact of gastrointestinal endoscopy as a whole has become increasingly recognized,” the authors wrote, “and further study and interventions directed at improving the environmental footprint of endoscopy will be of foremost importance.”

The authors disclosed no conflicts of interest.

The reprocessing of endoscopes following gastrointestinal endoscopy is highly effective for mitigating the risk of exogenous infections, yet challenges in duodenoscope reprocessing continue to persist. While several enhanced reprocessing measures have been developed to reduce duodenoscope-related infection risks, the effectiveness of these enhanced measures is largely unclear.

Rahul A. Shimpi, MD, and Joshua P. Spaete, MD, from Duke University, Durham, N.C., wrote in a paper in Techniques and Innovations in Gastrointestinal Endoscopy that novel disposable duodenoscope technologies offer promise for reducing infection risk and overcoming current reprocessing challenges. The paper notes that, despite this promise, there is a need to better define the usability, costs, and environmental impact of these disposable technologies.

Current challenges in endoscope reprocessing

According to the authors, the reprocessing of gastrointestinal endoscopes involves several sequential steps that require a “meticulous” attention to detail “to ensure the adequacy of reprocessing.” Human factors/errors are a major contributor to suboptimal reprocessing quality, and these errors are often related to varying adherence to current reprocessing protocols among centers and reprocessing staff members.

Despite these challenges, infectious complications associated with gastrointestinal endoscopy are rare, particularly in relation to end-viewing endoscopes. Many high-profile infectious outbreaks associated with duodenoscopes have been reported in recent years, however, which has heightened the awareness and corresponding concern with endoscope reprocessing. Many of these infectious outbreaks, the authors said, have involved multidrug-resistant organisms.