User login

Hospitalists Use Online Game to Identify, Manage Sepsis

Teaching trainees to identify and manage sepsis using an online game known as “Septris” earned hospitalists at Stanford University Medical Center in Palo Alto, Calif., a Research, Innovation, and Clinical Vignette category award at HM13.1

“We took third-year medical students and residents in medicine, surgery, and emergency medicine—people who would be sepsis first responders on the floor—and gave them pre- and post-tests that documented improvements in both attitudes and knowledge,” says lead author Lisa Shieh, MD, PhD, Stanford’s medical director of quality in the department of medicine. All participants said they enjoyed playing the game, she reported.

Septris was developed by a multidisciplinary group of physicians, educational technology specialists, and programmers at Stanford. The game offers a case-based interactive learning environment drawn from evidence-based treatment algorithms. Players make treatment decisions and watch as the patient outcome rises or declines. The game’s rapid pace underscores the importance of early diagnosis and treatment.

“We tried to make our game as engaging and real-life as possible,” Dr. Shieh says.

The Stanford team is in touch with the Society of Critical Care Medicine’s Surviving Sepsis Campaign (www.survivingsepsis.org) and with other medical groups internationally. Thousands of players have accessed the game online for free (http://cme.stanford.edu/septris/game/SepsisTetris.html), with a nominal fee for CME credit. It is best played on an iPad or iPhone, Dr. Shieh says.

Larry Beresford is a freelance writer in Alameda, Calif.

Teaching trainees to identify and manage sepsis using an online game known as “Septris” earned hospitalists at Stanford University Medical Center in Palo Alto, Calif., a Research, Innovation, and Clinical Vignette category award at HM13.1

“We took third-year medical students and residents in medicine, surgery, and emergency medicine—people who would be sepsis first responders on the floor—and gave them pre- and post-tests that documented improvements in both attitudes and knowledge,” says lead author Lisa Shieh, MD, PhD, Stanford’s medical director of quality in the department of medicine. All participants said they enjoyed playing the game, she reported.

Septris was developed by a multidisciplinary group of physicians, educational technology specialists, and programmers at Stanford. The game offers a case-based interactive learning environment drawn from evidence-based treatment algorithms. Players make treatment decisions and watch as the patient outcome rises or declines. The game’s rapid pace underscores the importance of early diagnosis and treatment.

“We tried to make our game as engaging and real-life as possible,” Dr. Shieh says.

The Stanford team is in touch with the Society of Critical Care Medicine’s Surviving Sepsis Campaign (www.survivingsepsis.org) and with other medical groups internationally. Thousands of players have accessed the game online for free (http://cme.stanford.edu/septris/game/SepsisTetris.html), with a nominal fee for CME credit. It is best played on an iPad or iPhone, Dr. Shieh says.

Larry Beresford is a freelance writer in Alameda, Calif.

Teaching trainees to identify and manage sepsis using an online game known as “Septris” earned hospitalists at Stanford University Medical Center in Palo Alto, Calif., a Research, Innovation, and Clinical Vignette category award at HM13.1

“We took third-year medical students and residents in medicine, surgery, and emergency medicine—people who would be sepsis first responders on the floor—and gave them pre- and post-tests that documented improvements in both attitudes and knowledge,” says lead author Lisa Shieh, MD, PhD, Stanford’s medical director of quality in the department of medicine. All participants said they enjoyed playing the game, she reported.

Septris was developed by a multidisciplinary group of physicians, educational technology specialists, and programmers at Stanford. The game offers a case-based interactive learning environment drawn from evidence-based treatment algorithms. Players make treatment decisions and watch as the patient outcome rises or declines. The game’s rapid pace underscores the importance of early diagnosis and treatment.

“We tried to make our game as engaging and real-life as possible,” Dr. Shieh says.

The Stanford team is in touch with the Society of Critical Care Medicine’s Surviving Sepsis Campaign (www.survivingsepsis.org) and with other medical groups internationally. Thousands of players have accessed the game online for free (http://cme.stanford.edu/septris/game/SepsisTetris.html), with a nominal fee for CME credit. It is best played on an iPad or iPhone, Dr. Shieh says.

Larry Beresford is a freelance writer in Alameda, Calif.

Society of Hospital Medicine Creates Self-Assessment Tool for Hospitalist Groups

Are you looking to improve your hospital medicine group (HMG)? Would you like to measure your group against other groups?

The February 2013 issue of the Journal of Hospital Medicine included a seminal article for our specialty, “The Key Principles and Characteristics of an Effective Hospital Medicine Group: an assessment guide for hospitals and hospitalists.” This paper has received a vast amount of attention around the country from hospitalists, hospitalist leaders, HMGs, and hospital executives. The report (www.hospitalmedicine.org/keychar) is a first step for physicians and executives looking to benchmark their practices, and it has stimulated discussions among many HMGs, beginning a process of self-review and considering action.

I am coming up on my 20th year as a hospitalist, and the debate over what makes a high-performing HMG has continued that entire time. In the beginning, there were questions about the mere existence of hospital medicine and HMGs. The discussion about what makes a high-performing HMG started among the physicians, medical groups, and hospitals that signed on early to the HM movement. At conferences, HMG leaders debated how to set up a program. A series of pioneer hospitalists, many with only a few years of experience, roamed the country as consultants giving advice on best practices. A professional society, the National Association of Inpatient Physicians, was born and, later, recast as the Society of Hospital Medicine (SHM)—and the discussion continued.

SHM furthered the debate with such important milestones as The Core Competencies in Hospital Medicine: A Framework for Curriculum Development, white papers on career satisfaction and hospitalist involvement in quality/safety and transitions of care. Different types of practice arrangements developed. Some were hospital-based, some physician practice-centered. Some were local, and others were regional and national. Each of these spawned innovations in HMG processes and contributed to the growing body of best practices.

Over the past five years, a consensus regarding those best practices has seemingly developed, and the discussions are centered on fine details rather than significant differences. To that end, approximately three years ago, a small group of SHM members met and discussed how to capture this information and disseminate it better among hospitalists, HMGs, and hospitals. We had all come to a similar conclusion—high-performing HMGs share common characteristics. Furthermore, every hospital and HMG seeks excellence, striving to be the best that they can be. We settled on a plan to write this up.

After a year of debate, we sought SHM’s help in the development phase and, in early 2012, SHM’s board of directors appointed a workgroup to identify the key principles and characteristics of an effective HMG. The initial group was widened to make sure we included different backgrounds and experiences in hospital medicine. The group had a wide array of involvement in HMG models, including HMG members, HMG leaders, hospital executives, and some involved in consulting. Many of the individuals had multiple experiences. The conversation among these individuals was lively!

The workgroup developed an initial draft of characteristics, which then went through a multi-step process of review and redrafting. More than 200 individuals, representing a broad group of stakeholders in hospital medicine and in the healthcare industry in general, provided comments and feedback. In addition, the workgroup went through a two-step Delphi process to consolidate and/or eliminate characteristics that were redundant or unnecessary.

In the final framework, 47 key characteristics were defined and organized under 10 principles (see Figure 1).

The authors and SHM’s board of directors view this document as an aspirational approach to improvement. We feel it helps to “raise the bar” for the specialty of hospital medicine by laying out a roadmap of potential improvement. These principles and characteristics provide a framework for HMGs seeking to conduct self-assessments, outlining a pathway for improvement, and better defining the central role of hospitalists in coordinating team-based, patient-centered care in the acute care setting.

In enhancing quality, the approach of a gap analysis is a very effective tool. These principles provide an excellent approach to begin that review.

So how do you get started? Hopefully, your HMG has a regular meeting. Take a principle and have a conversation. For example, what do we have? What don’t we have?

Other groups may want to tackle the entire document in a daylong strategy review. Some may want an outside facilitator. Bottom line: It doesn’t matter how you do it; just start with a conversation.

Dr. Cawley is CEO of Medical University of South Carolina Medical Center in Charleston. He is past president of SHM.

Reference

Are you looking to improve your hospital medicine group (HMG)? Would you like to measure your group against other groups?

The February 2013 issue of the Journal of Hospital Medicine included a seminal article for our specialty, “The Key Principles and Characteristics of an Effective Hospital Medicine Group: an assessment guide for hospitals and hospitalists.” This paper has received a vast amount of attention around the country from hospitalists, hospitalist leaders, HMGs, and hospital executives. The report (www.hospitalmedicine.org/keychar) is a first step for physicians and executives looking to benchmark their practices, and it has stimulated discussions among many HMGs, beginning a process of self-review and considering action.

I am coming up on my 20th year as a hospitalist, and the debate over what makes a high-performing HMG has continued that entire time. In the beginning, there were questions about the mere existence of hospital medicine and HMGs. The discussion about what makes a high-performing HMG started among the physicians, medical groups, and hospitals that signed on early to the HM movement. At conferences, HMG leaders debated how to set up a program. A series of pioneer hospitalists, many with only a few years of experience, roamed the country as consultants giving advice on best practices. A professional society, the National Association of Inpatient Physicians, was born and, later, recast as the Society of Hospital Medicine (SHM)—and the discussion continued.

SHM furthered the debate with such important milestones as The Core Competencies in Hospital Medicine: A Framework for Curriculum Development, white papers on career satisfaction and hospitalist involvement in quality/safety and transitions of care. Different types of practice arrangements developed. Some were hospital-based, some physician practice-centered. Some were local, and others were regional and national. Each of these spawned innovations in HMG processes and contributed to the growing body of best practices.

Over the past five years, a consensus regarding those best practices has seemingly developed, and the discussions are centered on fine details rather than significant differences. To that end, approximately three years ago, a small group of SHM members met and discussed how to capture this information and disseminate it better among hospitalists, HMGs, and hospitals. We had all come to a similar conclusion—high-performing HMGs share common characteristics. Furthermore, every hospital and HMG seeks excellence, striving to be the best that they can be. We settled on a plan to write this up.

After a year of debate, we sought SHM’s help in the development phase and, in early 2012, SHM’s board of directors appointed a workgroup to identify the key principles and characteristics of an effective HMG. The initial group was widened to make sure we included different backgrounds and experiences in hospital medicine. The group had a wide array of involvement in HMG models, including HMG members, HMG leaders, hospital executives, and some involved in consulting. Many of the individuals had multiple experiences. The conversation among these individuals was lively!

The workgroup developed an initial draft of characteristics, which then went through a multi-step process of review and redrafting. More than 200 individuals, representing a broad group of stakeholders in hospital medicine and in the healthcare industry in general, provided comments and feedback. In addition, the workgroup went through a two-step Delphi process to consolidate and/or eliminate characteristics that were redundant or unnecessary.

In the final framework, 47 key characteristics were defined and organized under 10 principles (see Figure 1).

The authors and SHM’s board of directors view this document as an aspirational approach to improvement. We feel it helps to “raise the bar” for the specialty of hospital medicine by laying out a roadmap of potential improvement. These principles and characteristics provide a framework for HMGs seeking to conduct self-assessments, outlining a pathway for improvement, and better defining the central role of hospitalists in coordinating team-based, patient-centered care in the acute care setting.

In enhancing quality, the approach of a gap analysis is a very effective tool. These principles provide an excellent approach to begin that review.

So how do you get started? Hopefully, your HMG has a regular meeting. Take a principle and have a conversation. For example, what do we have? What don’t we have?

Other groups may want to tackle the entire document in a daylong strategy review. Some may want an outside facilitator. Bottom line: It doesn’t matter how you do it; just start with a conversation.

Dr. Cawley is CEO of Medical University of South Carolina Medical Center in Charleston. He is past president of SHM.

Reference

Are you looking to improve your hospital medicine group (HMG)? Would you like to measure your group against other groups?

The February 2013 issue of the Journal of Hospital Medicine included a seminal article for our specialty, “The Key Principles and Characteristics of an Effective Hospital Medicine Group: an assessment guide for hospitals and hospitalists.” This paper has received a vast amount of attention around the country from hospitalists, hospitalist leaders, HMGs, and hospital executives. The report (www.hospitalmedicine.org/keychar) is a first step for physicians and executives looking to benchmark their practices, and it has stimulated discussions among many HMGs, beginning a process of self-review and considering action.

I am coming up on my 20th year as a hospitalist, and the debate over what makes a high-performing HMG has continued that entire time. In the beginning, there were questions about the mere existence of hospital medicine and HMGs. The discussion about what makes a high-performing HMG started among the physicians, medical groups, and hospitals that signed on early to the HM movement. At conferences, HMG leaders debated how to set up a program. A series of pioneer hospitalists, many with only a few years of experience, roamed the country as consultants giving advice on best practices. A professional society, the National Association of Inpatient Physicians, was born and, later, recast as the Society of Hospital Medicine (SHM)—and the discussion continued.

SHM furthered the debate with such important milestones as The Core Competencies in Hospital Medicine: A Framework for Curriculum Development, white papers on career satisfaction and hospitalist involvement in quality/safety and transitions of care. Different types of practice arrangements developed. Some were hospital-based, some physician practice-centered. Some were local, and others were regional and national. Each of these spawned innovations in HMG processes and contributed to the growing body of best practices.

Over the past five years, a consensus regarding those best practices has seemingly developed, and the discussions are centered on fine details rather than significant differences. To that end, approximately three years ago, a small group of SHM members met and discussed how to capture this information and disseminate it better among hospitalists, HMGs, and hospitals. We had all come to a similar conclusion—high-performing HMGs share common characteristics. Furthermore, every hospital and HMG seeks excellence, striving to be the best that they can be. We settled on a plan to write this up.

After a year of debate, we sought SHM’s help in the development phase and, in early 2012, SHM’s board of directors appointed a workgroup to identify the key principles and characteristics of an effective HMG. The initial group was widened to make sure we included different backgrounds and experiences in hospital medicine. The group had a wide array of involvement in HMG models, including HMG members, HMG leaders, hospital executives, and some involved in consulting. Many of the individuals had multiple experiences. The conversation among these individuals was lively!

The workgroup developed an initial draft of characteristics, which then went through a multi-step process of review and redrafting. More than 200 individuals, representing a broad group of stakeholders in hospital medicine and in the healthcare industry in general, provided comments and feedback. In addition, the workgroup went through a two-step Delphi process to consolidate and/or eliminate characteristics that were redundant or unnecessary.

In the final framework, 47 key characteristics were defined and organized under 10 principles (see Figure 1).

The authors and SHM’s board of directors view this document as an aspirational approach to improvement. We feel it helps to “raise the bar” for the specialty of hospital medicine by laying out a roadmap of potential improvement. These principles and characteristics provide a framework for HMGs seeking to conduct self-assessments, outlining a pathway for improvement, and better defining the central role of hospitalists in coordinating team-based, patient-centered care in the acute care setting.

In enhancing quality, the approach of a gap analysis is a very effective tool. These principles provide an excellent approach to begin that review.

So how do you get started? Hopefully, your HMG has a regular meeting. Take a principle and have a conversation. For example, what do we have? What don’t we have?

Other groups may want to tackle the entire document in a daylong strategy review. Some may want an outside facilitator. Bottom line: It doesn’t matter how you do it; just start with a conversation.

Dr. Cawley is CEO of Medical University of South Carolina Medical Center in Charleston. He is past president of SHM.

Reference

Society of Hospital Medicine’s Project BOOST Reduces Medicare Penalties and Readmissions

SHM’s Project BOOST is accepting applications for its 2014 cohort, giving hospitalists and hospital-based team members time to complete the application and receive buy-in from hospital executives to participate in the program.

And this year is the best year yet to make the case to hospital leadership for using Project BOOST to reduce hospital readmissions. More than 180 hospitals throughout the U.S. have used Project BOOST to systematically tackle readmissions.

Last year, the first peer-reviewed research on Project BOOST, published in the Journal of Hospital Medicine, showed that the program reduced 30-day readmissions to 12.7% from 14.7% among 11 hospitals participating in the study. In addition, media and government agencies taking a hard look at readmissions rates have also used Project BOOST as an example of programs that can reduce readmissions and avoid Medicare penalties.1

Accepted Project BOOST sites begin the yearlong program with an in-person training conference with other BOOST sites. After the training, participants utilize a comprehensive toolkit to begin implementing their own programs, followed by ongoing mentoring with national experts in reducing readmissions and collaboration with other hospitals tackling similar challenges.

Details, educational resources, and free on-demand webinars are available at www.hospitalmedicine.org/projectboost.

Brendon Shank is SHM’s associate vice president of communications.

Reference

SHM’s Project BOOST is accepting applications for its 2014 cohort, giving hospitalists and hospital-based team members time to complete the application and receive buy-in from hospital executives to participate in the program.

And this year is the best year yet to make the case to hospital leadership for using Project BOOST to reduce hospital readmissions. More than 180 hospitals throughout the U.S. have used Project BOOST to systematically tackle readmissions.

Last year, the first peer-reviewed research on Project BOOST, published in the Journal of Hospital Medicine, showed that the program reduced 30-day readmissions to 12.7% from 14.7% among 11 hospitals participating in the study. In addition, media and government agencies taking a hard look at readmissions rates have also used Project BOOST as an example of programs that can reduce readmissions and avoid Medicare penalties.1

Accepted Project BOOST sites begin the yearlong program with an in-person training conference with other BOOST sites. After the training, participants utilize a comprehensive toolkit to begin implementing their own programs, followed by ongoing mentoring with national experts in reducing readmissions and collaboration with other hospitals tackling similar challenges.

Details, educational resources, and free on-demand webinars are available at www.hospitalmedicine.org/projectboost.

Brendon Shank is SHM’s associate vice president of communications.

Reference

SHM’s Project BOOST is accepting applications for its 2014 cohort, giving hospitalists and hospital-based team members time to complete the application and receive buy-in from hospital executives to participate in the program.

And this year is the best year yet to make the case to hospital leadership for using Project BOOST to reduce hospital readmissions. More than 180 hospitals throughout the U.S. have used Project BOOST to systematically tackle readmissions.

Last year, the first peer-reviewed research on Project BOOST, published in the Journal of Hospital Medicine, showed that the program reduced 30-day readmissions to 12.7% from 14.7% among 11 hospitals participating in the study. In addition, media and government agencies taking a hard look at readmissions rates have also used Project BOOST as an example of programs that can reduce readmissions and avoid Medicare penalties.1

Accepted Project BOOST sites begin the yearlong program with an in-person training conference with other BOOST sites. After the training, participants utilize a comprehensive toolkit to begin implementing their own programs, followed by ongoing mentoring with national experts in reducing readmissions and collaboration with other hospitals tackling similar challenges.

Details, educational resources, and free on-demand webinars are available at www.hospitalmedicine.org/projectboost.

Brendon Shank is SHM’s associate vice president of communications.

Reference

The Costs of Quality Care in Pediatric Hospital Medicine

“Dr. Chang? Oh my, it’s Dr. Chang! And his little son!” I called them “mall moments.” I would be at the local shopping mall with my father, picking up new clothes for the upcoming school year, when suddenly an elderly woman would approach. My father, despite his inability to remember my own birthday, would warmly grasp the woman’s hands, gaze into her eyes, ask about her family, then reminisce about her late husband and his last days in the hospital. After a few minutes, she would say something like, “Well, your father is the best doctor in Bakersfield, and you’ll be lucky to grow up to be just like him.”

And this would be fine, except the same scene would replay at the supermarket, the dry cleaners, and the local Chinese restaurant (the only place my father would eat out until he discovered the exotic pleasures of sushi). I wondered how my father ever got any errands done, with all his patients chatting with him along the way. Looking back on these “moments,” it is clear to me that this was my father’s measure of quality—his patients loved him. Other doctors loved him. The nurses—well, maybe not so much. He was a doctor’s doctor.

Quality measures? After working in his office, I only knew of two: The waiting room must be empty before the doors are closed and locked, and no patient ever gets turned away, for any reason. By seven o’clock in the evening, these measures got pretty old. But simple credos made him one of the most beloved physicians in Kern County, Calif.

Quality, in whatever form it takes, has a cost, however. My father divorced twice. My own “quality” time with him was spent making weekend rounds at the seemingly innumerable nursing homes around Bakersfield, Calif., although this was great olfactory training for my future career as a hospitalist. Many a parent’s day was spent with only my mother present, and I would be lying if I said I didn’t envy the other children with both parents doting over their science projects.

As we in pediatric hospital medicine (PHM) embark on a journey to define and promote quality in our care of children, we are well aware that adhering to our defined standards of quality will have a cost. What has been discussed less, but is perhaps even more elementary, is the cost of simply endeavoring to define and measure quality itself. This has not slowed down the onslaught of newly defined quality measures in PHM. Quality measures from the adult HM world, such as readmission rates, adherence to national guidelines, and communication with primary care providers, have been extracted and repurposed.

Attempts to extrapolate these measures to PHM have been less than successful. Alverson and O’Callaghan recently made a compelling case debunking readmission rates as a valid quality measure in PHM.1 Compliance with Children’s Asthma Care (CAC) measures was not found to decrease asthma-related readmissions or subsequent ED visits in a 2011 study, although a study published in 2012 showed an association between compliance with asthma action plans at discharge and lower readmission rates.2,3 Documentation of primary care follow-up for patients discharged from a free-standing children’s hospital actually increased the readmission rate (if that is believed to be a quality measure).4

Yet quality measures continue to be created, espoused, and studied. Payments to accountable care organizations (ACO), hospitals, and individual providers are being tied to performance on quality measures. Medicare is considering quality measures that can be applied to PHM, which might affect future payments to children’s hospitals. Paciorkowski and colleagues recently described the development of 87 performance indicators specific to PHM that could be used to track quality of care on a division level, 79 of which were provider specific.5 A committee of pediatric hospitalists led by Paul Hain, MD, recently proposed a “dashboard” of metrics pertaining to descriptive, quality, productivity, and other data that could be used to compare PHM groups across the country.6 Many hospitalist groups already have instituted financial incentives tied to provider or group-specific quality measures.7 Pay-for-performance has arrived in adult HM and is now pulling out of the station: next stop, PHM.

Source: From Crosby P. B. Quality Is Free: The Art of Making Quality Certain. New York: McGraw-Hill; 1979.

The Rest of the Cost Story

Like any labor-intensive process in medicine, defining, measuring, and improving quality has a cost. A 2007 survey of four urban teaching hospitals found that core QI activities required 1%-2% of the total operating revenue.8 The QI activity costs fall into the category of the “cost of good quality,” as defined by Philip Crosby in his book, Quality is Free (see Figure 1).9 Although hospital operations with better process “sigma” will have lower prevention and appraisal costs, these can never be fully eliminated.

Despite our attempts at controlling costs, most ongoing QI efforts focused on improving clinical quality alone are doomed to fail with regard to providing bottom-line cost reductions.10 QI efforts that focus on decreasing variability in the use of best practices, such as the National Surgical Quality Improvement Program (NSQIP), have brought improvements in both outcomes and reduced costs of complications.11 Not only do these QI efforts lower the “cost of poor quality,” but they may provide less measurable benefits, such as reduced opportunity costs. Whether these efforts can compensate by reducing the cost of poor quality can be speculative. Some HM authorities, such as Duke University Health CMO Thomas Owens, have made the case, especially to hospital administrators, for espousing a more formulaic return on investment (ROI) calculation for HM QI efforts, taking into account reduced opportunity costs.12

But measured costs tell only part of the story. For every new quality measure that is defined, there are also unmeasured costs to measuring and collecting evidence of quality. Being constantly measured and assessed often leads to a perceived loss of autonomy, and this can lead to burnout; more than 40% of respondents from local hospitalist groups in the most recent SHM Career Satisfaction Survey indicated that optimal autonomy was among the four most important factors for job satisfaction.13 The same survey found that hospitalists were least satisfied with organizational climate, autonomy, and availability of personal time.14

As many a hospitalist can relate, although involvement in QI processes is considered a cornerstone of hospitalist practice, increased time spent in a given QI activity rarely translates to increased compensation. Fourteen percent of hospitalists in a recent SHM Focused Survey reported not even having dedicated time for or being compensated for QI.

Which is not to say, of course, that defining and measuring quality is not a worthy pursuit. On the contrary, QI is a pillar of hospital medicine practice. A recent survey showed that 84% of pediatric hospitalists participated in QI initiatives, and 72% considered the variety of pursuits inherent in a PHM career as a factor influencing career choice.15 But just as we are now focused on choosing wisely in diagnosing and treating our patients, we should also be choosing wisely in diagnosing and treating our systems. What is true for our patients is true for our system of care—simply ordering the test can lead to a cascade of interventions that can be not only costly but also potentially dangerous for the patient.

Physician-defined quality measures in adult HM have now been adopted as yardsticks with which to measure all hospitals—and with which to punish those who do not measure up. In 1984, Dr. Earl Steinberg, then a professor of medicine at Johns Hopkins, published a seminal article in the New England Journal of Medicine describing potential cost savings to the Medicare program from reductions in hospital readmissions.16 This was the match that lit the fuse to what is now the Affordable Care Act Hospital Readmissions Reduction Program. Yet, this quality measure might not even be a quality measure of…quality. A 2013 JAMA study showed that readmission rates for acute myocardial infarction and pneumonia were not correlated with mortality, the time-tested gold standard for quality in medicine.17 That has not stopped Medicare from levying $227 million in fines on 2,225 hospitals across the country beginning Oct. 1, 2013 for excess readmissions in Year 2 of the Hospital Readmissions Reduction Program.18 It seems that we have built it, and they have come, and now they won’t leave.

In Sum

What is the lesson for PHM? Assessing and improving quality of care remains a necessary cornerstone of PHM, but choosing meaningful quality measures is difficult and can have long-term consequences. The choices we make with regard to the direction of QI will, however, define the future of pediatric healthcare for decades to come. As such, we cannot waste both financial and human resources on defining and assessing quality measures that may sound superficially important but, in the end, are not reflective of the real quality of care provided to our patients.

My father, in his adherence to his own ideal of quality medical care, reaped the unintended consequences of his pursuit of quality medical care. Sometimes, though just sometimes, there are unintended consequences to the unintended consequences. I learned, and was perhaps inspired, just by watching him interact with patients and their families. Somehow I don’t think my own children will learn much by watching me interact with my computer.

Dr. Chang is pediatric editor of The Hospitalist. He is associate clinical professor of medicine and pediatrics at the University of California at San Diego (UCSD) School of Medicine, and a hospitalist at both UCSD Medical Center and Rady Children’s Hospital. Send comments and questions to [email protected].

“Dr. Chang? Oh my, it’s Dr. Chang! And his little son!” I called them “mall moments.” I would be at the local shopping mall with my father, picking up new clothes for the upcoming school year, when suddenly an elderly woman would approach. My father, despite his inability to remember my own birthday, would warmly grasp the woman’s hands, gaze into her eyes, ask about her family, then reminisce about her late husband and his last days in the hospital. After a few minutes, she would say something like, “Well, your father is the best doctor in Bakersfield, and you’ll be lucky to grow up to be just like him.”

And this would be fine, except the same scene would replay at the supermarket, the dry cleaners, and the local Chinese restaurant (the only place my father would eat out until he discovered the exotic pleasures of sushi). I wondered how my father ever got any errands done, with all his patients chatting with him along the way. Looking back on these “moments,” it is clear to me that this was my father’s measure of quality—his patients loved him. Other doctors loved him. The nurses—well, maybe not so much. He was a doctor’s doctor.

Quality measures? After working in his office, I only knew of two: The waiting room must be empty before the doors are closed and locked, and no patient ever gets turned away, for any reason. By seven o’clock in the evening, these measures got pretty old. But simple credos made him one of the most beloved physicians in Kern County, Calif.

Quality, in whatever form it takes, has a cost, however. My father divorced twice. My own “quality” time with him was spent making weekend rounds at the seemingly innumerable nursing homes around Bakersfield, Calif., although this was great olfactory training for my future career as a hospitalist. Many a parent’s day was spent with only my mother present, and I would be lying if I said I didn’t envy the other children with both parents doting over their science projects.

As we in pediatric hospital medicine (PHM) embark on a journey to define and promote quality in our care of children, we are well aware that adhering to our defined standards of quality will have a cost. What has been discussed less, but is perhaps even more elementary, is the cost of simply endeavoring to define and measure quality itself. This has not slowed down the onslaught of newly defined quality measures in PHM. Quality measures from the adult HM world, such as readmission rates, adherence to national guidelines, and communication with primary care providers, have been extracted and repurposed.

Attempts to extrapolate these measures to PHM have been less than successful. Alverson and O’Callaghan recently made a compelling case debunking readmission rates as a valid quality measure in PHM.1 Compliance with Children’s Asthma Care (CAC) measures was not found to decrease asthma-related readmissions or subsequent ED visits in a 2011 study, although a study published in 2012 showed an association between compliance with asthma action plans at discharge and lower readmission rates.2,3 Documentation of primary care follow-up for patients discharged from a free-standing children’s hospital actually increased the readmission rate (if that is believed to be a quality measure).4

Yet quality measures continue to be created, espoused, and studied. Payments to accountable care organizations (ACO), hospitals, and individual providers are being tied to performance on quality measures. Medicare is considering quality measures that can be applied to PHM, which might affect future payments to children’s hospitals. Paciorkowski and colleagues recently described the development of 87 performance indicators specific to PHM that could be used to track quality of care on a division level, 79 of which were provider specific.5 A committee of pediatric hospitalists led by Paul Hain, MD, recently proposed a “dashboard” of metrics pertaining to descriptive, quality, productivity, and other data that could be used to compare PHM groups across the country.6 Many hospitalist groups already have instituted financial incentives tied to provider or group-specific quality measures.7 Pay-for-performance has arrived in adult HM and is now pulling out of the station: next stop, PHM.

Source: From Crosby P. B. Quality Is Free: The Art of Making Quality Certain. New York: McGraw-Hill; 1979.

The Rest of the Cost Story

Like any labor-intensive process in medicine, defining, measuring, and improving quality has a cost. A 2007 survey of four urban teaching hospitals found that core QI activities required 1%-2% of the total operating revenue.8 The QI activity costs fall into the category of the “cost of good quality,” as defined by Philip Crosby in his book, Quality is Free (see Figure 1).9 Although hospital operations with better process “sigma” will have lower prevention and appraisal costs, these can never be fully eliminated.

Despite our attempts at controlling costs, most ongoing QI efforts focused on improving clinical quality alone are doomed to fail with regard to providing bottom-line cost reductions.10 QI efforts that focus on decreasing variability in the use of best practices, such as the National Surgical Quality Improvement Program (NSQIP), have brought improvements in both outcomes and reduced costs of complications.11 Not only do these QI efforts lower the “cost of poor quality,” but they may provide less measurable benefits, such as reduced opportunity costs. Whether these efforts can compensate by reducing the cost of poor quality can be speculative. Some HM authorities, such as Duke University Health CMO Thomas Owens, have made the case, especially to hospital administrators, for espousing a more formulaic return on investment (ROI) calculation for HM QI efforts, taking into account reduced opportunity costs.12

But measured costs tell only part of the story. For every new quality measure that is defined, there are also unmeasured costs to measuring and collecting evidence of quality. Being constantly measured and assessed often leads to a perceived loss of autonomy, and this can lead to burnout; more than 40% of respondents from local hospitalist groups in the most recent SHM Career Satisfaction Survey indicated that optimal autonomy was among the four most important factors for job satisfaction.13 The same survey found that hospitalists were least satisfied with organizational climate, autonomy, and availability of personal time.14

As many a hospitalist can relate, although involvement in QI processes is considered a cornerstone of hospitalist practice, increased time spent in a given QI activity rarely translates to increased compensation. Fourteen percent of hospitalists in a recent SHM Focused Survey reported not even having dedicated time for or being compensated for QI.

Which is not to say, of course, that defining and measuring quality is not a worthy pursuit. On the contrary, QI is a pillar of hospital medicine practice. A recent survey showed that 84% of pediatric hospitalists participated in QI initiatives, and 72% considered the variety of pursuits inherent in a PHM career as a factor influencing career choice.15 But just as we are now focused on choosing wisely in diagnosing and treating our patients, we should also be choosing wisely in diagnosing and treating our systems. What is true for our patients is true for our system of care—simply ordering the test can lead to a cascade of interventions that can be not only costly but also potentially dangerous for the patient.

Physician-defined quality measures in adult HM have now been adopted as yardsticks with which to measure all hospitals—and with which to punish those who do not measure up. In 1984, Dr. Earl Steinberg, then a professor of medicine at Johns Hopkins, published a seminal article in the New England Journal of Medicine describing potential cost savings to the Medicare program from reductions in hospital readmissions.16 This was the match that lit the fuse to what is now the Affordable Care Act Hospital Readmissions Reduction Program. Yet, this quality measure might not even be a quality measure of…quality. A 2013 JAMA study showed that readmission rates for acute myocardial infarction and pneumonia were not correlated with mortality, the time-tested gold standard for quality in medicine.17 That has not stopped Medicare from levying $227 million in fines on 2,225 hospitals across the country beginning Oct. 1, 2013 for excess readmissions in Year 2 of the Hospital Readmissions Reduction Program.18 It seems that we have built it, and they have come, and now they won’t leave.

In Sum

What is the lesson for PHM? Assessing and improving quality of care remains a necessary cornerstone of PHM, but choosing meaningful quality measures is difficult and can have long-term consequences. The choices we make with regard to the direction of QI will, however, define the future of pediatric healthcare for decades to come. As such, we cannot waste both financial and human resources on defining and assessing quality measures that may sound superficially important but, in the end, are not reflective of the real quality of care provided to our patients.

My father, in his adherence to his own ideal of quality medical care, reaped the unintended consequences of his pursuit of quality medical care. Sometimes, though just sometimes, there are unintended consequences to the unintended consequences. I learned, and was perhaps inspired, just by watching him interact with patients and their families. Somehow I don’t think my own children will learn much by watching me interact with my computer.

Dr. Chang is pediatric editor of The Hospitalist. He is associate clinical professor of medicine and pediatrics at the University of California at San Diego (UCSD) School of Medicine, and a hospitalist at both UCSD Medical Center and Rady Children’s Hospital. Send comments and questions to [email protected].

“Dr. Chang? Oh my, it’s Dr. Chang! And his little son!” I called them “mall moments.” I would be at the local shopping mall with my father, picking up new clothes for the upcoming school year, when suddenly an elderly woman would approach. My father, despite his inability to remember my own birthday, would warmly grasp the woman’s hands, gaze into her eyes, ask about her family, then reminisce about her late husband and his last days in the hospital. After a few minutes, she would say something like, “Well, your father is the best doctor in Bakersfield, and you’ll be lucky to grow up to be just like him.”

And this would be fine, except the same scene would replay at the supermarket, the dry cleaners, and the local Chinese restaurant (the only place my father would eat out until he discovered the exotic pleasures of sushi). I wondered how my father ever got any errands done, with all his patients chatting with him along the way. Looking back on these “moments,” it is clear to me that this was my father’s measure of quality—his patients loved him. Other doctors loved him. The nurses—well, maybe not so much. He was a doctor’s doctor.

Quality measures? After working in his office, I only knew of two: The waiting room must be empty before the doors are closed and locked, and no patient ever gets turned away, for any reason. By seven o’clock in the evening, these measures got pretty old. But simple credos made him one of the most beloved physicians in Kern County, Calif.

Quality, in whatever form it takes, has a cost, however. My father divorced twice. My own “quality” time with him was spent making weekend rounds at the seemingly innumerable nursing homes around Bakersfield, Calif., although this was great olfactory training for my future career as a hospitalist. Many a parent’s day was spent with only my mother present, and I would be lying if I said I didn’t envy the other children with both parents doting over their science projects.

As we in pediatric hospital medicine (PHM) embark on a journey to define and promote quality in our care of children, we are well aware that adhering to our defined standards of quality will have a cost. What has been discussed less, but is perhaps even more elementary, is the cost of simply endeavoring to define and measure quality itself. This has not slowed down the onslaught of newly defined quality measures in PHM. Quality measures from the adult HM world, such as readmission rates, adherence to national guidelines, and communication with primary care providers, have been extracted and repurposed.

Attempts to extrapolate these measures to PHM have been less than successful. Alverson and O’Callaghan recently made a compelling case debunking readmission rates as a valid quality measure in PHM.1 Compliance with Children’s Asthma Care (CAC) measures was not found to decrease asthma-related readmissions or subsequent ED visits in a 2011 study, although a study published in 2012 showed an association between compliance with asthma action plans at discharge and lower readmission rates.2,3 Documentation of primary care follow-up for patients discharged from a free-standing children’s hospital actually increased the readmission rate (if that is believed to be a quality measure).4

Yet quality measures continue to be created, espoused, and studied. Payments to accountable care organizations (ACO), hospitals, and individual providers are being tied to performance on quality measures. Medicare is considering quality measures that can be applied to PHM, which might affect future payments to children’s hospitals. Paciorkowski and colleagues recently described the development of 87 performance indicators specific to PHM that could be used to track quality of care on a division level, 79 of which were provider specific.5 A committee of pediatric hospitalists led by Paul Hain, MD, recently proposed a “dashboard” of metrics pertaining to descriptive, quality, productivity, and other data that could be used to compare PHM groups across the country.6 Many hospitalist groups already have instituted financial incentives tied to provider or group-specific quality measures.7 Pay-for-performance has arrived in adult HM and is now pulling out of the station: next stop, PHM.

Source: From Crosby P. B. Quality Is Free: The Art of Making Quality Certain. New York: McGraw-Hill; 1979.

The Rest of the Cost Story

Like any labor-intensive process in medicine, defining, measuring, and improving quality has a cost. A 2007 survey of four urban teaching hospitals found that core QI activities required 1%-2% of the total operating revenue.8 The QI activity costs fall into the category of the “cost of good quality,” as defined by Philip Crosby in his book, Quality is Free (see Figure 1).9 Although hospital operations with better process “sigma” will have lower prevention and appraisal costs, these can never be fully eliminated.

Despite our attempts at controlling costs, most ongoing QI efforts focused on improving clinical quality alone are doomed to fail with regard to providing bottom-line cost reductions.10 QI efforts that focus on decreasing variability in the use of best practices, such as the National Surgical Quality Improvement Program (NSQIP), have brought improvements in both outcomes and reduced costs of complications.11 Not only do these QI efforts lower the “cost of poor quality,” but they may provide less measurable benefits, such as reduced opportunity costs. Whether these efforts can compensate by reducing the cost of poor quality can be speculative. Some HM authorities, such as Duke University Health CMO Thomas Owens, have made the case, especially to hospital administrators, for espousing a more formulaic return on investment (ROI) calculation for HM QI efforts, taking into account reduced opportunity costs.12

But measured costs tell only part of the story. For every new quality measure that is defined, there are also unmeasured costs to measuring and collecting evidence of quality. Being constantly measured and assessed often leads to a perceived loss of autonomy, and this can lead to burnout; more than 40% of respondents from local hospitalist groups in the most recent SHM Career Satisfaction Survey indicated that optimal autonomy was among the four most important factors for job satisfaction.13 The same survey found that hospitalists were least satisfied with organizational climate, autonomy, and availability of personal time.14

As many a hospitalist can relate, although involvement in QI processes is considered a cornerstone of hospitalist practice, increased time spent in a given QI activity rarely translates to increased compensation. Fourteen percent of hospitalists in a recent SHM Focused Survey reported not even having dedicated time for or being compensated for QI.

Which is not to say, of course, that defining and measuring quality is not a worthy pursuit. On the contrary, QI is a pillar of hospital medicine practice. A recent survey showed that 84% of pediatric hospitalists participated in QI initiatives, and 72% considered the variety of pursuits inherent in a PHM career as a factor influencing career choice.15 But just as we are now focused on choosing wisely in diagnosing and treating our patients, we should also be choosing wisely in diagnosing and treating our systems. What is true for our patients is true for our system of care—simply ordering the test can lead to a cascade of interventions that can be not only costly but also potentially dangerous for the patient.

Physician-defined quality measures in adult HM have now been adopted as yardsticks with which to measure all hospitals—and with which to punish those who do not measure up. In 1984, Dr. Earl Steinberg, then a professor of medicine at Johns Hopkins, published a seminal article in the New England Journal of Medicine describing potential cost savings to the Medicare program from reductions in hospital readmissions.16 This was the match that lit the fuse to what is now the Affordable Care Act Hospital Readmissions Reduction Program. Yet, this quality measure might not even be a quality measure of…quality. A 2013 JAMA study showed that readmission rates for acute myocardial infarction and pneumonia were not correlated with mortality, the time-tested gold standard for quality in medicine.17 That has not stopped Medicare from levying $227 million in fines on 2,225 hospitals across the country beginning Oct. 1, 2013 for excess readmissions in Year 2 of the Hospital Readmissions Reduction Program.18 It seems that we have built it, and they have come, and now they won’t leave.

In Sum

What is the lesson for PHM? Assessing and improving quality of care remains a necessary cornerstone of PHM, but choosing meaningful quality measures is difficult and can have long-term consequences. The choices we make with regard to the direction of QI will, however, define the future of pediatric healthcare for decades to come. As such, we cannot waste both financial and human resources on defining and assessing quality measures that may sound superficially important but, in the end, are not reflective of the real quality of care provided to our patients.

My father, in his adherence to his own ideal of quality medical care, reaped the unintended consequences of his pursuit of quality medical care. Sometimes, though just sometimes, there are unintended consequences to the unintended consequences. I learned, and was perhaps inspired, just by watching him interact with patients and their families. Somehow I don’t think my own children will learn much by watching me interact with my computer.

Dr. Chang is pediatric editor of The Hospitalist. He is associate clinical professor of medicine and pediatrics at the University of California at San Diego (UCSD) School of Medicine, and a hospitalist at both UCSD Medical Center and Rady Children’s Hospital. Send comments and questions to [email protected].

Likelihood for Readmission of Hospitalized Medicare Patients with Multiple Chronic Conditions Up 600%

600%

The increased likelihood of 30-day hospital readmission for hospitalized Medicare patients who have 10 or more chronic conditions, compared with those who have only one to four chronic conditions.4 These patients with multiple chronic conditions represent only 8.9% of Medicare beneficiaries but account for 50% of all rehospitalizations. The numbers are drawn from a 5% sample of Medicare fee-for-service beneficiaries during the first nine months of 2008. Those with five to nine chronic conditions had 2.5 times the odds for being readmitted.

Larry Beresford is a freelance writer in Alameda, Calif.

- Shieh L, Pummer E, Tsui J, et al. Septris: improving sepsis recognition and management through a mobile educational game [abstract]. J Hosp Med. 2013;8(Suppl 1):1053.

- Mitchell SE, Gardiner PM, Sadikova E, et al. Patient activation and 30-day post-discharge hospital utilization. J Gen Intern Med. 2014;29(2):349-355.

- Daniels KR, Lee GC, Frei CR. Trends in catheter-associated urinary tract infections among a national cohort of hospitalized adults, 2001-2010. Am J Infect Control. 2014;42(1):17-22.

- Berkowitz SA. Anderson GF. Medicare beneficiaries most likely to be readmitted. J Hosp Med. 2013;8(11):639-641.

600%

The increased likelihood of 30-day hospital readmission for hospitalized Medicare patients who have 10 or more chronic conditions, compared with those who have only one to four chronic conditions.4 These patients with multiple chronic conditions represent only 8.9% of Medicare beneficiaries but account for 50% of all rehospitalizations. The numbers are drawn from a 5% sample of Medicare fee-for-service beneficiaries during the first nine months of 2008. Those with five to nine chronic conditions had 2.5 times the odds for being readmitted.

Larry Beresford is a freelance writer in Alameda, Calif.

- Shieh L, Pummer E, Tsui J, et al. Septris: improving sepsis recognition and management through a mobile educational game [abstract]. J Hosp Med. 2013;8(Suppl 1):1053.

- Mitchell SE, Gardiner PM, Sadikova E, et al. Patient activation and 30-day post-discharge hospital utilization. J Gen Intern Med. 2014;29(2):349-355.

- Daniels KR, Lee GC, Frei CR. Trends in catheter-associated urinary tract infections among a national cohort of hospitalized adults, 2001-2010. Am J Infect Control. 2014;42(1):17-22.

- Berkowitz SA. Anderson GF. Medicare beneficiaries most likely to be readmitted. J Hosp Med. 2013;8(11):639-641.

600%

The increased likelihood of 30-day hospital readmission for hospitalized Medicare patients who have 10 or more chronic conditions, compared with those who have only one to four chronic conditions.4 These patients with multiple chronic conditions represent only 8.9% of Medicare beneficiaries but account for 50% of all rehospitalizations. The numbers are drawn from a 5% sample of Medicare fee-for-service beneficiaries during the first nine months of 2008. Those with five to nine chronic conditions had 2.5 times the odds for being readmitted.

Larry Beresford is a freelance writer in Alameda, Calif.

- Shieh L, Pummer E, Tsui J, et al. Septris: improving sepsis recognition and management through a mobile educational game [abstract]. J Hosp Med. 2013;8(Suppl 1):1053.

- Mitchell SE, Gardiner PM, Sadikova E, et al. Patient activation and 30-day post-discharge hospital utilization. J Gen Intern Med. 2014;29(2):349-355.

- Daniels KR, Lee GC, Frei CR. Trends in catheter-associated urinary tract infections among a national cohort of hospitalized adults, 2001-2010. Am J Infect Control. 2014;42(1):17-22.

- Berkowitz SA. Anderson GF. Medicare beneficiaries most likely to be readmitted. J Hosp Med. 2013;8(11):639-641.

Campaign Seeks to Improve Small-Bore Tubing Misconnections

The American Society for Parenteral and Enteral Nutrition (ASPEN), the Global Enteral Device Supplier Association (GEDSA) and a number of other quality-oriented groups, including the FDA, Centers for Medicare & Medicaid Services (CMS), and the Joint Commission, are working to address tubing misconnections for medical device small-bore connectors—used for enteral, luer, neuro-cranial, respiratory, and other medical tubing equipment.2

Misconnections, although rare, can be harmful or even fatal to patients. The task force conducted a panel discussion Oct. 22 in Washington, D.C., focused on redesign issues, and is collaborating with the International Standards Organization to develop new small-bore connector standards.

GEDSA’s “Stay Connected” is an education campaign to inform and prepare the healthcare community for impending changes in standards for small-bore connectors. For more information, visit www.stayconnected2014.org.

The American Society for Parenteral and Enteral Nutrition (ASPEN), the Global Enteral Device Supplier Association (GEDSA) and a number of other quality-oriented groups, including the FDA, Centers for Medicare & Medicaid Services (CMS), and the Joint Commission, are working to address tubing misconnections for medical device small-bore connectors—used for enteral, luer, neuro-cranial, respiratory, and other medical tubing equipment.2

Misconnections, although rare, can be harmful or even fatal to patients. The task force conducted a panel discussion Oct. 22 in Washington, D.C., focused on redesign issues, and is collaborating with the International Standards Organization to develop new small-bore connector standards.

GEDSA’s “Stay Connected” is an education campaign to inform and prepare the healthcare community for impending changes in standards for small-bore connectors. For more information, visit www.stayconnected2014.org.

The American Society for Parenteral and Enteral Nutrition (ASPEN), the Global Enteral Device Supplier Association (GEDSA) and a number of other quality-oriented groups, including the FDA, Centers for Medicare & Medicaid Services (CMS), and the Joint Commission, are working to address tubing misconnections for medical device small-bore connectors—used for enteral, luer, neuro-cranial, respiratory, and other medical tubing equipment.2

Misconnections, although rare, can be harmful or even fatal to patients. The task force conducted a panel discussion Oct. 22 in Washington, D.C., focused on redesign issues, and is collaborating with the International Standards Organization to develop new small-bore connector standards.

GEDSA’s “Stay Connected” is an education campaign to inform and prepare the healthcare community for impending changes in standards for small-bore connectors. For more information, visit www.stayconnected2014.org.

Apply Now for Society of Hospital Medicine's Project BOOST

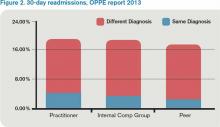

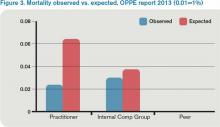

BOOST Makes a Difference

For more info, visit www.hospitalmedicine.org/boost.

BOOST Makes a Difference

For more info, visit www.hospitalmedicine.org/boost.

BOOST Makes a Difference

For more info, visit www.hospitalmedicine.org/boost.

Registration Still Open for Quality and Safety Educators Academy

Academic Hospitalists and Program Directors: There Is Still Time to Register for the Quality and Safety Educators Academy

Make sure your hospital is ready to meet the ACGME’s requirements that residency programs integrate quality and safety into their curriculum. The Quality and Safety Educators Academy (QSEA) is May 1-3 at the Tempe Mission Palms in Arizona.

For more info, visit www.hospitalmedicine.org/qsea.

Use SHM’s CODE-H Interactive to Avoid Coding Issues

Coding is a part of every hospitalist’s life, but tips from the experts can make that life easier, more efficient, and more compliant. That’s why SHM’s CODE-H program teaches hospitalists and hospitalist group managers and administrators how to stay up to date with the latest in the best practices of coding and documentation.

On March 20, coding expert Barbara Pierce, CCS-P, ACS-EM, will present an online session on some of the most important coding topics for hospitalists, including:

- Critical care;

- Prolonged services;

- Documentation when working with NPs and PAs;

- Teaching physician rules; and

- Tips to avoid billing issues and potential denials.

This session is the third in a series of seven that cover the full range of coding topics, from developing a compliance plan and internal auditing process to ICD-10, PQRS, and Medicare’s Physician Value-Based Payment Modifier.

CME credits are offered through post-tests following each webinar, and each participant is eligible for up to seven credits throughout the series. Up to 10 individuals in a group can sign up through a single registration.

For more information, visit www.hospitalmedicine.org/codeh.

BOOST Makes a Difference

Want to make a real difference in your hospital’s readmission rates? Now is the time to start compiling applications for SHM’s Project BOOST. Applications are due Aug. 30.

For more info, visit www.hospitalmedicine.org/boost.

Academic Hospitalists and Program Directors: There Is Still Time to Register for the Quality and Safety Educators Academy

Make sure your hospital is ready to meet the ACGME’s requirements that residency programs integrate quality and safety into their curriculum. The Quality and Safety Educators Academy (QSEA) is May 1-3 at the Tempe Mission Palms in Arizona.

For more info, visit www.hospitalmedicine.org/qsea.

Use SHM’s CODE-H Interactive to Avoid Coding Issues

Coding is a part of every hospitalist’s life, but tips from the experts can make that life easier, more efficient, and more compliant. That’s why SHM’s CODE-H program teaches hospitalists and hospitalist group managers and administrators how to stay up to date with the latest in the best practices of coding and documentation.

On March 20, coding expert Barbara Pierce, CCS-P, ACS-EM, will present an online session on some of the most important coding topics for hospitalists, including:

- Critical care;

- Prolonged services;

- Documentation when working with NPs and PAs;

- Teaching physician rules; and

- Tips to avoid billing issues and potential denials.

This session is the third in a series of seven that cover the full range of coding topics, from developing a compliance plan and internal auditing process to ICD-10, PQRS, and Medicare’s Physician Value-Based Payment Modifier.

CME credits are offered through post-tests following each webinar, and each participant is eligible for up to seven credits throughout the series. Up to 10 individuals in a group can sign up through a single registration.

For more information, visit www.hospitalmedicine.org/codeh.

BOOST Makes a Difference

Want to make a real difference in your hospital’s readmission rates? Now is the time to start compiling applications for SHM’s Project BOOST. Applications are due Aug. 30.

For more info, visit www.hospitalmedicine.org/boost.

Academic Hospitalists and Program Directors: There Is Still Time to Register for the Quality and Safety Educators Academy

Make sure your hospital is ready to meet the ACGME’s requirements that residency programs integrate quality and safety into their curriculum. The Quality and Safety Educators Academy (QSEA) is May 1-3 at the Tempe Mission Palms in Arizona.

For more info, visit www.hospitalmedicine.org/qsea.

Use SHM’s CODE-H Interactive to Avoid Coding Issues

Coding is a part of every hospitalist’s life, but tips from the experts can make that life easier, more efficient, and more compliant. That’s why SHM’s CODE-H program teaches hospitalists and hospitalist group managers and administrators how to stay up to date with the latest in the best practices of coding and documentation.

On March 20, coding expert Barbara Pierce, CCS-P, ACS-EM, will present an online session on some of the most important coding topics for hospitalists, including:

- Critical care;

- Prolonged services;

- Documentation when working with NPs and PAs;

- Teaching physician rules; and

- Tips to avoid billing issues and potential denials.

This session is the third in a series of seven that cover the full range of coding topics, from developing a compliance plan and internal auditing process to ICD-10, PQRS, and Medicare’s Physician Value-Based Payment Modifier.

CME credits are offered through post-tests following each webinar, and each participant is eligible for up to seven credits throughout the series. Up to 10 individuals in a group can sign up through a single registration.

For more information, visit www.hospitalmedicine.org/codeh.

BOOST Makes a Difference

Want to make a real difference in your hospital’s readmission rates? Now is the time to start compiling applications for SHM’s Project BOOST. Applications are due Aug. 30.

For more info, visit www.hospitalmedicine.org/boost.

Reflections on the Hospital Environment

Six years ago, after I had been in clinical practice for almost a decade, my career took several unusual turns that now have me sitting in the position of president of a 500-bed, full-service, very successful community hospital and referral center. While that has inevitably whittled my clinical time down to a mere fraction of what it used to be, I still spend a lot of time “on the dance floor,” although the steps are different at the bedside.

Whether you spend your day going from patient to patient or meeting to meeting, over time it’s nearly inevitable that you will lose some perspective and appreciation for the hospital settings that we have chosen to spend our careers in. From time to time, whether you are in clinical medicine or administration, take the time to step off that dance floor and get a different perspective, to reflect upon our hospital environment. It’s a critical skill for “systems-based thinkers.” Take a minute to reconnect and appreciate some extraordinary things about the places we work in.

Here are a handful of my own reflections:

Hospitals are remarkable places. Lives are transformed in hospitals—some by the miraculous skills and technology available, and some despite that technology. Last week, I saw a 23-week-old baby in our neonatal ICU, barely a pound, intubated, being tube-fed breast milk, with skin more delicate than tissue paper. When I was a medical student, such prematurity was simply incompatible with life.

We also walk patients and families through the end-of-life journey. To organize families and patients around such issues and help them find a path toward understanding and closure is a remarkable experience as well.

The difference between a good hospital and a great one is culture, not just “quality.” Over Labor Day, I went to my parents’ house outside Cincinnati. When I arrived, near midnight, my mother greeted my three children and me and then announced that she had to take my father to the hospital. Evidently, he had a skin/soft tissue infection that had gotten worse over the last couple of days, and when contacted that evening, his physician had made arrangements for him to be admitted directly to a nearby community hospital. It sure seemed to me that it would make more sense for me to take him to the hospital, so off we went.

I will say at this point that the quality of his care was fine. He was guided from registration to his room promptly. His IV antibiotics were started and were appropriately chosen. A surgeon saw him and debrided a large purulent lesion. The wound was packed, and he started feeling better. His pain was well controlled, and he went home a few days later with correct discharge instructions. There were no medication errors and no “near-misses” or harm events.

Yet, on that first night, no one was introduced by name or role. On the wheelchair ride up to the room, we passed at least six employees—four nurses or aides, a clerk, and a housekeeper. No one broke away from what they were doing (or not doing) to make eye contact, much less to smile or greet us. This hospital has EHR stations right in patient rooms, and the nurse and charge nurse stood in front of the machine, where we could hear them, complaining about the EHR. No one was able to step back from “the dance floor” of the minute-by-minute work and acknowledge the bummer reality that my father was going to spend Labor Day weekend in the hospital. And this is at a well-regarded community hospital, well-appointed with private rooms, in a relatively affluent community, with resources that most hospitals dream of. I left that night disappointed, not in the quality but in the culture.

Empathy matters. At the Cleveland Clinic, all employed physicians are now required to take a course called “Foundations of Healthcare Communication.” I recently took the class with about a dozen others. Our facilitator led us through several workshops and simulations of patients who were struggling with emotions—fear, uncertainly, anxiety. What struck me in participating in these workshops was our natural tendency as physicians when in these situations to try to “fix the problem.” We try to reassure, for instance, that a patient has “nothing to worry about,” that “everything will be fine,” or that “you are in good hands.”

While these statements may have a role, jumping to them as an immediate response misses a critical step: the acknowledgement of the fear, anxiety, or sense of hopelessness that our patients feel. It’s terribly difficult, when surrounded by so much sickness, to stay in touch with our ability to express empathy. Therefore, it’s all the more important to be able to step back and appreciate the need to do so.

Change is difficult—and hospitals are not airplanes. In healthcare, we are attempting to apply the principles of high reliability, continuous improvement, and “lean workflows” to our systems and to the bedside. This is absolutely necessary to improve patient safety and the outcomes and lives in our communities, with comparisons to the airline industry and other “high reliability” industries as benchmarks. I couldn’t agree more that our focus should not just be on prevention of errors; we should be eliminating them. Every central line-associated bloodstream infection, every “never event,” every patient who does not feel touched by our empathy—we should think of each of these as our industry’s equivalent of a “plane crash.”

As leaders, however, it’s critical that we step back and remember that healthcare is far behind in terms of integrated technologies and decision support—and more dependent on “human factors.” We are more complex, more variable, and more fallible.

A nurse arriving on his or her shift at my hospital is coming in to care for somewhere between four and seven patients, each of whom have different conditions, different complexities, different levels of understanding and expectation, different provider teams and family support. I am not sure that the comparison to the airline industry is appropriate, unless we level the playing field: How safe and reliable would air travel be if, until he or she sat down in the cockpit, the pilot had no idea what kind of plane he would be flying, how many of her flight crew had shown up, what the weather would be like on takeoff, or where the flight was even going. That is more similar to our reality at the bedside.

The answer, of course, is that the airline industry has made the decisions necessary to ensure that pilots, crew, and passengers are never in such situations. We need to re-engineer our own systems, even as they are more reliant upon these human factors. We also need the higher perspective to manage our teams through these extraordinarily difficult changes.

In Sum

I believe that the skills that successful physician leaders need come, either naturally or through self-selection, to many who work in hospital-based environments: teamwork, collaboration, communication, deference to expertise, and a focus on results. I also believe that the physician leaders who will stand out and become leaders in hospitals, systems, and policy will be those who are able stand back, gain perspective, and organize teams and systems toward aspirational strategies that engage our idealism and empathy, and continuously raise the bar.

From my 15 years with SHM and hospital medicine, I’ve seen that our organization is full of such individuals. Those of us in administrative and hospital leadership positions are looking to all of you to learn and showcase those skills, and to lead the way forward to improve care for our patients and communities.

Dr. Harte is president of Hillcrest Hospital in Mayfield Heights, Ohio, part of the Cleveland Clinic Health System. He is associate professor of medicine at the Lerner College of Medicine in Cleveland and an SHM board member.

Six years ago, after I had been in clinical practice for almost a decade, my career took several unusual turns that now have me sitting in the position of president of a 500-bed, full-service, very successful community hospital and referral center. While that has inevitably whittled my clinical time down to a mere fraction of what it used to be, I still spend a lot of time “on the dance floor,” although the steps are different at the bedside.

Whether you spend your day going from patient to patient or meeting to meeting, over time it’s nearly inevitable that you will lose some perspective and appreciation for the hospital settings that we have chosen to spend our careers in. From time to time, whether you are in clinical medicine or administration, take the time to step off that dance floor and get a different perspective, to reflect upon our hospital environment. It’s a critical skill for “systems-based thinkers.” Take a minute to reconnect and appreciate some extraordinary things about the places we work in.

Here are a handful of my own reflections:

Hospitals are remarkable places. Lives are transformed in hospitals—some by the miraculous skills and technology available, and some despite that technology. Last week, I saw a 23-week-old baby in our neonatal ICU, barely a pound, intubated, being tube-fed breast milk, with skin more delicate than tissue paper. When I was a medical student, such prematurity was simply incompatible with life.

We also walk patients and families through the end-of-life journey. To organize families and patients around such issues and help them find a path toward understanding and closure is a remarkable experience as well.

The difference between a good hospital and a great one is culture, not just “quality.” Over Labor Day, I went to my parents’ house outside Cincinnati. When I arrived, near midnight, my mother greeted my three children and me and then announced that she had to take my father to the hospital. Evidently, he had a skin/soft tissue infection that had gotten worse over the last couple of days, and when contacted that evening, his physician had made arrangements for him to be admitted directly to a nearby community hospital. It sure seemed to me that it would make more sense for me to take him to the hospital, so off we went.

I will say at this point that the quality of his care was fine. He was guided from registration to his room promptly. His IV antibiotics were started and were appropriately chosen. A surgeon saw him and debrided a large purulent lesion. The wound was packed, and he started feeling better. His pain was well controlled, and he went home a few days later with correct discharge instructions. There were no medication errors and no “near-misses” or harm events.

Yet, on that first night, no one was introduced by name or role. On the wheelchair ride up to the room, we passed at least six employees—four nurses or aides, a clerk, and a housekeeper. No one broke away from what they were doing (or not doing) to make eye contact, much less to smile or greet us. This hospital has EHR stations right in patient rooms, and the nurse and charge nurse stood in front of the machine, where we could hear them, complaining about the EHR. No one was able to step back from “the dance floor” of the minute-by-minute work and acknowledge the bummer reality that my father was going to spend Labor Day weekend in the hospital. And this is at a well-regarded community hospital, well-appointed with private rooms, in a relatively affluent community, with resources that most hospitals dream of. I left that night disappointed, not in the quality but in the culture.