User login

The link between suicide and sleep

According to the Centers for Disease Control and Prevention, suicide is the 10th leading cause of mortality in the United States, with rates of suicide rising over the past 2 decades. In 2016, completed suicides accounted for approximately 45,000 deaths in the United States (Ivey-Stephenson AZ, et al. MMWR Surveill Summ. 2017;66[18]:1). While progress has been made to lower mortality rates of other leading causes of death, very little progress has been made on reducing the rates of suicide. The term “suicide,” as referred to in this article, encompasses suicidal ideation, suicidal behavior, and suicide death.

Researchers have been investigating potential risk factors and prevention strategies for suicide. The relationship between suicide and sleep disturbances, specifically insomnia and nightmares, has been well documented in the literature. Given that insomnia and nightmares are potentially modifiable risk factors, it continues to be an area of active exploration for suicide rate reduction. While there are many different types of sleep disorders, including excessive daytime sleepiness, parasomnias, obstructive sleep apnea, and restless legs syndrome, this article will focus on the relationship between insomnia and nightmares with suicide.

Insomnia

Insomnia disorder, according to the American Psychiatric Association’s DSM-5, is a dissatisfaction of sleep quantity or quality that occurs at least three nights per week for a minimum of 3 months despite adequate opportunity for sleep. This may present as difficulty with falling asleep, staying asleep, or early morning awakenings. The sleep disturbance results in functional impairment or significant distress in at least one area of life (American Psychiatric Association. Arlington, Virginia: APA; 2013). While insomnia is often a symptom of many psychiatric disorders, research has shown that insomnia is an independent risk factor for suicide, even when controlling for mental illness. Studies have shown that there is up to a 2.4 relative risk of suicide death with insomnia after adjusting for depression severity (McCall W, et al. J Clin Sleep Med. 2013;32[9]:135).

Nightmares

Nightmares, as defined by the American Psychiatric Association’s DSM-5, are “typically lengthy, elaborate, story-like sequences of dream imagery that seem real and incite anxiety, fear, or other dysphoric emotions” (American Psychiatric Association. Arlington, Virginia: APA; 2013). They are common symptoms in posttraumatic stress disorder (PTSD), with up to 90% of individuals with PTSD experiencing nightmares following a traumatic event (Littlewood DL, et al. J Clin Sleep Med. 2016;12[3]:393). Nightmares have also been shown to be an independent risk factor for suicide when controlling for mental illness. Studies have shown that nightmares are associated with an elevated risk factor of 1.5 to 3 times for suicidal ideation and 3 to 4 times for suicide attempts. The data suggest that nightmares may be a stronger risk factor for suicide than insomnia (McCall W, et al. Curr Psychiatr Rep. 2013;15[9]:389).

Proposed Mechanism

The mechanism linking insomnia and nightmares with suicide has been theorized and studied by researchers. A couple of the most noteworthy proposed psychological mechanisms involve dysfunctional beliefs and attitudes about sleep, as well as deficits in problem solving capability. Dysfunctional beliefs and attitudes about sleep (DBAS) are negative cognitions pertaining to sleep, and they have been shown to be related to the intensity of suicidal ideations. Many of the DBAS are pessimistic thoughts that contain a “hopelessness flavor” to them, which lead to the perpetuation of insomnia. Hopelessness has been found to be a strong risk factor for suicide. In addition to DBAS, insomnia has also shown to lead to impairments in complex problem solving. The lack of problem solving skills in these patients may lead to fewer quantity and quality of solutions during stressful situations and leave suicide as the perceived best or only option.

The biological theories focus on serotonin and hyperarousal mediated by the hypothalamic-pituitary-adrenal (HPA) axis. Serotonin is a neurotransmitter that is involved in the induction and maintenance of sleep. Of interesting note, low levels of serotonin’s main metabolite, 5-hydroxyindoleacetic acid (5-HIAA) have been found in the cerebrospinal fluid of suicide victims. Evidence has also shown that sleep and the HPA axis are closely related. The HPA axis is activated by stress leading to a cascade of hormones that can cause susceptibility of hyperarousal, REM alterations, and suicide. Hyperarousal, shared in context with PTSD and insomnia, can lead to hyperactivation of the noradrenergic systems in the medial prefrontal cortex, which can lead to decrease in executive decision making (McCall W, et al. Curr Psychiatr Rep. 2013;15[9]:389).

Treatment Strategies

The benefit of treating insomnia and nightmares, in regards to reducing suicidality, continues to be an area of active research. Many of the previous studies have theorized that treating symptoms of insomnia and nightmares may indirectly reduce suicide. Pharmaceutical and nonpharmaceutical treatments are currently being used to help treat patients with insomnia and nightmares, but the benefit for reducing suicidality is still unknown.

One of the main treatment modalities for insomnia is hypnotic medication; however, these medications carry their own potential risk for suicide. Reports of suicide death in conjunction with hypnotic medication has led the FDA to add warnings about the increased risk of suicide with these medications. Some of these medications include zolpidem, zaleplon, eszopiclone, doxepin, ramelteon, and suvorexant. A review of research studies and case reports was completed in 2017 and showed that there was an odds ratio of 2 to 3 for hypnotic use in suicide deaths. However, most of the studies that were reviewed reported a potential confounding bias of the individual’s current mental health state. Furthermore, many of the suicide case reports that involved hypnotics also had additional substances detected, such as alcohol. Hypnotic medication has been shown to be an effective treatment for insomnia, but caution needs to be used when prescribing these medications. Strategies that may be beneficial when using hypnotic medication to reduce the risk of an adverse outcome include using the lowest effective dose and educating the patient of not combining the medication with alcohol or other sedative/hypnotics (McCall W, et al. Am J Psychiatry. 2017;174[1]:18).

For patients who have recurrent nightmares in the context of PTSD, the alpha-1 adrenergic receptor antagonist, prazosin, may provide some benefit; however, the literature is divided. There have been several randomized, placebo-controlled clinical trials with prazosin, which has shown a moderate to large effect for alleviating trauma-related nightmares and improving sleep quality. Some of the limitations of these studies were that the trials were small to moderate in size, and the length of the trials was 15 weeks or less. In 2018, Raskin and colleagues completed a follow-up randomized, placebo-controlled study for 26 weeks with 304 participants and did not find a significant difference between prazosin and placebo in regards to nightmares and sleep quality (Raskind MA, et al. N Engl J Med. 2018;378[6]:507).

Cognitive behavioral therapy for insomnia (CBT-I) and image rehearsal therapy (IRT) are two sleep-targeted therapy modalities that are evidence based. CBT-I targets dysfunctional beliefs and attitudes regarding sleep (McCall W, et al. J Clin Sleep Med. 2013;9[2]:135). IRT, on the other hand, specifically targets nightmares by having the patient write out a narrative of the nightmare, followed by re-scripting an alternative ending to something that is less distressing. The patient will rehearse the new dream narrative before going to sleep. There is still insufficient evidence to determine if these therapies have benefit in reducing suicide (Littlewood DL, et al. J Clin Sleep Med. 2016;12[3]:393).

While the jury is still out on how best to target and treat the risk factors of insomnia and nightmares in regards to suicide, there are still steps that health-care providers can take to help keep their patients safe. During the patient interview, new or worsening insomnia and nightmares should prompt further investigation of suicidal thoughts and behaviors. After a thorough interview, treatment options, with a discussion of risks and benefits, can be tailored to the individual’s needs. Managing insomnia and nightmares may be one avenue of suicide prevention.

Drs. Locrotondo and McCall are with the Department of Psychiatry and Health Behavior at the Medical College of Georgia, Augusta University, Augusta, Georgia.

According to the Centers for Disease Control and Prevention, suicide is the 10th leading cause of mortality in the United States, with rates of suicide rising over the past 2 decades. In 2016, completed suicides accounted for approximately 45,000 deaths in the United States (Ivey-Stephenson AZ, et al. MMWR Surveill Summ. 2017;66[18]:1). While progress has been made to lower mortality rates of other leading causes of death, very little progress has been made on reducing the rates of suicide. The term “suicide,” as referred to in this article, encompasses suicidal ideation, suicidal behavior, and suicide death.

Researchers have been investigating potential risk factors and prevention strategies for suicide. The relationship between suicide and sleep disturbances, specifically insomnia and nightmares, has been well documented in the literature. Given that insomnia and nightmares are potentially modifiable risk factors, it continues to be an area of active exploration for suicide rate reduction. While there are many different types of sleep disorders, including excessive daytime sleepiness, parasomnias, obstructive sleep apnea, and restless legs syndrome, this article will focus on the relationship between insomnia and nightmares with suicide.

Insomnia

Insomnia disorder, according to the American Psychiatric Association’s DSM-5, is a dissatisfaction of sleep quantity or quality that occurs at least three nights per week for a minimum of 3 months despite adequate opportunity for sleep. This may present as difficulty with falling asleep, staying asleep, or early morning awakenings. The sleep disturbance results in functional impairment or significant distress in at least one area of life (American Psychiatric Association. Arlington, Virginia: APA; 2013). While insomnia is often a symptom of many psychiatric disorders, research has shown that insomnia is an independent risk factor for suicide, even when controlling for mental illness. Studies have shown that there is up to a 2.4 relative risk of suicide death with insomnia after adjusting for depression severity (McCall W, et al. J Clin Sleep Med. 2013;32[9]:135).

Nightmares

Nightmares, as defined by the American Psychiatric Association’s DSM-5, are “typically lengthy, elaborate, story-like sequences of dream imagery that seem real and incite anxiety, fear, or other dysphoric emotions” (American Psychiatric Association. Arlington, Virginia: APA; 2013). They are common symptoms in posttraumatic stress disorder (PTSD), with up to 90% of individuals with PTSD experiencing nightmares following a traumatic event (Littlewood DL, et al. J Clin Sleep Med. 2016;12[3]:393). Nightmares have also been shown to be an independent risk factor for suicide when controlling for mental illness. Studies have shown that nightmares are associated with an elevated risk factor of 1.5 to 3 times for suicidal ideation and 3 to 4 times for suicide attempts. The data suggest that nightmares may be a stronger risk factor for suicide than insomnia (McCall W, et al. Curr Psychiatr Rep. 2013;15[9]:389).

Proposed Mechanism

The mechanism linking insomnia and nightmares with suicide has been theorized and studied by researchers. A couple of the most noteworthy proposed psychological mechanisms involve dysfunctional beliefs and attitudes about sleep, as well as deficits in problem solving capability. Dysfunctional beliefs and attitudes about sleep (DBAS) are negative cognitions pertaining to sleep, and they have been shown to be related to the intensity of suicidal ideations. Many of the DBAS are pessimistic thoughts that contain a “hopelessness flavor” to them, which lead to the perpetuation of insomnia. Hopelessness has been found to be a strong risk factor for suicide. In addition to DBAS, insomnia has also shown to lead to impairments in complex problem solving. The lack of problem solving skills in these patients may lead to fewer quantity and quality of solutions during stressful situations and leave suicide as the perceived best or only option.

The biological theories focus on serotonin and hyperarousal mediated by the hypothalamic-pituitary-adrenal (HPA) axis. Serotonin is a neurotransmitter that is involved in the induction and maintenance of sleep. Of interesting note, low levels of serotonin’s main metabolite, 5-hydroxyindoleacetic acid (5-HIAA) have been found in the cerebrospinal fluid of suicide victims. Evidence has also shown that sleep and the HPA axis are closely related. The HPA axis is activated by stress leading to a cascade of hormones that can cause susceptibility of hyperarousal, REM alterations, and suicide. Hyperarousal, shared in context with PTSD and insomnia, can lead to hyperactivation of the noradrenergic systems in the medial prefrontal cortex, which can lead to decrease in executive decision making (McCall W, et al. Curr Psychiatr Rep. 2013;15[9]:389).

Treatment Strategies

The benefit of treating insomnia and nightmares, in regards to reducing suicidality, continues to be an area of active research. Many of the previous studies have theorized that treating symptoms of insomnia and nightmares may indirectly reduce suicide. Pharmaceutical and nonpharmaceutical treatments are currently being used to help treat patients with insomnia and nightmares, but the benefit for reducing suicidality is still unknown.

One of the main treatment modalities for insomnia is hypnotic medication; however, these medications carry their own potential risk for suicide. Reports of suicide death in conjunction with hypnotic medication has led the FDA to add warnings about the increased risk of suicide with these medications. Some of these medications include zolpidem, zaleplon, eszopiclone, doxepin, ramelteon, and suvorexant. A review of research studies and case reports was completed in 2017 and showed that there was an odds ratio of 2 to 3 for hypnotic use in suicide deaths. However, most of the studies that were reviewed reported a potential confounding bias of the individual’s current mental health state. Furthermore, many of the suicide case reports that involved hypnotics also had additional substances detected, such as alcohol. Hypnotic medication has been shown to be an effective treatment for insomnia, but caution needs to be used when prescribing these medications. Strategies that may be beneficial when using hypnotic medication to reduce the risk of an adverse outcome include using the lowest effective dose and educating the patient of not combining the medication with alcohol or other sedative/hypnotics (McCall W, et al. Am J Psychiatry. 2017;174[1]:18).

For patients who have recurrent nightmares in the context of PTSD, the alpha-1 adrenergic receptor antagonist, prazosin, may provide some benefit; however, the literature is divided. There have been several randomized, placebo-controlled clinical trials with prazosin, which has shown a moderate to large effect for alleviating trauma-related nightmares and improving sleep quality. Some of the limitations of these studies were that the trials were small to moderate in size, and the length of the trials was 15 weeks or less. In 2018, Raskin and colleagues completed a follow-up randomized, placebo-controlled study for 26 weeks with 304 participants and did not find a significant difference between prazosin and placebo in regards to nightmares and sleep quality (Raskind MA, et al. N Engl J Med. 2018;378[6]:507).

Cognitive behavioral therapy for insomnia (CBT-I) and image rehearsal therapy (IRT) are two sleep-targeted therapy modalities that are evidence based. CBT-I targets dysfunctional beliefs and attitudes regarding sleep (McCall W, et al. J Clin Sleep Med. 2013;9[2]:135). IRT, on the other hand, specifically targets nightmares by having the patient write out a narrative of the nightmare, followed by re-scripting an alternative ending to something that is less distressing. The patient will rehearse the new dream narrative before going to sleep. There is still insufficient evidence to determine if these therapies have benefit in reducing suicide (Littlewood DL, et al. J Clin Sleep Med. 2016;12[3]:393).

While the jury is still out on how best to target and treat the risk factors of insomnia and nightmares in regards to suicide, there are still steps that health-care providers can take to help keep their patients safe. During the patient interview, new or worsening insomnia and nightmares should prompt further investigation of suicidal thoughts and behaviors. After a thorough interview, treatment options, with a discussion of risks and benefits, can be tailored to the individual’s needs. Managing insomnia and nightmares may be one avenue of suicide prevention.

Drs. Locrotondo and McCall are with the Department of Psychiatry and Health Behavior at the Medical College of Georgia, Augusta University, Augusta, Georgia.

According to the Centers for Disease Control and Prevention, suicide is the 10th leading cause of mortality in the United States, with rates of suicide rising over the past 2 decades. In 2016, completed suicides accounted for approximately 45,000 deaths in the United States (Ivey-Stephenson AZ, et al. MMWR Surveill Summ. 2017;66[18]:1). While progress has been made to lower mortality rates of other leading causes of death, very little progress has been made on reducing the rates of suicide. The term “suicide,” as referred to in this article, encompasses suicidal ideation, suicidal behavior, and suicide death.

Researchers have been investigating potential risk factors and prevention strategies for suicide. The relationship between suicide and sleep disturbances, specifically insomnia and nightmares, has been well documented in the literature. Given that insomnia and nightmares are potentially modifiable risk factors, it continues to be an area of active exploration for suicide rate reduction. While there are many different types of sleep disorders, including excessive daytime sleepiness, parasomnias, obstructive sleep apnea, and restless legs syndrome, this article will focus on the relationship between insomnia and nightmares with suicide.

Insomnia

Insomnia disorder, according to the American Psychiatric Association’s DSM-5, is a dissatisfaction of sleep quantity or quality that occurs at least three nights per week for a minimum of 3 months despite adequate opportunity for sleep. This may present as difficulty with falling asleep, staying asleep, or early morning awakenings. The sleep disturbance results in functional impairment or significant distress in at least one area of life (American Psychiatric Association. Arlington, Virginia: APA; 2013). While insomnia is often a symptom of many psychiatric disorders, research has shown that insomnia is an independent risk factor for suicide, even when controlling for mental illness. Studies have shown that there is up to a 2.4 relative risk of suicide death with insomnia after adjusting for depression severity (McCall W, et al. J Clin Sleep Med. 2013;32[9]:135).

Nightmares

Nightmares, as defined by the American Psychiatric Association’s DSM-5, are “typically lengthy, elaborate, story-like sequences of dream imagery that seem real and incite anxiety, fear, or other dysphoric emotions” (American Psychiatric Association. Arlington, Virginia: APA; 2013). They are common symptoms in posttraumatic stress disorder (PTSD), with up to 90% of individuals with PTSD experiencing nightmares following a traumatic event (Littlewood DL, et al. J Clin Sleep Med. 2016;12[3]:393). Nightmares have also been shown to be an independent risk factor for suicide when controlling for mental illness. Studies have shown that nightmares are associated with an elevated risk factor of 1.5 to 3 times for suicidal ideation and 3 to 4 times for suicide attempts. The data suggest that nightmares may be a stronger risk factor for suicide than insomnia (McCall W, et al. Curr Psychiatr Rep. 2013;15[9]:389).

Proposed Mechanism

The mechanism linking insomnia and nightmares with suicide has been theorized and studied by researchers. A couple of the most noteworthy proposed psychological mechanisms involve dysfunctional beliefs and attitudes about sleep, as well as deficits in problem solving capability. Dysfunctional beliefs and attitudes about sleep (DBAS) are negative cognitions pertaining to sleep, and they have been shown to be related to the intensity of suicidal ideations. Many of the DBAS are pessimistic thoughts that contain a “hopelessness flavor” to them, which lead to the perpetuation of insomnia. Hopelessness has been found to be a strong risk factor for suicide. In addition to DBAS, insomnia has also shown to lead to impairments in complex problem solving. The lack of problem solving skills in these patients may lead to fewer quantity and quality of solutions during stressful situations and leave suicide as the perceived best or only option.

The biological theories focus on serotonin and hyperarousal mediated by the hypothalamic-pituitary-adrenal (HPA) axis. Serotonin is a neurotransmitter that is involved in the induction and maintenance of sleep. Of interesting note, low levels of serotonin’s main metabolite, 5-hydroxyindoleacetic acid (5-HIAA) have been found in the cerebrospinal fluid of suicide victims. Evidence has also shown that sleep and the HPA axis are closely related. The HPA axis is activated by stress leading to a cascade of hormones that can cause susceptibility of hyperarousal, REM alterations, and suicide. Hyperarousal, shared in context with PTSD and insomnia, can lead to hyperactivation of the noradrenergic systems in the medial prefrontal cortex, which can lead to decrease in executive decision making (McCall W, et al. Curr Psychiatr Rep. 2013;15[9]:389).

Treatment Strategies

The benefit of treating insomnia and nightmares, in regards to reducing suicidality, continues to be an area of active research. Many of the previous studies have theorized that treating symptoms of insomnia and nightmares may indirectly reduce suicide. Pharmaceutical and nonpharmaceutical treatments are currently being used to help treat patients with insomnia and nightmares, but the benefit for reducing suicidality is still unknown.

One of the main treatment modalities for insomnia is hypnotic medication; however, these medications carry their own potential risk for suicide. Reports of suicide death in conjunction with hypnotic medication has led the FDA to add warnings about the increased risk of suicide with these medications. Some of these medications include zolpidem, zaleplon, eszopiclone, doxepin, ramelteon, and suvorexant. A review of research studies and case reports was completed in 2017 and showed that there was an odds ratio of 2 to 3 for hypnotic use in suicide deaths. However, most of the studies that were reviewed reported a potential confounding bias of the individual’s current mental health state. Furthermore, many of the suicide case reports that involved hypnotics also had additional substances detected, such as alcohol. Hypnotic medication has been shown to be an effective treatment for insomnia, but caution needs to be used when prescribing these medications. Strategies that may be beneficial when using hypnotic medication to reduce the risk of an adverse outcome include using the lowest effective dose and educating the patient of not combining the medication with alcohol or other sedative/hypnotics (McCall W, et al. Am J Psychiatry. 2017;174[1]:18).

For patients who have recurrent nightmares in the context of PTSD, the alpha-1 adrenergic receptor antagonist, prazosin, may provide some benefit; however, the literature is divided. There have been several randomized, placebo-controlled clinical trials with prazosin, which has shown a moderate to large effect for alleviating trauma-related nightmares and improving sleep quality. Some of the limitations of these studies were that the trials were small to moderate in size, and the length of the trials was 15 weeks or less. In 2018, Raskin and colleagues completed a follow-up randomized, placebo-controlled study for 26 weeks with 304 participants and did not find a significant difference between prazosin and placebo in regards to nightmares and sleep quality (Raskind MA, et al. N Engl J Med. 2018;378[6]:507).

Cognitive behavioral therapy for insomnia (CBT-I) and image rehearsal therapy (IRT) are two sleep-targeted therapy modalities that are evidence based. CBT-I targets dysfunctional beliefs and attitudes regarding sleep (McCall W, et al. J Clin Sleep Med. 2013;9[2]:135). IRT, on the other hand, specifically targets nightmares by having the patient write out a narrative of the nightmare, followed by re-scripting an alternative ending to something that is less distressing. The patient will rehearse the new dream narrative before going to sleep. There is still insufficient evidence to determine if these therapies have benefit in reducing suicide (Littlewood DL, et al. J Clin Sleep Med. 2016;12[3]:393).

While the jury is still out on how best to target and treat the risk factors of insomnia and nightmares in regards to suicide, there are still steps that health-care providers can take to help keep their patients safe. During the patient interview, new or worsening insomnia and nightmares should prompt further investigation of suicidal thoughts and behaviors. After a thorough interview, treatment options, with a discussion of risks and benefits, can be tailored to the individual’s needs. Managing insomnia and nightmares may be one avenue of suicide prevention.

Drs. Locrotondo and McCall are with the Department of Psychiatry and Health Behavior at the Medical College of Georgia, Augusta University, Augusta, Georgia.

ECMO for ARDS in the modern era

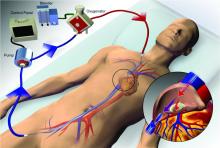

Extracorporeal membrane oxygenation (ECMO) has become increasingly accepted as a rescue therapy for severe respiratory failure from a variety of conditions, though most commonly, the acute respiratory distress syndrome (ARDS) (Thiagarajan R, et al. ASAIO. 2017;63[1]:60). ECMO can provide respiratory or cardiorespiratory support for failing lungs, heart, or both. The most common ECMO configuration used in ARDS is venovenous ECMO, in which blood is withdrawn from a catheter placed in a central vein, pumped through a gas exchange device known as an oxygenator, and returned to the venous system via another catheter. The blood flowing through the oxygenator is separated from a continuous supply of oxygen-rich sweep gas by a semipermeable membrane, across which diffusion-mediated gas exchange occurs, so that the blood exiting it is rich in oxygen and low in carbon dioxide. As venovenous ECMO functions in series with the native circulation, the well-oxygenated blood exiting the ECMO circuit mixes with poorly oxygenated blood flowing through the lungs. Therefore, oxygenation is dependent on native cardiac output to achieve systemic oxygen delivery (Figure 1).

ECMO been used successfully in adults with ARDS since the early 1970s (Hill JD, et al. N Engl J Med. 1972;286[12]:629-34) but, until recently, was limited to small numbers of patients at select global centers and associated with a high-risk profile. In the last decade, however, driven by improvements in ECMO circuit components making the device safer and easier to use, encouraging worldwide experience during the 2009 influenza A (H1N1) pandemic (Davies A, et al. JAMA. 2009;302[17]1888-95), and publication of the Efficacy and Economic Assessment of Conventional Ventilatory Support versus Extracorporeal Membrane Oxygenation for Severe Adult Respiratory Failure (CESAR) trial (Peek GJ, et al. Lancet. 2009;374[9698]:1351-63), ECMO use has markedly increased.

Despite its rapid growth, however, rigorous evidence supporting the use of ECMO has been lacking. The CESAR trial, while impressive in execution, had methodological issues that limited the strength of its conclusions. CESAR was a pragmatic trial that randomized 180 adults with severe respiratory failure from multiple etiologies to conventional management or transfer to an experienced, ECMO-capable center. CESAR met its primary outcome of improved survival without disability in the ECMO-referred group (63% vs 47%, relative risk [RR] 0.69; 95% confidence interval [CI] 0.05 to 0.97, P=.03), but not all patients in that group ultimately received ECMO. In addition, the use of lung protective ventilation was significantly higher in the ECMO-referred group, making it difficult to separate its benefit from that of ECMO. A conservative interpretation is that CESAR showed the clinical benefit of treatment at an ECMO-capable center, experienced in the management of patients with severe respiratory failure.

Not until the release of the Extracorporeal Membrane Oxygenation for Severe Acute Respiratory Distress Syndrome (EOLIA) trial earlier this year (Combes A, et al. N Engl J Med. 2018;378[21]:1965-75), did a modern, randomized controlled trial evaluating the use of ECMO itself exist. The EOLIA trial addressed the limitations of CESAR and randomized adult patients with early, severe ARDS to conventional, standard of care management that included a protocolized lung protective strategy in the control group vs immediate initiation of ECMO combined with an ultra-lung protective strategy (targeting end-inspiratory plateau pressure ≤24 cmH2O) in the intervention group. The primary outcome was all-cause mortality at 60 days. Of note, patients enrolled in EOLIA met entry criteria despite greater than 90% of patients receiving neuromuscular blockade and around 60% treated with prone positioning at the time of randomization (importantly, 90% of control group patients ultimately underwent prone positioning).

EOLIA was powered to detect a 20% decrease in mortality in the ECMO group. Based on trial design and the results of the fourth interim analysis, the trial was stopped for futility to reach that endpoint after enrollment of 249 of a maximum 331 patients. Although a 20% mortality reduction was not achieved, 60-day mortality was notably lower in the ECMO-treated group (35% vs 46%, RR 0.76, 95% CI 0.55 to 1.04, P=.09). The key secondary outcome of risk of treatment failure (defined as death in the ECMO group and death or crossover to ECMO in the control group) favored the ECMO group with a RR for mortality of 0.62 (95% CI, 0.47 to 0.82; P<.001), as did other secondary endpoints such as days free of renal and other organ failure.

A major limitation of the trial was that 35 (28%) of control group patients ultimately crossed over to ECMO, which diluted the effect of ECMO observed in the intention-to-treat analysis. Crossover occurred at clinician discretion an average of 6.5 days after randomization and after stringent criteria for crossover was met. These patients were incredibly ill, with a median oxygen saturation of 77%, rapidly worsening inotropic scores, and lactic acidosis; nine individuals had already suffered cardiac arrest, and six had received ECMO as part of extracorporeal cardiopulmonary resuscitation (ECPR), the initiation of venoarterial ECMO during cardiac arrest in attempt to restore spontaneous circulation. Mortality was considerably worse in the crossover group than in conventionally managed cohort overall, and, notably, 33% of patients crossed over to ECMO still survived.

In order to estimate the effect of ECMO on survival times if crossover had not occurred, the authors performed a post-hoc, rank-preserving structural failure time analysis. Though this relies on some assessment regarding the effect of the treatment itself, it showed a hazard ratio for mortality in the ECMO group of 0.51 (95% CI 0.24 to 1.02, P=.055). Although the EOLIA trial was not positive by traditional interpretation, all three major analyses and all secondary endpoints suggest some degree of benefit in patients with severe ARDS managed with ECMO.

Importantly, ECMO was well tolerated (at least when performed at expert centers, as done in this trial). There were significantly more bleeding events and cases of severe thrombocytopenia in the ECMO-treated group, but massive hemorrhage, ischemic and hemorrhagic stroke, arrhythmias, and other complications were similar.

Where do we go from here? Based on the totality of information, it is reasonable to consider ECMO for cases of severe ARDS not responsive to conventional measures, such as a lung protective ventilator strategy, neuromuscular blockade, and prone positioning. Initiation of ECMO may be reasonable prior to implementation of standard of care therapies, in order to permit safe transfer to an experienced center from a center not able to provide them.

Two take-away points: First, it is important to recognize that much of the clinical benefit derived from ECMO may be beyond its ability to normalize gas exchange and be due, at least in part, to the fact that ECMO allows the enhancement of proven lung protective ventilatory strategies. Initiation of ECMO and the “lung rest” it permits reduce the mechanical power applied to the injured alveoli and may attenuate ventilator-induced lung injury, cytokine release, and multiorgan failure that portend poor clinical outcomes in ARDS. Second, ECMO in EOLIA was conducted at expert centers with relatively low rates of complications.

It is too early to know how the critical care community will view ECMO for ARDS in light of EOLIA as well as a growing body of global ECMO experience, or how its wider application may impact the distribution and organization of ECMO centers. Regardless, of paramount importance in using ECMO as a treatment modality is optimizing patient management both prior to and after its initiation.

Dr. Agerstrand is Assistant Professor of Medicine, Director of the Medical ECMO Program, Columbia University College of Physicians and Surgeons, New York-Presbyterian Hospital.

Extracorporeal membrane oxygenation (ECMO) has become increasingly accepted as a rescue therapy for severe respiratory failure from a variety of conditions, though most commonly, the acute respiratory distress syndrome (ARDS) (Thiagarajan R, et al. ASAIO. 2017;63[1]:60). ECMO can provide respiratory or cardiorespiratory support for failing lungs, heart, or both. The most common ECMO configuration used in ARDS is venovenous ECMO, in which blood is withdrawn from a catheter placed in a central vein, pumped through a gas exchange device known as an oxygenator, and returned to the venous system via another catheter. The blood flowing through the oxygenator is separated from a continuous supply of oxygen-rich sweep gas by a semipermeable membrane, across which diffusion-mediated gas exchange occurs, so that the blood exiting it is rich in oxygen and low in carbon dioxide. As venovenous ECMO functions in series with the native circulation, the well-oxygenated blood exiting the ECMO circuit mixes with poorly oxygenated blood flowing through the lungs. Therefore, oxygenation is dependent on native cardiac output to achieve systemic oxygen delivery (Figure 1).

ECMO been used successfully in adults with ARDS since the early 1970s (Hill JD, et al. N Engl J Med. 1972;286[12]:629-34) but, until recently, was limited to small numbers of patients at select global centers and associated with a high-risk profile. In the last decade, however, driven by improvements in ECMO circuit components making the device safer and easier to use, encouraging worldwide experience during the 2009 influenza A (H1N1) pandemic (Davies A, et al. JAMA. 2009;302[17]1888-95), and publication of the Efficacy and Economic Assessment of Conventional Ventilatory Support versus Extracorporeal Membrane Oxygenation for Severe Adult Respiratory Failure (CESAR) trial (Peek GJ, et al. Lancet. 2009;374[9698]:1351-63), ECMO use has markedly increased.

Despite its rapid growth, however, rigorous evidence supporting the use of ECMO has been lacking. The CESAR trial, while impressive in execution, had methodological issues that limited the strength of its conclusions. CESAR was a pragmatic trial that randomized 180 adults with severe respiratory failure from multiple etiologies to conventional management or transfer to an experienced, ECMO-capable center. CESAR met its primary outcome of improved survival without disability in the ECMO-referred group (63% vs 47%, relative risk [RR] 0.69; 95% confidence interval [CI] 0.05 to 0.97, P=.03), but not all patients in that group ultimately received ECMO. In addition, the use of lung protective ventilation was significantly higher in the ECMO-referred group, making it difficult to separate its benefit from that of ECMO. A conservative interpretation is that CESAR showed the clinical benefit of treatment at an ECMO-capable center, experienced in the management of patients with severe respiratory failure.

Not until the release of the Extracorporeal Membrane Oxygenation for Severe Acute Respiratory Distress Syndrome (EOLIA) trial earlier this year (Combes A, et al. N Engl J Med. 2018;378[21]:1965-75), did a modern, randomized controlled trial evaluating the use of ECMO itself exist. The EOLIA trial addressed the limitations of CESAR and randomized adult patients with early, severe ARDS to conventional, standard of care management that included a protocolized lung protective strategy in the control group vs immediate initiation of ECMO combined with an ultra-lung protective strategy (targeting end-inspiratory plateau pressure ≤24 cmH2O) in the intervention group. The primary outcome was all-cause mortality at 60 days. Of note, patients enrolled in EOLIA met entry criteria despite greater than 90% of patients receiving neuromuscular blockade and around 60% treated with prone positioning at the time of randomization (importantly, 90% of control group patients ultimately underwent prone positioning).

EOLIA was powered to detect a 20% decrease in mortality in the ECMO group. Based on trial design and the results of the fourth interim analysis, the trial was stopped for futility to reach that endpoint after enrollment of 249 of a maximum 331 patients. Although a 20% mortality reduction was not achieved, 60-day mortality was notably lower in the ECMO-treated group (35% vs 46%, RR 0.76, 95% CI 0.55 to 1.04, P=.09). The key secondary outcome of risk of treatment failure (defined as death in the ECMO group and death or crossover to ECMO in the control group) favored the ECMO group with a RR for mortality of 0.62 (95% CI, 0.47 to 0.82; P<.001), as did other secondary endpoints such as days free of renal and other organ failure.

A major limitation of the trial was that 35 (28%) of control group patients ultimately crossed over to ECMO, which diluted the effect of ECMO observed in the intention-to-treat analysis. Crossover occurred at clinician discretion an average of 6.5 days after randomization and after stringent criteria for crossover was met. These patients were incredibly ill, with a median oxygen saturation of 77%, rapidly worsening inotropic scores, and lactic acidosis; nine individuals had already suffered cardiac arrest, and six had received ECMO as part of extracorporeal cardiopulmonary resuscitation (ECPR), the initiation of venoarterial ECMO during cardiac arrest in attempt to restore spontaneous circulation. Mortality was considerably worse in the crossover group than in conventionally managed cohort overall, and, notably, 33% of patients crossed over to ECMO still survived.

In order to estimate the effect of ECMO on survival times if crossover had not occurred, the authors performed a post-hoc, rank-preserving structural failure time analysis. Though this relies on some assessment regarding the effect of the treatment itself, it showed a hazard ratio for mortality in the ECMO group of 0.51 (95% CI 0.24 to 1.02, P=.055). Although the EOLIA trial was not positive by traditional interpretation, all three major analyses and all secondary endpoints suggest some degree of benefit in patients with severe ARDS managed with ECMO.

Importantly, ECMO was well tolerated (at least when performed at expert centers, as done in this trial). There were significantly more bleeding events and cases of severe thrombocytopenia in the ECMO-treated group, but massive hemorrhage, ischemic and hemorrhagic stroke, arrhythmias, and other complications were similar.

Where do we go from here? Based on the totality of information, it is reasonable to consider ECMO for cases of severe ARDS not responsive to conventional measures, such as a lung protective ventilator strategy, neuromuscular blockade, and prone positioning. Initiation of ECMO may be reasonable prior to implementation of standard of care therapies, in order to permit safe transfer to an experienced center from a center not able to provide them.

Two take-away points: First, it is important to recognize that much of the clinical benefit derived from ECMO may be beyond its ability to normalize gas exchange and be due, at least in part, to the fact that ECMO allows the enhancement of proven lung protective ventilatory strategies. Initiation of ECMO and the “lung rest” it permits reduce the mechanical power applied to the injured alveoli and may attenuate ventilator-induced lung injury, cytokine release, and multiorgan failure that portend poor clinical outcomes in ARDS. Second, ECMO in EOLIA was conducted at expert centers with relatively low rates of complications.

It is too early to know how the critical care community will view ECMO for ARDS in light of EOLIA as well as a growing body of global ECMO experience, or how its wider application may impact the distribution and organization of ECMO centers. Regardless, of paramount importance in using ECMO as a treatment modality is optimizing patient management both prior to and after its initiation.

Dr. Agerstrand is Assistant Professor of Medicine, Director of the Medical ECMO Program, Columbia University College of Physicians and Surgeons, New York-Presbyterian Hospital.

Extracorporeal membrane oxygenation (ECMO) has become increasingly accepted as a rescue therapy for severe respiratory failure from a variety of conditions, though most commonly, the acute respiratory distress syndrome (ARDS) (Thiagarajan R, et al. ASAIO. 2017;63[1]:60). ECMO can provide respiratory or cardiorespiratory support for failing lungs, heart, or both. The most common ECMO configuration used in ARDS is venovenous ECMO, in which blood is withdrawn from a catheter placed in a central vein, pumped through a gas exchange device known as an oxygenator, and returned to the venous system via another catheter. The blood flowing through the oxygenator is separated from a continuous supply of oxygen-rich sweep gas by a semipermeable membrane, across which diffusion-mediated gas exchange occurs, so that the blood exiting it is rich in oxygen and low in carbon dioxide. As venovenous ECMO functions in series with the native circulation, the well-oxygenated blood exiting the ECMO circuit mixes with poorly oxygenated blood flowing through the lungs. Therefore, oxygenation is dependent on native cardiac output to achieve systemic oxygen delivery (Figure 1).

ECMO been used successfully in adults with ARDS since the early 1970s (Hill JD, et al. N Engl J Med. 1972;286[12]:629-34) but, until recently, was limited to small numbers of patients at select global centers and associated with a high-risk profile. In the last decade, however, driven by improvements in ECMO circuit components making the device safer and easier to use, encouraging worldwide experience during the 2009 influenza A (H1N1) pandemic (Davies A, et al. JAMA. 2009;302[17]1888-95), and publication of the Efficacy and Economic Assessment of Conventional Ventilatory Support versus Extracorporeal Membrane Oxygenation for Severe Adult Respiratory Failure (CESAR) trial (Peek GJ, et al. Lancet. 2009;374[9698]:1351-63), ECMO use has markedly increased.

Despite its rapid growth, however, rigorous evidence supporting the use of ECMO has been lacking. The CESAR trial, while impressive in execution, had methodological issues that limited the strength of its conclusions. CESAR was a pragmatic trial that randomized 180 adults with severe respiratory failure from multiple etiologies to conventional management or transfer to an experienced, ECMO-capable center. CESAR met its primary outcome of improved survival without disability in the ECMO-referred group (63% vs 47%, relative risk [RR] 0.69; 95% confidence interval [CI] 0.05 to 0.97, P=.03), but not all patients in that group ultimately received ECMO. In addition, the use of lung protective ventilation was significantly higher in the ECMO-referred group, making it difficult to separate its benefit from that of ECMO. A conservative interpretation is that CESAR showed the clinical benefit of treatment at an ECMO-capable center, experienced in the management of patients with severe respiratory failure.

Not until the release of the Extracorporeal Membrane Oxygenation for Severe Acute Respiratory Distress Syndrome (EOLIA) trial earlier this year (Combes A, et al. N Engl J Med. 2018;378[21]:1965-75), did a modern, randomized controlled trial evaluating the use of ECMO itself exist. The EOLIA trial addressed the limitations of CESAR and randomized adult patients with early, severe ARDS to conventional, standard of care management that included a protocolized lung protective strategy in the control group vs immediate initiation of ECMO combined with an ultra-lung protective strategy (targeting end-inspiratory plateau pressure ≤24 cmH2O) in the intervention group. The primary outcome was all-cause mortality at 60 days. Of note, patients enrolled in EOLIA met entry criteria despite greater than 90% of patients receiving neuromuscular blockade and around 60% treated with prone positioning at the time of randomization (importantly, 90% of control group patients ultimately underwent prone positioning).

EOLIA was powered to detect a 20% decrease in mortality in the ECMO group. Based on trial design and the results of the fourth interim analysis, the trial was stopped for futility to reach that endpoint after enrollment of 249 of a maximum 331 patients. Although a 20% mortality reduction was not achieved, 60-day mortality was notably lower in the ECMO-treated group (35% vs 46%, RR 0.76, 95% CI 0.55 to 1.04, P=.09). The key secondary outcome of risk of treatment failure (defined as death in the ECMO group and death or crossover to ECMO in the control group) favored the ECMO group with a RR for mortality of 0.62 (95% CI, 0.47 to 0.82; P<.001), as did other secondary endpoints such as days free of renal and other organ failure.

A major limitation of the trial was that 35 (28%) of control group patients ultimately crossed over to ECMO, which diluted the effect of ECMO observed in the intention-to-treat analysis. Crossover occurred at clinician discretion an average of 6.5 days after randomization and after stringent criteria for crossover was met. These patients were incredibly ill, with a median oxygen saturation of 77%, rapidly worsening inotropic scores, and lactic acidosis; nine individuals had already suffered cardiac arrest, and six had received ECMO as part of extracorporeal cardiopulmonary resuscitation (ECPR), the initiation of venoarterial ECMO during cardiac arrest in attempt to restore spontaneous circulation. Mortality was considerably worse in the crossover group than in conventionally managed cohort overall, and, notably, 33% of patients crossed over to ECMO still survived.

In order to estimate the effect of ECMO on survival times if crossover had not occurred, the authors performed a post-hoc, rank-preserving structural failure time analysis. Though this relies on some assessment regarding the effect of the treatment itself, it showed a hazard ratio for mortality in the ECMO group of 0.51 (95% CI 0.24 to 1.02, P=.055). Although the EOLIA trial was not positive by traditional interpretation, all three major analyses and all secondary endpoints suggest some degree of benefit in patients with severe ARDS managed with ECMO.

Importantly, ECMO was well tolerated (at least when performed at expert centers, as done in this trial). There were significantly more bleeding events and cases of severe thrombocytopenia in the ECMO-treated group, but massive hemorrhage, ischemic and hemorrhagic stroke, arrhythmias, and other complications were similar.

Where do we go from here? Based on the totality of information, it is reasonable to consider ECMO for cases of severe ARDS not responsive to conventional measures, such as a lung protective ventilator strategy, neuromuscular blockade, and prone positioning. Initiation of ECMO may be reasonable prior to implementation of standard of care therapies, in order to permit safe transfer to an experienced center from a center not able to provide them.

Two take-away points: First, it is important to recognize that much of the clinical benefit derived from ECMO may be beyond its ability to normalize gas exchange and be due, at least in part, to the fact that ECMO allows the enhancement of proven lung protective ventilatory strategies. Initiation of ECMO and the “lung rest” it permits reduce the mechanical power applied to the injured alveoli and may attenuate ventilator-induced lung injury, cytokine release, and multiorgan failure that portend poor clinical outcomes in ARDS. Second, ECMO in EOLIA was conducted at expert centers with relatively low rates of complications.

It is too early to know how the critical care community will view ECMO for ARDS in light of EOLIA as well as a growing body of global ECMO experience, or how its wider application may impact the distribution and organization of ECMO centers. Regardless, of paramount importance in using ECMO as a treatment modality is optimizing patient management both prior to and after its initiation.

Dr. Agerstrand is Assistant Professor of Medicine, Director of the Medical ECMO Program, Columbia University College of Physicians and Surgeons, New York-Presbyterian Hospital.

The importance of diversity and inclusion in medicine

Diversity

There is growing appreciation for diversity and inclusion (DI) as drivers of excellence in medicine. CHEST also promotes excellence in medicine. Therefore, it is intuitive that CHEST promote DI. Diversity encompasses differences in gender, race/ethnicity, vocational training, age, sexual orientation, thought processes, etc.

Academic medicine is rich with examples of how diversity is critical to the health of our nation:

– Diverse student populations have been shown to improve our learners’ satisfaction with their educational experience.

– Diverse teams have been shown to be more capable of solving complex problems than homogenous teams.

– Health care is moving toward a team-based, interprofessional model that values the contributions of a range of providers’ perspectives in improving patient outcomes.

– In biomedical research, investigators ask different research questions based on their own background and experiences. This implies that finding solutions to diseases that affect specific populations will require a diverse pool of biomedical researchers.

– Faculty diversity as a key component of excellence for medical education and research has been documented.

Diversity alone doesn’t drive inclusion. Noted diversity advocate, Verna Myers, stated, “Diversity is being invited to the party. Inclusion is being asked to dance.” In my opinion, diversity is the commencement of work, but inclusion helps complete the task.

Inclusion

An inclusive environment values the unique contributions all members bring. Teams with diversity of thought are more innovative as individual members with different backgrounds and points of view bring an extensive range of ideas and creativity to scientific discovery and decision-making processes. Inclusion leverages the power of our unique differences to accomplish our mutual goals. By valuing everyone’s perspective, we demonstrate excellence.

I recommend an article from the Harvard Business Review (HBR Feb 2017). The authors suggest several ways to promote inclusiveness: (1) ensuring team members speak up and are heard; (2) making it safe to propose novel ideas; (3) empowering team members to make decisions; (4) taking advice and implementing feedback; (5) giving actionable feedback; and ( 6) sharing credit for team success. If the team leader possesses at least three of these traits, 87% of team members say they feel welcome and included in their team; 87% say they feel free to express their views and opinions; and 74% say they feel that their ideas are heard and recognized. If the team leader possessed none of these traits, those percentages dropped to 51%, 46%, and 37%, respectively. I believe this concept is applicable in medicine also.

Sponsors

What can we do to advance diversity and inclusion individually and in our individual institutions? A sponsor is a senior level leader who advocates for key assignments, promotes for and puts his or her reputation on the line for the protégé’s advancement. This invigorates and drives engagement. One key to rising above the playing field for women and people of color is sponsorship. Being a sponsor does not mean one would recommend someone who is not qualified. It means one recommends or supports those who are capable of doing the job but would not otherwise be given the opportunity.

Ask yourself: Have I served as a sponsor? What would prevent me from being a sponsor? Do I believe in this concept?

Cause for Alarm

Numerous publications have recently discussed the crisis of the decline of black men entering medicine. In 1978, there were 1,410 black male applicants to medical school, and in 2014, there were 1,337. Additionally, the number of black male matriculants to medical school over more than 35 years has not surpassed the 1978 numbers. In 1978, there were 542 black male matriculants, and in 2014, there were 515 (J of Racial and Ethnic Health Disparities. 2017, 4:317-321). This report is thorough and insightful and illustrates the work that we must do to help improve this situation.

Dr. Marc Nivet, Association of American Medical Colleges (AAMC) Chief Diversity Officer, stated “No other minority group has experienced such declines. The inability to find, engage, and develop candidates for careers in medicine from all members of our society limits our ability to improve health care for all.” I recommend you read the 2015 AAMC publication entitled: Altering the Course: Black Males in Medicine.

Health-care Disparities

Research suggests that the overall health of Americans has improved; however, disparities continue to persist among many populations within the United States. Racial and ethnic minority populations have poorer access to care and worse outcomes than their white counterparts. Approximately 20% of the nation living in rural areas is less likely than those living in urban areas to receive preventive care and more likely to experience language barriers.

Individuals identifying as lesbian, gay, bisexual, or transgender are likely to experience discrimination in health-care settings. These individuals often face insurance-based barriers and are less likely to have a usual source of care than patients who identify as straight.

A 2002 report by the Institute of Medicine entitled: Unequal Treatment: What Healthcare Providers Need to Know about Racial and Ethnic Disparities in Healthcare is revealing. Salient information reported is: It is generally accepted that a diverse workforce is a key component in the delivery of quality, competent care throughout the nation. Physicians from racial and ethnic backgrounds typically underrepresented in medicine are significantly more likely to practice primary care than white physicians and are more likely to practice in impoverished and medically underserved areas. Diversity in the physician workforce impacts the quality of care received by patients. Race concordance between patient and physician results in longer visits and increased patient satisfaction, and language concordance is positively associated with adherence to treatment among certain racial or ethnic groups.

Improving the patient experience or quality of care received also requires attention to education and training on cultural competence. By weaving together a diverse and culturally responsive pool of physicians working collaboratively with other health-care professionals, access and quality of care can improve throughout the nation.

CHEST cannot attain more racial diversity in our organization if we don’t have this diversity in medical education and training. This is why CHEST must be actively involved in addressing these issues.

Unconscious Bias

Despite many examples of how diversity enriches the quality of health care and health research, there is still much work to be done to address the human biases that impede our ability to benefit from diversity in medicine. While academic medicine has made progress toward addressing overt discrimination, unconscious bias (implicit bias) represents another threat. Unconscious bias describes the prejudices we don’t know we have. While unconscious biases vary from person to person, we all possess them. The existence of unconscious bias in academic medicine, while uncomfortable and unsettling, is a reality. The AAMC developed an unconscious bias learning lab for the health professions and produced an oft-cited video about addressing unconscious bias in the faculty advancement, promotion, and tenure process. We must consider this and other ways in which we can help promote the acknowledgment of unconscious bias. The CHEST staff have undergone unconscious bias training, and I recommend it for all faculty in academic medicine.

Summary

Diversity and inclusion in medicine is of paramount importance. It leads to better patient care and better trainee education and will decrease health-care disparities. Progress has been made, but there is more work to be done.

CHEST is supportive of these efforts and has worked on this previously and with a renewed push in the past 2 years with the DI Task Force initially and, now, the DI Roundtable, which has representatives from each of the standing committees, including the Board of Regents. This roundtable group will help advance the DI initiatives of the organization. I ask that each person reading this article consider what we as individuals can do in helping make DI in medicine a priority.

Dr. Haynes is Professor of Medicine at The University of Mississippi Medical Center in Jackson, MS. He is also the Executive Vice Chair of the Department of Medicine. At CHEST, he is a member of the training and transitions committee, executive scientific program committee, former chair of the diversity and inclusion task force, and is the current chair of the diversity and inclusion roundtable.

Diversity

There is growing appreciation for diversity and inclusion (DI) as drivers of excellence in medicine. CHEST also promotes excellence in medicine. Therefore, it is intuitive that CHEST promote DI. Diversity encompasses differences in gender, race/ethnicity, vocational training, age, sexual orientation, thought processes, etc.

Academic medicine is rich with examples of how diversity is critical to the health of our nation:

– Diverse student populations have been shown to improve our learners’ satisfaction with their educational experience.

– Diverse teams have been shown to be more capable of solving complex problems than homogenous teams.

– Health care is moving toward a team-based, interprofessional model that values the contributions of a range of providers’ perspectives in improving patient outcomes.

– In biomedical research, investigators ask different research questions based on their own background and experiences. This implies that finding solutions to diseases that affect specific populations will require a diverse pool of biomedical researchers.

– Faculty diversity as a key component of excellence for medical education and research has been documented.

Diversity alone doesn’t drive inclusion. Noted diversity advocate, Verna Myers, stated, “Diversity is being invited to the party. Inclusion is being asked to dance.” In my opinion, diversity is the commencement of work, but inclusion helps complete the task.

Inclusion

An inclusive environment values the unique contributions all members bring. Teams with diversity of thought are more innovative as individual members with different backgrounds and points of view bring an extensive range of ideas and creativity to scientific discovery and decision-making processes. Inclusion leverages the power of our unique differences to accomplish our mutual goals. By valuing everyone’s perspective, we demonstrate excellence.

I recommend an article from the Harvard Business Review (HBR Feb 2017). The authors suggest several ways to promote inclusiveness: (1) ensuring team members speak up and are heard; (2) making it safe to propose novel ideas; (3) empowering team members to make decisions; (4) taking advice and implementing feedback; (5) giving actionable feedback; and ( 6) sharing credit for team success. If the team leader possesses at least three of these traits, 87% of team members say they feel welcome and included in their team; 87% say they feel free to express their views and opinions; and 74% say they feel that their ideas are heard and recognized. If the team leader possessed none of these traits, those percentages dropped to 51%, 46%, and 37%, respectively. I believe this concept is applicable in medicine also.

Sponsors

What can we do to advance diversity and inclusion individually and in our individual institutions? A sponsor is a senior level leader who advocates for key assignments, promotes for and puts his or her reputation on the line for the protégé’s advancement. This invigorates and drives engagement. One key to rising above the playing field for women and people of color is sponsorship. Being a sponsor does not mean one would recommend someone who is not qualified. It means one recommends or supports those who are capable of doing the job but would not otherwise be given the opportunity.

Ask yourself: Have I served as a sponsor? What would prevent me from being a sponsor? Do I believe in this concept?

Cause for Alarm

Numerous publications have recently discussed the crisis of the decline of black men entering medicine. In 1978, there were 1,410 black male applicants to medical school, and in 2014, there were 1,337. Additionally, the number of black male matriculants to medical school over more than 35 years has not surpassed the 1978 numbers. In 1978, there were 542 black male matriculants, and in 2014, there were 515 (J of Racial and Ethnic Health Disparities. 2017, 4:317-321). This report is thorough and insightful and illustrates the work that we must do to help improve this situation.

Dr. Marc Nivet, Association of American Medical Colleges (AAMC) Chief Diversity Officer, stated “No other minority group has experienced such declines. The inability to find, engage, and develop candidates for careers in medicine from all members of our society limits our ability to improve health care for all.” I recommend you read the 2015 AAMC publication entitled: Altering the Course: Black Males in Medicine.

Health-care Disparities

Research suggests that the overall health of Americans has improved; however, disparities continue to persist among many populations within the United States. Racial and ethnic minority populations have poorer access to care and worse outcomes than their white counterparts. Approximately 20% of the nation living in rural areas is less likely than those living in urban areas to receive preventive care and more likely to experience language barriers.

Individuals identifying as lesbian, gay, bisexual, or transgender are likely to experience discrimination in health-care settings. These individuals often face insurance-based barriers and are less likely to have a usual source of care than patients who identify as straight.

A 2002 report by the Institute of Medicine entitled: Unequal Treatment: What Healthcare Providers Need to Know about Racial and Ethnic Disparities in Healthcare is revealing. Salient information reported is: It is generally accepted that a diverse workforce is a key component in the delivery of quality, competent care throughout the nation. Physicians from racial and ethnic backgrounds typically underrepresented in medicine are significantly more likely to practice primary care than white physicians and are more likely to practice in impoverished and medically underserved areas. Diversity in the physician workforce impacts the quality of care received by patients. Race concordance between patient and physician results in longer visits and increased patient satisfaction, and language concordance is positively associated with adherence to treatment among certain racial or ethnic groups.

Improving the patient experience or quality of care received also requires attention to education and training on cultural competence. By weaving together a diverse and culturally responsive pool of physicians working collaboratively with other health-care professionals, access and quality of care can improve throughout the nation.

CHEST cannot attain more racial diversity in our organization if we don’t have this diversity in medical education and training. This is why CHEST must be actively involved in addressing these issues.

Unconscious Bias

Despite many examples of how diversity enriches the quality of health care and health research, there is still much work to be done to address the human biases that impede our ability to benefit from diversity in medicine. While academic medicine has made progress toward addressing overt discrimination, unconscious bias (implicit bias) represents another threat. Unconscious bias describes the prejudices we don’t know we have. While unconscious biases vary from person to person, we all possess them. The existence of unconscious bias in academic medicine, while uncomfortable and unsettling, is a reality. The AAMC developed an unconscious bias learning lab for the health professions and produced an oft-cited video about addressing unconscious bias in the faculty advancement, promotion, and tenure process. We must consider this and other ways in which we can help promote the acknowledgment of unconscious bias. The CHEST staff have undergone unconscious bias training, and I recommend it for all faculty in academic medicine.

Summary

Diversity and inclusion in medicine is of paramount importance. It leads to better patient care and better trainee education and will decrease health-care disparities. Progress has been made, but there is more work to be done.

CHEST is supportive of these efforts and has worked on this previously and with a renewed push in the past 2 years with the DI Task Force initially and, now, the DI Roundtable, which has representatives from each of the standing committees, including the Board of Regents. This roundtable group will help advance the DI initiatives of the organization. I ask that each person reading this article consider what we as individuals can do in helping make DI in medicine a priority.

Dr. Haynes is Professor of Medicine at The University of Mississippi Medical Center in Jackson, MS. He is also the Executive Vice Chair of the Department of Medicine. At CHEST, he is a member of the training and transitions committee, executive scientific program committee, former chair of the diversity and inclusion task force, and is the current chair of the diversity and inclusion roundtable.

Diversity

There is growing appreciation for diversity and inclusion (DI) as drivers of excellence in medicine. CHEST also promotes excellence in medicine. Therefore, it is intuitive that CHEST promote DI. Diversity encompasses differences in gender, race/ethnicity, vocational training, age, sexual orientation, thought processes, etc.

Academic medicine is rich with examples of how diversity is critical to the health of our nation:

– Diverse student populations have been shown to improve our learners’ satisfaction with their educational experience.

– Diverse teams have been shown to be more capable of solving complex problems than homogenous teams.

– Health care is moving toward a team-based, interprofessional model that values the contributions of a range of providers’ perspectives in improving patient outcomes.

– In biomedical research, investigators ask different research questions based on their own background and experiences. This implies that finding solutions to diseases that affect specific populations will require a diverse pool of biomedical researchers.

– Faculty diversity as a key component of excellence for medical education and research has been documented.

Diversity alone doesn’t drive inclusion. Noted diversity advocate, Verna Myers, stated, “Diversity is being invited to the party. Inclusion is being asked to dance.” In my opinion, diversity is the commencement of work, but inclusion helps complete the task.

Inclusion

An inclusive environment values the unique contributions all members bring. Teams with diversity of thought are more innovative as individual members with different backgrounds and points of view bring an extensive range of ideas and creativity to scientific discovery and decision-making processes. Inclusion leverages the power of our unique differences to accomplish our mutual goals. By valuing everyone’s perspective, we demonstrate excellence.

I recommend an article from the Harvard Business Review (HBR Feb 2017). The authors suggest several ways to promote inclusiveness: (1) ensuring team members speak up and are heard; (2) making it safe to propose novel ideas; (3) empowering team members to make decisions; (4) taking advice and implementing feedback; (5) giving actionable feedback; and ( 6) sharing credit for team success. If the team leader possesses at least three of these traits, 87% of team members say they feel welcome and included in their team; 87% say they feel free to express their views and opinions; and 74% say they feel that their ideas are heard and recognized. If the team leader possessed none of these traits, those percentages dropped to 51%, 46%, and 37%, respectively. I believe this concept is applicable in medicine also.

Sponsors

What can we do to advance diversity and inclusion individually and in our individual institutions? A sponsor is a senior level leader who advocates for key assignments, promotes for and puts his or her reputation on the line for the protégé’s advancement. This invigorates and drives engagement. One key to rising above the playing field for women and people of color is sponsorship. Being a sponsor does not mean one would recommend someone who is not qualified. It means one recommends or supports those who are capable of doing the job but would not otherwise be given the opportunity.

Ask yourself: Have I served as a sponsor? What would prevent me from being a sponsor? Do I believe in this concept?

Cause for Alarm

Numerous publications have recently discussed the crisis of the decline of black men entering medicine. In 1978, there were 1,410 black male applicants to medical school, and in 2014, there were 1,337. Additionally, the number of black male matriculants to medical school over more than 35 years has not surpassed the 1978 numbers. In 1978, there were 542 black male matriculants, and in 2014, there were 515 (J of Racial and Ethnic Health Disparities. 2017, 4:317-321). This report is thorough and insightful and illustrates the work that we must do to help improve this situation.

Dr. Marc Nivet, Association of American Medical Colleges (AAMC) Chief Diversity Officer, stated “No other minority group has experienced such declines. The inability to find, engage, and develop candidates for careers in medicine from all members of our society limits our ability to improve health care for all.” I recommend you read the 2015 AAMC publication entitled: Altering the Course: Black Males in Medicine.

Health-care Disparities

Research suggests that the overall health of Americans has improved; however, disparities continue to persist among many populations within the United States. Racial and ethnic minority populations have poorer access to care and worse outcomes than their white counterparts. Approximately 20% of the nation living in rural areas is less likely than those living in urban areas to receive preventive care and more likely to experience language barriers.

Individuals identifying as lesbian, gay, bisexual, or transgender are likely to experience discrimination in health-care settings. These individuals often face insurance-based barriers and are less likely to have a usual source of care than patients who identify as straight.

A 2002 report by the Institute of Medicine entitled: Unequal Treatment: What Healthcare Providers Need to Know about Racial and Ethnic Disparities in Healthcare is revealing. Salient information reported is: It is generally accepted that a diverse workforce is a key component in the delivery of quality, competent care throughout the nation. Physicians from racial and ethnic backgrounds typically underrepresented in medicine are significantly more likely to practice primary care than white physicians and are more likely to practice in impoverished and medically underserved areas. Diversity in the physician workforce impacts the quality of care received by patients. Race concordance between patient and physician results in longer visits and increased patient satisfaction, and language concordance is positively associated with adherence to treatment among certain racial or ethnic groups.

Improving the patient experience or quality of care received also requires attention to education and training on cultural competence. By weaving together a diverse and culturally responsive pool of physicians working collaboratively with other health-care professionals, access and quality of care can improve throughout the nation.

CHEST cannot attain more racial diversity in our organization if we don’t have this diversity in medical education and training. This is why CHEST must be actively involved in addressing these issues.

Unconscious Bias

Despite many examples of how diversity enriches the quality of health care and health research, there is still much work to be done to address the human biases that impede our ability to benefit from diversity in medicine. While academic medicine has made progress toward addressing overt discrimination, unconscious bias (implicit bias) represents another threat. Unconscious bias describes the prejudices we don’t know we have. While unconscious biases vary from person to person, we all possess them. The existence of unconscious bias in academic medicine, while uncomfortable and unsettling, is a reality. The AAMC developed an unconscious bias learning lab for the health professions and produced an oft-cited video about addressing unconscious bias in the faculty advancement, promotion, and tenure process. We must consider this and other ways in which we can help promote the acknowledgment of unconscious bias. The CHEST staff have undergone unconscious bias training, and I recommend it for all faculty in academic medicine.

Summary

Diversity and inclusion in medicine is of paramount importance. It leads to better patient care and better trainee education and will decrease health-care disparities. Progress has been made, but there is more work to be done.

CHEST is supportive of these efforts and has worked on this previously and with a renewed push in the past 2 years with the DI Task Force initially and, now, the DI Roundtable, which has representatives from each of the standing committees, including the Board of Regents. This roundtable group will help advance the DI initiatives of the organization. I ask that each person reading this article consider what we as individuals can do in helping make DI in medicine a priority.

Dr. Haynes is Professor of Medicine at The University of Mississippi Medical Center in Jackson, MS. He is also the Executive Vice Chair of the Department of Medicine. At CHEST, he is a member of the training and transitions committee, executive scientific program committee, former chair of the diversity and inclusion task force, and is the current chair of the diversity and inclusion roundtable.

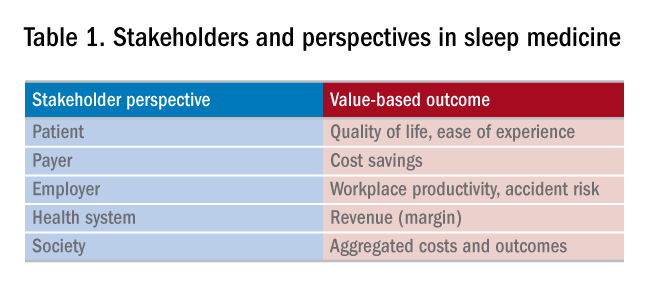

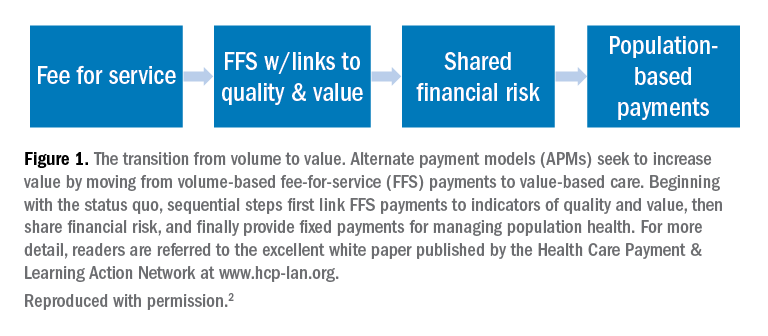

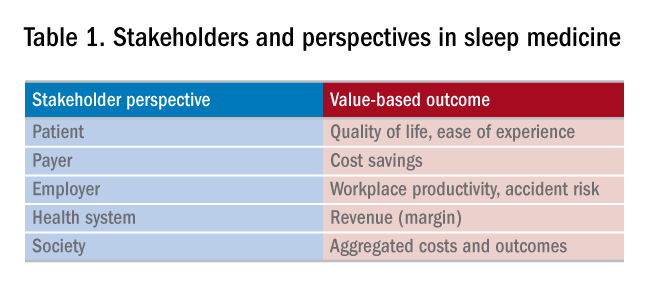

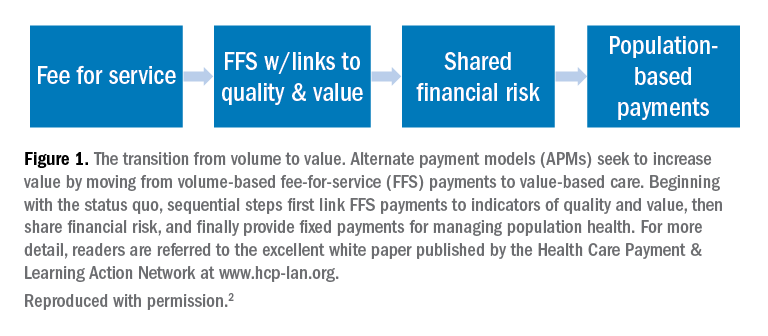

Value-based sleep: understanding and maximizing value in sleep medicine care

In addition to well-documented health consequences, obstructive sleep apnea (OSA) is associated with substantial economic costs borne by patients, payers, employers, and society at large. For example, in a recent white paper commissioned by the American Academy of Sleep Medicine, the total societal-level costs of OSA were estimated to exceed $150 billion per year in the United States alone. In addition to direct costs associated with OSA diagnosis and treatment, indirect costs were estimated at $86.9 billion for lost workplace productivity; $30 billion for increased health-care utilization (HCU); $26.2 billion for motor vehicle crashes (MVC); and $6.5 billion for workplace accidents and injuries.1

More important, evidence suggests that OSA treatments provide positive economic impact, for example reducing health-care utilization and reducing days missed from work. Our group at the University of Maryland is currently heavily involved in related research examining the health economic impact of sleep disorders and their treatments.

Value-based sleep is a concept that I created several years ago to guide a greater emphasis on health economic outcomes in order to advance our field. In addition to working with payers, industry partners, employers, and forward-thinking startups, we are investing much effort into provider education regarding the health economic aspects of sleep. This article examines what value-based sleep is, how to increase the value of sleep in your practice setting, and steps to prepare for payment models of the future.