User login

Recruiting Hospital Patients for Research

Randomized controlled trials (RCTs) generally provide the most rigorous evidence for clinical practice guidelines and quality‐improvement initiatives. However, 2 major shortcomings limit the ability to broadly apply these results to the general population. One has to do with sampling bias (due to subject consent and inclusion/exclusion criteria) and the other with potential differences between participants and eligible nonparticipants. The latter may be of particular importance in trials of behavioral interventions (rather than medication trials), which often require substantial participant effort.

First, individuals who provide written consent to participate in RCTs of behavioral interventions typically represent a minority of those approached and therefore may not be representative of the target population. Although the consenting proportion is often not disclosed, some estimate that only 35%50% of eligible subjects typically participate.[1, 2, 3] These estimates mirror the authors' prior experience with a 55.2% consent rate among subjects approached for a Medicare quality‐improvement behavioral intervention.[3] Though the literature is sparse, it suggests that eligible individuals who decline to participate in either interventions or usual care may differ from participants in their perception of intervention risks and effort[4] or in their levels of self‐efficacy or confidence in recovery.[5, 6] Relatively low enrollment rates mean that much of the population remains unstudied; however, evidence‐based interventions are often applied to populations broader than those included in the original analyses.

Additionally, although some nonparticipants may correctly decide that they do not need the assistance of a proposed intervention and therefore decline to participate, others may inappropriately judge the intervention's potential benefit and applicability when declining. In other words, electing to not participate in a study, despite eligibility, may reflect more than a refusal of inconvenience, disinterest, or desire to contribute to knowledge; for some individuals it may offer a proxy statement about health knowledge, personal beliefs, attitudes, and needs, including perceived stress,[5] cultural relevance,[7, 8] and literacy/health literacy.[9, 10] Characterizing these patients can help us to modify recruitment approaches and improve participation so that participants better represent the target population. If these differences also relate to patients' adherence to care recommendations, a more nuanced understanding could improve ways to identify and engage potentially nonadherent patients to improve health outcomes.

We hypothesized that we could identify characteristics that differ between behavioral‐intervention participants and eligible nonparticipants using a set of screening questions. We proposed that these characteristics, including constructs related to perceived stress, recovery expectation, health literacy, insight, and action into advance care planning and confusion by any question, would predict the likelihood of consenting to a behavioral intervention requiring substantial subject engagement. Some of these characteristics may relate to adherence to preventive care or treatment recommendations. We did not specifically hypothesize about the distribution of demographic differences.

METHODS

Study Design

Prospective observational study conducted within a larger behavioral intervention.

Screening Question Design

We adapted our screening questions from several previously validated surveys, selecting questions related to perceived stress and self‐efficacy,[11] recovery expectations, health literacy/medication label interpretation,[12] and discussing advance directives (Table 1). Some of these characteristics may relate to adherence to preventive care or treatment programs[13, 14] or to clinical outcomes.[15, 16]

| Screening Question | Adapted From Original Validated Question | Source | Construct |

|---|---|---|---|

| In the last week, how often have you felt that you are unable to control the important things in your life? (Rarely, sometimes, almost always) | In the last month, how often have you felt that you were unable to control the important things in your life? (Never, almost never, sometimes, fairly often, very often) | Adapted from the Perceived Stress Scale (PSS‐14).[11] | Perceived stress, self‐efficacy |

| In the last week, how often have you felt that difficulties were piling up so high that you could not overcome them? (Rarely, sometimes, almost always) | In the last month, how often have you felt difficulties were piling up so high that you could not overcome them? (Never, almost never, sometimes, fairly often, very often) | Adapted from the Perceived Stress Scale (PSS‐14).[11] | Perceived stress, self‐efficacy |

| How sure are you that you can go back to the way you felt before being hospitalized? (Not sure at all, somewhat sure, very sure) | Courtesy of Phil Clark, PhD, University of Rhode Island, drawing on research on resilience. Similar questions are used in other studies, including studies of postsurgical recovery.[29, 30, 31] | Recovery expectation, resilience | |

| Even if you have not made any decisions, have you talked with your family members or doctor about what you would want for medical care if you could not speak for yourself? (Yes, no) | Based on consumer‐targeted materials on advance care planning. | Advance care planning | |

| (Show patient a picture of prescription label.) How many times a day should someone take this medicine? (Correct, incorrect) | (Show patient a picture of ice cream label.) If you eat the entire container, how many calories will you eat? (Correct, incorrect) | Adapted from Pfizer's Clear Health Communication: The Newest Vital Sign.[12] | Health literacy |

Prior to administering the screening questions, we performed cognitive testing with residents of an assisted‐living facility (N=10), a population that resembles our study's target population. In response to cognitive testing, we eliminated a question not interpreted easily by any of the participants, identified wording changes to clarify questions, simplified answer choices for ease of response (especially because questions are delivered verbally), and moved the most complicated (and potentially most embarrassing) question to the end, with more straightforward questions toward the beginning. We also substantially enlarged the image of a standard medication label to improve readability. Our final tool included 5 questions (Table 1).

The final instrument prompted coaches to record patient confusion. Additionally, the advance‐directive question included a refused to answer option and the medication question included unable to answer (needs glasses, too tired, etc.), a potential marker of low health literacy if used as an excuse to avoid embarrassment.[17]

Setting

We recruited inpatients at 5 Rhode Island acute‐care hospitals, including 1 community hospital, 3 teaching hospitals, and a tertiary‐care center and teaching hospital, ranging from 174 beds to 719 beds. Recruitment occurred from November 2010 to April 2011. The hospitals' respective institutional review boards approved the screening questions.

Study Population

We recruited a convenience sample of consecutively identified hospitalized Medicare fee‐for‐service beneficiaries, identified as (1) eligible for the subsequent behavioral intervention based on inpatient census lists and (2) willing to discuss an offer for a home‐based behavioral intervention. The behavioral intervention, based on the Care Transitions Intervention and described elsewhere,[3, 18] included a home visit and 2 phone calls (each about 1 hour). Coaches used a personal health record to help patients and/or caregivers better manage their health by (1) being able to list their active medical conditions and medications and (2) understanding warning signs indicating a need to reach out for help, including getting a timely medical appointment after hospitalization. The population for the present study included individuals approached to discuss participation in the behavioral intervention who also agreed to answer the screening questions.

Inclusion/Exclusion Criteria

We included hospitalized Medicare fee‐for‐service beneficiaries. We excluded patients who were current long‐term care residents, were to be discharged to long‐term or skilled care, or had a documented hospice referral. We also excluded patients with limited English proficiency or who were judged to have inadequate cognitive function, unless a caregiver agreed to receive the intervention as a proxy. We made these exclusions when recruiting for the behavioral intervention. Because we presented the screening questions to a subset of those approached for the behavioral intervention, we did not further exclude anyone. In other words, we offered the screening questions to all 295 people we approached during this study time period (100%).

Screening‐Question Study Process

Coaches asked patients to answer the 5 screening questions immediately after offering them the opportunity to participate in the behavioral intervention, regardless of whether or not they accepted the behavioral intervention. This study examines the subset of patients approached for the behavioral intervention who verbally consented to answer the screening questions.

Data Sources and Covariates

We analyzed primary data from the screening questions and behavioral intervention (for those who consented to participate), as well as Medicare claims and Medicaid enrollment data. We matched screening‐question data from November 2010 through April 2011 with Medicare Part A claims from October 2010 through May 2011 to calculate 30‐day readmission rates.

We obtained the following information for patients offered the behavioral intervention: (1) responses to screening questions, (2) whether patients consented to the behavioral intervention, (3) exposure to the behavioral intervention, and (4) recruitment date. Medicare claims data included (1) admission and discharge dates to calculate the length of stay, (2) index diagnosis, (3) hospital, and (4) site of discharge. Medicare enrollment data provided information on (1) Medicaid/Medicare dual‐eligibility status, (2) sex, and (3) patient‐reported race. We matched data based on patient name and date of birth. Our primary outcome was consent to the behavioral intervention. Secondarily, we reviewed posthospital utilization patterns, including hospital readmission, emergency‐department use, and use of home‐health services.

Statistical Analysis

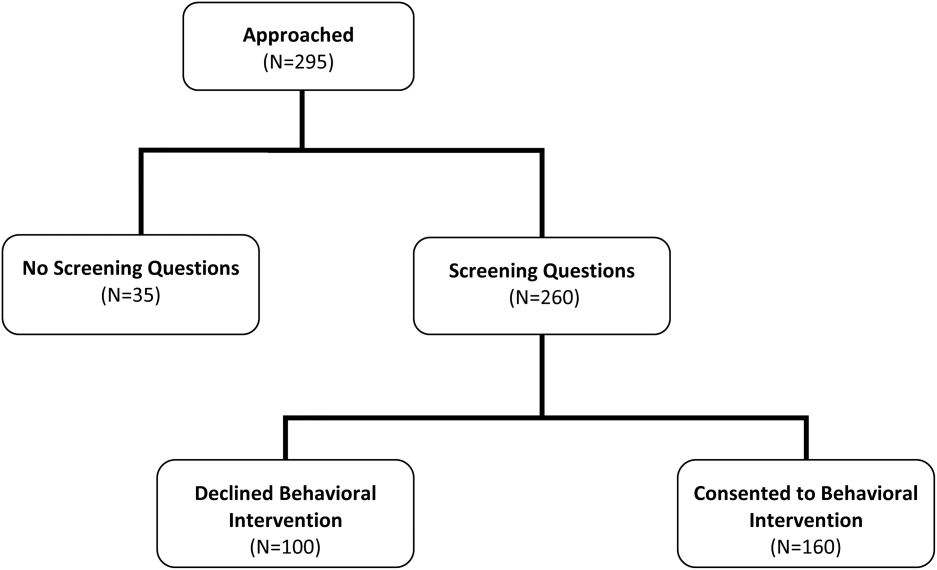

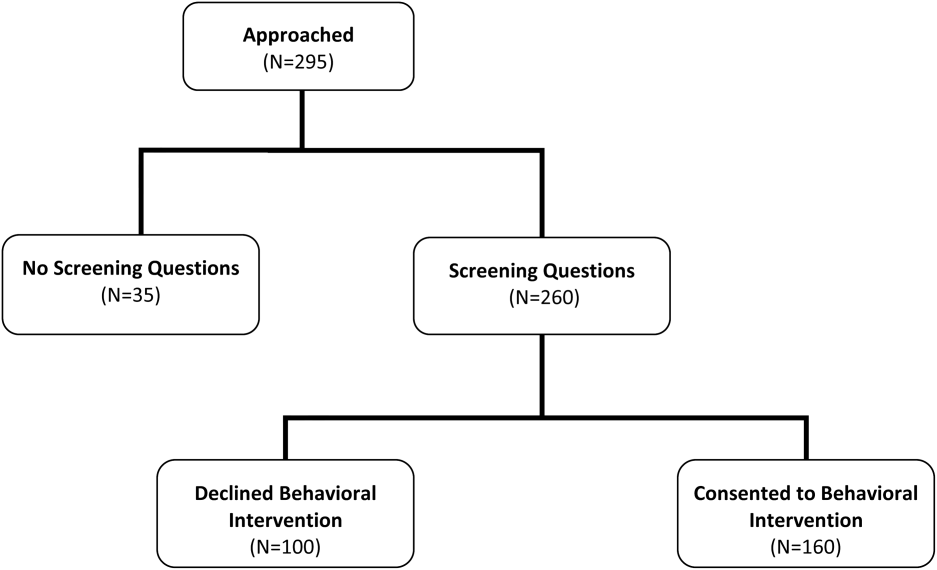

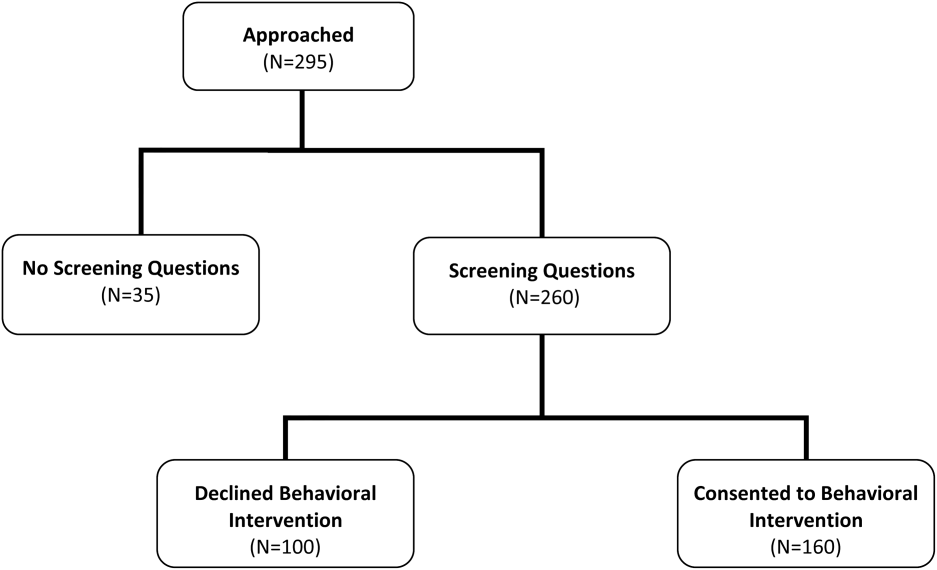

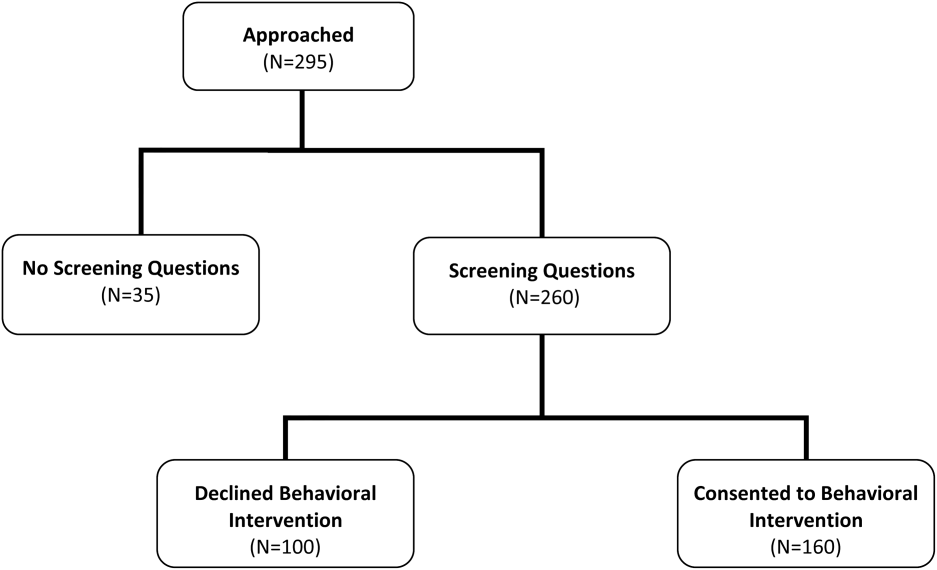

We categorized patients into 2 groups (Figure 1): participants (consented to the behavioral intervention) and nonparticipants (eligible for the behavioral intervention but declined to participate). We excluded responses for those confused by the question (no response). For the response scales never, sometimes, almost always and not at all sure, somewhat sure, very sure, we isolated the most negative response, grouping the middle and most positive responses (Table 2). For the medication‐label question, we grouped incorrect and unable to answer (needs glasses, too tired, etc.) responses. We compared demographic differences between behavioral intervention participants and nonparticipants using 2 tests (categorical variables) and Student t tests (continuous variables). We then used multivariate logistic regression to analyze differences in consent to the behavioral intervention based on screening‐question responses, adjusting for demographics that differed significantly in the bivariate comparisons.

| Screening‐Question Response | Adjusted OR (95% CI) | P Value |

|---|---|---|

| ||

| In the last week, how often have you felt that you are unable to control the important things in your life? | ||

| Out of control (Almost always) | 0.35 (0.14‐0.92) | 0.034a |

| In control (Sometimes, rarely) | 1.00 (Ref) | |

| In the last week, how often have you felt that difficulties were piling up so high that you could not overcome them? | ||

| Overwhelmed (Almost always) | 0.41 (0.16‐1.07) | 0.069 |

| Not overwhelmed (Sometimes, rarely) | 1.00 (Ref) | |

| How sure are you that you can go back to the way you felt before being hospitalized? | ||

| Not confident (Not sure at all) | 0.17 (0.06‐0.45) | 0.001a |

| Confident (Somewhat sure, very sure) | 1.00 (Ref) | |

| Even if you have not made any decisions, have you talked with your family members or doctor about what you would want for medical care if you could not speak for yourself? | ||

| No | 0.45 (0.13‐1.64) | 0.227 |

| Yes | 1.00 (Ref) | |

| How many times a day should someone take this medicine? (Show patient a medication label) | ||

| Incorrect answer | 3.82 (1.12‐13.03) | 0.033a |

| Correct answer | 1.00 (Ref) | |

| Confused by any question? | ||

| Yes | 0.11 (0.05‐0.24) | 0.001a |

| No | 1.00 (Ref) | |

The authors used SAS version 9.2 (SAS Institute, Inc., Cary, NC) for all analyses.

RESULTS

Of the 295 patients asked to complete the screening questions, 260 (88.1%) consented to answer the screening questions and 35 (11.9%) declined. More than half of those who answered the screening questions consented to participate in the behavioral intervention (160; 61.5%) (Figure 1). When compared with nonparticipants, participants in the behavioral intervention were younger (25.6% age 85 years vs 40% age 85 years, P=0.028), had a longer average length of hospital stay (7.9 vs 6.1 days, P=0.008), were more likely to be discharged home without clinical services (35.0% vs 23.0%, P=0.041), and were unevenly distributed between the 5 recruitment‐site hospitals, coming primarily from the teaching hospitals (P<0.001) (Table 3). There were no significant differences based on race, sex, dual‐eligible Medicare/Medicaid status, presence of a caregiver, or index diagnosis.

| Patient Characteristics | Declined (n=100) | Consented (n=160) | P Value |

|---|---|---|---|

| |||

| Male, n (%) | 34 (34.0) | 52 (32.5) | 0.803 |

| Race, n (%) | |||

| White | 94 (94.0) | 151 (94.4) | 0.691 |

| Black | 2 (2.0) | 5 (3.1) | |

| Other | 4 (4.0) | 4 (2.5) | |

| Age, n (%), y | |||

| <65 | 17 (17.0) | 23 (14.4) | 0.028a |

| 6574 | 14 (14.0) | 42 (26.3) | |

| 7584 | 29 (29.0) | 54 (33.8) | |

| 85 | 40 (40.0) | 41 (25.6) | |

| Dual eligible, n (%)b | 11 (11.0) | 24 (15.0) | 0.358 |

| Caregiver present, n (%) | 17 (17.0) | 34 (21.3) | 0.401 |

| Length of stay, mean (SD), d | 6.1 (4.1) | 7.9 (4.8) | 0.008a |

| Index diagnosis, n (%) | |||

| Acute MI | 3 (3.0) | 6 (3.8) | 0.806 |

| CHF | 6 (6.0) | 20 (12.5) | 0.111 |

| Pneumonia | 7 (7.0) | 9 (5.6) | 0.572 |

| COPD | 6 (6.0) | 6 (8.8) | 0.484 |

| Discharged home without clinical services, n (%)c | 23 (23.0) | 56 (35.0) | 0.041a |

| Hospital site | |||

| Hospital 1 | 15 (15.0) | 43 (26.9) | <0.001a |

| Hospital 2 | 20 (20.0) | 26 (16.3) | |

| Hospital 3 | 15 (15.0) | 23 (14.4) | |

| Hospital 4 | 2 (2.0) | 48 (30.0) | |

| Hospital 5 | 48 (48.0) | 20 (12.5) | |

Patients who identified themselves as being unable to control important things in their lives were 65% less likely to consent to the behavioral intervention than those in control (odds ratio [OR]: 0.35, 95% confidence interval [CI]: 0.14‐0.92), and those who did not feel confident about recovering were 83% less likely to consent (OR: 0.17, 95% CI: 0.06‐0.45). Individuals who were confused by any question were 89% less likely to consent (OR: 0.11, 95% CI: 0.05‐0.24). Individuals who answered the medication question incorrectly were 3 times more likely to consent (OR: 3.82, 95% CI: 1.12‐13.03). There were no significant differences in consent for feeling overwhelmed (difficulties piling up) or for having discussed advance care planning with family members or doctors.

We had insufficient power to detect significant differences in posthospital utilization (including hospital readmission, emergency‐department use, and receipt of home health), based on screening‐question responses (data not shown).

DISCUSSION

We find that patients who declined to participate in the behavioral intervention (eligible nonparticipants) differed from participants in 3 important ways: perceived stress, recovery expectation, and health literacy. As hypothesized, patients with higher perceived stress and lower recovery expectation were less likely to consent to the behavioral intervention, even after adjusting for demographic and healthcare‐utilization differences. Contrary to our hypothesis, patients who incorrectly answered the medication question were more likely to consent to the intervention than those who correctly answered.

Characterizing nonparticipants and participants can offer important insight into the limitations of the research that informs clinical guidelines and behavioral interventions. Such characteristics could also indicate how to better engage patients in interventions or other aspects of their care, if associated with lower rates of adherence to recommended health behaviors or treatment plans. For example, self‐efficacy (closely related to perceived stress) and hopelessness regarding clinical outcomes (similar to low recovery expectation in the present study) are associated with nonadherence to medication plans and other care in some populations.[5, 6] Other more extreme stress, like that following a major medical event, has also been associated with a lower rate of adherence to medication regimens and a resulting higher rate of hospital readmission and mortality.[19, 20] People with low health literacy (compared with adequate health literacy) are more likely to report being confused about their medications, requesting help to read medication labels and missing appointments due to trouble reading reminder cards.[9] Identifying these characteristics may assist providers in helping patients address adherence barriers by first accurately identifying the root of patient issues (eg, where the lack of confidence in recovery is rooted in lack of resources or social support), then potentially referring to community resources where possible. For example, some states (including Rhode Island, this study's location) may have Aging and Disability Resource Centers dedicated to linking elderly people with transportation, decision support, and other resources to support quality care.

The association between health literacy and intervention participation remains uncertain. Our question, which assessed interpretation of a prescription label as a health‐literacy proxy, may have given patients insight into their limited health literacy that motivated them to accept the subsequent behavioral intervention. Others have found that lowerhealth literacy patients want their providers to know that they did not understand some health words,[9] though they may be less likely to ask questions, request additional services, or seek new information during a medical encounter.[21] In our study, those who correctly answered the medication‐label question were almost mutually exclusive from those who were otherwise stressed (12% overlap; data not shown). Thus, patients who correctly answer this question may correctly realize that they do not need the support offered by the behavioral intervention and decline to participate. For other patients, perceived stress and poor recovery expectations may be more immediate and important determinants of declination, with patients too stressed to volunteer for another task, even if it involves much‐needed assistance.

The frequency with which patients were confused by the questions merits further comment and may also be driven by stress. Though each question seeks to identify the impact of a specific construct (Table 1), being confused by any question may reflect a more general (or subacute) level of cognitive impairment or generalized low health literacy not limited to the applied numeracy of the medication‐label question. We excluded confused responses to demonstrate more clearly the impact of each individual construct.

The impact of these characteristics may be affected by study design or other characteristics. One of the few studies to examine (via RCT) how methods affect consent found that participation decreased with increasing complexity of the consent process: written consent yielded the lowest participation, limited written consent was higher, and verbal consent was the highest.[10] Other tactics to increase consent include monetary incentives,[22] culturally sensitive materials,[7] telephone reminders,[23] an opt‐out instead of opt‐in approach,[23] and an open design where participants know which treatment they are receiving.[23] We do not know how these tactics relate to the characteristics captured in our screening questions, although other characteristics we measured, such as patients' self‐identified race, have been associated with intervention participation and access to care,[8, 24, 25] and patients who perceive that the benefit of the intervention outweighs expected risks and time requirements are more likely to consent.[4] We intentionally minimized the number of screening questions to encourage participation. The high rate of consent to our screening questions compared with consent to the (more involved) behavioral intervention reveals how sensitive patients are to the perceived invasiveness of an intervention.

We note several limitations. First, overall generalizability is limited due to our small sample size, use of consecutive convenience sampling, and exclusion criteria (eg, patients discharged to long‐term or skilled nursing care). And, these results may not apply to patients who are not hospitalized; hospitalized patients may have different motivations and stressors regarding their involvement in their care. Additionally, although we included as many people with mild cognitive impairment as possible by proxy through caregivers, we excluded some that did not have caregivers, potentially undermining the accuracy of how cognition impacts the choice to accept the behavioral intervention. Because researchers often explicitly exclude individuals based on cognitive impairment, differences between recruited subjects and the population at large may be particularly high among elderly patients, where up to half of the eligible population may be affected by cognitive impairment.[26] Further research into successfully engaging caregivers as a way to reach otherwise‐excluded patients with cognitive impairment can help to mitigate threats to generalizability. Finally, our screening questions are based on validated questions, but we rearranged our question wording, simplified answer choices, and removed them from their original context. Thus, the questions were not validated in our population or when administered in this manner. Although we conducted cognitive testing, further validity and reliability testing are necessary to translate these questions into a general screening tool. The medication‐label question also requires revision; in data collection and analysis, we assume that patients who were unable to answer (needs glasses, too tired, etc.) were masking an inability to respond correctly. Though the use of this excuse is cited in the literature,[17] we cannot be certain that our treatment of it in these screening questions is generalizable. Generalizability also applies to how we group responses. Isolating the most negative response (by grouping the middle answer with the most positive answer) most specifically identifies individuals more likely to need assistance and is therefore clinically pertinent, but this also potentially fails to identify individuals who also need help but do not choose the more extreme answer. Further research to refine the screening questions might also consider the timeframe of the perceived stress questions (past week rather than past month); this timeframe may be specific to the acute medical situation rather than general or unrelated perceived stress. Though this study cannot test this hypothesis, individuals with higher pre‐illness perceived stress may be more interested in addressing the issues that were stressors prior to acute illness, rather than the offered behavioral intervention. Additionally, some of the questions were highly correlated (Q1 and Q2) and indicate a potential for shortening the screening questionnaire.

Still, these findings further the discussion of how to identify and consent hospitalized patients for participation in behavioral interventions, both for research and for routine clinical care. Researchers should specifically consider how to engage individuals who are stressed and are not confident about recovery to improve reach and effectiveness. For example, interventions should prospectively collect data on stress and confidence in recovery and include protocols to support people who are positively identified with these characteristics. These characteristics may also offer insight into improving patient and caregiver engagement; more research is needed into characteristics related to patients' willingness to seek assistance in care. We are not the first to suggest that characteristics not observed in medical charts may impact patient completion or response to behavioral interventions,[27, 28] and considering differences between participants and eligible nonparticipants in clinical care delivery and interventions can strengthen the evidence base for clinical improvements, particularly related to patient self‐management. The implications are useful for both practicing clinicians and larger systems examining the comparativeness of patient interventions and generalizing results from RCTs.

Acknowledgments

The authors thank Phil Clark, PhD, and the SENIOR Project (Study of Exercise and Nutrition in Older Rhode Islanders) research team at the University of Rhode Island for formulating 1 of the screening questions, and Marissa Meucci for her assistance with the cognitive testing and formative research for the screening questions.

Disclosures

The analyses on which this study is based were performed by Healthcentric Advisors under contract HHSM 5002011‐RI10C, titled Utilization and Quality Control Peer Review for the State of Rhode Island, sponsored by the Centers for Medicare and Medicaid Services, US Department of Health and Human Services. The content of this publication does not necessarily reflect the views or policies of the Department of Health and Human Services, nor does mention of trade names, commercial products, or organizations imply endorsement by the US government. The authors report no conflicts of interest.

- , , , et al. Evaluating the 'all‐comers' design: a comparison of participants in two 'all‐comers' PCI trials with non‐participants. Eur Heart J. 2011;32(17):2161–2167.

- , , , . Can the randomized controlled trial literature generalize to nonrandomized patients? J Consult Clin Psychol. 2005;73(1):127–135.

- , , , , , . The care transitions intervention: translating from efficacy to effectiveness. Arch Intern Med. 2011;171(14):1232–1237.

- , , . Determinants of patient participation in clinical studies requiring informed consent: why patients enter a clinical trial. Patient Educ Couns. 1998;35(2):111–125.

- , , , . Coping self‐efficacy as a predictor of adherence to antiretroviral therapy in men and women living with HIV in Kenya. AIDS Patient Care STDS. 2011;25(9):557–561.

- , , , , , . Self‐reported influences of hopelessness, health literacy, lifestyle action, and patient inertia on blood pressure control in a hypertensive emergency department population. Am J Med Sci. 2009;338(5):368–372.

- , . Increasing recruitment to randomised trials: a review of randomised controlled trials. BMC Med Res Methodol. 2006;6:34.

- , , , , , . Age‐, sex‐, and race‐based differences among patients enrolled versus not enrolled in acute lung injury clinical trials. Crit Care Med. 2010;38(6):1450–1457.

- , , , , , . Patients' shame and attitudes toward discussing the results of literacy screening. J Health Commun. 2007;12(8):721–732.

- . Impact of detailed informed consent on research subjects' participation: a prospective, randomized trial. J Emerg Med. 2008;34(3):269–275.

- , , . A global measure of perceived stress. J Health Soc Behav. 1983;24:385–396. Available at: http://www.psy.cmu.edu/∼scohen/globalmeas83.pdf. Accessed May 10, 2012.

- .Pfizer, Inc. Clear Health Communication: The Newest Vital Sign. Available at: http://www.pfizerhealthliteracy.com/asset/pdf/NVS_Eng/files/nvs_flipbook_english_final.pdf. Accessed May 10, 2012.

- , , . Relationship of preventive health practices and health literacy: a national study. Am J Health Behav. 2008;32(3):227–242.

- , , , , , . Predictors of medication self‐management skill in a low‐literacy population. J Gen Intern Med. 2006;21:852–856.

- , , . Does how you do depend on how you think you'll do? A systematic review of the evidence for a relation between patients' recovery expectations and health outcomes [published correction appears in CMAJ. 2001;165(10):1303]. CMAJ. 2001;165(2):174–179.

- , , , et al. Health care costs in the last week of life: associations with end‐of‐life conversations. Arch Intern Med. 2009;169(5):480–488.

- , , , , . Exploring health literacy competencies in community pharmacy. Health Expect. 2010;15(1):12–22.

- , , , . The care transitions intervention: results of a randomized controlled trial. Arch Intern Med. 2006;166(17):1822–1828.

- , , , et al. A prospective study of posttraumatic stress symptoms and non‐adherence in survivors of a myocardial infarction (MI). Gen Hosp Psychiatry. 2001;23:215–222.

- , , , et al. Posttraumatic stress, non‐adherence, and adverse outcomes in survivors of a myocardial infarction. Psychosom Med. 2004;66:521–526.

- , . The implications of health literacy on patient‐provider communication. Arch Dis Child. 2008;93:428–432.

- , , . Strategies to improve recruitment to research studies. Cochrane Database Syst Rev. 2007;2:MR000013.

- , , , et al. Strategies to improve recruitment to randomised controlled trials. Cochrane Database Syst Rev. 2010;4:MR000013.

- , , , . Addressing diabetes racial and ethnic disparities: lessons learned from quality improvement collaboratives. Diabetes Manag (Lond). 2011;1(6):653–660.

- , , , . Racial differences in eligibility and enrollment in a smoking cessation clinical trial. Health Psychol. 2011;30(1):40–48.

- , , , . The disappearing subject: exclusion of people with cognitive impairment from research. J Am Geriatr Soc. 2012;6:413–419.

- , , . The role of patient preferences in cost‐effectiveness analysis: a conflict of values? Pharmacoeconomics. 2009;27(9):705–712.

- , , , et al. A comprehensive care management program to prevent chronic obstructive pulmonary disease hospitalizations. Ann Intern Med. 2012;156(10):673–683.

- , , , , . The role of expectations in patients' reports of post‐operative outcomes and improvement following therapy. Med Care. 1993;31:1043–1056.

- , . Expectations and outcomes after hip fracture among the elderly. Int J Aging Hum Dev. 1992;34:339–350.

- , , , . Role of patients' view of their illness in predicting return to work and functioning after myocardial infarction: longitudinal study. BMJ. 1996;312:1191–1194.

Randomized controlled trials (RCTs) generally provide the most rigorous evidence for clinical practice guidelines and quality‐improvement initiatives. However, 2 major shortcomings limit the ability to broadly apply these results to the general population. One has to do with sampling bias (due to subject consent and inclusion/exclusion criteria) and the other with potential differences between participants and eligible nonparticipants. The latter may be of particular importance in trials of behavioral interventions (rather than medication trials), which often require substantial participant effort.

First, individuals who provide written consent to participate in RCTs of behavioral interventions typically represent a minority of those approached and therefore may not be representative of the target population. Although the consenting proportion is often not disclosed, some estimate that only 35%50% of eligible subjects typically participate.[1, 2, 3] These estimates mirror the authors' prior experience with a 55.2% consent rate among subjects approached for a Medicare quality‐improvement behavioral intervention.[3] Though the literature is sparse, it suggests that eligible individuals who decline to participate in either interventions or usual care may differ from participants in their perception of intervention risks and effort[4] or in their levels of self‐efficacy or confidence in recovery.[5, 6] Relatively low enrollment rates mean that much of the population remains unstudied; however, evidence‐based interventions are often applied to populations broader than those included in the original analyses.

Additionally, although some nonparticipants may correctly decide that they do not need the assistance of a proposed intervention and therefore decline to participate, others may inappropriately judge the intervention's potential benefit and applicability when declining. In other words, electing to not participate in a study, despite eligibility, may reflect more than a refusal of inconvenience, disinterest, or desire to contribute to knowledge; for some individuals it may offer a proxy statement about health knowledge, personal beliefs, attitudes, and needs, including perceived stress,[5] cultural relevance,[7, 8] and literacy/health literacy.[9, 10] Characterizing these patients can help us to modify recruitment approaches and improve participation so that participants better represent the target population. If these differences also relate to patients' adherence to care recommendations, a more nuanced understanding could improve ways to identify and engage potentially nonadherent patients to improve health outcomes.

We hypothesized that we could identify characteristics that differ between behavioral‐intervention participants and eligible nonparticipants using a set of screening questions. We proposed that these characteristics, including constructs related to perceived stress, recovery expectation, health literacy, insight, and action into advance care planning and confusion by any question, would predict the likelihood of consenting to a behavioral intervention requiring substantial subject engagement. Some of these characteristics may relate to adherence to preventive care or treatment recommendations. We did not specifically hypothesize about the distribution of demographic differences.

METHODS

Study Design

Prospective observational study conducted within a larger behavioral intervention.

Screening Question Design

We adapted our screening questions from several previously validated surveys, selecting questions related to perceived stress and self‐efficacy,[11] recovery expectations, health literacy/medication label interpretation,[12] and discussing advance directives (Table 1). Some of these characteristics may relate to adherence to preventive care or treatment programs[13, 14] or to clinical outcomes.[15, 16]

| Screening Question | Adapted From Original Validated Question | Source | Construct |

|---|---|---|---|

| In the last week, how often have you felt that you are unable to control the important things in your life? (Rarely, sometimes, almost always) | In the last month, how often have you felt that you were unable to control the important things in your life? (Never, almost never, sometimes, fairly often, very often) | Adapted from the Perceived Stress Scale (PSS‐14).[11] | Perceived stress, self‐efficacy |

| In the last week, how often have you felt that difficulties were piling up so high that you could not overcome them? (Rarely, sometimes, almost always) | In the last month, how often have you felt difficulties were piling up so high that you could not overcome them? (Never, almost never, sometimes, fairly often, very often) | Adapted from the Perceived Stress Scale (PSS‐14).[11] | Perceived stress, self‐efficacy |

| How sure are you that you can go back to the way you felt before being hospitalized? (Not sure at all, somewhat sure, very sure) | Courtesy of Phil Clark, PhD, University of Rhode Island, drawing on research on resilience. Similar questions are used in other studies, including studies of postsurgical recovery.[29, 30, 31] | Recovery expectation, resilience | |

| Even if you have not made any decisions, have you talked with your family members or doctor about what you would want for medical care if you could not speak for yourself? (Yes, no) | Based on consumer‐targeted materials on advance care planning. | Advance care planning | |

| (Show patient a picture of prescription label.) How many times a day should someone take this medicine? (Correct, incorrect) | (Show patient a picture of ice cream label.) If you eat the entire container, how many calories will you eat? (Correct, incorrect) | Adapted from Pfizer's Clear Health Communication: The Newest Vital Sign.[12] | Health literacy |

Prior to administering the screening questions, we performed cognitive testing with residents of an assisted‐living facility (N=10), a population that resembles our study's target population. In response to cognitive testing, we eliminated a question not interpreted easily by any of the participants, identified wording changes to clarify questions, simplified answer choices for ease of response (especially because questions are delivered verbally), and moved the most complicated (and potentially most embarrassing) question to the end, with more straightforward questions toward the beginning. We also substantially enlarged the image of a standard medication label to improve readability. Our final tool included 5 questions (Table 1).

The final instrument prompted coaches to record patient confusion. Additionally, the advance‐directive question included a refused to answer option and the medication question included unable to answer (needs glasses, too tired, etc.), a potential marker of low health literacy if used as an excuse to avoid embarrassment.[17]

Setting

We recruited inpatients at 5 Rhode Island acute‐care hospitals, including 1 community hospital, 3 teaching hospitals, and a tertiary‐care center and teaching hospital, ranging from 174 beds to 719 beds. Recruitment occurred from November 2010 to April 2011. The hospitals' respective institutional review boards approved the screening questions.

Study Population

We recruited a convenience sample of consecutively identified hospitalized Medicare fee‐for‐service beneficiaries, identified as (1) eligible for the subsequent behavioral intervention based on inpatient census lists and (2) willing to discuss an offer for a home‐based behavioral intervention. The behavioral intervention, based on the Care Transitions Intervention and described elsewhere,[3, 18] included a home visit and 2 phone calls (each about 1 hour). Coaches used a personal health record to help patients and/or caregivers better manage their health by (1) being able to list their active medical conditions and medications and (2) understanding warning signs indicating a need to reach out for help, including getting a timely medical appointment after hospitalization. The population for the present study included individuals approached to discuss participation in the behavioral intervention who also agreed to answer the screening questions.

Inclusion/Exclusion Criteria

We included hospitalized Medicare fee‐for‐service beneficiaries. We excluded patients who were current long‐term care residents, were to be discharged to long‐term or skilled care, or had a documented hospice referral. We also excluded patients with limited English proficiency or who were judged to have inadequate cognitive function, unless a caregiver agreed to receive the intervention as a proxy. We made these exclusions when recruiting for the behavioral intervention. Because we presented the screening questions to a subset of those approached for the behavioral intervention, we did not further exclude anyone. In other words, we offered the screening questions to all 295 people we approached during this study time period (100%).

Screening‐Question Study Process

Coaches asked patients to answer the 5 screening questions immediately after offering them the opportunity to participate in the behavioral intervention, regardless of whether or not they accepted the behavioral intervention. This study examines the subset of patients approached for the behavioral intervention who verbally consented to answer the screening questions.

Data Sources and Covariates

We analyzed primary data from the screening questions and behavioral intervention (for those who consented to participate), as well as Medicare claims and Medicaid enrollment data. We matched screening‐question data from November 2010 through April 2011 with Medicare Part A claims from October 2010 through May 2011 to calculate 30‐day readmission rates.

We obtained the following information for patients offered the behavioral intervention: (1) responses to screening questions, (2) whether patients consented to the behavioral intervention, (3) exposure to the behavioral intervention, and (4) recruitment date. Medicare claims data included (1) admission and discharge dates to calculate the length of stay, (2) index diagnosis, (3) hospital, and (4) site of discharge. Medicare enrollment data provided information on (1) Medicaid/Medicare dual‐eligibility status, (2) sex, and (3) patient‐reported race. We matched data based on patient name and date of birth. Our primary outcome was consent to the behavioral intervention. Secondarily, we reviewed posthospital utilization patterns, including hospital readmission, emergency‐department use, and use of home‐health services.

Statistical Analysis

We categorized patients into 2 groups (Figure 1): participants (consented to the behavioral intervention) and nonparticipants (eligible for the behavioral intervention but declined to participate). We excluded responses for those confused by the question (no response). For the response scales never, sometimes, almost always and not at all sure, somewhat sure, very sure, we isolated the most negative response, grouping the middle and most positive responses (Table 2). For the medication‐label question, we grouped incorrect and unable to answer (needs glasses, too tired, etc.) responses. We compared demographic differences between behavioral intervention participants and nonparticipants using 2 tests (categorical variables) and Student t tests (continuous variables). We then used multivariate logistic regression to analyze differences in consent to the behavioral intervention based on screening‐question responses, adjusting for demographics that differed significantly in the bivariate comparisons.

| Screening‐Question Response | Adjusted OR (95% CI) | P Value |

|---|---|---|

| ||

| In the last week, how often have you felt that you are unable to control the important things in your life? | ||

| Out of control (Almost always) | 0.35 (0.14‐0.92) | 0.034a |

| In control (Sometimes, rarely) | 1.00 (Ref) | |

| In the last week, how often have you felt that difficulties were piling up so high that you could not overcome them? | ||

| Overwhelmed (Almost always) | 0.41 (0.16‐1.07) | 0.069 |

| Not overwhelmed (Sometimes, rarely) | 1.00 (Ref) | |

| How sure are you that you can go back to the way you felt before being hospitalized? | ||

| Not confident (Not sure at all) | 0.17 (0.06‐0.45) | 0.001a |

| Confident (Somewhat sure, very sure) | 1.00 (Ref) | |

| Even if you have not made any decisions, have you talked with your family members or doctor about what you would want for medical care if you could not speak for yourself? | ||

| No | 0.45 (0.13‐1.64) | 0.227 |

| Yes | 1.00 (Ref) | |

| How many times a day should someone take this medicine? (Show patient a medication label) | ||

| Incorrect answer | 3.82 (1.12‐13.03) | 0.033a |

| Correct answer | 1.00 (Ref) | |

| Confused by any question? | ||

| Yes | 0.11 (0.05‐0.24) | 0.001a |

| No | 1.00 (Ref) | |

The authors used SAS version 9.2 (SAS Institute, Inc., Cary, NC) for all analyses.

RESULTS

Of the 295 patients asked to complete the screening questions, 260 (88.1%) consented to answer the screening questions and 35 (11.9%) declined. More than half of those who answered the screening questions consented to participate in the behavioral intervention (160; 61.5%) (Figure 1). When compared with nonparticipants, participants in the behavioral intervention were younger (25.6% age 85 years vs 40% age 85 years, P=0.028), had a longer average length of hospital stay (7.9 vs 6.1 days, P=0.008), were more likely to be discharged home without clinical services (35.0% vs 23.0%, P=0.041), and were unevenly distributed between the 5 recruitment‐site hospitals, coming primarily from the teaching hospitals (P<0.001) (Table 3). There were no significant differences based on race, sex, dual‐eligible Medicare/Medicaid status, presence of a caregiver, or index diagnosis.

| Patient Characteristics | Declined (n=100) | Consented (n=160) | P Value |

|---|---|---|---|

| |||

| Male, n (%) | 34 (34.0) | 52 (32.5) | 0.803 |

| Race, n (%) | |||

| White | 94 (94.0) | 151 (94.4) | 0.691 |

| Black | 2 (2.0) | 5 (3.1) | |

| Other | 4 (4.0) | 4 (2.5) | |

| Age, n (%), y | |||

| <65 | 17 (17.0) | 23 (14.4) | 0.028a |

| 6574 | 14 (14.0) | 42 (26.3) | |

| 7584 | 29 (29.0) | 54 (33.8) | |

| 85 | 40 (40.0) | 41 (25.6) | |

| Dual eligible, n (%)b | 11 (11.0) | 24 (15.0) | 0.358 |

| Caregiver present, n (%) | 17 (17.0) | 34 (21.3) | 0.401 |

| Length of stay, mean (SD), d | 6.1 (4.1) | 7.9 (4.8) | 0.008a |

| Index diagnosis, n (%) | |||

| Acute MI | 3 (3.0) | 6 (3.8) | 0.806 |

| CHF | 6 (6.0) | 20 (12.5) | 0.111 |

| Pneumonia | 7 (7.0) | 9 (5.6) | 0.572 |

| COPD | 6 (6.0) | 6 (8.8) | 0.484 |

| Discharged home without clinical services, n (%)c | 23 (23.0) | 56 (35.0) | 0.041a |

| Hospital site | |||

| Hospital 1 | 15 (15.0) | 43 (26.9) | <0.001a |

| Hospital 2 | 20 (20.0) | 26 (16.3) | |

| Hospital 3 | 15 (15.0) | 23 (14.4) | |

| Hospital 4 | 2 (2.0) | 48 (30.0) | |

| Hospital 5 | 48 (48.0) | 20 (12.5) | |

Patients who identified themselves as being unable to control important things in their lives were 65% less likely to consent to the behavioral intervention than those in control (odds ratio [OR]: 0.35, 95% confidence interval [CI]: 0.14‐0.92), and those who did not feel confident about recovering were 83% less likely to consent (OR: 0.17, 95% CI: 0.06‐0.45). Individuals who were confused by any question were 89% less likely to consent (OR: 0.11, 95% CI: 0.05‐0.24). Individuals who answered the medication question incorrectly were 3 times more likely to consent (OR: 3.82, 95% CI: 1.12‐13.03). There were no significant differences in consent for feeling overwhelmed (difficulties piling up) or for having discussed advance care planning with family members or doctors.

We had insufficient power to detect significant differences in posthospital utilization (including hospital readmission, emergency‐department use, and receipt of home health), based on screening‐question responses (data not shown).

DISCUSSION

We find that patients who declined to participate in the behavioral intervention (eligible nonparticipants) differed from participants in 3 important ways: perceived stress, recovery expectation, and health literacy. As hypothesized, patients with higher perceived stress and lower recovery expectation were less likely to consent to the behavioral intervention, even after adjusting for demographic and healthcare‐utilization differences. Contrary to our hypothesis, patients who incorrectly answered the medication question were more likely to consent to the intervention than those who correctly answered.

Characterizing nonparticipants and participants can offer important insight into the limitations of the research that informs clinical guidelines and behavioral interventions. Such characteristics could also indicate how to better engage patients in interventions or other aspects of their care, if associated with lower rates of adherence to recommended health behaviors or treatment plans. For example, self‐efficacy (closely related to perceived stress) and hopelessness regarding clinical outcomes (similar to low recovery expectation in the present study) are associated with nonadherence to medication plans and other care in some populations.[5, 6] Other more extreme stress, like that following a major medical event, has also been associated with a lower rate of adherence to medication regimens and a resulting higher rate of hospital readmission and mortality.[19, 20] People with low health literacy (compared with adequate health literacy) are more likely to report being confused about their medications, requesting help to read medication labels and missing appointments due to trouble reading reminder cards.[9] Identifying these characteristics may assist providers in helping patients address adherence barriers by first accurately identifying the root of patient issues (eg, where the lack of confidence in recovery is rooted in lack of resources or social support), then potentially referring to community resources where possible. For example, some states (including Rhode Island, this study's location) may have Aging and Disability Resource Centers dedicated to linking elderly people with transportation, decision support, and other resources to support quality care.

The association between health literacy and intervention participation remains uncertain. Our question, which assessed interpretation of a prescription label as a health‐literacy proxy, may have given patients insight into their limited health literacy that motivated them to accept the subsequent behavioral intervention. Others have found that lowerhealth literacy patients want their providers to know that they did not understand some health words,[9] though they may be less likely to ask questions, request additional services, or seek new information during a medical encounter.[21] In our study, those who correctly answered the medication‐label question were almost mutually exclusive from those who were otherwise stressed (12% overlap; data not shown). Thus, patients who correctly answer this question may correctly realize that they do not need the support offered by the behavioral intervention and decline to participate. For other patients, perceived stress and poor recovery expectations may be more immediate and important determinants of declination, with patients too stressed to volunteer for another task, even if it involves much‐needed assistance.

The frequency with which patients were confused by the questions merits further comment and may also be driven by stress. Though each question seeks to identify the impact of a specific construct (Table 1), being confused by any question may reflect a more general (or subacute) level of cognitive impairment or generalized low health literacy not limited to the applied numeracy of the medication‐label question. We excluded confused responses to demonstrate more clearly the impact of each individual construct.

The impact of these characteristics may be affected by study design or other characteristics. One of the few studies to examine (via RCT) how methods affect consent found that participation decreased with increasing complexity of the consent process: written consent yielded the lowest participation, limited written consent was higher, and verbal consent was the highest.[10] Other tactics to increase consent include monetary incentives,[22] culturally sensitive materials,[7] telephone reminders,[23] an opt‐out instead of opt‐in approach,[23] and an open design where participants know which treatment they are receiving.[23] We do not know how these tactics relate to the characteristics captured in our screening questions, although other characteristics we measured, such as patients' self‐identified race, have been associated with intervention participation and access to care,[8, 24, 25] and patients who perceive that the benefit of the intervention outweighs expected risks and time requirements are more likely to consent.[4] We intentionally minimized the number of screening questions to encourage participation. The high rate of consent to our screening questions compared with consent to the (more involved) behavioral intervention reveals how sensitive patients are to the perceived invasiveness of an intervention.

We note several limitations. First, overall generalizability is limited due to our small sample size, use of consecutive convenience sampling, and exclusion criteria (eg, patients discharged to long‐term or skilled nursing care). And, these results may not apply to patients who are not hospitalized; hospitalized patients may have different motivations and stressors regarding their involvement in their care. Additionally, although we included as many people with mild cognitive impairment as possible by proxy through caregivers, we excluded some that did not have caregivers, potentially undermining the accuracy of how cognition impacts the choice to accept the behavioral intervention. Because researchers often explicitly exclude individuals based on cognitive impairment, differences between recruited subjects and the population at large may be particularly high among elderly patients, where up to half of the eligible population may be affected by cognitive impairment.[26] Further research into successfully engaging caregivers as a way to reach otherwise‐excluded patients with cognitive impairment can help to mitigate threats to generalizability. Finally, our screening questions are based on validated questions, but we rearranged our question wording, simplified answer choices, and removed them from their original context. Thus, the questions were not validated in our population or when administered in this manner. Although we conducted cognitive testing, further validity and reliability testing are necessary to translate these questions into a general screening tool. The medication‐label question also requires revision; in data collection and analysis, we assume that patients who were unable to answer (needs glasses, too tired, etc.) were masking an inability to respond correctly. Though the use of this excuse is cited in the literature,[17] we cannot be certain that our treatment of it in these screening questions is generalizable. Generalizability also applies to how we group responses. Isolating the most negative response (by grouping the middle answer with the most positive answer) most specifically identifies individuals more likely to need assistance and is therefore clinically pertinent, but this also potentially fails to identify individuals who also need help but do not choose the more extreme answer. Further research to refine the screening questions might also consider the timeframe of the perceived stress questions (past week rather than past month); this timeframe may be specific to the acute medical situation rather than general or unrelated perceived stress. Though this study cannot test this hypothesis, individuals with higher pre‐illness perceived stress may be more interested in addressing the issues that were stressors prior to acute illness, rather than the offered behavioral intervention. Additionally, some of the questions were highly correlated (Q1 and Q2) and indicate a potential for shortening the screening questionnaire.

Still, these findings further the discussion of how to identify and consent hospitalized patients for participation in behavioral interventions, both for research and for routine clinical care. Researchers should specifically consider how to engage individuals who are stressed and are not confident about recovery to improve reach and effectiveness. For example, interventions should prospectively collect data on stress and confidence in recovery and include protocols to support people who are positively identified with these characteristics. These characteristics may also offer insight into improving patient and caregiver engagement; more research is needed into characteristics related to patients' willingness to seek assistance in care. We are not the first to suggest that characteristics not observed in medical charts may impact patient completion or response to behavioral interventions,[27, 28] and considering differences between participants and eligible nonparticipants in clinical care delivery and interventions can strengthen the evidence base for clinical improvements, particularly related to patient self‐management. The implications are useful for both practicing clinicians and larger systems examining the comparativeness of patient interventions and generalizing results from RCTs.

Acknowledgments

The authors thank Phil Clark, PhD, and the SENIOR Project (Study of Exercise and Nutrition in Older Rhode Islanders) research team at the University of Rhode Island for formulating 1 of the screening questions, and Marissa Meucci for her assistance with the cognitive testing and formative research for the screening questions.

Disclosures

The analyses on which this study is based were performed by Healthcentric Advisors under contract HHSM 5002011‐RI10C, titled Utilization and Quality Control Peer Review for the State of Rhode Island, sponsored by the Centers for Medicare and Medicaid Services, US Department of Health and Human Services. The content of this publication does not necessarily reflect the views or policies of the Department of Health and Human Services, nor does mention of trade names, commercial products, or organizations imply endorsement by the US government. The authors report no conflicts of interest.

Randomized controlled trials (RCTs) generally provide the most rigorous evidence for clinical practice guidelines and quality‐improvement initiatives. However, 2 major shortcomings limit the ability to broadly apply these results to the general population. One has to do with sampling bias (due to subject consent and inclusion/exclusion criteria) and the other with potential differences between participants and eligible nonparticipants. The latter may be of particular importance in trials of behavioral interventions (rather than medication trials), which often require substantial participant effort.

First, individuals who provide written consent to participate in RCTs of behavioral interventions typically represent a minority of those approached and therefore may not be representative of the target population. Although the consenting proportion is often not disclosed, some estimate that only 35%50% of eligible subjects typically participate.[1, 2, 3] These estimates mirror the authors' prior experience with a 55.2% consent rate among subjects approached for a Medicare quality‐improvement behavioral intervention.[3] Though the literature is sparse, it suggests that eligible individuals who decline to participate in either interventions or usual care may differ from participants in their perception of intervention risks and effort[4] or in their levels of self‐efficacy or confidence in recovery.[5, 6] Relatively low enrollment rates mean that much of the population remains unstudied; however, evidence‐based interventions are often applied to populations broader than those included in the original analyses.

Additionally, although some nonparticipants may correctly decide that they do not need the assistance of a proposed intervention and therefore decline to participate, others may inappropriately judge the intervention's potential benefit and applicability when declining. In other words, electing to not participate in a study, despite eligibility, may reflect more than a refusal of inconvenience, disinterest, or desire to contribute to knowledge; for some individuals it may offer a proxy statement about health knowledge, personal beliefs, attitudes, and needs, including perceived stress,[5] cultural relevance,[7, 8] and literacy/health literacy.[9, 10] Characterizing these patients can help us to modify recruitment approaches and improve participation so that participants better represent the target population. If these differences also relate to patients' adherence to care recommendations, a more nuanced understanding could improve ways to identify and engage potentially nonadherent patients to improve health outcomes.

We hypothesized that we could identify characteristics that differ between behavioral‐intervention participants and eligible nonparticipants using a set of screening questions. We proposed that these characteristics, including constructs related to perceived stress, recovery expectation, health literacy, insight, and action into advance care planning and confusion by any question, would predict the likelihood of consenting to a behavioral intervention requiring substantial subject engagement. Some of these characteristics may relate to adherence to preventive care or treatment recommendations. We did not specifically hypothesize about the distribution of demographic differences.

METHODS

Study Design

Prospective observational study conducted within a larger behavioral intervention.

Screening Question Design

We adapted our screening questions from several previously validated surveys, selecting questions related to perceived stress and self‐efficacy,[11] recovery expectations, health literacy/medication label interpretation,[12] and discussing advance directives (Table 1). Some of these characteristics may relate to adherence to preventive care or treatment programs[13, 14] or to clinical outcomes.[15, 16]

| Screening Question | Adapted From Original Validated Question | Source | Construct |

|---|---|---|---|

| In the last week, how often have you felt that you are unable to control the important things in your life? (Rarely, sometimes, almost always) | In the last month, how often have you felt that you were unable to control the important things in your life? (Never, almost never, sometimes, fairly often, very often) | Adapted from the Perceived Stress Scale (PSS‐14).[11] | Perceived stress, self‐efficacy |

| In the last week, how often have you felt that difficulties were piling up so high that you could not overcome them? (Rarely, sometimes, almost always) | In the last month, how often have you felt difficulties were piling up so high that you could not overcome them? (Never, almost never, sometimes, fairly often, very often) | Adapted from the Perceived Stress Scale (PSS‐14).[11] | Perceived stress, self‐efficacy |

| How sure are you that you can go back to the way you felt before being hospitalized? (Not sure at all, somewhat sure, very sure) | Courtesy of Phil Clark, PhD, University of Rhode Island, drawing on research on resilience. Similar questions are used in other studies, including studies of postsurgical recovery.[29, 30, 31] | Recovery expectation, resilience | |

| Even if you have not made any decisions, have you talked with your family members or doctor about what you would want for medical care if you could not speak for yourself? (Yes, no) | Based on consumer‐targeted materials on advance care planning. | Advance care planning | |

| (Show patient a picture of prescription label.) How many times a day should someone take this medicine? (Correct, incorrect) | (Show patient a picture of ice cream label.) If you eat the entire container, how many calories will you eat? (Correct, incorrect) | Adapted from Pfizer's Clear Health Communication: The Newest Vital Sign.[12] | Health literacy |

Prior to administering the screening questions, we performed cognitive testing with residents of an assisted‐living facility (N=10), a population that resembles our study's target population. In response to cognitive testing, we eliminated a question not interpreted easily by any of the participants, identified wording changes to clarify questions, simplified answer choices for ease of response (especially because questions are delivered verbally), and moved the most complicated (and potentially most embarrassing) question to the end, with more straightforward questions toward the beginning. We also substantially enlarged the image of a standard medication label to improve readability. Our final tool included 5 questions (Table 1).

The final instrument prompted coaches to record patient confusion. Additionally, the advance‐directive question included a refused to answer option and the medication question included unable to answer (needs glasses, too tired, etc.), a potential marker of low health literacy if used as an excuse to avoid embarrassment.[17]

Setting

We recruited inpatients at 5 Rhode Island acute‐care hospitals, including 1 community hospital, 3 teaching hospitals, and a tertiary‐care center and teaching hospital, ranging from 174 beds to 719 beds. Recruitment occurred from November 2010 to April 2011. The hospitals' respective institutional review boards approved the screening questions.

Study Population

We recruited a convenience sample of consecutively identified hospitalized Medicare fee‐for‐service beneficiaries, identified as (1) eligible for the subsequent behavioral intervention based on inpatient census lists and (2) willing to discuss an offer for a home‐based behavioral intervention. The behavioral intervention, based on the Care Transitions Intervention and described elsewhere,[3, 18] included a home visit and 2 phone calls (each about 1 hour). Coaches used a personal health record to help patients and/or caregivers better manage their health by (1) being able to list their active medical conditions and medications and (2) understanding warning signs indicating a need to reach out for help, including getting a timely medical appointment after hospitalization. The population for the present study included individuals approached to discuss participation in the behavioral intervention who also agreed to answer the screening questions.

Inclusion/Exclusion Criteria

We included hospitalized Medicare fee‐for‐service beneficiaries. We excluded patients who were current long‐term care residents, were to be discharged to long‐term or skilled care, or had a documented hospice referral. We also excluded patients with limited English proficiency or who were judged to have inadequate cognitive function, unless a caregiver agreed to receive the intervention as a proxy. We made these exclusions when recruiting for the behavioral intervention. Because we presented the screening questions to a subset of those approached for the behavioral intervention, we did not further exclude anyone. In other words, we offered the screening questions to all 295 people we approached during this study time period (100%).

Screening‐Question Study Process

Coaches asked patients to answer the 5 screening questions immediately after offering them the opportunity to participate in the behavioral intervention, regardless of whether or not they accepted the behavioral intervention. This study examines the subset of patients approached for the behavioral intervention who verbally consented to answer the screening questions.

Data Sources and Covariates

We analyzed primary data from the screening questions and behavioral intervention (for those who consented to participate), as well as Medicare claims and Medicaid enrollment data. We matched screening‐question data from November 2010 through April 2011 with Medicare Part A claims from October 2010 through May 2011 to calculate 30‐day readmission rates.

We obtained the following information for patients offered the behavioral intervention: (1) responses to screening questions, (2) whether patients consented to the behavioral intervention, (3) exposure to the behavioral intervention, and (4) recruitment date. Medicare claims data included (1) admission and discharge dates to calculate the length of stay, (2) index diagnosis, (3) hospital, and (4) site of discharge. Medicare enrollment data provided information on (1) Medicaid/Medicare dual‐eligibility status, (2) sex, and (3) patient‐reported race. We matched data based on patient name and date of birth. Our primary outcome was consent to the behavioral intervention. Secondarily, we reviewed posthospital utilization patterns, including hospital readmission, emergency‐department use, and use of home‐health services.

Statistical Analysis

We categorized patients into 2 groups (Figure 1): participants (consented to the behavioral intervention) and nonparticipants (eligible for the behavioral intervention but declined to participate). We excluded responses for those confused by the question (no response). For the response scales never, sometimes, almost always and not at all sure, somewhat sure, very sure, we isolated the most negative response, grouping the middle and most positive responses (Table 2). For the medication‐label question, we grouped incorrect and unable to answer (needs glasses, too tired, etc.) responses. We compared demographic differences between behavioral intervention participants and nonparticipants using 2 tests (categorical variables) and Student t tests (continuous variables). We then used multivariate logistic regression to analyze differences in consent to the behavioral intervention based on screening‐question responses, adjusting for demographics that differed significantly in the bivariate comparisons.

| Screening‐Question Response | Adjusted OR (95% CI) | P Value |

|---|---|---|

| ||

| In the last week, how often have you felt that you are unable to control the important things in your life? | ||

| Out of control (Almost always) | 0.35 (0.14‐0.92) | 0.034a |

| In control (Sometimes, rarely) | 1.00 (Ref) | |

| In the last week, how often have you felt that difficulties were piling up so high that you could not overcome them? | ||

| Overwhelmed (Almost always) | 0.41 (0.16‐1.07) | 0.069 |

| Not overwhelmed (Sometimes, rarely) | 1.00 (Ref) | |

| How sure are you that you can go back to the way you felt before being hospitalized? | ||

| Not confident (Not sure at all) | 0.17 (0.06‐0.45) | 0.001a |

| Confident (Somewhat sure, very sure) | 1.00 (Ref) | |

| Even if you have not made any decisions, have you talked with your family members or doctor about what you would want for medical care if you could not speak for yourself? | ||

| No | 0.45 (0.13‐1.64) | 0.227 |

| Yes | 1.00 (Ref) | |

| How many times a day should someone take this medicine? (Show patient a medication label) | ||

| Incorrect answer | 3.82 (1.12‐13.03) | 0.033a |

| Correct answer | 1.00 (Ref) | |

| Confused by any question? | ||

| Yes | 0.11 (0.05‐0.24) | 0.001a |

| No | 1.00 (Ref) | |

The authors used SAS version 9.2 (SAS Institute, Inc., Cary, NC) for all analyses.

RESULTS

Of the 295 patients asked to complete the screening questions, 260 (88.1%) consented to answer the screening questions and 35 (11.9%) declined. More than half of those who answered the screening questions consented to participate in the behavioral intervention (160; 61.5%) (Figure 1). When compared with nonparticipants, participants in the behavioral intervention were younger (25.6% age 85 years vs 40% age 85 years, P=0.028), had a longer average length of hospital stay (7.9 vs 6.1 days, P=0.008), were more likely to be discharged home without clinical services (35.0% vs 23.0%, P=0.041), and were unevenly distributed between the 5 recruitment‐site hospitals, coming primarily from the teaching hospitals (P<0.001) (Table 3). There were no significant differences based on race, sex, dual‐eligible Medicare/Medicaid status, presence of a caregiver, or index diagnosis.

| Patient Characteristics | Declined (n=100) | Consented (n=160) | P Value |

|---|---|---|---|

| |||

| Male, n (%) | 34 (34.0) | 52 (32.5) | 0.803 |

| Race, n (%) | |||

| White | 94 (94.0) | 151 (94.4) | 0.691 |

| Black | 2 (2.0) | 5 (3.1) | |

| Other | 4 (4.0) | 4 (2.5) | |

| Age, n (%), y | |||

| <65 | 17 (17.0) | 23 (14.4) | 0.028a |

| 6574 | 14 (14.0) | 42 (26.3) | |

| 7584 | 29 (29.0) | 54 (33.8) | |

| 85 | 40 (40.0) | 41 (25.6) | |

| Dual eligible, n (%)b | 11 (11.0) | 24 (15.0) | 0.358 |

| Caregiver present, n (%) | 17 (17.0) | 34 (21.3) | 0.401 |

| Length of stay, mean (SD), d | 6.1 (4.1) | 7.9 (4.8) | 0.008a |

| Index diagnosis, n (%) | |||

| Acute MI | 3 (3.0) | 6 (3.8) | 0.806 |

| CHF | 6 (6.0) | 20 (12.5) | 0.111 |

| Pneumonia | 7 (7.0) | 9 (5.6) | 0.572 |

| COPD | 6 (6.0) | 6 (8.8) | 0.484 |

| Discharged home without clinical services, n (%)c | 23 (23.0) | 56 (35.0) | 0.041a |

| Hospital site | |||

| Hospital 1 | 15 (15.0) | 43 (26.9) | <0.001a |

| Hospital 2 | 20 (20.0) | 26 (16.3) | |

| Hospital 3 | 15 (15.0) | 23 (14.4) | |

| Hospital 4 | 2 (2.0) | 48 (30.0) | |

| Hospital 5 | 48 (48.0) | 20 (12.5) | |

Patients who identified themselves as being unable to control important things in their lives were 65% less likely to consent to the behavioral intervention than those in control (odds ratio [OR]: 0.35, 95% confidence interval [CI]: 0.14‐0.92), and those who did not feel confident about recovering were 83% less likely to consent (OR: 0.17, 95% CI: 0.06‐0.45). Individuals who were confused by any question were 89% less likely to consent (OR: 0.11, 95% CI: 0.05‐0.24). Individuals who answered the medication question incorrectly were 3 times more likely to consent (OR: 3.82, 95% CI: 1.12‐13.03). There were no significant differences in consent for feeling overwhelmed (difficulties piling up) or for having discussed advance care planning with family members or doctors.

We had insufficient power to detect significant differences in posthospital utilization (including hospital readmission, emergency‐department use, and receipt of home health), based on screening‐question responses (data not shown).

DISCUSSION

We find that patients who declined to participate in the behavioral intervention (eligible nonparticipants) differed from participants in 3 important ways: perceived stress, recovery expectation, and health literacy. As hypothesized, patients with higher perceived stress and lower recovery expectation were less likely to consent to the behavioral intervention, even after adjusting for demographic and healthcare‐utilization differences. Contrary to our hypothesis, patients who incorrectly answered the medication question were more likely to consent to the intervention than those who correctly answered.

Characterizing nonparticipants and participants can offer important insight into the limitations of the research that informs clinical guidelines and behavioral interventions. Such characteristics could also indicate how to better engage patients in interventions or other aspects of their care, if associated with lower rates of adherence to recommended health behaviors or treatment plans. For example, self‐efficacy (closely related to perceived stress) and hopelessness regarding clinical outcomes (similar to low recovery expectation in the present study) are associated with nonadherence to medication plans and other care in some populations.[5, 6] Other more extreme stress, like that following a major medical event, has also been associated with a lower rate of adherence to medication regimens and a resulting higher rate of hospital readmission and mortality.[19, 20] People with low health literacy (compared with adequate health literacy) are more likely to report being confused about their medications, requesting help to read medication labels and missing appointments due to trouble reading reminder cards.[9] Identifying these characteristics may assist providers in helping patients address adherence barriers by first accurately identifying the root of patient issues (eg, where the lack of confidence in recovery is rooted in lack of resources or social support), then potentially referring to community resources where possible. For example, some states (including Rhode Island, this study's location) may have Aging and Disability Resource Centers dedicated to linking elderly people with transportation, decision support, and other resources to support quality care.

The association between health literacy and intervention participation remains uncertain. Our question, which assessed interpretation of a prescription label as a health‐literacy proxy, may have given patients insight into their limited health literacy that motivated them to accept the subsequent behavioral intervention. Others have found that lowerhealth literacy patients want their providers to know that they did not understand some health words,[9] though they may be less likely to ask questions, request additional services, or seek new information during a medical encounter.[21] In our study, those who correctly answered the medication‐label question were almost mutually exclusive from those who were otherwise stressed (12% overlap; data not shown). Thus, patients who correctly answer this question may correctly realize that they do not need the support offered by the behavioral intervention and decline to participate. For other patients, perceived stress and poor recovery expectations may be more immediate and important determinants of declination, with patients too stressed to volunteer for another task, even if it involves much‐needed assistance.

The frequency with which patients were confused by the questions merits further comment and may also be driven by stress. Though each question seeks to identify the impact of a specific construct (Table 1), being confused by any question may reflect a more general (or subacute) level of cognitive impairment or generalized low health literacy not limited to the applied numeracy of the medication‐label question. We excluded confused responses to demonstrate more clearly the impact of each individual construct.