User login

Automating Measurement of Trainee Work Hours

Across the country, residents are bound to a set of rules from the Accreditation Council for Graduate Medical Education (ACGME) designed to mini mize fatigue, maintain quality of life, and reduce fatigue-related patient safety events. Adherence to work hours regulations is required to maintain accreditation. Among other guidelines, residents are required to work fewer than 80 hours per week on average over 4 consecutive weeks.1 When work hour violations occur, programs risk citation, penalties, and harm to the program’s reputation.

Residents self-report their adherence to program regulations in an annual survey conducted by the ACGME.2 To collect more frequent data, most training programs monitor resident work hours through self-report on an electronic tracking platform.3 These data generally are used internally to identify problems and opportunities for improvement. However, self-report approaches are subject to imperfect recall and incomplete reporting, and require time and effort to complete.4

The widespread adoption of electronic health records (EHRs) brings new opportunity to measure and promote adherence to work hours. EHR log data capture when users log in and out of the system, along with their location and specific actions. These data offer a compelling alternative to self-report because they are already being collected and can be analyzed almost immediately. Recent studies using EHR log data to approximate resident work hours in a pediatric hospital successfully approximated scheduled hours, but the approach was customized to their hospital’s workflows and might not generalize to other settings.5 Furthermore, earlier studies have not captured evening out-of-hospital work, which contributes to total work hours and is associated with physician burnout.6

We developed a computational method that sought to accurately capture work hours, including out-of-hospital work, which could be used as a screening tool to identify at-risk residents and rotations in near real-time. We estimated work hours, including EHR and non-EHR work, from these EHR data and compared these daily estimations to self-report. We then used a heuristic to estimate the frequency of exceeding the 80-hour workweek in a large internal medicine residency program.

METHODS

The population included 82 internal medicine interns (PGY-1) and 121 residents (PGY-2 = 60, PGY-3 = 61) who rotated through University of California, San Francisco Medical Center (UCSFMC) between July 1, 2018, and June 30, 2019, on inpatient rotations. In the UCSF internal medicine residency program, interns spend an average of 5 months per year and residents spend an average of 2 months per year on inpatient rotations at UCSFMC. Scheduled inpatient rotations generally are in 1-month blocks and include general medical wards, cardiology, liver transplant, night-float, and a procedures and jeopardy rotation where interns perform procedures at UCSFMC and serve as backup for their colleagues across sites. Although expected shift duration differs by rotation, types of shifts include regular length days, call days that are not overnight (but expected duration of work is into the late evening), 28-hour overnight call (PGY-2 and PGY-3), and night-float.

Data Source

This computational method was developed at UCSFMC. This study was approved by the University of California, San Francisco institutional review board. Using the UCSF Epic Clarity database, EHR access log data were obtained, including all Epic logins/logoffs, times, and access devices. Access devices identified included medical center computers, personal computers, and mobile devices.

Trainees self-report their work hours in MedHub, a widely used electronic tracking platform for self-report of resident work hours.7 Data were extracted from this database for interns and residents who matched the criteria above. The self-report data were considered the gold standard for comparison, because it is the best available despite its known limitations.

We used data collected from UCSF’s physician scheduling platform, AMiON, to identify interns and residents assigned to rotations at UCSF hospitals.8 AMiON also was used to capture half-days of off-site scheduled clinics and teaching, which count toward the workday but would not be associated with on-campus logins.

Developing a Computational Method to Measure Work Hours

We developed a heuristic to accomplish two goals: (1) infer the duration of continuous in-hospital work hours while providing clinical care and (2) measure “out-of-hospital” work. Logins from medical center computers were considered to be “on-campus” work. Logins from personal computers were considered to be “out-of-hospital.” “Out-of-hospital” login sessions were further subdivided into “out-of-hospital work” and “out-of-hospital study” based on activity during the session; if any work activities listed in Appendix Table 1 were performed, the session was attributed to work. If only chart review was performed, the session was attributed to study and did not count towards total hours worked. Logins from mobile devices also did not count towards total hours worked.

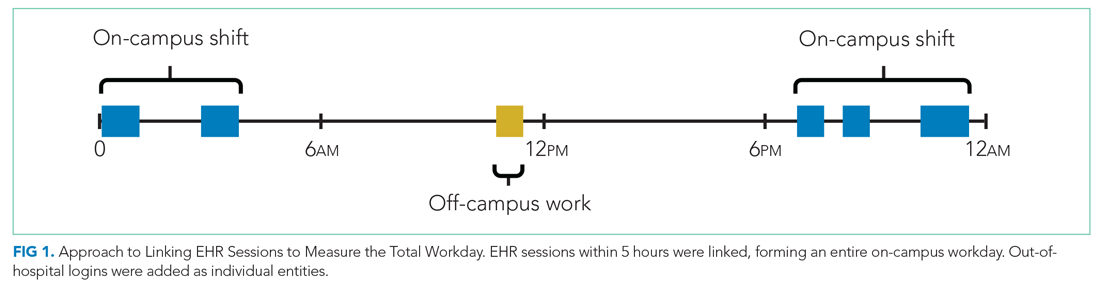

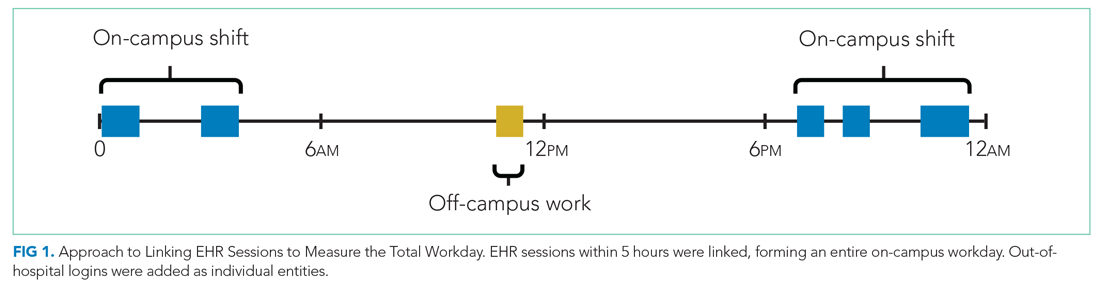

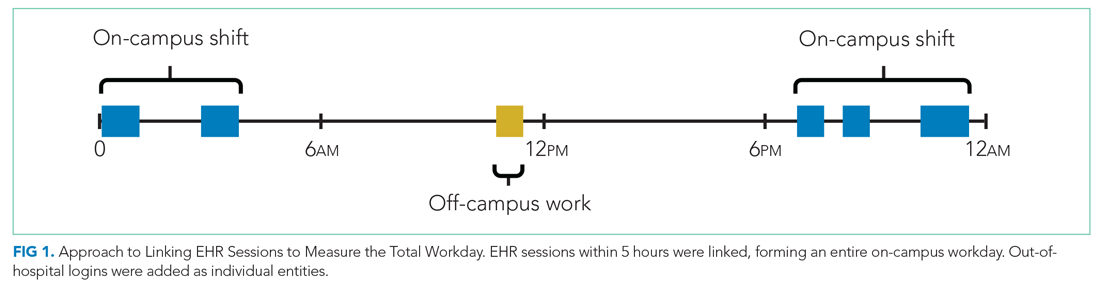

We inferred continuous in-hospital work by linking on-campus EHR sessions from the first on-campus login until the last on-campus logoff (Figure 1).

If there was overlapping time measurement between on-campus work and personal computer logins (for example, a resident was inferred to be doing on-campus work based on frequent medical center computer logins but there were also logins from personal computers), we inferred this to indicate that a personal device had been brought on-campus and the time was only attributed to on-campus work and was not double counted as out-of-hospital work. Out-of-hospital work that did not overlap with inferred on-campus work time contributed to the total hours worked in a week, consistent with ACGME guidelines.

Our internal medicine residents work at three hospitals: UCSFMC and two affiliated teaching hospitals. Although this study measured work hours while the residents were on an inpatient rotation at UCSFMC, trainees also might have occasional half-day clinics or teaching activities at other sites not captured by these EHR log data. The allocated time for that scheduled activity (extracted from AMiON) was counted as work hours. If the trainee was assigned to a morning half-day of off-site work (eg, didactics), this was counted the same as an 8

Comparison of EHR-Derived Work Hours Heuristic to Self-Report

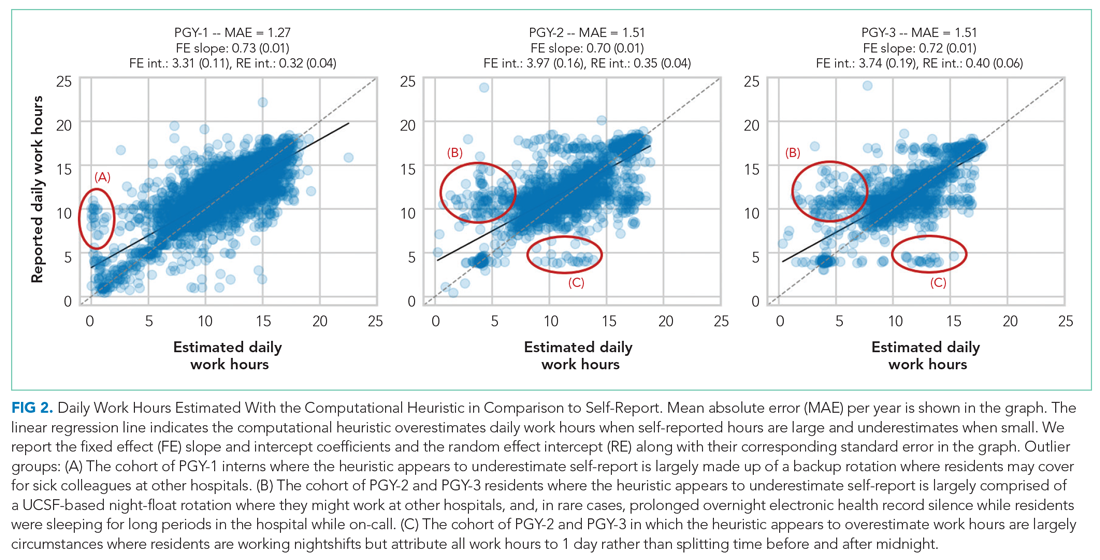

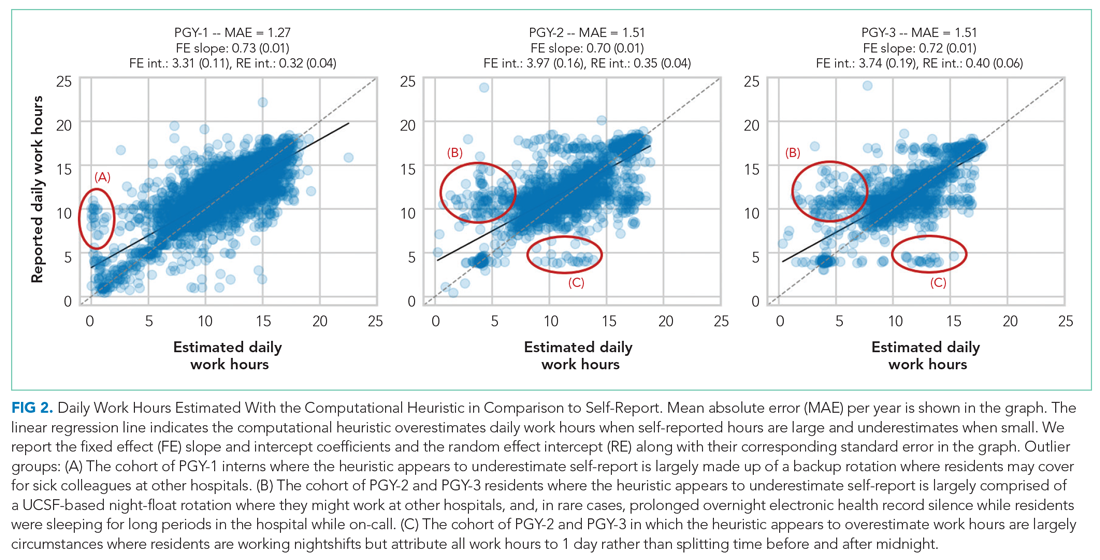

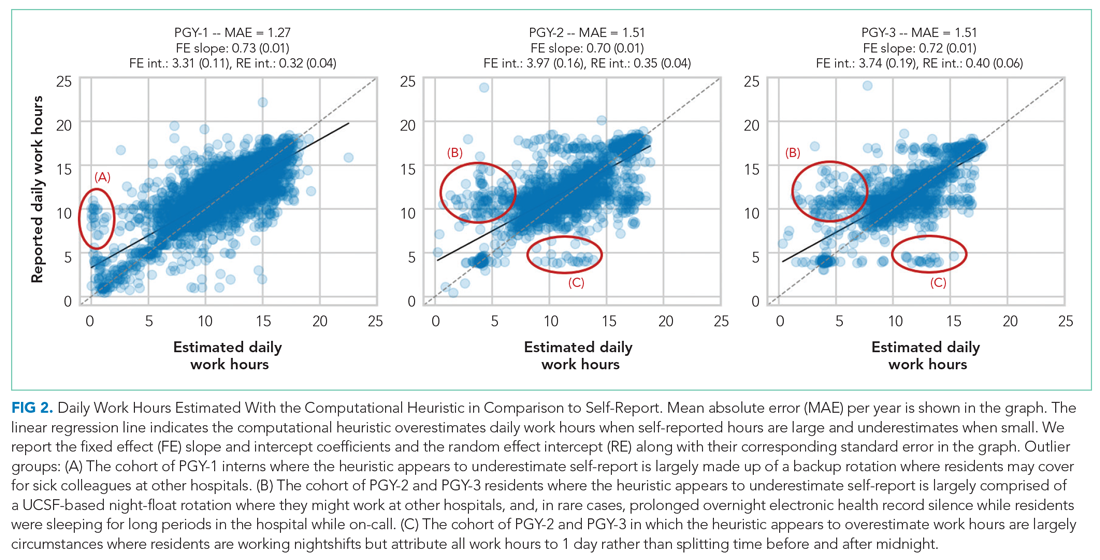

Because resident adherence with daily self-report is imperfect, we compared EHR-derived work to self-report on days when both were available. We generated scatter plots of EHR-derived work hours compared with self-report and calculated the mean absolute error of estimation. We fit a linear mixed-effect model for each PGY, modeling self-reported hours as a linear function of estimated hours (fixed effect) with a random intercept (random effect) for each trainee to account for variations among individuals. StatsModels, version 0.11.1, was used for statistical analyses.9

We reviewed detailed data from outlier clusters to understand situations where the heuristic might not perform optimally. To assess whether EHR-derived work hours reasonably overlapped with expected shifts, 20 8-day blocks from separate interns and residents were randomly selected for qualitative detail review in comparison with AMiON schedule data.

Estimating Hours Worked and Work Hours Violations

After validating against self-report on a daily basis, we used our heuristic to infer the average rate at which the 80-hour workweek was exceeded across all inpatient rotations at UCSFMC. This was determined both including “out-of-hospital” work as derived from logins on personal computers and excluding it. Using the estimated daily hours worked, we built a near real-time dashboard to assist program leadership with identifying at-risk trainees and trends across the program.

RESULTS

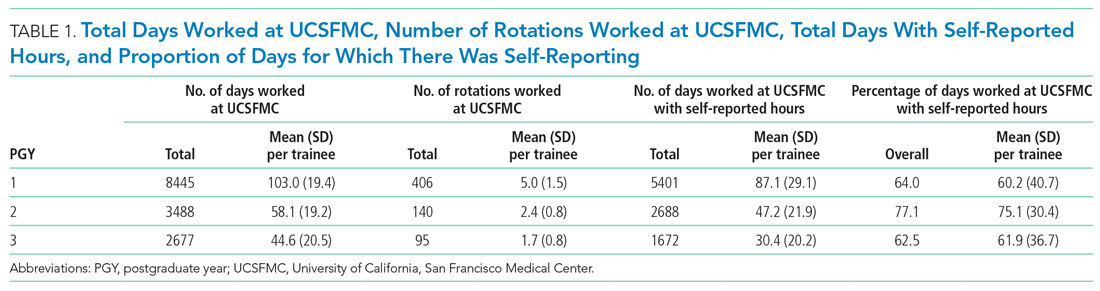

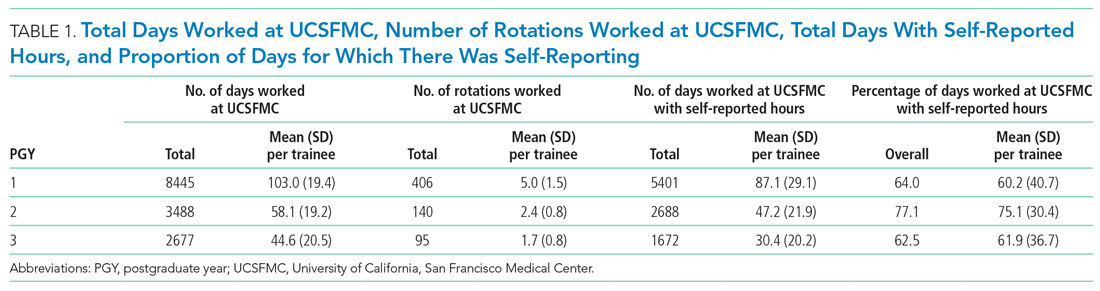

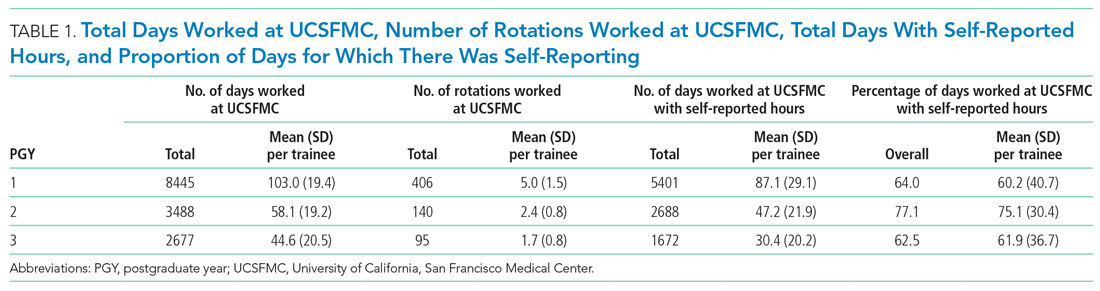

Data from 82 interns (PGY-1) and 121 internal medicine residents (PGY-2 and PGY-3) who rotated at UCSFMC between July 1, 2018, and June 30, 2019, were included in the study. Table 1 shows the number of days and rotations worked at UCSFMC as well as the frequency of self-report of work hours according to program year.

Qualitative review of EHR-derived data compared with schedule data showed that, although residents often reported homogenous daily work hours, EHR-derived work hours often varied as expected on a day-to-day basis according to the schedule (Appendix Table 2).

Because out-of-hospital EHR use does not count as work if done for educational purposes, we evaluated the proportion of out-of-hospital EHR use that is considered work and found that 67% of PGY-1, 50% of PGY-2, and 53% of PGY-3 out-of-hospital sessions included at least one work activity, as denoted in Appendix Table 1. Out-of-hospital work therefore represented 85% of PGY-1, 66% of PGY-2, and 73% of PGY-3 time spent in the EHR out-of-hospital. These sessions were counted towards work hours in accordance with ACGME rules and included 29% of PGY-1 workdays and 21% of PGY-2 and PGY-3 workdays. This amounted to a median of 1.0 hours per day (95% CI, 0.1-4.6 hours) of out-of-hospital work for PGY-1, 0.9 hours per day (95% CI, 0.1-4.1 hours) for PGY-2, and 0.8 hours per day (95% CI, 0.1-4.7 hours) for PGY-3 residents. Out-of-hospital logins that did not include work activities, as denoted in Appendix Table 1, were labeled out-of-hospital study and did not count towards work hours; this amounted to a median of 0.3 hours per day (95% CI, 0.02-1.6 hours) for PGY-1, 0.5 hours per day (95% CI, 0.04-0.25 hours) for PGY-2, and 0.3 hours per day (95% CI, 0.03-1.7 hours) for PGY-3. Mobile device logins also were not counted towards total work hours, with a median of 3 minutes per day for PGY-1, 6 minutes per day for PGY-2, and 5 minutes per day for PGY-3.

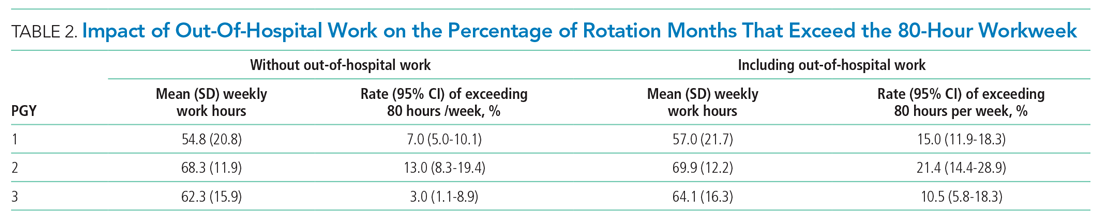

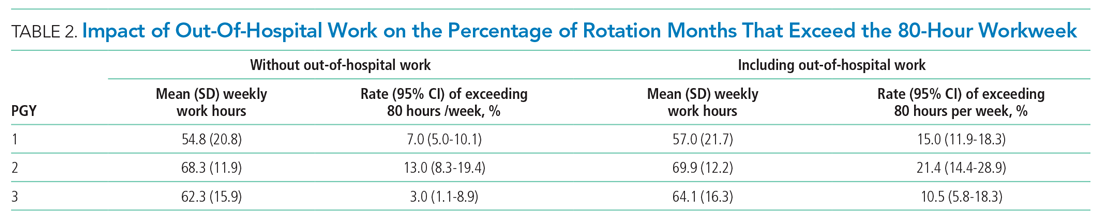

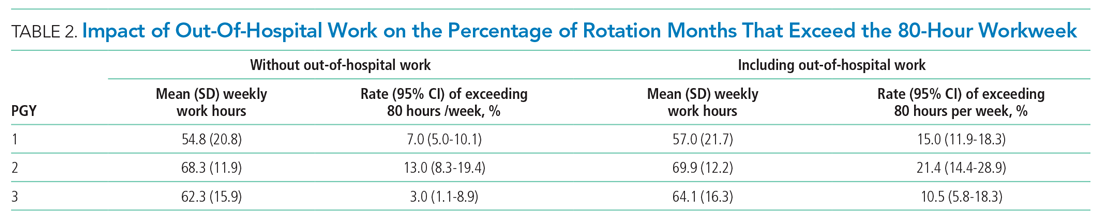

The percentage of rotation months where average hours worked exceeded 80 hours weekly is shown in Table 2. Inclusion of out-of-hospital work hours substantially increased the frequency at which the 80-hour workweek was exceeded. The frequency of individual residents working more than 80 hours weekly on average is shown in Appendix Figure 3. A narrow majority of PGY-1 and PGY-2 trainees and a larger majority of PGY-3 trainees never worked in excess of 80 hours per week when averaged over the course of a rotation, but several trainees did on several occasions.

Estimations from the computational method were built into a dashboard for use as screening tool by residency program directors (Appendix Figure 4).

DISCUSSION

EHR log data can be used to automate measurement of trainee work hours, providing timely data to program directors for identifying residents at risk of exceeding work hours limits. We demonstrated this by developing a data-driven approach to link on-campus logins that can be replicated in other training programs. We further demonstrated that out-of-hospital work substantially contributed to resident work hours and the frequency with which they exceed the 80-hour workweek, making it a critical component of any work hour estimation approach. Inclusive of out-of-hospital work, our computational method found that residents exceeded the 80-hour workweek 10% to 21% of the time, depending on their year in residency, with a small majority of residents never exceeding the 80-hour workweek.

Historically, most ACGME residency programs have relied on resident self-report to determine work hours.3 The validity of this method has been extensively studied and results remain mixed; in some surveys, residents admit to underreporting their hours while other validation studies, including the use of clock-in and clock-out or time-stamped parking data, align with self-report relatively well.10-12 Regardless of the reliability of self-report, it is a cumbersome task that residents have difficulty adhering to, as shown in our study, where only slightly more than one-half of the days worked had associated self-report. By relying on resident self-report, we are adding to the burden of clerical work, which is associated with physician burnout.13 Furthermore, because self-report typically does not happen in real-time, it limits a program’s ability to intervene on recent or impending work-hour violations. Our computational method enabled us to build a dashboard that is updated daily and provides critical insight into resident work hours at any time, without waiting for retrospective self-report.

Our study builds on previous work by Dziorny et al using EHR log data to algorithmically measure in-hospital work.5 In their study, the authors isolated shifts with a login gap of 4 hours and then combined shifts according to a set of heuristics. However, their logic integrated an extensive workflow analysis of trainee shifts, which might limit generalizability.5 Our approach computationally derives the temporal threshold for linking EHR sessions, which in our data was 5 hours but might differ at other sites. Automated derivation of this threshold will support generalizability to other programs and sites, although programs will still need to manually account for off-site work such as didactics. In a subsequent study evaluating the 80-hour workweek, Dziorny et al evaluated shift duration and appropriate time-off between shifts and found systematic underreporting of work.14 In our study, we prioritized evaluation of the 80-hour workweek and found general alignment between self-report and EHR-derived work-hour estimates, with a tendency to underestimate at lower reported work hours and overestimate at higher reported work hours (potentially because of underreporting as illustrated by Dziorny et al). We included the important out-of-hospital logins as discrete work events because out-of-hospital work contributes to the total hours worked and to the number of workweeks that exceed the 80-hour workweek, and might contribute to burnout.15 The incidence of exceeding the 80-hour workweek increased by 7% to 8% across all residents when out-of-hospital work was included, demonstrating that tools such as ResQ (ResQ Medical) that rely primarily on geolocation data might not sufficiently capture the ways in which residents spend their time working.16

Our approach has limitations. We determined on-campus vs out-of-hospital locations based on whether the login device belonged to the medical center or was a personal computer. Consequently, if trainees exclusively used a personal computer while on-campus and never used a medical center computer, we would have captured this work done while logged into the EHR but would not have inferred on-campus work. Although nearly all trainees in our organization use medical center computers throughout the day, this might impact generalizability for programs where trainees use personal computers exclusively in the hospital. Our approach also assumes trainees will use the EHR at the beginning and end of their workdays, which could lead to underestimation of work hours in trainees who do not employ this practice. With regards to work done on personal computers, our heuristic required that at least one work activity (as denoted in Appendix Table 1) be included in the session in order for it to count as work. Although this approach allows us to exclude sessions where trainees might be reviewing charts exclusively for educational purposes, it is difficult to infer the true intent of chart review.

There might be periods of time where residents are doing in-hospital work but more than 5 hours elapsed between EHR user sessions. As we have started adapting this computational method for other residency programs, we have added logic that allows for long periods of time in the operating room to be considered part of a continuous workday. There also are limitations to assigning blocks of time to off-site clinics; clinics that are associated with after-hours work but use a different EHR would not be captured in total out-of-hospital work.

Although correlation with self-report was good, we identified clusters of inaccuracy. This likely resulted from our residency program covering three medical centers, two of which were not included in the data set. For example, if a resident had an off-site clinic that was not accounted for in AMiON, EHR-derived work hours might have been underestimated relative to self-report. Operationally leveraging an automated system for measuring work hours in the form of dashboards and other tools could provide the impetus to ensure accurate documentation of schedule anomalies.

CONCLUSION

Implementation of our EHR-derived work-hour model will allow ACGME residency programs to understand and act upon trainee work-hour violations closer to real time, as the data extraction is daily and automated. Automation will save busy residents a cumbersome task, provide more complete data than self-report, and empower residency programs to intervene quickly to support overworked trainees.

Acknowledgments

The authors thank Drs Bradley Monash, Larissa Thomas, and Rebecca Berman for providing residency program input.

1. Accreditation Council for Graduate Medical Education. Common program requirements. Accessed August 12, 2020. https://www.acgme.org/What-We-Do/Accreditation/Common-Program-Requirements

2. Accreditation Council for Graduate Medical Education. Resident/fellow and faculty surveys. Accessed August 12, 2020. https://www.acgme.org/Data-Collection-Systems/Resident-Fellow-and-Faculty-Surveys

3. Petre M, Geana R, Cipparrone N, et al. Comparing electronic and manual tracking systems for monitoring resident duty hours. Ochsner J. 2016;16(1):16-21.

4. Gonzalo JD, Yang JJ, Ngo L, Clark A, Reynolds EE, Herzig SJ. Accuracy of residents’ retrospective perceptions of 16-hour call admitting shift compliance and characteristics. Grad Med Educ. 2013;5(4):630-633. https://doi.org/10.4300/jgme-d-12-00311.1

5. Dziorny AC, Orenstein EW, Lindell RB, Hames NA, Washington N, Desai B. Automatic detection of front-line clinician hospital shifts: a novel use of electronic health record timestamp data. Appl Clin Inform. 2019;10(1):28-37. https://doi.org/10.1055/s-0038-1676819

6. Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: the impact of health information technology. J Am Med Inform Assoc. 2019;26(2):106-114. https://doi.org/10.1093/jamia/ocy145

7. MedHub. Accessed April 7, 2021. https://www.medhub.com

8. AMiON. Accessed April 7, 2021. https://www.amion.com

9. Seabold S, Perktold J. Statsmodels: econometric and statistical modeling with python. Proceedings of the 9th Python in Science Conference. https://conference.scipy.org/proceedings/scipy2010/pdfs/seabold.pdf

10. Todd SR, Fahy BN, Paukert JL, Mersinger D, Johnson ML, Bass BL. How accurate are self-reported resident duty hours? J Surg Educ. 2010;67(2):103-107. https://doi.org/10.1016/j.jsurg.2009.08.004

11. Chadaga SR, Keniston A, Casey D, Albert RK. Correlation between self-reported resident duty hours and time-stamped parking data. J Grad Med Educ. 2012;4(2):254-256. https://doi.org/10.4300/JGME-D-11-00142.1

12. Drolet BC, Schwede M, Bishop KD, Fischer SA. Compliance and falsification of duty hours: reports from residents and program directors. J Grad Med Educ. 2013;5(3):368-373. https://doi.org/10.4300/JGME-D-12-00375.1

13. Shanafelt TD, Dyrbye LN, West CP. Addressing physician burnout: the way forward. JAMA. 2017;317(9):901. https://doi.org/10.1001/jama.2017.0076

14. Dziorny AC, Orenstein EW, Lindell RB, Hames NA, Washington N, Desai B. Pediatric trainees systematically under-report duty hour violations compared to electronic health record defined shifts. PLOS ONE. 2019;14(12):e0226493. https://doi.org/10.1371/journal.pone.0226493

15. Saag HS, Shah K, Jones SA, Testa PA, Horwitz LI. Pajama time: working after work in the electronic health record. J Gen Intern Med. 2019;34(9):1695-1696. https://doi.org/10.1007/s11606-019-05055-x

16. ResQ Medical. Accessed April 7, 2021. https://resqmedical.com

Across the country, residents are bound to a set of rules from the Accreditation Council for Graduate Medical Education (ACGME) designed to mini mize fatigue, maintain quality of life, and reduce fatigue-related patient safety events. Adherence to work hours regulations is required to maintain accreditation. Among other guidelines, residents are required to work fewer than 80 hours per week on average over 4 consecutive weeks.1 When work hour violations occur, programs risk citation, penalties, and harm to the program’s reputation.

Residents self-report their adherence to program regulations in an annual survey conducted by the ACGME.2 To collect more frequent data, most training programs monitor resident work hours through self-report on an electronic tracking platform.3 These data generally are used internally to identify problems and opportunities for improvement. However, self-report approaches are subject to imperfect recall and incomplete reporting, and require time and effort to complete.4

The widespread adoption of electronic health records (EHRs) brings new opportunity to measure and promote adherence to work hours. EHR log data capture when users log in and out of the system, along with their location and specific actions. These data offer a compelling alternative to self-report because they are already being collected and can be analyzed almost immediately. Recent studies using EHR log data to approximate resident work hours in a pediatric hospital successfully approximated scheduled hours, but the approach was customized to their hospital’s workflows and might not generalize to other settings.5 Furthermore, earlier studies have not captured evening out-of-hospital work, which contributes to total work hours and is associated with physician burnout.6

We developed a computational method that sought to accurately capture work hours, including out-of-hospital work, which could be used as a screening tool to identify at-risk residents and rotations in near real-time. We estimated work hours, including EHR and non-EHR work, from these EHR data and compared these daily estimations to self-report. We then used a heuristic to estimate the frequency of exceeding the 80-hour workweek in a large internal medicine residency program.

METHODS

The population included 82 internal medicine interns (PGY-1) and 121 residents (PGY-2 = 60, PGY-3 = 61) who rotated through University of California, San Francisco Medical Center (UCSFMC) between July 1, 2018, and June 30, 2019, on inpatient rotations. In the UCSF internal medicine residency program, interns spend an average of 5 months per year and residents spend an average of 2 months per year on inpatient rotations at UCSFMC. Scheduled inpatient rotations generally are in 1-month blocks and include general medical wards, cardiology, liver transplant, night-float, and a procedures and jeopardy rotation where interns perform procedures at UCSFMC and serve as backup for their colleagues across sites. Although expected shift duration differs by rotation, types of shifts include regular length days, call days that are not overnight (but expected duration of work is into the late evening), 28-hour overnight call (PGY-2 and PGY-3), and night-float.

Data Source

This computational method was developed at UCSFMC. This study was approved by the University of California, San Francisco institutional review board. Using the UCSF Epic Clarity database, EHR access log data were obtained, including all Epic logins/logoffs, times, and access devices. Access devices identified included medical center computers, personal computers, and mobile devices.

Trainees self-report their work hours in MedHub, a widely used electronic tracking platform for self-report of resident work hours.7 Data were extracted from this database for interns and residents who matched the criteria above. The self-report data were considered the gold standard for comparison, because it is the best available despite its known limitations.

We used data collected from UCSF’s physician scheduling platform, AMiON, to identify interns and residents assigned to rotations at UCSF hospitals.8 AMiON also was used to capture half-days of off-site scheduled clinics and teaching, which count toward the workday but would not be associated with on-campus logins.

Developing a Computational Method to Measure Work Hours

We developed a heuristic to accomplish two goals: (1) infer the duration of continuous in-hospital work hours while providing clinical care and (2) measure “out-of-hospital” work. Logins from medical center computers were considered to be “on-campus” work. Logins from personal computers were considered to be “out-of-hospital.” “Out-of-hospital” login sessions were further subdivided into “out-of-hospital work” and “out-of-hospital study” based on activity during the session; if any work activities listed in Appendix Table 1 were performed, the session was attributed to work. If only chart review was performed, the session was attributed to study and did not count towards total hours worked. Logins from mobile devices also did not count towards total hours worked.

We inferred continuous in-hospital work by linking on-campus EHR sessions from the first on-campus login until the last on-campus logoff (Figure 1).

If there was overlapping time measurement between on-campus work and personal computer logins (for example, a resident was inferred to be doing on-campus work based on frequent medical center computer logins but there were also logins from personal computers), we inferred this to indicate that a personal device had been brought on-campus and the time was only attributed to on-campus work and was not double counted as out-of-hospital work. Out-of-hospital work that did not overlap with inferred on-campus work time contributed to the total hours worked in a week, consistent with ACGME guidelines.

Our internal medicine residents work at three hospitals: UCSFMC and two affiliated teaching hospitals. Although this study measured work hours while the residents were on an inpatient rotation at UCSFMC, trainees also might have occasional half-day clinics or teaching activities at other sites not captured by these EHR log data. The allocated time for that scheduled activity (extracted from AMiON) was counted as work hours. If the trainee was assigned to a morning half-day of off-site work (eg, didactics), this was counted the same as an 8

Comparison of EHR-Derived Work Hours Heuristic to Self-Report

Because resident adherence with daily self-report is imperfect, we compared EHR-derived work to self-report on days when both were available. We generated scatter plots of EHR-derived work hours compared with self-report and calculated the mean absolute error of estimation. We fit a linear mixed-effect model for each PGY, modeling self-reported hours as a linear function of estimated hours (fixed effect) with a random intercept (random effect) for each trainee to account for variations among individuals. StatsModels, version 0.11.1, was used for statistical analyses.9

We reviewed detailed data from outlier clusters to understand situations where the heuristic might not perform optimally. To assess whether EHR-derived work hours reasonably overlapped with expected shifts, 20 8-day blocks from separate interns and residents were randomly selected for qualitative detail review in comparison with AMiON schedule data.

Estimating Hours Worked and Work Hours Violations

After validating against self-report on a daily basis, we used our heuristic to infer the average rate at which the 80-hour workweek was exceeded across all inpatient rotations at UCSFMC. This was determined both including “out-of-hospital” work as derived from logins on personal computers and excluding it. Using the estimated daily hours worked, we built a near real-time dashboard to assist program leadership with identifying at-risk trainees and trends across the program.

RESULTS

Data from 82 interns (PGY-1) and 121 internal medicine residents (PGY-2 and PGY-3) who rotated at UCSFMC between July 1, 2018, and June 30, 2019, were included in the study. Table 1 shows the number of days and rotations worked at UCSFMC as well as the frequency of self-report of work hours according to program year.

Qualitative review of EHR-derived data compared with schedule data showed that, although residents often reported homogenous daily work hours, EHR-derived work hours often varied as expected on a day-to-day basis according to the schedule (Appendix Table 2).

Because out-of-hospital EHR use does not count as work if done for educational purposes, we evaluated the proportion of out-of-hospital EHR use that is considered work and found that 67% of PGY-1, 50% of PGY-2, and 53% of PGY-3 out-of-hospital sessions included at least one work activity, as denoted in Appendix Table 1. Out-of-hospital work therefore represented 85% of PGY-1, 66% of PGY-2, and 73% of PGY-3 time spent in the EHR out-of-hospital. These sessions were counted towards work hours in accordance with ACGME rules and included 29% of PGY-1 workdays and 21% of PGY-2 and PGY-3 workdays. This amounted to a median of 1.0 hours per day (95% CI, 0.1-4.6 hours) of out-of-hospital work for PGY-1, 0.9 hours per day (95% CI, 0.1-4.1 hours) for PGY-2, and 0.8 hours per day (95% CI, 0.1-4.7 hours) for PGY-3 residents. Out-of-hospital logins that did not include work activities, as denoted in Appendix Table 1, were labeled out-of-hospital study and did not count towards work hours; this amounted to a median of 0.3 hours per day (95% CI, 0.02-1.6 hours) for PGY-1, 0.5 hours per day (95% CI, 0.04-0.25 hours) for PGY-2, and 0.3 hours per day (95% CI, 0.03-1.7 hours) for PGY-3. Mobile device logins also were not counted towards total work hours, with a median of 3 minutes per day for PGY-1, 6 minutes per day for PGY-2, and 5 minutes per day for PGY-3.

The percentage of rotation months where average hours worked exceeded 80 hours weekly is shown in Table 2. Inclusion of out-of-hospital work hours substantially increased the frequency at which the 80-hour workweek was exceeded. The frequency of individual residents working more than 80 hours weekly on average is shown in Appendix Figure 3. A narrow majority of PGY-1 and PGY-2 trainees and a larger majority of PGY-3 trainees never worked in excess of 80 hours per week when averaged over the course of a rotation, but several trainees did on several occasions.

Estimations from the computational method were built into a dashboard for use as screening tool by residency program directors (Appendix Figure 4).

DISCUSSION

EHR log data can be used to automate measurement of trainee work hours, providing timely data to program directors for identifying residents at risk of exceeding work hours limits. We demonstrated this by developing a data-driven approach to link on-campus logins that can be replicated in other training programs. We further demonstrated that out-of-hospital work substantially contributed to resident work hours and the frequency with which they exceed the 80-hour workweek, making it a critical component of any work hour estimation approach. Inclusive of out-of-hospital work, our computational method found that residents exceeded the 80-hour workweek 10% to 21% of the time, depending on their year in residency, with a small majority of residents never exceeding the 80-hour workweek.

Historically, most ACGME residency programs have relied on resident self-report to determine work hours.3 The validity of this method has been extensively studied and results remain mixed; in some surveys, residents admit to underreporting their hours while other validation studies, including the use of clock-in and clock-out or time-stamped parking data, align with self-report relatively well.10-12 Regardless of the reliability of self-report, it is a cumbersome task that residents have difficulty adhering to, as shown in our study, where only slightly more than one-half of the days worked had associated self-report. By relying on resident self-report, we are adding to the burden of clerical work, which is associated with physician burnout.13 Furthermore, because self-report typically does not happen in real-time, it limits a program’s ability to intervene on recent or impending work-hour violations. Our computational method enabled us to build a dashboard that is updated daily and provides critical insight into resident work hours at any time, without waiting for retrospective self-report.

Our study builds on previous work by Dziorny et al using EHR log data to algorithmically measure in-hospital work.5 In their study, the authors isolated shifts with a login gap of 4 hours and then combined shifts according to a set of heuristics. However, their logic integrated an extensive workflow analysis of trainee shifts, which might limit generalizability.5 Our approach computationally derives the temporal threshold for linking EHR sessions, which in our data was 5 hours but might differ at other sites. Automated derivation of this threshold will support generalizability to other programs and sites, although programs will still need to manually account for off-site work such as didactics. In a subsequent study evaluating the 80-hour workweek, Dziorny et al evaluated shift duration and appropriate time-off between shifts and found systematic underreporting of work.14 In our study, we prioritized evaluation of the 80-hour workweek and found general alignment between self-report and EHR-derived work-hour estimates, with a tendency to underestimate at lower reported work hours and overestimate at higher reported work hours (potentially because of underreporting as illustrated by Dziorny et al). We included the important out-of-hospital logins as discrete work events because out-of-hospital work contributes to the total hours worked and to the number of workweeks that exceed the 80-hour workweek, and might contribute to burnout.15 The incidence of exceeding the 80-hour workweek increased by 7% to 8% across all residents when out-of-hospital work was included, demonstrating that tools such as ResQ (ResQ Medical) that rely primarily on geolocation data might not sufficiently capture the ways in which residents spend their time working.16

Our approach has limitations. We determined on-campus vs out-of-hospital locations based on whether the login device belonged to the medical center or was a personal computer. Consequently, if trainees exclusively used a personal computer while on-campus and never used a medical center computer, we would have captured this work done while logged into the EHR but would not have inferred on-campus work. Although nearly all trainees in our organization use medical center computers throughout the day, this might impact generalizability for programs where trainees use personal computers exclusively in the hospital. Our approach also assumes trainees will use the EHR at the beginning and end of their workdays, which could lead to underestimation of work hours in trainees who do not employ this practice. With regards to work done on personal computers, our heuristic required that at least one work activity (as denoted in Appendix Table 1) be included in the session in order for it to count as work. Although this approach allows us to exclude sessions where trainees might be reviewing charts exclusively for educational purposes, it is difficult to infer the true intent of chart review.

There might be periods of time where residents are doing in-hospital work but more than 5 hours elapsed between EHR user sessions. As we have started adapting this computational method for other residency programs, we have added logic that allows for long periods of time in the operating room to be considered part of a continuous workday. There also are limitations to assigning blocks of time to off-site clinics; clinics that are associated with after-hours work but use a different EHR would not be captured in total out-of-hospital work.

Although correlation with self-report was good, we identified clusters of inaccuracy. This likely resulted from our residency program covering three medical centers, two of which were not included in the data set. For example, if a resident had an off-site clinic that was not accounted for in AMiON, EHR-derived work hours might have been underestimated relative to self-report. Operationally leveraging an automated system for measuring work hours in the form of dashboards and other tools could provide the impetus to ensure accurate documentation of schedule anomalies.

CONCLUSION

Implementation of our EHR-derived work-hour model will allow ACGME residency programs to understand and act upon trainee work-hour violations closer to real time, as the data extraction is daily and automated. Automation will save busy residents a cumbersome task, provide more complete data than self-report, and empower residency programs to intervene quickly to support overworked trainees.

Acknowledgments

The authors thank Drs Bradley Monash, Larissa Thomas, and Rebecca Berman for providing residency program input.

Across the country, residents are bound to a set of rules from the Accreditation Council for Graduate Medical Education (ACGME) designed to mini mize fatigue, maintain quality of life, and reduce fatigue-related patient safety events. Adherence to work hours regulations is required to maintain accreditation. Among other guidelines, residents are required to work fewer than 80 hours per week on average over 4 consecutive weeks.1 When work hour violations occur, programs risk citation, penalties, and harm to the program’s reputation.

Residents self-report their adherence to program regulations in an annual survey conducted by the ACGME.2 To collect more frequent data, most training programs monitor resident work hours through self-report on an electronic tracking platform.3 These data generally are used internally to identify problems and opportunities for improvement. However, self-report approaches are subject to imperfect recall and incomplete reporting, and require time and effort to complete.4

The widespread adoption of electronic health records (EHRs) brings new opportunity to measure and promote adherence to work hours. EHR log data capture when users log in and out of the system, along with their location and specific actions. These data offer a compelling alternative to self-report because they are already being collected and can be analyzed almost immediately. Recent studies using EHR log data to approximate resident work hours in a pediatric hospital successfully approximated scheduled hours, but the approach was customized to their hospital’s workflows and might not generalize to other settings.5 Furthermore, earlier studies have not captured evening out-of-hospital work, which contributes to total work hours and is associated with physician burnout.6

We developed a computational method that sought to accurately capture work hours, including out-of-hospital work, which could be used as a screening tool to identify at-risk residents and rotations in near real-time. We estimated work hours, including EHR and non-EHR work, from these EHR data and compared these daily estimations to self-report. We then used a heuristic to estimate the frequency of exceeding the 80-hour workweek in a large internal medicine residency program.

METHODS

The population included 82 internal medicine interns (PGY-1) and 121 residents (PGY-2 = 60, PGY-3 = 61) who rotated through University of California, San Francisco Medical Center (UCSFMC) between July 1, 2018, and June 30, 2019, on inpatient rotations. In the UCSF internal medicine residency program, interns spend an average of 5 months per year and residents spend an average of 2 months per year on inpatient rotations at UCSFMC. Scheduled inpatient rotations generally are in 1-month blocks and include general medical wards, cardiology, liver transplant, night-float, and a procedures and jeopardy rotation where interns perform procedures at UCSFMC and serve as backup for their colleagues across sites. Although expected shift duration differs by rotation, types of shifts include regular length days, call days that are not overnight (but expected duration of work is into the late evening), 28-hour overnight call (PGY-2 and PGY-3), and night-float.

Data Source

This computational method was developed at UCSFMC. This study was approved by the University of California, San Francisco institutional review board. Using the UCSF Epic Clarity database, EHR access log data were obtained, including all Epic logins/logoffs, times, and access devices. Access devices identified included medical center computers, personal computers, and mobile devices.

Trainees self-report their work hours in MedHub, a widely used electronic tracking platform for self-report of resident work hours.7 Data were extracted from this database for interns and residents who matched the criteria above. The self-report data were considered the gold standard for comparison, because it is the best available despite its known limitations.

We used data collected from UCSF’s physician scheduling platform, AMiON, to identify interns and residents assigned to rotations at UCSF hospitals.8 AMiON also was used to capture half-days of off-site scheduled clinics and teaching, which count toward the workday but would not be associated with on-campus logins.

Developing a Computational Method to Measure Work Hours

We developed a heuristic to accomplish two goals: (1) infer the duration of continuous in-hospital work hours while providing clinical care and (2) measure “out-of-hospital” work. Logins from medical center computers were considered to be “on-campus” work. Logins from personal computers were considered to be “out-of-hospital.” “Out-of-hospital” login sessions were further subdivided into “out-of-hospital work” and “out-of-hospital study” based on activity during the session; if any work activities listed in Appendix Table 1 were performed, the session was attributed to work. If only chart review was performed, the session was attributed to study and did not count towards total hours worked. Logins from mobile devices also did not count towards total hours worked.

We inferred continuous in-hospital work by linking on-campus EHR sessions from the first on-campus login until the last on-campus logoff (Figure 1).

If there was overlapping time measurement between on-campus work and personal computer logins (for example, a resident was inferred to be doing on-campus work based on frequent medical center computer logins but there were also logins from personal computers), we inferred this to indicate that a personal device had been brought on-campus and the time was only attributed to on-campus work and was not double counted as out-of-hospital work. Out-of-hospital work that did not overlap with inferred on-campus work time contributed to the total hours worked in a week, consistent with ACGME guidelines.

Our internal medicine residents work at three hospitals: UCSFMC and two affiliated teaching hospitals. Although this study measured work hours while the residents were on an inpatient rotation at UCSFMC, trainees also might have occasional half-day clinics or teaching activities at other sites not captured by these EHR log data. The allocated time for that scheduled activity (extracted from AMiON) was counted as work hours. If the trainee was assigned to a morning half-day of off-site work (eg, didactics), this was counted the same as an 8

Comparison of EHR-Derived Work Hours Heuristic to Self-Report

Because resident adherence with daily self-report is imperfect, we compared EHR-derived work to self-report on days when both were available. We generated scatter plots of EHR-derived work hours compared with self-report and calculated the mean absolute error of estimation. We fit a linear mixed-effect model for each PGY, modeling self-reported hours as a linear function of estimated hours (fixed effect) with a random intercept (random effect) for each trainee to account for variations among individuals. StatsModels, version 0.11.1, was used for statistical analyses.9

We reviewed detailed data from outlier clusters to understand situations where the heuristic might not perform optimally. To assess whether EHR-derived work hours reasonably overlapped with expected shifts, 20 8-day blocks from separate interns and residents were randomly selected for qualitative detail review in comparison with AMiON schedule data.

Estimating Hours Worked and Work Hours Violations

After validating against self-report on a daily basis, we used our heuristic to infer the average rate at which the 80-hour workweek was exceeded across all inpatient rotations at UCSFMC. This was determined both including “out-of-hospital” work as derived from logins on personal computers and excluding it. Using the estimated daily hours worked, we built a near real-time dashboard to assist program leadership with identifying at-risk trainees and trends across the program.

RESULTS

Data from 82 interns (PGY-1) and 121 internal medicine residents (PGY-2 and PGY-3) who rotated at UCSFMC between July 1, 2018, and June 30, 2019, were included in the study. Table 1 shows the number of days and rotations worked at UCSFMC as well as the frequency of self-report of work hours according to program year.

Qualitative review of EHR-derived data compared with schedule data showed that, although residents often reported homogenous daily work hours, EHR-derived work hours often varied as expected on a day-to-day basis according to the schedule (Appendix Table 2).

Because out-of-hospital EHR use does not count as work if done for educational purposes, we evaluated the proportion of out-of-hospital EHR use that is considered work and found that 67% of PGY-1, 50% of PGY-2, and 53% of PGY-3 out-of-hospital sessions included at least one work activity, as denoted in Appendix Table 1. Out-of-hospital work therefore represented 85% of PGY-1, 66% of PGY-2, and 73% of PGY-3 time spent in the EHR out-of-hospital. These sessions were counted towards work hours in accordance with ACGME rules and included 29% of PGY-1 workdays and 21% of PGY-2 and PGY-3 workdays. This amounted to a median of 1.0 hours per day (95% CI, 0.1-4.6 hours) of out-of-hospital work for PGY-1, 0.9 hours per day (95% CI, 0.1-4.1 hours) for PGY-2, and 0.8 hours per day (95% CI, 0.1-4.7 hours) for PGY-3 residents. Out-of-hospital logins that did not include work activities, as denoted in Appendix Table 1, were labeled out-of-hospital study and did not count towards work hours; this amounted to a median of 0.3 hours per day (95% CI, 0.02-1.6 hours) for PGY-1, 0.5 hours per day (95% CI, 0.04-0.25 hours) for PGY-2, and 0.3 hours per day (95% CI, 0.03-1.7 hours) for PGY-3. Mobile device logins also were not counted towards total work hours, with a median of 3 minutes per day for PGY-1, 6 minutes per day for PGY-2, and 5 minutes per day for PGY-3.

The percentage of rotation months where average hours worked exceeded 80 hours weekly is shown in Table 2. Inclusion of out-of-hospital work hours substantially increased the frequency at which the 80-hour workweek was exceeded. The frequency of individual residents working more than 80 hours weekly on average is shown in Appendix Figure 3. A narrow majority of PGY-1 and PGY-2 trainees and a larger majority of PGY-3 trainees never worked in excess of 80 hours per week when averaged over the course of a rotation, but several trainees did on several occasions.

Estimations from the computational method were built into a dashboard for use as screening tool by residency program directors (Appendix Figure 4).

DISCUSSION

EHR log data can be used to automate measurement of trainee work hours, providing timely data to program directors for identifying residents at risk of exceeding work hours limits. We demonstrated this by developing a data-driven approach to link on-campus logins that can be replicated in other training programs. We further demonstrated that out-of-hospital work substantially contributed to resident work hours and the frequency with which they exceed the 80-hour workweek, making it a critical component of any work hour estimation approach. Inclusive of out-of-hospital work, our computational method found that residents exceeded the 80-hour workweek 10% to 21% of the time, depending on their year in residency, with a small majority of residents never exceeding the 80-hour workweek.

Historically, most ACGME residency programs have relied on resident self-report to determine work hours.3 The validity of this method has been extensively studied and results remain mixed; in some surveys, residents admit to underreporting their hours while other validation studies, including the use of clock-in and clock-out or time-stamped parking data, align with self-report relatively well.10-12 Regardless of the reliability of self-report, it is a cumbersome task that residents have difficulty adhering to, as shown in our study, where only slightly more than one-half of the days worked had associated self-report. By relying on resident self-report, we are adding to the burden of clerical work, which is associated with physician burnout.13 Furthermore, because self-report typically does not happen in real-time, it limits a program’s ability to intervene on recent or impending work-hour violations. Our computational method enabled us to build a dashboard that is updated daily and provides critical insight into resident work hours at any time, without waiting for retrospective self-report.

Our study builds on previous work by Dziorny et al using EHR log data to algorithmically measure in-hospital work.5 In their study, the authors isolated shifts with a login gap of 4 hours and then combined shifts according to a set of heuristics. However, their logic integrated an extensive workflow analysis of trainee shifts, which might limit generalizability.5 Our approach computationally derives the temporal threshold for linking EHR sessions, which in our data was 5 hours but might differ at other sites. Automated derivation of this threshold will support generalizability to other programs and sites, although programs will still need to manually account for off-site work such as didactics. In a subsequent study evaluating the 80-hour workweek, Dziorny et al evaluated shift duration and appropriate time-off between shifts and found systematic underreporting of work.14 In our study, we prioritized evaluation of the 80-hour workweek and found general alignment between self-report and EHR-derived work-hour estimates, with a tendency to underestimate at lower reported work hours and overestimate at higher reported work hours (potentially because of underreporting as illustrated by Dziorny et al). We included the important out-of-hospital logins as discrete work events because out-of-hospital work contributes to the total hours worked and to the number of workweeks that exceed the 80-hour workweek, and might contribute to burnout.15 The incidence of exceeding the 80-hour workweek increased by 7% to 8% across all residents when out-of-hospital work was included, demonstrating that tools such as ResQ (ResQ Medical) that rely primarily on geolocation data might not sufficiently capture the ways in which residents spend their time working.16

Our approach has limitations. We determined on-campus vs out-of-hospital locations based on whether the login device belonged to the medical center or was a personal computer. Consequently, if trainees exclusively used a personal computer while on-campus and never used a medical center computer, we would have captured this work done while logged into the EHR but would not have inferred on-campus work. Although nearly all trainees in our organization use medical center computers throughout the day, this might impact generalizability for programs where trainees use personal computers exclusively in the hospital. Our approach also assumes trainees will use the EHR at the beginning and end of their workdays, which could lead to underestimation of work hours in trainees who do not employ this practice. With regards to work done on personal computers, our heuristic required that at least one work activity (as denoted in Appendix Table 1) be included in the session in order for it to count as work. Although this approach allows us to exclude sessions where trainees might be reviewing charts exclusively for educational purposes, it is difficult to infer the true intent of chart review.

There might be periods of time where residents are doing in-hospital work but more than 5 hours elapsed between EHR user sessions. As we have started adapting this computational method for other residency programs, we have added logic that allows for long periods of time in the operating room to be considered part of a continuous workday. There also are limitations to assigning blocks of time to off-site clinics; clinics that are associated with after-hours work but use a different EHR would not be captured in total out-of-hospital work.

Although correlation with self-report was good, we identified clusters of inaccuracy. This likely resulted from our residency program covering three medical centers, two of which were not included in the data set. For example, if a resident had an off-site clinic that was not accounted for in AMiON, EHR-derived work hours might have been underestimated relative to self-report. Operationally leveraging an automated system for measuring work hours in the form of dashboards and other tools could provide the impetus to ensure accurate documentation of schedule anomalies.

CONCLUSION

Implementation of our EHR-derived work-hour model will allow ACGME residency programs to understand and act upon trainee work-hour violations closer to real time, as the data extraction is daily and automated. Automation will save busy residents a cumbersome task, provide more complete data than self-report, and empower residency programs to intervene quickly to support overworked trainees.

Acknowledgments

The authors thank Drs Bradley Monash, Larissa Thomas, and Rebecca Berman for providing residency program input.

1. Accreditation Council for Graduate Medical Education. Common program requirements. Accessed August 12, 2020. https://www.acgme.org/What-We-Do/Accreditation/Common-Program-Requirements

2. Accreditation Council for Graduate Medical Education. Resident/fellow and faculty surveys. Accessed August 12, 2020. https://www.acgme.org/Data-Collection-Systems/Resident-Fellow-and-Faculty-Surveys

3. Petre M, Geana R, Cipparrone N, et al. Comparing electronic and manual tracking systems for monitoring resident duty hours. Ochsner J. 2016;16(1):16-21.

4. Gonzalo JD, Yang JJ, Ngo L, Clark A, Reynolds EE, Herzig SJ. Accuracy of residents’ retrospective perceptions of 16-hour call admitting shift compliance and characteristics. Grad Med Educ. 2013;5(4):630-633. https://doi.org/10.4300/jgme-d-12-00311.1

5. Dziorny AC, Orenstein EW, Lindell RB, Hames NA, Washington N, Desai B. Automatic detection of front-line clinician hospital shifts: a novel use of electronic health record timestamp data. Appl Clin Inform. 2019;10(1):28-37. https://doi.org/10.1055/s-0038-1676819

6. Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: the impact of health information technology. J Am Med Inform Assoc. 2019;26(2):106-114. https://doi.org/10.1093/jamia/ocy145

7. MedHub. Accessed April 7, 2021. https://www.medhub.com

8. AMiON. Accessed April 7, 2021. https://www.amion.com

9. Seabold S, Perktold J. Statsmodels: econometric and statistical modeling with python. Proceedings of the 9th Python in Science Conference. https://conference.scipy.org/proceedings/scipy2010/pdfs/seabold.pdf

10. Todd SR, Fahy BN, Paukert JL, Mersinger D, Johnson ML, Bass BL. How accurate are self-reported resident duty hours? J Surg Educ. 2010;67(2):103-107. https://doi.org/10.1016/j.jsurg.2009.08.004

11. Chadaga SR, Keniston A, Casey D, Albert RK. Correlation between self-reported resident duty hours and time-stamped parking data. J Grad Med Educ. 2012;4(2):254-256. https://doi.org/10.4300/JGME-D-11-00142.1

12. Drolet BC, Schwede M, Bishop KD, Fischer SA. Compliance and falsification of duty hours: reports from residents and program directors. J Grad Med Educ. 2013;5(3):368-373. https://doi.org/10.4300/JGME-D-12-00375.1

13. Shanafelt TD, Dyrbye LN, West CP. Addressing physician burnout: the way forward. JAMA. 2017;317(9):901. https://doi.org/10.1001/jama.2017.0076

14. Dziorny AC, Orenstein EW, Lindell RB, Hames NA, Washington N, Desai B. Pediatric trainees systematically under-report duty hour violations compared to electronic health record defined shifts. PLOS ONE. 2019;14(12):e0226493. https://doi.org/10.1371/journal.pone.0226493

15. Saag HS, Shah K, Jones SA, Testa PA, Horwitz LI. Pajama time: working after work in the electronic health record. J Gen Intern Med. 2019;34(9):1695-1696. https://doi.org/10.1007/s11606-019-05055-x

16. ResQ Medical. Accessed April 7, 2021. https://resqmedical.com

1. Accreditation Council for Graduate Medical Education. Common program requirements. Accessed August 12, 2020. https://www.acgme.org/What-We-Do/Accreditation/Common-Program-Requirements

2. Accreditation Council for Graduate Medical Education. Resident/fellow and faculty surveys. Accessed August 12, 2020. https://www.acgme.org/Data-Collection-Systems/Resident-Fellow-and-Faculty-Surveys

3. Petre M, Geana R, Cipparrone N, et al. Comparing electronic and manual tracking systems for monitoring resident duty hours. Ochsner J. 2016;16(1):16-21.

4. Gonzalo JD, Yang JJ, Ngo L, Clark A, Reynolds EE, Herzig SJ. Accuracy of residents’ retrospective perceptions of 16-hour call admitting shift compliance and characteristics. Grad Med Educ. 2013;5(4):630-633. https://doi.org/10.4300/jgme-d-12-00311.1

5. Dziorny AC, Orenstein EW, Lindell RB, Hames NA, Washington N, Desai B. Automatic detection of front-line clinician hospital shifts: a novel use of electronic health record timestamp data. Appl Clin Inform. 2019;10(1):28-37. https://doi.org/10.1055/s-0038-1676819

6. Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: the impact of health information technology. J Am Med Inform Assoc. 2019;26(2):106-114. https://doi.org/10.1093/jamia/ocy145

7. MedHub. Accessed April 7, 2021. https://www.medhub.com

8. AMiON. Accessed April 7, 2021. https://www.amion.com

9. Seabold S, Perktold J. Statsmodels: econometric and statistical modeling with python. Proceedings of the 9th Python in Science Conference. https://conference.scipy.org/proceedings/scipy2010/pdfs/seabold.pdf

10. Todd SR, Fahy BN, Paukert JL, Mersinger D, Johnson ML, Bass BL. How accurate are self-reported resident duty hours? J Surg Educ. 2010;67(2):103-107. https://doi.org/10.1016/j.jsurg.2009.08.004

11. Chadaga SR, Keniston A, Casey D, Albert RK. Correlation between self-reported resident duty hours and time-stamped parking data. J Grad Med Educ. 2012;4(2):254-256. https://doi.org/10.4300/JGME-D-11-00142.1

12. Drolet BC, Schwede M, Bishop KD, Fischer SA. Compliance and falsification of duty hours: reports from residents and program directors. J Grad Med Educ. 2013;5(3):368-373. https://doi.org/10.4300/JGME-D-12-00375.1

13. Shanafelt TD, Dyrbye LN, West CP. Addressing physician burnout: the way forward. JAMA. 2017;317(9):901. https://doi.org/10.1001/jama.2017.0076

14. Dziorny AC, Orenstein EW, Lindell RB, Hames NA, Washington N, Desai B. Pediatric trainees systematically under-report duty hour violations compared to electronic health record defined shifts. PLOS ONE. 2019;14(12):e0226493. https://doi.org/10.1371/journal.pone.0226493

15. Saag HS, Shah K, Jones SA, Testa PA, Horwitz LI. Pajama time: working after work in the electronic health record. J Gen Intern Med. 2019;34(9):1695-1696. https://doi.org/10.1007/s11606-019-05055-x

16. ResQ Medical. Accessed April 7, 2021. https://resqmedical.com

© 2021 Society of Hospital Medicine

Health information exchange in US hospitals: The current landscape and a path to improved information sharing

The US healthcare system is highly fragmented, with patients typically receiving treatment from multiple providers during an episode of care and from many more providers over their lifetime.1,2 As patients move between care delivery settings, whether and how their information follows them is determined by a haphazard and error-prone patchwork of telephone, fax, and electronic communication channels.3 The existence of more robust electronic communication channels is often dictated by factors such as which providers share the same electronic health record (EHR) vendor rather than which providers share the highest volume of patients. As a result, providers often make clinical decisions with incomplete information, increasing the chances of misdiagnosis, unsafe or suboptimal treatment, and duplicative utilization.

Providers across the continuum of care encounter challenges to optimal clinical decision-making as a result of incomplete information. These are particularly problematic among clinicians in hospitals and emergency departments (EDs). Clinical decision-making in EDs often involves urgent and critical conditions in which decisions are made under pressure. Time constraints limit provider ability to find key clinical information to accurately diagnose and safely treat patients.4-6 Even for planned inpatient care, providers are often unfamiliar with patients, and they make safer decisions when they have full access to information from outside providers.7,8

Transitions of care between hospitals and primary care settings are also fraught with gaps in information sharing. Clinical decisions made in primary care can set patients on treatment trajectories that are greatly affected by the quality of information available to the care team at the time of initial diagnosis as well as in their subsequent treatment. Primary care physicians are not universally notified when their patients are hospitalized and may not have access to detailed information about the hospitalization, which can impair their ability to provide high quality care.9-11

Widespread and effective electronic health information exchange (HIE) holds the potential to address these challenges.3 With robust, interconnected electronic systems, key pieces of a patient’s health record can be electronically accessed and reconciled during planned and unplanned care transitions. The concept of HIE is simple—make all relevant patient data available to the clinical care team at the point of care, regardless of where that information was generated. The estimated value of nationwide interoperable EHR adoption suggests large savings from the more efficient, less duplicative, and higher quality care that likely results.12,13

There has been substantial funding and activity at federal, state, and local levels to promote the development of HIE in the US. The 2009 Health Information Technology for Economic and Clinical Health (HITECH) Act has the specific goal of accelerating adoption and use of certified EHR technology coupled with the ability to exchange clinical information to support patient care.14 The HITECH programs supported specific types of HIE that were believed to be particularly critical to improving patient care and included them in the federally-defined criteria for Meaningful Use (MU) of EHRs (ie, providers receive financial incentives for achieving specific objectives). The MU criteria evolve, moving from data capture in stage 1 to improved patient outcomes in stage 3.15 The HIE criteria focus on sending and receiving summary-of-care records during care transitions.

Despite the clear benefits of HIE and substantial support stated in policy initiatives, the spread of national HIE has been slow. Today, HIE in the US is highly heterogeneous: as a result of multiple federal-, state-, community-, enterprise- and EHR vendor-level efforts, only some provider organizations are able to engage in HIE with the other provider organizations with which they routinely share patients. In this review, we offer a framework and a corresponding set of definitions to understand the current state of HIE in the US. We describe key challenges to HIE progress and offer insights into the likely path to ensure that clinicians have routine, electronic access to patient information.

FOUR KEY DIMENSIONS OF HEALTH INFORMATION EXCHANGE

While the concept of HIE is simple—electronic access to clinical information across healthcare settings—the operationalization of HIE occurs in many different ways.16 While the terms “health information exchange” and “interoperability” are often used interchangeably, they can have different meanings. In this section, we describe 4 important dimensions that serve as a framework for understanding any given effort to enable HIE (Table).

(1) What Is Exchanged? Types of Information

The term “health information exchange” is ambiguous with respect to the type(s) of information that are accessible. Health information exchange may refer to the process of 2 providers electronically sharing a wide range of data, from a single type of information (eg, lab test results), summary of care records, to complete patient records.17 Part of this ambiguity may stem from uncertainty about the scope of information that should be shared, and how this varies based on the type of clinical encounter. For example, critical types of information in the ED setting may differ from those relevant to a primary care team after a referral. While the ability to access only particular types of information will not address all information gaps, providing access to complete patient records may result in information overload that inhibits the ability to find the subset of information relevant in a given clinical encounter.

(2) Who is Exchanging? Relationship Between Provider Organizations

The types of information accessed electronically are effectively agnostic to the relationship between the provider organizations that are sharing information. Traditionally, HIE has been considered as information that is electronically shared among 2 or more unaffiliated organizations. However, there is increasing recognition that some providers may not have electronic access to all information about their patients that exists within their organization, often after a merger or acquisition between 2 providers with different EHR systems.18,19 In these cases, a primary care team in a large integrated delivery system may have as many information gaps as a primary care team in a small, independent practice. Fulfilling clinical information needs may require both intra- and interorganizational HIE, which complicates the design of HIE processes and how the care team approaches incorporating information from both types of organizations into their decision-making. It is also important to recognize that some provider organizations, particularly small, rural practices, may not have the information technology and connectivity infrastructure required to engage in HIE.

(3) How Is Information Exchanged? Types of Electronic Access: Push vs Pull Exchange

To minimize information gaps, electronic access to information from external settings needs to offer both “push” and “pull” options. Push exchange, which can direct information electronically to a targeted recipient, works in scenarios in which there is a known information gap and known information source. The classic use for push exchange is care coordination, such as primary care physician-specialist referrals or hospital-primary care physician transitions postdischarge. Pull exchange accommodates scenarios in which there is a known information gap but the source(s) of information are unknown; it requires that clinical care teams search for and locate the clinical information that exists about the patient in external settings. Here, the classic use is emergency care in which the care team may encounter a new patient and want to retrieve records.

Widespread use of provider portals that offer view-only access into EHRs and other clinical data repositories maintained by external organizations complicate the picture. Portals are commonly used by hospitals to enable community providers to view information from a hospitalization.21 While this does not fall under the commonly held notion of HIE because no exchange occurs, portals support a pull approach to accessing information electronically among care settings that treat the same patients but use different EHRs.

Regardless of whether information is pushed or pulled, this may happen with varying degrees of human effort. This distinction gives rise to the difference between HIE and interoperability. Health information exchange reflects the ability of EHRs to exchange information, while interoperability additionally requires that EHRs be able to use exchanged information. From an operational perspective, the key distinction between HIE and interoperability is the extent of human involvement. Health information exchange requires that a human read and decide how to enter information from external settings (eg, a chart in PDF format sent between 2 EHRs), while interoperability enables the EHR that receives the information to understand the content and automatically triage or reconcile information, such as a medication list, without any human action.21 Health information exchange, therefore, relies on the diligence of the receiving clinician, while interoperability does not.

(4) What Governance Entity Defines the “Rules” of Exchange?

When more than 1 provider organization shares patient-identified data, a governance entity must specify the framework that governs the exchange. While the specifics of HIE governance vary, there are 3 predominant types of HIE networks, based on the type of organization that governs exchange: enterprise HIE networks, EHR vendor HIE networks or community HIE networks.

Enterprise HIE networks exist when 1 or more provider organizations electronically share clinical information to support patient care with some restriction, beyond geography, that dictates which organizations are involved. Typically, restrictions are driven by strategic, proprietary interests.22,23 Although broad-based information access across settings would be in the best interest of the patient, provider organizations are sensitive to the competitive implications of sharing data and may pursue such sharing in a strategic way.24 A common scenario is when hospitals choose to strategically affiliate with select ambulatory providers and exclusively exchange information with them. This should facilitate better care coordination for patients shared by the hospital and those providers but can also benefit the hospital by increasing the referrals from those providers. While there is little direct evidence quantifying the extent to which this type of strategic sharing takes place, there have been anecdotal reports as well as indirect findings that for-profit hospitals in competitive markets are less likely to share patient data.19,25

EHR vendor HIE networks exist when exchange occurs within a community of provider organizations that use an EHR from the same vendor. A subset of EHR vendors have made this capability available; EPIC’s CareEverywhere solution27 is the best-known example. Providers with an EPIC EHR are able to query for and retrieve summary of care records and other documents from any provider organization with EPIC that has activated this functionality. There are also multivendor efforts, such as CommonWell27 and the Sequoia Project’s Carequality collaborative,28 which are initiatives that seek to provide a common interoperability framework across a diverse set of stakeholders, including provider organizations with different EHR systems, in a similar fashion to HIE modules like CareEverywhere. To date, growth in these cross-vendor collaborations has been slow, and they have limited participation. While HIE networks that involve EHR vendors are likely to grow, it is difficult to predict how quickly because they are still in an early phase of development, and face nontechnical barriers such as patient consent policies that vary between providers and across states.

Community HIE networks—also referred to as health information organizations (HIOs) or regional health information organizations (RHIOs)—exist when provider organizations in a community, frequently state-level organizations that were funded through HITECH grants,14 set up the technical infrastructure and governance approach to engage in HIE to improve patient care. In contrast to enterprise or vendor HIE networks that have pursued HIE in ways that appear strategically beneficial, the only restriction on participation in community and state HIE networks is usually geography because they view information exchange as a public good. Seventyone percent of hospital service areas (HSAs) are covered by at least 1 of the 106 operational HIOs, with 309,793 clinicians (licensed prescribers) participating in those exchange networks. Even with early infusions of public and other grant-funding, community HIE networks have experienced significant challenges to sustained operation, and many have ceased operating.29

Thus, for any given provider organization, available HIE networks are primarily shaped by 3 factors:

1. Geographic location, which determines the available community and state HIE networks (as well as other basic information technology and connectivity infrastructure); providers located outside the service areas covered by an operational HIE have little incentive to participate because they do not connect them to providers with whom they share patients. Providers in rural areas may simply not have the needed infrastructure to pursue HIE.

2. Type of organization to which they belong, which determines the available enterprise HIE networks; providers who are not members of large health systems may be excluded from participation in these types of networks.

3. EHR vendor, which determines whether they have access to an EHR vendor HIE network.

ONGOING CHALLENGES

Despite agreement about the substantial potential of HIE to reduce costs and increase the quality of care delivered across a broad range of providers, HIE progress has been slow. While HITECH has successfully increased EHR adoption in hospitals and ambulatory practices,30 HIE has lagged. This is largely because many complex, intertwined barriers must be addressed for HIE to be widespread.

Lack of a Defined Goal

The cost and complexity associated with the exchange of a single type of data (eg, medications) is substantially less than the cost and complexity of sharing complete patient records. There has been little industry consensus on the target goal—do we need to enable sharing of complete patient records across all providers, or will summary of care records suffice? If the latter, as is the focus of the current MU criteria, what types of information should be included in a summary of care record, and should content and/or structure vary depending on the type of care transition? While the MU criteria require the exchange of a summary of care record with defined data fields, it remains unclear whether this is the end state or whether we should continue to push towards broad-based sharing of all patient data as structured elements. Without a clear picture of the ideal end state, there has been significant heterogeneity in the development of HIE capabilities across providers and vendors, and difficulty coordinating efforts to continue to advance towards a nationwide approach. Addressing this issue also requires progress to define HIE usability, that is, how information from external organizations should be presented and integrated into clinical workflow and clinical decisions. Currently, where HIE is occurring and clinicians are receiving summary of care records, they find them long, cluttered, and difficult to locate key information.

Numerous, Complex Barriers Spanning Multiple Stakeholders

In the context of any individual HIE effort, even after the goal is defined, there are a myriad of challenges. In a recent survey of HIO efforts, many identified the following barriers as substantially impeding their development: establishing a sustainable business model, lack of funding, integration of HIE into provider workflow, limitations of current data standards, and working with governmental policy and mandates.30 What is notable about this list is that the barriers span an array of areas, including financial incentives and identifying a sustainable business model, technical barriers such as working within the limitations of data standards, and regulatory issues such as state laws that govern the requirements for patient consent to exchange personal health information. Overcoming any of these issues is challenging, but trying to tackle all of them simultaneously clearly reveals why progress has been slow. Further, resolving many of the issues involve different groups of stakeholders. For example, implementing appropriate patient consent procedures can require engaging with and harmonizing the regulations of multiple states, as well as the Health Insurance Portability and Accountability Act (HIPAA) and regulations specific to substance abuse data.

Weak or Misaligned Incentives