User login

Utilizing Telesimulation for Advanced Skills Training in Consultation and Handoff Communication: A Post-COVID-19 GME Bootcamp Experience

Events requiring communication among and within teams are vulnerable points in patient care in hospital medicine, with communication failures representing important contributors to adverse events.1-4 Consultations and handoffs are exceptionally common inpatient practices, yet training in these practices is variable across educational and practice domains.5,6 Advanced inpatient communication-skills training requires an effective, feasible, and scalable format. Simulation-based bootcamps can effectively support clinical skills training, often in procedural domains, and have been increasingly utilized for communication skills.7,8 We previously described the development and implementation of an in-person bootcamp for training and feedback in consultation and handoff communication.5,8

As hospitalist leaders grapple with how to systematically support and assess essential clinical skills, the COVID-19 pandemic has presented another impetus to rethink current processes. The rapid shift to virtual activities met immediate needs of the pandemic, but also inspired creativity in applying new methodologies to improve teaching strategies and implementation long-term.9,10 One such strategy, telesimulation, offers a way to continue simulation-based training limited by the need for physical distancing.10 Furthermore, recent calls to study the efficacy of virtual bootcamp structures have acknowledged potential benefits, even outside of the pandemic.11

The primary objective of this feasibility study was to convert our previously described consultation and handoff bootcamp to a telesimulation bootcamp (TBC), preserving rigorous performance evaluation and opportunities for skills-based feedback. We additionally compared evaluation between virtual and in-person formats to understand the utility of telesimulation for bootcamp-based clinical education moving forward.

METHODS

Setting and Participants

The TBC occurred in June 2020 during the University of Chicago institution-wide graduate medical education (GME) orientation; 130 interns entering 13 residency programs participated. The comparison group was 128 interns who underwent the traditional University of Chicago GME orientation “Advanced Communication Skills Bootcamp” (ACSBC) in 2019.5,8

Program Description

To develop TBC, we adapted observed structured clinical experiences (OSCEs) created for ACSBC. Until 2020, ACSBC included three in-person OSCEs: (1) requesting a consultation; (2) conducting handoffs; and (3) acquiring informed consent. COVID-19 necessitated conversion of ACSBC to virtual in June 2020. For this, we selected the consultation and handoff OSCEs, as these skills require near-universal and immediate application in clinical practice. Additionally, they required only trained facilitators (TFs), whereas informed consent required standardized patients. Hospitalist and emergency medicine faculty were recruited as TFs; 7 of 12 TFs were hospitalists. Each OSCE had two parts: an asynchronous, mandatory training module and a clinical simulation. For TBC, we adapted the simulations, previously separate experiences, into a 20-minute combined handoff/consultation telesimulation using the Zoom® video platform. Interns were paired with one TF who served as both standardized consultant (for one mock case) and handoff receiver (for three mock cases, including the consultation case). TFs rated intern performance and provided feedback.

TBC occurred on June 17 and 18, 2020. Interns were emailed asynchronous modules on June 1, and mock cases and instructions on June 12. When TBC began, GME staff proctors oriented interns in the Zoom® platform. Proctors placed TFs into private breakout rooms into which interns rotated through 20-minute timeslots. Faculty received copies of all TBC materials for review (Appendix 1) and underwent Zoom®-based training 1 to 2 weeks prior.

We evaluated TBC using several methods: (1) consultation and handoff skills performance measured by two validated checklists5,8; (2) survey of intern self-reported preparedness to practice consultations and handoffs; and (3) survey of intern satisfaction. Surveys were administered both immediately post bootcamp (Appendix 2) and 8 weeks into internship (Appendix 3). Skills performance checklists were a 12-item consultation checklist5 and 6-item handoff checklist.8 The handoff checklist was modified to remove activities impossible to assess virtually (ie, orienting sign-outs in a shared space) and to add a three-level rating scale of “outstanding,” “satisfactory,” and “needs improvement.” This was done based on feedback from ACSBC to allow more nuanced feedback for interns. A rating of “outstanding” was used to define successful completion of the item (Appendix 1). Interns rated preparedness and satisfaction on 5-point Likert-type items. All measures were compared to the 2019 in-person ACSBC cohort.

Data Analysis

Stata 16.1 (StataCorp LP) was used for analysis. We dichotomized preparedness and satisfaction scores, defining ratings of “4” or “5” as “prepared” or “satisfied.” As previously described,5 we created a composite score averaging both checklist scores for each intern. We normalized this score by rater to a z score (mean, 0; SD, 1) to account for rater differences. “Poor” and “outstanding” performances were defined as z scores below and above 1 SD, respectively. Fisher’s exact test was used to compare proportions, and Pearson correlation test to correlate z scores. The University of Chicago Institutional Review Board granted exemption.

RESULTS

All 130 entering interns participated in TBC. Internal medicine (IM) was the largest specialty (n = 37), followed by pediatrics (n = 22), emergency medicine (EM) (n = 16), and anesthesiology (n = 12). The remaining 9 programs ranged from 2 to 10 interns per program. The 128 interns in ACSBC were similar, including 40 IM, 23 pediatrics, 14 EM, and 12 anesthesia interns, with 2 to 10 interns in remaining programs.

TBC skills performance evaluations were compared to ACSBC (Table 1). The TBC intern cohort’s consultation performance was the same or better than the ACSBC intern cohort’s. For handoffs, TBC interns completed significantly fewer checklist items compared to ACSBC. Performance in each exercise was moderately correlated (r = 0.39, P < .05). For z scores, 14 TBC interns (10.8%) had “outstanding” and 15 (11.6%) had “poor” performances, compared to ACSBC interns with 7 (5.5%) “outstanding” and 10 (7.81%) “poor” performances (P = .15).

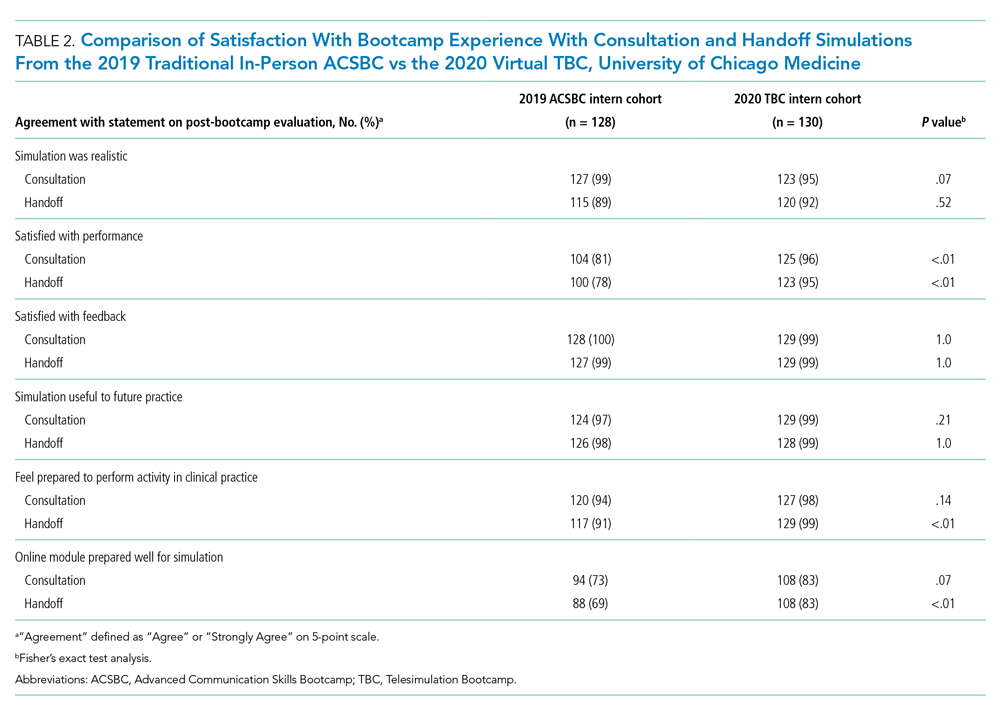

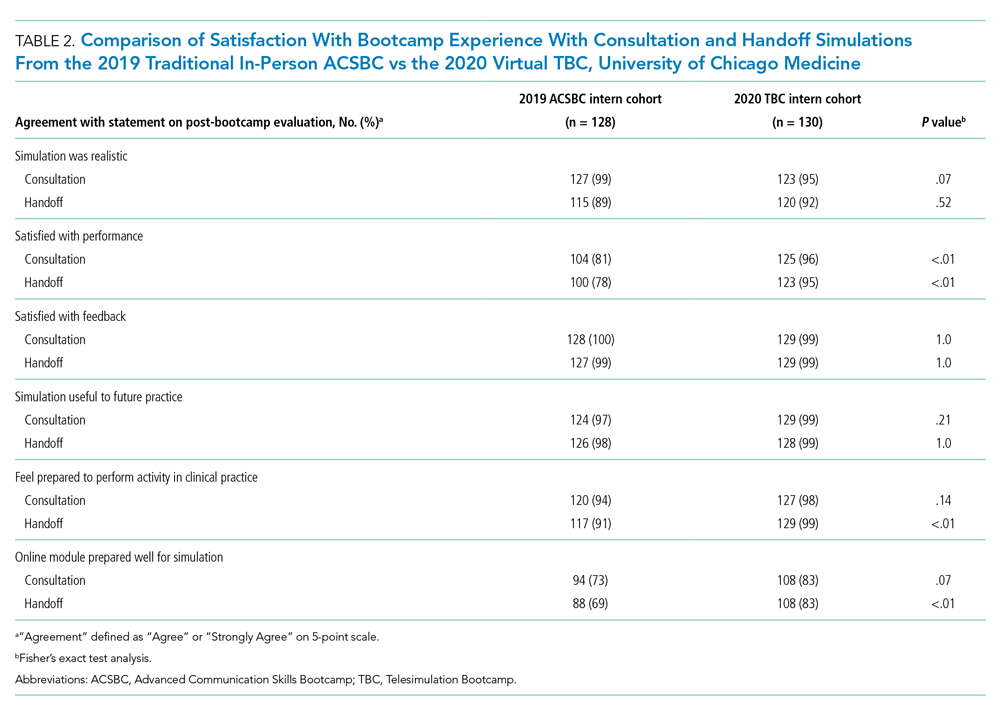

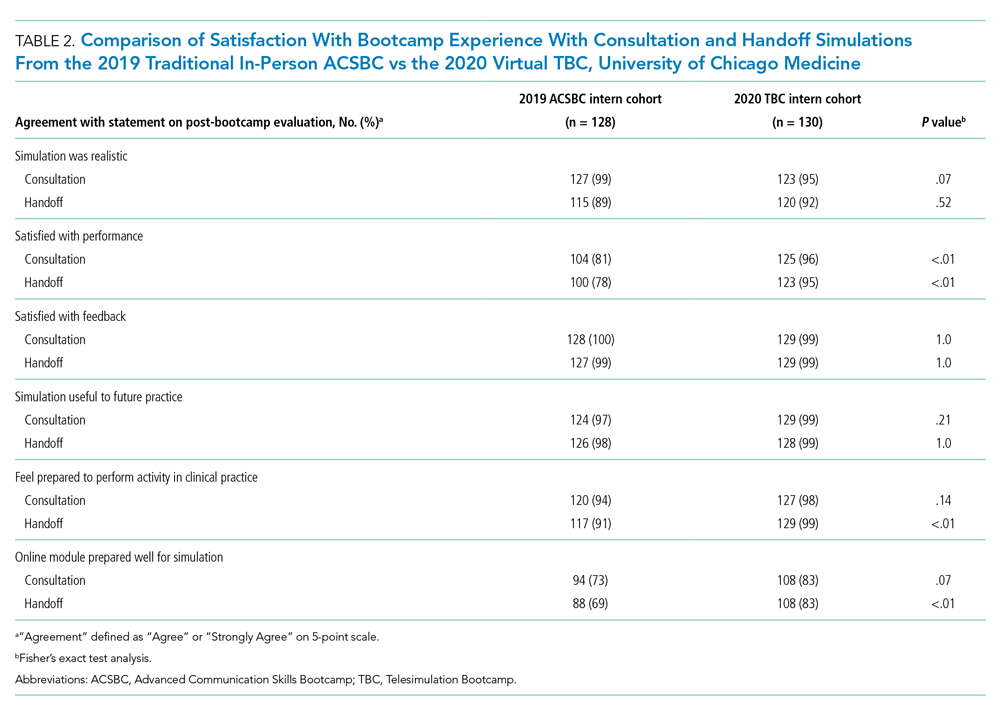

All 130 interns (100%) completed the immediate post-TBC survey. Overall, TBC satisfaction was comparable to ACSBC, and significantly improved for satisfaction with performance (Table 2). Compared to ACSBC, TBC interns felt more prepared for simulation and handoff clinical practice. Nearly all interns would recommend TBC (99% vs 96% of ACSBC interns, P = 0.28), and 99% felt the software used for the simulation ran smoothly.

The 8-week post-TBC survey had a response rate of 88% (115/130); 69% of interns reported conducting more effective handoffs due to TBC, and 79% felt confident in handoff skills. Similarly, 73% felt more effective at calling consultations, and 75% reported retained knowledge of consultation frameworks taught during TBC. Additionally, 71% of interns reported that TBC helped identify areas for self-directed improvement. There were no significant differences in 8-week postsurvey ratings between ACSBC and TBC.

DISCUSSION

In converting the advanced communication skills bootcamp from an in-person to a virtual format, telesimulation was well-received by interns and rated similarly to in-person bootcamp in most respects. Nearly all interns agreed the experience was realistic, provided useful feedback, and prepared them for clinical practice. Although we shifted to virtual out of necessity, our results demonstrate a high-quality, streamlined bootcamp experience that was less labor-intensive for interns, staff, and faculty. Telesimulation may represent an effective strategy beyond the COVID-19 pandemic to increase ease of administration and scale the use of bootcamps in supporting advanced clinical skill training for hospital-based practice.

TBC interns felt better prepared for simulation and more satisfied with their performance than ACSBC interns, potentially due to the revised format. The mock cases were adapted and consolidated for TBC, such that the handoff and consultation simulations shared a common case, whereas previously they were separate. Thus, intern preparation for TBC required familiarity with fewer overall cases. Ultimately, TBC maintained the quality of training but required review of less information.

In comparing performance, TBC interns were rated as well or better during consultation simulation compared to ASCBC, but handoffs were rated lower. This was likely due to the change in the handoff checklist from a dichotomous to a three-level rating scale. This change was made after receiving feedback from ACSBC TFs that a rating scale allowing for more nuance was needed to provide adequate feedback to interns. Although we defined handoff item completion for TBC interns as being rated “outstanding,” if the top two rankings, “outstanding” and “satisfactory,” are dichotomized to reflect completion, TBC handoff performance is equivalent or better than ACSBC. TF recruitment additionally differed between TBC and ACSBC cohorts. In ACSBC, resident physicians served as handoff TFs, whereas only faculty were recruited for TBC. Faculty were primarily clinically active hospitalists, whose expertise in handoffs may resulted in more stringent performance ratings, contributing to differences seen.

Hospitalist groups require clinicians to be immediately proficient in essential communication skills like consultation and handoffs, potentially requiring just-in-time training and feedback for large cohorts.12 Bootcamps can meet this need but require participation and time investment by many faculty members, staff, and administrators.5,8 Combining TBC into one virtual handoff/consultation simulation required recruitment and training of 50% fewer TFs and reduced administrative burden. ACSBC consultation simulations were high-fidelity but resource-heavy, requiring reliable two-way telephones with reliable connections and separate spaces for simulation and feedback.5 Conversely, TBC only required consultations to be “called” via audio-only Zoom® discussion, then both individuals turned on cameras for feedback. The slight decrease in perceived fidelity was certainly outweighed by ease of administration. TBC’s more efficient and less labor-intensive format is an appealing strategy for hospitalist groups looking to train up clinicians, including those operating across multiple or geographically distant sites.

Our study has limitations. It occurred with one group of learners at a single site with consistent consultation and handoff communication practices, which may not be the case elsewhere. Our comparison group was a separate cohort, and groups were not randomized; thus, differences seen may reflect inherent dissimilarities in these groups. Changes to the handoff checklist rating scale between 2019 and 2020 additionally may limit the direct comparison of handoff performance between cohorts. While overall fewer resources were required, TBC implementation did require time and institutional support, along with full virtual platform capability without user or time limitations. Our preparedness outcomes were self-reported without direct measurement of clinical performance, which is an area for future work.

We describe a feasible implementation of an adapted telesimulation communication bootcamp, with comparison to a previous in-person cohort’s skills performance and satisfaction. While COVID-19 has made the future of in-person training activities uncertain, it also served as a catalyst for educational innovation that may be sustained beyond the pandemic. Although developed out of necessity, the telesimulation communication bootcamp was effective and well-received. Telesimulation represents an opportunity for hospital medicine groups to implement advanced communication skills training and assessment in a more efficient, flexible, and potentially preferable way, even after the pandemic ends.

Acknowledgments

The authors thank the staff at the University of Chicago Office of Graduate Medical Education and the UChicago Medicine Simulation Center.

1. Sutcliffe KM, Lewton E, Rosenthal MM. Communication failures: an insidious contributor to medical mishaps. Acad Med. 2004;79(2):186-194. https://doi.org/ 10.1097/00001888-200402000-00019

2. Inadequate hand-off communication. Sentinel Event Alert. 2017;(58):1-6.

3. Horwitz LI, Meredith T, Schuur JD, Shah NR, Kulkarni RG, Jenq JY. Dropping the baton: a qualitative analysis of failures during the transition from emergency department to inpatient care. Ann Emerg Med. 2009;53(6):701-710. https://doi.org/ 10.1016/j.annemergmed.2008.05.007

4. Jagsi R, Kitch BT, Weinstein DF, Campbell EG, Hutter M, Weissman JS. Residents report on adverse events and their causes. Arch Intern Med. 2005;165(22):2607-2613. https://doi.org/10.1001/archinte.165.22.2607

5. Martin SK, Carter K, Hellerman N, et al. The consultation observed simulated clinical experience: training, assessment, and feedback for incoming interns on requesting consultations. Acad Med. 2018; 93(12):1814-1820. https://doi.org/10.1097/ACM.0000000000002337

6. Lopez MA, Campbell J. Developing a communication curriculum for primary and consulting services. Med Educ Online. 2020;25(1):1794341. https://doi.org/10.1080/10872981.2020

7. Cohen, ER, Barsuk JH, Moazed F, et al. Making July safer: simulation-based mastery learning during intern bootcamp. Acad Med. 2013;88(2):233-239. https://doi.org/10.1097/ACM.0b013e31827bfc0a

8. Gaffney S, Farnan JM, Hirsch K, McGinty M, Arora VM. The Modified, Multi-patient Observed Simulated Handoff Experience (M-OSHE): assessment and feedback for entering residents on handoff performance. J Gen Intern Med. 2016;31(4):438-441. https://doi.org/10.1007/s11606-016-3591-8.

9. Woolliscroft, J. Innovation in response to the COVID-19 pandemic crisis. Acad Med. 2020;95(8):1140-1142. https://doi.org/10.1097/ACM.0000000000003402.

10. Anderson ML, Turbow S, Willgerodt MA, Ruhnke G. Education in a crisis: the opportunity of our lives. J Hosp. Med 2020;5;287-291. https://doi.org/10.12788/jhm.3431

11. Farr DE, Zeh HJ, Abdelfattah KR. Virtual bootcamps—an emerging solution to the undergraduate medical education-graduate medical education transition. JAMA Surg. 2021;156(3):282-283. https://doi.org/10.1001/jamasurg.2020.6162

12. Hepps JH, Yu CE, Calaman S. Simulation in medical education for the hospitalist: moving beyond the mock code. Pediatr Clin North Am. 2019;66(4):855-866. https://doi.org/10.1016/j.pcl.2019.03.014

Events requiring communication among and within teams are vulnerable points in patient care in hospital medicine, with communication failures representing important contributors to adverse events.1-4 Consultations and handoffs are exceptionally common inpatient practices, yet training in these practices is variable across educational and practice domains.5,6 Advanced inpatient communication-skills training requires an effective, feasible, and scalable format. Simulation-based bootcamps can effectively support clinical skills training, often in procedural domains, and have been increasingly utilized for communication skills.7,8 We previously described the development and implementation of an in-person bootcamp for training and feedback in consultation and handoff communication.5,8

As hospitalist leaders grapple with how to systematically support and assess essential clinical skills, the COVID-19 pandemic has presented another impetus to rethink current processes. The rapid shift to virtual activities met immediate needs of the pandemic, but also inspired creativity in applying new methodologies to improve teaching strategies and implementation long-term.9,10 One such strategy, telesimulation, offers a way to continue simulation-based training limited by the need for physical distancing.10 Furthermore, recent calls to study the efficacy of virtual bootcamp structures have acknowledged potential benefits, even outside of the pandemic.11

The primary objective of this feasibility study was to convert our previously described consultation and handoff bootcamp to a telesimulation bootcamp (TBC), preserving rigorous performance evaluation and opportunities for skills-based feedback. We additionally compared evaluation between virtual and in-person formats to understand the utility of telesimulation for bootcamp-based clinical education moving forward.

METHODS

Setting and Participants

The TBC occurred in June 2020 during the University of Chicago institution-wide graduate medical education (GME) orientation; 130 interns entering 13 residency programs participated. The comparison group was 128 interns who underwent the traditional University of Chicago GME orientation “Advanced Communication Skills Bootcamp” (ACSBC) in 2019.5,8

Program Description

To develop TBC, we adapted observed structured clinical experiences (OSCEs) created for ACSBC. Until 2020, ACSBC included three in-person OSCEs: (1) requesting a consultation; (2) conducting handoffs; and (3) acquiring informed consent. COVID-19 necessitated conversion of ACSBC to virtual in June 2020. For this, we selected the consultation and handoff OSCEs, as these skills require near-universal and immediate application in clinical practice. Additionally, they required only trained facilitators (TFs), whereas informed consent required standardized patients. Hospitalist and emergency medicine faculty were recruited as TFs; 7 of 12 TFs were hospitalists. Each OSCE had two parts: an asynchronous, mandatory training module and a clinical simulation. For TBC, we adapted the simulations, previously separate experiences, into a 20-minute combined handoff/consultation telesimulation using the Zoom® video platform. Interns were paired with one TF who served as both standardized consultant (for one mock case) and handoff receiver (for three mock cases, including the consultation case). TFs rated intern performance and provided feedback.

TBC occurred on June 17 and 18, 2020. Interns were emailed asynchronous modules on June 1, and mock cases and instructions on June 12. When TBC began, GME staff proctors oriented interns in the Zoom® platform. Proctors placed TFs into private breakout rooms into which interns rotated through 20-minute timeslots. Faculty received copies of all TBC materials for review (Appendix 1) and underwent Zoom®-based training 1 to 2 weeks prior.

We evaluated TBC using several methods: (1) consultation and handoff skills performance measured by two validated checklists5,8; (2) survey of intern self-reported preparedness to practice consultations and handoffs; and (3) survey of intern satisfaction. Surveys were administered both immediately post bootcamp (Appendix 2) and 8 weeks into internship (Appendix 3). Skills performance checklists were a 12-item consultation checklist5 and 6-item handoff checklist.8 The handoff checklist was modified to remove activities impossible to assess virtually (ie, orienting sign-outs in a shared space) and to add a three-level rating scale of “outstanding,” “satisfactory,” and “needs improvement.” This was done based on feedback from ACSBC to allow more nuanced feedback for interns. A rating of “outstanding” was used to define successful completion of the item (Appendix 1). Interns rated preparedness and satisfaction on 5-point Likert-type items. All measures were compared to the 2019 in-person ACSBC cohort.

Data Analysis

Stata 16.1 (StataCorp LP) was used for analysis. We dichotomized preparedness and satisfaction scores, defining ratings of “4” or “5” as “prepared” or “satisfied.” As previously described,5 we created a composite score averaging both checklist scores for each intern. We normalized this score by rater to a z score (mean, 0; SD, 1) to account for rater differences. “Poor” and “outstanding” performances were defined as z scores below and above 1 SD, respectively. Fisher’s exact test was used to compare proportions, and Pearson correlation test to correlate z scores. The University of Chicago Institutional Review Board granted exemption.

RESULTS

All 130 entering interns participated in TBC. Internal medicine (IM) was the largest specialty (n = 37), followed by pediatrics (n = 22), emergency medicine (EM) (n = 16), and anesthesiology (n = 12). The remaining 9 programs ranged from 2 to 10 interns per program. The 128 interns in ACSBC were similar, including 40 IM, 23 pediatrics, 14 EM, and 12 anesthesia interns, with 2 to 10 interns in remaining programs.

TBC skills performance evaluations were compared to ACSBC (Table 1). The TBC intern cohort’s consultation performance was the same or better than the ACSBC intern cohort’s. For handoffs, TBC interns completed significantly fewer checklist items compared to ACSBC. Performance in each exercise was moderately correlated (r = 0.39, P < .05). For z scores, 14 TBC interns (10.8%) had “outstanding” and 15 (11.6%) had “poor” performances, compared to ACSBC interns with 7 (5.5%) “outstanding” and 10 (7.81%) “poor” performances (P = .15).

All 130 interns (100%) completed the immediate post-TBC survey. Overall, TBC satisfaction was comparable to ACSBC, and significantly improved for satisfaction with performance (Table 2). Compared to ACSBC, TBC interns felt more prepared for simulation and handoff clinical practice. Nearly all interns would recommend TBC (99% vs 96% of ACSBC interns, P = 0.28), and 99% felt the software used for the simulation ran smoothly.

The 8-week post-TBC survey had a response rate of 88% (115/130); 69% of interns reported conducting more effective handoffs due to TBC, and 79% felt confident in handoff skills. Similarly, 73% felt more effective at calling consultations, and 75% reported retained knowledge of consultation frameworks taught during TBC. Additionally, 71% of interns reported that TBC helped identify areas for self-directed improvement. There were no significant differences in 8-week postsurvey ratings between ACSBC and TBC.

DISCUSSION

In converting the advanced communication skills bootcamp from an in-person to a virtual format, telesimulation was well-received by interns and rated similarly to in-person bootcamp in most respects. Nearly all interns agreed the experience was realistic, provided useful feedback, and prepared them for clinical practice. Although we shifted to virtual out of necessity, our results demonstrate a high-quality, streamlined bootcamp experience that was less labor-intensive for interns, staff, and faculty. Telesimulation may represent an effective strategy beyond the COVID-19 pandemic to increase ease of administration and scale the use of bootcamps in supporting advanced clinical skill training for hospital-based practice.

TBC interns felt better prepared for simulation and more satisfied with their performance than ACSBC interns, potentially due to the revised format. The mock cases were adapted and consolidated for TBC, such that the handoff and consultation simulations shared a common case, whereas previously they were separate. Thus, intern preparation for TBC required familiarity with fewer overall cases. Ultimately, TBC maintained the quality of training but required review of less information.

In comparing performance, TBC interns were rated as well or better during consultation simulation compared to ASCBC, but handoffs were rated lower. This was likely due to the change in the handoff checklist from a dichotomous to a three-level rating scale. This change was made after receiving feedback from ACSBC TFs that a rating scale allowing for more nuance was needed to provide adequate feedback to interns. Although we defined handoff item completion for TBC interns as being rated “outstanding,” if the top two rankings, “outstanding” and “satisfactory,” are dichotomized to reflect completion, TBC handoff performance is equivalent or better than ACSBC. TF recruitment additionally differed between TBC and ACSBC cohorts. In ACSBC, resident physicians served as handoff TFs, whereas only faculty were recruited for TBC. Faculty were primarily clinically active hospitalists, whose expertise in handoffs may resulted in more stringent performance ratings, contributing to differences seen.

Hospitalist groups require clinicians to be immediately proficient in essential communication skills like consultation and handoffs, potentially requiring just-in-time training and feedback for large cohorts.12 Bootcamps can meet this need but require participation and time investment by many faculty members, staff, and administrators.5,8 Combining TBC into one virtual handoff/consultation simulation required recruitment and training of 50% fewer TFs and reduced administrative burden. ACSBC consultation simulations were high-fidelity but resource-heavy, requiring reliable two-way telephones with reliable connections and separate spaces for simulation and feedback.5 Conversely, TBC only required consultations to be “called” via audio-only Zoom® discussion, then both individuals turned on cameras for feedback. The slight decrease in perceived fidelity was certainly outweighed by ease of administration. TBC’s more efficient and less labor-intensive format is an appealing strategy for hospitalist groups looking to train up clinicians, including those operating across multiple or geographically distant sites.

Our study has limitations. It occurred with one group of learners at a single site with consistent consultation and handoff communication practices, which may not be the case elsewhere. Our comparison group was a separate cohort, and groups were not randomized; thus, differences seen may reflect inherent dissimilarities in these groups. Changes to the handoff checklist rating scale between 2019 and 2020 additionally may limit the direct comparison of handoff performance between cohorts. While overall fewer resources were required, TBC implementation did require time and institutional support, along with full virtual platform capability without user or time limitations. Our preparedness outcomes were self-reported without direct measurement of clinical performance, which is an area for future work.

We describe a feasible implementation of an adapted telesimulation communication bootcamp, with comparison to a previous in-person cohort’s skills performance and satisfaction. While COVID-19 has made the future of in-person training activities uncertain, it also served as a catalyst for educational innovation that may be sustained beyond the pandemic. Although developed out of necessity, the telesimulation communication bootcamp was effective and well-received. Telesimulation represents an opportunity for hospital medicine groups to implement advanced communication skills training and assessment in a more efficient, flexible, and potentially preferable way, even after the pandemic ends.

Acknowledgments

The authors thank the staff at the University of Chicago Office of Graduate Medical Education and the UChicago Medicine Simulation Center.

Events requiring communication among and within teams are vulnerable points in patient care in hospital medicine, with communication failures representing important contributors to adverse events.1-4 Consultations and handoffs are exceptionally common inpatient practices, yet training in these practices is variable across educational and practice domains.5,6 Advanced inpatient communication-skills training requires an effective, feasible, and scalable format. Simulation-based bootcamps can effectively support clinical skills training, often in procedural domains, and have been increasingly utilized for communication skills.7,8 We previously described the development and implementation of an in-person bootcamp for training and feedback in consultation and handoff communication.5,8

As hospitalist leaders grapple with how to systematically support and assess essential clinical skills, the COVID-19 pandemic has presented another impetus to rethink current processes. The rapid shift to virtual activities met immediate needs of the pandemic, but also inspired creativity in applying new methodologies to improve teaching strategies and implementation long-term.9,10 One such strategy, telesimulation, offers a way to continue simulation-based training limited by the need for physical distancing.10 Furthermore, recent calls to study the efficacy of virtual bootcamp structures have acknowledged potential benefits, even outside of the pandemic.11

The primary objective of this feasibility study was to convert our previously described consultation and handoff bootcamp to a telesimulation bootcamp (TBC), preserving rigorous performance evaluation and opportunities for skills-based feedback. We additionally compared evaluation between virtual and in-person formats to understand the utility of telesimulation for bootcamp-based clinical education moving forward.

METHODS

Setting and Participants

The TBC occurred in June 2020 during the University of Chicago institution-wide graduate medical education (GME) orientation; 130 interns entering 13 residency programs participated. The comparison group was 128 interns who underwent the traditional University of Chicago GME orientation “Advanced Communication Skills Bootcamp” (ACSBC) in 2019.5,8

Program Description

To develop TBC, we adapted observed structured clinical experiences (OSCEs) created for ACSBC. Until 2020, ACSBC included three in-person OSCEs: (1) requesting a consultation; (2) conducting handoffs; and (3) acquiring informed consent. COVID-19 necessitated conversion of ACSBC to virtual in June 2020. For this, we selected the consultation and handoff OSCEs, as these skills require near-universal and immediate application in clinical practice. Additionally, they required only trained facilitators (TFs), whereas informed consent required standardized patients. Hospitalist and emergency medicine faculty were recruited as TFs; 7 of 12 TFs were hospitalists. Each OSCE had two parts: an asynchronous, mandatory training module and a clinical simulation. For TBC, we adapted the simulations, previously separate experiences, into a 20-minute combined handoff/consultation telesimulation using the Zoom® video platform. Interns were paired with one TF who served as both standardized consultant (for one mock case) and handoff receiver (for three mock cases, including the consultation case). TFs rated intern performance and provided feedback.

TBC occurred on June 17 and 18, 2020. Interns were emailed asynchronous modules on June 1, and mock cases and instructions on June 12. When TBC began, GME staff proctors oriented interns in the Zoom® platform. Proctors placed TFs into private breakout rooms into which interns rotated through 20-minute timeslots. Faculty received copies of all TBC materials for review (Appendix 1) and underwent Zoom®-based training 1 to 2 weeks prior.

We evaluated TBC using several methods: (1) consultation and handoff skills performance measured by two validated checklists5,8; (2) survey of intern self-reported preparedness to practice consultations and handoffs; and (3) survey of intern satisfaction. Surveys were administered both immediately post bootcamp (Appendix 2) and 8 weeks into internship (Appendix 3). Skills performance checklists were a 12-item consultation checklist5 and 6-item handoff checklist.8 The handoff checklist was modified to remove activities impossible to assess virtually (ie, orienting sign-outs in a shared space) and to add a three-level rating scale of “outstanding,” “satisfactory,” and “needs improvement.” This was done based on feedback from ACSBC to allow more nuanced feedback for interns. A rating of “outstanding” was used to define successful completion of the item (Appendix 1). Interns rated preparedness and satisfaction on 5-point Likert-type items. All measures were compared to the 2019 in-person ACSBC cohort.

Data Analysis

Stata 16.1 (StataCorp LP) was used for analysis. We dichotomized preparedness and satisfaction scores, defining ratings of “4” or “5” as “prepared” or “satisfied.” As previously described,5 we created a composite score averaging both checklist scores for each intern. We normalized this score by rater to a z score (mean, 0; SD, 1) to account for rater differences. “Poor” and “outstanding” performances were defined as z scores below and above 1 SD, respectively. Fisher’s exact test was used to compare proportions, and Pearson correlation test to correlate z scores. The University of Chicago Institutional Review Board granted exemption.

RESULTS

All 130 entering interns participated in TBC. Internal medicine (IM) was the largest specialty (n = 37), followed by pediatrics (n = 22), emergency medicine (EM) (n = 16), and anesthesiology (n = 12). The remaining 9 programs ranged from 2 to 10 interns per program. The 128 interns in ACSBC were similar, including 40 IM, 23 pediatrics, 14 EM, and 12 anesthesia interns, with 2 to 10 interns in remaining programs.

TBC skills performance evaluations were compared to ACSBC (Table 1). The TBC intern cohort’s consultation performance was the same or better than the ACSBC intern cohort’s. For handoffs, TBC interns completed significantly fewer checklist items compared to ACSBC. Performance in each exercise was moderately correlated (r = 0.39, P < .05). For z scores, 14 TBC interns (10.8%) had “outstanding” and 15 (11.6%) had “poor” performances, compared to ACSBC interns with 7 (5.5%) “outstanding” and 10 (7.81%) “poor” performances (P = .15).

All 130 interns (100%) completed the immediate post-TBC survey. Overall, TBC satisfaction was comparable to ACSBC, and significantly improved for satisfaction with performance (Table 2). Compared to ACSBC, TBC interns felt more prepared for simulation and handoff clinical practice. Nearly all interns would recommend TBC (99% vs 96% of ACSBC interns, P = 0.28), and 99% felt the software used for the simulation ran smoothly.

The 8-week post-TBC survey had a response rate of 88% (115/130); 69% of interns reported conducting more effective handoffs due to TBC, and 79% felt confident in handoff skills. Similarly, 73% felt more effective at calling consultations, and 75% reported retained knowledge of consultation frameworks taught during TBC. Additionally, 71% of interns reported that TBC helped identify areas for self-directed improvement. There were no significant differences in 8-week postsurvey ratings between ACSBC and TBC.

DISCUSSION

In converting the advanced communication skills bootcamp from an in-person to a virtual format, telesimulation was well-received by interns and rated similarly to in-person bootcamp in most respects. Nearly all interns agreed the experience was realistic, provided useful feedback, and prepared them for clinical practice. Although we shifted to virtual out of necessity, our results demonstrate a high-quality, streamlined bootcamp experience that was less labor-intensive for interns, staff, and faculty. Telesimulation may represent an effective strategy beyond the COVID-19 pandemic to increase ease of administration and scale the use of bootcamps in supporting advanced clinical skill training for hospital-based practice.

TBC interns felt better prepared for simulation and more satisfied with their performance than ACSBC interns, potentially due to the revised format. The mock cases were adapted and consolidated for TBC, such that the handoff and consultation simulations shared a common case, whereas previously they were separate. Thus, intern preparation for TBC required familiarity with fewer overall cases. Ultimately, TBC maintained the quality of training but required review of less information.

In comparing performance, TBC interns were rated as well or better during consultation simulation compared to ASCBC, but handoffs were rated lower. This was likely due to the change in the handoff checklist from a dichotomous to a three-level rating scale. This change was made after receiving feedback from ACSBC TFs that a rating scale allowing for more nuance was needed to provide adequate feedback to interns. Although we defined handoff item completion for TBC interns as being rated “outstanding,” if the top two rankings, “outstanding” and “satisfactory,” are dichotomized to reflect completion, TBC handoff performance is equivalent or better than ACSBC. TF recruitment additionally differed between TBC and ACSBC cohorts. In ACSBC, resident physicians served as handoff TFs, whereas only faculty were recruited for TBC. Faculty were primarily clinically active hospitalists, whose expertise in handoffs may resulted in more stringent performance ratings, contributing to differences seen.

Hospitalist groups require clinicians to be immediately proficient in essential communication skills like consultation and handoffs, potentially requiring just-in-time training and feedback for large cohorts.12 Bootcamps can meet this need but require participation and time investment by many faculty members, staff, and administrators.5,8 Combining TBC into one virtual handoff/consultation simulation required recruitment and training of 50% fewer TFs and reduced administrative burden. ACSBC consultation simulations were high-fidelity but resource-heavy, requiring reliable two-way telephones with reliable connections and separate spaces for simulation and feedback.5 Conversely, TBC only required consultations to be “called” via audio-only Zoom® discussion, then both individuals turned on cameras for feedback. The slight decrease in perceived fidelity was certainly outweighed by ease of administration. TBC’s more efficient and less labor-intensive format is an appealing strategy for hospitalist groups looking to train up clinicians, including those operating across multiple or geographically distant sites.

Our study has limitations. It occurred with one group of learners at a single site with consistent consultation and handoff communication practices, which may not be the case elsewhere. Our comparison group was a separate cohort, and groups were not randomized; thus, differences seen may reflect inherent dissimilarities in these groups. Changes to the handoff checklist rating scale between 2019 and 2020 additionally may limit the direct comparison of handoff performance between cohorts. While overall fewer resources were required, TBC implementation did require time and institutional support, along with full virtual platform capability without user or time limitations. Our preparedness outcomes were self-reported without direct measurement of clinical performance, which is an area for future work.

We describe a feasible implementation of an adapted telesimulation communication bootcamp, with comparison to a previous in-person cohort’s skills performance and satisfaction. While COVID-19 has made the future of in-person training activities uncertain, it also served as a catalyst for educational innovation that may be sustained beyond the pandemic. Although developed out of necessity, the telesimulation communication bootcamp was effective and well-received. Telesimulation represents an opportunity for hospital medicine groups to implement advanced communication skills training and assessment in a more efficient, flexible, and potentially preferable way, even after the pandemic ends.

Acknowledgments

The authors thank the staff at the University of Chicago Office of Graduate Medical Education and the UChicago Medicine Simulation Center.

1. Sutcliffe KM, Lewton E, Rosenthal MM. Communication failures: an insidious contributor to medical mishaps. Acad Med. 2004;79(2):186-194. https://doi.org/ 10.1097/00001888-200402000-00019

2. Inadequate hand-off communication. Sentinel Event Alert. 2017;(58):1-6.

3. Horwitz LI, Meredith T, Schuur JD, Shah NR, Kulkarni RG, Jenq JY. Dropping the baton: a qualitative analysis of failures during the transition from emergency department to inpatient care. Ann Emerg Med. 2009;53(6):701-710. https://doi.org/ 10.1016/j.annemergmed.2008.05.007

4. Jagsi R, Kitch BT, Weinstein DF, Campbell EG, Hutter M, Weissman JS. Residents report on adverse events and their causes. Arch Intern Med. 2005;165(22):2607-2613. https://doi.org/10.1001/archinte.165.22.2607

5. Martin SK, Carter K, Hellerman N, et al. The consultation observed simulated clinical experience: training, assessment, and feedback for incoming interns on requesting consultations. Acad Med. 2018; 93(12):1814-1820. https://doi.org/10.1097/ACM.0000000000002337

6. Lopez MA, Campbell J. Developing a communication curriculum for primary and consulting services. Med Educ Online. 2020;25(1):1794341. https://doi.org/10.1080/10872981.2020

7. Cohen, ER, Barsuk JH, Moazed F, et al. Making July safer: simulation-based mastery learning during intern bootcamp. Acad Med. 2013;88(2):233-239. https://doi.org/10.1097/ACM.0b013e31827bfc0a

8. Gaffney S, Farnan JM, Hirsch K, McGinty M, Arora VM. The Modified, Multi-patient Observed Simulated Handoff Experience (M-OSHE): assessment and feedback for entering residents on handoff performance. J Gen Intern Med. 2016;31(4):438-441. https://doi.org/10.1007/s11606-016-3591-8.

9. Woolliscroft, J. Innovation in response to the COVID-19 pandemic crisis. Acad Med. 2020;95(8):1140-1142. https://doi.org/10.1097/ACM.0000000000003402.

10. Anderson ML, Turbow S, Willgerodt MA, Ruhnke G. Education in a crisis: the opportunity of our lives. J Hosp. Med 2020;5;287-291. https://doi.org/10.12788/jhm.3431

11. Farr DE, Zeh HJ, Abdelfattah KR. Virtual bootcamps—an emerging solution to the undergraduate medical education-graduate medical education transition. JAMA Surg. 2021;156(3):282-283. https://doi.org/10.1001/jamasurg.2020.6162

12. Hepps JH, Yu CE, Calaman S. Simulation in medical education for the hospitalist: moving beyond the mock code. Pediatr Clin North Am. 2019;66(4):855-866. https://doi.org/10.1016/j.pcl.2019.03.014

1. Sutcliffe KM, Lewton E, Rosenthal MM. Communication failures: an insidious contributor to medical mishaps. Acad Med. 2004;79(2):186-194. https://doi.org/ 10.1097/00001888-200402000-00019

2. Inadequate hand-off communication. Sentinel Event Alert. 2017;(58):1-6.

3. Horwitz LI, Meredith T, Schuur JD, Shah NR, Kulkarni RG, Jenq JY. Dropping the baton: a qualitative analysis of failures during the transition from emergency department to inpatient care. Ann Emerg Med. 2009;53(6):701-710. https://doi.org/ 10.1016/j.annemergmed.2008.05.007

4. Jagsi R, Kitch BT, Weinstein DF, Campbell EG, Hutter M, Weissman JS. Residents report on adverse events and their causes. Arch Intern Med. 2005;165(22):2607-2613. https://doi.org/10.1001/archinte.165.22.2607

5. Martin SK, Carter K, Hellerman N, et al. The consultation observed simulated clinical experience: training, assessment, and feedback for incoming interns on requesting consultations. Acad Med. 2018; 93(12):1814-1820. https://doi.org/10.1097/ACM.0000000000002337

6. Lopez MA, Campbell J. Developing a communication curriculum for primary and consulting services. Med Educ Online. 2020;25(1):1794341. https://doi.org/10.1080/10872981.2020

7. Cohen, ER, Barsuk JH, Moazed F, et al. Making July safer: simulation-based mastery learning during intern bootcamp. Acad Med. 2013;88(2):233-239. https://doi.org/10.1097/ACM.0b013e31827bfc0a

8. Gaffney S, Farnan JM, Hirsch K, McGinty M, Arora VM. The Modified, Multi-patient Observed Simulated Handoff Experience (M-OSHE): assessment and feedback for entering residents on handoff performance. J Gen Intern Med. 2016;31(4):438-441. https://doi.org/10.1007/s11606-016-3591-8.

9. Woolliscroft, J. Innovation in response to the COVID-19 pandemic crisis. Acad Med. 2020;95(8):1140-1142. https://doi.org/10.1097/ACM.0000000000003402.

10. Anderson ML, Turbow S, Willgerodt MA, Ruhnke G. Education in a crisis: the opportunity of our lives. J Hosp. Med 2020;5;287-291. https://doi.org/10.12788/jhm.3431

11. Farr DE, Zeh HJ, Abdelfattah KR. Virtual bootcamps—an emerging solution to the undergraduate medical education-graduate medical education transition. JAMA Surg. 2021;156(3):282-283. https://doi.org/10.1001/jamasurg.2020.6162

12. Hepps JH, Yu CE, Calaman S. Simulation in medical education for the hospitalist: moving beyond the mock code. Pediatr Clin North Am. 2019;66(4):855-866. https://doi.org/10.1016/j.pcl.2019.03.014

© 2021 Society of Hospital Medicine

Describing Variability of Inpatient Consultation Practices: Physician, Patient, and Admission Factors

Inpatient consultation is an extremely common practice with the potential to improve patient outcomes significantly.1-3 However, variability in consultation practices may be risky for patients. In addition to underuse when the benefit is clear, the overuse of consultation may lead to additional testing and therapies, increased length of stay (LOS) and costs, conflicting recommendations, and opportunities for communication breakdown.

Consultation use is often at the discretion of individual providers. While this decision is frequently driven by patient needs, significant variation in consultation practices not fully explained by patient factors exists.1 Prior work has described hospital-level variation1 and that primary care physicians use more consultation than hospitalists.4 However, other factors affecting consultation remain unknown. We sought to explore physician-, patient-, and admission-level factors associated with consultation use on inpatient general medicine services.

METHODS

Study Design

We conducted a retrospective analysis of data from the University of Chicago Hospitalist Project (UCHP). UCHP is a longstanding study of the care of hospitalized patients admitted to the University of Chicago general medicine services, involving both patient data collection and physician experience surveys.5 Data were obtained for enrolled UCHP patients between 2011-2016 from the Center for Research Informatics (CRI). The University of Chicago Institutional Review Board approved this study.

Data Collection

Attendings and patients consented to UCHP participation. Data collection details are described elsewhere.5,6 Data from EpicCare (EpicSystems Corp, Wisconsin) and Centricity Billing (GE Healthcare, Illinois) were obtained via CRI for all encounters of enrolled UCHP patients during the study period (N = 218,591).

Attending Attribution

We determined attending attribution for admissions as follows: the attending author of the first history and physical (H&P) was assigned. If this was unavailable, the attending author of the first progress note (PN) was assigned. For patients admitted by hospitalists on admitting shifts to nonteaching services (ie, service without residents/students), the author of the first PN was assigned if different from H&P. Where available, attribution was corroborated with call schedules.

Sample and Variables

All encounters containing inpatient admissions to the University of Chicago from May 10, 2011 (Electronic Health Record activation date), through December 31, 2016, were considered for inclusion (N = 51,171, Appendix 1). Admissions including only documentation from ancillary services were excluded (eg, encounters for hemodialysis or physical therapy). Admissions were limited to a length of stay (LOS) ≤ 5 days, corresponding to the average US inpatient LOS of 4.6 days,7 to minimize the likelihood of attending handoffs (N = 31,592). If attending attribution was not possible via the above-described methods, the admission was eliminated (N = 3,103; 10.9% of admissions with LOS ≤ 5 days). Finally, the sample was restricted to general medicine service admissions under attendings enrolled in UCHP who completed surveys. After the application of all criteria, 6,153 admissions remained for analysis.

The outcome variable was the number of consultations per admission, determined by counting the unique number of services creating clinical documentation, and subtracting one for the primary team. If the Medical/Surgical intensive care unit (ICU) was a service, then two were subtracted to account for the ICU transfer.

Attending years in practice (ie, years since medical school graduation) and gender were determined from public resources. Practice characteristics were determined from UCHP attending surveys, which address perceptions of workload and satisfaction (Appendix 2).

Patient characteristics (gender, age, Elixhauser Indices) and admission characteristics (LOS, season of admission, payor) were determined from UCHP and CRI data. The Elixhauser Index uses a well-validated system combining the presence/absence of 31 comorbidities to predict mortality and 30-day readmission.8 Elixhauser Indices were calculated using the “Creation of Elixhauser Comorbidity Index Scores 1.0” software.9 For admissions under hospitalist attendings, teaching/nonteaching team was ascertained via internal teaching service calendars.

Analysis

We used descriptive statistics to examine demographic characteristics. The difference between the lowest and highest quartile consultation use was determined via a two-sample t test. Given the multilevel nature of our count data, we used a mixed-effects Poisson model accounting for within-group variation by clustering on attending and patient (3-level random-effects model). The analysis was done using Stata 15 (StataCorp, Texas).

RESULTS

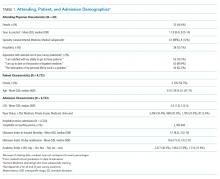

From 2011 to 2016, 14,848 patients and 88 attendings were enrolled in UCHP; 4,772 patients (32%) and 69 attendings (59.4%) had data available and were included. Mean LOS was 3.0 days (SD = 1.3). Table 1 describes the characteristics of attendings, patients, and admissions.

Seventy-six percent of admissions included at least one consultation. Consultation use varied widely, ranging from 0 to 10 per admission (mean = 1.39, median = 1; standard deviation [SD] = 1.17). The number of consultations per admission in the highest quartile of consultation frequency (mean = 3.47, median = 3) was 5.7-fold that of the lowest quartile (mean = 0.613, median = 1; P <.001).

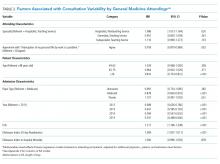

In multivariable regression, physician-, patient-, and admission-level characteristics were associated with the differential use of consultation (Table 2). On teaching services, consultations called by hospitalist vs nonhospitalist generalists did not differ (P =.361). However, hospitalists on nonteaching services called 8.6% more consultations than hospitalists on teaching services (P =.02). Attending agreement with survey item “The interruption of my personal life by work is a problem” was associated with 8.2% fewer consultations per admission (P =.002).

Patients older than 75 years received 19% fewer consultations compared with patients younger than 49 years (P <.001). Compared with Medicare, Medicaid admissions had 12.2% fewer consultations (P <.001), whereas privately insured admissions had 10.7% more (P =.001). The number of consultations per admission decreased every year, with 45.3% fewer consultations in 2015 than 2011 (P <.001). Consultations increased by each 22% per day increase in LOS (P <.001).

DISCUSSION

Our analysis described several physician-, patient-, and admission-level characteristics associated with the use of inpatient consultation. Our results strengthen prior work demonstrating that patient-level factors alone are insufficient to explain consultation variability.1

Hospitalists on nonteaching services called more consultations, which may reflect a higher workload on these services. Busy hospitalists on nonteaching teams may lack time to delve deeply into clinical problems and require more consultations, especially for work with heavy cognitive loads such as diagnosis. “Outsourcing” tasks when workload increases occurs in other cognitive activities such as teaching.10 The association between work interrupting personal life and fewer consultations may also implicate the effects of time. Attendings who are experiencing work encroaching on their personal lives may be those spending more time with patients and consulting less. This finding merits further study, especially with increasing concern about balancing time spent in meaningful patient care activities with risk of physician burnout.

This finding could also indicate that trainee participation modifies consultation use for hospitalists. Teaching service teams with more individual members may allow a greater pool of collective knowledge, decreasing the need for consultation to answer clinical questions.11 Interestingly, there was no difference in consultation use between generalists or subspecialists and hospitalists on teaching services, possibly suggesting a unique effect in hospitalists who vary clinical practice depending on team structure. These differences deserve further investigation, with implications for education and resource utilization.

We were surprised by the finding that consultations decreased each year, despite increasing patient complexity and availability of consultation services. This could be explained by a growing emphasis on shortening LOS in our institution, thus shifting consultative care to outpatient settings. Understanding these effects is critically important with growing evidence that consultation improves patient outcomes because these external pressures could lead to unintended consequences for quality or access to care.

Several findings related to patient factors additionally emerged, including age and insurance status. Although related to medical complexity, these effects persist despite adjustment, which raises the question of whether they contribute to the decision to seek consultation. Older patients received fewer consultations, which could reflect the use of more conservative practice models in the elderly,12 or ageism, which is associated with undertreatment.13 With respect to insurance status, Medicaid patients were associated with fewer consultations. This finding is consistent with previous work showing the decreased intensity of hospital services used for Medicaid patients.14Our study has limitations. Our data were from one large urban academic center that limits generalizability. Although systematic and redundant, attending attribution may have been flawed: incomplete or erroneous documentation could have led to attribution error, and we cannot rule out the possibility of service handoffs. We used a LOS ≤ 5 days to minimize this possibility, but this limits the applicability of our findings to longer admissions. Unsurprisingly, longer LOS correlated with the increased use of consultation even within our restricted sample, and future work should examine the effects of prolonged LOS. As a retrospective analysis, unmeasured confounders due to our limited adjustment will likely explain some findings, although we took steps to address this in our statistical design. Finally, we could not measure patient outcomes and, therefore, cannot determine the value of more or fewer consultations for specific patients or illnesses. Positive and negative outcomes of increased consultation are described, and understanding the impact of consultation is critical for further study.2,3

CONCLUSION

We found that the use of consultation on general medicine services varies widely between admissions, with large differences between the highest and lowest frequencies of use. This variation can be partially explained by several physician-, patient-, and admission-level characteristics. Our work may help identify patient and attending groups at high risk for under- or overuse of consultation and guide the subsequent development of interventions to improve value in consultation. One additional consultation over the average LOS of 4.6 days adds $420 per admission or $4.8 billion to the 11.5 million annual Medicare admissions.15 Increasing research, guidelines, and education on the judicious use of inpatient consultation will be key in maximizing high-value care and improving patient outcomes.

Acknowledgments

The authors would like to acknowledge the invaluable support and assistance of the University of Chicago Hospitalist Project, the Pritzker School of Medicine Summer Research Program, the University of Chicago Center for Quality, and the University of Chicago Center for Health and the Social Sciences (CHeSS). The authors would additionally like to thank John Cursio, PhD, for his support and guidance in statistical analysis for this project.

Disclaimer

The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH. The funders had no role in the design of the study; the collection, analysis, and interpretation of the data; or the decision to approve publication of the finished manuscript. Preliminary results of this analysis were presented at the 2018 Society of Hospital Medicine Annual Meeting in Orlando, Florida. All coauthors have seen and agree with the contents of the manuscript. The submission is not under review by any other publication.

1. Stevens JP, Nyweide D, Maresh S, et al. Variation in inpatient consultation among older adults in the United States. J Gen Intern Med. 2015;30(7):992-999. https://doi.org/10.1007/s11606-015-3216-7.

2. Lahey T, Shah R, Gittzus J, Schwartzman J, Kirkland K. Infectious diseases consultation lowers mortality from Staphylococcus aureus bacteremia. Medicine (Baltimore). 2009;88(5):263-267. https://doi.org/10.1097/MD.0b013e3181b8fccb.

3. Morrison RS, Dietrich J, Ladwig S, et al. Palliative care consultation teams cut hospital costs for Medicaid beneficiaries. Health Aff Proj Hope. 2011;30(3):454-463. https://doi.org/10.1377/hlthaff.2010.0929.

4. Stevens JP, Nyweide DJ, Maresh S, Hatfield LA, Howell MD, Landon BE. Comparison of hospital resource use and outcomes among hospitalists, primary care physicians, and other generalists. JAMA Intern Med. 2017;177(12):1781. https://doi.org/10.1001/jamainternmed.2017.5824.

5. Meltzer D. Effects of physician experience on costs and outcomes on an academic general medicine service: Results of a trial of hospitalists. Ann Intern Med. 2002;137(11):866. https://doi.org/10.7326/0003-4819-137-11-200212030-00007.

6. Martin SK, Farnan JM, Flores A, Kurina LM, Meltzer DO, Arora VM. Exploring entrustment: Housestaff autonomy and patient readmission. Am J Med. 2014;127(8):791-797. https://doi.org/10.1016/j.amjmed.2014.04.013.

7. HCUP-US NIS Overview. https://www.hcup-us.ahrq.gov/nisoverview.jsp. Accessed July 7, 2017.

8. Austin SR, Wong Y-N, Uzzo RG, Beck JR, Egleston BL. Why summary comorbidity measures such as the Charlson Comorbidity Index and Elixhauser Score work. Med Care. 2015;53(9):e65-e72. https://doi.org/10.1097/MLR.0b013e318297429c.

9. Elixhauser Comorbidity Software. Elixhauser Comorbidity Software. https://www.hcup-us.ahrq.gov/toolssoftware/comorbidity/comorbidity.jsp#references. Accessed May 13, 2019.

10. Roshetsky LM, Coltri A, Flores A, et al. No time for teaching? Inpatient attending physicians’ workload and teaching before and after the implementation of the 2003 duty hours regulations. Acad Med J Assoc Am Med Coll. 2013;88(9):1293-1298. https://doi.org/10.1097/ACM.0b013e31829eb795.

11. Barnett ML, Boddupalli D, Nundy S, Bates DW. Comparative accuracy of diagnosis by collective intelligence of multiple physicians vs individual physicians. JAMA Netw Open. 2019;2(3):e190096. https://doi.org/10.1001/jamanetworkopen.2019.0096.

12. Aoyama T, Kunisawa S, Fushimi K, Sawa T, Imanaka Y. Comparison of surgical and conservative treatment outcomes for type A aortic dissection in elderly patients. J Cardiothorac Surg. 2018;13(1):129. https://doi.org/10.1186/s13019-018-0814-6.

13. Lindau ST, Schumm LP, Laumann EO, Levinson W, O’Muircheartaigh CA, Waite LJ. A study of sexuality and health among older adults in the United States. N Engl J Med. 2007;357(8):762-774. https://doi.org/10.1056/NEJMoa067423.

14. Yergan J, Flood AB, Diehr P, LoGerfo JP. Relationship between patient source of payment and the intensity of hospital services. Med Care. 1988;26(11):1111-1114. https://doi.org/10.1097/00005650-198811000-00009.

15. Center for Medicare and Medicaid Services. MDCR INPT HOSP 1.; 2008. https://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/CMSProgramStatistics/2013/Downloads/MDCR_UTIL/CPS_MDCR_INPT_HOSP_1.pdf. Accessed April 15, 2018.

Inpatient consultation is an extremely common practice with the potential to improve patient outcomes significantly.1-3 However, variability in consultation practices may be risky for patients. In addition to underuse when the benefit is clear, the overuse of consultation may lead to additional testing and therapies, increased length of stay (LOS) and costs, conflicting recommendations, and opportunities for communication breakdown.

Consultation use is often at the discretion of individual providers. While this decision is frequently driven by patient needs, significant variation in consultation practices not fully explained by patient factors exists.1 Prior work has described hospital-level variation1 and that primary care physicians use more consultation than hospitalists.4 However, other factors affecting consultation remain unknown. We sought to explore physician-, patient-, and admission-level factors associated with consultation use on inpatient general medicine services.

METHODS

Study Design

We conducted a retrospective analysis of data from the University of Chicago Hospitalist Project (UCHP). UCHP is a longstanding study of the care of hospitalized patients admitted to the University of Chicago general medicine services, involving both patient data collection and physician experience surveys.5 Data were obtained for enrolled UCHP patients between 2011-2016 from the Center for Research Informatics (CRI). The University of Chicago Institutional Review Board approved this study.

Data Collection

Attendings and patients consented to UCHP participation. Data collection details are described elsewhere.5,6 Data from EpicCare (EpicSystems Corp, Wisconsin) and Centricity Billing (GE Healthcare, Illinois) were obtained via CRI for all encounters of enrolled UCHP patients during the study period (N = 218,591).

Attending Attribution

We determined attending attribution for admissions as follows: the attending author of the first history and physical (H&P) was assigned. If this was unavailable, the attending author of the first progress note (PN) was assigned. For patients admitted by hospitalists on admitting shifts to nonteaching services (ie, service without residents/students), the author of the first PN was assigned if different from H&P. Where available, attribution was corroborated with call schedules.

Sample and Variables

All encounters containing inpatient admissions to the University of Chicago from May 10, 2011 (Electronic Health Record activation date), through December 31, 2016, were considered for inclusion (N = 51,171, Appendix 1). Admissions including only documentation from ancillary services were excluded (eg, encounters for hemodialysis or physical therapy). Admissions were limited to a length of stay (LOS) ≤ 5 days, corresponding to the average US inpatient LOS of 4.6 days,7 to minimize the likelihood of attending handoffs (N = 31,592). If attending attribution was not possible via the above-described methods, the admission was eliminated (N = 3,103; 10.9% of admissions with LOS ≤ 5 days). Finally, the sample was restricted to general medicine service admissions under attendings enrolled in UCHP who completed surveys. After the application of all criteria, 6,153 admissions remained for analysis.

The outcome variable was the number of consultations per admission, determined by counting the unique number of services creating clinical documentation, and subtracting one for the primary team. If the Medical/Surgical intensive care unit (ICU) was a service, then two were subtracted to account for the ICU transfer.

Attending years in practice (ie, years since medical school graduation) and gender were determined from public resources. Practice characteristics were determined from UCHP attending surveys, which address perceptions of workload and satisfaction (Appendix 2).

Patient characteristics (gender, age, Elixhauser Indices) and admission characteristics (LOS, season of admission, payor) were determined from UCHP and CRI data. The Elixhauser Index uses a well-validated system combining the presence/absence of 31 comorbidities to predict mortality and 30-day readmission.8 Elixhauser Indices were calculated using the “Creation of Elixhauser Comorbidity Index Scores 1.0” software.9 For admissions under hospitalist attendings, teaching/nonteaching team was ascertained via internal teaching service calendars.

Analysis

We used descriptive statistics to examine demographic characteristics. The difference between the lowest and highest quartile consultation use was determined via a two-sample t test. Given the multilevel nature of our count data, we used a mixed-effects Poisson model accounting for within-group variation by clustering on attending and patient (3-level random-effects model). The analysis was done using Stata 15 (StataCorp, Texas).

RESULTS

From 2011 to 2016, 14,848 patients and 88 attendings were enrolled in UCHP; 4,772 patients (32%) and 69 attendings (59.4%) had data available and were included. Mean LOS was 3.0 days (SD = 1.3). Table 1 describes the characteristics of attendings, patients, and admissions.

Seventy-six percent of admissions included at least one consultation. Consultation use varied widely, ranging from 0 to 10 per admission (mean = 1.39, median = 1; standard deviation [SD] = 1.17). The number of consultations per admission in the highest quartile of consultation frequency (mean = 3.47, median = 3) was 5.7-fold that of the lowest quartile (mean = 0.613, median = 1; P <.001).

In multivariable regression, physician-, patient-, and admission-level characteristics were associated with the differential use of consultation (Table 2). On teaching services, consultations called by hospitalist vs nonhospitalist generalists did not differ (P =.361). However, hospitalists on nonteaching services called 8.6% more consultations than hospitalists on teaching services (P =.02). Attending agreement with survey item “The interruption of my personal life by work is a problem” was associated with 8.2% fewer consultations per admission (P =.002).

Patients older than 75 years received 19% fewer consultations compared with patients younger than 49 years (P <.001). Compared with Medicare, Medicaid admissions had 12.2% fewer consultations (P <.001), whereas privately insured admissions had 10.7% more (P =.001). The number of consultations per admission decreased every year, with 45.3% fewer consultations in 2015 than 2011 (P <.001). Consultations increased by each 22% per day increase in LOS (P <.001).

DISCUSSION

Our analysis described several physician-, patient-, and admission-level characteristics associated with the use of inpatient consultation. Our results strengthen prior work demonstrating that patient-level factors alone are insufficient to explain consultation variability.1

Hospitalists on nonteaching services called more consultations, which may reflect a higher workload on these services. Busy hospitalists on nonteaching teams may lack time to delve deeply into clinical problems and require more consultations, especially for work with heavy cognitive loads such as diagnosis. “Outsourcing” tasks when workload increases occurs in other cognitive activities such as teaching.10 The association between work interrupting personal life and fewer consultations may also implicate the effects of time. Attendings who are experiencing work encroaching on their personal lives may be those spending more time with patients and consulting less. This finding merits further study, especially with increasing concern about balancing time spent in meaningful patient care activities with risk of physician burnout.

This finding could also indicate that trainee participation modifies consultation use for hospitalists. Teaching service teams with more individual members may allow a greater pool of collective knowledge, decreasing the need for consultation to answer clinical questions.11 Interestingly, there was no difference in consultation use between generalists or subspecialists and hospitalists on teaching services, possibly suggesting a unique effect in hospitalists who vary clinical practice depending on team structure. These differences deserve further investigation, with implications for education and resource utilization.

We were surprised by the finding that consultations decreased each year, despite increasing patient complexity and availability of consultation services. This could be explained by a growing emphasis on shortening LOS in our institution, thus shifting consultative care to outpatient settings. Understanding these effects is critically important with growing evidence that consultation improves patient outcomes because these external pressures could lead to unintended consequences for quality or access to care.

Several findings related to patient factors additionally emerged, including age and insurance status. Although related to medical complexity, these effects persist despite adjustment, which raises the question of whether they contribute to the decision to seek consultation. Older patients received fewer consultations, which could reflect the use of more conservative practice models in the elderly,12 or ageism, which is associated with undertreatment.13 With respect to insurance status, Medicaid patients were associated with fewer consultations. This finding is consistent with previous work showing the decreased intensity of hospital services used for Medicaid patients.14Our study has limitations. Our data were from one large urban academic center that limits generalizability. Although systematic and redundant, attending attribution may have been flawed: incomplete or erroneous documentation could have led to attribution error, and we cannot rule out the possibility of service handoffs. We used a LOS ≤ 5 days to minimize this possibility, but this limits the applicability of our findings to longer admissions. Unsurprisingly, longer LOS correlated with the increased use of consultation even within our restricted sample, and future work should examine the effects of prolonged LOS. As a retrospective analysis, unmeasured confounders due to our limited adjustment will likely explain some findings, although we took steps to address this in our statistical design. Finally, we could not measure patient outcomes and, therefore, cannot determine the value of more or fewer consultations for specific patients or illnesses. Positive and negative outcomes of increased consultation are described, and understanding the impact of consultation is critical for further study.2,3

CONCLUSION

We found that the use of consultation on general medicine services varies widely between admissions, with large differences between the highest and lowest frequencies of use. This variation can be partially explained by several physician-, patient-, and admission-level characteristics. Our work may help identify patient and attending groups at high risk for under- or overuse of consultation and guide the subsequent development of interventions to improve value in consultation. One additional consultation over the average LOS of 4.6 days adds $420 per admission or $4.8 billion to the 11.5 million annual Medicare admissions.15 Increasing research, guidelines, and education on the judicious use of inpatient consultation will be key in maximizing high-value care and improving patient outcomes.

Acknowledgments

The authors would like to acknowledge the invaluable support and assistance of the University of Chicago Hospitalist Project, the Pritzker School of Medicine Summer Research Program, the University of Chicago Center for Quality, and the University of Chicago Center for Health and the Social Sciences (CHeSS). The authors would additionally like to thank John Cursio, PhD, for his support and guidance in statistical analysis for this project.

Disclaimer

The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH. The funders had no role in the design of the study; the collection, analysis, and interpretation of the data; or the decision to approve publication of the finished manuscript. Preliminary results of this analysis were presented at the 2018 Society of Hospital Medicine Annual Meeting in Orlando, Florida. All coauthors have seen and agree with the contents of the manuscript. The submission is not under review by any other publication.

Inpatient consultation is an extremely common practice with the potential to improve patient outcomes significantly.1-3 However, variability in consultation practices may be risky for patients. In addition to underuse when the benefit is clear, the overuse of consultation may lead to additional testing and therapies, increased length of stay (LOS) and costs, conflicting recommendations, and opportunities for communication breakdown.

Consultation use is often at the discretion of individual providers. While this decision is frequently driven by patient needs, significant variation in consultation practices not fully explained by patient factors exists.1 Prior work has described hospital-level variation1 and that primary care physicians use more consultation than hospitalists.4 However, other factors affecting consultation remain unknown. We sought to explore physician-, patient-, and admission-level factors associated with consultation use on inpatient general medicine services.

METHODS

Study Design

We conducted a retrospective analysis of data from the University of Chicago Hospitalist Project (UCHP). UCHP is a longstanding study of the care of hospitalized patients admitted to the University of Chicago general medicine services, involving both patient data collection and physician experience surveys.5 Data were obtained for enrolled UCHP patients between 2011-2016 from the Center for Research Informatics (CRI). The University of Chicago Institutional Review Board approved this study.

Data Collection

Attendings and patients consented to UCHP participation. Data collection details are described elsewhere.5,6 Data from EpicCare (EpicSystems Corp, Wisconsin) and Centricity Billing (GE Healthcare, Illinois) were obtained via CRI for all encounters of enrolled UCHP patients during the study period (N = 218,591).

Attending Attribution

We determined attending attribution for admissions as follows: the attending author of the first history and physical (H&P) was assigned. If this was unavailable, the attending author of the first progress note (PN) was assigned. For patients admitted by hospitalists on admitting shifts to nonteaching services (ie, service without residents/students), the author of the first PN was assigned if different from H&P. Where available, attribution was corroborated with call schedules.

Sample and Variables

All encounters containing inpatient admissions to the University of Chicago from May 10, 2011 (Electronic Health Record activation date), through December 31, 2016, were considered for inclusion (N = 51,171, Appendix 1). Admissions including only documentation from ancillary services were excluded (eg, encounters for hemodialysis or physical therapy). Admissions were limited to a length of stay (LOS) ≤ 5 days, corresponding to the average US inpatient LOS of 4.6 days,7 to minimize the likelihood of attending handoffs (N = 31,592). If attending attribution was not possible via the above-described methods, the admission was eliminated (N = 3,103; 10.9% of admissions with LOS ≤ 5 days). Finally, the sample was restricted to general medicine service admissions under attendings enrolled in UCHP who completed surveys. After the application of all criteria, 6,153 admissions remained for analysis.

The outcome variable was the number of consultations per admission, determined by counting the unique number of services creating clinical documentation, and subtracting one for the primary team. If the Medical/Surgical intensive care unit (ICU) was a service, then two were subtracted to account for the ICU transfer.

Attending years in practice (ie, years since medical school graduation) and gender were determined from public resources. Practice characteristics were determined from UCHP attending surveys, which address perceptions of workload and satisfaction (Appendix 2).

Patient characteristics (gender, age, Elixhauser Indices) and admission characteristics (LOS, season of admission, payor) were determined from UCHP and CRI data. The Elixhauser Index uses a well-validated system combining the presence/absence of 31 comorbidities to predict mortality and 30-day readmission.8 Elixhauser Indices were calculated using the “Creation of Elixhauser Comorbidity Index Scores 1.0” software.9 For admissions under hospitalist attendings, teaching/nonteaching team was ascertained via internal teaching service calendars.

Analysis

We used descriptive statistics to examine demographic characteristics. The difference between the lowest and highest quartile consultation use was determined via a two-sample t test. Given the multilevel nature of our count data, we used a mixed-effects Poisson model accounting for within-group variation by clustering on attending and patient (3-level random-effects model). The analysis was done using Stata 15 (StataCorp, Texas).

RESULTS

From 2011 to 2016, 14,848 patients and 88 attendings were enrolled in UCHP; 4,772 patients (32%) and 69 attendings (59.4%) had data available and were included. Mean LOS was 3.0 days (SD = 1.3). Table 1 describes the characteristics of attendings, patients, and admissions.

Seventy-six percent of admissions included at least one consultation. Consultation use varied widely, ranging from 0 to 10 per admission (mean = 1.39, median = 1; standard deviation [SD] = 1.17). The number of consultations per admission in the highest quartile of consultation frequency (mean = 3.47, median = 3) was 5.7-fold that of the lowest quartile (mean = 0.613, median = 1; P <.001).