User login

Utilizing Telesimulation for Advanced Skills Training in Consultation and Handoff Communication: A Post-COVID-19 GME Bootcamp Experience

Events requiring communication among and within teams are vulnerable points in patient care in hospital medicine, with communication failures representing important contributors to adverse events.1-4 Consultations and handoffs are exceptionally common inpatient practices, yet training in these practices is variable across educational and practice domains.5,6 Advanced inpatient communication-skills training requires an effective, feasible, and scalable format. Simulation-based bootcamps can effectively support clinical skills training, often in procedural domains, and have been increasingly utilized for communication skills.7,8 We previously described the development and implementation of an in-person bootcamp for training and feedback in consultation and handoff communication.5,8

As hospitalist leaders grapple with how to systematically support and assess essential clinical skills, the COVID-19 pandemic has presented another impetus to rethink current processes. The rapid shift to virtual activities met immediate needs of the pandemic, but also inspired creativity in applying new methodologies to improve teaching strategies and implementation long-term.9,10 One such strategy, telesimulation, offers a way to continue simulation-based training limited by the need for physical distancing.10 Furthermore, recent calls to study the efficacy of virtual bootcamp structures have acknowledged potential benefits, even outside of the pandemic.11

The primary objective of this feasibility study was to convert our previously described consultation and handoff bootcamp to a telesimulation bootcamp (TBC), preserving rigorous performance evaluation and opportunities for skills-based feedback. We additionally compared evaluation between virtual and in-person formats to understand the utility of telesimulation for bootcamp-based clinical education moving forward.

METHODS

Setting and Participants

The TBC occurred in June 2020 during the University of Chicago institution-wide graduate medical education (GME) orientation; 130 interns entering 13 residency programs participated. The comparison group was 128 interns who underwent the traditional University of Chicago GME orientation “Advanced Communication Skills Bootcamp” (ACSBC) in 2019.5,8

Program Description

To develop TBC, we adapted observed structured clinical experiences (OSCEs) created for ACSBC. Until 2020, ACSBC included three in-person OSCEs: (1) requesting a consultation; (2) conducting handoffs; and (3) acquiring informed consent. COVID-19 necessitated conversion of ACSBC to virtual in June 2020. For this, we selected the consultation and handoff OSCEs, as these skills require near-universal and immediate application in clinical practice. Additionally, they required only trained facilitators (TFs), whereas informed consent required standardized patients. Hospitalist and emergency medicine faculty were recruited as TFs; 7 of 12 TFs were hospitalists. Each OSCE had two parts: an asynchronous, mandatory training module and a clinical simulation. For TBC, we adapted the simulations, previously separate experiences, into a 20-minute combined handoff/consultation telesimulation using the Zoom® video platform. Interns were paired with one TF who served as both standardized consultant (for one mock case) and handoff receiver (for three mock cases, including the consultation case). TFs rated intern performance and provided feedback.

TBC occurred on June 17 and 18, 2020. Interns were emailed asynchronous modules on June 1, and mock cases and instructions on June 12. When TBC began, GME staff proctors oriented interns in the Zoom® platform. Proctors placed TFs into private breakout rooms into which interns rotated through 20-minute timeslots. Faculty received copies of all TBC materials for review (Appendix 1) and underwent Zoom®-based training 1 to 2 weeks prior.

We evaluated TBC using several methods: (1) consultation and handoff skills performance measured by two validated checklists5,8; (2) survey of intern self-reported preparedness to practice consultations and handoffs; and (3) survey of intern satisfaction. Surveys were administered both immediately post bootcamp (Appendix 2) and 8 weeks into internship (Appendix 3). Skills performance checklists were a 12-item consultation checklist5 and 6-item handoff checklist.8 The handoff checklist was modified to remove activities impossible to assess virtually (ie, orienting sign-outs in a shared space) and to add a three-level rating scale of “outstanding,” “satisfactory,” and “needs improvement.” This was done based on feedback from ACSBC to allow more nuanced feedback for interns. A rating of “outstanding” was used to define successful completion of the item (Appendix 1). Interns rated preparedness and satisfaction on 5-point Likert-type items. All measures were compared to the 2019 in-person ACSBC cohort.

Data Analysis

Stata 16.1 (StataCorp LP) was used for analysis. We dichotomized preparedness and satisfaction scores, defining ratings of “4” or “5” as “prepared” or “satisfied.” As previously described,5 we created a composite score averaging both checklist scores for each intern. We normalized this score by rater to a z score (mean, 0; SD, 1) to account for rater differences. “Poor” and “outstanding” performances were defined as z scores below and above 1 SD, respectively. Fisher’s exact test was used to compare proportions, and Pearson correlation test to correlate z scores. The University of Chicago Institutional Review Board granted exemption.

RESULTS

All 130 entering interns participated in TBC. Internal medicine (IM) was the largest specialty (n = 37), followed by pediatrics (n = 22), emergency medicine (EM) (n = 16), and anesthesiology (n = 12). The remaining 9 programs ranged from 2 to 10 interns per program. The 128 interns in ACSBC were similar, including 40 IM, 23 pediatrics, 14 EM, and 12 anesthesia interns, with 2 to 10 interns in remaining programs.

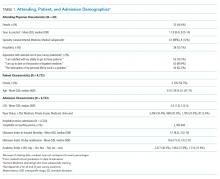

TBC skills performance evaluations were compared to ACSBC (Table 1). The TBC intern cohort’s consultation performance was the same or better than the ACSBC intern cohort’s. For handoffs, TBC interns completed significantly fewer checklist items compared to ACSBC. Performance in each exercise was moderately correlated (r = 0.39, P < .05). For z scores, 14 TBC interns (10.8%) had “outstanding” and 15 (11.6%) had “poor” performances, compared to ACSBC interns with 7 (5.5%) “outstanding” and 10 (7.81%) “poor” performances (P = .15).

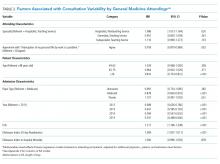

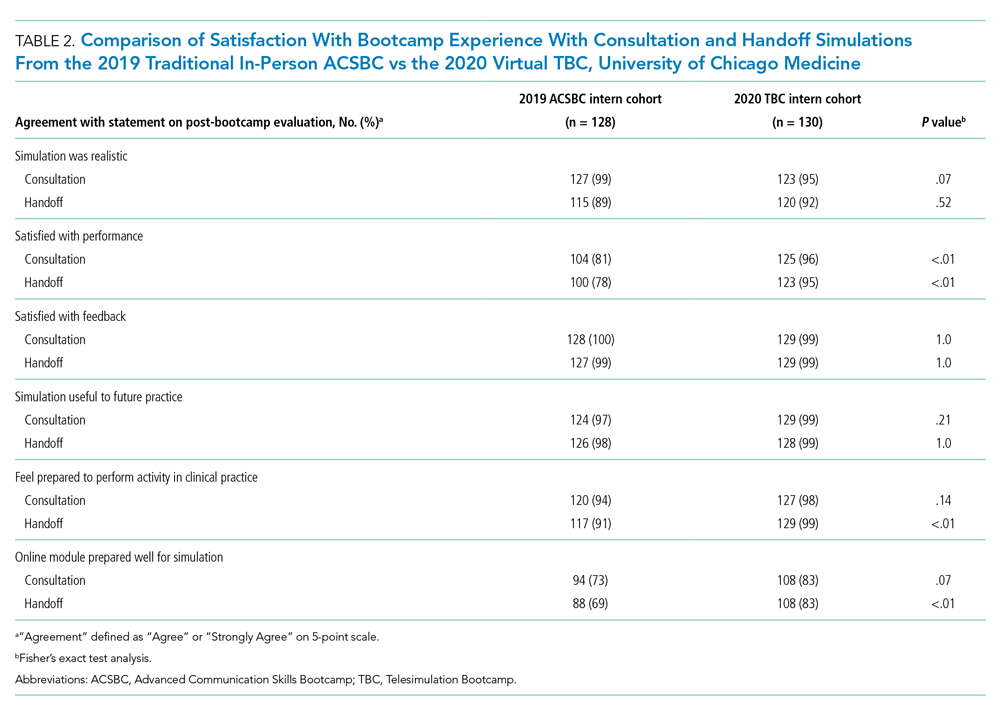

All 130 interns (100%) completed the immediate post-TBC survey. Overall, TBC satisfaction was comparable to ACSBC, and significantly improved for satisfaction with performance (Table 2). Compared to ACSBC, TBC interns felt more prepared for simulation and handoff clinical practice. Nearly all interns would recommend TBC (99% vs 96% of ACSBC interns, P = 0.28), and 99% felt the software used for the simulation ran smoothly.

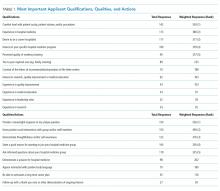

The 8-week post-TBC survey had a response rate of 88% (115/130); 69% of interns reported conducting more effective handoffs due to TBC, and 79% felt confident in handoff skills. Similarly, 73% felt more effective at calling consultations, and 75% reported retained knowledge of consultation frameworks taught during TBC. Additionally, 71% of interns reported that TBC helped identify areas for self-directed improvement. There were no significant differences in 8-week postsurvey ratings between ACSBC and TBC.

DISCUSSION

In converting the advanced communication skills bootcamp from an in-person to a virtual format, telesimulation was well-received by interns and rated similarly to in-person bootcamp in most respects. Nearly all interns agreed the experience was realistic, provided useful feedback, and prepared them for clinical practice. Although we shifted to virtual out of necessity, our results demonstrate a high-quality, streamlined bootcamp experience that was less labor-intensive for interns, staff, and faculty. Telesimulation may represent an effective strategy beyond the COVID-19 pandemic to increase ease of administration and scale the use of bootcamps in supporting advanced clinical skill training for hospital-based practice.

TBC interns felt better prepared for simulation and more satisfied with their performance than ACSBC interns, potentially due to the revised format. The mock cases were adapted and consolidated for TBC, such that the handoff and consultation simulations shared a common case, whereas previously they were separate. Thus, intern preparation for TBC required familiarity with fewer overall cases. Ultimately, TBC maintained the quality of training but required review of less information.

In comparing performance, TBC interns were rated as well or better during consultation simulation compared to ASCBC, but handoffs were rated lower. This was likely due to the change in the handoff checklist from a dichotomous to a three-level rating scale. This change was made after receiving feedback from ACSBC TFs that a rating scale allowing for more nuance was needed to provide adequate feedback to interns. Although we defined handoff item completion for TBC interns as being rated “outstanding,” if the top two rankings, “outstanding” and “satisfactory,” are dichotomized to reflect completion, TBC handoff performance is equivalent or better than ACSBC. TF recruitment additionally differed between TBC and ACSBC cohorts. In ACSBC, resident physicians served as handoff TFs, whereas only faculty were recruited for TBC. Faculty were primarily clinically active hospitalists, whose expertise in handoffs may resulted in more stringent performance ratings, contributing to differences seen.

Hospitalist groups require clinicians to be immediately proficient in essential communication skills like consultation and handoffs, potentially requiring just-in-time training and feedback for large cohorts.12 Bootcamps can meet this need but require participation and time investment by many faculty members, staff, and administrators.5,8 Combining TBC into one virtual handoff/consultation simulation required recruitment and training of 50% fewer TFs and reduced administrative burden. ACSBC consultation simulations were high-fidelity but resource-heavy, requiring reliable two-way telephones with reliable connections and separate spaces for simulation and feedback.5 Conversely, TBC only required consultations to be “called” via audio-only Zoom® discussion, then both individuals turned on cameras for feedback. The slight decrease in perceived fidelity was certainly outweighed by ease of administration. TBC’s more efficient and less labor-intensive format is an appealing strategy for hospitalist groups looking to train up clinicians, including those operating across multiple or geographically distant sites.

Our study has limitations. It occurred with one group of learners at a single site with consistent consultation and handoff communication practices, which may not be the case elsewhere. Our comparison group was a separate cohort, and groups were not randomized; thus, differences seen may reflect inherent dissimilarities in these groups. Changes to the handoff checklist rating scale between 2019 and 2020 additionally may limit the direct comparison of handoff performance between cohorts. While overall fewer resources were required, TBC implementation did require time and institutional support, along with full virtual platform capability without user or time limitations. Our preparedness outcomes were self-reported without direct measurement of clinical performance, which is an area for future work.

We describe a feasible implementation of an adapted telesimulation communication bootcamp, with comparison to a previous in-person cohort’s skills performance and satisfaction. While COVID-19 has made the future of in-person training activities uncertain, it also served as a catalyst for educational innovation that may be sustained beyond the pandemic. Although developed out of necessity, the telesimulation communication bootcamp was effective and well-received. Telesimulation represents an opportunity for hospital medicine groups to implement advanced communication skills training and assessment in a more efficient, flexible, and potentially preferable way, even after the pandemic ends.

Acknowledgments

The authors thank the staff at the University of Chicago Office of Graduate Medical Education and the UChicago Medicine Simulation Center.

1. Sutcliffe KM, Lewton E, Rosenthal MM. Communication failures: an insidious contributor to medical mishaps. Acad Med. 2004;79(2):186-194. https://doi.org/ 10.1097/00001888-200402000-00019

2. Inadequate hand-off communication. Sentinel Event Alert. 2017;(58):1-6.

3. Horwitz LI, Meredith T, Schuur JD, Shah NR, Kulkarni RG, Jenq JY. Dropping the baton: a qualitative analysis of failures during the transition from emergency department to inpatient care. Ann Emerg Med. 2009;53(6):701-710. https://doi.org/ 10.1016/j.annemergmed.2008.05.007

4. Jagsi R, Kitch BT, Weinstein DF, Campbell EG, Hutter M, Weissman JS. Residents report on adverse events and their causes. Arch Intern Med. 2005;165(22):2607-2613. https://doi.org/10.1001/archinte.165.22.2607

5. Martin SK, Carter K, Hellerman N, et al. The consultation observed simulated clinical experience: training, assessment, and feedback for incoming interns on requesting consultations. Acad Med. 2018; 93(12):1814-1820. https://doi.org/10.1097/ACM.0000000000002337

6. Lopez MA, Campbell J. Developing a communication curriculum for primary and consulting services. Med Educ Online. 2020;25(1):1794341. https://doi.org/10.1080/10872981.2020

7. Cohen, ER, Barsuk JH, Moazed F, et al. Making July safer: simulation-based mastery learning during intern bootcamp. Acad Med. 2013;88(2):233-239. https://doi.org/10.1097/ACM.0b013e31827bfc0a

8. Gaffney S, Farnan JM, Hirsch K, McGinty M, Arora VM. The Modified, Multi-patient Observed Simulated Handoff Experience (M-OSHE): assessment and feedback for entering residents on handoff performance. J Gen Intern Med. 2016;31(4):438-441. https://doi.org/10.1007/s11606-016-3591-8.

9. Woolliscroft, J. Innovation in response to the COVID-19 pandemic crisis. Acad Med. 2020;95(8):1140-1142. https://doi.org/10.1097/ACM.0000000000003402.

10. Anderson ML, Turbow S, Willgerodt MA, Ruhnke G. Education in a crisis: the opportunity of our lives. J Hosp. Med 2020;5;287-291. https://doi.org/10.12788/jhm.3431

11. Farr DE, Zeh HJ, Abdelfattah KR. Virtual bootcamps—an emerging solution to the undergraduate medical education-graduate medical education transition. JAMA Surg. 2021;156(3):282-283. https://doi.org/10.1001/jamasurg.2020.6162

12. Hepps JH, Yu CE, Calaman S. Simulation in medical education for the hospitalist: moving beyond the mock code. Pediatr Clin North Am. 2019;66(4):855-866. https://doi.org/10.1016/j.pcl.2019.03.014

Events requiring communication among and within teams are vulnerable points in patient care in hospital medicine, with communication failures representing important contributors to adverse events.1-4 Consultations and handoffs are exceptionally common inpatient practices, yet training in these practices is variable across educational and practice domains.5,6 Advanced inpatient communication-skills training requires an effective, feasible, and scalable format. Simulation-based bootcamps can effectively support clinical skills training, often in procedural domains, and have been increasingly utilized for communication skills.7,8 We previously described the development and implementation of an in-person bootcamp for training and feedback in consultation and handoff communication.5,8

As hospitalist leaders grapple with how to systematically support and assess essential clinical skills, the COVID-19 pandemic has presented another impetus to rethink current processes. The rapid shift to virtual activities met immediate needs of the pandemic, but also inspired creativity in applying new methodologies to improve teaching strategies and implementation long-term.9,10 One such strategy, telesimulation, offers a way to continue simulation-based training limited by the need for physical distancing.10 Furthermore, recent calls to study the efficacy of virtual bootcamp structures have acknowledged potential benefits, even outside of the pandemic.11

The primary objective of this feasibility study was to convert our previously described consultation and handoff bootcamp to a telesimulation bootcamp (TBC), preserving rigorous performance evaluation and opportunities for skills-based feedback. We additionally compared evaluation between virtual and in-person formats to understand the utility of telesimulation for bootcamp-based clinical education moving forward.

METHODS

Setting and Participants

The TBC occurred in June 2020 during the University of Chicago institution-wide graduate medical education (GME) orientation; 130 interns entering 13 residency programs participated. The comparison group was 128 interns who underwent the traditional University of Chicago GME orientation “Advanced Communication Skills Bootcamp” (ACSBC) in 2019.5,8

Program Description

To develop TBC, we adapted observed structured clinical experiences (OSCEs) created for ACSBC. Until 2020, ACSBC included three in-person OSCEs: (1) requesting a consultation; (2) conducting handoffs; and (3) acquiring informed consent. COVID-19 necessitated conversion of ACSBC to virtual in June 2020. For this, we selected the consultation and handoff OSCEs, as these skills require near-universal and immediate application in clinical practice. Additionally, they required only trained facilitators (TFs), whereas informed consent required standardized patients. Hospitalist and emergency medicine faculty were recruited as TFs; 7 of 12 TFs were hospitalists. Each OSCE had two parts: an asynchronous, mandatory training module and a clinical simulation. For TBC, we adapted the simulations, previously separate experiences, into a 20-minute combined handoff/consultation telesimulation using the Zoom® video platform. Interns were paired with one TF who served as both standardized consultant (for one mock case) and handoff receiver (for three mock cases, including the consultation case). TFs rated intern performance and provided feedback.

TBC occurred on June 17 and 18, 2020. Interns were emailed asynchronous modules on June 1, and mock cases and instructions on June 12. When TBC began, GME staff proctors oriented interns in the Zoom® platform. Proctors placed TFs into private breakout rooms into which interns rotated through 20-minute timeslots. Faculty received copies of all TBC materials for review (Appendix 1) and underwent Zoom®-based training 1 to 2 weeks prior.

We evaluated TBC using several methods: (1) consultation and handoff skills performance measured by two validated checklists5,8; (2) survey of intern self-reported preparedness to practice consultations and handoffs; and (3) survey of intern satisfaction. Surveys were administered both immediately post bootcamp (Appendix 2) and 8 weeks into internship (Appendix 3). Skills performance checklists were a 12-item consultation checklist5 and 6-item handoff checklist.8 The handoff checklist was modified to remove activities impossible to assess virtually (ie, orienting sign-outs in a shared space) and to add a three-level rating scale of “outstanding,” “satisfactory,” and “needs improvement.” This was done based on feedback from ACSBC to allow more nuanced feedback for interns. A rating of “outstanding” was used to define successful completion of the item (Appendix 1). Interns rated preparedness and satisfaction on 5-point Likert-type items. All measures were compared to the 2019 in-person ACSBC cohort.

Data Analysis

Stata 16.1 (StataCorp LP) was used for analysis. We dichotomized preparedness and satisfaction scores, defining ratings of “4” or “5” as “prepared” or “satisfied.” As previously described,5 we created a composite score averaging both checklist scores for each intern. We normalized this score by rater to a z score (mean, 0; SD, 1) to account for rater differences. “Poor” and “outstanding” performances were defined as z scores below and above 1 SD, respectively. Fisher’s exact test was used to compare proportions, and Pearson correlation test to correlate z scores. The University of Chicago Institutional Review Board granted exemption.

RESULTS

All 130 entering interns participated in TBC. Internal medicine (IM) was the largest specialty (n = 37), followed by pediatrics (n = 22), emergency medicine (EM) (n = 16), and anesthesiology (n = 12). The remaining 9 programs ranged from 2 to 10 interns per program. The 128 interns in ACSBC were similar, including 40 IM, 23 pediatrics, 14 EM, and 12 anesthesia interns, with 2 to 10 interns in remaining programs.

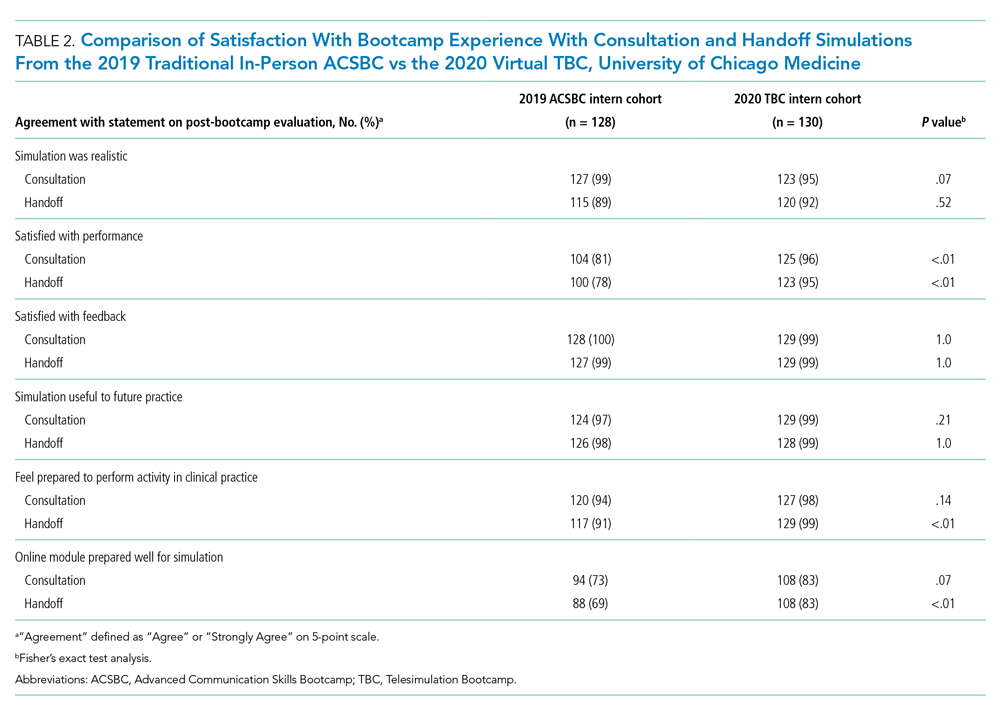

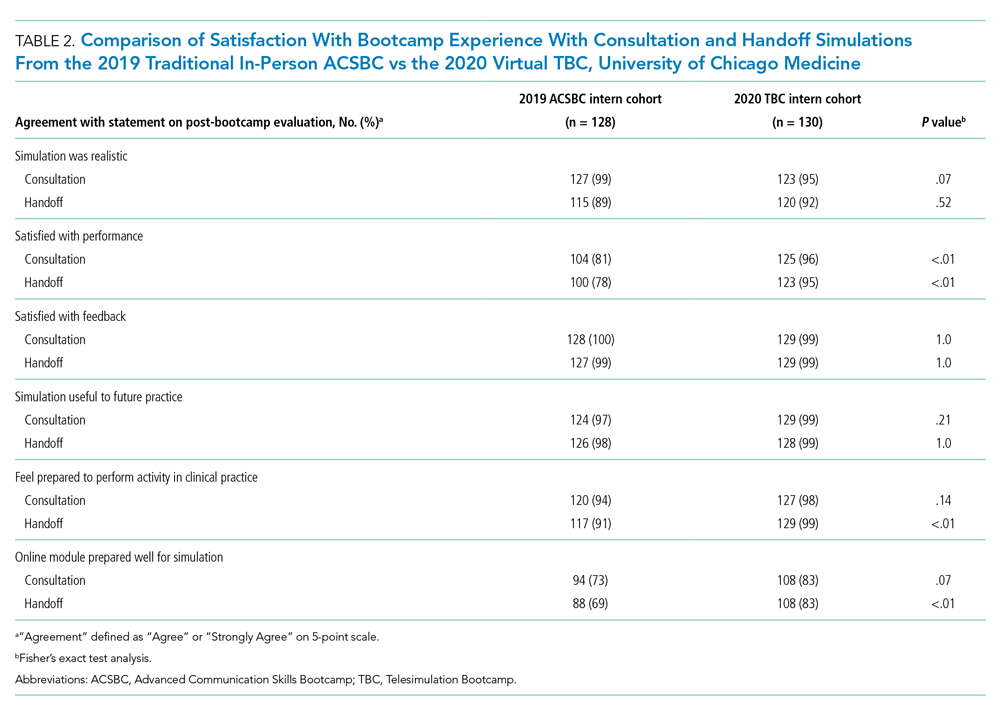

TBC skills performance evaluations were compared to ACSBC (Table 1). The TBC intern cohort’s consultation performance was the same or better than the ACSBC intern cohort’s. For handoffs, TBC interns completed significantly fewer checklist items compared to ACSBC. Performance in each exercise was moderately correlated (r = 0.39, P < .05). For z scores, 14 TBC interns (10.8%) had “outstanding” and 15 (11.6%) had “poor” performances, compared to ACSBC interns with 7 (5.5%) “outstanding” and 10 (7.81%) “poor” performances (P = .15).

All 130 interns (100%) completed the immediate post-TBC survey. Overall, TBC satisfaction was comparable to ACSBC, and significantly improved for satisfaction with performance (Table 2). Compared to ACSBC, TBC interns felt more prepared for simulation and handoff clinical practice. Nearly all interns would recommend TBC (99% vs 96% of ACSBC interns, P = 0.28), and 99% felt the software used for the simulation ran smoothly.

The 8-week post-TBC survey had a response rate of 88% (115/130); 69% of interns reported conducting more effective handoffs due to TBC, and 79% felt confident in handoff skills. Similarly, 73% felt more effective at calling consultations, and 75% reported retained knowledge of consultation frameworks taught during TBC. Additionally, 71% of interns reported that TBC helped identify areas for self-directed improvement. There were no significant differences in 8-week postsurvey ratings between ACSBC and TBC.

DISCUSSION

In converting the advanced communication skills bootcamp from an in-person to a virtual format, telesimulation was well-received by interns and rated similarly to in-person bootcamp in most respects. Nearly all interns agreed the experience was realistic, provided useful feedback, and prepared them for clinical practice. Although we shifted to virtual out of necessity, our results demonstrate a high-quality, streamlined bootcamp experience that was less labor-intensive for interns, staff, and faculty. Telesimulation may represent an effective strategy beyond the COVID-19 pandemic to increase ease of administration and scale the use of bootcamps in supporting advanced clinical skill training for hospital-based practice.

TBC interns felt better prepared for simulation and more satisfied with their performance than ACSBC interns, potentially due to the revised format. The mock cases were adapted and consolidated for TBC, such that the handoff and consultation simulations shared a common case, whereas previously they were separate. Thus, intern preparation for TBC required familiarity with fewer overall cases. Ultimately, TBC maintained the quality of training but required review of less information.

In comparing performance, TBC interns were rated as well or better during consultation simulation compared to ASCBC, but handoffs were rated lower. This was likely due to the change in the handoff checklist from a dichotomous to a three-level rating scale. This change was made after receiving feedback from ACSBC TFs that a rating scale allowing for more nuance was needed to provide adequate feedback to interns. Although we defined handoff item completion for TBC interns as being rated “outstanding,” if the top two rankings, “outstanding” and “satisfactory,” are dichotomized to reflect completion, TBC handoff performance is equivalent or better than ACSBC. TF recruitment additionally differed between TBC and ACSBC cohorts. In ACSBC, resident physicians served as handoff TFs, whereas only faculty were recruited for TBC. Faculty were primarily clinically active hospitalists, whose expertise in handoffs may resulted in more stringent performance ratings, contributing to differences seen.

Hospitalist groups require clinicians to be immediately proficient in essential communication skills like consultation and handoffs, potentially requiring just-in-time training and feedback for large cohorts.12 Bootcamps can meet this need but require participation and time investment by many faculty members, staff, and administrators.5,8 Combining TBC into one virtual handoff/consultation simulation required recruitment and training of 50% fewer TFs and reduced administrative burden. ACSBC consultation simulations were high-fidelity but resource-heavy, requiring reliable two-way telephones with reliable connections and separate spaces for simulation and feedback.5 Conversely, TBC only required consultations to be “called” via audio-only Zoom® discussion, then both individuals turned on cameras for feedback. The slight decrease in perceived fidelity was certainly outweighed by ease of administration. TBC’s more efficient and less labor-intensive format is an appealing strategy for hospitalist groups looking to train up clinicians, including those operating across multiple or geographically distant sites.

Our study has limitations. It occurred with one group of learners at a single site with consistent consultation and handoff communication practices, which may not be the case elsewhere. Our comparison group was a separate cohort, and groups were not randomized; thus, differences seen may reflect inherent dissimilarities in these groups. Changes to the handoff checklist rating scale between 2019 and 2020 additionally may limit the direct comparison of handoff performance between cohorts. While overall fewer resources were required, TBC implementation did require time and institutional support, along with full virtual platform capability without user or time limitations. Our preparedness outcomes were self-reported without direct measurement of clinical performance, which is an area for future work.

We describe a feasible implementation of an adapted telesimulation communication bootcamp, with comparison to a previous in-person cohort’s skills performance and satisfaction. While COVID-19 has made the future of in-person training activities uncertain, it also served as a catalyst for educational innovation that may be sustained beyond the pandemic. Although developed out of necessity, the telesimulation communication bootcamp was effective and well-received. Telesimulation represents an opportunity for hospital medicine groups to implement advanced communication skills training and assessment in a more efficient, flexible, and potentially preferable way, even after the pandemic ends.

Acknowledgments

The authors thank the staff at the University of Chicago Office of Graduate Medical Education and the UChicago Medicine Simulation Center.

Events requiring communication among and within teams are vulnerable points in patient care in hospital medicine, with communication failures representing important contributors to adverse events.1-4 Consultations and handoffs are exceptionally common inpatient practices, yet training in these practices is variable across educational and practice domains.5,6 Advanced inpatient communication-skills training requires an effective, feasible, and scalable format. Simulation-based bootcamps can effectively support clinical skills training, often in procedural domains, and have been increasingly utilized for communication skills.7,8 We previously described the development and implementation of an in-person bootcamp for training and feedback in consultation and handoff communication.5,8

As hospitalist leaders grapple with how to systematically support and assess essential clinical skills, the COVID-19 pandemic has presented another impetus to rethink current processes. The rapid shift to virtual activities met immediate needs of the pandemic, but also inspired creativity in applying new methodologies to improve teaching strategies and implementation long-term.9,10 One such strategy, telesimulation, offers a way to continue simulation-based training limited by the need for physical distancing.10 Furthermore, recent calls to study the efficacy of virtual bootcamp structures have acknowledged potential benefits, even outside of the pandemic.11

The primary objective of this feasibility study was to convert our previously described consultation and handoff bootcamp to a telesimulation bootcamp (TBC), preserving rigorous performance evaluation and opportunities for skills-based feedback. We additionally compared evaluation between virtual and in-person formats to understand the utility of telesimulation for bootcamp-based clinical education moving forward.

METHODS

Setting and Participants

The TBC occurred in June 2020 during the University of Chicago institution-wide graduate medical education (GME) orientation; 130 interns entering 13 residency programs participated. The comparison group was 128 interns who underwent the traditional University of Chicago GME orientation “Advanced Communication Skills Bootcamp” (ACSBC) in 2019.5,8

Program Description

To develop TBC, we adapted observed structured clinical experiences (OSCEs) created for ACSBC. Until 2020, ACSBC included three in-person OSCEs: (1) requesting a consultation; (2) conducting handoffs; and (3) acquiring informed consent. COVID-19 necessitated conversion of ACSBC to virtual in June 2020. For this, we selected the consultation and handoff OSCEs, as these skills require near-universal and immediate application in clinical practice. Additionally, they required only trained facilitators (TFs), whereas informed consent required standardized patients. Hospitalist and emergency medicine faculty were recruited as TFs; 7 of 12 TFs were hospitalists. Each OSCE had two parts: an asynchronous, mandatory training module and a clinical simulation. For TBC, we adapted the simulations, previously separate experiences, into a 20-minute combined handoff/consultation telesimulation using the Zoom® video platform. Interns were paired with one TF who served as both standardized consultant (for one mock case) and handoff receiver (for three mock cases, including the consultation case). TFs rated intern performance and provided feedback.

TBC occurred on June 17 and 18, 2020. Interns were emailed asynchronous modules on June 1, and mock cases and instructions on June 12. When TBC began, GME staff proctors oriented interns in the Zoom® platform. Proctors placed TFs into private breakout rooms into which interns rotated through 20-minute timeslots. Faculty received copies of all TBC materials for review (Appendix 1) and underwent Zoom®-based training 1 to 2 weeks prior.

We evaluated TBC using several methods: (1) consultation and handoff skills performance measured by two validated checklists5,8; (2) survey of intern self-reported preparedness to practice consultations and handoffs; and (3) survey of intern satisfaction. Surveys were administered both immediately post bootcamp (Appendix 2) and 8 weeks into internship (Appendix 3). Skills performance checklists were a 12-item consultation checklist5 and 6-item handoff checklist.8 The handoff checklist was modified to remove activities impossible to assess virtually (ie, orienting sign-outs in a shared space) and to add a three-level rating scale of “outstanding,” “satisfactory,” and “needs improvement.” This was done based on feedback from ACSBC to allow more nuanced feedback for interns. A rating of “outstanding” was used to define successful completion of the item (Appendix 1). Interns rated preparedness and satisfaction on 5-point Likert-type items. All measures were compared to the 2019 in-person ACSBC cohort.

Data Analysis

Stata 16.1 (StataCorp LP) was used for analysis. We dichotomized preparedness and satisfaction scores, defining ratings of “4” or “5” as “prepared” or “satisfied.” As previously described,5 we created a composite score averaging both checklist scores for each intern. We normalized this score by rater to a z score (mean, 0; SD, 1) to account for rater differences. “Poor” and “outstanding” performances were defined as z scores below and above 1 SD, respectively. Fisher’s exact test was used to compare proportions, and Pearson correlation test to correlate z scores. The University of Chicago Institutional Review Board granted exemption.

RESULTS

All 130 entering interns participated in TBC. Internal medicine (IM) was the largest specialty (n = 37), followed by pediatrics (n = 22), emergency medicine (EM) (n = 16), and anesthesiology (n = 12). The remaining 9 programs ranged from 2 to 10 interns per program. The 128 interns in ACSBC were similar, including 40 IM, 23 pediatrics, 14 EM, and 12 anesthesia interns, with 2 to 10 interns in remaining programs.

TBC skills performance evaluations were compared to ACSBC (Table 1). The TBC intern cohort’s consultation performance was the same or better than the ACSBC intern cohort’s. For handoffs, TBC interns completed significantly fewer checklist items compared to ACSBC. Performance in each exercise was moderately correlated (r = 0.39, P < .05). For z scores, 14 TBC interns (10.8%) had “outstanding” and 15 (11.6%) had “poor” performances, compared to ACSBC interns with 7 (5.5%) “outstanding” and 10 (7.81%) “poor” performances (P = .15).

All 130 interns (100%) completed the immediate post-TBC survey. Overall, TBC satisfaction was comparable to ACSBC, and significantly improved for satisfaction with performance (Table 2). Compared to ACSBC, TBC interns felt more prepared for simulation and handoff clinical practice. Nearly all interns would recommend TBC (99% vs 96% of ACSBC interns, P = 0.28), and 99% felt the software used for the simulation ran smoothly.

The 8-week post-TBC survey had a response rate of 88% (115/130); 69% of interns reported conducting more effective handoffs due to TBC, and 79% felt confident in handoff skills. Similarly, 73% felt more effective at calling consultations, and 75% reported retained knowledge of consultation frameworks taught during TBC. Additionally, 71% of interns reported that TBC helped identify areas for self-directed improvement. There were no significant differences in 8-week postsurvey ratings between ACSBC and TBC.

DISCUSSION

In converting the advanced communication skills bootcamp from an in-person to a virtual format, telesimulation was well-received by interns and rated similarly to in-person bootcamp in most respects. Nearly all interns agreed the experience was realistic, provided useful feedback, and prepared them for clinical practice. Although we shifted to virtual out of necessity, our results demonstrate a high-quality, streamlined bootcamp experience that was less labor-intensive for interns, staff, and faculty. Telesimulation may represent an effective strategy beyond the COVID-19 pandemic to increase ease of administration and scale the use of bootcamps in supporting advanced clinical skill training for hospital-based practice.

TBC interns felt better prepared for simulation and more satisfied with their performance than ACSBC interns, potentially due to the revised format. The mock cases were adapted and consolidated for TBC, such that the handoff and consultation simulations shared a common case, whereas previously they were separate. Thus, intern preparation for TBC required familiarity with fewer overall cases. Ultimately, TBC maintained the quality of training but required review of less information.

In comparing performance, TBC interns were rated as well or better during consultation simulation compared to ASCBC, but handoffs were rated lower. This was likely due to the change in the handoff checklist from a dichotomous to a three-level rating scale. This change was made after receiving feedback from ACSBC TFs that a rating scale allowing for more nuance was needed to provide adequate feedback to interns. Although we defined handoff item completion for TBC interns as being rated “outstanding,” if the top two rankings, “outstanding” and “satisfactory,” are dichotomized to reflect completion, TBC handoff performance is equivalent or better than ACSBC. TF recruitment additionally differed between TBC and ACSBC cohorts. In ACSBC, resident physicians served as handoff TFs, whereas only faculty were recruited for TBC. Faculty were primarily clinically active hospitalists, whose expertise in handoffs may resulted in more stringent performance ratings, contributing to differences seen.

Hospitalist groups require clinicians to be immediately proficient in essential communication skills like consultation and handoffs, potentially requiring just-in-time training and feedback for large cohorts.12 Bootcamps can meet this need but require participation and time investment by many faculty members, staff, and administrators.5,8 Combining TBC into one virtual handoff/consultation simulation required recruitment and training of 50% fewer TFs and reduced administrative burden. ACSBC consultation simulations were high-fidelity but resource-heavy, requiring reliable two-way telephones with reliable connections and separate spaces for simulation and feedback.5 Conversely, TBC only required consultations to be “called” via audio-only Zoom® discussion, then both individuals turned on cameras for feedback. The slight decrease in perceived fidelity was certainly outweighed by ease of administration. TBC’s more efficient and less labor-intensive format is an appealing strategy for hospitalist groups looking to train up clinicians, including those operating across multiple or geographically distant sites.

Our study has limitations. It occurred with one group of learners at a single site with consistent consultation and handoff communication practices, which may not be the case elsewhere. Our comparison group was a separate cohort, and groups were not randomized; thus, differences seen may reflect inherent dissimilarities in these groups. Changes to the handoff checklist rating scale between 2019 and 2020 additionally may limit the direct comparison of handoff performance between cohorts. While overall fewer resources were required, TBC implementation did require time and institutional support, along with full virtual platform capability without user or time limitations. Our preparedness outcomes were self-reported without direct measurement of clinical performance, which is an area for future work.

We describe a feasible implementation of an adapted telesimulation communication bootcamp, with comparison to a previous in-person cohort’s skills performance and satisfaction. While COVID-19 has made the future of in-person training activities uncertain, it also served as a catalyst for educational innovation that may be sustained beyond the pandemic. Although developed out of necessity, the telesimulation communication bootcamp was effective and well-received. Telesimulation represents an opportunity for hospital medicine groups to implement advanced communication skills training and assessment in a more efficient, flexible, and potentially preferable way, even after the pandemic ends.

Acknowledgments

The authors thank the staff at the University of Chicago Office of Graduate Medical Education and the UChicago Medicine Simulation Center.

1. Sutcliffe KM, Lewton E, Rosenthal MM. Communication failures: an insidious contributor to medical mishaps. Acad Med. 2004;79(2):186-194. https://doi.org/ 10.1097/00001888-200402000-00019

2. Inadequate hand-off communication. Sentinel Event Alert. 2017;(58):1-6.

3. Horwitz LI, Meredith T, Schuur JD, Shah NR, Kulkarni RG, Jenq JY. Dropping the baton: a qualitative analysis of failures during the transition from emergency department to inpatient care. Ann Emerg Med. 2009;53(6):701-710. https://doi.org/ 10.1016/j.annemergmed.2008.05.007

4. Jagsi R, Kitch BT, Weinstein DF, Campbell EG, Hutter M, Weissman JS. Residents report on adverse events and their causes. Arch Intern Med. 2005;165(22):2607-2613. https://doi.org/10.1001/archinte.165.22.2607

5. Martin SK, Carter K, Hellerman N, et al. The consultation observed simulated clinical experience: training, assessment, and feedback for incoming interns on requesting consultations. Acad Med. 2018; 93(12):1814-1820. https://doi.org/10.1097/ACM.0000000000002337

6. Lopez MA, Campbell J. Developing a communication curriculum for primary and consulting services. Med Educ Online. 2020;25(1):1794341. https://doi.org/10.1080/10872981.2020

7. Cohen, ER, Barsuk JH, Moazed F, et al. Making July safer: simulation-based mastery learning during intern bootcamp. Acad Med. 2013;88(2):233-239. https://doi.org/10.1097/ACM.0b013e31827bfc0a

8. Gaffney S, Farnan JM, Hirsch K, McGinty M, Arora VM. The Modified, Multi-patient Observed Simulated Handoff Experience (M-OSHE): assessment and feedback for entering residents on handoff performance. J Gen Intern Med. 2016;31(4):438-441. https://doi.org/10.1007/s11606-016-3591-8.

9. Woolliscroft, J. Innovation in response to the COVID-19 pandemic crisis. Acad Med. 2020;95(8):1140-1142. https://doi.org/10.1097/ACM.0000000000003402.

10. Anderson ML, Turbow S, Willgerodt MA, Ruhnke G. Education in a crisis: the opportunity of our lives. J Hosp. Med 2020;5;287-291. https://doi.org/10.12788/jhm.3431

11. Farr DE, Zeh HJ, Abdelfattah KR. Virtual bootcamps—an emerging solution to the undergraduate medical education-graduate medical education transition. JAMA Surg. 2021;156(3):282-283. https://doi.org/10.1001/jamasurg.2020.6162

12. Hepps JH, Yu CE, Calaman S. Simulation in medical education for the hospitalist: moving beyond the mock code. Pediatr Clin North Am. 2019;66(4):855-866. https://doi.org/10.1016/j.pcl.2019.03.014

1. Sutcliffe KM, Lewton E, Rosenthal MM. Communication failures: an insidious contributor to medical mishaps. Acad Med. 2004;79(2):186-194. https://doi.org/ 10.1097/00001888-200402000-00019

2. Inadequate hand-off communication. Sentinel Event Alert. 2017;(58):1-6.

3. Horwitz LI, Meredith T, Schuur JD, Shah NR, Kulkarni RG, Jenq JY. Dropping the baton: a qualitative analysis of failures during the transition from emergency department to inpatient care. Ann Emerg Med. 2009;53(6):701-710. https://doi.org/ 10.1016/j.annemergmed.2008.05.007

4. Jagsi R, Kitch BT, Weinstein DF, Campbell EG, Hutter M, Weissman JS. Residents report on adverse events and their causes. Arch Intern Med. 2005;165(22):2607-2613. https://doi.org/10.1001/archinte.165.22.2607

5. Martin SK, Carter K, Hellerman N, et al. The consultation observed simulated clinical experience: training, assessment, and feedback for incoming interns on requesting consultations. Acad Med. 2018; 93(12):1814-1820. https://doi.org/10.1097/ACM.0000000000002337

6. Lopez MA, Campbell J. Developing a communication curriculum for primary and consulting services. Med Educ Online. 2020;25(1):1794341. https://doi.org/10.1080/10872981.2020

7. Cohen, ER, Barsuk JH, Moazed F, et al. Making July safer: simulation-based mastery learning during intern bootcamp. Acad Med. 2013;88(2):233-239. https://doi.org/10.1097/ACM.0b013e31827bfc0a

8. Gaffney S, Farnan JM, Hirsch K, McGinty M, Arora VM. The Modified, Multi-patient Observed Simulated Handoff Experience (M-OSHE): assessment and feedback for entering residents on handoff performance. J Gen Intern Med. 2016;31(4):438-441. https://doi.org/10.1007/s11606-016-3591-8.

9. Woolliscroft, J. Innovation in response to the COVID-19 pandemic crisis. Acad Med. 2020;95(8):1140-1142. https://doi.org/10.1097/ACM.0000000000003402.

10. Anderson ML, Turbow S, Willgerodt MA, Ruhnke G. Education in a crisis: the opportunity of our lives. J Hosp. Med 2020;5;287-291. https://doi.org/10.12788/jhm.3431

11. Farr DE, Zeh HJ, Abdelfattah KR. Virtual bootcamps—an emerging solution to the undergraduate medical education-graduate medical education transition. JAMA Surg. 2021;156(3):282-283. https://doi.org/10.1001/jamasurg.2020.6162

12. Hepps JH, Yu CE, Calaman S. Simulation in medical education for the hospitalist: moving beyond the mock code. Pediatr Clin North Am. 2019;66(4):855-866. https://doi.org/10.1016/j.pcl.2019.03.014

© 2021 Society of Hospital Medicine

Evaluation of the Order SMARTT: An Initiative to Reduce Phlebotomy and Improve Sleep-Friendly Labs on General Medicine Services

Frequent daily laboratory testing for inpatients contributes to excessive costs,1 anemia,2 and unnecessary testing.3 The ABIM Foundation’s Choosing Wisely® campaign recommends avoiding routine labs, like complete blood counts (CBCs) and basic metabolic panels (BMP), in the face of clinical and laboratory stability.4,5 Prior interventions have reduced unnecessary labs without adverse outcomes.6-8

In addition to lab frequency, hospitalized patients face suboptimal lab timing. Labs are often ordered as early as 4

METHODS

Setting

This study was conducted on the University of Chicago Medicine (UCM) general medicine services, which consisted of a resident-covered service supervised by general medicine, subspecialist, or hospitalist attendings and a hospitalist service staffed by hospitalists and advanced practice providers.

Development of Order SMARTT

To inform intervention development, we surveyed providers about lab-ordering preferences with use of questions from a prior survey to provide a benchmark (Appendix Table 2).15 While reducing lab frequency was supported, the modal response for how frequently a stable patient should receive routine labs was every 48 hours (Appendix Table 2). Therefore, we hypothesized that labs ordered every 48 hours may be popular. Taking labs every 48 hours would not require an urgent 4

Physician Education

We created a 20-minute presentation on the harms of excessive labs and the benefits of sleep-friendly ordering. Instructional Order SMARTT posters were posted in clinician workrooms that emphasized forgoing labs on stable patients and using the “Order Sleep” shortcut when nonurgent labs were needed.

Labs Utilization Data

We used Epic Systems software (Verona, Wisconsin) and our institutional Tableau scorecard to obtain data on CBC and BMP ordering, patient census, and demographics for medical inpatients between July 1, 2017, and November 1, 2018.

Cost Analysis

Costs of lab tests (actual cost to our institution) were obtained from our institutional phlebotomy services’ estimates of direct variable labor and benefits costs and direct variable supplies cost.

Statistical Analysis

Data analysis was performed with SAS version 9.4 statistical software (Cary, North Carolina, USA) and R version 3.6.2 (Vienna, Austria). Descriptive statistics were used to summarize data. Surveys were analyzed using chi-square tests for categorical variables and two-sample t tests for continuous variables. For lab ordering data, interrupted time series analyses (ITSA) were used to determine the changes in ordering practices with the implementation of the two interventions controlling for service lines (resident vs hospitalist service). ITSA enables examination of changes in lab ordering while controlling for time. The AUTOREG function in SAS was used to build the model and estimate final parameters. This function automatically tests for autocorrelation, heteroscedasticity, and estimates any autoregressive parameters required in the model. Our main model tested the association between our two separate interventions on ordering practices, controlling for service (hospitalist or resident).16

RESULTS

Of 125 residents, 82 (65.6%) attended the session and completed the survey. Attendance and response rate for hospitalists was 80% (16 of 20). Similar to a prior study, many residents (73.1%) reported they would be comfortable if patients received less daily laboratory testing (Appendix Table 2).

We reviewed data from 7,045 total patients over 50,951 total patient days between July1, 2017, and November 1, 2018 (Appendix Table 3).

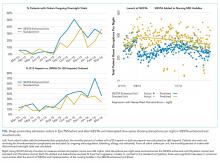

Total Lab Draws

After accounting for total patient days, we saw 26.3% reduction on average in total lab draws per patient-day per week postintervention (4.68 before vs 3.45 after; difference, 1.23; 95% CI, 0.82-1.63; P < .05; Appendix Table 3). When total lab draws were stratified by service, we saw 28% reduction on average in total lab draws per patient-day per week on resident services (4.67 before vs 3.36 after; difference, 1.31; 95% CI, 0.88-1.74; P < .05) and 23.9% reduction on average in lab draws/patient-day per week on the hospitalist service (4.73 before vs 3.60 after; difference, 1.13; 95% CI, 0.61-1.64; P < .05; Appendix Table 3).

Sleep-Friendly Labs by Intervention

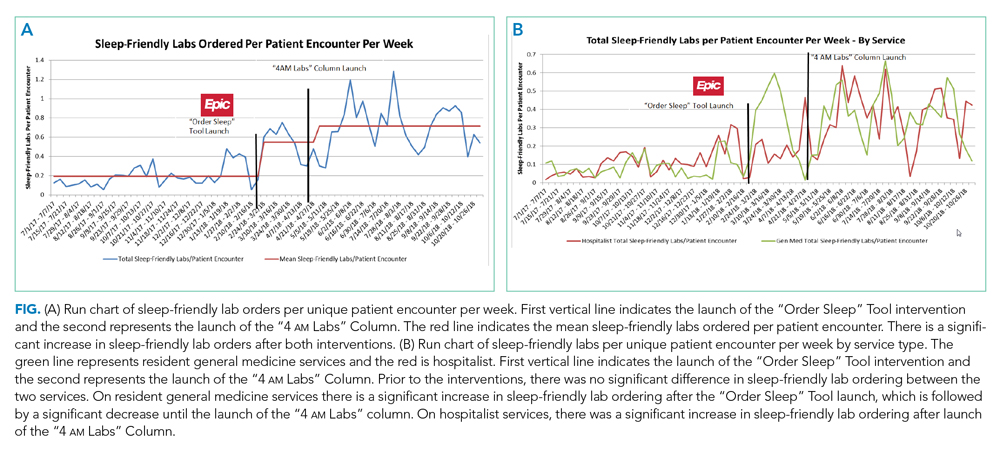

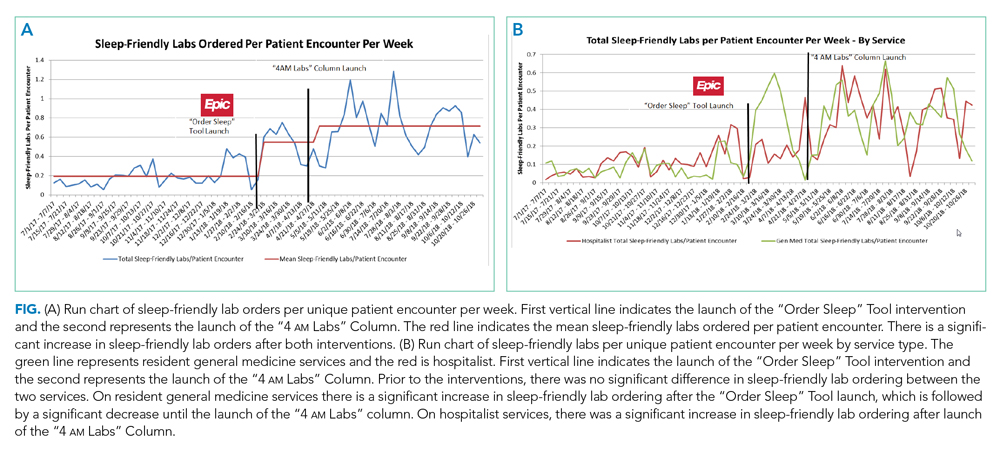

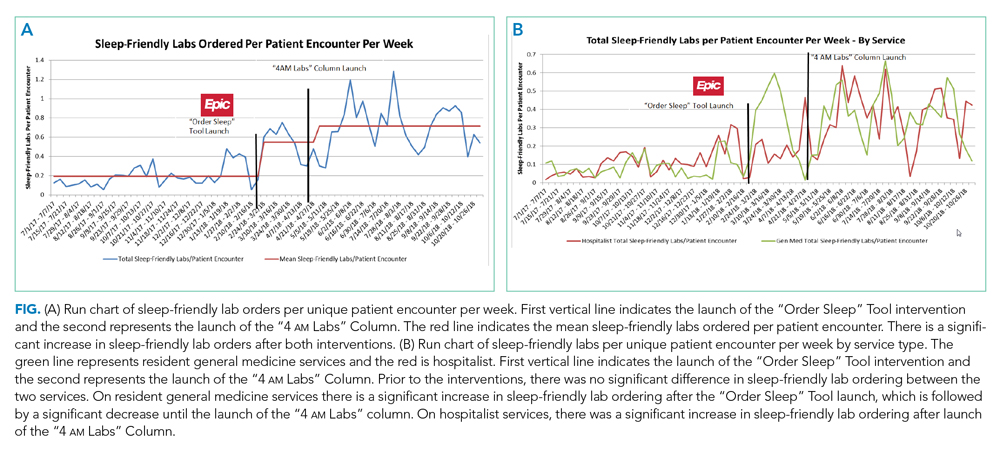

For patients with routine labs, the proportion of sleep-friendly labs drawn per patient-day increased from 6% preintervention to 21% postintervention (P < .001). ITSA demonstrated both interventions were associated with improving lab timing. There was a statistically significant increase in sleep-friendly labs ordered per patient encounter per week immediately after the launch of “Order Sleep” (intercept, 0.49; standard error (SE), 0.14; P = .001) and the “4

Sleep-Friendly Lab Orders by Service

Over the study period, there was no significant difference in total sleep-friendly labs ordered/month between resident and hospitalist services (84.88 vs 86.19; P = .95).

In ITSA, “Order Sleep” was associated with a statistically significant immediate increase in sleep-friendly lab orders per patient encounter per week on resident services (intercept, 1.03; SE, 0.29; P < .001). However, this initial increase was followed by a decrease over time in sleep-friendly lab orders per week (slope change, –0.1; SE, 0.04; P = .02; Table, Figure B). There was no statistically significant change observed on the hospitalist service with “Order Sleep.”

In contrast, the “4

Cost Savings

Using an estimated cost of $7.70 for CBCs and $8.01 for BMPs from our laboratory, our intervention saved an estimated $60,278 in lab costs alone over the 16-month study period (Appendix Table 4).

DISCUSSION

To our knowledge, this is the first study showing a multicomponent intervention using EHR tools can both reduce frequency and optimize timing of routine lab ordering. Our project had two interventions implemented at two different times: First, an “Order Sleep” shortcut was introduced to select sleep-friendly lab timing, including a 6

While the “Order Sleep” tool was initially associated with significant increases in sleep-friendly orders on resident services, this change was not sustained. This could have been caused by the short-lived effect of education more than sustained adoption of the tool. In contrast, the “4

The “4

While other institutions have attempted to shift lab-timing by altering phlebotomy workflows10 or via conscious decision-making on rounds,9 our study differs in several ways. We avoided default options and allowed clinicians to select sleep-friendly labs to promote buy-in. It is sometimes necessary to order 4

Our study had several limitations. First, this was a single center study on adult medicine services, which limits generalizability. Although we considered surgical services, their early rounds made deviations from 4

In conclusion, a multicomponent intervention using EHR tools can reduce inpatient daily lab frequency and optimize lab timing to help promote patient sleep.

Acknowledgments

The authors would like to thank The University of Chicago Center for Healthcare Delivery Science and Innovation for sponsoring their annual Choosing Wisely Challenge, which allowed for access to institutional support and resources for this study. We would also like to thank Mary Kate Springman, MHA, and John Fahrenbach, PhD, for their assistance with this project. Dr Tapaskar also received mentorship through the Future Leader Program for the High Value Practice Academic Alliance.

1. Eaton KP, Levy K, Soong C, et al. Evidence-based guidelines to eliminate repetitive laboratory testing. JAMA Intern Med. 2017;177(12):1833-1839. https://doi.org/10.1001/jamainternmed.2017.5152

2. Thavendiranathan P, Bagai A, Ebidia A, Detsky AS, Choudhry NK. Do blood tests cause anemia in hospitalized patients? J Gen Intern Med. 2005;20(6):520-524. https://doi.org/10.1111/j.1525-1497.2005.0094.x

3. Korenstein D, Husain S, Gennarelli RL, White C, Masciale JN, Roman BR. Impact of clinical specialty on attitudes regarding overuse of inpatient laboratory testing. J Hosp Med. 2018;13(12):844-847. https://doi.org/10.12788/jhm.2978

4. Choosing Wisely. 2020. Accessed January 10, 2020. http://www.choosingwisely.org/getting-started/

5. Bulger J, Nickel W, Messler J, et al. Choosing wisely in adult hospital medicine: five opportunities for improved healthcare value. J Hosp Med. 2013;8(9):486-492. https://doi.org/10.1002/jhm.2063

6. Stuebing EA, Miner TJ. Surgical vampires and rising health care expenditure: reducing the cost of daily phlebotomy. Arch Surg. 2011;146(5):524-527. https://doi.org/10.1001/archsurg.2011.103

7. Attali M, Barel Y, Somin M, et al. A cost-effective method for reducing the volume of laboratory tests in a university-associated teaching hospital. Mt Sinai J Med. 2006;73(5):787-794.

8. Vidyarthi AR, Hamill T, Green AL, Rosenbluth G, Baron RB. Changing resident test ordering behavior: a multilevel intervention to decrease laboratory utilization at an academic medical center. Am J Med Qual. 2015;30(1):81-87. https://doi.org/10.1177/1062860613517502

9. Krafft CA, Biondi EA, Leonard MS, et al. Ending the 4 AM Blood Draw. Presented at: American Academy of Pediatrics Experience; October 25, 2015, Washington, DC. Accessed January 10, 2020. https://aap.confex.com/aap/2015/webprogrampress/Paper31640.html

10. Ramarajan V, Chima HS, Young L. Implementation of later morning specimen draws to improve patient health and satisfaction. Lab Med. 2016;47(1):e1-e4. https://doi.org/10.1093/labmed/lmv013

11. Delaney LJ, Van Haren F, Lopez V. Sleeping on a problem: the impact of sleep disturbance on intensive care patients - a clinical review. Ann Intensive Care. 2015;5:3. https://doi.org/10.1186/s13613-015-0043-2

12. Knutson KL, Spiegel K, Penev P, Van Cauter E. The metabolic consequences of sleep deprivation. Sleep Med Rev. 2007;11(3):163-178. https://doi.org/10.1016/j.smrv.2007.01.002

13. Ho A, Raja B, Waldhorn R, Baez V, Mohammed I. New onset of insomnia in hospitalized patients in general medical wards: incidence, causes, and resolution rate. J Community Hosp Int. 2017;7(5):309-313. https://doi.org/10.1080/20009666.2017.1374108

14. Arora VM, Machado N, Anderson SL, et al. Effectiveness of SIESTA on objective and subjective metrics of nighttime hospital sleep disruptors. J Hosp Med. 2019;14(1):38-41. https://doi.org/10.12788/jhm.3091

15. Roman BR, Yang A, Masciale J, Korenstein D. Association of Attitudes Regarding Overuse of Inpatient Laboratory Testing With Health Care Provider Type. JAMA Intern Med. 2017;177(8):1205-1207. https://doi.org/10.1001/jamainternmed.2017.1634

16. Penfold RB, Zhang F. Use of interrupted time series analysis in evaluating health care quality improvements. Acad Pediatr. 2013;13(6 Suppl):S38-S44. https://doi.org/10.1016/j.acap.2013.08.002

Frequent daily laboratory testing for inpatients contributes to excessive costs,1 anemia,2 and unnecessary testing.3 The ABIM Foundation’s Choosing Wisely® campaign recommends avoiding routine labs, like complete blood counts (CBCs) and basic metabolic panels (BMP), in the face of clinical and laboratory stability.4,5 Prior interventions have reduced unnecessary labs without adverse outcomes.6-8

In addition to lab frequency, hospitalized patients face suboptimal lab timing. Labs are often ordered as early as 4

METHODS

Setting

This study was conducted on the University of Chicago Medicine (UCM) general medicine services, which consisted of a resident-covered service supervised by general medicine, subspecialist, or hospitalist attendings and a hospitalist service staffed by hospitalists and advanced practice providers.

Development of Order SMARTT

To inform intervention development, we surveyed providers about lab-ordering preferences with use of questions from a prior survey to provide a benchmark (Appendix Table 2).15 While reducing lab frequency was supported, the modal response for how frequently a stable patient should receive routine labs was every 48 hours (Appendix Table 2). Therefore, we hypothesized that labs ordered every 48 hours may be popular. Taking labs every 48 hours would not require an urgent 4

Physician Education

We created a 20-minute presentation on the harms of excessive labs and the benefits of sleep-friendly ordering. Instructional Order SMARTT posters were posted in clinician workrooms that emphasized forgoing labs on stable patients and using the “Order Sleep” shortcut when nonurgent labs were needed.

Labs Utilization Data

We used Epic Systems software (Verona, Wisconsin) and our institutional Tableau scorecard to obtain data on CBC and BMP ordering, patient census, and demographics for medical inpatients between July 1, 2017, and November 1, 2018.

Cost Analysis

Costs of lab tests (actual cost to our institution) were obtained from our institutional phlebotomy services’ estimates of direct variable labor and benefits costs and direct variable supplies cost.

Statistical Analysis

Data analysis was performed with SAS version 9.4 statistical software (Cary, North Carolina, USA) and R version 3.6.2 (Vienna, Austria). Descriptive statistics were used to summarize data. Surveys were analyzed using chi-square tests for categorical variables and two-sample t tests for continuous variables. For lab ordering data, interrupted time series analyses (ITSA) were used to determine the changes in ordering practices with the implementation of the two interventions controlling for service lines (resident vs hospitalist service). ITSA enables examination of changes in lab ordering while controlling for time. The AUTOREG function in SAS was used to build the model and estimate final parameters. This function automatically tests for autocorrelation, heteroscedasticity, and estimates any autoregressive parameters required in the model. Our main model tested the association between our two separate interventions on ordering practices, controlling for service (hospitalist or resident).16

RESULTS

Of 125 residents, 82 (65.6%) attended the session and completed the survey. Attendance and response rate for hospitalists was 80% (16 of 20). Similar to a prior study, many residents (73.1%) reported they would be comfortable if patients received less daily laboratory testing (Appendix Table 2).

We reviewed data from 7,045 total patients over 50,951 total patient days between July1, 2017, and November 1, 2018 (Appendix Table 3).

Total Lab Draws

After accounting for total patient days, we saw 26.3% reduction on average in total lab draws per patient-day per week postintervention (4.68 before vs 3.45 after; difference, 1.23; 95% CI, 0.82-1.63; P < .05; Appendix Table 3). When total lab draws were stratified by service, we saw 28% reduction on average in total lab draws per patient-day per week on resident services (4.67 before vs 3.36 after; difference, 1.31; 95% CI, 0.88-1.74; P < .05) and 23.9% reduction on average in lab draws/patient-day per week on the hospitalist service (4.73 before vs 3.60 after; difference, 1.13; 95% CI, 0.61-1.64; P < .05; Appendix Table 3).

Sleep-Friendly Labs by Intervention

For patients with routine labs, the proportion of sleep-friendly labs drawn per patient-day increased from 6% preintervention to 21% postintervention (P < .001). ITSA demonstrated both interventions were associated with improving lab timing. There was a statistically significant increase in sleep-friendly labs ordered per patient encounter per week immediately after the launch of “Order Sleep” (intercept, 0.49; standard error (SE), 0.14; P = .001) and the “4

Sleep-Friendly Lab Orders by Service

Over the study period, there was no significant difference in total sleep-friendly labs ordered/month between resident and hospitalist services (84.88 vs 86.19; P = .95).

In ITSA, “Order Sleep” was associated with a statistically significant immediate increase in sleep-friendly lab orders per patient encounter per week on resident services (intercept, 1.03; SE, 0.29; P < .001). However, this initial increase was followed by a decrease over time in sleep-friendly lab orders per week (slope change, –0.1; SE, 0.04; P = .02; Table, Figure B). There was no statistically significant change observed on the hospitalist service with “Order Sleep.”

In contrast, the “4

Cost Savings

Using an estimated cost of $7.70 for CBCs and $8.01 for BMPs from our laboratory, our intervention saved an estimated $60,278 in lab costs alone over the 16-month study period (Appendix Table 4).

DISCUSSION

To our knowledge, this is the first study showing a multicomponent intervention using EHR tools can both reduce frequency and optimize timing of routine lab ordering. Our project had two interventions implemented at two different times: First, an “Order Sleep” shortcut was introduced to select sleep-friendly lab timing, including a 6

While the “Order Sleep” tool was initially associated with significant increases in sleep-friendly orders on resident services, this change was not sustained. This could have been caused by the short-lived effect of education more than sustained adoption of the tool. In contrast, the “4

The “4

While other institutions have attempted to shift lab-timing by altering phlebotomy workflows10 or via conscious decision-making on rounds,9 our study differs in several ways. We avoided default options and allowed clinicians to select sleep-friendly labs to promote buy-in. It is sometimes necessary to order 4

Our study had several limitations. First, this was a single center study on adult medicine services, which limits generalizability. Although we considered surgical services, their early rounds made deviations from 4

In conclusion, a multicomponent intervention using EHR tools can reduce inpatient daily lab frequency and optimize lab timing to help promote patient sleep.

Acknowledgments

The authors would like to thank The University of Chicago Center for Healthcare Delivery Science and Innovation for sponsoring their annual Choosing Wisely Challenge, which allowed for access to institutional support and resources for this study. We would also like to thank Mary Kate Springman, MHA, and John Fahrenbach, PhD, for their assistance with this project. Dr Tapaskar also received mentorship through the Future Leader Program for the High Value Practice Academic Alliance.

Frequent daily laboratory testing for inpatients contributes to excessive costs,1 anemia,2 and unnecessary testing.3 The ABIM Foundation’s Choosing Wisely® campaign recommends avoiding routine labs, like complete blood counts (CBCs) and basic metabolic panels (BMP), in the face of clinical and laboratory stability.4,5 Prior interventions have reduced unnecessary labs without adverse outcomes.6-8

In addition to lab frequency, hospitalized patients face suboptimal lab timing. Labs are often ordered as early as 4

METHODS

Setting

This study was conducted on the University of Chicago Medicine (UCM) general medicine services, which consisted of a resident-covered service supervised by general medicine, subspecialist, or hospitalist attendings and a hospitalist service staffed by hospitalists and advanced practice providers.

Development of Order SMARTT

To inform intervention development, we surveyed providers about lab-ordering preferences with use of questions from a prior survey to provide a benchmark (Appendix Table 2).15 While reducing lab frequency was supported, the modal response for how frequently a stable patient should receive routine labs was every 48 hours (Appendix Table 2). Therefore, we hypothesized that labs ordered every 48 hours may be popular. Taking labs every 48 hours would not require an urgent 4

Physician Education

We created a 20-minute presentation on the harms of excessive labs and the benefits of sleep-friendly ordering. Instructional Order SMARTT posters were posted in clinician workrooms that emphasized forgoing labs on stable patients and using the “Order Sleep” shortcut when nonurgent labs were needed.

Labs Utilization Data

We used Epic Systems software (Verona, Wisconsin) and our institutional Tableau scorecard to obtain data on CBC and BMP ordering, patient census, and demographics for medical inpatients between July 1, 2017, and November 1, 2018.

Cost Analysis

Costs of lab tests (actual cost to our institution) were obtained from our institutional phlebotomy services’ estimates of direct variable labor and benefits costs and direct variable supplies cost.

Statistical Analysis

Data analysis was performed with SAS version 9.4 statistical software (Cary, North Carolina, USA) and R version 3.6.2 (Vienna, Austria). Descriptive statistics were used to summarize data. Surveys were analyzed using chi-square tests for categorical variables and two-sample t tests for continuous variables. For lab ordering data, interrupted time series analyses (ITSA) were used to determine the changes in ordering practices with the implementation of the two interventions controlling for service lines (resident vs hospitalist service). ITSA enables examination of changes in lab ordering while controlling for time. The AUTOREG function in SAS was used to build the model and estimate final parameters. This function automatically tests for autocorrelation, heteroscedasticity, and estimates any autoregressive parameters required in the model. Our main model tested the association between our two separate interventions on ordering practices, controlling for service (hospitalist or resident).16

RESULTS

Of 125 residents, 82 (65.6%) attended the session and completed the survey. Attendance and response rate for hospitalists was 80% (16 of 20). Similar to a prior study, many residents (73.1%) reported they would be comfortable if patients received less daily laboratory testing (Appendix Table 2).

We reviewed data from 7,045 total patients over 50,951 total patient days between July1, 2017, and November 1, 2018 (Appendix Table 3).

Total Lab Draws

After accounting for total patient days, we saw 26.3% reduction on average in total lab draws per patient-day per week postintervention (4.68 before vs 3.45 after; difference, 1.23; 95% CI, 0.82-1.63; P < .05; Appendix Table 3). When total lab draws were stratified by service, we saw 28% reduction on average in total lab draws per patient-day per week on resident services (4.67 before vs 3.36 after; difference, 1.31; 95% CI, 0.88-1.74; P < .05) and 23.9% reduction on average in lab draws/patient-day per week on the hospitalist service (4.73 before vs 3.60 after; difference, 1.13; 95% CI, 0.61-1.64; P < .05; Appendix Table 3).

Sleep-Friendly Labs by Intervention

For patients with routine labs, the proportion of sleep-friendly labs drawn per patient-day increased from 6% preintervention to 21% postintervention (P < .001). ITSA demonstrated both interventions were associated with improving lab timing. There was a statistically significant increase in sleep-friendly labs ordered per patient encounter per week immediately after the launch of “Order Sleep” (intercept, 0.49; standard error (SE), 0.14; P = .001) and the “4

Sleep-Friendly Lab Orders by Service

Over the study period, there was no significant difference in total sleep-friendly labs ordered/month between resident and hospitalist services (84.88 vs 86.19; P = .95).

In ITSA, “Order Sleep” was associated with a statistically significant immediate increase in sleep-friendly lab orders per patient encounter per week on resident services (intercept, 1.03; SE, 0.29; P < .001). However, this initial increase was followed by a decrease over time in sleep-friendly lab orders per week (slope change, –0.1; SE, 0.04; P = .02; Table, Figure B). There was no statistically significant change observed on the hospitalist service with “Order Sleep.”

In contrast, the “4

Cost Savings

Using an estimated cost of $7.70 for CBCs and $8.01 for BMPs from our laboratory, our intervention saved an estimated $60,278 in lab costs alone over the 16-month study period (Appendix Table 4).

DISCUSSION

To our knowledge, this is the first study showing a multicomponent intervention using EHR tools can both reduce frequency and optimize timing of routine lab ordering. Our project had two interventions implemented at two different times: First, an “Order Sleep” shortcut was introduced to select sleep-friendly lab timing, including a 6

While the “Order Sleep” tool was initially associated with significant increases in sleep-friendly orders on resident services, this change was not sustained. This could have been caused by the short-lived effect of education more than sustained adoption of the tool. In contrast, the “4

The “4

While other institutions have attempted to shift lab-timing by altering phlebotomy workflows10 or via conscious decision-making on rounds,9 our study differs in several ways. We avoided default options and allowed clinicians to select sleep-friendly labs to promote buy-in. It is sometimes necessary to order 4

Our study had several limitations. First, this was a single center study on adult medicine services, which limits generalizability. Although we considered surgical services, their early rounds made deviations from 4

In conclusion, a multicomponent intervention using EHR tools can reduce inpatient daily lab frequency and optimize lab timing to help promote patient sleep.

Acknowledgments

The authors would like to thank The University of Chicago Center for Healthcare Delivery Science and Innovation for sponsoring their annual Choosing Wisely Challenge, which allowed for access to institutional support and resources for this study. We would also like to thank Mary Kate Springman, MHA, and John Fahrenbach, PhD, for their assistance with this project. Dr Tapaskar also received mentorship through the Future Leader Program for the High Value Practice Academic Alliance.

1. Eaton KP, Levy K, Soong C, et al. Evidence-based guidelines to eliminate repetitive laboratory testing. JAMA Intern Med. 2017;177(12):1833-1839. https://doi.org/10.1001/jamainternmed.2017.5152

2. Thavendiranathan P, Bagai A, Ebidia A, Detsky AS, Choudhry NK. Do blood tests cause anemia in hospitalized patients? J Gen Intern Med. 2005;20(6):520-524. https://doi.org/10.1111/j.1525-1497.2005.0094.x

3. Korenstein D, Husain S, Gennarelli RL, White C, Masciale JN, Roman BR. Impact of clinical specialty on attitudes regarding overuse of inpatient laboratory testing. J Hosp Med. 2018;13(12):844-847. https://doi.org/10.12788/jhm.2978

4. Choosing Wisely. 2020. Accessed January 10, 2020. http://www.choosingwisely.org/getting-started/

5. Bulger J, Nickel W, Messler J, et al. Choosing wisely in adult hospital medicine: five opportunities for improved healthcare value. J Hosp Med. 2013;8(9):486-492. https://doi.org/10.1002/jhm.2063

6. Stuebing EA, Miner TJ. Surgical vampires and rising health care expenditure: reducing the cost of daily phlebotomy. Arch Surg. 2011;146(5):524-527. https://doi.org/10.1001/archsurg.2011.103

7. Attali M, Barel Y, Somin M, et al. A cost-effective method for reducing the volume of laboratory tests in a university-associated teaching hospital. Mt Sinai J Med. 2006;73(5):787-794.

8. Vidyarthi AR, Hamill T, Green AL, Rosenbluth G, Baron RB. Changing resident test ordering behavior: a multilevel intervention to decrease laboratory utilization at an academic medical center. Am J Med Qual. 2015;30(1):81-87. https://doi.org/10.1177/1062860613517502

9. Krafft CA, Biondi EA, Leonard MS, et al. Ending the 4 AM Blood Draw. Presented at: American Academy of Pediatrics Experience; October 25, 2015, Washington, DC. Accessed January 10, 2020. https://aap.confex.com/aap/2015/webprogrampress/Paper31640.html

10. Ramarajan V, Chima HS, Young L. Implementation of later morning specimen draws to improve patient health and satisfaction. Lab Med. 2016;47(1):e1-e4. https://doi.org/10.1093/labmed/lmv013

11. Delaney LJ, Van Haren F, Lopez V. Sleeping on a problem: the impact of sleep disturbance on intensive care patients - a clinical review. Ann Intensive Care. 2015;5:3. https://doi.org/10.1186/s13613-015-0043-2

12. Knutson KL, Spiegel K, Penev P, Van Cauter E. The metabolic consequences of sleep deprivation. Sleep Med Rev. 2007;11(3):163-178. https://doi.org/10.1016/j.smrv.2007.01.002

13. Ho A, Raja B, Waldhorn R, Baez V, Mohammed I. New onset of insomnia in hospitalized patients in general medical wards: incidence, causes, and resolution rate. J Community Hosp Int. 2017;7(5):309-313. https://doi.org/10.1080/20009666.2017.1374108

14. Arora VM, Machado N, Anderson SL, et al. Effectiveness of SIESTA on objective and subjective metrics of nighttime hospital sleep disruptors. J Hosp Med. 2019;14(1):38-41. https://doi.org/10.12788/jhm.3091

15. Roman BR, Yang A, Masciale J, Korenstein D. Association of Attitudes Regarding Overuse of Inpatient Laboratory Testing With Health Care Provider Type. JAMA Intern Med. 2017;177(8):1205-1207. https://doi.org/10.1001/jamainternmed.2017.1634

16. Penfold RB, Zhang F. Use of interrupted time series analysis in evaluating health care quality improvements. Acad Pediatr. 2013;13(6 Suppl):S38-S44. https://doi.org/10.1016/j.acap.2013.08.002

1. Eaton KP, Levy K, Soong C, et al. Evidence-based guidelines to eliminate repetitive laboratory testing. JAMA Intern Med. 2017;177(12):1833-1839. https://doi.org/10.1001/jamainternmed.2017.5152

2. Thavendiranathan P, Bagai A, Ebidia A, Detsky AS, Choudhry NK. Do blood tests cause anemia in hospitalized patients? J Gen Intern Med. 2005;20(6):520-524. https://doi.org/10.1111/j.1525-1497.2005.0094.x

3. Korenstein D, Husain S, Gennarelli RL, White C, Masciale JN, Roman BR. Impact of clinical specialty on attitudes regarding overuse of inpatient laboratory testing. J Hosp Med. 2018;13(12):844-847. https://doi.org/10.12788/jhm.2978

4. Choosing Wisely. 2020. Accessed January 10, 2020. http://www.choosingwisely.org/getting-started/

5. Bulger J, Nickel W, Messler J, et al. Choosing wisely in adult hospital medicine: five opportunities for improved healthcare value. J Hosp Med. 2013;8(9):486-492. https://doi.org/10.1002/jhm.2063

6. Stuebing EA, Miner TJ. Surgical vampires and rising health care expenditure: reducing the cost of daily phlebotomy. Arch Surg. 2011;146(5):524-527. https://doi.org/10.1001/archsurg.2011.103

7. Attali M, Barel Y, Somin M, et al. A cost-effective method for reducing the volume of laboratory tests in a university-associated teaching hospital. Mt Sinai J Med. 2006;73(5):787-794.

8. Vidyarthi AR, Hamill T, Green AL, Rosenbluth G, Baron RB. Changing resident test ordering behavior: a multilevel intervention to decrease laboratory utilization at an academic medical center. Am J Med Qual. 2015;30(1):81-87. https://doi.org/10.1177/1062860613517502

9. Krafft CA, Biondi EA, Leonard MS, et al. Ending the 4 AM Blood Draw. Presented at: American Academy of Pediatrics Experience; October 25, 2015, Washington, DC. Accessed January 10, 2020. https://aap.confex.com/aap/2015/webprogrampress/Paper31640.html

10. Ramarajan V, Chima HS, Young L. Implementation of later morning specimen draws to improve patient health and satisfaction. Lab Med. 2016;47(1):e1-e4. https://doi.org/10.1093/labmed/lmv013

11. Delaney LJ, Van Haren F, Lopez V. Sleeping on a problem: the impact of sleep disturbance on intensive care patients - a clinical review. Ann Intensive Care. 2015;5:3. https://doi.org/10.1186/s13613-015-0043-2

12. Knutson KL, Spiegel K, Penev P, Van Cauter E. The metabolic consequences of sleep deprivation. Sleep Med Rev. 2007;11(3):163-178. https://doi.org/10.1016/j.smrv.2007.01.002

13. Ho A, Raja B, Waldhorn R, Baez V, Mohammed I. New onset of insomnia in hospitalized patients in general medical wards: incidence, causes, and resolution rate. J Community Hosp Int. 2017;7(5):309-313. https://doi.org/10.1080/20009666.2017.1374108

14. Arora VM, Machado N, Anderson SL, et al. Effectiveness of SIESTA on objective and subjective metrics of nighttime hospital sleep disruptors. J Hosp Med. 2019;14(1):38-41. https://doi.org/10.12788/jhm.3091

15. Roman BR, Yang A, Masciale J, Korenstein D. Association of Attitudes Regarding Overuse of Inpatient Laboratory Testing With Health Care Provider Type. JAMA Intern Med. 2017;177(8):1205-1207. https://doi.org/10.1001/jamainternmed.2017.1634

16. Penfold RB, Zhang F. Use of interrupted time series analysis in evaluating health care quality improvements. Acad Pediatr. 2013;13(6 Suppl):S38-S44. https://doi.org/10.1016/j.acap.2013.08.002

© 2020 Society of Hospital Medicine

Describing Variability of Inpatient Consultation Practices: Physician, Patient, and Admission Factors

Inpatient consultation is an extremely common practice with the potential to improve patient outcomes significantly.1-3 However, variability in consultation practices may be risky for patients. In addition to underuse when the benefit is clear, the overuse of consultation may lead to additional testing and therapies, increased length of stay (LOS) and costs, conflicting recommendations, and opportunities for communication breakdown.

Consultation use is often at the discretion of individual providers. While this decision is frequently driven by patient needs, significant variation in consultation practices not fully explained by patient factors exists.1 Prior work has described hospital-level variation1 and that primary care physicians use more consultation than hospitalists.4 However, other factors affecting consultation remain unknown. We sought to explore physician-, patient-, and admission-level factors associated with consultation use on inpatient general medicine services.

METHODS