User login

Decrease in Inpatient Telemetry Utilization Through a System-Wide Electronic Health Record Change and a Multifaceted Hospitalist Intervention

Wasteful care may account for between 21% and 34% of the United States’ $3.2 trillion in annual healthcare expenditures, making it a prime target for cost-saving initiatives.1,2 Telemetry is a target for value improvement strategies because telemetry is overutilized, rarely leads to a change in management, and has associated guidelines on appropriate use.3-10 Telemetry use has been a focus of the Joint Commission’s National Patient Safety Goals since 2014, and it is also a focus of the Society of Hospital Medicine’s Choosing Wisely® campaign.11-13

Previous initiatives have evaluated how changes to telemetry orders or education and feedback affect telemetry use. Few studies have compared a system-wide electronic health record (EHR) approach to a multifaceted intervention. In seeking to address this gap, we adapted published guidelines from the American Heart Association (AHA) and incorporated them into our EHR ordering process.3 Simultaneously, we implemented a multifaceted quality improvement initiative and compared this combined program’s effectiveness to that of the EHR approach alone.

METHODS

Study Design, Setting, and Population

We performed a 2-group observational pre- to postintervention study at University of Utah Health. Hospital encounters of patients 18 years and older who had at least 1 inpatient acute care, nonintensive care unit (ICU) room charge and an admission date between January 1, 2014, and July 31, 2016, were included. Patient encounters with missing encounter-level covariates, such as case mix index (CMI) or attending provider identification, were excluded. The Institutional Review Board classified this project as quality improvement and did not require review and oversight.

Intervention

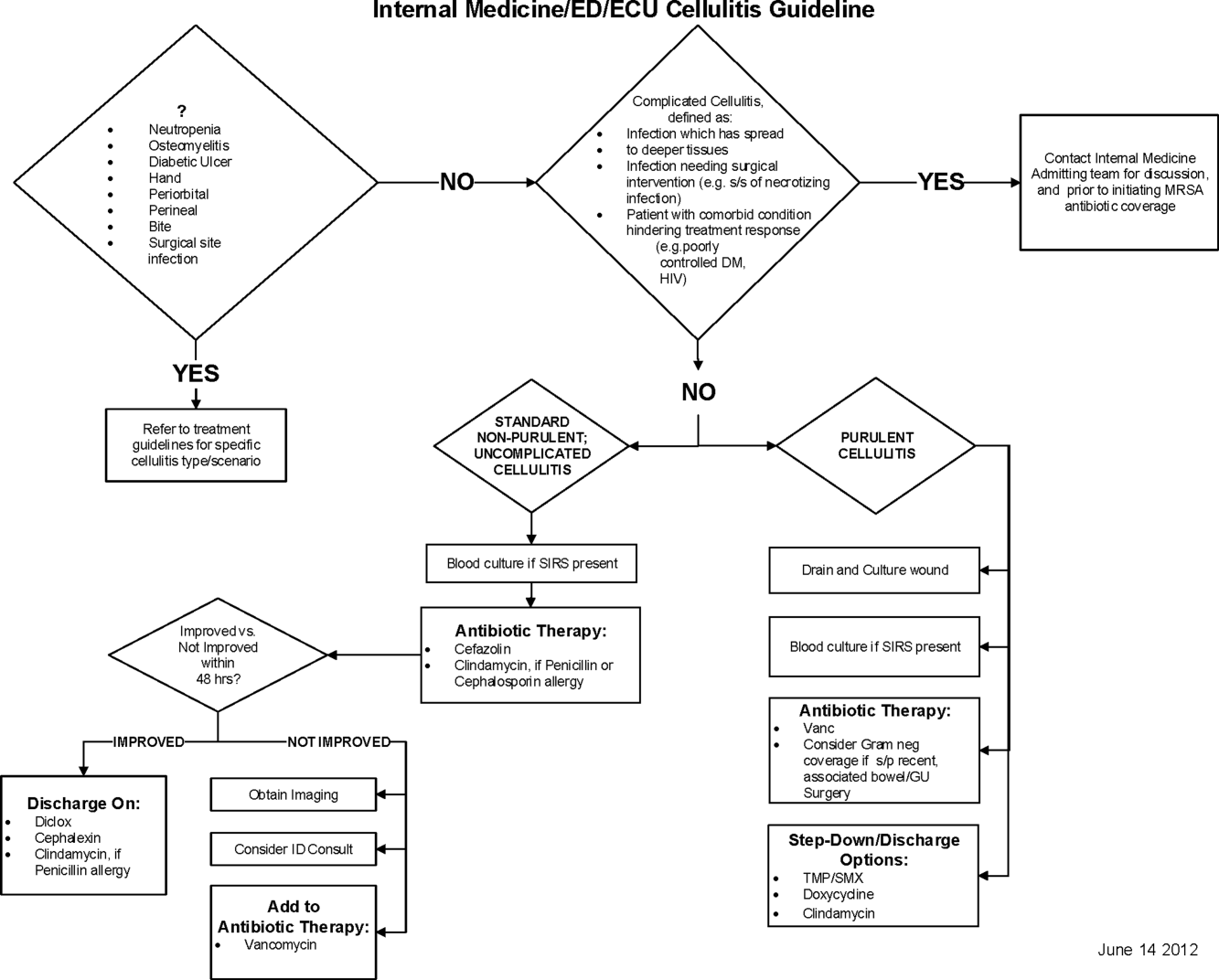

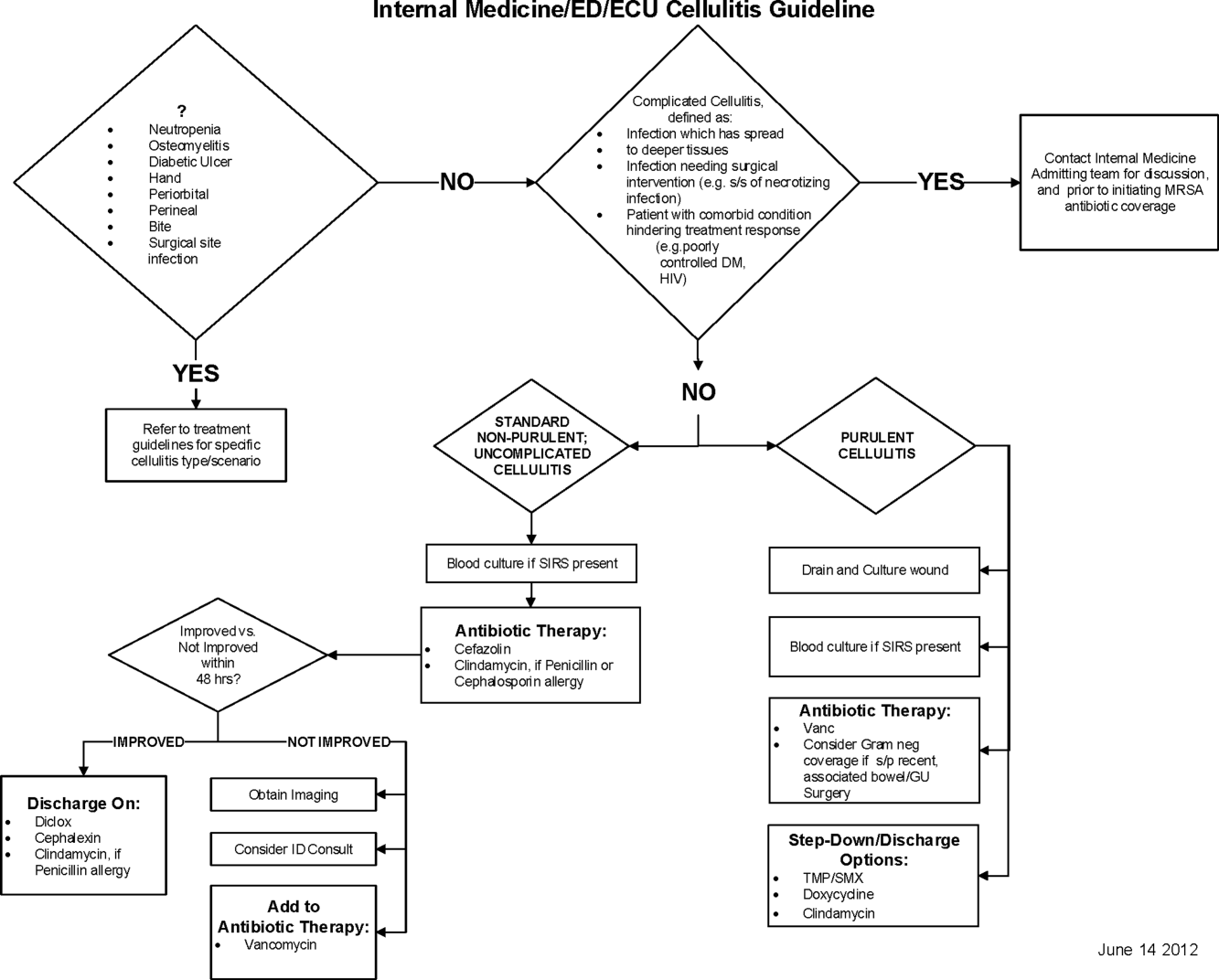

On July 6, 2015, our Epic (Epic Systems Corporation, Madison, WI) EHR telemetry order was modified to discourage unnecessary telemetry monitoring. The new order required providers ordering telemetry to choose a clinical indication and select a duration for monitoring, after which the order would expire and require physician renewal or discontinuation. These were the only changes that occurred for nonhospitalist providers. The nonhospitalist group included all admitting providers who were not hospitalists. This group included neurology (6.98%); cardiology (8.13%); other medical specialties such as pulmonology, hematology, and oncology (21.30%); cardiothoracic surgery (3.72%); orthopedic surgery (14.84%); general surgery (11.11%); neurosurgery (11.07%); and other surgical specialties, including urology, transplant, vascular surgery, and plastics (16.68%).

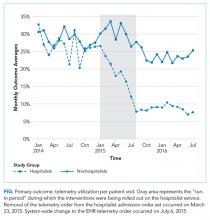

Between January 2015 and June 2015, we implemented a multicomponent program among our hospitalist service. The hospitalist service is composed of 4 teams with internal medicine residents and 2 teams with advanced practice providers, all staffed by academic hospitalists. Our program was composed of 5 elements, all of which were made before the hospital-wide changes to electronic telemetry orders and maintained throughout the study period, as follows: (1) a single provider education session reviewing available evidence (eg, AHA guidelines, Choosing Wisely® campaign), (2) removal of the telemetry order from hospitalist admission order set on March 23, 2015, (3) inclusion of telemetry discussion in the hospitalist group’s daily “Rounding Checklist,”14 (4) monthly feedback provided as part of hospitalist group meetings, and (5) a financial incentive, awarded to the division (no individual provider payment) if performance targets were met. See supplementary Appendix (“Implementation Manual”) for further details.

Data Source

We obtained data on patient age, gender, Medicare Severity-Diagnosis Related Group, Charlson comorbidity index (CCI), CMI, admitting unit, attending physician, admission and discharge dates, length of stay (LOS), 30-day readmission, bed charge (telemetry or nontelemetry), ICU stay, and inpatient mortality from the enterprise data warehouse. Telemetry days were determined through room billing charges, which are assigned based on the presence or absence of an active telemetry order at midnight. Code events came from a log kept by the hospital telephone operator, who is responsible for sending out all calls to the code team. Code event data were available starting July 19, 2014.

Measures

Our primary outcome was the percentage of hospital days that had telemetry charges for individual patients. All billed telemetry days on acute care floors were included regardless of admission status (inpatient vs observation), service, indication, or ordering provider. Secondary outcomes were inpatient mortality, escalation of care, code event rates, and appropriate telemetry utilization rates. Escalation of care was defined as transfer to an ICU after initially being admitted to an acute care floor. The code event rate was defined as the ratio of the number of code team activations to the number of patient days. Appropriate telemetry utilization rates were determined via chart review, as detailed below.

In order to evaluate changes in appropriateness of telemetry monitoring, 4 of the authors who are internal medicine physicians (KE, CC, JC, DG) performed chart reviews of 25 randomly selected patients in each group (hospitalist and nonhospitalist) before and after the intervention who received at least 1 day of telemetry monitoring. Each reviewer was provided a key based on AHA guidelines for monitoring indications and associated maximum allowable durations.3 Chart reviews were performed to determine the indication (if any) for monitoring, as well as the number of days that were indicated. The number of indicated days was compared to the number of telemetry days the patient received to determine the overall proportion of days that were indicated (“Telemetry appropriateness per visit”). Three reviewers (KE, AR, CC) also evaluated 100 patients on the hospitalist service after the intervention who did not receive any telemetry monitoring to evaluate whether patients with indications for telemetry monitoring were not receiving it after the intervention. For patients who had a possible indication, the indication was classified as Class I (“Cardiac monitoring is indicated in most, if not all, patients in this group”) or Class II (“Cardiac monitoring may be of benefit in some patients but is not considered essential for all patients”).3

Adjustment Variables

To account for differences in patient characteristics between hospitalist and nonhospitalist groups, we included age, gender, CMI, and CCI in statistical models. CCI was calculated according to the algorithm specified by Quan et al.15 using all patient diagnoses from previous visits and the index visit identified from the facility billing system.

Statistical Analysis

We computed descriptive statistics for study outcomes and visit characteristics for hospitalist and nonhospitalist visits for pre- and postintervention periods. Descriptive statistics were expressed as n (%) for categorical patient characteristics and outcome variables. For continuous patient characteristics, we expressed the variability of individual observations as the mean ± the standard deviation. For continuous outcomes, we expressed the precision of the mean estimates using standard error. Telemetry utilization per visit was weighted by the number of total acute care days per visit. Telemetry appropriateness per visit was weighted by the number of telemetry days per visit. Patients who did not receive any telemetry monitoring were included in the analysis and noted to have 0 telemetry days. All patients had at least 1 acute care day. Categorical variables were compared using χ2 tests, and continuous variables were compared using t tests. Code event rates were compared using the binomial probability mid-p exact test for person-time data.16

We fitted generalized linear regression models using generalized estimating equations to evaluate the relative change in outcomes of interest in the postintervention period compared with the preintervention period after adjusting for study covariates. The models included study group (hospitalist and nonhospitalist), time period (pre- and postintervention), an interaction term between study group and time period, and study covariates (age, gender, CMI, and CCI). The models were defined using a binomial distributional assumption and logit link function for mortality, escalation of care, and whether patients had at least 1 telemetry day. A gamma distributional assumption and log link function were used for LOS, telemetry acute care days per visit, and total acute care days per visit. A negative binomial distributional assumption and log link function were used for telemetry utilization and telemetry appropriateness. We used the log of the acute care days as an offset for telemetry utilization and the log of the telemetry days per visit as an offset for telemetry appropriateness. An exchangeable working correlation matrix was used to account for physician-level clustering for all outcomes. Intervention effects, representing the difference in odds for categorical variables and in amount for continuous variables, were calculated as exponentiation of the beta parameters for the covariate minus 1.

P values <.05 were considered significant. We used SAS version 9.4 statistical software (SAS Institute Inc., Cary, NC) for data analysis.

RESULTS

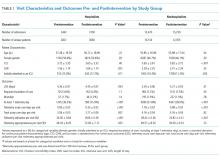

The percent of patients who had any telemetry charges decreased from 36.2% to 15.9% (P < .001) in the hospitalist group and from 31.8% to 28.0% in the nonhospitalist group (P < .001; Table 1). Rates of code events did not change over time (P = .9).

In the randomly selected sample of patients pre- and postintervention who received telemetry monitoring, there was an increase in telemetry appropriateness on the hospitalist service (46% to 72%, P = .025; Table 1). In the nonhospitalist group, appropriate telemetry utilization did not change significantly. Of the 100 randomly selected patients in the hospitalist group after the intervention who did not receive telemetry, no patient had an AHA Class I indication, and only 4 patients had a Class II indication.3,17

DISCUSSION

In this study, implementing a change in the EHR telemetry order produced reductions in telemetry days. However, when combined with a multicomponent program including education, audit and feedback, financial incentives, and changes to remove telemetry orders from admission orders sets, an even more marked improvement was seen. Neither intervention reduced LOS, increased code event rates, or increased rates of escalation of care.

Prior studies have evaluated interventions to reduce unnecessary telemetry monitoring with varying degrees of success. The most successful EHR intervention to date, from Dressler et al.,18 achieved a 70% reduction in overall telemetry use by integrating the AHA guidelines into their EHR and incorporating nursing discontinuation guidelines to ensure that telemetry discontinuation was both safe and timely. Other studies using stewardship approaches and standardized protocols have been less successful.19,20 One study utilizing a multidisciplinary approach but not including an EHR component showed modest improvements in telemetry.21

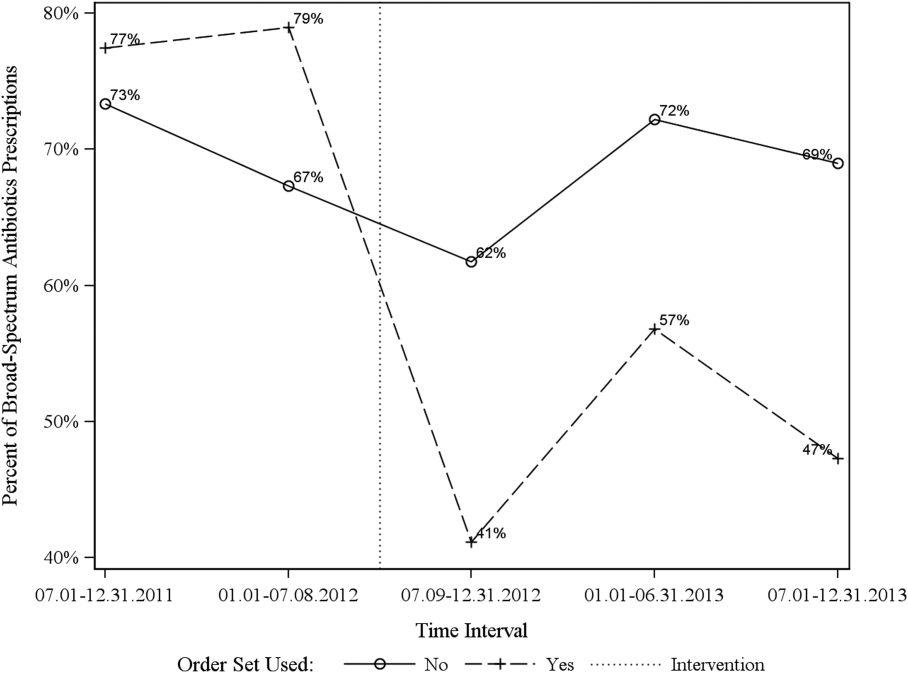

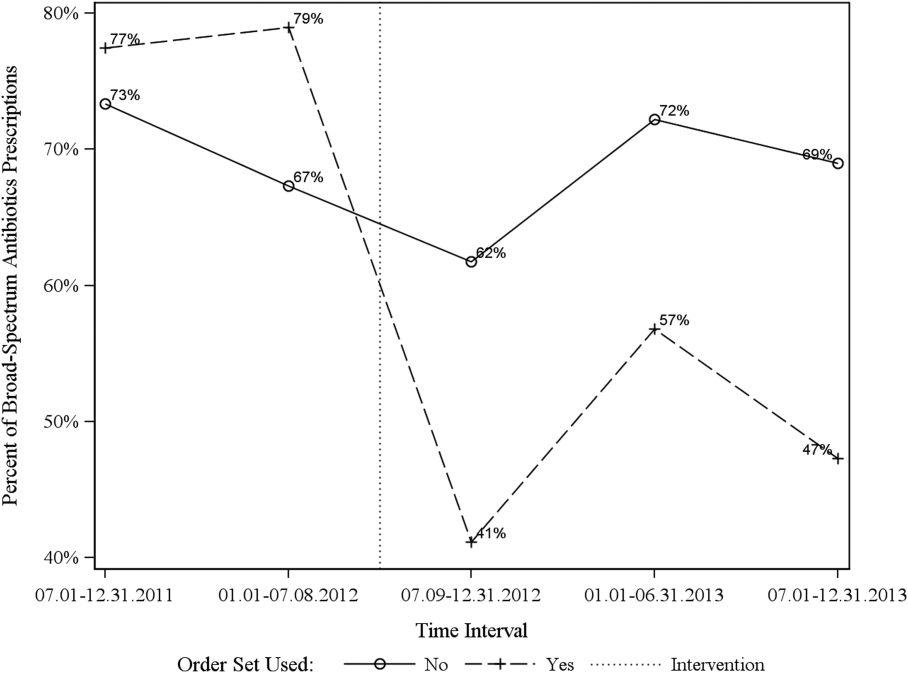

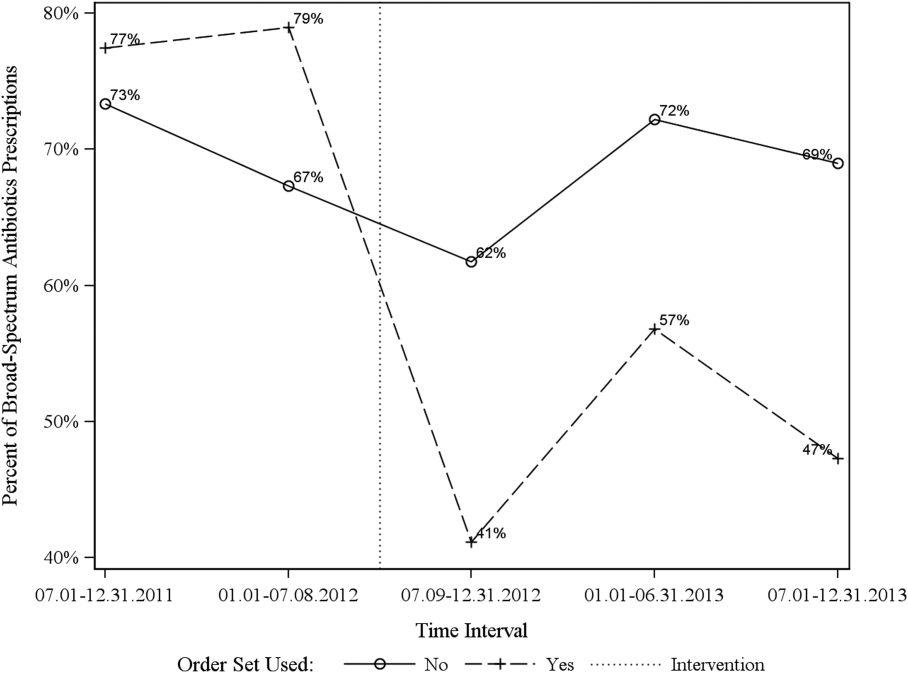

Although we are unable to differentiate the exact effect of each component of the intervention, we did note an immediate decrease in telemetry orders after removing the telemetry order from our admission order set, a trend that was magnified after the addition of broader EHR changes (Figure 1). Important additional contributors to our success seem to have been the standardization of rounds to include daily discussion of telemetry and the provision of routine feedback. We cannot discern whether other components of our program (such as the financial incentives) contributed more or less to our program, though the sum of these interventions produced an overall program that required substantial buy in and sustained focus from the hospitalist group. The importance of the hospitalist program is highlighted by the relatively large differences in improvement compared with the nonhospitalist group.

Our study has several limitations. First, the study was conducted at a single center, which may limit its generalizability. Second, the intervention was multifaceted, diminishing our ability to discern which aspects beyond the system-wide change in the telemetry order were most responsible for the observed effect among hospitalists. Third, we are unable to fully account for baseline differences in telemetry utilization between hospitalist and nonhospitalist groups. It is likely that different services utilize telemetry monitoring in different ways, and the hospitalist group may have been more aware of the existing guidelines for monitoring prior to the intervention. Furthermore, we had a limited sample size for the chart audits, which reduced the available statistical power for determining changes in the appropriateness of telemetry utilization. Additionally, because internal medicine residents rotate through various services, it is possible that the education they received on their hospitalist rotation as part of our intervention had a spillover effect in the nonhospitalist group. However, any effect should have decreased the difference between the groups. Lastly, although our postintervention time period was 1 year, we do not have data beyond that to monitor for sustainability of the results.

CONCLUSION

In this single-site study, combining EHR orders prompting physicians to choose a clinical indication and duration for monitoring with a broader program—including upstream changes in ordering as well as education, audit, and feedback—produced reductions in telemetry usage. Whether this reduction improves the appropriateness of telemetry utilization or reduces other effects of telemetry (eg, alert fatigue, calls for benign arrhythmias) cannot be discerned from our study. However, our results support the idea that multipronged approaches to telemetry use are most likely to produce improvements.

Acknowledgments

The authors thank Dr. Frank Thomas for his assistance with process engineering and Mr. Andrew Wood for his routine provision of data. The statistical analysis was supported by the University of Utah Study Design and Biostatistics Center, with funding in part from the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through Grant 5UL1TR001067-05 (formerly 8UL1TR000105 and UL1RR025764).

Disclosure

The authors have no conflicts of interest to report.

1. National Health Expenditure Fact Sheet. 2015; https://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/NationalHealthExpendData/NHE-Fact-Sheet.html. Accessed June 27, 2017.

2. Berwick DM, Hackbarth AD. Eliminating waste in US health care. JAMA. 2012;307(14):1513-1516. PubMed

3. Drew BJ, Califf RM, Funk M, et al. Practice standards for electrocardiographic monitoring in hospital settings: an American Heart Association scientific statement from the Councils on Cardiovascular Nursing, Clinical Cardiology, and Cardiovascular Disease in the Young: endorsed by the International Society of Computerized Electrocardiology and the American Association of Critical-Care Nurses. Circulation. 2004;110(17):2721-2746. PubMed

4. Sandau KE, Funk M, Auerbach A, et al. Update to Practice Standards for Electrocardiographic Monitoring in Hospital Settings: A Scientific Statement From the American Heart Association. Circulation. 2017;136(19):e273-e344. PubMed

5. Mohammad R, Shah S, Donath E, et al. Non-critical care telemetry and in-hospital cardiac arrest outcomes. J Electrocardiol. 2015;48(3):426-429. PubMed

6. Dhillon SK, Rachko M, Hanon S, Schweitzer P, Bergmann SR. Telemetry monitoring guidelines for efficient and safe delivery of cardiac rhythm monitoring to noncritical hospital inpatients. Crit Pathw Cardiol. 2009;8(3):125-126. PubMed

7. Estrada CA, Rosman HS, Prasad NK, et al. Evaluation of guidelines for the use of telemetry in the non-intensive-care setting. J Gen Intern Med. 2000;15(1):51-55. PubMed

8. Estrada CA, Prasad NK, Rosman HS, Young MJ. Outcomes of patients hospitalized to a telemetry unit. Am J Cardiol. 1994;74(4):357-362. PubMed

9. Atzema C, Schull MJ, Borgundvaag B, Slaughter GR, Lee CK. ALARMED: adverse events in low-risk patients with chest pain receiving continuous electrocardiographic monitoring in the emergency department. A pilot study. Am J Emerg Med. 2006;24(1):62-67. PubMed

10. Schull MJ, Redelmeier DA. Continuous electrocardiographic monitoring and cardiac arrest outcomes in 8,932 telemetry ward patients. Acad Emerg Med. 2000;7(6):647-652. PubMed

11. The Joint Commission 2017 National Patient Safety Goals https://www.jointcommission.org/hap_2017_npsgs/. Accessed on February 15, 2017.

12. Joint Commission on Accreditation of Healthcare Organizations. The Joint Commission announces 2014 National Patient Safety Goal. Jt Comm Perspect. 2013;33(7):1, 3-4. PubMed

13. Bulger J, Nickel W, Messler J, et al. Choosing wisely in adult hospital medicine: five opportunities for improved healthcare value. J Hosp Med. 2013;8(9):486-492. PubMed

14. Yarbrough PM, Kukhareva PV, Horton D, Edholm K, Kawamoto K. Multifaceted intervention including education, rounding checklist implementation, cost feedback, and financial incentives reduces inpatient laboratory costs. J Hosp Med. 2016;11(5):348-354. PubMed

15. Quan H, Li B, Couris CM, et al. Updating and validating the Charlson comorbidity index and score for risk adjustment in hospital discharge abstracts using data from 6 countries. Am J Epidemiol. 2011;173(6):676-682. PubMed

16. Greenland S, Rothman KJ. Introduction to categorical statistics In: Rothman KJ, Greenland S, Lash TL, eds. Modern Epidemiology. Vol 3. Philadelphia, PA: Lippincott Williams & Wilkins; 2008: 238-257.

17. Henriques-Forsythe MN, Ivonye CC, Jamched U, Kamuguisha LK, Olejeme KA, Onwuanyi AE. Is telemetry overused? Is it as helpful as thought? Cleve Clin J Med. 2009;76(6):368-372. PubMed

18. Dressler R, Dryer MM, Coletti C, Mahoney D, Doorey AJ. Altering overuse of cardiac telemetry in non-intensive care unit settings by hardwiring the use of American Heart Association guidelines. JAMA Intern Med. 2014;174(11):1852-1854. PubMed

19. Boggan JC, Navar-Boggan AM, Patel V, Schulteis RD, Simel DL. Reductions in telemetry order duration do not reduce telemetry utilization. J Hosp Med. 2014;9(12):795-796. PubMed

20. Cantillon DJ, Loy M, Burkle A, et al. Association Between Off-site Central Monitoring Using Standardized Cardiac Telemetry and Clinical Outcomes Among Non-Critically Ill Patients. JAMA. 2016;316(5):519-524. PubMed

21. Svec D, Ahuja N, Evans KH, et al. Hospitalist intervention for appropriate use of telemetry reduces length of stay and cost. J Hosp Med. 2015;10(9):627-632. PubMed

Wasteful care may account for between 21% and 34% of the United States’ $3.2 trillion in annual healthcare expenditures, making it a prime target for cost-saving initiatives.1,2 Telemetry is a target for value improvement strategies because telemetry is overutilized, rarely leads to a change in management, and has associated guidelines on appropriate use.3-10 Telemetry use has been a focus of the Joint Commission’s National Patient Safety Goals since 2014, and it is also a focus of the Society of Hospital Medicine’s Choosing Wisely® campaign.11-13

Previous initiatives have evaluated how changes to telemetry orders or education and feedback affect telemetry use. Few studies have compared a system-wide electronic health record (EHR) approach to a multifaceted intervention. In seeking to address this gap, we adapted published guidelines from the American Heart Association (AHA) and incorporated them into our EHR ordering process.3 Simultaneously, we implemented a multifaceted quality improvement initiative and compared this combined program’s effectiveness to that of the EHR approach alone.

METHODS

Study Design, Setting, and Population

We performed a 2-group observational pre- to postintervention study at University of Utah Health. Hospital encounters of patients 18 years and older who had at least 1 inpatient acute care, nonintensive care unit (ICU) room charge and an admission date between January 1, 2014, and July 31, 2016, were included. Patient encounters with missing encounter-level covariates, such as case mix index (CMI) or attending provider identification, were excluded. The Institutional Review Board classified this project as quality improvement and did not require review and oversight.

Intervention

On July 6, 2015, our Epic (Epic Systems Corporation, Madison, WI) EHR telemetry order was modified to discourage unnecessary telemetry monitoring. The new order required providers ordering telemetry to choose a clinical indication and select a duration for monitoring, after which the order would expire and require physician renewal or discontinuation. These were the only changes that occurred for nonhospitalist providers. The nonhospitalist group included all admitting providers who were not hospitalists. This group included neurology (6.98%); cardiology (8.13%); other medical specialties such as pulmonology, hematology, and oncology (21.30%); cardiothoracic surgery (3.72%); orthopedic surgery (14.84%); general surgery (11.11%); neurosurgery (11.07%); and other surgical specialties, including urology, transplant, vascular surgery, and plastics (16.68%).

Between January 2015 and June 2015, we implemented a multicomponent program among our hospitalist service. The hospitalist service is composed of 4 teams with internal medicine residents and 2 teams with advanced practice providers, all staffed by academic hospitalists. Our program was composed of 5 elements, all of which were made before the hospital-wide changes to electronic telemetry orders and maintained throughout the study period, as follows: (1) a single provider education session reviewing available evidence (eg, AHA guidelines, Choosing Wisely® campaign), (2) removal of the telemetry order from hospitalist admission order set on March 23, 2015, (3) inclusion of telemetry discussion in the hospitalist group’s daily “Rounding Checklist,”14 (4) monthly feedback provided as part of hospitalist group meetings, and (5) a financial incentive, awarded to the division (no individual provider payment) if performance targets were met. See supplementary Appendix (“Implementation Manual”) for further details.

Data Source

We obtained data on patient age, gender, Medicare Severity-Diagnosis Related Group, Charlson comorbidity index (CCI), CMI, admitting unit, attending physician, admission and discharge dates, length of stay (LOS), 30-day readmission, bed charge (telemetry or nontelemetry), ICU stay, and inpatient mortality from the enterprise data warehouse. Telemetry days were determined through room billing charges, which are assigned based on the presence or absence of an active telemetry order at midnight. Code events came from a log kept by the hospital telephone operator, who is responsible for sending out all calls to the code team. Code event data were available starting July 19, 2014.

Measures

Our primary outcome was the percentage of hospital days that had telemetry charges for individual patients. All billed telemetry days on acute care floors were included regardless of admission status (inpatient vs observation), service, indication, or ordering provider. Secondary outcomes were inpatient mortality, escalation of care, code event rates, and appropriate telemetry utilization rates. Escalation of care was defined as transfer to an ICU after initially being admitted to an acute care floor. The code event rate was defined as the ratio of the number of code team activations to the number of patient days. Appropriate telemetry utilization rates were determined via chart review, as detailed below.

In order to evaluate changes in appropriateness of telemetry monitoring, 4 of the authors who are internal medicine physicians (KE, CC, JC, DG) performed chart reviews of 25 randomly selected patients in each group (hospitalist and nonhospitalist) before and after the intervention who received at least 1 day of telemetry monitoring. Each reviewer was provided a key based on AHA guidelines for monitoring indications and associated maximum allowable durations.3 Chart reviews were performed to determine the indication (if any) for monitoring, as well as the number of days that were indicated. The number of indicated days was compared to the number of telemetry days the patient received to determine the overall proportion of days that were indicated (“Telemetry appropriateness per visit”). Three reviewers (KE, AR, CC) also evaluated 100 patients on the hospitalist service after the intervention who did not receive any telemetry monitoring to evaluate whether patients with indications for telemetry monitoring were not receiving it after the intervention. For patients who had a possible indication, the indication was classified as Class I (“Cardiac monitoring is indicated in most, if not all, patients in this group”) or Class II (“Cardiac monitoring may be of benefit in some patients but is not considered essential for all patients”).3

Adjustment Variables

To account for differences in patient characteristics between hospitalist and nonhospitalist groups, we included age, gender, CMI, and CCI in statistical models. CCI was calculated according to the algorithm specified by Quan et al.15 using all patient diagnoses from previous visits and the index visit identified from the facility billing system.

Statistical Analysis

We computed descriptive statistics for study outcomes and visit characteristics for hospitalist and nonhospitalist visits for pre- and postintervention periods. Descriptive statistics were expressed as n (%) for categorical patient characteristics and outcome variables. For continuous patient characteristics, we expressed the variability of individual observations as the mean ± the standard deviation. For continuous outcomes, we expressed the precision of the mean estimates using standard error. Telemetry utilization per visit was weighted by the number of total acute care days per visit. Telemetry appropriateness per visit was weighted by the number of telemetry days per visit. Patients who did not receive any telemetry monitoring were included in the analysis and noted to have 0 telemetry days. All patients had at least 1 acute care day. Categorical variables were compared using χ2 tests, and continuous variables were compared using t tests. Code event rates were compared using the binomial probability mid-p exact test for person-time data.16

We fitted generalized linear regression models using generalized estimating equations to evaluate the relative change in outcomes of interest in the postintervention period compared with the preintervention period after adjusting for study covariates. The models included study group (hospitalist and nonhospitalist), time period (pre- and postintervention), an interaction term between study group and time period, and study covariates (age, gender, CMI, and CCI). The models were defined using a binomial distributional assumption and logit link function for mortality, escalation of care, and whether patients had at least 1 telemetry day. A gamma distributional assumption and log link function were used for LOS, telemetry acute care days per visit, and total acute care days per visit. A negative binomial distributional assumption and log link function were used for telemetry utilization and telemetry appropriateness. We used the log of the acute care days as an offset for telemetry utilization and the log of the telemetry days per visit as an offset for telemetry appropriateness. An exchangeable working correlation matrix was used to account for physician-level clustering for all outcomes. Intervention effects, representing the difference in odds for categorical variables and in amount for continuous variables, were calculated as exponentiation of the beta parameters for the covariate minus 1.

P values <.05 were considered significant. We used SAS version 9.4 statistical software (SAS Institute Inc., Cary, NC) for data analysis.

RESULTS

The percent of patients who had any telemetry charges decreased from 36.2% to 15.9% (P < .001) in the hospitalist group and from 31.8% to 28.0% in the nonhospitalist group (P < .001; Table 1). Rates of code events did not change over time (P = .9).

In the randomly selected sample of patients pre- and postintervention who received telemetry monitoring, there was an increase in telemetry appropriateness on the hospitalist service (46% to 72%, P = .025; Table 1). In the nonhospitalist group, appropriate telemetry utilization did not change significantly. Of the 100 randomly selected patients in the hospitalist group after the intervention who did not receive telemetry, no patient had an AHA Class I indication, and only 4 patients had a Class II indication.3,17

DISCUSSION

In this study, implementing a change in the EHR telemetry order produced reductions in telemetry days. However, when combined with a multicomponent program including education, audit and feedback, financial incentives, and changes to remove telemetry orders from admission orders sets, an even more marked improvement was seen. Neither intervention reduced LOS, increased code event rates, or increased rates of escalation of care.

Prior studies have evaluated interventions to reduce unnecessary telemetry monitoring with varying degrees of success. The most successful EHR intervention to date, from Dressler et al.,18 achieved a 70% reduction in overall telemetry use by integrating the AHA guidelines into their EHR and incorporating nursing discontinuation guidelines to ensure that telemetry discontinuation was both safe and timely. Other studies using stewardship approaches and standardized protocols have been less successful.19,20 One study utilizing a multidisciplinary approach but not including an EHR component showed modest improvements in telemetry.21

Although we are unable to differentiate the exact effect of each component of the intervention, we did note an immediate decrease in telemetry orders after removing the telemetry order from our admission order set, a trend that was magnified after the addition of broader EHR changes (Figure 1). Important additional contributors to our success seem to have been the standardization of rounds to include daily discussion of telemetry and the provision of routine feedback. We cannot discern whether other components of our program (such as the financial incentives) contributed more or less to our program, though the sum of these interventions produced an overall program that required substantial buy in and sustained focus from the hospitalist group. The importance of the hospitalist program is highlighted by the relatively large differences in improvement compared with the nonhospitalist group.

Our study has several limitations. First, the study was conducted at a single center, which may limit its generalizability. Second, the intervention was multifaceted, diminishing our ability to discern which aspects beyond the system-wide change in the telemetry order were most responsible for the observed effect among hospitalists. Third, we are unable to fully account for baseline differences in telemetry utilization between hospitalist and nonhospitalist groups. It is likely that different services utilize telemetry monitoring in different ways, and the hospitalist group may have been more aware of the existing guidelines for monitoring prior to the intervention. Furthermore, we had a limited sample size for the chart audits, which reduced the available statistical power for determining changes in the appropriateness of telemetry utilization. Additionally, because internal medicine residents rotate through various services, it is possible that the education they received on their hospitalist rotation as part of our intervention had a spillover effect in the nonhospitalist group. However, any effect should have decreased the difference between the groups. Lastly, although our postintervention time period was 1 year, we do not have data beyond that to monitor for sustainability of the results.

CONCLUSION

In this single-site study, combining EHR orders prompting physicians to choose a clinical indication and duration for monitoring with a broader program—including upstream changes in ordering as well as education, audit, and feedback—produced reductions in telemetry usage. Whether this reduction improves the appropriateness of telemetry utilization or reduces other effects of telemetry (eg, alert fatigue, calls for benign arrhythmias) cannot be discerned from our study. However, our results support the idea that multipronged approaches to telemetry use are most likely to produce improvements.

Acknowledgments

The authors thank Dr. Frank Thomas for his assistance with process engineering and Mr. Andrew Wood for his routine provision of data. The statistical analysis was supported by the University of Utah Study Design and Biostatistics Center, with funding in part from the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through Grant 5UL1TR001067-05 (formerly 8UL1TR000105 and UL1RR025764).

Disclosure

The authors have no conflicts of interest to report.

Wasteful care may account for between 21% and 34% of the United States’ $3.2 trillion in annual healthcare expenditures, making it a prime target for cost-saving initiatives.1,2 Telemetry is a target for value improvement strategies because telemetry is overutilized, rarely leads to a change in management, and has associated guidelines on appropriate use.3-10 Telemetry use has been a focus of the Joint Commission’s National Patient Safety Goals since 2014, and it is also a focus of the Society of Hospital Medicine’s Choosing Wisely® campaign.11-13

Previous initiatives have evaluated how changes to telemetry orders or education and feedback affect telemetry use. Few studies have compared a system-wide electronic health record (EHR) approach to a multifaceted intervention. In seeking to address this gap, we adapted published guidelines from the American Heart Association (AHA) and incorporated them into our EHR ordering process.3 Simultaneously, we implemented a multifaceted quality improvement initiative and compared this combined program’s effectiveness to that of the EHR approach alone.

METHODS

Study Design, Setting, and Population

We performed a 2-group observational pre- to postintervention study at University of Utah Health. Hospital encounters of patients 18 years and older who had at least 1 inpatient acute care, nonintensive care unit (ICU) room charge and an admission date between January 1, 2014, and July 31, 2016, were included. Patient encounters with missing encounter-level covariates, such as case mix index (CMI) or attending provider identification, were excluded. The Institutional Review Board classified this project as quality improvement and did not require review and oversight.

Intervention

On July 6, 2015, our Epic (Epic Systems Corporation, Madison, WI) EHR telemetry order was modified to discourage unnecessary telemetry monitoring. The new order required providers ordering telemetry to choose a clinical indication and select a duration for monitoring, after which the order would expire and require physician renewal or discontinuation. These were the only changes that occurred for nonhospitalist providers. The nonhospitalist group included all admitting providers who were not hospitalists. This group included neurology (6.98%); cardiology (8.13%); other medical specialties such as pulmonology, hematology, and oncology (21.30%); cardiothoracic surgery (3.72%); orthopedic surgery (14.84%); general surgery (11.11%); neurosurgery (11.07%); and other surgical specialties, including urology, transplant, vascular surgery, and plastics (16.68%).

Between January 2015 and June 2015, we implemented a multicomponent program among our hospitalist service. The hospitalist service is composed of 4 teams with internal medicine residents and 2 teams with advanced practice providers, all staffed by academic hospitalists. Our program was composed of 5 elements, all of which were made before the hospital-wide changes to electronic telemetry orders and maintained throughout the study period, as follows: (1) a single provider education session reviewing available evidence (eg, AHA guidelines, Choosing Wisely® campaign), (2) removal of the telemetry order from hospitalist admission order set on March 23, 2015, (3) inclusion of telemetry discussion in the hospitalist group’s daily “Rounding Checklist,”14 (4) monthly feedback provided as part of hospitalist group meetings, and (5) a financial incentive, awarded to the division (no individual provider payment) if performance targets were met. See supplementary Appendix (“Implementation Manual”) for further details.

Data Source

We obtained data on patient age, gender, Medicare Severity-Diagnosis Related Group, Charlson comorbidity index (CCI), CMI, admitting unit, attending physician, admission and discharge dates, length of stay (LOS), 30-day readmission, bed charge (telemetry or nontelemetry), ICU stay, and inpatient mortality from the enterprise data warehouse. Telemetry days were determined through room billing charges, which are assigned based on the presence or absence of an active telemetry order at midnight. Code events came from a log kept by the hospital telephone operator, who is responsible for sending out all calls to the code team. Code event data were available starting July 19, 2014.

Measures

Our primary outcome was the percentage of hospital days that had telemetry charges for individual patients. All billed telemetry days on acute care floors were included regardless of admission status (inpatient vs observation), service, indication, or ordering provider. Secondary outcomes were inpatient mortality, escalation of care, code event rates, and appropriate telemetry utilization rates. Escalation of care was defined as transfer to an ICU after initially being admitted to an acute care floor. The code event rate was defined as the ratio of the number of code team activations to the number of patient days. Appropriate telemetry utilization rates were determined via chart review, as detailed below.

In order to evaluate changes in appropriateness of telemetry monitoring, 4 of the authors who are internal medicine physicians (KE, CC, JC, DG) performed chart reviews of 25 randomly selected patients in each group (hospitalist and nonhospitalist) before and after the intervention who received at least 1 day of telemetry monitoring. Each reviewer was provided a key based on AHA guidelines for monitoring indications and associated maximum allowable durations.3 Chart reviews were performed to determine the indication (if any) for monitoring, as well as the number of days that were indicated. The number of indicated days was compared to the number of telemetry days the patient received to determine the overall proportion of days that were indicated (“Telemetry appropriateness per visit”). Three reviewers (KE, AR, CC) also evaluated 100 patients on the hospitalist service after the intervention who did not receive any telemetry monitoring to evaluate whether patients with indications for telemetry monitoring were not receiving it after the intervention. For patients who had a possible indication, the indication was classified as Class I (“Cardiac monitoring is indicated in most, if not all, patients in this group”) or Class II (“Cardiac monitoring may be of benefit in some patients but is not considered essential for all patients”).3

Adjustment Variables

To account for differences in patient characteristics between hospitalist and nonhospitalist groups, we included age, gender, CMI, and CCI in statistical models. CCI was calculated according to the algorithm specified by Quan et al.15 using all patient diagnoses from previous visits and the index visit identified from the facility billing system.

Statistical Analysis

We computed descriptive statistics for study outcomes and visit characteristics for hospitalist and nonhospitalist visits for pre- and postintervention periods. Descriptive statistics were expressed as n (%) for categorical patient characteristics and outcome variables. For continuous patient characteristics, we expressed the variability of individual observations as the mean ± the standard deviation. For continuous outcomes, we expressed the precision of the mean estimates using standard error. Telemetry utilization per visit was weighted by the number of total acute care days per visit. Telemetry appropriateness per visit was weighted by the number of telemetry days per visit. Patients who did not receive any telemetry monitoring were included in the analysis and noted to have 0 telemetry days. All patients had at least 1 acute care day. Categorical variables were compared using χ2 tests, and continuous variables were compared using t tests. Code event rates were compared using the binomial probability mid-p exact test for person-time data.16

We fitted generalized linear regression models using generalized estimating equations to evaluate the relative change in outcomes of interest in the postintervention period compared with the preintervention period after adjusting for study covariates. The models included study group (hospitalist and nonhospitalist), time period (pre- and postintervention), an interaction term between study group and time period, and study covariates (age, gender, CMI, and CCI). The models were defined using a binomial distributional assumption and logit link function for mortality, escalation of care, and whether patients had at least 1 telemetry day. A gamma distributional assumption and log link function were used for LOS, telemetry acute care days per visit, and total acute care days per visit. A negative binomial distributional assumption and log link function were used for telemetry utilization and telemetry appropriateness. We used the log of the acute care days as an offset for telemetry utilization and the log of the telemetry days per visit as an offset for telemetry appropriateness. An exchangeable working correlation matrix was used to account for physician-level clustering for all outcomes. Intervention effects, representing the difference in odds for categorical variables and in amount for continuous variables, were calculated as exponentiation of the beta parameters for the covariate minus 1.

P values <.05 were considered significant. We used SAS version 9.4 statistical software (SAS Institute Inc., Cary, NC) for data analysis.

RESULTS

The percent of patients who had any telemetry charges decreased from 36.2% to 15.9% (P < .001) in the hospitalist group and from 31.8% to 28.0% in the nonhospitalist group (P < .001; Table 1). Rates of code events did not change over time (P = .9).

In the randomly selected sample of patients pre- and postintervention who received telemetry monitoring, there was an increase in telemetry appropriateness on the hospitalist service (46% to 72%, P = .025; Table 1). In the nonhospitalist group, appropriate telemetry utilization did not change significantly. Of the 100 randomly selected patients in the hospitalist group after the intervention who did not receive telemetry, no patient had an AHA Class I indication, and only 4 patients had a Class II indication.3,17

DISCUSSION

In this study, implementing a change in the EHR telemetry order produced reductions in telemetry days. However, when combined with a multicomponent program including education, audit and feedback, financial incentives, and changes to remove telemetry orders from admission orders sets, an even more marked improvement was seen. Neither intervention reduced LOS, increased code event rates, or increased rates of escalation of care.

Prior studies have evaluated interventions to reduce unnecessary telemetry monitoring with varying degrees of success. The most successful EHR intervention to date, from Dressler et al.,18 achieved a 70% reduction in overall telemetry use by integrating the AHA guidelines into their EHR and incorporating nursing discontinuation guidelines to ensure that telemetry discontinuation was both safe and timely. Other studies using stewardship approaches and standardized protocols have been less successful.19,20 One study utilizing a multidisciplinary approach but not including an EHR component showed modest improvements in telemetry.21

Although we are unable to differentiate the exact effect of each component of the intervention, we did note an immediate decrease in telemetry orders after removing the telemetry order from our admission order set, a trend that was magnified after the addition of broader EHR changes (Figure 1). Important additional contributors to our success seem to have been the standardization of rounds to include daily discussion of telemetry and the provision of routine feedback. We cannot discern whether other components of our program (such as the financial incentives) contributed more or less to our program, though the sum of these interventions produced an overall program that required substantial buy in and sustained focus from the hospitalist group. The importance of the hospitalist program is highlighted by the relatively large differences in improvement compared with the nonhospitalist group.

Our study has several limitations. First, the study was conducted at a single center, which may limit its generalizability. Second, the intervention was multifaceted, diminishing our ability to discern which aspects beyond the system-wide change in the telemetry order were most responsible for the observed effect among hospitalists. Third, we are unable to fully account for baseline differences in telemetry utilization between hospitalist and nonhospitalist groups. It is likely that different services utilize telemetry monitoring in different ways, and the hospitalist group may have been more aware of the existing guidelines for monitoring prior to the intervention. Furthermore, we had a limited sample size for the chart audits, which reduced the available statistical power for determining changes in the appropriateness of telemetry utilization. Additionally, because internal medicine residents rotate through various services, it is possible that the education they received on their hospitalist rotation as part of our intervention had a spillover effect in the nonhospitalist group. However, any effect should have decreased the difference between the groups. Lastly, although our postintervention time period was 1 year, we do not have data beyond that to monitor for sustainability of the results.

CONCLUSION

In this single-site study, combining EHR orders prompting physicians to choose a clinical indication and duration for monitoring with a broader program—including upstream changes in ordering as well as education, audit, and feedback—produced reductions in telemetry usage. Whether this reduction improves the appropriateness of telemetry utilization or reduces other effects of telemetry (eg, alert fatigue, calls for benign arrhythmias) cannot be discerned from our study. However, our results support the idea that multipronged approaches to telemetry use are most likely to produce improvements.

Acknowledgments

The authors thank Dr. Frank Thomas for his assistance with process engineering and Mr. Andrew Wood for his routine provision of data. The statistical analysis was supported by the University of Utah Study Design and Biostatistics Center, with funding in part from the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through Grant 5UL1TR001067-05 (formerly 8UL1TR000105 and UL1RR025764).

Disclosure

The authors have no conflicts of interest to report.

1. National Health Expenditure Fact Sheet. 2015; https://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/NationalHealthExpendData/NHE-Fact-Sheet.html. Accessed June 27, 2017.

2. Berwick DM, Hackbarth AD. Eliminating waste in US health care. JAMA. 2012;307(14):1513-1516. PubMed

3. Drew BJ, Califf RM, Funk M, et al. Practice standards for electrocardiographic monitoring in hospital settings: an American Heart Association scientific statement from the Councils on Cardiovascular Nursing, Clinical Cardiology, and Cardiovascular Disease in the Young: endorsed by the International Society of Computerized Electrocardiology and the American Association of Critical-Care Nurses. Circulation. 2004;110(17):2721-2746. PubMed

4. Sandau KE, Funk M, Auerbach A, et al. Update to Practice Standards for Electrocardiographic Monitoring in Hospital Settings: A Scientific Statement From the American Heart Association. Circulation. 2017;136(19):e273-e344. PubMed

5. Mohammad R, Shah S, Donath E, et al. Non-critical care telemetry and in-hospital cardiac arrest outcomes. J Electrocardiol. 2015;48(3):426-429. PubMed

6. Dhillon SK, Rachko M, Hanon S, Schweitzer P, Bergmann SR. Telemetry monitoring guidelines for efficient and safe delivery of cardiac rhythm monitoring to noncritical hospital inpatients. Crit Pathw Cardiol. 2009;8(3):125-126. PubMed

7. Estrada CA, Rosman HS, Prasad NK, et al. Evaluation of guidelines for the use of telemetry in the non-intensive-care setting. J Gen Intern Med. 2000;15(1):51-55. PubMed

8. Estrada CA, Prasad NK, Rosman HS, Young MJ. Outcomes of patients hospitalized to a telemetry unit. Am J Cardiol. 1994;74(4):357-362. PubMed

9. Atzema C, Schull MJ, Borgundvaag B, Slaughter GR, Lee CK. ALARMED: adverse events in low-risk patients with chest pain receiving continuous electrocardiographic monitoring in the emergency department. A pilot study. Am J Emerg Med. 2006;24(1):62-67. PubMed

10. Schull MJ, Redelmeier DA. Continuous electrocardiographic monitoring and cardiac arrest outcomes in 8,932 telemetry ward patients. Acad Emerg Med. 2000;7(6):647-652. PubMed

11. The Joint Commission 2017 National Patient Safety Goals https://www.jointcommission.org/hap_2017_npsgs/. Accessed on February 15, 2017.

12. Joint Commission on Accreditation of Healthcare Organizations. The Joint Commission announces 2014 National Patient Safety Goal. Jt Comm Perspect. 2013;33(7):1, 3-4. PubMed

13. Bulger J, Nickel W, Messler J, et al. Choosing wisely in adult hospital medicine: five opportunities for improved healthcare value. J Hosp Med. 2013;8(9):486-492. PubMed

14. Yarbrough PM, Kukhareva PV, Horton D, Edholm K, Kawamoto K. Multifaceted intervention including education, rounding checklist implementation, cost feedback, and financial incentives reduces inpatient laboratory costs. J Hosp Med. 2016;11(5):348-354. PubMed

15. Quan H, Li B, Couris CM, et al. Updating and validating the Charlson comorbidity index and score for risk adjustment in hospital discharge abstracts using data from 6 countries. Am J Epidemiol. 2011;173(6):676-682. PubMed

16. Greenland S, Rothman KJ. Introduction to categorical statistics In: Rothman KJ, Greenland S, Lash TL, eds. Modern Epidemiology. Vol 3. Philadelphia, PA: Lippincott Williams & Wilkins; 2008: 238-257.

17. Henriques-Forsythe MN, Ivonye CC, Jamched U, Kamuguisha LK, Olejeme KA, Onwuanyi AE. Is telemetry overused? Is it as helpful as thought? Cleve Clin J Med. 2009;76(6):368-372. PubMed

18. Dressler R, Dryer MM, Coletti C, Mahoney D, Doorey AJ. Altering overuse of cardiac telemetry in non-intensive care unit settings by hardwiring the use of American Heart Association guidelines. JAMA Intern Med. 2014;174(11):1852-1854. PubMed

19. Boggan JC, Navar-Boggan AM, Patel V, Schulteis RD, Simel DL. Reductions in telemetry order duration do not reduce telemetry utilization. J Hosp Med. 2014;9(12):795-796. PubMed

20. Cantillon DJ, Loy M, Burkle A, et al. Association Between Off-site Central Monitoring Using Standardized Cardiac Telemetry and Clinical Outcomes Among Non-Critically Ill Patients. JAMA. 2016;316(5):519-524. PubMed

21. Svec D, Ahuja N, Evans KH, et al. Hospitalist intervention for appropriate use of telemetry reduces length of stay and cost. J Hosp Med. 2015;10(9):627-632. PubMed

1. National Health Expenditure Fact Sheet. 2015; https://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/NationalHealthExpendData/NHE-Fact-Sheet.html. Accessed June 27, 2017.

2. Berwick DM, Hackbarth AD. Eliminating waste in US health care. JAMA. 2012;307(14):1513-1516. PubMed

3. Drew BJ, Califf RM, Funk M, et al. Practice standards for electrocardiographic monitoring in hospital settings: an American Heart Association scientific statement from the Councils on Cardiovascular Nursing, Clinical Cardiology, and Cardiovascular Disease in the Young: endorsed by the International Society of Computerized Electrocardiology and the American Association of Critical-Care Nurses. Circulation. 2004;110(17):2721-2746. PubMed

4. Sandau KE, Funk M, Auerbach A, et al. Update to Practice Standards for Electrocardiographic Monitoring in Hospital Settings: A Scientific Statement From the American Heart Association. Circulation. 2017;136(19):e273-e344. PubMed

5. Mohammad R, Shah S, Donath E, et al. Non-critical care telemetry and in-hospital cardiac arrest outcomes. J Electrocardiol. 2015;48(3):426-429. PubMed

6. Dhillon SK, Rachko M, Hanon S, Schweitzer P, Bergmann SR. Telemetry monitoring guidelines for efficient and safe delivery of cardiac rhythm monitoring to noncritical hospital inpatients. Crit Pathw Cardiol. 2009;8(3):125-126. PubMed

7. Estrada CA, Rosman HS, Prasad NK, et al. Evaluation of guidelines for the use of telemetry in the non-intensive-care setting. J Gen Intern Med. 2000;15(1):51-55. PubMed

8. Estrada CA, Prasad NK, Rosman HS, Young MJ. Outcomes of patients hospitalized to a telemetry unit. Am J Cardiol. 1994;74(4):357-362. PubMed

9. Atzema C, Schull MJ, Borgundvaag B, Slaughter GR, Lee CK. ALARMED: adverse events in low-risk patients with chest pain receiving continuous electrocardiographic monitoring in the emergency department. A pilot study. Am J Emerg Med. 2006;24(1):62-67. PubMed

10. Schull MJ, Redelmeier DA. Continuous electrocardiographic monitoring and cardiac arrest outcomes in 8,932 telemetry ward patients. Acad Emerg Med. 2000;7(6):647-652. PubMed

11. The Joint Commission 2017 National Patient Safety Goals https://www.jointcommission.org/hap_2017_npsgs/. Accessed on February 15, 2017.

12. Joint Commission on Accreditation of Healthcare Organizations. The Joint Commission announces 2014 National Patient Safety Goal. Jt Comm Perspect. 2013;33(7):1, 3-4. PubMed

13. Bulger J, Nickel W, Messler J, et al. Choosing wisely in adult hospital medicine: five opportunities for improved healthcare value. J Hosp Med. 2013;8(9):486-492. PubMed

14. Yarbrough PM, Kukhareva PV, Horton D, Edholm K, Kawamoto K. Multifaceted intervention including education, rounding checklist implementation, cost feedback, and financial incentives reduces inpatient laboratory costs. J Hosp Med. 2016;11(5):348-354. PubMed

15. Quan H, Li B, Couris CM, et al. Updating and validating the Charlson comorbidity index and score for risk adjustment in hospital discharge abstracts using data from 6 countries. Am J Epidemiol. 2011;173(6):676-682. PubMed

16. Greenland S, Rothman KJ. Introduction to categorical statistics In: Rothman KJ, Greenland S, Lash TL, eds. Modern Epidemiology. Vol 3. Philadelphia, PA: Lippincott Williams & Wilkins; 2008: 238-257.

17. Henriques-Forsythe MN, Ivonye CC, Jamched U, Kamuguisha LK, Olejeme KA, Onwuanyi AE. Is telemetry overused? Is it as helpful as thought? Cleve Clin J Med. 2009;76(6):368-372. PubMed

18. Dressler R, Dryer MM, Coletti C, Mahoney D, Doorey AJ. Altering overuse of cardiac telemetry in non-intensive care unit settings by hardwiring the use of American Heart Association guidelines. JAMA Intern Med. 2014;174(11):1852-1854. PubMed

19. Boggan JC, Navar-Boggan AM, Patel V, Schulteis RD, Simel DL. Reductions in telemetry order duration do not reduce telemetry utilization. J Hosp Med. 2014;9(12):795-796. PubMed

20. Cantillon DJ, Loy M, Burkle A, et al. Association Between Off-site Central Monitoring Using Standardized Cardiac Telemetry and Clinical Outcomes Among Non-Critically Ill Patients. JAMA. 2016;316(5):519-524. PubMed

21. Svec D, Ahuja N, Evans KH, et al. Hospitalist intervention for appropriate use of telemetry reduces length of stay and cost. J Hosp Med. 2015;10(9):627-632. PubMed

© 2018 Society of Hospital Medicine

Multifaceted Intervention Reduces Cost

Healthcare costs continue to increase and are estimated to be approximately $3.1 trillion per year in the United States.[1] Waste is a major contributor to this cost, accounting for an estimated $910 billion/year.[2] Laboratory tests are well documented to contribute to healthcare waste, with an estimated 30% to 50% of tests for hospitalized patients being unnecessary.[3, 4, 5] This issue has been highlighted by the American Board of Internal Medicine Foundation's Choosing Wisely campaign as an area to reduce waste.[6] Evaluating this concern locally, a University Health Systems Consortium 2011 analysis indicated that the University of Utah general internal medicine hospitalist service had a higher average direct lab cost per discharge compared to top performers, indicating an opportunity for improvement.

Multiple interventions have been described in the literature to address excessive laboratory utilization, including physician education, audit and feedback, cost information display, and administrative rules restricting certain types of ordering.[7, 8, 9, 10, 11] Despite these interventions, barriers remain common and not all interventions are sustained. For example, interventions focused mainly on education see a small improvement initially that is not sustained.[4, 12, 13] Additionally, although most studies focus on individual interventions, those that target multiple factors have been found to be more successful at producing and sustaining change.[14] Therefore, the opportunity existed to incorporate multiple etiologies into a single intervention and apply a checklist to laboratory ordering to see if combined modalities could be effective at reducing laboratory costs in a sustainable manner.

In addition to cost, there is potential patient harm resulting from unnecessary laboratory testing. For prolonged hospitalizations, anemia is a well‐recognized side effect of phlebotomy,[15, 16] and a recent evaluation of cardiac surgery patients found an average cumulative blood loss due to phlebotomy of 454 mL/hospital stay.[17] The sheer number of tests ordered can lead to false positive tests that result in additional testing and monitoring. Furthermore, patients subjected to laboratory blood draws are often awakened early in the morning, which is unpleasant and could adversely affect the patient experience.

Recognizing laboratory cost as a problem, the University of Utah general internal medicine hospitalist service implemented a multifaceted quality‐improvement initiative with a goal to reduce laboratory testing. At the time of this project, University of Utah Health Care (UUHC) developed a Value Driven Outcomes (VDO) tool to give direct data related to costs of care, including the actual cost paid by the hospital to the university‐owned laboratory vendor (ARUP Laboratories, Salt Lake City, UT) for testing.[18] The hospitalist group incorporated VDO into the initiative for routine cost feedback. This study evaluates the impact of this intervention on laboratory costs.

METHODS

Design

A retrospective, controlled, interrupted time series (ITS) study was performed to compare changes in lab costs between hospitalists (intervention study group) and other providers (control study group). The intervention initiation date was February 1, 2013. The baseline period was July 1, 2012 to January 31, 2013, as that was the period in which the VDO tool became available for cost analysis prior to intervention. The intervention period was February 1, 2013 to April 30, 2014, as there was a change in the electronic health record (EHR) in May 2014 that affected data flow and could act as a major confounder. The institutional review board classified this project as quality improvement and did not require review and oversight.

Setting

UUHC is a 500‐bed academic medical center in Salt Lake City, Utah. The hospitalist service is a teaching service composed of 4 teams with internal medicine residents and medical students. The nonhospitalist services include all surgical services, as well as pulmonary, cardiology, hematology, and oncology services on which internal medicine residents rotate. All services at UUHC are staffed by academic physicians affiliated with the University of Utah School of Medicine.

Population

All patients 18 years and older admitted to the hospital to a service other than obstetrics, rehabilitation, or psychiatry between July 1, 2012 and April 30, 2014 were evaluated. Patients with missing data for outcomes or covariates were excluded.

Intervention

Initial evaluation included an informal review of patient charts and discussion with hospitalist group members, both indicating laboratory overuse. A working group was then established including hospitalists and process engineers to evaluate the workflow by which laboratory tests were ordered. Concurrently, a literature review was performed to help identify the scope of the problem and evaluate methods that had been successful at other institutions. Through this review, it was noted that interns were the most frequent orderers of tests and the largest contributors to variation of testing for inpatients.[19] Two specific studies with direct applicability to this project demonstrated that discussion of costs with attendings in a trauma intensive care unit resulted in a 30% reduction of tests ordered,[20] and discussion of testing with a senior resident in an internal medicine inpatient setting demonstrated a 20% reduction in laboratory testing.[21]

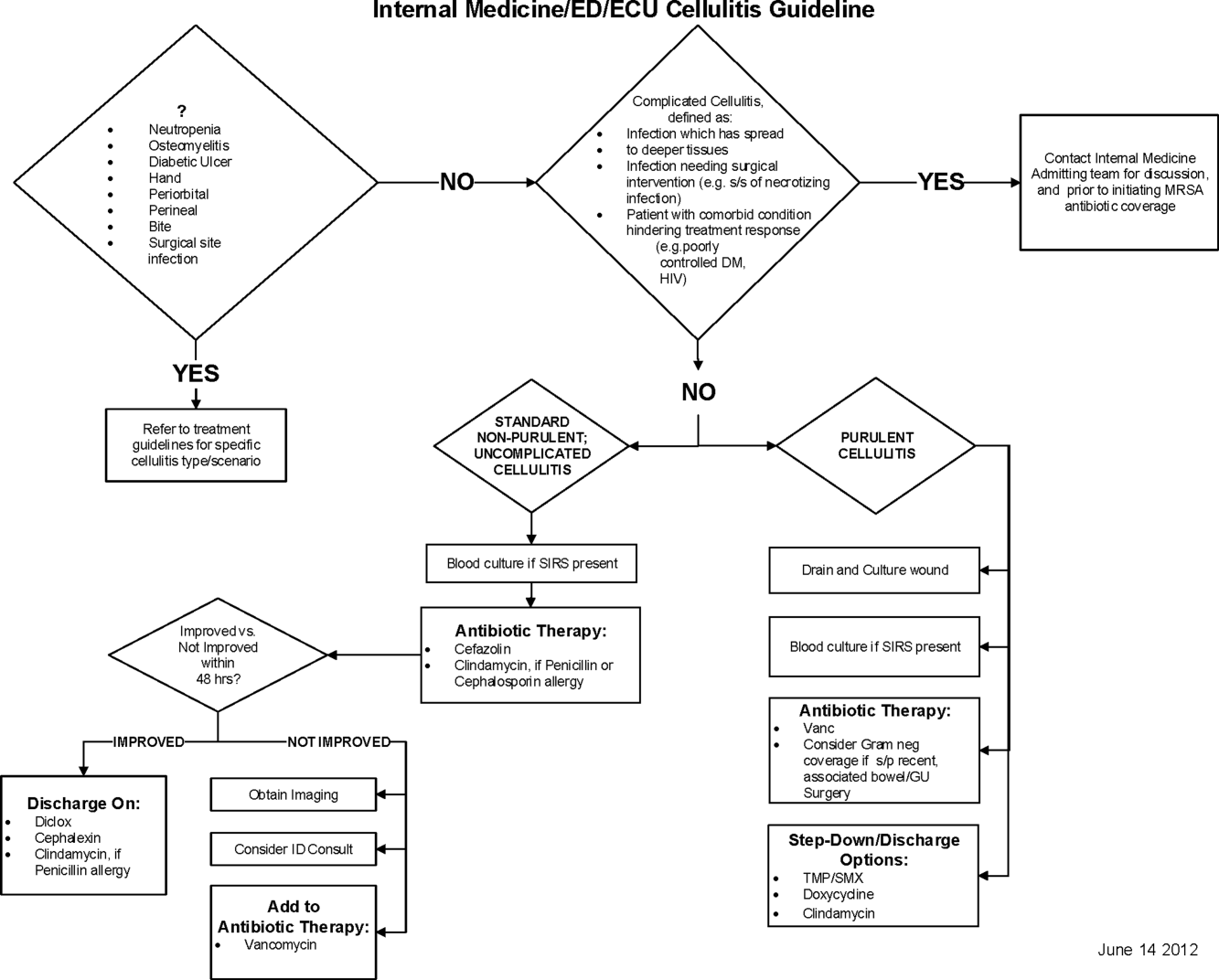

Our laboratory reduction intervention expanded on the current literature to incorporate education, process change, cost feedback, and financial incentives. Specifically, starting February 1, 2013, the following interventions were performed:

- Education of all providers involved, including the hospitalist group and all internal medicine residents at the start of their rotation with the hospitalist service. Education included a 30‐minute discussion of laboratory overuse, costs associated with laboratory overuse, previous interventions and their success, and current intervention with goals. Each resident was provided a pocket card with the most common lab tests and associated charges. Charges were used instead of costs due to concerns regarding the possible public dissemination of institutional costs.

- Standardization of the rounding process including a checklist review (see Supporting Information, Appendix, in the online version of this article) for all patients that ensured discussion of labs, telemetry, pain, lines/tubes, nursing presence, and follow‐up needed. The expectation was that all plans for lab testing would be discussed during rounds. The third‐year medical student was responsible to ensure that all items were covered daily on each patient.

- Monthly feedback at the hospitalist group meeting regarding laboratory costs using the VDO tool. Data were presented as a monthly group average and compared to preintervention baseline costs. Individual performance could be viewed and compared to other providers within the group.

- Financial incentive through a program that shares 50% of cost savings realized by the hospital with the Division of General Internal Medicine. The incentive could be used to support future quality‐improvement projects, but there was no individual physician incentive.

Data Collection and Preparation

Clinical data were collected in the inpatient EHR (Cerner Corp., Kansas City, MO) and later imported into the enterprise data warehouse (EDW) as part of the normal data flow. Billing data were imported into the EDW from the billing system. Cost data were estimated using the VDO tool developed by the University of Utah to identify clinical costs to the UUHC system.[18]

Clinical and Cost Outcomes

We hypothesized that following the intervention, the number of tests and lab costs would decrease greater for patients in the intervention group than in the control group, with no adverse effect on length of stay (LOS) or 30‐day readmissions.

Lab cost per day was calculated as the total lab cost per visit divided by the LOS. We adjusted all lab costs to 2013 US dollars using Consumer Price Index inflation data.[22] To account for different LOS, we used LOS as a weight variable when estimating descriptive characteristics and P values for lab cost per day and the number of tests. Thirty‐day readmissions included inpatient encounters followed by another inpatient encounter within 30 days excluding obstetrics, rehabilitation, and psychiatry visits.

Descriptive Variables

We included information on age at admission in years and Charlson Comorbidity Index (CCI) to evaluate differences in control and intervention groups.[23]

Statistical Analysis

First, unadjusted descriptive statistics were calculated for study outcomes and visit characteristics. Descriptive statistics were expressed as n (%) and mean standard deviation. Simple comparisons were performed based on 2 tests of homogeneity for categorical variables and on t tests for continuous variables.

Second, an ITS analysis was conducted to evaluate the impact of the intervention while accounting for baseline trends.[24] In this analysis, the dependent variable (yt) was the difference in aggregated outcome measures between the intervention and control groups every 2 weeks (eg, difference in average lab costs in a given 2‐week period between the 2 groups). Intervention impact was then evaluated in terms of changes in the level of the outcome (b2) as well as in the trend over time (b3) compared to the initial difference in means (b0) and baseline trend (b1). The following difference‐in‐differences segmented regression model was fitted using the autoreg procedure in SAS: yt = b0 + b1*timet + b2*study periodt + b3*time after the interventiont + errort, where timet is biweekly intervals after the beginning of the study, time after the interventiont is biweekly intervals after the intervention date, and study periodt is 1 postintervention and 0 preintervention. The models were fitted using maximum likelihood and stepwise autoregression to test 24 lags.

P values <0.05 were considered significant. SAS (version 9.3; SAS Institute Inc., Cary, NC) was used for data analysis.

RESULTS

We analyzed 48,327 inpatient visits that met inclusion criteria. We excluded 15,659 obstetrics, rehabilitation, and psychiatry visits. Seven hundred seventy‐two (2.4%) of the remaining visits were excluded due to missing data. A total of 31,896 inpatient visits by 22,545 patients were included in the analysis. There were 10,136 visits before the intervention and 21,760 visits after. Characteristics of the study groups for the full study timeframe (July 1, 2012April 30, 2014) are summarized in Table 1.

| Characteristic | Study Group* | |||

|---|---|---|---|---|

| Overall, N = 31,896 | Control, N = 25,586 | Intervention, N = 6,310 | P Value | |

| ||||

| Patient characteristics | ||||

| Age, y | 55.47 17.61 | 55.27 17.13 | 56.30 19.39 | <0.001 |

| Female gender | 14,995 (47%) | 11,753 (46%) | 3,242 (51%) | <0.001 |

| CCI | 3.73 3.25 | 3.61 3.17 | 4.20 3.54 | <0.001 |

| Outcomes | ||||

| Cost per day, $ | 130.95 392.16 | 131.57 423.94 | 127.68 220.40 | 0.022 |

| Cost per visit, $ | 733.75 1,693.98 | 772.30 1,847.65 | 577.40 795.29 | <0.001 |

| BMP tests per day | 0.73 1.17 | 0.74 1.19 | 0.67 1.05 | <0.001 |

| CMP tests per day | 0.20 0.67 | 0.19 0.68 | 0.26 0.62 | <0.001 |

| CBC tests per day | 0.83 1.10 | 0.84 1.15 | 0.73 0.82 | <0.001 |

| PT/INR tests per day | 0.36 1.03 | 0.36 1.07 | 0.34 0.83 | <.001 |

| LOS, d | 5.60 7.12 | 5.87 7.55 | 4.52 4.82 | <0.001 |

| 30‐day readmissions | 4,374 (14%) | 3,603 (14%) | 771 (12%) | <0.001 |

During the study period, there were 25,586 visits in the control group and 6310 visits in the intervention group. Patients in the intervention group were on average older than patients in the control group. There were more female patients in the intervention group. Mean CCI was 4.2 in the intervention group and 3.6 in the control group. The intervention group had lower LOS and 30‐day readmissions than the control group.

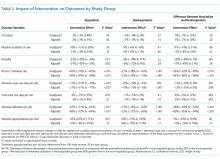

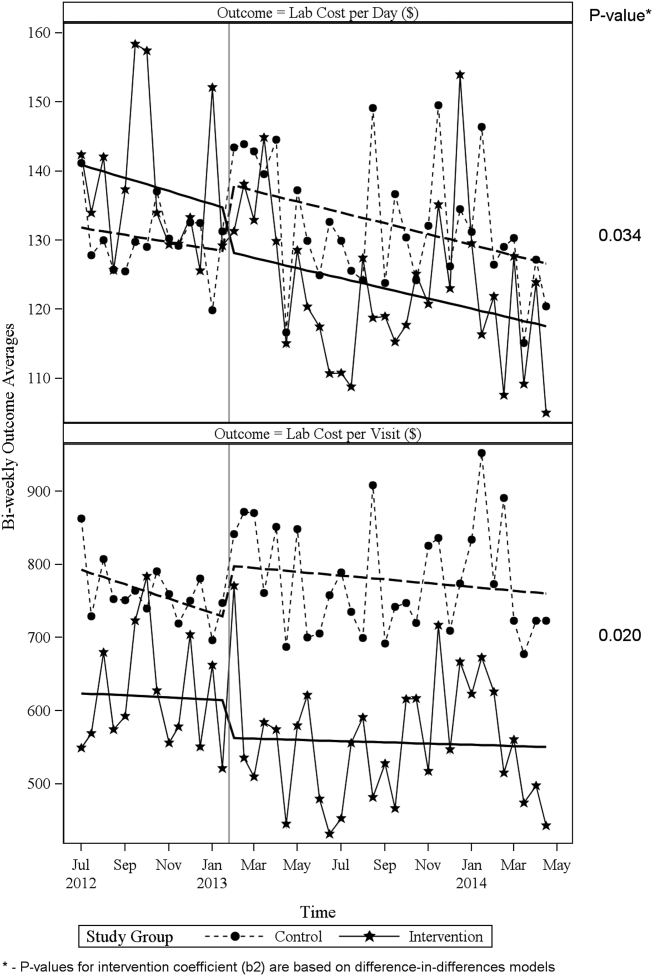

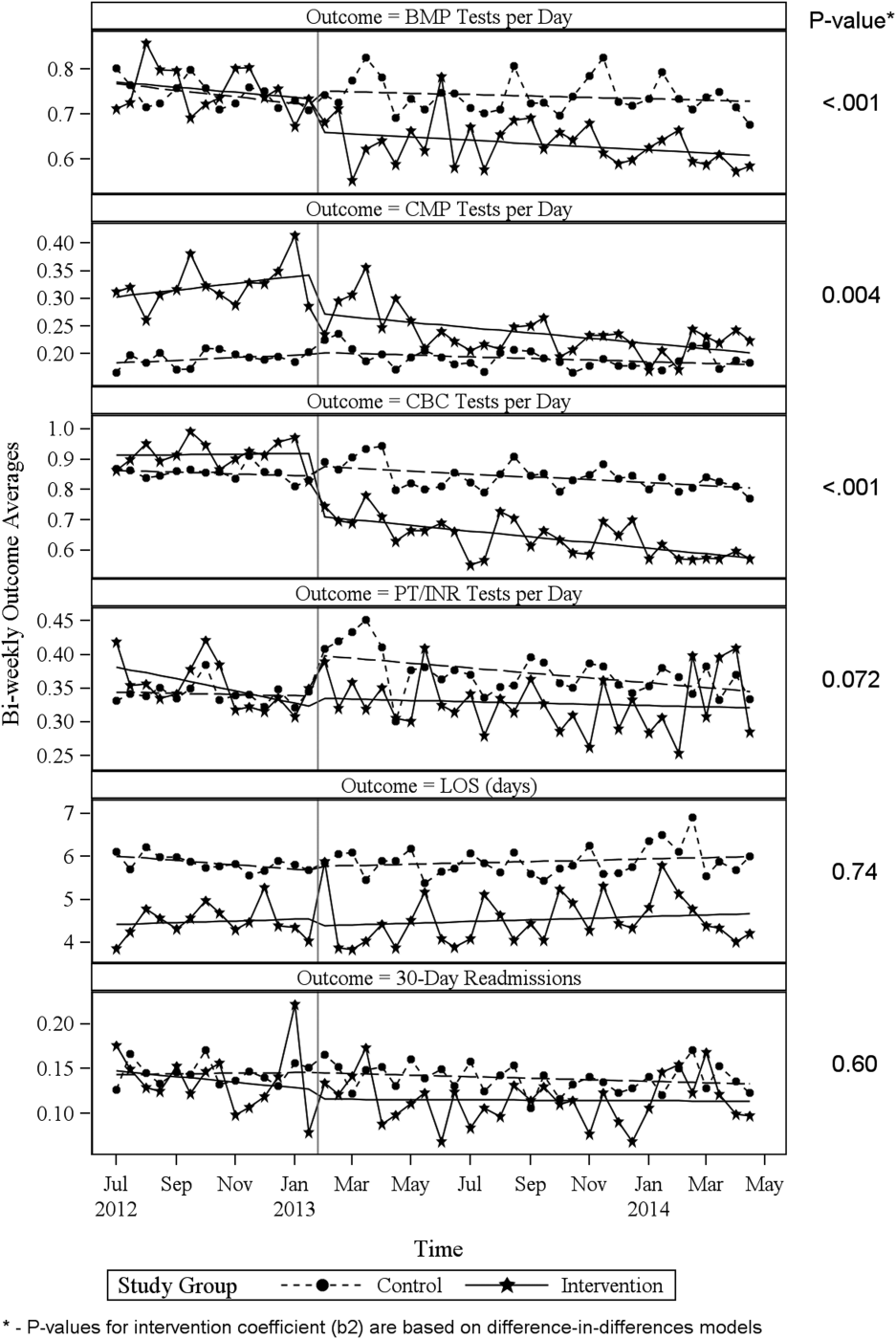

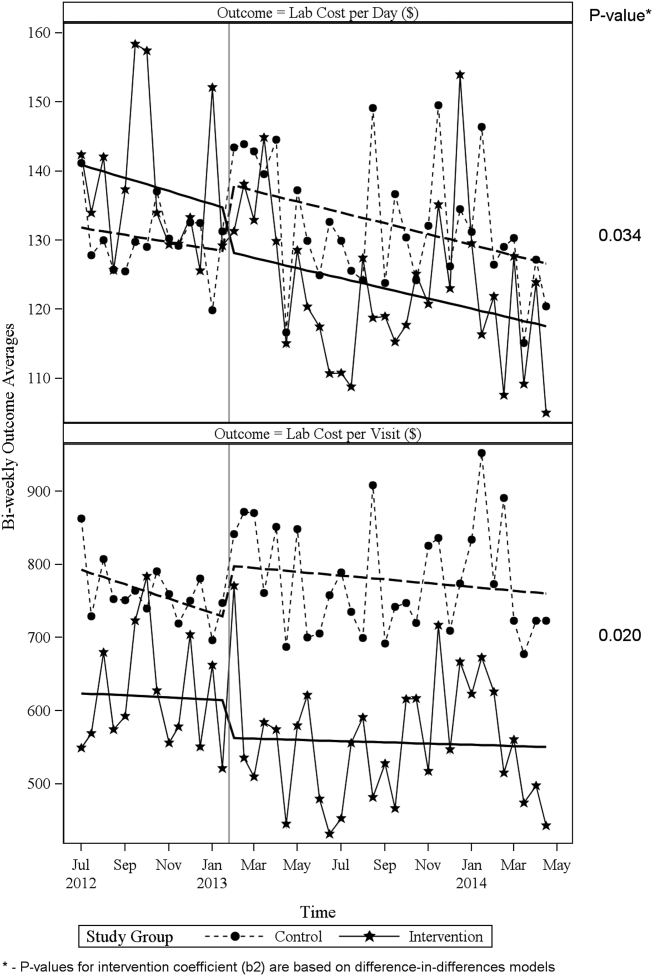

Descriptive statistics and simple comparisons of covariates and outcomes before and after the intervention are shown in Table 2. Age and gender distributions remained unchanged in both groups. CCI increased in the control group by 0.24 (P < 0.001) and remained unchanged in the intervention group. In the intervention group, lab cost per day was reduced from $138 before the intervention to $123 after the intervention (P < 0.001). In contrast, among control patients, cost per day increased nonsignificantly from $130 preintervention to $132 postintervention (P = 0.37). Number of tests per day significantly decreased for all specific tests in the intervention group. Readmission rates decreased significantly from 14% to 11% in the intervention group (P = 0.01). LOS remained constant in both groups.

| Characteristic* | Control | Intervention | ||||

|---|---|---|---|---|---|---|

| Preintervention, N = 8,102 | Postintervention, N = 17,484 | P Value | Preintervention, N = 2,034 | Postintervention, N = 4,276 | P Value | |

| ||||||

| Patient characteristics | ||||||

| Age, yr | 55.17 17.46 | 55.31 16.98 | 0.55 | 55.90 19.47 | 56.50 19.35 | 0.25 |

| Female gender | 3,707 (46%) | 8,046 (46%) | 0.69 | 1,039 (51%) | 2,203 (52%) | 0.74 |

| CCI | 3.45 3.06 | 3.69 3.21 | <0.001 | 4.19 3.51 | 4.20 3.56 | 0.89 |

| Outcomes | ||||||

| Cost per day, $ | 130.1 431.8 | 132.2 420.3 | 0.37 | 137.9 232.9 | 122.9 213.5 | <0.001 |

| Cost per visit, $ | 760.4 1,813.6 | 777.8 1,863.3 | 0.48 | 617.8 844.1 | 558.2 770.3 | 0.005 |

| BMP tests per day | 0.74 1.21 | 0.74 1.18 | 0.67 | 0.75 1.03 | 0.63 1.05 | <0.001 |

| CMP tests per day | 0.19 0.68 | 0.19 0.68 | 0.85 | 0.32 0.68 | 0.23 0.58 | <0.001 |

| CBC tests per day | 0.85 1.14 | 0.84 1.15 | 0.045 | 0.92 0.79 | 0.64 0.76 | <0.001 |

| PT/INR tests per day | 0.34 1.04 | 0.37 1.08 | <0.001 | 0.35 0.82 | 0.33 0.84 | 0.020 |

| LOS, d | 5.84 7.66 | 5.88 7.50 | 0.71 | 4.48 5.12 | 4.54 4.67 | 0.63 |

| 30‐day readmissions | 1,173 (14%) | 2,430 (14%) | 0.22 | 280 (14%) | 491 (11%) | 0.010 |

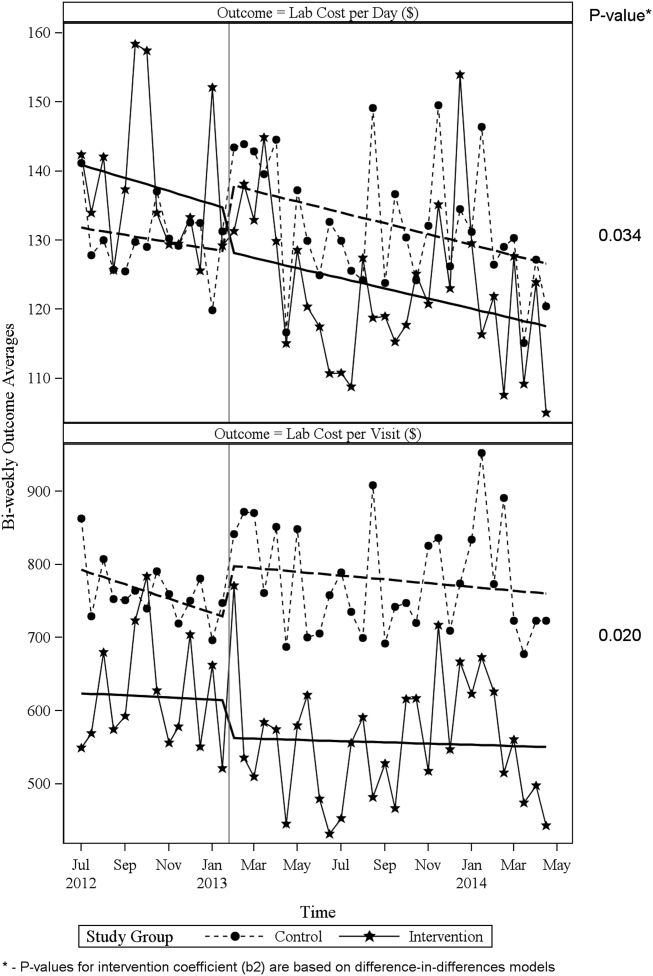

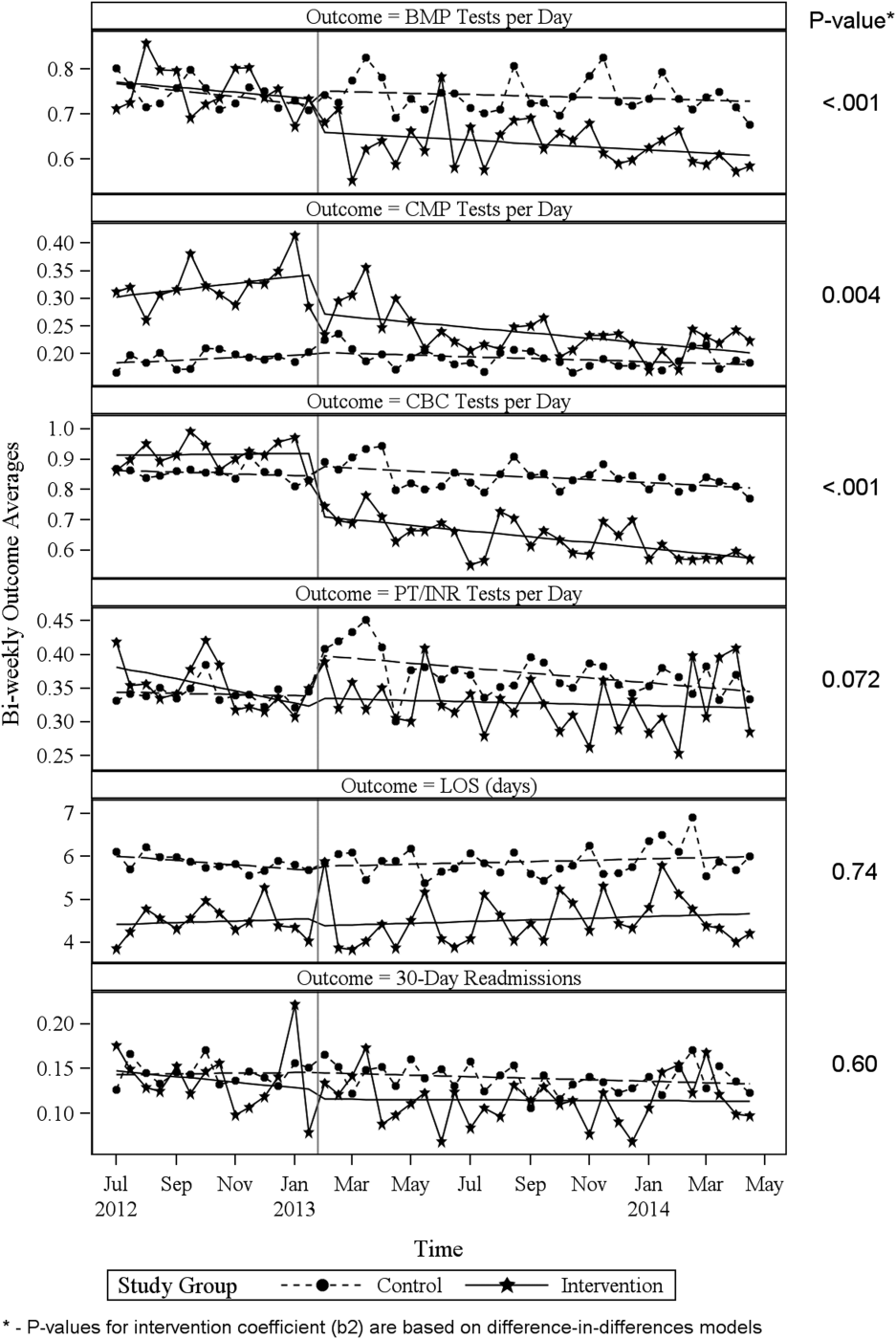

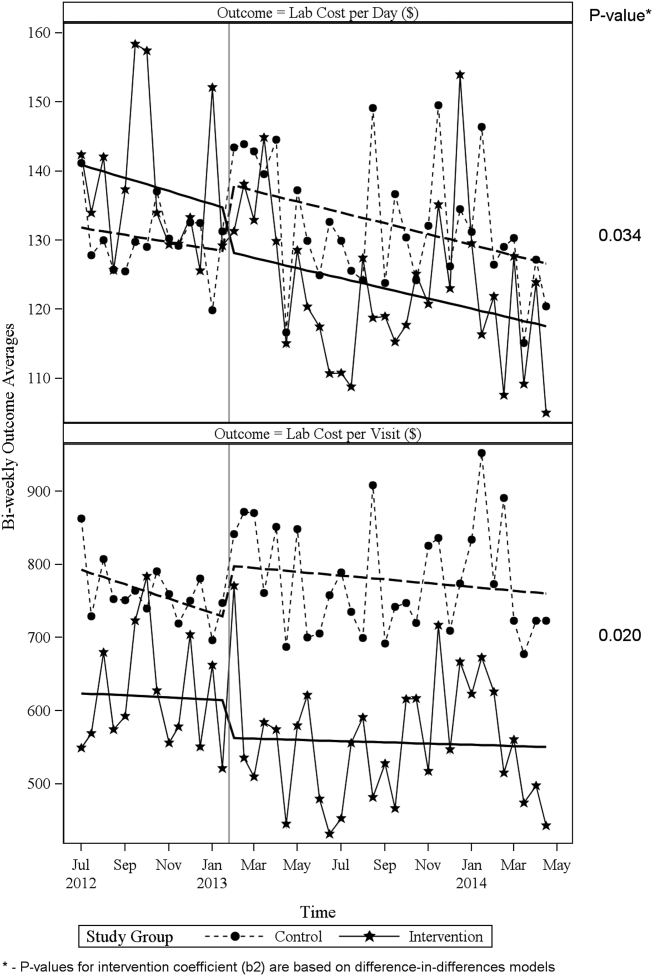

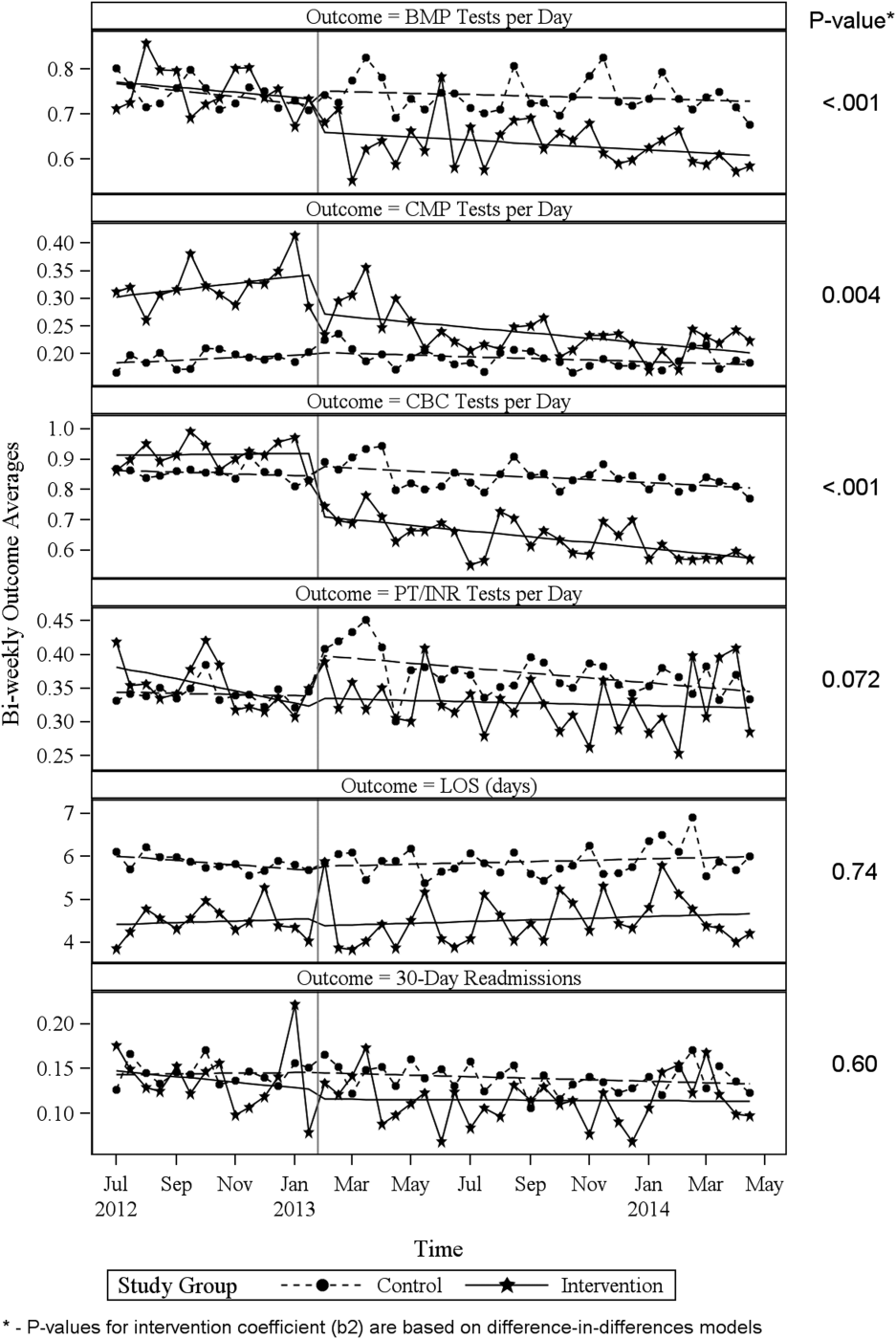

ITS analysis results are shown in Table 3. After the intervention, the difference in monthly means between the 2 groups dropped by $16 for cost per day (P = 0.034) and by $128 for cost per visit (P = 0.02). The decreased cost in the intervention group amounts to approximately $251,427 (95% confidence interval [CI]: $20,370‐$482,484) savings over the first year. If the intervention was rolled out for the control group and had a similar impact, it could have led to an additional cost savings of $1,321,669 (95% CI: 107,081‐2,536,256). Moreover, the number of basic metabolic panel, comprehensive metabolic panel, and complete blood count test per day were reduced significantly more in the intervention group compared to the control group (<0.001, 0.004, and <0.001).

| Outcome | Parameter* | Parameter Estimate | Standard Error | t Value | Pr > |t| |

|---|---|---|---|---|---|

| |||||

| Lab cost per day ($) | Baseline difference level (b0) | 9.3450 | 6.5640 | 1.4237 | 0.16 |

| Baseline difference trend (b1) | 0.2150 | 0.7709 | 0.2789 | 0.78 | |

| Change in difference level after intervention(b2) | 16.1200 | 7.3297 | 2.1993 | 0.034 | |

| Change in difference trend after intervention (b3) | 0.2388 | 0.8090 | 0.2952 | 0.77 | |

| Lab cost per visit ($) | Baseline difference level (b0) | 166.081 | 48.3425 | 3.4355 | 0.001 |

| Baseline difference trend (b1) | 3.6663 | 5.8571 | 0.6260 | 0.53 | |

| Change in difference level after intervention(b2) | 128.527 | 53.0278 | 2.4238 | 0.020 | |

| Change in difference trend after intervention (b3) | 2.2586 | 5.8463 | 0.3863 | 0.70 | |

| BMP tests per day | Baseline difference level (b0) | 0.0061 | 0.0250 | 0.2439 | 0.81 |

| Baseline difference trend (b1) | 0.0004 | 0.0030 | 0.1449 | 0.89 | |

| Change in difference level after intervention(b2) | 0.1034 | 0.0276 | 3.7426 | <0.001 | |

| Change in difference trend after intervention (b3) | 0.0014 | 0.0030 | 0.4588 | 0.65 | |

| CMP tests per day | Baseline difference level (b0) | 0.1226 | 0.0226 | 5.4302 | <0.001 |

| Baseline difference trend (b1) | 0.0015 | 0.0028 | 0.5539 | 0.58 | |

| Change in difference level after intervention(b2) | 0.0754 | 0.0248 | 3.0397 | 0.004 | |

| Change in difference trend after intervention (b3) | 0.0030 | 0.0028 | 1.0937 | 0.28 | |

| CBC tests per day | Baseline difference level (b0) | 0.0539 | 0.0190 | 2.8338 | 0.007 |

| Baseline difference trend (b1) | 0.0013 | 0.0023 | 0.5594 | 0.58 | |

| Change in difference level after intervention(b2) | 0.2343 | 0.0213 | 10.997 | <0.001 | |

| Change in difference trend after intervention (b3) | 0.0036 | 0.0023 | 1.5539 | 0.13 | |

| PT/INR tests per day | Baseline difference level (b0) | 0.0413 | 0.0242 | 1.7063 | 0.096 |

| Baseline difference trend (b1) | 0.0040 | 0.0028 | 1.4095 | 0.17 | |

| Change in difference level after intervention(b2) | 0.0500 | 0.0270 | 1.8507 | 0.072 | |

| Change in difference trend after intervention (b3) | 0.0054 | 0.0030 | 1.7940 | 0.080 | |

| LOS, d | Baseline difference level (b0) | 1.4211 | 0.2746 | 5.1743 | <0.001 |

| Baseline difference trend (b1) | 0.0093 | 0.0333 | 0.2807 | 0.78 | |

| Change in difference level after intervention(b2) | 0.1007 | 0.2988 | 0.3368 | 0.74 | |

| Change in difference trend after intervention (b3) | 0.0053 | 0.0331 | 0.1588 | 0.87 | |

| 30‐day readmissions | Baseline difference level (b0) | 0.0057 | 0.0185 | 0.3084 | 0.76 |

| Baseline difference trend (b1) | 0.0017 | 0.0022 | 0.8016 | 0.43 | |

| Change in difference level after intervention(b2) | 0.0110 | 0.0206 | 0.5315 | 0.60 | |

| Change in difference trend after intervention (b3) | 0.0021 | 0.0023 | 0.9111 | 0.37 | |

Figure 1 shows a graphical representation of the biweekly means for the 2 primary outcomeslab cost per day and lab cost per visit. Figure 2 shows all other outcomes. To the right of each figure, P values are provided for the b2 coefficients from Table 3.

DISCUSSION

Through a multifaceted quality‐improvement initiative, the UUHC hospitalist group was able to reduce lab cost per day and per visit as well as commonly ordered routine labs as compared to an institutional control group. A multifaceted approach was selected given the literature supporting this approach as the most likely method to sustain improvement.[14] At the same time, the use of a multifaceted intervention makes it difficult to rigorously determine the relative impact of different components of the intervention. In discussing this issue, however, the hospitalist group felt that the driving factors for change were those related to process change, specifically, the use of a standardized rounding checklist to discuss lab testing and the routine review of lab costs at group meetings. The ultimate goal was to change the culture of routine test ordering into a thoughtful process of needed tests and thereby reduce costs. Prior to this intervention, the least experienced person on this team (the intern) ordered any test he or she wanted, usually without discussion. The intervention focused on this issue through standardized supervision and explicit discussion of laboratory tests. Importantly, although improvements from education initiatives typically decrease over time, the incorporation of process change in this intervention was felt to likely contribute to the sustained reduction seen at 15 months. Although use of the rounding checklist added another step to daily rounds, the routine cost feedback, including comparisons to peers, helped encourage use of the checklist. Thus, we feel that routine feedback was essential to sustaining the intervention and its impact.

Inappropriate and unnecessary testing has been recognized for decades, and multiple interventions have been attempted, including a recent article that demonstrated a 10% reduction in common laboratory ordering through an initiative mainly focused on education and ordering feedback.[25] Despite reported success of several interventions, none have combined multiple interventions and explicitly required discussion of laboratory tests on rounds. For example, although the UUHC intervention used Attali et al.[21] and Barie and Hydo's[20] work to develop the intervention, neither of these studies described how laboratory testing was discussed with the attending or supervising resident. The UUHC intervention thus builds on the current literature by combining other successful modalities with explicit discussion of laboratory testing via a rounding checklist and feedback with the novel VDO tool to reduce laboratory costs. A major strength of this intervention is the relatively low cost and the generalizability of implementing rounding checklists. Initial support from the hospital was needed to provide accurate VDO information to the hospitalist group. However, ongoing costs were minimal and related to any additional time spent during rounds to discuss laboratory tests. Thus, we feel that this intervention is feasible for wide replication.