User login

Portable Ultrasound Device Usage and Learning Outcomes Among Internal Medicine Trainees: A Parallel-Group Randomized Trial

Point-of-care ultrasonography (POCUS) can transform healthcare delivery through its diagnostic and therapeutic expediency.1 POCUS has been shown to bolster diagnostic accuracy, reduce procedural complications, decrease inpatient length of stay, and improve patient satisfaction by encouraging the physician to be present at the bedside.2-8

POCUS has become widespread across a variety of clinical settings as more investigations have demonstrated its positive impact on patient care.1,9-12 This includes the use of POCUS by trainees, who are now utilizing this technology as part of their assessments of patients.13,14 However, trainees may be performing these examinations with minimal oversight, and outside of emergency medicine, there are few guidelines on how to effectively teach POCUS or measure competency.13,14 While POCUS is rapidly becoming a part of inpatient care, teaching physicians may have little experience in ultrasound or the expertise to adequately supervise trainees.14 There is a growing need to study what trainees can learn and how this knowledge is acquired.

Previous investigations have demonstrated that inexperienced users can be taught to use POCUS to identify a variety of pathological states.2,3,15-23 Most of these curricula used a single lecture series as their pedagogical vehicle, and they variably included junior medical trainees. More importantly, the investigations did not explore whether personal access to handheld ultrasound devices (HUDs) improved learning. In theory, improved access to POCUS devices increases opportunities for authentic and deliberate practice, which may be needed to improve trainee skill with POCUS beyond the classroom setting.14

This study aimed to address several ongoing gaps in knowledge related to learning POCUS. First, we hypothesized that personal HUD access would improve trainees’ POCUS-related knowledge and interpretive ability as a result of increased practice opportunities. Second, we hypothesized that trainees who receive personal access to HUDs would be more likely to perform POCUS examinations and feel more confident in their interpretations. Finally, we hypothesized that repeated exposure to POCUS-related lectures would result in greater improvements in knowledge as compared with a single lecture series.

METHODS

Participants and Setting

The 2017 intern class (n = 47) at an academic internal medicine residency program participated in the study. Control data were obtained from the 2016 intern class (historical control; n = 50) and the 2018 intern class (contemporaneous control; n = 52). The Stanford University Institutional Review Board approved this study.

Study Design

The 2017 intern class (n = 47) received POCUS didactics from June 2017 to June 2018. To evaluate if increased access to HUDs improved learning outcomes, the 2017 interns were randomized 1:1 to receive their own personal HUD that could be used for patient care and/or self-directed learning (n = 24) vs no-HUD (n = 23; Figure). Learning outcomes were assessed over the course of 1 year (see “Outcomes” below) and were compared with the 2016 and 2018 controls. The 2016 intern class had completed a year of training but had not received formalized POCUS didactics (historical control), whereas the 2018 intern class was assessed at the beginning of their year (contemporaneous control; Figure). In order to make comparisons based on intern experience, baseline data for the 2017 intern class were compared with the 2018 intern class, whereas end-of-study data for 2017 interns were compared with 2016 interns.

Outcomes

The primary outcome was the difference in assessment scores at the end of the study period between interns randomized to receive a HUD and those who were not. Secondary outcomes included differences in HUD usage rates, lecture attendance, and assessment scores. To assess whether repeated lecture exposure resulted in greater amounts of learning, this study evaluated for assessment score improvements after each lecture block. Finally, trainee attitudes toward POCUS and their confidence in their interpretative ability were measured at the beginning and end of the study period.

Curriculum Implementation

The lectures were administered as once-weekly didactics of 1-hour duration to interns rotating on the inpatient wards rotation. This rotation is 4 weeks long, and each intern will experience the rotation two to four times per year. Each lecture contained two parts: (1) 20-30 minutes of didactics via Microsoft PowerPointTM and (2) 30-40 minutes of supervised practice using HUDs on standardized patients. Four lectures were given each month: (1) introduction to POCUS and ultrasound physics, (2) thoracic/lung ultrasound, (3) echocardiography, and (4) abdominal POCUS. The lectures consisted of contrasting cases of normal/abnormal videos and clinical vignettes. These four lectures were repeated each month as new interns rotated on service. Some interns experienced the same content multiple times, which was intentional in order to assess their rates of learning over time. Lecture contents were based on previously published guidelines and expert consensus for teaching POCUS in internal medicine.13, 24-26 Content from the Accreditation Council for Graduate Medical Education (ACGME) and the American College of Emergency Physicians (ACEP) was also incorporated because these organizations had published relevant guidelines for teaching POCUS.13,26 Further development of the lectures occurred through review of previously described POCUS-relevant curricula.27-32

Handheld Ultrasound Devices

This study used the Philips LumifyTM, a United States Food and Drug Administration–approved device. Interns randomized to HUDs received their own device at the start of the rotation. It was at their discretion to use the device outside of the course. All devices were approved for patient use and were encrypted in compliance with our information security office. For privacy reasons, any saved patient images were not reviewed by the researchers. Interns were encouraged to share their findings with supervising physicians during rounds, but actual oversight was not measured. Interns not randomized to HUDs could access a single community device that was shared among all residents and fellows in the hospital. Interns reported the average number of POCUS examinations performed each week via a survey sent during the last week of the rotation.

Assessment Design and Implementation

Assessments evaluating trainee knowledge were administered before, during, and after the study period (Figure). For the 2017 cohort, assessments were also administered at the start and end of the ward month to track knowledge acquisition. Assessment contents were selected from POCUS guidelines for internal medicine and adaptation of the ACGME and ACEP guidelines.13,24,26 Additional content was obtained from major society POCUS tutorials and deidentified images collected by the study authors.13,24,33 In keeping with previously described methodology, the images were shown for approximately 12 seconds, followed by five additional seconds to allow the learner to answer the question.32 Final assessment contents were determined by the authors using the Delphi method.34 A sample assessment can be found in the Appendix Material.

Surveys

Surveys were administered alongside the assessments to the 2016-2018 intern classes. These surveys assessed trainee attitudes toward POCUS and were based on previously validated assessments.27,28,30 Attitudes were measured using 5-point Likert scales.

Statistical Analysis

For the primary outcome, we performed generalized binomial mixed-effect regressions using the survey periods, randomization group, and the interaction of the two as independent variables after adjusting for attendance and controlling of intra-intern correlations. The bivariate unadjusted analysis was performed to display the distribution of overall correctness on the assessments. Wilcoxon signed rank test was used to determine score significance for dependent score variables (R-Statistical Programming Language, Vienna, Austria).

RESULTS

Baseline Characteristics

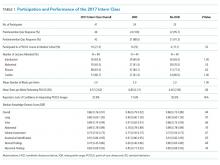

There were 149 interns who participated in this study (Figure). Assessment/survey completion rates were as follows: 2016 control: 68.0%; 2017 preintervention: 97.9%; 2017 postintervention: 89.4%; and 2018 control: 100%. The 2017 interns reported similar amounts of prior POCUS exposure in medical school (Table 1).

Primary Outcome: Assessment Scores (HUD vs no HUD)

There were no significant differences in assessment scores at the end of the study between interns randomized to personal HUD access vs those to no-HUD access (Table 1). HUD interns reported performing POCUS assessments on patients a mean 6.8 (standard deviation [SD] 2.2) times per week vs 6.4 (SD 2.9) times per week in the no-HUD arm (P = .66). The mean lecture attendance was 75.0% and did not significantly differ between the HUD arms (Table 1).

Secondary Outcomes

Impact of Repeating Lectures

The 2017 interns demonstrated significant increases in preblock vs postblock assessment scores after first-time exposure to the lectures (median preblock score 0.61 [interquartile range (IQR), 0.53-0.70] vs postblock score 0.81 [IQR, 0.72-0.86]; P < .001; Table 2). However, intern performance on the preblock vs postblock assessments after second-time exposure to the curriculum failed to improve (median second preblock score 0.78 [IQR, 0.69-0.83] vs postblock score 0.81 [IQR, 0.64-0.89]; P = .94). Intern performance on individual domains of knowledge for each block is listed in Appendix Table 1.

Intervention Performance vs Controls

The 2016 historical control had significantly higher scores compared with the 2017 preintervention group (P < .001; Appendix Table 2). The year-long lecture series resulted in significant increases in median scores for the 2017 group (median preintervention score 0.55 [0.41-0.61] vs median postintervention score 0.84 [0.71-0.90]; P = .006; Appendix Table 1). At the end of the study, the 2017 postintervention scores were significantly higher across multiple knowledge domains compared with the 2016 historical control (Appendix Table 2).

Survey Results

Notably, the 2017 intern class at the end of the intervention did not have significantly different assessment scores for several disease-specific domains, compared with the 2016 control (Appendix Table 2). Nonetheless, the 2017 intern class reported higher levels of confidence in these same domains despite similar scores (Supplementary Figure). The HUD group seldomly cited a lack of confidence in their abilities as a barrier to performing POCUS examinations (17.6%), compared with the no-HUD group (50.0%), despite nearly identical assessment scores between the two groups (Table 1).

DISCUSSION

Previous guidelines have recommended increased HUD access for learners,13,24,35,36 but there have been few investigations that have evaluated the impact of such access on learning POCUS. One previous investigation found that hospitalists who carried HUDs were more likely to identify heart failure on bedside examination.37 In contrast, our study found no improvement in interpretative ability when randomizing interns to carry HUDs for patient care. Notably, interns did not perform more POCUS examinations when given HUDs. We offer several explanations for this finding. First, time-motion studies have demonstrated that internal medicine interns spend less than 15% of their time toward direct patient care.38 It is possible that the demands of being an intern impeded their ability to perform more POCUS examinations on their patients, regardless of HUD access. Alternatively, the interns randomized to no personal access may have used the community device more frequently as a result of the lecture series. Given the cost of HUDs, further studies are needed to assess the degree to which HUD access will improve trainee interpretive ability, especially as more training programs consider the creation of ultrasound curricula.10,11,24,39,40

This study was unique because it followed interns over a year-long course that repeated the same material to assess rates of learning with repeated exposure. Learners improved their scores after the first, but not second, block. Furthermore, the median scores were nearly identical between the first postblock assessment and second preblock assessment (0.81 vs 0.78), suggesting that knowledge was retained between blocks. Together, these findings suggest there may be limitations of traditional lectures that use standardized patient models for practice. Supplementary pedagogies, such as in-the-moment feedback with actual patients, may be needed to promote mastery.14,35

Despite no formal curriculum, the 2016 intern class (historical control) had learned POCUS to some degree based on their higher assessment scores compared with the 2017 intern class during the preintervention period. Such learning may be informal, and yet, trainees may feel confident in making clinical decisions without formalized training, accreditation, or oversight. As suggested by this study, adding regular didactics or giving trainees HUDs may not immediately solve this issue. For assessment items in which the 2017 interns did not significantly differ from the controls, they nonetheless reported higher confidence in their abilities. Similarly, interns randomized to HUDs less frequently cited a lack of confidence in their abilities, despite similar scores to the no-HUD group. Such confidence may be incongruent with their actual knowledge or ability to safely use POCUS. This phenomenon of misplaced confidence is known as the Dunning–Kruger effect, and it may be common with ultrasound learning.41 While confidence can be part of a holistic definition of competency,14 these results raise the concern that trainees may have difficulty assessing their own competency level with POCUS.35

There are several limitations to this study. It was performed at a single institution with limited sample size. It examined only intern physicians because of funding constraints, which limits the generalizability of these findings among medical trainees. Technical ability assessments (including obtaining and interpreting images) were not included. We were unable to track the timing or location of the devices’ usage, and the interns’ self-reported usage rates may be subject to recall bias. To our knowledge, there were no significant lapses in device availability/functionality. Intern physicians in the HUD arm did not receive formal feedback on personally acquired patient images, which may have limited the intervention’s impact.

In conclusion, internal medicine interns who received personal HUDs were not better at recognizing normal/abnormal findings on image assessments, and they did not report performing more POCUS examinations. Since the minority of a trainee’s time is spent toward direct patient care, offering trainees HUDs without substantial guidance may not be enough to promote mastery. Notably, trainees who received HUDs felt more confident in their abilities, despite no objective increase in their actual skill. Finally, interns who received POCUS-related lectures experienced significant benefit upon first exposure to the material, while repeated exposures did not improve performance. Future investigations should stringently track trainee POCUS usage rates with HUDs and assess whether image acquisition ability improves as a result of personal access.

1. Moore CL, Copel JA. Point-of-care ultrasonography. N Engl J Med. 2011;364(8):749-757. https://doi.org/10.1056/NEJMra0909487.

2. Akkaya A, Yesilaras M, Aksay E, Sever M, Atilla OD. The interrater reliability of ultrasound imaging of the inferior vena cava performed by emergency residents. Am J Emerg Med. 2013;31(10):1509-1511. https://doi.org/10.1016/j.ajem.2013.07.006.

3. Razi R, Estrada JR, Doll J, Spencer KT. Bedside hand-carried ultrasound by internal medicine residents versus traditional clinical assessment for the identification of systolic dysfunction in patients admitted with decompensated heart failure. J Am Soc Echocardiogr. 2011;24(12):1319-1324. https://doi.org/10.1016/j.echo.2011.07.013.

4. Dodge KL, Lynch CA, Moore CL, Biroscak BJ, Evans LV. Use of ultrasound guidance improves central venous catheter insertion success rates among junior residents. J Ultrasound Med. 2012;31(10):1519-1526. https://doi.org/10.7863/jum.2012.31.10.1519.

5. Cavanna L, Mordenti P, Bertè R, et al. Ultrasound guidance reduces pneumothorax rate and improves safety of thoracentesis in malignant pleural effusion: Report on 445 consecutive patients with advanced cancer. World J Surg Oncol. 2014;12:139. https://doi.org/10.1186/1477-7819-12-139.

6. Testa A, Francesconi A, Giannuzzi R, Berardi S, Sbraccia P. Economic analysis of bedside ultrasonography (US) implementation in an Internal Medicine department. Intern Emerg Med. 2015;10(8):1015-1024. https://doi.org/10.1007/s11739-015-1320-7.

7. Howard ZD, Noble VE, Marill KA, et al. Bedside ultrasound maximizes patient satisfaction. J Emerg Med. 2014;46(1):46-53. https://doi.org/10.1016/j.jemermed.2013.05.044.

8. Park YH, Jung RB, Lee YG, et al. Does the use of bedside ultrasonography reduce emergency department length of stay for patients with renal colic? A pilot study. Clin Exp Emerg Med. 2016;3(4):197-203. https://doi.org/10.15441/ceem.15.109.

9. Glomb N, D’Amico B, Rus M, Chen C. Point-of-care ultrasound in resource-limited settings. Clin Pediatr Emerg Med. 2015;16(4):256-261. https://doi.org/10.1016/j.cpem.2015.10.001.

10. Bahner DP, Goldman E, Way D, Royall NA, Liu YT. The state of ultrasound education in U.S. medical schools: results of a national survey. Acad Med. 2014;89(12):1681-1686. https://doi.org/10.1097/ACM.0000000000000414.

11. Hall JWW, Holman H, Bornemann P, et al. Point of care ultrasound in family medicine residency programs: A CERA study. Fam Med. 2015;47(9):706-711.

12. Schnobrich DJ, Gladding S, Olson APJ, Duran-Nelson A. Point-of-care ultrasound in internal medicine: A national survey of educational leadership. J Grad Med Educ. 2013;5(3):498-502. https://doi.org/10.4300/JGME-D-12-00215.1.

13. Stolz LA, Stolz U, Fields JM, et al. Emergency medicine resident assessment of the emergency ultrasound milestones and current training recommendations. Acad Emerg Med. 2017;24(3):353-361. https://doi.org/10.1111/acem.13113.

14. Kumar, A., Jensen, T., Kugler, J. Evaluation of trainee competency with point-of-care ultrasonography (POCUS): A conceptual framework and review of existing assessments. J Gen Intern Med. 2019;34(6):1025-1031. https://doi.org/10.1007/s11606-019-04945-4.

15. Levitov A, Frankel HL, Blaivas M, et al. Guidelines for the appropriate use of bedside general and cardiac ultrasonography in the evaluation of critically ill patients—part ii: Cardiac ultrasonography. Crit Care Med. 2016;44(6):1206-1227. https://doi.org/10.1097/CCM.0000000000001847.

16. Kobal SL, Trento L, Baharami S, et al. Comparison of effectiveness of hand-carried ultrasound to bedside cardiovascular physical examination. Am J Cardiol. 2005;96(7):1002-1006. https://doi.org/10.1016/j.amjcard.2005.05.060.

17. Ceriani E, Cogliati C. Update on bedside ultrasound diagnosis of pericardial effusion. Intern Emerg Med. 2016;11(3):477-480. https://doi.org/10.1007/s11739-015-1372-8.

18. Labovitz AJ, Noble VE, Bierig M, et al. Focused cardiac ultrasound in the emergent setting: A consensus statement of the American Society of Echocardiography and American College of Emergency Physicians. J Am Soc Echocardiogr. 2010;23(12):1225-1230. https://doi.org/10.1016/j.echo.2010.10.005.

19. Keil-Ríos D, Terrazas-Solís H, González-Garay A, Sánchez-Ávila JF, García-Juárez I. Pocket ultrasound device as a complement to physical examination for ascites evaluation and guided paracentesis. Intern Emerg Med. 2016;11(3):461-466. https://doi.org/10.1007/s11739-016-1406-x.

20. Riddell J, Case A, Wopat R, et al. Sensitivity of emergency bedside ultrasound to detect hydronephrosis in patients with computed tomography–proven stones. West J Emerg Med. 2014;15(1):96-100. https://doi.org/10.5811/westjem.2013.9.15874.

21. Dalziel PJ, Noble VE. Bedside ultrasound and the assessment of renal colic: A review. Emerg Med J. 2013;30(1):3-8. https://doi.org/10.1136/emermed-2012-201375.

22. Whitson MR, Mayo PH. Ultrasonography in the emergency department. Crit Care. 2016;20(1):227. https://doi.org/10.1186/s13054-016-1399-x.

23. Kumar A, Liu G, Chi J, Kugler J. The role of technology in the bedside encounter. Med Clin North Am. 2018;102(3):443-451. https://doi.org/10.1016/j.mcna.2017.12.006.

24. Ma IWY, Arishenkoff S, Wiseman J, et al. Internal medicine point-of-care ultrasound curriculum: Consensus recommendations from the Canadian Internal Medicine Ultrasound (CIMUS) Group. J Gen Intern Med. 2017;32(9):1052-1057. https://doi.org/10.1007/s11606-017-4071-5.

15. Sabath BF, Singh G. Point-of-care ultrasonography as a training milestone for internal medicine residents: The time is now. J Community Hosp Intern Med Perspect. 2016;6(5):33094. https://doi.org/10.3402/jchimp.v6.33094.

26. American College of Emergency Physicians. Ultrasound guidelines: emergency, point-of-care and clinical ultrasound guidelines in medicine. Ann Emerg Med. 2017;69(5):e27-e54. https://doi.org/10.1016/j.annemergmed.2016.08.457.

27. Ramsingh D, Rinehart J, Kain Z, et al. Impact assessment of perioperative point-of-care ultrasound training on anesthesiology residents. Anesthesiology. 2015;123(3):670-682. https://doi.org/10.1097/ALN.0000000000000776.

28. Keddis MT, Cullen MW, Reed DA, et al. Effectiveness of an ultrasound training module for internal medicine residents. BMC Med Educ. 2011;11:75. https://doi.org/10.1186/1472-6920-11-75.

29. Townsend NT, Kendall J, Barnett C, Robinson T. An effective curriculum for focused assessment diagnostic echocardiography: Establishing the learning curve in surgical residents. J Surg Educ. 2016;73(2):190-196. https://doi.org/10.1016/j.jsurg.2015.10.009.

30. Hoppmann RA, Rao VV, Bell F, et al. The evolution of an integrated ultrasound curriculum (iUSC) for medical students: 9-year experience. Crit Ultrasound J. 2015;7(1):18. https://doi.org/10.1186/s13089-015-0035-3.

31. Skalski JH, Elrashidi M, Reed DA, McDonald FS, Bhagra A. Using standardized patients to teach point-of-care ultrasound–guided physical examination skills to internal medicine residents. J Grad Med Educ. 2015;7(1):95-97. https://doi.org/10.4300/JGME-D-14-00178.1.

32. Chisholm CB, Dodge WR, Balise RR, Williams SR, Gharahbaghian L, Beraud A-S. Focused cardiac ultrasound training: How much is enough? J Emerg Med. 2013;44(4):818-822. https://doi.org/10.1016/j.jemermed.2012.07.092.

33. Schmidt GA, Schraufnagel D. Introduction to ATS seminars: Intensive care ultrasound. Ann Am Thorac Soc. 2013;10(5):538-539. https://doi.org/10.1513/AnnalsATS.201306-203ED.

34. Skaarup SH, Laursen CB, Bjerrum AS, Hilberg O. Objective and structured assessment of lung ultrasound competence. A multispecialty Delphi consensus and construct validity study. Ann Am Thorac Soc. 2017;14(4):555-560. https://doi.org/10.1513/AnnalsATS.201611-894OC.

35. Lucas BP, Tierney DM, Jensen TP, et al. Credentialing of hospitalists in ultrasound-guided bedside procedures: A position statement of the Society of Hospital Medicine. J Hosp Med. 2018;13(2):117-125. https://doi.org/10.12788/jhm.2917.

36. Frankel HL, Kirkpatrick AW, Elbarbary M, et al. Guidelines for the appropriate use of bedside general and cardiac ultrasonography in the evaluation of critically ill patients-part i: General ultrasonography. Crit Care Med. 2015;43(11):2479-2502. https://doi.org/10.1097/CCM.0000000000001216.

37. Martin LD, Howell EE, Ziegelstein RC, et al. Hand-carried ultrasound performed by hospitalists: Does it improve the cardiac physical examination? Am J Med. 2009;122(1):35-41. https://doi.org/10.1016/j.amjmed.2008.07.022.

38. Desai SV, Asch DA, Bellini LM, et al. Education outcomes in a duty-hour flexibility trial in internal medicine. N Engl J Med. 2018;378(16):1494-1508. https://doi.org/10.1056/NEJMoa1800965.

39. Baltarowich OH, Di Salvo DN, Scoutt LM, et al. National ultrasound curriculum for medical students. Ultrasound Q. 2014;30(1):13-19. https://doi.org/10.1097/RUQ.0000000000000066.

40. Beal EW, Sigmond BR, Sage-Silski L, Lahey S, Nguyen V, Bahner DP. Point-of-care ultrasound in general surgery residency training: A proposal for milestones in graduate medical education ultrasound. J Ultrasound Med. 2017;36(12):2577-2584. https://doi.org/10.1002/jum.14298.

41. Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J Pers Soc Psychol. 1999;77(6):1121-1134. https://doi.org/10.1037//0022-3514.77.6.1121.

Point-of-care ultrasonography (POCUS) can transform healthcare delivery through its diagnostic and therapeutic expediency.1 POCUS has been shown to bolster diagnostic accuracy, reduce procedural complications, decrease inpatient length of stay, and improve patient satisfaction by encouraging the physician to be present at the bedside.2-8

POCUS has become widespread across a variety of clinical settings as more investigations have demonstrated its positive impact on patient care.1,9-12 This includes the use of POCUS by trainees, who are now utilizing this technology as part of their assessments of patients.13,14 However, trainees may be performing these examinations with minimal oversight, and outside of emergency medicine, there are few guidelines on how to effectively teach POCUS or measure competency.13,14 While POCUS is rapidly becoming a part of inpatient care, teaching physicians may have little experience in ultrasound or the expertise to adequately supervise trainees.14 There is a growing need to study what trainees can learn and how this knowledge is acquired.

Previous investigations have demonstrated that inexperienced users can be taught to use POCUS to identify a variety of pathological states.2,3,15-23 Most of these curricula used a single lecture series as their pedagogical vehicle, and they variably included junior medical trainees. More importantly, the investigations did not explore whether personal access to handheld ultrasound devices (HUDs) improved learning. In theory, improved access to POCUS devices increases opportunities for authentic and deliberate practice, which may be needed to improve trainee skill with POCUS beyond the classroom setting.14

This study aimed to address several ongoing gaps in knowledge related to learning POCUS. First, we hypothesized that personal HUD access would improve trainees’ POCUS-related knowledge and interpretive ability as a result of increased practice opportunities. Second, we hypothesized that trainees who receive personal access to HUDs would be more likely to perform POCUS examinations and feel more confident in their interpretations. Finally, we hypothesized that repeated exposure to POCUS-related lectures would result in greater improvements in knowledge as compared with a single lecture series.

METHODS

Participants and Setting

The 2017 intern class (n = 47) at an academic internal medicine residency program participated in the study. Control data were obtained from the 2016 intern class (historical control; n = 50) and the 2018 intern class (contemporaneous control; n = 52). The Stanford University Institutional Review Board approved this study.

Study Design

The 2017 intern class (n = 47) received POCUS didactics from June 2017 to June 2018. To evaluate if increased access to HUDs improved learning outcomes, the 2017 interns were randomized 1:1 to receive their own personal HUD that could be used for patient care and/or self-directed learning (n = 24) vs no-HUD (n = 23; Figure). Learning outcomes were assessed over the course of 1 year (see “Outcomes” below) and were compared with the 2016 and 2018 controls. The 2016 intern class had completed a year of training but had not received formalized POCUS didactics (historical control), whereas the 2018 intern class was assessed at the beginning of their year (contemporaneous control; Figure). In order to make comparisons based on intern experience, baseline data for the 2017 intern class were compared with the 2018 intern class, whereas end-of-study data for 2017 interns were compared with 2016 interns.

Outcomes

The primary outcome was the difference in assessment scores at the end of the study period between interns randomized to receive a HUD and those who were not. Secondary outcomes included differences in HUD usage rates, lecture attendance, and assessment scores. To assess whether repeated lecture exposure resulted in greater amounts of learning, this study evaluated for assessment score improvements after each lecture block. Finally, trainee attitudes toward POCUS and their confidence in their interpretative ability were measured at the beginning and end of the study period.

Curriculum Implementation

The lectures were administered as once-weekly didactics of 1-hour duration to interns rotating on the inpatient wards rotation. This rotation is 4 weeks long, and each intern will experience the rotation two to four times per year. Each lecture contained two parts: (1) 20-30 minutes of didactics via Microsoft PowerPointTM and (2) 30-40 minutes of supervised practice using HUDs on standardized patients. Four lectures were given each month: (1) introduction to POCUS and ultrasound physics, (2) thoracic/lung ultrasound, (3) echocardiography, and (4) abdominal POCUS. The lectures consisted of contrasting cases of normal/abnormal videos and clinical vignettes. These four lectures were repeated each month as new interns rotated on service. Some interns experienced the same content multiple times, which was intentional in order to assess their rates of learning over time. Lecture contents were based on previously published guidelines and expert consensus for teaching POCUS in internal medicine.13, 24-26 Content from the Accreditation Council for Graduate Medical Education (ACGME) and the American College of Emergency Physicians (ACEP) was also incorporated because these organizations had published relevant guidelines for teaching POCUS.13,26 Further development of the lectures occurred through review of previously described POCUS-relevant curricula.27-32

Handheld Ultrasound Devices

This study used the Philips LumifyTM, a United States Food and Drug Administration–approved device. Interns randomized to HUDs received their own device at the start of the rotation. It was at their discretion to use the device outside of the course. All devices were approved for patient use and were encrypted in compliance with our information security office. For privacy reasons, any saved patient images were not reviewed by the researchers. Interns were encouraged to share their findings with supervising physicians during rounds, but actual oversight was not measured. Interns not randomized to HUDs could access a single community device that was shared among all residents and fellows in the hospital. Interns reported the average number of POCUS examinations performed each week via a survey sent during the last week of the rotation.

Assessment Design and Implementation

Assessments evaluating trainee knowledge were administered before, during, and after the study period (Figure). For the 2017 cohort, assessments were also administered at the start and end of the ward month to track knowledge acquisition. Assessment contents were selected from POCUS guidelines for internal medicine and adaptation of the ACGME and ACEP guidelines.13,24,26 Additional content was obtained from major society POCUS tutorials and deidentified images collected by the study authors.13,24,33 In keeping with previously described methodology, the images were shown for approximately 12 seconds, followed by five additional seconds to allow the learner to answer the question.32 Final assessment contents were determined by the authors using the Delphi method.34 A sample assessment can be found in the Appendix Material.

Surveys

Surveys were administered alongside the assessments to the 2016-2018 intern classes. These surveys assessed trainee attitudes toward POCUS and were based on previously validated assessments.27,28,30 Attitudes were measured using 5-point Likert scales.

Statistical Analysis

For the primary outcome, we performed generalized binomial mixed-effect regressions using the survey periods, randomization group, and the interaction of the two as independent variables after adjusting for attendance and controlling of intra-intern correlations. The bivariate unadjusted analysis was performed to display the distribution of overall correctness on the assessments. Wilcoxon signed rank test was used to determine score significance for dependent score variables (R-Statistical Programming Language, Vienna, Austria).

RESULTS

Baseline Characteristics

There were 149 interns who participated in this study (Figure). Assessment/survey completion rates were as follows: 2016 control: 68.0%; 2017 preintervention: 97.9%; 2017 postintervention: 89.4%; and 2018 control: 100%. The 2017 interns reported similar amounts of prior POCUS exposure in medical school (Table 1).

Primary Outcome: Assessment Scores (HUD vs no HUD)

There were no significant differences in assessment scores at the end of the study between interns randomized to personal HUD access vs those to no-HUD access (Table 1). HUD interns reported performing POCUS assessments on patients a mean 6.8 (standard deviation [SD] 2.2) times per week vs 6.4 (SD 2.9) times per week in the no-HUD arm (P = .66). The mean lecture attendance was 75.0% and did not significantly differ between the HUD arms (Table 1).

Secondary Outcomes

Impact of Repeating Lectures

The 2017 interns demonstrated significant increases in preblock vs postblock assessment scores after first-time exposure to the lectures (median preblock score 0.61 [interquartile range (IQR), 0.53-0.70] vs postblock score 0.81 [IQR, 0.72-0.86]; P < .001; Table 2). However, intern performance on the preblock vs postblock assessments after second-time exposure to the curriculum failed to improve (median second preblock score 0.78 [IQR, 0.69-0.83] vs postblock score 0.81 [IQR, 0.64-0.89]; P = .94). Intern performance on individual domains of knowledge for each block is listed in Appendix Table 1.

Intervention Performance vs Controls

The 2016 historical control had significantly higher scores compared with the 2017 preintervention group (P < .001; Appendix Table 2). The year-long lecture series resulted in significant increases in median scores for the 2017 group (median preintervention score 0.55 [0.41-0.61] vs median postintervention score 0.84 [0.71-0.90]; P = .006; Appendix Table 1). At the end of the study, the 2017 postintervention scores were significantly higher across multiple knowledge domains compared with the 2016 historical control (Appendix Table 2).

Survey Results

Notably, the 2017 intern class at the end of the intervention did not have significantly different assessment scores for several disease-specific domains, compared with the 2016 control (Appendix Table 2). Nonetheless, the 2017 intern class reported higher levels of confidence in these same domains despite similar scores (Supplementary Figure). The HUD group seldomly cited a lack of confidence in their abilities as a barrier to performing POCUS examinations (17.6%), compared with the no-HUD group (50.0%), despite nearly identical assessment scores between the two groups (Table 1).

DISCUSSION

Previous guidelines have recommended increased HUD access for learners,13,24,35,36 but there have been few investigations that have evaluated the impact of such access on learning POCUS. One previous investigation found that hospitalists who carried HUDs were more likely to identify heart failure on bedside examination.37 In contrast, our study found no improvement in interpretative ability when randomizing interns to carry HUDs for patient care. Notably, interns did not perform more POCUS examinations when given HUDs. We offer several explanations for this finding. First, time-motion studies have demonstrated that internal medicine interns spend less than 15% of their time toward direct patient care.38 It is possible that the demands of being an intern impeded their ability to perform more POCUS examinations on their patients, regardless of HUD access. Alternatively, the interns randomized to no personal access may have used the community device more frequently as a result of the lecture series. Given the cost of HUDs, further studies are needed to assess the degree to which HUD access will improve trainee interpretive ability, especially as more training programs consider the creation of ultrasound curricula.10,11,24,39,40

This study was unique because it followed interns over a year-long course that repeated the same material to assess rates of learning with repeated exposure. Learners improved their scores after the first, but not second, block. Furthermore, the median scores were nearly identical between the first postblock assessment and second preblock assessment (0.81 vs 0.78), suggesting that knowledge was retained between blocks. Together, these findings suggest there may be limitations of traditional lectures that use standardized patient models for practice. Supplementary pedagogies, such as in-the-moment feedback with actual patients, may be needed to promote mastery.14,35

Despite no formal curriculum, the 2016 intern class (historical control) had learned POCUS to some degree based on their higher assessment scores compared with the 2017 intern class during the preintervention period. Such learning may be informal, and yet, trainees may feel confident in making clinical decisions without formalized training, accreditation, or oversight. As suggested by this study, adding regular didactics or giving trainees HUDs may not immediately solve this issue. For assessment items in which the 2017 interns did not significantly differ from the controls, they nonetheless reported higher confidence in their abilities. Similarly, interns randomized to HUDs less frequently cited a lack of confidence in their abilities, despite similar scores to the no-HUD group. Such confidence may be incongruent with their actual knowledge or ability to safely use POCUS. This phenomenon of misplaced confidence is known as the Dunning–Kruger effect, and it may be common with ultrasound learning.41 While confidence can be part of a holistic definition of competency,14 these results raise the concern that trainees may have difficulty assessing their own competency level with POCUS.35

There are several limitations to this study. It was performed at a single institution with limited sample size. It examined only intern physicians because of funding constraints, which limits the generalizability of these findings among medical trainees. Technical ability assessments (including obtaining and interpreting images) were not included. We were unable to track the timing or location of the devices’ usage, and the interns’ self-reported usage rates may be subject to recall bias. To our knowledge, there were no significant lapses in device availability/functionality. Intern physicians in the HUD arm did not receive formal feedback on personally acquired patient images, which may have limited the intervention’s impact.

In conclusion, internal medicine interns who received personal HUDs were not better at recognizing normal/abnormal findings on image assessments, and they did not report performing more POCUS examinations. Since the minority of a trainee’s time is spent toward direct patient care, offering trainees HUDs without substantial guidance may not be enough to promote mastery. Notably, trainees who received HUDs felt more confident in their abilities, despite no objective increase in their actual skill. Finally, interns who received POCUS-related lectures experienced significant benefit upon first exposure to the material, while repeated exposures did not improve performance. Future investigations should stringently track trainee POCUS usage rates with HUDs and assess whether image acquisition ability improves as a result of personal access.

Point-of-care ultrasonography (POCUS) can transform healthcare delivery through its diagnostic and therapeutic expediency.1 POCUS has been shown to bolster diagnostic accuracy, reduce procedural complications, decrease inpatient length of stay, and improve patient satisfaction by encouraging the physician to be present at the bedside.2-8

POCUS has become widespread across a variety of clinical settings as more investigations have demonstrated its positive impact on patient care.1,9-12 This includes the use of POCUS by trainees, who are now utilizing this technology as part of their assessments of patients.13,14 However, trainees may be performing these examinations with minimal oversight, and outside of emergency medicine, there are few guidelines on how to effectively teach POCUS or measure competency.13,14 While POCUS is rapidly becoming a part of inpatient care, teaching physicians may have little experience in ultrasound or the expertise to adequately supervise trainees.14 There is a growing need to study what trainees can learn and how this knowledge is acquired.

Previous investigations have demonstrated that inexperienced users can be taught to use POCUS to identify a variety of pathological states.2,3,15-23 Most of these curricula used a single lecture series as their pedagogical vehicle, and they variably included junior medical trainees. More importantly, the investigations did not explore whether personal access to handheld ultrasound devices (HUDs) improved learning. In theory, improved access to POCUS devices increases opportunities for authentic and deliberate practice, which may be needed to improve trainee skill with POCUS beyond the classroom setting.14

This study aimed to address several ongoing gaps in knowledge related to learning POCUS. First, we hypothesized that personal HUD access would improve trainees’ POCUS-related knowledge and interpretive ability as a result of increased practice opportunities. Second, we hypothesized that trainees who receive personal access to HUDs would be more likely to perform POCUS examinations and feel more confident in their interpretations. Finally, we hypothesized that repeated exposure to POCUS-related lectures would result in greater improvements in knowledge as compared with a single lecture series.

METHODS

Participants and Setting

The 2017 intern class (n = 47) at an academic internal medicine residency program participated in the study. Control data were obtained from the 2016 intern class (historical control; n = 50) and the 2018 intern class (contemporaneous control; n = 52). The Stanford University Institutional Review Board approved this study.

Study Design

The 2017 intern class (n = 47) received POCUS didactics from June 2017 to June 2018. To evaluate if increased access to HUDs improved learning outcomes, the 2017 interns were randomized 1:1 to receive their own personal HUD that could be used for patient care and/or self-directed learning (n = 24) vs no-HUD (n = 23; Figure). Learning outcomes were assessed over the course of 1 year (see “Outcomes” below) and were compared with the 2016 and 2018 controls. The 2016 intern class had completed a year of training but had not received formalized POCUS didactics (historical control), whereas the 2018 intern class was assessed at the beginning of their year (contemporaneous control; Figure). In order to make comparisons based on intern experience, baseline data for the 2017 intern class were compared with the 2018 intern class, whereas end-of-study data for 2017 interns were compared with 2016 interns.

Outcomes

The primary outcome was the difference in assessment scores at the end of the study period between interns randomized to receive a HUD and those who were not. Secondary outcomes included differences in HUD usage rates, lecture attendance, and assessment scores. To assess whether repeated lecture exposure resulted in greater amounts of learning, this study evaluated for assessment score improvements after each lecture block. Finally, trainee attitudes toward POCUS and their confidence in their interpretative ability were measured at the beginning and end of the study period.

Curriculum Implementation

The lectures were administered as once-weekly didactics of 1-hour duration to interns rotating on the inpatient wards rotation. This rotation is 4 weeks long, and each intern will experience the rotation two to four times per year. Each lecture contained two parts: (1) 20-30 minutes of didactics via Microsoft PowerPointTM and (2) 30-40 minutes of supervised practice using HUDs on standardized patients. Four lectures were given each month: (1) introduction to POCUS and ultrasound physics, (2) thoracic/lung ultrasound, (3) echocardiography, and (4) abdominal POCUS. The lectures consisted of contrasting cases of normal/abnormal videos and clinical vignettes. These four lectures were repeated each month as new interns rotated on service. Some interns experienced the same content multiple times, which was intentional in order to assess their rates of learning over time. Lecture contents were based on previously published guidelines and expert consensus for teaching POCUS in internal medicine.13, 24-26 Content from the Accreditation Council for Graduate Medical Education (ACGME) and the American College of Emergency Physicians (ACEP) was also incorporated because these organizations had published relevant guidelines for teaching POCUS.13,26 Further development of the lectures occurred through review of previously described POCUS-relevant curricula.27-32

Handheld Ultrasound Devices

This study used the Philips LumifyTM, a United States Food and Drug Administration–approved device. Interns randomized to HUDs received their own device at the start of the rotation. It was at their discretion to use the device outside of the course. All devices were approved for patient use and were encrypted in compliance with our information security office. For privacy reasons, any saved patient images were not reviewed by the researchers. Interns were encouraged to share their findings with supervising physicians during rounds, but actual oversight was not measured. Interns not randomized to HUDs could access a single community device that was shared among all residents and fellows in the hospital. Interns reported the average number of POCUS examinations performed each week via a survey sent during the last week of the rotation.

Assessment Design and Implementation

Assessments evaluating trainee knowledge were administered before, during, and after the study period (Figure). For the 2017 cohort, assessments were also administered at the start and end of the ward month to track knowledge acquisition. Assessment contents were selected from POCUS guidelines for internal medicine and adaptation of the ACGME and ACEP guidelines.13,24,26 Additional content was obtained from major society POCUS tutorials and deidentified images collected by the study authors.13,24,33 In keeping with previously described methodology, the images were shown for approximately 12 seconds, followed by five additional seconds to allow the learner to answer the question.32 Final assessment contents were determined by the authors using the Delphi method.34 A sample assessment can be found in the Appendix Material.

Surveys

Surveys were administered alongside the assessments to the 2016-2018 intern classes. These surveys assessed trainee attitudes toward POCUS and were based on previously validated assessments.27,28,30 Attitudes were measured using 5-point Likert scales.

Statistical Analysis

For the primary outcome, we performed generalized binomial mixed-effect regressions using the survey periods, randomization group, and the interaction of the two as independent variables after adjusting for attendance and controlling of intra-intern correlations. The bivariate unadjusted analysis was performed to display the distribution of overall correctness on the assessments. Wilcoxon signed rank test was used to determine score significance for dependent score variables (R-Statistical Programming Language, Vienna, Austria).

RESULTS

Baseline Characteristics

There were 149 interns who participated in this study (Figure). Assessment/survey completion rates were as follows: 2016 control: 68.0%; 2017 preintervention: 97.9%; 2017 postintervention: 89.4%; and 2018 control: 100%. The 2017 interns reported similar amounts of prior POCUS exposure in medical school (Table 1).

Primary Outcome: Assessment Scores (HUD vs no HUD)

There were no significant differences in assessment scores at the end of the study between interns randomized to personal HUD access vs those to no-HUD access (Table 1). HUD interns reported performing POCUS assessments on patients a mean 6.8 (standard deviation [SD] 2.2) times per week vs 6.4 (SD 2.9) times per week in the no-HUD arm (P = .66). The mean lecture attendance was 75.0% and did not significantly differ between the HUD arms (Table 1).

Secondary Outcomes

Impact of Repeating Lectures

The 2017 interns demonstrated significant increases in preblock vs postblock assessment scores after first-time exposure to the lectures (median preblock score 0.61 [interquartile range (IQR), 0.53-0.70] vs postblock score 0.81 [IQR, 0.72-0.86]; P < .001; Table 2). However, intern performance on the preblock vs postblock assessments after second-time exposure to the curriculum failed to improve (median second preblock score 0.78 [IQR, 0.69-0.83] vs postblock score 0.81 [IQR, 0.64-0.89]; P = .94). Intern performance on individual domains of knowledge for each block is listed in Appendix Table 1.

Intervention Performance vs Controls

The 2016 historical control had significantly higher scores compared with the 2017 preintervention group (P < .001; Appendix Table 2). The year-long lecture series resulted in significant increases in median scores for the 2017 group (median preintervention score 0.55 [0.41-0.61] vs median postintervention score 0.84 [0.71-0.90]; P = .006; Appendix Table 1). At the end of the study, the 2017 postintervention scores were significantly higher across multiple knowledge domains compared with the 2016 historical control (Appendix Table 2).

Survey Results

Notably, the 2017 intern class at the end of the intervention did not have significantly different assessment scores for several disease-specific domains, compared with the 2016 control (Appendix Table 2). Nonetheless, the 2017 intern class reported higher levels of confidence in these same domains despite similar scores (Supplementary Figure). The HUD group seldomly cited a lack of confidence in their abilities as a barrier to performing POCUS examinations (17.6%), compared with the no-HUD group (50.0%), despite nearly identical assessment scores between the two groups (Table 1).

DISCUSSION

Previous guidelines have recommended increased HUD access for learners,13,24,35,36 but there have been few investigations that have evaluated the impact of such access on learning POCUS. One previous investigation found that hospitalists who carried HUDs were more likely to identify heart failure on bedside examination.37 In contrast, our study found no improvement in interpretative ability when randomizing interns to carry HUDs for patient care. Notably, interns did not perform more POCUS examinations when given HUDs. We offer several explanations for this finding. First, time-motion studies have demonstrated that internal medicine interns spend less than 15% of their time toward direct patient care.38 It is possible that the demands of being an intern impeded their ability to perform more POCUS examinations on their patients, regardless of HUD access. Alternatively, the interns randomized to no personal access may have used the community device more frequently as a result of the lecture series. Given the cost of HUDs, further studies are needed to assess the degree to which HUD access will improve trainee interpretive ability, especially as more training programs consider the creation of ultrasound curricula.10,11,24,39,40

This study was unique because it followed interns over a year-long course that repeated the same material to assess rates of learning with repeated exposure. Learners improved their scores after the first, but not second, block. Furthermore, the median scores were nearly identical between the first postblock assessment and second preblock assessment (0.81 vs 0.78), suggesting that knowledge was retained between blocks. Together, these findings suggest there may be limitations of traditional lectures that use standardized patient models for practice. Supplementary pedagogies, such as in-the-moment feedback with actual patients, may be needed to promote mastery.14,35

Despite no formal curriculum, the 2016 intern class (historical control) had learned POCUS to some degree based on their higher assessment scores compared with the 2017 intern class during the preintervention period. Such learning may be informal, and yet, trainees may feel confident in making clinical decisions without formalized training, accreditation, or oversight. As suggested by this study, adding regular didactics or giving trainees HUDs may not immediately solve this issue. For assessment items in which the 2017 interns did not significantly differ from the controls, they nonetheless reported higher confidence in their abilities. Similarly, interns randomized to HUDs less frequently cited a lack of confidence in their abilities, despite similar scores to the no-HUD group. Such confidence may be incongruent with their actual knowledge or ability to safely use POCUS. This phenomenon of misplaced confidence is known as the Dunning–Kruger effect, and it may be common with ultrasound learning.41 While confidence can be part of a holistic definition of competency,14 these results raise the concern that trainees may have difficulty assessing their own competency level with POCUS.35

There are several limitations to this study. It was performed at a single institution with limited sample size. It examined only intern physicians because of funding constraints, which limits the generalizability of these findings among medical trainees. Technical ability assessments (including obtaining and interpreting images) were not included. We were unable to track the timing or location of the devices’ usage, and the interns’ self-reported usage rates may be subject to recall bias. To our knowledge, there were no significant lapses in device availability/functionality. Intern physicians in the HUD arm did not receive formal feedback on personally acquired patient images, which may have limited the intervention’s impact.

In conclusion, internal medicine interns who received personal HUDs were not better at recognizing normal/abnormal findings on image assessments, and they did not report performing more POCUS examinations. Since the minority of a trainee’s time is spent toward direct patient care, offering trainees HUDs without substantial guidance may not be enough to promote mastery. Notably, trainees who received HUDs felt more confident in their abilities, despite no objective increase in their actual skill. Finally, interns who received POCUS-related lectures experienced significant benefit upon first exposure to the material, while repeated exposures did not improve performance. Future investigations should stringently track trainee POCUS usage rates with HUDs and assess whether image acquisition ability improves as a result of personal access.

1. Moore CL, Copel JA. Point-of-care ultrasonography. N Engl J Med. 2011;364(8):749-757. https://doi.org/10.1056/NEJMra0909487.

2. Akkaya A, Yesilaras M, Aksay E, Sever M, Atilla OD. The interrater reliability of ultrasound imaging of the inferior vena cava performed by emergency residents. Am J Emerg Med. 2013;31(10):1509-1511. https://doi.org/10.1016/j.ajem.2013.07.006.

3. Razi R, Estrada JR, Doll J, Spencer KT. Bedside hand-carried ultrasound by internal medicine residents versus traditional clinical assessment for the identification of systolic dysfunction in patients admitted with decompensated heart failure. J Am Soc Echocardiogr. 2011;24(12):1319-1324. https://doi.org/10.1016/j.echo.2011.07.013.

4. Dodge KL, Lynch CA, Moore CL, Biroscak BJ, Evans LV. Use of ultrasound guidance improves central venous catheter insertion success rates among junior residents. J Ultrasound Med. 2012;31(10):1519-1526. https://doi.org/10.7863/jum.2012.31.10.1519.

5. Cavanna L, Mordenti P, Bertè R, et al. Ultrasound guidance reduces pneumothorax rate and improves safety of thoracentesis in malignant pleural effusion: Report on 445 consecutive patients with advanced cancer. World J Surg Oncol. 2014;12:139. https://doi.org/10.1186/1477-7819-12-139.

6. Testa A, Francesconi A, Giannuzzi R, Berardi S, Sbraccia P. Economic analysis of bedside ultrasonography (US) implementation in an Internal Medicine department. Intern Emerg Med. 2015;10(8):1015-1024. https://doi.org/10.1007/s11739-015-1320-7.

7. Howard ZD, Noble VE, Marill KA, et al. Bedside ultrasound maximizes patient satisfaction. J Emerg Med. 2014;46(1):46-53. https://doi.org/10.1016/j.jemermed.2013.05.044.

8. Park YH, Jung RB, Lee YG, et al. Does the use of bedside ultrasonography reduce emergency department length of stay for patients with renal colic? A pilot study. Clin Exp Emerg Med. 2016;3(4):197-203. https://doi.org/10.15441/ceem.15.109.

9. Glomb N, D’Amico B, Rus M, Chen C. Point-of-care ultrasound in resource-limited settings. Clin Pediatr Emerg Med. 2015;16(4):256-261. https://doi.org/10.1016/j.cpem.2015.10.001.

10. Bahner DP, Goldman E, Way D, Royall NA, Liu YT. The state of ultrasound education in U.S. medical schools: results of a national survey. Acad Med. 2014;89(12):1681-1686. https://doi.org/10.1097/ACM.0000000000000414.

11. Hall JWW, Holman H, Bornemann P, et al. Point of care ultrasound in family medicine residency programs: A CERA study. Fam Med. 2015;47(9):706-711.

12. Schnobrich DJ, Gladding S, Olson APJ, Duran-Nelson A. Point-of-care ultrasound in internal medicine: A national survey of educational leadership. J Grad Med Educ. 2013;5(3):498-502. https://doi.org/10.4300/JGME-D-12-00215.1.

13. Stolz LA, Stolz U, Fields JM, et al. Emergency medicine resident assessment of the emergency ultrasound milestones and current training recommendations. Acad Emerg Med. 2017;24(3):353-361. https://doi.org/10.1111/acem.13113.

14. Kumar, A., Jensen, T., Kugler, J. Evaluation of trainee competency with point-of-care ultrasonography (POCUS): A conceptual framework and review of existing assessments. J Gen Intern Med. 2019;34(6):1025-1031. https://doi.org/10.1007/s11606-019-04945-4.

15. Levitov A, Frankel HL, Blaivas M, et al. Guidelines for the appropriate use of bedside general and cardiac ultrasonography in the evaluation of critically ill patients—part ii: Cardiac ultrasonography. Crit Care Med. 2016;44(6):1206-1227. https://doi.org/10.1097/CCM.0000000000001847.

16. Kobal SL, Trento L, Baharami S, et al. Comparison of effectiveness of hand-carried ultrasound to bedside cardiovascular physical examination. Am J Cardiol. 2005;96(7):1002-1006. https://doi.org/10.1016/j.amjcard.2005.05.060.

17. Ceriani E, Cogliati C. Update on bedside ultrasound diagnosis of pericardial effusion. Intern Emerg Med. 2016;11(3):477-480. https://doi.org/10.1007/s11739-015-1372-8.

18. Labovitz AJ, Noble VE, Bierig M, et al. Focused cardiac ultrasound in the emergent setting: A consensus statement of the American Society of Echocardiography and American College of Emergency Physicians. J Am Soc Echocardiogr. 2010;23(12):1225-1230. https://doi.org/10.1016/j.echo.2010.10.005.

19. Keil-Ríos D, Terrazas-Solís H, González-Garay A, Sánchez-Ávila JF, García-Juárez I. Pocket ultrasound device as a complement to physical examination for ascites evaluation and guided paracentesis. Intern Emerg Med. 2016;11(3):461-466. https://doi.org/10.1007/s11739-016-1406-x.

20. Riddell J, Case A, Wopat R, et al. Sensitivity of emergency bedside ultrasound to detect hydronephrosis in patients with computed tomography–proven stones. West J Emerg Med. 2014;15(1):96-100. https://doi.org/10.5811/westjem.2013.9.15874.

21. Dalziel PJ, Noble VE. Bedside ultrasound and the assessment of renal colic: A review. Emerg Med J. 2013;30(1):3-8. https://doi.org/10.1136/emermed-2012-201375.

22. Whitson MR, Mayo PH. Ultrasonography in the emergency department. Crit Care. 2016;20(1):227. https://doi.org/10.1186/s13054-016-1399-x.

23. Kumar A, Liu G, Chi J, Kugler J. The role of technology in the bedside encounter. Med Clin North Am. 2018;102(3):443-451. https://doi.org/10.1016/j.mcna.2017.12.006.

24. Ma IWY, Arishenkoff S, Wiseman J, et al. Internal medicine point-of-care ultrasound curriculum: Consensus recommendations from the Canadian Internal Medicine Ultrasound (CIMUS) Group. J Gen Intern Med. 2017;32(9):1052-1057. https://doi.org/10.1007/s11606-017-4071-5.

15. Sabath BF, Singh G. Point-of-care ultrasonography as a training milestone for internal medicine residents: The time is now. J Community Hosp Intern Med Perspect. 2016;6(5):33094. https://doi.org/10.3402/jchimp.v6.33094.

26. American College of Emergency Physicians. Ultrasound guidelines: emergency, point-of-care and clinical ultrasound guidelines in medicine. Ann Emerg Med. 2017;69(5):e27-e54. https://doi.org/10.1016/j.annemergmed.2016.08.457.

27. Ramsingh D, Rinehart J, Kain Z, et al. Impact assessment of perioperative point-of-care ultrasound training on anesthesiology residents. Anesthesiology. 2015;123(3):670-682. https://doi.org/10.1097/ALN.0000000000000776.

28. Keddis MT, Cullen MW, Reed DA, et al. Effectiveness of an ultrasound training module for internal medicine residents. BMC Med Educ. 2011;11:75. https://doi.org/10.1186/1472-6920-11-75.

29. Townsend NT, Kendall J, Barnett C, Robinson T. An effective curriculum for focused assessment diagnostic echocardiography: Establishing the learning curve in surgical residents. J Surg Educ. 2016;73(2):190-196. https://doi.org/10.1016/j.jsurg.2015.10.009.

30. Hoppmann RA, Rao VV, Bell F, et al. The evolution of an integrated ultrasound curriculum (iUSC) for medical students: 9-year experience. Crit Ultrasound J. 2015;7(1):18. https://doi.org/10.1186/s13089-015-0035-3.

31. Skalski JH, Elrashidi M, Reed DA, McDonald FS, Bhagra A. Using standardized patients to teach point-of-care ultrasound–guided physical examination skills to internal medicine residents. J Grad Med Educ. 2015;7(1):95-97. https://doi.org/10.4300/JGME-D-14-00178.1.

32. Chisholm CB, Dodge WR, Balise RR, Williams SR, Gharahbaghian L, Beraud A-S. Focused cardiac ultrasound training: How much is enough? J Emerg Med. 2013;44(4):818-822. https://doi.org/10.1016/j.jemermed.2012.07.092.

33. Schmidt GA, Schraufnagel D. Introduction to ATS seminars: Intensive care ultrasound. Ann Am Thorac Soc. 2013;10(5):538-539. https://doi.org/10.1513/AnnalsATS.201306-203ED.

34. Skaarup SH, Laursen CB, Bjerrum AS, Hilberg O. Objective and structured assessment of lung ultrasound competence. A multispecialty Delphi consensus and construct validity study. Ann Am Thorac Soc. 2017;14(4):555-560. https://doi.org/10.1513/AnnalsATS.201611-894OC.

35. Lucas BP, Tierney DM, Jensen TP, et al. Credentialing of hospitalists in ultrasound-guided bedside procedures: A position statement of the Society of Hospital Medicine. J Hosp Med. 2018;13(2):117-125. https://doi.org/10.12788/jhm.2917.

36. Frankel HL, Kirkpatrick AW, Elbarbary M, et al. Guidelines for the appropriate use of bedside general and cardiac ultrasonography in the evaluation of critically ill patients-part i: General ultrasonography. Crit Care Med. 2015;43(11):2479-2502. https://doi.org/10.1097/CCM.0000000000001216.

37. Martin LD, Howell EE, Ziegelstein RC, et al. Hand-carried ultrasound performed by hospitalists: Does it improve the cardiac physical examination? Am J Med. 2009;122(1):35-41. https://doi.org/10.1016/j.amjmed.2008.07.022.

38. Desai SV, Asch DA, Bellini LM, et al. Education outcomes in a duty-hour flexibility trial in internal medicine. N Engl J Med. 2018;378(16):1494-1508. https://doi.org/10.1056/NEJMoa1800965.

39. Baltarowich OH, Di Salvo DN, Scoutt LM, et al. National ultrasound curriculum for medical students. Ultrasound Q. 2014;30(1):13-19. https://doi.org/10.1097/RUQ.0000000000000066.

40. Beal EW, Sigmond BR, Sage-Silski L, Lahey S, Nguyen V, Bahner DP. Point-of-care ultrasound in general surgery residency training: A proposal for milestones in graduate medical education ultrasound. J Ultrasound Med. 2017;36(12):2577-2584. https://doi.org/10.1002/jum.14298.

41. Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J Pers Soc Psychol. 1999;77(6):1121-1134. https://doi.org/10.1037//0022-3514.77.6.1121.

1. Moore CL, Copel JA. Point-of-care ultrasonography. N Engl J Med. 2011;364(8):749-757. https://doi.org/10.1056/NEJMra0909487.

2. Akkaya A, Yesilaras M, Aksay E, Sever M, Atilla OD. The interrater reliability of ultrasound imaging of the inferior vena cava performed by emergency residents. Am J Emerg Med. 2013;31(10):1509-1511. https://doi.org/10.1016/j.ajem.2013.07.006.

3. Razi R, Estrada JR, Doll J, Spencer KT. Bedside hand-carried ultrasound by internal medicine residents versus traditional clinical assessment for the identification of systolic dysfunction in patients admitted with decompensated heart failure. J Am Soc Echocardiogr. 2011;24(12):1319-1324. https://doi.org/10.1016/j.echo.2011.07.013.

4. Dodge KL, Lynch CA, Moore CL, Biroscak BJ, Evans LV. Use of ultrasound guidance improves central venous catheter insertion success rates among junior residents. J Ultrasound Med. 2012;31(10):1519-1526. https://doi.org/10.7863/jum.2012.31.10.1519.

5. Cavanna L, Mordenti P, Bertè R, et al. Ultrasound guidance reduces pneumothorax rate and improves safety of thoracentesis in malignant pleural effusion: Report on 445 consecutive patients with advanced cancer. World J Surg Oncol. 2014;12:139. https://doi.org/10.1186/1477-7819-12-139.

6. Testa A, Francesconi A, Giannuzzi R, Berardi S, Sbraccia P. Economic analysis of bedside ultrasonography (US) implementation in an Internal Medicine department. Intern Emerg Med. 2015;10(8):1015-1024. https://doi.org/10.1007/s11739-015-1320-7.

7. Howard ZD, Noble VE, Marill KA, et al. Bedside ultrasound maximizes patient satisfaction. J Emerg Med. 2014;46(1):46-53. https://doi.org/10.1016/j.jemermed.2013.05.044.

8. Park YH, Jung RB, Lee YG, et al. Does the use of bedside ultrasonography reduce emergency department length of stay for patients with renal colic? A pilot study. Clin Exp Emerg Med. 2016;3(4):197-203. https://doi.org/10.15441/ceem.15.109.

9. Glomb N, D’Amico B, Rus M, Chen C. Point-of-care ultrasound in resource-limited settings. Clin Pediatr Emerg Med. 2015;16(4):256-261. https://doi.org/10.1016/j.cpem.2015.10.001.

10. Bahner DP, Goldman E, Way D, Royall NA, Liu YT. The state of ultrasound education in U.S. medical schools: results of a national survey. Acad Med. 2014;89(12):1681-1686. https://doi.org/10.1097/ACM.0000000000000414.

11. Hall JWW, Holman H, Bornemann P, et al. Point of care ultrasound in family medicine residency programs: A CERA study. Fam Med. 2015;47(9):706-711.

12. Schnobrich DJ, Gladding S, Olson APJ, Duran-Nelson A. Point-of-care ultrasound in internal medicine: A national survey of educational leadership. J Grad Med Educ. 2013;5(3):498-502. https://doi.org/10.4300/JGME-D-12-00215.1.

13. Stolz LA, Stolz U, Fields JM, et al. Emergency medicine resident assessment of the emergency ultrasound milestones and current training recommendations. Acad Emerg Med. 2017;24(3):353-361. https://doi.org/10.1111/acem.13113.

14. Kumar, A., Jensen, T., Kugler, J. Evaluation of trainee competency with point-of-care ultrasonography (POCUS): A conceptual framework and review of existing assessments. J Gen Intern Med. 2019;34(6):1025-1031. https://doi.org/10.1007/s11606-019-04945-4.

15. Levitov A, Frankel HL, Blaivas M, et al. Guidelines for the appropriate use of bedside general and cardiac ultrasonography in the evaluation of critically ill patients—part ii: Cardiac ultrasonography. Crit Care Med. 2016;44(6):1206-1227. https://doi.org/10.1097/CCM.0000000000001847.

16. Kobal SL, Trento L, Baharami S, et al. Comparison of effectiveness of hand-carried ultrasound to bedside cardiovascular physical examination. Am J Cardiol. 2005;96(7):1002-1006. https://doi.org/10.1016/j.amjcard.2005.05.060.

17. Ceriani E, Cogliati C. Update on bedside ultrasound diagnosis of pericardial effusion. Intern Emerg Med. 2016;11(3):477-480. https://doi.org/10.1007/s11739-015-1372-8.

18. Labovitz AJ, Noble VE, Bierig M, et al. Focused cardiac ultrasound in the emergent setting: A consensus statement of the American Society of Echocardiography and American College of Emergency Physicians. J Am Soc Echocardiogr. 2010;23(12):1225-1230. https://doi.org/10.1016/j.echo.2010.10.005.

19. Keil-Ríos D, Terrazas-Solís H, González-Garay A, Sánchez-Ávila JF, García-Juárez I. Pocket ultrasound device as a complement to physical examination for ascites evaluation and guided paracentesis. Intern Emerg Med. 2016;11(3):461-466. https://doi.org/10.1007/s11739-016-1406-x.

20. Riddell J, Case A, Wopat R, et al. Sensitivity of emergency bedside ultrasound to detect hydronephrosis in patients with computed tomography–proven stones. West J Emerg Med. 2014;15(1):96-100. https://doi.org/10.5811/westjem.2013.9.15874.

21. Dalziel PJ, Noble VE. Bedside ultrasound and the assessment of renal colic: A review. Emerg Med J. 2013;30(1):3-8. https://doi.org/10.1136/emermed-2012-201375.

22. Whitson MR, Mayo PH. Ultrasonography in the emergency department. Crit Care. 2016;20(1):227. https://doi.org/10.1186/s13054-016-1399-x.

23. Kumar A, Liu G, Chi J, Kugler J. The role of technology in the bedside encounter. Med Clin North Am. 2018;102(3):443-451. https://doi.org/10.1016/j.mcna.2017.12.006.

24. Ma IWY, Arishenkoff S, Wiseman J, et al. Internal medicine point-of-care ultrasound curriculum: Consensus recommendations from the Canadian Internal Medicine Ultrasound (CIMUS) Group. J Gen Intern Med. 2017;32(9):1052-1057. https://doi.org/10.1007/s11606-017-4071-5.

15. Sabath BF, Singh G. Point-of-care ultrasonography as a training milestone for internal medicine residents: The time is now. J Community Hosp Intern Med Perspect. 2016;6(5):33094. https://doi.org/10.3402/jchimp.v6.33094.

26. American College of Emergency Physicians. Ultrasound guidelines: emergency, point-of-care and clinical ultrasound guidelines in medicine. Ann Emerg Med. 2017;69(5):e27-e54. https://doi.org/10.1016/j.annemergmed.2016.08.457.

27. Ramsingh D, Rinehart J, Kain Z, et al. Impact assessment of perioperative point-of-care ultrasound training on anesthesiology residents. Anesthesiology. 2015;123(3):670-682. https://doi.org/10.1097/ALN.0000000000000776.

28. Keddis MT, Cullen MW, Reed DA, et al. Effectiveness of an ultrasound training module for internal medicine residents. BMC Med Educ. 2011;11:75. https://doi.org/10.1186/1472-6920-11-75.

29. Townsend NT, Kendall J, Barnett C, Robinson T. An effective curriculum for focused assessment diagnostic echocardiography: Establishing the learning curve in surgical residents. J Surg Educ. 2016;73(2):190-196. https://doi.org/10.1016/j.jsurg.2015.10.009.

30. Hoppmann RA, Rao VV, Bell F, et al. The evolution of an integrated ultrasound curriculum (iUSC) for medical students: 9-year experience. Crit Ultrasound J. 2015;7(1):18. https://doi.org/10.1186/s13089-015-0035-3.

31. Skalski JH, Elrashidi M, Reed DA, McDonald FS, Bhagra A. Using standardized patients to teach point-of-care ultrasound–guided physical examination skills to internal medicine residents. J Grad Med Educ. 2015;7(1):95-97. https://doi.org/10.4300/JGME-D-14-00178.1.

32. Chisholm CB, Dodge WR, Balise RR, Williams SR, Gharahbaghian L, Beraud A-S. Focused cardiac ultrasound training: How much is enough? J Emerg Med. 2013;44(4):818-822. https://doi.org/10.1016/j.jemermed.2012.07.092.

33. Schmidt GA, Schraufnagel D. Introduction to ATS seminars: Intensive care ultrasound. Ann Am Thorac Soc. 2013;10(5):538-539. https://doi.org/10.1513/AnnalsATS.201306-203ED.

34. Skaarup SH, Laursen CB, Bjerrum AS, Hilberg O. Objective and structured assessment of lung ultrasound competence. A multispecialty Delphi consensus and construct validity study. Ann Am Thorac Soc. 2017;14(4):555-560. https://doi.org/10.1513/AnnalsATS.201611-894OC.

35. Lucas BP, Tierney DM, Jensen TP, et al. Credentialing of hospitalists in ultrasound-guided bedside procedures: A position statement of the Society of Hospital Medicine. J Hosp Med. 2018;13(2):117-125. https://doi.org/10.12788/jhm.2917.

36. Frankel HL, Kirkpatrick AW, Elbarbary M, et al. Guidelines for the appropriate use of bedside general and cardiac ultrasonography in the evaluation of critically ill patients-part i: General ultrasonography. Crit Care Med. 2015;43(11):2479-2502. https://doi.org/10.1097/CCM.0000000000001216.

37. Martin LD, Howell EE, Ziegelstein RC, et al. Hand-carried ultrasound performed by hospitalists: Does it improve the cardiac physical examination? Am J Med. 2009;122(1):35-41. https://doi.org/10.1016/j.amjmed.2008.07.022.

38. Desai SV, Asch DA, Bellini LM, et al. Education outcomes in a duty-hour flexibility trial in internal medicine. N Engl J Med. 2018;378(16):1494-1508. https://doi.org/10.1056/NEJMoa1800965.

39. Baltarowich OH, Di Salvo DN, Scoutt LM, et al. National ultrasound curriculum for medical students. Ultrasound Q. 2014;30(1):13-19. https://doi.org/10.1097/RUQ.0000000000000066.

40. Beal EW, Sigmond BR, Sage-Silski L, Lahey S, Nguyen V, Bahner DP. Point-of-care ultrasound in general surgery residency training: A proposal for milestones in graduate medical education ultrasound. J Ultrasound Med. 2017;36(12):2577-2584. https://doi.org/10.1002/jum.14298.

41. Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J Pers Soc Psychol. 1999;77(6):1121-1134. https://doi.org/10.1037//0022-3514.77.6.1121.

© 2020 Society of Hospital Medicine

Surgical Comanagement by Hospitalists: Continued Improvement Over 5 Years

In surgical comanagement (SCM), surgeons and hospitalists share responsibility of care for surgical patients. While SCM has been increasingly utilized, many of the reported models are a modification of the consultation model, in which a group of rotating hospitalists, internists, or geriatricians care for the surgical patients, often after medical complications may have occured.1-4

In August 2012, we implemented SCM in Orthopedic and Neurosurgery services at our institution.5 This model is unique because the same Internal Medicine hospitalists are dedicated year round to the same surgical service. SCM hospitalists see patients on their assigned surgical service only; they do not see patients on the Internal Medicine service. After the first year of implementing SCM, we conducted a propensity score–weighted study with 17,057 discharges in the pre-SCM group (January 2009 to July 2012) and 5,533 discharges in the post-SCM group (September 2012 to September 2013).5 In this study, SCM was associated with a decrease in medical complications, length of stay (LOS), medical consultations, 30-day readmissions, and cost.5

Since SCM requires ongoing investment by institutions, we now report a follow-up study to explore if there were continued improvements in patient outcomes with SCM. In this study, we evaluate if there was a decrease in medical complications, LOS, number of medical consultations, rapid response team calls, and code blues and an increase in patient satisfaction with SCM in Orthopedic and Neurosurgery services between 2012 and 2018.

METHODS

We included 26,380 discharges from Orthopedic and Neurosurgery services between September 1, 2012, and June 30, 2018, at our academic medical center. We excluded patients discharged in August 2012 as we transitioned to the SCM model. Our Institutional Review Board exempted this study from further review.

SCM Structure

SCM structure was detailed in a prior article.5 We have 3.0 clinical full-time equivalents on the Orthopedic surgery SCM service and 1.2 on the Neurosurgery SCM service. On weekdays, during the day (8

During the day, SCM hospitalists receive the first call for medical issues. After 5

SCM hospitalists screen the entire patient list on their assigned surgery service each day. After screening the patient list, SCM hospitalists formally see select patients with preventable or active medical conditions and write notes on the patient’s chart. There are no set criteria to determine which patients would be seen by SCM. This is because surgeries can decompensate stable medical conditions or new unexpected medical complications may occur. Additionally, in our prior study, we reported that SCM reduced medical complications and LOS regardless of age or patient acuity.5

Outcomes

Our primary outcome was proportion of patients with ≥1 medical complication (sepsis, pneumonia, urinary tract infection, delirium, acute kidney injury, atrial fibrillation, or ileus). Our secondary outcomes included mean LOS, proportion of patients with ≥2 medical consultations, rapid response team calls, code blues, and top-box patient satisfaction score. Though cost is an important consideration in implementing SCM, limited financial data were available. However, since LOS is a key component in calculating direct costs,6 we estimated the cost savings per discharge using mean direct cost per day and the difference in mean LOS between pre- and post-SCM groups.5