User login

A STEEEP Hill to Climb: A Scoping Review of Assessments of Individual Hospitalist Performance

Healthcare quality is defined as the extent to which healthcare services result in desired outcomes.1 Quality of care depends on how the healthcare system’s various components, including healthcare practitioners, interact to meet each patient’s needs.2 These components can be shaped to achieve desired outcomes through rules, incentives, and other approaches, but influencing the behaviors of each component, such as the performance of hospitalists, requires defining goals for performance and implementing measurement approaches to assess progress toward these goals.

One set of principles to define goals for quality and guide assessment of desired behaviors is the multidimensional STEEEP framework. This framework, created by the Institute of Medicine, identifies six domains of quality: Safe, Timely, Effective, Efficient, Equitable, and Patient Centered.2 Briefly, “Safe” means avoiding injuries to patients, “Timely” means reducing waits and delays in care, “Effective” means providing care based on evidence, “Efficient” means avoiding waste, “Equitable” means ensuring quality does not vary based on personal characteristics such as race and gender, and “Patient Centered” means providing care that is responsive to patients’ values and preferences. The STEEEP domains are not coequal; rather, they ensure that quality is considered broadly, while avoiding errors such as measuring only an intervention’s impact on effectiveness but not assessing its impact on multiple domains of quality, such as how patient centered, efficient (cost effective), or equitable the resulting care is.

Based on our review of the literature, a multidimensional framework like STEEEP has not been used in defining and assessing the quality of individual hospitalists’ performance. Some quality metrics at the hospital level impact several dimensions simultaneously, such as door to balloon time for acute myocardial infarction, which measures effectiveness and timeliness of care. Programs like pay-for-performance, Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS), and the Merit-Based Incentive Payment System (MIPS) have tied reimbursement to assessments aligned with several STEEEP domains at both individual and institutional levels but lack a holistic approach to quality.3-6 The every-other-year State of Hospital Medicine Report, the most widely used description of individual hospitalist performance, reports group-level performance including relative value units and whether groups are accountable for measures of quality such as performance on core measures, timely documentation, and “citizenship” (eg, committee participation or academic work).7 While these are useful benchmarks, the report focuses on performance at the group level. Concurrently, several academic groups have described more complete dashboards or scorecards to assess individual hospitalist performance, primarily designed to facilitate comparison across hospitalist groups or to incentivize overall group performance.8-10 However, these efforts are not guided by an overarching framework and are structured after traditional academic models with components related to teaching and scholarship, which may not translate to nonacademic environments. Finally, the Core Competencies for Hospital Medicine outlines some goals for hospitalist performance but does not speak to specific measurement approaches.11

Overall, assessing individual hospitalist performance is hindered by lack of consensus on important concepts to measure, a limited number of valid measures, and challenges in data collection such as resource limitations and feasibility. Developing and refining measures grounded in the STEEEP framework may provide a more comprehensive assessment of hospitalist quality and identify approaches to improve overall health outcomes. Comparative data could help individual hospitalists improve performance; leaders of hospitalist groups could use this data to guide faculty development and advancement as they ensure quality care at the individual, group, and system levels.

To better inform quality measurement of individual hospitalists, we sought to identify existing publications on individual hospitalist quality. Our goal was to define the published literature about quality measurement at the individual hospitalist level, relate these publications to domains of quality defined by the STEEEP framework, and identify directions for assessment or further research that could affect the overall quality of care.

METHODS

We conducted a scoping review following methods outlined by Arksey and O’Malley12 and Tricco.13 The goal of a scoping review is to map the extent of research within a specific field. This methodology is well suited to characterizing the existing research related to the quality of hospitalist care at the individual level. A protocol for the scoping review was not registered.

Evidence Search

A systematic search for published, English-language literature on hospitalist care was conducted in Medline (Ovid; 1946 - June 4, 2019) on June 5, 2019. The search used a combination of keywords and controlled vocabulary for the concept of hospitalists or hospital medicine. The search strategy used in this review is described in the Appendix. In addition, a hand search of reference lists of articles was used to discover publications not identified in the database searches.

Study Selection

All references were uploaded to Covidence systematic review software (www.covidence.org; Covidence), and duplicates were removed. Four reviewers (A.D., B.C., L.H., R.Q.) conducted title and abstract, as well as full-text, review to identify studies that measured differences in the performance of hospitalists at the individual level. Any disagreements among reviewers were resolved by consensus. Articles included both adult and pediatric populations. Articles that focused on group-level outcomes could be included if nonpooled data at the individual level was also reported. Studies were excluded if they did not focus on individual quality of care indicators or were not published in English.

Data Charting and Synthesis

We extracted the following information using a standardized data collection form: author, title, year of publication, study design, intervention, and outcome measures. Original manuscripts were accessed as needed to supplement analysis. Critical appraisal of individual studies was not conducted in this review because the goal of this review was to analyze which quality indicators have been studied and how they were measured. Articles were then coded for their alignment to the STEEEP framework by two reviewers (AD and BC). After initial coding was conducted, the reviewers met to consolidate codes and resolve any disagreement by consensus. The results of the analysis were summarized in both text and tabular format with studies grouped by focus of assessment with each one’s methods of assessment listed.

RESULTS

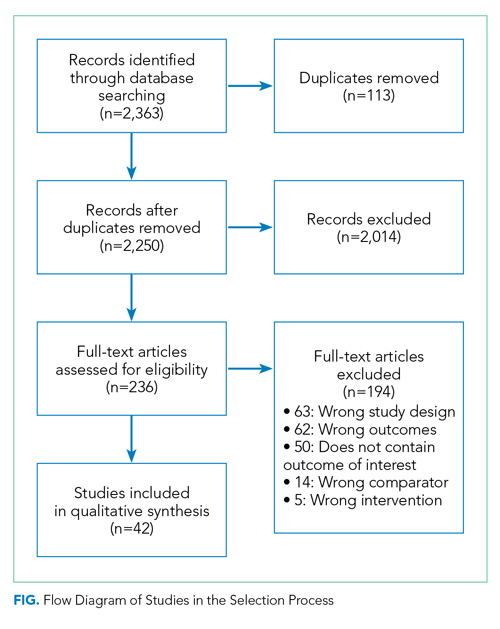

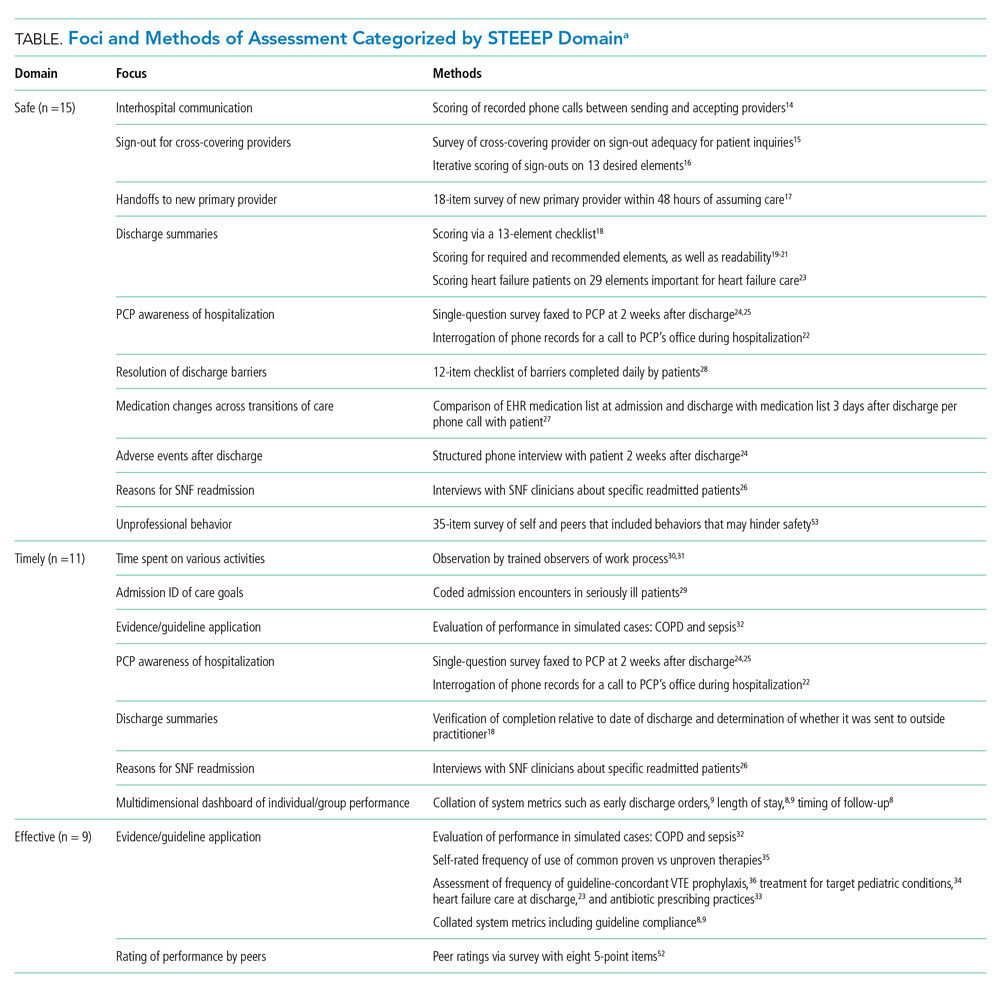

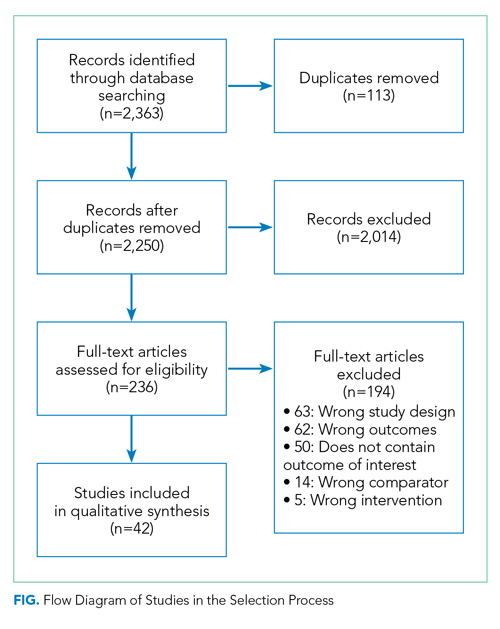

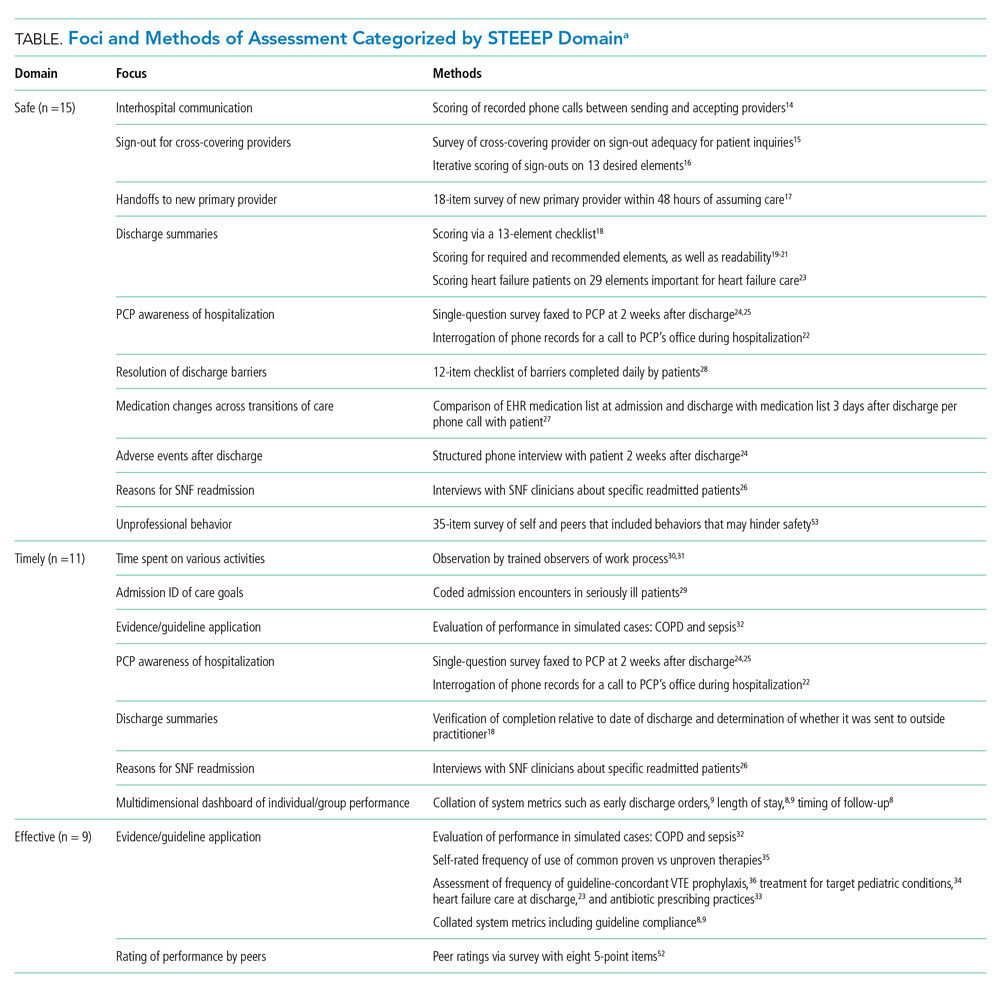

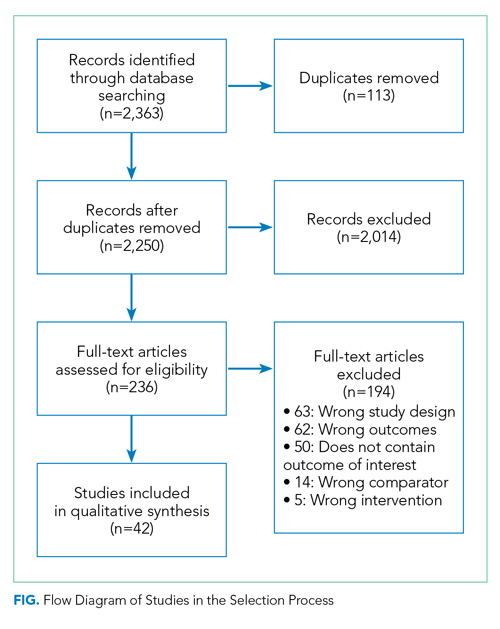

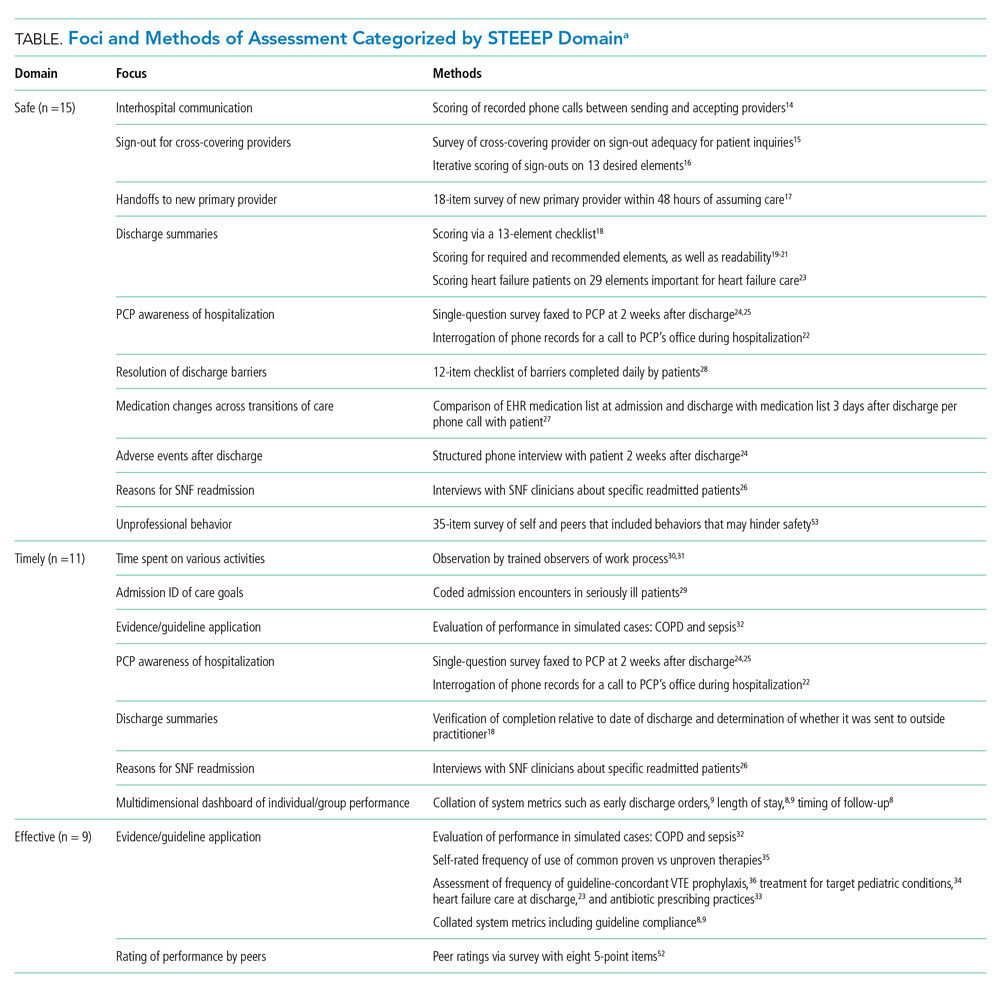

Results of the search strategy are shown in the Figure. The search retrieved a total of 2,363 references of which 113 were duplicates, leaving 2,250 to be screened. After title and abstract and full-text screening, 42 studies were included in the review. The final 42 studies were coded for alignment with the STEEEP framework. The Table displays the focus of assessment and methods of assessment within each STEEEP domain.

Eighteen studies were coded into a single domain while the rest were coded into at least two domains. The domain Patient Centered was coded as having the most studies (n = 23), followed by the domain of Safe (n = 15). Timely, Effective, and Efficient domains had 11, 9, and 12 studies, respectively. No studies were coded into the domain of Equitable.

Safe

Nearly all studies coded into the Safe domain focused on transitions of care. These included transfers into a hospital from other hospitals,14 transitions of care to cross-covering providers15,16 and new primary providers,17 and transition out from the acute care setting.18-28 Measures of hospital discharge included measures of both processes18-22 and outcomes.23-27 Methods of assessment varied from use of trained observers or scorers to surveys of individuals and colleagues about performance. Though a few leveraged informatics,22,27 all approaches relied on human interaction, and none were automated.

Timely

All studies coded into the Timely domain were coded into at least one other domain. For example, Anderson et al looked at how hospitalists communicated about potential life-limiting illness at the time of hospital admission and the subsequent effects on plans of care29; this was coded as both Timely and Patient Centered. Likewise, another group of studies centered on application of evidence-based guidelines, such as giving antibiotics within a certain time interval for sepsis and were coded as both Timely and Effective. Another set of authors described dashboards or scorecards that captured a number of group-level metrics of processes of care that span STEEEP domains and may be applicable to individuals, including Fox et al for pediatrics8 and Hwa et al for an adult academic hospitalist group.9 Methods of assessment varied widely across studies and included observations in the clinical environment,28,30,31 performance in simulations,32 and surveys about performance.22-26 A handful of approaches were more automated and made use of informatics8,9,22 or data collected for other health system purposes.8,9

Effective

Effectiveness was most often assessed through adherence to consensus and evidence-based guidelines. Examples included processes of care related to sepsis, venous thromboembolism prophylaxis, COPD, heart failure, pediatric asthma, and antibiotic appropriateness.8,9,23,32-36 Through the review, multiple other studies that included group-level measures of effectiveness for a variety of health conditions were excluded because data on individual-level variation were not reported. Methods of assessment included expert review of cases or discharge summaries, compliance with core measures, performance in simulation, and self-assessment on practice behaviors. Other than those efforts aligned with institutional data collection, most approaches were resource intensive.

Efficient

As with those in the Timely domain, most studies coded into the Efficient domain were coded into at least one other domain. One exception measured unnecessary daily lab work and both showed provider-level variation and demonstrated improvement in quality based on an intervention.37 Another paper coded into the Effective domain evaluated adherence to components of the Choosing Wisely® recommendations.34 In addition to these two studies focusing on cost efficacy, other studies coded to this domain assessed concepts such as ensuring more efficient care from other providers by optimizing transitions of care15-17 and clarifying patients’ goals for care.38 Although integrating insurer information into care plans is emphasized in the Core Competencies of Hospital Medicine,11 this concept was not represented in any of the identified articles. Methods of assessment varied and mostly relied on observation of behaviors or survey of providers. Several approaches were more automated or used Medicare claims data to assess the efficiency of individual providers relative to peers.34,37,39

Equitable

Among the studies reviewed, none were coded into the Equitable domain despite care of vulnerable populations being identified as a core competency of hospital medicine.40

Patient Centered

Studies coded to the Patient Centered domain assessed hospitalist performance through ratings of patient satisfaction,8,9,41-44 rating of communication between hospitalists and patients,19-21,29,45-51 identification of patient preferences,38,52 outcomes of patient-centered care activities,27,28 and peer ratings.53,54 Authors applied several theoretical constructs to these assessments including shared decision-making,50 etiquette-based medicine,47,48 empathetic responsiveness,45 agreement about the goals of care between the patient and healthcare team members,52 and lapses in professionalism.53 Studies often crossed STEEEP domains, such as those assessing quality of discharge information provided to patients, which were coded as both Safe and Patient Centered.19-21 In addition to coded or observed performance in the clinical setting, studies in this domain also used patient ratings as a method of assessment.8,9,28,41-44,49,50 Only a few of these approaches aligned with existing performance measures of health systems and were more automated.8,9

DISCUSSION

This scoping review of performance data for individual hospitalists coded to the STEEEP framework identified robust areas in the published literature, as well as opportunities to develop new approaches or refine existing measures. Transitions of care, both intrahospital and at discharge, and adherence to evidence-based guidelines are areas for which current research has created a foundation for care that is Safe, Timely, Effective, and Efficient. The Patient Centered domain also has several measures described, though the conceptual underpinnings are heterogeneous, and consensus appears necessary to compare performance across groups. No studies were coded to the Equitable domain. Across domains, approaches to measurement varied in resource intensity from simple ones, like integrating existing data collected by hospitals, to more complex ones, like shadowing physicians or coding interactions.

Methods of assessment coded into the Safe domain focused on communication and, less so, patient outcomes around transitions of care. Transitions of care that were evaluated included transfer of patients into a new facility, sign-out to new physicians for both cross-cover responsibilities and for newly assuming the role of primary attending, and discharge from the hospital. Most measures rated the quality of communication, although several23-27 examined patient outcomes. Approaches that survey individuals downstream from a transition of care15,17,24-26 may be the simplest and most feasible approach to implement in the future but, as described to date, do not include all transitions of care and may miss patient outcomes. Important core competencies for hospital medicine under the Safe domain that were not identified in this review include areas such as diagnostic error, hospital-acquired infections, error reporting, and medication safety.11 These are potential areas for future measure development.

The assessments in many studies were coded across more than one domain; for example, measures of the application of evidence-based guidelines were coded into domains of Effective, Timely, Efficient, and others. Applying the six domains of the STEEEP framework revealed the multidimensional outcomes of hospitalist work and could guide more meaningful quality assessments of individual hospitalist performance. For example, assessing adherence to evidence-based guidelines, as well as consideration of the Core Competencies of Hospital Medicine and recommendations of the Choosing Wisely® campaign, are promising areas for measurement and may align with existing hospital metrics. Notably, several reviewed studies measured group-level adherence to guidelines but were excluded because they did not examine variation at the individual level. Future measures based on evidence-based guidelines could center on the Effective domain while also integrating assessment of domains such as Efficient, Timely, and Patient Centered and, in so doing, provide a richer assessment of the diverse aspects of quality.

Several other approaches in the domains of Timely, Effective, and Efficient were described only in a few studies yet deserve consideration for further development. Two time-motion studies30,31 were coded into the domains of Timely and Efficient and would be cumbersome in regular practice but, with advances in wearable technology and electronic health records, could become more feasible in the future. Another approach used Medicare payment data to detect provider-level variation.39 Potentially, “big data” could be analyzed in other ways to compare the performance of individual hospitalists.

The lack of studies coded into the Equitable domain may seem surprising, but the Institute for Healthcare Improvement identifies Equitable as the “forgotten aim” of the STEEEP framework. This organization has developed a guide for health care organizations to promote equitable care.55 While this guide focuses mostly on organizational-level actions, some are focused on individual providers, such as training in implicit bias. Future research should seek to identify disparities in care by individual providers and develop interventions to address any discovered gaps.

The “Patient Centered” domain was the most frequently coded and had the most heterogeneous underpinnings for assessment. Studies varied widely in terminology and conceptual foundations. The field would benefit from future work to identify how “Patient Centered” care might be more clearly conceptualized, guided by comparative studies among different assessment approaches to define those most valid and feasible.

The overarching goal for measuring individual hospitalist quality should be to improve the delivery of patient care in a supportive and formative way. To further this goal, adding or expanding on metrics identified in this article may provide a more complete description of performance. As a future direction, groups should consider partnering with one another to define measurement approaches, collaborate with existing data sources, and even share deidentified individual data to establish performance benchmarks at the individual and group levels.

While this study used broad search terms to support completeness, the search process could have missed important studies. Grey literature, non–English language studies, and industry reports were not included in this review. Groups may also be using other assessments of individual hospitalist performance that are not published in the peer-reviewed literature. Coding of study assessments was achieved through consensus reconciliation; other coders might have classified studies differently.

CONCLUSION

This scoping review describes the peer-reviewed literature of individual hospitalist performance and is the first to link it to the STEEEP quality framework. Assessments of transitions of care, evidence-based care, and cost-effective care are exemplars in the published literature. Patient-centered care is well studied but assessed in a heterogeneous fashion. Assessments of equity in care are notably absent. The STEEEP framework provides a model to structure assessment of individual performance. Future research should build on this framework to define meaningful assessment approaches that are actionable and improve the welfare of our patients and our system.

Disclosures

The authors have nothing to disclose.

1. Quality of Care: A Process for Making Strategic Choices in Health Systems. World Health Organization; 2006.

2. Institute of Medicine (US) Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System for the 21st Century. National Academies Press; 2001. Accessed December 20, 2019. http://www.ncbi.nlm.nih.gov/books/NBK222274/

3. Wadhera RK, Joynt Maddox KE, Wasfy JH, Haneuse S, Shen C, Yeh RW. Association of the hospital readmissions reduction program with mortality among Medicare beneficiaries hospitalized for heart failure, acute myocardial infarction, and pneumonia. JAMA. 2018;320(24):2542-2552. https://doi.org/10.1001/jama.2018.19232

4. Kondo KK, Damberg CL, Mendelson A, et al. Implementation processes and pay for performance in healthcare: a systematic review. J Gen Intern Med. 2016;31(Suppl 1):61-69. https://doi.org/10.1007/s11606-015-3567-0

5. Fung CH, Lim Y-W, Mattke S, Damberg C, Shekelle PG. Systematic review: the evidence that publishing patient care performance data improves quality of care. Ann Intern Med. 2008;148(2):111-123. https://doi.org/10.7326/0003-4819-148-2-200801150-00006

6. Jha AK, Orav EJ, Epstein AM. Public reporting of discharge planning and rates of readmissions. N Engl J Med. 2009;361(27):2637-2645. https://doi.org/10.1056/NEJMsa0904859

7. Society of Hospital Medicine. State of Hospital Medicine Report; 2018. Accessed December 20, 2019. https://www.hospitalmedicine.org/practice-management/shms-state-of-hospital-medicine/

8. Hwa M, Sharpe BA, Wachter RM. Development and implementation of a balanced scorecard in an academic hospitalist group. J Hosp Med. 2013;8(3):148-153. https://doi.org/10.1002/jhm.2006

9. Fox LA, Walsh KE, Schainker EG. The creation of a pediatric hospital medicine dashboard: performance assessment for improvement. Hosp Pediatr. 2016;6(7):412-419. https://doi.org/10.1542/hpeds.2015-0222

10. Hain PD, Daru J, Robbins E, et al. A proposed dashboard for pediatric hospital medicine groups. Hosp Pediatr. 2012;2(2):59-68. https://doi.org/10.1542/hpeds.2012-0004

11. Nichani S, Crocker J, Fitterman N, Lukela M. Updating the core competencies in hospital medicine--2017 revision: introduction and methodology. J Hosp Med. 2017;12(4):283-287. https://doi.org/10.12788/jhm.2715

12. Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8:19-32. https://doi.org/10.1080/1364557032000119616

13. Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467-473. https://doi.org/10.7326/m18-0850

14. Borofsky JS, Bartsch JC, Howard AB, Repp AB. Quality of interhospital transfer communication practices and association with adverse events on an internal medicine hospitalist service. J Healthc Qual. 2017;39(3):177-185. https://doi.org/10.1097/01.JHQ.0000462682.32512.ad

15. Fogerty RL, Schoenfeld A, Salim Al-Damluji M, Horwitz LI. Effectiveness of written hospitalist sign-outs in answering overnight inquiries. J Hosp Med. 2013;8(11):609-614. https://doi.org10.1002/jhm.2090

16. Miller DM, Schapira MM, Visotcky AM, et al. Changes in written sign-out composition across hospitalization. J Hosp Med. 2015;10(8):534-536. https://doi.org/10.1002/jhm.2390

17. Hinami K, Farnan JM, Meltzer DO, Arora VM. Understanding communication during hospitalist service changes: a mixed methods study. J Hosp Med. 2009;4(9):535-540. https://doi.org/10.1002/jhm.523

18. Horwitz LI, Jenq GY, Brewster UC, et al. Comprehensive quality of discharge summaries at an academic medical center. J Hosp Med. 2013;8(8):436-443. https://doi.org10.1002/jhm.2021

19. Sarzynski E, Hashmi H, Subramanian J, et al. Opportunities to improve clinical summaries for patients at hospital discharge. BMJ Qual Saf. 2017;26(5):372-380. https://doi.org/10.1136/bmjqs-2015-005201

20. Unaka NI, Statile A, Haney J, Beck AF, Brady PW, Jerardi KE. Assessment of readability, understandability, and completeness of pediatric hospital medicine discharge instructions. J Hosp Med. 2017;12(2):98-101. https://doi.org/10.12788/jhm.2688

21. Unaka N, Statile A, Jerardi K, et al. Improving the readability of pediatric hospital medicine discharge instructions. J Hosp Med. 2017;12(7):551-557. https://doi.org/10.12788/jhm.2770

22. Zackoff MW, Graham C, Warrick D, et al. Increasing PCP and hospital medicine physician verbal communication during hospital admissions. Hosp Pediatr. 2018;8(4):220-226. https://doi.org/10.1542/hpeds.2017-0119

23. Salata BM, Sterling MR, Beecy AN, et al. Discharge processes and 30-day readmission rates of patients hospitalized for heart failure on general medicine and cardiology services. Am J Cardiol. 2018;121(9):1076-1080. https://doi.org/10.1016/j.amjcard.2018.01.027

24. Arora VM, Prochaska ML, Farnan JM, et al. Problems after discharge and understanding of communication with their primary care physicians among hospitalized seniors: a mixed methods study. J Hosp Med. 2010;5(7):385-391. https://doi.org/10.1002/jhm.668

25. Bell CM, Schnipper JL, Auerbach AD, et al. Association of communication between hospital-based physicians and primary care providers with patient outcomes. J Gen Intern Med. 2009;24(3):381-386. https://doi.org/10.1007/s11606-008-0882-8

26. Clark B, Baron K, Tynan-McKiernan K, Britton M, Minges K, Chaudhry S. Perspectives of clinicians at skilled nursing facilities on 30-day hospital readmissions: a qualitative study. J Hosp Med. 2017;12(8):632-638. https://doi.org/10.12788/jhm.2785

27. Harris CM, Sridharan A, Landis R, Howell E, Wright S. What happens to the medication regimens of older adults during and after an acute hospitalization? J Patient Saf. 2013;9(3):150-153. https://doi.org/10.1097/PTS.0b013e318286f87d

28. Harrison JD, Greysen RS, Jacolbia R, Nguyen A, Auerbach AD. Not ready, not set...discharge: patient-reported barriers to discharge readiness at an academic medical center. J Hosp Med. 2016;11(9):610-614. https://doi.org/10.1002/jhm.2591

29. Anderson WG, Kools S, Lyndon A. Dancing around death: hospitalist-patient communication about serious illness. Qual Health Res. 2013;23(1):3-13. https://doi.org/10.1177/1049732312461728

30. Tipping MD, Forth VE, Magill DB, Englert K, Williams MV. Systematic review of time studies evaluating physicians in the hospital setting. J Hosp Med. 2010;5(6):353-359. https://doi.org/10.1002/jhm.647

31. Tipping MD, Forth VE, O’Leary KJ, et al. Where did the day go?--a time-motion study of hospitalists. J Hosp Med. 2010;5(6):323-328. https://doi.org/10.1002/jhm.790

32. Bergmann S, Tran M, Robison K, et al. Standardising hospitalist practice in sepsis and COPD care. BMJ Qual Saf. 2019;28(10):800-808. https://doi.org/10.1136/bmjqs-2018-008829

33. Kisuule F, Wright S, Barreto J, Zenilman J. Improving antibiotic utilization among hospitalists: a pilot academic detailing project with a public health approach. J Hosp Med. 2008;3(1):64-70. https://doi.org/10.1002/jhm.278

34. Reyes M, Paulus E, Hronek C, et al. Choosing Wisely campaign: report card and achievable benchmarks of care for children’s hospitals. Hosp Pediatr. 2017;7(11):633-641. https://doi.org/10.1542/hpeds.2017-0029

35. Landrigan CP, Conway PH, Stucky ER, et al. Variation in pediatric hospitalists’ use of proven and unproven therapies: a study from the Pediatric Research in Inpatient Settings (PRIS) network. J Hosp Med. 2008;3(4):292-298. https://doi.org/10.1002/jhm.347

36. Michtalik HJ, Carolan HT, Haut ER, et al. Use of provider-level dashboards and pay-for-performance in venous thromboprophylaxis. J Hosp Med. 2015;10(3):172-178. https://doi.org/10.1002/jhm.2303

37. Johnson DP, Lind C, Parker SE, et al. Toward high-value care: a quality improvement initiative to reduce unnecessary repeat complete blood counts and basic metabolic panels on a pediatric hospitalist service. Hosp Pediatr. 2016;6(1):1-8. https://doi.org/10.1542/hpeds.2015-0099

38. Auerbach AD, Katz R, Pantilat SZ, et al. Factors associated with discussion of care plans and code status at the time of hospital admission: results from the Multicenter Hospitalist Study. J Hosp Med. 2008;3(6):437-445. https://doi.org/10.1002/jhm.369

39. Tsugawa Y, Jha AK, Newhouse JP, Zaslavsky AM, Jena AB. Variation in physician spending and association with patient outcomes. JAMA Intern Med. 2017;177(5):675-682. https://doi.org/10.1001/jamainternmed.2017.0059

40. Nichani S, Fitterman N, Lukela M, Crocker J. Equitable allocation of resources. 2017 hospital medicine revised core competencies. J Hosp Med. 2017;12(4):S62. https://doi.org/10.12788/jhm.3016

41. Blanden AR, Rohr RE. Cognitive interview techniques reveal specific behaviors and issues that could affect patient satisfaction relative to hospitalists. J Hosp Med. 2009;4(9):E1-E6. https://doi.org/10.1002/jhm.524

42. Torok H, Ghazarian SR, Kotwal S, Landis R, Wright S, Howell E. Development and validation of the tool to assess inpatient satisfaction with care from hospitalists. J Hosp Med. 2014;9(9):553-558. https://doi.org/10.1002/jhm.2220

43. Torok H, Kotwal S, Landis R, Ozumba U, Howell E, Wright S. Providing feedback on clinical performance to hospitalists: Experience using a new metric tool to assess inpatient satisfaction with care from hospitalists. J Contin Educ Health Prof. 2016;36(1):61-68. https://doi.org/10.1097/CEH.0000000000000060

44. Indovina K, Keniston A, Reid M, et al. Real-time patient experience surveys of hospitalized medical patients. J Hosp Med. 2016;11(4):251-256. https://doi.org/10.1002/jhm.2533

45. Weiss R, Vittinghoff E, Fang MC, et al. Associations of physician empathy with patient anxiety and ratings of communication in hospital admission encounters. J Hosp Med. 2017;12(10):805-810. https://doi.org/10.12788/jhm.2828

46. Apker J, Baker M, Shank S, Hatten K, VanSweden S. Optimizing hospitalist-patient communication: an observation study of medical encounter quality. Jt Comm J Qual Patient Saf. 2018;44(4):196-203. https://doi.org/10.1016/j.jcjq.2017.08.011

47. Kotwal S, Torok H, Khaliq W, Landis R, Howell E, Wright S. Comportment and communication patterns among hospitalist physicians: insight gleaned through observation. South Med J. 2015;108(8):496-501. https://doi.org/10.14423/SMJ.0000000000000328

48. Tackett S, Tad-y D, Rios R, Kisuule F, Wright S. Appraising the practice of etiquette-based medicine in the inpatient setting. J Gen Intern Med. 2013;28(7):908-913. https://doi.org/10.1007/s11606-012-2328-6

49. Ferranti DE, Makoul G, Forth VE, Rauworth J, Lee J, Williams MV. Assessing patient perceptions of hospitalist communication skills using the Communication Assessment Tool (CAT). J Hosp Med. 2010;5(9):522-527. https://doi.org/10.1002/jhm.787

50. Blankenburg R, Hilton JF, Yuan P, et al. Shared decision-making during inpatient rounds: opportunities for improvement in patient engagement and communication. J Hosp Med. 2018;13(7):453-461. https://doi.org/10.12788/jhm.2909

51. Chang D, Mann M, Sommer T, Fallar R, Weinberg A, Friedman E. Using standardized patients to assess hospitalist communication skills. J Hosp Med. 2017;12(7):562-566. https://doi.org/10.12788/jhm.2772

52. Figueroa JF, Schnipper JL, McNally K, Stade D, Lipsitz SR, Dalal AK. How often are hospitalized patients and providers on the same page with regard to the patient’s primary recovery goal for hospitalization? J Hosp Med. 2016;11(9):615-619. https://doi.org/10.1002/jhm.2569

53. Reddy ST, Iwaz JA, Didwania AK, et al. Participation in unprofessional behaviors among hospitalists: a multicenter study. J Hosp Med. 2012;7(7):543-550. https://doi.org/10.1002/jhm.1946

54. Bhogal HK, Howe E, Torok H, Knight AM, Howell E, Wright S. Peer assessment of professional performance by hospitalist physicians. South Med J. 2012;105(5):254-258. https://doi.org/10.1097/SMJ.0b013e318252d602

55. Wyatt R, Laderman M, Botwinick L, Mate K, Whittington J. Achieving health equity: a guide for health care organizations. IHI White Paper. Institute for Healthcare Improvement; 2016. https://www.ihi.org

Healthcare quality is defined as the extent to which healthcare services result in desired outcomes.1 Quality of care depends on how the healthcare system’s various components, including healthcare practitioners, interact to meet each patient’s needs.2 These components can be shaped to achieve desired outcomes through rules, incentives, and other approaches, but influencing the behaviors of each component, such as the performance of hospitalists, requires defining goals for performance and implementing measurement approaches to assess progress toward these goals.

One set of principles to define goals for quality and guide assessment of desired behaviors is the multidimensional STEEEP framework. This framework, created by the Institute of Medicine, identifies six domains of quality: Safe, Timely, Effective, Efficient, Equitable, and Patient Centered.2 Briefly, “Safe” means avoiding injuries to patients, “Timely” means reducing waits and delays in care, “Effective” means providing care based on evidence, “Efficient” means avoiding waste, “Equitable” means ensuring quality does not vary based on personal characteristics such as race and gender, and “Patient Centered” means providing care that is responsive to patients’ values and preferences. The STEEEP domains are not coequal; rather, they ensure that quality is considered broadly, while avoiding errors such as measuring only an intervention’s impact on effectiveness but not assessing its impact on multiple domains of quality, such as how patient centered, efficient (cost effective), or equitable the resulting care is.

Based on our review of the literature, a multidimensional framework like STEEEP has not been used in defining and assessing the quality of individual hospitalists’ performance. Some quality metrics at the hospital level impact several dimensions simultaneously, such as door to balloon time for acute myocardial infarction, which measures effectiveness and timeliness of care. Programs like pay-for-performance, Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS), and the Merit-Based Incentive Payment System (MIPS) have tied reimbursement to assessments aligned with several STEEEP domains at both individual and institutional levels but lack a holistic approach to quality.3-6 The every-other-year State of Hospital Medicine Report, the most widely used description of individual hospitalist performance, reports group-level performance including relative value units and whether groups are accountable for measures of quality such as performance on core measures, timely documentation, and “citizenship” (eg, committee participation or academic work).7 While these are useful benchmarks, the report focuses on performance at the group level. Concurrently, several academic groups have described more complete dashboards or scorecards to assess individual hospitalist performance, primarily designed to facilitate comparison across hospitalist groups or to incentivize overall group performance.8-10 However, these efforts are not guided by an overarching framework and are structured after traditional academic models with components related to teaching and scholarship, which may not translate to nonacademic environments. Finally, the Core Competencies for Hospital Medicine outlines some goals for hospitalist performance but does not speak to specific measurement approaches.11

Overall, assessing individual hospitalist performance is hindered by lack of consensus on important concepts to measure, a limited number of valid measures, and challenges in data collection such as resource limitations and feasibility. Developing and refining measures grounded in the STEEEP framework may provide a more comprehensive assessment of hospitalist quality and identify approaches to improve overall health outcomes. Comparative data could help individual hospitalists improve performance; leaders of hospitalist groups could use this data to guide faculty development and advancement as they ensure quality care at the individual, group, and system levels.

To better inform quality measurement of individual hospitalists, we sought to identify existing publications on individual hospitalist quality. Our goal was to define the published literature about quality measurement at the individual hospitalist level, relate these publications to domains of quality defined by the STEEEP framework, and identify directions for assessment or further research that could affect the overall quality of care.

METHODS

We conducted a scoping review following methods outlined by Arksey and O’Malley12 and Tricco.13 The goal of a scoping review is to map the extent of research within a specific field. This methodology is well suited to characterizing the existing research related to the quality of hospitalist care at the individual level. A protocol for the scoping review was not registered.

Evidence Search

A systematic search for published, English-language literature on hospitalist care was conducted in Medline (Ovid; 1946 - June 4, 2019) on June 5, 2019. The search used a combination of keywords and controlled vocabulary for the concept of hospitalists or hospital medicine. The search strategy used in this review is described in the Appendix. In addition, a hand search of reference lists of articles was used to discover publications not identified in the database searches.

Study Selection

All references were uploaded to Covidence systematic review software (www.covidence.org; Covidence), and duplicates were removed. Four reviewers (A.D., B.C., L.H., R.Q.) conducted title and abstract, as well as full-text, review to identify studies that measured differences in the performance of hospitalists at the individual level. Any disagreements among reviewers were resolved by consensus. Articles included both adult and pediatric populations. Articles that focused on group-level outcomes could be included if nonpooled data at the individual level was also reported. Studies were excluded if they did not focus on individual quality of care indicators or were not published in English.

Data Charting and Synthesis

We extracted the following information using a standardized data collection form: author, title, year of publication, study design, intervention, and outcome measures. Original manuscripts were accessed as needed to supplement analysis. Critical appraisal of individual studies was not conducted in this review because the goal of this review was to analyze which quality indicators have been studied and how they were measured. Articles were then coded for their alignment to the STEEEP framework by two reviewers (AD and BC). After initial coding was conducted, the reviewers met to consolidate codes and resolve any disagreement by consensus. The results of the analysis were summarized in both text and tabular format with studies grouped by focus of assessment with each one’s methods of assessment listed.

RESULTS

Results of the search strategy are shown in the Figure. The search retrieved a total of 2,363 references of which 113 were duplicates, leaving 2,250 to be screened. After title and abstract and full-text screening, 42 studies were included in the review. The final 42 studies were coded for alignment with the STEEEP framework. The Table displays the focus of assessment and methods of assessment within each STEEEP domain.

Eighteen studies were coded into a single domain while the rest were coded into at least two domains. The domain Patient Centered was coded as having the most studies (n = 23), followed by the domain of Safe (n = 15). Timely, Effective, and Efficient domains had 11, 9, and 12 studies, respectively. No studies were coded into the domain of Equitable.

Safe

Nearly all studies coded into the Safe domain focused on transitions of care. These included transfers into a hospital from other hospitals,14 transitions of care to cross-covering providers15,16 and new primary providers,17 and transition out from the acute care setting.18-28 Measures of hospital discharge included measures of both processes18-22 and outcomes.23-27 Methods of assessment varied from use of trained observers or scorers to surveys of individuals and colleagues about performance. Though a few leveraged informatics,22,27 all approaches relied on human interaction, and none were automated.

Timely

All studies coded into the Timely domain were coded into at least one other domain. For example, Anderson et al looked at how hospitalists communicated about potential life-limiting illness at the time of hospital admission and the subsequent effects on plans of care29; this was coded as both Timely and Patient Centered. Likewise, another group of studies centered on application of evidence-based guidelines, such as giving antibiotics within a certain time interval for sepsis and were coded as both Timely and Effective. Another set of authors described dashboards or scorecards that captured a number of group-level metrics of processes of care that span STEEEP domains and may be applicable to individuals, including Fox et al for pediatrics8 and Hwa et al for an adult academic hospitalist group.9 Methods of assessment varied widely across studies and included observations in the clinical environment,28,30,31 performance in simulations,32 and surveys about performance.22-26 A handful of approaches were more automated and made use of informatics8,9,22 or data collected for other health system purposes.8,9

Effective

Effectiveness was most often assessed through adherence to consensus and evidence-based guidelines. Examples included processes of care related to sepsis, venous thromboembolism prophylaxis, COPD, heart failure, pediatric asthma, and antibiotic appropriateness.8,9,23,32-36 Through the review, multiple other studies that included group-level measures of effectiveness for a variety of health conditions were excluded because data on individual-level variation were not reported. Methods of assessment included expert review of cases or discharge summaries, compliance with core measures, performance in simulation, and self-assessment on practice behaviors. Other than those efforts aligned with institutional data collection, most approaches were resource intensive.

Efficient

As with those in the Timely domain, most studies coded into the Efficient domain were coded into at least one other domain. One exception measured unnecessary daily lab work and both showed provider-level variation and demonstrated improvement in quality based on an intervention.37 Another paper coded into the Effective domain evaluated adherence to components of the Choosing Wisely® recommendations.34 In addition to these two studies focusing on cost efficacy, other studies coded to this domain assessed concepts such as ensuring more efficient care from other providers by optimizing transitions of care15-17 and clarifying patients’ goals for care.38 Although integrating insurer information into care plans is emphasized in the Core Competencies of Hospital Medicine,11 this concept was not represented in any of the identified articles. Methods of assessment varied and mostly relied on observation of behaviors or survey of providers. Several approaches were more automated or used Medicare claims data to assess the efficiency of individual providers relative to peers.34,37,39

Equitable

Among the studies reviewed, none were coded into the Equitable domain despite care of vulnerable populations being identified as a core competency of hospital medicine.40

Patient Centered

Studies coded to the Patient Centered domain assessed hospitalist performance through ratings of patient satisfaction,8,9,41-44 rating of communication between hospitalists and patients,19-21,29,45-51 identification of patient preferences,38,52 outcomes of patient-centered care activities,27,28 and peer ratings.53,54 Authors applied several theoretical constructs to these assessments including shared decision-making,50 etiquette-based medicine,47,48 empathetic responsiveness,45 agreement about the goals of care between the patient and healthcare team members,52 and lapses in professionalism.53 Studies often crossed STEEEP domains, such as those assessing quality of discharge information provided to patients, which were coded as both Safe and Patient Centered.19-21 In addition to coded or observed performance in the clinical setting, studies in this domain also used patient ratings as a method of assessment.8,9,28,41-44,49,50 Only a few of these approaches aligned with existing performance measures of health systems and were more automated.8,9

DISCUSSION

This scoping review of performance data for individual hospitalists coded to the STEEEP framework identified robust areas in the published literature, as well as opportunities to develop new approaches or refine existing measures. Transitions of care, both intrahospital and at discharge, and adherence to evidence-based guidelines are areas for which current research has created a foundation for care that is Safe, Timely, Effective, and Efficient. The Patient Centered domain also has several measures described, though the conceptual underpinnings are heterogeneous, and consensus appears necessary to compare performance across groups. No studies were coded to the Equitable domain. Across domains, approaches to measurement varied in resource intensity from simple ones, like integrating existing data collected by hospitals, to more complex ones, like shadowing physicians or coding interactions.

Methods of assessment coded into the Safe domain focused on communication and, less so, patient outcomes around transitions of care. Transitions of care that were evaluated included transfer of patients into a new facility, sign-out to new physicians for both cross-cover responsibilities and for newly assuming the role of primary attending, and discharge from the hospital. Most measures rated the quality of communication, although several23-27 examined patient outcomes. Approaches that survey individuals downstream from a transition of care15,17,24-26 may be the simplest and most feasible approach to implement in the future but, as described to date, do not include all transitions of care and may miss patient outcomes. Important core competencies for hospital medicine under the Safe domain that were not identified in this review include areas such as diagnostic error, hospital-acquired infections, error reporting, and medication safety.11 These are potential areas for future measure development.

The assessments in many studies were coded across more than one domain; for example, measures of the application of evidence-based guidelines were coded into domains of Effective, Timely, Efficient, and others. Applying the six domains of the STEEEP framework revealed the multidimensional outcomes of hospitalist work and could guide more meaningful quality assessments of individual hospitalist performance. For example, assessing adherence to evidence-based guidelines, as well as consideration of the Core Competencies of Hospital Medicine and recommendations of the Choosing Wisely® campaign, are promising areas for measurement and may align with existing hospital metrics. Notably, several reviewed studies measured group-level adherence to guidelines but were excluded because they did not examine variation at the individual level. Future measures based on evidence-based guidelines could center on the Effective domain while also integrating assessment of domains such as Efficient, Timely, and Patient Centered and, in so doing, provide a richer assessment of the diverse aspects of quality.

Several other approaches in the domains of Timely, Effective, and Efficient were described only in a few studies yet deserve consideration for further development. Two time-motion studies30,31 were coded into the domains of Timely and Efficient and would be cumbersome in regular practice but, with advances in wearable technology and electronic health records, could become more feasible in the future. Another approach used Medicare payment data to detect provider-level variation.39 Potentially, “big data” could be analyzed in other ways to compare the performance of individual hospitalists.

The lack of studies coded into the Equitable domain may seem surprising, but the Institute for Healthcare Improvement identifies Equitable as the “forgotten aim” of the STEEEP framework. This organization has developed a guide for health care organizations to promote equitable care.55 While this guide focuses mostly on organizational-level actions, some are focused on individual providers, such as training in implicit bias. Future research should seek to identify disparities in care by individual providers and develop interventions to address any discovered gaps.

The “Patient Centered” domain was the most frequently coded and had the most heterogeneous underpinnings for assessment. Studies varied widely in terminology and conceptual foundations. The field would benefit from future work to identify how “Patient Centered” care might be more clearly conceptualized, guided by comparative studies among different assessment approaches to define those most valid and feasible.

The overarching goal for measuring individual hospitalist quality should be to improve the delivery of patient care in a supportive and formative way. To further this goal, adding or expanding on metrics identified in this article may provide a more complete description of performance. As a future direction, groups should consider partnering with one another to define measurement approaches, collaborate with existing data sources, and even share deidentified individual data to establish performance benchmarks at the individual and group levels.

While this study used broad search terms to support completeness, the search process could have missed important studies. Grey literature, non–English language studies, and industry reports were not included in this review. Groups may also be using other assessments of individual hospitalist performance that are not published in the peer-reviewed literature. Coding of study assessments was achieved through consensus reconciliation; other coders might have classified studies differently.

CONCLUSION

This scoping review describes the peer-reviewed literature of individual hospitalist performance and is the first to link it to the STEEEP quality framework. Assessments of transitions of care, evidence-based care, and cost-effective care are exemplars in the published literature. Patient-centered care is well studied but assessed in a heterogeneous fashion. Assessments of equity in care are notably absent. The STEEEP framework provides a model to structure assessment of individual performance. Future research should build on this framework to define meaningful assessment approaches that are actionable and improve the welfare of our patients and our system.

Disclosures

The authors have nothing to disclose.

Healthcare quality is defined as the extent to which healthcare services result in desired outcomes.1 Quality of care depends on how the healthcare system’s various components, including healthcare practitioners, interact to meet each patient’s needs.2 These components can be shaped to achieve desired outcomes through rules, incentives, and other approaches, but influencing the behaviors of each component, such as the performance of hospitalists, requires defining goals for performance and implementing measurement approaches to assess progress toward these goals.

One set of principles to define goals for quality and guide assessment of desired behaviors is the multidimensional STEEEP framework. This framework, created by the Institute of Medicine, identifies six domains of quality: Safe, Timely, Effective, Efficient, Equitable, and Patient Centered.2 Briefly, “Safe” means avoiding injuries to patients, “Timely” means reducing waits and delays in care, “Effective” means providing care based on evidence, “Efficient” means avoiding waste, “Equitable” means ensuring quality does not vary based on personal characteristics such as race and gender, and “Patient Centered” means providing care that is responsive to patients’ values and preferences. The STEEEP domains are not coequal; rather, they ensure that quality is considered broadly, while avoiding errors such as measuring only an intervention’s impact on effectiveness but not assessing its impact on multiple domains of quality, such as how patient centered, efficient (cost effective), or equitable the resulting care is.

Based on our review of the literature, a multidimensional framework like STEEEP has not been used in defining and assessing the quality of individual hospitalists’ performance. Some quality metrics at the hospital level impact several dimensions simultaneously, such as door to balloon time for acute myocardial infarction, which measures effectiveness and timeliness of care. Programs like pay-for-performance, Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS), and the Merit-Based Incentive Payment System (MIPS) have tied reimbursement to assessments aligned with several STEEEP domains at both individual and institutional levels but lack a holistic approach to quality.3-6 The every-other-year State of Hospital Medicine Report, the most widely used description of individual hospitalist performance, reports group-level performance including relative value units and whether groups are accountable for measures of quality such as performance on core measures, timely documentation, and “citizenship” (eg, committee participation or academic work).7 While these are useful benchmarks, the report focuses on performance at the group level. Concurrently, several academic groups have described more complete dashboards or scorecards to assess individual hospitalist performance, primarily designed to facilitate comparison across hospitalist groups or to incentivize overall group performance.8-10 However, these efforts are not guided by an overarching framework and are structured after traditional academic models with components related to teaching and scholarship, which may not translate to nonacademic environments. Finally, the Core Competencies for Hospital Medicine outlines some goals for hospitalist performance but does not speak to specific measurement approaches.11

Overall, assessing individual hospitalist performance is hindered by lack of consensus on important concepts to measure, a limited number of valid measures, and challenges in data collection such as resource limitations and feasibility. Developing and refining measures grounded in the STEEEP framework may provide a more comprehensive assessment of hospitalist quality and identify approaches to improve overall health outcomes. Comparative data could help individual hospitalists improve performance; leaders of hospitalist groups could use this data to guide faculty development and advancement as they ensure quality care at the individual, group, and system levels.

To better inform quality measurement of individual hospitalists, we sought to identify existing publications on individual hospitalist quality. Our goal was to define the published literature about quality measurement at the individual hospitalist level, relate these publications to domains of quality defined by the STEEEP framework, and identify directions for assessment or further research that could affect the overall quality of care.

METHODS

We conducted a scoping review following methods outlined by Arksey and O’Malley12 and Tricco.13 The goal of a scoping review is to map the extent of research within a specific field. This methodology is well suited to characterizing the existing research related to the quality of hospitalist care at the individual level. A protocol for the scoping review was not registered.

Evidence Search

A systematic search for published, English-language literature on hospitalist care was conducted in Medline (Ovid; 1946 - June 4, 2019) on June 5, 2019. The search used a combination of keywords and controlled vocabulary for the concept of hospitalists or hospital medicine. The search strategy used in this review is described in the Appendix. In addition, a hand search of reference lists of articles was used to discover publications not identified in the database searches.

Study Selection

All references were uploaded to Covidence systematic review software (www.covidence.org; Covidence), and duplicates were removed. Four reviewers (A.D., B.C., L.H., R.Q.) conducted title and abstract, as well as full-text, review to identify studies that measured differences in the performance of hospitalists at the individual level. Any disagreements among reviewers were resolved by consensus. Articles included both adult and pediatric populations. Articles that focused on group-level outcomes could be included if nonpooled data at the individual level was also reported. Studies were excluded if they did not focus on individual quality of care indicators or were not published in English.

Data Charting and Synthesis

We extracted the following information using a standardized data collection form: author, title, year of publication, study design, intervention, and outcome measures. Original manuscripts were accessed as needed to supplement analysis. Critical appraisal of individual studies was not conducted in this review because the goal of this review was to analyze which quality indicators have been studied and how they were measured. Articles were then coded for their alignment to the STEEEP framework by two reviewers (AD and BC). After initial coding was conducted, the reviewers met to consolidate codes and resolve any disagreement by consensus. The results of the analysis were summarized in both text and tabular format with studies grouped by focus of assessment with each one’s methods of assessment listed.

RESULTS

Results of the search strategy are shown in the Figure. The search retrieved a total of 2,363 references of which 113 were duplicates, leaving 2,250 to be screened. After title and abstract and full-text screening, 42 studies were included in the review. The final 42 studies were coded for alignment with the STEEEP framework. The Table displays the focus of assessment and methods of assessment within each STEEEP domain.

Eighteen studies were coded into a single domain while the rest were coded into at least two domains. The domain Patient Centered was coded as having the most studies (n = 23), followed by the domain of Safe (n = 15). Timely, Effective, and Efficient domains had 11, 9, and 12 studies, respectively. No studies were coded into the domain of Equitable.

Safe

Nearly all studies coded into the Safe domain focused on transitions of care. These included transfers into a hospital from other hospitals,14 transitions of care to cross-covering providers15,16 and new primary providers,17 and transition out from the acute care setting.18-28 Measures of hospital discharge included measures of both processes18-22 and outcomes.23-27 Methods of assessment varied from use of trained observers or scorers to surveys of individuals and colleagues about performance. Though a few leveraged informatics,22,27 all approaches relied on human interaction, and none were automated.

Timely

All studies coded into the Timely domain were coded into at least one other domain. For example, Anderson et al looked at how hospitalists communicated about potential life-limiting illness at the time of hospital admission and the subsequent effects on plans of care29; this was coded as both Timely and Patient Centered. Likewise, another group of studies centered on application of evidence-based guidelines, such as giving antibiotics within a certain time interval for sepsis and were coded as both Timely and Effective. Another set of authors described dashboards or scorecards that captured a number of group-level metrics of processes of care that span STEEEP domains and may be applicable to individuals, including Fox et al for pediatrics8 and Hwa et al for an adult academic hospitalist group.9 Methods of assessment varied widely across studies and included observations in the clinical environment,28,30,31 performance in simulations,32 and surveys about performance.22-26 A handful of approaches were more automated and made use of informatics8,9,22 or data collected for other health system purposes.8,9

Effective

Effectiveness was most often assessed through adherence to consensus and evidence-based guidelines. Examples included processes of care related to sepsis, venous thromboembolism prophylaxis, COPD, heart failure, pediatric asthma, and antibiotic appropriateness.8,9,23,32-36 Through the review, multiple other studies that included group-level measures of effectiveness for a variety of health conditions were excluded because data on individual-level variation were not reported. Methods of assessment included expert review of cases or discharge summaries, compliance with core measures, performance in simulation, and self-assessment on practice behaviors. Other than those efforts aligned with institutional data collection, most approaches were resource intensive.

Efficient

As with those in the Timely domain, most studies coded into the Efficient domain were coded into at least one other domain. One exception measured unnecessary daily lab work and both showed provider-level variation and demonstrated improvement in quality based on an intervention.37 Another paper coded into the Effective domain evaluated adherence to components of the Choosing Wisely® recommendations.34 In addition to these two studies focusing on cost efficacy, other studies coded to this domain assessed concepts such as ensuring more efficient care from other providers by optimizing transitions of care15-17 and clarifying patients’ goals for care.38 Although integrating insurer information into care plans is emphasized in the Core Competencies of Hospital Medicine,11 this concept was not represented in any of the identified articles. Methods of assessment varied and mostly relied on observation of behaviors or survey of providers. Several approaches were more automated or used Medicare claims data to assess the efficiency of individual providers relative to peers.34,37,39

Equitable

Among the studies reviewed, none were coded into the Equitable domain despite care of vulnerable populations being identified as a core competency of hospital medicine.40

Patient Centered

Studies coded to the Patient Centered domain assessed hospitalist performance through ratings of patient satisfaction,8,9,41-44 rating of communication between hospitalists and patients,19-21,29,45-51 identification of patient preferences,38,52 outcomes of patient-centered care activities,27,28 and peer ratings.53,54 Authors applied several theoretical constructs to these assessments including shared decision-making,50 etiquette-based medicine,47,48 empathetic responsiveness,45 agreement about the goals of care between the patient and healthcare team members,52 and lapses in professionalism.53 Studies often crossed STEEEP domains, such as those assessing quality of discharge information provided to patients, which were coded as both Safe and Patient Centered.19-21 In addition to coded or observed performance in the clinical setting, studies in this domain also used patient ratings as a method of assessment.8,9,28,41-44,49,50 Only a few of these approaches aligned with existing performance measures of health systems and were more automated.8,9

DISCUSSION

This scoping review of performance data for individual hospitalists coded to the STEEEP framework identified robust areas in the published literature, as well as opportunities to develop new approaches or refine existing measures. Transitions of care, both intrahospital and at discharge, and adherence to evidence-based guidelines are areas for which current research has created a foundation for care that is Safe, Timely, Effective, and Efficient. The Patient Centered domain also has several measures described, though the conceptual underpinnings are heterogeneous, and consensus appears necessary to compare performance across groups. No studies were coded to the Equitable domain. Across domains, approaches to measurement varied in resource intensity from simple ones, like integrating existing data collected by hospitals, to more complex ones, like shadowing physicians or coding interactions.

Methods of assessment coded into the Safe domain focused on communication and, less so, patient outcomes around transitions of care. Transitions of care that were evaluated included transfer of patients into a new facility, sign-out to new physicians for both cross-cover responsibilities and for newly assuming the role of primary attending, and discharge from the hospital. Most measures rated the quality of communication, although several23-27 examined patient outcomes. Approaches that survey individuals downstream from a transition of care15,17,24-26 may be the simplest and most feasible approach to implement in the future but, as described to date, do not include all transitions of care and may miss patient outcomes. Important core competencies for hospital medicine under the Safe domain that were not identified in this review include areas such as diagnostic error, hospital-acquired infections, error reporting, and medication safety.11 These are potential areas for future measure development.

The assessments in many studies were coded across more than one domain; for example, measures of the application of evidence-based guidelines were coded into domains of Effective, Timely, Efficient, and others. Applying the six domains of the STEEEP framework revealed the multidimensional outcomes of hospitalist work and could guide more meaningful quality assessments of individual hospitalist performance. For example, assessing adherence to evidence-based guidelines, as well as consideration of the Core Competencies of Hospital Medicine and recommendations of the Choosing Wisely® campaign, are promising areas for measurement and may align with existing hospital metrics. Notably, several reviewed studies measured group-level adherence to guidelines but were excluded because they did not examine variation at the individual level. Future measures based on evidence-based guidelines could center on the Effective domain while also integrating assessment of domains such as Efficient, Timely, and Patient Centered and, in so doing, provide a richer assessment of the diverse aspects of quality.

Several other approaches in the domains of Timely, Effective, and Efficient were described only in a few studies yet deserve consideration for further development. Two time-motion studies30,31 were coded into the domains of Timely and Efficient and would be cumbersome in regular practice but, with advances in wearable technology and electronic health records, could become more feasible in the future. Another approach used Medicare payment data to detect provider-level variation.39 Potentially, “big data” could be analyzed in other ways to compare the performance of individual hospitalists.

The lack of studies coded into the Equitable domain may seem surprising, but the Institute for Healthcare Improvement identifies Equitable as the “forgotten aim” of the STEEEP framework. This organization has developed a guide for health care organizations to promote equitable care.55 While this guide focuses mostly on organizational-level actions, some are focused on individual providers, such as training in implicit bias. Future research should seek to identify disparities in care by individual providers and develop interventions to address any discovered gaps.

The “Patient Centered” domain was the most frequently coded and had the most heterogeneous underpinnings for assessment. Studies varied widely in terminology and conceptual foundations. The field would benefit from future work to identify how “Patient Centered” care might be more clearly conceptualized, guided by comparative studies among different assessment approaches to define those most valid and feasible.

The overarching goal for measuring individual hospitalist quality should be to improve the delivery of patient care in a supportive and formative way. To further this goal, adding or expanding on metrics identified in this article may provide a more complete description of performance. As a future direction, groups should consider partnering with one another to define measurement approaches, collaborate with existing data sources, and even share deidentified individual data to establish performance benchmarks at the individual and group levels.

While this study used broad search terms to support completeness, the search process could have missed important studies. Grey literature, non–English language studies, and industry reports were not included in this review. Groups may also be using other assessments of individual hospitalist performance that are not published in the peer-reviewed literature. Coding of study assessments was achieved through consensus reconciliation; other coders might have classified studies differently.

CONCLUSION

This scoping review describes the peer-reviewed literature of individual hospitalist performance and is the first to link it to the STEEEP quality framework. Assessments of transitions of care, evidence-based care, and cost-effective care are exemplars in the published literature. Patient-centered care is well studied but assessed in a heterogeneous fashion. Assessments of equity in care are notably absent. The STEEEP framework provides a model to structure assessment of individual performance. Future research should build on this framework to define meaningful assessment approaches that are actionable and improve the welfare of our patients and our system.

Disclosures

The authors have nothing to disclose.

1. Quality of Care: A Process for Making Strategic Choices in Health Systems. World Health Organization; 2006.

2. Institute of Medicine (US) Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System for the 21st Century. National Academies Press; 2001. Accessed December 20, 2019. http://www.ncbi.nlm.nih.gov/books/NBK222274/

3. Wadhera RK, Joynt Maddox KE, Wasfy JH, Haneuse S, Shen C, Yeh RW. Association of the hospital readmissions reduction program with mortality among Medicare beneficiaries hospitalized for heart failure, acute myocardial infarction, and pneumonia. JAMA. 2018;320(24):2542-2552. https://doi.org/10.1001/jama.2018.19232

4. Kondo KK, Damberg CL, Mendelson A, et al. Implementation processes and pay for performance in healthcare: a systematic review. J Gen Intern Med. 2016;31(Suppl 1):61-69. https://doi.org/10.1007/s11606-015-3567-0

5. Fung CH, Lim Y-W, Mattke S, Damberg C, Shekelle PG. Systematic review: the evidence that publishing patient care performance data improves quality of care. Ann Intern Med. 2008;148(2):111-123. https://doi.org/10.7326/0003-4819-148-2-200801150-00006

6. Jha AK, Orav EJ, Epstein AM. Public reporting of discharge planning and rates of readmissions. N Engl J Med. 2009;361(27):2637-2645. https://doi.org/10.1056/NEJMsa0904859

7. Society of Hospital Medicine. State of Hospital Medicine Report; 2018. Accessed December 20, 2019. https://www.hospitalmedicine.org/practice-management/shms-state-of-hospital-medicine/

8. Hwa M, Sharpe BA, Wachter RM. Development and implementation of a balanced scorecard in an academic hospitalist group. J Hosp Med. 2013;8(3):148-153. https://doi.org/10.1002/jhm.2006

9. Fox LA, Walsh KE, Schainker EG. The creation of a pediatric hospital medicine dashboard: performance assessment for improvement. Hosp Pediatr. 2016;6(7):412-419. https://doi.org/10.1542/hpeds.2015-0222

10. Hain PD, Daru J, Robbins E, et al. A proposed dashboard for pediatric hospital medicine groups. Hosp Pediatr. 2012;2(2):59-68. https://doi.org/10.1542/hpeds.2012-0004

11. Nichani S, Crocker J, Fitterman N, Lukela M. Updating the core competencies in hospital medicine--2017 revision: introduction and methodology. J Hosp Med. 2017;12(4):283-287. https://doi.org/10.12788/jhm.2715

12. Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8:19-32. https://doi.org/10.1080/1364557032000119616

13. Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467-473. https://doi.org/10.7326/m18-0850

14. Borofsky JS, Bartsch JC, Howard AB, Repp AB. Quality of interhospital transfer communication practices and association with adverse events on an internal medicine hospitalist service. J Healthc Qual. 2017;39(3):177-185. https://doi.org/10.1097/01.JHQ.0000462682.32512.ad

15. Fogerty RL, Schoenfeld A, Salim Al-Damluji M, Horwitz LI. Effectiveness of written hospitalist sign-outs in answering overnight inquiries. J Hosp Med. 2013;8(11):609-614. https://doi.org10.1002/jhm.2090

16. Miller DM, Schapira MM, Visotcky AM, et al. Changes in written sign-out composition across hospitalization. J Hosp Med. 2015;10(8):534-536. https://doi.org/10.1002/jhm.2390

17. Hinami K, Farnan JM, Meltzer DO, Arora VM. Understanding communication during hospitalist service changes: a mixed methods study. J Hosp Med. 2009;4(9):535-540. https://doi.org/10.1002/jhm.523

18. Horwitz LI, Jenq GY, Brewster UC, et al. Comprehensive quality of discharge summaries at an academic medical center. J Hosp Med. 2013;8(8):436-443. https://doi.org10.1002/jhm.2021

19. Sarzynski E, Hashmi H, Subramanian J, et al. Opportunities to improve clinical summaries for patients at hospital discharge. BMJ Qual Saf. 2017;26(5):372-380. https://doi.org/10.1136/bmjqs-2015-005201

20. Unaka NI, Statile A, Haney J, Beck AF, Brady PW, Jerardi KE. Assessment of readability, understandability, and completeness of pediatric hospital medicine discharge instructions. J Hosp Med. 2017;12(2):98-101. https://doi.org/10.12788/jhm.2688

21. Unaka N, Statile A, Jerardi K, et al. Improving the readability of pediatric hospital medicine discharge instructions. J Hosp Med. 2017;12(7):551-557. https://doi.org/10.12788/jhm.2770

22. Zackoff MW, Graham C, Warrick D, et al. Increasing PCP and hospital medicine physician verbal communication during hospital admissions. Hosp Pediatr. 2018;8(4):220-226. https://doi.org/10.1542/hpeds.2017-0119

23. Salata BM, Sterling MR, Beecy AN, et al. Discharge processes and 30-day readmission rates of patients hospitalized for heart failure on general medicine and cardiology services. Am J Cardiol. 2018;121(9):1076-1080. https://doi.org/10.1016/j.amjcard.2018.01.027

24. Arora VM, Prochaska ML, Farnan JM, et al. Problems after discharge and understanding of communication with their primary care physicians among hospitalized seniors: a mixed methods study. J Hosp Med. 2010;5(7):385-391. https://doi.org/10.1002/jhm.668

25. Bell CM, Schnipper JL, Auerbach AD, et al. Association of communication between hospital-based physicians and primary care providers with patient outcomes. J Gen Intern Med. 2009;24(3):381-386. https://doi.org/10.1007/s11606-008-0882-8

26. Clark B, Baron K, Tynan-McKiernan K, Britton M, Minges K, Chaudhry S. Perspectives of clinicians at skilled nursing facilities on 30-day hospital readmissions: a qualitative study. J Hosp Med. 2017;12(8):632-638. https://doi.org/10.12788/jhm.2785

27. Harris CM, Sridharan A, Landis R, Howell E, Wright S. What happens to the medication regimens of older adults during and after an acute hospitalization? J Patient Saf. 2013;9(3):150-153. https://doi.org/10.1097/PTS.0b013e318286f87d

28. Harrison JD, Greysen RS, Jacolbia R, Nguyen A, Auerbach AD. Not ready, not set...discharge: patient-reported barriers to discharge readiness at an academic medical center. J Hosp Med. 2016;11(9):610-614. https://doi.org/10.1002/jhm.2591

29. Anderson WG, Kools S, Lyndon A. Dancing around death: hospitalist-patient communication about serious illness. Qual Health Res. 2013;23(1):3-13. https://doi.org/10.1177/1049732312461728

30. Tipping MD, Forth VE, Magill DB, Englert K, Williams MV. Systematic review of time studies evaluating physicians in the hospital setting. J Hosp Med. 2010;5(6):353-359. https://doi.org/10.1002/jhm.647

31. Tipping MD, Forth VE, O’Leary KJ, et al. Where did the day go?--a time-motion study of hospitalists. J Hosp Med. 2010;5(6):323-328. https://doi.org/10.1002/jhm.790

32. Bergmann S, Tran M, Robison K, et al. Standardising hospitalist practice in sepsis and COPD care. BMJ Qual Saf. 2019;28(10):800-808. https://doi.org/10.1136/bmjqs-2018-008829

33. Kisuule F, Wright S, Barreto J, Zenilman J. Improving antibiotic utilization among hospitalists: a pilot academic detailing project with a public health approach. J Hosp Med. 2008;3(1):64-70. https://doi.org/10.1002/jhm.278

34. Reyes M, Paulus E, Hronek C, et al. Choosing Wisely campaign: report card and achievable benchmarks of care for children’s hospitals. Hosp Pediatr. 2017;7(11):633-641. https://doi.org/10.1542/hpeds.2017-0029

35. Landrigan CP, Conway PH, Stucky ER, et al. Variation in pediatric hospitalists’ use of proven and unproven therapies: a study from the Pediatric Research in Inpatient Settings (PRIS) network. J Hosp Med. 2008;3(4):292-298. https://doi.org/10.1002/jhm.347

36. Michtalik HJ, Carolan HT, Haut ER, et al. Use of provider-level dashboards and pay-for-performance in venous thromboprophylaxis. J Hosp Med. 2015;10(3):172-178. https://doi.org/10.1002/jhm.2303

37. Johnson DP, Lind C, Parker SE, et al. Toward high-value care: a quality improvement initiative to reduce unnecessary repeat complete blood counts and basic metabolic panels on a pediatric hospitalist service. Hosp Pediatr. 2016;6(1):1-8. https://doi.org/10.1542/hpeds.2015-0099

38. Auerbach AD, Katz R, Pantilat SZ, et al. Factors associated with discussion of care plans and code status at the time of hospital admission: results from the Multicenter Hospitalist Study. J Hosp Med. 2008;3(6):437-445. https://doi.org/10.1002/jhm.369

39. Tsugawa Y, Jha AK, Newhouse JP, Zaslavsky AM, Jena AB. Variation in physician spending and association with patient outcomes. JAMA Intern Med. 2017;177(5):675-682. https://doi.org/10.1001/jamainternmed.2017.0059

40. Nichani S, Fitterman N, Lukela M, Crocker J. Equitable allocation of resources. 2017 hospital medicine revised core competencies. J Hosp Med. 2017;12(4):S62. https://doi.org/10.12788/jhm.3016

41. Blanden AR, Rohr RE. Cognitive interview techniques reveal specific behaviors and issues that could affect patient satisfaction relative to hospitalists. J Hosp Med. 2009;4(9):E1-E6. https://doi.org/10.1002/jhm.524

42. Torok H, Ghazarian SR, Kotwal S, Landis R, Wright S, Howell E. Development and validation of the tool to assess inpatient satisfaction with care from hospitalists. J Hosp Med. 2014;9(9):553-558. https://doi.org/10.1002/jhm.2220

43. Torok H, Kotwal S, Landis R, Ozumba U, Howell E, Wright S. Providing feedback on clinical performance to hospitalists: Experience using a new metric tool to assess inpatient satisfaction with care from hospitalists. J Contin Educ Health Prof. 2016;36(1):61-68. https://doi.org/10.1097/CEH.0000000000000060

44. Indovina K, Keniston A, Reid M, et al. Real-time patient experience surveys of hospitalized medical patients. J Hosp Med. 2016;11(4):251-256. https://doi.org/10.1002/jhm.2533