User login

Infections, antibiotics more common in type 2 diabetes

People with type 2 diabetes are up to 55% more likely to experience hospital-treated infections and up to 30% more likely to receive an antibiotic prescription in the community setting, compared with the general population, but these associations moderated over an 8-year period – a phenomenon that could be at least partly related to better treatment of diabetes and an overall improvement in mean blood glucose levels, according to results of a large population-based study.

“These findings may be driven by earlier detection and treatment of milder type 2 diabetes cases over time,” or by improved therapy of hyperglycemia and other risk factors, wrote Anil Mor, MD, of Aarhus University Hospital, Denmark, and his associates (Clin Infect Dis. 2016 June 26. doi: 10.1093/cid/ciw345).

The study ran from 2004 to 2012 and used data from the Danish National Patient Registry. It tracked community- and hospital-treated infections in approximately 774,017 controls; of these, 155,158 had type 2 diabetes. Patients with diabetes were more likely to have serious medical comorbidities compared with controls (29% vs. 21%). These included myocardial infarction (5% vs. 3%), heart failure (4% vs. 2%), cerebrovascular diseases (7% vs. 5%), peripheral vascular diseases (4% vs. 2%), and chronic pulmonary disease (6% vs. 2%).

Over the study period, 62% of the diabetes patients received an antibiotic, compared with 55% of the controls – a 24% increased relative risk in a model that adjusted for factors such as alcohol use, Charlson comorbidity index, and cardiovascular and renal comorbidities. Cephalosporins were the most commonly prescribed drugs, followed by antimycobacterial agents, quinolones, and antibiotics commonly used for urinary tract and Staphylococcus aureus infections.

Hospital-treated infections were significantly more common among patients with diabetes, with 19% having at least one such infection compared with 13% of controls (RR 1.55). On a larger scale, at a median follow-up of 2.8 years, the hospital-treated rate among diabetes patients was 58 per 1,000 person/years vs. 39 per 1,000 person/years among controls – a relative risk of 1.49.

Patients were at highest risk for emphysematous cholecystitis (adjusted rate ratio 1.74) and abscesses, tuberculosis, and meningococcal infections. Pneumonia was approximately 30% more likely among patients.

The risk of a hospital-treated infection was highest among younger patients aged 40-50 years (RR 1.77) and lowest among those older than 80 years (RR 1.29). It was also higher among those with higher comorbidity scores. Statin use appeared to attenuate some of the risk, the authors noted. The authors did not discuss the possible cause of this association.

The annual risk of a hospital-treated infection among patients declined from a high of 1.89 in 2004 to 1.59 in 2011. The risk of receiving a community-based antibiotic prescription declined as well, from 1.31 in 2004 to 1.26 in 2011.

These changes were not seen in the control group, suggesting that patients with diabetes were experiencing a unique improvement in infections – earlier detection and treatment of type 2 diabetes, and better comorbidity management could be explanations, the investigators speculated.

The study was sponsored by the Danish Centre for Strategic Research in Type 2 Diabetes and the Program for Clinical Research Infrastructure established by the Lundbeck Foundation and the Novo Nordisk Foundation. Several of the coauthors reported financial ties with various pharmaceutical companies.

On Twitter @Alz_Gal

People with type 2 diabetes are up to 55% more likely to experience hospital-treated infections and up to 30% more likely to receive an antibiotic prescription in the community setting, compared with the general population, but these associations moderated over an 8-year period – a phenomenon that could be at least partly related to better treatment of diabetes and an overall improvement in mean blood glucose levels, according to results of a large population-based study.

“These findings may be driven by earlier detection and treatment of milder type 2 diabetes cases over time,” or by improved therapy of hyperglycemia and other risk factors, wrote Anil Mor, MD, of Aarhus University Hospital, Denmark, and his associates (Clin Infect Dis. 2016 June 26. doi: 10.1093/cid/ciw345).

The study ran from 2004 to 2012 and used data from the Danish National Patient Registry. It tracked community- and hospital-treated infections in approximately 774,017 controls; of these, 155,158 had type 2 diabetes. Patients with diabetes were more likely to have serious medical comorbidities compared with controls (29% vs. 21%). These included myocardial infarction (5% vs. 3%), heart failure (4% vs. 2%), cerebrovascular diseases (7% vs. 5%), peripheral vascular diseases (4% vs. 2%), and chronic pulmonary disease (6% vs. 2%).

Over the study period, 62% of the diabetes patients received an antibiotic, compared with 55% of the controls – a 24% increased relative risk in a model that adjusted for factors such as alcohol use, Charlson comorbidity index, and cardiovascular and renal comorbidities. Cephalosporins were the most commonly prescribed drugs, followed by antimycobacterial agents, quinolones, and antibiotics commonly used for urinary tract and Staphylococcus aureus infections.

Hospital-treated infections were significantly more common among patients with diabetes, with 19% having at least one such infection compared with 13% of controls (RR 1.55). On a larger scale, at a median follow-up of 2.8 years, the hospital-treated rate among diabetes patients was 58 per 1,000 person/years vs. 39 per 1,000 person/years among controls – a relative risk of 1.49.

Patients were at highest risk for emphysematous cholecystitis (adjusted rate ratio 1.74) and abscesses, tuberculosis, and meningococcal infections. Pneumonia was approximately 30% more likely among patients.

The risk of a hospital-treated infection was highest among younger patients aged 40-50 years (RR 1.77) and lowest among those older than 80 years (RR 1.29). It was also higher among those with higher comorbidity scores. Statin use appeared to attenuate some of the risk, the authors noted. The authors did not discuss the possible cause of this association.

The annual risk of a hospital-treated infection among patients declined from a high of 1.89 in 2004 to 1.59 in 2011. The risk of receiving a community-based antibiotic prescription declined as well, from 1.31 in 2004 to 1.26 in 2011.

These changes were not seen in the control group, suggesting that patients with diabetes were experiencing a unique improvement in infections – earlier detection and treatment of type 2 diabetes, and better comorbidity management could be explanations, the investigators speculated.

The study was sponsored by the Danish Centre for Strategic Research in Type 2 Diabetes and the Program for Clinical Research Infrastructure established by the Lundbeck Foundation and the Novo Nordisk Foundation. Several of the coauthors reported financial ties with various pharmaceutical companies.

On Twitter @Alz_Gal

People with type 2 diabetes are up to 55% more likely to experience hospital-treated infections and up to 30% more likely to receive an antibiotic prescription in the community setting, compared with the general population, but these associations moderated over an 8-year period – a phenomenon that could be at least partly related to better treatment of diabetes and an overall improvement in mean blood glucose levels, according to results of a large population-based study.

“These findings may be driven by earlier detection and treatment of milder type 2 diabetes cases over time,” or by improved therapy of hyperglycemia and other risk factors, wrote Anil Mor, MD, of Aarhus University Hospital, Denmark, and his associates (Clin Infect Dis. 2016 June 26. doi: 10.1093/cid/ciw345).

The study ran from 2004 to 2012 and used data from the Danish National Patient Registry. It tracked community- and hospital-treated infections in approximately 774,017 controls; of these, 155,158 had type 2 diabetes. Patients with diabetes were more likely to have serious medical comorbidities compared with controls (29% vs. 21%). These included myocardial infarction (5% vs. 3%), heart failure (4% vs. 2%), cerebrovascular diseases (7% vs. 5%), peripheral vascular diseases (4% vs. 2%), and chronic pulmonary disease (6% vs. 2%).

Over the study period, 62% of the diabetes patients received an antibiotic, compared with 55% of the controls – a 24% increased relative risk in a model that adjusted for factors such as alcohol use, Charlson comorbidity index, and cardiovascular and renal comorbidities. Cephalosporins were the most commonly prescribed drugs, followed by antimycobacterial agents, quinolones, and antibiotics commonly used for urinary tract and Staphylococcus aureus infections.

Hospital-treated infections were significantly more common among patients with diabetes, with 19% having at least one such infection compared with 13% of controls (RR 1.55). On a larger scale, at a median follow-up of 2.8 years, the hospital-treated rate among diabetes patients was 58 per 1,000 person/years vs. 39 per 1,000 person/years among controls – a relative risk of 1.49.

Patients were at highest risk for emphysematous cholecystitis (adjusted rate ratio 1.74) and abscesses, tuberculosis, and meningococcal infections. Pneumonia was approximately 30% more likely among patients.

The risk of a hospital-treated infection was highest among younger patients aged 40-50 years (RR 1.77) and lowest among those older than 80 years (RR 1.29). It was also higher among those with higher comorbidity scores. Statin use appeared to attenuate some of the risk, the authors noted. The authors did not discuss the possible cause of this association.

The annual risk of a hospital-treated infection among patients declined from a high of 1.89 in 2004 to 1.59 in 2011. The risk of receiving a community-based antibiotic prescription declined as well, from 1.31 in 2004 to 1.26 in 2011.

These changes were not seen in the control group, suggesting that patients with diabetes were experiencing a unique improvement in infections – earlier detection and treatment of type 2 diabetes, and better comorbidity management could be explanations, the investigators speculated.

The study was sponsored by the Danish Centre for Strategic Research in Type 2 Diabetes and the Program for Clinical Research Infrastructure established by the Lundbeck Foundation and the Novo Nordisk Foundation. Several of the coauthors reported financial ties with various pharmaceutical companies.

On Twitter @Alz_Gal

FROM CLINICAL INFECTIOUS DISEASES

Key clinical point: Both hospital-treated infections and community-acquired antibiotics are more common among people with type 2 diabetes

Major finding: Hospital-treated infections were 55% more likely; community-dispensed antibiotics, 30% more common.

Data source: An observational study comprising almost 900,000 people in Denmark.

Disclosures: The study was sponsored by the Danish Centre for Strategic Research in Type 2 Diabetes and the Program for Clinical Research Infrastructure established by the Lundbeck Foundation and the Novo Nordisk Foundation. Several coauthors reported financial ties with various pharmaceutical companies.

Experts emphasize scrupulous procedures for duodenoscopes, ERCP

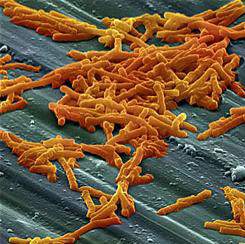

Contaminated duodenoscopes have caused multiple outbreaks of multidrug-resistant infections with sometimes lethal consequences; until these instruments become easier to clean, personnel must strictly follow recommendations for sterilization, surveillance, and unit-by-unit quality control, according to an extensive commentary accompanying an American Gastroenterological Association Clinical Practice Update.

“Patients and physicians want and expect no transmission of infections by any medical instrument,” wrote Bret Petersen, M.D., of the Mayo Clinic, Rochester, Minn., Johannes Koch, M.D., of Virginia Mason Medical Center, Seattle, and Gregory Ginsberg, M.D., of the University of Pennsylvania, Philadelphia. It is the collective responsibility of endoscope manufacturers, health systems, and providers to ensure endoscope reprocessing is mistake proof, establishing systems to identify and eliminate the risk of infection for patients undergoing flexible endoscopy.”

More than 650,000 endoscopic retrograde cholangiopancreatographies (ERCPs) occur in the United States annually, and “even the lowest reported defect rate of 0.7% will expose 4,500 patients to a preventable risk,” the experts noted. Carbapenem-resistant Enterobacteriaceae (CRE) are becoming more prevalent and have been transmitted during ERCP, even when personnel seem to have followed sterilization protocols to the letter. Clinical CRE infections have a fatality rate of at least 50%, months may elapse between exposure and symptom onset, and infections may involve distant organs. These factors, along with the phenomenon of “silent carriers,” have linked duodenoscopes to at least 250 multidrug-resistant infections and at least 20 fatalities worldwide, the experts wrote (Gastroenterology 2016 May 27. doi: 10.1053/j.gastro.2016.05.040).

Current duodenoscopes can be tough to sterilize. Between 1 billion and 1 trillion organisms typically cover a used instrument. Bedside cleaning cuts this number about 1,000-fold, and manual washing kills about another million organisms, leaving up to 1 billion bugs to be killed by high-level disinfection. That’s “a tall order” that can strain space, time, and staffing resources, especially given the fact that duodenoscopes have “tight crevices and mechanical joints that are exposed repeatedly to highly infectious bioburden,” the experts wrote. Furthermore, slips in processing enable the formation of biofilms that resist both cleaning and high-level disinfection.

The key to stopping duodenoscopes from transmitting dangerous pathogens is manual cleaning, including wiping the outside of the duodenoscope, flushing its channels, and brushing the elevator lever “immediately after use and before the surfaces have become dried,” the experts stressed. Disinfectants should be used at the right concentration and temperature, and for the intended amount of time. Biofilms form on moist surfaces only, so channels should be flushed with alcohol (a desiccant), dried with forced air, and stored in a dry environment.

But recent outbreaks spurred the Food and Drug Administration to recommend further steps – including better oversight and training of reprocessing staff and closer attention to precleaning, manual cleaning, and manufacturer recommendations for use, including determining whether the company used its own “proprietary” cleaning brushes in its validation studies, the experts noted. “Optional supplemental measures” include surveillance cultures of duodenoscopes, ethylene oxide sterilization, and double reprocessing, in which each scope undergoes two cycles of manual cleaning and high-intensity sterilization between patients. Double reprocessing might be the simplest and most easily adopted of these measures, the experts said. The AGA, for its part, recommends active surveillance of patients who undergo ERCP, surveillance cultures of scopes, and recording of the serial number of every scope used in every procedure.

Surveillance culture makes sense, but can be costly and hard to conduct and interpret because sampling detects vast numbers of nonpathogenic organisms in addition to any pathogens, the experts noted. The Centers for Disease Control and Prevention recommends that each institution follow its own complex outbreak sampling protocol and quarantine duodenoscopes for 2-3 days, pending negative results. That may mean buying more duodenoscopes. A less costly option is to culture a subset of scopes at the end of every workweek, the experts said. Real-time tests that reliably reflect bacterial culture results remain “elusive,” but testing for adenosine triphosphate after manual washing is easiest and best studied, they added.

Clearly, industry is responsible for making endoscopes that can be reliably disinfected. “Recent submissions by all three manufacturers (Olympus, Pentax, and Fujinon) have validated current reprocessing outcomes in test environments, and the FDA has ruled that postmarket studies of reprocessing in clinical settings are expected, but these results will not be forthcoming for several years,” the experts wrote. Redesigning duodenoscopes may be “the ultimate solution,” but in the meantime, endoscopists should carefully review indications for ERCP and ensure thorough informed consent. Doing so “will uphold the trust that we must achieve and maintain with our patients,” the authors said.

They had no funding sources. Dr. Koch has consulted for Sedasys, and Dr. Ginsberg has consulted for Olympus.

Contaminated duodenoscopes have caused multiple outbreaks of multidrug-resistant infections with sometimes lethal consequences; until these instruments become easier to clean, personnel must strictly follow recommendations for sterilization, surveillance, and unit-by-unit quality control, according to an extensive commentary accompanying an American Gastroenterological Association Clinical Practice Update.

“Patients and physicians want and expect no transmission of infections by any medical instrument,” wrote Bret Petersen, M.D., of the Mayo Clinic, Rochester, Minn., Johannes Koch, M.D., of Virginia Mason Medical Center, Seattle, and Gregory Ginsberg, M.D., of the University of Pennsylvania, Philadelphia. It is the collective responsibility of endoscope manufacturers, health systems, and providers to ensure endoscope reprocessing is mistake proof, establishing systems to identify and eliminate the risk of infection for patients undergoing flexible endoscopy.”

More than 650,000 endoscopic retrograde cholangiopancreatographies (ERCPs) occur in the United States annually, and “even the lowest reported defect rate of 0.7% will expose 4,500 patients to a preventable risk,” the experts noted. Carbapenem-resistant Enterobacteriaceae (CRE) are becoming more prevalent and have been transmitted during ERCP, even when personnel seem to have followed sterilization protocols to the letter. Clinical CRE infections have a fatality rate of at least 50%, months may elapse between exposure and symptom onset, and infections may involve distant organs. These factors, along with the phenomenon of “silent carriers,” have linked duodenoscopes to at least 250 multidrug-resistant infections and at least 20 fatalities worldwide, the experts wrote (Gastroenterology 2016 May 27. doi: 10.1053/j.gastro.2016.05.040).

Current duodenoscopes can be tough to sterilize. Between 1 billion and 1 trillion organisms typically cover a used instrument. Bedside cleaning cuts this number about 1,000-fold, and manual washing kills about another million organisms, leaving up to 1 billion bugs to be killed by high-level disinfection. That’s “a tall order” that can strain space, time, and staffing resources, especially given the fact that duodenoscopes have “tight crevices and mechanical joints that are exposed repeatedly to highly infectious bioburden,” the experts wrote. Furthermore, slips in processing enable the formation of biofilms that resist both cleaning and high-level disinfection.

The key to stopping duodenoscopes from transmitting dangerous pathogens is manual cleaning, including wiping the outside of the duodenoscope, flushing its channels, and brushing the elevator lever “immediately after use and before the surfaces have become dried,” the experts stressed. Disinfectants should be used at the right concentration and temperature, and for the intended amount of time. Biofilms form on moist surfaces only, so channels should be flushed with alcohol (a desiccant), dried with forced air, and stored in a dry environment.

But recent outbreaks spurred the Food and Drug Administration to recommend further steps – including better oversight and training of reprocessing staff and closer attention to precleaning, manual cleaning, and manufacturer recommendations for use, including determining whether the company used its own “proprietary” cleaning brushes in its validation studies, the experts noted. “Optional supplemental measures” include surveillance cultures of duodenoscopes, ethylene oxide sterilization, and double reprocessing, in which each scope undergoes two cycles of manual cleaning and high-intensity sterilization between patients. Double reprocessing might be the simplest and most easily adopted of these measures, the experts said. The AGA, for its part, recommends active surveillance of patients who undergo ERCP, surveillance cultures of scopes, and recording of the serial number of every scope used in every procedure.

Surveillance culture makes sense, but can be costly and hard to conduct and interpret because sampling detects vast numbers of nonpathogenic organisms in addition to any pathogens, the experts noted. The Centers for Disease Control and Prevention recommends that each institution follow its own complex outbreak sampling protocol and quarantine duodenoscopes for 2-3 days, pending negative results. That may mean buying more duodenoscopes. A less costly option is to culture a subset of scopes at the end of every workweek, the experts said. Real-time tests that reliably reflect bacterial culture results remain “elusive,” but testing for adenosine triphosphate after manual washing is easiest and best studied, they added.

Clearly, industry is responsible for making endoscopes that can be reliably disinfected. “Recent submissions by all three manufacturers (Olympus, Pentax, and Fujinon) have validated current reprocessing outcomes in test environments, and the FDA has ruled that postmarket studies of reprocessing in clinical settings are expected, but these results will not be forthcoming for several years,” the experts wrote. Redesigning duodenoscopes may be “the ultimate solution,” but in the meantime, endoscopists should carefully review indications for ERCP and ensure thorough informed consent. Doing so “will uphold the trust that we must achieve and maintain with our patients,” the authors said.

They had no funding sources. Dr. Koch has consulted for Sedasys, and Dr. Ginsberg has consulted for Olympus.

Contaminated duodenoscopes have caused multiple outbreaks of multidrug-resistant infections with sometimes lethal consequences; until these instruments become easier to clean, personnel must strictly follow recommendations for sterilization, surveillance, and unit-by-unit quality control, according to an extensive commentary accompanying an American Gastroenterological Association Clinical Practice Update.

“Patients and physicians want and expect no transmission of infections by any medical instrument,” wrote Bret Petersen, M.D., of the Mayo Clinic, Rochester, Minn., Johannes Koch, M.D., of Virginia Mason Medical Center, Seattle, and Gregory Ginsberg, M.D., of the University of Pennsylvania, Philadelphia. It is the collective responsibility of endoscope manufacturers, health systems, and providers to ensure endoscope reprocessing is mistake proof, establishing systems to identify and eliminate the risk of infection for patients undergoing flexible endoscopy.”

More than 650,000 endoscopic retrograde cholangiopancreatographies (ERCPs) occur in the United States annually, and “even the lowest reported defect rate of 0.7% will expose 4,500 patients to a preventable risk,” the experts noted. Carbapenem-resistant Enterobacteriaceae (CRE) are becoming more prevalent and have been transmitted during ERCP, even when personnel seem to have followed sterilization protocols to the letter. Clinical CRE infections have a fatality rate of at least 50%, months may elapse between exposure and symptom onset, and infections may involve distant organs. These factors, along with the phenomenon of “silent carriers,” have linked duodenoscopes to at least 250 multidrug-resistant infections and at least 20 fatalities worldwide, the experts wrote (Gastroenterology 2016 May 27. doi: 10.1053/j.gastro.2016.05.040).

Current duodenoscopes can be tough to sterilize. Between 1 billion and 1 trillion organisms typically cover a used instrument. Bedside cleaning cuts this number about 1,000-fold, and manual washing kills about another million organisms, leaving up to 1 billion bugs to be killed by high-level disinfection. That’s “a tall order” that can strain space, time, and staffing resources, especially given the fact that duodenoscopes have “tight crevices and mechanical joints that are exposed repeatedly to highly infectious bioburden,” the experts wrote. Furthermore, slips in processing enable the formation of biofilms that resist both cleaning and high-level disinfection.

The key to stopping duodenoscopes from transmitting dangerous pathogens is manual cleaning, including wiping the outside of the duodenoscope, flushing its channels, and brushing the elevator lever “immediately after use and before the surfaces have become dried,” the experts stressed. Disinfectants should be used at the right concentration and temperature, and for the intended amount of time. Biofilms form on moist surfaces only, so channels should be flushed with alcohol (a desiccant), dried with forced air, and stored in a dry environment.

But recent outbreaks spurred the Food and Drug Administration to recommend further steps – including better oversight and training of reprocessing staff and closer attention to precleaning, manual cleaning, and manufacturer recommendations for use, including determining whether the company used its own “proprietary” cleaning brushes in its validation studies, the experts noted. “Optional supplemental measures” include surveillance cultures of duodenoscopes, ethylene oxide sterilization, and double reprocessing, in which each scope undergoes two cycles of manual cleaning and high-intensity sterilization between patients. Double reprocessing might be the simplest and most easily adopted of these measures, the experts said. The AGA, for its part, recommends active surveillance of patients who undergo ERCP, surveillance cultures of scopes, and recording of the serial number of every scope used in every procedure.

Surveillance culture makes sense, but can be costly and hard to conduct and interpret because sampling detects vast numbers of nonpathogenic organisms in addition to any pathogens, the experts noted. The Centers for Disease Control and Prevention recommends that each institution follow its own complex outbreak sampling protocol and quarantine duodenoscopes for 2-3 days, pending negative results. That may mean buying more duodenoscopes. A less costly option is to culture a subset of scopes at the end of every workweek, the experts said. Real-time tests that reliably reflect bacterial culture results remain “elusive,” but testing for adenosine triphosphate after manual washing is easiest and best studied, they added.

Clearly, industry is responsible for making endoscopes that can be reliably disinfected. “Recent submissions by all three manufacturers (Olympus, Pentax, and Fujinon) have validated current reprocessing outcomes in test environments, and the FDA has ruled that postmarket studies of reprocessing in clinical settings are expected, but these results will not be forthcoming for several years,” the experts wrote. Redesigning duodenoscopes may be “the ultimate solution,” but in the meantime, endoscopists should carefully review indications for ERCP and ensure thorough informed consent. Doing so “will uphold the trust that we must achieve and maintain with our patients,” the authors said.

They had no funding sources. Dr. Koch has consulted for Sedasys, and Dr. Ginsberg has consulted for Olympus.

FROM GASTROENTEROLOGY

New antibiotics targeting MDR pathogens are expensive, but not impressive

The U.S. Food and Drug Administration has approved a number of new antibiotics targeting multidrug-resistant bacteria in the past 5 years, but the new drugs have not led to a substantial improvement in patient outcomes when compared with existing antibiotics, according to a recent analysis in the Annals of Internal Medicine.

The eight new antibiotics approved by the FDA between January 2010 and December 2015 were ceftaroline, fidaxomicin, bedaquiline, dalbavancin, tedizolid, oritavancin, ceftolozane/tazobactam, and ceftazidime/avibactam. Of those eight drugs, only three showed in vitro activity against the so-called ESKAPE pathogens (Enterococcus faecium, Staphylococcus aureus, Klebsiella pneumonia, Acinetobacter baumannii, Pseudomonas aeruginosa, and Enterobacter species). Only one drug, fidaxomicin, demonstrated in vitro activity against an urgent-threat pathogen from the Centers for Disease Control and Prevention, Clostridium difficile. Bedaquiline was the only new antibiotic specifically indicated for a disease from a multidrug-resistant pathogen, although the investigators said most of the drugs demonstrated in vitro activity against gram-positive drug-resistant pathogens.

Importantly, the authors noted that in vitro activity does not necessarily reflect benefits on actual patient clinical outcomes, as exemplified by such drugs as tigecycline and doripenem.

The researchers found what they called “important deficiencies in the clinical trials leading to approval of these new antibiotic products.” Most pivotal trial designs were primarily noninferiority trials, and the antibiotics were not studied to evaluate whether they have substantial benefits in efficacy over what is currently available, they noted. Additionally, none of the trials evaluated direct patient outcomes as primary end points, and some drugs did not have confirmatory evidence from a second independent trial or did not have any confirmatory trials.

Researchers also examined the prices of a single dose of the new antibiotics. The prices ranged from $1,195 to $4,183 (4-14 days of ceftolozane/tazobactam for acute pyelonephritis and intra-abdominal infections) to $69,702 (24 weeks of bedaquiline) – quite a premium for antibiotics showing unclear evidence of additional benefit.

“As antibiotic innovation continues to move forward, greater attention needs to be paid to incentives for developing high-quality new products with demonstrated superiority to existing products on outcomes in patients with multidrug-resistant disease, replacing the current focus on quantity and presumed future benefits,” researchers concluded.

Read the full study in the Annals of Internal Medicine (doi: 10.7326/M16-0291).

The U.S. Food and Drug Administration has approved a number of new antibiotics targeting multidrug-resistant bacteria in the past 5 years, but the new drugs have not led to a substantial improvement in patient outcomes when compared with existing antibiotics, according to a recent analysis in the Annals of Internal Medicine.

The eight new antibiotics approved by the FDA between January 2010 and December 2015 were ceftaroline, fidaxomicin, bedaquiline, dalbavancin, tedizolid, oritavancin, ceftolozane/tazobactam, and ceftazidime/avibactam. Of those eight drugs, only three showed in vitro activity against the so-called ESKAPE pathogens (Enterococcus faecium, Staphylococcus aureus, Klebsiella pneumonia, Acinetobacter baumannii, Pseudomonas aeruginosa, and Enterobacter species). Only one drug, fidaxomicin, demonstrated in vitro activity against an urgent-threat pathogen from the Centers for Disease Control and Prevention, Clostridium difficile. Bedaquiline was the only new antibiotic specifically indicated for a disease from a multidrug-resistant pathogen, although the investigators said most of the drugs demonstrated in vitro activity against gram-positive drug-resistant pathogens.

Importantly, the authors noted that in vitro activity does not necessarily reflect benefits on actual patient clinical outcomes, as exemplified by such drugs as tigecycline and doripenem.

The researchers found what they called “important deficiencies in the clinical trials leading to approval of these new antibiotic products.” Most pivotal trial designs were primarily noninferiority trials, and the antibiotics were not studied to evaluate whether they have substantial benefits in efficacy over what is currently available, they noted. Additionally, none of the trials evaluated direct patient outcomes as primary end points, and some drugs did not have confirmatory evidence from a second independent trial or did not have any confirmatory trials.

Researchers also examined the prices of a single dose of the new antibiotics. The prices ranged from $1,195 to $4,183 (4-14 days of ceftolozane/tazobactam for acute pyelonephritis and intra-abdominal infections) to $69,702 (24 weeks of bedaquiline) – quite a premium for antibiotics showing unclear evidence of additional benefit.

“As antibiotic innovation continues to move forward, greater attention needs to be paid to incentives for developing high-quality new products with demonstrated superiority to existing products on outcomes in patients with multidrug-resistant disease, replacing the current focus on quantity and presumed future benefits,” researchers concluded.

Read the full study in the Annals of Internal Medicine (doi: 10.7326/M16-0291).

The U.S. Food and Drug Administration has approved a number of new antibiotics targeting multidrug-resistant bacteria in the past 5 years, but the new drugs have not led to a substantial improvement in patient outcomes when compared with existing antibiotics, according to a recent analysis in the Annals of Internal Medicine.

The eight new antibiotics approved by the FDA between January 2010 and December 2015 were ceftaroline, fidaxomicin, bedaquiline, dalbavancin, tedizolid, oritavancin, ceftolozane/tazobactam, and ceftazidime/avibactam. Of those eight drugs, only three showed in vitro activity against the so-called ESKAPE pathogens (Enterococcus faecium, Staphylococcus aureus, Klebsiella pneumonia, Acinetobacter baumannii, Pseudomonas aeruginosa, and Enterobacter species). Only one drug, fidaxomicin, demonstrated in vitro activity against an urgent-threat pathogen from the Centers for Disease Control and Prevention, Clostridium difficile. Bedaquiline was the only new antibiotic specifically indicated for a disease from a multidrug-resistant pathogen, although the investigators said most of the drugs demonstrated in vitro activity against gram-positive drug-resistant pathogens.

Importantly, the authors noted that in vitro activity does not necessarily reflect benefits on actual patient clinical outcomes, as exemplified by such drugs as tigecycline and doripenem.

The researchers found what they called “important deficiencies in the clinical trials leading to approval of these new antibiotic products.” Most pivotal trial designs were primarily noninferiority trials, and the antibiotics were not studied to evaluate whether they have substantial benefits in efficacy over what is currently available, they noted. Additionally, none of the trials evaluated direct patient outcomes as primary end points, and some drugs did not have confirmatory evidence from a second independent trial or did not have any confirmatory trials.

Researchers also examined the prices of a single dose of the new antibiotics. The prices ranged from $1,195 to $4,183 (4-14 days of ceftolozane/tazobactam for acute pyelonephritis and intra-abdominal infections) to $69,702 (24 weeks of bedaquiline) – quite a premium for antibiotics showing unclear evidence of additional benefit.

“As antibiotic innovation continues to move forward, greater attention needs to be paid to incentives for developing high-quality new products with demonstrated superiority to existing products on outcomes in patients with multidrug-resistant disease, replacing the current focus on quantity and presumed future benefits,” researchers concluded.

Read the full study in the Annals of Internal Medicine (doi: 10.7326/M16-0291).

FROM ANNALS OF INTERNAL MEDICINE

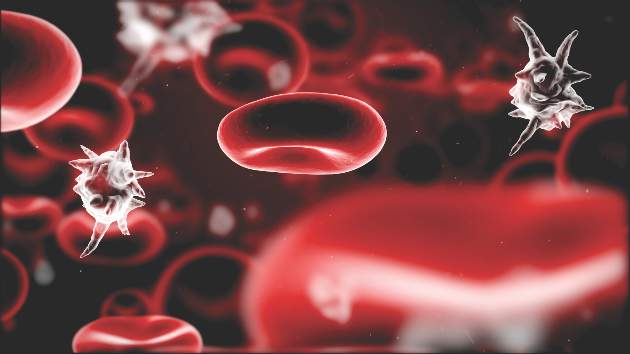

No evidence to show Alzheimer’s or Parkinson’s transmission via blood transfusion

A retrospective study of nearly 1.5 million patients over the course of 44 years showed no evidence to support the claim that neurodegenerative disorders can be transmitted between individuals through blood transfusions.

“Given that the neurodegenerative diseases on the dementia spectrum are relatively common, the misfolded proteins in affected persons have a wide tissue distribution, and the diseases may have a protracted prediagnostic course, potential transmission through transfusion could have important public health implications,” explained study authors led by Gustaf Edgren, MD, PhD, of the Karolinska Institute in Stockholm.

The retrospective cohort study analyzed data on 1,465,845 patients listed in nationwide transfusion registers, all of whom had undergone transfusions between 1968 and 2012 in Sweden, or during 1981-2012 in Denmark. The investigators looked for transfusions in which the donor had a diagnosis – either before or after the transfusion – of Alzheimer’s disease, Parkinson’s disease, or amyotrophic lateral sclerosis, and compared them with transfusions involving two healthy control groups. Creutzfeldt-Jakob disease was not included in the analysis (Ann Intern Med. 2016 Jun 28. doi: 10.7326/M15-2421).

All transfusion recipients were followed from 180 days after the transfusion date in order to minimize the risk of including a patient who had a neurodegenerative disorder that was present but not registered at the time of the transfusion. Follow-ups lasted until either the first diagnosis of a neurodegenerative disorder, death, emigration, or the end of the trial period on Dec. 31, 2012.

If a donor had received a diagnosis of a neurodegenerative disorder within 20 years of the transfusion, all recipients from said donor were considered to be exposed. “The maximum latency of 20 years was used as a compromise between allowing a long preclinical phase – during which donors may still be infectious – and avoiding classifying clearly healthy donors as potentially infectious,” the authors wrote.

Only 42,254 (2.9%) of subjects were deemed to be exposed to a neurodegenerative disorder based on their blood transfusion donor. However, there was no evidence of any increased risk of dementia of any type from exposure to blood from affected donors in any of the recipients over the course of the follow-up period (hazard ratio, 1.04; 95% confidence interval, 0.99-1.09), and similar results were obtained individually for Alzheimer’s disease and Parkinson’s disease. As a control measure, the investigators also tested for hepatitis C transmission before and after transfusions, and were “as expected, readily able to detect transmission of viral hepatitis,” despite finding no traces of neurodegenerative conditions.

“Despite accumulating scientific support for horizontal transmissibility of a range of disorders in the neurodegenerative spectrum through various methods of inoculation in animal models and of variant Creutzfeldt-Jakob disease from human and animal model data, this study provides evidence against blood transfusion as an important route of transmission of neurodegenerative diseases between humans,” the authors concluded.

The authors also added that “given that the study was based on the entire computerized blood banking history in two countries with several decades of follow-up, more comprehensive data are unlikely to be available in the foreseeable future.”

Funding for the study was provided by the Swedish Research Council, the Swedish Heart-Lung Foundation, the Swedish Society for Medical Research, and the Danish Council for Independent Research. One coauthor reported receiving a grant from the Danish Council for Independent Research, and another reported receiving grants from the Swedish Research Council and the Swedish Heart-Lung Foundation during the conduct of the study. No other authors reported any relevant financial disclosures.

A retrospective study of nearly 1.5 million patients over the course of 44 years showed no evidence to support the claim that neurodegenerative disorders can be transmitted between individuals through blood transfusions.

“Given that the neurodegenerative diseases on the dementia spectrum are relatively common, the misfolded proteins in affected persons have a wide tissue distribution, and the diseases may have a protracted prediagnostic course, potential transmission through transfusion could have important public health implications,” explained study authors led by Gustaf Edgren, MD, PhD, of the Karolinska Institute in Stockholm.

The retrospective cohort study analyzed data on 1,465,845 patients listed in nationwide transfusion registers, all of whom had undergone transfusions between 1968 and 2012 in Sweden, or during 1981-2012 in Denmark. The investigators looked for transfusions in which the donor had a diagnosis – either before or after the transfusion – of Alzheimer’s disease, Parkinson’s disease, or amyotrophic lateral sclerosis, and compared them with transfusions involving two healthy control groups. Creutzfeldt-Jakob disease was not included in the analysis (Ann Intern Med. 2016 Jun 28. doi: 10.7326/M15-2421).

All transfusion recipients were followed from 180 days after the transfusion date in order to minimize the risk of including a patient who had a neurodegenerative disorder that was present but not registered at the time of the transfusion. Follow-ups lasted until either the first diagnosis of a neurodegenerative disorder, death, emigration, or the end of the trial period on Dec. 31, 2012.

If a donor had received a diagnosis of a neurodegenerative disorder within 20 years of the transfusion, all recipients from said donor were considered to be exposed. “The maximum latency of 20 years was used as a compromise between allowing a long preclinical phase – during which donors may still be infectious – and avoiding classifying clearly healthy donors as potentially infectious,” the authors wrote.

Only 42,254 (2.9%) of subjects were deemed to be exposed to a neurodegenerative disorder based on their blood transfusion donor. However, there was no evidence of any increased risk of dementia of any type from exposure to blood from affected donors in any of the recipients over the course of the follow-up period (hazard ratio, 1.04; 95% confidence interval, 0.99-1.09), and similar results were obtained individually for Alzheimer’s disease and Parkinson’s disease. As a control measure, the investigators also tested for hepatitis C transmission before and after transfusions, and were “as expected, readily able to detect transmission of viral hepatitis,” despite finding no traces of neurodegenerative conditions.

“Despite accumulating scientific support for horizontal transmissibility of a range of disorders in the neurodegenerative spectrum through various methods of inoculation in animal models and of variant Creutzfeldt-Jakob disease from human and animal model data, this study provides evidence against blood transfusion as an important route of transmission of neurodegenerative diseases between humans,” the authors concluded.

The authors also added that “given that the study was based on the entire computerized blood banking history in two countries with several decades of follow-up, more comprehensive data are unlikely to be available in the foreseeable future.”

Funding for the study was provided by the Swedish Research Council, the Swedish Heart-Lung Foundation, the Swedish Society for Medical Research, and the Danish Council for Independent Research. One coauthor reported receiving a grant from the Danish Council for Independent Research, and another reported receiving grants from the Swedish Research Council and the Swedish Heart-Lung Foundation during the conduct of the study. No other authors reported any relevant financial disclosures.

A retrospective study of nearly 1.5 million patients over the course of 44 years showed no evidence to support the claim that neurodegenerative disorders can be transmitted between individuals through blood transfusions.

“Given that the neurodegenerative diseases on the dementia spectrum are relatively common, the misfolded proteins in affected persons have a wide tissue distribution, and the diseases may have a protracted prediagnostic course, potential transmission through transfusion could have important public health implications,” explained study authors led by Gustaf Edgren, MD, PhD, of the Karolinska Institute in Stockholm.

The retrospective cohort study analyzed data on 1,465,845 patients listed in nationwide transfusion registers, all of whom had undergone transfusions between 1968 and 2012 in Sweden, or during 1981-2012 in Denmark. The investigators looked for transfusions in which the donor had a diagnosis – either before or after the transfusion – of Alzheimer’s disease, Parkinson’s disease, or amyotrophic lateral sclerosis, and compared them with transfusions involving two healthy control groups. Creutzfeldt-Jakob disease was not included in the analysis (Ann Intern Med. 2016 Jun 28. doi: 10.7326/M15-2421).

All transfusion recipients were followed from 180 days after the transfusion date in order to minimize the risk of including a patient who had a neurodegenerative disorder that was present but not registered at the time of the transfusion. Follow-ups lasted until either the first diagnosis of a neurodegenerative disorder, death, emigration, or the end of the trial period on Dec. 31, 2012.

If a donor had received a diagnosis of a neurodegenerative disorder within 20 years of the transfusion, all recipients from said donor were considered to be exposed. “The maximum latency of 20 years was used as a compromise between allowing a long preclinical phase – during which donors may still be infectious – and avoiding classifying clearly healthy donors as potentially infectious,” the authors wrote.

Only 42,254 (2.9%) of subjects were deemed to be exposed to a neurodegenerative disorder based on their blood transfusion donor. However, there was no evidence of any increased risk of dementia of any type from exposure to blood from affected donors in any of the recipients over the course of the follow-up period (hazard ratio, 1.04; 95% confidence interval, 0.99-1.09), and similar results were obtained individually for Alzheimer’s disease and Parkinson’s disease. As a control measure, the investigators also tested for hepatitis C transmission before and after transfusions, and were “as expected, readily able to detect transmission of viral hepatitis,” despite finding no traces of neurodegenerative conditions.

“Despite accumulating scientific support for horizontal transmissibility of a range of disorders in the neurodegenerative spectrum through various methods of inoculation in animal models and of variant Creutzfeldt-Jakob disease from human and animal model data, this study provides evidence against blood transfusion as an important route of transmission of neurodegenerative diseases between humans,” the authors concluded.

The authors also added that “given that the study was based on the entire computerized blood banking history in two countries with several decades of follow-up, more comprehensive data are unlikely to be available in the foreseeable future.”

Funding for the study was provided by the Swedish Research Council, the Swedish Heart-Lung Foundation, the Swedish Society for Medical Research, and the Danish Council for Independent Research. One coauthor reported receiving a grant from the Danish Council for Independent Research, and another reported receiving grants from the Swedish Research Council and the Swedish Heart-Lung Foundation during the conduct of the study. No other authors reported any relevant financial disclosures.

FROM ANNALS OF INTERNAL MEDICINE

Key clinical point: There are no data to support the notion that human transmission of Alzheimer’s or Parkinson’s diseases can occur via blood transfusions.

Major finding: There was no evidence of any increased risk of dementia of any type from exposure to blood from affected donors in any of the recipients over the course of the follow-up period (HR, 1.04; 95% CI, 0.99-1.09).

Data source: Retrospective case-control study of 1,465,845 transfusion patients in Sweden and Denmark during 1968-2012.

Disclosures: The study was funded by the Swedish Research Council, the Swedish Heart-Lung Foundation, the Swedish Society for Medical Research, and the Danish Council for Independent Research. Two coauthors disclosed receiving grants from the funding institutions.

Seasonal variation not seen in C. difficile rates

BOSTON – No winter spike in Clostridium difficile infection (CDI) rates was seen among hospitalized patients after testing methodologies and frequency were accounted for, according to a large multinational study.

A total of 180 hospitals in five European countries had wide variation in CDI testing methods and testing density. However, among the hospitals that used a currently recommended toxin-detecting testing algorithm, there was no significant seasonal variation in cases, defined as mean cases per 10,000 patient bed-days per hospital per month (C/PBDs/H/M). The hospitals using toxin-detecting algorithms had summer C/PBDs/H/M rates of 9.6, compared to 8.0 in winter months (P = .27).

These results, presented at the annual meeting of the American Society for Microbiology by Kerrie Davies, clinical scientist at the University of Leeds (England), stand in contrast to some other studies that have shown a wintertime peak in CDI incidence. The data presented help in “understanding the context in which published reported rate data have been generated,” said Ms. Davies, enabling a better understanding both of how samples are tested, and who gets tested.

The study enrolled 180 hospitals – 38 each in France and Italy, 37 each in Germany and the United Kingdom, and 30 in Spain. Institutions reported patient demographics, as well as CDI testing data and patient bed-days for CDI cases, for 1 year.

Current European and U.K. CDI testing algorithms, said Ms. Davies, begin either with testing for glutamate dehydrogenase (GDH) or with nucleic acid amplification testing (NAAT), and then proceed to enzyme-linked immunosorbent assay (ELISA) testing for C. difficile toxins A and B.

Other algorithms, for example those that begin with toxin testing, are not recommended, said Ms. Davies. Some institutions may diagnose CDI only by toxin detection, GDH testing, or NAAT testing.

For data analysis, Ms. Davies and her collaborators compared CDI-related PBDs and testing density during June, July, and August to data collected in December, January, and February. Testing methods were dichotomized to toxin-detecting CDI testing algorithms (TCTA, using GDH/toxin or NAAT/toxin), or non-TCTA methods, which included all other algorithms or stand-alone testing methods.

Wide variation was seen between countries in testing methodologies. The United Kingdom had the highest rate of TCTA testing at 89%, while Germany had the lowest, at 8%, with 30 of 37 (81%) of participating German hospitals using non–toxin detection methods.

In addition, both testing density and case incidence rates varied between countries. Standardizing test density to mean number of tests per 10,000 PBDs per hospital per month (T/PBDs/H/M), the United Kingdom had the highest density, at 96.0 T/PBDs/H/M, while France had the lowest, at 34.4 T/PBDs/H/M. Overall per-nation case rates ranged from 2.55 C/PBDs/H/M in the United Kingdom to 6.9 C/PBDs/H/M in Spain.

Ms. Davies and her collaborators also analyzed data for all of the hospitals in any country according to testing method. That analysis saw no significant difference in seasonal variation testing rates for TCTA-using hospitals (mean T/PBDs/H/M in summer, 119.2 versus 102.4 in winter, P = .11), and no significant seasonal variation in CDI incidence. However, “the largest variation in CDI rates was seen in those hospitals using toxin-only diagnostic methods,” said Ms. Davies.

By contrast, for hospitals using non-TCTA methods, though testing rates did not change significantly, incidence was significantly higher in winter months, at a mean 13.5 wintertime versus 10.0 summertime C/PBDs/H/M (P = .49).

One country, Italy, stood out for having both higher overall wintertime testing (mean 57.2 summertime versus 78.8 wintertime T/PBDs/H/M, P = .041), and higher incidence (mean 6.6 summertime versus 10.1 wintertime C/PBDs/H/M, P = .017).

“Reported CDI rates only increase in winter if testing rates increase concurrently, or if hospitals use nonrecommended testing methods for diagnosis, especially non–toxin detection methods,” said Ms. Davies.

The study investigators reported receiving financial support from Sanofi Pasteur.

On Twitter @karioakes

BOSTON – No winter spike in Clostridium difficile infection (CDI) rates was seen among hospitalized patients after testing methodologies and frequency were accounted for, according to a large multinational study.

A total of 180 hospitals in five European countries had wide variation in CDI testing methods and testing density. However, among the hospitals that used a currently recommended toxin-detecting testing algorithm, there was no significant seasonal variation in cases, defined as mean cases per 10,000 patient bed-days per hospital per month (C/PBDs/H/M). The hospitals using toxin-detecting algorithms had summer C/PBDs/H/M rates of 9.6, compared to 8.0 in winter months (P = .27).

These results, presented at the annual meeting of the American Society for Microbiology by Kerrie Davies, clinical scientist at the University of Leeds (England), stand in contrast to some other studies that have shown a wintertime peak in CDI incidence. The data presented help in “understanding the context in which published reported rate data have been generated,” said Ms. Davies, enabling a better understanding both of how samples are tested, and who gets tested.

The study enrolled 180 hospitals – 38 each in France and Italy, 37 each in Germany and the United Kingdom, and 30 in Spain. Institutions reported patient demographics, as well as CDI testing data and patient bed-days for CDI cases, for 1 year.

Current European and U.K. CDI testing algorithms, said Ms. Davies, begin either with testing for glutamate dehydrogenase (GDH) or with nucleic acid amplification testing (NAAT), and then proceed to enzyme-linked immunosorbent assay (ELISA) testing for C. difficile toxins A and B.

Other algorithms, for example those that begin with toxin testing, are not recommended, said Ms. Davies. Some institutions may diagnose CDI only by toxin detection, GDH testing, or NAAT testing.

For data analysis, Ms. Davies and her collaborators compared CDI-related PBDs and testing density during June, July, and August to data collected in December, January, and February. Testing methods were dichotomized to toxin-detecting CDI testing algorithms (TCTA, using GDH/toxin or NAAT/toxin), or non-TCTA methods, which included all other algorithms or stand-alone testing methods.

Wide variation was seen between countries in testing methodologies. The United Kingdom had the highest rate of TCTA testing at 89%, while Germany had the lowest, at 8%, with 30 of 37 (81%) of participating German hospitals using non–toxin detection methods.

In addition, both testing density and case incidence rates varied between countries. Standardizing test density to mean number of tests per 10,000 PBDs per hospital per month (T/PBDs/H/M), the United Kingdom had the highest density, at 96.0 T/PBDs/H/M, while France had the lowest, at 34.4 T/PBDs/H/M. Overall per-nation case rates ranged from 2.55 C/PBDs/H/M in the United Kingdom to 6.9 C/PBDs/H/M in Spain.

Ms. Davies and her collaborators also analyzed data for all of the hospitals in any country according to testing method. That analysis saw no significant difference in seasonal variation testing rates for TCTA-using hospitals (mean T/PBDs/H/M in summer, 119.2 versus 102.4 in winter, P = .11), and no significant seasonal variation in CDI incidence. However, “the largest variation in CDI rates was seen in those hospitals using toxin-only diagnostic methods,” said Ms. Davies.

By contrast, for hospitals using non-TCTA methods, though testing rates did not change significantly, incidence was significantly higher in winter months, at a mean 13.5 wintertime versus 10.0 summertime C/PBDs/H/M (P = .49).

One country, Italy, stood out for having both higher overall wintertime testing (mean 57.2 summertime versus 78.8 wintertime T/PBDs/H/M, P = .041), and higher incidence (mean 6.6 summertime versus 10.1 wintertime C/PBDs/H/M, P = .017).

“Reported CDI rates only increase in winter if testing rates increase concurrently, or if hospitals use nonrecommended testing methods for diagnosis, especially non–toxin detection methods,” said Ms. Davies.

The study investigators reported receiving financial support from Sanofi Pasteur.

On Twitter @karioakes

BOSTON – No winter spike in Clostridium difficile infection (CDI) rates was seen among hospitalized patients after testing methodologies and frequency were accounted for, according to a large multinational study.

A total of 180 hospitals in five European countries had wide variation in CDI testing methods and testing density. However, among the hospitals that used a currently recommended toxin-detecting testing algorithm, there was no significant seasonal variation in cases, defined as mean cases per 10,000 patient bed-days per hospital per month (C/PBDs/H/M). The hospitals using toxin-detecting algorithms had summer C/PBDs/H/M rates of 9.6, compared to 8.0 in winter months (P = .27).

These results, presented at the annual meeting of the American Society for Microbiology by Kerrie Davies, clinical scientist at the University of Leeds (England), stand in contrast to some other studies that have shown a wintertime peak in CDI incidence. The data presented help in “understanding the context in which published reported rate data have been generated,” said Ms. Davies, enabling a better understanding both of how samples are tested, and who gets tested.

The study enrolled 180 hospitals – 38 each in France and Italy, 37 each in Germany and the United Kingdom, and 30 in Spain. Institutions reported patient demographics, as well as CDI testing data and patient bed-days for CDI cases, for 1 year.

Current European and U.K. CDI testing algorithms, said Ms. Davies, begin either with testing for glutamate dehydrogenase (GDH) or with nucleic acid amplification testing (NAAT), and then proceed to enzyme-linked immunosorbent assay (ELISA) testing for C. difficile toxins A and B.

Other algorithms, for example those that begin with toxin testing, are not recommended, said Ms. Davies. Some institutions may diagnose CDI only by toxin detection, GDH testing, or NAAT testing.

For data analysis, Ms. Davies and her collaborators compared CDI-related PBDs and testing density during June, July, and August to data collected in December, January, and February. Testing methods were dichotomized to toxin-detecting CDI testing algorithms (TCTA, using GDH/toxin or NAAT/toxin), or non-TCTA methods, which included all other algorithms or stand-alone testing methods.

Wide variation was seen between countries in testing methodologies. The United Kingdom had the highest rate of TCTA testing at 89%, while Germany had the lowest, at 8%, with 30 of 37 (81%) of participating German hospitals using non–toxin detection methods.

In addition, both testing density and case incidence rates varied between countries. Standardizing test density to mean number of tests per 10,000 PBDs per hospital per month (T/PBDs/H/M), the United Kingdom had the highest density, at 96.0 T/PBDs/H/M, while France had the lowest, at 34.4 T/PBDs/H/M. Overall per-nation case rates ranged from 2.55 C/PBDs/H/M in the United Kingdom to 6.9 C/PBDs/H/M in Spain.

Ms. Davies and her collaborators also analyzed data for all of the hospitals in any country according to testing method. That analysis saw no significant difference in seasonal variation testing rates for TCTA-using hospitals (mean T/PBDs/H/M in summer, 119.2 versus 102.4 in winter, P = .11), and no significant seasonal variation in CDI incidence. However, “the largest variation in CDI rates was seen in those hospitals using toxin-only diagnostic methods,” said Ms. Davies.

By contrast, for hospitals using non-TCTA methods, though testing rates did not change significantly, incidence was significantly higher in winter months, at a mean 13.5 wintertime versus 10.0 summertime C/PBDs/H/M (P = .49).

One country, Italy, stood out for having both higher overall wintertime testing (mean 57.2 summertime versus 78.8 wintertime T/PBDs/H/M, P = .041), and higher incidence (mean 6.6 summertime versus 10.1 wintertime C/PBDs/H/M, P = .017).

“Reported CDI rates only increase in winter if testing rates increase concurrently, or if hospitals use nonrecommended testing methods for diagnosis, especially non–toxin detection methods,” said Ms. Davies.

The study investigators reported receiving financial support from Sanofi Pasteur.

On Twitter @karioakes

AT ASM MICROBE 2016

Key clinical point: After researchers accounted for testing frequency and methods, Clostridium difficile infection (CDI) rates were not higher in the winter months.

Major finding: In five European countries, hospitals that used direct toxin-detecting algorithms to test for CDI had no seasonal variation in CDI incidence (mean cases/patient bed-days/hospital/month in summer, 9.6; in winter, 8.0; P = .27).

Data source: Demographic and testing data collection from 180 hospitals in five European countries to ascertain CDI testing methods, rates, cases, and patient bed-days per month.

Disclosures: The study investigators reported financial support from Sanofi Pasteur.

Staffing, work environment drive VAP risk in the ICU

SAN FRANCISCO – The work environment for nurses and the physician staffing model in the intensive care unit influence patients’ likelihood of acquiring ventilator-associated pneumonia (VAP), based on a cohort study of 25 ICUs.

Overall, each 1-point increase in the score for the nurse work environment – indicating that nurses had a greater sense of playing an important role in patient care – was unexpectedly associated with a roughly sixfold higher rate of VAP among the ICU’s patients, according to data reported in a session and press briefing at an international conference of the American Thoracic Society. However, additional analyses showed that the rate of VAP was higher in closed units where a board-certified critical care physician (intensivist) managed and led care rather than an open unit where care is shared.

“We think that the organization of the ICU is actually influencing nursing practice, which is a really novel finding,” commented first author Deena Kelly Costa, PhD, RN, of the University of Michigan School of Nursing in Ann Arbor. “In closed ICUs, when you have a board-certified physician and an ICU team managing and leading care, even if the work environment is better, nurses may not feel as empowered to standardize their care or practice.”

“ICU nurses are the ones who are primarily responsible for VAP preventive practices: they keep the head of the bed higher than 45 degrees, they conduct oral care, they conduct (patient) surveillance. ICU physicians are involved with writing the orders and ventilator setting management. So how these providers work together could theoretically influence the risk for patients developing VAP,” Dr. Costa said.

“We need to be thinking a little bit more critically about not only the care that’s happening at the bedside... but also at an organizational level. How are these providers organized, and can we work together to improve patient outcomes?”

“I’m not suggesting that we get rid of all closed ICUs because I don’t think that’s the solution,” Dr. Costa maintained. “I think from an administrative perspective, we need to be considering what’s the organization of these clinicians and this unit, and [in a context-specific manner], how can we improve it for better patient outcomes? That may be both working on improving the work environment and making the nurses feel more empowered, or it could be potentially considering other staffing models.”

Some data have already linked a more favorable nurse work environment and the presence of a board-certified critical care physician independently with better patient outcomes in the ICU. But studies of their joint impact are lacking.

The investigators performed a secondary, unit-level analysis of nurse survey data collected during 2005 and 2006 in ICUs in southern Michigan.

In all, 462 nurses working in 25 ICUs completed the Practice Environment Scale of the Nursing Work Index, on which averaged summary scores range between 1 (unfavorable) and 4 (favorable). The scale captures environmental factors such as the adequacy of resources for nurses, support from their managers, and their level of involvement in hospital policy decisions.

The rate of VAP during the same period was assessed using data from more than 1,000 patients from each ICU.

The summary nurse work environment score averaged 2.69 points in the 21 ICUs that had a closed physician staffing model and 2.62 points in the 4 ICUs that had an open physician staffing model. The respective rates of VAP were 7.5% and 2.5%.

In adjusted analysis among all 25 ICUs, each 1-point increase in an ICU’s Practice Environment Scale score was associated with a sharply higher rate of VAP on the unit (adjusted incidence rate ratio, 5.76; P = .02).

However, there was a strong interaction between the score and physician staffing model (P less than .001). In open ICUs, as the score rose, the rate of VAP fell (from about 16% to 5%), whereas in closed ICUs, as the score rose, so did the rate of VAP (from about 3% to 14%).

Dr. Costa disclosed that she had no relevant conflicts of interest. The parent survey was funded by the Blue Cross Blue Shield Foundation of Michigan.

SAN FRANCISCO – The work environment for nurses and the physician staffing model in the intensive care unit influence patients’ likelihood of acquiring ventilator-associated pneumonia (VAP), based on a cohort study of 25 ICUs.

Overall, each 1-point increase in the score for the nurse work environment – indicating that nurses had a greater sense of playing an important role in patient care – was unexpectedly associated with a roughly sixfold higher rate of VAP among the ICU’s patients, according to data reported in a session and press briefing at an international conference of the American Thoracic Society. However, additional analyses showed that the rate of VAP was higher in closed units where a board-certified critical care physician (intensivist) managed and led care rather than an open unit where care is shared.

“We think that the organization of the ICU is actually influencing nursing practice, which is a really novel finding,” commented first author Deena Kelly Costa, PhD, RN, of the University of Michigan School of Nursing in Ann Arbor. “In closed ICUs, when you have a board-certified physician and an ICU team managing and leading care, even if the work environment is better, nurses may not feel as empowered to standardize their care or practice.”

“ICU nurses are the ones who are primarily responsible for VAP preventive practices: they keep the head of the bed higher than 45 degrees, they conduct oral care, they conduct (patient) surveillance. ICU physicians are involved with writing the orders and ventilator setting management. So how these providers work together could theoretically influence the risk for patients developing VAP,” Dr. Costa said.

“We need to be thinking a little bit more critically about not only the care that’s happening at the bedside... but also at an organizational level. How are these providers organized, and can we work together to improve patient outcomes?”

“I’m not suggesting that we get rid of all closed ICUs because I don’t think that’s the solution,” Dr. Costa maintained. “I think from an administrative perspective, we need to be considering what’s the organization of these clinicians and this unit, and [in a context-specific manner], how can we improve it for better patient outcomes? That may be both working on improving the work environment and making the nurses feel more empowered, or it could be potentially considering other staffing models.”

Some data have already linked a more favorable nurse work environment and the presence of a board-certified critical care physician independently with better patient outcomes in the ICU. But studies of their joint impact are lacking.

The investigators performed a secondary, unit-level analysis of nurse survey data collected during 2005 and 2006 in ICUs in southern Michigan.

In all, 462 nurses working in 25 ICUs completed the Practice Environment Scale of the Nursing Work Index, on which averaged summary scores range between 1 (unfavorable) and 4 (favorable). The scale captures environmental factors such as the adequacy of resources for nurses, support from their managers, and their level of involvement in hospital policy decisions.

The rate of VAP during the same period was assessed using data from more than 1,000 patients from each ICU.

The summary nurse work environment score averaged 2.69 points in the 21 ICUs that had a closed physician staffing model and 2.62 points in the 4 ICUs that had an open physician staffing model. The respective rates of VAP were 7.5% and 2.5%.

In adjusted analysis among all 25 ICUs, each 1-point increase in an ICU’s Practice Environment Scale score was associated with a sharply higher rate of VAP on the unit (adjusted incidence rate ratio, 5.76; P = .02).

However, there was a strong interaction between the score and physician staffing model (P less than .001). In open ICUs, as the score rose, the rate of VAP fell (from about 16% to 5%), whereas in closed ICUs, as the score rose, so did the rate of VAP (from about 3% to 14%).

Dr. Costa disclosed that she had no relevant conflicts of interest. The parent survey was funded by the Blue Cross Blue Shield Foundation of Michigan.

SAN FRANCISCO – The work environment for nurses and the physician staffing model in the intensive care unit influence patients’ likelihood of acquiring ventilator-associated pneumonia (VAP), based on a cohort study of 25 ICUs.

Overall, each 1-point increase in the score for the nurse work environment – indicating that nurses had a greater sense of playing an important role in patient care – was unexpectedly associated with a roughly sixfold higher rate of VAP among the ICU’s patients, according to data reported in a session and press briefing at an international conference of the American Thoracic Society. However, additional analyses showed that the rate of VAP was higher in closed units where a board-certified critical care physician (intensivist) managed and led care rather than an open unit where care is shared.

“We think that the organization of the ICU is actually influencing nursing practice, which is a really novel finding,” commented first author Deena Kelly Costa, PhD, RN, of the University of Michigan School of Nursing in Ann Arbor. “In closed ICUs, when you have a board-certified physician and an ICU team managing and leading care, even if the work environment is better, nurses may not feel as empowered to standardize their care or practice.”

“ICU nurses are the ones who are primarily responsible for VAP preventive practices: they keep the head of the bed higher than 45 degrees, they conduct oral care, they conduct (patient) surveillance. ICU physicians are involved with writing the orders and ventilator setting management. So how these providers work together could theoretically influence the risk for patients developing VAP,” Dr. Costa said.

“We need to be thinking a little bit more critically about not only the care that’s happening at the bedside... but also at an organizational level. How are these providers organized, and can we work together to improve patient outcomes?”

“I’m not suggesting that we get rid of all closed ICUs because I don’t think that’s the solution,” Dr. Costa maintained. “I think from an administrative perspective, we need to be considering what’s the organization of these clinicians and this unit, and [in a context-specific manner], how can we improve it for better patient outcomes? That may be both working on improving the work environment and making the nurses feel more empowered, or it could be potentially considering other staffing models.”

Some data have already linked a more favorable nurse work environment and the presence of a board-certified critical care physician independently with better patient outcomes in the ICU. But studies of their joint impact are lacking.

The investigators performed a secondary, unit-level analysis of nurse survey data collected during 2005 and 2006 in ICUs in southern Michigan.

In all, 462 nurses working in 25 ICUs completed the Practice Environment Scale of the Nursing Work Index, on which averaged summary scores range between 1 (unfavorable) and 4 (favorable). The scale captures environmental factors such as the adequacy of resources for nurses, support from their managers, and their level of involvement in hospital policy decisions.

The rate of VAP during the same period was assessed using data from more than 1,000 patients from each ICU.

The summary nurse work environment score averaged 2.69 points in the 21 ICUs that had a closed physician staffing model and 2.62 points in the 4 ICUs that had an open physician staffing model. The respective rates of VAP were 7.5% and 2.5%.

In adjusted analysis among all 25 ICUs, each 1-point increase in an ICU’s Practice Environment Scale score was associated with a sharply higher rate of VAP on the unit (adjusted incidence rate ratio, 5.76; P = .02).

However, there was a strong interaction between the score and physician staffing model (P less than .001). In open ICUs, as the score rose, the rate of VAP fell (from about 16% to 5%), whereas in closed ICUs, as the score rose, so did the rate of VAP (from about 3% to 14%).

Dr. Costa disclosed that she had no relevant conflicts of interest. The parent survey was funded by the Blue Cross Blue Shield Foundation of Michigan.

AT ATS 2016

Key clinical point: The impact of nurse work environment on risk of VAP in the ICU depends on the unit’s physician staffing model.

Major finding: A better nurse work environment was associated with a higher rate of VAP overall (incidence rate ratio, 5.76), but there was an interaction whereby it was positively associated with rate in closed units but negatively so in open units.

Data source: A cohort study of 25 ICUs, 462 nurses, and more than 25,000 patients in southern Michigan between 2005 and 2006.

Disclosures: Dr. Costa disclosed that she had no relevant conflicts of interest. The parent study was funded by the Blue Cross Blue Shield Foundation of Michigan.

Universal decolonization reduces CLABSI rates in large health care system

Universal decolonization of adult intensive care unit patients in a large community health system significantly reduced the incidence of central line–associated bloodstream infections (CLABSIs), according to Dr. Edward Septimus and his associates.

A total of 136 ICUs at 95 hospitals were included in the study. Universal decolonization was completed within 6 months. A total of 672 CLABSIs occurred in the 24 months before intervention, and 181 CLABSIs occurred in the 8 months after intervention. The CLABSI rate was 1.1/1,000 central-line days before intervention and 0.87 after intervention, a reduction of 23.5%.

No evidence of a trend over time was found in either the pre- or postintervention period. The reduction in CLABSIs post intervention was not affected after adjustment for seasonality, number of beds in the ICU, or unit type. The gram-positive CLABSI rate was reduced by 28.7% after invention, and the rate of CLABSI due to Staphylococcus aureus was reduced by 31.9%.

“This rapid dissemination and implementation program demonstrated the utility of a protocol-specific toolkit, coupled with a multistep translation program to implement universal decolonization in a large, complex organization. Doing so demonstrated that use of CHG [chlorhexidine] bathing plus nasal mupirocin in routine practice reduced ICU CLABSIs at a level commensurate with that achieved within the framework of a clinical trial. This supports the use of universal decolonization in a wide variety of acute care facilities across the United States,” the investigators wrote.

Find the full study in Clinical Infectious Diseases (doi: 10.1093/cid/ciw282).

Universal decolonization of adult intensive care unit patients in a large community health system significantly reduced the incidence of central line–associated bloodstream infections (CLABSIs), according to Dr. Edward Septimus and his associates.

A total of 136 ICUs at 95 hospitals were included in the study. Universal decolonization was completed within 6 months. A total of 672 CLABSIs occurred in the 24 months before intervention, and 181 CLABSIs occurred in the 8 months after intervention. The CLABSI rate was 1.1/1,000 central-line days before intervention and 0.87 after intervention, a reduction of 23.5%.

No evidence of a trend over time was found in either the pre- or postintervention period. The reduction in CLABSIs post intervention was not affected after adjustment for seasonality, number of beds in the ICU, or unit type. The gram-positive CLABSI rate was reduced by 28.7% after invention, and the rate of CLABSI due to Staphylococcus aureus was reduced by 31.9%.

“This rapid dissemination and implementation program demonstrated the utility of a protocol-specific toolkit, coupled with a multistep translation program to implement universal decolonization in a large, complex organization. Doing so demonstrated that use of CHG [chlorhexidine] bathing plus nasal mupirocin in routine practice reduced ICU CLABSIs at a level commensurate with that achieved within the framework of a clinical trial. This supports the use of universal decolonization in a wide variety of acute care facilities across the United States,” the investigators wrote.