User login

FDA calls hospital-based Zika test ‘high risk’

Photo by Juan D. Alfonso

The US Food and Drug Administration (FDA) has deemed a hospital-based test for the Zika virus “high risk,” as the test has not been cleared by the FDA.

The test was developed by scientists at Texas Children’s Hospital and Houston Methodist Hospital. It has been available at both hospitals since last month.

The FDA has requested more information on the test but has not asked the hospitals to stop using it.

According to the hospitals, the test identifies virus-specific RNA sequences to detect the Zika virus. It can distinguish Zika infection from dengue, West Nile, or chikungunya infections. And it can be performed on blood, amniotic fluid, urine, or spinal fluid.

In a letter to the hospitals, the FDA said this test appears to meet the definition of a device, as defined in section 201(h) of the Federal Food Drug and Cosmetic Act. Yet the test has not been granted premarket clearance, approval, or Emergency Use Authorization review by the FDA.

Therefore, the FDA has asked for information on the test’s design, validation, and performance characteristics. The agency said the Centers for Disease Control and Prevention (CDC) and the Centers for Medicare & Medicaid Services asked the FDA to review the science behind the test.

The FDA has not asked the hospitals to stop using the test while the review is underway, according to a statement from Texas Children’s Hospital.

Nevertheless, the Association for Molecular Pathology (AMP) said it is “concerned and disappointed” to see the FDA taking enforcement action regarding this test. The AMP said these types of tests are critical for patient care and should be made available to patients in need.

In fact, the AMP said this is an example of how FDA regulation of laboratory developed procedures would hinder patient access to vital medical services. That’s because the FDA’s Emergency Use Authorization for antibody testing at the CDC or state public health labs does not provide results in the timely fashion needed for immediate patient care.

The FDA recently issued Emergency Use Authorization for the Zika IgM Antibody Capture Enzyme-Linked Immunosorbent Assay (Zika MAC-ELISA), which was developed by the CDC.

The test was distributed to labs in the US and abroad, but it was not made available in US hospitals or other primary care settings. ![]()

Photo by Juan D. Alfonso

The US Food and Drug Administration (FDA) has deemed a hospital-based test for the Zika virus “high risk,” as the test has not been cleared by the FDA.

The test was developed by scientists at Texas Children’s Hospital and Houston Methodist Hospital. It has been available at both hospitals since last month.

The FDA has requested more information on the test but has not asked the hospitals to stop using it.

According to the hospitals, the test identifies virus-specific RNA sequences to detect the Zika virus. It can distinguish Zika infection from dengue, West Nile, or chikungunya infections. And it can be performed on blood, amniotic fluid, urine, or spinal fluid.

In a letter to the hospitals, the FDA said this test appears to meet the definition of a device, as defined in section 201(h) of the Federal Food Drug and Cosmetic Act. Yet the test has not been granted premarket clearance, approval, or Emergency Use Authorization review by the FDA.

Therefore, the FDA has asked for information on the test’s design, validation, and performance characteristics. The agency said the Centers for Disease Control and Prevention (CDC) and the Centers for Medicare & Medicaid Services asked the FDA to review the science behind the test.

The FDA has not asked the hospitals to stop using the test while the review is underway, according to a statement from Texas Children’s Hospital.

Nevertheless, the Association for Molecular Pathology (AMP) said it is “concerned and disappointed” to see the FDA taking enforcement action regarding this test. The AMP said these types of tests are critical for patient care and should be made available to patients in need.

In fact, the AMP said this is an example of how FDA regulation of laboratory developed procedures would hinder patient access to vital medical services. That’s because the FDA’s Emergency Use Authorization for antibody testing at the CDC or state public health labs does not provide results in the timely fashion needed for immediate patient care.

The FDA recently issued Emergency Use Authorization for the Zika IgM Antibody Capture Enzyme-Linked Immunosorbent Assay (Zika MAC-ELISA), which was developed by the CDC.

The test was distributed to labs in the US and abroad, but it was not made available in US hospitals or other primary care settings. ![]()

Photo by Juan D. Alfonso

The US Food and Drug Administration (FDA) has deemed a hospital-based test for the Zika virus “high risk,” as the test has not been cleared by the FDA.

The test was developed by scientists at Texas Children’s Hospital and Houston Methodist Hospital. It has been available at both hospitals since last month.

The FDA has requested more information on the test but has not asked the hospitals to stop using it.

According to the hospitals, the test identifies virus-specific RNA sequences to detect the Zika virus. It can distinguish Zika infection from dengue, West Nile, or chikungunya infections. And it can be performed on blood, amniotic fluid, urine, or spinal fluid.

In a letter to the hospitals, the FDA said this test appears to meet the definition of a device, as defined in section 201(h) of the Federal Food Drug and Cosmetic Act. Yet the test has not been granted premarket clearance, approval, or Emergency Use Authorization review by the FDA.

Therefore, the FDA has asked for information on the test’s design, validation, and performance characteristics. The agency said the Centers for Disease Control and Prevention (CDC) and the Centers for Medicare & Medicaid Services asked the FDA to review the science behind the test.

The FDA has not asked the hospitals to stop using the test while the review is underway, according to a statement from Texas Children’s Hospital.

Nevertheless, the Association for Molecular Pathology (AMP) said it is “concerned and disappointed” to see the FDA taking enforcement action regarding this test. The AMP said these types of tests are critical for patient care and should be made available to patients in need.

In fact, the AMP said this is an example of how FDA regulation of laboratory developed procedures would hinder patient access to vital medical services. That’s because the FDA’s Emergency Use Authorization for antibody testing at the CDC or state public health labs does not provide results in the timely fashion needed for immediate patient care.

The FDA recently issued Emergency Use Authorization for the Zika IgM Antibody Capture Enzyme-Linked Immunosorbent Assay (Zika MAC-ELISA), which was developed by the CDC.

The test was distributed to labs in the US and abroad, but it was not made available in US hospitals or other primary care settings. ![]()

Financial burdens reduce QOL for cancer survivors

receiving treatment

Photo by Rhoda Baer

An analysis of nearly 20 million cancer survivors showed that almost 29% had financial burdens as a result of their cancer diagnosis and/or treatment.

In other words, they borrowed money, declared bankruptcy, worried about paying large medical bills, were unable to cover the cost of medical visits, or made other financial sacrifices.

Furthermore, such hardships could have lasting effects on a cancer survivor’s quality of life (QOL).

Hrishikesh Kale and Norman Carroll, PhD, both of Virginia Commonwealth University School of Pharmacy in Richmond, reported these findings in Cancer.

The pair analyzed 2011 Medical Expenditure Panel Survey data on 19.6 million cancer survivors, assessing financial burden and QOL.

Subjects were considered to have financial burden if they reported 1 of the following problems: borrowed money/declared bankruptcy, worried about paying large medical bills, unable to cover the cost of medical care visits, or other financial sacrifices.

Nearly 29% of the cancer survivors reported at least 1 financial problem resulting from cancer diagnosis, treatment, or lasting effects of that treatment.

Of all the cancer survivors in the analysis, 20.9% worried about paying large medical bills, 11.5% were unable to cover the cost of medical care visits, 7.6% reported borrowing money or going into debt, 1.4% declared bankruptcy, and 8.6% reported other financial sacrifices.

Cancer survivors who faced such financial difficulties had lower physical and mental health-related QOL, higher risk for depressed mood and psychological distress, and were more likely to worry about cancer recurrence, when compared with cancer survivors who did not face financial problems.

In addition, as the number of financial problems reported by cancer survivors increased, their QOL continued to decrease. And their risk for depressed mood, psychological distress, and worries about cancer recurrence continued to increase.

“Our results suggest that policies and practices that minimize cancer patients’ out-of-pocket costs can improve survivors’ health-related quality of life and psychological health,” Dr Carroll said.

“Reducing the financial burden of cancer care requires integrated efforts, and the study findings are useful for survivorship care programs, oncologists, payers, pharmaceutical companies, and patients and their family members.” ![]()

receiving treatment

Photo by Rhoda Baer

An analysis of nearly 20 million cancer survivors showed that almost 29% had financial burdens as a result of their cancer diagnosis and/or treatment.

In other words, they borrowed money, declared bankruptcy, worried about paying large medical bills, were unable to cover the cost of medical visits, or made other financial sacrifices.

Furthermore, such hardships could have lasting effects on a cancer survivor’s quality of life (QOL).

Hrishikesh Kale and Norman Carroll, PhD, both of Virginia Commonwealth University School of Pharmacy in Richmond, reported these findings in Cancer.

The pair analyzed 2011 Medical Expenditure Panel Survey data on 19.6 million cancer survivors, assessing financial burden and QOL.

Subjects were considered to have financial burden if they reported 1 of the following problems: borrowed money/declared bankruptcy, worried about paying large medical bills, unable to cover the cost of medical care visits, or other financial sacrifices.

Nearly 29% of the cancer survivors reported at least 1 financial problem resulting from cancer diagnosis, treatment, or lasting effects of that treatment.

Of all the cancer survivors in the analysis, 20.9% worried about paying large medical bills, 11.5% were unable to cover the cost of medical care visits, 7.6% reported borrowing money or going into debt, 1.4% declared bankruptcy, and 8.6% reported other financial sacrifices.

Cancer survivors who faced such financial difficulties had lower physical and mental health-related QOL, higher risk for depressed mood and psychological distress, and were more likely to worry about cancer recurrence, when compared with cancer survivors who did not face financial problems.

In addition, as the number of financial problems reported by cancer survivors increased, their QOL continued to decrease. And their risk for depressed mood, psychological distress, and worries about cancer recurrence continued to increase.

“Our results suggest that policies and practices that minimize cancer patients’ out-of-pocket costs can improve survivors’ health-related quality of life and psychological health,” Dr Carroll said.

“Reducing the financial burden of cancer care requires integrated efforts, and the study findings are useful for survivorship care programs, oncologists, payers, pharmaceutical companies, and patients and their family members.” ![]()

receiving treatment

Photo by Rhoda Baer

An analysis of nearly 20 million cancer survivors showed that almost 29% had financial burdens as a result of their cancer diagnosis and/or treatment.

In other words, they borrowed money, declared bankruptcy, worried about paying large medical bills, were unable to cover the cost of medical visits, or made other financial sacrifices.

Furthermore, such hardships could have lasting effects on a cancer survivor’s quality of life (QOL).

Hrishikesh Kale and Norman Carroll, PhD, both of Virginia Commonwealth University School of Pharmacy in Richmond, reported these findings in Cancer.

The pair analyzed 2011 Medical Expenditure Panel Survey data on 19.6 million cancer survivors, assessing financial burden and QOL.

Subjects were considered to have financial burden if they reported 1 of the following problems: borrowed money/declared bankruptcy, worried about paying large medical bills, unable to cover the cost of medical care visits, or other financial sacrifices.

Nearly 29% of the cancer survivors reported at least 1 financial problem resulting from cancer diagnosis, treatment, or lasting effects of that treatment.

Of all the cancer survivors in the analysis, 20.9% worried about paying large medical bills, 11.5% were unable to cover the cost of medical care visits, 7.6% reported borrowing money or going into debt, 1.4% declared bankruptcy, and 8.6% reported other financial sacrifices.

Cancer survivors who faced such financial difficulties had lower physical and mental health-related QOL, higher risk for depressed mood and psychological distress, and were more likely to worry about cancer recurrence, when compared with cancer survivors who did not face financial problems.

In addition, as the number of financial problems reported by cancer survivors increased, their QOL continued to decrease. And their risk for depressed mood, psychological distress, and worries about cancer recurrence continued to increase.

“Our results suggest that policies and practices that minimize cancer patients’ out-of-pocket costs can improve survivors’ health-related quality of life and psychological health,” Dr Carroll said.

“Reducing the financial burden of cancer care requires integrated efforts, and the study findings are useful for survivorship care programs, oncologists, payers, pharmaceutical companies, and patients and their family members.” ![]()

Doc laments increasing use of P values

Photo by Rhoda Baer

A review of the biomedical literature indicates an increase in the use of P values in recent years, but researchers say this technique can provide misleading results.

“It’s usually a suboptimal technique, and then it’s used in a biased way, so it can become very misleading,” said John Ioannidis, MD, of Stanford University in California.

He and his colleagues reviewed the use of P values and recounted their findings in JAMA.

The team used automated text-mining analysis to extract data on P values reported in 12,821,790 MEDLINE abstracts and 843,884 abstracts and full-text articles in PubMed Central from 1990 to 2015.

The researchers also assessed the reporting of P values in 151 English-language core clinical journals and specific article types as classified by PubMed.

They manually evaluated a random sample of 1000 MEDLINE abstracts for reporting of P values and other types of statistical information. And of those abstracts reporting empirical data, 100 articles were assessed in their entirety.

The data showed that reporting of P values more than doubled from 1990 to 2014—increasing from 7.3% to 15.6%.

In abstracts from core medical journals, 33% reported P values in 2014. And in the subset of randomized, controlled clinical trials, nearly 55% reported P values in 2014.

Dr Ioannidis noted that some researchers mistakenly think a P value is an estimate of how likely it is that a result is true.

“The P value does not tell you whether something is true,” he explained. “If you get a P value of 0.01, it doesn’t mean you have a 1% chance of something not being true.”

“A P value of 0.01 could mean the result is 20% likely to be true, 80% likely to be true, or 0.1% likely to be true—all with the same P value. The P value alone doesn’t tell you how true your result is.”

For an actual estimate of how likely a result is to be true or false, Dr Ioannidis said, researchers should instead use false-discovery rates or Bayes factor calculations.

He and his colleagues assessed the use of false-discovery rates, Bayes factor calculations, effect sizes, and confidence intervals in the 796 papers in their review that contained empirical data.

They found that 111 of these papers reported effect sizes, and 18 reported confidence intervals. None of the papers reported Bayes factors or false-discovery rates.

Fewer than 2% of the abstracts the team reviewed reported both an effect size and a confidence interval.

In a manual review of 99 randomly selected full-text articles, the researchers found that 55 articles reported at least 1 P value. But only 4 articles reported confidence intervals for all effect sizes, none used Bayesian methods, and 1 used false-discovery rates.

In light of these findings, Dr Ioannidis advocates more stringent approaches to analyzing data.

“The way to move forward is that P values need to be used more selectively,” he said. “When used, they need to be complemented by effect sizes and uncertainty [confidence intervals]. And it would often be a good idea to use a Bayesian approach or a false-discovery rate to answer the question, ‘How likely is this result to be true?’” ![]()

Photo by Rhoda Baer

A review of the biomedical literature indicates an increase in the use of P values in recent years, but researchers say this technique can provide misleading results.

“It’s usually a suboptimal technique, and then it’s used in a biased way, so it can become very misleading,” said John Ioannidis, MD, of Stanford University in California.

He and his colleagues reviewed the use of P values and recounted their findings in JAMA.

The team used automated text-mining analysis to extract data on P values reported in 12,821,790 MEDLINE abstracts and 843,884 abstracts and full-text articles in PubMed Central from 1990 to 2015.

The researchers also assessed the reporting of P values in 151 English-language core clinical journals and specific article types as classified by PubMed.

They manually evaluated a random sample of 1000 MEDLINE abstracts for reporting of P values and other types of statistical information. And of those abstracts reporting empirical data, 100 articles were assessed in their entirety.

The data showed that reporting of P values more than doubled from 1990 to 2014—increasing from 7.3% to 15.6%.

In abstracts from core medical journals, 33% reported P values in 2014. And in the subset of randomized, controlled clinical trials, nearly 55% reported P values in 2014.

Dr Ioannidis noted that some researchers mistakenly think a P value is an estimate of how likely it is that a result is true.

“The P value does not tell you whether something is true,” he explained. “If you get a P value of 0.01, it doesn’t mean you have a 1% chance of something not being true.”

“A P value of 0.01 could mean the result is 20% likely to be true, 80% likely to be true, or 0.1% likely to be true—all with the same P value. The P value alone doesn’t tell you how true your result is.”

For an actual estimate of how likely a result is to be true or false, Dr Ioannidis said, researchers should instead use false-discovery rates or Bayes factor calculations.

He and his colleagues assessed the use of false-discovery rates, Bayes factor calculations, effect sizes, and confidence intervals in the 796 papers in their review that contained empirical data.

They found that 111 of these papers reported effect sizes, and 18 reported confidence intervals. None of the papers reported Bayes factors or false-discovery rates.

Fewer than 2% of the abstracts the team reviewed reported both an effect size and a confidence interval.

In a manual review of 99 randomly selected full-text articles, the researchers found that 55 articles reported at least 1 P value. But only 4 articles reported confidence intervals for all effect sizes, none used Bayesian methods, and 1 used false-discovery rates.

In light of these findings, Dr Ioannidis advocates more stringent approaches to analyzing data.

“The way to move forward is that P values need to be used more selectively,” he said. “When used, they need to be complemented by effect sizes and uncertainty [confidence intervals]. And it would often be a good idea to use a Bayesian approach or a false-discovery rate to answer the question, ‘How likely is this result to be true?’” ![]()

Photo by Rhoda Baer

A review of the biomedical literature indicates an increase in the use of P values in recent years, but researchers say this technique can provide misleading results.

“It’s usually a suboptimal technique, and then it’s used in a biased way, so it can become very misleading,” said John Ioannidis, MD, of Stanford University in California.

He and his colleagues reviewed the use of P values and recounted their findings in JAMA.

The team used automated text-mining analysis to extract data on P values reported in 12,821,790 MEDLINE abstracts and 843,884 abstracts and full-text articles in PubMed Central from 1990 to 2015.

The researchers also assessed the reporting of P values in 151 English-language core clinical journals and specific article types as classified by PubMed.

They manually evaluated a random sample of 1000 MEDLINE abstracts for reporting of P values and other types of statistical information. And of those abstracts reporting empirical data, 100 articles were assessed in their entirety.

The data showed that reporting of P values more than doubled from 1990 to 2014—increasing from 7.3% to 15.6%.

In abstracts from core medical journals, 33% reported P values in 2014. And in the subset of randomized, controlled clinical trials, nearly 55% reported P values in 2014.

Dr Ioannidis noted that some researchers mistakenly think a P value is an estimate of how likely it is that a result is true.

“The P value does not tell you whether something is true,” he explained. “If you get a P value of 0.01, it doesn’t mean you have a 1% chance of something not being true.”

“A P value of 0.01 could mean the result is 20% likely to be true, 80% likely to be true, or 0.1% likely to be true—all with the same P value. The P value alone doesn’t tell you how true your result is.”

For an actual estimate of how likely a result is to be true or false, Dr Ioannidis said, researchers should instead use false-discovery rates or Bayes factor calculations.

He and his colleagues assessed the use of false-discovery rates, Bayes factor calculations, effect sizes, and confidence intervals in the 796 papers in their review that contained empirical data.

They found that 111 of these papers reported effect sizes, and 18 reported confidence intervals. None of the papers reported Bayes factors or false-discovery rates.

Fewer than 2% of the abstracts the team reviewed reported both an effect size and a confidence interval.

In a manual review of 99 randomly selected full-text articles, the researchers found that 55 articles reported at least 1 P value. But only 4 articles reported confidence intervals for all effect sizes, none used Bayesian methods, and 1 used false-discovery rates.

In light of these findings, Dr Ioannidis advocates more stringent approaches to analyzing data.

“The way to move forward is that P values need to be used more selectively,” he said. “When used, they need to be complemented by effect sizes and uncertainty [confidence intervals]. And it would often be a good idea to use a Bayesian approach or a false-discovery rate to answer the question, ‘How likely is this result to be true?’” ![]()

Negative cancer trials have long-term impact

for a clinical trial

Photo by Esther Dyson

Cancer trials with negative results don’t make an immediate splash in the scientific literature, but they do have a long-term impact on research, according to a study published in JAMA Oncology.

Researchers found that first reports of positive phase 3 cancer trials were twice as likely as first reports of negative phase 3 cancer trials

to be cited in scientific journals.

But over time, when all articles associated with the trials were considered, the scientific impact of negative trials and positive trials was about the same.

“Negative trials aren’t scientific failures,” said study author Joseph Unger, PhD, of the Fred Hutchinson Cancer Research Center in Seattle, Washington.

“We found that they have a positive, lasting impact on cancer research.”

Dr Unger and his colleagues analyzed every randomized, phase 3 cancer trial completed by the cooperative group SWOG from 1984 to 2014. This amounted to 94 studies involving 46,424 patients.

Of those 94 studies, 26 were positive, meaning that the treatment tested performed measurably better than the standard treatment at the time.

Analyses revealed that primary manuscripts first announcing these encouraging results were published in journals with higher impact factors and were cited twice as often as primary manuscripts of negative trials.

The mean 2-year impact factor of the journals was 28 for positive trials and 18 for negative trials (P=0.007). And the mean number of citations per year was 43 for positive trials and 21 for negative trials (P=0.03).

However, when the researchers looked at the number of citations from all primary and secondary manuscripts, they did not see a significant difference between positive and negative trials. The mean number of citations per year was 55 and 45, respectively (P=0.53).

“Negative trials matter because they tell us what doesn’t work, which can be as important as what does,” said study author Dawn Hershman, MD, of Columbia University Medical Center in New York, New York.

“Negative trials are also critical for secondary research, which mines existing trial data to answer new questions in cancer care and prevention. Negative trials are used frequently in secondary research and add great value to the scientific community.” ![]()

for a clinical trial

Photo by Esther Dyson

Cancer trials with negative results don’t make an immediate splash in the scientific literature, but they do have a long-term impact on research, according to a study published in JAMA Oncology.

Researchers found that first reports of positive phase 3 cancer trials were twice as likely as first reports of negative phase 3 cancer trials

to be cited in scientific journals.

But over time, when all articles associated with the trials were considered, the scientific impact of negative trials and positive trials was about the same.

“Negative trials aren’t scientific failures,” said study author Joseph Unger, PhD, of the Fred Hutchinson Cancer Research Center in Seattle, Washington.

“We found that they have a positive, lasting impact on cancer research.”

Dr Unger and his colleagues analyzed every randomized, phase 3 cancer trial completed by the cooperative group SWOG from 1984 to 2014. This amounted to 94 studies involving 46,424 patients.

Of those 94 studies, 26 were positive, meaning that the treatment tested performed measurably better than the standard treatment at the time.

Analyses revealed that primary manuscripts first announcing these encouraging results were published in journals with higher impact factors and were cited twice as often as primary manuscripts of negative trials.

The mean 2-year impact factor of the journals was 28 for positive trials and 18 for negative trials (P=0.007). And the mean number of citations per year was 43 for positive trials and 21 for negative trials (P=0.03).

However, when the researchers looked at the number of citations from all primary and secondary manuscripts, they did not see a significant difference between positive and negative trials. The mean number of citations per year was 55 and 45, respectively (P=0.53).

“Negative trials matter because they tell us what doesn’t work, which can be as important as what does,” said study author Dawn Hershman, MD, of Columbia University Medical Center in New York, New York.

“Negative trials are also critical for secondary research, which mines existing trial data to answer new questions in cancer care and prevention. Negative trials are used frequently in secondary research and add great value to the scientific community.” ![]()

for a clinical trial

Photo by Esther Dyson

Cancer trials with negative results don’t make an immediate splash in the scientific literature, but they do have a long-term impact on research, according to a study published in JAMA Oncology.

Researchers found that first reports of positive phase 3 cancer trials were twice as likely as first reports of negative phase 3 cancer trials

to be cited in scientific journals.

But over time, when all articles associated with the trials were considered, the scientific impact of negative trials and positive trials was about the same.

“Negative trials aren’t scientific failures,” said study author Joseph Unger, PhD, of the Fred Hutchinson Cancer Research Center in Seattle, Washington.

“We found that they have a positive, lasting impact on cancer research.”

Dr Unger and his colleagues analyzed every randomized, phase 3 cancer trial completed by the cooperative group SWOG from 1984 to 2014. This amounted to 94 studies involving 46,424 patients.

Of those 94 studies, 26 were positive, meaning that the treatment tested performed measurably better than the standard treatment at the time.

Analyses revealed that primary manuscripts first announcing these encouraging results were published in journals with higher impact factors and were cited twice as often as primary manuscripts of negative trials.

The mean 2-year impact factor of the journals was 28 for positive trials and 18 for negative trials (P=0.007). And the mean number of citations per year was 43 for positive trials and 21 for negative trials (P=0.03).

However, when the researchers looked at the number of citations from all primary and secondary manuscripts, they did not see a significant difference between positive and negative trials. The mean number of citations per year was 55 and 45, respectively (P=0.53).

“Negative trials matter because they tell us what doesn’t work, which can be as important as what does,” said study author Dawn Hershman, MD, of Columbia University Medical Center in New York, New York.

“Negative trials are also critical for secondary research, which mines existing trial data to answer new questions in cancer care and prevention. Negative trials are used frequently in secondary research and add great value to the scientific community.” ![]()

Program can predict drug side effects

Photo by Darren Baker

Scientists say they have developed a computer program that can predict whether or not a given pharmaceutical agent will produce certain side effects.

The software takes an “ensemble approach” to assessing the chemical structure of a drug molecule and can determine whether key substructures are present in the molecule that are known to give rise to side effects in other drugs.

Md Jamiul Jahid and Jianhua Ruan, PhD, both of the University of Texas at San Antonio, developed the computer program and described it in the International Journal of Computational Biology and Drug Design.

The pair tested the software’s ability to predict 1385 side effects associated with 888 marketed drugs and found that the program outperformed earlier software.

The team also used their new software to test 2883 uncharacterized compounds in the DrugBank database. The program proved capable of predicting a wide variety of side effects, including some effects that were missed by other screening methods.

The scientists believe their software could be used to alert regulatory authorities and healthcare workers as to what side effects might occur when a new drug enters late-stage clinical trials and is ultimately brought to market.

But the program may have an additional benefit as well. By identifying substructures that are associated with particular side effects, the software could be used to help medicinal chemists understand the underlying mechanism by which a side effect arises.

The chemists could then eliminate the offending substructures from drug molecules in the future, thereby reducing the number of drugs that go through the research and development pipeline and then fail in clinical trials due to severe side effects. ![]()

Photo by Darren Baker

Scientists say they have developed a computer program that can predict whether or not a given pharmaceutical agent will produce certain side effects.

The software takes an “ensemble approach” to assessing the chemical structure of a drug molecule and can determine whether key substructures are present in the molecule that are known to give rise to side effects in other drugs.

Md Jamiul Jahid and Jianhua Ruan, PhD, both of the University of Texas at San Antonio, developed the computer program and described it in the International Journal of Computational Biology and Drug Design.

The pair tested the software’s ability to predict 1385 side effects associated with 888 marketed drugs and found that the program outperformed earlier software.

The team also used their new software to test 2883 uncharacterized compounds in the DrugBank database. The program proved capable of predicting a wide variety of side effects, including some effects that were missed by other screening methods.

The scientists believe their software could be used to alert regulatory authorities and healthcare workers as to what side effects might occur when a new drug enters late-stage clinical trials and is ultimately brought to market.

But the program may have an additional benefit as well. By identifying substructures that are associated with particular side effects, the software could be used to help medicinal chemists understand the underlying mechanism by which a side effect arises.

The chemists could then eliminate the offending substructures from drug molecules in the future, thereby reducing the number of drugs that go through the research and development pipeline and then fail in clinical trials due to severe side effects. ![]()

Photo by Darren Baker

Scientists say they have developed a computer program that can predict whether or not a given pharmaceutical agent will produce certain side effects.

The software takes an “ensemble approach” to assessing the chemical structure of a drug molecule and can determine whether key substructures are present in the molecule that are known to give rise to side effects in other drugs.

Md Jamiul Jahid and Jianhua Ruan, PhD, both of the University of Texas at San Antonio, developed the computer program and described it in the International Journal of Computational Biology and Drug Design.

The pair tested the software’s ability to predict 1385 side effects associated with 888 marketed drugs and found that the program outperformed earlier software.

The team also used their new software to test 2883 uncharacterized compounds in the DrugBank database. The program proved capable of predicting a wide variety of side effects, including some effects that were missed by other screening methods.

The scientists believe their software could be used to alert regulatory authorities and healthcare workers as to what side effects might occur when a new drug enters late-stage clinical trials and is ultimately brought to market.

But the program may have an additional benefit as well. By identifying substructures that are associated with particular side effects, the software could be used to help medicinal chemists understand the underlying mechanism by which a side effect arises.

The chemists could then eliminate the offending substructures from drug molecules in the future, thereby reducing the number of drugs that go through the research and development pipeline and then fail in clinical trials due to severe side effects. ![]()

Regimen can reduce risk of malaria during pregnancy

Photo by Nina Matthews

A 2-drug prophylactic regimen can reduce the risk of Plasmodium falciparum malaria during pregnancy, according to a study published in NEJM.

Monthly treatment with the regimen, dihydroartemisinin-piperaquine (DP), reduced the rate of symptomatic malaria, placental malaria, and parasitemia, when compared to less frequent dosing with DP or treatment with sulfadoxine-pyrimethamine (SP), the current standard prophylactic regimen.

Researchers therefore believe DP may be a feasible alternative to SP, which has become less effective over time.

“The malaria parasite’s resistance to SP is widespread, especially in sub-Saharan Africa,” said study author Abel Kakuru, MD, of the Infectious Diseases Research Collaboration in Kampala, Uganda.

“But we are still using the same drugs because we have no better alternatives.”

In an attempt to identify a better alternative, Dr Kakuru and his colleagues studied 300 pregnant women from Tororo, Uganda, from June 2014 through October 2014. All of the subjects were age 16 or older and anywhere from 12 to 20 weeks pregnant.

The women were randomized to receive 1 of 3 regimens for malaria prophylaxis:

- DP once a month

- DP at 20, 28, and 30 weeks of pregnancy

- SP at 20, 28, and 30 weeks of pregnancy.

Participants had monthly checkups at the study clinic, where they received regular blood tests for malaria. The researchers also assessed malaria infection in the placenta.

Placental malaria was confirmed in 50% of women in the SP group, 34% in the 3-dose-DP group (P=0.03), and 27% in the monthly DP group (P=0.001).

Forty-one percent of the women on SP had malaria parasites in their blood, compared to 17% in the 3-dose DP group (P<0.001), and 5% in the monthly DP group (P<0.001).

None of the women on monthly DP had symptomatic malaria during pregnancy. But there were 41 episodes of malaria during pregnancy in the SP group and 12 episodes in the 3-dose DP group.

The researchers also evaluated the women and infants in the study for a composite adverse birth outcome of spontaneous abortion, stillbirth, low birth weight, preterm delivery, or birth defects.

The risk of any adverse birth outcome was 9% in the monthly DP group, 21% in the 3-dose DP group (P=0.02), and 19% in the SP group (P=0.05).

The researchers concluded that monthly dosing of DP provided the best protection against malaria and called for additional studies to determine if the regimen would provide an effective alternative treatment in other parts of Uganda and elsewhere in Africa. ![]()

Photo by Nina Matthews

A 2-drug prophylactic regimen can reduce the risk of Plasmodium falciparum malaria during pregnancy, according to a study published in NEJM.

Monthly treatment with the regimen, dihydroartemisinin-piperaquine (DP), reduced the rate of symptomatic malaria, placental malaria, and parasitemia, when compared to less frequent dosing with DP or treatment with sulfadoxine-pyrimethamine (SP), the current standard prophylactic regimen.

Researchers therefore believe DP may be a feasible alternative to SP, which has become less effective over time.

“The malaria parasite’s resistance to SP is widespread, especially in sub-Saharan Africa,” said study author Abel Kakuru, MD, of the Infectious Diseases Research Collaboration in Kampala, Uganda.

“But we are still using the same drugs because we have no better alternatives.”

In an attempt to identify a better alternative, Dr Kakuru and his colleagues studied 300 pregnant women from Tororo, Uganda, from June 2014 through October 2014. All of the subjects were age 16 or older and anywhere from 12 to 20 weeks pregnant.

The women were randomized to receive 1 of 3 regimens for malaria prophylaxis:

- DP once a month

- DP at 20, 28, and 30 weeks of pregnancy

- SP at 20, 28, and 30 weeks of pregnancy.

Participants had monthly checkups at the study clinic, where they received regular blood tests for malaria. The researchers also assessed malaria infection in the placenta.

Placental malaria was confirmed in 50% of women in the SP group, 34% in the 3-dose-DP group (P=0.03), and 27% in the monthly DP group (P=0.001).

Forty-one percent of the women on SP had malaria parasites in their blood, compared to 17% in the 3-dose DP group (P<0.001), and 5% in the monthly DP group (P<0.001).

None of the women on monthly DP had symptomatic malaria during pregnancy. But there were 41 episodes of malaria during pregnancy in the SP group and 12 episodes in the 3-dose DP group.

The researchers also evaluated the women and infants in the study for a composite adverse birth outcome of spontaneous abortion, stillbirth, low birth weight, preterm delivery, or birth defects.

The risk of any adverse birth outcome was 9% in the monthly DP group, 21% in the 3-dose DP group (P=0.02), and 19% in the SP group (P=0.05).

The researchers concluded that monthly dosing of DP provided the best protection against malaria and called for additional studies to determine if the regimen would provide an effective alternative treatment in other parts of Uganda and elsewhere in Africa. ![]()

Photo by Nina Matthews

A 2-drug prophylactic regimen can reduce the risk of Plasmodium falciparum malaria during pregnancy, according to a study published in NEJM.

Monthly treatment with the regimen, dihydroartemisinin-piperaquine (DP), reduced the rate of symptomatic malaria, placental malaria, and parasitemia, when compared to less frequent dosing with DP or treatment with sulfadoxine-pyrimethamine (SP), the current standard prophylactic regimen.

Researchers therefore believe DP may be a feasible alternative to SP, which has become less effective over time.

“The malaria parasite’s resistance to SP is widespread, especially in sub-Saharan Africa,” said study author Abel Kakuru, MD, of the Infectious Diseases Research Collaboration in Kampala, Uganda.

“But we are still using the same drugs because we have no better alternatives.”

In an attempt to identify a better alternative, Dr Kakuru and his colleagues studied 300 pregnant women from Tororo, Uganda, from June 2014 through October 2014. All of the subjects were age 16 or older and anywhere from 12 to 20 weeks pregnant.

The women were randomized to receive 1 of 3 regimens for malaria prophylaxis:

- DP once a month

- DP at 20, 28, and 30 weeks of pregnancy

- SP at 20, 28, and 30 weeks of pregnancy.

Participants had monthly checkups at the study clinic, where they received regular blood tests for malaria. The researchers also assessed malaria infection in the placenta.

Placental malaria was confirmed in 50% of women in the SP group, 34% in the 3-dose-DP group (P=0.03), and 27% in the monthly DP group (P=0.001).

Forty-one percent of the women on SP had malaria parasites in their blood, compared to 17% in the 3-dose DP group (P<0.001), and 5% in the monthly DP group (P<0.001).

None of the women on monthly DP had symptomatic malaria during pregnancy. But there were 41 episodes of malaria during pregnancy in the SP group and 12 episodes in the 3-dose DP group.

The researchers also evaluated the women and infants in the study for a composite adverse birth outcome of spontaneous abortion, stillbirth, low birth weight, preterm delivery, or birth defects.

The risk of any adverse birth outcome was 9% in the monthly DP group, 21% in the 3-dose DP group (P=0.02), and 19% in the SP group (P=0.05).

The researchers concluded that monthly dosing of DP provided the best protection against malaria and called for additional studies to determine if the regimen would provide an effective alternative treatment in other parts of Uganda and elsewhere in Africa. ![]()

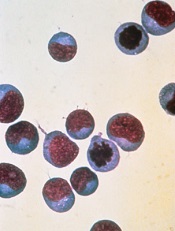

Tool provides insight into T cells’ behavior

Image courtesy of NIAID

A new tool can help scientists determine how different types of T cells detect, destroy, and remember antigens, according to research published in Nature Methods.

The tool is TraCeR, a computational method that allows researchers to reconstruct full-length, paired T-cell receptor sequences from single-cell RNA sequence data.

TraCeR reveals clonal relationships between T cells as well as their transcriptional profiles.

In the current study, TraCeR helped scientists detect T-cell clonotypes in mice infected with Salmonella.

“This new tool for single-cell sequencing gives us a new approach to the study of T cells and opens up new opportunities to explore immune responses in disease, vaccination, cancer, and autoimmunity,” said study author Sarah Teichmann, PhD, of the Wellcome Trust Sanger Institute in Cambridge, UK.

She and her colleagues noted that T-cell receptors are extremely variable, and a combination of paired sequences determines which protein a receptor will detect. So to understand what is happening at the molecular level, it is imperative to find both sequences in each cell.

TraCeR is designed to allow scientists to look at the DNA and RNA profiles of these highly variable T-cell receptors at the same time.

Dr Teichmann and her colleagues found that receptor sequences are unique, unless the T cells have the same parent cell. The presence of “sibling” cells proves that an infection has triggered the division of a particular T cell, which indicates it is multiplying to fight an antigen.

Using TraCeR, the researchers were able to accurately identify sibling T cells and explore their different responses to Salmonella infection in mice.

“This technique helps us see whether all the ‘children’ of a particular T cell do the same thing at the same time, which is an open question in biology,” said study author Tapio Lönnberg, PhD, of the European Molecular Biology Laboratory-European Bioinformatics Institute in Cambridge, UK.

“We can start to see whether the antigen itself plays a role in how a T cell will respond, and even whether it’s possible to determine what the invader is, just based on the sequence of a T-cell receptor.”

The researchers said their next step is to apply similar methods to the study of B cells to better understand the adaptive immune system as a whole. ![]()

Image courtesy of NIAID

A new tool can help scientists determine how different types of T cells detect, destroy, and remember antigens, according to research published in Nature Methods.

The tool is TraCeR, a computational method that allows researchers to reconstruct full-length, paired T-cell receptor sequences from single-cell RNA sequence data.

TraCeR reveals clonal relationships between T cells as well as their transcriptional profiles.

In the current study, TraCeR helped scientists detect T-cell clonotypes in mice infected with Salmonella.

“This new tool for single-cell sequencing gives us a new approach to the study of T cells and opens up new opportunities to explore immune responses in disease, vaccination, cancer, and autoimmunity,” said study author Sarah Teichmann, PhD, of the Wellcome Trust Sanger Institute in Cambridge, UK.

She and her colleagues noted that T-cell receptors are extremely variable, and a combination of paired sequences determines which protein a receptor will detect. So to understand what is happening at the molecular level, it is imperative to find both sequences in each cell.

TraCeR is designed to allow scientists to look at the DNA and RNA profiles of these highly variable T-cell receptors at the same time.

Dr Teichmann and her colleagues found that receptor sequences are unique, unless the T cells have the same parent cell. The presence of “sibling” cells proves that an infection has triggered the division of a particular T cell, which indicates it is multiplying to fight an antigen.

Using TraCeR, the researchers were able to accurately identify sibling T cells and explore their different responses to Salmonella infection in mice.

“This technique helps us see whether all the ‘children’ of a particular T cell do the same thing at the same time, which is an open question in biology,” said study author Tapio Lönnberg, PhD, of the European Molecular Biology Laboratory-European Bioinformatics Institute in Cambridge, UK.

“We can start to see whether the antigen itself plays a role in how a T cell will respond, and even whether it’s possible to determine what the invader is, just based on the sequence of a T-cell receptor.”

The researchers said their next step is to apply similar methods to the study of B cells to better understand the adaptive immune system as a whole. ![]()

Image courtesy of NIAID

A new tool can help scientists determine how different types of T cells detect, destroy, and remember antigens, according to research published in Nature Methods.

The tool is TraCeR, a computational method that allows researchers to reconstruct full-length, paired T-cell receptor sequences from single-cell RNA sequence data.

TraCeR reveals clonal relationships between T cells as well as their transcriptional profiles.

In the current study, TraCeR helped scientists detect T-cell clonotypes in mice infected with Salmonella.

“This new tool for single-cell sequencing gives us a new approach to the study of T cells and opens up new opportunities to explore immune responses in disease, vaccination, cancer, and autoimmunity,” said study author Sarah Teichmann, PhD, of the Wellcome Trust Sanger Institute in Cambridge, UK.

She and her colleagues noted that T-cell receptors are extremely variable, and a combination of paired sequences determines which protein a receptor will detect. So to understand what is happening at the molecular level, it is imperative to find both sequences in each cell.

TraCeR is designed to allow scientists to look at the DNA and RNA profiles of these highly variable T-cell receptors at the same time.

Dr Teichmann and her colleagues found that receptor sequences are unique, unless the T cells have the same parent cell. The presence of “sibling” cells proves that an infection has triggered the division of a particular T cell, which indicates it is multiplying to fight an antigen.

Using TraCeR, the researchers were able to accurately identify sibling T cells and explore their different responses to Salmonella infection in mice.

“This technique helps us see whether all the ‘children’ of a particular T cell do the same thing at the same time, which is an open question in biology,” said study author Tapio Lönnberg, PhD, of the European Molecular Biology Laboratory-European Bioinformatics Institute in Cambridge, UK.

“We can start to see whether the antigen itself plays a role in how a T cell will respond, and even whether it’s possible to determine what the invader is, just based on the sequence of a T-cell receptor.”

The researchers said their next step is to apply similar methods to the study of B cells to better understand the adaptive immune system as a whole.

EMA initiative aims to accelerate drug development

Photo courtesy of the FDA

The European Medicines Agency (EMA) has launched PRIME (PRIority MEdicines), an initiative intended to accelerate the development of drugs that target unmet medical needs.

These “priority medicines” may offer a major therapeutic advantage over existing treatments or benefit patients who currently have no treatment options in the European Union (EU).

Through PRIME, the EMA will offer early support to drug developers to optimize the generation of robust data on a treatment’s benefits and risks and enable accelerated assessment of an application for marketing authorization.

By engaging with developers early, the EMA aims to strengthen the design of clinical trials to facilitate the generation of high quality data to support an application for marketing authorization.

PRIME builds on the existing regulatory framework and available tools such as scientific advice and accelerated assessment.

To be accepted for PRIME, a treatment has to show the potential to benefit patients with unmet medical needs based on early clinical data.

Once a candidate medicine has been selected for PRIME, the EMA:

- Appoints a rapporteur from the Committee for Medicinal Products for Human Use (CHMP), or from the Committee on Advanced Therapies (CAT) in the case of an advanced therapy, to provide continuous support and help to build knowledge before a company submits an application for marketing authorization

- Organizes a kick-off meeting with the CHMP/CAT rapporteur and a multidisciplinary group of experts from relevant EMA scientific committees and working parties, and provides guidance on the overall development plan and regulatory strategy

- Assigns a dedicated EMA contact point

- Provides scientific advice at key development milestones, involving additional stakeholders such as health technology assessment bodies to facilitate quicker access to the new drug

- Confirms potential for accelerated assessment when an application for marketing authorization is submitted.

PRIME is open to all drug companies on the basis of preliminary clinical evidence. However, micro-, small- and medium-sized enterprises and applicants from the academic sector can apply earlier on the basis of compelling non-clinical data and tolerability data from initial clinical trials. They may also request fee waivers for scientific advice.

The EMA has released guidance documents on PRIME as well as a comprehensive overview of the EU early access regulatory tools—ie, accelerated assessment, conditional marketing authorization, and compassionate use.

The agency has also published revised guidelines on the implementation of accelerated assessment and conditional marketing authorization. These tools are reserved for treatments addressing major public health needs, and the revised guidelines provide more detailed information based on past experience.

Although PRIME is specifically designed to promote accelerated assessment, the EMA said the initiative will also help drug developers make the best use of other EU early access tools and initiatives, which can be combined whenever a drug fulfills the respective criteria.

PRIME was developed in consultation with the EMA’s scientific committees, the European Commission and its expert group on Safe and Timely Access to Medicines for Patients, as well as the European medicines regulatory network.

The main principles of PRIME were released for a 2-month public consultation in 2015, and the comments received were taken into account in the final version.

Photo courtesy of the FDA

The European Medicines Agency (EMA) has launched PRIME (PRIority MEdicines), an initiative intended to accelerate the development of drugs that target unmet medical needs.

These “priority medicines” may offer a major therapeutic advantage over existing treatments or benefit patients who currently have no treatment options in the European Union (EU).

Through PRIME, the EMA will offer early support to drug developers to optimize the generation of robust data on a treatment’s benefits and risks and enable accelerated assessment of an application for marketing authorization.

By engaging with developers early, the EMA aims to strengthen the design of clinical trials to facilitate the generation of high quality data to support an application for marketing authorization.

PRIME builds on the existing regulatory framework and available tools such as scientific advice and accelerated assessment.

To be accepted for PRIME, a treatment has to show the potential to benefit patients with unmet medical needs based on early clinical data.

Once a candidate medicine has been selected for PRIME, the EMA:

- Appoints a rapporteur from the Committee for Medicinal Products for Human Use (CHMP), or from the Committee on Advanced Therapies (CAT) in the case of an advanced therapy, to provide continuous support and help to build knowledge before a company submits an application for marketing authorization

- Organizes a kick-off meeting with the CHMP/CAT rapporteur and a multidisciplinary group of experts from relevant EMA scientific committees and working parties, and provides guidance on the overall development plan and regulatory strategy

- Assigns a dedicated EMA contact point

- Provides scientific advice at key development milestones, involving additional stakeholders such as health technology assessment bodies to facilitate quicker access to the new drug

- Confirms potential for accelerated assessment when an application for marketing authorization is submitted.

PRIME is open to all drug companies on the basis of preliminary clinical evidence. However, micro-, small- and medium-sized enterprises and applicants from the academic sector can apply earlier on the basis of compelling non-clinical data and tolerability data from initial clinical trials. They may also request fee waivers for scientific advice.

The EMA has released guidance documents on PRIME as well as a comprehensive overview of the EU early access regulatory tools—ie, accelerated assessment, conditional marketing authorization, and compassionate use.

The agency has also published revised guidelines on the implementation of accelerated assessment and conditional marketing authorization. These tools are reserved for treatments addressing major public health needs, and the revised guidelines provide more detailed information based on past experience.

Although PRIME is specifically designed to promote accelerated assessment, the EMA said the initiative will also help drug developers make the best use of other EU early access tools and initiatives, which can be combined whenever a drug fulfills the respective criteria.

PRIME was developed in consultation with the EMA’s scientific committees, the European Commission and its expert group on Safe and Timely Access to Medicines for Patients, as well as the European medicines regulatory network.

The main principles of PRIME were released for a 2-month public consultation in 2015, and the comments received were taken into account in the final version.

Photo courtesy of the FDA

The European Medicines Agency (EMA) has launched PRIME (PRIority MEdicines), an initiative intended to accelerate the development of drugs that target unmet medical needs.

These “priority medicines” may offer a major therapeutic advantage over existing treatments or benefit patients who currently have no treatment options in the European Union (EU).

Through PRIME, the EMA will offer early support to drug developers to optimize the generation of robust data on a treatment’s benefits and risks and enable accelerated assessment of an application for marketing authorization.

By engaging with developers early, the EMA aims to strengthen the design of clinical trials to facilitate the generation of high quality data to support an application for marketing authorization.

PRIME builds on the existing regulatory framework and available tools such as scientific advice and accelerated assessment.

To be accepted for PRIME, a treatment has to show the potential to benefit patients with unmet medical needs based on early clinical data.

Once a candidate medicine has been selected for PRIME, the EMA:

- Appoints a rapporteur from the Committee for Medicinal Products for Human Use (CHMP), or from the Committee on Advanced Therapies (CAT) in the case of an advanced therapy, to provide continuous support and help to build knowledge before a company submits an application for marketing authorization

- Organizes a kick-off meeting with the CHMP/CAT rapporteur and a multidisciplinary group of experts from relevant EMA scientific committees and working parties, and provides guidance on the overall development plan and regulatory strategy

- Assigns a dedicated EMA contact point

- Provides scientific advice at key development milestones, involving additional stakeholders such as health technology assessment bodies to facilitate quicker access to the new drug

- Confirms potential for accelerated assessment when an application for marketing authorization is submitted.

PRIME is open to all drug companies on the basis of preliminary clinical evidence. However, micro-, small- and medium-sized enterprises and applicants from the academic sector can apply earlier on the basis of compelling non-clinical data and tolerability data from initial clinical trials. They may also request fee waivers for scientific advice.

The EMA has released guidance documents on PRIME as well as a comprehensive overview of the EU early access regulatory tools—ie, accelerated assessment, conditional marketing authorization, and compassionate use.

The agency has also published revised guidelines on the implementation of accelerated assessment and conditional marketing authorization. These tools are reserved for treatments addressing major public health needs, and the revised guidelines provide more detailed information based on past experience.

Although PRIME is specifically designed to promote accelerated assessment, the EMA said the initiative will also help drug developers make the best use of other EU early access tools and initiatives, which can be combined whenever a drug fulfills the respective criteria.

PRIME was developed in consultation with the EMA’s scientific committees, the European Commission and its expert group on Safe and Timely Access to Medicines for Patients, as well as the European medicines regulatory network.

The main principles of PRIME were released for a 2-month public consultation in 2015, and the comments received were taken into account in the final version.

CDC quantifies threat of resistant HAIs in US

in the intensive care unit

New data from the US Centers for Disease Control and Prevention (CDC) suggest the incidence of certain healthcare-associated infections (HAIs) has fallen in recent years, but antibiotic-resistant bacteria remain a threat.

Therefore, the CDC is advising healthcare workers to use a combination of infection control recommendations to better protect patients from these infections.

“New data show that far too many patients are getting infected with dangerous, drug-resistant bacteria in healthcare settings,” said CDC Director Tom Frieden, MD.

The facts and figures are available in the CDC’s latest Vital Signs report and the agency’s annual progress report on HAI prevention.

The data indicate that 1 in 7 catheter- and surgery-related HAIs in acute care hospitals can be caused by 6 types of antibiotic-resistant bacteria. That number increases to 1 in 4 infections in long-term acute care hospitals.

The 6 antibiotic-resistant threats are carbapenem-resistant Enterobacteriaceae (CRE), methicillin-resistant Staphylococcus aureus (MRSA), ESBL-producing Enterobacteriaceae (extended-spectrum β-lactamases), vancomycin-resistant Enterococcus (VRE), multidrug-resistant Pseudomonas aeruginosa, and multidrug-resistant Acinetobacter.

Prevention and resistance

According to the CDC’s data, acute care hospitals saw a 50% decrease in central line-associated bloodstream infections between 2008 and 2014. But 1 in 6 remaining central line-associated bloodstream infections is caused by urgent or serious antibiotic-resistant bacteria.

Between 2008 and 2014, acute care hospitals saw a 17% decrease in surgical site infections related to 10 procedures that were tracked in previous HAI progress reports. One in 7 remaining surgical site infections is caused by urgent or serious antibiotic-resistant bacteria.

Acute care hospitals saw no change in the incidence of catheter-associated urinary tract infections (CAUTIs) between 2009 and 2014. However, there was a reduction in CAUTIs between 2013 and 2014. One in 10 CAUTIs is caused by urgent or serious antibiotic-resistant bacteria.

The Vital Signs report also examines Clostridium difficile, which caused almost half a million infections in the US in 2011 alone. The CDC’s annual progress report shows that hospital-onset C difficile infections decreased by 8% between 2011 and 2014.

In addition to the reports, the CDC has released a web app with interactive data on HAIs caused by antibiotic-resistant bacteria.

The tool, known as the Antibiotic Resistance Patient Safety Atlas, provides national, regional, and state map views of superbug/drug combinations showing percent resistance over time. The Atlas uses data reported to the CDC’s National Healthcare Safety Network from 2011 to 2014 from more than 4000 healthcare facilities.

in the intensive care unit

New data from the US Centers for Disease Control and Prevention (CDC) suggest the incidence of certain healthcare-associated infections (HAIs) has fallen in recent years, but antibiotic-resistant bacteria remain a threat.

Therefore, the CDC is advising healthcare workers to use a combination of infection control recommendations to better protect patients from these infections.

“New data show that far too many patients are getting infected with dangerous, drug-resistant bacteria in healthcare settings,” said CDC Director Tom Frieden, MD.

The facts and figures are available in the CDC’s latest Vital Signs report and the agency’s annual progress report on HAI prevention.

The data indicate that 1 in 7 catheter- and surgery-related HAIs in acute care hospitals can be caused by 6 types of antibiotic-resistant bacteria. That number increases to 1 in 4 infections in long-term acute care hospitals.

The 6 antibiotic-resistant threats are carbapenem-resistant Enterobacteriaceae (CRE), methicillin-resistant Staphylococcus aureus (MRSA), ESBL-producing Enterobacteriaceae (extended-spectrum β-lactamases), vancomycin-resistant Enterococcus (VRE), multidrug-resistant Pseudomonas aeruginosa, and multidrug-resistant Acinetobacter.

Prevention and resistance

According to the CDC’s data, acute care hospitals saw a 50% decrease in central line-associated bloodstream infections between 2008 and 2014. But 1 in 6 remaining central line-associated bloodstream infections is caused by urgent or serious antibiotic-resistant bacteria.

Between 2008 and 2014, acute care hospitals saw a 17% decrease in surgical site infections related to 10 procedures that were tracked in previous HAI progress reports. One in 7 remaining surgical site infections is caused by urgent or serious antibiotic-resistant bacteria.

Acute care hospitals saw no change in the incidence of catheter-associated urinary tract infections (CAUTIs) between 2009 and 2014. However, there was a reduction in CAUTIs between 2013 and 2014. One in 10 CAUTIs is caused by urgent or serious antibiotic-resistant bacteria.

The Vital Signs report also examines Clostridium difficile, which caused almost half a million infections in the US in 2011 alone. The CDC’s annual progress report shows that hospital-onset C difficile infections decreased by 8% between 2011 and 2014.

In addition to the reports, the CDC has released a web app with interactive data on HAIs caused by antibiotic-resistant bacteria.

The tool, known as the Antibiotic Resistance Patient Safety Atlas, provides national, regional, and state map views of superbug/drug combinations showing percent resistance over time. The Atlas uses data reported to the CDC’s National Healthcare Safety Network from 2011 to 2014 from more than 4000 healthcare facilities.

in the intensive care unit

New data from the US Centers for Disease Control and Prevention (CDC) suggest the incidence of certain healthcare-associated infections (HAIs) has fallen in recent years, but antibiotic-resistant bacteria remain a threat.

Therefore, the CDC is advising healthcare workers to use a combination of infection control recommendations to better protect patients from these infections.

“New data show that far too many patients are getting infected with dangerous, drug-resistant bacteria in healthcare settings,” said CDC Director Tom Frieden, MD.

The facts and figures are available in the CDC’s latest Vital Signs report and the agency’s annual progress report on HAI prevention.

The data indicate that 1 in 7 catheter- and surgery-related HAIs in acute care hospitals can be caused by 6 types of antibiotic-resistant bacteria. That number increases to 1 in 4 infections in long-term acute care hospitals.

The 6 antibiotic-resistant threats are carbapenem-resistant Enterobacteriaceae (CRE), methicillin-resistant Staphylococcus aureus (MRSA), ESBL-producing Enterobacteriaceae (extended-spectrum β-lactamases), vancomycin-resistant Enterococcus (VRE), multidrug-resistant Pseudomonas aeruginosa, and multidrug-resistant Acinetobacter.

Prevention and resistance

According to the CDC’s data, acute care hospitals saw a 50% decrease in central line-associated bloodstream infections between 2008 and 2014. But 1 in 6 remaining central line-associated bloodstream infections is caused by urgent or serious antibiotic-resistant bacteria.

Between 2008 and 2014, acute care hospitals saw a 17% decrease in surgical site infections related to 10 procedures that were tracked in previous HAI progress reports. One in 7 remaining surgical site infections is caused by urgent or serious antibiotic-resistant bacteria.

Acute care hospitals saw no change in the incidence of catheter-associated urinary tract infections (CAUTIs) between 2009 and 2014. However, there was a reduction in CAUTIs between 2013 and 2014. One in 10 CAUTIs is caused by urgent or serious antibiotic-resistant bacteria.

The Vital Signs report also examines Clostridium difficile, which caused almost half a million infections in the US in 2011 alone. The CDC’s annual progress report shows that hospital-onset C difficile infections decreased by 8% between 2011 and 2014.

In addition to the reports, the CDC has released a web app with interactive data on HAIs caused by antibiotic-resistant bacteria.

The tool, known as the Antibiotic Resistance Patient Safety Atlas, provides national, regional, and state map views of superbug/drug combinations showing percent resistance over time. The Atlas uses data reported to the CDC’s National Healthcare Safety Network from 2011 to 2014 from more than 4000 healthcare facilities.

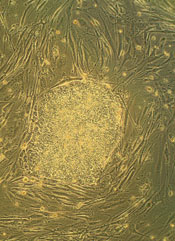

Factors driving blood cell development

Researchers believe they have identified key factors that drive hematopoietic specification and differentiation.

The team performed detailed analyses on cells at 6 consecutive stages of development—starting with embryonic stem cells and ending with macrophages.

They said this revealed the complete set of regulatory elements driving differential gene expression during these stages of development.

The researchers described this work in Developmental Cell.

The team studied hematopoietic specification and differentiation by looking at 6 stages of cell development—embryonic stem cells, mesoderm cells, hemangioblasts, hemogenic endothelium, hematopoietic precursors, and macrophages.

“We examined how embryonic cells develop towards blood cells by collecting multi-omics data from measuring gene activity, changes in chromosome structure, and the interaction of regulatory factors with the genes themselves,” explained study author Constanze Bonifer, PhD, of the University of Birmingham in the UK.

“Our research shows, in unprecedented detail, how a vast network of interacting genes control blood cell development. It also shows how we can use such data to enhance our knowledge of this process.”

The researchers said their findings help explain how regulatory elements in the DNA work together, driving gene expression and the switch from one developmental stage to another.

The team believes the work also revealed the minimum requirements for generating blood cells from an unrelated, cultured cell type.

The researchers have made their findings available to the public on the following website: http://www.haemopoiesis.leeds.ac.uk/data_analysis/.

Researchers believe they have identified key factors that drive hematopoietic specification and differentiation.

The team performed detailed analyses on cells at 6 consecutive stages of development—starting with embryonic stem cells and ending with macrophages.

They said this revealed the complete set of regulatory elements driving differential gene expression during these stages of development.

The researchers described this work in Developmental Cell.

The team studied hematopoietic specification and differentiation by looking at 6 stages of cell development—embryonic stem cells, mesoderm cells, hemangioblasts, hemogenic endothelium, hematopoietic precursors, and macrophages.

“We examined how embryonic cells develop towards blood cells by collecting multi-omics data from measuring gene activity, changes in chromosome structure, and the interaction of regulatory factors with the genes themselves,” explained study author Constanze Bonifer, PhD, of the University of Birmingham in the UK.

“Our research shows, in unprecedented detail, how a vast network of interacting genes control blood cell development. It also shows how we can use such data to enhance our knowledge of this process.”

The researchers said their findings help explain how regulatory elements in the DNA work together, driving gene expression and the switch from one developmental stage to another.

The team believes the work also revealed the minimum requirements for generating blood cells from an unrelated, cultured cell type.

The researchers have made their findings available to the public on the following website: http://www.haemopoiesis.leeds.ac.uk/data_analysis/.

Researchers believe they have identified key factors that drive hematopoietic specification and differentiation.

The team performed detailed analyses on cells at 6 consecutive stages of development—starting with embryonic stem cells and ending with macrophages.

They said this revealed the complete set of regulatory elements driving differential gene expression during these stages of development.

The researchers described this work in Developmental Cell.

The team studied hematopoietic specification and differentiation by looking at 6 stages of cell development—embryonic stem cells, mesoderm cells, hemangioblasts, hemogenic endothelium, hematopoietic precursors, and macrophages.

“We examined how embryonic cells develop towards blood cells by collecting multi-omics data from measuring gene activity, changes in chromosome structure, and the interaction of regulatory factors with the genes themselves,” explained study author Constanze Bonifer, PhD, of the University of Birmingham in the UK.

“Our research shows, in unprecedented detail, how a vast network of interacting genes control blood cell development. It also shows how we can use such data to enhance our knowledge of this process.”

The researchers said their findings help explain how regulatory elements in the DNA work together, driving gene expression and the switch from one developmental stage to another.

The team believes the work also revealed the minimum requirements for generating blood cells from an unrelated, cultured cell type.

The researchers have made their findings available to the public on the following website: http://www.haemopoiesis.leeds.ac.uk/data_analysis/.