User login

A microfluidic biochip for blood cell counts

Researchers say they have created a biosensor capable of counting blood cells electrically using only a drop of blood.

The microfluidic device can measure red blood cell, platelet, and white blood cell counts using as little as 11 µL of blood.

The device electrically counts the different types of blood cells based on their size and membrane properties.

To count leukocyte and its differentials, red blood cells are selectively lysed, and the remaining white blood cells are individually counted. Specific cells like neutrophils can be counted using multi-frequency analysis, which probes the membrane properties of the cells.

For red blood cells and platelets, 1 µL of whole blood is diluted with PBS on-chip, and the cells are counted electrically. The total time for measurement is under 20 minutes.

The researchers described this device in TECHNOLOGY.

“Our biosensor exhibits the potential to improve patient care in a spectrum of settings,” said Rashid Bashir, PhD, of the University of Illinois at Urbana-Champaign.

He noted that the device could be particularly useful in resource-limited settings where laboratory tests are often inaccessible due to costs, poor prevalence of laboratory facilities, and the difficulty of follow-up upon receiving results that take days to process.

“There exists a huge potential to translate our biosensor commercially for blood cell count applications,” added Umer Hassan, PhD, of the University of Illinois at Urbana-Champaign.

“The translation of our technology will result in minimal to no experience requirement for device operation. Even patients can perform the test at the comfort of their home and share the results with their primary care physicians via electronic means too.”

“The technology is scalable, and, in future, we plan to apply it to many other potential applications in the areas of animal diagnostics, blood transfusion analysis, ER/ICU applications, and blood cell counting for chemotherapy management,” Dr Bashir said.

The researchers are now working to further develop a portable prototype of the cell counter.

“The cartridges will be disposable and the size of a credit card,” Dr Umer said. “The base unit or the reader will be portable and possibly hand-held. Our technology has the potential to reduce the cost of the test to less than $10, as compared to $100 or more currently charged.” ![]()

Researchers say they have created a biosensor capable of counting blood cells electrically using only a drop of blood.

The microfluidic device can measure red blood cell, platelet, and white blood cell counts using as little as 11 µL of blood.

The device electrically counts the different types of blood cells based on their size and membrane properties.

To count leukocyte and its differentials, red blood cells are selectively lysed, and the remaining white blood cells are individually counted. Specific cells like neutrophils can be counted using multi-frequency analysis, which probes the membrane properties of the cells.

For red blood cells and platelets, 1 µL of whole blood is diluted with PBS on-chip, and the cells are counted electrically. The total time for measurement is under 20 minutes.

The researchers described this device in TECHNOLOGY.

“Our biosensor exhibits the potential to improve patient care in a spectrum of settings,” said Rashid Bashir, PhD, of the University of Illinois at Urbana-Champaign.

He noted that the device could be particularly useful in resource-limited settings where laboratory tests are often inaccessible due to costs, poor prevalence of laboratory facilities, and the difficulty of follow-up upon receiving results that take days to process.

“There exists a huge potential to translate our biosensor commercially for blood cell count applications,” added Umer Hassan, PhD, of the University of Illinois at Urbana-Champaign.

“The translation of our technology will result in minimal to no experience requirement for device operation. Even patients can perform the test at the comfort of their home and share the results with their primary care physicians via electronic means too.”

“The technology is scalable, and, in future, we plan to apply it to many other potential applications in the areas of animal diagnostics, blood transfusion analysis, ER/ICU applications, and blood cell counting for chemotherapy management,” Dr Bashir said.

The researchers are now working to further develop a portable prototype of the cell counter.

“The cartridges will be disposable and the size of a credit card,” Dr Umer said. “The base unit or the reader will be portable and possibly hand-held. Our technology has the potential to reduce the cost of the test to less than $10, as compared to $100 or more currently charged.” ![]()

Researchers say they have created a biosensor capable of counting blood cells electrically using only a drop of blood.

The microfluidic device can measure red blood cell, platelet, and white blood cell counts using as little as 11 µL of blood.

The device electrically counts the different types of blood cells based on their size and membrane properties.

To count leukocyte and its differentials, red blood cells are selectively lysed, and the remaining white blood cells are individually counted. Specific cells like neutrophils can be counted using multi-frequency analysis, which probes the membrane properties of the cells.

For red blood cells and platelets, 1 µL of whole blood is diluted with PBS on-chip, and the cells are counted electrically. The total time for measurement is under 20 minutes.

The researchers described this device in TECHNOLOGY.

“Our biosensor exhibits the potential to improve patient care in a spectrum of settings,” said Rashid Bashir, PhD, of the University of Illinois at Urbana-Champaign.

He noted that the device could be particularly useful in resource-limited settings where laboratory tests are often inaccessible due to costs, poor prevalence of laboratory facilities, and the difficulty of follow-up upon receiving results that take days to process.

“There exists a huge potential to translate our biosensor commercially for blood cell count applications,” added Umer Hassan, PhD, of the University of Illinois at Urbana-Champaign.

“The translation of our technology will result in minimal to no experience requirement for device operation. Even patients can perform the test at the comfort of their home and share the results with their primary care physicians via electronic means too.”

“The technology is scalable, and, in future, we plan to apply it to many other potential applications in the areas of animal diagnostics, blood transfusion analysis, ER/ICU applications, and blood cell counting for chemotherapy management,” Dr Bashir said.

The researchers are now working to further develop a portable prototype of the cell counter.

“The cartridges will be disposable and the size of a credit card,” Dr Umer said. “The base unit or the reader will be portable and possibly hand-held. Our technology has the potential to reduce the cost of the test to less than $10, as compared to $100 or more currently charged.” ![]()

Study reveals decrease in NIH-funded trials

Photo by Esther Dyson

A new study suggests that, in recent years, there has been a decrease in clinical trials funded by the National Institutes of Health (NIH) but an increase in trials with funding from other sources.

Researchers looked at trials newly registered on ClinicalTrials.gov and observed a substantial increase in trial listings from 2006 through 2014.

During that time period, the number of NIH-funded trials declined, but the number of trials funded by other US federal agencies, industry, and other groups (such as universities and organizations) increased.

Stephan Ehrhardt, MD, of Johns Hopkins Bloomberg School of Public Health in Baltimore, Maryland, and his colleagues conducted this study and recounted their findings in a letter to JAMA.

The researchers downloaded data from ClinicalTrials.gov, searched for “interventional study” and obtained counts of newly registered trials by funder type: “NIH,” “industry,” “other US federal agency,” or “all others (individuals, universities, organizations).”

According to the “first received” date (when trials were first registered with ClinicalTrials.gov), the number of newly registered trials increased from 9321 in 2006 to 18,400 in 2014 (97.4%).

During the same period, the number of industry-funded trials increased from 4585 to 6550 (42.9%), and the number of NIH-funded trials decreased from 1376 to 1048 (23.8%).

The number of trials funded by other US federal agencies increased from 263 to 339 (28.9%), and the number of trials funded by “all others” increased from 3240 to 10,597 (227.1%).

The researchers also examined the data according to the trial start date and observed similar patterns. They found the total number of trials increased from 9208 in 2006 to 14,618 in 2014 (58.8%).

The number of industry-funded trials increased from 4516 to 5274 (36.1%), and the number of NIH-funded trials decreased from 1189 to 873 (26.6%).

The number of trials funded by other US federal agencies increased from 229 to 292 (27.5%), and the number of trials funded by “all others” increased from 3397 to 8295 (144.2%).

Dr Ehrhardt said he believes the decline in NIH-funded studies can be traced to 2 things: flat NIH funding (the 2014 budget was 14% less than in 2006, after adjusting for inflation) and greater competition for these limited dollars from other, relatively new research areas such as genomic research or personalized medicine studies. ![]()

Photo by Esther Dyson

A new study suggests that, in recent years, there has been a decrease in clinical trials funded by the National Institutes of Health (NIH) but an increase in trials with funding from other sources.

Researchers looked at trials newly registered on ClinicalTrials.gov and observed a substantial increase in trial listings from 2006 through 2014.

During that time period, the number of NIH-funded trials declined, but the number of trials funded by other US federal agencies, industry, and other groups (such as universities and organizations) increased.

Stephan Ehrhardt, MD, of Johns Hopkins Bloomberg School of Public Health in Baltimore, Maryland, and his colleagues conducted this study and recounted their findings in a letter to JAMA.

The researchers downloaded data from ClinicalTrials.gov, searched for “interventional study” and obtained counts of newly registered trials by funder type: “NIH,” “industry,” “other US federal agency,” or “all others (individuals, universities, organizations).”

According to the “first received” date (when trials were first registered with ClinicalTrials.gov), the number of newly registered trials increased from 9321 in 2006 to 18,400 in 2014 (97.4%).

During the same period, the number of industry-funded trials increased from 4585 to 6550 (42.9%), and the number of NIH-funded trials decreased from 1376 to 1048 (23.8%).

The number of trials funded by other US federal agencies increased from 263 to 339 (28.9%), and the number of trials funded by “all others” increased from 3240 to 10,597 (227.1%).

The researchers also examined the data according to the trial start date and observed similar patterns. They found the total number of trials increased from 9208 in 2006 to 14,618 in 2014 (58.8%).

The number of industry-funded trials increased from 4516 to 5274 (36.1%), and the number of NIH-funded trials decreased from 1189 to 873 (26.6%).

The number of trials funded by other US federal agencies increased from 229 to 292 (27.5%), and the number of trials funded by “all others” increased from 3397 to 8295 (144.2%).

Dr Ehrhardt said he believes the decline in NIH-funded studies can be traced to 2 things: flat NIH funding (the 2014 budget was 14% less than in 2006, after adjusting for inflation) and greater competition for these limited dollars from other, relatively new research areas such as genomic research or personalized medicine studies. ![]()

Photo by Esther Dyson

A new study suggests that, in recent years, there has been a decrease in clinical trials funded by the National Institutes of Health (NIH) but an increase in trials with funding from other sources.

Researchers looked at trials newly registered on ClinicalTrials.gov and observed a substantial increase in trial listings from 2006 through 2014.

During that time period, the number of NIH-funded trials declined, but the number of trials funded by other US federal agencies, industry, and other groups (such as universities and organizations) increased.

Stephan Ehrhardt, MD, of Johns Hopkins Bloomberg School of Public Health in Baltimore, Maryland, and his colleagues conducted this study and recounted their findings in a letter to JAMA.

The researchers downloaded data from ClinicalTrials.gov, searched for “interventional study” and obtained counts of newly registered trials by funder type: “NIH,” “industry,” “other US federal agency,” or “all others (individuals, universities, organizations).”

According to the “first received” date (when trials were first registered with ClinicalTrials.gov), the number of newly registered trials increased from 9321 in 2006 to 18,400 in 2014 (97.4%).

During the same period, the number of industry-funded trials increased from 4585 to 6550 (42.9%), and the number of NIH-funded trials decreased from 1376 to 1048 (23.8%).

The number of trials funded by other US federal agencies increased from 263 to 339 (28.9%), and the number of trials funded by “all others” increased from 3240 to 10,597 (227.1%).

The researchers also examined the data according to the trial start date and observed similar patterns. They found the total number of trials increased from 9208 in 2006 to 14,618 in 2014 (58.8%).

The number of industry-funded trials increased from 4516 to 5274 (36.1%), and the number of NIH-funded trials decreased from 1189 to 873 (26.6%).

The number of trials funded by other US federal agencies increased from 229 to 292 (27.5%), and the number of trials funded by “all others” increased from 3397 to 8295 (144.2%).

Dr Ehrhardt said he believes the decline in NIH-funded studies can be traced to 2 things: flat NIH funding (the 2014 budget was 14% less than in 2006, after adjusting for inflation) and greater competition for these limited dollars from other, relatively new research areas such as genomic research or personalized medicine studies. ![]()

Group finds inconsistencies in genome sequencing procedures

Photo courtesy of NIGMS

Researchers say they have identified substantial differences in the procedures and quality of cancer genome sequencing between sequencing centers.

And this led to dramatic discrepancies in the number and types of somatic mutations detected when using the same cancer genome sequences for analysis.

The group’s study involved 83 researchers from 78 institutions participating in the International Cancer Genomics Consortium (ICGC).

The ICGC is an international effort to establish a comprehensive description of genomic, transcriptomic, and epigenomic changes in 50 different tumor types and/or subtypes that are thought to be of clinical and societal importance across the globe.

The consortium is characterizing more than 25,000 cancer genomes and carrying out 78 projects supported by different national and international funding agencies.

For the current project, which was published in Nature Communications, researchers studied a patient with chronic lymphocytic leukemia and a patient with medulloblastoma.

The team analyzed the entire tumor genome of each patient and compared it to the normal genome of the same patient to decipher the molecular causes for these cancers.

The researchers said they saw “widely varying mutation call rates and low concordance among analysis pipelines.”

So they established a reference mutation dataset to assess analytical procedures. They said this “gold-set” reference database has helped the ICGC community improve procedures for identifying more true somatic mutations in cancer genomes and making fewer false-positive calls.

“The findings of our study have far-reaching implications for cancer genome analysis,” said Ivo Gut, of Centro Nacional de Analisis Genómico in Barcelona, Spain.

“We have found many inconsistencies in both the sequencing of cancer genomes and the data analysis at different sites. We are making our findings available to the scientific and diagnostic community so that they can improve their systems and generate more standardized and consistent results.” ![]()

Photo courtesy of NIGMS

Researchers say they have identified substantial differences in the procedures and quality of cancer genome sequencing between sequencing centers.

And this led to dramatic discrepancies in the number and types of somatic mutations detected when using the same cancer genome sequences for analysis.

The group’s study involved 83 researchers from 78 institutions participating in the International Cancer Genomics Consortium (ICGC).

The ICGC is an international effort to establish a comprehensive description of genomic, transcriptomic, and epigenomic changes in 50 different tumor types and/or subtypes that are thought to be of clinical and societal importance across the globe.

The consortium is characterizing more than 25,000 cancer genomes and carrying out 78 projects supported by different national and international funding agencies.

For the current project, which was published in Nature Communications, researchers studied a patient with chronic lymphocytic leukemia and a patient with medulloblastoma.

The team analyzed the entire tumor genome of each patient and compared it to the normal genome of the same patient to decipher the molecular causes for these cancers.

The researchers said they saw “widely varying mutation call rates and low concordance among analysis pipelines.”

So they established a reference mutation dataset to assess analytical procedures. They said this “gold-set” reference database has helped the ICGC community improve procedures for identifying more true somatic mutations in cancer genomes and making fewer false-positive calls.

“The findings of our study have far-reaching implications for cancer genome analysis,” said Ivo Gut, of Centro Nacional de Analisis Genómico in Barcelona, Spain.

“We have found many inconsistencies in both the sequencing of cancer genomes and the data analysis at different sites. We are making our findings available to the scientific and diagnostic community so that they can improve their systems and generate more standardized and consistent results.” ![]()

Photo courtesy of NIGMS

Researchers say they have identified substantial differences in the procedures and quality of cancer genome sequencing between sequencing centers.

And this led to dramatic discrepancies in the number and types of somatic mutations detected when using the same cancer genome sequences for analysis.

The group’s study involved 83 researchers from 78 institutions participating in the International Cancer Genomics Consortium (ICGC).

The ICGC is an international effort to establish a comprehensive description of genomic, transcriptomic, and epigenomic changes in 50 different tumor types and/or subtypes that are thought to be of clinical and societal importance across the globe.

The consortium is characterizing more than 25,000 cancer genomes and carrying out 78 projects supported by different national and international funding agencies.

For the current project, which was published in Nature Communications, researchers studied a patient with chronic lymphocytic leukemia and a patient with medulloblastoma.

The team analyzed the entire tumor genome of each patient and compared it to the normal genome of the same patient to decipher the molecular causes for these cancers.

The researchers said they saw “widely varying mutation call rates and low concordance among analysis pipelines.”

So they established a reference mutation dataset to assess analytical procedures. They said this “gold-set” reference database has helped the ICGC community improve procedures for identifying more true somatic mutations in cancer genomes and making fewer false-positive calls.

“The findings of our study have far-reaching implications for cancer genome analysis,” said Ivo Gut, of Centro Nacional de Analisis Genómico in Barcelona, Spain.

“We have found many inconsistencies in both the sequencing of cancer genomes and the data analysis at different sites. We are making our findings available to the scientific and diagnostic community so that they can improve their systems and generate more standardized and consistent results.” ![]()

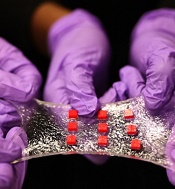

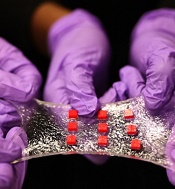

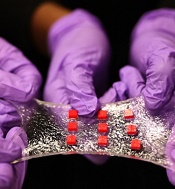

Engineers create ‘smart wound dressing’

a matrix of polymer islands

(red) that can encapsulate

electronic components

Photo by Melanie Gonick/MIT

Engineers say they have designed “smart wound dressing,” a sticky, stretchy, gel-like material that can incorporate temperature sensors, LED lights, and other electronics, as well as tiny, drug-delivering reservoirs and channels.

The dressing releases medicine in response to changes in skin temperature and can be designed to light up if, say, medicine is running low.

When the dressing is applied to a highly flexible area, such as the elbow or knee, it stretches with the body, keeping the embedded electronics functional and intact.

The key to the design is a hydrogel matrix designed by Xuanhe Zhao, PhD, of the Massachusetts Institute of Technology in Cambridge.

The hydrogel, which was describe in Nature Materials last month, is a rubbery material, mostly composed of water, designed to bond strongly to surfaces such as gold, titanium, aluminum, silicon, glass, and ceramic.

In a paper published in Advanced Materials, Dr Zhao and his colleagues described embedding various electronics within the hydrogel, such as conductive wires, semiconductor chips, LED lights, and temperature sensors.

Dr Zhao said electronics coated in hydrogel may be used not just on the surface of the skin but also inside the body; for example, as implanted, biocompatible glucose sensors, or even soft, compliant neural probes.

“Electronics are usually hard and dry, but the human body is soft and wet,” Dr Zhao said. “These two systems have drastically different properties. If you want to put electronics in close contact with the human body for applications such as healthcare monitoring and drug delivery, it is highly desirable to make the electronic devices soft and stretchable to fit the environment of the human body. That’s the motivation for stretchable hydrogel electronics.”

A strong and stretchy bond

Typical synthetic hydrogels are brittle, barely stretchable, and adhere weakly to other surfaces.

“They’re often used as degradable biomaterials at the current stage,” Dr Zhao said. “If you want to make an electronic device out of hydrogels, you need to think of long-term stability of the hydrogels and interfaces.”

To get around these challenges, his team came up with a design strategy for robust hydrogels, mixing water with a small amount of selected biopolymers to create soft, stretchy materials with a stiffness of 10 to 100 kilopascals—about the range of human soft tissues. The researchers also devised a method to strongly bond the hydrogel to various nonporous surfaces.

In the new study, the researchers applied their techniques to demonstrate several uses for the hydrogel, including encapsulating a titanium wire to form a transparent, stretchable conductor. In experiments, they stretched the encapsulated wire multiple times and found it maintained constant electrical conductivity.

Dr Zhao also created an array of LED lights embedded in a sheet of hydrogel. When attached to different regions of the body, the array continued working, even when stretched across highly deformable areas such as the knee and elbow.

A versatile matrix

Finally, the group embedded various electronic components within a sheet of hydrogel to create a “smart wound dressing,” comprising regularly spaced temperature sensors and tiny drug reservoirs.

The researchers also created pathways for drugs to flow through the hydrogel, by either inserting patterned tubes or drilling tiny holes through the matrix. They placed the dressing over various regions of the body and found that, even when highly stretched, the dressing continued to monitor skin temperature and release drugs according to the sensor readings.

An immediate application of the technology may be as a stretchable, on-demand treatment for burns or other skin conditions, said Hyunwoo Yuk, a graduate student at MIT.

“It’s a very versatile matrix,” Yuk said. “The unique capability here is, when a sensor senses something different, like an abnormal increase in temperature, the device can, on demand, release drugs to that specific location and select a specific drug from one of the reservoirs, which can diffuse in the hydrogel matrix for sustained release over time.”

Delving deeper, Dr Zhao envisions hydrogel to be an ideal, biocompatible vehicle for delivering electronics inside the body. He is currently exploring hydrogel’s potential as a carrier for glucose sensors as well as neural probes. ![]()

a matrix of polymer islands

(red) that can encapsulate

electronic components

Photo by Melanie Gonick/MIT

Engineers say they have designed “smart wound dressing,” a sticky, stretchy, gel-like material that can incorporate temperature sensors, LED lights, and other electronics, as well as tiny, drug-delivering reservoirs and channels.

The dressing releases medicine in response to changes in skin temperature and can be designed to light up if, say, medicine is running low.

When the dressing is applied to a highly flexible area, such as the elbow or knee, it stretches with the body, keeping the embedded electronics functional and intact.

The key to the design is a hydrogel matrix designed by Xuanhe Zhao, PhD, of the Massachusetts Institute of Technology in Cambridge.

The hydrogel, which was describe in Nature Materials last month, is a rubbery material, mostly composed of water, designed to bond strongly to surfaces such as gold, titanium, aluminum, silicon, glass, and ceramic.

In a paper published in Advanced Materials, Dr Zhao and his colleagues described embedding various electronics within the hydrogel, such as conductive wires, semiconductor chips, LED lights, and temperature sensors.

Dr Zhao said electronics coated in hydrogel may be used not just on the surface of the skin but also inside the body; for example, as implanted, biocompatible glucose sensors, or even soft, compliant neural probes.

“Electronics are usually hard and dry, but the human body is soft and wet,” Dr Zhao said. “These two systems have drastically different properties. If you want to put electronics in close contact with the human body for applications such as healthcare monitoring and drug delivery, it is highly desirable to make the electronic devices soft and stretchable to fit the environment of the human body. That’s the motivation for stretchable hydrogel electronics.”

A strong and stretchy bond

Typical synthetic hydrogels are brittle, barely stretchable, and adhere weakly to other surfaces.

“They’re often used as degradable biomaterials at the current stage,” Dr Zhao said. “If you want to make an electronic device out of hydrogels, you need to think of long-term stability of the hydrogels and interfaces.”

To get around these challenges, his team came up with a design strategy for robust hydrogels, mixing water with a small amount of selected biopolymers to create soft, stretchy materials with a stiffness of 10 to 100 kilopascals—about the range of human soft tissues. The researchers also devised a method to strongly bond the hydrogel to various nonporous surfaces.

In the new study, the researchers applied their techniques to demonstrate several uses for the hydrogel, including encapsulating a titanium wire to form a transparent, stretchable conductor. In experiments, they stretched the encapsulated wire multiple times and found it maintained constant electrical conductivity.

Dr Zhao also created an array of LED lights embedded in a sheet of hydrogel. When attached to different regions of the body, the array continued working, even when stretched across highly deformable areas such as the knee and elbow.

A versatile matrix

Finally, the group embedded various electronic components within a sheet of hydrogel to create a “smart wound dressing,” comprising regularly spaced temperature sensors and tiny drug reservoirs.

The researchers also created pathways for drugs to flow through the hydrogel, by either inserting patterned tubes or drilling tiny holes through the matrix. They placed the dressing over various regions of the body and found that, even when highly stretched, the dressing continued to monitor skin temperature and release drugs according to the sensor readings.

An immediate application of the technology may be as a stretchable, on-demand treatment for burns or other skin conditions, said Hyunwoo Yuk, a graduate student at MIT.

“It’s a very versatile matrix,” Yuk said. “The unique capability here is, when a sensor senses something different, like an abnormal increase in temperature, the device can, on demand, release drugs to that specific location and select a specific drug from one of the reservoirs, which can diffuse in the hydrogel matrix for sustained release over time.”

Delving deeper, Dr Zhao envisions hydrogel to be an ideal, biocompatible vehicle for delivering electronics inside the body. He is currently exploring hydrogel’s potential as a carrier for glucose sensors as well as neural probes. ![]()

a matrix of polymer islands

(red) that can encapsulate

electronic components

Photo by Melanie Gonick/MIT

Engineers say they have designed “smart wound dressing,” a sticky, stretchy, gel-like material that can incorporate temperature sensors, LED lights, and other electronics, as well as tiny, drug-delivering reservoirs and channels.

The dressing releases medicine in response to changes in skin temperature and can be designed to light up if, say, medicine is running low.

When the dressing is applied to a highly flexible area, such as the elbow or knee, it stretches with the body, keeping the embedded electronics functional and intact.

The key to the design is a hydrogel matrix designed by Xuanhe Zhao, PhD, of the Massachusetts Institute of Technology in Cambridge.

The hydrogel, which was describe in Nature Materials last month, is a rubbery material, mostly composed of water, designed to bond strongly to surfaces such as gold, titanium, aluminum, silicon, glass, and ceramic.

In a paper published in Advanced Materials, Dr Zhao and his colleagues described embedding various electronics within the hydrogel, such as conductive wires, semiconductor chips, LED lights, and temperature sensors.

Dr Zhao said electronics coated in hydrogel may be used not just on the surface of the skin but also inside the body; for example, as implanted, biocompatible glucose sensors, or even soft, compliant neural probes.

“Electronics are usually hard and dry, but the human body is soft and wet,” Dr Zhao said. “These two systems have drastically different properties. If you want to put electronics in close contact with the human body for applications such as healthcare monitoring and drug delivery, it is highly desirable to make the electronic devices soft and stretchable to fit the environment of the human body. That’s the motivation for stretchable hydrogel electronics.”

A strong and stretchy bond

Typical synthetic hydrogels are brittle, barely stretchable, and adhere weakly to other surfaces.

“They’re often used as degradable biomaterials at the current stage,” Dr Zhao said. “If you want to make an electronic device out of hydrogels, you need to think of long-term stability of the hydrogels and interfaces.”

To get around these challenges, his team came up with a design strategy for robust hydrogels, mixing water with a small amount of selected biopolymers to create soft, stretchy materials with a stiffness of 10 to 100 kilopascals—about the range of human soft tissues. The researchers also devised a method to strongly bond the hydrogel to various nonporous surfaces.

In the new study, the researchers applied their techniques to demonstrate several uses for the hydrogel, including encapsulating a titanium wire to form a transparent, stretchable conductor. In experiments, they stretched the encapsulated wire multiple times and found it maintained constant electrical conductivity.

Dr Zhao also created an array of LED lights embedded in a sheet of hydrogel. When attached to different regions of the body, the array continued working, even when stretched across highly deformable areas such as the knee and elbow.

A versatile matrix

Finally, the group embedded various electronic components within a sheet of hydrogel to create a “smart wound dressing,” comprising regularly spaced temperature sensors and tiny drug reservoirs.

The researchers also created pathways for drugs to flow through the hydrogel, by either inserting patterned tubes or drilling tiny holes through the matrix. They placed the dressing over various regions of the body and found that, even when highly stretched, the dressing continued to monitor skin temperature and release drugs according to the sensor readings.

An immediate application of the technology may be as a stretchable, on-demand treatment for burns or other skin conditions, said Hyunwoo Yuk, a graduate student at MIT.

“It’s a very versatile matrix,” Yuk said. “The unique capability here is, when a sensor senses something different, like an abnormal increase in temperature, the device can, on demand, release drugs to that specific location and select a specific drug from one of the reservoirs, which can diffuse in the hydrogel matrix for sustained release over time.”

Delving deeper, Dr Zhao envisions hydrogel to be an ideal, biocompatible vehicle for delivering electronics inside the body. He is currently exploring hydrogel’s potential as a carrier for glucose sensors as well as neural probes. ![]()

Cancer drug prices vary widely from country to country

Photo by Bill Branson

The price of cancer drugs varies widely between European countries, Australia, and New Zealand, according to a study published in The Lancet Oncology.

The study indicates that, overall, the UK and Mediterranean countries such as Greece, Spain, and Portugal pay the lowest average unit manufacturer prices for a group of 31 originator cancer drugs (new drugs under patent).

And Sweden, Switzerland, and Germany pay the highest prices.

The greatest differences in price were noted for gemcitabine, which costs €209 per vial in New Zealand and €43 in Australia, and zoledronic acid, which costs €330 per vial in New Zealand but €128 in Greece.*

“Public payers in Germany are paying 223% more in terms of official prices for interferon alfa 2b for melanoma and leukemia treatment than those in Greece,” noted study author Sabine Vogler, PhD, of the WHO Collaborating Centre for Pharmaceutical Pricing and Reimbursement Policies in Vienna, Austria.

“For gefitinib to treat non-small-lung cancer, the price in Germany is 172% higher than in New Zealand.”

To uncover these price differences, Dr Vogler and her colleagues reviewed official drug price data from the Pharma Price Information (PPI) service of the Austrian Public Health Institute for 16 European countries**, and from the pharmaceutical schedules in Australia and New Zealand.

The researchers compared what manufacturers charged for a unit (ie, price per tablet or vial) of 31 originator cancer drugs in June 2013.

None of these drugs had a unit price lower than €10. Four drugs (13%) had an average unit manufacturer price between €250 and €500, and 2 drugs (6%) had an average unit price between €500 and €1000.

Seven drugs (23%) had an average unit price higher than €1000. For example, plerixafor cost over €5000 per injection.

The price differences between the highest- and lowest-priced countries ranged from 28% to 50% for a third of the drugs sampled, between 50% and 100% for half of the drugs, and between 100% and 200% for 3 drugs (10%).

The researchers noted that information on real drug prices is scarce. The cancer drug prices they surveyed did not include confidential discounts such as those agreed upon in managed-entry arrangements that are increasingly used in countries such as Australia, Italy, the UK, and the Netherlands.

“Some high-income countries have managed to barter the manufacturers down to lower prices, but these agreements, including the agreed prices, are confidential,” Dr Vogler explained.

“Although these agreements ensure patient access to new drugs, other countries risk overpaying when setting drug prices through the common practice of external price referencing, or international price comparison, because they can only use the official undiscounted prices as a benchmark. There needs to be far more transparency.”

“We hope that our findings will provide concrete evidence for policymakers to take action to address high prices and ensure more transparency in cancer drug pricing so that costs and access to new drugs does not depend on where a patient lives.” ![]()

*Gemcitabine and zoledronic acid have generic versions in several countries, and originator prices were decreased in some countries following patent expiry but not in others.

**Austria, Belgium, Denmark, Germany, Greece, Finland, France, Italy, Ireland, the Netherlands, Norway, Portugal, Spain, Sweden, Switzerland, and the UK.

Photo by Bill Branson

The price of cancer drugs varies widely between European countries, Australia, and New Zealand, according to a study published in The Lancet Oncology.

The study indicates that, overall, the UK and Mediterranean countries such as Greece, Spain, and Portugal pay the lowest average unit manufacturer prices for a group of 31 originator cancer drugs (new drugs under patent).

And Sweden, Switzerland, and Germany pay the highest prices.

The greatest differences in price were noted for gemcitabine, which costs €209 per vial in New Zealand and €43 in Australia, and zoledronic acid, which costs €330 per vial in New Zealand but €128 in Greece.*

“Public payers in Germany are paying 223% more in terms of official prices for interferon alfa 2b for melanoma and leukemia treatment than those in Greece,” noted study author Sabine Vogler, PhD, of the WHO Collaborating Centre for Pharmaceutical Pricing and Reimbursement Policies in Vienna, Austria.

“For gefitinib to treat non-small-lung cancer, the price in Germany is 172% higher than in New Zealand.”

To uncover these price differences, Dr Vogler and her colleagues reviewed official drug price data from the Pharma Price Information (PPI) service of the Austrian Public Health Institute for 16 European countries**, and from the pharmaceutical schedules in Australia and New Zealand.

The researchers compared what manufacturers charged for a unit (ie, price per tablet or vial) of 31 originator cancer drugs in June 2013.

None of these drugs had a unit price lower than €10. Four drugs (13%) had an average unit manufacturer price between €250 and €500, and 2 drugs (6%) had an average unit price between €500 and €1000.

Seven drugs (23%) had an average unit price higher than €1000. For example, plerixafor cost over €5000 per injection.

The price differences between the highest- and lowest-priced countries ranged from 28% to 50% for a third of the drugs sampled, between 50% and 100% for half of the drugs, and between 100% and 200% for 3 drugs (10%).

The researchers noted that information on real drug prices is scarce. The cancer drug prices they surveyed did not include confidential discounts such as those agreed upon in managed-entry arrangements that are increasingly used in countries such as Australia, Italy, the UK, and the Netherlands.

“Some high-income countries have managed to barter the manufacturers down to lower prices, but these agreements, including the agreed prices, are confidential,” Dr Vogler explained.

“Although these agreements ensure patient access to new drugs, other countries risk overpaying when setting drug prices through the common practice of external price referencing, or international price comparison, because they can only use the official undiscounted prices as a benchmark. There needs to be far more transparency.”

“We hope that our findings will provide concrete evidence for policymakers to take action to address high prices and ensure more transparency in cancer drug pricing so that costs and access to new drugs does not depend on where a patient lives.” ![]()

*Gemcitabine and zoledronic acid have generic versions in several countries, and originator prices were decreased in some countries following patent expiry but not in others.

**Austria, Belgium, Denmark, Germany, Greece, Finland, France, Italy, Ireland, the Netherlands, Norway, Portugal, Spain, Sweden, Switzerland, and the UK.

Photo by Bill Branson

The price of cancer drugs varies widely between European countries, Australia, and New Zealand, according to a study published in The Lancet Oncology.

The study indicates that, overall, the UK and Mediterranean countries such as Greece, Spain, and Portugal pay the lowest average unit manufacturer prices for a group of 31 originator cancer drugs (new drugs under patent).

And Sweden, Switzerland, and Germany pay the highest prices.

The greatest differences in price were noted for gemcitabine, which costs €209 per vial in New Zealand and €43 in Australia, and zoledronic acid, which costs €330 per vial in New Zealand but €128 in Greece.*

“Public payers in Germany are paying 223% more in terms of official prices for interferon alfa 2b for melanoma and leukemia treatment than those in Greece,” noted study author Sabine Vogler, PhD, of the WHO Collaborating Centre for Pharmaceutical Pricing and Reimbursement Policies in Vienna, Austria.

“For gefitinib to treat non-small-lung cancer, the price in Germany is 172% higher than in New Zealand.”

To uncover these price differences, Dr Vogler and her colleagues reviewed official drug price data from the Pharma Price Information (PPI) service of the Austrian Public Health Institute for 16 European countries**, and from the pharmaceutical schedules in Australia and New Zealand.

The researchers compared what manufacturers charged for a unit (ie, price per tablet or vial) of 31 originator cancer drugs in June 2013.

None of these drugs had a unit price lower than €10. Four drugs (13%) had an average unit manufacturer price between €250 and €500, and 2 drugs (6%) had an average unit price between €500 and €1000.

Seven drugs (23%) had an average unit price higher than €1000. For example, plerixafor cost over €5000 per injection.

The price differences between the highest- and lowest-priced countries ranged from 28% to 50% for a third of the drugs sampled, between 50% and 100% for half of the drugs, and between 100% and 200% for 3 drugs (10%).

The researchers noted that information on real drug prices is scarce. The cancer drug prices they surveyed did not include confidential discounts such as those agreed upon in managed-entry arrangements that are increasingly used in countries such as Australia, Italy, the UK, and the Netherlands.

“Some high-income countries have managed to barter the manufacturers down to lower prices, but these agreements, including the agreed prices, are confidential,” Dr Vogler explained.

“Although these agreements ensure patient access to new drugs, other countries risk overpaying when setting drug prices through the common practice of external price referencing, or international price comparison, because they can only use the official undiscounted prices as a benchmark. There needs to be far more transparency.”

“We hope that our findings will provide concrete evidence for policymakers to take action to address high prices and ensure more transparency in cancer drug pricing so that costs and access to new drugs does not depend on where a patient lives.” ![]()

*Gemcitabine and zoledronic acid have generic versions in several countries, and originator prices were decreased in some countries following patent expiry but not in others.

**Austria, Belgium, Denmark, Germany, Greece, Finland, France, Italy, Ireland, the Netherlands, Norway, Portugal, Spain, Sweden, Switzerland, and the UK.

Tools may provide better genome analysis

Photo by Darren Baker

Scientists say they have developed 2 types of data analysis software that could help genomics researchers identify genetic drivers of disease with greater efficiency and accuracy.

Details on these tools were published in PLOS Computational Biology and Scientific Reports.

The first tool, MEGENA (for Multiscale Embedded Gene Co-expression Network Analysis), projects gene expression data onto a 3-dimensional sphere.

This allows scientists to study hierarchical organizational patterns in complex networks that are characteristic of diseases such as cancer, obesity, and Alzheimer’s disease.

When tested on data from The Cancer Genome Atlas (TCGA), MEGENA identified novel regulatory targets in breast and lung cancers, outperforming other co-expression analysis methods.

The second tool, SuperExactTest, establishes the first theoretical framework for assessing the statistical significance of multi-set intersections and enables users to compare large sets of data, such as gene sets produced from genome-wide association studies (GWAS) and differential expression analysis.

Scientists ran SuperExactTest on existing TCGA and GWAS data, identifying a core set of cancer genes and detecting related patterns among complex diseases.

Both tools come from the Multiscale Network Modeling Laboratory, led by Bin Zhang, PhD, an associate professor at Icahn School of Medicine at Mount Sinai in New York, New York.

“These tools fill important and unmet needs in genomics,” Dr Zhang said. “MEGENA will help scientists flesh out novel pathways and key targets in complex diseases, while SuperExactTest will provide a clearer understanding of the genome by comparing a large number of gene signatures.”

MEGENA and SuperExactTest are available as R packages on Dr Zhang’s website and CRAN (the Comprehensive R Archive Network), a repository of open-source software. ![]()

Photo by Darren Baker

Scientists say they have developed 2 types of data analysis software that could help genomics researchers identify genetic drivers of disease with greater efficiency and accuracy.

Details on these tools were published in PLOS Computational Biology and Scientific Reports.

The first tool, MEGENA (for Multiscale Embedded Gene Co-expression Network Analysis), projects gene expression data onto a 3-dimensional sphere.

This allows scientists to study hierarchical organizational patterns in complex networks that are characteristic of diseases such as cancer, obesity, and Alzheimer’s disease.

When tested on data from The Cancer Genome Atlas (TCGA), MEGENA identified novel regulatory targets in breast and lung cancers, outperforming other co-expression analysis methods.

The second tool, SuperExactTest, establishes the first theoretical framework for assessing the statistical significance of multi-set intersections and enables users to compare large sets of data, such as gene sets produced from genome-wide association studies (GWAS) and differential expression analysis.

Scientists ran SuperExactTest on existing TCGA and GWAS data, identifying a core set of cancer genes and detecting related patterns among complex diseases.

Both tools come from the Multiscale Network Modeling Laboratory, led by Bin Zhang, PhD, an associate professor at Icahn School of Medicine at Mount Sinai in New York, New York.

“These tools fill important and unmet needs in genomics,” Dr Zhang said. “MEGENA will help scientists flesh out novel pathways and key targets in complex diseases, while SuperExactTest will provide a clearer understanding of the genome by comparing a large number of gene signatures.”

MEGENA and SuperExactTest are available as R packages on Dr Zhang’s website and CRAN (the Comprehensive R Archive Network), a repository of open-source software. ![]()

Photo by Darren Baker

Scientists say they have developed 2 types of data analysis software that could help genomics researchers identify genetic drivers of disease with greater efficiency and accuracy.

Details on these tools were published in PLOS Computational Biology and Scientific Reports.

The first tool, MEGENA (for Multiscale Embedded Gene Co-expression Network Analysis), projects gene expression data onto a 3-dimensional sphere.

This allows scientists to study hierarchical organizational patterns in complex networks that are characteristic of diseases such as cancer, obesity, and Alzheimer’s disease.

When tested on data from The Cancer Genome Atlas (TCGA), MEGENA identified novel regulatory targets in breast and lung cancers, outperforming other co-expression analysis methods.

The second tool, SuperExactTest, establishes the first theoretical framework for assessing the statistical significance of multi-set intersections and enables users to compare large sets of data, such as gene sets produced from genome-wide association studies (GWAS) and differential expression analysis.

Scientists ran SuperExactTest on existing TCGA and GWAS data, identifying a core set of cancer genes and detecting related patterns among complex diseases.

Both tools come from the Multiscale Network Modeling Laboratory, led by Bin Zhang, PhD, an associate professor at Icahn School of Medicine at Mount Sinai in New York, New York.

“These tools fill important and unmet needs in genomics,” Dr Zhang said. “MEGENA will help scientists flesh out novel pathways and key targets in complex diseases, while SuperExactTest will provide a clearer understanding of the genome by comparing a large number of gene signatures.”

MEGENA and SuperExactTest are available as R packages on Dr Zhang’s website and CRAN (the Comprehensive R Archive Network), a repository of open-source software. ![]()

Climate change may alter malaria risk in Africa

Photo by James Gathany

A larger portion of Africa is at high risk for malaria transmission than previously predicted, according to a mapping study published in Vector-Borne and Zoonotic Diseases.

The research also suggests that, under future climate regimes, the area where the disease can be transmitted most easily will shrink, but the total

malaria transmission zone in Africa will expand and move into new territory.

Researchers estimate that, by 2080, the year-round, highest-risk transmission zone will move from coastal West Africa, east to the Albertine Rift, between the Democratic Republic of Congo and Uganda.

The area suitable for seasonal, lower-risk transmission will shift north into coastal sub-Saharan Africa.

In addition, some parts of Africa will become too hot for malaria.

The overall expansion of malaria-vulnerable areas will challenge management of the deadly disease, said study author Sadie Ryan, PhD, of the University of Florida in Gainesville.

She noted that malaria will arrive in new areas, posing a risk to new populations, and the shift of endemic and epidemic areas will require public health management changes.

“Mapping a mathematical predictive model of a climate-driven infectious disease like malaria allows us to develop tools to understand both spatial and seasonal dynamics, and to anticipate the future changes to those dynamics,” Dr Ryan said.

She and her colleagues used a model that takes into account how mosquitoes and the malaria parasite respond to temperature. This model shows an optimal transmission temperature for malaria that, at 25 degrees Celsius, is 6 degrees lower than previous predictive models.

Dr Ryan said this work will play an important role in helping public health officials and non-governmental organizations plan for the efficient deployment of resources and interventions to control future outbreaks of malaria and their associated societal costs.

This study expands upon the team’s prior work at the National Center for Ecological Analysis and Synthesis at the University of California, Santa Barbara. ![]()

Photo by James Gathany

A larger portion of Africa is at high risk for malaria transmission than previously predicted, according to a mapping study published in Vector-Borne and Zoonotic Diseases.

The research also suggests that, under future climate regimes, the area where the disease can be transmitted most easily will shrink, but the total

malaria transmission zone in Africa will expand and move into new territory.

Researchers estimate that, by 2080, the year-round, highest-risk transmission zone will move from coastal West Africa, east to the Albertine Rift, between the Democratic Republic of Congo and Uganda.

The area suitable for seasonal, lower-risk transmission will shift north into coastal sub-Saharan Africa.

In addition, some parts of Africa will become too hot for malaria.

The overall expansion of malaria-vulnerable areas will challenge management of the deadly disease, said study author Sadie Ryan, PhD, of the University of Florida in Gainesville.

She noted that malaria will arrive in new areas, posing a risk to new populations, and the shift of endemic and epidemic areas will require public health management changes.

“Mapping a mathematical predictive model of a climate-driven infectious disease like malaria allows us to develop tools to understand both spatial and seasonal dynamics, and to anticipate the future changes to those dynamics,” Dr Ryan said.

She and her colleagues used a model that takes into account how mosquitoes and the malaria parasite respond to temperature. This model shows an optimal transmission temperature for malaria that, at 25 degrees Celsius, is 6 degrees lower than previous predictive models.

Dr Ryan said this work will play an important role in helping public health officials and non-governmental organizations plan for the efficient deployment of resources and interventions to control future outbreaks of malaria and their associated societal costs.

This study expands upon the team’s prior work at the National Center for Ecological Analysis and Synthesis at the University of California, Santa Barbara. ![]()

Photo by James Gathany

A larger portion of Africa is at high risk for malaria transmission than previously predicted, according to a mapping study published in Vector-Borne and Zoonotic Diseases.

The research also suggests that, under future climate regimes, the area where the disease can be transmitted most easily will shrink, but the total

malaria transmission zone in Africa will expand and move into new territory.

Researchers estimate that, by 2080, the year-round, highest-risk transmission zone will move from coastal West Africa, east to the Albertine Rift, between the Democratic Republic of Congo and Uganda.

The area suitable for seasonal, lower-risk transmission will shift north into coastal sub-Saharan Africa.

In addition, some parts of Africa will become too hot for malaria.

The overall expansion of malaria-vulnerable areas will challenge management of the deadly disease, said study author Sadie Ryan, PhD, of the University of Florida in Gainesville.

She noted that malaria will arrive in new areas, posing a risk to new populations, and the shift of endemic and epidemic areas will require public health management changes.

“Mapping a mathematical predictive model of a climate-driven infectious disease like malaria allows us to develop tools to understand both spatial and seasonal dynamics, and to anticipate the future changes to those dynamics,” Dr Ryan said.

She and her colleagues used a model that takes into account how mosquitoes and the malaria parasite respond to temperature. This model shows an optimal transmission temperature for malaria that, at 25 degrees Celsius, is 6 degrees lower than previous predictive models.

Dr Ryan said this work will play an important role in helping public health officials and non-governmental organizations plan for the efficient deployment of resources and interventions to control future outbreaks of malaria and their associated societal costs.

This study expands upon the team’s prior work at the National Center for Ecological Analysis and Synthesis at the University of California, Santa Barbara.

How a genetic locus protects HSCs

in the bone marrow

The Dlk1-Gtl2 locus plays a critical role in protecting hematopoietic stem cells (HSCs), according to preclinical research.

The study suggests the mammalian imprinted gene Gtl2, located on mouse chromosome 12qF1, protects adult HSCs by restricting metabolic activity in the cells’ mitochondria.

This work indicates that Gtl2 may be useful as a biomarker to determine if cells are normal or potentially cancerous.

Linheng Li, PhD, of the Stowers Institute for Medical Research in Kansas City, Missouri, and his colleagues described this research in Cell Stem Cell.

The researchers knew that the Dlk1-Gtl2 locus produces multiple non-coding RNAs from the maternally inherited allele, including the largest microRNA cluster in the mammalian genome.

“Most of the non-coding RNAs at the Gtl2 locus have been documented to function as tumor suppressors to maintain normal cell function,” said study author Pengxu Qian, PhD, also from the Stowers Institute for Medical Research.

However, the role of this locus in HSCs was unclear. So the team studied HSCs in mice. They used transcriptome profiling to analyze 17 hematopoietic cell types.

The analyses revealed that non-coding RNAs expressed from the Gtl2 locus are predominantly enriched in fetal liver HSCs and adult long-term HSCs, and these non-coding RNAs sustain long-term HSC functionality.

Gtl2’s megacluster of microRNA suppresses the mTOR signaling pathway and downstream mitochondrial biogenesis and metabolism, thus blocking reactive oxygen species (ROS) that can damage adult stem cells.

When the researchers deleted the Dlk1-Gtl2 locus from the maternally inherited allele in HSCs, they observed increases in mitochondrial biogenesis, metabolic activity, and ROS levels, which led to cell death.

Dr Li said these findings suggest Gtl2 could be used as a biomarker because it could help label dormant (or reserve) stem cells in normal or potentially cancerous stem cell populations.

The addition of a fluorescent tag to the Gtl2 locus could allow researchers to mark other adult stem cells in the gut, hair follicle, muscle, and neural systems.

in the bone marrow

The Dlk1-Gtl2 locus plays a critical role in protecting hematopoietic stem cells (HSCs), according to preclinical research.

The study suggests the mammalian imprinted gene Gtl2, located on mouse chromosome 12qF1, protects adult HSCs by restricting metabolic activity in the cells’ mitochondria.

This work indicates that Gtl2 may be useful as a biomarker to determine if cells are normal or potentially cancerous.

Linheng Li, PhD, of the Stowers Institute for Medical Research in Kansas City, Missouri, and his colleagues described this research in Cell Stem Cell.

The researchers knew that the Dlk1-Gtl2 locus produces multiple non-coding RNAs from the maternally inherited allele, including the largest microRNA cluster in the mammalian genome.

“Most of the non-coding RNAs at the Gtl2 locus have been documented to function as tumor suppressors to maintain normal cell function,” said study author Pengxu Qian, PhD, also from the Stowers Institute for Medical Research.

However, the role of this locus in HSCs was unclear. So the team studied HSCs in mice. They used transcriptome profiling to analyze 17 hematopoietic cell types.

The analyses revealed that non-coding RNAs expressed from the Gtl2 locus are predominantly enriched in fetal liver HSCs and adult long-term HSCs, and these non-coding RNAs sustain long-term HSC functionality.

Gtl2’s megacluster of microRNA suppresses the mTOR signaling pathway and downstream mitochondrial biogenesis and metabolism, thus blocking reactive oxygen species (ROS) that can damage adult stem cells.

When the researchers deleted the Dlk1-Gtl2 locus from the maternally inherited allele in HSCs, they observed increases in mitochondrial biogenesis, metabolic activity, and ROS levels, which led to cell death.

Dr Li said these findings suggest Gtl2 could be used as a biomarker because it could help label dormant (or reserve) stem cells in normal or potentially cancerous stem cell populations.

The addition of a fluorescent tag to the Gtl2 locus could allow researchers to mark other adult stem cells in the gut, hair follicle, muscle, and neural systems.

in the bone marrow

The Dlk1-Gtl2 locus plays a critical role in protecting hematopoietic stem cells (HSCs), according to preclinical research.

The study suggests the mammalian imprinted gene Gtl2, located on mouse chromosome 12qF1, protects adult HSCs by restricting metabolic activity in the cells’ mitochondria.

This work indicates that Gtl2 may be useful as a biomarker to determine if cells are normal or potentially cancerous.

Linheng Li, PhD, of the Stowers Institute for Medical Research in Kansas City, Missouri, and his colleagues described this research in Cell Stem Cell.

The researchers knew that the Dlk1-Gtl2 locus produces multiple non-coding RNAs from the maternally inherited allele, including the largest microRNA cluster in the mammalian genome.

“Most of the non-coding RNAs at the Gtl2 locus have been documented to function as tumor suppressors to maintain normal cell function,” said study author Pengxu Qian, PhD, also from the Stowers Institute for Medical Research.

However, the role of this locus in HSCs was unclear. So the team studied HSCs in mice. They used transcriptome profiling to analyze 17 hematopoietic cell types.

The analyses revealed that non-coding RNAs expressed from the Gtl2 locus are predominantly enriched in fetal liver HSCs and adult long-term HSCs, and these non-coding RNAs sustain long-term HSC functionality.

Gtl2’s megacluster of microRNA suppresses the mTOR signaling pathway and downstream mitochondrial biogenesis and metabolism, thus blocking reactive oxygen species (ROS) that can damage adult stem cells.

When the researchers deleted the Dlk1-Gtl2 locus from the maternally inherited allele in HSCs, they observed increases in mitochondrial biogenesis, metabolic activity, and ROS levels, which led to cell death.

Dr Li said these findings suggest Gtl2 could be used as a biomarker because it could help label dormant (or reserve) stem cells in normal or potentially cancerous stem cell populations.

The addition of a fluorescent tag to the Gtl2 locus could allow researchers to mark other adult stem cells in the gut, hair follicle, muscle, and neural systems.

New insight into blood vessel formation

Image by Louis Heiser

and Robert Ackland

Research published in Cell Reports has provided new insight into cellular movement during angiogenesis.

Blood vessel growth was previously thought to occur in the direction of the vessel tip, stretching in a manner that left the lead cells behind.

However, recent studies have suggested that both tip cells and trailing cells move at different speeds and in different directions, changing positions to extend the blood vessel into the surrounding matrix.

With the current study, researchers wanted to determine how to gain control of the complex cellular motion involved in angiogenesis. They approached the problem using a combination of biology, mathematical models, and computer simulations.

“We watched the movement of the vascular endothelial cells in real time, created a mathematical model of the movement, and then performed simulations on a computer,” said Koichi Nishiyama, MD, PhD, of Kumamoto University in Japan.

“We found that we could reproduce blood vessel growth and the motion of the entire cellular structure by using only very simple cell-autonomous mechanisms. The mechanisms, such as speed and direction of movement, of every single cell change stochastically. It’s really interesting.”

Dr Nishiyama and his colleagues attempted to increase the accuracy of their simulation by adding a new rule to the mathematical model. This rule reduced the movement of cells at the tip of the blood vessel as the distance between tip cells and subsequent cells increased.

The researchers also conducted an experiment using actual cells to confirm whether the predicted cellular movement of the simulation was a feasible biological phenomenon.

They performed an operation to widen the distance between the tip cells and subsequent cells using a laser. The results showed that the forward movement of the tip cells was stopped in the same manner predicted by the simulations.

“We found that complex cell motility, such as that seen during blood vessel growth, is a process in which coexisting cells successfully control themselves spontaneously and move in a coordinated manner through the influence of adjacent cells,” Dr Nishiyama said.

“The ability to directly control this phenomenon was made apparent in our study. These results will add to the understanding of the formation of not only blood vessels but also various tissues and the fundamental mechanisms of the origins of the organism.”

Image by Louis Heiser

and Robert Ackland

Research published in Cell Reports has provided new insight into cellular movement during angiogenesis.

Blood vessel growth was previously thought to occur in the direction of the vessel tip, stretching in a manner that left the lead cells behind.

However, recent studies have suggested that both tip cells and trailing cells move at different speeds and in different directions, changing positions to extend the blood vessel into the surrounding matrix.

With the current study, researchers wanted to determine how to gain control of the complex cellular motion involved in angiogenesis. They approached the problem using a combination of biology, mathematical models, and computer simulations.

“We watched the movement of the vascular endothelial cells in real time, created a mathematical model of the movement, and then performed simulations on a computer,” said Koichi Nishiyama, MD, PhD, of Kumamoto University in Japan.

“We found that we could reproduce blood vessel growth and the motion of the entire cellular structure by using only very simple cell-autonomous mechanisms. The mechanisms, such as speed and direction of movement, of every single cell change stochastically. It’s really interesting.”

Dr Nishiyama and his colleagues attempted to increase the accuracy of their simulation by adding a new rule to the mathematical model. This rule reduced the movement of cells at the tip of the blood vessel as the distance between tip cells and subsequent cells increased.

The researchers also conducted an experiment using actual cells to confirm whether the predicted cellular movement of the simulation was a feasible biological phenomenon.

They performed an operation to widen the distance between the tip cells and subsequent cells using a laser. The results showed that the forward movement of the tip cells was stopped in the same manner predicted by the simulations.

“We found that complex cell motility, such as that seen during blood vessel growth, is a process in which coexisting cells successfully control themselves spontaneously and move in a coordinated manner through the influence of adjacent cells,” Dr Nishiyama said.

“The ability to directly control this phenomenon was made apparent in our study. These results will add to the understanding of the formation of not only blood vessels but also various tissues and the fundamental mechanisms of the origins of the organism.”

Image by Louis Heiser

and Robert Ackland

Research published in Cell Reports has provided new insight into cellular movement during angiogenesis.

Blood vessel growth was previously thought to occur in the direction of the vessel tip, stretching in a manner that left the lead cells behind.

However, recent studies have suggested that both tip cells and trailing cells move at different speeds and in different directions, changing positions to extend the blood vessel into the surrounding matrix.

With the current study, researchers wanted to determine how to gain control of the complex cellular motion involved in angiogenesis. They approached the problem using a combination of biology, mathematical models, and computer simulations.

“We watched the movement of the vascular endothelial cells in real time, created a mathematical model of the movement, and then performed simulations on a computer,” said Koichi Nishiyama, MD, PhD, of Kumamoto University in Japan.

“We found that we could reproduce blood vessel growth and the motion of the entire cellular structure by using only very simple cell-autonomous mechanisms. The mechanisms, such as speed and direction of movement, of every single cell change stochastically. It’s really interesting.”

Dr Nishiyama and his colleagues attempted to increase the accuracy of their simulation by adding a new rule to the mathematical model. This rule reduced the movement of cells at the tip of the blood vessel as the distance between tip cells and subsequent cells increased.

The researchers also conducted an experiment using actual cells to confirm whether the predicted cellular movement of the simulation was a feasible biological phenomenon.

They performed an operation to widen the distance between the tip cells and subsequent cells using a laser. The results showed that the forward movement of the tip cells was stopped in the same manner predicted by the simulations.

“We found that complex cell motility, such as that seen during blood vessel growth, is a process in which coexisting cells successfully control themselves spontaneously and move in a coordinated manner through the influence of adjacent cells,” Dr Nishiyama said.

“The ability to directly control this phenomenon was made apparent in our study. These results will add to the understanding of the formation of not only blood vessels but also various tissues and the fundamental mechanisms of the origins of the organism.”

Clinician computer use linked to patient satisfaction

Photo courtesy of NIH

In a small study, patients treated for chronic conditions at safety-net clinics reported lower satisfaction with their care when it involved “high computer use” by clinicians.

Patients were significantly less likely to rate their care as “excellent” if clinicians spent a great deal of time on the computer during visits—silently reviewing data or typing and failing to make consistent eye contact.

Neda Ratanawongsa, MD, of the University of California, San Francisco, and her colleagues conducted this research and described the results in a letter to JAMA Internal Medicine.

The researchers noted that safety-net clinics serve populations with limited health literacy and limited proficiency in English who may experience communication barriers that can contribute to disparities in care and health.

So the team wanted to assess clinician computer use and communication with patients treated for chronic disease in safety-net clinics. The study was conducted over 2 years at an academically affiliated public hospital with a basic electronic health record.

The study included 47 patients who spoke English or Spanish and received primary and subspecialty care. The researchers recorded 71 encounters among 47 patients and 39 clinicians.

Clinician computer use was quantified by the amount of computer data reviewed, typing or clicking the computer mouse, eye contact with patients, and noninteractive pauses.

Compared with patients in clinical encounters with low computer use, patients who had encounters with high computer use were less likely to rate their care as excellent—48% vs 83% (P=0.04).

And clinicians in encounters with high computer use were significantly more likely to engage in more negative rapport building (P<0.01).

The researchers said this study revealed “observable communication differences” according to clinicians’ computer use. They noted that social rapport building can increase patient satisfaction, but concurrent computer use may inhibit authentic engagement.

Photo courtesy of NIH

In a small study, patients treated for chronic conditions at safety-net clinics reported lower satisfaction with their care when it involved “high computer use” by clinicians.

Patients were significantly less likely to rate their care as “excellent” if clinicians spent a great deal of time on the computer during visits—silently reviewing data or typing and failing to make consistent eye contact.

Neda Ratanawongsa, MD, of the University of California, San Francisco, and her colleagues conducted this research and described the results in a letter to JAMA Internal Medicine.

The researchers noted that safety-net clinics serve populations with limited health literacy and limited proficiency in English who may experience communication barriers that can contribute to disparities in care and health.

So the team wanted to assess clinician computer use and communication with patients treated for chronic disease in safety-net clinics. The study was conducted over 2 years at an academically affiliated public hospital with a basic electronic health record.

The study included 47 patients who spoke English or Spanish and received primary and subspecialty care. The researchers recorded 71 encounters among 47 patients and 39 clinicians.

Clinician computer use was quantified by the amount of computer data reviewed, typing or clicking the computer mouse, eye contact with patients, and noninteractive pauses.

Compared with patients in clinical encounters with low computer use, patients who had encounters with high computer use were less likely to rate their care as excellent—48% vs 83% (P=0.04).

And clinicians in encounters with high computer use were significantly more likely to engage in more negative rapport building (P<0.01).

The researchers said this study revealed “observable communication differences” according to clinicians’ computer use. They noted that social rapport building can increase patient satisfaction, but concurrent computer use may inhibit authentic engagement.

Photo courtesy of NIH

In a small study, patients treated for chronic conditions at safety-net clinics reported lower satisfaction with their care when it involved “high computer use” by clinicians.

Patients were significantly less likely to rate their care as “excellent” if clinicians spent a great deal of time on the computer during visits—silently reviewing data or typing and failing to make consistent eye contact.

Neda Ratanawongsa, MD, of the University of California, San Francisco, and her colleagues conducted this research and described the results in a letter to JAMA Internal Medicine.

The researchers noted that safety-net clinics serve populations with limited health literacy and limited proficiency in English who may experience communication barriers that can contribute to disparities in care and health.

So the team wanted to assess clinician computer use and communication with patients treated for chronic disease in safety-net clinics. The study was conducted over 2 years at an academically affiliated public hospital with a basic electronic health record.

The study included 47 patients who spoke English or Spanish and received primary and subspecialty care. The researchers recorded 71 encounters among 47 patients and 39 clinicians.

Clinician computer use was quantified by the amount of computer data reviewed, typing or clicking the computer mouse, eye contact with patients, and noninteractive pauses.

Compared with patients in clinical encounters with low computer use, patients who had encounters with high computer use were less likely to rate their care as excellent—48% vs 83% (P=0.04).

And clinicians in encounters with high computer use were significantly more likely to engage in more negative rapport building (P<0.01).

The researchers said this study revealed “observable communication differences” according to clinicians’ computer use. They noted that social rapport building can increase patient satisfaction, but concurrent computer use may inhibit authentic engagement.