User login

A Concise Tool for Measuring Care Coordination from the Provider’s Perspective in the Hospital Setting

Care Coordination has been defined as “…the deliberate organization of patient care activities between two or more participants (including the patient) involved in a patient’s care to facilitate the appropriate delivery of healthcare services.”1 The Institute of Medicine identified care coordination as a key strategy to improve the American healthcare system,2 and evidence has been building that well-coordinated care improves patient outcomes and reduces healthcare costs associated with chronic conditions.3-5 In 2012, Johns Hopkins Medicine was awarded a Healthcare Innovation Award by the Centers for Medicare & Medicaid Services to improve coordination of care across the continuum of care for adult patients admitted to Johns Hopkins Hospital (JHH) and Johns Hopkins Bayview Medical Center (JHBMC), and for high-risk low-income Medicare and Medicaid beneficiaries receiving ambulatory care in targeted zip codes. The purpose of this project, known as the Johns Hopkins Community Health Partnership (J-CHiP), was to improve health and healthcare and to reduce healthcare costs. The acute care component of the program consisted of a bundle of interventions focused on improving coordination of care for all patients, including a “bridge to home” discharge process, as they transitioned back to the community from inpatient admission. The bundle included the following: early screening for discharge planning to predict needed postdischarge services; discussion in daily multidisciplinary rounds about goals and priorities of the hospitalization and potential postdischarge needs; patient and family self-care management; education enhanced medication management, including the option of “medications in hand” at the time of discharge; postdischarge telephone follow-up by nurses; and, for patients identified as high-risk, a “transition guide” (a nurse who works with the patient via home visits and by phone to optimize compliance with care for 30 days postdischarge).6 While the primary endpoints of the J-CHiP program were to improve clinical outcomes and reduce healthcare costs, we were also interested in the impact of the program on care coordination processes in the acute care setting. This created the need for an instrument to measure healthcare professionals’ views of care coordination in their immediate work environments.

We began our search for existing measures by reviewing the Coordination Measures Atlas published in 2014.7 Although this report evaluates over 80 different measures of care coordination, most of them focus on the perspective of the patient and/or family members, on specific conditions, and on primary care or outpatient settings.7,8 We were unable to identify an existing measure from the provider perspective, designed for the inpatient setting, that was both brief but comprehensive enough to cover a range of care coordination domains.8

Consequently, our first aim was to develop a brief, comprehensive tool to measure care coordination from the perspective of hospital inpatient staff that could be used to compare different units or types of providers, or to conduct longitudinal assessment. The second aim was to conduct a preliminary evaluation of the tool in our healthcare setting, including to assess its psychometric properties, to describe provider perceptions of care coordination after the implementation of J-CHiP, and to explore potential differences among departments, types of professionals, and between the 2 hospitals.

METHODS

Development of the Care Coordination Questionnaire

The survey was developed in collaboration with leaders of the J-CHiP Acute Care Team. We met at the outset and on multiple subsequent occasions to align survey domains with the main components of the J-CHiP acute care intervention and to assure that the survey would be relevant and understandable to a variety of multidisciplinary professionals, including physicians, nurses, social workers, physical therapists, and other health professionals. Care was taken to avoid redundancy with existing evaluation efforts and to minimize respondent burden. This process helped to ensure the content validity of the items, the usefulness of the results, and the future usability of the tool.

We modeled the Care Coordination Questionnaire (CCQ) after the Safety Attitudes Questionnaire (SAQ),9 a widely used survey that is deployed approximately annually at JHH and JHBMC. While the SAQ focuses on healthcare provider attitudes about issues relevant to patient safety (often referred to as safety climate or safety culture), this new tool was designed to focus on healthcare professionals’ attitudes about care coordination. Similar to the way that the SAQ “elicits a snapshot of the safety climate through surveys of frontline worker perceptions,” we sought to elicit a picture of our care coordination climate through a survey of frontline hospital staff.

The CCQ was built upon the domains and approaches to care coordination described in the Agency for Healthcare Research and Quality Care Coordination Atlas.3 This report identifies 9 mechanisms for achieving care coordination, including the following: Establish Accountability or Negotiate Responsibility; Communicate; Facilitate Transitions; Assess Needs and Goals; Create a Proactive Plan of Care; Monitor, Follow Up, and Respond to Change; Support Self-Management Goals; Link to Community Resources; and Align Resources with Patient and Population Needs; as well as 5 broad approaches commonly used to improve the delivery of healthcare, including Teamwork Focused on Coordination, Healthcare Home, Care Management, Medication Management, and Health IT-Enabled Coordination.7 We generated at least 1 item to represent 8 of the 9 domains, as well as the broad approach described as Teamwork Focused on Coordination. After developing an initial set of items, we sought input from 3 senior leaders of the J-CHiP Acute Care Team to determine if the items covered the care coordination domains of interest, and to provide feedback on content validity. To test the interpretability of survey items and consistency across professional groups, we sent an initial version of the survey questions to at least 1 person from each of the following professional groups: hospitalist, social worker, case manager, clinical pharmacist, and nurse. We asked them to review all of our survey questions and to provide us with feedback on all aspects of the questions, such as whether they believed the questions were relevant and understandable to the members of their professional discipline, the appropriateness of the wording of the questions, and other comments. Modifications were made to the content and wording of the questions based on the feedback received. The final draft of the questionnaire was reviewed by the leadership team of the J-CHiP Acute Care Team to ensure its usefulness in providing actionable information.

The resulting 12-item questionnaire used a 5-point Likert response scale ranging from 1 = “disagree strongly” to 5 = “agree strongly,” and an additional option of “not applicable (N/A).” To help assess construct validity, a global question was added at the end of the questionnaire asking, “Overall, how would you rate the care coordination at the hospital of your primary work setting?” The response was measured on a 10-point Likert-type scale ranging from 1 = “totally uncoordinated care” to 10 = “perfectly coordinated care” (see Appendix). In addition, the questionnaire requested information about the respondents’ gender, position, and their primary unit, department, and hospital affiliation.

Data Collection Procedures

An invitation to complete an anonymous questionnaire was sent to the following inpatient care professionals: all nursing staff working on care coordination units in the departments of medicine, surgery, and neurology/neurosurgery, as well as physicians, pharmacists, acute care therapists (eg, occupational and physical therapists), and other frontline staff. All healthcare staff fitting these criteria was sent an e-mail with a request to fill out the survey online using QualtricsTM (Qualtrics Labs Inc., Provo, UT), as well as multiple follow-up reminders. The participants worked either at the JHH (a 1194-bed tertiary academic medical center in Baltimore, MD) or the JHBMC (a 440-bed academic community hospital located nearby). Data were collected from October 2015 through January 2016.

Analysis

Means and standard deviations were calculated by treating the responses as continuous variables. We tried 3 different methods to handle missing data: (1) without imputation, (2) imputing the mean value of each item, and (3) substituting a neutral score. Because all 3 methods produced very similar results, we treated the N/A responses as missing values without imputation for simplicity of analysis. We used STATA 13.1 (Stata Corporation, College Station, Texas) to analyze the data.

To identify subscales, we performed exploratory factor analysis on responses to the 12 specific items. Promax rotation was selected based on the simple structure. Subscale scores for each respondent were generated by computing the mean of responses to the items in the subscale. Internal consistency reliability of the subscales was estimated using Cronbach’s alpha. We calculated Pearson correlation coefficients for the items in each subscale, and examined Cronbach’s alpha deleting each item in turn. For each of the subscales identified and the global scale, we calculated the mean, standard deviation, median and interquartile range. Although distributions of scores tended to be non-normal, this was done to increase interpretability. We also calculated percent scoring at the ceiling (highest possible score).

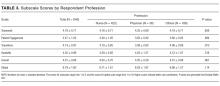

We analyzed the data with 3 research questions in mind: Was there a difference in perceptions of care coordination between (1) staff affiliated with the 2 different hospitals, (2) staff affiliated with different clinical departments, or (3) staff with different professional roles? For comparisons based on hospital and department, and type of professional, nonparametric tests (Wilcoxon rank-sum and Kruskal-Wallis test) were used with a level of statistical significance set at 0.05. The comparison between hospitals and departments was made only among nurses to minimize the confounding effect of different distribution of professionals. We tested the distribution of “years in specialty” between hospitals and departments for this comparison using Pearson’s χ2 test. The difference was not statistically significant (P = 0.167 for hospitals, and P = 0.518 for departments), so we assumed that the potential confounding effect of this variable was negligible in this analysis. The comparison of scores within each professional group used the Friedman test. Pearson’s χ2 test was used to compare the baseline characteristics between 2 hospitals.

RESULTS

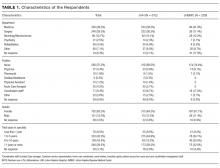

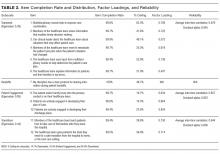

Among the 1486 acute care professionals asked to participate in the survey, 841 completed the questionnaire (response rate 56.6%). Table 1 shows the characteristics of the participants from each hospital. Table 2 summarizes the item response rates, proportion scoring at the ceiling, and weighting from the factor analysis. All items had completion rates of 99.2% or higher, with N/A responses ranging from 0% (item 2) to 3.1% (item 7). The percent scoring at the ceiling was 1.7% for the global item and ranged from 18.3% up to 63.3% for other individual items.

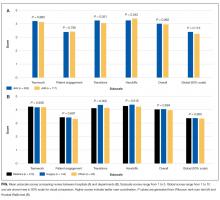

We also examined differences in perceptions of care coordination among nursing units to illustrate the tool’s ability to detect variation in Patient Engagement subscale scores for JHH nurses (see Appendix).

DISCUSSION

This study resulted in one of the first measurement tools to succinctly measure multiple aspects of care coordination in the hospital from the perspective of healthcare professionals. Given the hectic work environment of healthcare professionals, and the increasing emphasis on collecting data for evaluation and improvement, it is important to minimize respondent burden. This effort was catalyzed by a multifaceted initiative to redesign acute care delivery and promote seamless transitions of care, supported by the Center for Medicare & Medicaid Innovation. In initial testing, this questionnaire has evidence for reliability and validity. It was encouraging to find that the preliminary psychometric performance of the measure was very similar in 2 different settings of a tertiary academic hospital and a community hospital.

Our analysis of the survey data explored potential differences between the 2 hospitals, among different types of healthcare professionals and across different departments. Although we expected differences, we had no specific hypotheses about what those differences might be, and, in fact, did not observe any substantial differences. This could be taken to indicate that the intervention was uniformly and successfully implemented in both hospitals, and engaged various professionals in different departments. The ability to detect differences in care coordination at the nursing unit level could also prove to be beneficial for more precisely targeting where process improvement is needed. Further data collection and analyses should be conducted to more systematically compare units and to help identify those where practice is most advanced and those where improvements may be needed. It would also be informative to link differences in care coordination scores with patient outcomes. In addition, differences identified on specific domains between professional groups could be helpful to identify where greater efforts are needed to improve interdisciplinary practice. Sampling strategies stratified by provider type would need to be targeted to make this kind of analysis informative.

The consistently lower scores observed for patient engagement, from the perspective of care professionals in all groups, suggest that this is an area where improvement is needed. These findings are consistent with published reports on the common failure by hospitals to include patients as a member of their own care team. In addition to measuring care processes from the perspective of frontline healthcare workers, future evaluations within the healthcare system would also benefit from including data collected from the perspective of the patient and family.

This study had some limitations. First, there may be more than 4 domains of care coordination that are important and can be measured in the acute care setting from provider perspective. However, the addition of more domains should be balanced against practicality and respondent burden. It may be possible to further clarify priority domains in hospital settings as opposed to the primary care setting. Future research should be directed to find these areas and to develop a more comprehensive, yet still concise measurement instrument. Second, the tool was developed to measure the impact of a large-scale intervention, and to fit into the specific context of 2 hospitals. Therefore, it should be tested in different settings of hospital care to see how it performs. However, virtually all hospitals in the United States today are adapting to changes in both financing and healthcare delivery. A tool such as the one described in this paper could be helpful to many organizations. Third, the scoring system for the overall scale score is not weighted and therefore reflects teamwork more than other components of care coordination, which are represented by fewer items. In general, we believe that use of the subscale scores may be more informative. Alternative scoring systems might also be proposed, including item weighting based on factor scores.

For the purposes of evaluation in this specific instance, we only collected data at a single point in time, after the intervention had been deployed. Thus, we were not able to evaluate the effectiveness of the J-CHiP intervention. We also did not intend to focus too much on the differences between units, given the limited number of respondents from individual units. It would be useful to collect more data at future time points, both to test the responsiveness of the scales and to evaluate the impact of future interventions at both the hospital and unit level.

The preliminary data from this study have generated insights about gaps in current practice, such as in engaging patients in the inpatient care process. It has also increased awareness by hospital leaders about the need to achieve high reliability in the adoption of new procedures and interdisciplinary practice. This tool might be used to find areas in need of improvement, to evaluate the effect of initiatives to improve care coordination, to monitor the change over time in the perception of care coordination among healthcare professionals, and to develop better intervention strategies for coordination activities in acute care settings. Additional research is needed to provide further evidence for the reliability and validity of this measure in diverse settings.

Disclosure

The project described was supported by Grant Number 1C1CMS331053-01-00 from the US Department of Health and Human Services, Centers for Medicare & Medicaid Services. The contents of this publication are solely the responsibility of the authors and do not necessarily represent the official views of the US Department of Health and Human Services or any of its agencies. The research presented was conducted by the awardee. Results may or may not be consistent with or confirmed by the findings of the independent evaluation contractor.

The authors have no other disclosures.

1. McDonald KM, Sundaram V, Bravata DM, et al. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies (Vol. 7: Care Coordination). Technical Reviews, No. 9.7. Rockville (MD): Agency for Healthcare Research and Quality (US); 2007. PubMed

2. Adams K, Corrigan J. Priority areas for national action: transforming health care quality. Washington, DC: National Academies Press; 2003. PubMed

3. Renders CM, Valk GD, Griffin S, Wagner EH, Eijk JT, Assendelft WJ. Interventions to improve the management of diabetes mellitus in primary care, outpatient and community settings. Cochrane Database Syst Rev. 2001(1):CD001481. PubMed

4. McAlister FA, Lawson FM, Teo KK, Armstrong PW. A systematic review of randomized trials of disease management programs in heart failure. Am J Med. 2001;110(5):378-384. PubMed

5. Bruce ML, Raue PJ, Reilly CF, et al. Clinical effectiveness of integrating depression care management into medicare home health: the Depression CAREPATH Randomized trial. JAMA Intern Med. 2015;175(1):55-64. PubMed

6. Berkowitz SA, Brown P, Brotman DJ, et al. Case Study: Johns Hopkins Community Health Partnership: A model for transformation. Healthc (Amst). 2016;4(4):264-270. PubMed

7. McDonald. KM, Schultz. E, Albin. L, et al. Care Coordination Measures Atlas Version 4. Rockville, MD: Agency for Healthcare Research and Quality; 2014.

8 Schultz EM, Pineda N, Lonhart J, Davies SM, McDonald KM. A systematic review of the care coordination measurement landscape. BMC Health Serv Res. 2013;13:119. PubMed

9. Sexton JB, Helmreich RL, Neilands TB, et al. The Safety Attitudes Questionnaire: psychometric properties, benchmarking data, and emerging research. BMC Health Serv Res. 2006;6:44. PubMed

Care Coordination has been defined as “…the deliberate organization of patient care activities between two or more participants (including the patient) involved in a patient’s care to facilitate the appropriate delivery of healthcare services.”1 The Institute of Medicine identified care coordination as a key strategy to improve the American healthcare system,2 and evidence has been building that well-coordinated care improves patient outcomes and reduces healthcare costs associated with chronic conditions.3-5 In 2012, Johns Hopkins Medicine was awarded a Healthcare Innovation Award by the Centers for Medicare & Medicaid Services to improve coordination of care across the continuum of care for adult patients admitted to Johns Hopkins Hospital (JHH) and Johns Hopkins Bayview Medical Center (JHBMC), and for high-risk low-income Medicare and Medicaid beneficiaries receiving ambulatory care in targeted zip codes. The purpose of this project, known as the Johns Hopkins Community Health Partnership (J-CHiP), was to improve health and healthcare and to reduce healthcare costs. The acute care component of the program consisted of a bundle of interventions focused on improving coordination of care for all patients, including a “bridge to home” discharge process, as they transitioned back to the community from inpatient admission. The bundle included the following: early screening for discharge planning to predict needed postdischarge services; discussion in daily multidisciplinary rounds about goals and priorities of the hospitalization and potential postdischarge needs; patient and family self-care management; education enhanced medication management, including the option of “medications in hand” at the time of discharge; postdischarge telephone follow-up by nurses; and, for patients identified as high-risk, a “transition guide” (a nurse who works with the patient via home visits and by phone to optimize compliance with care for 30 days postdischarge).6 While the primary endpoints of the J-CHiP program were to improve clinical outcomes and reduce healthcare costs, we were also interested in the impact of the program on care coordination processes in the acute care setting. This created the need for an instrument to measure healthcare professionals’ views of care coordination in their immediate work environments.

We began our search for existing measures by reviewing the Coordination Measures Atlas published in 2014.7 Although this report evaluates over 80 different measures of care coordination, most of them focus on the perspective of the patient and/or family members, on specific conditions, and on primary care or outpatient settings.7,8 We were unable to identify an existing measure from the provider perspective, designed for the inpatient setting, that was both brief but comprehensive enough to cover a range of care coordination domains.8

Consequently, our first aim was to develop a brief, comprehensive tool to measure care coordination from the perspective of hospital inpatient staff that could be used to compare different units or types of providers, or to conduct longitudinal assessment. The second aim was to conduct a preliminary evaluation of the tool in our healthcare setting, including to assess its psychometric properties, to describe provider perceptions of care coordination after the implementation of J-CHiP, and to explore potential differences among departments, types of professionals, and between the 2 hospitals.

METHODS

Development of the Care Coordination Questionnaire

The survey was developed in collaboration with leaders of the J-CHiP Acute Care Team. We met at the outset and on multiple subsequent occasions to align survey domains with the main components of the J-CHiP acute care intervention and to assure that the survey would be relevant and understandable to a variety of multidisciplinary professionals, including physicians, nurses, social workers, physical therapists, and other health professionals. Care was taken to avoid redundancy with existing evaluation efforts and to minimize respondent burden. This process helped to ensure the content validity of the items, the usefulness of the results, and the future usability of the tool.

We modeled the Care Coordination Questionnaire (CCQ) after the Safety Attitudes Questionnaire (SAQ),9 a widely used survey that is deployed approximately annually at JHH and JHBMC. While the SAQ focuses on healthcare provider attitudes about issues relevant to patient safety (often referred to as safety climate or safety culture), this new tool was designed to focus on healthcare professionals’ attitudes about care coordination. Similar to the way that the SAQ “elicits a snapshot of the safety climate through surveys of frontline worker perceptions,” we sought to elicit a picture of our care coordination climate through a survey of frontline hospital staff.

The CCQ was built upon the domains and approaches to care coordination described in the Agency for Healthcare Research and Quality Care Coordination Atlas.3 This report identifies 9 mechanisms for achieving care coordination, including the following: Establish Accountability or Negotiate Responsibility; Communicate; Facilitate Transitions; Assess Needs and Goals; Create a Proactive Plan of Care; Monitor, Follow Up, and Respond to Change; Support Self-Management Goals; Link to Community Resources; and Align Resources with Patient and Population Needs; as well as 5 broad approaches commonly used to improve the delivery of healthcare, including Teamwork Focused on Coordination, Healthcare Home, Care Management, Medication Management, and Health IT-Enabled Coordination.7 We generated at least 1 item to represent 8 of the 9 domains, as well as the broad approach described as Teamwork Focused on Coordination. After developing an initial set of items, we sought input from 3 senior leaders of the J-CHiP Acute Care Team to determine if the items covered the care coordination domains of interest, and to provide feedback on content validity. To test the interpretability of survey items and consistency across professional groups, we sent an initial version of the survey questions to at least 1 person from each of the following professional groups: hospitalist, social worker, case manager, clinical pharmacist, and nurse. We asked them to review all of our survey questions and to provide us with feedback on all aspects of the questions, such as whether they believed the questions were relevant and understandable to the members of their professional discipline, the appropriateness of the wording of the questions, and other comments. Modifications were made to the content and wording of the questions based on the feedback received. The final draft of the questionnaire was reviewed by the leadership team of the J-CHiP Acute Care Team to ensure its usefulness in providing actionable information.

The resulting 12-item questionnaire used a 5-point Likert response scale ranging from 1 = “disagree strongly” to 5 = “agree strongly,” and an additional option of “not applicable (N/A).” To help assess construct validity, a global question was added at the end of the questionnaire asking, “Overall, how would you rate the care coordination at the hospital of your primary work setting?” The response was measured on a 10-point Likert-type scale ranging from 1 = “totally uncoordinated care” to 10 = “perfectly coordinated care” (see Appendix). In addition, the questionnaire requested information about the respondents’ gender, position, and their primary unit, department, and hospital affiliation.

Data Collection Procedures

An invitation to complete an anonymous questionnaire was sent to the following inpatient care professionals: all nursing staff working on care coordination units in the departments of medicine, surgery, and neurology/neurosurgery, as well as physicians, pharmacists, acute care therapists (eg, occupational and physical therapists), and other frontline staff. All healthcare staff fitting these criteria was sent an e-mail with a request to fill out the survey online using QualtricsTM (Qualtrics Labs Inc., Provo, UT), as well as multiple follow-up reminders. The participants worked either at the JHH (a 1194-bed tertiary academic medical center in Baltimore, MD) or the JHBMC (a 440-bed academic community hospital located nearby). Data were collected from October 2015 through January 2016.

Analysis

Means and standard deviations were calculated by treating the responses as continuous variables. We tried 3 different methods to handle missing data: (1) without imputation, (2) imputing the mean value of each item, and (3) substituting a neutral score. Because all 3 methods produced very similar results, we treated the N/A responses as missing values without imputation for simplicity of analysis. We used STATA 13.1 (Stata Corporation, College Station, Texas) to analyze the data.

To identify subscales, we performed exploratory factor analysis on responses to the 12 specific items. Promax rotation was selected based on the simple structure. Subscale scores for each respondent were generated by computing the mean of responses to the items in the subscale. Internal consistency reliability of the subscales was estimated using Cronbach’s alpha. We calculated Pearson correlation coefficients for the items in each subscale, and examined Cronbach’s alpha deleting each item in turn. For each of the subscales identified and the global scale, we calculated the mean, standard deviation, median and interquartile range. Although distributions of scores tended to be non-normal, this was done to increase interpretability. We also calculated percent scoring at the ceiling (highest possible score).

We analyzed the data with 3 research questions in mind: Was there a difference in perceptions of care coordination between (1) staff affiliated with the 2 different hospitals, (2) staff affiliated with different clinical departments, or (3) staff with different professional roles? For comparisons based on hospital and department, and type of professional, nonparametric tests (Wilcoxon rank-sum and Kruskal-Wallis test) were used with a level of statistical significance set at 0.05. The comparison between hospitals and departments was made only among nurses to minimize the confounding effect of different distribution of professionals. We tested the distribution of “years in specialty” between hospitals and departments for this comparison using Pearson’s χ2 test. The difference was not statistically significant (P = 0.167 for hospitals, and P = 0.518 for departments), so we assumed that the potential confounding effect of this variable was negligible in this analysis. The comparison of scores within each professional group used the Friedman test. Pearson’s χ2 test was used to compare the baseline characteristics between 2 hospitals.

RESULTS

Among the 1486 acute care professionals asked to participate in the survey, 841 completed the questionnaire (response rate 56.6%). Table 1 shows the characteristics of the participants from each hospital. Table 2 summarizes the item response rates, proportion scoring at the ceiling, and weighting from the factor analysis. All items had completion rates of 99.2% or higher, with N/A responses ranging from 0% (item 2) to 3.1% (item 7). The percent scoring at the ceiling was 1.7% for the global item and ranged from 18.3% up to 63.3% for other individual items.

We also examined differences in perceptions of care coordination among nursing units to illustrate the tool’s ability to detect variation in Patient Engagement subscale scores for JHH nurses (see Appendix).

DISCUSSION

This study resulted in one of the first measurement tools to succinctly measure multiple aspects of care coordination in the hospital from the perspective of healthcare professionals. Given the hectic work environment of healthcare professionals, and the increasing emphasis on collecting data for evaluation and improvement, it is important to minimize respondent burden. This effort was catalyzed by a multifaceted initiative to redesign acute care delivery and promote seamless transitions of care, supported by the Center for Medicare & Medicaid Innovation. In initial testing, this questionnaire has evidence for reliability and validity. It was encouraging to find that the preliminary psychometric performance of the measure was very similar in 2 different settings of a tertiary academic hospital and a community hospital.

Our analysis of the survey data explored potential differences between the 2 hospitals, among different types of healthcare professionals and across different departments. Although we expected differences, we had no specific hypotheses about what those differences might be, and, in fact, did not observe any substantial differences. This could be taken to indicate that the intervention was uniformly and successfully implemented in both hospitals, and engaged various professionals in different departments. The ability to detect differences in care coordination at the nursing unit level could also prove to be beneficial for more precisely targeting where process improvement is needed. Further data collection and analyses should be conducted to more systematically compare units and to help identify those where practice is most advanced and those where improvements may be needed. It would also be informative to link differences in care coordination scores with patient outcomes. In addition, differences identified on specific domains between professional groups could be helpful to identify where greater efforts are needed to improve interdisciplinary practice. Sampling strategies stratified by provider type would need to be targeted to make this kind of analysis informative.

The consistently lower scores observed for patient engagement, from the perspective of care professionals in all groups, suggest that this is an area where improvement is needed. These findings are consistent with published reports on the common failure by hospitals to include patients as a member of their own care team. In addition to measuring care processes from the perspective of frontline healthcare workers, future evaluations within the healthcare system would also benefit from including data collected from the perspective of the patient and family.

This study had some limitations. First, there may be more than 4 domains of care coordination that are important and can be measured in the acute care setting from provider perspective. However, the addition of more domains should be balanced against practicality and respondent burden. It may be possible to further clarify priority domains in hospital settings as opposed to the primary care setting. Future research should be directed to find these areas and to develop a more comprehensive, yet still concise measurement instrument. Second, the tool was developed to measure the impact of a large-scale intervention, and to fit into the specific context of 2 hospitals. Therefore, it should be tested in different settings of hospital care to see how it performs. However, virtually all hospitals in the United States today are adapting to changes in both financing and healthcare delivery. A tool such as the one described in this paper could be helpful to many organizations. Third, the scoring system for the overall scale score is not weighted and therefore reflects teamwork more than other components of care coordination, which are represented by fewer items. In general, we believe that use of the subscale scores may be more informative. Alternative scoring systems might also be proposed, including item weighting based on factor scores.

For the purposes of evaluation in this specific instance, we only collected data at a single point in time, after the intervention had been deployed. Thus, we were not able to evaluate the effectiveness of the J-CHiP intervention. We also did not intend to focus too much on the differences between units, given the limited number of respondents from individual units. It would be useful to collect more data at future time points, both to test the responsiveness of the scales and to evaluate the impact of future interventions at both the hospital and unit level.

The preliminary data from this study have generated insights about gaps in current practice, such as in engaging patients in the inpatient care process. It has also increased awareness by hospital leaders about the need to achieve high reliability in the adoption of new procedures and interdisciplinary practice. This tool might be used to find areas in need of improvement, to evaluate the effect of initiatives to improve care coordination, to monitor the change over time in the perception of care coordination among healthcare professionals, and to develop better intervention strategies for coordination activities in acute care settings. Additional research is needed to provide further evidence for the reliability and validity of this measure in diverse settings.

Disclosure

The project described was supported by Grant Number 1C1CMS331053-01-00 from the US Department of Health and Human Services, Centers for Medicare & Medicaid Services. The contents of this publication are solely the responsibility of the authors and do not necessarily represent the official views of the US Department of Health and Human Services or any of its agencies. The research presented was conducted by the awardee. Results may or may not be consistent with or confirmed by the findings of the independent evaluation contractor.

The authors have no other disclosures.

Care Coordination has been defined as “…the deliberate organization of patient care activities between two or more participants (including the patient) involved in a patient’s care to facilitate the appropriate delivery of healthcare services.”1 The Institute of Medicine identified care coordination as a key strategy to improve the American healthcare system,2 and evidence has been building that well-coordinated care improves patient outcomes and reduces healthcare costs associated with chronic conditions.3-5 In 2012, Johns Hopkins Medicine was awarded a Healthcare Innovation Award by the Centers for Medicare & Medicaid Services to improve coordination of care across the continuum of care for adult patients admitted to Johns Hopkins Hospital (JHH) and Johns Hopkins Bayview Medical Center (JHBMC), and for high-risk low-income Medicare and Medicaid beneficiaries receiving ambulatory care in targeted zip codes. The purpose of this project, known as the Johns Hopkins Community Health Partnership (J-CHiP), was to improve health and healthcare and to reduce healthcare costs. The acute care component of the program consisted of a bundle of interventions focused on improving coordination of care for all patients, including a “bridge to home” discharge process, as they transitioned back to the community from inpatient admission. The bundle included the following: early screening for discharge planning to predict needed postdischarge services; discussion in daily multidisciplinary rounds about goals and priorities of the hospitalization and potential postdischarge needs; patient and family self-care management; education enhanced medication management, including the option of “medications in hand” at the time of discharge; postdischarge telephone follow-up by nurses; and, for patients identified as high-risk, a “transition guide” (a nurse who works with the patient via home visits and by phone to optimize compliance with care for 30 days postdischarge).6 While the primary endpoints of the J-CHiP program were to improve clinical outcomes and reduce healthcare costs, we were also interested in the impact of the program on care coordination processes in the acute care setting. This created the need for an instrument to measure healthcare professionals’ views of care coordination in their immediate work environments.

We began our search for existing measures by reviewing the Coordination Measures Atlas published in 2014.7 Although this report evaluates over 80 different measures of care coordination, most of them focus on the perspective of the patient and/or family members, on specific conditions, and on primary care or outpatient settings.7,8 We were unable to identify an existing measure from the provider perspective, designed for the inpatient setting, that was both brief but comprehensive enough to cover a range of care coordination domains.8

Consequently, our first aim was to develop a brief, comprehensive tool to measure care coordination from the perspective of hospital inpatient staff that could be used to compare different units or types of providers, or to conduct longitudinal assessment. The second aim was to conduct a preliminary evaluation of the tool in our healthcare setting, including to assess its psychometric properties, to describe provider perceptions of care coordination after the implementation of J-CHiP, and to explore potential differences among departments, types of professionals, and between the 2 hospitals.

METHODS

Development of the Care Coordination Questionnaire

The survey was developed in collaboration with leaders of the J-CHiP Acute Care Team. We met at the outset and on multiple subsequent occasions to align survey domains with the main components of the J-CHiP acute care intervention and to assure that the survey would be relevant and understandable to a variety of multidisciplinary professionals, including physicians, nurses, social workers, physical therapists, and other health professionals. Care was taken to avoid redundancy with existing evaluation efforts and to minimize respondent burden. This process helped to ensure the content validity of the items, the usefulness of the results, and the future usability of the tool.

We modeled the Care Coordination Questionnaire (CCQ) after the Safety Attitudes Questionnaire (SAQ),9 a widely used survey that is deployed approximately annually at JHH and JHBMC. While the SAQ focuses on healthcare provider attitudes about issues relevant to patient safety (often referred to as safety climate or safety culture), this new tool was designed to focus on healthcare professionals’ attitudes about care coordination. Similar to the way that the SAQ “elicits a snapshot of the safety climate through surveys of frontline worker perceptions,” we sought to elicit a picture of our care coordination climate through a survey of frontline hospital staff.

The CCQ was built upon the domains and approaches to care coordination described in the Agency for Healthcare Research and Quality Care Coordination Atlas.3 This report identifies 9 mechanisms for achieving care coordination, including the following: Establish Accountability or Negotiate Responsibility; Communicate; Facilitate Transitions; Assess Needs and Goals; Create a Proactive Plan of Care; Monitor, Follow Up, and Respond to Change; Support Self-Management Goals; Link to Community Resources; and Align Resources with Patient and Population Needs; as well as 5 broad approaches commonly used to improve the delivery of healthcare, including Teamwork Focused on Coordination, Healthcare Home, Care Management, Medication Management, and Health IT-Enabled Coordination.7 We generated at least 1 item to represent 8 of the 9 domains, as well as the broad approach described as Teamwork Focused on Coordination. After developing an initial set of items, we sought input from 3 senior leaders of the J-CHiP Acute Care Team to determine if the items covered the care coordination domains of interest, and to provide feedback on content validity. To test the interpretability of survey items and consistency across professional groups, we sent an initial version of the survey questions to at least 1 person from each of the following professional groups: hospitalist, social worker, case manager, clinical pharmacist, and nurse. We asked them to review all of our survey questions and to provide us with feedback on all aspects of the questions, such as whether they believed the questions were relevant and understandable to the members of their professional discipline, the appropriateness of the wording of the questions, and other comments. Modifications were made to the content and wording of the questions based on the feedback received. The final draft of the questionnaire was reviewed by the leadership team of the J-CHiP Acute Care Team to ensure its usefulness in providing actionable information.

The resulting 12-item questionnaire used a 5-point Likert response scale ranging from 1 = “disagree strongly” to 5 = “agree strongly,” and an additional option of “not applicable (N/A).” To help assess construct validity, a global question was added at the end of the questionnaire asking, “Overall, how would you rate the care coordination at the hospital of your primary work setting?” The response was measured on a 10-point Likert-type scale ranging from 1 = “totally uncoordinated care” to 10 = “perfectly coordinated care” (see Appendix). In addition, the questionnaire requested information about the respondents’ gender, position, and their primary unit, department, and hospital affiliation.

Data Collection Procedures

An invitation to complete an anonymous questionnaire was sent to the following inpatient care professionals: all nursing staff working on care coordination units in the departments of medicine, surgery, and neurology/neurosurgery, as well as physicians, pharmacists, acute care therapists (eg, occupational and physical therapists), and other frontline staff. All healthcare staff fitting these criteria was sent an e-mail with a request to fill out the survey online using QualtricsTM (Qualtrics Labs Inc., Provo, UT), as well as multiple follow-up reminders. The participants worked either at the JHH (a 1194-bed tertiary academic medical center in Baltimore, MD) or the JHBMC (a 440-bed academic community hospital located nearby). Data were collected from October 2015 through January 2016.

Analysis

Means and standard deviations were calculated by treating the responses as continuous variables. We tried 3 different methods to handle missing data: (1) without imputation, (2) imputing the mean value of each item, and (3) substituting a neutral score. Because all 3 methods produced very similar results, we treated the N/A responses as missing values without imputation for simplicity of analysis. We used STATA 13.1 (Stata Corporation, College Station, Texas) to analyze the data.

To identify subscales, we performed exploratory factor analysis on responses to the 12 specific items. Promax rotation was selected based on the simple structure. Subscale scores for each respondent were generated by computing the mean of responses to the items in the subscale. Internal consistency reliability of the subscales was estimated using Cronbach’s alpha. We calculated Pearson correlation coefficients for the items in each subscale, and examined Cronbach’s alpha deleting each item in turn. For each of the subscales identified and the global scale, we calculated the mean, standard deviation, median and interquartile range. Although distributions of scores tended to be non-normal, this was done to increase interpretability. We also calculated percent scoring at the ceiling (highest possible score).

We analyzed the data with 3 research questions in mind: Was there a difference in perceptions of care coordination between (1) staff affiliated with the 2 different hospitals, (2) staff affiliated with different clinical departments, or (3) staff with different professional roles? For comparisons based on hospital and department, and type of professional, nonparametric tests (Wilcoxon rank-sum and Kruskal-Wallis test) were used with a level of statistical significance set at 0.05. The comparison between hospitals and departments was made only among nurses to minimize the confounding effect of different distribution of professionals. We tested the distribution of “years in specialty” between hospitals and departments for this comparison using Pearson’s χ2 test. The difference was not statistically significant (P = 0.167 for hospitals, and P = 0.518 for departments), so we assumed that the potential confounding effect of this variable was negligible in this analysis. The comparison of scores within each professional group used the Friedman test. Pearson’s χ2 test was used to compare the baseline characteristics between 2 hospitals.

RESULTS

Among the 1486 acute care professionals asked to participate in the survey, 841 completed the questionnaire (response rate 56.6%). Table 1 shows the characteristics of the participants from each hospital. Table 2 summarizes the item response rates, proportion scoring at the ceiling, and weighting from the factor analysis. All items had completion rates of 99.2% or higher, with N/A responses ranging from 0% (item 2) to 3.1% (item 7). The percent scoring at the ceiling was 1.7% for the global item and ranged from 18.3% up to 63.3% for other individual items.

We also examined differences in perceptions of care coordination among nursing units to illustrate the tool’s ability to detect variation in Patient Engagement subscale scores for JHH nurses (see Appendix).

DISCUSSION

This study resulted in one of the first measurement tools to succinctly measure multiple aspects of care coordination in the hospital from the perspective of healthcare professionals. Given the hectic work environment of healthcare professionals, and the increasing emphasis on collecting data for evaluation and improvement, it is important to minimize respondent burden. This effort was catalyzed by a multifaceted initiative to redesign acute care delivery and promote seamless transitions of care, supported by the Center for Medicare & Medicaid Innovation. In initial testing, this questionnaire has evidence for reliability and validity. It was encouraging to find that the preliminary psychometric performance of the measure was very similar in 2 different settings of a tertiary academic hospital and a community hospital.

Our analysis of the survey data explored potential differences between the 2 hospitals, among different types of healthcare professionals and across different departments. Although we expected differences, we had no specific hypotheses about what those differences might be, and, in fact, did not observe any substantial differences. This could be taken to indicate that the intervention was uniformly and successfully implemented in both hospitals, and engaged various professionals in different departments. The ability to detect differences in care coordination at the nursing unit level could also prove to be beneficial for more precisely targeting where process improvement is needed. Further data collection and analyses should be conducted to more systematically compare units and to help identify those where practice is most advanced and those where improvements may be needed. It would also be informative to link differences in care coordination scores with patient outcomes. In addition, differences identified on specific domains between professional groups could be helpful to identify where greater efforts are needed to improve interdisciplinary practice. Sampling strategies stratified by provider type would need to be targeted to make this kind of analysis informative.

The consistently lower scores observed for patient engagement, from the perspective of care professionals in all groups, suggest that this is an area where improvement is needed. These findings are consistent with published reports on the common failure by hospitals to include patients as a member of their own care team. In addition to measuring care processes from the perspective of frontline healthcare workers, future evaluations within the healthcare system would also benefit from including data collected from the perspective of the patient and family.

This study had some limitations. First, there may be more than 4 domains of care coordination that are important and can be measured in the acute care setting from provider perspective. However, the addition of more domains should be balanced against practicality and respondent burden. It may be possible to further clarify priority domains in hospital settings as opposed to the primary care setting. Future research should be directed to find these areas and to develop a more comprehensive, yet still concise measurement instrument. Second, the tool was developed to measure the impact of a large-scale intervention, and to fit into the specific context of 2 hospitals. Therefore, it should be tested in different settings of hospital care to see how it performs. However, virtually all hospitals in the United States today are adapting to changes in both financing and healthcare delivery. A tool such as the one described in this paper could be helpful to many organizations. Third, the scoring system for the overall scale score is not weighted and therefore reflects teamwork more than other components of care coordination, which are represented by fewer items. In general, we believe that use of the subscale scores may be more informative. Alternative scoring systems might also be proposed, including item weighting based on factor scores.

For the purposes of evaluation in this specific instance, we only collected data at a single point in time, after the intervention had been deployed. Thus, we were not able to evaluate the effectiveness of the J-CHiP intervention. We also did not intend to focus too much on the differences between units, given the limited number of respondents from individual units. It would be useful to collect more data at future time points, both to test the responsiveness of the scales and to evaluate the impact of future interventions at both the hospital and unit level.

The preliminary data from this study have generated insights about gaps in current practice, such as in engaging patients in the inpatient care process. It has also increased awareness by hospital leaders about the need to achieve high reliability in the adoption of new procedures and interdisciplinary practice. This tool might be used to find areas in need of improvement, to evaluate the effect of initiatives to improve care coordination, to monitor the change over time in the perception of care coordination among healthcare professionals, and to develop better intervention strategies for coordination activities in acute care settings. Additional research is needed to provide further evidence for the reliability and validity of this measure in diverse settings.

Disclosure

The project described was supported by Grant Number 1C1CMS331053-01-00 from the US Department of Health and Human Services, Centers for Medicare & Medicaid Services. The contents of this publication are solely the responsibility of the authors and do not necessarily represent the official views of the US Department of Health and Human Services or any of its agencies. The research presented was conducted by the awardee. Results may or may not be consistent with or confirmed by the findings of the independent evaluation contractor.

The authors have no other disclosures.

1. McDonald KM, Sundaram V, Bravata DM, et al. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies (Vol. 7: Care Coordination). Technical Reviews, No. 9.7. Rockville (MD): Agency for Healthcare Research and Quality (US); 2007. PubMed

2. Adams K, Corrigan J. Priority areas for national action: transforming health care quality. Washington, DC: National Academies Press; 2003. PubMed

3. Renders CM, Valk GD, Griffin S, Wagner EH, Eijk JT, Assendelft WJ. Interventions to improve the management of diabetes mellitus in primary care, outpatient and community settings. Cochrane Database Syst Rev. 2001(1):CD001481. PubMed

4. McAlister FA, Lawson FM, Teo KK, Armstrong PW. A systematic review of randomized trials of disease management programs in heart failure. Am J Med. 2001;110(5):378-384. PubMed

5. Bruce ML, Raue PJ, Reilly CF, et al. Clinical effectiveness of integrating depression care management into medicare home health: the Depression CAREPATH Randomized trial. JAMA Intern Med. 2015;175(1):55-64. PubMed

6. Berkowitz SA, Brown P, Brotman DJ, et al. Case Study: Johns Hopkins Community Health Partnership: A model for transformation. Healthc (Amst). 2016;4(4):264-270. PubMed

7. McDonald. KM, Schultz. E, Albin. L, et al. Care Coordination Measures Atlas Version 4. Rockville, MD: Agency for Healthcare Research and Quality; 2014.

8 Schultz EM, Pineda N, Lonhart J, Davies SM, McDonald KM. A systematic review of the care coordination measurement landscape. BMC Health Serv Res. 2013;13:119. PubMed

9. Sexton JB, Helmreich RL, Neilands TB, et al. The Safety Attitudes Questionnaire: psychometric properties, benchmarking data, and emerging research. BMC Health Serv Res. 2006;6:44. PubMed

1. McDonald KM, Sundaram V, Bravata DM, et al. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies (Vol. 7: Care Coordination). Technical Reviews, No. 9.7. Rockville (MD): Agency for Healthcare Research and Quality (US); 2007. PubMed

2. Adams K, Corrigan J. Priority areas for national action: transforming health care quality. Washington, DC: National Academies Press; 2003. PubMed

3. Renders CM, Valk GD, Griffin S, Wagner EH, Eijk JT, Assendelft WJ. Interventions to improve the management of diabetes mellitus in primary care, outpatient and community settings. Cochrane Database Syst Rev. 2001(1):CD001481. PubMed

4. McAlister FA, Lawson FM, Teo KK, Armstrong PW. A systematic review of randomized trials of disease management programs in heart failure. Am J Med. 2001;110(5):378-384. PubMed

5. Bruce ML, Raue PJ, Reilly CF, et al. Clinical effectiveness of integrating depression care management into medicare home health: the Depression CAREPATH Randomized trial. JAMA Intern Med. 2015;175(1):55-64. PubMed

6. Berkowitz SA, Brown P, Brotman DJ, et al. Case Study: Johns Hopkins Community Health Partnership: A model for transformation. Healthc (Amst). 2016;4(4):264-270. PubMed

7. McDonald. KM, Schultz. E, Albin. L, et al. Care Coordination Measures Atlas Version 4. Rockville, MD: Agency for Healthcare Research and Quality; 2014.

8 Schultz EM, Pineda N, Lonhart J, Davies SM, McDonald KM. A systematic review of the care coordination measurement landscape. BMC Health Serv Res. 2013;13:119. PubMed

9. Sexton JB, Helmreich RL, Neilands TB, et al. The Safety Attitudes Questionnaire: psychometric properties, benchmarking data, and emerging research. BMC Health Serv Res. 2006;6:44. PubMed

© 2017 Society of Hospital Medicine

Hospital Renovation Patient Satisfaction

Hospitals are expensive and complex facilities to build and renovate. It is estimated $200 billion is being spent in the United States during this decade on hospital construction and renovation, and further expenditures in this area are expected.[1] Aging hospital infrastructure, competition, and health system expansion have motivated institutions to invest in renovation and new hospital building construction.[2, 3, 4, 5, 6, 7] There is a trend toward patient‐centered design in new hospital construction. Features of this trend include same‐handed design (ie, rooms on a unit have all beds oriented in the same direction and do not share headwalls); use of sound absorbent materials to reduced ambient noise[7, 8, 9]; rooms with improved view and increased natural lighting to reduce anxiety, decrease delirium, and increase sense of wellbeing[10, 11, 12]; incorporation of natural elements like gardens, water features, and art[12, 13, 14, 15, 16, 17, 18]; single‐patient rooms to reduce transmission of infection and enhance privacy and visitor comfort[7, 19, 20]; presence of comfortable waiting rooms and visitor accommodations to enhance comfort and family participation[21, 22, 23]; and hotel‐like amenities such as on‐demand entertainment and room service menus.[24, 25]

There is a belief among some hospital leaders that patients are generally unable to distinguish their positive experience with a pleasing healthcare environment from their positive experience with care, and thus improving facilities will lead to improved satisfaction across the board.[26, 27] In a controlled study of hospitalized patients, appealing rooms were associated with increased satisfaction with services including housekeeping and food service staff, meals, as well as physicians and overall satisfaction.[26] A 2012 survey of hospital leadership found that expanding and renovating facilities was considered a top priority in improving patient satisfaction, with 82% of the respondents stating that this was important.[27]

Despite these attitudes, the impact of patient‐centered design on patient satisfaction is not well understood. Studies have shown that renovations and hospital construction that incorporates noise reduction strategies, positive distraction, patient and caregiver control, attractive waiting rooms, improved patient room appearance, private rooms, and large windows result in improved satisfaction with nursing, noise level, unit environment and cleanliness, perceived wait time, discharge preparedness, and overall care. [7, 19, 20, 23, 28] However, these studies were limited by small sample size, inclusion of a narrow group of patients (eg, ambulatory, obstetric, geriatric rehabilitation, intensive care unit), and concurrent use of interventions other than design improvement (eg, nurse and patient education). Many of these studies did not use the ubiquitous Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) and Press Ganey patient satisfaction surveys.

We sought to determine the changes in patient satisfaction that occurred during a natural experiment, in which clinical units (comprising stable nursing, physician, and unit teams) were relocated from an historic clinical building to a new clinical building that featured patient‐centered design, using HCAHPS and Press Ganey surveys and a large study population. We hypothesized that new building features would positively impact both facility related (eg, noise level), nonfacility related (eg, physician and housekeeping service related), and overall satisfaction.

METHODS

This was a retrospective analysis of prospectively collected Press Ganey and HCAPHS patient satisfaction survey data for a single academic tertiary care hospital.[29] The research project was reviewed and approved by the institutional review board.

Participants

All patients discharged from 12 clinical units that relocated to the new clinical building and returned patient satisfaction surveys served as study patients. The moved units included the coronary care unit, cardiac step down unit, medical intensive care unit, neuro critical care unit, surgical intensive care unit, orthopedic unit, neurology unit, neurosurgery unit, obstetrics units, gynecology unit, urology unit, cardiothoracic surgery unit, and the transplant surgery and renal transplant unit. Patients on clinical units that did not move served as concurrent controls.

Exposure

Patients admitted to the new clinical building experienced several patient‐centered design features. These features included easy access to healing gardens with a water feature, soaring lobbies, a collection of more than 500 works of art, well‐decorated and light‐filled patient rooms with sleeping accommodations for family members, sound‐absorbing features in patient care corridors ranging from acoustical ceiling tiles to a quiet nurse‐call system, and an interactive television network with Internet, movies, and games. All patients during the baseline period and control patients during the study period were located in typical patient rooms with standard hospital amenities. No other major patient satisfaction interventions were initiated during the pre‐ or postperiod in either arm of the study; ongoing patient satisfaction efforts (such as unit‐based customer care representatives) were deployed broadly and not restricted to the new clinical building. Clinical teams comprised of physicians, nurses, and ancillary staff did not change significantly after the move.

Time Periods

The move to new clinical building occurred on May 1, 2012. After allowing for a 15‐day washout period, the postmove period included Press Ganey and HCAHPS surveys returned for discharges that occurred during a 7.5‐month period between May 15, 2102 and December 31, 2012. Baseline data included Press Ganey and HCAHPS surveys returned for discharges in the preceding 12 months (May 1, 2011 to April 30, 2012). Sensitivity analysis using only 7.5 months of baseline data did not reveal any significant difference when compared with 12‐month baseline data, and we report only data from the 12‐month baseline period.

Instruments

Press Ganey and HCAHPS patient satisfaction surveys were sent via mail in the same envelope. Fifty percent of the discharged patients were randomized to receive the surveys. The Press Ganey survey contained 33 items covering across several subdomains including room, meal, nursing, physician, ancillary staff, visitor, discharge, and overall satisfaction. The HCAHPS survey contained 29 Centers for Medicare and Medicaid Services (CMS)‐mandated items, of which 21 are related to patient satisfaction. The development and testing and methods for administration and reporting of the HCAHPS survey have been previously described.[30, 31] Press Ganey patient satisfaction survey results have been reported in the literature.[32, 33]

Outcome Variables

Press Ganey and HCAHPS patient satisfaction survey responses were the primary outcome variables of the study. The survey items were categorized as facility related (eg, noise level), nonfacility related (eg, physician and nursing staff satisfaction), and overall satisfaction related.

Covariates

Age, sex, length of stay (LOS), insurance type, and all‐payer refined diagnosis‐related groupassociated illness complexity were included as covariates.

Statistical Analysis

Percent top‐box scores were calculated for each survey item as the percent of patients who responded very good for a given item on Press Ganey survey items and always or definitely yes or 9 or 10 on HCAHPS survey items. CMS utilizes percent top‐box scores to calculate payments under the Value Based Purchasing (VBP) program and to report the results publicly. Numerous studies have also reported percent top‐box scores for HCAHPS survey results.[31, 32, 33, 34]

Odds ratios of premove versus postmove percentage of top‐box scores, adjusted for age, sex, LOS, complexity of illness, and insurance type were determined using logistic regression for the units that moved. Similar scores were calculated for unmoved units to detect secular trends. To determine whether the differences between the moved and unmoved units were significant, we introduced the interaction term (moved vs unmoved unit status) (pre‐ vs postmove time period) into the logistic regression models and examined the adjusted P value for this term. All statistical analysis was performed using SAS Institute Inc.'s (Cary, NC) JMP Pro 10.0.0.

RESULTS

The study included 1648 respondents in the moved units in the baseline period (ie, units designated to move to a new clinical building) and 1373 respondents in the postmove period. There were 1593 respondents in the control group during the baseline period and 1049 respondents in the postmove period. For the units that moved, survey response rates were 28.5% prior to the move and 28.3% after the move. For the units that did not move, survey response rates were 20.9% prior to the move and 22.7% after the move. A majority of survey respondents on the nursing units that moved were white, male, and had private insurance (Table 1). There were no significant differences between respondents across these characteristics between the pre‐ and postmove periods. Mean age and LOS were also similar. For these units, there were 70.5% private rooms prior to the move and 100% after the move. For the unmoved units, 58.9% of the rooms were private in the baseline period and 72.7% were private in the study period. Similar to the units that moved, characteristics of the respondents on the unmoved units also did not differ significantly in the postmove period.

| Patient demographics | Moved Units (N=3,021) | Unmoved Units (N=2,642) | ||||

|---|---|---|---|---|---|---|

| Pre | Post | P Value | Pre | Post | P Value | |

| ||||||

| White | 75.3% | 78.2% | 0.07 | 66.7% | 68.5% | 0.31 |

| Mean age, y | 57.3 | 57.4 | 0.84 | 57.3 | 57.1 | 0.81 |

| Male | 54.3% | 53.0% | 0.48 | 40.5% | 42.3% | 0.23 |

| Self‐reported health | ||||||

| Excellent or very good | 54.7% | 51.2% | 0.04 | 38.7% | 39.5% | 0.11 |

| Good | 27.8% | 32.0% | 29.3% | 32.2% | ||

| Fair or poor | 17.5% | 16.9% | 32.0% | 28.3% | ||

| Self‐reported language | ||||||

| English | 96.0% | 97.2% | 0.06 | 96.8% | 97.1% | 0.63 |

| Other | 4.0% | 2.8% | 3.2% | 2.9% | ||

| Self‐reported education | ||||||

| Less than high school | 5.8% | 5.0% | 0.24 | 10.8% | 10.4% | 0.24 |

| High school grad | 46.4% | 44.2% | 48.6% | 45.5% | ||

| College grad or more | 47.7% | 50.7% | 40.7% | 44.7% | ||

| Insurance type | ||||||

| Medicaid | 6.7% | 5.5% | 0.11 | 10.8% | 9.0% | 0.32 |

| Medicare | 32.0% | 35.5% | 36.0% | 36.1% | ||

| Private insurance | 55.6% | 52.8% | 48.0% | 50.3% | ||

| Mean APRDRG complexity* | 2.1 | 2.1 | 0.09 | 2.3 | 2.3 | 0.14 |

| Mean LOS | 4.7 | 5.0 | 0.12 | 4.9 | 5.0 | 0.77 |

| Service | ||||||

| Medicine | 15.4% | 16.2% | 0.51 | 40.0% | 34.5% | 0.10 |

| Surgery | 50.7% | 45.7% | 40.1% | 44.1% | ||

| Neurosciences | 20.3% | 24.1% | 6.0% | 6.0% | ||

| Obstetrics/gynecology | 7.5% | 8.2% | 5.7% | 5.6% | ||

The move was associated with significant improvements in facility‐related satisfaction (Tables 2 and 3). The most prominent increases in satisfaction were with pleasantness of dcor (33.6% vs 66.2%), noise level (39.9% vs 59.3%), and visitor accommodation and comfort (50.0% vs 70.3 %). There was improvement in satisfaction related to cleanliness of the room (49.0% vs 68.6 %), but no significant increase in satisfaction with courtesy of the person cleaning the room (59.8% vs 67.7%) when compared with units that did move.

| Satisfaction Domain | Moved Units | Unmoved Units | P Value of the Difference in Odds Ratio Between Moved and Unmoved Units | |||||

|---|---|---|---|---|---|---|---|---|

| % Top Box | Adjusted Odds Ratio* (95% CI) | % Top Box | Adjusted Odds Ratio* (95% CI) | |||||

| Pre | Post | Pre | Post | |||||

| ||||||||

| FACILITY RELATED | ||||||||

| Hospital environment | ||||||||

| Cleanliness of the room and bathroom | 61.0 | 70.8 | 1.62 (1.40‐1.90) | 64.0 | 69.2 | 1.24 (1.03‐1.48) | 0.03 | |

| Quietness of the room | 51.3 | 65.4 | 1.89 (1.63‐2.19) | 58.6 | 60.3 | 1.08 (0.90‐1.28) | <0.0001 | |

| NONFACILITY RELATED | ||||||||

| Nursing communication | ||||||||

| Nurses treated with courtesy/respect | 84.0 | 86.7 | 1.28 (1.05‐1.57) | 83.6 | 87.1 | 1.29 (1.02‐1.64) | 0.92 | |

| Nurses listened | 73.1 | 76.4 | 1.21 (1.03‐1.43) | 74.2 | 75.5 | 1.05 (0.86‐1.27) | 0.26 | |

| Nurses explained | 75.0 | 76.6 | 1.10 (0.94‐1.30) | 76.0 | 76.2 | 1.00 (0.82‐1.21) | 0.43 | |

| Physician communication | ||||||||

| Doctors treated with courtesy/respect | 89.5 | 90.5 | 1.13 (0.89‐1.42) | 84.9 | 87.3 | 1.20 (0.94‐1.53) | 0.77 | |

| Doctors listened | 81.4 | 81.0 | 0.93 (0.83‐1.19) | 77.7 | 77.1 | 0.94 (0.77‐1.15) | 0.68 | |

| Doctors explained | 79.2 | 79.0 | 1.00(0.84‐1.19) | 75.7 | 74.4 | 0.92 (0.76‐1.12) | 0.49 | |

| Other | ||||||||

| Help toileting as soon as you wanted | 61.8 | 63.7 | 1.08 (0.89‐1.32) | 62.3 | 60.6 | 0.92 (0.71‐1.18) | 0.31 | |

| Pain well controlled | 63.2 | 63.8 | 1.06 (0.90‐1.25) | 62.0 | 62.6 | 0.99 (0.81‐1.20) | 060 | |

| Staff do everything to help with pain | 77.7 | 80.1 | 1.19 (0.99‐1.44) | 76.8 | 75.7 | 0.90 (0.75‐1.13) | 0.07 | |

| Staff describe medicine side effects | 47.0 | 47.6 | 1.05 (0.89‐1.24) | 49.2 | 47.1 | 0.91 (0.74‐1.11) | 0.32 | |

| Tell you what new medicine was for | 76.4 | 76.4 | 1.02 (0.84‐1.25) | 77.1 | 78.8 | 1.09(0.85‐1.39) | 0.65 | |

| Overall | ||||||||

| Rate hospital (010) | 75.0 | 83.3 | 1.71 (1.44‐2.05) | 75.7 | 77.6 | 1.06 (0.87‐1.29) | 0.006 | |

| Recommend hospital | 82.5 | 87.1 | 1.43 (1.18‐1.76) | 81.4 | 82.0 | 0.98 (0.79‐1.22) | 0.03 | |

| Satisfaction Domain | Moved Unit | Unmoved Unit | P Value of the Difference in Odds Ratio Between Moved and Unmoved Units | ||||

|---|---|---|---|---|---|---|---|

| % Top Box | Adjusted Odds Ratio* (95% CI) | % Top Box | Adjusted Odds Ratio* (95% CI) | ||||

| Pre | Post | Pre | Post | ||||

| |||||||

| FACILITY RELATED | |||||||

| Room | |||||||

| Pleasantness of room dcor | 33.6 | 64.8 | 3.77 (3.24‐4.38) | 41.6 | 47.0 | 1.21 (1.02‐1.44) | <0.0001 |

| Room cleanliness | 49.0 | 68.6 | 2.35 (2.02‐2.73) | 51.6 | 59.1 | 1.32 (1.12‐1.58) | <0.0001 |

| Room temperature | 43.1 | 54.9 | 1.64 (1.43‐1.90) | 45.0 | 48.8 | 1.14 (0.96‐1.36) | 0.002 |

| Noise level in and around the room | 40.2 | 59.2 | 2.23 (1.92‐2.58) | 45.5 | 47.6 | 1.07 (0.90‐1.22) | <0.0001 |

| Visitor related | |||||||

| Accommodations and comfort of visitors | 50.0 | 70.3 | 2.44 (2.10‐2.83) | 55.3 | 59.1 | 1.14 (0.96‐1.35) | <0.0001 |

| NONFACILITY RELATED | |||||||

| Food | |||||||

| Temperature of the food | 31.1 | 33.6 | 1.15 (0.99‐1.34) | 34.0 | 38.9 | 1.23 (1.02‐1.47) | 0.51 |

| Quality of the food | 25.8 | 27.1 | 1.10 (0.93‐1.30) | 30.2 | 36.2 | 1.32 (1.10‐1.59) | 0.12 |

| Courtesy of the person who served food | 63.9 | 62.3 | 0.93 (0.80‐1.10) | 66.0 | 61.4 | 0.82 (0.69‐0.98) | 0.26 |

| Nursing | |||||||

| Friendliness/courtesy of the nurses | 76.3 | 82.8 | 1.49 (1.26‐1.79) | 77.7 | 80.1 | 1.10 (0.90‐1.37) | 0.04 |

| Promptness of response to call | 60.1 | 62.6 | 1.14 (0.98‐1.33) | 59.2 | 62.0 | 1.10 (0.91‐1.31) | 0.80 |

| Nurses' attitude toward requests | 71.0 | 75.8 | 1.30 (1.11‐1.54) | 70.5 | 72.4 | 1.06 (0.88‐1.28) | 0.13 |

| Attention to special/personal needs | 66.7 | 72.2 | 1.32 (1.13‐1.54) | 67.8 | 70.3 | 1.09 (0.91‐1.31) | 0.16 |

| Nurses kept you informed | 64.3 | 72.2 | 1.46 (1.25‐1.70) | 65.8 | 69.8 | 1.17 (0.98‐1.41) | 0.88 |

| Skill of the nurses | 75.3 | 79.5 | 1.28 (1.08‐1.52) | 74.3 | 78.6 | 1.23 (1.01‐1.51) | 0.89 |

| Ancillary staff | |||||||

| Courtesy of the person cleaning the room | 59.8 | 67.7 | 1.41 (1.21‐1.65) | 61.2 | 66.5 | 1.24 (1.03‐1.49) | 0.28 |

| Courtesy of the person who took blood | 66.5 | 68.1 | 1.10 (0.94‐1.28) | 63.2 | 63.1 | 0.96 (0.76‐1.08) | 0.34 |

| Courtesy of the person who started the IV | 70.0 | 71.7 | 1.09 (0.93‐1.28) | 66.6 | 69.3 | 1.11 (0.92‐1.33) | 0.88 |

| Visitor related | |||||||

| Staff attitude toward visitors | 68.1 | 79.4 | 1.84 (1.56‐2.18) | 70.3 | 72.2 | 1.06 (0.87‐1.28) | <0.0001 |

| Physician | |||||||

| Time physician spent with you | 55.0 | 58.9 | 1.20 (1.04‐1.39) | 53.2 | 55.9 | 1.10 (0.92‐1.30) | 0.46 |

| Physician concern questions/worries | 67.2 | 70.7 | 1.20 (1.03‐1.40) | 64.3 | 66.1 | 1.05 (0.88‐1.26) | 0.31 |

| Physician kept you informed | 65.3 | 67.5 | 1.12 (0.96‐1.30) | 61.6 | 63.2 | 1.05 (0.88‐1.25) | 0.58 |

| Friendliness/courtesy of physician | 76.3 | 78.1 | 1.11 (0.93‐1.31) | 71.0 | 73.3 | 1.08 (0.90‐1.31) | 0.89 |

| Skill of physician | 85.4 | 88.5 | 1.35 (1.09‐1.68) | 78.0 | 81.0 | 1.15 (0.93‐1.43) | 0.34 |

| Discharge | |||||||

| Extent felt ready for discharge | 62.0 | 66.7 | 1.23 (1.07‐1.44) | 59.2 | 62.3 | 1.10 (0.92‐1.30) | 0.35 |

| Speed of discharge process | 50.7 | 54.2 | 1.16 (1.01‐1.33) | 47.8 | 50.0 | 1.07 (0.90‐1.27) | 0.49 |

| Instructions for care at home | 66.4 | 71.1 | 1.25 (1.06‐1.46) | 64.0 | 67.7 | 1.16 (0.97‐1.39) | 0.54 |

| Staff concern for your privacy | 65.3 | 71.8 | 1.37 (1.17‐0.85) | 63.6 | 66.2 | 1.10 (0.91‐1.31) | 0.07 |

| Miscellaneous | |||||||

| How well your pain was controlled | 64.2 | 66.5 | 1.14 (0.97‐1.32) | 60.2 | 62.6 | 1.07 (0.89‐1.28) | 0.66 |

| Staff addressed emotional needs | 60.0 | 63.4 | 1.19 (1.02‐1.38) | 55.1 | 60.2 | 1.20 (1.01‐1.42) | 0.90 |

| Response to concerns/complaints | 61.1 | 64.5 | 1.19 (1.02‐1.38) | 57.2 | 60.1 | 1.10 (0.92‐1.31) | 0.57 |

| Overall | |||||||

| Staff worked together to care for you | 72.6 | 77.2 | 1.29 (1.10‐1.52) | 70.3 | 73.2 | 1.13 (0.93‐1.37) | 0.30 |

| Likelihood of recommending hospital | 79.1 | 84.3 | 1.44 (1.20‐1.74) | 76.3 | 79.2 | 1.14 (0.93‐1.39) | 0.10 |

| Overall rating of care given | 76.8 | 83.0 | 1.50 (1.25‐1.80) | 74.7 | 77.2 | 1.10 (0.90‐1.34) | 0.03 |

With regard to nonfacility‐related satisfaction, there were statistically higher scores in several nursing, physician, and discharge‐related satisfaction domains after the move. However, these changes were not associated with the move to the new clinical building as they were not significantly different from improvements on the unmoved units. Among nonfacility‐related items, only staff attitude toward visitors showed significant improvement (68.1% vs 79.4%). There was a significant improvement in hospital rating (75.0% vs 83.3% in the moved units and 75.7% vs 77.6% in the unmoved units). However, the other 3 measures of overall satisfaction did not show significant improvement associated with the move to the new clinical building when compared to the concurrent controls.

DISCUSSION

Contrary to our hypothesis and a belief held by many, we found that patients appeared able to distinguish their experience with hospital environment from their experience with providers and other services. Improvement in hospital facilities with incorporation of patient‐centered features was associated with improvements that were largely limited to increases in satisfaction with quietness, cleanliness, temperature, and dcor of the room along with visitor‐related satisfaction. Notably, there was no significant improvement in satisfaction related to physicians, nurses, housekeeping, and other service staff. There was improvement in satisfaction with staff attitude toward visitors, but this can be attributed to availability of visitor‐friendly facilities. There was a significant improvement in 1 of the 4 measures of overall satisfaction. Our findings also support the construct validity of HCAHPS and Press Ganey patient satisfaction surveys.