User login

The Influence of Hospitalist Continuity on the Likelihood of Patient Discharge in General Medicine Patients

In addition to treating patients, physicians frequently have other time commitments that could include administrative, teaching, research, and family duties. Inpatient medicine is particularly unforgiving to these nonclinical duties since patients have to be assessed on a daily basis. Because of this characteristic, it is not uncommon for inpatient care responsibility to be switched between physicians to create time for nonclinical duties and personal health.

In contrast to the ambulatory setting, the influence of physician continuity of care on inpatient outcomes has not been studied frequently. Studies of inpatient continuity have primarily focused on patient discharge (likely because of its objective nature) over the weekends (likely because weekend cross-coverage is common) and have reported conflicting results.1-3 However, discontinuity of care is not isolated to the weekend since hospitalist-switches can occur at any time. In addition, expressing hospitalist continuity of care as a dichotomous variable (Was there weekend cross-coverage?) could incompletely express continuity since discharge likelihood might change with the consecutive number of days that a hospitalist is on service. This study measured the influence of hospitalist continuity throughout the patient’s hospitalization (rather than just the weekend) on daily patient discharge.

METHODS

Study Setting and Databases Used for Analysis

The study was conducted at The Ottawa Hospital, Ontario, Canada, a 1000-bed teaching hospital with 2 campuses and the primary referral center in our region. The division of general internal medicine has 6 patient services (or “teams”) at two campuses led by a staff hospitalist (exclusively general internists), a senior medical resident (2nd year of training), and various numbers of interns and medical students. Staff hospitalists do not treat more than one patient service even on the weekends.

Patients are admitted to each service on a daily basis and almost exclusively from the emergency room. Assignment of patients is essentially random since all services have the same clinical expertise. At a particular campus, the number of patients assigned daily to each service is usually equivalent between teams. Patients almost never switch between teams but may be transferred to another specialty. The study was approved by our local research ethics board.

The Patient Registry Database records for each patient the date and time of admissions (defined as the moment that a patient’s admission request is entered into the database), death or discharge from hospital (defined as the time when the patient’s discharge from hospital was entered into the database), or transfer to another specialty. It also records emergency visits, patient demographics, and location during admission. The Laboratory Database records all laboratory tests and their results.

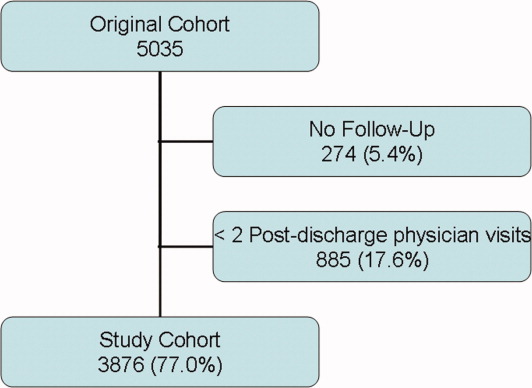

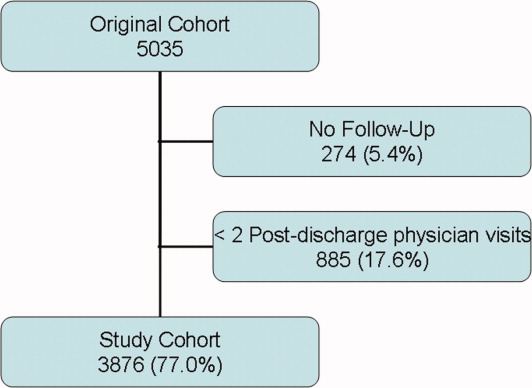

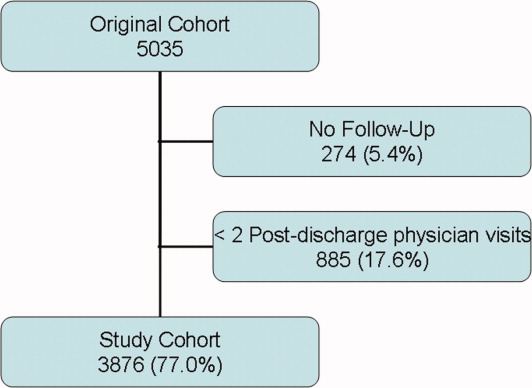

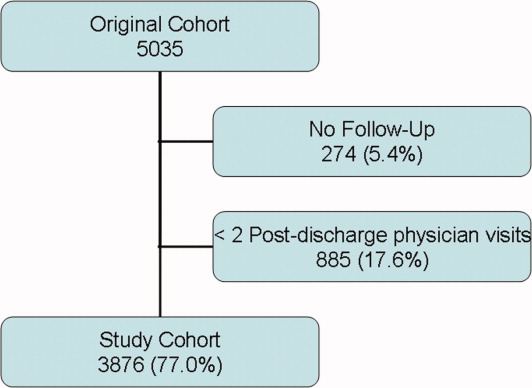

Study Cohort

The Patient Registry Database was used to identify all individuals who were admitted to the general medicine services between January 1 and December 31, 2015. This time frame was selected to ensure that data were complete and current. General medicine services were analyzed because they are collectively the largest inpatient specialty in the hospital.

Study Outcome

The primary outcome was discharge from hospital as determined from the Patient Registry Database. Patients who died or were transferred to another service were not counted as outcomes.

Covariables

The primary exposure variable was the consecutive number of days (including weekends) that a particular hospitalist rounded on patients on a particular general medicine service. This was measured using call schedules. Other covariates included tomorrow’s expected number of discharges (TEND) daily discharge probability and its components. The TEND model4 used patient factors (age, Laboratory Abnormality Physiological Score [LAPS]5 calculated at admission) and hospitalization factors (hospital campus and service, admission urgency, day of the week, ICU status) to predict the daily discharge probability. In a validation population, these daily discharge probabilities (when summed over a particular day) strongly predicted the daily number of discharges (adjusted R2 of 89.2% [P < .001], median relative difference between observed and expected number of discharges of only 1.4% [Interquartile range,IQR: −5.5% to 7.1%]). The expected annual death risk was determined using the HOMR-now! model.6 This model used routinely collected data available at patient admission regarding the patient (sex, life-table-estimated 1-year death risk, Charlson score, current living location, previous cancer clinic status, and number of emergency department visits in the previous year) and the hospitalization (urgency, service, and LAPS score). The model explained more than half of the total variability in death likelihood of death (Nagelkirke’s R2 value of 0.53),7 was highly discriminative (C-statistic 0.92), and accurately predicted death risk (calibration slope 0.98).

Analysis

Logistic generalized estimating equation (GEE) methods were used to model the adjusted daily discharge probability.8 Data in the analytical dataset were expressed in a patient-day format (each dataset row represented one day for a particular patient). This permitted the inclusion of time-dependent covariates and allowed the GEE model to cluster hospitalization days within patients.

Model construction started with the TEND daily discharge probability and the HOMR-now! expected annual death risk (both expressed as log-odds). Then, hospitalist continuity was entered as a time-dependent covariate (ie, its value changed every day). Linear, square root, and natural logarithm forms of physician continuity were examined to determine the best fit (determined using the QIC statistic9). Finally, individual components of the TEND model were also offered to the model with those which significantly improving fit kept in the model. The GEE model used an independent correlation structure since this minimized the QIC statistic in the base model. All covariates in the final daily discharge probability model were used in the hospital death model. Analyses were conducted using SAS 9.4 (Cary, NC).

RESULTS

There were 6,405 general medicine admissions involving 5208 patients and 38,967 patient-days between January 1 and December 31, 2015 (Appendix A). Patients were elderly and were evenly divided in terms of gender, with 85% of them being admitted from the community. Comorbidities were common (median coded Charlson score was 2), with 6.0% of patients known to our cancer clinic. The median length of stay was 4 days (IQR, 2–7), with 378 admissions (5.9%) ending in death and 121 admissions (1.9%) ending in a transfer to another service.

There were 41 different staff people having at least 1 day on service. The median total service by physicians was 9 weeks (IQR 1.8–10.9 weeks). Changes in hospitalist coverage were common; hospitalizations had a median of 1 (IQR 1–2) physician switches and a median of 1 (IQR 1–2) different physicians. However, patients spent a median of 100% (IQR 66.7%–100%] of their total hospitalization with their primary hospitalist. The median duration of individual physician “stints” on service was 5 days (IQR 2–7, range 1–42).

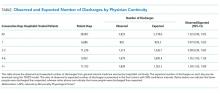

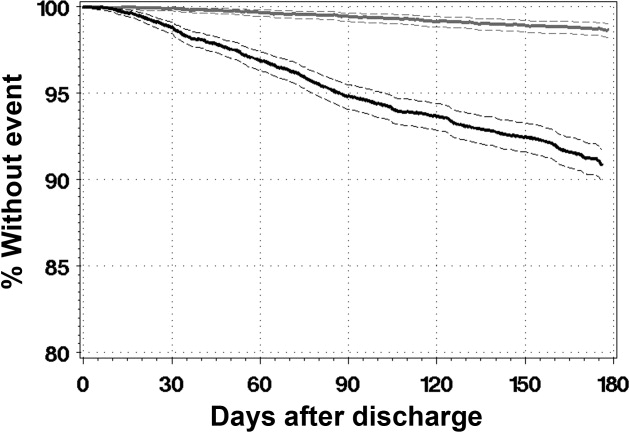

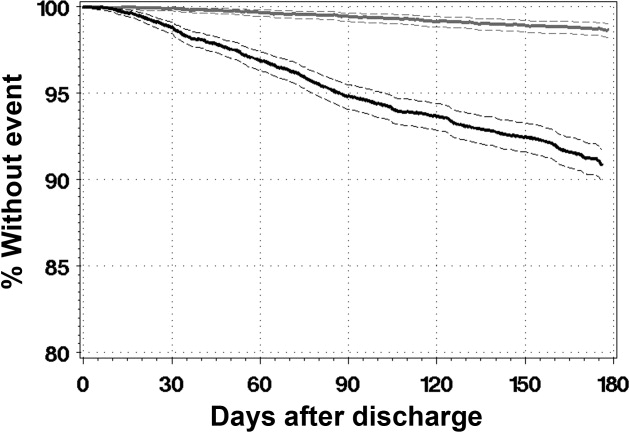

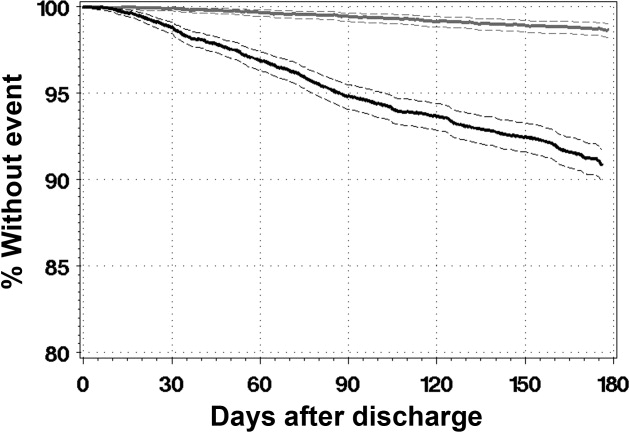

The TEND model accurately estimated daily discharge probability for the entire cohort with 5833 and 5718.6 observed and expected discharges, respectively, during 38,967 patient-days (O/E 1.02, 95% CI 0.99–1.05). Discharge probability increased as hospitalist continuity increased, but this was statistically significant only when hospitalist continuity exceeded 4 days. Other covariables also significantly influenced discharge probability (Appendix B).

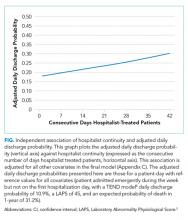

After adjusting for important covariables (Appendix C), hospitalist continuity was significantly associated with daily discharge probability (Figure). Discharge probability increased linearly with increasing consecutive days that hospitalists treated patients. For each additional consecutive day with the same hospitalist, the adjusted daily odds increased by 2% (Adj-odds ratio [OR] 1.02, 95% CI 1.01–1.02, Appendix C). When the consecutive number of days that hospitalists remained on service increased from 1 to 28 days, the adjusted discharge probability for the average patient increased from 18.1% to 25.7%, respectively. Discharge was significantly influenced by other factors (Appendix C). Continuity did not influence the risk of death in hospital (Appendix D).

DISCUSSION

In a general medicine service at a large teaching hospital, this study found that greater hospitalist continuity was associated with a significantly increased adjusted daily discharge probability, increasing (in the average patient) from 18.1% to 25.7% when the consecutive number of hospitalist days on service increased from 1 to 28 days, respectively.

The study demonstrated some interesting findings. First, it shows that shifting patient care between physicians can significantly influence patient outcomes. This could be a function of incomplete transfer of knowledge between physicians, a phenomenon that should be expected given the extensive amount of information–both explicit and implicit–that physicians collect about particular patients during their hospitalization. Second, continuity of care could increase a physician’s and a patient’s confidence in clinical decision-making. Perhaps physicians are subconsciously more trusting of their instincts (and the decisions based on those instincts) when they have been on service for a while. It is also possible that patients more readily trust recommendations of a physician they have had throughout their stay. Finally, people wishing to decrease patient length of stay might consider minimizing the extent that hospitalists sign over patient care to colleagues.

Several issues should be noted when interpreting the results of the study. First, the study examined only patient discharge and death. These are by no means the only or the most important outcomes that might be influenced by hospitalist continuity. Second, this study was limited to a single service at a single center. Third, the analysis did not account for house-staff continuity. Since hospitalist and house-staff at the study hospital invariably switched at different times, it is unlikely that hospitalist continuity was a surrogate for house-staff continuity.

Disclosures

This study was supported by the Department of Medicine, University of Ottawa, Ottawa, Ontario, Canada. The author has nothing to disclose.

1. Ali NA, Hammersley J, Hoffmann SP et al. Continuity of care in intensive care units: a cluster-randomized trial of intensivist staffing. Am J Respir Crit Care Med. 2011;184(7):803-808. PubMed

2. Epstein K, Juarez E, Epstein A, Loya K, Singer A. The impact of fragmentation of hospitalist care on length of stay. J Hosp Med. 2010;5(6):335-338. PubMed

3. Blecker S, Shine D, Park N et al. Association of weekend continuity of care with hospital length of stay. Int J Qual Health Care. 2014;26(5):530-537. PubMed

4. van Walraven C, Forster AJ. The TEND (Tomorrow’s Expected Number of Discharges) model accurately predicted the number of patients who were discharged from the hospital in the next day. J Hosp Med. In press. PubMed

5. Escobar GJ, Greene JD, Scheirer P, Gardner MN, Draper D, Kipnis P. Risk-adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases. Med Care. 2008;46(3):232-239. PubMed

6. van Walraven C, Forster AJ. HOMR-now! A modification of the HOMR score that predicts 1-year death risk for hospitalized patients using data immediately available at patient admission. Am J Med. In press. PubMed

7. Nagelkerke NJ. A note on a general definition of the coefficient of determination. Biometrika. 1991;78(3):691-692.

8. Stokes ME, Davis CS, Koch GG. Generalized estimating equations. Categorical Data Analysis Using the SAS System. 2nd ed. Cary, NC: SAS Institute Inc; 2000;469-549.

9. Pan W. Akaike’s information criterion in generalized estimating equations. Biometrics. 2001;57(1):120-125. PubMed

In addition to treating patients, physicians frequently have other time commitments that could include administrative, teaching, research, and family duties. Inpatient medicine is particularly unforgiving to these nonclinical duties since patients have to be assessed on a daily basis. Because of this characteristic, it is not uncommon for inpatient care responsibility to be switched between physicians to create time for nonclinical duties and personal health.

In contrast to the ambulatory setting, the influence of physician continuity of care on inpatient outcomes has not been studied frequently. Studies of inpatient continuity have primarily focused on patient discharge (likely because of its objective nature) over the weekends (likely because weekend cross-coverage is common) and have reported conflicting results.1-3 However, discontinuity of care is not isolated to the weekend since hospitalist-switches can occur at any time. In addition, expressing hospitalist continuity of care as a dichotomous variable (Was there weekend cross-coverage?) could incompletely express continuity since discharge likelihood might change with the consecutive number of days that a hospitalist is on service. This study measured the influence of hospitalist continuity throughout the patient’s hospitalization (rather than just the weekend) on daily patient discharge.

METHODS

Study Setting and Databases Used for Analysis

The study was conducted at The Ottawa Hospital, Ontario, Canada, a 1000-bed teaching hospital with 2 campuses and the primary referral center in our region. The division of general internal medicine has 6 patient services (or “teams”) at two campuses led by a staff hospitalist (exclusively general internists), a senior medical resident (2nd year of training), and various numbers of interns and medical students. Staff hospitalists do not treat more than one patient service even on the weekends.

Patients are admitted to each service on a daily basis and almost exclusively from the emergency room. Assignment of patients is essentially random since all services have the same clinical expertise. At a particular campus, the number of patients assigned daily to each service is usually equivalent between teams. Patients almost never switch between teams but may be transferred to another specialty. The study was approved by our local research ethics board.

The Patient Registry Database records for each patient the date and time of admissions (defined as the moment that a patient’s admission request is entered into the database), death or discharge from hospital (defined as the time when the patient’s discharge from hospital was entered into the database), or transfer to another specialty. It also records emergency visits, patient demographics, and location during admission. The Laboratory Database records all laboratory tests and their results.

Study Cohort

The Patient Registry Database was used to identify all individuals who were admitted to the general medicine services between January 1 and December 31, 2015. This time frame was selected to ensure that data were complete and current. General medicine services were analyzed because they are collectively the largest inpatient specialty in the hospital.

Study Outcome

The primary outcome was discharge from hospital as determined from the Patient Registry Database. Patients who died or were transferred to another service were not counted as outcomes.

Covariables

The primary exposure variable was the consecutive number of days (including weekends) that a particular hospitalist rounded on patients on a particular general medicine service. This was measured using call schedules. Other covariates included tomorrow’s expected number of discharges (TEND) daily discharge probability and its components. The TEND model4 used patient factors (age, Laboratory Abnormality Physiological Score [LAPS]5 calculated at admission) and hospitalization factors (hospital campus and service, admission urgency, day of the week, ICU status) to predict the daily discharge probability. In a validation population, these daily discharge probabilities (when summed over a particular day) strongly predicted the daily number of discharges (adjusted R2 of 89.2% [P < .001], median relative difference between observed and expected number of discharges of only 1.4% [Interquartile range,IQR: −5.5% to 7.1%]). The expected annual death risk was determined using the HOMR-now! model.6 This model used routinely collected data available at patient admission regarding the patient (sex, life-table-estimated 1-year death risk, Charlson score, current living location, previous cancer clinic status, and number of emergency department visits in the previous year) and the hospitalization (urgency, service, and LAPS score). The model explained more than half of the total variability in death likelihood of death (Nagelkirke’s R2 value of 0.53),7 was highly discriminative (C-statistic 0.92), and accurately predicted death risk (calibration slope 0.98).

Analysis

Logistic generalized estimating equation (GEE) methods were used to model the adjusted daily discharge probability.8 Data in the analytical dataset were expressed in a patient-day format (each dataset row represented one day for a particular patient). This permitted the inclusion of time-dependent covariates and allowed the GEE model to cluster hospitalization days within patients.

Model construction started with the TEND daily discharge probability and the HOMR-now! expected annual death risk (both expressed as log-odds). Then, hospitalist continuity was entered as a time-dependent covariate (ie, its value changed every day). Linear, square root, and natural logarithm forms of physician continuity were examined to determine the best fit (determined using the QIC statistic9). Finally, individual components of the TEND model were also offered to the model with those which significantly improving fit kept in the model. The GEE model used an independent correlation structure since this minimized the QIC statistic in the base model. All covariates in the final daily discharge probability model were used in the hospital death model. Analyses were conducted using SAS 9.4 (Cary, NC).

RESULTS

There were 6,405 general medicine admissions involving 5208 patients and 38,967 patient-days between January 1 and December 31, 2015 (Appendix A). Patients were elderly and were evenly divided in terms of gender, with 85% of them being admitted from the community. Comorbidities were common (median coded Charlson score was 2), with 6.0% of patients known to our cancer clinic. The median length of stay was 4 days (IQR, 2–7), with 378 admissions (5.9%) ending in death and 121 admissions (1.9%) ending in a transfer to another service.

There were 41 different staff people having at least 1 day on service. The median total service by physicians was 9 weeks (IQR 1.8–10.9 weeks). Changes in hospitalist coverage were common; hospitalizations had a median of 1 (IQR 1–2) physician switches and a median of 1 (IQR 1–2) different physicians. However, patients spent a median of 100% (IQR 66.7%–100%] of their total hospitalization with their primary hospitalist. The median duration of individual physician “stints” on service was 5 days (IQR 2–7, range 1–42).

The TEND model accurately estimated daily discharge probability for the entire cohort with 5833 and 5718.6 observed and expected discharges, respectively, during 38,967 patient-days (O/E 1.02, 95% CI 0.99–1.05). Discharge probability increased as hospitalist continuity increased, but this was statistically significant only when hospitalist continuity exceeded 4 days. Other covariables also significantly influenced discharge probability (Appendix B).

After adjusting for important covariables (Appendix C), hospitalist continuity was significantly associated with daily discharge probability (Figure). Discharge probability increased linearly with increasing consecutive days that hospitalists treated patients. For each additional consecutive day with the same hospitalist, the adjusted daily odds increased by 2% (Adj-odds ratio [OR] 1.02, 95% CI 1.01–1.02, Appendix C). When the consecutive number of days that hospitalists remained on service increased from 1 to 28 days, the adjusted discharge probability for the average patient increased from 18.1% to 25.7%, respectively. Discharge was significantly influenced by other factors (Appendix C). Continuity did not influence the risk of death in hospital (Appendix D).

DISCUSSION

In a general medicine service at a large teaching hospital, this study found that greater hospitalist continuity was associated with a significantly increased adjusted daily discharge probability, increasing (in the average patient) from 18.1% to 25.7% when the consecutive number of hospitalist days on service increased from 1 to 28 days, respectively.

The study demonstrated some interesting findings. First, it shows that shifting patient care between physicians can significantly influence patient outcomes. This could be a function of incomplete transfer of knowledge between physicians, a phenomenon that should be expected given the extensive amount of information–both explicit and implicit–that physicians collect about particular patients during their hospitalization. Second, continuity of care could increase a physician’s and a patient’s confidence in clinical decision-making. Perhaps physicians are subconsciously more trusting of their instincts (and the decisions based on those instincts) when they have been on service for a while. It is also possible that patients more readily trust recommendations of a physician they have had throughout their stay. Finally, people wishing to decrease patient length of stay might consider minimizing the extent that hospitalists sign over patient care to colleagues.

Several issues should be noted when interpreting the results of the study. First, the study examined only patient discharge and death. These are by no means the only or the most important outcomes that might be influenced by hospitalist continuity. Second, this study was limited to a single service at a single center. Third, the analysis did not account for house-staff continuity. Since hospitalist and house-staff at the study hospital invariably switched at different times, it is unlikely that hospitalist continuity was a surrogate for house-staff continuity.

Disclosures

This study was supported by the Department of Medicine, University of Ottawa, Ottawa, Ontario, Canada. The author has nothing to disclose.

In addition to treating patients, physicians frequently have other time commitments that could include administrative, teaching, research, and family duties. Inpatient medicine is particularly unforgiving to these nonclinical duties since patients have to be assessed on a daily basis. Because of this characteristic, it is not uncommon for inpatient care responsibility to be switched between physicians to create time for nonclinical duties and personal health.

In contrast to the ambulatory setting, the influence of physician continuity of care on inpatient outcomes has not been studied frequently. Studies of inpatient continuity have primarily focused on patient discharge (likely because of its objective nature) over the weekends (likely because weekend cross-coverage is common) and have reported conflicting results.1-3 However, discontinuity of care is not isolated to the weekend since hospitalist-switches can occur at any time. In addition, expressing hospitalist continuity of care as a dichotomous variable (Was there weekend cross-coverage?) could incompletely express continuity since discharge likelihood might change with the consecutive number of days that a hospitalist is on service. This study measured the influence of hospitalist continuity throughout the patient’s hospitalization (rather than just the weekend) on daily patient discharge.

METHODS

Study Setting and Databases Used for Analysis

The study was conducted at The Ottawa Hospital, Ontario, Canada, a 1000-bed teaching hospital with 2 campuses and the primary referral center in our region. The division of general internal medicine has 6 patient services (or “teams”) at two campuses led by a staff hospitalist (exclusively general internists), a senior medical resident (2nd year of training), and various numbers of interns and medical students. Staff hospitalists do not treat more than one patient service even on the weekends.

Patients are admitted to each service on a daily basis and almost exclusively from the emergency room. Assignment of patients is essentially random since all services have the same clinical expertise. At a particular campus, the number of patients assigned daily to each service is usually equivalent between teams. Patients almost never switch between teams but may be transferred to another specialty. The study was approved by our local research ethics board.

The Patient Registry Database records for each patient the date and time of admissions (defined as the moment that a patient’s admission request is entered into the database), death or discharge from hospital (defined as the time when the patient’s discharge from hospital was entered into the database), or transfer to another specialty. It also records emergency visits, patient demographics, and location during admission. The Laboratory Database records all laboratory tests and their results.

Study Cohort

The Patient Registry Database was used to identify all individuals who were admitted to the general medicine services between January 1 and December 31, 2015. This time frame was selected to ensure that data were complete and current. General medicine services were analyzed because they are collectively the largest inpatient specialty in the hospital.

Study Outcome

The primary outcome was discharge from hospital as determined from the Patient Registry Database. Patients who died or were transferred to another service were not counted as outcomes.

Covariables

The primary exposure variable was the consecutive number of days (including weekends) that a particular hospitalist rounded on patients on a particular general medicine service. This was measured using call schedules. Other covariates included tomorrow’s expected number of discharges (TEND) daily discharge probability and its components. The TEND model4 used patient factors (age, Laboratory Abnormality Physiological Score [LAPS]5 calculated at admission) and hospitalization factors (hospital campus and service, admission urgency, day of the week, ICU status) to predict the daily discharge probability. In a validation population, these daily discharge probabilities (when summed over a particular day) strongly predicted the daily number of discharges (adjusted R2 of 89.2% [P < .001], median relative difference between observed and expected number of discharges of only 1.4% [Interquartile range,IQR: −5.5% to 7.1%]). The expected annual death risk was determined using the HOMR-now! model.6 This model used routinely collected data available at patient admission regarding the patient (sex, life-table-estimated 1-year death risk, Charlson score, current living location, previous cancer clinic status, and number of emergency department visits in the previous year) and the hospitalization (urgency, service, and LAPS score). The model explained more than half of the total variability in death likelihood of death (Nagelkirke’s R2 value of 0.53),7 was highly discriminative (C-statistic 0.92), and accurately predicted death risk (calibration slope 0.98).

Analysis

Logistic generalized estimating equation (GEE) methods were used to model the adjusted daily discharge probability.8 Data in the analytical dataset were expressed in a patient-day format (each dataset row represented one day for a particular patient). This permitted the inclusion of time-dependent covariates and allowed the GEE model to cluster hospitalization days within patients.

Model construction started with the TEND daily discharge probability and the HOMR-now! expected annual death risk (both expressed as log-odds). Then, hospitalist continuity was entered as a time-dependent covariate (ie, its value changed every day). Linear, square root, and natural logarithm forms of physician continuity were examined to determine the best fit (determined using the QIC statistic9). Finally, individual components of the TEND model were also offered to the model with those which significantly improving fit kept in the model. The GEE model used an independent correlation structure since this minimized the QIC statistic in the base model. All covariates in the final daily discharge probability model were used in the hospital death model. Analyses were conducted using SAS 9.4 (Cary, NC).

RESULTS

There were 6,405 general medicine admissions involving 5208 patients and 38,967 patient-days between January 1 and December 31, 2015 (Appendix A). Patients were elderly and were evenly divided in terms of gender, with 85% of them being admitted from the community. Comorbidities were common (median coded Charlson score was 2), with 6.0% of patients known to our cancer clinic. The median length of stay was 4 days (IQR, 2–7), with 378 admissions (5.9%) ending in death and 121 admissions (1.9%) ending in a transfer to another service.

There were 41 different staff people having at least 1 day on service. The median total service by physicians was 9 weeks (IQR 1.8–10.9 weeks). Changes in hospitalist coverage were common; hospitalizations had a median of 1 (IQR 1–2) physician switches and a median of 1 (IQR 1–2) different physicians. However, patients spent a median of 100% (IQR 66.7%–100%] of their total hospitalization with their primary hospitalist. The median duration of individual physician “stints” on service was 5 days (IQR 2–7, range 1–42).

The TEND model accurately estimated daily discharge probability for the entire cohort with 5833 and 5718.6 observed and expected discharges, respectively, during 38,967 patient-days (O/E 1.02, 95% CI 0.99–1.05). Discharge probability increased as hospitalist continuity increased, but this was statistically significant only when hospitalist continuity exceeded 4 days. Other covariables also significantly influenced discharge probability (Appendix B).

After adjusting for important covariables (Appendix C), hospitalist continuity was significantly associated with daily discharge probability (Figure). Discharge probability increased linearly with increasing consecutive days that hospitalists treated patients. For each additional consecutive day with the same hospitalist, the adjusted daily odds increased by 2% (Adj-odds ratio [OR] 1.02, 95% CI 1.01–1.02, Appendix C). When the consecutive number of days that hospitalists remained on service increased from 1 to 28 days, the adjusted discharge probability for the average patient increased from 18.1% to 25.7%, respectively. Discharge was significantly influenced by other factors (Appendix C). Continuity did not influence the risk of death in hospital (Appendix D).

DISCUSSION

In a general medicine service at a large teaching hospital, this study found that greater hospitalist continuity was associated with a significantly increased adjusted daily discharge probability, increasing (in the average patient) from 18.1% to 25.7% when the consecutive number of hospitalist days on service increased from 1 to 28 days, respectively.

The study demonstrated some interesting findings. First, it shows that shifting patient care between physicians can significantly influence patient outcomes. This could be a function of incomplete transfer of knowledge between physicians, a phenomenon that should be expected given the extensive amount of information–both explicit and implicit–that physicians collect about particular patients during their hospitalization. Second, continuity of care could increase a physician’s and a patient’s confidence in clinical decision-making. Perhaps physicians are subconsciously more trusting of their instincts (and the decisions based on those instincts) when they have been on service for a while. It is also possible that patients more readily trust recommendations of a physician they have had throughout their stay. Finally, people wishing to decrease patient length of stay might consider minimizing the extent that hospitalists sign over patient care to colleagues.

Several issues should be noted when interpreting the results of the study. First, the study examined only patient discharge and death. These are by no means the only or the most important outcomes that might be influenced by hospitalist continuity. Second, this study was limited to a single service at a single center. Third, the analysis did not account for house-staff continuity. Since hospitalist and house-staff at the study hospital invariably switched at different times, it is unlikely that hospitalist continuity was a surrogate for house-staff continuity.

Disclosures

This study was supported by the Department of Medicine, University of Ottawa, Ottawa, Ontario, Canada. The author has nothing to disclose.

1. Ali NA, Hammersley J, Hoffmann SP et al. Continuity of care in intensive care units: a cluster-randomized trial of intensivist staffing. Am J Respir Crit Care Med. 2011;184(7):803-808. PubMed

2. Epstein K, Juarez E, Epstein A, Loya K, Singer A. The impact of fragmentation of hospitalist care on length of stay. J Hosp Med. 2010;5(6):335-338. PubMed

3. Blecker S, Shine D, Park N et al. Association of weekend continuity of care with hospital length of stay. Int J Qual Health Care. 2014;26(5):530-537. PubMed

4. van Walraven C, Forster AJ. The TEND (Tomorrow’s Expected Number of Discharges) model accurately predicted the number of patients who were discharged from the hospital in the next day. J Hosp Med. In press. PubMed

5. Escobar GJ, Greene JD, Scheirer P, Gardner MN, Draper D, Kipnis P. Risk-adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases. Med Care. 2008;46(3):232-239. PubMed

6. van Walraven C, Forster AJ. HOMR-now! A modification of the HOMR score that predicts 1-year death risk for hospitalized patients using data immediately available at patient admission. Am J Med. In press. PubMed

7. Nagelkerke NJ. A note on a general definition of the coefficient of determination. Biometrika. 1991;78(3):691-692.

8. Stokes ME, Davis CS, Koch GG. Generalized estimating equations. Categorical Data Analysis Using the SAS System. 2nd ed. Cary, NC: SAS Institute Inc; 2000;469-549.

9. Pan W. Akaike’s information criterion in generalized estimating equations. Biometrics. 2001;57(1):120-125. PubMed

1. Ali NA, Hammersley J, Hoffmann SP et al. Continuity of care in intensive care units: a cluster-randomized trial of intensivist staffing. Am J Respir Crit Care Med. 2011;184(7):803-808. PubMed

2. Epstein K, Juarez E, Epstein A, Loya K, Singer A. The impact of fragmentation of hospitalist care on length of stay. J Hosp Med. 2010;5(6):335-338. PubMed

3. Blecker S, Shine D, Park N et al. Association of weekend continuity of care with hospital length of stay. Int J Qual Health Care. 2014;26(5):530-537. PubMed

4. van Walraven C, Forster AJ. The TEND (Tomorrow’s Expected Number of Discharges) model accurately predicted the number of patients who were discharged from the hospital in the next day. J Hosp Med. In press. PubMed

5. Escobar GJ, Greene JD, Scheirer P, Gardner MN, Draper D, Kipnis P. Risk-adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases. Med Care. 2008;46(3):232-239. PubMed

6. van Walraven C, Forster AJ. HOMR-now! A modification of the HOMR score that predicts 1-year death risk for hospitalized patients using data immediately available at patient admission. Am J Med. In press. PubMed

7. Nagelkerke NJ. A note on a general definition of the coefficient of determination. Biometrika. 1991;78(3):691-692.

8. Stokes ME, Davis CS, Koch GG. Generalized estimating equations. Categorical Data Analysis Using the SAS System. 2nd ed. Cary, NC: SAS Institute Inc; 2000;469-549.

9. Pan W. Akaike’s information criterion in generalized estimating equations. Biometrics. 2001;57(1):120-125. PubMed

© 2018 Society of Hospital Medicine

The TEND (Tomorrow’s Expected Number of Discharges) Model Accurately Predicted the Number of Patients Who Were Discharged from the Hospital the Next Day

Hospitals typically allocate beds based on historical patient volumes. If funding decreases, hospitals will usually try to maximize resource utilization by allocating beds to attain occupancies close to 100% for significant periods of time. This will invariably cause days in which hospital occupancy exceeds capacity, at which time critical entry points (such as the emergency department and operating room) will become blocked. This creates significant concerns over the patient quality of care.

Hospital administrators have very few options when hospital occupancy exceeds 100%. They could postpone admissions for “planned” cases, bring in additional staff to increase capacity, or instigate additional methods to increase hospital discharges such as expanding care resources in the community. All options are costly, bothersome, or cannot be actioned immediately. The need for these options could be minimized by enabling hospital administrators to make more informed decisions regarding hospital bed management by knowing the likely number of discharges in the next 24 hours.

Predicting the number of people who will be discharged in the next day can be approached in several ways. One approach would be to calculate each patient’s expected length of stay and then use the variation around that estimate to calculate each day’s discharge probability. Several studies have attempted to model hospital length of stay using a broad assortment of methodologies, but a mechanism to accurately predict this outcome has been elusive1,2 (with Verburg et al.3 concluding in their study’s abstract that “…it is difficult to predict length of stay…”). A second approach would be to use survival analysis methods to generate each patient’s hazard of discharge over time, which could be directly converted to an expected daily risk of discharge. However, this approach is complicated by the concurrent need to include time-dependent covariates and consider the competing risk of death in hospital, which can complicate survival modeling.4,5 A third approach would be the implementation of a longitudinal analysis using marginal models to predict the daily probability of discharge,6 but this method quickly overwhelms computer resources when large datasets are present.

In this study, we decided to use nonparametric models to predict the daily number of hospital discharges. We first identified patient groups with distinct discharge patterns. We then calculated the conditional daily discharge probability of patients in each of these groups. Finally, these conditional daily discharge probabilities were then summed for each hospital day to generate the expected number of discharges in the next 24 hours. This paper details the methods we used to create our model and the accuracy of its predictions.

METHODS

Study Setting and Databases Used for Analysis

The study took place at The Ottawa Hospital, a 1000-bed teaching hospital with 3 campuses that is the primary referral center in our region. The study was approved by our local research ethics board.

The Patient Registry Database records the date and time of admission for each patient (defined as the moment that a patient’s admission request is registered in the patient registration) and discharge (defined as the time when the patient’s discharge from hospital was entered into the patient registration) for hospital encounters. Emergency department encounters were also identified in the Patient Registry Database along with admission service, patient age and sex, and patient location throughout the admission. The Laboratory Database records all laboratory studies and results on all patients at the hospital.

Study Cohort

We used the Patient Registry Database to identify all people aged 1 year or more who were admitted to the hospital between January 1, 2013, and December 31, 2015. This time frame was selected to (i) ensure that data were complete; and (ii) complete calendar years of data were available for both derivation (patient-days in 2013-2014) and validation (2015) cohorts. Patients who were observed in the emergency room without admission to hospital were not included.

Study Outcome

The study outcome was the number of patients discharged from the hospital each day. For the analysis, the reference point for each day was 1 second past midnight; therefore, values for time-dependent covariates up to and including midnight were used to predict the number of discharges in the next 24 hours.

Study Covariates

Baseline (ie, time-independent) covariates included patient age and sex, admission service, hospital campus, whether or not the patient was admitted from the emergency department (all determined from the Patient Registry Database), and the Laboratory-based Acute Physiological Score (LAPS). The latter, which was calculated with the Laboratory Database using results for 14 tests (arterial pH, PaCO2, PaO2, anion gap, hematocrit, total white blood cell count, serum albumin, total bilirubin, creatinine, urea nitrogen, glucose, sodium, bicarbonate, and troponin I) measured in the 24-hour time frame preceding hospitalization, was derived by Escobar and colleagues7 to measure severity of illness and was subsequently validated in our hospital.8 The independent association of each laboratory perturbation with risk of death in hospital is reflected by the number of points assigned to each lab value with the total LAPS being the sum of these values. Time-dependent covariates included weekday in hospital and whether or not patients were in the intensive care unit.

Analysis

We used 3 stages to create a model to predict the daily expected number of discharges: we identified discharge risk strata containing patients having similar discharge patterns using data from patients in the derivation cohort (first stage); then, we generated the preliminary probability of discharge by determining the daily discharge probability in each discharge risk strata (second stage); finally, we modified the probability from the second stage based on the weekday and admission service and summed these probabilities to create the expected number of discharges on a particular date (third stage).

The first stage identified discharge risk strata based on the covariates listed above. This was determined by using a survival tree approach9 with proportional hazard regression models to generate the “splits.” These models were offered all covariates listed in the Study Covariates section. Admission service was clustered within 4 departments (obstetrics/gynecology, psychiatry, surgery, and medicine) and day of week was “binarized” into weekday/weekend-holiday (because the use of categorical variables with large numbers of groups can “stunt” regression trees due to small numbers of patients—and, therefore, statistical power—in each subgroup). The proportional hazards model identified the covariate having the strongest association with time to discharge (based on the Wald X2 value divided by the degrees of freedom). This variable was then used to split the cohort into subgroups (with continuous covariates being categorized into quartiles). The proportional hazards model was then repeated in each subgroup (with the previous splitting variable[s] excluded from the model). This process continued until no variable was associated with time to discharge with a P value less than .0001. This survival-tree was then used to cluster all patients into distinct discharge risk strata.

In the second stage, we generated the preliminary probability of discharge for a specific date. This was calculated by assigning all patients in hospital to their discharge risk strata (Appendix). We then measured the probability of discharge on each hospitalization day in all discharge risk strata using data from the previous 180 days (we only used the prior 180 days of data to account for temporal changes in hospital discharge patterns). For example, consider a 75-year-old patient on her third hospital day under obstetrics/gynecology on December 19, 2015 (a Saturday). This patient would be assigned to risk stratum #133 (Appendix A). We then measured the probability of discharge of all patients in this discharge risk stratum hospitalized in the previous 6 months (ie, between June 22, 2015, and December 18, 2015) on each hospital day. For risk stratum #133, the probability of discharge on hospital day 3 was 0.1111; therefore, our sample patient’s preliminary expected discharge probability was 0.1111.

To attain stable daily discharge probability estimates, a minimum of 50 patients per discharge risk stratum-hospitalization day combination was required. If there were less than 50 patients for a particular hospitalization day in a particular discharge risk stratum, we grouped hospitalization days in that risk stratum together until the minimum of 50 patients was collected.

The third (and final) stage accounted for the lack of granularity when we created the discharge risk strata in the first stage. As we mentioned above, admission service was clustered into 4 departments and the day of week was clustered into weekend/weekday. However, important variations in discharge probabilities could still exist within departments and between particular days of the week.10 Therefore, we created a correction factor to adjust the preliminary expected number of discharges based on the admission division and day of week. This correction factor used data from the 180 days prior to the analysis date within which the expected daily number of discharges was calculated (using the methods above). The correction factor was the relative difference between the observed and expected number of discharges within each division-day of week grouping.

For example, to calculate the correction factor for our sample patient presented above (75-year-old patient on hospital day 3 under gynecology on Saturday, December 19, 2015), we measured the observed number of discharges from gynecology on Saturdays between June 22, 2015, and December 18, 2015, (n = 206) and the expected number of discharges (n = 195.255) resulting in a correction factor of (observed-expected)/expected = (195.255-206)/195.206 = 0.05503. Therefore, the final expected discharge probability for our sample patient was 0.1111+0.1111*0.05503=0.1172. The expected number of discharges on a particular date was the preliminary expected number of discharges on that date (generated in the second stage) multiplied by the correction factor for the corresponding division-day or week group.

RESULTS

There were 192,859 admissions involving patients more than 1 year of age that spent at least part of their hospitalization between January 1, 2013, and December 31, 2015 (Table). Patients were middle-aged and slightly female predominant, with about half being admitted from the emergency department. Approximately 80% of admissions were to surgical or medical services. More than 95% of admissions ended with a discharge from the hospital with the remainder ending in a death. Almost 30% of hospitalization days occurred on weekends or holidays. Hospitalizations in the derivation (2013-2014) and validation (2015) group were essentially the same, except there was a slight drop in hospital length of stay (from a median of 4 days to 3 days) between the 2 periods.

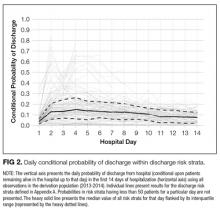

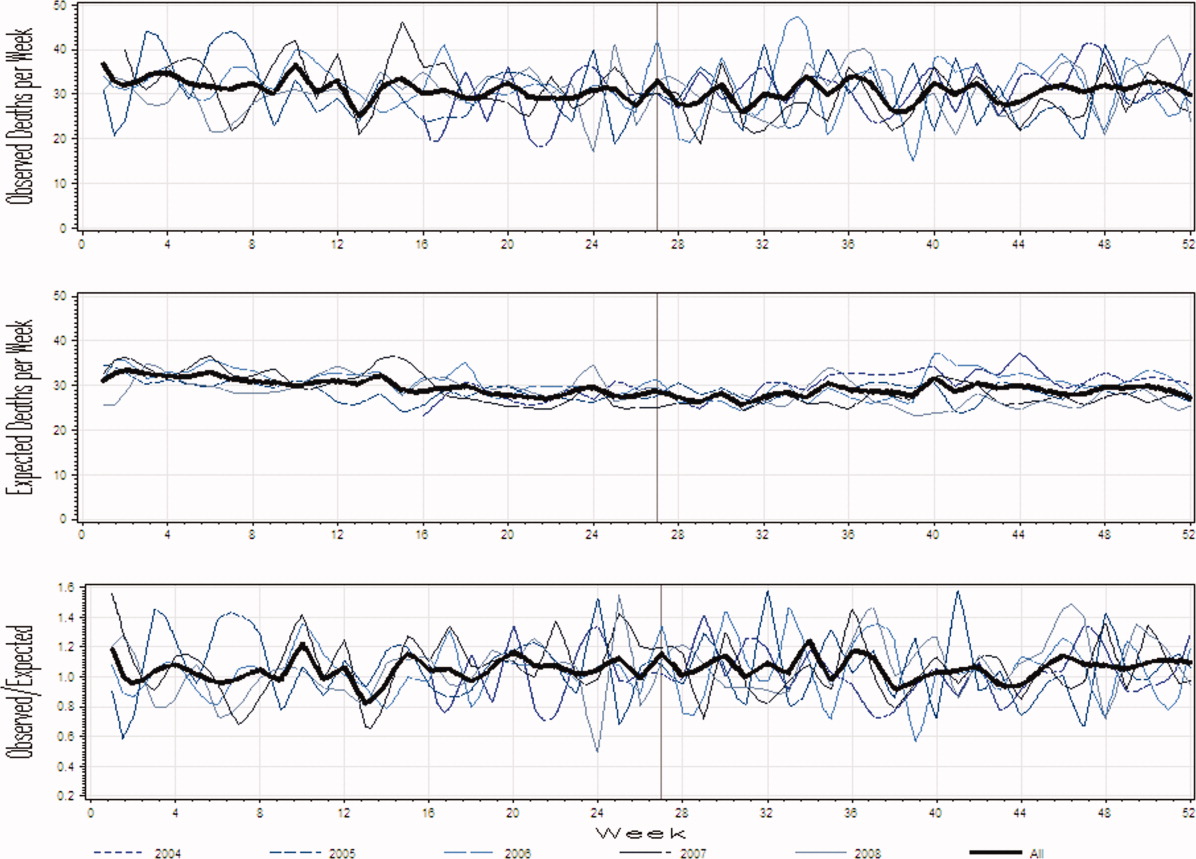

Patient and hospital covariates importantly influenced the daily conditional probability of discharge (Figure 1). Patients admitted to the obstetrics/gynecology department were notably more likely to be discharged from hospital with no influence from the day of week. In contrast, the probability of discharge decreased notably on the weekends in the other departments. Patients on the ward were much more likely to be discharged than those in the intensive care unit, with increasing age associated with a decreased discharge likelihood in the former but not the latter patients. Finally, discharge probabilities varied only slightly between campuses at our hospital with discharge risk decreasing as severity of illness (as measured by LAPS) increased.

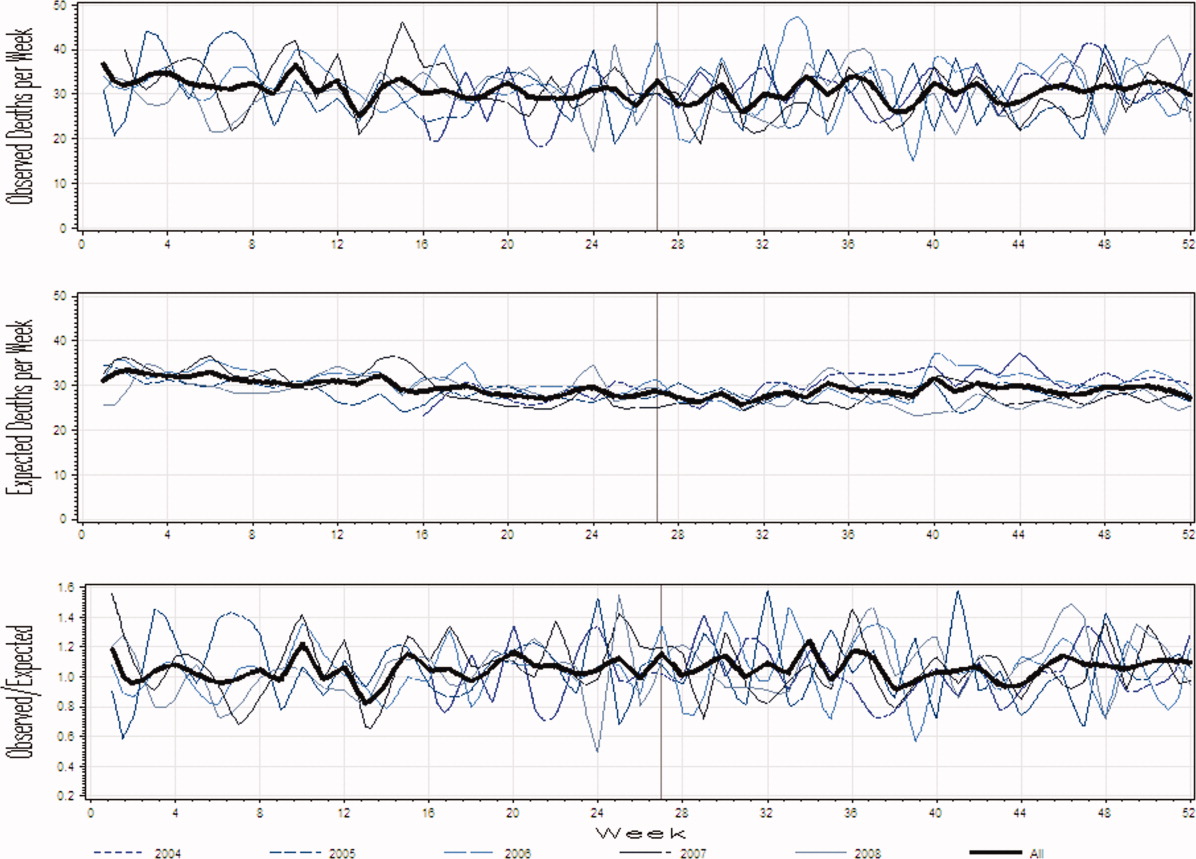

The TEND model contained 142 discharge risk strata (Appendix A). Weekend-holiday status had the strongest association with discharge probability (ie, it was the first splitting variable). The most complex discharge risk strata contained 6 covariates. The daily conditional probability of discharge during the first 2 weeks of hospitalization varied extensively between discharge risk strata (Figure 2). Overall, the conditional discharge probability increased from the first to the second day, remained relatively stable for several days, and then slowly decreased over time. However, this pattern and day-to-day variability differed extensively between risk strata.

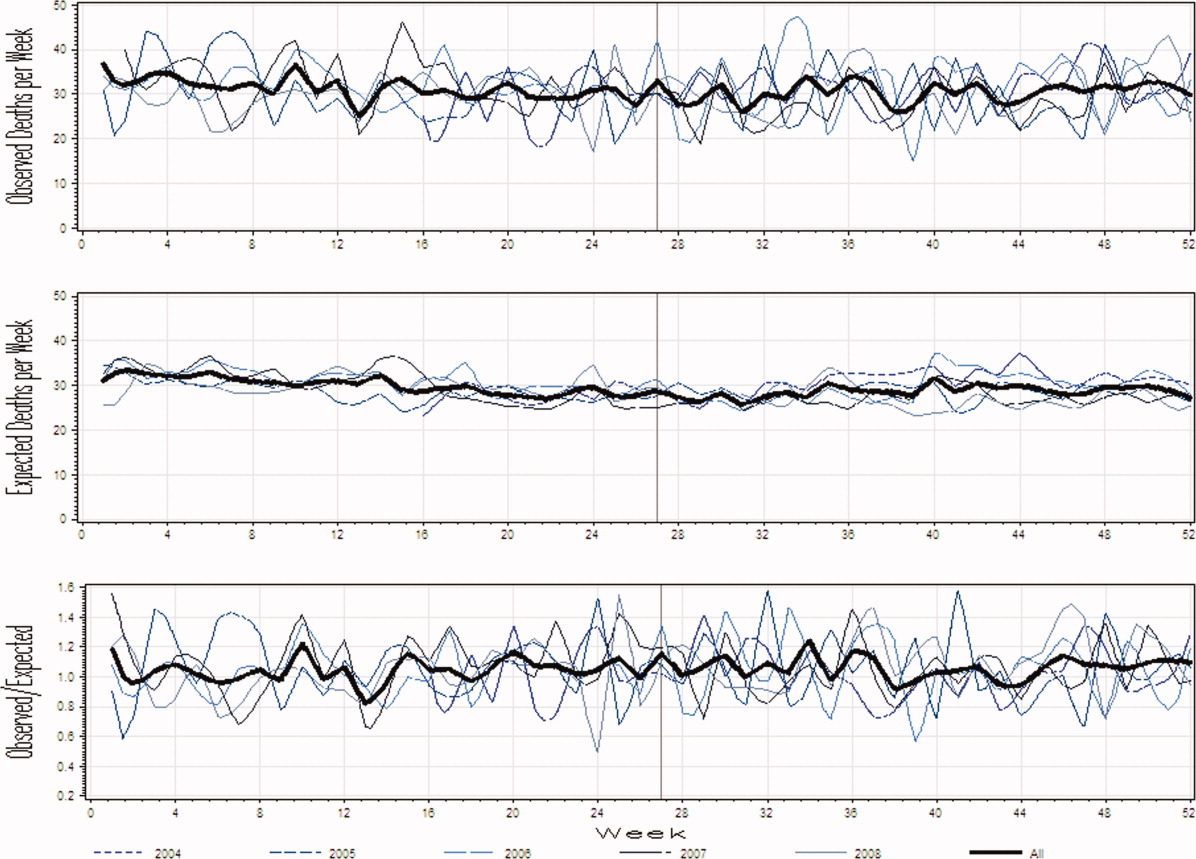

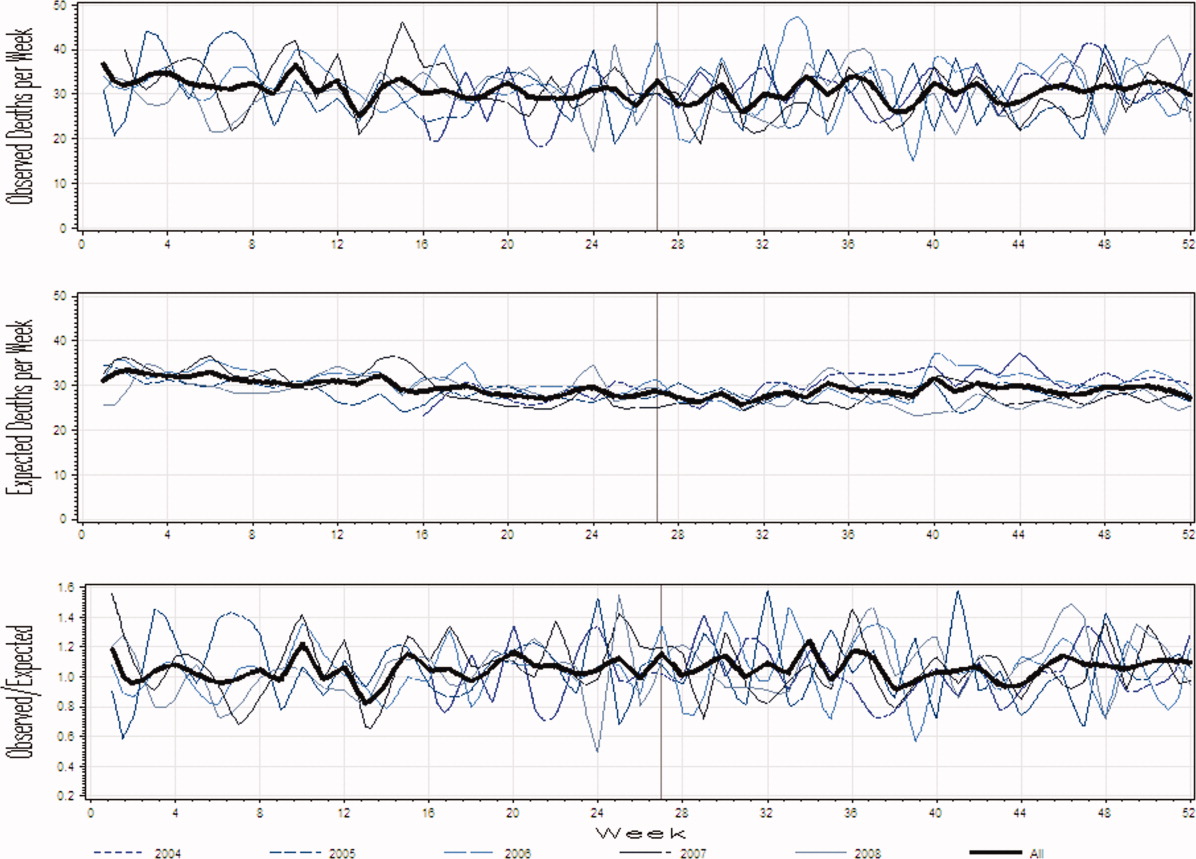

The observed daily number of discharges in the validation cohort varied extensively (median 139; interquartile range [IQR] 95-160; range 39-214). The TEND model accurately predicted the daily number of discharges with the expected daily number being strongly associated with the observed number (adjusted R2 = 89.2%; P < 0.0001; Figure 3). Calibration decreased but remained significant when we limited the analyses by hospital campus (General: R2 = 46.3%; P < 0.0001; Civic: R2 = 47.9%; P < 0.0001; Heart Institute: R2 = 18.1%; P < 0.0001). The expected number of daily discharges was an unbiased estimator of the observed number of discharges (its parameter estimate in a linear regression model with the observed number of discharges as the outcome variable was 1.0005; 95% confidence interval, 0.9647-1.0363). The absolute difference in the observed and expected daily number of discharges was small (median 1.6; IQR −6.8 to 9.4; range −37 to 63.4) as was the relative difference (median 1.4%; IQR −5.5% to 7.1%; range −40.9% to 43.4%). The expected number of discharges was within 20% of the observed number of discharges in 95.1% of days in 2015.

DISCUSSION

Knowing how many patients will soon be discharged from the hospital should greatly facilitate hospital planning. This study showed that the TEND model used simple patient and hospitalization covariates to accurately predict the number of patients who will be discharged from hospital in the next day.

We believe that this study has several notable findings. First, we think that using a nonparametric approach to predicting the daily number of discharges importantly increased accuracy. This approach allowed us to generate expected likelihoods based on actual discharge probabilities at our hospital in the most recent 6 months of hospitalization-days within patients having discharge patterns that were very similar to the patient in question (ie, discharge risk strata, Appendix A). This ensured that trends in hospitalization habits were accounted for without the need of a period variable in our model. In addition, the lack of parameters in the model will make it easier to transplant it to other hospitals. Second, we think that the accuracy of the predictions were remarkable given the relative “crudeness” of our predictors. By using relatively simple factors, the TEND model was able to output accurate predictions for the number of daily discharges (Figure 3).

This study joins several others that have attempted to accomplish the difficult task of predicting the number of hospital discharges by using digitized data. Barnes et al.11 created a model using regression random forest methods in a single medical service within a hospital to predict the daily number of discharges with impressive accuracy (mean daily number of discharges observed 8.29, expected 8.51). Interestingly, the model in this study was more accurate at predicting discharge likelihood than physicians. Levin et al.12 derived a model using discrete time logistic regression to predict the likelihood of discharge from a pediatric intensive care unit, finding that physician orders (captured via electronic order entry) could be categorized and used to significantly increase the accuracy of discharge likelihood. This study demonstrates the potential opportunities within health-related data from hospital data warehouses to improve prediction. We believe that continued work in this field will result in the increased use of digital data to help hospital administrators manage patient beds more efficiently and effectively than currently used resource intensive manual methods.13,14

Several issues should be kept in mind when interpreting our findings. First, our analysis is limited to a single institution in Canada. It will be important to determine if the TEND model methodology generalizes to other hospitals in different jurisdictions. Such an external validation, especially in multiple hospitals, will be important to show that the TEND model methodology works in other facilities. Hospitals could implement the TEND model if they are able to record daily values for each of the variables required to assign patients to a discharge risk stratum (Appendix A) and calculate within each the daily probability of discharge. Hospitals could derive their own discharge risk strata to account for covariates, which we did not include in our study but could be influential, such as insurance status. These discharge risk estimates could also be incorporated into the electronic medical record or hospital dashboards (as long as the data required to generate the estimates are available). These interventions would permit the expected number of hospital discharges (and even the patient-level probability of discharge) to be calculated on a daily basis. Second, 2 potential biases could have influenced the identification of our discharge risk strata (Appendix A). In this process, we used survival tree methods to separate patient-days into clusters having progressively more homogenous discharge patterns. Each split was determined by using a proportional hazards model that ignored the competing risks of death in hospital. In addition, the model expressed age and LAPS as continuous variables, whereas these covariates had to be categorized to create our risk strata groupings. The strength of a covariate’s association with an outcome will decrease when a continuous variable is categorized.15 Both of these issues might have biased our final risk strata categorization (Appendix A). Third, we limited our model to include simple covariates whose values could be determined relatively easily within most hospital administrative data systems. While this increases the generalizability to other hospital information systems, we believe that the introduction of other covariates to the model—such as daily vital signs, laboratory results, medications, or time from operations—could increase prediction accuracy. Finally, it is uncertain whether or not knowing the predicted number of discharges will improve the efficiency of bed management within the hospital. It seems logical that an accurate prediction of the number of beds that will be made available in the next day should improve decisions regarding the number of patients who could be admitted electively to the hospital. It remains to be seen, however, whether this truly happens.

In summary, we found that the TEND model used a handful of patient and hospitalization factors to accurately predict the expected number of discharges from hospital in the next day. Further work is required to implement this model into our institution’s data warehouse and then determine whether this prediction will improve the efficiency of bed management at our hospital.

Disclosure: CvW is supported by a University of Ottawa Department of Medicine Clinician Scientist Chair. The authors have no conflicts of interest

1. Austin PC, Rothwell DM, Tu JV. A comparison of statistical modeling strategies for analyzing length of stay after CABG surgery. Health Serv Outcomes Res Methodol. 2002;3:107-133.

2. Moran JL, Solomon PJ. A review of statistical estimators for risk-adjusted length of stay: analysis of the Australian and new Zealand intensive care adult patient data-base, 2008-2009. BMC Med Res Methodol. 2012;12:68. PubMed

3. Verburg IWM, de Keizer NF, de Jonge E, Peek N. Comparison of regression methods for modeling intensive care length of stay. PLoS One. 2014;9:e109684. PubMed

4. Beyersmann J, Schumacher M. Time-dependent covariates in the proportional subdistribution hazards model for competing risks. Biostatistics. 2008;9:765-776. PubMed

5. Latouche A, Porcher R, Chevret S. A note on including time-dependent covariate in regression model for competing risks data. Biom J. 2005;47:807-814. PubMed

6. Fitzmaurice GM, Laird NM, Ware JH. Marginal models: generalized estimating equations. Applied Longitudinal Analysis. 2nd ed. John Wiley & Sons; 2011;353-394.

7. Escobar GJ, Greene JD, Scheirer P, Gardner MN, Draper D, Kipnis P. Risk-adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases. Med Care. 2008;46:232-239. PubMed

8. van Walraven C, Escobar GJ, Greene JD, Forster AJ. The Kaiser Permanente inpatient risk adjustment methodology was valid in an external patient population. J Clin Epidemiol. 2010;63:798-803. PubMed

9. Bou-Hamad I, Larocque D, Ben-Ameur H. A review of survival trees. Statist Surv. 2011;44-71.

10. van Walraven C, Bell CM. Risk of death or readmission among people discharged from hospital on Fridays. CMAJ. 2002;166:1672-1673. PubMed

11. Barnes S, Hamrock E, Toerper M, Siddiqui S, Levin S. Real-time prediction of inpatient length of stay for discharge prioritization. J Am Med Inform Assoc. 2016;23:e2-e10. PubMed

12. Levin SRP, Harley ETB, Fackler JCM, et al. Real-time forecasting of pediatric intensive care unit length of stay using computerized provider orders. Crit Care Med. 2012;40:3058-3064. PubMed

13. Resar R, Nolan K, Kaczynski D, Jensen K. Using real-time demand capacity management to improve hospitalwide patient flow. Jt Comm J Qual Patient Saf. 2011;37:217-227. PubMed

14. de Grood A, Blades K, Pendharkar SR. A review of discharge prediction processes in acute care hospitals. Healthc Policy. 2016;12:105-115. PubMed

15. van Walraven C, Hart RG. Leave ‘em alone - why continuous variables should be analyzed as such. Neuroepidemiology 2008;30:138-139. PubMed

Hospitals typically allocate beds based on historical patient volumes. If funding decreases, hospitals will usually try to maximize resource utilization by allocating beds to attain occupancies close to 100% for significant periods of time. This will invariably cause days in which hospital occupancy exceeds capacity, at which time critical entry points (such as the emergency department and operating room) will become blocked. This creates significant concerns over the patient quality of care.

Hospital administrators have very few options when hospital occupancy exceeds 100%. They could postpone admissions for “planned” cases, bring in additional staff to increase capacity, or instigate additional methods to increase hospital discharges such as expanding care resources in the community. All options are costly, bothersome, or cannot be actioned immediately. The need for these options could be minimized by enabling hospital administrators to make more informed decisions regarding hospital bed management by knowing the likely number of discharges in the next 24 hours.

Predicting the number of people who will be discharged in the next day can be approached in several ways. One approach would be to calculate each patient’s expected length of stay and then use the variation around that estimate to calculate each day’s discharge probability. Several studies have attempted to model hospital length of stay using a broad assortment of methodologies, but a mechanism to accurately predict this outcome has been elusive1,2 (with Verburg et al.3 concluding in their study’s abstract that “…it is difficult to predict length of stay…”). A second approach would be to use survival analysis methods to generate each patient’s hazard of discharge over time, which could be directly converted to an expected daily risk of discharge. However, this approach is complicated by the concurrent need to include time-dependent covariates and consider the competing risk of death in hospital, which can complicate survival modeling.4,5 A third approach would be the implementation of a longitudinal analysis using marginal models to predict the daily probability of discharge,6 but this method quickly overwhelms computer resources when large datasets are present.

In this study, we decided to use nonparametric models to predict the daily number of hospital discharges. We first identified patient groups with distinct discharge patterns. We then calculated the conditional daily discharge probability of patients in each of these groups. Finally, these conditional daily discharge probabilities were then summed for each hospital day to generate the expected number of discharges in the next 24 hours. This paper details the methods we used to create our model and the accuracy of its predictions.

METHODS

Study Setting and Databases Used for Analysis

The study took place at The Ottawa Hospital, a 1000-bed teaching hospital with 3 campuses that is the primary referral center in our region. The study was approved by our local research ethics board.

The Patient Registry Database records the date and time of admission for each patient (defined as the moment that a patient’s admission request is registered in the patient registration) and discharge (defined as the time when the patient’s discharge from hospital was entered into the patient registration) for hospital encounters. Emergency department encounters were also identified in the Patient Registry Database along with admission service, patient age and sex, and patient location throughout the admission. The Laboratory Database records all laboratory studies and results on all patients at the hospital.

Study Cohort

We used the Patient Registry Database to identify all people aged 1 year or more who were admitted to the hospital between January 1, 2013, and December 31, 2015. This time frame was selected to (i) ensure that data were complete; and (ii) complete calendar years of data were available for both derivation (patient-days in 2013-2014) and validation (2015) cohorts. Patients who were observed in the emergency room without admission to hospital were not included.

Study Outcome

The study outcome was the number of patients discharged from the hospital each day. For the analysis, the reference point for each day was 1 second past midnight; therefore, values for time-dependent covariates up to and including midnight were used to predict the number of discharges in the next 24 hours.

Study Covariates

Baseline (ie, time-independent) covariates included patient age and sex, admission service, hospital campus, whether or not the patient was admitted from the emergency department (all determined from the Patient Registry Database), and the Laboratory-based Acute Physiological Score (LAPS). The latter, which was calculated with the Laboratory Database using results for 14 tests (arterial pH, PaCO2, PaO2, anion gap, hematocrit, total white blood cell count, serum albumin, total bilirubin, creatinine, urea nitrogen, glucose, sodium, bicarbonate, and troponin I) measured in the 24-hour time frame preceding hospitalization, was derived by Escobar and colleagues7 to measure severity of illness and was subsequently validated in our hospital.8 The independent association of each laboratory perturbation with risk of death in hospital is reflected by the number of points assigned to each lab value with the total LAPS being the sum of these values. Time-dependent covariates included weekday in hospital and whether or not patients were in the intensive care unit.

Analysis

We used 3 stages to create a model to predict the daily expected number of discharges: we identified discharge risk strata containing patients having similar discharge patterns using data from patients in the derivation cohort (first stage); then, we generated the preliminary probability of discharge by determining the daily discharge probability in each discharge risk strata (second stage); finally, we modified the probability from the second stage based on the weekday and admission service and summed these probabilities to create the expected number of discharges on a particular date (third stage).

The first stage identified discharge risk strata based on the covariates listed above. This was determined by using a survival tree approach9 with proportional hazard regression models to generate the “splits.” These models were offered all covariates listed in the Study Covariates section. Admission service was clustered within 4 departments (obstetrics/gynecology, psychiatry, surgery, and medicine) and day of week was “binarized” into weekday/weekend-holiday (because the use of categorical variables with large numbers of groups can “stunt” regression trees due to small numbers of patients—and, therefore, statistical power—in each subgroup). The proportional hazards model identified the covariate having the strongest association with time to discharge (based on the Wald X2 value divided by the degrees of freedom). This variable was then used to split the cohort into subgroups (with continuous covariates being categorized into quartiles). The proportional hazards model was then repeated in each subgroup (with the previous splitting variable[s] excluded from the model). This process continued until no variable was associated with time to discharge with a P value less than .0001. This survival-tree was then used to cluster all patients into distinct discharge risk strata.

In the second stage, we generated the preliminary probability of discharge for a specific date. This was calculated by assigning all patients in hospital to their discharge risk strata (Appendix). We then measured the probability of discharge on each hospitalization day in all discharge risk strata using data from the previous 180 days (we only used the prior 180 days of data to account for temporal changes in hospital discharge patterns). For example, consider a 75-year-old patient on her third hospital day under obstetrics/gynecology on December 19, 2015 (a Saturday). This patient would be assigned to risk stratum #133 (Appendix A). We then measured the probability of discharge of all patients in this discharge risk stratum hospitalized in the previous 6 months (ie, between June 22, 2015, and December 18, 2015) on each hospital day. For risk stratum #133, the probability of discharge on hospital day 3 was 0.1111; therefore, our sample patient’s preliminary expected discharge probability was 0.1111.

To attain stable daily discharge probability estimates, a minimum of 50 patients per discharge risk stratum-hospitalization day combination was required. If there were less than 50 patients for a particular hospitalization day in a particular discharge risk stratum, we grouped hospitalization days in that risk stratum together until the minimum of 50 patients was collected.

The third (and final) stage accounted for the lack of granularity when we created the discharge risk strata in the first stage. As we mentioned above, admission service was clustered into 4 departments and the day of week was clustered into weekend/weekday. However, important variations in discharge probabilities could still exist within departments and between particular days of the week.10 Therefore, we created a correction factor to adjust the preliminary expected number of discharges based on the admission division and day of week. This correction factor used data from the 180 days prior to the analysis date within which the expected daily number of discharges was calculated (using the methods above). The correction factor was the relative difference between the observed and expected number of discharges within each division-day of week grouping.

For example, to calculate the correction factor for our sample patient presented above (75-year-old patient on hospital day 3 under gynecology on Saturday, December 19, 2015), we measured the observed number of discharges from gynecology on Saturdays between June 22, 2015, and December 18, 2015, (n = 206) and the expected number of discharges (n = 195.255) resulting in a correction factor of (observed-expected)/expected = (195.255-206)/195.206 = 0.05503. Therefore, the final expected discharge probability for our sample patient was 0.1111+0.1111*0.05503=0.1172. The expected number of discharges on a particular date was the preliminary expected number of discharges on that date (generated in the second stage) multiplied by the correction factor for the corresponding division-day or week group.

RESULTS

There were 192,859 admissions involving patients more than 1 year of age that spent at least part of their hospitalization between January 1, 2013, and December 31, 2015 (Table). Patients were middle-aged and slightly female predominant, with about half being admitted from the emergency department. Approximately 80% of admissions were to surgical or medical services. More than 95% of admissions ended with a discharge from the hospital with the remainder ending in a death. Almost 30% of hospitalization days occurred on weekends or holidays. Hospitalizations in the derivation (2013-2014) and validation (2015) group were essentially the same, except there was a slight drop in hospital length of stay (from a median of 4 days to 3 days) between the 2 periods.

Patient and hospital covariates importantly influenced the daily conditional probability of discharge (Figure 1). Patients admitted to the obstetrics/gynecology department were notably more likely to be discharged from hospital with no influence from the day of week. In contrast, the probability of discharge decreased notably on the weekends in the other departments. Patients on the ward were much more likely to be discharged than those in the intensive care unit, with increasing age associated with a decreased discharge likelihood in the former but not the latter patients. Finally, discharge probabilities varied only slightly between campuses at our hospital with discharge risk decreasing as severity of illness (as measured by LAPS) increased.

The TEND model contained 142 discharge risk strata (Appendix A). Weekend-holiday status had the strongest association with discharge probability (ie, it was the first splitting variable). The most complex discharge risk strata contained 6 covariates. The daily conditional probability of discharge during the first 2 weeks of hospitalization varied extensively between discharge risk strata (Figure 2). Overall, the conditional discharge probability increased from the first to the second day, remained relatively stable for several days, and then slowly decreased over time. However, this pattern and day-to-day variability differed extensively between risk strata.

The observed daily number of discharges in the validation cohort varied extensively (median 139; interquartile range [IQR] 95-160; range 39-214). The TEND model accurately predicted the daily number of discharges with the expected daily number being strongly associated with the observed number (adjusted R2 = 89.2%; P < 0.0001; Figure 3). Calibration decreased but remained significant when we limited the analyses by hospital campus (General: R2 = 46.3%; P < 0.0001; Civic: R2 = 47.9%; P < 0.0001; Heart Institute: R2 = 18.1%; P < 0.0001). The expected number of daily discharges was an unbiased estimator of the observed number of discharges (its parameter estimate in a linear regression model with the observed number of discharges as the outcome variable was 1.0005; 95% confidence interval, 0.9647-1.0363). The absolute difference in the observed and expected daily number of discharges was small (median 1.6; IQR −6.8 to 9.4; range −37 to 63.4) as was the relative difference (median 1.4%; IQR −5.5% to 7.1%; range −40.9% to 43.4%). The expected number of discharges was within 20% of the observed number of discharges in 95.1% of days in 2015.

DISCUSSION

Knowing how many patients will soon be discharged from the hospital should greatly facilitate hospital planning. This study showed that the TEND model used simple patient and hospitalization covariates to accurately predict the number of patients who will be discharged from hospital in the next day.

We believe that this study has several notable findings. First, we think that using a nonparametric approach to predicting the daily number of discharges importantly increased accuracy. This approach allowed us to generate expected likelihoods based on actual discharge probabilities at our hospital in the most recent 6 months of hospitalization-days within patients having discharge patterns that were very similar to the patient in question (ie, discharge risk strata, Appendix A). This ensured that trends in hospitalization habits were accounted for without the need of a period variable in our model. In addition, the lack of parameters in the model will make it easier to transplant it to other hospitals. Second, we think that the accuracy of the predictions were remarkable given the relative “crudeness” of our predictors. By using relatively simple factors, the TEND model was able to output accurate predictions for the number of daily discharges (Figure 3).

This study joins several others that have attempted to accomplish the difficult task of predicting the number of hospital discharges by using digitized data. Barnes et al.11 created a model using regression random forest methods in a single medical service within a hospital to predict the daily number of discharges with impressive accuracy (mean daily number of discharges observed 8.29, expected 8.51). Interestingly, the model in this study was more accurate at predicting discharge likelihood than physicians. Levin et al.12 derived a model using discrete time logistic regression to predict the likelihood of discharge from a pediatric intensive care unit, finding that physician orders (captured via electronic order entry) could be categorized and used to significantly increase the accuracy of discharge likelihood. This study demonstrates the potential opportunities within health-related data from hospital data warehouses to improve prediction. We believe that continued work in this field will result in the increased use of digital data to help hospital administrators manage patient beds more efficiently and effectively than currently used resource intensive manual methods.13,14

Several issues should be kept in mind when interpreting our findings. First, our analysis is limited to a single institution in Canada. It will be important to determine if the TEND model methodology generalizes to other hospitals in different jurisdictions. Such an external validation, especially in multiple hospitals, will be important to show that the TEND model methodology works in other facilities. Hospitals could implement the TEND model if they are able to record daily values for each of the variables required to assign patients to a discharge risk stratum (Appendix A) and calculate within each the daily probability of discharge. Hospitals could derive their own discharge risk strata to account for covariates, which we did not include in our study but could be influential, such as insurance status. These discharge risk estimates could also be incorporated into the electronic medical record or hospital dashboards (as long as the data required to generate the estimates are available). These interventions would permit the expected number of hospital discharges (and even the patient-level probability of discharge) to be calculated on a daily basis. Second, 2 potential biases could have influenced the identification of our discharge risk strata (Appendix A). In this process, we used survival tree methods to separate patient-days into clusters having progressively more homogenous discharge patterns. Each split was determined by using a proportional hazards model that ignored the competing risks of death in hospital. In addition, the model expressed age and LAPS as continuous variables, whereas these covariates had to be categorized to create our risk strata groupings. The strength of a covariate’s association with an outcome will decrease when a continuous variable is categorized.15 Both of these issues might have biased our final risk strata categorization (Appendix A). Third, we limited our model to include simple covariates whose values could be determined relatively easily within most hospital administrative data systems. While this increases the generalizability to other hospital information systems, we believe that the introduction of other covariates to the model—such as daily vital signs, laboratory results, medications, or time from operations—could increase prediction accuracy. Finally, it is uncertain whether or not knowing the predicted number of discharges will improve the efficiency of bed management within the hospital. It seems logical that an accurate prediction of the number of beds that will be made available in the next day should improve decisions regarding the number of patients who could be admitted electively to the hospital. It remains to be seen, however, whether this truly happens.

In summary, we found that the TEND model used a handful of patient and hospitalization factors to accurately predict the expected number of discharges from hospital in the next day. Further work is required to implement this model into our institution’s data warehouse and then determine whether this prediction will improve the efficiency of bed management at our hospital.

Disclosure: CvW is supported by a University of Ottawa Department of Medicine Clinician Scientist Chair. The authors have no conflicts of interest