User login

Simulation-Based Training in Medical Education: Immediate Growth or Cautious Optimism?

For years, professional athletes have used simulation-based training (SBT), a combination of virtual and experiential learning that aims to optimize technical skills, teamwork, and communication.1 In SBT, critical plays and skills are first watched on video or reviewed on a chalkboard, and then run in the presence of a coach who offers immediate feedback to the player. The hope is that the individual will then be able to perfectly execute that play or scenario when it is game time. While SBT is a developing tool in medical education—allowing learners to practice important clinical skills prior to practicing in the higher-stakes clinical environment—an important question remains: what training can go virtual and what needs to stay in person?

In this issue, Carter et al2 present a single-site, telesimulation curriculum that addresses consult request and handoff communication using SBT. Due to the COVID-19 pandemic, the authors converted an in-person intern bootcamp into a virtual, Zoom®-based workshop and compared assessments and evaluations to the previous year’s (2019) in-person bootcamp. Compared to the in-person class, the telesimulation-based cohort were equally or better trained in the consult request portion of the workshop. However, participants were significantly less likely to perform the assessed handoff skills optimally, with only a quarter (26%) appropriately prioritizing patients and less than half (49%) providing an appropriate amount of information in the patient summary. Additionally, postworkshop surveys found that SBT participants were more satisfied with their performance in both the consult request and handoff scenarios and felt more prepared (99% vs 91%) to perform handoffs in clinical practice compared to the previous year’s in-person cohort.

We focus on this work as it explores the role that SBT or virtual training could have in hospital communication and patient safety training. While previous work has highlighted that technical and procedural skills often lend themselves to in-person adaptation (eg, point-of-care ultrasound), this work suggests that nontechnical skills training could be adapted to the virtual environment. Hospitalists and internal medicine trainees perform a myriad of nontechnical activities, such as end-of-life discussions, obtaining informed consent, providing peer-to-peer feedback, and leading multidisciplinary teams. Activities like these, which require no hands-on interactions, may be well-suited for simulation or virtual-based training.3

However, we make this suggestion with some caution. In Carter et al’s study,2 while we assumed that telesimulation would work for the handoff portion of the workshop, interestingly, the telesimulation-based cohort performed worse than the interns who participated in the previous year’s in-person training while simultaneously and paradoxically reporting that they felt more prepared. The authors offer several possible explanations, including alterations in the assessment checklist and a shift in the facilitators from peer observers to faculty hospitalists. We suspect that differences in the participants’ experiences prior to the bootcamp may also be at play. Given the onset of the pandemic during their final year in undergraduate training, many in this intern cohort were likely removed from their fourth-year clinical clerkships,4 taking from them pivotal opportunities to hone and refine this skill set prior to starting their graduate medical education.

As telesimulation and other virtual care educational opportunities continue to evolve, we must ensure that such training does not sacrifice quality for ease and satisfaction. As the authors’ findings show, simply replicating an in-person curriculum in a virtual environment does not ensure equivalence for all skill sets. We remain cautiously optimistic that as we adjust to a postpandemic world, more SBT and virtual-based educational interventions will allow medical trainees to be ready to perform come game time.

1. McCaskill S. Sports tech comes of age with VR training, coaching apps and smart gear. Forbes. March 31, 2020. https://www.forbes.com/sites/stevemccaskill/2020/03/31/sports-tech-comes-of-age-with-vr-training-coaching-apps-and-smart-gear/?sh=309a8fa219c9

2. Carter K, Podczerwinski J, Love L, et al. Utilizing telesimulation for advanced skills training in consultation and handoff communication: a post-COVID-19 GME bootcamp experience. J Hosp Med. 2021;16(12)730-734. https://doi.org/10.12788/jhm.3733

3. Paige JT, Sonesh SC, Garbee DD, Bonanno LS. Comprensive Healthcare Simulation: Interprofessional Team Training and Simulation. 1st ed. Springer International Publishing; 2020. https://doi.org/10.1007/978-3-030-28845-7

4. Goldenberg MN, Hersh DC, Wilkins KM, Schwartz ML. Suspending medical student clerkships due to COVID-19. Med Sci Educ. 2020;30(3):1-4. https://doi.org/10.1007/s40670-020-00994-1

For years, professional athletes have used simulation-based training (SBT), a combination of virtual and experiential learning that aims to optimize technical skills, teamwork, and communication.1 In SBT, critical plays and skills are first watched on video or reviewed on a chalkboard, and then run in the presence of a coach who offers immediate feedback to the player. The hope is that the individual will then be able to perfectly execute that play or scenario when it is game time. While SBT is a developing tool in medical education—allowing learners to practice important clinical skills prior to practicing in the higher-stakes clinical environment—an important question remains: what training can go virtual and what needs to stay in person?

In this issue, Carter et al2 present a single-site, telesimulation curriculum that addresses consult request and handoff communication using SBT. Due to the COVID-19 pandemic, the authors converted an in-person intern bootcamp into a virtual, Zoom®-based workshop and compared assessments and evaluations to the previous year’s (2019) in-person bootcamp. Compared to the in-person class, the telesimulation-based cohort were equally or better trained in the consult request portion of the workshop. However, participants were significantly less likely to perform the assessed handoff skills optimally, with only a quarter (26%) appropriately prioritizing patients and less than half (49%) providing an appropriate amount of information in the patient summary. Additionally, postworkshop surveys found that SBT participants were more satisfied with their performance in both the consult request and handoff scenarios and felt more prepared (99% vs 91%) to perform handoffs in clinical practice compared to the previous year’s in-person cohort.

We focus on this work as it explores the role that SBT or virtual training could have in hospital communication and patient safety training. While previous work has highlighted that technical and procedural skills often lend themselves to in-person adaptation (eg, point-of-care ultrasound), this work suggests that nontechnical skills training could be adapted to the virtual environment. Hospitalists and internal medicine trainees perform a myriad of nontechnical activities, such as end-of-life discussions, obtaining informed consent, providing peer-to-peer feedback, and leading multidisciplinary teams. Activities like these, which require no hands-on interactions, may be well-suited for simulation or virtual-based training.3

However, we make this suggestion with some caution. In Carter et al’s study,2 while we assumed that telesimulation would work for the handoff portion of the workshop, interestingly, the telesimulation-based cohort performed worse than the interns who participated in the previous year’s in-person training while simultaneously and paradoxically reporting that they felt more prepared. The authors offer several possible explanations, including alterations in the assessment checklist and a shift in the facilitators from peer observers to faculty hospitalists. We suspect that differences in the participants’ experiences prior to the bootcamp may also be at play. Given the onset of the pandemic during their final year in undergraduate training, many in this intern cohort were likely removed from their fourth-year clinical clerkships,4 taking from them pivotal opportunities to hone and refine this skill set prior to starting their graduate medical education.

As telesimulation and other virtual care educational opportunities continue to evolve, we must ensure that such training does not sacrifice quality for ease and satisfaction. As the authors’ findings show, simply replicating an in-person curriculum in a virtual environment does not ensure equivalence for all skill sets. We remain cautiously optimistic that as we adjust to a postpandemic world, more SBT and virtual-based educational interventions will allow medical trainees to be ready to perform come game time.

For years, professional athletes have used simulation-based training (SBT), a combination of virtual and experiential learning that aims to optimize technical skills, teamwork, and communication.1 In SBT, critical plays and skills are first watched on video or reviewed on a chalkboard, and then run in the presence of a coach who offers immediate feedback to the player. The hope is that the individual will then be able to perfectly execute that play or scenario when it is game time. While SBT is a developing tool in medical education—allowing learners to practice important clinical skills prior to practicing in the higher-stakes clinical environment—an important question remains: what training can go virtual and what needs to stay in person?

In this issue, Carter et al2 present a single-site, telesimulation curriculum that addresses consult request and handoff communication using SBT. Due to the COVID-19 pandemic, the authors converted an in-person intern bootcamp into a virtual, Zoom®-based workshop and compared assessments and evaluations to the previous year’s (2019) in-person bootcamp. Compared to the in-person class, the telesimulation-based cohort were equally or better trained in the consult request portion of the workshop. However, participants were significantly less likely to perform the assessed handoff skills optimally, with only a quarter (26%) appropriately prioritizing patients and less than half (49%) providing an appropriate amount of information in the patient summary. Additionally, postworkshop surveys found that SBT participants were more satisfied with their performance in both the consult request and handoff scenarios and felt more prepared (99% vs 91%) to perform handoffs in clinical practice compared to the previous year’s in-person cohort.

We focus on this work as it explores the role that SBT or virtual training could have in hospital communication and patient safety training. While previous work has highlighted that technical and procedural skills often lend themselves to in-person adaptation (eg, point-of-care ultrasound), this work suggests that nontechnical skills training could be adapted to the virtual environment. Hospitalists and internal medicine trainees perform a myriad of nontechnical activities, such as end-of-life discussions, obtaining informed consent, providing peer-to-peer feedback, and leading multidisciplinary teams. Activities like these, which require no hands-on interactions, may be well-suited for simulation or virtual-based training.3

However, we make this suggestion with some caution. In Carter et al’s study,2 while we assumed that telesimulation would work for the handoff portion of the workshop, interestingly, the telesimulation-based cohort performed worse than the interns who participated in the previous year’s in-person training while simultaneously and paradoxically reporting that they felt more prepared. The authors offer several possible explanations, including alterations in the assessment checklist and a shift in the facilitators from peer observers to faculty hospitalists. We suspect that differences in the participants’ experiences prior to the bootcamp may also be at play. Given the onset of the pandemic during their final year in undergraduate training, many in this intern cohort were likely removed from their fourth-year clinical clerkships,4 taking from them pivotal opportunities to hone and refine this skill set prior to starting their graduate medical education.

As telesimulation and other virtual care educational opportunities continue to evolve, we must ensure that such training does not sacrifice quality for ease and satisfaction. As the authors’ findings show, simply replicating an in-person curriculum in a virtual environment does not ensure equivalence for all skill sets. We remain cautiously optimistic that as we adjust to a postpandemic world, more SBT and virtual-based educational interventions will allow medical trainees to be ready to perform come game time.

1. McCaskill S. Sports tech comes of age with VR training, coaching apps and smart gear. Forbes. March 31, 2020. https://www.forbes.com/sites/stevemccaskill/2020/03/31/sports-tech-comes-of-age-with-vr-training-coaching-apps-and-smart-gear/?sh=309a8fa219c9

2. Carter K, Podczerwinski J, Love L, et al. Utilizing telesimulation for advanced skills training in consultation and handoff communication: a post-COVID-19 GME bootcamp experience. J Hosp Med. 2021;16(12)730-734. https://doi.org/10.12788/jhm.3733

3. Paige JT, Sonesh SC, Garbee DD, Bonanno LS. Comprensive Healthcare Simulation: Interprofessional Team Training and Simulation. 1st ed. Springer International Publishing; 2020. https://doi.org/10.1007/978-3-030-28845-7

4. Goldenberg MN, Hersh DC, Wilkins KM, Schwartz ML. Suspending medical student clerkships due to COVID-19. Med Sci Educ. 2020;30(3):1-4. https://doi.org/10.1007/s40670-020-00994-1

1. McCaskill S. Sports tech comes of age with VR training, coaching apps and smart gear. Forbes. March 31, 2020. https://www.forbes.com/sites/stevemccaskill/2020/03/31/sports-tech-comes-of-age-with-vr-training-coaching-apps-and-smart-gear/?sh=309a8fa219c9

2. Carter K, Podczerwinski J, Love L, et al. Utilizing telesimulation for advanced skills training in consultation and handoff communication: a post-COVID-19 GME bootcamp experience. J Hosp Med. 2021;16(12)730-734. https://doi.org/10.12788/jhm.3733

3. Paige JT, Sonesh SC, Garbee DD, Bonanno LS. Comprensive Healthcare Simulation: Interprofessional Team Training and Simulation. 1st ed. Springer International Publishing; 2020. https://doi.org/10.1007/978-3-030-28845-7

4. Goldenberg MN, Hersh DC, Wilkins KM, Schwartz ML. Suspending medical student clerkships due to COVID-19. Med Sci Educ. 2020;30(3):1-4. https://doi.org/10.1007/s40670-020-00994-1

How Organizations Can Build a Successful and Sustainable Social Media Presence

Horwitz and Detsky1 provide readers with a personal, experientially based primer on how healthcare professionals can more effectively engage on Twitter. As experienced physicians, researchers, and active social media users, the authors outline pragmatic and specific recommendations on how to engage misinformation and add value to social media discourse. We applaud the authors for offering best-practice approaches that are valuable to newcomers as well as seasoned social media users. In highlighting that social media is merely a modern tool for engagement and discussion, the authors underscore the time-held idea that only when a tool is used effectively will it yield the desired outcome. As a medical journal that regularly uses social media as a tool for outreach and dissemination, we could not agree more with the authors’ assertion.

Since 2015, the Journal of Hospital Medicine (JHM) has used social media to engage its readership and extend the impact of the work published in its pages. Like Horwitz and Detsky, JHM has developed insights and experience in how medical journals, organizations, institutions, and other academic programs can use social media effectively. Because of our experience in this area, we are often asked how to build a successful and sustainable social media presence. Here, we share five primary lessons on how to use social media as a tool to disseminate, connect, and engage.

ESTABLISH YOUR GOALS

As the flagship journal for the field of hospital medicine, we seek to disseminate the ideas and research that will inform health policy, optimize healthcare delivery, and improve patient outcomes while also building and sustaining an online community for professional engagement and growth. Our social media goals provide direction on how to interact, allow us to focus attention on what is important, and motivate our growth in this area. Simply put, we believe that using social media without defined goals would be like sailing a ship without a rudder.

KNOW YOUR AUDIENCE

As your organization establishes its goals, it is important to consider with whom you want to connect. Knowing your audience will allow you to better tailor the content you deliver through social media. For instance, we understand that as a journal focused on hospital medicine, our audience consists of busy clinicians, researchers, and medical educators who are trying to efficiently gather the most up-to-date information in our field. Recognizing this, we produce (and make available for download) Visual Abstracts and publish them on Twitter to help our followers assimilate information from new studies quickly and easily.2 Moreover, we recognize that our followers are interested in how to use social media in their professional lives and have published several articles in this topic area.3-5

BUILD YOUR TEAM

We have found that having multiple individuals on our social media team has led to greater creativity and thoughtfulness on how we engage our readership. Our teams span generations, clinical experience, institutions, and cultural backgrounds. This intentional approach has allowed for diversity in thoughts and opinions and has helped shape the JHM social media message. Additionally, we have not only formalized editorial roles through the creation of Digital Media Editor positions, but we have also created the JHM Digital Media Fellowship, a training program and development pipeline for those interested in cultivating organization-based social media experiences and skill sets.6

ENGAGE CONSISTENTLY

Many organizations believe that successful social media outreach means creating an account and posting content when convenient. Experience has taught us that daily postings and regular engagement will build your brand as a regular and reliable source of information for your followers. Additionally, while many academic journals and organizations only occasionally post material and rarely interact with their followers, we have found that engaging and facilitating conversations through our monthly Twitter discussion (#JHMChat) has established a community, created opportunities for professional networking, and further disseminated the work published in JHM.7 As an academic journal or organization entering this field, recognize the product for which people follow you and deliver that product on a consistent basis.

OWN YOUR MISTAKES

It will only be a matter of time before your organization makes a misstep on social media. Instead of hiding, we recommend stepping into that tension and owning the mistake. For example, we recently published an article that contained a culturally offensive term. As a journal, we reflected on our error and took concrete steps to correct it. Further, we shared our thoughts with our followers to ensure transparency.8 Moving forward, we have inserted specific stopgaps in our editorial review process to avoid such missteps in the future.

Although every organization will have different goals and reasons for engaging on social media, we believe these central tenets will help optimize the use of this platform. Although we have established specific objectives for our engagement on social media, we believe Horwitz and Detsky1 put it best when they note that, at the end of the day, our ultimate goal is in “…promoting knowledge and science in a way that helps us all live healthier and happier lives."

1. Horwitz LI, Detsky AS. Tweeting into the void: effective use of social media for healthcare professionals. J Hosp Med. 2021;16(10):581-582. https://doi.org/10.12788/jhm.3684

2. 2021 Visual Abstracts. Accessed September 8, 2021. https://www.journalofhospitalmedicine.com/jhospmed/page/2021-visual-abstracts

3. Kumar A, Chen N, Singh A. #ConsentObtained - patient privacy in the age of social media. J Hosp Med. 2020;15(11):702-704. https://doi.org/10.12788/jhm.3416

4. Minter DJ, Patel A, Ganeshan S, Nematollahi S. Medical communities go virtual. J Hosp Med. 2021;16(6):378-380. https://doi.org/10.12788/jhm.3532

5. Marcelin JR, Cawcutt KA, Shapiro M, Varghese T, O’Glasser A. Moment vs movement: mission-based tweeting for physician advocacy. J Hosp Med. 2021;16(8):507-509. https://doi.org/10.12788/jhm.3636

6. Editorial Fellowships (Digital Media and Editorial). Accessed September 8, 2021. https://www.journalofhospitalmedicine.com/content/editorial-fellowships-digital-media-and-editorial

7. Wray CM, Auerbach AD, Arora VM. The adoption of an online journal club to improve research dissemination and social media engagement among hospitalists. J Hosp Med. 2018;13(11):764-769. https://doi.org/10.12788/jhm.2987

8. Shah SS, Manning KD, Wray CM, Castellanos A, Jerardi KE. Microaggressions, accountability, and our commitment to doing better [editorial]. J Hosp Med. 2021;16(6):325. https://doi.org/10.12788/jhm.3646

Horwitz and Detsky1 provide readers with a personal, experientially based primer on how healthcare professionals can more effectively engage on Twitter. As experienced physicians, researchers, and active social media users, the authors outline pragmatic and specific recommendations on how to engage misinformation and add value to social media discourse. We applaud the authors for offering best-practice approaches that are valuable to newcomers as well as seasoned social media users. In highlighting that social media is merely a modern tool for engagement and discussion, the authors underscore the time-held idea that only when a tool is used effectively will it yield the desired outcome. As a medical journal that regularly uses social media as a tool for outreach and dissemination, we could not agree more with the authors’ assertion.

Since 2015, the Journal of Hospital Medicine (JHM) has used social media to engage its readership and extend the impact of the work published in its pages. Like Horwitz and Detsky, JHM has developed insights and experience in how medical journals, organizations, institutions, and other academic programs can use social media effectively. Because of our experience in this area, we are often asked how to build a successful and sustainable social media presence. Here, we share five primary lessons on how to use social media as a tool to disseminate, connect, and engage.

ESTABLISH YOUR GOALS

As the flagship journal for the field of hospital medicine, we seek to disseminate the ideas and research that will inform health policy, optimize healthcare delivery, and improve patient outcomes while also building and sustaining an online community for professional engagement and growth. Our social media goals provide direction on how to interact, allow us to focus attention on what is important, and motivate our growth in this area. Simply put, we believe that using social media without defined goals would be like sailing a ship without a rudder.

KNOW YOUR AUDIENCE

As your organization establishes its goals, it is important to consider with whom you want to connect. Knowing your audience will allow you to better tailor the content you deliver through social media. For instance, we understand that as a journal focused on hospital medicine, our audience consists of busy clinicians, researchers, and medical educators who are trying to efficiently gather the most up-to-date information in our field. Recognizing this, we produce (and make available for download) Visual Abstracts and publish them on Twitter to help our followers assimilate information from new studies quickly and easily.2 Moreover, we recognize that our followers are interested in how to use social media in their professional lives and have published several articles in this topic area.3-5

BUILD YOUR TEAM

We have found that having multiple individuals on our social media team has led to greater creativity and thoughtfulness on how we engage our readership. Our teams span generations, clinical experience, institutions, and cultural backgrounds. This intentional approach has allowed for diversity in thoughts and opinions and has helped shape the JHM social media message. Additionally, we have not only formalized editorial roles through the creation of Digital Media Editor positions, but we have also created the JHM Digital Media Fellowship, a training program and development pipeline for those interested in cultivating organization-based social media experiences and skill sets.6

ENGAGE CONSISTENTLY

Many organizations believe that successful social media outreach means creating an account and posting content when convenient. Experience has taught us that daily postings and regular engagement will build your brand as a regular and reliable source of information for your followers. Additionally, while many academic journals and organizations only occasionally post material and rarely interact with their followers, we have found that engaging and facilitating conversations through our monthly Twitter discussion (#JHMChat) has established a community, created opportunities for professional networking, and further disseminated the work published in JHM.7 As an academic journal or organization entering this field, recognize the product for which people follow you and deliver that product on a consistent basis.

OWN YOUR MISTAKES

It will only be a matter of time before your organization makes a misstep on social media. Instead of hiding, we recommend stepping into that tension and owning the mistake. For example, we recently published an article that contained a culturally offensive term. As a journal, we reflected on our error and took concrete steps to correct it. Further, we shared our thoughts with our followers to ensure transparency.8 Moving forward, we have inserted specific stopgaps in our editorial review process to avoid such missteps in the future.

Although every organization will have different goals and reasons for engaging on social media, we believe these central tenets will help optimize the use of this platform. Although we have established specific objectives for our engagement on social media, we believe Horwitz and Detsky1 put it best when they note that, at the end of the day, our ultimate goal is in “…promoting knowledge and science in a way that helps us all live healthier and happier lives."

Horwitz and Detsky1 provide readers with a personal, experientially based primer on how healthcare professionals can more effectively engage on Twitter. As experienced physicians, researchers, and active social media users, the authors outline pragmatic and specific recommendations on how to engage misinformation and add value to social media discourse. We applaud the authors for offering best-practice approaches that are valuable to newcomers as well as seasoned social media users. In highlighting that social media is merely a modern tool for engagement and discussion, the authors underscore the time-held idea that only when a tool is used effectively will it yield the desired outcome. As a medical journal that regularly uses social media as a tool for outreach and dissemination, we could not agree more with the authors’ assertion.

Since 2015, the Journal of Hospital Medicine (JHM) has used social media to engage its readership and extend the impact of the work published in its pages. Like Horwitz and Detsky, JHM has developed insights and experience in how medical journals, organizations, institutions, and other academic programs can use social media effectively. Because of our experience in this area, we are often asked how to build a successful and sustainable social media presence. Here, we share five primary lessons on how to use social media as a tool to disseminate, connect, and engage.

ESTABLISH YOUR GOALS

As the flagship journal for the field of hospital medicine, we seek to disseminate the ideas and research that will inform health policy, optimize healthcare delivery, and improve patient outcomes while also building and sustaining an online community for professional engagement and growth. Our social media goals provide direction on how to interact, allow us to focus attention on what is important, and motivate our growth in this area. Simply put, we believe that using social media without defined goals would be like sailing a ship without a rudder.

KNOW YOUR AUDIENCE

As your organization establishes its goals, it is important to consider with whom you want to connect. Knowing your audience will allow you to better tailor the content you deliver through social media. For instance, we understand that as a journal focused on hospital medicine, our audience consists of busy clinicians, researchers, and medical educators who are trying to efficiently gather the most up-to-date information in our field. Recognizing this, we produce (and make available for download) Visual Abstracts and publish them on Twitter to help our followers assimilate information from new studies quickly and easily.2 Moreover, we recognize that our followers are interested in how to use social media in their professional lives and have published several articles in this topic area.3-5

BUILD YOUR TEAM

We have found that having multiple individuals on our social media team has led to greater creativity and thoughtfulness on how we engage our readership. Our teams span generations, clinical experience, institutions, and cultural backgrounds. This intentional approach has allowed for diversity in thoughts and opinions and has helped shape the JHM social media message. Additionally, we have not only formalized editorial roles through the creation of Digital Media Editor positions, but we have also created the JHM Digital Media Fellowship, a training program and development pipeline for those interested in cultivating organization-based social media experiences and skill sets.6

ENGAGE CONSISTENTLY

Many organizations believe that successful social media outreach means creating an account and posting content when convenient. Experience has taught us that daily postings and regular engagement will build your brand as a regular and reliable source of information for your followers. Additionally, while many academic journals and organizations only occasionally post material and rarely interact with their followers, we have found that engaging and facilitating conversations through our monthly Twitter discussion (#JHMChat) has established a community, created opportunities for professional networking, and further disseminated the work published in JHM.7 As an academic journal or organization entering this field, recognize the product for which people follow you and deliver that product on a consistent basis.

OWN YOUR MISTAKES

It will only be a matter of time before your organization makes a misstep on social media. Instead of hiding, we recommend stepping into that tension and owning the mistake. For example, we recently published an article that contained a culturally offensive term. As a journal, we reflected on our error and took concrete steps to correct it. Further, we shared our thoughts with our followers to ensure transparency.8 Moving forward, we have inserted specific stopgaps in our editorial review process to avoid such missteps in the future.

Although every organization will have different goals and reasons for engaging on social media, we believe these central tenets will help optimize the use of this platform. Although we have established specific objectives for our engagement on social media, we believe Horwitz and Detsky1 put it best when they note that, at the end of the day, our ultimate goal is in “…promoting knowledge and science in a way that helps us all live healthier and happier lives."

1. Horwitz LI, Detsky AS. Tweeting into the void: effective use of social media for healthcare professionals. J Hosp Med. 2021;16(10):581-582. https://doi.org/10.12788/jhm.3684

2. 2021 Visual Abstracts. Accessed September 8, 2021. https://www.journalofhospitalmedicine.com/jhospmed/page/2021-visual-abstracts

3. Kumar A, Chen N, Singh A. #ConsentObtained - patient privacy in the age of social media. J Hosp Med. 2020;15(11):702-704. https://doi.org/10.12788/jhm.3416

4. Minter DJ, Patel A, Ganeshan S, Nematollahi S. Medical communities go virtual. J Hosp Med. 2021;16(6):378-380. https://doi.org/10.12788/jhm.3532

5. Marcelin JR, Cawcutt KA, Shapiro M, Varghese T, O’Glasser A. Moment vs movement: mission-based tweeting for physician advocacy. J Hosp Med. 2021;16(8):507-509. https://doi.org/10.12788/jhm.3636

6. Editorial Fellowships (Digital Media and Editorial). Accessed September 8, 2021. https://www.journalofhospitalmedicine.com/content/editorial-fellowships-digital-media-and-editorial

7. Wray CM, Auerbach AD, Arora VM. The adoption of an online journal club to improve research dissemination and social media engagement among hospitalists. J Hosp Med. 2018;13(11):764-769. https://doi.org/10.12788/jhm.2987

8. Shah SS, Manning KD, Wray CM, Castellanos A, Jerardi KE. Microaggressions, accountability, and our commitment to doing better [editorial]. J Hosp Med. 2021;16(6):325. https://doi.org/10.12788/jhm.3646

1. Horwitz LI, Detsky AS. Tweeting into the void: effective use of social media for healthcare professionals. J Hosp Med. 2021;16(10):581-582. https://doi.org/10.12788/jhm.3684

2. 2021 Visual Abstracts. Accessed September 8, 2021. https://www.journalofhospitalmedicine.com/jhospmed/page/2021-visual-abstracts

3. Kumar A, Chen N, Singh A. #ConsentObtained - patient privacy in the age of social media. J Hosp Med. 2020;15(11):702-704. https://doi.org/10.12788/jhm.3416

4. Minter DJ, Patel A, Ganeshan S, Nematollahi S. Medical communities go virtual. J Hosp Med. 2021;16(6):378-380. https://doi.org/10.12788/jhm.3532

5. Marcelin JR, Cawcutt KA, Shapiro M, Varghese T, O’Glasser A. Moment vs movement: mission-based tweeting for physician advocacy. J Hosp Med. 2021;16(8):507-509. https://doi.org/10.12788/jhm.3636

6. Editorial Fellowships (Digital Media and Editorial). Accessed September 8, 2021. https://www.journalofhospitalmedicine.com/content/editorial-fellowships-digital-media-and-editorial

7. Wray CM, Auerbach AD, Arora VM. The adoption of an online journal club to improve research dissemination and social media engagement among hospitalists. J Hosp Med. 2018;13(11):764-769. https://doi.org/10.12788/jhm.2987

8. Shah SS, Manning KD, Wray CM, Castellanos A, Jerardi KE. Microaggressions, accountability, and our commitment to doing better [editorial]. J Hosp Med. 2021;16(6):325. https://doi.org/10.12788/jhm.3646

© 2021 Society of Hospital Medicine

Leveraging the Care Team to Optimize Disposition Planning

Is this patient a good candidate? In medicine, we subconsciously answer this question for every clinical decision we make. Occasionally, though, a clinical scenario is so complex that it cannot or should not be answered by a single individual. One example is the decision on whether a patient should receive an organ transplant. In this situation, a multidisciplinary committee weighs the complex ethical, clinical, and financial implications of the decision before coming to a verdict. Together, team members discuss the risks and benefits of each patient’s candidacy and, in a united fashion, decide the best course of care. For hospitalists, a far more common question occurs every day and is similarly fraught with multifaceted implications: Is my patient a good candidate for a skilled nursing facility (SNF)? We often rely on a single individual to make the final call, but should we instead be leveraging the expertise of other care team members to assist with this decision?

In this issue, Boyle et al1 describe the implementation of a multidisciplinary team consisting of physicians, case managers, social workers, physical and occupational therapists, and home-health representatives that reviewed all patients with an expected discharge to a SNF. Case managers or social workers began the process by referring eligible patients to the committee for review. If deemed appropriate, the committee discussed each case and reached a consensus recommendation as to whether a SNF was an appropriate discharge destination. The investigators used a matched, preintervention sample as a comparison group, with a primary outcome of total discharges to SNFs, and secondary outcomes consisting of readmissions, time to readmission, and median length of stay. The authors observed a 49.7% relative reduction in total SNF discharges (25.5% of preintervention patients discharged to a SNF vs 12.8% postintervention), as well as a 66.9% relative reduction in new SNF discharges. Despite the significant reduction in SNF utilization, no differences were noted in readmissions, time to readmission, or readmission length of stay.

While this study was performed during the COVID-19 pandemic, several characteristics make its findings applicable beyond this period. First, the structure and workflow of the team are extensively detailed and make the intervention easily generalizable to most hospitals. Second, while not specifically examined, the outcome of SNF reduction likely corresponds to an increase in the patient’s time at home—an important patient-centered target for most posthospitalization plans.2 Finally, the intervention used existing infrastructure and individuals, and did not require new resources to improve patient care, which increases the feasibility of implementation at other institutions.

These findings also reveal potential overutilization of SNFs in the discharge process. On average, a typical SNF stay costs the health system more than $11,000.3 A simple intervention could lead to substantial savings for individuals and the healthcare system. With a nearly 50% reduction in SNF use, understanding why patients who were eligible to go home were ultimately discharged to a SNF will be a crucial question to answer. Are there barriers to patient or family education? Is there a perceived safety difference between a SNF and home for nonskilled nursing needs? Additionally, care should be taken to ensure that decreases in SNF utilization do not disproportionately affect certain populations. Further work should assess the performance of similar models in a non-COVID era and among multiple institutions to verify potential scalability and generalizability.

Like organ transplant committees, Boyle et al’s multidisciplinary approach to reduce SNF discharges had to include thoughtful and intentional decisions. Perhaps it is time we use this same model to transplant patients back into their homes as safely and efficiently as possible.

1. Boyle CA, Ravichandran U, Hankamp V, et al. Safe transitions and congregate living in the age of COVID-19: a retrospective cohort study. J Hosp Med. 2021;16(9):524-530. https://doi.org/10.12788/jhm.3657

2. Barnett ML, Grabowski DC, Mehrotra A. Home-to-home time—measuring what matters to patients and payers. N Engl J Med. 2017;377(1):4-6. https://doi.org/10.1056/NEJMp1703423

3. Werner RM, Coe NB, Qi M, Konetzka RT. Patient outcomes after hospital discharge to home with home health care vs to a skilled nursing facility. JAMA Intern Med. 2019;179(5):617-623. https://doi.org/10.1001/jamainternmed.2018.7998

Is this patient a good candidate? In medicine, we subconsciously answer this question for every clinical decision we make. Occasionally, though, a clinical scenario is so complex that it cannot or should not be answered by a single individual. One example is the decision on whether a patient should receive an organ transplant. In this situation, a multidisciplinary committee weighs the complex ethical, clinical, and financial implications of the decision before coming to a verdict. Together, team members discuss the risks and benefits of each patient’s candidacy and, in a united fashion, decide the best course of care. For hospitalists, a far more common question occurs every day and is similarly fraught with multifaceted implications: Is my patient a good candidate for a skilled nursing facility (SNF)? We often rely on a single individual to make the final call, but should we instead be leveraging the expertise of other care team members to assist with this decision?

In this issue, Boyle et al1 describe the implementation of a multidisciplinary team consisting of physicians, case managers, social workers, physical and occupational therapists, and home-health representatives that reviewed all patients with an expected discharge to a SNF. Case managers or social workers began the process by referring eligible patients to the committee for review. If deemed appropriate, the committee discussed each case and reached a consensus recommendation as to whether a SNF was an appropriate discharge destination. The investigators used a matched, preintervention sample as a comparison group, with a primary outcome of total discharges to SNFs, and secondary outcomes consisting of readmissions, time to readmission, and median length of stay. The authors observed a 49.7% relative reduction in total SNF discharges (25.5% of preintervention patients discharged to a SNF vs 12.8% postintervention), as well as a 66.9% relative reduction in new SNF discharges. Despite the significant reduction in SNF utilization, no differences were noted in readmissions, time to readmission, or readmission length of stay.

While this study was performed during the COVID-19 pandemic, several characteristics make its findings applicable beyond this period. First, the structure and workflow of the team are extensively detailed and make the intervention easily generalizable to most hospitals. Second, while not specifically examined, the outcome of SNF reduction likely corresponds to an increase in the patient’s time at home—an important patient-centered target for most posthospitalization plans.2 Finally, the intervention used existing infrastructure and individuals, and did not require new resources to improve patient care, which increases the feasibility of implementation at other institutions.

These findings also reveal potential overutilization of SNFs in the discharge process. On average, a typical SNF stay costs the health system more than $11,000.3 A simple intervention could lead to substantial savings for individuals and the healthcare system. With a nearly 50% reduction in SNF use, understanding why patients who were eligible to go home were ultimately discharged to a SNF will be a crucial question to answer. Are there barriers to patient or family education? Is there a perceived safety difference between a SNF and home for nonskilled nursing needs? Additionally, care should be taken to ensure that decreases in SNF utilization do not disproportionately affect certain populations. Further work should assess the performance of similar models in a non-COVID era and among multiple institutions to verify potential scalability and generalizability.

Like organ transplant committees, Boyle et al’s multidisciplinary approach to reduce SNF discharges had to include thoughtful and intentional decisions. Perhaps it is time we use this same model to transplant patients back into their homes as safely and efficiently as possible.

Is this patient a good candidate? In medicine, we subconsciously answer this question for every clinical decision we make. Occasionally, though, a clinical scenario is so complex that it cannot or should not be answered by a single individual. One example is the decision on whether a patient should receive an organ transplant. In this situation, a multidisciplinary committee weighs the complex ethical, clinical, and financial implications of the decision before coming to a verdict. Together, team members discuss the risks and benefits of each patient’s candidacy and, in a united fashion, decide the best course of care. For hospitalists, a far more common question occurs every day and is similarly fraught with multifaceted implications: Is my patient a good candidate for a skilled nursing facility (SNF)? We often rely on a single individual to make the final call, but should we instead be leveraging the expertise of other care team members to assist with this decision?

In this issue, Boyle et al1 describe the implementation of a multidisciplinary team consisting of physicians, case managers, social workers, physical and occupational therapists, and home-health representatives that reviewed all patients with an expected discharge to a SNF. Case managers or social workers began the process by referring eligible patients to the committee for review. If deemed appropriate, the committee discussed each case and reached a consensus recommendation as to whether a SNF was an appropriate discharge destination. The investigators used a matched, preintervention sample as a comparison group, with a primary outcome of total discharges to SNFs, and secondary outcomes consisting of readmissions, time to readmission, and median length of stay. The authors observed a 49.7% relative reduction in total SNF discharges (25.5% of preintervention patients discharged to a SNF vs 12.8% postintervention), as well as a 66.9% relative reduction in new SNF discharges. Despite the significant reduction in SNF utilization, no differences were noted in readmissions, time to readmission, or readmission length of stay.

While this study was performed during the COVID-19 pandemic, several characteristics make its findings applicable beyond this period. First, the structure and workflow of the team are extensively detailed and make the intervention easily generalizable to most hospitals. Second, while not specifically examined, the outcome of SNF reduction likely corresponds to an increase in the patient’s time at home—an important patient-centered target for most posthospitalization plans.2 Finally, the intervention used existing infrastructure and individuals, and did not require new resources to improve patient care, which increases the feasibility of implementation at other institutions.

These findings also reveal potential overutilization of SNFs in the discharge process. On average, a typical SNF stay costs the health system more than $11,000.3 A simple intervention could lead to substantial savings for individuals and the healthcare system. With a nearly 50% reduction in SNF use, understanding why patients who were eligible to go home were ultimately discharged to a SNF will be a crucial question to answer. Are there barriers to patient or family education? Is there a perceived safety difference between a SNF and home for nonskilled nursing needs? Additionally, care should be taken to ensure that decreases in SNF utilization do not disproportionately affect certain populations. Further work should assess the performance of similar models in a non-COVID era and among multiple institutions to verify potential scalability and generalizability.

Like organ transplant committees, Boyle et al’s multidisciplinary approach to reduce SNF discharges had to include thoughtful and intentional decisions. Perhaps it is time we use this same model to transplant patients back into their homes as safely and efficiently as possible.

1. Boyle CA, Ravichandran U, Hankamp V, et al. Safe transitions and congregate living in the age of COVID-19: a retrospective cohort study. J Hosp Med. 2021;16(9):524-530. https://doi.org/10.12788/jhm.3657

2. Barnett ML, Grabowski DC, Mehrotra A. Home-to-home time—measuring what matters to patients and payers. N Engl J Med. 2017;377(1):4-6. https://doi.org/10.1056/NEJMp1703423

3. Werner RM, Coe NB, Qi M, Konetzka RT. Patient outcomes after hospital discharge to home with home health care vs to a skilled nursing facility. JAMA Intern Med. 2019;179(5):617-623. https://doi.org/10.1001/jamainternmed.2018.7998

1. Boyle CA, Ravichandran U, Hankamp V, et al. Safe transitions and congregate living in the age of COVID-19: a retrospective cohort study. J Hosp Med. 2021;16(9):524-530. https://doi.org/10.12788/jhm.3657

2. Barnett ML, Grabowski DC, Mehrotra A. Home-to-home time—measuring what matters to patients and payers. N Engl J Med. 2017;377(1):4-6. https://doi.org/10.1056/NEJMp1703423

3. Werner RM, Coe NB, Qi M, Konetzka RT. Patient outcomes after hospital discharge to home with home health care vs to a skilled nursing facility. JAMA Intern Med. 2019;179(5):617-623. https://doi.org/10.1001/jamainternmed.2018.7998

© 2021 Society of Hospital Medicine

Microaggressions, Accountability, and Our Commitment to Doing Better

We recently published an article in our Leadership & Professional Development series titled “Tribalism: The Good, the Bad, and the Future.” Despite pre- and post-acceptance manuscript review and discussion by a diverse and thoughtful team of editors, we did not appreciate how particular language in this article would be hurtful to some communities. We also promoted the article using the hashtag “tribalism” in a journal tweet. Shortly after we posted the tweet, several readers on social media reached out with constructive feedback on the prejudicial nature of this terminology. Within hours of receiving this feedback, our editorial team met to better understand our error, and we made the decision to immediately retract the manuscript. We also deleted the tweet and issued an apology referencing a screenshot of the original tweet.1,2 We have republished the original article with appropriate language.3 Tweets promoting the new article will incorporate this new language.

From this experience, we learned that the words “tribe” and “tribalism” have no consistent meaning, are associated with negative historical and cultural assumptions, and can promote misleading stereotypes.4 The term “tribe” became popular as a colonial construct to describe forms of social organization considered ”uncivilized” or ”primitive.“5 In using the term “tribe” to describe members of medical communities, we ignored the complex and dynamic identities of Native American, African, and other Indigenous Peoples and the history of their oppression.

The intent of the original article was to highlight how being part of a distinct medical discipline, such as hospital medicine or emergency medicine, conferred benefits, such as shared identity and social support structure, and caution how this group identity could also lead to nonconstructive partisan behaviors that might not best serve our patients. We recognize that other words more accurately convey our intent and do not cause harm. We used “tribe” when we meant “group,” “discipline,” or “specialty.” We used “tribalism” when we meant “siloed” or “factional.”

This misstep underscores how, even with the best intentions and diverse teams, microaggressions can happen. We accept responsibility for this mistake, and we will continue to do the work of respecting and advocating for all members of our community. To minimize the likelihood of future errors, we are developing a systematic process to identify language within manuscripts accepted for publication that may be racist, sexist, ableist, homophobic, or otherwise harmful. As we embrace a growth mindset, we vow to remain transparent, responsive, and welcoming of feedback. We are grateful to our readers for helping us learn.

1. Shah SS [@SamirShahMD]. We are still learning. Despite review by a diverse group of team members, we did not appreciate how language in…. April 30, 2021. Accessed May 5, 2021. https://twitter.com/SamirShahMD/status/1388228974573244431

2. Journal of Hospital Medicine [@JHospMedicine]. We want to apologize. We used insensitive language that may be hurtful to Indigenous Americans & others. We are learning…. April 30, 2021. Accessed May 5, 2021. https://twitter.com/JHospMedicine/status/1388227448962052097

3. Kanjee Z, Bilello L. Specialty silos in medicine: the good, the bad, and the future. J Hosp Med. Published online May 21, 2021. https://doi.org/10.12788/jhm.3647

4. Lowe C. The trouble with tribe: How a common word masks complex African realities. Learning for Justice. Spring 2001. Accessed May 5, 2021. https://www.learningforjustice.org/magazine/spring-2001/the-trouble-with-tribe

5. Mungai C. Pundits who decry ‘tribalism’ know nothing about real tribes. Washington Post. January 30, 2019. Accessed May 6, 2021. https://www.washingtonpost.com/outlook/pundits-who-decry-tribalism-know-nothing-about-real-tribes/2019/01/29/8d14eb44-232f-11e9-90cd-dedb0c92dc17_story.html

We recently published an article in our Leadership & Professional Development series titled “Tribalism: The Good, the Bad, and the Future.” Despite pre- and post-acceptance manuscript review and discussion by a diverse and thoughtful team of editors, we did not appreciate how particular language in this article would be hurtful to some communities. We also promoted the article using the hashtag “tribalism” in a journal tweet. Shortly after we posted the tweet, several readers on social media reached out with constructive feedback on the prejudicial nature of this terminology. Within hours of receiving this feedback, our editorial team met to better understand our error, and we made the decision to immediately retract the manuscript. We also deleted the tweet and issued an apology referencing a screenshot of the original tweet.1,2 We have republished the original article with appropriate language.3 Tweets promoting the new article will incorporate this new language.

From this experience, we learned that the words “tribe” and “tribalism” have no consistent meaning, are associated with negative historical and cultural assumptions, and can promote misleading stereotypes.4 The term “tribe” became popular as a colonial construct to describe forms of social organization considered ”uncivilized” or ”primitive.“5 In using the term “tribe” to describe members of medical communities, we ignored the complex and dynamic identities of Native American, African, and other Indigenous Peoples and the history of their oppression.

The intent of the original article was to highlight how being part of a distinct medical discipline, such as hospital medicine or emergency medicine, conferred benefits, such as shared identity and social support structure, and caution how this group identity could also lead to nonconstructive partisan behaviors that might not best serve our patients. We recognize that other words more accurately convey our intent and do not cause harm. We used “tribe” when we meant “group,” “discipline,” or “specialty.” We used “tribalism” when we meant “siloed” or “factional.”

This misstep underscores how, even with the best intentions and diverse teams, microaggressions can happen. We accept responsibility for this mistake, and we will continue to do the work of respecting and advocating for all members of our community. To minimize the likelihood of future errors, we are developing a systematic process to identify language within manuscripts accepted for publication that may be racist, sexist, ableist, homophobic, or otherwise harmful. As we embrace a growth mindset, we vow to remain transparent, responsive, and welcoming of feedback. We are grateful to our readers for helping us learn.

We recently published an article in our Leadership & Professional Development series titled “Tribalism: The Good, the Bad, and the Future.” Despite pre- and post-acceptance manuscript review and discussion by a diverse and thoughtful team of editors, we did not appreciate how particular language in this article would be hurtful to some communities. We also promoted the article using the hashtag “tribalism” in a journal tweet. Shortly after we posted the tweet, several readers on social media reached out with constructive feedback on the prejudicial nature of this terminology. Within hours of receiving this feedback, our editorial team met to better understand our error, and we made the decision to immediately retract the manuscript. We also deleted the tweet and issued an apology referencing a screenshot of the original tweet.1,2 We have republished the original article with appropriate language.3 Tweets promoting the new article will incorporate this new language.

From this experience, we learned that the words “tribe” and “tribalism” have no consistent meaning, are associated with negative historical and cultural assumptions, and can promote misleading stereotypes.4 The term “tribe” became popular as a colonial construct to describe forms of social organization considered ”uncivilized” or ”primitive.“5 In using the term “tribe” to describe members of medical communities, we ignored the complex and dynamic identities of Native American, African, and other Indigenous Peoples and the history of their oppression.

The intent of the original article was to highlight how being part of a distinct medical discipline, such as hospital medicine or emergency medicine, conferred benefits, such as shared identity and social support structure, and caution how this group identity could also lead to nonconstructive partisan behaviors that might not best serve our patients. We recognize that other words more accurately convey our intent and do not cause harm. We used “tribe” when we meant “group,” “discipline,” or “specialty.” We used “tribalism” when we meant “siloed” or “factional.”

This misstep underscores how, even with the best intentions and diverse teams, microaggressions can happen. We accept responsibility for this mistake, and we will continue to do the work of respecting and advocating for all members of our community. To minimize the likelihood of future errors, we are developing a systematic process to identify language within manuscripts accepted for publication that may be racist, sexist, ableist, homophobic, or otherwise harmful. As we embrace a growth mindset, we vow to remain transparent, responsive, and welcoming of feedback. We are grateful to our readers for helping us learn.

1. Shah SS [@SamirShahMD]. We are still learning. Despite review by a diverse group of team members, we did not appreciate how language in…. April 30, 2021. Accessed May 5, 2021. https://twitter.com/SamirShahMD/status/1388228974573244431

2. Journal of Hospital Medicine [@JHospMedicine]. We want to apologize. We used insensitive language that may be hurtful to Indigenous Americans & others. We are learning…. April 30, 2021. Accessed May 5, 2021. https://twitter.com/JHospMedicine/status/1388227448962052097

3. Kanjee Z, Bilello L. Specialty silos in medicine: the good, the bad, and the future. J Hosp Med. Published online May 21, 2021. https://doi.org/10.12788/jhm.3647

4. Lowe C. The trouble with tribe: How a common word masks complex African realities. Learning for Justice. Spring 2001. Accessed May 5, 2021. https://www.learningforjustice.org/magazine/spring-2001/the-trouble-with-tribe

5. Mungai C. Pundits who decry ‘tribalism’ know nothing about real tribes. Washington Post. January 30, 2019. Accessed May 6, 2021. https://www.washingtonpost.com/outlook/pundits-who-decry-tribalism-know-nothing-about-real-tribes/2019/01/29/8d14eb44-232f-11e9-90cd-dedb0c92dc17_story.html

1. Shah SS [@SamirShahMD]. We are still learning. Despite review by a diverse group of team members, we did not appreciate how language in…. April 30, 2021. Accessed May 5, 2021. https://twitter.com/SamirShahMD/status/1388228974573244431

2. Journal of Hospital Medicine [@JHospMedicine]. We want to apologize. We used insensitive language that may be hurtful to Indigenous Americans & others. We are learning…. April 30, 2021. Accessed May 5, 2021. https://twitter.com/JHospMedicine/status/1388227448962052097

3. Kanjee Z, Bilello L. Specialty silos in medicine: the good, the bad, and the future. J Hosp Med. Published online May 21, 2021. https://doi.org/10.12788/jhm.3647

4. Lowe C. The trouble with tribe: How a common word masks complex African realities. Learning for Justice. Spring 2001. Accessed May 5, 2021. https://www.learningforjustice.org/magazine/spring-2001/the-trouble-with-tribe

5. Mungai C. Pundits who decry ‘tribalism’ know nothing about real tribes. Washington Post. January 30, 2019. Accessed May 6, 2021. https://www.washingtonpost.com/outlook/pundits-who-decry-tribalism-know-nothing-about-real-tribes/2019/01/29/8d14eb44-232f-11e9-90cd-dedb0c92dc17_story.html

© 2021 Society of Hospital Medicine

Leveling the Playing Field: Accounting for Academic Productivity During the COVID-19 Pandemic

Professional upheavals caused by the coronavirus disease 2019 (COVID-19) pandemic have affected the academic productivity of many physicians. This is due in part to rapid changes in clinical care and medical education: physician-researchers have been redeployed to frontline clinical care; clinician-educators have been forced to rapidly transition in-person curricula to virtual platforms; and primary care physicians and subspecialists have been forced to transition to telehealth-based practices. In addition to these changes in clinical and educational responsibilities, the COVID-19 pandemic has substantially altered the personal lives of physicians. During the height of the pandemic, clinicians simultaneously wrestled with a lack of available childcare, unexpected home-schooling responsibilities, decreased income, and many other COVID-19-related stresses.1 Additionally, the ever-present “second pandemic” of structural racism, persistent health disparities, and racial inequity has further increased the personal and professional demands facing academic faculty.2

In particular, the pandemic has placed personal and professional pressure on female and minority faculty members. In spite of these pressures, however, the academic promotions process still requires rigid accounting of scholarly productivity. As the focus of academic practices has shifted to support clinical care during the pandemic, scholarly productivity has suffered for clinicians on the frontline. As a result, academic clinical faculty have expressed significant stress and concerns about failing to meet benchmarks for promotion (eg, publications, curricula development, national presentations). To counter these shifts (and the inherent inequity that they create for female clinicians and for men and women who are Black, Indigenous, and/or of color), academic institutions should not only recognize the effects the COVID-19 pandemic has had on faculty, but also adopt immediate solutions to more equitably account for such disruptions to academic portfolios. In this paper, we explore populations whose career trajectories are most at-risk and propose a framework to capture novel and nontraditional contributions while also acknowledging the rapid changes the COVID-19 pandemic has brought to academic medicine.

POPULATIONS AT RISK FOR CAREER DISRUPTION

Even before the COVID-19 pandemic, physician mothers, underrepresented racial/ethnic minority groups, and junior faculty were most at-risk for career disruptions. The closure of daycare facilities and schools and shift to online learning resulting from the pandemic, along with the common challenges of parenting, have taken a significant toll on the lives of working parents. Because women tend to carry a disproportionate share of childcare and household responsibilities, these changes have inequitably leveraged themselves as a “mommy tax” on working women.3,4

As underrepresented medicine faculty (particularly Black, Hispanic, Latino, and Native American clinicians) comprise only 8% of the academic medical workforce,they currently face a variety of personal and professional challenges.5 This is especially true for Black and Latinx physicians who have been experiencing an increased COVID-19 burden in their communities, while concurrently fighting entrenched structural racism and police violence. In academia, these challenges have worsened because of the “minority tax”—the toll of often uncompensated extra responsibilities (time or money) placed on minority faculty in the name of achieving diversity. The unintended consequences of these responsibilities result in having fewer mentors,6 caring for underserved populations,7 and performing more clinical care8 than non-underrepresented minority faculty. Because minority faculty are unlikely to be in leadership positions, it is reasonable to conclude they have been shouldering heavier clinical obligations and facing greater career disruption of scholarly work due to the COVID-19 pandemic.

Junior faculty (eg, instructors and assistant professors) also remain professionally vulnerable during the COVID-19 pandemic. Because junior faculty are often more clinically focused and less likely to hold leadership positions than senior faculty, they are more likely to have assumed frontline clinical positions, which come at the expense of academic work. Junior faculty are also at a critical building phase in their academic career—a time when they benefit from the opportunity to share their scholarly work and network at conferences. Unfortunately, many conferences have been canceled or moved to a virtual platform. Given that some institutions may be freezing academic funding for conferences due to budgetary shortfalls from the pandemic, junior faculty may be particularly at risk if they are not able to present their work. In addition, junior faculty often face disproportionate struggles at home, trying to balance demands of work and caring for young children. Considering the unique needs of each of these groups, it is especially important to consider intersectionality, or the compounded issues for individuals who exist in multiple disproportionately affected groups (eg, a Black female junior faculty member who is also a mother).

THE COVID-19-CURRICULUM VITAE MATRIX

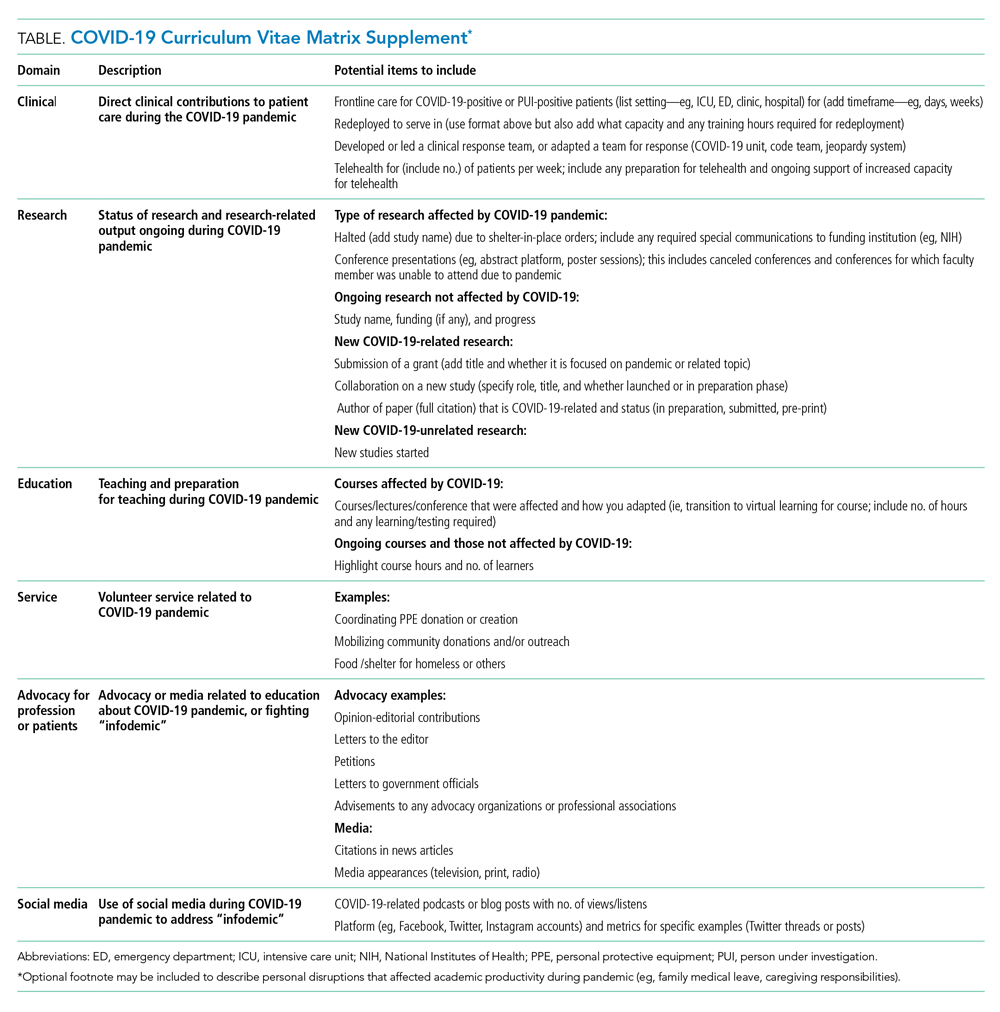

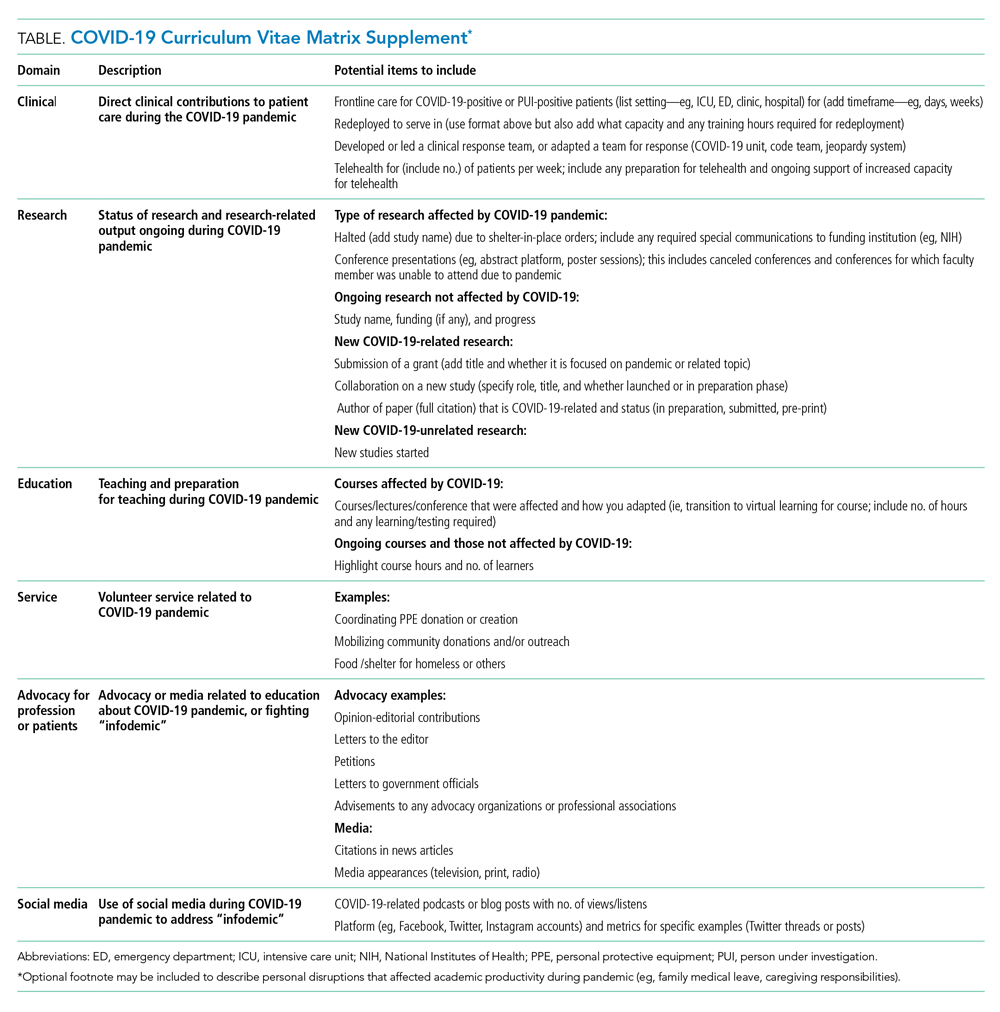

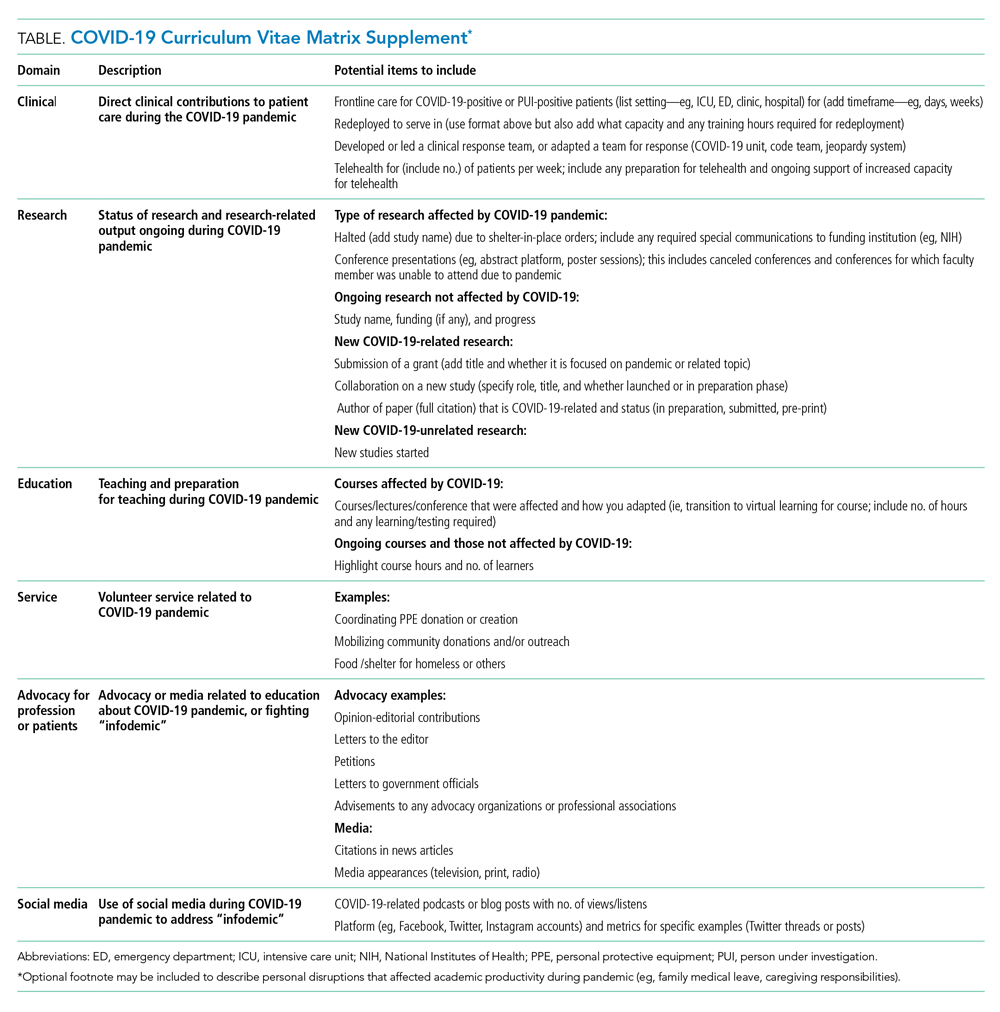

The typical format of a professional curriculum vitae (CV) at most academic institutions does not allow one to document potential disruptions or novel contributions, including those that occurred during the COVID-19 pandemic. As a group of academic clinicians, educators, and researchers whose careers have been affected by the pandemic, we created a COVID-19 CV matrix, a potential framework to serve as a supplement for faculty. In this matrix, faculty members may document their contributions, disruptions that affected their work, and caregiving responsibilities during this time period, while also providing a rubric for promotions and tenure committees to equitably evaluate the pandemic period on an academic CV. Our COVID-19 CV matrix consists of six domains: (1) clinical care, (2) research, (3) education, (4) service, (5) advocacy/media, and (6) social media. These domains encompass traditional and nontraditional contributions made by healthcare professionals during the pandemic (Table). This matrix broadens the ability of both faculty and institutions to determine the actual impact of individuals during the pandemic.

ACCOUNT FOR YOUR (NEW) IMPACT

Throughout the COVID-19 pandemic, academic faculty have been innovative, contributing in novel ways not routinely captured by promotions committees—eg, the digital health researcher who now directs the telemedicine response for their institution and the health disparities researcher who now leads daily webinar sessions on structural racism to medical students. Other novel contributions include advancing COVID-19 innovations and engaging in media and community advocacy (eg, organizing large-scale donations of equipment and funds to support organizations in need). While such nontraditional contributions may not have been readily captured or thought “CV worthy” in the past, faculty should now account for them. More importantly, promotions committees need to recognize that these pivots or alterations in career paths are not signals of professional failure, but rather evidence of a shifting landscape and the respective response of the individual. Furthermore, because these pivots often help fulfill an institutional mission, they are impactful.

ACKNOWLEDGE THE DISRUPTION

It is important for promotions and tenure committees to recognize the impact and disruption COVID-19 has had on traditional academic work, acknowledging the time and energy required for a faculty member to make needed work adjustments. This enables a leader to better assess how a faculty member’s academic portfolio has been affected. For example, researchers have had to halt studies, medical educators have had to redevelop and transition curricula to virtual platforms, and physicians have had to discontinue clinician quality improvement initiatives due to competing hospital priorities. Faculty members who document such unintentional alterations in their academic career path can explain to their institution how they have continued to positively influence their field and the community during the pandemic. This approach is analogous to the current model of accounting for clinical time when judging faculty members’ contributions in scholarly achievement.

The COVID-19 CV matrix has the potential to be annotated to explain the burden of one’s personal situation, which is often “invisible” in the professional environment. For example, many physicians have had to assume additional childcare responsibilities, tend to sick family members, friends, and even themselves. It is also possible that a faculty member has a partner who is also an essential worker, one who had to self-isolate due to COVID-19 exposure or illness, or who has been working overtime due to high patient volumes.

INSTITUTIONAL RESPONSE

How can institutions respond to the altered academic landscape caused by the COVID-19 pandemic? Promotions committees typically have two main tools at their disposal: adjusting the tenure clock or the benchmarks. Extending the period of time available to qualify for tenure is commonplace in the “publish-or-perish” academic tracks of university research professors. Clock adjustments are typically granted to faculty following the birth of a child or for other specific family- or health-related hardships, in accordance with the Family and Medical Leave Act. Unfortunately, tenure-clock extensions for female faculty members can exacerbate gender inequity: Data on tenure-clock extensions show a higher rate of tenure granted to male faculty compared to female faculty.9 For this reason, it is also important to explore adjustments or modifications to benchmark criteria. This could be accomplished by broadening the criteria for promotion, recognizing that impact occurs in many forms, thereby enabling meeting a benchmark. It can also occur by examining the trajectory of an individual within a promotion pathway before it was disrupted to determine impact. To avoid exacerbating social and gender inequities within academia, institutions should use these professional levers and create new ones to provide parity and equality across the promotional playing field. While the CV matrix openly acknowledges the disruptions and tangents the COVID-19 pandemic has had on academic careers, it remains important for academic institutions to recognize these disruptions and innovate the manner in which they acknowledge scholarly contributions.

Conclusion

While academic rigidity and known social taxes (minority and mommy taxes) are particularly problematic in the current climate, these issues have always been at play in evaluating academic success. Improved documentation of novel contributions, disruptions, caregiving, and other challenges can enable more holistic and timely professional advancement for all faculty, regardless of their sex, race, ethnicity, or social background. Ultimately, we hope this framework initiates further conversations among academic institutions on how to define productivity in an age where journal impact factor or number of publications is not the fullest measure of one’s impact in their field.

1. Jones Y, Durand V, Morton K, et al; ADVANCE PHM Steering Committee. Collateral damage: how covid-19 is adversely impacting women physicians. J Hosp Med. 2020;15(8):507-509. https://doi.org/10.12788/jhm.3470

2. Manning KD. When grief and crises intersect: perspectives of a black physician in the time of two pandemics. J Hosp Med. 2020;15(9):566-567. https://doi.org/10.12788/jhm.3481

3. Cohen P, Hsu T. Pandemic could scar a generation of working mothers. New York Times. Published June 3, 2020. Updated June 30, 2020. Accessed November 11, 2020. https://www.nytimes.com/2020/06/03/business/economy/coronavirus-working-women.html

4. Cain Miller C. Nearly half of men say they do most of the home schooling. 3 percent of women agree. Published May 6, 2020. Updated May 8, 2020. Accessed November 11, 2020. New York Times. https://www.nytimes.com/2020/05/06/upshot/pandemic-chores-homeschooling-gender.html

5. Rodríguez JE, Campbell KM, Pololi LH. Addressing disparities in academic medicine: what of the minority tax? BMC Med Educ. 2015;15:6. https://doi.org/10.1186/s12909-015-0290-9

6. Lewellen-Williams C, Johnson VA, Deloney LA, Thomas BR, Goyol A, Henry-Tillman R. The POD: a new model for mentoring underrepresented minority faculty. Acad Med. 2006;81(3):275-279. https://doi.org/10.1097/00001888-200603000-00020

7. Pololi LH, Evans AT, Gibbs BK, Krupat E, Brennan RT, Civian JT. The experience of minority faculty who are underrepresented in medicine, at 26 representative U.S. medical schools. Acad Med. 2013;88(9):1308-1314. https://doi.org/10.1097/acm.0b013e31829eefff

8. Richert A, Campbell K, Rodríguez J, Borowsky IW, Parikh R, Colwell A. ACU workforce column: expanding and supporting the health care workforce. J Health Care Poor Underserved. 2013;24(4):1423-1431. https://doi.org/10.1353/hpu.2013.0162

9. Woitowich NC, Jain S, Arora VM, Joffe H. COVID-19 threatens progress toward gender equity within academic medicine. Acad Med. 2020;29:10.1097/ACM.0000000000003782. https://doi.org/10.1097/acm.0000000000003782

Professional upheavals caused by the coronavirus disease 2019 (COVID-19) pandemic have affected the academic productivity of many physicians. This is due in part to rapid changes in clinical care and medical education: physician-researchers have been redeployed to frontline clinical care; clinician-educators have been forced to rapidly transition in-person curricula to virtual platforms; and primary care physicians and subspecialists have been forced to transition to telehealth-based practices. In addition to these changes in clinical and educational responsibilities, the COVID-19 pandemic has substantially altered the personal lives of physicians. During the height of the pandemic, clinicians simultaneously wrestled with a lack of available childcare, unexpected home-schooling responsibilities, decreased income, and many other COVID-19-related stresses.1 Additionally, the ever-present “second pandemic” of structural racism, persistent health disparities, and racial inequity has further increased the personal and professional demands facing academic faculty.2

In particular, the pandemic has placed personal and professional pressure on female and minority faculty members. In spite of these pressures, however, the academic promotions process still requires rigid accounting of scholarly productivity. As the focus of academic practices has shifted to support clinical care during the pandemic, scholarly productivity has suffered for clinicians on the frontline. As a result, academic clinical faculty have expressed significant stress and concerns about failing to meet benchmarks for promotion (eg, publications, curricula development, national presentations). To counter these shifts (and the inherent inequity that they create for female clinicians and for men and women who are Black, Indigenous, and/or of color), academic institutions should not only recognize the effects the COVID-19 pandemic has had on faculty, but also adopt immediate solutions to more equitably account for such disruptions to academic portfolios. In this paper, we explore populations whose career trajectories are most at-risk and propose a framework to capture novel and nontraditional contributions while also acknowledging the rapid changes the COVID-19 pandemic has brought to academic medicine.

POPULATIONS AT RISK FOR CAREER DISRUPTION

Even before the COVID-19 pandemic, physician mothers, underrepresented racial/ethnic minority groups, and junior faculty were most at-risk for career disruptions. The closure of daycare facilities and schools and shift to online learning resulting from the pandemic, along with the common challenges of parenting, have taken a significant toll on the lives of working parents. Because women tend to carry a disproportionate share of childcare and household responsibilities, these changes have inequitably leveraged themselves as a “mommy tax” on working women.3,4

As underrepresented medicine faculty (particularly Black, Hispanic, Latino, and Native American clinicians) comprise only 8% of the academic medical workforce,they currently face a variety of personal and professional challenges.5 This is especially true for Black and Latinx physicians who have been experiencing an increased COVID-19 burden in their communities, while concurrently fighting entrenched structural racism and police violence. In academia, these challenges have worsened because of the “minority tax”—the toll of often uncompensated extra responsibilities (time or money) placed on minority faculty in the name of achieving diversity. The unintended consequences of these responsibilities result in having fewer mentors,6 caring for underserved populations,7 and performing more clinical care8 than non-underrepresented minority faculty. Because minority faculty are unlikely to be in leadership positions, it is reasonable to conclude they have been shouldering heavier clinical obligations and facing greater career disruption of scholarly work due to the COVID-19 pandemic.