User login

Regional Variation in Standardized Costs of Care at Children’s Hospitals

With some areas of the country spending close to 3 times more on healthcare than others, regional variation in healthcare spending has been the focus of national attention.1-7 Since 1973, the Dartmouth Institute has studied regional variation in healthcare utilization and spending and concluded that variation is “unwarranted” because it is driven by providers’ practice patterns rather than differences in medical need, patient preferences, or evidence-based medicine.8-11 However, critics of the Dartmouth Institute’s findings argue that their approach does not adequately adjust for community-level income, and that higher costs in some areas reflect greater patient needs that are not reflected in illness acuity alone.12-14

While Medicare data have made it possible to study variations in spending for the senior population, fragmentation of insurance coverage and nonstandardized data structures make studying the pediatric population more difficult. However, the Children’s Hospital Association’s (CHA) Pediatric Health Information System (PHIS) has made large-scale comparisons more feasible. To overcome challenges associated with using charges and nonuniform cost data, PHIS-derived standardized costs provide new opportunities for comparisons.15,16 Initial analyses using PHIS data showed significant interhospital variations in costs of care,15 but they did not adjust for differences in populations and assess the drivers of variation. A more recent study that controlled for payer status, comorbidities, and illness severity found that intensive care unit (ICU) utilization varied significantly for children hospitalized for asthma, suggesting that hospital practice patterns drive differences in cost.17

This study uses PHIS data to analyze regional variations in standardized costs of care for 3 conditions for which children are hospitalized. To assess potential drivers of variation, the study investigates the effects of patient-level demographic and illness-severity variables as well as encounter-level variables on costs of care. It also estimates cost savings from reducing variation.

METHODS

Data Source

This retrospective cohort study uses the PHIS database (CHA, Overland Park, KS), which includes 48 freestanding children’s hospitals located in noncompeting markets across the United States and accounts for approximately 20% of pediatric hospitalizations. PHIS includes patient demographics, International Classification of Diseases, 9th Revision (ICD-9) diagnosis and procedure codes, as well as hospital charges. In addition to total charges, PHIS reports imaging, laboratory, pharmacy, and “other” charges. The “other” category aggregates clinical, supply, room, and nursing charges (including facility fees and ancillary staff services).

Inclusion Criteria

Inpatient- and observation-status hospitalizations for asthma, diabetic ketoacidosis (DKA), and acute gastroenteritis (AGE) at 46 PHIS hospitals from October 2014 to September 2015 were included. Two hospitals were excluded because of missing data. Hospitalizations for patients >18 years were excluded.

Hospitalizations were categorized by using All Patient Refined-Diagnosis Related Groups (APR-DRGs) version 24 (3M Health Information Systems, St. Paul, MN)18 based on the ICD-9 diagnosis and procedure codes assigned during the episode of care. Analyses included APR-DRG 141 (asthma), primary diagnosis ICD-9 codes 250.11 and 250.13 (DKA), and APR-DRG 249 (AGE). ICD-9 codes were used for DKA for increased specificity.19 These conditions were chosen to represent 3 clinical scenarios: (1) a diagnosis for which hospitals differ on whether certain aspects of care are provided in the ICU (asthma), (2) a diagnosis that frequently includes care in an ICU (DKA), and (3) a diagnosis that typically does not include ICU care (AGE).19

Study Design

To focus the analysis on variation in resource utilization across hospitals rather than variations in hospital item charges, each billed resource was assigned a standardized cost.15,16 For each clinical transaction code (CTC), the median unit cost was calculated for each hospital. The median of the hospital medians was defined as the standardized unit cost for that CTC.

The primary outcome variable was the total standardized cost for the hospitalization adjusted for patient-level demographic and illness-severity variables. Patient demographic and illness-severity covariates included age, race, gender, ZIP code-based median annual household income (HHI), rural-urban location, distance from home ZIP code to the hospital, chronic condition indicator (CCI), and severity-of-illness (SOI). When assessing drivers of variation, encounter-level covariates were added, including length of stay (LOS) in hours, ICU utilization, and 7-day readmission (an imprecise measure to account for quality of care during the index visit). The contribution of imaging, laboratory, pharmacy, and “other” costs was also considered.

Median annual HHI for patients’ home ZIP code was obtained from 2010 US Census data. Community-level HHI, a proxy for socioeconomic status (SES),20,21 was classified into categories based on the 2015 US federal poverty level (FPL) for a family of 422: HHI-1 = ≤ 1.5 × FPL; HHI-2 = 1.5 to 2 × FPL; HHI-3 = 2 to 3 × FPL; HHI-4 = ≥ 3 × FPL. Rural-urban commuting area (RUCA) codes were used to determine the rural-urban classification of the patient’s home.23 The distance from home ZIP code to the hospital was included as an additional control for illness severity because patients traveling longer distances are often more sick and require more resources.24

The Agency for Healthcare Research and Quality CCI classification system was used to identify the presence of a chronic condition.25 For asthma, CCI was flagged if the patient had a chronic condition other than asthma; for DKA, CCI was flagged if the patient had a chronic condition other than DKA; and for AGE, CCI was flagged if the patient had any chronic condition.

The APR-DRG system provides a 4-level SOI score with each APR-DRG category. Patient factors, such as comorbid diagnoses, are considered in severity scores generated through 3M’s proprietary algorithms.18

For the first analysis, the 46 hospitals were categorized into 7 geographic regions based on 2010 US Census Divisions.26 To overcome small hospital sample sizes, Mountain and Pacific were combined into West, and Middle Atlantic and New England were combined into North East. Because PHIS hospitals are located in noncompeting geographic regions, for the second analysis, we examined hospital-level variation (considering each hospital as its own region).

Data Analysis

To focus the analysis on “typical” patients and produce more robust estimates of central tendencies, the top and bottom 5% of hospitalizations with the most extreme standardized costs by condition were trimmed.27 Standardized costs were log-transformed because of their nonnormal distribution and analyzed by using linear mixed models. Covariates were added stepwise to assess the proportion of the variance explained by each predictor. Post-hoc tests with conservative single-step stepwise mutation model corrections for multiple testing were used to compare adjusted costs. Statistical analyses were performed using SAS version 9.3 (SAS Institute, Cary, NC). P values < 0.05 were considered significant. The Children’s Hospital of Philadelphia Institutional Review Board did not classify this study as human subjects research.

RESULTS

During the study period, there were 26,430 hospitalizations for asthma, 5056 for DKA, and 16,274 for AGE (Table 1).

Variation Across Census Regions

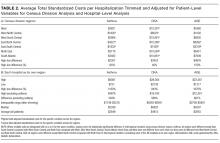

After adjusting for patient-level demographic and illness-severity variables, differences in adjusted total standardized costs remained between regions (P < 0.001). Although no region was an outlier compared to the overall mean for any of the conditions, regions were statistically different in pairwise comparison. The East North Central, South Atlantic, and West South Central regions had the highest adjusted total standardized costs for each of the conditions. The East South Central and West North Central regions had the lowest costs for each of the conditions. Adjusted total standardized costs were 120% higher for asthma ($1920 vs $4227), 46% higher for DKA ($7429 vs $10,881), and 150% higher for AGE ($3316 vs $8292) in the highest-cost region compared with the lowest-cost region (Table 2A).

Variation Within Census Regions

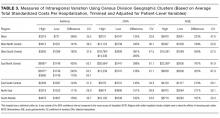

After controlling for patient-level demographic and illness-severity variables, standardized costs were different across hospitals in the same region (P < 0.001; panel A in Figure). This was true for all conditions in each region. Differences between the lowest- and highest-cost hospitals within the same region ranged from 111% to 420% for asthma, 101% to 398% for DKA, and 166% to 787% for AGE (Table 3).

Variation Across Hospitals (Each Hospital as Its Own Region)

One hospital had the highest adjusted standardized costs for all 3 conditions ($9087 for asthma, $28,564 for DKA, and $23,387 for AGE) and was outside of the 95% confidence interval compared with the overall means. The second highest-cost hospitals for asthma ($5977) and AGE ($18,780) were also outside of the 95% confidence interval. After removing these outliers, the difference between the highest- and lowest-cost hospitals was 549% for asthma ($721 vs $4678), 491% for DKA ($2738 vs $16,192), and 681% for AGE ($1317 vs $10,281; Table 2B).

Drivers of Variation Across Census Regions

Patient-level demographic and illness-severity variables explained very little of the variation in standardized costs across regions. For each of the conditions, age, race, gender, community-level HHI, RUCA, and distance from home to the hospital each accounted for <1.5% of variation, while SOI and CCI each accounted for <5%. Overall, patient-level variables explained 5.5%, 3.7%, and 6.7% of variation for asthma, DKA, and AGE.

Encounter-level variables explained a much larger percentage of the variation in costs. LOS accounted for 17.8% of the variation for asthma, 9.8% for DKA, and 8.7% for AGE. ICU utilization explained 6.9% of the variation for asthma and 12.5% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. The combination of patient-level and encounter-level variables explained 27%, 24%, and 15% of the variation for asthma, DKA, and AGE.

Drivers of Variation Across Hospitals

For each of the conditions, patient-level demographic variables each accounted for <2% of variation in costs between hospitals. SOI accounted for 4.5% of the variation for asthma and CCI accounted for 5.2% for AGE. Overall, patient-level variables explained 6.9%, 5.3%, and 7.3% of variation for asthma, DKA, and AGE.

Encounter-level variables accounted for a much larger percentage of the variation in cost. LOS explained 25.4% for asthma, 13.3% for DKA, and 14.2% for AGE. ICU utilization accounted for 13.4% for asthma and 21.9% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. Together, patient-level and encounter-level variables explained 40%, 36%, and 22% of variation for asthma, DKA, and AGE.

Imaging, Laboratory, Pharmacy, and “Other” Costs

The largest contributor to total costs adjusted for patient-level factors for all conditions was “other,” which aggregates room, nursing, clinical, and supply charges (panel B in Figure). When considering drivers of variation, this category explained >50% for each of the conditions. The next largest contributor to total costs was laboratory charges, which accounted for 15% of the variation across regions for asthma and 11% for DKA. Differences in imaging accounted for 18% of the variation for DKA and 15% for AGE. Differences in pharmacy charges accounted for <4% of the variation for each of the conditions. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 78%, and 72% of the variation across census regions for asthma, DKA, and AGE.

For the hospital-level analysis, differences in “other” remained the largest driver of cost variation. For asthma, “other” explained 61% of variation, while pharmacy, laboratory, and imaging each accounted for <8%. For DKA, differences in imaging accounted for 18% of the variation and laboratory charges accounted for 12%. For AGE, imaging accounted for 15% of the variation. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 72%, and 67% of the variation for asthma, DKA, and AGE.

Cost Savings

If all hospitals in this cohort with adjusted standardized costs above the national PHIS average achieved costs equal to the national PHIS average, estimated annual savings in adjusted standardized costs for these 3 conditions would be $69.1 million. If each hospital with adjusted costs above the average within its census region achieved costs equal to its regional average, estimated annual savings in adjusted standardized costs for these conditions would be $25.2 million.

DISCUSSION

This study reported on the regional variation in costs of care for 3 conditions treated at 46 children’s hospitals across 7 geographic regions, and it demonstrated that variations in costs of care exist in pediatrics. This study used standardized costs to compare utilization patterns across hospitals and adjusted for several patient-level demographic and illness-severity factors, and it found that differences in costs of care for children hospitalized with asthma, DKA, and AGE remained both between and within regions.

These variations are noteworthy, as hospitals strive to improve the value of healthcare. If the higher-cost hospitals in this cohort could achieve costs equal to the national PHIS averages, estimated annual savings in adjusted standardized costs for these conditions alone would equal $69.1 million. If higher-cost hospitals relative to the average in their own region reduced costs to their regional averages, annual standardized cost savings could equal $25.2 million for these conditions.

The differences observed are also significant in that they provide a foundation for exploring whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes.28 If so, studying what those hospitals do to achieve outcomes more efficiently can serve as the basis for the establishment of best practices.29 Standardizing best practices through protocols, pathways, and care-model redesign can reduce potentially unnecessary spending.30

Our findings showed that patient-level demographic and illness-severity covariates, including community-level HHI and SOI, did not consistently explain cost differences. Instead, LOS and ICU utilization were associated with higher costs.17,19 When considering the effect of the 4 cost components on the variation in total standardized costs between regions and between hospitals, the fact that the “other” category accounted for the largest percent of the variation is not surprising, because the cost of room occupancy and nursing services increases with longer LOS and more time in the ICU. Other individual cost components that were major drivers of variation were laboratory utilization for asthma and imaging for DKA and AGE31 (though they accounted for a much smaller proportion of total adjusted costs).19

To determine if these factors are modifiable, more information is needed to explain why practices differ. Many factors may contribute to varying utilization patterns, including differences in capabilities and resources (in the hospital and in the community) and patient volumes. For example, some hospitals provide continuous albuterol for status asthmaticus only in ICUs, while others provide it on regular units.32 But if certain hospitals do not have adequate resources or volumes to effectively care for certain populations outside of the ICU, their higher-value approach (considering quality and cost) may be to utilize ICU beds, even if some other hospitals care for those patients on non-ICU floors. Another possibility is that family preferences about care delivery (such as how long children stay in the hospital) may vary across regions.33

Other evidence suggests that physician practice and spending patterns are strongly influenced by the practices of the region where they trained.34 Because physicians often practice close to where they trained,35,36 this may partially explain how regional patterns are reinforced.

Even considering all mentioned covariates, our model did not fully explain variation in standardized costs. After adding the cost components as covariates, between one-third and one-fifth of the variation remained unexplained. It is possible that this unexplained variation stemmed from unmeasured patient-level factors.

In addition, while proxies for SES, including community-level HHI, did not significantly predict differences in costs across regions, it is possible that SES affected LOS differently in different regions. Previous studies have suggested that lower SES is associated with longer LOS.37 If this effect is more pronounced in certain regions (potentially because of differences in social service infrastructures), SES may be contributing to variations in cost through LOS.

Our findings were subject to limitations. First, this study only examined 3 diagnoses and did not include surgical or less common conditions. Second, while PHIS includes tertiary care, academic, and freestanding children’s hospitals, it does not include general hospitals, which is where most pediatric patients receive care.38 Third, we used ZIP code-based median annual HHI to account for SES, and we used ZIP codes to determine the distance to the hospital and rural-urban location of patients’ homes. These approximations lack precision because SES and distances vary within ZIP codes.39 Fourth, while adjusted standardized costs allow for comparisons between hospitals, they do not represent actual costs to patients or individual hospitals. Additionally, when determining whether variation remained after controlling for patient-level variables, we included SOI as a reflection of illness-severity at presentation. However, in practice, SOI scores may be assigned partially based on factors determined during the hospitalization.18 Finally, the use of other regional boundaries or the selection of different hospitals may yield different results.

CONCLUSION

This study reveals regional variations in costs of care for 3 inpatient pediatric conditions. Future studies should explore whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes. To the extent that variation is driven by modifiable factors and lower spending does not compromise outcomes, these data may prompt reviews of care models to reduce unwarranted variation and improve the value of care delivery at local, regional, and national levels.

Disclosure

Internal funds from the CHA and The Children’s Hospital of Philadelphia supported the conduct of this work. The authors have no financial interests, relationships, or affiliations relevant to the subject matter or materials discussed in the manuscript to disclose. The authors have no potential conflicts of interest relevant to the subject matter or materials discussed in the manuscript to disclose

1. Fisher E, Skinner J. Making Sense of Geographic Variations in Health Care: The New IOM Report. 2013; http://healthaffairs.org/blog/2013/07/24/making-sense-of-geographic-variations-in-health-care-the-new-iom-report/. Accessed on April 11, 2014.

With some areas of the country spending close to 3 times more on healthcare than others, regional variation in healthcare spending has been the focus of national attention.1-7 Since 1973, the Dartmouth Institute has studied regional variation in healthcare utilization and spending and concluded that variation is “unwarranted” because it is driven by providers’ practice patterns rather than differences in medical need, patient preferences, or evidence-based medicine.8-11 However, critics of the Dartmouth Institute’s findings argue that their approach does not adequately adjust for community-level income, and that higher costs in some areas reflect greater patient needs that are not reflected in illness acuity alone.12-14

While Medicare data have made it possible to study variations in spending for the senior population, fragmentation of insurance coverage and nonstandardized data structures make studying the pediatric population more difficult. However, the Children’s Hospital Association’s (CHA) Pediatric Health Information System (PHIS) has made large-scale comparisons more feasible. To overcome challenges associated with using charges and nonuniform cost data, PHIS-derived standardized costs provide new opportunities for comparisons.15,16 Initial analyses using PHIS data showed significant interhospital variations in costs of care,15 but they did not adjust for differences in populations and assess the drivers of variation. A more recent study that controlled for payer status, comorbidities, and illness severity found that intensive care unit (ICU) utilization varied significantly for children hospitalized for asthma, suggesting that hospital practice patterns drive differences in cost.17

This study uses PHIS data to analyze regional variations in standardized costs of care for 3 conditions for which children are hospitalized. To assess potential drivers of variation, the study investigates the effects of patient-level demographic and illness-severity variables as well as encounter-level variables on costs of care. It also estimates cost savings from reducing variation.

METHODS

Data Source

This retrospective cohort study uses the PHIS database (CHA, Overland Park, KS), which includes 48 freestanding children’s hospitals located in noncompeting markets across the United States and accounts for approximately 20% of pediatric hospitalizations. PHIS includes patient demographics, International Classification of Diseases, 9th Revision (ICD-9) diagnosis and procedure codes, as well as hospital charges. In addition to total charges, PHIS reports imaging, laboratory, pharmacy, and “other” charges. The “other” category aggregates clinical, supply, room, and nursing charges (including facility fees and ancillary staff services).

Inclusion Criteria

Inpatient- and observation-status hospitalizations for asthma, diabetic ketoacidosis (DKA), and acute gastroenteritis (AGE) at 46 PHIS hospitals from October 2014 to September 2015 were included. Two hospitals were excluded because of missing data. Hospitalizations for patients >18 years were excluded.

Hospitalizations were categorized by using All Patient Refined-Diagnosis Related Groups (APR-DRGs) version 24 (3M Health Information Systems, St. Paul, MN)18 based on the ICD-9 diagnosis and procedure codes assigned during the episode of care. Analyses included APR-DRG 141 (asthma), primary diagnosis ICD-9 codes 250.11 and 250.13 (DKA), and APR-DRG 249 (AGE). ICD-9 codes were used for DKA for increased specificity.19 These conditions were chosen to represent 3 clinical scenarios: (1) a diagnosis for which hospitals differ on whether certain aspects of care are provided in the ICU (asthma), (2) a diagnosis that frequently includes care in an ICU (DKA), and (3) a diagnosis that typically does not include ICU care (AGE).19

Study Design

To focus the analysis on variation in resource utilization across hospitals rather than variations in hospital item charges, each billed resource was assigned a standardized cost.15,16 For each clinical transaction code (CTC), the median unit cost was calculated for each hospital. The median of the hospital medians was defined as the standardized unit cost for that CTC.

The primary outcome variable was the total standardized cost for the hospitalization adjusted for patient-level demographic and illness-severity variables. Patient demographic and illness-severity covariates included age, race, gender, ZIP code-based median annual household income (HHI), rural-urban location, distance from home ZIP code to the hospital, chronic condition indicator (CCI), and severity-of-illness (SOI). When assessing drivers of variation, encounter-level covariates were added, including length of stay (LOS) in hours, ICU utilization, and 7-day readmission (an imprecise measure to account for quality of care during the index visit). The contribution of imaging, laboratory, pharmacy, and “other” costs was also considered.

Median annual HHI for patients’ home ZIP code was obtained from 2010 US Census data. Community-level HHI, a proxy for socioeconomic status (SES),20,21 was classified into categories based on the 2015 US federal poverty level (FPL) for a family of 422: HHI-1 = ≤ 1.5 × FPL; HHI-2 = 1.5 to 2 × FPL; HHI-3 = 2 to 3 × FPL; HHI-4 = ≥ 3 × FPL. Rural-urban commuting area (RUCA) codes were used to determine the rural-urban classification of the patient’s home.23 The distance from home ZIP code to the hospital was included as an additional control for illness severity because patients traveling longer distances are often more sick and require more resources.24

The Agency for Healthcare Research and Quality CCI classification system was used to identify the presence of a chronic condition.25 For asthma, CCI was flagged if the patient had a chronic condition other than asthma; for DKA, CCI was flagged if the patient had a chronic condition other than DKA; and for AGE, CCI was flagged if the patient had any chronic condition.

The APR-DRG system provides a 4-level SOI score with each APR-DRG category. Patient factors, such as comorbid diagnoses, are considered in severity scores generated through 3M’s proprietary algorithms.18

For the first analysis, the 46 hospitals were categorized into 7 geographic regions based on 2010 US Census Divisions.26 To overcome small hospital sample sizes, Mountain and Pacific were combined into West, and Middle Atlantic and New England were combined into North East. Because PHIS hospitals are located in noncompeting geographic regions, for the second analysis, we examined hospital-level variation (considering each hospital as its own region).

Data Analysis

To focus the analysis on “typical” patients and produce more robust estimates of central tendencies, the top and bottom 5% of hospitalizations with the most extreme standardized costs by condition were trimmed.27 Standardized costs were log-transformed because of their nonnormal distribution and analyzed by using linear mixed models. Covariates were added stepwise to assess the proportion of the variance explained by each predictor. Post-hoc tests with conservative single-step stepwise mutation model corrections for multiple testing were used to compare adjusted costs. Statistical analyses were performed using SAS version 9.3 (SAS Institute, Cary, NC). P values < 0.05 were considered significant. The Children’s Hospital of Philadelphia Institutional Review Board did not classify this study as human subjects research.

RESULTS

During the study period, there were 26,430 hospitalizations for asthma, 5056 for DKA, and 16,274 for AGE (Table 1).

Variation Across Census Regions

After adjusting for patient-level demographic and illness-severity variables, differences in adjusted total standardized costs remained between regions (P < 0.001). Although no region was an outlier compared to the overall mean for any of the conditions, regions were statistically different in pairwise comparison. The East North Central, South Atlantic, and West South Central regions had the highest adjusted total standardized costs for each of the conditions. The East South Central and West North Central regions had the lowest costs for each of the conditions. Adjusted total standardized costs were 120% higher for asthma ($1920 vs $4227), 46% higher for DKA ($7429 vs $10,881), and 150% higher for AGE ($3316 vs $8292) in the highest-cost region compared with the lowest-cost region (Table 2A).

Variation Within Census Regions

After controlling for patient-level demographic and illness-severity variables, standardized costs were different across hospitals in the same region (P < 0.001; panel A in Figure). This was true for all conditions in each region. Differences between the lowest- and highest-cost hospitals within the same region ranged from 111% to 420% for asthma, 101% to 398% for DKA, and 166% to 787% for AGE (Table 3).

Variation Across Hospitals (Each Hospital as Its Own Region)

One hospital had the highest adjusted standardized costs for all 3 conditions ($9087 for asthma, $28,564 for DKA, and $23,387 for AGE) and was outside of the 95% confidence interval compared with the overall means. The second highest-cost hospitals for asthma ($5977) and AGE ($18,780) were also outside of the 95% confidence interval. After removing these outliers, the difference between the highest- and lowest-cost hospitals was 549% for asthma ($721 vs $4678), 491% for DKA ($2738 vs $16,192), and 681% for AGE ($1317 vs $10,281; Table 2B).

Drivers of Variation Across Census Regions

Patient-level demographic and illness-severity variables explained very little of the variation in standardized costs across regions. For each of the conditions, age, race, gender, community-level HHI, RUCA, and distance from home to the hospital each accounted for <1.5% of variation, while SOI and CCI each accounted for <5%. Overall, patient-level variables explained 5.5%, 3.7%, and 6.7% of variation for asthma, DKA, and AGE.

Encounter-level variables explained a much larger percentage of the variation in costs. LOS accounted for 17.8% of the variation for asthma, 9.8% for DKA, and 8.7% for AGE. ICU utilization explained 6.9% of the variation for asthma and 12.5% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. The combination of patient-level and encounter-level variables explained 27%, 24%, and 15% of the variation for asthma, DKA, and AGE.

Drivers of Variation Across Hospitals

For each of the conditions, patient-level demographic variables each accounted for <2% of variation in costs between hospitals. SOI accounted for 4.5% of the variation for asthma and CCI accounted for 5.2% for AGE. Overall, patient-level variables explained 6.9%, 5.3%, and 7.3% of variation for asthma, DKA, and AGE.

Encounter-level variables accounted for a much larger percentage of the variation in cost. LOS explained 25.4% for asthma, 13.3% for DKA, and 14.2% for AGE. ICU utilization accounted for 13.4% for asthma and 21.9% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. Together, patient-level and encounter-level variables explained 40%, 36%, and 22% of variation for asthma, DKA, and AGE.

Imaging, Laboratory, Pharmacy, and “Other” Costs

The largest contributor to total costs adjusted for patient-level factors for all conditions was “other,” which aggregates room, nursing, clinical, and supply charges (panel B in Figure). When considering drivers of variation, this category explained >50% for each of the conditions. The next largest contributor to total costs was laboratory charges, which accounted for 15% of the variation across regions for asthma and 11% for DKA. Differences in imaging accounted for 18% of the variation for DKA and 15% for AGE. Differences in pharmacy charges accounted for <4% of the variation for each of the conditions. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 78%, and 72% of the variation across census regions for asthma, DKA, and AGE.

For the hospital-level analysis, differences in “other” remained the largest driver of cost variation. For asthma, “other” explained 61% of variation, while pharmacy, laboratory, and imaging each accounted for <8%. For DKA, differences in imaging accounted for 18% of the variation and laboratory charges accounted for 12%. For AGE, imaging accounted for 15% of the variation. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 72%, and 67% of the variation for asthma, DKA, and AGE.

Cost Savings

If all hospitals in this cohort with adjusted standardized costs above the national PHIS average achieved costs equal to the national PHIS average, estimated annual savings in adjusted standardized costs for these 3 conditions would be $69.1 million. If each hospital with adjusted costs above the average within its census region achieved costs equal to its regional average, estimated annual savings in adjusted standardized costs for these conditions would be $25.2 million.

DISCUSSION

This study reported on the regional variation in costs of care for 3 conditions treated at 46 children’s hospitals across 7 geographic regions, and it demonstrated that variations in costs of care exist in pediatrics. This study used standardized costs to compare utilization patterns across hospitals and adjusted for several patient-level demographic and illness-severity factors, and it found that differences in costs of care for children hospitalized with asthma, DKA, and AGE remained both between and within regions.

These variations are noteworthy, as hospitals strive to improve the value of healthcare. If the higher-cost hospitals in this cohort could achieve costs equal to the national PHIS averages, estimated annual savings in adjusted standardized costs for these conditions alone would equal $69.1 million. If higher-cost hospitals relative to the average in their own region reduced costs to their regional averages, annual standardized cost savings could equal $25.2 million for these conditions.

The differences observed are also significant in that they provide a foundation for exploring whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes.28 If so, studying what those hospitals do to achieve outcomes more efficiently can serve as the basis for the establishment of best practices.29 Standardizing best practices through protocols, pathways, and care-model redesign can reduce potentially unnecessary spending.30

Our findings showed that patient-level demographic and illness-severity covariates, including community-level HHI and SOI, did not consistently explain cost differences. Instead, LOS and ICU utilization were associated with higher costs.17,19 When considering the effect of the 4 cost components on the variation in total standardized costs between regions and between hospitals, the fact that the “other” category accounted for the largest percent of the variation is not surprising, because the cost of room occupancy and nursing services increases with longer LOS and more time in the ICU. Other individual cost components that were major drivers of variation were laboratory utilization for asthma and imaging for DKA and AGE31 (though they accounted for a much smaller proportion of total adjusted costs).19

To determine if these factors are modifiable, more information is needed to explain why practices differ. Many factors may contribute to varying utilization patterns, including differences in capabilities and resources (in the hospital and in the community) and patient volumes. For example, some hospitals provide continuous albuterol for status asthmaticus only in ICUs, while others provide it on regular units.32 But if certain hospitals do not have adequate resources or volumes to effectively care for certain populations outside of the ICU, their higher-value approach (considering quality and cost) may be to utilize ICU beds, even if some other hospitals care for those patients on non-ICU floors. Another possibility is that family preferences about care delivery (such as how long children stay in the hospital) may vary across regions.33

Other evidence suggests that physician practice and spending patterns are strongly influenced by the practices of the region where they trained.34 Because physicians often practice close to where they trained,35,36 this may partially explain how regional patterns are reinforced.

Even considering all mentioned covariates, our model did not fully explain variation in standardized costs. After adding the cost components as covariates, between one-third and one-fifth of the variation remained unexplained. It is possible that this unexplained variation stemmed from unmeasured patient-level factors.

In addition, while proxies for SES, including community-level HHI, did not significantly predict differences in costs across regions, it is possible that SES affected LOS differently in different regions. Previous studies have suggested that lower SES is associated with longer LOS.37 If this effect is more pronounced in certain regions (potentially because of differences in social service infrastructures), SES may be contributing to variations in cost through LOS.

Our findings were subject to limitations. First, this study only examined 3 diagnoses and did not include surgical or less common conditions. Second, while PHIS includes tertiary care, academic, and freestanding children’s hospitals, it does not include general hospitals, which is where most pediatric patients receive care.38 Third, we used ZIP code-based median annual HHI to account for SES, and we used ZIP codes to determine the distance to the hospital and rural-urban location of patients’ homes. These approximations lack precision because SES and distances vary within ZIP codes.39 Fourth, while adjusted standardized costs allow for comparisons between hospitals, they do not represent actual costs to patients or individual hospitals. Additionally, when determining whether variation remained after controlling for patient-level variables, we included SOI as a reflection of illness-severity at presentation. However, in practice, SOI scores may be assigned partially based on factors determined during the hospitalization.18 Finally, the use of other regional boundaries or the selection of different hospitals may yield different results.

CONCLUSION

This study reveals regional variations in costs of care for 3 inpatient pediatric conditions. Future studies should explore whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes. To the extent that variation is driven by modifiable factors and lower spending does not compromise outcomes, these data may prompt reviews of care models to reduce unwarranted variation and improve the value of care delivery at local, regional, and national levels.

Disclosure

Internal funds from the CHA and The Children’s Hospital of Philadelphia supported the conduct of this work. The authors have no financial interests, relationships, or affiliations relevant to the subject matter or materials discussed in the manuscript to disclose. The authors have no potential conflicts of interest relevant to the subject matter or materials discussed in the manuscript to disclose

With some areas of the country spending close to 3 times more on healthcare than others, regional variation in healthcare spending has been the focus of national attention.1-7 Since 1973, the Dartmouth Institute has studied regional variation in healthcare utilization and spending and concluded that variation is “unwarranted” because it is driven by providers’ practice patterns rather than differences in medical need, patient preferences, or evidence-based medicine.8-11 However, critics of the Dartmouth Institute’s findings argue that their approach does not adequately adjust for community-level income, and that higher costs in some areas reflect greater patient needs that are not reflected in illness acuity alone.12-14

While Medicare data have made it possible to study variations in spending for the senior population, fragmentation of insurance coverage and nonstandardized data structures make studying the pediatric population more difficult. However, the Children’s Hospital Association’s (CHA) Pediatric Health Information System (PHIS) has made large-scale comparisons more feasible. To overcome challenges associated with using charges and nonuniform cost data, PHIS-derived standardized costs provide new opportunities for comparisons.15,16 Initial analyses using PHIS data showed significant interhospital variations in costs of care,15 but they did not adjust for differences in populations and assess the drivers of variation. A more recent study that controlled for payer status, comorbidities, and illness severity found that intensive care unit (ICU) utilization varied significantly for children hospitalized for asthma, suggesting that hospital practice patterns drive differences in cost.17

This study uses PHIS data to analyze regional variations in standardized costs of care for 3 conditions for which children are hospitalized. To assess potential drivers of variation, the study investigates the effects of patient-level demographic and illness-severity variables as well as encounter-level variables on costs of care. It also estimates cost savings from reducing variation.

METHODS

Data Source

This retrospective cohort study uses the PHIS database (CHA, Overland Park, KS), which includes 48 freestanding children’s hospitals located in noncompeting markets across the United States and accounts for approximately 20% of pediatric hospitalizations. PHIS includes patient demographics, International Classification of Diseases, 9th Revision (ICD-9) diagnosis and procedure codes, as well as hospital charges. In addition to total charges, PHIS reports imaging, laboratory, pharmacy, and “other” charges. The “other” category aggregates clinical, supply, room, and nursing charges (including facility fees and ancillary staff services).

Inclusion Criteria

Inpatient- and observation-status hospitalizations for asthma, diabetic ketoacidosis (DKA), and acute gastroenteritis (AGE) at 46 PHIS hospitals from October 2014 to September 2015 were included. Two hospitals were excluded because of missing data. Hospitalizations for patients >18 years were excluded.

Hospitalizations were categorized by using All Patient Refined-Diagnosis Related Groups (APR-DRGs) version 24 (3M Health Information Systems, St. Paul, MN)18 based on the ICD-9 diagnosis and procedure codes assigned during the episode of care. Analyses included APR-DRG 141 (asthma), primary diagnosis ICD-9 codes 250.11 and 250.13 (DKA), and APR-DRG 249 (AGE). ICD-9 codes were used for DKA for increased specificity.19 These conditions were chosen to represent 3 clinical scenarios: (1) a diagnosis for which hospitals differ on whether certain aspects of care are provided in the ICU (asthma), (2) a diagnosis that frequently includes care in an ICU (DKA), and (3) a diagnosis that typically does not include ICU care (AGE).19

Study Design

To focus the analysis on variation in resource utilization across hospitals rather than variations in hospital item charges, each billed resource was assigned a standardized cost.15,16 For each clinical transaction code (CTC), the median unit cost was calculated for each hospital. The median of the hospital medians was defined as the standardized unit cost for that CTC.

The primary outcome variable was the total standardized cost for the hospitalization adjusted for patient-level demographic and illness-severity variables. Patient demographic and illness-severity covariates included age, race, gender, ZIP code-based median annual household income (HHI), rural-urban location, distance from home ZIP code to the hospital, chronic condition indicator (CCI), and severity-of-illness (SOI). When assessing drivers of variation, encounter-level covariates were added, including length of stay (LOS) in hours, ICU utilization, and 7-day readmission (an imprecise measure to account for quality of care during the index visit). The contribution of imaging, laboratory, pharmacy, and “other” costs was also considered.

Median annual HHI for patients’ home ZIP code was obtained from 2010 US Census data. Community-level HHI, a proxy for socioeconomic status (SES),20,21 was classified into categories based on the 2015 US federal poverty level (FPL) for a family of 422: HHI-1 = ≤ 1.5 × FPL; HHI-2 = 1.5 to 2 × FPL; HHI-3 = 2 to 3 × FPL; HHI-4 = ≥ 3 × FPL. Rural-urban commuting area (RUCA) codes were used to determine the rural-urban classification of the patient’s home.23 The distance from home ZIP code to the hospital was included as an additional control for illness severity because patients traveling longer distances are often more sick and require more resources.24

The Agency for Healthcare Research and Quality CCI classification system was used to identify the presence of a chronic condition.25 For asthma, CCI was flagged if the patient had a chronic condition other than asthma; for DKA, CCI was flagged if the patient had a chronic condition other than DKA; and for AGE, CCI was flagged if the patient had any chronic condition.

The APR-DRG system provides a 4-level SOI score with each APR-DRG category. Patient factors, such as comorbid diagnoses, are considered in severity scores generated through 3M’s proprietary algorithms.18

For the first analysis, the 46 hospitals were categorized into 7 geographic regions based on 2010 US Census Divisions.26 To overcome small hospital sample sizes, Mountain and Pacific were combined into West, and Middle Atlantic and New England were combined into North East. Because PHIS hospitals are located in noncompeting geographic regions, for the second analysis, we examined hospital-level variation (considering each hospital as its own region).

Data Analysis

To focus the analysis on “typical” patients and produce more robust estimates of central tendencies, the top and bottom 5% of hospitalizations with the most extreme standardized costs by condition were trimmed.27 Standardized costs were log-transformed because of their nonnormal distribution and analyzed by using linear mixed models. Covariates were added stepwise to assess the proportion of the variance explained by each predictor. Post-hoc tests with conservative single-step stepwise mutation model corrections for multiple testing were used to compare adjusted costs. Statistical analyses were performed using SAS version 9.3 (SAS Institute, Cary, NC). P values < 0.05 were considered significant. The Children’s Hospital of Philadelphia Institutional Review Board did not classify this study as human subjects research.

RESULTS

During the study period, there were 26,430 hospitalizations for asthma, 5056 for DKA, and 16,274 for AGE (Table 1).

Variation Across Census Regions

After adjusting for patient-level demographic and illness-severity variables, differences in adjusted total standardized costs remained between regions (P < 0.001). Although no region was an outlier compared to the overall mean for any of the conditions, regions were statistically different in pairwise comparison. The East North Central, South Atlantic, and West South Central regions had the highest adjusted total standardized costs for each of the conditions. The East South Central and West North Central regions had the lowest costs for each of the conditions. Adjusted total standardized costs were 120% higher for asthma ($1920 vs $4227), 46% higher for DKA ($7429 vs $10,881), and 150% higher for AGE ($3316 vs $8292) in the highest-cost region compared with the lowest-cost region (Table 2A).

Variation Within Census Regions

After controlling for patient-level demographic and illness-severity variables, standardized costs were different across hospitals in the same region (P < 0.001; panel A in Figure). This was true for all conditions in each region. Differences between the lowest- and highest-cost hospitals within the same region ranged from 111% to 420% for asthma, 101% to 398% for DKA, and 166% to 787% for AGE (Table 3).

Variation Across Hospitals (Each Hospital as Its Own Region)

One hospital had the highest adjusted standardized costs for all 3 conditions ($9087 for asthma, $28,564 for DKA, and $23,387 for AGE) and was outside of the 95% confidence interval compared with the overall means. The second highest-cost hospitals for asthma ($5977) and AGE ($18,780) were also outside of the 95% confidence interval. After removing these outliers, the difference between the highest- and lowest-cost hospitals was 549% for asthma ($721 vs $4678), 491% for DKA ($2738 vs $16,192), and 681% for AGE ($1317 vs $10,281; Table 2B).

Drivers of Variation Across Census Regions

Patient-level demographic and illness-severity variables explained very little of the variation in standardized costs across regions. For each of the conditions, age, race, gender, community-level HHI, RUCA, and distance from home to the hospital each accounted for <1.5% of variation, while SOI and CCI each accounted for <5%. Overall, patient-level variables explained 5.5%, 3.7%, and 6.7% of variation for asthma, DKA, and AGE.

Encounter-level variables explained a much larger percentage of the variation in costs. LOS accounted for 17.8% of the variation for asthma, 9.8% for DKA, and 8.7% for AGE. ICU utilization explained 6.9% of the variation for asthma and 12.5% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. The combination of patient-level and encounter-level variables explained 27%, 24%, and 15% of the variation for asthma, DKA, and AGE.

Drivers of Variation Across Hospitals

For each of the conditions, patient-level demographic variables each accounted for <2% of variation in costs between hospitals. SOI accounted for 4.5% of the variation for asthma and CCI accounted for 5.2% for AGE. Overall, patient-level variables explained 6.9%, 5.3%, and 7.3% of variation for asthma, DKA, and AGE.

Encounter-level variables accounted for a much larger percentage of the variation in cost. LOS explained 25.4% for asthma, 13.3% for DKA, and 14.2% for AGE. ICU utilization accounted for 13.4% for asthma and 21.9% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. Together, patient-level and encounter-level variables explained 40%, 36%, and 22% of variation for asthma, DKA, and AGE.

Imaging, Laboratory, Pharmacy, and “Other” Costs

The largest contributor to total costs adjusted for patient-level factors for all conditions was “other,” which aggregates room, nursing, clinical, and supply charges (panel B in Figure). When considering drivers of variation, this category explained >50% for each of the conditions. The next largest contributor to total costs was laboratory charges, which accounted for 15% of the variation across regions for asthma and 11% for DKA. Differences in imaging accounted for 18% of the variation for DKA and 15% for AGE. Differences in pharmacy charges accounted for <4% of the variation for each of the conditions. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 78%, and 72% of the variation across census regions for asthma, DKA, and AGE.

For the hospital-level analysis, differences in “other” remained the largest driver of cost variation. For asthma, “other” explained 61% of variation, while pharmacy, laboratory, and imaging each accounted for <8%. For DKA, differences in imaging accounted for 18% of the variation and laboratory charges accounted for 12%. For AGE, imaging accounted for 15% of the variation. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 72%, and 67% of the variation for asthma, DKA, and AGE.

Cost Savings

If all hospitals in this cohort with adjusted standardized costs above the national PHIS average achieved costs equal to the national PHIS average, estimated annual savings in adjusted standardized costs for these 3 conditions would be $69.1 million. If each hospital with adjusted costs above the average within its census region achieved costs equal to its regional average, estimated annual savings in adjusted standardized costs for these conditions would be $25.2 million.

DISCUSSION

This study reported on the regional variation in costs of care for 3 conditions treated at 46 children’s hospitals across 7 geographic regions, and it demonstrated that variations in costs of care exist in pediatrics. This study used standardized costs to compare utilization patterns across hospitals and adjusted for several patient-level demographic and illness-severity factors, and it found that differences in costs of care for children hospitalized with asthma, DKA, and AGE remained both between and within regions.

These variations are noteworthy, as hospitals strive to improve the value of healthcare. If the higher-cost hospitals in this cohort could achieve costs equal to the national PHIS averages, estimated annual savings in adjusted standardized costs for these conditions alone would equal $69.1 million. If higher-cost hospitals relative to the average in their own region reduced costs to their regional averages, annual standardized cost savings could equal $25.2 million for these conditions.

The differences observed are also significant in that they provide a foundation for exploring whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes.28 If so, studying what those hospitals do to achieve outcomes more efficiently can serve as the basis for the establishment of best practices.29 Standardizing best practices through protocols, pathways, and care-model redesign can reduce potentially unnecessary spending.30

Our findings showed that patient-level demographic and illness-severity covariates, including community-level HHI and SOI, did not consistently explain cost differences. Instead, LOS and ICU utilization were associated with higher costs.17,19 When considering the effect of the 4 cost components on the variation in total standardized costs between regions and between hospitals, the fact that the “other” category accounted for the largest percent of the variation is not surprising, because the cost of room occupancy and nursing services increases with longer LOS and more time in the ICU. Other individual cost components that were major drivers of variation were laboratory utilization for asthma and imaging for DKA and AGE31 (though they accounted for a much smaller proportion of total adjusted costs).19

To determine if these factors are modifiable, more information is needed to explain why practices differ. Many factors may contribute to varying utilization patterns, including differences in capabilities and resources (in the hospital and in the community) and patient volumes. For example, some hospitals provide continuous albuterol for status asthmaticus only in ICUs, while others provide it on regular units.32 But if certain hospitals do not have adequate resources or volumes to effectively care for certain populations outside of the ICU, their higher-value approach (considering quality and cost) may be to utilize ICU beds, even if some other hospitals care for those patients on non-ICU floors. Another possibility is that family preferences about care delivery (such as how long children stay in the hospital) may vary across regions.33

Other evidence suggests that physician practice and spending patterns are strongly influenced by the practices of the region where they trained.34 Because physicians often practice close to where they trained,35,36 this may partially explain how regional patterns are reinforced.

Even considering all mentioned covariates, our model did not fully explain variation in standardized costs. After adding the cost components as covariates, between one-third and one-fifth of the variation remained unexplained. It is possible that this unexplained variation stemmed from unmeasured patient-level factors.

In addition, while proxies for SES, including community-level HHI, did not significantly predict differences in costs across regions, it is possible that SES affected LOS differently in different regions. Previous studies have suggested that lower SES is associated with longer LOS.37 If this effect is more pronounced in certain regions (potentially because of differences in social service infrastructures), SES may be contributing to variations in cost through LOS.

Our findings were subject to limitations. First, this study only examined 3 diagnoses and did not include surgical or less common conditions. Second, while PHIS includes tertiary care, academic, and freestanding children’s hospitals, it does not include general hospitals, which is where most pediatric patients receive care.38 Third, we used ZIP code-based median annual HHI to account for SES, and we used ZIP codes to determine the distance to the hospital and rural-urban location of patients’ homes. These approximations lack precision because SES and distances vary within ZIP codes.39 Fourth, while adjusted standardized costs allow for comparisons between hospitals, they do not represent actual costs to patients or individual hospitals. Additionally, when determining whether variation remained after controlling for patient-level variables, we included SOI as a reflection of illness-severity at presentation. However, in practice, SOI scores may be assigned partially based on factors determined during the hospitalization.18 Finally, the use of other regional boundaries or the selection of different hospitals may yield different results.

CONCLUSION

This study reveals regional variations in costs of care for 3 inpatient pediatric conditions. Future studies should explore whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes. To the extent that variation is driven by modifiable factors and lower spending does not compromise outcomes, these data may prompt reviews of care models to reduce unwarranted variation and improve the value of care delivery at local, regional, and national levels.

Disclosure

Internal funds from the CHA and The Children’s Hospital of Philadelphia supported the conduct of this work. The authors have no financial interests, relationships, or affiliations relevant to the subject matter or materials discussed in the manuscript to disclose. The authors have no potential conflicts of interest relevant to the subject matter or materials discussed in the manuscript to disclose

1. Fisher E, Skinner J. Making Sense of Geographic Variations in Health Care: The New IOM Report. 2013; http://healthaffairs.org/blog/2013/07/24/making-sense-of-geographic-variations-in-health-care-the-new-iom-report/. Accessed on April 11, 2014.

1. Fisher E, Skinner J. Making Sense of Geographic Variations in Health Care: The New IOM Report. 2013; http://healthaffairs.org/blog/2013/07/24/making-sense-of-geographic-variations-in-health-care-the-new-iom-report/. Accessed on April 11, 2014.

© 2017 Society of Hospital Medicine

Readmission Analysis Using Fault Tree

As physicians strive to increase the value of healthcare delivery, there has been increased focus on improving the quality of care that patients receive while lowering per capita costs. A provision of the Affordable Care Act implemented in 2012 identified all‐cause 30‐day readmission rates as a measure of hospital quality, and as part of the Act's Hospital Readmission and Reduction Program, Medicare now penalizes hospitals with higher than expected all‐cause readmissions rates for adult patients with certain conditions by lowering reimbursements.[1] Although readmissions are not yet commonly used to determine reimbursements for pediatric hospitals, several states are penalizing higher than expected readmission rates for Medicaid enrollees,[2, 3] using an imprecise algorithm to determine which readmissions resulted from low‐quality care during the index admission.[4, 5, 6]

There is growing concern, however, that readmission rates are not an accurate gauge of the quality of care patients receive while in the hospital or during the discharge process to prepare them for their transition home.[7, 8, 9, 10] This is especially true in pediatric settings, where overall readmission rates are much lower than in adult settings, many readmissions are expected as part of a patient's planned course of care, and variation in readmission rates between hospitals is correlated with the percentage of patients with certain complex chronic conditions.[1, 7, 11] Thus, there is increasing agreement that hospitals and external evaluators need to shift the focus from all‐cause readmissions to a reliable, consistent, and fair measure of potentially preventable readmissions.[12, 13] In addition to being a more useful quality metric, analyzing preventable readmissions will help hospitals focus resources on patients with potentially modifiable risk factors and develop meaningful quality‐improvement initiatives to improve inpatient care as well as the discharge process to prepare families for their transition to home.[14]

Although previous studies have attempted to distinguish preventable from nonpreventable readmissions, many reported significant challenges in completing reviews efficiently, achieving consistency in how readmissions were classified, and attaining consensus on final determinations.[12, 13, 14] Studies have also demonstrated that the algorithms some states are using to streamline preventability reviews and determine reimbursements overestimate the rate of potentially preventable readmissions.[4, 5, 6]

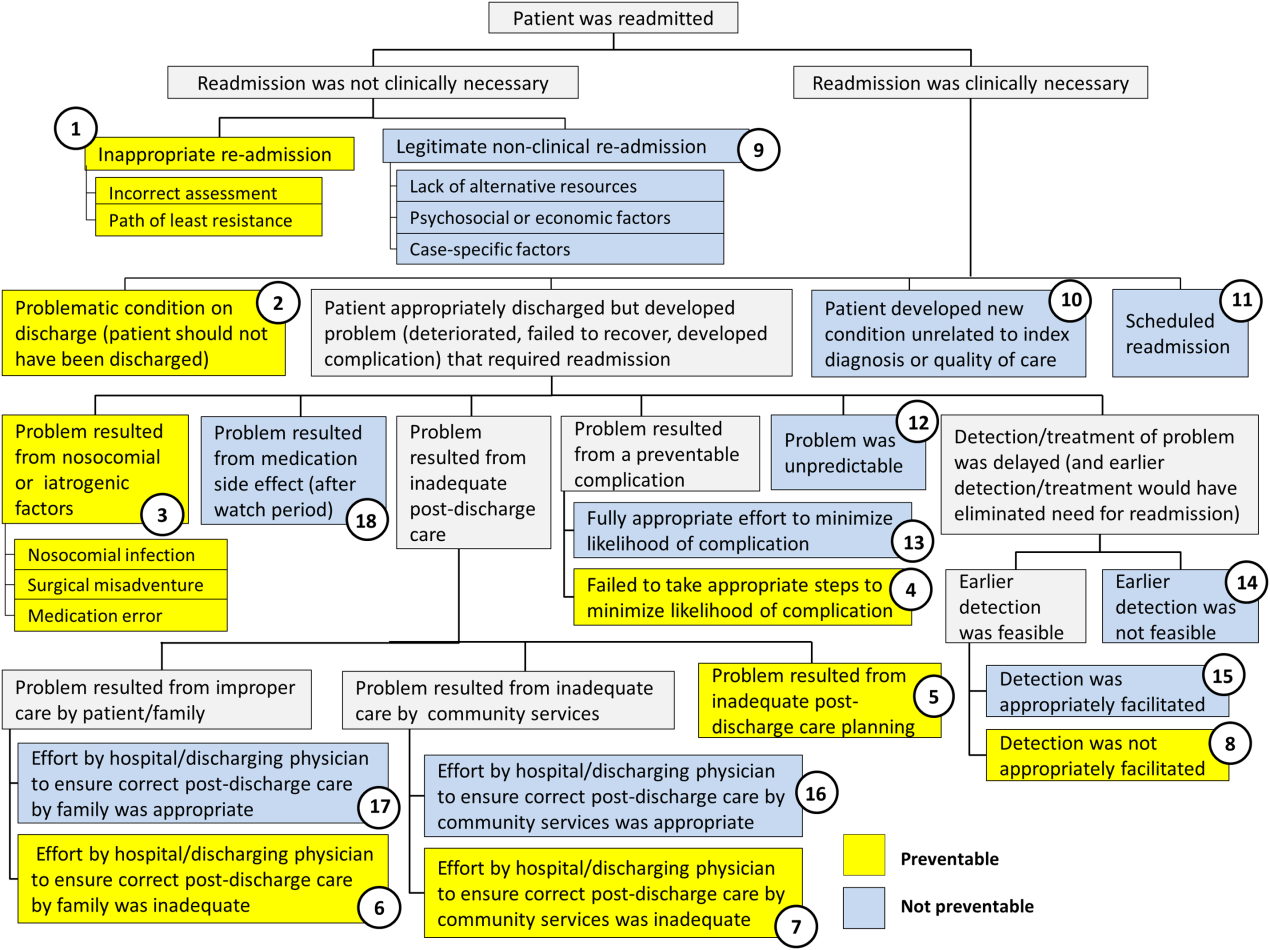

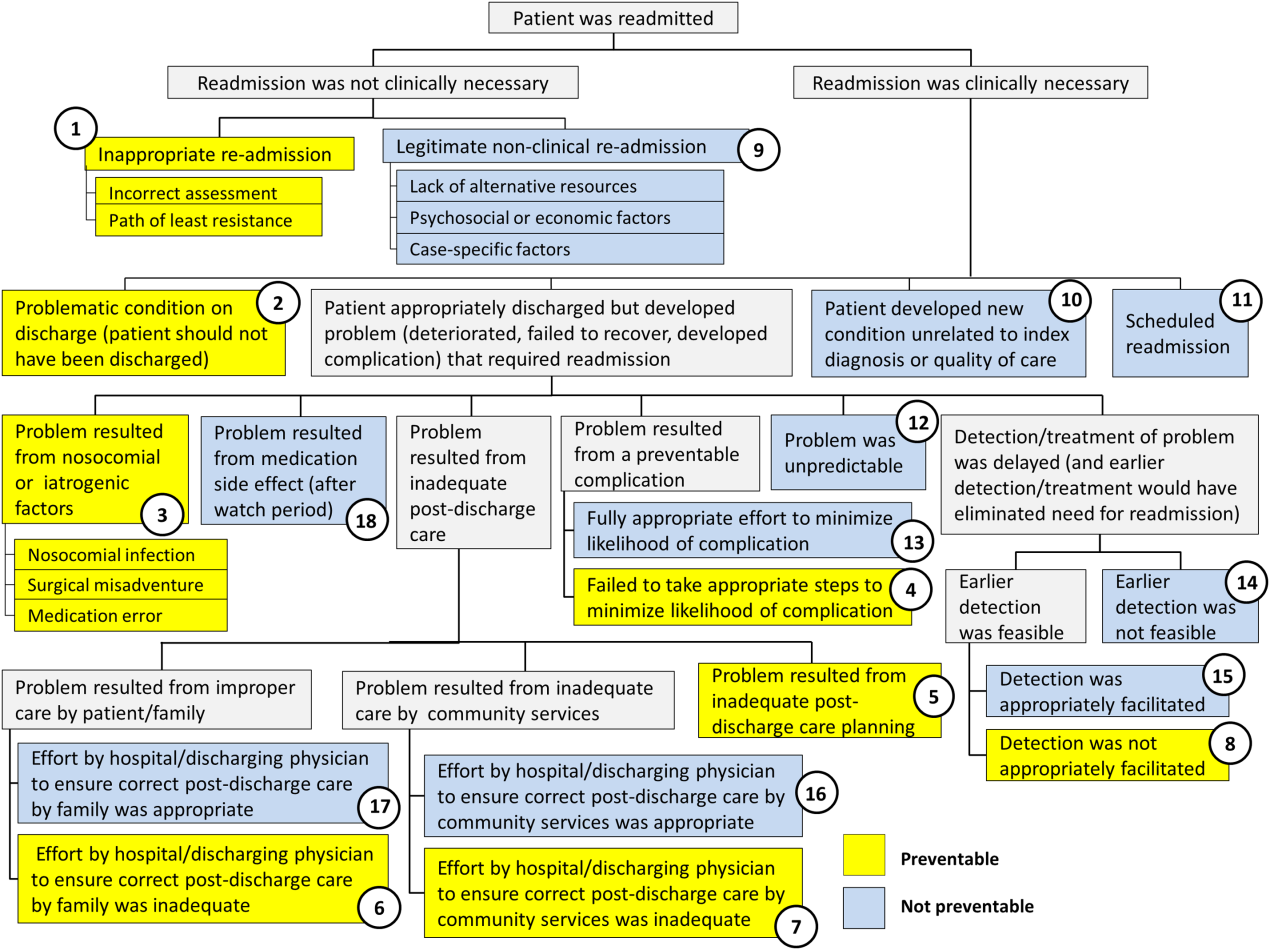

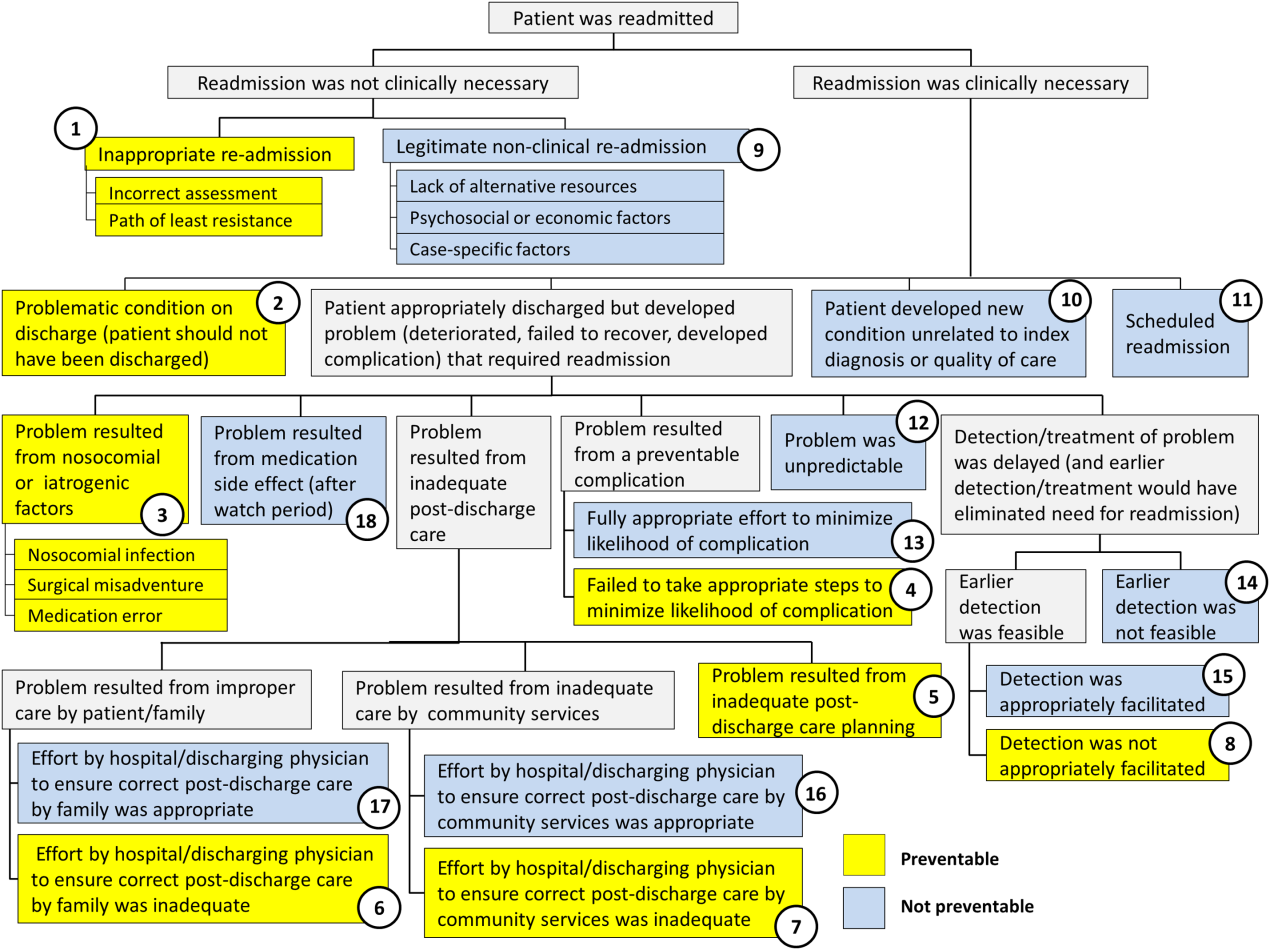

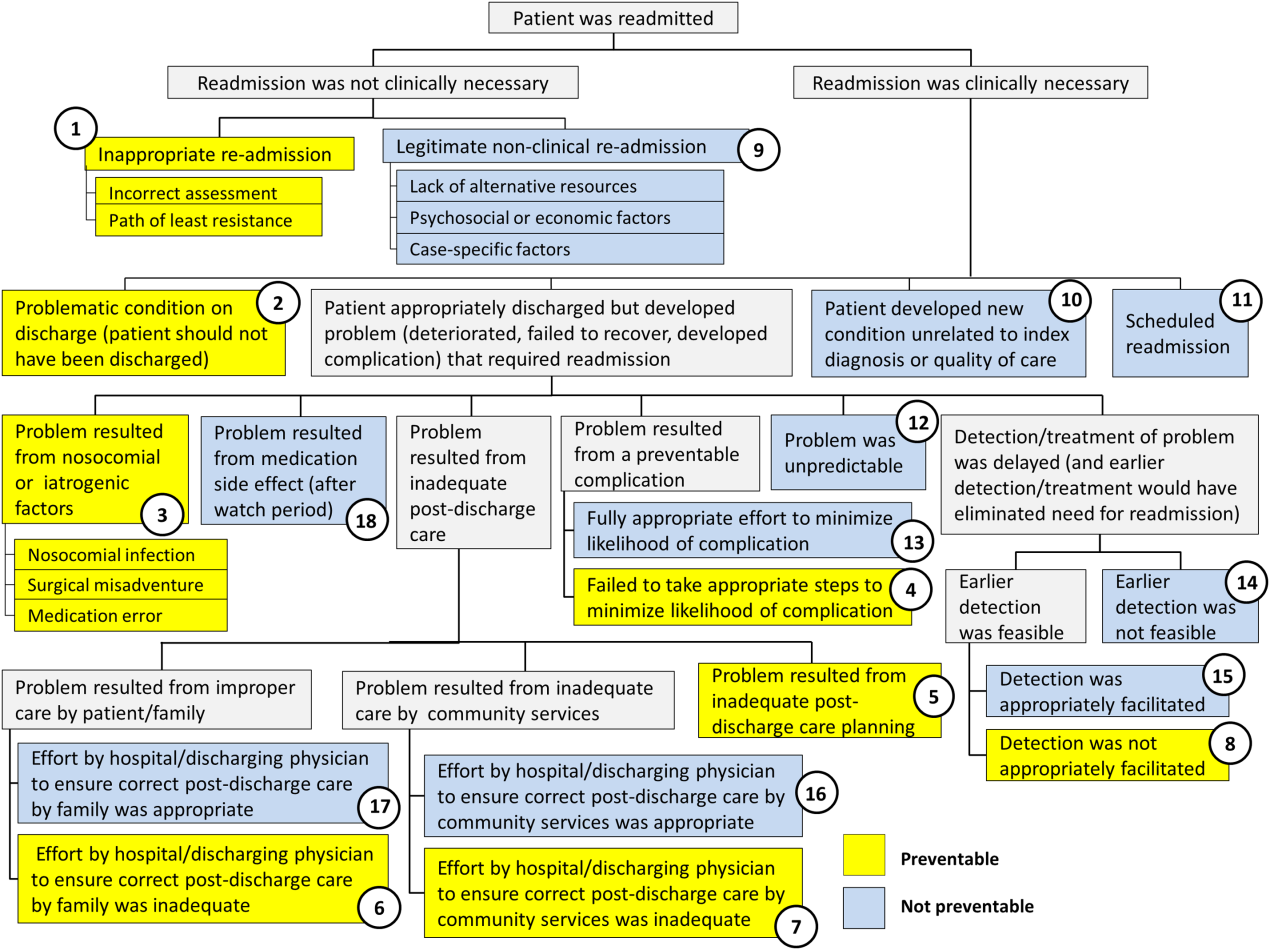

To increase the efficiency of preventability reviews and reduce the subjectivity involved in reaching final determinations, while still accounting for the nuances necessary to conduct a fair review, a quality‐improvement team from the Division of General Pediatrics at The Children's Hospital of Philadelphia (CHOP) implemented a fault tree analysis tool based on a framework developed by Howard Parker at Intermountain Primary Children's Hospital. The CHOP team coded this framework into a secure Web‐based data‐collection tool in the form of a decision tree to guide reviewers through a logical progression of questions that result in 1 of 18 root causes of readmissions, 8 of which are considered potentially preventable. We hypothesized that this method would help reviewers efficiently reach consensus on the root causes of hospital readmissions, and thus help the division and the hospital focus efforts on developing relevant quality‐improvement initiatives.

METHODS

Inclusion Criteria and Study Design

This study was conducted at CHOP, a 535‐bed urban, tertiary‐care, freestanding children's hospital with approximately 29,000 annual discharges. Of those discharges, 7000 to 8000 are from the general pediatrics service, meaning that the attending of record was a general pediatrician. Patients were included in the study if (1) they were discharged from the general pediatrics service between January 2014 and December 2014, and (2) they were readmitted to the hospital, for any reason, within 15 days of discharge. Because this analysis was done as part of a quality‐improvement initiative, it focuses on 15‐day, early readmissions to target cases with a higher probability of being potentially preventable from the perspective of the hospital care team.[10, 12, 13] Patients under observation status during the index admission or the readmission were included. However, patients who returned to the emergency department but were not admitted to an inpatient unit were excluded. Objective details about each case, including the patient's name, demographics, chart number, and diagnosis code, were pre‐loaded from EPIC (Epic Systems Corp., Verona, WI) into REDCap (Research Electronic Data Capture;

A panel of 10 general pediatricians divided up the cases to perform retrospective chart reviews. For each case, REDCap guided reviewers through the fault tree analysis. Reviewers met monthly to discuss difficult cases and reach consensus on any identified ambiguities in the process. After all cases were reviewed once, 3 panel members independently reviewed a random selection of cases to measure inter‐rater reliability and confirm reproducibility of final determinations. The inter‐rater reliability statistic was calculated using Stata 12.1 (StataCorp LP, College Station, TX). During chart reviews, panel members were not blinded to the identity of physicians and other staff members caring for the patients under review. CHOP's institutional review board determined this study to be exempt from ongoing review.

Fault Tree Analysis

Using the decision tree framework for analyzing readmissions that was developed at Intermountain Primary Children's Hospital, the REDCap tool prompted reviewers with a series of sequential questions, each with mutually exclusive options. Using imbedded branching logic to select follow‐up questions, the tool guided reviewers to 1 of 18 terminal nodes, each representing a potential root cause of the readmission. Of those 18 potential causes, 8 were considered potentially preventable. A diagram of the fault tree framework, color coded to indicate which nodes were considered potentially preventable, is shown in Figure 1.

RESULTS

In 2014, 7252 patients were discharged from the general pediatrics service at CHOP. Of those patients, 248 were readmitted within 15 days for an overall general pediatrics 15‐day readmission rate of 3.4%.

Preventability Analysis

Of the 248 readmissions, 233 (94.0%) were considered not preventable. The most common cause for readmission, which accounted for 145 cases (58.5%), was a patient developing an unpredictable problem related to the index diagnosis or a natural progression of the disease that required readmission. The second most common cause, which accounted for 53 cases (21.4%), was a patient developing a new condition unrelated to the index diagnosis or a readmission unrelated to the quality of care received during the index stay. The third most frequent cause, which accounted for 11 cases (4.4%), was a legitimate nonclinical readmission due to lack of alternative resources, psychosocial or economic factors, or case‐specific factors. Other nonpreventable causes of readmission, including scheduled readmissions, each accounted for 7 or fewer cases and <3% of total readmissions.

The 15 readmissions considered potentially preventable accounted for 6.0% of total readmissions and 0.2% of total discharges from the general pediatrics service in 2014. The most common cause of preventable readmissions, which accounted for 6 cases, was premature discharge. The second most common cause, which accounted for 4 cases, was a problem resulting from nosocomial or iatrogenic factors. Other potentially preventable causes included delayed detection of problem (3 cases), inappropriate readmission (1 case), and inadequate postdischarge care planning (1 case).

A breakdown of fault tree results, including examples of cases associated with each terminal node, is shown in Table 1. Information about general pediatrics patients and readmitted patients is included in Tables 2 and 3. A breakdown of determinations for each reviewer is included in Supporting Table 1 in the online version of this article.

| Fault Tree Terminal Node | Root Cause of Readmission | No. of Cases | % of Total Readmissions | % Within Preventability Category | % of Total Discharges |

|---|---|---|---|---|---|

| |||||

| 2 (Potentially Preventable) | Problematic condition on discharge. Example:* Index admission: Infant with history of prematurity admitted with RSV and rhinovirus bronchiolitis. Had some waxing and waning symptoms. Just prior to discharge, noted to have increased work of breathing related to feeds. Readmission: 12 hours later with tachypnea, retractions, and hypoxia. | 6 | 2.4% | 40.0% | 0.08% |

| 3 (Potentially Preventable) | Nosocomial/Iatrogenic factors. Example*: Index admission: Toddler admitted with fever and neutropenia. Treated with antibiotics 24 hours. Diagnosed with viral illness and discharged home. Readmission: symptomatic Clostridum difficile infection. | 4 | 1.6% | 26.7% | 0.06% |

| 8 (Potentially Preventable) | Detection/treatment of problem was delayed and not appropriately facilitated. Example:* Index admission: Preteen admitted with abdominal pain, concern for appendicitis. Ultrasound and abdominal MRI negative for appendicitis. Symptoms improved. Tolerated PO. Readmission: 3 days later with similar abdominal pain. Diagnosed with constipation with significant improvement following clean‐out. | 3 | 1.2% | 20.0% | 0.04% |

| 1 (Potentially Preventable) | Inappropriate readmission. Example:* Index admission: Infant with laryngomalacia admitted with bronchiolitis. Readmission: Continued mild bronchiolitis symptoms but did not require oxygen or suctioning, normal CXR. | 1 | 0.4% | 6.7% | 0.01% |

| 5 (Potentially Preventable) | Resulted from inadequate postdischarge care planning. Example:* Index diagnosis: Infant with vomiting, prior admissions, and extensive evaluation, diagnosed with milk protein allergy and GERD. PPI increased. Readmission: Persistent symptoms, required NGT feeds supplementation. | 1 | 0.4% | 6.7% | 0.01% |

| 4 (Potentially Preventable) | Resulted from a preventable complication and hospital/physician did not take the appropriate steps to minimize likelihood of complication. | ||||

| 6 (Potentially Preventable) | Resulted from improper care by patient/family and effort by hospital/physician to ensure correct postdischarge care was inadequate. | ||||

| 7 (Potentially Preventable) | Resulted from inadequate care by community services and effort by hospital/physician to ensure correct postdischarge care was inadequate. | ||||

| 15 | 6.0% | 100% | 0.2% | ||

| 12 (Not Preventable) | Problem was unpredictable. Example:* Index admission: Infant admitted with gastroenteritis and dehydration with an anion gap metabolic acidosis. Vomiting and diarrhea improved, rehydrated, acidosis improved. Readmission: 1 day later, presented with emesis and fussiness. Readmitted for metabolic acidosis. | 145 | 58.5% | 62.2% | 2.00% |

| 10 (Not Preventable) | Patient developed new condition unrelated to index diagnosis or quality of care. Example:* Index admission: Toddler admitted with cellulitis. Readmission: Bronchiolitis (did not meet CDC guidelines for nosocomial infection). | 53 | 21.4% | 22.7% | 0.73% |

| 9 (Not Preventable) | Legitimate nonclinical readmission. Example:* Index admission: Infant admitted with second episode of bronchiolitis. Readmission: 4 days later with mild diarrhea. Tolerated PO challenge in emergency department. Admitted due to parental anxiety. | 11 | 4.4% | 4.7% | 0.15% |

| 17 (Not Preventable) | Problem resulted from improper care by patient/family but effort by hospital/physician to ensure correct postdischarge care was appropriate. Example:* Index admission: Infant admitted with diarrhea, diagnosed with milk protein allergy. Discharged on soy formula. Readmission: Developed vomiting and diarrhea with cow milk formula. | 7 | 2.8% | 3.0% | 0.10% |

| 11 (Not Preventable) | Scheduled readmission. Example:* Index admission: Infant with conjunctivitis and preseptal cellulitis with nasolacrimal duct obstruction. Readmission: Postoperatively following scheduled nasolacrimal duct repair. | 7 | 2.8% | 3.0% | 0.10% |

| 14 (Not Preventable) | Detection/treatment of problem was delayed, but earlier detection was not feasible. Example:* Index admission: Preteen admitted with fever, abdominal pain, and elevated inflammatory markers. Fever resolved and symptoms improved. Diagnosed with unspecified viral infection. Readmission: 4 days later with lower extremity pyomyositis and possible osteomyelitis. | 4 | 1.6% | 1.7% | 0.06% |

| 15 (Not Preventable) | Detection/treatment of problem was delayed, earlier detection was feasible, but detection was appropriately facilitated. Example:* Index admission: Infant with history of laryngomalacia and GER admitted with an ALTE. No events during hospitalization. Appropriate workup and cleared by consultants for discharge. Zantac increased. Readmission: Infant had similar ALTE events within a week after discharge. Ultimately underwent supraglottoplasty. | 2 | 0.8% | 0.9% | 0.03% |

| 13 (Not Preventable) | Resulted from preventable complication but efforts to minimize likelihood were appropriate. Example:* Index admission: Patient on GJ feeds admitted for dislodged GJ. Extensive conversations between primary team and multiple consulting services regarding best type of tube. Determined that no other tube options were appropriate. Temporizing measures were initiated. Readmission: GJ tube dislodged again. | 2 | 0.8% | 0.9% | 0.03% |

| 18 (Not Preventable) | Resulted from medication side effect (after watch period). Example:* Index admission: Preteen with MSSA bacteremia spread to other organs. Sent home on appropriate IV antibiotics. Readmission: Fever, rash, increased LFTs. Blood cultures negative. Presumed drug reaction. Fevers resolved with alternate medication. | 2 | 0.8% | 0.9% | 0.03% |

| 16 (Not Preventable) | Resulted from inadequate care by community services, but effort by hospital/physician to ensure correct postdischarge care was appropriate. | ||||

| 233 | 94.0% | 100% | 3.2% | ||

| Fault Tree Terminal Node | Root Cause of Potentially Preventable Readmission with Case Descriptions* |

|---|---|

| |

| 2 (Potentially Preventable) | Problematic condition on discharge |

| Case 1: Index admission: Infant with history of prematurity admitted with RSV and rhinovirus bronchiolitis. Had some waxing and waning symptoms. Just prior to discharge, noted to have increased work of breathing related to feeds. Readmission: 12 hours later with tachypnea, retractions, and hypoxia. | |

| Case 2: Index admission: Toddler admitted with febrile seizure in setting of gastroenteritis. Poor PO intake during hospitalization. Readmission: 1 day later with dehydration. | |

| Case 3: Index admission: Infant admitted with a prolonged complex febrile seizure. Workup included an unremarkable lumbar puncture. No additional seizures. No inpatient imaging obtained. Readmission: Abnormal outpatient MRI requiring intervention. | |

| Case 4: Index admission: Teenager with wheezing and history of chronic daily symptoms. Discharged <24 hours later on albuterol every 4 hours and prednisone. Readmission: 1 day later, seen by primary care physician with persistent asthma flare. | |

| Case 5: Index admission: Exfull‐term infant admitted with bronchiolitis, early in course. At time of discharge, had been off oxygen for 24 hours, but last recorded respiratory rate was >70. Readmission: 1 day later due to continued tachypnea and increased work of breathing. No hypoxia. CXR normal. | |

| Case 6: Exfull‐term infant admitted with bilious emesis, diarrhea, and dehydration. Ultrasound of pylorus, UGI, and BMP all normal. Tolerated oral intake but had emesis and loose stools prior to discharge. Readmission: <48 hours later with severe metabolic acidosis. | |

| 3 (Potentially Preventable) | Nosocomial/ematrogenic factors |

| Case 1: Index admission: Toddler admitted with fever and neutropenia. Treated with antibiotics 24 hours. Diagnosed with viral illness and discharged home. Readmission: Symptomatic Clostridum difficile infection. | |

| Case 2: Index admission: Patient with autism admitted with viral gastroenteritis. Readmission: Presumed nosocominal upper respiratory infection. | |

| Case 3: Index admission: Infant admitted with bronchiolitis. Recovered from initial infection. Readmission: New upper respiratory infection and presumed nosocomial infection. | |

| Case 4: Index admission: <28‐day‐old full‐term neonate presenting with neonatal fever and rash. Full septic workup performed and all cultures negative at 24 hours. Readmission: CSF culture positive at 36 hours and readmitted while awaiting speciation. Discharged once culture grew out a contaminant. | |

| 8 (Potentially Preventable) | Detection/treatment of problem was delayed and/or not appropriately facilitated |

| Case 1: Index admission: Preteen admitted with abdominal pain, concern for appendicitis. Ultrasound and MRI abdomen negative for appendicitis. Symptoms improved. Tolerated PO. Readmission: 3 days later with similar abdominal pain. Diagnosed with constipation with significant improvement following clean‐out. | |

| Case 2: Index admission: Infant with history of macrocephaly presented with fever and full fontanelle. Head CT showed mild prominence of the extra‐axial space, and lumbar puncture was normal. Readmission: Patient developed torticollis. MRI demonstrated a malignant lesion. | |

| Case 3: Index admission: School‐age child with RLQ abdominal pain, fever, leukocytosis, and indeterminate RLQ abdominal ultrasound. Twelve‐hour observation with no further fevers. Pain and appetite improved. Readmission: 1 day later with fever, anorexia, and abdominal pain. RLQ ultrasound unchanged. Appendectomy performed with inflamed appendix. | |

| 1 (Potentially Preventable) | Inappropriate readmission |

| Case 1: Index admission: Infant with laryngomalacia admitted with bronchiolitis. Readmission: Continued mild bronchiolitis symptoms but did not require oxygen or suctioning. Normal CXR. | |

| 5 (Potentially Preventable) | Resulted from inadequate postdischarge care planning |

| Case 1: Index diagnosis: Infant with vomiting, prior admissions, and extensive evaluation, diagnosed with milk protein allergy and GERD. PPI increased. Readmission: Persistent symptoms, required NGT feeds supplementation. | |

| All General Pediatrics Patients in 2014 | General Pediatric Readmitted Patients in 2014 | ||||

|---|---|---|---|---|---|

| Major Diagnosis Category at Index Admission | No. | % | Major Diagnosis Category at Index Admission | No. | % |

| |||||

| Respiratory | 2,723 | 37.5% | Respiratory | 79 | 31.9% |

| Digestive | 748 | 10.3% | Digestive | 41 | 16.5% |

| Ear, nose, mouth, throat | 675 | 9.3% | Ear, nose, mouth, throat | 24 | 9.7% |

| Skin, subcutaneous tissue | 480 | 6.6% | Musculoskeletal and connective tissue | 14 | 5.6% |

| Infectious, parasitic, systemic | 455 | 6.3% | Nervous | 13 | 5.2% |

| Factors influencing health status | 359 | 5.0% | Endocrine, nutritional, metabolic | 13 | 5.2% |

| Endocrine, nutritional, metabolic | 339 | 4.7% | Infectious, parasitic, systemic | 12 | 4.8% |

| Nervous | 239 | 3.3% | Newborn, neonate, perinatal period | 11 | 4.4% |