User login

Methodologic Progress Note: A Clinician’s Guide to Logistic Regression

The ability to read and correctly interpret research is an essential skill, but most hospitalists—and physicians in general—do not receive formal training in biostatistics during their medical education.1-3 In addition to straightforward statistical tests that compare a single exposure and outcome, researchers commonly use statistical models to identify and quantify complex relationships among many exposures (eg, demographics, clinical characteristics, interventions, or other variables) and an outcome. Understanding statistical models can be challenging. Still, it is important to recognize the advantages and limitations of statistical models, how to interpret their results, and the potential implications of findings on current clinical practice.

In the article “Rates and Characteristics of Medical Malpractice Claims Against Hospitalists” published in the July 2021 issue of the Journal of Hospital Medicine, Schaffer et al4 used the Comparative Benchmarking System database, which is maintained by a malpractice insurer, to characterize malpractice claims against hospitalists. The authors used multiple logistic regression models to understand the relationship among clinical factors and indemnity payments. In this Progress Note, we describe situations in which logistic regression is the proper statistical method to analyze a data set, explain results from logistic regression analyses, and equip readers with skills to critically appraise conclusions drawn from these models.

Choosing an Appropriate Statistical Model

Statistical models often are used to describe the relationship among one or more exposure variables (ie, independent variables) and an outcome (ie, dependent variable). These models allow researchers to evaluate the effects of multiple exposure variables simultaneously, which in turn allows them to “isolate” the effect of each variable; in other words, models facilitate an understanding of the relationship between each exposure variable and the outcome, adjusted for (ie, independent of) the other exposure variables in the model.

Several statistical models can be used to quantify relationships within the data, but each type of model has certain assumptions that must be satisfied. Two important assumptions include characteristics of the outcome (eg, the type and distribution) and the nature of the relationships among the outcome and independent variables (eg, linear vs nonlinear). Simple linear regression, one of the most basic statistical models used in research,5 assumes that (a) the outcome is continuous (ie, any numeric value is possible) and normally distributed (ie, its histogram is a bell-shaped curve) and (b) the relationship between the independent variable and the outcome is linear (ie, follows a straight line). If an investigator wanted to understand how weight is related to height, a simple linear regression could be used to develop a mathematical equation that tells us how the outcome (weight) generally increases as the independent variable (height) increases.

Often, the outcome in a study is not a continuous variable but a simple success/failure variable (ie, dichotomous variable that can be one of two possible values). Schaffer et al4 examined the binary outcome of whether a malpractice claim case would end in an indemnity payment or no payment. Linear regression models are not equipped to handle dichotomous outcomes. Instead, we need to use a different statistical model: logistic regression. In logistic regression, the probability (p) of a defined outcome event is estimated by creating a regression model.

The Logistic Model

A probability (p) is a measure of how likely an event (eg, a malpractice claim ends in an indemnity payment or not) is to occur. It is always between 0 (ie, the event will definitely not occur) and 1 (ie, the event will definitely occur). A p of 0.5 means there is a 50/50 chance that the event will occur (ie, equivalent to a coin flip). Because p is a probability, we need to make sure it is always between 0 and 1. If we were to try to model p with a linear regression, the model would assume that p could extend beyond 0 and 1. What can we do?

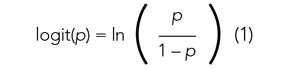

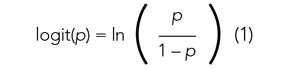

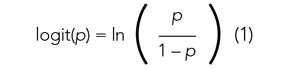

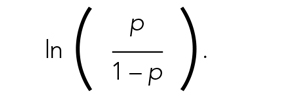

Applying a transformation is a commonly used tool in statistics to make data work better within statistical models.6 In this case, we will transform the variable p. In logistic regression, we model the probability of experiencing the outcome through a transformation called a logit. The logit represents the natural logarithm (ln) of the ratio of the probability of experiencing the outcome (p) vs the probability of not experiencing the outcome (1 – p), with the ratio being the odds of the event occurring.

This transformation works well for dichotomous outcomes because the logit transformation approximates a straight line as long as p is not too large or too small (between 0.05 and 0.95).

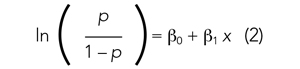

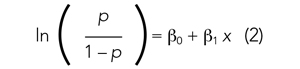

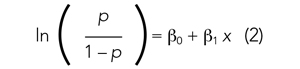

If we are performing a logistic regression with only one independent variable (x) and want to understand the relationship between this variable (x) and the probability of an outcome event (p), then our model is the equation of a line. The equation for the base model of logistic regression with one independent variable (x) is

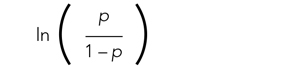

where β0 is the y-intercept and β1 is the slope of the line. Equation (2) is identical to the algebraic equation y = mx + b for a line, just rearranged slightly. In this algebraic equation, m is the slope (the same as β1) and b is the y-intercept (the same as β0). We will see that β0 and β1 are estimated (ie, assigned numeric values) from the data collected to help us understand how x and

are related and are the basis for estimating odds ratios.

We can build more complex models using multivariable logistic regression by adding more independent variables to the right side of equation (2). Essentially, this is what S

There are two notable techniques used frequently with multivariable logistic regression models. The first involves choosing which independent variables to include in the model. One way to select variables for multivariable models is defining them a priori, that is deciding which variables are clinically or conceptually associated with the outcome before looking at the data. With this approach, we can test specific hypotheses about the relationships between the independent variables and the outcome. Another common approach is to look at the data and identify the variables that vary significantly between the two outcome groups. Schaffer et al4 used an a priori approach to define variables in their multivariable model (ie, “variables for inclusion into the multivariable model were determined a priori”).

A second technique is the evaluation of collinearity, which helps us understand whether the i

Understanding the Results of the Logistic Model

Fitting the model is the process by which statistical software (eg, SAS, Stata, R, SPSS) estimates the relationships among independent variables in the model and the outcome within a specific dataset. In equation (2), this essentially means that the software will evaluate the data and provide us with the best estimates for β0 (the y-intercept) and β1 (the slope) that describe the relationship between the variable x and

Modeling can be iterative, and part of the process may include removing variables from the model that are not significantly associated with the outcome to create a simpler solution, a process known as model reduction. The results from models describe the independent association between a specific characteristic and the outcome, meaning that the relationship has been adjusted for all the other characteristics in the model.

The relationships among the independent variables and outcome are most often represented as an odds ratio (OR), which quantifies the strength of the association between two variables and is directly calculated from the β values in the model. As the name suggests, an OR is a ratio of odds. But what are odds? Simply, the odds of an outcome (such as mortality) is the probability of experiencing the event divided by the probability of not experiencing that event; in other words, it is the ratio:

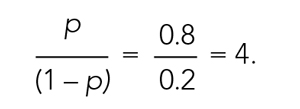

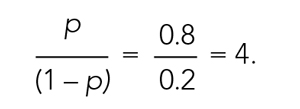

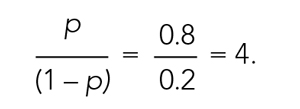

The concept of odds is often unfamiliar, so it can be helpful to consider the definition in the context of games of chance. For example, in horse race betting, the outcome of interest is that a horse will lose a race. Imagine that the probability of a horse losing a race is 0.8 and the probability of winning is 0.2. The odds of losing are

These odds usually are listed as 4-to-1, meaning that out of 5 races (ie, 4 + 1) the horse is expected to lose 4 times and win once. When odds are listed this way, we can easily calculate the associated probability by recognizing that the total number of expected races is the sum of two numbers (probability of losing: 4 races out of 5, or 0.80 vs probability of winning: 1 race out of 5, or 0.20).

In medical research, the OR typically represents the odds for one group of patients (A) compared with the odds for another group of patients (B) experiencing an outcome. If the odds of the outcome are the same for group A and group B, then OR = 1.0, meaning that the probability of the outcome is the same between the two groups. If the patients in group A have greater odds of experiencing the outcome compared with group B patients (and a greater probability of the outcome), then the OR will be >1. If the opposite is true, then the OR will be <1.

Schaffer et al4 estimated that the OR of an indemnity payment in malpractice cases involving errors in clinical judgment as a contributing factor was 5.01 (95% CI, 3.37-7.45). This means that malpractice cases involving errors in clinical judgement had a 5.01 times greater odds of indemnity payment compared with those without these errors after adjusting for all other variables in the model (eg, age, severity). Note that the 95% CI does not include 1.0. This indicates that the OR is statistically >1, and we can conclude that there is a significant relationship between errors in clinical judgment and payment that is unlikely to be attributed to chance alone.

In logistic regression for categorical independent variables, all categories are compared with a reference group within that variable, with the reference group serving as the denominator of the OR. The authors4 did not incorporate continuous independent variables in their multivariable logistic regression model. However, if the authors examined length of hospitalization as a contributing factor in indemnity payments, for example, the OR would represent a 1-unit increase in this variable (eg, 1-day increase in length of stay).

Conclusion

Logistic regression describes the relationships in data and is an important statistical model across many types of research. This Progress Note emphasizes the importance of weighing the advantages and limitations of logistic regression, provides a common approach to data transformation, and guides the correct interpretation of logistic regression model results.

1. Windish DM, Huot SJ, Green ML. Medicine residents’ understanding of the biostatistics and results in the medical literature. JAMA. 2007;298(9):1010. https://doi.org/10.1001/jama.298.9.1010

2. MacDougall M, Cameron HS, Maxwell SRJ. Medical graduate views on statistical learning needs for clinical practice: a comprehensive survey. BMC Med Educ. 2019;20(1):1. https://doi.org/10.1186/s12909-019-1842-1

3. Montori VM. Progress in evidence-based medicine. JAMA. 2008;300(15):1814-1816. https://doi.org/10.1001/jama.300.15.1814

4. Schaffer AC, Yu-Moe CW, Babayan A, Wachter RM, Einbinder JS. Rates and characteristics of medical malpractice claims against hospitalists. J Hosp Med. 2021;16(7):390-396. https://doi.org/10.12788/jhm.3557

5. Lane DM, Scott D, Hebl M, Guerra R, Osherson D, Zimmer H. Introducton to Statistics. Accessed April 13, 2021. https://onlinestatbook.com/Online_Statistics_Education.pdf

6. Marill KA. Advanced statistics: linear regression, part II: multiple linear regression. Acad Emerg Med Off J Soc Acad Emerg Med. 2004;11(1):94-102. https://doi.org/10.1197/j.aem.2003.09.006

The ability to read and correctly interpret research is an essential skill, but most hospitalists—and physicians in general—do not receive formal training in biostatistics during their medical education.1-3 In addition to straightforward statistical tests that compare a single exposure and outcome, researchers commonly use statistical models to identify and quantify complex relationships among many exposures (eg, demographics, clinical characteristics, interventions, or other variables) and an outcome. Understanding statistical models can be challenging. Still, it is important to recognize the advantages and limitations of statistical models, how to interpret their results, and the potential implications of findings on current clinical practice.

In the article “Rates and Characteristics of Medical Malpractice Claims Against Hospitalists” published in the July 2021 issue of the Journal of Hospital Medicine, Schaffer et al4 used the Comparative Benchmarking System database, which is maintained by a malpractice insurer, to characterize malpractice claims against hospitalists. The authors used multiple logistic regression models to understand the relationship among clinical factors and indemnity payments. In this Progress Note, we describe situations in which logistic regression is the proper statistical method to analyze a data set, explain results from logistic regression analyses, and equip readers with skills to critically appraise conclusions drawn from these models.

Choosing an Appropriate Statistical Model

Statistical models often are used to describe the relationship among one or more exposure variables (ie, independent variables) and an outcome (ie, dependent variable). These models allow researchers to evaluate the effects of multiple exposure variables simultaneously, which in turn allows them to “isolate” the effect of each variable; in other words, models facilitate an understanding of the relationship between each exposure variable and the outcome, adjusted for (ie, independent of) the other exposure variables in the model.

Several statistical models can be used to quantify relationships within the data, but each type of model has certain assumptions that must be satisfied. Two important assumptions include characteristics of the outcome (eg, the type and distribution) and the nature of the relationships among the outcome and independent variables (eg, linear vs nonlinear). Simple linear regression, one of the most basic statistical models used in research,5 assumes that (a) the outcome is continuous (ie, any numeric value is possible) and normally distributed (ie, its histogram is a bell-shaped curve) and (b) the relationship between the independent variable and the outcome is linear (ie, follows a straight line). If an investigator wanted to understand how weight is related to height, a simple linear regression could be used to develop a mathematical equation that tells us how the outcome (weight) generally increases as the independent variable (height) increases.

Often, the outcome in a study is not a continuous variable but a simple success/failure variable (ie, dichotomous variable that can be one of two possible values). Schaffer et al4 examined the binary outcome of whether a malpractice claim case would end in an indemnity payment or no payment. Linear regression models are not equipped to handle dichotomous outcomes. Instead, we need to use a different statistical model: logistic regression. In logistic regression, the probability (p) of a defined outcome event is estimated by creating a regression model.

The Logistic Model

A probability (p) is a measure of how likely an event (eg, a malpractice claim ends in an indemnity payment or not) is to occur. It is always between 0 (ie, the event will definitely not occur) and 1 (ie, the event will definitely occur). A p of 0.5 means there is a 50/50 chance that the event will occur (ie, equivalent to a coin flip). Because p is a probability, we need to make sure it is always between 0 and 1. If we were to try to model p with a linear regression, the model would assume that p could extend beyond 0 and 1. What can we do?

Applying a transformation is a commonly used tool in statistics to make data work better within statistical models.6 In this case, we will transform the variable p. In logistic regression, we model the probability of experiencing the outcome through a transformation called a logit. The logit represents the natural logarithm (ln) of the ratio of the probability of experiencing the outcome (p) vs the probability of not experiencing the outcome (1 – p), with the ratio being the odds of the event occurring.

This transformation works well for dichotomous outcomes because the logit transformation approximates a straight line as long as p is not too large or too small (between 0.05 and 0.95).

If we are performing a logistic regression with only one independent variable (x) and want to understand the relationship between this variable (x) and the probability of an outcome event (p), then our model is the equation of a line. The equation for the base model of logistic regression with one independent variable (x) is

where β0 is the y-intercept and β1 is the slope of the line. Equation (2) is identical to the algebraic equation y = mx + b for a line, just rearranged slightly. In this algebraic equation, m is the slope (the same as β1) and b is the y-intercept (the same as β0). We will see that β0 and β1 are estimated (ie, assigned numeric values) from the data collected to help us understand how x and

are related and are the basis for estimating odds ratios.

We can build more complex models using multivariable logistic regression by adding more independent variables to the right side of equation (2). Essentially, this is what S

There are two notable techniques used frequently with multivariable logistic regression models. The first involves choosing which independent variables to include in the model. One way to select variables for multivariable models is defining them a priori, that is deciding which variables are clinically or conceptually associated with the outcome before looking at the data. With this approach, we can test specific hypotheses about the relationships between the independent variables and the outcome. Another common approach is to look at the data and identify the variables that vary significantly between the two outcome groups. Schaffer et al4 used an a priori approach to define variables in their multivariable model (ie, “variables for inclusion into the multivariable model were determined a priori”).

A second technique is the evaluation of collinearity, which helps us understand whether the i

Understanding the Results of the Logistic Model

Fitting the model is the process by which statistical software (eg, SAS, Stata, R, SPSS) estimates the relationships among independent variables in the model and the outcome within a specific dataset. In equation (2), this essentially means that the software will evaluate the data and provide us with the best estimates for β0 (the y-intercept) and β1 (the slope) that describe the relationship between the variable x and

Modeling can be iterative, and part of the process may include removing variables from the model that are not significantly associated with the outcome to create a simpler solution, a process known as model reduction. The results from models describe the independent association between a specific characteristic and the outcome, meaning that the relationship has been adjusted for all the other characteristics in the model.

The relationships among the independent variables and outcome are most often represented as an odds ratio (OR), which quantifies the strength of the association between two variables and is directly calculated from the β values in the model. As the name suggests, an OR is a ratio of odds. But what are odds? Simply, the odds of an outcome (such as mortality) is the probability of experiencing the event divided by the probability of not experiencing that event; in other words, it is the ratio:

The concept of odds is often unfamiliar, so it can be helpful to consider the definition in the context of games of chance. For example, in horse race betting, the outcome of interest is that a horse will lose a race. Imagine that the probability of a horse losing a race is 0.8 and the probability of winning is 0.2. The odds of losing are

These odds usually are listed as 4-to-1, meaning that out of 5 races (ie, 4 + 1) the horse is expected to lose 4 times and win once. When odds are listed this way, we can easily calculate the associated probability by recognizing that the total number of expected races is the sum of two numbers (probability of losing: 4 races out of 5, or 0.80 vs probability of winning: 1 race out of 5, or 0.20).

In medical research, the OR typically represents the odds for one group of patients (A) compared with the odds for another group of patients (B) experiencing an outcome. If the odds of the outcome are the same for group A and group B, then OR = 1.0, meaning that the probability of the outcome is the same between the two groups. If the patients in group A have greater odds of experiencing the outcome compared with group B patients (and a greater probability of the outcome), then the OR will be >1. If the opposite is true, then the OR will be <1.

Schaffer et al4 estimated that the OR of an indemnity payment in malpractice cases involving errors in clinical judgment as a contributing factor was 5.01 (95% CI, 3.37-7.45). This means that malpractice cases involving errors in clinical judgement had a 5.01 times greater odds of indemnity payment compared with those without these errors after adjusting for all other variables in the model (eg, age, severity). Note that the 95% CI does not include 1.0. This indicates that the OR is statistically >1, and we can conclude that there is a significant relationship between errors in clinical judgment and payment that is unlikely to be attributed to chance alone.

In logistic regression for categorical independent variables, all categories are compared with a reference group within that variable, with the reference group serving as the denominator of the OR. The authors4 did not incorporate continuous independent variables in their multivariable logistic regression model. However, if the authors examined length of hospitalization as a contributing factor in indemnity payments, for example, the OR would represent a 1-unit increase in this variable (eg, 1-day increase in length of stay).

Conclusion

Logistic regression describes the relationships in data and is an important statistical model across many types of research. This Progress Note emphasizes the importance of weighing the advantages and limitations of logistic regression, provides a common approach to data transformation, and guides the correct interpretation of logistic regression model results.

The ability to read and correctly interpret research is an essential skill, but most hospitalists—and physicians in general—do not receive formal training in biostatistics during their medical education.1-3 In addition to straightforward statistical tests that compare a single exposure and outcome, researchers commonly use statistical models to identify and quantify complex relationships among many exposures (eg, demographics, clinical characteristics, interventions, or other variables) and an outcome. Understanding statistical models can be challenging. Still, it is important to recognize the advantages and limitations of statistical models, how to interpret their results, and the potential implications of findings on current clinical practice.

In the article “Rates and Characteristics of Medical Malpractice Claims Against Hospitalists” published in the July 2021 issue of the Journal of Hospital Medicine, Schaffer et al4 used the Comparative Benchmarking System database, which is maintained by a malpractice insurer, to characterize malpractice claims against hospitalists. The authors used multiple logistic regression models to understand the relationship among clinical factors and indemnity payments. In this Progress Note, we describe situations in which logistic regression is the proper statistical method to analyze a data set, explain results from logistic regression analyses, and equip readers with skills to critically appraise conclusions drawn from these models.

Choosing an Appropriate Statistical Model

Statistical models often are used to describe the relationship among one or more exposure variables (ie, independent variables) and an outcome (ie, dependent variable). These models allow researchers to evaluate the effects of multiple exposure variables simultaneously, which in turn allows them to “isolate” the effect of each variable; in other words, models facilitate an understanding of the relationship between each exposure variable and the outcome, adjusted for (ie, independent of) the other exposure variables in the model.

Several statistical models can be used to quantify relationships within the data, but each type of model has certain assumptions that must be satisfied. Two important assumptions include characteristics of the outcome (eg, the type and distribution) and the nature of the relationships among the outcome and independent variables (eg, linear vs nonlinear). Simple linear regression, one of the most basic statistical models used in research,5 assumes that (a) the outcome is continuous (ie, any numeric value is possible) and normally distributed (ie, its histogram is a bell-shaped curve) and (b) the relationship between the independent variable and the outcome is linear (ie, follows a straight line). If an investigator wanted to understand how weight is related to height, a simple linear regression could be used to develop a mathematical equation that tells us how the outcome (weight) generally increases as the independent variable (height) increases.

Often, the outcome in a study is not a continuous variable but a simple success/failure variable (ie, dichotomous variable that can be one of two possible values). Schaffer et al4 examined the binary outcome of whether a malpractice claim case would end in an indemnity payment or no payment. Linear regression models are not equipped to handle dichotomous outcomes. Instead, we need to use a different statistical model: logistic regression. In logistic regression, the probability (p) of a defined outcome event is estimated by creating a regression model.

The Logistic Model

A probability (p) is a measure of how likely an event (eg, a malpractice claim ends in an indemnity payment or not) is to occur. It is always between 0 (ie, the event will definitely not occur) and 1 (ie, the event will definitely occur). A p of 0.5 means there is a 50/50 chance that the event will occur (ie, equivalent to a coin flip). Because p is a probability, we need to make sure it is always between 0 and 1. If we were to try to model p with a linear regression, the model would assume that p could extend beyond 0 and 1. What can we do?

Applying a transformation is a commonly used tool in statistics to make data work better within statistical models.6 In this case, we will transform the variable p. In logistic regression, we model the probability of experiencing the outcome through a transformation called a logit. The logit represents the natural logarithm (ln) of the ratio of the probability of experiencing the outcome (p) vs the probability of not experiencing the outcome (1 – p), with the ratio being the odds of the event occurring.

This transformation works well for dichotomous outcomes because the logit transformation approximates a straight line as long as p is not too large or too small (between 0.05 and 0.95).

If we are performing a logistic regression with only one independent variable (x) and want to understand the relationship between this variable (x) and the probability of an outcome event (p), then our model is the equation of a line. The equation for the base model of logistic regression with one independent variable (x) is

where β0 is the y-intercept and β1 is the slope of the line. Equation (2) is identical to the algebraic equation y = mx + b for a line, just rearranged slightly. In this algebraic equation, m is the slope (the same as β1) and b is the y-intercept (the same as β0). We will see that β0 and β1 are estimated (ie, assigned numeric values) from the data collected to help us understand how x and

are related and are the basis for estimating odds ratios.

We can build more complex models using multivariable logistic regression by adding more independent variables to the right side of equation (2). Essentially, this is what S

There are two notable techniques used frequently with multivariable logistic regression models. The first involves choosing which independent variables to include in the model. One way to select variables for multivariable models is defining them a priori, that is deciding which variables are clinically or conceptually associated with the outcome before looking at the data. With this approach, we can test specific hypotheses about the relationships between the independent variables and the outcome. Another common approach is to look at the data and identify the variables that vary significantly between the two outcome groups. Schaffer et al4 used an a priori approach to define variables in their multivariable model (ie, “variables for inclusion into the multivariable model were determined a priori”).

A second technique is the evaluation of collinearity, which helps us understand whether the i

Understanding the Results of the Logistic Model

Fitting the model is the process by which statistical software (eg, SAS, Stata, R, SPSS) estimates the relationships among independent variables in the model and the outcome within a specific dataset. In equation (2), this essentially means that the software will evaluate the data and provide us with the best estimates for β0 (the y-intercept) and β1 (the slope) that describe the relationship between the variable x and

Modeling can be iterative, and part of the process may include removing variables from the model that are not significantly associated with the outcome to create a simpler solution, a process known as model reduction. The results from models describe the independent association between a specific characteristic and the outcome, meaning that the relationship has been adjusted for all the other characteristics in the model.

The relationships among the independent variables and outcome are most often represented as an odds ratio (OR), which quantifies the strength of the association between two variables and is directly calculated from the β values in the model. As the name suggests, an OR is a ratio of odds. But what are odds? Simply, the odds of an outcome (such as mortality) is the probability of experiencing the event divided by the probability of not experiencing that event; in other words, it is the ratio:

The concept of odds is often unfamiliar, so it can be helpful to consider the definition in the context of games of chance. For example, in horse race betting, the outcome of interest is that a horse will lose a race. Imagine that the probability of a horse losing a race is 0.8 and the probability of winning is 0.2. The odds of losing are

These odds usually are listed as 4-to-1, meaning that out of 5 races (ie, 4 + 1) the horse is expected to lose 4 times and win once. When odds are listed this way, we can easily calculate the associated probability by recognizing that the total number of expected races is the sum of two numbers (probability of losing: 4 races out of 5, or 0.80 vs probability of winning: 1 race out of 5, or 0.20).

In medical research, the OR typically represents the odds for one group of patients (A) compared with the odds for another group of patients (B) experiencing an outcome. If the odds of the outcome are the same for group A and group B, then OR = 1.0, meaning that the probability of the outcome is the same between the two groups. If the patients in group A have greater odds of experiencing the outcome compared with group B patients (and a greater probability of the outcome), then the OR will be >1. If the opposite is true, then the OR will be <1.

Schaffer et al4 estimated that the OR of an indemnity payment in malpractice cases involving errors in clinical judgment as a contributing factor was 5.01 (95% CI, 3.37-7.45). This means that malpractice cases involving errors in clinical judgement had a 5.01 times greater odds of indemnity payment compared with those without these errors after adjusting for all other variables in the model (eg, age, severity). Note that the 95% CI does not include 1.0. This indicates that the OR is statistically >1, and we can conclude that there is a significant relationship between errors in clinical judgment and payment that is unlikely to be attributed to chance alone.

In logistic regression for categorical independent variables, all categories are compared with a reference group within that variable, with the reference group serving as the denominator of the OR. The authors4 did not incorporate continuous independent variables in their multivariable logistic regression model. However, if the authors examined length of hospitalization as a contributing factor in indemnity payments, for example, the OR would represent a 1-unit increase in this variable (eg, 1-day increase in length of stay).

Conclusion

Logistic regression describes the relationships in data and is an important statistical model across many types of research. This Progress Note emphasizes the importance of weighing the advantages and limitations of logistic regression, provides a common approach to data transformation, and guides the correct interpretation of logistic regression model results.

1. Windish DM, Huot SJ, Green ML. Medicine residents’ understanding of the biostatistics and results in the medical literature. JAMA. 2007;298(9):1010. https://doi.org/10.1001/jama.298.9.1010

2. MacDougall M, Cameron HS, Maxwell SRJ. Medical graduate views on statistical learning needs for clinical practice: a comprehensive survey. BMC Med Educ. 2019;20(1):1. https://doi.org/10.1186/s12909-019-1842-1

3. Montori VM. Progress in evidence-based medicine. JAMA. 2008;300(15):1814-1816. https://doi.org/10.1001/jama.300.15.1814

4. Schaffer AC, Yu-Moe CW, Babayan A, Wachter RM, Einbinder JS. Rates and characteristics of medical malpractice claims against hospitalists. J Hosp Med. 2021;16(7):390-396. https://doi.org/10.12788/jhm.3557

5. Lane DM, Scott D, Hebl M, Guerra R, Osherson D, Zimmer H. Introducton to Statistics. Accessed April 13, 2021. https://onlinestatbook.com/Online_Statistics_Education.pdf

6. Marill KA. Advanced statistics: linear regression, part II: multiple linear regression. Acad Emerg Med Off J Soc Acad Emerg Med. 2004;11(1):94-102. https://doi.org/10.1197/j.aem.2003.09.006

1. Windish DM, Huot SJ, Green ML. Medicine residents’ understanding of the biostatistics and results in the medical literature. JAMA. 2007;298(9):1010. https://doi.org/10.1001/jama.298.9.1010

2. MacDougall M, Cameron HS, Maxwell SRJ. Medical graduate views on statistical learning needs for clinical practice: a comprehensive survey. BMC Med Educ. 2019;20(1):1. https://doi.org/10.1186/s12909-019-1842-1

3. Montori VM. Progress in evidence-based medicine. JAMA. 2008;300(15):1814-1816. https://doi.org/10.1001/jama.300.15.1814

4. Schaffer AC, Yu-Moe CW, Babayan A, Wachter RM, Einbinder JS. Rates and characteristics of medical malpractice claims against hospitalists. J Hosp Med. 2021;16(7):390-396. https://doi.org/10.12788/jhm.3557

5. Lane DM, Scott D, Hebl M, Guerra R, Osherson D, Zimmer H. Introducton to Statistics. Accessed April 13, 2021. https://onlinestatbook.com/Online_Statistics_Education.pdf

6. Marill KA. Advanced statistics: linear regression, part II: multiple linear regression. Acad Emerg Med Off J Soc Acad Emerg Med. 2004;11(1):94-102. https://doi.org/10.1197/j.aem.2003.09.006

© 2021 Society of Hospital Medicine

Methodological Progress Note: Interrupted Time Series

Hospital medicine research often asks the question whether an intervention, such as a policy or guideline, has improved quality of care and/or whether there were any unintended consequences. Alternatively, investigators may be interested in understanding the impact of an event, such as a natural disaster or a pandemic, on hospital care. The study design that provides the best estimate of the causal effect of the intervention is the randomized controlled trial (RCT). The goal of randomization, which can be implemented at the patient or cluster level (eg, hospitals), is attaining a balance of the known and unknown confounders between study groups.

However, an RCT may not be feasible for several reasons: complexity, insufficient setup time or funding, ethical barriers to randomization, unwillingness of funders or payers to withhold the intervention from patients (ie, the control group), or anticipated contamination of the intervention into the control group (eg, provider practice change interventions). In addition, it may be impossible to conduct an RCT because the investigator does not have control over the design of an intervention or because they are studying an event, such as a pandemic.

In the June 2020 issue of the Journal of Hospital Medicine, Coon et al1 use a type of quasi-experimental design (QED)—specifically, the interrupted time series (ITS)—to examine the impact of the adoption of ward-based high-flow nasal cannula protocols on intensive care unit (ICU) admission for bronchiolitis at children’s hospitals. In this methodologic progress note, we discuss QEDs for evaluating the impact of healthcare interventions or events and focus on ITS, one of the strongest QEDs.

WHAT IS A QUASI-EXPERIMENTAL DESIGN?

Quasi-experimental design refers to a broad range of nonrandomized or partially randomized pre- vs postintervention studies.2 In order to test a causal hypothesis without randomization, QEDs define a comparison group or a time period in which an intervention has not been implemented, as well as at least one group or time period in which an intervention has been implemented. In a QED, the control may lack similarity with the intervention group or time period because of differences in the patients, sites, or time period (sometimes referred to as having a “nonequivalent control group”). Several design and analytic approaches are available to enhance the extent to which the study is able to make conclusions about the causal impact of the intervention.2,3 Because randomization is not necessary, QEDs allow for inclusion of a broader population than that which is feasible by RCTs, which increases the applicability and generalizability of the results. Therefore, they are a powerful research design to test the effectiveness of interventions in real-world settings.

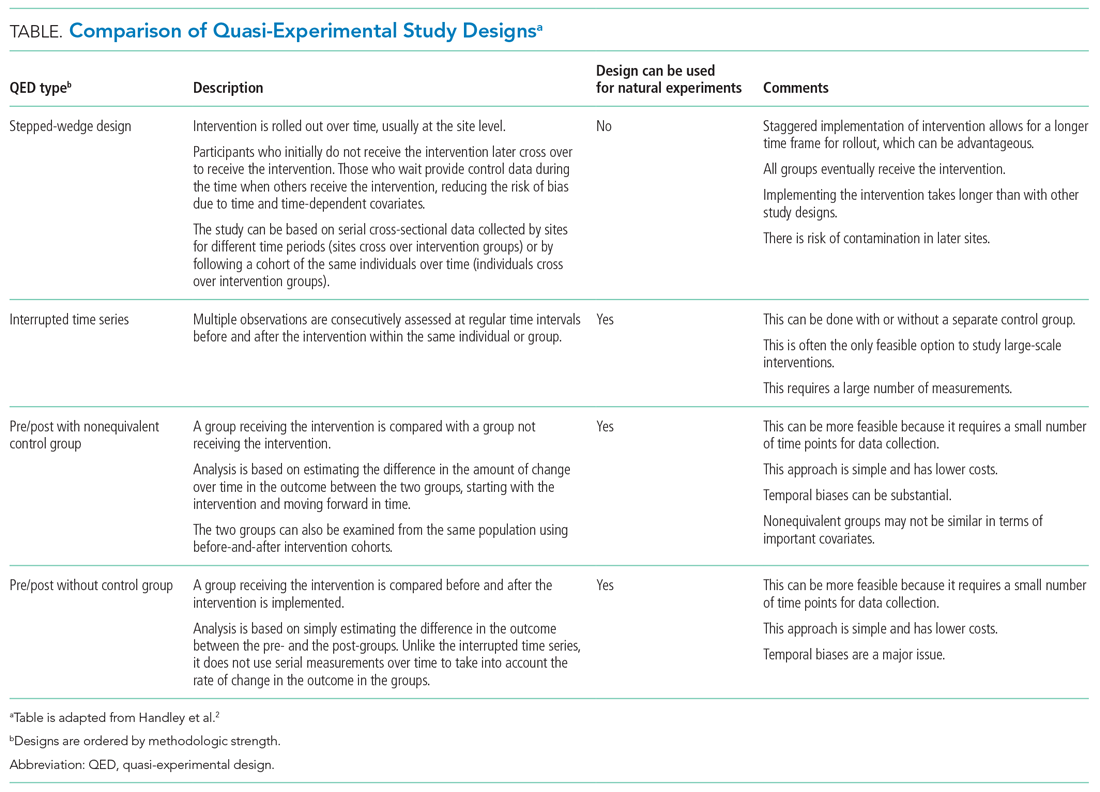

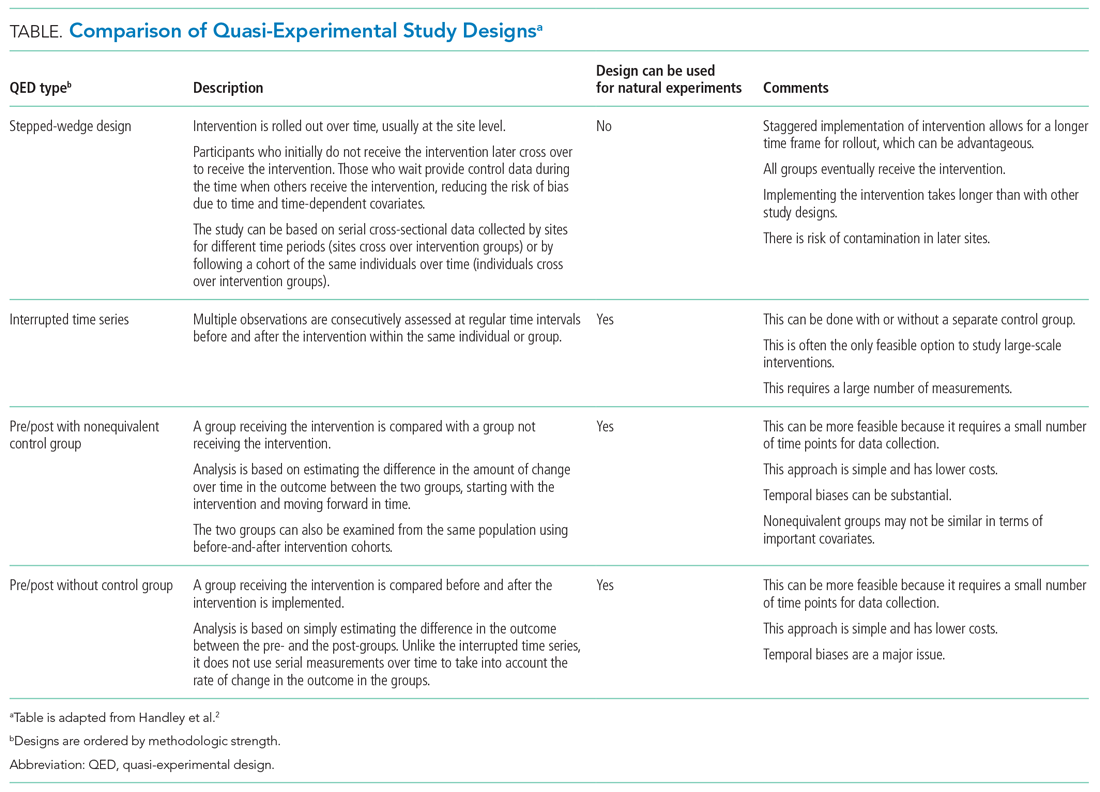

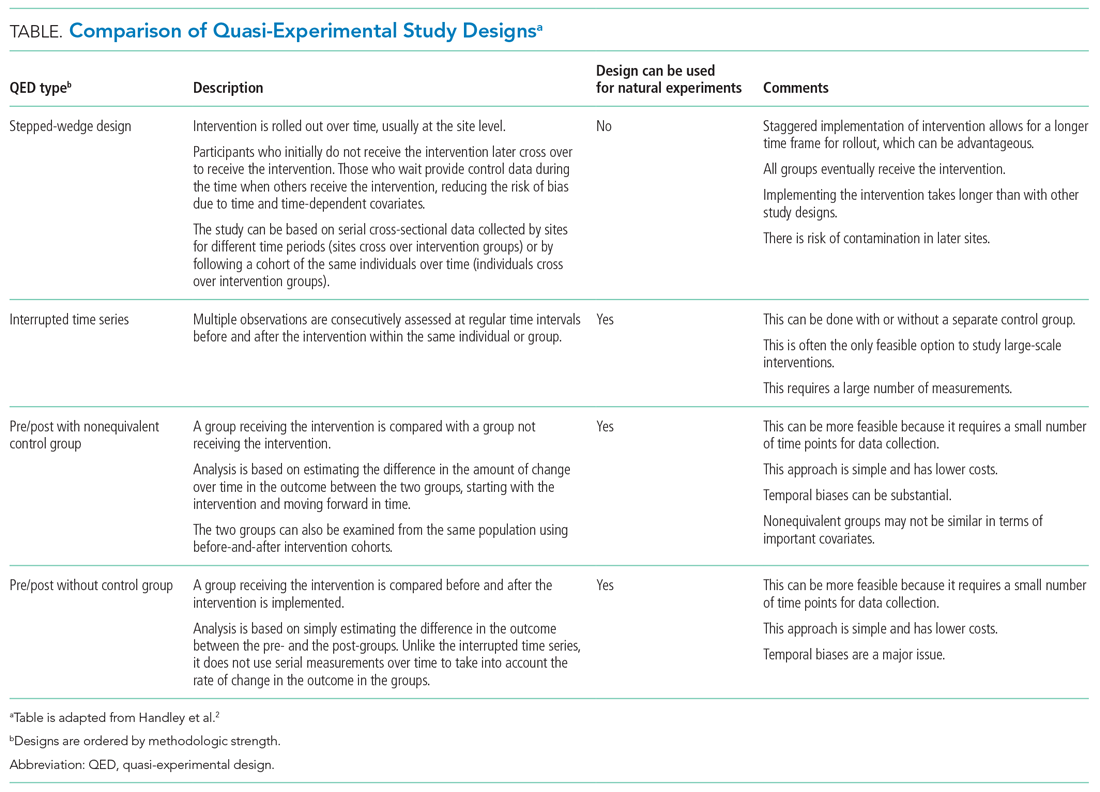

The choice of which QED depends on whether the investigators are conducting a prospective evaluation and have control over the study design (ie, the ordering of the intervention, selection of sites or individuals, and/or timing and frequency of the data collection) or whether the investigators do not have control over the intervention, which is also known as a “natural experiment.”4,5 Some studies may also incorporate two QEDs in tandem.6 The Table provides a brief summary of different QEDs, ordered by methodologic strength, and distinguishes those that can be used to study natural experiments. In the study by Coon et al,1 an ITS is used as opposed to a methodologically stronger QED, such as the stepped-wedge design, because the investigators did not have control over the rollout of heated high-flow nasal canula protocols across hospitals.

WHAT IS AN INTERRUPTED TIME SERIES?

Interrupted time series designs use repeated observations of an outcome over time. This method then divides, or “interrupts,” the series of data into two time periods: before the intervention or event and after. Using data from the preintervention period, an underlying trend in the outcome is estimated and assumed to continue forward into the postintervention period to estimate what would have occurred without the intervention. Any significant change in the outcome at the beginning of the postintervention period or change in the trend in the postintervention is then attributed to the intervention.

There are several important methodologic considerations when designing an ITS study, as detailed in other review papers.2,3,7,8 An ITS design can be retrospective or prospective. It can be of a single center or include multiple sites, as in Coon et al. It can be conducted with or without a control. The inclusion of a control, when appropriately chosen, improves the strength of the study design because it can account for seasonal trends and potential confounders that vary over time. The control can be a different group of hospitals or participants that are similar but did not receive the intervention, or it can be a different outcome in the same group of hospitals or participants that are not expected to be affected by the intervention. The ITS design may also be set up to estimate the individual effects of multicomponent interventions. If the different components are phased in sequentially over time, then it may be possible to interrupt the time series at these points and estimate the impact of each intervention component.

Other examples of ITS studies in hospital medicine include those that evaluated the impact of a readmission-reduction program,9 of state sepsis regulations on in-hospital mortality,10 of resident duty-hour reform on mortality among hospitalized patients,11 of a quality-improvement initiative on early discharge,12 and of national guidelines on pediatric pneumonia antibiotic selection.13 There are several types of ITS analysis, and in this article, we focus on segmented regression without a control group.7,8

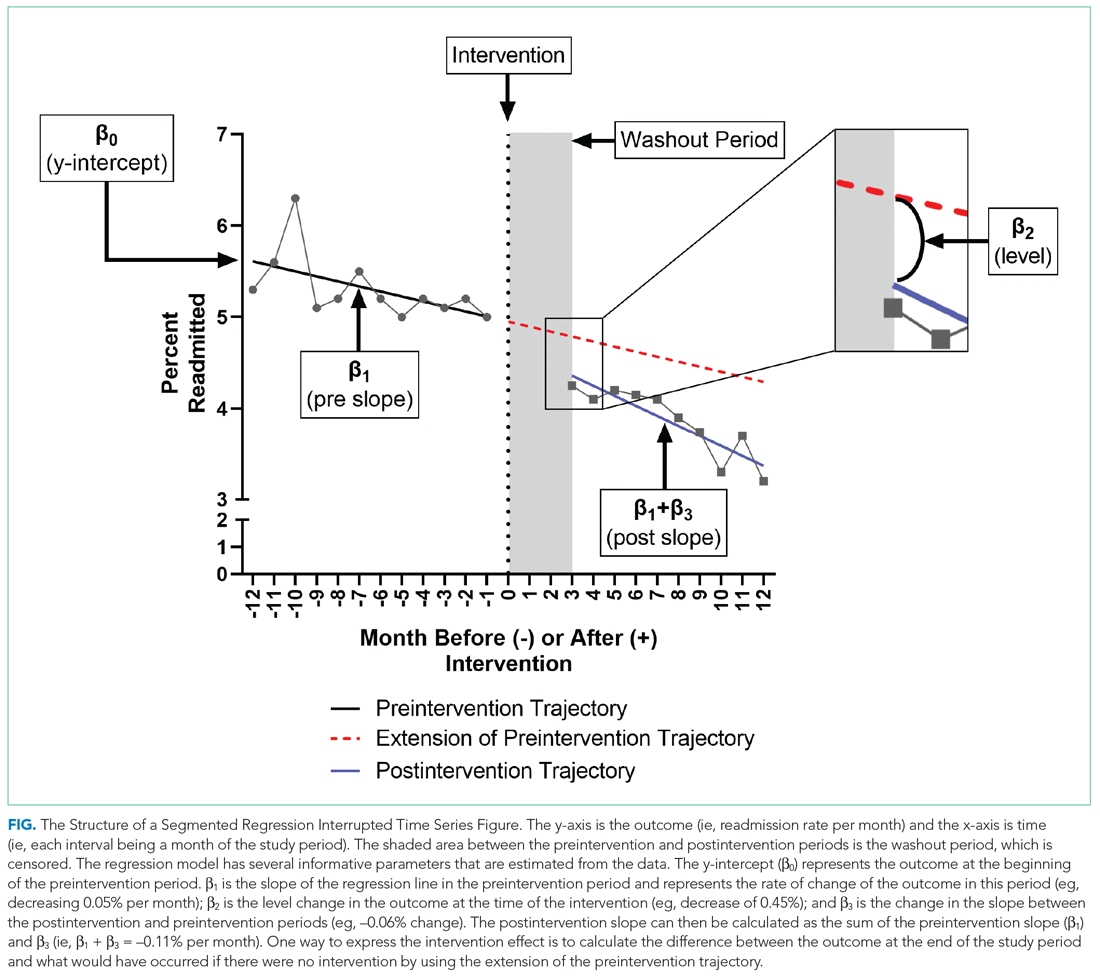

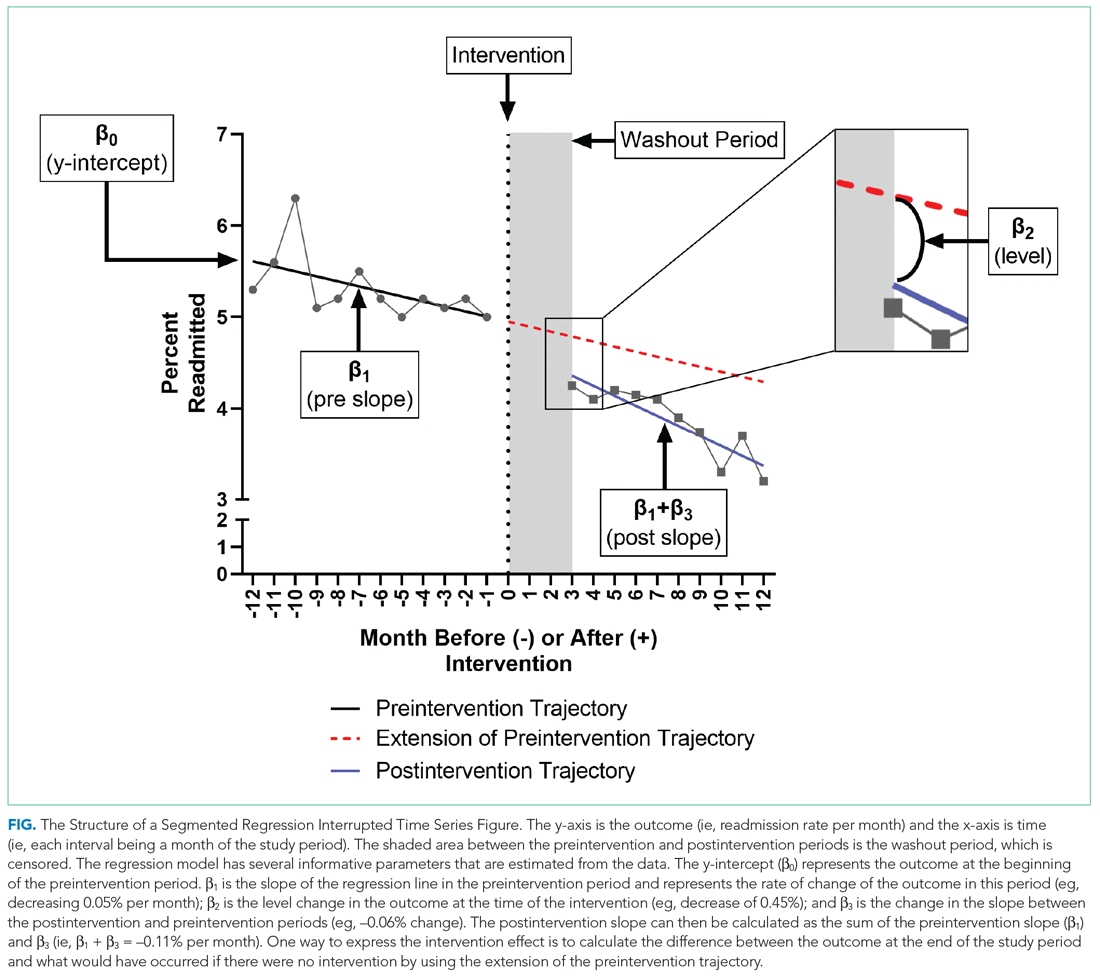

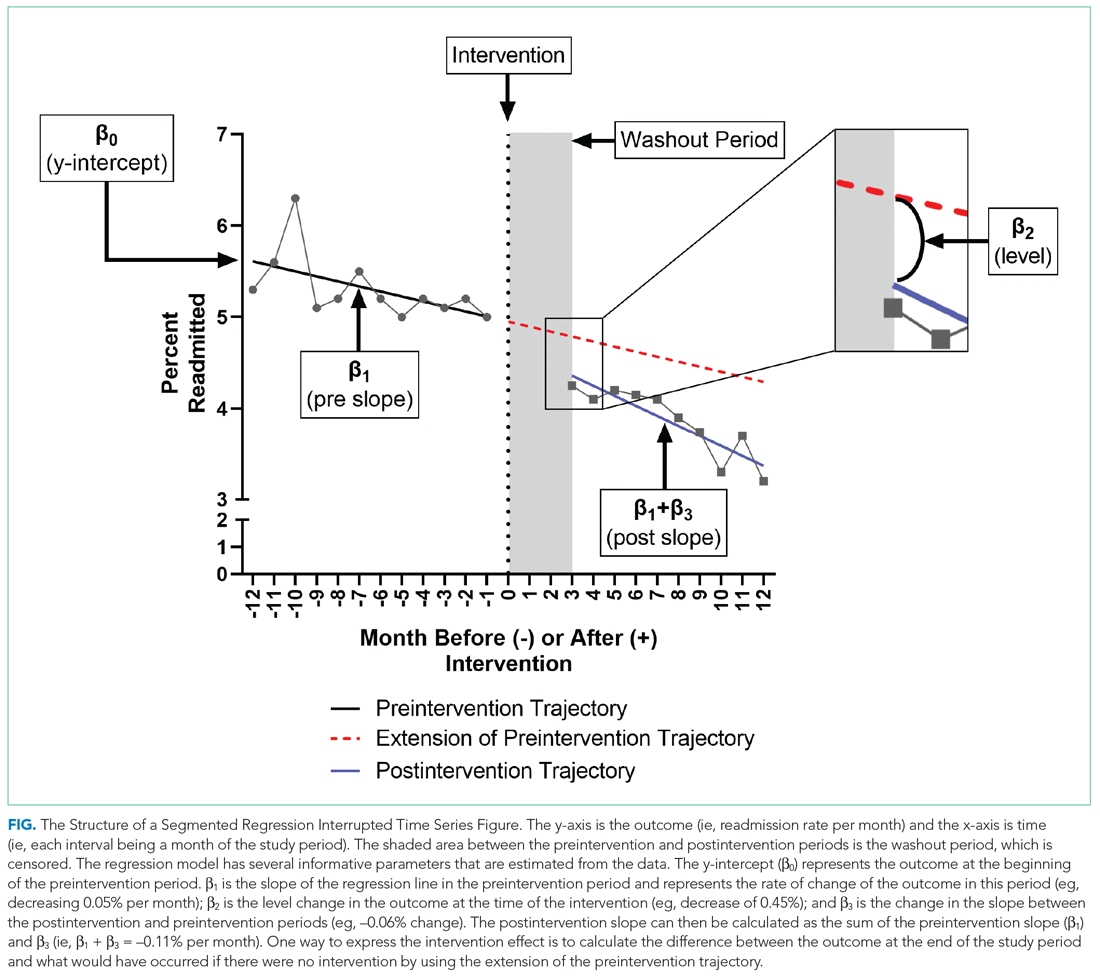

WHAT IS A SEGMENTED REGRESSION ITS?

Segmented regression is the statistical model used to measure (a) the immediate change in the outcome (level) at the start of the intervention and (b) the change in the trend of the outcome (slope) in the postintervention period vs that in the preintervention period. Therefore, the intervention effect size is expressed in terms of the level change and the slope change. To function properly, the models require several repeated (eg, monthly) measurements of the outcome before and after the intervention. Some experts suggest a minimum of 4 to 12 observations, depending on a number of factors including the stability of the outcome and seasonal variations.7,8 If changes before and after more than one intervention are being examined, there should be the minimum number of observations separating them. Unlike typical regression models, time-series models can correct for autocorrelation if it is present in the data. Autocorrelation is the type of correlation that arises when data are collected over time, with those closest in time being more strongly correlated (there are also other types of autocorrelation, such as seasonal patterns). Using available statistical software, autocorrelation can be detected and, if present, it can be controlled for in the segmented regression models.

HOW ARE SEGMENTED REGRESSION RESULTS PRESENTED?

Coon et al present results of their ITS analysis in a panel of figures detailing each study outcome, ICU admission, ICU length of stay, total length of stay, and rates of mechanical ventilation. Each panel shows the rate of change in the outcome per season across hospitals, before and after adoption of heated high-flow nasal cannula protocols, and the level change at the time of adoption.

To further explain how segmented regression results are presented, in the Figure we detail the structure of a segmented regression figure evaluating the impact of an intervention without a control group. In addition to the regression figure, authors typically provide 95% CIs around the rates, level change, and the difference between the postintervention and preintervention periods, along with P values demonstrating whether the rates, level change, and the differences between period slopes differ significantly from zero.

WHAT ARE THE UNDERLYING ASSUMPTIONS OF THE SEGMENTED REGRESSION ITS?

Segmented regression models assume a linear trend in the outcome. If the outcome follows a nonlinear pattern (eg, exponential spread of a disease during a pandemic), then using different distributions in the modeling or transformations of the data may be necessary. The validity of the comparison between the pre- and postintervention groups relies on the similarity between the populations. When there is imbalance, investigators can consider matching based on important characteristics or applying risk adjustment as necessary. Another important assumption is that the outcome of interest is unchanged in the absence of the intervention. Finally, the analysis assumes that the intervention is fully implemented at the time the postintervention period begins. Often, there is a washout period during which the old approach is stopped and the new approach (the intervention) is being implemented and can easily be taken into account.

WHAT ARE THE STRENGTHS OF THE SEGMENTED REGRESSION ITS?

There are several strengths of the ITS analysis and segmented regression.7,8 First, this approach accounts for a possible secular trend in the outcome measure that may have been present prior to the intervention. For example, investigators might conclude that a readmissions program was effective in reducing readmissions if they found that the mean readmission percentage in the period after the intervention was significantly lower than before using a simple pre/post study design. However, what if the readmission rate was already going down prior to the intervention? Using an ITS approach, they may have found that the rate of readmissions simply continued to decrease after the intervention at the same rate that it was decreasing prior to the intervention and, therefore, conclude that the intervention was not effective. Second, because the ITS approach evaluates changes in rates of an outcome at a population level, confounding by individual-level variables will not introduce serious bias unless the confounding occurred at the same time as the intervention. Third, ITS can be used to measure the unintended consequences of interventions or events, and investigators can construct separate time-series analyses for different outcomes. Fourth, ITS can be used to evaluate the impact of the intervention on subpopulations (eg, those grouped by age, sex, race) by conducting stratified analysis. Fifth, ITS provides simple and clear graphical results that can be easily understood by various audiences.

WHAT ARE THE IMPORTANT LIMITATIONS OF AN ITS?

By accounting for preintervention trends, ITS studies permit stronger causal inference than do cross-sectional or simple pre/post QEDs, but they may by prone to confounding by cointerventions or by changes in the population composition. Causal inference based on the ITS analysis is only valid to the extent to which the intervention was the only thing that changed at the point in time between the preintervention and postintervention periods. It is important for investigators to consider this in the design and discuss any coincident interventions. If there are multiple interventions over time, it is possible to account for these changes in the study design by creating multiple points of interruption provided there are sufficient measurements of the outcome between interventions. If the composition of the population changes at the same time as the intervention, this introduces bias. Changes in the ability to measure the outcome or changes to its definition also threaten the validity of the study’s inferences. Finally, it is also important to remember that when the outcome is a population-level measurement, inferences about individual-level outcomes are inappropriate due to ecological fallacies (ie, when inferences about individuals are deduced from inferences about the group to which those individuals belong). For example, Coon et al found that infants with bronchiolitis in the ward-based high-flow nasal cannula protocol group had greater ICU admission rates. It would be inappropriate to conclude that, based on this, an individual infant in a hospital on a ward-based protocol is more likely to be admitted to the ICU.

CONCLUSION

Studies evaluating interventions and events are important for informing healthcare practice, policy, and public health. While an RCT is the preferred method for such evaluations, investigators must often consider alternative study designs when an RCT is not feasible or when more real-world outcome evaluation is desired. Quasi-experimental designs are employed in studies that do not use randomization to study the impact of interventions in real-world settings, and an interrupted time series is a strong QED for the evaluation of interventions and natural experiments.

1. Coon ER, Stoddard G, Brady PW. Intensive care unit utilization after adoption of a ward-based high flow nasal cannula protocol. J Hosp Med. 2020;15(6):325-330. https://doi.org/10.12788/jhm.3417

2. Handley MA, Lyles CR, McCulloch C, Cattamanchi A. Selecting and improving quasi-experimental designs in effectiveness and implementation research. Annu Rev Public Health. 2018;39:5-25. https://doi.org/10.1146/annurev-publhealth-040617-014128

3. Craig P, Katikireddi SV, Leyland A, Popham F. Natural experiments: an overview of methods, approaches, and contributions to public health intervention research. Annu Rev Public Health. 2017;38:39-56. https://doi.org/10.1146/annurev-publhealth-031816-044327

4. Craig P, Cooper C, Gunnell D, et al. Using natural experiments to evaluate population health interventions: new Medical Research Council guidance. J Epidemiol Community Health. 2012;66(12):1182-1186. https://doi.org/10.1136/jech-2011-200375

5. Coly A, Parry G. Evaluating Complex Health Interventions: A Guide to Rigorous Research Designs. AcademyHealth; 2017.

6. Orenstein EW, Rasooly IR, Mai MV, et al. Influence of simulation on electronic health record use patterns among pediatric residents. J Am Med Inform Assoc. 2018;25(11):1501-1506. https://doi.org/10.1093/jamia/ocy105

7. Penfold RB, Zhang F. Use of interrupted time series analysis in evaluating health care quality improvements. Acad Pediatr. 2013;13(6 Suppl):S38-S44. https://doi.org/10.1016/j.acap.2013.08.002

8. Wagner AK, Soumerai SB, Zhang F, Ross‐Degnan D. Segmented regression analysis of interrupted time series studies in medication use research. J Clin Pharm Ther. 2002;27(4):299-309. https://doi.org/10.1046/j.1365-2710.2002.00430.x

9. Desai NR, Ross JS, Kwon JY, et al. Association between hospital penalty status under the hospital readmission reduction program and readmission rates for target and nontarget conditions. JAMA. 2016;316(24):2647-2656. https://doi.org/10.1001/jama.2016.18533

10. Kahn JM, Davis BS, Yabes JG, et al. Association between state-mandated protocolized sepsis care and in-hospital mortality among adults with sepsis. JAMA. 2019;322(3):240-250. https://doi.org/10.1001/jama.2019.9021

11. Volpp KG, Rosen AK, Rosenbaum PR, et al. Mortality among hospitalized Medicare beneficiaries in the first 2 years following ACGME resident duty hour reform. JAMA. 2007;298(9):975-983. https://doi.org/10.1001/jama.298.9.975

12. Destino L, Bennett D, Wood M, et al. Improving patient flow: analysis of an initiative to improve early discharge. J Hosp Med. 2019;14(1):22-27. https://doi.org/10.12788/jhm.3133

13. Williams DJ, Hall M, Gerber JS, et al; Pediatric Research in Inpatient Settings Network. Impact of a national guideline on antibiotic selection for hospitalized pneumonia. Pediatrics. 2017;139(4):e20163231. https://doi.org/10.1542/peds.2016-3231

Hospital medicine research often asks the question whether an intervention, such as a policy or guideline, has improved quality of care and/or whether there were any unintended consequences. Alternatively, investigators may be interested in understanding the impact of an event, such as a natural disaster or a pandemic, on hospital care. The study design that provides the best estimate of the causal effect of the intervention is the randomized controlled trial (RCT). The goal of randomization, which can be implemented at the patient or cluster level (eg, hospitals), is attaining a balance of the known and unknown confounders between study groups.

However, an RCT may not be feasible for several reasons: complexity, insufficient setup time or funding, ethical barriers to randomization, unwillingness of funders or payers to withhold the intervention from patients (ie, the control group), or anticipated contamination of the intervention into the control group (eg, provider practice change interventions). In addition, it may be impossible to conduct an RCT because the investigator does not have control over the design of an intervention or because they are studying an event, such as a pandemic.

In the June 2020 issue of the Journal of Hospital Medicine, Coon et al1 use a type of quasi-experimental design (QED)—specifically, the interrupted time series (ITS)—to examine the impact of the adoption of ward-based high-flow nasal cannula protocols on intensive care unit (ICU) admission for bronchiolitis at children’s hospitals. In this methodologic progress note, we discuss QEDs for evaluating the impact of healthcare interventions or events and focus on ITS, one of the strongest QEDs.

WHAT IS A QUASI-EXPERIMENTAL DESIGN?

Quasi-experimental design refers to a broad range of nonrandomized or partially randomized pre- vs postintervention studies.2 In order to test a causal hypothesis without randomization, QEDs define a comparison group or a time period in which an intervention has not been implemented, as well as at least one group or time period in which an intervention has been implemented. In a QED, the control may lack similarity with the intervention group or time period because of differences in the patients, sites, or time period (sometimes referred to as having a “nonequivalent control group”). Several design and analytic approaches are available to enhance the extent to which the study is able to make conclusions about the causal impact of the intervention.2,3 Because randomization is not necessary, QEDs allow for inclusion of a broader population than that which is feasible by RCTs, which increases the applicability and generalizability of the results. Therefore, they are a powerful research design to test the effectiveness of interventions in real-world settings.

The choice of which QED depends on whether the investigators are conducting a prospective evaluation and have control over the study design (ie, the ordering of the intervention, selection of sites or individuals, and/or timing and frequency of the data collection) or whether the investigators do not have control over the intervention, which is also known as a “natural experiment.”4,5 Some studies may also incorporate two QEDs in tandem.6 The Table provides a brief summary of different QEDs, ordered by methodologic strength, and distinguishes those that can be used to study natural experiments. In the study by Coon et al,1 an ITS is used as opposed to a methodologically stronger QED, such as the stepped-wedge design, because the investigators did not have control over the rollout of heated high-flow nasal canula protocols across hospitals.

WHAT IS AN INTERRUPTED TIME SERIES?

Interrupted time series designs use repeated observations of an outcome over time. This method then divides, or “interrupts,” the series of data into two time periods: before the intervention or event and after. Using data from the preintervention period, an underlying trend in the outcome is estimated and assumed to continue forward into the postintervention period to estimate what would have occurred without the intervention. Any significant change in the outcome at the beginning of the postintervention period or change in the trend in the postintervention is then attributed to the intervention.

There are several important methodologic considerations when designing an ITS study, as detailed in other review papers.2,3,7,8 An ITS design can be retrospective or prospective. It can be of a single center or include multiple sites, as in Coon et al. It can be conducted with or without a control. The inclusion of a control, when appropriately chosen, improves the strength of the study design because it can account for seasonal trends and potential confounders that vary over time. The control can be a different group of hospitals or participants that are similar but did not receive the intervention, or it can be a different outcome in the same group of hospitals or participants that are not expected to be affected by the intervention. The ITS design may also be set up to estimate the individual effects of multicomponent interventions. If the different components are phased in sequentially over time, then it may be possible to interrupt the time series at these points and estimate the impact of each intervention component.

Other examples of ITS studies in hospital medicine include those that evaluated the impact of a readmission-reduction program,9 of state sepsis regulations on in-hospital mortality,10 of resident duty-hour reform on mortality among hospitalized patients,11 of a quality-improvement initiative on early discharge,12 and of national guidelines on pediatric pneumonia antibiotic selection.13 There are several types of ITS analysis, and in this article, we focus on segmented regression without a control group.7,8

WHAT IS A SEGMENTED REGRESSION ITS?

Segmented regression is the statistical model used to measure (a) the immediate change in the outcome (level) at the start of the intervention and (b) the change in the trend of the outcome (slope) in the postintervention period vs that in the preintervention period. Therefore, the intervention effect size is expressed in terms of the level change and the slope change. To function properly, the models require several repeated (eg, monthly) measurements of the outcome before and after the intervention. Some experts suggest a minimum of 4 to 12 observations, depending on a number of factors including the stability of the outcome and seasonal variations.7,8 If changes before and after more than one intervention are being examined, there should be the minimum number of observations separating them. Unlike typical regression models, time-series models can correct for autocorrelation if it is present in the data. Autocorrelation is the type of correlation that arises when data are collected over time, with those closest in time being more strongly correlated (there are also other types of autocorrelation, such as seasonal patterns). Using available statistical software, autocorrelation can be detected and, if present, it can be controlled for in the segmented regression models.

HOW ARE SEGMENTED REGRESSION RESULTS PRESENTED?

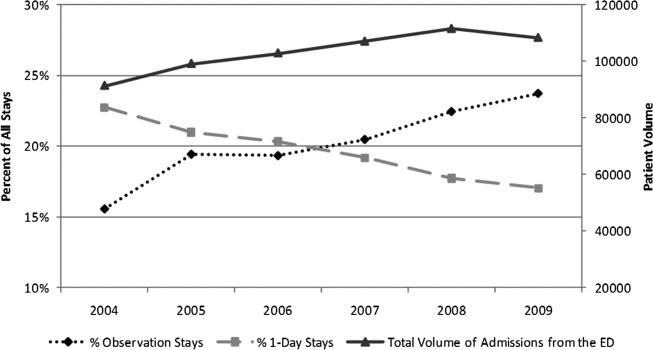

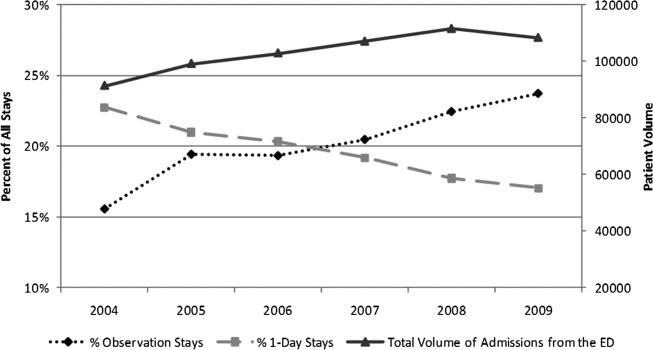

Coon et al present results of their ITS analysis in a panel of figures detailing each study outcome, ICU admission, ICU length of stay, total length of stay, and rates of mechanical ventilation. Each panel shows the rate of change in the outcome per season across hospitals, before and after adoption of heated high-flow nasal cannula protocols, and the level change at the time of adoption.

To further explain how segmented regression results are presented, in the Figure we detail the structure of a segmented regression figure evaluating the impact of an intervention without a control group. In addition to the regression figure, authors typically provide 95% CIs around the rates, level change, and the difference between the postintervention and preintervention periods, along with P values demonstrating whether the rates, level change, and the differences between period slopes differ significantly from zero.

WHAT ARE THE UNDERLYING ASSUMPTIONS OF THE SEGMENTED REGRESSION ITS?

Segmented regression models assume a linear trend in the outcome. If the outcome follows a nonlinear pattern (eg, exponential spread of a disease during a pandemic), then using different distributions in the modeling or transformations of the data may be necessary. The validity of the comparison between the pre- and postintervention groups relies on the similarity between the populations. When there is imbalance, investigators can consider matching based on important characteristics or applying risk adjustment as necessary. Another important assumption is that the outcome of interest is unchanged in the absence of the intervention. Finally, the analysis assumes that the intervention is fully implemented at the time the postintervention period begins. Often, there is a washout period during which the old approach is stopped and the new approach (the intervention) is being implemented and can easily be taken into account.

WHAT ARE THE STRENGTHS OF THE SEGMENTED REGRESSION ITS?

There are several strengths of the ITS analysis and segmented regression.7,8 First, this approach accounts for a possible secular trend in the outcome measure that may have been present prior to the intervention. For example, investigators might conclude that a readmissions program was effective in reducing readmissions if they found that the mean readmission percentage in the period after the intervention was significantly lower than before using a simple pre/post study design. However, what if the readmission rate was already going down prior to the intervention? Using an ITS approach, they may have found that the rate of readmissions simply continued to decrease after the intervention at the same rate that it was decreasing prior to the intervention and, therefore, conclude that the intervention was not effective. Second, because the ITS approach evaluates changes in rates of an outcome at a population level, confounding by individual-level variables will not introduce serious bias unless the confounding occurred at the same time as the intervention. Third, ITS can be used to measure the unintended consequences of interventions or events, and investigators can construct separate time-series analyses for different outcomes. Fourth, ITS can be used to evaluate the impact of the intervention on subpopulations (eg, those grouped by age, sex, race) by conducting stratified analysis. Fifth, ITS provides simple and clear graphical results that can be easily understood by various audiences.

WHAT ARE THE IMPORTANT LIMITATIONS OF AN ITS?

By accounting for preintervention trends, ITS studies permit stronger causal inference than do cross-sectional or simple pre/post QEDs, but they may by prone to confounding by cointerventions or by changes in the population composition. Causal inference based on the ITS analysis is only valid to the extent to which the intervention was the only thing that changed at the point in time between the preintervention and postintervention periods. It is important for investigators to consider this in the design and discuss any coincident interventions. If there are multiple interventions over time, it is possible to account for these changes in the study design by creating multiple points of interruption provided there are sufficient measurements of the outcome between interventions. If the composition of the population changes at the same time as the intervention, this introduces bias. Changes in the ability to measure the outcome or changes to its definition also threaten the validity of the study’s inferences. Finally, it is also important to remember that when the outcome is a population-level measurement, inferences about individual-level outcomes are inappropriate due to ecological fallacies (ie, when inferences about individuals are deduced from inferences about the group to which those individuals belong). For example, Coon et al found that infants with bronchiolitis in the ward-based high-flow nasal cannula protocol group had greater ICU admission rates. It would be inappropriate to conclude that, based on this, an individual infant in a hospital on a ward-based protocol is more likely to be admitted to the ICU.

CONCLUSION

Studies evaluating interventions and events are important for informing healthcare practice, policy, and public health. While an RCT is the preferred method for such evaluations, investigators must often consider alternative study designs when an RCT is not feasible or when more real-world outcome evaluation is desired. Quasi-experimental designs are employed in studies that do not use randomization to study the impact of interventions in real-world settings, and an interrupted time series is a strong QED for the evaluation of interventions and natural experiments.

Hospital medicine research often asks the question whether an intervention, such as a policy or guideline, has improved quality of care and/or whether there were any unintended consequences. Alternatively, investigators may be interested in understanding the impact of an event, such as a natural disaster or a pandemic, on hospital care. The study design that provides the best estimate of the causal effect of the intervention is the randomized controlled trial (RCT). The goal of randomization, which can be implemented at the patient or cluster level (eg, hospitals), is attaining a balance of the known and unknown confounders between study groups.

However, an RCT may not be feasible for several reasons: complexity, insufficient setup time or funding, ethical barriers to randomization, unwillingness of funders or payers to withhold the intervention from patients (ie, the control group), or anticipated contamination of the intervention into the control group (eg, provider practice change interventions). In addition, it may be impossible to conduct an RCT because the investigator does not have control over the design of an intervention or because they are studying an event, such as a pandemic.

In the June 2020 issue of the Journal of Hospital Medicine, Coon et al1 use a type of quasi-experimental design (QED)—specifically, the interrupted time series (ITS)—to examine the impact of the adoption of ward-based high-flow nasal cannula protocols on intensive care unit (ICU) admission for bronchiolitis at children’s hospitals. In this methodologic progress note, we discuss QEDs for evaluating the impact of healthcare interventions or events and focus on ITS, one of the strongest QEDs.

WHAT IS A QUASI-EXPERIMENTAL DESIGN?

Quasi-experimental design refers to a broad range of nonrandomized or partially randomized pre- vs postintervention studies.2 In order to test a causal hypothesis without randomization, QEDs define a comparison group or a time period in which an intervention has not been implemented, as well as at least one group or time period in which an intervention has been implemented. In a QED, the control may lack similarity with the intervention group or time period because of differences in the patients, sites, or time period (sometimes referred to as having a “nonequivalent control group”). Several design and analytic approaches are available to enhance the extent to which the study is able to make conclusions about the causal impact of the intervention.2,3 Because randomization is not necessary, QEDs allow for inclusion of a broader population than that which is feasible by RCTs, which increases the applicability and generalizability of the results. Therefore, they are a powerful research design to test the effectiveness of interventions in real-world settings.

The choice of which QED depends on whether the investigators are conducting a prospective evaluation and have control over the study design (ie, the ordering of the intervention, selection of sites or individuals, and/or timing and frequency of the data collection) or whether the investigators do not have control over the intervention, which is also known as a “natural experiment.”4,5 Some studies may also incorporate two QEDs in tandem.6 The Table provides a brief summary of different QEDs, ordered by methodologic strength, and distinguishes those that can be used to study natural experiments. In the study by Coon et al,1 an ITS is used as opposed to a methodologically stronger QED, such as the stepped-wedge design, because the investigators did not have control over the rollout of heated high-flow nasal canula protocols across hospitals.

WHAT IS AN INTERRUPTED TIME SERIES?

Interrupted time series designs use repeated observations of an outcome over time. This method then divides, or “interrupts,” the series of data into two time periods: before the intervention or event and after. Using data from the preintervention period, an underlying trend in the outcome is estimated and assumed to continue forward into the postintervention period to estimate what would have occurred without the intervention. Any significant change in the outcome at the beginning of the postintervention period or change in the trend in the postintervention is then attributed to the intervention.

There are several important methodologic considerations when designing an ITS study, as detailed in other review papers.2,3,7,8 An ITS design can be retrospective or prospective. It can be of a single center or include multiple sites, as in Coon et al. It can be conducted with or without a control. The inclusion of a control, when appropriately chosen, improves the strength of the study design because it can account for seasonal trends and potential confounders that vary over time. The control can be a different group of hospitals or participants that are similar but did not receive the intervention, or it can be a different outcome in the same group of hospitals or participants that are not expected to be affected by the intervention. The ITS design may also be set up to estimate the individual effects of multicomponent interventions. If the different components are phased in sequentially over time, then it may be possible to interrupt the time series at these points and estimate the impact of each intervention component.

Other examples of ITS studies in hospital medicine include those that evaluated the impact of a readmission-reduction program,9 of state sepsis regulations on in-hospital mortality,10 of resident duty-hour reform on mortality among hospitalized patients,11 of a quality-improvement initiative on early discharge,12 and of national guidelines on pediatric pneumonia antibiotic selection.13 There are several types of ITS analysis, and in this article, we focus on segmented regression without a control group.7,8

WHAT IS A SEGMENTED REGRESSION ITS?

Segmented regression is the statistical model used to measure (a) the immediate change in the outcome (level) at the start of the intervention and (b) the change in the trend of the outcome (slope) in the postintervention period vs that in the preintervention period. Therefore, the intervention effect size is expressed in terms of the level change and the slope change. To function properly, the models require several repeated (eg, monthly) measurements of the outcome before and after the intervention. Some experts suggest a minimum of 4 to 12 observations, depending on a number of factors including the stability of the outcome and seasonal variations.7,8 If changes before and after more than one intervention are being examined, there should be the minimum number of observations separating them. Unlike typical regression models, time-series models can correct for autocorrelation if it is present in the data. Autocorrelation is the type of correlation that arises when data are collected over time, with those closest in time being more strongly correlated (there are also other types of autocorrelation, such as seasonal patterns). Using available statistical software, autocorrelation can be detected and, if present, it can be controlled for in the segmented regression models.

HOW ARE SEGMENTED REGRESSION RESULTS PRESENTED?

Coon et al present results of their ITS analysis in a panel of figures detailing each study outcome, ICU admission, ICU length of stay, total length of stay, and rates of mechanical ventilation. Each panel shows the rate of change in the outcome per season across hospitals, before and after adoption of heated high-flow nasal cannula protocols, and the level change at the time of adoption.

To further explain how segmented regression results are presented, in the Figure we detail the structure of a segmented regression figure evaluating the impact of an intervention without a control group. In addition to the regression figure, authors typically provide 95% CIs around the rates, level change, and the difference between the postintervention and preintervention periods, along with P values demonstrating whether the rates, level change, and the differences between period slopes differ significantly from zero.

WHAT ARE THE UNDERLYING ASSUMPTIONS OF THE SEGMENTED REGRESSION ITS?

Segmented regression models assume a linear trend in the outcome. If the outcome follows a nonlinear pattern (eg, exponential spread of a disease during a pandemic), then using different distributions in the modeling or transformations of the data may be necessary. The validity of the comparison between the pre- and postintervention groups relies on the similarity between the populations. When there is imbalance, investigators can consider matching based on important characteristics or applying risk adjustment as necessary. Another important assumption is that the outcome of interest is unchanged in the absence of the intervention. Finally, the analysis assumes that the intervention is fully implemented at the time the postintervention period begins. Often, there is a washout period during which the old approach is stopped and the new approach (the intervention) is being implemented and can easily be taken into account.

WHAT ARE THE STRENGTHS OF THE SEGMENTED REGRESSION ITS?

There are several strengths of the ITS analysis and segmented regression.7,8 First, this approach accounts for a possible secular trend in the outcome measure that may have been present prior to the intervention. For example, investigators might conclude that a readmissions program was effective in reducing readmissions if they found that the mean readmission percentage in the period after the intervention was significantly lower than before using a simple pre/post study design. However, what if the readmission rate was already going down prior to the intervention? Using an ITS approach, they may have found that the rate of readmissions simply continued to decrease after the intervention at the same rate that it was decreasing prior to the intervention and, therefore, conclude that the intervention was not effective. Second, because the ITS approach evaluates changes in rates of an outcome at a population level, confounding by individual-level variables will not introduce serious bias unless the confounding occurred at the same time as the intervention. Third, ITS can be used to measure the unintended consequences of interventions or events, and investigators can construct separate time-series analyses for different outcomes. Fourth, ITS can be used to evaluate the impact of the intervention on subpopulations (eg, those grouped by age, sex, race) by conducting stratified analysis. Fifth, ITS provides simple and clear graphical results that can be easily understood by various audiences.

WHAT ARE THE IMPORTANT LIMITATIONS OF AN ITS?

By accounting for preintervention trends, ITS studies permit stronger causal inference than do cross-sectional or simple pre/post QEDs, but they may by prone to confounding by cointerventions or by changes in the population composition. Causal inference based on the ITS analysis is only valid to the extent to which the intervention was the only thing that changed at the point in time between the preintervention and postintervention periods. It is important for investigators to consider this in the design and discuss any coincident interventions. If there are multiple interventions over time, it is possible to account for these changes in the study design by creating multiple points of interruption provided there are sufficient measurements of the outcome between interventions. If the composition of the population changes at the same time as the intervention, this introduces bias. Changes in the ability to measure the outcome or changes to its definition also threaten the validity of the study’s inferences. Finally, it is also important to remember that when the outcome is a population-level measurement, inferences about individual-level outcomes are inappropriate due to ecological fallacies (ie, when inferences about individuals are deduced from inferences about the group to which those individuals belong). For example, Coon et al found that infants with bronchiolitis in the ward-based high-flow nasal cannula protocol group had greater ICU admission rates. It would be inappropriate to conclude that, based on this, an individual infant in a hospital on a ward-based protocol is more likely to be admitted to the ICU.

CONCLUSION

Studies evaluating interventions and events are important for informing healthcare practice, policy, and public health. While an RCT is the preferred method for such evaluations, investigators must often consider alternative study designs when an RCT is not feasible or when more real-world outcome evaluation is desired. Quasi-experimental designs are employed in studies that do not use randomization to study the impact of interventions in real-world settings, and an interrupted time series is a strong QED for the evaluation of interventions and natural experiments.

1. Coon ER, Stoddard G, Brady PW. Intensive care unit utilization after adoption of a ward-based high flow nasal cannula protocol. J Hosp Med. 2020;15(6):325-330. https://doi.org/10.12788/jhm.3417

2. Handley MA, Lyles CR, McCulloch C, Cattamanchi A. Selecting and improving quasi-experimental designs in effectiveness and implementation research. Annu Rev Public Health. 2018;39:5-25. https://doi.org/10.1146/annurev-publhealth-040617-014128

3. Craig P, Katikireddi SV, Leyland A, Popham F. Natural experiments: an overview of methods, approaches, and contributions to public health intervention research. Annu Rev Public Health. 2017;38:39-56. https://doi.org/10.1146/annurev-publhealth-031816-044327