User login

In the Literature: Research You Need to Know

Clinical question: Which clinical decision rule—Wells rule, simplified Wells rule, revised Geneva score, or simplified revised Geneva score—is the best for evaluating a patient with a possible acute pulmonary embolism?

Background: The use of standardized clinical decision rules to determine the probability of an acute pulmonary embolism (PE) has significantly improved the diagnostic evaluation of patients with suspected PE. Several clinical decision rules are available and widely used, but they have not been previously directly compared.

Study design: Prospective cohort.

Setting: Seven hospitals in the Netherlands.

Synopsis: A total of 807 patients with suspected first episode of acute PE had a sequential workup with clinical probability assessment and D-dimer testing. When PE was considered unlikely according to all four clinical decision rules and a normal D-dimer result, PE was excluded. In the remaining patients, a CT scan was used to confirm or exclude the diagnosis.

The prevalence of PE was 23%. Combined with a normal D-dimer, the decision rules excluded PE in 22% to 24% of patients. Thirty percent of patients had discordant decision rule outcomes, but PE was not detected by CT in any of these patients when combined with a normal D-dimer.

This study has practical limitations because management was based on a combination of four decision rules and D-dimer testing rather than only one rule and D-dimer testing, which is the more realistic clinical approach.

Bottom line: When used correctly and in conjunction with a D-dimer result, the Wells rule, simplified Wells rule, revised Geneva score, and simplified revised Geneva score all perform similarly in the exclusion of acute PE.

Citation: Douma RA, Mos IC, Erkens PM, et al. Performance of 4 clinical decision rules in the diagnostic management of acute pulmonary embolism: a prospective cohort study. Ann Intern Med. 2011;154:709-718.

For more of physician reviews of HM-related literature, check out this month's"In the Literature".

Clinical question: Which clinical decision rule—Wells rule, simplified Wells rule, revised Geneva score, or simplified revised Geneva score—is the best for evaluating a patient with a possible acute pulmonary embolism?

Background: The use of standardized clinical decision rules to determine the probability of an acute pulmonary embolism (PE) has significantly improved the diagnostic evaluation of patients with suspected PE. Several clinical decision rules are available and widely used, but they have not been previously directly compared.

Study design: Prospective cohort.

Setting: Seven hospitals in the Netherlands.

Synopsis: A total of 807 patients with suspected first episode of acute PE had a sequential workup with clinical probability assessment and D-dimer testing. When PE was considered unlikely according to all four clinical decision rules and a normal D-dimer result, PE was excluded. In the remaining patients, a CT scan was used to confirm or exclude the diagnosis.

The prevalence of PE was 23%. Combined with a normal D-dimer, the decision rules excluded PE in 22% to 24% of patients. Thirty percent of patients had discordant decision rule outcomes, but PE was not detected by CT in any of these patients when combined with a normal D-dimer.

This study has practical limitations because management was based on a combination of four decision rules and D-dimer testing rather than only one rule and D-dimer testing, which is the more realistic clinical approach.

Bottom line: When used correctly and in conjunction with a D-dimer result, the Wells rule, simplified Wells rule, revised Geneva score, and simplified revised Geneva score all perform similarly in the exclusion of acute PE.

Citation: Douma RA, Mos IC, Erkens PM, et al. Performance of 4 clinical decision rules in the diagnostic management of acute pulmonary embolism: a prospective cohort study. Ann Intern Med. 2011;154:709-718.

For more of physician reviews of HM-related literature, check out this month's"In the Literature".

Clinical question: Which clinical decision rule—Wells rule, simplified Wells rule, revised Geneva score, or simplified revised Geneva score—is the best for evaluating a patient with a possible acute pulmonary embolism?

Background: The use of standardized clinical decision rules to determine the probability of an acute pulmonary embolism (PE) has significantly improved the diagnostic evaluation of patients with suspected PE. Several clinical decision rules are available and widely used, but they have not been previously directly compared.

Study design: Prospective cohort.

Setting: Seven hospitals in the Netherlands.

Synopsis: A total of 807 patients with suspected first episode of acute PE had a sequential workup with clinical probability assessment and D-dimer testing. When PE was considered unlikely according to all four clinical decision rules and a normal D-dimer result, PE was excluded. In the remaining patients, a CT scan was used to confirm or exclude the diagnosis.

The prevalence of PE was 23%. Combined with a normal D-dimer, the decision rules excluded PE in 22% to 24% of patients. Thirty percent of patients had discordant decision rule outcomes, but PE was not detected by CT in any of these patients when combined with a normal D-dimer.

This study has practical limitations because management was based on a combination of four decision rules and D-dimer testing rather than only one rule and D-dimer testing, which is the more realistic clinical approach.

Bottom line: When used correctly and in conjunction with a D-dimer result, the Wells rule, simplified Wells rule, revised Geneva score, and simplified revised Geneva score all perform similarly in the exclusion of acute PE.

Citation: Douma RA, Mos IC, Erkens PM, et al. Performance of 4 clinical decision rules in the diagnostic management of acute pulmonary embolism: a prospective cohort study. Ann Intern Med. 2011;154:709-718.

For more of physician reviews of HM-related literature, check out this month's"In the Literature".

When Should a Patient with Ascites Receive Spontaneous Bacterial Peritonitis (SBP) Prophylaxis?

Case

A 54-year-old man with end-stage liver disease (ESLD) and no prior history of spontaneous bacterial peritonitis (SBP) presents with increasing shortness of breath and abdominal distention. He is admitted for worsening volume overload. The patient reveals that he has not been compliant with his diuretics. On the day of admission, a large-volume paracentesis is performed. Results are significant for a white blood cell count of 150 cells/mm3 and a total protein of 0.9 g/ul. The patient is started on furosemide and spironolactone, and his symptoms significantly improve throughout his hospitalization. His medications are reconciled on the day of discharge. He is not on any antibiotics for SBP prophylaxis; should he be? In general, which patients with ascites should receive SBP prophylaxis?

Overview

Spontaneous bacterial peritonitis is an infection of ascitic fluid that occurs in the absence of an indentified intra-abdominal source of infection or inflammation, i.e., perforation or abscess.1 It is diagnosed when the polymorphonuclear cell (PMN) count in the ascitic fluid is equal to or greater than 250 cells/mm3, with or without positive cultures.

SBP is a significant cause of morbidity and mortality in patients with cirrhosis, with the mortality rate approaching 20% to 40%.2 Of the 32% to 34% of cirrhotic patients who present with, or develop, a bacterial infection during their hospitalization, 25% are due to SBP.1 Changes in gut motility, mucosal defense, and microflora allow for translocation of bacteria into enteric lymph nodes and the bloodstream, resulting in seeding of the peritoneal fluid and SBP.1 Alterations in both systemic and localized immune defenses, both of which are reduced in patients with liver disease, also play a role in SBP pathogenesis (see Table 1, p. 41).

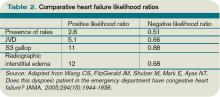

Current evidence supports the use of a third-generation cephalosporin or amoxicillin/clavulanate for initial treatment of SBP, as most infections are caused by gram-negative bacilli, in particular E. coli (see Table 2 on p. 41 and Table 3 on p. 42).1 Alternatively, an oral or intravenous fluoroquinolone could be used if the prevalence of fluoroquinolone-resistant organisms is low.1

Due to the frequency and morbidity associated with SBP, there is great interest in preventing it. However, the use of prophylactic antibiotics needs to be restricted to patients who are at highest risk of developing SBP. According to numerous studies, patients at high risk for SBP include:

- Patients with a prior SBP history;

- Patients admitted with a gastrointestinal bleed; and

- Patients with low total protein content in their ascitic fluid (defined as <1.5 g/ul).1

SBP History

Spontaneous bacterial peritonitis portends bad outcomes. The one-year mortality rate after an episode of SBP is 30% to 50%.1 Furthermore, patients who have recovered from a previous episode of SBP have a 70% chance of developing another episode of SBP in that year.1,2 In one study, norfloxacin was shown to decrease the one-year risk of SBP to 20% from 68% in patients with a history of SBP.3 Additionally, the likelihood of developing SBP from gram-negative bacilli was reduced to 3% from 60%. In order to be efficacious, norfloxacin must be given daily. When fluoroquinolones are prescribed less than once daily, there is a higher rate of fluoroquinolone resistant organisms in the stool.1

Though once-daily dosing of norfloxacin is recommended to decrease the promotion of resistant organisms in prophylaxis against SBP, ciprofloxacin once weekly is acceptable. In a group of patients with low ascitic protein content, with or without a history of SBP, weekly ciprofloxacin has been shown to decrease SBP incidence to 4% from 22% at six months.4 In regard to length of treatment, recommendations are to continue prophylactic antibiotics until resolution of ascites, the patient receives a transplant, or the patient passes away.1

Saab et al studied the impact of oral antibiotic prophylaxis in patients with advanced liver disease on morbidity and mortality.5 The authors examined prospective, randomized, controlled trials that compared high-risk cirrhotic patients receiving oral antibiotic prophylaxis for SBP with groups receiving placebo or no intervention. Eight studies totaling 647 patients were included in the analysis.

The overall mortality rate for patients treated with SBP prophylaxis was 16%, compared with 25% for the control group. Groups treated with prophylactic antibiotics also had a lower incidence of all infections (6.2% vs. 22.2% in the control groups). Additionally, a survival benefit was seen at three months in the group that received prophylactic antibiotics.

The absolute risk reduction with prophylactic antibiotics for primary prevention of SBP was 8% with a number needed to treat of 13. The incidence of gastrointestinal (GI) bleeding, renal failure, and hepatic failure did not significantly differ between treatment and control groups. Thus, survival benefit is thought to be related to the reduced incidence of infections in the group receiving prophylactic antibiotics.5

History of GI Bleeding

The incidence of developing SBP in cirrhotics with an active GI bleed is anywhere from 20% to 45%.1,2 For those with ascites of any etiology and a GI bleed, the incidence can be as high as 60%.5 In general, bacterial infections are frequently diagnosed in patients with cirrhosis and GI bleeding, and have been documented in 22% of these patients within the first 48 hours after admission. According to several studies, that percentage can reach as high as 35% to 66% within seven to 14 days of admission.6 A seven-day course of antibiotics, or antibiotics until discharge, is generally acceptable for SBP prophylaxis in the setting of ascites and GI bleeding (see Table 2, right).1

Bernard et al performed a meta-analysis of five trials to assess the efficacy of antibiotic prophylaxis in the prevention of infections and effect on survival in patients with cirrhosis and GI bleeding. Out of 534 patients, 264 were treated with antibiotics between four and 10 days, and 270 did not receive any antibiotics.

The endpoints of the study were infection, bacteremia and/or SBP; incidence of SBP; and death. Antibiotic prophylaxis not only increased the mean survival rate by 9.1%, but also increased the mean percentage of patients free of infection (32% improvement); bacteremia and/or SBP (19% improvement); and SBP (7% improvement).7

Low Ascitic Fluid Protein

Of the three major risk factors for SBP, ascitic fluid protein content is the most debated. Guarner et al studied the risk of first community-acquired SBP in cirrhotics with low ascitic fluid protein.2 Patients were seen immediately after discharge from the hospital and at two- to three-month intervals. Of the 109 hospitalized patients, 23 (21%) developed SBP, nine of which developed SBP during their hospitalization. The one-year cumulative probability of SBP in these patients with low ascitic fluid protein levels was 35%.

During this study, the authors also looked at 20 different patient variables on admission and found that two parameters—high bilirubin (>3.2mg/dL) and low platelet count (<98,000 cells/ul)—were associated with an increased risk of SBP. This is consistent with studies showing that patients with higher Model for End-Stage Liver Disease (MELD) or Child-Pugh scores, indicating more severe liver disease, are at increased risk for SBP. This likely is the reason SBP prophylaxis is recommended for patients with an elevated bilirubin, and higher Child-Pugh scores, by the American Association for the Study of Liver Disease (see Table 2, p. 41).

Runyon et al showed that 15% of patients with low ascitic fluid protein developed SBP during their hospitalization, as compared with 2% of patients with ascitic fluid levels greater than 1 g/dl.8 A randomized, non-placebo-controlled trial by Navasa et al evaluating 70 cirrhotic patients with low ascitic ascitic protein levels showed a lower probability of developing SBP in the group placed on SBP prophylaxis with norfloxacin (5% vs. 31%).9 Six-month mortality rate was also lower (19% vs. 36%).

In contrast to the previous studies, Grothe et al found that the presence of SBP was not related to ascitic protein content.10 Given conflicting studies, controversy still remains on whether patients with low ascitic protein should receive long-term prophylactic antibiotics.

Antibiotic Drawbacks

The consensus in the literature is that patients with ascites who are admitted with a GI bleed, or those with a history of SBP, should be placed on SBP prophylaxis. However, patients placed on long-term antibiotics are at risk for developing bacterial resistance. Bacterial resistance in cultures taken from cirrhotic patients with SBP has increased over the last decade, particularly in gram-negative bacteria.5 Patients who receive antibiotics in the pre-transplant setting also are at risk for post-transplant fungal infections.

Additionally, the antibiotic of choice for SBP prophylaxis is typically a fluoroquinolone, which can be expensive. However, numerous studies have shown that the cost of initiating prophylactic therapy for SBP in patients with a prior episode of SBP can be cheaper than treating SBP after diagnosis.2

Back to the Case

Our patient’s paracentesis was negative for SBP. Additionally, he does not have a history of SBP, nor does he have an active GI bleed. His only possible indication for SBP prophylaxis is low ascitic protein concentration. His electrolytes were all within normal limits. Additionally, total bilirubin was only slightly elevated at 2.3 mg/dL.

Based on the American Association for the Study of Liver Diseases guidelines, the patient was not started on SBP prophylaxis. Additionally, given his history of medication noncompliance, there is concern that he might not take the antibiotics as prescribed, thus leading to the development of bacterial resistance and more serious infections in the future.

Bottom Line

Patients with ascites and a prior episode of SBP, and those admitted to the hospital for GI bleeding, should be placed on SBP prophylaxis. SBP prophylaxis for low protein ascitic fluid remains controversial but is recommended by the American Association for the Study of Liver Diseases. TH

Dr. del Pino Jones is a hospitalist at the University of Colorado Denver.

References

- Ghassemi S, Garcia-Tsao G. Prevention and treatment of infections in patients with cirrhosis. Best Pract Res Clin Gastroenterol. 2007;21(1):77-93.

- Guarner C, Solà R, Soriono G, et al. Risk of a first community-acquired spontaneous bacterial peritonitis in cirrhotics with low ascitic fluid protein levels. Gastroenterology. 1999;117(2):414-419.

- Ginés P, Rimola A, Planas R, et al. Norfloxacin prevents spontaneous bacterial peritonitis recurrence in cirrhosis: results of a double-blind, placebo-controlled trial. Hepatology. 1990;12(4 Pt 1):716-724.

- Rolachon A, Cordier L, Bacq Y, et al. Ciprofloxacin and long-term prevention of spontaneous bacterial peritonitis: results of a prospective controlled trial. Hepatology. 1995;22(4 Pt 1):1171-1174.

- Saab S, Hernandez J, Chi AC, Tong MJ. Oral antibiotic prophylaxis reduces spontaneous bacterial peritonitis occurrence and improves short-term survival in cirrhosis: a meta-analysis. Am J Gastroenterol. 2009;104(4):993-1001.

- Deschênes M, Villeneuve J. Risk factors for the development of bacterial infections in hospitalized patients with cirrhosis. Am J Gastroenterol. 1999;94(8):2193-2197.

- Bernard B, Grangé J, Khac EN, Amiot X, Opolon P, Poynard T. Antibiotic prophylaxis for the prevention of bacterial infections in cirrhotic patients with gastrointestinal bleeding: a meta-analysis. Hepatology. 1999;29(6):1655-1661.

- Runyon B. Low-protein-concentration ascitic fluid is predisposed to spontaneous bacterial peritonitis. Gastroenterology. 1986;91(6):1343-1346.

- Navasa M, Fernandez J, Montoliu S, et al. Randomized, double-blind, placebo-controlled trial evaluating norfloxacin in the primary prophylaxis of spontaneous bacterial peritonitis in cirrhotics with renal impairment, hyponatremia or severe liver failure. J Hepatol. 2006;44(Supp2):S51.

- Grothe W, Lottere E, Fleig W. Factors predictive for spontaneous bacterial peritonitis (SBP) under routine inpatient conditions in patients with cirrhosis: a prospective multicenter trial. J Hepatol. 1990;34(4):547.

Case

A 54-year-old man with end-stage liver disease (ESLD) and no prior history of spontaneous bacterial peritonitis (SBP) presents with increasing shortness of breath and abdominal distention. He is admitted for worsening volume overload. The patient reveals that he has not been compliant with his diuretics. On the day of admission, a large-volume paracentesis is performed. Results are significant for a white blood cell count of 150 cells/mm3 and a total protein of 0.9 g/ul. The patient is started on furosemide and spironolactone, and his symptoms significantly improve throughout his hospitalization. His medications are reconciled on the day of discharge. He is not on any antibiotics for SBP prophylaxis; should he be? In general, which patients with ascites should receive SBP prophylaxis?

Overview

Spontaneous bacterial peritonitis is an infection of ascitic fluid that occurs in the absence of an indentified intra-abdominal source of infection or inflammation, i.e., perforation or abscess.1 It is diagnosed when the polymorphonuclear cell (PMN) count in the ascitic fluid is equal to or greater than 250 cells/mm3, with or without positive cultures.

SBP is a significant cause of morbidity and mortality in patients with cirrhosis, with the mortality rate approaching 20% to 40%.2 Of the 32% to 34% of cirrhotic patients who present with, or develop, a bacterial infection during their hospitalization, 25% are due to SBP.1 Changes in gut motility, mucosal defense, and microflora allow for translocation of bacteria into enteric lymph nodes and the bloodstream, resulting in seeding of the peritoneal fluid and SBP.1 Alterations in both systemic and localized immune defenses, both of which are reduced in patients with liver disease, also play a role in SBP pathogenesis (see Table 1, p. 41).

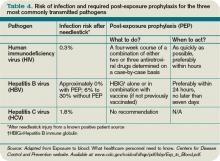

Current evidence supports the use of a third-generation cephalosporin or amoxicillin/clavulanate for initial treatment of SBP, as most infections are caused by gram-negative bacilli, in particular E. coli (see Table 2 on p. 41 and Table 3 on p. 42).1 Alternatively, an oral or intravenous fluoroquinolone could be used if the prevalence of fluoroquinolone-resistant organisms is low.1

Due to the frequency and morbidity associated with SBP, there is great interest in preventing it. However, the use of prophylactic antibiotics needs to be restricted to patients who are at highest risk of developing SBP. According to numerous studies, patients at high risk for SBP include:

- Patients with a prior SBP history;

- Patients admitted with a gastrointestinal bleed; and

- Patients with low total protein content in their ascitic fluid (defined as <1.5 g/ul).1

SBP History

Spontaneous bacterial peritonitis portends bad outcomes. The one-year mortality rate after an episode of SBP is 30% to 50%.1 Furthermore, patients who have recovered from a previous episode of SBP have a 70% chance of developing another episode of SBP in that year.1,2 In one study, norfloxacin was shown to decrease the one-year risk of SBP to 20% from 68% in patients with a history of SBP.3 Additionally, the likelihood of developing SBP from gram-negative bacilli was reduced to 3% from 60%. In order to be efficacious, norfloxacin must be given daily. When fluoroquinolones are prescribed less than once daily, there is a higher rate of fluoroquinolone resistant organisms in the stool.1

Though once-daily dosing of norfloxacin is recommended to decrease the promotion of resistant organisms in prophylaxis against SBP, ciprofloxacin once weekly is acceptable. In a group of patients with low ascitic protein content, with or without a history of SBP, weekly ciprofloxacin has been shown to decrease SBP incidence to 4% from 22% at six months.4 In regard to length of treatment, recommendations are to continue prophylactic antibiotics until resolution of ascites, the patient receives a transplant, or the patient passes away.1

Saab et al studied the impact of oral antibiotic prophylaxis in patients with advanced liver disease on morbidity and mortality.5 The authors examined prospective, randomized, controlled trials that compared high-risk cirrhotic patients receiving oral antibiotic prophylaxis for SBP with groups receiving placebo or no intervention. Eight studies totaling 647 patients were included in the analysis.

The overall mortality rate for patients treated with SBP prophylaxis was 16%, compared with 25% for the control group. Groups treated with prophylactic antibiotics also had a lower incidence of all infections (6.2% vs. 22.2% in the control groups). Additionally, a survival benefit was seen at three months in the group that received prophylactic antibiotics.

The absolute risk reduction with prophylactic antibiotics for primary prevention of SBP was 8% with a number needed to treat of 13. The incidence of gastrointestinal (GI) bleeding, renal failure, and hepatic failure did not significantly differ between treatment and control groups. Thus, survival benefit is thought to be related to the reduced incidence of infections in the group receiving prophylactic antibiotics.5

History of GI Bleeding

The incidence of developing SBP in cirrhotics with an active GI bleed is anywhere from 20% to 45%.1,2 For those with ascites of any etiology and a GI bleed, the incidence can be as high as 60%.5 In general, bacterial infections are frequently diagnosed in patients with cirrhosis and GI bleeding, and have been documented in 22% of these patients within the first 48 hours after admission. According to several studies, that percentage can reach as high as 35% to 66% within seven to 14 days of admission.6 A seven-day course of antibiotics, or antibiotics until discharge, is generally acceptable for SBP prophylaxis in the setting of ascites and GI bleeding (see Table 2, right).1

Bernard et al performed a meta-analysis of five trials to assess the efficacy of antibiotic prophylaxis in the prevention of infections and effect on survival in patients with cirrhosis and GI bleeding. Out of 534 patients, 264 were treated with antibiotics between four and 10 days, and 270 did not receive any antibiotics.

The endpoints of the study were infection, bacteremia and/or SBP; incidence of SBP; and death. Antibiotic prophylaxis not only increased the mean survival rate by 9.1%, but also increased the mean percentage of patients free of infection (32% improvement); bacteremia and/or SBP (19% improvement); and SBP (7% improvement).7

Low Ascitic Fluid Protein

Of the three major risk factors for SBP, ascitic fluid protein content is the most debated. Guarner et al studied the risk of first community-acquired SBP in cirrhotics with low ascitic fluid protein.2 Patients were seen immediately after discharge from the hospital and at two- to three-month intervals. Of the 109 hospitalized patients, 23 (21%) developed SBP, nine of which developed SBP during their hospitalization. The one-year cumulative probability of SBP in these patients with low ascitic fluid protein levels was 35%.

During this study, the authors also looked at 20 different patient variables on admission and found that two parameters—high bilirubin (>3.2mg/dL) and low platelet count (<98,000 cells/ul)—were associated with an increased risk of SBP. This is consistent with studies showing that patients with higher Model for End-Stage Liver Disease (MELD) or Child-Pugh scores, indicating more severe liver disease, are at increased risk for SBP. This likely is the reason SBP prophylaxis is recommended for patients with an elevated bilirubin, and higher Child-Pugh scores, by the American Association for the Study of Liver Disease (see Table 2, p. 41).

Runyon et al showed that 15% of patients with low ascitic fluid protein developed SBP during their hospitalization, as compared with 2% of patients with ascitic fluid levels greater than 1 g/dl.8 A randomized, non-placebo-controlled trial by Navasa et al evaluating 70 cirrhotic patients with low ascitic ascitic protein levels showed a lower probability of developing SBP in the group placed on SBP prophylaxis with norfloxacin (5% vs. 31%).9 Six-month mortality rate was also lower (19% vs. 36%).

In contrast to the previous studies, Grothe et al found that the presence of SBP was not related to ascitic protein content.10 Given conflicting studies, controversy still remains on whether patients with low ascitic protein should receive long-term prophylactic antibiotics.

Antibiotic Drawbacks

The consensus in the literature is that patients with ascites who are admitted with a GI bleed, or those with a history of SBP, should be placed on SBP prophylaxis. However, patients placed on long-term antibiotics are at risk for developing bacterial resistance. Bacterial resistance in cultures taken from cirrhotic patients with SBP has increased over the last decade, particularly in gram-negative bacteria.5 Patients who receive antibiotics in the pre-transplant setting also are at risk for post-transplant fungal infections.

Additionally, the antibiotic of choice for SBP prophylaxis is typically a fluoroquinolone, which can be expensive. However, numerous studies have shown that the cost of initiating prophylactic therapy for SBP in patients with a prior episode of SBP can be cheaper than treating SBP after diagnosis.2

Back to the Case

Our patient’s paracentesis was negative for SBP. Additionally, he does not have a history of SBP, nor does he have an active GI bleed. His only possible indication for SBP prophylaxis is low ascitic protein concentration. His electrolytes were all within normal limits. Additionally, total bilirubin was only slightly elevated at 2.3 mg/dL.

Based on the American Association for the Study of Liver Diseases guidelines, the patient was not started on SBP prophylaxis. Additionally, given his history of medication noncompliance, there is concern that he might not take the antibiotics as prescribed, thus leading to the development of bacterial resistance and more serious infections in the future.

Bottom Line

Patients with ascites and a prior episode of SBP, and those admitted to the hospital for GI bleeding, should be placed on SBP prophylaxis. SBP prophylaxis for low protein ascitic fluid remains controversial but is recommended by the American Association for the Study of Liver Diseases. TH

Dr. del Pino Jones is a hospitalist at the University of Colorado Denver.

References

- Ghassemi S, Garcia-Tsao G. Prevention and treatment of infections in patients with cirrhosis. Best Pract Res Clin Gastroenterol. 2007;21(1):77-93.

- Guarner C, Solà R, Soriono G, et al. Risk of a first community-acquired spontaneous bacterial peritonitis in cirrhotics with low ascitic fluid protein levels. Gastroenterology. 1999;117(2):414-419.

- Ginés P, Rimola A, Planas R, et al. Norfloxacin prevents spontaneous bacterial peritonitis recurrence in cirrhosis: results of a double-blind, placebo-controlled trial. Hepatology. 1990;12(4 Pt 1):716-724.

- Rolachon A, Cordier L, Bacq Y, et al. Ciprofloxacin and long-term prevention of spontaneous bacterial peritonitis: results of a prospective controlled trial. Hepatology. 1995;22(4 Pt 1):1171-1174.

- Saab S, Hernandez J, Chi AC, Tong MJ. Oral antibiotic prophylaxis reduces spontaneous bacterial peritonitis occurrence and improves short-term survival in cirrhosis: a meta-analysis. Am J Gastroenterol. 2009;104(4):993-1001.

- Deschênes M, Villeneuve J. Risk factors for the development of bacterial infections in hospitalized patients with cirrhosis. Am J Gastroenterol. 1999;94(8):2193-2197.

- Bernard B, Grangé J, Khac EN, Amiot X, Opolon P, Poynard T. Antibiotic prophylaxis for the prevention of bacterial infections in cirrhotic patients with gastrointestinal bleeding: a meta-analysis. Hepatology. 1999;29(6):1655-1661.

- Runyon B. Low-protein-concentration ascitic fluid is predisposed to spontaneous bacterial peritonitis. Gastroenterology. 1986;91(6):1343-1346.

- Navasa M, Fernandez J, Montoliu S, et al. Randomized, double-blind, placebo-controlled trial evaluating norfloxacin in the primary prophylaxis of spontaneous bacterial peritonitis in cirrhotics with renal impairment, hyponatremia or severe liver failure. J Hepatol. 2006;44(Supp2):S51.

- Grothe W, Lottere E, Fleig W. Factors predictive for spontaneous bacterial peritonitis (SBP) under routine inpatient conditions in patients with cirrhosis: a prospective multicenter trial. J Hepatol. 1990;34(4):547.

Case

A 54-year-old man with end-stage liver disease (ESLD) and no prior history of spontaneous bacterial peritonitis (SBP) presents with increasing shortness of breath and abdominal distention. He is admitted for worsening volume overload. The patient reveals that he has not been compliant with his diuretics. On the day of admission, a large-volume paracentesis is performed. Results are significant for a white blood cell count of 150 cells/mm3 and a total protein of 0.9 g/ul. The patient is started on furosemide and spironolactone, and his symptoms significantly improve throughout his hospitalization. His medications are reconciled on the day of discharge. He is not on any antibiotics for SBP prophylaxis; should he be? In general, which patients with ascites should receive SBP prophylaxis?

Overview

Spontaneous bacterial peritonitis is an infection of ascitic fluid that occurs in the absence of an indentified intra-abdominal source of infection or inflammation, i.e., perforation or abscess.1 It is diagnosed when the polymorphonuclear cell (PMN) count in the ascitic fluid is equal to or greater than 250 cells/mm3, with or without positive cultures.

SBP is a significant cause of morbidity and mortality in patients with cirrhosis, with the mortality rate approaching 20% to 40%.2 Of the 32% to 34% of cirrhotic patients who present with, or develop, a bacterial infection during their hospitalization, 25% are due to SBP.1 Changes in gut motility, mucosal defense, and microflora allow for translocation of bacteria into enteric lymph nodes and the bloodstream, resulting in seeding of the peritoneal fluid and SBP.1 Alterations in both systemic and localized immune defenses, both of which are reduced in patients with liver disease, also play a role in SBP pathogenesis (see Table 1, p. 41).

Current evidence supports the use of a third-generation cephalosporin or amoxicillin/clavulanate for initial treatment of SBP, as most infections are caused by gram-negative bacilli, in particular E. coli (see Table 2 on p. 41 and Table 3 on p. 42).1 Alternatively, an oral or intravenous fluoroquinolone could be used if the prevalence of fluoroquinolone-resistant organisms is low.1

Due to the frequency and morbidity associated with SBP, there is great interest in preventing it. However, the use of prophylactic antibiotics needs to be restricted to patients who are at highest risk of developing SBP. According to numerous studies, patients at high risk for SBP include:

- Patients with a prior SBP history;

- Patients admitted with a gastrointestinal bleed; and

- Patients with low total protein content in their ascitic fluid (defined as <1.5 g/ul).1

SBP History

Spontaneous bacterial peritonitis portends bad outcomes. The one-year mortality rate after an episode of SBP is 30% to 50%.1 Furthermore, patients who have recovered from a previous episode of SBP have a 70% chance of developing another episode of SBP in that year.1,2 In one study, norfloxacin was shown to decrease the one-year risk of SBP to 20% from 68% in patients with a history of SBP.3 Additionally, the likelihood of developing SBP from gram-negative bacilli was reduced to 3% from 60%. In order to be efficacious, norfloxacin must be given daily. When fluoroquinolones are prescribed less than once daily, there is a higher rate of fluoroquinolone resistant organisms in the stool.1

Though once-daily dosing of norfloxacin is recommended to decrease the promotion of resistant organisms in prophylaxis against SBP, ciprofloxacin once weekly is acceptable. In a group of patients with low ascitic protein content, with or without a history of SBP, weekly ciprofloxacin has been shown to decrease SBP incidence to 4% from 22% at six months.4 In regard to length of treatment, recommendations are to continue prophylactic antibiotics until resolution of ascites, the patient receives a transplant, or the patient passes away.1

Saab et al studied the impact of oral antibiotic prophylaxis in patients with advanced liver disease on morbidity and mortality.5 The authors examined prospective, randomized, controlled trials that compared high-risk cirrhotic patients receiving oral antibiotic prophylaxis for SBP with groups receiving placebo or no intervention. Eight studies totaling 647 patients were included in the analysis.

The overall mortality rate for patients treated with SBP prophylaxis was 16%, compared with 25% for the control group. Groups treated with prophylactic antibiotics also had a lower incidence of all infections (6.2% vs. 22.2% in the control groups). Additionally, a survival benefit was seen at three months in the group that received prophylactic antibiotics.

The absolute risk reduction with prophylactic antibiotics for primary prevention of SBP was 8% with a number needed to treat of 13. The incidence of gastrointestinal (GI) bleeding, renal failure, and hepatic failure did not significantly differ between treatment and control groups. Thus, survival benefit is thought to be related to the reduced incidence of infections in the group receiving prophylactic antibiotics.5

History of GI Bleeding

The incidence of developing SBP in cirrhotics with an active GI bleed is anywhere from 20% to 45%.1,2 For those with ascites of any etiology and a GI bleed, the incidence can be as high as 60%.5 In general, bacterial infections are frequently diagnosed in patients with cirrhosis and GI bleeding, and have been documented in 22% of these patients within the first 48 hours after admission. According to several studies, that percentage can reach as high as 35% to 66% within seven to 14 days of admission.6 A seven-day course of antibiotics, or antibiotics until discharge, is generally acceptable for SBP prophylaxis in the setting of ascites and GI bleeding (see Table 2, right).1

Bernard et al performed a meta-analysis of five trials to assess the efficacy of antibiotic prophylaxis in the prevention of infections and effect on survival in patients with cirrhosis and GI bleeding. Out of 534 patients, 264 were treated with antibiotics between four and 10 days, and 270 did not receive any antibiotics.

The endpoints of the study were infection, bacteremia and/or SBP; incidence of SBP; and death. Antibiotic prophylaxis not only increased the mean survival rate by 9.1%, but also increased the mean percentage of patients free of infection (32% improvement); bacteremia and/or SBP (19% improvement); and SBP (7% improvement).7

Low Ascitic Fluid Protein

Of the three major risk factors for SBP, ascitic fluid protein content is the most debated. Guarner et al studied the risk of first community-acquired SBP in cirrhotics with low ascitic fluid protein.2 Patients were seen immediately after discharge from the hospital and at two- to three-month intervals. Of the 109 hospitalized patients, 23 (21%) developed SBP, nine of which developed SBP during their hospitalization. The one-year cumulative probability of SBP in these patients with low ascitic fluid protein levels was 35%.

During this study, the authors also looked at 20 different patient variables on admission and found that two parameters—high bilirubin (>3.2mg/dL) and low platelet count (<98,000 cells/ul)—were associated with an increased risk of SBP. This is consistent with studies showing that patients with higher Model for End-Stage Liver Disease (MELD) or Child-Pugh scores, indicating more severe liver disease, are at increased risk for SBP. This likely is the reason SBP prophylaxis is recommended for patients with an elevated bilirubin, and higher Child-Pugh scores, by the American Association for the Study of Liver Disease (see Table 2, p. 41).

Runyon et al showed that 15% of patients with low ascitic fluid protein developed SBP during their hospitalization, as compared with 2% of patients with ascitic fluid levels greater than 1 g/dl.8 A randomized, non-placebo-controlled trial by Navasa et al evaluating 70 cirrhotic patients with low ascitic ascitic protein levels showed a lower probability of developing SBP in the group placed on SBP prophylaxis with norfloxacin (5% vs. 31%).9 Six-month mortality rate was also lower (19% vs. 36%).

In contrast to the previous studies, Grothe et al found that the presence of SBP was not related to ascitic protein content.10 Given conflicting studies, controversy still remains on whether patients with low ascitic protein should receive long-term prophylactic antibiotics.

Antibiotic Drawbacks

The consensus in the literature is that patients with ascites who are admitted with a GI bleed, or those with a history of SBP, should be placed on SBP prophylaxis. However, patients placed on long-term antibiotics are at risk for developing bacterial resistance. Bacterial resistance in cultures taken from cirrhotic patients with SBP has increased over the last decade, particularly in gram-negative bacteria.5 Patients who receive antibiotics in the pre-transplant setting also are at risk for post-transplant fungal infections.

Additionally, the antibiotic of choice for SBP prophylaxis is typically a fluoroquinolone, which can be expensive. However, numerous studies have shown that the cost of initiating prophylactic therapy for SBP in patients with a prior episode of SBP can be cheaper than treating SBP after diagnosis.2

Back to the Case

Our patient’s paracentesis was negative for SBP. Additionally, he does not have a history of SBP, nor does he have an active GI bleed. His only possible indication for SBP prophylaxis is low ascitic protein concentration. His electrolytes were all within normal limits. Additionally, total bilirubin was only slightly elevated at 2.3 mg/dL.

Based on the American Association for the Study of Liver Diseases guidelines, the patient was not started on SBP prophylaxis. Additionally, given his history of medication noncompliance, there is concern that he might not take the antibiotics as prescribed, thus leading to the development of bacterial resistance and more serious infections in the future.

Bottom Line

Patients with ascites and a prior episode of SBP, and those admitted to the hospital for GI bleeding, should be placed on SBP prophylaxis. SBP prophylaxis for low protein ascitic fluid remains controversial but is recommended by the American Association for the Study of Liver Diseases. TH

Dr. del Pino Jones is a hospitalist at the University of Colorado Denver.

References

- Ghassemi S, Garcia-Tsao G. Prevention and treatment of infections in patients with cirrhosis. Best Pract Res Clin Gastroenterol. 2007;21(1):77-93.

- Guarner C, Solà R, Soriono G, et al. Risk of a first community-acquired spontaneous bacterial peritonitis in cirrhotics with low ascitic fluid protein levels. Gastroenterology. 1999;117(2):414-419.

- Ginés P, Rimola A, Planas R, et al. Norfloxacin prevents spontaneous bacterial peritonitis recurrence in cirrhosis: results of a double-blind, placebo-controlled trial. Hepatology. 1990;12(4 Pt 1):716-724.

- Rolachon A, Cordier L, Bacq Y, et al. Ciprofloxacin and long-term prevention of spontaneous bacterial peritonitis: results of a prospective controlled trial. Hepatology. 1995;22(4 Pt 1):1171-1174.

- Saab S, Hernandez J, Chi AC, Tong MJ. Oral antibiotic prophylaxis reduces spontaneous bacterial peritonitis occurrence and improves short-term survival in cirrhosis: a meta-analysis. Am J Gastroenterol. 2009;104(4):993-1001.

- Deschênes M, Villeneuve J. Risk factors for the development of bacterial infections in hospitalized patients with cirrhosis. Am J Gastroenterol. 1999;94(8):2193-2197.

- Bernard B, Grangé J, Khac EN, Amiot X, Opolon P, Poynard T. Antibiotic prophylaxis for the prevention of bacterial infections in cirrhotic patients with gastrointestinal bleeding: a meta-analysis. Hepatology. 1999;29(6):1655-1661.

- Runyon B. Low-protein-concentration ascitic fluid is predisposed to spontaneous bacterial peritonitis. Gastroenterology. 1986;91(6):1343-1346.

- Navasa M, Fernandez J, Montoliu S, et al. Randomized, double-blind, placebo-controlled trial evaluating norfloxacin in the primary prophylaxis of spontaneous bacterial peritonitis in cirrhotics with renal impairment, hyponatremia or severe liver failure. J Hepatol. 2006;44(Supp2):S51.

- Grothe W, Lottere E, Fleig W. Factors predictive for spontaneous bacterial peritonitis (SBP) under routine inpatient conditions in patients with cirrhosis: a prospective multicenter trial. J Hepatol. 1990;34(4):547.

What Is the Role of BNP in Diagnosis and Management of Acutely Decompensated Heart Failure?

Case

A 76-year-old woman with a history of chronic obstructive pulmonary disease (COPD), congestive heart failure (CHF), and atrial fibrillation presents with shortness of breath. She is tachypneic, her pulse is 105 beats per minute, and her blood pressure is 105/60 mm/Hg. She is obese and has an immeasurable venous pressure with decreased breath sounds in both lung bases, and irregular and distant heart sounds. What is the role of brain (or B-type) natriuretic peptide (BNP) in the diagnosis and management of this patient?

Overview

Each year, more than 1 million patients are admitted to hospitals with acutely decompensated heart failure (ADHF). Although many of these patients carry a pre-admission diagnosis of CHF, their common presenting symptoms are not specific for ADHF, which leads to delays in diagnosis and therapy initiation, and increased diagnostic costs and potentially worse outcomes. Clinical risk scores from NHANES and the Framingham heart study have limited sensitivity, missing nearly 20% of patients.1,2 Moreover, these scores are underused by clinicians who depend heavily on clinical gestalt.3

Once ADHF is diagnosed, ongoing bedside assessment of volume status is a difficult and inexact science. The physiologic goal is achievement of normal left ventricular end diastolic volume; however, surrogate measures of this status, including weight change, venous pressure, and pulmonary and cardiac auscultatory findings, have significant limitations. After discharge, patients have high and heterogeneous risks of readmission, death, and other adverse events. Identifying patients with the highest risk might allow for intensive strategies to improve outcomes.

BNP is a neurohormone released from the ventricular cells in response to increased cardiac filling pressures. Plasma measurements of BNP have been shown to reflect volume status, to predict risk at admission and discharge, and to serve as a treatment guide in a variety of clinical settings.4 This simple laboratory test increasingly has been used to diagnose and manage ADHF; its utility and limitations deserve critical review.

Review of the Data

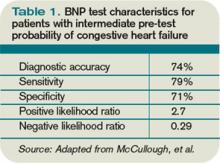

CHF diagnosis. Since introduction of the rapid BNP assay, several trials have evaluated its clinical utility in determining whether ADHF is the cause of a patient’s dyspnea. The largest of these trials, the Breathing Not Properly Multinational Study, conducted by McCullough et al, enrolled nearly 1,600 patients who presented with the primary complaint of dyspnea.5 After reviewing conventional clinical information, ED physicians were asked to determine the likelihood that ADHF was the etiology of a patient’s dyspnea. These likelihoods were classified as low (<20%), intermediate (20%-80%), or high (>80%). The admission BNP was recorded but was not available for the ED physician decisions.

The “gold standard” was the opinion of two adjudicating cardiologists who reviewed the cases retrospectively and determined whether the dyspnea resulted from ADHF. They were blinded to both the ED physician’s opinion and the BNP results. The accuracy of the ED physician’s initial assessment and the impact of the BNP results were compared with this gold standard.

For the entire cohort, the use of BNP (with a cutoff point of 100 pg/mL) would have improved the ED physician’s assessment from 74% diagnostic accuracy to 81%, which is statistically significant. Most important, in those patients initially given an intermediate likelihood of CHF, BNP results correctly classified 75% of these patients and rarely missed ADHF cases (<10%).

Atrial fibrillation. Since the original trials that established a BNP cutoff of 100 pg/mL for determining the presence of ADHF, several adjustments have been suggested. The presence of atrial fibrillation has been shown to increase BNP values independent of cardiac filling pressures. Breidthardt et al examined patients with atrial fibrillation presenting with dyspnea.4 In their analysis, using a cutoff of 100 pg/mL remained robust in identifying patients without ADHF. However, in the 100 pg/mL-500 pg/mL range, the test was not able to discriminate between atrial fibrillation and ADHF. Values greater than 500 pg/mL proved accurate in supporting the diagnosis of ADHF.

Renal failure. Renal dysfunction also elevates BNP levels independent of filling pressures. McCullough et al re-examined data from their Breathing Not Properly Multinational Study and found that the glomerular filtration rate (GFR) was inversely related to BNP levels.5 They recommend using a cutoff point of 200 pg/mL when the GFR is below 60 mg/dL. Other authors recommend not using BNP levels to diagnose ADHF when the GFR is less than 60 mg/dL due to the lack of data supporting this approach. Until clarified, clinicians should be cautious of interpreting BNP elevations in the setting of kidney disease.

Obesity. Obesity has a negative effect on BNP levels, decreasing the sensitivity of the test in these patients.6 Although no study defines how to adjust for body mass index (BMI), clinicians should be cautious about using a low BNP to rule out ADHF in a dyspneic obese patient.

Historical BNP values. If historical BNP values are available, studies of biological variation have shown that an increase to 123% from 66% from baseline is representative of a clinically meaningful increase in cardiac filling pressures. Less significant changes could merely represent biological variation and should be cautiously interpreted.7

Cost effectiveness. The cost effectiveness of using BNP measurements in dyspneic ED patients has been examined as well. Mueller et al found in a Swiss hospital that BNP testing was associated with a 25% decrease in treatment cost, length of stay (LOS), and ICU usage.8 However, LOS is significantly longer in Switzerland compared with the U.S., and given that much of the cost savings was attributed to reducing LOS, it is not possible to extrapolate these data to the U.S. health system. More evidence is needed to truly evaluate the cost effectiveness of BNP testing.

Serial BNP testing. Once a patient has been diagnosed with ADHF and admitted to the hospital, diuretics are indicated with the goal of achieving euvolemia. The bedside assessment of volume status remains a difficult and inexact science, and failure to appropriately remove fluid is associated with readmissions. Conversely, overdiuresis with a concomitant rise in creatinine has been associated with increased morbidity and mortality.

Several studies have shown that the reduction of volume associated with diuretic administration is coupled with a rapid decrease in BNP levels. Therefore, serial BNP measurement has been evaluated as a tool to guide the daily assessment of volume status in patients admitted with ADHF. Unfortunately, frequent measurements of BNP reveal that a great deal of variance, or “noise,” is present in these repeat measurements. Data do not clearly show how to incorporate serial BNP measurements into daily diuretic management.9

Mortality prediction. Nearly 3.5% of admitted heart failure patients will die during their hospitalization. For perspective, the rate of hospital mortality with acute myocardial infarction is 7%. BNP serves as a powerful and independent predictor of inpatient mortality. The ADHERE (Acute Decompensated Heart Failure National Registry) study showed that when divided into BNP quartiles of <430 pg/mL, 430 pg/mL to 839 pg/mL, 840 pg/mL to 1,729 pg/mL, and >1,730 pg/mL, patients’ risk of inpatient death was accurately predicted as 1.9%, 2.8%, 3.8%, and 6.0%, respectively.10 Even when adjusted for other risk factors, BNP remained a powerful predictor; the mortality rate more than doubled from the lowest to highest quartile.

Different strategies have been proposed to improve the outcomes in these highest-risk patients; however, to date, no evidence-based strategy offers a meaningful way to reduce inpatient mortality beyond the current standard of care.

Readmission and 30-day mortality. The 30-day readmission rate after discharge for ADHF is more than than 25%. A study of Medicare patients showed that more than $17 billion (more than 15% of all Medicare payments to hospitals) was associated with unplanned rehospitalizations.11 As bundling payment trends develop, hospitals have an enormous incentive to identify CHF patients with the highest risk of readmission and attempt to mitigate that risk.

From a patient-centered view, upon hospital discharge a patient with ADHF also realizes a 1 in 10 chance of dying within the first 30 days.

At discharge, BNP serves as a powerful and independent marker of increased risk of readmission, morbidity, and mortality. O’Connor et al developed a discharge risk model in patients with severe left ventricular dysfunction; the ESCAPE risk model and discharge score showed elevated BNP was the single most powerful predictor of six-month mortality.12 For every doubling of the BNP, the odds of death at six months increased by 1.4 times.

After combining discharge BNP with other factors, the ESCAPE discharge score was fairly successful at discriminating between patients who would and would not survive to six months. By identifying these outpatients, intensive management strategies could be focused on individuals with the highest risk. The data support the idea that readmission reductions are significant when outpatients obtain early follow-up. Many healthcare centers struggle to schedule early follow-up for all heart failure patients.

As such, the ability to target individuals with the highest discharge scores for intensive follow-up might improve outcomes. These patients could undergo early evaluation for such advanced therapies as resynchronization, left ventricular assist device implantation, or listing for transplantation. Currently, this strategy is not proven. It also is possible that these high-risk patients might have such advanced diseases that their risk cannot be modified by our current medications and advanced therapies.

Back to the Case

This patient has symptoms and signs that could be caused by ADHF or COPD. Her presentation is consistent with an intermediate probability of ADHF. A rapid BNP reveals a level of 950 pg/mL.

Even considering the higher cutoff required because of her coexistent atrial fibrillation, her BNP is consistent with ADHF. Additionally, her obesity likely has decreased the true value of her BNP. A previous BNP drawn when the patient was not in ADHF was 250 ng/mL, meaning that at least a 70% increase is present.

She was admitted and treated with intravenous diuretics with improvement in her congestion and relief of her symptoms. Daily BNPs were not drawn and her diuretics were titrated based on bedside clinical assessments. Her admission BNP elevation would predict a moderately high risk of short- and intermediate term of morbidity and mortality.

At discharge, a repeat BNP also could add to her risk stratification, though it would not be clear what do with this prognostic information beyond the standard of care.

Bottom Line

BNP measurement in specific situations can complement conventional clinical information in determining the presence of ADHF and also can enhance clinicians’ ability to risk-stratify patients during and after hospitalization. TH

Dr. Wolfe is a hospitalist and assistant professor of medicine at the University of Colorado Denver.

References

- Schocken DD, Arrieta MI, Leaverton PE, Ross EA. Prevalence and mortality of congestive heart failure in the United States. J Am Coll Cardiol. 1992;20(2):301-306.

- McKee PA, Castelli WP, McNamara PM, Kannel WB. The natural history of congestive heart failure: the Framingham study. N Eng J Med. 1971;285(26):1441-1446.

- Wang CS, FitzGerald JM, Schulzer M, Mak E, Ayas NT. Does this dyspneic patient in the emergency department have congestive heart failure? JAMA. 2005;294(15):1944-1956.

- Breidthardt T, Noveanu M, Cayir S, et al. The use of B-type natriuretic peptide in the management of patients with atrial fibrillation and dyspnea. Int J Cardiol. 2009;136(2):193-199.

- McCullough PA, Duc P, Omland T, et al. B-type natriuretic peptide and renal function in the diagnosis of heart failure: an analysis from the Breathing Not Properly Multinational Study. Am J Kidney Dis. 2003;41(3):571-579.

- Iwanaga Y, Hihara Y, Nizuma S, et al. BNP in overweight and obese patients with heart failure: an analysis based on the BNP-LV diastolic wall stress relationship. J Card Fail. 2007;13(8):663-667.

- O’Hanlon R, O’Shea P, Ledwidge M. The biologic variability of B-type natriuretic peptide and N-terminal pro-B-type natriuretic peptide in stable heart failure patients. J Card Fail. 2007;13(1):50-55.

- Mueller C, Laule-Kilian K, Schindler C, et al. Cost-effectiveness of B-type natriuretic peptide testing in patients with acute dyspnea. Arch Intern Med. 2006;166(1):1081-1087.

- Wu AH. Serial testing of B-type natriuretic peptide and NTpro-BNP for monitoring therapy of heart failure: the role of biologic variation in the interpretation of results. Am Heart J. 2006;152(5):828-834.

- Fonarow GC, Peacock WF, Phillips CO, et al. ADHERE Scientific Advisory Committee and Investigators. Admission B-type natriuretic peptide levels and in-hospital mortality in acute decompensated heart failure. J Am Coll Cardiol. 2007;48 (19):1943-1950.

- Jencks SF, Williams MC, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med. 2009;360(14):1418-1428.

- O’Connor CM, Hasselblad V, Mehta RH, et al. Triage after hospitalization with advanced heart failure: the ESCAPE (Evaluation Study of Congestive Heart Failure and Pulmonary Artery Catheterization Effectiveness) risk model and discharge score. J Am Coll Cardiol. 2010;55(9):872-878.

Case

A 76-year-old woman with a history of chronic obstructive pulmonary disease (COPD), congestive heart failure (CHF), and atrial fibrillation presents with shortness of breath. She is tachypneic, her pulse is 105 beats per minute, and her blood pressure is 105/60 mm/Hg. She is obese and has an immeasurable venous pressure with decreased breath sounds in both lung bases, and irregular and distant heart sounds. What is the role of brain (or B-type) natriuretic peptide (BNP) in the diagnosis and management of this patient?

Overview

Each year, more than 1 million patients are admitted to hospitals with acutely decompensated heart failure (ADHF). Although many of these patients carry a pre-admission diagnosis of CHF, their common presenting symptoms are not specific for ADHF, which leads to delays in diagnosis and therapy initiation, and increased diagnostic costs and potentially worse outcomes. Clinical risk scores from NHANES and the Framingham heart study have limited sensitivity, missing nearly 20% of patients.1,2 Moreover, these scores are underused by clinicians who depend heavily on clinical gestalt.3

Once ADHF is diagnosed, ongoing bedside assessment of volume status is a difficult and inexact science. The physiologic goal is achievement of normal left ventricular end diastolic volume; however, surrogate measures of this status, including weight change, venous pressure, and pulmonary and cardiac auscultatory findings, have significant limitations. After discharge, patients have high and heterogeneous risks of readmission, death, and other adverse events. Identifying patients with the highest risk might allow for intensive strategies to improve outcomes.

BNP is a neurohormone released from the ventricular cells in response to increased cardiac filling pressures. Plasma measurements of BNP have been shown to reflect volume status, to predict risk at admission and discharge, and to serve as a treatment guide in a variety of clinical settings.4 This simple laboratory test increasingly has been used to diagnose and manage ADHF; its utility and limitations deserve critical review.

Review of the Data

CHF diagnosis. Since introduction of the rapid BNP assay, several trials have evaluated its clinical utility in determining whether ADHF is the cause of a patient’s dyspnea. The largest of these trials, the Breathing Not Properly Multinational Study, conducted by McCullough et al, enrolled nearly 1,600 patients who presented with the primary complaint of dyspnea.5 After reviewing conventional clinical information, ED physicians were asked to determine the likelihood that ADHF was the etiology of a patient’s dyspnea. These likelihoods were classified as low (<20%), intermediate (20%-80%), or high (>80%). The admission BNP was recorded but was not available for the ED physician decisions.

The “gold standard” was the opinion of two adjudicating cardiologists who reviewed the cases retrospectively and determined whether the dyspnea resulted from ADHF. They were blinded to both the ED physician’s opinion and the BNP results. The accuracy of the ED physician’s initial assessment and the impact of the BNP results were compared with this gold standard.

For the entire cohort, the use of BNP (with a cutoff point of 100 pg/mL) would have improved the ED physician’s assessment from 74% diagnostic accuracy to 81%, which is statistically significant. Most important, in those patients initially given an intermediate likelihood of CHF, BNP results correctly classified 75% of these patients and rarely missed ADHF cases (<10%).

Atrial fibrillation. Since the original trials that established a BNP cutoff of 100 pg/mL for determining the presence of ADHF, several adjustments have been suggested. The presence of atrial fibrillation has been shown to increase BNP values independent of cardiac filling pressures. Breidthardt et al examined patients with atrial fibrillation presenting with dyspnea.4 In their analysis, using a cutoff of 100 pg/mL remained robust in identifying patients without ADHF. However, in the 100 pg/mL-500 pg/mL range, the test was not able to discriminate between atrial fibrillation and ADHF. Values greater than 500 pg/mL proved accurate in supporting the diagnosis of ADHF.

Renal failure. Renal dysfunction also elevates BNP levels independent of filling pressures. McCullough et al re-examined data from their Breathing Not Properly Multinational Study and found that the glomerular filtration rate (GFR) was inversely related to BNP levels.5 They recommend using a cutoff point of 200 pg/mL when the GFR is below 60 mg/dL. Other authors recommend not using BNP levels to diagnose ADHF when the GFR is less than 60 mg/dL due to the lack of data supporting this approach. Until clarified, clinicians should be cautious of interpreting BNP elevations in the setting of kidney disease.

Obesity. Obesity has a negative effect on BNP levels, decreasing the sensitivity of the test in these patients.6 Although no study defines how to adjust for body mass index (BMI), clinicians should be cautious about using a low BNP to rule out ADHF in a dyspneic obese patient.

Historical BNP values. If historical BNP values are available, studies of biological variation have shown that an increase to 123% from 66% from baseline is representative of a clinically meaningful increase in cardiac filling pressures. Less significant changes could merely represent biological variation and should be cautiously interpreted.7

Cost effectiveness. The cost effectiveness of using BNP measurements in dyspneic ED patients has been examined as well. Mueller et al found in a Swiss hospital that BNP testing was associated with a 25% decrease in treatment cost, length of stay (LOS), and ICU usage.8 However, LOS is significantly longer in Switzerland compared with the U.S., and given that much of the cost savings was attributed to reducing LOS, it is not possible to extrapolate these data to the U.S. health system. More evidence is needed to truly evaluate the cost effectiveness of BNP testing.

Serial BNP testing. Once a patient has been diagnosed with ADHF and admitted to the hospital, diuretics are indicated with the goal of achieving euvolemia. The bedside assessment of volume status remains a difficult and inexact science, and failure to appropriately remove fluid is associated with readmissions. Conversely, overdiuresis with a concomitant rise in creatinine has been associated with increased morbidity and mortality.

Several studies have shown that the reduction of volume associated with diuretic administration is coupled with a rapid decrease in BNP levels. Therefore, serial BNP measurement has been evaluated as a tool to guide the daily assessment of volume status in patients admitted with ADHF. Unfortunately, frequent measurements of BNP reveal that a great deal of variance, or “noise,” is present in these repeat measurements. Data do not clearly show how to incorporate serial BNP measurements into daily diuretic management.9

Mortality prediction. Nearly 3.5% of admitted heart failure patients will die during their hospitalization. For perspective, the rate of hospital mortality with acute myocardial infarction is 7%. BNP serves as a powerful and independent predictor of inpatient mortality. The ADHERE (Acute Decompensated Heart Failure National Registry) study showed that when divided into BNP quartiles of <430 pg/mL, 430 pg/mL to 839 pg/mL, 840 pg/mL to 1,729 pg/mL, and >1,730 pg/mL, patients’ risk of inpatient death was accurately predicted as 1.9%, 2.8%, 3.8%, and 6.0%, respectively.10 Even when adjusted for other risk factors, BNP remained a powerful predictor; the mortality rate more than doubled from the lowest to highest quartile.

Different strategies have been proposed to improve the outcomes in these highest-risk patients; however, to date, no evidence-based strategy offers a meaningful way to reduce inpatient mortality beyond the current standard of care.

Readmission and 30-day mortality. The 30-day readmission rate after discharge for ADHF is more than than 25%. A study of Medicare patients showed that more than $17 billion (more than 15% of all Medicare payments to hospitals) was associated with unplanned rehospitalizations.11 As bundling payment trends develop, hospitals have an enormous incentive to identify CHF patients with the highest risk of readmission and attempt to mitigate that risk.

From a patient-centered view, upon hospital discharge a patient with ADHF also realizes a 1 in 10 chance of dying within the first 30 days.

At discharge, BNP serves as a powerful and independent marker of increased risk of readmission, morbidity, and mortality. O’Connor et al developed a discharge risk model in patients with severe left ventricular dysfunction; the ESCAPE risk model and discharge score showed elevated BNP was the single most powerful predictor of six-month mortality.12 For every doubling of the BNP, the odds of death at six months increased by 1.4 times.

After combining discharge BNP with other factors, the ESCAPE discharge score was fairly successful at discriminating between patients who would and would not survive to six months. By identifying these outpatients, intensive management strategies could be focused on individuals with the highest risk. The data support the idea that readmission reductions are significant when outpatients obtain early follow-up. Many healthcare centers struggle to schedule early follow-up for all heart failure patients.

As such, the ability to target individuals with the highest discharge scores for intensive follow-up might improve outcomes. These patients could undergo early evaluation for such advanced therapies as resynchronization, left ventricular assist device implantation, or listing for transplantation. Currently, this strategy is not proven. It also is possible that these high-risk patients might have such advanced diseases that their risk cannot be modified by our current medications and advanced therapies.

Back to the Case

This patient has symptoms and signs that could be caused by ADHF or COPD. Her presentation is consistent with an intermediate probability of ADHF. A rapid BNP reveals a level of 950 pg/mL.

Even considering the higher cutoff required because of her coexistent atrial fibrillation, her BNP is consistent with ADHF. Additionally, her obesity likely has decreased the true value of her BNP. A previous BNP drawn when the patient was not in ADHF was 250 ng/mL, meaning that at least a 70% increase is present.

She was admitted and treated with intravenous diuretics with improvement in her congestion and relief of her symptoms. Daily BNPs were not drawn and her diuretics were titrated based on bedside clinical assessments. Her admission BNP elevation would predict a moderately high risk of short- and intermediate term of morbidity and mortality.

At discharge, a repeat BNP also could add to her risk stratification, though it would not be clear what do with this prognostic information beyond the standard of care.

Bottom Line

BNP measurement in specific situations can complement conventional clinical information in determining the presence of ADHF and also can enhance clinicians’ ability to risk-stratify patients during and after hospitalization. TH

Dr. Wolfe is a hospitalist and assistant professor of medicine at the University of Colorado Denver.

References

- Schocken DD, Arrieta MI, Leaverton PE, Ross EA. Prevalence and mortality of congestive heart failure in the United States. J Am Coll Cardiol. 1992;20(2):301-306.

- McKee PA, Castelli WP, McNamara PM, Kannel WB. The natural history of congestive heart failure: the Framingham study. N Eng J Med. 1971;285(26):1441-1446.

- Wang CS, FitzGerald JM, Schulzer M, Mak E, Ayas NT. Does this dyspneic patient in the emergency department have congestive heart failure? JAMA. 2005;294(15):1944-1956.

- Breidthardt T, Noveanu M, Cayir S, et al. The use of B-type natriuretic peptide in the management of patients with atrial fibrillation and dyspnea. Int J Cardiol. 2009;136(2):193-199.

- McCullough PA, Duc P, Omland T, et al. B-type natriuretic peptide and renal function in the diagnosis of heart failure: an analysis from the Breathing Not Properly Multinational Study. Am J Kidney Dis. 2003;41(3):571-579.

- Iwanaga Y, Hihara Y, Nizuma S, et al. BNP in overweight and obese patients with heart failure: an analysis based on the BNP-LV diastolic wall stress relationship. J Card Fail. 2007;13(8):663-667.

- O’Hanlon R, O’Shea P, Ledwidge M. The biologic variability of B-type natriuretic peptide and N-terminal pro-B-type natriuretic peptide in stable heart failure patients. J Card Fail. 2007;13(1):50-55.

- Mueller C, Laule-Kilian K, Schindler C, et al. Cost-effectiveness of B-type natriuretic peptide testing in patients with acute dyspnea. Arch Intern Med. 2006;166(1):1081-1087.

- Wu AH. Serial testing of B-type natriuretic peptide and NTpro-BNP for monitoring therapy of heart failure: the role of biologic variation in the interpretation of results. Am Heart J. 2006;152(5):828-834.

- Fonarow GC, Peacock WF, Phillips CO, et al. ADHERE Scientific Advisory Committee and Investigators. Admission B-type natriuretic peptide levels and in-hospital mortality in acute decompensated heart failure. J Am Coll Cardiol. 2007;48 (19):1943-1950.

- Jencks SF, Williams MC, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med. 2009;360(14):1418-1428.

- O’Connor CM, Hasselblad V, Mehta RH, et al. Triage after hospitalization with advanced heart failure: the ESCAPE (Evaluation Study of Congestive Heart Failure and Pulmonary Artery Catheterization Effectiveness) risk model and discharge score. J Am Coll Cardiol. 2010;55(9):872-878.

Case

A 76-year-old woman with a history of chronic obstructive pulmonary disease (COPD), congestive heart failure (CHF), and atrial fibrillation presents with shortness of breath. She is tachypneic, her pulse is 105 beats per minute, and her blood pressure is 105/60 mm/Hg. She is obese and has an immeasurable venous pressure with decreased breath sounds in both lung bases, and irregular and distant heart sounds. What is the role of brain (or B-type) natriuretic peptide (BNP) in the diagnosis and management of this patient?

Overview

Each year, more than 1 million patients are admitted to hospitals with acutely decompensated heart failure (ADHF). Although many of these patients carry a pre-admission diagnosis of CHF, their common presenting symptoms are not specific for ADHF, which leads to delays in diagnosis and therapy initiation, and increased diagnostic costs and potentially worse outcomes. Clinical risk scores from NHANES and the Framingham heart study have limited sensitivity, missing nearly 20% of patients.1,2 Moreover, these scores are underused by clinicians who depend heavily on clinical gestalt.3

Once ADHF is diagnosed, ongoing bedside assessment of volume status is a difficult and inexact science. The physiologic goal is achievement of normal left ventricular end diastolic volume; however, surrogate measures of this status, including weight change, venous pressure, and pulmonary and cardiac auscultatory findings, have significant limitations. After discharge, patients have high and heterogeneous risks of readmission, death, and other adverse events. Identifying patients with the highest risk might allow for intensive strategies to improve outcomes.

BNP is a neurohormone released from the ventricular cells in response to increased cardiac filling pressures. Plasma measurements of BNP have been shown to reflect volume status, to predict risk at admission and discharge, and to serve as a treatment guide in a variety of clinical settings.4 This simple laboratory test increasingly has been used to diagnose and manage ADHF; its utility and limitations deserve critical review.

Review of the Data

CHF diagnosis. Since introduction of the rapid BNP assay, several trials have evaluated its clinical utility in determining whether ADHF is the cause of a patient’s dyspnea. The largest of these trials, the Breathing Not Properly Multinational Study, conducted by McCullough et al, enrolled nearly 1,600 patients who presented with the primary complaint of dyspnea.5 After reviewing conventional clinical information, ED physicians were asked to determine the likelihood that ADHF was the etiology of a patient’s dyspnea. These likelihoods were classified as low (<20%), intermediate (20%-80%), or high (>80%). The admission BNP was recorded but was not available for the ED physician decisions.

The “gold standard” was the opinion of two adjudicating cardiologists who reviewed the cases retrospectively and determined whether the dyspnea resulted from ADHF. They were blinded to both the ED physician’s opinion and the BNP results. The accuracy of the ED physician’s initial assessment and the impact of the BNP results were compared with this gold standard.

For the entire cohort, the use of BNP (with a cutoff point of 100 pg/mL) would have improved the ED physician’s assessment from 74% diagnostic accuracy to 81%, which is statistically significant. Most important, in those patients initially given an intermediate likelihood of CHF, BNP results correctly classified 75% of these patients and rarely missed ADHF cases (<10%).

Atrial fibrillation. Since the original trials that established a BNP cutoff of 100 pg/mL for determining the presence of ADHF, several adjustments have been suggested. The presence of atrial fibrillation has been shown to increase BNP values independent of cardiac filling pressures. Breidthardt et al examined patients with atrial fibrillation presenting with dyspnea.4 In their analysis, using a cutoff of 100 pg/mL remained robust in identifying patients without ADHF. However, in the 100 pg/mL-500 pg/mL range, the test was not able to discriminate between atrial fibrillation and ADHF. Values greater than 500 pg/mL proved accurate in supporting the diagnosis of ADHF.

Renal failure. Renal dysfunction also elevates BNP levels independent of filling pressures. McCullough et al re-examined data from their Breathing Not Properly Multinational Study and found that the glomerular filtration rate (GFR) was inversely related to BNP levels.5 They recommend using a cutoff point of 200 pg/mL when the GFR is below 60 mg/dL. Other authors recommend not using BNP levels to diagnose ADHF when the GFR is less than 60 mg/dL due to the lack of data supporting this approach. Until clarified, clinicians should be cautious of interpreting BNP elevations in the setting of kidney disease.

Obesity. Obesity has a negative effect on BNP levels, decreasing the sensitivity of the test in these patients.6 Although no study defines how to adjust for body mass index (BMI), clinicians should be cautious about using a low BNP to rule out ADHF in a dyspneic obese patient.

Historical BNP values. If historical BNP values are available, studies of biological variation have shown that an increase to 123% from 66% from baseline is representative of a clinically meaningful increase in cardiac filling pressures. Less significant changes could merely represent biological variation and should be cautiously interpreted.7

Cost effectiveness. The cost effectiveness of using BNP measurements in dyspneic ED patients has been examined as well. Mueller et al found in a Swiss hospital that BNP testing was associated with a 25% decrease in treatment cost, length of stay (LOS), and ICU usage.8 However, LOS is significantly longer in Switzerland compared with the U.S., and given that much of the cost savings was attributed to reducing LOS, it is not possible to extrapolate these data to the U.S. health system. More evidence is needed to truly evaluate the cost effectiveness of BNP testing.

Serial BNP testing. Once a patient has been diagnosed with ADHF and admitted to the hospital, diuretics are indicated with the goal of achieving euvolemia. The bedside assessment of volume status remains a difficult and inexact science, and failure to appropriately remove fluid is associated with readmissions. Conversely, overdiuresis with a concomitant rise in creatinine has been associated with increased morbidity and mortality.

Several studies have shown that the reduction of volume associated with diuretic administration is coupled with a rapid decrease in BNP levels. Therefore, serial BNP measurement has been evaluated as a tool to guide the daily assessment of volume status in patients admitted with ADHF. Unfortunately, frequent measurements of BNP reveal that a great deal of variance, or “noise,” is present in these repeat measurements. Data do not clearly show how to incorporate serial BNP measurements into daily diuretic management.9