User login

Long Peripheral Catheters: A Retrospective Review of Major Complications

Introduced in the 1950s, midline catheters have become a popular option for intravenous (IV) access.1,2 Ranging from 8 to 25 cm in length, they are inserted in the veins of the upper arm. Unlike peripherally inserted central catheters (PICCs), the tip of midline catheters terminates proximal to the axillary vein; thus, midlines are peripheral, not central venous access devices.1-3 One popular variation of a midline catheter, though nebulously defined, is the long peripheral catheter (LPC), a device ranging from 6 to 15 cm in length.4,5

Concerns regarding inappropriate use and complications such as thrombosis and central line-associated bloodstream infection (CLABSI) have spurred growth in the use of LPCs.6 However, data regarding complication rates with these devices are limited. Whether LPCs are a safe and viable option for IV access is unclear. We conducted a retrospective study to examine indications, patterns of use, and complications following LPC insertion in hospitalized patients.

METHODS

Device Selection

Our institution is a 470-bed tertiary care, safety-net hospital in Chicago, Illinois. Our vascular access team (VAT) performs a patient assessment and selects IV devices based upon published standards for device appropriateness. 7 We retrospectively collated electronic requests for LPC insertion on adult inpatients between October 2015 and June 2017. Cases where (1) duplicate orders, (2) patient refusal, (3) peripheral intravenous catheter of any length, or (4) PICCs were placed were excluded from this analysis.

VAT and Device Characteristics

We used Bard PowerGlide® (Bard Access Systems, Inc., Salt Lake City, Utah), an 18-gauge, 8-10 cm long, power-injectable, polyurethane LPC. Bundled kits (ie, device, gown, dressing, etc.) were utilized, and VAT providers underwent two weeks of training prior to the study period. All LPCs were inserted in the upper extremities under sterile technique using ultrasound guidance (accelerated Seldinger technique). Placement confirmation was verified by aspiration, flush, and ultrasound visualization of the catheter tip within the vein. An antimicrobial dressing was applied to the catheter insertion site, and daily saline flushes and weekly dressing changes by bedside nurses were used for device maintenance. LPC placement was available on all nonholiday weekdays from 8

Data Selection

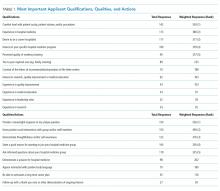

For each LPC recipient, demographic and comorbidity data were collected to calculate the Charlson Comorbidity Index (Table 1). Every LPC recipient’s history of deep vein thrombosis (DVT) and catheter-related infection (CRI) was recorded. Procedural information (eg, inserter, vein, and number of attempts) was obtained from insertion notes. All data were extracted from the electronic medical record via chart review. Two reviewers verified outcomes to ensure concordance with stated definitions (ie, DVT, CRI). Device parameters, including dwell time, indication, and time to complication(s) were also collected.

Primary Outcomes

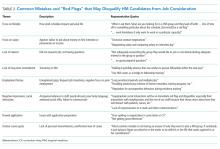

The primary outcome was the incidence of DVT and CRI (Table 2). DVT was defined as radiographically confirmed (eg, ultrasound, computed tomography) thrombosis in the presence of patient signs or symptoms. CRI was defined in accordance with Timsit et al.8 as follows: catheter-related clinical sepsis without bloodstream infection defined as (1) combination of fever (body temperature >38.5°C) or hypothermia (body temperature <36.5°C), (2) catheter-tip culture yielding ≥103 CFUs/mL, (3) pus at the insertion site or resolution of clinical sepsis after catheter removal, and (4) absence of any other infectious focus or catheter-related bloodstream infection (CRBSI). CRBSI was defined as a combination of (1) one or more positive peripheral blood cultures sampled immediately before or within 48 hours after catheter removal, (2) a quantitative catheter-tip culture testing positive for the same microorganisms (same species and susceptibility pattern) or a differential time to positivity of blood cultures ≥2 hours, and (3) no other infectious focus explaining the positive blood culture result.

Secondary Outcomes

Secondary outcomes, defined as minor complications, included infiltration, thrombophlebitis, and catheter occlusion. Infiltration was defined as localized swelling due to infusate or site leakage. Thrombophlebitis was defined as one or more of the following: localized erythema, palpable cord, tenderness, or streaking. Occlusion was defined as nonpatency of the catheter due to the inability to flush or aspirate. Definitions for secondary outcomes are consistent with those used in prior studies.9

Statistical Analysis

Patient and LPC characteristics were analyzed using descriptive statistics. Results were reported as percentages, means, medians (interquartile range [IQR]), and rates per 1,000 catheter days. All analyses were conducted in Stata v.15 (StataCorp, College Station, Texas).

RESULTS

Within the 20-month study period, a total of 539 LPCs representing 5,543 catheter days were available for analysis. The mean patient age was 53 years. A total of 90 patients (16.7%) had a history of DVT, while 6 (1.1%) had a history of CRI. We calculated a median Charlson index of 4 (interquartile range [IQR], 2-7), suggesting an estimated one-year postdischarge survival of 53% (Table 1).

The majority of LPCs (99.6% [537/539]) were single lumen catheters. No patient had more than one concurrent LPC. The cannulation success rate on the first attempt was 93.9% (507/539). The brachial or basilic veins were primarily targeted (98.7%, [532/539]). Difficult intravenous access represented 48.8% (263/539) of indications, and postdischarge parenteral antibiotics constituted 47.9% (258/539). The median catheter dwell time was eight days (IQR, 4-14 days).

Nine DVTs (1.7% [9/539]) occurred in patients with LPCs. The incidence of DVT was higher in patients with a history of DVT (5.7%, 5/90). The median time from insertion to DVT was 11 (IQR, 5-14) days. DVTs were managed with LPC removal and systemic anticoagulation in accordance with catheter-related DVT guidelines. The rate of CRI was 0.6% (3/539), or 0.54 per 1,000 catheter days. Two CRIs had positive blood cultures, while one had negative cultures. Infections occurred after a median of 12 (IQR, 8-15) days of catheter dwell. Each was treated with LPC removal and IV antibiotics, with two patients receiving two weeks and one receiving six weeks of antibiotic therapy (Table 2).

With respect to secondary outcomes, the incidence of infiltration was 0.4% (2/539), thrombophlebitis 0.7% (4/539), and catheter occlusion 0.9% (5/539). The time to event was 8.5, 3.75, and 5.4 days, respectively. Collectively, 2.0% of devices experienced a minor complication.

DISCUSSION

In our single-center study, LPCs were primarily inserted for difficult venous access or parenteral antibiotics. Despite a clinically complex population with a high number of comorbidities, rates of major and minor complications associated with LPCs were low. These data suggest that LPCs are a safe alternative to PICCs and other central access devices for short-term use.

Our incidence of CRI of 0.6% (0.54 per 1,000 catheter days) is similar to or lower than other studies.2,10,11 An incidence of 0%-1.5% was observed in two recent publications about midline catheters, with rates across individual studies and hospital sites varying widely.12,13 A systematic review of intravascular devices reported CRI rates of 0.4% (0.2 per 1,000 catheter days) for midlines and 0.1% (0.5 per 1,000 catheter days for peripheral IVs), in contrast to PICCs at 3.1% (1.1 per 1,000 catheter days).14 However, catheters of varying lengths and diameters were used in studies within the review, potentially leading to heterogeneous outcomes. In accordance with existing data, CRI incidence in our study increased with catheter dwell time.10

The 1.7% rate of DVT observed in our study is on the lower end of existing data (1.4%-5.9%).12-15 Compared with PICCs (2%-15%), the incidence of venous thrombosis appears to be lower with midlines/LPCs—justifying their use as an alternative device for IV access.7,9,12,14 There was an overall low rate of minor complications, similar to recently published results.10 As rates were greater in patients with a history of DVT (5.7%), caution is warranted when using these devices in this population.

Our experience with LPCs suggests financial and patient benefits. The cost of LPCs is lower than central access devices.4 As rates of CRI were low, costs related to CLABSIs from PICC use may be reduced by appropriate LPC use. LPCs may allow the ability to draw blood routinely, which could improve the patient experience—albeit with its own risks. Current recommendations support the use of PICCs or LPCs, somewhat interchangeably, for patients with appropriate indications needing IV therapy for more than five to six days.2,7 However, LPCs now account for 57% of vascular access procedures in our center and have led to a decrease in reliance on PICCs and attendant complications.

Our study has several limitations. First, LPCs and midlines are often used interchangeably in the literature.4,5 Therefore, reported complication rates may not reflect those of LPCs alone and may limit comparisons. Second, ours was a single-center study with experts assessing device appropriateness and performing ultrasound-guided insertions; our findings may not be generalizable to dissimilar settings. Third, we did not track LPC complications such as nonpatency and leakage. As prior studies reported high rates of complications such as these events, caution is advised when interpreting our findings.15 Finally, we retrospectively extracted data from our medical records; limitations in documentation may influence our findings.

CONCLUSION

In patients requiring short-term IV therapy, these data suggest LPCs have low complication rates and may be safely used as an alternative option for venous access.

Acknowledgments

The authors thank Drs. Laura Hernandez, Andres Mendez Hernandez, and Victor Prado for their assistance in data collection. The authors also thank Mr. Onofre Donceras and Dr. Sharon Welbel from the John H. Stroger, Jr. Hospital of Cook County Department of Infection Control & Epidemiology for their assistance in reviewing local line infection data.

Drs. Patel and Chopra developed the study design. Drs. Patel, Araujo, Parra Rodriguez, Ramirez Sanchez, and Chopra contributed to manuscript writing. Ms. Snyder provided statistical analysis. All authors have seen and approved the final manuscript for submission.

Disclosures

The authors have nothing to disclose.

1. Anderson NR. Midline catheters: the middle ground of intravenous therapy administration. J Infus Nurs. 2004;27(5):313-321.

2. Adams DZ, Little A, Vinsant C, et al. The midline catheter: a clinical review. J Emerg Med. 2016;51(3):252-258. https://doi.org/10.1016/j.jemermed.2016.05.029.

3. Scoppettuolo G, Pittiruti M, Pitoni S, et al. Ultrasound-guided “short” midline catheters for difficult venous access in the emergency department: a retrospective analysis. Int J Emerg Med. 2016;9(1):3. https://doi.org/10.1186/s12245-016-0100-0.

4. Qin KR, Nataraja RM, Pacilli M. Long peripheral catheters: is it time to address the confusion? J Vasc Access. 2018;20(5). https://doi.org/10.1177/1129729818819730.

5. Pittiruti M, Scoppettuolo G. The GAVeCeLT Manual of PICC and Midlines. Milano: EDRA; 2016.

6. Dawson RB, Moureau NL. Midline catheters: an essential tool in CLABSI reduction. Infection Control Today. https://www.infectioncontroltoday.com/clabsi/midline-catheters-essential-tool-clabsi-reduction. Accessed February 19, 2018

7. Chopra V, Flanders SA, Saint S, et al. The Michigan Appropriateness Guide for Intravenous Catheters (MAGIC): results from a multispecialty panel using the RAND/UCLA appropriateness method. Ann Intern Med. 2015;163(6):S1-S40. https://doi.org/10.7326/M15-0744.

8. Timsit JF, Schwebel C, Bouadma L, et al. Chlorhexidine-impregnated sponges and less frequent dressing changes for prevention of catheter-related infections in critically ill adults: a randomized controlled trial. JAMA. 2009;301(12):1231-1241. https://doi.org/10.1001/jama.2009.376.

9. Bahl A, Karabon P, Chu D. Comparison of venous thrombosis complications in midlines versus peripherally inserted central catheters: are midlines the safer option? Clin Appl Thromb Hemost. 2019;25. https://doi.org/10.1177/1076029619839150.

10. Goetz AM, Miller J, Wagener MM, et al. Complications related to intravenous midline catheter usage. A 2-year study. J Intraven Nurs. 1998;21(2):76-80.

11. Xu T, Kingsley L, DiNucci S, et al. Safety and utilization of peripherally inserted central catheters versus midline catheters at a large academic medical center. Am J Infect Control. 2016;44(12):1458-1461. https://doi.org/10.1016/j.ajic.2016.09.010.

12. Chopra V, Kaatz S, Swaminathan L, et al. Variation in use and outcomes related to midline catheters: results from a multicentre pilot study. BMJ Qual Saf. 2019;28(9):714-720. https://doi.org/10.1136/bmjqs-2018-008554.

13. Badger J. Long peripheral catheters for deep arm vein venous access: A systematic review of complications. Heart Lung. 2019;48(3):222-225. https://doi.org/10.1016/j.hrtlng.2019.01.002.

14. Maki DG, Kluger DM, Crnich CJ. The risk of bloodstream infection in adults with different intravascular devices: a systematic review of 200 published prospective studies. Mayo Clin Proc. 2006;81(9):1159-1171. https://doi.org/10.4065/81.9.1159.

15. Zerla PA, Caravella G, De Luca G, et al. Open- vs closed-tip valved peripherally inserted central catheters and midlines: Findings from a vascular access database. J Assoc Vasc Access. 2015;20(3):169-176. https://doi.org/10.1016/j.java.2015.06.001.

Introduced in the 1950s, midline catheters have become a popular option for intravenous (IV) access.1,2 Ranging from 8 to 25 cm in length, they are inserted in the veins of the upper arm. Unlike peripherally inserted central catheters (PICCs), the tip of midline catheters terminates proximal to the axillary vein; thus, midlines are peripheral, not central venous access devices.1-3 One popular variation of a midline catheter, though nebulously defined, is the long peripheral catheter (LPC), a device ranging from 6 to 15 cm in length.4,5

Concerns regarding inappropriate use and complications such as thrombosis and central line-associated bloodstream infection (CLABSI) have spurred growth in the use of LPCs.6 However, data regarding complication rates with these devices are limited. Whether LPCs are a safe and viable option for IV access is unclear. We conducted a retrospective study to examine indications, patterns of use, and complications following LPC insertion in hospitalized patients.

METHODS

Device Selection

Our institution is a 470-bed tertiary care, safety-net hospital in Chicago, Illinois. Our vascular access team (VAT) performs a patient assessment and selects IV devices based upon published standards for device appropriateness. 7 We retrospectively collated electronic requests for LPC insertion on adult inpatients between October 2015 and June 2017. Cases where (1) duplicate orders, (2) patient refusal, (3) peripheral intravenous catheter of any length, or (4) PICCs were placed were excluded from this analysis.

VAT and Device Characteristics

We used Bard PowerGlide® (Bard Access Systems, Inc., Salt Lake City, Utah), an 18-gauge, 8-10 cm long, power-injectable, polyurethane LPC. Bundled kits (ie, device, gown, dressing, etc.) were utilized, and VAT providers underwent two weeks of training prior to the study period. All LPCs were inserted in the upper extremities under sterile technique using ultrasound guidance (accelerated Seldinger technique). Placement confirmation was verified by aspiration, flush, and ultrasound visualization of the catheter tip within the vein. An antimicrobial dressing was applied to the catheter insertion site, and daily saline flushes and weekly dressing changes by bedside nurses were used for device maintenance. LPC placement was available on all nonholiday weekdays from 8

Data Selection

For each LPC recipient, demographic and comorbidity data were collected to calculate the Charlson Comorbidity Index (Table 1). Every LPC recipient’s history of deep vein thrombosis (DVT) and catheter-related infection (CRI) was recorded. Procedural information (eg, inserter, vein, and number of attempts) was obtained from insertion notes. All data were extracted from the electronic medical record via chart review. Two reviewers verified outcomes to ensure concordance with stated definitions (ie, DVT, CRI). Device parameters, including dwell time, indication, and time to complication(s) were also collected.

Primary Outcomes

The primary outcome was the incidence of DVT and CRI (Table 2). DVT was defined as radiographically confirmed (eg, ultrasound, computed tomography) thrombosis in the presence of patient signs or symptoms. CRI was defined in accordance with Timsit et al.8 as follows: catheter-related clinical sepsis without bloodstream infection defined as (1) combination of fever (body temperature >38.5°C) or hypothermia (body temperature <36.5°C), (2) catheter-tip culture yielding ≥103 CFUs/mL, (3) pus at the insertion site or resolution of clinical sepsis after catheter removal, and (4) absence of any other infectious focus or catheter-related bloodstream infection (CRBSI). CRBSI was defined as a combination of (1) one or more positive peripheral blood cultures sampled immediately before or within 48 hours after catheter removal, (2) a quantitative catheter-tip culture testing positive for the same microorganisms (same species and susceptibility pattern) or a differential time to positivity of blood cultures ≥2 hours, and (3) no other infectious focus explaining the positive blood culture result.

Secondary Outcomes

Secondary outcomes, defined as minor complications, included infiltration, thrombophlebitis, and catheter occlusion. Infiltration was defined as localized swelling due to infusate or site leakage. Thrombophlebitis was defined as one or more of the following: localized erythema, palpable cord, tenderness, or streaking. Occlusion was defined as nonpatency of the catheter due to the inability to flush or aspirate. Definitions for secondary outcomes are consistent with those used in prior studies.9

Statistical Analysis

Patient and LPC characteristics were analyzed using descriptive statistics. Results were reported as percentages, means, medians (interquartile range [IQR]), and rates per 1,000 catheter days. All analyses were conducted in Stata v.15 (StataCorp, College Station, Texas).

RESULTS

Within the 20-month study period, a total of 539 LPCs representing 5,543 catheter days were available for analysis. The mean patient age was 53 years. A total of 90 patients (16.7%) had a history of DVT, while 6 (1.1%) had a history of CRI. We calculated a median Charlson index of 4 (interquartile range [IQR], 2-7), suggesting an estimated one-year postdischarge survival of 53% (Table 1).

The majority of LPCs (99.6% [537/539]) were single lumen catheters. No patient had more than one concurrent LPC. The cannulation success rate on the first attempt was 93.9% (507/539). The brachial or basilic veins were primarily targeted (98.7%, [532/539]). Difficult intravenous access represented 48.8% (263/539) of indications, and postdischarge parenteral antibiotics constituted 47.9% (258/539). The median catheter dwell time was eight days (IQR, 4-14 days).

Nine DVTs (1.7% [9/539]) occurred in patients with LPCs. The incidence of DVT was higher in patients with a history of DVT (5.7%, 5/90). The median time from insertion to DVT was 11 (IQR, 5-14) days. DVTs were managed with LPC removal and systemic anticoagulation in accordance with catheter-related DVT guidelines. The rate of CRI was 0.6% (3/539), or 0.54 per 1,000 catheter days. Two CRIs had positive blood cultures, while one had negative cultures. Infections occurred after a median of 12 (IQR, 8-15) days of catheter dwell. Each was treated with LPC removal and IV antibiotics, with two patients receiving two weeks and one receiving six weeks of antibiotic therapy (Table 2).

With respect to secondary outcomes, the incidence of infiltration was 0.4% (2/539), thrombophlebitis 0.7% (4/539), and catheter occlusion 0.9% (5/539). The time to event was 8.5, 3.75, and 5.4 days, respectively. Collectively, 2.0% of devices experienced a minor complication.

DISCUSSION

In our single-center study, LPCs were primarily inserted for difficult venous access or parenteral antibiotics. Despite a clinically complex population with a high number of comorbidities, rates of major and minor complications associated with LPCs were low. These data suggest that LPCs are a safe alternative to PICCs and other central access devices for short-term use.

Our incidence of CRI of 0.6% (0.54 per 1,000 catheter days) is similar to or lower than other studies.2,10,11 An incidence of 0%-1.5% was observed in two recent publications about midline catheters, with rates across individual studies and hospital sites varying widely.12,13 A systematic review of intravascular devices reported CRI rates of 0.4% (0.2 per 1,000 catheter days) for midlines and 0.1% (0.5 per 1,000 catheter days for peripheral IVs), in contrast to PICCs at 3.1% (1.1 per 1,000 catheter days).14 However, catheters of varying lengths and diameters were used in studies within the review, potentially leading to heterogeneous outcomes. In accordance with existing data, CRI incidence in our study increased with catheter dwell time.10

The 1.7% rate of DVT observed in our study is on the lower end of existing data (1.4%-5.9%).12-15 Compared with PICCs (2%-15%), the incidence of venous thrombosis appears to be lower with midlines/LPCs—justifying their use as an alternative device for IV access.7,9,12,14 There was an overall low rate of minor complications, similar to recently published results.10 As rates were greater in patients with a history of DVT (5.7%), caution is warranted when using these devices in this population.

Our experience with LPCs suggests financial and patient benefits. The cost of LPCs is lower than central access devices.4 As rates of CRI were low, costs related to CLABSIs from PICC use may be reduced by appropriate LPC use. LPCs may allow the ability to draw blood routinely, which could improve the patient experience—albeit with its own risks. Current recommendations support the use of PICCs or LPCs, somewhat interchangeably, for patients with appropriate indications needing IV therapy for more than five to six days.2,7 However, LPCs now account for 57% of vascular access procedures in our center and have led to a decrease in reliance on PICCs and attendant complications.

Our study has several limitations. First, LPCs and midlines are often used interchangeably in the literature.4,5 Therefore, reported complication rates may not reflect those of LPCs alone and may limit comparisons. Second, ours was a single-center study with experts assessing device appropriateness and performing ultrasound-guided insertions; our findings may not be generalizable to dissimilar settings. Third, we did not track LPC complications such as nonpatency and leakage. As prior studies reported high rates of complications such as these events, caution is advised when interpreting our findings.15 Finally, we retrospectively extracted data from our medical records; limitations in documentation may influence our findings.

CONCLUSION

In patients requiring short-term IV therapy, these data suggest LPCs have low complication rates and may be safely used as an alternative option for venous access.

Acknowledgments

The authors thank Drs. Laura Hernandez, Andres Mendez Hernandez, and Victor Prado for their assistance in data collection. The authors also thank Mr. Onofre Donceras and Dr. Sharon Welbel from the John H. Stroger, Jr. Hospital of Cook County Department of Infection Control & Epidemiology for their assistance in reviewing local line infection data.

Drs. Patel and Chopra developed the study design. Drs. Patel, Araujo, Parra Rodriguez, Ramirez Sanchez, and Chopra contributed to manuscript writing. Ms. Snyder provided statistical analysis. All authors have seen and approved the final manuscript for submission.

Disclosures

The authors have nothing to disclose.

Introduced in the 1950s, midline catheters have become a popular option for intravenous (IV) access.1,2 Ranging from 8 to 25 cm in length, they are inserted in the veins of the upper arm. Unlike peripherally inserted central catheters (PICCs), the tip of midline catheters terminates proximal to the axillary vein; thus, midlines are peripheral, not central venous access devices.1-3 One popular variation of a midline catheter, though nebulously defined, is the long peripheral catheter (LPC), a device ranging from 6 to 15 cm in length.4,5

Concerns regarding inappropriate use and complications such as thrombosis and central line-associated bloodstream infection (CLABSI) have spurred growth in the use of LPCs.6 However, data regarding complication rates with these devices are limited. Whether LPCs are a safe and viable option for IV access is unclear. We conducted a retrospective study to examine indications, patterns of use, and complications following LPC insertion in hospitalized patients.

METHODS

Device Selection

Our institution is a 470-bed tertiary care, safety-net hospital in Chicago, Illinois. Our vascular access team (VAT) performs a patient assessment and selects IV devices based upon published standards for device appropriateness. 7 We retrospectively collated electronic requests for LPC insertion on adult inpatients between October 2015 and June 2017. Cases where (1) duplicate orders, (2) patient refusal, (3) peripheral intravenous catheter of any length, or (4) PICCs were placed were excluded from this analysis.

VAT and Device Characteristics

We used Bard PowerGlide® (Bard Access Systems, Inc., Salt Lake City, Utah), an 18-gauge, 8-10 cm long, power-injectable, polyurethane LPC. Bundled kits (ie, device, gown, dressing, etc.) were utilized, and VAT providers underwent two weeks of training prior to the study period. All LPCs were inserted in the upper extremities under sterile technique using ultrasound guidance (accelerated Seldinger technique). Placement confirmation was verified by aspiration, flush, and ultrasound visualization of the catheter tip within the vein. An antimicrobial dressing was applied to the catheter insertion site, and daily saline flushes and weekly dressing changes by bedside nurses were used for device maintenance. LPC placement was available on all nonholiday weekdays from 8

Data Selection

For each LPC recipient, demographic and comorbidity data were collected to calculate the Charlson Comorbidity Index (Table 1). Every LPC recipient’s history of deep vein thrombosis (DVT) and catheter-related infection (CRI) was recorded. Procedural information (eg, inserter, vein, and number of attempts) was obtained from insertion notes. All data were extracted from the electronic medical record via chart review. Two reviewers verified outcomes to ensure concordance with stated definitions (ie, DVT, CRI). Device parameters, including dwell time, indication, and time to complication(s) were also collected.

Primary Outcomes

The primary outcome was the incidence of DVT and CRI (Table 2). DVT was defined as radiographically confirmed (eg, ultrasound, computed tomography) thrombosis in the presence of patient signs or symptoms. CRI was defined in accordance with Timsit et al.8 as follows: catheter-related clinical sepsis without bloodstream infection defined as (1) combination of fever (body temperature >38.5°C) or hypothermia (body temperature <36.5°C), (2) catheter-tip culture yielding ≥103 CFUs/mL, (3) pus at the insertion site or resolution of clinical sepsis after catheter removal, and (4) absence of any other infectious focus or catheter-related bloodstream infection (CRBSI). CRBSI was defined as a combination of (1) one or more positive peripheral blood cultures sampled immediately before or within 48 hours after catheter removal, (2) a quantitative catheter-tip culture testing positive for the same microorganisms (same species and susceptibility pattern) or a differential time to positivity of blood cultures ≥2 hours, and (3) no other infectious focus explaining the positive blood culture result.

Secondary Outcomes

Secondary outcomes, defined as minor complications, included infiltration, thrombophlebitis, and catheter occlusion. Infiltration was defined as localized swelling due to infusate or site leakage. Thrombophlebitis was defined as one or more of the following: localized erythema, palpable cord, tenderness, or streaking. Occlusion was defined as nonpatency of the catheter due to the inability to flush or aspirate. Definitions for secondary outcomes are consistent with those used in prior studies.9

Statistical Analysis

Patient and LPC characteristics were analyzed using descriptive statistics. Results were reported as percentages, means, medians (interquartile range [IQR]), and rates per 1,000 catheter days. All analyses were conducted in Stata v.15 (StataCorp, College Station, Texas).

RESULTS

Within the 20-month study period, a total of 539 LPCs representing 5,543 catheter days were available for analysis. The mean patient age was 53 years. A total of 90 patients (16.7%) had a history of DVT, while 6 (1.1%) had a history of CRI. We calculated a median Charlson index of 4 (interquartile range [IQR], 2-7), suggesting an estimated one-year postdischarge survival of 53% (Table 1).

The majority of LPCs (99.6% [537/539]) were single lumen catheters. No patient had more than one concurrent LPC. The cannulation success rate on the first attempt was 93.9% (507/539). The brachial or basilic veins were primarily targeted (98.7%, [532/539]). Difficult intravenous access represented 48.8% (263/539) of indications, and postdischarge parenteral antibiotics constituted 47.9% (258/539). The median catheter dwell time was eight days (IQR, 4-14 days).

Nine DVTs (1.7% [9/539]) occurred in patients with LPCs. The incidence of DVT was higher in patients with a history of DVT (5.7%, 5/90). The median time from insertion to DVT was 11 (IQR, 5-14) days. DVTs were managed with LPC removal and systemic anticoagulation in accordance with catheter-related DVT guidelines. The rate of CRI was 0.6% (3/539), or 0.54 per 1,000 catheter days. Two CRIs had positive blood cultures, while one had negative cultures. Infections occurred after a median of 12 (IQR, 8-15) days of catheter dwell. Each was treated with LPC removal and IV antibiotics, with two patients receiving two weeks and one receiving six weeks of antibiotic therapy (Table 2).

With respect to secondary outcomes, the incidence of infiltration was 0.4% (2/539), thrombophlebitis 0.7% (4/539), and catheter occlusion 0.9% (5/539). The time to event was 8.5, 3.75, and 5.4 days, respectively. Collectively, 2.0% of devices experienced a minor complication.

DISCUSSION

In our single-center study, LPCs were primarily inserted for difficult venous access or parenteral antibiotics. Despite a clinically complex population with a high number of comorbidities, rates of major and minor complications associated with LPCs were low. These data suggest that LPCs are a safe alternative to PICCs and other central access devices for short-term use.

Our incidence of CRI of 0.6% (0.54 per 1,000 catheter days) is similar to or lower than other studies.2,10,11 An incidence of 0%-1.5% was observed in two recent publications about midline catheters, with rates across individual studies and hospital sites varying widely.12,13 A systematic review of intravascular devices reported CRI rates of 0.4% (0.2 per 1,000 catheter days) for midlines and 0.1% (0.5 per 1,000 catheter days for peripheral IVs), in contrast to PICCs at 3.1% (1.1 per 1,000 catheter days).14 However, catheters of varying lengths and diameters were used in studies within the review, potentially leading to heterogeneous outcomes. In accordance with existing data, CRI incidence in our study increased with catheter dwell time.10

The 1.7% rate of DVT observed in our study is on the lower end of existing data (1.4%-5.9%).12-15 Compared with PICCs (2%-15%), the incidence of venous thrombosis appears to be lower with midlines/LPCs—justifying their use as an alternative device for IV access.7,9,12,14 There was an overall low rate of minor complications, similar to recently published results.10 As rates were greater in patients with a history of DVT (5.7%), caution is warranted when using these devices in this population.

Our experience with LPCs suggests financial and patient benefits. The cost of LPCs is lower than central access devices.4 As rates of CRI were low, costs related to CLABSIs from PICC use may be reduced by appropriate LPC use. LPCs may allow the ability to draw blood routinely, which could improve the patient experience—albeit with its own risks. Current recommendations support the use of PICCs or LPCs, somewhat interchangeably, for patients with appropriate indications needing IV therapy for more than five to six days.2,7 However, LPCs now account for 57% of vascular access procedures in our center and have led to a decrease in reliance on PICCs and attendant complications.

Our study has several limitations. First, LPCs and midlines are often used interchangeably in the literature.4,5 Therefore, reported complication rates may not reflect those of LPCs alone and may limit comparisons. Second, ours was a single-center study with experts assessing device appropriateness and performing ultrasound-guided insertions; our findings may not be generalizable to dissimilar settings. Third, we did not track LPC complications such as nonpatency and leakage. As prior studies reported high rates of complications such as these events, caution is advised when interpreting our findings.15 Finally, we retrospectively extracted data from our medical records; limitations in documentation may influence our findings.

CONCLUSION

In patients requiring short-term IV therapy, these data suggest LPCs have low complication rates and may be safely used as an alternative option for venous access.

Acknowledgments

The authors thank Drs. Laura Hernandez, Andres Mendez Hernandez, and Victor Prado for their assistance in data collection. The authors also thank Mr. Onofre Donceras and Dr. Sharon Welbel from the John H. Stroger, Jr. Hospital of Cook County Department of Infection Control & Epidemiology for their assistance in reviewing local line infection data.

Drs. Patel and Chopra developed the study design. Drs. Patel, Araujo, Parra Rodriguez, Ramirez Sanchez, and Chopra contributed to manuscript writing. Ms. Snyder provided statistical analysis. All authors have seen and approved the final manuscript for submission.

Disclosures

The authors have nothing to disclose.

1. Anderson NR. Midline catheters: the middle ground of intravenous therapy administration. J Infus Nurs. 2004;27(5):313-321.

2. Adams DZ, Little A, Vinsant C, et al. The midline catheter: a clinical review. J Emerg Med. 2016;51(3):252-258. https://doi.org/10.1016/j.jemermed.2016.05.029.

3. Scoppettuolo G, Pittiruti M, Pitoni S, et al. Ultrasound-guided “short” midline catheters for difficult venous access in the emergency department: a retrospective analysis. Int J Emerg Med. 2016;9(1):3. https://doi.org/10.1186/s12245-016-0100-0.

4. Qin KR, Nataraja RM, Pacilli M. Long peripheral catheters: is it time to address the confusion? J Vasc Access. 2018;20(5). https://doi.org/10.1177/1129729818819730.

5. Pittiruti M, Scoppettuolo G. The GAVeCeLT Manual of PICC and Midlines. Milano: EDRA; 2016.

6. Dawson RB, Moureau NL. Midline catheters: an essential tool in CLABSI reduction. Infection Control Today. https://www.infectioncontroltoday.com/clabsi/midline-catheters-essential-tool-clabsi-reduction. Accessed February 19, 2018

7. Chopra V, Flanders SA, Saint S, et al. The Michigan Appropriateness Guide for Intravenous Catheters (MAGIC): results from a multispecialty panel using the RAND/UCLA appropriateness method. Ann Intern Med. 2015;163(6):S1-S40. https://doi.org/10.7326/M15-0744.

8. Timsit JF, Schwebel C, Bouadma L, et al. Chlorhexidine-impregnated sponges and less frequent dressing changes for prevention of catheter-related infections in critically ill adults: a randomized controlled trial. JAMA. 2009;301(12):1231-1241. https://doi.org/10.1001/jama.2009.376.

9. Bahl A, Karabon P, Chu D. Comparison of venous thrombosis complications in midlines versus peripherally inserted central catheters: are midlines the safer option? Clin Appl Thromb Hemost. 2019;25. https://doi.org/10.1177/1076029619839150.

10. Goetz AM, Miller J, Wagener MM, et al. Complications related to intravenous midline catheter usage. A 2-year study. J Intraven Nurs. 1998;21(2):76-80.

11. Xu T, Kingsley L, DiNucci S, et al. Safety and utilization of peripherally inserted central catheters versus midline catheters at a large academic medical center. Am J Infect Control. 2016;44(12):1458-1461. https://doi.org/10.1016/j.ajic.2016.09.010.

12. Chopra V, Kaatz S, Swaminathan L, et al. Variation in use and outcomes related to midline catheters: results from a multicentre pilot study. BMJ Qual Saf. 2019;28(9):714-720. https://doi.org/10.1136/bmjqs-2018-008554.

13. Badger J. Long peripheral catheters for deep arm vein venous access: A systematic review of complications. Heart Lung. 2019;48(3):222-225. https://doi.org/10.1016/j.hrtlng.2019.01.002.

14. Maki DG, Kluger DM, Crnich CJ. The risk of bloodstream infection in adults with different intravascular devices: a systematic review of 200 published prospective studies. Mayo Clin Proc. 2006;81(9):1159-1171. https://doi.org/10.4065/81.9.1159.

15. Zerla PA, Caravella G, De Luca G, et al. Open- vs closed-tip valved peripherally inserted central catheters and midlines: Findings from a vascular access database. J Assoc Vasc Access. 2015;20(3):169-176. https://doi.org/10.1016/j.java.2015.06.001.

1. Anderson NR. Midline catheters: the middle ground of intravenous therapy administration. J Infus Nurs. 2004;27(5):313-321.

2. Adams DZ, Little A, Vinsant C, et al. The midline catheter: a clinical review. J Emerg Med. 2016;51(3):252-258. https://doi.org/10.1016/j.jemermed.2016.05.029.

3. Scoppettuolo G, Pittiruti M, Pitoni S, et al. Ultrasound-guided “short” midline catheters for difficult venous access in the emergency department: a retrospective analysis. Int J Emerg Med. 2016;9(1):3. https://doi.org/10.1186/s12245-016-0100-0.

4. Qin KR, Nataraja RM, Pacilli M. Long peripheral catheters: is it time to address the confusion? J Vasc Access. 2018;20(5). https://doi.org/10.1177/1129729818819730.

5. Pittiruti M, Scoppettuolo G. The GAVeCeLT Manual of PICC and Midlines. Milano: EDRA; 2016.

6. Dawson RB, Moureau NL. Midline catheters: an essential tool in CLABSI reduction. Infection Control Today. https://www.infectioncontroltoday.com/clabsi/midline-catheters-essential-tool-clabsi-reduction. Accessed February 19, 2018

7. Chopra V, Flanders SA, Saint S, et al. The Michigan Appropriateness Guide for Intravenous Catheters (MAGIC): results from a multispecialty panel using the RAND/UCLA appropriateness method. Ann Intern Med. 2015;163(6):S1-S40. https://doi.org/10.7326/M15-0744.

8. Timsit JF, Schwebel C, Bouadma L, et al. Chlorhexidine-impregnated sponges and less frequent dressing changes for prevention of catheter-related infections in critically ill adults: a randomized controlled trial. JAMA. 2009;301(12):1231-1241. https://doi.org/10.1001/jama.2009.376.

9. Bahl A, Karabon P, Chu D. Comparison of venous thrombosis complications in midlines versus peripherally inserted central catheters: are midlines the safer option? Clin Appl Thromb Hemost. 2019;25. https://doi.org/10.1177/1076029619839150.

10. Goetz AM, Miller J, Wagener MM, et al. Complications related to intravenous midline catheter usage. A 2-year study. J Intraven Nurs. 1998;21(2):76-80.

11. Xu T, Kingsley L, DiNucci S, et al. Safety and utilization of peripherally inserted central catheters versus midline catheters at a large academic medical center. Am J Infect Control. 2016;44(12):1458-1461. https://doi.org/10.1016/j.ajic.2016.09.010.

12. Chopra V, Kaatz S, Swaminathan L, et al. Variation in use and outcomes related to midline catheters: results from a multicentre pilot study. BMJ Qual Saf. 2019;28(9):714-720. https://doi.org/10.1136/bmjqs-2018-008554.

13. Badger J. Long peripheral catheters for deep arm vein venous access: A systematic review of complications. Heart Lung. 2019;48(3):222-225. https://doi.org/10.1016/j.hrtlng.2019.01.002.

14. Maki DG, Kluger DM, Crnich CJ. The risk of bloodstream infection in adults with different intravascular devices: a systematic review of 200 published prospective studies. Mayo Clin Proc. 2006;81(9):1159-1171. https://doi.org/10.4065/81.9.1159.

15. Zerla PA, Caravella G, De Luca G, et al. Open- vs closed-tip valved peripherally inserted central catheters and midlines: Findings from a vascular access database. J Assoc Vasc Access. 2015;20(3):169-176. https://doi.org/10.1016/j.java.2015.06.001.

© 2019 Society of Hospital Medicine

Hospital Medicine Has a Specialty Code. Is the Memo Still in the Mail?

In recognizing the importance of Hospital Medicine (HM) and its practitioners, the Centers for Medicare and Medicaid Services (CMS) awarded the field a specialty designation in 2016. The code is self-selected by hospitalists and used by the CMS for programmatic and claims processing purposes. The HM code (“C6”), submitted to the CMS by the provider or their designee through the Provider Enrollment Chain and Ownership System (PECOS), in turn links to the National Provider Identification provider data.

The Society of Hospital Medicine® sought the designation given the growth of hospitalists practicing nationally, their impact on the practice of medicine in the inpatient setting,1 and their secondary effects on global care.2 In fact, early efforts by the CMS to transition physician payments to the value-based payment used specialty designations to create benchmarks in cost metrics, heightening the importance for hospitalists to be able to assess their performance. The need to identify any shifts in resource utilization and workforce mix in the broader context of health reforms necessitated action. Essentially, to understand the “why’s” of hospital medicine, the field required an accounting of the “who’s” and “where’s.”

The CMS granted the C6 designation in 2016, and it went live in April 2017. Despite the code’s brief two-year tenure, calls for its creation long predated its existence. As such, the new modifier requires an initial look to help steer the role of HM in any future CMS and managed care organization (MCO) quality, payment, or practice improvement activities.

METHODS

We analyzed publicly available 2017 Medicare Part B utilization data3 to explore the rates of Evaluation & Management (E&M) codes used across specialties, using the C6 designation to identify hospitalists.

To try to estimate the percentage of hospitalists who were likely billing under the C6 designation, we then compared the rates of C6 billing to expected rates of hospitalist E&M billing based on an analysis of hospitalist prevalence in the 2012 Medicare physician payment data. Prior work to identify hospitalists before the implementation of the C6 designation relied on thresholds of inpatient codes for various inpatient E&M services.4,5 We used our previously published approach of a threshold of 60% of inpatient E&M hospital services to differentiate hospitalists from their parent specialties.6 We also calculated the expected rates of E&M billing for other select specialty services by applying the 2012 E&M coding trends to the 2017 data.

RESULTS

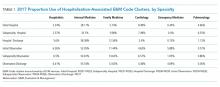

Table 1 shows the distribution of inpatient E&M codes billed by hospitalists using the C6 identification, as well as the use of those codes by other specialists. Hospitalists identified by the C6 designation billed only 2%-5% of inpatient and 6% of observation codes. As an example, in 2017, discharge CPT codes 99238 and 99239 were used 7,872,323 times. However, C6-identified hospitalists accounted for only 441,420 of these codes.

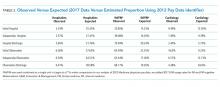

Table 2 compares the observed billing rates by specialty using the C6 designation to identify hospitalists with what would be the expected rates with the 2012 threshold-based specialty billing designation applied to the 2017 data. This comparison demonstrates that hospitalist billing based on the C6 modifier use is approximately one-tenth of what would have been their expected volume of E&M services.

DISCUSSION

We examined the patterns of hospitalist billing using the C6 hospital medicine specialty modifier, comparing billing patterns with what we would expect hospitalist activity to be if we had used a threshold-based approach. The difference between the C6 and the threshold-based approaches to assessing hospitalist activity suggests that as few as 10% of hospitalists have adopted the C6 code.

Why is the adoption of the C6 modifier so low? Although administrative data do not allow us to identify the reasons why providers chose to disregard the C6 designation, we can speculate on causes. There are, to date, low direct risks and recognized benefits with using the code. We hypothesize that several factors could be impeding whether providers use the modifier to bring about potential gains. The first may be knowledge-related; ie, hospitalists might not be familiar with the specialty code or unaware of the importance of accurately capturing hospitalist practice patterns. They may also wrongly assume that their practices are aware of the revision or have submitted the appropriate paperwork. Similarly, practice personnel may lack knowledge regarding the code or the importance of its use. The second factor may be logistical; ie, administrative barriers such as difficulty accessing the Provider Enrollment, Chain and Ownership System (PECOS) and out-of-date paper registration forms impede fast uptake. The final reason might be related to professionals whose tenures as hospitalists will be brief, and their unease of carrying an identifier into their next non-HM position prompts hesitation. Providers may have a misperception that using the C6 code may somehow impact or limit their future scope of practice, when, in fact, they may change their Medicare specialty designation at any time.

Changes in reimbursement models, including the Bundled Payments for Care Improvement Advanced (BPCI-A) and other value-based initiatives, heighten the need for a more accurate identification of the specialty. Classifying individual providers and groups to make valid performance comparisons is relevant for the same reasons. The CMS continues to advance cost and efficiency measures in its publicly accessible physiciancompare.gov website.7 Without an improved ability to identify services provided by hospitalists—by both CMS and commercial entities—the potential benefits delivered by hospitalists in terms of improved care quality, safety, or efficiency could go undetected by payers and policymakers. Moreover, C6 may be used in other ways by the CMS throughout its payment systems and programmatic efforts that use specialty to differentiate between Medicare providers.8 Finally, the C6 is an identifier for the Medicare fee-for-service system; state programs and MCOs may not identify hospitalists in the same manner, or at all. Therefore, it may make it more difficult for those groups and HM researchers to study the trends in care delivery changes. The specialty needs to engage with these other payers to assist in revising their information systems to better account for how hospitalists care for their insured populations.

Although we would expect a natural increase in C6 adoption over time, optimally meeting stakeholders’ data needs requires more rapid uptake. Our analysis is limited by our assumption that specialty patterns of code use remain similar from 2012 to 2017. Regardless, the magnitude of the difference between the estimate of hospitalists using the C6 versus billing thresholds strongly suggests underuse of the C6 designation. The CMS and MCOs have an increasing need for valid and representative data, and C6 can be used to assess “HM-adjusted” resource utilization, relative value units (RVUs), and performance evaluations. Therefore, hospitalists may see more incentives to use the C6 specialty code in a manner consistent with other recognized subspecialties.

Disclaimer

The research reported here was supported by the Department of Veterans Affairs, Veterans Health Administration, and the Health Services Research and Development Service. The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs.

1. Wachter RM, Goldman L. Zero to 50,000—The 20th Anniversary of the Hospitalist. N Engl J Med. 2016;375(11):1009-1011. https://doi.org/10.1056/NEJMp1607958.

2. Quinn R. HM 2016: A year in review. The Hospitalist. 2016;12. https://www.the-hospitalist.org/hospitalist/article/121419/everything-you-need-know-about-bundled-payments-care-improvement

3. Centers for Medicare and Medicaid Services. Medicare Utilization for Part B. https://www.cms.gov/research-statistics-data-and-systems/statistics-trends-and-reports/medicarefeeforsvcpartsab/medicareutilizationforpartb.html. Accessed June 14, 2019.

4. Saint S, Christakis DA, Baldwin L-M, Rosenblatt R. Is hospitalism new? An analysis of Medicare data from Washington State in 1994. Eff Clin Pract. 2000;3(1):35-39.

5. Welch WP, Stearns SC, Cuellar AE, Bindman AB. Use of hospitalists by Medicare beneficiaries: a national picture. Medicare Medicaid Res Rev. 2014;4(2). https://doi.org/10.5600/mmrr2014-004-02-b01.

6. Lapps J, Flansbaum B, Leykum L, Boswell J, Haines L. Updating threshold-based identification of hospitalists in 2012 medicare pay data. J Hosp Med. 2016;11(1):45-47. https://doi.org/10.1002/jhm.2480.

7. Centers for Medicare & Medicaid Services. Physician Compare Initiative. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/physician-compare-initiative/index.html. Accessed June 14, 2019.

8. Centers for Medicare & Medicaid Services. Revisions to Payment Policies under the Medicare Physician Fee Schedule, Quality Payment Program and Other Revisions to Part B for CY 2020 (CMS-1715-P). Accessed prior to publishing in the Federal Register through www.regulations.gov.

In recognizing the importance of Hospital Medicine (HM) and its practitioners, the Centers for Medicare and Medicaid Services (CMS) awarded the field a specialty designation in 2016. The code is self-selected by hospitalists and used by the CMS for programmatic and claims processing purposes. The HM code (“C6”), submitted to the CMS by the provider or their designee through the Provider Enrollment Chain and Ownership System (PECOS), in turn links to the National Provider Identification provider data.

The Society of Hospital Medicine® sought the designation given the growth of hospitalists practicing nationally, their impact on the practice of medicine in the inpatient setting,1 and their secondary effects on global care.2 In fact, early efforts by the CMS to transition physician payments to the value-based payment used specialty designations to create benchmarks in cost metrics, heightening the importance for hospitalists to be able to assess their performance. The need to identify any shifts in resource utilization and workforce mix in the broader context of health reforms necessitated action. Essentially, to understand the “why’s” of hospital medicine, the field required an accounting of the “who’s” and “where’s.”

The CMS granted the C6 designation in 2016, and it went live in April 2017. Despite the code’s brief two-year tenure, calls for its creation long predated its existence. As such, the new modifier requires an initial look to help steer the role of HM in any future CMS and managed care organization (MCO) quality, payment, or practice improvement activities.

METHODS

We analyzed publicly available 2017 Medicare Part B utilization data3 to explore the rates of Evaluation & Management (E&M) codes used across specialties, using the C6 designation to identify hospitalists.

To try to estimate the percentage of hospitalists who were likely billing under the C6 designation, we then compared the rates of C6 billing to expected rates of hospitalist E&M billing based on an analysis of hospitalist prevalence in the 2012 Medicare physician payment data. Prior work to identify hospitalists before the implementation of the C6 designation relied on thresholds of inpatient codes for various inpatient E&M services.4,5 We used our previously published approach of a threshold of 60% of inpatient E&M hospital services to differentiate hospitalists from their parent specialties.6 We also calculated the expected rates of E&M billing for other select specialty services by applying the 2012 E&M coding trends to the 2017 data.

RESULTS

Table 1 shows the distribution of inpatient E&M codes billed by hospitalists using the C6 identification, as well as the use of those codes by other specialists. Hospitalists identified by the C6 designation billed only 2%-5% of inpatient and 6% of observation codes. As an example, in 2017, discharge CPT codes 99238 and 99239 were used 7,872,323 times. However, C6-identified hospitalists accounted for only 441,420 of these codes.

Table 2 compares the observed billing rates by specialty using the C6 designation to identify hospitalists with what would be the expected rates with the 2012 threshold-based specialty billing designation applied to the 2017 data. This comparison demonstrates that hospitalist billing based on the C6 modifier use is approximately one-tenth of what would have been their expected volume of E&M services.

DISCUSSION

We examined the patterns of hospitalist billing using the C6 hospital medicine specialty modifier, comparing billing patterns with what we would expect hospitalist activity to be if we had used a threshold-based approach. The difference between the C6 and the threshold-based approaches to assessing hospitalist activity suggests that as few as 10% of hospitalists have adopted the C6 code.

Why is the adoption of the C6 modifier so low? Although administrative data do not allow us to identify the reasons why providers chose to disregard the C6 designation, we can speculate on causes. There are, to date, low direct risks and recognized benefits with using the code. We hypothesize that several factors could be impeding whether providers use the modifier to bring about potential gains. The first may be knowledge-related; ie, hospitalists might not be familiar with the specialty code or unaware of the importance of accurately capturing hospitalist practice patterns. They may also wrongly assume that their practices are aware of the revision or have submitted the appropriate paperwork. Similarly, practice personnel may lack knowledge regarding the code or the importance of its use. The second factor may be logistical; ie, administrative barriers such as difficulty accessing the Provider Enrollment, Chain and Ownership System (PECOS) and out-of-date paper registration forms impede fast uptake. The final reason might be related to professionals whose tenures as hospitalists will be brief, and their unease of carrying an identifier into their next non-HM position prompts hesitation. Providers may have a misperception that using the C6 code may somehow impact or limit their future scope of practice, when, in fact, they may change their Medicare specialty designation at any time.

Changes in reimbursement models, including the Bundled Payments for Care Improvement Advanced (BPCI-A) and other value-based initiatives, heighten the need for a more accurate identification of the specialty. Classifying individual providers and groups to make valid performance comparisons is relevant for the same reasons. The CMS continues to advance cost and efficiency measures in its publicly accessible physiciancompare.gov website.7 Without an improved ability to identify services provided by hospitalists—by both CMS and commercial entities—the potential benefits delivered by hospitalists in terms of improved care quality, safety, or efficiency could go undetected by payers and policymakers. Moreover, C6 may be used in other ways by the CMS throughout its payment systems and programmatic efforts that use specialty to differentiate between Medicare providers.8 Finally, the C6 is an identifier for the Medicare fee-for-service system; state programs and MCOs may not identify hospitalists in the same manner, or at all. Therefore, it may make it more difficult for those groups and HM researchers to study the trends in care delivery changes. The specialty needs to engage with these other payers to assist in revising their information systems to better account for how hospitalists care for their insured populations.

Although we would expect a natural increase in C6 adoption over time, optimally meeting stakeholders’ data needs requires more rapid uptake. Our analysis is limited by our assumption that specialty patterns of code use remain similar from 2012 to 2017. Regardless, the magnitude of the difference between the estimate of hospitalists using the C6 versus billing thresholds strongly suggests underuse of the C6 designation. The CMS and MCOs have an increasing need for valid and representative data, and C6 can be used to assess “HM-adjusted” resource utilization, relative value units (RVUs), and performance evaluations. Therefore, hospitalists may see more incentives to use the C6 specialty code in a manner consistent with other recognized subspecialties.

Disclaimer

The research reported here was supported by the Department of Veterans Affairs, Veterans Health Administration, and the Health Services Research and Development Service. The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs.

In recognizing the importance of Hospital Medicine (HM) and its practitioners, the Centers for Medicare and Medicaid Services (CMS) awarded the field a specialty designation in 2016. The code is self-selected by hospitalists and used by the CMS for programmatic and claims processing purposes. The HM code (“C6”), submitted to the CMS by the provider or their designee through the Provider Enrollment Chain and Ownership System (PECOS), in turn links to the National Provider Identification provider data.

The Society of Hospital Medicine® sought the designation given the growth of hospitalists practicing nationally, their impact on the practice of medicine in the inpatient setting,1 and their secondary effects on global care.2 In fact, early efforts by the CMS to transition physician payments to the value-based payment used specialty designations to create benchmarks in cost metrics, heightening the importance for hospitalists to be able to assess their performance. The need to identify any shifts in resource utilization and workforce mix in the broader context of health reforms necessitated action. Essentially, to understand the “why’s” of hospital medicine, the field required an accounting of the “who’s” and “where’s.”

The CMS granted the C6 designation in 2016, and it went live in April 2017. Despite the code’s brief two-year tenure, calls for its creation long predated its existence. As such, the new modifier requires an initial look to help steer the role of HM in any future CMS and managed care organization (MCO) quality, payment, or practice improvement activities.

METHODS

We analyzed publicly available 2017 Medicare Part B utilization data3 to explore the rates of Evaluation & Management (E&M) codes used across specialties, using the C6 designation to identify hospitalists.

To try to estimate the percentage of hospitalists who were likely billing under the C6 designation, we then compared the rates of C6 billing to expected rates of hospitalist E&M billing based on an analysis of hospitalist prevalence in the 2012 Medicare physician payment data. Prior work to identify hospitalists before the implementation of the C6 designation relied on thresholds of inpatient codes for various inpatient E&M services.4,5 We used our previously published approach of a threshold of 60% of inpatient E&M hospital services to differentiate hospitalists from their parent specialties.6 We also calculated the expected rates of E&M billing for other select specialty services by applying the 2012 E&M coding trends to the 2017 data.

RESULTS

Table 1 shows the distribution of inpatient E&M codes billed by hospitalists using the C6 identification, as well as the use of those codes by other specialists. Hospitalists identified by the C6 designation billed only 2%-5% of inpatient and 6% of observation codes. As an example, in 2017, discharge CPT codes 99238 and 99239 were used 7,872,323 times. However, C6-identified hospitalists accounted for only 441,420 of these codes.

Table 2 compares the observed billing rates by specialty using the C6 designation to identify hospitalists with what would be the expected rates with the 2012 threshold-based specialty billing designation applied to the 2017 data. This comparison demonstrates that hospitalist billing based on the C6 modifier use is approximately one-tenth of what would have been their expected volume of E&M services.

DISCUSSION

We examined the patterns of hospitalist billing using the C6 hospital medicine specialty modifier, comparing billing patterns with what we would expect hospitalist activity to be if we had used a threshold-based approach. The difference between the C6 and the threshold-based approaches to assessing hospitalist activity suggests that as few as 10% of hospitalists have adopted the C6 code.

Why is the adoption of the C6 modifier so low? Although administrative data do not allow us to identify the reasons why providers chose to disregard the C6 designation, we can speculate on causes. There are, to date, low direct risks and recognized benefits with using the code. We hypothesize that several factors could be impeding whether providers use the modifier to bring about potential gains. The first may be knowledge-related; ie, hospitalists might not be familiar with the specialty code or unaware of the importance of accurately capturing hospitalist practice patterns. They may also wrongly assume that their practices are aware of the revision or have submitted the appropriate paperwork. Similarly, practice personnel may lack knowledge regarding the code or the importance of its use. The second factor may be logistical; ie, administrative barriers such as difficulty accessing the Provider Enrollment, Chain and Ownership System (PECOS) and out-of-date paper registration forms impede fast uptake. The final reason might be related to professionals whose tenures as hospitalists will be brief, and their unease of carrying an identifier into their next non-HM position prompts hesitation. Providers may have a misperception that using the C6 code may somehow impact or limit their future scope of practice, when, in fact, they may change their Medicare specialty designation at any time.

Changes in reimbursement models, including the Bundled Payments for Care Improvement Advanced (BPCI-A) and other value-based initiatives, heighten the need for a more accurate identification of the specialty. Classifying individual providers and groups to make valid performance comparisons is relevant for the same reasons. The CMS continues to advance cost and efficiency measures in its publicly accessible physiciancompare.gov website.7 Without an improved ability to identify services provided by hospitalists—by both CMS and commercial entities—the potential benefits delivered by hospitalists in terms of improved care quality, safety, or efficiency could go undetected by payers and policymakers. Moreover, C6 may be used in other ways by the CMS throughout its payment systems and programmatic efforts that use specialty to differentiate between Medicare providers.8 Finally, the C6 is an identifier for the Medicare fee-for-service system; state programs and MCOs may not identify hospitalists in the same manner, or at all. Therefore, it may make it more difficult for those groups and HM researchers to study the trends in care delivery changes. The specialty needs to engage with these other payers to assist in revising their information systems to better account for how hospitalists care for their insured populations.

Although we would expect a natural increase in C6 adoption over time, optimally meeting stakeholders’ data needs requires more rapid uptake. Our analysis is limited by our assumption that specialty patterns of code use remain similar from 2012 to 2017. Regardless, the magnitude of the difference between the estimate of hospitalists using the C6 versus billing thresholds strongly suggests underuse of the C6 designation. The CMS and MCOs have an increasing need for valid and representative data, and C6 can be used to assess “HM-adjusted” resource utilization, relative value units (RVUs), and performance evaluations. Therefore, hospitalists may see more incentives to use the C6 specialty code in a manner consistent with other recognized subspecialties.

Disclaimer

The research reported here was supported by the Department of Veterans Affairs, Veterans Health Administration, and the Health Services Research and Development Service. The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs.

1. Wachter RM, Goldman L. Zero to 50,000—The 20th Anniversary of the Hospitalist. N Engl J Med. 2016;375(11):1009-1011. https://doi.org/10.1056/NEJMp1607958.

2. Quinn R. HM 2016: A year in review. The Hospitalist. 2016;12. https://www.the-hospitalist.org/hospitalist/article/121419/everything-you-need-know-about-bundled-payments-care-improvement

3. Centers for Medicare and Medicaid Services. Medicare Utilization for Part B. https://www.cms.gov/research-statistics-data-and-systems/statistics-trends-and-reports/medicarefeeforsvcpartsab/medicareutilizationforpartb.html. Accessed June 14, 2019.

4. Saint S, Christakis DA, Baldwin L-M, Rosenblatt R. Is hospitalism new? An analysis of Medicare data from Washington State in 1994. Eff Clin Pract. 2000;3(1):35-39.

5. Welch WP, Stearns SC, Cuellar AE, Bindman AB. Use of hospitalists by Medicare beneficiaries: a national picture. Medicare Medicaid Res Rev. 2014;4(2). https://doi.org/10.5600/mmrr2014-004-02-b01.

6. Lapps J, Flansbaum B, Leykum L, Boswell J, Haines L. Updating threshold-based identification of hospitalists in 2012 medicare pay data. J Hosp Med. 2016;11(1):45-47. https://doi.org/10.1002/jhm.2480.

7. Centers for Medicare & Medicaid Services. Physician Compare Initiative. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/physician-compare-initiative/index.html. Accessed June 14, 2019.

8. Centers for Medicare & Medicaid Services. Revisions to Payment Policies under the Medicare Physician Fee Schedule, Quality Payment Program and Other Revisions to Part B for CY 2020 (CMS-1715-P). Accessed prior to publishing in the Federal Register through www.regulations.gov.

1. Wachter RM, Goldman L. Zero to 50,000—The 20th Anniversary of the Hospitalist. N Engl J Med. 2016;375(11):1009-1011. https://doi.org/10.1056/NEJMp1607958.

2. Quinn R. HM 2016: A year in review. The Hospitalist. 2016;12. https://www.the-hospitalist.org/hospitalist/article/121419/everything-you-need-know-about-bundled-payments-care-improvement

3. Centers for Medicare and Medicaid Services. Medicare Utilization for Part B. https://www.cms.gov/research-statistics-data-and-systems/statistics-trends-and-reports/medicarefeeforsvcpartsab/medicareutilizationforpartb.html. Accessed June 14, 2019.

4. Saint S, Christakis DA, Baldwin L-M, Rosenblatt R. Is hospitalism new? An analysis of Medicare data from Washington State in 1994. Eff Clin Pract. 2000;3(1):35-39.

5. Welch WP, Stearns SC, Cuellar AE, Bindman AB. Use of hospitalists by Medicare beneficiaries: a national picture. Medicare Medicaid Res Rev. 2014;4(2). https://doi.org/10.5600/mmrr2014-004-02-b01.

6. Lapps J, Flansbaum B, Leykum L, Boswell J, Haines L. Updating threshold-based identification of hospitalists in 2012 medicare pay data. J Hosp Med. 2016;11(1):45-47. https://doi.org/10.1002/jhm.2480.

7. Centers for Medicare & Medicaid Services. Physician Compare Initiative. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/physician-compare-initiative/index.html. Accessed June 14, 2019.

8. Centers for Medicare & Medicaid Services. Revisions to Payment Policies under the Medicare Physician Fee Schedule, Quality Payment Program and Other Revisions to Part B for CY 2020 (CMS-1715-P). Accessed prior to publishing in the Federal Register through www.regulations.gov.

© 2019 Society of Hospital Medicine

Community Pediatric Hospitalist Workload: Results from a National Survey

As a newly recognized specialty, pediatric hospital medicine (PHM) continues to expand and diversify.1 Pediatric hospitalists care for children in hospitals ranging from small, rural community hospitals to large, free-standing quaternary children’s hospitals.2-4 In addition, more than 10% of graduating pediatric residents are seeking future careers within PHM.5

In 2018, Fromme et al. published a study describing clinical workload for pediatric hospitalists within university-based settings.6 They characterized the diversity of work models and programmatic sustainability but limited the study to university-based programs. With over half of children receiving care within community hospitals,7 workforce patterns for community-based pediatric hospitalists should be characterized to maximize sustainability and minimize attrition across the field.

In this study, we describe programmatic variability in clinical work expectations of 70 community-based PHM programs. We aimed to describe existing work models and expectations of community-based program leaders as they relate to their unique clinical setting.

METHODS

We conducted a cross-sectional survey of community-based PHM site directors through structured interviews. Community hospital programs were self-defined by the study participants, although typically defined as general hospitals that admit pediatric patients and are not free-standing or children’s hospitals within a general hospital. Survey respondents were asked to answer questions only reflecting expectations at their community hospital.

Survey Design and Content

Building from a tool used by Fromme et al.6 we created a 12-question structured interview questionnaire focused on three areas: (1) full-time employment (FTE) metrics including definitions of a 1.0 FTE, “typical” shifts, and weekend responsibilities; (2) work volume including census parameters, service-line coverage expectations, back-up systems, and overnight call responsibilities; and (3) programmatic model including sense of sustainability (eg, minimizing burnout and attrition), support for activities such as administrative or research time, and employer model (Appendix).

We modified the survey through research team consensus. After pilot-testing by research team members at their own sites, the survey was refined for item clarity, structural design, and length. We chose to administer surveys through phone interviews over a traditional distribution due to anticipated variability in work models. The research team discussed how each question should be asked, and responses were clarified to maintain consistency.

Survey Administration

Given the absence of a national registry or database for community-based PHM programs, study participation was solicited through an invitation posted on the American Academy of Pediatrics Section on Hospital Medicine (AAP SOHM) Listserv and the AAP SOHM Community Hospitalist Listserv in May 2018. Invitations were posted twice at two weeks apart. Each research team member completed 6-19 interviews. Responses to survey questions were recorded in REDCap, a secure, web-based data capture instrument.8

Participating in the study was considered implied consent, and participants did not receive a monetary incentive, although respondents were offered deidentified survey data for participation. The study was exempted through the University of Chicago Institutional Review Board.

Data Analysis

Employers were dichotomized as community hospital employer (including primary community hospital employment/private organization) or noncommunity hospital employer (including children’s/university hospital employment or school of medicine). Descriptive statistics were reported to compare the demographics of two employer groups. P values were calculated using two-sample t-tests for the continuous variables and chi-square or Fisher-exact tests for the categorical variables. Mann–Whitney U-test was performed for continuous variables without normality. Analyses were performed using the R Statistical Programming Language (R Foundation for Statistical Computing, Vienna, Austria), version 3.4.3.

RESULTS

Participation and Program Characteristics

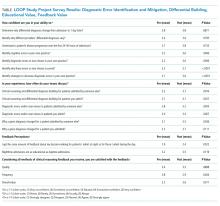

We interviewed 70 community-based PHM site directors representing programs across 29 states (Table 1) and five geographic regions: Midwest (34.3%), Northeast (11.4%), Southeast (15.7%), Southwest (4.3%), and West (34.3%). Employer models varied across groups, with more noncommunity hospital employers (57%) than community hospital employers (43%). The top three services covered by pediatric hospitalists were pediatric inpatient or observation bed admissions (97%), emergency department consults (89%), and general newborns (67%). PHM programs also provided coverage for other services, including newborn deliveries (43%), Special Care Nursery/Level II Neonatal Intensive Care Unit (41%), step-down unit (20%), and mental health units (13%). About 59% of programs provided education for family medicine residents, 36% were for pediatric residents, and 70% worked with advanced practice providers. The majority of programs (70%) provided in-house coverage overnight.

Clinical Work Expectations and Employer Model

Clinical work expectations varied broadly across programs (Table 2). The median expected hours for a 1.0 FTE was 1,882 hours per year (interquartile range [IQR] 1,805, 2,016), and the median expected weekend coverage/year (defined as covering two days or two nights of the weekend) was 21 (IQR 14, 24). Most programs did not expand staff coverage based on seasonality (73%), and less than 20% of programs operated with a census cap. Median support for nondirect patient care activities was 4% (IQR 0,10) of a program’s total FTE (ie, a 5.0 FTE program would have 0.20 FTE support). Programs with community hospital employers had an 8% higher expectation of 1.0 FTE hours/year (P = .01) and viewed an appropriate pediatric morning census as 20% higher (P = .01; Table 2).

Program Sustainability

DISCUSSION

To our knowledge, this study is the first to describe clinical work models exclusively for pediatric community hospitalist programs. We found that expectations for clinical FTE hours, weekend coverage, appropriate morning census, support for nondirect patient care activities, and perception of sustainability varied broadly across programs. The only variable affecting some of these differences was employer model, with those employed by a community hospital employer having a higher expectation for hours/year and appropriate morning pediatric census than those employed by noncommunity hospital employers.

With a growing emphasis on physician burnout and career satisfaction,9-11 understanding the characteristics of community hospital work settings is critical for identifying and building sustainable employment models. Previous studies have identified that the balance of clinical and nonclinical responsibilities and the setting of community versus university-based programs are major contributors to burnout and career satisfaction.9,11 Interestingly, although community hospital-based programs have limited FTE for nondirect patient care activities, we found that a higher percentage of program site directors perceived their program models as sustainable when compared with university-based programs in prior research (63% versus 50%).6 Elucidating why community hospital PHM programs are perceived as more sustainable provides an opportunity for future research. Potential reasons may include fewer academic requirements for promotion or an increased connection to a local community.

We also found that the employer model had a statistically significant impact on expected FTE hours per year but not on perception of sustainability. Programs employed by community hospitals worked 8% more hours per year than those employed by noncommunity hospital employers and accepted a higher morning pediatric census. This variation in hours and census level appropriateness is likely multifactorial, potentially from higher nonclinical expectations for promotion (eg, academic or scholarly production) at school of medicine or children’s hospital employed programs versus limited reimbursement for administrative responsibilities within community hospital employment models.

There are several potential next steps for our findings. As our data are the first attempt (to our knowledge) at describing the current practice and expectations exclusively within community hospital programs, this study can be used as a starting point for the development of workload expectation standards. Increasing transparency nationally for individual community programs potentially promotes discussions around burnout and attrition. Having objective data to compare program models may assist in advocating with local hospital leadership for restructuring that better aligns with national norms.