User login

Official Newspaper of the American College of Surgeons

Screening Mammograms Overdiagnosed More Than 1 Million Women

Over the past 3 decades, screening mammography may have overdiagnosed more than a million clinically insignificant breast tumors, while having virtually no impact on the incidence of metastatic disease.

Compared with the premammography era, routine screening now picks up 122 additional cases of early cancer per 100,000 women – just eight of which would likely have progressed to distant disease, Dr. Archie Bleyer and Dr. Gilbert Welch wrote in the Nov. 22 issue of the New England Journal of Medicine.

Looking at the results in light of a corresponding 28% decrease in breast cancer mortality puts screening mammography in perspective, the authors said: "Our data show that the true contribution of mammography to decreasing mortality must be at the low end of this range. They suggest that mammography has largely not met the first prerequisite for screening to reduce cancer-specific mortality – a reduction in the number of women who present with late-stage cancer.

Population cancer screening is a doubled-edged sword, wrote Dr. Bleyer of Oregon Health and Science University in Portland, and Dr. Welch of Geisel School of Medicine at Dartmouth in Hanover, N.H. While it’s impossible to say which screen-detected cancers would have caused serious disease or death, "there is certainty about what happens to [these women]. They undergo surgery, radiation therapy, hormonal therapy for 5 years or more, chemotherapy, or (usually) a combination of these treatments for abnormalities that otherwise would not have caused illness."

The authors used the Surveillance , Epidemiology, and End Results (SEER) database to examine screening mammography and breast cancer incidence data from 1976-2008. They considered the incidence baseline to be the number of cancers reported from 1976-1978, and compared it with incidence in 2006-2008. All of the models in the study controlled for an upswing of breast cancer from 1990 to 2005, which was associated with hormone-replacement therapy.

Screening mammography increased from about 30% of women aged 40 or older in the mid-80s to almost 70% by 2008. This was mirrored by an increase in the diagnosis of early-stage breast cancers diagnosed, from 112/100,000 to 234/100,000 per year – representing an absolute increase of 122/100,000 (N. Engl. J. Med. 2012;367:1998-2005 [doi:10.1056/NEJMoa1206809]).

"[This] reflects both the detection of more cases of localized disease and the advent of the detection of [ductal carcinoma in situ] (which was virtually not detected before mammography was available)," the authors said.

There was a much smaller concomitant decrease in late-stage cancers, which fell from 102/100,000 to 94 /100,000 women. This was almost entirely driven by a drop in regional disease, from about 85/100,000 in 1976 to 78/100,000 in 2008. The incidence of distant disease was almost entirely unchanged, hovering around 17/100,000 for the entire study period.

"If a constant underlying disease burden is assumed, only 8 of the 122 additional early diagnoses were destined to progress to advanced disease, implying a detection of 114 excess cases per 100,000 women" – a total of more than 1.5 million over the study period.

The incidence of overdiagnosis held when the authors used other models designed to favor mammography’s impact.

In a "best-guess estimate," they assumed that breast cancer incidence increased by 0.25% over the study period. "This approach suggests that the excess detection attributable to mammography ... involved more than 1.3 million women in the past 30 years."

The "extreme" model assumed that incidence increased by 0.5% each year, an estimate that minimized surplus diagnoses of early-stage disease and maximized the deficit of late-stage cancer. This model found that screening mammography detected an excess of 1.2 million cancers over the study period.

The "very extreme assumption" model assumed that incidence increased by 0.5% each year, and that the baseline incidence of late-stage disease was the highest ever observed (113 cases per 100,000 women, in 1985). Even with this model – the most favorable toward mammography – the authors estimated overdiagnosis of slightly more than 1 million women.

"Our analysis suggests that whatever the mortality benefit, breast-cancer screening involved substantial harm of excess detection of additional early-stage cancers that was not matched by a reduction in late-stage cancers. This imbalance indicates a considerable amount of overdiagnosis involving more than 1 million women in the past 3 decades – and, according to our best-guess estimate, more than 70,000 women in 2008."

SEER breast cancer mortality data help put the findings into perspective, the authors said. There has been a substantial reduction in mortality, which fell from 71/100,000 to 51/100,000 over the study period. "This reduction in mortality is probably due to some combination of the effects of screening mammography and better treatment," they said. But because the absolute reduction in deaths (20/100,000) was larger than the absolute reduction in late-stage cancer (8/100,000), screening mammography can’t be the main driver of change.

"Furthermore ... the small reduction in cases of late-stage cancer that has occurred has been confined to regional (largely node-positive) disease – a stage that can now often be treated successfully, with an expected 5-year survival rate of 85% among women 40 years of age or older. Unfortunately, however, the number of women in the United States who present with distant disease, only 25% of whom survive for 5 years, appears not to have been affected by screening."

Better therapy may even have altered the impact of screening, they added.

"Ironically, improvements in treatment tend to deteriorate the benefit of screening. As treatment of [disease detected by methods other than screening] improves, the benefit of screening diminishes. For example, since pneumonia can be treated successfully, no one would suggest that we screen for pneumonia."

The findings show that there are not absolutes for women considering whether or not to get a mammogram.

"Proponents of screening should provide women with data from a randomized screening trial that reflects improvements in current therapy and includes strategies to mitigate overdiagnosis in the intervention group. Women should recognize that our study does not answer the question, ‘Should I be screened for breast cancer?’ However, they can rest assured that the question has more than one right answer."

Dr. Bleyer disclosed that he is a consultant and speaker for Sigma-Tau Pharmaceuticals. Dr. Welch disclosed that he speaks on the topic of overdiagnosis with universities and medical schools, as well as to the Pharmacy Benefit Management Institute; all honoraria were donated to charitable organizations.

This paper is going to create discussion and concern, and get people thinking again about mammography. But from my point of view it isn’t the final answer.

Dr. J. Leonard Lichtenfeld |

There’s been a substantial amount of research and commentary on the role of mammography – discussion that has split people into two political camps: one saying it’s the major reason for the reduced mortality and one saying it has no value whatsoever.

I think the truth lies somewhere in between.

Something has clearly affected breast cancer mortality in the past few decades. Before the 1990s, the rate of breast cancer death in this country was a flat line that had not changed for decades. Then suddenly it began to drop, and it has continued to do so. We are clearly doing something right. The question is: What is it? Probably a combination of mammography, improvements in adjuvant chemotherapy, and a general increase in breast awareness.

In the 1970s, when I was beginning practice – and before that, as a family member – breast cancer was not something anyone spoke about. It was never mentioned publicly. Now we are much more aware. Women are tuned in to the topic and aware of the need to do self-exams. It’s a national discussion.

Both our surgeries and our chemotherapy are much improved. But even now, if a woman presents with a palpable breast lesion, the odds are that it’s going to be fairly sizable and have lymph node involvement. Can we assume that we are able to effectively treat every woman in this group? I’m not sure we can.

This leads us to mammography. There is no question that it identifies subclinical lesions. But we have to recognize the problem of overdiagnosis and overtreatment.

Mammography and other new imaging modalities are driving the point of detection to much earlier in the history of a woman’s breast cancer. We have recognized from autopsy studies that certain cancers can exist in the body for long periods of time without ever causing any problems. We know that most women with breast cancers don’t have any readily identifiable risk factors. And we don’t have a test that allows us to tell most women whether or not their particular cancer is likely to be aggressive.

The current study doesn’t really help us with these questions. There are severe limitations on these data. How often did the women actually have a mammogram? What we consider "regional disease" today is not the same as it was in 1975. Advanced disease today isn’t the same as it was in 1975. There are cultural and insurance barriers that affect access to care and, thus, affect mortality. All of these issues must be weighed into the equation.

Right now, we at the American Cancer Society are still confident in our recommendation for women older than 40 to have an annual screening mammogram and clinical breast exam. There are other recommendations from other groups, which may be a better fit for other women. The important thing is for a woman and her physician to pick a program and stay with it.

Everyone wants clear-cut answers in a world that is not clear-cut and is unlikely to become so. So women and doctors are left in the difficult position of weighing what is best for them.

I think we would all be reluctant to completely give this up. A breast cancer diagnosis in the 40s can kill a woman in her 50s. I’m concerned that we will begin missing breast cancers now that will kill in 10-15 years, and that we will lose the gains we’ve seen in mortality.

Dr. J. Leonard Lichtenfeld is the deputy chief medical officer for the national office of the American Cancer Society.

This paper is going to create discussion and concern, and get people thinking again about mammography. But from my point of view it isn’t the final answer.

Dr. J. Leonard Lichtenfeld |

There’s been a substantial amount of research and commentary on the role of mammography – discussion that has split people into two political camps: one saying it’s the major reason for the reduced mortality and one saying it has no value whatsoever.

I think the truth lies somewhere in between.

Something has clearly affected breast cancer mortality in the past few decades. Before the 1990s, the rate of breast cancer death in this country was a flat line that had not changed for decades. Then suddenly it began to drop, and it has continued to do so. We are clearly doing something right. The question is: What is it? Probably a combination of mammography, improvements in adjuvant chemotherapy, and a general increase in breast awareness.

In the 1970s, when I was beginning practice – and before that, as a family member – breast cancer was not something anyone spoke about. It was never mentioned publicly. Now we are much more aware. Women are tuned in to the topic and aware of the need to do self-exams. It’s a national discussion.

Both our surgeries and our chemotherapy are much improved. But even now, if a woman presents with a palpable breast lesion, the odds are that it’s going to be fairly sizable and have lymph node involvement. Can we assume that we are able to effectively treat every woman in this group? I’m not sure we can.

This leads us to mammography. There is no question that it identifies subclinical lesions. But we have to recognize the problem of overdiagnosis and overtreatment.

Mammography and other new imaging modalities are driving the point of detection to much earlier in the history of a woman’s breast cancer. We have recognized from autopsy studies that certain cancers can exist in the body for long periods of time without ever causing any problems. We know that most women with breast cancers don’t have any readily identifiable risk factors. And we don’t have a test that allows us to tell most women whether or not their particular cancer is likely to be aggressive.

The current study doesn’t really help us with these questions. There are severe limitations on these data. How often did the women actually have a mammogram? What we consider "regional disease" today is not the same as it was in 1975. Advanced disease today isn’t the same as it was in 1975. There are cultural and insurance barriers that affect access to care and, thus, affect mortality. All of these issues must be weighed into the equation.

Right now, we at the American Cancer Society are still confident in our recommendation for women older than 40 to have an annual screening mammogram and clinical breast exam. There are other recommendations from other groups, which may be a better fit for other women. The important thing is for a woman and her physician to pick a program and stay with it.

Everyone wants clear-cut answers in a world that is not clear-cut and is unlikely to become so. So women and doctors are left in the difficult position of weighing what is best for them.

I think we would all be reluctant to completely give this up. A breast cancer diagnosis in the 40s can kill a woman in her 50s. I’m concerned that we will begin missing breast cancers now that will kill in 10-15 years, and that we will lose the gains we’ve seen in mortality.

Dr. J. Leonard Lichtenfeld is the deputy chief medical officer for the national office of the American Cancer Society.

This paper is going to create discussion and concern, and get people thinking again about mammography. But from my point of view it isn’t the final answer.

Dr. J. Leonard Lichtenfeld |

There’s been a substantial amount of research and commentary on the role of mammography – discussion that has split people into two political camps: one saying it’s the major reason for the reduced mortality and one saying it has no value whatsoever.

I think the truth lies somewhere in between.

Something has clearly affected breast cancer mortality in the past few decades. Before the 1990s, the rate of breast cancer death in this country was a flat line that had not changed for decades. Then suddenly it began to drop, and it has continued to do so. We are clearly doing something right. The question is: What is it? Probably a combination of mammography, improvements in adjuvant chemotherapy, and a general increase in breast awareness.

In the 1970s, when I was beginning practice – and before that, as a family member – breast cancer was not something anyone spoke about. It was never mentioned publicly. Now we are much more aware. Women are tuned in to the topic and aware of the need to do self-exams. It’s a national discussion.

Both our surgeries and our chemotherapy are much improved. But even now, if a woman presents with a palpable breast lesion, the odds are that it’s going to be fairly sizable and have lymph node involvement. Can we assume that we are able to effectively treat every woman in this group? I’m not sure we can.

This leads us to mammography. There is no question that it identifies subclinical lesions. But we have to recognize the problem of overdiagnosis and overtreatment.

Mammography and other new imaging modalities are driving the point of detection to much earlier in the history of a woman’s breast cancer. We have recognized from autopsy studies that certain cancers can exist in the body for long periods of time without ever causing any problems. We know that most women with breast cancers don’t have any readily identifiable risk factors. And we don’t have a test that allows us to tell most women whether or not their particular cancer is likely to be aggressive.

The current study doesn’t really help us with these questions. There are severe limitations on these data. How often did the women actually have a mammogram? What we consider "regional disease" today is not the same as it was in 1975. Advanced disease today isn’t the same as it was in 1975. There are cultural and insurance barriers that affect access to care and, thus, affect mortality. All of these issues must be weighed into the equation.

Right now, we at the American Cancer Society are still confident in our recommendation for women older than 40 to have an annual screening mammogram and clinical breast exam. There are other recommendations from other groups, which may be a better fit for other women. The important thing is for a woman and her physician to pick a program and stay with it.

Everyone wants clear-cut answers in a world that is not clear-cut and is unlikely to become so. So women and doctors are left in the difficult position of weighing what is best for them.

I think we would all be reluctant to completely give this up. A breast cancer diagnosis in the 40s can kill a woman in her 50s. I’m concerned that we will begin missing breast cancers now that will kill in 10-15 years, and that we will lose the gains we’ve seen in mortality.

Dr. J. Leonard Lichtenfeld is the deputy chief medical officer for the national office of the American Cancer Society.

Over the past 3 decades, screening mammography may have overdiagnosed more than a million clinically insignificant breast tumors, while having virtually no impact on the incidence of metastatic disease.

Compared with the premammography era, routine screening now picks up 122 additional cases of early cancer per 100,000 women – just eight of which would likely have progressed to distant disease, Dr. Archie Bleyer and Dr. Gilbert Welch wrote in the Nov. 22 issue of the New England Journal of Medicine.

Looking at the results in light of a corresponding 28% decrease in breast cancer mortality puts screening mammography in perspective, the authors said: "Our data show that the true contribution of mammography to decreasing mortality must be at the low end of this range. They suggest that mammography has largely not met the first prerequisite for screening to reduce cancer-specific mortality – a reduction in the number of women who present with late-stage cancer.

Population cancer screening is a doubled-edged sword, wrote Dr. Bleyer of Oregon Health and Science University in Portland, and Dr. Welch of Geisel School of Medicine at Dartmouth in Hanover, N.H. While it’s impossible to say which screen-detected cancers would have caused serious disease or death, "there is certainty about what happens to [these women]. They undergo surgery, radiation therapy, hormonal therapy for 5 years or more, chemotherapy, or (usually) a combination of these treatments for abnormalities that otherwise would not have caused illness."

The authors used the Surveillance , Epidemiology, and End Results (SEER) database to examine screening mammography and breast cancer incidence data from 1976-2008. They considered the incidence baseline to be the number of cancers reported from 1976-1978, and compared it with incidence in 2006-2008. All of the models in the study controlled for an upswing of breast cancer from 1990 to 2005, which was associated with hormone-replacement therapy.

Screening mammography increased from about 30% of women aged 40 or older in the mid-80s to almost 70% by 2008. This was mirrored by an increase in the diagnosis of early-stage breast cancers diagnosed, from 112/100,000 to 234/100,000 per year – representing an absolute increase of 122/100,000 (N. Engl. J. Med. 2012;367:1998-2005 [doi:10.1056/NEJMoa1206809]).

"[This] reflects both the detection of more cases of localized disease and the advent of the detection of [ductal carcinoma in situ] (which was virtually not detected before mammography was available)," the authors said.

There was a much smaller concomitant decrease in late-stage cancers, which fell from 102/100,000 to 94 /100,000 women. This was almost entirely driven by a drop in regional disease, from about 85/100,000 in 1976 to 78/100,000 in 2008. The incidence of distant disease was almost entirely unchanged, hovering around 17/100,000 for the entire study period.

"If a constant underlying disease burden is assumed, only 8 of the 122 additional early diagnoses were destined to progress to advanced disease, implying a detection of 114 excess cases per 100,000 women" – a total of more than 1.5 million over the study period.

The incidence of overdiagnosis held when the authors used other models designed to favor mammography’s impact.

In a "best-guess estimate," they assumed that breast cancer incidence increased by 0.25% over the study period. "This approach suggests that the excess detection attributable to mammography ... involved more than 1.3 million women in the past 30 years."

The "extreme" model assumed that incidence increased by 0.5% each year, an estimate that minimized surplus diagnoses of early-stage disease and maximized the deficit of late-stage cancer. This model found that screening mammography detected an excess of 1.2 million cancers over the study period.

The "very extreme assumption" model assumed that incidence increased by 0.5% each year, and that the baseline incidence of late-stage disease was the highest ever observed (113 cases per 100,000 women, in 1985). Even with this model – the most favorable toward mammography – the authors estimated overdiagnosis of slightly more than 1 million women.

"Our analysis suggests that whatever the mortality benefit, breast-cancer screening involved substantial harm of excess detection of additional early-stage cancers that was not matched by a reduction in late-stage cancers. This imbalance indicates a considerable amount of overdiagnosis involving more than 1 million women in the past 3 decades – and, according to our best-guess estimate, more than 70,000 women in 2008."

SEER breast cancer mortality data help put the findings into perspective, the authors said. There has been a substantial reduction in mortality, which fell from 71/100,000 to 51/100,000 over the study period. "This reduction in mortality is probably due to some combination of the effects of screening mammography and better treatment," they said. But because the absolute reduction in deaths (20/100,000) was larger than the absolute reduction in late-stage cancer (8/100,000), screening mammography can’t be the main driver of change.

"Furthermore ... the small reduction in cases of late-stage cancer that has occurred has been confined to regional (largely node-positive) disease – a stage that can now often be treated successfully, with an expected 5-year survival rate of 85% among women 40 years of age or older. Unfortunately, however, the number of women in the United States who present with distant disease, only 25% of whom survive for 5 years, appears not to have been affected by screening."

Better therapy may even have altered the impact of screening, they added.

"Ironically, improvements in treatment tend to deteriorate the benefit of screening. As treatment of [disease detected by methods other than screening] improves, the benefit of screening diminishes. For example, since pneumonia can be treated successfully, no one would suggest that we screen for pneumonia."

The findings show that there are not absolutes for women considering whether or not to get a mammogram.

"Proponents of screening should provide women with data from a randomized screening trial that reflects improvements in current therapy and includes strategies to mitigate overdiagnosis in the intervention group. Women should recognize that our study does not answer the question, ‘Should I be screened for breast cancer?’ However, they can rest assured that the question has more than one right answer."

Dr. Bleyer disclosed that he is a consultant and speaker for Sigma-Tau Pharmaceuticals. Dr. Welch disclosed that he speaks on the topic of overdiagnosis with universities and medical schools, as well as to the Pharmacy Benefit Management Institute; all honoraria were donated to charitable organizations.

Over the past 3 decades, screening mammography may have overdiagnosed more than a million clinically insignificant breast tumors, while having virtually no impact on the incidence of metastatic disease.

Compared with the premammography era, routine screening now picks up 122 additional cases of early cancer per 100,000 women – just eight of which would likely have progressed to distant disease, Dr. Archie Bleyer and Dr. Gilbert Welch wrote in the Nov. 22 issue of the New England Journal of Medicine.

Looking at the results in light of a corresponding 28% decrease in breast cancer mortality puts screening mammography in perspective, the authors said: "Our data show that the true contribution of mammography to decreasing mortality must be at the low end of this range. They suggest that mammography has largely not met the first prerequisite for screening to reduce cancer-specific mortality – a reduction in the number of women who present with late-stage cancer.

Population cancer screening is a doubled-edged sword, wrote Dr. Bleyer of Oregon Health and Science University in Portland, and Dr. Welch of Geisel School of Medicine at Dartmouth in Hanover, N.H. While it’s impossible to say which screen-detected cancers would have caused serious disease or death, "there is certainty about what happens to [these women]. They undergo surgery, radiation therapy, hormonal therapy for 5 years or more, chemotherapy, or (usually) a combination of these treatments for abnormalities that otherwise would not have caused illness."

The authors used the Surveillance , Epidemiology, and End Results (SEER) database to examine screening mammography and breast cancer incidence data from 1976-2008. They considered the incidence baseline to be the number of cancers reported from 1976-1978, and compared it with incidence in 2006-2008. All of the models in the study controlled for an upswing of breast cancer from 1990 to 2005, which was associated with hormone-replacement therapy.

Screening mammography increased from about 30% of women aged 40 or older in the mid-80s to almost 70% by 2008. This was mirrored by an increase in the diagnosis of early-stage breast cancers diagnosed, from 112/100,000 to 234/100,000 per year – representing an absolute increase of 122/100,000 (N. Engl. J. Med. 2012;367:1998-2005 [doi:10.1056/NEJMoa1206809]).

"[This] reflects both the detection of more cases of localized disease and the advent of the detection of [ductal carcinoma in situ] (which was virtually not detected before mammography was available)," the authors said.

There was a much smaller concomitant decrease in late-stage cancers, which fell from 102/100,000 to 94 /100,000 women. This was almost entirely driven by a drop in regional disease, from about 85/100,000 in 1976 to 78/100,000 in 2008. The incidence of distant disease was almost entirely unchanged, hovering around 17/100,000 for the entire study period.

"If a constant underlying disease burden is assumed, only 8 of the 122 additional early diagnoses were destined to progress to advanced disease, implying a detection of 114 excess cases per 100,000 women" – a total of more than 1.5 million over the study period.

The incidence of overdiagnosis held when the authors used other models designed to favor mammography’s impact.

In a "best-guess estimate," they assumed that breast cancer incidence increased by 0.25% over the study period. "This approach suggests that the excess detection attributable to mammography ... involved more than 1.3 million women in the past 30 years."

The "extreme" model assumed that incidence increased by 0.5% each year, an estimate that minimized surplus diagnoses of early-stage disease and maximized the deficit of late-stage cancer. This model found that screening mammography detected an excess of 1.2 million cancers over the study period.

The "very extreme assumption" model assumed that incidence increased by 0.5% each year, and that the baseline incidence of late-stage disease was the highest ever observed (113 cases per 100,000 women, in 1985). Even with this model – the most favorable toward mammography – the authors estimated overdiagnosis of slightly more than 1 million women.

"Our analysis suggests that whatever the mortality benefit, breast-cancer screening involved substantial harm of excess detection of additional early-stage cancers that was not matched by a reduction in late-stage cancers. This imbalance indicates a considerable amount of overdiagnosis involving more than 1 million women in the past 3 decades – and, according to our best-guess estimate, more than 70,000 women in 2008."

SEER breast cancer mortality data help put the findings into perspective, the authors said. There has been a substantial reduction in mortality, which fell from 71/100,000 to 51/100,000 over the study period. "This reduction in mortality is probably due to some combination of the effects of screening mammography and better treatment," they said. But because the absolute reduction in deaths (20/100,000) was larger than the absolute reduction in late-stage cancer (8/100,000), screening mammography can’t be the main driver of change.

"Furthermore ... the small reduction in cases of late-stage cancer that has occurred has been confined to regional (largely node-positive) disease – a stage that can now often be treated successfully, with an expected 5-year survival rate of 85% among women 40 years of age or older. Unfortunately, however, the number of women in the United States who present with distant disease, only 25% of whom survive for 5 years, appears not to have been affected by screening."

Better therapy may even have altered the impact of screening, they added.

"Ironically, improvements in treatment tend to deteriorate the benefit of screening. As treatment of [disease detected by methods other than screening] improves, the benefit of screening diminishes. For example, since pneumonia can be treated successfully, no one would suggest that we screen for pneumonia."

The findings show that there are not absolutes for women considering whether or not to get a mammogram.

"Proponents of screening should provide women with data from a randomized screening trial that reflects improvements in current therapy and includes strategies to mitigate overdiagnosis in the intervention group. Women should recognize that our study does not answer the question, ‘Should I be screened for breast cancer?’ However, they can rest assured that the question has more than one right answer."

Dr. Bleyer disclosed that he is a consultant and speaker for Sigma-Tau Pharmaceuticals. Dr. Welch disclosed that he speaks on the topic of overdiagnosis with universities and medical schools, as well as to the Pharmacy Benefit Management Institute; all honoraria were donated to charitable organizations.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Major Finding: Compared with the premammography era, routine screening now picks up 122 additional cases of early cancer per 100,000 women – just eight of which would likely have progressed to distant disease.

Data Source: The authors extracted data from the Surveillance, Epidemiology, and End Results (SEER) database.

Disclosures: Dr. Bleyer disclosed that he is a consultant and speaker for Sigma-Tau Pharmaceuticals. Dr. Welch disclosed that he speaks on the topic of overdiagnosis with universities and medical schools, as well as to the Pharmacy Benefit.

Long-Term Mortality Similar After Endovascular vs. Open Repair of AAA

For patients who undergo elective repair of abdominal aortic aneurysm, long-term mortality is not significantly different between those who have endovascular surgery and those who have open surgery, according to a report published online Nov. 22 in the New England Journal of Medicine.

Perioperative survival was superior with the endovascular approach, and that advantage lasted for up to 3 years. But from that point on, survival was similar between patients who had undergone endovascular repair and those who had undergone open repair, said Dr. Frank A. Lederle of the Veterans Affairs Medical Center, Minneapolis, Minn., and his associates.

Three large, randomized clinical trials compared the two surgical approaches: the United Kingdom Endovascular Repair 1 (EVAR 1) trial, the Dutch Randomized Endovascular Aneurysm Management (DREAM) trial, and the Open versus Endovascular Repair (OVER) Veterans Affairs Cooperative Study in the United States. All three studies initially showed a survival advantage with the endovascular procedure in the perioperative period. But longer follow-up in the EVAR 1 and DREAM studies suggested that this advantage was lost at approximately 2 years, due to an excess in late deaths among patients who had undergone endovascular repair.

Dr. Lederle and his colleagues now report the long-term findings of the OVER trial, and they also found that at approximately 3 years, the survival curves between the two study groups converged.

The OVER trial was conducted at 42 VA medical centers across the country and involved 881 patients. The mean patient age was 70 years, and, as is typical in VA cohorts, 99% of the subjects were male.

All patients had abdominal aortic aneurysms with a maximal external diameter of at least 5 cm, an associated iliac-artery aneurysm with a maximum diameter of at least 3 cm, or a maximal diameter of at least 4.5 cm plus either rapid enlargement or a saccular appearance on radiography and CT examination.

A total of 444 study subjects were randomly assigned to endovascular repair and 437 to open repair. They were followed for up to 9 years (mean follow-up, 5.2 years). During that time, there were 146 deaths in each group.

All-cause mortality was significantly lower in the endovascular group at 2 years, but that difference was only of borderline significance at 3 years and disappeared completely thereafter. Similarly, the restricted mean survival was no different between the two groups at 5 years and at 9 years (N. Engl. J. Med. 2012;367:1988-97 [doi:10.1056/NEJMoa1207481]).

The time to a second therapeutic procedure or death was similar between the two groups, as were the number of hospitalizations after the initial repair, the number of secondary therapeutic procedures needed, and postoperative quality of life.

The most likely explanation for the convergence of the survival curves over time is that the frailest patients in the open-repair group died soon after that rigorous procedure, while the frailest patients in the endovascular-repair group survived that less invasive surgery but succumbed within a year or two, Dr. Lederle and his associates said.

When the data were analyzed according to patient age, an interesting result emerged: Patients younger than age 70 had better survival with endovascular than with open repair, while patients older than age 70 had better survival with open than with endovascular repair. This was surprising, given that "much of the early enthusiasm for endovascular repair focused on the expected advantage among old or infirm patients who were not good candidates for open repair," they noted.

Even though the rate of late ruptures was higher for the endovascular approach, it was still a very low rate, "with only six ruptures during 4,576 patient-years of follow-up." Moreover, four of these six late ruptures occurred in elderly patients, three of whom didn’t adhere to the recommended follow-up.

"We therefore consider endovascular repair to be a reasonable option in patients younger than 70 years of age who are likely to adhere to medical advice," Dr. Lederle and his colleagues said.

Nevertheless, endovascular repair "does not yet offer a long-term advantage over open repair, particularly among older patients, for whom such an advantage was originally expected," they noted.

This study was supported by the Department of Veterans Affairs Office of Research and Development. Dr. Lederle reported no financial conflicts of interest; one of his associates reported ties to Abbott, Cook, Covidien, Gore, and Endologix.

Now that all three large randomized clinical trials confirm that long-term outcomes are similar between endovascular and open repair of abdominal aortic aneurysms, patient preferences can become a larger part of the decision as to which surgery to pursue, said Dr. Joshua A. Beckman.

Now "patients can weight the value of open repair, a major operation with greater up-front morbidity and mortality, against that of endovascular repair, with its lower early-event rate but the need for indefinite radiologic surveillance," he said.

"The results of the OVER study confirm that the patient population that should undergo AAA repair remains the same as it has been for the past 15 years. Thus, endovascular repair has neither expanded AAA repair to new populations nor reduced long-term mortality when compared with open repair," he added.

"The dream of improving long-term survival and expanding the population that will benefit from AAA repair [using EVAR] is seemingly over, but the reality of better procedural recovery for patients today is certainly a step forward," Dr. Beckman concluded.

Joshua A. Beckman, M.D., is with the cardiovascular division at Brigham and Women’s Hospital, Boston. He reported ties to Novartis, Ferring Pharmaceuticals, Boston Scientific, BMS, and Lupin. These remarks were taken from his editorial accompanying Dr. Lederle’s report (N. Engl. J. Med. 2012 Nov. 22 [doi:10.1056/NEJMe1211163]).

Now that all three large randomized clinical trials confirm that long-term outcomes are similar between endovascular and open repair of abdominal aortic aneurysms, patient preferences can become a larger part of the decision as to which surgery to pursue, said Dr. Joshua A. Beckman.

Now "patients can weight the value of open repair, a major operation with greater up-front morbidity and mortality, against that of endovascular repair, with its lower early-event rate but the need for indefinite radiologic surveillance," he said.

"The results of the OVER study confirm that the patient population that should undergo AAA repair remains the same as it has been for the past 15 years. Thus, endovascular repair has neither expanded AAA repair to new populations nor reduced long-term mortality when compared with open repair," he added.

"The dream of improving long-term survival and expanding the population that will benefit from AAA repair [using EVAR] is seemingly over, but the reality of better procedural recovery for patients today is certainly a step forward," Dr. Beckman concluded.

Joshua A. Beckman, M.D., is with the cardiovascular division at Brigham and Women’s Hospital, Boston. He reported ties to Novartis, Ferring Pharmaceuticals, Boston Scientific, BMS, and Lupin. These remarks were taken from his editorial accompanying Dr. Lederle’s report (N. Engl. J. Med. 2012 Nov. 22 [doi:10.1056/NEJMe1211163]).

Now that all three large randomized clinical trials confirm that long-term outcomes are similar between endovascular and open repair of abdominal aortic aneurysms, patient preferences can become a larger part of the decision as to which surgery to pursue, said Dr. Joshua A. Beckman.

Now "patients can weight the value of open repair, a major operation with greater up-front morbidity and mortality, against that of endovascular repair, with its lower early-event rate but the need for indefinite radiologic surveillance," he said.

"The results of the OVER study confirm that the patient population that should undergo AAA repair remains the same as it has been for the past 15 years. Thus, endovascular repair has neither expanded AAA repair to new populations nor reduced long-term mortality when compared with open repair," he added.

"The dream of improving long-term survival and expanding the population that will benefit from AAA repair [using EVAR] is seemingly over, but the reality of better procedural recovery for patients today is certainly a step forward," Dr. Beckman concluded.

Joshua A. Beckman, M.D., is with the cardiovascular division at Brigham and Women’s Hospital, Boston. He reported ties to Novartis, Ferring Pharmaceuticals, Boston Scientific, BMS, and Lupin. These remarks were taken from his editorial accompanying Dr. Lederle’s report (N. Engl. J. Med. 2012 Nov. 22 [doi:10.1056/NEJMe1211163]).

For patients who undergo elective repair of abdominal aortic aneurysm, long-term mortality is not significantly different between those who have endovascular surgery and those who have open surgery, according to a report published online Nov. 22 in the New England Journal of Medicine.

Perioperative survival was superior with the endovascular approach, and that advantage lasted for up to 3 years. But from that point on, survival was similar between patients who had undergone endovascular repair and those who had undergone open repair, said Dr. Frank A. Lederle of the Veterans Affairs Medical Center, Minneapolis, Minn., and his associates.

Three large, randomized clinical trials compared the two surgical approaches: the United Kingdom Endovascular Repair 1 (EVAR 1) trial, the Dutch Randomized Endovascular Aneurysm Management (DREAM) trial, and the Open versus Endovascular Repair (OVER) Veterans Affairs Cooperative Study in the United States. All three studies initially showed a survival advantage with the endovascular procedure in the perioperative period. But longer follow-up in the EVAR 1 and DREAM studies suggested that this advantage was lost at approximately 2 years, due to an excess in late deaths among patients who had undergone endovascular repair.

Dr. Lederle and his colleagues now report the long-term findings of the OVER trial, and they also found that at approximately 3 years, the survival curves between the two study groups converged.

The OVER trial was conducted at 42 VA medical centers across the country and involved 881 patients. The mean patient age was 70 years, and, as is typical in VA cohorts, 99% of the subjects were male.

All patients had abdominal aortic aneurysms with a maximal external diameter of at least 5 cm, an associated iliac-artery aneurysm with a maximum diameter of at least 3 cm, or a maximal diameter of at least 4.5 cm plus either rapid enlargement or a saccular appearance on radiography and CT examination.

A total of 444 study subjects were randomly assigned to endovascular repair and 437 to open repair. They were followed for up to 9 years (mean follow-up, 5.2 years). During that time, there were 146 deaths in each group.

All-cause mortality was significantly lower in the endovascular group at 2 years, but that difference was only of borderline significance at 3 years and disappeared completely thereafter. Similarly, the restricted mean survival was no different between the two groups at 5 years and at 9 years (N. Engl. J. Med. 2012;367:1988-97 [doi:10.1056/NEJMoa1207481]).

The time to a second therapeutic procedure or death was similar between the two groups, as were the number of hospitalizations after the initial repair, the number of secondary therapeutic procedures needed, and postoperative quality of life.

The most likely explanation for the convergence of the survival curves over time is that the frailest patients in the open-repair group died soon after that rigorous procedure, while the frailest patients in the endovascular-repair group survived that less invasive surgery but succumbed within a year or two, Dr. Lederle and his associates said.

When the data were analyzed according to patient age, an interesting result emerged: Patients younger than age 70 had better survival with endovascular than with open repair, while patients older than age 70 had better survival with open than with endovascular repair. This was surprising, given that "much of the early enthusiasm for endovascular repair focused on the expected advantage among old or infirm patients who were not good candidates for open repair," they noted.

Even though the rate of late ruptures was higher for the endovascular approach, it was still a very low rate, "with only six ruptures during 4,576 patient-years of follow-up." Moreover, four of these six late ruptures occurred in elderly patients, three of whom didn’t adhere to the recommended follow-up.

"We therefore consider endovascular repair to be a reasonable option in patients younger than 70 years of age who are likely to adhere to medical advice," Dr. Lederle and his colleagues said.

Nevertheless, endovascular repair "does not yet offer a long-term advantage over open repair, particularly among older patients, for whom such an advantage was originally expected," they noted.

This study was supported by the Department of Veterans Affairs Office of Research and Development. Dr. Lederle reported no financial conflicts of interest; one of his associates reported ties to Abbott, Cook, Covidien, Gore, and Endologix.

For patients who undergo elective repair of abdominal aortic aneurysm, long-term mortality is not significantly different between those who have endovascular surgery and those who have open surgery, according to a report published online Nov. 22 in the New England Journal of Medicine.

Perioperative survival was superior with the endovascular approach, and that advantage lasted for up to 3 years. But from that point on, survival was similar between patients who had undergone endovascular repair and those who had undergone open repair, said Dr. Frank A. Lederle of the Veterans Affairs Medical Center, Minneapolis, Minn., and his associates.

Three large, randomized clinical trials compared the two surgical approaches: the United Kingdom Endovascular Repair 1 (EVAR 1) trial, the Dutch Randomized Endovascular Aneurysm Management (DREAM) trial, and the Open versus Endovascular Repair (OVER) Veterans Affairs Cooperative Study in the United States. All three studies initially showed a survival advantage with the endovascular procedure in the perioperative period. But longer follow-up in the EVAR 1 and DREAM studies suggested that this advantage was lost at approximately 2 years, due to an excess in late deaths among patients who had undergone endovascular repair.

Dr. Lederle and his colleagues now report the long-term findings of the OVER trial, and they also found that at approximately 3 years, the survival curves between the two study groups converged.

The OVER trial was conducted at 42 VA medical centers across the country and involved 881 patients. The mean patient age was 70 years, and, as is typical in VA cohorts, 99% of the subjects were male.

All patients had abdominal aortic aneurysms with a maximal external diameter of at least 5 cm, an associated iliac-artery aneurysm with a maximum diameter of at least 3 cm, or a maximal diameter of at least 4.5 cm plus either rapid enlargement or a saccular appearance on radiography and CT examination.

A total of 444 study subjects were randomly assigned to endovascular repair and 437 to open repair. They were followed for up to 9 years (mean follow-up, 5.2 years). During that time, there were 146 deaths in each group.

All-cause mortality was significantly lower in the endovascular group at 2 years, but that difference was only of borderline significance at 3 years and disappeared completely thereafter. Similarly, the restricted mean survival was no different between the two groups at 5 years and at 9 years (N. Engl. J. Med. 2012;367:1988-97 [doi:10.1056/NEJMoa1207481]).

The time to a second therapeutic procedure or death was similar between the two groups, as were the number of hospitalizations after the initial repair, the number of secondary therapeutic procedures needed, and postoperative quality of life.

The most likely explanation for the convergence of the survival curves over time is that the frailest patients in the open-repair group died soon after that rigorous procedure, while the frailest patients in the endovascular-repair group survived that less invasive surgery but succumbed within a year or two, Dr. Lederle and his associates said.

When the data were analyzed according to patient age, an interesting result emerged: Patients younger than age 70 had better survival with endovascular than with open repair, while patients older than age 70 had better survival with open than with endovascular repair. This was surprising, given that "much of the early enthusiasm for endovascular repair focused on the expected advantage among old or infirm patients who were not good candidates for open repair," they noted.

Even though the rate of late ruptures was higher for the endovascular approach, it was still a very low rate, "with only six ruptures during 4,576 patient-years of follow-up." Moreover, four of these six late ruptures occurred in elderly patients, three of whom didn’t adhere to the recommended follow-up.

"We therefore consider endovascular repair to be a reasonable option in patients younger than 70 years of age who are likely to adhere to medical advice," Dr. Lederle and his colleagues said.

Nevertheless, endovascular repair "does not yet offer a long-term advantage over open repair, particularly among older patients, for whom such an advantage was originally expected," they noted.

This study was supported by the Department of Veterans Affairs Office of Research and Development. Dr. Lederle reported no financial conflicts of interest; one of his associates reported ties to Abbott, Cook, Covidien, Gore, and Endologix.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Major Finding: All-cause mortality was significantly lower with endovascular than with open surgical repair for 2-3 years, but the two survival curves converged at that point and remained the same thereafter.

Data Source: A multicenter randomized controlled clinical trial involving 881 patients with abdominal aortic aneurysms who underwent either endovascular or open surgical repair and were followed for a mean of 5 years.

Disclosures: This study was supported by the Department of Veterans Affairs Office of Research and Development. Dr. Lederle reported no financial conflicts of interest; one of his associates reported ties to Abbott, Cook, Covidien, Gore, and Endologix.

Advanced Pleuroscopy Technique Is Biopsy Option for Unknown DPLDs

ATLANTA – Medical thoracoscopy is safe and feasible for performing lung biopsy in patients with diffuse parenchymal lung disease of unknown etiology on high-resolution computed tomography. And the approach could serve as an alternative to surgical biopsy in some patients, findings from a prospective study suggest.

In 10 patients who underwent medical thoracoscopic lung biopsies as part of the study, good biopsy specimens, with an average size of 0.5 x 0.4 cm were obtained, Dr. Mohamed Elnady said at the annual meeting of the American College of Chest Physicians.

Complications with this advanced technique included persistent air leak for 5-7 days in two patients, pneumothorax after removal of the intercostals tube in two patients, pain in six patients, and minor bleeding in one patient. The air leaks resolved spontaneously, and the pneumothoraces resolved with administration of high flow oxygen, said Dr. Elnady of Cairo (Egypt) University Hospitals.

The mean duration of intercostal tube placement was 3.1 days, with a range of 1-7 days; no infection, respiratory failure requiring intensive care unit admission, or mortality occurred within 30 days after the procedure, he noted.

Patients in the study included four women and six men with a mean age of 42 years. The lung biopsies obtained via medical thoracoscopy were sent for histopathologic examination, and patients underwent follow-up by chest x-ray for confirmation of lung expansion, as well as observation of the intercostal tube to detect complications. Among the ultimate diagnoses were metastatic adenocarcinoma, interstitial lung disease, and lymphangioleiomyomatosis.

"Thoracosopic lung biopsy by medical thoracoscopy is useful in the diagnosis of patient with diffuse pulmonary infiltrates of unknown etiology when lung biopsy is needed for an accurate diagnosis," Dr. Elnady concluded, noting that while the procedure does carry a risk of certain non–life-threatening complications, these can be minimized with good patient selection.

Commenting on the findings, Dr. Muthiah P. Muthiah, who moderated the session, said this novel approach to obtaining a lung biopsy is of interest, but also "something we still have to get comfortable with."

"I’m not ready to do this yet, but this is something to consider ... you will want to certainly do this with a surgeon’s back-up in your institution," said Dr. Muthiah of the University of Tennessee Health Science Center, Memphis.

Neither Dr. Muthiah nor Dr. Elnady had disclosures to report.

Dr. Lary Robinson, FCCP, comments: Medical thoracoscopy, commonly termed pleuroscopy, has been practiced for decades in some centers by pulmonary medicine specialists primarily to evaluate and treat pleural diseases, usually performed under conscious sedation.

Dr. Elmady from Cairo University Hospitals describes his experience in ten patients where a lung biopsy was performed. Their complication rate was significant (20% persistent air leak, 10% bleeding, 60% significant pain, etc.) for this awake procedure compared to the usual, minimal morbidity from VATS surgical thoracoscopy for lung biopsy. And the 5-mm x 4-mm diameter tissue specimen they obtained would be considered marginal at best for a definitive pathological diagnosis.

A VATS lung biopsy is a safe, quick 20-30 minute procedure under general anesthesia, with chest tube removal the following day, followed by discharge home in a very comfortable patient. Finally, most patients requiring this procedure have significantly compromised lung function (the reason for the biopsy) so that an awake, spontaneously-breathing patient can easily get into significant respiratory distress with the higher risk, medical thoracoscopic lung biopsy.

Lary Robinson, M.D., is a thoracic surgeon at the Moffitt Cancer Center in Tampa, Fla.

Dr. Lary Robinson, FCCP, comments: Medical thoracoscopy, commonly termed pleuroscopy, has been practiced for decades in some centers by pulmonary medicine specialists primarily to evaluate and treat pleural diseases, usually performed under conscious sedation.

Dr. Elmady from Cairo University Hospitals describes his experience in ten patients where a lung biopsy was performed. Their complication rate was significant (20% persistent air leak, 10% bleeding, 60% significant pain, etc.) for this awake procedure compared to the usual, minimal morbidity from VATS surgical thoracoscopy for lung biopsy. And the 5-mm x 4-mm diameter tissue specimen they obtained would be considered marginal at best for a definitive pathological diagnosis.

A VATS lung biopsy is a safe, quick 20-30 minute procedure under general anesthesia, with chest tube removal the following day, followed by discharge home in a very comfortable patient. Finally, most patients requiring this procedure have significantly compromised lung function (the reason for the biopsy) so that an awake, spontaneously-breathing patient can easily get into significant respiratory distress with the higher risk, medical thoracoscopic lung biopsy.

Lary Robinson, M.D., is a thoracic surgeon at the Moffitt Cancer Center in Tampa, Fla.

Dr. Lary Robinson, FCCP, comments: Medical thoracoscopy, commonly termed pleuroscopy, has been practiced for decades in some centers by pulmonary medicine specialists primarily to evaluate and treat pleural diseases, usually performed under conscious sedation.

Dr. Elmady from Cairo University Hospitals describes his experience in ten patients where a lung biopsy was performed. Their complication rate was significant (20% persistent air leak, 10% bleeding, 60% significant pain, etc.) for this awake procedure compared to the usual, minimal morbidity from VATS surgical thoracoscopy for lung biopsy. And the 5-mm x 4-mm diameter tissue specimen they obtained would be considered marginal at best for a definitive pathological diagnosis.

A VATS lung biopsy is a safe, quick 20-30 minute procedure under general anesthesia, with chest tube removal the following day, followed by discharge home in a very comfortable patient. Finally, most patients requiring this procedure have significantly compromised lung function (the reason for the biopsy) so that an awake, spontaneously-breathing patient can easily get into significant respiratory distress with the higher risk, medical thoracoscopic lung biopsy.

Lary Robinson, M.D., is a thoracic surgeon at the Moffitt Cancer Center in Tampa, Fla.

ATLANTA – Medical thoracoscopy is safe and feasible for performing lung biopsy in patients with diffuse parenchymal lung disease of unknown etiology on high-resolution computed tomography. And the approach could serve as an alternative to surgical biopsy in some patients, findings from a prospective study suggest.

In 10 patients who underwent medical thoracoscopic lung biopsies as part of the study, good biopsy specimens, with an average size of 0.5 x 0.4 cm were obtained, Dr. Mohamed Elnady said at the annual meeting of the American College of Chest Physicians.

Complications with this advanced technique included persistent air leak for 5-7 days in two patients, pneumothorax after removal of the intercostals tube in two patients, pain in six patients, and minor bleeding in one patient. The air leaks resolved spontaneously, and the pneumothoraces resolved with administration of high flow oxygen, said Dr. Elnady of Cairo (Egypt) University Hospitals.

The mean duration of intercostal tube placement was 3.1 days, with a range of 1-7 days; no infection, respiratory failure requiring intensive care unit admission, or mortality occurred within 30 days after the procedure, he noted.

Patients in the study included four women and six men with a mean age of 42 years. The lung biopsies obtained via medical thoracoscopy were sent for histopathologic examination, and patients underwent follow-up by chest x-ray for confirmation of lung expansion, as well as observation of the intercostal tube to detect complications. Among the ultimate diagnoses were metastatic adenocarcinoma, interstitial lung disease, and lymphangioleiomyomatosis.

"Thoracosopic lung biopsy by medical thoracoscopy is useful in the diagnosis of patient with diffuse pulmonary infiltrates of unknown etiology when lung biopsy is needed for an accurate diagnosis," Dr. Elnady concluded, noting that while the procedure does carry a risk of certain non–life-threatening complications, these can be minimized with good patient selection.

Commenting on the findings, Dr. Muthiah P. Muthiah, who moderated the session, said this novel approach to obtaining a lung biopsy is of interest, but also "something we still have to get comfortable with."

"I’m not ready to do this yet, but this is something to consider ... you will want to certainly do this with a surgeon’s back-up in your institution," said Dr. Muthiah of the University of Tennessee Health Science Center, Memphis.

Neither Dr. Muthiah nor Dr. Elnady had disclosures to report.

ATLANTA – Medical thoracoscopy is safe and feasible for performing lung biopsy in patients with diffuse parenchymal lung disease of unknown etiology on high-resolution computed tomography. And the approach could serve as an alternative to surgical biopsy in some patients, findings from a prospective study suggest.

In 10 patients who underwent medical thoracoscopic lung biopsies as part of the study, good biopsy specimens, with an average size of 0.5 x 0.4 cm were obtained, Dr. Mohamed Elnady said at the annual meeting of the American College of Chest Physicians.

Complications with this advanced technique included persistent air leak for 5-7 days in two patients, pneumothorax after removal of the intercostals tube in two patients, pain in six patients, and minor bleeding in one patient. The air leaks resolved spontaneously, and the pneumothoraces resolved with administration of high flow oxygen, said Dr. Elnady of Cairo (Egypt) University Hospitals.

The mean duration of intercostal tube placement was 3.1 days, with a range of 1-7 days; no infection, respiratory failure requiring intensive care unit admission, or mortality occurred within 30 days after the procedure, he noted.

Patients in the study included four women and six men with a mean age of 42 years. The lung biopsies obtained via medical thoracoscopy were sent for histopathologic examination, and patients underwent follow-up by chest x-ray for confirmation of lung expansion, as well as observation of the intercostal tube to detect complications. Among the ultimate diagnoses were metastatic adenocarcinoma, interstitial lung disease, and lymphangioleiomyomatosis.

"Thoracosopic lung biopsy by medical thoracoscopy is useful in the diagnosis of patient with diffuse pulmonary infiltrates of unknown etiology when lung biopsy is needed for an accurate diagnosis," Dr. Elnady concluded, noting that while the procedure does carry a risk of certain non–life-threatening complications, these can be minimized with good patient selection.

Commenting on the findings, Dr. Muthiah P. Muthiah, who moderated the session, said this novel approach to obtaining a lung biopsy is of interest, but also "something we still have to get comfortable with."

"I’m not ready to do this yet, but this is something to consider ... you will want to certainly do this with a surgeon’s back-up in your institution," said Dr. Muthiah of the University of Tennessee Health Science Center, Memphis.

Neither Dr. Muthiah nor Dr. Elnady had disclosures to report.

AT THE ANNUAL MEETING OF THE AMERICAN COLLEGE OF CHEST PHYSICIANS

Major Finding: Good biopsy specimens (average size of 0.5 x 0.4 cm) were obtained and no life-threatening complications occurred in patients who underwent medical thorascopic lung biopsies.

Data Source: A prospective study in 10 patients was conducted.

Disclosures: Neither Dr. Muthiah nor Dr. Elnady had disclosures to report.

FDA Approves Chest-Implanted LVAD

The HeartWare Ventricular Assist System, a small left ventricular assist device that is implanted in the chest instead of the abdomen, has been approved by the Food and Drug Administration as a bridge to heart transplant for end-stage heart failure patients.

The HeartWare Ventricular Assist System, manufactured by HeartWare, includes an implantable pump with an external driver and power source. The device is another treatment option for advanced heart failure patients, especially those who are smaller in size or can’t have an abdominal implant.

The approval of the continuous-flow pump device comes less than 3 years after the agency approved Thoratec’s HeartMate II left ventricular assist device (LVAD) for destination therapy. That device quickly replaced the previous generation of pulsatile-flow devices.

This is the first time that the FDA has approved a ventricular assist device using comparator data from a registry as a control, according to the agency.

The approval was based on data from the ADVANCE trial, which compared the outcomes of 137 patients implanted with the HeartWare System and of patients registered by the Interagency Registry for Mechanically Assisted Circulatory Support (INTERMACS). The registry has been in place since 2005, collecting information on patients who received an approved mechanical circulatory support device implant.

A total of 140 patients received the investigational pump, and 499 patients received a commercially available pump implanted contemporaneously. At 180 days, 90.7% of the investigational pump patients and 90.1% of the controls had survived, establishing the noninferiority of the investigational pump (P less than .001; 15% noninferiority margin). Infection, right heart failure, device replacement, stroke, kidney dysfunction, hemolysis, and arrhythmia rates for the HVAD were similar to those reported previously for the HeartMate II, according to Dr. Keith D. Aaronson and his colleagues in the study (Circulation. 2012;125:3191-3200).

Results of the ADVANCE trial showed that at 6 months, median 6-minute walk distance improved by 128.5 m, and "functional capacity and quality of life improved markedly, and the adverse event profile was favorable," according to the authors.

In a news release, FDA officials noted that "although rates of most key adverse events were comparable, the risk of stroke associated with the HeartWare LVAD necessitates patients and clinicians to discuss all treatment options before deciding to use the device."

"Well-designed registries in targeted product areas can enhance the public health and provide a cost-effective approach to clinical research for industry innovators," Christy Foreman, director of the Office of Device Evaluation in the FDA’s Center for Devices and Radiological Health, said in a statement. For HeartWare, registry data directly facilitated the development and availability of this new device. The registry is a joint effort involving the FDA; National Heart, Lung, and Blood Institute; Centers for Medicare and Medicaid Services (CMS); and clinicians, scientists, and industry.

The FDA approval of the bridge-to-transplant pump comes on the heels of a Medicare Advisory (MEDCAC) meeting, where heart societies and prominent heart failure experts discussed the state of VAD research. The panel stressed the importance of multidisciplinary heart teams, and said that there’s not enough data to show that the indications for VADs can be expanded to include lower-risk patients.

Medicare currently does not have an open National Coverage Determination (NCD) for VADs. Medicare currently reimburses VADs, under certain criteria, as a bridge-to-transplant and as destination therapy.

Dr. Sean Pinney, who spoke at the MEDCAC meeting on behalf of the Heart Failure Society of America, said that the society supported the NCD and did "not endorse any change in the current patient selection criteria which derive from prospective randomized trials." He added that there’s a need for more well-controlled clinical trials, including those that would examine "less sick" patients.

"We do not endorse expansion of destination therapy into this population in the absence of randomized clinical trials," said Dr. Pinney, associate professor of medicine at Mount Sinai Medical Center in New York.

The 140 U.S. centers that place LVADs are expected to implant nearly 3,000 devices this year. Nearly 4,600 patients have received an LVAD since 2010.

"This is a rapidly evolving field," said Dr. Mariell Jessup, president-elect of the American Heart Association and professor of medicine at the University of Pennsylvania Heart & Vascular Center in Philadelphia. "It’s only to be expected that CMS would open coverage determination. It’s a costly technology, and outcomes are a lot better than several years ago," she said.

Physicians quoted in this story reported no financial conflicts.

The HeartWare Ventricular Assist System, a small left ventricular assist device that is implanted in the chest instead of the abdomen, has been approved by the Food and Drug Administration as a bridge to heart transplant for end-stage heart failure patients.

The HeartWare Ventricular Assist System, manufactured by HeartWare, includes an implantable pump with an external driver and power source. The device is another treatment option for advanced heart failure patients, especially those who are smaller in size or can’t have an abdominal implant.

The approval of the continuous-flow pump device comes less than 3 years after the agency approved Thoratec’s HeartMate II left ventricular assist device (LVAD) for destination therapy. That device quickly replaced the previous generation of pulsatile-flow devices.

This is the first time that the FDA has approved a ventricular assist device using comparator data from a registry as a control, according to the agency.

The approval was based on data from the ADVANCE trial, which compared the outcomes of 137 patients implanted with the HeartWare System and of patients registered by the Interagency Registry for Mechanically Assisted Circulatory Support (INTERMACS). The registry has been in place since 2005, collecting information on patients who received an approved mechanical circulatory support device implant.

A total of 140 patients received the investigational pump, and 499 patients received a commercially available pump implanted contemporaneously. At 180 days, 90.7% of the investigational pump patients and 90.1% of the controls had survived, establishing the noninferiority of the investigational pump (P less than .001; 15% noninferiority margin). Infection, right heart failure, device replacement, stroke, kidney dysfunction, hemolysis, and arrhythmia rates for the HVAD were similar to those reported previously for the HeartMate II, according to Dr. Keith D. Aaronson and his colleagues in the study (Circulation. 2012;125:3191-3200).

Results of the ADVANCE trial showed that at 6 months, median 6-minute walk distance improved by 128.5 m, and "functional capacity and quality of life improved markedly, and the adverse event profile was favorable," according to the authors.

In a news release, FDA officials noted that "although rates of most key adverse events were comparable, the risk of stroke associated with the HeartWare LVAD necessitates patients and clinicians to discuss all treatment options before deciding to use the device."

"Well-designed registries in targeted product areas can enhance the public health and provide a cost-effective approach to clinical research for industry innovators," Christy Foreman, director of the Office of Device Evaluation in the FDA’s Center for Devices and Radiological Health, said in a statement. For HeartWare, registry data directly facilitated the development and availability of this new device. The registry is a joint effort involving the FDA; National Heart, Lung, and Blood Institute; Centers for Medicare and Medicaid Services (CMS); and clinicians, scientists, and industry.

The FDA approval of the bridge-to-transplant pump comes on the heels of a Medicare Advisory (MEDCAC) meeting, where heart societies and prominent heart failure experts discussed the state of VAD research. The panel stressed the importance of multidisciplinary heart teams, and said that there’s not enough data to show that the indications for VADs can be expanded to include lower-risk patients.

Medicare currently does not have an open National Coverage Determination (NCD) for VADs. Medicare currently reimburses VADs, under certain criteria, as a bridge-to-transplant and as destination therapy.

Dr. Sean Pinney, who spoke at the MEDCAC meeting on behalf of the Heart Failure Society of America, said that the society supported the NCD and did "not endorse any change in the current patient selection criteria which derive from prospective randomized trials." He added that there’s a need for more well-controlled clinical trials, including those that would examine "less sick" patients.

"We do not endorse expansion of destination therapy into this population in the absence of randomized clinical trials," said Dr. Pinney, associate professor of medicine at Mount Sinai Medical Center in New York.

The 140 U.S. centers that place LVADs are expected to implant nearly 3,000 devices this year. Nearly 4,600 patients have received an LVAD since 2010.

"This is a rapidly evolving field," said Dr. Mariell Jessup, president-elect of the American Heart Association and professor of medicine at the University of Pennsylvania Heart & Vascular Center in Philadelphia. "It’s only to be expected that CMS would open coverage determination. It’s a costly technology, and outcomes are a lot better than several years ago," she said.

Physicians quoted in this story reported no financial conflicts.

The HeartWare Ventricular Assist System, a small left ventricular assist device that is implanted in the chest instead of the abdomen, has been approved by the Food and Drug Administration as a bridge to heart transplant for end-stage heart failure patients.

The HeartWare Ventricular Assist System, manufactured by HeartWare, includes an implantable pump with an external driver and power source. The device is another treatment option for advanced heart failure patients, especially those who are smaller in size or can’t have an abdominal implant.

The approval of the continuous-flow pump device comes less than 3 years after the agency approved Thoratec’s HeartMate II left ventricular assist device (LVAD) for destination therapy. That device quickly replaced the previous generation of pulsatile-flow devices.

This is the first time that the FDA has approved a ventricular assist device using comparator data from a registry as a control, according to the agency.

The approval was based on data from the ADVANCE trial, which compared the outcomes of 137 patients implanted with the HeartWare System and of patients registered by the Interagency Registry for Mechanically Assisted Circulatory Support (INTERMACS). The registry has been in place since 2005, collecting information on patients who received an approved mechanical circulatory support device implant.

A total of 140 patients received the investigational pump, and 499 patients received a commercially available pump implanted contemporaneously. At 180 days, 90.7% of the investigational pump patients and 90.1% of the controls had survived, establishing the noninferiority of the investigational pump (P less than .001; 15% noninferiority margin). Infection, right heart failure, device replacement, stroke, kidney dysfunction, hemolysis, and arrhythmia rates for the HVAD were similar to those reported previously for the HeartMate II, according to Dr. Keith D. Aaronson and his colleagues in the study (Circulation. 2012;125:3191-3200).

Results of the ADVANCE trial showed that at 6 months, median 6-minute walk distance improved by 128.5 m, and "functional capacity and quality of life improved markedly, and the adverse event profile was favorable," according to the authors.

In a news release, FDA officials noted that "although rates of most key adverse events were comparable, the risk of stroke associated with the HeartWare LVAD necessitates patients and clinicians to discuss all treatment options before deciding to use the device."

"Well-designed registries in targeted product areas can enhance the public health and provide a cost-effective approach to clinical research for industry innovators," Christy Foreman, director of the Office of Device Evaluation in the FDA’s Center for Devices and Radiological Health, said in a statement. For HeartWare, registry data directly facilitated the development and availability of this new device. The registry is a joint effort involving the FDA; National Heart, Lung, and Blood Institute; Centers for Medicare and Medicaid Services (CMS); and clinicians, scientists, and industry.

The FDA approval of the bridge-to-transplant pump comes on the heels of a Medicare Advisory (MEDCAC) meeting, where heart societies and prominent heart failure experts discussed the state of VAD research. The panel stressed the importance of multidisciplinary heart teams, and said that there’s not enough data to show that the indications for VADs can be expanded to include lower-risk patients.

Medicare currently does not have an open National Coverage Determination (NCD) for VADs. Medicare currently reimburses VADs, under certain criteria, as a bridge-to-transplant and as destination therapy.

Dr. Sean Pinney, who spoke at the MEDCAC meeting on behalf of the Heart Failure Society of America, said that the society supported the NCD and did "not endorse any change in the current patient selection criteria which derive from prospective randomized trials." He added that there’s a need for more well-controlled clinical trials, including those that would examine "less sick" patients.

"We do not endorse expansion of destination therapy into this population in the absence of randomized clinical trials," said Dr. Pinney, associate professor of medicine at Mount Sinai Medical Center in New York.

The 140 U.S. centers that place LVADs are expected to implant nearly 3,000 devices this year. Nearly 4,600 patients have received an LVAD since 2010.

"This is a rapidly evolving field," said Dr. Mariell Jessup, president-elect of the American Heart Association and professor of medicine at the University of Pennsylvania Heart & Vascular Center in Philadelphia. "It’s only to be expected that CMS would open coverage determination. It’s a costly technology, and outcomes are a lot better than several years ago," she said.

Physicians quoted in this story reported no financial conflicts.

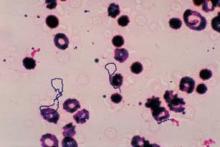

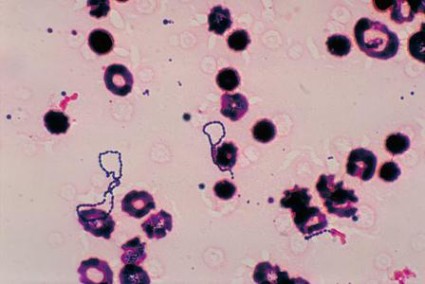

Triple Therapy Boosts HCV Response After Transplant

BOSTON – Liver transplant recipients with hepatitis C virus infection who underwent triple-drug therapy achieved a high extended rapid virologic response rate but often contended with treatment complications in a retrospective multicenter cohort study.

The extended rapid virologic response (eRVR) rate seen in 57% of patients was "encouraging, given a very difficult-to-cure population," Dr. James R. Burton, Jr., said at the annual meeting of the American Association for the Study of Liver Diseases. He noted, however, that it’s not clear if the encouraging eRVR rate will predict sustained virologic response (SVR) as it does in non-liver transplant patients.