User login

Jeff Evans has been editor of Rheumatology News/MDedge Rheumatology and the EULAR Congress News since 2013. He started at Frontline Medical Communications in 2001 and was a reporter for 8 years before serving as editor of Clinical Neurology News and World Neurology, and briefly as editor of GI & Hepatology News. He graduated cum laude from Cornell University (New York) with a BA in biological sciences, concentrating in neurobiology and behavior.

Potential of transcranial direct current stimulation shown in fibromyalgia

Transcranial direct current stimulation delivered focally to the left primary motor cortex of patients with fibromyalgia significantly reduced perceived pain compared with sham stimulation in a proof-of-principle pilot trial.

The reduction in pain levels continued at least 30 minutes after a single, 20-minute session of transcranial direct current stimulation (tDCS) of the left primary motor cortex (M1 area), supporting "the theory that tDCS-induced modulatory effects on pain-related neural circuitry are dependent on modulation of M1 activity," noted the trial investigators, led by Dr. Mauricio F. Villamar of Spaulding Rehabilitation Hospital and Massachusetts General Hospital, both in Boston.

The ability of tDCS and repetitive transcranial magnetic stimulation (rTMS) to modify the excitability of cortical neural circuits, particularly the primary motor cortex (M1), gives them the potential to target one of the pathophysiological mechanisms of fibromyalgia, "where pain can be characterized by a lack of inhibitory control over somatosensory processing," said Dr. Villamar and his associates.

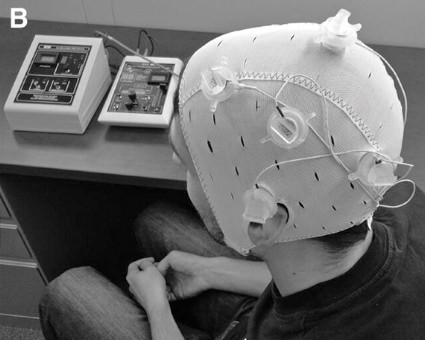

The researchers noted that although tDCS may have advantages over rTMS in its portability, ease of use, and low cost, the relatively large electrode pads used for tDCS in earlier studies of chronic pain disorders affect diffuse areas of the brain and could potentially be improved to provide larger effect sizes and reduce the likelihood of side effects by decreasing stimulation to adjacent regions. The investigators created a method for delivering tDCS with increased focality, called high-definition tDCS, through the use of four small electrode pads configured in a ring around a central electrode to increase the focality of stimulation. The central electrode could be switched between anode and cathode states.

All 18 trial participants (15 were women) and the physiatrist who performed the nociception assessments were blind to the type of stimulation. The participants all had fibromyalgia diagnoses that met the 2010 American College of Rheumatology Preliminary Diagnostic Criteria. They had a mean age of 50 years and had been diagnosed with fibromyalgia for a mean of nearly 11 years before enrollment (J. Pain 2013 Feb. 18 [doi: 10.1016/j.jpain.2012.12.007]).

The trial had a crossover design in which the 18 patients underwent either real stimulation (where the central electrode functioned as either an anode or a cathode) or sham stimulation on three separate clinic visits, with the order of stimulation counterbalanced and randomly assigned for each individual. There was also a baseline and a final visit that did not involve treatment.

At each session, participants received direct current that was gradually increased over 30 seconds up to 2 mA. Actively treated patients received stimulation for 20 minutes, while the current was shut down after the initial 30 seconds in sham-treated patients. The authors noted that the 30-second duration "has been reported to be a reliable method for blinding participants in conventional tDCS trials, which induces no effects on cortical excitability."

Pain scores on a visual numerical scale of 0-10 with 0.5-point increments showed declines from baseline to immediately after stimulation to 30 minutes after stimulation for all three treatments (sham and anodal and cathodal stimulation). However, only cathodal HD-tDCS gave significant improvement over sham treatment immediately after stimulation. Both cathodal and anodal HD-tDCS resulted in significant pain reduction 30 minutes after the end of stimulation. In the sham stimulation group, mean pain scores dropped from 5.09 at baseline to 4.59 immediately after stimulation to 4.41 30 minutes after. For anodal stimulation, mean pain scores dropped from 5.47 to 4.79 to 4.07, respectively. For cathodal stimulation, the scores declined from 5.03 to 3.89 to 3.65.

The standard deviation in mean pain scores in all groups ranged from a little less than 2 points to a little more than 2 points. "Although the changes in overall perceived pain showed a relatively large variability, as evidenced by the standard deviation values, this goes in accordance with the large variability in baseline pain levels among participants in our trial," the investigators wrote.

Only anodal stimulation led to a significant increase in the secondary outcome of Semmes-Weinstein monofilament mechanical detection threshold on both sides of the body, compared with sham.

As expected, other secondary measurements did not change significantly in the three groups, including visual numerical score for anxiety, quality of life, Beck Depression Inventory-II score, Semmes-Weinstein monofilament testing for pain thresholds, pain pressure thresholds, and diffuse noxious inhibitory control testing.

No patients reported any unexpected adverse events.

Soterix Medical, the manufacturer of the HD-tDCS device, is now recruiting participants with fibromyalgia for another multicenter trial that will test the magnitude and durability of effects with repeated HD-tDCS sessions.

The trial was funded by a Translational Research Award from the Wallace H. Coulter Foundation. Two of the seven authors have equity in Soterix Medical. Their institution, the City College of New York of CUNY in New York, holds the intellectual property rights to their inventions involving noninvasive brain stimulation. The other five authors declared having no conflicts of interest related to the study.

Transcranial direct current stimulation delivered focally to the left primary motor cortex of patients with fibromyalgia significantly reduced perceived pain compared with sham stimulation in a proof-of-principle pilot trial.

The reduction in pain levels continued at least 30 minutes after a single, 20-minute session of transcranial direct current stimulation (tDCS) of the left primary motor cortex (M1 area), supporting "the theory that tDCS-induced modulatory effects on pain-related neural circuitry are dependent on modulation of M1 activity," noted the trial investigators, led by Dr. Mauricio F. Villamar of Spaulding Rehabilitation Hospital and Massachusetts General Hospital, both in Boston.

The ability of tDCS and repetitive transcranial magnetic stimulation (rTMS) to modify the excitability of cortical neural circuits, particularly the primary motor cortex (M1), gives them the potential to target one of the pathophysiological mechanisms of fibromyalgia, "where pain can be characterized by a lack of inhibitory control over somatosensory processing," said Dr. Villamar and his associates.

The researchers noted that although tDCS may have advantages over rTMS in its portability, ease of use, and low cost, the relatively large electrode pads used for tDCS in earlier studies of chronic pain disorders affect diffuse areas of the brain and could potentially be improved to provide larger effect sizes and reduce the likelihood of side effects by decreasing stimulation to adjacent regions. The investigators created a method for delivering tDCS with increased focality, called high-definition tDCS, through the use of four small electrode pads configured in a ring around a central electrode to increase the focality of stimulation. The central electrode could be switched between anode and cathode states.

All 18 trial participants (15 were women) and the physiatrist who performed the nociception assessments were blind to the type of stimulation. The participants all had fibromyalgia diagnoses that met the 2010 American College of Rheumatology Preliminary Diagnostic Criteria. They had a mean age of 50 years and had been diagnosed with fibromyalgia for a mean of nearly 11 years before enrollment (J. Pain 2013 Feb. 18 [doi: 10.1016/j.jpain.2012.12.007]).

The trial had a crossover design in which the 18 patients underwent either real stimulation (where the central electrode functioned as either an anode or a cathode) or sham stimulation on three separate clinic visits, with the order of stimulation counterbalanced and randomly assigned for each individual. There was also a baseline and a final visit that did not involve treatment.

At each session, participants received direct current that was gradually increased over 30 seconds up to 2 mA. Actively treated patients received stimulation for 20 minutes, while the current was shut down after the initial 30 seconds in sham-treated patients. The authors noted that the 30-second duration "has been reported to be a reliable method for blinding participants in conventional tDCS trials, which induces no effects on cortical excitability."

Pain scores on a visual numerical scale of 0-10 with 0.5-point increments showed declines from baseline to immediately after stimulation to 30 minutes after stimulation for all three treatments (sham and anodal and cathodal stimulation). However, only cathodal HD-tDCS gave significant improvement over sham treatment immediately after stimulation. Both cathodal and anodal HD-tDCS resulted in significant pain reduction 30 minutes after the end of stimulation. In the sham stimulation group, mean pain scores dropped from 5.09 at baseline to 4.59 immediately after stimulation to 4.41 30 minutes after. For anodal stimulation, mean pain scores dropped from 5.47 to 4.79 to 4.07, respectively. For cathodal stimulation, the scores declined from 5.03 to 3.89 to 3.65.

The standard deviation in mean pain scores in all groups ranged from a little less than 2 points to a little more than 2 points. "Although the changes in overall perceived pain showed a relatively large variability, as evidenced by the standard deviation values, this goes in accordance with the large variability in baseline pain levels among participants in our trial," the investigators wrote.

Only anodal stimulation led to a significant increase in the secondary outcome of Semmes-Weinstein monofilament mechanical detection threshold on both sides of the body, compared with sham.

As expected, other secondary measurements did not change significantly in the three groups, including visual numerical score for anxiety, quality of life, Beck Depression Inventory-II score, Semmes-Weinstein monofilament testing for pain thresholds, pain pressure thresholds, and diffuse noxious inhibitory control testing.

No patients reported any unexpected adverse events.

Soterix Medical, the manufacturer of the HD-tDCS device, is now recruiting participants with fibromyalgia for another multicenter trial that will test the magnitude and durability of effects with repeated HD-tDCS sessions.

The trial was funded by a Translational Research Award from the Wallace H. Coulter Foundation. Two of the seven authors have equity in Soterix Medical. Their institution, the City College of New York of CUNY in New York, holds the intellectual property rights to their inventions involving noninvasive brain stimulation. The other five authors declared having no conflicts of interest related to the study.

Transcranial direct current stimulation delivered focally to the left primary motor cortex of patients with fibromyalgia significantly reduced perceived pain compared with sham stimulation in a proof-of-principle pilot trial.

The reduction in pain levels continued at least 30 minutes after a single, 20-minute session of transcranial direct current stimulation (tDCS) of the left primary motor cortex (M1 area), supporting "the theory that tDCS-induced modulatory effects on pain-related neural circuitry are dependent on modulation of M1 activity," noted the trial investigators, led by Dr. Mauricio F. Villamar of Spaulding Rehabilitation Hospital and Massachusetts General Hospital, both in Boston.

The ability of tDCS and repetitive transcranial magnetic stimulation (rTMS) to modify the excitability of cortical neural circuits, particularly the primary motor cortex (M1), gives them the potential to target one of the pathophysiological mechanisms of fibromyalgia, "where pain can be characterized by a lack of inhibitory control over somatosensory processing," said Dr. Villamar and his associates.

The researchers noted that although tDCS may have advantages over rTMS in its portability, ease of use, and low cost, the relatively large electrode pads used for tDCS in earlier studies of chronic pain disorders affect diffuse areas of the brain and could potentially be improved to provide larger effect sizes and reduce the likelihood of side effects by decreasing stimulation to adjacent regions. The investigators created a method for delivering tDCS with increased focality, called high-definition tDCS, through the use of four small electrode pads configured in a ring around a central electrode to increase the focality of stimulation. The central electrode could be switched between anode and cathode states.

All 18 trial participants (15 were women) and the physiatrist who performed the nociception assessments were blind to the type of stimulation. The participants all had fibromyalgia diagnoses that met the 2010 American College of Rheumatology Preliminary Diagnostic Criteria. They had a mean age of 50 years and had been diagnosed with fibromyalgia for a mean of nearly 11 years before enrollment (J. Pain 2013 Feb. 18 [doi: 10.1016/j.jpain.2012.12.007]).

The trial had a crossover design in which the 18 patients underwent either real stimulation (where the central electrode functioned as either an anode or a cathode) or sham stimulation on three separate clinic visits, with the order of stimulation counterbalanced and randomly assigned for each individual. There was also a baseline and a final visit that did not involve treatment.

At each session, participants received direct current that was gradually increased over 30 seconds up to 2 mA. Actively treated patients received stimulation for 20 minutes, while the current was shut down after the initial 30 seconds in sham-treated patients. The authors noted that the 30-second duration "has been reported to be a reliable method for blinding participants in conventional tDCS trials, which induces no effects on cortical excitability."

Pain scores on a visual numerical scale of 0-10 with 0.5-point increments showed declines from baseline to immediately after stimulation to 30 minutes after stimulation for all three treatments (sham and anodal and cathodal stimulation). However, only cathodal HD-tDCS gave significant improvement over sham treatment immediately after stimulation. Both cathodal and anodal HD-tDCS resulted in significant pain reduction 30 minutes after the end of stimulation. In the sham stimulation group, mean pain scores dropped from 5.09 at baseline to 4.59 immediately after stimulation to 4.41 30 minutes after. For anodal stimulation, mean pain scores dropped from 5.47 to 4.79 to 4.07, respectively. For cathodal stimulation, the scores declined from 5.03 to 3.89 to 3.65.

The standard deviation in mean pain scores in all groups ranged from a little less than 2 points to a little more than 2 points. "Although the changes in overall perceived pain showed a relatively large variability, as evidenced by the standard deviation values, this goes in accordance with the large variability in baseline pain levels among participants in our trial," the investigators wrote.

Only anodal stimulation led to a significant increase in the secondary outcome of Semmes-Weinstein monofilament mechanical detection threshold on both sides of the body, compared with sham.

As expected, other secondary measurements did not change significantly in the three groups, including visual numerical score for anxiety, quality of life, Beck Depression Inventory-II score, Semmes-Weinstein monofilament testing for pain thresholds, pain pressure thresholds, and diffuse noxious inhibitory control testing.

No patients reported any unexpected adverse events.

Soterix Medical, the manufacturer of the HD-tDCS device, is now recruiting participants with fibromyalgia for another multicenter trial that will test the magnitude and durability of effects with repeated HD-tDCS sessions.

The trial was funded by a Translational Research Award from the Wallace H. Coulter Foundation. Two of the seven authors have equity in Soterix Medical. Their institution, the City College of New York of CUNY in New York, holds the intellectual property rights to their inventions involving noninvasive brain stimulation. The other five authors declared having no conflicts of interest related to the study.

FROM THE JOURNAL OF PAIN

Major finding: Cathodal high-definition tDCS significantly decreased visual numerical pain scores, compared with sham stimulation, lowering scores (on a scale of 1-10) from a mean of 5.03 at baseline to 3.89 immediately after stimulation to 3.65 30 minutes after stimulation.

Data source: A pilot, double-blind, randomized, sham-controlled trial of 18 patients with fibromyalgia.

Disclosures: The trial was funded by a Translational Research Award from the Wallace H. Coulter Foundation. Two of the seven authors have equity in Soterix Medical. Their institution, the City College of New York of CUNY in New York, holds the intellectual property rights to their inventions involving noninvasive brain stimulation. The other five authors declared having no conflicts of interest related to the study.

Charting the course for rheumatology this year

What’s on tap for rheumatology in 2013? We asked some of our editorial advisory board members to weigh in with their expectations for this year. They highlighted trends in the practice and insurance environment, women’s health issues, patient-reported outcomes, the development of biosimilar drugs and small-molecule drugs for rheumatoid arthritis, and advances in lupus research as important subjects to follow.

Women’s Health Issues

Rheumatologists Dimitrios T. Boumpas of the University of Crete in Heraklion, Greece, and Daniel E. Furst of the University of California, Los Angeles, both pointed to 2013 as a year in which women’s health issues will gain more attention in rheumatology. They foresee more emphasis on rheumatologists taking an individualized approach to counseling women on reproductive issues based on their personal risk factors and history, as well as unique symptoms and signs they might have in some rheumatic diseases compared with men.

Patient-Reported Outcomes

Dr. Boumpas and Dr. Furst also predicted that patient-reported outcomes will make a further push into rheumatology clinical research and day-to-day clinical practice. The availability of patient-reported outcome tools from the National Institutes of Health’s PROMIS (Patient-Reported Outcomes Measurement Information System) toolbox should help to speed this for important constructs or traits such as physical function, global health assessment, and fatigue. Patients can fill out customized item banks for a variety of PROMIS measures on a tablet, laptop, personal computer, or possibly a smartphone while they are in a waiting room.

Changing Practice and Insurance Environment

Dr. Norman Gaylis, a rheumatologist in private practice in Aventura, Fla, cited numerous challenges to rheumatology practices in the coming year. He noted that "overhead creep in both private practice and academic settings" could result in an increase in staff, time, prior authorizations needed to perform treatment procedures, and the cost of health insurance for staff.

He foresees more rheumatology community practices being acquired by hospital corporations and institutions as the economic environment continues to make it difficult for small and solo practices to survive. The disconnect between the value of imaging, such as MRI, in rheumatology and its reimbursement level is a related issue that may get more attention in the coming year, Dr. Gaylis said.

Payers also might begin to replace coverage of infusion therapy with subcutaneous treatments and then ultimately oral biologics. We also might see rebates to payers begin to drive trends in rheumatoid arthritis treatment in a crowded therapeutic marketplace, he said.

Biosimilar Drugs

With the issuance of the first draft guidance on biosimilar product development last February, Dr. Gaylis said to expect that further announcements will be made to set the stage for Food and Drug Administration approval of biosimilar drugs.

As part of the Affordable Care Act, Congress created an abbreviated licensure pathway for biologic products that can be shown to be "highly similar" to an already-approved biologic drug. The Biologics Price Competition and Innovation Act, which lawmakers had been working on for years, was passed in 2010 as part of the ACA. It will make it possible for biosimilar manufacturers to bring their products to market with less clinical testing than is required of originator biopharmaceuticals and therefore at a significantly lower cost to consumers.

Under the new pathway, biosimilars must be shown to be highly similar to an already-approved product. The biosimilar may have minor differences in clinically inactive components, but there must be no clinically meaningful differences between the original and the biosimilar product in terms of safety, purity, and potency, according to the FDA.

New Drugs in the Pipeline

With the approval of tofacitinib (Xeljanz) for rheumatoid arthritis last November, Dr. Boumpas wondered whether other small molecules in the regulatory pathway will be approved. It will be interesting to see if further trials bear out the different clinical effects that three different Janus kinase (JAK) inhibitors in development have reported so far in phase II trials, he said. While tofacitinib blocks all four JAKs, its effects seem strongest on JAK3, and other JAK inhibitors in clinical development have specific JAK targets, such as Vertex Pharmaceuticals’ VX-509 (JAK3), Eli Lilly and Incyte’s baricitinib (JAK1 and JAK2), and Abbott and Galapagos’s GLPG0634 (JAK1).

Advances in Lupus Research

Lupus researcher and rheumatologist Joan T. Merrill of the Oklahoma Medical Research Foundation suggested that 2013 may see greater evolution of lupus clinical trials that involve integrating basic science into patient selection and dosing studies. "This is just now starting to happen more seriously, such as with Genentech’s phase II anti-interferon-alpha study and Amgen’s entire development program for lupus. These developments derive in part from the phase II analysis of the belimumab studies where autoantibodies became a probable surrogate for high B-lymphocyte stimulator levels. This will revolutionize medical care and bring diagnostic testing out of the initial diagnosis and vague prognostic category and more cleanly into the daily practice of optimizing treatments."

Dr. Merrill also said that the trend of certain patient advocacy groups playing a larger role in setting the research and practice agendas will continue to grow. The Lupus Foundation of America (LFA), for example, has mobilized companies to share data from placebo groups to improve clinical trial designs, created an online training site for clinical trialists worldwide, formed a Congressional Lupus Caucus, initiated a national ad campaign to educate about lupus, funded an international project to come up with a definition for lupus flare, funded the Systemic Lupus International Collaborating Clinics project to revise the classification criteria for lupus, and the list goes on – they have had a major impact on lupus. The LFA (and also some other organizations such as the Lupus Research Institute and Lupus Awareness and Research) also fund basic and clinical research in lupus.

"The LFA has done so many specific, innovative things to ‘bring down the barriers’ to treatment development in a complex and heterogenous disease where clinical trial design has been a tough nut to crack," said Dr. Merrill, noting that the foundation’s work "serves as a template for what can be done in other underserved rheumatic diseases."

Dr. Furst is the Carl M. Pearson Professor of Rheumatology at the University of California, Los Angeles. Dr. Boumpas is professor of medicine and director of internal medicine/rheumatology at the University of Crete, Heraklion, Greece, and is acting clinical director of the National Institute of Arthritis, Musculoskeletal, and Skin Diseases. Dr. Gaylis is in private practice in Aventura, Fla. Dr. Merrill is program chair of the clinical pharmacology research program at the Oklahoma Medical Research Foundation and is professor of medicine at the University of Oklahoma Health Sciences Center in Oklahoma City. All have financial relationships with numerous pharmaceutical companies that have products that are relevant to rheumatology.

What’s on tap for rheumatology in 2013? We asked some of our editorial advisory board members to weigh in with their expectations for this year. They highlighted trends in the practice and insurance environment, women’s health issues, patient-reported outcomes, the development of biosimilar drugs and small-molecule drugs for rheumatoid arthritis, and advances in lupus research as important subjects to follow.

Women’s Health Issues

Rheumatologists Dimitrios T. Boumpas of the University of Crete in Heraklion, Greece, and Daniel E. Furst of the University of California, Los Angeles, both pointed to 2013 as a year in which women’s health issues will gain more attention in rheumatology. They foresee more emphasis on rheumatologists taking an individualized approach to counseling women on reproductive issues based on their personal risk factors and history, as well as unique symptoms and signs they might have in some rheumatic diseases compared with men.

Patient-Reported Outcomes

Dr. Boumpas and Dr. Furst also predicted that patient-reported outcomes will make a further push into rheumatology clinical research and day-to-day clinical practice. The availability of patient-reported outcome tools from the National Institutes of Health’s PROMIS (Patient-Reported Outcomes Measurement Information System) toolbox should help to speed this for important constructs or traits such as physical function, global health assessment, and fatigue. Patients can fill out customized item banks for a variety of PROMIS measures on a tablet, laptop, personal computer, or possibly a smartphone while they are in a waiting room.

Changing Practice and Insurance Environment

Dr. Norman Gaylis, a rheumatologist in private practice in Aventura, Fla, cited numerous challenges to rheumatology practices in the coming year. He noted that "overhead creep in both private practice and academic settings" could result in an increase in staff, time, prior authorizations needed to perform treatment procedures, and the cost of health insurance for staff.

He foresees more rheumatology community practices being acquired by hospital corporations and institutions as the economic environment continues to make it difficult for small and solo practices to survive. The disconnect between the value of imaging, such as MRI, in rheumatology and its reimbursement level is a related issue that may get more attention in the coming year, Dr. Gaylis said.

Payers also might begin to replace coverage of infusion therapy with subcutaneous treatments and then ultimately oral biologics. We also might see rebates to payers begin to drive trends in rheumatoid arthritis treatment in a crowded therapeutic marketplace, he said.

Biosimilar Drugs

With the issuance of the first draft guidance on biosimilar product development last February, Dr. Gaylis said to expect that further announcements will be made to set the stage for Food and Drug Administration approval of biosimilar drugs.

As part of the Affordable Care Act, Congress created an abbreviated licensure pathway for biologic products that can be shown to be "highly similar" to an already-approved biologic drug. The Biologics Price Competition and Innovation Act, which lawmakers had been working on for years, was passed in 2010 as part of the ACA. It will make it possible for biosimilar manufacturers to bring their products to market with less clinical testing than is required of originator biopharmaceuticals and therefore at a significantly lower cost to consumers.

Under the new pathway, biosimilars must be shown to be highly similar to an already-approved product. The biosimilar may have minor differences in clinically inactive components, but there must be no clinically meaningful differences between the original and the biosimilar product in terms of safety, purity, and potency, according to the FDA.

New Drugs in the Pipeline

With the approval of tofacitinib (Xeljanz) for rheumatoid arthritis last November, Dr. Boumpas wondered whether other small molecules in the regulatory pathway will be approved. It will be interesting to see if further trials bear out the different clinical effects that three different Janus kinase (JAK) inhibitors in development have reported so far in phase II trials, he said. While tofacitinib blocks all four JAKs, its effects seem strongest on JAK3, and other JAK inhibitors in clinical development have specific JAK targets, such as Vertex Pharmaceuticals’ VX-509 (JAK3), Eli Lilly and Incyte’s baricitinib (JAK1 and JAK2), and Abbott and Galapagos’s GLPG0634 (JAK1).

Advances in Lupus Research

Lupus researcher and rheumatologist Joan T. Merrill of the Oklahoma Medical Research Foundation suggested that 2013 may see greater evolution of lupus clinical trials that involve integrating basic science into patient selection and dosing studies. "This is just now starting to happen more seriously, such as with Genentech’s phase II anti-interferon-alpha study and Amgen’s entire development program for lupus. These developments derive in part from the phase II analysis of the belimumab studies where autoantibodies became a probable surrogate for high B-lymphocyte stimulator levels. This will revolutionize medical care and bring diagnostic testing out of the initial diagnosis and vague prognostic category and more cleanly into the daily practice of optimizing treatments."

Dr. Merrill also said that the trend of certain patient advocacy groups playing a larger role in setting the research and practice agendas will continue to grow. The Lupus Foundation of America (LFA), for example, has mobilized companies to share data from placebo groups to improve clinical trial designs, created an online training site for clinical trialists worldwide, formed a Congressional Lupus Caucus, initiated a national ad campaign to educate about lupus, funded an international project to come up with a definition for lupus flare, funded the Systemic Lupus International Collaborating Clinics project to revise the classification criteria for lupus, and the list goes on – they have had a major impact on lupus. The LFA (and also some other organizations such as the Lupus Research Institute and Lupus Awareness and Research) also fund basic and clinical research in lupus.

"The LFA has done so many specific, innovative things to ‘bring down the barriers’ to treatment development in a complex and heterogenous disease where clinical trial design has been a tough nut to crack," said Dr. Merrill, noting that the foundation’s work "serves as a template for what can be done in other underserved rheumatic diseases."

Dr. Furst is the Carl M. Pearson Professor of Rheumatology at the University of California, Los Angeles. Dr. Boumpas is professor of medicine and director of internal medicine/rheumatology at the University of Crete, Heraklion, Greece, and is acting clinical director of the National Institute of Arthritis, Musculoskeletal, and Skin Diseases. Dr. Gaylis is in private practice in Aventura, Fla. Dr. Merrill is program chair of the clinical pharmacology research program at the Oklahoma Medical Research Foundation and is professor of medicine at the University of Oklahoma Health Sciences Center in Oklahoma City. All have financial relationships with numerous pharmaceutical companies that have products that are relevant to rheumatology.

What’s on tap for rheumatology in 2013? We asked some of our editorial advisory board members to weigh in with their expectations for this year. They highlighted trends in the practice and insurance environment, women’s health issues, patient-reported outcomes, the development of biosimilar drugs and small-molecule drugs for rheumatoid arthritis, and advances in lupus research as important subjects to follow.

Women’s Health Issues

Rheumatologists Dimitrios T. Boumpas of the University of Crete in Heraklion, Greece, and Daniel E. Furst of the University of California, Los Angeles, both pointed to 2013 as a year in which women’s health issues will gain more attention in rheumatology. They foresee more emphasis on rheumatologists taking an individualized approach to counseling women on reproductive issues based on their personal risk factors and history, as well as unique symptoms and signs they might have in some rheumatic diseases compared with men.

Patient-Reported Outcomes

Dr. Boumpas and Dr. Furst also predicted that patient-reported outcomes will make a further push into rheumatology clinical research and day-to-day clinical practice. The availability of patient-reported outcome tools from the National Institutes of Health’s PROMIS (Patient-Reported Outcomes Measurement Information System) toolbox should help to speed this for important constructs or traits such as physical function, global health assessment, and fatigue. Patients can fill out customized item banks for a variety of PROMIS measures on a tablet, laptop, personal computer, or possibly a smartphone while they are in a waiting room.

Changing Practice and Insurance Environment

Dr. Norman Gaylis, a rheumatologist in private practice in Aventura, Fla, cited numerous challenges to rheumatology practices in the coming year. He noted that "overhead creep in both private practice and academic settings" could result in an increase in staff, time, prior authorizations needed to perform treatment procedures, and the cost of health insurance for staff.

He foresees more rheumatology community practices being acquired by hospital corporations and institutions as the economic environment continues to make it difficult for small and solo practices to survive. The disconnect between the value of imaging, such as MRI, in rheumatology and its reimbursement level is a related issue that may get more attention in the coming year, Dr. Gaylis said.

Payers also might begin to replace coverage of infusion therapy with subcutaneous treatments and then ultimately oral biologics. We also might see rebates to payers begin to drive trends in rheumatoid arthritis treatment in a crowded therapeutic marketplace, he said.

Biosimilar Drugs

With the issuance of the first draft guidance on biosimilar product development last February, Dr. Gaylis said to expect that further announcements will be made to set the stage for Food and Drug Administration approval of biosimilar drugs.

As part of the Affordable Care Act, Congress created an abbreviated licensure pathway for biologic products that can be shown to be "highly similar" to an already-approved biologic drug. The Biologics Price Competition and Innovation Act, which lawmakers had been working on for years, was passed in 2010 as part of the ACA. It will make it possible for biosimilar manufacturers to bring their products to market with less clinical testing than is required of originator biopharmaceuticals and therefore at a significantly lower cost to consumers.

Under the new pathway, biosimilars must be shown to be highly similar to an already-approved product. The biosimilar may have minor differences in clinically inactive components, but there must be no clinically meaningful differences between the original and the biosimilar product in terms of safety, purity, and potency, according to the FDA.

New Drugs in the Pipeline

With the approval of tofacitinib (Xeljanz) for rheumatoid arthritis last November, Dr. Boumpas wondered whether other small molecules in the regulatory pathway will be approved. It will be interesting to see if further trials bear out the different clinical effects that three different Janus kinase (JAK) inhibitors in development have reported so far in phase II trials, he said. While tofacitinib blocks all four JAKs, its effects seem strongest on JAK3, and other JAK inhibitors in clinical development have specific JAK targets, such as Vertex Pharmaceuticals’ VX-509 (JAK3), Eli Lilly and Incyte’s baricitinib (JAK1 and JAK2), and Abbott and Galapagos’s GLPG0634 (JAK1).

Advances in Lupus Research

Lupus researcher and rheumatologist Joan T. Merrill of the Oklahoma Medical Research Foundation suggested that 2013 may see greater evolution of lupus clinical trials that involve integrating basic science into patient selection and dosing studies. "This is just now starting to happen more seriously, such as with Genentech’s phase II anti-interferon-alpha study and Amgen’s entire development program for lupus. These developments derive in part from the phase II analysis of the belimumab studies where autoantibodies became a probable surrogate for high B-lymphocyte stimulator levels. This will revolutionize medical care and bring diagnostic testing out of the initial diagnosis and vague prognostic category and more cleanly into the daily practice of optimizing treatments."

Dr. Merrill also said that the trend of certain patient advocacy groups playing a larger role in setting the research and practice agendas will continue to grow. The Lupus Foundation of America (LFA), for example, has mobilized companies to share data from placebo groups to improve clinical trial designs, created an online training site for clinical trialists worldwide, formed a Congressional Lupus Caucus, initiated a national ad campaign to educate about lupus, funded an international project to come up with a definition for lupus flare, funded the Systemic Lupus International Collaborating Clinics project to revise the classification criteria for lupus, and the list goes on – they have had a major impact on lupus. The LFA (and also some other organizations such as the Lupus Research Institute and Lupus Awareness and Research) also fund basic and clinical research in lupus.

"The LFA has done so many specific, innovative things to ‘bring down the barriers’ to treatment development in a complex and heterogenous disease where clinical trial design has been a tough nut to crack," said Dr. Merrill, noting that the foundation’s work "serves as a template for what can be done in other underserved rheumatic diseases."

Dr. Furst is the Carl M. Pearson Professor of Rheumatology at the University of California, Los Angeles. Dr. Boumpas is professor of medicine and director of internal medicine/rheumatology at the University of Crete, Heraklion, Greece, and is acting clinical director of the National Institute of Arthritis, Musculoskeletal, and Skin Diseases. Dr. Gaylis is in private practice in Aventura, Fla. Dr. Merrill is program chair of the clinical pharmacology research program at the Oklahoma Medical Research Foundation and is professor of medicine at the University of Oklahoma Health Sciences Center in Oklahoma City. All have financial relationships with numerous pharmaceutical companies that have products that are relevant to rheumatology.

Alzheimer's assessment scale may not be accurate enough

Portions of one of the most commonly used tests to measure cognitive performance in Alzheimer’s disease trials may be too easy and may not accurately assess the range of patients’ cognitive abilities or detect their change over time, according to two complementary studies.

Analyses of Alzheimer’s Disease Assessment Scale – Cognitive Behavior Section (ADAS-Cog) scores measured in 193 patients with mild disease who participated in ADNI (the Alzheimer’s Disease Neuroimaging Initiative) over the course of 2 years detected limitations of the scale that could be improved, reported Dr. Jeremy Hobart of the Clinical Neurology Research Group at Plymouth (England) University Peninsula Schools of Medicine and Dentistry, and his colleagues.

The investigators used observational data from ADNI to show that out of 675 measurements made at time points of 0, 6, 12, and 24 months, data from ADAS-Cog total scores spanned the entire range of the scale and had no floor or ceiling effects that would reduce its ability to measure changes and differences in lower-functioning or higher-functioning patients, respectively. However, 8 of the scale’s 11 components (all except for word recall, word recognition, and orientation) had statistically significant ceiling effects with a skewed distribution of scores (Alzheimers Dement. 2012 [doi:10.1016/j.jalz.2012.08.005]). The mean age of patients was 74 years, 47% were female, and participants had a mean Mini-Mental State Examination score of 23 across all time points.

These results reproduce those that the investigators obtained in a previous psychometric evaluation of the ADAS-Cog in patients who participated in a randomized, controlled clinical trial (J. Neurol. Neurosurg. Psychiatry 2010;81:1363-8). They noted that the results mean that "these components may underestimate cognitive performance differences in those with mild to moderate AD-type dementia. This may lead to problems in detecting clinical change."

Because often greater than three-fourths of the participants with mild Alzheimer’s disease in the ADNI study scored either 0 or 1 on the majority of ADAS-Cog components, Dr. Hobart and his associates remarked that this would mean that few or no cognitive problems were detected. "However, as there is almost certainly greater variance in patient ability, this finding points to a limitation in the ADAS-Cog score function – namely that the ADAS-Cog, in its current form, is not subtle enough to record and monitor variance in the mildest stages of AD-type dementia."

In a second study that analyzed the extent to which the ADAS-Cog accurately measured cognitive performance in the same sample of patients, the investigators tried to avoid the limitations imposed by classical means of assessing reliability and validity of scales. They did this by using a method called Rasch Measurement Theory, which is a mathematical model designed to analyze the extent to which the rating scale data meet certain conditions necessary for the scale to record accurate measurements of cognitive performance. It provides diagnostic information that can help to revise a scale by exposing anomalies in the scale that can be improved and then retested, Dr. Hobart and his colleagues explained.

The range of cognitive performance measured by the 11 ADAS-Cog components suboptimally targeted the range of cognitive performance observed in patients in the sample. In six of the components, the integer-based scoring method used to assign cognitive performance did not reflect a continuum of performance as it was intended to, but instead indicated that some component scores were much more likely to occur than others, meaning that a higher score on one of these components did not confirm more cognitive impairment.

These gaps in some of the components’ abilities to measure cognitive performance means that their precision is limited, and that the raw scores of the ADAS-Cog and the linear relationship they are thought to reflect is actually an S-shaped relationship in which a 1-point change in ADAS-Cog measurement varies across the range of the scale, such that it is highest at the extremes of the its range and lowest at the center of its range, the investigators said (Alzheimers Dement. 2012 [doi:10.1016/j.jalz.2012.08.006]).

Both studies were supported in part by grants from an anonymous foundation, the U.K. National Institute for Health Research, and the U.S. National Institutes of Health.

Analyses of Alzheimer’s Disease Assessment Scale – Cognitive Behavior Section, ADAS-Cog, ADNI, the Alzheimer’s Disease Neuroimaging Initiative, Dr. Jeremy Hobart,

Portions of one of the most commonly used tests to measure cognitive performance in Alzheimer’s disease trials may be too easy and may not accurately assess the range of patients’ cognitive abilities or detect their change over time, according to two complementary studies.

Analyses of Alzheimer’s Disease Assessment Scale – Cognitive Behavior Section (ADAS-Cog) scores measured in 193 patients with mild disease who participated in ADNI (the Alzheimer’s Disease Neuroimaging Initiative) over the course of 2 years detected limitations of the scale that could be improved, reported Dr. Jeremy Hobart of the Clinical Neurology Research Group at Plymouth (England) University Peninsula Schools of Medicine and Dentistry, and his colleagues.

The investigators used observational data from ADNI to show that out of 675 measurements made at time points of 0, 6, 12, and 24 months, data from ADAS-Cog total scores spanned the entire range of the scale and had no floor or ceiling effects that would reduce its ability to measure changes and differences in lower-functioning or higher-functioning patients, respectively. However, 8 of the scale’s 11 components (all except for word recall, word recognition, and orientation) had statistically significant ceiling effects with a skewed distribution of scores (Alzheimers Dement. 2012 [doi:10.1016/j.jalz.2012.08.005]). The mean age of patients was 74 years, 47% were female, and participants had a mean Mini-Mental State Examination score of 23 across all time points.

These results reproduce those that the investigators obtained in a previous psychometric evaluation of the ADAS-Cog in patients who participated in a randomized, controlled clinical trial (J. Neurol. Neurosurg. Psychiatry 2010;81:1363-8). They noted that the results mean that "these components may underestimate cognitive performance differences in those with mild to moderate AD-type dementia. This may lead to problems in detecting clinical change."

Because often greater than three-fourths of the participants with mild Alzheimer’s disease in the ADNI study scored either 0 or 1 on the majority of ADAS-Cog components, Dr. Hobart and his associates remarked that this would mean that few or no cognitive problems were detected. "However, as there is almost certainly greater variance in patient ability, this finding points to a limitation in the ADAS-Cog score function – namely that the ADAS-Cog, in its current form, is not subtle enough to record and monitor variance in the mildest stages of AD-type dementia."

In a second study that analyzed the extent to which the ADAS-Cog accurately measured cognitive performance in the same sample of patients, the investigators tried to avoid the limitations imposed by classical means of assessing reliability and validity of scales. They did this by using a method called Rasch Measurement Theory, which is a mathematical model designed to analyze the extent to which the rating scale data meet certain conditions necessary for the scale to record accurate measurements of cognitive performance. It provides diagnostic information that can help to revise a scale by exposing anomalies in the scale that can be improved and then retested, Dr. Hobart and his colleagues explained.

The range of cognitive performance measured by the 11 ADAS-Cog components suboptimally targeted the range of cognitive performance observed in patients in the sample. In six of the components, the integer-based scoring method used to assign cognitive performance did not reflect a continuum of performance as it was intended to, but instead indicated that some component scores were much more likely to occur than others, meaning that a higher score on one of these components did not confirm more cognitive impairment.

These gaps in some of the components’ abilities to measure cognitive performance means that their precision is limited, and that the raw scores of the ADAS-Cog and the linear relationship they are thought to reflect is actually an S-shaped relationship in which a 1-point change in ADAS-Cog measurement varies across the range of the scale, such that it is highest at the extremes of the its range and lowest at the center of its range, the investigators said (Alzheimers Dement. 2012 [doi:10.1016/j.jalz.2012.08.006]).

Both studies were supported in part by grants from an anonymous foundation, the U.K. National Institute for Health Research, and the U.S. National Institutes of Health.

Portions of one of the most commonly used tests to measure cognitive performance in Alzheimer’s disease trials may be too easy and may not accurately assess the range of patients’ cognitive abilities or detect their change over time, according to two complementary studies.

Analyses of Alzheimer’s Disease Assessment Scale – Cognitive Behavior Section (ADAS-Cog) scores measured in 193 patients with mild disease who participated in ADNI (the Alzheimer’s Disease Neuroimaging Initiative) over the course of 2 years detected limitations of the scale that could be improved, reported Dr. Jeremy Hobart of the Clinical Neurology Research Group at Plymouth (England) University Peninsula Schools of Medicine and Dentistry, and his colleagues.

The investigators used observational data from ADNI to show that out of 675 measurements made at time points of 0, 6, 12, and 24 months, data from ADAS-Cog total scores spanned the entire range of the scale and had no floor or ceiling effects that would reduce its ability to measure changes and differences in lower-functioning or higher-functioning patients, respectively. However, 8 of the scale’s 11 components (all except for word recall, word recognition, and orientation) had statistically significant ceiling effects with a skewed distribution of scores (Alzheimers Dement. 2012 [doi:10.1016/j.jalz.2012.08.005]). The mean age of patients was 74 years, 47% were female, and participants had a mean Mini-Mental State Examination score of 23 across all time points.

These results reproduce those that the investigators obtained in a previous psychometric evaluation of the ADAS-Cog in patients who participated in a randomized, controlled clinical trial (J. Neurol. Neurosurg. Psychiatry 2010;81:1363-8). They noted that the results mean that "these components may underestimate cognitive performance differences in those with mild to moderate AD-type dementia. This may lead to problems in detecting clinical change."

Because often greater than three-fourths of the participants with mild Alzheimer’s disease in the ADNI study scored either 0 or 1 on the majority of ADAS-Cog components, Dr. Hobart and his associates remarked that this would mean that few or no cognitive problems were detected. "However, as there is almost certainly greater variance in patient ability, this finding points to a limitation in the ADAS-Cog score function – namely that the ADAS-Cog, in its current form, is not subtle enough to record and monitor variance in the mildest stages of AD-type dementia."

In a second study that analyzed the extent to which the ADAS-Cog accurately measured cognitive performance in the same sample of patients, the investigators tried to avoid the limitations imposed by classical means of assessing reliability and validity of scales. They did this by using a method called Rasch Measurement Theory, which is a mathematical model designed to analyze the extent to which the rating scale data meet certain conditions necessary for the scale to record accurate measurements of cognitive performance. It provides diagnostic information that can help to revise a scale by exposing anomalies in the scale that can be improved and then retested, Dr. Hobart and his colleagues explained.

The range of cognitive performance measured by the 11 ADAS-Cog components suboptimally targeted the range of cognitive performance observed in patients in the sample. In six of the components, the integer-based scoring method used to assign cognitive performance did not reflect a continuum of performance as it was intended to, but instead indicated that some component scores were much more likely to occur than others, meaning that a higher score on one of these components did not confirm more cognitive impairment.

These gaps in some of the components’ abilities to measure cognitive performance means that their precision is limited, and that the raw scores of the ADAS-Cog and the linear relationship they are thought to reflect is actually an S-shaped relationship in which a 1-point change in ADAS-Cog measurement varies across the range of the scale, such that it is highest at the extremes of the its range and lowest at the center of its range, the investigators said (Alzheimers Dement. 2012 [doi:10.1016/j.jalz.2012.08.006]).

Both studies were supported in part by grants from an anonymous foundation, the U.K. National Institute for Health Research, and the U.S. National Institutes of Health.

Analyses of Alzheimer’s Disease Assessment Scale – Cognitive Behavior Section, ADAS-Cog, ADNI, the Alzheimer’s Disease Neuroimaging Initiative, Dr. Jeremy Hobart,

Analyses of Alzheimer’s Disease Assessment Scale – Cognitive Behavior Section, ADAS-Cog, ADNI, the Alzheimer’s Disease Neuroimaging Initiative, Dr. Jeremy Hobart,

FROM ALZHEIMER'S & DEMENTIA

Major Finding: Of the 11 components of the ADAS-Cog scale, 8 had significant ceiling effects and 6 suboptimally targeted the range of cognitive performance observed in patients in the sample.

Data Source: Analyses of ADAS-Cog scores during a 2-year period in 193 participants of the Alzheimer’s Disease Neuroimaging Initiative.

Disclosures: Both studies were supported in part by grants from an anonymous foundation, the U.K. National Institute for Health Research, and the U.S. National Institutes of Health.

First Americans undergo Alzheimer's deep brain stimulation

Investigational use of deep brain stimulation for Alzheimer’s disease has arrived in the United States.

As a part of a new multicenter trial funded by the National Institute on Aging and Functional Neuromodulation, the Advance Study, Johns Hopkins University neurosurgeons recently implanted the first device into a patient with mild Alzheimer’s disease. Via two implanted ultrathin wires, the device delivers 4-8 V electrical charges directly to the fornix on both sides of the brain. The fornix, one of the first brain regions to be destroyed by Alzheimer’s, is involved in memory formation, and serves to relay signals between the hippocampus and hypothalamus.

A second patient is scheduled for the same procedure this month, according to Johns Hopkins, in Baltimore. About 40 patients are expected to receive the deep brain stimulation implant over the next year or so at Johns Hopkins; the University of Toronto; the University of Pennsylvania, Philadelphia; the University of Florida, Gainesville; and Banner Health System in Phoenix.

Half of the patients in the study will have the devices activated 2 weeks after surgery, while the others will have the device turned on after 1 year. All study participants will be regularly assessed for at least 18 months to measure their rate of Alzheimer’s progression.

As part of a preliminary safety study in 2010, the devices were implanted in six Alzheimer’s disease patients at the University of Toronto. Researchers found that patients with mild forms of the disorder showed sustained increases in glucose metabolism, an indicator of neuronal activity, over a 13-month period (Ann. Neurol. 2010;68:521-34). Most Alzheimer’s disease patients show decreases in glucose metabolism over the same period.

Investigational use of deep brain stimulation for Alzheimer’s disease has arrived in the United States.

As a part of a new multicenter trial funded by the National Institute on Aging and Functional Neuromodulation, the Advance Study, Johns Hopkins University neurosurgeons recently implanted the first device into a patient with mild Alzheimer’s disease. Via two implanted ultrathin wires, the device delivers 4-8 V electrical charges directly to the fornix on both sides of the brain. The fornix, one of the first brain regions to be destroyed by Alzheimer’s, is involved in memory formation, and serves to relay signals between the hippocampus and hypothalamus.

A second patient is scheduled for the same procedure this month, according to Johns Hopkins, in Baltimore. About 40 patients are expected to receive the deep brain stimulation implant over the next year or so at Johns Hopkins; the University of Toronto; the University of Pennsylvania, Philadelphia; the University of Florida, Gainesville; and Banner Health System in Phoenix.

Half of the patients in the study will have the devices activated 2 weeks after surgery, while the others will have the device turned on after 1 year. All study participants will be regularly assessed for at least 18 months to measure their rate of Alzheimer’s progression.

As part of a preliminary safety study in 2010, the devices were implanted in six Alzheimer’s disease patients at the University of Toronto. Researchers found that patients with mild forms of the disorder showed sustained increases in glucose metabolism, an indicator of neuronal activity, over a 13-month period (Ann. Neurol. 2010;68:521-34). Most Alzheimer’s disease patients show decreases in glucose metabolism over the same period.

Investigational use of deep brain stimulation for Alzheimer’s disease has arrived in the United States.

As a part of a new multicenter trial funded by the National Institute on Aging and Functional Neuromodulation, the Advance Study, Johns Hopkins University neurosurgeons recently implanted the first device into a patient with mild Alzheimer’s disease. Via two implanted ultrathin wires, the device delivers 4-8 V electrical charges directly to the fornix on both sides of the brain. The fornix, one of the first brain regions to be destroyed by Alzheimer’s, is involved in memory formation, and serves to relay signals between the hippocampus and hypothalamus.

A second patient is scheduled for the same procedure this month, according to Johns Hopkins, in Baltimore. About 40 patients are expected to receive the deep brain stimulation implant over the next year or so at Johns Hopkins; the University of Toronto; the University of Pennsylvania, Philadelphia; the University of Florida, Gainesville; and Banner Health System in Phoenix.

Half of the patients in the study will have the devices activated 2 weeks after surgery, while the others will have the device turned on after 1 year. All study participants will be regularly assessed for at least 18 months to measure their rate of Alzheimer’s progression.

As part of a preliminary safety study in 2010, the devices were implanted in six Alzheimer’s disease patients at the University of Toronto. Researchers found that patients with mild forms of the disorder showed sustained increases in glucose metabolism, an indicator of neuronal activity, over a 13-month period (Ann. Neurol. 2010;68:521-34). Most Alzheimer’s disease patients show decreases in glucose metabolism over the same period.

Hot Topics at the American Epilepsy Society Meeting

The 2012 American Epilepsy Society annual meeting brings a multitude of original research and important reports that set the stage for the future of care for people with epilepsy. In the coming days, look for coverage of the meeting from Clinical Neurology News.

Here are some highlights of presentations we will report on:

• The Institute of Medicine’s 2012 report. The Presidential Symposium’s focus on the IOM report, "Epilepsy Across the Spectrum: Promoting Health and Understanding," should help to give neurologists a better understanding of where the public health emphasis for epilepsy needs to be. Dr. Joseph Drazkowski, a Clinical Neurology News editorial advisory board member and an epilepsy specialist at the Mayo Clinic in Phoenix, says the symposium should help neurologists to understand the implications of the report.

"In the new world of the Affordable Care Act, there has been much speculation and uncertainty on how our patients’ lives will be affected and what the corresponding impact will be to the practice of caring for the person with epilepsy. Included in the program will be discussion from an expert with a perspective from the leadership of the Department of Health and Human Services. Additionally, there will be a presentation from an IOM panelist, hopefully providing insight about why epilepsy was chosen as a focus, how the report was crafted, and what implications it has for the future. How this mix of patients, providers, advocacy groups, insurers and government regulators plays out over the next several years has potentially far-reaching implications for epilepsy care, neurology, and medicine in general."

Another editorial advisory board member, Dr. Jeffrey Buchhalter of Alberta Children's Hospital, Calgary, commented that "the impact of the Institute of Medicine report on epilepsy is yet to be fully realized. It could put epilepsy on center stage and have profound implications for future research resources."

• Merritt-Putnam Symposium. The discussion at this year's symposium will focus on advances in the understanding of the genetics of epilepsy. "Epilepsy genetics continues to be a very hot topic due to the expansion in testing available. The symposium will explore a number of the key areas including the meaning of a particular type of mutation, how these are discovered, and the functional implications," Dr. Buchhalter said.

• Transitioning from adolescent to adult epilepsy care. In the Professionals in Epilepsy Care Symposium, experts discuss transition models and their personal experience in handling the tradeoff, as well as the challenges of doing so for adolescents with intellectual disabilities and epilepsy.

• Barriers to optimal care. Several poster abstracts focus on the factors associated with patients not having access to specialized epilepsy care that they need, such as video EEG monitoring, surgery, or psychiatric, psychological, neuropsychological, or speech and language evaluations. The most important factors predicting access to such care are age, race, insurance status, and proximity to a comprehensive epilepsy center.

• Predicting epilepsy after childhood status epilepticus. Preliminary results from the first prospective pediatric population-based study in childhood status epilepticus, the Status Epilepticus Outcomes Study (STEPSOUT), indicate that children with prolonged febrile seizures have good neurologic and cognitive outcomes within 10 years of the status epilepticus episode, but in some cases, those with prior known neurologic problems do not.

• Long-term results of deep brain stimulation. The 5-year results of deep brain bilateral stimulation of the anterior nuclei of the thalamus for drug refractory epilepsy in the SANTE trial (Stimulation of the Anterior Nucleus of the Thalamus for Epilepsy) indicate a sustained efficacy and continuous improvement with a median reduction in seizure frequency of 69%. The intervention is still considered investigational in the United States.

• Discussing sudden, unexplained death. A new survey indicates that few neurologists always bring up the issue of sudden, unexplained death in epilepsy with patients and their parents, contrary to what many parents say they want.

• Impact of antidepressants on epilepsy outcomes. Selective serotonin and serotonin-norepinephrine reuptake inhibitors do not worsen seizure frequency and may have a slight antiepileptic effect in patients with frequent seizures.

The 2012 American Epilepsy Society annual meeting brings a multitude of original research and important reports that set the stage for the future of care for people with epilepsy. In the coming days, look for coverage of the meeting from Clinical Neurology News.

Here are some highlights of presentations we will report on:

• The Institute of Medicine’s 2012 report. The Presidential Symposium’s focus on the IOM report, "Epilepsy Across the Spectrum: Promoting Health and Understanding," should help to give neurologists a better understanding of where the public health emphasis for epilepsy needs to be. Dr. Joseph Drazkowski, a Clinical Neurology News editorial advisory board member and an epilepsy specialist at the Mayo Clinic in Phoenix, says the symposium should help neurologists to understand the implications of the report.

"In the new world of the Affordable Care Act, there has been much speculation and uncertainty on how our patients’ lives will be affected and what the corresponding impact will be to the practice of caring for the person with epilepsy. Included in the program will be discussion from an expert with a perspective from the leadership of the Department of Health and Human Services. Additionally, there will be a presentation from an IOM panelist, hopefully providing insight about why epilepsy was chosen as a focus, how the report was crafted, and what implications it has for the future. How this mix of patients, providers, advocacy groups, insurers and government regulators plays out over the next several years has potentially far-reaching implications for epilepsy care, neurology, and medicine in general."

Another editorial advisory board member, Dr. Jeffrey Buchhalter of Alberta Children's Hospital, Calgary, commented that "the impact of the Institute of Medicine report on epilepsy is yet to be fully realized. It could put epilepsy on center stage and have profound implications for future research resources."

• Merritt-Putnam Symposium. The discussion at this year's symposium will focus on advances in the understanding of the genetics of epilepsy. "Epilepsy genetics continues to be a very hot topic due to the expansion in testing available. The symposium will explore a number of the key areas including the meaning of a particular type of mutation, how these are discovered, and the functional implications," Dr. Buchhalter said.

• Transitioning from adolescent to adult epilepsy care. In the Professionals in Epilepsy Care Symposium, experts discuss transition models and their personal experience in handling the tradeoff, as well as the challenges of doing so for adolescents with intellectual disabilities and epilepsy.

• Barriers to optimal care. Several poster abstracts focus on the factors associated with patients not having access to specialized epilepsy care that they need, such as video EEG monitoring, surgery, or psychiatric, psychological, neuropsychological, or speech and language evaluations. The most important factors predicting access to such care are age, race, insurance status, and proximity to a comprehensive epilepsy center.

• Predicting epilepsy after childhood status epilepticus. Preliminary results from the first prospective pediatric population-based study in childhood status epilepticus, the Status Epilepticus Outcomes Study (STEPSOUT), indicate that children with prolonged febrile seizures have good neurologic and cognitive outcomes within 10 years of the status epilepticus episode, but in some cases, those with prior known neurologic problems do not.

• Long-term results of deep brain stimulation. The 5-year results of deep brain bilateral stimulation of the anterior nuclei of the thalamus for drug refractory epilepsy in the SANTE trial (Stimulation of the Anterior Nucleus of the Thalamus for Epilepsy) indicate a sustained efficacy and continuous improvement with a median reduction in seizure frequency of 69%. The intervention is still considered investigational in the United States.

• Discussing sudden, unexplained death. A new survey indicates that few neurologists always bring up the issue of sudden, unexplained death in epilepsy with patients and their parents, contrary to what many parents say they want.

• Impact of antidepressants on epilepsy outcomes. Selective serotonin and serotonin-norepinephrine reuptake inhibitors do not worsen seizure frequency and may have a slight antiepileptic effect in patients with frequent seizures.

The 2012 American Epilepsy Society annual meeting brings a multitude of original research and important reports that set the stage for the future of care for people with epilepsy. In the coming days, look for coverage of the meeting from Clinical Neurology News.

Here are some highlights of presentations we will report on:

• The Institute of Medicine’s 2012 report. The Presidential Symposium’s focus on the IOM report, "Epilepsy Across the Spectrum: Promoting Health and Understanding," should help to give neurologists a better understanding of where the public health emphasis for epilepsy needs to be. Dr. Joseph Drazkowski, a Clinical Neurology News editorial advisory board member and an epilepsy specialist at the Mayo Clinic in Phoenix, says the symposium should help neurologists to understand the implications of the report.

"In the new world of the Affordable Care Act, there has been much speculation and uncertainty on how our patients’ lives will be affected and what the corresponding impact will be to the practice of caring for the person with epilepsy. Included in the program will be discussion from an expert with a perspective from the leadership of the Department of Health and Human Services. Additionally, there will be a presentation from an IOM panelist, hopefully providing insight about why epilepsy was chosen as a focus, how the report was crafted, and what implications it has for the future. How this mix of patients, providers, advocacy groups, insurers and government regulators plays out over the next several years has potentially far-reaching implications for epilepsy care, neurology, and medicine in general."

Another editorial advisory board member, Dr. Jeffrey Buchhalter of Alberta Children's Hospital, Calgary, commented that "the impact of the Institute of Medicine report on epilepsy is yet to be fully realized. It could put epilepsy on center stage and have profound implications for future research resources."

• Merritt-Putnam Symposium. The discussion at this year's symposium will focus on advances in the understanding of the genetics of epilepsy. "Epilepsy genetics continues to be a very hot topic due to the expansion in testing available. The symposium will explore a number of the key areas including the meaning of a particular type of mutation, how these are discovered, and the functional implications," Dr. Buchhalter said.

• Transitioning from adolescent to adult epilepsy care. In the Professionals in Epilepsy Care Symposium, experts discuss transition models and their personal experience in handling the tradeoff, as well as the challenges of doing so for adolescents with intellectual disabilities and epilepsy.

• Barriers to optimal care. Several poster abstracts focus on the factors associated with patients not having access to specialized epilepsy care that they need, such as video EEG monitoring, surgery, or psychiatric, psychological, neuropsychological, or speech and language evaluations. The most important factors predicting access to such care are age, race, insurance status, and proximity to a comprehensive epilepsy center.

• Predicting epilepsy after childhood status epilepticus. Preliminary results from the first prospective pediatric population-based study in childhood status epilepticus, the Status Epilepticus Outcomes Study (STEPSOUT), indicate that children with prolonged febrile seizures have good neurologic and cognitive outcomes within 10 years of the status epilepticus episode, but in some cases, those with prior known neurologic problems do not.

• Long-term results of deep brain stimulation. The 5-year results of deep brain bilateral stimulation of the anterior nuclei of the thalamus for drug refractory epilepsy in the SANTE trial (Stimulation of the Anterior Nucleus of the Thalamus for Epilepsy) indicate a sustained efficacy and continuous improvement with a median reduction in seizure frequency of 69%. The intervention is still considered investigational in the United States.

• Discussing sudden, unexplained death. A new survey indicates that few neurologists always bring up the issue of sudden, unexplained death in epilepsy with patients and their parents, contrary to what many parents say they want.

• Impact of antidepressants on epilepsy outcomes. Selective serotonin and serotonin-norepinephrine reuptake inhibitors do not worsen seizure frequency and may have a slight antiepileptic effect in patients with frequent seizures.

Triple Therapy Boosts HCV Response After Transplant

BOSTON – Liver transplant recipients with hepatitis C virus infection who underwent triple-drug therapy achieved a high extended rapid virologic response rate but often contended with treatment complications in a retrospective multicenter cohort study.

The extended rapid virologic response (eRVR) rate seen in 57% of patients was "encouraging, given a very difficult-to-cure population," Dr. James R. Burton, Jr., said at the annual meeting of the American Association for the Study of Liver Diseases. He noted, however, that it’s not clear if the encouraging eRVR rate will predict sustained virologic response (SVR) as it does in non-liver transplant patients.

The use of peginteferon plus ribavirin in liver transplant recipients with hepatitis C virus infection has an SVR of only 30%. While triple therapy with peginterferon, ribavirin, and a protease inhibitor (boceprevir or telaprevir) has significantly improved rates of SVR in patients infected with genotype 1 hepatitis C virus, its safety and efficacy in liver transplant recipients is unknown, said Dr. Burton, medical director of liver transplantation at the University of Colorado Hospital, Aurora.

With 101 patients, the five-center study is the largest involving triple therapy in liver transplant recipients with hepatitis C virus infection.

Telaprevir was the protease inhibitor used most often in patients (90% vs. 10% with boceprevir). Nearly all patients (96%) had a lead-in treatment phase with peginterferon plus ribavirin. A minority of patients (14%) had an extended lead-in phase of at least 90 days (median of 189 days) and were excluded from efficacy, but not safety, analyses. The other patients had a lead-in lasting a median of 29 days. The patients with a long lead-in phase had a median of 398 total treatment days, compared with a median of 154 days for those with a shorter lead-in time.

The efficacy study population involved genotype 1–infected patients (54% genotype 1a, 39% 1b, and 7% mixed) from five medical centers. Most patients were men (76%); they had a median age of 58 years and a median duration of 54 months from their liver transplant to starting a protease inhibitor. An unfavorable IL28B genotype was found in 69% of 45 patients tested. In the 60% of patients who had undergone previous antiviral therapy, 29% had a partial response. On liver biopsy, another 47% of patients had either bridging fibrosis or cirrhosis.

The immunosuppressive agents used by the patients included cyclosporine (66%) and tacrolimus (23%). A small percentage did not receive a calcineurin inhibitor or rapamycin. Another 27% were taking corticosteroids, and 72% were taking mycophenolate mofetil or mycophenolic acid.

On treatment, the percentage of patients who had an HCV RNA level less than the limit of detection increased from 55% at 4 weeks to 63% at 8 weeks. At 12 weeks the percentage was 71%. An eRVR, defined as negative HCV RNA tests at 4 and 12 weeks, occurred in 57%. An eRVR occurred significantly more often among patients who had at least a 1 log drop in HCV RNA levels during the lead-in phase than did those with less than a 1 log drop (76% vs. 35%).

Overall, 12% of patients experienced virologic breakthrough, and treatment was stopped. This occurred more often among those with a long lead-in vs. those with a short lead-in (21% vs. 10%, respectively). Another 14% discontinued treatment early because of an adverse event; discontinuations occurred more often among patients with a long lead-in (40% vs. 11%).

Protease inhibitors are known to inhibit the metabolism of calcineurin inhibitors, which was reflected in the study by the need to reduce the median daily doses of cyclosporine (from 200 mg to 50 mg) and tacrolimus (from 1.0 mg to 0.06 mg) after protease inhibitor therapy began.

Many patients (49%) required blood transfusions during triple therapy. During the first 16 weeks of therapy, these patients used a median of 2.5 units. The majority of patients (86%) used growth factors, including granulocyte-colony stimulating factor in 44% and erythropoietin in 79%. Medication dose reductions were most frequent for ribavirin (in 78%). A total of 7% were hospitalized for anemia, Dr. Burton said.

Renal insufficiency, defined as an increase in creatinine of greater than 0.5 mg/dL from baseline, developed in 32%. Of two rejection episodes in the study, one involved a patient coming off a protease inhibitor.

Dr. Burton suggested that future studies should focus on identifying predictors for nonresponse to avoid unnecessary treatment and associated toxicities such as complications of anemia and adverse events related to significant protease inhibitor–calcineurin inhibitor interactions, such as worsening renal function and graft rejection when transitioning off a protease inhibitor.

Dr. Burton disclosed that he is an investigator in a clinical trial sponsored by Vertex Pharmaceuticals, which makes telaprevir.☐

BOSTON – Liver transplant recipients with hepatitis C virus infection who underwent triple-drug therapy achieved a high extended rapid virologic response rate but often contended with treatment complications in a retrospective multicenter cohort study.

The extended rapid virologic response (eRVR) rate seen in 57% of patients was "encouraging, given a very difficult-to-cure population," Dr. James R. Burton, Jr., said at the annual meeting of the American Association for the Study of Liver Diseases. He noted, however, that it’s not clear if the encouraging eRVR rate will predict sustained virologic response (SVR) as it does in non-liver transplant patients.