User login

End-stage renal disease risk in lupus nephritis remains unchanged of late

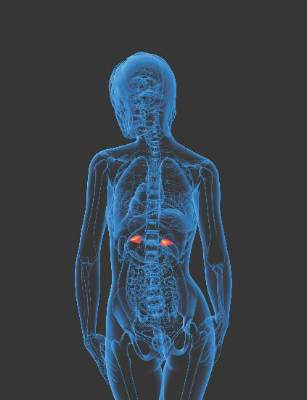

The world health community has lost ground in its fight to reduce end-stage renal disease in patients with lupus nephritis, a systematic review and meta-analysis concluded.

The risk of end-stage renal disease (ESRD) at 5 years of lupus nephritis decreased substantially from the 1970s, when it was 16%, to the mid-1990s, when it plateaued at 11%.

ESRD risks at 10 years and 15 years declined more sharply in the 1970s and 1980s but also plateaued in the mid-1990s at 17% and 22%, respectively.

This plateau was followed by a notable increase in risk in the late 2000s, particularly in the 10-year and 15-year estimates, Dr. Maria Tektonidou of the University of Athens and her coauthors reported (Arthritis Rheumatol. 2016 Jan 27. doi: 10.1002/art.39594).

“Despite extensive use of immunosuppressive medications through the 2000s, we did not find continued improvement in ESRD risks, but instead a slight increase in risks in the late 2000s,” they wrote.

The increase did not appear to be related to greater representation in recent studies of ethnic minorities, who may be more likely to develop ESRD. In the main analysis involving 148 of the 187 studies, “trends suggest this increase may have been temporary, but further follow-up will be needed to determine if this is sustained,” the investigators added.

Notably, patients with class-IV lupus nephritis had the greatest risk of ESRD during the 2000s, with a 15-year risk of 44%.

The 15-year risk of ESRD also was higher by 10 percentage points in developing countries than in developed countries during the 2000s.

The trends are worrisome because ESRD is a costly complication of lupus nephritis, which affects more than half of all patients with systemic lupus erythematosus (SLE). Patients with lupus nephritis have a 26-fold increased risk of death and estimated annual health care costs between $43,000 and $107,000 per patient, the authors noted.

The systematic review and Bayesian meta-analysis included 187 studies reporting on 18,309 adults with lupus nephritis from 1971 to 2015. The main analysis of ESRD risk included 102 studies from developed countries and 46 studies from developing countries.

Across all studies, 86% of patients were women, 32% had elevated serum creatinine levels at study entry, and proteinuria averaged 3.6 g daily. The average age was 31.2 years and mean duration of lupus nephritis was 2.7 years.

The proportion of patients treated with glucocorticoids alone in the studies declined from 54% in 1966 to 9% in 2010, while use of immunosuppressive therapies increased.

The decrease in ESRD risks early on coincided with increased use of immunosuppressives, particularly cyclophosphamide, and better control of hypertension and proteinuria. As for why those gains have stalled, Dr. Tektonidou and her colleagues said it’s possible that the limits of effectiveness of current treatments have been reached and better outcomes will require new therapies. “It is also possible that the plateau primarily reflects lack of progress in the way currently available and effective treatments are deployed,” they added. “This includes health system factors that result in delays in treatment initiation, and patient and health system factors that result in treatment interruptions and reduced adherence.”

In an accompanying editorial, Dr. Candace Feldman and Dr. Karen Costenbader, both of Brigham and Women’s Hospital in Boston, wrote, “While we have made advances over the past 50 years in our understanding of immunosuppressive medications, there have been few meaningful improvements in other domains that contribute to ESRD and to the persistent and disproportionate burden among vulnerable populations” (Ann Rheum Dis. 2016 Jan 27. doi: 10.1002/art.39593).

Despite the clear importance of medication adherence to SLE care, a recent systematic review of adherence interventions in rheumatic diseases (Ann Rheum Dis. 2015 Feb 9. doi: 10.1136/annrheumdis-2014-206593) found few SLE-specific interventions overall and none that significantly improved adherence outcomes, Dr. Feldman and Dr. Costenbader pointed out.

Dr. Tektonidou and her associates acknowledged that the new systematic review and meta-analysis were limited by the inability to estimate risks beyond 15 years and because the findings were similar only when observational studies were considered. Factors associated with ESRD, such as renal flares and uncontrolled hypertension, were not examined, and few studies were judged to be of high-quality.

Still, the results can be used to counsel patients on risks of ESRD and also will provide benchmarks to judge the effectiveness of future treatments, the authors concluded.

Dr. Feldman and Dr. Costenbader disagreed with this conclusion, citing various study limitations and the many nuanced factors that play into a patient’s risk of developing ESRD.

“This study should rather be used to provide a broad overview of our understanding of changes in SLE ESRD over time, rather than data to counsel an individual patient on his/her risks,” they wrote.

The study was supported by the intramural research program of the National Institute of Arthritis and Musculoskeletal and Skin Diseases. The authors reported having no conflicts of interest.

The world health community has lost ground in its fight to reduce end-stage renal disease in patients with lupus nephritis, a systematic review and meta-analysis concluded.

The risk of end-stage renal disease (ESRD) at 5 years of lupus nephritis decreased substantially from the 1970s, when it was 16%, to the mid-1990s, when it plateaued at 11%.

ESRD risks at 10 years and 15 years declined more sharply in the 1970s and 1980s but also plateaued in the mid-1990s at 17% and 22%, respectively.

This plateau was followed by a notable increase in risk in the late 2000s, particularly in the 10-year and 15-year estimates, Dr. Maria Tektonidou of the University of Athens and her coauthors reported (Arthritis Rheumatol. 2016 Jan 27. doi: 10.1002/art.39594).

“Despite extensive use of immunosuppressive medications through the 2000s, we did not find continued improvement in ESRD risks, but instead a slight increase in risks in the late 2000s,” they wrote.

The increase did not appear to be related to greater representation in recent studies of ethnic minorities, who may be more likely to develop ESRD. In the main analysis involving 148 of the 187 studies, “trends suggest this increase may have been temporary, but further follow-up will be needed to determine if this is sustained,” the investigators added.

Notably, patients with class-IV lupus nephritis had the greatest risk of ESRD during the 2000s, with a 15-year risk of 44%.

The 15-year risk of ESRD also was higher by 10 percentage points in developing countries than in developed countries during the 2000s.

The trends are worrisome because ESRD is a costly complication of lupus nephritis, which affects more than half of all patients with systemic lupus erythematosus (SLE). Patients with lupus nephritis have a 26-fold increased risk of death and estimated annual health care costs between $43,000 and $107,000 per patient, the authors noted.

The systematic review and Bayesian meta-analysis included 187 studies reporting on 18,309 adults with lupus nephritis from 1971 to 2015. The main analysis of ESRD risk included 102 studies from developed countries and 46 studies from developing countries.

Across all studies, 86% of patients were women, 32% had elevated serum creatinine levels at study entry, and proteinuria averaged 3.6 g daily. The average age was 31.2 years and mean duration of lupus nephritis was 2.7 years.

The proportion of patients treated with glucocorticoids alone in the studies declined from 54% in 1966 to 9% in 2010, while use of immunosuppressive therapies increased.

The decrease in ESRD risks early on coincided with increased use of immunosuppressives, particularly cyclophosphamide, and better control of hypertension and proteinuria. As for why those gains have stalled, Dr. Tektonidou and her colleagues said it’s possible that the limits of effectiveness of current treatments have been reached and better outcomes will require new therapies. “It is also possible that the plateau primarily reflects lack of progress in the way currently available and effective treatments are deployed,” they added. “This includes health system factors that result in delays in treatment initiation, and patient and health system factors that result in treatment interruptions and reduced adherence.”

In an accompanying editorial, Dr. Candace Feldman and Dr. Karen Costenbader, both of Brigham and Women’s Hospital in Boston, wrote, “While we have made advances over the past 50 years in our understanding of immunosuppressive medications, there have been few meaningful improvements in other domains that contribute to ESRD and to the persistent and disproportionate burden among vulnerable populations” (Ann Rheum Dis. 2016 Jan 27. doi: 10.1002/art.39593).

Despite the clear importance of medication adherence to SLE care, a recent systematic review of adherence interventions in rheumatic diseases (Ann Rheum Dis. 2015 Feb 9. doi: 10.1136/annrheumdis-2014-206593) found few SLE-specific interventions overall and none that significantly improved adherence outcomes, Dr. Feldman and Dr. Costenbader pointed out.

Dr. Tektonidou and her associates acknowledged that the new systematic review and meta-analysis were limited by the inability to estimate risks beyond 15 years and because the findings were similar only when observational studies were considered. Factors associated with ESRD, such as renal flares and uncontrolled hypertension, were not examined, and few studies were judged to be of high-quality.

Still, the results can be used to counsel patients on risks of ESRD and also will provide benchmarks to judge the effectiveness of future treatments, the authors concluded.

Dr. Feldman and Dr. Costenbader disagreed with this conclusion, citing various study limitations and the many nuanced factors that play into a patient’s risk of developing ESRD.

“This study should rather be used to provide a broad overview of our understanding of changes in SLE ESRD over time, rather than data to counsel an individual patient on his/her risks,” they wrote.

The study was supported by the intramural research program of the National Institute of Arthritis and Musculoskeletal and Skin Diseases. The authors reported having no conflicts of interest.

The world health community has lost ground in its fight to reduce end-stage renal disease in patients with lupus nephritis, a systematic review and meta-analysis concluded.

The risk of end-stage renal disease (ESRD) at 5 years of lupus nephritis decreased substantially from the 1970s, when it was 16%, to the mid-1990s, when it plateaued at 11%.

ESRD risks at 10 years and 15 years declined more sharply in the 1970s and 1980s but also plateaued in the mid-1990s at 17% and 22%, respectively.

This plateau was followed by a notable increase in risk in the late 2000s, particularly in the 10-year and 15-year estimates, Dr. Maria Tektonidou of the University of Athens and her coauthors reported (Arthritis Rheumatol. 2016 Jan 27. doi: 10.1002/art.39594).

“Despite extensive use of immunosuppressive medications through the 2000s, we did not find continued improvement in ESRD risks, but instead a slight increase in risks in the late 2000s,” they wrote.

The increase did not appear to be related to greater representation in recent studies of ethnic minorities, who may be more likely to develop ESRD. In the main analysis involving 148 of the 187 studies, “trends suggest this increase may have been temporary, but further follow-up will be needed to determine if this is sustained,” the investigators added.

Notably, patients with class-IV lupus nephritis had the greatest risk of ESRD during the 2000s, with a 15-year risk of 44%.

The 15-year risk of ESRD also was higher by 10 percentage points in developing countries than in developed countries during the 2000s.

The trends are worrisome because ESRD is a costly complication of lupus nephritis, which affects more than half of all patients with systemic lupus erythematosus (SLE). Patients with lupus nephritis have a 26-fold increased risk of death and estimated annual health care costs between $43,000 and $107,000 per patient, the authors noted.

The systematic review and Bayesian meta-analysis included 187 studies reporting on 18,309 adults with lupus nephritis from 1971 to 2015. The main analysis of ESRD risk included 102 studies from developed countries and 46 studies from developing countries.

Across all studies, 86% of patients were women, 32% had elevated serum creatinine levels at study entry, and proteinuria averaged 3.6 g daily. The average age was 31.2 years and mean duration of lupus nephritis was 2.7 years.

The proportion of patients treated with glucocorticoids alone in the studies declined from 54% in 1966 to 9% in 2010, while use of immunosuppressive therapies increased.

The decrease in ESRD risks early on coincided with increased use of immunosuppressives, particularly cyclophosphamide, and better control of hypertension and proteinuria. As for why those gains have stalled, Dr. Tektonidou and her colleagues said it’s possible that the limits of effectiveness of current treatments have been reached and better outcomes will require new therapies. “It is also possible that the plateau primarily reflects lack of progress in the way currently available and effective treatments are deployed,” they added. “This includes health system factors that result in delays in treatment initiation, and patient and health system factors that result in treatment interruptions and reduced adherence.”

In an accompanying editorial, Dr. Candace Feldman and Dr. Karen Costenbader, both of Brigham and Women’s Hospital in Boston, wrote, “While we have made advances over the past 50 years in our understanding of immunosuppressive medications, there have been few meaningful improvements in other domains that contribute to ESRD and to the persistent and disproportionate burden among vulnerable populations” (Ann Rheum Dis. 2016 Jan 27. doi: 10.1002/art.39593).

Despite the clear importance of medication adherence to SLE care, a recent systematic review of adherence interventions in rheumatic diseases (Ann Rheum Dis. 2015 Feb 9. doi: 10.1136/annrheumdis-2014-206593) found few SLE-specific interventions overall and none that significantly improved adherence outcomes, Dr. Feldman and Dr. Costenbader pointed out.

Dr. Tektonidou and her associates acknowledged that the new systematic review and meta-analysis were limited by the inability to estimate risks beyond 15 years and because the findings were similar only when observational studies were considered. Factors associated with ESRD, such as renal flares and uncontrolled hypertension, were not examined, and few studies were judged to be of high-quality.

Still, the results can be used to counsel patients on risks of ESRD and also will provide benchmarks to judge the effectiveness of future treatments, the authors concluded.

Dr. Feldman and Dr. Costenbader disagreed with this conclusion, citing various study limitations and the many nuanced factors that play into a patient’s risk of developing ESRD.

“This study should rather be used to provide a broad overview of our understanding of changes in SLE ESRD over time, rather than data to counsel an individual patient on his/her risks,” they wrote.

The study was supported by the intramural research program of the National Institute of Arthritis and Musculoskeletal and Skin Diseases. The authors reported having no conflicts of interest.

FROM ARTHRITIS & RHEUMATOLOGY

Key clinical point: The risk of end-stage renal disease in lupus nephritis decreased from the 1970s to the mid-1990s but has since remained largely unchanged.

Major finding: Patients with class-IV lupus nephritis had the greatest risk of ESRD during the 2000s, with a 15-year risk of 44%.

Data source: Systematic review and Bayesian meta-analysis of 18,309 adults with lupus nephritis.

Disclosures: The study was supported by the intramural research program of the National Institute of Arthritis and Musculoskeletal and Skin Diseases. The authors reported having no conflicts of interest.

Wearable device offers home-based knee OA pain relief

A wearable pulsed electromagnetic fields device reduced pain intensity and improved physical functioning in patients with painful knee osteoarthritis (OA) in a double-blind, randomized trial.

The commercially available device (ActiPatch, Bioelectronics Corp.) did not improve patients’ mental health, but significantly reduced patients’ intake of NSAIDs and analgesics, compared with placebo.

“Although NSAIDs remain the gold standard for the treatment of pain in OA, there is increasing need to find conservative and alternative approaches, in order to avoid the toxicity associated with the chronic use of the analgesics, mostly in the elderly population,” wrote Dr. Gian Luca Bagnato of the University of Messina (Italy) and his colleagues (Rheumatology [Oxford]. 2015 Dec 24. doi: 10.1093/rheumatology/kev426).

Pulsed electromagnetic fields (PEMF) therapy has been shown to reduce chondrocyte apoptosis and MMP-13 expression of knee cartilage and favorably affect cartilage homeostasis in animal models, but data regarding osteoarthritis (OA) pain and function in humans are mixed.

A recent systematic review found no effect in all 14 trials analyzed, but when only high-quality randomized clinical trials were included, PEMF provided significantly better pain relief at 4 and 8 weeks and better function at 8 weeks than did placebo (Rheumatology [Oxford]. 2013;52[5]:815-24).

Not only has the quality of trials varied, so has the PEMF pulse frequency and duration used in trials, “further limiting the possibility of comparing efficacy and safety,” Dr. Bagnato and associates observed.

The current study evenly randomized 60 patients with radiologic evidence of knee OA and persistent pain to wear the PEMF or a placebo device for a minimum of 12 hours, mainly at night, with the device kept in place with a wrap. The active device emits a form of non-ionizing electromagnetic radiation at a frequency of 27.12 MHz, a pulse rate of 1,000 Hz, and a burst width of 100 microsec.

Persistent pain was defined as a minimal mean score of 40 mm for global pain on the VAS (visual analog scale) and daily pain during the month prior to enrollment despite maximal tolerated doses of conventional medical therapy, including acetaminophen and/or an NSAID. The patients’ mean age was 67.7 years and mean OA duration 12 years.

The primary efficacy endpoint was reduction in pain intensity at 1 month on the VAS and WOMAC (Western Ontario and McMaster Universities Arthritis Index). The mean WOMAC total score at baseline was 132.9.

At 1 month, VAS pain scores were reduced 25.5% with the PEMF device and 3.6% with the placebo device. The standardized treatment effect size induced by PEMF therapy was –0.73 (95% confidence interval, –1.24 to –0.19), the investigators reported.

WOMAC pain subscale and total scores fell 23.4% and 18.4% with the PEMF device versus a 2.3% reduction for both scores with the placebo device. The standardized effect size was –0.61 for WOMAC pain (95% CI, –1.12 to –0.09) and –0.34 for WOMAC total score (95% CI, –0.85 to 0.17).

At 1 month, the mean Short Form-36 physical health score was significantly better in the PEMF group than in the placebo group (55.8 vs. 53.1; P = .024), while SF-36 mental health scores were nearly identical (43.8 vs. 43.6; P = .6).

Patients were allowed per protocol to take prescribed analgesic therapy as needed, but eight patients from the PEMF group stopped these medications, while one patient from the placebo group stopped medication and three started a new therapy for chronic pain. No adverse events were reported during the study.

“Given that our data are limited to a low number of participants and the long-term efficacy of the wearable device is unknown, the generalizability of the results needs to be confirmed in a larger clinical trial with a longer duration of treatment,” Dr. Bagnato and his coauthors concluded. “However, the use of a wearable PEMF therapy in knee OA can be considered as an alternative safe and effective therapy in knee OA, providing the possibility for home-based management of pain, compared with previous studies.”

Nonpharmacologic therapies and pharmacologic agents are helpful for a large segment of the population with knee osteoarthritis (OA). In contrast to rheumatoid arthritis for which there are now many truly effective agents, the physician and patient are frustrated with the borderline effective therapies for a proportion of those poorly responsive patients on present day therapy with knee OA. Joint replacement continues to be the most effective treatment for hip and knee OA, but many have postoperative joint pain. In addition, the population of patients with pain from knee OA is growing with the aging population and already exceeds the number that can be accommodated by our present physicians, without even considering the financial burden.

|

Dr. Roy D. Altman |

Until something is of proven benefit, researchers continue to fine-tune existing programs to maximize their benefit. One of the nonpharmacologic therapies is a wearable device that delivers pulsed electromagnetic fields (PEMF). Clinical trials supporting pulsed electrical stimulation for knee OA have been present for more than 20 years (J Rheumatol. 1993 Mar;20:456-60 and J Rheumatol. 1995 Sep;22:1757-61). Indeed, the devices have been commercially available for more than 10 years.

In the conclusions of a 2013 Cochrane review, “... electromagnetic field treatment may provide moderate benefit for osteoarthritis sufferers in terms of pain relief,” with more data needed for physical function and quality of life (Cochrane Database Syst Rev. 2013;Dec 14;12:CD003523). The studies tended to be small, as there were nine studies including 636 patients in the review. One of the problems in performing a systematic review is that there have been a variety of devices tested that vary in their functions. Examples of devices that have been tested in knee OA are the ActiPatch (used in the study by Dr. Bagnato and his colleagues), BioniCare, EarthPulse, MAGCELL ARTHRO, and Magnetofield devices. They vary in structure, size, frequency (Hz) per area, magnetic flux density, time intervals of each frequency (burst milliseconds), voltage, decibel level, duty cycle, contact time and intervals, wearing device for minutes/hours, etc. Blinding of when the device is on or off in studies has been complicated.

Dr. Bagnato and his associates add to the limited literature with a well-designed and well-conducted but relatively small trial. However, until there are more data, it will be difficult to use these devices on a regular basis, as they tend to be quite expensive and require a strong commitment of time and energy by the patient, who often thinks of the device as a form of alternative medicine.

Dr. Roy D. Altman is professor emeritus of medicine in the division of rheumatology and immunology at the University of California, Los Angeles. He has no relevant disclosures.

Nonpharmacologic therapies and pharmacologic agents are helpful for a large segment of the population with knee osteoarthritis (OA). In contrast to rheumatoid arthritis for which there are now many truly effective agents, the physician and patient are frustrated with the borderline effective therapies for a proportion of those poorly responsive patients on present day therapy with knee OA. Joint replacement continues to be the most effective treatment for hip and knee OA, but many have postoperative joint pain. In addition, the population of patients with pain from knee OA is growing with the aging population and already exceeds the number that can be accommodated by our present physicians, without even considering the financial burden.

|

Dr. Roy D. Altman |

Until something is of proven benefit, researchers continue to fine-tune existing programs to maximize their benefit. One of the nonpharmacologic therapies is a wearable device that delivers pulsed electromagnetic fields (PEMF). Clinical trials supporting pulsed electrical stimulation for knee OA have been present for more than 20 years (J Rheumatol. 1993 Mar;20:456-60 and J Rheumatol. 1995 Sep;22:1757-61). Indeed, the devices have been commercially available for more than 10 years.

In the conclusions of a 2013 Cochrane review, “... electromagnetic field treatment may provide moderate benefit for osteoarthritis sufferers in terms of pain relief,” with more data needed for physical function and quality of life (Cochrane Database Syst Rev. 2013;Dec 14;12:CD003523). The studies tended to be small, as there were nine studies including 636 patients in the review. One of the problems in performing a systematic review is that there have been a variety of devices tested that vary in their functions. Examples of devices that have been tested in knee OA are the ActiPatch (used in the study by Dr. Bagnato and his colleagues), BioniCare, EarthPulse, MAGCELL ARTHRO, and Magnetofield devices. They vary in structure, size, frequency (Hz) per area, magnetic flux density, time intervals of each frequency (burst milliseconds), voltage, decibel level, duty cycle, contact time and intervals, wearing device for minutes/hours, etc. Blinding of when the device is on or off in studies has been complicated.

Dr. Bagnato and his associates add to the limited literature with a well-designed and well-conducted but relatively small trial. However, until there are more data, it will be difficult to use these devices on a regular basis, as they tend to be quite expensive and require a strong commitment of time and energy by the patient, who often thinks of the device as a form of alternative medicine.

Dr. Roy D. Altman is professor emeritus of medicine in the division of rheumatology and immunology at the University of California, Los Angeles. He has no relevant disclosures.

Nonpharmacologic therapies and pharmacologic agents are helpful for a large segment of the population with knee osteoarthritis (OA). In contrast to rheumatoid arthritis for which there are now many truly effective agents, the physician and patient are frustrated with the borderline effective therapies for a proportion of those poorly responsive patients on present day therapy with knee OA. Joint replacement continues to be the most effective treatment for hip and knee OA, but many have postoperative joint pain. In addition, the population of patients with pain from knee OA is growing with the aging population and already exceeds the number that can be accommodated by our present physicians, without even considering the financial burden.

|

Dr. Roy D. Altman |

Until something is of proven benefit, researchers continue to fine-tune existing programs to maximize their benefit. One of the nonpharmacologic therapies is a wearable device that delivers pulsed electromagnetic fields (PEMF). Clinical trials supporting pulsed electrical stimulation for knee OA have been present for more than 20 years (J Rheumatol. 1993 Mar;20:456-60 and J Rheumatol. 1995 Sep;22:1757-61). Indeed, the devices have been commercially available for more than 10 years.

In the conclusions of a 2013 Cochrane review, “... electromagnetic field treatment may provide moderate benefit for osteoarthritis sufferers in terms of pain relief,” with more data needed for physical function and quality of life (Cochrane Database Syst Rev. 2013;Dec 14;12:CD003523). The studies tended to be small, as there were nine studies including 636 patients in the review. One of the problems in performing a systematic review is that there have been a variety of devices tested that vary in their functions. Examples of devices that have been tested in knee OA are the ActiPatch (used in the study by Dr. Bagnato and his colleagues), BioniCare, EarthPulse, MAGCELL ARTHRO, and Magnetofield devices. They vary in structure, size, frequency (Hz) per area, magnetic flux density, time intervals of each frequency (burst milliseconds), voltage, decibel level, duty cycle, contact time and intervals, wearing device for minutes/hours, etc. Blinding of when the device is on or off in studies has been complicated.

Dr. Bagnato and his associates add to the limited literature with a well-designed and well-conducted but relatively small trial. However, until there are more data, it will be difficult to use these devices on a regular basis, as they tend to be quite expensive and require a strong commitment of time and energy by the patient, who often thinks of the device as a form of alternative medicine.

Dr. Roy D. Altman is professor emeritus of medicine in the division of rheumatology and immunology at the University of California, Los Angeles. He has no relevant disclosures.

A wearable pulsed electromagnetic fields device reduced pain intensity and improved physical functioning in patients with painful knee osteoarthritis (OA) in a double-blind, randomized trial.

The commercially available device (ActiPatch, Bioelectronics Corp.) did not improve patients’ mental health, but significantly reduced patients’ intake of NSAIDs and analgesics, compared with placebo.

“Although NSAIDs remain the gold standard for the treatment of pain in OA, there is increasing need to find conservative and alternative approaches, in order to avoid the toxicity associated with the chronic use of the analgesics, mostly in the elderly population,” wrote Dr. Gian Luca Bagnato of the University of Messina (Italy) and his colleagues (Rheumatology [Oxford]. 2015 Dec 24. doi: 10.1093/rheumatology/kev426).

Pulsed electromagnetic fields (PEMF) therapy has been shown to reduce chondrocyte apoptosis and MMP-13 expression of knee cartilage and favorably affect cartilage homeostasis in animal models, but data regarding osteoarthritis (OA) pain and function in humans are mixed.

A recent systematic review found no effect in all 14 trials analyzed, but when only high-quality randomized clinical trials were included, PEMF provided significantly better pain relief at 4 and 8 weeks and better function at 8 weeks than did placebo (Rheumatology [Oxford]. 2013;52[5]:815-24).

Not only has the quality of trials varied, so has the PEMF pulse frequency and duration used in trials, “further limiting the possibility of comparing efficacy and safety,” Dr. Bagnato and associates observed.

The current study evenly randomized 60 patients with radiologic evidence of knee OA and persistent pain to wear the PEMF or a placebo device for a minimum of 12 hours, mainly at night, with the device kept in place with a wrap. The active device emits a form of non-ionizing electromagnetic radiation at a frequency of 27.12 MHz, a pulse rate of 1,000 Hz, and a burst width of 100 microsec.

Persistent pain was defined as a minimal mean score of 40 mm for global pain on the VAS (visual analog scale) and daily pain during the month prior to enrollment despite maximal tolerated doses of conventional medical therapy, including acetaminophen and/or an NSAID. The patients’ mean age was 67.7 years and mean OA duration 12 years.

The primary efficacy endpoint was reduction in pain intensity at 1 month on the VAS and WOMAC (Western Ontario and McMaster Universities Arthritis Index). The mean WOMAC total score at baseline was 132.9.

At 1 month, VAS pain scores were reduced 25.5% with the PEMF device and 3.6% with the placebo device. The standardized treatment effect size induced by PEMF therapy was –0.73 (95% confidence interval, –1.24 to –0.19), the investigators reported.

WOMAC pain subscale and total scores fell 23.4% and 18.4% with the PEMF device versus a 2.3% reduction for both scores with the placebo device. The standardized effect size was –0.61 for WOMAC pain (95% CI, –1.12 to –0.09) and –0.34 for WOMAC total score (95% CI, –0.85 to 0.17).

At 1 month, the mean Short Form-36 physical health score was significantly better in the PEMF group than in the placebo group (55.8 vs. 53.1; P = .024), while SF-36 mental health scores were nearly identical (43.8 vs. 43.6; P = .6).

Patients were allowed per protocol to take prescribed analgesic therapy as needed, but eight patients from the PEMF group stopped these medications, while one patient from the placebo group stopped medication and three started a new therapy for chronic pain. No adverse events were reported during the study.

“Given that our data are limited to a low number of participants and the long-term efficacy of the wearable device is unknown, the generalizability of the results needs to be confirmed in a larger clinical trial with a longer duration of treatment,” Dr. Bagnato and his coauthors concluded. “However, the use of a wearable PEMF therapy in knee OA can be considered as an alternative safe and effective therapy in knee OA, providing the possibility for home-based management of pain, compared with previous studies.”

A wearable pulsed electromagnetic fields device reduced pain intensity and improved physical functioning in patients with painful knee osteoarthritis (OA) in a double-blind, randomized trial.

The commercially available device (ActiPatch, Bioelectronics Corp.) did not improve patients’ mental health, but significantly reduced patients’ intake of NSAIDs and analgesics, compared with placebo.

“Although NSAIDs remain the gold standard for the treatment of pain in OA, there is increasing need to find conservative and alternative approaches, in order to avoid the toxicity associated with the chronic use of the analgesics, mostly in the elderly population,” wrote Dr. Gian Luca Bagnato of the University of Messina (Italy) and his colleagues (Rheumatology [Oxford]. 2015 Dec 24. doi: 10.1093/rheumatology/kev426).

Pulsed electromagnetic fields (PEMF) therapy has been shown to reduce chondrocyte apoptosis and MMP-13 expression of knee cartilage and favorably affect cartilage homeostasis in animal models, but data regarding osteoarthritis (OA) pain and function in humans are mixed.

A recent systematic review found no effect in all 14 trials analyzed, but when only high-quality randomized clinical trials were included, PEMF provided significantly better pain relief at 4 and 8 weeks and better function at 8 weeks than did placebo (Rheumatology [Oxford]. 2013;52[5]:815-24).

Not only has the quality of trials varied, so has the PEMF pulse frequency and duration used in trials, “further limiting the possibility of comparing efficacy and safety,” Dr. Bagnato and associates observed.

The current study evenly randomized 60 patients with radiologic evidence of knee OA and persistent pain to wear the PEMF or a placebo device for a minimum of 12 hours, mainly at night, with the device kept in place with a wrap. The active device emits a form of non-ionizing electromagnetic radiation at a frequency of 27.12 MHz, a pulse rate of 1,000 Hz, and a burst width of 100 microsec.

Persistent pain was defined as a minimal mean score of 40 mm for global pain on the VAS (visual analog scale) and daily pain during the month prior to enrollment despite maximal tolerated doses of conventional medical therapy, including acetaminophen and/or an NSAID. The patients’ mean age was 67.7 years and mean OA duration 12 years.

The primary efficacy endpoint was reduction in pain intensity at 1 month on the VAS and WOMAC (Western Ontario and McMaster Universities Arthritis Index). The mean WOMAC total score at baseline was 132.9.

At 1 month, VAS pain scores were reduced 25.5% with the PEMF device and 3.6% with the placebo device. The standardized treatment effect size induced by PEMF therapy was –0.73 (95% confidence interval, –1.24 to –0.19), the investigators reported.

WOMAC pain subscale and total scores fell 23.4% and 18.4% with the PEMF device versus a 2.3% reduction for both scores with the placebo device. The standardized effect size was –0.61 for WOMAC pain (95% CI, –1.12 to –0.09) and –0.34 for WOMAC total score (95% CI, –0.85 to 0.17).

At 1 month, the mean Short Form-36 physical health score was significantly better in the PEMF group than in the placebo group (55.8 vs. 53.1; P = .024), while SF-36 mental health scores were nearly identical (43.8 vs. 43.6; P = .6).

Patients were allowed per protocol to take prescribed analgesic therapy as needed, but eight patients from the PEMF group stopped these medications, while one patient from the placebo group stopped medication and three started a new therapy for chronic pain. No adverse events were reported during the study.

“Given that our data are limited to a low number of participants and the long-term efficacy of the wearable device is unknown, the generalizability of the results needs to be confirmed in a larger clinical trial with a longer duration of treatment,” Dr. Bagnato and his coauthors concluded. “However, the use of a wearable PEMF therapy in knee OA can be considered as an alternative safe and effective therapy in knee OA, providing the possibility for home-based management of pain, compared with previous studies.”

FROM RHEUMATOLOGY

Key clinical point: Pulsed electromagnetic fields therapy is safe and effective in improving knee osteoarthritis symptoms.

Major finding: The mean treatment effect size was –0.73 in the VAS score and –0.34 in the WOMAC score.

Data source: Double-blind, randomized trial in 60 patients with knee osteoarthritis and persistent pain.

Disclosures: Bioelectronics provided the pulsed electromagnetic fields and placebo devices. The authors reported having no conflicts of interest.

Metformin trims mother’s weight, but not baby’s

In obese pregnant women without diabetes, daily metformin reduces maternal weight gain, but not infant birth weight, results of the MOP trial show.

The incidence of preeclampsia was also lower with metformin than with placebo (3.0% vs. 11.3%; odds ratio, 0.24; P =.001).

There was no significant difference between the two groups in the incidence of other pregnancy complications or of adverse fetal or neonatal outcomes, Argyro Syngelaki, Ph.D., of King’s College Hospital in London, and her colleagues reported (N Engl J Med. 2016 Feb 3;374:434-43.).

Attempts at reducing the incidence of pregnancy complications associated with obesity have focused on dietary and lifestyle interventions but have generally been unsuccessful, she noted.

Additionally, the recent randomized EMPOWaR (Effect of Metformin on Maternal and Fetal Outcomes) trial failed to show that metformin use was associated with less gestational weight gain and a lower incidence of preeclampsia than did placebo.

EMPOWaR, however, used a body mass index (BMI) cutoff of 30 kg/m2 and a 2.5-g dose of metformin, compared with a BMI cutoff of 35 and the 3.0-g dose used in the MOP (Metformin in Obese Non-diabetic Pregnant Women) trial, Dr. Syngelaki observed.

Adherence to metformin was also higher in the MOP trial. Nearly 66% of women took a minimum metformin dose of 2.5 g for at least 50% of the days between randomization and delivery, compared with 2.5 g of metformin being used for only 38% of the days in the same period in EMPOWaR. The proportion of patients who were in this dose subgroup in the EMPOWaR trial was not specified.

MOP investigators randomly assigned 400 women with a singleton pregnancy to metformin or placebo from 12-18 weeks of gestation until delivery. All women underwent a 75-g oral glucose tolerance test at 28 weeks’ gestation, with metformin and placebo stopped for 1 week before the test. Women with abnormal oral glucose tolerance test results continued the assigned study regimen, and began home glucose monitoring. Insulin was added to their regimen, if target blood-glucose values were not achieved.

The metformin and placebo groups were well matched at baseline with respect to median maternal age (32.9 years vs. 30.8 years), median BMI (38.6 kg/m2 vs. 38.4 kg/m2), and spontaneous conception (97.5% vs. 98.0%).

Metformin did not reduce the primary outcome of median neonatal birth-weight z score, compared with placebo (0.05 vs. 0.17; P = .66) or the incidence of large for gestational age neonates (16.8% vs. 15.4%; P = .79), the MOP investigators reported.

There were no significant differences between the metformin and placebo groups in rates of miscarriage (zero vs. three cases), stillbirths (one case vs. two cases), or neonatal death (zero vs. one case).

The median maternal gestational weight gain, however, was lower with metformin than placebo (4.6 kg vs 6.3 kg; P less than .001), as was the incidence of preeclampsia.

The authors acknowledged that a limitation of the study was that it was not adequately powered for the secondary outcomes, but said the preeclampsia finding is compatible with several previous studies reporting that the prevalence of this potentially deadly complication increased with increasing prepregnancy BMI and gestational weight gain.

Side effects such as nausea, vomiting, and headache were as expected during gestation, although the incidence was significantly higher in the metformin group. Still, there was no significant between-group differences with regard to the decision to continue with the full dose, reduce the dose, or stop the study regimen.

“The rate of adherence was considerably higher among women taking the full dose of 3.0 g per day than among those taking less than 2.0 g per day, which suggests that adherence was not driven by the presence or absence of side effects, but by the motivation of the patients to adhere to the demands of the study,” the investigators wrote.

The Fetal Medicine Foundation funded the study. The investigators reported having no financial disclosures.

In obese pregnant women without diabetes, daily metformin reduces maternal weight gain, but not infant birth weight, results of the MOP trial show.

The incidence of preeclampsia was also lower with metformin than with placebo (3.0% vs. 11.3%; odds ratio, 0.24; P =.001).

There was no significant difference between the two groups in the incidence of other pregnancy complications or of adverse fetal or neonatal outcomes, Argyro Syngelaki, Ph.D., of King’s College Hospital in London, and her colleagues reported (N Engl J Med. 2016 Feb 3;374:434-43.).

Attempts at reducing the incidence of pregnancy complications associated with obesity have focused on dietary and lifestyle interventions but have generally been unsuccessful, she noted.

Additionally, the recent randomized EMPOWaR (Effect of Metformin on Maternal and Fetal Outcomes) trial failed to show that metformin use was associated with less gestational weight gain and a lower incidence of preeclampsia than did placebo.

EMPOWaR, however, used a body mass index (BMI) cutoff of 30 kg/m2 and a 2.5-g dose of metformin, compared with a BMI cutoff of 35 and the 3.0-g dose used in the MOP (Metformin in Obese Non-diabetic Pregnant Women) trial, Dr. Syngelaki observed.

Adherence to metformin was also higher in the MOP trial. Nearly 66% of women took a minimum metformin dose of 2.5 g for at least 50% of the days between randomization and delivery, compared with 2.5 g of metformin being used for only 38% of the days in the same period in EMPOWaR. The proportion of patients who were in this dose subgroup in the EMPOWaR trial was not specified.

MOP investigators randomly assigned 400 women with a singleton pregnancy to metformin or placebo from 12-18 weeks of gestation until delivery. All women underwent a 75-g oral glucose tolerance test at 28 weeks’ gestation, with metformin and placebo stopped for 1 week before the test. Women with abnormal oral glucose tolerance test results continued the assigned study regimen, and began home glucose monitoring. Insulin was added to their regimen, if target blood-glucose values were not achieved.

The metformin and placebo groups were well matched at baseline with respect to median maternal age (32.9 years vs. 30.8 years), median BMI (38.6 kg/m2 vs. 38.4 kg/m2), and spontaneous conception (97.5% vs. 98.0%).

Metformin did not reduce the primary outcome of median neonatal birth-weight z score, compared with placebo (0.05 vs. 0.17; P = .66) or the incidence of large for gestational age neonates (16.8% vs. 15.4%; P = .79), the MOP investigators reported.

There were no significant differences between the metformin and placebo groups in rates of miscarriage (zero vs. three cases), stillbirths (one case vs. two cases), or neonatal death (zero vs. one case).

The median maternal gestational weight gain, however, was lower with metformin than placebo (4.6 kg vs 6.3 kg; P less than .001), as was the incidence of preeclampsia.

The authors acknowledged that a limitation of the study was that it was not adequately powered for the secondary outcomes, but said the preeclampsia finding is compatible with several previous studies reporting that the prevalence of this potentially deadly complication increased with increasing prepregnancy BMI and gestational weight gain.

Side effects such as nausea, vomiting, and headache were as expected during gestation, although the incidence was significantly higher in the metformin group. Still, there was no significant between-group differences with regard to the decision to continue with the full dose, reduce the dose, or stop the study regimen.

“The rate of adherence was considerably higher among women taking the full dose of 3.0 g per day than among those taking less than 2.0 g per day, which suggests that adherence was not driven by the presence or absence of side effects, but by the motivation of the patients to adhere to the demands of the study,” the investigators wrote.

The Fetal Medicine Foundation funded the study. The investigators reported having no financial disclosures.

In obese pregnant women without diabetes, daily metformin reduces maternal weight gain, but not infant birth weight, results of the MOP trial show.

The incidence of preeclampsia was also lower with metformin than with placebo (3.0% vs. 11.3%; odds ratio, 0.24; P =.001).

There was no significant difference between the two groups in the incidence of other pregnancy complications or of adverse fetal or neonatal outcomes, Argyro Syngelaki, Ph.D., of King’s College Hospital in London, and her colleagues reported (N Engl J Med. 2016 Feb 3;374:434-43.).

Attempts at reducing the incidence of pregnancy complications associated with obesity have focused on dietary and lifestyle interventions but have generally been unsuccessful, she noted.

Additionally, the recent randomized EMPOWaR (Effect of Metformin on Maternal and Fetal Outcomes) trial failed to show that metformin use was associated with less gestational weight gain and a lower incidence of preeclampsia than did placebo.

EMPOWaR, however, used a body mass index (BMI) cutoff of 30 kg/m2 and a 2.5-g dose of metformin, compared with a BMI cutoff of 35 and the 3.0-g dose used in the MOP (Metformin in Obese Non-diabetic Pregnant Women) trial, Dr. Syngelaki observed.

Adherence to metformin was also higher in the MOP trial. Nearly 66% of women took a minimum metformin dose of 2.5 g for at least 50% of the days between randomization and delivery, compared with 2.5 g of metformin being used for only 38% of the days in the same period in EMPOWaR. The proportion of patients who were in this dose subgroup in the EMPOWaR trial was not specified.

MOP investigators randomly assigned 400 women with a singleton pregnancy to metformin or placebo from 12-18 weeks of gestation until delivery. All women underwent a 75-g oral glucose tolerance test at 28 weeks’ gestation, with metformin and placebo stopped for 1 week before the test. Women with abnormal oral glucose tolerance test results continued the assigned study regimen, and began home glucose monitoring. Insulin was added to their regimen, if target blood-glucose values were not achieved.

The metformin and placebo groups were well matched at baseline with respect to median maternal age (32.9 years vs. 30.8 years), median BMI (38.6 kg/m2 vs. 38.4 kg/m2), and spontaneous conception (97.5% vs. 98.0%).

Metformin did not reduce the primary outcome of median neonatal birth-weight z score, compared with placebo (0.05 vs. 0.17; P = .66) or the incidence of large for gestational age neonates (16.8% vs. 15.4%; P = .79), the MOP investigators reported.

There were no significant differences between the metformin and placebo groups in rates of miscarriage (zero vs. three cases), stillbirths (one case vs. two cases), or neonatal death (zero vs. one case).

The median maternal gestational weight gain, however, was lower with metformin than placebo (4.6 kg vs 6.3 kg; P less than .001), as was the incidence of preeclampsia.

The authors acknowledged that a limitation of the study was that it was not adequately powered for the secondary outcomes, but said the preeclampsia finding is compatible with several previous studies reporting that the prevalence of this potentially deadly complication increased with increasing prepregnancy BMI and gestational weight gain.

Side effects such as nausea, vomiting, and headache were as expected during gestation, although the incidence was significantly higher in the metformin group. Still, there was no significant between-group differences with regard to the decision to continue with the full dose, reduce the dose, or stop the study regimen.

“The rate of adherence was considerably higher among women taking the full dose of 3.0 g per day than among those taking less than 2.0 g per day, which suggests that adherence was not driven by the presence or absence of side effects, but by the motivation of the patients to adhere to the demands of the study,” the investigators wrote.

The Fetal Medicine Foundation funded the study. The investigators reported having no financial disclosures.

FROM NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point: Antenatal metformin use reduces maternal weight gain, but not neonatal birth weight.

Major finding: The median maternal gestational weight gain was 4.6 kg with metformin and 6.3 kg with placebo (P less than .001).

Data source: Double-blind, randomized trial in 400 obese pregnant women without diabetes.

Disclosures: The Fetal Medicine Foundation funded the study. The investigators reported having no financial disclosures.

Office visits still common before colonoscopy

Nearly 30% of outpatient colonoscopies for colon cancer screening or polyp surveillance were preceded by a gastroenterology office visit, with that number reaching a staggering 50.5% in the South, according to an analysis of 842,849 middle-aged adults.

Patients with a higher Charlson Comorbidity Index (CCI) also were more likely to have an office visit. Still, 66.4% of patients with an office visit had a CCI of 0.

“Although the precolonoscopy office visits added a modest $36 per colonoscopy in this population, there are an estimated 7 million screening colonoscopies performed in the United States annually, so the cumulative costs are significant,” reported Dr. Kevin Riggs of Johns Hopkins University, Baltimore, and associates reported (JAMA. 2016 Feb 2. doi:10.1001/jama2015.15278).

The cost of precolonoscopy office visits has received little attention despite scrutiny of the high cost of colonoscopy in the United States and the availability of open-access colonoscopy since the 1990s, the authors noted.

The primary limitation of the study was the inability to determine the exact circumstances of the office visits. Patients with a diagnosis of colon cancer or inflammatory bowel disease in the prior 12 months were excluded from the analysis.

The study was funded by the National Institutes of Health and the National Cancer Institute. Dr. Riggs’ salary is supported by an NIH grant. Coauthor and colleague Dr. Craig Pollack reported stock ownership in the Advisory Board Company and that his salary is supported by a grant from the NCI.

Nearly 30% of outpatient colonoscopies for colon cancer screening or polyp surveillance were preceded by a gastroenterology office visit, with that number reaching a staggering 50.5% in the South, according to an analysis of 842,849 middle-aged adults.

Patients with a higher Charlson Comorbidity Index (CCI) also were more likely to have an office visit. Still, 66.4% of patients with an office visit had a CCI of 0.

“Although the precolonoscopy office visits added a modest $36 per colonoscopy in this population, there are an estimated 7 million screening colonoscopies performed in the United States annually, so the cumulative costs are significant,” reported Dr. Kevin Riggs of Johns Hopkins University, Baltimore, and associates reported (JAMA. 2016 Feb 2. doi:10.1001/jama2015.15278).

The cost of precolonoscopy office visits has received little attention despite scrutiny of the high cost of colonoscopy in the United States and the availability of open-access colonoscopy since the 1990s, the authors noted.

The primary limitation of the study was the inability to determine the exact circumstances of the office visits. Patients with a diagnosis of colon cancer or inflammatory bowel disease in the prior 12 months were excluded from the analysis.

The study was funded by the National Institutes of Health and the National Cancer Institute. Dr. Riggs’ salary is supported by an NIH grant. Coauthor and colleague Dr. Craig Pollack reported stock ownership in the Advisory Board Company and that his salary is supported by a grant from the NCI.

Nearly 30% of outpatient colonoscopies for colon cancer screening or polyp surveillance were preceded by a gastroenterology office visit, with that number reaching a staggering 50.5% in the South, according to an analysis of 842,849 middle-aged adults.

Patients with a higher Charlson Comorbidity Index (CCI) also were more likely to have an office visit. Still, 66.4% of patients with an office visit had a CCI of 0.

“Although the precolonoscopy office visits added a modest $36 per colonoscopy in this population, there are an estimated 7 million screening colonoscopies performed in the United States annually, so the cumulative costs are significant,” reported Dr. Kevin Riggs of Johns Hopkins University, Baltimore, and associates reported (JAMA. 2016 Feb 2. doi:10.1001/jama2015.15278).

The cost of precolonoscopy office visits has received little attention despite scrutiny of the high cost of colonoscopy in the United States and the availability of open-access colonoscopy since the 1990s, the authors noted.

The primary limitation of the study was the inability to determine the exact circumstances of the office visits. Patients with a diagnosis of colon cancer or inflammatory bowel disease in the prior 12 months were excluded from the analysis.

The study was funded by the National Institutes of Health and the National Cancer Institute. Dr. Riggs’ salary is supported by an NIH grant. Coauthor and colleague Dr. Craig Pollack reported stock ownership in the Advisory Board Company and that his salary is supported by a grant from the NCI.

FROM JAMA

Minimal residual disease a powerful prognostic factor in AML

The presence of minimal residual disease predicts relapse in patients with NMP1-mutated acute myeloid leukemia and is superior to currently used molecular genetic markers in determining whether these patients should be considered for stem cell transplantation, a new study has found.

At 3 years, patients with minimal residual disease (MRD) had a significantly greater risk of relapse than those with no MRD (82% vs. 30%; univariate hazard ratio, 4.80; P less than .001) and a lower rate of survival (24% vs. 75%; univariate HR, 4.38; P less than .001), Adam Ivey of King’s College London reported (N Engl J Med. 2016; doi:10.1056/NEJMoa1507471).

In an editorial that accompanied the study Dr. Michael J. Burke from the Children’s Hospital of Wisconsin in Milwaukee wrote, “Time will tell, but this moment may prove to be a pivotal one in the assessment of minimal residual disease to assign treatment in patients with AML” (N Engl J Med. 2016; doi:10.1056/NEJMe1515525).

In adult AML, assessment of MRD has taken a back seat to analyses of cytogenetic and molecular lesions in determining a patient’s risk and treatment strategy. Typically, allogeneic stem cell transplantation is used for patients with high-risk features such as chromosome 3, 5, or 7 abnormalities or the FLT3-internal tandem duplication (ITD) mutation, while chemotherapy alone is used for low-risk disease.

The role of transplantation is unclear, however, for cytogenetically standard-risk patients, which includes those with a mutation in the gene encoding nucleophosmin (NPM1).

To address this issue, the investigators used a reverse-transcriptase quantitative polymerase chain reaction assay to evaluate 2,569 bone marrow and peripheral-blood samples from 346 patients with NPM1 mutations who had completed two cycles of induction chemotherapy in the U.K. National Cancer Research Institute AML17 trial.

MRD, defined as persistence of NPM1-mutated transcripts in peripheral blood, was present in 15% of patients after the second chemotherapy cycle.

Patients with MRD were significantly more likely than those without MRD to have a high U.K. Medical Research Council clinical risk score and to carry the FLT3-ITD mutation.

On univariate analysis, the risk of relapse was significantly higher with the presence of MRD in peripheral blood, an increased white cell count, and with the DNMT3A and FLT3-ITD mutations.

Only the presence of MRD and an elevated white cell count significantly predicted survival, Mr. Ivey reported.

“We could find no specific molecular subgroup consisting of 10 patients or more that had a rate of survival less than 52%; in contrast, the rate in the group with the presence of minimal residual disease was 24%,” he observed.

In multivariate analysis, the presence of MRD was the only significant prognostic factor for relapse (HR, 5.09; P less than .001) or death (HR, 4.84; P less than .001).

The results were validated in an independent cohort of 91 AML17 study patients. It confirmed that MRD in peripheral blood predicts worse outcome at 2 years than the absence of MRD, with a cumulative incidence of relapse of 70% vs. 31% (P = .001) and overall survival rates of 40% vs. 87% (P = .001), reported the investigators, including senior author Professor David Grimwade, also from King’s College London.

The clinical implications of these results “are substantive” because NPM-1 mutated AML is the most common subtype of AML and because of the uncertainty over the best treatment strategy for patients typically classified as standard risk, editorialist Dr. Burke observed.

“Now with the ability to reclassify standard-risk or low-risk patients as high-risk on the basis of the persistent expression of mutant NPM1 transcripts, it may be possible that stem-cell transplantation is a better approach in patients who otherwise would be treated with chemotherapy alone and that transplantation may be avoidable in high-risk patients who have no evidence of minimal residual disease,” he wrote. “Such predictions will need to be tested prospectively.”

The presence of MRD is also known to be an important independent prognostic factor in acute lymphoblastic leukemia, but since AML has a greater molecular heterogeneity, routine MRD assessment has not been as quickly adopted in AML, Dr. Burke noted.

The Children’s Oncology Group, however, recently adopted MRD assessment by flow cytometry to further stratify children with newly diagnosed AML after first induction therapy into low-risk or high-risk groups.

The study was supported by grants from Bloodwise and the National Institute for Health Research. Mr. Ivey and Dr. Burke reported having no disclosures.

The presence of minimal residual disease predicts relapse in patients with NMP1-mutated acute myeloid leukemia and is superior to currently used molecular genetic markers in determining whether these patients should be considered for stem cell transplantation, a new study has found.

At 3 years, patients with minimal residual disease (MRD) had a significantly greater risk of relapse than those with no MRD (82% vs. 30%; univariate hazard ratio, 4.80; P less than .001) and a lower rate of survival (24% vs. 75%; univariate HR, 4.38; P less than .001), Adam Ivey of King’s College London reported (N Engl J Med. 2016; doi:10.1056/NEJMoa1507471).

In an editorial that accompanied the study Dr. Michael J. Burke from the Children’s Hospital of Wisconsin in Milwaukee wrote, “Time will tell, but this moment may prove to be a pivotal one in the assessment of minimal residual disease to assign treatment in patients with AML” (N Engl J Med. 2016; doi:10.1056/NEJMe1515525).

In adult AML, assessment of MRD has taken a back seat to analyses of cytogenetic and molecular lesions in determining a patient’s risk and treatment strategy. Typically, allogeneic stem cell transplantation is used for patients with high-risk features such as chromosome 3, 5, or 7 abnormalities or the FLT3-internal tandem duplication (ITD) mutation, while chemotherapy alone is used for low-risk disease.

The role of transplantation is unclear, however, for cytogenetically standard-risk patients, which includes those with a mutation in the gene encoding nucleophosmin (NPM1).

To address this issue, the investigators used a reverse-transcriptase quantitative polymerase chain reaction assay to evaluate 2,569 bone marrow and peripheral-blood samples from 346 patients with NPM1 mutations who had completed two cycles of induction chemotherapy in the U.K. National Cancer Research Institute AML17 trial.

MRD, defined as persistence of NPM1-mutated transcripts in peripheral blood, was present in 15% of patients after the second chemotherapy cycle.

Patients with MRD were significantly more likely than those without MRD to have a high U.K. Medical Research Council clinical risk score and to carry the FLT3-ITD mutation.

On univariate analysis, the risk of relapse was significantly higher with the presence of MRD in peripheral blood, an increased white cell count, and with the DNMT3A and FLT3-ITD mutations.

Only the presence of MRD and an elevated white cell count significantly predicted survival, Mr. Ivey reported.

“We could find no specific molecular subgroup consisting of 10 patients or more that had a rate of survival less than 52%; in contrast, the rate in the group with the presence of minimal residual disease was 24%,” he observed.

In multivariate analysis, the presence of MRD was the only significant prognostic factor for relapse (HR, 5.09; P less than .001) or death (HR, 4.84; P less than .001).

The results were validated in an independent cohort of 91 AML17 study patients. It confirmed that MRD in peripheral blood predicts worse outcome at 2 years than the absence of MRD, with a cumulative incidence of relapse of 70% vs. 31% (P = .001) and overall survival rates of 40% vs. 87% (P = .001), reported the investigators, including senior author Professor David Grimwade, also from King’s College London.

The clinical implications of these results “are substantive” because NPM-1 mutated AML is the most common subtype of AML and because of the uncertainty over the best treatment strategy for patients typically classified as standard risk, editorialist Dr. Burke observed.

“Now with the ability to reclassify standard-risk or low-risk patients as high-risk on the basis of the persistent expression of mutant NPM1 transcripts, it may be possible that stem-cell transplantation is a better approach in patients who otherwise would be treated with chemotherapy alone and that transplantation may be avoidable in high-risk patients who have no evidence of minimal residual disease,” he wrote. “Such predictions will need to be tested prospectively.”

The presence of MRD is also known to be an important independent prognostic factor in acute lymphoblastic leukemia, but since AML has a greater molecular heterogeneity, routine MRD assessment has not been as quickly adopted in AML, Dr. Burke noted.

The Children’s Oncology Group, however, recently adopted MRD assessment by flow cytometry to further stratify children with newly diagnosed AML after first induction therapy into low-risk or high-risk groups.

The study was supported by grants from Bloodwise and the National Institute for Health Research. Mr. Ivey and Dr. Burke reported having no disclosures.

The presence of minimal residual disease predicts relapse in patients with NMP1-mutated acute myeloid leukemia and is superior to currently used molecular genetic markers in determining whether these patients should be considered for stem cell transplantation, a new study has found.

At 3 years, patients with minimal residual disease (MRD) had a significantly greater risk of relapse than those with no MRD (82% vs. 30%; univariate hazard ratio, 4.80; P less than .001) and a lower rate of survival (24% vs. 75%; univariate HR, 4.38; P less than .001), Adam Ivey of King’s College London reported (N Engl J Med. 2016; doi:10.1056/NEJMoa1507471).

In an editorial that accompanied the study Dr. Michael J. Burke from the Children’s Hospital of Wisconsin in Milwaukee wrote, “Time will tell, but this moment may prove to be a pivotal one in the assessment of minimal residual disease to assign treatment in patients with AML” (N Engl J Med. 2016; doi:10.1056/NEJMe1515525).

In adult AML, assessment of MRD has taken a back seat to analyses of cytogenetic and molecular lesions in determining a patient’s risk and treatment strategy. Typically, allogeneic stem cell transplantation is used for patients with high-risk features such as chromosome 3, 5, or 7 abnormalities or the FLT3-internal tandem duplication (ITD) mutation, while chemotherapy alone is used for low-risk disease.

The role of transplantation is unclear, however, for cytogenetically standard-risk patients, which includes those with a mutation in the gene encoding nucleophosmin (NPM1).

To address this issue, the investigators used a reverse-transcriptase quantitative polymerase chain reaction assay to evaluate 2,569 bone marrow and peripheral-blood samples from 346 patients with NPM1 mutations who had completed two cycles of induction chemotherapy in the U.K. National Cancer Research Institute AML17 trial.

MRD, defined as persistence of NPM1-mutated transcripts in peripheral blood, was present in 15% of patients after the second chemotherapy cycle.

Patients with MRD were significantly more likely than those without MRD to have a high U.K. Medical Research Council clinical risk score and to carry the FLT3-ITD mutation.

On univariate analysis, the risk of relapse was significantly higher with the presence of MRD in peripheral blood, an increased white cell count, and with the DNMT3A and FLT3-ITD mutations.

Only the presence of MRD and an elevated white cell count significantly predicted survival, Mr. Ivey reported.

“We could find no specific molecular subgroup consisting of 10 patients or more that had a rate of survival less than 52%; in contrast, the rate in the group with the presence of minimal residual disease was 24%,” he observed.

In multivariate analysis, the presence of MRD was the only significant prognostic factor for relapse (HR, 5.09; P less than .001) or death (HR, 4.84; P less than .001).

The results were validated in an independent cohort of 91 AML17 study patients. It confirmed that MRD in peripheral blood predicts worse outcome at 2 years than the absence of MRD, with a cumulative incidence of relapse of 70% vs. 31% (P = .001) and overall survival rates of 40% vs. 87% (P = .001), reported the investigators, including senior author Professor David Grimwade, also from King’s College London.

The clinical implications of these results “are substantive” because NPM-1 mutated AML is the most common subtype of AML and because of the uncertainty over the best treatment strategy for patients typically classified as standard risk, editorialist Dr. Burke observed.

“Now with the ability to reclassify standard-risk or low-risk patients as high-risk on the basis of the persistent expression of mutant NPM1 transcripts, it may be possible that stem-cell transplantation is a better approach in patients who otherwise would be treated with chemotherapy alone and that transplantation may be avoidable in high-risk patients who have no evidence of minimal residual disease,” he wrote. “Such predictions will need to be tested prospectively.”

The presence of MRD is also known to be an important independent prognostic factor in acute lymphoblastic leukemia, but since AML has a greater molecular heterogeneity, routine MRD assessment has not been as quickly adopted in AML, Dr. Burke noted.

The Children’s Oncology Group, however, recently adopted MRD assessment by flow cytometry to further stratify children with newly diagnosed AML after first induction therapy into low-risk or high-risk groups.

The study was supported by grants from Bloodwise and the National Institute for Health Research. Mr. Ivey and Dr. Burke reported having no disclosures.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point: The presence of minimal residual disease provides powerful prognostic information independent of other risk factors in patients with NPM1-mutated AML.

Major finding: Minimal residual disease was associated with a significantly greater risk of relapse than absence of MRD (82% vs. 30%; hazard ratio, 4.80; P less than .001) and a lower rate of survival (24% vs. 75%; HR, 4.38, P less than .001).

Data source: Analysis of 346 patients with NPM1-mutated AML.

Disclosures: The study was supported by grants from Bloodwise and the National Institute for Health Research. Mr. Ivey and Dr. Burke reported having no disclosures.

Research refining radiomic features for lung cancer screening

SAN DIEGO – A series of radiomics-derived imaging features may improve the diagnostic accuracy of low-dose CT lung cancer screening and help predict which nodules are at risk of becoming cancers.

“We are providing pretty compelling evidence that there is some utility in this science,” Matthew Schabath, Ph.D., said at a conference on lung cancer translational science sponsored by the American Association for Cancer Research and the International Association for the Study of Lung Cancer.

Radiomics is an emerging field that uses high-throughput extraction to identify hundreds of quantitative features from standard computed tomography (CT) images and mines that data to develop diagnostic, predictive, or prognostic models.

Radiologists first identify a region of interest (ROI) on the CT scan containing either the whole tumor or spatially explicit regions of the tumor called “habitats.” These ROIs are then segmented via computer software before being rendered in three dimensions. Quantitative features are extracted from the rendered volumes and entered into the models, along with other clinical and patient data.

“Right now our tool box is about 219, but by the end of the year we are hoping to have close to 1,000 radiomic features we can extract from a 3-D rendered nodule or tumor,” said Dr. Schabath, of the Moffitt Cancer Center in Tampa, Fla.

Although not without its own challenges, radiomics is a far cry from the current practice that relies on a single CT feature, nodule size, and clinical guidelines to evaluate and follow-up pulmonary nodules, none of which provides clinicians tools to accurately predict the risk or probability of lung cancer development.

CT images are typically thought of as pictures, but in radiomics, “the images are data. That’s really the underlying principle,” he said.

Led by Dr. Robert Gillies, often referred to as the father of radiomics, the researchers extracted and analyzed the 219 radiomic features from nodules in 196 lung cancer cases and in 392 controls who had a positive but benign nodule at the baseline scan and were matched for age, sex, smoking status, and race.

The post hoc, nested case-control study used images and data from the pivotal National Lung Screening Trial, which identified a 20% reduction in lung cancer mortality for low-dose CT screening compared with chest x-rays, but with a 96% false-positive rate, which also highlighted the challenges of LDCT as a screening tool.

Two classes of features were extracted from the images: semantic features, which are commonly used in radiology to describe ROIs, and agnostic features, which are mathematically extracted quantitative descriptors that capture lesion heterogeneity.

Univariable analyses were used to identify statistically significant features (threshold P value less than .05) and a backward elimination process (threshold P less than 0.1) performed to generate the final set of features, Dr. Schabath said.

Separate analyses were performed for predictive and diagnostic features.

In the risk prediction model, eight “highly informative features” were identified, Dr. Schabath said. Five were agnostic and three were semantic – circularity of the nodule, volume, and distance from or pleural attachment.

The receiver operating characteristic (ROC) area under the curve for the model was 0.92, with 75% sensitivity and 89% specificity. When the model included only patient demographics, it was no better than flipping a coin for predicting nodules at risk of becoming cancerous (ROC 0.58), he said.

Six highly informative features were identified in the agnostic model, which extracted features from the nodules found at the first and second follow-up interval, Dr. Schabath said. Three were agnostic and three semantic – longest diameter, volume, and distance from or pleural attachment.

The ROC for the diagnostic model was 0.89, with 74% sensitivity and 89% specificity.

When an additional analysis was performed using a nodule threshold of less than 15 mm to account for nodule growth over time and smaller nodule size at baseline in controls, the ROC and specificity held steady, but sensitivity dropped off to 59%, he said.

“I think we’re showing a rigorous [statistical] approach by identifying really unique, highly informative features,” Dr. Schabath concluded.

The overlap of volume and distance from or pleural attachment in both the diagnostic and predictive models suggests “there might be something very important about these two features,” he added.

Dr. Schabath stressed that the findings are preliminary and said additional analyses will be run before the results are ready for prime time. Long-term goals are to implement radiomic-based decision support tools and models into radiology reading rooms.

“In the future, we envision that all medical images will be converted to mineable data with the process of radiomics as part of standard of care,” Dr. Gillies said in an interview. “Such data have already shown promise to increase the precision and accuracy of diagnostic images, and hence, will increasingly be used in therapy decision support.”

Among the many challenges that first need to be resolved are that images are often captured with settings and filters that can be different even within a single institution. The inconsistency adds noise to the data that are extracted by computers.

“Hence, the most robust data we have today are generated by radiologists themselves, although this has its own challenges of being time-consuming with inter-reader variability,” Dr. Gillies noted.

Another major challenge is sharing of the image data. Right now, radiomics is practiced at only a few research hospitals and thus, building large cohort studies requires that the images be moved across site. In the future, the researchers anticipate that software can be deployed across sites to enable radiomic feature extraction, which would mean that only the extracted data will have to be shared, he said.

SAN DIEGO – A series of radiomics-derived imaging features may improve the diagnostic accuracy of low-dose CT lung cancer screening and help predict which nodules are at risk of becoming cancers.

“We are providing pretty compelling evidence that there is some utility in this science,” Matthew Schabath, Ph.D., said at a conference on lung cancer translational science sponsored by the American Association for Cancer Research and the International Association for the Study of Lung Cancer.

Radiomics is an emerging field that uses high-throughput extraction to identify hundreds of quantitative features from standard computed tomography (CT) images and mines that data to develop diagnostic, predictive, or prognostic models.

Radiologists first identify a region of interest (ROI) on the CT scan containing either the whole tumor or spatially explicit regions of the tumor called “habitats.” These ROIs are then segmented via computer software before being rendered in three dimensions. Quantitative features are extracted from the rendered volumes and entered into the models, along with other clinical and patient data.

“Right now our tool box is about 219, but by the end of the year we are hoping to have close to 1,000 radiomic features we can extract from a 3-D rendered nodule or tumor,” said Dr. Schabath, of the Moffitt Cancer Center in Tampa, Fla.

Although not without its own challenges, radiomics is a far cry from the current practice that relies on a single CT feature, nodule size, and clinical guidelines to evaluate and follow-up pulmonary nodules, none of which provides clinicians tools to accurately predict the risk or probability of lung cancer development.

CT images are typically thought of as pictures, but in radiomics, “the images are data. That’s really the underlying principle,” he said.

Led by Dr. Robert Gillies, often referred to as the father of radiomics, the researchers extracted and analyzed the 219 radiomic features from nodules in 196 lung cancer cases and in 392 controls who had a positive but benign nodule at the baseline scan and were matched for age, sex, smoking status, and race.