User login

Study supports expanded definition of serrated polyposis syndrome

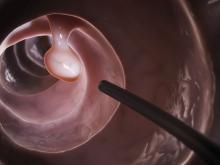

Patients with more than 10 colonic polyps, of which at least half were serrated, and their first-degree relatives had a risk of colorectal cancer similar to that of patients who met formal diagnostic criteria for serrated polyposis syndrome (SPS), according to a retrospective multicenter study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.003).

Such patients “should be treated with the same follow-up procedures as those proposed for patients with SPS, and possibly the definition of SPS should be broadened to include this phenotype,” wrote Cecilia M. Egoavil, MD, Miriam Juárez, and their associates.

SPS increases the risk of colorectal cancer (CRC) and is considered a heritable disease, which mandates “strict surveillance” of first-degree relatives, the researchers noted. The World Health Organization defines SPS as having at least five histologically diagnosed serrated lesions proximal to the sigmoid colon, of which two are at least 10 mm in diameter, or serrated polyps proximal to the sigmoid colon and a first-degree relative with SPS, or more than 20 serrated polyps throughout the colon. This “arbitrary” definition is “somewhat restrictive, and possibly leads to underdiagnosis of this disease,” the researchers wrote. Patients with multiple serrated polyps who do not meet WHO SPS criteria might have a “phenotypically attenuated form of serrated polyposis.”

For the study, the researchers compared 53 patients meeting WHO SPS criteria with 145 patients who did not meet these criteria but had more than 10 polyps throughout the colon, of which at least 50% were serrated. For both groups, number of polyps was obtained by adding polyp counts from subsequent colonoscopies. The data source was EPIPOLIP, a multicenter study of patients recruited from 24 hospitals in Spain in 2008 and 2009. At baseline, all patients had more than 10 adenomatous or serrated colonic polyps but did not have familial adenomatous polyposis, Lynch syndrome, hamartomatous polyposis, inflammatory bowel disease, or only hyperplastic rectosigmoid polyps.

The prevalence of CRC was statistically similar between groups (P = .4). There were 12 (22.6%) cases among SPS patients (mean age at diagnosis, 50 years), and 41 (28.3%) cases (mean age, 59 years) among patients with multiple serrated polyps who did not meet SPS criteria. During a mean follow-up of 4.2 years, one (1.9%) SPS patient developed incident CRC, as did four (2.8%) patients with multiple serrated polyps without SPS. Thus, standardized incidence ratios were 0.51 (95% confidence interval, 0.01-2.82) and 0.74 (95% CI, 0.20-1.90), respectively (P = .7). Standardized incidence ratios for CRC also did not significantly differ between first-degree relatives of patients with SPS (3.28, 95% CI, 2.16-4.77) and those with multiple serrated polyps (2.79, 95% CI, 2.10-3.63; P = .5).

A Kaplan-Meier analysis confirmed that there were no differences in the incidence of CRC between groups during follow-up. The findings “confirm that a special surveillance strategy is needed for patients with multiple serrated polyps and their relatives, probably similar to the strategy currently recommended for SPS patients,” the researchers concluded. They arbitrarily defined the group with multiple serrated polyps, so they were not able to link CRC to a cutoff number or percentage of serrated polyps, they noted.

Funders included Instituto de Salud Carlos III, Fundación de Investigación Biomédica de la Comunidad Valenciana-Instituto de Investigación Sanitaria y Biomédica de Alicante, Asociación Española Contra el Cáncer, and Conselleria d’Educació de la Generalitat Valenciana. The investigators had no conflicts of interest.

Patients with more than 10 colonic polyps, of which at least half were serrated, and their first-degree relatives had a risk of colorectal cancer similar to that of patients who met formal diagnostic criteria for serrated polyposis syndrome (SPS), according to a retrospective multicenter study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.003).

Such patients “should be treated with the same follow-up procedures as those proposed for patients with SPS, and possibly the definition of SPS should be broadened to include this phenotype,” wrote Cecilia M. Egoavil, MD, Miriam Juárez, and their associates.

SPS increases the risk of colorectal cancer (CRC) and is considered a heritable disease, which mandates “strict surveillance” of first-degree relatives, the researchers noted. The World Health Organization defines SPS as having at least five histologically diagnosed serrated lesions proximal to the sigmoid colon, of which two are at least 10 mm in diameter, or serrated polyps proximal to the sigmoid colon and a first-degree relative with SPS, or more than 20 serrated polyps throughout the colon. This “arbitrary” definition is “somewhat restrictive, and possibly leads to underdiagnosis of this disease,” the researchers wrote. Patients with multiple serrated polyps who do not meet WHO SPS criteria might have a “phenotypically attenuated form of serrated polyposis.”

For the study, the researchers compared 53 patients meeting WHO SPS criteria with 145 patients who did not meet these criteria but had more than 10 polyps throughout the colon, of which at least 50% were serrated. For both groups, number of polyps was obtained by adding polyp counts from subsequent colonoscopies. The data source was EPIPOLIP, a multicenter study of patients recruited from 24 hospitals in Spain in 2008 and 2009. At baseline, all patients had more than 10 adenomatous or serrated colonic polyps but did not have familial adenomatous polyposis, Lynch syndrome, hamartomatous polyposis, inflammatory bowel disease, or only hyperplastic rectosigmoid polyps.

The prevalence of CRC was statistically similar between groups (P = .4). There were 12 (22.6%) cases among SPS patients (mean age at diagnosis, 50 years), and 41 (28.3%) cases (mean age, 59 years) among patients with multiple serrated polyps who did not meet SPS criteria. During a mean follow-up of 4.2 years, one (1.9%) SPS patient developed incident CRC, as did four (2.8%) patients with multiple serrated polyps without SPS. Thus, standardized incidence ratios were 0.51 (95% confidence interval, 0.01-2.82) and 0.74 (95% CI, 0.20-1.90), respectively (P = .7). Standardized incidence ratios for CRC also did not significantly differ between first-degree relatives of patients with SPS (3.28, 95% CI, 2.16-4.77) and those with multiple serrated polyps (2.79, 95% CI, 2.10-3.63; P = .5).

A Kaplan-Meier analysis confirmed that there were no differences in the incidence of CRC between groups during follow-up. The findings “confirm that a special surveillance strategy is needed for patients with multiple serrated polyps and their relatives, probably similar to the strategy currently recommended for SPS patients,” the researchers concluded. They arbitrarily defined the group with multiple serrated polyps, so they were not able to link CRC to a cutoff number or percentage of serrated polyps, they noted.

Funders included Instituto de Salud Carlos III, Fundación de Investigación Biomédica de la Comunidad Valenciana-Instituto de Investigación Sanitaria y Biomédica de Alicante, Asociación Española Contra el Cáncer, and Conselleria d’Educació de la Generalitat Valenciana. The investigators had no conflicts of interest.

Patients with more than 10 colonic polyps, of which at least half were serrated, and their first-degree relatives had a risk of colorectal cancer similar to that of patients who met formal diagnostic criteria for serrated polyposis syndrome (SPS), according to a retrospective multicenter study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.003).

Such patients “should be treated with the same follow-up procedures as those proposed for patients with SPS, and possibly the definition of SPS should be broadened to include this phenotype,” wrote Cecilia M. Egoavil, MD, Miriam Juárez, and their associates.

SPS increases the risk of colorectal cancer (CRC) and is considered a heritable disease, which mandates “strict surveillance” of first-degree relatives, the researchers noted. The World Health Organization defines SPS as having at least five histologically diagnosed serrated lesions proximal to the sigmoid colon, of which two are at least 10 mm in diameter, or serrated polyps proximal to the sigmoid colon and a first-degree relative with SPS, or more than 20 serrated polyps throughout the colon. This “arbitrary” definition is “somewhat restrictive, and possibly leads to underdiagnosis of this disease,” the researchers wrote. Patients with multiple serrated polyps who do not meet WHO SPS criteria might have a “phenotypically attenuated form of serrated polyposis.”

For the study, the researchers compared 53 patients meeting WHO SPS criteria with 145 patients who did not meet these criteria but had more than 10 polyps throughout the colon, of which at least 50% were serrated. For both groups, number of polyps was obtained by adding polyp counts from subsequent colonoscopies. The data source was EPIPOLIP, a multicenter study of patients recruited from 24 hospitals in Spain in 2008 and 2009. At baseline, all patients had more than 10 adenomatous or serrated colonic polyps but did not have familial adenomatous polyposis, Lynch syndrome, hamartomatous polyposis, inflammatory bowel disease, or only hyperplastic rectosigmoid polyps.

The prevalence of CRC was statistically similar between groups (P = .4). There were 12 (22.6%) cases among SPS patients (mean age at diagnosis, 50 years), and 41 (28.3%) cases (mean age, 59 years) among patients with multiple serrated polyps who did not meet SPS criteria. During a mean follow-up of 4.2 years, one (1.9%) SPS patient developed incident CRC, as did four (2.8%) patients with multiple serrated polyps without SPS. Thus, standardized incidence ratios were 0.51 (95% confidence interval, 0.01-2.82) and 0.74 (95% CI, 0.20-1.90), respectively (P = .7). Standardized incidence ratios for CRC also did not significantly differ between first-degree relatives of patients with SPS (3.28, 95% CI, 2.16-4.77) and those with multiple serrated polyps (2.79, 95% CI, 2.10-3.63; P = .5).

A Kaplan-Meier analysis confirmed that there were no differences in the incidence of CRC between groups during follow-up. The findings “confirm that a special surveillance strategy is needed for patients with multiple serrated polyps and their relatives, probably similar to the strategy currently recommended for SPS patients,” the researchers concluded. They arbitrarily defined the group with multiple serrated polyps, so they were not able to link CRC to a cutoff number or percentage of serrated polyps, they noted.

Funders included Instituto de Salud Carlos III, Fundación de Investigación Biomédica de la Comunidad Valenciana-Instituto de Investigación Sanitaria y Biomédica de Alicante, Asociación Española Contra el Cáncer, and Conselleria d’Educació de la Generalitat Valenciana. The investigators had no conflicts of interest.

FROM GASTROENTEROLOGY

Key clinical point: Risk of colorectal cancer was similar among patients with serrated polyposis syndrome and those who did not meet formal diagnostic criteria but had more than 10 colonic polyps, of which more than 50% were serrated, and their first-degree relatives.

Major finding: Standardized incidence ratios were 0.51 (95% confidence interval, 0.01-2.82) in patients who met criteria for serrated polyposis syndrome and 0.74 (95% CI, 0.20-1.90) in patients with multiple serrated polyps who did not meet the criteria (P = .7).

Data source: A multicenter retrospective study of 53 patients who met criteria for serrated polyposis and 145 patients who did not meet these criteria, but had more than 10 polyps throughout the colon, of which more than 50% were serrated.

Disclosures: Funders included Instituto de Salud Carlos III, Fundación de Investigación Biomédica de la Comunidad Valenciana–Instituto de Investigación Sanitaria y Biomédica de Alicante, Asociación Española Contra el Cáncer, and Conselleria d’Educació de la Generalitat Valenciana. The investigators had no conflicts of interest.

Steatosis linked to persistent ALT increase in hepatitis B

About one in five patients with chronic hepatitis B virus (HBV) infection had persistently elevated alanine aminotransferase (ALT) levels despite long-term treatment with tenofovir disoproxil fumarate, according to data from two phase III trials reported in the July issue of Clinical Gastroenterology and Hepatology (2017. doi: 10.1016/j.cgh.2017.01.032).

“Both host and viral factors, particularly hepatic steatosis and hepatitis B e antigen [HBeAg] seropositivity, are important contributors to this phenomenon,” Ira M. Jacobson, MD, of Mount Sinai Beth Israel Medical Center, New York, wrote with his associates. “Although serum ALT may indicate significant liver injury, this association is inconsistent, suggesting that relying on serum ALT alone is not sufficient to gauge either the extent of liver injury or the impact of antiviral therapy.”

Long-term treatment with newer antivirals such as tenofovir disoproxil fumarate (TDF) achieves complete viral suppression and improves liver histology in most cases of HBV infection. Transaminase levels are used to track long-term clinical response but sometimes remain elevated in the face of complete virologic response and regression of fibrosis. To explore predictors of this outcome, the researchers analyzed data from 471 chronic HBV patients receiving TDF 300 mg once daily for 5 years as part of two ongoing phase III trials (NCT00117676 and NCT00116805). At baseline, about 25% of patients were cirrhotic (Ishak fibrosis score greater than or equal to 5) and none had decompensated cirrhosis. A central laboratory analyzed ALT levels, which were up to 10 times the upper limit of normal in both HBeAg-positive and -negative patients and were at least twice the upper limit of normal in all HBeAg-positive patients.

After 5 years of TDF, ALT levels remained elevated in 87 (18%) of patients. Patients with at least 5% (grade 1) steatosis at baseline were significantly more likely to have persistent ALT elevation than were those with less or no steatosis (odds ratio, 2.2; 95% confidence interval, 1.03-4.9; P = .04). At least grade 1 steatosis at year 5 also was associated with persistent ALT elevation (OR, 3.4; 95% CI, 1.6-7.4; P =.002). Other significant correlates included HBeAg seropositivity (OR, 3.3; 95% CI, 1.7-6.6; P less than .001) and age 40 years or younger (OR, 2.1; 95% CI, 1.01-4.3; P = .046). Strikingly, half of HBeAg-positive patients with steatosis at baseline had elevated ALT at year 5, said the investigators.

Because many patients whose ALT values fall within commercial laboratory reference ranges have chronic active necroinflammation or fibrogenesis, the researchers performed a sensitivity analysis of patients who achieved a stricter definition of ALT normalization of no more than 30 U/L for men and 19 U/L for women that has been previously recommended (Ann Intern Med. 2002;137:1-10). In this analysis, 47% of patients had persistently elevated ALT despite effective virologic suppression, and the only significant predictor of persistent ALT elevation was grade 1 or more steatosis at year 5 (OR, 6.2; 95% CI, 2.3-16.4; P less than .001). Younger age and HBeAg positivity plus age were no longer significant.

Hepatic steatosis is common overall and in chronic HBV infection and often leads to increased serum transaminases, the researchers noted. Although past work has linked a PNPLA3 single nucleotide polymorphism to obesity, metabolic syndrome, and hepatic steatosis, the presence of this single nucleotide polymorphism was not significant in their study, possibly because many patients lacked genotype data, they added. “Larger longitudinal studies are warranted to further explore this factor and its potential effect on the biochemical response to antiviral treatment in [chronic HBV] patients,” they concluded.

Gilead Sciences sponsored the study. Dr. Jacobson disclosed consultancy, honoraria, and research ties to Gilead and several other pharmaceutical companies.

Antiviral therapy for chronic hepatitis B virus in most treated patients suppresses rather than eradicates infection. Despite this, long-term treatment results in substantial histologic improvement – including regression of fibrosis and reduction in complications.

However, as Jacobson et al. report in a histologic follow-up of 471 HBV patients treated long-term, aminotransferase elevation persisted in 18%. Factors implicated on multivariate analysis in unresolved biochemical dysfunction included HBeAg seropositivity, age less than 40 years, and steatosis at entry, in addition to steatosis at 5-year follow-up. The only association with hepatic dysfunction that persisted was steatosis when modified normal ranges for aminotransferases proposed by Prati were applied, namely 30 U for men and 19 U for women. This suggests that metabolic rather than viral factors are implicated in persistent biochemical dysfunction in patients with chronic HBV infection. Steatosis is also a frequent finding on liver biopsy in patients with chronic HCV infection.

Importantly, HCV-specific mechanisms have been implicated in the accumulation of steatosis in infected patients, as the virus may interfere with host lipid metabolism. HCV genotype 3 has a marked propensity to cause fat accumulation in hepatocytes, which appears to regress with successful antiviral therapy. In the interferon era, hepatic steatosis had been identified as a predictor of nonresponse to therapy for HCV. In patients with chronic viral hepatitis, attention needs to be paid to cofactors in liver disease – notably the metabolic syndrome – particularly because successfully treated patients are now discharged from the care of specialists.

Paul S. Martin, MD, is chief, division of hepatology, professor of medicine, University of Miami Health System, Fla. He has been a consultant and investigator for Gilead, BMS, and Merck.

Antiviral therapy for chronic hepatitis B virus in most treated patients suppresses rather than eradicates infection. Despite this, long-term treatment results in substantial histologic improvement – including regression of fibrosis and reduction in complications.

However, as Jacobson et al. report in a histologic follow-up of 471 HBV patients treated long-term, aminotransferase elevation persisted in 18%. Factors implicated on multivariate analysis in unresolved biochemical dysfunction included HBeAg seropositivity, age less than 40 years, and steatosis at entry, in addition to steatosis at 5-year follow-up. The only association with hepatic dysfunction that persisted was steatosis when modified normal ranges for aminotransferases proposed by Prati were applied, namely 30 U for men and 19 U for women. This suggests that metabolic rather than viral factors are implicated in persistent biochemical dysfunction in patients with chronic HBV infection. Steatosis is also a frequent finding on liver biopsy in patients with chronic HCV infection.

Importantly, HCV-specific mechanisms have been implicated in the accumulation of steatosis in infected patients, as the virus may interfere with host lipid metabolism. HCV genotype 3 has a marked propensity to cause fat accumulation in hepatocytes, which appears to regress with successful antiviral therapy. In the interferon era, hepatic steatosis had been identified as a predictor of nonresponse to therapy for HCV. In patients with chronic viral hepatitis, attention needs to be paid to cofactors in liver disease – notably the metabolic syndrome – particularly because successfully treated patients are now discharged from the care of specialists.

Paul S. Martin, MD, is chief, division of hepatology, professor of medicine, University of Miami Health System, Fla. He has been a consultant and investigator for Gilead, BMS, and Merck.

Antiviral therapy for chronic hepatitis B virus in most treated patients suppresses rather than eradicates infection. Despite this, long-term treatment results in substantial histologic improvement – including regression of fibrosis and reduction in complications.

However, as Jacobson et al. report in a histologic follow-up of 471 HBV patients treated long-term, aminotransferase elevation persisted in 18%. Factors implicated on multivariate analysis in unresolved biochemical dysfunction included HBeAg seropositivity, age less than 40 years, and steatosis at entry, in addition to steatosis at 5-year follow-up. The only association with hepatic dysfunction that persisted was steatosis when modified normal ranges for aminotransferases proposed by Prati were applied, namely 30 U for men and 19 U for women. This suggests that metabolic rather than viral factors are implicated in persistent biochemical dysfunction in patients with chronic HBV infection. Steatosis is also a frequent finding on liver biopsy in patients with chronic HCV infection.

Importantly, HCV-specific mechanisms have been implicated in the accumulation of steatosis in infected patients, as the virus may interfere with host lipid metabolism. HCV genotype 3 has a marked propensity to cause fat accumulation in hepatocytes, which appears to regress with successful antiviral therapy. In the interferon era, hepatic steatosis had been identified as a predictor of nonresponse to therapy for HCV. In patients with chronic viral hepatitis, attention needs to be paid to cofactors in liver disease – notably the metabolic syndrome – particularly because successfully treated patients are now discharged from the care of specialists.

Paul S. Martin, MD, is chief, division of hepatology, professor of medicine, University of Miami Health System, Fla. He has been a consultant and investigator for Gilead, BMS, and Merck.

About one in five patients with chronic hepatitis B virus (HBV) infection had persistently elevated alanine aminotransferase (ALT) levels despite long-term treatment with tenofovir disoproxil fumarate, according to data from two phase III trials reported in the July issue of Clinical Gastroenterology and Hepatology (2017. doi: 10.1016/j.cgh.2017.01.032).

“Both host and viral factors, particularly hepatic steatosis and hepatitis B e antigen [HBeAg] seropositivity, are important contributors to this phenomenon,” Ira M. Jacobson, MD, of Mount Sinai Beth Israel Medical Center, New York, wrote with his associates. “Although serum ALT may indicate significant liver injury, this association is inconsistent, suggesting that relying on serum ALT alone is not sufficient to gauge either the extent of liver injury or the impact of antiviral therapy.”

Long-term treatment with newer antivirals such as tenofovir disoproxil fumarate (TDF) achieves complete viral suppression and improves liver histology in most cases of HBV infection. Transaminase levels are used to track long-term clinical response but sometimes remain elevated in the face of complete virologic response and regression of fibrosis. To explore predictors of this outcome, the researchers analyzed data from 471 chronic HBV patients receiving TDF 300 mg once daily for 5 years as part of two ongoing phase III trials (NCT00117676 and NCT00116805). At baseline, about 25% of patients were cirrhotic (Ishak fibrosis score greater than or equal to 5) and none had decompensated cirrhosis. A central laboratory analyzed ALT levels, which were up to 10 times the upper limit of normal in both HBeAg-positive and -negative patients and were at least twice the upper limit of normal in all HBeAg-positive patients.

After 5 years of TDF, ALT levels remained elevated in 87 (18%) of patients. Patients with at least 5% (grade 1) steatosis at baseline were significantly more likely to have persistent ALT elevation than were those with less or no steatosis (odds ratio, 2.2; 95% confidence interval, 1.03-4.9; P = .04). At least grade 1 steatosis at year 5 also was associated with persistent ALT elevation (OR, 3.4; 95% CI, 1.6-7.4; P =.002). Other significant correlates included HBeAg seropositivity (OR, 3.3; 95% CI, 1.7-6.6; P less than .001) and age 40 years or younger (OR, 2.1; 95% CI, 1.01-4.3; P = .046). Strikingly, half of HBeAg-positive patients with steatosis at baseline had elevated ALT at year 5, said the investigators.

Because many patients whose ALT values fall within commercial laboratory reference ranges have chronic active necroinflammation or fibrogenesis, the researchers performed a sensitivity analysis of patients who achieved a stricter definition of ALT normalization of no more than 30 U/L for men and 19 U/L for women that has been previously recommended (Ann Intern Med. 2002;137:1-10). In this analysis, 47% of patients had persistently elevated ALT despite effective virologic suppression, and the only significant predictor of persistent ALT elevation was grade 1 or more steatosis at year 5 (OR, 6.2; 95% CI, 2.3-16.4; P less than .001). Younger age and HBeAg positivity plus age were no longer significant.

Hepatic steatosis is common overall and in chronic HBV infection and often leads to increased serum transaminases, the researchers noted. Although past work has linked a PNPLA3 single nucleotide polymorphism to obesity, metabolic syndrome, and hepatic steatosis, the presence of this single nucleotide polymorphism was not significant in their study, possibly because many patients lacked genotype data, they added. “Larger longitudinal studies are warranted to further explore this factor and its potential effect on the biochemical response to antiviral treatment in [chronic HBV] patients,” they concluded.

Gilead Sciences sponsored the study. Dr. Jacobson disclosed consultancy, honoraria, and research ties to Gilead and several other pharmaceutical companies.

About one in five patients with chronic hepatitis B virus (HBV) infection had persistently elevated alanine aminotransferase (ALT) levels despite long-term treatment with tenofovir disoproxil fumarate, according to data from two phase III trials reported in the July issue of Clinical Gastroenterology and Hepatology (2017. doi: 10.1016/j.cgh.2017.01.032).

“Both host and viral factors, particularly hepatic steatosis and hepatitis B e antigen [HBeAg] seropositivity, are important contributors to this phenomenon,” Ira M. Jacobson, MD, of Mount Sinai Beth Israel Medical Center, New York, wrote with his associates. “Although serum ALT may indicate significant liver injury, this association is inconsistent, suggesting that relying on serum ALT alone is not sufficient to gauge either the extent of liver injury or the impact of antiviral therapy.”

Long-term treatment with newer antivirals such as tenofovir disoproxil fumarate (TDF) achieves complete viral suppression and improves liver histology in most cases of HBV infection. Transaminase levels are used to track long-term clinical response but sometimes remain elevated in the face of complete virologic response and regression of fibrosis. To explore predictors of this outcome, the researchers analyzed data from 471 chronic HBV patients receiving TDF 300 mg once daily for 5 years as part of two ongoing phase III trials (NCT00117676 and NCT00116805). At baseline, about 25% of patients were cirrhotic (Ishak fibrosis score greater than or equal to 5) and none had decompensated cirrhosis. A central laboratory analyzed ALT levels, which were up to 10 times the upper limit of normal in both HBeAg-positive and -negative patients and were at least twice the upper limit of normal in all HBeAg-positive patients.

After 5 years of TDF, ALT levels remained elevated in 87 (18%) of patients. Patients with at least 5% (grade 1) steatosis at baseline were significantly more likely to have persistent ALT elevation than were those with less or no steatosis (odds ratio, 2.2; 95% confidence interval, 1.03-4.9; P = .04). At least grade 1 steatosis at year 5 also was associated with persistent ALT elevation (OR, 3.4; 95% CI, 1.6-7.4; P =.002). Other significant correlates included HBeAg seropositivity (OR, 3.3; 95% CI, 1.7-6.6; P less than .001) and age 40 years or younger (OR, 2.1; 95% CI, 1.01-4.3; P = .046). Strikingly, half of HBeAg-positive patients with steatosis at baseline had elevated ALT at year 5, said the investigators.

Because many patients whose ALT values fall within commercial laboratory reference ranges have chronic active necroinflammation or fibrogenesis, the researchers performed a sensitivity analysis of patients who achieved a stricter definition of ALT normalization of no more than 30 U/L for men and 19 U/L for women that has been previously recommended (Ann Intern Med. 2002;137:1-10). In this analysis, 47% of patients had persistently elevated ALT despite effective virologic suppression, and the only significant predictor of persistent ALT elevation was grade 1 or more steatosis at year 5 (OR, 6.2; 95% CI, 2.3-16.4; P less than .001). Younger age and HBeAg positivity plus age were no longer significant.

Hepatic steatosis is common overall and in chronic HBV infection and often leads to increased serum transaminases, the researchers noted. Although past work has linked a PNPLA3 single nucleotide polymorphism to obesity, metabolic syndrome, and hepatic steatosis, the presence of this single nucleotide polymorphism was not significant in their study, possibly because many patients lacked genotype data, they added. “Larger longitudinal studies are warranted to further explore this factor and its potential effect on the biochemical response to antiviral treatment in [chronic HBV] patients,” they concluded.

Gilead Sciences sponsored the study. Dr. Jacobson disclosed consultancy, honoraria, and research ties to Gilead and several other pharmaceutical companies.

FROM CLINICAL GASTROENTEROLOGY AND HEPATOLOGY

Key clinical point: In patients with chronic hepatitis B virus infection, steatosis was significantly associated with persistently elevated alanine aminotransferase (ALT) levels despite successful treatment with tenofovir disoproxil fumarate.

Major finding: At baseline and after 5 years of treatment, steatosis of grade 1 (5%) or more predicted persistent ALT elevation with odds ratios of 2.2 (P = .04) and 3.4 (P = .002), respectively.

Data source: Two phase III trials of tenofovir disoproxil fumarate in 471 patients with chronic hepatitis B virus infection.

Disclosures: Gilead Sciences sponsored the study. Dr. Jacobson disclosed consultancy, honoraria, and research ties to Gilead and several other pharmaceutical companies.

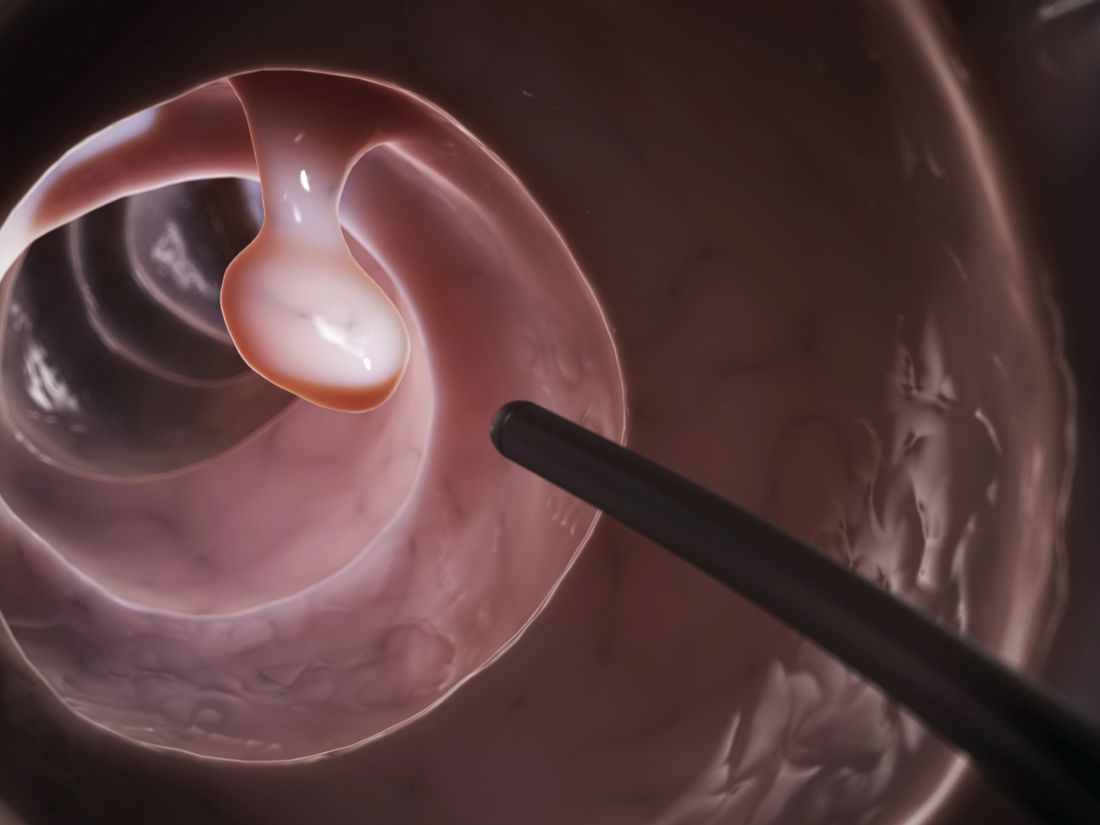

Improved adenoma detection rate found protective against interval cancers, death

An improved annual adenoma detection rate was associated with a significantly decreased risk of interval colorectal cancer (ICRC) and subsequent death in a national prospective cohort study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.006).

Source: American Gastroenterological Association

This is the first study to show a significant inverse relationship between an improved annual adenoma detection rate (ADR) and ICRC or subsequent death, Michal F. Kaminski MD, PhD, of the Institute of Oncology, Warsaw, wrote with his associates.

The rates of these outcomes were lowest when endoscopists achieved and maintained ADRs above 24.6%, which supports the currently recommended performance target of 25% for a mixed male-female population, they reported (Am J Gastroenterol. 2015;110:72-90).

This study included 294 endoscopists and 146,860 individuals who underwent screening colonoscopy as part of a national cancer prevention program in Poland between 2004 and 2008. Endoscopists received annual feedback based on quality benchmarks to spur improvements in colonoscopy performance, and all participated for at least 2 years. For each endoscopist, investigators categorized annual ADRs based on quintiles for the entire data set. “Improved ADR” was defined as keeping annual ADR within the highest quintile (above 24.6%) or as increasing annual ADR by at least one quintile, compared with baseline.

Based on this definition, 219 endoscopists (75%) improved their ADR during a median of 5.8 years of follow-up (interquartile range, 5-7.2 years). In all, 168 interval CRCs were diagnosed, of which 44 cases led to death. After age, sex, and family history of colorectal cancer were controlled for, patients whose endoscopists improved their ADRs were significantly less likely to develop (adjusted hazard ratio, 0.6; 95% confidence interval, 0.5-0.9; P = .006) or to die of interval CRC (95% CI, 0.3-0.95; P = .04) than were patients whose endoscopists did not improve their ADRs.

Maintaining ADR in the highest quintile (above 24.6%) throughout follow-up led to an even lower risk of interval CRC (HR, 0.3; 95% CI, 0.1-0.6; P = .003) and death (HR, 0.2; 95% CI, 0.1-0.6; P = .003), the researchers reported. In absolute numbers, that translated to a decrease from 25.3 interval CRCs per 100,000 person-years of follow-up to 7.1 cases when endoscopists eventually reached the highest ADR quintile or to 4.5 cases when they were in the highest quintile throughout follow-up. Rates of colonic perforation remained stable even though most endoscopists upped their ADRs.

Together, these findings “prove the causal relationship between endoscopists’ ADRs and the likelihood of being diagnosed with, or dying from, interval CRC,” the investigators concluded. The national cancer registry in Poland is thought to miss about 10% of cases, but the rate of missing cases was not thought to change over time, they noted. However, they also lacked data on colonoscope withdrawal times, and had no control group to definitively show that feedback based on benchmarking was responsible for improved ADRs.

Funders included the Foundation of Polish Science, the Innovative Economy Operational Programme, the Polish Foundation of Gastroenterology, the Polish Ministry of Health, and the Polish Ministry of Science and Higher Education. The investigators reported having no relevant conflicts of interest.

The U.S. Multi-Society Task Force on Colorectal Cancer proposed the adenoma detection rate (ADR) as a colonoscopy quality measure in 2002. The rationale for a new measure was emerging evidence of highly variable adenoma detection and cancer prevention among colonoscopists. Highly variable performance, consistently verified in subsequent studies, casts a pall of severe operator dependence over colonoscopy. In landmark studies from Kaminski et al. and Corley et al. in 2010 and 2014, respectively, it was shown that doctors with higher ADRs provide patients with much greater protection against interval colorectal cancer (CRC).

Now Kaminski and colleagues from Poland have delivered a second landmark study, demonstrating for the first time that improving ADR prevents CRCs. We now have strong evidence that ADR predicts the level of cancer prevention, that ADR improvement is achievable, and that improving ADR further prevents CRCs and CRC deaths. Thanks to this study, ADR has come full circle. Measurement of and improvement in detection is now a fully validated concept that is essential to modern colonoscopy. In 2017, ADR measurement is mandatory for all practicing colonoscopists who are serious about CRC prevention. The tools to improve ADR that are widely accepted include ADR measurement and reporting, split or same-day preparations, lesion recognition and optimal technique, high-definition imaging, double examination (particularly for the right colon), patient rotation during withdrawal, chromoendoscopy, mucosal exposure devices (caps, cuffs, balloons, etc.), and water exchange. Tools for ADR improvement that are emerging or under study are brighter forms of electronic chromoendoscopy, and videorecording.

Douglas K. Rex, MD, is professor of medicine, division of gastroenterology/hepatology, at Indiana University, Indianapolis.* He has no relevant conflicts of interest.

Correction, 6/20/17: An earlier version of this article misstated Dr. Rex's affiliation.

The U.S. Multi-Society Task Force on Colorectal Cancer proposed the adenoma detection rate (ADR) as a colonoscopy quality measure in 2002. The rationale for a new measure was emerging evidence of highly variable adenoma detection and cancer prevention among colonoscopists. Highly variable performance, consistently verified in subsequent studies, casts a pall of severe operator dependence over colonoscopy. In landmark studies from Kaminski et al. and Corley et al. in 2010 and 2014, respectively, it was shown that doctors with higher ADRs provide patients with much greater protection against interval colorectal cancer (CRC).

Now Kaminski and colleagues from Poland have delivered a second landmark study, demonstrating for the first time that improving ADR prevents CRCs. We now have strong evidence that ADR predicts the level of cancer prevention, that ADR improvement is achievable, and that improving ADR further prevents CRCs and CRC deaths. Thanks to this study, ADR has come full circle. Measurement of and improvement in detection is now a fully validated concept that is essential to modern colonoscopy. In 2017, ADR measurement is mandatory for all practicing colonoscopists who are serious about CRC prevention. The tools to improve ADR that are widely accepted include ADR measurement and reporting, split or same-day preparations, lesion recognition and optimal technique, high-definition imaging, double examination (particularly for the right colon), patient rotation during withdrawal, chromoendoscopy, mucosal exposure devices (caps, cuffs, balloons, etc.), and water exchange. Tools for ADR improvement that are emerging or under study are brighter forms of electronic chromoendoscopy, and videorecording.

Douglas K. Rex, MD, is professor of medicine, division of gastroenterology/hepatology, at Indiana University, Indianapolis.* He has no relevant conflicts of interest.

Correction, 6/20/17: An earlier version of this article misstated Dr. Rex's affiliation.

The U.S. Multi-Society Task Force on Colorectal Cancer proposed the adenoma detection rate (ADR) as a colonoscopy quality measure in 2002. The rationale for a new measure was emerging evidence of highly variable adenoma detection and cancer prevention among colonoscopists. Highly variable performance, consistently verified in subsequent studies, casts a pall of severe operator dependence over colonoscopy. In landmark studies from Kaminski et al. and Corley et al. in 2010 and 2014, respectively, it was shown that doctors with higher ADRs provide patients with much greater protection against interval colorectal cancer (CRC).

Now Kaminski and colleagues from Poland have delivered a second landmark study, demonstrating for the first time that improving ADR prevents CRCs. We now have strong evidence that ADR predicts the level of cancer prevention, that ADR improvement is achievable, and that improving ADR further prevents CRCs and CRC deaths. Thanks to this study, ADR has come full circle. Measurement of and improvement in detection is now a fully validated concept that is essential to modern colonoscopy. In 2017, ADR measurement is mandatory for all practicing colonoscopists who are serious about CRC prevention. The tools to improve ADR that are widely accepted include ADR measurement and reporting, split or same-day preparations, lesion recognition and optimal technique, high-definition imaging, double examination (particularly for the right colon), patient rotation during withdrawal, chromoendoscopy, mucosal exposure devices (caps, cuffs, balloons, etc.), and water exchange. Tools for ADR improvement that are emerging or under study are brighter forms of electronic chromoendoscopy, and videorecording.

Douglas K. Rex, MD, is professor of medicine, division of gastroenterology/hepatology, at Indiana University, Indianapolis.* He has no relevant conflicts of interest.

Correction, 6/20/17: An earlier version of this article misstated Dr. Rex's affiliation.

An improved annual adenoma detection rate was associated with a significantly decreased risk of interval colorectal cancer (ICRC) and subsequent death in a national prospective cohort study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.006).

Source: American Gastroenterological Association

This is the first study to show a significant inverse relationship between an improved annual adenoma detection rate (ADR) and ICRC or subsequent death, Michal F. Kaminski MD, PhD, of the Institute of Oncology, Warsaw, wrote with his associates.

The rates of these outcomes were lowest when endoscopists achieved and maintained ADRs above 24.6%, which supports the currently recommended performance target of 25% for a mixed male-female population, they reported (Am J Gastroenterol. 2015;110:72-90).

This study included 294 endoscopists and 146,860 individuals who underwent screening colonoscopy as part of a national cancer prevention program in Poland between 2004 and 2008. Endoscopists received annual feedback based on quality benchmarks to spur improvements in colonoscopy performance, and all participated for at least 2 years. For each endoscopist, investigators categorized annual ADRs based on quintiles for the entire data set. “Improved ADR” was defined as keeping annual ADR within the highest quintile (above 24.6%) or as increasing annual ADR by at least one quintile, compared with baseline.

Based on this definition, 219 endoscopists (75%) improved their ADR during a median of 5.8 years of follow-up (interquartile range, 5-7.2 years). In all, 168 interval CRCs were diagnosed, of which 44 cases led to death. After age, sex, and family history of colorectal cancer were controlled for, patients whose endoscopists improved their ADRs were significantly less likely to develop (adjusted hazard ratio, 0.6; 95% confidence interval, 0.5-0.9; P = .006) or to die of interval CRC (95% CI, 0.3-0.95; P = .04) than were patients whose endoscopists did not improve their ADRs.

Maintaining ADR in the highest quintile (above 24.6%) throughout follow-up led to an even lower risk of interval CRC (HR, 0.3; 95% CI, 0.1-0.6; P = .003) and death (HR, 0.2; 95% CI, 0.1-0.6; P = .003), the researchers reported. In absolute numbers, that translated to a decrease from 25.3 interval CRCs per 100,000 person-years of follow-up to 7.1 cases when endoscopists eventually reached the highest ADR quintile or to 4.5 cases when they were in the highest quintile throughout follow-up. Rates of colonic perforation remained stable even though most endoscopists upped their ADRs.

Together, these findings “prove the causal relationship between endoscopists’ ADRs and the likelihood of being diagnosed with, or dying from, interval CRC,” the investigators concluded. The national cancer registry in Poland is thought to miss about 10% of cases, but the rate of missing cases was not thought to change over time, they noted. However, they also lacked data on colonoscope withdrawal times, and had no control group to definitively show that feedback based on benchmarking was responsible for improved ADRs.

Funders included the Foundation of Polish Science, the Innovative Economy Operational Programme, the Polish Foundation of Gastroenterology, the Polish Ministry of Health, and the Polish Ministry of Science and Higher Education. The investigators reported having no relevant conflicts of interest.

An improved annual adenoma detection rate was associated with a significantly decreased risk of interval colorectal cancer (ICRC) and subsequent death in a national prospective cohort study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.006).

Source: American Gastroenterological Association

This is the first study to show a significant inverse relationship between an improved annual adenoma detection rate (ADR) and ICRC or subsequent death, Michal F. Kaminski MD, PhD, of the Institute of Oncology, Warsaw, wrote with his associates.

The rates of these outcomes were lowest when endoscopists achieved and maintained ADRs above 24.6%, which supports the currently recommended performance target of 25% for a mixed male-female population, they reported (Am J Gastroenterol. 2015;110:72-90).

This study included 294 endoscopists and 146,860 individuals who underwent screening colonoscopy as part of a national cancer prevention program in Poland between 2004 and 2008. Endoscopists received annual feedback based on quality benchmarks to spur improvements in colonoscopy performance, and all participated for at least 2 years. For each endoscopist, investigators categorized annual ADRs based on quintiles for the entire data set. “Improved ADR” was defined as keeping annual ADR within the highest quintile (above 24.6%) or as increasing annual ADR by at least one quintile, compared with baseline.

Based on this definition, 219 endoscopists (75%) improved their ADR during a median of 5.8 years of follow-up (interquartile range, 5-7.2 years). In all, 168 interval CRCs were diagnosed, of which 44 cases led to death. After age, sex, and family history of colorectal cancer were controlled for, patients whose endoscopists improved their ADRs were significantly less likely to develop (adjusted hazard ratio, 0.6; 95% confidence interval, 0.5-0.9; P = .006) or to die of interval CRC (95% CI, 0.3-0.95; P = .04) than were patients whose endoscopists did not improve their ADRs.

Maintaining ADR in the highest quintile (above 24.6%) throughout follow-up led to an even lower risk of interval CRC (HR, 0.3; 95% CI, 0.1-0.6; P = .003) and death (HR, 0.2; 95% CI, 0.1-0.6; P = .003), the researchers reported. In absolute numbers, that translated to a decrease from 25.3 interval CRCs per 100,000 person-years of follow-up to 7.1 cases when endoscopists eventually reached the highest ADR quintile or to 4.5 cases when they were in the highest quintile throughout follow-up. Rates of colonic perforation remained stable even though most endoscopists upped their ADRs.

Together, these findings “prove the causal relationship between endoscopists’ ADRs and the likelihood of being diagnosed with, or dying from, interval CRC,” the investigators concluded. The national cancer registry in Poland is thought to miss about 10% of cases, but the rate of missing cases was not thought to change over time, they noted. However, they also lacked data on colonoscope withdrawal times, and had no control group to definitively show that feedback based on benchmarking was responsible for improved ADRs.

Funders included the Foundation of Polish Science, the Innovative Economy Operational Programme, the Polish Foundation of Gastroenterology, the Polish Ministry of Health, and the Polish Ministry of Science and Higher Education. The investigators reported having no relevant conflicts of interest.

FROM GASTROENTEROLOGY

Key clinical point: An improved adenoma detection rate was associated with a significantly reduced risk of interval colorectal cancer and subsequent death.

Major finding: Adjusted hazard ratios were 0.6 for developing ICRC (95% CI, 0.5-0.9; P = .006) and 0.50 for dying of ICRC (95% CI, 0.3-0.95; P = .04).

Data source: A prospective registry study of 294 endoscopists and 146,860 individuals who underwent screening colonoscopy as part of a national screening program between 2004 and 2008.

Disclosures: Funders included the Foundation of Polish Science, the Innovative Economy Operational Programme, the Polish Foundation of Gastroenterology, the Polish Ministry of Health, and the Polish Ministry of Science and Higher Education. The investigators reported having no relevant conflicts of interest.

VIDEO: Start probiotics within 2 days of antibiotics to prevent CDI

Starting probiotics within 2 days of the first antibiotic dose could cut the risk of Clostridium difficile infection among hospitalized adults by more than 50%, according to the results of a systemic review and metaregression analysis.

The protective effect waned when patients delayed starting probiotics, reported Nicole T. Shen, MD, of Cornell University, New York, and her associates. The study appears in Gastroenterology (doi: 10.1053/j.gastro.2017.02.003). “Given the magnitude of benefit and the low cost of probiotics, the decision is likely to be highly cost effective,” they added.

Systematic reviews support the use of probiotics for preventing Clostridium difficile infection (CDI), but guidelines do not reflect these findings. To help guide clinical practice, the reviewers searched MEDLINE, EMBASE, the International Journal of Probiotics and Prebiotics, and the Cochrane Library databases for randomized controlled trials of probiotics and CDI among hospitalized adults taking antibiotics. This search yielded 19 published studies of 6,261 patients. Two reviewers separately extracted data from these studies and examined quality of evidence and risk of bias.

SOURCE: AMERICAN GASTROENTEROLOGICAL ASSOCIATION

A total of 54 patients in the probiotic cohort (1.6%) developed CDI, compared with 115 controls (3.9%), a statistically significant difference (P less than .001). In regression analysis, the probiotic group was about 58% less likely to develop CDI than controls (hazard ratio, 0.42; 95% confidence interval, 0.30-0.57; P less than .001). Importantly, probiotics were significantly effective against CDI only when started within 2 days of antibiotic initiation (relative risk, 0.32; 95% CI, 0.22-0.48), not when started within 3-7 days (RR, 0.70, 95% CI, 0.40-1.23). The difference between these estimated risk ratios was statistically significant (P = .02).

In 18 of the 19 studies, patients received probiotics within 3 days of starting antibiotics, while patients in the remaining study could start probiotics any time within 7 days of antibiotic initiation. “Not only was [this] study unusual with respect to probiotic timing, it was also much larger than all other studies, and its results were statistically insignificant,” the reviewers wrote. Metaregression analyses of all studies and of all but the outlier study linked delaying probiotics with a decrease in efficacy against CDI, with P values of .04 and .09, respectively. Those findings “suggest that the decrement in efficacy with delay in starting probiotics is not sensitive to inclusion of a single large ‘outlier’ study,” the reviewers emphasized. “In fact, inclusion only dampens the magnitude of the decrement in efficacy, although it is still clinically important and statistically significant.”

The trials included 12 probiotic formulas containing Lactobacillus, Saccharomyces, Bifidobacterium, and Streptococcus, either alone or in combination. Probiotics were not associated with adverse effects in the trials. Quality of evidence was generally high, but seven trials had missing data on the primary outcome. Furthermore, two studies lacked a placebo group, and lead authors of two studies disclosed ties to the probiotic manufacturers that provided funding.

One reviewer received fellowship support from the Louis and Rachel Rudin Foundation. None had conflicts of interest.

Starting probiotics within 2 days of the first antibiotic dose could cut the risk of Clostridium difficile infection among hospitalized adults by more than 50%, according to the results of a systemic review and metaregression analysis.

The protective effect waned when patients delayed starting probiotics, reported Nicole T. Shen, MD, of Cornell University, New York, and her associates. The study appears in Gastroenterology (doi: 10.1053/j.gastro.2017.02.003). “Given the magnitude of benefit and the low cost of probiotics, the decision is likely to be highly cost effective,” they added.

Systematic reviews support the use of probiotics for preventing Clostridium difficile infection (CDI), but guidelines do not reflect these findings. To help guide clinical practice, the reviewers searched MEDLINE, EMBASE, the International Journal of Probiotics and Prebiotics, and the Cochrane Library databases for randomized controlled trials of probiotics and CDI among hospitalized adults taking antibiotics. This search yielded 19 published studies of 6,261 patients. Two reviewers separately extracted data from these studies and examined quality of evidence and risk of bias.

SOURCE: AMERICAN GASTROENTEROLOGICAL ASSOCIATION

A total of 54 patients in the probiotic cohort (1.6%) developed CDI, compared with 115 controls (3.9%), a statistically significant difference (P less than .001). In regression analysis, the probiotic group was about 58% less likely to develop CDI than controls (hazard ratio, 0.42; 95% confidence interval, 0.30-0.57; P less than .001). Importantly, probiotics were significantly effective against CDI only when started within 2 days of antibiotic initiation (relative risk, 0.32; 95% CI, 0.22-0.48), not when started within 3-7 days (RR, 0.70, 95% CI, 0.40-1.23). The difference between these estimated risk ratios was statistically significant (P = .02).

In 18 of the 19 studies, patients received probiotics within 3 days of starting antibiotics, while patients in the remaining study could start probiotics any time within 7 days of antibiotic initiation. “Not only was [this] study unusual with respect to probiotic timing, it was also much larger than all other studies, and its results were statistically insignificant,” the reviewers wrote. Metaregression analyses of all studies and of all but the outlier study linked delaying probiotics with a decrease in efficacy against CDI, with P values of .04 and .09, respectively. Those findings “suggest that the decrement in efficacy with delay in starting probiotics is not sensitive to inclusion of a single large ‘outlier’ study,” the reviewers emphasized. “In fact, inclusion only dampens the magnitude of the decrement in efficacy, although it is still clinically important and statistically significant.”

The trials included 12 probiotic formulas containing Lactobacillus, Saccharomyces, Bifidobacterium, and Streptococcus, either alone or in combination. Probiotics were not associated with adverse effects in the trials. Quality of evidence was generally high, but seven trials had missing data on the primary outcome. Furthermore, two studies lacked a placebo group, and lead authors of two studies disclosed ties to the probiotic manufacturers that provided funding.

One reviewer received fellowship support from the Louis and Rachel Rudin Foundation. None had conflicts of interest.

Starting probiotics within 2 days of the first antibiotic dose could cut the risk of Clostridium difficile infection among hospitalized adults by more than 50%, according to the results of a systemic review and metaregression analysis.

The protective effect waned when patients delayed starting probiotics, reported Nicole T. Shen, MD, of Cornell University, New York, and her associates. The study appears in Gastroenterology (doi: 10.1053/j.gastro.2017.02.003). “Given the magnitude of benefit and the low cost of probiotics, the decision is likely to be highly cost effective,” they added.

Systematic reviews support the use of probiotics for preventing Clostridium difficile infection (CDI), but guidelines do not reflect these findings. To help guide clinical practice, the reviewers searched MEDLINE, EMBASE, the International Journal of Probiotics and Prebiotics, and the Cochrane Library databases for randomized controlled trials of probiotics and CDI among hospitalized adults taking antibiotics. This search yielded 19 published studies of 6,261 patients. Two reviewers separately extracted data from these studies and examined quality of evidence and risk of bias.

SOURCE: AMERICAN GASTROENTEROLOGICAL ASSOCIATION

A total of 54 patients in the probiotic cohort (1.6%) developed CDI, compared with 115 controls (3.9%), a statistically significant difference (P less than .001). In regression analysis, the probiotic group was about 58% less likely to develop CDI than controls (hazard ratio, 0.42; 95% confidence interval, 0.30-0.57; P less than .001). Importantly, probiotics were significantly effective against CDI only when started within 2 days of antibiotic initiation (relative risk, 0.32; 95% CI, 0.22-0.48), not when started within 3-7 days (RR, 0.70, 95% CI, 0.40-1.23). The difference between these estimated risk ratios was statistically significant (P = .02).

In 18 of the 19 studies, patients received probiotics within 3 days of starting antibiotics, while patients in the remaining study could start probiotics any time within 7 days of antibiotic initiation. “Not only was [this] study unusual with respect to probiotic timing, it was also much larger than all other studies, and its results were statistically insignificant,” the reviewers wrote. Metaregression analyses of all studies and of all but the outlier study linked delaying probiotics with a decrease in efficacy against CDI, with P values of .04 and .09, respectively. Those findings “suggest that the decrement in efficacy with delay in starting probiotics is not sensitive to inclusion of a single large ‘outlier’ study,” the reviewers emphasized. “In fact, inclusion only dampens the magnitude of the decrement in efficacy, although it is still clinically important and statistically significant.”

The trials included 12 probiotic formulas containing Lactobacillus, Saccharomyces, Bifidobacterium, and Streptococcus, either alone or in combination. Probiotics were not associated with adverse effects in the trials. Quality of evidence was generally high, but seven trials had missing data on the primary outcome. Furthermore, two studies lacked a placebo group, and lead authors of two studies disclosed ties to the probiotic manufacturers that provided funding.

One reviewer received fellowship support from the Louis and Rachel Rudin Foundation. None had conflicts of interest.

FROM GASTROENTEROLOGY

Key clinical point: Starting probiotics within 2 days of antibiotics was associated with a significantly reduced risk of Clostridium difficile infection among hospitalized patients.

Major finding: Probiotics were significantly effective against CDI only when started within 2 days of antibiotic initiation (relative risk, 0.32; 95% CI, 0.22-0.48), not when started within 3-7 days (RR, 0.70; 95% CI, 0.40-1.23).

Data source: A systematic review and metaregression analysis of 19 studies of 6,261 patients.

Disclosures: One reviewer received fellowship support from the Louis and Rachel Rudin Foundation. None had conflicts of interest.

Distance from transplant center predicted mortality in chronic liver disease

Living more than 150 miles from a liver transplant center was associated with a higher risk of mortality among patients with chronic liver failure, regardless of etiology, transplantation status, or whether patients had decompensated cirrhosis or hepatocellular carcinoma, according to a first-in-kind, population-based study reported in the June issue of Clinical Gastroenterology and Hepatology (doi: 10.1016/j.cgh.2017.02.023).

The findings underscore the need for accessible, specialized liver care irrespective of whether patients with chronic liver failure (CLF) are destined for transplantation, David S. Goldberg, MD, of the University of Pennsylvania, Philadelphia, wrote with his associates. The associations “do not provide cause and effect,” but underscore the need to consider “the broader impact of transplant-related policies that could decrease transplant volumes and threaten closures of smaller liver transplant centers that serve geographically isolated populations in the Southeast and Midwest,” they added.

A total of 879 (5.2%) patients lived more than 150 miles from the nearest liver transplant center, the analysis showed. Even after controlling for etiology of liver disease, this subgroup was at significantly greater risk of mortality (hazard ratio, 1.2; 95% confidence interval, 1.1-1.3; P less than .001) and of dying without undergoing transplantation (HR, 1.2; 95% CI, 1.1-1.3; P = .003) than were patients who were less geographically isolated. Distance from a transplant center also predicted overall and transplant-free mortality when modeled as a continuous variable, with hazard ratios of 1.02 (P = .02) and 1.03 (P = .04), respectively. “Although patients living more than 150 miles from a liver transplant center had fewer outpatient gastroenterologist visits, this covariate did not affect the final models,” the investigators reported. Rural locality did not predict mortality after controlling for distance from a transplant center, and neither did living in a low-income zip code, they added.

Data from the Centers for Disease Control and Prevention indicate that age-adjusted rates of death from liver disease are lowest in New York, where the entire population lives within 150 miles of a liver transplant center, the researchers noted. “By contrast, New Mexico and Wyoming have the highest age-adjusted death rates, and more than 95% of those states’ populations live more than 150 miles from a [transplant] center,” they emphasized. “The management of most patients with CLF is not centered on transplantation, but rather the spectrum of care for decompensated cirrhosis and hepatocellular carcinoma. Thus, maintaining access to specialized liver care is important for patients with CLF.”

Dr. Goldberg received support from the National Institutes of Health. The investigators had no conflicts.

Living more than 150 miles from a liver transplant center was associated with a higher risk of mortality among patients with chronic liver failure, regardless of etiology, transplantation status, or whether patients had decompensated cirrhosis or hepatocellular carcinoma, according to a first-in-kind, population-based study reported in the June issue of Clinical Gastroenterology and Hepatology (doi: 10.1016/j.cgh.2017.02.023).

The findings underscore the need for accessible, specialized liver care irrespective of whether patients with chronic liver failure (CLF) are destined for transplantation, David S. Goldberg, MD, of the University of Pennsylvania, Philadelphia, wrote with his associates. The associations “do not provide cause and effect,” but underscore the need to consider “the broader impact of transplant-related policies that could decrease transplant volumes and threaten closures of smaller liver transplant centers that serve geographically isolated populations in the Southeast and Midwest,” they added.

A total of 879 (5.2%) patients lived more than 150 miles from the nearest liver transplant center, the analysis showed. Even after controlling for etiology of liver disease, this subgroup was at significantly greater risk of mortality (hazard ratio, 1.2; 95% confidence interval, 1.1-1.3; P less than .001) and of dying without undergoing transplantation (HR, 1.2; 95% CI, 1.1-1.3; P = .003) than were patients who were less geographically isolated. Distance from a transplant center also predicted overall and transplant-free mortality when modeled as a continuous variable, with hazard ratios of 1.02 (P = .02) and 1.03 (P = .04), respectively. “Although patients living more than 150 miles from a liver transplant center had fewer outpatient gastroenterologist visits, this covariate did not affect the final models,” the investigators reported. Rural locality did not predict mortality after controlling for distance from a transplant center, and neither did living in a low-income zip code, they added.

Data from the Centers for Disease Control and Prevention indicate that age-adjusted rates of death from liver disease are lowest in New York, where the entire population lives within 150 miles of a liver transplant center, the researchers noted. “By contrast, New Mexico and Wyoming have the highest age-adjusted death rates, and more than 95% of those states’ populations live more than 150 miles from a [transplant] center,” they emphasized. “The management of most patients with CLF is not centered on transplantation, but rather the spectrum of care for decompensated cirrhosis and hepatocellular carcinoma. Thus, maintaining access to specialized liver care is important for patients with CLF.”

Dr. Goldberg received support from the National Institutes of Health. The investigators had no conflicts.

Living more than 150 miles from a liver transplant center was associated with a higher risk of mortality among patients with chronic liver failure, regardless of etiology, transplantation status, or whether patients had decompensated cirrhosis or hepatocellular carcinoma, according to a first-in-kind, population-based study reported in the June issue of Clinical Gastroenterology and Hepatology (doi: 10.1016/j.cgh.2017.02.023).

The findings underscore the need for accessible, specialized liver care irrespective of whether patients with chronic liver failure (CLF) are destined for transplantation, David S. Goldberg, MD, of the University of Pennsylvania, Philadelphia, wrote with his associates. The associations “do not provide cause and effect,” but underscore the need to consider “the broader impact of transplant-related policies that could decrease transplant volumes and threaten closures of smaller liver transplant centers that serve geographically isolated populations in the Southeast and Midwest,” they added.

A total of 879 (5.2%) patients lived more than 150 miles from the nearest liver transplant center, the analysis showed. Even after controlling for etiology of liver disease, this subgroup was at significantly greater risk of mortality (hazard ratio, 1.2; 95% confidence interval, 1.1-1.3; P less than .001) and of dying without undergoing transplantation (HR, 1.2; 95% CI, 1.1-1.3; P = .003) than were patients who were less geographically isolated. Distance from a transplant center also predicted overall and transplant-free mortality when modeled as a continuous variable, with hazard ratios of 1.02 (P = .02) and 1.03 (P = .04), respectively. “Although patients living more than 150 miles from a liver transplant center had fewer outpatient gastroenterologist visits, this covariate did not affect the final models,” the investigators reported. Rural locality did not predict mortality after controlling for distance from a transplant center, and neither did living in a low-income zip code, they added.

Data from the Centers for Disease Control and Prevention indicate that age-adjusted rates of death from liver disease are lowest in New York, where the entire population lives within 150 miles of a liver transplant center, the researchers noted. “By contrast, New Mexico and Wyoming have the highest age-adjusted death rates, and more than 95% of those states’ populations live more than 150 miles from a [transplant] center,” they emphasized. “The management of most patients with CLF is not centered on transplantation, but rather the spectrum of care for decompensated cirrhosis and hepatocellular carcinoma. Thus, maintaining access to specialized liver care is important for patients with CLF.”

Dr. Goldberg received support from the National Institutes of Health. The investigators had no conflicts.

FROM CLINICAL GASTROENTEROLOGY AND HEPATOLOGY

Key clinical point: Geographic isolation from a liver transplant center independently predicted mortality among patients with chronic liver failure.

Major finding: In adjusted analyses, patients who lived more than 150 miles from a liver transplant center were at significantly greater risk of mortality (HR, 1.2; 95% CI, 1.1-1.3; P less than .001) and of dying without undergoing transplantation (HR, 1.2; 95% CI, 1.1-1.3; P = .003) than were patients who were less geographically isolated.

Data source: A retrospective cohort study of 16,824 patients with chronic liver failure who were included in the Healthcare Integrated Research Database between 2006 and 2014.

Disclosures: Dr. Goldberg received support from the National Institutes of Health. The investigators had no conflicts.

Persistently nondysplastic Barrett’s esophagus did not protect against progression

Patients with at least five biopsies showing nondysplastic Barrett’s esophagus were statistically as likely to progress to high-grade dysplasia or esophageal adenocarcinoma as patients with a single such biopsy, according to a multicenter prospective registry study reported in the June issue of Clinical Gastroenterology and Hepatology (doi: org/10.1016/j.cgh.2017.02.019).

The findings, which contradict those from another recent multicenter cohort study (Gastroenterology. 2013;145[3]:548-53), highlight the need for more studies before lengthening the time between surveillance biopsies in patients with nondysplastic Barrett’s esophagus, Rajesh Krishnamoorthi, MD, of Mayo Clinic in Rochester, Minn., wrote with his associates.

Barrett’s esophagus is the strongest predictor of esophageal adenocarcinoma, but studies have reported mixed results as to whether the risk of this cancer increases over time or wanes with consecutive biopsies that indicate nondysplasia, the researchers noted. Therefore, they studied the prospective, multicenter Mayo Clinic Esophageal Adenocarcinoma and Barrett’s Esophagus registry, excluding patients who progressed to adenocarcinoma within 12 months, had missing data, or had no follow-up biopsies. This approach left 480 subjects for analysis. Patients averaged 63 years of age, 78% were male, the mean length of Barrett’s esophagus was 5.7 cm, and the average time between biopsies was 1.8 years, with a standard deviation of 1.3 years.

A total of 16 patients progressed to high-grade dysplasia or esophageal adenocarcinoma over 1,832 patient-years of follow-up, for an overall annual risk of progression of 0.87%. Two patients progressed to esophageal adenocarcinoma (annual risk, 0.11%; 95% confidence interval, 0.03% to 0.44%), while 14 patients progressed to high-grade dysplasia (annual risk, 0.76%; 95% CI, 0.45% to 1.29%). Eight patients progressed to one of these two outcomes after a single nondysplastic biopsy, three progressed after two such biopsies, three progressed after three such biopsies, none progressed after four such biopsies, and two progressed after five such biopsies. Statistically, patients with at least five consecutive nondysplastic biopsies were no less likely to progress than were patients with only one nondysplastic biopsy (hazard ratio, 0.48; 95% CI, 0.07 to 1.92; P = .32). Hazard ratios for the other groups ranged between 0.0 and 0.85, with no significant difference in estimated risk between groups (P = .68) after controlling for age, sex, and length of Barrett’s esophagus.

The previous multicenter cohort study linked persistently nondysplastic Barrett’s esophagus with a lower rate of progression to esophageal adenocarcinoma, and, based on those findings, the authors suggested lengthening intervals between biopsy surveillance or even stopping surveillance, Dr. Krishnamoorthi and his associates noted. However, that study did not have mutually exclusive groups. “Additional data are required before increasing the interval between surveillance endoscopies based on persistence of nondysplastic Barrett’s esophagus,” they concluded.

The study lacked misclassification bias given long-segment Barrett’s esophagus, and specialized gastrointestinal pathologists interpreted all histology specimens, the researchers noted. “The small number of progressors is a potential limitation, reducing power to assess associations,” they added.

The investigators did not report funding sources. They reported having no conflicts of interest.

Current practice guidelines recommend endoscopic surveillance in Barrett’s esophagus (BE) patients to detect esophageal adenocarcinoma (EAC) at an early and potentially curable stage.

As currently practiced, endoscopic surveillance of BE has numerous limitations and provides the impetus for improved risk-stratification and, ultimately, the effectiveness of current surveillance strategies. Persistence of nondysplastic BE (NDBE) has previously been shown to be an indicator of lower risk of progression to high-grade dysplasia (HGD)/EAC. However, outcomes studies on this topic have reported conflicting results.

Where do we stand with regard to persistence of NDBE and its impact on surveillance intervals? Future large cohort studies are required that address all potential confounders and include a large number of patients with progression to HGD/EAC (a challenge given the rarity of this outcome). At the present time, based on the available data, surveillance intervals cannot be lengthened in patients with persistent NDBE. Future studies also need to focus on the development and validation of prediction models that incorporate clinical, endoscopic, and histologic factors in risk stratification. Until then, meticulous examination techniques, cognitive knowledge and training, use of standardized grading systems, and use of high-definition white light endoscopy are critical in improving effectiveness of surveillance programs in BE patients.

Sachin Wani, MD, is associate professor of medicine and Medical codirector of the Esophageal and Gastric Center of Excellence, division of gastroenterology and hepatology, University of Colorado at Denver, Aurora. He is supported by the University of Colorado Department of Medicine Outstanding Early Scholars Program and is a consultant for Medtronic and Boston Scientific.

Current practice guidelines recommend endoscopic surveillance in Barrett’s esophagus (BE) patients to detect esophageal adenocarcinoma (EAC) at an early and potentially curable stage.

As currently practiced, endoscopic surveillance of BE has numerous limitations and provides the impetus for improved risk-stratification and, ultimately, the effectiveness of current surveillance strategies. Persistence of nondysplastic BE (NDBE) has previously been shown to be an indicator of lower risk of progression to high-grade dysplasia (HGD)/EAC. However, outcomes studies on this topic have reported conflicting results.

Where do we stand with regard to persistence of NDBE and its impact on surveillance intervals? Future large cohort studies are required that address all potential confounders and include a large number of patients with progression to HGD/EAC (a challenge given the rarity of this outcome). At the present time, based on the available data, surveillance intervals cannot be lengthened in patients with persistent NDBE. Future studies also need to focus on the development and validation of prediction models that incorporate clinical, endoscopic, and histologic factors in risk stratification. Until then, meticulous examination techniques, cognitive knowledge and training, use of standardized grading systems, and use of high-definition white light endoscopy are critical in improving effectiveness of surveillance programs in BE patients.

Sachin Wani, MD, is associate professor of medicine and Medical codirector of the Esophageal and Gastric Center of Excellence, division of gastroenterology and hepatology, University of Colorado at Denver, Aurora. He is supported by the University of Colorado Department of Medicine Outstanding Early Scholars Program and is a consultant for Medtronic and Boston Scientific.

Current practice guidelines recommend endoscopic surveillance in Barrett’s esophagus (BE) patients to detect esophageal adenocarcinoma (EAC) at an early and potentially curable stage.

As currently practiced, endoscopic surveillance of BE has numerous limitations and provides the impetus for improved risk-stratification and, ultimately, the effectiveness of current surveillance strategies. Persistence of nondysplastic BE (NDBE) has previously been shown to be an indicator of lower risk of progression to high-grade dysplasia (HGD)/EAC. However, outcomes studies on this topic have reported conflicting results.

Where do we stand with regard to persistence of NDBE and its impact on surveillance intervals? Future large cohort studies are required that address all potential confounders and include a large number of patients with progression to HGD/EAC (a challenge given the rarity of this outcome). At the present time, based on the available data, surveillance intervals cannot be lengthened in patients with persistent NDBE. Future studies also need to focus on the development and validation of prediction models that incorporate clinical, endoscopic, and histologic factors in risk stratification. Until then, meticulous examination techniques, cognitive knowledge and training, use of standardized grading systems, and use of high-definition white light endoscopy are critical in improving effectiveness of surveillance programs in BE patients.

Sachin Wani, MD, is associate professor of medicine and Medical codirector of the Esophageal and Gastric Center of Excellence, division of gastroenterology and hepatology, University of Colorado at Denver, Aurora. He is supported by the University of Colorado Department of Medicine Outstanding Early Scholars Program and is a consultant for Medtronic and Boston Scientific.

Patients with at least five biopsies showing nondysplastic Barrett’s esophagus were statistically as likely to progress to high-grade dysplasia or esophageal adenocarcinoma as patients with a single such biopsy, according to a multicenter prospective registry study reported in the June issue of Clinical Gastroenterology and Hepatology (doi: org/10.1016/j.cgh.2017.02.019).

The findings, which contradict those from another recent multicenter cohort study (Gastroenterology. 2013;145[3]:548-53), highlight the need for more studies before lengthening the time between surveillance biopsies in patients with nondysplastic Barrett’s esophagus, Rajesh Krishnamoorthi, MD, of Mayo Clinic in Rochester, Minn., wrote with his associates.

Barrett’s esophagus is the strongest predictor of esophageal adenocarcinoma, but studies have reported mixed results as to whether the risk of this cancer increases over time or wanes with consecutive biopsies that indicate nondysplasia, the researchers noted. Therefore, they studied the prospective, multicenter Mayo Clinic Esophageal Adenocarcinoma and Barrett’s Esophagus registry, excluding patients who progressed to adenocarcinoma within 12 months, had missing data, or had no follow-up biopsies. This approach left 480 subjects for analysis. Patients averaged 63 years of age, 78% were male, the mean length of Barrett’s esophagus was 5.7 cm, and the average time between biopsies was 1.8 years, with a standard deviation of 1.3 years.