User login

Kidney transplant: New opportunities and challenges

Much has improved in renal transplantation over the past 20 years. The focus has shifted to using stronger immunotherapy rather than trying to minimize it. There has been increasing recognition of infection and ways to prevent and treat it. Induction therapy now has greater emphasis so that maintenance therapy can be eased, with the aim of reducing long-term toxicity. Perhaps the biggest change is the practice of screening for donor-specific antibodies at the time of transplant so that predictable problems can be prevented or better handled if they occur. Such advances have helped patients directly and by extending the life of their transplanted organs.

LONGER SURVIVAL

As early as the 1990s, it was recognized that kidney transplant offers a survival advantage for patients with end-stage renal disease over maintenance on dialysis.1 Although the risk of death is higher immediately after transplant, within a few months it becomes much lower than for patients on dialysis. Survival varies according to the health of the patient and the quality of the transplanted organ.

In general, patients who obtain the greatest benefit from transplants in terms of years of life gained are those with diabetes, especially those who are younger. Those ages 20 to 39 live about 8 years on dialysis vs 25 years after transplant.

CONTRAINDICATIONS TO TRANSPLANT

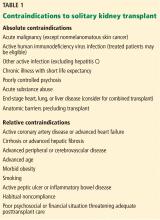

There are multiple contraindications to a solitary kidney transplant (Table 1), including smoking. Most transplant centers require that smokers quit before transplant. Long-standing smokers almost double their risk of a cardiac event after transplant and double their rate of malignancy. Active smoking at the time of transplant is associated with twice the risk of death by 10 years after transplant compared with that of nonsmokers.2 Cotinine testing can detect whether a patient is an active smoker.

WAITING-LIST CONSIDERATIONS

Organs are scarce

The number of patients on the kidney waiting list has increased rapidly in the last few decades, while the number of transplants performed each year has remained about the same. In 2016, about 100,000 patients were on the list, but only about 19,000 transplants were performed.3 Wait times, especially for deceased-donor organs, have increased to about 6 years, varying by blood type and geographic region.

Waiting-list placement

Placement on the waiting list for a deceased-donor kidney transplant occurs when a patient has an estimated glomerular filtration rate (GFR) of 20 mL/min/1.73 m2 or less, although referral to the list can be made earlier. Early listing remains advantageous, as total time on the list will be counted before starting dialysis. “Preemptive transplant” means the patient had no dialysis before transplant; this applies to about 10% of transplant recipients. These patients tend to fare the best and are usually recipients of a living-donor organ.

Most patients do not receive a transplant until the GFR is less than 15 mL/min/1.73 m2.

Since 2014, wait time has been measured from the beginning of dialysis rather than the date of waiting-list placement in patients who are listed after starting dialysis therapy. This approach is more fair but sometimes introduces problems. A patient who did not previously know about the list may suddenly jump to the head of the line after 10 years of dialysis, by which time comorbidities associated with long-term dialysis make the patient less likely to gain as much benefit from a transplant as people lower on the list. Time on dialysis, or “dialysis vintage,” predicts patient and kidney survival after transplant, with reduced survival associated with increasing time on dialysis.4

Shorter wait for a suboptimal kidney

The aging population has increased the number of older patients being listed for transplant, presenting multiple challenges. Patients age 65 or older have a 50% chance of dying before they receive a transplant during a 5-year wait.

A patient may shorten the wait by joining the list for a suboptimal organ. All deceased-donor organs are given a Kidney Donor Profile Index score, which predicts the longevity of an organ after transplant. The score is determined by donor age, kidney function based on the serum creatinine at the time of death, and other donor factors.

A kidney with a score higher than 85% is likely to function longer than only 15% of available kidneys. Patients who receive a kidney with that score have a longer period of risk of death soon after transplant and a slightly higher risk of death in the long term than patients who receive a healthier kidney, although on average they still do better than patients on dialysis.5

Older patients should be encouraged to sign up for both the regular waiting list and the suboptimal kidney waiting list to reduce the risk of dying before they get a kidney.

LIVING-DONOR ORGAN TRANSPLANT

Many advantages

Living-donor organ transplant is associated with a better survival rate than deceased-donor organ transplant, and the advantage becomes greater over time. At 1 year, patient survival is more than 90% in both groups, but by 5 years about 80% of patients with a living-donor organ are still alive vs only about 65% of patients with a deceased-donor organ.

The waiting time for a living-donor transplant may be only weeks to months, rather than years. Because increasing time on dialysis predicts worse patient and graft survival after transplant, the shorter wait time is a big advantage. In addition, because the donor and recipient are typically in adjacent operating rooms, the organ sustains less ischemic damage. In general, the kidney quality is better from healthy donors, resulting in superior function early on and longer graft survival by an average of 4 years. If the living donor is related to the recipient, human leukocyte antigen matching also tends to be better and predicts better outcomes.

Special challenges

Opting for a living-donor organ also entails special challenges. In addition to the ethical issues surrounding living-donor organ donation, an appropriate donor must be found. Donors must be highly motivated and pass physical, laboratory, and psychological evaluations.

For older patients, if the donor is a spouse or close friend, he or she is also likely to be older, making the organ less viable than one from a younger person. Even an adult child may not be an ideal donor if there is a family propensity to kidney disease, such as diabetic nephropathy. No test is available to determine the risk for future diabetes, but it is known to run in families.

POTENT IMMUNOSUPPRESSION

Induction therapy

Induction therapy with antithymocyte globulin or basiliximab provides intense immunosuppression to prevent acute rejection during the early posttransplant period.

Antithymocyte globulin is a potent agent that contains antibodies directed at T cells, B cells, neutrophils, platelets, adhesion molecules, and complement. It binds T cells and removes them from circulation by opsonization in splenic and lymphoid tissue. The immunosuppressive effect is sustained for at least 2 to 3 months after a series of injections (dosage 1.5 mg/kg/day, usually for 4 to 10 doses). Antithymocyte globulin is also used to treat acute rejection, especially high-grade rejection for which steroid therapy is likely to be insufficient.

Basiliximab consists of antibodies to the interleukin 2 (IL-2) receptor of T cells. Binding to T cells prevents their activation rather than removing them from circulation. The drug prevents rejection, with 30% relative reduction in early studies compared with placebo. However, it is ineffective in reversing established rejection. Dosage is 20 mg at day 0 and day 4, which provides receptor saturation for 30 to 45 days.

Basiliximab is also sometimes used off-label for patients who need to discontinue a calcineurin inhibitor (ie, tacrolimus or cyclosporine). In such cases, normal therapy is put on hold while basiliximab is given for 1 or 2 doses. Case series have been reported for this use, particularly for patients with a heart and liver transplant who develop acute kidney injury while hospitalized.6,7

Antithymocyte globulin is more effective but also more risky. Brennan et al8 randomized 278 transplant recipients to either antithymocyte globulin or basiliximab. Patients in the antithymocyte globulin group had a 16% rejection rate vs 26% in the basiliximab group.

Antithymocyte globulin therapy is associated with multiple adverse effects, including fever and chills, pulmonary edema, and long-standing immunosuppressive effects such as increased risk of lymphoma and cytomegalovirus (CMV) infection. Basiliximab side-effect profiles are similar to those of placebo.

Maintenance therapy

The calcineurin inhibitors cyclosporine and tacrolimus remain the standard of care in kidney transplant despite multiple drug interactions and side effects that include renal toxicity and fibrosis. Cyclosporine and tacrolimus both bind intracellular immunophilins and thereby prevent transcription of IL-2 and production of T cells. The drugs work similarly but have different binding sites. Cyclosporine has largely been replaced by tacrolimus because its reliability of dosing and higher potency are associated with lower rejection rates.

Tacrolimus is typically given twice daily (1–6 mg/dose). Twelve-hour trough levels are followed (target: 8–12 ng/mL early on, then 5–8 ng/mL after 3 months posttransplant). Side effects include hypertension and hypercholesterolemia, but less so than with cyclosporine. On the other hand, hyperglycemia tends to be worse with tacrolimus than with cyclosporine, and combining tacrolimus with steroids frequently leads to diabetes. Tacrolimus can also cause acute and chronic renal failure, especially at high drug levels, as well as neurotoxicity, tremors, and hair loss.

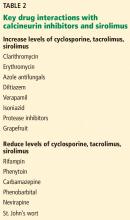

Cyclosporine, tacrolimus, and sirolimus (not a calcineurin inhibitor) are metabolized through the same cytochrome P450 pathway (CYP3A4), so they have common drug interactions (Table 2).

Mycophenolate mofetil is typically used as an adjunct therapy (500–1,000 mg twice daily). It is also used for other kidney diseases before transplant, including lupus nephritis. Transplanted kidney rejection rates with mycophenolate mofetil with steroids are about 40%, so the drug is not potent enough to be used without a calcineurin inhibitor.

Side effects include gastrointestinal toxicity in up to 20% of patients, and leukopenia, which is associated with viral infections.

CORONARY ARTERY DISEASE IS COMMON WITH DIALYSIS

Coronary artery disease is highly associated with end-stage kidney disease and occurs in as many as 85% of older patients with diabetes on dialysis. Although patients with end-stage kidney disease tend to have more numerous and severe atherosclerotic lesions compared with the general population, justifying aggressive management, cardiac care tends to be conservative in patients on dialysis.9

Death from acute myocardial infarction occurs in about 20% to 30% of patients on dialysis vs about 2% of patients with normal renal function. Five years after myocardial infarction, survival is only about 30% in patients on dialysis.9

There are many explanations for excess coronary artery disease in patients on dialysis. In addition to the traditional cardiovascular risk factors of diabetes, hypertension, and preexisting coronary artery disease, patients are in a proinflammatory uremic state and have high levels of phosphorus and fibroblast growth factor 23 that contribute to vascular calcification. Almost all patients have high homocysteine levels and hemodynamic instability, particularly if they are on hemodialysis.

Pretransplant evaluation for heart disease

Patients on the kidney transplant waiting list are screened aggressively for heart disease. A history of myocardial infarction usually results in removal from the list. All patients have an initial electrocardiogram and echocardiogram. Thallium or echocardiographic stress testing is used for patients who are age 50 and older, have diabetes, or have had dialysis for many years. Patients with evidence of ischemia undergo catheterization.

Patients are also screened with computed tomography before transplant. Because the kidney is typically anastomosed to the iliac artery and vein, heavy calcification of the iliac artery can make the procedure too difficult to perform.

Reduced long-term risk of myocardial infarction after transplant

Kasiske et al10 analyzed data from more than 50,000 patients from the US Renal Data System and found that, for about the first year after transplant, patients who underwent kidney transplant were more likely to have a myocardial infarction than those on dialysis. After that, they fared better than patients who remained on dialysis. Those with a living-donor transplant were less likely at all times to have a myocardial infarction than those with a deceased-donor transplant. By 3 years after transplant, the relative risk of having a myocardial infarction was 0.89 for deceased-donor organ recipients and 0.69 for living-donor recipients compared with patients on the waiting list.10

INFECTIOUS COMPLICATIONS IN KIDNEY RECIPIENTS

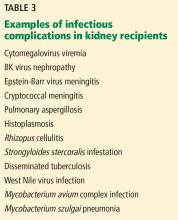

Kidney recipients are prone to many common and uncommon infections (Table 3). All potential recipients are tested pretransplant for hepatitis B, hepatitis C, human immunodeficiency virus, syphilis, and tuberculosis. A positive result does not necessarily rule out transplant.

The following viral serology tests are also done before transplant:

Epstein-Barr virus (antibodies are positive in about 90% of adults)

CMV (about 70% of adults are seropositive)

Varicella zoster (seronegative patients should be given live-attenuated varicella vaccine).

Risk of transmission of these viruses relates to the serostatus of the donor and recipient before transplant. If a donor is positive for viral antibodies but the recipient is not (a so-called “mismatch”), risk is higher after transplant.

Hepatitis C

Patients with hepatitis C fare better if they get a transplant than if they remain on dialysis, although their posttransplant course is worse compared with transplant patients who do not have hepatitis. Some patients develop accelerated liver disease after kidney transplant. Hepatitis C-related kidney disease—membranous proliferative glomerulonephritis—also occurs, as do comorbidities such as diabetes.

Careful evaluation is warranted before transplant, including liver imaging, alpha-fetoprotein testing, and liver biopsy to evaluate for hepatocellular carcinoma. A patient with advanced fibrosis or cirrhosis may not be a candidate for kidney transplant alone but could possibly receive a combined kidney and liver transplant.

There is a need to determine the best time to treat hepatitis C infection. Patients with advanced liver disease or hepatitis C-related kidney disease would likely benefit from early treatment. However, delaying treatment could shorten the wait time for a deceased-donor organ positive for hepatitis C. Transplant candidates with active hepatitis C are uniquely considered to accept hepatitis C-positive kidneys, which are often discarded, and may only wait weeks for such a transplant. The shortened kidney survival associated with a hepatitis C-positive kidney may no longer be true with the new antiviral hepatitis C therapy, which has been shown to be effective post-transplant.

Hepatitis B

No cure is available for hepatitis B infection, but it can be well controlled with antiviral therapy. Patients with hepatitis B infection may be candidates for transplant, but they should be stable on antiviral therapy (lamivudine, entecavir, or tenofovir) to eliminate the viral load before transplant, and therapy should be continued afterward. Liver imaging, alpha-fetoprotein levels, and biopsy are recommended for evaluation. All hepatitis B- negative patients should be vaccinated before transplant.

Organs from living or deceased donors that test positive for hepatitis B core antibody, indicating prior exposure, can be considered for transplant in a patient who tests positive for hepatitis B surface antibody, indicating successful vaccination or prior exposure in the recipient. But donors must have negative surface antigen and polymerase chain reaction (PCR) tests that indicate no active hepatitis B infection.

Cytomegalovirus

CMV typically does not appear until prophylactic therapy is stopped. Classic symptoms are fever, leukopenia, and diarrhea. Infection can involve any organ, and patients may present with hepatitis, pancreatitis or, less commonly, pneumonitis.

Patients who are negative for CMV before transplant and receive a donor-positive organ are at the highest risk. Patients who are CMV IgG-positive are considered to be at intermediate risk, regardless of the donor status. Patients who are negative for CMV and receive a donor-negative organ are at the lowest risk and do not need prophylaxis with valganciclovir.

CMV infection is diagnosed by PCR testing of the blood or immunostaining in tissue biopsy. Occasionally, blood testing is negative in the face of tissue-based disease.

BK virus

BK is a polyoma virus and a common virus associated with kidney transplant. Viremia is seen in about 18% of patients, whereas actual kidney disease associated with a higher level of virus is seen in fewer than 10% of patients. Most people are exposed to BK virus, often in childhood, and it can remain indolent in the bladder and uroepithelium.

Patients can develop BK nephropathy after exposure to transplant immunosuppression.11 Posttransplant monitoring protocols typically include PCR testing for BK virus at 1, 3, 6, and 12 months. No agent has been identified to specifically treat BK virus. The general strategy is to minimize immunosuppressive therapy by reducing or eliminating mycophenolate mofetil. Fortunately, BK virus does not tend to recur, and patients can have a low-level viremia (< 10,000 copies/mL) persisting over months or even years but often without clinical consequences.

The appearance of BK virus on biopsy can mimic acute rejection. Before BK viral nephropathy was a recognized entity, patients would have been diagnosed with acute rejection and may have been put on high-dose steroids, which would have worsened the BK infection.

Posttransplant lymphoproliferative disorder

Posttransplant lymphoproliferative disorder is most often associated with Epstein-Barr virus and usually involves a large, diffuse B-cell lymphoma. Burkitt lymphoma and plasma cell neoplasms also can occur less commonly.

The condition is about 30 times more common in patients after transplant than in the general population, and it is the third most common malignancy in transplant patients after skin and cervical cancers. About 80% of the cases occur early after transplant, within the first year.

Patients typically have a marked elevation in viral load of Epstein-Barr virus, although a negative viral load does not rule it out. A patient who is serologically negative for Epstein-Barr virus receiving a donor-positive kidney is at highest risk; this situation is most often seen in the pediatric population. Potent induction therapies (eg, antilymphocyte antibody therapy) are also associated with posttransplant lymphoproliferative disorder.

Patients typically present with fever of unknown origin with no localizing signs or symptoms. Mass lesions can be challenging to find; positron emission tomography may be helpful. The culprit is usually a focal mass, ulcer (especially in the gastrointestinal tract), or infiltrate (commonly localized to the allograft). Multifocal or disseminated disease can also occur, including lymphoma or with central nervous system, gastrointestinal, or pulmonary involvement.

Biopsy of the affected site is required for histopathology and Epstein-Barr virus markers. PCR blood testing is often positive for Epstein-Barr virus.

Typical antiviral therapy does not eliminate Epstein-Barr virus. In early polyclonal viral proliferation, the first goal is to reduce immunosuppressive therapy. Rituximab alone may also help in polymorphic cases. With disease that is clearly monomorphic and has transformed to a true malignancy, cytotoxic chemotherapy is also required. “R-CHOP,” a combination therapy consisting of rituximab with cyclophosphamide, doxorubicin, vincristine, and prednisone, is usually used. Radiation therapy may help in some cases.

Cryptococcal infection

Previously seen in patients with acquired immune deficiency syndrome, cryptococcal infection is now most commonly encountered in patients with solid-organ transplants. Vilchez et al12 found a 1% incidence in a series of more than 5,000 patients who had received an organ transplant.

Immunosuppression likely conveys risk, but because cryptococcal infection is acquired, environmental exposure also plays a role. It tends to appear more than 6 months after transplant, indicating that its cause is a primary infection by spore inhalation rather than by reactivation or transmission from the donor organ.13 Bird exposure is a risk factor for cryptococcal infection. One case identified the same strain of Cryptococcus in a kidney transplant recipient and the family’s pet cockatoo.14

Cryptococcal infection typically starts as pneumonia, which may be subclinical. The infection can then disseminate, with meningitis presenting with headache and mental status changes being the most concerning complication. The death rate is about 50% in most series of patients with meningitis. Skin and soft-tissue manifestations may also occur in 10% to 15% of cases and can be nodular, ulcerative, or cellulitic.

More than 75% of fungal infections requiring hospitalization in US patients who have undergone transplant are attributed to either Candida, Aspergillus, or Cryptococcus species.15 Risk of fungal infection is increased with diabetes, duration of pretransplant dialysis, tacrolimus therapy, or rejection treatment.

- Wolfe RA, Ashby VB, Milford EL, et al. Comparison of mortality in all patients on dialysis, patients on dialysis awaiting transplantation, and recipients of a first cadaveric transplant. N Engl J Med 1999; 341:1725–1730.

- Kasiske BL, Klinger D. Cigarette smoking in renal transplant recipients. J Am Soc Nephrol 2000; 11:753–759.

- United Network for Organ Sharing. Transplant trends. https://transplantpro.org/technology/transplant-trends/#waitlists_by_organ. Accessed December 13, 2017.

- Meier-Kriesche HU, Kaplan B. Waiting time on dialysis as the strongest modifiable risk factor for renal transplant outcomes: a paired donor kidney analysis. Transplantation 2002; 74:1377–1381.

- Ojo AO, Hanson JA, Meier-Kriesche H, et al. Survival in recipients of marginal cadaveric donor kidneys compared with other recipients and wait-listed transplant candidates. J Am Soc Nephrol 2001; 12:589–597.

- Alonso P. Sanchez-Lazaro I, Almenar L, et al. Use of a “CNI holidays” strategy in acute renal dysfunction late after heart transplant. Report of two cases. Heart Int 2014; 9:74–77.

- Cantarovich M, Metrakos P, Giannetti N, Cecere R, Barkun J, Tchervenkov J. Anti-CD25 monoclonal antibody coverage allows for calcineurin inhibitor “holiday” in solid organ transplant patients with acute renal dysfunction. Transplantation 2002; 73:1169–1172.

- Brennan DC, Daller JA, Lake KD, Cibrik D, Del Castillo D; Thymoglobulin Induction Study Group. Rabbit antithymocyte globulin versus basiliximab in renal transplantation. N Engl J Med 2006; 355:1967–1977.

- McCullough PA. Evaluation and treatment of coronary artery disease in patients with end-stage renal disease. Kidney Int 2005; 67:S51–S58.

- Kasiske BL, Maclean JR, Snyder JJ. Acute myocardial infarction and kidney transplantation. J Am Soc Nephrol 2006; 17:900–907.

- Bohl DL, Storch GA, Ryschkewitsch C, et al. Donor origin of BK virus in renal transplantation and role of HLA C7 in susceptibility to sustained BK viremia. Am J Transplant 2005; 5:2213–2221.

- Vilchez RA, Fung J, Kusne S. Cryptococcosis in organ transplant recipients: an overview. Am J Transplant 2002; 2:575–580.

- Vilchez R, Shapiro R, McCurry K, et al. Longitudinal study of cryptococcosis in adult solid-organ transplant recipients. Transpl Int 2003; 16:336–340.

- Nosanchuk JD, Shoham S, Fries BC, Shapiro DS, Levitz SM, Casadevall A. Evidence of zoonotic transmission of Cryptococcus neoformans from a pet cockatoo to an immunocompromised patient. Ann Intern Med 2000; 132:205–208.

- Abbott KC, Hypolite I, Poropatich RK, et al. Hospitalizations for fungal infections after renal transplantation in the United States. Transpl Infect Dis 2001; 3:203–211.

Much has improved in renal transplantation over the past 20 years. The focus has shifted to using stronger immunotherapy rather than trying to minimize it. There has been increasing recognition of infection and ways to prevent and treat it. Induction therapy now has greater emphasis so that maintenance therapy can be eased, with the aim of reducing long-term toxicity. Perhaps the biggest change is the practice of screening for donor-specific antibodies at the time of transplant so that predictable problems can be prevented or better handled if they occur. Such advances have helped patients directly and by extending the life of their transplanted organs.

LONGER SURVIVAL

As early as the 1990s, it was recognized that kidney transplant offers a survival advantage for patients with end-stage renal disease over maintenance on dialysis.1 Although the risk of death is higher immediately after transplant, within a few months it becomes much lower than for patients on dialysis. Survival varies according to the health of the patient and the quality of the transplanted organ.

In general, patients who obtain the greatest benefit from transplants in terms of years of life gained are those with diabetes, especially those who are younger. Those ages 20 to 39 live about 8 years on dialysis vs 25 years after transplant.

CONTRAINDICATIONS TO TRANSPLANT

There are multiple contraindications to a solitary kidney transplant (Table 1), including smoking. Most transplant centers require that smokers quit before transplant. Long-standing smokers almost double their risk of a cardiac event after transplant and double their rate of malignancy. Active smoking at the time of transplant is associated with twice the risk of death by 10 years after transplant compared with that of nonsmokers.2 Cotinine testing can detect whether a patient is an active smoker.

WAITING-LIST CONSIDERATIONS

Organs are scarce

The number of patients on the kidney waiting list has increased rapidly in the last few decades, while the number of transplants performed each year has remained about the same. In 2016, about 100,000 patients were on the list, but only about 19,000 transplants were performed.3 Wait times, especially for deceased-donor organs, have increased to about 6 years, varying by blood type and geographic region.

Waiting-list placement

Placement on the waiting list for a deceased-donor kidney transplant occurs when a patient has an estimated glomerular filtration rate (GFR) of 20 mL/min/1.73 m2 or less, although referral to the list can be made earlier. Early listing remains advantageous, as total time on the list will be counted before starting dialysis. “Preemptive transplant” means the patient had no dialysis before transplant; this applies to about 10% of transplant recipients. These patients tend to fare the best and are usually recipients of a living-donor organ.

Most patients do not receive a transplant until the GFR is less than 15 mL/min/1.73 m2.

Since 2014, wait time has been measured from the beginning of dialysis rather than the date of waiting-list placement in patients who are listed after starting dialysis therapy. This approach is more fair but sometimes introduces problems. A patient who did not previously know about the list may suddenly jump to the head of the line after 10 years of dialysis, by which time comorbidities associated with long-term dialysis make the patient less likely to gain as much benefit from a transplant as people lower on the list. Time on dialysis, or “dialysis vintage,” predicts patient and kidney survival after transplant, with reduced survival associated with increasing time on dialysis.4

Shorter wait for a suboptimal kidney

The aging population has increased the number of older patients being listed for transplant, presenting multiple challenges. Patients age 65 or older have a 50% chance of dying before they receive a transplant during a 5-year wait.

A patient may shorten the wait by joining the list for a suboptimal organ. All deceased-donor organs are given a Kidney Donor Profile Index score, which predicts the longevity of an organ after transplant. The score is determined by donor age, kidney function based on the serum creatinine at the time of death, and other donor factors.

A kidney with a score higher than 85% is likely to function longer than only 15% of available kidneys. Patients who receive a kidney with that score have a longer period of risk of death soon after transplant and a slightly higher risk of death in the long term than patients who receive a healthier kidney, although on average they still do better than patients on dialysis.5

Older patients should be encouraged to sign up for both the regular waiting list and the suboptimal kidney waiting list to reduce the risk of dying before they get a kidney.

LIVING-DONOR ORGAN TRANSPLANT

Many advantages

Living-donor organ transplant is associated with a better survival rate than deceased-donor organ transplant, and the advantage becomes greater over time. At 1 year, patient survival is more than 90% in both groups, but by 5 years about 80% of patients with a living-donor organ are still alive vs only about 65% of patients with a deceased-donor organ.

The waiting time for a living-donor transplant may be only weeks to months, rather than years. Because increasing time on dialysis predicts worse patient and graft survival after transplant, the shorter wait time is a big advantage. In addition, because the donor and recipient are typically in adjacent operating rooms, the organ sustains less ischemic damage. In general, the kidney quality is better from healthy donors, resulting in superior function early on and longer graft survival by an average of 4 years. If the living donor is related to the recipient, human leukocyte antigen matching also tends to be better and predicts better outcomes.

Special challenges

Opting for a living-donor organ also entails special challenges. In addition to the ethical issues surrounding living-donor organ donation, an appropriate donor must be found. Donors must be highly motivated and pass physical, laboratory, and psychological evaluations.

For older patients, if the donor is a spouse or close friend, he or she is also likely to be older, making the organ less viable than one from a younger person. Even an adult child may not be an ideal donor if there is a family propensity to kidney disease, such as diabetic nephropathy. No test is available to determine the risk for future diabetes, but it is known to run in families.

POTENT IMMUNOSUPPRESSION

Induction therapy

Induction therapy with antithymocyte globulin or basiliximab provides intense immunosuppression to prevent acute rejection during the early posttransplant period.

Antithymocyte globulin is a potent agent that contains antibodies directed at T cells, B cells, neutrophils, platelets, adhesion molecules, and complement. It binds T cells and removes them from circulation by opsonization in splenic and lymphoid tissue. The immunosuppressive effect is sustained for at least 2 to 3 months after a series of injections (dosage 1.5 mg/kg/day, usually for 4 to 10 doses). Antithymocyte globulin is also used to treat acute rejection, especially high-grade rejection for which steroid therapy is likely to be insufficient.

Basiliximab consists of antibodies to the interleukin 2 (IL-2) receptor of T cells. Binding to T cells prevents their activation rather than removing them from circulation. The drug prevents rejection, with 30% relative reduction in early studies compared with placebo. However, it is ineffective in reversing established rejection. Dosage is 20 mg at day 0 and day 4, which provides receptor saturation for 30 to 45 days.

Basiliximab is also sometimes used off-label for patients who need to discontinue a calcineurin inhibitor (ie, tacrolimus or cyclosporine). In such cases, normal therapy is put on hold while basiliximab is given for 1 or 2 doses. Case series have been reported for this use, particularly for patients with a heart and liver transplant who develop acute kidney injury while hospitalized.6,7

Antithymocyte globulin is more effective but also more risky. Brennan et al8 randomized 278 transplant recipients to either antithymocyte globulin or basiliximab. Patients in the antithymocyte globulin group had a 16% rejection rate vs 26% in the basiliximab group.

Antithymocyte globulin therapy is associated with multiple adverse effects, including fever and chills, pulmonary edema, and long-standing immunosuppressive effects such as increased risk of lymphoma and cytomegalovirus (CMV) infection. Basiliximab side-effect profiles are similar to those of placebo.

Maintenance therapy

The calcineurin inhibitors cyclosporine and tacrolimus remain the standard of care in kidney transplant despite multiple drug interactions and side effects that include renal toxicity and fibrosis. Cyclosporine and tacrolimus both bind intracellular immunophilins and thereby prevent transcription of IL-2 and production of T cells. The drugs work similarly but have different binding sites. Cyclosporine has largely been replaced by tacrolimus because its reliability of dosing and higher potency are associated with lower rejection rates.

Tacrolimus is typically given twice daily (1–6 mg/dose). Twelve-hour trough levels are followed (target: 8–12 ng/mL early on, then 5–8 ng/mL after 3 months posttransplant). Side effects include hypertension and hypercholesterolemia, but less so than with cyclosporine. On the other hand, hyperglycemia tends to be worse with tacrolimus than with cyclosporine, and combining tacrolimus with steroids frequently leads to diabetes. Tacrolimus can also cause acute and chronic renal failure, especially at high drug levels, as well as neurotoxicity, tremors, and hair loss.

Cyclosporine, tacrolimus, and sirolimus (not a calcineurin inhibitor) are metabolized through the same cytochrome P450 pathway (CYP3A4), so they have common drug interactions (Table 2).

Mycophenolate mofetil is typically used as an adjunct therapy (500–1,000 mg twice daily). It is also used for other kidney diseases before transplant, including lupus nephritis. Transplanted kidney rejection rates with mycophenolate mofetil with steroids are about 40%, so the drug is not potent enough to be used without a calcineurin inhibitor.

Side effects include gastrointestinal toxicity in up to 20% of patients, and leukopenia, which is associated with viral infections.

CORONARY ARTERY DISEASE IS COMMON WITH DIALYSIS

Coronary artery disease is highly associated with end-stage kidney disease and occurs in as many as 85% of older patients with diabetes on dialysis. Although patients with end-stage kidney disease tend to have more numerous and severe atherosclerotic lesions compared with the general population, justifying aggressive management, cardiac care tends to be conservative in patients on dialysis.9

Death from acute myocardial infarction occurs in about 20% to 30% of patients on dialysis vs about 2% of patients with normal renal function. Five years after myocardial infarction, survival is only about 30% in patients on dialysis.9

There are many explanations for excess coronary artery disease in patients on dialysis. In addition to the traditional cardiovascular risk factors of diabetes, hypertension, and preexisting coronary artery disease, patients are in a proinflammatory uremic state and have high levels of phosphorus and fibroblast growth factor 23 that contribute to vascular calcification. Almost all patients have high homocysteine levels and hemodynamic instability, particularly if they are on hemodialysis.

Pretransplant evaluation for heart disease

Patients on the kidney transplant waiting list are screened aggressively for heart disease. A history of myocardial infarction usually results in removal from the list. All patients have an initial electrocardiogram and echocardiogram. Thallium or echocardiographic stress testing is used for patients who are age 50 and older, have diabetes, or have had dialysis for many years. Patients with evidence of ischemia undergo catheterization.

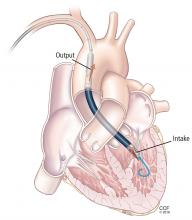

Patients are also screened with computed tomography before transplant. Because the kidney is typically anastomosed to the iliac artery and vein, heavy calcification of the iliac artery can make the procedure too difficult to perform.

Reduced long-term risk of myocardial infarction after transplant

Kasiske et al10 analyzed data from more than 50,000 patients from the US Renal Data System and found that, for about the first year after transplant, patients who underwent kidney transplant were more likely to have a myocardial infarction than those on dialysis. After that, they fared better than patients who remained on dialysis. Those with a living-donor transplant were less likely at all times to have a myocardial infarction than those with a deceased-donor transplant. By 3 years after transplant, the relative risk of having a myocardial infarction was 0.89 for deceased-donor organ recipients and 0.69 for living-donor recipients compared with patients on the waiting list.10

INFECTIOUS COMPLICATIONS IN KIDNEY RECIPIENTS

Kidney recipients are prone to many common and uncommon infections (Table 3). All potential recipients are tested pretransplant for hepatitis B, hepatitis C, human immunodeficiency virus, syphilis, and tuberculosis. A positive result does not necessarily rule out transplant.

The following viral serology tests are also done before transplant:

Epstein-Barr virus (antibodies are positive in about 90% of adults)

CMV (about 70% of adults are seropositive)

Varicella zoster (seronegative patients should be given live-attenuated varicella vaccine).

Risk of transmission of these viruses relates to the serostatus of the donor and recipient before transplant. If a donor is positive for viral antibodies but the recipient is not (a so-called “mismatch”), risk is higher after transplant.

Hepatitis C

Patients with hepatitis C fare better if they get a transplant than if they remain on dialysis, although their posttransplant course is worse compared with transplant patients who do not have hepatitis. Some patients develop accelerated liver disease after kidney transplant. Hepatitis C-related kidney disease—membranous proliferative glomerulonephritis—also occurs, as do comorbidities such as diabetes.

Careful evaluation is warranted before transplant, including liver imaging, alpha-fetoprotein testing, and liver biopsy to evaluate for hepatocellular carcinoma. A patient with advanced fibrosis or cirrhosis may not be a candidate for kidney transplant alone but could possibly receive a combined kidney and liver transplant.

There is a need to determine the best time to treat hepatitis C infection. Patients with advanced liver disease or hepatitis C-related kidney disease would likely benefit from early treatment. However, delaying treatment could shorten the wait time for a deceased-donor organ positive for hepatitis C. Transplant candidates with active hepatitis C are uniquely considered to accept hepatitis C-positive kidneys, which are often discarded, and may only wait weeks for such a transplant. The shortened kidney survival associated with a hepatitis C-positive kidney may no longer be true with the new antiviral hepatitis C therapy, which has been shown to be effective post-transplant.

Hepatitis B

No cure is available for hepatitis B infection, but it can be well controlled with antiviral therapy. Patients with hepatitis B infection may be candidates for transplant, but they should be stable on antiviral therapy (lamivudine, entecavir, or tenofovir) to eliminate the viral load before transplant, and therapy should be continued afterward. Liver imaging, alpha-fetoprotein levels, and biopsy are recommended for evaluation. All hepatitis B- negative patients should be vaccinated before transplant.

Organs from living or deceased donors that test positive for hepatitis B core antibody, indicating prior exposure, can be considered for transplant in a patient who tests positive for hepatitis B surface antibody, indicating successful vaccination or prior exposure in the recipient. But donors must have negative surface antigen and polymerase chain reaction (PCR) tests that indicate no active hepatitis B infection.

Cytomegalovirus

CMV typically does not appear until prophylactic therapy is stopped. Classic symptoms are fever, leukopenia, and diarrhea. Infection can involve any organ, and patients may present with hepatitis, pancreatitis or, less commonly, pneumonitis.

Patients who are negative for CMV before transplant and receive a donor-positive organ are at the highest risk. Patients who are CMV IgG-positive are considered to be at intermediate risk, regardless of the donor status. Patients who are negative for CMV and receive a donor-negative organ are at the lowest risk and do not need prophylaxis with valganciclovir.

CMV infection is diagnosed by PCR testing of the blood or immunostaining in tissue biopsy. Occasionally, blood testing is negative in the face of tissue-based disease.

BK virus

BK is a polyoma virus and a common virus associated with kidney transplant. Viremia is seen in about 18% of patients, whereas actual kidney disease associated with a higher level of virus is seen in fewer than 10% of patients. Most people are exposed to BK virus, often in childhood, and it can remain indolent in the bladder and uroepithelium.

Patients can develop BK nephropathy after exposure to transplant immunosuppression.11 Posttransplant monitoring protocols typically include PCR testing for BK virus at 1, 3, 6, and 12 months. No agent has been identified to specifically treat BK virus. The general strategy is to minimize immunosuppressive therapy by reducing or eliminating mycophenolate mofetil. Fortunately, BK virus does not tend to recur, and patients can have a low-level viremia (< 10,000 copies/mL) persisting over months or even years but often without clinical consequences.

The appearance of BK virus on biopsy can mimic acute rejection. Before BK viral nephropathy was a recognized entity, patients would have been diagnosed with acute rejection and may have been put on high-dose steroids, which would have worsened the BK infection.

Posttransplant lymphoproliferative disorder

Posttransplant lymphoproliferative disorder is most often associated with Epstein-Barr virus and usually involves a large, diffuse B-cell lymphoma. Burkitt lymphoma and plasma cell neoplasms also can occur less commonly.

The condition is about 30 times more common in patients after transplant than in the general population, and it is the third most common malignancy in transplant patients after skin and cervical cancers. About 80% of the cases occur early after transplant, within the first year.

Patients typically have a marked elevation in viral load of Epstein-Barr virus, although a negative viral load does not rule it out. A patient who is serologically negative for Epstein-Barr virus receiving a donor-positive kidney is at highest risk; this situation is most often seen in the pediatric population. Potent induction therapies (eg, antilymphocyte antibody therapy) are also associated with posttransplant lymphoproliferative disorder.

Patients typically present with fever of unknown origin with no localizing signs or symptoms. Mass lesions can be challenging to find; positron emission tomography may be helpful. The culprit is usually a focal mass, ulcer (especially in the gastrointestinal tract), or infiltrate (commonly localized to the allograft). Multifocal or disseminated disease can also occur, including lymphoma or with central nervous system, gastrointestinal, or pulmonary involvement.

Biopsy of the affected site is required for histopathology and Epstein-Barr virus markers. PCR blood testing is often positive for Epstein-Barr virus.

Typical antiviral therapy does not eliminate Epstein-Barr virus. In early polyclonal viral proliferation, the first goal is to reduce immunosuppressive therapy. Rituximab alone may also help in polymorphic cases. With disease that is clearly monomorphic and has transformed to a true malignancy, cytotoxic chemotherapy is also required. “R-CHOP,” a combination therapy consisting of rituximab with cyclophosphamide, doxorubicin, vincristine, and prednisone, is usually used. Radiation therapy may help in some cases.

Cryptococcal infection

Previously seen in patients with acquired immune deficiency syndrome, cryptococcal infection is now most commonly encountered in patients with solid-organ transplants. Vilchez et al12 found a 1% incidence in a series of more than 5,000 patients who had received an organ transplant.

Immunosuppression likely conveys risk, but because cryptococcal infection is acquired, environmental exposure also plays a role. It tends to appear more than 6 months after transplant, indicating that its cause is a primary infection by spore inhalation rather than by reactivation or transmission from the donor organ.13 Bird exposure is a risk factor for cryptococcal infection. One case identified the same strain of Cryptococcus in a kidney transplant recipient and the family’s pet cockatoo.14

Cryptococcal infection typically starts as pneumonia, which may be subclinical. The infection can then disseminate, with meningitis presenting with headache and mental status changes being the most concerning complication. The death rate is about 50% in most series of patients with meningitis. Skin and soft-tissue manifestations may also occur in 10% to 15% of cases and can be nodular, ulcerative, or cellulitic.

More than 75% of fungal infections requiring hospitalization in US patients who have undergone transplant are attributed to either Candida, Aspergillus, or Cryptococcus species.15 Risk of fungal infection is increased with diabetes, duration of pretransplant dialysis, tacrolimus therapy, or rejection treatment.

Much has improved in renal transplantation over the past 20 years. The focus has shifted to using stronger immunotherapy rather than trying to minimize it. There has been increasing recognition of infection and ways to prevent and treat it. Induction therapy now has greater emphasis so that maintenance therapy can be eased, with the aim of reducing long-term toxicity. Perhaps the biggest change is the practice of screening for donor-specific antibodies at the time of transplant so that predictable problems can be prevented or better handled if they occur. Such advances have helped patients directly and by extending the life of their transplanted organs.

LONGER SURVIVAL

As early as the 1990s, it was recognized that kidney transplant offers a survival advantage for patients with end-stage renal disease over maintenance on dialysis.1 Although the risk of death is higher immediately after transplant, within a few months it becomes much lower than for patients on dialysis. Survival varies according to the health of the patient and the quality of the transplanted organ.

In general, patients who obtain the greatest benefit from transplants in terms of years of life gained are those with diabetes, especially those who are younger. Those ages 20 to 39 live about 8 years on dialysis vs 25 years after transplant.

CONTRAINDICATIONS TO TRANSPLANT

There are multiple contraindications to a solitary kidney transplant (Table 1), including smoking. Most transplant centers require that smokers quit before transplant. Long-standing smokers almost double their risk of a cardiac event after transplant and double their rate of malignancy. Active smoking at the time of transplant is associated with twice the risk of death by 10 years after transplant compared with that of nonsmokers.2 Cotinine testing can detect whether a patient is an active smoker.

WAITING-LIST CONSIDERATIONS

Organs are scarce

The number of patients on the kidney waiting list has increased rapidly in the last few decades, while the number of transplants performed each year has remained about the same. In 2016, about 100,000 patients were on the list, but only about 19,000 transplants were performed.3 Wait times, especially for deceased-donor organs, have increased to about 6 years, varying by blood type and geographic region.

Waiting-list placement

Placement on the waiting list for a deceased-donor kidney transplant occurs when a patient has an estimated glomerular filtration rate (GFR) of 20 mL/min/1.73 m2 or less, although referral to the list can be made earlier. Early listing remains advantageous, as total time on the list will be counted before starting dialysis. “Preemptive transplant” means the patient had no dialysis before transplant; this applies to about 10% of transplant recipients. These patients tend to fare the best and are usually recipients of a living-donor organ.

Most patients do not receive a transplant until the GFR is less than 15 mL/min/1.73 m2.

Since 2014, wait time has been measured from the beginning of dialysis rather than the date of waiting-list placement in patients who are listed after starting dialysis therapy. This approach is more fair but sometimes introduces problems. A patient who did not previously know about the list may suddenly jump to the head of the line after 10 years of dialysis, by which time comorbidities associated with long-term dialysis make the patient less likely to gain as much benefit from a transplant as people lower on the list. Time on dialysis, or “dialysis vintage,” predicts patient and kidney survival after transplant, with reduced survival associated with increasing time on dialysis.4

Shorter wait for a suboptimal kidney

The aging population has increased the number of older patients being listed for transplant, presenting multiple challenges. Patients age 65 or older have a 50% chance of dying before they receive a transplant during a 5-year wait.

A patient may shorten the wait by joining the list for a suboptimal organ. All deceased-donor organs are given a Kidney Donor Profile Index score, which predicts the longevity of an organ after transplant. The score is determined by donor age, kidney function based on the serum creatinine at the time of death, and other donor factors.

A kidney with a score higher than 85% is likely to function longer than only 15% of available kidneys. Patients who receive a kidney with that score have a longer period of risk of death soon after transplant and a slightly higher risk of death in the long term than patients who receive a healthier kidney, although on average they still do better than patients on dialysis.5

Older patients should be encouraged to sign up for both the regular waiting list and the suboptimal kidney waiting list to reduce the risk of dying before they get a kidney.

LIVING-DONOR ORGAN TRANSPLANT

Many advantages

Living-donor organ transplant is associated with a better survival rate than deceased-donor organ transplant, and the advantage becomes greater over time. At 1 year, patient survival is more than 90% in both groups, but by 5 years about 80% of patients with a living-donor organ are still alive vs only about 65% of patients with a deceased-donor organ.

The waiting time for a living-donor transplant may be only weeks to months, rather than years. Because increasing time on dialysis predicts worse patient and graft survival after transplant, the shorter wait time is a big advantage. In addition, because the donor and recipient are typically in adjacent operating rooms, the organ sustains less ischemic damage. In general, the kidney quality is better from healthy donors, resulting in superior function early on and longer graft survival by an average of 4 years. If the living donor is related to the recipient, human leukocyte antigen matching also tends to be better and predicts better outcomes.

Special challenges

Opting for a living-donor organ also entails special challenges. In addition to the ethical issues surrounding living-donor organ donation, an appropriate donor must be found. Donors must be highly motivated and pass physical, laboratory, and psychological evaluations.

For older patients, if the donor is a spouse or close friend, he or she is also likely to be older, making the organ less viable than one from a younger person. Even an adult child may not be an ideal donor if there is a family propensity to kidney disease, such as diabetic nephropathy. No test is available to determine the risk for future diabetes, but it is known to run in families.

POTENT IMMUNOSUPPRESSION

Induction therapy

Induction therapy with antithymocyte globulin or basiliximab provides intense immunosuppression to prevent acute rejection during the early posttransplant period.

Antithymocyte globulin is a potent agent that contains antibodies directed at T cells, B cells, neutrophils, platelets, adhesion molecules, and complement. It binds T cells and removes them from circulation by opsonization in splenic and lymphoid tissue. The immunosuppressive effect is sustained for at least 2 to 3 months after a series of injections (dosage 1.5 mg/kg/day, usually for 4 to 10 doses). Antithymocyte globulin is also used to treat acute rejection, especially high-grade rejection for which steroid therapy is likely to be insufficient.

Basiliximab consists of antibodies to the interleukin 2 (IL-2) receptor of T cells. Binding to T cells prevents their activation rather than removing them from circulation. The drug prevents rejection, with 30% relative reduction in early studies compared with placebo. However, it is ineffective in reversing established rejection. Dosage is 20 mg at day 0 and day 4, which provides receptor saturation for 30 to 45 days.

Basiliximab is also sometimes used off-label for patients who need to discontinue a calcineurin inhibitor (ie, tacrolimus or cyclosporine). In such cases, normal therapy is put on hold while basiliximab is given for 1 or 2 doses. Case series have been reported for this use, particularly for patients with a heart and liver transplant who develop acute kidney injury while hospitalized.6,7

Antithymocyte globulin is more effective but also more risky. Brennan et al8 randomized 278 transplant recipients to either antithymocyte globulin or basiliximab. Patients in the antithymocyte globulin group had a 16% rejection rate vs 26% in the basiliximab group.

Antithymocyte globulin therapy is associated with multiple adverse effects, including fever and chills, pulmonary edema, and long-standing immunosuppressive effects such as increased risk of lymphoma and cytomegalovirus (CMV) infection. Basiliximab side-effect profiles are similar to those of placebo.

Maintenance therapy

The calcineurin inhibitors cyclosporine and tacrolimus remain the standard of care in kidney transplant despite multiple drug interactions and side effects that include renal toxicity and fibrosis. Cyclosporine and tacrolimus both bind intracellular immunophilins and thereby prevent transcription of IL-2 and production of T cells. The drugs work similarly but have different binding sites. Cyclosporine has largely been replaced by tacrolimus because its reliability of dosing and higher potency are associated with lower rejection rates.

Tacrolimus is typically given twice daily (1–6 mg/dose). Twelve-hour trough levels are followed (target: 8–12 ng/mL early on, then 5–8 ng/mL after 3 months posttransplant). Side effects include hypertension and hypercholesterolemia, but less so than with cyclosporine. On the other hand, hyperglycemia tends to be worse with tacrolimus than with cyclosporine, and combining tacrolimus with steroids frequently leads to diabetes. Tacrolimus can also cause acute and chronic renal failure, especially at high drug levels, as well as neurotoxicity, tremors, and hair loss.

Cyclosporine, tacrolimus, and sirolimus (not a calcineurin inhibitor) are metabolized through the same cytochrome P450 pathway (CYP3A4), so they have common drug interactions (Table 2).

Mycophenolate mofetil is typically used as an adjunct therapy (500–1,000 mg twice daily). It is also used for other kidney diseases before transplant, including lupus nephritis. Transplanted kidney rejection rates with mycophenolate mofetil with steroids are about 40%, so the drug is not potent enough to be used without a calcineurin inhibitor.

Side effects include gastrointestinal toxicity in up to 20% of patients, and leukopenia, which is associated with viral infections.

CORONARY ARTERY DISEASE IS COMMON WITH DIALYSIS

Coronary artery disease is highly associated with end-stage kidney disease and occurs in as many as 85% of older patients with diabetes on dialysis. Although patients with end-stage kidney disease tend to have more numerous and severe atherosclerotic lesions compared with the general population, justifying aggressive management, cardiac care tends to be conservative in patients on dialysis.9

Death from acute myocardial infarction occurs in about 20% to 30% of patients on dialysis vs about 2% of patients with normal renal function. Five years after myocardial infarction, survival is only about 30% in patients on dialysis.9

There are many explanations for excess coronary artery disease in patients on dialysis. In addition to the traditional cardiovascular risk factors of diabetes, hypertension, and preexisting coronary artery disease, patients are in a proinflammatory uremic state and have high levels of phosphorus and fibroblast growth factor 23 that contribute to vascular calcification. Almost all patients have high homocysteine levels and hemodynamic instability, particularly if they are on hemodialysis.

Pretransplant evaluation for heart disease

Patients on the kidney transplant waiting list are screened aggressively for heart disease. A history of myocardial infarction usually results in removal from the list. All patients have an initial electrocardiogram and echocardiogram. Thallium or echocardiographic stress testing is used for patients who are age 50 and older, have diabetes, or have had dialysis for many years. Patients with evidence of ischemia undergo catheterization.

Patients are also screened with computed tomography before transplant. Because the kidney is typically anastomosed to the iliac artery and vein, heavy calcification of the iliac artery can make the procedure too difficult to perform.

Reduced long-term risk of myocardial infarction after transplant

Kasiske et al10 analyzed data from more than 50,000 patients from the US Renal Data System and found that, for about the first year after transplant, patients who underwent kidney transplant were more likely to have a myocardial infarction than those on dialysis. After that, they fared better than patients who remained on dialysis. Those with a living-donor transplant were less likely at all times to have a myocardial infarction than those with a deceased-donor transplant. By 3 years after transplant, the relative risk of having a myocardial infarction was 0.89 for deceased-donor organ recipients and 0.69 for living-donor recipients compared with patients on the waiting list.10

INFECTIOUS COMPLICATIONS IN KIDNEY RECIPIENTS

Kidney recipients are prone to many common and uncommon infections (Table 3). All potential recipients are tested pretransplant for hepatitis B, hepatitis C, human immunodeficiency virus, syphilis, and tuberculosis. A positive result does not necessarily rule out transplant.

The following viral serology tests are also done before transplant:

Epstein-Barr virus (antibodies are positive in about 90% of adults)

CMV (about 70% of adults are seropositive)

Varicella zoster (seronegative patients should be given live-attenuated varicella vaccine).

Risk of transmission of these viruses relates to the serostatus of the donor and recipient before transplant. If a donor is positive for viral antibodies but the recipient is not (a so-called “mismatch”), risk is higher after transplant.

Hepatitis C

Patients with hepatitis C fare better if they get a transplant than if they remain on dialysis, although their posttransplant course is worse compared with transplant patients who do not have hepatitis. Some patients develop accelerated liver disease after kidney transplant. Hepatitis C-related kidney disease—membranous proliferative glomerulonephritis—also occurs, as do comorbidities such as diabetes.

Careful evaluation is warranted before transplant, including liver imaging, alpha-fetoprotein testing, and liver biopsy to evaluate for hepatocellular carcinoma. A patient with advanced fibrosis or cirrhosis may not be a candidate for kidney transplant alone but could possibly receive a combined kidney and liver transplant.

There is a need to determine the best time to treat hepatitis C infection. Patients with advanced liver disease or hepatitis C-related kidney disease would likely benefit from early treatment. However, delaying treatment could shorten the wait time for a deceased-donor organ positive for hepatitis C. Transplant candidates with active hepatitis C are uniquely considered to accept hepatitis C-positive kidneys, which are often discarded, and may only wait weeks for such a transplant. The shortened kidney survival associated with a hepatitis C-positive kidney may no longer be true with the new antiviral hepatitis C therapy, which has been shown to be effective post-transplant.

Hepatitis B

No cure is available for hepatitis B infection, but it can be well controlled with antiviral therapy. Patients with hepatitis B infection may be candidates for transplant, but they should be stable on antiviral therapy (lamivudine, entecavir, or tenofovir) to eliminate the viral load before transplant, and therapy should be continued afterward. Liver imaging, alpha-fetoprotein levels, and biopsy are recommended for evaluation. All hepatitis B- negative patients should be vaccinated before transplant.

Organs from living or deceased donors that test positive for hepatitis B core antibody, indicating prior exposure, can be considered for transplant in a patient who tests positive for hepatitis B surface antibody, indicating successful vaccination or prior exposure in the recipient. But donors must have negative surface antigen and polymerase chain reaction (PCR) tests that indicate no active hepatitis B infection.

Cytomegalovirus

CMV typically does not appear until prophylactic therapy is stopped. Classic symptoms are fever, leukopenia, and diarrhea. Infection can involve any organ, and patients may present with hepatitis, pancreatitis or, less commonly, pneumonitis.

Patients who are negative for CMV before transplant and receive a donor-positive organ are at the highest risk. Patients who are CMV IgG-positive are considered to be at intermediate risk, regardless of the donor status. Patients who are negative for CMV and receive a donor-negative organ are at the lowest risk and do not need prophylaxis with valganciclovir.

CMV infection is diagnosed by PCR testing of the blood or immunostaining in tissue biopsy. Occasionally, blood testing is negative in the face of tissue-based disease.

BK virus

BK is a polyoma virus and a common virus associated with kidney transplant. Viremia is seen in about 18% of patients, whereas actual kidney disease associated with a higher level of virus is seen in fewer than 10% of patients. Most people are exposed to BK virus, often in childhood, and it can remain indolent in the bladder and uroepithelium.

Patients can develop BK nephropathy after exposure to transplant immunosuppression.11 Posttransplant monitoring protocols typically include PCR testing for BK virus at 1, 3, 6, and 12 months. No agent has been identified to specifically treat BK virus. The general strategy is to minimize immunosuppressive therapy by reducing or eliminating mycophenolate mofetil. Fortunately, BK virus does not tend to recur, and patients can have a low-level viremia (< 10,000 copies/mL) persisting over months or even years but often without clinical consequences.

The appearance of BK virus on biopsy can mimic acute rejection. Before BK viral nephropathy was a recognized entity, patients would have been diagnosed with acute rejection and may have been put on high-dose steroids, which would have worsened the BK infection.

Posttransplant lymphoproliferative disorder

Posttransplant lymphoproliferative disorder is most often associated with Epstein-Barr virus and usually involves a large, diffuse B-cell lymphoma. Burkitt lymphoma and plasma cell neoplasms also can occur less commonly.

The condition is about 30 times more common in patients after transplant than in the general population, and it is the third most common malignancy in transplant patients after skin and cervical cancers. About 80% of the cases occur early after transplant, within the first year.

Patients typically have a marked elevation in viral load of Epstein-Barr virus, although a negative viral load does not rule it out. A patient who is serologically negative for Epstein-Barr virus receiving a donor-positive kidney is at highest risk; this situation is most often seen in the pediatric population. Potent induction therapies (eg, antilymphocyte antibody therapy) are also associated with posttransplant lymphoproliferative disorder.

Patients typically present with fever of unknown origin with no localizing signs or symptoms. Mass lesions can be challenging to find; positron emission tomography may be helpful. The culprit is usually a focal mass, ulcer (especially in the gastrointestinal tract), or infiltrate (commonly localized to the allograft). Multifocal or disseminated disease can also occur, including lymphoma or with central nervous system, gastrointestinal, or pulmonary involvement.

Biopsy of the affected site is required for histopathology and Epstein-Barr virus markers. PCR blood testing is often positive for Epstein-Barr virus.

Typical antiviral therapy does not eliminate Epstein-Barr virus. In early polyclonal viral proliferation, the first goal is to reduce immunosuppressive therapy. Rituximab alone may also help in polymorphic cases. With disease that is clearly monomorphic and has transformed to a true malignancy, cytotoxic chemotherapy is also required. “R-CHOP,” a combination therapy consisting of rituximab with cyclophosphamide, doxorubicin, vincristine, and prednisone, is usually used. Radiation therapy may help in some cases.

Cryptococcal infection

Previously seen in patients with acquired immune deficiency syndrome, cryptococcal infection is now most commonly encountered in patients with solid-organ transplants. Vilchez et al12 found a 1% incidence in a series of more than 5,000 patients who had received an organ transplant.

Immunosuppression likely conveys risk, but because cryptococcal infection is acquired, environmental exposure also plays a role. It tends to appear more than 6 months after transplant, indicating that its cause is a primary infection by spore inhalation rather than by reactivation or transmission from the donor organ.13 Bird exposure is a risk factor for cryptococcal infection. One case identified the same strain of Cryptococcus in a kidney transplant recipient and the family’s pet cockatoo.14

Cryptococcal infection typically starts as pneumonia, which may be subclinical. The infection can then disseminate, with meningitis presenting with headache and mental status changes being the most concerning complication. The death rate is about 50% in most series of patients with meningitis. Skin and soft-tissue manifestations may also occur in 10% to 15% of cases and can be nodular, ulcerative, or cellulitic.

More than 75% of fungal infections requiring hospitalization in US patients who have undergone transplant are attributed to either Candida, Aspergillus, or Cryptococcus species.15 Risk of fungal infection is increased with diabetes, duration of pretransplant dialysis, tacrolimus therapy, or rejection treatment.

- Wolfe RA, Ashby VB, Milford EL, et al. Comparison of mortality in all patients on dialysis, patients on dialysis awaiting transplantation, and recipients of a first cadaveric transplant. N Engl J Med 1999; 341:1725–1730.

- Kasiske BL, Klinger D. Cigarette smoking in renal transplant recipients. J Am Soc Nephrol 2000; 11:753–759.

- United Network for Organ Sharing. Transplant trends. https://transplantpro.org/technology/transplant-trends/#waitlists_by_organ. Accessed December 13, 2017.

- Meier-Kriesche HU, Kaplan B. Waiting time on dialysis as the strongest modifiable risk factor for renal transplant outcomes: a paired donor kidney analysis. Transplantation 2002; 74:1377–1381.

- Ojo AO, Hanson JA, Meier-Kriesche H, et al. Survival in recipients of marginal cadaveric donor kidneys compared with other recipients and wait-listed transplant candidates. J Am Soc Nephrol 2001; 12:589–597.

- Alonso P. Sanchez-Lazaro I, Almenar L, et al. Use of a “CNI holidays” strategy in acute renal dysfunction late after heart transplant. Report of two cases. Heart Int 2014; 9:74–77.

- Cantarovich M, Metrakos P, Giannetti N, Cecere R, Barkun J, Tchervenkov J. Anti-CD25 monoclonal antibody coverage allows for calcineurin inhibitor “holiday” in solid organ transplant patients with acute renal dysfunction. Transplantation 2002; 73:1169–1172.

- Brennan DC, Daller JA, Lake KD, Cibrik D, Del Castillo D; Thymoglobulin Induction Study Group. Rabbit antithymocyte globulin versus basiliximab in renal transplantation. N Engl J Med 2006; 355:1967–1977.

- McCullough PA. Evaluation and treatment of coronary artery disease in patients with end-stage renal disease. Kidney Int 2005; 67:S51–S58.

- Kasiske BL, Maclean JR, Snyder JJ. Acute myocardial infarction and kidney transplantation. J Am Soc Nephrol 2006; 17:900–907.

- Bohl DL, Storch GA, Ryschkewitsch C, et al. Donor origin of BK virus in renal transplantation and role of HLA C7 in susceptibility to sustained BK viremia. Am J Transplant 2005; 5:2213–2221.

- Vilchez RA, Fung J, Kusne S. Cryptococcosis in organ transplant recipients: an overview. Am J Transplant 2002; 2:575–580.

- Vilchez R, Shapiro R, McCurry K, et al. Longitudinal study of cryptococcosis in adult solid-organ transplant recipients. Transpl Int 2003; 16:336–340.

- Nosanchuk JD, Shoham S, Fries BC, Shapiro DS, Levitz SM, Casadevall A. Evidence of zoonotic transmission of Cryptococcus neoformans from a pet cockatoo to an immunocompromised patient. Ann Intern Med 2000; 132:205–208.

- Abbott KC, Hypolite I, Poropatich RK, et al. Hospitalizations for fungal infections after renal transplantation in the United States. Transpl Infect Dis 2001; 3:203–211.

- Wolfe RA, Ashby VB, Milford EL, et al. Comparison of mortality in all patients on dialysis, patients on dialysis awaiting transplantation, and recipients of a first cadaveric transplant. N Engl J Med 1999; 341:1725–1730.

- Kasiske BL, Klinger D. Cigarette smoking in renal transplant recipients. J Am Soc Nephrol 2000; 11:753–759.

- United Network for Organ Sharing. Transplant trends. https://transplantpro.org/technology/transplant-trends/#waitlists_by_organ. Accessed December 13, 2017.

- Meier-Kriesche HU, Kaplan B. Waiting time on dialysis as the strongest modifiable risk factor for renal transplant outcomes: a paired donor kidney analysis. Transplantation 2002; 74:1377–1381.

- Ojo AO, Hanson JA, Meier-Kriesche H, et al. Survival in recipients of marginal cadaveric donor kidneys compared with other recipients and wait-listed transplant candidates. J Am Soc Nephrol 2001; 12:589–597.

- Alonso P. Sanchez-Lazaro I, Almenar L, et al. Use of a “CNI holidays” strategy in acute renal dysfunction late after heart transplant. Report of two cases. Heart Int 2014; 9:74–77.

- Cantarovich M, Metrakos P, Giannetti N, Cecere R, Barkun J, Tchervenkov J. Anti-CD25 monoclonal antibody coverage allows for calcineurin inhibitor “holiday” in solid organ transplant patients with acute renal dysfunction. Transplantation 2002; 73:1169–1172.

- Brennan DC, Daller JA, Lake KD, Cibrik D, Del Castillo D; Thymoglobulin Induction Study Group. Rabbit antithymocyte globulin versus basiliximab in renal transplantation. N Engl J Med 2006; 355:1967–1977.

- McCullough PA. Evaluation and treatment of coronary artery disease in patients with end-stage renal disease. Kidney Int 2005; 67:S51–S58.

- Kasiske BL, Maclean JR, Snyder JJ. Acute myocardial infarction and kidney transplantation. J Am Soc Nephrol 2006; 17:900–907.

- Bohl DL, Storch GA, Ryschkewitsch C, et al. Donor origin of BK virus in renal transplantation and role of HLA C7 in susceptibility to sustained BK viremia. Am J Transplant 2005; 5:2213–2221.

- Vilchez RA, Fung J, Kusne S. Cryptococcosis in organ transplant recipients: an overview. Am J Transplant 2002; 2:575–580.

- Vilchez R, Shapiro R, McCurry K, et al. Longitudinal study of cryptococcosis in adult solid-organ transplant recipients. Transpl Int 2003; 16:336–340.

- Nosanchuk JD, Shoham S, Fries BC, Shapiro DS, Levitz SM, Casadevall A. Evidence of zoonotic transmission of Cryptococcus neoformans from a pet cockatoo to an immunocompromised patient. Ann Intern Med 2000; 132:205–208.

- Abbott KC, Hypolite I, Poropatich RK, et al. Hospitalizations for fungal infections after renal transplantation in the United States. Transpl Infect Dis 2001; 3:203–211.

KEY POINTS

- Kidney transplant improves survival and long-term outcomes in patients with renal failure.

- Before transplant, patients should be carefully evaluated for cardiovascular and infectious disease risk.

- Potent immunosuppression is required to maintain a successful kidney transplant.

- After transplant, patients must be monitored for recurrent disease, side effects of immunosuppression, and opportunistic infections.

PCI for stable angina: A missed opportunity for shared decision-making

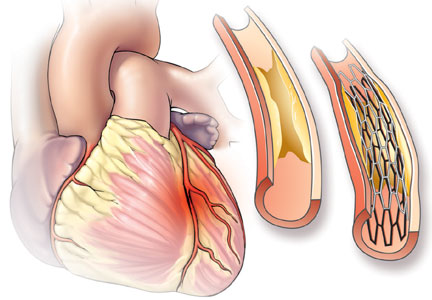

Multiple randomized controlled trials have compared percutaneous coronary intervention (PCI) vs optimal medical therapy for patients with chronic stable angina. All have consistently shown that PCI does not reduce the risk of death or even myocardial infarction (MI) but that it may relieve angina temporarily. Nevertheless, PCI is still commonly performed for patients with stable coronary disease, often in the absence of angina, and patients mistakenly believe the procedure is life-saving. Cardiologists may not be aware of patients’ misperceptions, or worse, may encourage them. In either case, if patients do not understand the benefits of the procedure, they cannot give informed consent.

This article reviews the pathophysiology of coronary artery disease, evidence from clinical trials of the value of PCI for chronic stable angina, patient and physician perceptions of PCI, and ways to promote patient-centered, shared decision-making.

CLINICAL CASE: EXERTIONAL ANGINA

While climbing 4 flights of stairs, a 55-year-old man noticed tightness in his chest, which lasted for 5 minutes and resolved spontaneously. Several weeks later, when visiting his primary care physician, he mentioned the episode. He had had no symptoms in the interim, but the physician ordered an exercise stress test.

Six minutes into a standard Bruce protocol, the patient experienced the same chest tightness, accompanied by 1-mm ST-segment depressions in leads II, III, and aVF. He was then referred to a cardiologist, who recommended catheterization.

Catheterization demonstrated a 95% stenosis of the right coronary artery with nonsignificant stenoses of the left anterior descending and circumflex arteries. A drug-eluting stent was placed in the right coronary artery, with no residual stenosis.

Did this intervention likely prevent an MI and perhaps save the man’s life?

HOW MYOCARDIAL INFARCTION HAPPENS

Understanding the pathogenesis of MI is critical to having realistic expectations of the benefits of stent placement.

Doctors often describe coronary atherosclerosis as a plumbing problem, where deposits of cholesterol and fat build up in arterial walls, clogging the pipes and eventually causing a heart attack. This analogy, which has been around since the 1950s, is easy to for patients to grasp and has been popularized in the press and internalized by the public—as one patient with a 95% stenosis put it, “I was 95% dead.” In that model, angioplasty and stenting can resolve the blockage and “fix” the problem, much as a plumber can clear your pipes with a Roto-Rooter.

Despite the visual appeal of this model,1 it doesn’t accurately convey what we know about the pathophysiology of coronary artery disease. Instead of a gradual buildup of fatty deposits, low-density lipoprotein cholesterol particles infiltrate arterial walls and trigger an inflammatory reaction as they are engulfed by macrophages, leading to a cascade of cytokines and recruitment of more inflammatory cells.2 This immune response can eventually cause the rupture of the plaque’s fibrous cap, triggering thrombosis and infarction, often at a site of insignificant stenosis.

In this new model, coronary artery disease is primarily a problem of inflammation distributed throughout the vasculature, rather than a mechanical problem localized to the site of a significant stenosis.

Significant stenosis does not equal unstable plaque

Not all plaques are equally likely to rupture. Stable plaques tend to be long-standing and calcified, with a thick fibrous cap. A stable plaque causing a 95% stenosis may cause symptoms with exertion, but it is unlikely to cause infarction.3 Conversely, rupture-prone plaques may cause little stenosis, but a large and dangerous plaque may be lurking beneath the thin fibrous cap.