User login

Video-Based Coaching for Dermatology Resident Surgical Education

To the Editor:

Video-based coaching (VBC) involves a surgeon recording a surgery and then reviewing the video with a surgical coach; it is a form of education that is gaining popularity among surgical specialties.1 Video-based education is underutilized in dermatology residency training.2 We conducted a pilot study at our dermatology residency program to evaluate the efficacy and feasibility of VBC.

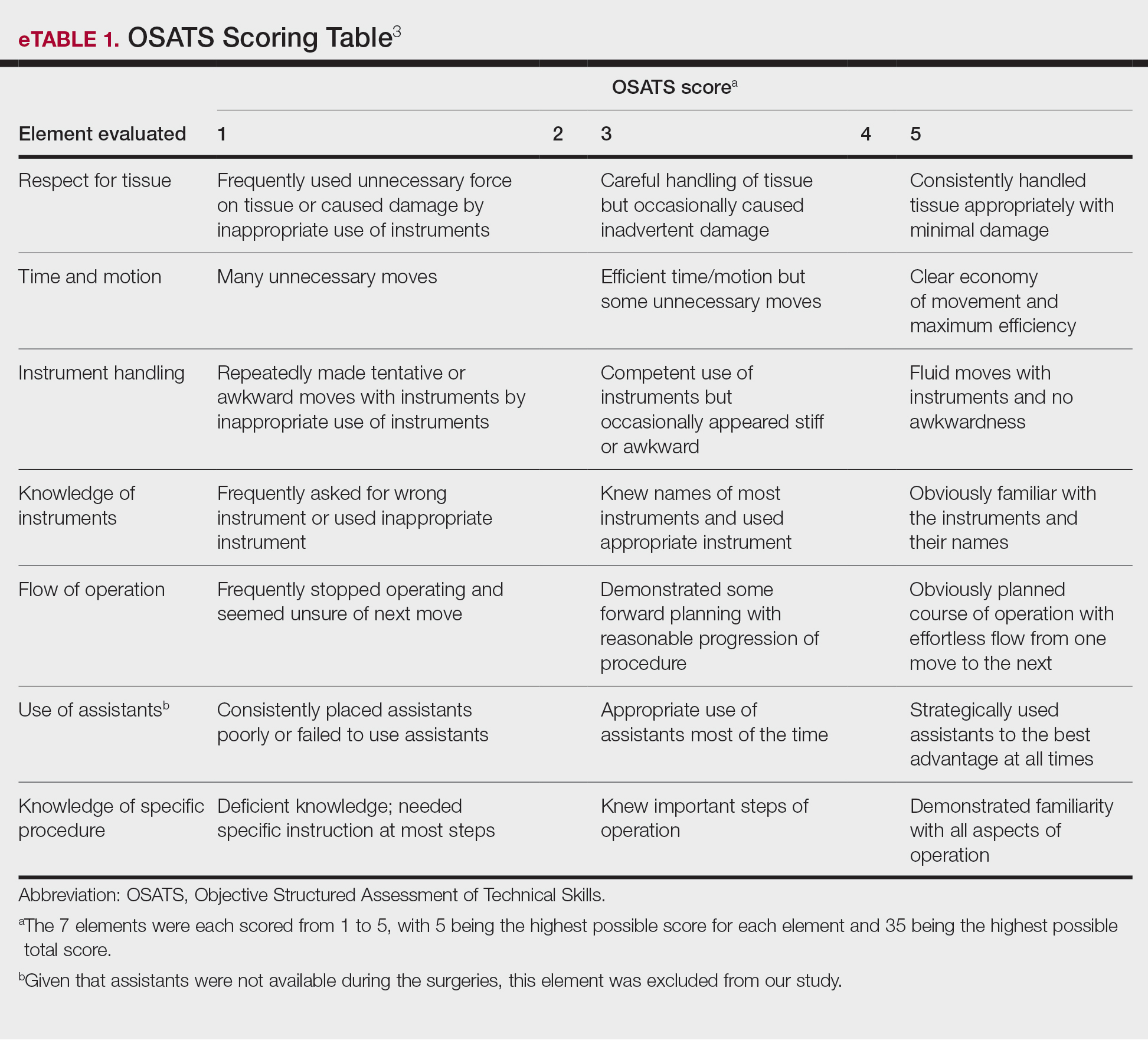

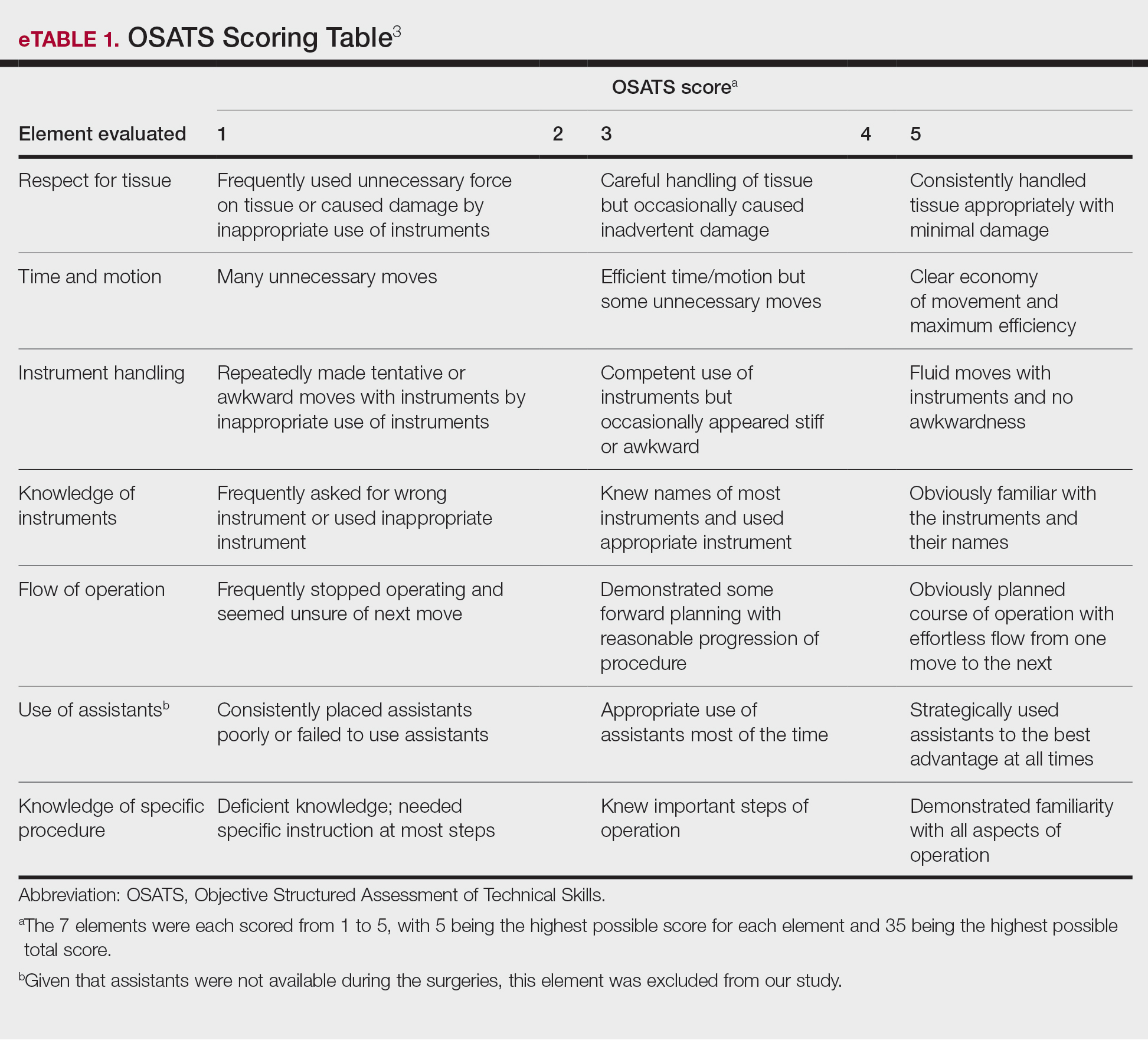

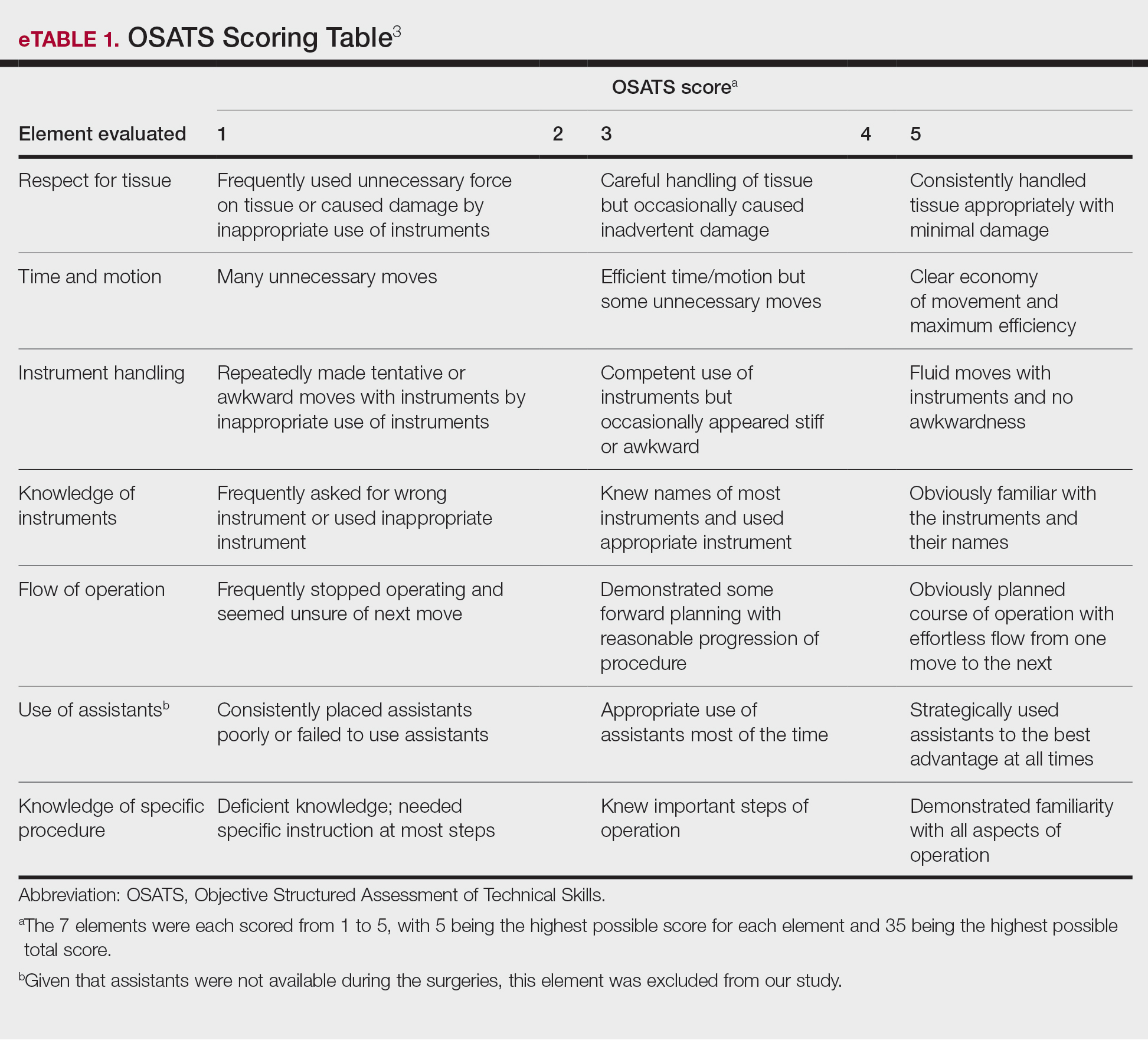

The University of Texas at Austin Dell Medical School institutional review board approved this study. All 4 first-year dermatology residents were recruited to participate in this study. Participants filled out a prestudy survey assessing their surgical experience, confidence in performing surgery, and attitudes on VBC. Participants used a head-mounted point-of-view camera to record themselves performing a wide local excision on the trunk or extremities of a live human patient. Participants then reviewed the recording on their own and scored themselves using the Objective Structured Assessment of Technical Skills (OSATS) scoring table (scored from 1 to 5, with 5 being the highest possible score for each element), which is a validated tool for assessing surgical skills (eTable 1).3 Given that there were no assistants participating in the surgery, this element of the OSATS scoring table was excluded, making a maximum possible score of 30 and a minimum possible score of 6. After scoring themselves, participants then had a 1-on-1 coaching session with a fellowship-trained dermatologic surgeon (M.F. or T.H.) via online teleconferencing.

During the coaching session, participants and coaches reviewed the video. The surgical coaches also scored the residents using the OSATS, then residents and coaches discussed how the resident could improve using the OSATS scores as a guide. The residents then completed a poststudy survey assessing their surgical experience, confidence in performing surgery, and attitudes on VBC. Descriptive statistics were reported.

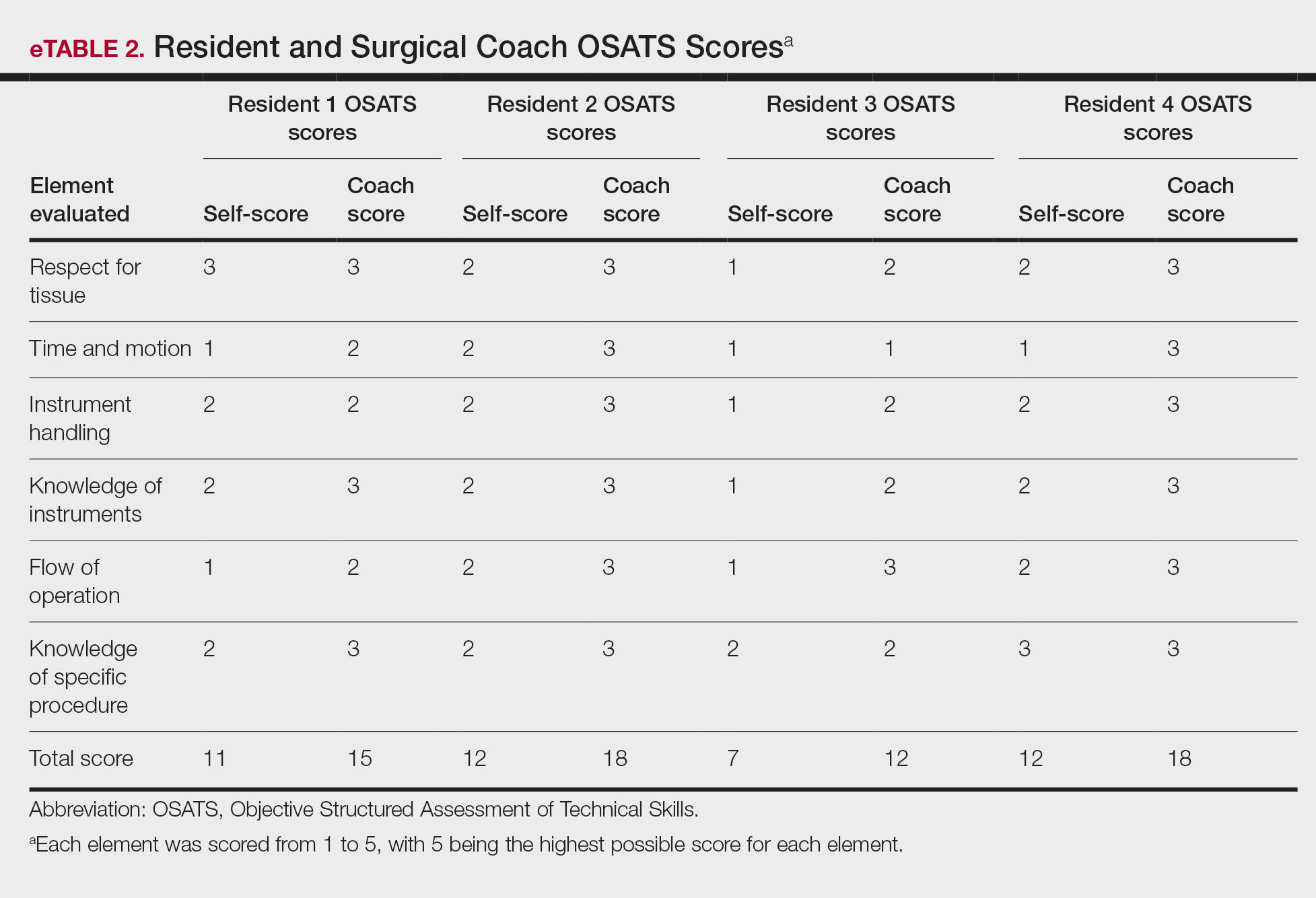

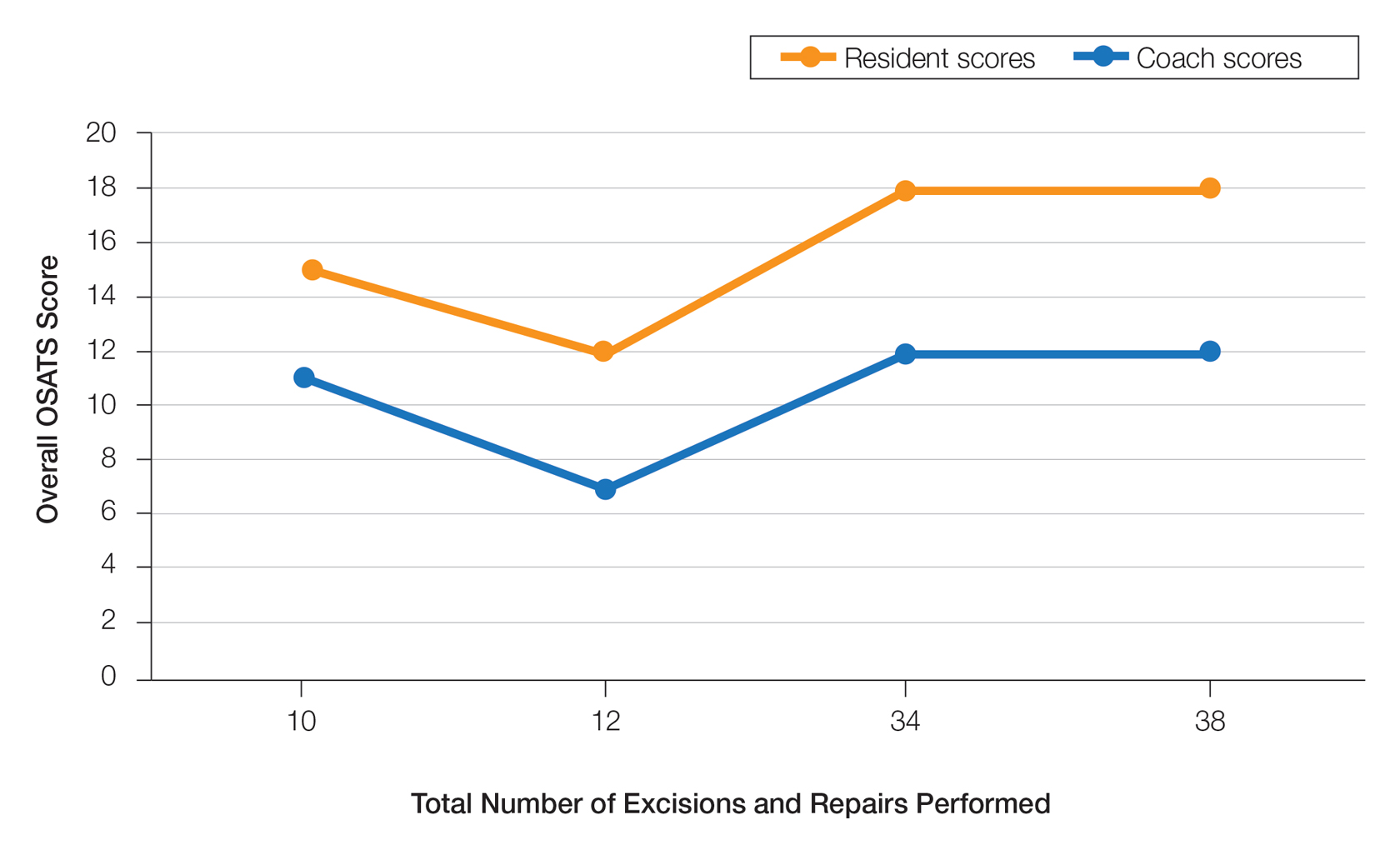

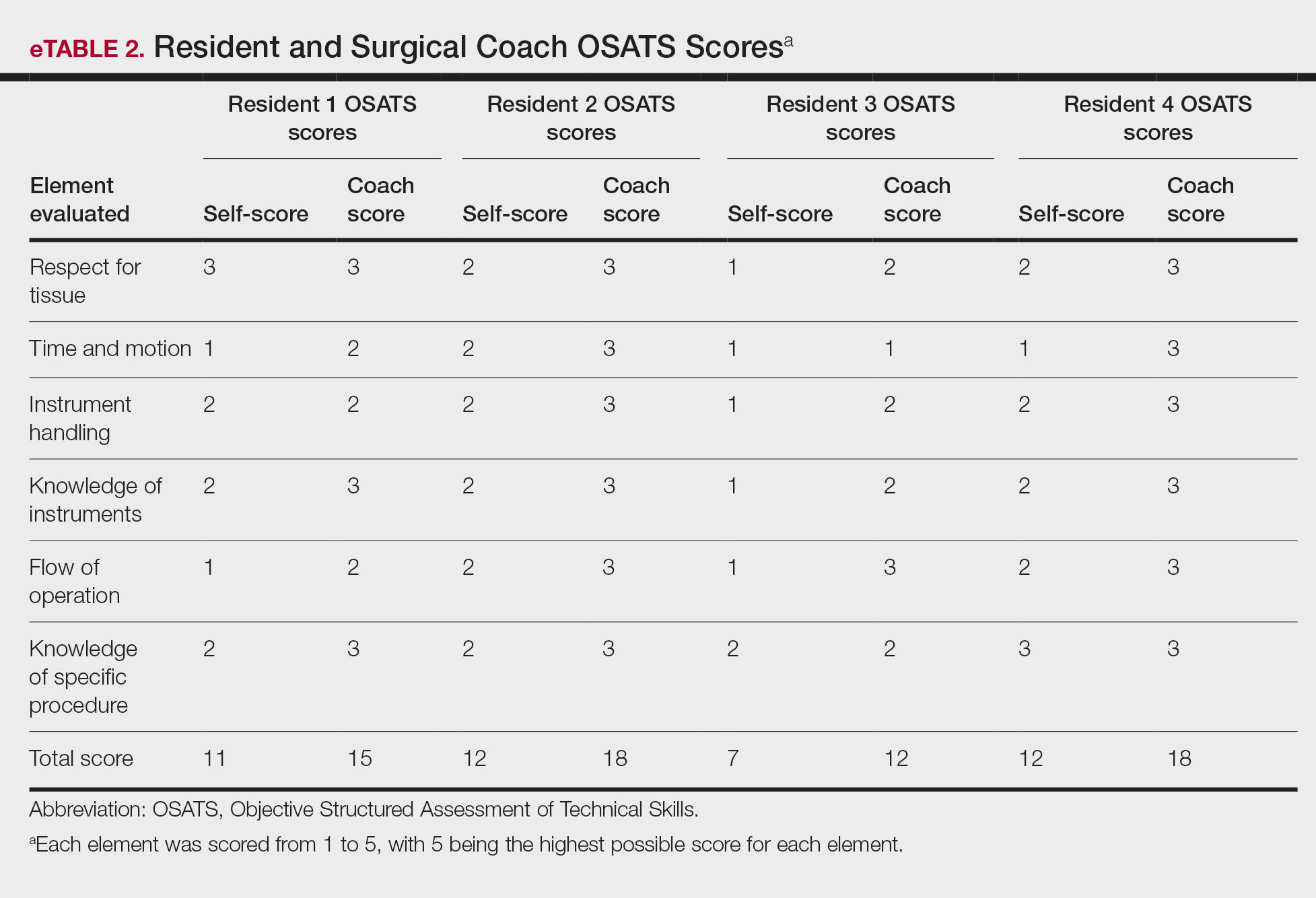

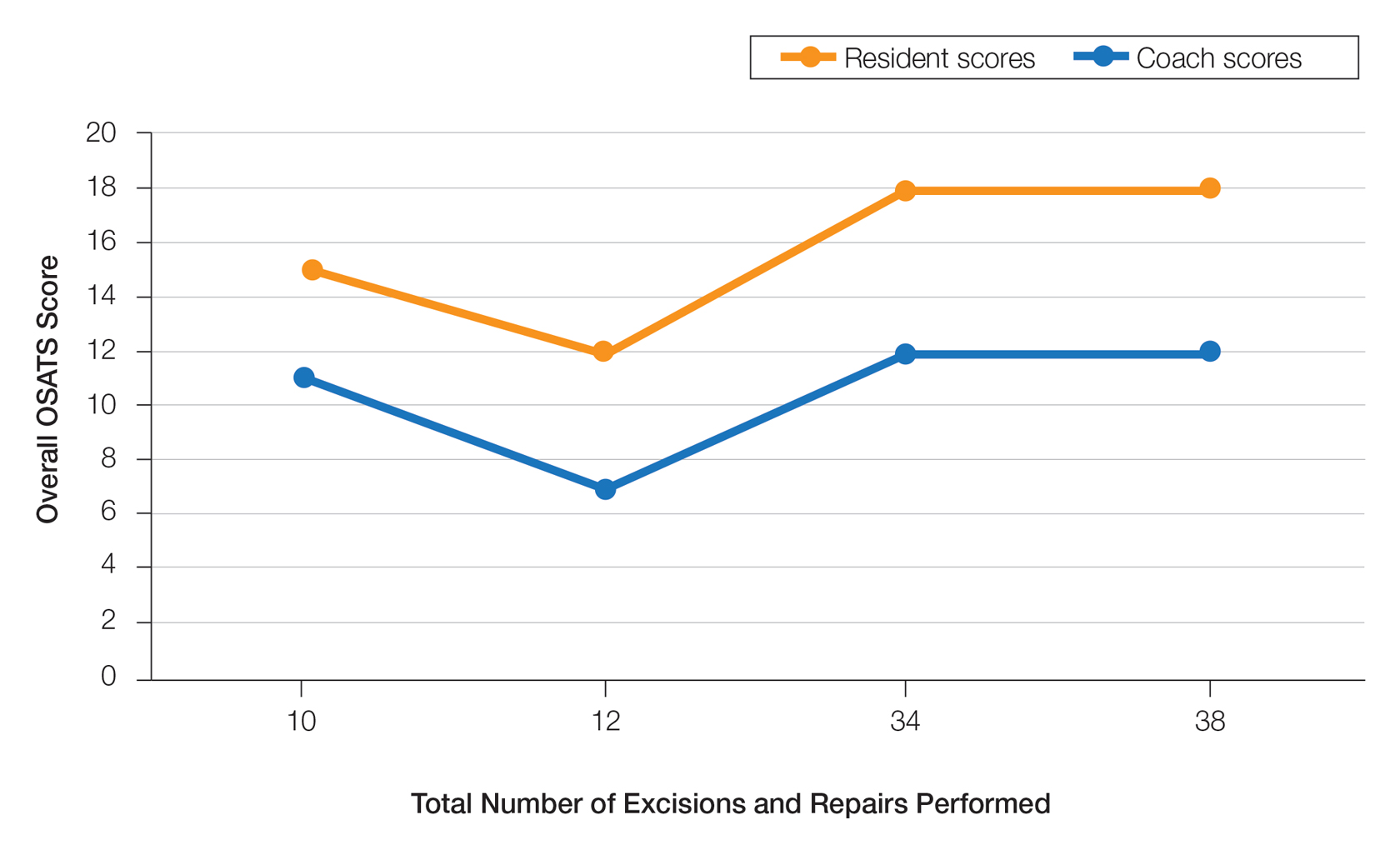

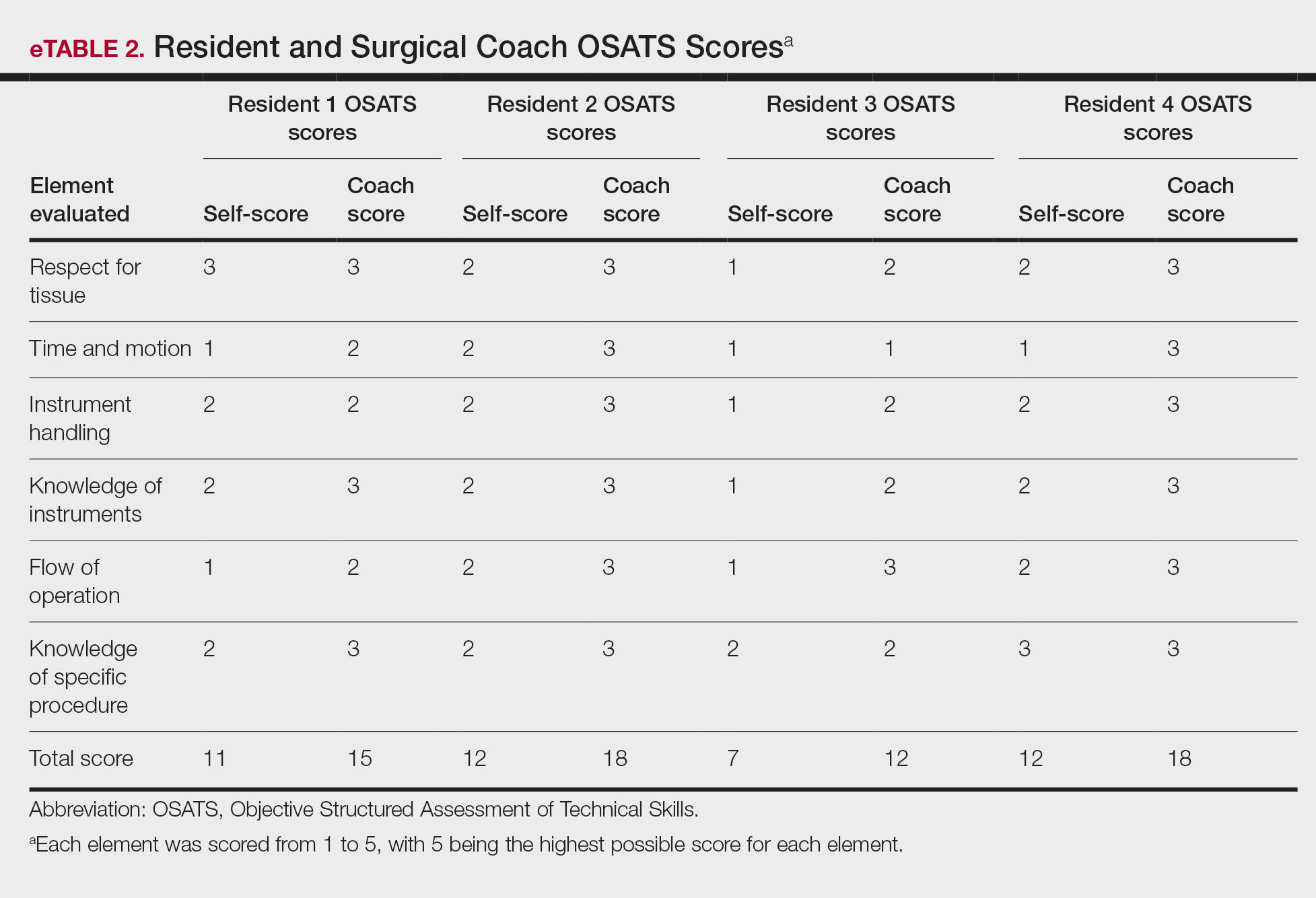

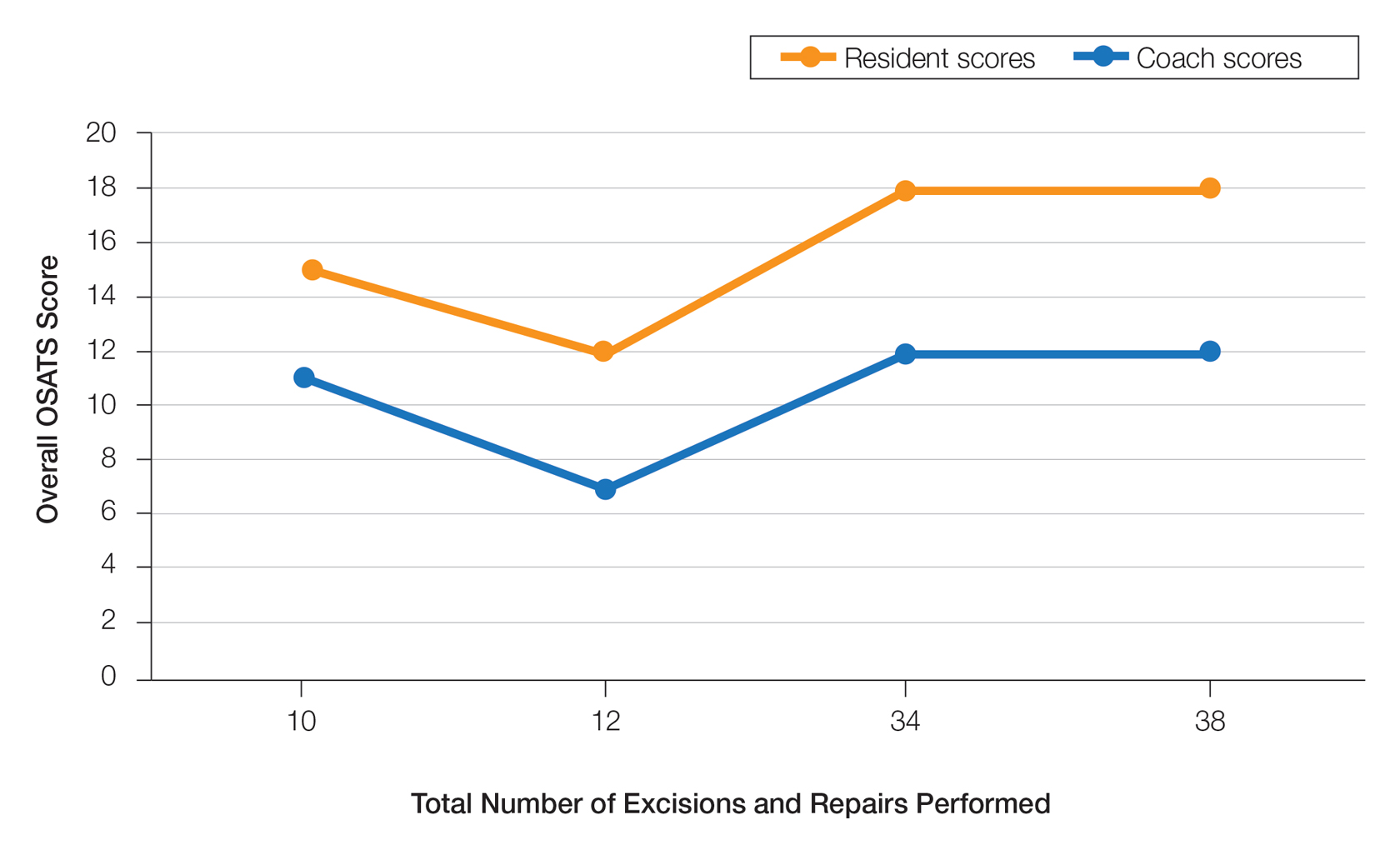

On average, residents spent 31.3 minutes reviewing their own surgeries and scoring themselves. The average time for a coaching session, which included time spent scoring, was 13.8 minutes. Residents scored themselves lower than the surgical coaches did by an average of 5.25 points (eTable 2). Residents gave themselves an average total score of 10.5, while their respective surgical coaches gave the residents an average score of 15.75. There was a trend of residents with greater surgical experience having higher OSATS scores (Figure). After the coaching session, 3 of 4 residents reported that they felt more confident in their surgical skills. All residents felt more confident in assessing their surgical skills and felt that VBC was an effective teaching measure. All residents agreed that VBC should be continued as part of their residency training.

Video-based coaching has the potential to provide several benefits for dermatology trainees. Because receiving feedback intraoperatively often can be distracting and incomplete, video review can instead allow the surgeon to focus on performing the surgery and then later focus on learning while reviewing the video.1,4 Feedback also can be more comprehensive and delivered without concern for time constraints or disturbing clinic flow as well as without the additional concern of the patient overhearing comments and feedback.3 Although independent video review in the absence of coaching can lead to improvement in surgical skills, the addition of VBC provides even greater potential educational benefit.4 During the COVID-19 pandemic, VBC allowed coaches to provide feedback without additional exposures. We utilized dermatologic surgery faculty as coaches, but this format of training also would apply to general dermatology faculty.

Another goal of VBC is to enhance a trainee’s ability to perform self-directed learning, which requires accurate self-assessment.4 Accurately assessing one’s own strengths empowers a trainee to act with appropriate confidence, while understanding one’s own weaknesses allows a trainee to effectively balance confidence and caution in daily practice.5 Interestingly, in our study all residents scored themselves lower than surgical coaches, but with 1 coaching session, the residents subsequently reported greater surgical confidence.

Time constraints can be a potential barrier to surgical coaching.4 Our study demonstrates that VBC requires minimal time investment. Increasing the speed of video playback allowed for efficient evaluation of resident surgeries without compromising the coach’s ability to provide comprehensive feedback. Our feedback sessions were performed virtually, which allowed for ease of scheduling between trainees and coaches.

Our pilot study demonstrated that VBC is relatively easy to implement in a dermatology residency training setting, leveraging relatively low-cost technologies and allowing for a means of learning that residents felt was effective. Video-based coaching requires minimal time investment from both trainees and coaches and has the potential to enhance surgical confidence. Our current study is limited by its small sample size. Future studies should include follow-up recordings and assess the efficacy of VBC in enhancing surgical skills.

- Greenberg CC, Dombrowski J, Dimick JB. Video-based surgical coaching: an emerging approach to performance improvement. JAMA Surg. 2016;151:282-283.

- Dai J, Bordeaux JS, Miller CJ, et al. Assessing surgical training and deliberate practice methods in dermatology residency: a survey of dermatology program directors. Dermatol Surg. 2016;42:977-984.

- Chitgopeker P, Sidey K, Aronson A, et al. Surgical skills video-based assessment tool for dermatology residents: a prospective pilot study. J Am Acad Dermatol. 2020;83:614-616.

- Bull NB, Silverman CD, Bonrath EM. Targeted surgical coaching can improve operative self-assessment ability: a single-blinded nonrandomized trial. Surgery. 2020;167:308-313.

- Eva KW, Regehr G. Self-assessment in the health professions: a reformulation and research agenda. Acad Med. 2005;80(10 suppl):S46-S54.

To the Editor:

Video-based coaching (VBC) involves a surgeon recording a surgery and then reviewing the video with a surgical coach; it is a form of education that is gaining popularity among surgical specialties.1 Video-based education is underutilized in dermatology residency training.2 We conducted a pilot study at our dermatology residency program to evaluate the efficacy and feasibility of VBC.

The University of Texas at Austin Dell Medical School institutional review board approved this study. All 4 first-year dermatology residents were recruited to participate in this study. Participants filled out a prestudy survey assessing their surgical experience, confidence in performing surgery, and attitudes on VBC. Participants used a head-mounted point-of-view camera to record themselves performing a wide local excision on the trunk or extremities of a live human patient. Participants then reviewed the recording on their own and scored themselves using the Objective Structured Assessment of Technical Skills (OSATS) scoring table (scored from 1 to 5, with 5 being the highest possible score for each element), which is a validated tool for assessing surgical skills (eTable 1).3 Given that there were no assistants participating in the surgery, this element of the OSATS scoring table was excluded, making a maximum possible score of 30 and a minimum possible score of 6. After scoring themselves, participants then had a 1-on-1 coaching session with a fellowship-trained dermatologic surgeon (M.F. or T.H.) via online teleconferencing.

During the coaching session, participants and coaches reviewed the video. The surgical coaches also scored the residents using the OSATS, then residents and coaches discussed how the resident could improve using the OSATS scores as a guide. The residents then completed a poststudy survey assessing their surgical experience, confidence in performing surgery, and attitudes on VBC. Descriptive statistics were reported.

On average, residents spent 31.3 minutes reviewing their own surgeries and scoring themselves. The average time for a coaching session, which included time spent scoring, was 13.8 minutes. Residents scored themselves lower than the surgical coaches did by an average of 5.25 points (eTable 2). Residents gave themselves an average total score of 10.5, while their respective surgical coaches gave the residents an average score of 15.75. There was a trend of residents with greater surgical experience having higher OSATS scores (Figure). After the coaching session, 3 of 4 residents reported that they felt more confident in their surgical skills. All residents felt more confident in assessing their surgical skills and felt that VBC was an effective teaching measure. All residents agreed that VBC should be continued as part of their residency training.

Video-based coaching has the potential to provide several benefits for dermatology trainees. Because receiving feedback intraoperatively often can be distracting and incomplete, video review can instead allow the surgeon to focus on performing the surgery and then later focus on learning while reviewing the video.1,4 Feedback also can be more comprehensive and delivered without concern for time constraints or disturbing clinic flow as well as without the additional concern of the patient overhearing comments and feedback.3 Although independent video review in the absence of coaching can lead to improvement in surgical skills, the addition of VBC provides even greater potential educational benefit.4 During the COVID-19 pandemic, VBC allowed coaches to provide feedback without additional exposures. We utilized dermatologic surgery faculty as coaches, but this format of training also would apply to general dermatology faculty.

Another goal of VBC is to enhance a trainee’s ability to perform self-directed learning, which requires accurate self-assessment.4 Accurately assessing one’s own strengths empowers a trainee to act with appropriate confidence, while understanding one’s own weaknesses allows a trainee to effectively balance confidence and caution in daily practice.5 Interestingly, in our study all residents scored themselves lower than surgical coaches, but with 1 coaching session, the residents subsequently reported greater surgical confidence.

Time constraints can be a potential barrier to surgical coaching.4 Our study demonstrates that VBC requires minimal time investment. Increasing the speed of video playback allowed for efficient evaluation of resident surgeries without compromising the coach’s ability to provide comprehensive feedback. Our feedback sessions were performed virtually, which allowed for ease of scheduling between trainees and coaches.

Our pilot study demonstrated that VBC is relatively easy to implement in a dermatology residency training setting, leveraging relatively low-cost technologies and allowing for a means of learning that residents felt was effective. Video-based coaching requires minimal time investment from both trainees and coaches and has the potential to enhance surgical confidence. Our current study is limited by its small sample size. Future studies should include follow-up recordings and assess the efficacy of VBC in enhancing surgical skills.

To the Editor:

Video-based coaching (VBC) involves a surgeon recording a surgery and then reviewing the video with a surgical coach; it is a form of education that is gaining popularity among surgical specialties.1 Video-based education is underutilized in dermatology residency training.2 We conducted a pilot study at our dermatology residency program to evaluate the efficacy and feasibility of VBC.

The University of Texas at Austin Dell Medical School institutional review board approved this study. All 4 first-year dermatology residents were recruited to participate in this study. Participants filled out a prestudy survey assessing their surgical experience, confidence in performing surgery, and attitudes on VBC. Participants used a head-mounted point-of-view camera to record themselves performing a wide local excision on the trunk or extremities of a live human patient. Participants then reviewed the recording on their own and scored themselves using the Objective Structured Assessment of Technical Skills (OSATS) scoring table (scored from 1 to 5, with 5 being the highest possible score for each element), which is a validated tool for assessing surgical skills (eTable 1).3 Given that there were no assistants participating in the surgery, this element of the OSATS scoring table was excluded, making a maximum possible score of 30 and a minimum possible score of 6. After scoring themselves, participants then had a 1-on-1 coaching session with a fellowship-trained dermatologic surgeon (M.F. or T.H.) via online teleconferencing.

During the coaching session, participants and coaches reviewed the video. The surgical coaches also scored the residents using the OSATS, then residents and coaches discussed how the resident could improve using the OSATS scores as a guide. The residents then completed a poststudy survey assessing their surgical experience, confidence in performing surgery, and attitudes on VBC. Descriptive statistics were reported.

On average, residents spent 31.3 minutes reviewing their own surgeries and scoring themselves. The average time for a coaching session, which included time spent scoring, was 13.8 minutes. Residents scored themselves lower than the surgical coaches did by an average of 5.25 points (eTable 2). Residents gave themselves an average total score of 10.5, while their respective surgical coaches gave the residents an average score of 15.75. There was a trend of residents with greater surgical experience having higher OSATS scores (Figure). After the coaching session, 3 of 4 residents reported that they felt more confident in their surgical skills. All residents felt more confident in assessing their surgical skills and felt that VBC was an effective teaching measure. All residents agreed that VBC should be continued as part of their residency training.

Video-based coaching has the potential to provide several benefits for dermatology trainees. Because receiving feedback intraoperatively often can be distracting and incomplete, video review can instead allow the surgeon to focus on performing the surgery and then later focus on learning while reviewing the video.1,4 Feedback also can be more comprehensive and delivered without concern for time constraints or disturbing clinic flow as well as without the additional concern of the patient overhearing comments and feedback.3 Although independent video review in the absence of coaching can lead to improvement in surgical skills, the addition of VBC provides even greater potential educational benefit.4 During the COVID-19 pandemic, VBC allowed coaches to provide feedback without additional exposures. We utilized dermatologic surgery faculty as coaches, but this format of training also would apply to general dermatology faculty.

Another goal of VBC is to enhance a trainee’s ability to perform self-directed learning, which requires accurate self-assessment.4 Accurately assessing one’s own strengths empowers a trainee to act with appropriate confidence, while understanding one’s own weaknesses allows a trainee to effectively balance confidence and caution in daily practice.5 Interestingly, in our study all residents scored themselves lower than surgical coaches, but with 1 coaching session, the residents subsequently reported greater surgical confidence.

Time constraints can be a potential barrier to surgical coaching.4 Our study demonstrates that VBC requires minimal time investment. Increasing the speed of video playback allowed for efficient evaluation of resident surgeries without compromising the coach’s ability to provide comprehensive feedback. Our feedback sessions were performed virtually, which allowed for ease of scheduling between trainees and coaches.

Our pilot study demonstrated that VBC is relatively easy to implement in a dermatology residency training setting, leveraging relatively low-cost technologies and allowing for a means of learning that residents felt was effective. Video-based coaching requires minimal time investment from both trainees and coaches and has the potential to enhance surgical confidence. Our current study is limited by its small sample size. Future studies should include follow-up recordings and assess the efficacy of VBC in enhancing surgical skills.

- Greenberg CC, Dombrowski J, Dimick JB. Video-based surgical coaching: an emerging approach to performance improvement. JAMA Surg. 2016;151:282-283.

- Dai J, Bordeaux JS, Miller CJ, et al. Assessing surgical training and deliberate practice methods in dermatology residency: a survey of dermatology program directors. Dermatol Surg. 2016;42:977-984.

- Chitgopeker P, Sidey K, Aronson A, et al. Surgical skills video-based assessment tool for dermatology residents: a prospective pilot study. J Am Acad Dermatol. 2020;83:614-616.

- Bull NB, Silverman CD, Bonrath EM. Targeted surgical coaching can improve operative self-assessment ability: a single-blinded nonrandomized trial. Surgery. 2020;167:308-313.

- Eva KW, Regehr G. Self-assessment in the health professions: a reformulation and research agenda. Acad Med. 2005;80(10 suppl):S46-S54.

- Greenberg CC, Dombrowski J, Dimick JB. Video-based surgical coaching: an emerging approach to performance improvement. JAMA Surg. 2016;151:282-283.

- Dai J, Bordeaux JS, Miller CJ, et al. Assessing surgical training and deliberate practice methods in dermatology residency: a survey of dermatology program directors. Dermatol Surg. 2016;42:977-984.

- Chitgopeker P, Sidey K, Aronson A, et al. Surgical skills video-based assessment tool for dermatology residents: a prospective pilot study. J Am Acad Dermatol. 2020;83:614-616.

- Bull NB, Silverman CD, Bonrath EM. Targeted surgical coaching can improve operative self-assessment ability: a single-blinded nonrandomized trial. Surgery. 2020;167:308-313.

- Eva KW, Regehr G. Self-assessment in the health professions: a reformulation and research agenda. Acad Med. 2005;80(10 suppl):S46-S54.

PRACTICE POINTS

- Video-based coaching (VBC) for surgical procedures is an up-and-coming form of medical education that allows a “coach” to provide thoughtful and in-depth feedback while reviewing a recording with the surgeon in a private setting. This format has potential utility in teaching dermatology resident surgeons being coached by a dermatology faculty member.

- We performed a pilot study demonstrating that VBC can be performed easily with a minimal time investment for both the surgeon and the coach. Dermatology residents not only felt that VBC was an effective teaching method but also should become a formal part of their education.

Improving Diagnostic Accuracy in Skin of Color Using an Educational Module

Dermatologic disparities disproportionately affect patients with skin of color (SOC). Two studies assessing the diagnostic accuracy of medical students have shown disparities in diagnosing common skin conditions presenting in darker skin compared to lighter skin at early stages of training.1,2 This knowledge gap could be attributed to the underrepresentation of SOC in dermatologic textbooks, journals, and educational curricula.3-6 It is important for dermatologists as well as physicians in other specialties and ancillary health care workers involved in treating or triaging dermatologic diseases to recognize common skin conditions presenting in SOC. We sought to evaluate the effectiveness of a focused educational module for improving diagnostic accuracy and confidence in treating SOC among interprofessional health care providers.

Methods

Interprofessional health care providers—medical students, residents/fellows, attending physicians, advanced practice providers (APPs), and nurses practicing across various medical specialties—at The University of Texas at Austin Dell Medical School and Ascension Medical Group (both in Austin, Texas) were invited to participate in an institutional review board–exempt study involving a virtual SOC educational module from February through May 2021. The 1-hour module involved a pretest, a 15-minute lecture, an immediate posttest, and a 3-month posttest. All tests included the same 40 multiple-choice questions of 20 dermatologic conditions portrayed in lighter and darker skin types from VisualDx.com, and participants were asked to identify the condition in each photograph. Questions appeared one at a time in a randomized order, and answers could not be changed once submitted.

For analysis, the dermatologic conditions were categorized into 4 groups: cancerous, infectious, inflammatory, and SOC-associated conditions. Cancerous conditions included basal cell carcinoma, squamous cell carcinoma, and melanoma. Infectious conditions included herpes zoster, tinea corporis, tinea versicolor, staphylococcal scalded skin syndrome, and verruca vulgaris. Inflammatory conditions included acne, atopic dermatitis, pityriasis rosea, psoriasis, seborrheic dermatitis, contact dermatitis, lichen planus, and urticaria. Skin of color–associated conditions included hidradenitis suppurativa, acanthosis nigricans, keloid, and melasma. Two questions utilizing a 5-point Likert scale assessing confidence in diagnosing light and dark skin also were included.

The pre-recorded 15-minute video lecture was given by 2 dermatology residents (P.L.K. and C.P.), and the learning objectives covered morphologic differences in lighter skin and darker skin, comparisons of common dermatologic diseases in lighter skin and darker skin, diseases more commonly affecting patients with SOC, and treatment considerations for conditions affecting skin and hair in patients with SOC. Photographs from the diagnostic accuracy assessment were not reused in the lecture. Detailed explanations on morphology, diagnostic pearls, and treatment options for all conditions tested were provided to participants upon completion of the 3-month posttest.

Statistical Analysis—Test scores were compared between conditions shown in lighter and darker skin types and from the pretest to the immediate posttest and 3-month posttest. Multiple linear regression was used to assess for intervention effects on lighter and darker skin scores controlling for provider type and specialty. All tests were 2-sided with significance at P<.05. Analyses were conducted using Stata 17.

Results

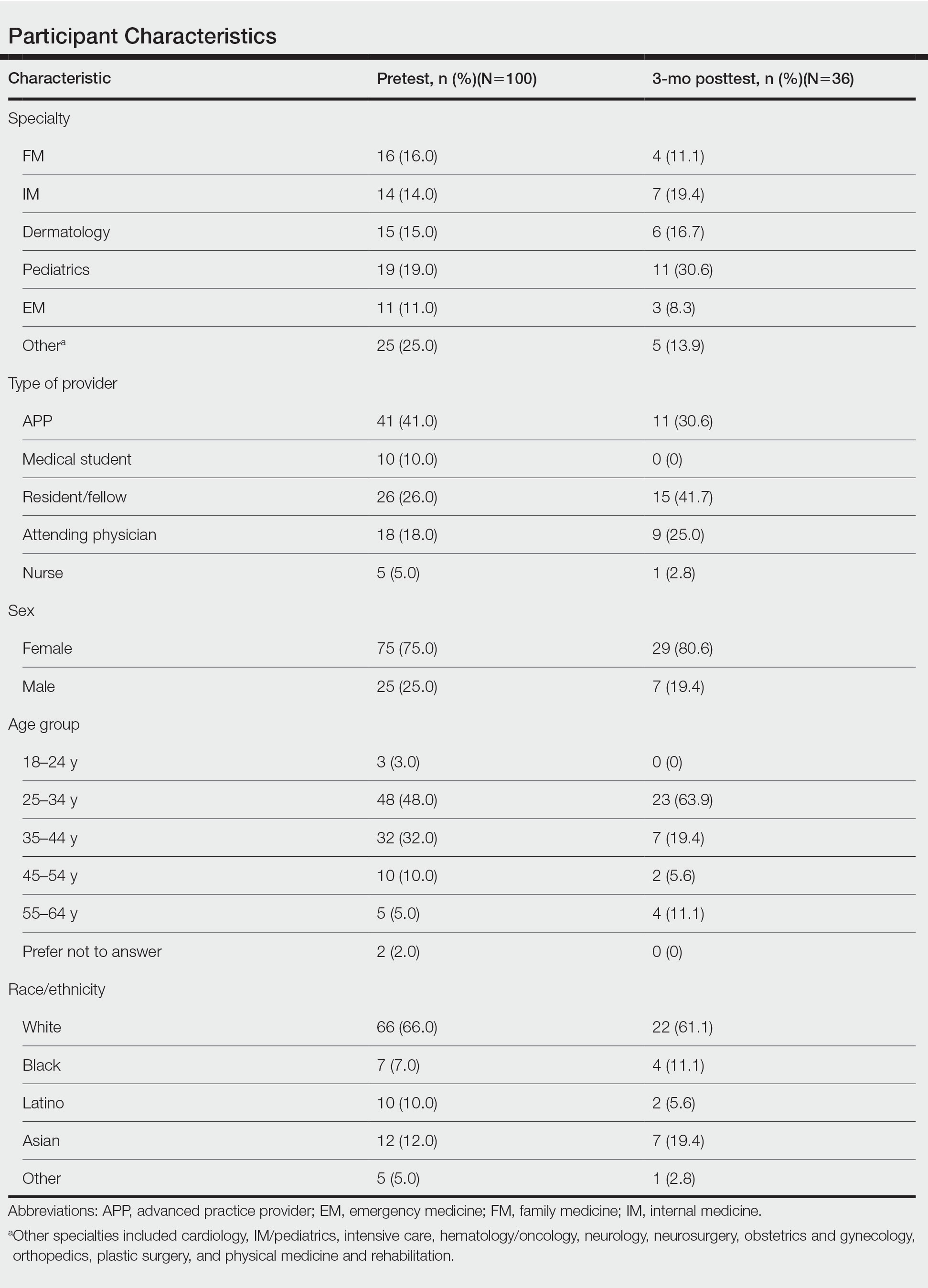

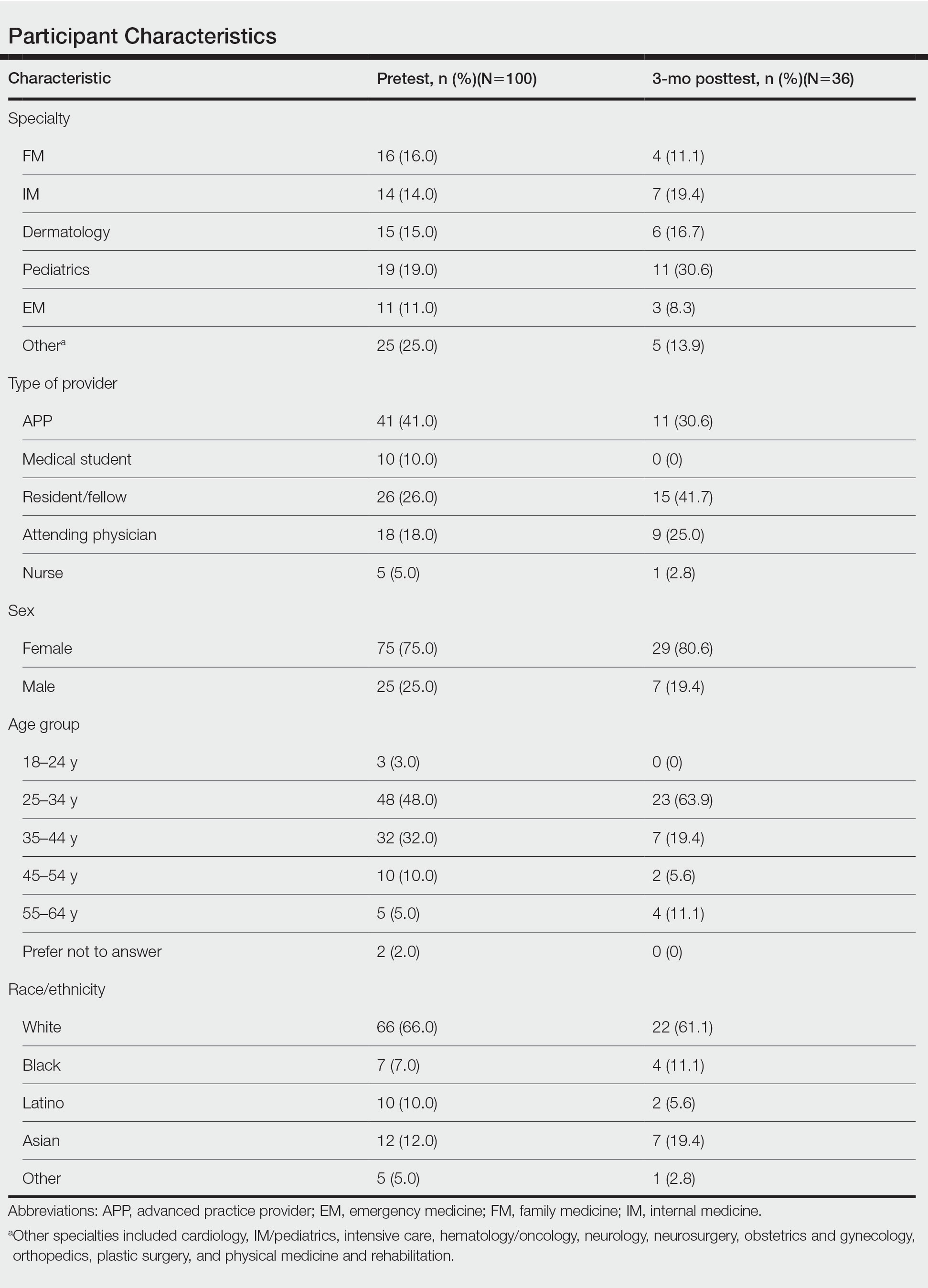

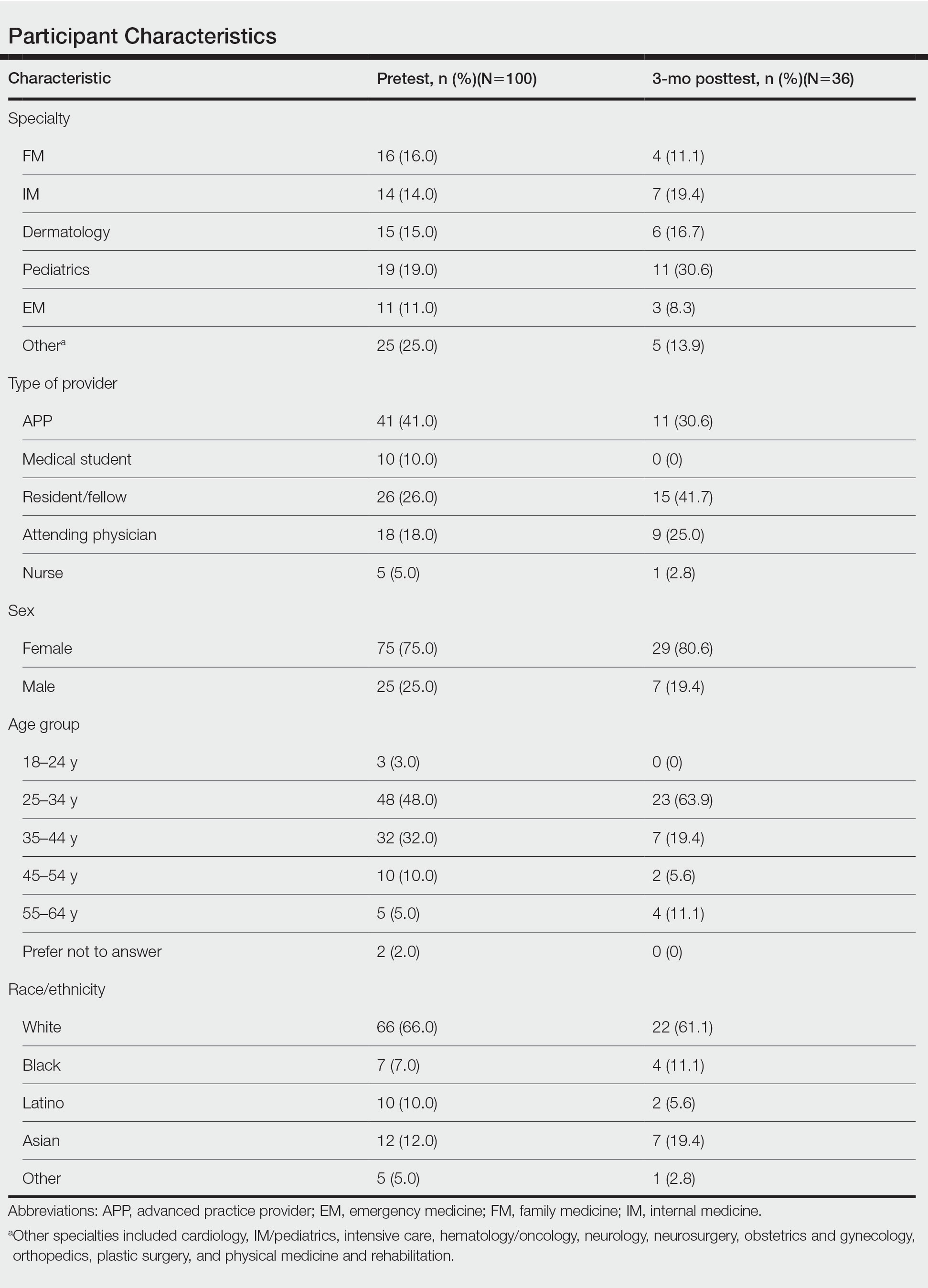

One hundred participants completed the pretest and immediate posttest, 36 of whom also completed the 3-month posttest (Table). There was no significant difference in baseline characteristics between the pretest and 3-month posttest groups.

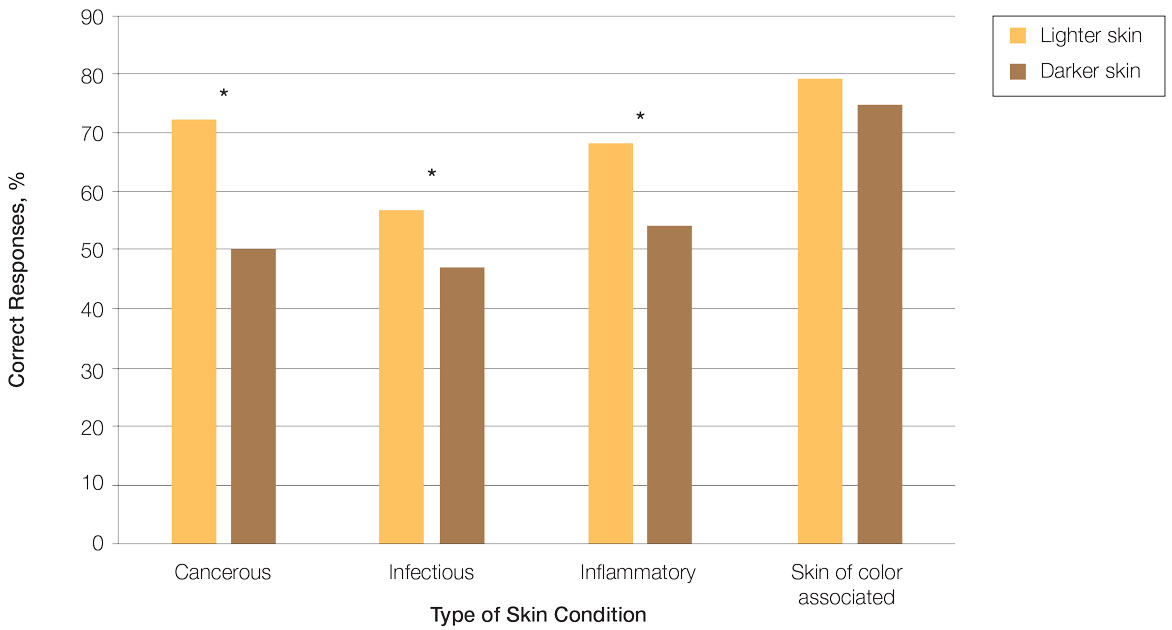

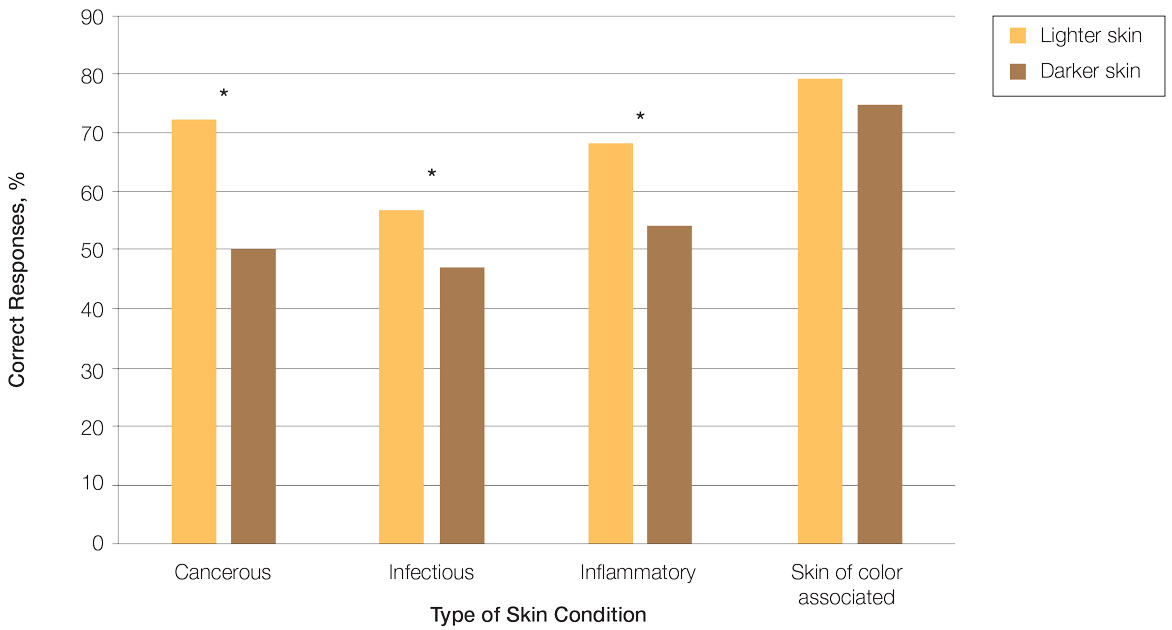

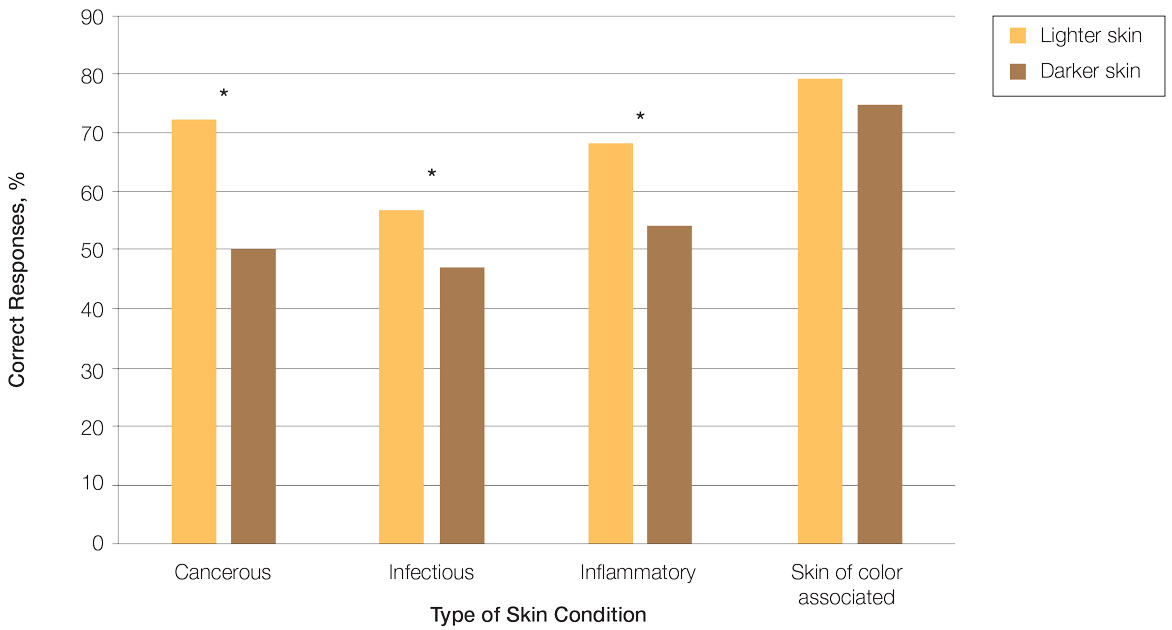

Test scores were correlated with provider type and specialty but not age, sex, or race/ethnicity. Specializing in dermatology and being a resident or attending physician were independently associated with higher test scores. Mean pretest diagnostic accuracy and confidence scores were higher for skin conditions shown in lighter skin compared with those shown in darker skin (13.6 vs 11.3 and 2.7 vs 1.9, respectively; both P<.001). Pretest diagnostic accuracy was significantly higher for skin conditions shown in lighter skin compared with darker skin for cancerous, inflammatory, and infectious conditions (72% vs 50%, 68% vs 55%, and 57% vs 47%, respectively; P<.001 for all)(Figure 1). Skin of color–associated conditions were not associated with significantly different scores for lighter skin compared with darker skin (79% vs 75%; P=.059).

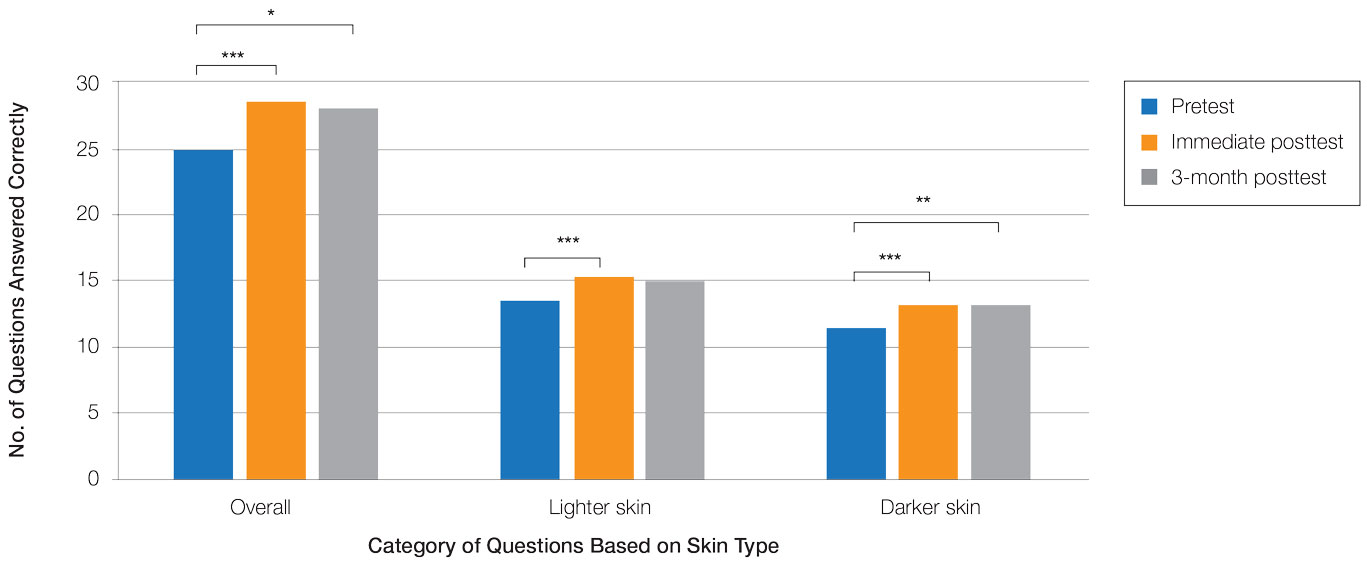

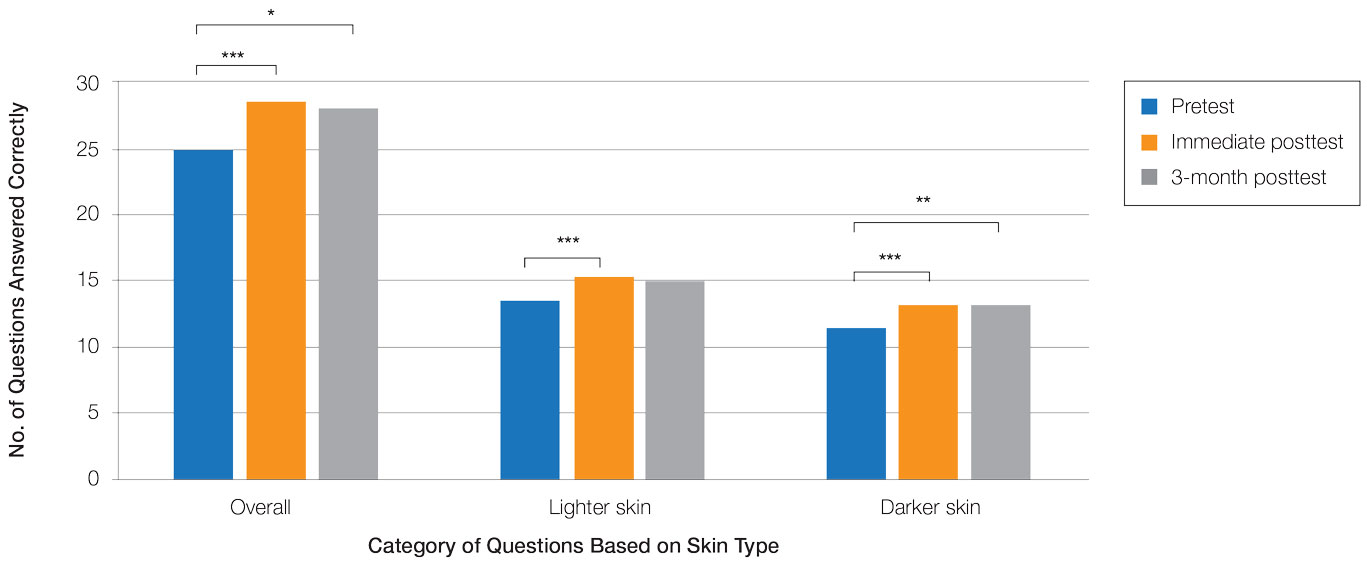

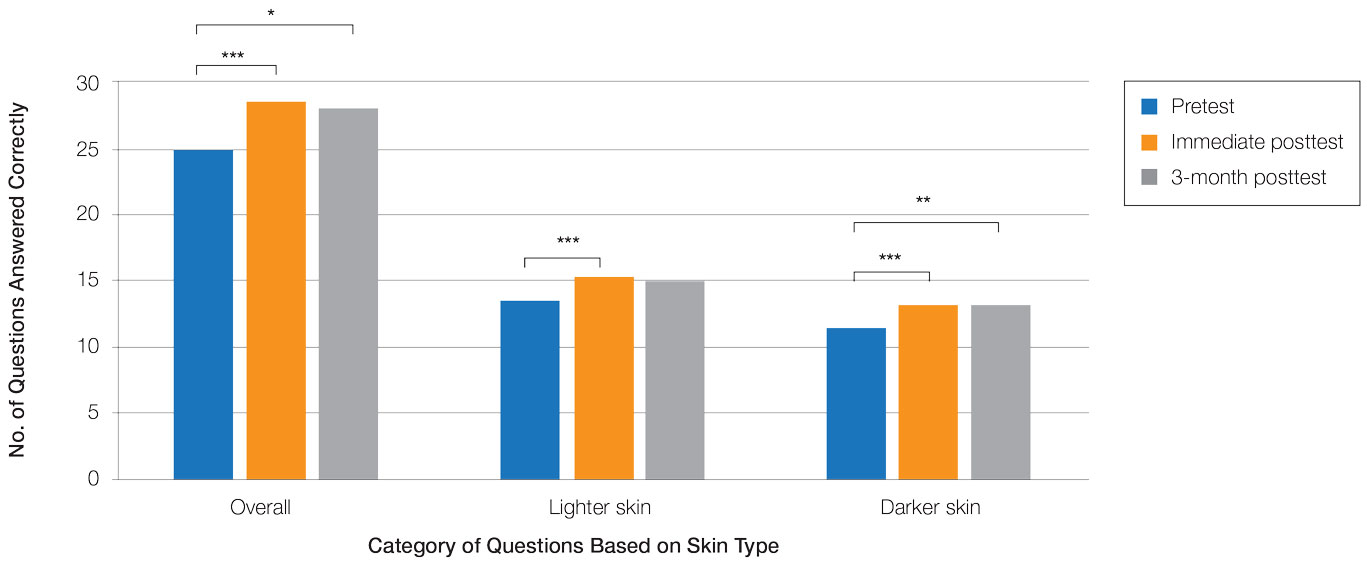

Controlling for provider type and specialty, significantly improved diagnostic accuracy was seen in immediate posttest scores compared with pretest scores for conditions shown in both lighter and darker skin types (lighter: 15.2 vs 13.6; darker: 13.3 vs 11.3; both P<.001)(Figure 2). The immediate posttest demonstrated higher mean diagnostic accuracy and confidence scores for skin conditions shown in lighter skin compared with darker skin (diagnostic accuracy: 15.2 vs 13.3; confidence: 3.0 vs 2.6; both P<.001), but the disparity between scores was less than in the pretest.

Following the 3-month posttest, improvement in diagnostic accuracy was noted among both lighter and darker skin types compared with the pretest, but the difference remained significant only for conditions shown in darker skin (mean scores, 11.3 vs 13.3; P<.01). Similarly, confidence in diagnosing conditions in both lighter and darker skin improved following the immediate posttest (mean scores, 2.7 vs 3.0 and 1.9 vs 2.6; both P<.001), and this improvement remained significant for only darker skin following the 3-month posttest (mean scores, 1.9 vs 2.3; P<.001). Despite these improvements, diagnostic accuracy and confidence remained higher for skin conditions shown in lighter skin compared with darker skin (diagnostic accuracy: 14.7 vs 13.3; P<.01; confidence: 2.8 vs 2.3; P<.001), though the disparity between scores was again less than in the pretest.

Comment

Our study showed that there are diagnostic disparities between lighter and darker skin types among interprofessional health care providers. Education on SOC should extend to interprofessional health care providers and other medical specialties involved in treating or triaging dermatologic diseases. A focused educational module may provide long-term improvements in diagnostic accuracy and confidence for conditions presenting in SOC. Differences in diagnostic accuracy between conditions shown in lighter and darker skin types were noted for the disease categories of infectious, cancerous, and inflammatory conditions, with the exception of conditions more frequently seen in patients with SOC. Learning resources for SOC-associated conditions are more likely to have greater representation of images depicting darker skin types.7 Future educational interventions may need to focus on dermatologic conditions that are not preferentially seen in patients with SOC. In our study, the pretest scores for conditions shown in darker skin were lowest among infectious and cancerous conditions. For infections, certain morphologic clues such as erythema are important for diagnosis but may be more subtle or difficult to discern in darker skin. It also is possible that providers may be less likely to suspect skin cancer in patients with SOC given that the morphologic presentation and/or anatomic site of involvement for skin cancers in SOC differs from those in lighter skin. Future educational interventions targeting disparities in diagnostic accuracy should focus on conditions that are not specifically associated with SOC.

Limitations of our study included the small number of participants, the study population came from a single institution, and a possible selection bias for providers interested in dermatology.

Conclusion

Disparities exist among interprofessional health care providers when treating conditions in patients with lighter skin compared to darker skin. An educational module for health care providers may provide long-term improvements in diagnostic accuracy and confidence for conditions presenting in patients with SOC.

- Fenton A, Elliott E, Shahbandi A, et al. Medical students’ ability to diagnose common dermatologic conditions in skin of color. J Am Acad Dermatol. 2020;83:957-958. doi:10.1016/j.jaad.2019.12.078

- Mamo A, Szeto MD, Rietcheck H, et al. Evaluating medical student assessment of common dermatologic conditions across Fitzpatrick phototypes and skin of color. J Am Acad Dermatol. 2022;87:167-169. doi:10.1016/j.jaad.2021.06.868

- Guda VA, Paek SY. Skin of color representation in commonly utilized medical student dermatology resources. J Drugs Dermatol. 2021;20:799. doi:10.36849/JDD.5726

- Wilson BN, Sun M, Ashbaugh AG, et al. Assessment of skin of color and diversity and inclusion content of dermatologic published literature: an analysis and call to action. Int J Womens Dermatol. 2021;7:391-397. doi:10.1016/j.ijwd.2021.04.001

- Ibraheim MK, Gupta R, Dao H, et al. Evaluating skin of color education in dermatology residency programs: data from a national survey. Clin Dermatol. 2022;40:228-233. doi:10.1016/j.clindermatol.2021.11.015

- Gupta R, Ibraheim MK, Dao H Jr, et al. Assessing dermatology resident confidence in caring for patients with skin of color. Clin Dermatol. 2021;39:873-878. doi:10.1016/j.clindermatol.2021.08.019

- Chang MJ, Lipner SR. Analysis of skin color on the American Academy of Dermatology public education website. J Drugs Dermatol. 2020;19:1236-1237. doi:10.36849/JDD.2020.5545

Dermatologic disparities disproportionately affect patients with skin of color (SOC). Two studies assessing the diagnostic accuracy of medical students have shown disparities in diagnosing common skin conditions presenting in darker skin compared to lighter skin at early stages of training.1,2 This knowledge gap could be attributed to the underrepresentation of SOC in dermatologic textbooks, journals, and educational curricula.3-6 It is important for dermatologists as well as physicians in other specialties and ancillary health care workers involved in treating or triaging dermatologic diseases to recognize common skin conditions presenting in SOC. We sought to evaluate the effectiveness of a focused educational module for improving diagnostic accuracy and confidence in treating SOC among interprofessional health care providers.

Methods

Interprofessional health care providers—medical students, residents/fellows, attending physicians, advanced practice providers (APPs), and nurses practicing across various medical specialties—at The University of Texas at Austin Dell Medical School and Ascension Medical Group (both in Austin, Texas) were invited to participate in an institutional review board–exempt study involving a virtual SOC educational module from February through May 2021. The 1-hour module involved a pretest, a 15-minute lecture, an immediate posttest, and a 3-month posttest. All tests included the same 40 multiple-choice questions of 20 dermatologic conditions portrayed in lighter and darker skin types from VisualDx.com, and participants were asked to identify the condition in each photograph. Questions appeared one at a time in a randomized order, and answers could not be changed once submitted.

For analysis, the dermatologic conditions were categorized into 4 groups: cancerous, infectious, inflammatory, and SOC-associated conditions. Cancerous conditions included basal cell carcinoma, squamous cell carcinoma, and melanoma. Infectious conditions included herpes zoster, tinea corporis, tinea versicolor, staphylococcal scalded skin syndrome, and verruca vulgaris. Inflammatory conditions included acne, atopic dermatitis, pityriasis rosea, psoriasis, seborrheic dermatitis, contact dermatitis, lichen planus, and urticaria. Skin of color–associated conditions included hidradenitis suppurativa, acanthosis nigricans, keloid, and melasma. Two questions utilizing a 5-point Likert scale assessing confidence in diagnosing light and dark skin also were included.

The pre-recorded 15-minute video lecture was given by 2 dermatology residents (P.L.K. and C.P.), and the learning objectives covered morphologic differences in lighter skin and darker skin, comparisons of common dermatologic diseases in lighter skin and darker skin, diseases more commonly affecting patients with SOC, and treatment considerations for conditions affecting skin and hair in patients with SOC. Photographs from the diagnostic accuracy assessment were not reused in the lecture. Detailed explanations on morphology, diagnostic pearls, and treatment options for all conditions tested were provided to participants upon completion of the 3-month posttest.

Statistical Analysis—Test scores were compared between conditions shown in lighter and darker skin types and from the pretest to the immediate posttest and 3-month posttest. Multiple linear regression was used to assess for intervention effects on lighter and darker skin scores controlling for provider type and specialty. All tests were 2-sided with significance at P<.05. Analyses were conducted using Stata 17.

Results

One hundred participants completed the pretest and immediate posttest, 36 of whom also completed the 3-month posttest (Table). There was no significant difference in baseline characteristics between the pretest and 3-month posttest groups.

Test scores were correlated with provider type and specialty but not age, sex, or race/ethnicity. Specializing in dermatology and being a resident or attending physician were independently associated with higher test scores. Mean pretest diagnostic accuracy and confidence scores were higher for skin conditions shown in lighter skin compared with those shown in darker skin (13.6 vs 11.3 and 2.7 vs 1.9, respectively; both P<.001). Pretest diagnostic accuracy was significantly higher for skin conditions shown in lighter skin compared with darker skin for cancerous, inflammatory, and infectious conditions (72% vs 50%, 68% vs 55%, and 57% vs 47%, respectively; P<.001 for all)(Figure 1). Skin of color–associated conditions were not associated with significantly different scores for lighter skin compared with darker skin (79% vs 75%; P=.059).

Controlling for provider type and specialty, significantly improved diagnostic accuracy was seen in immediate posttest scores compared with pretest scores for conditions shown in both lighter and darker skin types (lighter: 15.2 vs 13.6; darker: 13.3 vs 11.3; both P<.001)(Figure 2). The immediate posttest demonstrated higher mean diagnostic accuracy and confidence scores for skin conditions shown in lighter skin compared with darker skin (diagnostic accuracy: 15.2 vs 13.3; confidence: 3.0 vs 2.6; both P<.001), but the disparity between scores was less than in the pretest.

Following the 3-month posttest, improvement in diagnostic accuracy was noted among both lighter and darker skin types compared with the pretest, but the difference remained significant only for conditions shown in darker skin (mean scores, 11.3 vs 13.3; P<.01). Similarly, confidence in diagnosing conditions in both lighter and darker skin improved following the immediate posttest (mean scores, 2.7 vs 3.0 and 1.9 vs 2.6; both P<.001), and this improvement remained significant for only darker skin following the 3-month posttest (mean scores, 1.9 vs 2.3; P<.001). Despite these improvements, diagnostic accuracy and confidence remained higher for skin conditions shown in lighter skin compared with darker skin (diagnostic accuracy: 14.7 vs 13.3; P<.01; confidence: 2.8 vs 2.3; P<.001), though the disparity between scores was again less than in the pretest.

Comment

Our study showed that there are diagnostic disparities between lighter and darker skin types among interprofessional health care providers. Education on SOC should extend to interprofessional health care providers and other medical specialties involved in treating or triaging dermatologic diseases. A focused educational module may provide long-term improvements in diagnostic accuracy and confidence for conditions presenting in SOC. Differences in diagnostic accuracy between conditions shown in lighter and darker skin types were noted for the disease categories of infectious, cancerous, and inflammatory conditions, with the exception of conditions more frequently seen in patients with SOC. Learning resources for SOC-associated conditions are more likely to have greater representation of images depicting darker skin types.7 Future educational interventions may need to focus on dermatologic conditions that are not preferentially seen in patients with SOC. In our study, the pretest scores for conditions shown in darker skin were lowest among infectious and cancerous conditions. For infections, certain morphologic clues such as erythema are important for diagnosis but may be more subtle or difficult to discern in darker skin. It also is possible that providers may be less likely to suspect skin cancer in patients with SOC given that the morphologic presentation and/or anatomic site of involvement for skin cancers in SOC differs from those in lighter skin. Future educational interventions targeting disparities in diagnostic accuracy should focus on conditions that are not specifically associated with SOC.

Limitations of our study included the small number of participants, the study population came from a single institution, and a possible selection bias for providers interested in dermatology.

Conclusion

Disparities exist among interprofessional health care providers when treating conditions in patients with lighter skin compared to darker skin. An educational module for health care providers may provide long-term improvements in diagnostic accuracy and confidence for conditions presenting in patients with SOC.

Dermatologic disparities disproportionately affect patients with skin of color (SOC). Two studies assessing the diagnostic accuracy of medical students have shown disparities in diagnosing common skin conditions presenting in darker skin compared to lighter skin at early stages of training.1,2 This knowledge gap could be attributed to the underrepresentation of SOC in dermatologic textbooks, journals, and educational curricula.3-6 It is important for dermatologists as well as physicians in other specialties and ancillary health care workers involved in treating or triaging dermatologic diseases to recognize common skin conditions presenting in SOC. We sought to evaluate the effectiveness of a focused educational module for improving diagnostic accuracy and confidence in treating SOC among interprofessional health care providers.

Methods

Interprofessional health care providers—medical students, residents/fellows, attending physicians, advanced practice providers (APPs), and nurses practicing across various medical specialties—at The University of Texas at Austin Dell Medical School and Ascension Medical Group (both in Austin, Texas) were invited to participate in an institutional review board–exempt study involving a virtual SOC educational module from February through May 2021. The 1-hour module involved a pretest, a 15-minute lecture, an immediate posttest, and a 3-month posttest. All tests included the same 40 multiple-choice questions of 20 dermatologic conditions portrayed in lighter and darker skin types from VisualDx.com, and participants were asked to identify the condition in each photograph. Questions appeared one at a time in a randomized order, and answers could not be changed once submitted.

For analysis, the dermatologic conditions were categorized into 4 groups: cancerous, infectious, inflammatory, and SOC-associated conditions. Cancerous conditions included basal cell carcinoma, squamous cell carcinoma, and melanoma. Infectious conditions included herpes zoster, tinea corporis, tinea versicolor, staphylococcal scalded skin syndrome, and verruca vulgaris. Inflammatory conditions included acne, atopic dermatitis, pityriasis rosea, psoriasis, seborrheic dermatitis, contact dermatitis, lichen planus, and urticaria. Skin of color–associated conditions included hidradenitis suppurativa, acanthosis nigricans, keloid, and melasma. Two questions utilizing a 5-point Likert scale assessing confidence in diagnosing light and dark skin also were included.

The pre-recorded 15-minute video lecture was given by 2 dermatology residents (P.L.K. and C.P.), and the learning objectives covered morphologic differences in lighter skin and darker skin, comparisons of common dermatologic diseases in lighter skin and darker skin, diseases more commonly affecting patients with SOC, and treatment considerations for conditions affecting skin and hair in patients with SOC. Photographs from the diagnostic accuracy assessment were not reused in the lecture. Detailed explanations on morphology, diagnostic pearls, and treatment options for all conditions tested were provided to participants upon completion of the 3-month posttest.

Statistical Analysis—Test scores were compared between conditions shown in lighter and darker skin types and from the pretest to the immediate posttest and 3-month posttest. Multiple linear regression was used to assess for intervention effects on lighter and darker skin scores controlling for provider type and specialty. All tests were 2-sided with significance at P<.05. Analyses were conducted using Stata 17.

Results

One hundred participants completed the pretest and immediate posttest, 36 of whom also completed the 3-month posttest (Table). There was no significant difference in baseline characteristics between the pretest and 3-month posttest groups.

Test scores were correlated with provider type and specialty but not age, sex, or race/ethnicity. Specializing in dermatology and being a resident or attending physician were independently associated with higher test scores. Mean pretest diagnostic accuracy and confidence scores were higher for skin conditions shown in lighter skin compared with those shown in darker skin (13.6 vs 11.3 and 2.7 vs 1.9, respectively; both P<.001). Pretest diagnostic accuracy was significantly higher for skin conditions shown in lighter skin compared with darker skin for cancerous, inflammatory, and infectious conditions (72% vs 50%, 68% vs 55%, and 57% vs 47%, respectively; P<.001 for all)(Figure 1). Skin of color–associated conditions were not associated with significantly different scores for lighter skin compared with darker skin (79% vs 75%; P=.059).

Controlling for provider type and specialty, significantly improved diagnostic accuracy was seen in immediate posttest scores compared with pretest scores for conditions shown in both lighter and darker skin types (lighter: 15.2 vs 13.6; darker: 13.3 vs 11.3; both P<.001)(Figure 2). The immediate posttest demonstrated higher mean diagnostic accuracy and confidence scores for skin conditions shown in lighter skin compared with darker skin (diagnostic accuracy: 15.2 vs 13.3; confidence: 3.0 vs 2.6; both P<.001), but the disparity between scores was less than in the pretest.

Following the 3-month posttest, improvement in diagnostic accuracy was noted among both lighter and darker skin types compared with the pretest, but the difference remained significant only for conditions shown in darker skin (mean scores, 11.3 vs 13.3; P<.01). Similarly, confidence in diagnosing conditions in both lighter and darker skin improved following the immediate posttest (mean scores, 2.7 vs 3.0 and 1.9 vs 2.6; both P<.001), and this improvement remained significant for only darker skin following the 3-month posttest (mean scores, 1.9 vs 2.3; P<.001). Despite these improvements, diagnostic accuracy and confidence remained higher for skin conditions shown in lighter skin compared with darker skin (diagnostic accuracy: 14.7 vs 13.3; P<.01; confidence: 2.8 vs 2.3; P<.001), though the disparity between scores was again less than in the pretest.

Comment

Our study showed that there are diagnostic disparities between lighter and darker skin types among interprofessional health care providers. Education on SOC should extend to interprofessional health care providers and other medical specialties involved in treating or triaging dermatologic diseases. A focused educational module may provide long-term improvements in diagnostic accuracy and confidence for conditions presenting in SOC. Differences in diagnostic accuracy between conditions shown in lighter and darker skin types were noted for the disease categories of infectious, cancerous, and inflammatory conditions, with the exception of conditions more frequently seen in patients with SOC. Learning resources for SOC-associated conditions are more likely to have greater representation of images depicting darker skin types.7 Future educational interventions may need to focus on dermatologic conditions that are not preferentially seen in patients with SOC. In our study, the pretest scores for conditions shown in darker skin were lowest among infectious and cancerous conditions. For infections, certain morphologic clues such as erythema are important for diagnosis but may be more subtle or difficult to discern in darker skin. It also is possible that providers may be less likely to suspect skin cancer in patients with SOC given that the morphologic presentation and/or anatomic site of involvement for skin cancers in SOC differs from those in lighter skin. Future educational interventions targeting disparities in diagnostic accuracy should focus on conditions that are not specifically associated with SOC.

Limitations of our study included the small number of participants, the study population came from a single institution, and a possible selection bias for providers interested in dermatology.

Conclusion

Disparities exist among interprofessional health care providers when treating conditions in patients with lighter skin compared to darker skin. An educational module for health care providers may provide long-term improvements in diagnostic accuracy and confidence for conditions presenting in patients with SOC.

- Fenton A, Elliott E, Shahbandi A, et al. Medical students’ ability to diagnose common dermatologic conditions in skin of color. J Am Acad Dermatol. 2020;83:957-958. doi:10.1016/j.jaad.2019.12.078

- Mamo A, Szeto MD, Rietcheck H, et al. Evaluating medical student assessment of common dermatologic conditions across Fitzpatrick phototypes and skin of color. J Am Acad Dermatol. 2022;87:167-169. doi:10.1016/j.jaad.2021.06.868

- Guda VA, Paek SY. Skin of color representation in commonly utilized medical student dermatology resources. J Drugs Dermatol. 2021;20:799. doi:10.36849/JDD.5726

- Wilson BN, Sun M, Ashbaugh AG, et al. Assessment of skin of color and diversity and inclusion content of dermatologic published literature: an analysis and call to action. Int J Womens Dermatol. 2021;7:391-397. doi:10.1016/j.ijwd.2021.04.001

- Ibraheim MK, Gupta R, Dao H, et al. Evaluating skin of color education in dermatology residency programs: data from a national survey. Clin Dermatol. 2022;40:228-233. doi:10.1016/j.clindermatol.2021.11.015

- Gupta R, Ibraheim MK, Dao H Jr, et al. Assessing dermatology resident confidence in caring for patients with skin of color. Clin Dermatol. 2021;39:873-878. doi:10.1016/j.clindermatol.2021.08.019

- Chang MJ, Lipner SR. Analysis of skin color on the American Academy of Dermatology public education website. J Drugs Dermatol. 2020;19:1236-1237. doi:10.36849/JDD.2020.5545

- Fenton A, Elliott E, Shahbandi A, et al. Medical students’ ability to diagnose common dermatologic conditions in skin of color. J Am Acad Dermatol. 2020;83:957-958. doi:10.1016/j.jaad.2019.12.078

- Mamo A, Szeto MD, Rietcheck H, et al. Evaluating medical student assessment of common dermatologic conditions across Fitzpatrick phototypes and skin of color. J Am Acad Dermatol. 2022;87:167-169. doi:10.1016/j.jaad.2021.06.868

- Guda VA, Paek SY. Skin of color representation in commonly utilized medical student dermatology resources. J Drugs Dermatol. 2021;20:799. doi:10.36849/JDD.5726

- Wilson BN, Sun M, Ashbaugh AG, et al. Assessment of skin of color and diversity and inclusion content of dermatologic published literature: an analysis and call to action. Int J Womens Dermatol. 2021;7:391-397. doi:10.1016/j.ijwd.2021.04.001

- Ibraheim MK, Gupta R, Dao H, et al. Evaluating skin of color education in dermatology residency programs: data from a national survey. Clin Dermatol. 2022;40:228-233. doi:10.1016/j.clindermatol.2021.11.015

- Gupta R, Ibraheim MK, Dao H Jr, et al. Assessing dermatology resident confidence in caring for patients with skin of color. Clin Dermatol. 2021;39:873-878. doi:10.1016/j.clindermatol.2021.08.019

- Chang MJ, Lipner SR. Analysis of skin color on the American Academy of Dermatology public education website. J Drugs Dermatol. 2020;19:1236-1237. doi:10.36849/JDD.2020.5545

Practice Points

- Disparities exist among interprofessional health care providers when diagnosing conditions in patients with lighter and darker skin, specifically for infectious, cancerous, or inflammatory conditions vs conditions that are preferentially seen in patients with skin of color (SOC).

- A focused educational module for health care providers may provide long-term improvements in diagnostic accuracy and confidence for conditions presenting in patients with SOC.