User login

News and Views that Matter to Physicians

Comprehensive diabetic retinopathy screening challenging

NEW ORLEANS – Fewer than one-third of patients with diabetes being cared for by a public hospital system underwent screening for retinopathy within the past year, judging from the results from a survey of administrative data.

“Diabetic retinopathy is a major cause of vision loss in the United States,” researchers led by Dr. David C. Ziemer wrote in an abstract presented during a poster session at the annual scientific sessions of the American Diabetes Association.

“In 2011, the age-adjusted percentage of adults with diagnosed diabetes reporting visual impairment was 17.6%. This is a pressing issue as the number of Americans with diabetic retinopathy is expected to double from 7.7 million in 2010 to 15.6 million in 2050.”

In an effort to plan for better diabetic retinopathy screening, Dr. Ziemer and his associates analyzed 2014 administrative data from 19,361 patients with diabetes who attended one of several clinics operated by the Atlanta-based Grady Health System. Diabetic retinopathy was considered complete if ophthalmology clinic, optometry, or retinal photograph visit was attended. The researchers also surveyed a convenience sample of 80 patients about their diabetic retinopathy screening in the past year.

The mean age of patients was 57 years, their mean hemoglobin A1c level was 7.8%, 59% were female, and 83% were African-American. Of the 19,361 patients, 5,595 (29%) underwent diabetic retinopathy screening and 13,766 (71%) did not. The unscreened had a mean of 1 clinic visit for diabetes care, compared with a mean of 3.1 for those who underwent screening (P less than .0005). In the analysis of administrative data, Dr. Ziemer, of the division of endocrinology at Emory University, Atlanta, reported that 29% of patients underwent diabetic retinopathy screening in the past year, with variation by care site that ranged from 5% to 66%, and 5,000 had no diabetes continuity care visit.

Factors associated with increased diabetic retinopathy screening were treatment in a diabetes clinic (odds ratio, 2.8), treatment in a primary care clinic (OR, 2.1), and being older (OR, 1.03/year; P less than .001 for all associations), according to a multivariable analysis. Factors associated with decreased diabetic retinopathy screening were Hispanic ethnicity (OR, 0.7) and having a mental health diagnosis (OR, .8; P less than .001 for both associations). The researchers also found that having an in-clinic eye screening doubled the proportion of diabetic retinopathy screenings (48% vs. 22%) and decreased the number of screenings done in an outside clinic (45% vs. 95%).

Of the 80 patients who completed the survey, 68% reported that they underwent diabetic retinopathy screening within the past year, which was in contrast to the 29% reported by administrative data. In addition, 50% of survey respondents who did not undergo diabetic retinopathy screening reported that they received a referral, yet more than 40% failed to honor eye appointments. “The first barrier to address is people who don’t keep appointments,” Dr. Ziemer said in an interview. “Getting people in care is one issue. Having the capacity is another. That’s a real problem.”

The study was supported by the American Diabetes Association. Dr. Ziemer reported having no financial disclosures.

NEW ORLEANS – Fewer than one-third of patients with diabetes being cared for by a public hospital system underwent screening for retinopathy within the past year, judging from the results from a survey of administrative data.

“Diabetic retinopathy is a major cause of vision loss in the United States,” researchers led by Dr. David C. Ziemer wrote in an abstract presented during a poster session at the annual scientific sessions of the American Diabetes Association.

“In 2011, the age-adjusted percentage of adults with diagnosed diabetes reporting visual impairment was 17.6%. This is a pressing issue as the number of Americans with diabetic retinopathy is expected to double from 7.7 million in 2010 to 15.6 million in 2050.”

In an effort to plan for better diabetic retinopathy screening, Dr. Ziemer and his associates analyzed 2014 administrative data from 19,361 patients with diabetes who attended one of several clinics operated by the Atlanta-based Grady Health System. Diabetic retinopathy was considered complete if ophthalmology clinic, optometry, or retinal photograph visit was attended. The researchers also surveyed a convenience sample of 80 patients about their diabetic retinopathy screening in the past year.

The mean age of patients was 57 years, their mean hemoglobin A1c level was 7.8%, 59% were female, and 83% were African-American. Of the 19,361 patients, 5,595 (29%) underwent diabetic retinopathy screening and 13,766 (71%) did not. The unscreened had a mean of 1 clinic visit for diabetes care, compared with a mean of 3.1 for those who underwent screening (P less than .0005). In the analysis of administrative data, Dr. Ziemer, of the division of endocrinology at Emory University, Atlanta, reported that 29% of patients underwent diabetic retinopathy screening in the past year, with variation by care site that ranged from 5% to 66%, and 5,000 had no diabetes continuity care visit.

Factors associated with increased diabetic retinopathy screening were treatment in a diabetes clinic (odds ratio, 2.8), treatment in a primary care clinic (OR, 2.1), and being older (OR, 1.03/year; P less than .001 for all associations), according to a multivariable analysis. Factors associated with decreased diabetic retinopathy screening were Hispanic ethnicity (OR, 0.7) and having a mental health diagnosis (OR, .8; P less than .001 for both associations). The researchers also found that having an in-clinic eye screening doubled the proportion of diabetic retinopathy screenings (48% vs. 22%) and decreased the number of screenings done in an outside clinic (45% vs. 95%).

Of the 80 patients who completed the survey, 68% reported that they underwent diabetic retinopathy screening within the past year, which was in contrast to the 29% reported by administrative data. In addition, 50% of survey respondents who did not undergo diabetic retinopathy screening reported that they received a referral, yet more than 40% failed to honor eye appointments. “The first barrier to address is people who don’t keep appointments,” Dr. Ziemer said in an interview. “Getting people in care is one issue. Having the capacity is another. That’s a real problem.”

The study was supported by the American Diabetes Association. Dr. Ziemer reported having no financial disclosures.

NEW ORLEANS – Fewer than one-third of patients with diabetes being cared for by a public hospital system underwent screening for retinopathy within the past year, judging from the results from a survey of administrative data.

“Diabetic retinopathy is a major cause of vision loss in the United States,” researchers led by Dr. David C. Ziemer wrote in an abstract presented during a poster session at the annual scientific sessions of the American Diabetes Association.

“In 2011, the age-adjusted percentage of adults with diagnosed diabetes reporting visual impairment was 17.6%. This is a pressing issue as the number of Americans with diabetic retinopathy is expected to double from 7.7 million in 2010 to 15.6 million in 2050.”

In an effort to plan for better diabetic retinopathy screening, Dr. Ziemer and his associates analyzed 2014 administrative data from 19,361 patients with diabetes who attended one of several clinics operated by the Atlanta-based Grady Health System. Diabetic retinopathy was considered complete if ophthalmology clinic, optometry, or retinal photograph visit was attended. The researchers also surveyed a convenience sample of 80 patients about their diabetic retinopathy screening in the past year.

The mean age of patients was 57 years, their mean hemoglobin A1c level was 7.8%, 59% were female, and 83% were African-American. Of the 19,361 patients, 5,595 (29%) underwent diabetic retinopathy screening and 13,766 (71%) did not. The unscreened had a mean of 1 clinic visit for diabetes care, compared with a mean of 3.1 for those who underwent screening (P less than .0005). In the analysis of administrative data, Dr. Ziemer, of the division of endocrinology at Emory University, Atlanta, reported that 29% of patients underwent diabetic retinopathy screening in the past year, with variation by care site that ranged from 5% to 66%, and 5,000 had no diabetes continuity care visit.

Factors associated with increased diabetic retinopathy screening were treatment in a diabetes clinic (odds ratio, 2.8), treatment in a primary care clinic (OR, 2.1), and being older (OR, 1.03/year; P less than .001 for all associations), according to a multivariable analysis. Factors associated with decreased diabetic retinopathy screening were Hispanic ethnicity (OR, 0.7) and having a mental health diagnosis (OR, .8; P less than .001 for both associations). The researchers also found that having an in-clinic eye screening doubled the proportion of diabetic retinopathy screenings (48% vs. 22%) and decreased the number of screenings done in an outside clinic (45% vs. 95%).

Of the 80 patients who completed the survey, 68% reported that they underwent diabetic retinopathy screening within the past year, which was in contrast to the 29% reported by administrative data. In addition, 50% of survey respondents who did not undergo diabetic retinopathy screening reported that they received a referral, yet more than 40% failed to honor eye appointments. “The first barrier to address is people who don’t keep appointments,” Dr. Ziemer said in an interview. “Getting people in care is one issue. Having the capacity is another. That’s a real problem.”

The study was supported by the American Diabetes Association. Dr. Ziemer reported having no financial disclosures.

AT THE ADA ANNUAL SCIENTIFIC SESSIONS

Key clinical point: Some 71% of diabetes patients did not undergo screening for diabetic retinopathy.

Major finding: Only 29% of patients underwent diabetic retinopathy screening in the past year, with variation by care site that ranged from 5% to 66%.

Data source: An analysis of administrative data from 19,361 patients with diabetes who attended one of several clinics operated by the Atlanta-based Grady Health System in 2014.

Disclosures: The study was supported by the American Diabetes Association. Dr. Ziemer reported having no financial disclosures.

Nonbenzodiazepines reduce time to extubation, compared with benzodiazepines

The nonbenzodiazepines propofol and dexmedetomidine reduce the time to extubation, compared with benzodiazepines, suggest results of an observational study published in Chest.

“This study found that sedatives vary in their associations with [ventilator-associated events] and time to extubation but not in their associations with time to hospital discharge or mortality. Both propofol and dexmedetomidine were associated with less time to extubation, compared with benzodiazepines,” wrote Dr. Michael Klompas of the department of population medicine at Harvard Medical School and Harvard Pilgrim Health Care Institute, both in Boston, and colleagues (Chest. 2016 Jun;149[6]:1373-9).

Current sedation guidelines for mechanical ventilation recommend using nonbenzodiazepines to lightly sedate patients, whenever possible.

Compared with the use of benzodiazepines, the uses of propofol and dexmedetomidine were associated with shorter times to extubation with hazard ratios of propofol vs. benzodiazepines and dexmedetomidine vs. benzodiazepines of 1.4 (P less than .0001) and 2.3 (P less than .0001), respectively. In the relatively few cases involving uses of dexmedetomidine that were available, this sedative was also associated with shorter time to extubation, compared with propofol (HR, 1.7; P less than .0001).

Uses of benzodiazepines and propofol were associated with increased risk for ventilator-associated events (VAEs), compared with regimens not involving them; for benzodiazepine use, the HR was 1.4 (P = .002) and for propofol, the HR was 1.3 (P = .003). Dexmedetomidine use, in contrast, was not associated with increased risk for VAEs (P = .92).

Regarding hazards for hospital discharges and hospital deaths, using each sedative or sedative class studied had similar outcomes.

The observational study involved 9,603 retrospectively identified mechanical ventilations. All consecutively occurring invasive mechanical ventilations lasting 3 days or longer in Boston’s Brigham and Women’s Hospital between July 1, 2006 and December 31, 2013 were studied. The researchers evaluated the impact that daily use of propofol, dexmedetomidine, and benzodiazepines have on VAEs, time to extubation, time to hospital discharge, and death in a large cohort of patients.

This study’s findings were similar to those of prior randomized controlled trials, especially concerning the time to extubation, the researchers said. “The large number of episodes of mechanical ventilation in our trial dataset, however, allowed us to extend conceivable but underpowered signals from randomized controlled trials.”

A limitation of this study is that it was a single-center retrospective analysis, which may have caused some of its findings to be attributable to “residual confounding and/or idiosyncratic local practice patterns.” Other limitations include the lack of measurements of patients’ total doses or adjusted doses per kilogram of body weight, a possible overtraining of the analysis model used to adjust for severity of illness, and a relatively low number of patients treated with dexmedetomidine, with most of such patients undergoing cardiac surgery.

Funding was provided by the Centers for Disease Control and Prevention. Dr. Klompas and the other researchers had no disclosures.

While sedatives are the most widely used pharmacologic compounds in the critical care of patients, data on the real-world patterns of sedative use are lacking,

Klompas et al. are to be commended for conducting an observational trial that addressed the real-world patterns of sedative use. “Their data speak to what many clinicians believe to be their clinical sedative administration experience.”

This is an important study that begins to address a basic pharmacologic issue. The researchers’ observations of the effects of the two nonbenzodiazepines (dexmedetomidine and propofol) and benzodiazepines on the patients studied will help to clarify whether such effects can be attributed to the specific drug used or the sedative effect that a drug had on a patient.

The study was limited by the relatively low number of patients who received dexmedetomidine. This limitation, which might have suggested a selection bias, made the conclusions less robust.

Dr. Yoanna Skrobik is from the faculty of medicine, department of medicine at the McGill University Health Centre, Montreal. She reported having no relevant financial disclosures. These remarks are adapted from an accompanying editorial (Chest. 2016 Jun;149[6]:1355-6).

While sedatives are the most widely used pharmacologic compounds in the critical care of patients, data on the real-world patterns of sedative use are lacking,

Klompas et al. are to be commended for conducting an observational trial that addressed the real-world patterns of sedative use. “Their data speak to what many clinicians believe to be their clinical sedative administration experience.”

This is an important study that begins to address a basic pharmacologic issue. The researchers’ observations of the effects of the two nonbenzodiazepines (dexmedetomidine and propofol) and benzodiazepines on the patients studied will help to clarify whether such effects can be attributed to the specific drug used or the sedative effect that a drug had on a patient.

The study was limited by the relatively low number of patients who received dexmedetomidine. This limitation, which might have suggested a selection bias, made the conclusions less robust.

Dr. Yoanna Skrobik is from the faculty of medicine, department of medicine at the McGill University Health Centre, Montreal. She reported having no relevant financial disclosures. These remarks are adapted from an accompanying editorial (Chest. 2016 Jun;149[6]:1355-6).

While sedatives are the most widely used pharmacologic compounds in the critical care of patients, data on the real-world patterns of sedative use are lacking,

Klompas et al. are to be commended for conducting an observational trial that addressed the real-world patterns of sedative use. “Their data speak to what many clinicians believe to be their clinical sedative administration experience.”

This is an important study that begins to address a basic pharmacologic issue. The researchers’ observations of the effects of the two nonbenzodiazepines (dexmedetomidine and propofol) and benzodiazepines on the patients studied will help to clarify whether such effects can be attributed to the specific drug used or the sedative effect that a drug had on a patient.

The study was limited by the relatively low number of patients who received dexmedetomidine. This limitation, which might have suggested a selection bias, made the conclusions less robust.

Dr. Yoanna Skrobik is from the faculty of medicine, department of medicine at the McGill University Health Centre, Montreal. She reported having no relevant financial disclosures. These remarks are adapted from an accompanying editorial (Chest. 2016 Jun;149[6]:1355-6).

The nonbenzodiazepines propofol and dexmedetomidine reduce the time to extubation, compared with benzodiazepines, suggest results of an observational study published in Chest.

“This study found that sedatives vary in their associations with [ventilator-associated events] and time to extubation but not in their associations with time to hospital discharge or mortality. Both propofol and dexmedetomidine were associated with less time to extubation, compared with benzodiazepines,” wrote Dr. Michael Klompas of the department of population medicine at Harvard Medical School and Harvard Pilgrim Health Care Institute, both in Boston, and colleagues (Chest. 2016 Jun;149[6]:1373-9).

Current sedation guidelines for mechanical ventilation recommend using nonbenzodiazepines to lightly sedate patients, whenever possible.

Compared with the use of benzodiazepines, the uses of propofol and dexmedetomidine were associated with shorter times to extubation with hazard ratios of propofol vs. benzodiazepines and dexmedetomidine vs. benzodiazepines of 1.4 (P less than .0001) and 2.3 (P less than .0001), respectively. In the relatively few cases involving uses of dexmedetomidine that were available, this sedative was also associated with shorter time to extubation, compared with propofol (HR, 1.7; P less than .0001).

Uses of benzodiazepines and propofol were associated with increased risk for ventilator-associated events (VAEs), compared with regimens not involving them; for benzodiazepine use, the HR was 1.4 (P = .002) and for propofol, the HR was 1.3 (P = .003). Dexmedetomidine use, in contrast, was not associated with increased risk for VAEs (P = .92).

Regarding hazards for hospital discharges and hospital deaths, using each sedative or sedative class studied had similar outcomes.

The observational study involved 9,603 retrospectively identified mechanical ventilations. All consecutively occurring invasive mechanical ventilations lasting 3 days or longer in Boston’s Brigham and Women’s Hospital between July 1, 2006 and December 31, 2013 were studied. The researchers evaluated the impact that daily use of propofol, dexmedetomidine, and benzodiazepines have on VAEs, time to extubation, time to hospital discharge, and death in a large cohort of patients.

This study’s findings were similar to those of prior randomized controlled trials, especially concerning the time to extubation, the researchers said. “The large number of episodes of mechanical ventilation in our trial dataset, however, allowed us to extend conceivable but underpowered signals from randomized controlled trials.”

A limitation of this study is that it was a single-center retrospective analysis, which may have caused some of its findings to be attributable to “residual confounding and/or idiosyncratic local practice patterns.” Other limitations include the lack of measurements of patients’ total doses or adjusted doses per kilogram of body weight, a possible overtraining of the analysis model used to adjust for severity of illness, and a relatively low number of patients treated with dexmedetomidine, with most of such patients undergoing cardiac surgery.

Funding was provided by the Centers for Disease Control and Prevention. Dr. Klompas and the other researchers had no disclosures.

The nonbenzodiazepines propofol and dexmedetomidine reduce the time to extubation, compared with benzodiazepines, suggest results of an observational study published in Chest.

“This study found that sedatives vary in their associations with [ventilator-associated events] and time to extubation but not in their associations with time to hospital discharge or mortality. Both propofol and dexmedetomidine were associated with less time to extubation, compared with benzodiazepines,” wrote Dr. Michael Klompas of the department of population medicine at Harvard Medical School and Harvard Pilgrim Health Care Institute, both in Boston, and colleagues (Chest. 2016 Jun;149[6]:1373-9).

Current sedation guidelines for mechanical ventilation recommend using nonbenzodiazepines to lightly sedate patients, whenever possible.

Compared with the use of benzodiazepines, the uses of propofol and dexmedetomidine were associated with shorter times to extubation with hazard ratios of propofol vs. benzodiazepines and dexmedetomidine vs. benzodiazepines of 1.4 (P less than .0001) and 2.3 (P less than .0001), respectively. In the relatively few cases involving uses of dexmedetomidine that were available, this sedative was also associated with shorter time to extubation, compared with propofol (HR, 1.7; P less than .0001).

Uses of benzodiazepines and propofol were associated with increased risk for ventilator-associated events (VAEs), compared with regimens not involving them; for benzodiazepine use, the HR was 1.4 (P = .002) and for propofol, the HR was 1.3 (P = .003). Dexmedetomidine use, in contrast, was not associated with increased risk for VAEs (P = .92).

Regarding hazards for hospital discharges and hospital deaths, using each sedative or sedative class studied had similar outcomes.

The observational study involved 9,603 retrospectively identified mechanical ventilations. All consecutively occurring invasive mechanical ventilations lasting 3 days or longer in Boston’s Brigham and Women’s Hospital between July 1, 2006 and December 31, 2013 were studied. The researchers evaluated the impact that daily use of propofol, dexmedetomidine, and benzodiazepines have on VAEs, time to extubation, time to hospital discharge, and death in a large cohort of patients.

This study’s findings were similar to those of prior randomized controlled trials, especially concerning the time to extubation, the researchers said. “The large number of episodes of mechanical ventilation in our trial dataset, however, allowed us to extend conceivable but underpowered signals from randomized controlled trials.”

A limitation of this study is that it was a single-center retrospective analysis, which may have caused some of its findings to be attributable to “residual confounding and/or idiosyncratic local practice patterns.” Other limitations include the lack of measurements of patients’ total doses or adjusted doses per kilogram of body weight, a possible overtraining of the analysis model used to adjust for severity of illness, and a relatively low number of patients treated with dexmedetomidine, with most of such patients undergoing cardiac surgery.

Funding was provided by the Centers for Disease Control and Prevention. Dr. Klompas and the other researchers had no disclosures.

FROM CHEST

Key clinical point: Dexmedetomidine and propofol reduce the time to extubation, compared with benzodiazepines.

Major finding: Compared with the use of benzodiazepines, the uses of propofol and dexmedetomidine were associated with shorter times to extubation with hazard ratios of propofol vs. benzodiazepines and dexmedetomidine vs. benzodiazepines of 1.4 and 2.3, respectively.

Data source: A observational study of 9,603 consecutive episodes of mechanical ventilation lasting 3 days or longer at a large medical center.

Disclosures: Funding for the study came from the Centers for Disease Control and Prevention. Dr. Klompas and his coauthors had no disclosures.

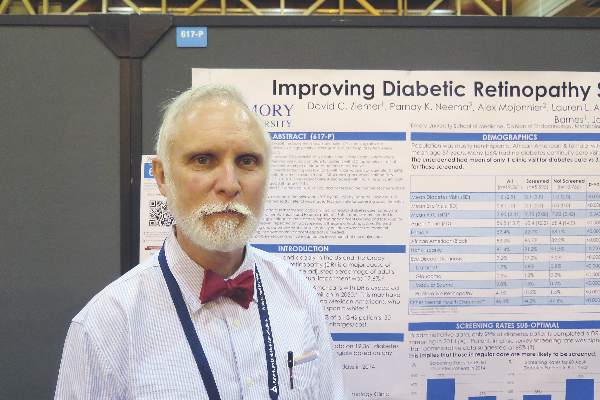

Septicemia cost U.S. hospitals $23.7 billion in 2013

Septicemia is the most expensive condition treated in U.S. hospitals, accounting for $23.7 billion in aggregate hospital costs in 2013, according to the Agency for Healthcare Research and Quality.

Spending on septicemia represented 6.2% of the total cost for all hospitalizations in 2013. The rest of the five most expensive conditions were osteoarthritis ($16.5 billion); liveborn infants ($13.3 billion); complications of devices, implants, or grafts ($12.4 billion); and acute MI ($12.1 billion). These five conditions accounted for 20.5% of all U.S. hospital costs and 20.6% of all admissions, the AHRQ report noted.

Total hospital costs for the 35.6 million overall stays in 2013 were more than $381 billion. That figure represents hospitals’ cost to produce the services, not the amount covered by payers, and it does not include physician fees associated with hospitalizations, the agency said.

Septicemia is the most expensive condition treated in U.S. hospitals, accounting for $23.7 billion in aggregate hospital costs in 2013, according to the Agency for Healthcare Research and Quality.

Spending on septicemia represented 6.2% of the total cost for all hospitalizations in 2013. The rest of the five most expensive conditions were osteoarthritis ($16.5 billion); liveborn infants ($13.3 billion); complications of devices, implants, or grafts ($12.4 billion); and acute MI ($12.1 billion). These five conditions accounted for 20.5% of all U.S. hospital costs and 20.6% of all admissions, the AHRQ report noted.

Total hospital costs for the 35.6 million overall stays in 2013 were more than $381 billion. That figure represents hospitals’ cost to produce the services, not the amount covered by payers, and it does not include physician fees associated with hospitalizations, the agency said.

Septicemia is the most expensive condition treated in U.S. hospitals, accounting for $23.7 billion in aggregate hospital costs in 2013, according to the Agency for Healthcare Research and Quality.

Spending on septicemia represented 6.2% of the total cost for all hospitalizations in 2013. The rest of the five most expensive conditions were osteoarthritis ($16.5 billion); liveborn infants ($13.3 billion); complications of devices, implants, or grafts ($12.4 billion); and acute MI ($12.1 billion). These five conditions accounted for 20.5% of all U.S. hospital costs and 20.6% of all admissions, the AHRQ report noted.

Total hospital costs for the 35.6 million overall stays in 2013 were more than $381 billion. That figure represents hospitals’ cost to produce the services, not the amount covered by payers, and it does not include physician fees associated with hospitalizations, the agency said.

Midodrine cuts ICU days in septic shock patients

Septic shock patients who received midodrine needed significantly fewer intravenous vasopressors during recovery and had shorter hospital stays, based on data from a retrospective study of 275 adults at a single tertiary care center.

In many institutions, policy dictates that patients must remain in the ICU as long as they need intravenous vasopressors, wrote Dr. Micah R. Whitson of North Shore-LIJ Health System in New Hyde Park, N.Y., and colleagues. “One solution to this problem may be replacement of IV vasopressors with an oral agent.”

“Midodrine facilitated earlier patient transfer from the ICU and more efficient allocation of ICU resources,” the researchers wrote (Chest. 2016;149[6]:1380-83).

The researchers compared data on 135 patients treated with midodrine in addition to an intravenous vasopressor and 140 patients treated with an intravenous vasopressor alone.

Overall, patients given midodrine received intravenous vasopressors for 2.9 days while the other group received intravenous vasopressors for 3.8 days, a significant 24% difference. Hospital length of stay was not significantly different, averaging 22 days in the midodrine group and 24 days in the intravenous vasopressor–only group. However, ICU length of stay averaged 7.5 days in the midodrine group and 9.4 days in the vasopressor-only group, a significant 20% reduction. Further, the midodrine group was significantly less likely to reinstitute intravenous vasopressors than the intravenous vasopressor–only group (5.2% vs. 15%, respectively). ICU and hospital mortality rates were not significantly different between the two groups, Dr. Whitson and associates reported.

Patients in the midodrine group received a starting dose of 10 mg every 8 hours, which was increased incrementally until they no longer needed intravenous vasopressors. The maximum midodrine dose in the study was 18.7 mg every 8 hours, and the average duration of use was 6 days.

The patients’ average age was 65 years in the intravenous vasopressor group and 69 years in the midodrine group. Other demographic factors did not significantly differ between the two groups.

One patient discontinued midodrine before discontinuing an intravenous vasopressor because of bradycardia, which resolved without additional treatment.

The findings were limited by the observational nature of the study and the use of data from a single center, the investigators noted. The results, however, support the safety of midodrine and the study “lays the groundwork for a prospective, randomized controlled trial that will examine efficacy, starting dose, escalation, tapering and appropriate patient selection for midodrine use during recovery from septic shock,” they said.

The researchers had no financial conflicts to disclose.

Septic shock patients who received midodrine needed significantly fewer intravenous vasopressors during recovery and had shorter hospital stays, based on data from a retrospective study of 275 adults at a single tertiary care center.

In many institutions, policy dictates that patients must remain in the ICU as long as they need intravenous vasopressors, wrote Dr. Micah R. Whitson of North Shore-LIJ Health System in New Hyde Park, N.Y., and colleagues. “One solution to this problem may be replacement of IV vasopressors with an oral agent.”

“Midodrine facilitated earlier patient transfer from the ICU and more efficient allocation of ICU resources,” the researchers wrote (Chest. 2016;149[6]:1380-83).

The researchers compared data on 135 patients treated with midodrine in addition to an intravenous vasopressor and 140 patients treated with an intravenous vasopressor alone.

Overall, patients given midodrine received intravenous vasopressors for 2.9 days while the other group received intravenous vasopressors for 3.8 days, a significant 24% difference. Hospital length of stay was not significantly different, averaging 22 days in the midodrine group and 24 days in the intravenous vasopressor–only group. However, ICU length of stay averaged 7.5 days in the midodrine group and 9.4 days in the vasopressor-only group, a significant 20% reduction. Further, the midodrine group was significantly less likely to reinstitute intravenous vasopressors than the intravenous vasopressor–only group (5.2% vs. 15%, respectively). ICU and hospital mortality rates were not significantly different between the two groups, Dr. Whitson and associates reported.

Patients in the midodrine group received a starting dose of 10 mg every 8 hours, which was increased incrementally until they no longer needed intravenous vasopressors. The maximum midodrine dose in the study was 18.7 mg every 8 hours, and the average duration of use was 6 days.

The patients’ average age was 65 years in the intravenous vasopressor group and 69 years in the midodrine group. Other demographic factors did not significantly differ between the two groups.

One patient discontinued midodrine before discontinuing an intravenous vasopressor because of bradycardia, which resolved without additional treatment.

The findings were limited by the observational nature of the study and the use of data from a single center, the investigators noted. The results, however, support the safety of midodrine and the study “lays the groundwork for a prospective, randomized controlled trial that will examine efficacy, starting dose, escalation, tapering and appropriate patient selection for midodrine use during recovery from septic shock,” they said.

The researchers had no financial conflicts to disclose.

Septic shock patients who received midodrine needed significantly fewer intravenous vasopressors during recovery and had shorter hospital stays, based on data from a retrospective study of 275 adults at a single tertiary care center.

In many institutions, policy dictates that patients must remain in the ICU as long as they need intravenous vasopressors, wrote Dr. Micah R. Whitson of North Shore-LIJ Health System in New Hyde Park, N.Y., and colleagues. “One solution to this problem may be replacement of IV vasopressors with an oral agent.”

“Midodrine facilitated earlier patient transfer from the ICU and more efficient allocation of ICU resources,” the researchers wrote (Chest. 2016;149[6]:1380-83).

The researchers compared data on 135 patients treated with midodrine in addition to an intravenous vasopressor and 140 patients treated with an intravenous vasopressor alone.

Overall, patients given midodrine received intravenous vasopressors for 2.9 days while the other group received intravenous vasopressors for 3.8 days, a significant 24% difference. Hospital length of stay was not significantly different, averaging 22 days in the midodrine group and 24 days in the intravenous vasopressor–only group. However, ICU length of stay averaged 7.5 days in the midodrine group and 9.4 days in the vasopressor-only group, a significant 20% reduction. Further, the midodrine group was significantly less likely to reinstitute intravenous vasopressors than the intravenous vasopressor–only group (5.2% vs. 15%, respectively). ICU and hospital mortality rates were not significantly different between the two groups, Dr. Whitson and associates reported.

Patients in the midodrine group received a starting dose of 10 mg every 8 hours, which was increased incrementally until they no longer needed intravenous vasopressors. The maximum midodrine dose in the study was 18.7 mg every 8 hours, and the average duration of use was 6 days.

The patients’ average age was 65 years in the intravenous vasopressor group and 69 years in the midodrine group. Other demographic factors did not significantly differ between the two groups.

One patient discontinued midodrine before discontinuing an intravenous vasopressor because of bradycardia, which resolved without additional treatment.

The findings were limited by the observational nature of the study and the use of data from a single center, the investigators noted. The results, however, support the safety of midodrine and the study “lays the groundwork for a prospective, randomized controlled trial that will examine efficacy, starting dose, escalation, tapering and appropriate patient selection for midodrine use during recovery from septic shock,” they said.

The researchers had no financial conflicts to disclose.

FROM CHEST

Key clinical point: Midodrine may reduce ICU length of stay by reducing the need for intravenous vasopressors in patients recovering from septic shock.

Major finding: The mean intravenous vasopressor duration was 2.9 days for patients who received midodrine vs. 3.8 days for controls who received intravenous vasopressors alone.

Data source: A retrospective study involving 275 patients that was conducted in a single medical intensive care unit.

Disclosures: The researchers had no financial conflicts to disclose.

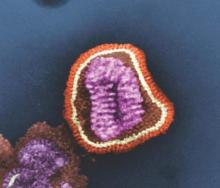

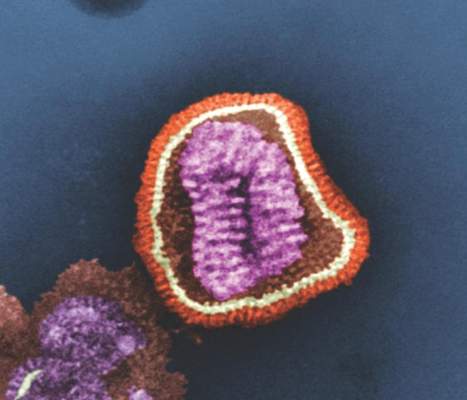

HIV rapid tests miss 1 in 7 infections

Rapid HIV tests in high-income countries miss about one in seven infections and should be used in combination with fourth-generation enzyme immunoassays (EIA) or nucleic acid amplification tests (NAAT) in clinical settings, according to a study in the journal AIDS.

“These infections are likely to be particularly transmissible due to the high HIV viral load in early infection ... in high-income countries, rapid tests should be used in combination with fourth-generation EIA or NAAT, except in special circumstances,” the Australian researchers said.

They performed a systematic review and meta-analysis of 18 studies involving 110,122 HIV rapid test results. The primary outcome was the test’s sensitivity for detecting acute or established HIV infections. Sensitivity was calculated by dividing the number of confirmed positive rapid tests by the number of confirmed positive comparator tests. Specificity was calculated by dividing the number of confirmed negative rapid tests by the number of negative comparator tests.

Compared with EIA, the estimated sensitivity of rapid tests was 94.5% (95% confidence interval, 87.4-97.7). Compared with NAAT, the sensitivity of rapid tests was 93.7% (95% CI, 88.7-96.5). The sensitivity of rapid tests in high income countries was 85.7% (95% CI, 81.9-88.9), and in low income countries was 97.7% (95% CI, 95.2-98·9), compared with either EIA or NAAT (P less than .01 for difference between settings). Proportions of antibody negative acute infections were 13.6% (95% CI, 10.1-18.0) and 4.7% (95% CI, 2.8-7.7) in studies from high- and low-income countries respectively (P less than .01).

Rapid tests were less sensitive when used in clinical settings in high-income countries, regardless of whether they were compared with a NAAT or fourth-generation EIA. However, the researchers noted that the discrepancy between high- and low-income countries could be attributed to the higher proportion of acute HIV infections (antibody-negative NAAT positive) in populations tested in high-income countries, which might reflect higher background testing rates or a higher incidence of HIV in men who have sex with men.

Read the full study in AIDS (doi: 10.1097/QAD.0000000000001134).

On Twitter @richpizzi

Rapid HIV tests in high-income countries miss about one in seven infections and should be used in combination with fourth-generation enzyme immunoassays (EIA) or nucleic acid amplification tests (NAAT) in clinical settings, according to a study in the journal AIDS.

“These infections are likely to be particularly transmissible due to the high HIV viral load in early infection ... in high-income countries, rapid tests should be used in combination with fourth-generation EIA or NAAT, except in special circumstances,” the Australian researchers said.

They performed a systematic review and meta-analysis of 18 studies involving 110,122 HIV rapid test results. The primary outcome was the test’s sensitivity for detecting acute or established HIV infections. Sensitivity was calculated by dividing the number of confirmed positive rapid tests by the number of confirmed positive comparator tests. Specificity was calculated by dividing the number of confirmed negative rapid tests by the number of negative comparator tests.

Compared with EIA, the estimated sensitivity of rapid tests was 94.5% (95% confidence interval, 87.4-97.7). Compared with NAAT, the sensitivity of rapid tests was 93.7% (95% CI, 88.7-96.5). The sensitivity of rapid tests in high income countries was 85.7% (95% CI, 81.9-88.9), and in low income countries was 97.7% (95% CI, 95.2-98·9), compared with either EIA or NAAT (P less than .01 for difference between settings). Proportions of antibody negative acute infections were 13.6% (95% CI, 10.1-18.0) and 4.7% (95% CI, 2.8-7.7) in studies from high- and low-income countries respectively (P less than .01).

Rapid tests were less sensitive when used in clinical settings in high-income countries, regardless of whether they were compared with a NAAT or fourth-generation EIA. However, the researchers noted that the discrepancy between high- and low-income countries could be attributed to the higher proportion of acute HIV infections (antibody-negative NAAT positive) in populations tested in high-income countries, which might reflect higher background testing rates or a higher incidence of HIV in men who have sex with men.

Read the full study in AIDS (doi: 10.1097/QAD.0000000000001134).

On Twitter @richpizzi

Rapid HIV tests in high-income countries miss about one in seven infections and should be used in combination with fourth-generation enzyme immunoassays (EIA) or nucleic acid amplification tests (NAAT) in clinical settings, according to a study in the journal AIDS.

“These infections are likely to be particularly transmissible due to the high HIV viral load in early infection ... in high-income countries, rapid tests should be used in combination with fourth-generation EIA or NAAT, except in special circumstances,” the Australian researchers said.

They performed a systematic review and meta-analysis of 18 studies involving 110,122 HIV rapid test results. The primary outcome was the test’s sensitivity for detecting acute or established HIV infections. Sensitivity was calculated by dividing the number of confirmed positive rapid tests by the number of confirmed positive comparator tests. Specificity was calculated by dividing the number of confirmed negative rapid tests by the number of negative comparator tests.

Compared with EIA, the estimated sensitivity of rapid tests was 94.5% (95% confidence interval, 87.4-97.7). Compared with NAAT, the sensitivity of rapid tests was 93.7% (95% CI, 88.7-96.5). The sensitivity of rapid tests in high income countries was 85.7% (95% CI, 81.9-88.9), and in low income countries was 97.7% (95% CI, 95.2-98·9), compared with either EIA or NAAT (P less than .01 for difference between settings). Proportions of antibody negative acute infections were 13.6% (95% CI, 10.1-18.0) and 4.7% (95% CI, 2.8-7.7) in studies from high- and low-income countries respectively (P less than .01).

Rapid tests were less sensitive when used in clinical settings in high-income countries, regardless of whether they were compared with a NAAT or fourth-generation EIA. However, the researchers noted that the discrepancy between high- and low-income countries could be attributed to the higher proportion of acute HIV infections (antibody-negative NAAT positive) in populations tested in high-income countries, which might reflect higher background testing rates or a higher incidence of HIV in men who have sex with men.

Read the full study in AIDS (doi: 10.1097/QAD.0000000000001134).

On Twitter @richpizzi

FROM AIDS

Far fewer adults would meet SPRINT than guideline-recommended BP goals

Applying the more stringent SPRINT criteria to a general population of persons with hypertension would yield a significant reduction in the number of people meeting their treatment goals, although those who do would achieve a significant reduction in their risk of cardiovascular disease, a study published June 13 in the Journal of the American College of Cardiology has found.

Min Jung Ko, Ph.D., of the National Evidence-Based Healthcare Collaborating Agency in Seoul, Korea, and coauthors explored the relative impacts of SPRINT target of less than 120 mm Hg for hypertension treatments with the 2014 hypertension recommendations of the Eighth Joint National Committee of less than 140 mm Hg, using data from 13,346 individuals in the Korean National Health and Nutrition Examination Survey of 2008-2013, and 67,965 individuals in the Korean National Health Insurance Service health examinee cohort of 2007.

The investigators found that 11.9% of adults with hypertension would meet the treatment goals of the SPRINT criteria, compared with 70.8% who would meet the 2014 recommendations.

However, the analysis showed that those who met the more aggressive SPRINT treatment goal of systolic BP below 120 mm Hg also had the lowest 10-year risk of a major cardiovascular event (6.2%), compared with 7.7% in those who met the 2014 targets but not the SPRINT targets, and 9.4% in those who failed to meet the 2014 treatment targets (J Am Coll Cardiol. 2016 Jun 13. doi 10.1016/j.jacc.2016.03.572).

After adjustment for factors such as age, diabetes, chronic kidney disease, hyperlipidemia, body mass index, and smoking, the least-controlled group showed a 62% increase in the risk of cardiovascular events, compared with the SPRINT criteria group. Those who met the 2014 criteria had a 17% greater risk than those who met the SPRINT criteria.

“Despite greater cardiovascular protection with intensive BP lowering, achieving SPRINT-defined BP goals might not be easy or practical because the target BP was not met in more than one-half of the participants in the intensive-treatment group,” the authors wrote.

Individuals who were older, female, or had diabetes, chronic kidney disease, or prevalent cardiovascular disease were more likely to meet the stricter goals of SPRINT (Systolic Blood Pressure Intervention Trial), in which combined cardiovascular events occurred in 5.2% of patients treated to a target systolic blood pressure of less than 120 mm Hg and 6.8% of patients treated to a target of less than 140 mm Hg (N Engl J Med. 2015;373:2103-16).

Researchers also noted a significant linear association between lesser blood pressure control and an increased risk of myocardial infarction and stroke, although there was no significant trend seen relating to cardiovascular or all-cause mortality. The authors noted that this was the opposite to what was observed in the original SPRINT trial, where there was a reduction in cardiovascular mortality and heart failure but only a modest, nonsignificant impact on MI or stroke.

“Although the exact reasons remain unclear, this discrepancy might be explained in part by differences in study design, population characteristics, clinical practice pattern, or race or ethnic groups,” they suggested. “The generalizability of the SPRINT experience to multiple groups of various ethnic backgrounds warrants further investigations and is likely to be of considerable interest.”

Unlike the SPRINT trial, the Korean analysis did not look into the potential adverse effects of more aggressive blood pressure–lowering, but the authors noted that the SPRINT trial did see an increased incidence of more serious adverse events, including hypotension, syncope, and acute kidney injury.

“Therefore, beyond the BP target per se, several important factors should be considered for optimal BP management in the contemporary medical setting; for example, an integrated and systematic assessment of combined risk factors and baseline cardiovascular risk, concomitant preventive medical therapies, cost-effectiveness, clinician-patient discussions of the potential benefits and harms, or the clinical judgment of the treating physician.”

The National Evidence-Based Healthcare Collaborating Agency, Seoul, South Korea, funded the study. No conflicts of interest were declared.

How broadly SPRINT findings should be generalized is an important and challenging consideration for clinicians, guideline committees, and policy decision makers.

Changing the target for hypertension treatment to systolic BP below 120 mm Hg for all Korean adults would require considerable effort and would almost certainly result in a substantial reduction in hypertension control rates, but these data suggest that more intensive reduction in systolic BP may also result in substantial reduction in cardiovascular disease risk.

However, the findings of Ko et al. must be interpreted with caution. This study provides CVD event rate estimates based on experience in all Korean adults with hypertension, whereas the SPRINT experience was derived from a much more restricted sample of older U.S. adults with hypertension and a high risk of CVD.

Guideline committees and the practice community must use caution when generalizing SPRINT results to adults with a profile that differs from the participants in the parent study.

Dr. Paul K. Whelton is in the department of epidemiology, Tulane University School of Public Health and Tropical Medicine, New Orleans, and Paul Muntner, Ph.D., is in the department of epidemiology at the University of Alabama at Birmingham. These comments were part of an editorial (JACC 2016 June 21. doi 10.1016/j.jacc.2016.04.010). Dr. Whelton serves as chair of the SPRINT steering committee. Dr. Muntner has received grant support from Amgen unrelated to the topic of the current paper.

How broadly SPRINT findings should be generalized is an important and challenging consideration for clinicians, guideline committees, and policy decision makers.

Changing the target for hypertension treatment to systolic BP below 120 mm Hg for all Korean adults would require considerable effort and would almost certainly result in a substantial reduction in hypertension control rates, but these data suggest that more intensive reduction in systolic BP may also result in substantial reduction in cardiovascular disease risk.

However, the findings of Ko et al. must be interpreted with caution. This study provides CVD event rate estimates based on experience in all Korean adults with hypertension, whereas the SPRINT experience was derived from a much more restricted sample of older U.S. adults with hypertension and a high risk of CVD.

Guideline committees and the practice community must use caution when generalizing SPRINT results to adults with a profile that differs from the participants in the parent study.

Dr. Paul K. Whelton is in the department of epidemiology, Tulane University School of Public Health and Tropical Medicine, New Orleans, and Paul Muntner, Ph.D., is in the department of epidemiology at the University of Alabama at Birmingham. These comments were part of an editorial (JACC 2016 June 21. doi 10.1016/j.jacc.2016.04.010). Dr. Whelton serves as chair of the SPRINT steering committee. Dr. Muntner has received grant support from Amgen unrelated to the topic of the current paper.

How broadly SPRINT findings should be generalized is an important and challenging consideration for clinicians, guideline committees, and policy decision makers.

Changing the target for hypertension treatment to systolic BP below 120 mm Hg for all Korean adults would require considerable effort and would almost certainly result in a substantial reduction in hypertension control rates, but these data suggest that more intensive reduction in systolic BP may also result in substantial reduction in cardiovascular disease risk.

However, the findings of Ko et al. must be interpreted with caution. This study provides CVD event rate estimates based on experience in all Korean adults with hypertension, whereas the SPRINT experience was derived from a much more restricted sample of older U.S. adults with hypertension and a high risk of CVD.

Guideline committees and the practice community must use caution when generalizing SPRINT results to adults with a profile that differs from the participants in the parent study.

Dr. Paul K. Whelton is in the department of epidemiology, Tulane University School of Public Health and Tropical Medicine, New Orleans, and Paul Muntner, Ph.D., is in the department of epidemiology at the University of Alabama at Birmingham. These comments were part of an editorial (JACC 2016 June 21. doi 10.1016/j.jacc.2016.04.010). Dr. Whelton serves as chair of the SPRINT steering committee. Dr. Muntner has received grant support from Amgen unrelated to the topic of the current paper.

Applying the more stringent SPRINT criteria to a general population of persons with hypertension would yield a significant reduction in the number of people meeting their treatment goals, although those who do would achieve a significant reduction in their risk of cardiovascular disease, a study published June 13 in the Journal of the American College of Cardiology has found.

Min Jung Ko, Ph.D., of the National Evidence-Based Healthcare Collaborating Agency in Seoul, Korea, and coauthors explored the relative impacts of SPRINT target of less than 120 mm Hg for hypertension treatments with the 2014 hypertension recommendations of the Eighth Joint National Committee of less than 140 mm Hg, using data from 13,346 individuals in the Korean National Health and Nutrition Examination Survey of 2008-2013, and 67,965 individuals in the Korean National Health Insurance Service health examinee cohort of 2007.

The investigators found that 11.9% of adults with hypertension would meet the treatment goals of the SPRINT criteria, compared with 70.8% who would meet the 2014 recommendations.

However, the analysis showed that those who met the more aggressive SPRINT treatment goal of systolic BP below 120 mm Hg also had the lowest 10-year risk of a major cardiovascular event (6.2%), compared with 7.7% in those who met the 2014 targets but not the SPRINT targets, and 9.4% in those who failed to meet the 2014 treatment targets (J Am Coll Cardiol. 2016 Jun 13. doi 10.1016/j.jacc.2016.03.572).

After adjustment for factors such as age, diabetes, chronic kidney disease, hyperlipidemia, body mass index, and smoking, the least-controlled group showed a 62% increase in the risk of cardiovascular events, compared with the SPRINT criteria group. Those who met the 2014 criteria had a 17% greater risk than those who met the SPRINT criteria.

“Despite greater cardiovascular protection with intensive BP lowering, achieving SPRINT-defined BP goals might not be easy or practical because the target BP was not met in more than one-half of the participants in the intensive-treatment group,” the authors wrote.

Individuals who were older, female, or had diabetes, chronic kidney disease, or prevalent cardiovascular disease were more likely to meet the stricter goals of SPRINT (Systolic Blood Pressure Intervention Trial), in which combined cardiovascular events occurred in 5.2% of patients treated to a target systolic blood pressure of less than 120 mm Hg and 6.8% of patients treated to a target of less than 140 mm Hg (N Engl J Med. 2015;373:2103-16).

Researchers also noted a significant linear association between lesser blood pressure control and an increased risk of myocardial infarction and stroke, although there was no significant trend seen relating to cardiovascular or all-cause mortality. The authors noted that this was the opposite to what was observed in the original SPRINT trial, where there was a reduction in cardiovascular mortality and heart failure but only a modest, nonsignificant impact on MI or stroke.

“Although the exact reasons remain unclear, this discrepancy might be explained in part by differences in study design, population characteristics, clinical practice pattern, or race or ethnic groups,” they suggested. “The generalizability of the SPRINT experience to multiple groups of various ethnic backgrounds warrants further investigations and is likely to be of considerable interest.”

Unlike the SPRINT trial, the Korean analysis did not look into the potential adverse effects of more aggressive blood pressure–lowering, but the authors noted that the SPRINT trial did see an increased incidence of more serious adverse events, including hypotension, syncope, and acute kidney injury.

“Therefore, beyond the BP target per se, several important factors should be considered for optimal BP management in the contemporary medical setting; for example, an integrated and systematic assessment of combined risk factors and baseline cardiovascular risk, concomitant preventive medical therapies, cost-effectiveness, clinician-patient discussions of the potential benefits and harms, or the clinical judgment of the treating physician.”

The National Evidence-Based Healthcare Collaborating Agency, Seoul, South Korea, funded the study. No conflicts of interest were declared.

Applying the more stringent SPRINT criteria to a general population of persons with hypertension would yield a significant reduction in the number of people meeting their treatment goals, although those who do would achieve a significant reduction in their risk of cardiovascular disease, a study published June 13 in the Journal of the American College of Cardiology has found.

Min Jung Ko, Ph.D., of the National Evidence-Based Healthcare Collaborating Agency in Seoul, Korea, and coauthors explored the relative impacts of SPRINT target of less than 120 mm Hg for hypertension treatments with the 2014 hypertension recommendations of the Eighth Joint National Committee of less than 140 mm Hg, using data from 13,346 individuals in the Korean National Health and Nutrition Examination Survey of 2008-2013, and 67,965 individuals in the Korean National Health Insurance Service health examinee cohort of 2007.

The investigators found that 11.9% of adults with hypertension would meet the treatment goals of the SPRINT criteria, compared with 70.8% who would meet the 2014 recommendations.

However, the analysis showed that those who met the more aggressive SPRINT treatment goal of systolic BP below 120 mm Hg also had the lowest 10-year risk of a major cardiovascular event (6.2%), compared with 7.7% in those who met the 2014 targets but not the SPRINT targets, and 9.4% in those who failed to meet the 2014 treatment targets (J Am Coll Cardiol. 2016 Jun 13. doi 10.1016/j.jacc.2016.03.572).

After adjustment for factors such as age, diabetes, chronic kidney disease, hyperlipidemia, body mass index, and smoking, the least-controlled group showed a 62% increase in the risk of cardiovascular events, compared with the SPRINT criteria group. Those who met the 2014 criteria had a 17% greater risk than those who met the SPRINT criteria.

“Despite greater cardiovascular protection with intensive BP lowering, achieving SPRINT-defined BP goals might not be easy or practical because the target BP was not met in more than one-half of the participants in the intensive-treatment group,” the authors wrote.

Individuals who were older, female, or had diabetes, chronic kidney disease, or prevalent cardiovascular disease were more likely to meet the stricter goals of SPRINT (Systolic Blood Pressure Intervention Trial), in which combined cardiovascular events occurred in 5.2% of patients treated to a target systolic blood pressure of less than 120 mm Hg and 6.8% of patients treated to a target of less than 140 mm Hg (N Engl J Med. 2015;373:2103-16).

Researchers also noted a significant linear association between lesser blood pressure control and an increased risk of myocardial infarction and stroke, although there was no significant trend seen relating to cardiovascular or all-cause mortality. The authors noted that this was the opposite to what was observed in the original SPRINT trial, where there was a reduction in cardiovascular mortality and heart failure but only a modest, nonsignificant impact on MI or stroke.

“Although the exact reasons remain unclear, this discrepancy might be explained in part by differences in study design, population characteristics, clinical practice pattern, or race or ethnic groups,” they suggested. “The generalizability of the SPRINT experience to multiple groups of various ethnic backgrounds warrants further investigations and is likely to be of considerable interest.”

Unlike the SPRINT trial, the Korean analysis did not look into the potential adverse effects of more aggressive blood pressure–lowering, but the authors noted that the SPRINT trial did see an increased incidence of more serious adverse events, including hypotension, syncope, and acute kidney injury.

“Therefore, beyond the BP target per se, several important factors should be considered for optimal BP management in the contemporary medical setting; for example, an integrated and systematic assessment of combined risk factors and baseline cardiovascular risk, concomitant preventive medical therapies, cost-effectiveness, clinician-patient discussions of the potential benefits and harms, or the clinical judgment of the treating physician.”

The National Evidence-Based Healthcare Collaborating Agency, Seoul, South Korea, funded the study. No conflicts of interest were declared.

FROM THE JOURNAL OF THE AMERICAN COLLEGE OF CARDIOLOGY

Key clinical point: The more aggressive SPRINT targets for blood pressure lowering reduce major cardiovascular events but significantly fewer people meet the treatment goals, compared with the 2014 recommendations.

Major finding: Only 11.9% of hypertensive adults would meet the treatment goals of the SPRINT criteria compared to 70.8% who would meet the 2014 recommendations.

Data source: Database study in two population-based Korean cohorts comprising 81,311 adults.

Disclosures: The National Evidence-Based Healthcare Collaborating Agency, Seoul, South Korea, funded the study. No conflicts of interest were declared.

Tissue flap reconstruction associated with higher costs, postop complication risk

LOS ANGELES – The use of locoregional tissue flaps in combination with abdominoperineal resection was associated with higher rates of perioperative complications, longer hospital stays, and higher total hospital charges, compared with patients who did not undergo tissue flap reconstruction, an analysis of national data showed.

The findings come at a time when closure of perineal wounds with tissue flaps is an increasingly common approach, especially in academic institutions, Dr. Nicole Lopez said at the annual meeting of the American Society of Colon and Rectal Surgeons. “The role of selection bias in this [study] is difficult to determine, but I think it’s important that we clarify the utility of this technique before more widespread adoption of the approach,” she said.

According to Dr. Lopez of the department of surgery at the University of North Carolina, Chapel Hill, perineal wound complications can occur in 16%-49% of patients undergoing abdominoperineal resection. Contributing factors include noncollapsible dead space, bacterial contamination, wound characteristics, and patient comorbidities.

In an effort to identify national trends in the use of tissue flaps in patients undergoing abdominoperineal resection for rectal or anal cancer, as well as the effect of this approach on perioperative complications, length of stay, and total hospital charges, Dr. Lopez and her associates used the National Inpatient Sample to identify patients aged 18-80 years who were treated between 2000 and 2013. They excluded patients undergoing nonelective procedures or additional pelvic organ resections. Patients who received a tissue flap were compared with those who did not.

Dr. Lopez reported results from 298 patients who received a tissue flap graft and 12,107 who did not. Variables significantly associated with receiving a tissue flap, compared with not receiving one, were being male (73% vs. 66%, respectively; P =. 01), having anal cancer (32% vs. 11%; P less than .0001), being a smoker (34% vs. 23%; P less than .0001), undergoing the procedure in a large hospital (75% vs. 67%; P = .003), and undergoing the procedure in an urban teaching hospital (89% vs. 53%; P less than .0001).

The researchers also found that the number of concurrent tissue flaps performed rose significantly during the study period, from 0.4% in 2000 to 6% in 2013 (P less than .0001). “This was most noted in teaching institutions, compared with nonteaching institutions,” Dr. Lopez said.

Bivariate analysis revealed that, compared with patients who did not receive tissue flaps, those who did had higher rates of postoperative complications (43% vs. 33%, respectively; P less than .0001), a longer hospital stay (mean of 9 vs. 7 days; P less than .001), and higher total hospital charges (mean of $67,200 vs. $42,300; P less than .001). These trends persisted on multivariate analysis. Specifically, patients who received tissue flaps were 4.14 times more likely to have wound complications, had a length of stay that averaged an additional 2.78 days, and had $28,000 more in total hospital charges.

“The extended duration of the study enables evaluation of trends over time, and this is the first study that analyzes the costs associated with these procedures,” Dr. Lopez said. She acknowledged certain limitations of the study, including its retrospective, nonrandomized design and the potential for selection bias. In addition, the National Inpatient Sample “is susceptible to coding errors, a lack of patient-specific oncologic history, and the inability to assess postdischarge occurrences, since this only looks at inpatient stays.”

Dr. Lopez reported having no financial disclosures.

LOS ANGELES – The use of locoregional tissue flaps in combination with abdominoperineal resection was associated with higher rates of perioperative complications, longer hospital stays, and higher total hospital charges, compared with patients who did not undergo tissue flap reconstruction, an analysis of national data showed.

The findings come at a time when closure of perineal wounds with tissue flaps is an increasingly common approach, especially in academic institutions, Dr. Nicole Lopez said at the annual meeting of the American Society of Colon and Rectal Surgeons. “The role of selection bias in this [study] is difficult to determine, but I think it’s important that we clarify the utility of this technique before more widespread adoption of the approach,” she said.

According to Dr. Lopez of the department of surgery at the University of North Carolina, Chapel Hill, perineal wound complications can occur in 16%-49% of patients undergoing abdominoperineal resection. Contributing factors include noncollapsible dead space, bacterial contamination, wound characteristics, and patient comorbidities.

In an effort to identify national trends in the use of tissue flaps in patients undergoing abdominoperineal resection for rectal or anal cancer, as well as the effect of this approach on perioperative complications, length of stay, and total hospital charges, Dr. Lopez and her associates used the National Inpatient Sample to identify patients aged 18-80 years who were treated between 2000 and 2013. They excluded patients undergoing nonelective procedures or additional pelvic organ resections. Patients who received a tissue flap were compared with those who did not.

Dr. Lopez reported results from 298 patients who received a tissue flap graft and 12,107 who did not. Variables significantly associated with receiving a tissue flap, compared with not receiving one, were being male (73% vs. 66%, respectively; P =. 01), having anal cancer (32% vs. 11%; P less than .0001), being a smoker (34% vs. 23%; P less than .0001), undergoing the procedure in a large hospital (75% vs. 67%; P = .003), and undergoing the procedure in an urban teaching hospital (89% vs. 53%; P less than .0001).

The researchers also found that the number of concurrent tissue flaps performed rose significantly during the study period, from 0.4% in 2000 to 6% in 2013 (P less than .0001). “This was most noted in teaching institutions, compared with nonteaching institutions,” Dr. Lopez said.

Bivariate analysis revealed that, compared with patients who did not receive tissue flaps, those who did had higher rates of postoperative complications (43% vs. 33%, respectively; P less than .0001), a longer hospital stay (mean of 9 vs. 7 days; P less than .001), and higher total hospital charges (mean of $67,200 vs. $42,300; P less than .001). These trends persisted on multivariate analysis. Specifically, patients who received tissue flaps were 4.14 times more likely to have wound complications, had a length of stay that averaged an additional 2.78 days, and had $28,000 more in total hospital charges.

“The extended duration of the study enables evaluation of trends over time, and this is the first study that analyzes the costs associated with these procedures,” Dr. Lopez said. She acknowledged certain limitations of the study, including its retrospective, nonrandomized design and the potential for selection bias. In addition, the National Inpatient Sample “is susceptible to coding errors, a lack of patient-specific oncologic history, and the inability to assess postdischarge occurrences, since this only looks at inpatient stays.”

Dr. Lopez reported having no financial disclosures.

LOS ANGELES – The use of locoregional tissue flaps in combination with abdominoperineal resection was associated with higher rates of perioperative complications, longer hospital stays, and higher total hospital charges, compared with patients who did not undergo tissue flap reconstruction, an analysis of national data showed.

The findings come at a time when closure of perineal wounds with tissue flaps is an increasingly common approach, especially in academic institutions, Dr. Nicole Lopez said at the annual meeting of the American Society of Colon and Rectal Surgeons. “The role of selection bias in this [study] is difficult to determine, but I think it’s important that we clarify the utility of this technique before more widespread adoption of the approach,” she said.

According to Dr. Lopez of the department of surgery at the University of North Carolina, Chapel Hill, perineal wound complications can occur in 16%-49% of patients undergoing abdominoperineal resection. Contributing factors include noncollapsible dead space, bacterial contamination, wound characteristics, and patient comorbidities.

In an effort to identify national trends in the use of tissue flaps in patients undergoing abdominoperineal resection for rectal or anal cancer, as well as the effect of this approach on perioperative complications, length of stay, and total hospital charges, Dr. Lopez and her associates used the National Inpatient Sample to identify patients aged 18-80 years who were treated between 2000 and 2013. They excluded patients undergoing nonelective procedures or additional pelvic organ resections. Patients who received a tissue flap were compared with those who did not.

Dr. Lopez reported results from 298 patients who received a tissue flap graft and 12,107 who did not. Variables significantly associated with receiving a tissue flap, compared with not receiving one, were being male (73% vs. 66%, respectively; P =. 01), having anal cancer (32% vs. 11%; P less than .0001), being a smoker (34% vs. 23%; P less than .0001), undergoing the procedure in a large hospital (75% vs. 67%; P = .003), and undergoing the procedure in an urban teaching hospital (89% vs. 53%; P less than .0001).

The researchers also found that the number of concurrent tissue flaps performed rose significantly during the study period, from 0.4% in 2000 to 6% in 2013 (P less than .0001). “This was most noted in teaching institutions, compared with nonteaching institutions,” Dr. Lopez said.

Bivariate analysis revealed that, compared with patients who did not receive tissue flaps, those who did had higher rates of postoperative complications (43% vs. 33%, respectively; P less than .0001), a longer hospital stay (mean of 9 vs. 7 days; P less than .001), and higher total hospital charges (mean of $67,200 vs. $42,300; P less than .001). These trends persisted on multivariate analysis. Specifically, patients who received tissue flaps were 4.14 times more likely to have wound complications, had a length of stay that averaged an additional 2.78 days, and had $28,000 more in total hospital charges.

“The extended duration of the study enables evaluation of trends over time, and this is the first study that analyzes the costs associated with these procedures,” Dr. Lopez said. She acknowledged certain limitations of the study, including its retrospective, nonrandomized design and the potential for selection bias. In addition, the National Inpatient Sample “is susceptible to coding errors, a lack of patient-specific oncologic history, and the inability to assess postdischarge occurrences, since this only looks at inpatient stays.”

Dr. Lopez reported having no financial disclosures.

AT THE ASCRS ANNUAL MEETING

Key clinical point: Complications occurred more often in patients who underwent concurrent tissue flap reconstruction during abdominoperineal resection, compared with those who did not.

Major finding: Compared with patients who did not receive tissue flaps, those who did were 4.14 times more likely to have wound complications, had a length of stay that averaged an additional 2.78 days, and had $28,000 more in total hospital charges.

Data source: A study of 12,405 patients aged 18-80 years from the National Inpatient Sample who underwent abdominoperineal resection for rectal or anal cancer between 2000 and 2013.

Disclosures: Dr. Lopez reported having no financial disclosures.

VIDEO: Updated axial SpA recommendations include IL-17 inhibitors

LONDON – The option of treating axial spondyloarthritis with an interleukin-17 inhibitor has become an officially recommended option for the first time in a new update to management recommendations for this disease released by a task force assembled jointly by the Assessment of SpondyloArthritis International Society and the European League Against Rheumatism.

The update replaces recommendations last released by the two groups for managing patients with ankylosing spondylitis in 2010 (Ann Rheum Dis. 2011 June;70[6]:896-904), as well as the prior recommendations from the two organizations for using tumor necrosis factor (TNF) inhibitors on these patients (Ann Rheum Dis. 2011 June;70[6]:905-8). The new update also broadens the disease spectrum from ankylosing spondylitis to axial spondyloarthritis (SpA).

The latest recommendations continue to place nonsteroidal anti-inflammatory drugs (NSAIDs) as first-line pharmacotherapy for patients with axial SpA to control pain and stiffness, and continue to place treatment with a biological disease modifying antirheumatic drug (DMARD) – identified in the recommendations as most typically a TNF inhibitor by current practice – as second-line treatment after NSAIDs. The recommendations specify that initiation of a biological DMARD should target patients who have both failed treatment with at least two different NSAIDs over the course of at least 4 weeks of treatment, and who have active disease documented by either of two standard measures of disease activity in patients with axial SpA: either a score of at least 2.1 on the ankylosing spondylitis disease activity score (ASDAS) or a score of at least 4 on the Bath ankylosing spondylitis disease activity index (BASDAI).

Incorporation of the ASDAS as a potential alternative to the BASDAI for assessing disease activity in these patients is another new feature of these recommendations, noted Dr. Désirée van der Heijde, convenor of the update task force, who presented the new recommendations at the European Congress of Rheumatology.

The new recommendations place use of an interleukin (IL)-17 inhibitor as a third-line management option, for patients who fail to adequately respond to a first TNF inhibitor, and they also say that an alternative to starting an IL-17 inhibitor at this stage of management is to instead try treatment with a second type of TNF inhibitor. The IL-17 inhibitor class includes secukinumab (Cosentyx), which received approval from the Food and Drug Administration for treating active ankylosing spondylitis in January 2016, and which also has approval for the same indication from the European Medicines Agency.

The updated recommendations leave unchanged from the prior version advice to use biological DMARDs only after failure of other treatments, as well as advocacy of nondrug therapy with regular exercise, smoking cessation, and physical therapy when appropriate as the very first therapeutic step to take, before even starting a NSAID regimen. For patients with axial SpA who have peripheral arthritis, the recommendations say that clinicians can consider treatment with a local injection of a glucocorticoid, and a treatment course with sulfasalazine. The recommendations do not endorse treatment with a conventional, synthetic DMARD for patients with purely axial disease, and they also recommend against long-term treatment with a systemic corticosteroid. The update calls analgesics contraindicated.

Another new feature of the updated recommendations is endorsement of treating axial SpA patients to a predefined treatment target, although the recommendations left the nature of that target undefined and is something for the treating clinician to discuss and tailor to each patient individually, said Dr. van der Heijde, professor of rheumatology at Leiden University Medical Center in The Netherlands. The update also introduces for the first time the recommendation to consider tapering down treatment with a biological DMARD for patients who achieve remission.

Dr. van der Heijde said that she has been a consultant to 17 drug companies.

On Twitter @mitchelzoler

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

It’s important to have updated treatment recommendations as we accrue new evidence and treatment options. These recommendations can now address interleukin-17 inhibitors, which were not available for U.S. use to treat ankylosing spondylitis when the American College of Rheumatology and its collaborating organizations released updated recommendations for treating ankylosing spondylitis and nonradiographic axial spondyloarthritis in September 2015 (Arthritis Rheum. 2016 Feb;68[2]:282-98). Having interleukin (IL)-17 inhibitors now available and joining tumor necrosis factor (TNF) inhibitors as a second class of biological drugs to treat these patients is a big step forward.