User login

Glycemic Control eQUIPS yields success at Dignity Health Sequoia Hospital

Glucometrics database aids tracking, trending

In honor of Diabetes Awareness Month, The Hospitalist spoke recently with Stephanie Dizon, PharmD, BCPS, director of pharmacy at Dignity Health Sequoia Hospital in Redwood City, Calif. Dr. Dizon was the project lead for Dignity Health Sequoia’s participation in the Society of Hospital Medicine’s Glycemic Control eQUIPS program. The Northern California hospital was recognized as a top performer in the program.

SHM’s eQUIPS offers a virtual library of resources, including a step-by-step implementation guide, that addresses various issues that range from subcutaneous insulin protocols to care coordination and good hypoglycemia management. In addition, the program offers access to a data center for performance tracking and benchmarking.

Dr. Dizon shared her experience as a participant in the program, and explained its impact on glycemic control at Dignity Health Sequoia Hospital.

Could you tell us about your personal involvement with SHM?

I started as the quality lead for glycemic control for Sequoia Hospital in 2017 while serving in the role as the clinical pharmacy manager. Currently, I am the director of pharmacy.

What inspired your institution to enroll in the GC eQUIPS program? What were the challenges it helped you address?

Sequoia Hospital started in this journey to improve overall glycemic control in a collaborative with eight other Dignity Health hospitals in 2011. At Sequoia Hospital, this effort was led by Karen Harrison, RN, MSN, CCRN. At the time, Dignity Health saw variations in insulin management and adverse events, and it inspired this group to review their practices and try to find a better way to standardize them. The hope was that sharing information and making efforts to standardize practices would lead to better glycemic control.

Enrollment in the GC eQUIPS program helped Sequoia Hospital efficiently analyze data that would otherwise be too large to manage. In addition, by tracking and trending these large data sets, it helped us not only to see where the hospital’s greatest challenges are in glycemic control but also observe what the impact is when making changes. We were part of a nine-site study that proved the effectiveness of GC eQUIPS and highlighted the collective success across the health system.

What did you find most useful in the suite of resources included in eQUIPS?

The benchmarking webinars and informational webinars that have been provided by Greg Maynard, MD, over the years have been especially helpful. They have broadened my understanding of glycemic control. The glucometrics database is especially helpful for tracking and trending – we share these reports on a monthly basis with nursing and provider leadership. In addition, being able to benchmark ourselves with other hospitals pushes us to improve and keep an eye on glycemic control.

Are there any other highlights from your participation– and your institution’s – in the program that you feel would be beneficial to others who may be considering enrollment?

Having access to the tools available in the GC eQUIPS program is very powerful for data analysis and benchmarking. As a result, it allows the people at an institution to focus on the day-to-day tasks, clinical initiatives, and building a culture that can make a program successful instead of focusing on data collection.

For more information on SHM’s Glycemic Control resources or to enroll in eQUIPS, visit hospitalmedicine.org/gc.

Glucometrics database aids tracking, trending

Glucometrics database aids tracking, trending

In honor of Diabetes Awareness Month, The Hospitalist spoke recently with Stephanie Dizon, PharmD, BCPS, director of pharmacy at Dignity Health Sequoia Hospital in Redwood City, Calif. Dr. Dizon was the project lead for Dignity Health Sequoia’s participation in the Society of Hospital Medicine’s Glycemic Control eQUIPS program. The Northern California hospital was recognized as a top performer in the program.

SHM’s eQUIPS offers a virtual library of resources, including a step-by-step implementation guide, that addresses various issues that range from subcutaneous insulin protocols to care coordination and good hypoglycemia management. In addition, the program offers access to a data center for performance tracking and benchmarking.

Dr. Dizon shared her experience as a participant in the program, and explained its impact on glycemic control at Dignity Health Sequoia Hospital.

Could you tell us about your personal involvement with SHM?

I started as the quality lead for glycemic control for Sequoia Hospital in 2017 while serving in the role as the clinical pharmacy manager. Currently, I am the director of pharmacy.

What inspired your institution to enroll in the GC eQUIPS program? What were the challenges it helped you address?

Sequoia Hospital started in this journey to improve overall glycemic control in a collaborative with eight other Dignity Health hospitals in 2011. At Sequoia Hospital, this effort was led by Karen Harrison, RN, MSN, CCRN. At the time, Dignity Health saw variations in insulin management and adverse events, and it inspired this group to review their practices and try to find a better way to standardize them. The hope was that sharing information and making efforts to standardize practices would lead to better glycemic control.

Enrollment in the GC eQUIPS program helped Sequoia Hospital efficiently analyze data that would otherwise be too large to manage. In addition, by tracking and trending these large data sets, it helped us not only to see where the hospital’s greatest challenges are in glycemic control but also observe what the impact is when making changes. We were part of a nine-site study that proved the effectiveness of GC eQUIPS and highlighted the collective success across the health system.

What did you find most useful in the suite of resources included in eQUIPS?

The benchmarking webinars and informational webinars that have been provided by Greg Maynard, MD, over the years have been especially helpful. They have broadened my understanding of glycemic control. The glucometrics database is especially helpful for tracking and trending – we share these reports on a monthly basis with nursing and provider leadership. In addition, being able to benchmark ourselves with other hospitals pushes us to improve and keep an eye on glycemic control.

Are there any other highlights from your participation– and your institution’s – in the program that you feel would be beneficial to others who may be considering enrollment?

Having access to the tools available in the GC eQUIPS program is very powerful for data analysis and benchmarking. As a result, it allows the people at an institution to focus on the day-to-day tasks, clinical initiatives, and building a culture that can make a program successful instead of focusing on data collection.

For more information on SHM’s Glycemic Control resources or to enroll in eQUIPS, visit hospitalmedicine.org/gc.

In honor of Diabetes Awareness Month, The Hospitalist spoke recently with Stephanie Dizon, PharmD, BCPS, director of pharmacy at Dignity Health Sequoia Hospital in Redwood City, Calif. Dr. Dizon was the project lead for Dignity Health Sequoia’s participation in the Society of Hospital Medicine’s Glycemic Control eQUIPS program. The Northern California hospital was recognized as a top performer in the program.

SHM’s eQUIPS offers a virtual library of resources, including a step-by-step implementation guide, that addresses various issues that range from subcutaneous insulin protocols to care coordination and good hypoglycemia management. In addition, the program offers access to a data center for performance tracking and benchmarking.

Dr. Dizon shared her experience as a participant in the program, and explained its impact on glycemic control at Dignity Health Sequoia Hospital.

Could you tell us about your personal involvement with SHM?

I started as the quality lead for glycemic control for Sequoia Hospital in 2017 while serving in the role as the clinical pharmacy manager. Currently, I am the director of pharmacy.

What inspired your institution to enroll in the GC eQUIPS program? What were the challenges it helped you address?

Sequoia Hospital started in this journey to improve overall glycemic control in a collaborative with eight other Dignity Health hospitals in 2011. At Sequoia Hospital, this effort was led by Karen Harrison, RN, MSN, CCRN. At the time, Dignity Health saw variations in insulin management and adverse events, and it inspired this group to review their practices and try to find a better way to standardize them. The hope was that sharing information and making efforts to standardize practices would lead to better glycemic control.

Enrollment in the GC eQUIPS program helped Sequoia Hospital efficiently analyze data that would otherwise be too large to manage. In addition, by tracking and trending these large data sets, it helped us not only to see where the hospital’s greatest challenges are in glycemic control but also observe what the impact is when making changes. We were part of a nine-site study that proved the effectiveness of GC eQUIPS and highlighted the collective success across the health system.

What did you find most useful in the suite of resources included in eQUIPS?

The benchmarking webinars and informational webinars that have been provided by Greg Maynard, MD, over the years have been especially helpful. They have broadened my understanding of glycemic control. The glucometrics database is especially helpful for tracking and trending – we share these reports on a monthly basis with nursing and provider leadership. In addition, being able to benchmark ourselves with other hospitals pushes us to improve and keep an eye on glycemic control.

Are there any other highlights from your participation– and your institution’s – in the program that you feel would be beneficial to others who may be considering enrollment?

Having access to the tools available in the GC eQUIPS program is very powerful for data analysis and benchmarking. As a result, it allows the people at an institution to focus on the day-to-day tasks, clinical initiatives, and building a culture that can make a program successful instead of focusing on data collection.

For more information on SHM’s Glycemic Control resources or to enroll in eQUIPS, visit hospitalmedicine.org/gc.

Better time data from in-hospital resuscitations

Benefits of an undocumented defibrillator feature

Research and quality improvement (QI) related to in-hospital cardiopulmonary resuscitation attempts (“codes” from here forward) are hampered significantly by the poor quality of data on time intervals from arrest onset to clinical interventions.1

In 2000, the American Heart Association’s (AHA) Emergency Cardiac Care Guidelines said that current data were inaccurate and that greater accuracy was “the key to future high-quality research”2 – but since then, the general situation has not improved: Time intervals reported by the national AHA-supported registry Get With the Guidelines–Resuscitation (GWTG-R, 200+ hospitals enrolled) include a figure from all hospitals for times to first defibrillation of 1 minute median and 0 minutes first interquartile.3 Such numbers are typical – when they are tracked at all – but they strain credulity, and prima facie evidence is available at most clinical simulation centers simply by timing simulated defibrillation attempts under realistic conditions, as in “mock codes.”4,5

Taking artificially short time-interval data from GWTG-R or other sources at face value can hide serious delays in response to in-hospital arrests. It can also lead to flawed studies and highly questionable conclusions.6

The key to accuracy of critical time intervals – the intervals from arrest to key interventions – is an accurate time of arrest.7 Codes are typically recorded in handwritten form, though they may later be transcribed or scanned into electronic records. The “start” of the code for unmonitored arrests and most monitored arrests is typically taken to be the time that a human bedside recorder, arriving at an unknown interval after the arrest, writes down the first intervention. Researchers acknowledged the problem of artificially short time intervals in 2005, but they did not propose a remedy.1 Since then, the problem of in-hospital resuscitation delays has received little to no attention in the professional literature.

Description of feature

To get better time data from unmonitored resuscitation attempts, it is necessary to use a “surrogate marker” – a stand-in or substitute event – for the time of arrest. This event should occur reliably for each code, and as near as possible to the actual time of arrest. The main early events in a code are starting basic CPR, paging the code, and moving the defibrillator (usually on a code cart) to the scene. Ideally these events occur almost simultaneously, but that is not consistently achieved.

There are significant problems with use of the first two events as surrogate markers: the time of starting CPR cannot be determined accurately, and paging the code is dependent on several intermediate steps that lead to inaccuracy. Furthermore, the times of both markers are recorded using clocks that are typically not synchronized with the clock used for recording the code (defibrillator clock or the human recorder’s timepiece). Reconciliation of these times with the code record, while not particularly difficult,8 is rarely if ever done.

Defibrillator Power On is recorded on the defibrillator timeline and thus does not need to be reconciled with the defibrillator clock, but it is not suitable as a surrogate marker because this time is highly variable: It often does not occur until the time that monitoring pads are placed. Moving the code cart to the scene, which must occur early in the code, is a much more valid surrogate marker, with the added benefit that it can be marked on the defibrillator timeline.

The undocumented feature described here provides that marker. This feature has been a part of the LIFEPAK 20/20e’s design since it was launched in 2002, but it has not been publicized until now and is not documented in the user manual.

Hospital defibrillators are connected to alternating-current (AC) power when not in use. When the defibrillator is moved to the scene of the code, it is obviously necessary to disconnect the defibrillator from the wall outlet, at which time “AC Power Loss” is recorded on the event record generated by the LIFEPAK 20/20e defibrillators. The defibrillator may be powered on up to 10 minutes later while retaining the AC Power Loss marker in the event record. This surrogate marker for the start time will be on the same timeline as other events recorded by the defibrillator, including times of first monitoring and shocks.

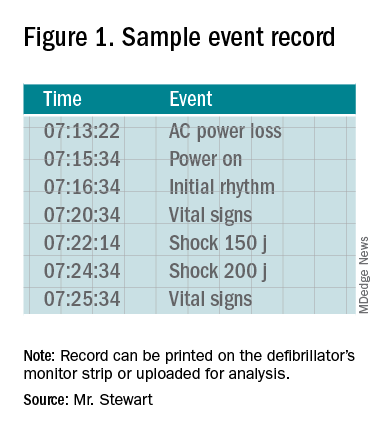

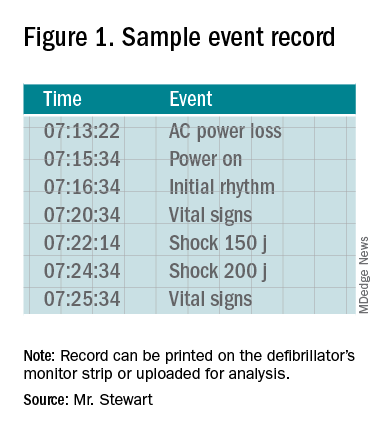

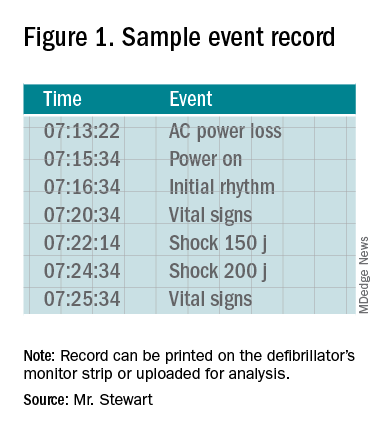

Once the event record is acquired, determining time intervals is accomplished by subtracting clock times (see example, Figure 1).

In the example, using AC Power Loss as the start time, time intervals from arrest to first monitoring (Initial Rhythm on the Event Record) and first shock were 3:12 (07:16:34 minus 07:13:22) and 8:42 (07:22:14 minus 07:13:22). Note that if Power On were used as the surrogate time of arrest in the example, the calculated intervals would be artificially shorter, by 2 min 12 sec.

Using this undocumented feature, any facility using LIFEPAK 20/20e defibrillators can easily measure critical time intervals during resuscitation attempts with much greater accuracy, including times to first monitoring and first defibrillation. Each defibrillator stores code summaries sufficient for dozens of events and accessing past data is simple. Analysis of the data can provide a much-improved measure of the facility’s speed of response as a baseline for QI.

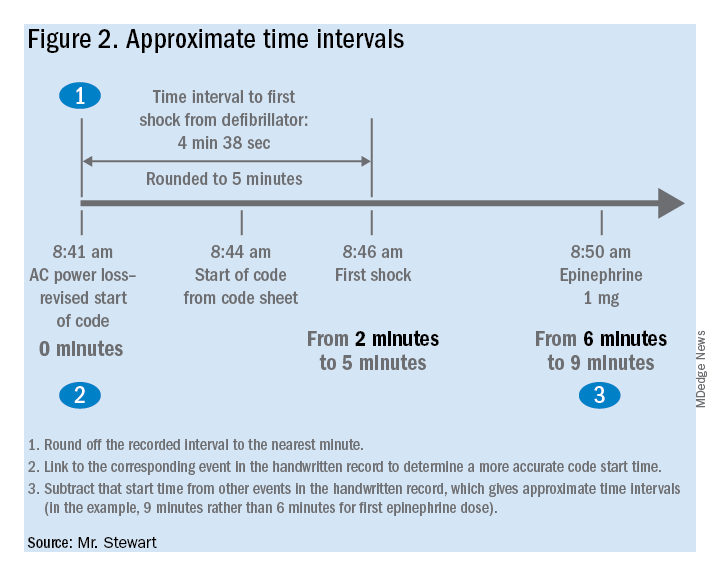

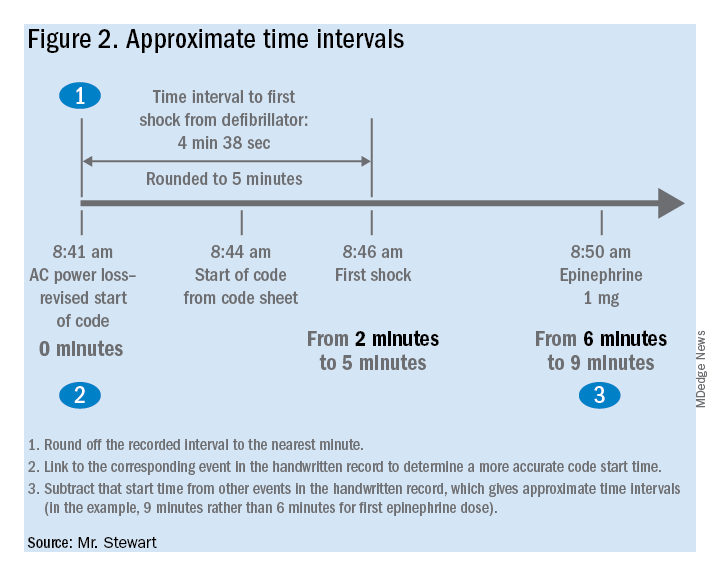

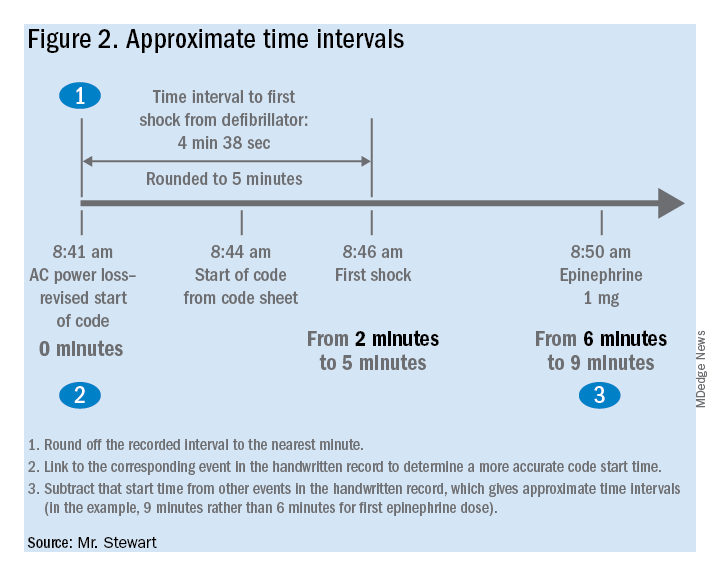

If desired, the time-interval data thus obtained can also be integrated with the handwritten record. The usual handwritten code sheet records times only in whole minutes, but with one of the more accurate intervals from the defibrillator – to first monitoring or first defibrillation – an adjusted time of arrest can be added to any code record to get other intervals that better approximate real-world response times.9

Research prospects

The feature opens multiple avenues for future research. Acquiring data by this method should be simple for any facility using LIFEPAK 20/20e defibrillators as its standard devices. Matching the existing handwritten code records with the time intervals obtained using this surrogate time marker will show how inaccurate the commonly reported data are. This can be done with a retrospective study comparing the time intervals from the archived event records with those from the handwritten records, to provide an example of the inaccuracy of data reported in the medical literature. The more accurate picture of time intervals can provide a much-needed yardstick for future research aimed at shortening response times.

The feature can facilitate aggregation of data across multiple facilities that use the LIFEPAK 20/20e as their standard defibrillator. Also, it is possible that other defibrillator manufacturers will duplicate this feature with their devices – it should produce valid data with any defibrillator – although there may be legal and technical obstacles to adopting it.

Combining data from multiple sites might lead to an important contribution to resuscitation research: a reasonably accurate overall survival curve for in-hospital tachyarrhythmic arrests. A commonly cited but crude guideline is that survival from tachyarrhythmic arrests decreases by 10%-15% per minute as defibrillation is delayed,10 but it seems unlikely that the relationship would be linear: Experience and the literature suggest that survival drops very quickly in the first few minutes, flattening out as elapsed time after arrest increases. Aggregating the much more accurate time-interval data from multiple facilities should produce a survival curve for in-hospital tachyarrhythmic arrests that comes much closer to reality.

Conclusion

It is unknown whether this feature will be used to improve the accuracy of reported code response times. It greatly facilitates acquiring more accurate times, but the task has never been especially difficult – particularly when balanced with the importance of better time data for QI and research.8 One possible impediment may be institutional obstacles to publishing studies with accurate response times due to concerns about public relations or legal exposure: The more accurate times will almost certainly be longer than those generally reported.

As was stated almost 2 decades ago and remains true today, acquiring accurate time-interval data is “the key to future high-quality research.”2 It is also key to improving any hospital’s quality of code response. As described in this article, better time data can easily be acquired. It is time for this important problem to be recognized and remedied.

Mr. Stewart has worked as a hospital nurse in Seattle for many years, and has numerous publications to his credit related to resuscitation issues. You can contact him at [email protected].

References

1. Kaye W et al. When minutes count – the fallacy of accurate time documentation during in-hospital resuscitation. Resuscitation. 2005;65(3):285-90.

2. The American Heart Association in collaboration with the International Liaison Committee on Resuscitation. Guidelines 2000 for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care, Part 4: the automated external defibrillator: key link in the chain of survival. Circulation. 2000;102(8 Suppl):I-60-76.

3. Chan PS et al. American Heart Association National Registry of Cardiopulmonary Resuscitation Investigators. Delayed time to defibrillation after in-hospital cardiac arrest. N Engl J Med. 2008 Jan 3;358(1):9-17. doi: 10.1056/NEJMoa0706467.

4. Hunt EA et al. Simulation of in-hospital pediatric medical emergencies and cardiopulmonary arrests: Highlighting the importance of the first 5 minutes. Pediatrics. 2008;121(1):e34-e43. doi: 10.1542/peds.2007-0029.

5. Reeson M et al. Defibrillator design and usability may be impeding timely defibrillation. Comm J Qual Patient Saf. 2018 Sep;44(9):536-544. doi: 10.1016/j.jcjq.2018.01.005.

6. Hunt EA et al. American Heart Association’s Get With The Guidelines – Resuscitation Investigators. Association between time to defibrillation and survival in pediatric in-hospital cardiac arrest with a first documented shockable rhythm JAMA Netw Open. 2018;1(5):e182643. doi: 10.1001/jamanetworkopen.2018.2643.

7. Cummins RO et al. Recommended guidelines for reviewing, reporting, and conducting research on in-hospital resuscitation: the in-hospital “Utstein” style. Circulation. 1997;95:2213-39.

8. Stewart JA. Determining accurate call-to-shock times is easy. Resuscitation. 2005 Oct;67(1):150-1.

9. In infrequent cases, the code cart and defibrillator may be moved to a deteriorating patient before a full arrest. Such occurrences should be analyzed separately or excluded from analysis.

10. Valenzuela TD et al. Estimating effectiveness of cardiac arrest interventions: a logistic regression survival model. Circulation. 1997;96(10):3308-13. doi: 10.1161/01.cir.96.10.3308.

Benefits of an undocumented defibrillator feature

Benefits of an undocumented defibrillator feature

Research and quality improvement (QI) related to in-hospital cardiopulmonary resuscitation attempts (“codes” from here forward) are hampered significantly by the poor quality of data on time intervals from arrest onset to clinical interventions.1

In 2000, the American Heart Association’s (AHA) Emergency Cardiac Care Guidelines said that current data were inaccurate and that greater accuracy was “the key to future high-quality research”2 – but since then, the general situation has not improved: Time intervals reported by the national AHA-supported registry Get With the Guidelines–Resuscitation (GWTG-R, 200+ hospitals enrolled) include a figure from all hospitals for times to first defibrillation of 1 minute median and 0 minutes first interquartile.3 Such numbers are typical – when they are tracked at all – but they strain credulity, and prima facie evidence is available at most clinical simulation centers simply by timing simulated defibrillation attempts under realistic conditions, as in “mock codes.”4,5

Taking artificially short time-interval data from GWTG-R or other sources at face value can hide serious delays in response to in-hospital arrests. It can also lead to flawed studies and highly questionable conclusions.6

The key to accuracy of critical time intervals – the intervals from arrest to key interventions – is an accurate time of arrest.7 Codes are typically recorded in handwritten form, though they may later be transcribed or scanned into electronic records. The “start” of the code for unmonitored arrests and most monitored arrests is typically taken to be the time that a human bedside recorder, arriving at an unknown interval after the arrest, writes down the first intervention. Researchers acknowledged the problem of artificially short time intervals in 2005, but they did not propose a remedy.1 Since then, the problem of in-hospital resuscitation delays has received little to no attention in the professional literature.

Description of feature

To get better time data from unmonitored resuscitation attempts, it is necessary to use a “surrogate marker” – a stand-in or substitute event – for the time of arrest. This event should occur reliably for each code, and as near as possible to the actual time of arrest. The main early events in a code are starting basic CPR, paging the code, and moving the defibrillator (usually on a code cart) to the scene. Ideally these events occur almost simultaneously, but that is not consistently achieved.

There are significant problems with use of the first two events as surrogate markers: the time of starting CPR cannot be determined accurately, and paging the code is dependent on several intermediate steps that lead to inaccuracy. Furthermore, the times of both markers are recorded using clocks that are typically not synchronized with the clock used for recording the code (defibrillator clock or the human recorder’s timepiece). Reconciliation of these times with the code record, while not particularly difficult,8 is rarely if ever done.

Defibrillator Power On is recorded on the defibrillator timeline and thus does not need to be reconciled with the defibrillator clock, but it is not suitable as a surrogate marker because this time is highly variable: It often does not occur until the time that monitoring pads are placed. Moving the code cart to the scene, which must occur early in the code, is a much more valid surrogate marker, with the added benefit that it can be marked on the defibrillator timeline.

The undocumented feature described here provides that marker. This feature has been a part of the LIFEPAK 20/20e’s design since it was launched in 2002, but it has not been publicized until now and is not documented in the user manual.

Hospital defibrillators are connected to alternating-current (AC) power when not in use. When the defibrillator is moved to the scene of the code, it is obviously necessary to disconnect the defibrillator from the wall outlet, at which time “AC Power Loss” is recorded on the event record generated by the LIFEPAK 20/20e defibrillators. The defibrillator may be powered on up to 10 minutes later while retaining the AC Power Loss marker in the event record. This surrogate marker for the start time will be on the same timeline as other events recorded by the defibrillator, including times of first monitoring and shocks.

Once the event record is acquired, determining time intervals is accomplished by subtracting clock times (see example, Figure 1).

In the example, using AC Power Loss as the start time, time intervals from arrest to first monitoring (Initial Rhythm on the Event Record) and first shock were 3:12 (07:16:34 minus 07:13:22) and 8:42 (07:22:14 minus 07:13:22). Note that if Power On were used as the surrogate time of arrest in the example, the calculated intervals would be artificially shorter, by 2 min 12 sec.

Using this undocumented feature, any facility using LIFEPAK 20/20e defibrillators can easily measure critical time intervals during resuscitation attempts with much greater accuracy, including times to first monitoring and first defibrillation. Each defibrillator stores code summaries sufficient for dozens of events and accessing past data is simple. Analysis of the data can provide a much-improved measure of the facility’s speed of response as a baseline for QI.

If desired, the time-interval data thus obtained can also be integrated with the handwritten record. The usual handwritten code sheet records times only in whole minutes, but with one of the more accurate intervals from the defibrillator – to first monitoring or first defibrillation – an adjusted time of arrest can be added to any code record to get other intervals that better approximate real-world response times.9

Research prospects

The feature opens multiple avenues for future research. Acquiring data by this method should be simple for any facility using LIFEPAK 20/20e defibrillators as its standard devices. Matching the existing handwritten code records with the time intervals obtained using this surrogate time marker will show how inaccurate the commonly reported data are. This can be done with a retrospective study comparing the time intervals from the archived event records with those from the handwritten records, to provide an example of the inaccuracy of data reported in the medical literature. The more accurate picture of time intervals can provide a much-needed yardstick for future research aimed at shortening response times.

The feature can facilitate aggregation of data across multiple facilities that use the LIFEPAK 20/20e as their standard defibrillator. Also, it is possible that other defibrillator manufacturers will duplicate this feature with their devices – it should produce valid data with any defibrillator – although there may be legal and technical obstacles to adopting it.

Combining data from multiple sites might lead to an important contribution to resuscitation research: a reasonably accurate overall survival curve for in-hospital tachyarrhythmic arrests. A commonly cited but crude guideline is that survival from tachyarrhythmic arrests decreases by 10%-15% per minute as defibrillation is delayed,10 but it seems unlikely that the relationship would be linear: Experience and the literature suggest that survival drops very quickly in the first few minutes, flattening out as elapsed time after arrest increases. Aggregating the much more accurate time-interval data from multiple facilities should produce a survival curve for in-hospital tachyarrhythmic arrests that comes much closer to reality.

Conclusion

It is unknown whether this feature will be used to improve the accuracy of reported code response times. It greatly facilitates acquiring more accurate times, but the task has never been especially difficult – particularly when balanced with the importance of better time data for QI and research.8 One possible impediment may be institutional obstacles to publishing studies with accurate response times due to concerns about public relations or legal exposure: The more accurate times will almost certainly be longer than those generally reported.

As was stated almost 2 decades ago and remains true today, acquiring accurate time-interval data is “the key to future high-quality research.”2 It is also key to improving any hospital’s quality of code response. As described in this article, better time data can easily be acquired. It is time for this important problem to be recognized and remedied.

Mr. Stewart has worked as a hospital nurse in Seattle for many years, and has numerous publications to his credit related to resuscitation issues. You can contact him at [email protected].

References

1. Kaye W et al. When minutes count – the fallacy of accurate time documentation during in-hospital resuscitation. Resuscitation. 2005;65(3):285-90.

2. The American Heart Association in collaboration with the International Liaison Committee on Resuscitation. Guidelines 2000 for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care, Part 4: the automated external defibrillator: key link in the chain of survival. Circulation. 2000;102(8 Suppl):I-60-76.

3. Chan PS et al. American Heart Association National Registry of Cardiopulmonary Resuscitation Investigators. Delayed time to defibrillation after in-hospital cardiac arrest. N Engl J Med. 2008 Jan 3;358(1):9-17. doi: 10.1056/NEJMoa0706467.

4. Hunt EA et al. Simulation of in-hospital pediatric medical emergencies and cardiopulmonary arrests: Highlighting the importance of the first 5 minutes. Pediatrics. 2008;121(1):e34-e43. doi: 10.1542/peds.2007-0029.

5. Reeson M et al. Defibrillator design and usability may be impeding timely defibrillation. Comm J Qual Patient Saf. 2018 Sep;44(9):536-544. doi: 10.1016/j.jcjq.2018.01.005.

6. Hunt EA et al. American Heart Association’s Get With The Guidelines – Resuscitation Investigators. Association between time to defibrillation and survival in pediatric in-hospital cardiac arrest with a first documented shockable rhythm JAMA Netw Open. 2018;1(5):e182643. doi: 10.1001/jamanetworkopen.2018.2643.

7. Cummins RO et al. Recommended guidelines for reviewing, reporting, and conducting research on in-hospital resuscitation: the in-hospital “Utstein” style. Circulation. 1997;95:2213-39.

8. Stewart JA. Determining accurate call-to-shock times is easy. Resuscitation. 2005 Oct;67(1):150-1.

9. In infrequent cases, the code cart and defibrillator may be moved to a deteriorating patient before a full arrest. Such occurrences should be analyzed separately or excluded from analysis.

10. Valenzuela TD et al. Estimating effectiveness of cardiac arrest interventions: a logistic regression survival model. Circulation. 1997;96(10):3308-13. doi: 10.1161/01.cir.96.10.3308.

Research and quality improvement (QI) related to in-hospital cardiopulmonary resuscitation attempts (“codes” from here forward) are hampered significantly by the poor quality of data on time intervals from arrest onset to clinical interventions.1

In 2000, the American Heart Association’s (AHA) Emergency Cardiac Care Guidelines said that current data were inaccurate and that greater accuracy was “the key to future high-quality research”2 – but since then, the general situation has not improved: Time intervals reported by the national AHA-supported registry Get With the Guidelines–Resuscitation (GWTG-R, 200+ hospitals enrolled) include a figure from all hospitals for times to first defibrillation of 1 minute median and 0 minutes first interquartile.3 Such numbers are typical – when they are tracked at all – but they strain credulity, and prima facie evidence is available at most clinical simulation centers simply by timing simulated defibrillation attempts under realistic conditions, as in “mock codes.”4,5

Taking artificially short time-interval data from GWTG-R or other sources at face value can hide serious delays in response to in-hospital arrests. It can also lead to flawed studies and highly questionable conclusions.6

The key to accuracy of critical time intervals – the intervals from arrest to key interventions – is an accurate time of arrest.7 Codes are typically recorded in handwritten form, though they may later be transcribed or scanned into electronic records. The “start” of the code for unmonitored arrests and most monitored arrests is typically taken to be the time that a human bedside recorder, arriving at an unknown interval after the arrest, writes down the first intervention. Researchers acknowledged the problem of artificially short time intervals in 2005, but they did not propose a remedy.1 Since then, the problem of in-hospital resuscitation delays has received little to no attention in the professional literature.

Description of feature

To get better time data from unmonitored resuscitation attempts, it is necessary to use a “surrogate marker” – a stand-in or substitute event – for the time of arrest. This event should occur reliably for each code, and as near as possible to the actual time of arrest. The main early events in a code are starting basic CPR, paging the code, and moving the defibrillator (usually on a code cart) to the scene. Ideally these events occur almost simultaneously, but that is not consistently achieved.

There are significant problems with use of the first two events as surrogate markers: the time of starting CPR cannot be determined accurately, and paging the code is dependent on several intermediate steps that lead to inaccuracy. Furthermore, the times of both markers are recorded using clocks that are typically not synchronized with the clock used for recording the code (defibrillator clock or the human recorder’s timepiece). Reconciliation of these times with the code record, while not particularly difficult,8 is rarely if ever done.

Defibrillator Power On is recorded on the defibrillator timeline and thus does not need to be reconciled with the defibrillator clock, but it is not suitable as a surrogate marker because this time is highly variable: It often does not occur until the time that monitoring pads are placed. Moving the code cart to the scene, which must occur early in the code, is a much more valid surrogate marker, with the added benefit that it can be marked on the defibrillator timeline.

The undocumented feature described here provides that marker. This feature has been a part of the LIFEPAK 20/20e’s design since it was launched in 2002, but it has not been publicized until now and is not documented in the user manual.

Hospital defibrillators are connected to alternating-current (AC) power when not in use. When the defibrillator is moved to the scene of the code, it is obviously necessary to disconnect the defibrillator from the wall outlet, at which time “AC Power Loss” is recorded on the event record generated by the LIFEPAK 20/20e defibrillators. The defibrillator may be powered on up to 10 minutes later while retaining the AC Power Loss marker in the event record. This surrogate marker for the start time will be on the same timeline as other events recorded by the defibrillator, including times of first monitoring and shocks.

Once the event record is acquired, determining time intervals is accomplished by subtracting clock times (see example, Figure 1).

In the example, using AC Power Loss as the start time, time intervals from arrest to first monitoring (Initial Rhythm on the Event Record) and first shock were 3:12 (07:16:34 minus 07:13:22) and 8:42 (07:22:14 minus 07:13:22). Note that if Power On were used as the surrogate time of arrest in the example, the calculated intervals would be artificially shorter, by 2 min 12 sec.

Using this undocumented feature, any facility using LIFEPAK 20/20e defibrillators can easily measure critical time intervals during resuscitation attempts with much greater accuracy, including times to first monitoring and first defibrillation. Each defibrillator stores code summaries sufficient for dozens of events and accessing past data is simple. Analysis of the data can provide a much-improved measure of the facility’s speed of response as a baseline for QI.

If desired, the time-interval data thus obtained can also be integrated with the handwritten record. The usual handwritten code sheet records times only in whole minutes, but with one of the more accurate intervals from the defibrillator – to first monitoring or first defibrillation – an adjusted time of arrest can be added to any code record to get other intervals that better approximate real-world response times.9

Research prospects

The feature opens multiple avenues for future research. Acquiring data by this method should be simple for any facility using LIFEPAK 20/20e defibrillators as its standard devices. Matching the existing handwritten code records with the time intervals obtained using this surrogate time marker will show how inaccurate the commonly reported data are. This can be done with a retrospective study comparing the time intervals from the archived event records with those from the handwritten records, to provide an example of the inaccuracy of data reported in the medical literature. The more accurate picture of time intervals can provide a much-needed yardstick for future research aimed at shortening response times.

The feature can facilitate aggregation of data across multiple facilities that use the LIFEPAK 20/20e as their standard defibrillator. Also, it is possible that other defibrillator manufacturers will duplicate this feature with their devices – it should produce valid data with any defibrillator – although there may be legal and technical obstacles to adopting it.

Combining data from multiple sites might lead to an important contribution to resuscitation research: a reasonably accurate overall survival curve for in-hospital tachyarrhythmic arrests. A commonly cited but crude guideline is that survival from tachyarrhythmic arrests decreases by 10%-15% per minute as defibrillation is delayed,10 but it seems unlikely that the relationship would be linear: Experience and the literature suggest that survival drops very quickly in the first few minutes, flattening out as elapsed time after arrest increases. Aggregating the much more accurate time-interval data from multiple facilities should produce a survival curve for in-hospital tachyarrhythmic arrests that comes much closer to reality.

Conclusion

It is unknown whether this feature will be used to improve the accuracy of reported code response times. It greatly facilitates acquiring more accurate times, but the task has never been especially difficult – particularly when balanced with the importance of better time data for QI and research.8 One possible impediment may be institutional obstacles to publishing studies with accurate response times due to concerns about public relations or legal exposure: The more accurate times will almost certainly be longer than those generally reported.

As was stated almost 2 decades ago and remains true today, acquiring accurate time-interval data is “the key to future high-quality research.”2 It is also key to improving any hospital’s quality of code response. As described in this article, better time data can easily be acquired. It is time for this important problem to be recognized and remedied.

Mr. Stewart has worked as a hospital nurse in Seattle for many years, and has numerous publications to his credit related to resuscitation issues. You can contact him at [email protected].

References

1. Kaye W et al. When minutes count – the fallacy of accurate time documentation during in-hospital resuscitation. Resuscitation. 2005;65(3):285-90.

2. The American Heart Association in collaboration with the International Liaison Committee on Resuscitation. Guidelines 2000 for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care, Part 4: the automated external defibrillator: key link in the chain of survival. Circulation. 2000;102(8 Suppl):I-60-76.

3. Chan PS et al. American Heart Association National Registry of Cardiopulmonary Resuscitation Investigators. Delayed time to defibrillation after in-hospital cardiac arrest. N Engl J Med. 2008 Jan 3;358(1):9-17. doi: 10.1056/NEJMoa0706467.

4. Hunt EA et al. Simulation of in-hospital pediatric medical emergencies and cardiopulmonary arrests: Highlighting the importance of the first 5 minutes. Pediatrics. 2008;121(1):e34-e43. doi: 10.1542/peds.2007-0029.

5. Reeson M et al. Defibrillator design and usability may be impeding timely defibrillation. Comm J Qual Patient Saf. 2018 Sep;44(9):536-544. doi: 10.1016/j.jcjq.2018.01.005.

6. Hunt EA et al. American Heart Association’s Get With The Guidelines – Resuscitation Investigators. Association between time to defibrillation and survival in pediatric in-hospital cardiac arrest with a first documented shockable rhythm JAMA Netw Open. 2018;1(5):e182643. doi: 10.1001/jamanetworkopen.2018.2643.

7. Cummins RO et al. Recommended guidelines for reviewing, reporting, and conducting research on in-hospital resuscitation: the in-hospital “Utstein” style. Circulation. 1997;95:2213-39.

8. Stewart JA. Determining accurate call-to-shock times is easy. Resuscitation. 2005 Oct;67(1):150-1.

9. In infrequent cases, the code cart and defibrillator may be moved to a deteriorating patient before a full arrest. Such occurrences should be analyzed separately or excluded from analysis.

10. Valenzuela TD et al. Estimating effectiveness of cardiac arrest interventions: a logistic regression survival model. Circulation. 1997;96(10):3308-13. doi: 10.1161/01.cir.96.10.3308.

Was the success of hospital medicine inevitable?

Early on, SHM defined the specialty

When I started at the Society of Hospital Medicine – known then as the National Association of Inpatient Physicians (NAIP) – in January 2000, Bill Clinton was still president. There were probably 500 hospitalists in the United States, and SHM had about 200-250 members.

It was so long ago that the iPhone hadn’t been invented, Twitter wasn’t even an idea, and Amazon was an online book store. SHM’s national offices were a cubicle at the American College of Physicians headquarters in Philadelphia, and our entire staff was me and a part-time assistant.

We have certainly come a long way in my 20 years as CEO of SHM.

When I first became involved with NAIP, it was to help the board with their strategic planning in 1998. At that time, the national thought leaders for the hospitalist movement (the term hospital medicine had not been invented yet) predicted that hospitalists would eventually do the inpatient work for about 25% of family doctors and for 15% of internists. Hospitalists were considered to be a form of “general medicine” without an office-based practice.

One of the first things we set about doing was to define the new specialty of hospital medicine before anyone else (e.g., American Medical Association, ACP, American Academy of Family Physicians, American Academy of Pediatrics, the government) defined us.

Most specialties were defined by a body organ (e.g., cardiology, renal), a population (e.g., pediatrics, geriatrics), or a disease (e.g., oncology), and there were a few other site-specific specialties (e.g., ED medicine, critical care). We felt that, to be a specialty, we needed certain key elements:

- Separate group consciousness

- Professional society

- Distinct residency and fellowship programs

- Separate CME

- Distinct educational materials (e.g., textbooks)

- Definable and distinct competencies

- Separate credentials – certification and/or hospital insurance driven

Early on, SHM defined the Core Competencies for Hospital Medicine for adults in patient care and, eventually, for pediatric patients. We rebranded our specialty as hospital medicine to be more than just inpatient physicians, and to broadly encompass the growing “big tent” of SHM that included those trained in internal medicine, family medicine, pediatrics, med-peds, as well as nurse practitioners, physician assistants, pharmacists, and others.

We were the first and only specialty society to set the standard for hospitalist compensation (how much you are paid) and productivity (what you are expected to do) with our unique State of Hospital Medicine (SOHM) Report. Other specialties left this work to the Medical Group Management Association, the AMA, or commercial companies.

Our specialty was soon being asked to do things that no other group of clinicians was ever asked to do.

Hospitalists were expected to Save Money by reducing length of stay and the use of resources on the sickest patients. Hospitalists were asked to Improve Measurable Quality at a time when most other physicians or even hospitals weren’t even being measured.

We were expected to form and Lead Teams of other clinicians when health care was still seen as a solo enterprise. Hospitalists were expected to Improve Efficiency and to create a Seamless Continuity, both during the hospital stay and in the transitions out of the hospital.

Hospitalists were asked to do things no one else wanted to do, such as taking on the uncompensated patients and extra hospital committee work and just about any new project their hospital wanted to be involved in. Along the way, we were expected to Make Other Physicians’ Lives Better by taking on their inpatients, inpatient calls, comanagement with specialists, and unloading the ED.

And both at medical schools and in the community, hospitalists became the Major Educators of medical students, residents, nurses, and other hospital staff.

At the same time, SHM was focusing on becoming a very unique medical professional society.

SHM built on the energy of our young and innovative hospitalists to forge a different path. We had no reputation to protect. We were not bound like most other specialty societies to over 100 years of “the way it’s always been done.”

While other professional societies thought their role in quality improvement was to pontificate and publish clinical guidelines that often were little used, SHM embarked on an aggressive, hands-on, frontline approach by starting SHM’s Center for Quality Improvement. Over the last 15 years, the center has raised millions of dollars to deliver real change and improvement at hundreds of hospitals nationwide, many times bringing work plans and mentors to support and train local clinicians in quality improvement skills and data collection. This approach was recognized by the National Quality Forum and the Joint Commission with their prestigious John Eisenberg Award for Quality Improvement.

When we went to Washington to help shape the future of health care, we did not ask for more money for hospitalists. We did not ask for more power or to use regulations to protect our new specialty. Instead, we went with ideas of how to make acute medical care more effective and efficient. We could show the politicians and the regulators how we could reduce incidence of deep vein thrombosis and pulmonary emboli, how we could make the hospital discharge process work better, how we could help chart a smoother medication reconciliation process, and so many other ways the system could be improved.

And even the way SHM generated our new ideas was uniquely different than other specialties. Way back in 2000 – long before Twitter and other social media were able to crowdsource and use the Internet to percolate new ideas – SHM relied on our members’ conversations on the SHM electronic mail discussion list to see what hospitalists were worried about, and what everyone was being asked to do, and SHM provided the resources and initiatives to support our nation’s hospitalists.

From these early conversations, SHM heard that hospitalists were being asked to Lead Change without much of an idea of the skills they would need. And so, the SHM leadership academies were born, which have now educated more than 2,700 hospitalist leaders.

Early on, we learned that hospitalists and even their bosses had no idea of how to start or run a successful hospital medicine group. SHM started our practice management courses and webinars and we developed the groundbreaking document, Key Characteristics of Effective Hospital Medicine Groups. In a typical SHM manner, we challenged most of our members to improve and get better rather trying to defend the status quo. At SHM, we have constantly felt that hospital medicine was a “work in progress.” We may not be perfect today, but we will be better in 90 days and even better in a year.

I have more to say about how we got this far and even more to say about where we might go. So, stay tuned and keep contributing to the future and success of SHM and hospital medicine.

Dr. Wellikson is the CEO of SHM. He has announced his plan to retire from SHM in late 2020. This article is the first in a series celebrating Dr. Wellikson’s tenure as CEO.

Early on, SHM defined the specialty

Early on, SHM defined the specialty

When I started at the Society of Hospital Medicine – known then as the National Association of Inpatient Physicians (NAIP) – in January 2000, Bill Clinton was still president. There were probably 500 hospitalists in the United States, and SHM had about 200-250 members.

It was so long ago that the iPhone hadn’t been invented, Twitter wasn’t even an idea, and Amazon was an online book store. SHM’s national offices were a cubicle at the American College of Physicians headquarters in Philadelphia, and our entire staff was me and a part-time assistant.

We have certainly come a long way in my 20 years as CEO of SHM.

When I first became involved with NAIP, it was to help the board with their strategic planning in 1998. At that time, the national thought leaders for the hospitalist movement (the term hospital medicine had not been invented yet) predicted that hospitalists would eventually do the inpatient work for about 25% of family doctors and for 15% of internists. Hospitalists were considered to be a form of “general medicine” without an office-based practice.

One of the first things we set about doing was to define the new specialty of hospital medicine before anyone else (e.g., American Medical Association, ACP, American Academy of Family Physicians, American Academy of Pediatrics, the government) defined us.

Most specialties were defined by a body organ (e.g., cardiology, renal), a population (e.g., pediatrics, geriatrics), or a disease (e.g., oncology), and there were a few other site-specific specialties (e.g., ED medicine, critical care). We felt that, to be a specialty, we needed certain key elements:

- Separate group consciousness

- Professional society

- Distinct residency and fellowship programs

- Separate CME

- Distinct educational materials (e.g., textbooks)

- Definable and distinct competencies

- Separate credentials – certification and/or hospital insurance driven

Early on, SHM defined the Core Competencies for Hospital Medicine for adults in patient care and, eventually, for pediatric patients. We rebranded our specialty as hospital medicine to be more than just inpatient physicians, and to broadly encompass the growing “big tent” of SHM that included those trained in internal medicine, family medicine, pediatrics, med-peds, as well as nurse practitioners, physician assistants, pharmacists, and others.

We were the first and only specialty society to set the standard for hospitalist compensation (how much you are paid) and productivity (what you are expected to do) with our unique State of Hospital Medicine (SOHM) Report. Other specialties left this work to the Medical Group Management Association, the AMA, or commercial companies.

Our specialty was soon being asked to do things that no other group of clinicians was ever asked to do.

Hospitalists were expected to Save Money by reducing length of stay and the use of resources on the sickest patients. Hospitalists were asked to Improve Measurable Quality at a time when most other physicians or even hospitals weren’t even being measured.

We were expected to form and Lead Teams of other clinicians when health care was still seen as a solo enterprise. Hospitalists were expected to Improve Efficiency and to create a Seamless Continuity, both during the hospital stay and in the transitions out of the hospital.

Hospitalists were asked to do things no one else wanted to do, such as taking on the uncompensated patients and extra hospital committee work and just about any new project their hospital wanted to be involved in. Along the way, we were expected to Make Other Physicians’ Lives Better by taking on their inpatients, inpatient calls, comanagement with specialists, and unloading the ED.

And both at medical schools and in the community, hospitalists became the Major Educators of medical students, residents, nurses, and other hospital staff.

At the same time, SHM was focusing on becoming a very unique medical professional society.

SHM built on the energy of our young and innovative hospitalists to forge a different path. We had no reputation to protect. We were not bound like most other specialty societies to over 100 years of “the way it’s always been done.”

While other professional societies thought their role in quality improvement was to pontificate and publish clinical guidelines that often were little used, SHM embarked on an aggressive, hands-on, frontline approach by starting SHM’s Center for Quality Improvement. Over the last 15 years, the center has raised millions of dollars to deliver real change and improvement at hundreds of hospitals nationwide, many times bringing work plans and mentors to support and train local clinicians in quality improvement skills and data collection. This approach was recognized by the National Quality Forum and the Joint Commission with their prestigious John Eisenberg Award for Quality Improvement.

When we went to Washington to help shape the future of health care, we did not ask for more money for hospitalists. We did not ask for more power or to use regulations to protect our new specialty. Instead, we went with ideas of how to make acute medical care more effective and efficient. We could show the politicians and the regulators how we could reduce incidence of deep vein thrombosis and pulmonary emboli, how we could make the hospital discharge process work better, how we could help chart a smoother medication reconciliation process, and so many other ways the system could be improved.

And even the way SHM generated our new ideas was uniquely different than other specialties. Way back in 2000 – long before Twitter and other social media were able to crowdsource and use the Internet to percolate new ideas – SHM relied on our members’ conversations on the SHM electronic mail discussion list to see what hospitalists were worried about, and what everyone was being asked to do, and SHM provided the resources and initiatives to support our nation’s hospitalists.

From these early conversations, SHM heard that hospitalists were being asked to Lead Change without much of an idea of the skills they would need. And so, the SHM leadership academies were born, which have now educated more than 2,700 hospitalist leaders.

Early on, we learned that hospitalists and even their bosses had no idea of how to start or run a successful hospital medicine group. SHM started our practice management courses and webinars and we developed the groundbreaking document, Key Characteristics of Effective Hospital Medicine Groups. In a typical SHM manner, we challenged most of our members to improve and get better rather trying to defend the status quo. At SHM, we have constantly felt that hospital medicine was a “work in progress.” We may not be perfect today, but we will be better in 90 days and even better in a year.

I have more to say about how we got this far and even more to say about where we might go. So, stay tuned and keep contributing to the future and success of SHM and hospital medicine.

Dr. Wellikson is the CEO of SHM. He has announced his plan to retire from SHM in late 2020. This article is the first in a series celebrating Dr. Wellikson’s tenure as CEO.

When I started at the Society of Hospital Medicine – known then as the National Association of Inpatient Physicians (NAIP) – in January 2000, Bill Clinton was still president. There were probably 500 hospitalists in the United States, and SHM had about 200-250 members.

It was so long ago that the iPhone hadn’t been invented, Twitter wasn’t even an idea, and Amazon was an online book store. SHM’s national offices were a cubicle at the American College of Physicians headquarters in Philadelphia, and our entire staff was me and a part-time assistant.

We have certainly come a long way in my 20 years as CEO of SHM.

When I first became involved with NAIP, it was to help the board with their strategic planning in 1998. At that time, the national thought leaders for the hospitalist movement (the term hospital medicine had not been invented yet) predicted that hospitalists would eventually do the inpatient work for about 25% of family doctors and for 15% of internists. Hospitalists were considered to be a form of “general medicine” without an office-based practice.

One of the first things we set about doing was to define the new specialty of hospital medicine before anyone else (e.g., American Medical Association, ACP, American Academy of Family Physicians, American Academy of Pediatrics, the government) defined us.

Most specialties were defined by a body organ (e.g., cardiology, renal), a population (e.g., pediatrics, geriatrics), or a disease (e.g., oncology), and there were a few other site-specific specialties (e.g., ED medicine, critical care). We felt that, to be a specialty, we needed certain key elements:

- Separate group consciousness

- Professional society

- Distinct residency and fellowship programs

- Separate CME

- Distinct educational materials (e.g., textbooks)

- Definable and distinct competencies

- Separate credentials – certification and/or hospital insurance driven

Early on, SHM defined the Core Competencies for Hospital Medicine for adults in patient care and, eventually, for pediatric patients. We rebranded our specialty as hospital medicine to be more than just inpatient physicians, and to broadly encompass the growing “big tent” of SHM that included those trained in internal medicine, family medicine, pediatrics, med-peds, as well as nurse practitioners, physician assistants, pharmacists, and others.

We were the first and only specialty society to set the standard for hospitalist compensation (how much you are paid) and productivity (what you are expected to do) with our unique State of Hospital Medicine (SOHM) Report. Other specialties left this work to the Medical Group Management Association, the AMA, or commercial companies.

Our specialty was soon being asked to do things that no other group of clinicians was ever asked to do.

Hospitalists were expected to Save Money by reducing length of stay and the use of resources on the sickest patients. Hospitalists were asked to Improve Measurable Quality at a time when most other physicians or even hospitals weren’t even being measured.

We were expected to form and Lead Teams of other clinicians when health care was still seen as a solo enterprise. Hospitalists were expected to Improve Efficiency and to create a Seamless Continuity, both during the hospital stay and in the transitions out of the hospital.

Hospitalists were asked to do things no one else wanted to do, such as taking on the uncompensated patients and extra hospital committee work and just about any new project their hospital wanted to be involved in. Along the way, we were expected to Make Other Physicians’ Lives Better by taking on their inpatients, inpatient calls, comanagement with specialists, and unloading the ED.

And both at medical schools and in the community, hospitalists became the Major Educators of medical students, residents, nurses, and other hospital staff.

At the same time, SHM was focusing on becoming a very unique medical professional society.

SHM built on the energy of our young and innovative hospitalists to forge a different path. We had no reputation to protect. We were not bound like most other specialty societies to over 100 years of “the way it’s always been done.”

While other professional societies thought their role in quality improvement was to pontificate and publish clinical guidelines that often were little used, SHM embarked on an aggressive, hands-on, frontline approach by starting SHM’s Center for Quality Improvement. Over the last 15 years, the center has raised millions of dollars to deliver real change and improvement at hundreds of hospitals nationwide, many times bringing work plans and mentors to support and train local clinicians in quality improvement skills and data collection. This approach was recognized by the National Quality Forum and the Joint Commission with their prestigious John Eisenberg Award for Quality Improvement.

When we went to Washington to help shape the future of health care, we did not ask for more money for hospitalists. We did not ask for more power or to use regulations to protect our new specialty. Instead, we went with ideas of how to make acute medical care more effective and efficient. We could show the politicians and the regulators how we could reduce incidence of deep vein thrombosis and pulmonary emboli, how we could make the hospital discharge process work better, how we could help chart a smoother medication reconciliation process, and so many other ways the system could be improved.

And even the way SHM generated our new ideas was uniquely different than other specialties. Way back in 2000 – long before Twitter and other social media were able to crowdsource and use the Internet to percolate new ideas – SHM relied on our members’ conversations on the SHM electronic mail discussion list to see what hospitalists were worried about, and what everyone was being asked to do, and SHM provided the resources and initiatives to support our nation’s hospitalists.

From these early conversations, SHM heard that hospitalists were being asked to Lead Change without much of an idea of the skills they would need. And so, the SHM leadership academies were born, which have now educated more than 2,700 hospitalist leaders.

Early on, we learned that hospitalists and even their bosses had no idea of how to start or run a successful hospital medicine group. SHM started our practice management courses and webinars and we developed the groundbreaking document, Key Characteristics of Effective Hospital Medicine Groups. In a typical SHM manner, we challenged most of our members to improve and get better rather trying to defend the status quo. At SHM, we have constantly felt that hospital medicine was a “work in progress.” We may not be perfect today, but we will be better in 90 days and even better in a year.

I have more to say about how we got this far and even more to say about where we might go. So, stay tuned and keep contributing to the future and success of SHM and hospital medicine.

Dr. Wellikson is the CEO of SHM. He has announced his plan to retire from SHM in late 2020. This article is the first in a series celebrating Dr. Wellikson’s tenure as CEO.

Aspirin for primary prevention reduces risk of CV events, increases bleeding

Background: Aspirin is beneficial in secondary prevention of stroke and MI. There is no consensus on its role in primary prevention of the same.

Study design: Systematic review and meta-analysis.

Setting: PubMed and Embase search on Cochrane from the earliest publication available through Nov. 1, 2018.

Synopsis: This meta-analysis included randomized, controlled trials that compared aspirin use versus no aspirin use in more than 1,000 participants without known cardiovascular (CV) disease. The primary CV outcome was a composite of CV mortality, nonfatal MI, and nonfatal stroke. The primary bleeding outcome was major bleeding (defined by individual studies). Thirteen studies enrolling 164,225 participants and including 1,050,511 participant-years were included. Compared with no aspirin use, aspirin use showed a reduction in composite CV outcomes (hazard ratio, 0.89; 95% confidence interval, 0.84-0.95; number needed to treat, 265) and an increased risk of major bleeding (HR, 1.43; 95% CI, 1.30-1.56; number needed to harm, 210). Limitations of the study include variations in data quality, outcome definitions, and aspirin doses among trials. The study authors advocate for including the lower risk of CV events and increased risk of major bleeding as part of discussions with patients about the use of aspirin for primary prevention.

Bottom line: Aspirin for primary prevention lowers risk of CV events and increases risk of major bleeding. Health care providers should include this as part of informed decision-making discussions with patients about the use of aspirin for primary prevention.

Citation: Zheng S et al. Association of aspirin use for primary prevention with cardiovascular events and bleeding events: A systematic review and meta-analysis. JAMA. 2019 Jan 22;321(3):277-87.

Dr. Radhakrishnan is a hospitalist at Beth Israel Deaconess Medical Center.

Background: Aspirin is beneficial in secondary prevention of stroke and MI. There is no consensus on its role in primary prevention of the same.

Study design: Systematic review and meta-analysis.

Setting: PubMed and Embase search on Cochrane from the earliest publication available through Nov. 1, 2018.

Synopsis: This meta-analysis included randomized, controlled trials that compared aspirin use versus no aspirin use in more than 1,000 participants without known cardiovascular (CV) disease. The primary CV outcome was a composite of CV mortality, nonfatal MI, and nonfatal stroke. The primary bleeding outcome was major bleeding (defined by individual studies). Thirteen studies enrolling 164,225 participants and including 1,050,511 participant-years were included. Compared with no aspirin use, aspirin use showed a reduction in composite CV outcomes (hazard ratio, 0.89; 95% confidence interval, 0.84-0.95; number needed to treat, 265) and an increased risk of major bleeding (HR, 1.43; 95% CI, 1.30-1.56; number needed to harm, 210). Limitations of the study include variations in data quality, outcome definitions, and aspirin doses among trials. The study authors advocate for including the lower risk of CV events and increased risk of major bleeding as part of discussions with patients about the use of aspirin for primary prevention.

Bottom line: Aspirin for primary prevention lowers risk of CV events and increases risk of major bleeding. Health care providers should include this as part of informed decision-making discussions with patients about the use of aspirin for primary prevention.

Citation: Zheng S et al. Association of aspirin use for primary prevention with cardiovascular events and bleeding events: A systematic review and meta-analysis. JAMA. 2019 Jan 22;321(3):277-87.

Dr. Radhakrishnan is a hospitalist at Beth Israel Deaconess Medical Center.

Background: Aspirin is beneficial in secondary prevention of stroke and MI. There is no consensus on its role in primary prevention of the same.

Study design: Systematic review and meta-analysis.

Setting: PubMed and Embase search on Cochrane from the earliest publication available through Nov. 1, 2018.

Synopsis: This meta-analysis included randomized, controlled trials that compared aspirin use versus no aspirin use in more than 1,000 participants without known cardiovascular (CV) disease. The primary CV outcome was a composite of CV mortality, nonfatal MI, and nonfatal stroke. The primary bleeding outcome was major bleeding (defined by individual studies). Thirteen studies enrolling 164,225 participants and including 1,050,511 participant-years were included. Compared with no aspirin use, aspirin use showed a reduction in composite CV outcomes (hazard ratio, 0.89; 95% confidence interval, 0.84-0.95; number needed to treat, 265) and an increased risk of major bleeding (HR, 1.43; 95% CI, 1.30-1.56; number needed to harm, 210). Limitations of the study include variations in data quality, outcome definitions, and aspirin doses among trials. The study authors advocate for including the lower risk of CV events and increased risk of major bleeding as part of discussions with patients about the use of aspirin for primary prevention.

Bottom line: Aspirin for primary prevention lowers risk of CV events and increases risk of major bleeding. Health care providers should include this as part of informed decision-making discussions with patients about the use of aspirin for primary prevention.

Citation: Zheng S et al. Association of aspirin use for primary prevention with cardiovascular events and bleeding events: A systematic review and meta-analysis. JAMA. 2019 Jan 22;321(3):277-87.

Dr. Radhakrishnan is a hospitalist at Beth Israel Deaconess Medical Center.

Spanish risk score predicts 30-day mortality in acute HF in ED patients

Background: The MEESSI-AHF (Multiple Estimation of Risk based on the Emergency Department Spanish Score In patients with Acute Heart Failure) score is a risk-stratification tool that includes systolic blood pressure, age, NT-proBNP, potassium, cardiac troponin T, New York Heart Association class 4 disease, respiratory rate, low-output symptoms, oxygen saturation, episode associated with acute coronary syndrome, signs of left ventricular hypertrophy on EKG, creatinine, and Barthel Index Score. Prior research has shown that it accurately risk-stratified ED patients with AHF in Spain. It has not been studied in other populations.

Study design: Prospective multicenter cohort study.

Setting: Adult ED patients with acute dyspnea in four hospitals in Switzerland.

Synopsis: The study included 1,247 nonhemodialysis patients who presented to the ED with acute dyspnea, were found to have all the necessary variables to calculate the MEESSI-AHF score, and were adjudicated to have acute heart failure. The authors calculated a modified MEESSI-AHF score, excluding the Barthel Index for all patients. The authors found that a six-group modified MEESSI-AHF risk-stratification model could predict 30-day mortality with excellent discrimination (C-Statistic, 0.80). Limitations of the study include the exclusion of all hemodynamically unstable patients and those on hemodialysis.

Bottom line: The MEESSI-AHF score effectively predicts 30-day mortality in AHF in Swiss and Spanish ED patients.

Citation: Wussler D et al. External validation of the MEESSI acute heart failure risk score: A cohort study. Ann Intern Med. 2019;170:248-56.

Dr. Radhakrishnan is a hospitalist at Beth Israel Deaconess Medical Center.

Background: The MEESSI-AHF (Multiple Estimation of Risk based on the Emergency Department Spanish Score In patients with Acute Heart Failure) score is a risk-stratification tool that includes systolic blood pressure, age, NT-proBNP, potassium, cardiac troponin T, New York Heart Association class 4 disease, respiratory rate, low-output symptoms, oxygen saturation, episode associated with acute coronary syndrome, signs of left ventricular hypertrophy on EKG, creatinine, and Barthel Index Score. Prior research has shown that it accurately risk-stratified ED patients with AHF in Spain. It has not been studied in other populations.

Study design: Prospective multicenter cohort study.

Setting: Adult ED patients with acute dyspnea in four hospitals in Switzerland.

Synopsis: The study included 1,247 nonhemodialysis patients who presented to the ED with acute dyspnea, were found to have all the necessary variables to calculate the MEESSI-AHF score, and were adjudicated to have acute heart failure. The authors calculated a modified MEESSI-AHF score, excluding the Barthel Index for all patients. The authors found that a six-group modified MEESSI-AHF risk-stratification model could predict 30-day mortality with excellent discrimination (C-Statistic, 0.80). Limitations of the study include the exclusion of all hemodynamically unstable patients and those on hemodialysis.

Bottom line: The MEESSI-AHF score effectively predicts 30-day mortality in AHF in Swiss and Spanish ED patients.

Citation: Wussler D et al. External validation of the MEESSI acute heart failure risk score: A cohort study. Ann Intern Med. 2019;170:248-56.

Dr. Radhakrishnan is a hospitalist at Beth Israel Deaconess Medical Center.

Background: The MEESSI-AHF (Multiple Estimation of Risk based on the Emergency Department Spanish Score In patients with Acute Heart Failure) score is a risk-stratification tool that includes systolic blood pressure, age, NT-proBNP, potassium, cardiac troponin T, New York Heart Association class 4 disease, respiratory rate, low-output symptoms, oxygen saturation, episode associated with acute coronary syndrome, signs of left ventricular hypertrophy on EKG, creatinine, and Barthel Index Score. Prior research has shown that it accurately risk-stratified ED patients with AHF in Spain. It has not been studied in other populations.

Study design: Prospective multicenter cohort study.

Setting: Adult ED patients with acute dyspnea in four hospitals in Switzerland.

Synopsis: The study included 1,247 nonhemodialysis patients who presented to the ED with acute dyspnea, were found to have all the necessary variables to calculate the MEESSI-AHF score, and were adjudicated to have acute heart failure. The authors calculated a modified MEESSI-AHF score, excluding the Barthel Index for all patients. The authors found that a six-group modified MEESSI-AHF risk-stratification model could predict 30-day mortality with excellent discrimination (C-Statistic, 0.80). Limitations of the study include the exclusion of all hemodynamically unstable patients and those on hemodialysis.

Bottom line: The MEESSI-AHF score effectively predicts 30-day mortality in AHF in Swiss and Spanish ED patients.

Citation: Wussler D et al. External validation of the MEESSI acute heart failure risk score: A cohort study. Ann Intern Med. 2019;170:248-56.

Dr. Radhakrishnan is a hospitalist at Beth Israel Deaconess Medical Center.

Oral antibiotics as effective as IV for stable endocarditis patients

Background: Patients with left-sided infective endocarditis often are treated with prolonged courses of intravenous (IV) antibiotics. The safety of switching from IV to oral antibiotics is unknown.

Study design: Randomized, multicenter, noninferiority study.

Setting: Cardiac centers in Denmark during July 2011–August 2017.

Synopsis: The study enrolled 400 patients with left-sided infective endocarditis and positive blood cultures from Streptococcus, Enterococcus, Staphylococcus aureus, or coagulase-negative staph (non–methicillin-resistant Staphylococcus aureus), without evidence of valvular abscess. Following at least 7 days (for those who required surgical intervention) or 10 days (for those who did not require surgical intervention) of IV antibiotics, patients with ongoing fever, leukocytosis, elevated C-reactive protein, or concurrent infections were excluded from the study. Patients were randomized to receive continued IV antibiotic treatment or switch to oral antibiotic treatment. The IV treatment group received a median of 19 additional days of therapy, compared with 17 days in the oral group. The primary composite outcome of death, unplanned cardiac surgery, embolic event, and relapse of bacteremia occurred in 12.1% in the IV therapy group and 9% in the oral therapy group (difference of 3.1%; 95% confidence interval, –3.4 to 9.6; P = .40), meeting the studies prespecified noninferiority criteria. Poor representation of women, obese patients, and patients who use IV drugs may limit the study’s generalizability. An accompanying editorial advocated for additional research before widespread change to current treatment recommendations are made.

Bottom line: For patients with left-sided infective endocarditis who have been stabilized on IV antibiotic treatment, transitioning to an oral antibiotic regimen may be a noninferior approach.

Citation: Iverson K et al. Partial oral versus intravenous antibiotic treatment of endocarditis. N Eng J Med. 2019 Jan 31;380(5):415-24.

Dr. Phillips is a hospitalist at Beth Israel Deaconess Medical Center and instructor in medicine at Harvard Medical School.

Background: Patients with left-sided infective endocarditis often are treated with prolonged courses of intravenous (IV) antibiotics. The safety of switching from IV to oral antibiotics is unknown.

Study design: Randomized, multicenter, noninferiority study.

Setting: Cardiac centers in Denmark during July 2011–August 2017.