User login

Mad Cow disease: Dealing sensibly with a new concern

After a period out of the spotlight, Mad Cow disease is again causing a stir. Following the first documented case in this country on December 23, 2003,1 the US government is instituting new preventive measures, and patients may be asking for assurances of safe-ty (see “What to advise patients,” page 565).

Mad Cow’s connection to humans: vCJD

Mad Cow disease is the bovine form of transmissible spongiform encephalopathy (TSE), a disease that can also affect sheep, deer, goats, and humans (Table 1). The causative agent is thought to be an infective protein called a prion, discovered in 1997.

Bovine spongiform encephalopathy (BSE) was first identified in the United Kingdom in 1986 and caused a large outbreak in cattle, which peaked in 1993. Subsequently, it was discovered that BSE could rarely spread to humans, causing a variant of Creutzfeldt-Jakob disease (vCJD) that is universally fatal. As of December 2003, 153 cases of vCJD had been reported worldwide, most in the UK. Confirmation of either the classic or variant form requires pathology examination of brain tissue collected by biopsy or, if a patient has died, at autopsy.2

TABLE 1

Transmissible spongiform encephalopathies

| Species affected | Prion disease | Transmissible to humans? |

|---|---|---|

| Mink | Transmissible mink encephalopathy | No |

| Sheep and goats | Scrapie | Historically no; questionable in newly discovered atypical cases |

| Deer and elk | Chronic wasting disease | Possible (under investigation) |

| Cattle and bison | Bovine spongiform encephalopathy | Yes (variant CJD) |

| Humans | Creutzfeldt-jakob disease; variant CJD, Gerstmann-Straussler-Scheinker disease, Kuru, fatal familial insomnia | Through contaminated medical products, instruments, possibly blood |

Uniqueness of vCJD

In medical school, family physicians learned about classic CJD, which is endemic throughout the world and, in the US, causes an average of 1 death per million people per year. The epidemiology of vCJD and CJD are quite different (Table 2). Because vCJD is a new disease, its incubation period is unknown, but it is likely to be years or decades. In the UK it is thought that exposure to BSE-contaminated food from 1984–86 and the onset of vCJD cases in 1994–96 is consistent with such a long incubation period.

Since 1986, BSE has been identified in 20 European countries, Japan, Israel, Canada, and now the US. The main method of its spread through herds is believed to be the former practice of feeding cattle the meat and bone meal products that, at some point, were contaminated with BSE. In 1997, the US and Canada prohibited the feeding of ruminant meat and bone meal to other ruminants. It is thought that most cases of vCJD are transmitted to people when they eat beef products containing brain or spinal cord material contaminated with BSE.

Neuropathology. Variant CJD deposits plaques, vacuoles, and prion protein in the brain. To date, all persons with vCJD have had methio-nine homozygosity at the polymorphic codon 129 of the prion protein gene, suggesting that persons not carrying this genotype (who make 60% of the population) have increased resistance to the disease. In addition, vCJD and BSE are both dose-dependent infections, so both genetics and exposure may explain why so few human cases have occurred despite the widespread outbreak of BSE in the UK.

TABLE 2

Characteristics distinguishing vCJD from CJD

| Characteristic | UK vCJD | US classic CJD |

|---|---|---|

| Median age at death | 28 (range, 14–74) | 68 (range, 23–97)* |

| Median illness duration (mo) | 13–14 | 4–5 |

| Clinical presentation | Prominent psychiatric/behavioral symptoms; delayed neurologic signs | Dementia; early neurologic signs |

| Periodic sharp waves on EEG | Absent | Often present |

| “Pulvinar sign” on MRI† | Present in >75% of cases | Not reported |

| Presense of “florid plaques” on neuropathology | Present in great numbers | Rare or absent |

| Immunohistochemical analysis of brain tissue | Marked accumulation of PrPres | Variable accumulation |

| Presense of agent in lymphoid tissue | Readily detected | Not readily detected |

| Increased glycoform ratio on immunoblot analysis of PrPres | Present | Not present |

| Genotype at codon 129 of prion protein | Methionine/Methionine | Polymorphic |

| *Surveillance data 1997–2001. | ||

| † High signal in the posterior thalmus. | ||

| CJD; Creutzfeldt-Jakob disease; vCJD, variant CJD; EEG, electoencephalogram; MRI, magnetic resonance imaging; | ||

| PrPres, protease-resistant prion protein. | ||

| Source: Centers for Disease Control and Prevention, MMWR Morb Mortal Wkly Rep 2004; 52:1280–1285.1 | ||

Prevention measures have been updated

Before December 30, 2003, prevention measures in place to prevent BSE in this country were the following:

- Import restrictions on bovine-derived consumer products from high-risk BSE countries (initiated in 1989).

- Prohibition of the use of ruminant derived meat and bone meal in cattle feed (initiated in 1997).

- A surveillance system for BSE that involved annual testing of between 5000 and 20,000 cattle slaughtered for human consumption (out of about 35 million cattle slaughtered per year).

Since December 30, 2003, the US Department of Agriculture (USDA) and Food and Drug Administration (FDA) have added or proposed a number of additional provisions to prevent BSE:

- Defining high-risk materials banned for human consumption, including the entire verte-bral column.

- Banning the use of advanced meat recovery systems on vertebral columns. These systems use brushes and air to blast soft tissue off of bone and led to up to 30% of hamburger sampled to be contaminated with central nervous system tissue.

- Proposing an expanded annual surveillance to include about 200,000 high-risk cattle (sick, suspect, dead) and a random sample of 20,000 normal cattle over 30 months old.

But are these measures enough?

Concerns about these new measures center on the surveillance program. First, how long will it take the USDA to expand its testing? Second, will even this expanded testing be sufficient? Some scientists and consumer advocates propose adopting the policy of the European Union, which is to test all cattle over 30 months of age, since this age group can harbor BSE without being ill.

Other congressional proposals include ban-ning all high-risk meat products from all animal feeds and cosmetics, and creating a prion disease task force to coordinate surveillance and research for all prion diseases. Unfortunately, because we have been testing so few cattle for BSE, we don’t really know if there are more infected cattle in our food system. Interestingly, in Japan, where all cattle are tested for BSE after slaughter, 10 more infected animals were discovered, most of which lacked the characteristics that would put them at high risk.3

To date, the beef industry has supported the changes already put into effect, but not the additional ones noted above. Ironically, a number of small, upscale slaughterhouses have proposed testing all cattle they slaughter (mostly under 30 months old) so they may resume sales to Japan. The USDA has turned down their requests for the chemical reagents to run the BSE tests (the agency controls the sale of these kits), citing its concern that testing all cattle would give the impression it is necessary for the entire US herd—a proposition the USDA and many scientists believe is unnecessary. Thus, the controversy over BSE surveillance has now become an economic, political, and scientific issue.

What to advise patients

The risk of contracting vCJD from eating contaminated beef is extremely small.4

There has yet to be a case of BSE found in any native-born US cattle.

There is no association between BSE and milk or milk products.

If traveling to countries where BSE is endem-ic—ie, UK and Portugal—patients may avoid beef altogether or limit consumption to whole cuts, not ground beef or sausage.5

Avoid bovine-derived nutritional supplements, especially those containing bovine pitu-itary, thyroid, adrenal, thymus, or other organ tissue.

Avoid products containing bovine meat or bone meal, such as some types of garden fertilizers.

Deer and elk can develop chronic wasting disease (CWD), another form of TSE. States that have recorded CWD cases include Colorado, Illinois, Wisconsin, and Wyoming. CWD is not known to cause disease in humans, but the risk to hunters and those who eat the meat is unknown. Physicians may want to advise hunters to have deer and elk hunted in CWD areas tested and only CWD negative animals processed for meat. Guidelines for field dressing deer and elk to prevent possible contamination of meat are available at state Departments of Natural Resources.

Investigating suspected disease

Physicians who suspect a patient may have vCJD or CJD, or that a patient has died of such disease, should advocate for brain biopsy or autopsy. The National Prion Disease Pathology Surveillance Center at Case Western University (funded by the Centers for Disease Control and Prevention) provides diagnostic services free of charge to physicians and health departments (available at www.cjdsurveillance.com).

Federal agencies, Congress, and the public became more aware of BSE on December 23, 2003, when the US Department of Agriculture (USDA) diagnosed the disease in a dairy cow in Washington state.1 The cow, traced to a herd originating in Canada, was 6.5 years old and had been slaughtered on December 9. Whether the cow was a “downer” (nonambulatory) is still under investigation. Downer cows are automatically tested; however, it is possible this cow was tested as part of a routine surveillance system rather than because it was at high risk of disease. Regardless, the carcass was released for use as food while tissues considered more risky for BSE transmission (brain, spinal cord, and small intestine) were kept from the human food supply.

After the case was diagnosed, the USDA recalled all meat from cattle slaughtered at that plant the same day. Unfortunately about 30,000 pounds of potentially contaminated meat was never recovered and ended up on consumers’ plates.

Corresponding author

Eric Henley, MD, MPH, Co-Editor, Practice Alert, 1601 Parkview Avenue, Rockford, IL 61107. E-mail: [email protected].

1. Centers for Disease Control and Prevention (CDC) Bovine spongiform encephalopathy in a dairy cow—Washington State, 2003. MMWR Morb Mortal Wkly Rep 2004;52:1280-1285.Available at: www.cdc.gov/mmwr/preview/mmwrhtml/mm5253a2.htm. Accessed on July 15, 2004.

2. CDC.BSE and CJD information and resources. Available at: www.cdc.gov/ncidod/diseases/cjd/cjd.html. Accessed on July 15, 2004.

3. Kaufman M. They’re not allowed to test for Mad Cow. Washington Post National Weekly, May 3–9, 2004;21.-

4. US Food and Drug Administration, Center for Food Safety and Applied Nutrition Commonly asked questions about BSE in products regulated by FDA’s Center for Food Safety and Applied Nutrition (CFSAN). Available at: www.cfsan.fda.gov/~comm/bsefaq.html. Accessed on July 15, 2004.

5. CDC. Bovine spongiform encephalopathy and variant Creutzfeldt-Jakob disease. Available at: www.cdc.gov/travel/diseases/madcow.htm. Accessed on July 15, 2004.

After a period out of the spotlight, Mad Cow disease is again causing a stir. Following the first documented case in this country on December 23, 2003,1 the US government is instituting new preventive measures, and patients may be asking for assurances of safe-ty (see “What to advise patients,” page 565).

Mad Cow’s connection to humans: vCJD

Mad Cow disease is the bovine form of transmissible spongiform encephalopathy (TSE), a disease that can also affect sheep, deer, goats, and humans (Table 1). The causative agent is thought to be an infective protein called a prion, discovered in 1997.

Bovine spongiform encephalopathy (BSE) was first identified in the United Kingdom in 1986 and caused a large outbreak in cattle, which peaked in 1993. Subsequently, it was discovered that BSE could rarely spread to humans, causing a variant of Creutzfeldt-Jakob disease (vCJD) that is universally fatal. As of December 2003, 153 cases of vCJD had been reported worldwide, most in the UK. Confirmation of either the classic or variant form requires pathology examination of brain tissue collected by biopsy or, if a patient has died, at autopsy.2

TABLE 1

Transmissible spongiform encephalopathies

| Species affected | Prion disease | Transmissible to humans? |

|---|---|---|

| Mink | Transmissible mink encephalopathy | No |

| Sheep and goats | Scrapie | Historically no; questionable in newly discovered atypical cases |

| Deer and elk | Chronic wasting disease | Possible (under investigation) |

| Cattle and bison | Bovine spongiform encephalopathy | Yes (variant CJD) |

| Humans | Creutzfeldt-jakob disease; variant CJD, Gerstmann-Straussler-Scheinker disease, Kuru, fatal familial insomnia | Through contaminated medical products, instruments, possibly blood |

Uniqueness of vCJD

In medical school, family physicians learned about classic CJD, which is endemic throughout the world and, in the US, causes an average of 1 death per million people per year. The epidemiology of vCJD and CJD are quite different (Table 2). Because vCJD is a new disease, its incubation period is unknown, but it is likely to be years or decades. In the UK it is thought that exposure to BSE-contaminated food from 1984–86 and the onset of vCJD cases in 1994–96 is consistent with such a long incubation period.

Since 1986, BSE has been identified in 20 European countries, Japan, Israel, Canada, and now the US. The main method of its spread through herds is believed to be the former practice of feeding cattle the meat and bone meal products that, at some point, were contaminated with BSE. In 1997, the US and Canada prohibited the feeding of ruminant meat and bone meal to other ruminants. It is thought that most cases of vCJD are transmitted to people when they eat beef products containing brain or spinal cord material contaminated with BSE.

Neuropathology. Variant CJD deposits plaques, vacuoles, and prion protein in the brain. To date, all persons with vCJD have had methio-nine homozygosity at the polymorphic codon 129 of the prion protein gene, suggesting that persons not carrying this genotype (who make 60% of the population) have increased resistance to the disease. In addition, vCJD and BSE are both dose-dependent infections, so both genetics and exposure may explain why so few human cases have occurred despite the widespread outbreak of BSE in the UK.

TABLE 2

Characteristics distinguishing vCJD from CJD

| Characteristic | UK vCJD | US classic CJD |

|---|---|---|

| Median age at death | 28 (range, 14–74) | 68 (range, 23–97)* |

| Median illness duration (mo) | 13–14 | 4–5 |

| Clinical presentation | Prominent psychiatric/behavioral symptoms; delayed neurologic signs | Dementia; early neurologic signs |

| Periodic sharp waves on EEG | Absent | Often present |

| “Pulvinar sign” on MRI† | Present in >75% of cases | Not reported |

| Presense of “florid plaques” on neuropathology | Present in great numbers | Rare or absent |

| Immunohistochemical analysis of brain tissue | Marked accumulation of PrPres | Variable accumulation |

| Presense of agent in lymphoid tissue | Readily detected | Not readily detected |

| Increased glycoform ratio on immunoblot analysis of PrPres | Present | Not present |

| Genotype at codon 129 of prion protein | Methionine/Methionine | Polymorphic |

| *Surveillance data 1997–2001. | ||

| † High signal in the posterior thalmus. | ||

| CJD; Creutzfeldt-Jakob disease; vCJD, variant CJD; EEG, electoencephalogram; MRI, magnetic resonance imaging; | ||

| PrPres, protease-resistant prion protein. | ||

| Source: Centers for Disease Control and Prevention, MMWR Morb Mortal Wkly Rep 2004; 52:1280–1285.1 | ||

Prevention measures have been updated

Before December 30, 2003, prevention measures in place to prevent BSE in this country were the following:

- Import restrictions on bovine-derived consumer products from high-risk BSE countries (initiated in 1989).

- Prohibition of the use of ruminant derived meat and bone meal in cattle feed (initiated in 1997).

- A surveillance system for BSE that involved annual testing of between 5000 and 20,000 cattle slaughtered for human consumption (out of about 35 million cattle slaughtered per year).

Since December 30, 2003, the US Department of Agriculture (USDA) and Food and Drug Administration (FDA) have added or proposed a number of additional provisions to prevent BSE:

- Defining high-risk materials banned for human consumption, including the entire verte-bral column.

- Banning the use of advanced meat recovery systems on vertebral columns. These systems use brushes and air to blast soft tissue off of bone and led to up to 30% of hamburger sampled to be contaminated with central nervous system tissue.

- Proposing an expanded annual surveillance to include about 200,000 high-risk cattle (sick, suspect, dead) and a random sample of 20,000 normal cattle over 30 months old.

But are these measures enough?

Concerns about these new measures center on the surveillance program. First, how long will it take the USDA to expand its testing? Second, will even this expanded testing be sufficient? Some scientists and consumer advocates propose adopting the policy of the European Union, which is to test all cattle over 30 months of age, since this age group can harbor BSE without being ill.

Other congressional proposals include ban-ning all high-risk meat products from all animal feeds and cosmetics, and creating a prion disease task force to coordinate surveillance and research for all prion diseases. Unfortunately, because we have been testing so few cattle for BSE, we don’t really know if there are more infected cattle in our food system. Interestingly, in Japan, where all cattle are tested for BSE after slaughter, 10 more infected animals were discovered, most of which lacked the characteristics that would put them at high risk.3

To date, the beef industry has supported the changes already put into effect, but not the additional ones noted above. Ironically, a number of small, upscale slaughterhouses have proposed testing all cattle they slaughter (mostly under 30 months old) so they may resume sales to Japan. The USDA has turned down their requests for the chemical reagents to run the BSE tests (the agency controls the sale of these kits), citing its concern that testing all cattle would give the impression it is necessary for the entire US herd—a proposition the USDA and many scientists believe is unnecessary. Thus, the controversy over BSE surveillance has now become an economic, political, and scientific issue.

What to advise patients

The risk of contracting vCJD from eating contaminated beef is extremely small.4

There has yet to be a case of BSE found in any native-born US cattle.

There is no association between BSE and milk or milk products.

If traveling to countries where BSE is endem-ic—ie, UK and Portugal—patients may avoid beef altogether or limit consumption to whole cuts, not ground beef or sausage.5

Avoid bovine-derived nutritional supplements, especially those containing bovine pitu-itary, thyroid, adrenal, thymus, or other organ tissue.

Avoid products containing bovine meat or bone meal, such as some types of garden fertilizers.

Deer and elk can develop chronic wasting disease (CWD), another form of TSE. States that have recorded CWD cases include Colorado, Illinois, Wisconsin, and Wyoming. CWD is not known to cause disease in humans, but the risk to hunters and those who eat the meat is unknown. Physicians may want to advise hunters to have deer and elk hunted in CWD areas tested and only CWD negative animals processed for meat. Guidelines for field dressing deer and elk to prevent possible contamination of meat are available at state Departments of Natural Resources.

Investigating suspected disease

Physicians who suspect a patient may have vCJD or CJD, or that a patient has died of such disease, should advocate for brain biopsy or autopsy. The National Prion Disease Pathology Surveillance Center at Case Western University (funded by the Centers for Disease Control and Prevention) provides diagnostic services free of charge to physicians and health departments (available at www.cjdsurveillance.com).

Federal agencies, Congress, and the public became more aware of BSE on December 23, 2003, when the US Department of Agriculture (USDA) diagnosed the disease in a dairy cow in Washington state.1 The cow, traced to a herd originating in Canada, was 6.5 years old and had been slaughtered on December 9. Whether the cow was a “downer” (nonambulatory) is still under investigation. Downer cows are automatically tested; however, it is possible this cow was tested as part of a routine surveillance system rather than because it was at high risk of disease. Regardless, the carcass was released for use as food while tissues considered more risky for BSE transmission (brain, spinal cord, and small intestine) were kept from the human food supply.

After the case was diagnosed, the USDA recalled all meat from cattle slaughtered at that plant the same day. Unfortunately about 30,000 pounds of potentially contaminated meat was never recovered and ended up on consumers’ plates.

Corresponding author

Eric Henley, MD, MPH, Co-Editor, Practice Alert, 1601 Parkview Avenue, Rockford, IL 61107. E-mail: [email protected].

After a period out of the spotlight, Mad Cow disease is again causing a stir. Following the first documented case in this country on December 23, 2003,1 the US government is instituting new preventive measures, and patients may be asking for assurances of safe-ty (see “What to advise patients,” page 565).

Mad Cow’s connection to humans: vCJD

Mad Cow disease is the bovine form of transmissible spongiform encephalopathy (TSE), a disease that can also affect sheep, deer, goats, and humans (Table 1). The causative agent is thought to be an infective protein called a prion, discovered in 1997.

Bovine spongiform encephalopathy (BSE) was first identified in the United Kingdom in 1986 and caused a large outbreak in cattle, which peaked in 1993. Subsequently, it was discovered that BSE could rarely spread to humans, causing a variant of Creutzfeldt-Jakob disease (vCJD) that is universally fatal. As of December 2003, 153 cases of vCJD had been reported worldwide, most in the UK. Confirmation of either the classic or variant form requires pathology examination of brain tissue collected by biopsy or, if a patient has died, at autopsy.2

TABLE 1

Transmissible spongiform encephalopathies

| Species affected | Prion disease | Transmissible to humans? |

|---|---|---|

| Mink | Transmissible mink encephalopathy | No |

| Sheep and goats | Scrapie | Historically no; questionable in newly discovered atypical cases |

| Deer and elk | Chronic wasting disease | Possible (under investigation) |

| Cattle and bison | Bovine spongiform encephalopathy | Yes (variant CJD) |

| Humans | Creutzfeldt-jakob disease; variant CJD, Gerstmann-Straussler-Scheinker disease, Kuru, fatal familial insomnia | Through contaminated medical products, instruments, possibly blood |

Uniqueness of vCJD

In medical school, family physicians learned about classic CJD, which is endemic throughout the world and, in the US, causes an average of 1 death per million people per year. The epidemiology of vCJD and CJD are quite different (Table 2). Because vCJD is a new disease, its incubation period is unknown, but it is likely to be years or decades. In the UK it is thought that exposure to BSE-contaminated food from 1984–86 and the onset of vCJD cases in 1994–96 is consistent with such a long incubation period.

Since 1986, BSE has been identified in 20 European countries, Japan, Israel, Canada, and now the US. The main method of its spread through herds is believed to be the former practice of feeding cattle the meat and bone meal products that, at some point, were contaminated with BSE. In 1997, the US and Canada prohibited the feeding of ruminant meat and bone meal to other ruminants. It is thought that most cases of vCJD are transmitted to people when they eat beef products containing brain or spinal cord material contaminated with BSE.

Neuropathology. Variant CJD deposits plaques, vacuoles, and prion protein in the brain. To date, all persons with vCJD have had methio-nine homozygosity at the polymorphic codon 129 of the prion protein gene, suggesting that persons not carrying this genotype (who make 60% of the population) have increased resistance to the disease. In addition, vCJD and BSE are both dose-dependent infections, so both genetics and exposure may explain why so few human cases have occurred despite the widespread outbreak of BSE in the UK.

TABLE 2

Characteristics distinguishing vCJD from CJD

| Characteristic | UK vCJD | US classic CJD |

|---|---|---|

| Median age at death | 28 (range, 14–74) | 68 (range, 23–97)* |

| Median illness duration (mo) | 13–14 | 4–5 |

| Clinical presentation | Prominent psychiatric/behavioral symptoms; delayed neurologic signs | Dementia; early neurologic signs |

| Periodic sharp waves on EEG | Absent | Often present |

| “Pulvinar sign” on MRI† | Present in >75% of cases | Not reported |

| Presense of “florid plaques” on neuropathology | Present in great numbers | Rare or absent |

| Immunohistochemical analysis of brain tissue | Marked accumulation of PrPres | Variable accumulation |

| Presense of agent in lymphoid tissue | Readily detected | Not readily detected |

| Increased glycoform ratio on immunoblot analysis of PrPres | Present | Not present |

| Genotype at codon 129 of prion protein | Methionine/Methionine | Polymorphic |

| *Surveillance data 1997–2001. | ||

| † High signal in the posterior thalmus. | ||

| CJD; Creutzfeldt-Jakob disease; vCJD, variant CJD; EEG, electoencephalogram; MRI, magnetic resonance imaging; | ||

| PrPres, protease-resistant prion protein. | ||

| Source: Centers for Disease Control and Prevention, MMWR Morb Mortal Wkly Rep 2004; 52:1280–1285.1 | ||

Prevention measures have been updated

Before December 30, 2003, prevention measures in place to prevent BSE in this country were the following:

- Import restrictions on bovine-derived consumer products from high-risk BSE countries (initiated in 1989).

- Prohibition of the use of ruminant derived meat and bone meal in cattle feed (initiated in 1997).

- A surveillance system for BSE that involved annual testing of between 5000 and 20,000 cattle slaughtered for human consumption (out of about 35 million cattle slaughtered per year).

Since December 30, 2003, the US Department of Agriculture (USDA) and Food and Drug Administration (FDA) have added or proposed a number of additional provisions to prevent BSE:

- Defining high-risk materials banned for human consumption, including the entire verte-bral column.

- Banning the use of advanced meat recovery systems on vertebral columns. These systems use brushes and air to blast soft tissue off of bone and led to up to 30% of hamburger sampled to be contaminated with central nervous system tissue.

- Proposing an expanded annual surveillance to include about 200,000 high-risk cattle (sick, suspect, dead) and a random sample of 20,000 normal cattle over 30 months old.

But are these measures enough?

Concerns about these new measures center on the surveillance program. First, how long will it take the USDA to expand its testing? Second, will even this expanded testing be sufficient? Some scientists and consumer advocates propose adopting the policy of the European Union, which is to test all cattle over 30 months of age, since this age group can harbor BSE without being ill.

Other congressional proposals include ban-ning all high-risk meat products from all animal feeds and cosmetics, and creating a prion disease task force to coordinate surveillance and research for all prion diseases. Unfortunately, because we have been testing so few cattle for BSE, we don’t really know if there are more infected cattle in our food system. Interestingly, in Japan, where all cattle are tested for BSE after slaughter, 10 more infected animals were discovered, most of which lacked the characteristics that would put them at high risk.3

To date, the beef industry has supported the changes already put into effect, but not the additional ones noted above. Ironically, a number of small, upscale slaughterhouses have proposed testing all cattle they slaughter (mostly under 30 months old) so they may resume sales to Japan. The USDA has turned down their requests for the chemical reagents to run the BSE tests (the agency controls the sale of these kits), citing its concern that testing all cattle would give the impression it is necessary for the entire US herd—a proposition the USDA and many scientists believe is unnecessary. Thus, the controversy over BSE surveillance has now become an economic, political, and scientific issue.

What to advise patients

The risk of contracting vCJD from eating contaminated beef is extremely small.4

There has yet to be a case of BSE found in any native-born US cattle.

There is no association between BSE and milk or milk products.

If traveling to countries where BSE is endem-ic—ie, UK and Portugal—patients may avoid beef altogether or limit consumption to whole cuts, not ground beef or sausage.5

Avoid bovine-derived nutritional supplements, especially those containing bovine pitu-itary, thyroid, adrenal, thymus, or other organ tissue.

Avoid products containing bovine meat or bone meal, such as some types of garden fertilizers.

Deer and elk can develop chronic wasting disease (CWD), another form of TSE. States that have recorded CWD cases include Colorado, Illinois, Wisconsin, and Wyoming. CWD is not known to cause disease in humans, but the risk to hunters and those who eat the meat is unknown. Physicians may want to advise hunters to have deer and elk hunted in CWD areas tested and only CWD negative animals processed for meat. Guidelines for field dressing deer and elk to prevent possible contamination of meat are available at state Departments of Natural Resources.

Investigating suspected disease

Physicians who suspect a patient may have vCJD or CJD, or that a patient has died of such disease, should advocate for brain biopsy or autopsy. The National Prion Disease Pathology Surveillance Center at Case Western University (funded by the Centers for Disease Control and Prevention) provides diagnostic services free of charge to physicians and health departments (available at www.cjdsurveillance.com).

Federal agencies, Congress, and the public became more aware of BSE on December 23, 2003, when the US Department of Agriculture (USDA) diagnosed the disease in a dairy cow in Washington state.1 The cow, traced to a herd originating in Canada, was 6.5 years old and had been slaughtered on December 9. Whether the cow was a “downer” (nonambulatory) is still under investigation. Downer cows are automatically tested; however, it is possible this cow was tested as part of a routine surveillance system rather than because it was at high risk of disease. Regardless, the carcass was released for use as food while tissues considered more risky for BSE transmission (brain, spinal cord, and small intestine) were kept from the human food supply.

After the case was diagnosed, the USDA recalled all meat from cattle slaughtered at that plant the same day. Unfortunately about 30,000 pounds of potentially contaminated meat was never recovered and ended up on consumers’ plates.

Corresponding author

Eric Henley, MD, MPH, Co-Editor, Practice Alert, 1601 Parkview Avenue, Rockford, IL 61107. E-mail: [email protected].

1. Centers for Disease Control and Prevention (CDC) Bovine spongiform encephalopathy in a dairy cow—Washington State, 2003. MMWR Morb Mortal Wkly Rep 2004;52:1280-1285.Available at: www.cdc.gov/mmwr/preview/mmwrhtml/mm5253a2.htm. Accessed on July 15, 2004.

2. CDC.BSE and CJD information and resources. Available at: www.cdc.gov/ncidod/diseases/cjd/cjd.html. Accessed on July 15, 2004.

3. Kaufman M. They’re not allowed to test for Mad Cow. Washington Post National Weekly, May 3–9, 2004;21.-

4. US Food and Drug Administration, Center for Food Safety and Applied Nutrition Commonly asked questions about BSE in products regulated by FDA’s Center for Food Safety and Applied Nutrition (CFSAN). Available at: www.cfsan.fda.gov/~comm/bsefaq.html. Accessed on July 15, 2004.

5. CDC. Bovine spongiform encephalopathy and variant Creutzfeldt-Jakob disease. Available at: www.cdc.gov/travel/diseases/madcow.htm. Accessed on July 15, 2004.

1. Centers for Disease Control and Prevention (CDC) Bovine spongiform encephalopathy in a dairy cow—Washington State, 2003. MMWR Morb Mortal Wkly Rep 2004;52:1280-1285.Available at: www.cdc.gov/mmwr/preview/mmwrhtml/mm5253a2.htm. Accessed on July 15, 2004.

2. CDC.BSE and CJD information and resources. Available at: www.cdc.gov/ncidod/diseases/cjd/cjd.html. Accessed on July 15, 2004.

3. Kaufman M. They’re not allowed to test for Mad Cow. Washington Post National Weekly, May 3–9, 2004;21.-

4. US Food and Drug Administration, Center for Food Safety and Applied Nutrition Commonly asked questions about BSE in products regulated by FDA’s Center for Food Safety and Applied Nutrition (CFSAN). Available at: www.cfsan.fda.gov/~comm/bsefaq.html. Accessed on July 15, 2004.

5. CDC. Bovine spongiform encephalopathy and variant Creutzfeldt-Jakob disease. Available at: www.cdc.gov/travel/diseases/madcow.htm. Accessed on July 15, 2004.

What the new Medicare prescription drug bill may mean for providers and patients

In November 2003, President Bush signed the Medicare prescription-drug bill, which will usher in the largest change in the Medicare program in terms of money and number of people affected since the program’s creation in 1965. The final version of the bill was controversial, passing by a small margin in both the House and Senate.

Conservatives criticized the bill for not giving a large enough role to the private sector as an alternative to the traditional Medicare program, for spending too much money, and for risking even larger budget deficits than already predicted.

Liberals criticized it for providing an inadequate drug benefit, for allowing the prescription program to be run by private industry, and for creating an experimental private-sector program that will compete with traditional Medicare.

In the end, passage was ensured with support from the American Association of Retired Persons (AARP), drug companies, private health insurers, and national medical groups—and with the usual political maneuvering.

Public support among seniors and other groups remains unclear. For example, the American Academy of Family Physicians supported the bill, but negative reaction by members led President Michael Fleming to write a letter explaining the reasons for the decision (www.aafp.org/medicareletter.xml). In addition, Republican concerns about the overall cost of the legislation seem borne out by the administration’s recent announcement projecting costs of $530 billion over 10 years, about one third more than the price tag used to convince Congress to pass the legislation about 2 months before.

This article reviews the bill and some of its health policy implications.

Not all details clear; more than drug benefits affected

Several generalizations about Federal legislation hold true with this bill.

First, while the bill establishes the intent of Congress, a number of details will not be made clear until it is implemented by the executive branch—the administration and the responsible cabinet departments such as the Center for Medicare and Medicaid Services. The importance of these implementation details is most relevant to the prescription drug benefit section of the bill.

Second, the bill changes or adds programs in a number of health areas besides prescription drugs (see Supplementary changes with the Medicare prescription drug bill). These additions partly reflected the need of proponents to satisfy diverse special interests (private insurers, hospitals and physicians, rural areas) and thereby gain their support for other parts of the bill that were more controversial, principally the drug benefit and private competition for Medicare. Thus, there is funding to increase Medicare payments to physicians and rural hospitals and to hospitals serving large numbers of low-income patients.

- Medicare payments to rural hospitals and doctors increase by $25 billion over 10 years.

- Payments to hospitals serving large numbers of low-income patients would increase.

- Hospitals can avoid some future cuts in Medicare payments by submitting quality of care data to the government.

- Doctors would receive increases of 1.5% per year in Medicare payments for 2004 and 2005 rather than the cuts currently planned.

- Medicare would cover an initial physical for new beneficiaries and screening for diabetes and cardiovascular disease.

- Support for development of health savings accounts that allow people with high-deductible health insurance to shelter income from taxes and obtain tax deductions if the money is used for health expenses.

- Home health agencies would see cuts in payments, but patient co-pays would not be required.

- Medicare Part B premiums (for physician and outpatient services) would be greater for those with incomes over $80,000.

Third, the changes also reflect genuine goals of improving health by expanding Medicare coverage of preventive services and requiring participating hospitals to submit quality-of-care data.

Prescription drug coverage under the new bill

Although many seniors have drug coverage through retirement health plans or Medigap policies purchased privately, about one quarter of beneficiaries (some 10 million people) do not have such coverage. Even those with drug coverage may have difficulty affording recommended medications since the median income for a senior is little more than $23,000. Many physicians have seen the ill effects of seniors not filling their prescriptions or skipping doses of prescribed medications.

Until the benefit takes effect. The actual prescription drug benefit will not begin until 2006. Until then, Medicare recipients will be given the option of purchasing a drug-discount card for $30 per year starting this spring. It is estimated these cards may save 10% to 15% of prescription costs. In addition, low-income seniors will receive $600 per year toward drug purchases.

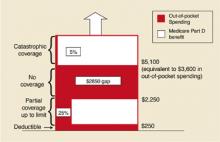

After it takes effect. The drug benefit starting in 2006 will be funded through a complex arrangement of patient and government payments (Figure).

- Premium: A premium estimated to begin at $35 per month.

- Deductible: An annual deductible starting at $250 and indexed to increase to $445 in 2013.

- Co-pay: After paying the deductible, enrollees will pay 25% of additional drug costs up to $2250, at which point a $2850 gap in cover-age—the so-called “doughut hole”—leaves the onus of payment with the patient until $5100 is reached.

- Catastrophic coverage: After $5100, patients will pay 5% of any additional annual drug costs.

In 2006, catastrophic coverage will begin after $3600 in out-of-pocket costs ($250 deductible + $500 in co-pays to $2250 + the $2850 doughnut gap), not counting the premium. Indexing provisions are projected to raise this out-of-pocket cost requirement to $6400 in 2013. These indexing features have received less attention in the media, but may become increasingly important to seniors. For lower-income individuals, as determined by specific yearly income and total assets guidelines, a small per-prescription fee will replace the premium, deductible, and doughnut hole gap payments.

Coverage will vary. As the yearly cost of drugs changes, so will the relative contributions made by the patient and the government (Table). The new bill provides substantial benefit to those with catastrophic drug costs and to very low-income seniors. The idea of linking payments to income (for the drug benefit and the Part B premium) is a change in the Medicare program, as it has traditionally provided the same benefit to all beneficiaries, regardless of income.

Expected effects of privatization. The manner in which the drug benefit will be administered was controversial in Congress. The legislation, written primarily by Republicans, provides that beneficiaries can obtain coverage by participating with an HMO or PPO or by purchasing standalone coverage through a private prescription drug insurance program. Patient enrollment is voluntary. Managed care plans would be encouraged to participate in the prescription benefit program through eligibility for government subsidies. In turn, more beneficiaries would be encouraged to choose managed care plans, thus decreasing the number of patients covered by traditional Medicare. Furthermore, current private Medigap supplemental plans will be barred from offering drug benefits.

With the HMO/PPO and stand-alone programs, it is likely that any reduction in drug costs will result from private pharmacy benefit managers negotiating discounts from drug companies as they do now for many employer-sponsored plans. Presumably, formularies will vary from plan to plan, and it may be difficult for patients to know ahead of time whether the plan they join will cover their current medications. The legislation prohibits the government from using its vast purchasing power to negotiate substantial discounts from drug companies as it does now for the Medicaid program. There are provisions to increase availability of generic drugs, but importation of drugs from Canada is prohibited unless FDA approval is given (so far, the FDA has opposed this). Many Democrats who opposed the bill argued that it allowed too large a role for the private sector and constrained the ability of the government to control drug costs.

A final controversial measure in the bill provided for conducting an experiment in 6 cities beginning in 2010, in which at least 1 private insurance plan would be funded to compete directly with the traditional Medicare program. Many Republicans believe this type of competition is necessary to decrease the rate of cost increases in the Medicare program, while many Democrats believe the private market is a big reason for increasing problems with the quality and cost of the entire health care system.

FIGURE

Out-of-pocket spending under new legislation

Out-of-pocket drug spending in 2006 for Medicare beneficiaries under new Medicare legislation. Note: Benefit levels are indexed to growth in per capita expenditures for covered Part D drugs. As a result, the Part D deductible in projected to increase from $250 in 2006 to $445 in 2013; the catastrophic threshold is projected to increase from $5100 in 2006 to $9066 in 2013. From the Kaiser Family Foundation website(www.kff.org/medicare/medicarebenefitataglance.ctm).

Looming questions

The new Medicare legislation is vast in scope, cost, and controversy. In the coming months, a number of organizations—AARP, the Department of Health and Human Services, and various foundations—will attempt to explain its provisions to the public, most likely in different ways.

TABLE

Deciphering the 2006 drug benefit

The chart above shows what portion of yearly drug costs would be paid by the Medicare recipient and what portion would be paid by Medicare beginning in 2006. It does not include the $420 yearly premium.Family physicians may be asked by patients to explain provisions of the program and to offer advice in making decisions about their participation.

In addition, preoccupation with explaining and implementing the Medicare bill may keep Congress and the President from addressing other pressing health issues such as the growing number of uninsured.

Corresponding author

Eric Henley, MD, MPH, Co-Editor, Practice Alert, 1601 Parkview Avenue, Rockford, IL 61107. E-mail: [email protected].

1. Pear R. Bush’s aides put higher price tag on Medicare law. New York Times, January 30, 2004.

2. Altman D. The new Medicare prescription-drug legislation. N Engl J Med 2004;350:9-10

3. American Academy of Family Physicians. Medicare Prescription Drug, Improvement and Modernization Act. Available at: www.aafp.org/x25558.xml. Accessed on April 2, 2004.

4. National Association of Chain Drug Stores. Medicare Prescription Drug Benefit and Discount Card Program Q & A. Available at: www.nacds.org/user-assets/PDF_files/MedicareRx_Q&A.pdf. Accessed on April 2, 2004.

5. New Medicare law/key provisions. Christian Science Monitor, December 4, 2003

In November 2003, President Bush signed the Medicare prescription-drug bill, which will usher in the largest change in the Medicare program in terms of money and number of people affected since the program’s creation in 1965. The final version of the bill was controversial, passing by a small margin in both the House and Senate.

Conservatives criticized the bill for not giving a large enough role to the private sector as an alternative to the traditional Medicare program, for spending too much money, and for risking even larger budget deficits than already predicted.

Liberals criticized it for providing an inadequate drug benefit, for allowing the prescription program to be run by private industry, and for creating an experimental private-sector program that will compete with traditional Medicare.

In the end, passage was ensured with support from the American Association of Retired Persons (AARP), drug companies, private health insurers, and national medical groups—and with the usual political maneuvering.

Public support among seniors and other groups remains unclear. For example, the American Academy of Family Physicians supported the bill, but negative reaction by members led President Michael Fleming to write a letter explaining the reasons for the decision (www.aafp.org/medicareletter.xml). In addition, Republican concerns about the overall cost of the legislation seem borne out by the administration’s recent announcement projecting costs of $530 billion over 10 years, about one third more than the price tag used to convince Congress to pass the legislation about 2 months before.

This article reviews the bill and some of its health policy implications.

Not all details clear; more than drug benefits affected

Several generalizations about Federal legislation hold true with this bill.

First, while the bill establishes the intent of Congress, a number of details will not be made clear until it is implemented by the executive branch—the administration and the responsible cabinet departments such as the Center for Medicare and Medicaid Services. The importance of these implementation details is most relevant to the prescription drug benefit section of the bill.

Second, the bill changes or adds programs in a number of health areas besides prescription drugs (see Supplementary changes with the Medicare prescription drug bill). These additions partly reflected the need of proponents to satisfy diverse special interests (private insurers, hospitals and physicians, rural areas) and thereby gain their support for other parts of the bill that were more controversial, principally the drug benefit and private competition for Medicare. Thus, there is funding to increase Medicare payments to physicians and rural hospitals and to hospitals serving large numbers of low-income patients.

- Medicare payments to rural hospitals and doctors increase by $25 billion over 10 years.

- Payments to hospitals serving large numbers of low-income patients would increase.

- Hospitals can avoid some future cuts in Medicare payments by submitting quality of care data to the government.

- Doctors would receive increases of 1.5% per year in Medicare payments for 2004 and 2005 rather than the cuts currently planned.

- Medicare would cover an initial physical for new beneficiaries and screening for diabetes and cardiovascular disease.

- Support for development of health savings accounts that allow people with high-deductible health insurance to shelter income from taxes and obtain tax deductions if the money is used for health expenses.

- Home health agencies would see cuts in payments, but patient co-pays would not be required.

- Medicare Part B premiums (for physician and outpatient services) would be greater for those with incomes over $80,000.

Third, the changes also reflect genuine goals of improving health by expanding Medicare coverage of preventive services and requiring participating hospitals to submit quality-of-care data.

Prescription drug coverage under the new bill

Although many seniors have drug coverage through retirement health plans or Medigap policies purchased privately, about one quarter of beneficiaries (some 10 million people) do not have such coverage. Even those with drug coverage may have difficulty affording recommended medications since the median income for a senior is little more than $23,000. Many physicians have seen the ill effects of seniors not filling their prescriptions or skipping doses of prescribed medications.

Until the benefit takes effect. The actual prescription drug benefit will not begin until 2006. Until then, Medicare recipients will be given the option of purchasing a drug-discount card for $30 per year starting this spring. It is estimated these cards may save 10% to 15% of prescription costs. In addition, low-income seniors will receive $600 per year toward drug purchases.

After it takes effect. The drug benefit starting in 2006 will be funded through a complex arrangement of patient and government payments (Figure).

- Premium: A premium estimated to begin at $35 per month.

- Deductible: An annual deductible starting at $250 and indexed to increase to $445 in 2013.

- Co-pay: After paying the deductible, enrollees will pay 25% of additional drug costs up to $2250, at which point a $2850 gap in cover-age—the so-called “doughut hole”—leaves the onus of payment with the patient until $5100 is reached.

- Catastrophic coverage: After $5100, patients will pay 5% of any additional annual drug costs.

In 2006, catastrophic coverage will begin after $3600 in out-of-pocket costs ($250 deductible + $500 in co-pays to $2250 + the $2850 doughnut gap), not counting the premium. Indexing provisions are projected to raise this out-of-pocket cost requirement to $6400 in 2013. These indexing features have received less attention in the media, but may become increasingly important to seniors. For lower-income individuals, as determined by specific yearly income and total assets guidelines, a small per-prescription fee will replace the premium, deductible, and doughnut hole gap payments.

Coverage will vary. As the yearly cost of drugs changes, so will the relative contributions made by the patient and the government (Table). The new bill provides substantial benefit to those with catastrophic drug costs and to very low-income seniors. The idea of linking payments to income (for the drug benefit and the Part B premium) is a change in the Medicare program, as it has traditionally provided the same benefit to all beneficiaries, regardless of income.

Expected effects of privatization. The manner in which the drug benefit will be administered was controversial in Congress. The legislation, written primarily by Republicans, provides that beneficiaries can obtain coverage by participating with an HMO or PPO or by purchasing standalone coverage through a private prescription drug insurance program. Patient enrollment is voluntary. Managed care plans would be encouraged to participate in the prescription benefit program through eligibility for government subsidies. In turn, more beneficiaries would be encouraged to choose managed care plans, thus decreasing the number of patients covered by traditional Medicare. Furthermore, current private Medigap supplemental plans will be barred from offering drug benefits.

With the HMO/PPO and stand-alone programs, it is likely that any reduction in drug costs will result from private pharmacy benefit managers negotiating discounts from drug companies as they do now for many employer-sponsored plans. Presumably, formularies will vary from plan to plan, and it may be difficult for patients to know ahead of time whether the plan they join will cover their current medications. The legislation prohibits the government from using its vast purchasing power to negotiate substantial discounts from drug companies as it does now for the Medicaid program. There are provisions to increase availability of generic drugs, but importation of drugs from Canada is prohibited unless FDA approval is given (so far, the FDA has opposed this). Many Democrats who opposed the bill argued that it allowed too large a role for the private sector and constrained the ability of the government to control drug costs.

A final controversial measure in the bill provided for conducting an experiment in 6 cities beginning in 2010, in which at least 1 private insurance plan would be funded to compete directly with the traditional Medicare program. Many Republicans believe this type of competition is necessary to decrease the rate of cost increases in the Medicare program, while many Democrats believe the private market is a big reason for increasing problems with the quality and cost of the entire health care system.

FIGURE

Out-of-pocket spending under new legislation

Out-of-pocket drug spending in 2006 for Medicare beneficiaries under new Medicare legislation. Note: Benefit levels are indexed to growth in per capita expenditures for covered Part D drugs. As a result, the Part D deductible in projected to increase from $250 in 2006 to $445 in 2013; the catastrophic threshold is projected to increase from $5100 in 2006 to $9066 in 2013. From the Kaiser Family Foundation website(www.kff.org/medicare/medicarebenefitataglance.ctm).

Looming questions

The new Medicare legislation is vast in scope, cost, and controversy. In the coming months, a number of organizations—AARP, the Department of Health and Human Services, and various foundations—will attempt to explain its provisions to the public, most likely in different ways.

TABLE

Deciphering the 2006 drug benefit

The chart above shows what portion of yearly drug costs would be paid by the Medicare recipient and what portion would be paid by Medicare beginning in 2006. It does not include the $420 yearly premium.Family physicians may be asked by patients to explain provisions of the program and to offer advice in making decisions about their participation.

In addition, preoccupation with explaining and implementing the Medicare bill may keep Congress and the President from addressing other pressing health issues such as the growing number of uninsured.

Corresponding author

Eric Henley, MD, MPH, Co-Editor, Practice Alert, 1601 Parkview Avenue, Rockford, IL 61107. E-mail: [email protected].

In November 2003, President Bush signed the Medicare prescription-drug bill, which will usher in the largest change in the Medicare program in terms of money and number of people affected since the program’s creation in 1965. The final version of the bill was controversial, passing by a small margin in both the House and Senate.

Conservatives criticized the bill for not giving a large enough role to the private sector as an alternative to the traditional Medicare program, for spending too much money, and for risking even larger budget deficits than already predicted.

Liberals criticized it for providing an inadequate drug benefit, for allowing the prescription program to be run by private industry, and for creating an experimental private-sector program that will compete with traditional Medicare.

In the end, passage was ensured with support from the American Association of Retired Persons (AARP), drug companies, private health insurers, and national medical groups—and with the usual political maneuvering.

Public support among seniors and other groups remains unclear. For example, the American Academy of Family Physicians supported the bill, but negative reaction by members led President Michael Fleming to write a letter explaining the reasons for the decision (www.aafp.org/medicareletter.xml). In addition, Republican concerns about the overall cost of the legislation seem borne out by the administration’s recent announcement projecting costs of $530 billion over 10 years, about one third more than the price tag used to convince Congress to pass the legislation about 2 months before.

This article reviews the bill and some of its health policy implications.

Not all details clear; more than drug benefits affected

Several generalizations about Federal legislation hold true with this bill.

First, while the bill establishes the intent of Congress, a number of details will not be made clear until it is implemented by the executive branch—the administration and the responsible cabinet departments such as the Center for Medicare and Medicaid Services. The importance of these implementation details is most relevant to the prescription drug benefit section of the bill.

Second, the bill changes or adds programs in a number of health areas besides prescription drugs (see Supplementary changes with the Medicare prescription drug bill). These additions partly reflected the need of proponents to satisfy diverse special interests (private insurers, hospitals and physicians, rural areas) and thereby gain their support for other parts of the bill that were more controversial, principally the drug benefit and private competition for Medicare. Thus, there is funding to increase Medicare payments to physicians and rural hospitals and to hospitals serving large numbers of low-income patients.

- Medicare payments to rural hospitals and doctors increase by $25 billion over 10 years.

- Payments to hospitals serving large numbers of low-income patients would increase.

- Hospitals can avoid some future cuts in Medicare payments by submitting quality of care data to the government.

- Doctors would receive increases of 1.5% per year in Medicare payments for 2004 and 2005 rather than the cuts currently planned.

- Medicare would cover an initial physical for new beneficiaries and screening for diabetes and cardiovascular disease.

- Support for development of health savings accounts that allow people with high-deductible health insurance to shelter income from taxes and obtain tax deductions if the money is used for health expenses.

- Home health agencies would see cuts in payments, but patient co-pays would not be required.

- Medicare Part B premiums (for physician and outpatient services) would be greater for those with incomes over $80,000.

Third, the changes also reflect genuine goals of improving health by expanding Medicare coverage of preventive services and requiring participating hospitals to submit quality-of-care data.

Prescription drug coverage under the new bill

Although many seniors have drug coverage through retirement health plans or Medigap policies purchased privately, about one quarter of beneficiaries (some 10 million people) do not have such coverage. Even those with drug coverage may have difficulty affording recommended medications since the median income for a senior is little more than $23,000. Many physicians have seen the ill effects of seniors not filling their prescriptions or skipping doses of prescribed medications.

Until the benefit takes effect. The actual prescription drug benefit will not begin until 2006. Until then, Medicare recipients will be given the option of purchasing a drug-discount card for $30 per year starting this spring. It is estimated these cards may save 10% to 15% of prescription costs. In addition, low-income seniors will receive $600 per year toward drug purchases.

After it takes effect. The drug benefit starting in 2006 will be funded through a complex arrangement of patient and government payments (Figure).

- Premium: A premium estimated to begin at $35 per month.

- Deductible: An annual deductible starting at $250 and indexed to increase to $445 in 2013.

- Co-pay: After paying the deductible, enrollees will pay 25% of additional drug costs up to $2250, at which point a $2850 gap in cover-age—the so-called “doughut hole”—leaves the onus of payment with the patient until $5100 is reached.

- Catastrophic coverage: After $5100, patients will pay 5% of any additional annual drug costs.

In 2006, catastrophic coverage will begin after $3600 in out-of-pocket costs ($250 deductible + $500 in co-pays to $2250 + the $2850 doughnut gap), not counting the premium. Indexing provisions are projected to raise this out-of-pocket cost requirement to $6400 in 2013. These indexing features have received less attention in the media, but may become increasingly important to seniors. For lower-income individuals, as determined by specific yearly income and total assets guidelines, a small per-prescription fee will replace the premium, deductible, and doughnut hole gap payments.

Coverage will vary. As the yearly cost of drugs changes, so will the relative contributions made by the patient and the government (Table). The new bill provides substantial benefit to those with catastrophic drug costs and to very low-income seniors. The idea of linking payments to income (for the drug benefit and the Part B premium) is a change in the Medicare program, as it has traditionally provided the same benefit to all beneficiaries, regardless of income.

Expected effects of privatization. The manner in which the drug benefit will be administered was controversial in Congress. The legislation, written primarily by Republicans, provides that beneficiaries can obtain coverage by participating with an HMO or PPO or by purchasing standalone coverage through a private prescription drug insurance program. Patient enrollment is voluntary. Managed care plans would be encouraged to participate in the prescription benefit program through eligibility for government subsidies. In turn, more beneficiaries would be encouraged to choose managed care plans, thus decreasing the number of patients covered by traditional Medicare. Furthermore, current private Medigap supplemental plans will be barred from offering drug benefits.

With the HMO/PPO and stand-alone programs, it is likely that any reduction in drug costs will result from private pharmacy benefit managers negotiating discounts from drug companies as they do now for many employer-sponsored plans. Presumably, formularies will vary from plan to plan, and it may be difficult for patients to know ahead of time whether the plan they join will cover their current medications. The legislation prohibits the government from using its vast purchasing power to negotiate substantial discounts from drug companies as it does now for the Medicaid program. There are provisions to increase availability of generic drugs, but importation of drugs from Canada is prohibited unless FDA approval is given (so far, the FDA has opposed this). Many Democrats who opposed the bill argued that it allowed too large a role for the private sector and constrained the ability of the government to control drug costs.

A final controversial measure in the bill provided for conducting an experiment in 6 cities beginning in 2010, in which at least 1 private insurance plan would be funded to compete directly with the traditional Medicare program. Many Republicans believe this type of competition is necessary to decrease the rate of cost increases in the Medicare program, while many Democrats believe the private market is a big reason for increasing problems with the quality and cost of the entire health care system.

FIGURE

Out-of-pocket spending under new legislation

Out-of-pocket drug spending in 2006 for Medicare beneficiaries under new Medicare legislation. Note: Benefit levels are indexed to growth in per capita expenditures for covered Part D drugs. As a result, the Part D deductible in projected to increase from $250 in 2006 to $445 in 2013; the catastrophic threshold is projected to increase from $5100 in 2006 to $9066 in 2013. From the Kaiser Family Foundation website(www.kff.org/medicare/medicarebenefitataglance.ctm).

Looming questions

The new Medicare legislation is vast in scope, cost, and controversy. In the coming months, a number of organizations—AARP, the Department of Health and Human Services, and various foundations—will attempt to explain its provisions to the public, most likely in different ways.

TABLE

Deciphering the 2006 drug benefit

The chart above shows what portion of yearly drug costs would be paid by the Medicare recipient and what portion would be paid by Medicare beginning in 2006. It does not include the $420 yearly premium.Family physicians may be asked by patients to explain provisions of the program and to offer advice in making decisions about their participation.

In addition, preoccupation with explaining and implementing the Medicare bill may keep Congress and the President from addressing other pressing health issues such as the growing number of uninsured.

Corresponding author

Eric Henley, MD, MPH, Co-Editor, Practice Alert, 1601 Parkview Avenue, Rockford, IL 61107. E-mail: [email protected].

1. Pear R. Bush’s aides put higher price tag on Medicare law. New York Times, January 30, 2004.

2. Altman D. The new Medicare prescription-drug legislation. N Engl J Med 2004;350:9-10

3. American Academy of Family Physicians. Medicare Prescription Drug, Improvement and Modernization Act. Available at: www.aafp.org/x25558.xml. Accessed on April 2, 2004.

4. National Association of Chain Drug Stores. Medicare Prescription Drug Benefit and Discount Card Program Q & A. Available at: www.nacds.org/user-assets/PDF_files/MedicareRx_Q&A.pdf. Accessed on April 2, 2004.

5. New Medicare law/key provisions. Christian Science Monitor, December 4, 2003

1. Pear R. Bush’s aides put higher price tag on Medicare law. New York Times, January 30, 2004.

2. Altman D. The new Medicare prescription-drug legislation. N Engl J Med 2004;350:9-10

3. American Academy of Family Physicians. Medicare Prescription Drug, Improvement and Modernization Act. Available at: www.aafp.org/x25558.xml. Accessed on April 2, 2004.

4. National Association of Chain Drug Stores. Medicare Prescription Drug Benefit and Discount Card Program Q & A. Available at: www.nacds.org/user-assets/PDF_files/MedicareRx_Q&A.pdf. Accessed on April 2, 2004.

5. New Medicare law/key provisions. Christian Science Monitor, December 4, 2003

10 steps for avoiding health disparities in your practice

We hope the answer to the question above is no. However, the evidence regarding differences in the care of patients based on race, ethnicity, gender, and socioeconomic status suggests that if this patient is a woman or African American or from a lower socioeconomic class, resultant morbidity or mortality will be higher.

Differences are seen in the provision of cardiovascular care, cancer diagnosis and treament, and HIV care. African Americans, Latino Americans, Asian Americans, and Native Americans have higher morbidity and mortality than Caucasian chemical dependency, diabetes, heart disease, infant Americans for multiple problems including cancer, mortality, and unintentional and intentional injuries.1

This article explores possible explanations for health care disparities and offers 10 practical strategies for tackling this challenging issue.

Examples of health disparities

The United States has dramatically improved the health status of its citizens—increasing longevity, reducing infant mortality and teenage pregnancies, and increasing the number of children being immunized. Despite these improvements, though, there remain persistent and disproportionate burdens of disease and illness borne by subgroups of the population (Table 1). 2,3

The Institute of Medicine in its recent report, “Unequal Treatment,” approaches the issue from another perspective: they define these disparities as “racial or ethnic differences in the quality of healthcare that are not due to access-related factors or clinical needs, preferences and appropriateness of intervention.”4

TABLE 1

Examples of health disparities that could be changed

| Disparity in mortality |

| Infant mortality |

| Infant mortality is higher for infants of African American, Native Hawaiian, and Native American mothers (13.8, 10.0, and 9.3 deaths per 1000 live births, respectively) than for infants of other race groups. Infant mortality decreases as the mother’s level of education increases. |

| Disparity in morbidity |

| Cancer (males) |

| The incidence of cancer among black males exceeds that of white males for prostate cancer (60%), lung and bronchial cancer (58% ), and colon and rectum cancers (14%). |

| Disparity in health behaviors |

| Cigarette smoking |

| Smoking among persons aged 25 years and over ranges from 11% among college graduates to 32% for those without a high school diploma; 19% of adolescents in the most rural counties smoke compared to 11% in central counties. |

| Disparity in preventive health care |

| Mammography |

| Poor women are 27% less likely to have had a recent mammogram than are women with family incomes above the poverty level. |

| Disparity in access to care |

| Health insurance coverage |

| 13% of children under aged <18 years have no health insurance coverage; 28% of children with family incomes of 1 to 1.5 times the poverty level are without coverage, compared with 5% of those with family incomes at least twice the poverty level. |

| Source: Adapted from Health, United States, 2001. |

| Hyattsville, Md: National Center for Health Statistics; 2001. |

Correcting health disparity begins with understanding its causes

A number of factors account for disparities in health and health care.

Population-influenced factors

Leading candidates are some population groups’ lower socioeconomic status (eg, income, occupation, education) and increased exposure to unhealthy environments. Individuals may also exhibit preferences for or against treatment (when appropriate treatment recommendations are offered) that mirror group preferences.

For example, African American patients’ distrust of the healthcare system may be based in part on their experience of discrimination as research subjects in the Tuskegee syphilis study and Los Angeles measles immunization study. Research has shown that while these issues are relevant, they do not fully account for observed disparities.

System factors

Problems with access to care are common: inadequate insurance, transportation difficulties, geographic barriers to needed services (rural/urban), and language barriers. Again, research has shown that access to care matters, but not necessarily more than other factors.

Individual factors

At the individual level, a clinical encounter may be adversely affected by physician-patient racial/ethnic discordance, patient health literacy, and physician cultural competence. Also, there is the high prevalence of risky behavior such as smoking.

Finally, provider-specific issues may be operative: bias (prejudice) against certain groups of patients, clinical uncertainty when dealing with patients, and stereotypes held by providers about the behavior or health of different groups of patients according to race, ethnicity, or culture.

Addressing disparities in practice

Clearly, improving the socioeconomic status and access to care for all people are among the most important ways to eliminate health disparities. Physicians can influence these areas through individual participation in political activities, in nonprofit organizations, and in their professional organizations.

Steps can also be taken in your own practice (Table 2).

TABLE 2

Ten practical measures for avoiding health disparity in your practice

| Use evidence-based clinical guidelines as much as possible. |

| Consider the health literacy level of your patients when planning care and treatment, when explaining medical recommendations, and when handing out written material. |

| Ensure that front desk staff are sensitive to patient backgrounds and cultures. |

| Provide culturally sensitive patient education materials (eg, brochures in Spanish). |

| Keep a “black book” with the names and numbers of community health resources. |

| Volunteer with a nonprofit community-based agency in your area. |

| Ask your local health department or managed care plans if they have a community health improvement plan. Get involved in creating or implementing the plan. |

| Create a special program for one or more of the populations you care for (eg, a school-based program to help reduce teenage pregnancy). |

| Develop a plan for translation services. |

| Browse through the Institute of Medicine report, “Unequal Treatment” (available at www.iom.edu/report.asp?id=4475). |

Use evidence-based guidelines

To minimize the effect of possible bias and stereotyping in caring for patients of different races, ethnicities, and cultures, an important foundation is to standardize care for all patients by using evidence-based practice guidelines when appropriate. Clinical guidelines such as those published by the US Preventive Services Task Force and those available on the Internet through the National Guideline Clearinghouse provide well-researched and substantiated recommendations (available at www.ngc.gov).

Using guidelines is consistent with national recommendations to incorporate more evidence-based practices in clinical care.

Make your office patient-friendly

Create an office environment that is sensitive to the needs of all patients. Addressing language issues, having front desk staff who are sensitive and unbiased, and providing culturally relevant patient education material (eg, posters, magazines) are important components of a supportive office environment.1

Advocate patient education

Strategies to improve patient health literacy and physician cultural competence may be of benefit. The literacy issue can be helped considerably by enabling patients to increase their understanding of health terminology, and there are national efforts to address patient health literacy. Physicians can also help by explaining options and care plans simply, carefully, and without medical jargon. The American Medical Association has a national campaign in support of health literacy (www.amaassn.org/ama/pub/category/8115.html).

Increase cross-cultural communication skills

The Institute of Medicine and academicians have increasingly recommended training healthcare professionals to be more culturally competent. Experts have agreed that the “essence of cultural competence is not the mastery of ‘facts’ about different ethnic groups, but rather a patient-centered approach that incorporates fundamental skills and attitudes that may be applicable across ethnic boundaries.”6

A recent national survey supported this idea by showing that racial differences in patient satisfaction disappeared after adjustment for the quality of physician behaviors (eg, showing respect for patients, spending adequate time with patients). The fact that these positive physician behaviors were reported more frequently by white than non-white patients points to the need for continued effort at improving physicians’ interpersonal skills.

Eliminating health disparities is one of the top 2 goals of Healthy People 2010, the document that guides the nation’s health promotion and disease prevention agenda. Healthy People 2010 (www.health.gov/healthypeople) is a compilation of important prevention objectives for the Nation identified by the US Public Health Service that helps to focus health care system and community efforts. The vision for Healthy People 2010 is “Healthy People in Healthy Communities,” a theme emphasizing that the health of the individual is closely linked with the health of the community.

The Leading Health Indicators are a subset of the Healthy People 2010 objectives and were chosen for emphasis because they account for more than 50% of the leading preventable causes of morbidity and premature morality in the US. 5 Data on these 10 objectives also point to disparities in health status and health outcomes among population groups in the US. Most states and many local communities have used the Healthy People 2010/Leading Health Indicators to develop and implement state and local “Healthy People” plans.

Physicians have an important role in efforts to meet these goals because many of them can only be met by utilizing multicomponent intervention strategies that include actions at the clinic, health care system and community level.

Corresponding author

Eric Henley, MD, MPH, Co-Editor, Practice Alert, 1601 Parkview Avenue, Rockford, IL 61107. E-mail: [email protected].

1. Tucker C, Herman K, Pedersen T, Higley B, Montrichard M, Ivery P. Cultural sensitivity in physician-patient relationships: perspectives of an ethnically diverse sample of low-income primary care patients. Med Care 2003;41:859-870.

2. Fiscella K, Franks P, Gold MR, Clancy CM. Inequality in quality: addressing socioeconomic, racial and ethnic disparities in health care. JAMA 2000;283:2579-2584.

3. Navarro V. Race or class versus race and class: mortality differentials in the United States. Lancet 1990;336:1238-1240.