User login

News and Views that Matter to Physicians

ASCO: Patients with advanced cancer should receive palliative care within 8 weeks of diagnosis

Patients with advanced cancer should receive dedicated palliative care services early in the disease course, concurrently with active treatment, according to the American Society of Clinical Oncology’s new guidelines on the integration of palliative care into standard oncology care.

Ideally, patients should be referred to interdisciplinary palliative care teams within 8 weeks of cancer diagnosis, and palliative care should be available in both the inpatient and outpatient setting, recommended ASCO.

The guidelines, which updated and expanded the 2012 ASCO provisional clinical opinion, were developed by a multidisciplinary expert panel that systematically reviewed phase III randomized controlled trials, secondary analyses of those trials, and meta-analyses that were published between March 2010 and January 2016.

According to the panel, essential components of palliative care include:

• Rapport and relationship building with patient and family caregivers.

• Symptom, distress, and functional status management.

• Exploration of understanding and education about illness and prognosis.

• Clarification of treatment goals.

• Assessment and support of coping needs.

• Assistance with medical decision making.

• Provision of referrals to other care providers as indicated.

The panel makes the case that not only does palliative care improve care for patients and families, it also likely reduces the total cost of care, often substantially. However, “race, poverty and low socioeconomic and/or immigration status are determinants of barriers to palliative care,” wrote the expert panel, which was cochaired by Betty Ferrell, PhD, of the City of Hope Medical Center, Duarte, Calif., and Thomas Smith, MD, of the Sidney Kimmel Comprehensive Cancer Center in Baltimore.

Read the full guidelines here.

[email protected]

On Twitter @jessnicolecraig

Patients with advanced cancer should receive dedicated palliative care services early in the disease course, concurrently with active treatment, according to the American Society of Clinical Oncology’s new guidelines on the integration of palliative care into standard oncology care.

Ideally, patients should be referred to interdisciplinary palliative care teams within 8 weeks of cancer diagnosis, and palliative care should be available in both the inpatient and outpatient setting, recommended ASCO.

The guidelines, which updated and expanded the 2012 ASCO provisional clinical opinion, were developed by a multidisciplinary expert panel that systematically reviewed phase III randomized controlled trials, secondary analyses of those trials, and meta-analyses that were published between March 2010 and January 2016.

According to the panel, essential components of palliative care include:

• Rapport and relationship building with patient and family caregivers.

• Symptom, distress, and functional status management.

• Exploration of understanding and education about illness and prognosis.

• Clarification of treatment goals.

• Assessment and support of coping needs.

• Assistance with medical decision making.

• Provision of referrals to other care providers as indicated.

The panel makes the case that not only does palliative care improve care for patients and families, it also likely reduces the total cost of care, often substantially. However, “race, poverty and low socioeconomic and/or immigration status are determinants of barriers to palliative care,” wrote the expert panel, which was cochaired by Betty Ferrell, PhD, of the City of Hope Medical Center, Duarte, Calif., and Thomas Smith, MD, of the Sidney Kimmel Comprehensive Cancer Center in Baltimore.

Read the full guidelines here.

[email protected]

On Twitter @jessnicolecraig

Patients with advanced cancer should receive dedicated palliative care services early in the disease course, concurrently with active treatment, according to the American Society of Clinical Oncology’s new guidelines on the integration of palliative care into standard oncology care.

Ideally, patients should be referred to interdisciplinary palliative care teams within 8 weeks of cancer diagnosis, and palliative care should be available in both the inpatient and outpatient setting, recommended ASCO.

The guidelines, which updated and expanded the 2012 ASCO provisional clinical opinion, were developed by a multidisciplinary expert panel that systematically reviewed phase III randomized controlled trials, secondary analyses of those trials, and meta-analyses that were published between March 2010 and January 2016.

According to the panel, essential components of palliative care include:

• Rapport and relationship building with patient and family caregivers.

• Symptom, distress, and functional status management.

• Exploration of understanding and education about illness and prognosis.

• Clarification of treatment goals.

• Assessment and support of coping needs.

• Assistance with medical decision making.

• Provision of referrals to other care providers as indicated.

The panel makes the case that not only does palliative care improve care for patients and families, it also likely reduces the total cost of care, often substantially. However, “race, poverty and low socioeconomic and/or immigration status are determinants of barriers to palliative care,” wrote the expert panel, which was cochaired by Betty Ferrell, PhD, of the City of Hope Medical Center, Duarte, Calif., and Thomas Smith, MD, of the Sidney Kimmel Comprehensive Cancer Center in Baltimore.

Read the full guidelines here.

[email protected]

On Twitter @jessnicolecraig

FROM THE JOURNAL OF CLINICAL ONCOLOGY

Results puzzling for embolic protection during TAVR

The largest randomized clinical trial to assess the safety and efficacy of cerebral embolic protection systems during transcatheter aortic valve replacement yielded puzzling and somewhat contradictory results, according to a report presented at the Transcatheter Cardiovascular Therapeutics annual meeting and published simultaneously in the Journal of the American College of Cardiology.

Virtually every device in this industry-sponsored study involving 363 elderly patients (mean age, 83.4 years) with severe aortic stenosis trapped particulate debris as intended, the mean volume of new lesions in the protected areas of the brain was reduced by 42%, and the number and volume of new lesions correlated with neurocognitive outcomes at 30 days.

However, the reduction in lesion volume did not achieve statistical significance, and the improvement in neurocognitive function also did not reach statistical significance.

In addition, “the sample size was clearly too low to assess clinical outcomes, and in retrospect, was also too low to evaluate follow-up MRI findings or neurocognitive outcomes.” Nevertheless, the trial “provides reassuring evidence of device safety,” said Samir R. Kapadia, MD, of the Cleveland Clinic (J Am Coll Cardiol. 2016 Nov 1. doi: 10.1016/j.jacc.2016.10.023).

In this prospective study, the investigators assessed patients at 17 medical centers in the United States and 2 in Germany. In addition to being elderly, the study patients were at high risk because of frequent comorbidities, including atrial fibrillation (31.7%) and prior stroke (5.8%).

The remaining 123 patients underwent TAVR but not MRI in a safety arm of the trial.

The protection devices were placed “without safety concerns” in most patients. The rate of major adverse events with the device was 7.3%, markedly less than the 18.3% prespecified performance goal for this outcome. Total procedure time was lengthened by only 13 minutes when the device was used, and total fluoroscopy time was increased by only 3 minutes. These findings demonstrate the overall safety of using the device, Dr. Kapadia said.

Debris including thrombus with tissue elements, artery wall particles, calcifications, valve tissue, and foreign materials was retrieved from the filters in 99% of patients.

The mean volume of new cerebral lesions in areas of the brain protected by the device was reduced by 42%, compared with that in patients who underwent TAVR without the protection device. However, this reduction was not statistically significant, so the primary efficacy endpoint of the study was not met.

Similarly, neurocognitive testing at 30 days showed that the volume of new lesions correlated with poorer outcomes. However, the difference in neurocognitive function between the intervention group and the control group did not reach statistical significance.

Several limitations likely contributed to this lack of statistical significance, Dr. Kapadia said.

First, the 5-day “window” for MRI assessment was too long. Both the number and the volume of new lesions rapidly changed over time, which led to marked variance in MRI findings depending on when the images were taken.

In addition, only one TAVR device was available at the time the trial was designed, so the study wasn’t stratified by type of valve device. But several new devices became available during the study, and the study investigators were permitted to use any of them. Both pre- and postimplantation techniques differ among these TAVR devices, but these differences could not be accounted for, given the study design.

Also, certain risk factors for stroke, especially certain findings on baseline MRI, were not understood when the trial was designed, and those factors also were not accounted for, Dr. Kapadia said.

Claret Medical funded the study. Dr. Kapadia reported having no relevant financial disclosures; his associates reported numerous ties to industry sources. The meeting was sponsored by the Cardiovascular Research Foundation.

From a logical standpoint, a device that collects cerebral embolic material in 99% of cases should prevent ischemic brain injury, yet the findings from this randomized trial don’t appear to support the routine use of such devices. But it would be inappropriate and unfair to close the book on cerebral protection after this chapter.

The authors acknowledge that an MRI “window” of 5 days creates too much heterogeneity in the data, that multiple TAVR devices requiring different implantation techniques further muddy the picture, and that in retrospect the sample size was inadequate and the study was underpowered. In addition, rigorous neurocognitive assessment can be challenging in elderly, recovering patients, and results can depend on the time of day and the patient’s alertness.

Despite the negative findings regarding both primary and secondary endpoints, the data do show the overall safety of embolic protection devices. We are dealing with a potential benefit that cannot be ignored as TAVR shifts to younger and lower-risk patients.

Azeem Latib, MD, is in the interventional cardiology unit at San Raffaele Scientific Institute in Milan. Matteo Pagnesi, MD, is in the interventional cardiology unit at EMO-GVM Centro Cuore Columbus in Milan. San Raffaele Scientific Institute has been involved in clinical studies of embolic protection devices made by Claret Medical, Innovative Cardiovascular Solutions, and Keystone Heart. Dr. Latib and Dr. Pagnesi reported having no other relevant financial disclosures. They made these remarks in an editorial accompanying Dr. Kapadia’s report (J Am Coll Cardiol. 2016 Nov 1. doi: 10.1016/j.jacc.2016.10.036).

From a logical standpoint, a device that collects cerebral embolic material in 99% of cases should prevent ischemic brain injury, yet the findings from this randomized trial don’t appear to support the routine use of such devices. But it would be inappropriate and unfair to close the book on cerebral protection after this chapter.

The authors acknowledge that an MRI “window” of 5 days creates too much heterogeneity in the data, that multiple TAVR devices requiring different implantation techniques further muddy the picture, and that in retrospect the sample size was inadequate and the study was underpowered. In addition, rigorous neurocognitive assessment can be challenging in elderly, recovering patients, and results can depend on the time of day and the patient’s alertness.

Despite the negative findings regarding both primary and secondary endpoints, the data do show the overall safety of embolic protection devices. We are dealing with a potential benefit that cannot be ignored as TAVR shifts to younger and lower-risk patients.

Azeem Latib, MD, is in the interventional cardiology unit at San Raffaele Scientific Institute in Milan. Matteo Pagnesi, MD, is in the interventional cardiology unit at EMO-GVM Centro Cuore Columbus in Milan. San Raffaele Scientific Institute has been involved in clinical studies of embolic protection devices made by Claret Medical, Innovative Cardiovascular Solutions, and Keystone Heart. Dr. Latib and Dr. Pagnesi reported having no other relevant financial disclosures. They made these remarks in an editorial accompanying Dr. Kapadia’s report (J Am Coll Cardiol. 2016 Nov 1. doi: 10.1016/j.jacc.2016.10.036).

From a logical standpoint, a device that collects cerebral embolic material in 99% of cases should prevent ischemic brain injury, yet the findings from this randomized trial don’t appear to support the routine use of such devices. But it would be inappropriate and unfair to close the book on cerebral protection after this chapter.

The authors acknowledge that an MRI “window” of 5 days creates too much heterogeneity in the data, that multiple TAVR devices requiring different implantation techniques further muddy the picture, and that in retrospect the sample size was inadequate and the study was underpowered. In addition, rigorous neurocognitive assessment can be challenging in elderly, recovering patients, and results can depend on the time of day and the patient’s alertness.

Despite the negative findings regarding both primary and secondary endpoints, the data do show the overall safety of embolic protection devices. We are dealing with a potential benefit that cannot be ignored as TAVR shifts to younger and lower-risk patients.

Azeem Latib, MD, is in the interventional cardiology unit at San Raffaele Scientific Institute in Milan. Matteo Pagnesi, MD, is in the interventional cardiology unit at EMO-GVM Centro Cuore Columbus in Milan. San Raffaele Scientific Institute has been involved in clinical studies of embolic protection devices made by Claret Medical, Innovative Cardiovascular Solutions, and Keystone Heart. Dr. Latib and Dr. Pagnesi reported having no other relevant financial disclosures. They made these remarks in an editorial accompanying Dr. Kapadia’s report (J Am Coll Cardiol. 2016 Nov 1. doi: 10.1016/j.jacc.2016.10.036).

The largest randomized clinical trial to assess the safety and efficacy of cerebral embolic protection systems during transcatheter aortic valve replacement yielded puzzling and somewhat contradictory results, according to a report presented at the Transcatheter Cardiovascular Therapeutics annual meeting and published simultaneously in the Journal of the American College of Cardiology.

Virtually every device in this industry-sponsored study involving 363 elderly patients (mean age, 83.4 years) with severe aortic stenosis trapped particulate debris as intended, the mean volume of new lesions in the protected areas of the brain was reduced by 42%, and the number and volume of new lesions correlated with neurocognitive outcomes at 30 days.

However, the reduction in lesion volume did not achieve statistical significance, and the improvement in neurocognitive function also did not reach statistical significance.

In addition, “the sample size was clearly too low to assess clinical outcomes, and in retrospect, was also too low to evaluate follow-up MRI findings or neurocognitive outcomes.” Nevertheless, the trial “provides reassuring evidence of device safety,” said Samir R. Kapadia, MD, of the Cleveland Clinic (J Am Coll Cardiol. 2016 Nov 1. doi: 10.1016/j.jacc.2016.10.023).

In this prospective study, the investigators assessed patients at 17 medical centers in the United States and 2 in Germany. In addition to being elderly, the study patients were at high risk because of frequent comorbidities, including atrial fibrillation (31.7%) and prior stroke (5.8%).

The remaining 123 patients underwent TAVR but not MRI in a safety arm of the trial.

The protection devices were placed “without safety concerns” in most patients. The rate of major adverse events with the device was 7.3%, markedly less than the 18.3% prespecified performance goal for this outcome. Total procedure time was lengthened by only 13 minutes when the device was used, and total fluoroscopy time was increased by only 3 minutes. These findings demonstrate the overall safety of using the device, Dr. Kapadia said.

Debris including thrombus with tissue elements, artery wall particles, calcifications, valve tissue, and foreign materials was retrieved from the filters in 99% of patients.

The mean volume of new cerebral lesions in areas of the brain protected by the device was reduced by 42%, compared with that in patients who underwent TAVR without the protection device. However, this reduction was not statistically significant, so the primary efficacy endpoint of the study was not met.

Similarly, neurocognitive testing at 30 days showed that the volume of new lesions correlated with poorer outcomes. However, the difference in neurocognitive function between the intervention group and the control group did not reach statistical significance.

Several limitations likely contributed to this lack of statistical significance, Dr. Kapadia said.

First, the 5-day “window” for MRI assessment was too long. Both the number and the volume of new lesions rapidly changed over time, which led to marked variance in MRI findings depending on when the images were taken.

In addition, only one TAVR device was available at the time the trial was designed, so the study wasn’t stratified by type of valve device. But several new devices became available during the study, and the study investigators were permitted to use any of them. Both pre- and postimplantation techniques differ among these TAVR devices, but these differences could not be accounted for, given the study design.

Also, certain risk factors for stroke, especially certain findings on baseline MRI, were not understood when the trial was designed, and those factors also were not accounted for, Dr. Kapadia said.

Claret Medical funded the study. Dr. Kapadia reported having no relevant financial disclosures; his associates reported numerous ties to industry sources. The meeting was sponsored by the Cardiovascular Research Foundation.

The largest randomized clinical trial to assess the safety and efficacy of cerebral embolic protection systems during transcatheter aortic valve replacement yielded puzzling and somewhat contradictory results, according to a report presented at the Transcatheter Cardiovascular Therapeutics annual meeting and published simultaneously in the Journal of the American College of Cardiology.

Virtually every device in this industry-sponsored study involving 363 elderly patients (mean age, 83.4 years) with severe aortic stenosis trapped particulate debris as intended, the mean volume of new lesions in the protected areas of the brain was reduced by 42%, and the number and volume of new lesions correlated with neurocognitive outcomes at 30 days.

However, the reduction in lesion volume did not achieve statistical significance, and the improvement in neurocognitive function also did not reach statistical significance.

In addition, “the sample size was clearly too low to assess clinical outcomes, and in retrospect, was also too low to evaluate follow-up MRI findings or neurocognitive outcomes.” Nevertheless, the trial “provides reassuring evidence of device safety,” said Samir R. Kapadia, MD, of the Cleveland Clinic (J Am Coll Cardiol. 2016 Nov 1. doi: 10.1016/j.jacc.2016.10.023).

In this prospective study, the investigators assessed patients at 17 medical centers in the United States and 2 in Germany. In addition to being elderly, the study patients were at high risk because of frequent comorbidities, including atrial fibrillation (31.7%) and prior stroke (5.8%).

The remaining 123 patients underwent TAVR but not MRI in a safety arm of the trial.

The protection devices were placed “without safety concerns” in most patients. The rate of major adverse events with the device was 7.3%, markedly less than the 18.3% prespecified performance goal for this outcome. Total procedure time was lengthened by only 13 minutes when the device was used, and total fluoroscopy time was increased by only 3 minutes. These findings demonstrate the overall safety of using the device, Dr. Kapadia said.

Debris including thrombus with tissue elements, artery wall particles, calcifications, valve tissue, and foreign materials was retrieved from the filters in 99% of patients.

The mean volume of new cerebral lesions in areas of the brain protected by the device was reduced by 42%, compared with that in patients who underwent TAVR without the protection device. However, this reduction was not statistically significant, so the primary efficacy endpoint of the study was not met.

Similarly, neurocognitive testing at 30 days showed that the volume of new lesions correlated with poorer outcomes. However, the difference in neurocognitive function between the intervention group and the control group did not reach statistical significance.

Several limitations likely contributed to this lack of statistical significance, Dr. Kapadia said.

First, the 5-day “window” for MRI assessment was too long. Both the number and the volume of new lesions rapidly changed over time, which led to marked variance in MRI findings depending on when the images were taken.

In addition, only one TAVR device was available at the time the trial was designed, so the study wasn’t stratified by type of valve device. But several new devices became available during the study, and the study investigators were permitted to use any of them. Both pre- and postimplantation techniques differ among these TAVR devices, but these differences could not be accounted for, given the study design.

Also, certain risk factors for stroke, especially certain findings on baseline MRI, were not understood when the trial was designed, and those factors also were not accounted for, Dr. Kapadia said.

Claret Medical funded the study. Dr. Kapadia reported having no relevant financial disclosures; his associates reported numerous ties to industry sources. The meeting was sponsored by the Cardiovascular Research Foundation.

Key clinical point: The largest randomized clinical trial to assess the safety and efficacy of cerebral embolic protection systems during TAVR yielded puzzling and contradictory results.

Major finding: Debris including thrombus with tissue elements, artery wall particles, calcifications, valve tissue, and foreign materials was retrieved from the cerebral protection filters in 99% of patients.

Data source: A prospective, international, randomized trial involving 363 elderly patients undergoing TAVR for severe aortic stenosis.

Disclosures: Claret Medical funded the study. Dr. Kapadia reported having no relevant financial disclosures; his associates reported numerous ties to industry sources.

Hospitalizations for opioid poisoning tripled in preschool children

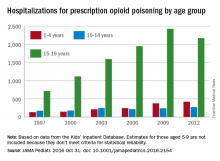

From 1997 to 2012, the annual number of hospitalizations for opioid poisoning rose 178% among children aged 1-19 years, according to data from over 13,000 discharge records.

In 2012, there were 2,918 hospitalizations for opioid poisoning among children aged 1-19, compared with 1,049 in 1997, reported Julie R. Gaither, PhD, MPH, RN, and her associates at Yale University in New Haven, Conn. (JAMA Pediatr. 2016 Oct 31. doi: 10.1001/jamapediatrics.2016.2154).

The greatest change occurred among the youngest children, as the number of those aged 1-4 years rose from 133 in 1997 to 421 in 2012 – an increase of 217%. For those aged 15-19 years, the annual number of hospitalizations went from 715 to 2,171 (204%) over that time period, which included a slight drop from 2009 to 2012, according to the investigators, who used data from 13,052 discharges in the Agency for Healthcare Research and Quality’s Kids’ Inpatient Database.

The increase in hospitalizations for prescription opioid poisoning in children aged 10-14 years was 58% from 1997 to 2012 (rising from 171 to 272), while estimates for 5- to 9-year-olds did not meet the criteria for statistical reliability and were not included in the analysis, Dr. Gaither and her associates said.

The study was supported by grants from the National Institute on Drug Abuse. The investigators did not report any conflicts of interest.

From 1997 to 2012, the annual number of hospitalizations for opioid poisoning rose 178% among children aged 1-19 years, according to data from over 13,000 discharge records.

In 2012, there were 2,918 hospitalizations for opioid poisoning among children aged 1-19, compared with 1,049 in 1997, reported Julie R. Gaither, PhD, MPH, RN, and her associates at Yale University in New Haven, Conn. (JAMA Pediatr. 2016 Oct 31. doi: 10.1001/jamapediatrics.2016.2154).

The greatest change occurred among the youngest children, as the number of those aged 1-4 years rose from 133 in 1997 to 421 in 2012 – an increase of 217%. For those aged 15-19 years, the annual number of hospitalizations went from 715 to 2,171 (204%) over that time period, which included a slight drop from 2009 to 2012, according to the investigators, who used data from 13,052 discharges in the Agency for Healthcare Research and Quality’s Kids’ Inpatient Database.

The increase in hospitalizations for prescription opioid poisoning in children aged 10-14 years was 58% from 1997 to 2012 (rising from 171 to 272), while estimates for 5- to 9-year-olds did not meet the criteria for statistical reliability and were not included in the analysis, Dr. Gaither and her associates said.

The study was supported by grants from the National Institute on Drug Abuse. The investigators did not report any conflicts of interest.

From 1997 to 2012, the annual number of hospitalizations for opioid poisoning rose 178% among children aged 1-19 years, according to data from over 13,000 discharge records.

In 2012, there were 2,918 hospitalizations for opioid poisoning among children aged 1-19, compared with 1,049 in 1997, reported Julie R. Gaither, PhD, MPH, RN, and her associates at Yale University in New Haven, Conn. (JAMA Pediatr. 2016 Oct 31. doi: 10.1001/jamapediatrics.2016.2154).

The greatest change occurred among the youngest children, as the number of those aged 1-4 years rose from 133 in 1997 to 421 in 2012 – an increase of 217%. For those aged 15-19 years, the annual number of hospitalizations went from 715 to 2,171 (204%) over that time period, which included a slight drop from 2009 to 2012, according to the investigators, who used data from 13,052 discharges in the Agency for Healthcare Research and Quality’s Kids’ Inpatient Database.

The increase in hospitalizations for prescription opioid poisoning in children aged 10-14 years was 58% from 1997 to 2012 (rising from 171 to 272), while estimates for 5- to 9-year-olds did not meet the criteria for statistical reliability and were not included in the analysis, Dr. Gaither and her associates said.

The study was supported by grants from the National Institute on Drug Abuse. The investigators did not report any conflicts of interest.

FROM JAMA PEDIATRICS

From 1997 to 2012, the annual number of hospitalizations for opioid poisoning rose 178% among children aged 1-19 years, according to data from over 13,000 discharge records.

ECMO patients need less sedation, pain meds than previously reported

Patients on extracorporeal membrane oxygenation (ECMO) received relatively low doses of sedatives and analgesics while at a light level of sedation in a single-center prospective study of 32 patients.

In addition, patients rarely required neuromuscular blockade, investigators reported online in the Journal of Critical Care.

This finding contrasts with current guidelines on the management of pain, agitation, and delirium in patients on ECMO. The guidelines are based upon previous research that indicated the need for significant increases in sedative and analgesic doses, as well as the need for neuromuscular blockade, wrote Jeremy R. DeGrado, PharmD, of the department of pharmacy at Brigham and Women’s Hospital, Boston, and his colleagues (J Crit Care. 2016 Aug 10;37:1-6. doi: 10.1016/j.jcrc.2016.07.020).

“Patients required significantly lower doses of opioids and sedatives than previously reported in the literature and did not demonstrate a need for increasing doses throughout the study period,” the investigators said. “Continuous infusions of opioids were utilized on most ECMO days, but continuous infusions of benzodiazepines were used on less than half of all ECMO days.”

Their 2-year, prospective, observational study assessed 32 adult intensive care unit patients on ECMO support for more than 48 hours. A total of 15 patients received VA (venoarterial) ECMO and 17 received VV (venovenous) ECMO. Patients received a median daily dose of benzodiazepines (midazolam equivalents) of 24 mg and a median daily dose of opioids (fentanyl equivalents) of 3,875 mcg.

The primary indication for VA ECMO was cardiogenic shock, while VV ECMO was mainly used as a bridge to lung transplant or in patients with severe acute respiratory distress syndrome. The researchers evaluated a total of 475 ECMO days: 110 VA ECMO and 365 VV ECMO.

On average, patients were sedated to Richmond Agitation Sedation Scale scores between 0 and −1. Across all 475 ECMO days, patients were treated with continuous infusions of opioids (on 85% of ECMO days), benzodiazepines (42%), propofol (20%), dexmedetomidine (7%), and neuromuscular blocking agents (13%).

In total, patients who received VV ECMO had a higher median dose of opioids and trended toward a lower dose of benzodiazepines than those who received VA ECMO, Dr. DeGrado and his associates reported.

In total, patients in the VA arm, compared with those in the VV arm, more frequently received a continuous infusion opioid (96% vs. 82% of days) and a benzodiazepine (58% vs. 37% of days). These differences were statistically significant.

Adjunctive therapies, including antipsychotics and clonidine, were administered frequently, according to the report.

“We did not observe an increase in dose requirement over time during ECMO support, possibly due to a multi-modal pharmacologic approach. Overall, patients were not deeply sedated and rarely required neuromuscular blockade. The hypothesis that patients on ECMO require high doses of sedatives and analgesics should be further investigated,” the researchers concluded.

The authors reported that they had no disclosures.

Patients on extracorporeal membrane oxygenation (ECMO) received relatively low doses of sedatives and analgesics while at a light level of sedation in a single-center prospective study of 32 patients.

In addition, patients rarely required neuromuscular blockade, investigators reported online in the Journal of Critical Care.

This finding contrasts with current guidelines on the management of pain, agitation, and delirium in patients on ECMO. The guidelines are based upon previous research that indicated the need for significant increases in sedative and analgesic doses, as well as the need for neuromuscular blockade, wrote Jeremy R. DeGrado, PharmD, of the department of pharmacy at Brigham and Women’s Hospital, Boston, and his colleagues (J Crit Care. 2016 Aug 10;37:1-6. doi: 10.1016/j.jcrc.2016.07.020).

“Patients required significantly lower doses of opioids and sedatives than previously reported in the literature and did not demonstrate a need for increasing doses throughout the study period,” the investigators said. “Continuous infusions of opioids were utilized on most ECMO days, but continuous infusions of benzodiazepines were used on less than half of all ECMO days.”

Their 2-year, prospective, observational study assessed 32 adult intensive care unit patients on ECMO support for more than 48 hours. A total of 15 patients received VA (venoarterial) ECMO and 17 received VV (venovenous) ECMO. Patients received a median daily dose of benzodiazepines (midazolam equivalents) of 24 mg and a median daily dose of opioids (fentanyl equivalents) of 3,875 mcg.

The primary indication for VA ECMO was cardiogenic shock, while VV ECMO was mainly used as a bridge to lung transplant or in patients with severe acute respiratory distress syndrome. The researchers evaluated a total of 475 ECMO days: 110 VA ECMO and 365 VV ECMO.

On average, patients were sedated to Richmond Agitation Sedation Scale scores between 0 and −1. Across all 475 ECMO days, patients were treated with continuous infusions of opioids (on 85% of ECMO days), benzodiazepines (42%), propofol (20%), dexmedetomidine (7%), and neuromuscular blocking agents (13%).

In total, patients who received VV ECMO had a higher median dose of opioids and trended toward a lower dose of benzodiazepines than those who received VA ECMO, Dr. DeGrado and his associates reported.

In total, patients in the VA arm, compared with those in the VV arm, more frequently received a continuous infusion opioid (96% vs. 82% of days) and a benzodiazepine (58% vs. 37% of days). These differences were statistically significant.

Adjunctive therapies, including antipsychotics and clonidine, were administered frequently, according to the report.

“We did not observe an increase in dose requirement over time during ECMO support, possibly due to a multi-modal pharmacologic approach. Overall, patients were not deeply sedated and rarely required neuromuscular blockade. The hypothesis that patients on ECMO require high doses of sedatives and analgesics should be further investigated,” the researchers concluded.

The authors reported that they had no disclosures.

Patients on extracorporeal membrane oxygenation (ECMO) received relatively low doses of sedatives and analgesics while at a light level of sedation in a single-center prospective study of 32 patients.

In addition, patients rarely required neuromuscular blockade, investigators reported online in the Journal of Critical Care.

This finding contrasts with current guidelines on the management of pain, agitation, and delirium in patients on ECMO. The guidelines are based upon previous research that indicated the need for significant increases in sedative and analgesic doses, as well as the need for neuromuscular blockade, wrote Jeremy R. DeGrado, PharmD, of the department of pharmacy at Brigham and Women’s Hospital, Boston, and his colleagues (J Crit Care. 2016 Aug 10;37:1-6. doi: 10.1016/j.jcrc.2016.07.020).

“Patients required significantly lower doses of opioids and sedatives than previously reported in the literature and did not demonstrate a need for increasing doses throughout the study period,” the investigators said. “Continuous infusions of opioids were utilized on most ECMO days, but continuous infusions of benzodiazepines were used on less than half of all ECMO days.”

Their 2-year, prospective, observational study assessed 32 adult intensive care unit patients on ECMO support for more than 48 hours. A total of 15 patients received VA (venoarterial) ECMO and 17 received VV (venovenous) ECMO. Patients received a median daily dose of benzodiazepines (midazolam equivalents) of 24 mg and a median daily dose of opioids (fentanyl equivalents) of 3,875 mcg.

The primary indication for VA ECMO was cardiogenic shock, while VV ECMO was mainly used as a bridge to lung transplant or in patients with severe acute respiratory distress syndrome. The researchers evaluated a total of 475 ECMO days: 110 VA ECMO and 365 VV ECMO.

On average, patients were sedated to Richmond Agitation Sedation Scale scores between 0 and −1. Across all 475 ECMO days, patients were treated with continuous infusions of opioids (on 85% of ECMO days), benzodiazepines (42%), propofol (20%), dexmedetomidine (7%), and neuromuscular blocking agents (13%).

In total, patients who received VV ECMO had a higher median dose of opioids and trended toward a lower dose of benzodiazepines than those who received VA ECMO, Dr. DeGrado and his associates reported.

In total, patients in the VA arm, compared with those in the VV arm, more frequently received a continuous infusion opioid (96% vs. 82% of days) and a benzodiazepine (58% vs. 37% of days). These differences were statistically significant.

Adjunctive therapies, including antipsychotics and clonidine, were administered frequently, according to the report.

“We did not observe an increase in dose requirement over time during ECMO support, possibly due to a multi-modal pharmacologic approach. Overall, patients were not deeply sedated and rarely required neuromuscular blockade. The hypothesis that patients on ECMO require high doses of sedatives and analgesics should be further investigated,” the researchers concluded.

The authors reported that they had no disclosures.

FROM JOURNAL OF CRITICAL CARE

Key clinical point:

Major finding: Patients required lower doses of opioids and sedatives than previously reported and did not need increasing doses.

Data source: A single-institution, prospective study of 32 patients on extracorporeal membrane oxygenation.

Disclosures: Dr. DeGrado reported having no financial disclosures.

Selected liver-transplant patients thrive off immunosuppression

MONTREAL – Three-fifths of pediatric liver-transplant recipients who were doing well enough to attempt weaning from their immunosuppression regimen succeeded in getting off immunosuppression and staying off for more than a year. In the process, they also significantly improved their health-related quality of life.

“Health-related quality of life domains associated with social interactions, worry, and medications improved” in pediatric liver recipients who had undergone immunosuppression withdrawal, Saeed Mohammad, MD, said at the World Congress of Pediatric Gastroenterology, Hepatology and Nutrition.

Patients who succeeded in staying off immunosuppressant drugs for at least 2 years after they first began ratcheting down their regimen showed better quality of life scores compared with their scores at baseline, and also compared with the scores of other pediatric liver transplant patients who unsuccessfully tried coming off immunosuppression.

Not every pediatric liver transplant patient should attempt withdrawing immunosuppression, cautioned Dr. Mohammad, a pediatric gastroenterologist at Northwestern University in Chicago. “To be successful withdrawal of immunosuppression needs to be in selected patients; not every patient is a good candidate.”

The Immunosuppression Withdrawal for Stable Pediatric Liver Transplant Recipients (iWITH) study ran at 11 U.S. center and one center in Toronto during October 2012 through June 2014. Pediatric liver transplant recipients were eligible to start a 9-10 month graduated withdrawal from their immunosuppression regimen if they met several criteria of stability including no rejection episode over at least the prior 12 months, normal laboratory-test results, no autoimmune disease and no problems detected in a liver biopsy. The prospective study enrolled 88 patients who averaged 10 years old. Patients underwent comprehensive examinations and laboratory testing at baseline and again several times during the subsequent 2 years including assessment of several quality of life measures.

During follow-up, 35 of the 88 patients (40%) developed symptoms of rejection and had to go back on immunosuppression. Most of these patients developed their rejection symptoms early during immunosuppression weaning, but a few patients failed later including one patient who failed 22 months after starting immunosuppression withdrawal, Dr. Mohammad said. Researchers from the iWITH study first reported these results at the American Transplant Congress in June 2016.

The quality of life findings reported by Dr. Mohammad came from assessments at baseline, after 12 months, and after 24 months, and included 30 of the patients who resumed immunosuppression and 48 patients who remained off immunosuppression for 2 years. All of these 78 patients had relatively robust quality of life profiles at baseline. Their scores for both physical and social subscales as well as for total score were significantly superior to the average scores for a large number of primarily U.S. pediatric liver transplant patients in the SPLIT database. Dr. Mohammad called the patients who attempted immunosuppression discontinuation as the “creme de la creme” of pediatric liver transplant patients in terms of their clinical status.

Analysis of scores after 2 years compared with baseline showed statistically significant improvements among patients who stayed off immunosuppression for the domains of social function, treatment attitudes and compliance, communication, and worry. A comparison of changes in quality of life scores from baseline to 2 years showed that patients who stayed off immunosuppression had improvements in several of their scores while patients who went back onto immunosuppression had on average a small deterioration of their scores.

Dr. Mohammad had no disclosures.

[email protected]

On Twitter @mitchelzoler

MONTREAL – Three-fifths of pediatric liver-transplant recipients who were doing well enough to attempt weaning from their immunosuppression regimen succeeded in getting off immunosuppression and staying off for more than a year. In the process, they also significantly improved their health-related quality of life.

“Health-related quality of life domains associated with social interactions, worry, and medications improved” in pediatric liver recipients who had undergone immunosuppression withdrawal, Saeed Mohammad, MD, said at the World Congress of Pediatric Gastroenterology, Hepatology and Nutrition.

Patients who succeeded in staying off immunosuppressant drugs for at least 2 years after they first began ratcheting down their regimen showed better quality of life scores compared with their scores at baseline, and also compared with the scores of other pediatric liver transplant patients who unsuccessfully tried coming off immunosuppression.

Not every pediatric liver transplant patient should attempt withdrawing immunosuppression, cautioned Dr. Mohammad, a pediatric gastroenterologist at Northwestern University in Chicago. “To be successful withdrawal of immunosuppression needs to be in selected patients; not every patient is a good candidate.”

The Immunosuppression Withdrawal for Stable Pediatric Liver Transplant Recipients (iWITH) study ran at 11 U.S. center and one center in Toronto during October 2012 through June 2014. Pediatric liver transplant recipients were eligible to start a 9-10 month graduated withdrawal from their immunosuppression regimen if they met several criteria of stability including no rejection episode over at least the prior 12 months, normal laboratory-test results, no autoimmune disease and no problems detected in a liver biopsy. The prospective study enrolled 88 patients who averaged 10 years old. Patients underwent comprehensive examinations and laboratory testing at baseline and again several times during the subsequent 2 years including assessment of several quality of life measures.

During follow-up, 35 of the 88 patients (40%) developed symptoms of rejection and had to go back on immunosuppression. Most of these patients developed their rejection symptoms early during immunosuppression weaning, but a few patients failed later including one patient who failed 22 months after starting immunosuppression withdrawal, Dr. Mohammad said. Researchers from the iWITH study first reported these results at the American Transplant Congress in June 2016.

The quality of life findings reported by Dr. Mohammad came from assessments at baseline, after 12 months, and after 24 months, and included 30 of the patients who resumed immunosuppression and 48 patients who remained off immunosuppression for 2 years. All of these 78 patients had relatively robust quality of life profiles at baseline. Their scores for both physical and social subscales as well as for total score were significantly superior to the average scores for a large number of primarily U.S. pediatric liver transplant patients in the SPLIT database. Dr. Mohammad called the patients who attempted immunosuppression discontinuation as the “creme de la creme” of pediatric liver transplant patients in terms of their clinical status.

Analysis of scores after 2 years compared with baseline showed statistically significant improvements among patients who stayed off immunosuppression for the domains of social function, treatment attitudes and compliance, communication, and worry. A comparison of changes in quality of life scores from baseline to 2 years showed that patients who stayed off immunosuppression had improvements in several of their scores while patients who went back onto immunosuppression had on average a small deterioration of their scores.

Dr. Mohammad had no disclosures.

[email protected]

On Twitter @mitchelzoler

MONTREAL – Three-fifths of pediatric liver-transplant recipients who were doing well enough to attempt weaning from their immunosuppression regimen succeeded in getting off immunosuppression and staying off for more than a year. In the process, they also significantly improved their health-related quality of life.

“Health-related quality of life domains associated with social interactions, worry, and medications improved” in pediatric liver recipients who had undergone immunosuppression withdrawal, Saeed Mohammad, MD, said at the World Congress of Pediatric Gastroenterology, Hepatology and Nutrition.

Patients who succeeded in staying off immunosuppressant drugs for at least 2 years after they first began ratcheting down their regimen showed better quality of life scores compared with their scores at baseline, and also compared with the scores of other pediatric liver transplant patients who unsuccessfully tried coming off immunosuppression.

Not every pediatric liver transplant patient should attempt withdrawing immunosuppression, cautioned Dr. Mohammad, a pediatric gastroenterologist at Northwestern University in Chicago. “To be successful withdrawal of immunosuppression needs to be in selected patients; not every patient is a good candidate.”

The Immunosuppression Withdrawal for Stable Pediatric Liver Transplant Recipients (iWITH) study ran at 11 U.S. center and one center in Toronto during October 2012 through June 2014. Pediatric liver transplant recipients were eligible to start a 9-10 month graduated withdrawal from their immunosuppression regimen if they met several criteria of stability including no rejection episode over at least the prior 12 months, normal laboratory-test results, no autoimmune disease and no problems detected in a liver biopsy. The prospective study enrolled 88 patients who averaged 10 years old. Patients underwent comprehensive examinations and laboratory testing at baseline and again several times during the subsequent 2 years including assessment of several quality of life measures.

During follow-up, 35 of the 88 patients (40%) developed symptoms of rejection and had to go back on immunosuppression. Most of these patients developed their rejection symptoms early during immunosuppression weaning, but a few patients failed later including one patient who failed 22 months after starting immunosuppression withdrawal, Dr. Mohammad said. Researchers from the iWITH study first reported these results at the American Transplant Congress in June 2016.

The quality of life findings reported by Dr. Mohammad came from assessments at baseline, after 12 months, and after 24 months, and included 30 of the patients who resumed immunosuppression and 48 patients who remained off immunosuppression for 2 years. All of these 78 patients had relatively robust quality of life profiles at baseline. Their scores for both physical and social subscales as well as for total score were significantly superior to the average scores for a large number of primarily U.S. pediatric liver transplant patients in the SPLIT database. Dr. Mohammad called the patients who attempted immunosuppression discontinuation as the “creme de la creme” of pediatric liver transplant patients in terms of their clinical status.

Analysis of scores after 2 years compared with baseline showed statistically significant improvements among patients who stayed off immunosuppression for the domains of social function, treatment attitudes and compliance, communication, and worry. A comparison of changes in quality of life scores from baseline to 2 years showed that patients who stayed off immunosuppression had improvements in several of their scores while patients who went back onto immunosuppression had on average a small deterioration of their scores.

Dr. Mohammad had no disclosures.

[email protected]

On Twitter @mitchelzoler

AT WCPGHAN 2016

Key clinical point: Selected pediatric liver-transplant patients who successfully weaned off immunosuppression responded with significantly improved quality of life scores.

Major finding: Patient and parent treatment satisfaction improved by 6-7 points when patients stopped immunosuppression and fell by 2-3 points when they did not.

Data source: iWISH, a multicenter study with 88 enrolled patients.

Disclosures: Dr. Mohammad had no disclosures.

Resorbable scaffold appears safe, effective in diabetes patients

An everolimus-eluting resorbable scaffold appeared to be safe and effective for percutaneous coronary intervention (PCI) in patients with diabetes and noncomplex coronary lesions, according to a study presented at the Transcatheter Cardiovascular Therapeutics annual meeting and published simultaneously in the Journal of the American College of Cardiology: Cardiovascular Interventions.

Patients with diabetes constitute an important and increasingly prevalent subgroup of PCI patients, who are at high risk of adverse clinical and angiographic outcomes such as MI, stent thrombosis, restenosis, and death. This is thought to be due to diabetic patients’ greater level of vascular inflammation and tendency toward a prothrombotic state and more complex angiographic features, said Dean J. Kereiakes, MD, of the Christ Hospital Heart and Vascular Center, Lindner Research Center, Cincinnati.

The substudy participants all received at least one resorbable scaffold in at least one target lesion. A total of 27.3% were insulin dependent and nearly 60% had HbA1c levels exceeding 7.0%. Notably, 18% of all the treated lesions in this analysis were less than 2.25 mm in diameter as assessed by quantitative coronary angiography, and approximately 60% had moderately to severely complex morphology.

The primary endpoint – the rate of target-lesion failure at 1-year follow-up – was 8.3%, which was well below the prespecified performance goal of 12.7%. This rate ranged from 4.4% to 10.9% across the different trials. A sensitivity analysis confirmed that the 1-year rate of target-lesion failure was significantly lower than the prespecified performance goal.

The rates of target-lesion failure, target-vessel MI, ischemia-driven target-lesion revascularization, and scaffold thrombosis were significantly higher in diabetic patients who required insulin than in those who did not. Older patient age, insulin dependency, and small target-vessel diameter all were independent predictors of target-lesion failure at 1 year.

The overall 1-year rate of scaffold thrombosis in this study was 2.3%, which is not surprising given the study population’s risk factors. For diabetic patients with appropriately sized vessels of greater than 2.25 mm diameter, the scaffold thrombosis rate was lower (1.3%).

In addition to being underpowered to assess rare adverse events, this study was limited in that it reported outcomes at 1 year, before resorption of the device was complete. It also reflects the first-time clinical experience with a resorbable scaffold for most of the participating investigators, “and one would expect that as with all new medical procedures, results will improve over time with increased operator experience,” the coauthors wrote.

Dr. Kereiakes reported being a consultant to Abbott Vascular, and his associates also reported ties to the company and to other industry sources.

An everolimus-eluting resorbable scaffold appeared to be safe and effective for percutaneous coronary intervention (PCI) in patients with diabetes and noncomplex coronary lesions, according to a study presented at the Transcatheter Cardiovascular Therapeutics annual meeting and published simultaneously in the Journal of the American College of Cardiology: Cardiovascular Interventions.

Patients with diabetes constitute an important and increasingly prevalent subgroup of PCI patients, who are at high risk of adverse clinical and angiographic outcomes such as MI, stent thrombosis, restenosis, and death. This is thought to be due to diabetic patients’ greater level of vascular inflammation and tendency toward a prothrombotic state and more complex angiographic features, said Dean J. Kereiakes, MD, of the Christ Hospital Heart and Vascular Center, Lindner Research Center, Cincinnati.

The substudy participants all received at least one resorbable scaffold in at least one target lesion. A total of 27.3% were insulin dependent and nearly 60% had HbA1c levels exceeding 7.0%. Notably, 18% of all the treated lesions in this analysis were less than 2.25 mm in diameter as assessed by quantitative coronary angiography, and approximately 60% had moderately to severely complex morphology.

The primary endpoint – the rate of target-lesion failure at 1-year follow-up – was 8.3%, which was well below the prespecified performance goal of 12.7%. This rate ranged from 4.4% to 10.9% across the different trials. A sensitivity analysis confirmed that the 1-year rate of target-lesion failure was significantly lower than the prespecified performance goal.

The rates of target-lesion failure, target-vessel MI, ischemia-driven target-lesion revascularization, and scaffold thrombosis were significantly higher in diabetic patients who required insulin than in those who did not. Older patient age, insulin dependency, and small target-vessel diameter all were independent predictors of target-lesion failure at 1 year.

The overall 1-year rate of scaffold thrombosis in this study was 2.3%, which is not surprising given the study population’s risk factors. For diabetic patients with appropriately sized vessels of greater than 2.25 mm diameter, the scaffold thrombosis rate was lower (1.3%).

In addition to being underpowered to assess rare adverse events, this study was limited in that it reported outcomes at 1 year, before resorption of the device was complete. It also reflects the first-time clinical experience with a resorbable scaffold for most of the participating investigators, “and one would expect that as with all new medical procedures, results will improve over time with increased operator experience,” the coauthors wrote.

Dr. Kereiakes reported being a consultant to Abbott Vascular, and his associates also reported ties to the company and to other industry sources.

An everolimus-eluting resorbable scaffold appeared to be safe and effective for percutaneous coronary intervention (PCI) in patients with diabetes and noncomplex coronary lesions, according to a study presented at the Transcatheter Cardiovascular Therapeutics annual meeting and published simultaneously in the Journal of the American College of Cardiology: Cardiovascular Interventions.

Patients with diabetes constitute an important and increasingly prevalent subgroup of PCI patients, who are at high risk of adverse clinical and angiographic outcomes such as MI, stent thrombosis, restenosis, and death. This is thought to be due to diabetic patients’ greater level of vascular inflammation and tendency toward a prothrombotic state and more complex angiographic features, said Dean J. Kereiakes, MD, of the Christ Hospital Heart and Vascular Center, Lindner Research Center, Cincinnati.

The substudy participants all received at least one resorbable scaffold in at least one target lesion. A total of 27.3% were insulin dependent and nearly 60% had HbA1c levels exceeding 7.0%. Notably, 18% of all the treated lesions in this analysis were less than 2.25 mm in diameter as assessed by quantitative coronary angiography, and approximately 60% had moderately to severely complex morphology.

The primary endpoint – the rate of target-lesion failure at 1-year follow-up – was 8.3%, which was well below the prespecified performance goal of 12.7%. This rate ranged from 4.4% to 10.9% across the different trials. A sensitivity analysis confirmed that the 1-year rate of target-lesion failure was significantly lower than the prespecified performance goal.

The rates of target-lesion failure, target-vessel MI, ischemia-driven target-lesion revascularization, and scaffold thrombosis were significantly higher in diabetic patients who required insulin than in those who did not. Older patient age, insulin dependency, and small target-vessel diameter all were independent predictors of target-lesion failure at 1 year.

The overall 1-year rate of scaffold thrombosis in this study was 2.3%, which is not surprising given the study population’s risk factors. For diabetic patients with appropriately sized vessels of greater than 2.25 mm diameter, the scaffold thrombosis rate was lower (1.3%).

In addition to being underpowered to assess rare adverse events, this study was limited in that it reported outcomes at 1 year, before resorption of the device was complete. It also reflects the first-time clinical experience with a resorbable scaffold for most of the participating investigators, “and one would expect that as with all new medical procedures, results will improve over time with increased operator experience,” the coauthors wrote.

Dr. Kereiakes reported being a consultant to Abbott Vascular, and his associates also reported ties to the company and to other industry sources.

Key clinical point:

Major finding: The primary endpoint – the rate of target-lesion failure at 1 year follow-up – was 8.3%, which was well below the prespecified performance goal of 12.7%.

Data source: A prespecified formal substudy of 754 patients with diabetes who participated in three clinical trials and one device registry, assessing 1-year outcomes after PCI.

Disclosures: This pooled analysis, plus all the contributing trials and the device registry, were funded by Abbott Vascular, maker of the resorbable scaffold. Dr. Kereiakes reported being a consultant to Abbott Vascular, and his associates also reported ties to the company and to other industry sources.

Causes of recurrent pediatric pancreatitis start to emerge

MONTREAL – Once children have a first bout of acute pancreatitis, a second, separate episode of acute pancreatitis most often occurs in patients with genetically triggered pancreatitis, those who are taller or weigh more than average, and patients with pancreatic necrosis, based on multicenter, prospective data collected from 83 patients.

This is the first reported study to prospectively follow pediatric cases of acute pancreatitis, and additional studies with more patients are needed to better identify the factors predisposing patients to recurrent episodes of acute pancreatitis and to quantify the amount of risk these factors pose, Katherine F. Sweeny, MD, said at the annual meeting of the Federation of the International Societies of Pediatric Gastroenterology, Hepatology, and Nutrition.

The analysis focused on the 83 patients with at least 3 months of follow-up. During observation, 17 (20%) of the patients developed a second episode of acute pancreatitis that was distinguished from the initial episode by either at least 1 pain-free month or by complete normalization of amylase and lipase levels between the two episodes. Thirteen of the 17 recurrences occurred within 5 months of the first episode, with 11 of these occurring within the first 3 months after the first attack, a subgroup Dr. Sweeny called the “rapid progressors.”

Comparison of the 11 rapid progressors with the other 72 patients showed that the rapid progressors were significantly taller and weighed more. In addition, two of the 11 rapid progressors had pancreatic necrosis while none of the other patients had this complication.

The pancreatitis etiologies of the 11 rapid progressors also highlighted the potent influence a mutation can have on producing recurrent acute pancreatitis. Four of the 11 rapid progressors had a genetic mutation linked to pancreatitis susceptibility, and five of the six patients with a genetic cause for their index episode of pancreatitis developed a second acute episode during follow-up, said Dr. Sweeny, a pediatrician at Cincinnati Children’s Hospital Medical Center. In contrast, the next most effective cause of recurrent pancreatitis was a toxin or drug, which resulted in about a 25% incidence rate of a second episode. All of the other pancreatitis etiologies had recurrence rates of 10% or less.

Collecting better information on the causes of recurrent pancreatitis and chronic pancreatitis is especially important because of the rising incidence of acute pediatric pancreatitis, currently about one case in every 10,000 children and adolescents. Prior to formation of the INSPPIRE consortium, studies of pediatric pancreatitis had largely been limited to single-center retrospective reviews. The limitations of these data have made it hard to predict which patients with a first episode of acute pancreatitis will progress to a second episode or beyond, Dr. Sweeny said.

Dr. Sweeny had no disclosures.

[email protected]

On Twitter @mitchelzoler

MONTREAL – Once children have a first bout of acute pancreatitis, a second, separate episode of acute pancreatitis most often occurs in patients with genetically triggered pancreatitis, those who are taller or weigh more than average, and patients with pancreatic necrosis, based on multicenter, prospective data collected from 83 patients.

This is the first reported study to prospectively follow pediatric cases of acute pancreatitis, and additional studies with more patients are needed to better identify the factors predisposing patients to recurrent episodes of acute pancreatitis and to quantify the amount of risk these factors pose, Katherine F. Sweeny, MD, said at the annual meeting of the Federation of the International Societies of Pediatric Gastroenterology, Hepatology, and Nutrition.

The analysis focused on the 83 patients with at least 3 months of follow-up. During observation, 17 (20%) of the patients developed a second episode of acute pancreatitis that was distinguished from the initial episode by either at least 1 pain-free month or by complete normalization of amylase and lipase levels between the two episodes. Thirteen of the 17 recurrences occurred within 5 months of the first episode, with 11 of these occurring within the first 3 months after the first attack, a subgroup Dr. Sweeny called the “rapid progressors.”

Comparison of the 11 rapid progressors with the other 72 patients showed that the rapid progressors were significantly taller and weighed more. In addition, two of the 11 rapid progressors had pancreatic necrosis while none of the other patients had this complication.

The pancreatitis etiologies of the 11 rapid progressors also highlighted the potent influence a mutation can have on producing recurrent acute pancreatitis. Four of the 11 rapid progressors had a genetic mutation linked to pancreatitis susceptibility, and five of the six patients with a genetic cause for their index episode of pancreatitis developed a second acute episode during follow-up, said Dr. Sweeny, a pediatrician at Cincinnati Children’s Hospital Medical Center. In contrast, the next most effective cause of recurrent pancreatitis was a toxin or drug, which resulted in about a 25% incidence rate of a second episode. All of the other pancreatitis etiologies had recurrence rates of 10% or less.

Collecting better information on the causes of recurrent pancreatitis and chronic pancreatitis is especially important because of the rising incidence of acute pediatric pancreatitis, currently about one case in every 10,000 children and adolescents. Prior to formation of the INSPPIRE consortium, studies of pediatric pancreatitis had largely been limited to single-center retrospective reviews. The limitations of these data have made it hard to predict which patients with a first episode of acute pancreatitis will progress to a second episode or beyond, Dr. Sweeny said.

Dr. Sweeny had no disclosures.

[email protected]

On Twitter @mitchelzoler

MONTREAL – Once children have a first bout of acute pancreatitis, a second, separate episode of acute pancreatitis most often occurs in patients with genetically triggered pancreatitis, those who are taller or weigh more than average, and patients with pancreatic necrosis, based on multicenter, prospective data collected from 83 patients.

This is the first reported study to prospectively follow pediatric cases of acute pancreatitis, and additional studies with more patients are needed to better identify the factors predisposing patients to recurrent episodes of acute pancreatitis and to quantify the amount of risk these factors pose, Katherine F. Sweeny, MD, said at the annual meeting of the Federation of the International Societies of Pediatric Gastroenterology, Hepatology, and Nutrition.

The analysis focused on the 83 patients with at least 3 months of follow-up. During observation, 17 (20%) of the patients developed a second episode of acute pancreatitis that was distinguished from the initial episode by either at least 1 pain-free month or by complete normalization of amylase and lipase levels between the two episodes. Thirteen of the 17 recurrences occurred within 5 months of the first episode, with 11 of these occurring within the first 3 months after the first attack, a subgroup Dr. Sweeny called the “rapid progressors.”

Comparison of the 11 rapid progressors with the other 72 patients showed that the rapid progressors were significantly taller and weighed more. In addition, two of the 11 rapid progressors had pancreatic necrosis while none of the other patients had this complication.

The pancreatitis etiologies of the 11 rapid progressors also highlighted the potent influence a mutation can have on producing recurrent acute pancreatitis. Four of the 11 rapid progressors had a genetic mutation linked to pancreatitis susceptibility, and five of the six patients with a genetic cause for their index episode of pancreatitis developed a second acute episode during follow-up, said Dr. Sweeny, a pediatrician at Cincinnati Children’s Hospital Medical Center. In contrast, the next most effective cause of recurrent pancreatitis was a toxin or drug, which resulted in about a 25% incidence rate of a second episode. All of the other pancreatitis etiologies had recurrence rates of 10% or less.

Collecting better information on the causes of recurrent pancreatitis and chronic pancreatitis is especially important because of the rising incidence of acute pediatric pancreatitis, currently about one case in every 10,000 children and adolescents. Prior to formation of the INSPPIRE consortium, studies of pediatric pancreatitis had largely been limited to single-center retrospective reviews. The limitations of these data have made it hard to predict which patients with a first episode of acute pancreatitis will progress to a second episode or beyond, Dr. Sweeny said.

Dr. Sweeny had no disclosures.

[email protected]

On Twitter @mitchelzoler

AT WCPGHAN 2016

Key clinical point:

Major finding: Overall, 17 of 83 patients (20%) had recurrent acute pancreatitis, but among six patients with a genetic cause, five had recurrences.

Data source: Eighty-three patients enrolled in INSPPIRE, an international consortium formed to prospectively study pediatric pancreatitis.

Disclosures: Dr. Sweeny had no disclosures.

VIDEO: Bioresorbable Absorb unexpectedly humbled by metallic DES

WASHINGTON – The bioabsorbable vascular scaffold bubble suddenly burst with the first 3-year follow-up data from a randomized trial that unexpectedly showed that the Absorb device significantly underperformed compared with Xience, a widely-used, second-generation metallic drug-eluting stent.

“As a pioneer of BVS [bioresorbable vascular scaffold] I’m disappointed,” Patrick W. Serruys, MD, said at the Transcatheter Cardiovascular Therapeutics annual meeting. “The performance of the comparator stent was spectacular.”

Xience surpassed Absorb in several other secondary endpoints. For example, the in-device binary restenosis rate was 7.0% with Absorb and 0.7% with Xience; the in-segment binary restenosis rate was 8% with Absorb and 3% with Xience. Target-vessel MIs occurred in 7% of the Absorb patients and 1% of the Xience patients, while clinically indicated target-lesion revascularization occurred in 6% of the Absorb patients and 1% of the Xience patients.

Another notable finding was that definite or probable in-device thrombosis occurred in nine Absorb patents and in none of the Xience patients, a statistically significant difference. Six of the Absorb thrombotic events occurred more than 1 year after the device was placed, and in several instances these thromboses occurred more than 900 days after placement, when the BVS had largely resorbed.

“These thromboses are occurring at the late stages of BVS degradation,” Dr. Serruys noted. “The Absorb polymer is basically gone after 3 years, but it’s replaced by a proteoglycan, and some proteoglycans are quite thrombogenic,” a possible explanation for the “mysterious” very late thromboses, he said.

These “disappointing” results my be linked to inadequate lesion preparation, appropriate sizing of the BVS for the lesion, and inconsistent postdilatation of the BVS, three steps that became the guiding mantra for BVS use starting a couple of years ago, said Giulio G. Stefanini, MD, an interventional cardiologist at Humanitas Research Hospital in Milan and a discussant for the report at the meeting, which was sponsored by the Cardiovascular Research Foundation.

Dr. Stefanini said that even though the Absorb stent became available for routine use in Europe starting in 2012, the device gained little traction since then in his own practice and throughout Italy. Currently it’s used for fewer than 5% of coronary interventions in Italy, he estimated. That’s largely because “we have failed to identify a population that benefits.” Other issues include the extra time needed to place a BVS, and the need for longer treatment with dual antiplatelet therapy for patients who receive a BVS, compared with when they receive a modern metallic drug-eluting stent. The Absorb BVS received Food and Drug Administration approval for routine U.S. use in July 2016.

“It would be beautiful to have a fully bioresorbable stent. It’s a lovely concept, but we’re not there yet,” Dr. Stefanini observed.

ABSORB II was sponsored by Abbott Vascular, which markets the Absorb device. Dr. Serruys has received research support from Abbott Vascular and has been a consultant to several other device and drug companies. Dr. Stefanini has been a consultant to Boston Scientific, B.Braun, and Edwards.

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

[email protected]

On Twitter @mitchelzoler

We were all disappointed by the ABSORB II 3-year results. It was really surprising that even the vasomotion endpoint was, if anything, a little better with Xience, which performed amazingly well in this trial. Both arms of the study did well out to 3 years, but the Xience patients did better.

The Absorb bioresorbable vascular scaffold (BVS) is an early-stage device, and based on these results I wouldn’t give up on the BVS concept. But we need to be very careful with Absorb and which patients we implant with it. We need to make sure we carefully use thorough lesion preparation, correct sizing, and postdilatation in every patient, and we need to carefully select the right patients.

The main issue with the Absorb BVS in this trial was scaffold thrombosis, so we need to use a BVS only in patients with the lowest thrombosis risk, which means younger patients without renal failure, calcified vessels, and a larger-diameter target coronary artery. Younger patients have the most to gain from receiving a BVS. Younger patients who need a coronary intervention often collect several stents over the balance of their life, and it’s in these patients where you’d prefer that the stents eventually disappear.

Paul S. Teirstein, MD , is chief of cardiology and director of interventional cardiology at the Scripps Clinic in La Jolla, Calif. He has received research support from and has been a consultant to Abbott Vascular, Boston Scientific, and Medtronic. He made these comments in an interview .

We were all disappointed by the ABSORB II 3-year results. It was really surprising that even the vasomotion endpoint was, if anything, a little better with Xience, which performed amazingly well in this trial. Both arms of the study did well out to 3 years, but the Xience patients did better.

The Absorb bioresorbable vascular scaffold (BVS) is an early-stage device, and based on these results I wouldn’t give up on the BVS concept. But we need to be very careful with Absorb and which patients we implant with it. We need to make sure we carefully use thorough lesion preparation, correct sizing, and postdilatation in every patient, and we need to carefully select the right patients.

The main issue with the Absorb BVS in this trial was scaffold thrombosis, so we need to use a BVS only in patients with the lowest thrombosis risk, which means younger patients without renal failure, calcified vessels, and a larger-diameter target coronary artery. Younger patients have the most to gain from receiving a BVS. Younger patients who need a coronary intervention often collect several stents over the balance of their life, and it’s in these patients where you’d prefer that the stents eventually disappear.

Paul S. Teirstein, MD , is chief of cardiology and director of interventional cardiology at the Scripps Clinic in La Jolla, Calif. He has received research support from and has been a consultant to Abbott Vascular, Boston Scientific, and Medtronic. He made these comments in an interview .

We were all disappointed by the ABSORB II 3-year results. It was really surprising that even the vasomotion endpoint was, if anything, a little better with Xience, which performed amazingly well in this trial. Both arms of the study did well out to 3 years, but the Xience patients did better.

The Absorb bioresorbable vascular scaffold (BVS) is an early-stage device, and based on these results I wouldn’t give up on the BVS concept. But we need to be very careful with Absorb and which patients we implant with it. We need to make sure we carefully use thorough lesion preparation, correct sizing, and postdilatation in every patient, and we need to carefully select the right patients.

The main issue with the Absorb BVS in this trial was scaffold thrombosis, so we need to use a BVS only in patients with the lowest thrombosis risk, which means younger patients without renal failure, calcified vessels, and a larger-diameter target coronary artery. Younger patients have the most to gain from receiving a BVS. Younger patients who need a coronary intervention often collect several stents over the balance of their life, and it’s in these patients where you’d prefer that the stents eventually disappear.

Paul S. Teirstein, MD , is chief of cardiology and director of interventional cardiology at the Scripps Clinic in La Jolla, Calif. He has received research support from and has been a consultant to Abbott Vascular, Boston Scientific, and Medtronic. He made these comments in an interview .

WASHINGTON – The bioabsorbable vascular scaffold bubble suddenly burst with the first 3-year follow-up data from a randomized trial that unexpectedly showed that the Absorb device significantly underperformed compared with Xience, a widely-used, second-generation metallic drug-eluting stent.

“As a pioneer of BVS [bioresorbable vascular scaffold] I’m disappointed,” Patrick W. Serruys, MD, said at the Transcatheter Cardiovascular Therapeutics annual meeting. “The performance of the comparator stent was spectacular.”

Xience surpassed Absorb in several other secondary endpoints. For example, the in-device binary restenosis rate was 7.0% with Absorb and 0.7% with Xience; the in-segment binary restenosis rate was 8% with Absorb and 3% with Xience. Target-vessel MIs occurred in 7% of the Absorb patients and 1% of the Xience patients, while clinically indicated target-lesion revascularization occurred in 6% of the Absorb patients and 1% of the Xience patients.

Another notable finding was that definite or probable in-device thrombosis occurred in nine Absorb patents and in none of the Xience patients, a statistically significant difference. Six of the Absorb thrombotic events occurred more than 1 year after the device was placed, and in several instances these thromboses occurred more than 900 days after placement, when the BVS had largely resorbed.

“These thromboses are occurring at the late stages of BVS degradation,” Dr. Serruys noted. “The Absorb polymer is basically gone after 3 years, but it’s replaced by a proteoglycan, and some proteoglycans are quite thrombogenic,” a possible explanation for the “mysterious” very late thromboses, he said.