User login

News and Views that Matter to Physicians

Absorb bioresorbable vascular scaffold wins FDA approval

The Food and Drug Administration approved the first fully absorbable vascular scaffold designed for use in coronary arteries, the Absorb GT1 bioresorbable vascular scaffold system, made by Abbott.

Concurrent with the FDA’s announcement on July 5, the company said that it plans to start immediate commercial rollout of the Absorb bioresorbable vascular scaffold (BVS). Initial availability will be limited to the roughly 100 most active sites that participated in the ABSORB III trial, the pivotal study that established noninferiority of the BVS, compared with a state-of-the-art metallic coronary stent during 1-year follow-up, according to a company spokesman.

However, the ABSORB III results, reported in October 2015, failed to document any superiority of the BVS, compared with a metallic stent. The potential advantages of a BVS remain for now unproven, and are based on the potential long-term advantages of using devices in percutaneous coronary interventions that slowly degrade away and thereby eliminate a residual metallic structure in a patient’s coronaries and the long-term threat they could pose for thrombosis or interference with subsequent coronary procedures.

“All the potential advantages are hypothetical at this point,” said Hiram G. Bezerra, MD, an investigator in the ABSORB III trial and director of the cardiac catheterization laboratory at University Hospitals Case Medical Center in Cleveland. However, “if you have a metallic stent it lasts a lifetime, creating a metallic cage” that could interfere with a possible later coronary procedure or be the site for thrombus formation. Disappearance of the BVS also creates the possibility for eventual restoration of more normal vasomotion in the coronary wall, said Dr. Bezerra, a self-professed “enthusiast” for the BVS alternative.

A major limiting factor for BVS use today is coronary diameter because the Absorb BVS is bulkier than metallic stents. The ABSORB III trial limited use of the BVS to coronary vessels with a reference-vessel diameter by visual assessment of at least 2.5 mm, with an upper limit of 3.75 mm. Other limiting factors can be coronary calcification and tortuosity, although Dr. Bezerra said that these obstacles are usually overcome with a more time-consuming procedure if the operator is committed to placing a BVS.

Another variable will be the cost of the BVS. According to the Abbott spokesman, the device “will be priced so that it will be broadly accessible to hospitals.” Also, the Absorb BVS will receive payer reimbursement comparable to a drug-eluting stent using existing reimbursement codes, the spokesman said. Abbott will require inexperienced operators to take a training course to learn proper placement technique.

Dr. Bezerra admitted that he is probably an outlier in his plan to quickly make the BVS a mainstay of his practice. “I think adoption will be slow in the beginning” for most U.S. operators, he predicted. One of his Cleveland colleagues who spoke about the near-term prospects BVS use last October when the ABSORB III results came out predicted that immediate use might occur in about 10%-15% of patients undergoing percutaneous coronary interventions, similar to the usage level in Europe where this BVS has been available for several years.

Dr. Bezerra has been a consultant to Abbott and St. Jude. He was an investigator on the ABSORB III trial.

On Twitter @mitchelzoler

The Food and Drug Administration approved the first fully absorbable vascular scaffold designed for use in coronary arteries, the Absorb GT1 bioresorbable vascular scaffold system, made by Abbott.

Concurrent with the FDA’s announcement on July 5, the company said that it plans to start immediate commercial rollout of the Absorb bioresorbable vascular scaffold (BVS). Initial availability will be limited to the roughly 100 most active sites that participated in the ABSORB III trial, the pivotal study that established noninferiority of the BVS, compared with a state-of-the-art metallic coronary stent during 1-year follow-up, according to a company spokesman.

However, the ABSORB III results, reported in October 2015, failed to document any superiority of the BVS, compared with a metallic stent. The potential advantages of a BVS remain for now unproven, and are based on the potential long-term advantages of using devices in percutaneous coronary interventions that slowly degrade away and thereby eliminate a residual metallic structure in a patient’s coronaries and the long-term threat they could pose for thrombosis or interference with subsequent coronary procedures.

“All the potential advantages are hypothetical at this point,” said Hiram G. Bezerra, MD, an investigator in the ABSORB III trial and director of the cardiac catheterization laboratory at University Hospitals Case Medical Center in Cleveland. However, “if you have a metallic stent it lasts a lifetime, creating a metallic cage” that could interfere with a possible later coronary procedure or be the site for thrombus formation. Disappearance of the BVS also creates the possibility for eventual restoration of more normal vasomotion in the coronary wall, said Dr. Bezerra, a self-professed “enthusiast” for the BVS alternative.

A major limiting factor for BVS use today is coronary diameter because the Absorb BVS is bulkier than metallic stents. The ABSORB III trial limited use of the BVS to coronary vessels with a reference-vessel diameter by visual assessment of at least 2.5 mm, with an upper limit of 3.75 mm. Other limiting factors can be coronary calcification and tortuosity, although Dr. Bezerra said that these obstacles are usually overcome with a more time-consuming procedure if the operator is committed to placing a BVS.

Another variable will be the cost of the BVS. According to the Abbott spokesman, the device “will be priced so that it will be broadly accessible to hospitals.” Also, the Absorb BVS will receive payer reimbursement comparable to a drug-eluting stent using existing reimbursement codes, the spokesman said. Abbott will require inexperienced operators to take a training course to learn proper placement technique.

Dr. Bezerra admitted that he is probably an outlier in his plan to quickly make the BVS a mainstay of his practice. “I think adoption will be slow in the beginning” for most U.S. operators, he predicted. One of his Cleveland colleagues who spoke about the near-term prospects BVS use last October when the ABSORB III results came out predicted that immediate use might occur in about 10%-15% of patients undergoing percutaneous coronary interventions, similar to the usage level in Europe where this BVS has been available for several years.

Dr. Bezerra has been a consultant to Abbott and St. Jude. He was an investigator on the ABSORB III trial.

On Twitter @mitchelzoler

The Food and Drug Administration approved the first fully absorbable vascular scaffold designed for use in coronary arteries, the Absorb GT1 bioresorbable vascular scaffold system, made by Abbott.

Concurrent with the FDA’s announcement on July 5, the company said that it plans to start immediate commercial rollout of the Absorb bioresorbable vascular scaffold (BVS). Initial availability will be limited to the roughly 100 most active sites that participated in the ABSORB III trial, the pivotal study that established noninferiority of the BVS, compared with a state-of-the-art metallic coronary stent during 1-year follow-up, according to a company spokesman.

However, the ABSORB III results, reported in October 2015, failed to document any superiority of the BVS, compared with a metallic stent. The potential advantages of a BVS remain for now unproven, and are based on the potential long-term advantages of using devices in percutaneous coronary interventions that slowly degrade away and thereby eliminate a residual metallic structure in a patient’s coronaries and the long-term threat they could pose for thrombosis or interference with subsequent coronary procedures.

“All the potential advantages are hypothetical at this point,” said Hiram G. Bezerra, MD, an investigator in the ABSORB III trial and director of the cardiac catheterization laboratory at University Hospitals Case Medical Center in Cleveland. However, “if you have a metallic stent it lasts a lifetime, creating a metallic cage” that could interfere with a possible later coronary procedure or be the site for thrombus formation. Disappearance of the BVS also creates the possibility for eventual restoration of more normal vasomotion in the coronary wall, said Dr. Bezerra, a self-professed “enthusiast” for the BVS alternative.

A major limiting factor for BVS use today is coronary diameter because the Absorb BVS is bulkier than metallic stents. The ABSORB III trial limited use of the BVS to coronary vessels with a reference-vessel diameter by visual assessment of at least 2.5 mm, with an upper limit of 3.75 mm. Other limiting factors can be coronary calcification and tortuosity, although Dr. Bezerra said that these obstacles are usually overcome with a more time-consuming procedure if the operator is committed to placing a BVS.

Another variable will be the cost of the BVS. According to the Abbott spokesman, the device “will be priced so that it will be broadly accessible to hospitals.” Also, the Absorb BVS will receive payer reimbursement comparable to a drug-eluting stent using existing reimbursement codes, the spokesman said. Abbott will require inexperienced operators to take a training course to learn proper placement technique.

Dr. Bezerra admitted that he is probably an outlier in his plan to quickly make the BVS a mainstay of his practice. “I think adoption will be slow in the beginning” for most U.S. operators, he predicted. One of his Cleveland colleagues who spoke about the near-term prospects BVS use last October when the ABSORB III results came out predicted that immediate use might occur in about 10%-15% of patients undergoing percutaneous coronary interventions, similar to the usage level in Europe where this BVS has been available for several years.

Dr. Bezerra has been a consultant to Abbott and St. Jude. He was an investigator on the ABSORB III trial.

On Twitter @mitchelzoler

Multisite NIH-sponsored research can now use single IRB

In an effort to streamline multisite clinical research, the National Institutes of Health announced a new policy related to the use of institutional review boards (IRBs).

The new policy sets “the expectation that multisite studies conducting the same protocol use a single IRB to carry out the ethical review of the proposed research,” NIH Director Francis S. Collins, MD, said in a June 21 statement. The policy goes into effect May 25, 2017, and applies only to domestic research.

Currently, for most multisite studies, the IRB at each site conducts an independent review of protocol and consent documents, which Dr. Collins said “adds time, but generally does not meaningfully enhance protections for the participants. This new NIH policy seeks to end duplicative reviews that slow down the start of the research.”

Michael Pichichero, MD, director of the research institute, Rochester (N.Y.) General Hospital, called the change “a good policy. Allowing a single IRB to review and not go through multiple reviews will help get clinical trials going faster,” he said in an interview. “The policies and principles of IRB review are the same for all U.S. Food and Drug Administration–approved IRBs, so the concern that inappropriate approval might be given is highly unlikely.”

In an effort to streamline multisite clinical research, the National Institutes of Health announced a new policy related to the use of institutional review boards (IRBs).

The new policy sets “the expectation that multisite studies conducting the same protocol use a single IRB to carry out the ethical review of the proposed research,” NIH Director Francis S. Collins, MD, said in a June 21 statement. The policy goes into effect May 25, 2017, and applies only to domestic research.

Currently, for most multisite studies, the IRB at each site conducts an independent review of protocol and consent documents, which Dr. Collins said “adds time, but generally does not meaningfully enhance protections for the participants. This new NIH policy seeks to end duplicative reviews that slow down the start of the research.”

Michael Pichichero, MD, director of the research institute, Rochester (N.Y.) General Hospital, called the change “a good policy. Allowing a single IRB to review and not go through multiple reviews will help get clinical trials going faster,” he said in an interview. “The policies and principles of IRB review are the same for all U.S. Food and Drug Administration–approved IRBs, so the concern that inappropriate approval might be given is highly unlikely.”

In an effort to streamline multisite clinical research, the National Institutes of Health announced a new policy related to the use of institutional review boards (IRBs).

The new policy sets “the expectation that multisite studies conducting the same protocol use a single IRB to carry out the ethical review of the proposed research,” NIH Director Francis S. Collins, MD, said in a June 21 statement. The policy goes into effect May 25, 2017, and applies only to domestic research.

Currently, for most multisite studies, the IRB at each site conducts an independent review of protocol and consent documents, which Dr. Collins said “adds time, but generally does not meaningfully enhance protections for the participants. This new NIH policy seeks to end duplicative reviews that slow down the start of the research.”

Michael Pichichero, MD, director of the research institute, Rochester (N.Y.) General Hospital, called the change “a good policy. Allowing a single IRB to review and not go through multiple reviews will help get clinical trials going faster,” he said in an interview. “The policies and principles of IRB review are the same for all U.S. Food and Drug Administration–approved IRBs, so the concern that inappropriate approval might be given is highly unlikely.”

Permanent pacemaker in TAVR: Earlier implantation costs much less

PARIS – When a patient undergoing transcatheter aortic valve replacement needs a permanent pacemaker, the additional hospital costs are significantly less if the device is implanted within 24 hours post TAVR rather than later, Seth Clancy reported at the annual congress of the European Association of Percutaneous Cardiovascular Interventions.

“Not only the need for permanent pacemaker implantation but also the timing of the procedure as well as the management and monitoring of conduction disturbances have important resource use implications for TAVR,” observed Mr. Clancy of Edwards Lifesciences of Irvine, Calif.

He presented an economic analysis of all 12,148 TAVR hospitalizations included in the Medicare database for 2014. A key finding: The mean cost of TAVR hospitalizations with no permanent pacemaker implantation was $63,136, while for the 12% of TAVRs that did include permanent pacemaker implantation, the mean cost shot up to $80,441, for a difference of $17,305.

The additional cost of putting in a permanent pacemaker included nearly $8,000 for supplies, more than $2,600 for additional time in the operating room and/or catheterization laboratory, and in excess of $2,100 worth of extra ICU or cardiac care unit time.

Patients who received a permanent pacemaker during their TAVR hospitalization spent an average of 2.3 days longer in the hospital than the mean 6.6 days for patients who didn’t get a permanent pacemaker.

Drilling down further into the data, Mr. Clancy found that 41% of permanent pacemakers implanted during hospitalization for TAVR went in within 24 hours of the TAVR procedure. In a multivariate regression analysis adjusted for differences in patient demographics, comorbid conditions, and complications, those patients generated an average of $9,843 more in hospital costs than patients who didn’t get a permanent pacemaker during their TAVR hospitalization. However, patients who received a permanent pacemaker more than 24 hours after TAVR cost an average of $17,681 more and had a 2.72-day longer stay than patients who didn’t get a permanent pacemaker.

The need for a permanent pacemaker is a common complication following TAVR. This has been a sticking point for many cardiothoracic surgeons, who note that rates of permanent pacemaker implantation following surgical aortic valve replacement are far lower. Still, rates in TAVR patients have come down over time with advances in valve technology. Currently, permanent pacemaker implantation rates in TAVR patients are 5%-25%, depending upon the valve system, according to Mr. Clancy.

Advances in device design and techniques aimed at reducing the permanent pacemaker implantation rate substantially below the 12% figure seen in 2014 have the potential to generate substantial cost savings, he observed.

Session chair Mohammad Abdelghani, MD, of the Academic Medical Center at Amsterdam questioned whether the study results are relevant to European practice because of the large differences in health care costs.

Discussant Sonia Petronio, MD, expressed a more fundamental reservation.

“This is a very important subject – and a very dangerous one,” said Dr. Petronio of the University of Pisa (Italy). “It’s easier and less costly for a hospital to encourage increasing early permanent pacemaker implantation because the patient can go home earlier.”

“We don’t want to put in a pacemaker earlier to save money,” agreed Dr. Abdelghani. “This is not a cost-effectiveness analysis, it’s purely a cost analysis. Cost-effectiveness would take into account the long-term clinical outcomes and welfare of the patients. We would like to see that from you next year.”

Mr. Clancy is an employee of Edwards Lifesciences, which funded the study.

PARIS – When a patient undergoing transcatheter aortic valve replacement needs a permanent pacemaker, the additional hospital costs are significantly less if the device is implanted within 24 hours post TAVR rather than later, Seth Clancy reported at the annual congress of the European Association of Percutaneous Cardiovascular Interventions.

“Not only the need for permanent pacemaker implantation but also the timing of the procedure as well as the management and monitoring of conduction disturbances have important resource use implications for TAVR,” observed Mr. Clancy of Edwards Lifesciences of Irvine, Calif.

He presented an economic analysis of all 12,148 TAVR hospitalizations included in the Medicare database for 2014. A key finding: The mean cost of TAVR hospitalizations with no permanent pacemaker implantation was $63,136, while for the 12% of TAVRs that did include permanent pacemaker implantation, the mean cost shot up to $80,441, for a difference of $17,305.

The additional cost of putting in a permanent pacemaker included nearly $8,000 for supplies, more than $2,600 for additional time in the operating room and/or catheterization laboratory, and in excess of $2,100 worth of extra ICU or cardiac care unit time.

Patients who received a permanent pacemaker during their TAVR hospitalization spent an average of 2.3 days longer in the hospital than the mean 6.6 days for patients who didn’t get a permanent pacemaker.

Drilling down further into the data, Mr. Clancy found that 41% of permanent pacemakers implanted during hospitalization for TAVR went in within 24 hours of the TAVR procedure. In a multivariate regression analysis adjusted for differences in patient demographics, comorbid conditions, and complications, those patients generated an average of $9,843 more in hospital costs than patients who didn’t get a permanent pacemaker during their TAVR hospitalization. However, patients who received a permanent pacemaker more than 24 hours after TAVR cost an average of $17,681 more and had a 2.72-day longer stay than patients who didn’t get a permanent pacemaker.

The need for a permanent pacemaker is a common complication following TAVR. This has been a sticking point for many cardiothoracic surgeons, who note that rates of permanent pacemaker implantation following surgical aortic valve replacement are far lower. Still, rates in TAVR patients have come down over time with advances in valve technology. Currently, permanent pacemaker implantation rates in TAVR patients are 5%-25%, depending upon the valve system, according to Mr. Clancy.

Advances in device design and techniques aimed at reducing the permanent pacemaker implantation rate substantially below the 12% figure seen in 2014 have the potential to generate substantial cost savings, he observed.

Session chair Mohammad Abdelghani, MD, of the Academic Medical Center at Amsterdam questioned whether the study results are relevant to European practice because of the large differences in health care costs.

Discussant Sonia Petronio, MD, expressed a more fundamental reservation.

“This is a very important subject – and a very dangerous one,” said Dr. Petronio of the University of Pisa (Italy). “It’s easier and less costly for a hospital to encourage increasing early permanent pacemaker implantation because the patient can go home earlier.”

“We don’t want to put in a pacemaker earlier to save money,” agreed Dr. Abdelghani. “This is not a cost-effectiveness analysis, it’s purely a cost analysis. Cost-effectiveness would take into account the long-term clinical outcomes and welfare of the patients. We would like to see that from you next year.”

Mr. Clancy is an employee of Edwards Lifesciences, which funded the study.

PARIS – When a patient undergoing transcatheter aortic valve replacement needs a permanent pacemaker, the additional hospital costs are significantly less if the device is implanted within 24 hours post TAVR rather than later, Seth Clancy reported at the annual congress of the European Association of Percutaneous Cardiovascular Interventions.

“Not only the need for permanent pacemaker implantation but also the timing of the procedure as well as the management and monitoring of conduction disturbances have important resource use implications for TAVR,” observed Mr. Clancy of Edwards Lifesciences of Irvine, Calif.

He presented an economic analysis of all 12,148 TAVR hospitalizations included in the Medicare database for 2014. A key finding: The mean cost of TAVR hospitalizations with no permanent pacemaker implantation was $63,136, while for the 12% of TAVRs that did include permanent pacemaker implantation, the mean cost shot up to $80,441, for a difference of $17,305.

The additional cost of putting in a permanent pacemaker included nearly $8,000 for supplies, more than $2,600 for additional time in the operating room and/or catheterization laboratory, and in excess of $2,100 worth of extra ICU or cardiac care unit time.

Patients who received a permanent pacemaker during their TAVR hospitalization spent an average of 2.3 days longer in the hospital than the mean 6.6 days for patients who didn’t get a permanent pacemaker.

Drilling down further into the data, Mr. Clancy found that 41% of permanent pacemakers implanted during hospitalization for TAVR went in within 24 hours of the TAVR procedure. In a multivariate regression analysis adjusted for differences in patient demographics, comorbid conditions, and complications, those patients generated an average of $9,843 more in hospital costs than patients who didn’t get a permanent pacemaker during their TAVR hospitalization. However, patients who received a permanent pacemaker more than 24 hours after TAVR cost an average of $17,681 more and had a 2.72-day longer stay than patients who didn’t get a permanent pacemaker.

The need for a permanent pacemaker is a common complication following TAVR. This has been a sticking point for many cardiothoracic surgeons, who note that rates of permanent pacemaker implantation following surgical aortic valve replacement are far lower. Still, rates in TAVR patients have come down over time with advances in valve technology. Currently, permanent pacemaker implantation rates in TAVR patients are 5%-25%, depending upon the valve system, according to Mr. Clancy.

Advances in device design and techniques aimed at reducing the permanent pacemaker implantation rate substantially below the 12% figure seen in 2014 have the potential to generate substantial cost savings, he observed.

Session chair Mohammad Abdelghani, MD, of the Academic Medical Center at Amsterdam questioned whether the study results are relevant to European practice because of the large differences in health care costs.

Discussant Sonia Petronio, MD, expressed a more fundamental reservation.

“This is a very important subject – and a very dangerous one,” said Dr. Petronio of the University of Pisa (Italy). “It’s easier and less costly for a hospital to encourage increasing early permanent pacemaker implantation because the patient can go home earlier.”

“We don’t want to put in a pacemaker earlier to save money,” agreed Dr. Abdelghani. “This is not a cost-effectiveness analysis, it’s purely a cost analysis. Cost-effectiveness would take into account the long-term clinical outcomes and welfare of the patients. We would like to see that from you next year.”

Mr. Clancy is an employee of Edwards Lifesciences, which funded the study.

AT EUROPCR 2016

Key clinical point: The incremental cost of permanent pacemaker implantation more than 24 hours after transcatheter aortic valve replacement is almost twice as great as if the pacemaker goes in within 24 hours.

Major finding: The mean cost of hospitalization for transcatheter aortic valve replacement without permanent pacemaker implication in Medicare patients in 2014 was $63,136, compared with $80,441 if they needed a pacemaker.

Data source: This was a retrospective study of the health care costs and lengths of stay for all 12,148 hospitalizations for transcatheter aortic valve replacement in the Medicare inpatient database for 2014.

Disclosures: The presenter is an employee of Edwards Lifesciences, which funded the study.

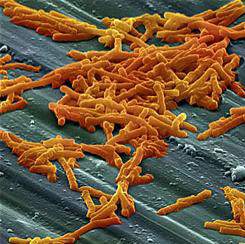

Seasonal variation not seen in C. difficile rates

BOSTON – No winter spike in Clostridium difficile infection (CDI) rates was seen among hospitalized patients after testing methodologies and frequency were accounted for, according to a large multinational study.

A total of 180 hospitals in five European countries had wide variation in CDI testing methods and testing density. However, among the hospitals that used a currently recommended toxin-detecting testing algorithm, there was no significant seasonal variation in cases, defined as mean cases per 10,000 patient bed-days per hospital per month (C/PBDs/H/M). The hospitals using toxin-detecting algorithms had summer C/PBDs/H/M rates of 9.6, compared to 8.0 in winter months (P = .27).

These results, presented at the annual meeting of the American Society for Microbiology by Kerrie Davies, clinical scientist at the University of Leeds (England), stand in contrast to some other studies that have shown a wintertime peak in CDI incidence. The data presented help in “understanding the context in which published reported rate data have been generated,” said Ms. Davies, enabling a better understanding both of how samples are tested, and who gets tested.

The study enrolled 180 hospitals – 38 each in France and Italy, 37 each in Germany and the United Kingdom, and 30 in Spain. Institutions reported patient demographics, as well as CDI testing data and patient bed-days for CDI cases, for 1 year.

Current European and U.K. CDI testing algorithms, said Ms. Davies, begin either with testing for glutamate dehydrogenase (GDH) or with nucleic acid amplification testing (NAAT), and then proceed to enzyme-linked immunosorbent assay (ELISA) testing for C. difficile toxins A and B.

Other algorithms, for example those that begin with toxin testing, are not recommended, said Ms. Davies. Some institutions may diagnose CDI only by toxin detection, GDH testing, or NAAT testing.

For data analysis, Ms. Davies and her collaborators compared CDI-related PBDs and testing density during June, July, and August to data collected in December, January, and February. Testing methods were dichotomized to toxin-detecting CDI testing algorithms (TCTA, using GDH/toxin or NAAT/toxin), or non-TCTA methods, which included all other algorithms or stand-alone testing methods.

Wide variation was seen between countries in testing methodologies. The United Kingdom had the highest rate of TCTA testing at 89%, while Germany had the lowest, at 8%, with 30 of 37 (81%) of participating German hospitals using non–toxin detection methods.

In addition, both testing density and case incidence rates varied between countries. Standardizing test density to mean number of tests per 10,000 PBDs per hospital per month (T/PBDs/H/M), the United Kingdom had the highest density, at 96.0 T/PBDs/H/M, while France had the lowest, at 34.4 T/PBDs/H/M. Overall per-nation case rates ranged from 2.55 C/PBDs/H/M in the United Kingdom to 6.9 C/PBDs/H/M in Spain.

Ms. Davies and her collaborators also analyzed data for all of the hospitals in any country according to testing method. That analysis saw no significant difference in seasonal variation testing rates for TCTA-using hospitals (mean T/PBDs/H/M in summer, 119.2 versus 102.4 in winter, P = .11), and no significant seasonal variation in CDI incidence. However, “the largest variation in CDI rates was seen in those hospitals using toxin-only diagnostic methods,” said Ms. Davies.

By contrast, for hospitals using non-TCTA methods, though testing rates did not change significantly, incidence was significantly higher in winter months, at a mean 13.5 wintertime versus 10.0 summertime C/PBDs/H/M (P = .49).

One country, Italy, stood out for having both higher overall wintertime testing (mean 57.2 summertime versus 78.8 wintertime T/PBDs/H/M, P = .041), and higher incidence (mean 6.6 summertime versus 10.1 wintertime C/PBDs/H/M, P = .017).

“Reported CDI rates only increase in winter if testing rates increase concurrently, or if hospitals use nonrecommended testing methods for diagnosis, especially non–toxin detection methods,” said Ms. Davies.

The study investigators reported receiving financial support from Sanofi Pasteur.

On Twitter @karioakes

BOSTON – No winter spike in Clostridium difficile infection (CDI) rates was seen among hospitalized patients after testing methodologies and frequency were accounted for, according to a large multinational study.

A total of 180 hospitals in five European countries had wide variation in CDI testing methods and testing density. However, among the hospitals that used a currently recommended toxin-detecting testing algorithm, there was no significant seasonal variation in cases, defined as mean cases per 10,000 patient bed-days per hospital per month (C/PBDs/H/M). The hospitals using toxin-detecting algorithms had summer C/PBDs/H/M rates of 9.6, compared to 8.0 in winter months (P = .27).

These results, presented at the annual meeting of the American Society for Microbiology by Kerrie Davies, clinical scientist at the University of Leeds (England), stand in contrast to some other studies that have shown a wintertime peak in CDI incidence. The data presented help in “understanding the context in which published reported rate data have been generated,” said Ms. Davies, enabling a better understanding both of how samples are tested, and who gets tested.

The study enrolled 180 hospitals – 38 each in France and Italy, 37 each in Germany and the United Kingdom, and 30 in Spain. Institutions reported patient demographics, as well as CDI testing data and patient bed-days for CDI cases, for 1 year.

Current European and U.K. CDI testing algorithms, said Ms. Davies, begin either with testing for glutamate dehydrogenase (GDH) or with nucleic acid amplification testing (NAAT), and then proceed to enzyme-linked immunosorbent assay (ELISA) testing for C. difficile toxins A and B.

Other algorithms, for example those that begin with toxin testing, are not recommended, said Ms. Davies. Some institutions may diagnose CDI only by toxin detection, GDH testing, or NAAT testing.

For data analysis, Ms. Davies and her collaborators compared CDI-related PBDs and testing density during June, July, and August to data collected in December, January, and February. Testing methods were dichotomized to toxin-detecting CDI testing algorithms (TCTA, using GDH/toxin or NAAT/toxin), or non-TCTA methods, which included all other algorithms or stand-alone testing methods.

Wide variation was seen between countries in testing methodologies. The United Kingdom had the highest rate of TCTA testing at 89%, while Germany had the lowest, at 8%, with 30 of 37 (81%) of participating German hospitals using non–toxin detection methods.

In addition, both testing density and case incidence rates varied between countries. Standardizing test density to mean number of tests per 10,000 PBDs per hospital per month (T/PBDs/H/M), the United Kingdom had the highest density, at 96.0 T/PBDs/H/M, while France had the lowest, at 34.4 T/PBDs/H/M. Overall per-nation case rates ranged from 2.55 C/PBDs/H/M in the United Kingdom to 6.9 C/PBDs/H/M in Spain.

Ms. Davies and her collaborators also analyzed data for all of the hospitals in any country according to testing method. That analysis saw no significant difference in seasonal variation testing rates for TCTA-using hospitals (mean T/PBDs/H/M in summer, 119.2 versus 102.4 in winter, P = .11), and no significant seasonal variation in CDI incidence. However, “the largest variation in CDI rates was seen in those hospitals using toxin-only diagnostic methods,” said Ms. Davies.

By contrast, for hospitals using non-TCTA methods, though testing rates did not change significantly, incidence was significantly higher in winter months, at a mean 13.5 wintertime versus 10.0 summertime C/PBDs/H/M (P = .49).

One country, Italy, stood out for having both higher overall wintertime testing (mean 57.2 summertime versus 78.8 wintertime T/PBDs/H/M, P = .041), and higher incidence (mean 6.6 summertime versus 10.1 wintertime C/PBDs/H/M, P = .017).

“Reported CDI rates only increase in winter if testing rates increase concurrently, or if hospitals use nonrecommended testing methods for diagnosis, especially non–toxin detection methods,” said Ms. Davies.

The study investigators reported receiving financial support from Sanofi Pasteur.

On Twitter @karioakes

BOSTON – No winter spike in Clostridium difficile infection (CDI) rates was seen among hospitalized patients after testing methodologies and frequency were accounted for, according to a large multinational study.

A total of 180 hospitals in five European countries had wide variation in CDI testing methods and testing density. However, among the hospitals that used a currently recommended toxin-detecting testing algorithm, there was no significant seasonal variation in cases, defined as mean cases per 10,000 patient bed-days per hospital per month (C/PBDs/H/M). The hospitals using toxin-detecting algorithms had summer C/PBDs/H/M rates of 9.6, compared to 8.0 in winter months (P = .27).

These results, presented at the annual meeting of the American Society for Microbiology by Kerrie Davies, clinical scientist at the University of Leeds (England), stand in contrast to some other studies that have shown a wintertime peak in CDI incidence. The data presented help in “understanding the context in which published reported rate data have been generated,” said Ms. Davies, enabling a better understanding both of how samples are tested, and who gets tested.

The study enrolled 180 hospitals – 38 each in France and Italy, 37 each in Germany and the United Kingdom, and 30 in Spain. Institutions reported patient demographics, as well as CDI testing data and patient bed-days for CDI cases, for 1 year.

Current European and U.K. CDI testing algorithms, said Ms. Davies, begin either with testing for glutamate dehydrogenase (GDH) or with nucleic acid amplification testing (NAAT), and then proceed to enzyme-linked immunosorbent assay (ELISA) testing for C. difficile toxins A and B.

Other algorithms, for example those that begin with toxin testing, are not recommended, said Ms. Davies. Some institutions may diagnose CDI only by toxin detection, GDH testing, or NAAT testing.

For data analysis, Ms. Davies and her collaborators compared CDI-related PBDs and testing density during June, July, and August to data collected in December, January, and February. Testing methods were dichotomized to toxin-detecting CDI testing algorithms (TCTA, using GDH/toxin or NAAT/toxin), or non-TCTA methods, which included all other algorithms or stand-alone testing methods.

Wide variation was seen between countries in testing methodologies. The United Kingdom had the highest rate of TCTA testing at 89%, while Germany had the lowest, at 8%, with 30 of 37 (81%) of participating German hospitals using non–toxin detection methods.

In addition, both testing density and case incidence rates varied between countries. Standardizing test density to mean number of tests per 10,000 PBDs per hospital per month (T/PBDs/H/M), the United Kingdom had the highest density, at 96.0 T/PBDs/H/M, while France had the lowest, at 34.4 T/PBDs/H/M. Overall per-nation case rates ranged from 2.55 C/PBDs/H/M in the United Kingdom to 6.9 C/PBDs/H/M in Spain.

Ms. Davies and her collaborators also analyzed data for all of the hospitals in any country according to testing method. That analysis saw no significant difference in seasonal variation testing rates for TCTA-using hospitals (mean T/PBDs/H/M in summer, 119.2 versus 102.4 in winter, P = .11), and no significant seasonal variation in CDI incidence. However, “the largest variation in CDI rates was seen in those hospitals using toxin-only diagnostic methods,” said Ms. Davies.

By contrast, for hospitals using non-TCTA methods, though testing rates did not change significantly, incidence was significantly higher in winter months, at a mean 13.5 wintertime versus 10.0 summertime C/PBDs/H/M (P = .49).

One country, Italy, stood out for having both higher overall wintertime testing (mean 57.2 summertime versus 78.8 wintertime T/PBDs/H/M, P = .041), and higher incidence (mean 6.6 summertime versus 10.1 wintertime C/PBDs/H/M, P = .017).

“Reported CDI rates only increase in winter if testing rates increase concurrently, or if hospitals use nonrecommended testing methods for diagnosis, especially non–toxin detection methods,” said Ms. Davies.

The study investigators reported receiving financial support from Sanofi Pasteur.

On Twitter @karioakes

AT ASM MICROBE 2016

Key clinical point: After researchers accounted for testing frequency and methods, Clostridium difficile infection (CDI) rates were not higher in the winter months.

Major finding: In five European countries, hospitals that used direct toxin-detecting algorithms to test for CDI had no seasonal variation in CDI incidence (mean cases/patient bed-days/hospital/month in summer, 9.6; in winter, 8.0; P = .27).

Data source: Demographic and testing data collection from 180 hospitals in five European countries to ascertain CDI testing methods, rates, cases, and patient bed-days per month.

Disclosures: The study investigators reported financial support from Sanofi Pasteur.

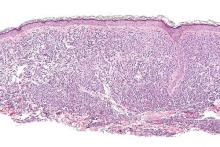

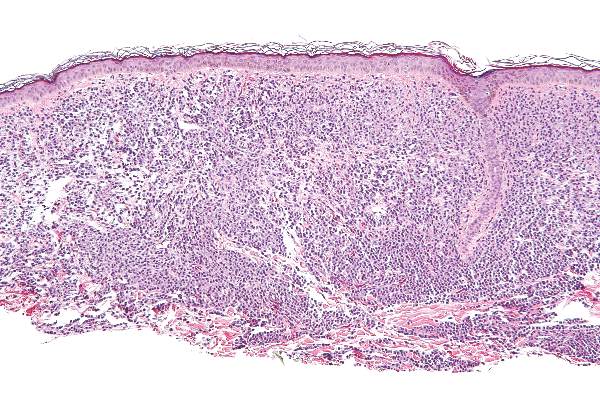

Midostaurin cut organ damage in systemic mastocytosis

Midostaurin completely resolved at least one type of organ damage for 45% of patients with advanced systemic mastocytosis, based on a multicenter, open-label, phase II, industry-sponsored trial.

“Response rates were similar regardless of the subtype of advanced systemic mastocytosis, KIT mutation status, or exposure to previous therapy,” reported Jason R. Gotlib, MD, of Stanford (Calif.) University, and his associates. Adverse effects led to dose reductions for 41% of patients, however, and caused 22% of patients to stop treatment, the researchers wrote online June 29 in the New England Journal of Medicine.

Systemic mastocytosis is related to a constitutively activated receptor tyrosine kinase encoded by the KIT D816V mutation. As neoplastic mast cells infiltrate and damage organs, patients develop cytopenias, hypoalbuminemia, osteolytic bone lesions, abnormal liver function, ascites, and weight loss. Mastocytosis lacks effective treatments, and patients with aggressive disease tend to live about 3.5 years, the researchers noted (N Engl J Med. 2016 Jun 29;374:2530-40).

Of 116 patients with advanced systemic mastocytosis, 27 lacked measurable signs of disease or had unrelated signs and symptoms. The remaining 89 patients included 16 with aggressive systemic disease, 57 with systemic disease and an associated hematologic neoplasm, and 16 with mast cell leukemia. Patients received 100 mg oral midostaurin twice daily in continuous 4-week cycles for a median of 11.4 months, with a median follow-up of 26 months.

In all, 53 (60%) patients experienced at least 50% improvement in one type of organ damage or improvement in more than one type of organ damage, said the researchers. These responders included 12 patients with aggressive systemic mastocytosis, 33 patients with systemic mastocytosis and a hematologic neoplasm, and eight patients with mast-cell leukemia. No one achieved complete remission, but after six treatment cycles, 45% of patients had complete resolution of at least one type of organ damage.

Patients typically experienced, at best, a nearly 60% drop in bone marrow mast cell burden and serum tryptase. The median duration of response was 24 months, median overall survival was 28.7 months, and median progression-free survival was 14.1 months. Mast cell leukemia and a history of treatment for mastocytosis were tied to shorter survival, while a 50% decrease in mast cell burden significantly improved survival (hazard ratio, 0.33; P = .01).

Grade 3 or 4 hematologic abnormalities included neutropenia (24% of patients), anemia (41%), and thrombocytopenia (29%). Marked myelosuppression was associated with baseline cytopenia and may have reflected either treatment-related effects or disease progression, the researchers said. The most common grade 3/4 nonhematologic adverse effects were fatigue (9% of patients) and diarrhea (8%).

Novartis Pharmaceuticals sponsored the study, and designed it and collected the data with the authors. Dr. Gotlib disclosed travel reimbursement from Novartis. Ten coinvestigators disclosed financial ties to Novartis and to several other pharmaceutical companies. Two coinvestigators disclosed direct research support from Novartis. The remaining four coinvestigators had no disclosures.

Midostaurin completely resolved at least one type of organ damage for 45% of patients with advanced systemic mastocytosis, based on a multicenter, open-label, phase II, industry-sponsored trial.

“Response rates were similar regardless of the subtype of advanced systemic mastocytosis, KIT mutation status, or exposure to previous therapy,” reported Jason R. Gotlib, MD, of Stanford (Calif.) University, and his associates. Adverse effects led to dose reductions for 41% of patients, however, and caused 22% of patients to stop treatment, the researchers wrote online June 29 in the New England Journal of Medicine.

Systemic mastocytosis is related to a constitutively activated receptor tyrosine kinase encoded by the KIT D816V mutation. As neoplastic mast cells infiltrate and damage organs, patients develop cytopenias, hypoalbuminemia, osteolytic bone lesions, abnormal liver function, ascites, and weight loss. Mastocytosis lacks effective treatments, and patients with aggressive disease tend to live about 3.5 years, the researchers noted (N Engl J Med. 2016 Jun 29;374:2530-40).

Of 116 patients with advanced systemic mastocytosis, 27 lacked measurable signs of disease or had unrelated signs and symptoms. The remaining 89 patients included 16 with aggressive systemic disease, 57 with systemic disease and an associated hematologic neoplasm, and 16 with mast cell leukemia. Patients received 100 mg oral midostaurin twice daily in continuous 4-week cycles for a median of 11.4 months, with a median follow-up of 26 months.

In all, 53 (60%) patients experienced at least 50% improvement in one type of organ damage or improvement in more than one type of organ damage, said the researchers. These responders included 12 patients with aggressive systemic mastocytosis, 33 patients with systemic mastocytosis and a hematologic neoplasm, and eight patients with mast-cell leukemia. No one achieved complete remission, but after six treatment cycles, 45% of patients had complete resolution of at least one type of organ damage.

Patients typically experienced, at best, a nearly 60% drop in bone marrow mast cell burden and serum tryptase. The median duration of response was 24 months, median overall survival was 28.7 months, and median progression-free survival was 14.1 months. Mast cell leukemia and a history of treatment for mastocytosis were tied to shorter survival, while a 50% decrease in mast cell burden significantly improved survival (hazard ratio, 0.33; P = .01).

Grade 3 or 4 hematologic abnormalities included neutropenia (24% of patients), anemia (41%), and thrombocytopenia (29%). Marked myelosuppression was associated with baseline cytopenia and may have reflected either treatment-related effects or disease progression, the researchers said. The most common grade 3/4 nonhematologic adverse effects were fatigue (9% of patients) and diarrhea (8%).

Novartis Pharmaceuticals sponsored the study, and designed it and collected the data with the authors. Dr. Gotlib disclosed travel reimbursement from Novartis. Ten coinvestigators disclosed financial ties to Novartis and to several other pharmaceutical companies. Two coinvestigators disclosed direct research support from Novartis. The remaining four coinvestigators had no disclosures.

Midostaurin completely resolved at least one type of organ damage for 45% of patients with advanced systemic mastocytosis, based on a multicenter, open-label, phase II, industry-sponsored trial.

“Response rates were similar regardless of the subtype of advanced systemic mastocytosis, KIT mutation status, or exposure to previous therapy,” reported Jason R. Gotlib, MD, of Stanford (Calif.) University, and his associates. Adverse effects led to dose reductions for 41% of patients, however, and caused 22% of patients to stop treatment, the researchers wrote online June 29 in the New England Journal of Medicine.

Systemic mastocytosis is related to a constitutively activated receptor tyrosine kinase encoded by the KIT D816V mutation. As neoplastic mast cells infiltrate and damage organs, patients develop cytopenias, hypoalbuminemia, osteolytic bone lesions, abnormal liver function, ascites, and weight loss. Mastocytosis lacks effective treatments, and patients with aggressive disease tend to live about 3.5 years, the researchers noted (N Engl J Med. 2016 Jun 29;374:2530-40).

Of 116 patients with advanced systemic mastocytosis, 27 lacked measurable signs of disease or had unrelated signs and symptoms. The remaining 89 patients included 16 with aggressive systemic disease, 57 with systemic disease and an associated hematologic neoplasm, and 16 with mast cell leukemia. Patients received 100 mg oral midostaurin twice daily in continuous 4-week cycles for a median of 11.4 months, with a median follow-up of 26 months.

In all, 53 (60%) patients experienced at least 50% improvement in one type of organ damage or improvement in more than one type of organ damage, said the researchers. These responders included 12 patients with aggressive systemic mastocytosis, 33 patients with systemic mastocytosis and a hematologic neoplasm, and eight patients with mast-cell leukemia. No one achieved complete remission, but after six treatment cycles, 45% of patients had complete resolution of at least one type of organ damage.

Patients typically experienced, at best, a nearly 60% drop in bone marrow mast cell burden and serum tryptase. The median duration of response was 24 months, median overall survival was 28.7 months, and median progression-free survival was 14.1 months. Mast cell leukemia and a history of treatment for mastocytosis were tied to shorter survival, while a 50% decrease in mast cell burden significantly improved survival (hazard ratio, 0.33; P = .01).

Grade 3 or 4 hematologic abnormalities included neutropenia (24% of patients), anemia (41%), and thrombocytopenia (29%). Marked myelosuppression was associated with baseline cytopenia and may have reflected either treatment-related effects or disease progression, the researchers said. The most common grade 3/4 nonhematologic adverse effects were fatigue (9% of patients) and diarrhea (8%).

Novartis Pharmaceuticals sponsored the study, and designed it and collected the data with the authors. Dr. Gotlib disclosed travel reimbursement from Novartis. Ten coinvestigators disclosed financial ties to Novartis and to several other pharmaceutical companies. Two coinvestigators disclosed direct research support from Novartis. The remaining four coinvestigators had no disclosures.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point: Midostaurin helped to resolve organ damage related to mastocytosis.

Major finding: In all, 45% of patients had complete resolution of at least one type of organ damage within six, 4-week treatment cycles.

Data source: An international, open-label, phase II study of 116 patients given 100 mg oral midostaurin twice daily.

Disclosures: Novartis Pharmaceuticals sponsored the study, designed the study, and collected the data together with the authors. Dr. Gotlib disclosed travel reimbursement from Novartis. Ten coinvestigators disclosed financial ties to Novartis and to several other pharmaceutical companies. Two coinvestigators disclosed direct research support from Novartis. Four coinvestigators had no disclosures.

Staffing, work environment drive VAP risk in the ICU

SAN FRANCISCO – The work environment for nurses and the physician staffing model in the intensive care unit influence patients’ likelihood of acquiring ventilator-associated pneumonia (VAP), based on a cohort study of 25 ICUs.

Overall, each 1-point increase in the score for the nurse work environment – indicating that nurses had a greater sense of playing an important role in patient care – was unexpectedly associated with a roughly sixfold higher rate of VAP among the ICU’s patients, according to data reported in a session and press briefing at an international conference of the American Thoracic Society. However, additional analyses showed that the rate of VAP was higher in closed units where a board-certified critical care physician (intensivist) managed and led care rather than an open unit where care is shared.

“We think that the organization of the ICU is actually influencing nursing practice, which is a really novel finding,” commented first author Deena Kelly Costa, PhD, RN, of the University of Michigan School of Nursing in Ann Arbor. “In closed ICUs, when you have a board-certified physician and an ICU team managing and leading care, even if the work environment is better, nurses may not feel as empowered to standardize their care or practice.”

“ICU nurses are the ones who are primarily responsible for VAP preventive practices: they keep the head of the bed higher than 45 degrees, they conduct oral care, they conduct (patient) surveillance. ICU physicians are involved with writing the orders and ventilator setting management. So how these providers work together could theoretically influence the risk for patients developing VAP,” Dr. Costa said.

“We need to be thinking a little bit more critically about not only the care that’s happening at the bedside... but also at an organizational level. How are these providers organized, and can we work together to improve patient outcomes?”

“I’m not suggesting that we get rid of all closed ICUs because I don’t think that’s the solution,” Dr. Costa maintained. “I think from an administrative perspective, we need to be considering what’s the organization of these clinicians and this unit, and [in a context-specific manner], how can we improve it for better patient outcomes? That may be both working on improving the work environment and making the nurses feel more empowered, or it could be potentially considering other staffing models.”

Some data have already linked a more favorable nurse work environment and the presence of a board-certified critical care physician independently with better patient outcomes in the ICU. But studies of their joint impact are lacking.

The investigators performed a secondary, unit-level analysis of nurse survey data collected during 2005 and 2006 in ICUs in southern Michigan.

In all, 462 nurses working in 25 ICUs completed the Practice Environment Scale of the Nursing Work Index, on which averaged summary scores range between 1 (unfavorable) and 4 (favorable). The scale captures environmental factors such as the adequacy of resources for nurses, support from their managers, and their level of involvement in hospital policy decisions.

The rate of VAP during the same period was assessed using data from more than 1,000 patients from each ICU.

The summary nurse work environment score averaged 2.69 points in the 21 ICUs that had a closed physician staffing model and 2.62 points in the 4 ICUs that had an open physician staffing model. The respective rates of VAP were 7.5% and 2.5%.

In adjusted analysis among all 25 ICUs, each 1-point increase in an ICU’s Practice Environment Scale score was associated with a sharply higher rate of VAP on the unit (adjusted incidence rate ratio, 5.76; P = .02).

However, there was a strong interaction between the score and physician staffing model (P less than .001). In open ICUs, as the score rose, the rate of VAP fell (from about 16% to 5%), whereas in closed ICUs, as the score rose, so did the rate of VAP (from about 3% to 14%).

Dr. Costa disclosed that she had no relevant conflicts of interest. The parent survey was funded by the Blue Cross Blue Shield Foundation of Michigan.

SAN FRANCISCO – The work environment for nurses and the physician staffing model in the intensive care unit influence patients’ likelihood of acquiring ventilator-associated pneumonia (VAP), based on a cohort study of 25 ICUs.

Overall, each 1-point increase in the score for the nurse work environment – indicating that nurses had a greater sense of playing an important role in patient care – was unexpectedly associated with a roughly sixfold higher rate of VAP among the ICU’s patients, according to data reported in a session and press briefing at an international conference of the American Thoracic Society. However, additional analyses showed that the rate of VAP was higher in closed units where a board-certified critical care physician (intensivist) managed and led care rather than an open unit where care is shared.

“We think that the organization of the ICU is actually influencing nursing practice, which is a really novel finding,” commented first author Deena Kelly Costa, PhD, RN, of the University of Michigan School of Nursing in Ann Arbor. “In closed ICUs, when you have a board-certified physician and an ICU team managing and leading care, even if the work environment is better, nurses may not feel as empowered to standardize their care or practice.”

“ICU nurses are the ones who are primarily responsible for VAP preventive practices: they keep the head of the bed higher than 45 degrees, they conduct oral care, they conduct (patient) surveillance. ICU physicians are involved with writing the orders and ventilator setting management. So how these providers work together could theoretically influence the risk for patients developing VAP,” Dr. Costa said.

“We need to be thinking a little bit more critically about not only the care that’s happening at the bedside... but also at an organizational level. How are these providers organized, and can we work together to improve patient outcomes?”

“I’m not suggesting that we get rid of all closed ICUs because I don’t think that’s the solution,” Dr. Costa maintained. “I think from an administrative perspective, we need to be considering what’s the organization of these clinicians and this unit, and [in a context-specific manner], how can we improve it for better patient outcomes? That may be both working on improving the work environment and making the nurses feel more empowered, or it could be potentially considering other staffing models.”

Some data have already linked a more favorable nurse work environment and the presence of a board-certified critical care physician independently with better patient outcomes in the ICU. But studies of their joint impact are lacking.

The investigators performed a secondary, unit-level analysis of nurse survey data collected during 2005 and 2006 in ICUs in southern Michigan.

In all, 462 nurses working in 25 ICUs completed the Practice Environment Scale of the Nursing Work Index, on which averaged summary scores range between 1 (unfavorable) and 4 (favorable). The scale captures environmental factors such as the adequacy of resources for nurses, support from their managers, and their level of involvement in hospital policy decisions.

The rate of VAP during the same period was assessed using data from more than 1,000 patients from each ICU.

The summary nurse work environment score averaged 2.69 points in the 21 ICUs that had a closed physician staffing model and 2.62 points in the 4 ICUs that had an open physician staffing model. The respective rates of VAP were 7.5% and 2.5%.

In adjusted analysis among all 25 ICUs, each 1-point increase in an ICU’s Practice Environment Scale score was associated with a sharply higher rate of VAP on the unit (adjusted incidence rate ratio, 5.76; P = .02).

However, there was a strong interaction between the score and physician staffing model (P less than .001). In open ICUs, as the score rose, the rate of VAP fell (from about 16% to 5%), whereas in closed ICUs, as the score rose, so did the rate of VAP (from about 3% to 14%).

Dr. Costa disclosed that she had no relevant conflicts of interest. The parent survey was funded by the Blue Cross Blue Shield Foundation of Michigan.

SAN FRANCISCO – The work environment for nurses and the physician staffing model in the intensive care unit influence patients’ likelihood of acquiring ventilator-associated pneumonia (VAP), based on a cohort study of 25 ICUs.

Overall, each 1-point increase in the score for the nurse work environment – indicating that nurses had a greater sense of playing an important role in patient care – was unexpectedly associated with a roughly sixfold higher rate of VAP among the ICU’s patients, according to data reported in a session and press briefing at an international conference of the American Thoracic Society. However, additional analyses showed that the rate of VAP was higher in closed units where a board-certified critical care physician (intensivist) managed and led care rather than an open unit where care is shared.

“We think that the organization of the ICU is actually influencing nursing practice, which is a really novel finding,” commented first author Deena Kelly Costa, PhD, RN, of the University of Michigan School of Nursing in Ann Arbor. “In closed ICUs, when you have a board-certified physician and an ICU team managing and leading care, even if the work environment is better, nurses may not feel as empowered to standardize their care or practice.”

“ICU nurses are the ones who are primarily responsible for VAP preventive practices: they keep the head of the bed higher than 45 degrees, they conduct oral care, they conduct (patient) surveillance. ICU physicians are involved with writing the orders and ventilator setting management. So how these providers work together could theoretically influence the risk for patients developing VAP,” Dr. Costa said.

“We need to be thinking a little bit more critically about not only the care that’s happening at the bedside... but also at an organizational level. How are these providers organized, and can we work together to improve patient outcomes?”

“I’m not suggesting that we get rid of all closed ICUs because I don’t think that’s the solution,” Dr. Costa maintained. “I think from an administrative perspective, we need to be considering what’s the organization of these clinicians and this unit, and [in a context-specific manner], how can we improve it for better patient outcomes? That may be both working on improving the work environment and making the nurses feel more empowered, or it could be potentially considering other staffing models.”

Some data have already linked a more favorable nurse work environment and the presence of a board-certified critical care physician independently with better patient outcomes in the ICU. But studies of their joint impact are lacking.

The investigators performed a secondary, unit-level analysis of nurse survey data collected during 2005 and 2006 in ICUs in southern Michigan.

In all, 462 nurses working in 25 ICUs completed the Practice Environment Scale of the Nursing Work Index, on which averaged summary scores range between 1 (unfavorable) and 4 (favorable). The scale captures environmental factors such as the adequacy of resources for nurses, support from their managers, and their level of involvement in hospital policy decisions.

The rate of VAP during the same period was assessed using data from more than 1,000 patients from each ICU.

The summary nurse work environment score averaged 2.69 points in the 21 ICUs that had a closed physician staffing model and 2.62 points in the 4 ICUs that had an open physician staffing model. The respective rates of VAP were 7.5% and 2.5%.

In adjusted analysis among all 25 ICUs, each 1-point increase in an ICU’s Practice Environment Scale score was associated with a sharply higher rate of VAP on the unit (adjusted incidence rate ratio, 5.76; P = .02).

However, there was a strong interaction between the score and physician staffing model (P less than .001). In open ICUs, as the score rose, the rate of VAP fell (from about 16% to 5%), whereas in closed ICUs, as the score rose, so did the rate of VAP (from about 3% to 14%).

Dr. Costa disclosed that she had no relevant conflicts of interest. The parent survey was funded by the Blue Cross Blue Shield Foundation of Michigan.

AT ATS 2016

Key clinical point: The impact of nurse work environment on risk of VAP in the ICU depends on the unit’s physician staffing model.

Major finding: A better nurse work environment was associated with a higher rate of VAP overall (incidence rate ratio, 5.76), but there was an interaction whereby it was positively associated with rate in closed units but negatively so in open units.

Data source: A cohort study of 25 ICUs, 462 nurses, and more than 25,000 patients in southern Michigan between 2005 and 2006.

Disclosures: Dr. Costa disclosed that she had no relevant conflicts of interest. The parent study was funded by the Blue Cross Blue Shield Foundation of Michigan.

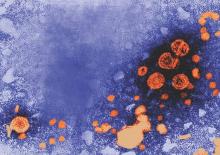

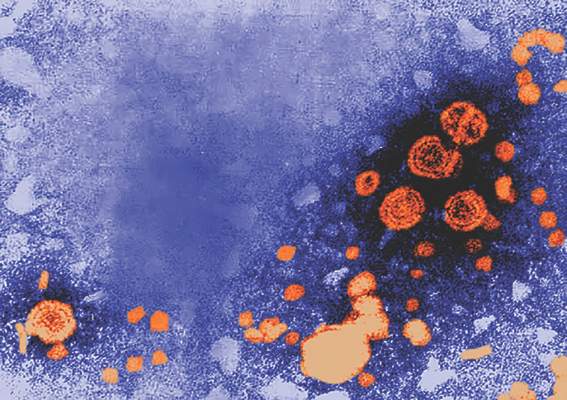

HBV/HIV coinfection a significant risk factor for inpatient mortality

Patients coinfected with hepatitis B virus and HIV are at greater risk for in-hospital mortality, particularly in liver-related admissions, compared with HBV monoinfection, according to a study in the Journal of Viral Hepatitis.

Researchers at Massachusetts General Hospital, Boston, identified patients in the 2011 U.S. Nationwide Inpatient Sample who had been hospitalized with HBV or HIV monoinfection or HBV/HIV coinfection using ICD-9-CM codes. A total of 72,584 discharges with HBV monoinfection, 133,880 discharges with HIV monoinfection and 8,156 discharges with HBV/HIV coinfection were included. The researchers then compared liver-related hospital admissions among the three groups and performed multivariable logistic regression to identify independent predictors of in-hospital mortality, length of stay, and total charges.

According to Raymond T. Chung, MD, director of hepatology at Massachusetts General Hospital, and his coauthors, this study is the first to examine outcomes of HBV/HIV coinfection among hospitalized patients, a “group that represents those with advanced disease and vulnerable to poor outcomes and high health care utilization.” (J Viral Hepat. 2016 Jun 13. doi: 10.1111/jvh.12555)

Patients in the study with HBV monoinfection tended to be older than those with HIV monoinfection or HBV/HIV coinfection. Of those aged 51-65 years, 42% had HBV monoinfection, 34% had HIV monoinfection, and 31% had HBV/HIV coinfection (P less than .001). Males were overrepresented in the HBV/HIV coinfection group (77%), compared with those with HBV monoinfection (57%), and HIV monoinfection (67%). Additionally, the investigators found that patients with HBV monoinfection were more likely to be white (42%) than were those with HIV monoinfection (26%) or HBV/HIV coinfection (27%).

Dr. Chung and his colleagues found that HBV/HIV coinfection was associated with significantly higher adjusted in-hospital mortality, compared with patients with HBV monoinfection (adjusted odds ratio, 1.67; 95% confidence interval, 1.30-2.15), but not compared with HIV monoinfection (aOR, 1.22; 95% CI, 0.96-1.54).

“Interestingly, [the] increase in risk of mortality was primarily observed in liver-related admissions … and not infectious-related hospitalizations,” Dr. Chung and his coauthors said.

The overall adjusted hospital length of stay (LOS;1.53 days; 95% CI, 0.93-2.13; less than .001) and total hospitalization charges ($17,595; 95% CI 11,120-24,069; P less than .0001) were higher in the coinfected group, compared with the HBV monoinfection group – even after adjustment for comorbidity- and disease-related complications, the authors wrote. LOS and total charges also were higher in the coinfected group, compared with the HIV monoinfection group (+0.62 days; P = .034; $8,840; P = .005).

While HBV/HIV coinfection by itself was not associated with higher in-hospital mortality, the presence of HBV along with cirrhosis or complications of portal hypertension was associated with three times greater in-hospital mortality in patients with HIV, compared with those without such complications (odds ratio, 3.00; 95% CI, 1.80-5.02). Researchers also found that LOS (0.62 days; 95% CI, 0.05-1.20; P = .034) and hospitalization cost ($8,840; 95% CI, 2,604-15,077; P = .005) were increased in patients with HBV/HIV coinfection, compared with HIV monoinfection.

“Overall health care utilization from HBV/HIV coinfection is … higher than for either infection alone and higher than the national average for all hospitalizations, emphasizing the health care burden from these illnesses,” the authors concluded.

Three study coauthors were supported in part by grants from the National Institutes of Health, while one coauthor was supported by a career development award from the American Gastroenterological Association and by the National Institute of Diabetes and Digestive and Kidney Diseases. Dr. Chung reported no financial conflict of interests. One coauthor reported participation on scientific advisory boards of AbbVie and Cubist pharmaceuticals.

On Twitter @richpizzi

Patients coinfected with hepatitis B virus and HIV are at greater risk for in-hospital mortality, particularly in liver-related admissions, compared with HBV monoinfection, according to a study in the Journal of Viral Hepatitis.

Researchers at Massachusetts General Hospital, Boston, identified patients in the 2011 U.S. Nationwide Inpatient Sample who had been hospitalized with HBV or HIV monoinfection or HBV/HIV coinfection using ICD-9-CM codes. A total of 72,584 discharges with HBV monoinfection, 133,880 discharges with HIV monoinfection and 8,156 discharges with HBV/HIV coinfection were included. The researchers then compared liver-related hospital admissions among the three groups and performed multivariable logistic regression to identify independent predictors of in-hospital mortality, length of stay, and total charges.

According to Raymond T. Chung, MD, director of hepatology at Massachusetts General Hospital, and his coauthors, this study is the first to examine outcomes of HBV/HIV coinfection among hospitalized patients, a “group that represents those with advanced disease and vulnerable to poor outcomes and high health care utilization.” (J Viral Hepat. 2016 Jun 13. doi: 10.1111/jvh.12555)

Patients in the study with HBV monoinfection tended to be older than those with HIV monoinfection or HBV/HIV coinfection. Of those aged 51-65 years, 42% had HBV monoinfection, 34% had HIV monoinfection, and 31% had HBV/HIV coinfection (P less than .001). Males were overrepresented in the HBV/HIV coinfection group (77%), compared with those with HBV monoinfection (57%), and HIV monoinfection (67%). Additionally, the investigators found that patients with HBV monoinfection were more likely to be white (42%) than were those with HIV monoinfection (26%) or HBV/HIV coinfection (27%).

Dr. Chung and his colleagues found that HBV/HIV coinfection was associated with significantly higher adjusted in-hospital mortality, compared with patients with HBV monoinfection (adjusted odds ratio, 1.67; 95% confidence interval, 1.30-2.15), but not compared with HIV monoinfection (aOR, 1.22; 95% CI, 0.96-1.54).

“Interestingly, [the] increase in risk of mortality was primarily observed in liver-related admissions … and not infectious-related hospitalizations,” Dr. Chung and his coauthors said.

The overall adjusted hospital length of stay (LOS;1.53 days; 95% CI, 0.93-2.13; less than .001) and total hospitalization charges ($17,595; 95% CI 11,120-24,069; P less than .0001) were higher in the coinfected group, compared with the HBV monoinfection group – even after adjustment for comorbidity- and disease-related complications, the authors wrote. LOS and total charges also were higher in the coinfected group, compared with the HIV monoinfection group (+0.62 days; P = .034; $8,840; P = .005).

While HBV/HIV coinfection by itself was not associated with higher in-hospital mortality, the presence of HBV along with cirrhosis or complications of portal hypertension was associated with three times greater in-hospital mortality in patients with HIV, compared with those without such complications (odds ratio, 3.00; 95% CI, 1.80-5.02). Researchers also found that LOS (0.62 days; 95% CI, 0.05-1.20; P = .034) and hospitalization cost ($8,840; 95% CI, 2,604-15,077; P = .005) were increased in patients with HBV/HIV coinfection, compared with HIV monoinfection.

“Overall health care utilization from HBV/HIV coinfection is … higher than for either infection alone and higher than the national average for all hospitalizations, emphasizing the health care burden from these illnesses,” the authors concluded.

Three study coauthors were supported in part by grants from the National Institutes of Health, while one coauthor was supported by a career development award from the American Gastroenterological Association and by the National Institute of Diabetes and Digestive and Kidney Diseases. Dr. Chung reported no financial conflict of interests. One coauthor reported participation on scientific advisory boards of AbbVie and Cubist pharmaceuticals.

On Twitter @richpizzi

Patients coinfected with hepatitis B virus and HIV are at greater risk for in-hospital mortality, particularly in liver-related admissions, compared with HBV monoinfection, according to a study in the Journal of Viral Hepatitis.

Researchers at Massachusetts General Hospital, Boston, identified patients in the 2011 U.S. Nationwide Inpatient Sample who had been hospitalized with HBV or HIV monoinfection or HBV/HIV coinfection using ICD-9-CM codes. A total of 72,584 discharges with HBV monoinfection, 133,880 discharges with HIV monoinfection and 8,156 discharges with HBV/HIV coinfection were included. The researchers then compared liver-related hospital admissions among the three groups and performed multivariable logistic regression to identify independent predictors of in-hospital mortality, length of stay, and total charges.

According to Raymond T. Chung, MD, director of hepatology at Massachusetts General Hospital, and his coauthors, this study is the first to examine outcomes of HBV/HIV coinfection among hospitalized patients, a “group that represents those with advanced disease and vulnerable to poor outcomes and high health care utilization.” (J Viral Hepat. 2016 Jun 13. doi: 10.1111/jvh.12555)

Patients in the study with HBV monoinfection tended to be older than those with HIV monoinfection or HBV/HIV coinfection. Of those aged 51-65 years, 42% had HBV monoinfection, 34% had HIV monoinfection, and 31% had HBV/HIV coinfection (P less than .001). Males were overrepresented in the HBV/HIV coinfection group (77%), compared with those with HBV monoinfection (57%), and HIV monoinfection (67%). Additionally, the investigators found that patients with HBV monoinfection were more likely to be white (42%) than were those with HIV monoinfection (26%) or HBV/HIV coinfection (27%).

Dr. Chung and his colleagues found that HBV/HIV coinfection was associated with significantly higher adjusted in-hospital mortality, compared with patients with HBV monoinfection (adjusted odds ratio, 1.67; 95% confidence interval, 1.30-2.15), but not compared with HIV monoinfection (aOR, 1.22; 95% CI, 0.96-1.54).

“Interestingly, [the] increase in risk of mortality was primarily observed in liver-related admissions … and not infectious-related hospitalizations,” Dr. Chung and his coauthors said.

The overall adjusted hospital length of stay (LOS;1.53 days; 95% CI, 0.93-2.13; less than .001) and total hospitalization charges ($17,595; 95% CI 11,120-24,069; P less than .0001) were higher in the coinfected group, compared with the HBV monoinfection group – even after adjustment for comorbidity- and disease-related complications, the authors wrote. LOS and total charges also were higher in the coinfected group, compared with the HIV monoinfection group (+0.62 days; P = .034; $8,840; P = .005).

While HBV/HIV coinfection by itself was not associated with higher in-hospital mortality, the presence of HBV along with cirrhosis or complications of portal hypertension was associated with three times greater in-hospital mortality in patients with HIV, compared with those without such complications (odds ratio, 3.00; 95% CI, 1.80-5.02). Researchers also found that LOS (0.62 days; 95% CI, 0.05-1.20; P = .034) and hospitalization cost ($8,840; 95% CI, 2,604-15,077; P = .005) were increased in patients with HBV/HIV coinfection, compared with HIV monoinfection.

“Overall health care utilization from HBV/HIV coinfection is … higher than for either infection alone and higher than the national average for all hospitalizations, emphasizing the health care burden from these illnesses,” the authors concluded.

Three study coauthors were supported in part by grants from the National Institutes of Health, while one coauthor was supported by a career development award from the American Gastroenterological Association and by the National Institute of Diabetes and Digestive and Kidney Diseases. Dr. Chung reported no financial conflict of interests. One coauthor reported participation on scientific advisory boards of AbbVie and Cubist pharmaceuticals.

On Twitter @richpizzi

FROM THE JOURNAL OF VIRAL HEPATITIS

Key clinical point: Patients coinfected with HBV and HIV are at greater risk for in-hospital mortality, particularly in liver-related admissions, compared with HBV monoinfection.

Major finding: HBV/HIV coinfection was associated with significantly higher adjusted in-hospital mortality, compared with patients with HBV monoinfection, but not when compared with HIV monoinfection.

Data source: Comparison study of patient discharges from the 2011 U.S. Nationwide Inpatient Sample: 72,584 discharges with HBV monoinfection, 133,880 discharges with HIV monoinfection and 8,156 discharges with HBV/HIV coinfection.

Disclosures: Three study coauthors were supported in part by grants from the National Institutes of Health, while one coauthor was supported by a career development award from the American Gastroenterological Association and by the National Institute of Diabetes and Digestive and Kidney Diseases. Dr. Chung reported no financial conflict of interests. One coauthor reported participation on scientific advisory boards of AbbVie and Cubist pharmaceuticals.

Tool kit improves communication after an adverse event

The Communication and Optimal Resolution tool kit, offered by the Agency for Healthcare Research and Quality, helps hospitals, health systems, and clinicians respond to patients who are harmed by the care they receive, Andy Bindman, MD, AHRQ director, said in a blog post.

The tool kit is a new addition to AHRQ’s suite of patient safety tools and training materials.

Poor communication can lead to life-and-death mistakes, which can then become legal issues, said Dr. Bindman. The Communication and Optimal Resolution (CANDOR) tool kit uses time as a key factor by disclosing harm to patients and families as soon as it happens.

“It has been estimated that medical errors are the third-leading cause of death in the United States and that the majority of clinicians have experience with a medical error that resulted in harm to a patient. Often these ‘mistakes’ are not the result of poorly trained individuals but the result of the faulty systems we sometimes work in,” Dr. Bindman noted.

“It is also important for us to engage with colleagues to reflect on the mistake and explore the root causes of how it happened. This helps us to learn from the situation and take steps to minimize the chances of a similar mistake happening again to another patient,” he said.

Funding for CANDOR was provided by the AHRQ’s $23 million Patient Safety and Medical Liability grant initiative, launched in 2009. The initiative is the largest federal investment in research linking improved patient safety to reduced medical liability, according to Dr. Bindman.