User login

ACC 21 looks to repeat success despite pandemic headwinds

The American College of Cardiology pulled off an impressive all-virtual meeting in March 2020, less than 3 weeks after canceling its in-person event and just 2 weeks after COVID-19 was declared a national emergency.

Optimistic plans for the annual scientific sessions of the American College of Cardiology (ACC 2021) to be a March hybrid affair in Atlanta pivoted not once, but twice, as the pandemic evolved, with the date pushed back 2 full months, to May 15-17, and the format revised to fully virtual.

“While this meeting is being delivered virtually, I think you’ll see there have been benefits in the time to plan and also the lessons that ACC has learned in virtual education over the past year. This has come together to really create a robust educational and scientific agenda,” ACC 2021 chair Pamela B. Morris, MD, said in a press conference focused on the upcoming meeting.

Over the 3 days, there will be more than 200 education sessions, 10 guideline-specific sessions, and 11 learning pathways that include core areas, but also special topics, such as COVID-19 and the emerging cardio-obstetrics subspecialty.

The meeting will be delivered through a new virtual education program built to optimize real-time interaction between faculty members and attendees, she said. A dedicated portal on the platform will allow attendees to interact virtually, for example, with presenters of the nearly 3,000 ePosters and 420 moderated posters.

For those suffering from Zoom fatigue, the increasingly popular Heart2Heart stage talks have also been converted to podcasts, which cover topics like gender equity in cardiology, the evolving role of advanced practice professionals, and “one of my favorites: art as a tool for healing,” said Dr. Morris, from the Medical University of South Carolina, Charleston. “Those sessions are really not to be missed.”

Reconnecting is an underlying theme of the meeting but the great divider will not be ignored. COVID-19 will be the focus of two 90-minute Intensive Sessions on Saturday, May 15, the first kicking off at 10:30 a.m. ET, with the Bishop Keynote lecture on bringing health equity to the frontline of cardiovascular care, followed by lessons learned during the pandemic, how to conduct clinical trials, and vaccine development.

The second session, set for 12:15 p.m., continues the “silver linings” theme, with case presentations on advances in telehealth, myocardial involvement, and thrombosis in COVID. For those wanting more, 18 abstracts are on tap in a 2-hour Spotlight on Special Topics session beginning at 2:30 p.m.

Asked about the pandemic’s effect on bringing science to fruition this past year, Dr. Morris said there’s no question it’s slowed some of the progress the cardiology community had made but, like clinical practice, “we’ve also surmounted many of those obstacles.”

“I think research has rebounded,” she said. “Just in terms of the number of abstracts and the quality of abstracts that were submitted this year, I don’t think there’s any question that we are right on par with previous years.”

Indeed, 5,258 abstracts from 76 countries were submitted, with more than 3,400 chosen for oral and poster presentation, including 25 late-breaking clinical trials to be presented in five sessions.

The late-breaking presentations and discussions will be prerecorded but speakers and panelists have been invited to be present during the streaming to answer live any questions that may arise in the chat box, ACC 2021 vice chair Douglas Drachman, MD, Massachusetts General Hospital, Boston, said in an interview.

Late-breaking clinical trials

The Joint ACC/JACC Late-Breaking Clinical Trials I (Saturday, May 15, 9:00 a.m.–-10:00 a.m.) kicks off with PARADISE-MI, the first head-to-head comparison of an angiotensin receptor neprilysin inhibitor (ARNI) and an ACE inhibitor in patients with reduced ejection fractions (EFs) after MI but no history of heart failure (HF), studying 200 mg sacubitril/valsartan (Entresto) versus 5 mg of ramipril, both twice daily, in 5,669 patients.

Sacubitril/valsartan was initially approved for HF with reduced EF and added a new indication to treat some HF patients with preserved EF. Novartis, however, recently told investors that although numerical trends consistently favored the ARNI over the ACE inhibitor ramipril, the phase 3 study failed to meet the primary endpoint for efficacy superiority of reducing the risk for cardiovascular (CV) death and HF events after an acute MI.

Second up is ADAPTABLE, which looks to close a surprising evidence gap over whether 81 mg or 325 mg daily is the optimal dose of the ubiquitously prescribed aspirin for secondary prevention in high-risk patients with established atherosclerotic CV disease.

The open-label, randomized study will look at efficacy and major bleeding over roughly 4 years in 15,000 patients within PCORnet, the National Patient-centered Clinical Research Network, a partnership of clinical research, health plan research, and patient-powered networks created to streamline patient-reported outcomes research.

“This study will not only give important clinical information for us, practically speaking, whether we should prescribe lower- or higher-dose aspirin, but it may also serve as a template for future pragmatic clinical trial design in the real world,” Dr. Drachman said during the press conference.

Up next is the 4,812-patient Canadian LAAOS III, the largest trial to examine the efficacy of left atrial appendage occlusion for stroke prevention in patients with atrial fibrillation (AFib) already undergoing cardiac surgery. The primary outcome is the first occurrence of stroke or systemic arterial embolism over an average follow-up of 4 years.

Percutaneous closure of the left atrial appendage (LAA) has been shown to reduce stroke in AFib patients at high-risk of bleeding on systemic anticoagulation. But these devices can be expensive and studies haven’t included patients who also have valvular heart disease, a group that actually comprises more than half of patients undergoing cardiac surgery who also have AFib, he noted.

At the same time, surgical LAA closure studies have been small and have had very mixed results. “There isn’t a large-scale rigorous assessment out there for these patients undergoing surgery, so I think this is going to be fascinating to see,” Dr. Drachman said.

The session closes with ATLANTIS, which looks to shed some light on the role of anticoagulation therapy in patients after transcatheter aortic valve replacement (TAVR or TAVI). POPular TAVI, presented at ACC 2020, showed aspirin alone was the preferred antithrombotic therapy over aspirin plus clopidogrel (Plavix) in patients not on oral anticoagulants, but the optimal anticoagulation regimen remains unsettled.

The French open-label, 1,510-patient ATLANTIS trial examined whether the novel oral anticoagulant apixaban (Eliquis) is superior in preventing CV events after TAVR, compared with antiplatelet therapy in patients without an indication for anticoagulation and compared with vitamin K antagonists in those receiving anticoagulants.

An ATLANTIS 4D CT substudy of valve thrombosis is also slated for Saturday’s Featured Clinical Research 1 session at 12:15 p.m. to 1:45 p.m..

Sunday LBCTs

Dr. Drachman highlighted a series of other late-breaking studies, including the global DARE-19 trial testing the diabetes and HF drug dapagliflozin (Farxiga) given with local standard-of-care therapy for 30 days in hospitalized COVID-19 patients with CV, metabolic, or renal risk factors.

Although sodium-glucose cotransporter-2 inhibitors have been white-hot of late, top-line results reported last month show dapagliflozin failed to achieve statistical significance for the primary endpoints of reducing organ dysfunction and all-cause mortality and for improving recovery. Details will be presented in the Joint ACC/JAMA Late-Breaking Clinical Trials II (Sunday, May 16, 8:00 a.m.-9:30 a.m.).

Two trials, FLOWER-MI and RADIANCE-HTN TRIO, were singled out in the Joint ACC/New England Journal of Medicine Late-Breaking Clinical Trials III (Sunday, May 16, 10:45 a.m.-12:00 p.m.). FLOWER-MI examines whether fractional flow reserve (FFR) is better than angiography to guide complete multivessel revascularization in ST-elevation MI patients with at least 50% stenosis in at least one nonculprit lesion requiring percutaneous coronary intervention (PCI). Recent studies have shown the superiority of FFR-guided PCI for nonculprit lesions, compared with culprit lesion treatment-only, but this is the first time FFR- and angiography-guided PCI have been compared in STEMI patients.

RADIANCE-HTN TRIO already tipped its hand, with top-line results reported in late 2020 showing that the trial met its primary efficacy endpoint of greater reduction in daytime blood pressure over 2 months with the Paradise endovascular ultrasound renal denervation system, compared with a sham procedure, in 136 patients with resistant hypertension, importantly, after being given a single pill containing a calcium channel blocker, angiotensin II receptor blocker, and diuretic.

Renal denervation for hypertension has been making something of a comeback, with the 2018 RADIANCE-HTN SOLO reporting better ambulatory blood pressure control with the Paradise system than with a sham procedure in the absence of antihypertensive agents. The device has been granted breakthrough device designation from the Food and Drug Administration for the treatment of hypertensive patients who are unable to sufficiently respond to or are intolerant of antihypertensive therapy.

Monday LBCTs

In the Late-Breaking Clinical Trials IV session (Monday, May 17, 8 a.m.–9:30 a.m.), Drachman called out a secondary analysis from GALATIC-HF looking at the impact of EF on the therapeutic effect of omecamtiv mecarbil. In last year’s primary analysis, the selective cardiac myosin activator produced a modest but significant reduction in HF events or CV death in 8,232 patients with HF and an EF of 35% or less.

Rounding out the list is the Canadian CAPITAL CHILL study of moderate versus mild therapeutic hypothermia in out-of-hospital cardiac arrest, to be presented in the final Late-Breaking Clinical Trials V session (Monday, May 17, 10:45 a.m.–12:00 p.m.).

The double-blind trial sought to determine whether neurologic outcomes at 6 months are improved by targeting a core temperature of 31 ˚C versus 34 ˚C after the return of spontaneous circulation in comatose survivors of out-of-hospital cardiac arrest.

“For me, I think this could really change practice and has personal relevance from experience with cardiac arrest survivors that I’ve known and care for very deeply,” Dr. Drachman said in an interview. “I think that there’s a lot of opportunity here as well.”

Asked what other trials have the potential to change practice, Dr. Drachman said FLOWER-MI holds particular interest because it looks at how to manage patients with STEMI with multiple lesions at the point of care.

“We’ve gained a lot of clarity from several other prior clinical trials, but this will help to answer the question in a slightly different way of saying: can you eyeball it, can you look at the angiogram and say whether or not that other, nonculprit lesion ought to be treated in the same hospitalization or should you really be using a pressure wire,” he said. “For me as an interventionalist, this is really important because when you finish up doing an intervention on a patient it might be the middle of the night and the patient may be more or less stable, but you’ve already exposed them to the risk of a procedure, should you then move on and do another aspect of the procedure to interrogate with a pressure wire a remaining narrowing? I think that’s very important; that’ll help me make decisions on a day-to-day basis.”

Dr. Drachman also cited RADIANCE-HTN TRIO because it employs an endovascular technique to control blood pressure in patients with hypertension, specifically those resistant to multiple drugs.

During the press conference, Dr. Morris, a preventive cardiologist, put her money on the ADAPTABLE study of aspirin dosing, reiterating that the unique trial design could inform future research, and on Sunday’s 8:45 a.m. late-breaking post hoc analysis from the STRENGTH trial that looks to pick up where the controversy over omega-3 fatty acid preparations left off at last year’s American Heart Association meeting.

A lack of benefit on CV event rates reported with Epanova, a high-dose combination of eicosapentaenoic acid (EPA) and docosahexaenoic acid, led to a contentious debate over how to reconcile STRENGTH with the findings from REDUCE-IT, which showed a 25% relative risk reduction in major CV events with the EPA product icosapent ethyl (Vascepa).

STRENGTH investigator Steven Nissen, MD, Cleveland Clinic, and REDUCE-IT investigator and session panelist Deepak Bhatt, MD, Brigham and Women’s Hospital, Boston, will share the virtual stage at ACC 2021, but Dr. Morris said the “good news” is both researchers know one another very well and “will really be focusing on no political issues, just the omega-3 fatty levels in the bloodstream and what does that mean in either trial.

“This is not designed to be a debate, point counterpoint,” she added.

For that, as all cardiologists and journalists know, there will be the wild and woolly #CardioTwitter sphere.

A version of this article first appeared on Medscape.com.

The American College of Cardiology pulled off an impressive all-virtual meeting in March 2020, less than 3 weeks after canceling its in-person event and just 2 weeks after COVID-19 was declared a national emergency.

Optimistic plans for the annual scientific sessions of the American College of Cardiology (ACC 2021) to be a March hybrid affair in Atlanta pivoted not once, but twice, as the pandemic evolved, with the date pushed back 2 full months, to May 15-17, and the format revised to fully virtual.

“While this meeting is being delivered virtually, I think you’ll see there have been benefits in the time to plan and also the lessons that ACC has learned in virtual education over the past year. This has come together to really create a robust educational and scientific agenda,” ACC 2021 chair Pamela B. Morris, MD, said in a press conference focused on the upcoming meeting.

Over the 3 days, there will be more than 200 education sessions, 10 guideline-specific sessions, and 11 learning pathways that include core areas, but also special topics, such as COVID-19 and the emerging cardio-obstetrics subspecialty.

The meeting will be delivered through a new virtual education program built to optimize real-time interaction between faculty members and attendees, she said. A dedicated portal on the platform will allow attendees to interact virtually, for example, with presenters of the nearly 3,000 ePosters and 420 moderated posters.

For those suffering from Zoom fatigue, the increasingly popular Heart2Heart stage talks have also been converted to podcasts, which cover topics like gender equity in cardiology, the evolving role of advanced practice professionals, and “one of my favorites: art as a tool for healing,” said Dr. Morris, from the Medical University of South Carolina, Charleston. “Those sessions are really not to be missed.”

Reconnecting is an underlying theme of the meeting but the great divider will not be ignored. COVID-19 will be the focus of two 90-minute Intensive Sessions on Saturday, May 15, the first kicking off at 10:30 a.m. ET, with the Bishop Keynote lecture on bringing health equity to the frontline of cardiovascular care, followed by lessons learned during the pandemic, how to conduct clinical trials, and vaccine development.

The second session, set for 12:15 p.m., continues the “silver linings” theme, with case presentations on advances in telehealth, myocardial involvement, and thrombosis in COVID. For those wanting more, 18 abstracts are on tap in a 2-hour Spotlight on Special Topics session beginning at 2:30 p.m.

Asked about the pandemic’s effect on bringing science to fruition this past year, Dr. Morris said there’s no question it’s slowed some of the progress the cardiology community had made but, like clinical practice, “we’ve also surmounted many of those obstacles.”

“I think research has rebounded,” she said. “Just in terms of the number of abstracts and the quality of abstracts that were submitted this year, I don’t think there’s any question that we are right on par with previous years.”

Indeed, 5,258 abstracts from 76 countries were submitted, with more than 3,400 chosen for oral and poster presentation, including 25 late-breaking clinical trials to be presented in five sessions.

The late-breaking presentations and discussions will be prerecorded but speakers and panelists have been invited to be present during the streaming to answer live any questions that may arise in the chat box, ACC 2021 vice chair Douglas Drachman, MD, Massachusetts General Hospital, Boston, said in an interview.

Late-breaking clinical trials

The Joint ACC/JACC Late-Breaking Clinical Trials I (Saturday, May 15, 9:00 a.m.–-10:00 a.m.) kicks off with PARADISE-MI, the first head-to-head comparison of an angiotensin receptor neprilysin inhibitor (ARNI) and an ACE inhibitor in patients with reduced ejection fractions (EFs) after MI but no history of heart failure (HF), studying 200 mg sacubitril/valsartan (Entresto) versus 5 mg of ramipril, both twice daily, in 5,669 patients.

Sacubitril/valsartan was initially approved for HF with reduced EF and added a new indication to treat some HF patients with preserved EF. Novartis, however, recently told investors that although numerical trends consistently favored the ARNI over the ACE inhibitor ramipril, the phase 3 study failed to meet the primary endpoint for efficacy superiority of reducing the risk for cardiovascular (CV) death and HF events after an acute MI.

Second up is ADAPTABLE, which looks to close a surprising evidence gap over whether 81 mg or 325 mg daily is the optimal dose of the ubiquitously prescribed aspirin for secondary prevention in high-risk patients with established atherosclerotic CV disease.

The open-label, randomized study will look at efficacy and major bleeding over roughly 4 years in 15,000 patients within PCORnet, the National Patient-centered Clinical Research Network, a partnership of clinical research, health plan research, and patient-powered networks created to streamline patient-reported outcomes research.

“This study will not only give important clinical information for us, practically speaking, whether we should prescribe lower- or higher-dose aspirin, but it may also serve as a template for future pragmatic clinical trial design in the real world,” Dr. Drachman said during the press conference.

Up next is the 4,812-patient Canadian LAAOS III, the largest trial to examine the efficacy of left atrial appendage occlusion for stroke prevention in patients with atrial fibrillation (AFib) already undergoing cardiac surgery. The primary outcome is the first occurrence of stroke or systemic arterial embolism over an average follow-up of 4 years.

Percutaneous closure of the left atrial appendage (LAA) has been shown to reduce stroke in AFib patients at high-risk of bleeding on systemic anticoagulation. But these devices can be expensive and studies haven’t included patients who also have valvular heart disease, a group that actually comprises more than half of patients undergoing cardiac surgery who also have AFib, he noted.

At the same time, surgical LAA closure studies have been small and have had very mixed results. “There isn’t a large-scale rigorous assessment out there for these patients undergoing surgery, so I think this is going to be fascinating to see,” Dr. Drachman said.

The session closes with ATLANTIS, which looks to shed some light on the role of anticoagulation therapy in patients after transcatheter aortic valve replacement (TAVR or TAVI). POPular TAVI, presented at ACC 2020, showed aspirin alone was the preferred antithrombotic therapy over aspirin plus clopidogrel (Plavix) in patients not on oral anticoagulants, but the optimal anticoagulation regimen remains unsettled.

The French open-label, 1,510-patient ATLANTIS trial examined whether the novel oral anticoagulant apixaban (Eliquis) is superior in preventing CV events after TAVR, compared with antiplatelet therapy in patients without an indication for anticoagulation and compared with vitamin K antagonists in those receiving anticoagulants.

An ATLANTIS 4D CT substudy of valve thrombosis is also slated for Saturday’s Featured Clinical Research 1 session at 12:15 p.m. to 1:45 p.m..

Sunday LBCTs

Dr. Drachman highlighted a series of other late-breaking studies, including the global DARE-19 trial testing the diabetes and HF drug dapagliflozin (Farxiga) given with local standard-of-care therapy for 30 days in hospitalized COVID-19 patients with CV, metabolic, or renal risk factors.

Although sodium-glucose cotransporter-2 inhibitors have been white-hot of late, top-line results reported last month show dapagliflozin failed to achieve statistical significance for the primary endpoints of reducing organ dysfunction and all-cause mortality and for improving recovery. Details will be presented in the Joint ACC/JAMA Late-Breaking Clinical Trials II (Sunday, May 16, 8:00 a.m.-9:30 a.m.).

Two trials, FLOWER-MI and RADIANCE-HTN TRIO, were singled out in the Joint ACC/New England Journal of Medicine Late-Breaking Clinical Trials III (Sunday, May 16, 10:45 a.m.-12:00 p.m.). FLOWER-MI examines whether fractional flow reserve (FFR) is better than angiography to guide complete multivessel revascularization in ST-elevation MI patients with at least 50% stenosis in at least one nonculprit lesion requiring percutaneous coronary intervention (PCI). Recent studies have shown the superiority of FFR-guided PCI for nonculprit lesions, compared with culprit lesion treatment-only, but this is the first time FFR- and angiography-guided PCI have been compared in STEMI patients.

RADIANCE-HTN TRIO already tipped its hand, with top-line results reported in late 2020 showing that the trial met its primary efficacy endpoint of greater reduction in daytime blood pressure over 2 months with the Paradise endovascular ultrasound renal denervation system, compared with a sham procedure, in 136 patients with resistant hypertension, importantly, after being given a single pill containing a calcium channel blocker, angiotensin II receptor blocker, and diuretic.

Renal denervation for hypertension has been making something of a comeback, with the 2018 RADIANCE-HTN SOLO reporting better ambulatory blood pressure control with the Paradise system than with a sham procedure in the absence of antihypertensive agents. The device has been granted breakthrough device designation from the Food and Drug Administration for the treatment of hypertensive patients who are unable to sufficiently respond to or are intolerant of antihypertensive therapy.

Monday LBCTs

In the Late-Breaking Clinical Trials IV session (Monday, May 17, 8 a.m.–9:30 a.m.), Drachman called out a secondary analysis from GALATIC-HF looking at the impact of EF on the therapeutic effect of omecamtiv mecarbil. In last year’s primary analysis, the selective cardiac myosin activator produced a modest but significant reduction in HF events or CV death in 8,232 patients with HF and an EF of 35% or less.

Rounding out the list is the Canadian CAPITAL CHILL study of moderate versus mild therapeutic hypothermia in out-of-hospital cardiac arrest, to be presented in the final Late-Breaking Clinical Trials V session (Monday, May 17, 10:45 a.m.–12:00 p.m.).

The double-blind trial sought to determine whether neurologic outcomes at 6 months are improved by targeting a core temperature of 31 ˚C versus 34 ˚C after the return of spontaneous circulation in comatose survivors of out-of-hospital cardiac arrest.

“For me, I think this could really change practice and has personal relevance from experience with cardiac arrest survivors that I’ve known and care for very deeply,” Dr. Drachman said in an interview. “I think that there’s a lot of opportunity here as well.”

Asked what other trials have the potential to change practice, Dr. Drachman said FLOWER-MI holds particular interest because it looks at how to manage patients with STEMI with multiple lesions at the point of care.

“We’ve gained a lot of clarity from several other prior clinical trials, but this will help to answer the question in a slightly different way of saying: can you eyeball it, can you look at the angiogram and say whether or not that other, nonculprit lesion ought to be treated in the same hospitalization or should you really be using a pressure wire,” he said. “For me as an interventionalist, this is really important because when you finish up doing an intervention on a patient it might be the middle of the night and the patient may be more or less stable, but you’ve already exposed them to the risk of a procedure, should you then move on and do another aspect of the procedure to interrogate with a pressure wire a remaining narrowing? I think that’s very important; that’ll help me make decisions on a day-to-day basis.”

Dr. Drachman also cited RADIANCE-HTN TRIO because it employs an endovascular technique to control blood pressure in patients with hypertension, specifically those resistant to multiple drugs.

During the press conference, Dr. Morris, a preventive cardiologist, put her money on the ADAPTABLE study of aspirin dosing, reiterating that the unique trial design could inform future research, and on Sunday’s 8:45 a.m. late-breaking post hoc analysis from the STRENGTH trial that looks to pick up where the controversy over omega-3 fatty acid preparations left off at last year’s American Heart Association meeting.

A lack of benefit on CV event rates reported with Epanova, a high-dose combination of eicosapentaenoic acid (EPA) and docosahexaenoic acid, led to a contentious debate over how to reconcile STRENGTH with the findings from REDUCE-IT, which showed a 25% relative risk reduction in major CV events with the EPA product icosapent ethyl (Vascepa).

STRENGTH investigator Steven Nissen, MD, Cleveland Clinic, and REDUCE-IT investigator and session panelist Deepak Bhatt, MD, Brigham and Women’s Hospital, Boston, will share the virtual stage at ACC 2021, but Dr. Morris said the “good news” is both researchers know one another very well and “will really be focusing on no political issues, just the omega-3 fatty levels in the bloodstream and what does that mean in either trial.

“This is not designed to be a debate, point counterpoint,” she added.

For that, as all cardiologists and journalists know, there will be the wild and woolly #CardioTwitter sphere.

A version of this article first appeared on Medscape.com.

The American College of Cardiology pulled off an impressive all-virtual meeting in March 2020, less than 3 weeks after canceling its in-person event and just 2 weeks after COVID-19 was declared a national emergency.

Optimistic plans for the annual scientific sessions of the American College of Cardiology (ACC 2021) to be a March hybrid affair in Atlanta pivoted not once, but twice, as the pandemic evolved, with the date pushed back 2 full months, to May 15-17, and the format revised to fully virtual.

“While this meeting is being delivered virtually, I think you’ll see there have been benefits in the time to plan and also the lessons that ACC has learned in virtual education over the past year. This has come together to really create a robust educational and scientific agenda,” ACC 2021 chair Pamela B. Morris, MD, said in a press conference focused on the upcoming meeting.

Over the 3 days, there will be more than 200 education sessions, 10 guideline-specific sessions, and 11 learning pathways that include core areas, but also special topics, such as COVID-19 and the emerging cardio-obstetrics subspecialty.

The meeting will be delivered through a new virtual education program built to optimize real-time interaction between faculty members and attendees, she said. A dedicated portal on the platform will allow attendees to interact virtually, for example, with presenters of the nearly 3,000 ePosters and 420 moderated posters.

For those suffering from Zoom fatigue, the increasingly popular Heart2Heart stage talks have also been converted to podcasts, which cover topics like gender equity in cardiology, the evolving role of advanced practice professionals, and “one of my favorites: art as a tool for healing,” said Dr. Morris, from the Medical University of South Carolina, Charleston. “Those sessions are really not to be missed.”

Reconnecting is an underlying theme of the meeting but the great divider will not be ignored. COVID-19 will be the focus of two 90-minute Intensive Sessions on Saturday, May 15, the first kicking off at 10:30 a.m. ET, with the Bishop Keynote lecture on bringing health equity to the frontline of cardiovascular care, followed by lessons learned during the pandemic, how to conduct clinical trials, and vaccine development.

The second session, set for 12:15 p.m., continues the “silver linings” theme, with case presentations on advances in telehealth, myocardial involvement, and thrombosis in COVID. For those wanting more, 18 abstracts are on tap in a 2-hour Spotlight on Special Topics session beginning at 2:30 p.m.

Asked about the pandemic’s effect on bringing science to fruition this past year, Dr. Morris said there’s no question it’s slowed some of the progress the cardiology community had made but, like clinical practice, “we’ve also surmounted many of those obstacles.”

“I think research has rebounded,” she said. “Just in terms of the number of abstracts and the quality of abstracts that were submitted this year, I don’t think there’s any question that we are right on par with previous years.”

Indeed, 5,258 abstracts from 76 countries were submitted, with more than 3,400 chosen for oral and poster presentation, including 25 late-breaking clinical trials to be presented in five sessions.

The late-breaking presentations and discussions will be prerecorded but speakers and panelists have been invited to be present during the streaming to answer live any questions that may arise in the chat box, ACC 2021 vice chair Douglas Drachman, MD, Massachusetts General Hospital, Boston, said in an interview.

Late-breaking clinical trials

The Joint ACC/JACC Late-Breaking Clinical Trials I (Saturday, May 15, 9:00 a.m.–-10:00 a.m.) kicks off with PARADISE-MI, the first head-to-head comparison of an angiotensin receptor neprilysin inhibitor (ARNI) and an ACE inhibitor in patients with reduced ejection fractions (EFs) after MI but no history of heart failure (HF), studying 200 mg sacubitril/valsartan (Entresto) versus 5 mg of ramipril, both twice daily, in 5,669 patients.

Sacubitril/valsartan was initially approved for HF with reduced EF and added a new indication to treat some HF patients with preserved EF. Novartis, however, recently told investors that although numerical trends consistently favored the ARNI over the ACE inhibitor ramipril, the phase 3 study failed to meet the primary endpoint for efficacy superiority of reducing the risk for cardiovascular (CV) death and HF events after an acute MI.

Second up is ADAPTABLE, which looks to close a surprising evidence gap over whether 81 mg or 325 mg daily is the optimal dose of the ubiquitously prescribed aspirin for secondary prevention in high-risk patients with established atherosclerotic CV disease.

The open-label, randomized study will look at efficacy and major bleeding over roughly 4 years in 15,000 patients within PCORnet, the National Patient-centered Clinical Research Network, a partnership of clinical research, health plan research, and patient-powered networks created to streamline patient-reported outcomes research.

“This study will not only give important clinical information for us, practically speaking, whether we should prescribe lower- or higher-dose aspirin, but it may also serve as a template for future pragmatic clinical trial design in the real world,” Dr. Drachman said during the press conference.

Up next is the 4,812-patient Canadian LAAOS III, the largest trial to examine the efficacy of left atrial appendage occlusion for stroke prevention in patients with atrial fibrillation (AFib) already undergoing cardiac surgery. The primary outcome is the first occurrence of stroke or systemic arterial embolism over an average follow-up of 4 years.

Percutaneous closure of the left atrial appendage (LAA) has been shown to reduce stroke in AFib patients at high-risk of bleeding on systemic anticoagulation. But these devices can be expensive and studies haven’t included patients who also have valvular heart disease, a group that actually comprises more than half of patients undergoing cardiac surgery who also have AFib, he noted.

At the same time, surgical LAA closure studies have been small and have had very mixed results. “There isn’t a large-scale rigorous assessment out there for these patients undergoing surgery, so I think this is going to be fascinating to see,” Dr. Drachman said.

The session closes with ATLANTIS, which looks to shed some light on the role of anticoagulation therapy in patients after transcatheter aortic valve replacement (TAVR or TAVI). POPular TAVI, presented at ACC 2020, showed aspirin alone was the preferred antithrombotic therapy over aspirin plus clopidogrel (Plavix) in patients not on oral anticoagulants, but the optimal anticoagulation regimen remains unsettled.

The French open-label, 1,510-patient ATLANTIS trial examined whether the novel oral anticoagulant apixaban (Eliquis) is superior in preventing CV events after TAVR, compared with antiplatelet therapy in patients without an indication for anticoagulation and compared with vitamin K antagonists in those receiving anticoagulants.

An ATLANTIS 4D CT substudy of valve thrombosis is also slated for Saturday’s Featured Clinical Research 1 session at 12:15 p.m. to 1:45 p.m..

Sunday LBCTs

Dr. Drachman highlighted a series of other late-breaking studies, including the global DARE-19 trial testing the diabetes and HF drug dapagliflozin (Farxiga) given with local standard-of-care therapy for 30 days in hospitalized COVID-19 patients with CV, metabolic, or renal risk factors.

Although sodium-glucose cotransporter-2 inhibitors have been white-hot of late, top-line results reported last month show dapagliflozin failed to achieve statistical significance for the primary endpoints of reducing organ dysfunction and all-cause mortality and for improving recovery. Details will be presented in the Joint ACC/JAMA Late-Breaking Clinical Trials II (Sunday, May 16, 8:00 a.m.-9:30 a.m.).

Two trials, FLOWER-MI and RADIANCE-HTN TRIO, were singled out in the Joint ACC/New England Journal of Medicine Late-Breaking Clinical Trials III (Sunday, May 16, 10:45 a.m.-12:00 p.m.). FLOWER-MI examines whether fractional flow reserve (FFR) is better than angiography to guide complete multivessel revascularization in ST-elevation MI patients with at least 50% stenosis in at least one nonculprit lesion requiring percutaneous coronary intervention (PCI). Recent studies have shown the superiority of FFR-guided PCI for nonculprit lesions, compared with culprit lesion treatment-only, but this is the first time FFR- and angiography-guided PCI have been compared in STEMI patients.

RADIANCE-HTN TRIO already tipped its hand, with top-line results reported in late 2020 showing that the trial met its primary efficacy endpoint of greater reduction in daytime blood pressure over 2 months with the Paradise endovascular ultrasound renal denervation system, compared with a sham procedure, in 136 patients with resistant hypertension, importantly, after being given a single pill containing a calcium channel blocker, angiotensin II receptor blocker, and diuretic.

Renal denervation for hypertension has been making something of a comeback, with the 2018 RADIANCE-HTN SOLO reporting better ambulatory blood pressure control with the Paradise system than with a sham procedure in the absence of antihypertensive agents. The device has been granted breakthrough device designation from the Food and Drug Administration for the treatment of hypertensive patients who are unable to sufficiently respond to or are intolerant of antihypertensive therapy.

Monday LBCTs

In the Late-Breaking Clinical Trials IV session (Monday, May 17, 8 a.m.–9:30 a.m.), Drachman called out a secondary analysis from GALATIC-HF looking at the impact of EF on the therapeutic effect of omecamtiv mecarbil. In last year’s primary analysis, the selective cardiac myosin activator produced a modest but significant reduction in HF events or CV death in 8,232 patients with HF and an EF of 35% or less.

Rounding out the list is the Canadian CAPITAL CHILL study of moderate versus mild therapeutic hypothermia in out-of-hospital cardiac arrest, to be presented in the final Late-Breaking Clinical Trials V session (Monday, May 17, 10:45 a.m.–12:00 p.m.).

The double-blind trial sought to determine whether neurologic outcomes at 6 months are improved by targeting a core temperature of 31 ˚C versus 34 ˚C after the return of spontaneous circulation in comatose survivors of out-of-hospital cardiac arrest.

“For me, I think this could really change practice and has personal relevance from experience with cardiac arrest survivors that I’ve known and care for very deeply,” Dr. Drachman said in an interview. “I think that there’s a lot of opportunity here as well.”

Asked what other trials have the potential to change practice, Dr. Drachman said FLOWER-MI holds particular interest because it looks at how to manage patients with STEMI with multiple lesions at the point of care.

“We’ve gained a lot of clarity from several other prior clinical trials, but this will help to answer the question in a slightly different way of saying: can you eyeball it, can you look at the angiogram and say whether or not that other, nonculprit lesion ought to be treated in the same hospitalization or should you really be using a pressure wire,” he said. “For me as an interventionalist, this is really important because when you finish up doing an intervention on a patient it might be the middle of the night and the patient may be more or less stable, but you’ve already exposed them to the risk of a procedure, should you then move on and do another aspect of the procedure to interrogate with a pressure wire a remaining narrowing? I think that’s very important; that’ll help me make decisions on a day-to-day basis.”

Dr. Drachman also cited RADIANCE-HTN TRIO because it employs an endovascular technique to control blood pressure in patients with hypertension, specifically those resistant to multiple drugs.

During the press conference, Dr. Morris, a preventive cardiologist, put her money on the ADAPTABLE study of aspirin dosing, reiterating that the unique trial design could inform future research, and on Sunday’s 8:45 a.m. late-breaking post hoc analysis from the STRENGTH trial that looks to pick up where the controversy over omega-3 fatty acid preparations left off at last year’s American Heart Association meeting.

A lack of benefit on CV event rates reported with Epanova, a high-dose combination of eicosapentaenoic acid (EPA) and docosahexaenoic acid, led to a contentious debate over how to reconcile STRENGTH with the findings from REDUCE-IT, which showed a 25% relative risk reduction in major CV events with the EPA product icosapent ethyl (Vascepa).

STRENGTH investigator Steven Nissen, MD, Cleveland Clinic, and REDUCE-IT investigator and session panelist Deepak Bhatt, MD, Brigham and Women’s Hospital, Boston, will share the virtual stage at ACC 2021, but Dr. Morris said the “good news” is both researchers know one another very well and “will really be focusing on no political issues, just the omega-3 fatty levels in the bloodstream and what does that mean in either trial.

“This is not designed to be a debate, point counterpoint,” she added.

For that, as all cardiologists and journalists know, there will be the wild and woolly #CardioTwitter sphere.

A version of this article first appeared on Medscape.com.

Canned diabetes prevention and a haunted COVID castle

Lower blood sugar with sardines

If you’ve ever turned your nose up at someone eating sardines straight from the can, you could be the one missing out on a good way to boost your own health.

New research from Open University of Catalonia (Spain) has found that eating two cans of whole sardines a week can help prevent people from developing type 2 diabetes (T2D). Now you might be thinking: That’s a lot of fish, can’t I just take a supplement pill? Actually, no.

“Nutrients can play an essential role in the prevention and treatment of many different pathologies, but their effect is usually caused by the synergy that exists between them and the food that they are contained in,” study coauthor Diana Rizzolo, PhD, said in a written statement. See, we told you.

In a study of 152 patients with prediabetes, each participant was put on a specific diet to reduce their chances of developing T2D. Among the patients who were not given sardines each week, the proportion considered to be at the highest risk fell from 27% to 22% after 1 year, but for those who did get the sardines, the size of the high-risk group shrank from 37% to just 8%.

Suggesting sardines during checkups could make eating them more widely accepted, Dr. Rizzolo and associates said. Sardines are cheap, easy to find, and also have the benefits of other oily fish, like boosting insulin resistance and increasing good cholesterol.

So why not have a can with a couple of saltine crackers for lunch? Your blood sugar will thank you. Just please avoid indulging on a plane or in your office, where workers are slowly returning – no need to give them another excuse to avoid their cubicle.

Come for the torture, stay for the vaccine

Bran Castle. Home of Dracula and Vlad the Impaler (at least in pop culture’s eyes). A moody Gothic structure atop a hill. You can practically hear the ancient screams of thousands of tortured souls as you wander the grounds and its cursed halls. Naturally, it’s a major tourist destination.

Unfortunately for Romania, the pandemic has rather put a damper on tourism. The restrictions have done their damage, but here’s a quick LOTME theory: Perhaps people don’t want to be reminded of medieval tortures when we’ve got plenty of modern-day ones right now.

The management of Bran Castle has developed a new gimmick to drum up attendance – come to Bran Castle and get your COVID vaccine. Anyone can come and get jabbed with the Pfizer vaccine on all weekends in May, and when they do, they gain free admittance to the castle and the exhibit within, home to 52 medieval torture instruments. “The idea … was to show how people got jabbed 500-600 years ago in Europe,” the castle’s marketing director said.

While it may not be kind of the jabbing ole Vladdy got his name for – fully impaling people on hundreds of wooden stakes while you eat a nice dinner isn’t exactly smiled upon in today’s world – we’re sure he’d approve of this more limited but ultimately beneficial version. Jabbing people while helping them really is the dream.

Fuzzy little COVID detectors

Before we get started, we need a moment to get our deep, movie trailer announcer-type voice ready. Okay, here goes.

“In a world where an organism too tiny to see brings entire economies to a standstill and pits scientists against doofuses, who can humanity turn to for help?”

How about bees? That’s right, we said bees. But not just any bees. Specially trained bees. Specially trained Dutch bees. Bees trained to sniff out our greatest nemesis. No, we’re not talking about Ted Cruz anymore. Let it go, that was just a joke. We’re talking COVID.

We’ll let Wim van der Poel, professor of virology at Wageningen (the Netherlands) University, explain the process: “We collect normal honeybees from a beekeeper, and we put the bees in harnesses.” And you thought their tulips were pretty great – the Dutch are putting harnesses on bees! (Which is much better than our previous story of bees involving a Taiwanese patient.)

The researchers presented the bees with two types of samples: COVID infected and non–COVID infected. The infected samples came with a sugary water reward and the noninfected samples did not, so the bees quickly learned to tell the difference.

The bees, then, could cut the waiting time for test results down to seconds, and at a fraction of the cost, making them an option in countries without a lot of testing infrastructure, the research team suggested.

The plan is not without its flaws, of course, but we’re convinced. More than that, we are true bee-lievers.

A little slice of … well, not heaven

If you’ve been around for the last 2 decades, you’ve seen your share of Internet trends: Remember the ice bucket challenge? Tide pod eating? We know what you’re thinking: Sigh, what could they be doing now?

Well, people are eating old meat, and before you think about the expired ground beef you got on special from the grocery store yesterday, that’s not quite what we mean. We all know expiration dates are “suggestions,” like yield signs and yellow lights. People are eating rotten, decomposing, borderline moldy meat.

They claim that the meat tastes better. We’re not so sure, but don’t worry, because it gets weirder. Some folks, apparently, are getting high from eating this meat, experiencing a feeling of euphoria. Personally, we think that rotten fumes probably knocked these people out and made them hallucinate.

Singaporean dietitian Naras Lapsys says that eating rotten meat can possibly cause a person to go into another state of consciousness, but it’s not a good thing. We don’t think you have to be a dietitian to know that.

It has not been definitively proven that eating rotting meat makes you high, but it’s definitely proven that this is disgusting … and very dangerous.

Lower blood sugar with sardines

If you’ve ever turned your nose up at someone eating sardines straight from the can, you could be the one missing out on a good way to boost your own health.

New research from Open University of Catalonia (Spain) has found that eating two cans of whole sardines a week can help prevent people from developing type 2 diabetes (T2D). Now you might be thinking: That’s a lot of fish, can’t I just take a supplement pill? Actually, no.

“Nutrients can play an essential role in the prevention and treatment of many different pathologies, but their effect is usually caused by the synergy that exists between them and the food that they are contained in,” study coauthor Diana Rizzolo, PhD, said in a written statement. See, we told you.

In a study of 152 patients with prediabetes, each participant was put on a specific diet to reduce their chances of developing T2D. Among the patients who were not given sardines each week, the proportion considered to be at the highest risk fell from 27% to 22% after 1 year, but for those who did get the sardines, the size of the high-risk group shrank from 37% to just 8%.

Suggesting sardines during checkups could make eating them more widely accepted, Dr. Rizzolo and associates said. Sardines are cheap, easy to find, and also have the benefits of other oily fish, like boosting insulin resistance and increasing good cholesterol.

So why not have a can with a couple of saltine crackers for lunch? Your blood sugar will thank you. Just please avoid indulging on a plane or in your office, where workers are slowly returning – no need to give them another excuse to avoid their cubicle.

Come for the torture, stay for the vaccine

Bran Castle. Home of Dracula and Vlad the Impaler (at least in pop culture’s eyes). A moody Gothic structure atop a hill. You can practically hear the ancient screams of thousands of tortured souls as you wander the grounds and its cursed halls. Naturally, it’s a major tourist destination.

Unfortunately for Romania, the pandemic has rather put a damper on tourism. The restrictions have done their damage, but here’s a quick LOTME theory: Perhaps people don’t want to be reminded of medieval tortures when we’ve got plenty of modern-day ones right now.

The management of Bran Castle has developed a new gimmick to drum up attendance – come to Bran Castle and get your COVID vaccine. Anyone can come and get jabbed with the Pfizer vaccine on all weekends in May, and when they do, they gain free admittance to the castle and the exhibit within, home to 52 medieval torture instruments. “The idea … was to show how people got jabbed 500-600 years ago in Europe,” the castle’s marketing director said.

While it may not be kind of the jabbing ole Vladdy got his name for – fully impaling people on hundreds of wooden stakes while you eat a nice dinner isn’t exactly smiled upon in today’s world – we’re sure he’d approve of this more limited but ultimately beneficial version. Jabbing people while helping them really is the dream.

Fuzzy little COVID detectors

Before we get started, we need a moment to get our deep, movie trailer announcer-type voice ready. Okay, here goes.

“In a world where an organism too tiny to see brings entire economies to a standstill and pits scientists against doofuses, who can humanity turn to for help?”

How about bees? That’s right, we said bees. But not just any bees. Specially trained bees. Specially trained Dutch bees. Bees trained to sniff out our greatest nemesis. No, we’re not talking about Ted Cruz anymore. Let it go, that was just a joke. We’re talking COVID.

We’ll let Wim van der Poel, professor of virology at Wageningen (the Netherlands) University, explain the process: “We collect normal honeybees from a beekeeper, and we put the bees in harnesses.” And you thought their tulips were pretty great – the Dutch are putting harnesses on bees! (Which is much better than our previous story of bees involving a Taiwanese patient.)

The researchers presented the bees with two types of samples: COVID infected and non–COVID infected. The infected samples came with a sugary water reward and the noninfected samples did not, so the bees quickly learned to tell the difference.

The bees, then, could cut the waiting time for test results down to seconds, and at a fraction of the cost, making them an option in countries without a lot of testing infrastructure, the research team suggested.

The plan is not without its flaws, of course, but we’re convinced. More than that, we are true bee-lievers.

A little slice of … well, not heaven

If you’ve been around for the last 2 decades, you’ve seen your share of Internet trends: Remember the ice bucket challenge? Tide pod eating? We know what you’re thinking: Sigh, what could they be doing now?

Well, people are eating old meat, and before you think about the expired ground beef you got on special from the grocery store yesterday, that’s not quite what we mean. We all know expiration dates are “suggestions,” like yield signs and yellow lights. People are eating rotten, decomposing, borderline moldy meat.

They claim that the meat tastes better. We’re not so sure, but don’t worry, because it gets weirder. Some folks, apparently, are getting high from eating this meat, experiencing a feeling of euphoria. Personally, we think that rotten fumes probably knocked these people out and made them hallucinate.

Singaporean dietitian Naras Lapsys says that eating rotten meat can possibly cause a person to go into another state of consciousness, but it’s not a good thing. We don’t think you have to be a dietitian to know that.

It has not been definitively proven that eating rotting meat makes you high, but it’s definitely proven that this is disgusting … and very dangerous.

Lower blood sugar with sardines

If you’ve ever turned your nose up at someone eating sardines straight from the can, you could be the one missing out on a good way to boost your own health.

New research from Open University of Catalonia (Spain) has found that eating two cans of whole sardines a week can help prevent people from developing type 2 diabetes (T2D). Now you might be thinking: That’s a lot of fish, can’t I just take a supplement pill? Actually, no.

“Nutrients can play an essential role in the prevention and treatment of many different pathologies, but their effect is usually caused by the synergy that exists between them and the food that they are contained in,” study coauthor Diana Rizzolo, PhD, said in a written statement. See, we told you.

In a study of 152 patients with prediabetes, each participant was put on a specific diet to reduce their chances of developing T2D. Among the patients who were not given sardines each week, the proportion considered to be at the highest risk fell from 27% to 22% after 1 year, but for those who did get the sardines, the size of the high-risk group shrank from 37% to just 8%.

Suggesting sardines during checkups could make eating them more widely accepted, Dr. Rizzolo and associates said. Sardines are cheap, easy to find, and also have the benefits of other oily fish, like boosting insulin resistance and increasing good cholesterol.

So why not have a can with a couple of saltine crackers for lunch? Your blood sugar will thank you. Just please avoid indulging on a plane or in your office, where workers are slowly returning – no need to give them another excuse to avoid their cubicle.

Come for the torture, stay for the vaccine

Bran Castle. Home of Dracula and Vlad the Impaler (at least in pop culture’s eyes). A moody Gothic structure atop a hill. You can practically hear the ancient screams of thousands of tortured souls as you wander the grounds and its cursed halls. Naturally, it’s a major tourist destination.

Unfortunately for Romania, the pandemic has rather put a damper on tourism. The restrictions have done their damage, but here’s a quick LOTME theory: Perhaps people don’t want to be reminded of medieval tortures when we’ve got plenty of modern-day ones right now.

The management of Bran Castle has developed a new gimmick to drum up attendance – come to Bran Castle and get your COVID vaccine. Anyone can come and get jabbed with the Pfizer vaccine on all weekends in May, and when they do, they gain free admittance to the castle and the exhibit within, home to 52 medieval torture instruments. “The idea … was to show how people got jabbed 500-600 years ago in Europe,” the castle’s marketing director said.

While it may not be kind of the jabbing ole Vladdy got his name for – fully impaling people on hundreds of wooden stakes while you eat a nice dinner isn’t exactly smiled upon in today’s world – we’re sure he’d approve of this more limited but ultimately beneficial version. Jabbing people while helping them really is the dream.

Fuzzy little COVID detectors

Before we get started, we need a moment to get our deep, movie trailer announcer-type voice ready. Okay, here goes.

“In a world where an organism too tiny to see brings entire economies to a standstill and pits scientists against doofuses, who can humanity turn to for help?”

How about bees? That’s right, we said bees. But not just any bees. Specially trained bees. Specially trained Dutch bees. Bees trained to sniff out our greatest nemesis. No, we’re not talking about Ted Cruz anymore. Let it go, that was just a joke. We’re talking COVID.

We’ll let Wim van der Poel, professor of virology at Wageningen (the Netherlands) University, explain the process: “We collect normal honeybees from a beekeeper, and we put the bees in harnesses.” And you thought their tulips were pretty great – the Dutch are putting harnesses on bees! (Which is much better than our previous story of bees involving a Taiwanese patient.)

The researchers presented the bees with two types of samples: COVID infected and non–COVID infected. The infected samples came with a sugary water reward and the noninfected samples did not, so the bees quickly learned to tell the difference.

The bees, then, could cut the waiting time for test results down to seconds, and at a fraction of the cost, making them an option in countries without a lot of testing infrastructure, the research team suggested.

The plan is not without its flaws, of course, but we’re convinced. More than that, we are true bee-lievers.

A little slice of … well, not heaven

If you’ve been around for the last 2 decades, you’ve seen your share of Internet trends: Remember the ice bucket challenge? Tide pod eating? We know what you’re thinking: Sigh, what could they be doing now?

Well, people are eating old meat, and before you think about the expired ground beef you got on special from the grocery store yesterday, that’s not quite what we mean. We all know expiration dates are “suggestions,” like yield signs and yellow lights. People are eating rotten, decomposing, borderline moldy meat.

They claim that the meat tastes better. We’re not so sure, but don’t worry, because it gets weirder. Some folks, apparently, are getting high from eating this meat, experiencing a feeling of euphoria. Personally, we think that rotten fumes probably knocked these people out and made them hallucinate.

Singaporean dietitian Naras Lapsys says that eating rotten meat can possibly cause a person to go into another state of consciousness, but it’s not a good thing. We don’t think you have to be a dietitian to know that.

It has not been definitively proven that eating rotting meat makes you high, but it’s definitely proven that this is disgusting … and very dangerous.

Subcutaneous, Mucocutaneous, and Mucous Membrane Tumors

The Diagnosis: Granular Cell Tumor

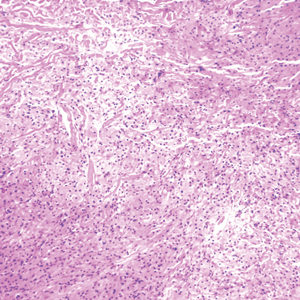

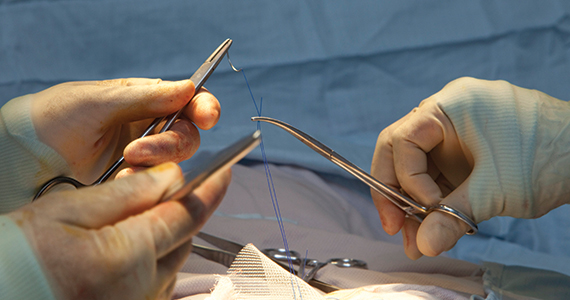

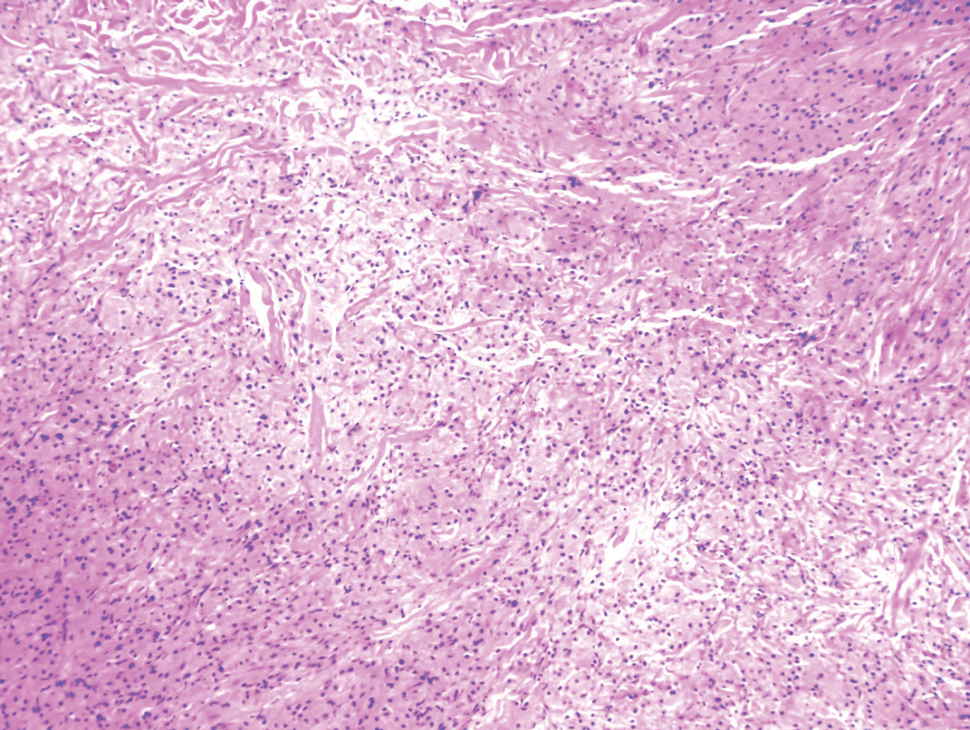

Histopathologic analysis from the axillary excision demonstrated cords and sheets of large polygonal cells in the dermis with uniform, oval, hyperchromatic nuclei and ample pink granular-staining cytoplasm (quiz images). An infiltrative growth pattern was noted; however, there was no evidence of conspicuous mitoses, nuclear pleomorphism, or necrosis. These results in conjunction with the immunohistochemistry findings were consistent with a benign granular cell tumor (GCT), a rare neoplasm considered to have neural/Schwann cell origin.1-3

Our case demonstrates the difficulty in clinically diagnosing cutaneous GCTs. The tumor often presents as a solitary, 0.5- to 3-cm, asymptomatic, firm nodule4,5; however, GCTs also can appear verrucous, eroded, or with other variable morphologies, which can create diagnostic challenges.5,6 Accordingly, a 1980 study of 110 patients with GCTs found that the preoperative clinical diagnosis was incorrect in all but 3 cases,7 emphasizing the need for histologic evaluation. Benign GCTs tend to exhibit sheets of polygonal tumor cells with eosinophilic granular cytoplasm and small central nuclei.3,5 The cytoplasmic granules are periodic acid-Schiff positive and diastase resistant.6 Many cases feature pseudoepitheliomatous hyperplasia, which can misleadingly resemble squamous cell carcinoma.3,5,6 Of note, invasive growth patterns on histology can occur with benign GCTs, as in our patient's case, and do not impact prognosis.3,4 On immunohistochemistry, benign, atypical, and malignant GCTs often stain positive for S-100 protein, vimentin, neuron-specific enolase, SOX10, and CD68.1,3

Although our patient's GCTs were benign, an estimated 1% to 2% are malignant.1,4 In 1998, Fanburg-Smith et al1 defined 6 histologic criteria that characterize malignant GCTs: necrosis, tumor cell spindling, vesicular nuclei with large nucleoli, high nuclear to cytoplasmic ratio, increased mitosis, and pleomorphism. Neoplasms with 3 or more of these features are classified as malignant, those with 1 or 2 are considered atypical, and those with only pleomorphism or no other criteria met are diagnosed as benign.1

Multiple GCTs have been reported in 10% to 25% of cases and, as highlighted in our case, can occur in both a metachronous and synchronous manner.2-4,6 Our patient developed a solitary GCT on the inferior lip 3 years prior to the appearance of 2 additional GCTs within 6 months of each other. The presence of multiple GCTs has been associated with genetic syndromes, such as neurofibromatosis type 1 and Noonan syndrome with multiple lentigines3,8; however, as our case demonstrates, multiple GCTs can occur in nonsyndromic patients as well. When multiple GCTs develop at distant sites, they can resemble metastasis.3 To differentiate these clinical scenarios, Machado et al3 proposed utilizing histology and anatomic location. Multiple tumors with benign characteristics on histology likely represent multiple GCTs, whereas tumors arising at sites common to GCT metastasis, such as lymph node, bone, or viscera, are more concerning for metastatic disease. It has been suggested that patients with multiple GCTs should be monitored with physical examination and repeat magnetic resonance imaging or computed tomography every 6 to 12 months.2 Given our patient's presentation with new tumors arising within 6 months of one another, we recommended a 6-month follow-up interval rather than 1 year. Due to the rarity of GCTs, clinical trials to define treatment guidelines and recommendations have not been performed.3 However, the most commonly utilized treatment modality is wide local excision, as performed in our patient.2,4

Melanoma, atypical fibroxanthoma (AFX), xanthoma, and leiomyosarcoma may be difficult to distinguish from GCT.1,3,4 Melanoma incidence has increased dramatically over the last several decades, with rates in the United States rising from 6.8 cases per 100,000 individuals in the 1970s to 20.1 in the early 2000s. Risk factors for its development include UV radiation exposure and particularly severe sunburns during childhood, along with a number of host risk factors such as total number of melanocytic nevi, family history, and fair complexion.9 Histologically, it often demonstrates irregularly distributed, poorly defined melanocytes with pagetoid spread and dyscohesive nests (Figure 1).10 Melanoma metastasis occasionally can present as a soft-tissue mass and often stains positive for S-100 and vimentin, thus resembling GCT1,4; however, unlike melanoma, GCTs lack melanosomes and stain negative for more specific melanocyte markers, such as melanoma antigen recognized by T cells 1 (MART-1).1,3,4

Atypical fibroxanthoma is a cutaneous neoplasm with fibrohistiocytic mesenchymal origin.11 These tumors typically arise on the head and neck in elderly individuals, particularly men with sun-damaged skin. They often present as superficial, rapidly growing nodules with the potential to ulcerate and bleed.11,12 Histologic features include pleomorphic spindle and epithelioid cells, whose nuclei appear hyperchromatic with atypical mitoses (Figure 2).12 Granular cell changes occur infrequently with AFXs, but in such cases immunohistochemistry can readily distinguish AFX from GCT. Although both tend to stain positive for CD68 and vimentin, AFXs lack S-100 protein and SOX10 expression that frequently is observed in GCTs.3,12

Xanthomas are localized lipid deposits in the connective tissue of the skin that often arise in association with dyslipidemia.13 They typically present as soft to semisolid yellow papules, plaques, or nodules. Their clinical appearance can resemble GCTs; however, histologic analysis enables differentiation with ease, as xanthomas demonstrate characteristic foam cells, consisting of lipid-laden macrophages (Figure 3).13

Cutaneous leiomyosarcoma is a rare dermal neoplasm, accounting for 2% to 3% of all sarcomas.14 They typically occur in White males during the fifth to seventh decades of life and often present as asymptomatic lesions on the lower extremities. They frequently arise from pilar smooth muscle. Unlike uterine and soft-tissue leiomyosarcoma, cutaneous leiomyosarcoma tends to follow an indolent course and rarely metastasizes.14 Histologically, these tumors display intersecting, well-defined, spindle-cell fascicles with abundant eosinophilic cytoplasm and cigar-shaped, blunt-ended nuclei (Figure 4).15 Occasionally, leiomyosarcomas can demonstrate cytoplasmic granularity due to lysosome accumulation4; nevertheless, the diagnosis usually can be elucidated by examining more typical histologic areas and utilizing immunohistochemistry, which often stains positive for α-smooth muscle actin, desmin, and h-caldesmon.4,15

- Fanburg-Smith JC, Meis-Kindblom JM, Fante R, et al. Malignant granular cell tumor of soft tissue: diagnostic criteria and clinicopathologic correlation. Am J Surg Pathol. 1998;22:779-794.

- Moten AS, Movva S, von Mehren M, et al. Granular cell tumor experience at a comprehensive cancer center. J Surg Res. 2018;226:1-7.

- Machado I, Cruz J, Lavernia J, et al. Solitary, multiple, benign, atypical, or malignant: the "granular cell tumor" puzzle. Virchows Arch. 2016;468:527-538.

- Ordóñez NG. Granular cell tumor: a review and update. Adv Anat Pathol. 1999;6:186-203.

- Vaughan V, Ferringer T. Granular cell tumor. Cutis. 2014;94:275, 279-280.

- Van L, Parker SR. Multiple morphologically distinct cutaneous granular cell tumors occurring in a single patient. Cutis. 2016;97:E26-E29.

- Lack EE, Worsham GF, Callihan MD, et al. Granular cell tumor: a clinicopathologic study of 110 patients. J Surg Oncol. 1980;13:301-316.

- Bamps S, Oyen T, Legius E, et al. Multiple granular cell tumors in a child with Noonan syndrome. Eur J Pediatr Surg. 2013;23:257-259.

- Rastrelli M, Tropea S, Rossi CR, et al. Melanoma: epidemiology, risk factors, pathogenesis, diagnosis and classification. In Vivo. 2014;28:1005-1011.

- Smoller BR. Histologic criteria for diagnosing primary cutaneousmalignant melanoma. Mod Pathol. 2006;19(suppl 2):S34-S40.

- Soleymani T, Aasi SZ, Novoa R, et al. Atypical fibroxanthoma and pleomorphic dermal sarcoma: updates on classification and management. Dermatol Clin. 2019;37:253-259.

- Cardis MA, Ni J, Bhawan J. Granular cell differentiation: a review of the published work. J Dermatol. 2017;44:251-258.

- Zak A, Zeman M, Slaby A, et al. Xanthomas: clinical and pathophysiological relations [published online April 29, 2014]. Biomed Pap Med Fac Univ Palacky Olomouc Czech Repub. 2014;158:181-188.

- Sandhu N, Sauvageau AP, Groman A, et al. Cutaneous leiomyosarcoma: a SEER database analysis. Dermatol Surg. 2020;46:159-164.

- George S, Serrano C, Hensley ML, et al. Soft tissue and uterine leiomyosarcoma. J Clin Oncol. 2018;36:144-150.

The Diagnosis: Granular Cell Tumor

Histopathologic analysis from the axillary excision demonstrated cords and sheets of large polygonal cells in the dermis with uniform, oval, hyperchromatic nuclei and ample pink granular-staining cytoplasm (quiz images). An infiltrative growth pattern was noted; however, there was no evidence of conspicuous mitoses, nuclear pleomorphism, or necrosis. These results in conjunction with the immunohistochemistry findings were consistent with a benign granular cell tumor (GCT), a rare neoplasm considered to have neural/Schwann cell origin.1-3

Our case demonstrates the difficulty in clinically diagnosing cutaneous GCTs. The tumor often presents as a solitary, 0.5- to 3-cm, asymptomatic, firm nodule4,5; however, GCTs also can appear verrucous, eroded, or with other variable morphologies, which can create diagnostic challenges.5,6 Accordingly, a 1980 study of 110 patients with GCTs found that the preoperative clinical diagnosis was incorrect in all but 3 cases,7 emphasizing the need for histologic evaluation. Benign GCTs tend to exhibit sheets of polygonal tumor cells with eosinophilic granular cytoplasm and small central nuclei.3,5 The cytoplasmic granules are periodic acid-Schiff positive and diastase resistant.6 Many cases feature pseudoepitheliomatous hyperplasia, which can misleadingly resemble squamous cell carcinoma.3,5,6 Of note, invasive growth patterns on histology can occur with benign GCTs, as in our patient's case, and do not impact prognosis.3,4 On immunohistochemistry, benign, atypical, and malignant GCTs often stain positive for S-100 protein, vimentin, neuron-specific enolase, SOX10, and CD68.1,3

Although our patient's GCTs were benign, an estimated 1% to 2% are malignant.1,4 In 1998, Fanburg-Smith et al1 defined 6 histologic criteria that characterize malignant GCTs: necrosis, tumor cell spindling, vesicular nuclei with large nucleoli, high nuclear to cytoplasmic ratio, increased mitosis, and pleomorphism. Neoplasms with 3 or more of these features are classified as malignant, those with 1 or 2 are considered atypical, and those with only pleomorphism or no other criteria met are diagnosed as benign.1

Multiple GCTs have been reported in 10% to 25% of cases and, as highlighted in our case, can occur in both a metachronous and synchronous manner.2-4,6 Our patient developed a solitary GCT on the inferior lip 3 years prior to the appearance of 2 additional GCTs within 6 months of each other. The presence of multiple GCTs has been associated with genetic syndromes, such as neurofibromatosis type 1 and Noonan syndrome with multiple lentigines3,8; however, as our case demonstrates, multiple GCTs can occur in nonsyndromic patients as well. When multiple GCTs develop at distant sites, they can resemble metastasis.3 To differentiate these clinical scenarios, Machado et al3 proposed utilizing histology and anatomic location. Multiple tumors with benign characteristics on histology likely represent multiple GCTs, whereas tumors arising at sites common to GCT metastasis, such as lymph node, bone, or viscera, are more concerning for metastatic disease. It has been suggested that patients with multiple GCTs should be monitored with physical examination and repeat magnetic resonance imaging or computed tomography every 6 to 12 months.2 Given our patient's presentation with new tumors arising within 6 months of one another, we recommended a 6-month follow-up interval rather than 1 year. Due to the rarity of GCTs, clinical trials to define treatment guidelines and recommendations have not been performed.3 However, the most commonly utilized treatment modality is wide local excision, as performed in our patient.2,4

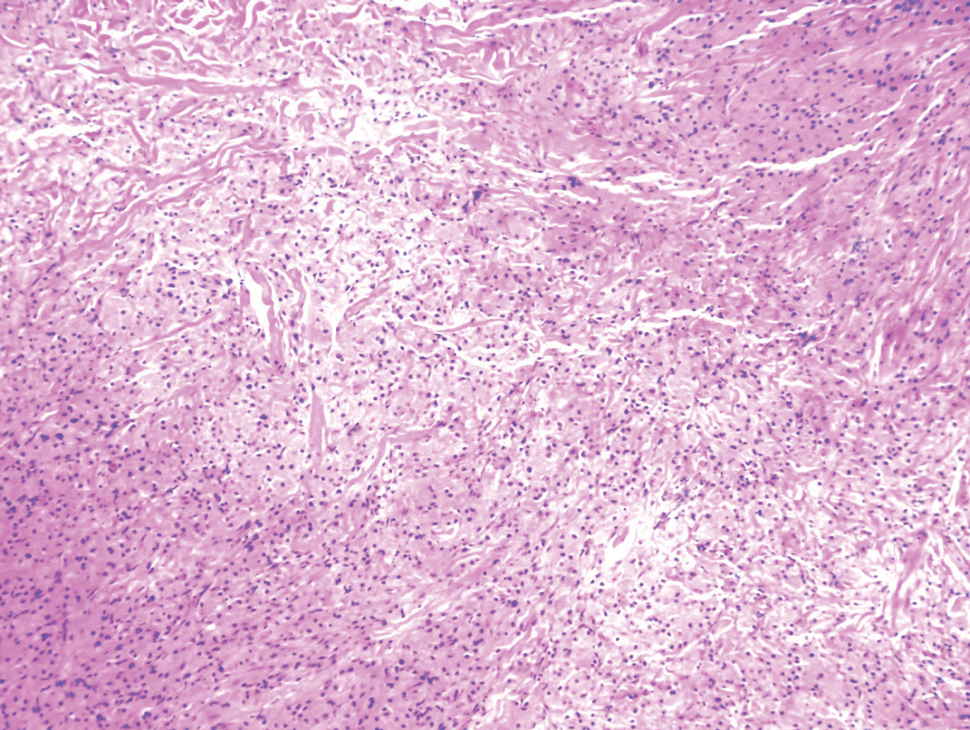

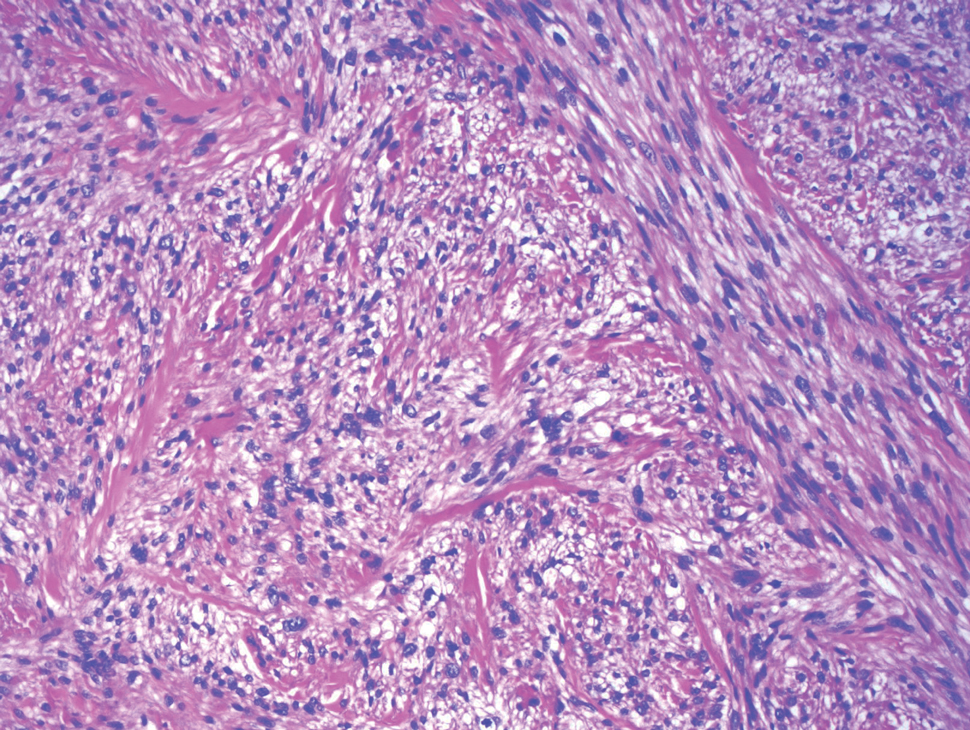

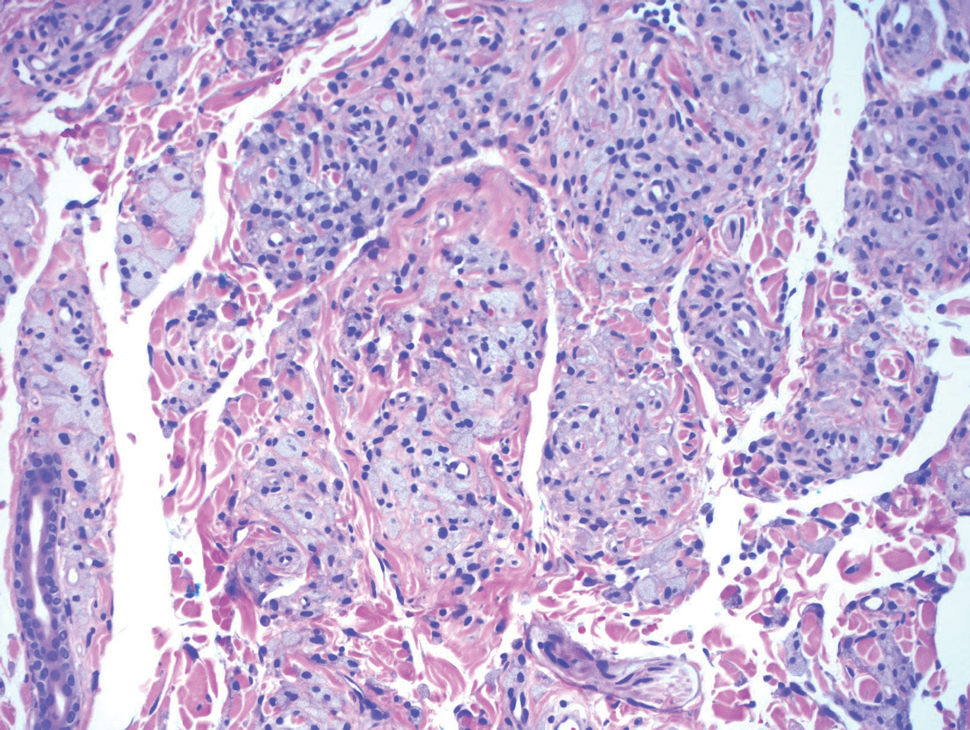

Melanoma, atypical fibroxanthoma (AFX), xanthoma, and leiomyosarcoma may be difficult to distinguish from GCT.1,3,4 Melanoma incidence has increased dramatically over the last several decades, with rates in the United States rising from 6.8 cases per 100,000 individuals in the 1970s to 20.1 in the early 2000s. Risk factors for its development include UV radiation exposure and particularly severe sunburns during childhood, along with a number of host risk factors such as total number of melanocytic nevi, family history, and fair complexion.9 Histologically, it often demonstrates irregularly distributed, poorly defined melanocytes with pagetoid spread and dyscohesive nests (Figure 1).10 Melanoma metastasis occasionally can present as a soft-tissue mass and often stains positive for S-100 and vimentin, thus resembling GCT1,4; however, unlike melanoma, GCTs lack melanosomes and stain negative for more specific melanocyte markers, such as melanoma antigen recognized by T cells 1 (MART-1).1,3,4

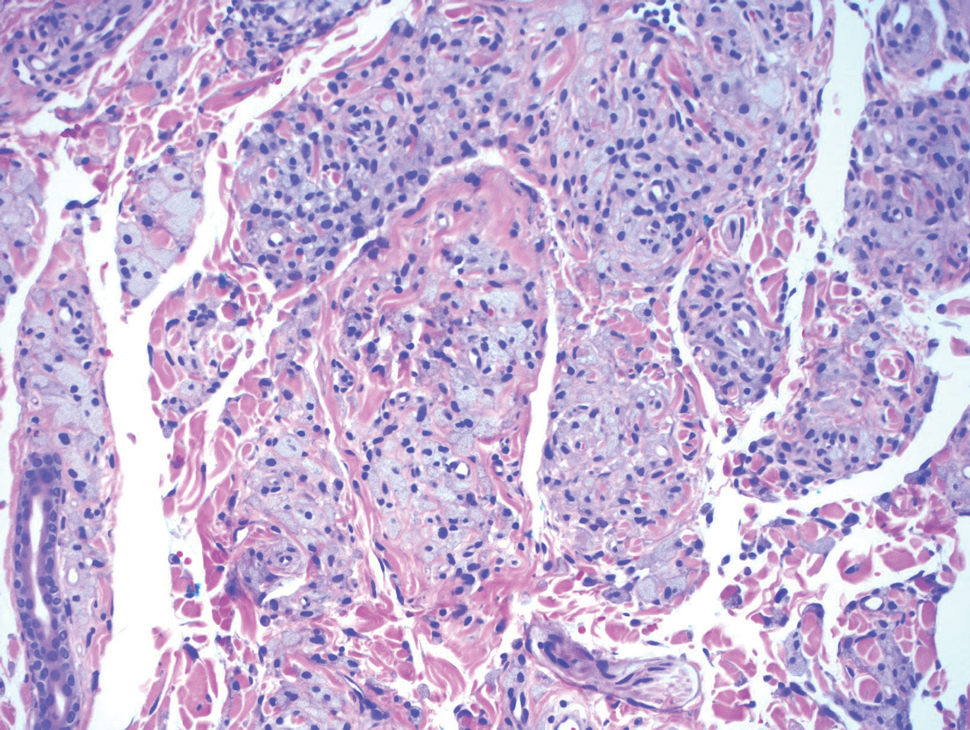

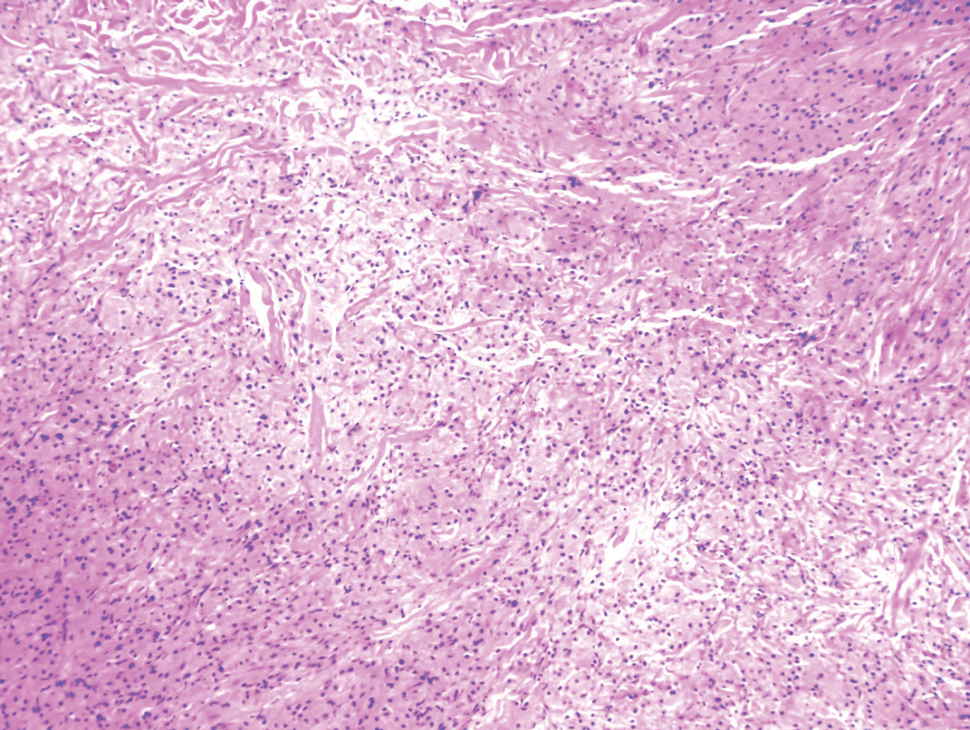

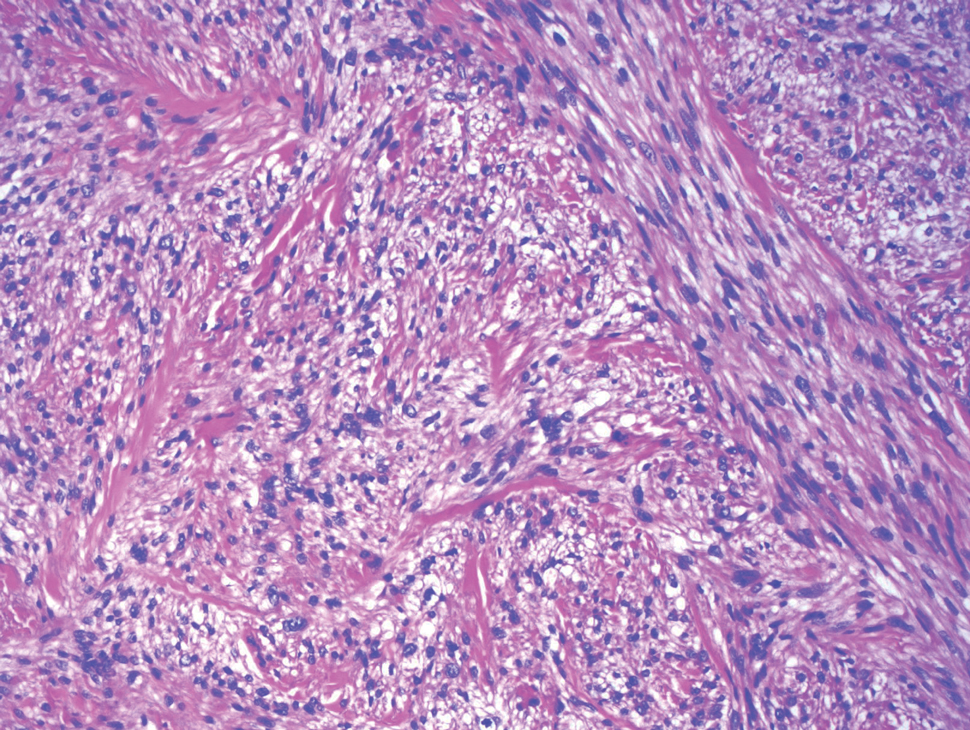

Atypical fibroxanthoma is a cutaneous neoplasm with fibrohistiocytic mesenchymal origin.11 These tumors typically arise on the head and neck in elderly individuals, particularly men with sun-damaged skin. They often present as superficial, rapidly growing nodules with the potential to ulcerate and bleed.11,12 Histologic features include pleomorphic spindle and epithelioid cells, whose nuclei appear hyperchromatic with atypical mitoses (Figure 2).12 Granular cell changes occur infrequently with AFXs, but in such cases immunohistochemistry can readily distinguish AFX from GCT. Although both tend to stain positive for CD68 and vimentin, AFXs lack S-100 protein and SOX10 expression that frequently is observed in GCTs.3,12

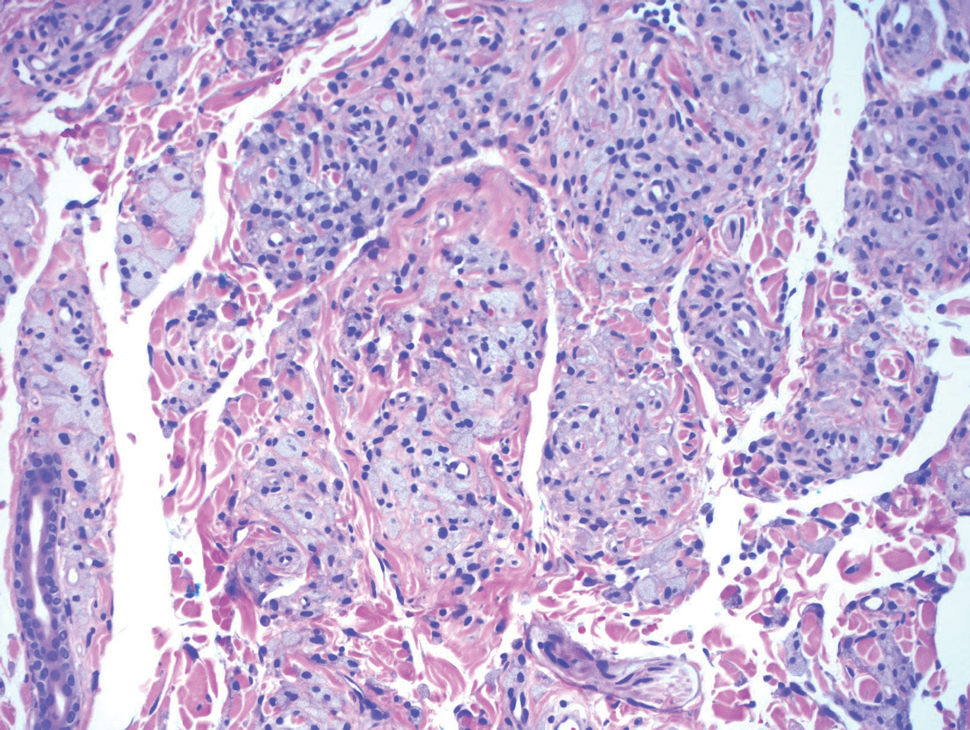

Xanthomas are localized lipid deposits in the connective tissue of the skin that often arise in association with dyslipidemia.13 They typically present as soft to semisolid yellow papules, plaques, or nodules. Their clinical appearance can resemble GCTs; however, histologic analysis enables differentiation with ease, as xanthomas demonstrate characteristic foam cells, consisting of lipid-laden macrophages (Figure 3).13

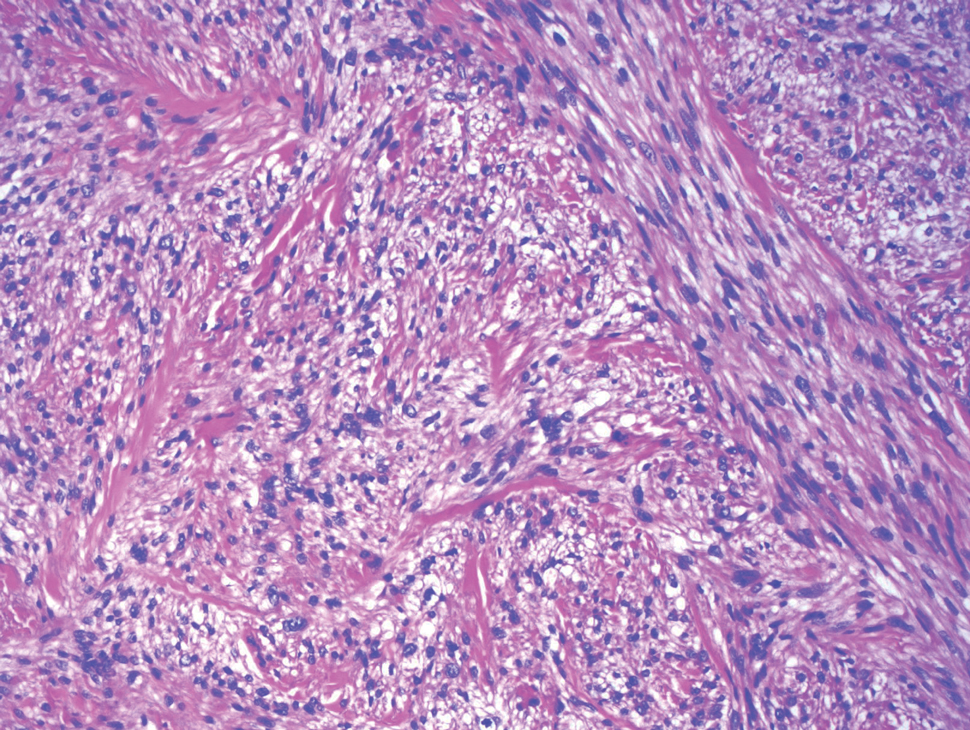

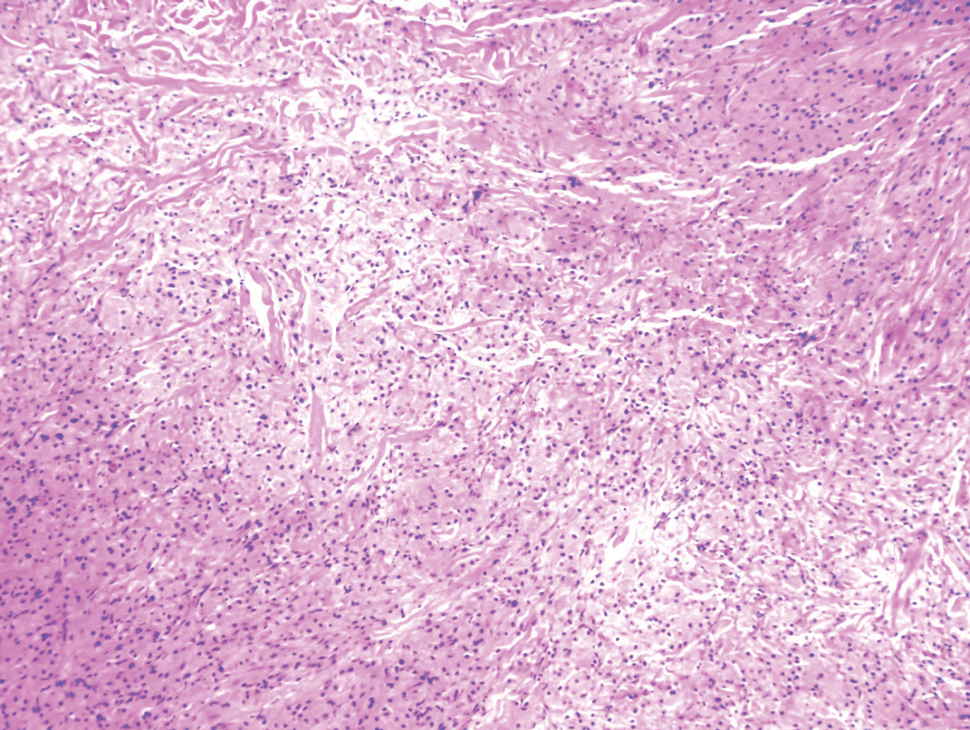

Cutaneous leiomyosarcoma is a rare dermal neoplasm, accounting for 2% to 3% of all sarcomas.14 They typically occur in White males during the fifth to seventh decades of life and often present as asymptomatic lesions on the lower extremities. They frequently arise from pilar smooth muscle. Unlike uterine and soft-tissue leiomyosarcoma, cutaneous leiomyosarcoma tends to follow an indolent course and rarely metastasizes.14 Histologically, these tumors display intersecting, well-defined, spindle-cell fascicles with abundant eosinophilic cytoplasm and cigar-shaped, blunt-ended nuclei (Figure 4).15 Occasionally, leiomyosarcomas can demonstrate cytoplasmic granularity due to lysosome accumulation4; nevertheless, the diagnosis usually can be elucidated by examining more typical histologic areas and utilizing immunohistochemistry, which often stains positive for α-smooth muscle actin, desmin, and h-caldesmon.4,15

The Diagnosis: Granular Cell Tumor

Histopathologic analysis from the axillary excision demonstrated cords and sheets of large polygonal cells in the dermis with uniform, oval, hyperchromatic nuclei and ample pink granular-staining cytoplasm (quiz images). An infiltrative growth pattern was noted; however, there was no evidence of conspicuous mitoses, nuclear pleomorphism, or necrosis. These results in conjunction with the immunohistochemistry findings were consistent with a benign granular cell tumor (GCT), a rare neoplasm considered to have neural/Schwann cell origin.1-3

Our case demonstrates the difficulty in clinically diagnosing cutaneous GCTs. The tumor often presents as a solitary, 0.5- to 3-cm, asymptomatic, firm nodule4,5; however, GCTs also can appear verrucous, eroded, or with other variable morphologies, which can create diagnostic challenges.5,6 Accordingly, a 1980 study of 110 patients with GCTs found that the preoperative clinical diagnosis was incorrect in all but 3 cases,7 emphasizing the need for histologic evaluation. Benign GCTs tend to exhibit sheets of polygonal tumor cells with eosinophilic granular cytoplasm and small central nuclei.3,5 The cytoplasmic granules are periodic acid-Schiff positive and diastase resistant.6 Many cases feature pseudoepitheliomatous hyperplasia, which can misleadingly resemble squamous cell carcinoma.3,5,6 Of note, invasive growth patterns on histology can occur with benign GCTs, as in our patient's case, and do not impact prognosis.3,4 On immunohistochemistry, benign, atypical, and malignant GCTs often stain positive for S-100 protein, vimentin, neuron-specific enolase, SOX10, and CD68.1,3

Although our patient's GCTs were benign, an estimated 1% to 2% are malignant.1,4 In 1998, Fanburg-Smith et al1 defined 6 histologic criteria that characterize malignant GCTs: necrosis, tumor cell spindling, vesicular nuclei with large nucleoli, high nuclear to cytoplasmic ratio, increased mitosis, and pleomorphism. Neoplasms with 3 or more of these features are classified as malignant, those with 1 or 2 are considered atypical, and those with only pleomorphism or no other criteria met are diagnosed as benign.1

Multiple GCTs have been reported in 10% to 25% of cases and, as highlighted in our case, can occur in both a metachronous and synchronous manner.2-4,6 Our patient developed a solitary GCT on the inferior lip 3 years prior to the appearance of 2 additional GCTs within 6 months of each other. The presence of multiple GCTs has been associated with genetic syndromes, such as neurofibromatosis type 1 and Noonan syndrome with multiple lentigines3,8; however, as our case demonstrates, multiple GCTs can occur in nonsyndromic patients as well. When multiple GCTs develop at distant sites, they can resemble metastasis.3 To differentiate these clinical scenarios, Machado et al3 proposed utilizing histology and anatomic location. Multiple tumors with benign characteristics on histology likely represent multiple GCTs, whereas tumors arising at sites common to GCT metastasis, such as lymph node, bone, or viscera, are more concerning for metastatic disease. It has been suggested that patients with multiple GCTs should be monitored with physical examination and repeat magnetic resonance imaging or computed tomography every 6 to 12 months.2 Given our patient's presentation with new tumors arising within 6 months of one another, we recommended a 6-month follow-up interval rather than 1 year. Due to the rarity of GCTs, clinical trials to define treatment guidelines and recommendations have not been performed.3 However, the most commonly utilized treatment modality is wide local excision, as performed in our patient.2,4

Melanoma, atypical fibroxanthoma (AFX), xanthoma, and leiomyosarcoma may be difficult to distinguish from GCT.1,3,4 Melanoma incidence has increased dramatically over the last several decades, with rates in the United States rising from 6.8 cases per 100,000 individuals in the 1970s to 20.1 in the early 2000s. Risk factors for its development include UV radiation exposure and particularly severe sunburns during childhood, along with a number of host risk factors such as total number of melanocytic nevi, family history, and fair complexion.9 Histologically, it often demonstrates irregularly distributed, poorly defined melanocytes with pagetoid spread and dyscohesive nests (Figure 1).10 Melanoma metastasis occasionally can present as a soft-tissue mass and often stains positive for S-100 and vimentin, thus resembling GCT1,4; however, unlike melanoma, GCTs lack melanosomes and stain negative for more specific melanocyte markers, such as melanoma antigen recognized by T cells 1 (MART-1).1,3,4