User login

What Can Be Done to Maintain Positive Patient Experience and Improve Residents’ Satisfaction? In Reference to: “Standardized Attending Rounds to Improve the Patient Experience: A Pragmatic Cluster Randomized Controlled Trial”

We read the article by Monash et al.1 published in the March 2017 issue with great interest. This randomized study showed a discrepancy between patients’ and residents’ satisfaction with standardized rounds; for example, residents reported less autonomy, efficiency, teaching, and longer time of rounds.

We agree that letting residents lead the rounds with minimal participation of an attending (only when needed) may improve resident satisfaction. Other factors, such as quality of teaching, positive comments to learners during bedside rounds (whenever appropriate), and a positive attending attitude, might be helpful.2,3 We believe that the adaptation of such a model through the prism of residents’ benefit will lead to better satisfaction among trainees.

On the other hand, we note that the nature of the study might have exaggerated patient satisfaction when compared with real-world surveys.4 The survey appears to focus only on attending rounds and did not consider other factors like hospitality, pain control, etc. A low patient census and lack of double blinding are other potential factors.

In conclusion, we want to congratulate the authors for raising this important topic and showing positive patients’ satisfaction with standardized rounds on teaching services. Further research should focus on improving residents’ satisfaction without compromising patients’ experiences.

1. Monash B, Najafi N, Mourad M, et al. Standardized Attending Rounds to Improve the Patient Experience: A Pragmatic Cluster Randomized Controlled Trial. J Hosp Med. 2017;12(3):143-149. PubMed

2. Williams KN, Ramani S, Fraser B, Orlander JD. Improving bedside teaching: findings from a focus group study of learners. Acad Med. 2008;83(3):257-264. PubMed

3. Castiglioni A, Shewchuk RM, Willett LL, Heudebert GR, Centor RM. A pilot study using nominal group technique to assess residents’ perceptions of successful attending rounds. J Gen Intern Med. 2008;23(7):1060-1065. PubMed

4. Siddiqui ZK, Wu AW, Kurbanova N, Qayyum R. Comparison of Hospital Consumer Assessment of Healthcare Providers and Systems patient satisfaction scores for specialty hospitals and general medical hospitals: confounding effect of survey response rate. J Hosp Med. 2014;9(9):590-593. PubMed

We read the article by Monash et al.1 published in the March 2017 issue with great interest. This randomized study showed a discrepancy between patients’ and residents’ satisfaction with standardized rounds; for example, residents reported less autonomy, efficiency, teaching, and longer time of rounds.

We agree that letting residents lead the rounds with minimal participation of an attending (only when needed) may improve resident satisfaction. Other factors, such as quality of teaching, positive comments to learners during bedside rounds (whenever appropriate), and a positive attending attitude, might be helpful.2,3 We believe that the adaptation of such a model through the prism of residents’ benefit will lead to better satisfaction among trainees.

On the other hand, we note that the nature of the study might have exaggerated patient satisfaction when compared with real-world surveys.4 The survey appears to focus only on attending rounds and did not consider other factors like hospitality, pain control, etc. A low patient census and lack of double blinding are other potential factors.

In conclusion, we want to congratulate the authors for raising this important topic and showing positive patients’ satisfaction with standardized rounds on teaching services. Further research should focus on improving residents’ satisfaction without compromising patients’ experiences.

We read the article by Monash et al.1 published in the March 2017 issue with great interest. This randomized study showed a discrepancy between patients’ and residents’ satisfaction with standardized rounds; for example, residents reported less autonomy, efficiency, teaching, and longer time of rounds.

We agree that letting residents lead the rounds with minimal participation of an attending (only when needed) may improve resident satisfaction. Other factors, such as quality of teaching, positive comments to learners during bedside rounds (whenever appropriate), and a positive attending attitude, might be helpful.2,3 We believe that the adaptation of such a model through the prism of residents’ benefit will lead to better satisfaction among trainees.

On the other hand, we note that the nature of the study might have exaggerated patient satisfaction when compared with real-world surveys.4 The survey appears to focus only on attending rounds and did not consider other factors like hospitality, pain control, etc. A low patient census and lack of double blinding are other potential factors.

In conclusion, we want to congratulate the authors for raising this important topic and showing positive patients’ satisfaction with standardized rounds on teaching services. Further research should focus on improving residents’ satisfaction without compromising patients’ experiences.

1. Monash B, Najafi N, Mourad M, et al. Standardized Attending Rounds to Improve the Patient Experience: A Pragmatic Cluster Randomized Controlled Trial. J Hosp Med. 2017;12(3):143-149. PubMed

2. Williams KN, Ramani S, Fraser B, Orlander JD. Improving bedside teaching: findings from a focus group study of learners. Acad Med. 2008;83(3):257-264. PubMed

3. Castiglioni A, Shewchuk RM, Willett LL, Heudebert GR, Centor RM. A pilot study using nominal group technique to assess residents’ perceptions of successful attending rounds. J Gen Intern Med. 2008;23(7):1060-1065. PubMed

4. Siddiqui ZK, Wu AW, Kurbanova N, Qayyum R. Comparison of Hospital Consumer Assessment of Healthcare Providers and Systems patient satisfaction scores for specialty hospitals and general medical hospitals: confounding effect of survey response rate. J Hosp Med. 2014;9(9):590-593. PubMed

1. Monash B, Najafi N, Mourad M, et al. Standardized Attending Rounds to Improve the Patient Experience: A Pragmatic Cluster Randomized Controlled Trial. J Hosp Med. 2017;12(3):143-149. PubMed

2. Williams KN, Ramani S, Fraser B, Orlander JD. Improving bedside teaching: findings from a focus group study of learners. Acad Med. 2008;83(3):257-264. PubMed

3. Castiglioni A, Shewchuk RM, Willett LL, Heudebert GR, Centor RM. A pilot study using nominal group technique to assess residents’ perceptions of successful attending rounds. J Gen Intern Med. 2008;23(7):1060-1065. PubMed

4. Siddiqui ZK, Wu AW, Kurbanova N, Qayyum R. Comparison of Hospital Consumer Assessment of Healthcare Providers and Systems patient satisfaction scores for specialty hospitals and general medical hospitals: confounding effect of survey response rate. J Hosp Med. 2014;9(9):590-593. PubMed

The Authors Reply: “Cost and Utility of Thrombophilia Testing”

We thank Dr. Berse and colleagues for their correspondence about our paper.1,2 We are pleased they agreed with our conclusion: Thrombophilia testing has limited clinical utility in most inpatient settings.

Berse and colleagues critiqued details of our methodology in calculating payer cost, including how we estimated the number of Medicare claims for thrombophilia testing. We estimated that there were at least 280,000 Medicare claims in 2014 using CodeMap® (Wheaton Partners, LLC, Schaumburg, IL), a dataset of utilization data from the Physician Supplier Procedure Summary Master File from all Medicare Part B carriers.3 This estimate was similar to that reported in a previous publication.4

Thus, regardless of the precise estimates, even a conservative estimate of 33 to 80 million dollars of unnecessary spending is far too much. Rather, it is a perfect example of “Things We Do for No Reason.”

Disclosure

Nothing to report.

1. Petrilli CM, Mack M, Petrilli JJ, Hickner A, Saint S, Chopra V. Understanding the role of physician attire on patient perceptions: a systematic review of the literature--targeting attire to improve likelihood of rapport (TAILOR) investigators. BMJ Open. 2015;5(1):e006578. DOI:10.1136/bmjopen-2014-006578. PubMed

2. Berse B, Lynch JA, Bowen S, Grosse SD. In Reference to: “Cost and Utility of Thrombophilia Testing.” J Hosp Med. 2017;12(9):783.

3. CodeMap® https://www.codemap.com/. Accessed March 2, 2017.

4. Somma J, Sussman, II, Rand JH. An evaluation of thrombophilia screening in an urban tertiary care medical center: A “real world” experience. Am J Clin Pathol. 2006;126(1):120-7. DOI:10.1309/KV06-32LJ-8EDM-EWQT. PubMed

We thank Dr. Berse and colleagues for their correspondence about our paper.1,2 We are pleased they agreed with our conclusion: Thrombophilia testing has limited clinical utility in most inpatient settings.

Berse and colleagues critiqued details of our methodology in calculating payer cost, including how we estimated the number of Medicare claims for thrombophilia testing. We estimated that there were at least 280,000 Medicare claims in 2014 using CodeMap® (Wheaton Partners, LLC, Schaumburg, IL), a dataset of utilization data from the Physician Supplier Procedure Summary Master File from all Medicare Part B carriers.3 This estimate was similar to that reported in a previous publication.4

Thus, regardless of the precise estimates, even a conservative estimate of 33 to 80 million dollars of unnecessary spending is far too much. Rather, it is a perfect example of “Things We Do for No Reason.”

Disclosure

Nothing to report.

We thank Dr. Berse and colleagues for their correspondence about our paper.1,2 We are pleased they agreed with our conclusion: Thrombophilia testing has limited clinical utility in most inpatient settings.

Berse and colleagues critiqued details of our methodology in calculating payer cost, including how we estimated the number of Medicare claims for thrombophilia testing. We estimated that there were at least 280,000 Medicare claims in 2014 using CodeMap® (Wheaton Partners, LLC, Schaumburg, IL), a dataset of utilization data from the Physician Supplier Procedure Summary Master File from all Medicare Part B carriers.3 This estimate was similar to that reported in a previous publication.4

Thus, regardless of the precise estimates, even a conservative estimate of 33 to 80 million dollars of unnecessary spending is far too much. Rather, it is a perfect example of “Things We Do for No Reason.”

Disclosure

Nothing to report.

1. Petrilli CM, Mack M, Petrilli JJ, Hickner A, Saint S, Chopra V. Understanding the role of physician attire on patient perceptions: a systematic review of the literature--targeting attire to improve likelihood of rapport (TAILOR) investigators. BMJ Open. 2015;5(1):e006578. DOI:10.1136/bmjopen-2014-006578. PubMed

2. Berse B, Lynch JA, Bowen S, Grosse SD. In Reference to: “Cost and Utility of Thrombophilia Testing.” J Hosp Med. 2017;12(9):783.

3. CodeMap® https://www.codemap.com/. Accessed March 2, 2017.

4. Somma J, Sussman, II, Rand JH. An evaluation of thrombophilia screening in an urban tertiary care medical center: A “real world” experience. Am J Clin Pathol. 2006;126(1):120-7. DOI:10.1309/KV06-32LJ-8EDM-EWQT. PubMed

1. Petrilli CM, Mack M, Petrilli JJ, Hickner A, Saint S, Chopra V. Understanding the role of physician attire on patient perceptions: a systematic review of the literature--targeting attire to improve likelihood of rapport (TAILOR) investigators. BMJ Open. 2015;5(1):e006578. DOI:10.1136/bmjopen-2014-006578. PubMed

2. Berse B, Lynch JA, Bowen S, Grosse SD. In Reference to: “Cost and Utility of Thrombophilia Testing.” J Hosp Med. 2017;12(9):783.

3. CodeMap® https://www.codemap.com/. Accessed March 2, 2017.

4. Somma J, Sussman, II, Rand JH. An evaluation of thrombophilia screening in an urban tertiary care medical center: A “real world” experience. Am J Clin Pathol. 2006;126(1):120-7. DOI:10.1309/KV06-32LJ-8EDM-EWQT. PubMed

© 2017 Society of Hospital Medicine

In Reference to: “Cost and Utility of Thrombophilia Testing”

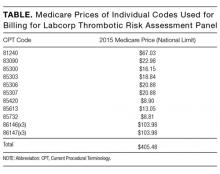

The article by Petrilli et al. points to the important but complicated issue of ordering laboratory testing for thrombophilia despite multiple guidelines that dispute the clinical utility of such testing for many indications.1 We question the basis of these authors’ assertion that Medicare spends $300 to $672 million for thrombophilia testing annually. They arrived at this figure by multiplying the price of a thrombophilia test panel (between $1100 and $2400) by the number of annual Medicare claims for thrombophilia analysis, which they estimated at 280,000. The price of the panel is derived from two papers: (1) a 2001 review2 that lists prices of various thrombophilia-related tests adding up to $1782, and (2) a 2006 evaluation by Somma et al.3 of thrombophilia screening at one hospital in New York in 2005. The latter paper refers to various thrombophilia panels from Quest Diagnostics with list prices ranging from $1311 to $2429. However, the repertoire of available test panels and their prices have changed over the last decade. The cost evaluation of thrombophilia testing should be based on actual current payments for tests, and not on list prices for laboratory offerings from over a decade ago. Several laboratories offer mutational analysis of 3 genes—F5, F2, and MTHFR—as a thrombophilia risk panel. Based on the Current Procedural Terminology (CPT) codes listed by the test suppliers (81240, 81241, and 81291), the average Medicare payment for the combination of these 3 markers in 2013 was $172.4 A broader panel of several biochemical, immunological, and genetic assays had a maximum Medicare payment in 2015 of $405 (Table).5

In conclusion, the cost evaluation of thrombophilia screening is more challenging than the calculation by Petrilli et al. suggests.1 Even if Medicare paid as much as $400 per individual tested and assuming up to 200,000 individuals underwent thrombophilia testing per year, the aggregate Medicare expenditure would have been no more than roughly $80 million. Thus, the estimated range in the article appears to have overstated actual Medicare expenditures by an order of magnitude. This does not take away from their overall conclusion that payers are burdened with significant expenditures for laboratory testing that may not present clinical value for many patients.6 We need research into the patterns of utilization as well as improvements in documentation of expenditures associated with these tests.

Disclosure

The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention, the Department of Veterans Affairs, or the United States government. The autho

1. Petrilli CM, Heidemann L, Mack M, Durance P, Chopra V. Inpatient inherited thrombophilia testing. J Hosp Med. 2016;11(11):801-804. PubMed

2. Abramson N, Abramson S. Hypercoagulability: clinical assessment and treatment. South Med J. 2001;94(10):1013-1020. PubMed

3. Somma J, Sussman, II, Rand JH. An evaluation of thrombophilia screening in an urban tertiary care medical center: A “real world” experience. Am J Clin Pathol. 2006;126(1):120-127. PubMed

4. Lynch JA, Berse B, Dotson WD, Khoury MJ, Coomer N, Kautter J. Utilization of genetic tests: Analysis of gene-specific billing in Medicare claims data [Published online ahead of print January 26, 2017]. Genet Med. 2017. doi: 10.1038/gim.2016.209. PubMed

5. Centers for Medicare and Medicaid Services. Clinical Laboratory Fee Schedule 2016. https://www.cms.gov/Medicare/Medicare-fee-for-service-Payment/clinicallabfeesched/index.html. Accessed on December 20, 2016.

6. Stevens SM, Woller SC, Bauer KA, et al. Guidance for the evaluation and treatment of hereditary and acquired thrombophilia. J Thromb Thrombolysis. 2016;41(1):154-164. PubMed

The article by Petrilli et al. points to the important but complicated issue of ordering laboratory testing for thrombophilia despite multiple guidelines that dispute the clinical utility of such testing for many indications.1 We question the basis of these authors’ assertion that Medicare spends $300 to $672 million for thrombophilia testing annually. They arrived at this figure by multiplying the price of a thrombophilia test panel (between $1100 and $2400) by the number of annual Medicare claims for thrombophilia analysis, which they estimated at 280,000. The price of the panel is derived from two papers: (1) a 2001 review2 that lists prices of various thrombophilia-related tests adding up to $1782, and (2) a 2006 evaluation by Somma et al.3 of thrombophilia screening at one hospital in New York in 2005. The latter paper refers to various thrombophilia panels from Quest Diagnostics with list prices ranging from $1311 to $2429. However, the repertoire of available test panels and their prices have changed over the last decade. The cost evaluation of thrombophilia testing should be based on actual current payments for tests, and not on list prices for laboratory offerings from over a decade ago. Several laboratories offer mutational analysis of 3 genes—F5, F2, and MTHFR—as a thrombophilia risk panel. Based on the Current Procedural Terminology (CPT) codes listed by the test suppliers (81240, 81241, and 81291), the average Medicare payment for the combination of these 3 markers in 2013 was $172.4 A broader panel of several biochemical, immunological, and genetic assays had a maximum Medicare payment in 2015 of $405 (Table).5

In conclusion, the cost evaluation of thrombophilia screening is more challenging than the calculation by Petrilli et al. suggests.1 Even if Medicare paid as much as $400 per individual tested and assuming up to 200,000 individuals underwent thrombophilia testing per year, the aggregate Medicare expenditure would have been no more than roughly $80 million. Thus, the estimated range in the article appears to have overstated actual Medicare expenditures by an order of magnitude. This does not take away from their overall conclusion that payers are burdened with significant expenditures for laboratory testing that may not present clinical value for many patients.6 We need research into the patterns of utilization as well as improvements in documentation of expenditures associated with these tests.

Disclosure

The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention, the Department of Veterans Affairs, or the United States government. The autho

The article by Petrilli et al. points to the important but complicated issue of ordering laboratory testing for thrombophilia despite multiple guidelines that dispute the clinical utility of such testing for many indications.1 We question the basis of these authors’ assertion that Medicare spends $300 to $672 million for thrombophilia testing annually. They arrived at this figure by multiplying the price of a thrombophilia test panel (between $1100 and $2400) by the number of annual Medicare claims for thrombophilia analysis, which they estimated at 280,000. The price of the panel is derived from two papers: (1) a 2001 review2 that lists prices of various thrombophilia-related tests adding up to $1782, and (2) a 2006 evaluation by Somma et al.3 of thrombophilia screening at one hospital in New York in 2005. The latter paper refers to various thrombophilia panels from Quest Diagnostics with list prices ranging from $1311 to $2429. However, the repertoire of available test panels and their prices have changed over the last decade. The cost evaluation of thrombophilia testing should be based on actual current payments for tests, and not on list prices for laboratory offerings from over a decade ago. Several laboratories offer mutational analysis of 3 genes—F5, F2, and MTHFR—as a thrombophilia risk panel. Based on the Current Procedural Terminology (CPT) codes listed by the test suppliers (81240, 81241, and 81291), the average Medicare payment for the combination of these 3 markers in 2013 was $172.4 A broader panel of several biochemical, immunological, and genetic assays had a maximum Medicare payment in 2015 of $405 (Table).5

In conclusion, the cost evaluation of thrombophilia screening is more challenging than the calculation by Petrilli et al. suggests.1 Even if Medicare paid as much as $400 per individual tested and assuming up to 200,000 individuals underwent thrombophilia testing per year, the aggregate Medicare expenditure would have been no more than roughly $80 million. Thus, the estimated range in the article appears to have overstated actual Medicare expenditures by an order of magnitude. This does not take away from their overall conclusion that payers are burdened with significant expenditures for laboratory testing that may not present clinical value for many patients.6 We need research into the patterns of utilization as well as improvements in documentation of expenditures associated with these tests.

Disclosure

The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention, the Department of Veterans Affairs, or the United States government. The autho

1. Petrilli CM, Heidemann L, Mack M, Durance P, Chopra V. Inpatient inherited thrombophilia testing. J Hosp Med. 2016;11(11):801-804. PubMed

2. Abramson N, Abramson S. Hypercoagulability: clinical assessment and treatment. South Med J. 2001;94(10):1013-1020. PubMed

3. Somma J, Sussman, II, Rand JH. An evaluation of thrombophilia screening in an urban tertiary care medical center: A “real world” experience. Am J Clin Pathol. 2006;126(1):120-127. PubMed

4. Lynch JA, Berse B, Dotson WD, Khoury MJ, Coomer N, Kautter J. Utilization of genetic tests: Analysis of gene-specific billing in Medicare claims data [Published online ahead of print January 26, 2017]. Genet Med. 2017. doi: 10.1038/gim.2016.209. PubMed

5. Centers for Medicare and Medicaid Services. Clinical Laboratory Fee Schedule 2016. https://www.cms.gov/Medicare/Medicare-fee-for-service-Payment/clinicallabfeesched/index.html. Accessed on December 20, 2016.

6. Stevens SM, Woller SC, Bauer KA, et al. Guidance for the evaluation and treatment of hereditary and acquired thrombophilia. J Thromb Thrombolysis. 2016;41(1):154-164. PubMed

1. Petrilli CM, Heidemann L, Mack M, Durance P, Chopra V. Inpatient inherited thrombophilia testing. J Hosp Med. 2016;11(11):801-804. PubMed

2. Abramson N, Abramson S. Hypercoagulability: clinical assessment and treatment. South Med J. 2001;94(10):1013-1020. PubMed

3. Somma J, Sussman, II, Rand JH. An evaluation of thrombophilia screening in an urban tertiary care medical center: A “real world” experience. Am J Clin Pathol. 2006;126(1):120-127. PubMed

4. Lynch JA, Berse B, Dotson WD, Khoury MJ, Coomer N, Kautter J. Utilization of genetic tests: Analysis of gene-specific billing in Medicare claims data [Published online ahead of print January 26, 2017]. Genet Med. 2017. doi: 10.1038/gim.2016.209. PubMed

5. Centers for Medicare and Medicaid Services. Clinical Laboratory Fee Schedule 2016. https://www.cms.gov/Medicare/Medicare-fee-for-service-Payment/clinicallabfeesched/index.html. Accessed on December 20, 2016.

6. Stevens SM, Woller SC, Bauer KA, et al. Guidance for the evaluation and treatment of hereditary and acquired thrombophilia. J Thromb Thrombolysis. 2016;41(1):154-164. PubMed

© 2017 Society of Hospital Medicine

Reducing Routine Labs—Teaching Residents Restraint

Inappropriate resource utilization is a pervasive problem in healthcare, and it has received increasing emphasis over the last few years as financial strain on the healthcare system has grown. This waste has led to new models of care—bundled care payments, accountable care organizations, and merit-based payment systems. Professional organizations have also emphasized the provision of high-value care and avoiding unnecessary diagnostic testing and treatment. In April 2012, the American Board of Internal Medicine (ABIM) launched the Choosing Wisely initiative to assist professional societies in putting forth recommendations on clinical circumstances in which particular tests and procedures should be avoided.

Until recently, teaching cost-effective care was not widely considered an important part of internal medicine residency programs. In a 2010 study surveying residents about resource utilization feedback, only 37% of internal medicine residents reported receiving any feedback on resource utilization and 20% reported receiving regular feedback.1 These findings are especially significant in the broader context of national healthcare spending, as there is evidence that physicians who train in high-spending localities tend to have high-spending patterns later in their careers.2 Another study showed similar findings when looking at region of training relative to success at recognizing high-value care on ABIM test questions.3 The Accreditation Council for Graduate Medical Education has developed the Clinical Learning Environment Review program to help address this need. This program provides feedback to teaching hospitals about their success at teaching residents and fellows to provide high-value medical care.

Given the current zeitgeist of emphasizing cost-effective, high-value care, appropriate utilization of routine labs is one area that stands out as an especially low-hanging fruit. The Society of Hospital Medicine, as part of the Choosing Wisely campaign, recommended minimizing routine lab draws in hospitalized patients with clinical and laboratory stability.4 Certainly, avoiding unnecessary routine lab draws is ideal because it saves patients the pain of superfluous phlebotomy, allows phlebotomy resources to be directed to blood draws with actual clinical utility, and saves money. There is also good evidence that hospital-acquired anemia, an effect of overuse of routine blood draws, has an adverse impact on morbidity and mortality in postmyocardial infarction patients5,6 and more generally in hospitalized patients.7

Several studies have examined lab utilization on teaching services. Not surprisingly, the vast majority of test utilization is attributable to the interns (45%) and residents (26%), rather than attendings.8 Another study showed that internal medicine residents at one center had a much stronger self-reported predilection for ordering daily recurring routine labs rather than one-time labs for the following morning when admitting patients and when picking up patients, as compared with hospitalist attendings.9 This self-reported tendency translated into ordering more complete blood counts and basic chemistry panels per patient per day. A qualitative study looking at why internal medicine and general surgery residents ordered unnecessary labs yielded a number of responses, including ingrained habit, lack of price transparency, clinical uncertainty, belief that the attending expected it, and absence of a culture emphasizing resource utilization.10

In this issue of the Journal of Hospital Medicine, Kurtzman and colleagues report on a mixed-methods study looking at internal medicine resident engagement at their center with an electronic medical record–associated dashboard providing feedback on lab utilization.11 Over a 6-month period, the residents randomized into the dashboard group received weekly e-mails while on service with a brief synopsis of their lab utilization relative to their peers and also a link to a dashboard with a time-series display of their relative lab ordering. While the majority of residents (74%) opened the e-mail, only a minority (21%) actually accessed the dashboard. Also, there was not a statistically significant relationship between dashboard use and lab ordering, though there was a trend to decreased lab ordering associated with opening the dashboard. The residents who participated in a focus group expressed both positive and negative opinions on the dashboard.

This is one example of social comparison feedback, which aims to improve performance by providing information to physicians on their performance relative to their peers. It has been shown to be effective in other areas of clinical medicine like limiting antibiotic overutilization in patients with upper respiratory infections.12 One study examining social comparison feedback and objective feedback found that social comparison feedback improved performance for a simulated work task more for high performers but less for low performers than standard objective feedback.13 The utility of this type of feedback has not been extensively studied in healthcare.

However, the audit and feedback strategy, of which social comparison feedback is a subtype, has been extensively studied in healthcare. A 2012 Cochrane Review found that audit and feedback leads to “small but potentially important improvements in professional practice.”14 They found a wide variation in the effect of feedback among the 140 studies they analyzed. The factors strongly associated with a significant improvement after feedback were as follows: poor performance at baseline, a colleague or supervisor as the one providing the audit and feedback, repetitive feedback, feedback given both verbally and in writing, and clear advice or guidance on how to improve. Many of these components were missing from this study—that may be one reason the authors did not find a significant relationship between dashboard use and lab ordering.

A number of interventions, however, have been shown to decrease lab utilization, including unbundling of the components of the metabolic panel and disallowing daily recurring lab orders,15 fee displays,16 cost reminders,17 didactics and data feedback,18 and a multifaceted approach (didactics, monthly feedback, checklist, and financial incentives).19 A multipronged strategy, including an element of education, audit and feedback, hard-stop limits on redundant lab ordering, and fee information is likely to be the most successful strategy to reducing lab overutilization for both residents and attending physicians. Resource overutilization is a multifactorial problem, and multifactorial problems call for multifaceted solutions. Moreover, it may be necessary to employ both “carrot” and “stick” elements to such an approach, rewarding physicians who practice appropriate stewardship, but also penalizing practitioners who do not appropriately adjust their lab ordering tendencies after receiving feedback showing overuse.

Physician behavior is difficult to change, and there are many reasons why physicians order inappropriate tests and studies, including provider uncertainty, fear of malpractice litigation, and inadequate time to consider the utility of a test. Audit and feedback should be integrated into residency curriculums focusing on high-value care, in which hospitalists should play a central role. If supervising attendings are not integrated into such curriculums and continue to both overorder tests themselves and allow residents to do so, then the informal curriculum will trump the formal one.

Physicians respond to incentives, and appropriately designed incentives should be developed to help steer them to order only those tests and studies that are medically indicated. Such incentives must be provided alongside audit and feedback with appropriate goals that account for patient complexity. Ultimately, routine lab ordering is just one area of overutilization in hospital medicine, and the techniques that are successful at reducing overuse in this arena will need to be applied to other aspects of medicine like imaging and medication prescribing.

Disclosure

The authors declare no conflicts of interest.

1. Dine CJ, Miller J, Fuld A, Bellini LM, Iwashyna TJ. Educating Physicians-in-Training About Resource Utilization and Their Own Outcomes of Care in the Inpatient Setting. J Grad Med Educ. 2010;2(2):175-180. PubMed

2. Chen C, Petterson S, Phillips R, Bazemore A, Mullan F. Spending patterns in region of residency training and subsequent expenditures for care provided by practicing physicians for Medicare beneficiaries. JAMA. 2014;312(22):2385-2393. PubMed

3. Sirovich BE, Lipner RS, Johnston M, Holmboe ES. The association between residency training and internists’ ability to practice conservatively. JAMA Intern Med. 2014;174(10):1640-1648. PubMed

4. Bulger J, Nickel W, Messler J, et al. Choosing wisely in adult hospital medicine: Five opportunities for improved healthcare value. J Hosp Med. 2013;8(9):486-492. PubMed

5. Salisbury AC, Amin AP, Reid KJ, et al. Hospital-acquired anemia and in-hospital mortality in patients with acute myocardial infarction. Am Heart J. 2011;162(2):300-309.e3. PubMed

6. Meroño O, Cladellas M, Recasens L, et al. In-hospital acquired anemia in acute coronary syndrome. Predictors, in-hospital prognosis and one-year mortality. Rev Esp Cardiol (Engl Ed). 2012;65(8):742-748. PubMed

7. Koch CG, Li L, Sun Z, et al. Hospital-acquired anemia: Prevalence, outcomes, and healthcare implications. J Hosp Med. 2013;8(9):506-512. PubMed

8. Iwashyna TJ, Fuld A, Asch DA, Bellini LM. The impact of residents, interns, and attendings on inpatient laboratory ordering patterns: a report from one university’s hospitalist service. Acad Med. 2011;86(1):139-145. PubMed

9. Ellenbogen MI, Ma M, Christensen NP, Lee J, O’Leary KJ. Differences in Routine Laboratory Ordering Between a Teaching Service and a Hospitalist Service at a Single Academic Medical Center. South Med J. 2017;110(1):25-30. PubMed

10. Sedrak MS, Patel MS, Ziemba JB, et al. Residents’ self-report on why they order perceived unnecessary inpatient laboratory tests. J Hosp Med. 2016;11(12):869-872. PubMed

11. Kurtzman G, Dine J, Epstein A, et al. Internal Medicine Resident Engagement with a Laboratory Utilization Dashboard: Mixed Methods Study. J Hosp Med. 2017;12(9):743-746. PubMed

12. Meeker D, Linder JA, Fox CR, et al. Effect of Behavioral Interventions on Inappropriate Antibiotic Prescribing Among Primary Care Practices: A Randomized Clinical Trial. JAMA. 2016;315(6):562-570. PubMed

13. Moon K, Lee K, Lee K, Oah S. The Effects of Social Comparison and Objective Feedback on Work Performance Across Different Performance Levels. J Organ Behav Manage. 2017;37(1):63-74.

14. Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback : effects on professional practice and healthcare outcomes ( Review ). Cochrane Database Syst Rev. 2012;(6):CD000259. PubMed

15. Neilson EG, Johnson KB, Rosenbloom ST, Dupont WD, Talbert D, Giuse DA. The Impact of Peer Management on Test-Ordering Behavior. Ann Intern Med. 2004;141:196-204. PubMed

16. Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903-908. PubMed

17. Stuebing EA, Miner TJ. Surgical vampires and rising health care expenditure: reducing the cost of daily phlebotomy. Arch Surg. 2011;146:524-527. PubMed

18. Iams W, Heck J, Kapp M, et al. A Multidisciplinary Housestaff-Led Initiative to Safely Reduce Daily Laboratory Testing. Acad Med. 2016;91(6):813-820. PubMed

19. Yarbrough PM, Kukhareva P V., Horton D, Edholm K, Kawamoto K. Multifaceted intervention including education, rounding checklist implementation, cost feedback, and financial incentives reduces inpatient laboratory costs. J Hosp Med. 2016;11(5):348-354. PubMed

Inappropriate resource utilization is a pervasive problem in healthcare, and it has received increasing emphasis over the last few years as financial strain on the healthcare system has grown. This waste has led to new models of care—bundled care payments, accountable care organizations, and merit-based payment systems. Professional organizations have also emphasized the provision of high-value care and avoiding unnecessary diagnostic testing and treatment. In April 2012, the American Board of Internal Medicine (ABIM) launched the Choosing Wisely initiative to assist professional societies in putting forth recommendations on clinical circumstances in which particular tests and procedures should be avoided.

Until recently, teaching cost-effective care was not widely considered an important part of internal medicine residency programs. In a 2010 study surveying residents about resource utilization feedback, only 37% of internal medicine residents reported receiving any feedback on resource utilization and 20% reported receiving regular feedback.1 These findings are especially significant in the broader context of national healthcare spending, as there is evidence that physicians who train in high-spending localities tend to have high-spending patterns later in their careers.2 Another study showed similar findings when looking at region of training relative to success at recognizing high-value care on ABIM test questions.3 The Accreditation Council for Graduate Medical Education has developed the Clinical Learning Environment Review program to help address this need. This program provides feedback to teaching hospitals about their success at teaching residents and fellows to provide high-value medical care.

Given the current zeitgeist of emphasizing cost-effective, high-value care, appropriate utilization of routine labs is one area that stands out as an especially low-hanging fruit. The Society of Hospital Medicine, as part of the Choosing Wisely campaign, recommended minimizing routine lab draws in hospitalized patients with clinical and laboratory stability.4 Certainly, avoiding unnecessary routine lab draws is ideal because it saves patients the pain of superfluous phlebotomy, allows phlebotomy resources to be directed to blood draws with actual clinical utility, and saves money. There is also good evidence that hospital-acquired anemia, an effect of overuse of routine blood draws, has an adverse impact on morbidity and mortality in postmyocardial infarction patients5,6 and more generally in hospitalized patients.7

Several studies have examined lab utilization on teaching services. Not surprisingly, the vast majority of test utilization is attributable to the interns (45%) and residents (26%), rather than attendings.8 Another study showed that internal medicine residents at one center had a much stronger self-reported predilection for ordering daily recurring routine labs rather than one-time labs for the following morning when admitting patients and when picking up patients, as compared with hospitalist attendings.9 This self-reported tendency translated into ordering more complete blood counts and basic chemistry panels per patient per day. A qualitative study looking at why internal medicine and general surgery residents ordered unnecessary labs yielded a number of responses, including ingrained habit, lack of price transparency, clinical uncertainty, belief that the attending expected it, and absence of a culture emphasizing resource utilization.10

In this issue of the Journal of Hospital Medicine, Kurtzman and colleagues report on a mixed-methods study looking at internal medicine resident engagement at their center with an electronic medical record–associated dashboard providing feedback on lab utilization.11 Over a 6-month period, the residents randomized into the dashboard group received weekly e-mails while on service with a brief synopsis of their lab utilization relative to their peers and also a link to a dashboard with a time-series display of their relative lab ordering. While the majority of residents (74%) opened the e-mail, only a minority (21%) actually accessed the dashboard. Also, there was not a statistically significant relationship between dashboard use and lab ordering, though there was a trend to decreased lab ordering associated with opening the dashboard. The residents who participated in a focus group expressed both positive and negative opinions on the dashboard.

This is one example of social comparison feedback, which aims to improve performance by providing information to physicians on their performance relative to their peers. It has been shown to be effective in other areas of clinical medicine like limiting antibiotic overutilization in patients with upper respiratory infections.12 One study examining social comparison feedback and objective feedback found that social comparison feedback improved performance for a simulated work task more for high performers but less for low performers than standard objective feedback.13 The utility of this type of feedback has not been extensively studied in healthcare.

However, the audit and feedback strategy, of which social comparison feedback is a subtype, has been extensively studied in healthcare. A 2012 Cochrane Review found that audit and feedback leads to “small but potentially important improvements in professional practice.”14 They found a wide variation in the effect of feedback among the 140 studies they analyzed. The factors strongly associated with a significant improvement after feedback were as follows: poor performance at baseline, a colleague or supervisor as the one providing the audit and feedback, repetitive feedback, feedback given both verbally and in writing, and clear advice or guidance on how to improve. Many of these components were missing from this study—that may be one reason the authors did not find a significant relationship between dashboard use and lab ordering.

A number of interventions, however, have been shown to decrease lab utilization, including unbundling of the components of the metabolic panel and disallowing daily recurring lab orders,15 fee displays,16 cost reminders,17 didactics and data feedback,18 and a multifaceted approach (didactics, monthly feedback, checklist, and financial incentives).19 A multipronged strategy, including an element of education, audit and feedback, hard-stop limits on redundant lab ordering, and fee information is likely to be the most successful strategy to reducing lab overutilization for both residents and attending physicians. Resource overutilization is a multifactorial problem, and multifactorial problems call for multifaceted solutions. Moreover, it may be necessary to employ both “carrot” and “stick” elements to such an approach, rewarding physicians who practice appropriate stewardship, but also penalizing practitioners who do not appropriately adjust their lab ordering tendencies after receiving feedback showing overuse.

Physician behavior is difficult to change, and there are many reasons why physicians order inappropriate tests and studies, including provider uncertainty, fear of malpractice litigation, and inadequate time to consider the utility of a test. Audit and feedback should be integrated into residency curriculums focusing on high-value care, in which hospitalists should play a central role. If supervising attendings are not integrated into such curriculums and continue to both overorder tests themselves and allow residents to do so, then the informal curriculum will trump the formal one.

Physicians respond to incentives, and appropriately designed incentives should be developed to help steer them to order only those tests and studies that are medically indicated. Such incentives must be provided alongside audit and feedback with appropriate goals that account for patient complexity. Ultimately, routine lab ordering is just one area of overutilization in hospital medicine, and the techniques that are successful at reducing overuse in this arena will need to be applied to other aspects of medicine like imaging and medication prescribing.

Disclosure

The authors declare no conflicts of interest.

Inappropriate resource utilization is a pervasive problem in healthcare, and it has received increasing emphasis over the last few years as financial strain on the healthcare system has grown. This waste has led to new models of care—bundled care payments, accountable care organizations, and merit-based payment systems. Professional organizations have also emphasized the provision of high-value care and avoiding unnecessary diagnostic testing and treatment. In April 2012, the American Board of Internal Medicine (ABIM) launched the Choosing Wisely initiative to assist professional societies in putting forth recommendations on clinical circumstances in which particular tests and procedures should be avoided.

Until recently, teaching cost-effective care was not widely considered an important part of internal medicine residency programs. In a 2010 study surveying residents about resource utilization feedback, only 37% of internal medicine residents reported receiving any feedback on resource utilization and 20% reported receiving regular feedback.1 These findings are especially significant in the broader context of national healthcare spending, as there is evidence that physicians who train in high-spending localities tend to have high-spending patterns later in their careers.2 Another study showed similar findings when looking at region of training relative to success at recognizing high-value care on ABIM test questions.3 The Accreditation Council for Graduate Medical Education has developed the Clinical Learning Environment Review program to help address this need. This program provides feedback to teaching hospitals about their success at teaching residents and fellows to provide high-value medical care.

Given the current zeitgeist of emphasizing cost-effective, high-value care, appropriate utilization of routine labs is one area that stands out as an especially low-hanging fruit. The Society of Hospital Medicine, as part of the Choosing Wisely campaign, recommended minimizing routine lab draws in hospitalized patients with clinical and laboratory stability.4 Certainly, avoiding unnecessary routine lab draws is ideal because it saves patients the pain of superfluous phlebotomy, allows phlebotomy resources to be directed to blood draws with actual clinical utility, and saves money. There is also good evidence that hospital-acquired anemia, an effect of overuse of routine blood draws, has an adverse impact on morbidity and mortality in postmyocardial infarction patients5,6 and more generally in hospitalized patients.7

Several studies have examined lab utilization on teaching services. Not surprisingly, the vast majority of test utilization is attributable to the interns (45%) and residents (26%), rather than attendings.8 Another study showed that internal medicine residents at one center had a much stronger self-reported predilection for ordering daily recurring routine labs rather than one-time labs for the following morning when admitting patients and when picking up patients, as compared with hospitalist attendings.9 This self-reported tendency translated into ordering more complete blood counts and basic chemistry panels per patient per day. A qualitative study looking at why internal medicine and general surgery residents ordered unnecessary labs yielded a number of responses, including ingrained habit, lack of price transparency, clinical uncertainty, belief that the attending expected it, and absence of a culture emphasizing resource utilization.10

In this issue of the Journal of Hospital Medicine, Kurtzman and colleagues report on a mixed-methods study looking at internal medicine resident engagement at their center with an electronic medical record–associated dashboard providing feedback on lab utilization.11 Over a 6-month period, the residents randomized into the dashboard group received weekly e-mails while on service with a brief synopsis of their lab utilization relative to their peers and also a link to a dashboard with a time-series display of their relative lab ordering. While the majority of residents (74%) opened the e-mail, only a minority (21%) actually accessed the dashboard. Also, there was not a statistically significant relationship between dashboard use and lab ordering, though there was a trend to decreased lab ordering associated with opening the dashboard. The residents who participated in a focus group expressed both positive and negative opinions on the dashboard.

This is one example of social comparison feedback, which aims to improve performance by providing information to physicians on their performance relative to their peers. It has been shown to be effective in other areas of clinical medicine like limiting antibiotic overutilization in patients with upper respiratory infections.12 One study examining social comparison feedback and objective feedback found that social comparison feedback improved performance for a simulated work task more for high performers but less for low performers than standard objective feedback.13 The utility of this type of feedback has not been extensively studied in healthcare.

However, the audit and feedback strategy, of which social comparison feedback is a subtype, has been extensively studied in healthcare. A 2012 Cochrane Review found that audit and feedback leads to “small but potentially important improvements in professional practice.”14 They found a wide variation in the effect of feedback among the 140 studies they analyzed. The factors strongly associated with a significant improvement after feedback were as follows: poor performance at baseline, a colleague or supervisor as the one providing the audit and feedback, repetitive feedback, feedback given both verbally and in writing, and clear advice or guidance on how to improve. Many of these components were missing from this study—that may be one reason the authors did not find a significant relationship between dashboard use and lab ordering.

A number of interventions, however, have been shown to decrease lab utilization, including unbundling of the components of the metabolic panel and disallowing daily recurring lab orders,15 fee displays,16 cost reminders,17 didactics and data feedback,18 and a multifaceted approach (didactics, monthly feedback, checklist, and financial incentives).19 A multipronged strategy, including an element of education, audit and feedback, hard-stop limits on redundant lab ordering, and fee information is likely to be the most successful strategy to reducing lab overutilization for both residents and attending physicians. Resource overutilization is a multifactorial problem, and multifactorial problems call for multifaceted solutions. Moreover, it may be necessary to employ both “carrot” and “stick” elements to such an approach, rewarding physicians who practice appropriate stewardship, but also penalizing practitioners who do not appropriately adjust their lab ordering tendencies after receiving feedback showing overuse.

Physician behavior is difficult to change, and there are many reasons why physicians order inappropriate tests and studies, including provider uncertainty, fear of malpractice litigation, and inadequate time to consider the utility of a test. Audit and feedback should be integrated into residency curriculums focusing on high-value care, in which hospitalists should play a central role. If supervising attendings are not integrated into such curriculums and continue to both overorder tests themselves and allow residents to do so, then the informal curriculum will trump the formal one.

Physicians respond to incentives, and appropriately designed incentives should be developed to help steer them to order only those tests and studies that are medically indicated. Such incentives must be provided alongside audit and feedback with appropriate goals that account for patient complexity. Ultimately, routine lab ordering is just one area of overutilization in hospital medicine, and the techniques that are successful at reducing overuse in this arena will need to be applied to other aspects of medicine like imaging and medication prescribing.

Disclosure

The authors declare no conflicts of interest.

1. Dine CJ, Miller J, Fuld A, Bellini LM, Iwashyna TJ. Educating Physicians-in-Training About Resource Utilization and Their Own Outcomes of Care in the Inpatient Setting. J Grad Med Educ. 2010;2(2):175-180. PubMed

2. Chen C, Petterson S, Phillips R, Bazemore A, Mullan F. Spending patterns in region of residency training and subsequent expenditures for care provided by practicing physicians for Medicare beneficiaries. JAMA. 2014;312(22):2385-2393. PubMed

3. Sirovich BE, Lipner RS, Johnston M, Holmboe ES. The association between residency training and internists’ ability to practice conservatively. JAMA Intern Med. 2014;174(10):1640-1648. PubMed

4. Bulger J, Nickel W, Messler J, et al. Choosing wisely in adult hospital medicine: Five opportunities for improved healthcare value. J Hosp Med. 2013;8(9):486-492. PubMed

5. Salisbury AC, Amin AP, Reid KJ, et al. Hospital-acquired anemia and in-hospital mortality in patients with acute myocardial infarction. Am Heart J. 2011;162(2):300-309.e3. PubMed

6. Meroño O, Cladellas M, Recasens L, et al. In-hospital acquired anemia in acute coronary syndrome. Predictors, in-hospital prognosis and one-year mortality. Rev Esp Cardiol (Engl Ed). 2012;65(8):742-748. PubMed

7. Koch CG, Li L, Sun Z, et al. Hospital-acquired anemia: Prevalence, outcomes, and healthcare implications. J Hosp Med. 2013;8(9):506-512. PubMed

8. Iwashyna TJ, Fuld A, Asch DA, Bellini LM. The impact of residents, interns, and attendings on inpatient laboratory ordering patterns: a report from one university’s hospitalist service. Acad Med. 2011;86(1):139-145. PubMed

9. Ellenbogen MI, Ma M, Christensen NP, Lee J, O’Leary KJ. Differences in Routine Laboratory Ordering Between a Teaching Service and a Hospitalist Service at a Single Academic Medical Center. South Med J. 2017;110(1):25-30. PubMed

10. Sedrak MS, Patel MS, Ziemba JB, et al. Residents’ self-report on why they order perceived unnecessary inpatient laboratory tests. J Hosp Med. 2016;11(12):869-872. PubMed

11. Kurtzman G, Dine J, Epstein A, et al. Internal Medicine Resident Engagement with a Laboratory Utilization Dashboard: Mixed Methods Study. J Hosp Med. 2017;12(9):743-746. PubMed

12. Meeker D, Linder JA, Fox CR, et al. Effect of Behavioral Interventions on Inappropriate Antibiotic Prescribing Among Primary Care Practices: A Randomized Clinical Trial. JAMA. 2016;315(6):562-570. PubMed

13. Moon K, Lee K, Lee K, Oah S. The Effects of Social Comparison and Objective Feedback on Work Performance Across Different Performance Levels. J Organ Behav Manage. 2017;37(1):63-74.

14. Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback : effects on professional practice and healthcare outcomes ( Review ). Cochrane Database Syst Rev. 2012;(6):CD000259. PubMed

15. Neilson EG, Johnson KB, Rosenbloom ST, Dupont WD, Talbert D, Giuse DA. The Impact of Peer Management on Test-Ordering Behavior. Ann Intern Med. 2004;141:196-204. PubMed

16. Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903-908. PubMed

17. Stuebing EA, Miner TJ. Surgical vampires and rising health care expenditure: reducing the cost of daily phlebotomy. Arch Surg. 2011;146:524-527. PubMed

18. Iams W, Heck J, Kapp M, et al. A Multidisciplinary Housestaff-Led Initiative to Safely Reduce Daily Laboratory Testing. Acad Med. 2016;91(6):813-820. PubMed

19. Yarbrough PM, Kukhareva P V., Horton D, Edholm K, Kawamoto K. Multifaceted intervention including education, rounding checklist implementation, cost feedback, and financial incentives reduces inpatient laboratory costs. J Hosp Med. 2016;11(5):348-354. PubMed

1. Dine CJ, Miller J, Fuld A, Bellini LM, Iwashyna TJ. Educating Physicians-in-Training About Resource Utilization and Their Own Outcomes of Care in the Inpatient Setting. J Grad Med Educ. 2010;2(2):175-180. PubMed

2. Chen C, Petterson S, Phillips R, Bazemore A, Mullan F. Spending patterns in region of residency training and subsequent expenditures for care provided by practicing physicians for Medicare beneficiaries. JAMA. 2014;312(22):2385-2393. PubMed

3. Sirovich BE, Lipner RS, Johnston M, Holmboe ES. The association between residency training and internists’ ability to practice conservatively. JAMA Intern Med. 2014;174(10):1640-1648. PubMed

4. Bulger J, Nickel W, Messler J, et al. Choosing wisely in adult hospital medicine: Five opportunities for improved healthcare value. J Hosp Med. 2013;8(9):486-492. PubMed

5. Salisbury AC, Amin AP, Reid KJ, et al. Hospital-acquired anemia and in-hospital mortality in patients with acute myocardial infarction. Am Heart J. 2011;162(2):300-309.e3. PubMed

6. Meroño O, Cladellas M, Recasens L, et al. In-hospital acquired anemia in acute coronary syndrome. Predictors, in-hospital prognosis and one-year mortality. Rev Esp Cardiol (Engl Ed). 2012;65(8):742-748. PubMed

7. Koch CG, Li L, Sun Z, et al. Hospital-acquired anemia: Prevalence, outcomes, and healthcare implications. J Hosp Med. 2013;8(9):506-512. PubMed

8. Iwashyna TJ, Fuld A, Asch DA, Bellini LM. The impact of residents, interns, and attendings on inpatient laboratory ordering patterns: a report from one university’s hospitalist service. Acad Med. 2011;86(1):139-145. PubMed

9. Ellenbogen MI, Ma M, Christensen NP, Lee J, O’Leary KJ. Differences in Routine Laboratory Ordering Between a Teaching Service and a Hospitalist Service at a Single Academic Medical Center. South Med J. 2017;110(1):25-30. PubMed

10. Sedrak MS, Patel MS, Ziemba JB, et al. Residents’ self-report on why they order perceived unnecessary inpatient laboratory tests. J Hosp Med. 2016;11(12):869-872. PubMed

11. Kurtzman G, Dine J, Epstein A, et al. Internal Medicine Resident Engagement with a Laboratory Utilization Dashboard: Mixed Methods Study. J Hosp Med. 2017;12(9):743-746. PubMed

12. Meeker D, Linder JA, Fox CR, et al. Effect of Behavioral Interventions on Inappropriate Antibiotic Prescribing Among Primary Care Practices: A Randomized Clinical Trial. JAMA. 2016;315(6):562-570. PubMed

13. Moon K, Lee K, Lee K, Oah S. The Effects of Social Comparison and Objective Feedback on Work Performance Across Different Performance Levels. J Organ Behav Manage. 2017;37(1):63-74.

14. Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback : effects on professional practice and healthcare outcomes ( Review ). Cochrane Database Syst Rev. 2012;(6):CD000259. PubMed

15. Neilson EG, Johnson KB, Rosenbloom ST, Dupont WD, Talbert D, Giuse DA. The Impact of Peer Management on Test-Ordering Behavior. Ann Intern Med. 2004;141:196-204. PubMed

16. Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903-908. PubMed

17. Stuebing EA, Miner TJ. Surgical vampires and rising health care expenditure: reducing the cost of daily phlebotomy. Arch Surg. 2011;146:524-527. PubMed

18. Iams W, Heck J, Kapp M, et al. A Multidisciplinary Housestaff-Led Initiative to Safely Reduce Daily Laboratory Testing. Acad Med. 2016;91(6):813-820. PubMed

19. Yarbrough PM, Kukhareva P V., Horton D, Edholm K, Kawamoto K. Multifaceted intervention including education, rounding checklist implementation, cost feedback, and financial incentives reduces inpatient laboratory costs. J Hosp Med. 2016;11(5):348-354. PubMed

© 2017 Society of Hospital Medicine

Does the Week-End Justify the Means?

Let’s face it—rates of hospital admission are on the rise, but there are still just 7 days in a week. That means that patients are increasingly admitted on weekdays and on the weekend, requiring more nurses and doctors to look after them. Why then are there no lines for coffee on a Saturday? Does this reduced intensity of staffing translate into worse care for our patients?

Since one of its earliest descriptions in hospitalized patients, the “weekend effect” has been extensively studied in various patient populations and hospital settings.1-5 The results have been varied, depending on the place of care,6 reason for care, type of admission,5,7 or admitting diagnosis.1,8,9 Many researchers have posited the drivers behind the weekend effect, including understaffed wards, intensity of specialist care, delays in procedural treatments, or severity of illness, but the truth is that we still don’t know.

Pauls et al. performed a robust systematic review and meta-analysis examining the rates of in-hospital mortality in patients admitted on the weekend compared with those admitted on weekdays.10 They analyzed predetermined subgroups to identify system- and patient-level factors associated with a difference in weekend mortality.

A total of 97 studies—comprising an astounding 51 million patients—was included in the study. They found that individuals admitted on the weekend carried an almost 20% increase in the risk of death compared with those who landed in hospital on a weekday. The effect was present for both in-hospital deaths and when looking specifically at 30-day mortality. Translating these findings into practice, an additional 14 deaths per 1000 admissions occur when patients are admitted on the weekend. Brain surgery can be less risky.11

Despite this concerning finding, no individual factor was identified that could account for the effect. There was a 16% and 11% increase in mortality in weekend patients associated with decreased hospital staffing and delays to procedural therapies, respectively. No differences were found when examining reduced rates of procedures or illness severity on weekends compared with weekdays. But one must always interpret subgroup analyses, even prespecified ones, with caution because they often lack the statistical power to make concrete conclusions.

To this end, an important finding of the study by Pauls et al. highlights the variation in mortality risk as it relates to the weekend effect.10 Even for individuals with cancer, a disease with a relatively predictable rate of decline, there are weekend differences in mortality risk that depend upon the type of cancer.8,12 This heterogeneity persists when examining for the possible factors that contribute to the effect, introducing a significant amount of noise into the analysis, and may explain why research to date has been unable to find the proverbial black cat in the coal cellar.

One thing Pauls et al. makes clear is that the weekend effect appears to be a real phenomenon, despite significant heterogeneity in the literature.10 Only a high-quality, systematic review has the capability to draw such conclusions. Prior work demonstrates that this effect is substantial in some individuals,and this study confirms that it perseveres beyond an immediate time period following admission.1,9 The elements contributing to the weekend effect remain undefined and are likely as complex as our healthcare system itself.

Society and policy makers should resist the tantalizing urge to invoke interventions aimed at fixing this issue before fully understanding the drivers of a system problem. The government of the United Kingdom has decreed a manifesto to create a “7-day National Health Service,” in which weekend services and physician staffing will match that of the weekdays. Considering recent labor tensions between junior doctors in the United Kingdom over pay and working hours, the stakes are at an all-time high.

But such drastic measures violate a primary directive of quality improvement science to study and understand the problem before reflexively jumping to solutions. This will require new research endeavors aimed at determining the underlying factor(s) responsible for the weekend effect. Once we are confident in its cause, only then can careful evaluation of targeted interventions aimed at the highest-risk admissions be instituted. As global hospital and healthcare budgets bend under increasing strain, a critical component of any proposed intervention must be to examine the cost-effectiveness in doing so. Because the weekend effect is one of increased mortality, it will be hard to justify an acceptable price for an individual’s life. And it is not as straightforward as a randomized trial examining the efficacy of parachutes. Any formal evaluation must account for the unintended consequences and opportunity costs of implementing a potential fix aimed at minimizing the weekend effect.

The weekend effect has now been studied for over 15 years. Pauls et al. add to our knowledge of this phenomenon, confirming that the overall risk of mortality for patients admitted on the weekend is real, variable, and substantial.10 As more individuals are admitted to hospitals, resulting in increasing numbers of admissions on the weekend, a desperate search for the underlying cause must be carried out before we can fix it. Whatever the means to the end, our elation will continue to be tempered by a feeling of uneasiness every time our coworkers joyously exclaim, “TGIF!”

Disclosure

The authors have nothing to disclose.

1. Bell CM, Redelmeier DA. Mortality among patients admitted to hospitals on weekends as compared with weekdays. N Engl J Med. 2001;345(9):663-668. doi:10.1056/NEJMsa003376. PubMed

2. Bell CM, Redelmeier DA. Waiting for urgent procedures on the weekend among emergently hospitalized patients. AJM. 2004;117(3):175-181. doi:10.1016/j.amjmed.2004.02.047. PubMed

3. Kalaitzakis E, Helgeson J, Strömdahl M, Tóth E. Weekend admission in upper GI bleeding: does it have an impact on outcome? Gastrointest Endosc. 2015;81(5):1295-1296. doi:10.1016/j.gie.2014.12.003. PubMed

4. Nanchal R, Kumar G, Taneja A, et al. Pulmonary embolism: the weekend effect. Chest. 2012;142(3):690-696. doi:10.1378/chest.11-2663. PubMed

5. Ricciardi R, Roberts PL, Read TE, Baxter NN, Marcello PW, Schoetz DJ. Mortality rate after nonelective hospital admission. Arch Surg. 2011;146(5):545-551. PubMed

6. Wunsch H, Mapstone J, Brady T, Hanks R, Rowan K. Hospital mortality associated with day and time of admission to intensive care units. Intensive Care Med. 2004;30(5):895-901. doi:10.1007/s00134-004-2170-3. PubMed

7. Freemantle N, Richardson M, Wood J, et al. Weekend hospitalization and additional risk of death: an analysis of inpatient data. J R Soc Med. 2012;105(2):74-84. doi:10.1258/jrsm.2012.120009. PubMed

8. Lapointe-Shaw L, Bell CM. It’s not you, it’s me: time to narrow the gap in weekend care. BMJ Qual Saf. 2014;23(3):180-182. doi:10.1136/bmjqs-2013-002674. PubMed

9. Concha OP, Gallego B, Hillman K, Delaney GP, Coiera E. Do variations in hospital mortality patterns after weekend admission reflect reduced quality of care or different patient cohorts? A population-based study. BMJ Qual Saf. 2014;23(3):215-222. doi:10.1136/bmjqs-2013-002218. PubMed

10. Pauls LA, Johnson-Paben R, McGready J, Murphy JD, Pronovost PJ, Wu CL. The Weekend Effect in Hospitalized Patients: A Meta-analysis. J Hosp Med. 2017;12(9):760-766. PubMed

11. American College of Surgeons. NSQIP Risk Calculator. http://riskcalculator.facs.org/RiskCalculator/. Accessed on July 5, 2017.

12. Lapointe-Shaw L, Abushomar H, Chen XK, et al. Care and outcomes of patients with cancer admitted to the hospital on weekends and holidays: a retrospective cohort study. J Natl Compr Canc Netw. 2016;14(7):867-874. PubMed

Let’s face it—rates of hospital admission are on the rise, but there are still just 7 days in a week. That means that patients are increasingly admitted on weekdays and on the weekend, requiring more nurses and doctors to look after them. Why then are there no lines for coffee on a Saturday? Does this reduced intensity of staffing translate into worse care for our patients?

Since one of its earliest descriptions in hospitalized patients, the “weekend effect” has been extensively studied in various patient populations and hospital settings.1-5 The results have been varied, depending on the place of care,6 reason for care, type of admission,5,7 or admitting diagnosis.1,8,9 Many researchers have posited the drivers behind the weekend effect, including understaffed wards, intensity of specialist care, delays in procedural treatments, or severity of illness, but the truth is that we still don’t know.

Pauls et al. performed a robust systematic review and meta-analysis examining the rates of in-hospital mortality in patients admitted on the weekend compared with those admitted on weekdays.10 They analyzed predetermined subgroups to identify system- and patient-level factors associated with a difference in weekend mortality.

A total of 97 studies—comprising an astounding 51 million patients—was included in the study. They found that individuals admitted on the weekend carried an almost 20% increase in the risk of death compared with those who landed in hospital on a weekday. The effect was present for both in-hospital deaths and when looking specifically at 30-day mortality. Translating these findings into practice, an additional 14 deaths per 1000 admissions occur when patients are admitted on the weekend. Brain surgery can be less risky.11

Despite this concerning finding, no individual factor was identified that could account for the effect. There was a 16% and 11% increase in mortality in weekend patients associated with decreased hospital staffing and delays to procedural therapies, respectively. No differences were found when examining reduced rates of procedures or illness severity on weekends compared with weekdays. But one must always interpret subgroup analyses, even prespecified ones, with caution because they often lack the statistical power to make concrete conclusions.

To this end, an important finding of the study by Pauls et al. highlights the variation in mortality risk as it relates to the weekend effect.10 Even for individuals with cancer, a disease with a relatively predictable rate of decline, there are weekend differences in mortality risk that depend upon the type of cancer.8,12 This heterogeneity persists when examining for the possible factors that contribute to the effect, introducing a significant amount of noise into the analysis, and may explain why research to date has been unable to find the proverbial black cat in the coal cellar.

One thing Pauls et al. makes clear is that the weekend effect appears to be a real phenomenon, despite significant heterogeneity in the literature.10 Only a high-quality, systematic review has the capability to draw such conclusions. Prior work demonstrates that this effect is substantial in some individuals,and this study confirms that it perseveres beyond an immediate time period following admission.1,9 The elements contributing to the weekend effect remain undefined and are likely as complex as our healthcare system itself.

Society and policy makers should resist the tantalizing urge to invoke interventions aimed at fixing this issue before fully understanding the drivers of a system problem. The government of the United Kingdom has decreed a manifesto to create a “7-day National Health Service,” in which weekend services and physician staffing will match that of the weekdays. Considering recent labor tensions between junior doctors in the United Kingdom over pay and working hours, the stakes are at an all-time high.

But such drastic measures violate a primary directive of quality improvement science to study and understand the problem before reflexively jumping to solutions. This will require new research endeavors aimed at determining the underlying factor(s) responsible for the weekend effect. Once we are confident in its cause, only then can careful evaluation of targeted interventions aimed at the highest-risk admissions be instituted. As global hospital and healthcare budgets bend under increasing strain, a critical component of any proposed intervention must be to examine the cost-effectiveness in doing so. Because the weekend effect is one of increased mortality, it will be hard to justify an acceptable price for an individual’s life. And it is not as straightforward as a randomized trial examining the efficacy of parachutes. Any formal evaluation must account for the unintended consequences and opportunity costs of implementing a potential fix aimed at minimizing the weekend effect.

The weekend effect has now been studied for over 15 years. Pauls et al. add to our knowledge of this phenomenon, confirming that the overall risk of mortality for patients admitted on the weekend is real, variable, and substantial.10 As more individuals are admitted to hospitals, resulting in increasing numbers of admissions on the weekend, a desperate search for the underlying cause must be carried out before we can fix it. Whatever the means to the end, our elation will continue to be tempered by a feeling of uneasiness every time our coworkers joyously exclaim, “TGIF!”

Disclosure

The authors have nothing to disclose.

Let’s face it—rates of hospital admission are on the rise, but there are still just 7 days in a week. That means that patients are increasingly admitted on weekdays and on the weekend, requiring more nurses and doctors to look after them. Why then are there no lines for coffee on a Saturday? Does this reduced intensity of staffing translate into worse care for our patients?

Since one of its earliest descriptions in hospitalized patients, the “weekend effect” has been extensively studied in various patient populations and hospital settings.1-5 The results have been varied, depending on the place of care,6 reason for care, type of admission,5,7 or admitting diagnosis.1,8,9 Many researchers have posited the drivers behind the weekend effect, including understaffed wards, intensity of specialist care, delays in procedural treatments, or severity of illness, but the truth is that we still don’t know.

Pauls et al. performed a robust systematic review and meta-analysis examining the rates of in-hospital mortality in patients admitted on the weekend compared with those admitted on weekdays.10 They analyzed predetermined subgroups to identify system- and patient-level factors associated with a difference in weekend mortality.

A total of 97 studies—comprising an astounding 51 million patients—was included in the study. They found that individuals admitted on the weekend carried an almost 20% increase in the risk of death compared with those who landed in hospital on a weekday. The effect was present for both in-hospital deaths and when looking specifically at 30-day mortality. Translating these findings into practice, an additional 14 deaths per 1000 admissions occur when patients are admitted on the weekend. Brain surgery can be less risky.11

Despite this concerning finding, no individual factor was identified that could account for the effect. There was a 16% and 11% increase in mortality in weekend patients associated with decreased hospital staffing and delays to procedural therapies, respectively. No differences were found when examining reduced rates of procedures or illness severity on weekends compared with weekdays. But one must always interpret subgroup analyses, even prespecified ones, with caution because they often lack the statistical power to make concrete conclusions.

To this end, an important finding of the study by Pauls et al. highlights the variation in mortality risk as it relates to the weekend effect.10 Even for individuals with cancer, a disease with a relatively predictable rate of decline, there are weekend differences in mortality risk that depend upon the type of cancer.8,12 This heterogeneity persists when examining for the possible factors that contribute to the effect, introducing a significant amount of noise into the analysis, and may explain why research to date has been unable to find the proverbial black cat in the coal cellar.

One thing Pauls et al. makes clear is that the weekend effect appears to be a real phenomenon, despite significant heterogeneity in the literature.10 Only a high-quality, systematic review has the capability to draw such conclusions. Prior work demonstrates that this effect is substantial in some individuals,and this study confirms that it perseveres beyond an immediate time period following admission.1,9 The elements contributing to the weekend effect remain undefined and are likely as complex as our healthcare system itself.

Society and policy makers should resist the tantalizing urge to invoke interventions aimed at fixing this issue before fully understanding the drivers of a system problem. The government of the United Kingdom has decreed a manifesto to create a “7-day National Health Service,” in which weekend services and physician staffing will match that of the weekdays. Considering recent labor tensions between junior doctors in the United Kingdom over pay and working hours, the stakes are at an all-time high.

But such drastic measures violate a primary directive of quality improvement science to study and understand the problem before reflexively jumping to solutions. This will require new research endeavors aimed at determining the underlying factor(s) responsible for the weekend effect. Once we are confident in its cause, only then can careful evaluation of targeted interventions aimed at the highest-risk admissions be instituted. As global hospital and healthcare budgets bend under increasing strain, a critical component of any proposed intervention must be to examine the cost-effectiveness in doing so. Because the weekend effect is one of increased mortality, it will be hard to justify an acceptable price for an individual’s life. And it is not as straightforward as a randomized trial examining the efficacy of parachutes. Any formal evaluation must account for the unintended consequences and opportunity costs of implementing a potential fix aimed at minimizing the weekend effect.

The weekend effect has now been studied for over 15 years. Pauls et al. add to our knowledge of this phenomenon, confirming that the overall risk of mortality for patients admitted on the weekend is real, variable, and substantial.10 As more individuals are admitted to hospitals, resulting in increasing numbers of admissions on the weekend, a desperate search for the underlying cause must be carried out before we can fix it. Whatever the means to the end, our elation will continue to be tempered by a feeling of uneasiness every time our coworkers joyously exclaim, “TGIF!”

Disclosure

The authors have nothing to disclose.

1. Bell CM, Redelmeier DA. Mortality among patients admitted to hospitals on weekends as compared with weekdays. N Engl J Med. 2001;345(9):663-668. doi:10.1056/NEJMsa003376. PubMed