User login

Modified Atkins diet beneficial in drug-resistant epilepsy

, new research shows.

In a randomized prospective study, the number of seizures per month dropped by more than half in one-quarter of patients following the high-fat, low-carb diet; and 5% of the group were free from all seizure activity after 6 months.

Both adults and adolescents reported benefits from the diet, which is a less strict version of a traditional ketogenic diet that many patients find difficult to follow. The modified Atkins diet includes foods such as leafy green vegetables and eggs, chicken, fish, bacon, and other animal proteins.

“The use of an exchange list and recipe booklet with local recipes and spices helped in the initiation of modified Atkins diet with the flexibility of meal choices and ease of administration,” said coinvestigator Manjari Tripathi, MD, DM, department of neurology, All India Institute of Medical Science, New Delhi.

“As items were everyday household ingredients in proportion to the requirement of the modified Atkins diet, this diet is possible in low-income countries also,” Dr. Tripathi added.

The findings were published online in the journal Neurology.

Low carbs, high benefit

The modified Atkins diet includes around 65% fat, 25% protein, and 10% carbohydrates. Unlike a traditional ketogenic diet, the modified Atkins diet includes no restrictions on protein, calories, or fluids.

Researchers have long known that ketogenic and Atkins diets are associated with reduced seizure activity in adolescents with epilepsy. But previous studies were small, and many were retrospective analyses.

The current investigators enrolled 160 patients (80 adults, 80 adolescents) aged 10-55 years whose epilepsy was not controlled despite using at least three antiseizure medications at maximum tolerated doses.

The intervention group received training in the modified Atkins diet and were given a food exchange list, sample menu, and recipe booklet. Carbohydrate intake was restricted to 20 grams per day.

Participants took supplemental multivitamins and minerals, kept a food diary, logged seizure activity, and measured urine ketone levels three times a day. They also received weekly check-up phone calls to ensure diet adherence.

The control group received a normal diet with no carbohydrate restrictions. All participants continued their prescribed antiseizure therapy throughout the trial.

Primary outcome met

The primary study outcome was a reduction in seizures of more than 50%. At 6 months, 26.2% of the intervention group had reached that goal, compared with just 2.5% of the control group (P < .001).

When the median number of seizures in the modified Atkins diet group was analyzed, the frequency dropped in the intervention group from 37.5 per month at baseline to 27.5 per month after 3 months of the modified Atkins diet and to 21.5 per month after 6 months.

Adding the modified Atkins diet had a larger effect on seizure activity in adults than in adolescents. At the end of 6 months, 36% of adolescents on the modified Atkins diet had more than a 50% reduction in seizures, while 57.1% of adults on the diet reached that level.

Quality-of-life scores were also higher in the intervention group.

By the end of the trial, 5% of patients on the modified Atkins diet had no seizure activity at all versus none of the control group. In fact, the median number of seizures increased in the control group during the study.

The mean morning and evening levels of urine ketosis in the intervention group were 58.3 ± 8.0 mg/dL and 62.2 ± 22.6 mg/dL, respectively, suggesting satisfactory diet adherence. There was no significant difference between groups in weight loss.

Dr. Tripathi noted that 33% of participants did not complete the study because of poor tolerance of the diet, lack of benefit, or the inability to follow up – in part due to COVID-19. However, she said tolerance of the modified Atkins diet was better than what has been reported with the ketogenic diet.

“Though the exact mechanism by which such a diet protects against seizures is unknown, there is evidence that it causes effects on intermediary metabolism that influences the dynamics of the major inhibitory and excitatory neurotransmitter systems in the brain,” Dr. Tripathi said.

Benefits outweigh cost

Commenting on the research findings, Mackenzie Cervenka, MD, professor of neurology and director of the Adult Epilepsy Diet Center at Johns Hopkins University, Baltimore, noted that the study is the first randomized controlled trial of this size to demonstrate a benefit from adding the modified Atkins diet to standard antiseizure therapy in treatment-resistant epilepsy.

“Importantly, the study also showed improvement in quality of life and behavior over standard-of-care therapies without significant adverse effects,” said Dr. Cervenka, who was not part of the research.

The investigators noted that the flexibility of the modified Atkins diet allows more variation in menu options and a greater intake of protein, making it easier to follow than a traditional ketogenic diet.

One area of debate, however, is whether these diets are manageable for individuals with low income. Poultry, meat, and fish, all of which are staples of a modified Atkins diet, can be more expensive than other high-carb options such as pasta and rice.

“While some of the foods such as protein sources that patients purchase when they are on a ketogenic diet therapy can be more expensive, if you take into account the cost of antiseizure medications and other antiseizure treatments, hospital visits, and missed work related to seizures, et cetera, the overall financial benefits of seizure reduction with incorporating a ketogenic diet therapy may outweigh these costs,” Dr. Cervenka said.

“There are also low-cost foods that can be used since there is a great deal of flexibility with a modified Atkins diet,” she added.

The study was funded by the Centre of Excellence for Epilepsy, which is funded by the Department of Biotechnology, Government of India. Dr. Tripathi and Dr. Cervenka report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research shows.

In a randomized prospective study, the number of seizures per month dropped by more than half in one-quarter of patients following the high-fat, low-carb diet; and 5% of the group were free from all seizure activity after 6 months.

Both adults and adolescents reported benefits from the diet, which is a less strict version of a traditional ketogenic diet that many patients find difficult to follow. The modified Atkins diet includes foods such as leafy green vegetables and eggs, chicken, fish, bacon, and other animal proteins.

“The use of an exchange list and recipe booklet with local recipes and spices helped in the initiation of modified Atkins diet with the flexibility of meal choices and ease of administration,” said coinvestigator Manjari Tripathi, MD, DM, department of neurology, All India Institute of Medical Science, New Delhi.

“As items were everyday household ingredients in proportion to the requirement of the modified Atkins diet, this diet is possible in low-income countries also,” Dr. Tripathi added.

The findings were published online in the journal Neurology.

Low carbs, high benefit

The modified Atkins diet includes around 65% fat, 25% protein, and 10% carbohydrates. Unlike a traditional ketogenic diet, the modified Atkins diet includes no restrictions on protein, calories, or fluids.

Researchers have long known that ketogenic and Atkins diets are associated with reduced seizure activity in adolescents with epilepsy. But previous studies were small, and many were retrospective analyses.

The current investigators enrolled 160 patients (80 adults, 80 adolescents) aged 10-55 years whose epilepsy was not controlled despite using at least three antiseizure medications at maximum tolerated doses.

The intervention group received training in the modified Atkins diet and were given a food exchange list, sample menu, and recipe booklet. Carbohydrate intake was restricted to 20 grams per day.

Participants took supplemental multivitamins and minerals, kept a food diary, logged seizure activity, and measured urine ketone levels three times a day. They also received weekly check-up phone calls to ensure diet adherence.

The control group received a normal diet with no carbohydrate restrictions. All participants continued their prescribed antiseizure therapy throughout the trial.

Primary outcome met

The primary study outcome was a reduction in seizures of more than 50%. At 6 months, 26.2% of the intervention group had reached that goal, compared with just 2.5% of the control group (P < .001).

When the median number of seizures in the modified Atkins diet group was analyzed, the frequency dropped in the intervention group from 37.5 per month at baseline to 27.5 per month after 3 months of the modified Atkins diet and to 21.5 per month after 6 months.

Adding the modified Atkins diet had a larger effect on seizure activity in adults than in adolescents. At the end of 6 months, 36% of adolescents on the modified Atkins diet had more than a 50% reduction in seizures, while 57.1% of adults on the diet reached that level.

Quality-of-life scores were also higher in the intervention group.

By the end of the trial, 5% of patients on the modified Atkins diet had no seizure activity at all versus none of the control group. In fact, the median number of seizures increased in the control group during the study.

The mean morning and evening levels of urine ketosis in the intervention group were 58.3 ± 8.0 mg/dL and 62.2 ± 22.6 mg/dL, respectively, suggesting satisfactory diet adherence. There was no significant difference between groups in weight loss.

Dr. Tripathi noted that 33% of participants did not complete the study because of poor tolerance of the diet, lack of benefit, or the inability to follow up – in part due to COVID-19. However, she said tolerance of the modified Atkins diet was better than what has been reported with the ketogenic diet.

“Though the exact mechanism by which such a diet protects against seizures is unknown, there is evidence that it causes effects on intermediary metabolism that influences the dynamics of the major inhibitory and excitatory neurotransmitter systems in the brain,” Dr. Tripathi said.

Benefits outweigh cost

Commenting on the research findings, Mackenzie Cervenka, MD, professor of neurology and director of the Adult Epilepsy Diet Center at Johns Hopkins University, Baltimore, noted that the study is the first randomized controlled trial of this size to demonstrate a benefit from adding the modified Atkins diet to standard antiseizure therapy in treatment-resistant epilepsy.

“Importantly, the study also showed improvement in quality of life and behavior over standard-of-care therapies without significant adverse effects,” said Dr. Cervenka, who was not part of the research.

The investigators noted that the flexibility of the modified Atkins diet allows more variation in menu options and a greater intake of protein, making it easier to follow than a traditional ketogenic diet.

One area of debate, however, is whether these diets are manageable for individuals with low income. Poultry, meat, and fish, all of which are staples of a modified Atkins diet, can be more expensive than other high-carb options such as pasta and rice.

“While some of the foods such as protein sources that patients purchase when they are on a ketogenic diet therapy can be more expensive, if you take into account the cost of antiseizure medications and other antiseizure treatments, hospital visits, and missed work related to seizures, et cetera, the overall financial benefits of seizure reduction with incorporating a ketogenic diet therapy may outweigh these costs,” Dr. Cervenka said.

“There are also low-cost foods that can be used since there is a great deal of flexibility with a modified Atkins diet,” she added.

The study was funded by the Centre of Excellence for Epilepsy, which is funded by the Department of Biotechnology, Government of India. Dr. Tripathi and Dr. Cervenka report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research shows.

In a randomized prospective study, the number of seizures per month dropped by more than half in one-quarter of patients following the high-fat, low-carb diet; and 5% of the group were free from all seizure activity after 6 months.

Both adults and adolescents reported benefits from the diet, which is a less strict version of a traditional ketogenic diet that many patients find difficult to follow. The modified Atkins diet includes foods such as leafy green vegetables and eggs, chicken, fish, bacon, and other animal proteins.

“The use of an exchange list and recipe booklet with local recipes and spices helped in the initiation of modified Atkins diet with the flexibility of meal choices and ease of administration,” said coinvestigator Manjari Tripathi, MD, DM, department of neurology, All India Institute of Medical Science, New Delhi.

“As items were everyday household ingredients in proportion to the requirement of the modified Atkins diet, this diet is possible in low-income countries also,” Dr. Tripathi added.

The findings were published online in the journal Neurology.

Low carbs, high benefit

The modified Atkins diet includes around 65% fat, 25% protein, and 10% carbohydrates. Unlike a traditional ketogenic diet, the modified Atkins diet includes no restrictions on protein, calories, or fluids.

Researchers have long known that ketogenic and Atkins diets are associated with reduced seizure activity in adolescents with epilepsy. But previous studies were small, and many were retrospective analyses.

The current investigators enrolled 160 patients (80 adults, 80 adolescents) aged 10-55 years whose epilepsy was not controlled despite using at least three antiseizure medications at maximum tolerated doses.

The intervention group received training in the modified Atkins diet and were given a food exchange list, sample menu, and recipe booklet. Carbohydrate intake was restricted to 20 grams per day.

Participants took supplemental multivitamins and minerals, kept a food diary, logged seizure activity, and measured urine ketone levels three times a day. They also received weekly check-up phone calls to ensure diet adherence.

The control group received a normal diet with no carbohydrate restrictions. All participants continued their prescribed antiseizure therapy throughout the trial.

Primary outcome met

The primary study outcome was a reduction in seizures of more than 50%. At 6 months, 26.2% of the intervention group had reached that goal, compared with just 2.5% of the control group (P < .001).

When the median number of seizures in the modified Atkins diet group was analyzed, the frequency dropped in the intervention group from 37.5 per month at baseline to 27.5 per month after 3 months of the modified Atkins diet and to 21.5 per month after 6 months.

Adding the modified Atkins diet had a larger effect on seizure activity in adults than in adolescents. At the end of 6 months, 36% of adolescents on the modified Atkins diet had more than a 50% reduction in seizures, while 57.1% of adults on the diet reached that level.

Quality-of-life scores were also higher in the intervention group.

By the end of the trial, 5% of patients on the modified Atkins diet had no seizure activity at all versus none of the control group. In fact, the median number of seizures increased in the control group during the study.

The mean morning and evening levels of urine ketosis in the intervention group were 58.3 ± 8.0 mg/dL and 62.2 ± 22.6 mg/dL, respectively, suggesting satisfactory diet adherence. There was no significant difference between groups in weight loss.

Dr. Tripathi noted that 33% of participants did not complete the study because of poor tolerance of the diet, lack of benefit, or the inability to follow up – in part due to COVID-19. However, she said tolerance of the modified Atkins diet was better than what has been reported with the ketogenic diet.

“Though the exact mechanism by which such a diet protects against seizures is unknown, there is evidence that it causes effects on intermediary metabolism that influences the dynamics of the major inhibitory and excitatory neurotransmitter systems in the brain,” Dr. Tripathi said.

Benefits outweigh cost

Commenting on the research findings, Mackenzie Cervenka, MD, professor of neurology and director of the Adult Epilepsy Diet Center at Johns Hopkins University, Baltimore, noted that the study is the first randomized controlled trial of this size to demonstrate a benefit from adding the modified Atkins diet to standard antiseizure therapy in treatment-resistant epilepsy.

“Importantly, the study also showed improvement in quality of life and behavior over standard-of-care therapies without significant adverse effects,” said Dr. Cervenka, who was not part of the research.

The investigators noted that the flexibility of the modified Atkins diet allows more variation in menu options and a greater intake of protein, making it easier to follow than a traditional ketogenic diet.

One area of debate, however, is whether these diets are manageable for individuals with low income. Poultry, meat, and fish, all of which are staples of a modified Atkins diet, can be more expensive than other high-carb options such as pasta and rice.

“While some of the foods such as protein sources that patients purchase when they are on a ketogenic diet therapy can be more expensive, if you take into account the cost of antiseizure medications and other antiseizure treatments, hospital visits, and missed work related to seizures, et cetera, the overall financial benefits of seizure reduction with incorporating a ketogenic diet therapy may outweigh these costs,” Dr. Cervenka said.

“There are also low-cost foods that can be used since there is a great deal of flexibility with a modified Atkins diet,” she added.

The study was funded by the Centre of Excellence for Epilepsy, which is funded by the Department of Biotechnology, Government of India. Dr. Tripathi and Dr. Cervenka report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM NEUROLOGY

Screen all patients for cannabis use before surgery: Guideline

All patients who undergo procedures that require regional or general anesthesia should be asked if, how often, and in what forms they use the drug, according to recommendations from the American Society of Regional Anesthesia and Pain Medicine.

One reason: Patients who regularly use cannabis may experience worse pain and nausea after surgery and may require more opioid analgesia, the group said.

The society’s recommendations – published in Regional Anesthesia and Pain Medicine – are the first guidelines in the United States to cover cannabis use as it relates to surgery, the group said.

Possible interactions

Use of cannabis has increased in recent years, and researchers have been concerned that the drug may interact with anesthesia and complicate pain management. Few studies have evaluated interactions between cannabis and anesthetic agents, however, according to the authors of the new guidelines.

“With the rising prevalence of both medical and recreational cannabis use in the general population, anesthesiologists, surgeons, and perioperative physicians must have an understanding of the effects of cannabis on physiology in order to provide safe perioperative care,” the guideline said.

“Before surgery, anesthesiologists should ask patients if they use cannabis – whether medicinally or recreationally – and be prepared to possibly change the anesthesia plan or delay the procedure in certain situations,” Samer Narouze, MD, PhD, ASRA president and senior author of the guidelines, said in a news release about the recommendations.

Although some patients may use cannabis to relieve pain, research shows that “regular users may have more pain and nausea after surgery, not less, and may need more medications, including opioids, to manage the discomfort,” said Dr. Narouze, chairman of the Center for Pain Medicine at Western Reserve Hospital in Cuyahoga Falls, Ohio.

Risks for vomiting, heart attack

The new recommendations were created by a committee of 13 experts, including anesthesiologists, chronic pain physicians, and a patient advocate. Shalini Shah, MD, vice chair of anesthesiology at the University of California, Irvine, was lead author of the document.

Four of 21 recommendations were classified as grade A, meaning that following them would be expected to provide substantial benefits. Those recommendations are to screen all patients before surgery; postpone elective surgery for patients who have altered mental status or impaired decision-making capacity at the time of surgery; counsel frequent, heavy users about the potential for cannabis use to impair postoperative pain control; and counsel pregnant patients about the risks of cannabis use to unborn children.

The authors cited studies to support their recommendations, including one showing that long-term cannabis use was associated with a 20% increase in the incidence of postoperative nausea and vomiting, a leading complaint of surgery patients. Other research has shown that cannabis use is linked to more pain and use of opioids after surgery.

Other recommendations include delaying elective surgery for at least 2 hours after a patient has smoked cannabis, owing to an increased risk for heart attack, and considering adjustment of ventilation settings during surgery for regular smokers of cannabis. Research has shown that smoking cannabis may be a rare trigger for myocardial infarction and is associated with airway inflammation and self-reported respiratory symptoms.

Nevertheless, doctors should not conduct universal toxicology screening, given a lack of evidence supporting this practice, the guideline stated.

The authors did not have enough information to make recommendations about reducing cannabis use before surgery or adjusting opioid prescriptions after surgery for patients who use cannabis, they said.

Kenneth Finn, MD, president of the American Board of Pain Medicine, welcomed the publication of the new guidelines. Dr. Finn, who practices at Springs Rehabilitation in Colorado Springs, has edited a textbook about cannabis in medicine and founded the International Academy on the Science and Impact of Cannabis.

“The vast majority of medical providers really have no idea about cannabis and what its impacts are on the human body,” Dr. Finn said.

For one, it can interact with numerous other drugs, including warfarin.

Guideline coauthor Eugene R. Viscusi, MD, professor of anesthesiology at the Sidney Kimmel Medical College, Philadelphia, emphasized that, while cannabis may be perceived as “natural,” it should not be considered differently from manufactured drugs.

Cannabis and cannabinoids represent “a class of very potent and pharmacologically active compounds,” Dr. Viscusi said in an interview. While researchers continue to assess possible medically beneficial effects of cannabis compounds, clinicians also need to be aware of the risks.

“The literature continues to emerge, and while we are always hopeful for good news, as physicians, we need to be very well versed on potential risks, especially in a high-risk situation like surgery,” he said.

Dr. Shah has consulted for companies that develop medical devices and drugs. Dr. Finn is the editor of the textbook, “Cannabis in Medicine: An Evidence-Based Approach” (Springer: New York, 2020), for which he receives royalties.

A version of this article first appeared on Medscape.com.

All patients who undergo procedures that require regional or general anesthesia should be asked if, how often, and in what forms they use the drug, according to recommendations from the American Society of Regional Anesthesia and Pain Medicine.

One reason: Patients who regularly use cannabis may experience worse pain and nausea after surgery and may require more opioid analgesia, the group said.

The society’s recommendations – published in Regional Anesthesia and Pain Medicine – are the first guidelines in the United States to cover cannabis use as it relates to surgery, the group said.

Possible interactions

Use of cannabis has increased in recent years, and researchers have been concerned that the drug may interact with anesthesia and complicate pain management. Few studies have evaluated interactions between cannabis and anesthetic agents, however, according to the authors of the new guidelines.

“With the rising prevalence of both medical and recreational cannabis use in the general population, anesthesiologists, surgeons, and perioperative physicians must have an understanding of the effects of cannabis on physiology in order to provide safe perioperative care,” the guideline said.

“Before surgery, anesthesiologists should ask patients if they use cannabis – whether medicinally or recreationally – and be prepared to possibly change the anesthesia plan or delay the procedure in certain situations,” Samer Narouze, MD, PhD, ASRA president and senior author of the guidelines, said in a news release about the recommendations.

Although some patients may use cannabis to relieve pain, research shows that “regular users may have more pain and nausea after surgery, not less, and may need more medications, including opioids, to manage the discomfort,” said Dr. Narouze, chairman of the Center for Pain Medicine at Western Reserve Hospital in Cuyahoga Falls, Ohio.

Risks for vomiting, heart attack

The new recommendations were created by a committee of 13 experts, including anesthesiologists, chronic pain physicians, and a patient advocate. Shalini Shah, MD, vice chair of anesthesiology at the University of California, Irvine, was lead author of the document.

Four of 21 recommendations were classified as grade A, meaning that following them would be expected to provide substantial benefits. Those recommendations are to screen all patients before surgery; postpone elective surgery for patients who have altered mental status or impaired decision-making capacity at the time of surgery; counsel frequent, heavy users about the potential for cannabis use to impair postoperative pain control; and counsel pregnant patients about the risks of cannabis use to unborn children.

The authors cited studies to support their recommendations, including one showing that long-term cannabis use was associated with a 20% increase in the incidence of postoperative nausea and vomiting, a leading complaint of surgery patients. Other research has shown that cannabis use is linked to more pain and use of opioids after surgery.

Other recommendations include delaying elective surgery for at least 2 hours after a patient has smoked cannabis, owing to an increased risk for heart attack, and considering adjustment of ventilation settings during surgery for regular smokers of cannabis. Research has shown that smoking cannabis may be a rare trigger for myocardial infarction and is associated with airway inflammation and self-reported respiratory symptoms.

Nevertheless, doctors should not conduct universal toxicology screening, given a lack of evidence supporting this practice, the guideline stated.

The authors did not have enough information to make recommendations about reducing cannabis use before surgery or adjusting opioid prescriptions after surgery for patients who use cannabis, they said.

Kenneth Finn, MD, president of the American Board of Pain Medicine, welcomed the publication of the new guidelines. Dr. Finn, who practices at Springs Rehabilitation in Colorado Springs, has edited a textbook about cannabis in medicine and founded the International Academy on the Science and Impact of Cannabis.

“The vast majority of medical providers really have no idea about cannabis and what its impacts are on the human body,” Dr. Finn said.

For one, it can interact with numerous other drugs, including warfarin.

Guideline coauthor Eugene R. Viscusi, MD, professor of anesthesiology at the Sidney Kimmel Medical College, Philadelphia, emphasized that, while cannabis may be perceived as “natural,” it should not be considered differently from manufactured drugs.

Cannabis and cannabinoids represent “a class of very potent and pharmacologically active compounds,” Dr. Viscusi said in an interview. While researchers continue to assess possible medically beneficial effects of cannabis compounds, clinicians also need to be aware of the risks.

“The literature continues to emerge, and while we are always hopeful for good news, as physicians, we need to be very well versed on potential risks, especially in a high-risk situation like surgery,” he said.

Dr. Shah has consulted for companies that develop medical devices and drugs. Dr. Finn is the editor of the textbook, “Cannabis in Medicine: An Evidence-Based Approach” (Springer: New York, 2020), for which he receives royalties.

A version of this article first appeared on Medscape.com.

All patients who undergo procedures that require regional or general anesthesia should be asked if, how often, and in what forms they use the drug, according to recommendations from the American Society of Regional Anesthesia and Pain Medicine.

One reason: Patients who regularly use cannabis may experience worse pain and nausea after surgery and may require more opioid analgesia, the group said.

The society’s recommendations – published in Regional Anesthesia and Pain Medicine – are the first guidelines in the United States to cover cannabis use as it relates to surgery, the group said.

Possible interactions

Use of cannabis has increased in recent years, and researchers have been concerned that the drug may interact with anesthesia and complicate pain management. Few studies have evaluated interactions between cannabis and anesthetic agents, however, according to the authors of the new guidelines.

“With the rising prevalence of both medical and recreational cannabis use in the general population, anesthesiologists, surgeons, and perioperative physicians must have an understanding of the effects of cannabis on physiology in order to provide safe perioperative care,” the guideline said.

“Before surgery, anesthesiologists should ask patients if they use cannabis – whether medicinally or recreationally – and be prepared to possibly change the anesthesia plan or delay the procedure in certain situations,” Samer Narouze, MD, PhD, ASRA president and senior author of the guidelines, said in a news release about the recommendations.

Although some patients may use cannabis to relieve pain, research shows that “regular users may have more pain and nausea after surgery, not less, and may need more medications, including opioids, to manage the discomfort,” said Dr. Narouze, chairman of the Center for Pain Medicine at Western Reserve Hospital in Cuyahoga Falls, Ohio.

Risks for vomiting, heart attack

The new recommendations were created by a committee of 13 experts, including anesthesiologists, chronic pain physicians, and a patient advocate. Shalini Shah, MD, vice chair of anesthesiology at the University of California, Irvine, was lead author of the document.

Four of 21 recommendations were classified as grade A, meaning that following them would be expected to provide substantial benefits. Those recommendations are to screen all patients before surgery; postpone elective surgery for patients who have altered mental status or impaired decision-making capacity at the time of surgery; counsel frequent, heavy users about the potential for cannabis use to impair postoperative pain control; and counsel pregnant patients about the risks of cannabis use to unborn children.

The authors cited studies to support their recommendations, including one showing that long-term cannabis use was associated with a 20% increase in the incidence of postoperative nausea and vomiting, a leading complaint of surgery patients. Other research has shown that cannabis use is linked to more pain and use of opioids after surgery.

Other recommendations include delaying elective surgery for at least 2 hours after a patient has smoked cannabis, owing to an increased risk for heart attack, and considering adjustment of ventilation settings during surgery for regular smokers of cannabis. Research has shown that smoking cannabis may be a rare trigger for myocardial infarction and is associated with airway inflammation and self-reported respiratory symptoms.

Nevertheless, doctors should not conduct universal toxicology screening, given a lack of evidence supporting this practice, the guideline stated.

The authors did not have enough information to make recommendations about reducing cannabis use before surgery or adjusting opioid prescriptions after surgery for patients who use cannabis, they said.

Kenneth Finn, MD, president of the American Board of Pain Medicine, welcomed the publication of the new guidelines. Dr. Finn, who practices at Springs Rehabilitation in Colorado Springs, has edited a textbook about cannabis in medicine and founded the International Academy on the Science and Impact of Cannabis.

“The vast majority of medical providers really have no idea about cannabis and what its impacts are on the human body,” Dr. Finn said.

For one, it can interact with numerous other drugs, including warfarin.

Guideline coauthor Eugene R. Viscusi, MD, professor of anesthesiology at the Sidney Kimmel Medical College, Philadelphia, emphasized that, while cannabis may be perceived as “natural,” it should not be considered differently from manufactured drugs.

Cannabis and cannabinoids represent “a class of very potent and pharmacologically active compounds,” Dr. Viscusi said in an interview. While researchers continue to assess possible medically beneficial effects of cannabis compounds, clinicians also need to be aware of the risks.

“The literature continues to emerge, and while we are always hopeful for good news, as physicians, we need to be very well versed on potential risks, especially in a high-risk situation like surgery,” he said.

Dr. Shah has consulted for companies that develop medical devices and drugs. Dr. Finn is the editor of the textbook, “Cannabis in Medicine: An Evidence-Based Approach” (Springer: New York, 2020), for which he receives royalties.

A version of this article first appeared on Medscape.com.

FROM REGIONAL ANETHESIA AND MEDICINE

Is thrombolysis safe for stroke patients on DOACs?

, a new study has found.

The study, the largest ever regarding the safety of thrombolysis in patients on DOACs, actually found a lower rate of sICH among patients taking DOACs than among those not taking anticoagulants.

“Thrombolysis is a backbone therapy in stroke, but the large population of patients who take DOACs are currently excluded from this treatment because DOAC use is a contraindication to treatment with thrombolysis. This is based on the presumption of an increased risk of sICH, but data to support or refute this presumption are lacking,” said senior author David J. Seiffge, MD, Bern University Hospital, Switzerland.

“Our results suggest that current guidelines need to be revised to remove the absolute contraindication of thrombolysis in patients on DOACs. The guidelines need to be more liberal on the use of thrombolysis in these patients,” he added.

“This study provides the basis for extending vital thrombolysis treatment to this substantial population of patients who take DOACs,” Dr. Seiffge said.

He estimates that 1 of every 6 stroke patients are taking a DOAC and that 1% to 2% of patients taking DOACs have a stroke each year. “As millions of patients are on DOACs, this is a large number of people who are not getting potentially life-saving thrombolysis therapy.”

Dr. Seiffge comments: “In our hospital we see at least one stroke patient on DOACs every day. It is a very frequent scenario. With this new data, we believe many of these patients could now benefit from thrombolysis without an increased bleeding risk.”

The study was published online in JAMA Neurology.

An international investigation

While thrombolysis is currently contraindicated for patients taking DOACs, some clinicians still administer thrombolysis to these patients. Different selection strategies are used, including the use of DOAC reversal agents prior to thrombolysis or the selection of patients with low anticoagulant activity, the authors noted.

The current study involved an international collaboration. The investigators compared the risk of sICH among patients who had recently taken DOACs and who underwent thrombolysis as treatment for acute ischemic stroke with the risk among control stroke patients who underwent thrombolysis but who had not been taking DOACs.

Potential contributing centers were identified by a systematic search of the literature based on published studies on the use of thrombolysis for patients who had recently taken DOACs or prospective stroke registries that may include patients who had recently taken DOACs.

The study included 832 patients from 64 centers worldwide who were confirmed to have taken a DOAC within 48 hours of receiving thrombolysis for acute ischemic stroke. The comparison group was made up of 32,375 patients who had experienced ischemic stroke that was treated with thrombolysis but who had received no prior anticoagulation therapy.

Compared with control patients, patients who had recently taken DOACs were older; the incidence of hypertension among them was higher; they had a higher degree of prestroke disability; they were less likely to be smokers; the time from symptom onset to treatment was longer; they had experienced more severe stroke; and they were more likely to have a large-vessel occlusion.

Of the patients taking DOACs, 30.3% received DOAC reversal prior to thrombolysis. For 27.0%, DOAC plasma levels were measured. The remainder were treated with thrombolysis without either of these selection methods.

Results showed that the unadjusted rate of sICH was 2.5% among patients taking DOACs, compared with 4.1% among control patients who were not taking anticoagulants.

After adjustment for stroke severity and other baseline sICH predictors, patients who had recently taken DOACs and who received thrombolysis had lower odds of developing sICH (adjusted odds ratio, 0.57; 95% confidence interval, 0.36-0.92; P = .02).

There was no difference between the selection strategies, and results were consistent in different sensitivity analyses.

The secondary outcome of any ICH occurred in 18.0% in patients taking DOACs, compared with 17.4% among control patients who used no anticoagulants. After adjustment, there was no difference in the odds for any ICH between the groups (aOR, 1.18; 95% CI, 0.95-1.45; P = .14).

The unadjusted rate of functional independence was 45% among patients taking DOACs, compared with 57% among control patients. After adjustment, patients who had recently taken DOACs and who underwent thrombolysis had numerically higher odds of being functionally independent than control patients, although this difference did not reach statistical significance (aOR, 1.13; 95% CI, 0.94-1.36; P = .20).

The association of DOAC therapy with lower odds of sICH remained when mechanical thrombectomy, large-vessel occlusion, or concomitant antiplatelet therapy was added to the model.

“This is by far the largest study to look at this issue of thrombolytic use in patients on DOACs, and we did not find any group on DOACs that had an excess ICH rate with thrombolysis,” Dr. Seiffge said,

He explained that receiving warfarin was at one time an absolute contraindication for thrombolysis, but after a 2014 study suggested that the risk was not increased for patients with an international normalized ratio below 117, this was downgraded to a relative contraindication.

“We think our study is comparable and should lead to a guideline change,” Dr. Seiffge commented.

“A relative contraindication allows clinicians the space to make a considered decision on an individual basis,” he added.

Dr. Seiffge said that at his hospital, local guidelines regarding this issue have already been changed on the basis of these data, and use of DOACs is now considered a relative contraindication.

“International guidelines can take years to update, so in the meantime, I think other centers will also go ahead with a more liberal approach. There are always some centers that are ahead of the guidelines,” he added.

Although the lower risk of sICH seen in patients who have recently used DOACs seems counterintuitive at first glance, there could be a pathophysiologic explanation for this finding, the authors suggest.

They point out that thrombin inhibition, either directly or via the coagulation cascade, might be protective against the occurrence of sICH.

“Anticoagulants may allow the clot to respond better to thrombolysis – the clot is not as solid and is easier to recanalize. This leads to smaller strokes and a lower bleeding risk. Thrombin generation is also a major driver for blood brain barrier breakdown. DOACs reduce thrombin generation, so reduce blood brain barrier breakdown and reduce bleeding,” Dr. Seiffge explained. “But these are hypotheses,” he added.

Study ‘meaningfully advances the field’

In an accompanying editorial, Eva A. Mistry, MBBS, University of Cincinnati, said the current study “meaningfully advances the field” and provides an estimation of safety of intravenous thrombolysis among patients who have taken DOACs within 48 hours of hospital admission.

She lists strengths of the study as inclusion of a large number of patients across several geographically diverse institutions with heterogeneous standard practices for thrombolysis with recent DOAC use and narrow confidence intervals regarding observed rates of sICH.

“Further, the upper bound of this confidence interval for the DOAC group is below 4%, which is a welcome result and provides supportive data for clinicians who already practice thrombolysis for patients with recent DOAC ingestion,” Dr. Mistry adds.

However, she points out several study limitations, which she says limit immediate, widespread clinical applicability.

These include use of a nonconcurrent control population, which included patients from centers that did not contribute to the DOAC group and the inclusion of Asian patients who likely received a lower thrombolytic dose.

Dr. Seiffge noted that the researchers did adjust for Asian patients but not for the thrombolytic dosage. “I personally do not think this affects the results, as Asian patients have a lower dosage because they have a higher bleeding risk. The lower bleeding risk with DOACs was seen in all continents.”

Dr. Mistry also suggests that the DOAC group itself is prone to selection bias from preferential thrombolysis of patients receiving DOAC who are at lower risk of sICH.

But Dr. Seiffge argued: “I think, actually, the opposite is true. The DOAC patients were older, had more severe comorbidities, and an increased bleeding risk.”

Dr. Mistry concluded, “Despite the limitations of the study design and enrolled population, these data may be used by clinicians to make individualized decisions regarding thrombolysis among patients with recent DOAC use. Importantly, this study lays the foundation for prospective, well-powered studies that definitively determine the safety of thrombolysis in this population.”

The study was supported by a grant from the Bangerter-Rhyner Foundation. Dr. Seiffge received grants from Bangerter Rhyner Foundation during the conduct of the study and personal fees from Bayer, Alexion, and VarmX outside the submitted work. Dr. Mistry receives grant funding from the National Institute of Neurological Disorders and Stroke and serves as a consultant for RAPID AI.

A version of this article first appeared on Medscape.com.

, a new study has found.

The study, the largest ever regarding the safety of thrombolysis in patients on DOACs, actually found a lower rate of sICH among patients taking DOACs than among those not taking anticoagulants.

“Thrombolysis is a backbone therapy in stroke, but the large population of patients who take DOACs are currently excluded from this treatment because DOAC use is a contraindication to treatment with thrombolysis. This is based on the presumption of an increased risk of sICH, but data to support or refute this presumption are lacking,” said senior author David J. Seiffge, MD, Bern University Hospital, Switzerland.

“Our results suggest that current guidelines need to be revised to remove the absolute contraindication of thrombolysis in patients on DOACs. The guidelines need to be more liberal on the use of thrombolysis in these patients,” he added.

“This study provides the basis for extending vital thrombolysis treatment to this substantial population of patients who take DOACs,” Dr. Seiffge said.

He estimates that 1 of every 6 stroke patients are taking a DOAC and that 1% to 2% of patients taking DOACs have a stroke each year. “As millions of patients are on DOACs, this is a large number of people who are not getting potentially life-saving thrombolysis therapy.”

Dr. Seiffge comments: “In our hospital we see at least one stroke patient on DOACs every day. It is a very frequent scenario. With this new data, we believe many of these patients could now benefit from thrombolysis without an increased bleeding risk.”

The study was published online in JAMA Neurology.

An international investigation

While thrombolysis is currently contraindicated for patients taking DOACs, some clinicians still administer thrombolysis to these patients. Different selection strategies are used, including the use of DOAC reversal agents prior to thrombolysis or the selection of patients with low anticoagulant activity, the authors noted.

The current study involved an international collaboration. The investigators compared the risk of sICH among patients who had recently taken DOACs and who underwent thrombolysis as treatment for acute ischemic stroke with the risk among control stroke patients who underwent thrombolysis but who had not been taking DOACs.

Potential contributing centers were identified by a systematic search of the literature based on published studies on the use of thrombolysis for patients who had recently taken DOACs or prospective stroke registries that may include patients who had recently taken DOACs.

The study included 832 patients from 64 centers worldwide who were confirmed to have taken a DOAC within 48 hours of receiving thrombolysis for acute ischemic stroke. The comparison group was made up of 32,375 patients who had experienced ischemic stroke that was treated with thrombolysis but who had received no prior anticoagulation therapy.

Compared with control patients, patients who had recently taken DOACs were older; the incidence of hypertension among them was higher; they had a higher degree of prestroke disability; they were less likely to be smokers; the time from symptom onset to treatment was longer; they had experienced more severe stroke; and they were more likely to have a large-vessel occlusion.

Of the patients taking DOACs, 30.3% received DOAC reversal prior to thrombolysis. For 27.0%, DOAC plasma levels were measured. The remainder were treated with thrombolysis without either of these selection methods.

Results showed that the unadjusted rate of sICH was 2.5% among patients taking DOACs, compared with 4.1% among control patients who were not taking anticoagulants.

After adjustment for stroke severity and other baseline sICH predictors, patients who had recently taken DOACs and who received thrombolysis had lower odds of developing sICH (adjusted odds ratio, 0.57; 95% confidence interval, 0.36-0.92; P = .02).

There was no difference between the selection strategies, and results were consistent in different sensitivity analyses.

The secondary outcome of any ICH occurred in 18.0% in patients taking DOACs, compared with 17.4% among control patients who used no anticoagulants. After adjustment, there was no difference in the odds for any ICH between the groups (aOR, 1.18; 95% CI, 0.95-1.45; P = .14).

The unadjusted rate of functional independence was 45% among patients taking DOACs, compared with 57% among control patients. After adjustment, patients who had recently taken DOACs and who underwent thrombolysis had numerically higher odds of being functionally independent than control patients, although this difference did not reach statistical significance (aOR, 1.13; 95% CI, 0.94-1.36; P = .20).

The association of DOAC therapy with lower odds of sICH remained when mechanical thrombectomy, large-vessel occlusion, or concomitant antiplatelet therapy was added to the model.

“This is by far the largest study to look at this issue of thrombolytic use in patients on DOACs, and we did not find any group on DOACs that had an excess ICH rate with thrombolysis,” Dr. Seiffge said,

He explained that receiving warfarin was at one time an absolute contraindication for thrombolysis, but after a 2014 study suggested that the risk was not increased for patients with an international normalized ratio below 117, this was downgraded to a relative contraindication.

“We think our study is comparable and should lead to a guideline change,” Dr. Seiffge commented.

“A relative contraindication allows clinicians the space to make a considered decision on an individual basis,” he added.

Dr. Seiffge said that at his hospital, local guidelines regarding this issue have already been changed on the basis of these data, and use of DOACs is now considered a relative contraindication.

“International guidelines can take years to update, so in the meantime, I think other centers will also go ahead with a more liberal approach. There are always some centers that are ahead of the guidelines,” he added.

Although the lower risk of sICH seen in patients who have recently used DOACs seems counterintuitive at first glance, there could be a pathophysiologic explanation for this finding, the authors suggest.

They point out that thrombin inhibition, either directly or via the coagulation cascade, might be protective against the occurrence of sICH.

“Anticoagulants may allow the clot to respond better to thrombolysis – the clot is not as solid and is easier to recanalize. This leads to smaller strokes and a lower bleeding risk. Thrombin generation is also a major driver for blood brain barrier breakdown. DOACs reduce thrombin generation, so reduce blood brain barrier breakdown and reduce bleeding,” Dr. Seiffge explained. “But these are hypotheses,” he added.

Study ‘meaningfully advances the field’

In an accompanying editorial, Eva A. Mistry, MBBS, University of Cincinnati, said the current study “meaningfully advances the field” and provides an estimation of safety of intravenous thrombolysis among patients who have taken DOACs within 48 hours of hospital admission.

She lists strengths of the study as inclusion of a large number of patients across several geographically diverse institutions with heterogeneous standard practices for thrombolysis with recent DOAC use and narrow confidence intervals regarding observed rates of sICH.

“Further, the upper bound of this confidence interval for the DOAC group is below 4%, which is a welcome result and provides supportive data for clinicians who already practice thrombolysis for patients with recent DOAC ingestion,” Dr. Mistry adds.

However, she points out several study limitations, which she says limit immediate, widespread clinical applicability.

These include use of a nonconcurrent control population, which included patients from centers that did not contribute to the DOAC group and the inclusion of Asian patients who likely received a lower thrombolytic dose.

Dr. Seiffge noted that the researchers did adjust for Asian patients but not for the thrombolytic dosage. “I personally do not think this affects the results, as Asian patients have a lower dosage because they have a higher bleeding risk. The lower bleeding risk with DOACs was seen in all continents.”

Dr. Mistry also suggests that the DOAC group itself is prone to selection bias from preferential thrombolysis of patients receiving DOAC who are at lower risk of sICH.

But Dr. Seiffge argued: “I think, actually, the opposite is true. The DOAC patients were older, had more severe comorbidities, and an increased bleeding risk.”

Dr. Mistry concluded, “Despite the limitations of the study design and enrolled population, these data may be used by clinicians to make individualized decisions regarding thrombolysis among patients with recent DOAC use. Importantly, this study lays the foundation for prospective, well-powered studies that definitively determine the safety of thrombolysis in this population.”

The study was supported by a grant from the Bangerter-Rhyner Foundation. Dr. Seiffge received grants from Bangerter Rhyner Foundation during the conduct of the study and personal fees from Bayer, Alexion, and VarmX outside the submitted work. Dr. Mistry receives grant funding from the National Institute of Neurological Disorders and Stroke and serves as a consultant for RAPID AI.

A version of this article first appeared on Medscape.com.

, a new study has found.

The study, the largest ever regarding the safety of thrombolysis in patients on DOACs, actually found a lower rate of sICH among patients taking DOACs than among those not taking anticoagulants.

“Thrombolysis is a backbone therapy in stroke, but the large population of patients who take DOACs are currently excluded from this treatment because DOAC use is a contraindication to treatment with thrombolysis. This is based on the presumption of an increased risk of sICH, but data to support or refute this presumption are lacking,” said senior author David J. Seiffge, MD, Bern University Hospital, Switzerland.

“Our results suggest that current guidelines need to be revised to remove the absolute contraindication of thrombolysis in patients on DOACs. The guidelines need to be more liberal on the use of thrombolysis in these patients,” he added.

“This study provides the basis for extending vital thrombolysis treatment to this substantial population of patients who take DOACs,” Dr. Seiffge said.

He estimates that 1 of every 6 stroke patients are taking a DOAC and that 1% to 2% of patients taking DOACs have a stroke each year. “As millions of patients are on DOACs, this is a large number of people who are not getting potentially life-saving thrombolysis therapy.”

Dr. Seiffge comments: “In our hospital we see at least one stroke patient on DOACs every day. It is a very frequent scenario. With this new data, we believe many of these patients could now benefit from thrombolysis without an increased bleeding risk.”

The study was published online in JAMA Neurology.

An international investigation

While thrombolysis is currently contraindicated for patients taking DOACs, some clinicians still administer thrombolysis to these patients. Different selection strategies are used, including the use of DOAC reversal agents prior to thrombolysis or the selection of patients with low anticoagulant activity, the authors noted.

The current study involved an international collaboration. The investigators compared the risk of sICH among patients who had recently taken DOACs and who underwent thrombolysis as treatment for acute ischemic stroke with the risk among control stroke patients who underwent thrombolysis but who had not been taking DOACs.

Potential contributing centers were identified by a systematic search of the literature based on published studies on the use of thrombolysis for patients who had recently taken DOACs or prospective stroke registries that may include patients who had recently taken DOACs.

The study included 832 patients from 64 centers worldwide who were confirmed to have taken a DOAC within 48 hours of receiving thrombolysis for acute ischemic stroke. The comparison group was made up of 32,375 patients who had experienced ischemic stroke that was treated with thrombolysis but who had received no prior anticoagulation therapy.

Compared with control patients, patients who had recently taken DOACs were older; the incidence of hypertension among them was higher; they had a higher degree of prestroke disability; they were less likely to be smokers; the time from symptom onset to treatment was longer; they had experienced more severe stroke; and they were more likely to have a large-vessel occlusion.

Of the patients taking DOACs, 30.3% received DOAC reversal prior to thrombolysis. For 27.0%, DOAC plasma levels were measured. The remainder were treated with thrombolysis without either of these selection methods.

Results showed that the unadjusted rate of sICH was 2.5% among patients taking DOACs, compared with 4.1% among control patients who were not taking anticoagulants.

After adjustment for stroke severity and other baseline sICH predictors, patients who had recently taken DOACs and who received thrombolysis had lower odds of developing sICH (adjusted odds ratio, 0.57; 95% confidence interval, 0.36-0.92; P = .02).

There was no difference between the selection strategies, and results were consistent in different sensitivity analyses.

The secondary outcome of any ICH occurred in 18.0% in patients taking DOACs, compared with 17.4% among control patients who used no anticoagulants. After adjustment, there was no difference in the odds for any ICH between the groups (aOR, 1.18; 95% CI, 0.95-1.45; P = .14).

The unadjusted rate of functional independence was 45% among patients taking DOACs, compared with 57% among control patients. After adjustment, patients who had recently taken DOACs and who underwent thrombolysis had numerically higher odds of being functionally independent than control patients, although this difference did not reach statistical significance (aOR, 1.13; 95% CI, 0.94-1.36; P = .20).

The association of DOAC therapy with lower odds of sICH remained when mechanical thrombectomy, large-vessel occlusion, or concomitant antiplatelet therapy was added to the model.

“This is by far the largest study to look at this issue of thrombolytic use in patients on DOACs, and we did not find any group on DOACs that had an excess ICH rate with thrombolysis,” Dr. Seiffge said,

He explained that receiving warfarin was at one time an absolute contraindication for thrombolysis, but after a 2014 study suggested that the risk was not increased for patients with an international normalized ratio below 117, this was downgraded to a relative contraindication.

“We think our study is comparable and should lead to a guideline change,” Dr. Seiffge commented.

“A relative contraindication allows clinicians the space to make a considered decision on an individual basis,” he added.

Dr. Seiffge said that at his hospital, local guidelines regarding this issue have already been changed on the basis of these data, and use of DOACs is now considered a relative contraindication.

“International guidelines can take years to update, so in the meantime, I think other centers will also go ahead with a more liberal approach. There are always some centers that are ahead of the guidelines,” he added.

Although the lower risk of sICH seen in patients who have recently used DOACs seems counterintuitive at first glance, there could be a pathophysiologic explanation for this finding, the authors suggest.

They point out that thrombin inhibition, either directly or via the coagulation cascade, might be protective against the occurrence of sICH.

“Anticoagulants may allow the clot to respond better to thrombolysis – the clot is not as solid and is easier to recanalize. This leads to smaller strokes and a lower bleeding risk. Thrombin generation is also a major driver for blood brain barrier breakdown. DOACs reduce thrombin generation, so reduce blood brain barrier breakdown and reduce bleeding,” Dr. Seiffge explained. “But these are hypotheses,” he added.

Study ‘meaningfully advances the field’

In an accompanying editorial, Eva A. Mistry, MBBS, University of Cincinnati, said the current study “meaningfully advances the field” and provides an estimation of safety of intravenous thrombolysis among patients who have taken DOACs within 48 hours of hospital admission.

She lists strengths of the study as inclusion of a large number of patients across several geographically diverse institutions with heterogeneous standard practices for thrombolysis with recent DOAC use and narrow confidence intervals regarding observed rates of sICH.

“Further, the upper bound of this confidence interval for the DOAC group is below 4%, which is a welcome result and provides supportive data for clinicians who already practice thrombolysis for patients with recent DOAC ingestion,” Dr. Mistry adds.

However, she points out several study limitations, which she says limit immediate, widespread clinical applicability.

These include use of a nonconcurrent control population, which included patients from centers that did not contribute to the DOAC group and the inclusion of Asian patients who likely received a lower thrombolytic dose.

Dr. Seiffge noted that the researchers did adjust for Asian patients but not for the thrombolytic dosage. “I personally do not think this affects the results, as Asian patients have a lower dosage because they have a higher bleeding risk. The lower bleeding risk with DOACs was seen in all continents.”

Dr. Mistry also suggests that the DOAC group itself is prone to selection bias from preferential thrombolysis of patients receiving DOAC who are at lower risk of sICH.

But Dr. Seiffge argued: “I think, actually, the opposite is true. The DOAC patients were older, had more severe comorbidities, and an increased bleeding risk.”

Dr. Mistry concluded, “Despite the limitations of the study design and enrolled population, these data may be used by clinicians to make individualized decisions regarding thrombolysis among patients with recent DOAC use. Importantly, this study lays the foundation for prospective, well-powered studies that definitively determine the safety of thrombolysis in this population.”

The study was supported by a grant from the Bangerter-Rhyner Foundation. Dr. Seiffge received grants from Bangerter Rhyner Foundation during the conduct of the study and personal fees from Bayer, Alexion, and VarmX outside the submitted work. Dr. Mistry receives grant funding from the National Institute of Neurological Disorders and Stroke and serves as a consultant for RAPID AI.

A version of this article first appeared on Medscape.com.

From JAMA Neurology

Antiepileptic drugs tied to increased Parkinson’s disease risk

, new research suggests.

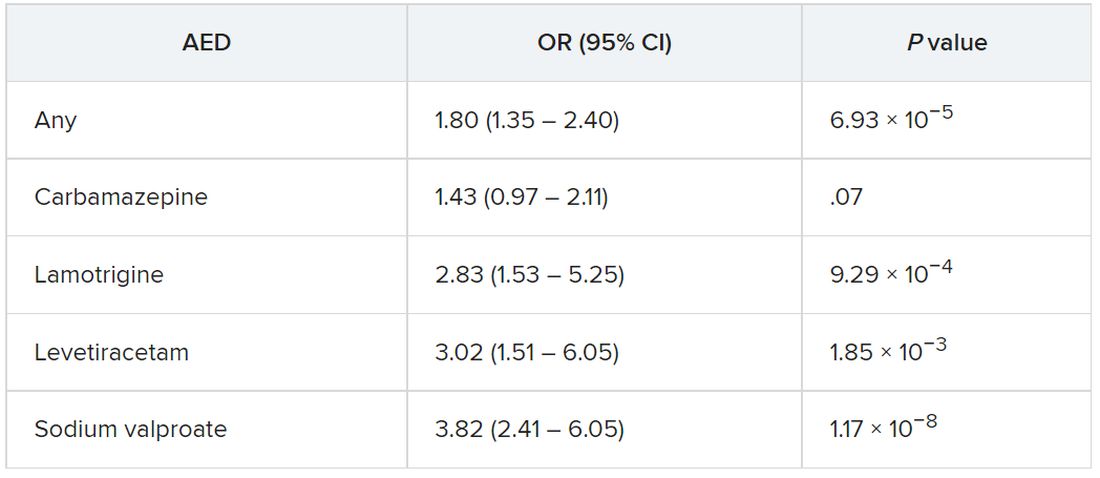

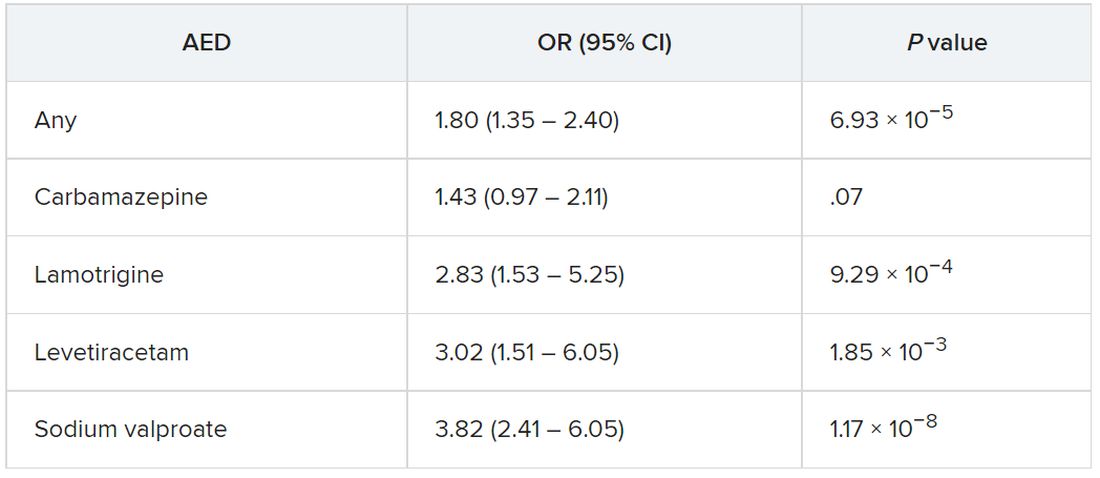

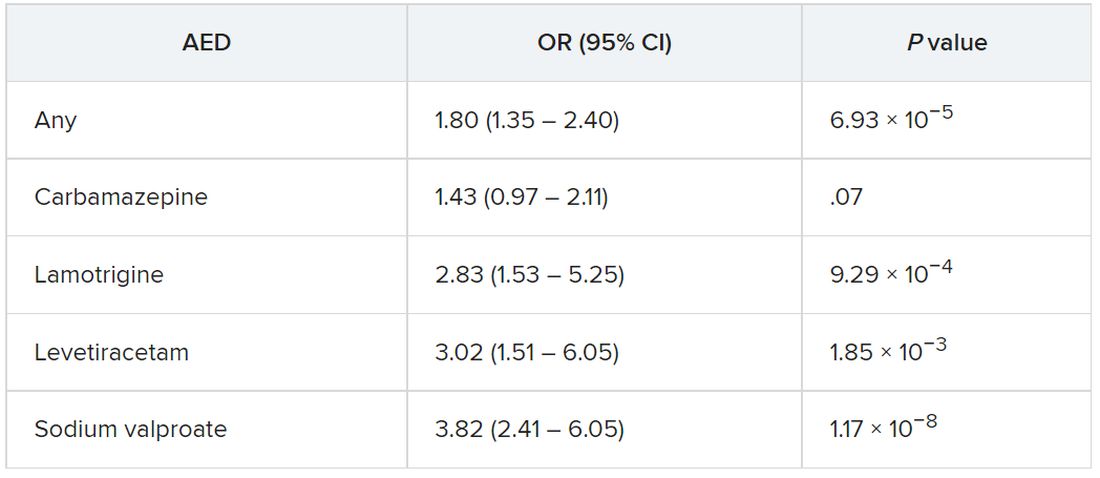

Drawing on data from the UK Biobank, investigators compared more than 1,400 individuals diagnosed with Parkinson’s disease with matched control persons and found a considerably higher risk of developing Parkinson’s disease among those who had taken AEDs in comparison with those who had not. There was a trend linking a greater number of AED prescriptions and multiple AEDs associated with a greater risk for Parkinson’s disease.

“We observed an association between the most commonly prescribed antiepileptic drugs in the U.K. and Parkinson’s disease using data from UK Biobank,” said senior author Alastair Noyce, PhD, professor of neurology and neuroepidemiology and honorary consultant neurologist, Queen Mary University of London.

“This is the first time that a comprehensive study of the link between AEDs and Parkinson’s disease has been undertaken,” said Dr. Noyce.

He added that the findings have no immediate clinical implications, “but further research is definitely needed, [as] this is an interesting observation made in a research setting.”

The study was published online in JAMA Neurology.

Plausible, but unclear link

Recent observational studies have found a “temporal association” between epilepsy and incident Parkinson’s disease, but the mechanism underlying this association is “unclear,” the authors wrote.

It is “plausible” that AEDs “may account for some or all of the apparent association between epilepsy and Parkinson’s disease” and that movement disorders are potential side effects of AEDs, but the association between AEDs and Parkinson’s disease has “not been well studied,” so it remains “unclear” whether AEDs play a role in the association.

“We have previously reported an association between epilepsy and Parkinson’s disease in several different datasets. Here, we wanted to see if it could be explained by an association with the drugs used to treat epilepsy rather than epilepsy per se,” Dr. Noyce explained.

Are AEDs the culprit?

The researchers used data from the UK Biobank, a longitudinal cohort study with more than 500,000 participants, as well as linked primary care medication data to conduct a nested case-control study to investigate this potential association. Participants ranged in age from 40 to 69 years and were recruited between 2006 and 2010.

The researchers compared 1,433 individuals diagnosed with Parkinson’s disease with 8,598 control persons who were matched in a 6:1 ratio for age, sex, race, ethnicity, and socioeconomic status (median [interquartile range] age, 71 [65-75] years; 60.9% men; 97.5% White).

Of those with Parkinson’s disease, 4.3% had been prescribed an AED prior to the date of their being diagnosed with Parkinson’s disease, compared with 2.5% in the control group; 4.4% had been diagnosed with epilepsy, compared with 1% of the control persons.

The strongest evidence was for the association between lamotrigine, levetiracetam, and sodium valproate and Parkinson’s disease. There was “weaker evidence” for carbamazepine, although all the AEDs were associated with a higher risk of Parkinson’s disease.

The odds of incident Parkinson’s disease were higher among those who were prescribed one or more AEDs and among individuals who were issued a higher number of prescriptions, the authors reported.

It is possible that it is the epilepsy itself that is associated with the risk of Parkinson’s disease, rather than the drugs, and that “likely explains part of the association we are seeing,” said Dr. Noyce.

“The bottom line is that more research into the links between epilepsy – and drugs used to treat epilepsy – and Parkinson’s disease is needed,” he said.

Moreover, “only with time will we work out whether the findings hold any real clinical relevance,” he added.

Alternative explanations

Commenting on the research, Rebecca Gilbert, MD, PhD, chief scientific officer, American Parkinson Disease Association, said, “It has been established in prior research that there is an association between epilepsy and Parkinson’s disease.” The current study “shows that having had a prescription written for one of four antiepileptic medications was associated with subsequently receiving a diagnosis of Parkinson’s disease.”

Although one possible conclusion is that the AEDs themselves increase the risk of developing Parkinson’s disease, “there seem to be other alternative explanations as to why a person who had been prescribed AEDs has an increased risk of receiving a diagnosis of Parkinson’s disease,” said Dr. Gilbert, an associate professor of neurology at Bellevue Hospital Center, New York, who was not involved with the current study.

For example, pre-motor changes in the brain of persons with Parkinson’s disease “may increase the risk of requiring an AED by potentially increasing the risk of having a seizure,” and “changes in the brain caused by the seizures for which AEDs are prescribed may increase the risk of Parkinson’s disease.”

Moreover, psychiatric changes related to Parkinson’s disease may have led to the prescription for AEDs, because at least two of the AEDs are also prescribed for mood stabilization, Dr. Gilbert suggested.

“An unanswered question that the paper acknowledges is, what about people who receive AEDs for reasons other than seizures? Do they also have an increased risk of Parkinson’s disease? This would be an interesting population to focus on because it would remove the link between AEDs and seizure and focus on the association between AEDs and Parkinson’s disease,” Dr. Gilbert said.

She emphasized that people who take AEDs for seizures “should not jump to the conclusion that they must come off these medications so as not to increase their risk of developing Parkinson’s disease.” She noted that having seizures “can be dangerous – injuries can occur during a seizure, and if a seizure can’t be stopped or a number occur in rapid succession, brain injury may result.”

For these reasons, people with “a tendency to have seizures need to protect themselves with AEDs” and “should certainly reach out to their neurologists with any questions,” Dr. Gilbert said.

The Preventive Neurology Unit is funded by Barts Charity. The Apocrita High Performance Cluster facility, supported by Queen Mary University London Research–IT Services, was used for this research. Dr. Noyce has received grants from Barts Charity, Parkinson’s UK, Cure Parkinson’s, the Michael J. Fox Foundation, Innovate UK, Solvemed, and Alchemab and personal fees from AstraZeneca, AbbVie, Zambon, BIAL, uMedeor, Alchemab, Britannia, and Charco Neurotech outside the submitted work. The other authors’ disclosures are listed on the original article. Dr. Gilbert reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research suggests.

Drawing on data from the UK Biobank, investigators compared more than 1,400 individuals diagnosed with Parkinson’s disease with matched control persons and found a considerably higher risk of developing Parkinson’s disease among those who had taken AEDs in comparison with those who had not. There was a trend linking a greater number of AED prescriptions and multiple AEDs associated with a greater risk for Parkinson’s disease.

“We observed an association between the most commonly prescribed antiepileptic drugs in the U.K. and Parkinson’s disease using data from UK Biobank,” said senior author Alastair Noyce, PhD, professor of neurology and neuroepidemiology and honorary consultant neurologist, Queen Mary University of London.

“This is the first time that a comprehensive study of the link between AEDs and Parkinson’s disease has been undertaken,” said Dr. Noyce.

He added that the findings have no immediate clinical implications, “but further research is definitely needed, [as] this is an interesting observation made in a research setting.”

The study was published online in JAMA Neurology.

Plausible, but unclear link

Recent observational studies have found a “temporal association” between epilepsy and incident Parkinson’s disease, but the mechanism underlying this association is “unclear,” the authors wrote.

It is “plausible” that AEDs “may account for some or all of the apparent association between epilepsy and Parkinson’s disease” and that movement disorders are potential side effects of AEDs, but the association between AEDs and Parkinson’s disease has “not been well studied,” so it remains “unclear” whether AEDs play a role in the association.

“We have previously reported an association between epilepsy and Parkinson’s disease in several different datasets. Here, we wanted to see if it could be explained by an association with the drugs used to treat epilepsy rather than epilepsy per se,” Dr. Noyce explained.

Are AEDs the culprit?

The researchers used data from the UK Biobank, a longitudinal cohort study with more than 500,000 participants, as well as linked primary care medication data to conduct a nested case-control study to investigate this potential association. Participants ranged in age from 40 to 69 years and were recruited between 2006 and 2010.

The researchers compared 1,433 individuals diagnosed with Parkinson’s disease with 8,598 control persons who were matched in a 6:1 ratio for age, sex, race, ethnicity, and socioeconomic status (median [interquartile range] age, 71 [65-75] years; 60.9% men; 97.5% White).

Of those with Parkinson’s disease, 4.3% had been prescribed an AED prior to the date of their being diagnosed with Parkinson’s disease, compared with 2.5% in the control group; 4.4% had been diagnosed with epilepsy, compared with 1% of the control persons.

The strongest evidence was for the association between lamotrigine, levetiracetam, and sodium valproate and Parkinson’s disease. There was “weaker evidence” for carbamazepine, although all the AEDs were associated with a higher risk of Parkinson’s disease.

The odds of incident Parkinson’s disease were higher among those who were prescribed one or more AEDs and among individuals who were issued a higher number of prescriptions, the authors reported.

It is possible that it is the epilepsy itself that is associated with the risk of Parkinson’s disease, rather than the drugs, and that “likely explains part of the association we are seeing,” said Dr. Noyce.

“The bottom line is that more research into the links between epilepsy – and drugs used to treat epilepsy – and Parkinson’s disease is needed,” he said.

Moreover, “only with time will we work out whether the findings hold any real clinical relevance,” he added.

Alternative explanations

Commenting on the research, Rebecca Gilbert, MD, PhD, chief scientific officer, American Parkinson Disease Association, said, “It has been established in prior research that there is an association between epilepsy and Parkinson’s disease.” The current study “shows that having had a prescription written for one of four antiepileptic medications was associated with subsequently receiving a diagnosis of Parkinson’s disease.”

Although one possible conclusion is that the AEDs themselves increase the risk of developing Parkinson’s disease, “there seem to be other alternative explanations as to why a person who had been prescribed AEDs has an increased risk of receiving a diagnosis of Parkinson’s disease,” said Dr. Gilbert, an associate professor of neurology at Bellevue Hospital Center, New York, who was not involved with the current study.

For example, pre-motor changes in the brain of persons with Parkinson’s disease “may increase the risk of requiring an AED by potentially increasing the risk of having a seizure,” and “changes in the brain caused by the seizures for which AEDs are prescribed may increase the risk of Parkinson’s disease.”

Moreover, psychiatric changes related to Parkinson’s disease may have led to the prescription for AEDs, because at least two of the AEDs are also prescribed for mood stabilization, Dr. Gilbert suggested.

“An unanswered question that the paper acknowledges is, what about people who receive AEDs for reasons other than seizures? Do they also have an increased risk of Parkinson’s disease? This would be an interesting population to focus on because it would remove the link between AEDs and seizure and focus on the association between AEDs and Parkinson’s disease,” Dr. Gilbert said.

She emphasized that people who take AEDs for seizures “should not jump to the conclusion that they must come off these medications so as not to increase their risk of developing Parkinson’s disease.” She noted that having seizures “can be dangerous – injuries can occur during a seizure, and if a seizure can’t be stopped or a number occur in rapid succession, brain injury may result.”

For these reasons, people with “a tendency to have seizures need to protect themselves with AEDs” and “should certainly reach out to their neurologists with any questions,” Dr. Gilbert said.

The Preventive Neurology Unit is funded by Barts Charity. The Apocrita High Performance Cluster facility, supported by Queen Mary University London Research–IT Services, was used for this research. Dr. Noyce has received grants from Barts Charity, Parkinson’s UK, Cure Parkinson’s, the Michael J. Fox Foundation, Innovate UK, Solvemed, and Alchemab and personal fees from AstraZeneca, AbbVie, Zambon, BIAL, uMedeor, Alchemab, Britannia, and Charco Neurotech outside the submitted work. The other authors’ disclosures are listed on the original article. Dr. Gilbert reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research suggests.

Drawing on data from the UK Biobank, investigators compared more than 1,400 individuals diagnosed with Parkinson’s disease with matched control persons and found a considerably higher risk of developing Parkinson’s disease among those who had taken AEDs in comparison with those who had not. There was a trend linking a greater number of AED prescriptions and multiple AEDs associated with a greater risk for Parkinson’s disease.

“We observed an association between the most commonly prescribed antiepileptic drugs in the U.K. and Parkinson’s disease using data from UK Biobank,” said senior author Alastair Noyce, PhD, professor of neurology and neuroepidemiology and honorary consultant neurologist, Queen Mary University of London.

“This is the first time that a comprehensive study of the link between AEDs and Parkinson’s disease has been undertaken,” said Dr. Noyce.

He added that the findings have no immediate clinical implications, “but further research is definitely needed, [as] this is an interesting observation made in a research setting.”

The study was published online in JAMA Neurology.

Plausible, but unclear link

Recent observational studies have found a “temporal association” between epilepsy and incident Parkinson’s disease, but the mechanism underlying this association is “unclear,” the authors wrote.