User login

Early consumption of peanuts can induce tolerance in high-risk children

HOUSTON – The introduction of peanuts at an early age to children who are more highly predisposed to having a peanut allergy can induce tolerance of peanuts and thereby significantly decrease the likelihood of developing a sustained allergy.

This finding from the Learning Early about Peanut Allergy (LEAP) study was presented at the annual meeting of the American Academy of Allergy, Asthma, and Immunology and simultaneously published by the New England Journal of Medicine. Investigators enrolled 640 infants aged 4-11 months between December 2006 and May 6, 2009, all of whom were given skin-prick tests at baseline to determine existing peanut allergies, and randomized them into cohorts that would either consume or avoid peanuts until they reached 60 months of age (N. Engl. J. Med. 2015 Feb. 23 [doi:10.1056/NEJMoa1414850]).

Of the 530 children who had negative skin-prick test results, the prevalence of peanut allergy after 60 months was 13.7% in the avoidance cohort and 1.9% in the consumption cohort (P < .001). Of 98 children who had positive skin-prick test results, 35% of the avoidance cohort had peanut allergy after 60 months, while only 11% of the consumption cohort had the allergy (P = .004). Several children who were initially enrolled and randomized either dropped out or were excluded because of inadequate adherence to treatment or missing data.

Outcomes were measured by placing children in a double-blind, placebo-controlled study that had them consume a cumulative total of 9.4 g of peanut protein to determine whether or not tolerance to peanuts had been effectively induced. All children consumed peanuts by eating peanut butter or a peanut-based snack called Bamba, which was provided for the study at a discounted rate. Children whose skin-prick tests showed wheals of larger than 4 mm in diameter were excluded for presumed existing peanut allergy.

“If you enroll children after 11 months of age, you get about twice the rate of [already allergic] children, with a steady decline as [age] goes down,” corresponding author Dr. Gideon Lack, head of the department of pediatric allergy at King’s College, London, said in a press conference following presentation of the study. “But of children younger than 5 months of age, none of them were peanut allergic, so that would suggest that timing here is key: There is a narrow window of opportunity to intervene if you want to be effective.”

Investigators also noted that children who consumed peanuts had increased levels of IgG4 antibody, while those told to avoid peanuts had higher levels of IgE antibody. They also found that development of peanut allergy was generally associated with subjects who both displayed larger wheals on the skin-prick test and had a lower ratio of IgG4:IgE antibodies. Skin-prick tests that yielded wheals of 1-2 mm in diameter were considered indicative of early risk for peanut allergy.

There was no significant difference in the rates of adverse events or hospitalizations between the consumption and avoidance cohort, but 99% of subjects in each group did experience at least one adverse event: 4,527 in the consumption cohort and 4,287 in the avoidance cohort (P = .02). Infants who consumed peanuts had higher instances of upper respiratory tract infection, viral skin infection, gastroenteritis, urticaria, and conjunctivitis, but events mostly ranged from mild to moderate and their severity was not significantly different from similar incidents reported in the avoidance cohort.

Hospitalization rates also were low, with 52 children in the avoidance cohort and 50 children who consumed peanuts being admitted over the study interval, or 16.2% and 15.7%, respectively (P = .86). Rates of serious adverse effects also were not significantly different between the two cohorts, with 101 children who avoided peanuts and 89 who consumed peanuts experiencing such events (P = .41).

Adequate adherence to treatment for the children randomized into the peanut-avoidance group was defined as eating less than 0.2 g of peanut protein on any single occasion and no more than 0.5 g over the course of a single week in the first 24 months of life. For children in the consumption cohort, adequate adherence meant consuming at least 2 g of peanut protein on at least one occasion in both the first and second years of life, and at least 3 g of peanut protein weekly for at least half the number of weeks for which data was collected.

“Whether or not these benefits can be sustained, we will find out in 1 year,” Dr. Lack said, adding that “all the children who had been consuming peanut have stopped [for] 1 year, and we will see if peanut allergy persists after 1 year of cessation of consumption.” He predicted that the findings would most likely produce a mixed response, with some children relapsing while others maintain sustained tolerance.

The LEAP study was supported by grants from the National Institute of Allergy and Infectious Diseases, Food Allergy and Research Education, the Medical Research Council and Asthma UK, the UK Department of Health’s National Institute for Health Research, the National Peanut Board, and the UK Food Standards Agency. Dr. Lack disclosed financial affiliations with DBV Technologies, and coauthor Dr. Helen Brough disclosed receiving financial support from Fare and Action Medical Research, along with study materials from Stallergenes, Thermo Scientific, and Meridian Foods.

HOUSTON – The introduction of peanuts at an early age to children who are more highly predisposed to having a peanut allergy can induce tolerance of peanuts and thereby significantly decrease the likelihood of developing a sustained allergy.

This finding from the Learning Early about Peanut Allergy (LEAP) study was presented at the annual meeting of the American Academy of Allergy, Asthma, and Immunology and simultaneously published by the New England Journal of Medicine. Investigators enrolled 640 infants aged 4-11 months between December 2006 and May 6, 2009, all of whom were given skin-prick tests at baseline to determine existing peanut allergies, and randomized them into cohorts that would either consume or avoid peanuts until they reached 60 months of age (N. Engl. J. Med. 2015 Feb. 23 [doi:10.1056/NEJMoa1414850]).

Of the 530 children who had negative skin-prick test results, the prevalence of peanut allergy after 60 months was 13.7% in the avoidance cohort and 1.9% in the consumption cohort (P < .001). Of 98 children who had positive skin-prick test results, 35% of the avoidance cohort had peanut allergy after 60 months, while only 11% of the consumption cohort had the allergy (P = .004). Several children who were initially enrolled and randomized either dropped out or were excluded because of inadequate adherence to treatment or missing data.

Outcomes were measured by placing children in a double-blind, placebo-controlled study that had them consume a cumulative total of 9.4 g of peanut protein to determine whether or not tolerance to peanuts had been effectively induced. All children consumed peanuts by eating peanut butter or a peanut-based snack called Bamba, which was provided for the study at a discounted rate. Children whose skin-prick tests showed wheals of larger than 4 mm in diameter were excluded for presumed existing peanut allergy.

“If you enroll children after 11 months of age, you get about twice the rate of [already allergic] children, with a steady decline as [age] goes down,” corresponding author Dr. Gideon Lack, head of the department of pediatric allergy at King’s College, London, said in a press conference following presentation of the study. “But of children younger than 5 months of age, none of them were peanut allergic, so that would suggest that timing here is key: There is a narrow window of opportunity to intervene if you want to be effective.”

Investigators also noted that children who consumed peanuts had increased levels of IgG4 antibody, while those told to avoid peanuts had higher levels of IgE antibody. They also found that development of peanut allergy was generally associated with subjects who both displayed larger wheals on the skin-prick test and had a lower ratio of IgG4:IgE antibodies. Skin-prick tests that yielded wheals of 1-2 mm in diameter were considered indicative of early risk for peanut allergy.

There was no significant difference in the rates of adverse events or hospitalizations between the consumption and avoidance cohort, but 99% of subjects in each group did experience at least one adverse event: 4,527 in the consumption cohort and 4,287 in the avoidance cohort (P = .02). Infants who consumed peanuts had higher instances of upper respiratory tract infection, viral skin infection, gastroenteritis, urticaria, and conjunctivitis, but events mostly ranged from mild to moderate and their severity was not significantly different from similar incidents reported in the avoidance cohort.

Hospitalization rates also were low, with 52 children in the avoidance cohort and 50 children who consumed peanuts being admitted over the study interval, or 16.2% and 15.7%, respectively (P = .86). Rates of serious adverse effects also were not significantly different between the two cohorts, with 101 children who avoided peanuts and 89 who consumed peanuts experiencing such events (P = .41).

Adequate adherence to treatment for the children randomized into the peanut-avoidance group was defined as eating less than 0.2 g of peanut protein on any single occasion and no more than 0.5 g over the course of a single week in the first 24 months of life. For children in the consumption cohort, adequate adherence meant consuming at least 2 g of peanut protein on at least one occasion in both the first and second years of life, and at least 3 g of peanut protein weekly for at least half the number of weeks for which data was collected.

“Whether or not these benefits can be sustained, we will find out in 1 year,” Dr. Lack said, adding that “all the children who had been consuming peanut have stopped [for] 1 year, and we will see if peanut allergy persists after 1 year of cessation of consumption.” He predicted that the findings would most likely produce a mixed response, with some children relapsing while others maintain sustained tolerance.

The LEAP study was supported by grants from the National Institute of Allergy and Infectious Diseases, Food Allergy and Research Education, the Medical Research Council and Asthma UK, the UK Department of Health’s National Institute for Health Research, the National Peanut Board, and the UK Food Standards Agency. Dr. Lack disclosed financial affiliations with DBV Technologies, and coauthor Dr. Helen Brough disclosed receiving financial support from Fare and Action Medical Research, along with study materials from Stallergenes, Thermo Scientific, and Meridian Foods.

HOUSTON – The introduction of peanuts at an early age to children who are more highly predisposed to having a peanut allergy can induce tolerance of peanuts and thereby significantly decrease the likelihood of developing a sustained allergy.

This finding from the Learning Early about Peanut Allergy (LEAP) study was presented at the annual meeting of the American Academy of Allergy, Asthma, and Immunology and simultaneously published by the New England Journal of Medicine. Investigators enrolled 640 infants aged 4-11 months between December 2006 and May 6, 2009, all of whom were given skin-prick tests at baseline to determine existing peanut allergies, and randomized them into cohorts that would either consume or avoid peanuts until they reached 60 months of age (N. Engl. J. Med. 2015 Feb. 23 [doi:10.1056/NEJMoa1414850]).

Of the 530 children who had negative skin-prick test results, the prevalence of peanut allergy after 60 months was 13.7% in the avoidance cohort and 1.9% in the consumption cohort (P < .001). Of 98 children who had positive skin-prick test results, 35% of the avoidance cohort had peanut allergy after 60 months, while only 11% of the consumption cohort had the allergy (P = .004). Several children who were initially enrolled and randomized either dropped out or were excluded because of inadequate adherence to treatment or missing data.

Outcomes were measured by placing children in a double-blind, placebo-controlled study that had them consume a cumulative total of 9.4 g of peanut protein to determine whether or not tolerance to peanuts had been effectively induced. All children consumed peanuts by eating peanut butter or a peanut-based snack called Bamba, which was provided for the study at a discounted rate. Children whose skin-prick tests showed wheals of larger than 4 mm in diameter were excluded for presumed existing peanut allergy.

“If you enroll children after 11 months of age, you get about twice the rate of [already allergic] children, with a steady decline as [age] goes down,” corresponding author Dr. Gideon Lack, head of the department of pediatric allergy at King’s College, London, said in a press conference following presentation of the study. “But of children younger than 5 months of age, none of them were peanut allergic, so that would suggest that timing here is key: There is a narrow window of opportunity to intervene if you want to be effective.”

Investigators also noted that children who consumed peanuts had increased levels of IgG4 antibody, while those told to avoid peanuts had higher levels of IgE antibody. They also found that development of peanut allergy was generally associated with subjects who both displayed larger wheals on the skin-prick test and had a lower ratio of IgG4:IgE antibodies. Skin-prick tests that yielded wheals of 1-2 mm in diameter were considered indicative of early risk for peanut allergy.

There was no significant difference in the rates of adverse events or hospitalizations between the consumption and avoidance cohort, but 99% of subjects in each group did experience at least one adverse event: 4,527 in the consumption cohort and 4,287 in the avoidance cohort (P = .02). Infants who consumed peanuts had higher instances of upper respiratory tract infection, viral skin infection, gastroenteritis, urticaria, and conjunctivitis, but events mostly ranged from mild to moderate and their severity was not significantly different from similar incidents reported in the avoidance cohort.

Hospitalization rates also were low, with 52 children in the avoidance cohort and 50 children who consumed peanuts being admitted over the study interval, or 16.2% and 15.7%, respectively (P = .86). Rates of serious adverse effects also were not significantly different between the two cohorts, with 101 children who avoided peanuts and 89 who consumed peanuts experiencing such events (P = .41).

Adequate adherence to treatment for the children randomized into the peanut-avoidance group was defined as eating less than 0.2 g of peanut protein on any single occasion and no more than 0.5 g over the course of a single week in the first 24 months of life. For children in the consumption cohort, adequate adherence meant consuming at least 2 g of peanut protein on at least one occasion in both the first and second years of life, and at least 3 g of peanut protein weekly for at least half the number of weeks for which data was collected.

“Whether or not these benefits can be sustained, we will find out in 1 year,” Dr. Lack said, adding that “all the children who had been consuming peanut have stopped [for] 1 year, and we will see if peanut allergy persists after 1 year of cessation of consumption.” He predicted that the findings would most likely produce a mixed response, with some children relapsing while others maintain sustained tolerance.

The LEAP study was supported by grants from the National Institute of Allergy and Infectious Diseases, Food Allergy and Research Education, the Medical Research Council and Asthma UK, the UK Department of Health’s National Institute for Health Research, the National Peanut Board, and the UK Food Standards Agency. Dr. Lack disclosed financial affiliations with DBV Technologies, and coauthor Dr. Helen Brough disclosed receiving financial support from Fare and Action Medical Research, along with study materials from Stallergenes, Thermo Scientific, and Meridian Foods.

AT 2015 AAAAI ANNUAL MEETING

Key clinical point: Early introduction of peanuts into the diets of children at high risk for peanut allergy can significantly decrease the possibility of peanut allergy development.

Major finding: Of 530 infants who initially tested negative for peanut allergy, prevalence of said allergy after 60 months was 13.7% in the avoidance cohort and 1.9% in the consumption cohort (P < .001); of the 98 infants who initially tested positive, prevalence after 60 months was 35% in the avoidance cohort and 11% in the consumption cohort (P = .004).

Data source: Randomized cohort study of 640 children enrolled at ages 4-11 months between December 2006 and May 2009.

Disclosures: The LEAP study was funded by several health care agencies in the United States and United Kingdom. Corresponding author Dr. Gideon Lack disclosed financial affiliations with DBV Technologies, and coauthor Dr. Helen Brough disclosed receiving financial support from Food Allergy and Research Education and Action Medical Research, along with study materials from Stallergenes, Thermo Scientific, and Meridian Foods.

Look for adverse events in patients with chronic urticaria

HOUSTON – The risk of adverse events may be cumulative over the lifetime of patients taking oral corticosteroids for urticaria.

Dr. Dennis Ledford, professor of medicine at the University of South Florida, Tampa, and his colleagues examined records of 12,647 patients culled from a commercial claims database between January 2008 and December 2012 who had taken oral corticosteroids for chronic idiopathic or spontaneous urticaria during a 12-month period. More than half (55%) used oral corticosteroids (mean dosage of 367.5 mg) for an average of 16.2 days. At follow-up, patients displayed adverse events at a rate of 27 per 100 patient-years.

Adverse events mostly included skeletal conditions such as osteoporosis and bone fractures, but investigators also noted diabetes, hypertension, lipid disorders, depression, mania, and cataracts, Dr. Ledford said at the annual meeting of the American Academy of Allergy, Asthma, and Immunology.

More concerning, “there’s a cumulative risk,” Dr. Ledford said in an interview. “The more [prednisone equivalent] you take over your lifetime, the greater the chance is that you’re going to develop the side effects we’ve listed here.”

Using time-sensitive Cox regression models, Dr. Ledford and his colleagues determined that the risk for adverse events went up by 7% for each gram dose of prednisone equivalent to which patients were exposed after adjusting for age, sex, immunomodulator use, and Charlson Comorbidity Index. Only cataracts were not subject to the cumulative effects.

“The message of this fairly large analysis is that there are cumulative side effects to prednisone that may not be evident to the physician or clinician performing day-to-day care of patients,” Dr. Ledford said. “These effects are slow to develop and often present in areas of medicine that the physician treating urticaria would not take care of.”

Patients enrolled in this study had all been diagnosed with urticaria at either of two outpatient clinic visits at least 6 weeks apart in a single calendar year, or had received one diagnosis of urticaria and one of angioedema at two separate outpatient clinics at least 6 weeks apart. Patients were followed for at least 1 year after completion of the initial 12-month study period, until end of enrollment or end of study.

Dr. Ledford stressed the need to use noncorticosteroid therapies when treating chronic urticaria, such as calcineurin inhibitors – which also carry risks of hypertension and cancer – or omalizumab.

The study was funded by Genentech and Novartis Pharma AG which market omalizumab as Xolair. Dr. Ledford disclosed that he is affiliated with Genentech, Novartis Pharma AG, and a number of other pharmaceutical companies.

HOUSTON – The risk of adverse events may be cumulative over the lifetime of patients taking oral corticosteroids for urticaria.

Dr. Dennis Ledford, professor of medicine at the University of South Florida, Tampa, and his colleagues examined records of 12,647 patients culled from a commercial claims database between January 2008 and December 2012 who had taken oral corticosteroids for chronic idiopathic or spontaneous urticaria during a 12-month period. More than half (55%) used oral corticosteroids (mean dosage of 367.5 mg) for an average of 16.2 days. At follow-up, patients displayed adverse events at a rate of 27 per 100 patient-years.

Adverse events mostly included skeletal conditions such as osteoporosis and bone fractures, but investigators also noted diabetes, hypertension, lipid disorders, depression, mania, and cataracts, Dr. Ledford said at the annual meeting of the American Academy of Allergy, Asthma, and Immunology.

More concerning, “there’s a cumulative risk,” Dr. Ledford said in an interview. “The more [prednisone equivalent] you take over your lifetime, the greater the chance is that you’re going to develop the side effects we’ve listed here.”

Using time-sensitive Cox regression models, Dr. Ledford and his colleagues determined that the risk for adverse events went up by 7% for each gram dose of prednisone equivalent to which patients were exposed after adjusting for age, sex, immunomodulator use, and Charlson Comorbidity Index. Only cataracts were not subject to the cumulative effects.

“The message of this fairly large analysis is that there are cumulative side effects to prednisone that may not be evident to the physician or clinician performing day-to-day care of patients,” Dr. Ledford said. “These effects are slow to develop and often present in areas of medicine that the physician treating urticaria would not take care of.”

Patients enrolled in this study had all been diagnosed with urticaria at either of two outpatient clinic visits at least 6 weeks apart in a single calendar year, or had received one diagnosis of urticaria and one of angioedema at two separate outpatient clinics at least 6 weeks apart. Patients were followed for at least 1 year after completion of the initial 12-month study period, until end of enrollment or end of study.

Dr. Ledford stressed the need to use noncorticosteroid therapies when treating chronic urticaria, such as calcineurin inhibitors – which also carry risks of hypertension and cancer – or omalizumab.

The study was funded by Genentech and Novartis Pharma AG which market omalizumab as Xolair. Dr. Ledford disclosed that he is affiliated with Genentech, Novartis Pharma AG, and a number of other pharmaceutical companies.

HOUSTON – The risk of adverse events may be cumulative over the lifetime of patients taking oral corticosteroids for urticaria.

Dr. Dennis Ledford, professor of medicine at the University of South Florida, Tampa, and his colleagues examined records of 12,647 patients culled from a commercial claims database between January 2008 and December 2012 who had taken oral corticosteroids for chronic idiopathic or spontaneous urticaria during a 12-month period. More than half (55%) used oral corticosteroids (mean dosage of 367.5 mg) for an average of 16.2 days. At follow-up, patients displayed adverse events at a rate of 27 per 100 patient-years.

Adverse events mostly included skeletal conditions such as osteoporosis and bone fractures, but investigators also noted diabetes, hypertension, lipid disorders, depression, mania, and cataracts, Dr. Ledford said at the annual meeting of the American Academy of Allergy, Asthma, and Immunology.

More concerning, “there’s a cumulative risk,” Dr. Ledford said in an interview. “The more [prednisone equivalent] you take over your lifetime, the greater the chance is that you’re going to develop the side effects we’ve listed here.”

Using time-sensitive Cox regression models, Dr. Ledford and his colleagues determined that the risk for adverse events went up by 7% for each gram dose of prednisone equivalent to which patients were exposed after adjusting for age, sex, immunomodulator use, and Charlson Comorbidity Index. Only cataracts were not subject to the cumulative effects.

“The message of this fairly large analysis is that there are cumulative side effects to prednisone that may not be evident to the physician or clinician performing day-to-day care of patients,” Dr. Ledford said. “These effects are slow to develop and often present in areas of medicine that the physician treating urticaria would not take care of.”

Patients enrolled in this study had all been diagnosed with urticaria at either of two outpatient clinic visits at least 6 weeks apart in a single calendar year, or had received one diagnosis of urticaria and one of angioedema at two separate outpatient clinics at least 6 weeks apart. Patients were followed for at least 1 year after completion of the initial 12-month study period, until end of enrollment or end of study.

Dr. Ledford stressed the need to use noncorticosteroid therapies when treating chronic urticaria, such as calcineurin inhibitors – which also carry risks of hypertension and cancer – or omalizumab.

The study was funded by Genentech and Novartis Pharma AG which market omalizumab as Xolair. Dr. Ledford disclosed that he is affiliated with Genentech, Novartis Pharma AG, and a number of other pharmaceutical companies.

AT 2015 AAAAI ANNUAL MEETING

Key clinical point: The cumulative adverse events of oral corticosteroids may not present to the physician treating the patient for urticaria.

Major finding: The risk for adverse events went up by 7% for each gram dose of prednisone equivalent.

Data source: Retrospective cohort study of 12,647 patients selected from a commercial claims database from 2008 through 2012.

Disclosures: Study funded by Genentech and Novartis Pharma AG; Dr. Ledford is affiliated with Genentech, Novartis Pharma AG, and a number of other pharmaceutical companies.

EXPECT: Omalizumab pregnancy safety data called ‘reassuring’

HOUSTON – Babies born to mothers who took omalizumab just before or during pregnancy exhibited birth defects that are in line with the general population, according to data from the EXPECT registry.

EXPECT (the Xolair Pregnancy Registry) is an ongoing, prospective observational study of pregnant women who received at least 1 dose of omalizumab within 2 months of conception or during their pregnancies.

Dr. Jennifer Namazy of the Scripps Clinic in La Jolla, Calif., presented data from women enrolled between September 2006 and November 2013 at the annual meeting of the American Academy of Allergy, Asthma, and Immunology. The registry aims to enroll 250 women.

Dr. Namazy reported on 186 of the 207 expectant mothers for whom outcomes are recorded. Asthma severity was available for 164. Most (105 or 64%) had severe asthma, while 55 (34%) had moderate asthma, and 4 (2.4%) had mild disease. Each received omalizumab during the first trimester of pregnancy.

A total of 178 births were recorded, 174 of which were live. Of the 170 singletons, 24 (14%) were born at less than 37 weeks’ gestation and of those 3 (13%) were considered small for gestational age (weight less than 10th percentile for gestational age).

Of 140 single-born infants who were carried to full term and also had relevant weight data, 4 (3%) had low birth weight (less than 2.5 kg) and 16 (11%) were considered small for gestational age. Overall, 27 (15%) infants had confirmed congenital anomalies and 11 (6.2%) had a major birth defect.

“This is all very reassuring data,” Dr. Namazy said in an interview. “In terms of the outcomes – major defects and conditional defects – these results are not too unusual, [compared with] the general population. But when we’re looking at infant outcomes, it’s not too different from the severe asthma population.”

She noted, however, that “it’s hard to make large-scale conclusions from this sample size. When you’re talking about congenital malformations, they’re so rare and uncommon that you need a very large population in order to reach any kind of conclusion. But looking at these [data], we’re reassured because there isn’t any consistent information that’s disconcerting; it’s all over the spectrum, and so there’s no significant increase in the rate of birth rate defects that we’re seeing.”

Omalizumab is marketed by Genentech as Xolair. The EXPECT study is funded by Genentech and Novartis Pharma AG. Dr. Namazy is affiliated with Genentech.

HOUSTON – Babies born to mothers who took omalizumab just before or during pregnancy exhibited birth defects that are in line with the general population, according to data from the EXPECT registry.

EXPECT (the Xolair Pregnancy Registry) is an ongoing, prospective observational study of pregnant women who received at least 1 dose of omalizumab within 2 months of conception or during their pregnancies.

Dr. Jennifer Namazy of the Scripps Clinic in La Jolla, Calif., presented data from women enrolled between September 2006 and November 2013 at the annual meeting of the American Academy of Allergy, Asthma, and Immunology. The registry aims to enroll 250 women.

Dr. Namazy reported on 186 of the 207 expectant mothers for whom outcomes are recorded. Asthma severity was available for 164. Most (105 or 64%) had severe asthma, while 55 (34%) had moderate asthma, and 4 (2.4%) had mild disease. Each received omalizumab during the first trimester of pregnancy.

A total of 178 births were recorded, 174 of which were live. Of the 170 singletons, 24 (14%) were born at less than 37 weeks’ gestation and of those 3 (13%) were considered small for gestational age (weight less than 10th percentile for gestational age).

Of 140 single-born infants who were carried to full term and also had relevant weight data, 4 (3%) had low birth weight (less than 2.5 kg) and 16 (11%) were considered small for gestational age. Overall, 27 (15%) infants had confirmed congenital anomalies and 11 (6.2%) had a major birth defect.

“This is all very reassuring data,” Dr. Namazy said in an interview. “In terms of the outcomes – major defects and conditional defects – these results are not too unusual, [compared with] the general population. But when we’re looking at infant outcomes, it’s not too different from the severe asthma population.”

She noted, however, that “it’s hard to make large-scale conclusions from this sample size. When you’re talking about congenital malformations, they’re so rare and uncommon that you need a very large population in order to reach any kind of conclusion. But looking at these [data], we’re reassured because there isn’t any consistent information that’s disconcerting; it’s all over the spectrum, and so there’s no significant increase in the rate of birth rate defects that we’re seeing.”

Omalizumab is marketed by Genentech as Xolair. The EXPECT study is funded by Genentech and Novartis Pharma AG. Dr. Namazy is affiliated with Genentech.

HOUSTON – Babies born to mothers who took omalizumab just before or during pregnancy exhibited birth defects that are in line with the general population, according to data from the EXPECT registry.

EXPECT (the Xolair Pregnancy Registry) is an ongoing, prospective observational study of pregnant women who received at least 1 dose of omalizumab within 2 months of conception or during their pregnancies.

Dr. Jennifer Namazy of the Scripps Clinic in La Jolla, Calif., presented data from women enrolled between September 2006 and November 2013 at the annual meeting of the American Academy of Allergy, Asthma, and Immunology. The registry aims to enroll 250 women.

Dr. Namazy reported on 186 of the 207 expectant mothers for whom outcomes are recorded. Asthma severity was available for 164. Most (105 or 64%) had severe asthma, while 55 (34%) had moderate asthma, and 4 (2.4%) had mild disease. Each received omalizumab during the first trimester of pregnancy.

A total of 178 births were recorded, 174 of which were live. Of the 170 singletons, 24 (14%) were born at less than 37 weeks’ gestation and of those 3 (13%) were considered small for gestational age (weight less than 10th percentile for gestational age).

Of 140 single-born infants who were carried to full term and also had relevant weight data, 4 (3%) had low birth weight (less than 2.5 kg) and 16 (11%) were considered small for gestational age. Overall, 27 (15%) infants had confirmed congenital anomalies and 11 (6.2%) had a major birth defect.

“This is all very reassuring data,” Dr. Namazy said in an interview. “In terms of the outcomes – major defects and conditional defects – these results are not too unusual, [compared with] the general population. But when we’re looking at infant outcomes, it’s not too different from the severe asthma population.”

She noted, however, that “it’s hard to make large-scale conclusions from this sample size. When you’re talking about congenital malformations, they’re so rare and uncommon that you need a very large population in order to reach any kind of conclusion. But looking at these [data], we’re reassured because there isn’t any consistent information that’s disconcerting; it’s all over the spectrum, and so there’s no significant increase in the rate of birth rate defects that we’re seeing.”

Omalizumab is marketed by Genentech as Xolair. The EXPECT study is funded by Genentech and Novartis Pharma AG. Dr. Namazy is affiliated with Genentech.

AT 2015 AAAAI ANNUAL MEETING

Key clinical point: Omalizumab does not appear to increase the risk of birth defects or adverse outcomes in pregnant women and their babies.

Major finding: Of 140 singleton infants carried to full term and for whom relevant weight data was available, 4 (2.9%) had low birth weight and 16 (11.4%) were considered small for gestational age, 27 (15.2%) had congenital anomalies, and 11 (6.2%) had a major birth defect.

Data source: EXPECT trial – an ongoing observational study of 207 prospectively enrolled women.

Disclosures: EXPECT is funded by Genentech and Novartis Pharma AG. Dr. Namazy is affiliated with Genentech.

ECMO alone before lung transplant linked to good survival rates

SAN DIEGO – Extracorporeal membrane oxygenation with spontaneous breathing is the optimal bridging strategy for patients who have rapidly advancing pulmonary disease and are awaiting lung transplantation, based on data from over 18,000 patients who received lung transplants.

In the study, patients on extracorporeal membrane oxygenation (ECMO) alone had outcomes that were comparable to those of patients requiring no invasive support prior to transplantation, Dr. Matthew Schechter of Duke University in Durham, N.C., reported at the annual meeting of the Society of Thoracic Surgeons.

Dr. Schechter and his colleagues analyzed the United Network for Organ Sharing database for all adult patients who underwent lung transplantations between January 2000 and September 2013.

The 18,392 patients selected for study inclusion were divided into cohorts based on the type of preoperative support they received: ECMO with mechanical ventilation; ECMO only; ventilation only; and no support of any kind. Nearly 95% of the patients received no invasive preoperative support. Over 4% received mechanical ventilation alone, less than 1% received ECMO with mechanical ventilation, and about 0.5%) received ECMO only.

By using Kaplan-Meier survival analyses with log-rank testing, Dr. Schechter and his associates were able to compare survival rates for each type of preoperative support. Cox regression models were used to ascertain whether any particular type of preoperative support could definitively be associated with mortality.

At 3 years post transplantation, the survival rates of patients on ECMO alone and of those who received no preoperative support of any kind were comparable at 66% and 65%, respectively. Survival rates at 3 years after transplant were 38% in patients who received ECMO and mechanical ventilation and 52% in patients who received mechanical ventilation alone. The survival advantage in the ECMO only and no support groups was significantly better when compared to the ECMO and mechanical ventilation and the mechanical ventilation alone cohorts (P < .0001).

The findings held up after a multivariate analysis; the hazard ratio was 1.96 (95% confidence interval, 1.36-2.84) for ECMO with mechanical ventilation and 1.52 (95% CI, 1.31-1.78) for mechanical ventilation only (P < .0001 for both).

ECMO alone was not associated with any significant change in survival rate (HR = 1.07; 95% CI, 0.57-2.01; P = .843).

Patients who received just ECMO had the shortest lengths of stay after lung transplant. They also had the lowest rate of acute rejection prior to discharge, although not to an extent that was statistically significant. The incidence of new-onset dialysis was highest in patients who received ECMO with mechanical ventilation.

“ECMO alone may provide a survival advantage over other bridging strategies,” Dr. Schechter concluded. “One advantage of using ECMO only is an avoidance of the risks that come with mechanical ventilation, [which] include generalized muscle atrophy, maladapted muscle fiber remodeling in the diaphragm – which leads to a decrease in the overall durability of this muscle – as well as the induction of the pulmonary and systemic inflammatory risk responses, [all of which] have been shown to affect outcomes following lung transplantation.”

Dr. Schechter explained that patients receiving ECMO without mechanical ventilation can actively rehabilitate themselves post transplantation since nonintubated ECMO patients can participate in physical therapy.

Further study is needed to find an optimal way of assessing patients and determining exactly which ones would be best suited for ECMO with spontaneous breathing support, he said.

Dr, Schechter had no relevant financial disclosures.

SAN DIEGO – Extracorporeal membrane oxygenation with spontaneous breathing is the optimal bridging strategy for patients who have rapidly advancing pulmonary disease and are awaiting lung transplantation, based on data from over 18,000 patients who received lung transplants.

In the study, patients on extracorporeal membrane oxygenation (ECMO) alone had outcomes that were comparable to those of patients requiring no invasive support prior to transplantation, Dr. Matthew Schechter of Duke University in Durham, N.C., reported at the annual meeting of the Society of Thoracic Surgeons.

Dr. Schechter and his colleagues analyzed the United Network for Organ Sharing database for all adult patients who underwent lung transplantations between January 2000 and September 2013.

The 18,392 patients selected for study inclusion were divided into cohorts based on the type of preoperative support they received: ECMO with mechanical ventilation; ECMO only; ventilation only; and no support of any kind. Nearly 95% of the patients received no invasive preoperative support. Over 4% received mechanical ventilation alone, less than 1% received ECMO with mechanical ventilation, and about 0.5%) received ECMO only.

By using Kaplan-Meier survival analyses with log-rank testing, Dr. Schechter and his associates were able to compare survival rates for each type of preoperative support. Cox regression models were used to ascertain whether any particular type of preoperative support could definitively be associated with mortality.

At 3 years post transplantation, the survival rates of patients on ECMO alone and of those who received no preoperative support of any kind were comparable at 66% and 65%, respectively. Survival rates at 3 years after transplant were 38% in patients who received ECMO and mechanical ventilation and 52% in patients who received mechanical ventilation alone. The survival advantage in the ECMO only and no support groups was significantly better when compared to the ECMO and mechanical ventilation and the mechanical ventilation alone cohorts (P < .0001).

The findings held up after a multivariate analysis; the hazard ratio was 1.96 (95% confidence interval, 1.36-2.84) for ECMO with mechanical ventilation and 1.52 (95% CI, 1.31-1.78) for mechanical ventilation only (P < .0001 for both).

ECMO alone was not associated with any significant change in survival rate (HR = 1.07; 95% CI, 0.57-2.01; P = .843).

Patients who received just ECMO had the shortest lengths of stay after lung transplant. They also had the lowest rate of acute rejection prior to discharge, although not to an extent that was statistically significant. The incidence of new-onset dialysis was highest in patients who received ECMO with mechanical ventilation.

“ECMO alone may provide a survival advantage over other bridging strategies,” Dr. Schechter concluded. “One advantage of using ECMO only is an avoidance of the risks that come with mechanical ventilation, [which] include generalized muscle atrophy, maladapted muscle fiber remodeling in the diaphragm – which leads to a decrease in the overall durability of this muscle – as well as the induction of the pulmonary and systemic inflammatory risk responses, [all of which] have been shown to affect outcomes following lung transplantation.”

Dr. Schechter explained that patients receiving ECMO without mechanical ventilation can actively rehabilitate themselves post transplantation since nonintubated ECMO patients can participate in physical therapy.

Further study is needed to find an optimal way of assessing patients and determining exactly which ones would be best suited for ECMO with spontaneous breathing support, he said.

Dr, Schechter had no relevant financial disclosures.

SAN DIEGO – Extracorporeal membrane oxygenation with spontaneous breathing is the optimal bridging strategy for patients who have rapidly advancing pulmonary disease and are awaiting lung transplantation, based on data from over 18,000 patients who received lung transplants.

In the study, patients on extracorporeal membrane oxygenation (ECMO) alone had outcomes that were comparable to those of patients requiring no invasive support prior to transplantation, Dr. Matthew Schechter of Duke University in Durham, N.C., reported at the annual meeting of the Society of Thoracic Surgeons.

Dr. Schechter and his colleagues analyzed the United Network for Organ Sharing database for all adult patients who underwent lung transplantations between January 2000 and September 2013.

The 18,392 patients selected for study inclusion were divided into cohorts based on the type of preoperative support they received: ECMO with mechanical ventilation; ECMO only; ventilation only; and no support of any kind. Nearly 95% of the patients received no invasive preoperative support. Over 4% received mechanical ventilation alone, less than 1% received ECMO with mechanical ventilation, and about 0.5%) received ECMO only.

By using Kaplan-Meier survival analyses with log-rank testing, Dr. Schechter and his associates were able to compare survival rates for each type of preoperative support. Cox regression models were used to ascertain whether any particular type of preoperative support could definitively be associated with mortality.

At 3 years post transplantation, the survival rates of patients on ECMO alone and of those who received no preoperative support of any kind were comparable at 66% and 65%, respectively. Survival rates at 3 years after transplant were 38% in patients who received ECMO and mechanical ventilation and 52% in patients who received mechanical ventilation alone. The survival advantage in the ECMO only and no support groups was significantly better when compared to the ECMO and mechanical ventilation and the mechanical ventilation alone cohorts (P < .0001).

The findings held up after a multivariate analysis; the hazard ratio was 1.96 (95% confidence interval, 1.36-2.84) for ECMO with mechanical ventilation and 1.52 (95% CI, 1.31-1.78) for mechanical ventilation only (P < .0001 for both).

ECMO alone was not associated with any significant change in survival rate (HR = 1.07; 95% CI, 0.57-2.01; P = .843).

Patients who received just ECMO had the shortest lengths of stay after lung transplant. They also had the lowest rate of acute rejection prior to discharge, although not to an extent that was statistically significant. The incidence of new-onset dialysis was highest in patients who received ECMO with mechanical ventilation.

“ECMO alone may provide a survival advantage over other bridging strategies,” Dr. Schechter concluded. “One advantage of using ECMO only is an avoidance of the risks that come with mechanical ventilation, [which] include generalized muscle atrophy, maladapted muscle fiber remodeling in the diaphragm – which leads to a decrease in the overall durability of this muscle – as well as the induction of the pulmonary and systemic inflammatory risk responses, [all of which] have been shown to affect outcomes following lung transplantation.”

Dr. Schechter explained that patients receiving ECMO without mechanical ventilation can actively rehabilitate themselves post transplantation since nonintubated ECMO patients can participate in physical therapy.

Further study is needed to find an optimal way of assessing patients and determining exactly which ones would be best suited for ECMO with spontaneous breathing support, he said.

Dr, Schechter had no relevant financial disclosures.

FROM THE STS ANNUAL MEETING

Key clinical point: ECMO with spontaneous breathing should be considered the preferred bridging strategy for patients who have rapidly advancing pulmonary disease and are awaiting lung transplantations.

Major finding: At 3 years post transplantation, the survival rates of patients on ECMO alone and of those who received no preoperative support of any kind were comparable at 66% and 65%, respectively.

Data source: Retrospective analysis of 18,392 adult patients in the United Network for Organ Sharing database.

Disclosures: Dr. Schechter had no relevant financial disclosures.

Human Liver Cells Can Induce Antiviral Reaction Against Hepatitis C

Immunity against the hepatitis C virus can be induced by cultured primary human liver sinusoidal endothelial cells, according to a study published in the February issue of Gastroenterology (doi:10.1053/j.gastro.2014.10.040).

“We found HLSECs [primary human LSECs] express many of the receptors implicated in HCV [hepatitis C virus] attachment and entry and HLSEC-to-hepatocyte contact was dispensable for uptake,” wrote lead author Dr. Silvia M. Giugliano of the University of Colorado, Denver, and her associates.

In a cohort study, investigators exposed HLSECs and immortalized liver endothelial cells (TMNK-1) to several variants of HCV: full-length transmitted/founder virus, sucrose-purified Japanese fulminant hepatitis–1 (JFH-1), a virus encoding a luciferase reporter, and the HCV-specific pathogen-associated molecular pattern molecules (PAMP, substrate for RIG-I). Cells were analyzed by using polymerase chain reaction (PCR), immunofluorescence, and immunohistochemical assays.

Results showed that HLSECs, regardless of contact between cells, were able to internalize HCV when exposed to it and replicate, though not translate HCV RNA. The HCV RNA “induced consistent and broad transcription of multiple interferons (IFNs) [via] pattern recognition receptors (TLR7 and retinoic acid-inducible gene 1).” Furthermore, supernatants from primary HLSECs transfected with HCV-specific PAMP molecules had larger inductions of IFNs and IFN-stimulated genes in HLSECs.

“Conditioned media from IFN-stimulated HLSECs induced expression of antiviral genes by uninfected primary human hepatocytes,” wrote Dr. Giugliano and her coauthors. “Exosomes, derived from HLSECs following stimulation with either type I or type III IFNs, controlled HCV replication in a dose-dependent manner.”

However, the investigators say that more study is required to understand why HCV is able to present so persistently if the means to combat the virus are so apparent in the immune system.

“These results raise a number of intriguing questions, for example, how the use of exogenous IFN to treat viral hepatitis could be expected to induce additional, previously unrecognized antiviral mechanisms involving HLSECs,” says the study. “Further work is warranted to understand why despite these innate immune responses, HCV is able to establish persistence and fail eradication with IFN-based antiviral therapy.”

The study supported by grants R21-AI103361 and U19 AI 1066328; Dr. Rosen received a Merit Review grant and Dr. Shaw received grant R21-AI 106000. The authors reported no financial conflicts of interest.

Immunity against the hepatitis C virus can be induced by cultured primary human liver sinusoidal endothelial cells, according to a study published in the February issue of Gastroenterology (doi:10.1053/j.gastro.2014.10.040).

“We found HLSECs [primary human LSECs] express many of the receptors implicated in HCV [hepatitis C virus] attachment and entry and HLSEC-to-hepatocyte contact was dispensable for uptake,” wrote lead author Dr. Silvia M. Giugliano of the University of Colorado, Denver, and her associates.

In a cohort study, investigators exposed HLSECs and immortalized liver endothelial cells (TMNK-1) to several variants of HCV: full-length transmitted/founder virus, sucrose-purified Japanese fulminant hepatitis–1 (JFH-1), a virus encoding a luciferase reporter, and the HCV-specific pathogen-associated molecular pattern molecules (PAMP, substrate for RIG-I). Cells were analyzed by using polymerase chain reaction (PCR), immunofluorescence, and immunohistochemical assays.

Results showed that HLSECs, regardless of contact between cells, were able to internalize HCV when exposed to it and replicate, though not translate HCV RNA. The HCV RNA “induced consistent and broad transcription of multiple interferons (IFNs) [via] pattern recognition receptors (TLR7 and retinoic acid-inducible gene 1).” Furthermore, supernatants from primary HLSECs transfected with HCV-specific PAMP molecules had larger inductions of IFNs and IFN-stimulated genes in HLSECs.

“Conditioned media from IFN-stimulated HLSECs induced expression of antiviral genes by uninfected primary human hepatocytes,” wrote Dr. Giugliano and her coauthors. “Exosomes, derived from HLSECs following stimulation with either type I or type III IFNs, controlled HCV replication in a dose-dependent manner.”

However, the investigators say that more study is required to understand why HCV is able to present so persistently if the means to combat the virus are so apparent in the immune system.

“These results raise a number of intriguing questions, for example, how the use of exogenous IFN to treat viral hepatitis could be expected to induce additional, previously unrecognized antiviral mechanisms involving HLSECs,” says the study. “Further work is warranted to understand why despite these innate immune responses, HCV is able to establish persistence and fail eradication with IFN-based antiviral therapy.”

The study supported by grants R21-AI103361 and U19 AI 1066328; Dr. Rosen received a Merit Review grant and Dr. Shaw received grant R21-AI 106000. The authors reported no financial conflicts of interest.

Immunity against the hepatitis C virus can be induced by cultured primary human liver sinusoidal endothelial cells, according to a study published in the February issue of Gastroenterology (doi:10.1053/j.gastro.2014.10.040).

“We found HLSECs [primary human LSECs] express many of the receptors implicated in HCV [hepatitis C virus] attachment and entry and HLSEC-to-hepatocyte contact was dispensable for uptake,” wrote lead author Dr. Silvia M. Giugliano of the University of Colorado, Denver, and her associates.

In a cohort study, investigators exposed HLSECs and immortalized liver endothelial cells (TMNK-1) to several variants of HCV: full-length transmitted/founder virus, sucrose-purified Japanese fulminant hepatitis–1 (JFH-1), a virus encoding a luciferase reporter, and the HCV-specific pathogen-associated molecular pattern molecules (PAMP, substrate for RIG-I). Cells were analyzed by using polymerase chain reaction (PCR), immunofluorescence, and immunohistochemical assays.

Results showed that HLSECs, regardless of contact between cells, were able to internalize HCV when exposed to it and replicate, though not translate HCV RNA. The HCV RNA “induced consistent and broad transcription of multiple interferons (IFNs) [via] pattern recognition receptors (TLR7 and retinoic acid-inducible gene 1).” Furthermore, supernatants from primary HLSECs transfected with HCV-specific PAMP molecules had larger inductions of IFNs and IFN-stimulated genes in HLSECs.

“Conditioned media from IFN-stimulated HLSECs induced expression of antiviral genes by uninfected primary human hepatocytes,” wrote Dr. Giugliano and her coauthors. “Exosomes, derived from HLSECs following stimulation with either type I or type III IFNs, controlled HCV replication in a dose-dependent manner.”

However, the investigators say that more study is required to understand why HCV is able to present so persistently if the means to combat the virus are so apparent in the immune system.

“These results raise a number of intriguing questions, for example, how the use of exogenous IFN to treat viral hepatitis could be expected to induce additional, previously unrecognized antiviral mechanisms involving HLSECs,” says the study. “Further work is warranted to understand why despite these innate immune responses, HCV is able to establish persistence and fail eradication with IFN-based antiviral therapy.”

The study supported by grants R21-AI103361 and U19 AI 1066328; Dr. Rosen received a Merit Review grant and Dr. Shaw received grant R21-AI 106000. The authors reported no financial conflicts of interest.

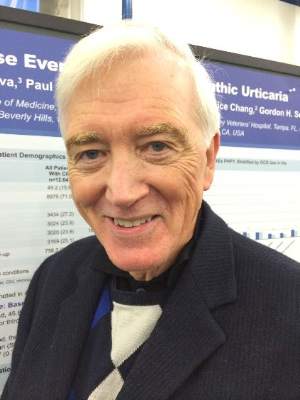

Secondhand smoke remains problematic in the United States

Exposure to and inhalation of secondhand smoke continue to be a significant problem throughout the United States, with cases of secondhand smoking–related illness and morbidity higher in juvenile and African American populations, among others, Dr. Thomas Frieden, director of the Centers for Disease Control and Prevention, said at a press briefing.

“Secondhand smoke is a Class A carcinogen [and] the Surgeon General has concluded that there is no risk-free level of exposure to secondhand smoke,” Dr. Frieden told reporters ahead of the release of this month’s CDC Vital Signs report (MMWR 2015 Feb.3;64:1-7). “People may not fully appreciate that secondhand smoke is not merely a nuisance – it causes disease and death.”

He explained that the Vital Signs report will highlight data from the National Health and Nutrition Examination Survey (NHANES), which was conducted between 1999 and 2012 to examine the health and nutrition of both children and adults across the United States. The study’s findings indicate that 40% of children, or two out of every five, are still exposed to secondhand smoke, and that even though cigarette smoking has decreased while smoke-free laws have increased in recent years, one in four nonsmokers and 58 million people overall are being exposed to secondhand smoke.

Furthermore, certain demographic groups are more likely to suffer exposure to secondhand smoke and, consequently, face several related health problems. In addition to 40.6% of children aged 3-11 years, nearly 70% of African American children in the same age range and 46.8% of all African Americans face a higher risk of secondhand smoke exposure. Mexican Americans and non-Hispanic whites also are highly predisposed to secondhand smoke exposure, with rates of 23.9% and 21.8%, respectively.

Americans living below the poverty line also face a 43% secondhand smoke exposure rate, while 37% of Americans in rental housing also are at risk. In fact, the home is the most significant source of exposure to secondhand smoke for children, although Dr. Frieden credited Americans with doubling the country’s number of smoke-free households over the last 20 years.

“Comprehensive smoke-free laws that prohibit smoking in all indoor areas of bars, restaurants, and workplaces are an important start,” stated Dr. Frieden. “As of today, 26 states and the District of Columbia, plus about 700 communities across the country, have adopted smoke-free laws that cover these three locations [and] we’ve seen tremendous increases in the number of college and university campuses that have gone smoke free.”

According to the CDC, exposure to secondhand smoke has been proven to cause afflictions such as ear infections, respiratory infections, asthma attacks, and sudden infant death syndrome in infants and children, plus stroke, coronary heart disease, heart attack, and lung cancer in nonsmoking adults. Secondhand smoke exposure causes 400 deaths in infants each year and more than 41,000 fatalities among nonsmoking adults per year. CDC figures also state that secondhand smoke exposure costs the United States $5.6 billion annually in lost productivity.

Exposure to and inhalation of secondhand smoke continue to be a significant problem throughout the United States, with cases of secondhand smoking–related illness and morbidity higher in juvenile and African American populations, among others, Dr. Thomas Frieden, director of the Centers for Disease Control and Prevention, said at a press briefing.

“Secondhand smoke is a Class A carcinogen [and] the Surgeon General has concluded that there is no risk-free level of exposure to secondhand smoke,” Dr. Frieden told reporters ahead of the release of this month’s CDC Vital Signs report (MMWR 2015 Feb.3;64:1-7). “People may not fully appreciate that secondhand smoke is not merely a nuisance – it causes disease and death.”

He explained that the Vital Signs report will highlight data from the National Health and Nutrition Examination Survey (NHANES), which was conducted between 1999 and 2012 to examine the health and nutrition of both children and adults across the United States. The study’s findings indicate that 40% of children, or two out of every five, are still exposed to secondhand smoke, and that even though cigarette smoking has decreased while smoke-free laws have increased in recent years, one in four nonsmokers and 58 million people overall are being exposed to secondhand smoke.

Furthermore, certain demographic groups are more likely to suffer exposure to secondhand smoke and, consequently, face several related health problems. In addition to 40.6% of children aged 3-11 years, nearly 70% of African American children in the same age range and 46.8% of all African Americans face a higher risk of secondhand smoke exposure. Mexican Americans and non-Hispanic whites also are highly predisposed to secondhand smoke exposure, with rates of 23.9% and 21.8%, respectively.

Americans living below the poverty line also face a 43% secondhand smoke exposure rate, while 37% of Americans in rental housing also are at risk. In fact, the home is the most significant source of exposure to secondhand smoke for children, although Dr. Frieden credited Americans with doubling the country’s number of smoke-free households over the last 20 years.

“Comprehensive smoke-free laws that prohibit smoking in all indoor areas of bars, restaurants, and workplaces are an important start,” stated Dr. Frieden. “As of today, 26 states and the District of Columbia, plus about 700 communities across the country, have adopted smoke-free laws that cover these three locations [and] we’ve seen tremendous increases in the number of college and university campuses that have gone smoke free.”

According to the CDC, exposure to secondhand smoke has been proven to cause afflictions such as ear infections, respiratory infections, asthma attacks, and sudden infant death syndrome in infants and children, plus stroke, coronary heart disease, heart attack, and lung cancer in nonsmoking adults. Secondhand smoke exposure causes 400 deaths in infants each year and more than 41,000 fatalities among nonsmoking adults per year. CDC figures also state that secondhand smoke exposure costs the United States $5.6 billion annually in lost productivity.

Exposure to and inhalation of secondhand smoke continue to be a significant problem throughout the United States, with cases of secondhand smoking–related illness and morbidity higher in juvenile and African American populations, among others, Dr. Thomas Frieden, director of the Centers for Disease Control and Prevention, said at a press briefing.

“Secondhand smoke is a Class A carcinogen [and] the Surgeon General has concluded that there is no risk-free level of exposure to secondhand smoke,” Dr. Frieden told reporters ahead of the release of this month’s CDC Vital Signs report (MMWR 2015 Feb.3;64:1-7). “People may not fully appreciate that secondhand smoke is not merely a nuisance – it causes disease and death.”

He explained that the Vital Signs report will highlight data from the National Health and Nutrition Examination Survey (NHANES), which was conducted between 1999 and 2012 to examine the health and nutrition of both children and adults across the United States. The study’s findings indicate that 40% of children, or two out of every five, are still exposed to secondhand smoke, and that even though cigarette smoking has decreased while smoke-free laws have increased in recent years, one in four nonsmokers and 58 million people overall are being exposed to secondhand smoke.

Furthermore, certain demographic groups are more likely to suffer exposure to secondhand smoke and, consequently, face several related health problems. In addition to 40.6% of children aged 3-11 years, nearly 70% of African American children in the same age range and 46.8% of all African Americans face a higher risk of secondhand smoke exposure. Mexican Americans and non-Hispanic whites also are highly predisposed to secondhand smoke exposure, with rates of 23.9% and 21.8%, respectively.

Americans living below the poverty line also face a 43% secondhand smoke exposure rate, while 37% of Americans in rental housing also are at risk. In fact, the home is the most significant source of exposure to secondhand smoke for children, although Dr. Frieden credited Americans with doubling the country’s number of smoke-free households over the last 20 years.

“Comprehensive smoke-free laws that prohibit smoking in all indoor areas of bars, restaurants, and workplaces are an important start,” stated Dr. Frieden. “As of today, 26 states and the District of Columbia, plus about 700 communities across the country, have adopted smoke-free laws that cover these three locations [and] we’ve seen tremendous increases in the number of college and university campuses that have gone smoke free.”

According to the CDC, exposure to secondhand smoke has been proven to cause afflictions such as ear infections, respiratory infections, asthma attacks, and sudden infant death syndrome in infants and children, plus stroke, coronary heart disease, heart attack, and lung cancer in nonsmoking adults. Secondhand smoke exposure causes 400 deaths in infants each year and more than 41,000 fatalities among nonsmoking adults per year. CDC figures also state that secondhand smoke exposure costs the United States $5.6 billion annually in lost productivity.

FROM A CDC TELEBRIEFING

Human liver cells can induce antiviral reaction against hepatitis C

Immunity against the hepatitis C virus can be induced by cultured primary human liver sinusoidal endothelial cells, according to a study published in the February issue of Gastroenterology (doi:10.1053/j.gastro.2014.10.040).

“We found HLSECs [primary human LSECs] express many of the receptors implicated in HCV [hepatitis C virus] attachment and entry and HLSEC-to-hepatocyte contact was dispensable for uptake,” wrote lead author Dr. Silvia M. Giugliano of the University of Colorado, Denver, and her associates.

In a cohort study, investigators exposed HLSECs and immortalized liver endothelial cells (TMNK-1) to several variants of HCV: full-length transmitted/founder virus, sucrose-purified Japanese fulminant hepatitis–1 (JFH-1), a virus encoding a luciferase reporter, and the HCV-specific pathogen-associated molecular pattern molecules (PAMP, substrate for RIG-I). Cells were analyzed by using polymerase chain reaction (PCR), immunofluorescence, and immunohistochemical assays.

Results showed that HLSECs, regardless of contact between cells, were able to internalize HCV when exposed to it and replicate, though not translate HCV RNA. The HCV RNA “induced consistent and broad transcription of multiple interferons (IFNs) [via] pattern recognition receptors (TLR7 and retinoic acid-inducible gene 1).” Furthermore, supernatants from primary HLSECs transfected with HCV-specific PAMP molecules had larger inductions of IFNs and IFN-stimulated genes in HLSECs.

“Conditioned media from IFN-stimulated HLSECs induced expression of antiviral genes by uninfected primary human hepatocytes,” wrote Dr. Giugliano and her coauthors. “Exosomes, derived from HLSECs following stimulation with either type I or type III IFNs, controlled HCV replication in a dose-dependent manner.”

However, the investigators say that more study is required to understand why HCV is able to present so persistently if the means to combat the virus are so apparent in the immune system.

“These results raise a number of intriguing questions, for example, how the use of exogenous IFN to treat viral hepatitis could be expected to induce additional, previously unrecognized antiviral mechanisms involving HLSECs,” says the study. “Further work is warranted to understand why despite these innate immune responses, HCV is able to establish persistence and fail eradication with IFN-based antiviral therapy.”

The study supported by grants R21-AI103361 and U19 AI 1066328; Dr. Rosen received a Merit Review grant and Dr. Shaw received grant R21-AI 106000. The authors reported no financial conflicts of interest.

Liver sinusoidal endothelial cells (LSECs) are a large component of the liver immune system but are not very well characterized for their role in diseases like chronic hepatitis C virus (HCV) infection. Dr. Giugliano and her associates tested the antiviral activity of primary human LSECs and an immortalized LSEC cell line (TMNK-1), following exposure to several viral variants. These included naturally

|

| Dr. Arash Grakoui |

occurring founder genotype 1a virus, a JFH-1 infectious clone (cloned originally from a patient with fulminant hepatitis), a modified cell culture of adaptive JFH-1 virus expressing luciferase reporter, and HCV-specific pathogen-associated molecular pattern poly UC RNA that is a substrate for RIG-I. They found LSECs were able to acquire HCV in an LSEC-to-hepatocyte–contact independent manner but that these cells were not permissive for productive infection (replication). Importantly, viral RNA through HCV uptake induced robust transcription of type I and III interferon (IFN) by LSECs via TLR7 and RIG-I pathways. LSEC-derived IFN had antiviral effects on HCV-infected human hepatoma cell line, Huh7.5 cells in vitro, and this effect was mediated through the production of exosomes. The authors concluded that LSECs likely have an important antiviral role in HCV infection through bystander induction of an antiviral state. This study highlights the idea that these cells can function similarly to other innate/phagocytic cells like monocytes/macrophages and furthers the idea that innate activation of LSECs may have protective effects on the antiviral state of hepatocytes. Mechanisms of antiviral immunity by LSECs, whether it occurs through exosomes or IFN, will have a significant impact in the field as the answers will have relevance to numerous facets of liver immunology, including gene transfer, organ transplant, and response to other hepatotropic infections.

Dr. Arash Grakoui is an associate professor jointly appointed in the departments of medicine, division of infectious diseases and Yerkes National Primate Research Center, department of microbiology and immunology at Emory University, Atlanta. He has no conflicts of interest.

Liver sinusoidal endothelial cells (LSECs) are a large component of the liver immune system but are not very well characterized for their role in diseases like chronic hepatitis C virus (HCV) infection. Dr. Giugliano and her associates tested the antiviral activity of primary human LSECs and an immortalized LSEC cell line (TMNK-1), following exposure to several viral variants. These included naturally

|

| Dr. Arash Grakoui |

occurring founder genotype 1a virus, a JFH-1 infectious clone (cloned originally from a patient with fulminant hepatitis), a modified cell culture of adaptive JFH-1 virus expressing luciferase reporter, and HCV-specific pathogen-associated molecular pattern poly UC RNA that is a substrate for RIG-I. They found LSECs were able to acquire HCV in an LSEC-to-hepatocyte–contact independent manner but that these cells were not permissive for productive infection (replication). Importantly, viral RNA through HCV uptake induced robust transcription of type I and III interferon (IFN) by LSECs via TLR7 and RIG-I pathways. LSEC-derived IFN had antiviral effects on HCV-infected human hepatoma cell line, Huh7.5 cells in vitro, and this effect was mediated through the production of exosomes. The authors concluded that LSECs likely have an important antiviral role in HCV infection through bystander induction of an antiviral state. This study highlights the idea that these cells can function similarly to other innate/phagocytic cells like monocytes/macrophages and furthers the idea that innate activation of LSECs may have protective effects on the antiviral state of hepatocytes. Mechanisms of antiviral immunity by LSECs, whether it occurs through exosomes or IFN, will have a significant impact in the field as the answers will have relevance to numerous facets of liver immunology, including gene transfer, organ transplant, and response to other hepatotropic infections.

Dr. Arash Grakoui is an associate professor jointly appointed in the departments of medicine, division of infectious diseases and Yerkes National Primate Research Center, department of microbiology and immunology at Emory University, Atlanta. He has no conflicts of interest.

Liver sinusoidal endothelial cells (LSECs) are a large component of the liver immune system but are not very well characterized for their role in diseases like chronic hepatitis C virus (HCV) infection. Dr. Giugliano and her associates tested the antiviral activity of primary human LSECs and an immortalized LSEC cell line (TMNK-1), following exposure to several viral variants. These included naturally

|

| Dr. Arash Grakoui |

occurring founder genotype 1a virus, a JFH-1 infectious clone (cloned originally from a patient with fulminant hepatitis), a modified cell culture of adaptive JFH-1 virus expressing luciferase reporter, and HCV-specific pathogen-associated molecular pattern poly UC RNA that is a substrate for RIG-I. They found LSECs were able to acquire HCV in an LSEC-to-hepatocyte–contact independent manner but that these cells were not permissive for productive infection (replication). Importantly, viral RNA through HCV uptake induced robust transcription of type I and III interferon (IFN) by LSECs via TLR7 and RIG-I pathways. LSEC-derived IFN had antiviral effects on HCV-infected human hepatoma cell line, Huh7.5 cells in vitro, and this effect was mediated through the production of exosomes. The authors concluded that LSECs likely have an important antiviral role in HCV infection through bystander induction of an antiviral state. This study highlights the idea that these cells can function similarly to other innate/phagocytic cells like monocytes/macrophages and furthers the idea that innate activation of LSECs may have protective effects on the antiviral state of hepatocytes. Mechanisms of antiviral immunity by LSECs, whether it occurs through exosomes or IFN, will have a significant impact in the field as the answers will have relevance to numerous facets of liver immunology, including gene transfer, organ transplant, and response to other hepatotropic infections.

Dr. Arash Grakoui is an associate professor jointly appointed in the departments of medicine, division of infectious diseases and Yerkes National Primate Research Center, department of microbiology and immunology at Emory University, Atlanta. He has no conflicts of interest.

Immunity against the hepatitis C virus can be induced by cultured primary human liver sinusoidal endothelial cells, according to a study published in the February issue of Gastroenterology (doi:10.1053/j.gastro.2014.10.040).

“We found HLSECs [primary human LSECs] express many of the receptors implicated in HCV [hepatitis C virus] attachment and entry and HLSEC-to-hepatocyte contact was dispensable for uptake,” wrote lead author Dr. Silvia M. Giugliano of the University of Colorado, Denver, and her associates.

In a cohort study, investigators exposed HLSECs and immortalized liver endothelial cells (TMNK-1) to several variants of HCV: full-length transmitted/founder virus, sucrose-purified Japanese fulminant hepatitis–1 (JFH-1), a virus encoding a luciferase reporter, and the HCV-specific pathogen-associated molecular pattern molecules (PAMP, substrate for RIG-I). Cells were analyzed by using polymerase chain reaction (PCR), immunofluorescence, and immunohistochemical assays.

Results showed that HLSECs, regardless of contact between cells, were able to internalize HCV when exposed to it and replicate, though not translate HCV RNA. The HCV RNA “induced consistent and broad transcription of multiple interferons (IFNs) [via] pattern recognition receptors (TLR7 and retinoic acid-inducible gene 1).” Furthermore, supernatants from primary HLSECs transfected with HCV-specific PAMP molecules had larger inductions of IFNs and IFN-stimulated genes in HLSECs.

“Conditioned media from IFN-stimulated HLSECs induced expression of antiviral genes by uninfected primary human hepatocytes,” wrote Dr. Giugliano and her coauthors. “Exosomes, derived from HLSECs following stimulation with either type I or type III IFNs, controlled HCV replication in a dose-dependent manner.”

However, the investigators say that more study is required to understand why HCV is able to present so persistently if the means to combat the virus are so apparent in the immune system.

“These results raise a number of intriguing questions, for example, how the use of exogenous IFN to treat viral hepatitis could be expected to induce additional, previously unrecognized antiviral mechanisms involving HLSECs,” says the study. “Further work is warranted to understand why despite these innate immune responses, HCV is able to establish persistence and fail eradication with IFN-based antiviral therapy.”

The study supported by grants R21-AI103361 and U19 AI 1066328; Dr. Rosen received a Merit Review grant and Dr. Shaw received grant R21-AI 106000. The authors reported no financial conflicts of interest.

Immunity against the hepatitis C virus can be induced by cultured primary human liver sinusoidal endothelial cells, according to a study published in the February issue of Gastroenterology (doi:10.1053/j.gastro.2014.10.040).

“We found HLSECs [primary human LSECs] express many of the receptors implicated in HCV [hepatitis C virus] attachment and entry and HLSEC-to-hepatocyte contact was dispensable for uptake,” wrote lead author Dr. Silvia M. Giugliano of the University of Colorado, Denver, and her associates.

In a cohort study, investigators exposed HLSECs and immortalized liver endothelial cells (TMNK-1) to several variants of HCV: full-length transmitted/founder virus, sucrose-purified Japanese fulminant hepatitis–1 (JFH-1), a virus encoding a luciferase reporter, and the HCV-specific pathogen-associated molecular pattern molecules (PAMP, substrate for RIG-I). Cells were analyzed by using polymerase chain reaction (PCR), immunofluorescence, and immunohistochemical assays.

Results showed that HLSECs, regardless of contact between cells, were able to internalize HCV when exposed to it and replicate, though not translate HCV RNA. The HCV RNA “induced consistent and broad transcription of multiple interferons (IFNs) [via] pattern recognition receptors (TLR7 and retinoic acid-inducible gene 1).” Furthermore, supernatants from primary HLSECs transfected with HCV-specific PAMP molecules had larger inductions of IFNs and IFN-stimulated genes in HLSECs.

“Conditioned media from IFN-stimulated HLSECs induced expression of antiviral genes by uninfected primary human hepatocytes,” wrote Dr. Giugliano and her coauthors. “Exosomes, derived from HLSECs following stimulation with either type I or type III IFNs, controlled HCV replication in a dose-dependent manner.”

However, the investigators say that more study is required to understand why HCV is able to present so persistently if the means to combat the virus are so apparent in the immune system.

“These results raise a number of intriguing questions, for example, how the use of exogenous IFN to treat viral hepatitis could be expected to induce additional, previously unrecognized antiviral mechanisms involving HLSECs,” says the study. “Further work is warranted to understand why despite these innate immune responses, HCV is able to establish persistence and fail eradication with IFN-based antiviral therapy.”

The study supported by grants R21-AI103361 and U19 AI 1066328; Dr. Rosen received a Merit Review grant and Dr. Shaw received grant R21-AI 106000. The authors reported no financial conflicts of interest.

FROM GASTROENTEROLOGY

Key clinical point: Cultured primary human liver sinusoidal endothelial cells (HLSECs) induce self-amplifying interferon-mediated responses and release of exosomes with antiviral activity, thus allowing it to mediate immunity against the hepatitis C virus (HCV).

Major finding: HLSECs and CV-specific PAMP molecules induced IFNs and replicated HCV RNA when exposed to various forms of HCV.

Data source: Cohort study