User login

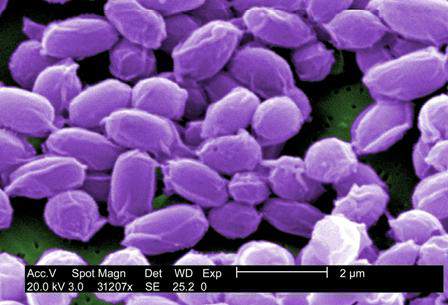

FDA approves Anthrasil to treat inhalational anthrax

The Food and Drug Administration has approved Anthrasil, Anthrax Immune Globulin Intravenous (Human), for treatment of inhalational anthrax when used with appropriate antibacterial drugs.

Inhalational anthrax is caused by breathing in Bacillus anthracis spores, which can occur after exposure to infected animals or contaminated animal products, or as a result of an intentional release of spores. In a statement, Dr. Karen Midthun – director of the FDA’s Center for Biologics Evaluation and Research – explained that Anthrasil “will be stored in U.S. Strategic National Stockpile to facilitate its availability in response to an anthrax emergency.”

Anthrasil was purchased by the U.S. Department of Health & Human Services’ Biomedical Advanced Research and Development Authority (BARDA) in 2011, but because it was not approved, its use prior to FDA approval would have required an emergency use authorization from the FDA.

The efficacy of Anthrasil was studied in animals because it was not feasible or ethical to conduct adequately controlled efficacy studies in humans, the FDA said. Monkeys and rabbits were exposed to Bacillus anthracis spores, and subsequently given either Anthrasil or a placebo. The survival rate for monkeys given Anthrasil was between 36% and 70%, with a trend toward increased survival at higher doses of Anthrasil. None of the monkeys given placebo survived. Rabbits had a 26% survival rate when given the drug, compared to 2% of those given placebo. A separate study exposed rabbits to Bacillus anthracis and treated them with either antibiotics or a combination of antibiotics and Anthrasil; survival rates were 71% for those treated with the combination and 25% for those treated with antibiotics only.

Safety was tested in 74 healthy human volunteers and the most commonly reported side effects were headache, back pain, nausea, and pain and swelling at the infusion site.

Anthrasil is manufactured by Cangene Corporation, based in Winnipeg, Canada, which developed the drug in collaboration with BARDA.

The Food and Drug Administration has approved Anthrasil, Anthrax Immune Globulin Intravenous (Human), for treatment of inhalational anthrax when used with appropriate antibacterial drugs.

Inhalational anthrax is caused by breathing in Bacillus anthracis spores, which can occur after exposure to infected animals or contaminated animal products, or as a result of an intentional release of spores. In a statement, Dr. Karen Midthun – director of the FDA’s Center for Biologics Evaluation and Research – explained that Anthrasil “will be stored in U.S. Strategic National Stockpile to facilitate its availability in response to an anthrax emergency.”

Anthrasil was purchased by the U.S. Department of Health & Human Services’ Biomedical Advanced Research and Development Authority (BARDA) in 2011, but because it was not approved, its use prior to FDA approval would have required an emergency use authorization from the FDA.

The efficacy of Anthrasil was studied in animals because it was not feasible or ethical to conduct adequately controlled efficacy studies in humans, the FDA said. Monkeys and rabbits were exposed to Bacillus anthracis spores, and subsequently given either Anthrasil or a placebo. The survival rate for monkeys given Anthrasil was between 36% and 70%, with a trend toward increased survival at higher doses of Anthrasil. None of the monkeys given placebo survived. Rabbits had a 26% survival rate when given the drug, compared to 2% of those given placebo. A separate study exposed rabbits to Bacillus anthracis and treated them with either antibiotics or a combination of antibiotics and Anthrasil; survival rates were 71% for those treated with the combination and 25% for those treated with antibiotics only.

Safety was tested in 74 healthy human volunteers and the most commonly reported side effects were headache, back pain, nausea, and pain and swelling at the infusion site.

Anthrasil is manufactured by Cangene Corporation, based in Winnipeg, Canada, which developed the drug in collaboration with BARDA.

The Food and Drug Administration has approved Anthrasil, Anthrax Immune Globulin Intravenous (Human), for treatment of inhalational anthrax when used with appropriate antibacterial drugs.

Inhalational anthrax is caused by breathing in Bacillus anthracis spores, which can occur after exposure to infected animals or contaminated animal products, or as a result of an intentional release of spores. In a statement, Dr. Karen Midthun – director of the FDA’s Center for Biologics Evaluation and Research – explained that Anthrasil “will be stored in U.S. Strategic National Stockpile to facilitate its availability in response to an anthrax emergency.”

Anthrasil was purchased by the U.S. Department of Health & Human Services’ Biomedical Advanced Research and Development Authority (BARDA) in 2011, but because it was not approved, its use prior to FDA approval would have required an emergency use authorization from the FDA.

The efficacy of Anthrasil was studied in animals because it was not feasible or ethical to conduct adequately controlled efficacy studies in humans, the FDA said. Monkeys and rabbits were exposed to Bacillus anthracis spores, and subsequently given either Anthrasil or a placebo. The survival rate for monkeys given Anthrasil was between 36% and 70%, with a trend toward increased survival at higher doses of Anthrasil. None of the monkeys given placebo survived. Rabbits had a 26% survival rate when given the drug, compared to 2% of those given placebo. A separate study exposed rabbits to Bacillus anthracis and treated them with either antibiotics or a combination of antibiotics and Anthrasil; survival rates were 71% for those treated with the combination and 25% for those treated with antibiotics only.

Safety was tested in 74 healthy human volunteers and the most commonly reported side effects were headache, back pain, nausea, and pain and swelling at the infusion site.

Anthrasil is manufactured by Cangene Corporation, based in Winnipeg, Canada, which developed the drug in collaboration with BARDA.

Self-reported penicillin allergy may be undiagnosed chronic urticaria

HOUSTON – The higher prevalence of chronic urticaria in patients with self-reported penicillin allergy suggests that these patients may be confusing one condition with the other, according to a late-breaking study presented at the annual meeting of the American Academy of Allergy, Asthma, and Immunology.

A retrospective chart review of 1,419 patients with self-reported penicillin allergy revealed that 175 patients (12.3%) had a diagnosis of chronic urticaria, a significantly higher percentage than the typical prevalence range of 0.5%-5% that has been reported in the general population.

“Patients are potentially mistakenly attributing symptoms of chronic urticaria to a penicillin allergy,” explained study author Dr. Susanna G. Silverman of the University of Pennsylvania, Philadelphia.

The study included patients at the University of Pennsylvania’s allergy and immunology clinic who self-reported penicillin allergy from June 2007 to August 2014. Patients were identified as having penicillin allergy if penicillin, amoxicillin, amoxicillin-clavulanate, or piperacillin-tazobactam were present on the allergy list of their medical records.

Dr. Silverman then identified all patients from that group who also received a diagnosis of urticaria – a total of 343 patients – then narrowed the list to those who were diagnosed with chronic urticaria or the presence of urticaria for at least 6 weeks.

Of the 175 patients who had chronic urticaria, all were between the ages of 20 years and 92 years; 84% were female, and 53% were white.

“We think it’s important for physicians to think about this and to ask patients about symptoms of chronic urticaria when they report penicillin allergy,” Dr. Silverman noted, “to better determine what is truly penicillin allergy versus simply chronic urticaria symptoms.”

Dr. Silverman did not report any financial disclosures.

HOUSTON – The higher prevalence of chronic urticaria in patients with self-reported penicillin allergy suggests that these patients may be confusing one condition with the other, according to a late-breaking study presented at the annual meeting of the American Academy of Allergy, Asthma, and Immunology.

A retrospective chart review of 1,419 patients with self-reported penicillin allergy revealed that 175 patients (12.3%) had a diagnosis of chronic urticaria, a significantly higher percentage than the typical prevalence range of 0.5%-5% that has been reported in the general population.

“Patients are potentially mistakenly attributing symptoms of chronic urticaria to a penicillin allergy,” explained study author Dr. Susanna G. Silverman of the University of Pennsylvania, Philadelphia.

The study included patients at the University of Pennsylvania’s allergy and immunology clinic who self-reported penicillin allergy from June 2007 to August 2014. Patients were identified as having penicillin allergy if penicillin, amoxicillin, amoxicillin-clavulanate, or piperacillin-tazobactam were present on the allergy list of their medical records.

Dr. Silverman then identified all patients from that group who also received a diagnosis of urticaria – a total of 343 patients – then narrowed the list to those who were diagnosed with chronic urticaria or the presence of urticaria for at least 6 weeks.

Of the 175 patients who had chronic urticaria, all were between the ages of 20 years and 92 years; 84% were female, and 53% were white.

“We think it’s important for physicians to think about this and to ask patients about symptoms of chronic urticaria when they report penicillin allergy,” Dr. Silverman noted, “to better determine what is truly penicillin allergy versus simply chronic urticaria symptoms.”

Dr. Silverman did not report any financial disclosures.

HOUSTON – The higher prevalence of chronic urticaria in patients with self-reported penicillin allergy suggests that these patients may be confusing one condition with the other, according to a late-breaking study presented at the annual meeting of the American Academy of Allergy, Asthma, and Immunology.

A retrospective chart review of 1,419 patients with self-reported penicillin allergy revealed that 175 patients (12.3%) had a diagnosis of chronic urticaria, a significantly higher percentage than the typical prevalence range of 0.5%-5% that has been reported in the general population.

“Patients are potentially mistakenly attributing symptoms of chronic urticaria to a penicillin allergy,” explained study author Dr. Susanna G. Silverman of the University of Pennsylvania, Philadelphia.

The study included patients at the University of Pennsylvania’s allergy and immunology clinic who self-reported penicillin allergy from June 2007 to August 2014. Patients were identified as having penicillin allergy if penicillin, amoxicillin, amoxicillin-clavulanate, or piperacillin-tazobactam were present on the allergy list of their medical records.

Dr. Silverman then identified all patients from that group who also received a diagnosis of urticaria – a total of 343 patients – then narrowed the list to those who were diagnosed with chronic urticaria or the presence of urticaria for at least 6 weeks.

Of the 175 patients who had chronic urticaria, all were between the ages of 20 years and 92 years; 84% were female, and 53% were white.

“We think it’s important for physicians to think about this and to ask patients about symptoms of chronic urticaria when they report penicillin allergy,” Dr. Silverman noted, “to better determine what is truly penicillin allergy versus simply chronic urticaria symptoms.”

Dr. Silverman did not report any financial disclosures.

AT 2015 AAAAI ANNUAL MEETING

Key clinical point: Patients self-reporting penicillin allergy may have undiagnosed chronic urticaria.

Major finding: 12.3% of patients with self-reported penicillin allergy had chronic urticaria diagnoses, a significantly higher rate than the 5% rate in the general population.

Data source: Retrospective chart review of 1,419 patients at the University of Pennsylvania’s allergy and immunology clinic.

Disclosures: Dr. Silverman did not report any financial disclosures.

CDC, AMA Launch Nationwide Prediabetes Awareness Initiative

The Centers for Disease Control and Prevention have joined the American Medical Association to create a new program aimed reducing the number of Americans diagnosed with type 2 diabetes, one of the most common chronic medical conditions in the United States.

The initiative, entitled “Prevent Diabetes STAT: Screen, Test, Act – Today,” will focus on individuals who have prediabetes, which is characterized by having blood glucose levels that are higher than normal but not high enough to be considered diabetic. Unless they are able to lose weight through diet and exercise, 15%-30% of prediabetic individuals are diagnosed with type 2 diabetes within 5 years of becoming prediabetic.

“This isn’t just a concern: It’s a crisis,” said AMA president Robert M. Wah, during a telebriefing on Thursday. “It’s not only taking a physical and emotional toll on people living with prediabetes, but it also takes an economic toll on our country. More than $245 billion in health care spending and reduced productivity is directly linked to diabetes,” Dr. Wah said.

“The truth is, our health care system simply can not sustain the growing number of people developing diabetes,” said Ann Albright, Ph.D., director of the CDC’s Division of Diabetes Translation, during the same telebriefing. “Research shows that screening, testing, and referring people who are at risk for type 2 diabetes is critical, [and] that when people know they have prediabetes, they are more likely to take action.”

To that end, the AMA and CDC have created an online “toolkit” that allows health care providers and patients to understand the risks and signs of prediabetes. The toolkit will offer resources on how to prevent high blood glucose levels from progressing to type 2 diabetes. Additionally, both organizations have created an online screening tool that allows visitors to determine their risk for prediabetes.

Health care providers are another key component of Prevent Diabetes STAT, said Dr. Wah and Dr. Albright, who urged physicians and health care teams to actively screen patients using either the CDC’s Prediabetes Screening Test or the American Diabetes Association’s Type 2 Diabetes Risk Test, test for prediabetes using one of three recommended blood tests, and refer prediabetic patients to a CDC-recognized prevention program.

More on Prevent Diabetes STAT >>

Prevent Diabetes STAT is intended to be a multiyear program. It represents an expansion of previous efforts undertaken individually by the CDC and AMA to combat the growing diabetes epidemic. In 2012, the CDC created the National Diabetes Prevention Program, using data from the National Institutes of Health, to create a framework of more than 500 programs designed to help people with diabetes institute meaningful lifestyle changes. The AMA launched a similar initiative in 2013 known as Improving Health Outcomes, which included partnering with YMCAs around the country to refer at-risk youths to diabetes prevention programs recognized by the CDC.

“We have seen significant progress, but we’ve really got to be sure that we get this [diabetes initiative] to a larger number of people,” said Dr. Albright. “We need to allow this to be scaled nationally [by] taking the successes that we’ve had and the lessons that we’ve learned, [but] in order to do that, people have to receive a diagnosis of prediabetes so that they can get connected to these services.”

According to the AMA, there are currently more than 86 million individuals in the United States living with prediabetes, yet roughly 90% of those people don’t even know they have it. The CDC estimates that the number of individuals diagnosed with diabetes in the United States more than tripled from 1980 to 2011, going from 5.6 million to nearly 21 million in just over 30 years. In 2011, 19.6 million American adults were diagnosed with diabetes; right now, according to the AMA and CDC, more than 33% of American adults are living with prediabetes.

The Centers for Disease Control and Prevention have joined the American Medical Association to create a new program aimed reducing the number of Americans diagnosed with type 2 diabetes, one of the most common chronic medical conditions in the United States.

The initiative, entitled “Prevent Diabetes STAT: Screen, Test, Act – Today,” will focus on individuals who have prediabetes, which is characterized by having blood glucose levels that are higher than normal but not high enough to be considered diabetic. Unless they are able to lose weight through diet and exercise, 15%-30% of prediabetic individuals are diagnosed with type 2 diabetes within 5 years of becoming prediabetic.

“This isn’t just a concern: It’s a crisis,” said AMA president Robert M. Wah, during a telebriefing on Thursday. “It’s not only taking a physical and emotional toll on people living with prediabetes, but it also takes an economic toll on our country. More than $245 billion in health care spending and reduced productivity is directly linked to diabetes,” Dr. Wah said.

“The truth is, our health care system simply can not sustain the growing number of people developing diabetes,” said Ann Albright, Ph.D., director of the CDC’s Division of Diabetes Translation, during the same telebriefing. “Research shows that screening, testing, and referring people who are at risk for type 2 diabetes is critical, [and] that when people know they have prediabetes, they are more likely to take action.”

To that end, the AMA and CDC have created an online “toolkit” that allows health care providers and patients to understand the risks and signs of prediabetes. The toolkit will offer resources on how to prevent high blood glucose levels from progressing to type 2 diabetes. Additionally, both organizations have created an online screening tool that allows visitors to determine their risk for prediabetes.

Health care providers are another key component of Prevent Diabetes STAT, said Dr. Wah and Dr. Albright, who urged physicians and health care teams to actively screen patients using either the CDC’s Prediabetes Screening Test or the American Diabetes Association’s Type 2 Diabetes Risk Test, test for prediabetes using one of three recommended blood tests, and refer prediabetic patients to a CDC-recognized prevention program.

More on Prevent Diabetes STAT >>

Prevent Diabetes STAT is intended to be a multiyear program. It represents an expansion of previous efforts undertaken individually by the CDC and AMA to combat the growing diabetes epidemic. In 2012, the CDC created the National Diabetes Prevention Program, using data from the National Institutes of Health, to create a framework of more than 500 programs designed to help people with diabetes institute meaningful lifestyle changes. The AMA launched a similar initiative in 2013 known as Improving Health Outcomes, which included partnering with YMCAs around the country to refer at-risk youths to diabetes prevention programs recognized by the CDC.

“We have seen significant progress, but we’ve really got to be sure that we get this [diabetes initiative] to a larger number of people,” said Dr. Albright. “We need to allow this to be scaled nationally [by] taking the successes that we’ve had and the lessons that we’ve learned, [but] in order to do that, people have to receive a diagnosis of prediabetes so that they can get connected to these services.”

According to the AMA, there are currently more than 86 million individuals in the United States living with prediabetes, yet roughly 90% of those people don’t even know they have it. The CDC estimates that the number of individuals diagnosed with diabetes in the United States more than tripled from 1980 to 2011, going from 5.6 million to nearly 21 million in just over 30 years. In 2011, 19.6 million American adults were diagnosed with diabetes; right now, according to the AMA and CDC, more than 33% of American adults are living with prediabetes.

The Centers for Disease Control and Prevention have joined the American Medical Association to create a new program aimed reducing the number of Americans diagnosed with type 2 diabetes, one of the most common chronic medical conditions in the United States.

The initiative, entitled “Prevent Diabetes STAT: Screen, Test, Act – Today,” will focus on individuals who have prediabetes, which is characterized by having blood glucose levels that are higher than normal but not high enough to be considered diabetic. Unless they are able to lose weight through diet and exercise, 15%-30% of prediabetic individuals are diagnosed with type 2 diabetes within 5 years of becoming prediabetic.

“This isn’t just a concern: It’s a crisis,” said AMA president Robert M. Wah, during a telebriefing on Thursday. “It’s not only taking a physical and emotional toll on people living with prediabetes, but it also takes an economic toll on our country. More than $245 billion in health care spending and reduced productivity is directly linked to diabetes,” Dr. Wah said.

“The truth is, our health care system simply can not sustain the growing number of people developing diabetes,” said Ann Albright, Ph.D., director of the CDC’s Division of Diabetes Translation, during the same telebriefing. “Research shows that screening, testing, and referring people who are at risk for type 2 diabetes is critical, [and] that when people know they have prediabetes, they are more likely to take action.”

To that end, the AMA and CDC have created an online “toolkit” that allows health care providers and patients to understand the risks and signs of prediabetes. The toolkit will offer resources on how to prevent high blood glucose levels from progressing to type 2 diabetes. Additionally, both organizations have created an online screening tool that allows visitors to determine their risk for prediabetes.

Health care providers are another key component of Prevent Diabetes STAT, said Dr. Wah and Dr. Albright, who urged physicians and health care teams to actively screen patients using either the CDC’s Prediabetes Screening Test or the American Diabetes Association’s Type 2 Diabetes Risk Test, test for prediabetes using one of three recommended blood tests, and refer prediabetic patients to a CDC-recognized prevention program.

More on Prevent Diabetes STAT >>

Prevent Diabetes STAT is intended to be a multiyear program. It represents an expansion of previous efforts undertaken individually by the CDC and AMA to combat the growing diabetes epidemic. In 2012, the CDC created the National Diabetes Prevention Program, using data from the National Institutes of Health, to create a framework of more than 500 programs designed to help people with diabetes institute meaningful lifestyle changes. The AMA launched a similar initiative in 2013 known as Improving Health Outcomes, which included partnering with YMCAs around the country to refer at-risk youths to diabetes prevention programs recognized by the CDC.

“We have seen significant progress, but we’ve really got to be sure that we get this [diabetes initiative] to a larger number of people,” said Dr. Albright. “We need to allow this to be scaled nationally [by] taking the successes that we’ve had and the lessons that we’ve learned, [but] in order to do that, people have to receive a diagnosis of prediabetes so that they can get connected to these services.”

According to the AMA, there are currently more than 86 million individuals in the United States living with prediabetes, yet roughly 90% of those people don’t even know they have it. The CDC estimates that the number of individuals diagnosed with diabetes in the United States more than tripled from 1980 to 2011, going from 5.6 million to nearly 21 million in just over 30 years. In 2011, 19.6 million American adults were diagnosed with diabetes; right now, according to the AMA and CDC, more than 33% of American adults are living with prediabetes.

Low-volume centers using ECMO have poorest survival rates

SAN DIEGO – Lung transplantation centers that are considered low volume tend to have lower rates of survival than do those of their medium- and high-volume counterparts when patients are bridged via extracorporeal membrane oxygenation (ECMO), according to researchers.

Even so, there is a point at which survival outcomes begin to improve for low-volume centers, they added.

“Increasingly, [ECMO] is used as a bridge to lung transplantation; indeed, the use of ECMO has tripled over the past 15 years and survival has increased by the same magnitude,” Dr. Jeremiah A. Hayanga said at the annual meeting of the Society of Thoracic Surgeons.

“An entire body of literature has linked high-volume [centers] to improved outcomes in the context of complex surgical procedures. Lung transplantation [LTx] falls within the same domain, and has been considered subject to the same inverse volume-outcome paradigm,” said Dr. Hayanga of Michigan State University, Grand Rapids.

He and his coinvestigators conducted a retrospective analysis of 16,603 LTx recipients in the International Registry for Heart and Lung Transplantation (ISHLT) who underwent ECMO as their bridging strategy between 2005 and 2010. Centers were stratified into categories of low, medium, and high based on the volume of LTx procedures performed over the study interval: Low was defined as fewer than 25, medium as 25-50, and more than 50 as high volume.

Overall, 85 of the 16,603 transplant recipients in the study population were bridged via ECMO: 20 (23.5%) of them in low-volume centers, 30 (35.3%) in medium-volume centers, and 35 (41.2%) in high-volume centers. The researchers used Cox proportional hazard modeling to identify predictors of both 1- and 5-year survival rates, which were found to be significantly lower in low-volume centers – 13.61% at 5 years post LTx.

Looking at just the high-volume and low-volume centers, the researchers noted “significant differences” in both 1-year and 5-year survival rates when ECMO was used for bridging. One-year survival probability was roughly 40% in low-volume centers and roughly 70% in high-volume centers, while 5-year survival probability was well under 25% for recipients from low-volume centers and around 50% for those from high-volume centers (P = .0006). No significant differences existed for non-ECMO patients, regardless of center volume.

“No differences existed in survival in medium- and high-volume centers,” said Dr. Hayanga. “Transplanting without ECMO as a bridge showed fewer survival differences for both 1-year and 5-year survival. However, when ECMO was used as a bridge, the low-volume center [survival rates] were dramatically lower at both 1 year and 5 years.”

When Dr. Hayanga and his colleagues examined procedural volume as a continuous variable, however, a single inflection point was determined as the point at which survival outcomes steadily improve – 19 procedures. Centers that performed at least 19 LTx procedures between 2005 and 2010 experienced an uptick in survival rates, even though centers that saw 19-25 procedures were still considered low volume, the researchers noted.

“The corresponding c-statistic, however, is just under 60%,” cautioned Dr. Hayanga. “The C-statistic is a measure of the explanatory power of a variable – in this case, [center] volume – in accounting for the variability in outcome, or survival in this case. To put that number into context, a C-statistic of 50% means ‘no explanatory power’ whatsoever.”

Dr. Hayanga explained that he and his coauthors compared transplant recipient and donor characteristics using analysis of variance (ANOVA) and chi-square tests to compare variables, cumulative survival using Kaplan-Meier curves, and significance using log-rank tests.

Dr. Hayanga reported no financial conflicts of interest.

SAN DIEGO – Lung transplantation centers that are considered low volume tend to have lower rates of survival than do those of their medium- and high-volume counterparts when patients are bridged via extracorporeal membrane oxygenation (ECMO), according to researchers.

Even so, there is a point at which survival outcomes begin to improve for low-volume centers, they added.

“Increasingly, [ECMO] is used as a bridge to lung transplantation; indeed, the use of ECMO has tripled over the past 15 years and survival has increased by the same magnitude,” Dr. Jeremiah A. Hayanga said at the annual meeting of the Society of Thoracic Surgeons.

“An entire body of literature has linked high-volume [centers] to improved outcomes in the context of complex surgical procedures. Lung transplantation [LTx] falls within the same domain, and has been considered subject to the same inverse volume-outcome paradigm,” said Dr. Hayanga of Michigan State University, Grand Rapids.

He and his coinvestigators conducted a retrospective analysis of 16,603 LTx recipients in the International Registry for Heart and Lung Transplantation (ISHLT) who underwent ECMO as their bridging strategy between 2005 and 2010. Centers were stratified into categories of low, medium, and high based on the volume of LTx procedures performed over the study interval: Low was defined as fewer than 25, medium as 25-50, and more than 50 as high volume.

Overall, 85 of the 16,603 transplant recipients in the study population were bridged via ECMO: 20 (23.5%) of them in low-volume centers, 30 (35.3%) in medium-volume centers, and 35 (41.2%) in high-volume centers. The researchers used Cox proportional hazard modeling to identify predictors of both 1- and 5-year survival rates, which were found to be significantly lower in low-volume centers – 13.61% at 5 years post LTx.

Looking at just the high-volume and low-volume centers, the researchers noted “significant differences” in both 1-year and 5-year survival rates when ECMO was used for bridging. One-year survival probability was roughly 40% in low-volume centers and roughly 70% in high-volume centers, while 5-year survival probability was well under 25% for recipients from low-volume centers and around 50% for those from high-volume centers (P = .0006). No significant differences existed for non-ECMO patients, regardless of center volume.

“No differences existed in survival in medium- and high-volume centers,” said Dr. Hayanga. “Transplanting without ECMO as a bridge showed fewer survival differences for both 1-year and 5-year survival. However, when ECMO was used as a bridge, the low-volume center [survival rates] were dramatically lower at both 1 year and 5 years.”

When Dr. Hayanga and his colleagues examined procedural volume as a continuous variable, however, a single inflection point was determined as the point at which survival outcomes steadily improve – 19 procedures. Centers that performed at least 19 LTx procedures between 2005 and 2010 experienced an uptick in survival rates, even though centers that saw 19-25 procedures were still considered low volume, the researchers noted.

“The corresponding c-statistic, however, is just under 60%,” cautioned Dr. Hayanga. “The C-statistic is a measure of the explanatory power of a variable – in this case, [center] volume – in accounting for the variability in outcome, or survival in this case. To put that number into context, a C-statistic of 50% means ‘no explanatory power’ whatsoever.”

Dr. Hayanga explained that he and his coauthors compared transplant recipient and donor characteristics using analysis of variance (ANOVA) and chi-square tests to compare variables, cumulative survival using Kaplan-Meier curves, and significance using log-rank tests.

Dr. Hayanga reported no financial conflicts of interest.

SAN DIEGO – Lung transplantation centers that are considered low volume tend to have lower rates of survival than do those of their medium- and high-volume counterparts when patients are bridged via extracorporeal membrane oxygenation (ECMO), according to researchers.

Even so, there is a point at which survival outcomes begin to improve for low-volume centers, they added.

“Increasingly, [ECMO] is used as a bridge to lung transplantation; indeed, the use of ECMO has tripled over the past 15 years and survival has increased by the same magnitude,” Dr. Jeremiah A. Hayanga said at the annual meeting of the Society of Thoracic Surgeons.

“An entire body of literature has linked high-volume [centers] to improved outcomes in the context of complex surgical procedures. Lung transplantation [LTx] falls within the same domain, and has been considered subject to the same inverse volume-outcome paradigm,” said Dr. Hayanga of Michigan State University, Grand Rapids.

He and his coinvestigators conducted a retrospective analysis of 16,603 LTx recipients in the International Registry for Heart and Lung Transplantation (ISHLT) who underwent ECMO as their bridging strategy between 2005 and 2010. Centers were stratified into categories of low, medium, and high based on the volume of LTx procedures performed over the study interval: Low was defined as fewer than 25, medium as 25-50, and more than 50 as high volume.

Overall, 85 of the 16,603 transplant recipients in the study population were bridged via ECMO: 20 (23.5%) of them in low-volume centers, 30 (35.3%) in medium-volume centers, and 35 (41.2%) in high-volume centers. The researchers used Cox proportional hazard modeling to identify predictors of both 1- and 5-year survival rates, which were found to be significantly lower in low-volume centers – 13.61% at 5 years post LTx.

Looking at just the high-volume and low-volume centers, the researchers noted “significant differences” in both 1-year and 5-year survival rates when ECMO was used for bridging. One-year survival probability was roughly 40% in low-volume centers and roughly 70% in high-volume centers, while 5-year survival probability was well under 25% for recipients from low-volume centers and around 50% for those from high-volume centers (P = .0006). No significant differences existed for non-ECMO patients, regardless of center volume.

“No differences existed in survival in medium- and high-volume centers,” said Dr. Hayanga. “Transplanting without ECMO as a bridge showed fewer survival differences for both 1-year and 5-year survival. However, when ECMO was used as a bridge, the low-volume center [survival rates] were dramatically lower at both 1 year and 5 years.”

When Dr. Hayanga and his colleagues examined procedural volume as a continuous variable, however, a single inflection point was determined as the point at which survival outcomes steadily improve – 19 procedures. Centers that performed at least 19 LTx procedures between 2005 and 2010 experienced an uptick in survival rates, even though centers that saw 19-25 procedures were still considered low volume, the researchers noted.

“The corresponding c-statistic, however, is just under 60%,” cautioned Dr. Hayanga. “The C-statistic is a measure of the explanatory power of a variable – in this case, [center] volume – in accounting for the variability in outcome, or survival in this case. To put that number into context, a C-statistic of 50% means ‘no explanatory power’ whatsoever.”

Dr. Hayanga explained that he and his coauthors compared transplant recipient and donor characteristics using analysis of variance (ANOVA) and chi-square tests to compare variables, cumulative survival using Kaplan-Meier curves, and significance using log-rank tests.

Dr. Hayanga reported no financial conflicts of interest.

AT THE STS ANNUAL MEETING

Key clinical point: Low-volume lung transplantation centers in the United States typically have the poorest survival rates compared to those with higher volumes when using ECMO.

Major finding: Of 85 LTx subjects bridged via ECMO, 20 (23.5%) of these were bridged in low, 30 (35.3%) in medium, and 35 (41.2%) in high-volume centers; in the ECMO cohort, the lowest 5-year survival rate (13.61%) was observed at low-volume centers.

Data source: Retrospective analysis of 16,603 adult LTx recipients in the International Registry for Heart and Lung Transplantation during 2005-2010.

Disclosures: Dr. Hayanga reported no financial conflicts of interest.

CDC, AMA launch nationwide prediabetes awareness initiative

The Centers for Disease Control and Prevention have joined the American Medical Association to create a new program aimed at reducing the number of Americans diagnosed with type 2 diabetes, one of the most common chronic medical conditions in the United States.

The initiative, entitled “Prevent Diabetes STAT: Screen, Test, Act – Today,” will focus on individuals who have prediabetes, which is characterized by having blood glucose levels that are higher than normal but not high enough to be considered diabetic. Unless they are able to lose weight through diet and exercise, 15%-30% of prediabetic individuals are diagnosed with type 2 diabetes within 5 years of becoming prediabetic.

“This isn’t just a concern: It’s a crisis,” said AMA president Robert M. Wah, during a telebriefing on Thursday. “It’s not only taking a physical and emotional toll on people living with prediabetes, but it also takes an economic toll on our country. More than $245 billion in health care spending and reduced productivity is directly linked to diabetes,” Dr. Wah said.

“The truth is, our health care system simply can not sustain the growing number of people developing diabetes,” said Ann Albright, Ph.D., director of the CDC’s Division of Diabetes Translation, during the same telebriefing. “Research shows that screening, testing, and referring people who are at risk for type 2 diabetes is critical, [and] that when people know they have prediabetes, they are more likely to take action.”

To that end, the AMA and CDC have created an online “toolkit” that allows health care providers and patients to understand the risks and signs of prediabetes. The toolkit will offer resources on how to prevent high blood glucose levels from progressing to type 2 diabetes. Additionally, both organizations have created an online screening tool that allows visitors to determine their risk for prediabetes.

Health care providers are another key component of Prevent Diabetes STAT, said Dr. Wah and Dr. Albright, who urged physicians and health care teams to actively screen patients using either the CDC’s Prediabetes Screening Test or the American Diabetes Association’s Type 2 Diabetes Risk Test, test for prediabetes using one of three recommended blood tests, and refer prediabetic patients to a CDC-recognized prevention program.

Prevent Diabetes STAT is intended to be a multiyear program. It represents an expansion of previous efforts undertaken individually by the CDC and AMA to combat the growing diabetes epidemic. In 2012, the CDC created the National Diabetes Prevention Program, using data from the National Institutes of Health, to create a framework of more than 500 programs designed to help people with diabetes institute meaningful lifestyle changes. The AMA launched a similar initiative in 2013 known as Improving Health Outcomes, which included partnering with YMCAs around the country to refer at-risk youths to diabetes prevention programs recognized by the CDC.

“We have seen significant progress, but we’ve really got to be sure that we get this [diabetes initiative] to a larger number of people,” said Dr. Albright. “We need to allow this to be scaled nationally [by] taking the successes that we’ve had and the lessons that we’ve learned, [but] in order to do that, people have to receive a diagnosis of prediabetes so that they can get connected to these services.”

According to the AMA, there are currently more than 86 million individuals in the United States living with prediabetes, yet roughly 90% of those people don’t even know they have it. The CDC estimates that the number of individuals diagnosed with diabetes in the United States more than tripled from 1980 to 2011, going from 5.6 million to nearly 21 million in just over 30 years. In 2011, 19.6 million American adults were diagnosed with diabetes; right now, according to the AMA and CDC, more than 33% of American adults are living with prediabetes.

The Centers for Disease Control and Prevention have joined the American Medical Association to create a new program aimed at reducing the number of Americans diagnosed with type 2 diabetes, one of the most common chronic medical conditions in the United States.

The initiative, entitled “Prevent Diabetes STAT: Screen, Test, Act – Today,” will focus on individuals who have prediabetes, which is characterized by having blood glucose levels that are higher than normal but not high enough to be considered diabetic. Unless they are able to lose weight through diet and exercise, 15%-30% of prediabetic individuals are diagnosed with type 2 diabetes within 5 years of becoming prediabetic.

“This isn’t just a concern: It’s a crisis,” said AMA president Robert M. Wah, during a telebriefing on Thursday. “It’s not only taking a physical and emotional toll on people living with prediabetes, but it also takes an economic toll on our country. More than $245 billion in health care spending and reduced productivity is directly linked to diabetes,” Dr. Wah said.

“The truth is, our health care system simply can not sustain the growing number of people developing diabetes,” said Ann Albright, Ph.D., director of the CDC’s Division of Diabetes Translation, during the same telebriefing. “Research shows that screening, testing, and referring people who are at risk for type 2 diabetes is critical, [and] that when people know they have prediabetes, they are more likely to take action.”

To that end, the AMA and CDC have created an online “toolkit” that allows health care providers and patients to understand the risks and signs of prediabetes. The toolkit will offer resources on how to prevent high blood glucose levels from progressing to type 2 diabetes. Additionally, both organizations have created an online screening tool that allows visitors to determine their risk for prediabetes.

Health care providers are another key component of Prevent Diabetes STAT, said Dr. Wah and Dr. Albright, who urged physicians and health care teams to actively screen patients using either the CDC’s Prediabetes Screening Test or the American Diabetes Association’s Type 2 Diabetes Risk Test, test for prediabetes using one of three recommended blood tests, and refer prediabetic patients to a CDC-recognized prevention program.

Prevent Diabetes STAT is intended to be a multiyear program. It represents an expansion of previous efforts undertaken individually by the CDC and AMA to combat the growing diabetes epidemic. In 2012, the CDC created the National Diabetes Prevention Program, using data from the National Institutes of Health, to create a framework of more than 500 programs designed to help people with diabetes institute meaningful lifestyle changes. The AMA launched a similar initiative in 2013 known as Improving Health Outcomes, which included partnering with YMCAs around the country to refer at-risk youths to diabetes prevention programs recognized by the CDC.

“We have seen significant progress, but we’ve really got to be sure that we get this [diabetes initiative] to a larger number of people,” said Dr. Albright. “We need to allow this to be scaled nationally [by] taking the successes that we’ve had and the lessons that we’ve learned, [but] in order to do that, people have to receive a diagnosis of prediabetes so that they can get connected to these services.”

According to the AMA, there are currently more than 86 million individuals in the United States living with prediabetes, yet roughly 90% of those people don’t even know they have it. The CDC estimates that the number of individuals diagnosed with diabetes in the United States more than tripled from 1980 to 2011, going from 5.6 million to nearly 21 million in just over 30 years. In 2011, 19.6 million American adults were diagnosed with diabetes; right now, according to the AMA and CDC, more than 33% of American adults are living with prediabetes.

The Centers for Disease Control and Prevention have joined the American Medical Association to create a new program aimed at reducing the number of Americans diagnosed with type 2 diabetes, one of the most common chronic medical conditions in the United States.

The initiative, entitled “Prevent Diabetes STAT: Screen, Test, Act – Today,” will focus on individuals who have prediabetes, which is characterized by having blood glucose levels that are higher than normal but not high enough to be considered diabetic. Unless they are able to lose weight through diet and exercise, 15%-30% of prediabetic individuals are diagnosed with type 2 diabetes within 5 years of becoming prediabetic.

“This isn’t just a concern: It’s a crisis,” said AMA president Robert M. Wah, during a telebriefing on Thursday. “It’s not only taking a physical and emotional toll on people living with prediabetes, but it also takes an economic toll on our country. More than $245 billion in health care spending and reduced productivity is directly linked to diabetes,” Dr. Wah said.

“The truth is, our health care system simply can not sustain the growing number of people developing diabetes,” said Ann Albright, Ph.D., director of the CDC’s Division of Diabetes Translation, during the same telebriefing. “Research shows that screening, testing, and referring people who are at risk for type 2 diabetes is critical, [and] that when people know they have prediabetes, they are more likely to take action.”

To that end, the AMA and CDC have created an online “toolkit” that allows health care providers and patients to understand the risks and signs of prediabetes. The toolkit will offer resources on how to prevent high blood glucose levels from progressing to type 2 diabetes. Additionally, both organizations have created an online screening tool that allows visitors to determine their risk for prediabetes.

Health care providers are another key component of Prevent Diabetes STAT, said Dr. Wah and Dr. Albright, who urged physicians and health care teams to actively screen patients using either the CDC’s Prediabetes Screening Test or the American Diabetes Association’s Type 2 Diabetes Risk Test, test for prediabetes using one of three recommended blood tests, and refer prediabetic patients to a CDC-recognized prevention program.

Prevent Diabetes STAT is intended to be a multiyear program. It represents an expansion of previous efforts undertaken individually by the CDC and AMA to combat the growing diabetes epidemic. In 2012, the CDC created the National Diabetes Prevention Program, using data from the National Institutes of Health, to create a framework of more than 500 programs designed to help people with diabetes institute meaningful lifestyle changes. The AMA launched a similar initiative in 2013 known as Improving Health Outcomes, which included partnering with YMCAs around the country to refer at-risk youths to diabetes prevention programs recognized by the CDC.

“We have seen significant progress, but we’ve really got to be sure that we get this [diabetes initiative] to a larger number of people,” said Dr. Albright. “We need to allow this to be scaled nationally [by] taking the successes that we’ve had and the lessons that we’ve learned, [but] in order to do that, people have to receive a diagnosis of prediabetes so that they can get connected to these services.”

According to the AMA, there are currently more than 86 million individuals in the United States living with prediabetes, yet roughly 90% of those people don’t even know they have it. The CDC estimates that the number of individuals diagnosed with diabetes in the United States more than tripled from 1980 to 2011, going from 5.6 million to nearly 21 million in just over 30 years. In 2011, 19.6 million American adults were diagnosed with diabetes; right now, according to the AMA and CDC, more than 33% of American adults are living with prediabetes.

FROM A CDC/AMA TELEBRIEFING

New mortality index aids comparison of outcome rates across institutions

SAN DIEGO–Uniquely derived mortality scores, based exclusively on adult congenital data, can be used to create risk models that more accurately and reliably compare mortality outcomes across institutions with differing case mixes than do existing empirically based tools, according to researchers.

“Several established tools exist – including the STAT [Society of Thoracic Surgery–European Association for Cardiothoracic Surgery Congenital Heart Surgery Mortality] score, and the Aristotle Basic Complexity [ABC] score; however, none of these are age-specific,” Dr. Stephanie M. Fuller reported at the annual meeting of the Society of Thoracic Surgeons.

“Prior analyses have demonstrated varying degrees of discrimination using existing tools as supplied to adults; however, no empirically based system of assessing risk specifically in adults exists,” said Dr. Fuller of the University of Pennsylvania, Philadelphia.

Dr. Fuller and her colleagues conducted an exploratory analysis with a twofold purpose:

1. To compare procedure-specific risk of in-hospital mortality for adults, compared with an aggregate of all age groups and compared with pediatric patients using the Society of Thoracic Surgeons Congenital Heart Surgery Database (STS-CHSD).

2. To develop an empirically based mortality score unique to adults undergoing congenital heart surgery that can be easily applied to assess case-mix across hospitals as well as procedural risk.

Dr. Fuller and her coinvestigators estimated mortality risks based on more than 200,000 indexed operations from 120 centers found in the STS-CHSD between January 2000 and June 2013, eventually whittling the cases down to 52 distinct types and groups of operative procedures and 12,842 operations on adults aged 18 years and older for inclusion in the study.

All 52 procedural groups were ranked in ascending order according to their unadjusted mortality rates, at which point Bayesian modeling was used to determine the best estimates of procedural mortality risk to adjust for the relatively small numbers in some of these groups. Subsequently, each procedure or group of procedures was assigned a numerical score based on the estimated risk of mortality from 0.1 to 3.0 – this score was called the STS Adult Congenital Heart Surgery Mortality Score.

The researchers’ validation sample was done “by looking at those performed during the most recent year, July 2013 through June 2014, and all cases from this year were separated into the same 52 procedural groups,” explained Dr. Fuller. “These were analyzed comparing the adult congenital heart surgery score to both the STAT score and the ABC complexity level; [however] not all procedural groups were common to both STAT and ABC level analysis, so validation was performed only for those [that] were common in both groups.”

Dr. Fuller and her colleagues found that the number of adult congenital heart procedures per year increased drastically from 2000 to 2012 – from 85 to 1,961, respectively – and the rate of survival over this period was 1.8% without adjustment to the Bayesian model. After adjustment, model-based estimates for mortality in each of the main procedure types were determined: 7.3% for Fontan revisions, 7.2% for heart transplantations, 6.6% for lung transplantations, 6.0% for Fontan procedures, 5.5% for coronary artery intervention, 0.4% for partial anomalous pulmonary venous return, and 0.2% for repair of atrial septal defect operations.

“Future directions [for this study] include, primarily, augmenting the data sample, which can be done by incorporating data from either the European Association for Cardiothoracic Surgery database, or by incorporating data from the STS Adult Cardiac Surgery database,” said Dr. Fuller, adding that she and her colleagues would also have liked to include clinical characteristics and risk factors of patients, such as presence of genetic syndromes and medical comorbidities in the adult population.

Dr. Fuller did not report any financial disclosures.

SAN DIEGO–Uniquely derived mortality scores, based exclusively on adult congenital data, can be used to create risk models that more accurately and reliably compare mortality outcomes across institutions with differing case mixes than do existing empirically based tools, according to researchers.

“Several established tools exist – including the STAT [Society of Thoracic Surgery–European Association for Cardiothoracic Surgery Congenital Heart Surgery Mortality] score, and the Aristotle Basic Complexity [ABC] score; however, none of these are age-specific,” Dr. Stephanie M. Fuller reported at the annual meeting of the Society of Thoracic Surgeons.

“Prior analyses have demonstrated varying degrees of discrimination using existing tools as supplied to adults; however, no empirically based system of assessing risk specifically in adults exists,” said Dr. Fuller of the University of Pennsylvania, Philadelphia.

Dr. Fuller and her colleagues conducted an exploratory analysis with a twofold purpose:

1. To compare procedure-specific risk of in-hospital mortality for adults, compared with an aggregate of all age groups and compared with pediatric patients using the Society of Thoracic Surgeons Congenital Heart Surgery Database (STS-CHSD).

2. To develop an empirically based mortality score unique to adults undergoing congenital heart surgery that can be easily applied to assess case-mix across hospitals as well as procedural risk.

Dr. Fuller and her coinvestigators estimated mortality risks based on more than 200,000 indexed operations from 120 centers found in the STS-CHSD between January 2000 and June 2013, eventually whittling the cases down to 52 distinct types and groups of operative procedures and 12,842 operations on adults aged 18 years and older for inclusion in the study.

All 52 procedural groups were ranked in ascending order according to their unadjusted mortality rates, at which point Bayesian modeling was used to determine the best estimates of procedural mortality risk to adjust for the relatively small numbers in some of these groups. Subsequently, each procedure or group of procedures was assigned a numerical score based on the estimated risk of mortality from 0.1 to 3.0 – this score was called the STS Adult Congenital Heart Surgery Mortality Score.

The researchers’ validation sample was done “by looking at those performed during the most recent year, July 2013 through June 2014, and all cases from this year were separated into the same 52 procedural groups,” explained Dr. Fuller. “These were analyzed comparing the adult congenital heart surgery score to both the STAT score and the ABC complexity level; [however] not all procedural groups were common to both STAT and ABC level analysis, so validation was performed only for those [that] were common in both groups.”

Dr. Fuller and her colleagues found that the number of adult congenital heart procedures per year increased drastically from 2000 to 2012 – from 85 to 1,961, respectively – and the rate of survival over this period was 1.8% without adjustment to the Bayesian model. After adjustment, model-based estimates for mortality in each of the main procedure types were determined: 7.3% for Fontan revisions, 7.2% for heart transplantations, 6.6% for lung transplantations, 6.0% for Fontan procedures, 5.5% for coronary artery intervention, 0.4% for partial anomalous pulmonary venous return, and 0.2% for repair of atrial septal defect operations.

“Future directions [for this study] include, primarily, augmenting the data sample, which can be done by incorporating data from either the European Association for Cardiothoracic Surgery database, or by incorporating data from the STS Adult Cardiac Surgery database,” said Dr. Fuller, adding that she and her colleagues would also have liked to include clinical characteristics and risk factors of patients, such as presence of genetic syndromes and medical comorbidities in the adult population.

Dr. Fuller did not report any financial disclosures.

SAN DIEGO–Uniquely derived mortality scores, based exclusively on adult congenital data, can be used to create risk models that more accurately and reliably compare mortality outcomes across institutions with differing case mixes than do existing empirically based tools, according to researchers.

“Several established tools exist – including the STAT [Society of Thoracic Surgery–European Association for Cardiothoracic Surgery Congenital Heart Surgery Mortality] score, and the Aristotle Basic Complexity [ABC] score; however, none of these are age-specific,” Dr. Stephanie M. Fuller reported at the annual meeting of the Society of Thoracic Surgeons.

“Prior analyses have demonstrated varying degrees of discrimination using existing tools as supplied to adults; however, no empirically based system of assessing risk specifically in adults exists,” said Dr. Fuller of the University of Pennsylvania, Philadelphia.

Dr. Fuller and her colleagues conducted an exploratory analysis with a twofold purpose:

1. To compare procedure-specific risk of in-hospital mortality for adults, compared with an aggregate of all age groups and compared with pediatric patients using the Society of Thoracic Surgeons Congenital Heart Surgery Database (STS-CHSD).

2. To develop an empirically based mortality score unique to adults undergoing congenital heart surgery that can be easily applied to assess case-mix across hospitals as well as procedural risk.

Dr. Fuller and her coinvestigators estimated mortality risks based on more than 200,000 indexed operations from 120 centers found in the STS-CHSD between January 2000 and June 2013, eventually whittling the cases down to 52 distinct types and groups of operative procedures and 12,842 operations on adults aged 18 years and older for inclusion in the study.

All 52 procedural groups were ranked in ascending order according to their unadjusted mortality rates, at which point Bayesian modeling was used to determine the best estimates of procedural mortality risk to adjust for the relatively small numbers in some of these groups. Subsequently, each procedure or group of procedures was assigned a numerical score based on the estimated risk of mortality from 0.1 to 3.0 – this score was called the STS Adult Congenital Heart Surgery Mortality Score.

The researchers’ validation sample was done “by looking at those performed during the most recent year, July 2013 through June 2014, and all cases from this year were separated into the same 52 procedural groups,” explained Dr. Fuller. “These were analyzed comparing the adult congenital heart surgery score to both the STAT score and the ABC complexity level; [however] not all procedural groups were common to both STAT and ABC level analysis, so validation was performed only for those [that] were common in both groups.”

Dr. Fuller and her colleagues found that the number of adult congenital heart procedures per year increased drastically from 2000 to 2012 – from 85 to 1,961, respectively – and the rate of survival over this period was 1.8% without adjustment to the Bayesian model. After adjustment, model-based estimates for mortality in each of the main procedure types were determined: 7.3% for Fontan revisions, 7.2% for heart transplantations, 6.6% for lung transplantations, 6.0% for Fontan procedures, 5.5% for coronary artery intervention, 0.4% for partial anomalous pulmonary venous return, and 0.2% for repair of atrial septal defect operations.

“Future directions [for this study] include, primarily, augmenting the data sample, which can be done by incorporating data from either the European Association for Cardiothoracic Surgery database, or by incorporating data from the STS Adult Cardiac Surgery database,” said Dr. Fuller, adding that she and her colleagues would also have liked to include clinical characteristics and risk factors of patients, such as presence of genetic syndromes and medical comorbidities in the adult population.

Dr. Fuller did not report any financial disclosures.

AT THE STS ANNUAL MEETING

Key clinical point: Adult congenital data can be used to create unique mortality scores to compare mortality outcomes across several institutions with differing case mixes, making the data more accurate and reliable than existing empirically based tools.

Major finding: Overall unadjusted mortality across all procedures analyzed in the STS-CHSD database between 2000 and 2012 was 1.8%, but after adjustment, individual procedure rates ranged from 0.2% to 7.3%. Compared with established pediatric/congenital mortality risk stratification, some procedures common to both cohorts have widely disparate mortality risk.

Data source: Retrospective analysis of 12,842 operations of 52 different types in the STS-CHSD on adults aged 18 years and older between January 2000 and June 2013.

Disclosures: Dr. Fuller reported no conflicts of interest.

Scoring system identifies mortality risks in esophagectomy patients

SAN DIEGO – Predictive factors such as Zubrod score, previous cardiothoracic surgery, history of smoking, and hypertension can give health care providers a powerful tool for predicting the likelihood of patient morbidity resulting from esophagectomy, thus allowing accurate stratification of such patients and thereby mitigating morbidity rates, a study showed.

Patients who had previously undergone cardiothoracic surgery, had Zubrod scores of at least 2, had diabetes mellitus requiring insulin therapy, were currently smoking, had hypertension, were female, and had a forced expiratory volume in 1 second (FEV1) score of less than 60% were the most highly predisposed to mortality after undergoing esophagectomy, said Dr. J. Matthew Reinersman of the Mayo Clinic in Rochester, Minn., who presented the findings at the annual meeting of the Society of Thoracic Surgeons.

Dr. Reinersman led a team of investigators in a retrospective analysis of 343 consecutive patients in the STS General Thoracic Surgery Database who underwent esophagectomies for malignancies between August 2009 and December 2012. “Our primary outcome variables were operative mortality, both in-hospital and 30-day mortality, as well as major morbidity, which we defined as anastomotic leak, myocardial infarction, pulmonary embolism, pneumonia, reintubation for respiratory failure, empyema, chylothorax, and any reoperation,” he explained.

Univariate and multivariate analyses, using a chi-square test or Fisher’s exact analyses, were performed to look for predictors within the data set, and were subsequently used to craft the risk-based model that assigned each patient a score for how likely he or she would be to experience morbidity or mortality after undergoing an esophagectomy. Each patient was then assigned to one of four groups based on this score – group A (86 subjects), group B (138 subjects), group C (81 subjects), or group D (38 subjects) – in ascending order of score.

Dr. Reinersman and his coauthors found that 17 subjects (19.8%) in group A had either morbidity or mortality, as did 45 subjects (32.6%) in group B, 61 subjects (75.3%) in group C, and 36 (94.7%) in group D, indicating that the model and scoring system developed by the investigators was successful in predicting likely morbidity and mortality outcomes based on patients’ medical histories.

The mean patient age was 63.2 years, and the majority of subjects were male. Smokers were prevalent in the study population: Roughly 56% were former smokers, and 12% were current smokers. Endocarcinoma was the predominant tissue type observed by the authors, and the most common tumor location was the gastroesophageal junction or lower third of the esophagus. Approximately, 75% of patients received neoadjuvant therapy as well.

“This risk-assessment tool, which uses only seven factors and an easy-to-remember scoring system dividing patients into four risk categories, can be easily integrated into everyday clinical practice,” said Dr. Reinersman. “It can help inform patient selection and education, [and] also identifies smoking as an important but modifiable preoperative risk factor.”

Dr. Reinersman reported no relevant financial conflicts.

SAN DIEGO – Predictive factors such as Zubrod score, previous cardiothoracic surgery, history of smoking, and hypertension can give health care providers a powerful tool for predicting the likelihood of patient morbidity resulting from esophagectomy, thus allowing accurate stratification of such patients and thereby mitigating morbidity rates, a study showed.

Patients who had previously undergone cardiothoracic surgery, had Zubrod scores of at least 2, had diabetes mellitus requiring insulin therapy, were currently smoking, had hypertension, were female, and had a forced expiratory volume in 1 second (FEV1) score of less than 60% were the most highly predisposed to mortality after undergoing esophagectomy, said Dr. J. Matthew Reinersman of the Mayo Clinic in Rochester, Minn., who presented the findings at the annual meeting of the Society of Thoracic Surgeons.

Dr. Reinersman led a team of investigators in a retrospective analysis of 343 consecutive patients in the STS General Thoracic Surgery Database who underwent esophagectomies for malignancies between August 2009 and December 2012. “Our primary outcome variables were operative mortality, both in-hospital and 30-day mortality, as well as major morbidity, which we defined as anastomotic leak, myocardial infarction, pulmonary embolism, pneumonia, reintubation for respiratory failure, empyema, chylothorax, and any reoperation,” he explained.

Univariate and multivariate analyses, using a chi-square test or Fisher’s exact analyses, were performed to look for predictors within the data set, and were subsequently used to craft the risk-based model that assigned each patient a score for how likely he or she would be to experience morbidity or mortality after undergoing an esophagectomy. Each patient was then assigned to one of four groups based on this score – group A (86 subjects), group B (138 subjects), group C (81 subjects), or group D (38 subjects) – in ascending order of score.

Dr. Reinersman and his coauthors found that 17 subjects (19.8%) in group A had either morbidity or mortality, as did 45 subjects (32.6%) in group B, 61 subjects (75.3%) in group C, and 36 (94.7%) in group D, indicating that the model and scoring system developed by the investigators was successful in predicting likely morbidity and mortality outcomes based on patients’ medical histories.

The mean patient age was 63.2 years, and the majority of subjects were male. Smokers were prevalent in the study population: Roughly 56% were former smokers, and 12% were current smokers. Endocarcinoma was the predominant tissue type observed by the authors, and the most common tumor location was the gastroesophageal junction or lower third of the esophagus. Approximately, 75% of patients received neoadjuvant therapy as well.

“This risk-assessment tool, which uses only seven factors and an easy-to-remember scoring system dividing patients into four risk categories, can be easily integrated into everyday clinical practice,” said Dr. Reinersman. “It can help inform patient selection and education, [and] also identifies smoking as an important but modifiable preoperative risk factor.”

Dr. Reinersman reported no relevant financial conflicts.

SAN DIEGO – Predictive factors such as Zubrod score, previous cardiothoracic surgery, history of smoking, and hypertension can give health care providers a powerful tool for predicting the likelihood of patient morbidity resulting from esophagectomy, thus allowing accurate stratification of such patients and thereby mitigating morbidity rates, a study showed.

Patients who had previously undergone cardiothoracic surgery, had Zubrod scores of at least 2, had diabetes mellitus requiring insulin therapy, were currently smoking, had hypertension, were female, and had a forced expiratory volume in 1 second (FEV1) score of less than 60% were the most highly predisposed to mortality after undergoing esophagectomy, said Dr. J. Matthew Reinersman of the Mayo Clinic in Rochester, Minn., who presented the findings at the annual meeting of the Society of Thoracic Surgeons.

Dr. Reinersman led a team of investigators in a retrospective analysis of 343 consecutive patients in the STS General Thoracic Surgery Database who underwent esophagectomies for malignancies between August 2009 and December 2012. “Our primary outcome variables were operative mortality, both in-hospital and 30-day mortality, as well as major morbidity, which we defined as anastomotic leak, myocardial infarction, pulmonary embolism, pneumonia, reintubation for respiratory failure, empyema, chylothorax, and any reoperation,” he explained.

Univariate and multivariate analyses, using a chi-square test or Fisher’s exact analyses, were performed to look for predictors within the data set, and were subsequently used to craft the risk-based model that assigned each patient a score for how likely he or she would be to experience morbidity or mortality after undergoing an esophagectomy. Each patient was then assigned to one of four groups based on this score – group A (86 subjects), group B (138 subjects), group C (81 subjects), or group D (38 subjects) – in ascending order of score.

Dr. Reinersman and his coauthors found that 17 subjects (19.8%) in group A had either morbidity or mortality, as did 45 subjects (32.6%) in group B, 61 subjects (75.3%) in group C, and 36 (94.7%) in group D, indicating that the model and scoring system developed by the investigators was successful in predicting likely morbidity and mortality outcomes based on patients’ medical histories.

The mean patient age was 63.2 years, and the majority of subjects were male. Smokers were prevalent in the study population: Roughly 56% were former smokers, and 12% were current smokers. Endocarcinoma was the predominant tissue type observed by the authors, and the most common tumor location was the gastroesophageal junction or lower third of the esophagus. Approximately, 75% of patients received neoadjuvant therapy as well.

“This risk-assessment tool, which uses only seven factors and an easy-to-remember scoring system dividing patients into four risk categories, can be easily integrated into everyday clinical practice,” said Dr. Reinersman. “It can help inform patient selection and education, [and] also identifies smoking as an important but modifiable preoperative risk factor.”

Dr. Reinersman reported no relevant financial conflicts.

AT THE STS ANNUAL MEETING

Key clinical point: Certain predictive indicators can help stratify esophagectomy patients based on preoperative variables and risk factors so that morbidity rates are mitigated.

Major finding: Of 343 patients studied, morbidity occurred in 159 (45.8%); the combined 30-day and in-hospital mortality in subjects was 12 (3.5%); the most reliable predictors were prior cardiothoracic surgery, Zubrod score ≥ 2, diabetes mellitus requiring insulin therapy, current smoking, hypertension, female gender, and an FEV1 score less than 60% of predicted.

Data source: A retrospective analysis of 343 patients with esophageal cancer who underwent esophagectomy from August 2009 to December 2012.

Disclosures: Dr. Reinersman reported no relevant financial conflicts.

Environmental factors could increase U.S. anthrax cases

WASHINGTON– Recent isolated cases of anthrax in Minnesota and elsewhere, along with the disease’s relative ease of transmission from animals or plants to humans, should heighten U.S. physicians’ awareness of anthrax’s symptoms and treatments, warned Dr. Jason K. Blackburn.

“[Anthrax] is something that our international partners deal with on an annual basis [as] we can see the disease reemerging, or at least increasing, in annual reports on humans in a number of countries,” explained Dr. Blackburn of the University of Florida in Gainesville, at a meeting on biodefense and emerging diseases sponsored by the American Society for Microbiology. “Here in the United States, we’re seeing it shift from a livestock disease [to] a wildlife disease, where we have these populations that we can’t reach with vaccines, and where surveillance is very logistically challenging.”

Environmental factors can drive higher incidences of anthrax cases. Temperature, precipitation, and vegetation indices are key variables that facilitate anthrax transmission and spread of the disease. Geographically, lowland areas also have higher prevalences of the disease.

For example, Dr. Blackburn and his colleagues used predictive models to quantify the theory that anthrax case rates increase during years that have wet springs followed by hot, dry summers in the region of western Texas.

Using these data would allow scientists and health care providers to predict which years would have an increased risk for anthrax cases in humans, Dr. Blackburn said, and could help hospitals and clinics effectively prepare to treat a higher influx of these cases and prevent possible outbreaks.

Although relatively large numbers of human anthrax cases persist in parts of world – particularly in Africa and central Asia – cases in the United States have been primarily relegated to livestock.

However, during the last decade, there has been a noticeable shift in cases from livestock to wildlife, particularly in western Texas and Montana, where local populations of elk, bison, and white-tailed deer have been affected. The newfound prevalence in wildlife species, along with continued presence in domestic animals such as cattle and sheep, mean that transmission to humans could become even easier.

“Human cases are usually driven by direct human interaction with mammalian hosts,” said Dr. Blackburn, citing farms and meat factories as prime examples of where the Bacillus anthracis organism would easily spread. In addition, Dr. Blackburn specifically noted a scenario in which flies can transmit the disease from sheep to humans, and have also been found to carry anthrax from carcasses to wildlife and vegetation.

From 2010 to 2012, anthrax cases in Europe, particularly Georgia and Turkey, increased, compared with numbers over a similar time frame between 2000 and 2009. While case reporting can be partly attributed to this increase, Dr. Blackburn indicated that it was most likely evidence of an associative trend between livestock and human anthrax cases.

Dr. Blackburn did not report any disclosures.

WASHINGTON– Recent isolated cases of anthrax in Minnesota and elsewhere, along with the disease’s relative ease of transmission from animals or plants to humans, should heighten U.S. physicians’ awareness of anthrax’s symptoms and treatments, warned Dr. Jason K. Blackburn.

“[Anthrax] is something that our international partners deal with on an annual basis [as] we can see the disease reemerging, or at least increasing, in annual reports on humans in a number of countries,” explained Dr. Blackburn of the University of Florida in Gainesville, at a meeting on biodefense and emerging diseases sponsored by the American Society for Microbiology. “Here in the United States, we’re seeing it shift from a livestock disease [to] a wildlife disease, where we have these populations that we can’t reach with vaccines, and where surveillance is very logistically challenging.”

Environmental factors can drive higher incidences of anthrax cases. Temperature, precipitation, and vegetation indices are key variables that facilitate anthrax transmission and spread of the disease. Geographically, lowland areas also have higher prevalences of the disease.

For example, Dr. Blackburn and his colleagues used predictive models to quantify the theory that anthrax case rates increase during years that have wet springs followed by hot, dry summers in the region of western Texas.

Using these data would allow scientists and health care providers to predict which years would have an increased risk for anthrax cases in humans, Dr. Blackburn said, and could help hospitals and clinics effectively prepare to treat a higher influx of these cases and prevent possible outbreaks.

Although relatively large numbers of human anthrax cases persist in parts of world – particularly in Africa and central Asia – cases in the United States have been primarily relegated to livestock.

However, during the last decade, there has been a noticeable shift in cases from livestock to wildlife, particularly in western Texas and Montana, where local populations of elk, bison, and white-tailed deer have been affected. The newfound prevalence in wildlife species, along with continued presence in domestic animals such as cattle and sheep, mean that transmission to humans could become even easier.

“Human cases are usually driven by direct human interaction with mammalian hosts,” said Dr. Blackburn, citing farms and meat factories as prime examples of where the Bacillus anthracis organism would easily spread. In addition, Dr. Blackburn specifically noted a scenario in which flies can transmit the disease from sheep to humans, and have also been found to carry anthrax from carcasses to wildlife and vegetation.

From 2010 to 2012, anthrax cases in Europe, particularly Georgia and Turkey, increased, compared with numbers over a similar time frame between 2000 and 2009. While case reporting can be partly attributed to this increase, Dr. Blackburn indicated that it was most likely evidence of an associative trend between livestock and human anthrax cases.

Dr. Blackburn did not report any disclosures.

WASHINGTON– Recent isolated cases of anthrax in Minnesota and elsewhere, along with the disease’s relative ease of transmission from animals or plants to humans, should heighten U.S. physicians’ awareness of anthrax’s symptoms and treatments, warned Dr. Jason K. Blackburn.