User login

Bispecific CAR T-cells yield high response rate in relapsed/refractory myeloma

ORLANDO – A dual-targeted chimeric antigen receptor (CAR) T-cell therapy has demonstrated a high overall response rate, a long response duration, and manageable safety in patients with relapsed or refractory multiple myeloma, according to an investigator in a phase 1 study.

The overall response rate exceeded 90%, and about three-quarters of patients remained progression-free at 9 months after treatment with the CAR T-cell therapy, which targets both B-cell maturation antigen (BCMA) and CD38, the study investigator reported.

Grade 3 or greater cytokine release syndrome (CRS) occurred in about one-quarter of the patients, and no neurotoxicity was observed, according to investigator Yu Hu, MD, of Tongji Medical College in Hubei, China.

“,” Dr. Hu said in a press conference.

Short-term relapse has been a “major challenge” with current CAR T-cell therapies currently under investigation for myeloma, most of which target BCMA, according to Dr. Hu.

He said the bispecific CAR T-cell therapy under investigation, known as BM38, was designed to target antigen loss and increase persistence of effector cells. According to the investigator, this was the first study to focus on an anti-BCMA and CD38 dual-targeted CAR T-cell therapy for patients with relapsed or refractory multiple myeloma.

Gary J. Schiller, MD, of UCLA Health, who moderated the press conference, said that while dual-targeting is a potentially “attractive” approach in these hard-to-treat patients, further follow-up is needed to see duration of response and to see if antigen escape re-emerges.

“Cellular therapy is costly, in terms of toxicity as well as financial costs, so you would like to see what the durability of responses is before engaging in that as a late-stage therapy, not to mention moving it up front,” Dr. Schiller said in an interview.

The median progression-free survival (PFS) duration had not been reached at the time of this report, though the 9-month PFS rate was 78.87%, according to the data presented by Dr. Hu.

In the phase 1 study, 22 patients received BM38 CAR T-cell infusions following a fludarabine and cyclophosphamide preconditioning regimen. The median patient age was 59 years, and 50% were male. Nearly three-quarters (72%) had a cytogenetic abnormality, and the median number of prior therapies approached four (range, two to nine prior therapies).

Twenty of the patients (90.9%) had a response: 12 who achieved stringent complete remission, 2 with very good partial response, 5 with partial responses, and 1 with a minimal response.

Of 9 patients with extramedullary disease, 8 achieved partial or complete elimination of tumors, Dr. Hu said in his presentation.

Cytokine release syndrome occurred in 20 patients (90.91%), 5 of whom experienced severe cases (22.73%), according to the reported data. There was no observed neurotoxicity, according to the report, while almost all had hematologic toxicities. Three experienced hepatotoxicity and one had nephrotoxicity, according to Dr. Hu.

The phase 1 study was supported by the National Natural Science Foundation of China, the Major Technological Innovation Special Project Fund of Hubei Province of China, and Cellyan Therapeutics. The senior author of the study was affiliated with Cellyan Therapeutics. Dr. Hu and coauthors reported that they had no relevant conflicts of interest to declare.

SOURCE: Li C et al. ASH 2019. Abstract 930.

ORLANDO – A dual-targeted chimeric antigen receptor (CAR) T-cell therapy has demonstrated a high overall response rate, a long response duration, and manageable safety in patients with relapsed or refractory multiple myeloma, according to an investigator in a phase 1 study.

The overall response rate exceeded 90%, and about three-quarters of patients remained progression-free at 9 months after treatment with the CAR T-cell therapy, which targets both B-cell maturation antigen (BCMA) and CD38, the study investigator reported.

Grade 3 or greater cytokine release syndrome (CRS) occurred in about one-quarter of the patients, and no neurotoxicity was observed, according to investigator Yu Hu, MD, of Tongji Medical College in Hubei, China.

“,” Dr. Hu said in a press conference.

Short-term relapse has been a “major challenge” with current CAR T-cell therapies currently under investigation for myeloma, most of which target BCMA, according to Dr. Hu.

He said the bispecific CAR T-cell therapy under investigation, known as BM38, was designed to target antigen loss and increase persistence of effector cells. According to the investigator, this was the first study to focus on an anti-BCMA and CD38 dual-targeted CAR T-cell therapy for patients with relapsed or refractory multiple myeloma.

Gary J. Schiller, MD, of UCLA Health, who moderated the press conference, said that while dual-targeting is a potentially “attractive” approach in these hard-to-treat patients, further follow-up is needed to see duration of response and to see if antigen escape re-emerges.

“Cellular therapy is costly, in terms of toxicity as well as financial costs, so you would like to see what the durability of responses is before engaging in that as a late-stage therapy, not to mention moving it up front,” Dr. Schiller said in an interview.

The median progression-free survival (PFS) duration had not been reached at the time of this report, though the 9-month PFS rate was 78.87%, according to the data presented by Dr. Hu.

In the phase 1 study, 22 patients received BM38 CAR T-cell infusions following a fludarabine and cyclophosphamide preconditioning regimen. The median patient age was 59 years, and 50% were male. Nearly three-quarters (72%) had a cytogenetic abnormality, and the median number of prior therapies approached four (range, two to nine prior therapies).

Twenty of the patients (90.9%) had a response: 12 who achieved stringent complete remission, 2 with very good partial response, 5 with partial responses, and 1 with a minimal response.

Of 9 patients with extramedullary disease, 8 achieved partial or complete elimination of tumors, Dr. Hu said in his presentation.

Cytokine release syndrome occurred in 20 patients (90.91%), 5 of whom experienced severe cases (22.73%), according to the reported data. There was no observed neurotoxicity, according to the report, while almost all had hematologic toxicities. Three experienced hepatotoxicity and one had nephrotoxicity, according to Dr. Hu.

The phase 1 study was supported by the National Natural Science Foundation of China, the Major Technological Innovation Special Project Fund of Hubei Province of China, and Cellyan Therapeutics. The senior author of the study was affiliated with Cellyan Therapeutics. Dr. Hu and coauthors reported that they had no relevant conflicts of interest to declare.

SOURCE: Li C et al. ASH 2019. Abstract 930.

ORLANDO – A dual-targeted chimeric antigen receptor (CAR) T-cell therapy has demonstrated a high overall response rate, a long response duration, and manageable safety in patients with relapsed or refractory multiple myeloma, according to an investigator in a phase 1 study.

The overall response rate exceeded 90%, and about three-quarters of patients remained progression-free at 9 months after treatment with the CAR T-cell therapy, which targets both B-cell maturation antigen (BCMA) and CD38, the study investigator reported.

Grade 3 or greater cytokine release syndrome (CRS) occurred in about one-quarter of the patients, and no neurotoxicity was observed, according to investigator Yu Hu, MD, of Tongji Medical College in Hubei, China.

“,” Dr. Hu said in a press conference.

Short-term relapse has been a “major challenge” with current CAR T-cell therapies currently under investigation for myeloma, most of which target BCMA, according to Dr. Hu.

He said the bispecific CAR T-cell therapy under investigation, known as BM38, was designed to target antigen loss and increase persistence of effector cells. According to the investigator, this was the first study to focus on an anti-BCMA and CD38 dual-targeted CAR T-cell therapy for patients with relapsed or refractory multiple myeloma.

Gary J. Schiller, MD, of UCLA Health, who moderated the press conference, said that while dual-targeting is a potentially “attractive” approach in these hard-to-treat patients, further follow-up is needed to see duration of response and to see if antigen escape re-emerges.

“Cellular therapy is costly, in terms of toxicity as well as financial costs, so you would like to see what the durability of responses is before engaging in that as a late-stage therapy, not to mention moving it up front,” Dr. Schiller said in an interview.

The median progression-free survival (PFS) duration had not been reached at the time of this report, though the 9-month PFS rate was 78.87%, according to the data presented by Dr. Hu.

In the phase 1 study, 22 patients received BM38 CAR T-cell infusions following a fludarabine and cyclophosphamide preconditioning regimen. The median patient age was 59 years, and 50% were male. Nearly three-quarters (72%) had a cytogenetic abnormality, and the median number of prior therapies approached four (range, two to nine prior therapies).

Twenty of the patients (90.9%) had a response: 12 who achieved stringent complete remission, 2 with very good partial response, 5 with partial responses, and 1 with a minimal response.

Of 9 patients with extramedullary disease, 8 achieved partial or complete elimination of tumors, Dr. Hu said in his presentation.

Cytokine release syndrome occurred in 20 patients (90.91%), 5 of whom experienced severe cases (22.73%), according to the reported data. There was no observed neurotoxicity, according to the report, while almost all had hematologic toxicities. Three experienced hepatotoxicity and one had nephrotoxicity, according to Dr. Hu.

The phase 1 study was supported by the National Natural Science Foundation of China, the Major Technological Innovation Special Project Fund of Hubei Province of China, and Cellyan Therapeutics. The senior author of the study was affiliated with Cellyan Therapeutics. Dr. Hu and coauthors reported that they had no relevant conflicts of interest to declare.

SOURCE: Li C et al. ASH 2019. Abstract 930.

REPORTING FROM ASH 2019

High complete response rate seen with novel CAR-T for myeloma

ORLANDO – A novel chimeric antigen receptor T (CAR T) cell construct is associated with deep clinical responses in patients with multiple myeloma for whom prior lines of therapy – some numbering in the double digits – have failed.

Among 29 patients with multiple myeloma enrolled in a phase 1b/2 trial of JNJ-4528, the overall response rate (ORR) at 6 months median follow-up was 100%, including 69% complete responses, with 27 patients remaining free of disease progression at a median of 6 months, reported Deepu Madduri, MD, of Icahn School of Medicine at Mount Sinai, New York.

“These are very heavily pretreated patients, and so getting early and deep responses is quite amazing,” she said at a briefing prior to presentation of the data at the annual meeting of the American Society of Hematology.

JNJ-4528 is a second-generation CAR T containing two single-domain antibodies targeted against B-cell maturation protein (BCMA). As previously reported, an identical CAR T cell construct showed a high overall response with manageable toxicities in 74 patients with relapsed/refractory multiple myeloma. JNJ-4528 was granted a breakthrough therapy designation for relapsed/refractory multiple myeloma by the Food and Drug Administration on Dec. 6, 2019, and a priority medicines (PRIME) designation by the European Medicines Agency in April 2019.

BCMA was first described in myeloma in 2004 as a mechanism for the growth and survival of malignant plasma cells. Several research groups are currently investigating CAR T cells or monoclonal antibodies targeted to BCMA. The product closest to receiving FDA approval is likely BB2121.

At ASH 2019, Dr. Madduri presented results from the phase 1b portion of the CARTITUDE-1 trial. The investigators enrolled patients with multiple myeloma with measurable diseases as assessed by M-protein or serum free light chain levels who had experienced disease progression on at least 3 prior lines of therapy, or whose disease was refractory to at least two lines of therapy with a proteasome inhibitor, immunomodulatory drug (IMiD), and an anti-CD38 antibody.

Patients underwent apheresis for T-cell collection, with bridging therapy allowed until the expanded T cells could be delivered.

Following T-cell depletion with cyclophosphamide 300 mg/m2 and fludarabine 30 mg/m2 over 3 days, patients received a single weight-based infusion (compared with fixed-dose infusions used with other CAR T cell constructs).

The dose was targeted at 0.75x106 CAR-positive cells/kg, with a target range of 0.5–1.0x106, administered 5-7 days after the start of the conditioning regimen.

A total of 29 patients, median age 60, were evaluable for the safety and efficacy endpoints. One-fourth of the patients had a high-risk cytogenetic profile. The patients had received a median of 5 prior lines of therapy, with one patient receiving 18 prior lines. Of the 29 patients, 25 (86%) had previously undergone autologous transplantation.

As noted before, the ORR after a median follow-up of 6 months was 100%, with 69% completer responses, 17% very good partial responses, and 14% partial responses. The median time to complete response was 1 month (range 1 to 9 months). All but two patients remained free of disease progression at the median 6-month follow-up.

Nearly all patients (27) developed cytokine release syndrome (CRS), and one patient with prolonged grade 4 CRS died from related complications 99 days after infusion.

The median time to onset of CRS was 7 days with more than 90% of cases occurring between days 5 and 9.

Neurotoxicities, specifically immune effector cell–associated neurotoxicity syndrome (ICANS), were infrequent in CRS, and when they did occur were generally low grade, with only 1 grade 3 ICANS event.

Asked in an interview whether the impressive response rates seen with JNJ-4528 might persist over time, Dr. Madduri acknowledged that follow-up is still relatively short.

“This product is unique in that has a CD8 central memory phenotype preferentially, and we’re hoping that this would play a central role in the durability of response because they’re memory cells, but I think at this time we don’t know,” she said.

The CARTITUDE-1 trial is funded by Janssen Research & Development. Dr. Madduri disclosed serving as a consultant to Janssen and to Takeda, Foundation Medicine, AbbVie, and Celgene.

SOURCE: Madduri D et al. ASH 2019. Abstract 577.

ORLANDO – A novel chimeric antigen receptor T (CAR T) cell construct is associated with deep clinical responses in patients with multiple myeloma for whom prior lines of therapy – some numbering in the double digits – have failed.

Among 29 patients with multiple myeloma enrolled in a phase 1b/2 trial of JNJ-4528, the overall response rate (ORR) at 6 months median follow-up was 100%, including 69% complete responses, with 27 patients remaining free of disease progression at a median of 6 months, reported Deepu Madduri, MD, of Icahn School of Medicine at Mount Sinai, New York.

“These are very heavily pretreated patients, and so getting early and deep responses is quite amazing,” she said at a briefing prior to presentation of the data at the annual meeting of the American Society of Hematology.

JNJ-4528 is a second-generation CAR T containing two single-domain antibodies targeted against B-cell maturation protein (BCMA). As previously reported, an identical CAR T cell construct showed a high overall response with manageable toxicities in 74 patients with relapsed/refractory multiple myeloma. JNJ-4528 was granted a breakthrough therapy designation for relapsed/refractory multiple myeloma by the Food and Drug Administration on Dec. 6, 2019, and a priority medicines (PRIME) designation by the European Medicines Agency in April 2019.

BCMA was first described in myeloma in 2004 as a mechanism for the growth and survival of malignant plasma cells. Several research groups are currently investigating CAR T cells or monoclonal antibodies targeted to BCMA. The product closest to receiving FDA approval is likely BB2121.

At ASH 2019, Dr. Madduri presented results from the phase 1b portion of the CARTITUDE-1 trial. The investigators enrolled patients with multiple myeloma with measurable diseases as assessed by M-protein or serum free light chain levels who had experienced disease progression on at least 3 prior lines of therapy, or whose disease was refractory to at least two lines of therapy with a proteasome inhibitor, immunomodulatory drug (IMiD), and an anti-CD38 antibody.

Patients underwent apheresis for T-cell collection, with bridging therapy allowed until the expanded T cells could be delivered.

Following T-cell depletion with cyclophosphamide 300 mg/m2 and fludarabine 30 mg/m2 over 3 days, patients received a single weight-based infusion (compared with fixed-dose infusions used with other CAR T cell constructs).

The dose was targeted at 0.75x106 CAR-positive cells/kg, with a target range of 0.5–1.0x106, administered 5-7 days after the start of the conditioning regimen.

A total of 29 patients, median age 60, were evaluable for the safety and efficacy endpoints. One-fourth of the patients had a high-risk cytogenetic profile. The patients had received a median of 5 prior lines of therapy, with one patient receiving 18 prior lines. Of the 29 patients, 25 (86%) had previously undergone autologous transplantation.

As noted before, the ORR after a median follow-up of 6 months was 100%, with 69% completer responses, 17% very good partial responses, and 14% partial responses. The median time to complete response was 1 month (range 1 to 9 months). All but two patients remained free of disease progression at the median 6-month follow-up.

Nearly all patients (27) developed cytokine release syndrome (CRS), and one patient with prolonged grade 4 CRS died from related complications 99 days after infusion.

The median time to onset of CRS was 7 days with more than 90% of cases occurring between days 5 and 9.

Neurotoxicities, specifically immune effector cell–associated neurotoxicity syndrome (ICANS), were infrequent in CRS, and when they did occur were generally low grade, with only 1 grade 3 ICANS event.

Asked in an interview whether the impressive response rates seen with JNJ-4528 might persist over time, Dr. Madduri acknowledged that follow-up is still relatively short.

“This product is unique in that has a CD8 central memory phenotype preferentially, and we’re hoping that this would play a central role in the durability of response because they’re memory cells, but I think at this time we don’t know,” she said.

The CARTITUDE-1 trial is funded by Janssen Research & Development. Dr. Madduri disclosed serving as a consultant to Janssen and to Takeda, Foundation Medicine, AbbVie, and Celgene.

SOURCE: Madduri D et al. ASH 2019. Abstract 577.

ORLANDO – A novel chimeric antigen receptor T (CAR T) cell construct is associated with deep clinical responses in patients with multiple myeloma for whom prior lines of therapy – some numbering in the double digits – have failed.

Among 29 patients with multiple myeloma enrolled in a phase 1b/2 trial of JNJ-4528, the overall response rate (ORR) at 6 months median follow-up was 100%, including 69% complete responses, with 27 patients remaining free of disease progression at a median of 6 months, reported Deepu Madduri, MD, of Icahn School of Medicine at Mount Sinai, New York.

“These are very heavily pretreated patients, and so getting early and deep responses is quite amazing,” she said at a briefing prior to presentation of the data at the annual meeting of the American Society of Hematology.

JNJ-4528 is a second-generation CAR T containing two single-domain antibodies targeted against B-cell maturation protein (BCMA). As previously reported, an identical CAR T cell construct showed a high overall response with manageable toxicities in 74 patients with relapsed/refractory multiple myeloma. JNJ-4528 was granted a breakthrough therapy designation for relapsed/refractory multiple myeloma by the Food and Drug Administration on Dec. 6, 2019, and a priority medicines (PRIME) designation by the European Medicines Agency in April 2019.

BCMA was first described in myeloma in 2004 as a mechanism for the growth and survival of malignant plasma cells. Several research groups are currently investigating CAR T cells or monoclonal antibodies targeted to BCMA. The product closest to receiving FDA approval is likely BB2121.

At ASH 2019, Dr. Madduri presented results from the phase 1b portion of the CARTITUDE-1 trial. The investigators enrolled patients with multiple myeloma with measurable diseases as assessed by M-protein or serum free light chain levels who had experienced disease progression on at least 3 prior lines of therapy, or whose disease was refractory to at least two lines of therapy with a proteasome inhibitor, immunomodulatory drug (IMiD), and an anti-CD38 antibody.

Patients underwent apheresis for T-cell collection, with bridging therapy allowed until the expanded T cells could be delivered.

Following T-cell depletion with cyclophosphamide 300 mg/m2 and fludarabine 30 mg/m2 over 3 days, patients received a single weight-based infusion (compared with fixed-dose infusions used with other CAR T cell constructs).

The dose was targeted at 0.75x106 CAR-positive cells/kg, with a target range of 0.5–1.0x106, administered 5-7 days after the start of the conditioning regimen.

A total of 29 patients, median age 60, were evaluable for the safety and efficacy endpoints. One-fourth of the patients had a high-risk cytogenetic profile. The patients had received a median of 5 prior lines of therapy, with one patient receiving 18 prior lines. Of the 29 patients, 25 (86%) had previously undergone autologous transplantation.

As noted before, the ORR after a median follow-up of 6 months was 100%, with 69% completer responses, 17% very good partial responses, and 14% partial responses. The median time to complete response was 1 month (range 1 to 9 months). All but two patients remained free of disease progression at the median 6-month follow-up.

Nearly all patients (27) developed cytokine release syndrome (CRS), and one patient with prolonged grade 4 CRS died from related complications 99 days after infusion.

The median time to onset of CRS was 7 days with more than 90% of cases occurring between days 5 and 9.

Neurotoxicities, specifically immune effector cell–associated neurotoxicity syndrome (ICANS), were infrequent in CRS, and when they did occur were generally low grade, with only 1 grade 3 ICANS event.

Asked in an interview whether the impressive response rates seen with JNJ-4528 might persist over time, Dr. Madduri acknowledged that follow-up is still relatively short.

“This product is unique in that has a CD8 central memory phenotype preferentially, and we’re hoping that this would play a central role in the durability of response because they’re memory cells, but I think at this time we don’t know,” she said.

The CARTITUDE-1 trial is funded by Janssen Research & Development. Dr. Madduri disclosed serving as a consultant to Janssen and to Takeda, Foundation Medicine, AbbVie, and Celgene.

SOURCE: Madduri D et al. ASH 2019. Abstract 577.

REPORTING FROM ASH 2019

Women with epilepsy less likely than controls to breastfeed

BALTIMORE – , according to data presented at the annual meeting of the American Epilepsy Society. Seizure control, education by the treating neurologist, and postpartum lactation consultative support are associated with adherence to breastfeeding, said the researchers.

“We need to understand and address the challenges that women with epilepsy face beyond seizure control and medication management when they are being seen by various health care providers to ensure the best quality of life for them and their babies,” Abrar Al-Faraj, MD, instructor of neurology at Boston University, said in a press release. “The strong efforts to advocate for breastfeeding in the general population should include women with chronic diseases such as epilepsy.”

A retrospective study of women who underwent pregnancy

Data have established the benefits of breastfeeding in the general population. Recent studies have confirmed that for women with epilepsy and their children, breastfeeding is safe and may provide neurodevelopmental benefits. Data also indicate, however, that rates of breastfeeding are significantly lower in women with epilepsy than in the general population. Dr. Al-Faraj and colleagues sought to compare the rates of initiation of and adherence to breastfeeding in women with epilepsy with those in healthy controls. They also intended to identify the factors that affect breastfeeding in women with epilepsy and assess the influence of support systems (e.g., lactation consult services) on breastfeeding.

The investigators retrospectively studied 102 women with epilepsy who were treated at the Beth Israel Deaconess Medical Center (BIDMC) Epilepsy Clinic and underwent pregnancies between 2009 and 2018. They compared these women to 113 healthy controls without epilepsy who were treated at the obstetrical service at BIDMC during the same period. Dr. Al-Faraj and colleagues reviewed patients’ medical records for demographic information, epilepsy type, degree of seizure control during pregnancy and post partum, number of antiepileptic medications (AEDs), breastfeeding education by providers (i.e., neurologists and epilepsy nurses), lactation consult, and rate of initiation of and adherence to breastfeeding at 6 weeks and 3 and 6 months. The investigators excluded from their analysis patients with other chronic medical conditions, those taking medications other than AEDs that may affect breastfeeding, and those with limited follow-up during pregnancy and post partum.

Education and support were correlated with breastfeeding

Participants’ ages ranged from 20 years to 40 years. The rate of breastfeeding initiation was significantly lower in women with epilepsy (51%) than in controls (87%). The rate declined significantly to 38.2% at 6 weeks in women with epilepsy, compared to 76% in controls. The rate of adherence at 3 months was 36.2% in women with epilepsy, and adherence at 6 months was 18.6%.

The reasons for not breastfeeding were known for 17.6% of women with epilepsy. These reasons included fear of AED exposure through breast milk, recommendations by providers (e.g., pediatricians and obstetricians) not to breastfeed, failed breastfeeding attempts because of technical difficulties (e.g., the baby’s inability to latch), and lack of milk supply. Treating neurologists discussed breastfeeding with 52.9% of women with epilepsy, and epilepsy nurses discussed it with 91% of women with epilepsy. Among the 66% of patients who received obstetrical care at BIDMC, 13% of women with epilepsy had lactation consultation post partum, compared with 58% of controls. Breastfeeding education by the treating neurologist was significantly and positively correlated with decision to breastfeed and initiation of breastfeeding. Postpartum lactation consult support was also associated with a significantly higher rate of breastfeeding initiation, adherence at 6 weeks, adherence at 3 months, and adherence at 6 months. Women with well-controlled seizures were more likely to continue breastfeeding at 6 weeks, compared with women with uncontrolled seizures. The researchers found no statistically significant difference in the breastfeeding initiation rate, however, between women with controlled seizures and those with uncontrolled seizures.

“Women with poor seizure control are a particularly vulnerable group and have the greatest need for intervention to improve breastfeeding rates,” said Dr. Al-Faraj and colleagues. Focused physician education and support measures such as lactation consultation may be potential interventions to improve the treatment of women with epilepsy, they added. “Further prospective investigations are needed to identify other factors that prevent the decision to initiate or adhere to breastfeeding in women with epilepsy and evaluate interventions that may be implemented as a public health measure to support this vulnerable population.”

The study did not have external funding, and the investigators reported no disclosures.

SOURCE: Al-Faraj AO et al. AES 2019, Abstract 1.246.

BALTIMORE – , according to data presented at the annual meeting of the American Epilepsy Society. Seizure control, education by the treating neurologist, and postpartum lactation consultative support are associated with adherence to breastfeeding, said the researchers.

“We need to understand and address the challenges that women with epilepsy face beyond seizure control and medication management when they are being seen by various health care providers to ensure the best quality of life for them and their babies,” Abrar Al-Faraj, MD, instructor of neurology at Boston University, said in a press release. “The strong efforts to advocate for breastfeeding in the general population should include women with chronic diseases such as epilepsy.”

A retrospective study of women who underwent pregnancy

Data have established the benefits of breastfeeding in the general population. Recent studies have confirmed that for women with epilepsy and their children, breastfeeding is safe and may provide neurodevelopmental benefits. Data also indicate, however, that rates of breastfeeding are significantly lower in women with epilepsy than in the general population. Dr. Al-Faraj and colleagues sought to compare the rates of initiation of and adherence to breastfeeding in women with epilepsy with those in healthy controls. They also intended to identify the factors that affect breastfeeding in women with epilepsy and assess the influence of support systems (e.g., lactation consult services) on breastfeeding.

The investigators retrospectively studied 102 women with epilepsy who were treated at the Beth Israel Deaconess Medical Center (BIDMC) Epilepsy Clinic and underwent pregnancies between 2009 and 2018. They compared these women to 113 healthy controls without epilepsy who were treated at the obstetrical service at BIDMC during the same period. Dr. Al-Faraj and colleagues reviewed patients’ medical records for demographic information, epilepsy type, degree of seizure control during pregnancy and post partum, number of antiepileptic medications (AEDs), breastfeeding education by providers (i.e., neurologists and epilepsy nurses), lactation consult, and rate of initiation of and adherence to breastfeeding at 6 weeks and 3 and 6 months. The investigators excluded from their analysis patients with other chronic medical conditions, those taking medications other than AEDs that may affect breastfeeding, and those with limited follow-up during pregnancy and post partum.

Education and support were correlated with breastfeeding

Participants’ ages ranged from 20 years to 40 years. The rate of breastfeeding initiation was significantly lower in women with epilepsy (51%) than in controls (87%). The rate declined significantly to 38.2% at 6 weeks in women with epilepsy, compared to 76% in controls. The rate of adherence at 3 months was 36.2% in women with epilepsy, and adherence at 6 months was 18.6%.

The reasons for not breastfeeding were known for 17.6% of women with epilepsy. These reasons included fear of AED exposure through breast milk, recommendations by providers (e.g., pediatricians and obstetricians) not to breastfeed, failed breastfeeding attempts because of technical difficulties (e.g., the baby’s inability to latch), and lack of milk supply. Treating neurologists discussed breastfeeding with 52.9% of women with epilepsy, and epilepsy nurses discussed it with 91% of women with epilepsy. Among the 66% of patients who received obstetrical care at BIDMC, 13% of women with epilepsy had lactation consultation post partum, compared with 58% of controls. Breastfeeding education by the treating neurologist was significantly and positively correlated with decision to breastfeed and initiation of breastfeeding. Postpartum lactation consult support was also associated with a significantly higher rate of breastfeeding initiation, adherence at 6 weeks, adherence at 3 months, and adherence at 6 months. Women with well-controlled seizures were more likely to continue breastfeeding at 6 weeks, compared with women with uncontrolled seizures. The researchers found no statistically significant difference in the breastfeeding initiation rate, however, between women with controlled seizures and those with uncontrolled seizures.

“Women with poor seizure control are a particularly vulnerable group and have the greatest need for intervention to improve breastfeeding rates,” said Dr. Al-Faraj and colleagues. Focused physician education and support measures such as lactation consultation may be potential interventions to improve the treatment of women with epilepsy, they added. “Further prospective investigations are needed to identify other factors that prevent the decision to initiate or adhere to breastfeeding in women with epilepsy and evaluate interventions that may be implemented as a public health measure to support this vulnerable population.”

The study did not have external funding, and the investigators reported no disclosures.

SOURCE: Al-Faraj AO et al. AES 2019, Abstract 1.246.

BALTIMORE – , according to data presented at the annual meeting of the American Epilepsy Society. Seizure control, education by the treating neurologist, and postpartum lactation consultative support are associated with adherence to breastfeeding, said the researchers.

“We need to understand and address the challenges that women with epilepsy face beyond seizure control and medication management when they are being seen by various health care providers to ensure the best quality of life for them and their babies,” Abrar Al-Faraj, MD, instructor of neurology at Boston University, said in a press release. “The strong efforts to advocate for breastfeeding in the general population should include women with chronic diseases such as epilepsy.”

A retrospective study of women who underwent pregnancy

Data have established the benefits of breastfeeding in the general population. Recent studies have confirmed that for women with epilepsy and their children, breastfeeding is safe and may provide neurodevelopmental benefits. Data also indicate, however, that rates of breastfeeding are significantly lower in women with epilepsy than in the general population. Dr. Al-Faraj and colleagues sought to compare the rates of initiation of and adherence to breastfeeding in women with epilepsy with those in healthy controls. They also intended to identify the factors that affect breastfeeding in women with epilepsy and assess the influence of support systems (e.g., lactation consult services) on breastfeeding.

The investigators retrospectively studied 102 women with epilepsy who were treated at the Beth Israel Deaconess Medical Center (BIDMC) Epilepsy Clinic and underwent pregnancies between 2009 and 2018. They compared these women to 113 healthy controls without epilepsy who were treated at the obstetrical service at BIDMC during the same period. Dr. Al-Faraj and colleagues reviewed patients’ medical records for demographic information, epilepsy type, degree of seizure control during pregnancy and post partum, number of antiepileptic medications (AEDs), breastfeeding education by providers (i.e., neurologists and epilepsy nurses), lactation consult, and rate of initiation of and adherence to breastfeeding at 6 weeks and 3 and 6 months. The investigators excluded from their analysis patients with other chronic medical conditions, those taking medications other than AEDs that may affect breastfeeding, and those with limited follow-up during pregnancy and post partum.

Education and support were correlated with breastfeeding

Participants’ ages ranged from 20 years to 40 years. The rate of breastfeeding initiation was significantly lower in women with epilepsy (51%) than in controls (87%). The rate declined significantly to 38.2% at 6 weeks in women with epilepsy, compared to 76% in controls. The rate of adherence at 3 months was 36.2% in women with epilepsy, and adherence at 6 months was 18.6%.

The reasons for not breastfeeding were known for 17.6% of women with epilepsy. These reasons included fear of AED exposure through breast milk, recommendations by providers (e.g., pediatricians and obstetricians) not to breastfeed, failed breastfeeding attempts because of technical difficulties (e.g., the baby’s inability to latch), and lack of milk supply. Treating neurologists discussed breastfeeding with 52.9% of women with epilepsy, and epilepsy nurses discussed it with 91% of women with epilepsy. Among the 66% of patients who received obstetrical care at BIDMC, 13% of women with epilepsy had lactation consultation post partum, compared with 58% of controls. Breastfeeding education by the treating neurologist was significantly and positively correlated with decision to breastfeed and initiation of breastfeeding. Postpartum lactation consult support was also associated with a significantly higher rate of breastfeeding initiation, adherence at 6 weeks, adherence at 3 months, and adherence at 6 months. Women with well-controlled seizures were more likely to continue breastfeeding at 6 weeks, compared with women with uncontrolled seizures. The researchers found no statistically significant difference in the breastfeeding initiation rate, however, between women with controlled seizures and those with uncontrolled seizures.

“Women with poor seizure control are a particularly vulnerable group and have the greatest need for intervention to improve breastfeeding rates,” said Dr. Al-Faraj and colleagues. Focused physician education and support measures such as lactation consultation may be potential interventions to improve the treatment of women with epilepsy, they added. “Further prospective investigations are needed to identify other factors that prevent the decision to initiate or adhere to breastfeeding in women with epilepsy and evaluate interventions that may be implemented as a public health measure to support this vulnerable population.”

The study did not have external funding, and the investigators reported no disclosures.

SOURCE: Al-Faraj AO et al. AES 2019, Abstract 1.246.

REPORTING FROM AES 2019

Is strict glycemic control meaningless for older adults?

AT THE IDF CONGRESS 2019

BUSAN, SOUTH KOREA – The question of whether or not strict glycemic control is appropriate for older adults was the subject of a debate between two experts at the 2019 congress of the International Diabetes Federation.

Current guidelines from the Endocrine Society addressing diabetes management in older adults call for shared decision making and individualized approaches, taking into account comorbidities, complications, and special situations.

Medha Munshi, MD, and Ryo Suzuki, MD, PhD, took differing approaches to the risk-versus-benefit equation for older patients.

The case against ...

Dr. Munshi, director of the Joslin geriatric diabetes program at Beth Israel Deaconess Medical Center, Boston, started the debate by stating, “Yes, strict glycemic control in the elderly is meaningless.”

She based this on two main points: The benefits of strict glycemic control in older adults are not clear, and the risks are “catastrophic and well documented.”

The first problem, said Dr. Munshi, is that there is a dearth of data in older adults. In a 2013 review of 2,484 diabetes-focused studies registered on clinicaltrials.gov, just 0.6% included participants who were older than 65 years, whereas 30.8% specifically excluded that age group, and 54.9% excluded people older than 70 years.

Another analysis of 440 studies that investigated treatments for type 2 diabetes showed that, of trials that did include older adults, more than three-quarters (76.8%) excluded those with comorbidities, nearly a third (29.5%) excluded people with polypharmacy or specific drugs, and 18.4% excluded those with cognitive impairment.

“So, the trials are not targeted toward older adults, and those that are, exclude people with multiple comorbidities, so the [participants] who are left in the trials are not [representative of the patients] we see in the clinic,” Dr. Munshi emphasized.

Among the major trials that evaluated intensive treatment versus usual care in type 2 diabetes – including the UK Prospective Diabetes Study (UKPDS), the Veterans Administration Diabetes Trial (VADT), and the Action to Control Cardiovascular Risk in Diabetes (ACCORD) trial – no macrovascular benefits were found except in UKPDS, and evidence of harm was found in ACCORD.

What those trials suggested, said Dr. Munshi, is that the patients who do better with intensive glycemic control are younger, have a shorter duration of disease, fewer complications and comorbidities at baseline, better overall health, and longer life expectancy.

In contrast, those at greater risk from the hypoglycemia associated with intensive glycemic control are people who are older and frail, have longer duration of diabetes, have macro- and microvascular complications and comorbidities, are unable to safely follow complex regimens, and have shorter life expectancy.

She also pointed to a 2010 retrospective cohort study that identified a U-shaped curve relationship between hemoglobin A1c and all-cause mortality and cardiac events, suggesting that “there is a threshold beyond which, if the control is tighter, then the risk of mortality increases.”

Medications used by older adults with diabetes also pose risks, as shown in a study published in 2011 of 99,628 emergency hospitalizations for adverse drug events among U.S. adults aged 65 years and older conducted during 2007-2009.

In that study, warfarin topped the list, but insulin was the second most common, and oral hypoglycemic agents were also in the top 5.

And those episodes of emergency hospitalization, another study found, were associated with a 3.4-fold increased risk for 5-year mortality.

“ It increases the risk of cognitive decline, depression, frailty, falls and fractures, functional decline, anxiety, and fear of hypoglycemia; and it lowers quality of life,” Dr. Munshi explained.

Other unintended consequences of strict glycemic control in older adults include difficulty coping with complex regimens, increased caregiver burden, loss of independence, and increased financial burden, she added.

Control in healthy adults

A valid question, Dr. Munshi said, is whether strict glycemic control might be appropriate for older adults who are still healthy.

She responded to that by explaining that there is a phenomenon of aging called homeostenosis, a physical limit beyond which homeostasis cannot be restored in the presence of stressors, such as hypoglycemia leading to a fall, hospitalization, delirium, and poor outcome.

Another reasonable question, she added, was whether strict glycemic control in older adults could be achieved more safely and with greater benefit by using newer agents with lower risks for hypoglycemia that have been found to have cardiovascular and renal benefits.

To that, she noted that it’s not clear whether those benefits are a result of glycemic control, that the duration of the trials has been short (2-3 years), and drug interactions and side effects in populations with multiple morbidities have not been studied. Moreover, “cost and availability need consideration,” she said.

And so, she concluded, “Is strict glycemic control in the elderly really worth the risk? My answer would be no.”

The case for ...

Dr. Suzuki, a professor in the division of diabetes, metabolism, endocrinology, rheumatology, and collagen diseases at Tokyo Medical University, argued that strict glycemic control in the elderly is not “meaningless.”

He began by pointing out that his country, Japan, is “one of the most highly aging societies in the world.”

His arguments were based on three points: The elderly population is “full of diversity;” HbA1c is “not a perfect marker of glycemic control;” and new glucose-lowering drug classes may have benefits beyond reduction of blood glucose levels.

He also noted that there is no consensus on the definition of “elderly.”

Most developed countries use age 65 years and older as the cut-off, but the United Nations defines being elderly as 60 years and older, whereas the International Diabetes Federation’s guideline for managing older people with type 2 diabetes, uses 70 and older. These differences, he asserted, emphasize “the difficulty to generalize the gap between calendar age and biological age.”

Dr. Suzuki also pointed out that the American Diabetes Association’s Standards of Medical Care in Diabetes 2019 does not mention age as a consideration in individualizing glycemic targets.

Instead, factors such as risk for hypoglycemia, disease duration, life expectancy, comorbidities, established vascular complications, patient preference, and resources/support systems are listed. “We need to evaluate and assess these factors individually for every patient,” he asserted.

“Older age is very heterogeneous. Some people are very robust and active, while others are sick and frail ... We need to be careful about the active, healthy people because sometimes they need more intensified treatment to prevent complications of diabetes.”

Dr. Suzuki also pointed out that people hold important positions that require good health well into their 60s and 70s. “In many countries, many older individuals with or without diabetes have responsibilities and play important roles in their societies. Diabetes can be a big barrier for them ... Sometimes it requires hospitalizations, and they need to stop business.”

He cited an observational study from a Swedish national database showing a significant difference in hospitalizations for heart failure for older adults with diabetes and HbA1c of between 6% and 7%, compared with 7%-8%, among both men and women aged 71-75 and 61-65 years. In that study, investigators found that poor glycemic control (HbA1c of more than 7%) was associated with an increased risk of hospitalization for heart failure in patients with type 2 diabetes.

“This is, of course, an observational study, so we cannot draw a conclusion, but still, it strongly suggests that lower than 7% may prevent hospitalization for heart failure in elderly people.”

Glycemic variability

Another point is that HbA1c does not reflect glycemic variability, so it’s impossible to tell just from that measure the extent to which an individual is experiencing hypoglycemia – that is, two people can have the same A1c level, yet one experiences frequent hypoglycemia whereas the other never does.

“So, determining treatment based solely on A1c may be risky,” Dr. Suzuki noted.

And recently, the availability of continuous glucose monitoring is shifting the definition of “strict” glycemic control from “average” glucose to “time in range,” which also allows for a determination of the key metric “time below range.”

Recent international guidelines advise that, for older adults, fewer than 1% of readings should be below 70 mg/dL (3.9 mmol/L), compared with fewer than 4% for most other individuals with diabetes.

Thus, “in terms of avoiding hypoglycemia, older adults have a ‘stricter’ range. In other words, less stringency for high-risk people does not always mean broader allowance range in any glycemic profiles,” Dr. Suzuki noted.

However, newer drugs that don’t increase the risk for hypoglycemia are available for patients with type 2 diabetes.

Dr. Suzuki pointed to his own 2018 study demonstrating that the dipeptidyl peptidase‐4 (DPP-4) inhibitor sitagliptin had a greater ability to reduce daily glucose fluctuations in drug-naive Japanese patients with type 2 diabetes, compared with the sulfonylurea glibenclamide.

Similarly, in the Trial Evaluating Cardiovascular Outcomes with Sitagliptin (TECOS), the DPP-4 inhibitor did not increase severe hypoglycemia in the subgroup of participants aged 75 years and older.

And in several of the recent cardiovascular outcomes trials demonstrating cardiovascular benefit for type 2 diabetes agents, those benefits have been just as robust among older participants, he stressed.

These include the Researching Cardiovascular Events With a Weekly Incretin in Diabetes (REWIND) trial, in which those aged above and below 66 years experienced similar results with dulaglutide, a GLP-1 agonist.

And the landmark Empagliflozin Cardiovascular Outcome Event Trial in Type 2 Diabetes Mellitus Patients (EMPA-REG OUTCOME), which actually showed even greater protection against cardiovascular events among subjects aged 65 and older (hazard ratio, 0.86).

Also in the Dapagliflozin-Heart Failure (Dapa-HF) study, the SGLT-2 inhibitor reduced worsening of heart failure in patients with heart failure with reduced ejection fraction, regardless of age or presence of diabetes.

“I argue that older patients have rights to receive appropriate and effective treatment to prevent diabetes complications,” Dr. Suzuki concluded.

Dr. Munshi is a consultant for Sanofi and Lilly. Dr. Suzuki has received honoraria from MSD, Novo Nordisk, Novartis Pharma, Takeda, Mitsubishi Tanabe, and Eli Lilly Japan.

A version of this story originally appeared on Medscape.com.

AT THE IDF CONGRESS 2019

BUSAN, SOUTH KOREA – The question of whether or not strict glycemic control is appropriate for older adults was the subject of a debate between two experts at the 2019 congress of the International Diabetes Federation.

Current guidelines from the Endocrine Society addressing diabetes management in older adults call for shared decision making and individualized approaches, taking into account comorbidities, complications, and special situations.

Medha Munshi, MD, and Ryo Suzuki, MD, PhD, took differing approaches to the risk-versus-benefit equation for older patients.

The case against ...

Dr. Munshi, director of the Joslin geriatric diabetes program at Beth Israel Deaconess Medical Center, Boston, started the debate by stating, “Yes, strict glycemic control in the elderly is meaningless.”

She based this on two main points: The benefits of strict glycemic control in older adults are not clear, and the risks are “catastrophic and well documented.”

The first problem, said Dr. Munshi, is that there is a dearth of data in older adults. In a 2013 review of 2,484 diabetes-focused studies registered on clinicaltrials.gov, just 0.6% included participants who were older than 65 years, whereas 30.8% specifically excluded that age group, and 54.9% excluded people older than 70 years.

Another analysis of 440 studies that investigated treatments for type 2 diabetes showed that, of trials that did include older adults, more than three-quarters (76.8%) excluded those with comorbidities, nearly a third (29.5%) excluded people with polypharmacy or specific drugs, and 18.4% excluded those with cognitive impairment.

“So, the trials are not targeted toward older adults, and those that are, exclude people with multiple comorbidities, so the [participants] who are left in the trials are not [representative of the patients] we see in the clinic,” Dr. Munshi emphasized.

Among the major trials that evaluated intensive treatment versus usual care in type 2 diabetes – including the UK Prospective Diabetes Study (UKPDS), the Veterans Administration Diabetes Trial (VADT), and the Action to Control Cardiovascular Risk in Diabetes (ACCORD) trial – no macrovascular benefits were found except in UKPDS, and evidence of harm was found in ACCORD.

What those trials suggested, said Dr. Munshi, is that the patients who do better with intensive glycemic control are younger, have a shorter duration of disease, fewer complications and comorbidities at baseline, better overall health, and longer life expectancy.

In contrast, those at greater risk from the hypoglycemia associated with intensive glycemic control are people who are older and frail, have longer duration of diabetes, have macro- and microvascular complications and comorbidities, are unable to safely follow complex regimens, and have shorter life expectancy.

She also pointed to a 2010 retrospective cohort study that identified a U-shaped curve relationship between hemoglobin A1c and all-cause mortality and cardiac events, suggesting that “there is a threshold beyond which, if the control is tighter, then the risk of mortality increases.”

Medications used by older adults with diabetes also pose risks, as shown in a study published in 2011 of 99,628 emergency hospitalizations for adverse drug events among U.S. adults aged 65 years and older conducted during 2007-2009.

In that study, warfarin topped the list, but insulin was the second most common, and oral hypoglycemic agents were also in the top 5.

And those episodes of emergency hospitalization, another study found, were associated with a 3.4-fold increased risk for 5-year mortality.

“ It increases the risk of cognitive decline, depression, frailty, falls and fractures, functional decline, anxiety, and fear of hypoglycemia; and it lowers quality of life,” Dr. Munshi explained.

Other unintended consequences of strict glycemic control in older adults include difficulty coping with complex regimens, increased caregiver burden, loss of independence, and increased financial burden, she added.

Control in healthy adults

A valid question, Dr. Munshi said, is whether strict glycemic control might be appropriate for older adults who are still healthy.

She responded to that by explaining that there is a phenomenon of aging called homeostenosis, a physical limit beyond which homeostasis cannot be restored in the presence of stressors, such as hypoglycemia leading to a fall, hospitalization, delirium, and poor outcome.

Another reasonable question, she added, was whether strict glycemic control in older adults could be achieved more safely and with greater benefit by using newer agents with lower risks for hypoglycemia that have been found to have cardiovascular and renal benefits.

To that, she noted that it’s not clear whether those benefits are a result of glycemic control, that the duration of the trials has been short (2-3 years), and drug interactions and side effects in populations with multiple morbidities have not been studied. Moreover, “cost and availability need consideration,” she said.

And so, she concluded, “Is strict glycemic control in the elderly really worth the risk? My answer would be no.”

The case for ...

Dr. Suzuki, a professor in the division of diabetes, metabolism, endocrinology, rheumatology, and collagen diseases at Tokyo Medical University, argued that strict glycemic control in the elderly is not “meaningless.”

He began by pointing out that his country, Japan, is “one of the most highly aging societies in the world.”

His arguments were based on three points: The elderly population is “full of diversity;” HbA1c is “not a perfect marker of glycemic control;” and new glucose-lowering drug classes may have benefits beyond reduction of blood glucose levels.

He also noted that there is no consensus on the definition of “elderly.”

Most developed countries use age 65 years and older as the cut-off, but the United Nations defines being elderly as 60 years and older, whereas the International Diabetes Federation’s guideline for managing older people with type 2 diabetes, uses 70 and older. These differences, he asserted, emphasize “the difficulty to generalize the gap between calendar age and biological age.”

Dr. Suzuki also pointed out that the American Diabetes Association’s Standards of Medical Care in Diabetes 2019 does not mention age as a consideration in individualizing glycemic targets.

Instead, factors such as risk for hypoglycemia, disease duration, life expectancy, comorbidities, established vascular complications, patient preference, and resources/support systems are listed. “We need to evaluate and assess these factors individually for every patient,” he asserted.

“Older age is very heterogeneous. Some people are very robust and active, while others are sick and frail ... We need to be careful about the active, healthy people because sometimes they need more intensified treatment to prevent complications of diabetes.”

Dr. Suzuki also pointed out that people hold important positions that require good health well into their 60s and 70s. “In many countries, many older individuals with or without diabetes have responsibilities and play important roles in their societies. Diabetes can be a big barrier for them ... Sometimes it requires hospitalizations, and they need to stop business.”

He cited an observational study from a Swedish national database showing a significant difference in hospitalizations for heart failure for older adults with diabetes and HbA1c of between 6% and 7%, compared with 7%-8%, among both men and women aged 71-75 and 61-65 years. In that study, investigators found that poor glycemic control (HbA1c of more than 7%) was associated with an increased risk of hospitalization for heart failure in patients with type 2 diabetes.

“This is, of course, an observational study, so we cannot draw a conclusion, but still, it strongly suggests that lower than 7% may prevent hospitalization for heart failure in elderly people.”

Glycemic variability

Another point is that HbA1c does not reflect glycemic variability, so it’s impossible to tell just from that measure the extent to which an individual is experiencing hypoglycemia – that is, two people can have the same A1c level, yet one experiences frequent hypoglycemia whereas the other never does.

“So, determining treatment based solely on A1c may be risky,” Dr. Suzuki noted.

And recently, the availability of continuous glucose monitoring is shifting the definition of “strict” glycemic control from “average” glucose to “time in range,” which also allows for a determination of the key metric “time below range.”

Recent international guidelines advise that, for older adults, fewer than 1% of readings should be below 70 mg/dL (3.9 mmol/L), compared with fewer than 4% for most other individuals with diabetes.

Thus, “in terms of avoiding hypoglycemia, older adults have a ‘stricter’ range. In other words, less stringency for high-risk people does not always mean broader allowance range in any glycemic profiles,” Dr. Suzuki noted.

However, newer drugs that don’t increase the risk for hypoglycemia are available for patients with type 2 diabetes.

Dr. Suzuki pointed to his own 2018 study demonstrating that the dipeptidyl peptidase‐4 (DPP-4) inhibitor sitagliptin had a greater ability to reduce daily glucose fluctuations in drug-naive Japanese patients with type 2 diabetes, compared with the sulfonylurea glibenclamide.

Similarly, in the Trial Evaluating Cardiovascular Outcomes with Sitagliptin (TECOS), the DPP-4 inhibitor did not increase severe hypoglycemia in the subgroup of participants aged 75 years and older.

And in several of the recent cardiovascular outcomes trials demonstrating cardiovascular benefit for type 2 diabetes agents, those benefits have been just as robust among older participants, he stressed.

These include the Researching Cardiovascular Events With a Weekly Incretin in Diabetes (REWIND) trial, in which those aged above and below 66 years experienced similar results with dulaglutide, a GLP-1 agonist.

And the landmark Empagliflozin Cardiovascular Outcome Event Trial in Type 2 Diabetes Mellitus Patients (EMPA-REG OUTCOME), which actually showed even greater protection against cardiovascular events among subjects aged 65 and older (hazard ratio, 0.86).

Also in the Dapagliflozin-Heart Failure (Dapa-HF) study, the SGLT-2 inhibitor reduced worsening of heart failure in patients with heart failure with reduced ejection fraction, regardless of age or presence of diabetes.

“I argue that older patients have rights to receive appropriate and effective treatment to prevent diabetes complications,” Dr. Suzuki concluded.

Dr. Munshi is a consultant for Sanofi and Lilly. Dr. Suzuki has received honoraria from MSD, Novo Nordisk, Novartis Pharma, Takeda, Mitsubishi Tanabe, and Eli Lilly Japan.

A version of this story originally appeared on Medscape.com.

AT THE IDF CONGRESS 2019

BUSAN, SOUTH KOREA – The question of whether or not strict glycemic control is appropriate for older adults was the subject of a debate between two experts at the 2019 congress of the International Diabetes Federation.

Current guidelines from the Endocrine Society addressing diabetes management in older adults call for shared decision making and individualized approaches, taking into account comorbidities, complications, and special situations.

Medha Munshi, MD, and Ryo Suzuki, MD, PhD, took differing approaches to the risk-versus-benefit equation for older patients.

The case against ...

Dr. Munshi, director of the Joslin geriatric diabetes program at Beth Israel Deaconess Medical Center, Boston, started the debate by stating, “Yes, strict glycemic control in the elderly is meaningless.”

She based this on two main points: The benefits of strict glycemic control in older adults are not clear, and the risks are “catastrophic and well documented.”

The first problem, said Dr. Munshi, is that there is a dearth of data in older adults. In a 2013 review of 2,484 diabetes-focused studies registered on clinicaltrials.gov, just 0.6% included participants who were older than 65 years, whereas 30.8% specifically excluded that age group, and 54.9% excluded people older than 70 years.

Another analysis of 440 studies that investigated treatments for type 2 diabetes showed that, of trials that did include older adults, more than three-quarters (76.8%) excluded those with comorbidities, nearly a third (29.5%) excluded people with polypharmacy or specific drugs, and 18.4% excluded those with cognitive impairment.

“So, the trials are not targeted toward older adults, and those that are, exclude people with multiple comorbidities, so the [participants] who are left in the trials are not [representative of the patients] we see in the clinic,” Dr. Munshi emphasized.

Among the major trials that evaluated intensive treatment versus usual care in type 2 diabetes – including the UK Prospective Diabetes Study (UKPDS), the Veterans Administration Diabetes Trial (VADT), and the Action to Control Cardiovascular Risk in Diabetes (ACCORD) trial – no macrovascular benefits were found except in UKPDS, and evidence of harm was found in ACCORD.

What those trials suggested, said Dr. Munshi, is that the patients who do better with intensive glycemic control are younger, have a shorter duration of disease, fewer complications and comorbidities at baseline, better overall health, and longer life expectancy.

In contrast, those at greater risk from the hypoglycemia associated with intensive glycemic control are people who are older and frail, have longer duration of diabetes, have macro- and microvascular complications and comorbidities, are unable to safely follow complex regimens, and have shorter life expectancy.

She also pointed to a 2010 retrospective cohort study that identified a U-shaped curve relationship between hemoglobin A1c and all-cause mortality and cardiac events, suggesting that “there is a threshold beyond which, if the control is tighter, then the risk of mortality increases.”

Medications used by older adults with diabetes also pose risks, as shown in a study published in 2011 of 99,628 emergency hospitalizations for adverse drug events among U.S. adults aged 65 years and older conducted during 2007-2009.

In that study, warfarin topped the list, but insulin was the second most common, and oral hypoglycemic agents were also in the top 5.

And those episodes of emergency hospitalization, another study found, were associated with a 3.4-fold increased risk for 5-year mortality.

“ It increases the risk of cognitive decline, depression, frailty, falls and fractures, functional decline, anxiety, and fear of hypoglycemia; and it lowers quality of life,” Dr. Munshi explained.

Other unintended consequences of strict glycemic control in older adults include difficulty coping with complex regimens, increased caregiver burden, loss of independence, and increased financial burden, she added.

Control in healthy adults

A valid question, Dr. Munshi said, is whether strict glycemic control might be appropriate for older adults who are still healthy.

She responded to that by explaining that there is a phenomenon of aging called homeostenosis, a physical limit beyond which homeostasis cannot be restored in the presence of stressors, such as hypoglycemia leading to a fall, hospitalization, delirium, and poor outcome.

Another reasonable question, she added, was whether strict glycemic control in older adults could be achieved more safely and with greater benefit by using newer agents with lower risks for hypoglycemia that have been found to have cardiovascular and renal benefits.

To that, she noted that it’s not clear whether those benefits are a result of glycemic control, that the duration of the trials has been short (2-3 years), and drug interactions and side effects in populations with multiple morbidities have not been studied. Moreover, “cost and availability need consideration,” she said.

And so, she concluded, “Is strict glycemic control in the elderly really worth the risk? My answer would be no.”

The case for ...

Dr. Suzuki, a professor in the division of diabetes, metabolism, endocrinology, rheumatology, and collagen diseases at Tokyo Medical University, argued that strict glycemic control in the elderly is not “meaningless.”

He began by pointing out that his country, Japan, is “one of the most highly aging societies in the world.”

His arguments were based on three points: The elderly population is “full of diversity;” HbA1c is “not a perfect marker of glycemic control;” and new glucose-lowering drug classes may have benefits beyond reduction of blood glucose levels.

He also noted that there is no consensus on the definition of “elderly.”

Most developed countries use age 65 years and older as the cut-off, but the United Nations defines being elderly as 60 years and older, whereas the International Diabetes Federation’s guideline for managing older people with type 2 diabetes, uses 70 and older. These differences, he asserted, emphasize “the difficulty to generalize the gap between calendar age and biological age.”

Dr. Suzuki also pointed out that the American Diabetes Association’s Standards of Medical Care in Diabetes 2019 does not mention age as a consideration in individualizing glycemic targets.

Instead, factors such as risk for hypoglycemia, disease duration, life expectancy, comorbidities, established vascular complications, patient preference, and resources/support systems are listed. “We need to evaluate and assess these factors individually for every patient,” he asserted.

“Older age is very heterogeneous. Some people are very robust and active, while others are sick and frail ... We need to be careful about the active, healthy people because sometimes they need more intensified treatment to prevent complications of diabetes.”

Dr. Suzuki also pointed out that people hold important positions that require good health well into their 60s and 70s. “In many countries, many older individuals with or without diabetes have responsibilities and play important roles in their societies. Diabetes can be a big barrier for them ... Sometimes it requires hospitalizations, and they need to stop business.”

He cited an observational study from a Swedish national database showing a significant difference in hospitalizations for heart failure for older adults with diabetes and HbA1c of between 6% and 7%, compared with 7%-8%, among both men and women aged 71-75 and 61-65 years. In that study, investigators found that poor glycemic control (HbA1c of more than 7%) was associated with an increased risk of hospitalization for heart failure in patients with type 2 diabetes.

“This is, of course, an observational study, so we cannot draw a conclusion, but still, it strongly suggests that lower than 7% may prevent hospitalization for heart failure in elderly people.”

Glycemic variability

Another point is that HbA1c does not reflect glycemic variability, so it’s impossible to tell just from that measure the extent to which an individual is experiencing hypoglycemia – that is, two people can have the same A1c level, yet one experiences frequent hypoglycemia whereas the other never does.

“So, determining treatment based solely on A1c may be risky,” Dr. Suzuki noted.

And recently, the availability of continuous glucose monitoring is shifting the definition of “strict” glycemic control from “average” glucose to “time in range,” which also allows for a determination of the key metric “time below range.”

Recent international guidelines advise that, for older adults, fewer than 1% of readings should be below 70 mg/dL (3.9 mmol/L), compared with fewer than 4% for most other individuals with diabetes.

Thus, “in terms of avoiding hypoglycemia, older adults have a ‘stricter’ range. In other words, less stringency for high-risk people does not always mean broader allowance range in any glycemic profiles,” Dr. Suzuki noted.

However, newer drugs that don’t increase the risk for hypoglycemia are available for patients with type 2 diabetes.

Dr. Suzuki pointed to his own 2018 study demonstrating that the dipeptidyl peptidase‐4 (DPP-4) inhibitor sitagliptin had a greater ability to reduce daily glucose fluctuations in drug-naive Japanese patients with type 2 diabetes, compared with the sulfonylurea glibenclamide.

Similarly, in the Trial Evaluating Cardiovascular Outcomes with Sitagliptin (TECOS), the DPP-4 inhibitor did not increase severe hypoglycemia in the subgroup of participants aged 75 years and older.

And in several of the recent cardiovascular outcomes trials demonstrating cardiovascular benefit for type 2 diabetes agents, those benefits have been just as robust among older participants, he stressed.

These include the Researching Cardiovascular Events With a Weekly Incretin in Diabetes (REWIND) trial, in which those aged above and below 66 years experienced similar results with dulaglutide, a GLP-1 agonist.

And the landmark Empagliflozin Cardiovascular Outcome Event Trial in Type 2 Diabetes Mellitus Patients (EMPA-REG OUTCOME), which actually showed even greater protection against cardiovascular events among subjects aged 65 and older (hazard ratio, 0.86).

Also in the Dapagliflozin-Heart Failure (Dapa-HF) study, the SGLT-2 inhibitor reduced worsening of heart failure in patients with heart failure with reduced ejection fraction, regardless of age or presence of diabetes.

“I argue that older patients have rights to receive appropriate and effective treatment to prevent diabetes complications,” Dr. Suzuki concluded.

Dr. Munshi is a consultant for Sanofi and Lilly. Dr. Suzuki has received honoraria from MSD, Novo Nordisk, Novartis Pharma, Takeda, Mitsubishi Tanabe, and Eli Lilly Japan.

A version of this story originally appeared on Medscape.com.

Social anxiety more likely with inattentive ADHD, psychiatric comorbidities

Social anxiety is more likely in adolescents aged 12-18 years with predominantly inattentive ADHD and psychiatric comorbidities, according to María Jesús Mardomingo-Sanz, MD, PhD, and associates.

A total of 234 ADHD patients with a mean age of 14.9 years were recruited for the cross-sectional, observational study, and social anxiety was assessed using the Social Anxiety Scale for Adolescents (SAS-A). Just under 70% were male; 37.2% had predominantly inattentive disease, 9% had predominantly hyperactive-impulsive disease, and 51.7% had combined-type disease. , reported Dr. Mardomingo-Sanz, of the child psychiatry and psychology section at the Hospital General Universitario Gregorio Marañón in Madrid, and associates. The study was published in Anales de Pediatría.

The investigators found that 50.4% of patients had a psychiatric comorbidity. Learning and communication disorders, and anxiety disorders were the most common, occurring in 20.1% and 19.2% of all patients, respectively. Patients within the cohort scored significantly higher on the SAS-A, compared with reference values in a healthy population.

Patients with predominantly inattentive disease had significantly higher scores in the SAS-A, compared with those with predominantly hyperactive-impulsive disease (P = .015). Comorbid anxiety disorder was associated with the worst SAS-A scores (P less than .001).

“Social anxiety greatly influences the way in which children and adolescents interact with the surrounding environment and react to it, and therefore can contribute to the development of psychiatric comorbidities. Social anxiety detected by the SAS-A questionnaire is not diagnostic of an anxiety disorder, but detecting it is important, as it can contribute to the secondary prevention of future comorbidities that could lead to less favorable outcomes of these stage of development in patients with ADHD,” the investigators concluded.

Laboratorios Farmacéuticos funded the study, and the investigators reported receiving fees and being employed by Laboratorios Farmacéuticos.

SOURCE: Mardomingo-Sanz MJ et al. An Pediatr (Barc). 2019;90(6):349-61.

Social anxiety is more likely in adolescents aged 12-18 years with predominantly inattentive ADHD and psychiatric comorbidities, according to María Jesús Mardomingo-Sanz, MD, PhD, and associates.

A total of 234 ADHD patients with a mean age of 14.9 years were recruited for the cross-sectional, observational study, and social anxiety was assessed using the Social Anxiety Scale for Adolescents (SAS-A). Just under 70% were male; 37.2% had predominantly inattentive disease, 9% had predominantly hyperactive-impulsive disease, and 51.7% had combined-type disease. , reported Dr. Mardomingo-Sanz, of the child psychiatry and psychology section at the Hospital General Universitario Gregorio Marañón in Madrid, and associates. The study was published in Anales de Pediatría.

The investigators found that 50.4% of patients had a psychiatric comorbidity. Learning and communication disorders, and anxiety disorders were the most common, occurring in 20.1% and 19.2% of all patients, respectively. Patients within the cohort scored significantly higher on the SAS-A, compared with reference values in a healthy population.

Patients with predominantly inattentive disease had significantly higher scores in the SAS-A, compared with those with predominantly hyperactive-impulsive disease (P = .015). Comorbid anxiety disorder was associated with the worst SAS-A scores (P less than .001).

“Social anxiety greatly influences the way in which children and adolescents interact with the surrounding environment and react to it, and therefore can contribute to the development of psychiatric comorbidities. Social anxiety detected by the SAS-A questionnaire is not diagnostic of an anxiety disorder, but detecting it is important, as it can contribute to the secondary prevention of future comorbidities that could lead to less favorable outcomes of these stage of development in patients with ADHD,” the investigators concluded.

Laboratorios Farmacéuticos funded the study, and the investigators reported receiving fees and being employed by Laboratorios Farmacéuticos.

SOURCE: Mardomingo-Sanz MJ et al. An Pediatr (Barc). 2019;90(6):349-61.

Social anxiety is more likely in adolescents aged 12-18 years with predominantly inattentive ADHD and psychiatric comorbidities, according to María Jesús Mardomingo-Sanz, MD, PhD, and associates.

A total of 234 ADHD patients with a mean age of 14.9 years were recruited for the cross-sectional, observational study, and social anxiety was assessed using the Social Anxiety Scale for Adolescents (SAS-A). Just under 70% were male; 37.2% had predominantly inattentive disease, 9% had predominantly hyperactive-impulsive disease, and 51.7% had combined-type disease. , reported Dr. Mardomingo-Sanz, of the child psychiatry and psychology section at the Hospital General Universitario Gregorio Marañón in Madrid, and associates. The study was published in Anales de Pediatría.

The investigators found that 50.4% of patients had a psychiatric comorbidity. Learning and communication disorders, and anxiety disorders were the most common, occurring in 20.1% and 19.2% of all patients, respectively. Patients within the cohort scored significantly higher on the SAS-A, compared with reference values in a healthy population.

Patients with predominantly inattentive disease had significantly higher scores in the SAS-A, compared with those with predominantly hyperactive-impulsive disease (P = .015). Comorbid anxiety disorder was associated with the worst SAS-A scores (P less than .001).

“Social anxiety greatly influences the way in which children and adolescents interact with the surrounding environment and react to it, and therefore can contribute to the development of psychiatric comorbidities. Social anxiety detected by the SAS-A questionnaire is not diagnostic of an anxiety disorder, but detecting it is important, as it can contribute to the secondary prevention of future comorbidities that could lead to less favorable outcomes of these stage of development in patients with ADHD,” the investigators concluded.

Laboratorios Farmacéuticos funded the study, and the investigators reported receiving fees and being employed by Laboratorios Farmacéuticos.

SOURCE: Mardomingo-Sanz MJ et al. An Pediatr (Barc). 2019;90(6):349-61.

FROM ANALES DE PEDIATRÍA

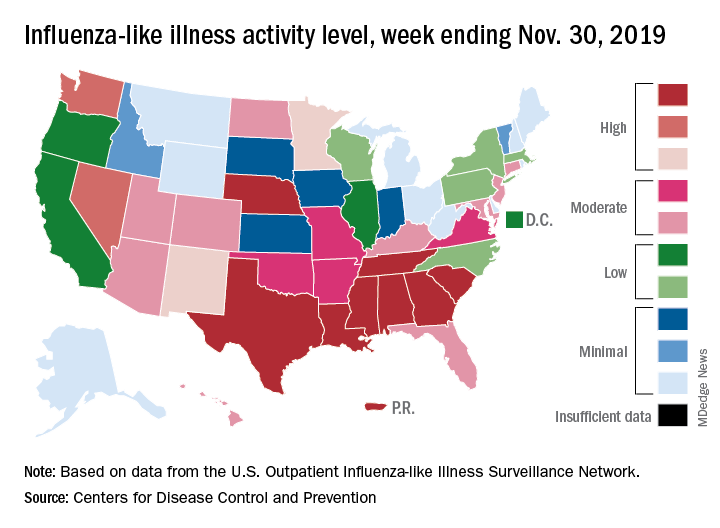

Influenza already in midseason form