User login

Routine clinical data may predict psychiatric adverse effects from levetiracetam

Among patients with epilepsy, a simple model that incorporates factors such as a patient’s sex and history of depression, anxiety, and recreational drug use may help predict the risk of a psychiatric adverse effect from levetiracetam, according to a study published in JAMA Neurology.

“This study derived 2 simple models that predict the risk of a psychiatric adverse effect from levetiracetam” and can “guide prescription in clinical practice,” said Colin B. Josephson, MD, of the department of clinical neurosciences at the University of Calgary (Canada) and his research colleagues.

Levetiracetam is a commonly used first-line treatment for epilepsy because of its ease of use, broad spectrum of action, and safety profile, the researchers said. Still, psychiatric adverse reactions occur in as many as 16% of patients and frequently require treatment discontinuation.

To evaluate whether routine clinical data can predict which patients with epilepsy will experience a psychiatric adverse event from levetiracetam, the investigators analyzed data from The Health Improvement Network (THIN) database, which includes anonymized patient records from general practices in the United Kingdom. They assessed 21 variables for possible inclusion in prediction models. They identified these variables by searching the literature and weighing input from a panel of experts.

Their analysis included data from Jan. 1, 2000–May 31, 2012. Among the more than 11 million patients in THIN, the researchers identified 7,300 incident cases of epilepsy. The researchers examined when patients received a first prescription for levetiracetam and whether patients experienced a psychiatric symptom or disorder within 2 years of the prescription.

Among 1,173 patients with epilepsy receiving levetiracetam, the median age was 39 years; about half were women. In all, 14.1% experienced a psychiatric symptom or disorder within 2 years of prescription. Women were more likely to report a psychiatric symptom (odds ratio, 1.41), as were patients with a history of social deprivation (OR, 1.15), anxiety (OR, 1.74), recreational drug use (OR, 2.02), or depression (OR, 2.20).

The final model included female sex, history of depression, history of anxiety, and history of recreational drug use. Low socioeconomic status was not included because “it would be challenging to assign this score in clinic,” the authors said.

“There was a gradient in risk probabilities increasing from 8% for 0 risk factors to 11%-17% for 1, 17% to 31% for 2, 30%-42% for 3, and 49% when all risk factors were present,” Dr. Josephson and his colleagues indicated. “The discovered incremental probability of reporting a psychiatric sign can help generate an index of suspicion to counsel patients.”

Using the example of a woman patient with depression, the model “suggests she would be at risk,” with a 22% chance of a psychiatric adverse event in the 2 years after receiving a levetiracetam prescription.

The researchers created a second prediction algorithm based on data from patients without documentation of a mental health sign, symptom, or disorder prior to their levetiracetam prescription. This model incorporated age, sex, recreational drug use, and levetiracetam daily dose; it performed comparably well and might be used to determine safety of prescription, according to Dr. Josephson and his colleagues.

The authors noted that the study was limited by an inability to evaluate medication adherence and seizure type and frequency. One advantage of the study’s design is that it may have circumvented expectation bias because general practitioners were not prone to anticipating psychiatric adverse events or to have a lower threshold for diagnosing them.

The authors disclosed research fellowships and support from foundations and federal agencies.

SOURCE: Josephson CB et al. JAMA Neurol. 2019 Jan 28. doi: 10.1001/jamaneurol.2018.4561.

Among patients with epilepsy, a simple model that incorporates factors such as a patient’s sex and history of depression, anxiety, and recreational drug use may help predict the risk of a psychiatric adverse effect from levetiracetam, according to a study published in JAMA Neurology.

“This study derived 2 simple models that predict the risk of a psychiatric adverse effect from levetiracetam” and can “guide prescription in clinical practice,” said Colin B. Josephson, MD, of the department of clinical neurosciences at the University of Calgary (Canada) and his research colleagues.

Levetiracetam is a commonly used first-line treatment for epilepsy because of its ease of use, broad spectrum of action, and safety profile, the researchers said. Still, psychiatric adverse reactions occur in as many as 16% of patients and frequently require treatment discontinuation.

To evaluate whether routine clinical data can predict which patients with epilepsy will experience a psychiatric adverse event from levetiracetam, the investigators analyzed data from The Health Improvement Network (THIN) database, which includes anonymized patient records from general practices in the United Kingdom. They assessed 21 variables for possible inclusion in prediction models. They identified these variables by searching the literature and weighing input from a panel of experts.

Their analysis included data from Jan. 1, 2000–May 31, 2012. Among the more than 11 million patients in THIN, the researchers identified 7,300 incident cases of epilepsy. The researchers examined when patients received a first prescription for levetiracetam and whether patients experienced a psychiatric symptom or disorder within 2 years of the prescription.

Among 1,173 patients with epilepsy receiving levetiracetam, the median age was 39 years; about half were women. In all, 14.1% experienced a psychiatric symptom or disorder within 2 years of prescription. Women were more likely to report a psychiatric symptom (odds ratio, 1.41), as were patients with a history of social deprivation (OR, 1.15), anxiety (OR, 1.74), recreational drug use (OR, 2.02), or depression (OR, 2.20).

The final model included female sex, history of depression, history of anxiety, and history of recreational drug use. Low socioeconomic status was not included because “it would be challenging to assign this score in clinic,” the authors said.

“There was a gradient in risk probabilities increasing from 8% for 0 risk factors to 11%-17% for 1, 17% to 31% for 2, 30%-42% for 3, and 49% when all risk factors were present,” Dr. Josephson and his colleagues indicated. “The discovered incremental probability of reporting a psychiatric sign can help generate an index of suspicion to counsel patients.”

Using the example of a woman patient with depression, the model “suggests she would be at risk,” with a 22% chance of a psychiatric adverse event in the 2 years after receiving a levetiracetam prescription.

The researchers created a second prediction algorithm based on data from patients without documentation of a mental health sign, symptom, or disorder prior to their levetiracetam prescription. This model incorporated age, sex, recreational drug use, and levetiracetam daily dose; it performed comparably well and might be used to determine safety of prescription, according to Dr. Josephson and his colleagues.

The authors noted that the study was limited by an inability to evaluate medication adherence and seizure type and frequency. One advantage of the study’s design is that it may have circumvented expectation bias because general practitioners were not prone to anticipating psychiatric adverse events or to have a lower threshold for diagnosing them.

The authors disclosed research fellowships and support from foundations and federal agencies.

SOURCE: Josephson CB et al. JAMA Neurol. 2019 Jan 28. doi: 10.1001/jamaneurol.2018.4561.

Among patients with epilepsy, a simple model that incorporates factors such as a patient’s sex and history of depression, anxiety, and recreational drug use may help predict the risk of a psychiatric adverse effect from levetiracetam, according to a study published in JAMA Neurology.

“This study derived 2 simple models that predict the risk of a psychiatric adverse effect from levetiracetam” and can “guide prescription in clinical practice,” said Colin B. Josephson, MD, of the department of clinical neurosciences at the University of Calgary (Canada) and his research colleagues.

Levetiracetam is a commonly used first-line treatment for epilepsy because of its ease of use, broad spectrum of action, and safety profile, the researchers said. Still, psychiatric adverse reactions occur in as many as 16% of patients and frequently require treatment discontinuation.

To evaluate whether routine clinical data can predict which patients with epilepsy will experience a psychiatric adverse event from levetiracetam, the investigators analyzed data from The Health Improvement Network (THIN) database, which includes anonymized patient records from general practices in the United Kingdom. They assessed 21 variables for possible inclusion in prediction models. They identified these variables by searching the literature and weighing input from a panel of experts.

Their analysis included data from Jan. 1, 2000–May 31, 2012. Among the more than 11 million patients in THIN, the researchers identified 7,300 incident cases of epilepsy. The researchers examined when patients received a first prescription for levetiracetam and whether patients experienced a psychiatric symptom or disorder within 2 years of the prescription.

Among 1,173 patients with epilepsy receiving levetiracetam, the median age was 39 years; about half were women. In all, 14.1% experienced a psychiatric symptom or disorder within 2 years of prescription. Women were more likely to report a psychiatric symptom (odds ratio, 1.41), as were patients with a history of social deprivation (OR, 1.15), anxiety (OR, 1.74), recreational drug use (OR, 2.02), or depression (OR, 2.20).

The final model included female sex, history of depression, history of anxiety, and history of recreational drug use. Low socioeconomic status was not included because “it would be challenging to assign this score in clinic,” the authors said.

“There was a gradient in risk probabilities increasing from 8% for 0 risk factors to 11%-17% for 1, 17% to 31% for 2, 30%-42% for 3, and 49% when all risk factors were present,” Dr. Josephson and his colleagues indicated. “The discovered incremental probability of reporting a psychiatric sign can help generate an index of suspicion to counsel patients.”

Using the example of a woman patient with depression, the model “suggests she would be at risk,” with a 22% chance of a psychiatric adverse event in the 2 years after receiving a levetiracetam prescription.

The researchers created a second prediction algorithm based on data from patients without documentation of a mental health sign, symptom, or disorder prior to their levetiracetam prescription. This model incorporated age, sex, recreational drug use, and levetiracetam daily dose; it performed comparably well and might be used to determine safety of prescription, according to Dr. Josephson and his colleagues.

The authors noted that the study was limited by an inability to evaluate medication adherence and seizure type and frequency. One advantage of the study’s design is that it may have circumvented expectation bias because general practitioners were not prone to anticipating psychiatric adverse events or to have a lower threshold for diagnosing them.

The authors disclosed research fellowships and support from foundations and federal agencies.

SOURCE: Josephson CB et al. JAMA Neurol. 2019 Jan 28. doi: 10.1001/jamaneurol.2018.4561.

FROM JAMA NEUROLOGY

Key clinical point: Among patients with epilepsy, a simple model may help predict the risk of a psychiatric adverse effect from levetiracetam.

Major finding: The likelihood of a psychiatric adverse event increases from 8% for patients with no risk factors to 49% with all risk factors present.

Study details: A retrospective open cohort study of 1,173 patients with epilepsy receiving levetiracetam in the United Kingdom.

Disclosures: The authors disclosed research fellowships and support from foundations and federal agencies.

Source: Josephson CB et al. JAMA Neurol. 2019 Jan 28. doi: 10.1001/jamaneurol.2018.4561

To refer—or not?

When I was training to become a family physician, my mentor often told me that a competent family physician should be able to manage about 80% of patients’ office visits without consultation. I am not sure where that figure came from, but my 40 years of experience in family medicine supports that prediction. Of course, the flip-side of that coin is having the wisdom to make those referrals for patients who really need a specialist’s diagnostic or treatment skills. The “rub,” of course, is that when I do need a specialist’s help, the wait for an appointment is often unacceptably long—both for me and my patients.

One way to help alleviate the logjam of referrals is to manage more medical problems ourselves. Now I don’t mean holding on to patients who definitely need a referral. But I do think we should avoid being too quick to hand off a patient. Let me explain.

When I was Chair of Family Medicine at Cleveland Clinic, I asked my specialty colleagues what percentage of the referred patients they saw in their offices could be managed competently by a well-trained family physician. The usual answer—from a variety of specialists—was “about 30%.” If we took care of that 30% of patients ourselves, it would go a long way toward freeing up specialists’ schedules to see the patients who truly require their expertise.

Some public health systems, such as the University of California San Francisco Medical Center,1 have implemented successful triage systems to alleviate the referral backlog. Patients are triaged by a specialist and assigned to 1 of 3 categories: 1) urgent—the patient will be seen right away, 2) non-urgent—the patient will be seen as soon as possible (usually within 2 weeks), or 3) phone/email consultation—the specialist provides diagnostic and management advice electronically, or by phone, but does not see the patient.

Continue to: The issue of referral comes to mind...

The issue of referral comes to mind this month in light of our cover story on migraine headache management. Migraine is one of those conditions that is often referred for specialist care, but can, in many cases, be competently managed by family physicians. The diagnosis of migraine is made almost entirely by history and physical exam, and there are many treatments for acute attacks and prevention that are effective and can be prescribed by family physicians and other primary health care professionals.

Yes, patients with more severe migraine may need a specialist consultation. But let’s remain cognizant of the fact that a good percentage of our patients will be best served staying right where they are—in the office of their family physician.

1. Chen AH, Murphy EJ, Yee HF. eReferral—a new model for integrated care. N Engl J Med. 2013;368:2450-2453.

When I was training to become a family physician, my mentor often told me that a competent family physician should be able to manage about 80% of patients’ office visits without consultation. I am not sure where that figure came from, but my 40 years of experience in family medicine supports that prediction. Of course, the flip-side of that coin is having the wisdom to make those referrals for patients who really need a specialist’s diagnostic or treatment skills. The “rub,” of course, is that when I do need a specialist’s help, the wait for an appointment is often unacceptably long—both for me and my patients.

One way to help alleviate the logjam of referrals is to manage more medical problems ourselves. Now I don’t mean holding on to patients who definitely need a referral. But I do think we should avoid being too quick to hand off a patient. Let me explain.

When I was Chair of Family Medicine at Cleveland Clinic, I asked my specialty colleagues what percentage of the referred patients they saw in their offices could be managed competently by a well-trained family physician. The usual answer—from a variety of specialists—was “about 30%.” If we took care of that 30% of patients ourselves, it would go a long way toward freeing up specialists’ schedules to see the patients who truly require their expertise.

Some public health systems, such as the University of California San Francisco Medical Center,1 have implemented successful triage systems to alleviate the referral backlog. Patients are triaged by a specialist and assigned to 1 of 3 categories: 1) urgent—the patient will be seen right away, 2) non-urgent—the patient will be seen as soon as possible (usually within 2 weeks), or 3) phone/email consultation—the specialist provides diagnostic and management advice electronically, or by phone, but does not see the patient.

Continue to: The issue of referral comes to mind...

The issue of referral comes to mind this month in light of our cover story on migraine headache management. Migraine is one of those conditions that is often referred for specialist care, but can, in many cases, be competently managed by family physicians. The diagnosis of migraine is made almost entirely by history and physical exam, and there are many treatments for acute attacks and prevention that are effective and can be prescribed by family physicians and other primary health care professionals.

Yes, patients with more severe migraine may need a specialist consultation. But let’s remain cognizant of the fact that a good percentage of our patients will be best served staying right where they are—in the office of their family physician.

When I was training to become a family physician, my mentor often told me that a competent family physician should be able to manage about 80% of patients’ office visits without consultation. I am not sure where that figure came from, but my 40 years of experience in family medicine supports that prediction. Of course, the flip-side of that coin is having the wisdom to make those referrals for patients who really need a specialist’s diagnostic or treatment skills. The “rub,” of course, is that when I do need a specialist’s help, the wait for an appointment is often unacceptably long—both for me and my patients.

One way to help alleviate the logjam of referrals is to manage more medical problems ourselves. Now I don’t mean holding on to patients who definitely need a referral. But I do think we should avoid being too quick to hand off a patient. Let me explain.

When I was Chair of Family Medicine at Cleveland Clinic, I asked my specialty colleagues what percentage of the referred patients they saw in their offices could be managed competently by a well-trained family physician. The usual answer—from a variety of specialists—was “about 30%.” If we took care of that 30% of patients ourselves, it would go a long way toward freeing up specialists’ schedules to see the patients who truly require their expertise.

Some public health systems, such as the University of California San Francisco Medical Center,1 have implemented successful triage systems to alleviate the referral backlog. Patients are triaged by a specialist and assigned to 1 of 3 categories: 1) urgent—the patient will be seen right away, 2) non-urgent—the patient will be seen as soon as possible (usually within 2 weeks), or 3) phone/email consultation—the specialist provides diagnostic and management advice electronically, or by phone, but does not see the patient.

Continue to: The issue of referral comes to mind...

The issue of referral comes to mind this month in light of our cover story on migraine headache management. Migraine is one of those conditions that is often referred for specialist care, but can, in many cases, be competently managed by family physicians. The diagnosis of migraine is made almost entirely by history and physical exam, and there are many treatments for acute attacks and prevention that are effective and can be prescribed by family physicians and other primary health care professionals.

Yes, patients with more severe migraine may need a specialist consultation. But let’s remain cognizant of the fact that a good percentage of our patients will be best served staying right where they are—in the office of their family physician.

1. Chen AH, Murphy EJ, Yee HF. eReferral—a new model for integrated care. N Engl J Med. 2013;368:2450-2453.

1. Chen AH, Murphy EJ, Yee HF. eReferral—a new model for integrated care. N Engl J Med. 2013;368:2450-2453.

Alcohol use disorder: How best to screen and intervene

THE CASE

Ms. E, a 42-year-old woman, visited her new physician for a physical exam. When asked about alcohol intake, she reported that she drank 3 to 4 beers after work and sometimes 5 to 8 beers a day on the weekends. Occasionally, she exceeded those amounts, but she didn’t feel guilty about her drinking. She was often late to work and said her relationship with her boyfriend was strained. A review of systems was positive for fatigue, poor concentration, abdominal pain, and weight gain. Her body mass index was 41, pulse 100 beats/min, blood pressure 125/75 mm Hg, and she was afebrile. Her physical exam was otherwise within normal limits.

How would you proceed with this patient?

Alcohol use disorder (AUD) is a common and often untreated condition that is increasingly prevalent in the United States.1 The Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (DSM-5) characterizes AUD as a combination of signs and symptoms typifying alcohol abuse and dependence (discussed in a bit).2

Data from the 2015 National Survey on Drug Use and Health (NSDUH) showed 15.7 million Americans with AUD, affecting 6.2% of the population ages 18 years or older and 2.5% of adolescents ages 12 to 17 years.3

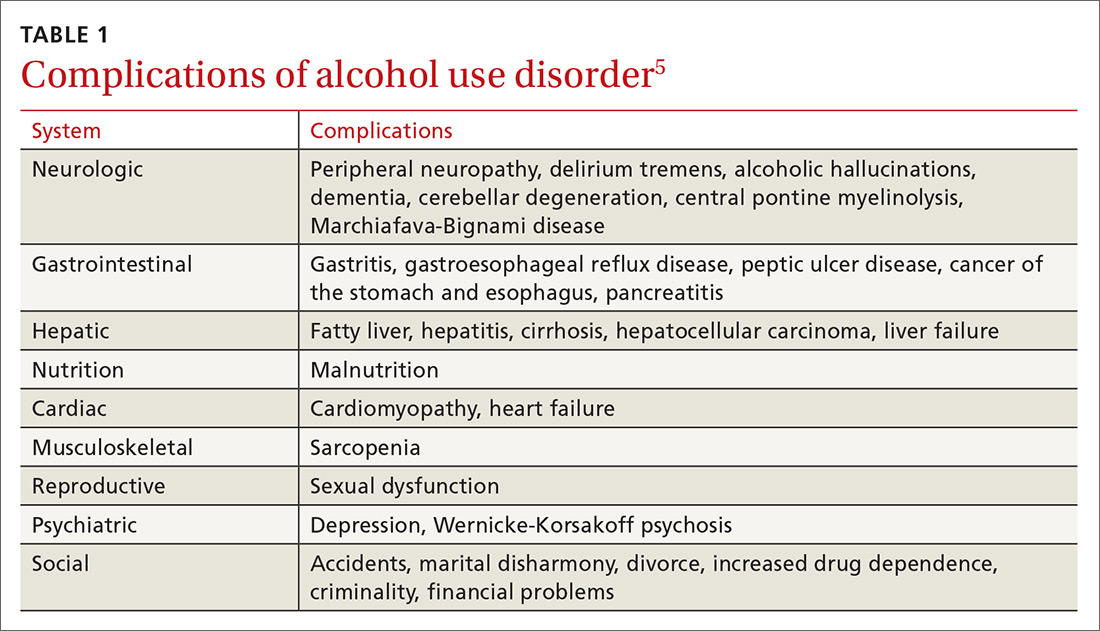

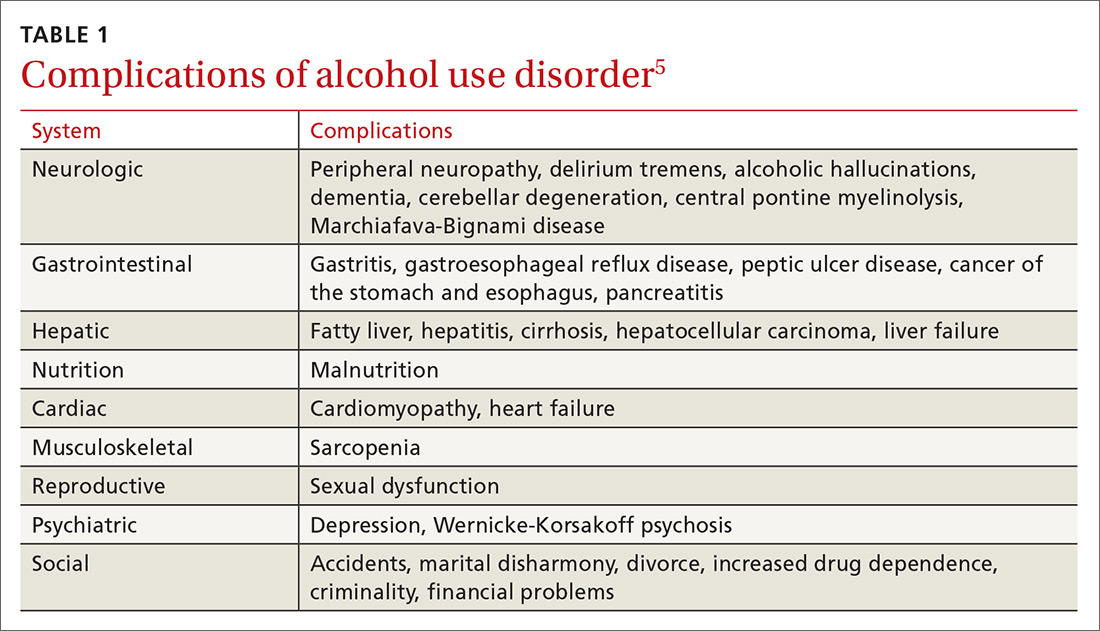

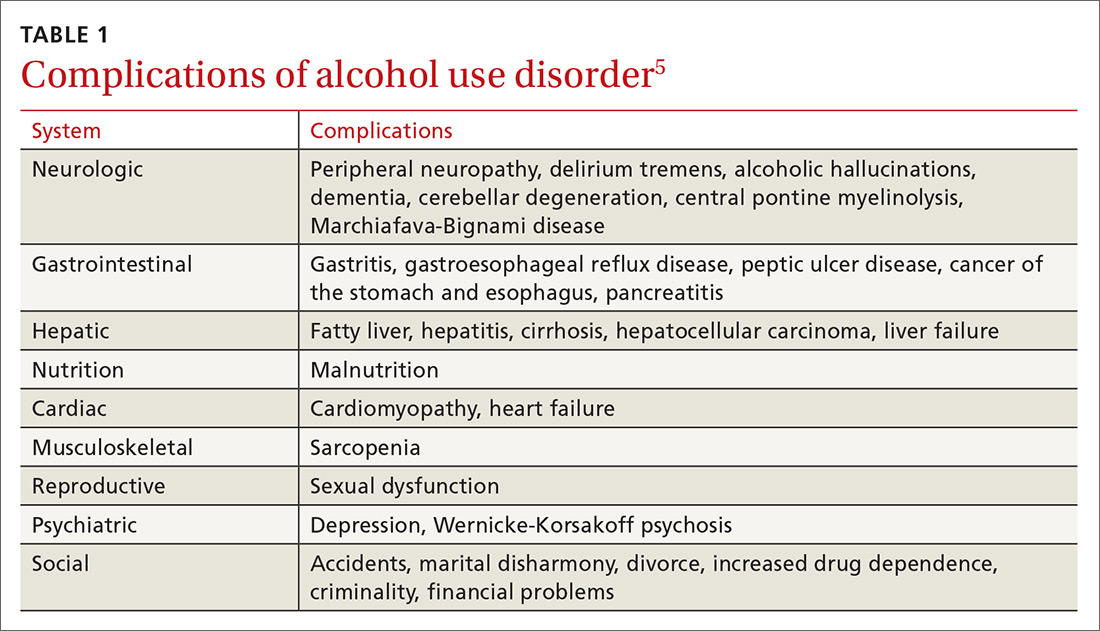

Alcohol use and AUD account for an estimated 3.8% of all global deaths and 4.6% of global disability-adjusted life years.4 AUD adversely affects several systems (TABLE 15), and patients with AUD are sicker and more likely to die younger than those without AUD.4 In the United States, prevalence of AUD has increased in recent years among women, older adults, racial minorities, and individuals with a low education level.6

Screening for AUD is reasonable and straightforward, although diagnosis and treatment of AUD in primary care settings may be challenging due to competing clinical priorities; lack of training, resources, and support; and skepticism about the efficacy of behavioral and pharmacologic treatments.7,8 However, family physicians are in an excellent position to diagnose and help address the complex biopsychosocial needs of patients with AUD, often in collaboration with colleagues and community organizations.

Signs and symptoms of AUD

In clinical practice, at least 2 of the following 11 behaviors or symptoms are required to diagnose AUD2:

- consuming larger amounts of alcohol over a longer period than intended

- persistent desire or unsuccessful efforts to cut down or control alcohol use

- making a significant effort to obtain, use, or recover from alcohol

In moderate-to-severe cases:

- cravings or urges to use alcohol

- recurrent failure to fulfill major work, school, or social obligations

- continued alcohol use despite recurrent social and interpersonal problems

- giving up social, occupational, and recreational activities due to alcohol

- using alcohol in physically dangerous situations

- continued alcohol use despite having physical or psychological problems

- tolerance to alcohol’s effects

- withdrawal symptoms.

Continue to: Patients meet criteria for mild AUD severity if...

Patients meet criteria for mild AUD severity if they exhibit 2 or 3 symptoms, moderate AUD with 4 or 5 symptoms, and severe AUD if there are 6 or more symptoms.2

Those who meet criteria for AUD and are able to stop using alcohol are deemed to be in early remission if the criteria have gone unfulfilled for at least 3 months and less than 12 months. Patients are considered to be in sustained remission if they have not met criteria for AUD at any time during a period of 12 months or longer.

How to detect AUD

Several clues in a patient’s history can suggest AUD (TABLE 29,10). Most imbibers are unaware of the dangers and may consider themselves merely “social drinkers.” Binge drinking may be an early indicator of vulnerability to AUD and should be assessed as part of a thorough clinical evaluation.11 The US Preventive Services Task Force (USPSTF) recommends (Grade B) that clinicians screen adults ages 18 years or older for alcohol misuse.12

Studies demonstrate that both genetic and environmental factors play important roles in the development of AUD.13 A family history of excessive alcohol use increases the risk of AUD. Comorbidity of AUD and other mental health conditions is extremely common. For example, high rates of association between major depressive disorder and AUD have been observed.14

Tools to use in screening and diagnosing AUD

Screening for AUD during an office visit can be done fairly quickly. While 96% of primary care physicians screen for alcohol misuse in some way, only 38% use 1 of the 3 tools recommended by the USPSTF15—the Alcohol Use Disorders Identification Test (AUDIT), the abbreviated AUDIT-C, or the National Institute on Alcohol Abuse and Alcoholism (NIAAA) single question screen—which detect the full spectrum of alcohol misuse in adults.12 Although the commonly used CAGE questionnaire is one of the most studied self-report tools, it has lower sensitivity at a lower level of alcohol intake.16

Continue to: The NIAAA single-question screen asks...

The NIAAA single-question screen asks how many times in the past year the patient had ≥4 drinks (women) or ≥5 drinks (men) in a day.15 The sensitivity and specificity of single-question screening are 82% to 87% and 61% to 79%, respectively, and the test has been validated in several different settings.12 The AUDIT screening tool, freely available from the World Health Organization, is a 10-item questionnaire that probes an individual’s alcohol intake, alcohol dependence, and adverse consequences of alcohol use. Administration of the AUDIT typically requires only 2 minutes. AUDIT-C17 is an abbreviated version of the AUDIT questionnaire that asks 3 consumption questions to screen for AUD.

It was found that AUDIT scores in the range of 8 to 15 indicate a medium-level alcohol problem, whereas a score of ≥16 indicates a high-level alcohol problem. The AUDIT-C is scored from 0 to 12, with ≥4 indicating a problem in men and ≥3

THE CASE

The physician had used the NIAAA single- question screen to determine that Ms. E drank more than 4 beers per day during social events and weekends, which occurred 2 to 3 times per month over the past year. She lives alone and said that she’d been seeing less and less of her boyfriend lately. Her score on the Patient Health Questionnaire (PHQ), which screens for depression, was 11, indicating moderate impairment. Her response on the CAGE questionnaire was negative for a problem with alcohol. However, her AUDIT score was 17, indicating a high-level alcohol problem. Based on these findings, her physician expressed concern that her alcohol use might be contributing to her symptoms and difficulties.

Although she did not have a history of increasing usage per day, a persistent desire to cut down, significant effort to obtain alcohol, or cravings, she was having work troubles and continued to drink even though it was straining relationships, promoting weight gain, and causing abdominal pain.

The physician asked her to schedule a return visit and ordered several blood studies. He also offered to connect her with a colleague with whom he collaborated who could speak with her about possible alcohol use disorders and depression.

Continue to: Selecting blood work in screening for AUD

Selecting blood work in screening for AUD

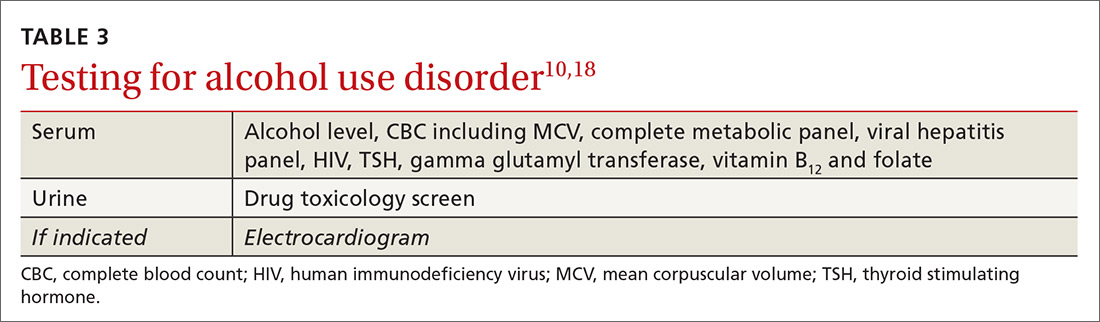

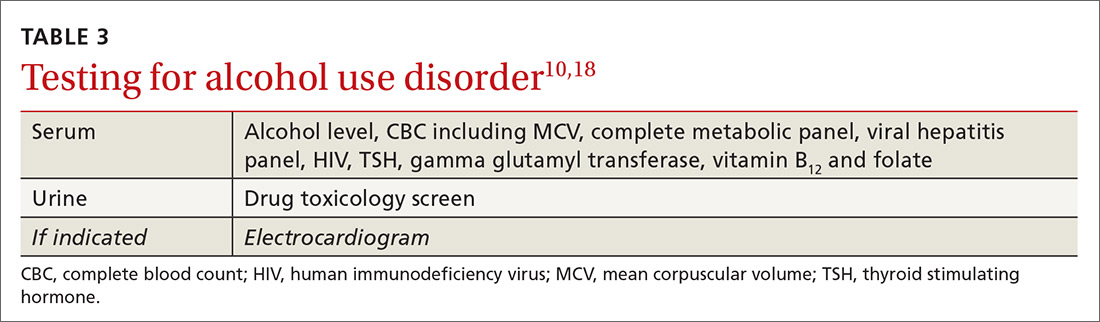

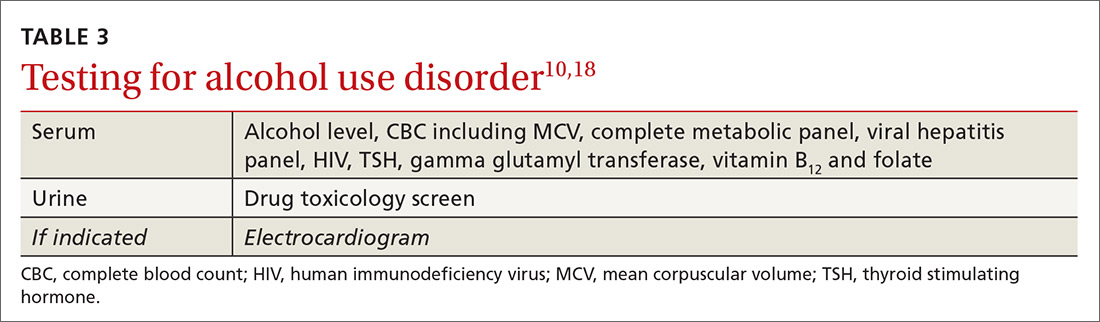

Lab tests used to measure hepatic injury due to alcohol include gamma-glutamyl-transferase, aspartate aminotransferase (AST), alanine aminotransferase (ALT), and macrocytic volume, although the indices of hepatic damage have low specificity. Elevated serum ethanol levels can reveal recent alcohol use, and vitamin deficiencies and other abnormalities can be used to differentiate other causes of hepatic inflammation and co-existing health issues (TABLE 310,18). A number of as-yet-unvalidated biomarkers are being studied to assist in screening, diagnosing, and treating AUD.18

What treatment approaches work for AUD?

Family physicians can efficiently and productively address AUD by using alcohol screening and brief intervention, which have been shown to reduce risky drinking. Reimbursement for this service is covered by such CPT codes as 99408, 99409, or H0049, or with other evaluation and management (E/M) codes by using modifier 25.

Treatment of AUD varies and should be customized to each patient’s needs, readiness, preferences, and resources. Individual and group counseling approaches can be effective, and medications are available for inpatient and outpatient settings. Psychotherapy options include brief interventions, 12-step programs (eg, Alcoholics Anonymous—https://www.aa.org/pages/en_US/find-aa-resources),motivational enhancement therapy, and cognitive behavioral therapy. Although it is beyond the scope of this article to describe these options in detail, resources are available for those who wish to learn more.19-21

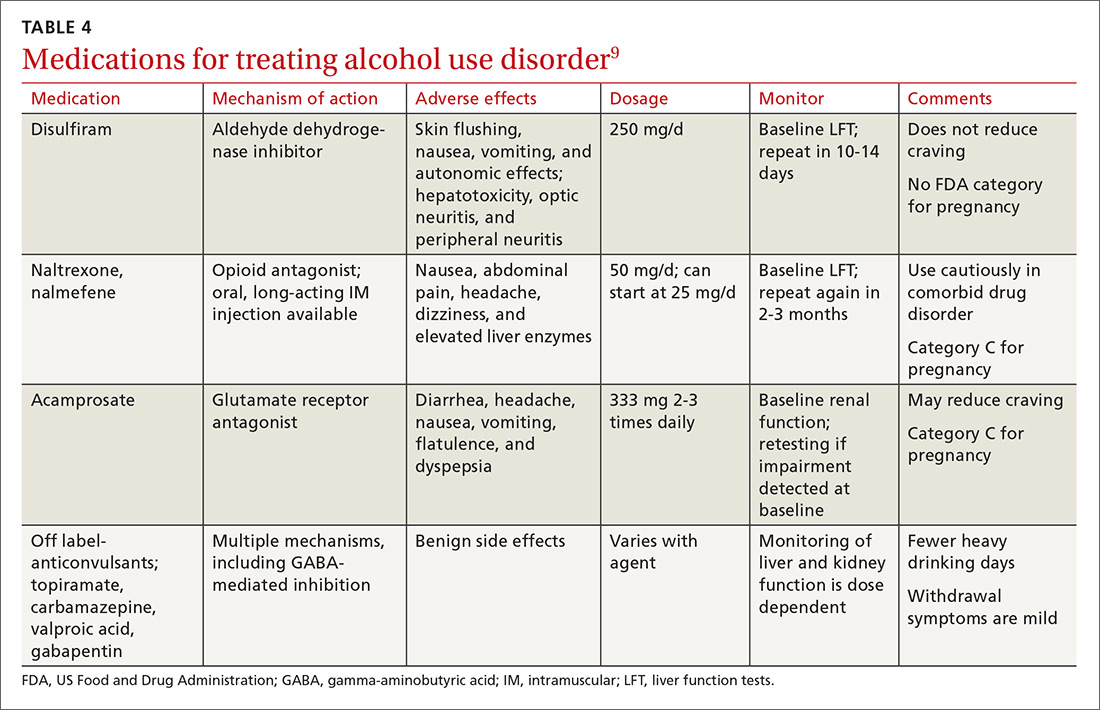

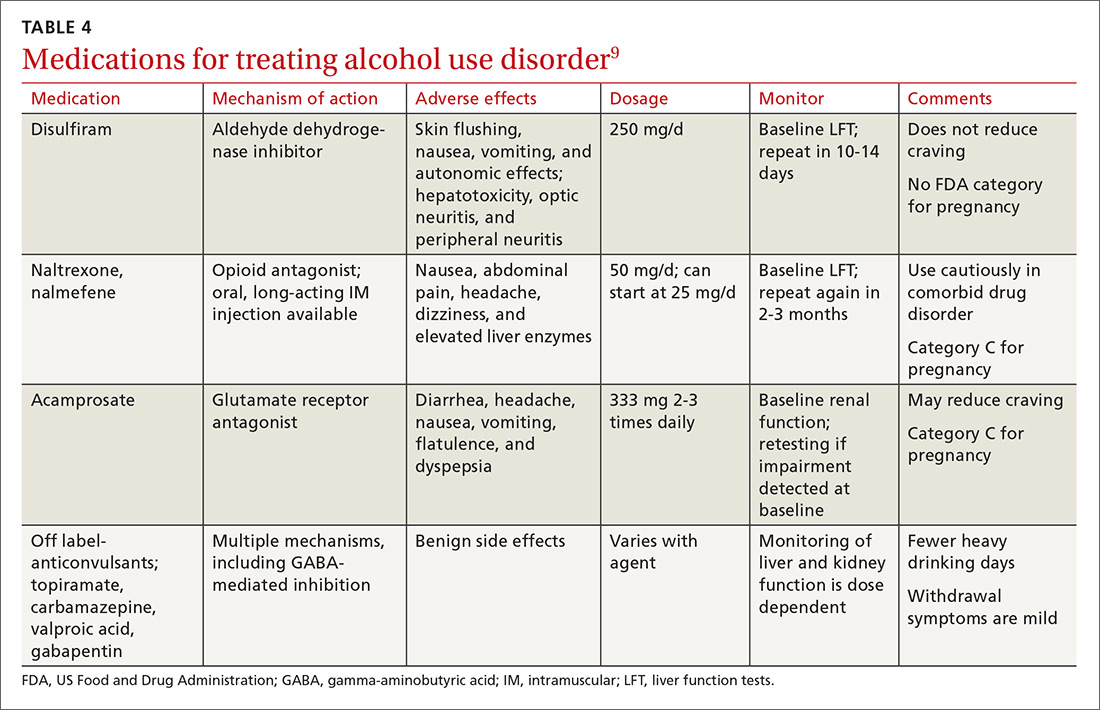

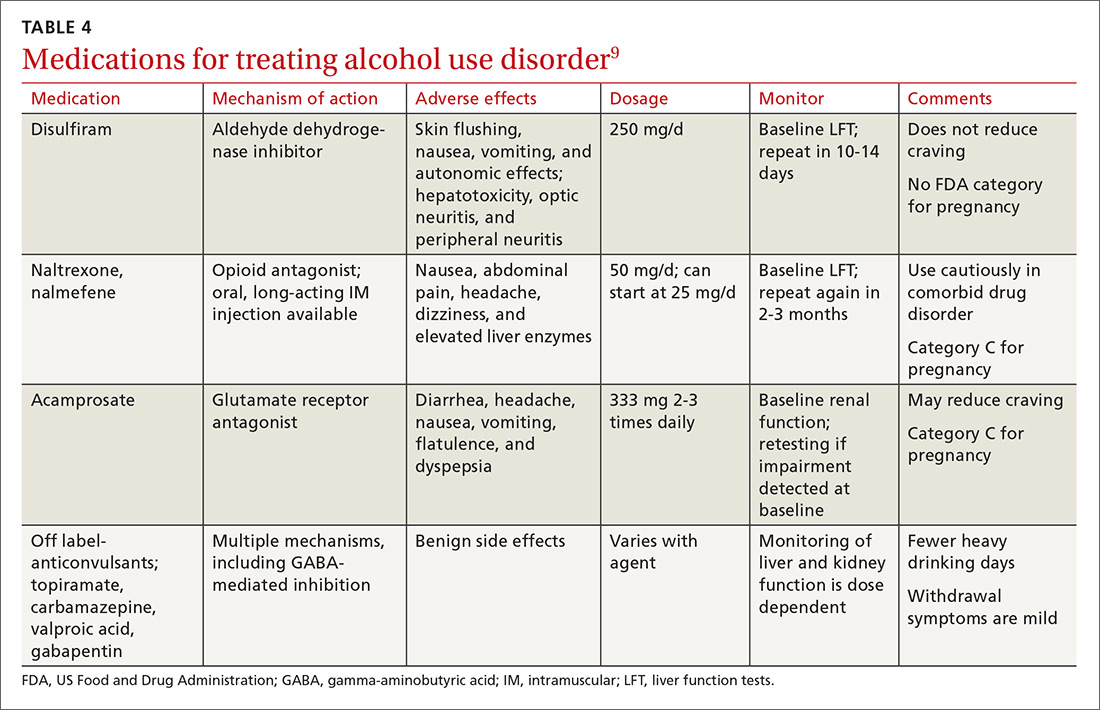

Psychopharmacologic management includes US Food and Drug Administration (FDA)-approved medications such as disulfiram, naltrexone, and acamprosate, and off-label uses of other medications (TABLE 49). Not enough empiric evidence is available to judge the effectiveness of these medications in adolescents, and the FDA has not approved them for such use. Evidence from meta-analyses comparing naltrexone and acamprosate have shown naltrexone to be more efficacious in reducing heavy drinking and cravings, while acamprosate is effective in promoting abstinence.22,23 Naltrexone combined with behavioral intervention reduces the heavy drinking days and percentage of abstinence days.24

Current guideline recommendations from the American Psychiatric Association25 include:

- Naltrexone and acamprosate are recommended to treat patients with moderate-to-severe AUD in specific circumstances (eg, when nonpharmacologic approaches have failed to produce an effect or when patients prefer to use one of these medications).

- Topiramate and gabapentin are also suggested as medications for patients with moderate-to-severe AUD, but typically after first trying naltrexone and acamprosate.

- Disulfiram generally should not be used as first-line treatment. It produces physical reactions (eg, flushing) if alcohol is consumed within 12 to 24 hours of medication use.

Continue to: THE CASE

THE CASE

Ms. E was open to the idea of decreasing her alcohol use and agreed that she was depressed. Her lab tests at follow-up were normal other than an elevated AST/ALT of 90/80 U/L. S

She continued to get counseling for her AUD and for her comorbid depression in addition to taking a selective serotonin reuptake inhibitor. She is now in early remission for her alcohol use.

CORRESPONDENCE

Jaividhya Dasarathy, MD, Department of Family Medicine, Metro Health Medical Center, 2500 MetroHealth Drive, Cleveland, OH 44109; [email protected].

1. Grant BF, Goldstein RB, Saha TD, et al. Epidemiology of DSM-5 alcohol use disorder: results from the National Epidemiologic Survey on Alcohol and Related Conditions III. JAMA Psychiatry. 2015;72:757-766.

2. APA. Diagnostic and Statistical Manual of Mental Disorders, 5th ed. Washington DC; 2013.

3. HHS. Results from the 2015 National Survey on Drug Use and Health: summary of national findings. https://www.samhsa.gov/data/sites/default/files/NSDUH-DetTabs-2015/NSDUH-DetTabs-2015/NSDUH-DetTabs-2015.pdf. Accessed November 27, 2018.

4. Rehm J, Mathers C, Popova S, et al. Global burden of disease and injury and economic cost attributable to alcohol use and alcohol-use disorders. Lancet. 2009;373:2223-2233.

5. Chase V, Neild R, Sadler CW, et al. The medical complications of alcohol use: understanding mechanisms to improve management. Drug Alcohol Rev. 2005;24:253-265.

6. Grant BF, Chou SP, Saha TD, et al. Prevalence of 12-month alcohol use, high-risk drinking, and DSM-IV alcohol use disorder in the United States, 2001-2002 to 2012-2013: results from the National Epidemiologic Survey on Alcohol and Related Conditions. JAMA Psychiatry. 2017;74:911-923.

7. Williams EC, Achtmeyer CE, Young JP, et al. Barriers to and facilitators of alcohol use disorder pharmacotherapy in primary care: a qualitative study in five VA clinics. J Gen Intern Med. 2018;33:258-267.

8. Zhang DX, Li ST, Lee QK, et al. Systematic review of guidelines on managing patients with harmful use of alcohol in primary healthcare settings. Alcohol Alcohol. 2017;52:595-609.

9. Wackernah RC, Minnick MJ, Clapp P. Alcohol use disorder: pathophysiology, effects, and pharmacologic options for treatment. Subst Abuse Rehabil. 2014;5:1-12.

10. Kattimani S, Bharadwaj B. Clinical management of alcohol withdrawal: a systematic review. Ind Psychiatry J. 2013;22:100-108.

11. Gowin JL, Sloan ME, Stangl BL, et al. Vulnerability for alcohol use disorder and rate of alcohol consumption. Am J Psychiatry. 2017;174:1094-1101.

12. Moyer VA; Preventive Services Task Force. Screening and behavioral counseling interventions in primary care to reduce alcohol misuse: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2013;159:210-218.

13. Tarter RE, Alterman AI, Edwards KL. Vulnerability to alcoholism in men: a behavior-genetic perspective. J Stud Alcohol. 1985;46:329-356.

14. Brière FN, Rohde P, Seeley JR, et al. Comorbidity between major depression and alcohol use disorder from adolescence to adulthood [published online ahead of print, October 22, 2013]. Compr Psychiatry. 2014;55:526-533. doi: 10.1016/j.comppsych.2013.10.007.

15. Tan CH, Hungerford DW, Denny CH, et al. Screening for alcohol misuse: practices among U.S. primary care providers, DocStyles 2016. Am J Prev Med. 2018;54:173-180.

16. Aertgeerts B, Buntinx F, Kester A. The value of the CAGE in screening for alcohol abuse and alcohol dependence in general clinical populations: a diagnostic meta-analysis. J Clin Epidemiol. 2004;57:30-39.

17. Bush K, Kivlahan DR, McDonell MB, et al. The AUDIT alcohol consumption questions (AUDIT-C): an effective brief screening test for problem drinking. Ambulatory Care Quality Improvement Project (ACQUIP). Alcohol Use Disorders Identification Test. Arch Intern Med. 1998;158:1789-1795.

18. Nanau RM, Neuman MG. Biomolecules and biomarkers used in diagnosis of alcohol drinking and in monitoring therapeutic interventions. Biomolecules. 2015;5:1339-1385.

19. Raddock M, Martukovich R, Berko E, et al. 7 tools to help patients adopt healthier behaviors. J Fam Pract. 2015;64:97-103.

20. AHRQ. Whitlock EP, Green CA, Polen MR, et al. Behavioral Counseling Interventions in Primary Care to Reduce Risky/Harmful Alcohol Use. 2004. https://www.ncbi.nlm.nih.gov/books/NBK42863/. Accessed November 17, 2018.

21. Miller WR, Baca C, Compton WM, et al. Addressing substance abuse in health care settings. Alcohol Clin Exp Res. 2006;30:292-302.

22. Maisel NC, Blodgett JC, Wilbourne PL, et al. Meta-analysis of naltrexone and acamprosate for treating alcohol use disorders: when are these medications most helpful? Addiction. 2013;108:275-293.

23. Rosner S, Leucht S, Lehert P, et al. Acamprosate supports abstinence, naltrexone prevents excessive drinking: evidence from a meta-analysis with unreported outcomes. J Psychopharmacol. 2008;22:11-23.

24. Anton RF, O’Malley SS, Ciraulo DA, et al. Combined pharmacotherapies and behavioral interventions for alcohol dependence: the COMBINE study: a randomized controlled trial. JAMA. 2006;295:2003-2017.

25. Reus VI, Fochtmann LJ, Bukstein O, et al. The American Psychiatric Association Practice Guideline for the Pharmacological Treatment of Patients With Alcohol Use Disorder. Am J Psychiatry. 2018;175:86-90.

THE CASE

Ms. E, a 42-year-old woman, visited her new physician for a physical exam. When asked about alcohol intake, she reported that she drank 3 to 4 beers after work and sometimes 5 to 8 beers a day on the weekends. Occasionally, she exceeded those amounts, but she didn’t feel guilty about her drinking. She was often late to work and said her relationship with her boyfriend was strained. A review of systems was positive for fatigue, poor concentration, abdominal pain, and weight gain. Her body mass index was 41, pulse 100 beats/min, blood pressure 125/75 mm Hg, and she was afebrile. Her physical exam was otherwise within normal limits.

How would you proceed with this patient?

Alcohol use disorder (AUD) is a common and often untreated condition that is increasingly prevalent in the United States.1 The Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (DSM-5) characterizes AUD as a combination of signs and symptoms typifying alcohol abuse and dependence (discussed in a bit).2

Data from the 2015 National Survey on Drug Use and Health (NSDUH) showed 15.7 million Americans with AUD, affecting 6.2% of the population ages 18 years or older and 2.5% of adolescents ages 12 to 17 years.3

Alcohol use and AUD account for an estimated 3.8% of all global deaths and 4.6% of global disability-adjusted life years.4 AUD adversely affects several systems (TABLE 15), and patients with AUD are sicker and more likely to die younger than those without AUD.4 In the United States, prevalence of AUD has increased in recent years among women, older adults, racial minorities, and individuals with a low education level.6

Screening for AUD is reasonable and straightforward, although diagnosis and treatment of AUD in primary care settings may be challenging due to competing clinical priorities; lack of training, resources, and support; and skepticism about the efficacy of behavioral and pharmacologic treatments.7,8 However, family physicians are in an excellent position to diagnose and help address the complex biopsychosocial needs of patients with AUD, often in collaboration with colleagues and community organizations.

Signs and symptoms of AUD

In clinical practice, at least 2 of the following 11 behaviors or symptoms are required to diagnose AUD2:

- consuming larger amounts of alcohol over a longer period than intended

- persistent desire or unsuccessful efforts to cut down or control alcohol use

- making a significant effort to obtain, use, or recover from alcohol

In moderate-to-severe cases:

- cravings or urges to use alcohol

- recurrent failure to fulfill major work, school, or social obligations

- continued alcohol use despite recurrent social and interpersonal problems

- giving up social, occupational, and recreational activities due to alcohol

- using alcohol in physically dangerous situations

- continued alcohol use despite having physical or psychological problems

- tolerance to alcohol’s effects

- withdrawal symptoms.

Continue to: Patients meet criteria for mild AUD severity if...

Patients meet criteria for mild AUD severity if they exhibit 2 or 3 symptoms, moderate AUD with 4 or 5 symptoms, and severe AUD if there are 6 or more symptoms.2

Those who meet criteria for AUD and are able to stop using alcohol are deemed to be in early remission if the criteria have gone unfulfilled for at least 3 months and less than 12 months. Patients are considered to be in sustained remission if they have not met criteria for AUD at any time during a period of 12 months or longer.

How to detect AUD

Several clues in a patient’s history can suggest AUD (TABLE 29,10). Most imbibers are unaware of the dangers and may consider themselves merely “social drinkers.” Binge drinking may be an early indicator of vulnerability to AUD and should be assessed as part of a thorough clinical evaluation.11 The US Preventive Services Task Force (USPSTF) recommends (Grade B) that clinicians screen adults ages 18 years or older for alcohol misuse.12

Studies demonstrate that both genetic and environmental factors play important roles in the development of AUD.13 A family history of excessive alcohol use increases the risk of AUD. Comorbidity of AUD and other mental health conditions is extremely common. For example, high rates of association between major depressive disorder and AUD have been observed.14

Tools to use in screening and diagnosing AUD

Screening for AUD during an office visit can be done fairly quickly. While 96% of primary care physicians screen for alcohol misuse in some way, only 38% use 1 of the 3 tools recommended by the USPSTF15—the Alcohol Use Disorders Identification Test (AUDIT), the abbreviated AUDIT-C, or the National Institute on Alcohol Abuse and Alcoholism (NIAAA) single question screen—which detect the full spectrum of alcohol misuse in adults.12 Although the commonly used CAGE questionnaire is one of the most studied self-report tools, it has lower sensitivity at a lower level of alcohol intake.16

Continue to: The NIAAA single-question screen asks...

The NIAAA single-question screen asks how many times in the past year the patient had ≥4 drinks (women) or ≥5 drinks (men) in a day.15 The sensitivity and specificity of single-question screening are 82% to 87% and 61% to 79%, respectively, and the test has been validated in several different settings.12 The AUDIT screening tool, freely available from the World Health Organization, is a 10-item questionnaire that probes an individual’s alcohol intake, alcohol dependence, and adverse consequences of alcohol use. Administration of the AUDIT typically requires only 2 minutes. AUDIT-C17 is an abbreviated version of the AUDIT questionnaire that asks 3 consumption questions to screen for AUD.

It was found that AUDIT scores in the range of 8 to 15 indicate a medium-level alcohol problem, whereas a score of ≥16 indicates a high-level alcohol problem. The AUDIT-C is scored from 0 to 12, with ≥4 indicating a problem in men and ≥3

THE CASE

The physician had used the NIAAA single- question screen to determine that Ms. E drank more than 4 beers per day during social events and weekends, which occurred 2 to 3 times per month over the past year. She lives alone and said that she’d been seeing less and less of her boyfriend lately. Her score on the Patient Health Questionnaire (PHQ), which screens for depression, was 11, indicating moderate impairment. Her response on the CAGE questionnaire was negative for a problem with alcohol. However, her AUDIT score was 17, indicating a high-level alcohol problem. Based on these findings, her physician expressed concern that her alcohol use might be contributing to her symptoms and difficulties.

Although she did not have a history of increasing usage per day, a persistent desire to cut down, significant effort to obtain alcohol, or cravings, she was having work troubles and continued to drink even though it was straining relationships, promoting weight gain, and causing abdominal pain.

The physician asked her to schedule a return visit and ordered several blood studies. He also offered to connect her with a colleague with whom he collaborated who could speak with her about possible alcohol use disorders and depression.

Continue to: Selecting blood work in screening for AUD

Selecting blood work in screening for AUD

Lab tests used to measure hepatic injury due to alcohol include gamma-glutamyl-transferase, aspartate aminotransferase (AST), alanine aminotransferase (ALT), and macrocytic volume, although the indices of hepatic damage have low specificity. Elevated serum ethanol levels can reveal recent alcohol use, and vitamin deficiencies and other abnormalities can be used to differentiate other causes of hepatic inflammation and co-existing health issues (TABLE 310,18). A number of as-yet-unvalidated biomarkers are being studied to assist in screening, diagnosing, and treating AUD.18

What treatment approaches work for AUD?

Family physicians can efficiently and productively address AUD by using alcohol screening and brief intervention, which have been shown to reduce risky drinking. Reimbursement for this service is covered by such CPT codes as 99408, 99409, or H0049, or with other evaluation and management (E/M) codes by using modifier 25.

Treatment of AUD varies and should be customized to each patient’s needs, readiness, preferences, and resources. Individual and group counseling approaches can be effective, and medications are available for inpatient and outpatient settings. Psychotherapy options include brief interventions, 12-step programs (eg, Alcoholics Anonymous—https://www.aa.org/pages/en_US/find-aa-resources),motivational enhancement therapy, and cognitive behavioral therapy. Although it is beyond the scope of this article to describe these options in detail, resources are available for those who wish to learn more.19-21

Psychopharmacologic management includes US Food and Drug Administration (FDA)-approved medications such as disulfiram, naltrexone, and acamprosate, and off-label uses of other medications (TABLE 49). Not enough empiric evidence is available to judge the effectiveness of these medications in adolescents, and the FDA has not approved them for such use. Evidence from meta-analyses comparing naltrexone and acamprosate have shown naltrexone to be more efficacious in reducing heavy drinking and cravings, while acamprosate is effective in promoting abstinence.22,23 Naltrexone combined with behavioral intervention reduces the heavy drinking days and percentage of abstinence days.24

Current guideline recommendations from the American Psychiatric Association25 include:

- Naltrexone and acamprosate are recommended to treat patients with moderate-to-severe AUD in specific circumstances (eg, when nonpharmacologic approaches have failed to produce an effect or when patients prefer to use one of these medications).

- Topiramate and gabapentin are also suggested as medications for patients with moderate-to-severe AUD, but typically after first trying naltrexone and acamprosate.

- Disulfiram generally should not be used as first-line treatment. It produces physical reactions (eg, flushing) if alcohol is consumed within 12 to 24 hours of medication use.

Continue to: THE CASE

THE CASE

Ms. E was open to the idea of decreasing her alcohol use and agreed that she was depressed. Her lab tests at follow-up were normal other than an elevated AST/ALT of 90/80 U/L. S

She continued to get counseling for her AUD and for her comorbid depression in addition to taking a selective serotonin reuptake inhibitor. She is now in early remission for her alcohol use.

CORRESPONDENCE

Jaividhya Dasarathy, MD, Department of Family Medicine, Metro Health Medical Center, 2500 MetroHealth Drive, Cleveland, OH 44109; [email protected].

THE CASE

Ms. E, a 42-year-old woman, visited her new physician for a physical exam. When asked about alcohol intake, she reported that she drank 3 to 4 beers after work and sometimes 5 to 8 beers a day on the weekends. Occasionally, she exceeded those amounts, but she didn’t feel guilty about her drinking. She was often late to work and said her relationship with her boyfriend was strained. A review of systems was positive for fatigue, poor concentration, abdominal pain, and weight gain. Her body mass index was 41, pulse 100 beats/min, blood pressure 125/75 mm Hg, and she was afebrile. Her physical exam was otherwise within normal limits.

How would you proceed with this patient?

Alcohol use disorder (AUD) is a common and often untreated condition that is increasingly prevalent in the United States.1 The Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (DSM-5) characterizes AUD as a combination of signs and symptoms typifying alcohol abuse and dependence (discussed in a bit).2

Data from the 2015 National Survey on Drug Use and Health (NSDUH) showed 15.7 million Americans with AUD, affecting 6.2% of the population ages 18 years or older and 2.5% of adolescents ages 12 to 17 years.3

Alcohol use and AUD account for an estimated 3.8% of all global deaths and 4.6% of global disability-adjusted life years.4 AUD adversely affects several systems (TABLE 15), and patients with AUD are sicker and more likely to die younger than those without AUD.4 In the United States, prevalence of AUD has increased in recent years among women, older adults, racial minorities, and individuals with a low education level.6

Screening for AUD is reasonable and straightforward, although diagnosis and treatment of AUD in primary care settings may be challenging due to competing clinical priorities; lack of training, resources, and support; and skepticism about the efficacy of behavioral and pharmacologic treatments.7,8 However, family physicians are in an excellent position to diagnose and help address the complex biopsychosocial needs of patients with AUD, often in collaboration with colleagues and community organizations.

Signs and symptoms of AUD

In clinical practice, at least 2 of the following 11 behaviors or symptoms are required to diagnose AUD2:

- consuming larger amounts of alcohol over a longer period than intended

- persistent desire or unsuccessful efforts to cut down or control alcohol use

- making a significant effort to obtain, use, or recover from alcohol

In moderate-to-severe cases:

- cravings or urges to use alcohol

- recurrent failure to fulfill major work, school, or social obligations

- continued alcohol use despite recurrent social and interpersonal problems

- giving up social, occupational, and recreational activities due to alcohol

- using alcohol in physically dangerous situations

- continued alcohol use despite having physical or psychological problems

- tolerance to alcohol’s effects

- withdrawal symptoms.

Continue to: Patients meet criteria for mild AUD severity if...

Patients meet criteria for mild AUD severity if they exhibit 2 or 3 symptoms, moderate AUD with 4 or 5 symptoms, and severe AUD if there are 6 or more symptoms.2

Those who meet criteria for AUD and are able to stop using alcohol are deemed to be in early remission if the criteria have gone unfulfilled for at least 3 months and less than 12 months. Patients are considered to be in sustained remission if they have not met criteria for AUD at any time during a period of 12 months or longer.

How to detect AUD

Several clues in a patient’s history can suggest AUD (TABLE 29,10). Most imbibers are unaware of the dangers and may consider themselves merely “social drinkers.” Binge drinking may be an early indicator of vulnerability to AUD and should be assessed as part of a thorough clinical evaluation.11 The US Preventive Services Task Force (USPSTF) recommends (Grade B) that clinicians screen adults ages 18 years or older for alcohol misuse.12

Studies demonstrate that both genetic and environmental factors play important roles in the development of AUD.13 A family history of excessive alcohol use increases the risk of AUD. Comorbidity of AUD and other mental health conditions is extremely common. For example, high rates of association between major depressive disorder and AUD have been observed.14

Tools to use in screening and diagnosing AUD

Screening for AUD during an office visit can be done fairly quickly. While 96% of primary care physicians screen for alcohol misuse in some way, only 38% use 1 of the 3 tools recommended by the USPSTF15—the Alcohol Use Disorders Identification Test (AUDIT), the abbreviated AUDIT-C, or the National Institute on Alcohol Abuse and Alcoholism (NIAAA) single question screen—which detect the full spectrum of alcohol misuse in adults.12 Although the commonly used CAGE questionnaire is one of the most studied self-report tools, it has lower sensitivity at a lower level of alcohol intake.16

Continue to: The NIAAA single-question screen asks...

The NIAAA single-question screen asks how many times in the past year the patient had ≥4 drinks (women) or ≥5 drinks (men) in a day.15 The sensitivity and specificity of single-question screening are 82% to 87% and 61% to 79%, respectively, and the test has been validated in several different settings.12 The AUDIT screening tool, freely available from the World Health Organization, is a 10-item questionnaire that probes an individual’s alcohol intake, alcohol dependence, and adverse consequences of alcohol use. Administration of the AUDIT typically requires only 2 minutes. AUDIT-C17 is an abbreviated version of the AUDIT questionnaire that asks 3 consumption questions to screen for AUD.

It was found that AUDIT scores in the range of 8 to 15 indicate a medium-level alcohol problem, whereas a score of ≥16 indicates a high-level alcohol problem. The AUDIT-C is scored from 0 to 12, with ≥4 indicating a problem in men and ≥3

THE CASE

The physician had used the NIAAA single- question screen to determine that Ms. E drank more than 4 beers per day during social events and weekends, which occurred 2 to 3 times per month over the past year. She lives alone and said that she’d been seeing less and less of her boyfriend lately. Her score on the Patient Health Questionnaire (PHQ), which screens for depression, was 11, indicating moderate impairment. Her response on the CAGE questionnaire was negative for a problem with alcohol. However, her AUDIT score was 17, indicating a high-level alcohol problem. Based on these findings, her physician expressed concern that her alcohol use might be contributing to her symptoms and difficulties.

Although she did not have a history of increasing usage per day, a persistent desire to cut down, significant effort to obtain alcohol, or cravings, she was having work troubles and continued to drink even though it was straining relationships, promoting weight gain, and causing abdominal pain.

The physician asked her to schedule a return visit and ordered several blood studies. He also offered to connect her with a colleague with whom he collaborated who could speak with her about possible alcohol use disorders and depression.

Continue to: Selecting blood work in screening for AUD

Selecting blood work in screening for AUD

Lab tests used to measure hepatic injury due to alcohol include gamma-glutamyl-transferase, aspartate aminotransferase (AST), alanine aminotransferase (ALT), and macrocytic volume, although the indices of hepatic damage have low specificity. Elevated serum ethanol levels can reveal recent alcohol use, and vitamin deficiencies and other abnormalities can be used to differentiate other causes of hepatic inflammation and co-existing health issues (TABLE 310,18). A number of as-yet-unvalidated biomarkers are being studied to assist in screening, diagnosing, and treating AUD.18

What treatment approaches work for AUD?

Family physicians can efficiently and productively address AUD by using alcohol screening and brief intervention, which have been shown to reduce risky drinking. Reimbursement for this service is covered by such CPT codes as 99408, 99409, or H0049, or with other evaluation and management (E/M) codes by using modifier 25.

Treatment of AUD varies and should be customized to each patient’s needs, readiness, preferences, and resources. Individual and group counseling approaches can be effective, and medications are available for inpatient and outpatient settings. Psychotherapy options include brief interventions, 12-step programs (eg, Alcoholics Anonymous—https://www.aa.org/pages/en_US/find-aa-resources),motivational enhancement therapy, and cognitive behavioral therapy. Although it is beyond the scope of this article to describe these options in detail, resources are available for those who wish to learn more.19-21

Psychopharmacologic management includes US Food and Drug Administration (FDA)-approved medications such as disulfiram, naltrexone, and acamprosate, and off-label uses of other medications (TABLE 49). Not enough empiric evidence is available to judge the effectiveness of these medications in adolescents, and the FDA has not approved them for such use. Evidence from meta-analyses comparing naltrexone and acamprosate have shown naltrexone to be more efficacious in reducing heavy drinking and cravings, while acamprosate is effective in promoting abstinence.22,23 Naltrexone combined with behavioral intervention reduces the heavy drinking days and percentage of abstinence days.24

Current guideline recommendations from the American Psychiatric Association25 include:

- Naltrexone and acamprosate are recommended to treat patients with moderate-to-severe AUD in specific circumstances (eg, when nonpharmacologic approaches have failed to produce an effect or when patients prefer to use one of these medications).

- Topiramate and gabapentin are also suggested as medications for patients with moderate-to-severe AUD, but typically after first trying naltrexone and acamprosate.

- Disulfiram generally should not be used as first-line treatment. It produces physical reactions (eg, flushing) if alcohol is consumed within 12 to 24 hours of medication use.

Continue to: THE CASE

THE CASE

Ms. E was open to the idea of decreasing her alcohol use and agreed that she was depressed. Her lab tests at follow-up were normal other than an elevated AST/ALT of 90/80 U/L. S

She continued to get counseling for her AUD and for her comorbid depression in addition to taking a selective serotonin reuptake inhibitor. She is now in early remission for her alcohol use.

CORRESPONDENCE

Jaividhya Dasarathy, MD, Department of Family Medicine, Metro Health Medical Center, 2500 MetroHealth Drive, Cleveland, OH 44109; [email protected].

1. Grant BF, Goldstein RB, Saha TD, et al. Epidemiology of DSM-5 alcohol use disorder: results from the National Epidemiologic Survey on Alcohol and Related Conditions III. JAMA Psychiatry. 2015;72:757-766.

2. APA. Diagnostic and Statistical Manual of Mental Disorders, 5th ed. Washington DC; 2013.

3. HHS. Results from the 2015 National Survey on Drug Use and Health: summary of national findings. https://www.samhsa.gov/data/sites/default/files/NSDUH-DetTabs-2015/NSDUH-DetTabs-2015/NSDUH-DetTabs-2015.pdf. Accessed November 27, 2018.

4. Rehm J, Mathers C, Popova S, et al. Global burden of disease and injury and economic cost attributable to alcohol use and alcohol-use disorders. Lancet. 2009;373:2223-2233.

5. Chase V, Neild R, Sadler CW, et al. The medical complications of alcohol use: understanding mechanisms to improve management. Drug Alcohol Rev. 2005;24:253-265.

6. Grant BF, Chou SP, Saha TD, et al. Prevalence of 12-month alcohol use, high-risk drinking, and DSM-IV alcohol use disorder in the United States, 2001-2002 to 2012-2013: results from the National Epidemiologic Survey on Alcohol and Related Conditions. JAMA Psychiatry. 2017;74:911-923.

7. Williams EC, Achtmeyer CE, Young JP, et al. Barriers to and facilitators of alcohol use disorder pharmacotherapy in primary care: a qualitative study in five VA clinics. J Gen Intern Med. 2018;33:258-267.

8. Zhang DX, Li ST, Lee QK, et al. Systematic review of guidelines on managing patients with harmful use of alcohol in primary healthcare settings. Alcohol Alcohol. 2017;52:595-609.

9. Wackernah RC, Minnick MJ, Clapp P. Alcohol use disorder: pathophysiology, effects, and pharmacologic options for treatment. Subst Abuse Rehabil. 2014;5:1-12.

10. Kattimani S, Bharadwaj B. Clinical management of alcohol withdrawal: a systematic review. Ind Psychiatry J. 2013;22:100-108.

11. Gowin JL, Sloan ME, Stangl BL, et al. Vulnerability for alcohol use disorder and rate of alcohol consumption. Am J Psychiatry. 2017;174:1094-1101.

12. Moyer VA; Preventive Services Task Force. Screening and behavioral counseling interventions in primary care to reduce alcohol misuse: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2013;159:210-218.

13. Tarter RE, Alterman AI, Edwards KL. Vulnerability to alcoholism in men: a behavior-genetic perspective. J Stud Alcohol. 1985;46:329-356.

14. Brière FN, Rohde P, Seeley JR, et al. Comorbidity between major depression and alcohol use disorder from adolescence to adulthood [published online ahead of print, October 22, 2013]. Compr Psychiatry. 2014;55:526-533. doi: 10.1016/j.comppsych.2013.10.007.

15. Tan CH, Hungerford DW, Denny CH, et al. Screening for alcohol misuse: practices among U.S. primary care providers, DocStyles 2016. Am J Prev Med. 2018;54:173-180.

16. Aertgeerts B, Buntinx F, Kester A. The value of the CAGE in screening for alcohol abuse and alcohol dependence in general clinical populations: a diagnostic meta-analysis. J Clin Epidemiol. 2004;57:30-39.

17. Bush K, Kivlahan DR, McDonell MB, et al. The AUDIT alcohol consumption questions (AUDIT-C): an effective brief screening test for problem drinking. Ambulatory Care Quality Improvement Project (ACQUIP). Alcohol Use Disorders Identification Test. Arch Intern Med. 1998;158:1789-1795.

18. Nanau RM, Neuman MG. Biomolecules and biomarkers used in diagnosis of alcohol drinking and in monitoring therapeutic interventions. Biomolecules. 2015;5:1339-1385.

19. Raddock M, Martukovich R, Berko E, et al. 7 tools to help patients adopt healthier behaviors. J Fam Pract. 2015;64:97-103.

20. AHRQ. Whitlock EP, Green CA, Polen MR, et al. Behavioral Counseling Interventions in Primary Care to Reduce Risky/Harmful Alcohol Use. 2004. https://www.ncbi.nlm.nih.gov/books/NBK42863/. Accessed November 17, 2018.

21. Miller WR, Baca C, Compton WM, et al. Addressing substance abuse in health care settings. Alcohol Clin Exp Res. 2006;30:292-302.

22. Maisel NC, Blodgett JC, Wilbourne PL, et al. Meta-analysis of naltrexone and acamprosate for treating alcohol use disorders: when are these medications most helpful? Addiction. 2013;108:275-293.

23. Rosner S, Leucht S, Lehert P, et al. Acamprosate supports abstinence, naltrexone prevents excessive drinking: evidence from a meta-analysis with unreported outcomes. J Psychopharmacol. 2008;22:11-23.

24. Anton RF, O’Malley SS, Ciraulo DA, et al. Combined pharmacotherapies and behavioral interventions for alcohol dependence: the COMBINE study: a randomized controlled trial. JAMA. 2006;295:2003-2017.

25. Reus VI, Fochtmann LJ, Bukstein O, et al. The American Psychiatric Association Practice Guideline for the Pharmacological Treatment of Patients With Alcohol Use Disorder. Am J Psychiatry. 2018;175:86-90.

1. Grant BF, Goldstein RB, Saha TD, et al. Epidemiology of DSM-5 alcohol use disorder: results from the National Epidemiologic Survey on Alcohol and Related Conditions III. JAMA Psychiatry. 2015;72:757-766.

2. APA. Diagnostic and Statistical Manual of Mental Disorders, 5th ed. Washington DC; 2013.

3. HHS. Results from the 2015 National Survey on Drug Use and Health: summary of national findings. https://www.samhsa.gov/data/sites/default/files/NSDUH-DetTabs-2015/NSDUH-DetTabs-2015/NSDUH-DetTabs-2015.pdf. Accessed November 27, 2018.

4. Rehm J, Mathers C, Popova S, et al. Global burden of disease and injury and economic cost attributable to alcohol use and alcohol-use disorders. Lancet. 2009;373:2223-2233.

5. Chase V, Neild R, Sadler CW, et al. The medical complications of alcohol use: understanding mechanisms to improve management. Drug Alcohol Rev. 2005;24:253-265.

6. Grant BF, Chou SP, Saha TD, et al. Prevalence of 12-month alcohol use, high-risk drinking, and DSM-IV alcohol use disorder in the United States, 2001-2002 to 2012-2013: results from the National Epidemiologic Survey on Alcohol and Related Conditions. JAMA Psychiatry. 2017;74:911-923.

7. Williams EC, Achtmeyer CE, Young JP, et al. Barriers to and facilitators of alcohol use disorder pharmacotherapy in primary care: a qualitative study in five VA clinics. J Gen Intern Med. 2018;33:258-267.

8. Zhang DX, Li ST, Lee QK, et al. Systematic review of guidelines on managing patients with harmful use of alcohol in primary healthcare settings. Alcohol Alcohol. 2017;52:595-609.

9. Wackernah RC, Minnick MJ, Clapp P. Alcohol use disorder: pathophysiology, effects, and pharmacologic options for treatment. Subst Abuse Rehabil. 2014;5:1-12.

10. Kattimani S, Bharadwaj B. Clinical management of alcohol withdrawal: a systematic review. Ind Psychiatry J. 2013;22:100-108.

11. Gowin JL, Sloan ME, Stangl BL, et al. Vulnerability for alcohol use disorder and rate of alcohol consumption. Am J Psychiatry. 2017;174:1094-1101.

12. Moyer VA; Preventive Services Task Force. Screening and behavioral counseling interventions in primary care to reduce alcohol misuse: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2013;159:210-218.

13. Tarter RE, Alterman AI, Edwards KL. Vulnerability to alcoholism in men: a behavior-genetic perspective. J Stud Alcohol. 1985;46:329-356.

14. Brière FN, Rohde P, Seeley JR, et al. Comorbidity between major depression and alcohol use disorder from adolescence to adulthood [published online ahead of print, October 22, 2013]. Compr Psychiatry. 2014;55:526-533. doi: 10.1016/j.comppsych.2013.10.007.

15. Tan CH, Hungerford DW, Denny CH, et al. Screening for alcohol misuse: practices among U.S. primary care providers, DocStyles 2016. Am J Prev Med. 2018;54:173-180.

16. Aertgeerts B, Buntinx F, Kester A. The value of the CAGE in screening for alcohol abuse and alcohol dependence in general clinical populations: a diagnostic meta-analysis. J Clin Epidemiol. 2004;57:30-39.

17. Bush K, Kivlahan DR, McDonell MB, et al. The AUDIT alcohol consumption questions (AUDIT-C): an effective brief screening test for problem drinking. Ambulatory Care Quality Improvement Project (ACQUIP). Alcohol Use Disorders Identification Test. Arch Intern Med. 1998;158:1789-1795.

18. Nanau RM, Neuman MG. Biomolecules and biomarkers used in diagnosis of alcohol drinking and in monitoring therapeutic interventions. Biomolecules. 2015;5:1339-1385.

19. Raddock M, Martukovich R, Berko E, et al. 7 tools to help patients adopt healthier behaviors. J Fam Pract. 2015;64:97-103.

20. AHRQ. Whitlock EP, Green CA, Polen MR, et al. Behavioral Counseling Interventions in Primary Care to Reduce Risky/Harmful Alcohol Use. 2004. https://www.ncbi.nlm.nih.gov/books/NBK42863/. Accessed November 17, 2018.

21. Miller WR, Baca C, Compton WM, et al. Addressing substance abuse in health care settings. Alcohol Clin Exp Res. 2006;30:292-302.

22. Maisel NC, Blodgett JC, Wilbourne PL, et al. Meta-analysis of naltrexone and acamprosate for treating alcohol use disorders: when are these medications most helpful? Addiction. 2013;108:275-293.

23. Rosner S, Leucht S, Lehert P, et al. Acamprosate supports abstinence, naltrexone prevents excessive drinking: evidence from a meta-analysis with unreported outcomes. J Psychopharmacol. 2008;22:11-23.

24. Anton RF, O’Malley SS, Ciraulo DA, et al. Combined pharmacotherapies and behavioral interventions for alcohol dependence: the COMBINE study: a randomized controlled trial. JAMA. 2006;295:2003-2017.

25. Reus VI, Fochtmann LJ, Bukstein O, et al. The American Psychiatric Association Practice Guideline for the Pharmacological Treatment of Patients With Alcohol Use Disorder. Am J Psychiatry. 2018;175:86-90.

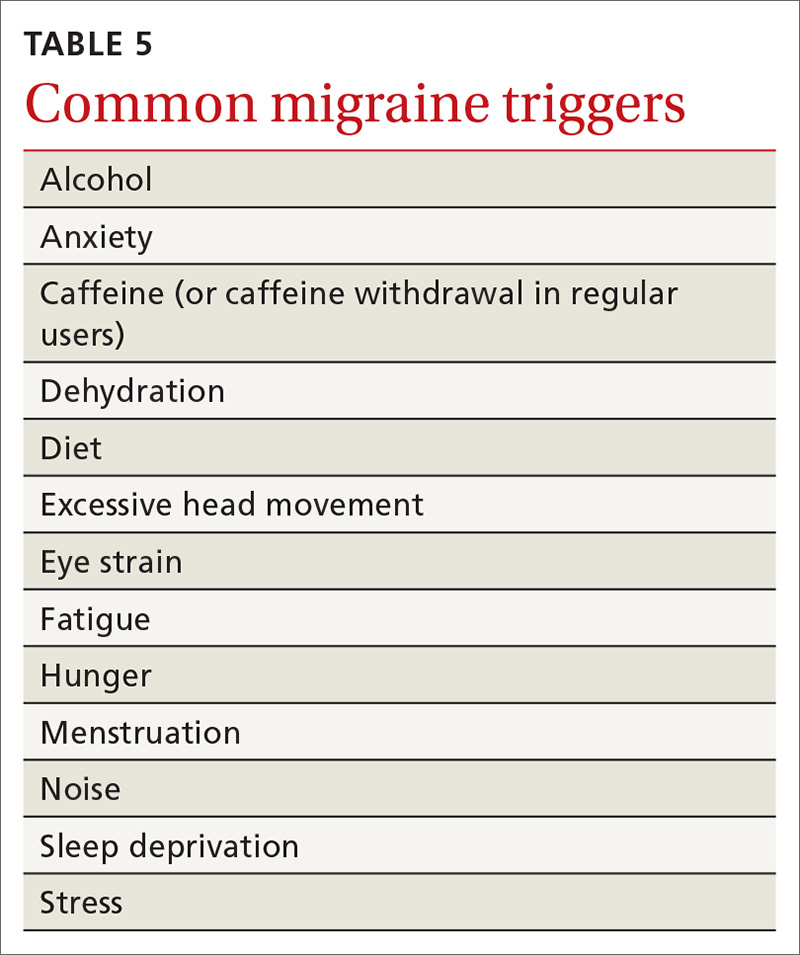

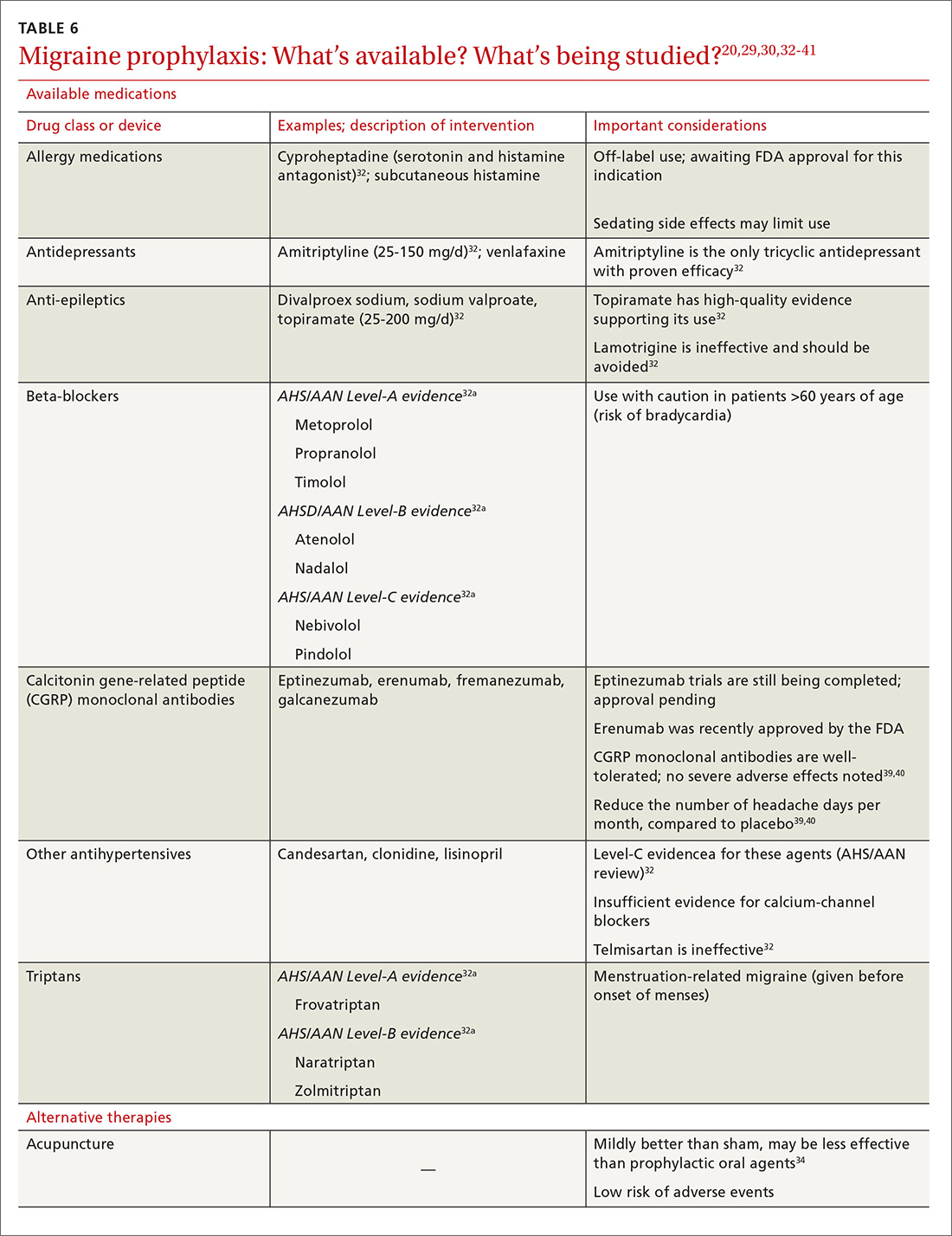

Migraine: Expanding our Tx arsenal

Migraine is a highly disabling primary headache disorder that affects more than 44 million Americans annually.1 The disorder causes pain, photophobia, phonophobia, and nausea that can last for hours, even days. Migraine headaches are 2 times more common in women than in men; although migraine is most common in people 30 to 39 years of age, all ages are affected.2,3 Frequency of migraine headache is variable; chronic migraineurs experience more than 15 headache days a month.

Recent estimates indicate that the cost of acute and chronic migraine headaches reaches approximately $78 million a year in the United States. 4 This high burden of disease has made effective migraine treatment options absolutely essential. Recent advances in our understanding of migraine pathophysiology have led to new therapeutic targets; there are now many novel treatment approaches on the horizon.

In this article, we review the diagnosis and management of migraine in detail. Our emphasis is on evidence-based approaches to acute and prophylactic treatment, including tried-and-true options and newly emerging therapies.

Neuronal dysfunction and a genetic predisposition

Although migraine was once thought to be caused by abnormalities of vasodilation, current research suggests that the disorder has its origins in primary neuronal dysfunction. There appears to be a genetic predisposition toward widespread neuronal hyperexcitability in migraineurs.5 In addition, hypothalamic neurons are thought to initiate migraine by responding to changes in brain homeostasis. Increased parasympathetic tone might activate meningeal pain receptors or lower the threshold for transmitting pain signals from the thalamus to the cortex.6

Prodromal symptoms and aura appear to originate from multiple areas across the brain, including the hypothalamus, cortex, limbic system, and brainstem. This widespread brain involvement might explain why some headache sufferers concurrently experience a variety of symptoms, including fatigue, depression, muscle pain, and an abnormal sensitivity to light, sound, and smell.6,7

Although the exact mechanisms behind each of these symptoms have yet to be defined precisely, waves of neuronal depolarization—known as cortical spreading depression—are suspected to cause migraine aura.8-10 Cortical spreading depression activates the trigeminal pain pathway and leads to the release of pro-inflammatory markers such as calcitonin gene-related protein (CGRP).6 A better understanding of these complex signaling pathways has helped provide potential therapeutic targets for new migraine drugs.

Diagnosis: Close patient inquiry is most helpful

The International Headache Society (IHS) criteria for primary headache disorders serve as the basis for the diagnosis of migraine and its subtypes, which include migraine without aura and migraine with aura. Due to variability of presentation, migraine with aura is further subdivided into migraine with typical aura (with and without headache), migraine with brainstem aura, hemiplegic migraine, and retinal migraine.11

Continue to: How is migraine defined?

How is migraine defined? Simply, migraine is classically defined as a unilateral, pulsating headache of moderate to severe intensity lasting 4 to 72 hours, associated with photophobia and phonophobia or nausea and vomiting, or both.11 Often visual in nature, aura is a set of neurologic symptoms that lasts for minutes and precedes the onset of the headache. The visual aura is often described as a scintillating scotoma that begins near the point of visual fixation and then spreads left or right. Other aura symptoms include tingling or numbness (second most common), speech disturbance (aphasia), motor changes and, in rare cases, a combination of these in succession. By definition, all of these symptoms fully resolve between attacks.11

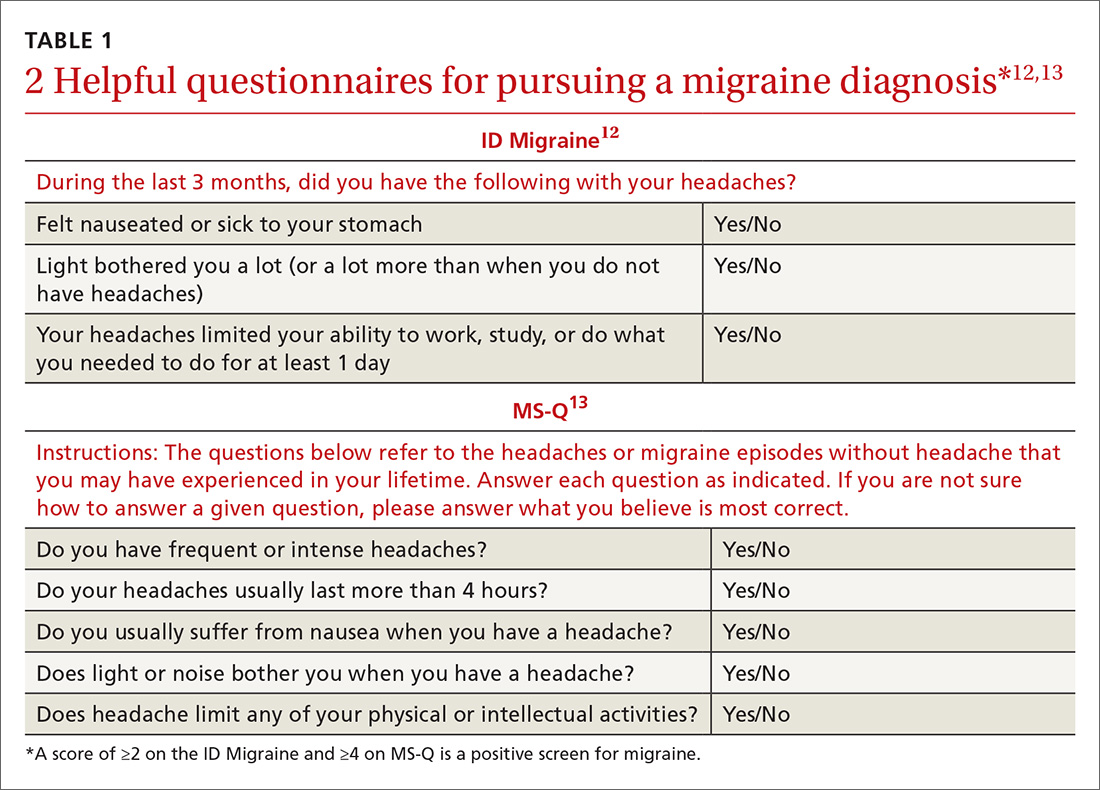

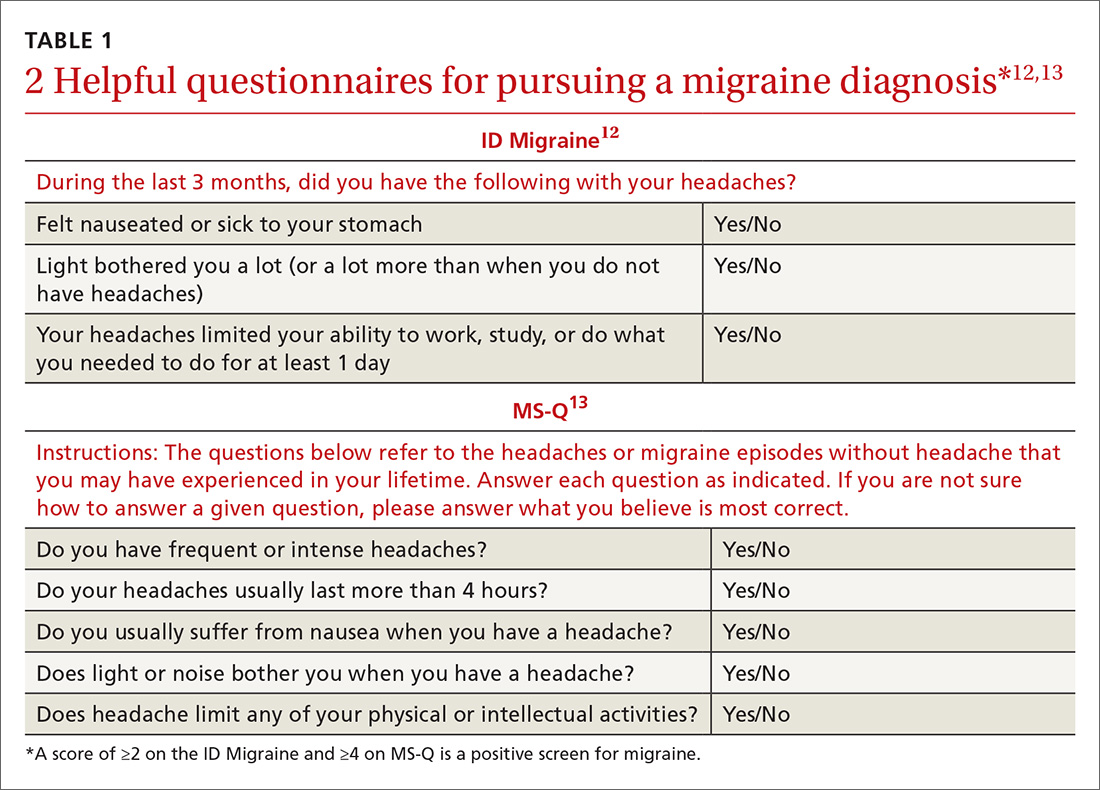

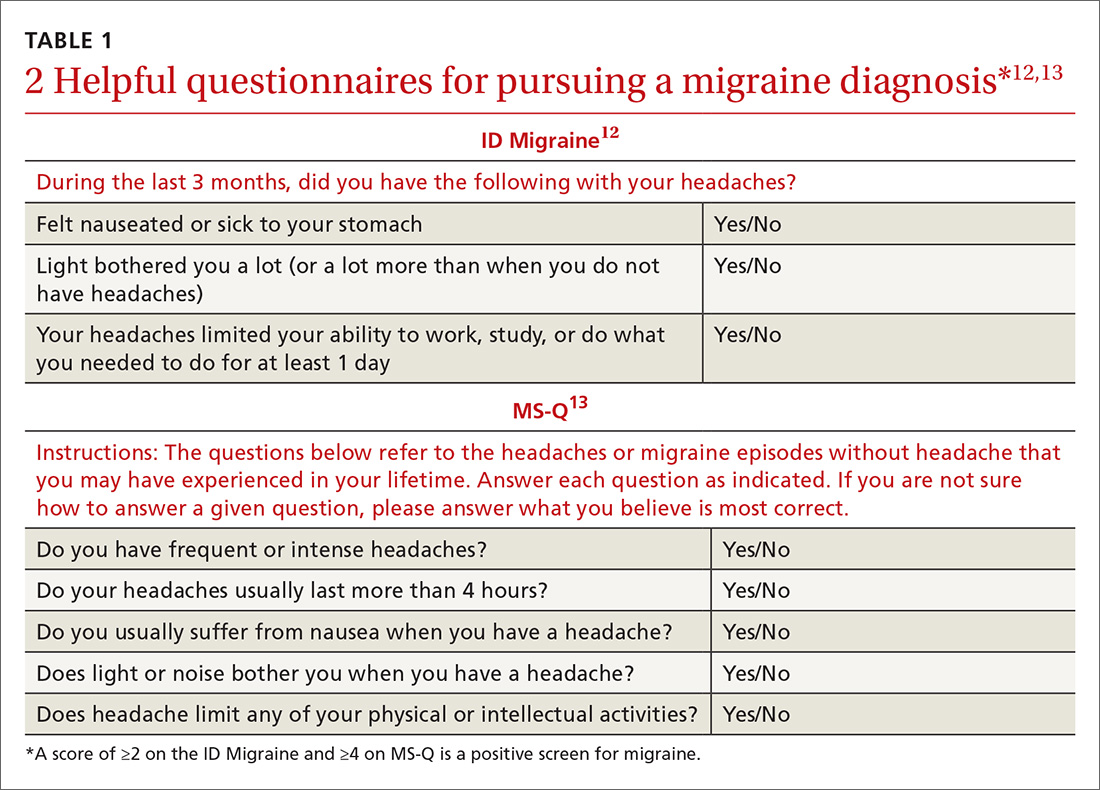

Validated valuable questionnaires. To help with accurate and timely diagnosis, researchers have developed and validated simplified questionnaires that can be completed independently by patients presenting to primary care (TABLE 112,13):

- ID Migraine is a set of 3 questions that scores positive when a patient endorses at least 2 of the 3 symptoms. 12

- MS-Q is similar to the ID Migraine but includes 5 items. A score of ≥4 is a positive screen. 13

The sensitivity and specificity of MS-Q (0.93 and 0.81, respectively) are slightly higher than those of ID Migraine (0.81 and 0.75).13

Remember POUND. This mnemonic device can also be used during history-taking to aid in diagnostic accuracy. Migraine is highly likely (92%) in patients who endorse 4 of the following 5 symptoms and unlikely (17%) in those who endorse ≤2 symptoms14: Pulsatile quality of headache 4 to 72 hOurs in duration, Unilateral location, Nausea or vomiting, and Disabling intensity.

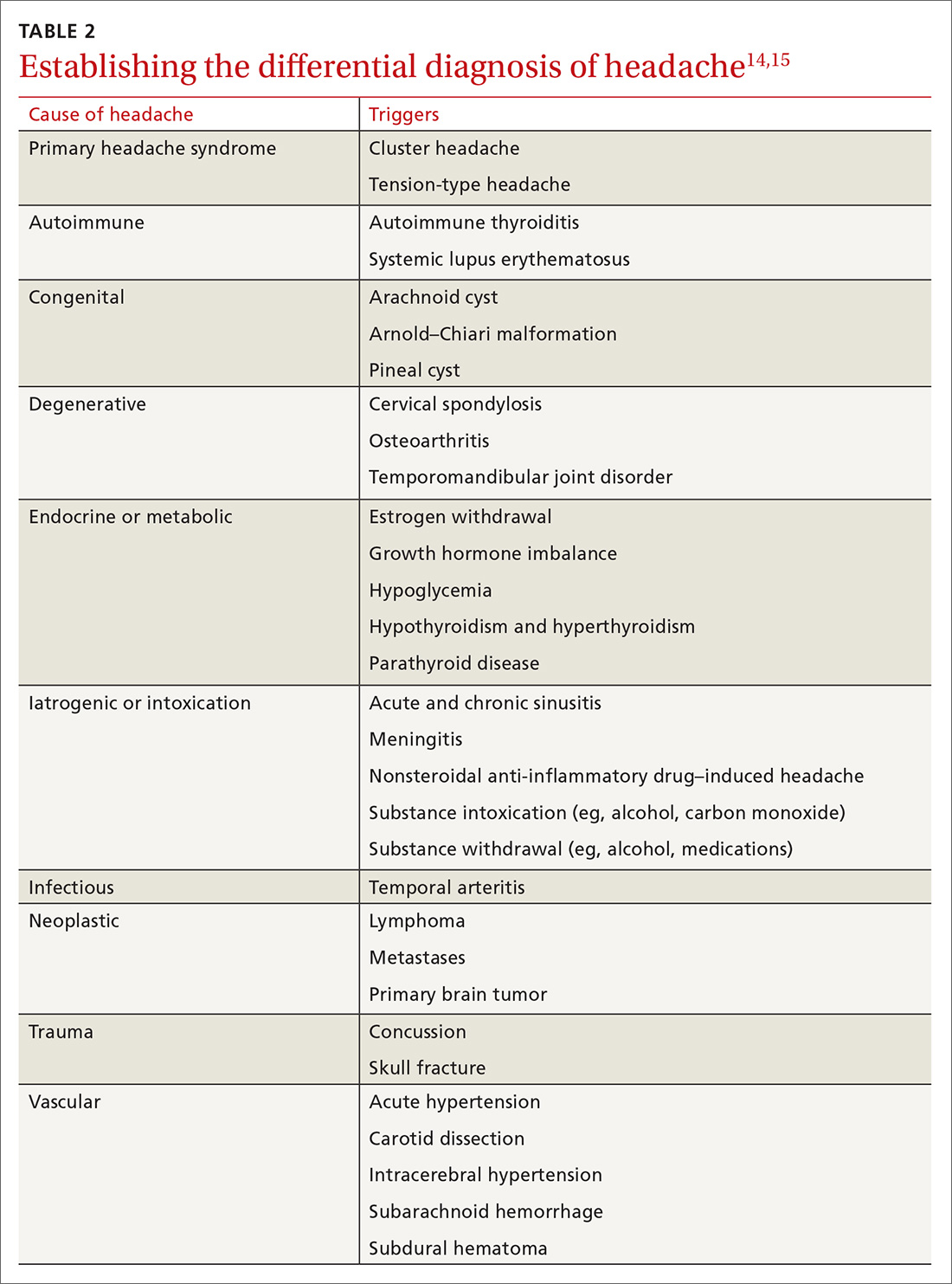

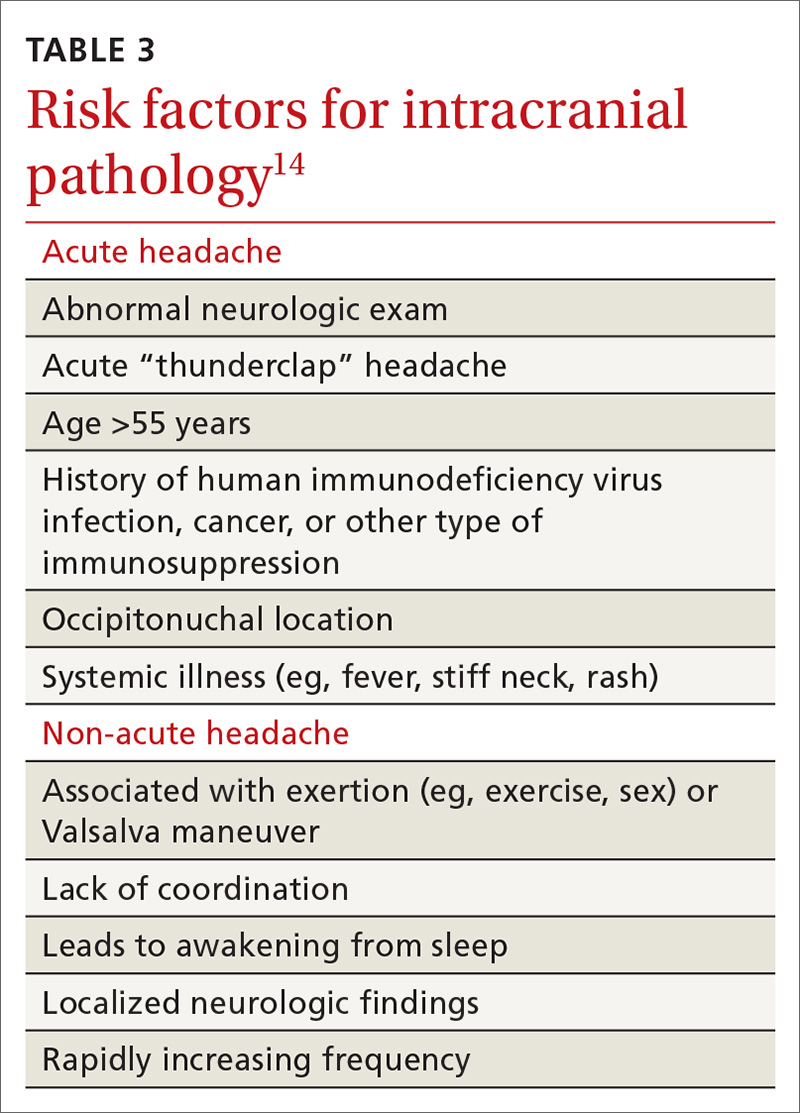

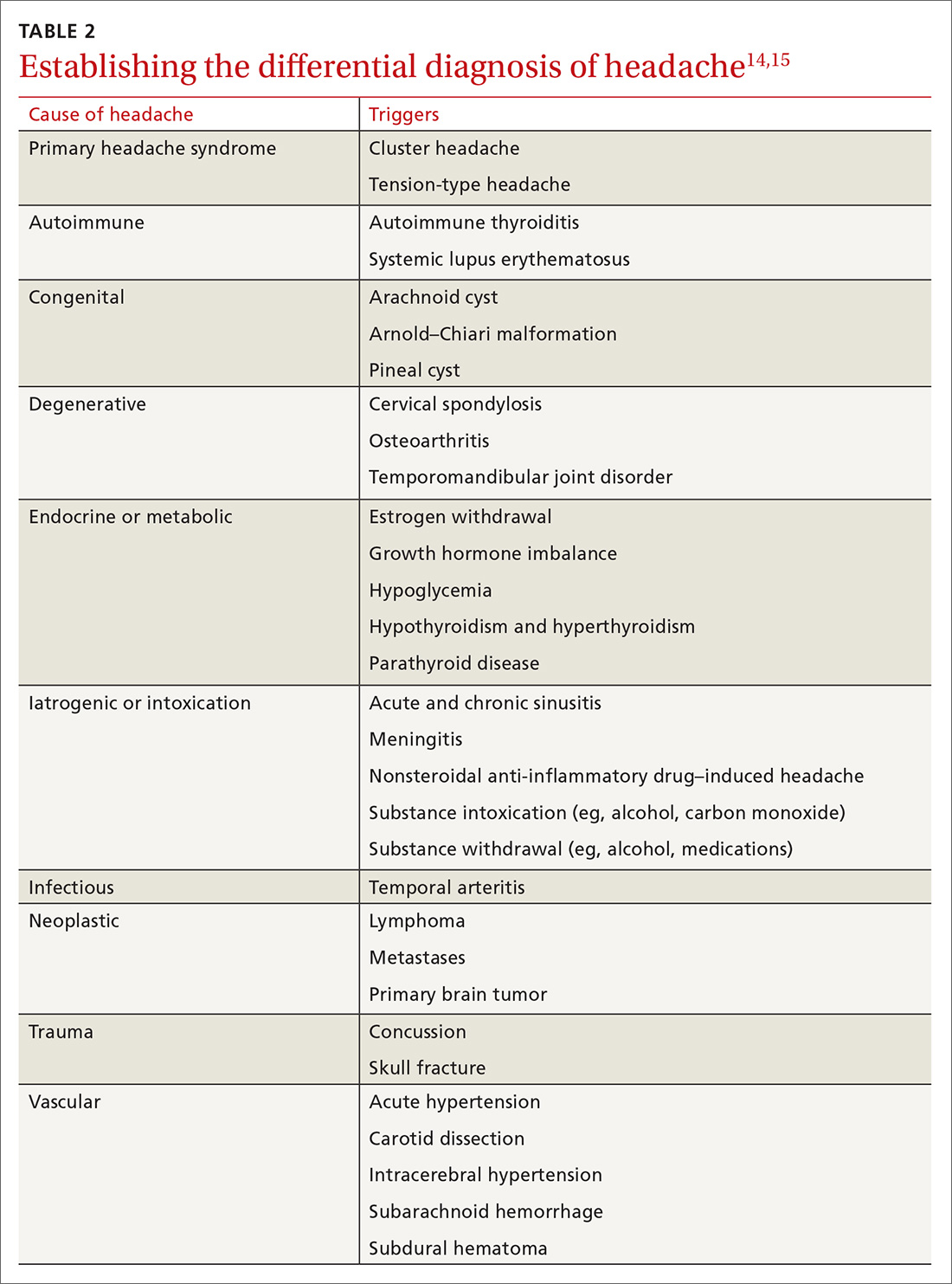

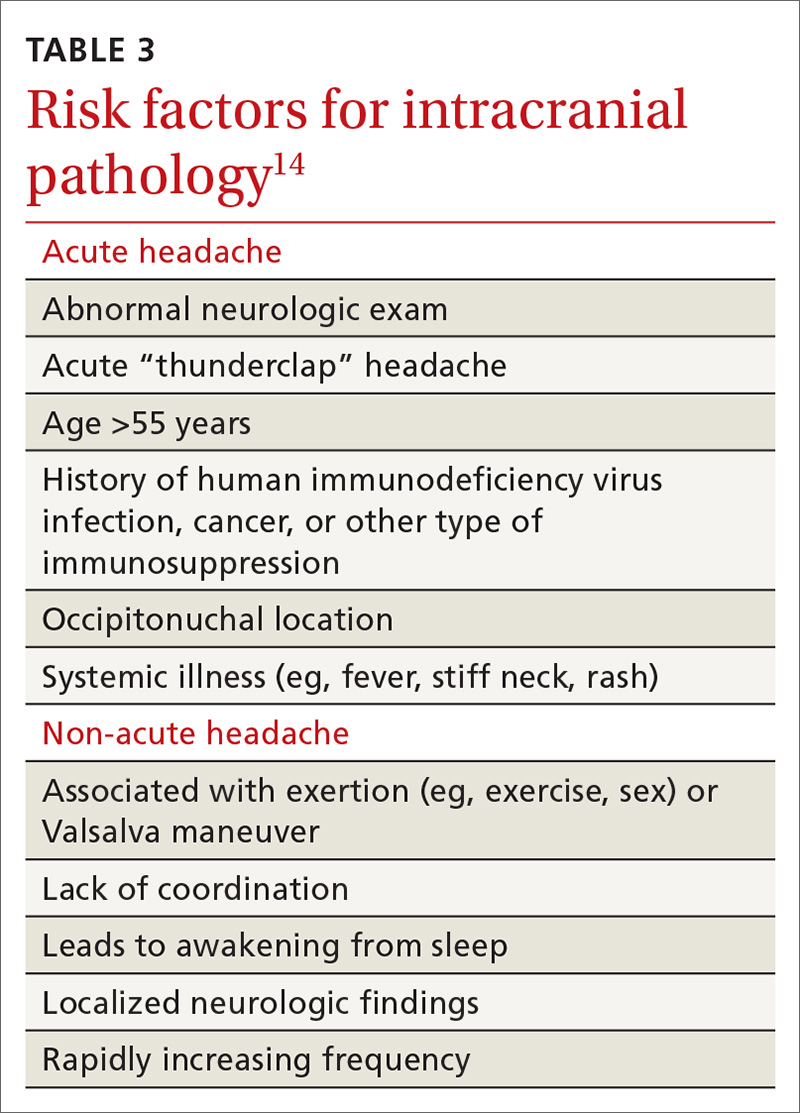

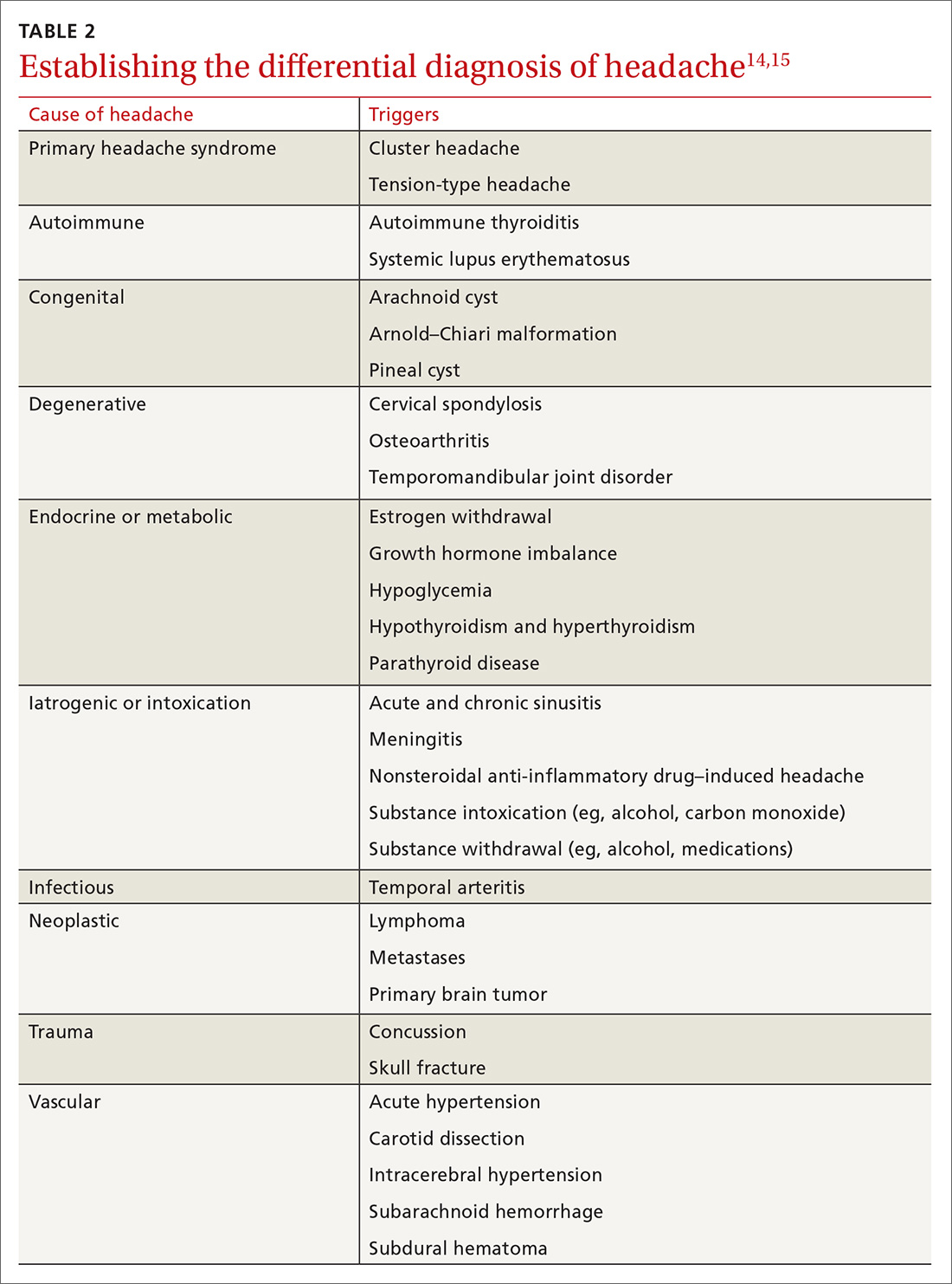

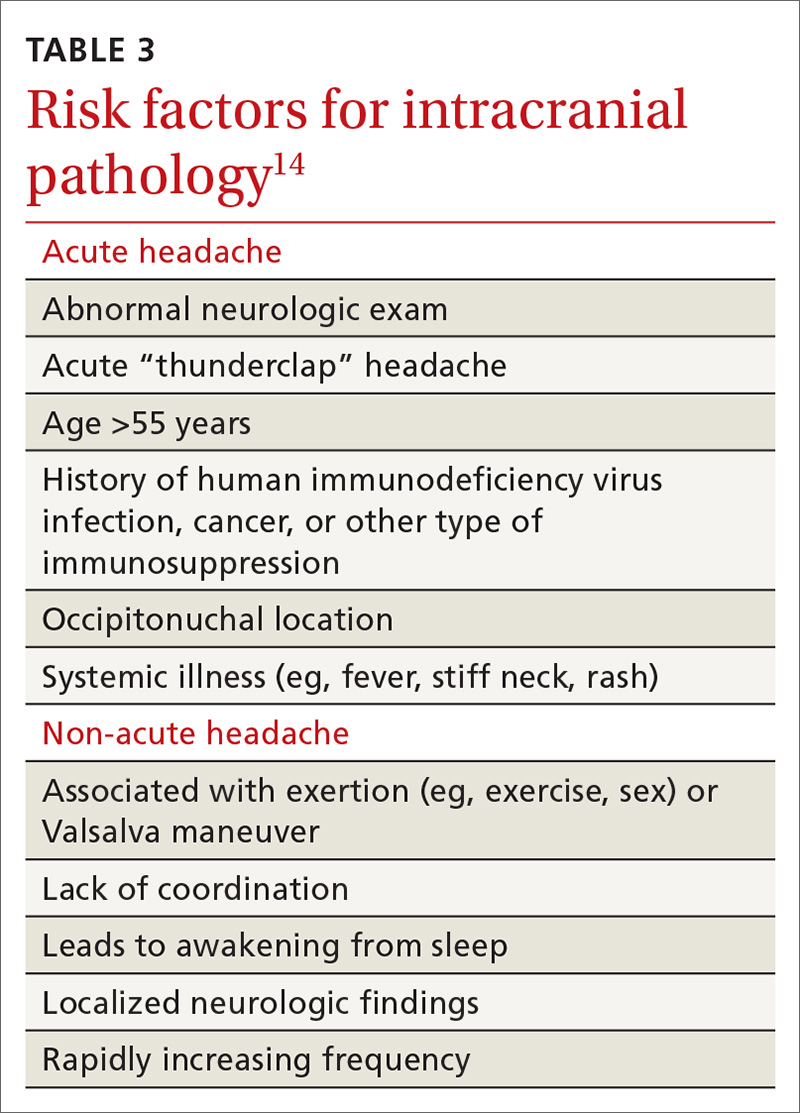

Differential Dx. Although the differential diagnosis of headache is broad (TABLE 214,15), the history alone can often guide clinicians towards the correct assessment. After taking the initial history (headache onset, location, duration, and associated symptoms), focus your attention on assessing the risk of intracranial pathology. This is best accomplished by assessing specific details of the history (TABLE 314) and findings on physical examination15:

- blood pressure measurement (seated, legs uncrossed, feet flat on the floor; having rested for 5 minutes; arm well supported)

- cranial nerve exam

- extremity strength testing

- eye exam (vision, extra-ocular muscles, visual fields, pupillary reactivity, and funduscopic exam)

- gait (tandem walk)

- reflexes.

Continue to: Further testing needed?

Further testing needed? Neuroimaging should be considered only in patients with an abnormal neurologic exam, atypical headache features, or certain risk factors, such as an immune deficiency. There is no role for electroencephalography or other diagnostic testing in migraine.16

Take a multipronged approach to treatment

As with other complex, chronic conditions, the treatment of migraine should take a multifaceted approach, including management of acute symptoms as well as prevention of future headaches. In 2015, the American Headache Society published a systematic review that specified particular treatment goals for migraine sufferers. 17 These goals include:

- headache reduction

- headache relief

- decreased disability from headache

- elimination of nausea and vomiting

- elimination of photophobia and phonophobia.

Our review, which follows, of therapeutic options focuses on the management of migraine in adults. Approaches in special populations (older adults, pregnant women, and children) are discussed afterward.

Pharmacotherapy for acute migraine

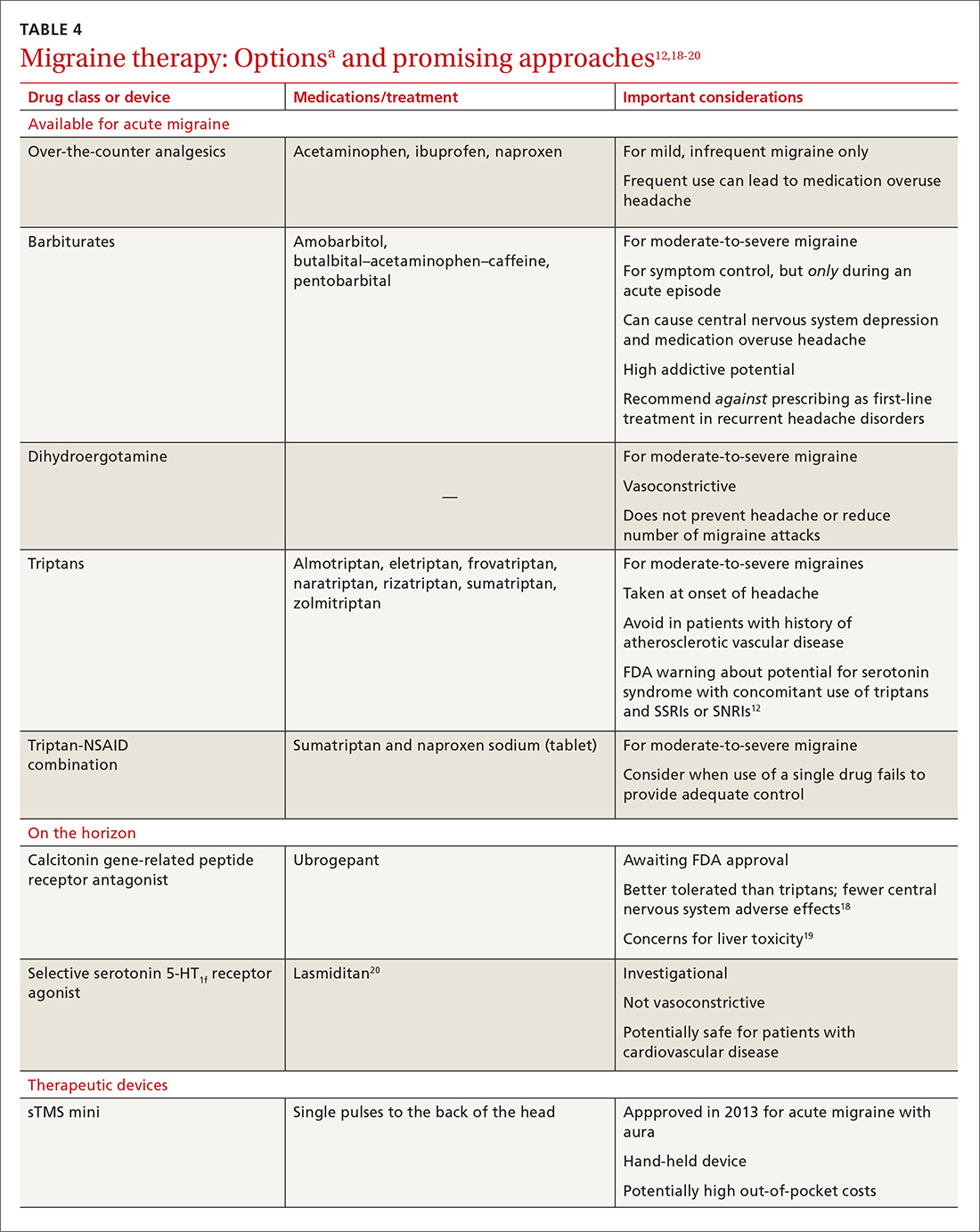

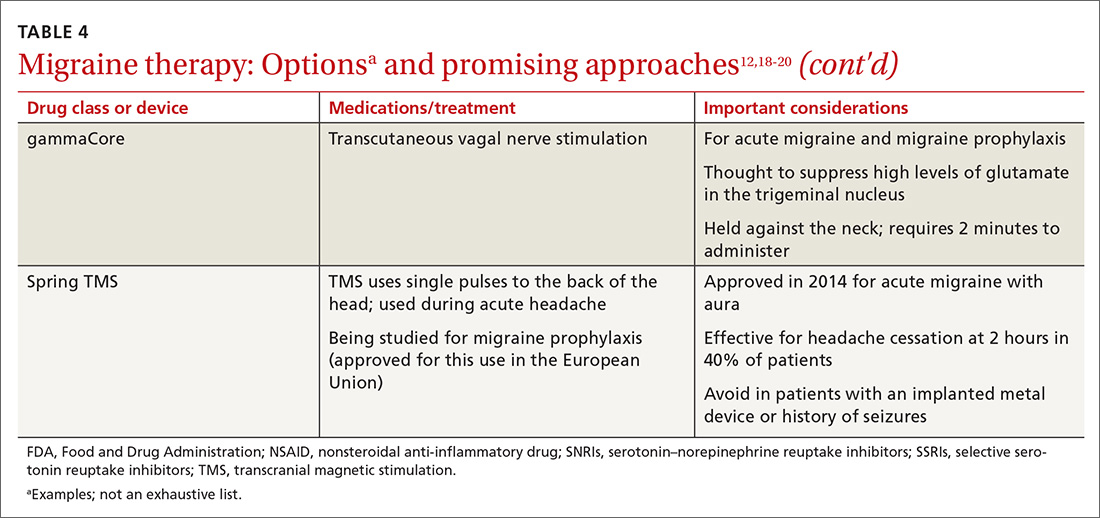

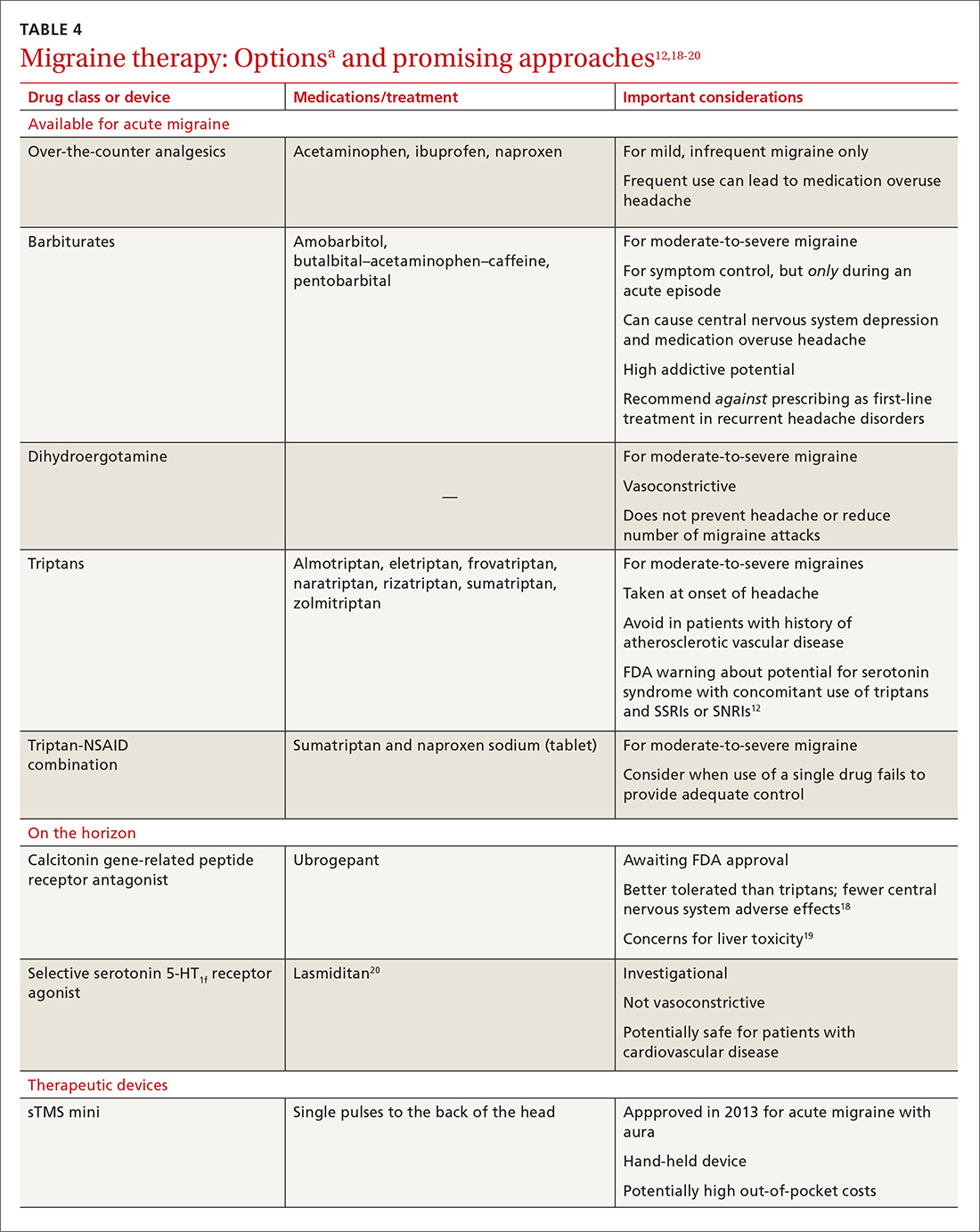

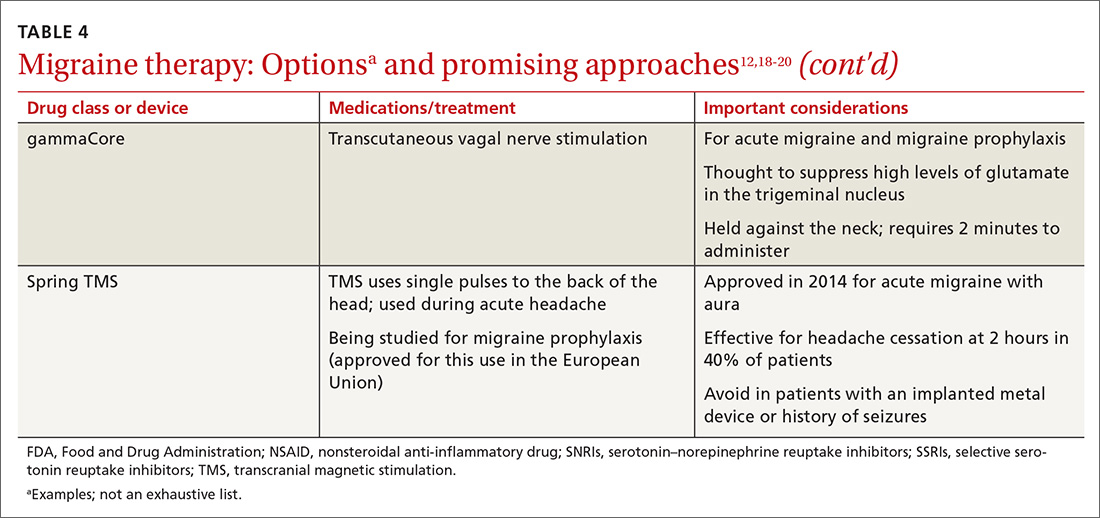

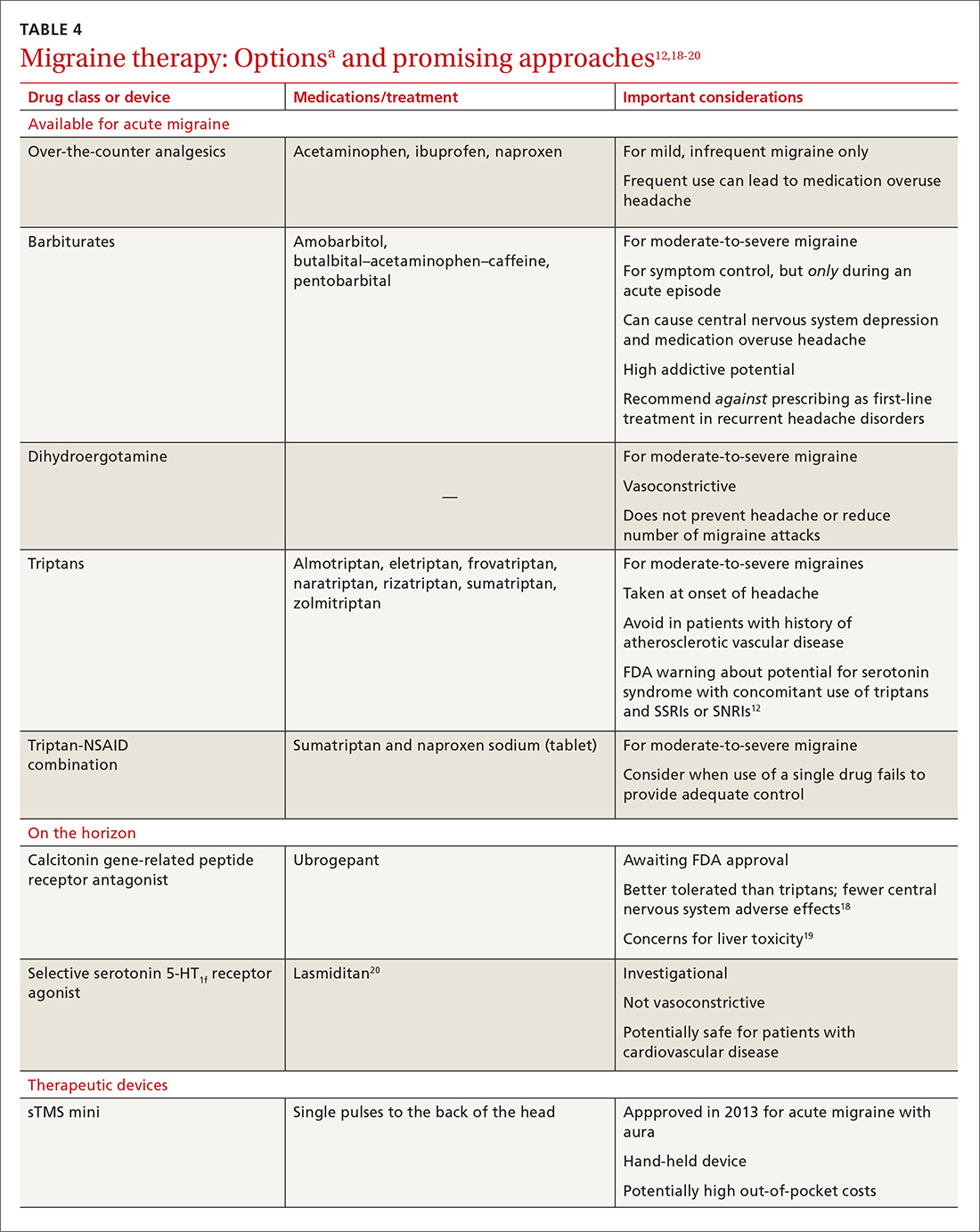

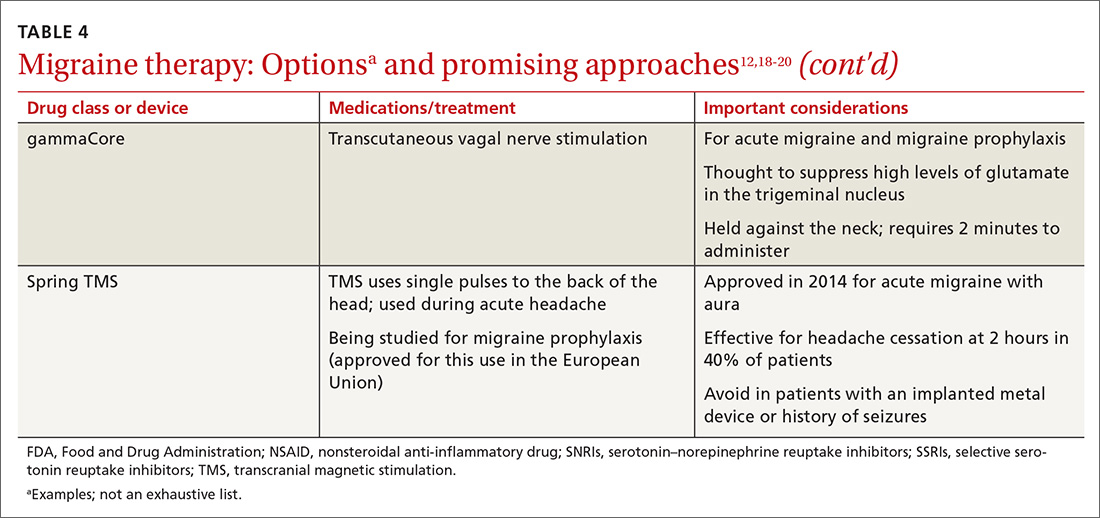

Acute migraine should be treated with an abortive medication at the onset of headache. The immediate goal is to relieve pain within 2 hours and prevent its recurrence within the subsequent 48 hours (TABLE 412,18-20).

In the general population, mild, infrequent migraines can be managed with acetaminophen and nonsteroidal anti-inflammatory drugs (NSAIDs).21

Continue to: For moderate-to-severe migraine...

For moderate-to-severe migraine, triptans, which target serotonin receptors, are the drug of choice for most patients.21 Triptans are superior to placebo in achieving a pain-free state at 2 and 24 hours after administration; eletriptan has the most desirable outcome, with 68% of patients pain free at 2 hours and 54% pain free at 24 hours.22 Triptans are available as sublingual tablets and nasal sprays, as well as subcutaneous injections for patients with significant associated nausea and vomiting. Avoid prescribing triptans for patients with known vascular disease (eg, history of stroke, myocardial infarction, peripheral vascular disease, uncontrolled hypertension, or signs and symptoms of these conditions), as well as for patients with severe hepatic impairment.

Importantly, although triptans all have a similar mechanism of action, patients might respond differently to different drugs within the class. If a patient does not get adequate headache relief from an appropriate dosage of a given triptan during a particular migraine episode, a different triptan can be tried during the next migraine.22 Additionally, if a patient experiences an adverse effect from one triptan, this does not necessarily mean that a trial of another triptan at a later time is contraindicated.

For patients who have an incomplete response to migraine treatment or for those with frequent recurrence, the combination formulation of sumatriptan, 85 mg, and naproxen, 500 mg, showed the highest rate of resolution of headache within 2 hours compared with either drug alone.23 A similar result might be found by combining a triptan known to be effective for a patient and an NSAID other than naproxen. If migraine persists despite initial treatment of an attack, a different class of medication should be tried during the course of that attack to attain relief of symptoms of that migraine.21

When a patient is seen in an acute care setting (eg, emergency department, urgent care center) while suffering a migraine, additional treatment options are available. Intravenous (IV) anti-emetics are useful for relieving the pain of migraine and nausea, and can be used in combination with an IV NSAID (eg, ketorolac).21 The most effective anti-emetics are dopamine receptor type-2 blockers, including chlorpromazine, droperidol, metoclopramide, and prochlorperazine, which has the highest level of efficacy.24 Note that these medications do present the risk of a dystonic reaction; diphenhydramine is therefore often used in tandem to mitigate such a response.

Looking ahead. Although triptans are the current first-line therapy for acute migraine, their effectiveness is limited. Only 20% of patients report sustained relief of pain in the 2 to 24 hours after treatment, and the response can vary from episode to episode.25

Continue to: With better understading of the pathophysiology of migraine...

With better understanding of the pathophysiology of migraine, a host of novel anti-migraine drugs are on the horizon.