User login

M. Alexander Otto began his reporting career early in 1999 covering the pharmaceutical industry for a national pharmacists' magazine and freelancing for the Washington Post and other newspapers. He then joined BNA, now part of Bloomberg News, covering health law and the protection of people and animals in medical research. Alex next worked for the McClatchy Company. Based on his work, Alex won a year-long Knight Science Journalism Fellowship to MIT in 2008-2009. He joined the company shortly thereafter. Alex has a newspaper journalism degree from Syracuse (N.Y.) University and a master's degree in medical science -- a physician assistant degree -- from George Washington University. Alex is based in Seattle.

Supportive oncodermatology: Cancer advances spawn new subspecialty

Not too long ago at the Dana-Farber/Brigham and Women’s Cancer Center in Boston, a woman with widely metastatic melanoma, who had been planning her own funeral, was surprised when she had a phenomenal response to immunotherapy.

She was shocked to learn that her cancer was almost completely gone after 12 weeks, but she was stunned when she developed a rash that made her oncologist think she needed to stop treatment.

With traditional cytotoxic chemotherapies, there were a few well-defined skin side effects that oncologists were comfortable managing on their own with steroids or by reducing or stopping treatment for a bit.

But over the last decade, new cancer options have become available, most notably immunotherapies and targeted biologics, which are keeping some people alive longer but also causing cutaneous side effects that have never been seen before in oncology and are being reported frequently.

An urgent need

Currently in the United States, there’s only a handful of dedicated supportive oncodermatology services, which can be found at major academic cancer centers such as Dana-Farber/Brigham and Women’s, but the residents and fellows being trained at these centers are starting to fan out across the country and set up new services.

One day, it’s likely that every major cancer institution will have “a toxicities team with expert dermatologists,” said Dr. LeBoeuf, who launched the supportive oncodermatology program at Dana-Farber in 2014 and who now runs it with a team of dermatologists and clinics every week. Dr. LeBoeuf is a leader in the field, like the other dermatologists interviewed for this story.

With all the new treatments and with even more on the way, “there’s an urgent need for dermatologists to be involved in care of cancer patients,” Dr. LeBoeuf said.

The problem

Immunotherapies like the PD-1 blocking agents pembrolizumab (Keytruda) and nivolumab (Opdivo) – both used for an ever-expanding list of tumors – amp up the immune system to fight cancer, but they also tend to cause adverse events that mimic autoimmune diseases such as lupus, psoriasis, lichen planus, and vitiligo. Dermatologists are familiar with those problems and how to manage them, but oncologists generally are not.

Meanwhile, the many targeted therapies approved over the past decade interfere with specific molecules needed for tumor growth, but they also are associated with a wide range of skin, hair, and nail side effects that include skin growths, itching, paronychia, and more.

Agents that target vascular endothelial growth factors, such as sorafenib (Nexavar) and bevacizumab (Avastin), can trigger a painful hand-foot skin reaction that’s different from the hand-foot syndrome reported with older cytotoxic agents.

Epidermal growth factor receptor (EGFR) inhibitors, such as erlotinib (Tarceva) or gefitinib (Iressa), often cause miserable acne-like eruptions, but that can mean the drug is working.

It’s hard for oncologists to know what’s life-threatening and what isn’t; that’s where dermatologists come in.

A solution

When problems come up, oncologists and patients need answers right away, she said. There’s no time to wait a month or two for a dermatology appointment to find out whether, for instance, a new mouth ulcer is a minor inconvenience or the first sign of Stevens-Johnson syndrome, and the last thing an exhausted cancer patient needs is to be told to go to yet another clinic for a dermatology consult.

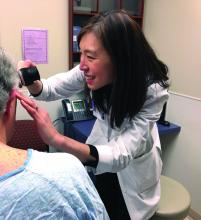

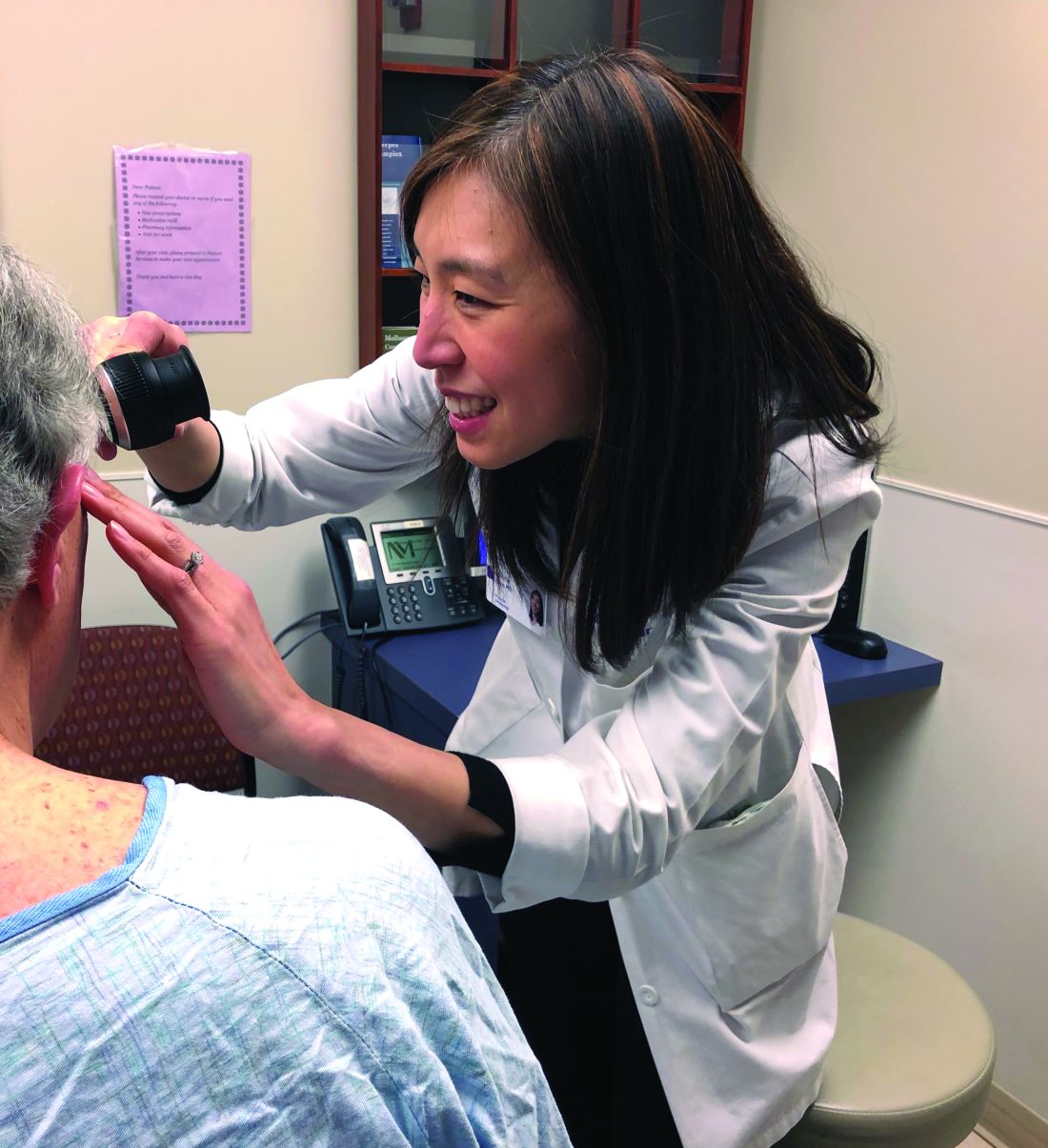

For supportive oncodermatology, that means being where the patients are: in the cancer centers. “Our clinic is situated on the same cancer floor as all the other oncology clinics,” which means easy access for both patients and oncologists, Dr. Choi said. “They just come down the hall.”

Build it, and they will come

The Stanford (Calif.) Cancer Center is a good example of what happens once a supportive oncodermatology service is up and running.

The program there was the brainchild of dermatologist Bernice Kwong, MD, who helped launch it in 2012 with 2 half-day outpatient clinics per week.

“Once people knew we were there seeing patients, we needed to expand it to 3 half days, and within 6 months, we knew we had to be” in the cancer center daily, she said. “The oncologists felt we were helping them keep their patients on treatment longer; they didn’t have to stop therapy to sort out a rash.”

Currently, the clinic sees about 15 to 20 patients a day, but “we have more need than that,” said Dr. Kwong, who is trying to recruit more dermatologists to help.

“The need is huge. There’s so much room for growth,” she noted, but first, “you need the oncologists to be on board.”

Dermatologist Adam Friedman, MD, director of supportive oncodermatology at the George Washington University Cancer Center, Washington, says his program is on the other end of the growth curve since it was only launched in the spring of 2017. Only about 80 patients have been treated so far, and there’s one dedicated clinic day a month, although he is on call for urgent cases, as is the case for many of the other dermatologists interviewed for this story.

Dr. Friedman expects business will pick up soon once word gets out, just like at Dana-Farber/Brigham and Women’s, Stanford, and elsewhere. “The places with the greatest need are going to have these services first, and then you’ll see them pop up elsewhere. I think we are going to see more,” he said.

The birth of supportive oncodermatology

Dermatologist Mario Lacouture, MD, director of the oncodermatology program at Memorial Sloan Kettering Cancer Center, New York, is considered by many oncodermatologists to be the father of the field.

He started the very first program in 2005 at Northwestern University, Chicago, followed by the program at Sloan Kettering a few years later. He has helped train many of the leaders in the field and coined the phrase “supportive oncodermatology” as the senior author in the field’s seminal paper, published in 2011 (J Am Acad Dermatol. 2011 Sep;65[3]:624-35). That article, in turn, inspired at least a few young dermatologists to make supportive oncodermatology their career choice. Dr. Lacouture speaks regularly at oncology and dermatology meetings to raise awareness about how dermatologists can improve cancer care.

Cancer survivors were also a concern. “Cancer treatment has improved so much that people are living longer, but the majority of survivors have either temporary or permanent cutaneous problems that would benefit from dermatologic care. However, the oncology community and patients are usually not aware that there are things we can do to help,” Dr. Lacouture said.

The message seems to have gotten out, however, among the hundreds of oncologists affiliated with Sloan Kettering. Dr. Lacouture needs a team of supportive oncodermatologists to meet the demand, with walk-in clinics every day and round-the-clock call.

He anticipates a day when visiting a supportive oncodermatologist will be routine, even before the start of cancer treatment, just as people visit a dentist before bone marrow transplants or radiation treatment to the head and neck. The idea would be to prevent cutaneous toxicity, something Dr. Lacouture and his team are already doing at Sloan Kettering. In time, supportive oncodermatology “is something that is going to be instituted early on” in treatment, he said.

“It’s important for dermatologists to reach out to their local oncologists; they will see there are many, many cancer patients and survivors who would benefit immensely from their care,” he said.

Dr. Lacouture is a consultant for Galderma, Janssen, and Johnson & Johnson. The other dermatologists interviewed for this story had no relevant industry disclosures. La Roche-Posay, a subsidiary of L’Oreal, is helping fund the supportive oncodermatology program at George Washington University. The company is interested in using cosmetics to camouflage cancer treatment skin lesions, Dr. Friedman said. Dr. Friedman is a member of the Dermatology News advisory board.

[email protected]

Not too long ago at the Dana-Farber/Brigham and Women’s Cancer Center in Boston, a woman with widely metastatic melanoma, who had been planning her own funeral, was surprised when she had a phenomenal response to immunotherapy.

She was shocked to learn that her cancer was almost completely gone after 12 weeks, but she was stunned when she developed a rash that made her oncologist think she needed to stop treatment.

With traditional cytotoxic chemotherapies, there were a few well-defined skin side effects that oncologists were comfortable managing on their own with steroids or by reducing or stopping treatment for a bit.

But over the last decade, new cancer options have become available, most notably immunotherapies and targeted biologics, which are keeping some people alive longer but also causing cutaneous side effects that have never been seen before in oncology and are being reported frequently.

An urgent need

Currently in the United States, there’s only a handful of dedicated supportive oncodermatology services, which can be found at major academic cancer centers such as Dana-Farber/Brigham and Women’s, but the residents and fellows being trained at these centers are starting to fan out across the country and set up new services.

One day, it’s likely that every major cancer institution will have “a toxicities team with expert dermatologists,” said Dr. LeBoeuf, who launched the supportive oncodermatology program at Dana-Farber in 2014 and who now runs it with a team of dermatologists and clinics every week. Dr. LeBoeuf is a leader in the field, like the other dermatologists interviewed for this story.

With all the new treatments and with even more on the way, “there’s an urgent need for dermatologists to be involved in care of cancer patients,” Dr. LeBoeuf said.

The problem

Immunotherapies like the PD-1 blocking agents pembrolizumab (Keytruda) and nivolumab (Opdivo) – both used for an ever-expanding list of tumors – amp up the immune system to fight cancer, but they also tend to cause adverse events that mimic autoimmune diseases such as lupus, psoriasis, lichen planus, and vitiligo. Dermatologists are familiar with those problems and how to manage them, but oncologists generally are not.

Meanwhile, the many targeted therapies approved over the past decade interfere with specific molecules needed for tumor growth, but they also are associated with a wide range of skin, hair, and nail side effects that include skin growths, itching, paronychia, and more.

Agents that target vascular endothelial growth factors, such as sorafenib (Nexavar) and bevacizumab (Avastin), can trigger a painful hand-foot skin reaction that’s different from the hand-foot syndrome reported with older cytotoxic agents.

Epidermal growth factor receptor (EGFR) inhibitors, such as erlotinib (Tarceva) or gefitinib (Iressa), often cause miserable acne-like eruptions, but that can mean the drug is working.

It’s hard for oncologists to know what’s life-threatening and what isn’t; that’s where dermatologists come in.

A solution

When problems come up, oncologists and patients need answers right away, she said. There’s no time to wait a month or two for a dermatology appointment to find out whether, for instance, a new mouth ulcer is a minor inconvenience or the first sign of Stevens-Johnson syndrome, and the last thing an exhausted cancer patient needs is to be told to go to yet another clinic for a dermatology consult.

For supportive oncodermatology, that means being where the patients are: in the cancer centers. “Our clinic is situated on the same cancer floor as all the other oncology clinics,” which means easy access for both patients and oncologists, Dr. Choi said. “They just come down the hall.”

Build it, and they will come

The Stanford (Calif.) Cancer Center is a good example of what happens once a supportive oncodermatology service is up and running.

The program there was the brainchild of dermatologist Bernice Kwong, MD, who helped launch it in 2012 with 2 half-day outpatient clinics per week.

“Once people knew we were there seeing patients, we needed to expand it to 3 half days, and within 6 months, we knew we had to be” in the cancer center daily, she said. “The oncologists felt we were helping them keep their patients on treatment longer; they didn’t have to stop therapy to sort out a rash.”

Currently, the clinic sees about 15 to 20 patients a day, but “we have more need than that,” said Dr. Kwong, who is trying to recruit more dermatologists to help.

“The need is huge. There’s so much room for growth,” she noted, but first, “you need the oncologists to be on board.”

Dermatologist Adam Friedman, MD, director of supportive oncodermatology at the George Washington University Cancer Center, Washington, says his program is on the other end of the growth curve since it was only launched in the spring of 2017. Only about 80 patients have been treated so far, and there’s one dedicated clinic day a month, although he is on call for urgent cases, as is the case for many of the other dermatologists interviewed for this story.

Dr. Friedman expects business will pick up soon once word gets out, just like at Dana-Farber/Brigham and Women’s, Stanford, and elsewhere. “The places with the greatest need are going to have these services first, and then you’ll see them pop up elsewhere. I think we are going to see more,” he said.

The birth of supportive oncodermatology

Dermatologist Mario Lacouture, MD, director of the oncodermatology program at Memorial Sloan Kettering Cancer Center, New York, is considered by many oncodermatologists to be the father of the field.

He started the very first program in 2005 at Northwestern University, Chicago, followed by the program at Sloan Kettering a few years later. He has helped train many of the leaders in the field and coined the phrase “supportive oncodermatology” as the senior author in the field’s seminal paper, published in 2011 (J Am Acad Dermatol. 2011 Sep;65[3]:624-35). That article, in turn, inspired at least a few young dermatologists to make supportive oncodermatology their career choice. Dr. Lacouture speaks regularly at oncology and dermatology meetings to raise awareness about how dermatologists can improve cancer care.

Cancer survivors were also a concern. “Cancer treatment has improved so much that people are living longer, but the majority of survivors have either temporary or permanent cutaneous problems that would benefit from dermatologic care. However, the oncology community and patients are usually not aware that there are things we can do to help,” Dr. Lacouture said.

The message seems to have gotten out, however, among the hundreds of oncologists affiliated with Sloan Kettering. Dr. Lacouture needs a team of supportive oncodermatologists to meet the demand, with walk-in clinics every day and round-the-clock call.

He anticipates a day when visiting a supportive oncodermatologist will be routine, even before the start of cancer treatment, just as people visit a dentist before bone marrow transplants or radiation treatment to the head and neck. The idea would be to prevent cutaneous toxicity, something Dr. Lacouture and his team are already doing at Sloan Kettering. In time, supportive oncodermatology “is something that is going to be instituted early on” in treatment, he said.

“It’s important for dermatologists to reach out to their local oncologists; they will see there are many, many cancer patients and survivors who would benefit immensely from their care,” he said.

Dr. Lacouture is a consultant for Galderma, Janssen, and Johnson & Johnson. The other dermatologists interviewed for this story had no relevant industry disclosures. La Roche-Posay, a subsidiary of L’Oreal, is helping fund the supportive oncodermatology program at George Washington University. The company is interested in using cosmetics to camouflage cancer treatment skin lesions, Dr. Friedman said. Dr. Friedman is a member of the Dermatology News advisory board.

[email protected]

Not too long ago at the Dana-Farber/Brigham and Women’s Cancer Center in Boston, a woman with widely metastatic melanoma, who had been planning her own funeral, was surprised when she had a phenomenal response to immunotherapy.

She was shocked to learn that her cancer was almost completely gone after 12 weeks, but she was stunned when she developed a rash that made her oncologist think she needed to stop treatment.

With traditional cytotoxic chemotherapies, there were a few well-defined skin side effects that oncologists were comfortable managing on their own with steroids or by reducing or stopping treatment for a bit.

But over the last decade, new cancer options have become available, most notably immunotherapies and targeted biologics, which are keeping some people alive longer but also causing cutaneous side effects that have never been seen before in oncology and are being reported frequently.

An urgent need

Currently in the United States, there’s only a handful of dedicated supportive oncodermatology services, which can be found at major academic cancer centers such as Dana-Farber/Brigham and Women’s, but the residents and fellows being trained at these centers are starting to fan out across the country and set up new services.

One day, it’s likely that every major cancer institution will have “a toxicities team with expert dermatologists,” said Dr. LeBoeuf, who launched the supportive oncodermatology program at Dana-Farber in 2014 and who now runs it with a team of dermatologists and clinics every week. Dr. LeBoeuf is a leader in the field, like the other dermatologists interviewed for this story.

With all the new treatments and with even more on the way, “there’s an urgent need for dermatologists to be involved in care of cancer patients,” Dr. LeBoeuf said.

The problem

Immunotherapies like the PD-1 blocking agents pembrolizumab (Keytruda) and nivolumab (Opdivo) – both used for an ever-expanding list of tumors – amp up the immune system to fight cancer, but they also tend to cause adverse events that mimic autoimmune diseases such as lupus, psoriasis, lichen planus, and vitiligo. Dermatologists are familiar with those problems and how to manage them, but oncologists generally are not.

Meanwhile, the many targeted therapies approved over the past decade interfere with specific molecules needed for tumor growth, but they also are associated with a wide range of skin, hair, and nail side effects that include skin growths, itching, paronychia, and more.

Agents that target vascular endothelial growth factors, such as sorafenib (Nexavar) and bevacizumab (Avastin), can trigger a painful hand-foot skin reaction that’s different from the hand-foot syndrome reported with older cytotoxic agents.

Epidermal growth factor receptor (EGFR) inhibitors, such as erlotinib (Tarceva) or gefitinib (Iressa), often cause miserable acne-like eruptions, but that can mean the drug is working.

It’s hard for oncologists to know what’s life-threatening and what isn’t; that’s where dermatologists come in.

A solution

When problems come up, oncologists and patients need answers right away, she said. There’s no time to wait a month or two for a dermatology appointment to find out whether, for instance, a new mouth ulcer is a minor inconvenience or the first sign of Stevens-Johnson syndrome, and the last thing an exhausted cancer patient needs is to be told to go to yet another clinic for a dermatology consult.

For supportive oncodermatology, that means being where the patients are: in the cancer centers. “Our clinic is situated on the same cancer floor as all the other oncology clinics,” which means easy access for both patients and oncologists, Dr. Choi said. “They just come down the hall.”

Build it, and they will come

The Stanford (Calif.) Cancer Center is a good example of what happens once a supportive oncodermatology service is up and running.

The program there was the brainchild of dermatologist Bernice Kwong, MD, who helped launch it in 2012 with 2 half-day outpatient clinics per week.

“Once people knew we were there seeing patients, we needed to expand it to 3 half days, and within 6 months, we knew we had to be” in the cancer center daily, she said. “The oncologists felt we were helping them keep their patients on treatment longer; they didn’t have to stop therapy to sort out a rash.”

Currently, the clinic sees about 15 to 20 patients a day, but “we have more need than that,” said Dr. Kwong, who is trying to recruit more dermatologists to help.

“The need is huge. There’s so much room for growth,” she noted, but first, “you need the oncologists to be on board.”

Dermatologist Adam Friedman, MD, director of supportive oncodermatology at the George Washington University Cancer Center, Washington, says his program is on the other end of the growth curve since it was only launched in the spring of 2017. Only about 80 patients have been treated so far, and there’s one dedicated clinic day a month, although he is on call for urgent cases, as is the case for many of the other dermatologists interviewed for this story.

Dr. Friedman expects business will pick up soon once word gets out, just like at Dana-Farber/Brigham and Women’s, Stanford, and elsewhere. “The places with the greatest need are going to have these services first, and then you’ll see them pop up elsewhere. I think we are going to see more,” he said.

The birth of supportive oncodermatology

Dermatologist Mario Lacouture, MD, director of the oncodermatology program at Memorial Sloan Kettering Cancer Center, New York, is considered by many oncodermatologists to be the father of the field.

He started the very first program in 2005 at Northwestern University, Chicago, followed by the program at Sloan Kettering a few years later. He has helped train many of the leaders in the field and coined the phrase “supportive oncodermatology” as the senior author in the field’s seminal paper, published in 2011 (J Am Acad Dermatol. 2011 Sep;65[3]:624-35). That article, in turn, inspired at least a few young dermatologists to make supportive oncodermatology their career choice. Dr. Lacouture speaks regularly at oncology and dermatology meetings to raise awareness about how dermatologists can improve cancer care.

Cancer survivors were also a concern. “Cancer treatment has improved so much that people are living longer, but the majority of survivors have either temporary or permanent cutaneous problems that would benefit from dermatologic care. However, the oncology community and patients are usually not aware that there are things we can do to help,” Dr. Lacouture said.

The message seems to have gotten out, however, among the hundreds of oncologists affiliated with Sloan Kettering. Dr. Lacouture needs a team of supportive oncodermatologists to meet the demand, with walk-in clinics every day and round-the-clock call.

He anticipates a day when visiting a supportive oncodermatologist will be routine, even before the start of cancer treatment, just as people visit a dentist before bone marrow transplants or radiation treatment to the head and neck. The idea would be to prevent cutaneous toxicity, something Dr. Lacouture and his team are already doing at Sloan Kettering. In time, supportive oncodermatology “is something that is going to be instituted early on” in treatment, he said.

“It’s important for dermatologists to reach out to their local oncologists; they will see there are many, many cancer patients and survivors who would benefit immensely from their care,” he said.

Dr. Lacouture is a consultant for Galderma, Janssen, and Johnson & Johnson. The other dermatologists interviewed for this story had no relevant industry disclosures. La Roche-Posay, a subsidiary of L’Oreal, is helping fund the supportive oncodermatology program at George Washington University. The company is interested in using cosmetics to camouflage cancer treatment skin lesions, Dr. Friedman said. Dr. Friedman is a member of the Dermatology News advisory board.

[email protected]

Do not delay tranexamic acid for hemorrhage

Immediate treatment improved survival by more than 70% (odds ratio, 1.72; 95%; confidence interval, 1.42-2.10; P less than .0001), but the survival benefit decreased by 10% for every 15 minutes of treatment delay until hour 3, after which there was no benefit with tranexamic acid.

“Even a short delay in treatment reduces the benefit of tranexamic acid administration. Patients must be treated immediately,” said investigators led by Angele Gayet-Ageron, MD, PhD, of University Hospitals of Geneva.

“Trauma patients should be treated at the scene of injury and postpartum hemorrhage should be treated as soon as the diagnosis is made. Clinical audit should record the time from bleeding onset to tranexamic acid treatment, with feedback and best practice benchmarking,” they said in the Lancet.

The team found no increase in vascular occlusive events with tranexamic acid and no evidence of other adverse effects, so “it can be given safely as soon as bleeding is suspected,” they said.

Antifibrinolytics like tranexamic acid are known to reduce death from bleeding, but the effects of treatment delay have been less clear. To get an idea, the investigators ran a meta-analysis of subjects from two, large randomized tranexamic acid trials, one for trauma hemorrhage and the other for postpartum hemorrhage.

There were 1,408 deaths from bleeding among the 40,000-plus subjects. Most of the bleeding deaths (63%) occurred within 12 hours of onset; deaths from postpartum hemorrhage peaked at 2-3 hours after childbirth.

The authors had no competing interests. The trials were funded by the Britain’s National Institute for Health Research and Pfizer, among others.

SOURCE: Gayet-Ageron A et al. Lancet. 2017 Nov 7. doi: 10.1016/S0140-6736(17)32455-8

Early administration of tranexamic acid appears to offer the best hope for a good outcome in a bleeding patient with hyperfibrinolysis. The effect of tranexamic acid on inflammation and other pathways in patients without active bleeding is less clear. It is also unclear whether thromboelastography will move out of the research laboratory and become a routine means of assessment for bleeding patients.

At present, the careful study of Dr. Gayet-Ageron and her coworkers suggests applicability of early administration of this agent in patients with substantial bleeding from multiple causes. As data from additional trials with tranexamic acid become available, the spectrum of applications for this agent should become apparent.

David Dries, MD, is a professor of surgery at the University of Minnesota, Minneapolis. He made his comments in an editorial (Lancet. 2017 Nov 7. doi: 10.1016/S0140-6736(17)32806-4) and had no competing interests.

Early administration of tranexamic acid appears to offer the best hope for a good outcome in a bleeding patient with hyperfibrinolysis. The effect of tranexamic acid on inflammation and other pathways in patients without active bleeding is less clear. It is also unclear whether thromboelastography will move out of the research laboratory and become a routine means of assessment for bleeding patients.

At present, the careful study of Dr. Gayet-Ageron and her coworkers suggests applicability of early administration of this agent in patients with substantial bleeding from multiple causes. As data from additional trials with tranexamic acid become available, the spectrum of applications for this agent should become apparent.

David Dries, MD, is a professor of surgery at the University of Minnesota, Minneapolis. He made his comments in an editorial (Lancet. 2017 Nov 7. doi: 10.1016/S0140-6736(17)32806-4) and had no competing interests.

Early administration of tranexamic acid appears to offer the best hope for a good outcome in a bleeding patient with hyperfibrinolysis. The effect of tranexamic acid on inflammation and other pathways in patients without active bleeding is less clear. It is also unclear whether thromboelastography will move out of the research laboratory and become a routine means of assessment for bleeding patients.

At present, the careful study of Dr. Gayet-Ageron and her coworkers suggests applicability of early administration of this agent in patients with substantial bleeding from multiple causes. As data from additional trials with tranexamic acid become available, the spectrum of applications for this agent should become apparent.

David Dries, MD, is a professor of surgery at the University of Minnesota, Minneapolis. He made his comments in an editorial (Lancet. 2017 Nov 7. doi: 10.1016/S0140-6736(17)32806-4) and had no competing interests.

Immediate treatment improved survival by more than 70% (odds ratio, 1.72; 95%; confidence interval, 1.42-2.10; P less than .0001), but the survival benefit decreased by 10% for every 15 minutes of treatment delay until hour 3, after which there was no benefit with tranexamic acid.

“Even a short delay in treatment reduces the benefit of tranexamic acid administration. Patients must be treated immediately,” said investigators led by Angele Gayet-Ageron, MD, PhD, of University Hospitals of Geneva.

“Trauma patients should be treated at the scene of injury and postpartum hemorrhage should be treated as soon as the diagnosis is made. Clinical audit should record the time from bleeding onset to tranexamic acid treatment, with feedback and best practice benchmarking,” they said in the Lancet.

The team found no increase in vascular occlusive events with tranexamic acid and no evidence of other adverse effects, so “it can be given safely as soon as bleeding is suspected,” they said.

Antifibrinolytics like tranexamic acid are known to reduce death from bleeding, but the effects of treatment delay have been less clear. To get an idea, the investigators ran a meta-analysis of subjects from two, large randomized tranexamic acid trials, one for trauma hemorrhage and the other for postpartum hemorrhage.

There were 1,408 deaths from bleeding among the 40,000-plus subjects. Most of the bleeding deaths (63%) occurred within 12 hours of onset; deaths from postpartum hemorrhage peaked at 2-3 hours after childbirth.

The authors had no competing interests. The trials were funded by the Britain’s National Institute for Health Research and Pfizer, among others.

SOURCE: Gayet-Ageron A et al. Lancet. 2017 Nov 7. doi: 10.1016/S0140-6736(17)32455-8

Immediate treatment improved survival by more than 70% (odds ratio, 1.72; 95%; confidence interval, 1.42-2.10; P less than .0001), but the survival benefit decreased by 10% for every 15 minutes of treatment delay until hour 3, after which there was no benefit with tranexamic acid.

“Even a short delay in treatment reduces the benefit of tranexamic acid administration. Patients must be treated immediately,” said investigators led by Angele Gayet-Ageron, MD, PhD, of University Hospitals of Geneva.

“Trauma patients should be treated at the scene of injury and postpartum hemorrhage should be treated as soon as the diagnosis is made. Clinical audit should record the time from bleeding onset to tranexamic acid treatment, with feedback and best practice benchmarking,” they said in the Lancet.

The team found no increase in vascular occlusive events with tranexamic acid and no evidence of other adverse effects, so “it can be given safely as soon as bleeding is suspected,” they said.

Antifibrinolytics like tranexamic acid are known to reduce death from bleeding, but the effects of treatment delay have been less clear. To get an idea, the investigators ran a meta-analysis of subjects from two, large randomized tranexamic acid trials, one for trauma hemorrhage and the other for postpartum hemorrhage.

There were 1,408 deaths from bleeding among the 40,000-plus subjects. Most of the bleeding deaths (63%) occurred within 12 hours of onset; deaths from postpartum hemorrhage peaked at 2-3 hours after childbirth.

The authors had no competing interests. The trials were funded by the Britain’s National Institute for Health Research and Pfizer, among others.

SOURCE: Gayet-Ageron A et al. Lancet. 2017 Nov 7. doi: 10.1016/S0140-6736(17)32455-8

FROM THE LANCET

USPSTF goes neutral on adolescent scoliosis screening

The U.S. Preventive Services Task Force neither recommended for nor recommended against routine screening for adolescent idiopathic scoliosis in new guidelines published Jan. 9 in JAMA.

The determination applies to asymptomatic adolescents 10-18 years old; it does not apply to children and adolescents who present with back pain, breathing difficulties, obvious spine deformities, or abnormal imaging.

Studies since then, however, have shifted the calculus a bit so that the group “no longer has moderate certainty that the harms of treatment outweigh the benefits ... As a result, the USPSTF has determined that the current evidence is insufficient to assess the balance of benefits and harms of screening for adolescent idiopathic scoliosis,” which led the group to issue an “I statement” for “insufficient evidence,” David C. Grossman, MD, MPH, of Kaiser Permanente

Washington Health Research Institute, Seattle, and the other members of the task force wrote.

An I statement means that “if the service is offered, patients should understand the uncertainty about the balance of benefits and harms ... The USPSTF recognizes that clinical decisions involve more considerations than evidence alone. Clinicians should understand the evidence but individualize decision making to the specific patient or situation.”

The task force did find that screening using the forward bend test, scoliometer, or both with radiologic confirmation does a good job at detecting scoliosis. It also found a growing body of evidence that bracing can interrupt or slow scoliosis progression; “however, evidence on whether reducing spinal curvature in adolescence has a long-term effect on health in adulthood is inadequate,” and “evidence on the effects of exercise and surgery on health or spinal curvature in childhood or adulthood is insufficient.” Also, the majority of individuals identified through screening will never require treatment, the task force said.

The guidance is based on a review of 448,276 subjects in 14 studies, more than half of which were published after the last guidance.

USPSTF noted that limited new evidence suggests curves “may respond similarly to physiotherapeutic, scoliosis-specific exercise treatment; if confirmed, this may represent a treatment option for mild curves before bracing is recommended.”

Meanwhile, “surgical treatment remains the standard of care for curves that progress to greater than 40-50 degrees; however, there are no controlled studies of surgical [versus] nonsurgical treatment in individuals with lower degrees of curvature,” the task force said in an evidence review that was also published Jan. 9 in JAMA and was led by pediatrician John Dunn, MD, of Kaiser Permanente Washington Health Research Institute, Seattle.

More than half of US states either mandate or recommend school-based screening for scoliosis. The American Academy of Orthopaedic Surgeons, the Scoliosis Research Society, the Pediatric Orthopaedic Society of North America, and the American Academy of Pediatrics advocate screening for scoliosis in girls at 10 and 12 years and in male adolescents at either 13 or 14 years as part of medical home preventive services. The United Kingdom National Screening Society does not recommend screening for scoliosis given the uncertainty surrounding the effectiveness of screening and treatment.

The work was funded by the Agency for Healthcare Research and Quality. The authors had no relevant disclosures.

SOURCE: US Preventive Services Task Force. JAMA. 2018 Jan 9;319(2):165-72; Dunn J et al. JAMA. 2018;319(2):173-87.

Twenty or more states, including highly populous states such as California, New York, Ohio, and Texas, mandate or strongly recommend school-based screening for scoliosis ... Given the new USPSTF recommendations and the I statement [suggesting insufficient evidence], it would be appropriate for states to advise students and parents of the insufficient data about benefits and harms of screening, while also sharing more recent evidence that bracing and exercise therapies may be helpful if scoliosis is clinically diagnosed in screen-positive youth.

The broad lack of evidence regarding the short-term effect of screening for adolescents and long-term health outcomes in later adolescence and into adulthood is a clear obstacle to moving adolescent idiopathic scoliosis recommendations beyond the I rating. Consequently, the gaps in current understanding serve to highlight immediate opportunities for clinical and health services research. For example, a multisite, multiyear observational study could provide evidence about the association between reduction in spinal curvature in adolescence and long-term health outcomes.

John Sarwark, MD , is head of orthopedic surgery at Ann & Robert H. Lurie Children’s Hospital and a professor of orthopedic surgery at Northwestern University, both in Chicago. Matthew Davis, MD , is head of academic general pediatrics and primary care at Lurie and a pediatrics professor at Northwestern. They made their comments in an editorial published Jan. 9 in JAMA and were not involved with the work ( 2018;319(2):127-129) .

Twenty or more states, including highly populous states such as California, New York, Ohio, and Texas, mandate or strongly recommend school-based screening for scoliosis ... Given the new USPSTF recommendations and the I statement [suggesting insufficient evidence], it would be appropriate for states to advise students and parents of the insufficient data about benefits and harms of screening, while also sharing more recent evidence that bracing and exercise therapies may be helpful if scoliosis is clinically diagnosed in screen-positive youth.

The broad lack of evidence regarding the short-term effect of screening for adolescents and long-term health outcomes in later adolescence and into adulthood is a clear obstacle to moving adolescent idiopathic scoliosis recommendations beyond the I rating. Consequently, the gaps in current understanding serve to highlight immediate opportunities for clinical and health services research. For example, a multisite, multiyear observational study could provide evidence about the association between reduction in spinal curvature in adolescence and long-term health outcomes.

John Sarwark, MD , is head of orthopedic surgery at Ann & Robert H. Lurie Children’s Hospital and a professor of orthopedic surgery at Northwestern University, both in Chicago. Matthew Davis, MD , is head of academic general pediatrics and primary care at Lurie and a pediatrics professor at Northwestern. They made their comments in an editorial published Jan. 9 in JAMA and were not involved with the work ( 2018;319(2):127-129) .

Twenty or more states, including highly populous states such as California, New York, Ohio, and Texas, mandate or strongly recommend school-based screening for scoliosis ... Given the new USPSTF recommendations and the I statement [suggesting insufficient evidence], it would be appropriate for states to advise students and parents of the insufficient data about benefits and harms of screening, while also sharing more recent evidence that bracing and exercise therapies may be helpful if scoliosis is clinically diagnosed in screen-positive youth.

The broad lack of evidence regarding the short-term effect of screening for adolescents and long-term health outcomes in later adolescence and into adulthood is a clear obstacle to moving adolescent idiopathic scoliosis recommendations beyond the I rating. Consequently, the gaps in current understanding serve to highlight immediate opportunities for clinical and health services research. For example, a multisite, multiyear observational study could provide evidence about the association between reduction in spinal curvature in adolescence and long-term health outcomes.

John Sarwark, MD , is head of orthopedic surgery at Ann & Robert H. Lurie Children’s Hospital and a professor of orthopedic surgery at Northwestern University, both in Chicago. Matthew Davis, MD , is head of academic general pediatrics and primary care at Lurie and a pediatrics professor at Northwestern. They made their comments in an editorial published Jan. 9 in JAMA and were not involved with the work ( 2018;319(2):127-129) .

The U.S. Preventive Services Task Force neither recommended for nor recommended against routine screening for adolescent idiopathic scoliosis in new guidelines published Jan. 9 in JAMA.

The determination applies to asymptomatic adolescents 10-18 years old; it does not apply to children and adolescents who present with back pain, breathing difficulties, obvious spine deformities, or abnormal imaging.

Studies since then, however, have shifted the calculus a bit so that the group “no longer has moderate certainty that the harms of treatment outweigh the benefits ... As a result, the USPSTF has determined that the current evidence is insufficient to assess the balance of benefits and harms of screening for adolescent idiopathic scoliosis,” which led the group to issue an “I statement” for “insufficient evidence,” David C. Grossman, MD, MPH, of Kaiser Permanente

Washington Health Research Institute, Seattle, and the other members of the task force wrote.

An I statement means that “if the service is offered, patients should understand the uncertainty about the balance of benefits and harms ... The USPSTF recognizes that clinical decisions involve more considerations than evidence alone. Clinicians should understand the evidence but individualize decision making to the specific patient or situation.”

The task force did find that screening using the forward bend test, scoliometer, or both with radiologic confirmation does a good job at detecting scoliosis. It also found a growing body of evidence that bracing can interrupt or slow scoliosis progression; “however, evidence on whether reducing spinal curvature in adolescence has a long-term effect on health in adulthood is inadequate,” and “evidence on the effects of exercise and surgery on health or spinal curvature in childhood or adulthood is insufficient.” Also, the majority of individuals identified through screening will never require treatment, the task force said.

The guidance is based on a review of 448,276 subjects in 14 studies, more than half of which were published after the last guidance.

USPSTF noted that limited new evidence suggests curves “may respond similarly to physiotherapeutic, scoliosis-specific exercise treatment; if confirmed, this may represent a treatment option for mild curves before bracing is recommended.”

Meanwhile, “surgical treatment remains the standard of care for curves that progress to greater than 40-50 degrees; however, there are no controlled studies of surgical [versus] nonsurgical treatment in individuals with lower degrees of curvature,” the task force said in an evidence review that was also published Jan. 9 in JAMA and was led by pediatrician John Dunn, MD, of Kaiser Permanente Washington Health Research Institute, Seattle.

More than half of US states either mandate or recommend school-based screening for scoliosis. The American Academy of Orthopaedic Surgeons, the Scoliosis Research Society, the Pediatric Orthopaedic Society of North America, and the American Academy of Pediatrics advocate screening for scoliosis in girls at 10 and 12 years and in male adolescents at either 13 or 14 years as part of medical home preventive services. The United Kingdom National Screening Society does not recommend screening for scoliosis given the uncertainty surrounding the effectiveness of screening and treatment.

The work was funded by the Agency for Healthcare Research and Quality. The authors had no relevant disclosures.

SOURCE: US Preventive Services Task Force. JAMA. 2018 Jan 9;319(2):165-72; Dunn J et al. JAMA. 2018;319(2):173-87.

The U.S. Preventive Services Task Force neither recommended for nor recommended against routine screening for adolescent idiopathic scoliosis in new guidelines published Jan. 9 in JAMA.

The determination applies to asymptomatic adolescents 10-18 years old; it does not apply to children and adolescents who present with back pain, breathing difficulties, obvious spine deformities, or abnormal imaging.

Studies since then, however, have shifted the calculus a bit so that the group “no longer has moderate certainty that the harms of treatment outweigh the benefits ... As a result, the USPSTF has determined that the current evidence is insufficient to assess the balance of benefits and harms of screening for adolescent idiopathic scoliosis,” which led the group to issue an “I statement” for “insufficient evidence,” David C. Grossman, MD, MPH, of Kaiser Permanente

Washington Health Research Institute, Seattle, and the other members of the task force wrote.

An I statement means that “if the service is offered, patients should understand the uncertainty about the balance of benefits and harms ... The USPSTF recognizes that clinical decisions involve more considerations than evidence alone. Clinicians should understand the evidence but individualize decision making to the specific patient or situation.”

The task force did find that screening using the forward bend test, scoliometer, or both with radiologic confirmation does a good job at detecting scoliosis. It also found a growing body of evidence that bracing can interrupt or slow scoliosis progression; “however, evidence on whether reducing spinal curvature in adolescence has a long-term effect on health in adulthood is inadequate,” and “evidence on the effects of exercise and surgery on health or spinal curvature in childhood or adulthood is insufficient.” Also, the majority of individuals identified through screening will never require treatment, the task force said.

The guidance is based on a review of 448,276 subjects in 14 studies, more than half of which were published after the last guidance.

USPSTF noted that limited new evidence suggests curves “may respond similarly to physiotherapeutic, scoliosis-specific exercise treatment; if confirmed, this may represent a treatment option for mild curves before bracing is recommended.”

Meanwhile, “surgical treatment remains the standard of care for curves that progress to greater than 40-50 degrees; however, there are no controlled studies of surgical [versus] nonsurgical treatment in individuals with lower degrees of curvature,” the task force said in an evidence review that was also published Jan. 9 in JAMA and was led by pediatrician John Dunn, MD, of Kaiser Permanente Washington Health Research Institute, Seattle.

More than half of US states either mandate or recommend school-based screening for scoliosis. The American Academy of Orthopaedic Surgeons, the Scoliosis Research Society, the Pediatric Orthopaedic Society of North America, and the American Academy of Pediatrics advocate screening for scoliosis in girls at 10 and 12 years and in male adolescents at either 13 or 14 years as part of medical home preventive services. The United Kingdom National Screening Society does not recommend screening for scoliosis given the uncertainty surrounding the effectiveness of screening and treatment.

The work was funded by the Agency for Healthcare Research and Quality. The authors had no relevant disclosures.

SOURCE: US Preventive Services Task Force. JAMA. 2018 Jan 9;319(2):165-72; Dunn J et al. JAMA. 2018;319(2):173-87.

FROM JAMA

Continue to opt for HDT/ASCT for multiple myeloma

High-dose therapy with melphalan followed by autologous stem cell transplant (HDT/ASCT) is still the best option for multiple myeloma even after almost 2 decades with newer and highly effective induction agents, according to a recent systematic review and two meta-analyses.

Given the “unprecedented efficacy” of “modern induction therapy with immunomodulatory drugs and proteasome inhibitors (also called ‘novel agents’),” investigators “have sought to reevaluate the role of HDT/ASCT,” wrote Binod Dhakal, MD, of the Medical College of Wisconsin, and his colleagues. The report is in JAMA Oncology.

To solve the issue, they analyzed five randomized controlled trials conducted since 2000 and concluded that HDT/ASCT is still the preferred treatment approach.

Despite a lack of demonstrable overall survival benefit, there is a significant progression-free survival (PFS) benefit, low treatment-related mortality, and potential high minimal residual disease-negative rates conferred by HDT/ASCT in newly-diagnosed multiple myeloma, the researchers noted.

The combined odds for complete response were 1.27 (95% confidence interval, 0.97-1.65, P = .07) with HDT/ASCT, compared with standard-dose therapy (SDT). The combined hazard ratio (HR) for PFS was 0.55 (95% CI, 0.41-0.7, P less than .001) and 0.76 for overall survival (95% CI, 0.42-1.36, P = .20) in favor of HDT.

PFS was best with tandem HDT/ASCT (HR, 0.49, 95% CI, 0.37-0.65) followed by single HDT/ASCT with bortezomib, lenalidomide, and dexamethasone consolidation (HR, 0.53, 95% CI, 0.37-0.76) and single HDT/ASCT alone (HR, 0.68, 95% CI, 0.53-0.87), compared with SDT. However, none of the HDT/ASCT approaches had a significant impact on overall survival.

Meanwhile, treatment-related mortality with HDT/ASCT was minimal, at less than 1%.

“The achievement of high [minimal residual disease] rates with HDT/ASCT may render this approach the ideal platform for testing novel approaches (e.g., immunotherapy) aiming at disease eradication and cures,” the researchers wrote.

The researchers reported relationships with a number of companies, including Takeda, Celgene, and Amgen, that make novel induction agents.

SOURCE: Dhakal B et al. JAMA Oncol. 2018 Jan 4. doi: 10.1001/jamaoncol.2017.4600.

High-dose therapy with melphalan followed by autologous stem cell transplant (HDT/ASCT) is still the best option for multiple myeloma even after almost 2 decades with newer and highly effective induction agents, according to a recent systematic review and two meta-analyses.

Given the “unprecedented efficacy” of “modern induction therapy with immunomodulatory drugs and proteasome inhibitors (also called ‘novel agents’),” investigators “have sought to reevaluate the role of HDT/ASCT,” wrote Binod Dhakal, MD, of the Medical College of Wisconsin, and his colleagues. The report is in JAMA Oncology.

To solve the issue, they analyzed five randomized controlled trials conducted since 2000 and concluded that HDT/ASCT is still the preferred treatment approach.

Despite a lack of demonstrable overall survival benefit, there is a significant progression-free survival (PFS) benefit, low treatment-related mortality, and potential high minimal residual disease-negative rates conferred by HDT/ASCT in newly-diagnosed multiple myeloma, the researchers noted.

The combined odds for complete response were 1.27 (95% confidence interval, 0.97-1.65, P = .07) with HDT/ASCT, compared with standard-dose therapy (SDT). The combined hazard ratio (HR) for PFS was 0.55 (95% CI, 0.41-0.7, P less than .001) and 0.76 for overall survival (95% CI, 0.42-1.36, P = .20) in favor of HDT.

PFS was best with tandem HDT/ASCT (HR, 0.49, 95% CI, 0.37-0.65) followed by single HDT/ASCT with bortezomib, lenalidomide, and dexamethasone consolidation (HR, 0.53, 95% CI, 0.37-0.76) and single HDT/ASCT alone (HR, 0.68, 95% CI, 0.53-0.87), compared with SDT. However, none of the HDT/ASCT approaches had a significant impact on overall survival.

Meanwhile, treatment-related mortality with HDT/ASCT was minimal, at less than 1%.

“The achievement of high [minimal residual disease] rates with HDT/ASCT may render this approach the ideal platform for testing novel approaches (e.g., immunotherapy) aiming at disease eradication and cures,” the researchers wrote.

The researchers reported relationships with a number of companies, including Takeda, Celgene, and Amgen, that make novel induction agents.

SOURCE: Dhakal B et al. JAMA Oncol. 2018 Jan 4. doi: 10.1001/jamaoncol.2017.4600.

High-dose therapy with melphalan followed by autologous stem cell transplant (HDT/ASCT) is still the best option for multiple myeloma even after almost 2 decades with newer and highly effective induction agents, according to a recent systematic review and two meta-analyses.

Given the “unprecedented efficacy” of “modern induction therapy with immunomodulatory drugs and proteasome inhibitors (also called ‘novel agents’),” investigators “have sought to reevaluate the role of HDT/ASCT,” wrote Binod Dhakal, MD, of the Medical College of Wisconsin, and his colleagues. The report is in JAMA Oncology.

To solve the issue, they analyzed five randomized controlled trials conducted since 2000 and concluded that HDT/ASCT is still the preferred treatment approach.

Despite a lack of demonstrable overall survival benefit, there is a significant progression-free survival (PFS) benefit, low treatment-related mortality, and potential high minimal residual disease-negative rates conferred by HDT/ASCT in newly-diagnosed multiple myeloma, the researchers noted.

The combined odds for complete response were 1.27 (95% confidence interval, 0.97-1.65, P = .07) with HDT/ASCT, compared with standard-dose therapy (SDT). The combined hazard ratio (HR) for PFS was 0.55 (95% CI, 0.41-0.7, P less than .001) and 0.76 for overall survival (95% CI, 0.42-1.36, P = .20) in favor of HDT.

PFS was best with tandem HDT/ASCT (HR, 0.49, 95% CI, 0.37-0.65) followed by single HDT/ASCT with bortezomib, lenalidomide, and dexamethasone consolidation (HR, 0.53, 95% CI, 0.37-0.76) and single HDT/ASCT alone (HR, 0.68, 95% CI, 0.53-0.87), compared with SDT. However, none of the HDT/ASCT approaches had a significant impact on overall survival.

Meanwhile, treatment-related mortality with HDT/ASCT was minimal, at less than 1%.

“The achievement of high [minimal residual disease] rates with HDT/ASCT may render this approach the ideal platform for testing novel approaches (e.g., immunotherapy) aiming at disease eradication and cures,” the researchers wrote.

The researchers reported relationships with a number of companies, including Takeda, Celgene, and Amgen, that make novel induction agents.

SOURCE: Dhakal B et al. JAMA Oncol. 2018 Jan 4. doi: 10.1001/jamaoncol.2017.4600.

FROM JAMA ONCOLOGY

Key clinical point:

Major finding: The combined odds for complete response were 1.27 (95% CI 0.97-1.65, P = .07) with HDT/ASCT, compared with standard-dose therapy (SDT).

Study details: A systematic review and two meta-analyses examining five phase 3 clinical trials reported since 2000.

Disclosures: The researchers reported relationships with a number of companies, including Takeda, Celgene, and Amgen, that make novel induction agents.

Source: Dhakal B et al. JAMA Oncol. 2018 Jan 4. doi: 10.1001/jamaoncol.2017.4600.

Risks identified for drug-resistant bacteremia in cirrhosis

In patients hospitalized with cirrhosis, biliary cirrhosis, recent health care exposure, nonwhite race, and cultures taken more than 48 hours after admission all independently predicted that bacteremia would be caused by multidrug-resistant organisms (MDROs), according to a medical record review at CHI St. Luke’s Medical Center, an 850-bed tertiary care center in Houston.

“These variables along with severity of infection and liver disease may help clinicians identify patients who will benefit most from broader-spectrum empiric antimicrobial therapy,” wrote the investigators, led by Jennifer Addo Smith, PharmD, of St. Luke’s, in the Journal of Clinical Gastroenterology.

But local epidemiology remains important. “Although a gram-positive agent (e.g., vancomycin) and a carbapenem-sparing gram-negative agent (e.g., ceftriaxone, cefepime) are reasonable empiric agents at our center, other centers with different resistance patterns may warrant different empiric therapy. Given the low prevalence of VRE [vancomycin-resistant Enterococcus] in this study ... and E. faecium in other studies (4%-7%), an empiric agent active against VRE does not seem to be routinely required,” they said.

The team looked into the issue because there hasn’t been much investigation in the United States of the role of multidrug resistant organisms in bacteremia among patients hospitalized with cirrhosis.

Thirty patients in the study had bacteremia caused by MDROs while 60 had bacteremia from non-MDROs, giving a 33% prevalence of MDRO bacteremia, which was consistent with previous, mostly European studies.

Enterobacteriaceae (43%), Staphylococcus aureus (18%), Streptococcus spp. (11%), Enterococcus spp. (10%), and nonfermenting gram-negative bacilli (6%) were the main causes of bacteremia overall.

Among the 30 MDRO cases, methicillin-resistant S. aureus was isolated in seven (23%); methicillin-resistant coagulase-negative Staphylococci in four (13%); fluoroquinolone-resistant Enterobacteriaceae in nine (30%); extended spectrum beta-lactamase–producing Enterobacteriaceae in three (10%), and VRE in two (7%). No carbapenemase-producing gram-negative bacteria were identified.

The predictors of MDRO bacteremia emerged on multivariate analysis and included biliary cirrhosis (adjusted odds ratio, 11.75; 95% confidence interval, 2.08-66.32); recent health care exposure (aOR, 9.81; 95% CI, 2.15-44.88); blood cultures obtained 48 hours after hospital admission (aOR, 6.02; 95% CI, 1.70-21.40) and nonwhite race (aOR , 3.35; 95% CI, 1.19-9.38).

Blood cultures past 48 hours and recent health care exposure – generally hospitalization within the past 90 days – were likely surrogates for nosocomial infection.

The link with biliary cirrhosis is unclear. “Compared with other cirrhotic patients, perhaps patients with PBC [primary biliary cholangitis] have had more cumulative antimicrobial exposure because of [their] higher risk for UTIs [urinary tract infections] and therefore are at increased risk for MDROs,” they wrote.

The median age in the study was 59 years. Half of the patients were white; 46% were women. Hepatitis C was the most common cause of cirrhosis, followed by alcohol.

MDRO was defined in the study as bacteria not susceptible to at least one antibiotic in at least three antimicrobial categories; 90 cirrhosis patients without bacteremia served as controls.

The funding source was not reported. Dr. Addo Smith had no disclosures.

SOURCE: Smith JA et al. J Clin Gastroenterol. 2017 Nov 23. doi: 10.1097/MCG.0000000000000964.

*This story was updated on 1/10/2018.

In patients hospitalized with cirrhosis, biliary cirrhosis, recent health care exposure, nonwhite race, and cultures taken more than 48 hours after admission all independently predicted that bacteremia would be caused by multidrug-resistant organisms (MDROs), according to a medical record review at CHI St. Luke’s Medical Center, an 850-bed tertiary care center in Houston.

“These variables along with severity of infection and liver disease may help clinicians identify patients who will benefit most from broader-spectrum empiric antimicrobial therapy,” wrote the investigators, led by Jennifer Addo Smith, PharmD, of St. Luke’s, in the Journal of Clinical Gastroenterology.

But local epidemiology remains important. “Although a gram-positive agent (e.g., vancomycin) and a carbapenem-sparing gram-negative agent (e.g., ceftriaxone, cefepime) are reasonable empiric agents at our center, other centers with different resistance patterns may warrant different empiric therapy. Given the low prevalence of VRE [vancomycin-resistant Enterococcus] in this study ... and E. faecium in other studies (4%-7%), an empiric agent active against VRE does not seem to be routinely required,” they said.

The team looked into the issue because there hasn’t been much investigation in the United States of the role of multidrug resistant organisms in bacteremia among patients hospitalized with cirrhosis.

Thirty patients in the study had bacteremia caused by MDROs while 60 had bacteremia from non-MDROs, giving a 33% prevalence of MDRO bacteremia, which was consistent with previous, mostly European studies.

Enterobacteriaceae (43%), Staphylococcus aureus (18%), Streptococcus spp. (11%), Enterococcus spp. (10%), and nonfermenting gram-negative bacilli (6%) were the main causes of bacteremia overall.

Among the 30 MDRO cases, methicillin-resistant S. aureus was isolated in seven (23%); methicillin-resistant coagulase-negative Staphylococci in four (13%); fluoroquinolone-resistant Enterobacteriaceae in nine (30%); extended spectrum beta-lactamase–producing Enterobacteriaceae in three (10%), and VRE in two (7%). No carbapenemase-producing gram-negative bacteria were identified.

The predictors of MDRO bacteremia emerged on multivariate analysis and included biliary cirrhosis (adjusted odds ratio, 11.75; 95% confidence interval, 2.08-66.32); recent health care exposure (aOR, 9.81; 95% CI, 2.15-44.88); blood cultures obtained 48 hours after hospital admission (aOR, 6.02; 95% CI, 1.70-21.40) and nonwhite race (aOR , 3.35; 95% CI, 1.19-9.38).

Blood cultures past 48 hours and recent health care exposure – generally hospitalization within the past 90 days – were likely surrogates for nosocomial infection.

The link with biliary cirrhosis is unclear. “Compared with other cirrhotic patients, perhaps patients with PBC [primary biliary cholangitis] have had more cumulative antimicrobial exposure because of [their] higher risk for UTIs [urinary tract infections] and therefore are at increased risk for MDROs,” they wrote.

The median age in the study was 59 years. Half of the patients were white; 46% were women. Hepatitis C was the most common cause of cirrhosis, followed by alcohol.

MDRO was defined in the study as bacteria not susceptible to at least one antibiotic in at least three antimicrobial categories; 90 cirrhosis patients without bacteremia served as controls.

The funding source was not reported. Dr. Addo Smith had no disclosures.

SOURCE: Smith JA et al. J Clin Gastroenterol. 2017 Nov 23. doi: 10.1097/MCG.0000000000000964.

*This story was updated on 1/10/2018.

In patients hospitalized with cirrhosis, biliary cirrhosis, recent health care exposure, nonwhite race, and cultures taken more than 48 hours after admission all independently predicted that bacteremia would be caused by multidrug-resistant organisms (MDROs), according to a medical record review at CHI St. Luke’s Medical Center, an 850-bed tertiary care center in Houston.

“These variables along with severity of infection and liver disease may help clinicians identify patients who will benefit most from broader-spectrum empiric antimicrobial therapy,” wrote the investigators, led by Jennifer Addo Smith, PharmD, of St. Luke’s, in the Journal of Clinical Gastroenterology.

But local epidemiology remains important. “Although a gram-positive agent (e.g., vancomycin) and a carbapenem-sparing gram-negative agent (e.g., ceftriaxone, cefepime) are reasonable empiric agents at our center, other centers with different resistance patterns may warrant different empiric therapy. Given the low prevalence of VRE [vancomycin-resistant Enterococcus] in this study ... and E. faecium in other studies (4%-7%), an empiric agent active against VRE does not seem to be routinely required,” they said.

The team looked into the issue because there hasn’t been much investigation in the United States of the role of multidrug resistant organisms in bacteremia among patients hospitalized with cirrhosis.

Thirty patients in the study had bacteremia caused by MDROs while 60 had bacteremia from non-MDROs, giving a 33% prevalence of MDRO bacteremia, which was consistent with previous, mostly European studies.

Enterobacteriaceae (43%), Staphylococcus aureus (18%), Streptococcus spp. (11%), Enterococcus spp. (10%), and nonfermenting gram-negative bacilli (6%) were the main causes of bacteremia overall.

Among the 30 MDRO cases, methicillin-resistant S. aureus was isolated in seven (23%); methicillin-resistant coagulase-negative Staphylococci in four (13%); fluoroquinolone-resistant Enterobacteriaceae in nine (30%); extended spectrum beta-lactamase–producing Enterobacteriaceae in three (10%), and VRE in two (7%). No carbapenemase-producing gram-negative bacteria were identified.

The predictors of MDRO bacteremia emerged on multivariate analysis and included biliary cirrhosis (adjusted odds ratio, 11.75; 95% confidence interval, 2.08-66.32); recent health care exposure (aOR, 9.81; 95% CI, 2.15-44.88); blood cultures obtained 48 hours after hospital admission (aOR, 6.02; 95% CI, 1.70-21.40) and nonwhite race (aOR , 3.35; 95% CI, 1.19-9.38).

Blood cultures past 48 hours and recent health care exposure – generally hospitalization within the past 90 days – were likely surrogates for nosocomial infection.

The link with biliary cirrhosis is unclear. “Compared with other cirrhotic patients, perhaps patients with PBC [primary biliary cholangitis] have had more cumulative antimicrobial exposure because of [their] higher risk for UTIs [urinary tract infections] and therefore are at increased risk for MDROs,” they wrote.

The median age in the study was 59 years. Half of the patients were white; 46% were women. Hepatitis C was the most common cause of cirrhosis, followed by alcohol.

MDRO was defined in the study as bacteria not susceptible to at least one antibiotic in at least three antimicrobial categories; 90 cirrhosis patients without bacteremia served as controls.

The funding source was not reported. Dr. Addo Smith had no disclosures.

SOURCE: Smith JA et al. J Clin Gastroenterol. 2017 Nov 23. doi: 10.1097/MCG.0000000000000964.

*This story was updated on 1/10/2018.

FROM THE JOURNAL OF CLINICAL GASTROENTEROLOGY

Key clinical point: In patients hospitalized with cirrhosis, nonwhite race, biliary involvement, recent health care exposure, and cultures taken more than 48 hours after hospital admission all independently predicted that bacteremia would be caused by multidrug-resistant organisms.

Major finding: The predictors of multidrug-resistant organism bacteremia emerged on multivariate analysis and included biliary cirrhosis (aOR 11.75; 95% CI, 2.08-66.32); recent health care exposure (aOR 9.81; 95% CI, 2.15-44.88); and blood cultures obtained 48 hours after hospital admission (aOR 6.02; 95% CI, 1.70-21.40).

Study details: Review of 90 cirrhotic patients with bacteremia, plus 90 controls.

Disclosures: The lead investigator had no disclosures.

Source: Smith JA et al. J Clin Gastroenterol. 2017 Nov 23. doi: 10.1097/MCG.0000000000000964.

Cars that recognize hypoglycemia? Maybe soon

SAN DIEGO – When researchers at the University of Nebraska placed sensors in the cars of patients with type 1 diabetes, they found something interesting: About 3.4% of the time, the patients were driving with a blood glucose below 70 mg/dL.

Almost 10% of the time, it was above 300 mg/dL, and both hyper and hypoglycemia, but especially hypoglycemia, corresponded with erratic driving, especially at highway speeds.

The finding explains why patients taking insulin for type 1 diabetes have a 12%-19% higher risk of crashing their cars, compared with the general population. But in a larger sense, the study speaks to a new possibility as cars become smarter: monitoring drivers’ mental states and pulling over to the side of the road or otherwise taking control if there’s a problem.

The “results show that vehicle sensor and physiologic data can be successfully linked to quantify individual driver performance and behavior in drivers with metabolic disorders that affect brain function. The work we are doing could be used to tune the algorithm that drive these automated vehicles. I think this is a very important area of study,” said senior investigator Matthew Rizzo, MD, chair of the university’s department of neurological sciences in Omaha.

Participants had the devices in their cars for a month, during which time the diabetes patients were also on continuous, 24-hour blood glucose monitoring. The investigators then synched the car data with the glucose readings, and compared it with the data from the controls’ cars. In all, the system recorded more than 1,000 hours of road time across 3,687 drives and 21,232 miles.

“What we found was that the drivers with diabetes had trouble,” Dr. Rizzo said at the American Neurological Association annual meeting.

Glucose was dangerously high or low about 13% of the time when people with diabetes were behind the wheel. Their accelerometer profiles revealed more risky maneuvering and variability in pedal control even during periods of euglycemia and moderate hyperglycemia, but particularly when hypoglycemia occurred at highway speeds.

One driver almost blacked out behind the wheel when his blood glucose fell below 40 mg/dL. “He might have been driving because he was not aware he had a problem,” Dr. Rizzo said. He is now; he was shown the video.

The team reviewed their subjects’ department of motor vehicles records for the 2 years before the study. All three car crashes in the study population were among drivers with diabetes, and they received 11 of the 13 citations (85%).

The technology has many implications. In the short term, it’s a feedback tool to help people with diabetes stay safer on the road. But the work is also “a model for us to be able to approach all kinds of medical disorders in the real world. We want generalizable models that go beyond type 1 diabetes to type 2 diabetes and other forms of encephalopathy, of which there are many in neurology.” Those models could one day lead to “automated in-vehicle technology responsive to driver’s momentary neurocognitive state. You could have [systems] that alert the car that the driver is in no state to drive; the car could even take over. We are very excited about” the possibilities, Dr. Rizzo said.

Meanwhile, “just the diagnosis of diabetes itself is not enough to restrict a person from driving. But if you record their sugars over long periods of time, and you see the kind of changes we saw in some of the drivers, it means the license might need to be adjusted slightly,” he said.

Dr. Rizzo had no relevant disclosures. One of the investigators was an employee of the Toyota Collaborative Safety Research Center.

SAN DIEGO – When researchers at the University of Nebraska placed sensors in the cars of patients with type 1 diabetes, they found something interesting: About 3.4% of the time, the patients were driving with a blood glucose below 70 mg/dL.

Almost 10% of the time, it was above 300 mg/dL, and both hyper and hypoglycemia, but especially hypoglycemia, corresponded with erratic driving, especially at highway speeds.

The finding explains why patients taking insulin for type 1 diabetes have a 12%-19% higher risk of crashing their cars, compared with the general population. But in a larger sense, the study speaks to a new possibility as cars become smarter: monitoring drivers’ mental states and pulling over to the side of the road or otherwise taking control if there’s a problem.

The “results show that vehicle sensor and physiologic data can be successfully linked to quantify individual driver performance and behavior in drivers with metabolic disorders that affect brain function. The work we are doing could be used to tune the algorithm that drive these automated vehicles. I think this is a very important area of study,” said senior investigator Matthew Rizzo, MD, chair of the university’s department of neurological sciences in Omaha.

Participants had the devices in their cars for a month, during which time the diabetes patients were also on continuous, 24-hour blood glucose monitoring. The investigators then synched the car data with the glucose readings, and compared it with the data from the controls’ cars. In all, the system recorded more than 1,000 hours of road time across 3,687 drives and 21,232 miles.

“What we found was that the drivers with diabetes had trouble,” Dr. Rizzo said at the American Neurological Association annual meeting.

Glucose was dangerously high or low about 13% of the time when people with diabetes were behind the wheel. Their accelerometer profiles revealed more risky maneuvering and variability in pedal control even during periods of euglycemia and moderate hyperglycemia, but particularly when hypoglycemia occurred at highway speeds.

One driver almost blacked out behind the wheel when his blood glucose fell below 40 mg/dL. “He might have been driving because he was not aware he had a problem,” Dr. Rizzo said. He is now; he was shown the video.

The team reviewed their subjects’ department of motor vehicles records for the 2 years before the study. All three car crashes in the study population were among drivers with diabetes, and they received 11 of the 13 citations (85%).

The technology has many implications. In the short term, it’s a feedback tool to help people with diabetes stay safer on the road. But the work is also “a model for us to be able to approach all kinds of medical disorders in the real world. We want generalizable models that go beyond type 1 diabetes to type 2 diabetes and other forms of encephalopathy, of which there are many in neurology.” Those models could one day lead to “automated in-vehicle technology responsive to driver’s momentary neurocognitive state. You could have [systems] that alert the car that the driver is in no state to drive; the car could even take over. We are very excited about” the possibilities, Dr. Rizzo said.

Meanwhile, “just the diagnosis of diabetes itself is not enough to restrict a person from driving. But if you record their sugars over long periods of time, and you see the kind of changes we saw in some of the drivers, it means the license might need to be adjusted slightly,” he said.

Dr. Rizzo had no relevant disclosures. One of the investigators was an employee of the Toyota Collaborative Safety Research Center.

SAN DIEGO – When researchers at the University of Nebraska placed sensors in the cars of patients with type 1 diabetes, they found something interesting: About 3.4% of the time, the patients were driving with a blood glucose below 70 mg/dL.

Almost 10% of the time, it was above 300 mg/dL, and both hyper and hypoglycemia, but especially hypoglycemia, corresponded with erratic driving, especially at highway speeds.

The finding explains why patients taking insulin for type 1 diabetes have a 12%-19% higher risk of crashing their cars, compared with the general population. But in a larger sense, the study speaks to a new possibility as cars become smarter: monitoring drivers’ mental states and pulling over to the side of the road or otherwise taking control if there’s a problem.

The “results show that vehicle sensor and physiologic data can be successfully linked to quantify individual driver performance and behavior in drivers with metabolic disorders that affect brain function. The work we are doing could be used to tune the algorithm that drive these automated vehicles. I think this is a very important area of study,” said senior investigator Matthew Rizzo, MD, chair of the university’s department of neurological sciences in Omaha.

Participants had the devices in their cars for a month, during which time the diabetes patients were also on continuous, 24-hour blood glucose monitoring. The investigators then synched the car data with the glucose readings, and compared it with the data from the controls’ cars. In all, the system recorded more than 1,000 hours of road time across 3,687 drives and 21,232 miles.

“What we found was that the drivers with diabetes had trouble,” Dr. Rizzo said at the American Neurological Association annual meeting.

Glucose was dangerously high or low about 13% of the time when people with diabetes were behind the wheel. Their accelerometer profiles revealed more risky maneuvering and variability in pedal control even during periods of euglycemia and moderate hyperglycemia, but particularly when hypoglycemia occurred at highway speeds.

One driver almost blacked out behind the wheel when his blood glucose fell below 40 mg/dL. “He might have been driving because he was not aware he had a problem,” Dr. Rizzo said. He is now; he was shown the video.

The team reviewed their subjects’ department of motor vehicles records for the 2 years before the study. All three car crashes in the study population were among drivers with diabetes, and they received 11 of the 13 citations (85%).

The technology has many implications. In the short term, it’s a feedback tool to help people with diabetes stay safer on the road. But the work is also “a model for us to be able to approach all kinds of medical disorders in the real world. We want generalizable models that go beyond type 1 diabetes to type 2 diabetes and other forms of encephalopathy, of which there are many in neurology.” Those models could one day lead to “automated in-vehicle technology responsive to driver’s momentary neurocognitive state. You could have [systems] that alert the car that the driver is in no state to drive; the car could even take over. We are very excited about” the possibilities, Dr. Rizzo said.