User login

Bringing you the latest news, research and reviews, exclusive interviews, podcasts, quizzes, and more.

div[contains(@class, 'read-next-article')]

div[contains(@class, 'nav-primary')]

nav[contains(@class, 'nav-primary')]

section[contains(@class, 'footer-nav-section-wrapper')]

nav[contains(@class, 'nav-ce-stack nav-ce-stack__large-screen')]

header[@id='header']

div[contains(@class, 'header__large-screen')]

div[contains(@class, 'read-next-article')]

div[contains(@class, 'main-prefix')]

div[contains(@class, 'nav-primary')]

nav[contains(@class, 'nav-primary')]

section[contains(@class, 'footer-nav-section-wrapper')]

footer[@id='footer']

section[contains(@class, 'nav-hidden')]

div[contains(@class, 'ce-card-content')]

nav[contains(@class, 'nav-ce-stack')]

div[contains(@class, 'view-medstat-quiz-listing-panes')]

div[contains(@class, 'pane-article-sidebar-latest-news')]

ILD on the rise: Doctors offer tips for diagnosing deadly disease

“There is definitely a delay from the time of symptom onset to the time that they are even evaluated for ILD,” said Dr. Kulkarni of the department of pulmonary, allergy and critical care medicine at the University of Alabama, Birmingham. “Some patients have had a significant loss of lung function by the time they come to see us. By that point we are limited by what treatment options we can offer.”

Interstitial lung disease is an umbrella term for a group of disorders involving progressive scarring of the lungs – typically irreversible – usually caused by long-term exposure to hazardous materials or by autoimmune effects. It includes idiopathic pulmonary fibrosis (IPF), a disease that is fairly rare but which has therapy options that can be effective if caught early enough. The term pulmonary fibrosis refers to lung scarring. Another type of ILD is pulmonary sarcoidosis, in which small clumps of immune cells form in the lungs in an immune response sometimes following an environmental trigger, and can lead to lung scarring if it doesn’t resolve.

Cases of ILD appear to be on the rise, and COVID-19 has made diagnosing it more complicated. One study found the prevalence of ILD and pulmonary sarcoidosis in high-income countries was about 122 of every 100,000 people in 1990 and rose to about 198 of every 100,000 people in 2017. The data were pulled from the Global Burden of Diseases, Injuries, and Risk Factors Study 2017. Globally, the researchers found a prevalence of 62 per 100,000 in 1990, compared with 82 per 100,000 in 2017.

If all of a patient’s symptoms have appeared post COVID and a physician is seeing a patient within 4-6 weeks of COVID symptoms, it is likely that the symptoms are COVID related. But a full work-up is recommended if a patient has lung crackles, which are an indicator of lung scarring, she said.

“The patterns that are seen on CT scan for COVID pneumonia are very distinct from what we expect to see with idiopathic pulmonary fibrosis,” Dr. Kulkarni said. “Putting all this information together is what is important to differentiate it from COVID pneumonia, as well as other types of ILD.”

A study published earlier this year found similarities between COVID-19 and IPF in gene expression, their IL-15-heavy cytokine storms, and the type of damage to alveolar cells. Both might be driven by endoplasmic reticulum stress, they found.

“COVID-19 resembles IPF at a fundamental level,” they wrote.

Jeffrey Horowitz, MD, a pulmonologist and professor of medicine at the Ohio State University, said the need for early diagnosis is in part a function of the therapies available for ILD.

“They don’t make the lung function better,” he said. “So delays in diagnosis mean that there’s the possibility of underlying progression for months, or sometimes years, before the diagnosis is recognized.”

In an area in which diagnosis is delayed and the prognosis is dire – 3-5 years in untreated patients after diagnosis – “there’s a tremendous amount of nihilism out there” among patients, he said.

He said patients with long-term shortness of breath and unexplained cough are often told they have asthma and are prescribed inhalers, but then further assessment isn’t performed when those don’t work.

Diagnosing ILD in primary care

Many primary care physicians feel ill-equipped to discuss IPF. More than a dozen physicians contacted for this piece to talk about ILD either did not respond, or said they felt unqualified to respond to questions on the disease.

“Not my area of expertise” and “I don’t think I’m the right person for this discussion” were two of the responses provided to this news organization.

“For some reason, in the world of primary care, it seems like there’s an impediment to getting pulmonary function studies,” Dr. Horowitz said. “Anybody who has a persistent ongoing prolonged unexplained shortness of breath and cough should have pulmonary function studies done.”

Listening to the lungs alone might not be enough, he said. There might be no clear sign in the case of early pulmonary fibrosis, he said.

“There’s the textbook description of these Velcro-sounding crackles, but sometimes it’s very subtle,” he said. “And unless you’re listening very carefully it can easily be missed by somebody who has a busy practice, or it’s loud.”

William E. Golden, MD, professor of medicine and public health at the University of Arkansas, Little Rock, is the sole primary care physician contacted for this piece who spoke with authority on ILD.

For cases of suspected ILD, internist Dr. Golden, who also serves on the editorial advisory board of Internal Medicine News, suggested ordering a test for diffusing capacity for carbon monoxide (DLCO), which will be low in the case of IPF, along with a fine-cut lung CT scan to assess ongoing fibrotic changes.

It’s “not that difficult, but you need to have an index of suspicion for the diagnosis,” he said.

New initiative for helping diagnose ILD

Dr. Kulkarni is a committee member for a new effort under way to try to get patients with ILD diagnosed earlier.

The initiative, called Bridging Specialties: Timely Diagnosis for ILD Patients, has already produced an introductory podcast and a white paper on the effort, and its rationale is expected to be released soon, according to Dr. Kulkarni and her fellow committee members.

The American College of Chest Physicians and the Three Lakes Foundation – a foundation dedicated to pulmonary fibrosis awareness and research – are working together on this initiative. They plan to put together a suite of resources, to be gradually rolled out on the college’s website, to raise awareness about the importance of early diagnosis of ILD.

The full toolkit, expected to be rolled out over the next 12 months, will include a series of podcasts and resources on how to get patients diagnosed earlier and steps to take in cases of suspected ILD, Dr. Kulkarni said.

“The goal would be to try to increase awareness about the disease so that people start thinking more about it up front – and not after we’ve ruled out everything else,” she said. The main audience will be primary care providers, but patients and community pulmonologists would likely also benefit from the resources, the committee members said.

The urgency of the initiative stems from the way ILD treatments work. They are antifibrotic, meaning they help prevent scar tissue from forming, but they can’t reverse scar tissue that has already formed. If scarring is severe, the only option might be a lung transplant, and, since the average age at ILD diagnosis is in the 60s, many patients have comorbidities that make them ineligible for transplant. According to the Global Burden of Disease Study mentioned earlier, the death rate per 100,000 people with ILD was 1.93 in 2017.

“The longer we take to diagnose it, the more chance that inflammation will become scar tissue,” Dr. Kularni explained.

William Lago, MD, another member of the committee and a family physician, said identifying ILD early is not a straightforward matter .

“When they first present, it’s hard to pick up,” said Dr. Lago, who is also a staff physician at Cleveland Clinic’s Wooster Family Health Center and medical director of the COVID Recover Clinic there. “Many of them, even themselves, will discount the symptoms.”

Dr. Lago said that patients might resist having a work-up even when a primary care physician identifies symptoms as possible ILD. In rural settings, they might have to travel quite a distance for a CT scan or other necessary evaluations, or they might just not think the symptoms are serious enough.

“Most of the time when I’ve picked up some of my pulmonary fibrosis patients, it’s been incidentally while they’re in the office for other things,” he said. He often has to “push the issue” for further work-up, he said.

The overlap of shortness of breath and cough with other, much more common disorders, such as heart disease or chronic obstructive pulmonary disease (COPD), make ILD diagnosis a challenge, he said.

“For most of us, we’ve got sometimes 10 or 15 minutes with a patient who’s presenting with 5-6 different problems. And the shortness of breath or the occasional cough – that they think is nothing – is probably the least of those,” Dr. Lago said.

Dr. Golden said he suspected a tool like the one being developed by CHEST to be useful for some and not useful for others. He added that “no one has the time to spend on that kind of thing.”

Instead, he suggested just reinforcing what the core symptoms are and what the core testing is, “to make people think about it.”

Dr. Horowitiz seemed more optimistic about the likelihood of the CHEST tool being utilized to diagnose ILD.

Whether and how he would use the CHEST resource will depend on the final form it takes, Dr. Horowitz said. It’s encouraging that it’s being put together by a credible source, he added.

Dr. Kulkarni reported financial relationships with Boehringer Ingelheim, Aluda Pharmaceuticals and PureTech Lyt-100 Inc. Dr. Lago, Dr. Horowitz, and Dr. Golden reported no relevant disclosures.

Katie Lennon contributed to this report.

“There is definitely a delay from the time of symptom onset to the time that they are even evaluated for ILD,” said Dr. Kulkarni of the department of pulmonary, allergy and critical care medicine at the University of Alabama, Birmingham. “Some patients have had a significant loss of lung function by the time they come to see us. By that point we are limited by what treatment options we can offer.”

Interstitial lung disease is an umbrella term for a group of disorders involving progressive scarring of the lungs – typically irreversible – usually caused by long-term exposure to hazardous materials or by autoimmune effects. It includes idiopathic pulmonary fibrosis (IPF), a disease that is fairly rare but which has therapy options that can be effective if caught early enough. The term pulmonary fibrosis refers to lung scarring. Another type of ILD is pulmonary sarcoidosis, in which small clumps of immune cells form in the lungs in an immune response sometimes following an environmental trigger, and can lead to lung scarring if it doesn’t resolve.

Cases of ILD appear to be on the rise, and COVID-19 has made diagnosing it more complicated. One study found the prevalence of ILD and pulmonary sarcoidosis in high-income countries was about 122 of every 100,000 people in 1990 and rose to about 198 of every 100,000 people in 2017. The data were pulled from the Global Burden of Diseases, Injuries, and Risk Factors Study 2017. Globally, the researchers found a prevalence of 62 per 100,000 in 1990, compared with 82 per 100,000 in 2017.

If all of a patient’s symptoms have appeared post COVID and a physician is seeing a patient within 4-6 weeks of COVID symptoms, it is likely that the symptoms are COVID related. But a full work-up is recommended if a patient has lung crackles, which are an indicator of lung scarring, she said.

“The patterns that are seen on CT scan for COVID pneumonia are very distinct from what we expect to see with idiopathic pulmonary fibrosis,” Dr. Kulkarni said. “Putting all this information together is what is important to differentiate it from COVID pneumonia, as well as other types of ILD.”

A study published earlier this year found similarities between COVID-19 and IPF in gene expression, their IL-15-heavy cytokine storms, and the type of damage to alveolar cells. Both might be driven by endoplasmic reticulum stress, they found.

“COVID-19 resembles IPF at a fundamental level,” they wrote.

Jeffrey Horowitz, MD, a pulmonologist and professor of medicine at the Ohio State University, said the need for early diagnosis is in part a function of the therapies available for ILD.

“They don’t make the lung function better,” he said. “So delays in diagnosis mean that there’s the possibility of underlying progression for months, or sometimes years, before the diagnosis is recognized.”

In an area in which diagnosis is delayed and the prognosis is dire – 3-5 years in untreated patients after diagnosis – “there’s a tremendous amount of nihilism out there” among patients, he said.

He said patients with long-term shortness of breath and unexplained cough are often told they have asthma and are prescribed inhalers, but then further assessment isn’t performed when those don’t work.

Diagnosing ILD in primary care

Many primary care physicians feel ill-equipped to discuss IPF. More than a dozen physicians contacted for this piece to talk about ILD either did not respond, or said they felt unqualified to respond to questions on the disease.

“Not my area of expertise” and “I don’t think I’m the right person for this discussion” were two of the responses provided to this news organization.

“For some reason, in the world of primary care, it seems like there’s an impediment to getting pulmonary function studies,” Dr. Horowitz said. “Anybody who has a persistent ongoing prolonged unexplained shortness of breath and cough should have pulmonary function studies done.”

Listening to the lungs alone might not be enough, he said. There might be no clear sign in the case of early pulmonary fibrosis, he said.

“There’s the textbook description of these Velcro-sounding crackles, but sometimes it’s very subtle,” he said. “And unless you’re listening very carefully it can easily be missed by somebody who has a busy practice, or it’s loud.”

William E. Golden, MD, professor of medicine and public health at the University of Arkansas, Little Rock, is the sole primary care physician contacted for this piece who spoke with authority on ILD.

For cases of suspected ILD, internist Dr. Golden, who also serves on the editorial advisory board of Internal Medicine News, suggested ordering a test for diffusing capacity for carbon monoxide (DLCO), which will be low in the case of IPF, along with a fine-cut lung CT scan to assess ongoing fibrotic changes.

It’s “not that difficult, but you need to have an index of suspicion for the diagnosis,” he said.

New initiative for helping diagnose ILD

Dr. Kulkarni is a committee member for a new effort under way to try to get patients with ILD diagnosed earlier.

The initiative, called Bridging Specialties: Timely Diagnosis for ILD Patients, has already produced an introductory podcast and a white paper on the effort, and its rationale is expected to be released soon, according to Dr. Kulkarni and her fellow committee members.

The American College of Chest Physicians and the Three Lakes Foundation – a foundation dedicated to pulmonary fibrosis awareness and research – are working together on this initiative. They plan to put together a suite of resources, to be gradually rolled out on the college’s website, to raise awareness about the importance of early diagnosis of ILD.

The full toolkit, expected to be rolled out over the next 12 months, will include a series of podcasts and resources on how to get patients diagnosed earlier and steps to take in cases of suspected ILD, Dr. Kulkarni said.

“The goal would be to try to increase awareness about the disease so that people start thinking more about it up front – and not after we’ve ruled out everything else,” she said. The main audience will be primary care providers, but patients and community pulmonologists would likely also benefit from the resources, the committee members said.

The urgency of the initiative stems from the way ILD treatments work. They are antifibrotic, meaning they help prevent scar tissue from forming, but they can’t reverse scar tissue that has already formed. If scarring is severe, the only option might be a lung transplant, and, since the average age at ILD diagnosis is in the 60s, many patients have comorbidities that make them ineligible for transplant. According to the Global Burden of Disease Study mentioned earlier, the death rate per 100,000 people with ILD was 1.93 in 2017.

“The longer we take to diagnose it, the more chance that inflammation will become scar tissue,” Dr. Kularni explained.

William Lago, MD, another member of the committee and a family physician, said identifying ILD early is not a straightforward matter .

“When they first present, it’s hard to pick up,” said Dr. Lago, who is also a staff physician at Cleveland Clinic’s Wooster Family Health Center and medical director of the COVID Recover Clinic there. “Many of them, even themselves, will discount the symptoms.”

Dr. Lago said that patients might resist having a work-up even when a primary care physician identifies symptoms as possible ILD. In rural settings, they might have to travel quite a distance for a CT scan or other necessary evaluations, or they might just not think the symptoms are serious enough.

“Most of the time when I’ve picked up some of my pulmonary fibrosis patients, it’s been incidentally while they’re in the office for other things,” he said. He often has to “push the issue” for further work-up, he said.

The overlap of shortness of breath and cough with other, much more common disorders, such as heart disease or chronic obstructive pulmonary disease (COPD), make ILD diagnosis a challenge, he said.

“For most of us, we’ve got sometimes 10 or 15 minutes with a patient who’s presenting with 5-6 different problems. And the shortness of breath or the occasional cough – that they think is nothing – is probably the least of those,” Dr. Lago said.

Dr. Golden said he suspected a tool like the one being developed by CHEST to be useful for some and not useful for others. He added that “no one has the time to spend on that kind of thing.”

Instead, he suggested just reinforcing what the core symptoms are and what the core testing is, “to make people think about it.”

Dr. Horowitiz seemed more optimistic about the likelihood of the CHEST tool being utilized to diagnose ILD.

Whether and how he would use the CHEST resource will depend on the final form it takes, Dr. Horowitz said. It’s encouraging that it’s being put together by a credible source, he added.

Dr. Kulkarni reported financial relationships with Boehringer Ingelheim, Aluda Pharmaceuticals and PureTech Lyt-100 Inc. Dr. Lago, Dr. Horowitz, and Dr. Golden reported no relevant disclosures.

Katie Lennon contributed to this report.

“There is definitely a delay from the time of symptom onset to the time that they are even evaluated for ILD,” said Dr. Kulkarni of the department of pulmonary, allergy and critical care medicine at the University of Alabama, Birmingham. “Some patients have had a significant loss of lung function by the time they come to see us. By that point we are limited by what treatment options we can offer.”

Interstitial lung disease is an umbrella term for a group of disorders involving progressive scarring of the lungs – typically irreversible – usually caused by long-term exposure to hazardous materials or by autoimmune effects. It includes idiopathic pulmonary fibrosis (IPF), a disease that is fairly rare but which has therapy options that can be effective if caught early enough. The term pulmonary fibrosis refers to lung scarring. Another type of ILD is pulmonary sarcoidosis, in which small clumps of immune cells form in the lungs in an immune response sometimes following an environmental trigger, and can lead to lung scarring if it doesn’t resolve.

Cases of ILD appear to be on the rise, and COVID-19 has made diagnosing it more complicated. One study found the prevalence of ILD and pulmonary sarcoidosis in high-income countries was about 122 of every 100,000 people in 1990 and rose to about 198 of every 100,000 people in 2017. The data were pulled from the Global Burden of Diseases, Injuries, and Risk Factors Study 2017. Globally, the researchers found a prevalence of 62 per 100,000 in 1990, compared with 82 per 100,000 in 2017.

If all of a patient’s symptoms have appeared post COVID and a physician is seeing a patient within 4-6 weeks of COVID symptoms, it is likely that the symptoms are COVID related. But a full work-up is recommended if a patient has lung crackles, which are an indicator of lung scarring, she said.

“The patterns that are seen on CT scan for COVID pneumonia are very distinct from what we expect to see with idiopathic pulmonary fibrosis,” Dr. Kulkarni said. “Putting all this information together is what is important to differentiate it from COVID pneumonia, as well as other types of ILD.”

A study published earlier this year found similarities between COVID-19 and IPF in gene expression, their IL-15-heavy cytokine storms, and the type of damage to alveolar cells. Both might be driven by endoplasmic reticulum stress, they found.

“COVID-19 resembles IPF at a fundamental level,” they wrote.

Jeffrey Horowitz, MD, a pulmonologist and professor of medicine at the Ohio State University, said the need for early diagnosis is in part a function of the therapies available for ILD.

“They don’t make the lung function better,” he said. “So delays in diagnosis mean that there’s the possibility of underlying progression for months, or sometimes years, before the diagnosis is recognized.”

In an area in which diagnosis is delayed and the prognosis is dire – 3-5 years in untreated patients after diagnosis – “there’s a tremendous amount of nihilism out there” among patients, he said.

He said patients with long-term shortness of breath and unexplained cough are often told they have asthma and are prescribed inhalers, but then further assessment isn’t performed when those don’t work.

Diagnosing ILD in primary care

Many primary care physicians feel ill-equipped to discuss IPF. More than a dozen physicians contacted for this piece to talk about ILD either did not respond, or said they felt unqualified to respond to questions on the disease.

“Not my area of expertise” and “I don’t think I’m the right person for this discussion” were two of the responses provided to this news organization.

“For some reason, in the world of primary care, it seems like there’s an impediment to getting pulmonary function studies,” Dr. Horowitz said. “Anybody who has a persistent ongoing prolonged unexplained shortness of breath and cough should have pulmonary function studies done.”

Listening to the lungs alone might not be enough, he said. There might be no clear sign in the case of early pulmonary fibrosis, he said.

“There’s the textbook description of these Velcro-sounding crackles, but sometimes it’s very subtle,” he said. “And unless you’re listening very carefully it can easily be missed by somebody who has a busy practice, or it’s loud.”

William E. Golden, MD, professor of medicine and public health at the University of Arkansas, Little Rock, is the sole primary care physician contacted for this piece who spoke with authority on ILD.

For cases of suspected ILD, internist Dr. Golden, who also serves on the editorial advisory board of Internal Medicine News, suggested ordering a test for diffusing capacity for carbon monoxide (DLCO), which will be low in the case of IPF, along with a fine-cut lung CT scan to assess ongoing fibrotic changes.

It’s “not that difficult, but you need to have an index of suspicion for the diagnosis,” he said.

New initiative for helping diagnose ILD

Dr. Kulkarni is a committee member for a new effort under way to try to get patients with ILD diagnosed earlier.

The initiative, called Bridging Specialties: Timely Diagnosis for ILD Patients, has already produced an introductory podcast and a white paper on the effort, and its rationale is expected to be released soon, according to Dr. Kulkarni and her fellow committee members.

The American College of Chest Physicians and the Three Lakes Foundation – a foundation dedicated to pulmonary fibrosis awareness and research – are working together on this initiative. They plan to put together a suite of resources, to be gradually rolled out on the college’s website, to raise awareness about the importance of early diagnosis of ILD.

The full toolkit, expected to be rolled out over the next 12 months, will include a series of podcasts and resources on how to get patients diagnosed earlier and steps to take in cases of suspected ILD, Dr. Kulkarni said.

“The goal would be to try to increase awareness about the disease so that people start thinking more about it up front – and not after we’ve ruled out everything else,” she said. The main audience will be primary care providers, but patients and community pulmonologists would likely also benefit from the resources, the committee members said.

The urgency of the initiative stems from the way ILD treatments work. They are antifibrotic, meaning they help prevent scar tissue from forming, but they can’t reverse scar tissue that has already formed. If scarring is severe, the only option might be a lung transplant, and, since the average age at ILD diagnosis is in the 60s, many patients have comorbidities that make them ineligible for transplant. According to the Global Burden of Disease Study mentioned earlier, the death rate per 100,000 people with ILD was 1.93 in 2017.

“The longer we take to diagnose it, the more chance that inflammation will become scar tissue,” Dr. Kularni explained.

William Lago, MD, another member of the committee and a family physician, said identifying ILD early is not a straightforward matter .

“When they first present, it’s hard to pick up,” said Dr. Lago, who is also a staff physician at Cleveland Clinic’s Wooster Family Health Center and medical director of the COVID Recover Clinic there. “Many of them, even themselves, will discount the symptoms.”

Dr. Lago said that patients might resist having a work-up even when a primary care physician identifies symptoms as possible ILD. In rural settings, they might have to travel quite a distance for a CT scan or other necessary evaluations, or they might just not think the symptoms are serious enough.

“Most of the time when I’ve picked up some of my pulmonary fibrosis patients, it’s been incidentally while they’re in the office for other things,” he said. He often has to “push the issue” for further work-up, he said.

The overlap of shortness of breath and cough with other, much more common disorders, such as heart disease or chronic obstructive pulmonary disease (COPD), make ILD diagnosis a challenge, he said.

“For most of us, we’ve got sometimes 10 or 15 minutes with a patient who’s presenting with 5-6 different problems. And the shortness of breath or the occasional cough – that they think is nothing – is probably the least of those,” Dr. Lago said.

Dr. Golden said he suspected a tool like the one being developed by CHEST to be useful for some and not useful for others. He added that “no one has the time to spend on that kind of thing.”

Instead, he suggested just reinforcing what the core symptoms are and what the core testing is, “to make people think about it.”

Dr. Horowitiz seemed more optimistic about the likelihood of the CHEST tool being utilized to diagnose ILD.

Whether and how he would use the CHEST resource will depend on the final form it takes, Dr. Horowitz said. It’s encouraging that it’s being put together by a credible source, he added.

Dr. Kulkarni reported financial relationships with Boehringer Ingelheim, Aluda Pharmaceuticals and PureTech Lyt-100 Inc. Dr. Lago, Dr. Horowitz, and Dr. Golden reported no relevant disclosures.

Katie Lennon contributed to this report.

Crystal bone algorithm predicts early fractures, uses ICD codes

The Crystal Bone (Amgen) novel algorithm predicted 2-year risk of osteoporotic fractures in a large dataset with an accuracy that was consistent with FRAX 10-year risk predictions, researchers report.

The algorithm was built using machine learning and artificial intelligence to predict fracture risk based on International Classification of Diseases (ICD) codes, as described in an article published in the Journal of Medical Internet Research.

The current validation study was presented September 9 as a poster at the annual meeting of the American Society for Bone and Mineral Research.

The scientists validated the algorithm in more than 100,000 patients aged 50 and older (that is, at risk of fracture) who were part of the Reliant Medical Group dataset (a subset of Optum Care).

Importantly, the algorithm predicted increased fracture in many patients who did not have a diagnosis of osteoporosis.

The next steps are validation in other datasets to support the generalizability of Crystal Bone across U.S. health care systems, Elinor Mody, MD, Reliant Medical Group, and colleagues report.

“Implementation research, in which patients identified by Crystal Bone undergo a bone health assessment and receive ongoing management, will help inform the clinical utility of this novel algorithm,” they conclude.

At the poster session, Tina Kelley, Optum Life Sciences, explained: “It’s a screening tool that says: ‘These are your patients that maybe you should spend a little extra time with, ask a few extra questions.’ ”

However, further study is needed before it should be used in clinical practice, she emphasized to this news organization.

‘A very useful advance’ but needs further validation

Invited to comment, Peter R. Ebeling, MD, outgoing president of the ASBMR, noted that “many clinicians now use FRAX to calculate absolute fracture risk and select patients who should initiate anti-osteoporosis drugs.”

With FRAX, clinicians input a patient’s age, sex, weight, height, previous fracture, [history of] parent with fractured hip, current smoking status, glucocorticoids, rheumatoid arthritis, secondary osteoporosis, alcohol (3 units/day or more), and bone mineral density (by DXA at the femoral neck) into the tool, to obtain a 10-year probability of fracture.

“Crystal Bone takes a different approach,” Dr. Ebeling, from Monash University, Melbourne, who was not involved with the research but who disclosed receiving funding from Amgen, told this news organization in an email.

The algorithm uses electronic health records (EHRs) to identify patients who are likely to have a fracture within the next 2 years, he explained, based on diagnoses and medications associated with osteoporosis and fractures. These include ICD-10 codes for fractures at various sites and secondary causes of osteoporosis (such as rheumatoid and other inflammatory arthritis, chronic obstructive pulmonary disease, asthma, celiac disease, and inflammatory bowel disease).

“This is a very useful advance,” Dr. Ebeling summarized, “in that it would alert the clinician to patients in their practice who have a high fracture risk and need to be investigated for osteoporosis and initiated on treatment. Otherwise, the patients would be missed, as currently often occurs.”

“It would need to be adaptable to other [EMR] systems and to be validated in a large separate population to be ready to enter clinical practice,” he said, “but these data look very promising with a good [positive predictive value (PPV)].”

Similarly, Juliet Compston, MD, said: “It provides a novel, fully automated approach to population-based screening for osteoporosis using EHRs to identify people at high imminent risk of fracture.”

Dr. Compston, emeritus professor of bone medicine, University of Cambridge, England, who was not involved with the research but who also disclosed being a consultant for Amgen, selected the study as one of the top clinical science highlights abstracts at the meeting.

“The algorithm looks at ICD codes for previous history of fracture, medications that have adverse effects on bone – for example glucocorticoids, aromatase inhibitors, and anti-androgens – as well as chronic diseases that increase the risk of fracture,” she explained.

“FRAX is the most commonly used tool to estimate fracture probability in clinical practice and to guide treatment decisions,” she noted. However, “currently it requires human input of data into the FRAX website and is generally only performed on individuals who are selected on the basis of clinical risk factors.”

“The Crystal Bone algorithm offers the potential for fully automated population-based screening in older adults to identify those at high risk of fracture, for whom effective therapies are available to reduce fracture risk,” she summarized.

“It needs further validation,” she noted, “and implementation into clinical practice requires the availability of high-quality EHRs.”

Algorithm validated in 106,328 patients aged 50 and older

Despite guidelines that recommend screening for osteoporosis in women aged 65 and older, men older than 70, and adults aged 50-79 with risk factors, real-world data suggest such screening is low, the researchers note.

The current validation study identified 106,328 patients aged 50 and older who had at least 2 years of consecutive medical history with the Reliant Medical Group from December 2014 to November 2020 as well as at least two EHR codes.

The accuracy of predicting a fracture within 2 years, expressed as area under the receiver operating characteristic (AUROC), was 0.77, where 1 is perfect, 0.5 is no better than random selection, 0.7 to 0.8 is acceptable, and 0.8 to 0.9 indicates excellent predictive accuracy.

In the entire Optum Reliant population older than 50, the risk of fracture within 2 years was 1.95%.

The algorithm identified four groups with a greater risk: 19,100 patients had a threefold higher risk of fracture within 2 years, 9,246 patients had a fourfold higher risk, 3,533 patients had a sevenfold higher risk, and 1,735 patients had a ninefold higher risk.

Many of these patients had no prior diagnosis of osteoporosis

For example, in the 19,100 patients with a threefold greater risk of fracture in 2 years, 69% of patients had not been diagnosed with osteoporosis (49% of them had no history of fracture and 20% did have a history of fracture).

The algorithm had a positive predictive value of 6%-18%, a negative predictive value of 98%-99%, a specificity of 81%-98%, and a sensitivity of 18%-59%, for the four groups.

The study was funded by Amgen. Dr. Mody and another author are Reliant Medical Group employees. Ms. Kelley and another author are Optum Life Sciences employees. One author is an employee at Landing AI. Two authors are Amgen employees and own Amgen stock. Dr. Ebeling has disclosed receiving research funding from Amgen, Sanofi, and Alexion, and his institution has received honoraria from Amgen and Kyowa Kirin. Dr. Compston has disclosed receiving speaking and consultancy fees from Amgen and UCB.

A version of this article first appeared on Medscape.com.

The Crystal Bone (Amgen) novel algorithm predicted 2-year risk of osteoporotic fractures in a large dataset with an accuracy that was consistent with FRAX 10-year risk predictions, researchers report.

The algorithm was built using machine learning and artificial intelligence to predict fracture risk based on International Classification of Diseases (ICD) codes, as described in an article published in the Journal of Medical Internet Research.

The current validation study was presented September 9 as a poster at the annual meeting of the American Society for Bone and Mineral Research.

The scientists validated the algorithm in more than 100,000 patients aged 50 and older (that is, at risk of fracture) who were part of the Reliant Medical Group dataset (a subset of Optum Care).

Importantly, the algorithm predicted increased fracture in many patients who did not have a diagnosis of osteoporosis.

The next steps are validation in other datasets to support the generalizability of Crystal Bone across U.S. health care systems, Elinor Mody, MD, Reliant Medical Group, and colleagues report.

“Implementation research, in which patients identified by Crystal Bone undergo a bone health assessment and receive ongoing management, will help inform the clinical utility of this novel algorithm,” they conclude.

At the poster session, Tina Kelley, Optum Life Sciences, explained: “It’s a screening tool that says: ‘These are your patients that maybe you should spend a little extra time with, ask a few extra questions.’ ”

However, further study is needed before it should be used in clinical practice, she emphasized to this news organization.

‘A very useful advance’ but needs further validation

Invited to comment, Peter R. Ebeling, MD, outgoing president of the ASBMR, noted that “many clinicians now use FRAX to calculate absolute fracture risk and select patients who should initiate anti-osteoporosis drugs.”

With FRAX, clinicians input a patient’s age, sex, weight, height, previous fracture, [history of] parent with fractured hip, current smoking status, glucocorticoids, rheumatoid arthritis, secondary osteoporosis, alcohol (3 units/day or more), and bone mineral density (by DXA at the femoral neck) into the tool, to obtain a 10-year probability of fracture.

“Crystal Bone takes a different approach,” Dr. Ebeling, from Monash University, Melbourne, who was not involved with the research but who disclosed receiving funding from Amgen, told this news organization in an email.

The algorithm uses electronic health records (EHRs) to identify patients who are likely to have a fracture within the next 2 years, he explained, based on diagnoses and medications associated with osteoporosis and fractures. These include ICD-10 codes for fractures at various sites and secondary causes of osteoporosis (such as rheumatoid and other inflammatory arthritis, chronic obstructive pulmonary disease, asthma, celiac disease, and inflammatory bowel disease).

“This is a very useful advance,” Dr. Ebeling summarized, “in that it would alert the clinician to patients in their practice who have a high fracture risk and need to be investigated for osteoporosis and initiated on treatment. Otherwise, the patients would be missed, as currently often occurs.”

“It would need to be adaptable to other [EMR] systems and to be validated in a large separate population to be ready to enter clinical practice,” he said, “but these data look very promising with a good [positive predictive value (PPV)].”

Similarly, Juliet Compston, MD, said: “It provides a novel, fully automated approach to population-based screening for osteoporosis using EHRs to identify people at high imminent risk of fracture.”

Dr. Compston, emeritus professor of bone medicine, University of Cambridge, England, who was not involved with the research but who also disclosed being a consultant for Amgen, selected the study as one of the top clinical science highlights abstracts at the meeting.

“The algorithm looks at ICD codes for previous history of fracture, medications that have adverse effects on bone – for example glucocorticoids, aromatase inhibitors, and anti-androgens – as well as chronic diseases that increase the risk of fracture,” she explained.

“FRAX is the most commonly used tool to estimate fracture probability in clinical practice and to guide treatment decisions,” she noted. However, “currently it requires human input of data into the FRAX website and is generally only performed on individuals who are selected on the basis of clinical risk factors.”

“The Crystal Bone algorithm offers the potential for fully automated population-based screening in older adults to identify those at high risk of fracture, for whom effective therapies are available to reduce fracture risk,” she summarized.

“It needs further validation,” she noted, “and implementation into clinical practice requires the availability of high-quality EHRs.”

Algorithm validated in 106,328 patients aged 50 and older

Despite guidelines that recommend screening for osteoporosis in women aged 65 and older, men older than 70, and adults aged 50-79 with risk factors, real-world data suggest such screening is low, the researchers note.

The current validation study identified 106,328 patients aged 50 and older who had at least 2 years of consecutive medical history with the Reliant Medical Group from December 2014 to November 2020 as well as at least two EHR codes.

The accuracy of predicting a fracture within 2 years, expressed as area under the receiver operating characteristic (AUROC), was 0.77, where 1 is perfect, 0.5 is no better than random selection, 0.7 to 0.8 is acceptable, and 0.8 to 0.9 indicates excellent predictive accuracy.

In the entire Optum Reliant population older than 50, the risk of fracture within 2 years was 1.95%.

The algorithm identified four groups with a greater risk: 19,100 patients had a threefold higher risk of fracture within 2 years, 9,246 patients had a fourfold higher risk, 3,533 patients had a sevenfold higher risk, and 1,735 patients had a ninefold higher risk.

Many of these patients had no prior diagnosis of osteoporosis

For example, in the 19,100 patients with a threefold greater risk of fracture in 2 years, 69% of patients had not been diagnosed with osteoporosis (49% of them had no history of fracture and 20% did have a history of fracture).

The algorithm had a positive predictive value of 6%-18%, a negative predictive value of 98%-99%, a specificity of 81%-98%, and a sensitivity of 18%-59%, for the four groups.

The study was funded by Amgen. Dr. Mody and another author are Reliant Medical Group employees. Ms. Kelley and another author are Optum Life Sciences employees. One author is an employee at Landing AI. Two authors are Amgen employees and own Amgen stock. Dr. Ebeling has disclosed receiving research funding from Amgen, Sanofi, and Alexion, and his institution has received honoraria from Amgen and Kyowa Kirin. Dr. Compston has disclosed receiving speaking and consultancy fees from Amgen and UCB.

A version of this article first appeared on Medscape.com.

The Crystal Bone (Amgen) novel algorithm predicted 2-year risk of osteoporotic fractures in a large dataset with an accuracy that was consistent with FRAX 10-year risk predictions, researchers report.

The algorithm was built using machine learning and artificial intelligence to predict fracture risk based on International Classification of Diseases (ICD) codes, as described in an article published in the Journal of Medical Internet Research.

The current validation study was presented September 9 as a poster at the annual meeting of the American Society for Bone and Mineral Research.

The scientists validated the algorithm in more than 100,000 patients aged 50 and older (that is, at risk of fracture) who were part of the Reliant Medical Group dataset (a subset of Optum Care).

Importantly, the algorithm predicted increased fracture in many patients who did not have a diagnosis of osteoporosis.

The next steps are validation in other datasets to support the generalizability of Crystal Bone across U.S. health care systems, Elinor Mody, MD, Reliant Medical Group, and colleagues report.

“Implementation research, in which patients identified by Crystal Bone undergo a bone health assessment and receive ongoing management, will help inform the clinical utility of this novel algorithm,” they conclude.

At the poster session, Tina Kelley, Optum Life Sciences, explained: “It’s a screening tool that says: ‘These are your patients that maybe you should spend a little extra time with, ask a few extra questions.’ ”

However, further study is needed before it should be used in clinical practice, she emphasized to this news organization.

‘A very useful advance’ but needs further validation

Invited to comment, Peter R. Ebeling, MD, outgoing president of the ASBMR, noted that “many clinicians now use FRAX to calculate absolute fracture risk and select patients who should initiate anti-osteoporosis drugs.”

With FRAX, clinicians input a patient’s age, sex, weight, height, previous fracture, [history of] parent with fractured hip, current smoking status, glucocorticoids, rheumatoid arthritis, secondary osteoporosis, alcohol (3 units/day or more), and bone mineral density (by DXA at the femoral neck) into the tool, to obtain a 10-year probability of fracture.

“Crystal Bone takes a different approach,” Dr. Ebeling, from Monash University, Melbourne, who was not involved with the research but who disclosed receiving funding from Amgen, told this news organization in an email.

The algorithm uses electronic health records (EHRs) to identify patients who are likely to have a fracture within the next 2 years, he explained, based on diagnoses and medications associated with osteoporosis and fractures. These include ICD-10 codes for fractures at various sites and secondary causes of osteoporosis (such as rheumatoid and other inflammatory arthritis, chronic obstructive pulmonary disease, asthma, celiac disease, and inflammatory bowel disease).

“This is a very useful advance,” Dr. Ebeling summarized, “in that it would alert the clinician to patients in their practice who have a high fracture risk and need to be investigated for osteoporosis and initiated on treatment. Otherwise, the patients would be missed, as currently often occurs.”

“It would need to be adaptable to other [EMR] systems and to be validated in a large separate population to be ready to enter clinical practice,” he said, “but these data look very promising with a good [positive predictive value (PPV)].”

Similarly, Juliet Compston, MD, said: “It provides a novel, fully automated approach to population-based screening for osteoporosis using EHRs to identify people at high imminent risk of fracture.”

Dr. Compston, emeritus professor of bone medicine, University of Cambridge, England, who was not involved with the research but who also disclosed being a consultant for Amgen, selected the study as one of the top clinical science highlights abstracts at the meeting.

“The algorithm looks at ICD codes for previous history of fracture, medications that have adverse effects on bone – for example glucocorticoids, aromatase inhibitors, and anti-androgens – as well as chronic diseases that increase the risk of fracture,” she explained.

“FRAX is the most commonly used tool to estimate fracture probability in clinical practice and to guide treatment decisions,” she noted. However, “currently it requires human input of data into the FRAX website and is generally only performed on individuals who are selected on the basis of clinical risk factors.”

“The Crystal Bone algorithm offers the potential for fully automated population-based screening in older adults to identify those at high risk of fracture, for whom effective therapies are available to reduce fracture risk,” she summarized.

“It needs further validation,” she noted, “and implementation into clinical practice requires the availability of high-quality EHRs.”

Algorithm validated in 106,328 patients aged 50 and older

Despite guidelines that recommend screening for osteoporosis in women aged 65 and older, men older than 70, and adults aged 50-79 with risk factors, real-world data suggest such screening is low, the researchers note.

The current validation study identified 106,328 patients aged 50 and older who had at least 2 years of consecutive medical history with the Reliant Medical Group from December 2014 to November 2020 as well as at least two EHR codes.

The accuracy of predicting a fracture within 2 years, expressed as area under the receiver operating characteristic (AUROC), was 0.77, where 1 is perfect, 0.5 is no better than random selection, 0.7 to 0.8 is acceptable, and 0.8 to 0.9 indicates excellent predictive accuracy.

In the entire Optum Reliant population older than 50, the risk of fracture within 2 years was 1.95%.

The algorithm identified four groups with a greater risk: 19,100 patients had a threefold higher risk of fracture within 2 years, 9,246 patients had a fourfold higher risk, 3,533 patients had a sevenfold higher risk, and 1,735 patients had a ninefold higher risk.

Many of these patients had no prior diagnosis of osteoporosis

For example, in the 19,100 patients with a threefold greater risk of fracture in 2 years, 69% of patients had not been diagnosed with osteoporosis (49% of them had no history of fracture and 20% did have a history of fracture).

The algorithm had a positive predictive value of 6%-18%, a negative predictive value of 98%-99%, a specificity of 81%-98%, and a sensitivity of 18%-59%, for the four groups.

The study was funded by Amgen. Dr. Mody and another author are Reliant Medical Group employees. Ms. Kelley and another author are Optum Life Sciences employees. One author is an employee at Landing AI. Two authors are Amgen employees and own Amgen stock. Dr. Ebeling has disclosed receiving research funding from Amgen, Sanofi, and Alexion, and his institution has received honoraria from Amgen and Kyowa Kirin. Dr. Compston has disclosed receiving speaking and consultancy fees from Amgen and UCB.

A version of this article first appeared on Medscape.com.

FROM ASBMR 2022

Prior psychological distress tied to ‘long-COVID’ conditions

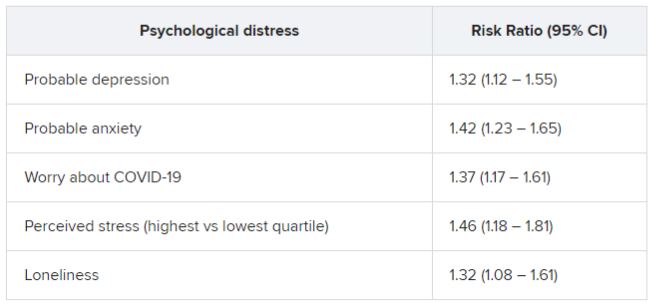

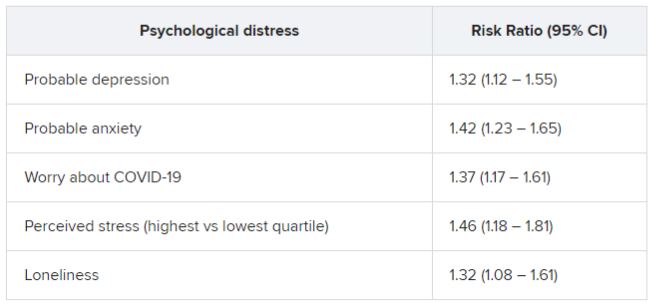

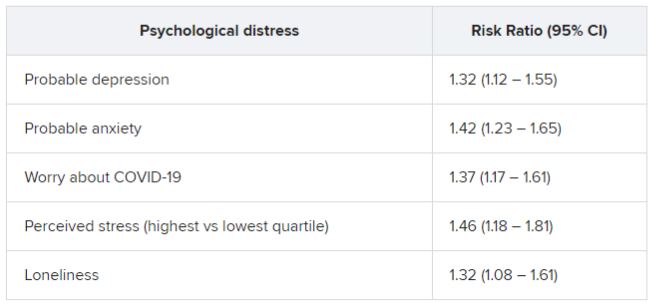

In an analysis of almost 55,000 adult participants in three ongoing studies, having depression, anxiety, worry, perceived stress, or loneliness early in the pandemic, before SARS-CoV-2 infection, was associated with a 50% increased risk for developing long COVID. These types of psychological distress were also associated with a 15% to 51% greater risk for impairment in daily life among individuals with long COVID.

Psychological distress was even more strongly associated with developing long COVID than were physical health risk factors, and the increased risk was not explained by health behaviors such as smoking or physical comorbidities, researchers note.

“Our findings suggest the need to consider psychological health in addition to physical health as risk factors of long COVID-19,” lead author Siwen Wang, MD, postdoctoral fellow, department of nutrition, Harvard T. H. Chan School of Public Health, Boston, said in an interview.

“We need to increase public awareness of the importance of mental health and focus on getting mental health care for people who need it, increasing the supply of mental health clinicians and improving access to care,” she said.

The findings were published online in JAMA Psychiatry.

‘Poorly understood’

Postacute sequelae of SARS-CoV-2 (“long COVID”), which are “signs and symptoms consistent with COVID-19 that extend beyond 4 weeks from onset of infection” constitute “an emerging health issue,” the investigators write.

Dr. Wang noted that it has been estimated that 8-23 million Americans have developed long COVID. However, “despite the high prevalence and daily life impairment associated with long COVID, it is still poorly understood, and few risk factors have been established,” she said.

Although psychological distress may be implicated in long COVID, only three previous studies investigated psychological factors as potential contributors, the researchers note. Also, no study has investigated the potential role of other common manifestations of distress that have increased during the pandemic, such as loneliness and perceived stress, they add.

To investigate these issues, the researchers turned to three large ongoing longitudinal studies: the Nurses’ Health Study II (NSHII), the Nurses’ Health study 3 (NHS3), and the Growing Up Today Study (GUTS).

They analyzed data on 54,960 total participants (96.6% women; mean age, 57.5 years). Of the full group, 38% were active health care workers.

Participants completed an online COVID-19 questionnaire from April 2020 to Sept. 1, 2020 (baseline), and monthly surveys thereafter. Beginning in August 2020, surveys were administered quarterly. The end of follow-up was in November 2021.

The COVID questionnaires included questions about positive SARS-CoV-2 test results, COVID symptoms and hospitalization since March 1, 2020, and the presence of long-term COVID symptoms, such as fatigue, respiratory problems, persistent cough, muscle/joint/chest pain, smell/taste problems, confusion/disorientation/brain fog, depression/anxiety/changes in mood, headache, and memory problems.

Participants who reported these post-COVID conditions were asked about the frequency of symptoms and the degree of impairment in daily life.

Inflammation, immune dysregulation implicated?

The Patient Health Questionnaire–4 (PHQ-4) was used to assess for anxiety and depressive symptoms in the past 2 weeks. It consists of a two-item depression measure (PHQ-2) and a two-item Generalized Anxiety Disorder Scale (GAD-2).

Non–health care providers completed two additional assessments of psychological distress: the four-item Perceived Stress Scale and the three-item UCLA Loneliness Scale.

The researchers included demographic factors, weight, smoking status, marital status, and medical conditions, including diabetes, hypertension, hypercholesterolemia, asthma, and cancer, and socioeconomic factors as covariates.

For each participant, the investigators calculated the number of types of distress experienced at a high level, including probable depression, probable anxiety, worry about COVID-19, being in the top quartile of perceived stress, and loneliness.

During the 19 months of follow-up (1-47 weeks after baseline), 6% of respondents reported a positive result on a SARS-CoV-2 antibody, antigen, or polymerase chain reaction test.

Of these, 43.9% reported long-COVID conditions, with most reporting that symptoms lasted 2 months or longer; 55.8% reported at least occasional daily life impairment.

The most common post-COVID conditions were fatigue (reported by 56%), loss of smell or taste problems (44.6%), shortness of breath (25.5%), confusion/disorientation/ brain fog (24.5%), and memory issues (21.8%).

Among patients who had been infected, there was a considerably higher rate of preinfection psychological distress after adjusting for sociodemographic factors, health behaviors, and comorbidities. Each type of distress was associated with post-COVID conditions.

In addition, participants who had experienced at least two types of distress prior to infection were at nearly 50% increased risk for post–COVID conditions (risk ratio, 1.49; 95% confidence interval, 1.23-1.80).

Among those with post-COVID conditions, all types of distress were associated with increased risk for daily life impairment (RR range, 1.15-1.51).

Senior author Andrea Roberts, PhD, senior research scientist at the Harvard T. H. Chan School of Public Health, Boston, noted that the investigators did not examine biological mechanisms potentially underlying the association they found.

However, “based on prior research, it may be that inflammation and immune dysregulation related to psychological distress play a role in the association of distress with long COVID, but we can’t be sure,” Dr. Roberts said.

Contributes to the field

Commenting for this article, Yapeng Su, PhD, a postdoctoral researcher at the Fred Hutchinson Cancer Research Center in Seattle, called the study “great work contributing to the long-COVID research field and revealing important connections” with psychological stress prior to infection.

Dr. Su, who was not involved with the study, was previously at the Institute for Systems Biology, also in Seattle, and has written about long COVID.

He noted that the “biological mechanism of such intriguing linkage is definitely the important next step, which will likely require deep phenotyping of biological specimens from these patients longitudinally.”

Dr. Wang pointed to past research suggesting that some patients with mental illness “sometimes develop autoantibodies that have also been associated with increased risk of long COVID.” In addition, depression “affects the brain in ways that may explain certain cognitive symptoms in long COVID,” she added.

More studies are now needed to understand how psychological distress increases the risk for long COVID, said Dr. Wang.

The research was supported by grants from the Eunice Kennedy Shriver National Institute of Child Health and Human Development, the National Institutes of Health, the Dean’s Fund for Scientific Advancement Acceleration Award from the Harvard T. H. Chan School of Public Health, the Massachusetts Consortium on Pathogen Readiness Evergrande COVID-19 Response Fund Award, and the Veterans Affairs Health Services Research and Development Service funds. Dr. Wang and Dr. Roberts have reported no relevant financial relationships. The other investigators’ disclosures are listed in the original article. Dr. Su reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

In an analysis of almost 55,000 adult participants in three ongoing studies, having depression, anxiety, worry, perceived stress, or loneliness early in the pandemic, before SARS-CoV-2 infection, was associated with a 50% increased risk for developing long COVID. These types of psychological distress were also associated with a 15% to 51% greater risk for impairment in daily life among individuals with long COVID.

Psychological distress was even more strongly associated with developing long COVID than were physical health risk factors, and the increased risk was not explained by health behaviors such as smoking or physical comorbidities, researchers note.

“Our findings suggest the need to consider psychological health in addition to physical health as risk factors of long COVID-19,” lead author Siwen Wang, MD, postdoctoral fellow, department of nutrition, Harvard T. H. Chan School of Public Health, Boston, said in an interview.

“We need to increase public awareness of the importance of mental health and focus on getting mental health care for people who need it, increasing the supply of mental health clinicians and improving access to care,” she said.

The findings were published online in JAMA Psychiatry.

‘Poorly understood’

Postacute sequelae of SARS-CoV-2 (“long COVID”), which are “signs and symptoms consistent with COVID-19 that extend beyond 4 weeks from onset of infection” constitute “an emerging health issue,” the investigators write.

Dr. Wang noted that it has been estimated that 8-23 million Americans have developed long COVID. However, “despite the high prevalence and daily life impairment associated with long COVID, it is still poorly understood, and few risk factors have been established,” she said.

Although psychological distress may be implicated in long COVID, only three previous studies investigated psychological factors as potential contributors, the researchers note. Also, no study has investigated the potential role of other common manifestations of distress that have increased during the pandemic, such as loneliness and perceived stress, they add.

To investigate these issues, the researchers turned to three large ongoing longitudinal studies: the Nurses’ Health Study II (NSHII), the Nurses’ Health study 3 (NHS3), and the Growing Up Today Study (GUTS).

They analyzed data on 54,960 total participants (96.6% women; mean age, 57.5 years). Of the full group, 38% were active health care workers.

Participants completed an online COVID-19 questionnaire from April 2020 to Sept. 1, 2020 (baseline), and monthly surveys thereafter. Beginning in August 2020, surveys were administered quarterly. The end of follow-up was in November 2021.

The COVID questionnaires included questions about positive SARS-CoV-2 test results, COVID symptoms and hospitalization since March 1, 2020, and the presence of long-term COVID symptoms, such as fatigue, respiratory problems, persistent cough, muscle/joint/chest pain, smell/taste problems, confusion/disorientation/brain fog, depression/anxiety/changes in mood, headache, and memory problems.

Participants who reported these post-COVID conditions were asked about the frequency of symptoms and the degree of impairment in daily life.

Inflammation, immune dysregulation implicated?

The Patient Health Questionnaire–4 (PHQ-4) was used to assess for anxiety and depressive symptoms in the past 2 weeks. It consists of a two-item depression measure (PHQ-2) and a two-item Generalized Anxiety Disorder Scale (GAD-2).

Non–health care providers completed two additional assessments of psychological distress: the four-item Perceived Stress Scale and the three-item UCLA Loneliness Scale.

The researchers included demographic factors, weight, smoking status, marital status, and medical conditions, including diabetes, hypertension, hypercholesterolemia, asthma, and cancer, and socioeconomic factors as covariates.

For each participant, the investigators calculated the number of types of distress experienced at a high level, including probable depression, probable anxiety, worry about COVID-19, being in the top quartile of perceived stress, and loneliness.

During the 19 months of follow-up (1-47 weeks after baseline), 6% of respondents reported a positive result on a SARS-CoV-2 antibody, antigen, or polymerase chain reaction test.

Of these, 43.9% reported long-COVID conditions, with most reporting that symptoms lasted 2 months or longer; 55.8% reported at least occasional daily life impairment.

The most common post-COVID conditions were fatigue (reported by 56%), loss of smell or taste problems (44.6%), shortness of breath (25.5%), confusion/disorientation/ brain fog (24.5%), and memory issues (21.8%).

Among patients who had been infected, there was a considerably higher rate of preinfection psychological distress after adjusting for sociodemographic factors, health behaviors, and comorbidities. Each type of distress was associated with post-COVID conditions.

In addition, participants who had experienced at least two types of distress prior to infection were at nearly 50% increased risk for post–COVID conditions (risk ratio, 1.49; 95% confidence interval, 1.23-1.80).

Among those with post-COVID conditions, all types of distress were associated with increased risk for daily life impairment (RR range, 1.15-1.51).

Senior author Andrea Roberts, PhD, senior research scientist at the Harvard T. H. Chan School of Public Health, Boston, noted that the investigators did not examine biological mechanisms potentially underlying the association they found.

However, “based on prior research, it may be that inflammation and immune dysregulation related to psychological distress play a role in the association of distress with long COVID, but we can’t be sure,” Dr. Roberts said.

Contributes to the field

Commenting for this article, Yapeng Su, PhD, a postdoctoral researcher at the Fred Hutchinson Cancer Research Center in Seattle, called the study “great work contributing to the long-COVID research field and revealing important connections” with psychological stress prior to infection.

Dr. Su, who was not involved with the study, was previously at the Institute for Systems Biology, also in Seattle, and has written about long COVID.

He noted that the “biological mechanism of such intriguing linkage is definitely the important next step, which will likely require deep phenotyping of biological specimens from these patients longitudinally.”

Dr. Wang pointed to past research suggesting that some patients with mental illness “sometimes develop autoantibodies that have also been associated with increased risk of long COVID.” In addition, depression “affects the brain in ways that may explain certain cognitive symptoms in long COVID,” she added.

More studies are now needed to understand how psychological distress increases the risk for long COVID, said Dr. Wang.

The research was supported by grants from the Eunice Kennedy Shriver National Institute of Child Health and Human Development, the National Institutes of Health, the Dean’s Fund for Scientific Advancement Acceleration Award from the Harvard T. H. Chan School of Public Health, the Massachusetts Consortium on Pathogen Readiness Evergrande COVID-19 Response Fund Award, and the Veterans Affairs Health Services Research and Development Service funds. Dr. Wang and Dr. Roberts have reported no relevant financial relationships. The other investigators’ disclosures are listed in the original article. Dr. Su reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

In an analysis of almost 55,000 adult participants in three ongoing studies, having depression, anxiety, worry, perceived stress, or loneliness early in the pandemic, before SARS-CoV-2 infection, was associated with a 50% increased risk for developing long COVID. These types of psychological distress were also associated with a 15% to 51% greater risk for impairment in daily life among individuals with long COVID.

Psychological distress was even more strongly associated with developing long COVID than were physical health risk factors, and the increased risk was not explained by health behaviors such as smoking or physical comorbidities, researchers note.

“Our findings suggest the need to consider psychological health in addition to physical health as risk factors of long COVID-19,” lead author Siwen Wang, MD, postdoctoral fellow, department of nutrition, Harvard T. H. Chan School of Public Health, Boston, said in an interview.

“We need to increase public awareness of the importance of mental health and focus on getting mental health care for people who need it, increasing the supply of mental health clinicians and improving access to care,” she said.

The findings were published online in JAMA Psychiatry.

‘Poorly understood’

Postacute sequelae of SARS-CoV-2 (“long COVID”), which are “signs and symptoms consistent with COVID-19 that extend beyond 4 weeks from onset of infection” constitute “an emerging health issue,” the investigators write.

Dr. Wang noted that it has been estimated that 8-23 million Americans have developed long COVID. However, “despite the high prevalence and daily life impairment associated with long COVID, it is still poorly understood, and few risk factors have been established,” she said.

Although psychological distress may be implicated in long COVID, only three previous studies investigated psychological factors as potential contributors, the researchers note. Also, no study has investigated the potential role of other common manifestations of distress that have increased during the pandemic, such as loneliness and perceived stress, they add.

To investigate these issues, the researchers turned to three large ongoing longitudinal studies: the Nurses’ Health Study II (NSHII), the Nurses’ Health study 3 (NHS3), and the Growing Up Today Study (GUTS).

They analyzed data on 54,960 total participants (96.6% women; mean age, 57.5 years). Of the full group, 38% were active health care workers.

Participants completed an online COVID-19 questionnaire from April 2020 to Sept. 1, 2020 (baseline), and monthly surveys thereafter. Beginning in August 2020, surveys were administered quarterly. The end of follow-up was in November 2021.

The COVID questionnaires included questions about positive SARS-CoV-2 test results, COVID symptoms and hospitalization since March 1, 2020, and the presence of long-term COVID symptoms, such as fatigue, respiratory problems, persistent cough, muscle/joint/chest pain, smell/taste problems, confusion/disorientation/brain fog, depression/anxiety/changes in mood, headache, and memory problems.

Participants who reported these post-COVID conditions were asked about the frequency of symptoms and the degree of impairment in daily life.

Inflammation, immune dysregulation implicated?

The Patient Health Questionnaire–4 (PHQ-4) was used to assess for anxiety and depressive symptoms in the past 2 weeks. It consists of a two-item depression measure (PHQ-2) and a two-item Generalized Anxiety Disorder Scale (GAD-2).

Non–health care providers completed two additional assessments of psychological distress: the four-item Perceived Stress Scale and the three-item UCLA Loneliness Scale.

The researchers included demographic factors, weight, smoking status, marital status, and medical conditions, including diabetes, hypertension, hypercholesterolemia, asthma, and cancer, and socioeconomic factors as covariates.

For each participant, the investigators calculated the number of types of distress experienced at a high level, including probable depression, probable anxiety, worry about COVID-19, being in the top quartile of perceived stress, and loneliness.

During the 19 months of follow-up (1-47 weeks after baseline), 6% of respondents reported a positive result on a SARS-CoV-2 antibody, antigen, or polymerase chain reaction test.

Of these, 43.9% reported long-COVID conditions, with most reporting that symptoms lasted 2 months or longer; 55.8% reported at least occasional daily life impairment.

The most common post-COVID conditions were fatigue (reported by 56%), loss of smell or taste problems (44.6%), shortness of breath (25.5%), confusion/disorientation/ brain fog (24.5%), and memory issues (21.8%).

Among patients who had been infected, there was a considerably higher rate of preinfection psychological distress after adjusting for sociodemographic factors, health behaviors, and comorbidities. Each type of distress was associated with post-COVID conditions.

In addition, participants who had experienced at least two types of distress prior to infection were at nearly 50% increased risk for post–COVID conditions (risk ratio, 1.49; 95% confidence interval, 1.23-1.80).

Among those with post-COVID conditions, all types of distress were associated with increased risk for daily life impairment (RR range, 1.15-1.51).

Senior author Andrea Roberts, PhD, senior research scientist at the Harvard T. H. Chan School of Public Health, Boston, noted that the investigators did not examine biological mechanisms potentially underlying the association they found.

However, “based on prior research, it may be that inflammation and immune dysregulation related to psychological distress play a role in the association of distress with long COVID, but we can’t be sure,” Dr. Roberts said.

Contributes to the field

Commenting for this article, Yapeng Su, PhD, a postdoctoral researcher at the Fred Hutchinson Cancer Research Center in Seattle, called the study “great work contributing to the long-COVID research field and revealing important connections” with psychological stress prior to infection.

Dr. Su, who was not involved with the study, was previously at the Institute for Systems Biology, also in Seattle, and has written about long COVID.

He noted that the “biological mechanism of such intriguing linkage is definitely the important next step, which will likely require deep phenotyping of biological specimens from these patients longitudinally.”

Dr. Wang pointed to past research suggesting that some patients with mental illness “sometimes develop autoantibodies that have also been associated with increased risk of long COVID.” In addition, depression “affects the brain in ways that may explain certain cognitive symptoms in long COVID,” she added.

More studies are now needed to understand how psychological distress increases the risk for long COVID, said Dr. Wang.

The research was supported by grants from the Eunice Kennedy Shriver National Institute of Child Health and Human Development, the National Institutes of Health, the Dean’s Fund for Scientific Advancement Acceleration Award from the Harvard T. H. Chan School of Public Health, the Massachusetts Consortium on Pathogen Readiness Evergrande COVID-19 Response Fund Award, and the Veterans Affairs Health Services Research and Development Service funds. Dr. Wang and Dr. Roberts have reported no relevant financial relationships. The other investigators’ disclosures are listed in the original article. Dr. Su reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM JAMA PSYCHIATRY

A ‘big breakfast’ diet affects hunger, not weight loss

, published in Cell Metabolism, from the University of Aberdeen. The idea that ‘front-loading’ calories early in the day might help dieting attempts was based on the belief that consuming the bulk of daily calories in the morning optimizes weight loss by burning calories more efficiently and quickly.

“There are a lot of myths surrounding the timing of eating and how it might influence either body weight or health,” said senior author Alexandra Johnstone, PhD, a researcher at the Rowett Institute, University of Aberdeen, who specializes in appetite control. “This has been driven largely by the circadian rhythm field. But we in the nutrition field have wondered how this could be possible. Where would the energy go? We decided to take a closer look at how time of day interacts with metabolism.”

Her team undertook a randomized crossover trial of 30 overweight and obese subjects recruited via social media ads. Participants – 16 men and 14 women – had a mean age of 51 years, and body mass index of 27-42 kg/ m2 but were otherwise healthy. The researchers compared two calorie-restricted but isoenergetic weight loss diets: morning-loaded calories with 45% of intake at breakfast, 35% at lunch, and 20% at dinner, and evening-loaded calories with the inverse proportions of 20%, 35%, and 45% at breakfast, lunch, and dinner, respectively.

Each diet was followed for 4 weeks, with a controlled baseline diet in which calories were balanced throughout the day provided for 1 week at the outset and during a 1-week washout period between the two intervention diets. Each person’s calorie intake was fixed, referenced to their individual measured resting metabolic rate, to assess the effect on weight loss and energy expenditure of meal timing under isoenergetic intake. Both diets were designed to provide the same nutrient composition of 30% protein, 35% carbohydrate, and 35% fat.

All food and beverages were provided, “making this the most rigorously controlled study to assess timing of eating in humans to date,” the team said, “with the aim of accounting for all aspects of energy balance.”

No optimum time to eat for weight loss

Results showed that both diets resulted in significant weight reduction at the end of each dietary intervention period, with subjects losing an average of just over 3 kg during each of the 4-week periods. However, there was no difference in weight loss between the morning-loaded and evening-loaded diets.

The relative size of breakfast and dinner – whether a person eats the largest meal early or late in the day – does not have an impact on metabolism, the team said. This challenges previous studies that have suggested that “evening eaters” – now a majority of the U.K. population – have a greater likelihood of gaining weight and greater difficulty in losing it.

“Participants were provided with all their meals for 8 weeks and their energy expenditure and body composition monitored for changes, using gold standard techniques at the Rowett Institute,” Dr. Johnstone said. “The same number of calories was consumed by volunteers at different times of the day, with energy expenditure measures using analysis of urine.

“This study is important because it challenges the previously held belief that eating at different times of the day leads to differential energy expenditure. The research shows that under weight loss conditions there is no optimum time to eat in order to manage weight, and that change in body weight is determined by energy balance.”

Meal timing reduces hunger but does not affect weight loss

However, the research also revealed that when subjects consumed the morning-loaded (big breakfast) diet, they reported feeling significantly less hungry later in the day. “Morning-loaded intake may assist with compliance to weight loss regime, through a greater suppression of appetite,” the authors said, adding that this “could foster easier weight loss in the real world.”

“The participants reported that their appetites were better controlled on the days they ate a bigger breakfast and that they felt satiated throughout the rest of the day,” Dr. Johnstone said.

“We know that appetite control is important to achieve weight loss, and our study suggests that those consuming the most calories in the morning felt less hungry, in contrast to when they consumed more calories in the evening period.

“This could be quite useful in the real-world environment, versus in the research setting that we were working in.”

‘Major finding’ for chrono-nutrition

Coauthor Jonathan Johnston, PhD, professor of chronobiology and integrative physiology at the University of Surrey, said: “This is a major finding for the field of meal timing (‘chrono-nutrition’) research. Many aspects of human biology change across the day and we are starting to understand how this interacts with food intake.

“Our new research shows that, in weight loss conditions, the size of breakfast and dinner regulates our appetite but not the total amount of energy that our bodies use,” Dr. Johnston said. “We plan to build upon this research to improve the health of the general population and specific groups, e.g, shift workers.”