User login

Prevalence and Management of Veterans with Advanced Solid Tumors Harboring NTRK Gene Rearrangements

Background

Oncogenic fusions within Neurotrophic Tyrosine Receptor Kinase (NTRK) 1, 2, or 3 drive constitutive hyperproliferative activity of (TRK) A, B, and C, respectively. Two TRK inhibitors have been approved for patients with advanced solid tumors bearing oncogenic fusions in NTRK1-3. We sought to describe the prevalence of NTRK fusions and rearrangements and to evaluate treatment outcomes among veterans treated with TRK inhibitors.

Methods

Patients with NTRK1-3 gene fusions or rearrangements were identified from the VA National Precision Oncology Program (NPOP) database. Separately, patients with orders for larotrectinib or entrectinib were identified from the Corporate Data Warehouse (CDW) and associated patient demographics and vital status were obtained. The prevalence of NTRK1-3 gene fusions and rearrangements was computed for all patients who had testing within NPOP. For patients who received either larotrectinib or entrectinib, duration of drug use, tumor response, reasons for drug discontinuation and toxicities were abstracted from medical records. For patients not treated with either drug, medical records were used to identify the reason for no drug use.

Results

Among 14,515 samples sequenced through NPOP (11,714 tissue DNA ,176 tissue DNA/RNA and 2625 liquid biopsy DNA tests), 14 (0.096%) had NTRK1-3 gene fusions or rearrangements (6 canonical fusions, 2 non-canonical fusions and 6 non-fusion gene rearrangements). Two patients tested outside of NPOP had canonical fusions. Among the 16 patients, 5 had prostate, 4 lung, 2 thyroid, 2 sarcoma, 1 bladder, 1 gastric, and 1 colorectal cancer. Twelve patients had metastatic disease, and 4 had early-stage disease. Eight patients were prescribed a TRK inhibitor (larotrectinib 5, entrectinib 3). Median duration of treatment was 59 (29 – 88) days. No responses were observed in the 7 evaluable patients. One patient developed neurotoxicity requiring temporary cessation of larotrectinib, and one patient treated with entrectinib developed volume overload requiring hospitalization leading to drug discontinuation.

Conclusion

Among veterans tested in NPOP, oncogenic NTRK fusions and rearrangements are very uncommon, and no patient had a response to treatment with a TRK inhibitor. Reconsideration of NTRK1-3 testing methodology and recommendations is warranted.

Background

Oncogenic fusions within Neurotrophic Tyrosine Receptor Kinase (NTRK) 1, 2, or 3 drive constitutive hyperproliferative activity of (TRK) A, B, and C, respectively. Two TRK inhibitors have been approved for patients with advanced solid tumors bearing oncogenic fusions in NTRK1-3. We sought to describe the prevalence of NTRK fusions and rearrangements and to evaluate treatment outcomes among veterans treated with TRK inhibitors.

Methods

Patients with NTRK1-3 gene fusions or rearrangements were identified from the VA National Precision Oncology Program (NPOP) database. Separately, patients with orders for larotrectinib or entrectinib were identified from the Corporate Data Warehouse (CDW) and associated patient demographics and vital status were obtained. The prevalence of NTRK1-3 gene fusions and rearrangements was computed for all patients who had testing within NPOP. For patients who received either larotrectinib or entrectinib, duration of drug use, tumor response, reasons for drug discontinuation and toxicities were abstracted from medical records. For patients not treated with either drug, medical records were used to identify the reason for no drug use.

Results

Among 14,515 samples sequenced through NPOP (11,714 tissue DNA ,176 tissue DNA/RNA and 2625 liquid biopsy DNA tests), 14 (0.096%) had NTRK1-3 gene fusions or rearrangements (6 canonical fusions, 2 non-canonical fusions and 6 non-fusion gene rearrangements). Two patients tested outside of NPOP had canonical fusions. Among the 16 patients, 5 had prostate, 4 lung, 2 thyroid, 2 sarcoma, 1 bladder, 1 gastric, and 1 colorectal cancer. Twelve patients had metastatic disease, and 4 had early-stage disease. Eight patients were prescribed a TRK inhibitor (larotrectinib 5, entrectinib 3). Median duration of treatment was 59 (29 – 88) days. No responses were observed in the 7 evaluable patients. One patient developed neurotoxicity requiring temporary cessation of larotrectinib, and one patient treated with entrectinib developed volume overload requiring hospitalization leading to drug discontinuation.

Conclusion

Among veterans tested in NPOP, oncogenic NTRK fusions and rearrangements are very uncommon, and no patient had a response to treatment with a TRK inhibitor. Reconsideration of NTRK1-3 testing methodology and recommendations is warranted.

Background

Oncogenic fusions within Neurotrophic Tyrosine Receptor Kinase (NTRK) 1, 2, or 3 drive constitutive hyperproliferative activity of (TRK) A, B, and C, respectively. Two TRK inhibitors have been approved for patients with advanced solid tumors bearing oncogenic fusions in NTRK1-3. We sought to describe the prevalence of NTRK fusions and rearrangements and to evaluate treatment outcomes among veterans treated with TRK inhibitors.

Methods

Patients with NTRK1-3 gene fusions or rearrangements were identified from the VA National Precision Oncology Program (NPOP) database. Separately, patients with orders for larotrectinib or entrectinib were identified from the Corporate Data Warehouse (CDW) and associated patient demographics and vital status were obtained. The prevalence of NTRK1-3 gene fusions and rearrangements was computed for all patients who had testing within NPOP. For patients who received either larotrectinib or entrectinib, duration of drug use, tumor response, reasons for drug discontinuation and toxicities were abstracted from medical records. For patients not treated with either drug, medical records were used to identify the reason for no drug use.

Results

Among 14,515 samples sequenced through NPOP (11,714 tissue DNA ,176 tissue DNA/RNA and 2625 liquid biopsy DNA tests), 14 (0.096%) had NTRK1-3 gene fusions or rearrangements (6 canonical fusions, 2 non-canonical fusions and 6 non-fusion gene rearrangements). Two patients tested outside of NPOP had canonical fusions. Among the 16 patients, 5 had prostate, 4 lung, 2 thyroid, 2 sarcoma, 1 bladder, 1 gastric, and 1 colorectal cancer. Twelve patients had metastatic disease, and 4 had early-stage disease. Eight patients were prescribed a TRK inhibitor (larotrectinib 5, entrectinib 3). Median duration of treatment was 59 (29 – 88) days. No responses were observed in the 7 evaluable patients. One patient developed neurotoxicity requiring temporary cessation of larotrectinib, and one patient treated with entrectinib developed volume overload requiring hospitalization leading to drug discontinuation.

Conclusion

Among veterans tested in NPOP, oncogenic NTRK fusions and rearrangements are very uncommon, and no patient had a response to treatment with a TRK inhibitor. Reconsideration of NTRK1-3 testing methodology and recommendations is warranted.

Factors Associated with Survival and Epidemiology of Gastrointestinal Neuroendocrine Tumors in the US Department of Veteran Affairs

Introduction

Rectal carcinoid tumors are rare but the second most common carcinoid in the gastrointestinal tract. They are usually found incidentally during endoscopic or rectal examination. They do not often produce carcinoid syndrome like manifestations although they may manifest as rectal bleeding. Rectal carcinoid patients also have a higher morbidity for other cancers such as stomach, small intestine, or secondary lung cancer.

Methods

We retrospectively explored factors associated with survival in Veterans with rectal carcinoid tumors over a ten-year period from 2007-2017 using the National Veterans Affairs Cancer Cube Registry using specific histological ICD-03 coding. We identified 1110 cases of rectal carcinoid. Chi-squared tests were used for statistical analysis.

Results

Regarding age distribution in our cohort, there were 2.61% of patients ages 40-50 group, 14.0% in the 50-60 age group, 41.5% in the 60-70 age group, and 40.7% above ages 70. There was a higher proportion of rectal cancer in stage 1 compared to other stages (86.3%). The majority of diagnoses occur after age 50 (89.8%). A higher proportion of rectal carcinoid was identified in the 60-70 years category compared to <60 and >70 years old. In the general VA population, there are 80.2% White and 12.8% Black patients. We found a higher proportion of rectal carcinoid in Black patients (47.8%) over White patients (42.8%, p=0.02), which differs significantly from the racial makeup of the VA population (12.8% Black vs 80.3% White). Looking at survival time based on diagnosis, it is notable that 82.7% of individuals survive longer than 5 years when the diagnosis is made in ages 50-60 when compared to 68.7% when the diagnosis is made between ages 60-70 (p<0.001).

Conclusions

Our data is consistent with the SEER data in that the incidence and prevalence of rectal carcinoid are higher in Black patients compared to White patients. Further analysis into reasons for this racial disparity may prove beneficial to our understanding of this malignancy in the Veteran population. Further research is needed to determine whether diagnosis at a younger age offers a survival advantage in rectal carcinoid.

Introduction

Rectal carcinoid tumors are rare but the second most common carcinoid in the gastrointestinal tract. They are usually found incidentally during endoscopic or rectal examination. They do not often produce carcinoid syndrome like manifestations although they may manifest as rectal bleeding. Rectal carcinoid patients also have a higher morbidity for other cancers such as stomach, small intestine, or secondary lung cancer.

Methods

We retrospectively explored factors associated with survival in Veterans with rectal carcinoid tumors over a ten-year period from 2007-2017 using the National Veterans Affairs Cancer Cube Registry using specific histological ICD-03 coding. We identified 1110 cases of rectal carcinoid. Chi-squared tests were used for statistical analysis.

Results

Regarding age distribution in our cohort, there were 2.61% of patients ages 40-50 group, 14.0% in the 50-60 age group, 41.5% in the 60-70 age group, and 40.7% above ages 70. There was a higher proportion of rectal cancer in stage 1 compared to other stages (86.3%). The majority of diagnoses occur after age 50 (89.8%). A higher proportion of rectal carcinoid was identified in the 60-70 years category compared to <60 and >70 years old. In the general VA population, there are 80.2% White and 12.8% Black patients. We found a higher proportion of rectal carcinoid in Black patients (47.8%) over White patients (42.8%, p=0.02), which differs significantly from the racial makeup of the VA population (12.8% Black vs 80.3% White). Looking at survival time based on diagnosis, it is notable that 82.7% of individuals survive longer than 5 years when the diagnosis is made in ages 50-60 when compared to 68.7% when the diagnosis is made between ages 60-70 (p<0.001).

Conclusions

Our data is consistent with the SEER data in that the incidence and prevalence of rectal carcinoid are higher in Black patients compared to White patients. Further analysis into reasons for this racial disparity may prove beneficial to our understanding of this malignancy in the Veteran population. Further research is needed to determine whether diagnosis at a younger age offers a survival advantage in rectal carcinoid.

Introduction

Rectal carcinoid tumors are rare but the second most common carcinoid in the gastrointestinal tract. They are usually found incidentally during endoscopic or rectal examination. They do not often produce carcinoid syndrome like manifestations although they may manifest as rectal bleeding. Rectal carcinoid patients also have a higher morbidity for other cancers such as stomach, small intestine, or secondary lung cancer.

Methods

We retrospectively explored factors associated with survival in Veterans with rectal carcinoid tumors over a ten-year period from 2007-2017 using the National Veterans Affairs Cancer Cube Registry using specific histological ICD-03 coding. We identified 1110 cases of rectal carcinoid. Chi-squared tests were used for statistical analysis.

Results

Regarding age distribution in our cohort, there were 2.61% of patients ages 40-50 group, 14.0% in the 50-60 age group, 41.5% in the 60-70 age group, and 40.7% above ages 70. There was a higher proportion of rectal cancer in stage 1 compared to other stages (86.3%). The majority of diagnoses occur after age 50 (89.8%). A higher proportion of rectal carcinoid was identified in the 60-70 years category compared to <60 and >70 years old. In the general VA population, there are 80.2% White and 12.8% Black patients. We found a higher proportion of rectal carcinoid in Black patients (47.8%) over White patients (42.8%, p=0.02), which differs significantly from the racial makeup of the VA population (12.8% Black vs 80.3% White). Looking at survival time based on diagnosis, it is notable that 82.7% of individuals survive longer than 5 years when the diagnosis is made in ages 50-60 when compared to 68.7% when the diagnosis is made between ages 60-70 (p<0.001).

Conclusions

Our data is consistent with the SEER data in that the incidence and prevalence of rectal carcinoid are higher in Black patients compared to White patients. Further analysis into reasons for this racial disparity may prove beneficial to our understanding of this malignancy in the Veteran population. Further research is needed to determine whether diagnosis at a younger age offers a survival advantage in rectal carcinoid.

Methods of Identifying Real World mCRPC Patients from the Veterans Health Administration System

Purpose

Prostate cancer is the fifth leading cause of death in the United States. Genomic testing is essential to guide treatment decisions in patients with metastatic castration resistant prostate cancer (mCRPC), the most advanced stage of prostate cancer. However, identifying mCRPC patients from administrative data is challenging and hinders researchers’ ability to assess testing among these patients. This study aims to develop algorithms using structured data and unstructured data with Natural language processing (NLP) methods to identify veterans by disease stage and hormone sensitivity, and to assess patient characteristics as well as receipt of tumor NGS testing.

Methods

We used biopsy, pathology, and diagnosis codes, to identify veterans with newly diagnosed PC within the Veterans Health Administration (VA) from January 1, 2017 to December 31, 2020. We developed and deployed: 1. A structured algorithm that used medication and Prostate-Specific Antigen (PSA) data to assess hormone sensitivity. 2. NLP tools to extract disease stage and hormone sensitivity from clinical notes. We report descriptive statistics on patient demographics, clinical characteristics, disease status, androgen deprivation therapy (ADT), and receipt of tumor NGS testing.

Results

There were 42,485 veterans with newly diagnosed prostate cancer between 2017-2020. This represented ~0.18% of veterans served in the VA and consisted of Whites (57%), Blacks (33%), and others (10%). During the study period, 3,113 (7.3%) patients had documentation of assessment for intraductal carcinoma, 5,160 (12.1%) had ADT treatment, 1,481 (3.5%) had CRPC, and 3,246 (7.6%) had metastatic disease. Among the 42,485 veterans, 422 received tumor NGS testing within VA, and 300 of them had metastatic disease. NLP tool and structured data algorithm collectively showed that 38% of the 422 tumor NGS testing recipients had mCRPC. Among all newly diagnosed PC patients, White patients had highest rates of tumor-based testing (2.3%), then Native Hawaiians (1.7%), Asians and Blacks (1.2% each), compared to Native Americans (0.4%).

Implications

NLP tools alongside structured data algorithms successfully identified variables required to measure access to tumor NGS testing. Efforts to validate and apply this method is ongoing to assess receipt of precision prostate cancer care in VA.

Purpose

Prostate cancer is the fifth leading cause of death in the United States. Genomic testing is essential to guide treatment decisions in patients with metastatic castration resistant prostate cancer (mCRPC), the most advanced stage of prostate cancer. However, identifying mCRPC patients from administrative data is challenging and hinders researchers’ ability to assess testing among these patients. This study aims to develop algorithms using structured data and unstructured data with Natural language processing (NLP) methods to identify veterans by disease stage and hormone sensitivity, and to assess patient characteristics as well as receipt of tumor NGS testing.

Methods

We used biopsy, pathology, and diagnosis codes, to identify veterans with newly diagnosed PC within the Veterans Health Administration (VA) from January 1, 2017 to December 31, 2020. We developed and deployed: 1. A structured algorithm that used medication and Prostate-Specific Antigen (PSA) data to assess hormone sensitivity. 2. NLP tools to extract disease stage and hormone sensitivity from clinical notes. We report descriptive statistics on patient demographics, clinical characteristics, disease status, androgen deprivation therapy (ADT), and receipt of tumor NGS testing.

Results

There were 42,485 veterans with newly diagnosed prostate cancer between 2017-2020. This represented ~0.18% of veterans served in the VA and consisted of Whites (57%), Blacks (33%), and others (10%). During the study period, 3,113 (7.3%) patients had documentation of assessment for intraductal carcinoma, 5,160 (12.1%) had ADT treatment, 1,481 (3.5%) had CRPC, and 3,246 (7.6%) had metastatic disease. Among the 42,485 veterans, 422 received tumor NGS testing within VA, and 300 of them had metastatic disease. NLP tool and structured data algorithm collectively showed that 38% of the 422 tumor NGS testing recipients had mCRPC. Among all newly diagnosed PC patients, White patients had highest rates of tumor-based testing (2.3%), then Native Hawaiians (1.7%), Asians and Blacks (1.2% each), compared to Native Americans (0.4%).

Implications

NLP tools alongside structured data algorithms successfully identified variables required to measure access to tumor NGS testing. Efforts to validate and apply this method is ongoing to assess receipt of precision prostate cancer care in VA.

Purpose

Prostate cancer is the fifth leading cause of death in the United States. Genomic testing is essential to guide treatment decisions in patients with metastatic castration resistant prostate cancer (mCRPC), the most advanced stage of prostate cancer. However, identifying mCRPC patients from administrative data is challenging and hinders researchers’ ability to assess testing among these patients. This study aims to develop algorithms using structured data and unstructured data with Natural language processing (NLP) methods to identify veterans by disease stage and hormone sensitivity, and to assess patient characteristics as well as receipt of tumor NGS testing.

Methods

We used biopsy, pathology, and diagnosis codes, to identify veterans with newly diagnosed PC within the Veterans Health Administration (VA) from January 1, 2017 to December 31, 2020. We developed and deployed: 1. A structured algorithm that used medication and Prostate-Specific Antigen (PSA) data to assess hormone sensitivity. 2. NLP tools to extract disease stage and hormone sensitivity from clinical notes. We report descriptive statistics on patient demographics, clinical characteristics, disease status, androgen deprivation therapy (ADT), and receipt of tumor NGS testing.

Results

There were 42,485 veterans with newly diagnosed prostate cancer between 2017-2020. This represented ~0.18% of veterans served in the VA and consisted of Whites (57%), Blacks (33%), and others (10%). During the study period, 3,113 (7.3%) patients had documentation of assessment for intraductal carcinoma, 5,160 (12.1%) had ADT treatment, 1,481 (3.5%) had CRPC, and 3,246 (7.6%) had metastatic disease. Among the 42,485 veterans, 422 received tumor NGS testing within VA, and 300 of them had metastatic disease. NLP tool and structured data algorithm collectively showed that 38% of the 422 tumor NGS testing recipients had mCRPC. Among all newly diagnosed PC patients, White patients had highest rates of tumor-based testing (2.3%), then Native Hawaiians (1.7%), Asians and Blacks (1.2% each), compared to Native Americans (0.4%).

Implications

NLP tools alongside structured data algorithms successfully identified variables required to measure access to tumor NGS testing. Efforts to validate and apply this method is ongoing to assess receipt of precision prostate cancer care in VA.

Diagnosis of Prostate Cancer and Prostate-specific Antigen Level on Initial Prostate Biopsy: Does Race Matter?

Objective

To determine whether Black Veterans are at higher risk for prostate cancer diagnosis on their first prostate biopsy compared to non-Hispanic White (White) Veterans.

Background

Prostate-specific antigen (PSA) testing is widely used to screen for prostate cancer. Although men of African ancestry display an increased incidence of prostate cancer and more aggressive disease, specific PSA thresholds for biopsy referral have yet to be proposed for this population.

Methods

We used the VHA’s electronic medical record data to collect Veterans’ demographic and clinical characteristics including self-identified race/ethnicity, age, date of first prostate biopsy, PSA results, and prostate cancer diagnosis. Veterans’ ZIP code of residence was used to determine urban/rural status, income, and education. We estimated multivariable logistic regression models to predict the likelihood of prostate cancer diagnosis on the first biopsy using race, baseline PSA, age at first PSA test, age at initial biopsy, smoking status, use of statins, and socioeconomic factors as predictors. We calculated adjusted predicted probabilities of cancer detection on the first prostate biopsy from the logistic models at different PSA levels.

Results

We identified 246,056 White and 71,653 Black Veterans who underwent their first prostate biopsy through February 28, 2020 and who had no previous prostate cancer diagnosis or treatment prior to that biopsy. Black Veterans appeared to receive their first PSA test four years earlier and undergo their first prostate biopsy two years earlier than their White counterparts (median age of 57 vs. 61 and 63 vs. 65, respectively). After controlling for selected covariates, we found that Black Veterans were 52% more likely to be diagnosed with prostate cancer on their first prostate biopsy compared to White Veterans (OR 1.52, 95% CI 1.49-1.55). Our model indicated that a Black Veteran with a PSA of 4.0 ng/ml has an equivalent risk of prostate cancer detection as a White Veteran with a PSA of 9.7 ng/ml.

Implications

Our findings suggested that developing a risk-based PSA threshold for referral to prostate biopsy may lead to earlier diagnosis of clinically significant prostate cancer in a population of Veterans known to have an increased incidence and risk of aggressive disease.

Objective

To determine whether Black Veterans are at higher risk for prostate cancer diagnosis on their first prostate biopsy compared to non-Hispanic White (White) Veterans.

Background

Prostate-specific antigen (PSA) testing is widely used to screen for prostate cancer. Although men of African ancestry display an increased incidence of prostate cancer and more aggressive disease, specific PSA thresholds for biopsy referral have yet to be proposed for this population.

Methods

We used the VHA’s electronic medical record data to collect Veterans’ demographic and clinical characteristics including self-identified race/ethnicity, age, date of first prostate biopsy, PSA results, and prostate cancer diagnosis. Veterans’ ZIP code of residence was used to determine urban/rural status, income, and education. We estimated multivariable logistic regression models to predict the likelihood of prostate cancer diagnosis on the first biopsy using race, baseline PSA, age at first PSA test, age at initial biopsy, smoking status, use of statins, and socioeconomic factors as predictors. We calculated adjusted predicted probabilities of cancer detection on the first prostate biopsy from the logistic models at different PSA levels.

Results

We identified 246,056 White and 71,653 Black Veterans who underwent their first prostate biopsy through February 28, 2020 and who had no previous prostate cancer diagnosis or treatment prior to that biopsy. Black Veterans appeared to receive their first PSA test four years earlier and undergo their first prostate biopsy two years earlier than their White counterparts (median age of 57 vs. 61 and 63 vs. 65, respectively). After controlling for selected covariates, we found that Black Veterans were 52% more likely to be diagnosed with prostate cancer on their first prostate biopsy compared to White Veterans (OR 1.52, 95% CI 1.49-1.55). Our model indicated that a Black Veteran with a PSA of 4.0 ng/ml has an equivalent risk of prostate cancer detection as a White Veteran with a PSA of 9.7 ng/ml.

Implications

Our findings suggested that developing a risk-based PSA threshold for referral to prostate biopsy may lead to earlier diagnosis of clinically significant prostate cancer in a population of Veterans known to have an increased incidence and risk of aggressive disease.

Objective

To determine whether Black Veterans are at higher risk for prostate cancer diagnosis on their first prostate biopsy compared to non-Hispanic White (White) Veterans.

Background

Prostate-specific antigen (PSA) testing is widely used to screen for prostate cancer. Although men of African ancestry display an increased incidence of prostate cancer and more aggressive disease, specific PSA thresholds for biopsy referral have yet to be proposed for this population.

Methods

We used the VHA’s electronic medical record data to collect Veterans’ demographic and clinical characteristics including self-identified race/ethnicity, age, date of first prostate biopsy, PSA results, and prostate cancer diagnosis. Veterans’ ZIP code of residence was used to determine urban/rural status, income, and education. We estimated multivariable logistic regression models to predict the likelihood of prostate cancer diagnosis on the first biopsy using race, baseline PSA, age at first PSA test, age at initial biopsy, smoking status, use of statins, and socioeconomic factors as predictors. We calculated adjusted predicted probabilities of cancer detection on the first prostate biopsy from the logistic models at different PSA levels.

Results

We identified 246,056 White and 71,653 Black Veterans who underwent their first prostate biopsy through February 28, 2020 and who had no previous prostate cancer diagnosis or treatment prior to that biopsy. Black Veterans appeared to receive their first PSA test four years earlier and undergo their first prostate biopsy two years earlier than their White counterparts (median age of 57 vs. 61 and 63 vs. 65, respectively). After controlling for selected covariates, we found that Black Veterans were 52% more likely to be diagnosed with prostate cancer on their first prostate biopsy compared to White Veterans (OR 1.52, 95% CI 1.49-1.55). Our model indicated that a Black Veteran with a PSA of 4.0 ng/ml has an equivalent risk of prostate cancer detection as a White Veteran with a PSA of 9.7 ng/ml.

Implications

Our findings suggested that developing a risk-based PSA threshold for referral to prostate biopsy may lead to earlier diagnosis of clinically significant prostate cancer in a population of Veterans known to have an increased incidence and risk of aggressive disease.

Racial Disparities in Treatment and Survival for Early-Stage Non-Small Cell Lung Cancer: Is Equal Access Health Care System the Answer?

Background

Survival for early-stage non-small cell lung cancer (NSCLC) has dramatically improved with advancement in surgical and radiation techniques over last two decades but there exists a disparity for African Americans (AA) having worse overall survival (OS) in recent studies on the general US population. We studied this racial disparity in Veteran population.

Methods

Data for 2589 AA and 14184 Caucasian Veterans diagnosed with early-stage (I, II) NSCLC between 2011-2017 was obtained from the Cancer Cube Registry (VACCR). IRB approval was obtained.

Results

The distribution of newly diagnosed cases of Stage I (73.92% AA vs 74.71% Caucasians) and Stage II (26.07% vs 25.29%) between the two races was comparable (p = .41). More Caucasians were diagnosed above the age of 60 compared to AA (92.22% vs 84.51%, p < .05). More AA were diagnosed with adenocarcinoma at diagnosis (56.01% vs 45.88% Caucasians, p < .05) for both Stage I and II disease. For the limited number of Veterans with reported performance status (PS), similar proportion of patients had a good PS defined as ECOG 0-2 among the two races (93.70% AA vs 93.97% Caucasians, p = .73). There was no statistically significant difference between 5-year OS for AA and Caucasians (69.81% vs 70.78%, p = .33) for both Stage I and II NSCLC. Both groups had similar rate of receipt of surgery as first line treatment or in combination with other treatments (58.90% AA vs 59.07% Caucasians, p = .90). Similarly, the rate of receiving radiation therapy was comparable between AA and Caucasians (42.4% vs 42.3%, p = .96). Although both races showed improved 5-year OS after surgery, there was no statistical difference in survival benefit between AA and Caucasians (69.8% vs 70.8%, p = .33).

Conclusion

In contrast to the studies assessing general US population trends, there was no racial disparity for 5-year OS in early-stage NSCLC for the Veteran population. This points to the inequities in access to treatment and preventive healthcare services as a possible contributing cause to the increased mortality in AA in general US population and a more equitable healthcare delivery within the VHA system.

Background

Survival for early-stage non-small cell lung cancer (NSCLC) has dramatically improved with advancement in surgical and radiation techniques over last two decades but there exists a disparity for African Americans (AA) having worse overall survival (OS) in recent studies on the general US population. We studied this racial disparity in Veteran population.

Methods

Data for 2589 AA and 14184 Caucasian Veterans diagnosed with early-stage (I, II) NSCLC between 2011-2017 was obtained from the Cancer Cube Registry (VACCR). IRB approval was obtained.

Results

The distribution of newly diagnosed cases of Stage I (73.92% AA vs 74.71% Caucasians) and Stage II (26.07% vs 25.29%) between the two races was comparable (p = .41). More Caucasians were diagnosed above the age of 60 compared to AA (92.22% vs 84.51%, p < .05). More AA were diagnosed with adenocarcinoma at diagnosis (56.01% vs 45.88% Caucasians, p < .05) for both Stage I and II disease. For the limited number of Veterans with reported performance status (PS), similar proportion of patients had a good PS defined as ECOG 0-2 among the two races (93.70% AA vs 93.97% Caucasians, p = .73). There was no statistically significant difference between 5-year OS for AA and Caucasians (69.81% vs 70.78%, p = .33) for both Stage I and II NSCLC. Both groups had similar rate of receipt of surgery as first line treatment or in combination with other treatments (58.90% AA vs 59.07% Caucasians, p = .90). Similarly, the rate of receiving radiation therapy was comparable between AA and Caucasians (42.4% vs 42.3%, p = .96). Although both races showed improved 5-year OS after surgery, there was no statistical difference in survival benefit between AA and Caucasians (69.8% vs 70.8%, p = .33).

Conclusion

In contrast to the studies assessing general US population trends, there was no racial disparity for 5-year OS in early-stage NSCLC for the Veteran population. This points to the inequities in access to treatment and preventive healthcare services as a possible contributing cause to the increased mortality in AA in general US population and a more equitable healthcare delivery within the VHA system.

Background

Survival for early-stage non-small cell lung cancer (NSCLC) has dramatically improved with advancement in surgical and radiation techniques over last two decades but there exists a disparity for African Americans (AA) having worse overall survival (OS) in recent studies on the general US population. We studied this racial disparity in Veteran population.

Methods

Data for 2589 AA and 14184 Caucasian Veterans diagnosed with early-stage (I, II) NSCLC between 2011-2017 was obtained from the Cancer Cube Registry (VACCR). IRB approval was obtained.

Results

The distribution of newly diagnosed cases of Stage I (73.92% AA vs 74.71% Caucasians) and Stage II (26.07% vs 25.29%) between the two races was comparable (p = .41). More Caucasians were diagnosed above the age of 60 compared to AA (92.22% vs 84.51%, p < .05). More AA were diagnosed with adenocarcinoma at diagnosis (56.01% vs 45.88% Caucasians, p < .05) for both Stage I and II disease. For the limited number of Veterans with reported performance status (PS), similar proportion of patients had a good PS defined as ECOG 0-2 among the two races (93.70% AA vs 93.97% Caucasians, p = .73). There was no statistically significant difference between 5-year OS for AA and Caucasians (69.81% vs 70.78%, p = .33) for both Stage I and II NSCLC. Both groups had similar rate of receipt of surgery as first line treatment or in combination with other treatments (58.90% AA vs 59.07% Caucasians, p = .90). Similarly, the rate of receiving radiation therapy was comparable between AA and Caucasians (42.4% vs 42.3%, p = .96). Although both races showed improved 5-year OS after surgery, there was no statistical difference in survival benefit between AA and Caucasians (69.8% vs 70.8%, p = .33).

Conclusion

In contrast to the studies assessing general US population trends, there was no racial disparity for 5-year OS in early-stage NSCLC for the Veteran population. This points to the inequities in access to treatment and preventive healthcare services as a possible contributing cause to the increased mortality in AA in general US population and a more equitable healthcare delivery within the VHA system.

Survival Analysis of Untreated Early-Stage Non-Small Cell Lung Cancer (NSCLC) in a Veteran Population

Introduction

Veterans with early-stage NSCLC who do not receive any form of treatment have been shown to have a worse overall survival compared to those who receive treatment. Factors that may influence the decision to administer treatment including age, performance status (PS), comorbidities, and racial disparity have not been assessed on a national level in recent years.

Methods

Data for 31,966 veterans diagnosed with early-stage (0, I) NSCLC between 2003-2017 was obtained from the Cancer cube registry (VACCR). IRB approval was obtained.

Results

Patients were divided into treatment (26,833/31,966, 83.16%) and no-treatment group (3096/31966, 9.68%). Of the no-treatment group, 3004 patients were stage I and 92 were stage 0 whereas in the treatment group, the distribution was 26,584 and 249 respectively. Gender, race, and histology distribution were comparable between the two. Patients with poor PS (defined as ECOG III and IV) received less treatment with any modality compared to those with good PS (ECOG I and II) (15.07% in no treatment group vs 4.03% in treatment group, p<0.05). The treatment group had a better 5-year overall survival (OS) as compared to no-treatment group (43.1% vs 14.7%, p<0.05). Regardless of treatment, patients above the age of 60 (41% vs 13.4%, p<0.05) and those with poor PS (19.6% vs 5.8%, p<0.05) had worse 5-year survival, with the effect being greater in the treatment group. Adenocarcinoma had a better 5-year survival compared to squamous cell carcinoma (SCC) in both groups (49.56% vs 39.1% p<0.05). There was no clinically significant OS difference in terms of race (Caucasian or African American) or tumor location (upper, middle, or lower lobe) in between the two groups. Our study was limited by lack of patient- level data including smoking status or reason why no treatment was given.

Conclusion

Patients with early-stage NSCLC who receive no treatment based on poor PS have a worse overall survival compared to the patients that receive treatment. Further investigation is required to assess what other criteria are used to decide treatment eligibility and whether these patients would be candidates for immunotherapy or targeted therapy in the future.

Introduction

Veterans with early-stage NSCLC who do not receive any form of treatment have been shown to have a worse overall survival compared to those who receive treatment. Factors that may influence the decision to administer treatment including age, performance status (PS), comorbidities, and racial disparity have not been assessed on a national level in recent years.

Methods

Data for 31,966 veterans diagnosed with early-stage (0, I) NSCLC between 2003-2017 was obtained from the Cancer cube registry (VACCR). IRB approval was obtained.

Results

Patients were divided into treatment (26,833/31,966, 83.16%) and no-treatment group (3096/31966, 9.68%). Of the no-treatment group, 3004 patients were stage I and 92 were stage 0 whereas in the treatment group, the distribution was 26,584 and 249 respectively. Gender, race, and histology distribution were comparable between the two. Patients with poor PS (defined as ECOG III and IV) received less treatment with any modality compared to those with good PS (ECOG I and II) (15.07% in no treatment group vs 4.03% in treatment group, p<0.05). The treatment group had a better 5-year overall survival (OS) as compared to no-treatment group (43.1% vs 14.7%, p<0.05). Regardless of treatment, patients above the age of 60 (41% vs 13.4%, p<0.05) and those with poor PS (19.6% vs 5.8%, p<0.05) had worse 5-year survival, with the effect being greater in the treatment group. Adenocarcinoma had a better 5-year survival compared to squamous cell carcinoma (SCC) in both groups (49.56% vs 39.1% p<0.05). There was no clinically significant OS difference in terms of race (Caucasian or African American) or tumor location (upper, middle, or lower lobe) in between the two groups. Our study was limited by lack of patient- level data including smoking status or reason why no treatment was given.

Conclusion

Patients with early-stage NSCLC who receive no treatment based on poor PS have a worse overall survival compared to the patients that receive treatment. Further investigation is required to assess what other criteria are used to decide treatment eligibility and whether these patients would be candidates for immunotherapy or targeted therapy in the future.

Introduction

Veterans with early-stage NSCLC who do not receive any form of treatment have been shown to have a worse overall survival compared to those who receive treatment. Factors that may influence the decision to administer treatment including age, performance status (PS), comorbidities, and racial disparity have not been assessed on a national level in recent years.

Methods

Data for 31,966 veterans diagnosed with early-stage (0, I) NSCLC between 2003-2017 was obtained from the Cancer cube registry (VACCR). IRB approval was obtained.

Results

Patients were divided into treatment (26,833/31,966, 83.16%) and no-treatment group (3096/31966, 9.68%). Of the no-treatment group, 3004 patients were stage I and 92 were stage 0 whereas in the treatment group, the distribution was 26,584 and 249 respectively. Gender, race, and histology distribution were comparable between the two. Patients with poor PS (defined as ECOG III and IV) received less treatment with any modality compared to those with good PS (ECOG I and II) (15.07% in no treatment group vs 4.03% in treatment group, p<0.05). The treatment group had a better 5-year overall survival (OS) as compared to no-treatment group (43.1% vs 14.7%, p<0.05). Regardless of treatment, patients above the age of 60 (41% vs 13.4%, p<0.05) and those with poor PS (19.6% vs 5.8%, p<0.05) had worse 5-year survival, with the effect being greater in the treatment group. Adenocarcinoma had a better 5-year survival compared to squamous cell carcinoma (SCC) in both groups (49.56% vs 39.1% p<0.05). There was no clinically significant OS difference in terms of race (Caucasian or African American) or tumor location (upper, middle, or lower lobe) in between the two groups. Our study was limited by lack of patient- level data including smoking status or reason why no treatment was given.

Conclusion

Patients with early-stage NSCLC who receive no treatment based on poor PS have a worse overall survival compared to the patients that receive treatment. Further investigation is required to assess what other criteria are used to decide treatment eligibility and whether these patients would be candidates for immunotherapy or targeted therapy in the future.

Atopic Dermatitis Oral Therapies: What Are Patients Learning on YouTube?

To the Editor:

Oral immunosuppressive therapies are prescribed for moderate to severe atopic dermatitis. Patients often consult YouTube to make informed decisions about these therapies. In the United States, most health-related online searches are initiated through a search engine, which frequently leads to social media sites such as YouTube. Recent studies have examined the reasons why users turn to the Internet for health-related information, indicating that users typically seek specific information regarding health concerns.1,2 Furthermore, social media platforms such as YouTube are a popular means of sharing health information with the public.3-5 Currently, YouTube has more than 1 billion registered users, and 30 million health-related videos are watched each day.6 Almost one-third of US consumers use YouTube, Facebook, and Twitter to obtain medical information.7 YouTube is a versatile tool because of its video-discovery mechanisms such as a keyword-based search engine, video-recommendation system, highlight feature for videos on home pages, and the capacity to embed YouTube videos on various web pages.8 Searchers use videos that are short, fast paced, emotion evoking, from credible sources, recently uploaded, and relevant to the searcher for aiding in health decisions.9 Furthermore, studies have demonstrated YouTube’s capacity to support a change in attitude and increase users’ knowledge. In fact, YouTube had higher impact on recall, attitudes, and behaviors when compared with written materials on other social media platforms, such as Facebook and Twitter.9 We conducted a cross-sectional study to examine the quality of YouTube videos on oral therapies for atopic dermatitis, such as cyclosporine, methotrexate, azathioprine, and mycophenolate mofetil.

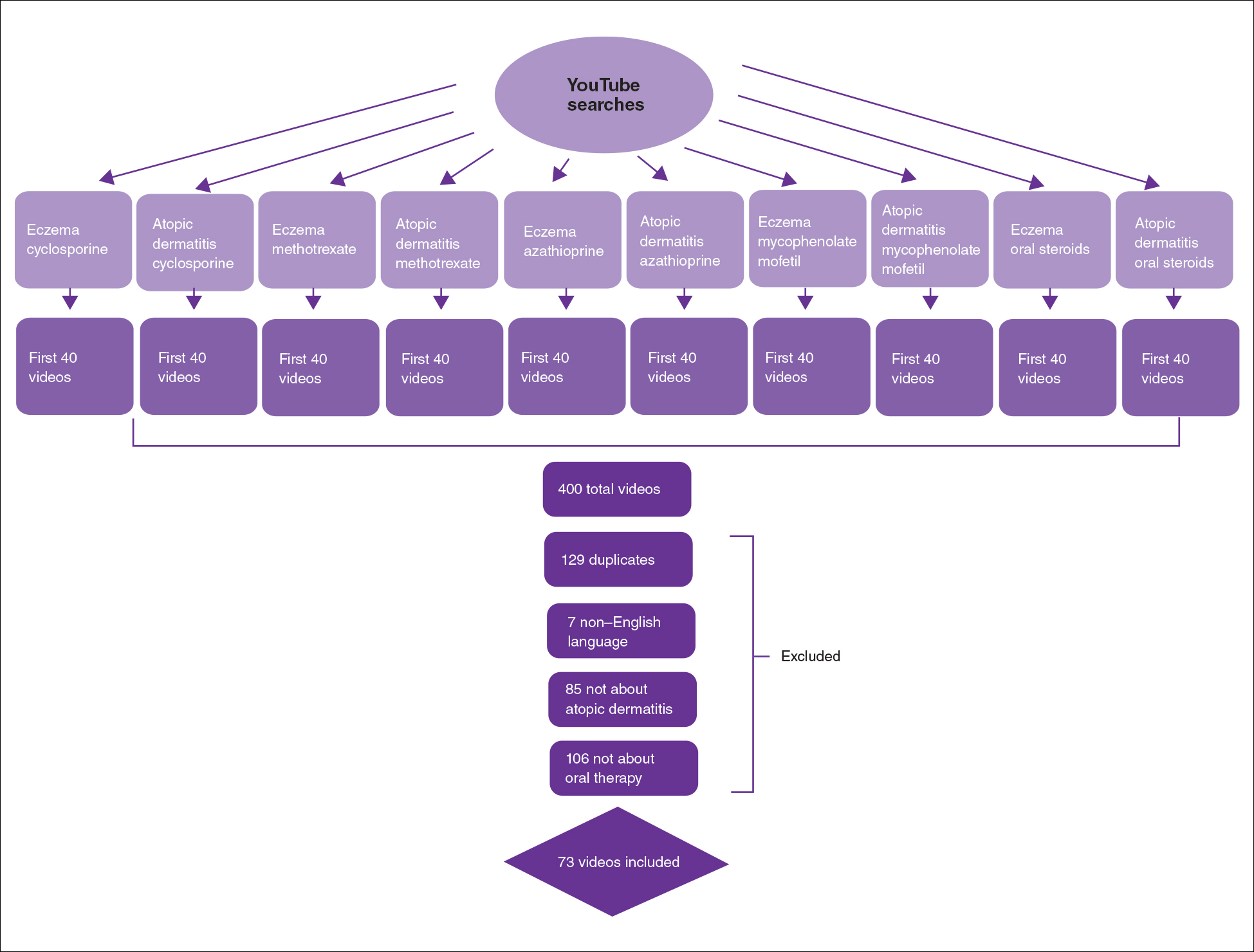

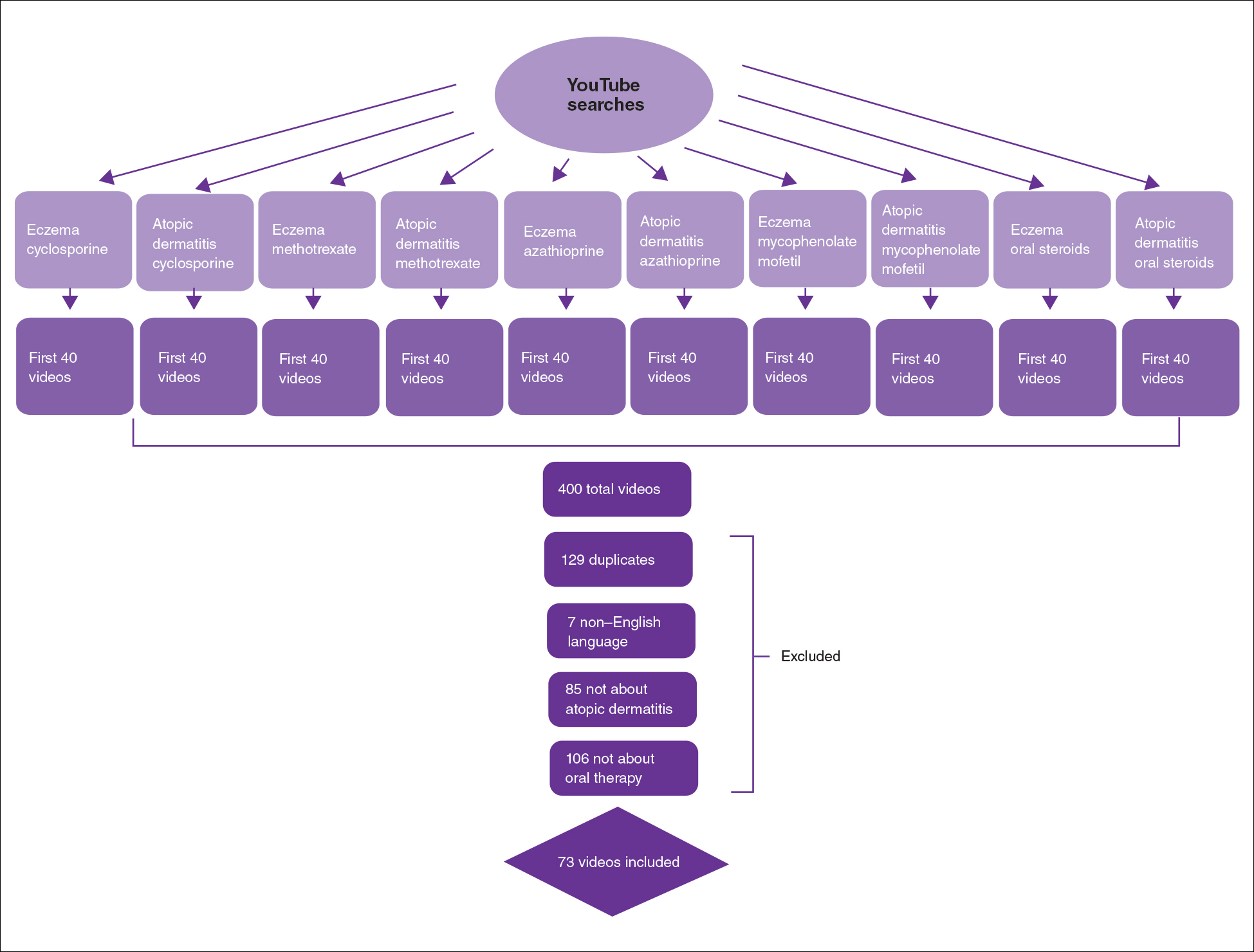

On April 23, 2020, we performed 8 searches using a private browser with default filters on YouTube (Figure). Injectables were not included in the analysis, as the YouTube experience on dupilumab previously has been investigated.10 The top 40 videos from each search were screened by 3 researchers. Duplicates, non–English-language videos, and videos that did not discuss atopic dermatitis or oral therapies were excluded, resulting in 73 videos included in this analysis. Testimonials generated by patients made up 39 of 73 (53.4%) videos. Health care professionals created 23 of 73 (31.5%) videos, and educators with financial interest created 11 of 73 (15.1%) videos. The dates of production for the videos spanned from 2008 to 2020.

The major topics addressed in the videos were symptomatic changes (63 [68.8% of all topics discussed]), adverse effects (52 [67.5%]), and quality-of-life changes (37 [48.1%]). Of the videos included, the majority (42/73 [57.5%]) contained a neutral tone about the medication, citing advantages and disadvantages with therapy, while 22 of 73 (30.1%) had an encouraging tone, and 9 of 73 (12.3%) had a discouraging tone. Regarding videos with positive tones, there were 17 videos on cyclosporine, 9 on azathioprine, 7 on methotrexate, 4 on oral steroids, and 2 on mycophenolate mofetil. Regarding videos with negative tones, there were 4 on cyclosporine, 3 on azathioprine, 2 on methotrexate, and 2 on mycophenolate mofetil.

Of the videos made with financial interest, the majority (28/34 [77.8%]) were more suitable for informing health care providers rather than patients, containing jargon as well as complex information on clinical trials, dosing, and mechanisms of action. From the videos discussing clinical recommendations, there were 9 of 73 (12.3%) Grade A recommendations (eg, citing evidence-based information and clinical trials) and 64 of 73 (87.7%) Grade B recommendations (eg, anecdotal information on patient experience). Thirty-seven of 73 (50.7%) videos were evidence based, and 36 of 73 (49.3%) were non–evidence based. Six videos were patient-oriented news broadcasts.

Patient-generated testimonials had the most views (mean, 9238.4) and highest interaction ratio (the sum of likes, dislikes, and comments divided by the number of views)(mean, 0.027), while health care provider–generated videos had fewer views (mean, 9218.7) and a lower interaction ratio (mean, 0.011). Financial-based videos had 4233.4 views on average, with an average interaction ratio of 0.014. Based on these results, biased, patient-generated content comprised greater than 50% of YouTube videos about oral therapies for atopic dermatitis and was quite likely to be engaged with by users. Thus, these patient testimonials have great potential to affect decision-making.

The high number of patient-generated videos about oral therapies was consistent with prior studies of YouTube videos about therapies for numerous conditions.11-13 Dermatologists should consider utilizing YouTube for providing evidence-based, patient-oriented information about novel therapeutics. They may consider collaborating with patients to assist with their creation of YouTube videos and directing patients to credible resources by the American Academy of Dermatology and Canadian Dermatology Association for decision-making.

Importantly, this analysis is limited by its lack of quality-assessment tools for video-based resources such as JAMA score and DISCERN score.14,15 However, these metrics have limited ability to evaluate audiovisual elements, indicating the need for novel tools to score their validity.

- Fox S, Duggan M. Health online 2013. January 15, 2013. Accessed August 15, 2021. https://www.pewresearch.org/internet/2013/01/15/health-online-2013/

- Ní Ríordáin R, McCreary C. Dental patients’ use of the Internet. Br Dent J. 2009;207:583-586, 575.

- Fergie G, Hilton S, Hunt K. Young adults’ experiences of seeking online information about diabetes and mental health in the age of social media. Health Expect. 2016;19:1324-1335.

- Antheunis ML, Tates K, Nieboer TE. Patients’ and health professionals’ use of social media in health care: motives, barriers and expectations. Patient Educ Couns. 2013;92:426-431.

- McGregor F, Somner JE, Bourne RR, et al. Social media use by patients with glaucoma: what can we learn? Ophthalmic Physiol Opt. 2014;34:46-52.

- YouTube Statistics—15 Amazing Stats for 2015. Published April 30, 2015. Accessed August 27, 2021. YouTube.com/watch?v=9ZLBSPzY7GQ

- Health Research Institute. Social media “likes” healthcare: from marketing to social business. April 2012. Accessed August 15, 2021. https://www.pwc.com/us/en/health-industries/health-research-institute/publications/pdf/health-care-social-media-report.pdf

- Zhou R, Khemmarat S, Gao L, et al. How YouTube videos are discovered and its impact on videos views. Multimed Tools Appl. 2016;75:6035-6058.

- Haslam K, Doucette H, Hachey S, et al. YouTube videos as health decision aids for the public: an integrative review. Can J Dent Hyg. 2019;53:53-66.

- Pithadia D, Reynolds K, Lee E, et al. Dupilumab for atopic dermatitis: what are patients learning on YouTube [published online ahead of print April 16,2020]? J Dermatolog Treat. doi: 10.1080/09546634.2020.1755418

- Tolu S, Yurdakul OV, Basaran B, et al. English-language videos on YouTube as a source of information on self-administer subcutaneous anti-tumour necrosis factor agent injections. Rheumatol Int. 2018;38:1285-1292.

- Reynolds KA, Pithadia DJ, Lee EB, et al. A cross-sectional study of YouTube videos about psoriasis biologics. Int J Dermatol. 2019;58:E61-E62.

- Kocyigit BF, Akaltun MS. Does YouTube provide high quality information? assessment of secukinumab videos. Rheumatol Int. 2019;39:1263-1268.

- Qi J, Trang T, Doong J, et al. Misinformation is prevalent in psoriasis-related YouTube videos. Dermatol Online J. 2016;22:13030/qt7qc9z2m5

- Gokcen HB, Gumussuyu G. A quality analysis of disc herniation videos on YouTube. World Neurosurg. 2019;124:E799-E804.

To the Editor:

Oral immunosuppressive therapies are prescribed for moderate to severe atopic dermatitis. Patients often consult YouTube to make informed decisions about these therapies. In the United States, most health-related online searches are initiated through a search engine, which frequently leads to social media sites such as YouTube. Recent studies have examined the reasons why users turn to the Internet for health-related information, indicating that users typically seek specific information regarding health concerns.1,2 Furthermore, social media platforms such as YouTube are a popular means of sharing health information with the public.3-5 Currently, YouTube has more than 1 billion registered users, and 30 million health-related videos are watched each day.6 Almost one-third of US consumers use YouTube, Facebook, and Twitter to obtain medical information.7 YouTube is a versatile tool because of its video-discovery mechanisms such as a keyword-based search engine, video-recommendation system, highlight feature for videos on home pages, and the capacity to embed YouTube videos on various web pages.8 Searchers use videos that are short, fast paced, emotion evoking, from credible sources, recently uploaded, and relevant to the searcher for aiding in health decisions.9 Furthermore, studies have demonstrated YouTube’s capacity to support a change in attitude and increase users’ knowledge. In fact, YouTube had higher impact on recall, attitudes, and behaviors when compared with written materials on other social media platforms, such as Facebook and Twitter.9 We conducted a cross-sectional study to examine the quality of YouTube videos on oral therapies for atopic dermatitis, such as cyclosporine, methotrexate, azathioprine, and mycophenolate mofetil.

On April 23, 2020, we performed 8 searches using a private browser with default filters on YouTube (Figure). Injectables were not included in the analysis, as the YouTube experience on dupilumab previously has been investigated.10 The top 40 videos from each search were screened by 3 researchers. Duplicates, non–English-language videos, and videos that did not discuss atopic dermatitis or oral therapies were excluded, resulting in 73 videos included in this analysis. Testimonials generated by patients made up 39 of 73 (53.4%) videos. Health care professionals created 23 of 73 (31.5%) videos, and educators with financial interest created 11 of 73 (15.1%) videos. The dates of production for the videos spanned from 2008 to 2020.

The major topics addressed in the videos were symptomatic changes (63 [68.8% of all topics discussed]), adverse effects (52 [67.5%]), and quality-of-life changes (37 [48.1%]). Of the videos included, the majority (42/73 [57.5%]) contained a neutral tone about the medication, citing advantages and disadvantages with therapy, while 22 of 73 (30.1%) had an encouraging tone, and 9 of 73 (12.3%) had a discouraging tone. Regarding videos with positive tones, there were 17 videos on cyclosporine, 9 on azathioprine, 7 on methotrexate, 4 on oral steroids, and 2 on mycophenolate mofetil. Regarding videos with negative tones, there were 4 on cyclosporine, 3 on azathioprine, 2 on methotrexate, and 2 on mycophenolate mofetil.

Of the videos made with financial interest, the majority (28/34 [77.8%]) were more suitable for informing health care providers rather than patients, containing jargon as well as complex information on clinical trials, dosing, and mechanisms of action. From the videos discussing clinical recommendations, there were 9 of 73 (12.3%) Grade A recommendations (eg, citing evidence-based information and clinical trials) and 64 of 73 (87.7%) Grade B recommendations (eg, anecdotal information on patient experience). Thirty-seven of 73 (50.7%) videos were evidence based, and 36 of 73 (49.3%) were non–evidence based. Six videos were patient-oriented news broadcasts.

Patient-generated testimonials had the most views (mean, 9238.4) and highest interaction ratio (the sum of likes, dislikes, and comments divided by the number of views)(mean, 0.027), while health care provider–generated videos had fewer views (mean, 9218.7) and a lower interaction ratio (mean, 0.011). Financial-based videos had 4233.4 views on average, with an average interaction ratio of 0.014. Based on these results, biased, patient-generated content comprised greater than 50% of YouTube videos about oral therapies for atopic dermatitis and was quite likely to be engaged with by users. Thus, these patient testimonials have great potential to affect decision-making.

The high number of patient-generated videos about oral therapies was consistent with prior studies of YouTube videos about therapies for numerous conditions.11-13 Dermatologists should consider utilizing YouTube for providing evidence-based, patient-oriented information about novel therapeutics. They may consider collaborating with patients to assist with their creation of YouTube videos and directing patients to credible resources by the American Academy of Dermatology and Canadian Dermatology Association for decision-making.

Importantly, this analysis is limited by its lack of quality-assessment tools for video-based resources such as JAMA score and DISCERN score.14,15 However, these metrics have limited ability to evaluate audiovisual elements, indicating the need for novel tools to score their validity.

To the Editor:

Oral immunosuppressive therapies are prescribed for moderate to severe atopic dermatitis. Patients often consult YouTube to make informed decisions about these therapies. In the United States, most health-related online searches are initiated through a search engine, which frequently leads to social media sites such as YouTube. Recent studies have examined the reasons why users turn to the Internet for health-related information, indicating that users typically seek specific information regarding health concerns.1,2 Furthermore, social media platforms such as YouTube are a popular means of sharing health information with the public.3-5 Currently, YouTube has more than 1 billion registered users, and 30 million health-related videos are watched each day.6 Almost one-third of US consumers use YouTube, Facebook, and Twitter to obtain medical information.7 YouTube is a versatile tool because of its video-discovery mechanisms such as a keyword-based search engine, video-recommendation system, highlight feature for videos on home pages, and the capacity to embed YouTube videos on various web pages.8 Searchers use videos that are short, fast paced, emotion evoking, from credible sources, recently uploaded, and relevant to the searcher for aiding in health decisions.9 Furthermore, studies have demonstrated YouTube’s capacity to support a change in attitude and increase users’ knowledge. In fact, YouTube had higher impact on recall, attitudes, and behaviors when compared with written materials on other social media platforms, such as Facebook and Twitter.9 We conducted a cross-sectional study to examine the quality of YouTube videos on oral therapies for atopic dermatitis, such as cyclosporine, methotrexate, azathioprine, and mycophenolate mofetil.

On April 23, 2020, we performed 8 searches using a private browser with default filters on YouTube (Figure). Injectables were not included in the analysis, as the YouTube experience on dupilumab previously has been investigated.10 The top 40 videos from each search were screened by 3 researchers. Duplicates, non–English-language videos, and videos that did not discuss atopic dermatitis or oral therapies were excluded, resulting in 73 videos included in this analysis. Testimonials generated by patients made up 39 of 73 (53.4%) videos. Health care professionals created 23 of 73 (31.5%) videos, and educators with financial interest created 11 of 73 (15.1%) videos. The dates of production for the videos spanned from 2008 to 2020.

The major topics addressed in the videos were symptomatic changes (63 [68.8% of all topics discussed]), adverse effects (52 [67.5%]), and quality-of-life changes (37 [48.1%]). Of the videos included, the majority (42/73 [57.5%]) contained a neutral tone about the medication, citing advantages and disadvantages with therapy, while 22 of 73 (30.1%) had an encouraging tone, and 9 of 73 (12.3%) had a discouraging tone. Regarding videos with positive tones, there were 17 videos on cyclosporine, 9 on azathioprine, 7 on methotrexate, 4 on oral steroids, and 2 on mycophenolate mofetil. Regarding videos with negative tones, there were 4 on cyclosporine, 3 on azathioprine, 2 on methotrexate, and 2 on mycophenolate mofetil.

Of the videos made with financial interest, the majority (28/34 [77.8%]) were more suitable for informing health care providers rather than patients, containing jargon as well as complex information on clinical trials, dosing, and mechanisms of action. From the videos discussing clinical recommendations, there were 9 of 73 (12.3%) Grade A recommendations (eg, citing evidence-based information and clinical trials) and 64 of 73 (87.7%) Grade B recommendations (eg, anecdotal information on patient experience). Thirty-seven of 73 (50.7%) videos were evidence based, and 36 of 73 (49.3%) were non–evidence based. Six videos were patient-oriented news broadcasts.

Patient-generated testimonials had the most views (mean, 9238.4) and highest interaction ratio (the sum of likes, dislikes, and comments divided by the number of views)(mean, 0.027), while health care provider–generated videos had fewer views (mean, 9218.7) and a lower interaction ratio (mean, 0.011). Financial-based videos had 4233.4 views on average, with an average interaction ratio of 0.014. Based on these results, biased, patient-generated content comprised greater than 50% of YouTube videos about oral therapies for atopic dermatitis and was quite likely to be engaged with by users. Thus, these patient testimonials have great potential to affect decision-making.

The high number of patient-generated videos about oral therapies was consistent with prior studies of YouTube videos about therapies for numerous conditions.11-13 Dermatologists should consider utilizing YouTube for providing evidence-based, patient-oriented information about novel therapeutics. They may consider collaborating with patients to assist with their creation of YouTube videos and directing patients to credible resources by the American Academy of Dermatology and Canadian Dermatology Association for decision-making.

Importantly, this analysis is limited by its lack of quality-assessment tools for video-based resources such as JAMA score and DISCERN score.14,15 However, these metrics have limited ability to evaluate audiovisual elements, indicating the need for novel tools to score their validity.

- Fox S, Duggan M. Health online 2013. January 15, 2013. Accessed August 15, 2021. https://www.pewresearch.org/internet/2013/01/15/health-online-2013/

- Ní Ríordáin R, McCreary C. Dental patients’ use of the Internet. Br Dent J. 2009;207:583-586, 575.

- Fergie G, Hilton S, Hunt K. Young adults’ experiences of seeking online information about diabetes and mental health in the age of social media. Health Expect. 2016;19:1324-1335.

- Antheunis ML, Tates K, Nieboer TE. Patients’ and health professionals’ use of social media in health care: motives, barriers and expectations. Patient Educ Couns. 2013;92:426-431.

- McGregor F, Somner JE, Bourne RR, et al. Social media use by patients with glaucoma: what can we learn? Ophthalmic Physiol Opt. 2014;34:46-52.

- YouTube Statistics—15 Amazing Stats for 2015. Published April 30, 2015. Accessed August 27, 2021. YouTube.com/watch?v=9ZLBSPzY7GQ

- Health Research Institute. Social media “likes” healthcare: from marketing to social business. April 2012. Accessed August 15, 2021. https://www.pwc.com/us/en/health-industries/health-research-institute/publications/pdf/health-care-social-media-report.pdf

- Zhou R, Khemmarat S, Gao L, et al. How YouTube videos are discovered and its impact on videos views. Multimed Tools Appl. 2016;75:6035-6058.

- Haslam K, Doucette H, Hachey S, et al. YouTube videos as health decision aids for the public: an integrative review. Can J Dent Hyg. 2019;53:53-66.

- Pithadia D, Reynolds K, Lee E, et al. Dupilumab for atopic dermatitis: what are patients learning on YouTube [published online ahead of print April 16,2020]? J Dermatolog Treat. doi: 10.1080/09546634.2020.1755418

- Tolu S, Yurdakul OV, Basaran B, et al. English-language videos on YouTube as a source of information on self-administer subcutaneous anti-tumour necrosis factor agent injections. Rheumatol Int. 2018;38:1285-1292.

- Reynolds KA, Pithadia DJ, Lee EB, et al. A cross-sectional study of YouTube videos about psoriasis biologics. Int J Dermatol. 2019;58:E61-E62.

- Kocyigit BF, Akaltun MS. Does YouTube provide high quality information? assessment of secukinumab videos. Rheumatol Int. 2019;39:1263-1268.

- Qi J, Trang T, Doong J, et al. Misinformation is prevalent in psoriasis-related YouTube videos. Dermatol Online J. 2016;22:13030/qt7qc9z2m5

- Gokcen HB, Gumussuyu G. A quality analysis of disc herniation videos on YouTube. World Neurosurg. 2019;124:E799-E804.

- Fox S, Duggan M. Health online 2013. January 15, 2013. Accessed August 15, 2021. https://www.pewresearch.org/internet/2013/01/15/health-online-2013/

- Ní Ríordáin R, McCreary C. Dental patients’ use of the Internet. Br Dent J. 2009;207:583-586, 575.

- Fergie G, Hilton S, Hunt K. Young adults’ experiences of seeking online information about diabetes and mental health in the age of social media. Health Expect. 2016;19:1324-1335.

- Antheunis ML, Tates K, Nieboer TE. Patients’ and health professionals’ use of social media in health care: motives, barriers and expectations. Patient Educ Couns. 2013;92:426-431.

- McGregor F, Somner JE, Bourne RR, et al. Social media use by patients with glaucoma: what can we learn? Ophthalmic Physiol Opt. 2014;34:46-52.

- YouTube Statistics—15 Amazing Stats for 2015. Published April 30, 2015. Accessed August 27, 2021. YouTube.com/watch?v=9ZLBSPzY7GQ

- Health Research Institute. Social media “likes” healthcare: from marketing to social business. April 2012. Accessed August 15, 2021. https://www.pwc.com/us/en/health-industries/health-research-institute/publications/pdf/health-care-social-media-report.pdf

- Zhou R, Khemmarat S, Gao L, et al. How YouTube videos are discovered and its impact on videos views. Multimed Tools Appl. 2016;75:6035-6058.

- Haslam K, Doucette H, Hachey S, et al. YouTube videos as health decision aids for the public: an integrative review. Can J Dent Hyg. 2019;53:53-66.

- Pithadia D, Reynolds K, Lee E, et al. Dupilumab for atopic dermatitis: what are patients learning on YouTube [published online ahead of print April 16,2020]? J Dermatolog Treat. doi: 10.1080/09546634.2020.1755418

- Tolu S, Yurdakul OV, Basaran B, et al. English-language videos on YouTube as a source of information on self-administer subcutaneous anti-tumour necrosis factor agent injections. Rheumatol Int. 2018;38:1285-1292.

- Reynolds KA, Pithadia DJ, Lee EB, et al. A cross-sectional study of YouTube videos about psoriasis biologics. Int J Dermatol. 2019;58:E61-E62.

- Kocyigit BF, Akaltun MS. Does YouTube provide high quality information? assessment of secukinumab videos. Rheumatol Int. 2019;39:1263-1268.

- Qi J, Trang T, Doong J, et al. Misinformation is prevalent in psoriasis-related YouTube videos. Dermatol Online J. 2016;22:13030/qt7qc9z2m5

- Gokcen HB, Gumussuyu G. A quality analysis of disc herniation videos on YouTube. World Neurosurg. 2019;124:E799-E804.

Practice Points

- Patient-based YouTube videos comprised the majority of videos on oral therapies for atopic dermatitis, with the greatest views and interaction ratio.

- Most YouTube videos on this topic contained a neutral tone and Grade B recommendations, thus meriting production of more evidence-based videos in collaboration with patients on the YouTube platform.

Atopic Dermatitis Topical Therapies: Study of YouTube Videos as a Source of Patient Information

To the Editor:

Atopic dermatitis (eczema) affects approximately 20% of children worldwide.1 In atopic dermatitis management, patient education is crucial for optimal outcomes.2 The COVID-19 pandemic has impacted patient-physician interactions. To ensure safety of patients and physicians, visits may have been canceled, postponed, or conducted virtually, leaving less time for discussion and questions.3 As a consequence, patients may seek information about atopic dermatitis from alternative sources, including YouTube videos. We performed a cross-sectional study to analyze YouTube videos about topical treatments for atopic dermatitis.

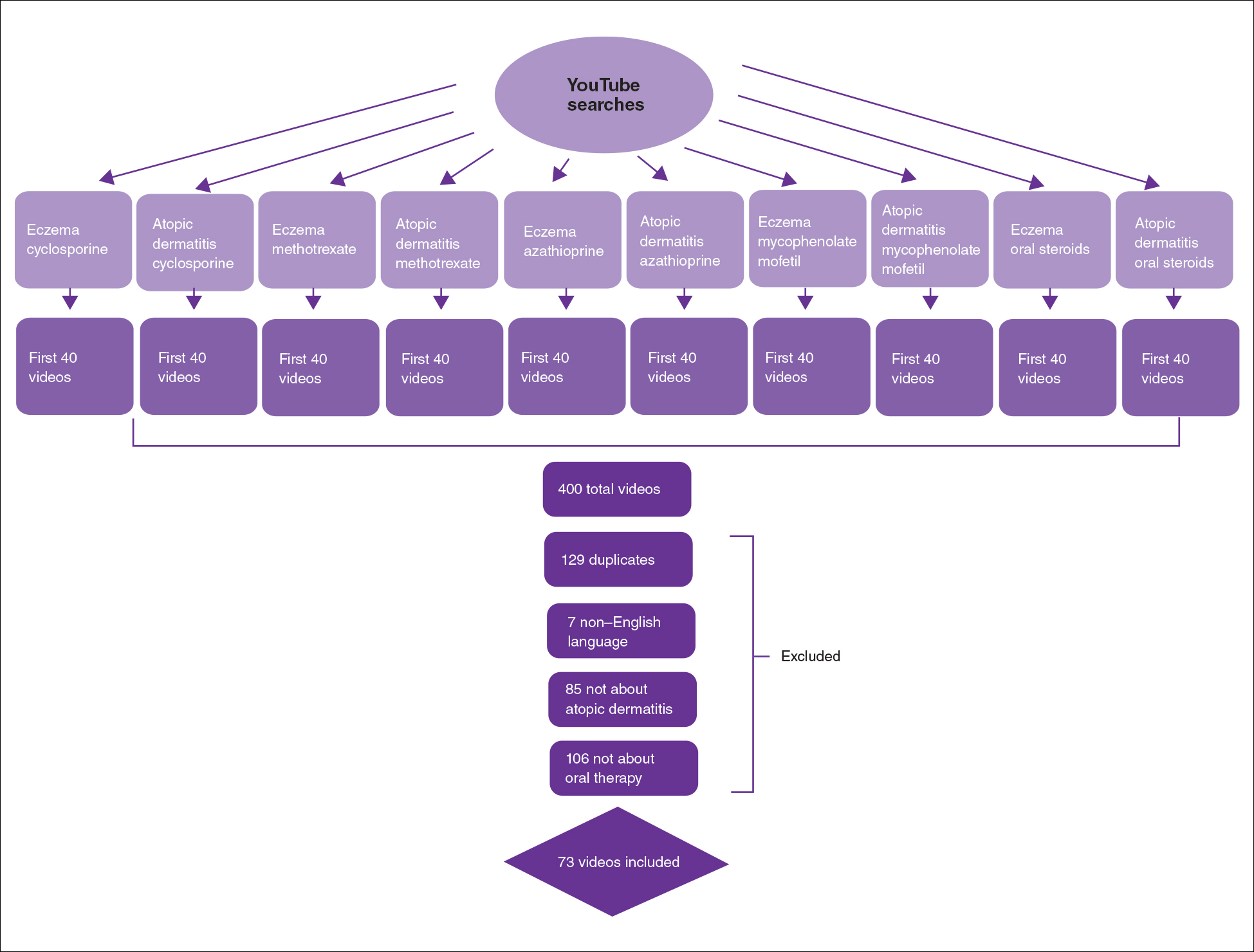

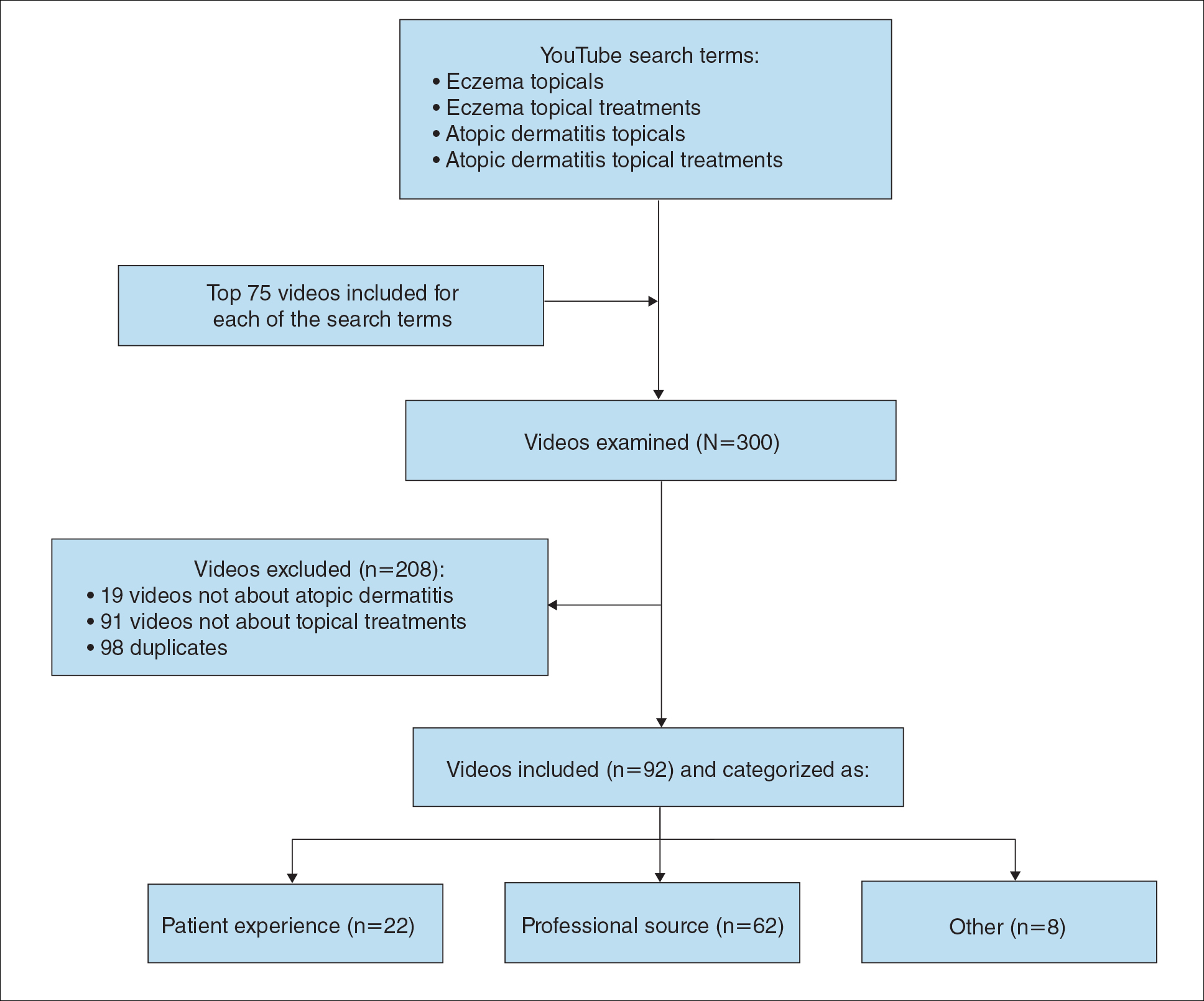

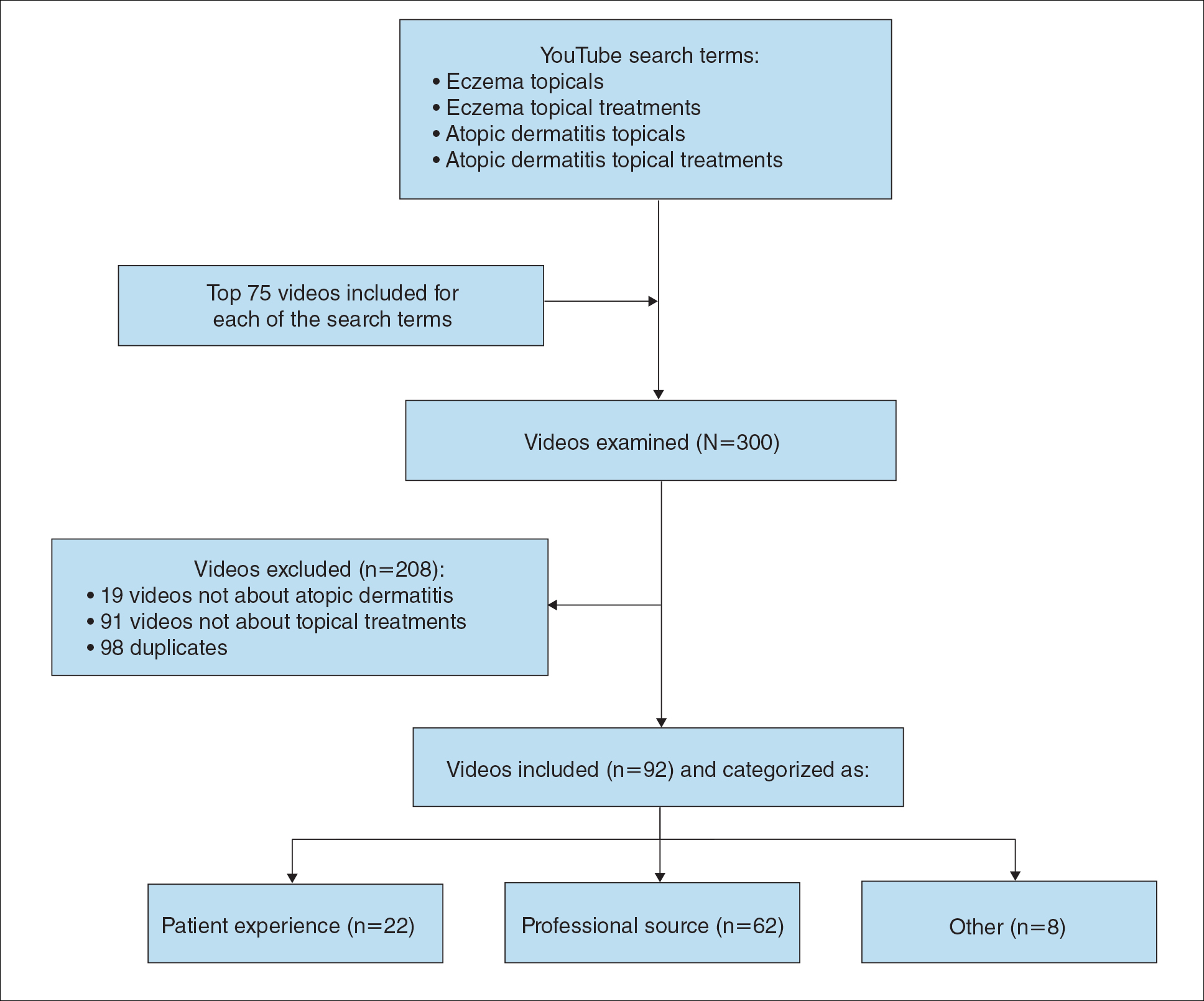

During the week of July 16, 2020, we performed 4 private browser YouTube searches with default filters using the following terms: eczema topicals, eczema topical treatments, atopic dermatitis topicals, and atopic dermatitis topical treatments. For video selection, we defined topical treatments as topical corticosteroids, topical calcineurin inhibitors, crisaborole, emollients, wet wraps, and any prospective treatment topically administered. For each of the 4 searches, 2 researchers (A.M. and A.T.) independently examined the top 75 videos, yielding a total of 300 videos. Of them, 98 videos were duplicates, 19 videos were not about atopic dermatitis, and 91 videos were not about topical treatments, leaving a total of 92 videos for analysis (Figure 1).

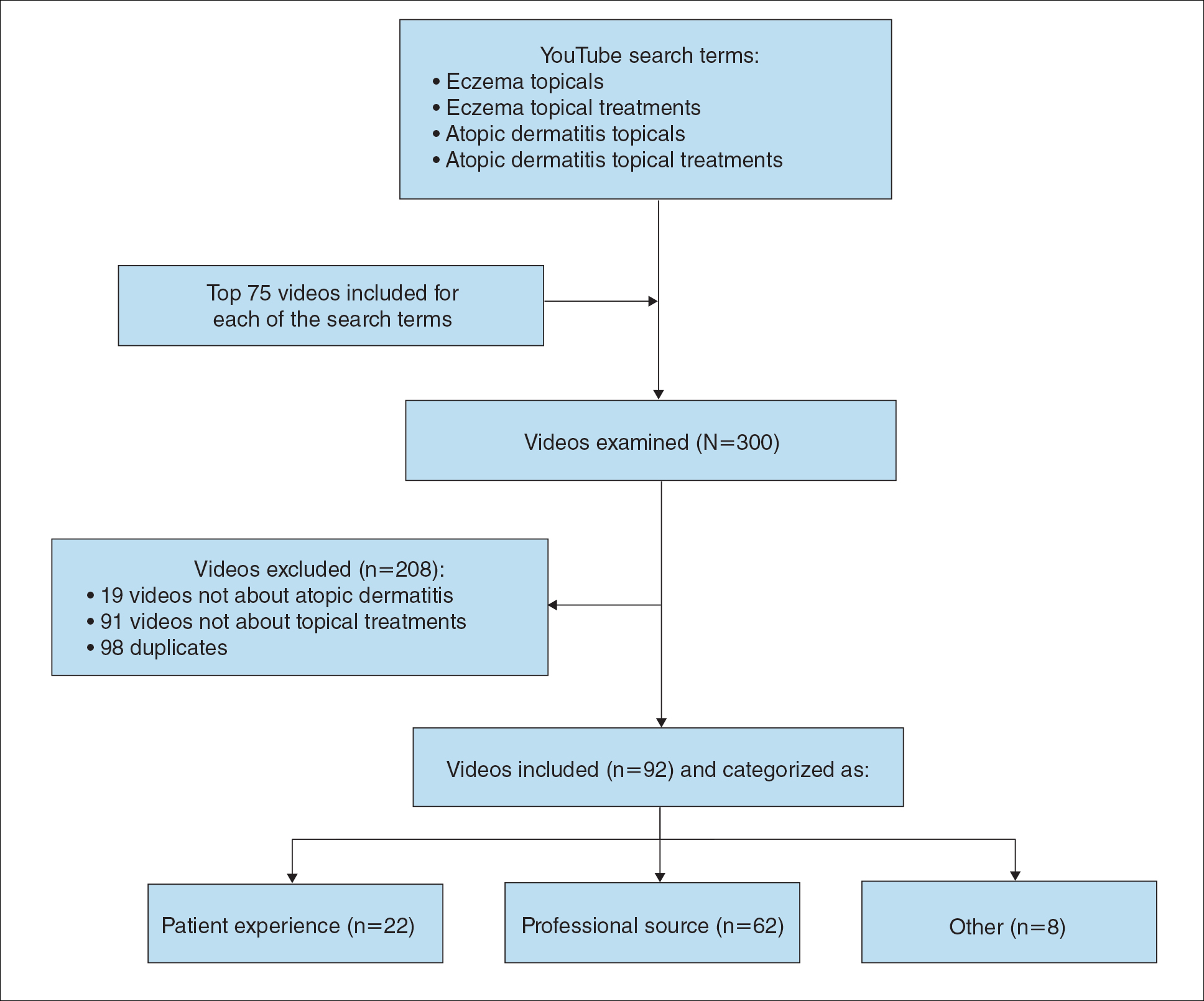

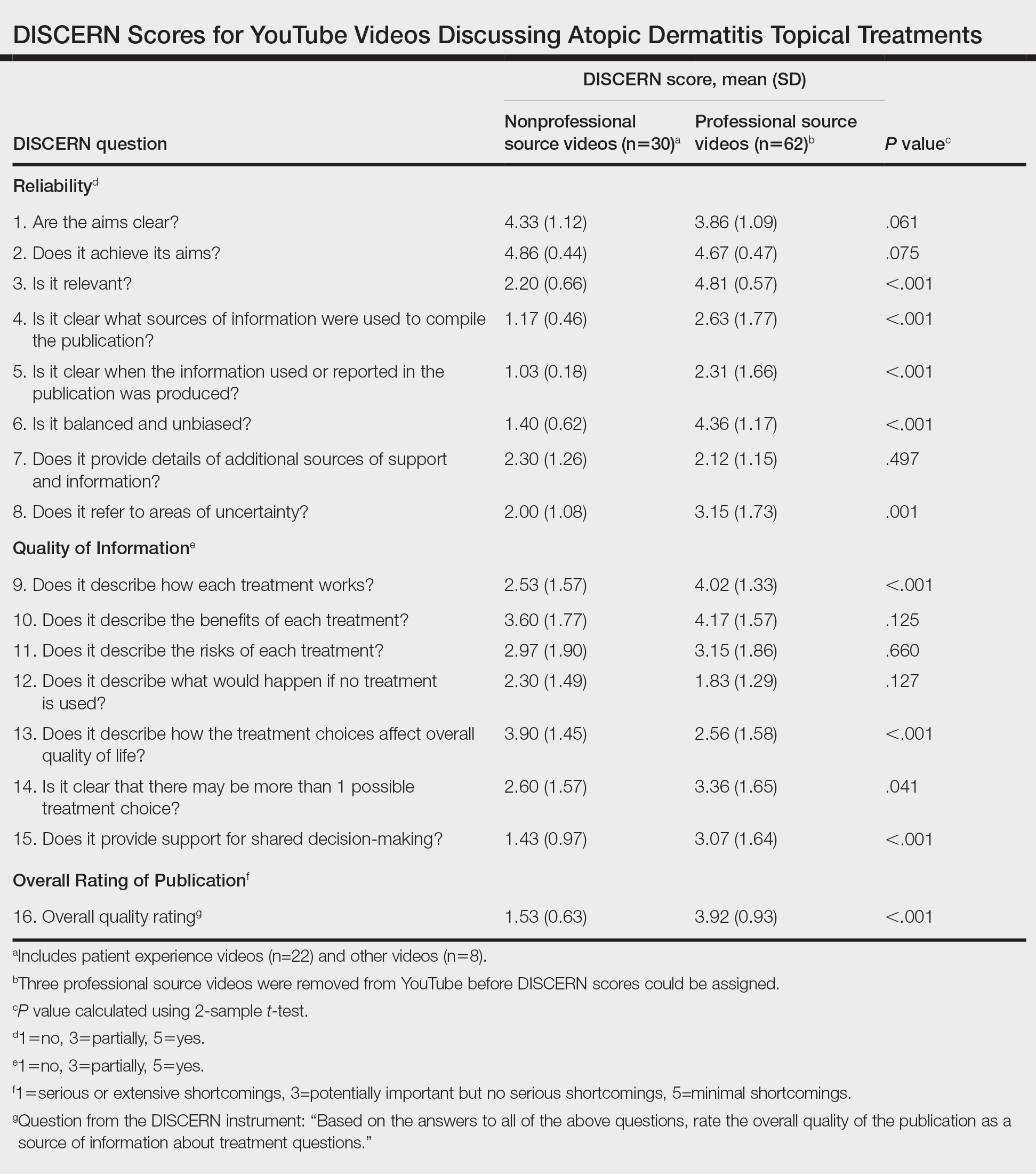

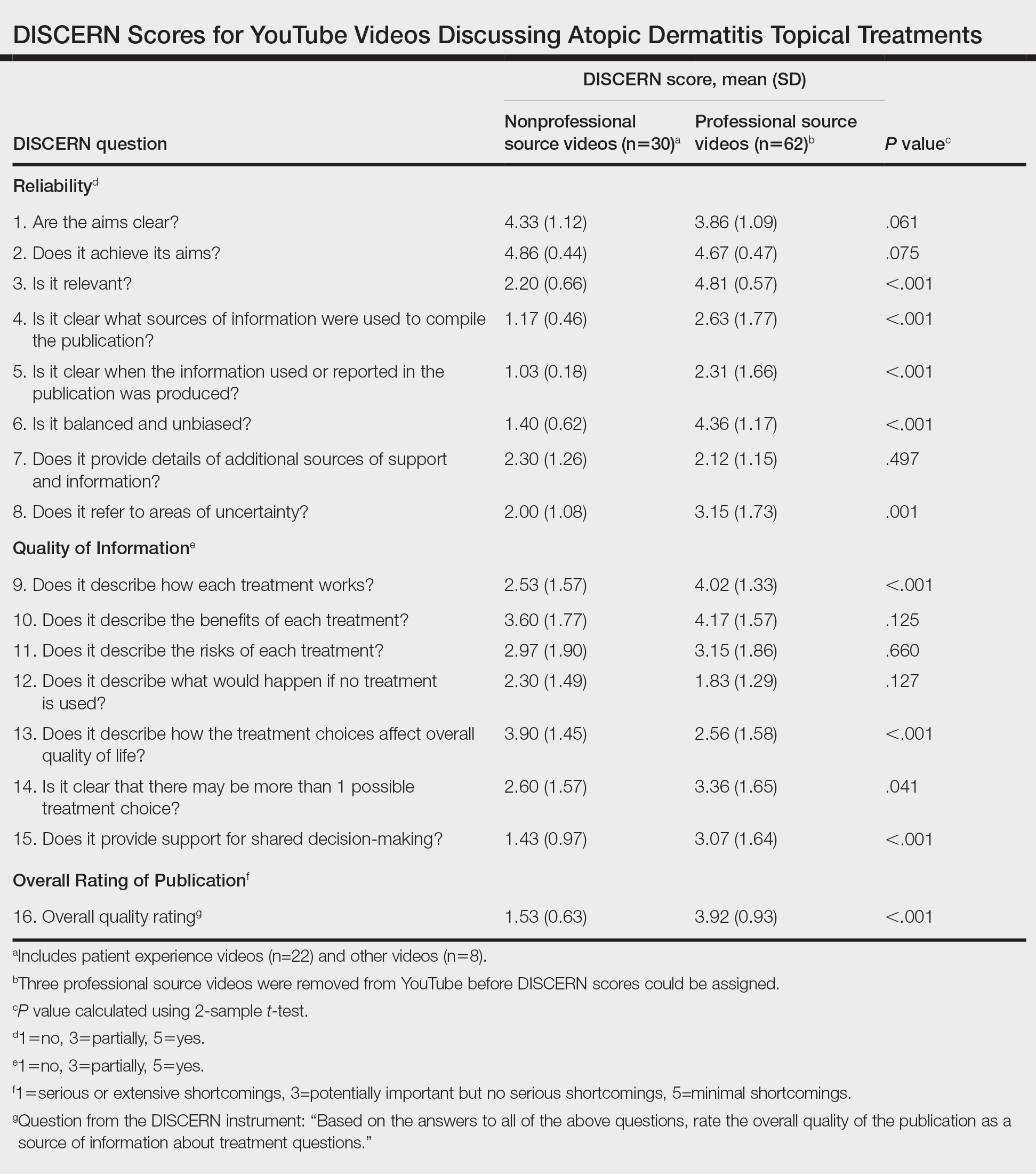

For the 92 included videos, the length; upload year; number of views, likes, dislikes, and comments; interaction ratio (IR)(the sum of likes, dislikes, and comments divided by the number of views); and video content were determined. The videos were placed into mutually exclusive categories as follows: (1) patient experience, defined as a video about patient perspective; (2) professional source, defined as a video featuring a physician, physician extender, pharmacist, or scientist, or produced by a formal organization; or (3) other. The DISCERN Instrument was used for grading the reliability and quality of the 92 included videos. This instrument consists of 16 questions with the responses rated on a scale of 1 to 5.4 For analysis of DISCERN scores, patient experience and other videos were grouped together as nonprofessional source videos. A 2-sample t-test was used to compare DISCERN scores between professional source and nonprofessional source videos.

Most videos were uploaded in 2017 (n=19), 2018 (n=23), and 2019 (n=25), but 20 were uploaded in 2012-2016 and 5 were uploaded in 2020. The 92 videos had a mean length of 8 minutes and 35 seconds (range, 30 seconds to 62 minutes and 23 seconds).

Patient experience videos accounted for 23.9% (n=22) of videos. These videos discussed topical steroid withdrawal (TSW)(n=16), instructions for making emollients (n=2), and treatment successes (n=4). Professional source videos represented 67.4% (n=62) of videos. Of them, 40.3% (n=25) were physician oriented, defined as having extensive medical terminology or qualifying for continuing medical education credit. Three (4.8%) of the professional source videos were sponsored by a drug company. Other constituted the remaining 8.7% (n=8) of videos. Patient experience videos had more views (median views [interquartile range], 6865 [10,307]) and higher engagement (median IR [interquartile range], 0.038 [0.022]) than professional source videos (views: median views [interquartile range], 1052.5 [10,610.5]; engagement: median IR [interquartile range], 0.006 [0.008]).

Although less popular, professional source videos had a significantly higher DISCERN overall quality rating score (question 16) compared to those categorized as nonprofessional source (3.92 vs 1.53; P<.001). In contrast, nonprofessional source videos scored significantly higher on the quality-of-life question (question 13) compared to professional source videos (3.90 vs 2.56; P<.001)(eTable). (Three professional source videos were removed from YouTube before DISCERN scores could be assigned.)

Notably, 20.7% (n=19) of the 92 videos discussed TSW, and most of them were patient experiences (n=16). Other categories included topical steroids excluding TSW (n=11), steroid phobia (n=2), topical calcineurin inhibitors (n=2), crisaborole (n=6), news broadcast (n=7), wet wraps (n=5), product advertisement (n=7), and research (n=11)(Figure 2). Interestingly, there were no videos focusing on the calcineurin inhibitor black box warning.

Similar to prior studies, our results indicate preference for patient-generated videos over videos produced by or including a professional source.5 Additionally, only 3 of 19 videos about TSW were from a professional source, increasing the potential for patient misconceptions about topical corticosteroids. Future studies should examine the educational impact of patient-generated videos as well as features that make the patient experience videos more desirable for viewing.

- Mueller SM, Hongler VNS, Jungo P, et al. Fiction, falsehoods, and few facts: cross-sectional study on the content-related quality of atopic eczema-related videos on YouTube. J Med Internet Res. 2020;22:e15599. doi:10.2196/15599

- Torres T, Ferreira EO, Gonçalo M, et al. Update on atopic dermatitis. Acta Med Port. 2019;32:606-613. doi:10.20344/amp.11963

- Vogler SA, Lightner AL. Rethinking how we care for our patients in a time of social distancing during the COVID-19 pandemic. Br J Surg. 2020;107:937-939. doi:10.1002/bjs.11636

- The DISCERN Instrument. discern online. Accessed January 22, 2021. http://www.discern.org.uk/discern_instrument.php

- Pithadia DJ, Reynolds KA, Lee EB, et al. Dupilumab for atopic dermatitis: what are patients learning on YouTube? [published online April 16, 2020]. J Dermatolog Treat. doi:10.1080/09546634.2020.1755418

To the Editor:

Atopic dermatitis (eczema) affects approximately 20% of children worldwide.1 In atopic dermatitis management, patient education is crucial for optimal outcomes.2 The COVID-19 pandemic has impacted patient-physician interactions. To ensure safety of patients and physicians, visits may have been canceled, postponed, or conducted virtually, leaving less time for discussion and questions.3 As a consequence, patients may seek information about atopic dermatitis from alternative sources, including YouTube videos. We performed a cross-sectional study to analyze YouTube videos about topical treatments for atopic dermatitis.

During the week of July 16, 2020, we performed 4 private browser YouTube searches with default filters using the following terms: eczema topicals, eczema topical treatments, atopic dermatitis topicals, and atopic dermatitis topical treatments. For video selection, we defined topical treatments as topical corticosteroids, topical calcineurin inhibitors, crisaborole, emollients, wet wraps, and any prospective treatment topically administered. For each of the 4 searches, 2 researchers (A.M. and A.T.) independently examined the top 75 videos, yielding a total of 300 videos. Of them, 98 videos were duplicates, 19 videos were not about atopic dermatitis, and 91 videos were not about topical treatments, leaving a total of 92 videos for analysis (Figure 1).

For the 92 included videos, the length; upload year; number of views, likes, dislikes, and comments; interaction ratio (IR)(the sum of likes, dislikes, and comments divided by the number of views); and video content were determined. The videos were placed into mutually exclusive categories as follows: (1) patient experience, defined as a video about patient perspective; (2) professional source, defined as a video featuring a physician, physician extender, pharmacist, or scientist, or produced by a formal organization; or (3) other. The DISCERN Instrument was used for grading the reliability and quality of the 92 included videos. This instrument consists of 16 questions with the responses rated on a scale of 1 to 5.4 For analysis of DISCERN scores, patient experience and other videos were grouped together as nonprofessional source videos. A 2-sample t-test was used to compare DISCERN scores between professional source and nonprofessional source videos.

Most videos were uploaded in 2017 (n=19), 2018 (n=23), and 2019 (n=25), but 20 were uploaded in 2012-2016 and 5 were uploaded in 2020. The 92 videos had a mean length of 8 minutes and 35 seconds (range, 30 seconds to 62 minutes and 23 seconds).

Patient experience videos accounted for 23.9% (n=22) of videos. These videos discussed topical steroid withdrawal (TSW)(n=16), instructions for making emollients (n=2), and treatment successes (n=4). Professional source videos represented 67.4% (n=62) of videos. Of them, 40.3% (n=25) were physician oriented, defined as having extensive medical terminology or qualifying for continuing medical education credit. Three (4.8%) of the professional source videos were sponsored by a drug company. Other constituted the remaining 8.7% (n=8) of videos. Patient experience videos had more views (median views [interquartile range], 6865 [10,307]) and higher engagement (median IR [interquartile range], 0.038 [0.022]) than professional source videos (views: median views [interquartile range], 1052.5 [10,610.5]; engagement: median IR [interquartile range], 0.006 [0.008]).

Although less popular, professional source videos had a significantly higher DISCERN overall quality rating score (question 16) compared to those categorized as nonprofessional source (3.92 vs 1.53; P<.001). In contrast, nonprofessional source videos scored significantly higher on the quality-of-life question (question 13) compared to professional source videos (3.90 vs 2.56; P<.001)(eTable). (Three professional source videos were removed from YouTube before DISCERN scores could be assigned.)

Notably, 20.7% (n=19) of the 92 videos discussed TSW, and most of them were patient experiences (n=16). Other categories included topical steroids excluding TSW (n=11), steroid phobia (n=2), topical calcineurin inhibitors (n=2), crisaborole (n=6), news broadcast (n=7), wet wraps (n=5), product advertisement (n=7), and research (n=11)(Figure 2). Interestingly, there were no videos focusing on the calcineurin inhibitor black box warning.

Similar to prior studies, our results indicate preference for patient-generated videos over videos produced by or including a professional source.5 Additionally, only 3 of 19 videos about TSW were from a professional source, increasing the potential for patient misconceptions about topical corticosteroids. Future studies should examine the educational impact of patient-generated videos as well as features that make the patient experience videos more desirable for viewing.

To the Editor:

Atopic dermatitis (eczema) affects approximately 20% of children worldwide.1 In atopic dermatitis management, patient education is crucial for optimal outcomes.2 The COVID-19 pandemic has impacted patient-physician interactions. To ensure safety of patients and physicians, visits may have been canceled, postponed, or conducted virtually, leaving less time for discussion and questions.3 As a consequence, patients may seek information about atopic dermatitis from alternative sources, including YouTube videos. We performed a cross-sectional study to analyze YouTube videos about topical treatments for atopic dermatitis.

During the week of July 16, 2020, we performed 4 private browser YouTube searches with default filters using the following terms: eczema topicals, eczema topical treatments, atopic dermatitis topicals, and atopic dermatitis topical treatments. For video selection, we defined topical treatments as topical corticosteroids, topical calcineurin inhibitors, crisaborole, emollients, wet wraps, and any prospective treatment topically administered. For each of the 4 searches, 2 researchers (A.M. and A.T.) independently examined the top 75 videos, yielding a total of 300 videos. Of them, 98 videos were duplicates, 19 videos were not about atopic dermatitis, and 91 videos were not about topical treatments, leaving a total of 92 videos for analysis (Figure 1).

For the 92 included videos, the length; upload year; number of views, likes, dislikes, and comments; interaction ratio (IR)(the sum of likes, dislikes, and comments divided by the number of views); and video content were determined. The videos were placed into mutually exclusive categories as follows: (1) patient experience, defined as a video about patient perspective; (2) professional source, defined as a video featuring a physician, physician extender, pharmacist, or scientist, or produced by a formal organization; or (3) other. The DISCERN Instrument was used for grading the reliability and quality of the 92 included videos. This instrument consists of 16 questions with the responses rated on a scale of 1 to 5.4 For analysis of DISCERN scores, patient experience and other videos were grouped together as nonprofessional source videos. A 2-sample t-test was used to compare DISCERN scores between professional source and nonprofessional source videos.