User login

Is Red Meat Healthy? Multiverse Analysis Has Lessons Beyond Meat

Observational studies on red meat consumption and lifespan are prime examples of attempts to find signal in a sea of noise.

Randomized controlled trials are the best way to sort cause from mere correlation. But these are not possible in most matters of food consumption. So, we look back and observe groups with different exposures.

My most frequent complaint about these nonrandom comparison studies has been the chance that the two groups differ in important ways, and it’s these differences — not the food in question — that account for the disparate outcomes.

But selection biases are only one issue. There is also the matter of analytic flexibility. Observational studies are born from large databases. Researchers have many choices in how to analyze all these data.

A few years ago, Brian Nosek, PhD, and colleagues elegantly showed that analytic choices can affect results. His Many Analysts, One Data Set study had little uptake in the medical community, perhaps because he studied a social science question.

Multiple Ways to Slice the Data

Recently, a group from McMaster University, led by Dena Zeraatkar, PhD, has confirmed the analytic choices problem, using the question of red meat consumption and mortality.

Their idea was simple: Because there are many plausible and defensible ways to analyze a dataset, we should not choose one method; rather, we should choose thousands, combine the results, and see where the truth lies.

You might wonder how there could be thousands of ways to analyze a dataset. I surely did.

The answer stems from the choices that researchers face. For instance, there is the selection of eligible participants, the choice of analytic model (logistic, Poisson, etc.), and covariates for which to adjust. Think exponents when combining possible choices.

Dr. Zeraatkar and colleagues are research methodologists, so, sadly, they are comfortable with the clunky name of this approach: specification curve analysis. Don’t be deterred. It means that they analyze the data in thousands of ways using computers. Each way is a specification. In the end, the specifications give rise to a curve of hazard ratios for red meat and mortality. Another name for this approach is multiverse analysis.

For their paper in the Journal of Clinical Epidemiology, aptly named “Grilling the Data,” they didn’t just conjure up the many analytic ways to study the red meat–mortality question. Instead, they used a published systematic review of 15 studies on unprocessed red meat and early mortality. The studies included in this review reported 70 unique ways to analyze the association.

Is Red Meat Good or Bad?

Their first finding was that this analysis yielded widely disparate effect estimates, from 0.63 (reduced risk for early death) to 2.31 (a higher risk). The median hazard ratio was 1.14 with an interquartile range (IQR) of 1.02-1.23. One might conclude from this that eating red meat is associated with a slightly higher risk for early mortality.

Their second step was to calculate how many ways (specifications) there were to analyze the data by totaling all possible combinations of choices in the 70 ways found in the systematic review.

They calculated a total of 10 quadrillion possible unique analyses. A quadrillion is 1 with 15 zeros. Computing power cannot handle that amount of analyses yet. So, they generated 20 random unique combinations of covariates, which narrowed the number of analyses to about 1400. About 200 of these were excluded due to implausibly wide confidence intervals.

Voilà. They now had about 1200 different ways to analyze a dataset; they chose an NHANES longitudinal cohort study from 2007-2014. They deemed each of the more than 1200 approaches plausible because they were derived from peer-reviewed papers written by experts in epidemiology.

Specification Curve Analyses Results

Each analysis (or specification) yielded a hazard ratio for red meat exposure and death.

- The median HR was 0.94 (IQR, 0.83-1.05) for the effect of red meat on all-cause mortality — ie, not significant.

- The range of hazard ratios was large. They went from 0.51 — a 49% reduced risk for early mortality — to 1.75: a 75% increase in early mortality.

- Among all analyses, 36% yielded hazard ratios above 1.0 and 64% less than 1.0.

- As for statistical significance, defined as P ≤.05, only 4% (or 48 specifications) met this threshold. Zeraatkar reminded me that this is what you’d expect if unprocessed red meat has no effect on longevity.

- Of the 48 analyses deemed statistically significant, 40 indicated that red meat consumption reduced early death and eight indicated that eating red meat led to higher mortality.

- Nearly half the analyses yielded unexciting point estimates, with hazard ratios between 0.90 and 1.10.

Paradigm Changing

As a user of evidence, I find this a potentially paradigm-changing study. Observational studies far outnumber randomized trials. For many medical questions, observational data are all we have.

Now think about every observational study published. The authors tell you — post hoc — which method they used to analyze the data. The key point is that it is one method.

Dr. Zeraatkar and colleagues have shown that there are thousands of plausible ways to analyze the data, and this can lead to very different findings. In the specific question of red meat and mortality, their many analyses yielded a null result.

Now imagine other cases where the researchers did many analyses of a dataset and chose to publish only the significant ones. Observational studies are rarely preregistered, so a reader cannot know how a result would vary depending on analytic choices. A specification curve analysis of a dataset provides a much broader picture. In the case of red meat, you see some significant results, but the vast majority hover around null.

What about the difficulty in analyzing a dataset 1000 different ways? Dr. Zeraatkar told me that it is harder than just choosing one method, but it’s not impossible.

The main barrier to adopting this multiverse approach to data, she noted, was not the extra work but the entrenched belief among researchers that there is a best way to analyze data.

I hope you read this paper and think about it every time you read an observational study that finds a positive or negative association between two things. Ask: What if the researchers were as careful as Dr. Zeraatkar and colleagues and did multiple different analyses? Would the finding hold up to a series of plausible analytic choices?

Nutritional epidemiology would benefit greatly from this approach. But so would any observational study of an exposure and outcome. I suspect that the number of “positive” associations would diminish. And that would not be a bad thing.

Dr. Mandrola, a clinical electrophysiologist at Baptist Medical Associates, Louisville, Kentucky, disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Observational studies on red meat consumption and lifespan are prime examples of attempts to find signal in a sea of noise.

Randomized controlled trials are the best way to sort cause from mere correlation. But these are not possible in most matters of food consumption. So, we look back and observe groups with different exposures.

My most frequent complaint about these nonrandom comparison studies has been the chance that the two groups differ in important ways, and it’s these differences — not the food in question — that account for the disparate outcomes.

But selection biases are only one issue. There is also the matter of analytic flexibility. Observational studies are born from large databases. Researchers have many choices in how to analyze all these data.

A few years ago, Brian Nosek, PhD, and colleagues elegantly showed that analytic choices can affect results. His Many Analysts, One Data Set study had little uptake in the medical community, perhaps because he studied a social science question.

Multiple Ways to Slice the Data

Recently, a group from McMaster University, led by Dena Zeraatkar, PhD, has confirmed the analytic choices problem, using the question of red meat consumption and mortality.

Their idea was simple: Because there are many plausible and defensible ways to analyze a dataset, we should not choose one method; rather, we should choose thousands, combine the results, and see where the truth lies.

You might wonder how there could be thousands of ways to analyze a dataset. I surely did.

The answer stems from the choices that researchers face. For instance, there is the selection of eligible participants, the choice of analytic model (logistic, Poisson, etc.), and covariates for which to adjust. Think exponents when combining possible choices.

Dr. Zeraatkar and colleagues are research methodologists, so, sadly, they are comfortable with the clunky name of this approach: specification curve analysis. Don’t be deterred. It means that they analyze the data in thousands of ways using computers. Each way is a specification. In the end, the specifications give rise to a curve of hazard ratios for red meat and mortality. Another name for this approach is multiverse analysis.

For their paper in the Journal of Clinical Epidemiology, aptly named “Grilling the Data,” they didn’t just conjure up the many analytic ways to study the red meat–mortality question. Instead, they used a published systematic review of 15 studies on unprocessed red meat and early mortality. The studies included in this review reported 70 unique ways to analyze the association.

Is Red Meat Good or Bad?

Their first finding was that this analysis yielded widely disparate effect estimates, from 0.63 (reduced risk for early death) to 2.31 (a higher risk). The median hazard ratio was 1.14 with an interquartile range (IQR) of 1.02-1.23. One might conclude from this that eating red meat is associated with a slightly higher risk for early mortality.

Their second step was to calculate how many ways (specifications) there were to analyze the data by totaling all possible combinations of choices in the 70 ways found in the systematic review.

They calculated a total of 10 quadrillion possible unique analyses. A quadrillion is 1 with 15 zeros. Computing power cannot handle that amount of analyses yet. So, they generated 20 random unique combinations of covariates, which narrowed the number of analyses to about 1400. About 200 of these were excluded due to implausibly wide confidence intervals.

Voilà. They now had about 1200 different ways to analyze a dataset; they chose an NHANES longitudinal cohort study from 2007-2014. They deemed each of the more than 1200 approaches plausible because they were derived from peer-reviewed papers written by experts in epidemiology.

Specification Curve Analyses Results

Each analysis (or specification) yielded a hazard ratio for red meat exposure and death.

- The median HR was 0.94 (IQR, 0.83-1.05) for the effect of red meat on all-cause mortality — ie, not significant.

- The range of hazard ratios was large. They went from 0.51 — a 49% reduced risk for early mortality — to 1.75: a 75% increase in early mortality.

- Among all analyses, 36% yielded hazard ratios above 1.0 and 64% less than 1.0.

- As for statistical significance, defined as P ≤.05, only 4% (or 48 specifications) met this threshold. Zeraatkar reminded me that this is what you’d expect if unprocessed red meat has no effect on longevity.

- Of the 48 analyses deemed statistically significant, 40 indicated that red meat consumption reduced early death and eight indicated that eating red meat led to higher mortality.

- Nearly half the analyses yielded unexciting point estimates, with hazard ratios between 0.90 and 1.10.

Paradigm Changing

As a user of evidence, I find this a potentially paradigm-changing study. Observational studies far outnumber randomized trials. For many medical questions, observational data are all we have.

Now think about every observational study published. The authors tell you — post hoc — which method they used to analyze the data. The key point is that it is one method.

Dr. Zeraatkar and colleagues have shown that there are thousands of plausible ways to analyze the data, and this can lead to very different findings. In the specific question of red meat and mortality, their many analyses yielded a null result.

Now imagine other cases where the researchers did many analyses of a dataset and chose to publish only the significant ones. Observational studies are rarely preregistered, so a reader cannot know how a result would vary depending on analytic choices. A specification curve analysis of a dataset provides a much broader picture. In the case of red meat, you see some significant results, but the vast majority hover around null.

What about the difficulty in analyzing a dataset 1000 different ways? Dr. Zeraatkar told me that it is harder than just choosing one method, but it’s not impossible.

The main barrier to adopting this multiverse approach to data, she noted, was not the extra work but the entrenched belief among researchers that there is a best way to analyze data.

I hope you read this paper and think about it every time you read an observational study that finds a positive or negative association between two things. Ask: What if the researchers were as careful as Dr. Zeraatkar and colleagues and did multiple different analyses? Would the finding hold up to a series of plausible analytic choices?

Nutritional epidemiology would benefit greatly from this approach. But so would any observational study of an exposure and outcome. I suspect that the number of “positive” associations would diminish. And that would not be a bad thing.

Dr. Mandrola, a clinical electrophysiologist at Baptist Medical Associates, Louisville, Kentucky, disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Observational studies on red meat consumption and lifespan are prime examples of attempts to find signal in a sea of noise.

Randomized controlled trials are the best way to sort cause from mere correlation. But these are not possible in most matters of food consumption. So, we look back and observe groups with different exposures.

My most frequent complaint about these nonrandom comparison studies has been the chance that the two groups differ in important ways, and it’s these differences — not the food in question — that account for the disparate outcomes.

But selection biases are only one issue. There is also the matter of analytic flexibility. Observational studies are born from large databases. Researchers have many choices in how to analyze all these data.

A few years ago, Brian Nosek, PhD, and colleagues elegantly showed that analytic choices can affect results. His Many Analysts, One Data Set study had little uptake in the medical community, perhaps because he studied a social science question.

Multiple Ways to Slice the Data

Recently, a group from McMaster University, led by Dena Zeraatkar, PhD, has confirmed the analytic choices problem, using the question of red meat consumption and mortality.

Their idea was simple: Because there are many plausible and defensible ways to analyze a dataset, we should not choose one method; rather, we should choose thousands, combine the results, and see where the truth lies.

You might wonder how there could be thousands of ways to analyze a dataset. I surely did.

The answer stems from the choices that researchers face. For instance, there is the selection of eligible participants, the choice of analytic model (logistic, Poisson, etc.), and covariates for which to adjust. Think exponents when combining possible choices.

Dr. Zeraatkar and colleagues are research methodologists, so, sadly, they are comfortable with the clunky name of this approach: specification curve analysis. Don’t be deterred. It means that they analyze the data in thousands of ways using computers. Each way is a specification. In the end, the specifications give rise to a curve of hazard ratios for red meat and mortality. Another name for this approach is multiverse analysis.

For their paper in the Journal of Clinical Epidemiology, aptly named “Grilling the Data,” they didn’t just conjure up the many analytic ways to study the red meat–mortality question. Instead, they used a published systematic review of 15 studies on unprocessed red meat and early mortality. The studies included in this review reported 70 unique ways to analyze the association.

Is Red Meat Good or Bad?

Their first finding was that this analysis yielded widely disparate effect estimates, from 0.63 (reduced risk for early death) to 2.31 (a higher risk). The median hazard ratio was 1.14 with an interquartile range (IQR) of 1.02-1.23. One might conclude from this that eating red meat is associated with a slightly higher risk for early mortality.

Their second step was to calculate how many ways (specifications) there were to analyze the data by totaling all possible combinations of choices in the 70 ways found in the systematic review.

They calculated a total of 10 quadrillion possible unique analyses. A quadrillion is 1 with 15 zeros. Computing power cannot handle that amount of analyses yet. So, they generated 20 random unique combinations of covariates, which narrowed the number of analyses to about 1400. About 200 of these were excluded due to implausibly wide confidence intervals.

Voilà. They now had about 1200 different ways to analyze a dataset; they chose an NHANES longitudinal cohort study from 2007-2014. They deemed each of the more than 1200 approaches plausible because they were derived from peer-reviewed papers written by experts in epidemiology.

Specification Curve Analyses Results

Each analysis (or specification) yielded a hazard ratio for red meat exposure and death.

- The median HR was 0.94 (IQR, 0.83-1.05) for the effect of red meat on all-cause mortality — ie, not significant.

- The range of hazard ratios was large. They went from 0.51 — a 49% reduced risk for early mortality — to 1.75: a 75% increase in early mortality.

- Among all analyses, 36% yielded hazard ratios above 1.0 and 64% less than 1.0.

- As for statistical significance, defined as P ≤.05, only 4% (or 48 specifications) met this threshold. Zeraatkar reminded me that this is what you’d expect if unprocessed red meat has no effect on longevity.

- Of the 48 analyses deemed statistically significant, 40 indicated that red meat consumption reduced early death and eight indicated that eating red meat led to higher mortality.

- Nearly half the analyses yielded unexciting point estimates, with hazard ratios between 0.90 and 1.10.

Paradigm Changing

As a user of evidence, I find this a potentially paradigm-changing study. Observational studies far outnumber randomized trials. For many medical questions, observational data are all we have.

Now think about every observational study published. The authors tell you — post hoc — which method they used to analyze the data. The key point is that it is one method.

Dr. Zeraatkar and colleagues have shown that there are thousands of plausible ways to analyze the data, and this can lead to very different findings. In the specific question of red meat and mortality, their many analyses yielded a null result.

Now imagine other cases where the researchers did many analyses of a dataset and chose to publish only the significant ones. Observational studies are rarely preregistered, so a reader cannot know how a result would vary depending on analytic choices. A specification curve analysis of a dataset provides a much broader picture. In the case of red meat, you see some significant results, but the vast majority hover around null.

What about the difficulty in analyzing a dataset 1000 different ways? Dr. Zeraatkar told me that it is harder than just choosing one method, but it’s not impossible.

The main barrier to adopting this multiverse approach to data, she noted, was not the extra work but the entrenched belief among researchers that there is a best way to analyze data.

I hope you read this paper and think about it every time you read an observational study that finds a positive or negative association between two things. Ask: What if the researchers were as careful as Dr. Zeraatkar and colleagues and did multiple different analyses? Would the finding hold up to a series of plausible analytic choices?

Nutritional epidemiology would benefit greatly from this approach. But so would any observational study of an exposure and outcome. I suspect that the number of “positive” associations would diminish. And that would not be a bad thing.

Dr. Mandrola, a clinical electrophysiologist at Baptist Medical Associates, Louisville, Kentucky, disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Video Games Marketing Food Impacts Teens’ Eating

, according to research presented on May 12, 2024, at the 31st European Congress on Obesity in Venice, Italy.

The presentation by Rebecca Evans, University of Liverpool, United Kingdom, included findings from three recently published studies and a submitted randomized controlled trial. At the time of the research, the top VGLSPs globally were Twitch (with 77% of the market share by hours watched), YouTube Gaming (15%), and Facebook Gaming Live (7%).

“Endorsement deals for prominent streamers on Twitch can be worth many millions of dollars, and younger people, who are attractive to advertisers, are moving away from television to these more interactive forms of entertainment,” Evans said. “These deals involve collaborating with brands and promoting their products, including foods that are high in fats, salt, and/or sugar.”

To delve more deeply into the extent and consequences of VGLSP advertising for HFSS, the researchers first analyzed 52 hour-long Twitch videos uploaded to gaming platforms by three popular influencers. They found that food cues appeared at an average rate of 2.6 per hour, and the average duration of each cue was 20 minutes.

Most cues (70.7%) were for branded HFSS (80.5%), led by energy drinks (62.4%). Most (97.7%) were not accompanied by an advertising disclosure. Most food cues were either product placement (44.0%) and looping banners (40.6%) or features such as tie-ins, logos, or offers. Notably, these forms of advertising are always visible on the video game screen, so viewers cannot skip over them or close them.

Next, the team did a systematic review and meta-analysis to assess the relationship between exposure to digital game-based or influencer food marketing with food-related outcomes. They found that young people were twice as likely to prefer foods displayed via digital game-based marketing, and that influencer and digital game-based marketing was associated with increased HFSS food consumption of about 37 additional calories in one sitting.

Researchers then surveyed 490 youngsters (mean age, 16.8 years; 70%, female) to explore associations between recall of food marketing of the top VGLSPs and food-related outcomes. Recall was associated with more positive attitudes towards HFSS foods and, in turn, the purchase and consumption of the marketed HFSS foods.

In addition, the researchers conducted a lab-based randomized controlled trial to explore associations between HFSS food marketing via a mock Twitch stream and subsequent snack intake. A total of 91 youngsters (average age, 18 years; 69% women) viewed the mock stream, which contained either an advertisement (an image overlaid on the video featuring a brand logo and product) for an HFSS food, or a non-branded food. They were then offered a snack. Acute exposure to HFSS food marketing was not associated with immediate consumption, but more habitual use of VGLSPs was associated with increased intake of the marketed snack.

The observational studies could not prove cause and effect, and may not be generalizable to all teens, the authors acknowledged. They also noted that some of the findings are based on self-report surveys, which can lead to recall bias and may have affected the results.

Nevertheless, Ms. Evans said, “The high level of exposure to digital marketing of unhealthy food could drive excess calorie consumption and weight gain, particularly in adolescents who are more susceptible to advertising. It is important that digital food marketing restrictions encompass innovative and emerging digital media such as VGLSPs.”

The research formed Ms. Evans’ PhD work, which is funded by the University of Liverpool. Evans and colleagues declared no conflicts of interest.

A version of this article appeared on Medscape.com .

, according to research presented on May 12, 2024, at the 31st European Congress on Obesity in Venice, Italy.

The presentation by Rebecca Evans, University of Liverpool, United Kingdom, included findings from three recently published studies and a submitted randomized controlled trial. At the time of the research, the top VGLSPs globally were Twitch (with 77% of the market share by hours watched), YouTube Gaming (15%), and Facebook Gaming Live (7%).

“Endorsement deals for prominent streamers on Twitch can be worth many millions of dollars, and younger people, who are attractive to advertisers, are moving away from television to these more interactive forms of entertainment,” Evans said. “These deals involve collaborating with brands and promoting their products, including foods that are high in fats, salt, and/or sugar.”

To delve more deeply into the extent and consequences of VGLSP advertising for HFSS, the researchers first analyzed 52 hour-long Twitch videos uploaded to gaming platforms by three popular influencers. They found that food cues appeared at an average rate of 2.6 per hour, and the average duration of each cue was 20 minutes.

Most cues (70.7%) were for branded HFSS (80.5%), led by energy drinks (62.4%). Most (97.7%) were not accompanied by an advertising disclosure. Most food cues were either product placement (44.0%) and looping banners (40.6%) or features such as tie-ins, logos, or offers. Notably, these forms of advertising are always visible on the video game screen, so viewers cannot skip over them or close them.

Next, the team did a systematic review and meta-analysis to assess the relationship between exposure to digital game-based or influencer food marketing with food-related outcomes. They found that young people were twice as likely to prefer foods displayed via digital game-based marketing, and that influencer and digital game-based marketing was associated with increased HFSS food consumption of about 37 additional calories in one sitting.

Researchers then surveyed 490 youngsters (mean age, 16.8 years; 70%, female) to explore associations between recall of food marketing of the top VGLSPs and food-related outcomes. Recall was associated with more positive attitudes towards HFSS foods and, in turn, the purchase and consumption of the marketed HFSS foods.

In addition, the researchers conducted a lab-based randomized controlled trial to explore associations between HFSS food marketing via a mock Twitch stream and subsequent snack intake. A total of 91 youngsters (average age, 18 years; 69% women) viewed the mock stream, which contained either an advertisement (an image overlaid on the video featuring a brand logo and product) for an HFSS food, or a non-branded food. They were then offered a snack. Acute exposure to HFSS food marketing was not associated with immediate consumption, but more habitual use of VGLSPs was associated with increased intake of the marketed snack.

The observational studies could not prove cause and effect, and may not be generalizable to all teens, the authors acknowledged. They also noted that some of the findings are based on self-report surveys, which can lead to recall bias and may have affected the results.

Nevertheless, Ms. Evans said, “The high level of exposure to digital marketing of unhealthy food could drive excess calorie consumption and weight gain, particularly in adolescents who are more susceptible to advertising. It is important that digital food marketing restrictions encompass innovative and emerging digital media such as VGLSPs.”

The research formed Ms. Evans’ PhD work, which is funded by the University of Liverpool. Evans and colleagues declared no conflicts of interest.

A version of this article appeared on Medscape.com .

, according to research presented on May 12, 2024, at the 31st European Congress on Obesity in Venice, Italy.

The presentation by Rebecca Evans, University of Liverpool, United Kingdom, included findings from three recently published studies and a submitted randomized controlled trial. At the time of the research, the top VGLSPs globally were Twitch (with 77% of the market share by hours watched), YouTube Gaming (15%), and Facebook Gaming Live (7%).

“Endorsement deals for prominent streamers on Twitch can be worth many millions of dollars, and younger people, who are attractive to advertisers, are moving away from television to these more interactive forms of entertainment,” Evans said. “These deals involve collaborating with brands and promoting their products, including foods that are high in fats, salt, and/or sugar.”

To delve more deeply into the extent and consequences of VGLSP advertising for HFSS, the researchers first analyzed 52 hour-long Twitch videos uploaded to gaming platforms by three popular influencers. They found that food cues appeared at an average rate of 2.6 per hour, and the average duration of each cue was 20 minutes.

Most cues (70.7%) were for branded HFSS (80.5%), led by energy drinks (62.4%). Most (97.7%) were not accompanied by an advertising disclosure. Most food cues were either product placement (44.0%) and looping banners (40.6%) or features such as tie-ins, logos, or offers. Notably, these forms of advertising are always visible on the video game screen, so viewers cannot skip over them or close them.

Next, the team did a systematic review and meta-analysis to assess the relationship between exposure to digital game-based or influencer food marketing with food-related outcomes. They found that young people were twice as likely to prefer foods displayed via digital game-based marketing, and that influencer and digital game-based marketing was associated with increased HFSS food consumption of about 37 additional calories in one sitting.

Researchers then surveyed 490 youngsters (mean age, 16.8 years; 70%, female) to explore associations between recall of food marketing of the top VGLSPs and food-related outcomes. Recall was associated with more positive attitudes towards HFSS foods and, in turn, the purchase and consumption of the marketed HFSS foods.

In addition, the researchers conducted a lab-based randomized controlled trial to explore associations between HFSS food marketing via a mock Twitch stream and subsequent snack intake. A total of 91 youngsters (average age, 18 years; 69% women) viewed the mock stream, which contained either an advertisement (an image overlaid on the video featuring a brand logo and product) for an HFSS food, or a non-branded food. They were then offered a snack. Acute exposure to HFSS food marketing was not associated with immediate consumption, but more habitual use of VGLSPs was associated with increased intake of the marketed snack.

The observational studies could not prove cause and effect, and may not be generalizable to all teens, the authors acknowledged. They also noted that some of the findings are based on self-report surveys, which can lead to recall bias and may have affected the results.

Nevertheless, Ms. Evans said, “The high level of exposure to digital marketing of unhealthy food could drive excess calorie consumption and weight gain, particularly in adolescents who are more susceptible to advertising. It is important that digital food marketing restrictions encompass innovative and emerging digital media such as VGLSPs.”

The research formed Ms. Evans’ PhD work, which is funded by the University of Liverpool. Evans and colleagues declared no conflicts of interest.

A version of this article appeared on Medscape.com .

New and Emerging Treatments for Major Depressive Disorder

Outside of treating major depressive disorder (MDD) through the monoamine system with selective serotonin reuptake inhibitors and serotonin-norepinephrine reuptake inhibitors, exploration of other treatment pathways has opened the possibility of faster onset of action and fewer side effects.

In this ReCAP, Dr Joseph Goldberg, from Mount Sinai Hospital in New York, NY, outlines how a better understanding of the glutamate system has led to the emergence of ketamine and esketamine as important treatment options, as well as the combination therapy of dextromethorphan with bupropion.

Dr Goldberg also discusses new results from serotonin system modulation through the 5HT1A receptor with gepirone, or the 5HT2A receptor with psilocybin. He also reports on a new compound esmethadone, known as REL-1017. Finally, he discusses the first approval of a digital therapeutic app designed to augment pharmacotherapy, and the dopamine partial agonist cariprazine as an adjunctive therapy.

--

Joseph F. Goldberg, MD, Clinical Professor, Department of Psychiatry, Icahn School of Medicine at Mount Sinai; Teaching Attending, Department of Psychiatry, Mount Sinai Hospital, New York, NY

Joseph F. Goldberg, MD, has disclosed the following relevant financial relationships:

Serve(d) as a director, officer, partner, employee, advisor, consultant, or trustee for: AbbVie; Genomind; Luye Pharma; Neuroma; Neurelis; Otsuka; Sunovion

Serve(d) as a speaker or a member of a speakers bureau for: AbbVie; Alkermes; Axsome; Intracellular Therapies

Receive(d) royalties from: American Psychiatric Publishing; Cambridge University Press

Outside of treating major depressive disorder (MDD) through the monoamine system with selective serotonin reuptake inhibitors and serotonin-norepinephrine reuptake inhibitors, exploration of other treatment pathways has opened the possibility of faster onset of action and fewer side effects.

In this ReCAP, Dr Joseph Goldberg, from Mount Sinai Hospital in New York, NY, outlines how a better understanding of the glutamate system has led to the emergence of ketamine and esketamine as important treatment options, as well as the combination therapy of dextromethorphan with bupropion.

Dr Goldberg also discusses new results from serotonin system modulation through the 5HT1A receptor with gepirone, or the 5HT2A receptor with psilocybin. He also reports on a new compound esmethadone, known as REL-1017. Finally, he discusses the first approval of a digital therapeutic app designed to augment pharmacotherapy, and the dopamine partial agonist cariprazine as an adjunctive therapy.

--

Joseph F. Goldberg, MD, Clinical Professor, Department of Psychiatry, Icahn School of Medicine at Mount Sinai; Teaching Attending, Department of Psychiatry, Mount Sinai Hospital, New York, NY

Joseph F. Goldberg, MD, has disclosed the following relevant financial relationships:

Serve(d) as a director, officer, partner, employee, advisor, consultant, or trustee for: AbbVie; Genomind; Luye Pharma; Neuroma; Neurelis; Otsuka; Sunovion

Serve(d) as a speaker or a member of a speakers bureau for: AbbVie; Alkermes; Axsome; Intracellular Therapies

Receive(d) royalties from: American Psychiatric Publishing; Cambridge University Press

Outside of treating major depressive disorder (MDD) through the monoamine system with selective serotonin reuptake inhibitors and serotonin-norepinephrine reuptake inhibitors, exploration of other treatment pathways has opened the possibility of faster onset of action and fewer side effects.

In this ReCAP, Dr Joseph Goldberg, from Mount Sinai Hospital in New York, NY, outlines how a better understanding of the glutamate system has led to the emergence of ketamine and esketamine as important treatment options, as well as the combination therapy of dextromethorphan with bupropion.

Dr Goldberg also discusses new results from serotonin system modulation through the 5HT1A receptor with gepirone, or the 5HT2A receptor with psilocybin. He also reports on a new compound esmethadone, known as REL-1017. Finally, he discusses the first approval of a digital therapeutic app designed to augment pharmacotherapy, and the dopamine partial agonist cariprazine as an adjunctive therapy.

--

Joseph F. Goldberg, MD, Clinical Professor, Department of Psychiatry, Icahn School of Medicine at Mount Sinai; Teaching Attending, Department of Psychiatry, Mount Sinai Hospital, New York, NY

Joseph F. Goldberg, MD, has disclosed the following relevant financial relationships:

Serve(d) as a director, officer, partner, employee, advisor, consultant, or trustee for: AbbVie; Genomind; Luye Pharma; Neuroma; Neurelis; Otsuka; Sunovion

Serve(d) as a speaker or a member of a speakers bureau for: AbbVie; Alkermes; Axsome; Intracellular Therapies

Receive(d) royalties from: American Psychiatric Publishing; Cambridge University Press

Darker Skin Tones Underrepresented on Skin Cancer Education Websites

“Given the known disparities patients with darker skin tones face in terms of increased skin cancer morbidity and mortality, this lack of representation further disadvantages those patients by not providing them with an adequate representation of how skin cancers manifest on their skin tones,” the study’s first author, Alana Sadur, who recently completed her third year at the George Washington School of Medicine and Health Sciences, Washington, said in an interview. “By not having images to refer to, patients are less likely to self-identify and seek treatment for concerning skin lesions.”

For the study, which was published in Journal of Drugs in Dermatology, Ms. Sadur and coauthors evaluated the inclusivity and representation of skin tones in photos of skin cancer on the following patient-facing websites: CDC.gov, NIH.gov, skincancer.org, americancancerfund.org, mayoclinic.org, and cancer.org. The researchers counted each individual person or image showing skin as a separate representation, and three independent reviewers used the 5-color Pantone swatch as described in a dermatology atlas to categorize representations as “lighter-toned skin” (Pantones A-B or lighter) or “darker-toned skin” (Pantones C-E or darker).

Of the 372 total representations identified on the websites, only 49 (13.2%) showed darker skin tones. Of these, 44.9% depicted Pantone C, 34.7% depicted Pantone D, and 20.4% depicted Pantone E. The researchers also found that only 11% of nonmelanoma skin cancers (NMSC) and 5.8% of melanoma skin cancers (MSC) were shown on darker skin tones, while no cartoon portrayals of NMSC or MSC included darker skin tones.

In findings related to nondisease representations on the websites, darker skin tones were depicted in just 22.7% of stock photos and 26.1% of website front pages.

The study’s senior author, Adam Friedman, MD, professor and chair of dermatology at George Washington University, Washington, emphasized the need for trusted sources like national organizations and federally funded agencies to be purposeful with their selection of images to “ensure all visitors to the site are represented,” he told this news organization.

“This is very important when dealing with skin cancer as a lack of representation could easily be misinterpreted as epidemiological data, meaning this gap could suggest certain individuals do not get skin cancer because photos in those skin tones are not present,” he added. “This doesn’t even begin to touch upon the diversity of individuals in the stock photos or lack thereof, which can perpetuate the lack of diversity in our specialty. We need to do better.”

The authors reported having no relevant disclosures.

A version of this article first appeared on Medscape.com.

“Given the known disparities patients with darker skin tones face in terms of increased skin cancer morbidity and mortality, this lack of representation further disadvantages those patients by not providing them with an adequate representation of how skin cancers manifest on their skin tones,” the study’s first author, Alana Sadur, who recently completed her third year at the George Washington School of Medicine and Health Sciences, Washington, said in an interview. “By not having images to refer to, patients are less likely to self-identify and seek treatment for concerning skin lesions.”

For the study, which was published in Journal of Drugs in Dermatology, Ms. Sadur and coauthors evaluated the inclusivity and representation of skin tones in photos of skin cancer on the following patient-facing websites: CDC.gov, NIH.gov, skincancer.org, americancancerfund.org, mayoclinic.org, and cancer.org. The researchers counted each individual person or image showing skin as a separate representation, and three independent reviewers used the 5-color Pantone swatch as described in a dermatology atlas to categorize representations as “lighter-toned skin” (Pantones A-B or lighter) or “darker-toned skin” (Pantones C-E or darker).

Of the 372 total representations identified on the websites, only 49 (13.2%) showed darker skin tones. Of these, 44.9% depicted Pantone C, 34.7% depicted Pantone D, and 20.4% depicted Pantone E. The researchers also found that only 11% of nonmelanoma skin cancers (NMSC) and 5.8% of melanoma skin cancers (MSC) were shown on darker skin tones, while no cartoon portrayals of NMSC or MSC included darker skin tones.

In findings related to nondisease representations on the websites, darker skin tones were depicted in just 22.7% of stock photos and 26.1% of website front pages.

The study’s senior author, Adam Friedman, MD, professor and chair of dermatology at George Washington University, Washington, emphasized the need for trusted sources like national organizations and federally funded agencies to be purposeful with their selection of images to “ensure all visitors to the site are represented,” he told this news organization.

“This is very important when dealing with skin cancer as a lack of representation could easily be misinterpreted as epidemiological data, meaning this gap could suggest certain individuals do not get skin cancer because photos in those skin tones are not present,” he added. “This doesn’t even begin to touch upon the diversity of individuals in the stock photos or lack thereof, which can perpetuate the lack of diversity in our specialty. We need to do better.”

The authors reported having no relevant disclosures.

A version of this article first appeared on Medscape.com.

“Given the known disparities patients with darker skin tones face in terms of increased skin cancer morbidity and mortality, this lack of representation further disadvantages those patients by not providing them with an adequate representation of how skin cancers manifest on their skin tones,” the study’s first author, Alana Sadur, who recently completed her third year at the George Washington School of Medicine and Health Sciences, Washington, said in an interview. “By not having images to refer to, patients are less likely to self-identify and seek treatment for concerning skin lesions.”

For the study, which was published in Journal of Drugs in Dermatology, Ms. Sadur and coauthors evaluated the inclusivity and representation of skin tones in photos of skin cancer on the following patient-facing websites: CDC.gov, NIH.gov, skincancer.org, americancancerfund.org, mayoclinic.org, and cancer.org. The researchers counted each individual person or image showing skin as a separate representation, and three independent reviewers used the 5-color Pantone swatch as described in a dermatology atlas to categorize representations as “lighter-toned skin” (Pantones A-B or lighter) or “darker-toned skin” (Pantones C-E or darker).

Of the 372 total representations identified on the websites, only 49 (13.2%) showed darker skin tones. Of these, 44.9% depicted Pantone C, 34.7% depicted Pantone D, and 20.4% depicted Pantone E. The researchers also found that only 11% of nonmelanoma skin cancers (NMSC) and 5.8% of melanoma skin cancers (MSC) were shown on darker skin tones, while no cartoon portrayals of NMSC or MSC included darker skin tones.

In findings related to nondisease representations on the websites, darker skin tones were depicted in just 22.7% of stock photos and 26.1% of website front pages.

The study’s senior author, Adam Friedman, MD, professor and chair of dermatology at George Washington University, Washington, emphasized the need for trusted sources like national organizations and federally funded agencies to be purposeful with their selection of images to “ensure all visitors to the site are represented,” he told this news organization.

“This is very important when dealing with skin cancer as a lack of representation could easily be misinterpreted as epidemiological data, meaning this gap could suggest certain individuals do not get skin cancer because photos in those skin tones are not present,” he added. “This doesn’t even begin to touch upon the diversity of individuals in the stock photos or lack thereof, which can perpetuate the lack of diversity in our specialty. We need to do better.”

The authors reported having no relevant disclosures.

A version of this article first appeared on Medscape.com.

FROM JOURNAL OF DRUGS IN DERMATOLOGY

Throbbing headache and nausea

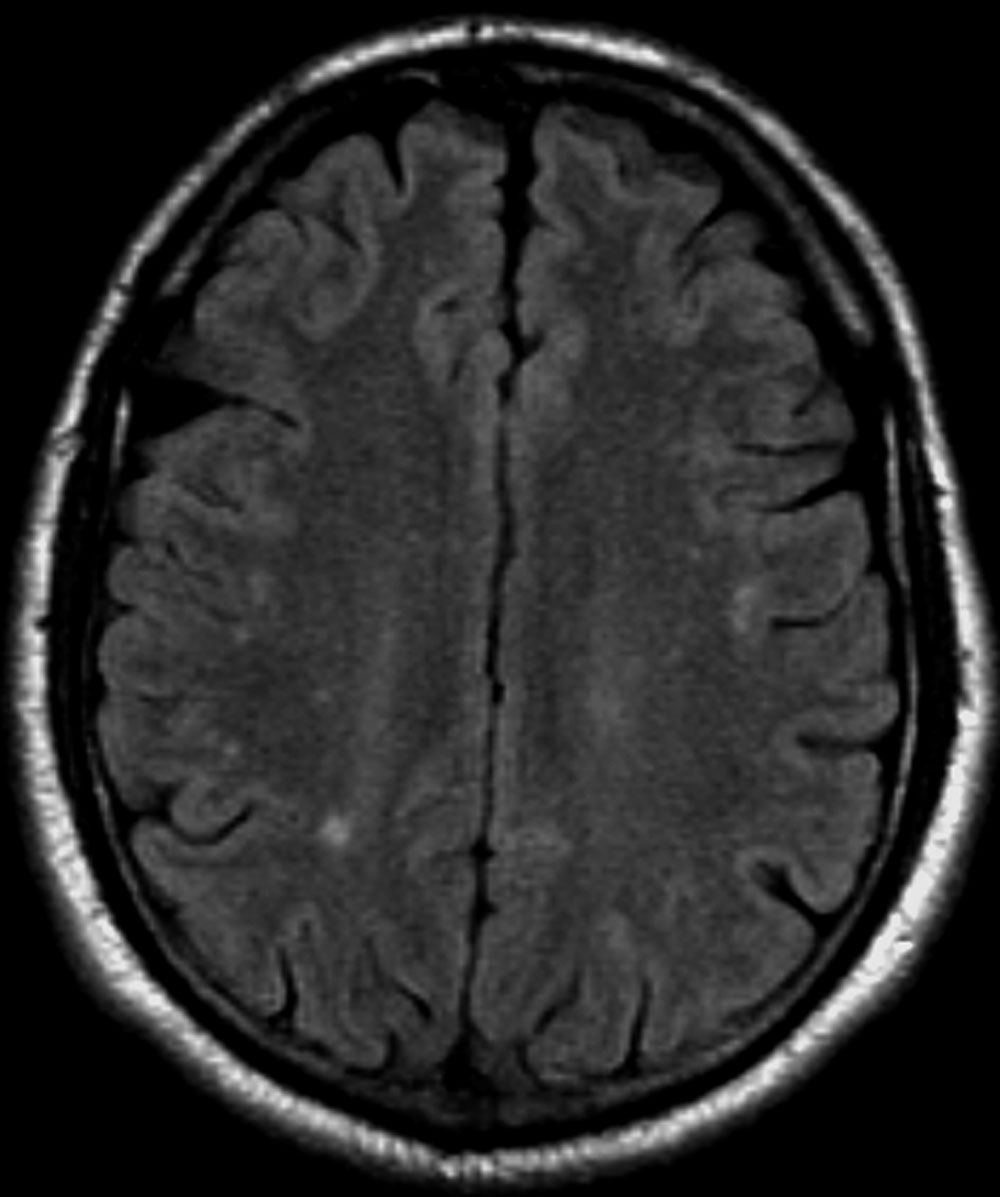

Migraine is a form of recurrent headache that can present as migraine with aura or migraine without aura, with the latter being the most common form. As in this patient, migraine without aura is a chronic form of headache of moderate to severe intensity that usually lasts for several hours but rarely may persist for up to 3 days. Headache pain is unilateral and often aggravated by triggers such as routine physical activity. The American Headache Society diagnostic criteria for migraine without aura include having symptoms of nausea and/or hypersensitivity to light or sound. This patient also described symptoms typical of the prodromal phase of migraine, which include yawning, temperature control, excessive thirst, and mood swings.

Patients who have migraine with aura also have unilateral headache pain of several hours' duration but experience visual (eg, dots or flashes) or sensory (prickly sensation on skin) symptoms, or may have brief difficulty with speech or motor function. These aura symptoms generally last 5 to 60 minutes before abating.

The worldwide impact of migraine potentially reaches a billion individuals. Its prevalence is second only to tension-type headaches. Migraine occurs in patients of all ages and affects women at a rate two to three times higher than in men. Prevalence appears to peak in the third and fourth decades of life and tends to be lower among older adults. Migraine also has a negative effect on patients' work, school, or social lives, and is associated with increased rates of depression and anxiety in adults. For patients who are prone to migraines, potential triggers include some foods and beverages (including those that contain caffeine and alcohol), menstrual cycles in women, exposure to strobing or bright lights or loud sounds, stressful situations, extra physical activity, and too much or too little sleep.

Migraine is a clinical diagnosis based on number of headaches (five or more episodes) plus two or more of the characteristic signs (unilateral, throbbing pain, pain intensity of ≥ 5 on a 10-point scale, and pain aggravated by routine physical motion, such as climbing stairs or bending over) plus nausea and/or photosensitivity or phonosensitivity. Prodrome symptoms are reported by about 70% of adult patients. Diagnosis rarely requires neuroimaging; however, before prescribing medication, a complete lab and metabolic workup should be done.

Management of migraine without aura includes acute and preventive interventions. Acute interventions cited by the American Headache Society include nonsteroidal anti-inflammatory drugs and acetaminophen for mild pain, and migraine-specific therapies such as the triptans, ergotamine derivatives, gepants (rimegepant, ubrogepant), and lasmiditan. Because response to any of these therapies will differ among patients with migraine, shared decision-making with patients about benefits and potential side effects is necessary and should include flexibility to change therapy if needed.

Preventive therapy should be offered to patients experiencing six or more migraines a month (regardless of impairment) and those, like this patient, with three or more migraines a month that significantly impair daily activities. Preventive therapy can be considered for those with fewer monthly episodes, depending on the degree of impairment. Oral preventive therapies with established efficacy include candesartan, certain beta-blockers, topiramate, and valproate. Parenteral monoclonal antibodies that inhibit calcitonin gene-related peptide activity (eptinezumab, erenumab, fremanezumab, and galcanezumab) and onabotulinumtoxinA may be considered if oral therapies provide inadequate prevention.

Tension-type headache is the most common form of primary headache. These headaches are bilateral and characterized by a pressing or dull sensation that is often mild in intensity. They are different from migraine in that they occur infrequently, lack sensory symptoms, and generally are of shorter duration (30 minutes to 24 hours). Fasting-related headache is characterized by diffuse, nonpulsating pain and is relieved with food.

Heidi Moawad, MD, Clinical Assistant Professor, Department of Medical Education, Case Western Reserve University School of Medicine, Cleveland, Ohio.

Heidi Moawad, MD, has disclosed no relevant financial relationships.

Image Quizzes are fictional or fictionalized clinical scenarios intended to provide evidence-based educational takeaways.

Migraine is a form of recurrent headache that can present as migraine with aura or migraine without aura, with the latter being the most common form. As in this patient, migraine without aura is a chronic form of headache of moderate to severe intensity that usually lasts for several hours but rarely may persist for up to 3 days. Headache pain is unilateral and often aggravated by triggers such as routine physical activity. The American Headache Society diagnostic criteria for migraine without aura include having symptoms of nausea and/or hypersensitivity to light or sound. This patient also described symptoms typical of the prodromal phase of migraine, which include yawning, temperature control, excessive thirst, and mood swings.

Patients who have migraine with aura also have unilateral headache pain of several hours' duration but experience visual (eg, dots or flashes) or sensory (prickly sensation on skin) symptoms, or may have brief difficulty with speech or motor function. These aura symptoms generally last 5 to 60 minutes before abating.

The worldwide impact of migraine potentially reaches a billion individuals. Its prevalence is second only to tension-type headaches. Migraine occurs in patients of all ages and affects women at a rate two to three times higher than in men. Prevalence appears to peak in the third and fourth decades of life and tends to be lower among older adults. Migraine also has a negative effect on patients' work, school, or social lives, and is associated with increased rates of depression and anxiety in adults. For patients who are prone to migraines, potential triggers include some foods and beverages (including those that contain caffeine and alcohol), menstrual cycles in women, exposure to strobing or bright lights or loud sounds, stressful situations, extra physical activity, and too much or too little sleep.

Migraine is a clinical diagnosis based on number of headaches (five or more episodes) plus two or more of the characteristic signs (unilateral, throbbing pain, pain intensity of ≥ 5 on a 10-point scale, and pain aggravated by routine physical motion, such as climbing stairs or bending over) plus nausea and/or photosensitivity or phonosensitivity. Prodrome symptoms are reported by about 70% of adult patients. Diagnosis rarely requires neuroimaging; however, before prescribing medication, a complete lab and metabolic workup should be done.

Management of migraine without aura includes acute and preventive interventions. Acute interventions cited by the American Headache Society include nonsteroidal anti-inflammatory drugs and acetaminophen for mild pain, and migraine-specific therapies such as the triptans, ergotamine derivatives, gepants (rimegepant, ubrogepant), and lasmiditan. Because response to any of these therapies will differ among patients with migraine, shared decision-making with patients about benefits and potential side effects is necessary and should include flexibility to change therapy if needed.

Preventive therapy should be offered to patients experiencing six or more migraines a month (regardless of impairment) and those, like this patient, with three or more migraines a month that significantly impair daily activities. Preventive therapy can be considered for those with fewer monthly episodes, depending on the degree of impairment. Oral preventive therapies with established efficacy include candesartan, certain beta-blockers, topiramate, and valproate. Parenteral monoclonal antibodies that inhibit calcitonin gene-related peptide activity (eptinezumab, erenumab, fremanezumab, and galcanezumab) and onabotulinumtoxinA may be considered if oral therapies provide inadequate prevention.

Tension-type headache is the most common form of primary headache. These headaches are bilateral and characterized by a pressing or dull sensation that is often mild in intensity. They are different from migraine in that they occur infrequently, lack sensory symptoms, and generally are of shorter duration (30 minutes to 24 hours). Fasting-related headache is characterized by diffuse, nonpulsating pain and is relieved with food.

Heidi Moawad, MD, Clinical Assistant Professor, Department of Medical Education, Case Western Reserve University School of Medicine, Cleveland, Ohio.

Heidi Moawad, MD, has disclosed no relevant financial relationships.

Image Quizzes are fictional or fictionalized clinical scenarios intended to provide evidence-based educational takeaways.

Migraine is a form of recurrent headache that can present as migraine with aura or migraine without aura, with the latter being the most common form. As in this patient, migraine without aura is a chronic form of headache of moderate to severe intensity that usually lasts for several hours but rarely may persist for up to 3 days. Headache pain is unilateral and often aggravated by triggers such as routine physical activity. The American Headache Society diagnostic criteria for migraine without aura include having symptoms of nausea and/or hypersensitivity to light or sound. This patient also described symptoms typical of the prodromal phase of migraine, which include yawning, temperature control, excessive thirst, and mood swings.

Patients who have migraine with aura also have unilateral headache pain of several hours' duration but experience visual (eg, dots or flashes) or sensory (prickly sensation on skin) symptoms, or may have brief difficulty with speech or motor function. These aura symptoms generally last 5 to 60 minutes before abating.

The worldwide impact of migraine potentially reaches a billion individuals. Its prevalence is second only to tension-type headaches. Migraine occurs in patients of all ages and affects women at a rate two to three times higher than in men. Prevalence appears to peak in the third and fourth decades of life and tends to be lower among older adults. Migraine also has a negative effect on patients' work, school, or social lives, and is associated with increased rates of depression and anxiety in adults. For patients who are prone to migraines, potential triggers include some foods and beverages (including those that contain caffeine and alcohol), menstrual cycles in women, exposure to strobing or bright lights or loud sounds, stressful situations, extra physical activity, and too much or too little sleep.

Migraine is a clinical diagnosis based on number of headaches (five or more episodes) plus two or more of the characteristic signs (unilateral, throbbing pain, pain intensity of ≥ 5 on a 10-point scale, and pain aggravated by routine physical motion, such as climbing stairs or bending over) plus nausea and/or photosensitivity or phonosensitivity. Prodrome symptoms are reported by about 70% of adult patients. Diagnosis rarely requires neuroimaging; however, before prescribing medication, a complete lab and metabolic workup should be done.

Management of migraine without aura includes acute and preventive interventions. Acute interventions cited by the American Headache Society include nonsteroidal anti-inflammatory drugs and acetaminophen for mild pain, and migraine-specific therapies such as the triptans, ergotamine derivatives, gepants (rimegepant, ubrogepant), and lasmiditan. Because response to any of these therapies will differ among patients with migraine, shared decision-making with patients about benefits and potential side effects is necessary and should include flexibility to change therapy if needed.

Preventive therapy should be offered to patients experiencing six or more migraines a month (regardless of impairment) and those, like this patient, with three or more migraines a month that significantly impair daily activities. Preventive therapy can be considered for those with fewer monthly episodes, depending on the degree of impairment. Oral preventive therapies with established efficacy include candesartan, certain beta-blockers, topiramate, and valproate. Parenteral monoclonal antibodies that inhibit calcitonin gene-related peptide activity (eptinezumab, erenumab, fremanezumab, and galcanezumab) and onabotulinumtoxinA may be considered if oral therapies provide inadequate prevention.

Tension-type headache is the most common form of primary headache. These headaches are bilateral and characterized by a pressing or dull sensation that is often mild in intensity. They are different from migraine in that they occur infrequently, lack sensory symptoms, and generally are of shorter duration (30 minutes to 24 hours). Fasting-related headache is characterized by diffuse, nonpulsating pain and is relieved with food.

Heidi Moawad, MD, Clinical Assistant Professor, Department of Medical Education, Case Western Reserve University School of Medicine, Cleveland, Ohio.

Heidi Moawad, MD, has disclosed no relevant financial relationships.

Image Quizzes are fictional or fictionalized clinical scenarios intended to provide evidence-based educational takeaways.

A 30-year-old female patient (140 lb and 5 ft 7 in; BMI 21.9) presents at the emergency department with a throbbing headache that began after dinner and was accompanied by queasy nausea. She reports immediately going to bed and sleeping through the night, but pain and other symptoms were still present in the morning. At this point, headache duration is approaching 15 hours. The patient describes the headache pain as throbbing and intense on the left side of her head.

The patient has a demanding job in advertising and often works very long hours on little sleep; this latest headache developed after working through the night before. When asked, she admits to feeling lethargic, yawning (to the point where coworkers commented), and experiencing intervals of excessive sweating earlier in the day before the headache emerged. The patient attributed these to being tired and hungry because of skipped meals since the previous night's dinner.

She has no history of cardiovascular or other chronic illness, and her blood pressure is within normal range. She describes having had about seven similar headaches of shorter duration, over the past 2 months; in each case, the headache led to a missed workday or having to leave work early and/or cancel social plans.

Want a healthy diet? Eat real food, GI physician advises

What exactly is a healthy diet?

Scott Ketover, MD, AGAF, FASGE, will be the first to admit that’s not an easy question to answer. “As much research and information as we have, we don’t really know what a healthy diet is,” said Dr. Ketover, president and CEO of MNGI Digestive Health in Minneapolis, Minnesota. He was recognized by AGA this year with the Distinguished Clinician Award in Private Practice.

When patients ask questions about a healthy diet, Dr. Ketover responds with a dose of common sense: “If it’s food that didn’t exist in the year 1900, don’t eat it.” Your grandmother’s apple pie is fine in moderation, he said, but the apple pie you get at the McDonald’s drive-through could sit on your shelf for 6 months and look the same.

That is not something you should eat, he emphasizes.

“I really do believe though, that what crosses our lips and gets into our GI tract really underlies our entire health. It’s just that we don’t have enough information yet to know how we can coach people in telling them: eat this, not that,” he added.

In an interview, Dr. Ketover spoke more about the link between the gut microbiome and health, and the young patient who inspired him to become a GI physician.

Q: Why did you choose GI?

Dr. Ketover: I was a medical student working on my pediatrics rotation at Children’s Minnesota (Minneapolis Pediatrics Hospital). A 17-year-old young man who had Crohn’s disease really turned this into my lifelong passion. The patient confided in me that when he was 11, he had an ileostomy. He wore an ileostomy bag for 6 years and kept it hidden from all his friends. He was petrified of their knowing. And he told me at the age of 17 that if he knew how hard it was going to be to keep that secret, he would’ve preferred to have died rather than have the ileostomy. That got me thinking a lot about Crohn’s disease, and certainly how it affects patients. It became a very motivating thing for me to be involved in something that could potentially prevent this situation for others.

Today, we have much better treatment for Crohn’s than we did 30 years ago. So that’s all a good thing.

Q: Wellness and therapeutic diets are a specific interest of yours. Can you talk about this?

Dr. Ketover: We talk about things like Cheetos, Twinkies — those are not real foods. I do direct patients to ‘think’ when they go to the grocery store. All the good stuff is in the perimeter of the store. When you walk down the aisles, it’s all the processed food with added chemicals. It’s hard to point at specific things though and say: this is bad for you, but we do know that we should eat real food as often as we can. And I think that will contribute to our knowledge and learning about the intestinal microbiome. Again, we’re really at the beginning of our infancy of this, even though there’s lots of probiotics and things out there that claim to make you healthier. We don’t really know yet. And it’s going to take more time.

Q: What role does diet play in improving the intestinal microbiome?

Dr. Ketover: When you look at people who are healthy and who have low incidence of chronic diseases or inflammatory conditions, obesity, cancer, we’re starting to study their microbiome to see how it differs from people who have those illnesses and conditions and try to understand what the different constituents are of the microbiome. And then the big question is: Okay, so once we know that, how do we take ‘the unhealthy microbiome’ and change it to the ‘healthy microbiome’?

The only method we currently have is fecal transplant for Clostridioides difficile. And that’s just not a feasible way to change the microbiome for most people.

Some studies are going on with this. There’s been laboratory studies done with lab animals that show that fecal transplant can reverse obesity.

Q: Describe your biggest practice-related challenge and what you are doing to address it.

Dr. Ketover: The biggest challenge these days for medical practices is the relationship with the payer world and prior authorization. Where we’ve seen the greatest impact of prior authorization, unfortunately, is in the Medicare Advantage programs. Payers receive money from the federal government on plans that they can better manage the patient on, rather than Medicare. That results in a tremendous amount of prior authorization.

I get particularly incensed when I see that a lot of payers are practicing medicine without a license and they’re not relying on the professionals who are actually in the exam room with patients and doing the history and physical examination to determine what is an appropriate course of diagnosis or therapy for a patient.

It comes around every January. We have patients who are stable on meds, then their insurance gets renewed and the pharmacy formulary changes. Patients stable on various therapies are either kicked off them, or we have to go through the prior authorization process again for the same patient for the umpteenth time to keep them on a stable therapy.

How do I address that? It’s in conversations with payers and policy makers. There’s a lot going on in Washington, talking about prior authorization. I’m not sure that non-practitioners fully feel the pain that it delivers to patients.

Q: What teacher or mentor had the greatest impact on you?

Dr. Ketover: Phillip M. Kibort, MD, the pediatric physician I worked with as a medical student who really turned me on to GI medicine. We worked together on several patients and I was able to develop an appreciation for the breadth and depth of GI-related abnormalities and diseases and therapies. And I really got excited by the spectrum of opportunity that I would have as a physician to help treat patients with GI illness.

Q: What would you do differently if you had a chance?

Dr. Ketover: I’d travel more both for work and for pleasure. I really enjoy my relationships that I’ve created with lots of other gastroenterologists as well as non-physicians around policy issues. I’m involved in a couple of national organizations that talk to politicians on Capitol Hill and at state houses about patient advocacy. I would have done more of that earlier in my career if I could have.

Q: What do you like to do in your free time?

Dr. Ketover: I like to run, bike, walk. I like being outside as much as possible and enjoy being active.

Lightning Round

Texting or talking?

Texting, very efficient

Favorite city in U.S. besides the one you live in?

Waikiki, Honolulu

Favorite breakfast?

Pancakes

Place you most want to travel to?

Australia and New Zealand

Favorite junk food?

Pretzels and ice cream

Favorite season?

Summer

How many cups of coffee do you drink per day?

2-3

If you weren’t a gastroenterologist, what would you be?

Public policy writer

Who inspires you?

My wife

Best Halloween costume you ever wore?

Cowboy

Favorite type of music?

Classic rock

Favorite movie genre?

Science fiction, space exploration

Cat person or dog person?

Dog

Favorite sport?

Football — to watch

What song do you have to sing along with when you hear it?

Bohemian Rhapsody

Introvert or extrovert?

Introvert

Optimist or pessimist?

Optimist

What exactly is a healthy diet?

Scott Ketover, MD, AGAF, FASGE, will be the first to admit that’s not an easy question to answer. “As much research and information as we have, we don’t really know what a healthy diet is,” said Dr. Ketover, president and CEO of MNGI Digestive Health in Minneapolis, Minnesota. He was recognized by AGA this year with the Distinguished Clinician Award in Private Practice.

When patients ask questions about a healthy diet, Dr. Ketover responds with a dose of common sense: “If it’s food that didn’t exist in the year 1900, don’t eat it.” Your grandmother’s apple pie is fine in moderation, he said, but the apple pie you get at the McDonald’s drive-through could sit on your shelf for 6 months and look the same.

That is not something you should eat, he emphasizes.

“I really do believe though, that what crosses our lips and gets into our GI tract really underlies our entire health. It’s just that we don’t have enough information yet to know how we can coach people in telling them: eat this, not that,” he added.

In an interview, Dr. Ketover spoke more about the link between the gut microbiome and health, and the young patient who inspired him to become a GI physician.

Q: Why did you choose GI?

Dr. Ketover: I was a medical student working on my pediatrics rotation at Children’s Minnesota (Minneapolis Pediatrics Hospital). A 17-year-old young man who had Crohn’s disease really turned this into my lifelong passion. The patient confided in me that when he was 11, he had an ileostomy. He wore an ileostomy bag for 6 years and kept it hidden from all his friends. He was petrified of their knowing. And he told me at the age of 17 that if he knew how hard it was going to be to keep that secret, he would’ve preferred to have died rather than have the ileostomy. That got me thinking a lot about Crohn’s disease, and certainly how it affects patients. It became a very motivating thing for me to be involved in something that could potentially prevent this situation for others.

Today, we have much better treatment for Crohn’s than we did 30 years ago. So that’s all a good thing.

Q: Wellness and therapeutic diets are a specific interest of yours. Can you talk about this?

Dr. Ketover: We talk about things like Cheetos, Twinkies — those are not real foods. I do direct patients to ‘think’ when they go to the grocery store. All the good stuff is in the perimeter of the store. When you walk down the aisles, it’s all the processed food with added chemicals. It’s hard to point at specific things though and say: this is bad for you, but we do know that we should eat real food as often as we can. And I think that will contribute to our knowledge and learning about the intestinal microbiome. Again, we’re really at the beginning of our infancy of this, even though there’s lots of probiotics and things out there that claim to make you healthier. We don’t really know yet. And it’s going to take more time.

Q: What role does diet play in improving the intestinal microbiome?

Dr. Ketover: When you look at people who are healthy and who have low incidence of chronic diseases or inflammatory conditions, obesity, cancer, we’re starting to study their microbiome to see how it differs from people who have those illnesses and conditions and try to understand what the different constituents are of the microbiome. And then the big question is: Okay, so once we know that, how do we take ‘the unhealthy microbiome’ and change it to the ‘healthy microbiome’?

The only method we currently have is fecal transplant for Clostridioides difficile. And that’s just not a feasible way to change the microbiome for most people.

Some studies are going on with this. There’s been laboratory studies done with lab animals that show that fecal transplant can reverse obesity.

Q: Describe your biggest practice-related challenge and what you are doing to address it.

Dr. Ketover: The biggest challenge these days for medical practices is the relationship with the payer world and prior authorization. Where we’ve seen the greatest impact of prior authorization, unfortunately, is in the Medicare Advantage programs. Payers receive money from the federal government on plans that they can better manage the patient on, rather than Medicare. That results in a tremendous amount of prior authorization.

I get particularly incensed when I see that a lot of payers are practicing medicine without a license and they’re not relying on the professionals who are actually in the exam room with patients and doing the history and physical examination to determine what is an appropriate course of diagnosis or therapy for a patient.

It comes around every January. We have patients who are stable on meds, then their insurance gets renewed and the pharmacy formulary changes. Patients stable on various therapies are either kicked off them, or we have to go through the prior authorization process again for the same patient for the umpteenth time to keep them on a stable therapy.

How do I address that? It’s in conversations with payers and policy makers. There’s a lot going on in Washington, talking about prior authorization. I’m not sure that non-practitioners fully feel the pain that it delivers to patients.

Q: What teacher or mentor had the greatest impact on you?

Dr. Ketover: Phillip M. Kibort, MD, the pediatric physician I worked with as a medical student who really turned me on to GI medicine. We worked together on several patients and I was able to develop an appreciation for the breadth and depth of GI-related abnormalities and diseases and therapies. And I really got excited by the spectrum of opportunity that I would have as a physician to help treat patients with GI illness.

Q: What would you do differently if you had a chance?

Dr. Ketover: I’d travel more both for work and for pleasure. I really enjoy my relationships that I’ve created with lots of other gastroenterologists as well as non-physicians around policy issues. I’m involved in a couple of national organizations that talk to politicians on Capitol Hill and at state houses about patient advocacy. I would have done more of that earlier in my career if I could have.

Q: What do you like to do in your free time?

Dr. Ketover: I like to run, bike, walk. I like being outside as much as possible and enjoy being active.

Lightning Round

Texting or talking?

Texting, very efficient

Favorite city in U.S. besides the one you live in?

Waikiki, Honolulu

Favorite breakfast?

Pancakes

Place you most want to travel to?

Australia and New Zealand

Favorite junk food?

Pretzels and ice cream

Favorite season?

Summer

How many cups of coffee do you drink per day?

2-3

If you weren’t a gastroenterologist, what would you be?

Public policy writer

Who inspires you?

My wife

Best Halloween costume you ever wore?

Cowboy

Favorite type of music?

Classic rock

Favorite movie genre?

Science fiction, space exploration

Cat person or dog person?

Dog

Favorite sport?

Football — to watch

What song do you have to sing along with when you hear it?

Bohemian Rhapsody

Introvert or extrovert?

Introvert

Optimist or pessimist?

Optimist

What exactly is a healthy diet?

Scott Ketover, MD, AGAF, FASGE, will be the first to admit that’s not an easy question to answer. “As much research and information as we have, we don’t really know what a healthy diet is,” said Dr. Ketover, president and CEO of MNGI Digestive Health in Minneapolis, Minnesota. He was recognized by AGA this year with the Distinguished Clinician Award in Private Practice.

When patients ask questions about a healthy diet, Dr. Ketover responds with a dose of common sense: “If it’s food that didn’t exist in the year 1900, don’t eat it.” Your grandmother’s apple pie is fine in moderation, he said, but the apple pie you get at the McDonald’s drive-through could sit on your shelf for 6 months and look the same.

That is not something you should eat, he emphasizes.

“I really do believe though, that what crosses our lips and gets into our GI tract really underlies our entire health. It’s just that we don’t have enough information yet to know how we can coach people in telling them: eat this, not that,” he added.

In an interview, Dr. Ketover spoke more about the link between the gut microbiome and health, and the young patient who inspired him to become a GI physician.

Q: Why did you choose GI?

Dr. Ketover: I was a medical student working on my pediatrics rotation at Children’s Minnesota (Minneapolis Pediatrics Hospital). A 17-year-old young man who had Crohn’s disease really turned this into my lifelong passion. The patient confided in me that when he was 11, he had an ileostomy. He wore an ileostomy bag for 6 years and kept it hidden from all his friends. He was petrified of their knowing. And he told me at the age of 17 that if he knew how hard it was going to be to keep that secret, he would’ve preferred to have died rather than have the ileostomy. That got me thinking a lot about Crohn’s disease, and certainly how it affects patients. It became a very motivating thing for me to be involved in something that could potentially prevent this situation for others.

Today, we have much better treatment for Crohn’s than we did 30 years ago. So that’s all a good thing.

Q: Wellness and therapeutic diets are a specific interest of yours. Can you talk about this?

Dr. Ketover: We talk about things like Cheetos, Twinkies — those are not real foods. I do direct patients to ‘think’ when they go to the grocery store. All the good stuff is in the perimeter of the store. When you walk down the aisles, it’s all the processed food with added chemicals. It’s hard to point at specific things though and say: this is bad for you, but we do know that we should eat real food as often as we can. And I think that will contribute to our knowledge and learning about the intestinal microbiome. Again, we’re really at the beginning of our infancy of this, even though there’s lots of probiotics and things out there that claim to make you healthier. We don’t really know yet. And it’s going to take more time.

Q: What role does diet play in improving the intestinal microbiome?