User login

Bringing you the latest news, research and reviews, exclusive interviews, podcasts, quizzes, and more.

div[contains(@class, 'header__large-screen')]

div[contains(@class, 'read-next-article')]

div[contains(@class, 'nav-primary')]

nav[contains(@class, 'nav-primary')]

section[contains(@class, 'footer-nav-section-wrapper')]

footer[@id='footer']

div[contains(@class, 'main-prefix')]

section[contains(@class, 'nav-hidden')]

div[contains(@class, 'ce-card-content')]

nav[contains(@class, 'nav-ce-stack')]

Alcohol dependence in teens tied to subsequent depression

TOPLINE

Alcohol dependence, but not consumption, at age 18 years increases the risk for depression at age 24 years.

METHODOLOGY

- The study included 3,902 mostly White adolescents, about 58% female, born in England from April 1991 to December 1992, who were part of the Avon Longitudinal Study of Parents and Children (ALSPAC) that examined genetic and environmental determinants of health and development.

- Participants completed the self-report Alcohol Use Disorders Identification Test (AUDIT) between the ages of 16 and 23 years, a period when average alcohol use increases rapidly.

- The primary outcome was probability for depression at age 24 years, using the Clinical Interview Schedule Revised (CIS-R), a self-administered computerized clinical assessment of common mental disorder symptoms during the past week.

- Researchers assessed frequency and quantity of alcohol consumption as well as alcohol dependence.

- Confounders included sex, housing type, maternal education and depressive symptoms, parents’ alcohol use, conduct problems at age 4 years, being bullied, and smoking status.

TAKEAWAYS

- After adjustments, alcohol dependence at age 18 years was associated with depression at age 24 years (unstandardized probit coefficient 0.13; 95% confidence interval, 0.02-0.25; P = .019)

- The relationship appeared to persist for alcohol dependence at each age of the growth curve (17-22 years).

- There was no evidence that frequency or quantity of alcohol consumption at age 18 was significantly associated with depression at age 24, suggesting these factors may not increase the risk for later depression unless there are also features of dependency.

IN PRACTICE

“Our findings suggest that preventing alcohol dependence during adolescence, or treating it early, could reduce the risk of depression,” which could have important public health implications, the researchers write.

STUDY DETAILS

The study was carried out by researchers at the University of Bristol; University College London; Critical Thinking Unit, Public Health Directorate, NHS; University of Nottingham, all in the United Kingdom. It was published online in Lancet Psychiatry

LIMITATIONS

There was substantial attrition in the ALSPAC cohort from birth to age 24 years. The sample was recruited from one U.K. region and most participants were White. Measures of alcohol consumption and dependence excluded some features of abuse. And as this is an observational study, the possibility of residual confounding can’t be excluded.

DISCLOSURES

The investigators report no relevant disclosures. The study received support from the UK Medical Research Council and Alcohol Research UK.

A version of this article first appeared on Medscape.com.

TOPLINE

Alcohol dependence, but not consumption, at age 18 years increases the risk for depression at age 24 years.

METHODOLOGY

- The study included 3,902 mostly White adolescents, about 58% female, born in England from April 1991 to December 1992, who were part of the Avon Longitudinal Study of Parents and Children (ALSPAC) that examined genetic and environmental determinants of health and development.

- Participants completed the self-report Alcohol Use Disorders Identification Test (AUDIT) between the ages of 16 and 23 years, a period when average alcohol use increases rapidly.

- The primary outcome was probability for depression at age 24 years, using the Clinical Interview Schedule Revised (CIS-R), a self-administered computerized clinical assessment of common mental disorder symptoms during the past week.

- Researchers assessed frequency and quantity of alcohol consumption as well as alcohol dependence.

- Confounders included sex, housing type, maternal education and depressive symptoms, parents’ alcohol use, conduct problems at age 4 years, being bullied, and smoking status.

TAKEAWAYS

- After adjustments, alcohol dependence at age 18 years was associated with depression at age 24 years (unstandardized probit coefficient 0.13; 95% confidence interval, 0.02-0.25; P = .019)

- The relationship appeared to persist for alcohol dependence at each age of the growth curve (17-22 years).

- There was no evidence that frequency or quantity of alcohol consumption at age 18 was significantly associated with depression at age 24, suggesting these factors may not increase the risk for later depression unless there are also features of dependency.

IN PRACTICE

“Our findings suggest that preventing alcohol dependence during adolescence, or treating it early, could reduce the risk of depression,” which could have important public health implications, the researchers write.

STUDY DETAILS

The study was carried out by researchers at the University of Bristol; University College London; Critical Thinking Unit, Public Health Directorate, NHS; University of Nottingham, all in the United Kingdom. It was published online in Lancet Psychiatry

LIMITATIONS

There was substantial attrition in the ALSPAC cohort from birth to age 24 years. The sample was recruited from one U.K. region and most participants were White. Measures of alcohol consumption and dependence excluded some features of abuse. And as this is an observational study, the possibility of residual confounding can’t be excluded.

DISCLOSURES

The investigators report no relevant disclosures. The study received support from the UK Medical Research Council and Alcohol Research UK.

A version of this article first appeared on Medscape.com.

TOPLINE

Alcohol dependence, but not consumption, at age 18 years increases the risk for depression at age 24 years.

METHODOLOGY

- The study included 3,902 mostly White adolescents, about 58% female, born in England from April 1991 to December 1992, who were part of the Avon Longitudinal Study of Parents and Children (ALSPAC) that examined genetic and environmental determinants of health and development.

- Participants completed the self-report Alcohol Use Disorders Identification Test (AUDIT) between the ages of 16 and 23 years, a period when average alcohol use increases rapidly.

- The primary outcome was probability for depression at age 24 years, using the Clinical Interview Schedule Revised (CIS-R), a self-administered computerized clinical assessment of common mental disorder symptoms during the past week.

- Researchers assessed frequency and quantity of alcohol consumption as well as alcohol dependence.

- Confounders included sex, housing type, maternal education and depressive symptoms, parents’ alcohol use, conduct problems at age 4 years, being bullied, and smoking status.

TAKEAWAYS

- After adjustments, alcohol dependence at age 18 years was associated with depression at age 24 years (unstandardized probit coefficient 0.13; 95% confidence interval, 0.02-0.25; P = .019)

- The relationship appeared to persist for alcohol dependence at each age of the growth curve (17-22 years).

- There was no evidence that frequency or quantity of alcohol consumption at age 18 was significantly associated with depression at age 24, suggesting these factors may not increase the risk for later depression unless there are also features of dependency.

IN PRACTICE

“Our findings suggest that preventing alcohol dependence during adolescence, or treating it early, could reduce the risk of depression,” which could have important public health implications, the researchers write.

STUDY DETAILS

The study was carried out by researchers at the University of Bristol; University College London; Critical Thinking Unit, Public Health Directorate, NHS; University of Nottingham, all in the United Kingdom. It was published online in Lancet Psychiatry

LIMITATIONS

There was substantial attrition in the ALSPAC cohort from birth to age 24 years. The sample was recruited from one U.K. region and most participants were White. Measures of alcohol consumption and dependence excluded some features of abuse. And as this is an observational study, the possibility of residual confounding can’t be excluded.

DISCLOSURES

The investigators report no relevant disclosures. The study received support from the UK Medical Research Council and Alcohol Research UK.

A version of this article first appeared on Medscape.com.

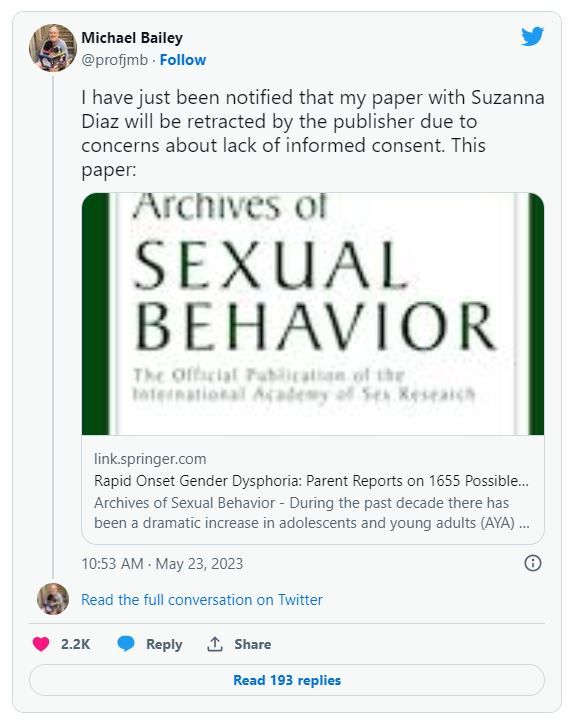

After backlash, publisher to retract article that surveyed parents of children with gender dysphoria, says coauthor

The move is “due to concerns about lack of informed consent,” according to tweets by one of the paper’s authors.

The article, “Rapid Onset Gender Dysphoria: Parent Reports on 1655 Possible Cases,” was published in March in the Archives of Sexual Behavior. It has not been cited in the scientific literature, according to Clarivate’s Web of Science, but Altmetric, which tracks the online attention papers receive, ranks the article in the top 1% of all articles of a similar age.

Rapid Onset Gender Dysphoria (ROGD) is, the article stated, a “controversial theory” that “common cultural beliefs, values, and preoccupations cause some adolescents (especially female adolescents) to attribute their social problems, feelings, and mental health issues to gender dysphoria,” and that “youth with ROGD falsely believe that they are transgender,” in part due to social influences.

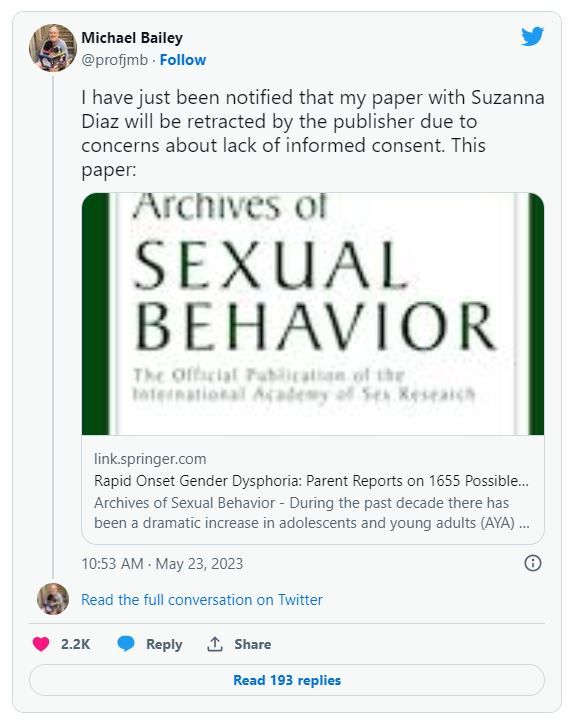

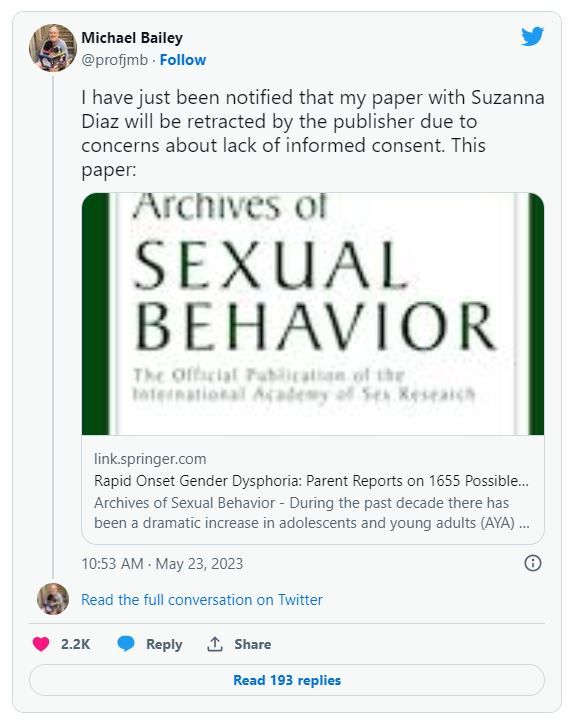

Michael Bailey, a psychology professor at Northwestern University in Evanston, Ill., and the paper’s corresponding author, tweeted:

Bailey told Retraction Watch that he would “respond when [he] can” to our request for comment, following “new developments on our end.” Neither Springer Nature nor Kenneth Zucker, editor in chief of Archives of Sexual Behavior, has responded to similar requests.

The paper reported the results of a survey of parents who contacted the website ParentsofROGDKids.com, with which the first author is affiliated. According to the abstract, the authors found:

“Pre-existing mental health issues were common, and youths with these issues were more likely than those without them to have socially and medically transitioned. Parents reported that they had often felt pressured by clinicians to affirm their AYA [adolescent and young adult] child’s new gender and support their transition. According to the parents, AYA children’s mental health deteriorated considerably after social transition.”

Soon after publication, the paper attracted criticism that its method of gathering study participants was biased, and that the authors ignored information that didn’t support the theory of ROGD.

Archives of Sexual Behavior is the official publication of the International Academy of Sex Research, which tweeted on April 19:

The episode prompted a May 5 “Open Letter in Support of Dr. Kenneth Zucker and the Need to Promote Robust Scientific Debate” from the Foundation Against Intolerance and Racism that has now been signed by nearly 2000 people.

On May 10, the following publisher’s note was added to the article:

“readers are alerted that concerns have been raised regarding methodology as described in this article. The publisher is currently investigating this matter and a further response will follow the conclusion of this investigation.

Six days later, the publisher removed the article’s supplementary information “due to a lack of documented consent by study participants.”

The story may feel familiar to readers who recall what happened to another paper in 2018. In that paper, Brown University’s Lisa Littman coined the term ROGD. Following a backlash, Brown took down a press release touting the results, and the paper was eventually republished with corrections.

Bailey has been accused of mistreating transgender research participants, but an investigation by bioethicist Alice Dreger found that of the many accusations, “almost none appear to have been legitimate.”

In a post on UnHerd earlier this month, Bailey responded to the reported concerns about the study lacking approval by an Institutional Review Board (IRB), and that the way the participants were recruited biased the results.

IRB approval was not necessary, Bailey wrote, because Suzanna Diaz, the first author who collected the data, was not affiliated with an institution that required it. “Suzanna Diaz” is a pseudonym for “the mother of a gender dysphoric child she believes has ROGD” who wishes to remain anonymous for the sake of her family, Bailey wrote.

The paper included the following statement about its ethical approval:

“The first author and creator of the survey is not affiliated with any university or hospital. Thus, she did not seek approval from an IRB. After seeing a presentation of preliminary survey results by the first author, the second author suggested the data to be analyzed and submitted as an academic article (he was not involved in collecting the data). The second author consulted with his university’s IRB, who declined to certify the study because data were already collected. However, they advised that publishing the results was likely ethical provided data were deidentified. Editor’s note: After I reviewed the manuscript, I concluded that its publication is ethically appropriate, consistent with Springer policy.”

In his UnHerd post, Bailey quoted from the journal’s submission guidelines:

“If a study has not been granted ethics committee approval prior to commencing, retrospective ethics approval usually cannot be obtained and it may not be possible to consider the manuscript for peer review. The decision on whether to proceed to peer review in such cases is at the Editor’s discretion.”

“Regarding the methodological limitations of the study, these were addressed forthrightly and thoroughly in our article,” Bailey wrote.

Adam Marcus, a cofounder of Retraction Watch, is an editor at this news organization.

A version of this article first appeared on RetractionWatch.com.

The move is “due to concerns about lack of informed consent,” according to tweets by one of the paper’s authors.

The article, “Rapid Onset Gender Dysphoria: Parent Reports on 1655 Possible Cases,” was published in March in the Archives of Sexual Behavior. It has not been cited in the scientific literature, according to Clarivate’s Web of Science, but Altmetric, which tracks the online attention papers receive, ranks the article in the top 1% of all articles of a similar age.

Rapid Onset Gender Dysphoria (ROGD) is, the article stated, a “controversial theory” that “common cultural beliefs, values, and preoccupations cause some adolescents (especially female adolescents) to attribute their social problems, feelings, and mental health issues to gender dysphoria,” and that “youth with ROGD falsely believe that they are transgender,” in part due to social influences.

Michael Bailey, a psychology professor at Northwestern University in Evanston, Ill., and the paper’s corresponding author, tweeted:

Bailey told Retraction Watch that he would “respond when [he] can” to our request for comment, following “new developments on our end.” Neither Springer Nature nor Kenneth Zucker, editor in chief of Archives of Sexual Behavior, has responded to similar requests.

The paper reported the results of a survey of parents who contacted the website ParentsofROGDKids.com, with which the first author is affiliated. According to the abstract, the authors found:

“Pre-existing mental health issues were common, and youths with these issues were more likely than those without them to have socially and medically transitioned. Parents reported that they had often felt pressured by clinicians to affirm their AYA [adolescent and young adult] child’s new gender and support their transition. According to the parents, AYA children’s mental health deteriorated considerably after social transition.”

Soon after publication, the paper attracted criticism that its method of gathering study participants was biased, and that the authors ignored information that didn’t support the theory of ROGD.

Archives of Sexual Behavior is the official publication of the International Academy of Sex Research, which tweeted on April 19:

The episode prompted a May 5 “Open Letter in Support of Dr. Kenneth Zucker and the Need to Promote Robust Scientific Debate” from the Foundation Against Intolerance and Racism that has now been signed by nearly 2000 people.

On May 10, the following publisher’s note was added to the article:

“readers are alerted that concerns have been raised regarding methodology as described in this article. The publisher is currently investigating this matter and a further response will follow the conclusion of this investigation.

Six days later, the publisher removed the article’s supplementary information “due to a lack of documented consent by study participants.”

The story may feel familiar to readers who recall what happened to another paper in 2018. In that paper, Brown University’s Lisa Littman coined the term ROGD. Following a backlash, Brown took down a press release touting the results, and the paper was eventually republished with corrections.

Bailey has been accused of mistreating transgender research participants, but an investigation by bioethicist Alice Dreger found that of the many accusations, “almost none appear to have been legitimate.”

In a post on UnHerd earlier this month, Bailey responded to the reported concerns about the study lacking approval by an Institutional Review Board (IRB), and that the way the participants were recruited biased the results.

IRB approval was not necessary, Bailey wrote, because Suzanna Diaz, the first author who collected the data, was not affiliated with an institution that required it. “Suzanna Diaz” is a pseudonym for “the mother of a gender dysphoric child she believes has ROGD” who wishes to remain anonymous for the sake of her family, Bailey wrote.

The paper included the following statement about its ethical approval:

“The first author and creator of the survey is not affiliated with any university or hospital. Thus, she did not seek approval from an IRB. After seeing a presentation of preliminary survey results by the first author, the second author suggested the data to be analyzed and submitted as an academic article (he was not involved in collecting the data). The second author consulted with his university’s IRB, who declined to certify the study because data were already collected. However, they advised that publishing the results was likely ethical provided data were deidentified. Editor’s note: After I reviewed the manuscript, I concluded that its publication is ethically appropriate, consistent with Springer policy.”

In his UnHerd post, Bailey quoted from the journal’s submission guidelines:

“If a study has not been granted ethics committee approval prior to commencing, retrospective ethics approval usually cannot be obtained and it may not be possible to consider the manuscript for peer review. The decision on whether to proceed to peer review in such cases is at the Editor’s discretion.”

“Regarding the methodological limitations of the study, these were addressed forthrightly and thoroughly in our article,” Bailey wrote.

Adam Marcus, a cofounder of Retraction Watch, is an editor at this news organization.

A version of this article first appeared on RetractionWatch.com.

The move is “due to concerns about lack of informed consent,” according to tweets by one of the paper’s authors.

The article, “Rapid Onset Gender Dysphoria: Parent Reports on 1655 Possible Cases,” was published in March in the Archives of Sexual Behavior. It has not been cited in the scientific literature, according to Clarivate’s Web of Science, but Altmetric, which tracks the online attention papers receive, ranks the article in the top 1% of all articles of a similar age.

Rapid Onset Gender Dysphoria (ROGD) is, the article stated, a “controversial theory” that “common cultural beliefs, values, and preoccupations cause some adolescents (especially female adolescents) to attribute their social problems, feelings, and mental health issues to gender dysphoria,” and that “youth with ROGD falsely believe that they are transgender,” in part due to social influences.

Michael Bailey, a psychology professor at Northwestern University in Evanston, Ill., and the paper’s corresponding author, tweeted:

Bailey told Retraction Watch that he would “respond when [he] can” to our request for comment, following “new developments on our end.” Neither Springer Nature nor Kenneth Zucker, editor in chief of Archives of Sexual Behavior, has responded to similar requests.

The paper reported the results of a survey of parents who contacted the website ParentsofROGDKids.com, with which the first author is affiliated. According to the abstract, the authors found:

“Pre-existing mental health issues were common, and youths with these issues were more likely than those without them to have socially and medically transitioned. Parents reported that they had often felt pressured by clinicians to affirm their AYA [adolescent and young adult] child’s new gender and support their transition. According to the parents, AYA children’s mental health deteriorated considerably after social transition.”

Soon after publication, the paper attracted criticism that its method of gathering study participants was biased, and that the authors ignored information that didn’t support the theory of ROGD.

Archives of Sexual Behavior is the official publication of the International Academy of Sex Research, which tweeted on April 19:

The episode prompted a May 5 “Open Letter in Support of Dr. Kenneth Zucker and the Need to Promote Robust Scientific Debate” from the Foundation Against Intolerance and Racism that has now been signed by nearly 2000 people.

On May 10, the following publisher’s note was added to the article:

“readers are alerted that concerns have been raised regarding methodology as described in this article. The publisher is currently investigating this matter and a further response will follow the conclusion of this investigation.

Six days later, the publisher removed the article’s supplementary information “due to a lack of documented consent by study participants.”

The story may feel familiar to readers who recall what happened to another paper in 2018. In that paper, Brown University’s Lisa Littman coined the term ROGD. Following a backlash, Brown took down a press release touting the results, and the paper was eventually republished with corrections.

Bailey has been accused of mistreating transgender research participants, but an investigation by bioethicist Alice Dreger found that of the many accusations, “almost none appear to have been legitimate.”

In a post on UnHerd earlier this month, Bailey responded to the reported concerns about the study lacking approval by an Institutional Review Board (IRB), and that the way the participants were recruited biased the results.

IRB approval was not necessary, Bailey wrote, because Suzanna Diaz, the first author who collected the data, was not affiliated with an institution that required it. “Suzanna Diaz” is a pseudonym for “the mother of a gender dysphoric child she believes has ROGD” who wishes to remain anonymous for the sake of her family, Bailey wrote.

The paper included the following statement about its ethical approval:

“The first author and creator of the survey is not affiliated with any university or hospital. Thus, she did not seek approval from an IRB. After seeing a presentation of preliminary survey results by the first author, the second author suggested the data to be analyzed and submitted as an academic article (he was not involved in collecting the data). The second author consulted with his university’s IRB, who declined to certify the study because data were already collected. However, they advised that publishing the results was likely ethical provided data were deidentified. Editor’s note: After I reviewed the manuscript, I concluded that its publication is ethically appropriate, consistent with Springer policy.”

In his UnHerd post, Bailey quoted from the journal’s submission guidelines:

“If a study has not been granted ethics committee approval prior to commencing, retrospective ethics approval usually cannot be obtained and it may not be possible to consider the manuscript for peer review. The decision on whether to proceed to peer review in such cases is at the Editor’s discretion.”

“Regarding the methodological limitations of the study, these were addressed forthrightly and thoroughly in our article,” Bailey wrote.

Adam Marcus, a cofounder of Retraction Watch, is an editor at this news organization.

A version of this article first appeared on RetractionWatch.com.

Cognitive decline risk in adult childhood cancer survivors

Among more than 2,300 adult survivors of childhood cancer and their siblings, who served as controls, new-onset memory impairment emerged more often in survivors decades later.

The increased risk was associated with the cancer treatment that was provided as well as modifiable health behaviors and chronic health conditions.

Even 35 years after being diagnosed, cancer survivors who never received chemotherapies or radiation therapies known to damage the brain reported far greater memory impairment than did their siblings, first author Nicholas Phillips, MD, told this news organization.

What the findings suggest is that “we need to educate oncologists and primary care providers on the risks our survivors face long after completion of therapy,” said Dr. Phillips, of the epidemiology and cancer control department at St. Jude Children’s Research Hospital, Memphis, Tenn.

The study was published online in JAMA Network Open.

Cancer survivors face an elevated risk for severe neurocognitive effects that can emerge 5-10 years following their diagnosis and treatment. However, it’s unclear whether new-onset neurocognitive problems can still develop a decade or more following diagnosis.

Over a long-term follow-up, Dr. Phillips and colleagues explored this question in 2,375 adult survivors of childhood cancer from the Childhood Cancer Survivor Study and 232 of their siblings.

Among the cancer cohort, 1,316 patients were survivors of acute lymphoblastic leukemia (ALL), 488 were survivors of central nervous system (CNS) tumors, and 571 had survived Hodgkin lymphoma.

The researchers determined the prevalence of new-onset neurocognitive impairment between baseline (23 years after diagnosis) and follow-up (35 years after diagnosis). New-onset neurocognitive impairment – present at follow-up but not at baseline – was defined as having a score in the worst 10% of the sibling cohort.

A higher proportion of survivors had new-onset memory impairment at follow-up compared with siblings. Specifically, about 8% of siblings had new-onset memory trouble, compared with 14% of ALL survivors treated with chemotherapy only, 26% of ALL survivors treated with cranial radiation, 35% of CNS tumor survivors, and 17% of Hodgkin lymphoma survivors.

New-onset memory impairment was associated with cranial radiation among CNS tumor survivors (relative risk [RR], 1.97) and alkylator chemotherapy at or above 8,000 mg/m2 among survivors of ALL who were treated without cranial radiation (RR, 2.80). The authors also found that smoking, low educational attainment, and low physical activity were associated with an elevated risk for new-onset memory impairment.

Dr. Phillips noted that current guidelines emphasize the importance of short-term monitoring of a survivor’s neurocognitive status on the basis of that person’s chemotherapy and radiation exposures.

However, “our study suggests that all survivors, regardless of their therapy, should be screened regularly for new-onset neurocognitive problems. And this screening should be done regularly for decades after diagnosis,” he said in an interview.

Dr. Phillips also noted the importance of communicating lifestyle modifications, such as not smoking and maintaining an active lifestyle.

“We need to start early and use the power of repetition when communicating with our survivors and their families,” Dr. Phillips said. “When our families and survivors hear the word ‘exercise,’ they think of gym memberships, lifting weights, and running on treadmills. But what we really want our survivors to do is stay active.”

What this means is engaging for about 2.5 hours a week in a range of activities, such as ballet, basketball, volleyball, bicycling, or swimming.

“And if our kids want to quit after 3 months, let them know that this is okay. They just need to replace that activity with another activity,” said Dr. Phillips. “We want them to find a fun hobby that they will enjoy that will keep them active.”

The study was supported by the National Cancer Institute. Dr. Phillips has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Among more than 2,300 adult survivors of childhood cancer and their siblings, who served as controls, new-onset memory impairment emerged more often in survivors decades later.

The increased risk was associated with the cancer treatment that was provided as well as modifiable health behaviors and chronic health conditions.

Even 35 years after being diagnosed, cancer survivors who never received chemotherapies or radiation therapies known to damage the brain reported far greater memory impairment than did their siblings, first author Nicholas Phillips, MD, told this news organization.

What the findings suggest is that “we need to educate oncologists and primary care providers on the risks our survivors face long after completion of therapy,” said Dr. Phillips, of the epidemiology and cancer control department at St. Jude Children’s Research Hospital, Memphis, Tenn.

The study was published online in JAMA Network Open.

Cancer survivors face an elevated risk for severe neurocognitive effects that can emerge 5-10 years following their diagnosis and treatment. However, it’s unclear whether new-onset neurocognitive problems can still develop a decade or more following diagnosis.

Over a long-term follow-up, Dr. Phillips and colleagues explored this question in 2,375 adult survivors of childhood cancer from the Childhood Cancer Survivor Study and 232 of their siblings.

Among the cancer cohort, 1,316 patients were survivors of acute lymphoblastic leukemia (ALL), 488 were survivors of central nervous system (CNS) tumors, and 571 had survived Hodgkin lymphoma.

The researchers determined the prevalence of new-onset neurocognitive impairment between baseline (23 years after diagnosis) and follow-up (35 years after diagnosis). New-onset neurocognitive impairment – present at follow-up but not at baseline – was defined as having a score in the worst 10% of the sibling cohort.

A higher proportion of survivors had new-onset memory impairment at follow-up compared with siblings. Specifically, about 8% of siblings had new-onset memory trouble, compared with 14% of ALL survivors treated with chemotherapy only, 26% of ALL survivors treated with cranial radiation, 35% of CNS tumor survivors, and 17% of Hodgkin lymphoma survivors.

New-onset memory impairment was associated with cranial radiation among CNS tumor survivors (relative risk [RR], 1.97) and alkylator chemotherapy at or above 8,000 mg/m2 among survivors of ALL who were treated without cranial radiation (RR, 2.80). The authors also found that smoking, low educational attainment, and low physical activity were associated with an elevated risk for new-onset memory impairment.

Dr. Phillips noted that current guidelines emphasize the importance of short-term monitoring of a survivor’s neurocognitive status on the basis of that person’s chemotherapy and radiation exposures.

However, “our study suggests that all survivors, regardless of their therapy, should be screened regularly for new-onset neurocognitive problems. And this screening should be done regularly for decades after diagnosis,” he said in an interview.

Dr. Phillips also noted the importance of communicating lifestyle modifications, such as not smoking and maintaining an active lifestyle.

“We need to start early and use the power of repetition when communicating with our survivors and their families,” Dr. Phillips said. “When our families and survivors hear the word ‘exercise,’ they think of gym memberships, lifting weights, and running on treadmills. But what we really want our survivors to do is stay active.”

What this means is engaging for about 2.5 hours a week in a range of activities, such as ballet, basketball, volleyball, bicycling, or swimming.

“And if our kids want to quit after 3 months, let them know that this is okay. They just need to replace that activity with another activity,” said Dr. Phillips. “We want them to find a fun hobby that they will enjoy that will keep them active.”

The study was supported by the National Cancer Institute. Dr. Phillips has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Among more than 2,300 adult survivors of childhood cancer and their siblings, who served as controls, new-onset memory impairment emerged more often in survivors decades later.

The increased risk was associated with the cancer treatment that was provided as well as modifiable health behaviors and chronic health conditions.

Even 35 years after being diagnosed, cancer survivors who never received chemotherapies or radiation therapies known to damage the brain reported far greater memory impairment than did their siblings, first author Nicholas Phillips, MD, told this news organization.

What the findings suggest is that “we need to educate oncologists and primary care providers on the risks our survivors face long after completion of therapy,” said Dr. Phillips, of the epidemiology and cancer control department at St. Jude Children’s Research Hospital, Memphis, Tenn.

The study was published online in JAMA Network Open.

Cancer survivors face an elevated risk for severe neurocognitive effects that can emerge 5-10 years following their diagnosis and treatment. However, it’s unclear whether new-onset neurocognitive problems can still develop a decade or more following diagnosis.

Over a long-term follow-up, Dr. Phillips and colleagues explored this question in 2,375 adult survivors of childhood cancer from the Childhood Cancer Survivor Study and 232 of their siblings.

Among the cancer cohort, 1,316 patients were survivors of acute lymphoblastic leukemia (ALL), 488 were survivors of central nervous system (CNS) tumors, and 571 had survived Hodgkin lymphoma.

The researchers determined the prevalence of new-onset neurocognitive impairment between baseline (23 years after diagnosis) and follow-up (35 years after diagnosis). New-onset neurocognitive impairment – present at follow-up but not at baseline – was defined as having a score in the worst 10% of the sibling cohort.

A higher proportion of survivors had new-onset memory impairment at follow-up compared with siblings. Specifically, about 8% of siblings had new-onset memory trouble, compared with 14% of ALL survivors treated with chemotherapy only, 26% of ALL survivors treated with cranial radiation, 35% of CNS tumor survivors, and 17% of Hodgkin lymphoma survivors.

New-onset memory impairment was associated with cranial radiation among CNS tumor survivors (relative risk [RR], 1.97) and alkylator chemotherapy at or above 8,000 mg/m2 among survivors of ALL who were treated without cranial radiation (RR, 2.80). The authors also found that smoking, low educational attainment, and low physical activity were associated with an elevated risk for new-onset memory impairment.

Dr. Phillips noted that current guidelines emphasize the importance of short-term monitoring of a survivor’s neurocognitive status on the basis of that person’s chemotherapy and radiation exposures.

However, “our study suggests that all survivors, regardless of their therapy, should be screened regularly for new-onset neurocognitive problems. And this screening should be done regularly for decades after diagnosis,” he said in an interview.

Dr. Phillips also noted the importance of communicating lifestyle modifications, such as not smoking and maintaining an active lifestyle.

“We need to start early and use the power of repetition when communicating with our survivors and their families,” Dr. Phillips said. “When our families and survivors hear the word ‘exercise,’ they think of gym memberships, lifting weights, and running on treadmills. But what we really want our survivors to do is stay active.”

What this means is engaging for about 2.5 hours a week in a range of activities, such as ballet, basketball, volleyball, bicycling, or swimming.

“And if our kids want to quit after 3 months, let them know that this is okay. They just need to replace that activity with another activity,” said Dr. Phillips. “We want them to find a fun hobby that they will enjoy that will keep them active.”

The study was supported by the National Cancer Institute. Dr. Phillips has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM JAMA NETWORK OPEN

Is ChatGPT a friend or foe of medical publishing?

. These tools should not be listed as authors, and researchers must denote how AI-assisted technologies were used, the committee said.

These new guidelines are the latest effort for medical journals to define policies for using these large-scale language models (LLMs) in scientific publication. While these AI-assisted tools can help with tasks such as writing, analyzing data, catching mistakes, and much more, they are also prone to errors, noted Casey Greene, PhD, a professor of biomedical informatics at the University of Colorado at Denver, Aurora. It is also not totally clear how information is stored and processed in these kinds of tools, and who has access to that information, he noted.

At the same time, experts argue that these AI tools could have a positive impact on the field by limiting some of the linguistic disparities in scientific publishing as well as alleviating the burden of some monotonous or mechanical tasks that come along with manuscript writing.

What experts can agree on, though, is that the use of AI tools is here to stay. “This is going to become a common tool,” Dr. Greene said. “I don’t think there’s a way out of that at this point.”

A change in medical publishing

OpenAI released ChatGPT in November 2022. In its own words, ChatGPT is “a deep learning model that has been trained on a massive amount of text data to understand and generate humanlike text.” Enter a question or a prompt, and it will respond. For example, when asked how the AI tool can be used in scientific publishing, ChatGPT responded:

“ChatGPT can aid scientific publishing by generating ideas, clarifying concepts, conducting preliminary literature reviews, providing proofreading and editing suggestions, and assisting with outlining and organizing scientific papers. However, it should be used as a supportive tool alongside domain expertise and validation from trusted scientific sources.”

Just a few months after ChatGPT became available, researchers began using this tool in their own work. One individual, Som Biswas, MD, a radiologist at the University of Tennessee Health Science Center in Memphis, reportedly used ChatGPT to author 16 scientific articles in just 4 months, according to the Daily Beast. Five of these articles have been published in four different journals. Dr. Biswas declined to be interviewed for this article.

There were also reports of papers with ChatGPT as one of the listed authors, which sparked backlash. In response, JAMA, Nature, and Science all published editorials in January outlining their policies for using ChatGPT and other large language models in the scientific authoring process. Editors from the journals of the American College of Cardiology and the American College of Rheumatology also updated their policies to reflect the influence of AI authoring tools.

The consensus is that AI has no place on the author byline.

“We think that’s not appropriate, because coauthorship means that you are taking responsibility for the analysis and the generation of data that are included in a manuscript. A machine that is dictated by AI can’t take responsibility,” said Daniel Solomon, MD, MPH, a rheumatologist at Brigham and Women’s Hospital, Boston, and the editor in chief of the ACR journal Arthritis & Rheumatology.

Issues with AI

One of the big concerns around using AI in writing is that it can generate text that seems plausible but is untrue or not supported by data. For example, Dr. Greene and colleague Milton Pividori, PhD, also of the University of Colorado, were writing a journal article about new software they developed that uses a large language model to revise scientific manuscripts.

“We used the same software to revise that article and at one point, it added a line that noted that the large language model had been fine-tuned on a data set of manuscripts from within the same field. This makes a lot of sense, and is absolutely something you could do, but was not something that we did,” Dr. Greene said. “Without a really careful review of the content, it becomes possible to invent things that were not actually done.”

In another case, ChatGPT falsely stated that a prominent law professor had been accused of sexual assault, citing a Washington Post article that did not exist.

“We live in a society where we are extremely concerned about fake news,” Dr. Pividori added, “and [these kinds of errors] could certainly exacerbate that in the scientific community, which is very concerning because science informs public policy.”

Another issue is the lack of transparency around how large language models like ChatGPT process and store data used to make queries.

“We have no idea how they are recording all the prompts and things that we input into ChatGPT and their systems,” Dr. Pividori said.

OpenAI recently addressed some privacy concerns by allowing users to turn off their chat history with the AI chatbot, so conversations cannot be used to train or improve the company’s models. But Dr. Greene noted that the terms of service “still remain pretty nebulous.”

Dr. Solomon is also concerned with researchers using these AI tools in authoring without knowing how they work. “The thing we are really concerned about is that fact that [LLMs] are a bit of a black box – people don’t really understand the methodologies,” he said.

A positive tool?

But despite these concerns, many think that these types of AI-assisted tools could have a positive impact on medical publishing, particularly for researchers for whom English is not their first language, noted Catherine Gao, MD, a pulmonary and critical care instructor at Northwestern University, Chicago. She recently led research comparing scientific abstracts written by ChatGPT and real abstracts and discovered that reviewers found it “surprisingly difficult” to differentiate the two.

“The majority of research is published in English,” she said in an email. “Responsible use of LLMs can potentially reduce the burden of writing for busy scientists and improve equity for those who are not native English speakers.”

Dr. Pividori agreed, adding that as a non-native English speaker, he spends much more time working on the structure and grammar of sentences when authoring a manuscript, compared with people who speak English as a first language. He noted that these tools can also be used to automate some of the more monotonous tasks that come along with writing manuscripts and allow researchers to focus on the more creative aspects.

In the future, “I want to focus more on the things that only a human can do and let these tools do all the rest of it,” he said.

New rules

But despite how individual researchers feel about LLMs, they agree that these AI tools are here to stay.

“I think that we should anticipate that they will become part of the medical research establishment over time, when we figure out how to use them appropriately,” Dr. Solomon said.

While the debate of how to best use AI in medical publications will continue, journal editors agree that all authors of a manuscript are solely responsible for content in articles that used AI-assisted technology.

“Authors should carefully review and edit the result because AI can generate authoritative-sounding output that can be incorrect, incomplete, or biased,” the ICMJE guidelines state. “Authors should be able to assert that there is no plagiarism in their paper, including in text and images produced by the AI.” This includes appropriate attribution of all cited materials.

The committee also recommends that authors write in both the cover letter and submitted work how AI was used in the manuscript writing process. Recently updated guidelines from the World Association of Medical Editors recommend that all prompts used to generate new text or analytical work should be provided in submitted work. Dr. Greene also noted that if authors used an AI tool to revise their work, they can include a version of the manuscript untouched by LLMs.

It is similar to a preprint, he said, but rather than publishing a version of a paper prior to peer review, someone is showing a version of a manuscript before it was reviewed and revised by AI. “This type of practice could be a path that lets us benefit from these models,” he said, “without having the drawbacks that many are concerned about.”

Dr. Solomon has financial relationships with AbbVie, Amgen, Janssen, CorEvitas, and Moderna. Both Dr. Greene and Dr. Pividori are inventors in the U.S. Provisional Patent Application No. 63/486,706 that the University of Colorado has filed for the “Publishing Infrastructure For AI-Assisted Academic Authoring” invention with the U.S. Patent and Trademark Office. Dr. Greene and Dr. Pividori also received a grant from the Alfred P. Sloan Foundation to improve their AI-based manuscript revision tool. Dr. Gao reported no relevant financial relationships.

A version of this article originally appeared on Medscape.com.

. These tools should not be listed as authors, and researchers must denote how AI-assisted technologies were used, the committee said.

These new guidelines are the latest effort for medical journals to define policies for using these large-scale language models (LLMs) in scientific publication. While these AI-assisted tools can help with tasks such as writing, analyzing data, catching mistakes, and much more, they are also prone to errors, noted Casey Greene, PhD, a professor of biomedical informatics at the University of Colorado at Denver, Aurora. It is also not totally clear how information is stored and processed in these kinds of tools, and who has access to that information, he noted.

At the same time, experts argue that these AI tools could have a positive impact on the field by limiting some of the linguistic disparities in scientific publishing as well as alleviating the burden of some monotonous or mechanical tasks that come along with manuscript writing.

What experts can agree on, though, is that the use of AI tools is here to stay. “This is going to become a common tool,” Dr. Greene said. “I don’t think there’s a way out of that at this point.”

A change in medical publishing

OpenAI released ChatGPT in November 2022. In its own words, ChatGPT is “a deep learning model that has been trained on a massive amount of text data to understand and generate humanlike text.” Enter a question or a prompt, and it will respond. For example, when asked how the AI tool can be used in scientific publishing, ChatGPT responded:

“ChatGPT can aid scientific publishing by generating ideas, clarifying concepts, conducting preliminary literature reviews, providing proofreading and editing suggestions, and assisting with outlining and organizing scientific papers. However, it should be used as a supportive tool alongside domain expertise and validation from trusted scientific sources.”

Just a few months after ChatGPT became available, researchers began using this tool in their own work. One individual, Som Biswas, MD, a radiologist at the University of Tennessee Health Science Center in Memphis, reportedly used ChatGPT to author 16 scientific articles in just 4 months, according to the Daily Beast. Five of these articles have been published in four different journals. Dr. Biswas declined to be interviewed for this article.

There were also reports of papers with ChatGPT as one of the listed authors, which sparked backlash. In response, JAMA, Nature, and Science all published editorials in January outlining their policies for using ChatGPT and other large language models in the scientific authoring process. Editors from the journals of the American College of Cardiology and the American College of Rheumatology also updated their policies to reflect the influence of AI authoring tools.

The consensus is that AI has no place on the author byline.

“We think that’s not appropriate, because coauthorship means that you are taking responsibility for the analysis and the generation of data that are included in a manuscript. A machine that is dictated by AI can’t take responsibility,” said Daniel Solomon, MD, MPH, a rheumatologist at Brigham and Women’s Hospital, Boston, and the editor in chief of the ACR journal Arthritis & Rheumatology.

Issues with AI

One of the big concerns around using AI in writing is that it can generate text that seems plausible but is untrue or not supported by data. For example, Dr. Greene and colleague Milton Pividori, PhD, also of the University of Colorado, were writing a journal article about new software they developed that uses a large language model to revise scientific manuscripts.

“We used the same software to revise that article and at one point, it added a line that noted that the large language model had been fine-tuned on a data set of manuscripts from within the same field. This makes a lot of sense, and is absolutely something you could do, but was not something that we did,” Dr. Greene said. “Without a really careful review of the content, it becomes possible to invent things that were not actually done.”

In another case, ChatGPT falsely stated that a prominent law professor had been accused of sexual assault, citing a Washington Post article that did not exist.

“We live in a society where we are extremely concerned about fake news,” Dr. Pividori added, “and [these kinds of errors] could certainly exacerbate that in the scientific community, which is very concerning because science informs public policy.”

Another issue is the lack of transparency around how large language models like ChatGPT process and store data used to make queries.

“We have no idea how they are recording all the prompts and things that we input into ChatGPT and their systems,” Dr. Pividori said.

OpenAI recently addressed some privacy concerns by allowing users to turn off their chat history with the AI chatbot, so conversations cannot be used to train or improve the company’s models. But Dr. Greene noted that the terms of service “still remain pretty nebulous.”

Dr. Solomon is also concerned with researchers using these AI tools in authoring without knowing how they work. “The thing we are really concerned about is that fact that [LLMs] are a bit of a black box – people don’t really understand the methodologies,” he said.

A positive tool?

But despite these concerns, many think that these types of AI-assisted tools could have a positive impact on medical publishing, particularly for researchers for whom English is not their first language, noted Catherine Gao, MD, a pulmonary and critical care instructor at Northwestern University, Chicago. She recently led research comparing scientific abstracts written by ChatGPT and real abstracts and discovered that reviewers found it “surprisingly difficult” to differentiate the two.

“The majority of research is published in English,” she said in an email. “Responsible use of LLMs can potentially reduce the burden of writing for busy scientists and improve equity for those who are not native English speakers.”

Dr. Pividori agreed, adding that as a non-native English speaker, he spends much more time working on the structure and grammar of sentences when authoring a manuscript, compared with people who speak English as a first language. He noted that these tools can also be used to automate some of the more monotonous tasks that come along with writing manuscripts and allow researchers to focus on the more creative aspects.

In the future, “I want to focus more on the things that only a human can do and let these tools do all the rest of it,” he said.

New rules

But despite how individual researchers feel about LLMs, they agree that these AI tools are here to stay.

“I think that we should anticipate that they will become part of the medical research establishment over time, when we figure out how to use them appropriately,” Dr. Solomon said.

While the debate of how to best use AI in medical publications will continue, journal editors agree that all authors of a manuscript are solely responsible for content in articles that used AI-assisted technology.

“Authors should carefully review and edit the result because AI can generate authoritative-sounding output that can be incorrect, incomplete, or biased,” the ICMJE guidelines state. “Authors should be able to assert that there is no plagiarism in their paper, including in text and images produced by the AI.” This includes appropriate attribution of all cited materials.

The committee also recommends that authors write in both the cover letter and submitted work how AI was used in the manuscript writing process. Recently updated guidelines from the World Association of Medical Editors recommend that all prompts used to generate new text or analytical work should be provided in submitted work. Dr. Greene also noted that if authors used an AI tool to revise their work, they can include a version of the manuscript untouched by LLMs.

It is similar to a preprint, he said, but rather than publishing a version of a paper prior to peer review, someone is showing a version of a manuscript before it was reviewed and revised by AI. “This type of practice could be a path that lets us benefit from these models,” he said, “without having the drawbacks that many are concerned about.”

Dr. Solomon has financial relationships with AbbVie, Amgen, Janssen, CorEvitas, and Moderna. Both Dr. Greene and Dr. Pividori are inventors in the U.S. Provisional Patent Application No. 63/486,706 that the University of Colorado has filed for the “Publishing Infrastructure For AI-Assisted Academic Authoring” invention with the U.S. Patent and Trademark Office. Dr. Greene and Dr. Pividori also received a grant from the Alfred P. Sloan Foundation to improve their AI-based manuscript revision tool. Dr. Gao reported no relevant financial relationships.

A version of this article originally appeared on Medscape.com.

. These tools should not be listed as authors, and researchers must denote how AI-assisted technologies were used, the committee said.

These new guidelines are the latest effort for medical journals to define policies for using these large-scale language models (LLMs) in scientific publication. While these AI-assisted tools can help with tasks such as writing, analyzing data, catching mistakes, and much more, they are also prone to errors, noted Casey Greene, PhD, a professor of biomedical informatics at the University of Colorado at Denver, Aurora. It is also not totally clear how information is stored and processed in these kinds of tools, and who has access to that information, he noted.

At the same time, experts argue that these AI tools could have a positive impact on the field by limiting some of the linguistic disparities in scientific publishing as well as alleviating the burden of some monotonous or mechanical tasks that come along with manuscript writing.

What experts can agree on, though, is that the use of AI tools is here to stay. “This is going to become a common tool,” Dr. Greene said. “I don’t think there’s a way out of that at this point.”

A change in medical publishing

OpenAI released ChatGPT in November 2022. In its own words, ChatGPT is “a deep learning model that has been trained on a massive amount of text data to understand and generate humanlike text.” Enter a question or a prompt, and it will respond. For example, when asked how the AI tool can be used in scientific publishing, ChatGPT responded:

“ChatGPT can aid scientific publishing by generating ideas, clarifying concepts, conducting preliminary literature reviews, providing proofreading and editing suggestions, and assisting with outlining and organizing scientific papers. However, it should be used as a supportive tool alongside domain expertise and validation from trusted scientific sources.”

Just a few months after ChatGPT became available, researchers began using this tool in their own work. One individual, Som Biswas, MD, a radiologist at the University of Tennessee Health Science Center in Memphis, reportedly used ChatGPT to author 16 scientific articles in just 4 months, according to the Daily Beast. Five of these articles have been published in four different journals. Dr. Biswas declined to be interviewed for this article.

There were also reports of papers with ChatGPT as one of the listed authors, which sparked backlash. In response, JAMA, Nature, and Science all published editorials in January outlining their policies for using ChatGPT and other large language models in the scientific authoring process. Editors from the journals of the American College of Cardiology and the American College of Rheumatology also updated their policies to reflect the influence of AI authoring tools.

The consensus is that AI has no place on the author byline.

“We think that’s not appropriate, because coauthorship means that you are taking responsibility for the analysis and the generation of data that are included in a manuscript. A machine that is dictated by AI can’t take responsibility,” said Daniel Solomon, MD, MPH, a rheumatologist at Brigham and Women’s Hospital, Boston, and the editor in chief of the ACR journal Arthritis & Rheumatology.

Issues with AI

One of the big concerns around using AI in writing is that it can generate text that seems plausible but is untrue or not supported by data. For example, Dr. Greene and colleague Milton Pividori, PhD, also of the University of Colorado, were writing a journal article about new software they developed that uses a large language model to revise scientific manuscripts.

“We used the same software to revise that article and at one point, it added a line that noted that the large language model had been fine-tuned on a data set of manuscripts from within the same field. This makes a lot of sense, and is absolutely something you could do, but was not something that we did,” Dr. Greene said. “Without a really careful review of the content, it becomes possible to invent things that were not actually done.”

In another case, ChatGPT falsely stated that a prominent law professor had been accused of sexual assault, citing a Washington Post article that did not exist.

“We live in a society where we are extremely concerned about fake news,” Dr. Pividori added, “and [these kinds of errors] could certainly exacerbate that in the scientific community, which is very concerning because science informs public policy.”

Another issue is the lack of transparency around how large language models like ChatGPT process and store data used to make queries.

“We have no idea how they are recording all the prompts and things that we input into ChatGPT and their systems,” Dr. Pividori said.

OpenAI recently addressed some privacy concerns by allowing users to turn off their chat history with the AI chatbot, so conversations cannot be used to train or improve the company’s models. But Dr. Greene noted that the terms of service “still remain pretty nebulous.”

Dr. Solomon is also concerned with researchers using these AI tools in authoring without knowing how they work. “The thing we are really concerned about is that fact that [LLMs] are a bit of a black box – people don’t really understand the methodologies,” he said.

A positive tool?

But despite these concerns, many think that these types of AI-assisted tools could have a positive impact on medical publishing, particularly for researchers for whom English is not their first language, noted Catherine Gao, MD, a pulmonary and critical care instructor at Northwestern University, Chicago. She recently led research comparing scientific abstracts written by ChatGPT and real abstracts and discovered that reviewers found it “surprisingly difficult” to differentiate the two.

“The majority of research is published in English,” she said in an email. “Responsible use of LLMs can potentially reduce the burden of writing for busy scientists and improve equity for those who are not native English speakers.”

Dr. Pividori agreed, adding that as a non-native English speaker, he spends much more time working on the structure and grammar of sentences when authoring a manuscript, compared with people who speak English as a first language. He noted that these tools can also be used to automate some of the more monotonous tasks that come along with writing manuscripts and allow researchers to focus on the more creative aspects.

In the future, “I want to focus more on the things that only a human can do and let these tools do all the rest of it,” he said.

New rules

But despite how individual researchers feel about LLMs, they agree that these AI tools are here to stay.

“I think that we should anticipate that they will become part of the medical research establishment over time, when we figure out how to use them appropriately,” Dr. Solomon said.

While the debate of how to best use AI in medical publications will continue, journal editors agree that all authors of a manuscript are solely responsible for content in articles that used AI-assisted technology.

“Authors should carefully review and edit the result because AI can generate authoritative-sounding output that can be incorrect, incomplete, or biased,” the ICMJE guidelines state. “Authors should be able to assert that there is no plagiarism in their paper, including in text and images produced by the AI.” This includes appropriate attribution of all cited materials.

The committee also recommends that authors write in both the cover letter and submitted work how AI was used in the manuscript writing process. Recently updated guidelines from the World Association of Medical Editors recommend that all prompts used to generate new text or analytical work should be provided in submitted work. Dr. Greene also noted that if authors used an AI tool to revise their work, they can include a version of the manuscript untouched by LLMs.

It is similar to a preprint, he said, but rather than publishing a version of a paper prior to peer review, someone is showing a version of a manuscript before it was reviewed and revised by AI. “This type of practice could be a path that lets us benefit from these models,” he said, “without having the drawbacks that many are concerned about.”

Dr. Solomon has financial relationships with AbbVie, Amgen, Janssen, CorEvitas, and Moderna. Both Dr. Greene and Dr. Pividori are inventors in the U.S. Provisional Patent Application No. 63/486,706 that the University of Colorado has filed for the “Publishing Infrastructure For AI-Assisted Academic Authoring” invention with the U.S. Patent and Trademark Office. Dr. Greene and Dr. Pividori also received a grant from the Alfred P. Sloan Foundation to improve their AI-based manuscript revision tool. Dr. Gao reported no relevant financial relationships.

A version of this article originally appeared on Medscape.com.

Survival similar with hearts donated after circulatory or brain death

in the first randomized trial comparing the two approaches.

“This randomized trial showing recipient survival with DCD to be similar to DBD should lead to DCD becoming the standard of care alongside DBD,” lead author Jacob Schroder, MD, surgical director, heart transplantation program, Duke University Medical Center, Durham, N.C., said in an interview.

“This should enable many more heart transplants to take place and for us to be able to cast the net further and wider for donors,” he said.

The trial was published online in the New England Journal of Medicine.

Dr. Schroder estimated that only around one-fifth of the 120 U.S. heart transplant centers currently carry out DCD transplants, but he is hopeful that the publication of this study will encourage more transplant centers to do these DCD procedures.

“The problem is there are many low-volume heart transplant centers, which may not be keen to do DCD transplants as they are a bit more complicated and expensive than DBD heart transplants,” he said. “But we need to look at the big picture of how many lives can be saved by increasing the number of heart transplant procedures and the money saved by getting more patients off the waiting list.”

The authors explain that heart transplantation has traditionally been limited to the use of hearts obtained from donors after brain death, which allows in situ assessment of cardiac function and of the suitability for transplantation of the donor allograft before surgical procurement.

But because the need for heart transplants far exceeds the availability of suitable donors, the use of DCD hearts has been investigated and this approach is now being pursued in many countries. In the DCD approach, the heart will have stopped beating in the donor, and perfusion techniques are used to restart the organ.

There are two different approaches to restarting the heart in DCD. The first approach involves the heart being removed from the donor and reanimated, preserved, assessed, and transported with the use of a portable extracorporeal perfusion and preservation system (Organ Care System, TransMedics). The second involves restarting the heart in the donor’s body for evaluation before removal and transportation under the traditional cold storage method used for donations after brain death.

The current trial was designed to compare clinical outcomes in patients who had received a heart from a circulatory death donor using the portable extracorporeal perfusion method for DCD transplantation, with outcomes from the traditional method of heart transplantation using organs donated after brain death.

For the randomized, noninferiority trial, adult candidates for heart transplantation were assigned to receive a heart after the circulatory death of the donor or a heart from a donor after brain death if that heart was available first (circulatory-death group) or to receive only a heart that had been preserved with the use of traditional cold storage after the brain death of the donor (brain-death group).

The primary end point was the risk-adjusted survival at 6 months in the as-treated circulatory-death group, as compared with the brain-death group. The primary safety end point was serious adverse events associated with the heart graft at 30 days after transplantation.

A total of 180 patients underwent transplantation, 90 of whom received a heart donated after circulatory death and 90 who received a heart donated after brain death. A total of 166 transplant recipients were included in the as-treated primary analysis (80 who received a heart from a circulatory-death donor and 86 who received a heart from a brain-death donor).

The risk-adjusted 6-month survival in the as-treated population was 94% among recipients of a heart from a circulatory-death donor, as compared with 90% among recipients of a heart from a brain-death donor (P < .001 for noninferiority).

There were no substantial between-group differences in the mean per-patient number of serious adverse events associated with the heart graft at 30 days after transplantation.

Of 101 hearts from circulatory-death donors that were preserved with the use of the perfusion system, 90 were successfully transplanted according to the criteria for lactate trend and overall contractility of the donor heart, which resulted in overall utilization percentage of 89%.

More patients who received a heart from a circulatory-death donor had moderate or severe primary graft dysfunction (22%) than those who received a heart from a brain-death donor (10%). However, graft failure that resulted in retransplantation occurred in two (2.3%) patients who received a heart from a brain-death donor versus zero patients who received a heart from a circulatory-death donor.

The researchers note that the higher incidence of primary graft dysfunction in the circulatory-death group is expected, given the period of warm ischemia that occurs in this approach. But they point out that this did not affect patient or graft survival at 30 days or 1 year.

“Primary graft dysfunction is when the heart doesn’t fully work immediately after transplant and some mechanical support is needed,” Dr. Schroder commented to this news organization. “This occurred more often in the DCD group, but this mechanical support is only temporary, and generally only needed for a day or two.

“It looks like it might take the heart a little longer to start fully functioning after DCD, but our results show this doesn’t seem to affect recipient survival.”

He added: “We’ve started to become more comfortable with DCD. Sometimes it may take a little longer to get the heart working properly on its own, but the rate of mechanical support is now much lower than when we first started doing these procedures. And cardiac MRI on the recipient patients before discharge have shown that the DCD hearts are not more damaged than those from DBD donors.”

The authors also report that there were six donor hearts in the DCD group for which there were protocol deviations of functional warm ischemic time greater than 30 minutes or continuously rising lactate levels and these hearts did not show primary graft dysfunction.

On this observation, Dr. Schroder said: “I think we need to do more work on understanding the ischemic time limits. The current 30 minutes time limit was estimated in animal studies. We need to look more closely at data from actual DCD transplants. While 30 minutes may be too long for a heart from an older donor, the heart from a younger donor may be fine for a longer period of ischemic time as it will be healthier.”

“Exciting” results

In an editorial, Nancy K. Sweitzer, MD, PhD, vice chair of clinical research, department of medicine, and director of clinical research, division of cardiology, Washington University in St. Louis, describes the results of the current study as “exciting,” adding that, “They clearly show the feasibility and safety of transplantation of hearts from circulatory-death donors.”

However, Dr. Sweitzer points out that the sickest patients in the study – those who were United Network for Organ Sharing (UNOS) status 1 and 2 – were more likely to receive a DBD heart and the more stable patients (UNOS 3-6) were more likely to receive a DCD heart.

“This imbalance undoubtedly contributed to the success of the trial in meeting its noninferiority end point. Whether transplantation of hearts from circulatory-death donors is truly safe in our sickest patients with heart failure is not clear,” she says.

However, she concludes, “Although caution and continuous evaluation of data are warranted, the increased use of hearts from circulatory-death donors appears to be safe in the hands of experienced transplantation teams and will launch an exciting phase of learning and improvement.”

“A safely expanded pool of heart donors has the potential to increase fairness and equity in heart transplantation, allowing more persons with heart failure to have access to this lifesaving therapy,” she adds. “Organ donors and transplantation teams will save increasing numbers of lives with this most precious gift.”

The current study was supported by TransMedics. Dr. Schroder reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

in the first randomized trial comparing the two approaches.

“This randomized trial showing recipient survival with DCD to be similar to DBD should lead to DCD becoming the standard of care alongside DBD,” lead author Jacob Schroder, MD, surgical director, heart transplantation program, Duke University Medical Center, Durham, N.C., said in an interview.

“This should enable many more heart transplants to take place and for us to be able to cast the net further and wider for donors,” he said.

The trial was published online in the New England Journal of Medicine.

Dr. Schroder estimated that only around one-fifth of the 120 U.S. heart transplant centers currently carry out DCD transplants, but he is hopeful that the publication of this study will encourage more transplant centers to do these DCD procedures.

“The problem is there are many low-volume heart transplant centers, which may not be keen to do DCD transplants as they are a bit more complicated and expensive than DBD heart transplants,” he said. “But we need to look at the big picture of how many lives can be saved by increasing the number of heart transplant procedures and the money saved by getting more patients off the waiting list.”

The authors explain that heart transplantation has traditionally been limited to the use of hearts obtained from donors after brain death, which allows in situ assessment of cardiac function and of the suitability for transplantation of the donor allograft before surgical procurement.

But because the need for heart transplants far exceeds the availability of suitable donors, the use of DCD hearts has been investigated and this approach is now being pursued in many countries. In the DCD approach, the heart will have stopped beating in the donor, and perfusion techniques are used to restart the organ.

There are two different approaches to restarting the heart in DCD. The first approach involves the heart being removed from the donor and reanimated, preserved, assessed, and transported with the use of a portable extracorporeal perfusion and preservation system (Organ Care System, TransMedics). The second involves restarting the heart in the donor’s body for evaluation before removal and transportation under the traditional cold storage method used for donations after brain death.

The current trial was designed to compare clinical outcomes in patients who had received a heart from a circulatory death donor using the portable extracorporeal perfusion method for DCD transplantation, with outcomes from the traditional method of heart transplantation using organs donated after brain death.

For the randomized, noninferiority trial, adult candidates for heart transplantation were assigned to receive a heart after the circulatory death of the donor or a heart from a donor after brain death if that heart was available first (circulatory-death group) or to receive only a heart that had been preserved with the use of traditional cold storage after the brain death of the donor (brain-death group).

The primary end point was the risk-adjusted survival at 6 months in the as-treated circulatory-death group, as compared with the brain-death group. The primary safety end point was serious adverse events associated with the heart graft at 30 days after transplantation.

A total of 180 patients underwent transplantation, 90 of whom received a heart donated after circulatory death and 90 who received a heart donated after brain death. A total of 166 transplant recipients were included in the as-treated primary analysis (80 who received a heart from a circulatory-death donor and 86 who received a heart from a brain-death donor).

The risk-adjusted 6-month survival in the as-treated population was 94% among recipients of a heart from a circulatory-death donor, as compared with 90% among recipients of a heart from a brain-death donor (P < .001 for noninferiority).

There were no substantial between-group differences in the mean per-patient number of serious adverse events associated with the heart graft at 30 days after transplantation.

Of 101 hearts from circulatory-death donors that were preserved with the use of the perfusion system, 90 were successfully transplanted according to the criteria for lactate trend and overall contractility of the donor heart, which resulted in overall utilization percentage of 89%.

More patients who received a heart from a circulatory-death donor had moderate or severe primary graft dysfunction (22%) than those who received a heart from a brain-death donor (10%). However, graft failure that resulted in retransplantation occurred in two (2.3%) patients who received a heart from a brain-death donor versus zero patients who received a heart from a circulatory-death donor.

The researchers note that the higher incidence of primary graft dysfunction in the circulatory-death group is expected, given the period of warm ischemia that occurs in this approach. But they point out that this did not affect patient or graft survival at 30 days or 1 year.