User login

Successful COVID-19 Surge Management With Monoclonal Antibody Infusion in Emergency Department Patients

From the Center for Artificial Intelligence in Diagnostic Medicine, University of California, Irvine, CA (Drs. Chow and Chang, Mazaya Soundara), University of California Irvine School of Medicine, Irvine, CA (Ruchi Desai), Division of Infectious Diseases, University of California, Irvine, CA (Dr. Gohil), and the Department of Medicine and Hospital Medicine Program, University of California, Irvine, CA (Dr. Amin).

Background: The COVID-19 pandemic has placed substantial strain on hospital resources and has been responsible for more than 733 000 deaths in the United States. The US Food and Drug Administration has granted emergency use authorization (EUA) for monoclonal antibody (mAb) therapy in the US for patients with early-stage high-risk COVID-19.

Methods: In this retrospective cohort study, we studied the emergency department (ED) during a massive COVID-19 surge in Orange County, California, from December 4, 2020, to January 29, 2021, as a potential setting for efficient mAb delivery by evaluating the impact of bamlanivimab use in high-risk COVID-19 patients. All patients included in this study had positive results on nucleic acid amplification detection from nasopharyngeal or throat swabs, presented with 1 or more mild or moderate symptom, and met EUA criteria for mAb treatment. The primary outcome analyzed among this cohort of ED patients was overall improvement, which included subsequent ED/hospital visits, inpatient hospitalization, and death related to COVID-19.

Results: We identified 1278 ED patients with COVID-19 not treated with bamlanivimab and 73 patients with COVID-19 treated with bamlanivimab during the treatment period. Of these patients, 239 control patients and 63 treatment patients met EUA criteria. Overall, 7.9% (5/63) of patients receiving bamlanivimab had a subsequent ED/hospital visit, hospitalization, or death compared with 19.2% (46/239) in the control group (P = .03).

Conclusion: Targeting ED patients for mAb treatment may be an effective strategy to prevent progression to severe COVID-19 illness and substantially reduce the composite end point of repeat ED visits, hospitalizations, and deaths, especially for individuals of underserved populations who may not have access to ambulatory care.

Keywords: COVID-19; mAb; bamlanivimab; surge management.

Since December 2019, the novel pathogen SARS-CoV-2 has spread rapidly, culminating in a pandemic that has caused more than 4.9 million deaths worldwide and claimed more than 733 000 lives in the United States.1 The scale of the COVID-19 pandemic has placed an immense strain on hospital resources, including personal protective equipment (PPE), beds, ventilators and personnel.2,3 A previous analysis demonstrated that hospital capacity strain is associated with increased mortality and worsened health outcomes.4 A more recent analysis in light of the COVID-19 pandemic found that strains on critical care capacity were associated with increased COVID-19 intensive care unit (ICU) mortality.5 While more studies are needed to understand the association between hospital resources and COVID-19 mortality, efforts to decrease COVID-19 hospitalizations by early targeted treatment of patients in outpatient and emergency department (ED) settings may help to relieve the burden on hospital personnel and resources and decrease subsequent mortality.

Current therapeutic options focus on inpatient management of patients who progress to acute respiratory illness while patients with mild presentations are managed with outpatient monitoring, even those at high risk for progression. At the moment, only remdesivir, a viral RNA-dependent RNA polymerase inhibitor, has been approved by the US Food and Drug Administration (FDA) for treatment of hospitalized COVID-19 patients.6 However, in November 2020, the FDA granted emergency use authorization (EUA) for monoclonal antibodies (mAbs), monotherapy, and combination therapy in a broad range of early-stage, high-risk patients.7-9 Neutralizing mAbs include bamlanivimab (LY-CoV555), etesevimab (LY-CoV016), sotrovimab (VIR-7831), and casirivimab/imdevimab (REGN-COV2). These anti–spike protein antibodies prevent viral attachment to the human angiotensin-converting enzyme 2 receptor (hACE2) and subsequently prevent viral entry.10 mAb therapy has been shown to be effective in substantially reducing viral load, hospitalizations, and ED visits.11

Despite these promising results, uptake of mAb therapy has been slow, with more than 600 000 available doses remaining unused as of mid-January 2021, despite very high infection rates across the United States.12 In addition to the logistical challenges associated with intravenous (IV) therapy in the ambulatory setting, identifying, notifying, and scheduling appointments for ambulatory patients hamper efficient delivery to high-risk patients and limit access to underserved patients without primary care providers. For patients not treated in the ambulatory setting, the ED may serve as an ideal location for early implementation of mAb treatment in high-risk patients with mild to moderate COVID-19.

The University of California, Irvine (UCI) Medical Center is not only the major premium academic medical center in Orange County, California, but also the primary safety net hospital for vulnerable populations in Orange County. During the surge period from December 2020 through January 2021, we were over 100% capacity and had built an onsite mobile hospital to expand the number of beds available. Given the severity of the impact of COVID-19 on our resources, implementing a strategy to reduce hospital admissions, patient death, and subsequent ED visits was imperative. Our goal was to implement a strategy on the front end through the ED to optimize care for patients and reduce the strain on hospital resources.

We sought to study the ED during this massive surge as a potential setting for efficient mAb delivery by evaluating the impact of bamlanivimab use in high risk COVID-19 patients.

Methods

We conducted a retrospective cohort study (approved by UCI institutional review board) of sequential COVID-19 adult patients who were evaluated and discharged from the ED between December 4, 2020, and January 29, 2021, and received bamlanivimab treatment (cases) compared with a nontreatment group (control) of ED patients.

Using the UCI electronic medical record (EMR) system, we identified 1278 ED patients with COVID-19 not treated with bamlanivimab and 73 patients with COVID-19 treated with bamlanivimab during the months of December 2020 and January 2021. All patients included in this study met the EUA criteria for mAb therapy. According to the Centers for Disease Control and Prevention (CDC), during the period of this study, patients met EUA criteria if they had mild to moderate COVID-19, a positive direct SARS-CoV-2 viral testing, and a high risk for progressing to severe COVID-19 or hospitalization.13 High risk for progressing to severe COVID-19 and/or hospitalization is defined as meeting at least 1 of the following criteria: a body mass index of 35 or higher, chronic kidney disease (CKD), diabetes, immunosuppressive disease, currently receiving immunosuppressive treatment, aged 65 years or older, aged 55 years or older and have cardiovascular disease or hypertension, or chronic obstructive pulmonary disease (COPD)/other chronic respiratory diseases.13 All patients in the ED who met EUA criteria were offered mAb treatment; those who accepted the treatment were included in the treatment group, and those who refused were included in the control group.

All patients included in this study had positive results on nucleic acid amplification detection from nasopharyngeal or throat swabs and presented with 1 or more mild or moderate symptom, defined as: fever, cough, sore throat, malaise, headache, muscle pain, gastrointestinal symptoms, or shortness of breath. We excluded patients admitted to the hospital on that ED visit and those discharged to hospice. In addition, we excluded patients who presented 2 weeks after symptom onset and those who did not meet EUA criteria. Demographic data (age and gender) and comorbid conditions were obtained by EMR review. Comorbid conditions obtained included diabetes, hypertension, cardiovascular disease, coronary artery disease, CKD/end-stage renal disease (ESRD), COPD, obesity, and immunocompromised status.

Bamlanivimab infusion therapy in the ED followed CDC guidelines. Each patient received 700 mg of bamlanivimab diluted in 0.9% sodium chloride and administered as a single IV infusion. We established protocols to give patients IV immunoglobulin (IVIG) infusions directly in the ED.

The primary outcome analyzed among this cohort of ED patients was overall improvement, which included subsequent ED/hospital visits, inpatient hospitalization, and death related to COVID-19 within 90 days of initial ED visit. Each patient was only counted once. Data analysis and statistical tests were conducted using SPSS statistical software (SPSS Inc). Treatment effects were compared using χ2 test with an α level of 0.05. A t test was used for continuous variables, including age. A P value of less than .05 was considered significant.

Results

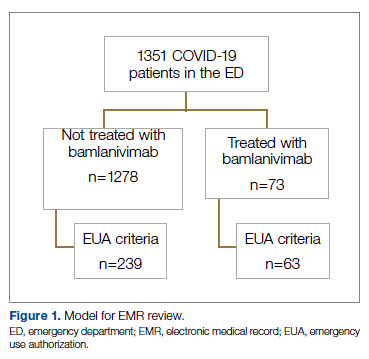

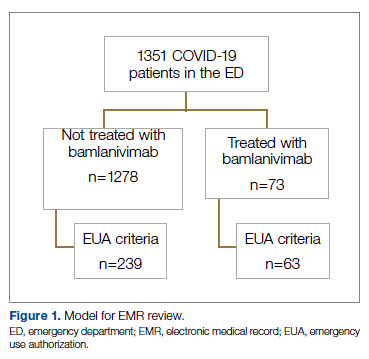

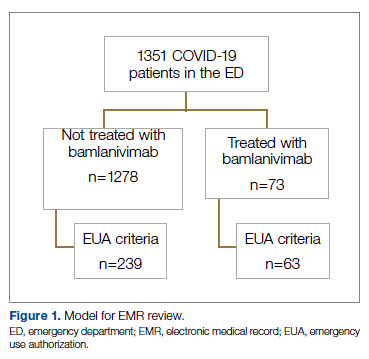

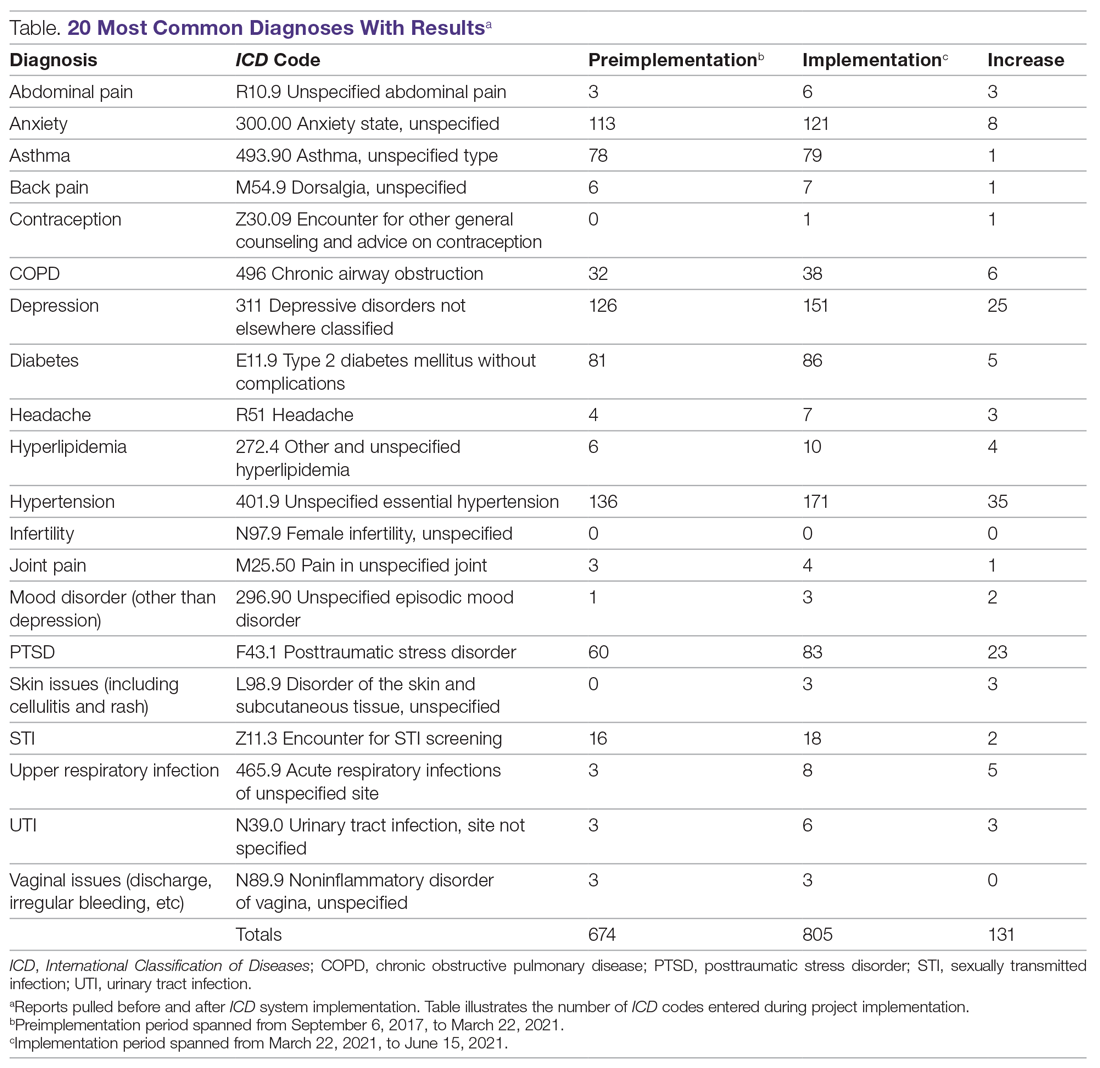

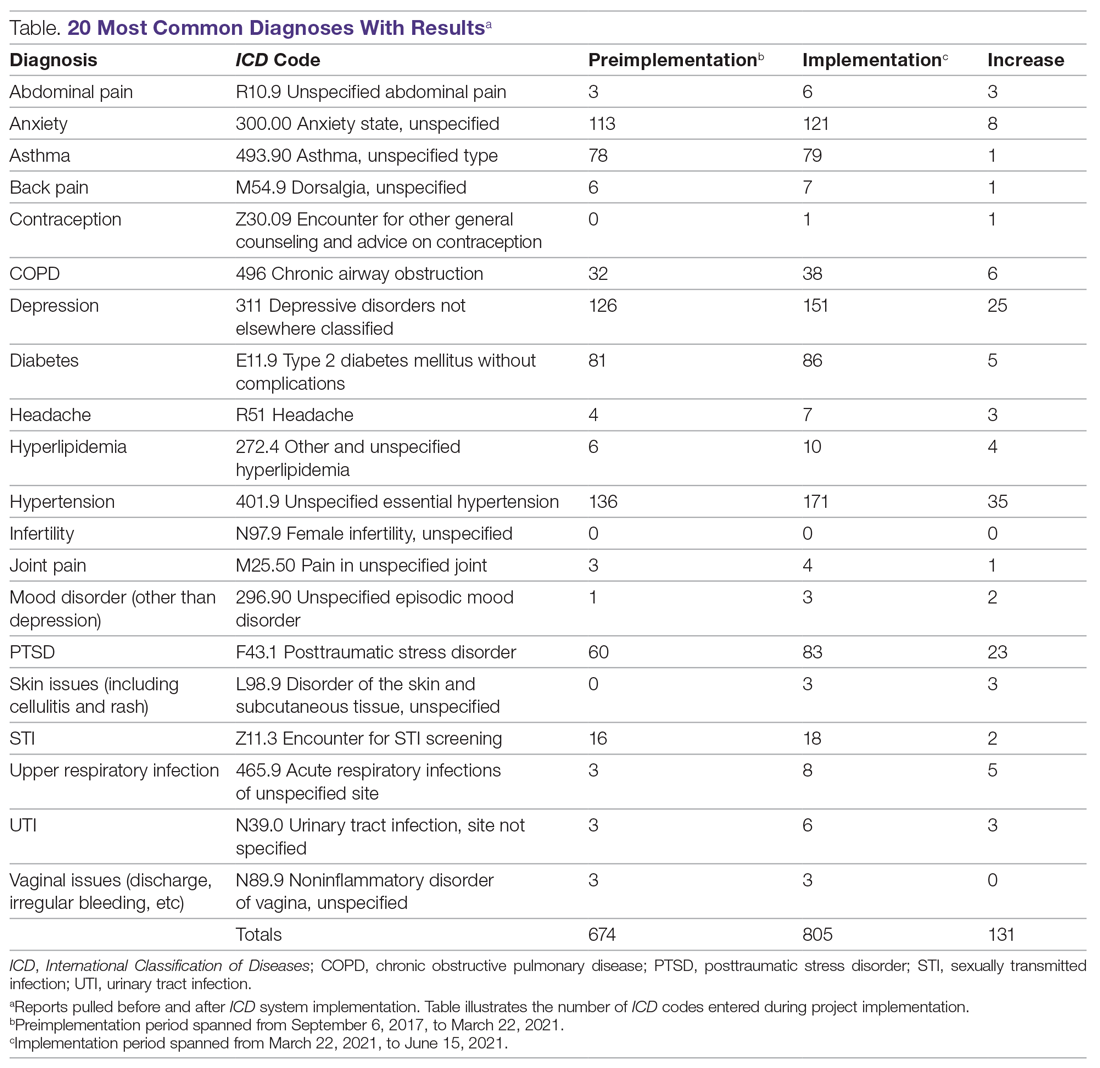

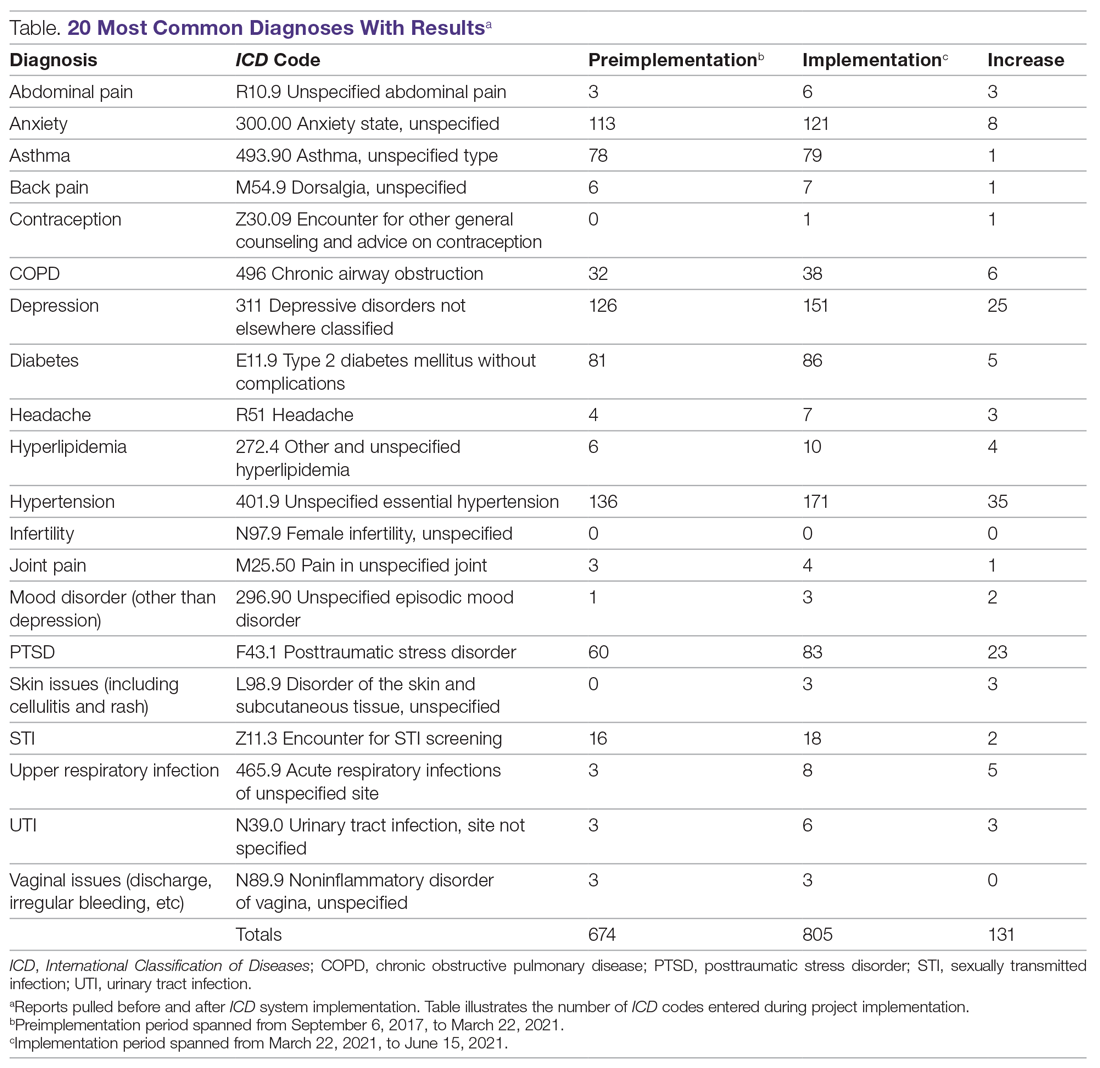

We screened a total of 1351 patients with COVID-19. Of these, 1278 patients did not receive treatment with bamlanivimab. Two hundred thirty-nine patients met inclusion criteria and were included in the control group. Seventy-three patients were treated with bamlanivimab in the ED; 63 of these patients met EUA criteria and comprised the treatment group (Figure 1).

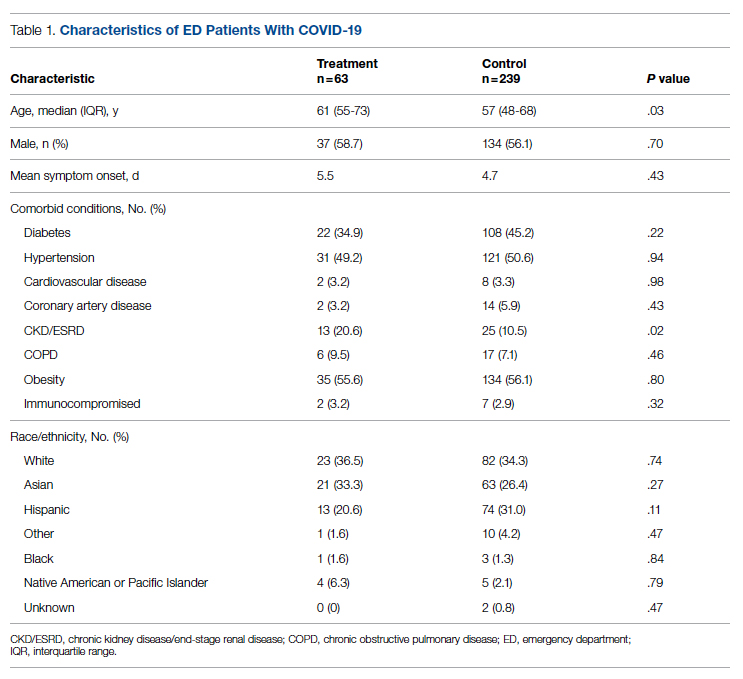

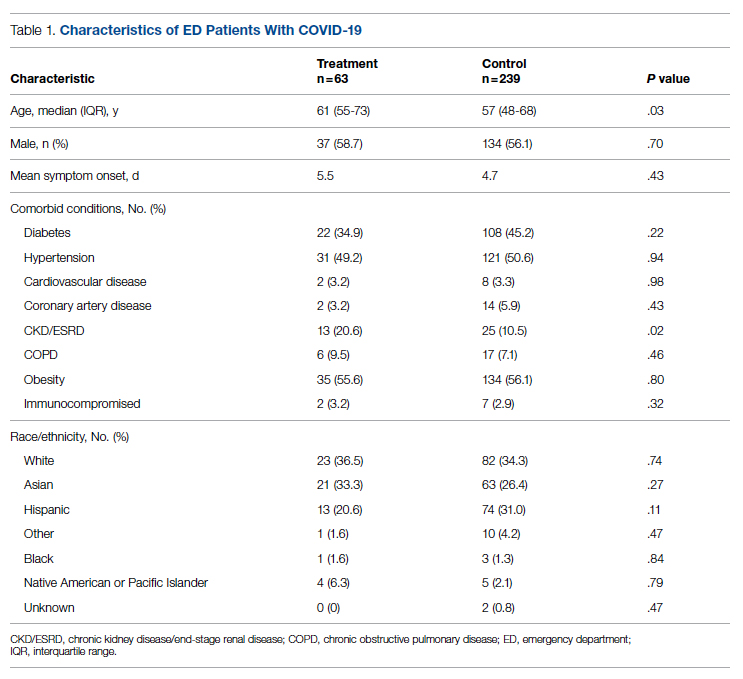

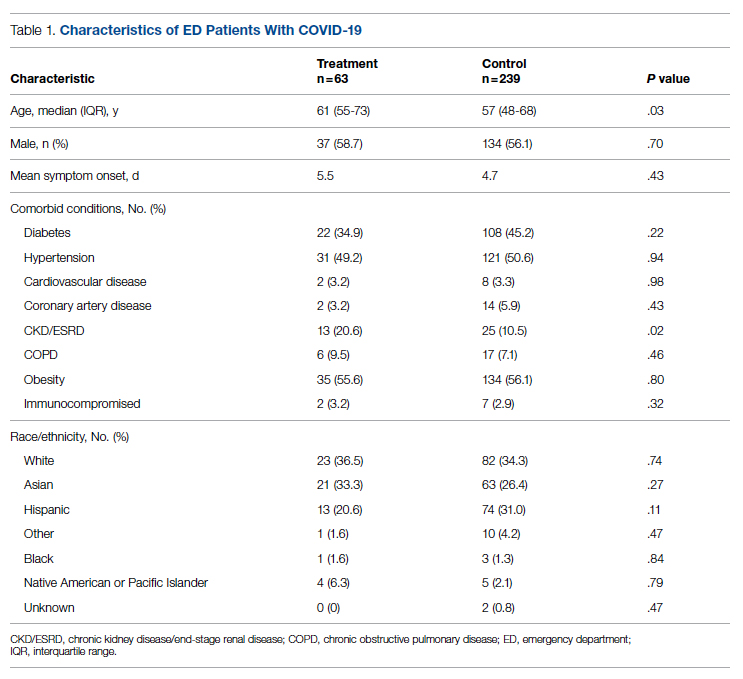

Demographic details of the trial groups are provided in Table 1. The median age of the treatment group was 61 years (interquartile range [IQR], 55-73), while the median age of the control group was 57 years (IQR, 48-68). The difference in median age between the treatment and control individuals was significantly different (P = .03). There was no significant difference found in terms of gender between the control and treatment groups (P = .07). In addition, no significant difference was seen among racial and ethnic groups in the control and treatment groups. Comorbidities and demographics of all patients in the treatment and control groups are provided in Table 1. The only comorbidity that was found to be significantly different between the treatment and control groups was CKD/ESRD. Among those treated with bamlanivimab, 20.6% (13/63) had CKD/ESRD compared with 10.5% (25/239) in the control group (P = .02).

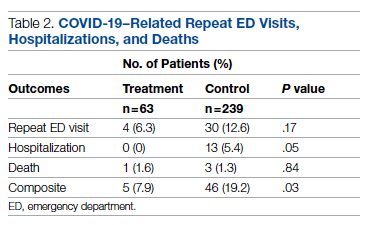

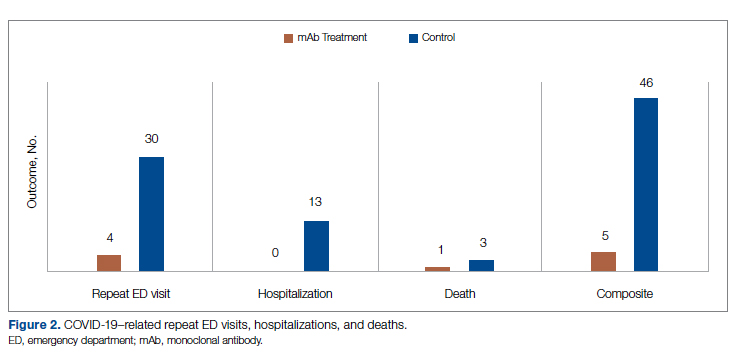

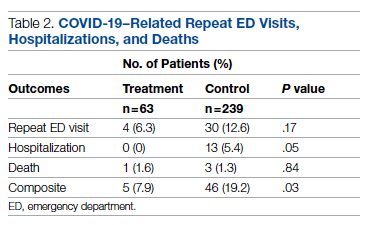

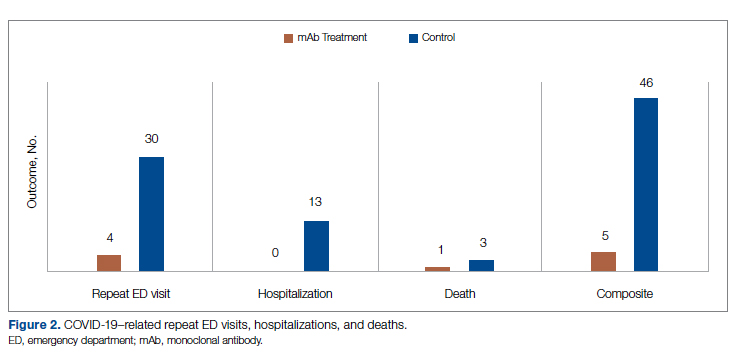

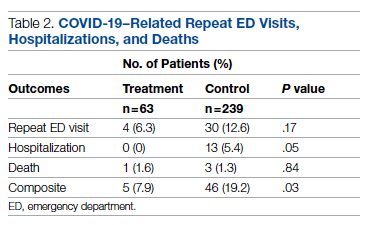

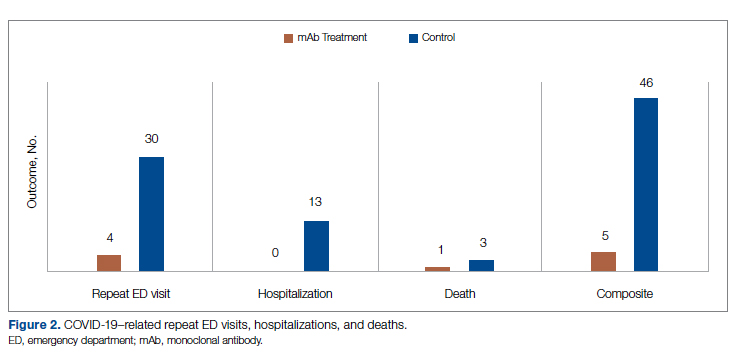

Overall, 7.9% (5/63) of patients receiving bamlanivimab had a subsequent ED/hospital visit, hospitalization, or death compared with 19.2% (46/239) in the control group (P = .03) (Table 2).

While the primary outcome of overall improvement was significantly different between the 2 groups, comparison of the individual components, including subsequent ED visits, hospitalizations, or death, were not significant. No treatment patients were hospitalized, compared with 5.4% (13/239) in the control group (P = .05). In the treatment group, 6.3% (4/63) returned to the ED compared with 12.6% (30/239) of the control group (P = .17). Finally, 1.6% (1/63) of the treatment group had a subsequent death that was due to COVID-19 compared with 1.3% (3/239) in the control group (P = .84) (Figure 2).

Discussion

In this retrospective cohort study, we observed a significant difference in rates of COVID-19 patients requiring repeat ED visits, hospitalizations, and deaths among those who received bamlanivimab compared with those who did not. Our study focused on high-risk patients with mild or moderate COVID-19, a unique subset of individuals who would normally be followed and treated via outpatient monitoring. We propose that treating high-risk patients earlier in their disease process with mAb therapy can have a major impact on overall outcomes, as defined by decreased subsequent hospitalizations, ED visits, and death.

Compared to clinical trials such as BLAZE-1 or REGN-COV2, every patient in this trial had at least 1 high-risk characteristic.9,11 This may explain why a greater proportion of our patients in both the control and treatment groups had subsequent hospitalization, ED visits, and deaths. COVID-19 patients seen in the ED may be a uniquely self-selected population of individuals likely to benefit from mAb therapy since they may be more likely to be sicker, have more comorbidities, or have less readily available primary care access for testing and treatment.14

Despite conducting a thorough literature review, we were unable to find any similar studies describing the ED as an appropriate setting for mAb treatment in patients with COVID-19. Multiple studies have used outpatient clinics as a setting for mAb treatment, and 1 retrospective analysis found that neutralizing mAb treatment in COVID-19 patients in an outpatient setting reduced hospital utilization.15 However, many Americans do not have access to primary care, with 1 study finding that only 75% of Americans had an identified source of primary care in 2015.16 Obstacles to primary care access include disabilities, lack of health insurance, language-related barriers, race/ethnicity, and homelessness.17 Barriers to access for primary care services and timely care make these populations more likely to frequent the ED.17 This makes the ED a unique location for early and targeted treatment of COVID-19 patients with a high risk for progression to severe COVID-19.

During surge periods in the COVID-19 pandemic, many hospitals met capacity or superseded their capacity for patients, with 4423 hospitals reporting more than 90% of hospital beds occupied and 2591 reporting more than 90% of ICU beds occupied during the peak surge week of January 1, 2021, to January 7, 2021.18 The main goals of lockdowns and masking have been to decrease the transmission of COVID-19 and hopefully flatten the curve to alleviate the burden on hospitals and decrease patient mortality. However, in surge situations when hospitals have already been pushed to their limits, we need to find ways to circumvent these shortages. This was particularly true at our academic medical center during the surge period of December 2020 through January 2021, necessitating the need for an innovative approach to improve patient outcomes and reduce the strain on resources. Utilizing the ED and implementing early treatment strategies with mAbs, especially during a surge crisis, can decrease severity of illness, hospitalizations, and deaths, as demonstrated in our article.

This study had several limitations. First, it is plausible that some ED patients may have gone to a different hospital after discharge from the UCI ED rather than returning to our institution. Given the constraints of using the EMR, we were only able to assess hospitalizations and subsequent ED visits at UCI. Second, there were 2 confounding variables identified when analyzing the demographic differences between the control and treatment group among those who met EUA criteria. The median age among those in the treatment group was greater than those in the control group (P = .03), and the proportion of individuals with CKD/ESRD was also greater in those in the treatment group (P = .02). It is well known that older patients and those with renal disease have higher incidences of morbidity and mortality. Achieving statistically significant differences overall between control and treatment groups despite greater numbers of older individuals and patients with renal disease in the treatment group supports our strategy and the usage of mAb.19,20

Finally, as of April 16, 2021, the FDA revoked EUA for bamlanivimab when administered alone. However, alternative mAb therapies remain available under the EUA, including REGEN-COV (casirivimab and imdevimab), sotrovimab, and the combination therapy of bamlanivimab and etesevimab.21 This decision was made in light of the increased frequency of resistant variants of SARS-CoV-2 with bamlanivimab treatment alone.21 Our study was conducted prior to this announcement. However, as treatment with other mAbs is still permissible, we believe our findings can translate to treatment with mAbs in general. In fact, combination therapy with bamlanivimab and etesevimab has been found to be more effective than monotherapy alone, suggesting that our results may be even more robust with combination mAb therapy.11 Overall, while additional studies are needed with larger sample sizes and combination mAb treatment to fully elucidate the impact of administering mAb treatment in the ED, our results suggest that targeting ED patients for mAb treatment may be an effective strategy to prevent the composite end point of repeat ED visits, hospitalizations, or deaths.

Conclusion

Targeting ED patients for mAb treatment may be an effective strategy to prevent progression to severe COVID-19 illness and substantially reduce the composite end point of repeat ED visits, hospitalizations, and deaths, especially for individuals of underserved populations who may not have access to ambulatory care.

Corresponding author: Alpesh Amin, MD, MBA, Department of Medicine and Hospital Medicine Program, University of California, Irvine, 333 City Tower West, Ste 500, Orange, CA 92868; [email protected].

Financial disclosures: This manuscript was generously supported by multiple donors, including the Mehra Family, the Yang Family, and the Chao Family. Dr. Amin reported serving as Principal Investigator or Co-Investigator of clinical trials sponsored by NIH/NIAID, NeuroRX Pharma, Pulmotect, Blade Therapeutics, Novartis, Takeda, Humanigen, Eli Lilly, PTC Therapeutics, OctaPharma, Fulcrum Therapeutics, and Alexion, unrelated to the present study. He has served as speaker and/or consultant for BMS, Pfizer, BI, Portola, Sunovion, Mylan, Salix, Alexion, AstraZeneca, Novartis, Nabriva, Paratek, Bayer, Tetraphase, Achaogen La Jolla, Ferring, Seres, Millennium, PeraHealth, HeartRite, Aseptiscope, and Sprightly, unrelated to the present study.

1. Global map. Johns Hopkins University & Medicine Coronavirus Resource Center. Updated November 9, 2021. Accessed November 9, 2021. https://coronavirus.jhu.edu/map.html

2. Truog RD, Mitchell C, Daley GQ. The toughest triage — allocating ventilators in a pandemic. N Engl J Med. 2020;382(21):1973-1975. doi:10.1056/NEJMp2005689

3. Cavallo JJ, Donoho DA, Forman HP. Hospital capacity and operations in the coronavirus disease 2019 (COVID-19) pandemic—planning for the Nth patient. JAMA Health Forum. 2020;1(3):e200345. doi:10.1001/jamahealthforum.2020.0345

4. Eriksson CO, Stoner RC, Eden KB, et al. The association between hospital capacity strain and inpatient outcomes in highly developed countries: a systematic review. J Gen Intern Med. 2017;32(6):686-696. doi:10.1007/s11606-016-3936-3

5. Bravata DM, Perkins AJ, Myers LJ, et al. Association of intensive care unit patient load and demand with mortality rates in US Department of Veterans Affairs hospitals during the COVID-19 pandemic. JAMA Netw Open. 2021;4(1):e2034266. doi:10.1001/jamanetworkopen.2020.34266

6. Beigel JH, Tomashek KM, Dodd LE, et al. Remdesivir for the treatment of Covid-19 - final report. N Engl J Med. 2020;383(19);1813-1826. doi:10.1056/NEJMoa2007764

7. Coronavirus (COVID-19) update: FDA authorizes monoclonal antibody for treatment of COVID-19. US Food & Drug Administration. November 9, 2020. Accessed November 9, 2021. https://www.fda.gov/news-events/press-announcements/coronavirus-covid-19-update-fda-authorizes-monoclonal-antibody-treatment-covid-19

8. Chen P, Nirula A, Heller B, et al. SARS-CoV-2 neutralizing antibody LY-CoV555 in outpatients with Covid-19. N Engl J Med. 2021;384(3):229-237. doi:10.1056/NEJMoa2029849

9. Weinreich DM, Sivapalasingam S, Norton T, et al. REGN-COV2, a neutralizing antibody cocktail, in outpatients with Covid-19. N Engl J Med. 2021;384(3):238-251. doi:10.1056/NEJMoa2035002

10. Chen X, Li R, Pan Z, et al. Human monoclonal antibodies block the binding of SARS-CoV-2 spike protein to angiotensin converting enzyme 2 receptor. Cell Mol Immunol. 2020;17(6):647-649. doi:10.1038/s41423-020-0426-7

11. Gottlieb RL, Nirula A, Chen P, et al. Effect of bamlanivimab as monotherapy or in combination with etesevimab on viral load in patients with mild to moderate COVID-19: a randomized clinical trial. JAMA. 2021;325(7):632-644. doi:10.1001/jama.2021.0202

12. Toy S, Walker J, Evans M. Highly touted monoclonal antibody therapies sit unused in hospitals The Wall Street Journal. December 27, 2020. Accessed November 9, 2021. https://www.wsj.com/articles/highly-touted-monoclonal-antibody-therapies-sit-unused-in-hospitals-11609087364

13. Anti-SARS-CoV-2 monoclonal antibodies. NIH COVID-19 Treatment Guidelines. Updated October 19, 2021. Accessed November 9, 2021. https://www.covid19treatmentguidelines.nih.gov/anti-sars-cov-2-antibody-products/anti-sars-cov-2-monoclonal-antibodies/

14. Langellier BA. Policy recommendations to address high risk of COVID-19 among immigrants. Am J Public Health. 2020;110(8):1137-1139. doi:10.2105/AJPH.2020.305792

15. Verderese J P, Stepanova M, Lam B, et al. Neutralizing monoclonal antibody treatment reduces hospitalization for mild and moderate COVID-19: a real-world experience. Clin Infect Dis. 2021;ciab579. doi:10.1093/cid/ciab579

16. Levine DM, Linder JA, Landon BE. Characteristics of Americans with primary care and changes over time, 2002-2015. JAMA Intern Med. 2020;180(3):463-466. doi:10.1001/jamainternmed.2019.6282

17. Rust G, Ye J, Daniels E, et al. Practical barriers to timely primary care access: impact on adult use of emergency department services. Arch Intern Med. 2008;168(15):1705-1710. doi:10.1001/archinte.168.15.1705

18. COVID-19 Hospitalization Tracking Project: analysis of HHS data. University of Minnesota. Carlson School of Management. Accessed November 9, 2021. https://carlsonschool.umn.edu/mili-misrc-covid19-tracking-project

19. Zare˛bska-Michaluk D, Jaroszewicz J, Rogalska M, et al. Impact of kidney failure on the severity of COVID-19. J Clin Med. 2021;10(9):2042. doi:10.3390/jcm10092042

20. Shahid Z, Kalayanamitra R, McClafferty B, et al. COVID‐19 and older adults: what we know. J Am Geriatr Soc. 2020;68(5):926-929. doi:10.1111/jgs.16472

21. Coronavirus (COVID-19) update: FDA revokes emergency use authorization for monoclonal antibody bamlanivimab. US Food & Drug Administration. April 16, 2021. Accessed November 9, 2021. https://www.fda.gov/news-events/press-announcements/coronavirus-covid-19-update-fda-revokes-emergency-use-authorization-monoclonal-antibody-bamlanivimab

From the Center for Artificial Intelligence in Diagnostic Medicine, University of California, Irvine, CA (Drs. Chow and Chang, Mazaya Soundara), University of California Irvine School of Medicine, Irvine, CA (Ruchi Desai), Division of Infectious Diseases, University of California, Irvine, CA (Dr. Gohil), and the Department of Medicine and Hospital Medicine Program, University of California, Irvine, CA (Dr. Amin).

Background: The COVID-19 pandemic has placed substantial strain on hospital resources and has been responsible for more than 733 000 deaths in the United States. The US Food and Drug Administration has granted emergency use authorization (EUA) for monoclonal antibody (mAb) therapy in the US for patients with early-stage high-risk COVID-19.

Methods: In this retrospective cohort study, we studied the emergency department (ED) during a massive COVID-19 surge in Orange County, California, from December 4, 2020, to January 29, 2021, as a potential setting for efficient mAb delivery by evaluating the impact of bamlanivimab use in high-risk COVID-19 patients. All patients included in this study had positive results on nucleic acid amplification detection from nasopharyngeal or throat swabs, presented with 1 or more mild or moderate symptom, and met EUA criteria for mAb treatment. The primary outcome analyzed among this cohort of ED patients was overall improvement, which included subsequent ED/hospital visits, inpatient hospitalization, and death related to COVID-19.

Results: We identified 1278 ED patients with COVID-19 not treated with bamlanivimab and 73 patients with COVID-19 treated with bamlanivimab during the treatment period. Of these patients, 239 control patients and 63 treatment patients met EUA criteria. Overall, 7.9% (5/63) of patients receiving bamlanivimab had a subsequent ED/hospital visit, hospitalization, or death compared with 19.2% (46/239) in the control group (P = .03).

Conclusion: Targeting ED patients for mAb treatment may be an effective strategy to prevent progression to severe COVID-19 illness and substantially reduce the composite end point of repeat ED visits, hospitalizations, and deaths, especially for individuals of underserved populations who may not have access to ambulatory care.

Keywords: COVID-19; mAb; bamlanivimab; surge management.

Since December 2019, the novel pathogen SARS-CoV-2 has spread rapidly, culminating in a pandemic that has caused more than 4.9 million deaths worldwide and claimed more than 733 000 lives in the United States.1 The scale of the COVID-19 pandemic has placed an immense strain on hospital resources, including personal protective equipment (PPE), beds, ventilators and personnel.2,3 A previous analysis demonstrated that hospital capacity strain is associated with increased mortality and worsened health outcomes.4 A more recent analysis in light of the COVID-19 pandemic found that strains on critical care capacity were associated with increased COVID-19 intensive care unit (ICU) mortality.5 While more studies are needed to understand the association between hospital resources and COVID-19 mortality, efforts to decrease COVID-19 hospitalizations by early targeted treatment of patients in outpatient and emergency department (ED) settings may help to relieve the burden on hospital personnel and resources and decrease subsequent mortality.

Current therapeutic options focus on inpatient management of patients who progress to acute respiratory illness while patients with mild presentations are managed with outpatient monitoring, even those at high risk for progression. At the moment, only remdesivir, a viral RNA-dependent RNA polymerase inhibitor, has been approved by the US Food and Drug Administration (FDA) for treatment of hospitalized COVID-19 patients.6 However, in November 2020, the FDA granted emergency use authorization (EUA) for monoclonal antibodies (mAbs), monotherapy, and combination therapy in a broad range of early-stage, high-risk patients.7-9 Neutralizing mAbs include bamlanivimab (LY-CoV555), etesevimab (LY-CoV016), sotrovimab (VIR-7831), and casirivimab/imdevimab (REGN-COV2). These anti–spike protein antibodies prevent viral attachment to the human angiotensin-converting enzyme 2 receptor (hACE2) and subsequently prevent viral entry.10 mAb therapy has been shown to be effective in substantially reducing viral load, hospitalizations, and ED visits.11

Despite these promising results, uptake of mAb therapy has been slow, with more than 600 000 available doses remaining unused as of mid-January 2021, despite very high infection rates across the United States.12 In addition to the logistical challenges associated with intravenous (IV) therapy in the ambulatory setting, identifying, notifying, and scheduling appointments for ambulatory patients hamper efficient delivery to high-risk patients and limit access to underserved patients without primary care providers. For patients not treated in the ambulatory setting, the ED may serve as an ideal location for early implementation of mAb treatment in high-risk patients with mild to moderate COVID-19.

The University of California, Irvine (UCI) Medical Center is not only the major premium academic medical center in Orange County, California, but also the primary safety net hospital for vulnerable populations in Orange County. During the surge period from December 2020 through January 2021, we were over 100% capacity and had built an onsite mobile hospital to expand the number of beds available. Given the severity of the impact of COVID-19 on our resources, implementing a strategy to reduce hospital admissions, patient death, and subsequent ED visits was imperative. Our goal was to implement a strategy on the front end through the ED to optimize care for patients and reduce the strain on hospital resources.

We sought to study the ED during this massive surge as a potential setting for efficient mAb delivery by evaluating the impact of bamlanivimab use in high risk COVID-19 patients.

Methods

We conducted a retrospective cohort study (approved by UCI institutional review board) of sequential COVID-19 adult patients who were evaluated and discharged from the ED between December 4, 2020, and January 29, 2021, and received bamlanivimab treatment (cases) compared with a nontreatment group (control) of ED patients.

Using the UCI electronic medical record (EMR) system, we identified 1278 ED patients with COVID-19 not treated with bamlanivimab and 73 patients with COVID-19 treated with bamlanivimab during the months of December 2020 and January 2021. All patients included in this study met the EUA criteria for mAb therapy. According to the Centers for Disease Control and Prevention (CDC), during the period of this study, patients met EUA criteria if they had mild to moderate COVID-19, a positive direct SARS-CoV-2 viral testing, and a high risk for progressing to severe COVID-19 or hospitalization.13 High risk for progressing to severe COVID-19 and/or hospitalization is defined as meeting at least 1 of the following criteria: a body mass index of 35 or higher, chronic kidney disease (CKD), diabetes, immunosuppressive disease, currently receiving immunosuppressive treatment, aged 65 years or older, aged 55 years or older and have cardiovascular disease or hypertension, or chronic obstructive pulmonary disease (COPD)/other chronic respiratory diseases.13 All patients in the ED who met EUA criteria were offered mAb treatment; those who accepted the treatment were included in the treatment group, and those who refused were included in the control group.

All patients included in this study had positive results on nucleic acid amplification detection from nasopharyngeal or throat swabs and presented with 1 or more mild or moderate symptom, defined as: fever, cough, sore throat, malaise, headache, muscle pain, gastrointestinal symptoms, or shortness of breath. We excluded patients admitted to the hospital on that ED visit and those discharged to hospice. In addition, we excluded patients who presented 2 weeks after symptom onset and those who did not meet EUA criteria. Demographic data (age and gender) and comorbid conditions were obtained by EMR review. Comorbid conditions obtained included diabetes, hypertension, cardiovascular disease, coronary artery disease, CKD/end-stage renal disease (ESRD), COPD, obesity, and immunocompromised status.

Bamlanivimab infusion therapy in the ED followed CDC guidelines. Each patient received 700 mg of bamlanivimab diluted in 0.9% sodium chloride and administered as a single IV infusion. We established protocols to give patients IV immunoglobulin (IVIG) infusions directly in the ED.

The primary outcome analyzed among this cohort of ED patients was overall improvement, which included subsequent ED/hospital visits, inpatient hospitalization, and death related to COVID-19 within 90 days of initial ED visit. Each patient was only counted once. Data analysis and statistical tests were conducted using SPSS statistical software (SPSS Inc). Treatment effects were compared using χ2 test with an α level of 0.05. A t test was used for continuous variables, including age. A P value of less than .05 was considered significant.

Results

We screened a total of 1351 patients with COVID-19. Of these, 1278 patients did not receive treatment with bamlanivimab. Two hundred thirty-nine patients met inclusion criteria and were included in the control group. Seventy-three patients were treated with bamlanivimab in the ED; 63 of these patients met EUA criteria and comprised the treatment group (Figure 1).

Demographic details of the trial groups are provided in Table 1. The median age of the treatment group was 61 years (interquartile range [IQR], 55-73), while the median age of the control group was 57 years (IQR, 48-68). The difference in median age between the treatment and control individuals was significantly different (P = .03). There was no significant difference found in terms of gender between the control and treatment groups (P = .07). In addition, no significant difference was seen among racial and ethnic groups in the control and treatment groups. Comorbidities and demographics of all patients in the treatment and control groups are provided in Table 1. The only comorbidity that was found to be significantly different between the treatment and control groups was CKD/ESRD. Among those treated with bamlanivimab, 20.6% (13/63) had CKD/ESRD compared with 10.5% (25/239) in the control group (P = .02).

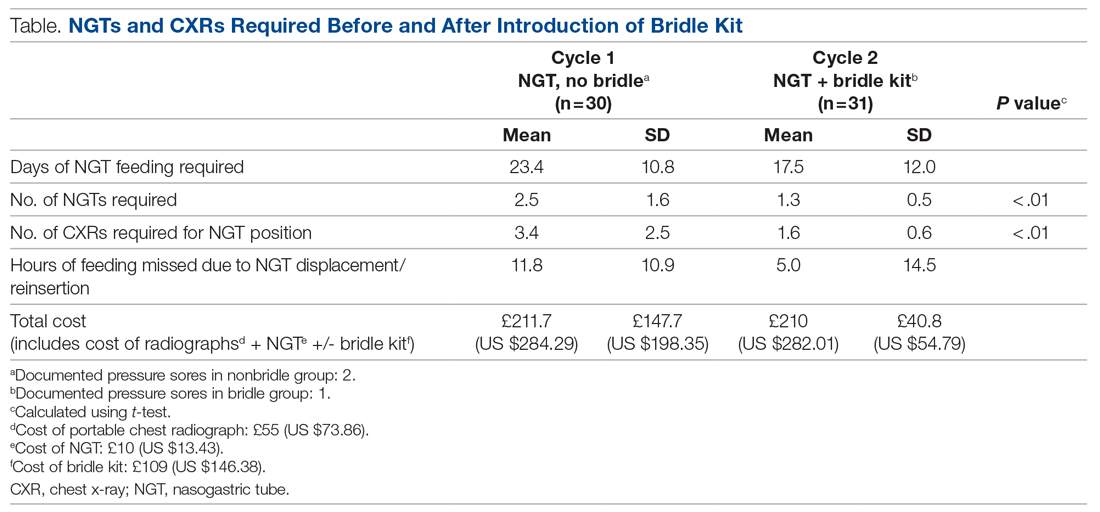

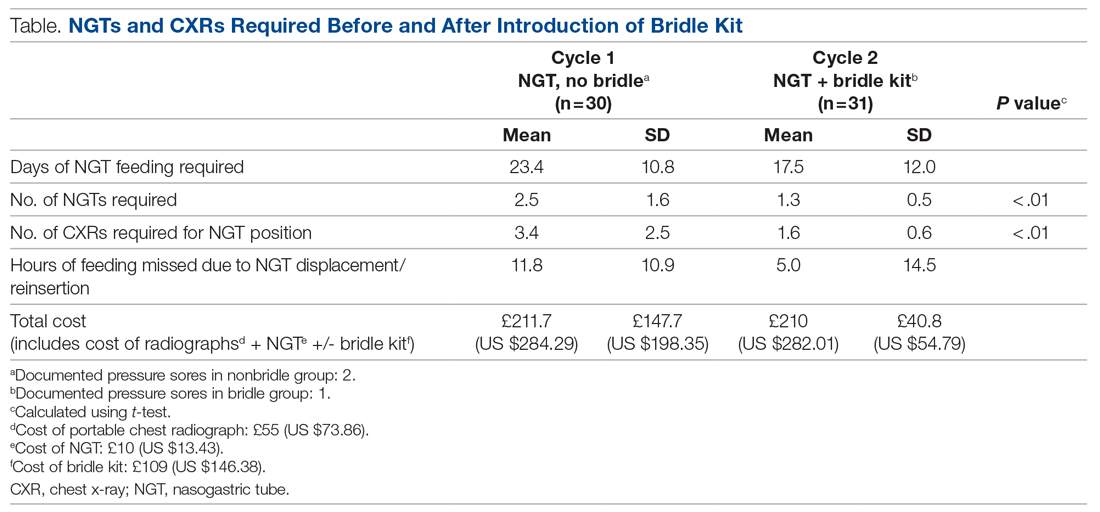

Overall, 7.9% (5/63) of patients receiving bamlanivimab had a subsequent ED/hospital visit, hospitalization, or death compared with 19.2% (46/239) in the control group (P = .03) (Table 2).

While the primary outcome of overall improvement was significantly different between the 2 groups, comparison of the individual components, including subsequent ED visits, hospitalizations, or death, were not significant. No treatment patients were hospitalized, compared with 5.4% (13/239) in the control group (P = .05). In the treatment group, 6.3% (4/63) returned to the ED compared with 12.6% (30/239) of the control group (P = .17). Finally, 1.6% (1/63) of the treatment group had a subsequent death that was due to COVID-19 compared with 1.3% (3/239) in the control group (P = .84) (Figure 2).

Discussion

In this retrospective cohort study, we observed a significant difference in rates of COVID-19 patients requiring repeat ED visits, hospitalizations, and deaths among those who received bamlanivimab compared with those who did not. Our study focused on high-risk patients with mild or moderate COVID-19, a unique subset of individuals who would normally be followed and treated via outpatient monitoring. We propose that treating high-risk patients earlier in their disease process with mAb therapy can have a major impact on overall outcomes, as defined by decreased subsequent hospitalizations, ED visits, and death.

Compared to clinical trials such as BLAZE-1 or REGN-COV2, every patient in this trial had at least 1 high-risk characteristic.9,11 This may explain why a greater proportion of our patients in both the control and treatment groups had subsequent hospitalization, ED visits, and deaths. COVID-19 patients seen in the ED may be a uniquely self-selected population of individuals likely to benefit from mAb therapy since they may be more likely to be sicker, have more comorbidities, or have less readily available primary care access for testing and treatment.14

Despite conducting a thorough literature review, we were unable to find any similar studies describing the ED as an appropriate setting for mAb treatment in patients with COVID-19. Multiple studies have used outpatient clinics as a setting for mAb treatment, and 1 retrospective analysis found that neutralizing mAb treatment in COVID-19 patients in an outpatient setting reduced hospital utilization.15 However, many Americans do not have access to primary care, with 1 study finding that only 75% of Americans had an identified source of primary care in 2015.16 Obstacles to primary care access include disabilities, lack of health insurance, language-related barriers, race/ethnicity, and homelessness.17 Barriers to access for primary care services and timely care make these populations more likely to frequent the ED.17 This makes the ED a unique location for early and targeted treatment of COVID-19 patients with a high risk for progression to severe COVID-19.

During surge periods in the COVID-19 pandemic, many hospitals met capacity or superseded their capacity for patients, with 4423 hospitals reporting more than 90% of hospital beds occupied and 2591 reporting more than 90% of ICU beds occupied during the peak surge week of January 1, 2021, to January 7, 2021.18 The main goals of lockdowns and masking have been to decrease the transmission of COVID-19 and hopefully flatten the curve to alleviate the burden on hospitals and decrease patient mortality. However, in surge situations when hospitals have already been pushed to their limits, we need to find ways to circumvent these shortages. This was particularly true at our academic medical center during the surge period of December 2020 through January 2021, necessitating the need for an innovative approach to improve patient outcomes and reduce the strain on resources. Utilizing the ED and implementing early treatment strategies with mAbs, especially during a surge crisis, can decrease severity of illness, hospitalizations, and deaths, as demonstrated in our article.

This study had several limitations. First, it is plausible that some ED patients may have gone to a different hospital after discharge from the UCI ED rather than returning to our institution. Given the constraints of using the EMR, we were only able to assess hospitalizations and subsequent ED visits at UCI. Second, there were 2 confounding variables identified when analyzing the demographic differences between the control and treatment group among those who met EUA criteria. The median age among those in the treatment group was greater than those in the control group (P = .03), and the proportion of individuals with CKD/ESRD was also greater in those in the treatment group (P = .02). It is well known that older patients and those with renal disease have higher incidences of morbidity and mortality. Achieving statistically significant differences overall between control and treatment groups despite greater numbers of older individuals and patients with renal disease in the treatment group supports our strategy and the usage of mAb.19,20

Finally, as of April 16, 2021, the FDA revoked EUA for bamlanivimab when administered alone. However, alternative mAb therapies remain available under the EUA, including REGEN-COV (casirivimab and imdevimab), sotrovimab, and the combination therapy of bamlanivimab and etesevimab.21 This decision was made in light of the increased frequency of resistant variants of SARS-CoV-2 with bamlanivimab treatment alone.21 Our study was conducted prior to this announcement. However, as treatment with other mAbs is still permissible, we believe our findings can translate to treatment with mAbs in general. In fact, combination therapy with bamlanivimab and etesevimab has been found to be more effective than monotherapy alone, suggesting that our results may be even more robust with combination mAb therapy.11 Overall, while additional studies are needed with larger sample sizes and combination mAb treatment to fully elucidate the impact of administering mAb treatment in the ED, our results suggest that targeting ED patients for mAb treatment may be an effective strategy to prevent the composite end point of repeat ED visits, hospitalizations, or deaths.

Conclusion

Targeting ED patients for mAb treatment may be an effective strategy to prevent progression to severe COVID-19 illness and substantially reduce the composite end point of repeat ED visits, hospitalizations, and deaths, especially for individuals of underserved populations who may not have access to ambulatory care.

Corresponding author: Alpesh Amin, MD, MBA, Department of Medicine and Hospital Medicine Program, University of California, Irvine, 333 City Tower West, Ste 500, Orange, CA 92868; [email protected].

Financial disclosures: This manuscript was generously supported by multiple donors, including the Mehra Family, the Yang Family, and the Chao Family. Dr. Amin reported serving as Principal Investigator or Co-Investigator of clinical trials sponsored by NIH/NIAID, NeuroRX Pharma, Pulmotect, Blade Therapeutics, Novartis, Takeda, Humanigen, Eli Lilly, PTC Therapeutics, OctaPharma, Fulcrum Therapeutics, and Alexion, unrelated to the present study. He has served as speaker and/or consultant for BMS, Pfizer, BI, Portola, Sunovion, Mylan, Salix, Alexion, AstraZeneca, Novartis, Nabriva, Paratek, Bayer, Tetraphase, Achaogen La Jolla, Ferring, Seres, Millennium, PeraHealth, HeartRite, Aseptiscope, and Sprightly, unrelated to the present study.

From the Center for Artificial Intelligence in Diagnostic Medicine, University of California, Irvine, CA (Drs. Chow and Chang, Mazaya Soundara), University of California Irvine School of Medicine, Irvine, CA (Ruchi Desai), Division of Infectious Diseases, University of California, Irvine, CA (Dr. Gohil), and the Department of Medicine and Hospital Medicine Program, University of California, Irvine, CA (Dr. Amin).

Background: The COVID-19 pandemic has placed substantial strain on hospital resources and has been responsible for more than 733 000 deaths in the United States. The US Food and Drug Administration has granted emergency use authorization (EUA) for monoclonal antibody (mAb) therapy in the US for patients with early-stage high-risk COVID-19.

Methods: In this retrospective cohort study, we studied the emergency department (ED) during a massive COVID-19 surge in Orange County, California, from December 4, 2020, to January 29, 2021, as a potential setting for efficient mAb delivery by evaluating the impact of bamlanivimab use in high-risk COVID-19 patients. All patients included in this study had positive results on nucleic acid amplification detection from nasopharyngeal or throat swabs, presented with 1 or more mild or moderate symptom, and met EUA criteria for mAb treatment. The primary outcome analyzed among this cohort of ED patients was overall improvement, which included subsequent ED/hospital visits, inpatient hospitalization, and death related to COVID-19.

Results: We identified 1278 ED patients with COVID-19 not treated with bamlanivimab and 73 patients with COVID-19 treated with bamlanivimab during the treatment period. Of these patients, 239 control patients and 63 treatment patients met EUA criteria. Overall, 7.9% (5/63) of patients receiving bamlanivimab had a subsequent ED/hospital visit, hospitalization, or death compared with 19.2% (46/239) in the control group (P = .03).

Conclusion: Targeting ED patients for mAb treatment may be an effective strategy to prevent progression to severe COVID-19 illness and substantially reduce the composite end point of repeat ED visits, hospitalizations, and deaths, especially for individuals of underserved populations who may not have access to ambulatory care.

Keywords: COVID-19; mAb; bamlanivimab; surge management.

Since December 2019, the novel pathogen SARS-CoV-2 has spread rapidly, culminating in a pandemic that has caused more than 4.9 million deaths worldwide and claimed more than 733 000 lives in the United States.1 The scale of the COVID-19 pandemic has placed an immense strain on hospital resources, including personal protective equipment (PPE), beds, ventilators and personnel.2,3 A previous analysis demonstrated that hospital capacity strain is associated with increased mortality and worsened health outcomes.4 A more recent analysis in light of the COVID-19 pandemic found that strains on critical care capacity were associated with increased COVID-19 intensive care unit (ICU) mortality.5 While more studies are needed to understand the association between hospital resources and COVID-19 mortality, efforts to decrease COVID-19 hospitalizations by early targeted treatment of patients in outpatient and emergency department (ED) settings may help to relieve the burden on hospital personnel and resources and decrease subsequent mortality.

Current therapeutic options focus on inpatient management of patients who progress to acute respiratory illness while patients with mild presentations are managed with outpatient monitoring, even those at high risk for progression. At the moment, only remdesivir, a viral RNA-dependent RNA polymerase inhibitor, has been approved by the US Food and Drug Administration (FDA) for treatment of hospitalized COVID-19 patients.6 However, in November 2020, the FDA granted emergency use authorization (EUA) for monoclonal antibodies (mAbs), monotherapy, and combination therapy in a broad range of early-stage, high-risk patients.7-9 Neutralizing mAbs include bamlanivimab (LY-CoV555), etesevimab (LY-CoV016), sotrovimab (VIR-7831), and casirivimab/imdevimab (REGN-COV2). These anti–spike protein antibodies prevent viral attachment to the human angiotensin-converting enzyme 2 receptor (hACE2) and subsequently prevent viral entry.10 mAb therapy has been shown to be effective in substantially reducing viral load, hospitalizations, and ED visits.11

Despite these promising results, uptake of mAb therapy has been slow, with more than 600 000 available doses remaining unused as of mid-January 2021, despite very high infection rates across the United States.12 In addition to the logistical challenges associated with intravenous (IV) therapy in the ambulatory setting, identifying, notifying, and scheduling appointments for ambulatory patients hamper efficient delivery to high-risk patients and limit access to underserved patients without primary care providers. For patients not treated in the ambulatory setting, the ED may serve as an ideal location for early implementation of mAb treatment in high-risk patients with mild to moderate COVID-19.

The University of California, Irvine (UCI) Medical Center is not only the major premium academic medical center in Orange County, California, but also the primary safety net hospital for vulnerable populations in Orange County. During the surge period from December 2020 through January 2021, we were over 100% capacity and had built an onsite mobile hospital to expand the number of beds available. Given the severity of the impact of COVID-19 on our resources, implementing a strategy to reduce hospital admissions, patient death, and subsequent ED visits was imperative. Our goal was to implement a strategy on the front end through the ED to optimize care for patients and reduce the strain on hospital resources.

We sought to study the ED during this massive surge as a potential setting for efficient mAb delivery by evaluating the impact of bamlanivimab use in high risk COVID-19 patients.

Methods

We conducted a retrospective cohort study (approved by UCI institutional review board) of sequential COVID-19 adult patients who were evaluated and discharged from the ED between December 4, 2020, and January 29, 2021, and received bamlanivimab treatment (cases) compared with a nontreatment group (control) of ED patients.

Using the UCI electronic medical record (EMR) system, we identified 1278 ED patients with COVID-19 not treated with bamlanivimab and 73 patients with COVID-19 treated with bamlanivimab during the months of December 2020 and January 2021. All patients included in this study met the EUA criteria for mAb therapy. According to the Centers for Disease Control and Prevention (CDC), during the period of this study, patients met EUA criteria if they had mild to moderate COVID-19, a positive direct SARS-CoV-2 viral testing, and a high risk for progressing to severe COVID-19 or hospitalization.13 High risk for progressing to severe COVID-19 and/or hospitalization is defined as meeting at least 1 of the following criteria: a body mass index of 35 or higher, chronic kidney disease (CKD), diabetes, immunosuppressive disease, currently receiving immunosuppressive treatment, aged 65 years or older, aged 55 years or older and have cardiovascular disease or hypertension, or chronic obstructive pulmonary disease (COPD)/other chronic respiratory diseases.13 All patients in the ED who met EUA criteria were offered mAb treatment; those who accepted the treatment were included in the treatment group, and those who refused were included in the control group.

All patients included in this study had positive results on nucleic acid amplification detection from nasopharyngeal or throat swabs and presented with 1 or more mild or moderate symptom, defined as: fever, cough, sore throat, malaise, headache, muscle pain, gastrointestinal symptoms, or shortness of breath. We excluded patients admitted to the hospital on that ED visit and those discharged to hospice. In addition, we excluded patients who presented 2 weeks after symptom onset and those who did not meet EUA criteria. Demographic data (age and gender) and comorbid conditions were obtained by EMR review. Comorbid conditions obtained included diabetes, hypertension, cardiovascular disease, coronary artery disease, CKD/end-stage renal disease (ESRD), COPD, obesity, and immunocompromised status.

Bamlanivimab infusion therapy in the ED followed CDC guidelines. Each patient received 700 mg of bamlanivimab diluted in 0.9% sodium chloride and administered as a single IV infusion. We established protocols to give patients IV immunoglobulin (IVIG) infusions directly in the ED.

The primary outcome analyzed among this cohort of ED patients was overall improvement, which included subsequent ED/hospital visits, inpatient hospitalization, and death related to COVID-19 within 90 days of initial ED visit. Each patient was only counted once. Data analysis and statistical tests were conducted using SPSS statistical software (SPSS Inc). Treatment effects were compared using χ2 test with an α level of 0.05. A t test was used for continuous variables, including age. A P value of less than .05 was considered significant.

Results

We screened a total of 1351 patients with COVID-19. Of these, 1278 patients did not receive treatment with bamlanivimab. Two hundred thirty-nine patients met inclusion criteria and were included in the control group. Seventy-three patients were treated with bamlanivimab in the ED; 63 of these patients met EUA criteria and comprised the treatment group (Figure 1).

Demographic details of the trial groups are provided in Table 1. The median age of the treatment group was 61 years (interquartile range [IQR], 55-73), while the median age of the control group was 57 years (IQR, 48-68). The difference in median age between the treatment and control individuals was significantly different (P = .03). There was no significant difference found in terms of gender between the control and treatment groups (P = .07). In addition, no significant difference was seen among racial and ethnic groups in the control and treatment groups. Comorbidities and demographics of all patients in the treatment and control groups are provided in Table 1. The only comorbidity that was found to be significantly different between the treatment and control groups was CKD/ESRD. Among those treated with bamlanivimab, 20.6% (13/63) had CKD/ESRD compared with 10.5% (25/239) in the control group (P = .02).

Overall, 7.9% (5/63) of patients receiving bamlanivimab had a subsequent ED/hospital visit, hospitalization, or death compared with 19.2% (46/239) in the control group (P = .03) (Table 2).

While the primary outcome of overall improvement was significantly different between the 2 groups, comparison of the individual components, including subsequent ED visits, hospitalizations, or death, were not significant. No treatment patients were hospitalized, compared with 5.4% (13/239) in the control group (P = .05). In the treatment group, 6.3% (4/63) returned to the ED compared with 12.6% (30/239) of the control group (P = .17). Finally, 1.6% (1/63) of the treatment group had a subsequent death that was due to COVID-19 compared with 1.3% (3/239) in the control group (P = .84) (Figure 2).

Discussion

In this retrospective cohort study, we observed a significant difference in rates of COVID-19 patients requiring repeat ED visits, hospitalizations, and deaths among those who received bamlanivimab compared with those who did not. Our study focused on high-risk patients with mild or moderate COVID-19, a unique subset of individuals who would normally be followed and treated via outpatient monitoring. We propose that treating high-risk patients earlier in their disease process with mAb therapy can have a major impact on overall outcomes, as defined by decreased subsequent hospitalizations, ED visits, and death.

Compared to clinical trials such as BLAZE-1 or REGN-COV2, every patient in this trial had at least 1 high-risk characteristic.9,11 This may explain why a greater proportion of our patients in both the control and treatment groups had subsequent hospitalization, ED visits, and deaths. COVID-19 patients seen in the ED may be a uniquely self-selected population of individuals likely to benefit from mAb therapy since they may be more likely to be sicker, have more comorbidities, or have less readily available primary care access for testing and treatment.14

Despite conducting a thorough literature review, we were unable to find any similar studies describing the ED as an appropriate setting for mAb treatment in patients with COVID-19. Multiple studies have used outpatient clinics as a setting for mAb treatment, and 1 retrospective analysis found that neutralizing mAb treatment in COVID-19 patients in an outpatient setting reduced hospital utilization.15 However, many Americans do not have access to primary care, with 1 study finding that only 75% of Americans had an identified source of primary care in 2015.16 Obstacles to primary care access include disabilities, lack of health insurance, language-related barriers, race/ethnicity, and homelessness.17 Barriers to access for primary care services and timely care make these populations more likely to frequent the ED.17 This makes the ED a unique location for early and targeted treatment of COVID-19 patients with a high risk for progression to severe COVID-19.

During surge periods in the COVID-19 pandemic, many hospitals met capacity or superseded their capacity for patients, with 4423 hospitals reporting more than 90% of hospital beds occupied and 2591 reporting more than 90% of ICU beds occupied during the peak surge week of January 1, 2021, to January 7, 2021.18 The main goals of lockdowns and masking have been to decrease the transmission of COVID-19 and hopefully flatten the curve to alleviate the burden on hospitals and decrease patient mortality. However, in surge situations when hospitals have already been pushed to their limits, we need to find ways to circumvent these shortages. This was particularly true at our academic medical center during the surge period of December 2020 through January 2021, necessitating the need for an innovative approach to improve patient outcomes and reduce the strain on resources. Utilizing the ED and implementing early treatment strategies with mAbs, especially during a surge crisis, can decrease severity of illness, hospitalizations, and deaths, as demonstrated in our article.

This study had several limitations. First, it is plausible that some ED patients may have gone to a different hospital after discharge from the UCI ED rather than returning to our institution. Given the constraints of using the EMR, we were only able to assess hospitalizations and subsequent ED visits at UCI. Second, there were 2 confounding variables identified when analyzing the demographic differences between the control and treatment group among those who met EUA criteria. The median age among those in the treatment group was greater than those in the control group (P = .03), and the proportion of individuals with CKD/ESRD was also greater in those in the treatment group (P = .02). It is well known that older patients and those with renal disease have higher incidences of morbidity and mortality. Achieving statistically significant differences overall between control and treatment groups despite greater numbers of older individuals and patients with renal disease in the treatment group supports our strategy and the usage of mAb.19,20

Finally, as of April 16, 2021, the FDA revoked EUA for bamlanivimab when administered alone. However, alternative mAb therapies remain available under the EUA, including REGEN-COV (casirivimab and imdevimab), sotrovimab, and the combination therapy of bamlanivimab and etesevimab.21 This decision was made in light of the increased frequency of resistant variants of SARS-CoV-2 with bamlanivimab treatment alone.21 Our study was conducted prior to this announcement. However, as treatment with other mAbs is still permissible, we believe our findings can translate to treatment with mAbs in general. In fact, combination therapy with bamlanivimab and etesevimab has been found to be more effective than monotherapy alone, suggesting that our results may be even more robust with combination mAb therapy.11 Overall, while additional studies are needed with larger sample sizes and combination mAb treatment to fully elucidate the impact of administering mAb treatment in the ED, our results suggest that targeting ED patients for mAb treatment may be an effective strategy to prevent the composite end point of repeat ED visits, hospitalizations, or deaths.

Conclusion

Targeting ED patients for mAb treatment may be an effective strategy to prevent progression to severe COVID-19 illness and substantially reduce the composite end point of repeat ED visits, hospitalizations, and deaths, especially for individuals of underserved populations who may not have access to ambulatory care.

Corresponding author: Alpesh Amin, MD, MBA, Department of Medicine and Hospital Medicine Program, University of California, Irvine, 333 City Tower West, Ste 500, Orange, CA 92868; [email protected].

Financial disclosures: This manuscript was generously supported by multiple donors, including the Mehra Family, the Yang Family, and the Chao Family. Dr. Amin reported serving as Principal Investigator or Co-Investigator of clinical trials sponsored by NIH/NIAID, NeuroRX Pharma, Pulmotect, Blade Therapeutics, Novartis, Takeda, Humanigen, Eli Lilly, PTC Therapeutics, OctaPharma, Fulcrum Therapeutics, and Alexion, unrelated to the present study. He has served as speaker and/or consultant for BMS, Pfizer, BI, Portola, Sunovion, Mylan, Salix, Alexion, AstraZeneca, Novartis, Nabriva, Paratek, Bayer, Tetraphase, Achaogen La Jolla, Ferring, Seres, Millennium, PeraHealth, HeartRite, Aseptiscope, and Sprightly, unrelated to the present study.

1. Global map. Johns Hopkins University & Medicine Coronavirus Resource Center. Updated November 9, 2021. Accessed November 9, 2021. https://coronavirus.jhu.edu/map.html

2. Truog RD, Mitchell C, Daley GQ. The toughest triage — allocating ventilators in a pandemic. N Engl J Med. 2020;382(21):1973-1975. doi:10.1056/NEJMp2005689

3. Cavallo JJ, Donoho DA, Forman HP. Hospital capacity and operations in the coronavirus disease 2019 (COVID-19) pandemic—planning for the Nth patient. JAMA Health Forum. 2020;1(3):e200345. doi:10.1001/jamahealthforum.2020.0345

4. Eriksson CO, Stoner RC, Eden KB, et al. The association between hospital capacity strain and inpatient outcomes in highly developed countries: a systematic review. J Gen Intern Med. 2017;32(6):686-696. doi:10.1007/s11606-016-3936-3

5. Bravata DM, Perkins AJ, Myers LJ, et al. Association of intensive care unit patient load and demand with mortality rates in US Department of Veterans Affairs hospitals during the COVID-19 pandemic. JAMA Netw Open. 2021;4(1):e2034266. doi:10.1001/jamanetworkopen.2020.34266

6. Beigel JH, Tomashek KM, Dodd LE, et al. Remdesivir for the treatment of Covid-19 - final report. N Engl J Med. 2020;383(19);1813-1826. doi:10.1056/NEJMoa2007764

7. Coronavirus (COVID-19) update: FDA authorizes monoclonal antibody for treatment of COVID-19. US Food & Drug Administration. November 9, 2020. Accessed November 9, 2021. https://www.fda.gov/news-events/press-announcements/coronavirus-covid-19-update-fda-authorizes-monoclonal-antibody-treatment-covid-19

8. Chen P, Nirula A, Heller B, et al. SARS-CoV-2 neutralizing antibody LY-CoV555 in outpatients with Covid-19. N Engl J Med. 2021;384(3):229-237. doi:10.1056/NEJMoa2029849

9. Weinreich DM, Sivapalasingam S, Norton T, et al. REGN-COV2, a neutralizing antibody cocktail, in outpatients with Covid-19. N Engl J Med. 2021;384(3):238-251. doi:10.1056/NEJMoa2035002

10. Chen X, Li R, Pan Z, et al. Human monoclonal antibodies block the binding of SARS-CoV-2 spike protein to angiotensin converting enzyme 2 receptor. Cell Mol Immunol. 2020;17(6):647-649. doi:10.1038/s41423-020-0426-7

11. Gottlieb RL, Nirula A, Chen P, et al. Effect of bamlanivimab as monotherapy or in combination with etesevimab on viral load in patients with mild to moderate COVID-19: a randomized clinical trial. JAMA. 2021;325(7):632-644. doi:10.1001/jama.2021.0202

12. Toy S, Walker J, Evans M. Highly touted monoclonal antibody therapies sit unused in hospitals The Wall Street Journal. December 27, 2020. Accessed November 9, 2021. https://www.wsj.com/articles/highly-touted-monoclonal-antibody-therapies-sit-unused-in-hospitals-11609087364

13. Anti-SARS-CoV-2 monoclonal antibodies. NIH COVID-19 Treatment Guidelines. Updated October 19, 2021. Accessed November 9, 2021. https://www.covid19treatmentguidelines.nih.gov/anti-sars-cov-2-antibody-products/anti-sars-cov-2-monoclonal-antibodies/

14. Langellier BA. Policy recommendations to address high risk of COVID-19 among immigrants. Am J Public Health. 2020;110(8):1137-1139. doi:10.2105/AJPH.2020.305792

15. Verderese J P, Stepanova M, Lam B, et al. Neutralizing monoclonal antibody treatment reduces hospitalization for mild and moderate COVID-19: a real-world experience. Clin Infect Dis. 2021;ciab579. doi:10.1093/cid/ciab579

16. Levine DM, Linder JA, Landon BE. Characteristics of Americans with primary care and changes over time, 2002-2015. JAMA Intern Med. 2020;180(3):463-466. doi:10.1001/jamainternmed.2019.6282

17. Rust G, Ye J, Daniels E, et al. Practical barriers to timely primary care access: impact on adult use of emergency department services. Arch Intern Med. 2008;168(15):1705-1710. doi:10.1001/archinte.168.15.1705

18. COVID-19 Hospitalization Tracking Project: analysis of HHS data. University of Minnesota. Carlson School of Management. Accessed November 9, 2021. https://carlsonschool.umn.edu/mili-misrc-covid19-tracking-project

19. Zare˛bska-Michaluk D, Jaroszewicz J, Rogalska M, et al. Impact of kidney failure on the severity of COVID-19. J Clin Med. 2021;10(9):2042. doi:10.3390/jcm10092042

20. Shahid Z, Kalayanamitra R, McClafferty B, et al. COVID‐19 and older adults: what we know. J Am Geriatr Soc. 2020;68(5):926-929. doi:10.1111/jgs.16472

21. Coronavirus (COVID-19) update: FDA revokes emergency use authorization for monoclonal antibody bamlanivimab. US Food & Drug Administration. April 16, 2021. Accessed November 9, 2021. https://www.fda.gov/news-events/press-announcements/coronavirus-covid-19-update-fda-revokes-emergency-use-authorization-monoclonal-antibody-bamlanivimab

1. Global map. Johns Hopkins University & Medicine Coronavirus Resource Center. Updated November 9, 2021. Accessed November 9, 2021. https://coronavirus.jhu.edu/map.html

2. Truog RD, Mitchell C, Daley GQ. The toughest triage — allocating ventilators in a pandemic. N Engl J Med. 2020;382(21):1973-1975. doi:10.1056/NEJMp2005689

3. Cavallo JJ, Donoho DA, Forman HP. Hospital capacity and operations in the coronavirus disease 2019 (COVID-19) pandemic—planning for the Nth patient. JAMA Health Forum. 2020;1(3):e200345. doi:10.1001/jamahealthforum.2020.0345

4. Eriksson CO, Stoner RC, Eden KB, et al. The association between hospital capacity strain and inpatient outcomes in highly developed countries: a systematic review. J Gen Intern Med. 2017;32(6):686-696. doi:10.1007/s11606-016-3936-3

5. Bravata DM, Perkins AJ, Myers LJ, et al. Association of intensive care unit patient load and demand with mortality rates in US Department of Veterans Affairs hospitals during the COVID-19 pandemic. JAMA Netw Open. 2021;4(1):e2034266. doi:10.1001/jamanetworkopen.2020.34266

6. Beigel JH, Tomashek KM, Dodd LE, et al. Remdesivir for the treatment of Covid-19 - final report. N Engl J Med. 2020;383(19);1813-1826. doi:10.1056/NEJMoa2007764

7. Coronavirus (COVID-19) update: FDA authorizes monoclonal antibody for treatment of COVID-19. US Food & Drug Administration. November 9, 2020. Accessed November 9, 2021. https://www.fda.gov/news-events/press-announcements/coronavirus-covid-19-update-fda-authorizes-monoclonal-antibody-treatment-covid-19

8. Chen P, Nirula A, Heller B, et al. SARS-CoV-2 neutralizing antibody LY-CoV555 in outpatients with Covid-19. N Engl J Med. 2021;384(3):229-237. doi:10.1056/NEJMoa2029849

9. Weinreich DM, Sivapalasingam S, Norton T, et al. REGN-COV2, a neutralizing antibody cocktail, in outpatients with Covid-19. N Engl J Med. 2021;384(3):238-251. doi:10.1056/NEJMoa2035002

10. Chen X, Li R, Pan Z, et al. Human monoclonal antibodies block the binding of SARS-CoV-2 spike protein to angiotensin converting enzyme 2 receptor. Cell Mol Immunol. 2020;17(6):647-649. doi:10.1038/s41423-020-0426-7

11. Gottlieb RL, Nirula A, Chen P, et al. Effect of bamlanivimab as monotherapy or in combination with etesevimab on viral load in patients with mild to moderate COVID-19: a randomized clinical trial. JAMA. 2021;325(7):632-644. doi:10.1001/jama.2021.0202

12. Toy S, Walker J, Evans M. Highly touted monoclonal antibody therapies sit unused in hospitals The Wall Street Journal. December 27, 2020. Accessed November 9, 2021. https://www.wsj.com/articles/highly-touted-monoclonal-antibody-therapies-sit-unused-in-hospitals-11609087364

13. Anti-SARS-CoV-2 monoclonal antibodies. NIH COVID-19 Treatment Guidelines. Updated October 19, 2021. Accessed November 9, 2021. https://www.covid19treatmentguidelines.nih.gov/anti-sars-cov-2-antibody-products/anti-sars-cov-2-monoclonal-antibodies/

14. Langellier BA. Policy recommendations to address high risk of COVID-19 among immigrants. Am J Public Health. 2020;110(8):1137-1139. doi:10.2105/AJPH.2020.305792

15. Verderese J P, Stepanova M, Lam B, et al. Neutralizing monoclonal antibody treatment reduces hospitalization for mild and moderate COVID-19: a real-world experience. Clin Infect Dis. 2021;ciab579. doi:10.1093/cid/ciab579

16. Levine DM, Linder JA, Landon BE. Characteristics of Americans with primary care and changes over time, 2002-2015. JAMA Intern Med. 2020;180(3):463-466. doi:10.1001/jamainternmed.2019.6282

17. Rust G, Ye J, Daniels E, et al. Practical barriers to timely primary care access: impact on adult use of emergency department services. Arch Intern Med. 2008;168(15):1705-1710. doi:10.1001/archinte.168.15.1705

18. COVID-19 Hospitalization Tracking Project: analysis of HHS data. University of Minnesota. Carlson School of Management. Accessed November 9, 2021. https://carlsonschool.umn.edu/mili-misrc-covid19-tracking-project

19. Zare˛bska-Michaluk D, Jaroszewicz J, Rogalska M, et al. Impact of kidney failure on the severity of COVID-19. J Clin Med. 2021;10(9):2042. doi:10.3390/jcm10092042

20. Shahid Z, Kalayanamitra R, McClafferty B, et al. COVID‐19 and older adults: what we know. J Am Geriatr Soc. 2020;68(5):926-929. doi:10.1111/jgs.16472

21. Coronavirus (COVID-19) update: FDA revokes emergency use authorization for monoclonal antibody bamlanivimab. US Food & Drug Administration. April 16, 2021. Accessed November 9, 2021. https://www.fda.gov/news-events/press-announcements/coronavirus-covid-19-update-fda-revokes-emergency-use-authorization-monoclonal-antibody-bamlanivimab

Evaluation of Intermittent Energy Restriction and Continuous Energy Restriction on Weight Loss and Blood Pressure Control in Overweight and Obese Patients With Hypertension

Study Overview

Objective. To compare the effects of intermittent energy restriction (IER) with those of continuous energy restriction (CER) on blood pressure control and weight loss in overweight and obese patients with hypertension during a 6-month period.

Design. Randomized controlled trial.

Settings and participants. The trial was conducted at the Affiliated Hospital of Jiaxing University from June 1, 2020, to April 30, 2021. Chinese adults were recruited using advertisements and flyers posted in the hospital and local communities. Prior to participation in study activities, all participants gave informed consent prior to recruitment and were provided compensation in the form of a $38 voucher at 3 and 6 months for their time for participating in the study.

The main inclusion criteria were patients between the ages of 18 and 70 years, hypertension, and body mass index (BMI) ranging from 24 to 40 kg/m2. The exclusion criteria were systolic blood pressure (SBP) ≥ 180 mmHg or diastolic blood pressure (DBP) ≥ 120 mmHg, type 1 or 2 diabetes with a history of severe hypoglycemic episodes, pregnancy or breastfeeding, usage of glucagon-like peptide 1 receptor agonists, weight loss > 5 kg within the past 3 months or previous weight loss surgery, and inability to adhere to the dietary protocol.

Of the 294 participants screened for eligibility, 205 were randomized in a 1:1 ratio to the IER group (n = 102) or the CER group (n = 103), stratified by sex and BMI (as overweight or obese). All participants were required to have a stable medication regimen and weight in the 3 months prior to enrollment and not to use weight-loss drugs or vitamin supplements for the duration of the study. Researchers and participants were not blinded to the study group assignment.

Interventions. Participants randomly assigned to the IER group followed a 5:2 eating pattern: a very-low-energy diet of 500-600 kcal for 2 days of the week along with their usual diet for the other 5 days. The 2 days of calorie restriction could be consecutive or nonconsecutive, with a minimum of 0.8 g supplemental protein per kg of body weight per day, in accordance with the 2016 Dietary Guidelines for Chinese Residents. The CER group was advised to consume 1000 kcal/day for women and 1200 kcal/day for men on a 7-day energy restriction. That is, they were prescribed a daily 25% restriction based on the general principles of a Mediterranean-type diet (30% fat, 45-50% carbohydrate, and 20-25% protein).

Both groups received dietary education from a qualified dietitian and were recommended to maintain their current daily activity levels throughout the trial. Written dietary information brochures with portion advice and sample meal plans were provided to improve compliance in each group. All participants received a digital cooking scale to weigh foods to ensure accuracy of intake and were required to keep a food diary while following the recommended recipe on 2 days/week during calorie restriction to help with adherence. No food was provided. All participants were followed up by regular outpatient visits to both cardiologists and dietitians once a month. Diet checklists, activity schedules, and weight were reviewed to assess compliance with dietary advice at each visit.

Of note, participants were encouraged to measure and record their BP twice daily, and if 2 consecutive BP readings were < 110/70 mmHg and/or accompanied by hypotensive episodes with symptoms (dizziness, nausea, headache, and fatigue), they were asked to contact the investigators directly. Antihypertensive medication changes were then made in consultation with cardiologists. In addition, a medication management protocol (ie, doses of antidiabetic medications, including insulin and sulfonylurea) was designed to avoid hypoglycemia. Medication could be reduced in the CER group based on the basal dose at the endocrinologist’s discretion. In the IER group, insulin and sulfonylureas were discontinued on calorie restriction days only, and long-acting insulin was discontinued the night before the IER day. Insulin was not to be resumed until a full day’s caloric intake was achieved.

Measures and analysis. The primary outcomes of this study were changes in BP and weight (measured using an automatic digital sphygmomanometer and an electronic scale), and the secondary outcomes were changes in body composition (assessed by dual-energy x-ray absorptiometry scanning), as well as glycosylated hemoglobin A1c (HbA1c) levels and blood lipids after 6 months. All outcome measures were recorded at baseline and at each monthly visit. Incidence rates of hypoglycemia were based on blood glucose (defined as blood glucose < 70 mg/dL) and/or symptomatic hypoglycemia (symptoms of sweating, paleness, dizziness, and confusion). Two cardiologists who were blind to the patients’ diet condition measured and recorded all pertinent clinical parameters and adjudicated serious adverse events.

Data were compared using independent-samples t-tests or the Mann–Whitney U test for continuous variables, and Pearson’s χ2 test or Fisher’s exact test for categorial variables as appropriate. Repeated-measures ANOVA via a linear mixed model was employed to test the effects of diet, time, and their interaction. In subgroup analyses, differential effects of the intervention on the primary outcomes were evaluated with respect to patients’ level of education, domicile, and sex based on the statistical significance of the interaction term for the subgroup of interest in the multivariate model. Analyses were performed based on completers and on an intention-to-treat principle.

Main results. Among the 205 randomized participants, 118 were women and 87 were men; mean (SD) age was 50.5 (8.8) years; mean (SD) BMI was 28.7 (2.6); mean (SD) SBP was 143 (10) mmHg; and mean (SD) DBP was 91 (9) mmHg. At the end of the 6-month intervention, 173 (84.4%) completed the study (IER group: n = 88; CER group: n = 85). Both groups had similar dropout rates at 6 months (IER group: 14 participants [13.7%]; CER group: 18 participants [17.5%]; P = .83) and were well matched for baseline characteristics except for triglyceride levels.

In the completers analysis, both groups experienced significant reductions in weight (mean [SEM]), but there was no difference between treatment groups (−7.2 [0.6] kg in the IER group vs −7.1 [0.6] kg in the CER group; diet by time P = .72). Similarly, the change in SBP and DBP achieved was statistically significant over time, but there was also no difference between the dietary interventions (−8 [0.7] mmHg in the IER group vs −8 [0.6] mmHg in the CER group, diet by time P = .68; −6 [0.6] mmHg in the IER group vs −6 [0.5] mmHg in the CER group, diet by time P = .53]. Subgroup analyses of the association of the intervention with weight, SBP and DBP by sex, education, and domicile showed no significant between-group differences.

All measures of body composition decreased significantly at 6 months with both groups experiencing comparable reductions in total fat mass (−5.5 [0.6] kg in the IER group vs −4.8 [0.5] kg in the CER group, diet by time P = .08) and android fat mass (−1.1 [0.2] kg in the IER group vs −0.8 [0.2] kg in the CER group, diet by time P = .16). Of note, participants in the CER group lost significantly more total fat-free mass than did participants in the IER group (mean [SEM], −2.3 [0.2] kg vs −1.7 [0.2] kg; P = .03], and there was a trend toward a greater change in total fat mass in the IER group (P = .08). The secondary outcome of mean (SEM) HbA1c (−0.2% [0.1%]) and blood lipid levels (triglyceride level, −1.0 [0.3] mmol/L; total cholesterol level, −0.9 [0.2] mmol/L; low-density lipoprotein cholesterol level, −0.9 [0.2 mmol/L; high-density lipoprotein cholesterol level, 0.7 [0.3] mmol/L] improved with weight loss (P < .05), with no differences between groups (diet by time P > .05).

The intention-to-treat analysis demonstrated that IER and CER are equally effective for weight loss and blood pressure control: both groups experienced significant reductions in weight, SBP, and DBP, but with no difference between treatment groups – mean (SEM) weight change with IER was −7.0 (0.6) kg vs −6.8 (0.6) kg with CER; the mean (SEM) SBP with IER was −7 (0.7) mmHg vs −7 (0.6) mmHg with CER; and the mean (SEM) DBP with IER was −6 (0.5) mmHg vs −5 (0.5) mmHg with CER, (diet by time P = .62, .39, and .41, respectively). There were favorable improvements in

Conclusion. A 2-day severe energy restriction with 5 days of habitual eating compared to 7 days of CER provides an acceptable alternative for BP control and weight loss in overweight and obese individuals with hypertension after 6 months. IER may offer a useful alternative strategy for this population, who find continuous weight-loss diets too difficult to maintain.

Commentary

Globally, obesity represents a major health challenge as it substantially increases the risk of diseases such as hypertension, type 2 diabetes, and coronary heart disease.1 Lifestyle modifications, including weight loss and increased physical activity, are recommended in major guidelines as a first-step intervention in the treatment of hypertensive patients.2 However, lifestyle and behavioral interventions aimed at reducing calorie intake through low-calorie dieting is challenging as it is dependent on individual motivation and adherence to a strict, continuous protocol. Further, CER strategies have limited effectiveness because complex and persistent hormonal, metabolic, and neurochemical adaptations defend against weight loss and promote weight regain.3-4 IER has drawn attention in the popular media as an alternative to CER due to its feasibility and even potential for higher rates of compliance.5

This study adds to the literature as it is the first randomized controlled trial (to the knowledge of the authors at the time of publication) to explore 2 forms of energy restriction – CER and IER – and their impact on weight loss, BP, body composition, HbA1c, and blood lipid levels in overweight and obese patients with high blood pressure. Results from this study showed that IER is as effective as, but not superior to, CER (in terms of the outcomes measures assessed). Specifically, findings highlighted that the 5:2 diet is an effective strategy and noninferior to that of daily calorie restriction for BP and weight control. In addition, both weight loss and BP reduction were greater in a subgroup of obese compared with overweight participants, which indicates that obese populations may benefit more from energy restriction. As the authors highlight, this study both aligns with and expands on current related literature.