User login

Official news magazine of the Society of Hospital Medicine

Copyright by Society of Hospital Medicine or related companies. All rights reserved. ISSN 1553-085X

nav[contains(@class, 'nav-ce-stack nav-ce-stack__large-screen')]

header[@id='header']

div[contains(@class, 'header__large-screen')]

div[contains(@class, 'read-next-article')]

div[contains(@class, 'main-prefix')]

div[contains(@class, 'nav-primary')]

nav[contains(@class, 'nav-primary')]

section[contains(@class, 'footer-nav-section-wrapper')]

footer[@id='footer']

section[contains(@class, 'nav-hidden')]

div[contains(@class, 'ce-card-content')]

nav[contains(@class, 'nav-ce-stack')]

div[contains(@class, 'view-medstat-quiz-listing-panes')]

div[contains(@class, 'pane-article-sidebar-latest-news')]

div[contains(@class, 'pane-pub-article-hospitalist')]

CDC releases update of 2013 Antibiotic Resistance Threats Report

“You and I are living in a time when some miracle drugs no longer perform miracles and families are being ripped apart by a microscopic enemy. The time for action is now and we can be part of the solution,” said Robert R. Redfield, MD, director of the Centers for Disease Control and Prevention in his foreword to the new CDC report on antibiotic resistance.

In this update of the previous 2013 report, The current report uses EHRs and other data sources obtained by the CDC for relevant infections extrapolated to develop national disease incidence. The report focuses on “the top 18 pathogens that require attention now,” advises specific steps be taken to address these pathogens, and puts into perspective the future of antibiotic development, their use and abuse, and the continuing threat of antibiotic resistance.

The CDC categorizes these 18 pathogens as either an urgent, serious, or concerning threat.

Urgent Threats

- Carbapenem-resistant Acinetobacter, which cause pneumonia and wound, bloodstream, and urinary tract infections; they tend to affect patients in ICUs. Of particular concern, some Acinetobacter are resistant to nearly all antibiotics, with few new drugs in development (8,500 hospital infections in 2017; 700 deaths).

- Candida auris, a drug-resistant fungus that was first identified in 2009 in Asia and has quickly become a cause of severe infections around the world; it is extremely difficult to eradicate from health care settings. It began spreading in the United States in 2015, with 323 cases reported in 2018 (90% resistant to at least one antifungal, and 30% resistant to at least two antifungals).

- Clostridioides difficile, which can cause life-threatening diarrhea, most often in people who have taken antibiotics for other conditions. It is the most common health care–associated infection, and although decreasing in the health care system, it has not decreased in community settings (223,900 hospital infections in 2017, and 12,800 estimated deaths).

- Carbapenem-resistant Enterobacteriaceae, which most frequently infect patients who require devices such as catheters and those taking long courses of some antibiotics. Of particular concern is the fact that these bacteria contain a transmissible plasmid that can transfer their drug resistance to other pathogens (13,100 hospital infections in 2017, and 1,100 estimated deaths).

- Drug-resistant Neisseria gonorrhoeae, which is a sexually transmitted disease that can result in life-threatening ectopic pregnancy, lead to infertility, and can increase the risk of getting and giving HIV; it can also cause cardiovascular and neurological problems. It is resistant to all but one class of antibiotics, and half of all infections are resistant to at least one antibiotic (550,000 drug-resistant infections yearly).

Serious Threats

- Drug-resistant Campylobacter.

- Drug-resistant Candida.

- Extended spectrum beta-lactamase–producing Enterobacteriaceae.

- Vancomycin-resistant Enterococci.

- Multidrug-resistant Pseudomonas aeruginosa.

- Drug-resistant nontyphoidal Salmonella.

- Drug-resistant Salmonella serotype Typhi.

- Drug-resistant Shigella.

- Methicillin-resistant Staphylococcus aureus (MRSA).

- Drug-resistant Streptococcus pneumoniae.

- Drug-resistant Tuberculosis.

Concerning Threats

These comprise erythromycin-resistant group A Streptococcus and clindamycin-resistant group B Streptococcus.

In addition, the CDC has established a Watch List of three pathogens to be wary of: azole-resistant Aspergillus fumigatus, drug-resistant Mycoplasma genitalium, and drug-resistant Bordetella pertussis.

Because antibiotic resistance is a global phenomenon caused by and affecting everyone, the CDC provided solutions to the problem of antibiotic resistance at every level of society. This “comprehensive and coordinated response implements the U.S. National Action Plan for Combating Antibiotic-Resistant Bacteria” and includes cooperation with the Department of Health and Human Services, Department of Veterans Affairs, Department of Defense, Department of State, and Department of Agriculture, according to the report.

The key components of this response include using data and new technologies to detect and track antibiotic resistance; infection prevention and containment, especially in terms of outbreak response; improving antibiotic use across populations (one successful example being a 16% decrease of outpatient antibiotic prescribing to children during 2011-2017); improvements in the identification and intervention in the environment including water and soil and in sanitation; and a significant investment in vaccines, diagnostics, and novel therapeutics (the CDC provided nearly $110 million to 96 institutions for work in these areas).

The report also details some hope in the development of new antibiotics. As of June 2019, there were 42 new antibiotics in development, including 4 with new drug applications submitted, 17 with the potential to treat serious gram negative bacteria, and 11 that could address the urgent threats of gonorrhea or C. difficile. Overall, a quarter of these new antibiotics represent a novel drug class or use a novel mechanism of action.

Furthermore, 84% of U.S. hospitals report a stewardship program meeting all seven of CDC’s Core Elements of Hospital Antibiotic Stewardship. Proper stewardship is at the core of preventing the development of new antibiotic resistant pathogen strains.

In addition, the CDC noted a 5% overall decline in antibiotic prescribing in outpatient settings during 2011-2016.

“The problem will get worse if we do not act now, but we can make a difference,” according to Dr. Redfield. “Simply, here’s what works. Preventing infections protects everyone. Improving antibiotic use in people and animals slows the threat and helps preserve today’s drugs and those yet to come. Detecting threats and implementing interventions to keep germs from becoming widespread saves lives.”

In response to the release of the report, the AMA issued a supporting statement and cited its collection of educational resources for physicians focused on antibiotic use, resistance, and stewardship.

Similarly, the Society for Healthcare Epidemiology of America (SHEA) stated that hospitals were “a bright spot” in the CDC report and offered tools and resources available to educate and inform health care professionals about best practices in infection prevention and control, as well as antibiotic stewardship.

SOURCE: CDC. Antibiotic Resistance Threats in the United States 2019.

“You and I are living in a time when some miracle drugs no longer perform miracles and families are being ripped apart by a microscopic enemy. The time for action is now and we can be part of the solution,” said Robert R. Redfield, MD, director of the Centers for Disease Control and Prevention in his foreword to the new CDC report on antibiotic resistance.

In this update of the previous 2013 report, The current report uses EHRs and other data sources obtained by the CDC for relevant infections extrapolated to develop national disease incidence. The report focuses on “the top 18 pathogens that require attention now,” advises specific steps be taken to address these pathogens, and puts into perspective the future of antibiotic development, their use and abuse, and the continuing threat of antibiotic resistance.

The CDC categorizes these 18 pathogens as either an urgent, serious, or concerning threat.

Urgent Threats

- Carbapenem-resistant Acinetobacter, which cause pneumonia and wound, bloodstream, and urinary tract infections; they tend to affect patients in ICUs. Of particular concern, some Acinetobacter are resistant to nearly all antibiotics, with few new drugs in development (8,500 hospital infections in 2017; 700 deaths).

- Candida auris, a drug-resistant fungus that was first identified in 2009 in Asia and has quickly become a cause of severe infections around the world; it is extremely difficult to eradicate from health care settings. It began spreading in the United States in 2015, with 323 cases reported in 2018 (90% resistant to at least one antifungal, and 30% resistant to at least two antifungals).

- Clostridioides difficile, which can cause life-threatening diarrhea, most often in people who have taken antibiotics for other conditions. It is the most common health care–associated infection, and although decreasing in the health care system, it has not decreased in community settings (223,900 hospital infections in 2017, and 12,800 estimated deaths).

- Carbapenem-resistant Enterobacteriaceae, which most frequently infect patients who require devices such as catheters and those taking long courses of some antibiotics. Of particular concern is the fact that these bacteria contain a transmissible plasmid that can transfer their drug resistance to other pathogens (13,100 hospital infections in 2017, and 1,100 estimated deaths).

- Drug-resistant Neisseria gonorrhoeae, which is a sexually transmitted disease that can result in life-threatening ectopic pregnancy, lead to infertility, and can increase the risk of getting and giving HIV; it can also cause cardiovascular and neurological problems. It is resistant to all but one class of antibiotics, and half of all infections are resistant to at least one antibiotic (550,000 drug-resistant infections yearly).

Serious Threats

- Drug-resistant Campylobacter.

- Drug-resistant Candida.

- Extended spectrum beta-lactamase–producing Enterobacteriaceae.

- Vancomycin-resistant Enterococci.

- Multidrug-resistant Pseudomonas aeruginosa.

- Drug-resistant nontyphoidal Salmonella.

- Drug-resistant Salmonella serotype Typhi.

- Drug-resistant Shigella.

- Methicillin-resistant Staphylococcus aureus (MRSA).

- Drug-resistant Streptococcus pneumoniae.

- Drug-resistant Tuberculosis.

Concerning Threats

These comprise erythromycin-resistant group A Streptococcus and clindamycin-resistant group B Streptococcus.

In addition, the CDC has established a Watch List of three pathogens to be wary of: azole-resistant Aspergillus fumigatus, drug-resistant Mycoplasma genitalium, and drug-resistant Bordetella pertussis.

Because antibiotic resistance is a global phenomenon caused by and affecting everyone, the CDC provided solutions to the problem of antibiotic resistance at every level of society. This “comprehensive and coordinated response implements the U.S. National Action Plan for Combating Antibiotic-Resistant Bacteria” and includes cooperation with the Department of Health and Human Services, Department of Veterans Affairs, Department of Defense, Department of State, and Department of Agriculture, according to the report.

The key components of this response include using data and new technologies to detect and track antibiotic resistance; infection prevention and containment, especially in terms of outbreak response; improving antibiotic use across populations (one successful example being a 16% decrease of outpatient antibiotic prescribing to children during 2011-2017); improvements in the identification and intervention in the environment including water and soil and in sanitation; and a significant investment in vaccines, diagnostics, and novel therapeutics (the CDC provided nearly $110 million to 96 institutions for work in these areas).

The report also details some hope in the development of new antibiotics. As of June 2019, there were 42 new antibiotics in development, including 4 with new drug applications submitted, 17 with the potential to treat serious gram negative bacteria, and 11 that could address the urgent threats of gonorrhea or C. difficile. Overall, a quarter of these new antibiotics represent a novel drug class or use a novel mechanism of action.

Furthermore, 84% of U.S. hospitals report a stewardship program meeting all seven of CDC’s Core Elements of Hospital Antibiotic Stewardship. Proper stewardship is at the core of preventing the development of new antibiotic resistant pathogen strains.

In addition, the CDC noted a 5% overall decline in antibiotic prescribing in outpatient settings during 2011-2016.

“The problem will get worse if we do not act now, but we can make a difference,” according to Dr. Redfield. “Simply, here’s what works. Preventing infections protects everyone. Improving antibiotic use in people and animals slows the threat and helps preserve today’s drugs and those yet to come. Detecting threats and implementing interventions to keep germs from becoming widespread saves lives.”

In response to the release of the report, the AMA issued a supporting statement and cited its collection of educational resources for physicians focused on antibiotic use, resistance, and stewardship.

Similarly, the Society for Healthcare Epidemiology of America (SHEA) stated that hospitals were “a bright spot” in the CDC report and offered tools and resources available to educate and inform health care professionals about best practices in infection prevention and control, as well as antibiotic stewardship.

SOURCE: CDC. Antibiotic Resistance Threats in the United States 2019.

“You and I are living in a time when some miracle drugs no longer perform miracles and families are being ripped apart by a microscopic enemy. The time for action is now and we can be part of the solution,” said Robert R. Redfield, MD, director of the Centers for Disease Control and Prevention in his foreword to the new CDC report on antibiotic resistance.

In this update of the previous 2013 report, The current report uses EHRs and other data sources obtained by the CDC for relevant infections extrapolated to develop national disease incidence. The report focuses on “the top 18 pathogens that require attention now,” advises specific steps be taken to address these pathogens, and puts into perspective the future of antibiotic development, their use and abuse, and the continuing threat of antibiotic resistance.

The CDC categorizes these 18 pathogens as either an urgent, serious, or concerning threat.

Urgent Threats

- Carbapenem-resistant Acinetobacter, which cause pneumonia and wound, bloodstream, and urinary tract infections; they tend to affect patients in ICUs. Of particular concern, some Acinetobacter are resistant to nearly all antibiotics, with few new drugs in development (8,500 hospital infections in 2017; 700 deaths).

- Candida auris, a drug-resistant fungus that was first identified in 2009 in Asia and has quickly become a cause of severe infections around the world; it is extremely difficult to eradicate from health care settings. It began spreading in the United States in 2015, with 323 cases reported in 2018 (90% resistant to at least one antifungal, and 30% resistant to at least two antifungals).

- Clostridioides difficile, which can cause life-threatening diarrhea, most often in people who have taken antibiotics for other conditions. It is the most common health care–associated infection, and although decreasing in the health care system, it has not decreased in community settings (223,900 hospital infections in 2017, and 12,800 estimated deaths).

- Carbapenem-resistant Enterobacteriaceae, which most frequently infect patients who require devices such as catheters and those taking long courses of some antibiotics. Of particular concern is the fact that these bacteria contain a transmissible plasmid that can transfer their drug resistance to other pathogens (13,100 hospital infections in 2017, and 1,100 estimated deaths).

- Drug-resistant Neisseria gonorrhoeae, which is a sexually transmitted disease that can result in life-threatening ectopic pregnancy, lead to infertility, and can increase the risk of getting and giving HIV; it can also cause cardiovascular and neurological problems. It is resistant to all but one class of antibiotics, and half of all infections are resistant to at least one antibiotic (550,000 drug-resistant infections yearly).

Serious Threats

- Drug-resistant Campylobacter.

- Drug-resistant Candida.

- Extended spectrum beta-lactamase–producing Enterobacteriaceae.

- Vancomycin-resistant Enterococci.

- Multidrug-resistant Pseudomonas aeruginosa.

- Drug-resistant nontyphoidal Salmonella.

- Drug-resistant Salmonella serotype Typhi.

- Drug-resistant Shigella.

- Methicillin-resistant Staphylococcus aureus (MRSA).

- Drug-resistant Streptococcus pneumoniae.

- Drug-resistant Tuberculosis.

Concerning Threats

These comprise erythromycin-resistant group A Streptococcus and clindamycin-resistant group B Streptococcus.

In addition, the CDC has established a Watch List of three pathogens to be wary of: azole-resistant Aspergillus fumigatus, drug-resistant Mycoplasma genitalium, and drug-resistant Bordetella pertussis.

Because antibiotic resistance is a global phenomenon caused by and affecting everyone, the CDC provided solutions to the problem of antibiotic resistance at every level of society. This “comprehensive and coordinated response implements the U.S. National Action Plan for Combating Antibiotic-Resistant Bacteria” and includes cooperation with the Department of Health and Human Services, Department of Veterans Affairs, Department of Defense, Department of State, and Department of Agriculture, according to the report.

The key components of this response include using data and new technologies to detect and track antibiotic resistance; infection prevention and containment, especially in terms of outbreak response; improving antibiotic use across populations (one successful example being a 16% decrease of outpatient antibiotic prescribing to children during 2011-2017); improvements in the identification and intervention in the environment including water and soil and in sanitation; and a significant investment in vaccines, diagnostics, and novel therapeutics (the CDC provided nearly $110 million to 96 institutions for work in these areas).

The report also details some hope in the development of new antibiotics. As of June 2019, there were 42 new antibiotics in development, including 4 with new drug applications submitted, 17 with the potential to treat serious gram negative bacteria, and 11 that could address the urgent threats of gonorrhea or C. difficile. Overall, a quarter of these new antibiotics represent a novel drug class or use a novel mechanism of action.

Furthermore, 84% of U.S. hospitals report a stewardship program meeting all seven of CDC’s Core Elements of Hospital Antibiotic Stewardship. Proper stewardship is at the core of preventing the development of new antibiotic resistant pathogen strains.

In addition, the CDC noted a 5% overall decline in antibiotic prescribing in outpatient settings during 2011-2016.

“The problem will get worse if we do not act now, but we can make a difference,” according to Dr. Redfield. “Simply, here’s what works. Preventing infections protects everyone. Improving antibiotic use in people and animals slows the threat and helps preserve today’s drugs and those yet to come. Detecting threats and implementing interventions to keep germs from becoming widespread saves lives.”

In response to the release of the report, the AMA issued a supporting statement and cited its collection of educational resources for physicians focused on antibiotic use, resistance, and stewardship.

Similarly, the Society for Healthcare Epidemiology of America (SHEA) stated that hospitals were “a bright spot” in the CDC report and offered tools and resources available to educate and inform health care professionals about best practices in infection prevention and control, as well as antibiotic stewardship.

SOURCE: CDC. Antibiotic Resistance Threats in the United States 2019.

Going beyond the QI project

Role modeling for residents

Quality improvement (QI) education has become increasingly seen as core content in graduate medical education, said Brian Wong, MD, FRCPC, of the University of Toronto. One of the most common strategies for teaching QI is to have residents participate in a QI project, in which hospitalists often take a leading role.

“Given the investment made and time spent carrying out these projects, it is important to know whether or not the training has led to the desired outcome from both a learning and a project standpoint,” Dr. Wong said, which is why he coauthored a recent editorial on the subject in BMJ Quality and Safety. QI educators have long recognized that it’s difficult to know whether the education was successful.

“For example, if the project was not successful, does it matter if the residents learned key QI principles that they were able to apply to their project work?” Dr. Wong noted. “Our perspective extends this discussion by asking, ‘What does success look like in QI education?’ We argue that rather than focusing on whether the project was successful or not, our real goal should be to create QI educational experiences that will ensure that residents change their behaviors in future practice to embrace QI as an activity that is core to their everyday work.”

Hospitalists have an important role in that. “They can set the stage for learners to recognize just how important it is to incorporate QI into daily work. Through this role modeling, residents who carry out QI projects can see that the lessons learned contribute to lifelong engagement in QI.”

Dr. Wong’s hope is to focus on the type of QI experience that fosters long-term behavior changes.

“We want residents, when they graduate, to embrace QI, to volunteer to participate in organizational initiatives, to welcome practice data and reflect on it for the purposes of continuous improvement, to collaborate interprofessionally to make small iterative changes to the care delivery system to ensure that patients receive the highest quality of care possible,” he said. “My hope is that we can start to think differently about how we measure success in QI education.”

Reference

1. Myers JS, Wong BM. Measuring outcomes in quality improvement education: Success is in the eye of the beholder. BMJ Qual Saf. 2019 Mar 18. doi: 10.1136/bmjqs-2018-008305.

Role modeling for residents

Role modeling for residents

Quality improvement (QI) education has become increasingly seen as core content in graduate medical education, said Brian Wong, MD, FRCPC, of the University of Toronto. One of the most common strategies for teaching QI is to have residents participate in a QI project, in which hospitalists often take a leading role.

“Given the investment made and time spent carrying out these projects, it is important to know whether or not the training has led to the desired outcome from both a learning and a project standpoint,” Dr. Wong said, which is why he coauthored a recent editorial on the subject in BMJ Quality and Safety. QI educators have long recognized that it’s difficult to know whether the education was successful.

“For example, if the project was not successful, does it matter if the residents learned key QI principles that they were able to apply to their project work?” Dr. Wong noted. “Our perspective extends this discussion by asking, ‘What does success look like in QI education?’ We argue that rather than focusing on whether the project was successful or not, our real goal should be to create QI educational experiences that will ensure that residents change their behaviors in future practice to embrace QI as an activity that is core to their everyday work.”

Hospitalists have an important role in that. “They can set the stage for learners to recognize just how important it is to incorporate QI into daily work. Through this role modeling, residents who carry out QI projects can see that the lessons learned contribute to lifelong engagement in QI.”

Dr. Wong’s hope is to focus on the type of QI experience that fosters long-term behavior changes.

“We want residents, when they graduate, to embrace QI, to volunteer to participate in organizational initiatives, to welcome practice data and reflect on it for the purposes of continuous improvement, to collaborate interprofessionally to make small iterative changes to the care delivery system to ensure that patients receive the highest quality of care possible,” he said. “My hope is that we can start to think differently about how we measure success in QI education.”

Reference

1. Myers JS, Wong BM. Measuring outcomes in quality improvement education: Success is in the eye of the beholder. BMJ Qual Saf. 2019 Mar 18. doi: 10.1136/bmjqs-2018-008305.

Quality improvement (QI) education has become increasingly seen as core content in graduate medical education, said Brian Wong, MD, FRCPC, of the University of Toronto. One of the most common strategies for teaching QI is to have residents participate in a QI project, in which hospitalists often take a leading role.

“Given the investment made and time spent carrying out these projects, it is important to know whether or not the training has led to the desired outcome from both a learning and a project standpoint,” Dr. Wong said, which is why he coauthored a recent editorial on the subject in BMJ Quality and Safety. QI educators have long recognized that it’s difficult to know whether the education was successful.

“For example, if the project was not successful, does it matter if the residents learned key QI principles that they were able to apply to their project work?” Dr. Wong noted. “Our perspective extends this discussion by asking, ‘What does success look like in QI education?’ We argue that rather than focusing on whether the project was successful or not, our real goal should be to create QI educational experiences that will ensure that residents change their behaviors in future practice to embrace QI as an activity that is core to their everyday work.”

Hospitalists have an important role in that. “They can set the stage for learners to recognize just how important it is to incorporate QI into daily work. Through this role modeling, residents who carry out QI projects can see that the lessons learned contribute to lifelong engagement in QI.”

Dr. Wong’s hope is to focus on the type of QI experience that fosters long-term behavior changes.

“We want residents, when they graduate, to embrace QI, to volunteer to participate in organizational initiatives, to welcome practice data and reflect on it for the purposes of continuous improvement, to collaborate interprofessionally to make small iterative changes to the care delivery system to ensure that patients receive the highest quality of care possible,” he said. “My hope is that we can start to think differently about how we measure success in QI education.”

Reference

1. Myers JS, Wong BM. Measuring outcomes in quality improvement education: Success is in the eye of the beholder. BMJ Qual Saf. 2019 Mar 18. doi: 10.1136/bmjqs-2018-008305.

Short Takes

E-cigarettes with behavioral support more effective than nicotine replacement for smoking cessation

A study of 886 randomized United Kingdom National Health Service stop-smoking service attendees showed better 1-year abstinence rates in the e-cigarette (18%) vs. nicotine replacement product (9%) group (risk ratio,1.83; 95% confidence interval, 1.30-2.58) when both groups received behavioral support.

Citation: Hajek P et al. A randomized trial of e-cigarettes versus nicotine-replacement therapy. N Eng J Med. 2019 Feb 14;380:629-37.

New scoring system more accurate in diagnosing sepsis than qSOFA

Using retrospective data from 2,759,529 ED patients in 49 urban hospitals, and a supervised machine-learning process, the authors developed a Risk of Sepsis score, which demonstrated significantly higher sensitivity for detecting sepsis than the qSOFA (Quick Sequential Organ Failure Assessment) score.

Citation: Delahanty R et al. Development and evaluation of a machine learning model for the early identification of patients at risk for sepsis. Ann Emerg Med. 2019 Apr;73(4):334-44.

Shared decision making may decrease risk of legal action

A randomized, controlled simulation experiment using a clinical vignette with an adverse outcome showed that when engaged in shared decision making, participants were less likely to consider taking legal action.

Citation: Schoenfeld EM et al. The effect of shared decision making on patients’ likelihood of filing a complaint or lawsuit: A simulation study. Ann Emerg Med. 2019 Jan 3. doi: 10.1016/j.annemergmed.2018.11.017.

ADA issues new inpatient diabetes care recommendations

The American Diabetes Association recommends that insulin therapy be initiated for a majority of inpatients who have persistent hyperglycemia greater than 180 mg/dL to target a blood glucose range of 140-180 mg/dL. They recommend the use of basal insulin or basal plus bolus insulin and discourage the sole use of sliding scale insulin.

Citation: American Diabetes Association. 15. Diabetes care in the hospital: Standards of Medical Care in Diabetes-2019. Diabetes Care. 2019;42(Suppl. 1):S173-81.

Beta-blocker use may reduce risk of COPD hospitalization

In a retrospective longitudinal study of 301,542 patients newly prescribed beta-blockers and 1,000,633 patients newly prescribed any other antihypertensive drug, patients who were treated with beta-blockers continuously for more than 6 months had a significantly lower risk of chronic obstructive pulmonary disease (COPD) hospitalization, all-cause mortality, and COPD death than did patients who received alternative antihypertensives. Patients with a history of COPD hospitalization were excluded from this study.

Citation: Nielsen AO et al. Beta-blocker therapy and risk of chronic obstructive pulmonary disease: A Danish nationwide study of 1.3 million individuals. EClinicalMedicine. 2019;7:21-6. doi: 10.1016/j.eclinm.2019.01.004.

E-cigarettes with behavioral support more effective than nicotine replacement for smoking cessation

A study of 886 randomized United Kingdom National Health Service stop-smoking service attendees showed better 1-year abstinence rates in the e-cigarette (18%) vs. nicotine replacement product (9%) group (risk ratio,1.83; 95% confidence interval, 1.30-2.58) when both groups received behavioral support.

Citation: Hajek P et al. A randomized trial of e-cigarettes versus nicotine-replacement therapy. N Eng J Med. 2019 Feb 14;380:629-37.

New scoring system more accurate in diagnosing sepsis than qSOFA

Using retrospective data from 2,759,529 ED patients in 49 urban hospitals, and a supervised machine-learning process, the authors developed a Risk of Sepsis score, which demonstrated significantly higher sensitivity for detecting sepsis than the qSOFA (Quick Sequential Organ Failure Assessment) score.

Citation: Delahanty R et al. Development and evaluation of a machine learning model for the early identification of patients at risk for sepsis. Ann Emerg Med. 2019 Apr;73(4):334-44.

Shared decision making may decrease risk of legal action

A randomized, controlled simulation experiment using a clinical vignette with an adverse outcome showed that when engaged in shared decision making, participants were less likely to consider taking legal action.

Citation: Schoenfeld EM et al. The effect of shared decision making on patients’ likelihood of filing a complaint or lawsuit: A simulation study. Ann Emerg Med. 2019 Jan 3. doi: 10.1016/j.annemergmed.2018.11.017.

ADA issues new inpatient diabetes care recommendations

The American Diabetes Association recommends that insulin therapy be initiated for a majority of inpatients who have persistent hyperglycemia greater than 180 mg/dL to target a blood glucose range of 140-180 mg/dL. They recommend the use of basal insulin or basal plus bolus insulin and discourage the sole use of sliding scale insulin.

Citation: American Diabetes Association. 15. Diabetes care in the hospital: Standards of Medical Care in Diabetes-2019. Diabetes Care. 2019;42(Suppl. 1):S173-81.

Beta-blocker use may reduce risk of COPD hospitalization

In a retrospective longitudinal study of 301,542 patients newly prescribed beta-blockers and 1,000,633 patients newly prescribed any other antihypertensive drug, patients who were treated with beta-blockers continuously for more than 6 months had a significantly lower risk of chronic obstructive pulmonary disease (COPD) hospitalization, all-cause mortality, and COPD death than did patients who received alternative antihypertensives. Patients with a history of COPD hospitalization were excluded from this study.

Citation: Nielsen AO et al. Beta-blocker therapy and risk of chronic obstructive pulmonary disease: A Danish nationwide study of 1.3 million individuals. EClinicalMedicine. 2019;7:21-6. doi: 10.1016/j.eclinm.2019.01.004.

E-cigarettes with behavioral support more effective than nicotine replacement for smoking cessation

A study of 886 randomized United Kingdom National Health Service stop-smoking service attendees showed better 1-year abstinence rates in the e-cigarette (18%) vs. nicotine replacement product (9%) group (risk ratio,1.83; 95% confidence interval, 1.30-2.58) when both groups received behavioral support.

Citation: Hajek P et al. A randomized trial of e-cigarettes versus nicotine-replacement therapy. N Eng J Med. 2019 Feb 14;380:629-37.

New scoring system more accurate in diagnosing sepsis than qSOFA

Using retrospective data from 2,759,529 ED patients in 49 urban hospitals, and a supervised machine-learning process, the authors developed a Risk of Sepsis score, which demonstrated significantly higher sensitivity for detecting sepsis than the qSOFA (Quick Sequential Organ Failure Assessment) score.

Citation: Delahanty R et al. Development and evaluation of a machine learning model for the early identification of patients at risk for sepsis. Ann Emerg Med. 2019 Apr;73(4):334-44.

Shared decision making may decrease risk of legal action

A randomized, controlled simulation experiment using a clinical vignette with an adverse outcome showed that when engaged in shared decision making, participants were less likely to consider taking legal action.

Citation: Schoenfeld EM et al. The effect of shared decision making on patients’ likelihood of filing a complaint or lawsuit: A simulation study. Ann Emerg Med. 2019 Jan 3. doi: 10.1016/j.annemergmed.2018.11.017.

ADA issues new inpatient diabetes care recommendations

The American Diabetes Association recommends that insulin therapy be initiated for a majority of inpatients who have persistent hyperglycemia greater than 180 mg/dL to target a blood glucose range of 140-180 mg/dL. They recommend the use of basal insulin or basal plus bolus insulin and discourage the sole use of sliding scale insulin.

Citation: American Diabetes Association. 15. Diabetes care in the hospital: Standards of Medical Care in Diabetes-2019. Diabetes Care. 2019;42(Suppl. 1):S173-81.

Beta-blocker use may reduce risk of COPD hospitalization

In a retrospective longitudinal study of 301,542 patients newly prescribed beta-blockers and 1,000,633 patients newly prescribed any other antihypertensive drug, patients who were treated with beta-blockers continuously for more than 6 months had a significantly lower risk of chronic obstructive pulmonary disease (COPD) hospitalization, all-cause mortality, and COPD death than did patients who received alternative antihypertensives. Patients with a history of COPD hospitalization were excluded from this study.

Citation: Nielsen AO et al. Beta-blocker therapy and risk of chronic obstructive pulmonary disease: A Danish nationwide study of 1.3 million individuals. EClinicalMedicine. 2019;7:21-6. doi: 10.1016/j.eclinm.2019.01.004.

Storytelling tool can assist elderly in the ICU

SAN FRANCISCO – A “Best Case/Worst Case” (BCWC) framework tool has been adapted for use with geriatric trauma patients in the ICU, where it can help track a patient’s progress and enable better communication with patients and loved ones. The tool relies on a combination of graphics and text that surgeons update daily during rounds, and creates a longitudinal view of a patient’s trajectory during their stay in the ICU.

– for example, after a complication has arisen.

“Each day during rounds, the ICU team records important events on the graphic aid that change the patient’s course. The team draws a star to represent the best case, and a line to represent prognostic uncertainty. The attending trauma surgeon then uses the geriatric trauma outcome score, their knowledge of the health state of the patient, and their own clinical experience to tell a story about treatments, recovery, and outcomes if everything goes as well as we might hope. This story is written down in the best-case scenario box,” Christopher Zimmerman, MD, a general surgery resident at the University of Wisconsin–Madison, said during a presentation about the BCWC tool at the annual clinical congress of the American College of Surgeons

“We often like to talk to patients and their families [about best- and worst-case scenarios] anyway, but [the research team] have tried to formalize it,” said Tam Pham, MD, professor of surgery at the University of Washington, in an interview. Dr. Pham comoderated the session where the research was presented.

“When we’re able to communicate where the uncertainty is and where the boundaries are around the course of care and possible outcomes, we can build an alliance with patients and families that will be helpful when there is a big decision to make, say about a laparotomy for a perforated viscus,” said Dr. Zimmerman.

Dr. Zimmerman gave an example of a patient who came into the ICU after suffering multiple fractures from falling down a set of stairs. The team created an initial BCWC with a hoped-for best-case scenario. Later, the patient developed hypoxemic respiratory failure and had to be intubated overnight. “This event is recorded on the graphic, and her star representing the best case has changed position, the line representing uncertainty has shortened, and the contents of her best-case scenario has changed. Each day in rounds, this process is repeated,” said Dr. Zimmerman.

Palliative care physicians, education experts, and surgeons at the University of Wisconsin–Madison developed the tool in an effort to reduce unwanted care at the end of life, in the context of high-risk surgeries. The researchers adapted the tool to the trauma setting by gathering six focus groups of trauma practitioners at the University of Wisconsin; University of Texas, Dallas; and Oregon Health & Science University, Portland. They modified the tool after incorporating comments, and then iteratively modified it through tasks carried out in the ICU as part of a qualitative improvement initiative at the University of Wisconsin–Madison. They generated a change to the tool, implemented it in the ICU during subsequent rounds, then collected observations and field notes, then revised and repeated the process, streamlining it to fit into the ICU environment, according to Dr. Zimmerman.

The back side of the tool is available for family members to write important details about their loved ones, leading insight into the patient’s personality and desires, such as favorite music or affection for a family pet.

The work was supported by the National Institutes of Health. Dr. Zimmerman and Dr. Pham have no relevant financial disclosures.

SOURCE: Zimmerman C et al. Clinical Congress 2019, Abstract.

SAN FRANCISCO – A “Best Case/Worst Case” (BCWC) framework tool has been adapted for use with geriatric trauma patients in the ICU, where it can help track a patient’s progress and enable better communication with patients and loved ones. The tool relies on a combination of graphics and text that surgeons update daily during rounds, and creates a longitudinal view of a patient’s trajectory during their stay in the ICU.

– for example, after a complication has arisen.

“Each day during rounds, the ICU team records important events on the graphic aid that change the patient’s course. The team draws a star to represent the best case, and a line to represent prognostic uncertainty. The attending trauma surgeon then uses the geriatric trauma outcome score, their knowledge of the health state of the patient, and their own clinical experience to tell a story about treatments, recovery, and outcomes if everything goes as well as we might hope. This story is written down in the best-case scenario box,” Christopher Zimmerman, MD, a general surgery resident at the University of Wisconsin–Madison, said during a presentation about the BCWC tool at the annual clinical congress of the American College of Surgeons

“We often like to talk to patients and their families [about best- and worst-case scenarios] anyway, but [the research team] have tried to formalize it,” said Tam Pham, MD, professor of surgery at the University of Washington, in an interview. Dr. Pham comoderated the session where the research was presented.

“When we’re able to communicate where the uncertainty is and where the boundaries are around the course of care and possible outcomes, we can build an alliance with patients and families that will be helpful when there is a big decision to make, say about a laparotomy for a perforated viscus,” said Dr. Zimmerman.

Dr. Zimmerman gave an example of a patient who came into the ICU after suffering multiple fractures from falling down a set of stairs. The team created an initial BCWC with a hoped-for best-case scenario. Later, the patient developed hypoxemic respiratory failure and had to be intubated overnight. “This event is recorded on the graphic, and her star representing the best case has changed position, the line representing uncertainty has shortened, and the contents of her best-case scenario has changed. Each day in rounds, this process is repeated,” said Dr. Zimmerman.

Palliative care physicians, education experts, and surgeons at the University of Wisconsin–Madison developed the tool in an effort to reduce unwanted care at the end of life, in the context of high-risk surgeries. The researchers adapted the tool to the trauma setting by gathering six focus groups of trauma practitioners at the University of Wisconsin; University of Texas, Dallas; and Oregon Health & Science University, Portland. They modified the tool after incorporating comments, and then iteratively modified it through tasks carried out in the ICU as part of a qualitative improvement initiative at the University of Wisconsin–Madison. They generated a change to the tool, implemented it in the ICU during subsequent rounds, then collected observations and field notes, then revised and repeated the process, streamlining it to fit into the ICU environment, according to Dr. Zimmerman.

The back side of the tool is available for family members to write important details about their loved ones, leading insight into the patient’s personality and desires, such as favorite music or affection for a family pet.

The work was supported by the National Institutes of Health. Dr. Zimmerman and Dr. Pham have no relevant financial disclosures.

SOURCE: Zimmerman C et al. Clinical Congress 2019, Abstract.

SAN FRANCISCO – A “Best Case/Worst Case” (BCWC) framework tool has been adapted for use with geriatric trauma patients in the ICU, where it can help track a patient’s progress and enable better communication with patients and loved ones. The tool relies on a combination of graphics and text that surgeons update daily during rounds, and creates a longitudinal view of a patient’s trajectory during their stay in the ICU.

– for example, after a complication has arisen.

“Each day during rounds, the ICU team records important events on the graphic aid that change the patient’s course. The team draws a star to represent the best case, and a line to represent prognostic uncertainty. The attending trauma surgeon then uses the geriatric trauma outcome score, their knowledge of the health state of the patient, and their own clinical experience to tell a story about treatments, recovery, and outcomes if everything goes as well as we might hope. This story is written down in the best-case scenario box,” Christopher Zimmerman, MD, a general surgery resident at the University of Wisconsin–Madison, said during a presentation about the BCWC tool at the annual clinical congress of the American College of Surgeons

“We often like to talk to patients and their families [about best- and worst-case scenarios] anyway, but [the research team] have tried to formalize it,” said Tam Pham, MD, professor of surgery at the University of Washington, in an interview. Dr. Pham comoderated the session where the research was presented.

“When we’re able to communicate where the uncertainty is and where the boundaries are around the course of care and possible outcomes, we can build an alliance with patients and families that will be helpful when there is a big decision to make, say about a laparotomy for a perforated viscus,” said Dr. Zimmerman.

Dr. Zimmerman gave an example of a patient who came into the ICU after suffering multiple fractures from falling down a set of stairs. The team created an initial BCWC with a hoped-for best-case scenario. Later, the patient developed hypoxemic respiratory failure and had to be intubated overnight. “This event is recorded on the graphic, and her star representing the best case has changed position, the line representing uncertainty has shortened, and the contents of her best-case scenario has changed. Each day in rounds, this process is repeated,” said Dr. Zimmerman.

Palliative care physicians, education experts, and surgeons at the University of Wisconsin–Madison developed the tool in an effort to reduce unwanted care at the end of life, in the context of high-risk surgeries. The researchers adapted the tool to the trauma setting by gathering six focus groups of trauma practitioners at the University of Wisconsin; University of Texas, Dallas; and Oregon Health & Science University, Portland. They modified the tool after incorporating comments, and then iteratively modified it through tasks carried out in the ICU as part of a qualitative improvement initiative at the University of Wisconsin–Madison. They generated a change to the tool, implemented it in the ICU during subsequent rounds, then collected observations and field notes, then revised and repeated the process, streamlining it to fit into the ICU environment, according to Dr. Zimmerman.

The back side of the tool is available for family members to write important details about their loved ones, leading insight into the patient’s personality and desires, such as favorite music or affection for a family pet.

The work was supported by the National Institutes of Health. Dr. Zimmerman and Dr. Pham have no relevant financial disclosures.

SOURCE: Zimmerman C et al. Clinical Congress 2019, Abstract.

REPORTING FROM CLINICAL CONGRESS 2019

Risk stratification of syncope patients can help determine duration of telemetry monitoring

Background: About half of ED patients with syncope of unknown etiology are admitted for telemetry monitoring. No consensus exists regarding the optimal duration of telemetry monitoring in these patients to detect underlying arrhythmia.

Study design: Prospective cohort study.

Setting: Six EDs in Canada during September 2010–March 2015.

Synopsis: Using the Canadian Syncope Risk Score, 5,581 adults who presented to the ED within 24 hours of a syncopal event were risk stratified as low, medium, or high risk for serious adverse events (arrhythmic vs. nonarrhythmic) and then followed for 30 days. Approximately half of arrhythmias were identified among low-risk patients within 2 hours of telemetry monitoring and within 6 hours of monitoring among medium- and high-risk patients. In the low-risk group, none experienced death or ventricular arrhythmia within 30 days. In the medium- and high-risk group, 91.7% of underlying arrhythmias were identified within 15 days. The study was limited by the lack of standardized approach in the use of outpatient cardiac rhythm monitoring, which may have resulted in arrhythmia underdetection.

Bottom line: Among ED patients with syncope of unknown etiology, approximately 47% of arrhythmias were detected after 2-6 hours of telemetry monitoring. Among medium- and high-risk patients, the majority of serious arrhythmias were identified within 15 days. Based on these results, the authors recommend the use of 15-day outpatient cardiac monitoring for medium- and high-risk patients.

Citation: Thiruganasambandamoorthy V et al. Duration of electrocardiographic monitoring of emergency department patients with syncope. Circulation. 2019 Mar 12;139(11):1396-406.

Dr. Roy is a hospitalist at Beth Israel Deaconess Medical Center and instructor in medicine at Harvard Medical School.

Background: About half of ED patients with syncope of unknown etiology are admitted for telemetry monitoring. No consensus exists regarding the optimal duration of telemetry monitoring in these patients to detect underlying arrhythmia.

Study design: Prospective cohort study.

Setting: Six EDs in Canada during September 2010–March 2015.

Synopsis: Using the Canadian Syncope Risk Score, 5,581 adults who presented to the ED within 24 hours of a syncopal event were risk stratified as low, medium, or high risk for serious adverse events (arrhythmic vs. nonarrhythmic) and then followed for 30 days. Approximately half of arrhythmias were identified among low-risk patients within 2 hours of telemetry monitoring and within 6 hours of monitoring among medium- and high-risk patients. In the low-risk group, none experienced death or ventricular arrhythmia within 30 days. In the medium- and high-risk group, 91.7% of underlying arrhythmias were identified within 15 days. The study was limited by the lack of standardized approach in the use of outpatient cardiac rhythm monitoring, which may have resulted in arrhythmia underdetection.

Bottom line: Among ED patients with syncope of unknown etiology, approximately 47% of arrhythmias were detected after 2-6 hours of telemetry monitoring. Among medium- and high-risk patients, the majority of serious arrhythmias were identified within 15 days. Based on these results, the authors recommend the use of 15-day outpatient cardiac monitoring for medium- and high-risk patients.

Citation: Thiruganasambandamoorthy V et al. Duration of electrocardiographic monitoring of emergency department patients with syncope. Circulation. 2019 Mar 12;139(11):1396-406.

Dr. Roy is a hospitalist at Beth Israel Deaconess Medical Center and instructor in medicine at Harvard Medical School.

Background: About half of ED patients with syncope of unknown etiology are admitted for telemetry monitoring. No consensus exists regarding the optimal duration of telemetry monitoring in these patients to detect underlying arrhythmia.

Study design: Prospective cohort study.

Setting: Six EDs in Canada during September 2010–March 2015.

Synopsis: Using the Canadian Syncope Risk Score, 5,581 adults who presented to the ED within 24 hours of a syncopal event were risk stratified as low, medium, or high risk for serious adverse events (arrhythmic vs. nonarrhythmic) and then followed for 30 days. Approximately half of arrhythmias were identified among low-risk patients within 2 hours of telemetry monitoring and within 6 hours of monitoring among medium- and high-risk patients. In the low-risk group, none experienced death or ventricular arrhythmia within 30 days. In the medium- and high-risk group, 91.7% of underlying arrhythmias were identified within 15 days. The study was limited by the lack of standardized approach in the use of outpatient cardiac rhythm monitoring, which may have resulted in arrhythmia underdetection.

Bottom line: Among ED patients with syncope of unknown etiology, approximately 47% of arrhythmias were detected after 2-6 hours of telemetry monitoring. Among medium- and high-risk patients, the majority of serious arrhythmias were identified within 15 days. Based on these results, the authors recommend the use of 15-day outpatient cardiac monitoring for medium- and high-risk patients.

Citation: Thiruganasambandamoorthy V et al. Duration of electrocardiographic monitoring of emergency department patients with syncope. Circulation. 2019 Mar 12;139(11):1396-406.

Dr. Roy is a hospitalist at Beth Israel Deaconess Medical Center and instructor in medicine at Harvard Medical School.

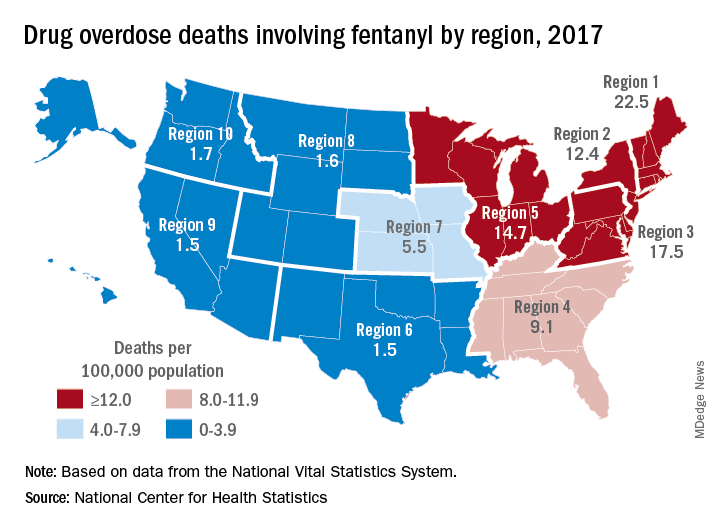

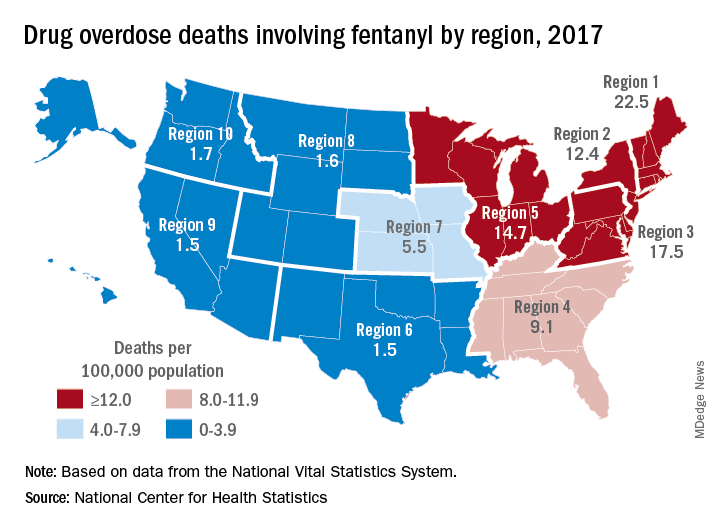

Fentanyl-related deaths show strong regional pattern

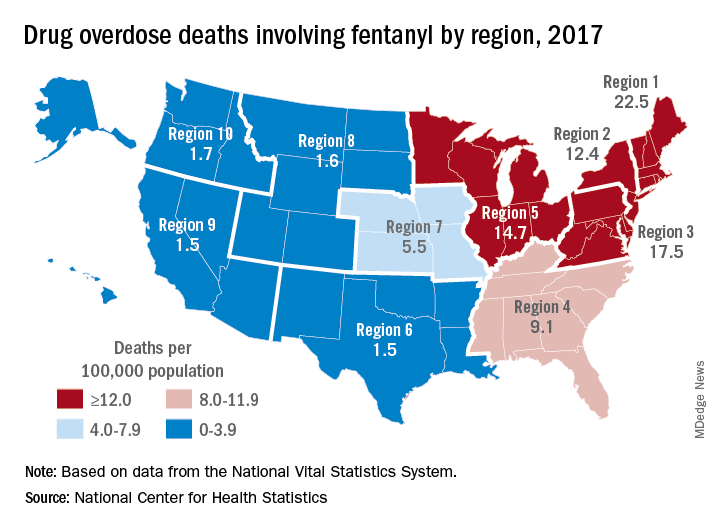

Fentanyl was involved in more overdose deaths than any other drug in 2017, and the death rate in New England was 15 times higher than in regions of the Midwest and West, according to the National Center for Health Statistics.

Nationally, fentanyl was involved in 39% of all drug overdose deaths and had an age-adjusted death rate of 8.7/100,000 standard population in 2017. In 2016, when fentanyl also was the most involved drug in the United States, the corresponding figures were 29% and 5.9/100,000, the agency said in a recent report.

Fentanyl was the most involved drug in overdose deaths for 6 of the country’s 10 public health regions in 2017, with a clear pattern of decreasing use from east to west. The highest death rate (22.5/100,000) occurred in Region 1 (New England) and the lowest rates (1.5/100,000) came in Region 6 (Arkansas, Louisiana, New Mexico, Oklahoma, and Texas) and Region 9 (Arizona, California, Hawaii, and Nevada), the researchers said.

A somewhat similar pattern was seen for heroin, which was second nationally on the list of drugs most frequently involved in overdose deaths (23%), except that New England was somewhat below three other regions in the East and upper Midwest. The highest heroin death rate (8.6/100,000) was seen in Region 2 (New Jersey and New York) and the lowest (2.2) occurred in Region 9, they said, based on data from the National Vital Statistics System’s mortality files.

The fentanyl pattern was even more closely repeated with cocaine, third in involvement nationally at 21% of overdose deaths in 2017. The high in overdose deaths (9.5/100,000) came in Region 1 again, and the low in Region 9 (1.3), along with Region 7 (Iowa, Kansas, Missouri, and Nebraska) and Region 10 (Alaska, Idaho, Oregon, and Washington), the report showed.

The regional pattern of overdose deaths for methamphetamine, which was fourth nationally in involvement (13.3%), basically reversed the other three drugs: highest in the West and lowest in the Northeast. Region 9 had the highest death rate (5.2/100,000) and Region 2 the lowest (0.4), with Region 1 just ahead at 0.6.

Fentanyl was involved in more overdose deaths than any other drug in 2017, and the death rate in New England was 15 times higher than in regions of the Midwest and West, according to the National Center for Health Statistics.

Nationally, fentanyl was involved in 39% of all drug overdose deaths and had an age-adjusted death rate of 8.7/100,000 standard population in 2017. In 2016, when fentanyl also was the most involved drug in the United States, the corresponding figures were 29% and 5.9/100,000, the agency said in a recent report.

Fentanyl was the most involved drug in overdose deaths for 6 of the country’s 10 public health regions in 2017, with a clear pattern of decreasing use from east to west. The highest death rate (22.5/100,000) occurred in Region 1 (New England) and the lowest rates (1.5/100,000) came in Region 6 (Arkansas, Louisiana, New Mexico, Oklahoma, and Texas) and Region 9 (Arizona, California, Hawaii, and Nevada), the researchers said.

A somewhat similar pattern was seen for heroin, which was second nationally on the list of drugs most frequently involved in overdose deaths (23%), except that New England was somewhat below three other regions in the East and upper Midwest. The highest heroin death rate (8.6/100,000) was seen in Region 2 (New Jersey and New York) and the lowest (2.2) occurred in Region 9, they said, based on data from the National Vital Statistics System’s mortality files.

The fentanyl pattern was even more closely repeated with cocaine, third in involvement nationally at 21% of overdose deaths in 2017. The high in overdose deaths (9.5/100,000) came in Region 1 again, and the low in Region 9 (1.3), along with Region 7 (Iowa, Kansas, Missouri, and Nebraska) and Region 10 (Alaska, Idaho, Oregon, and Washington), the report showed.

The regional pattern of overdose deaths for methamphetamine, which was fourth nationally in involvement (13.3%), basically reversed the other three drugs: highest in the West and lowest in the Northeast. Region 9 had the highest death rate (5.2/100,000) and Region 2 the lowest (0.4), with Region 1 just ahead at 0.6.

Fentanyl was involved in more overdose deaths than any other drug in 2017, and the death rate in New England was 15 times higher than in regions of the Midwest and West, according to the National Center for Health Statistics.

Nationally, fentanyl was involved in 39% of all drug overdose deaths and had an age-adjusted death rate of 8.7/100,000 standard population in 2017. In 2016, when fentanyl also was the most involved drug in the United States, the corresponding figures were 29% and 5.9/100,000, the agency said in a recent report.

Fentanyl was the most involved drug in overdose deaths for 6 of the country’s 10 public health regions in 2017, with a clear pattern of decreasing use from east to west. The highest death rate (22.5/100,000) occurred in Region 1 (New England) and the lowest rates (1.5/100,000) came in Region 6 (Arkansas, Louisiana, New Mexico, Oklahoma, and Texas) and Region 9 (Arizona, California, Hawaii, and Nevada), the researchers said.

A somewhat similar pattern was seen for heroin, which was second nationally on the list of drugs most frequently involved in overdose deaths (23%), except that New England was somewhat below three other regions in the East and upper Midwest. The highest heroin death rate (8.6/100,000) was seen in Region 2 (New Jersey and New York) and the lowest (2.2) occurred in Region 9, they said, based on data from the National Vital Statistics System’s mortality files.

The fentanyl pattern was even more closely repeated with cocaine, third in involvement nationally at 21% of overdose deaths in 2017. The high in overdose deaths (9.5/100,000) came in Region 1 again, and the low in Region 9 (1.3), along with Region 7 (Iowa, Kansas, Missouri, and Nebraska) and Region 10 (Alaska, Idaho, Oregon, and Washington), the report showed.

The regional pattern of overdose deaths for methamphetamine, which was fourth nationally in involvement (13.3%), basically reversed the other three drugs: highest in the West and lowest in the Northeast. Region 9 had the highest death rate (5.2/100,000) and Region 2 the lowest (0.4), with Region 1 just ahead at 0.6.

Smokers with PE have higher rate of hospital readmission

NEW ORLEANS – , according to a retrospective study.

The rate of readmission was significantly higher among patients with tobacco dependence, and tobacco dependence was independently associated with an increased risk of readmission.

“This is the first study to quantify the increased rate of hospital readmission due to smoking,” said study investigator Kam Sing Ho, MD, of Mount Sinai St. Luke’s and Mount Sinai West, New York.

Dr. Ho and colleagues described this study and its results in a poster presented at the annual meeting of the American College of Chest Physicians.

The researchers analyzed data on 168,891 hospital admissions of adults with PE, 34.2% of whom had tobacco dependence. Patients with and without tobacco dependence were propensity matched for baseline characteristics (n = 24,262 in each group).

The 30-day readmission rate was significantly higher in patients with tobacco dependence than in those without it – 11.0% and 8.9%, respectively (P less than .001). The most common reason for readmission in both groups was PE.

Dr. Ho said the higher readmission rate among patients with tobacco dependence might be explained by the fact that smokers have a higher level of fibrinogen, which may affect blood viscosity and contribute to thrombus formation (Proc Am Thorac Soc. 2005;2[1]:71-7).

The investigators also found that tobacco dependence was an independent predictor of readmission (hazard ratio, 1.43; P less than .001). And the mortality rate was significantly higher after readmission than after index admission – 6.27% and 3.15%, respectively (P less than .001).

The increased risk of readmission and death among smokers highlights the importance of smoking cessation services. Dr. Ho cited previous research suggesting these services are underused in the hospital setting (BMJ Qual Improv Rep. 2014;3[1]:u204964.w2110).

“Given that smoking is a common phenomenon among patients admitted with pulmonary embolism, we suggest that more rigorous smoking cessation services are implemented prior to discharge for all active smokers,” Dr. Ho said. “[P]atients have the right to be informed on the benefits of smoking cessation and the autonomy to choose. Future research will focus on implementing inpatient smoking cessation at our hospital and its effect on local readmission rate, health resources utilization, and mortality.”

Dr. Ho has no relevant relationships to disclose.

SOURCE: Ho KS et al. CHEST 2019 October. doi: 10.1016/j.chest.2019.08.1551.

NEW ORLEANS – , according to a retrospective study.

The rate of readmission was significantly higher among patients with tobacco dependence, and tobacco dependence was independently associated with an increased risk of readmission.

“This is the first study to quantify the increased rate of hospital readmission due to smoking,” said study investigator Kam Sing Ho, MD, of Mount Sinai St. Luke’s and Mount Sinai West, New York.

Dr. Ho and colleagues described this study and its results in a poster presented at the annual meeting of the American College of Chest Physicians.

The researchers analyzed data on 168,891 hospital admissions of adults with PE, 34.2% of whom had tobacco dependence. Patients with and without tobacco dependence were propensity matched for baseline characteristics (n = 24,262 in each group).

The 30-day readmission rate was significantly higher in patients with tobacco dependence than in those without it – 11.0% and 8.9%, respectively (P less than .001). The most common reason for readmission in both groups was PE.

Dr. Ho said the higher readmission rate among patients with tobacco dependence might be explained by the fact that smokers have a higher level of fibrinogen, which may affect blood viscosity and contribute to thrombus formation (Proc Am Thorac Soc. 2005;2[1]:71-7).

The investigators also found that tobacco dependence was an independent predictor of readmission (hazard ratio, 1.43; P less than .001). And the mortality rate was significantly higher after readmission than after index admission – 6.27% and 3.15%, respectively (P less than .001).

The increased risk of readmission and death among smokers highlights the importance of smoking cessation services. Dr. Ho cited previous research suggesting these services are underused in the hospital setting (BMJ Qual Improv Rep. 2014;3[1]:u204964.w2110).

“Given that smoking is a common phenomenon among patients admitted with pulmonary embolism, we suggest that more rigorous smoking cessation services are implemented prior to discharge for all active smokers,” Dr. Ho said. “[P]atients have the right to be informed on the benefits of smoking cessation and the autonomy to choose. Future research will focus on implementing inpatient smoking cessation at our hospital and its effect on local readmission rate, health resources utilization, and mortality.”

Dr. Ho has no relevant relationships to disclose.

SOURCE: Ho KS et al. CHEST 2019 October. doi: 10.1016/j.chest.2019.08.1551.

NEW ORLEANS – , according to a retrospective study.

The rate of readmission was significantly higher among patients with tobacco dependence, and tobacco dependence was independently associated with an increased risk of readmission.

“This is the first study to quantify the increased rate of hospital readmission due to smoking,” said study investigator Kam Sing Ho, MD, of Mount Sinai St. Luke’s and Mount Sinai West, New York.

Dr. Ho and colleagues described this study and its results in a poster presented at the annual meeting of the American College of Chest Physicians.

The researchers analyzed data on 168,891 hospital admissions of adults with PE, 34.2% of whom had tobacco dependence. Patients with and without tobacco dependence were propensity matched for baseline characteristics (n = 24,262 in each group).

The 30-day readmission rate was significantly higher in patients with tobacco dependence than in those without it – 11.0% and 8.9%, respectively (P less than .001). The most common reason for readmission in both groups was PE.

Dr. Ho said the higher readmission rate among patients with tobacco dependence might be explained by the fact that smokers have a higher level of fibrinogen, which may affect blood viscosity and contribute to thrombus formation (Proc Am Thorac Soc. 2005;2[1]:71-7).

The investigators also found that tobacco dependence was an independent predictor of readmission (hazard ratio, 1.43; P less than .001). And the mortality rate was significantly higher after readmission than after index admission – 6.27% and 3.15%, respectively (P less than .001).

The increased risk of readmission and death among smokers highlights the importance of smoking cessation services. Dr. Ho cited previous research suggesting these services are underused in the hospital setting (BMJ Qual Improv Rep. 2014;3[1]:u204964.w2110).

“Given that smoking is a common phenomenon among patients admitted with pulmonary embolism, we suggest that more rigorous smoking cessation services are implemented prior to discharge for all active smokers,” Dr. Ho said. “[P]atients have the right to be informed on the benefits of smoking cessation and the autonomy to choose. Future research will focus on implementing inpatient smoking cessation at our hospital and its effect on local readmission rate, health resources utilization, and mortality.”

Dr. Ho has no relevant relationships to disclose.

SOURCE: Ho KS et al. CHEST 2019 October. doi: 10.1016/j.chest.2019.08.1551.

REPORTING FROM CHEST 2019

Oral vs. IV antibiotic therapy in early treatment of complex bone and joint infections

Background: The standard of care for complex bone and joint infections includes the use of IV antibiotics. A prior meta-analysis suggested that the outcomes for bone and joint infections treated with oral and IV antibiotics are similar.

Study design: Randomized, controlled trial.

Setting: Twenty-six U.K. sites during June 2010–October 2015.

Synopsis: The study enrolled 1,054 adults with bone or joint infections who would have been treated with 6 weeks of IV antibiotics; they were then randomized to receive either IV or oral antibiotics. Treatment regimens were selected by infectious disease specialists. The rate of the primary endpoint, definite treatment failure at 1 year after randomization, was 14.6% in the intravenous group and 13.2% in the oral group. The difference in the risk of definite treatment failure between the two groups was –1.4% (95% confidence interval, –5.6 to 2.9), which met the predefined noninferiority criteria. The use of oral antibiotics also was associated with a shorter hospital stay and fewer complications. The conclusions of the trial are limited by the open-label design. An associated editorial advocated for additional research before widespread change to current treatment recommendations.

Bottom line: Bone and joint infections treated with oral versus IV antibiotics may have similar treatment failure rates.

Citation: Li HK et al. Oral versus intravenous antibiotics for bone and joint infection. N Eng J Med. 2019 Jan 31;380(5):425-36.

Dr. Roy is a hospitalist at Beth Israel Deaconess Medical Center and instructor in medicine at Harvard Medical School.

Background: The standard of care for complex bone and joint infections includes the use of IV antibiotics. A prior meta-analysis suggested that the outcomes for bone and joint infections treated with oral and IV antibiotics are similar.

Study design: Randomized, controlled trial.

Setting: Twenty-six U.K. sites during June 2010–October 2015.

Synopsis: The study enrolled 1,054 adults with bone or joint infections who would have been treated with 6 weeks of IV antibiotics; they were then randomized to receive either IV or oral antibiotics. Treatment regimens were selected by infectious disease specialists. The rate of the primary endpoint, definite treatment failure at 1 year after randomization, was 14.6% in the intravenous group and 13.2% in the oral group. The difference in the risk of definite treatment failure between the two groups was –1.4% (95% confidence interval, –5.6 to 2.9), which met the predefined noninferiority criteria. The use of oral antibiotics also was associated with a shorter hospital stay and fewer complications. The conclusions of the trial are limited by the open-label design. An associated editorial advocated for additional research before widespread change to current treatment recommendations.

Bottom line: Bone and joint infections treated with oral versus IV antibiotics may have similar treatment failure rates.

Citation: Li HK et al. Oral versus intravenous antibiotics for bone and joint infection. N Eng J Med. 2019 Jan 31;380(5):425-36.

Dr. Roy is a hospitalist at Beth Israel Deaconess Medical Center and instructor in medicine at Harvard Medical School.

Background: The standard of care for complex bone and joint infections includes the use of IV antibiotics. A prior meta-analysis suggested that the outcomes for bone and joint infections treated with oral and IV antibiotics are similar.

Study design: Randomized, controlled trial.

Setting: Twenty-six U.K. sites during June 2010–October 2015.

Synopsis: The study enrolled 1,054 adults with bone or joint infections who would have been treated with 6 weeks of IV antibiotics; they were then randomized to receive either IV or oral antibiotics. Treatment regimens were selected by infectious disease specialists. The rate of the primary endpoint, definite treatment failure at 1 year after randomization, was 14.6% in the intravenous group and 13.2% in the oral group. The difference in the risk of definite treatment failure between the two groups was –1.4% (95% confidence interval, –5.6 to 2.9), which met the predefined noninferiority criteria. The use of oral antibiotics also was associated with a shorter hospital stay and fewer complications. The conclusions of the trial are limited by the open-label design. An associated editorial advocated for additional research before widespread change to current treatment recommendations.

Bottom line: Bone and joint infections treated with oral versus IV antibiotics may have similar treatment failure rates.

Citation: Li HK et al. Oral versus intravenous antibiotics for bone and joint infection. N Eng J Med. 2019 Jan 31;380(5):425-36.

Dr. Roy is a hospitalist at Beth Israel Deaconess Medical Center and instructor in medicine at Harvard Medical School.

Glycemic Control eQUIPS yields success at Dignity Health Sequoia Hospital

Glucometrics database aids tracking, trending

In honor of Diabetes Awareness Month, The Hospitalist spoke recently with Stephanie Dizon, PharmD, BCPS, director of pharmacy at Dignity Health Sequoia Hospital in Redwood City, Calif. Dr. Dizon was the project lead for Dignity Health Sequoia’s participation in the Society of Hospital Medicine’s Glycemic Control eQUIPS program. The Northern California hospital was recognized as a top performer in the program.

SHM’s eQUIPS offers a virtual library of resources, including a step-by-step implementation guide, that addresses various issues that range from subcutaneous insulin protocols to care coordination and good hypoglycemia management. In addition, the program offers access to a data center for performance tracking and benchmarking.

Dr. Dizon shared her experience as a participant in the program, and explained its impact on glycemic control at Dignity Health Sequoia Hospital.

Could you tell us about your personal involvement with SHM?

I started as the quality lead for glycemic control for Sequoia Hospital in 2017 while serving in the role as the clinical pharmacy manager. Currently, I am the director of pharmacy.

What inspired your institution to enroll in the GC eQUIPS program? What were the challenges it helped you address?

Sequoia Hospital started in this journey to improve overall glycemic control in a collaborative with eight other Dignity Health hospitals in 2011. At Sequoia Hospital, this effort was led by Karen Harrison, RN, MSN, CCRN. At the time, Dignity Health saw variations in insulin management and adverse events, and it inspired this group to review their practices and try to find a better way to standardize them. The hope was that sharing information and making efforts to standardize practices would lead to better glycemic control.

Enrollment in the GC eQUIPS program helped Sequoia Hospital efficiently analyze data that would otherwise be too large to manage. In addition, by tracking and trending these large data sets, it helped us not only to see where the hospital’s greatest challenges are in glycemic control but also observe what the impact is when making changes. We were part of a nine-site study that proved the effectiveness of GC eQUIPS and highlighted the collective success across the health system.

What did you find most useful in the suite of resources included in eQUIPS?

The benchmarking webinars and informational webinars that have been provided by Greg Maynard, MD, over the years have been especially helpful. They have broadened my understanding of glycemic control. The glucometrics database is especially helpful for tracking and trending – we share these reports on a monthly basis with nursing and provider leadership. In addition, being able to benchmark ourselves with other hospitals pushes us to improve and keep an eye on glycemic control.

Are there any other highlights from your participation– and your institution’s – in the program that you feel would be beneficial to others who may be considering enrollment?

Having access to the tools available in the GC eQUIPS program is very powerful for data analysis and benchmarking. As a result, it allows the people at an institution to focus on the day-to-day tasks, clinical initiatives, and building a culture that can make a program successful instead of focusing on data collection.

For more information on SHM’s Glycemic Control resources or to enroll in eQUIPS, visit hospitalmedicine.org/gc.

Glucometrics database aids tracking, trending

Glucometrics database aids tracking, trending

In honor of Diabetes Awareness Month, The Hospitalist spoke recently with Stephanie Dizon, PharmD, BCPS, director of pharmacy at Dignity Health Sequoia Hospital in Redwood City, Calif. Dr. Dizon was the project lead for Dignity Health Sequoia’s participation in the Society of Hospital Medicine’s Glycemic Control eQUIPS program. The Northern California hospital was recognized as a top performer in the program.

SHM’s eQUIPS offers a virtual library of resources, including a step-by-step implementation guide, that addresses various issues that range from subcutaneous insulin protocols to care coordination and good hypoglycemia management. In addition, the program offers access to a data center for performance tracking and benchmarking.

Dr. Dizon shared her experience as a participant in the program, and explained its impact on glycemic control at Dignity Health Sequoia Hospital.

Could you tell us about your personal involvement with SHM?

I started as the quality lead for glycemic control for Sequoia Hospital in 2017 while serving in the role as the clinical pharmacy manager. Currently, I am the director of pharmacy.

What inspired your institution to enroll in the GC eQUIPS program? What were the challenges it helped you address?

Sequoia Hospital started in this journey to improve overall glycemic control in a collaborative with eight other Dignity Health hospitals in 2011. At Sequoia Hospital, this effort was led by Karen Harrison, RN, MSN, CCRN. At the time, Dignity Health saw variations in insulin management and adverse events, and it inspired this group to review their practices and try to find a better way to standardize them. The hope was that sharing information and making efforts to standardize practices would lead to better glycemic control.

Enrollment in the GC eQUIPS program helped Sequoia Hospital efficiently analyze data that would otherwise be too large to manage. In addition, by tracking and trending these large data sets, it helped us not only to see where the hospital’s greatest challenges are in glycemic control but also observe what the impact is when making changes. We were part of a nine-site study that proved the effectiveness of GC eQUIPS and highlighted the collective success across the health system.

What did you find most useful in the suite of resources included in eQUIPS?