User login

‘Striking’ increase in childhood obesity during pandemic

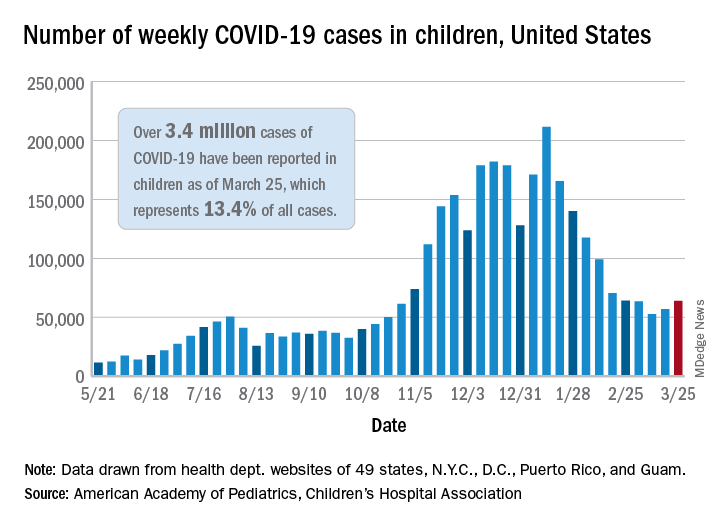

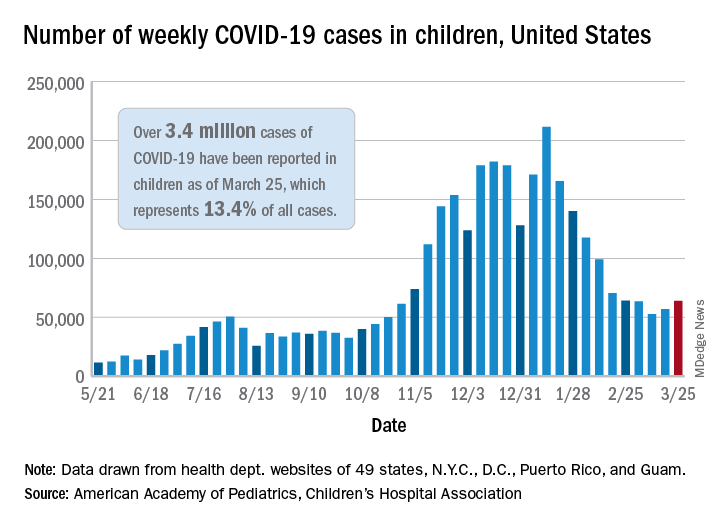

Obesity rates among children jumped substantially in the first months of the COVID-19 pandemic, according to a study published online in Pediatrics. Experts worry the excess weight will be a continuing problem for these children.

“Across the board in the span of a year, there has been a 2% increase in obesity, which is really striking,” lead author Brian P. Jenssen, MD, said in an interview.

The prevalence of obesity in a large pediatric primary care network increased from 13.7% to 15.4%.

Preexisting disparities by race or ethnicity and socioeconomic status worsened, noted Dr. Jenssen, a primary care pediatrician affiliated with Children’s Hospital of Philadelphia (CHOP) and the University of Pennsylvania, Philadelphia.

Dr. Jenssen and colleagues compared the average obesity rate from June to December 2020 with the rate from June to December 2019 among patients in the CHOP Care Network, which includes 29 urban, suburban, and semirural clinics in the Philadelphia region. In June 2020, the volume of patient visits “returned to near-normal” after a dramatic decline in March 2020, the study authors wrote.

The investigators examined body mass index at all visits for patients aged 2-17 years for whom height and weight were documented. Patients with a BMI at or above the 95th percentile were classified as obese. The analysis included approximately 169,000 visits in 2019 and about 145,000 in 2020.

The average age of the patients was 9.2 years, and 48.9% were girls. In all, 21.4% were non-Hispanic Black, and about 30% were publicly insured.

Increases in obesity rates were more pronounced among patients aged 5-9 years and among patients who were Hispanic/Latino, non-Hispanic Black, publicly insured, or from lower-income neighborhoods.

Whereas the obesity rate increased 1% for patients aged 13-17 years, the rate increased 2.6% for patients aged 5-9 years.

Nearly 25% of Hispanic/Latino or non-Hispanic Black patients seen during the pandemic were obese, compared with 11.3% of non-Hispanic White patients. Before the pandemic, differences by race or ethnicity had been about 10%-11%.

Limiting the analysis to preventive visits did not meaningfully change the results, wrote Dr. Jenssen and colleagues.

“Having any increase in the obesity rates is alarming,” said Sandra Hassink, MD, medical director for the American Academy of Pediatrics’ (AAP’s) Institute for Healthy Childhood Weight. “I think what we’re seeing is what we feared.”

Before the pandemic, children received appropriately portioned breakfasts and lunches at school, but during the pandemic, they had less access to such meals, the academy noted. Disruptions to schooling, easier access to unhealthy snacks, increased screen time, and economic issues such as parents’ job losses were further factors, Hassink said.

Tackling the weight gain

In December 2020, the AAP issued two clinical guidance documents to highlight the importance of addressing obesity during the pandemic. Recommendations included physician counseling of families about maintaining healthy nutrition, minimizing sedentary time, and getting enough sleep and physical activity, as well as the assessment of all patients for onset of obesity and the maintenance of obesity treatment for patients with obesity.

In addition to clinical assessments and guidance, Dr. Jenssen emphasized that a return to routines may be crucial. Prepandemic studies have shown that many children, especially those insured by Medicaid, gain more weight during the summer when they are out of school, he noted. Many of the same factors are present during the pandemic, he said.

“One solution, and probably the most important solution, is getting kids back in school,” Dr. Jenssen said. School disruptions also have affected children’s learning and mental health, but those effects may be harder to quantify than BMI, he said.

Dr. Jenssen suggests that parents do their best to model good routines and habits. For example, they might decide that they and their children will stop drinking soda as a family, or opt for an apple instead of a bag of chips. They can walk around the house or up and down stairs when talking. “Those sorts of little things can make a big difference in the long run,” Dr. Jenssen said.

Clinicians should address obesity in a compassionate and caring way, be aware of community resources to help families adopt healthy lifestyles, and “look for the comorbidities of obesity,” such as type 2 diabetes, liver disease, sleep apnea, knee problems, and hypertension, Dr. Hassink said.

Policies that address other factors, such as the cost of healthy foods and the marketing of unhealthy foods, may also be needed, Dr. Hassink said.

“I’ve always thought of obesity as kind of the canary in the coal mine,” Dr. Hassink said. “It is important to keep our minds on the fact that it is a chronic disease. But it also indicates a lot of things about how we are able to support a healthy population.”

Potato chips, red meat, and sugary drinks

Other researchers have assessed how healthy behaviors tended to take a turn for the worse when routines were disrupted during the pandemic. Steven B. Heymsfield, MD, a professor in the metabolism and body composition laboratory at Pennington Biomedical Research Center, Louisiana State University System, Baton Rouge, and collaborators documented how diet and activity changed for children during the pandemic.

Dr. Heymsfield worked with researchers in Italy to examine changes in behavior among 41 children and adolescents with obesity in Verona, Italy, during an early lockdown.

As part of a longitudinal observational study, they had baseline data about diet and physical activity from interviews conducted from May to July 2019. They repeated the interviews 3 weeks after a mandatory quarantine.

Intake of potato chips, red meat, and sugary drinks had increased, time spent in sports activities had decreased by more than 2 hours per week, and screen time had increased by more than 4 hours per day, the researchers found. Their study was published in Obesity.

Unpublished follow-up data indicate that “there was further deterioration in the diets and activity patterns” for some but not all of the participants, Dr. Heymsfield said.

He said he was hopeful that children who experienced the onset of obesity during the pandemic may lose weight when routines return to normal, but added that it is unclear whether that will happen.

“My impression from the limited written literature on this question is that for some kids who gain weight during the lockdown or, by analogy, the summer months, the weight doesn’t go back down again. It is not universal, but it is a known phenomenon that it is a bit of a ratchet,” he said. “They just sort of slowly ratchet their weights up, up to adulthood.”

Recognizing weight gain during the pandemic may be an important first step.

“The first thing is not to ignore it,” Dr. Heymsfield said. “Anything that can be done to prevent excess weight gain during childhood – not to promote anorexia or anything like that, but just being careful – is very important, because these behaviors are formed early in life, and they persist.”

CHOP supported the research. Dr. Jenssen and Dr. Hassink have disclosed no relevant financial relationships. Dr. Heymsfield is a medical adviser for Medifast, a weight loss company.

A version of this article first appeared on Medscape.com.

Obesity rates among children jumped substantially in the first months of the COVID-19 pandemic, according to a study published online in Pediatrics. Experts worry the excess weight will be a continuing problem for these children.

“Across the board in the span of a year, there has been a 2% increase in obesity, which is really striking,” lead author Brian P. Jenssen, MD, said in an interview.

The prevalence of obesity in a large pediatric primary care network increased from 13.7% to 15.4%.

Preexisting disparities by race or ethnicity and socioeconomic status worsened, noted Dr. Jenssen, a primary care pediatrician affiliated with Children’s Hospital of Philadelphia (CHOP) and the University of Pennsylvania, Philadelphia.

Dr. Jenssen and colleagues compared the average obesity rate from June to December 2020 with the rate from June to December 2019 among patients in the CHOP Care Network, which includes 29 urban, suburban, and semirural clinics in the Philadelphia region. In June 2020, the volume of patient visits “returned to near-normal” after a dramatic decline in March 2020, the study authors wrote.

The investigators examined body mass index at all visits for patients aged 2-17 years for whom height and weight were documented. Patients with a BMI at or above the 95th percentile were classified as obese. The analysis included approximately 169,000 visits in 2019 and about 145,000 in 2020.

The average age of the patients was 9.2 years, and 48.9% were girls. In all, 21.4% were non-Hispanic Black, and about 30% were publicly insured.

Increases in obesity rates were more pronounced among patients aged 5-9 years and among patients who were Hispanic/Latino, non-Hispanic Black, publicly insured, or from lower-income neighborhoods.

Whereas the obesity rate increased 1% for patients aged 13-17 years, the rate increased 2.6% for patients aged 5-9 years.

Nearly 25% of Hispanic/Latino or non-Hispanic Black patients seen during the pandemic were obese, compared with 11.3% of non-Hispanic White patients. Before the pandemic, differences by race or ethnicity had been about 10%-11%.

Limiting the analysis to preventive visits did not meaningfully change the results, wrote Dr. Jenssen and colleagues.

“Having any increase in the obesity rates is alarming,” said Sandra Hassink, MD, medical director for the American Academy of Pediatrics’ (AAP’s) Institute for Healthy Childhood Weight. “I think what we’re seeing is what we feared.”

Before the pandemic, children received appropriately portioned breakfasts and lunches at school, but during the pandemic, they had less access to such meals, the academy noted. Disruptions to schooling, easier access to unhealthy snacks, increased screen time, and economic issues such as parents’ job losses were further factors, Hassink said.

Tackling the weight gain

In December 2020, the AAP issued two clinical guidance documents to highlight the importance of addressing obesity during the pandemic. Recommendations included physician counseling of families about maintaining healthy nutrition, minimizing sedentary time, and getting enough sleep and physical activity, as well as the assessment of all patients for onset of obesity and the maintenance of obesity treatment for patients with obesity.

In addition to clinical assessments and guidance, Dr. Jenssen emphasized that a return to routines may be crucial. Prepandemic studies have shown that many children, especially those insured by Medicaid, gain more weight during the summer when they are out of school, he noted. Many of the same factors are present during the pandemic, he said.

“One solution, and probably the most important solution, is getting kids back in school,” Dr. Jenssen said. School disruptions also have affected children’s learning and mental health, but those effects may be harder to quantify than BMI, he said.

Dr. Jenssen suggests that parents do their best to model good routines and habits. For example, they might decide that they and their children will stop drinking soda as a family, or opt for an apple instead of a bag of chips. They can walk around the house or up and down stairs when talking. “Those sorts of little things can make a big difference in the long run,” Dr. Jenssen said.

Clinicians should address obesity in a compassionate and caring way, be aware of community resources to help families adopt healthy lifestyles, and “look for the comorbidities of obesity,” such as type 2 diabetes, liver disease, sleep apnea, knee problems, and hypertension, Dr. Hassink said.

Policies that address other factors, such as the cost of healthy foods and the marketing of unhealthy foods, may also be needed, Dr. Hassink said.

“I’ve always thought of obesity as kind of the canary in the coal mine,” Dr. Hassink said. “It is important to keep our minds on the fact that it is a chronic disease. But it also indicates a lot of things about how we are able to support a healthy population.”

Potato chips, red meat, and sugary drinks

Other researchers have assessed how healthy behaviors tended to take a turn for the worse when routines were disrupted during the pandemic. Steven B. Heymsfield, MD, a professor in the metabolism and body composition laboratory at Pennington Biomedical Research Center, Louisiana State University System, Baton Rouge, and collaborators documented how diet and activity changed for children during the pandemic.

Dr. Heymsfield worked with researchers in Italy to examine changes in behavior among 41 children and adolescents with obesity in Verona, Italy, during an early lockdown.

As part of a longitudinal observational study, they had baseline data about diet and physical activity from interviews conducted from May to July 2019. They repeated the interviews 3 weeks after a mandatory quarantine.

Intake of potato chips, red meat, and sugary drinks had increased, time spent in sports activities had decreased by more than 2 hours per week, and screen time had increased by more than 4 hours per day, the researchers found. Their study was published in Obesity.

Unpublished follow-up data indicate that “there was further deterioration in the diets and activity patterns” for some but not all of the participants, Dr. Heymsfield said.

He said he was hopeful that children who experienced the onset of obesity during the pandemic may lose weight when routines return to normal, but added that it is unclear whether that will happen.

“My impression from the limited written literature on this question is that for some kids who gain weight during the lockdown or, by analogy, the summer months, the weight doesn’t go back down again. It is not universal, but it is a known phenomenon that it is a bit of a ratchet,” he said. “They just sort of slowly ratchet their weights up, up to adulthood.”

Recognizing weight gain during the pandemic may be an important first step.

“The first thing is not to ignore it,” Dr. Heymsfield said. “Anything that can be done to prevent excess weight gain during childhood – not to promote anorexia or anything like that, but just being careful – is very important, because these behaviors are formed early in life, and they persist.”

CHOP supported the research. Dr. Jenssen and Dr. Hassink have disclosed no relevant financial relationships. Dr. Heymsfield is a medical adviser for Medifast, a weight loss company.

A version of this article first appeared on Medscape.com.

Obesity rates among children jumped substantially in the first months of the COVID-19 pandemic, according to a study published online in Pediatrics. Experts worry the excess weight will be a continuing problem for these children.

“Across the board in the span of a year, there has been a 2% increase in obesity, which is really striking,” lead author Brian P. Jenssen, MD, said in an interview.

The prevalence of obesity in a large pediatric primary care network increased from 13.7% to 15.4%.

Preexisting disparities by race or ethnicity and socioeconomic status worsened, noted Dr. Jenssen, a primary care pediatrician affiliated with Children’s Hospital of Philadelphia (CHOP) and the University of Pennsylvania, Philadelphia.

Dr. Jenssen and colleagues compared the average obesity rate from June to December 2020 with the rate from June to December 2019 among patients in the CHOP Care Network, which includes 29 urban, suburban, and semirural clinics in the Philadelphia region. In June 2020, the volume of patient visits “returned to near-normal” after a dramatic decline in March 2020, the study authors wrote.

The investigators examined body mass index at all visits for patients aged 2-17 years for whom height and weight were documented. Patients with a BMI at or above the 95th percentile were classified as obese. The analysis included approximately 169,000 visits in 2019 and about 145,000 in 2020.

The average age of the patients was 9.2 years, and 48.9% were girls. In all, 21.4% were non-Hispanic Black, and about 30% were publicly insured.

Increases in obesity rates were more pronounced among patients aged 5-9 years and among patients who were Hispanic/Latino, non-Hispanic Black, publicly insured, or from lower-income neighborhoods.

Whereas the obesity rate increased 1% for patients aged 13-17 years, the rate increased 2.6% for patients aged 5-9 years.

Nearly 25% of Hispanic/Latino or non-Hispanic Black patients seen during the pandemic were obese, compared with 11.3% of non-Hispanic White patients. Before the pandemic, differences by race or ethnicity had been about 10%-11%.

Limiting the analysis to preventive visits did not meaningfully change the results, wrote Dr. Jenssen and colleagues.

“Having any increase in the obesity rates is alarming,” said Sandra Hassink, MD, medical director for the American Academy of Pediatrics’ (AAP’s) Institute for Healthy Childhood Weight. “I think what we’re seeing is what we feared.”

Before the pandemic, children received appropriately portioned breakfasts and lunches at school, but during the pandemic, they had less access to such meals, the academy noted. Disruptions to schooling, easier access to unhealthy snacks, increased screen time, and economic issues such as parents’ job losses were further factors, Hassink said.

Tackling the weight gain

In December 2020, the AAP issued two clinical guidance documents to highlight the importance of addressing obesity during the pandemic. Recommendations included physician counseling of families about maintaining healthy nutrition, minimizing sedentary time, and getting enough sleep and physical activity, as well as the assessment of all patients for onset of obesity and the maintenance of obesity treatment for patients with obesity.

In addition to clinical assessments and guidance, Dr. Jenssen emphasized that a return to routines may be crucial. Prepandemic studies have shown that many children, especially those insured by Medicaid, gain more weight during the summer when they are out of school, he noted. Many of the same factors are present during the pandemic, he said.

“One solution, and probably the most important solution, is getting kids back in school,” Dr. Jenssen said. School disruptions also have affected children’s learning and mental health, but those effects may be harder to quantify than BMI, he said.

Dr. Jenssen suggests that parents do their best to model good routines and habits. For example, they might decide that they and their children will stop drinking soda as a family, or opt for an apple instead of a bag of chips. They can walk around the house or up and down stairs when talking. “Those sorts of little things can make a big difference in the long run,” Dr. Jenssen said.

Clinicians should address obesity in a compassionate and caring way, be aware of community resources to help families adopt healthy lifestyles, and “look for the comorbidities of obesity,” such as type 2 diabetes, liver disease, sleep apnea, knee problems, and hypertension, Dr. Hassink said.

Policies that address other factors, such as the cost of healthy foods and the marketing of unhealthy foods, may also be needed, Dr. Hassink said.

“I’ve always thought of obesity as kind of the canary in the coal mine,” Dr. Hassink said. “It is important to keep our minds on the fact that it is a chronic disease. But it also indicates a lot of things about how we are able to support a healthy population.”

Potato chips, red meat, and sugary drinks

Other researchers have assessed how healthy behaviors tended to take a turn for the worse when routines were disrupted during the pandemic. Steven B. Heymsfield, MD, a professor in the metabolism and body composition laboratory at Pennington Biomedical Research Center, Louisiana State University System, Baton Rouge, and collaborators documented how diet and activity changed for children during the pandemic.

Dr. Heymsfield worked with researchers in Italy to examine changes in behavior among 41 children and adolescents with obesity in Verona, Italy, during an early lockdown.

As part of a longitudinal observational study, they had baseline data about diet and physical activity from interviews conducted from May to July 2019. They repeated the interviews 3 weeks after a mandatory quarantine.

Intake of potato chips, red meat, and sugary drinks had increased, time spent in sports activities had decreased by more than 2 hours per week, and screen time had increased by more than 4 hours per day, the researchers found. Their study was published in Obesity.

Unpublished follow-up data indicate that “there was further deterioration in the diets and activity patterns” for some but not all of the participants, Dr. Heymsfield said.

He said he was hopeful that children who experienced the onset of obesity during the pandemic may lose weight when routines return to normal, but added that it is unclear whether that will happen.

“My impression from the limited written literature on this question is that for some kids who gain weight during the lockdown or, by analogy, the summer months, the weight doesn’t go back down again. It is not universal, but it is a known phenomenon that it is a bit of a ratchet,” he said. “They just sort of slowly ratchet their weights up, up to adulthood.”

Recognizing weight gain during the pandemic may be an important first step.

“The first thing is not to ignore it,” Dr. Heymsfield said. “Anything that can be done to prevent excess weight gain during childhood – not to promote anorexia or anything like that, but just being careful – is very important, because these behaviors are formed early in life, and they persist.”

CHOP supported the research. Dr. Jenssen and Dr. Hassink have disclosed no relevant financial relationships. Dr. Heymsfield is a medical adviser for Medifast, a weight loss company.

A version of this article first appeared on Medscape.com.

New guidelines on the diagnosis and treatment of adults with CAP

Background: More than a decade has passed since the last CAP guidelines. Since then there have been new trials and epidemiological studies. There have also been changes to the process for guideline development. This guideline has moved away from the narrative style of guidelines to the GRADE format and PICO framework with hopes of answering specific questions by looking at the quality of evidence.

Study design: Multidisciplinary panel conducted pragmatic systemic reviews of high-quality studies.

Setting: The panel revised and built upon the 2007 guidelines, addressing 16 clinical questions to be used in immunocompetent patients with radiographic evidence of CAP in the United States with no recent foreign travel.

Synopsis: Changes from the 2007 guidelines are as follows: Sputum and blood cultures, previously recommended only in patients with severe CAP, are now also recommended for inpatients being empirically treated for Pseudomonas or methicillin-resistant Staphylococcus aureus (MRSA) and for those who have received IV antibiotics in the previous 90 days; use of procalcitonin is not recommended to decide whether to withhold antibiotics; steroids are not recommended unless being used for shock; HCAP categorization should be abandoned and need for empiric coverage of MRSA and Pseudomonas should be based on local epidemiology and local validated risk factors; B-lactam/macrolide is favored over fluoroquinolone for severe CAP therapy; and routine follow-up chest x-ray is not recommended.

Other recommendations include not routinely testing for urine pneumococcal or legionella antigens in nonsevere CAP; using PSI over CURB-65, in addition to clinical judgment, to determine need for inpatient care; using severe CAP criteria and clinical judgment for determining ICU need; not adding anaerobic coverage for aspiration pneumonia; and treating most cases of CAP that are clinically stable and uncomplicated for 5-7 days.

Bottom line: Given new data, updated recommendations have been made to help optimize CAP therapy.

Citation: Metlay JP et al. Diagnosis and treatment of adults with community-acquired pneumonia: An official clinical practice guideline of the American Thoracic Society and Infectious Diseases Society of America. Am J Respir Crit Care Med. 2019 Oct 1;200(7):e45-67.

Dr. Horton is a hospitalist and clinical instructor of medicine at the University of Utah, Salt Lake City.

Background: More than a decade has passed since the last CAP guidelines. Since then there have been new trials and epidemiological studies. There have also been changes to the process for guideline development. This guideline has moved away from the narrative style of guidelines to the GRADE format and PICO framework with hopes of answering specific questions by looking at the quality of evidence.

Study design: Multidisciplinary panel conducted pragmatic systemic reviews of high-quality studies.

Setting: The panel revised and built upon the 2007 guidelines, addressing 16 clinical questions to be used in immunocompetent patients with radiographic evidence of CAP in the United States with no recent foreign travel.

Synopsis: Changes from the 2007 guidelines are as follows: Sputum and blood cultures, previously recommended only in patients with severe CAP, are now also recommended for inpatients being empirically treated for Pseudomonas or methicillin-resistant Staphylococcus aureus (MRSA) and for those who have received IV antibiotics in the previous 90 days; use of procalcitonin is not recommended to decide whether to withhold antibiotics; steroids are not recommended unless being used for shock; HCAP categorization should be abandoned and need for empiric coverage of MRSA and Pseudomonas should be based on local epidemiology and local validated risk factors; B-lactam/macrolide is favored over fluoroquinolone for severe CAP therapy; and routine follow-up chest x-ray is not recommended.

Other recommendations include not routinely testing for urine pneumococcal or legionella antigens in nonsevere CAP; using PSI over CURB-65, in addition to clinical judgment, to determine need for inpatient care; using severe CAP criteria and clinical judgment for determining ICU need; not adding anaerobic coverage for aspiration pneumonia; and treating most cases of CAP that are clinically stable and uncomplicated for 5-7 days.

Bottom line: Given new data, updated recommendations have been made to help optimize CAP therapy.

Citation: Metlay JP et al. Diagnosis and treatment of adults with community-acquired pneumonia: An official clinical practice guideline of the American Thoracic Society and Infectious Diseases Society of America. Am J Respir Crit Care Med. 2019 Oct 1;200(7):e45-67.

Dr. Horton is a hospitalist and clinical instructor of medicine at the University of Utah, Salt Lake City.

Background: More than a decade has passed since the last CAP guidelines. Since then there have been new trials and epidemiological studies. There have also been changes to the process for guideline development. This guideline has moved away from the narrative style of guidelines to the GRADE format and PICO framework with hopes of answering specific questions by looking at the quality of evidence.

Study design: Multidisciplinary panel conducted pragmatic systemic reviews of high-quality studies.

Setting: The panel revised and built upon the 2007 guidelines, addressing 16 clinical questions to be used in immunocompetent patients with radiographic evidence of CAP in the United States with no recent foreign travel.

Synopsis: Changes from the 2007 guidelines are as follows: Sputum and blood cultures, previously recommended only in patients with severe CAP, are now also recommended for inpatients being empirically treated for Pseudomonas or methicillin-resistant Staphylococcus aureus (MRSA) and for those who have received IV antibiotics in the previous 90 days; use of procalcitonin is not recommended to decide whether to withhold antibiotics; steroids are not recommended unless being used for shock; HCAP categorization should be abandoned and need for empiric coverage of MRSA and Pseudomonas should be based on local epidemiology and local validated risk factors; B-lactam/macrolide is favored over fluoroquinolone for severe CAP therapy; and routine follow-up chest x-ray is not recommended.

Other recommendations include not routinely testing for urine pneumococcal or legionella antigens in nonsevere CAP; using PSI over CURB-65, in addition to clinical judgment, to determine need for inpatient care; using severe CAP criteria and clinical judgment for determining ICU need; not adding anaerobic coverage for aspiration pneumonia; and treating most cases of CAP that are clinically stable and uncomplicated for 5-7 days.

Bottom line: Given new data, updated recommendations have been made to help optimize CAP therapy.

Citation: Metlay JP et al. Diagnosis and treatment of adults with community-acquired pneumonia: An official clinical practice guideline of the American Thoracic Society and Infectious Diseases Society of America. Am J Respir Crit Care Med. 2019 Oct 1;200(7):e45-67.

Dr. Horton is a hospitalist and clinical instructor of medicine at the University of Utah, Salt Lake City.

Cutaneous Manifestation as Initial Presentation of Metastatic Breast Cancer: A Systematic Review

Breast cancer is the second most common malignancy in women (after primary skin cancer) and is the second leading cause of cancer-related death in this population. In 2020, the American Cancer Society reported an estimated 276,480 new breast cancer diagnoses and 42,170 breast cancer–related deaths.1 Despite the fact that routine screening with mammography and sonography is standard, the incidence of advanced breast cancer at the time of diagnosis has remained stable over time, suggesting that life-threatening breast cancers are not being caught at an earlier stage. The number of breast cancers with distant metastases at the time of diagnosis also has not decreased.2 Therefore, although screening tests are valuable, they are imperfect and not without limitations.

Cutaneous metastasis is defined as the spread of malignant cells from an internal neoplasm to the skin, which can occur either by contiguous invasion or by distant metastasis through hematogenous or lymphatic routes.3 The diagnosis of cutaneous metastasis requires a high index of suspicion on the part of the clinician.4 Of the various internal malignancies in women, breast cancer most frequently results in metastasis to the skin,5 with up to 24% of patients with metastatic breast cancer developing cutaneous lesions.6

In recent years, there have been multiple reports of skin lesions prompting the diagnosis of a previously unknown breast cancer. In a study by Lookingbill et al,6 6.3% of patients with breast cancer presented with cutaneous involvement at the time of diagnosis, with 3.5% having skin symptoms as the presenting sign. Although there have been studies analyzing cutaneous metastasis from various internal malignancies, none thus far have focused on cutaneous metastasis as a presenting sign of breast cancer. This systematic review aimed to highlight the diverse clinical presentations of cutaneous metastatic breast cancer and their clinical implications.

Methods

Study Selection

This study utilized the PRISMA guidelines for systematic reviews.7 A review of the literature was conducted using the following databases: MEDLINE/PubMed, EMBASE, Cochrane library, CINAHL, and EBSCO.

Search Strategy and Analysis

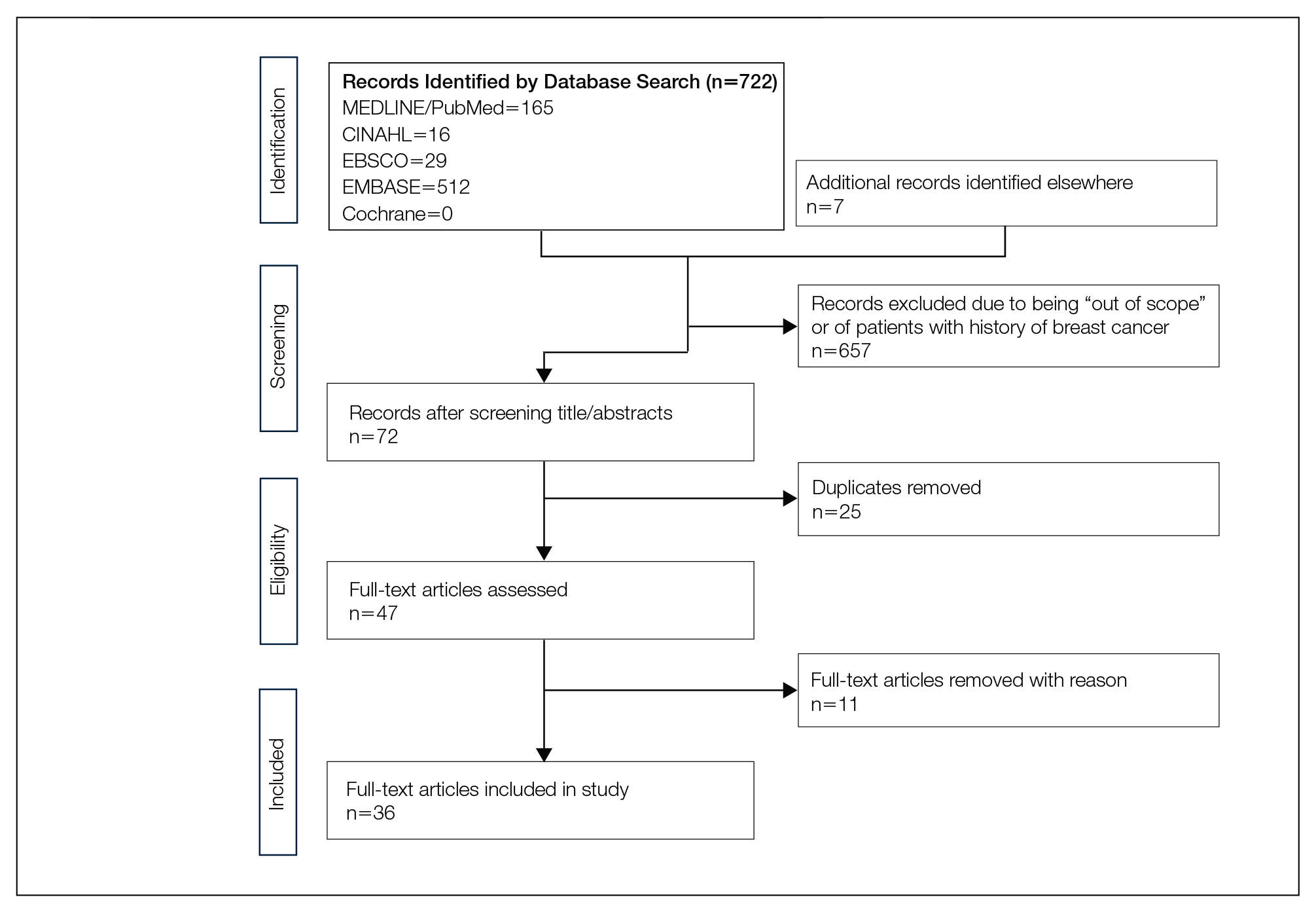

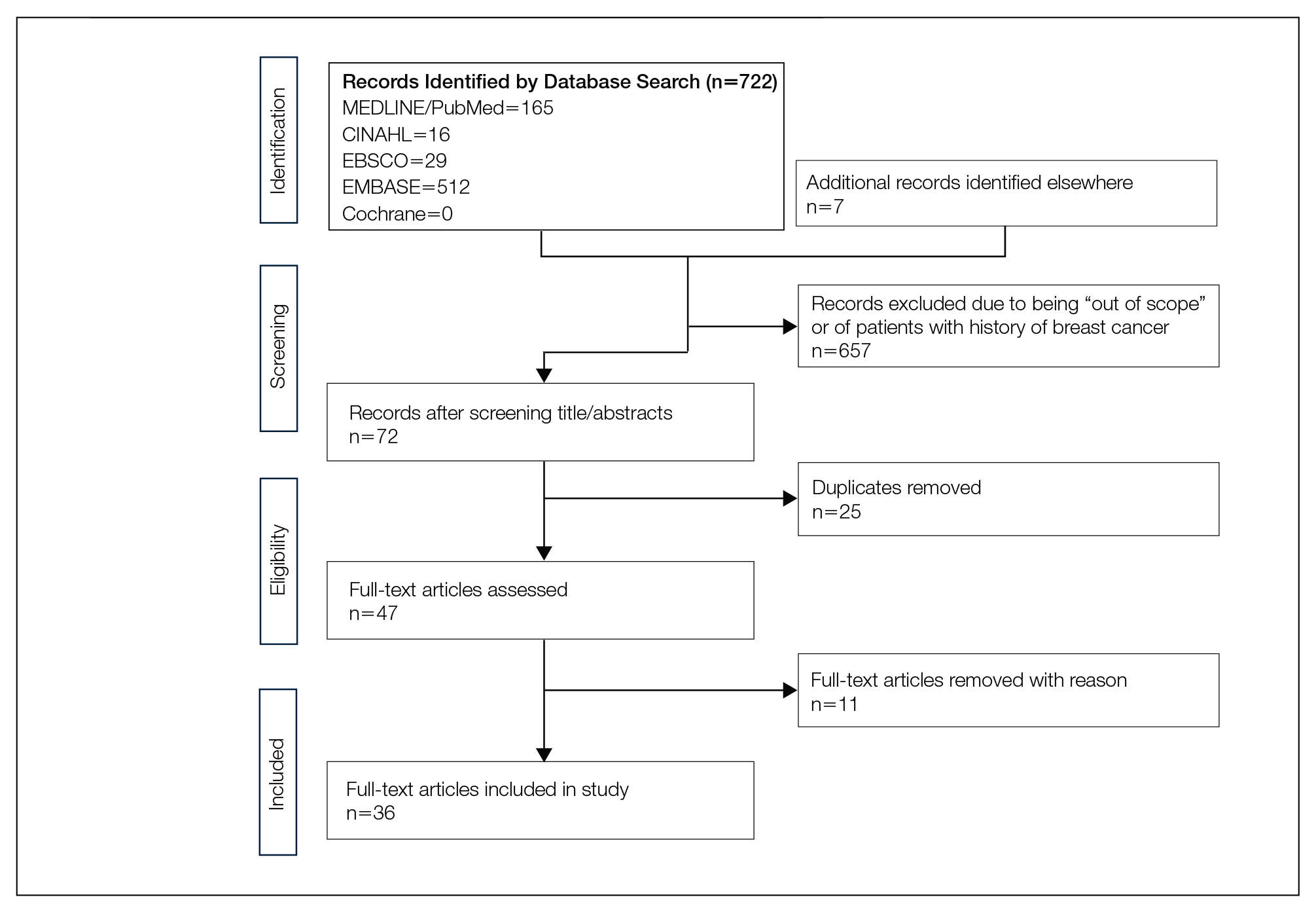

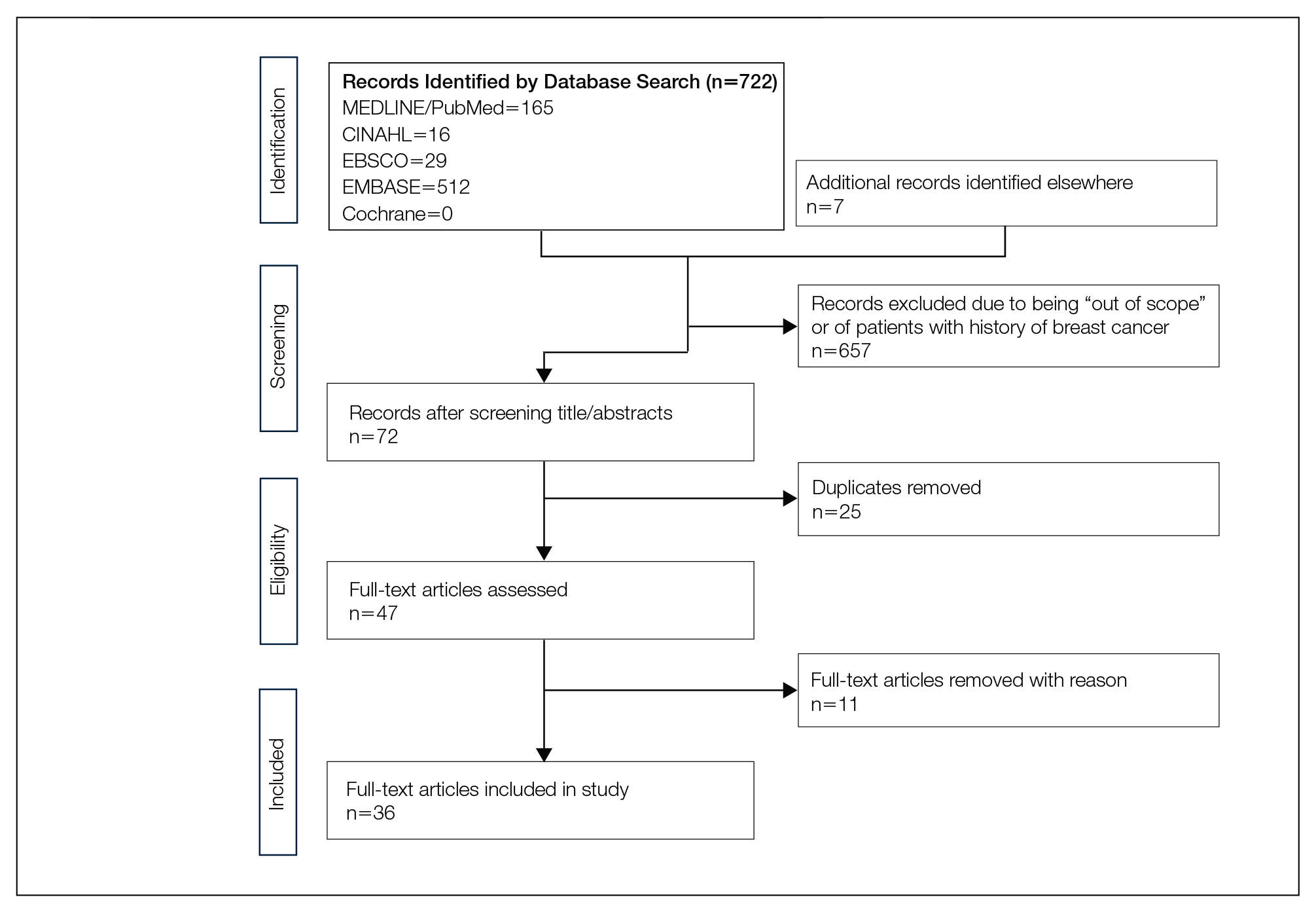

We completed our search of each of the databases on December 16, 2017, using the phrases cutaneous metastasis and breast cancer to find relevant case reports and retrospective studies. Three authors (C.J., S.R., and M.A.) manually reviewed the resulting abstracts. If an abstract did not include enough information to determine inclusion, the full-text version was reviewed by 2 of the authors (C.J. and S.R.). Two of the authors (C.J. and M.A.) also assessed each source for relevancy and included the articles deemed eligible (Figure 1).

Inclusion criteria were the following: case reports and retrospective studies published in the prior 10 years (January 1, 2007, to December 16, 2017) with human female patients who developed metastatic cutaneous lesions due to a previously unknown primary breast malignancy. Studies published in other languages were included; these articles were translated into English using a human translator or computer translation program (Google Translate). Exclusion criteria were the following: male patients, patients with a known diagnosis of primary breast malignancy prior to the appearance of a metastatic cutaneous lesion, articles focusing on the treatment of breast cancer, and articles without enough details to draw meaningful conclusions.

For a retrospective review to be included, it must have specified the number of breast cancer cases and the number of cutaneous metastases presenting initially or simultaneously to the breast cancer diagnosis. Bansal et al8 defined a simultaneous diagnosis as a skin lesion presenting with other concerns associated with the primary malignancy.

Results

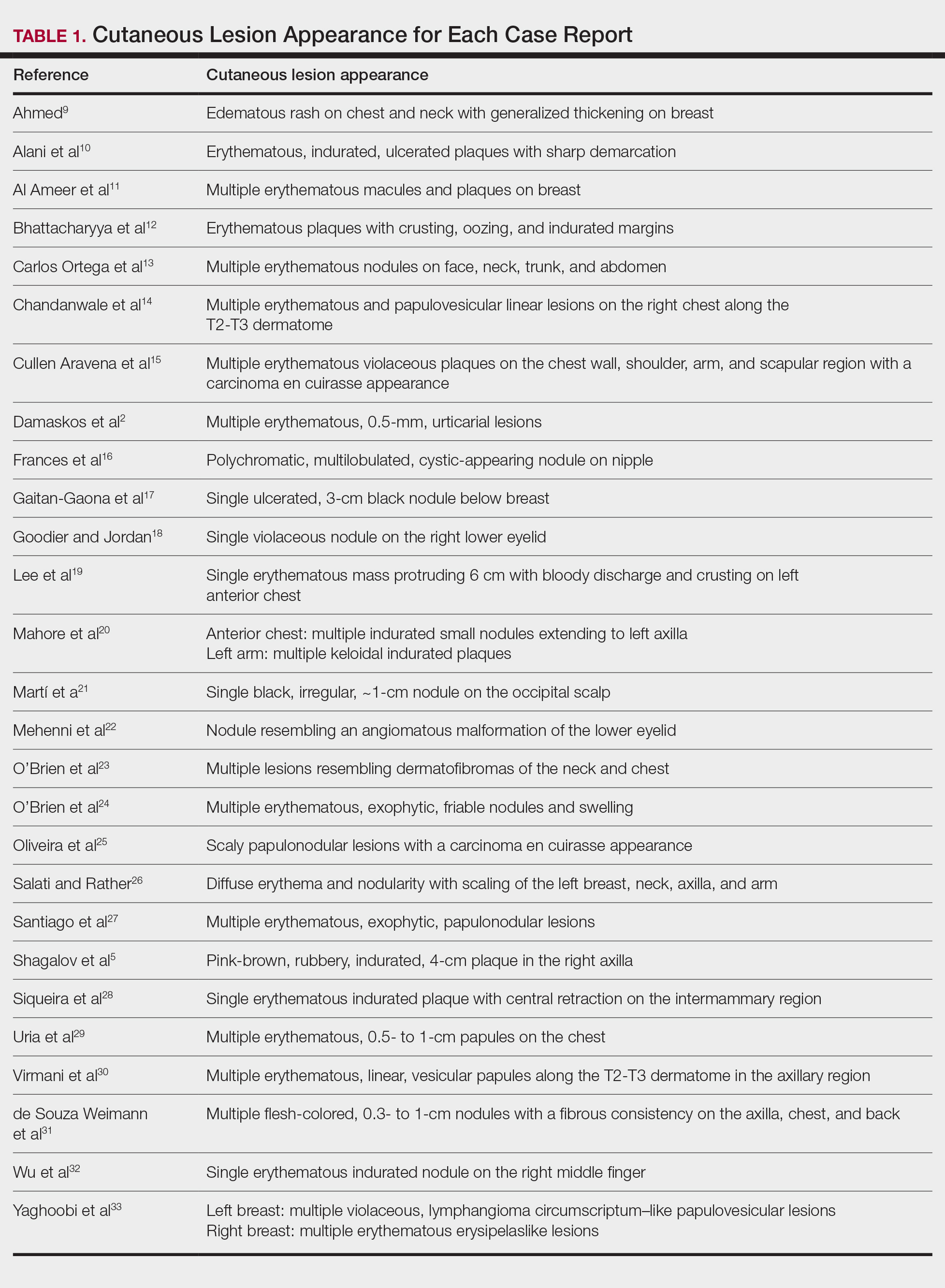

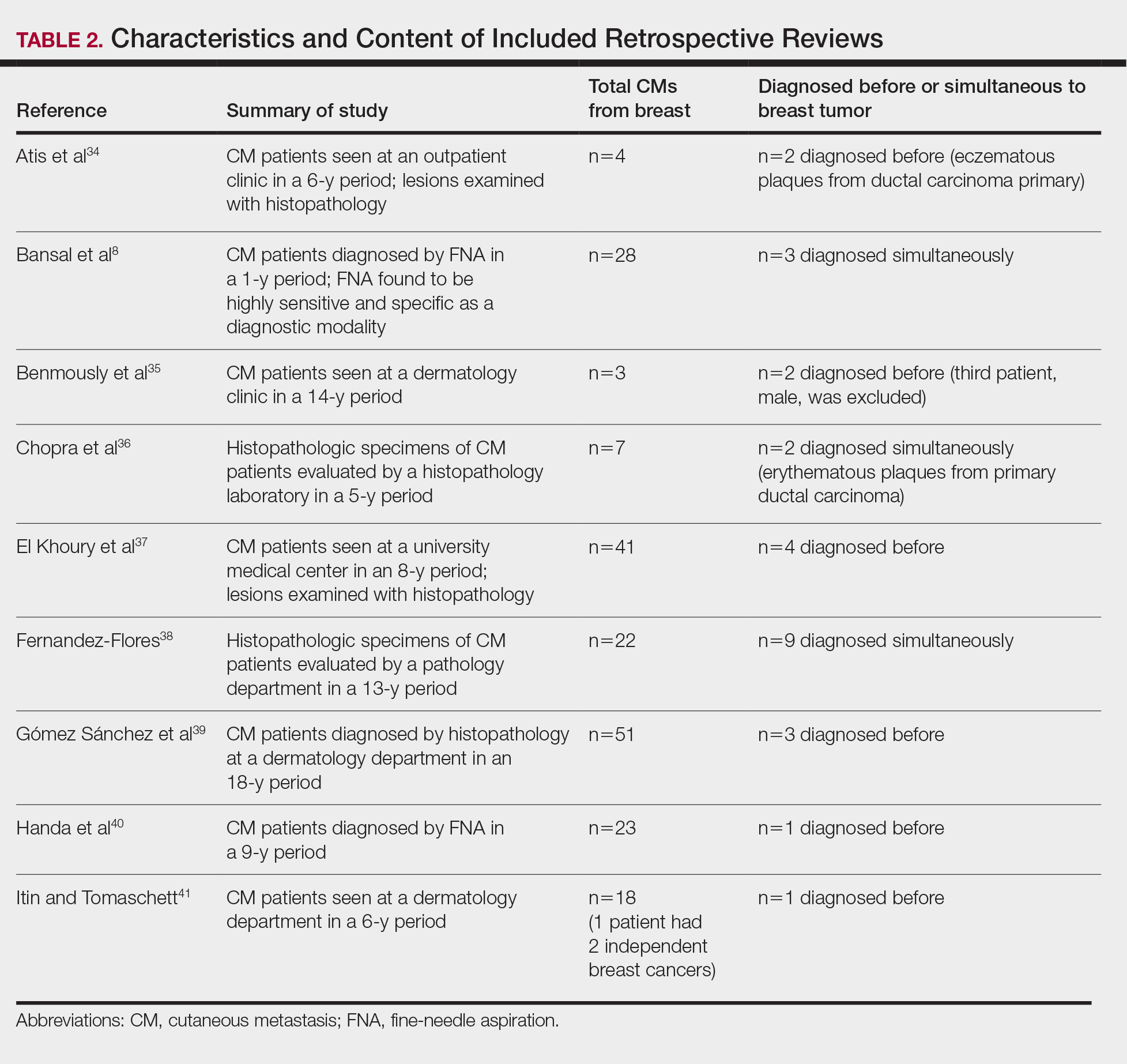

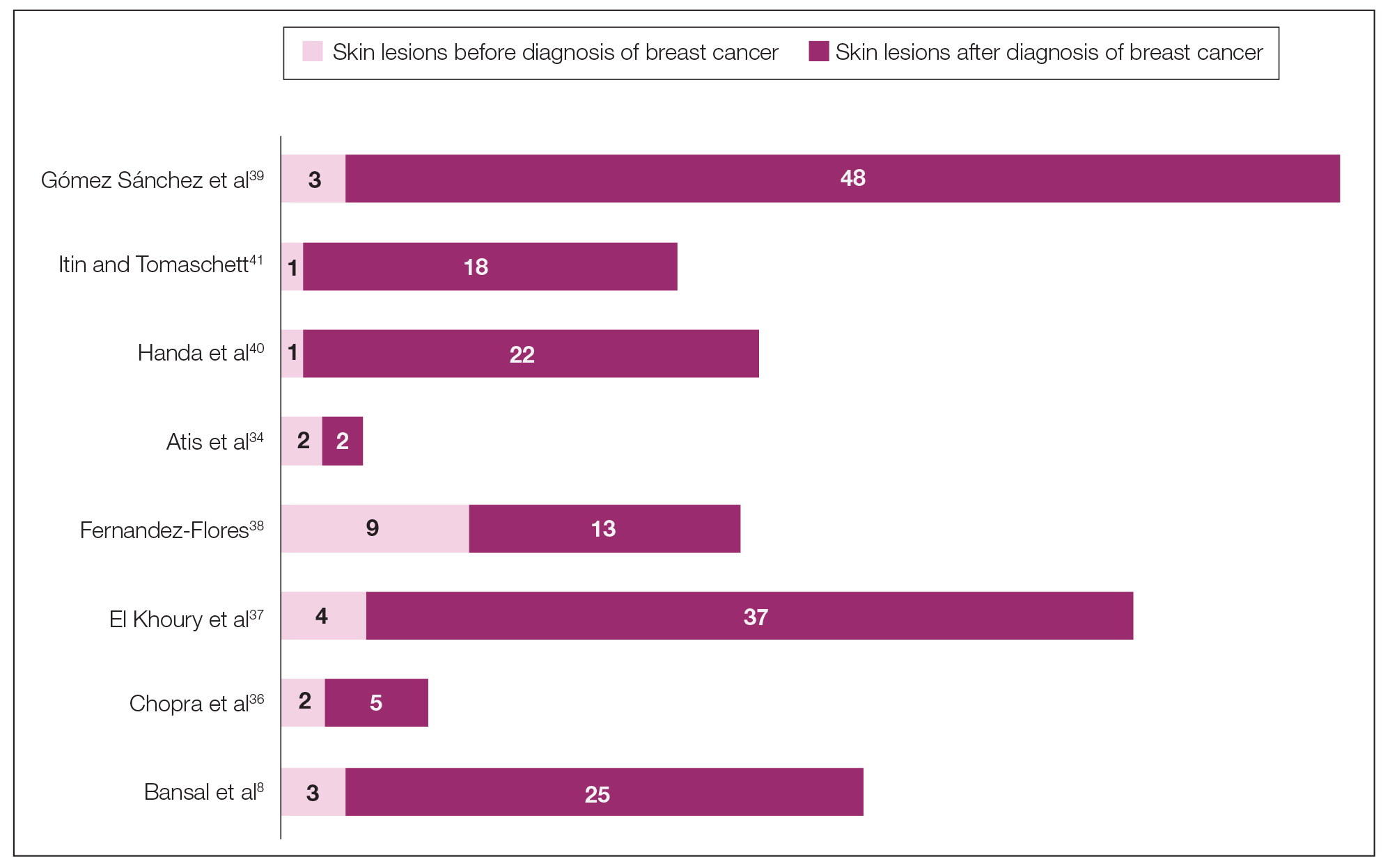

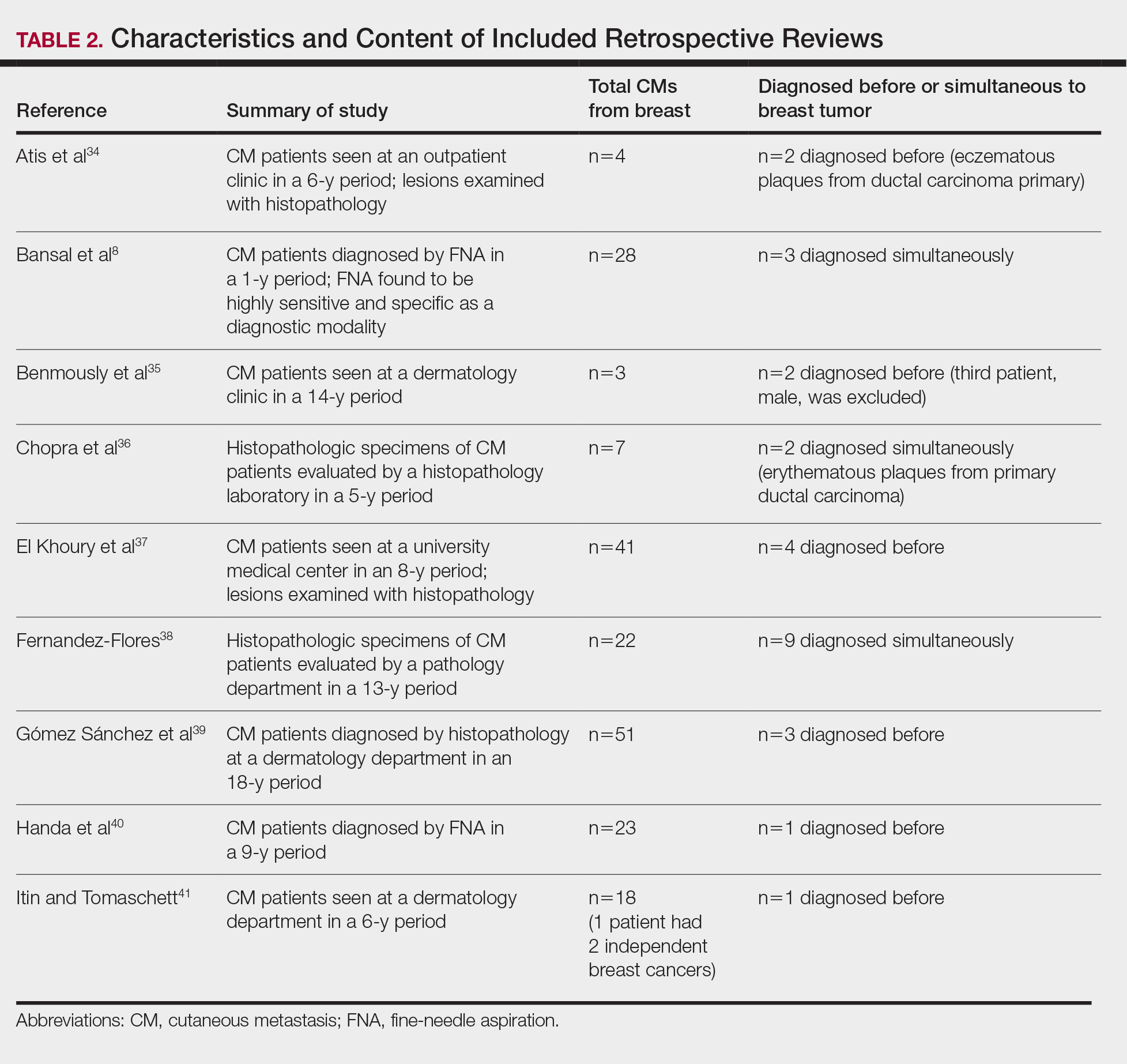

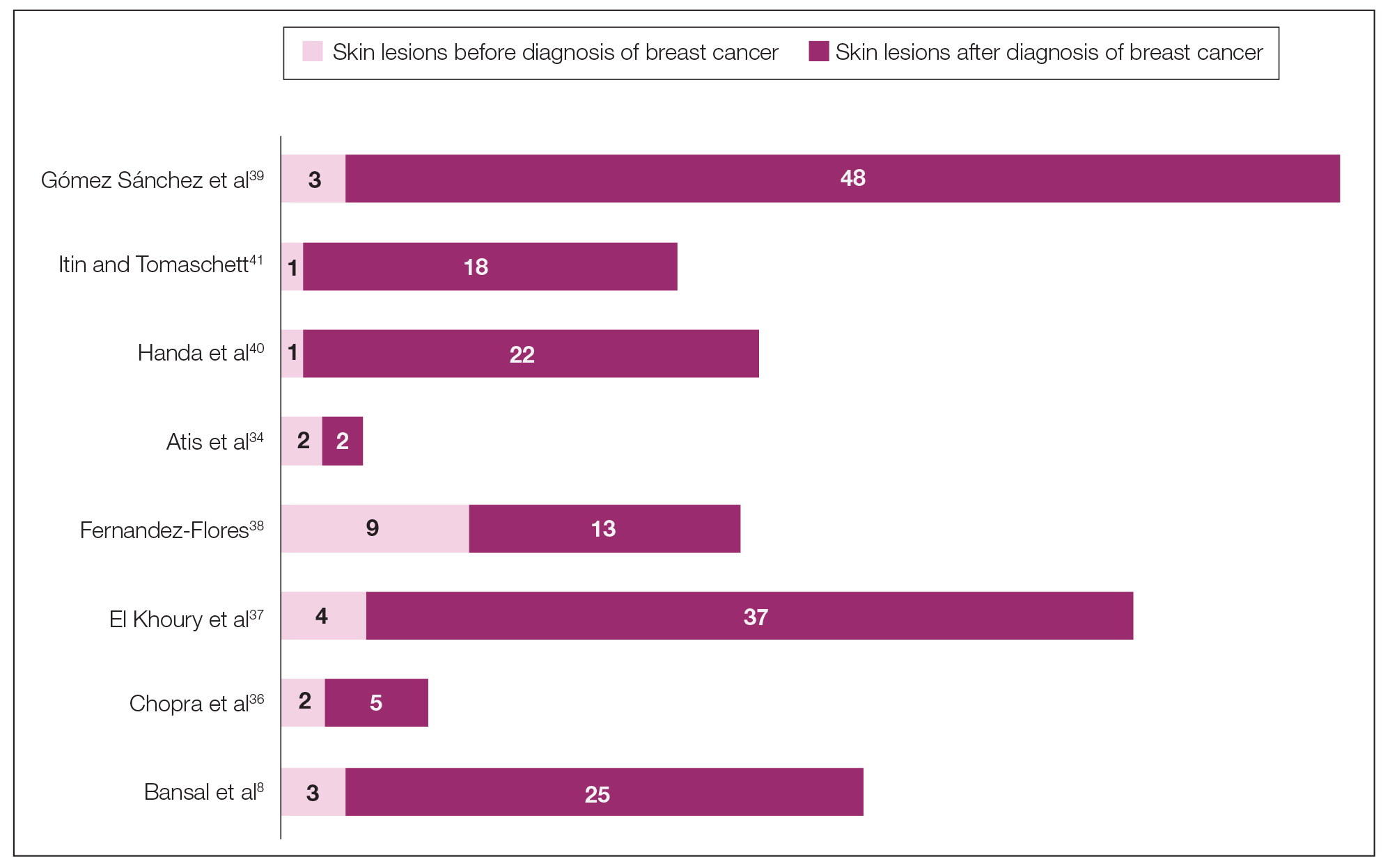

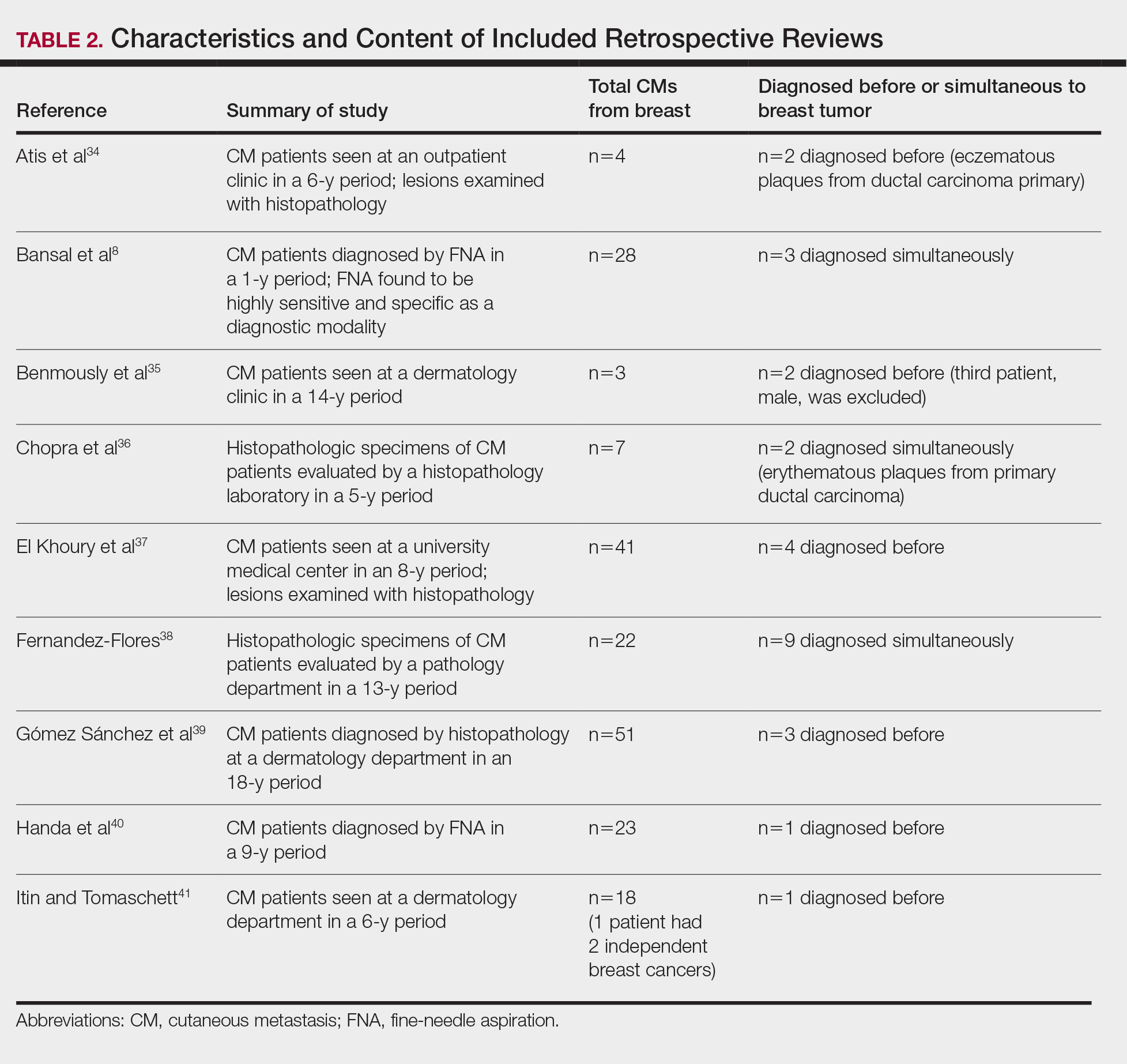

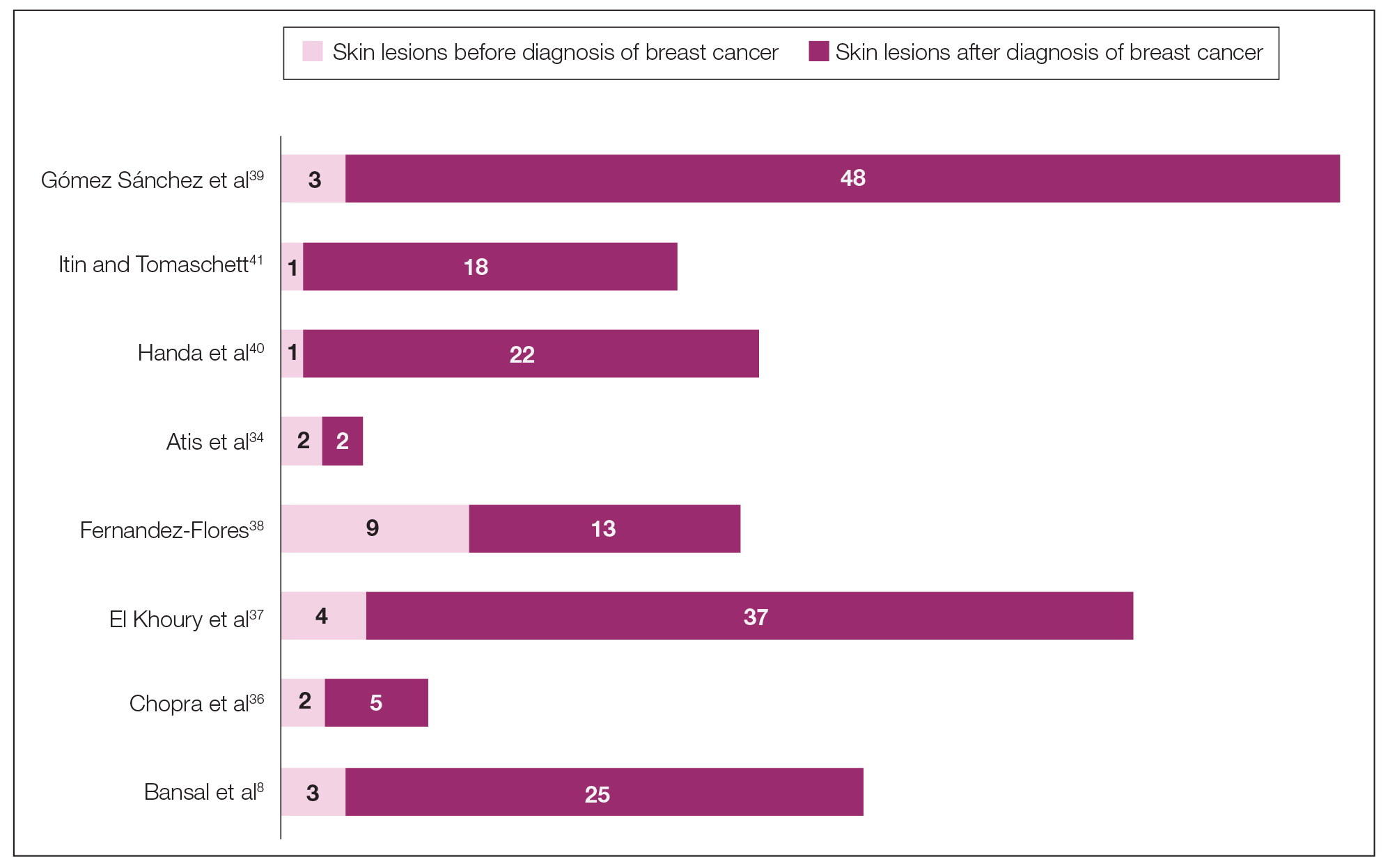

The initial search of MEDLINE/PubMed, EMBASE, Cochrane library, CINAHL, and EBSCO yielded a total of 722 articles. Seven other articles found separately while undergoing our initial research were added to this total. Abstracts were manually screened, with 657 articles discarded after failing to meet the predetermined inclusion criteria. After removal of 25 duplicate articles, the full text of the remaining 47 articles were reviewed, leading to the elimination of an additional 11 articles that did not meet the necessary criteria. This resulted in 36 articles (Figure 1), including 27 individual case reports (Table 1) and 9 retrospective reviews (Table 2). Approximately 13.7% of patients in the 9 retrospective reviews presented with a skin lesion before or simultaneous to the diagnosis of breast cancer (Figure 2).

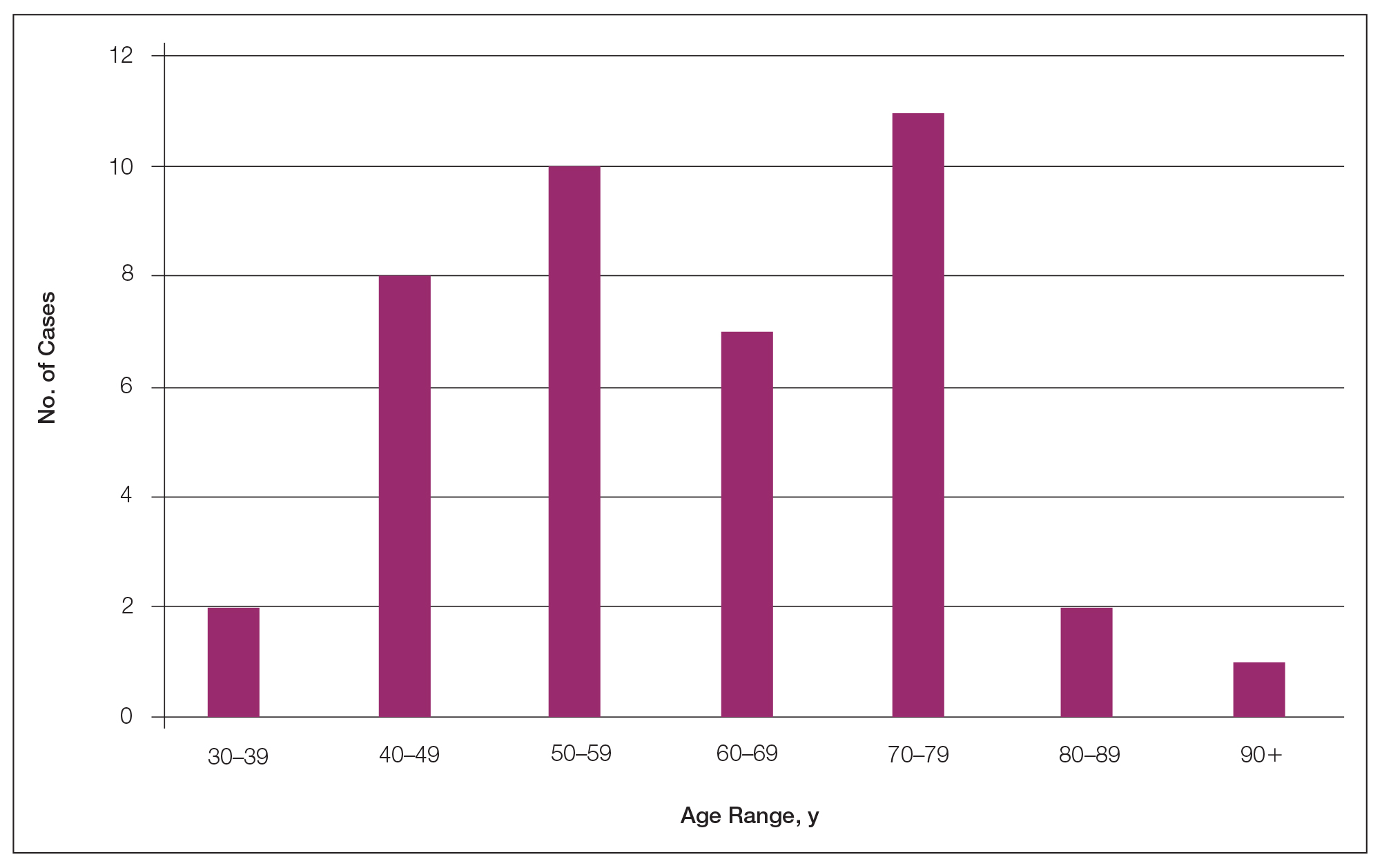

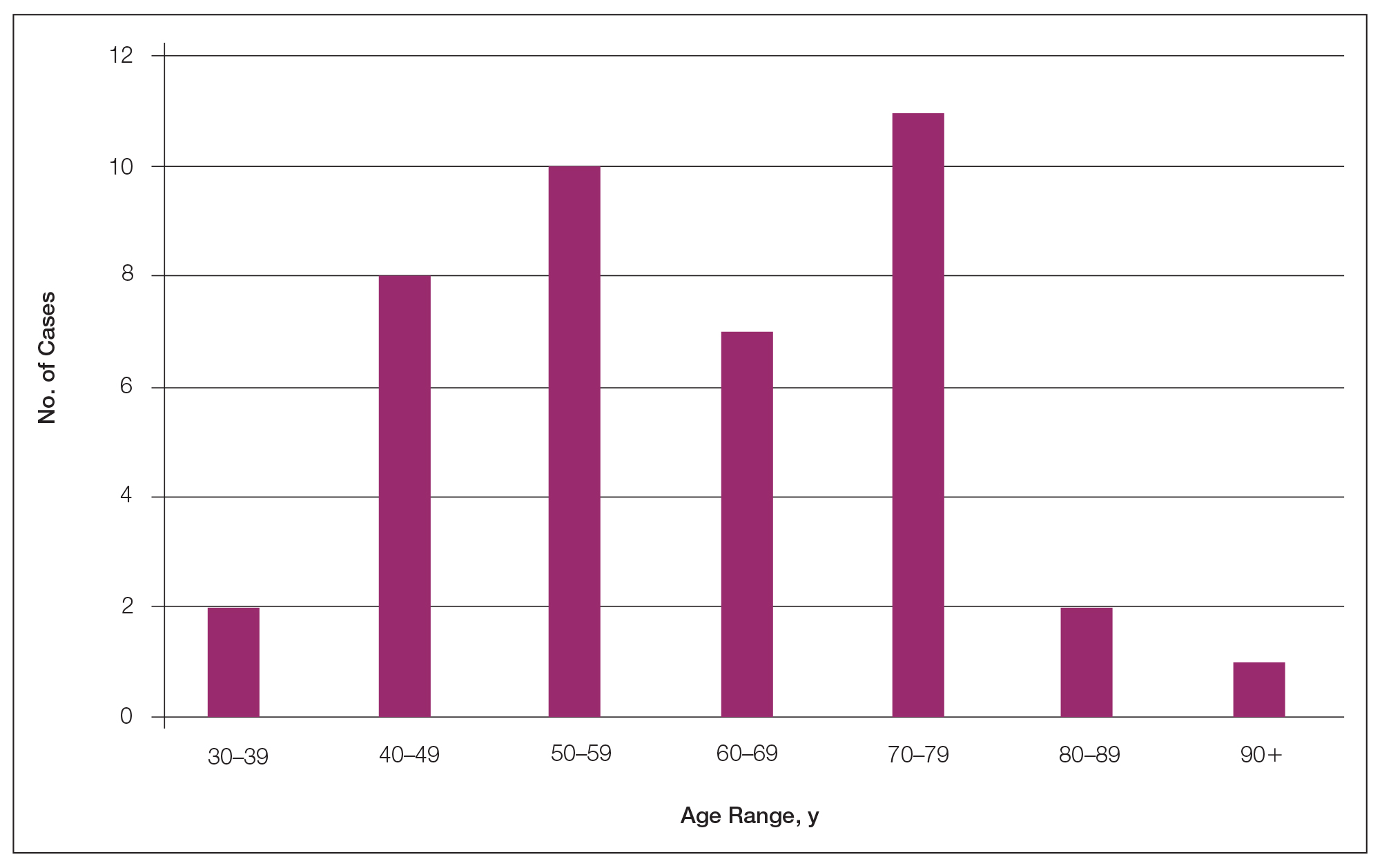

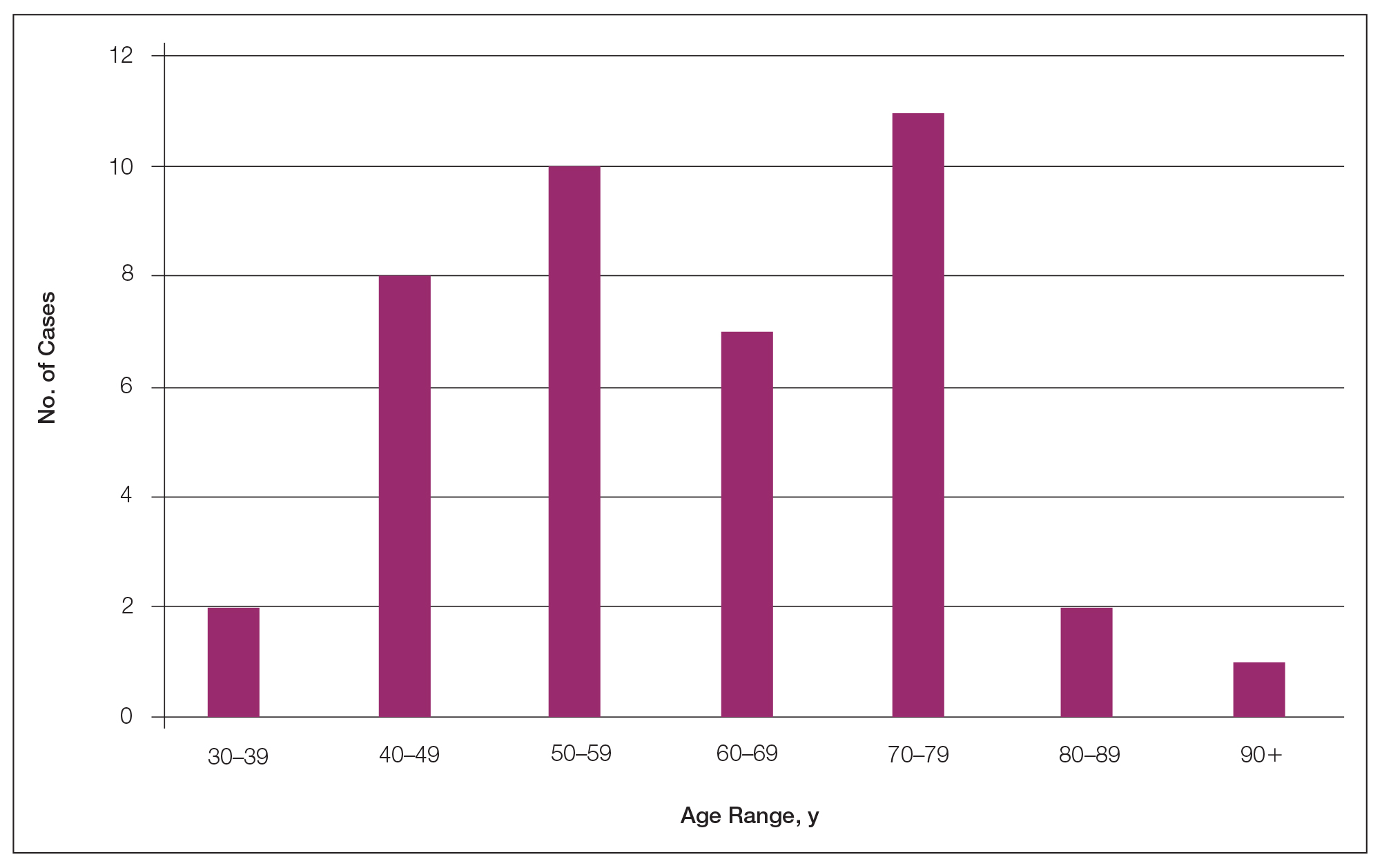

Forty-one percent (17/41) of the patients with cutaneous metastasis as a presenting feature of their breast cancer fell outside the age range for breast cancer screening recommended by the US Preventive Services Task Force,42 with 24% of the patients younger than 50 years and 17% older than 74 years (Figure 3).

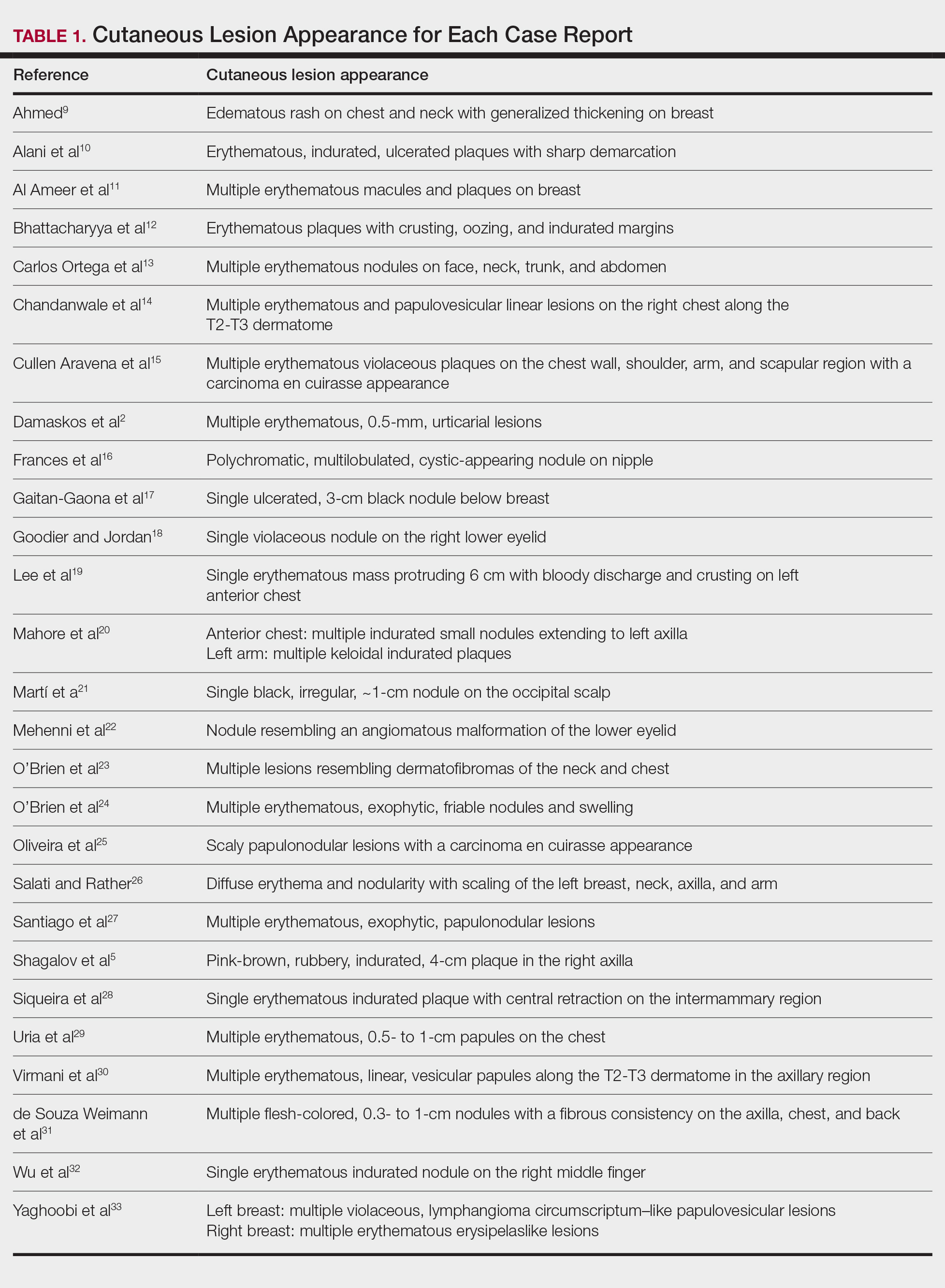

Lesion Characteristics

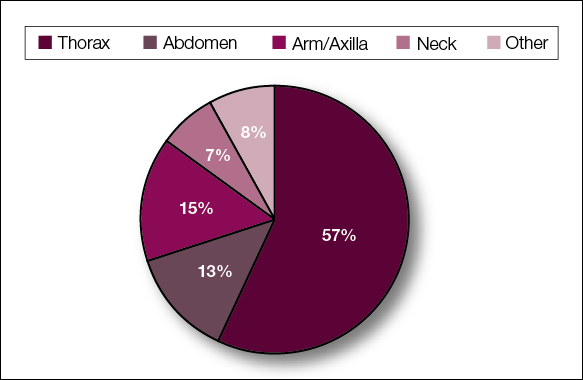

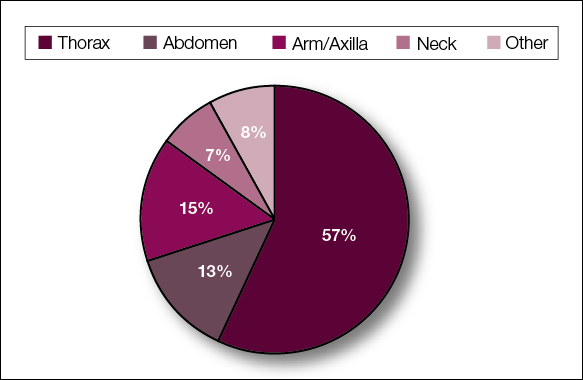

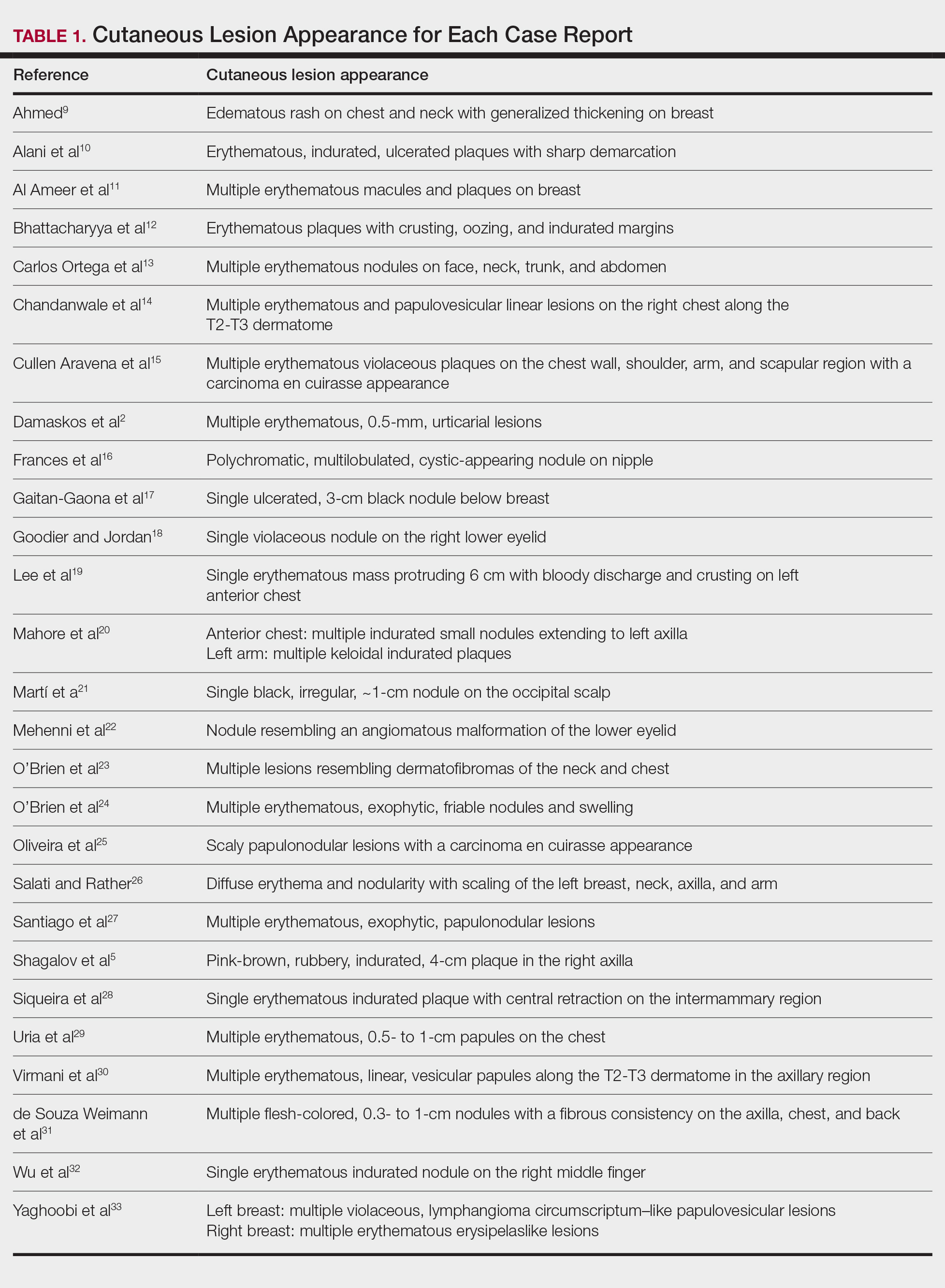

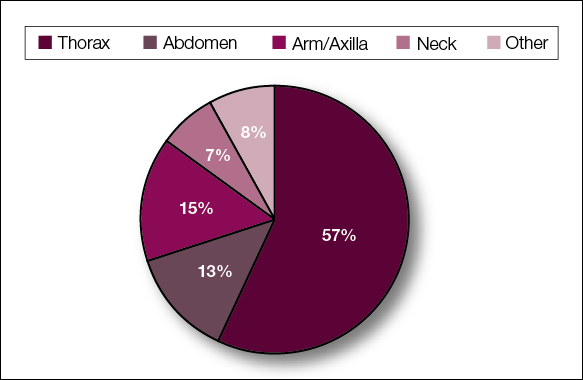

The most common cutaneous lesions were erythematous nodules and plaques, with a few reports of black17,21 or flesh-colored5,20,31 lesions, as well as ulceration.8,17,32 The most common location for skin lesions was on the thorax (chest or breast), accounting for 57% of the cutaneous metastases, with the arms and axillae being second most commonly involved (15%)(Figure 4). Some cases presented with skin lesions extending to multiple regions. In these cases, each location of the lesion was recorded separately when analyzing the data. An additional 5 cases, shown as “Other” in Figure 4, included the eyelids, occiput, and finger. Eight case reports described symptoms associated with the cutaneous lesions, with painful or tender lesions reported in 7 cases5,9,14,17,20,30,32 and pruritus in 2 cases.12,20 Moreover, 6 case reports presented patients denying any systemic or associated symptoms with their skin lesions.2,5,9,16,17,28 Multiple cases were initially treated as other conditions due to misdiagnosis, including herpes zoster14,30 and dermatitis.11,12

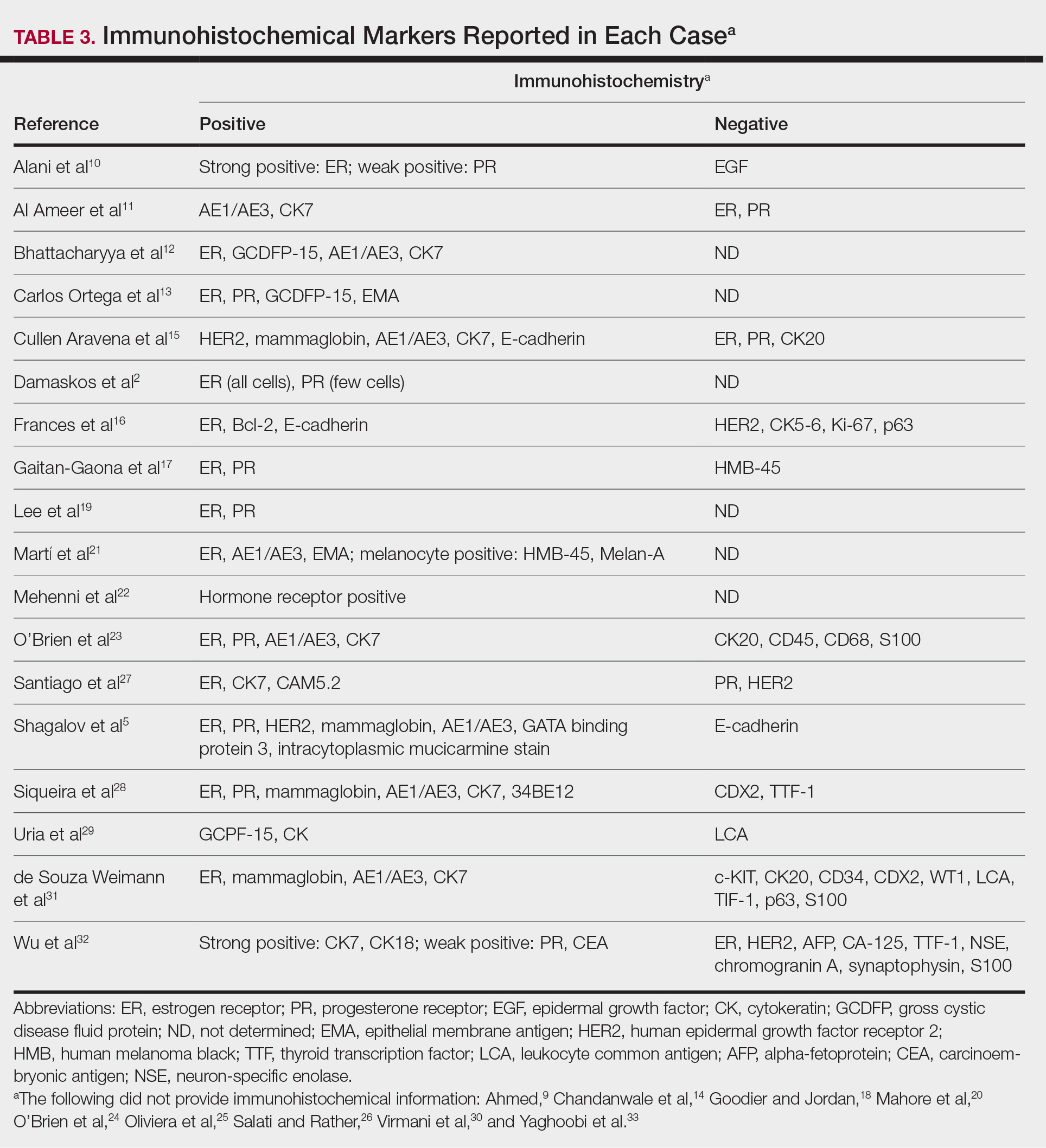

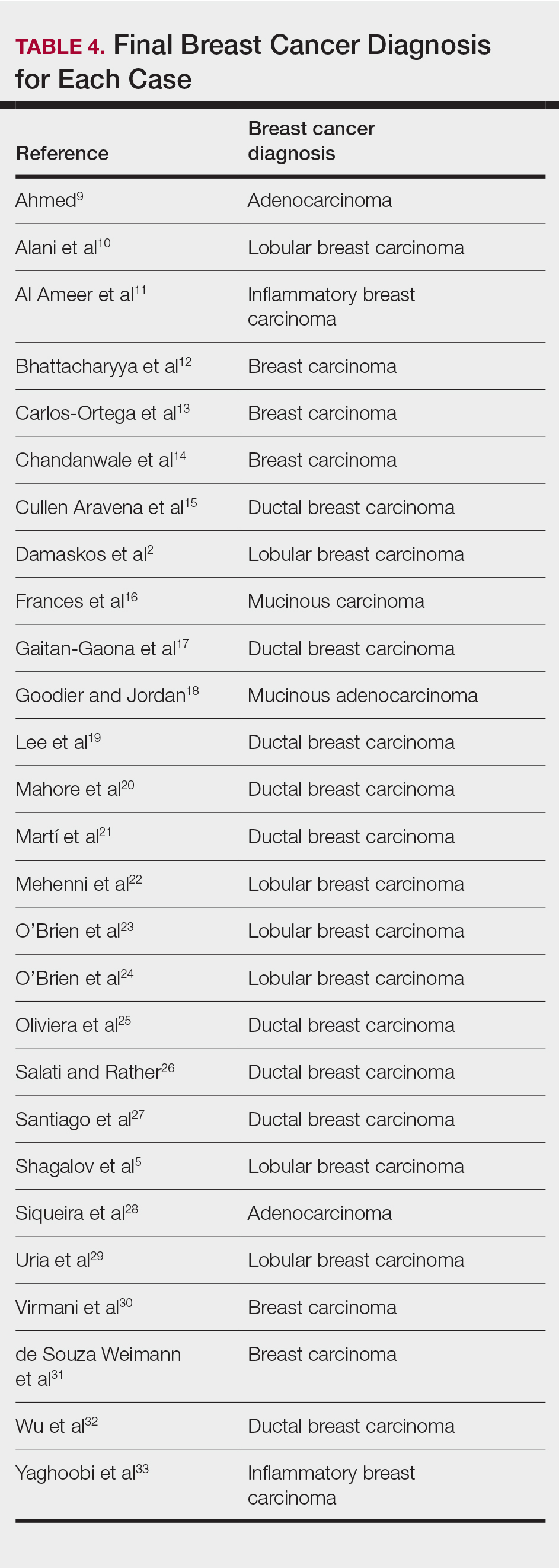

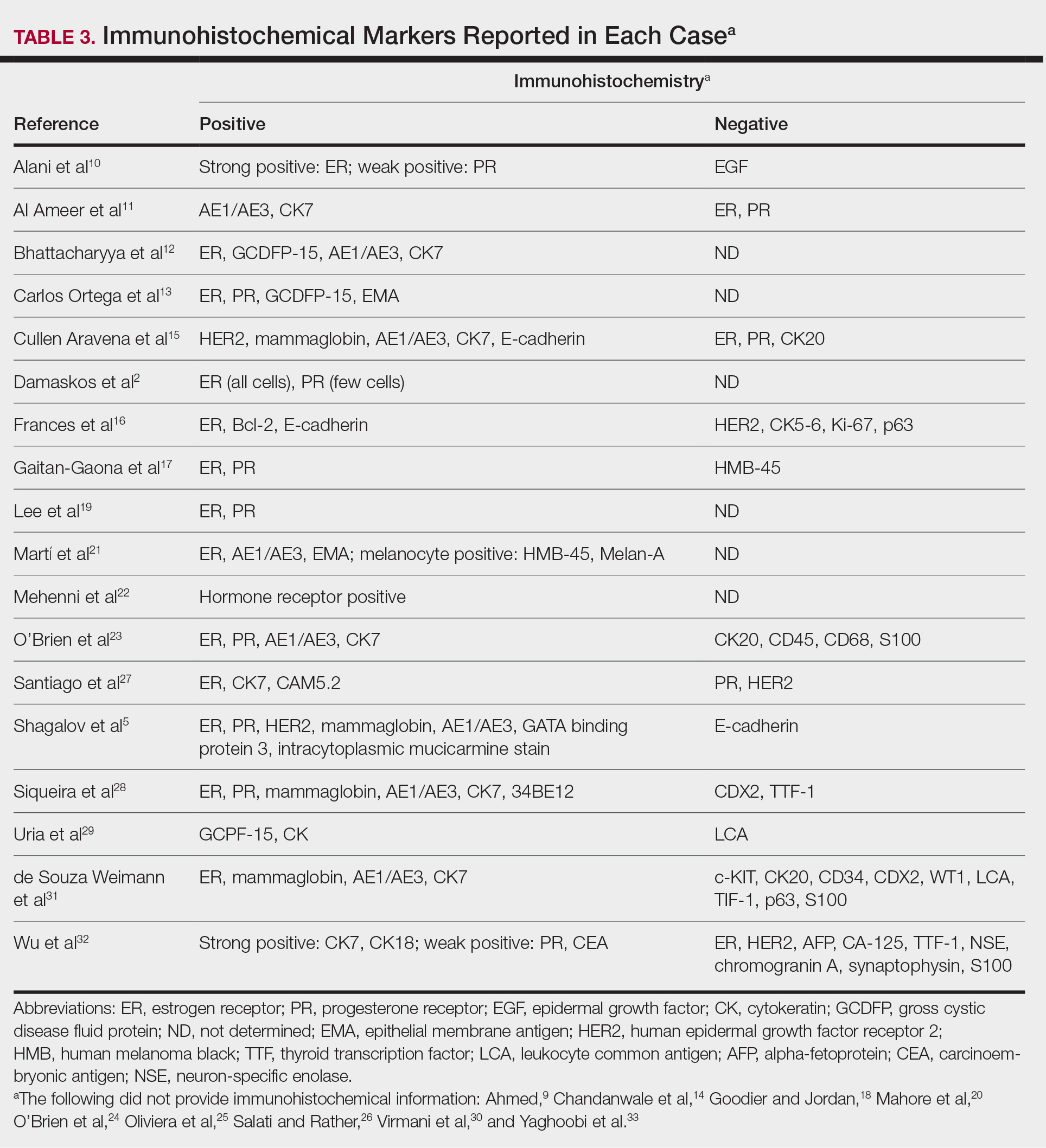

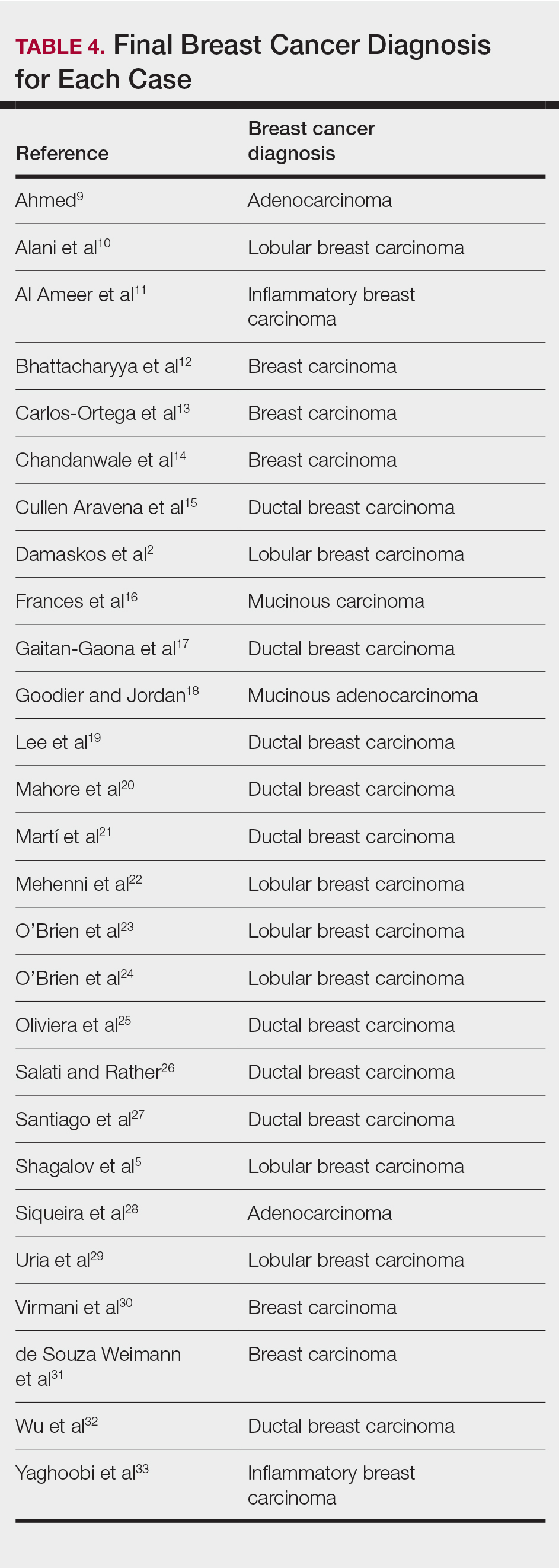

Diagnostic Data

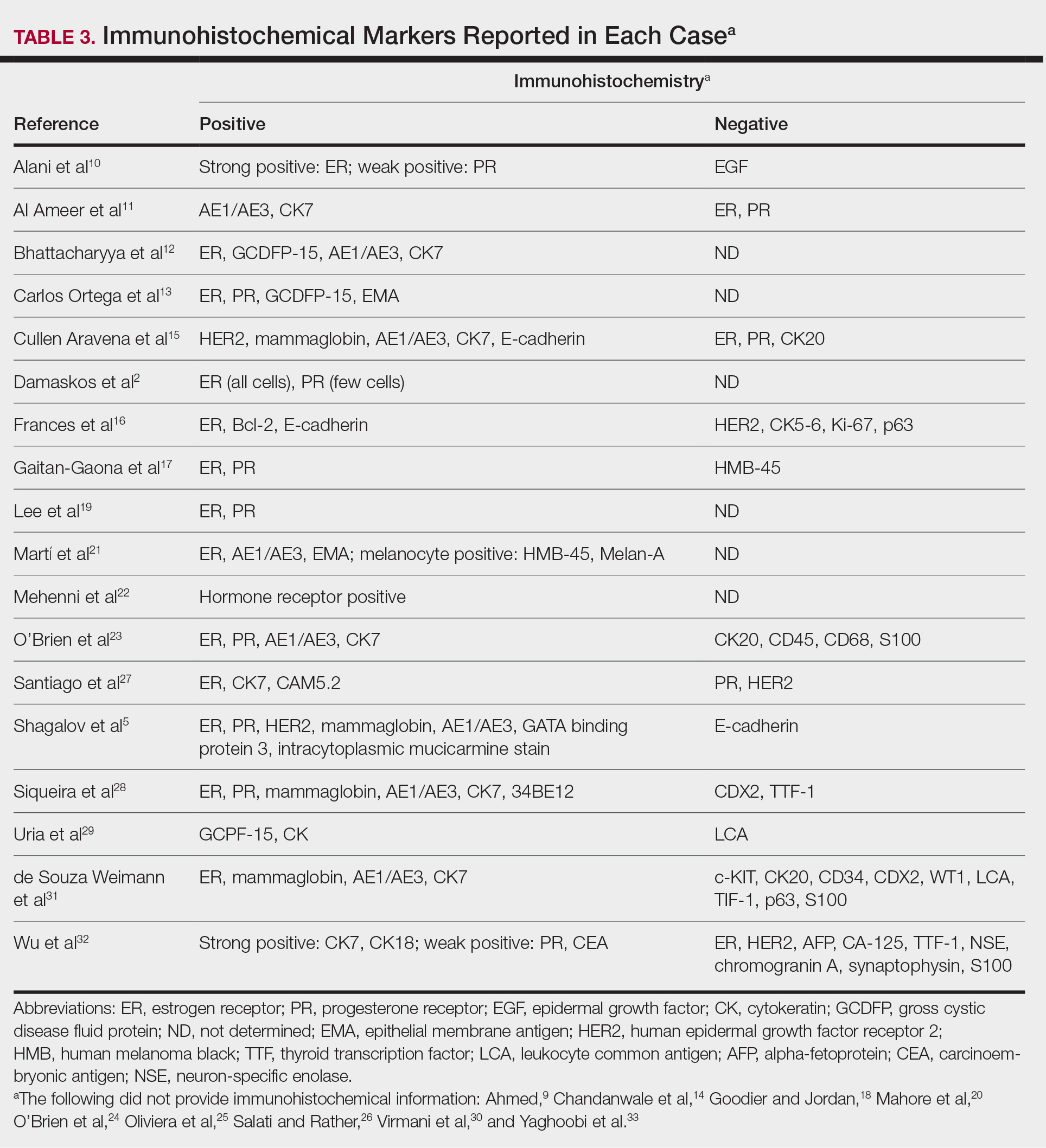

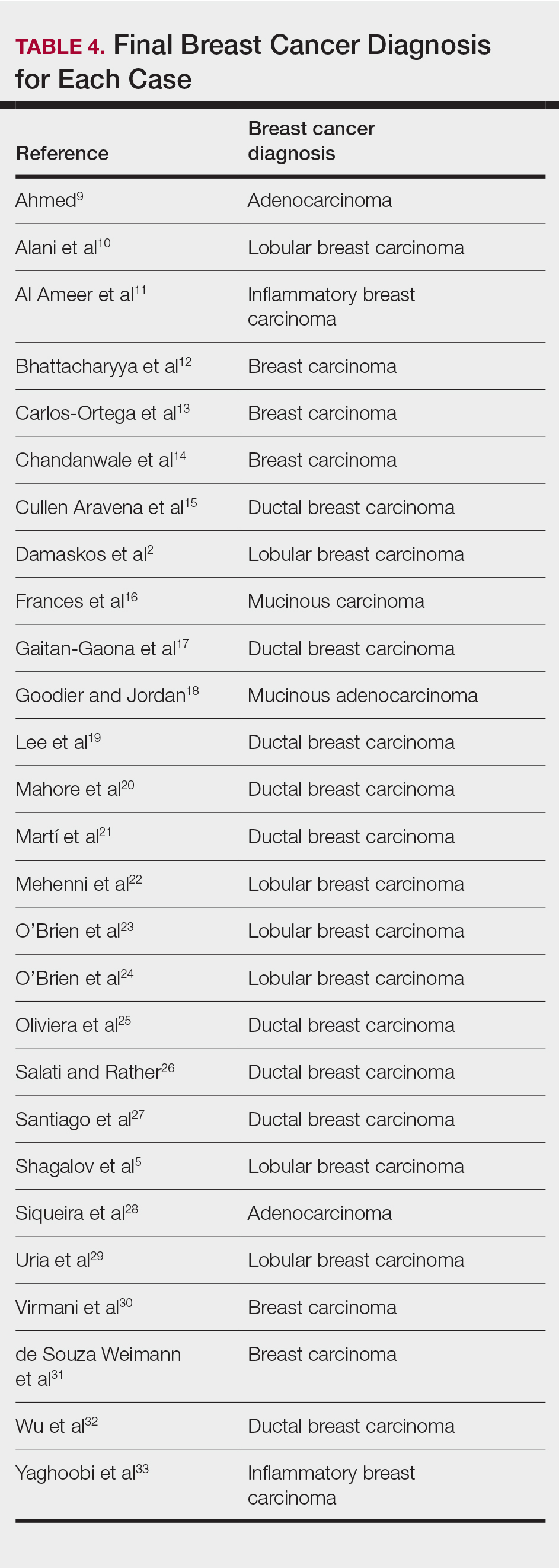

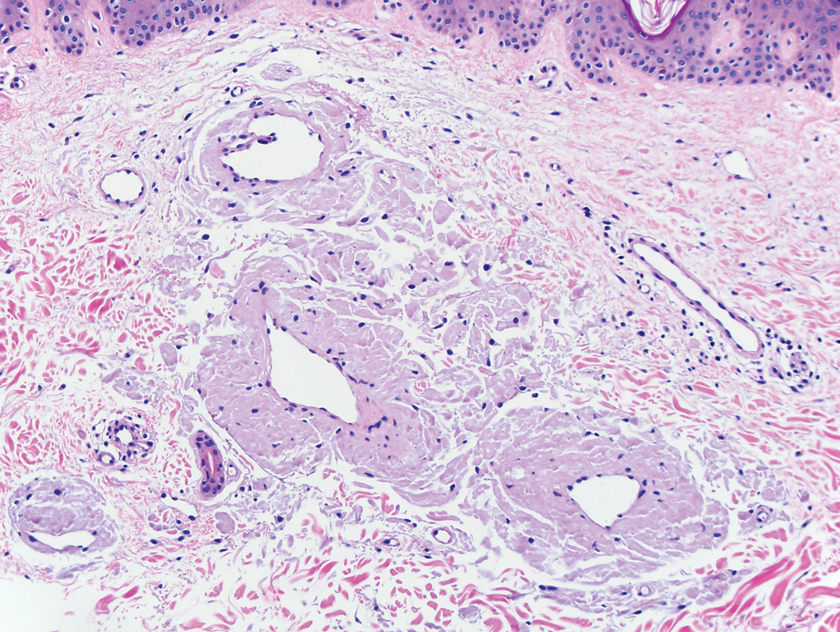

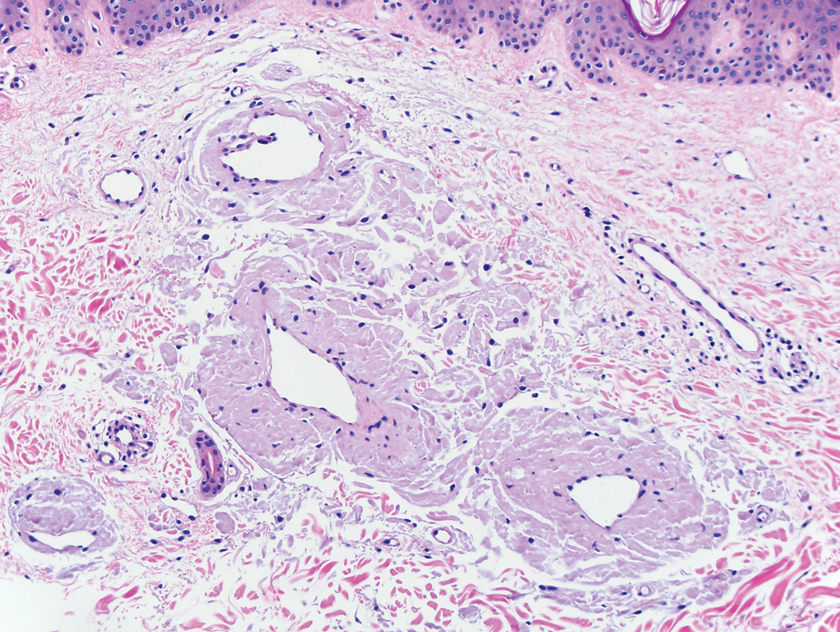

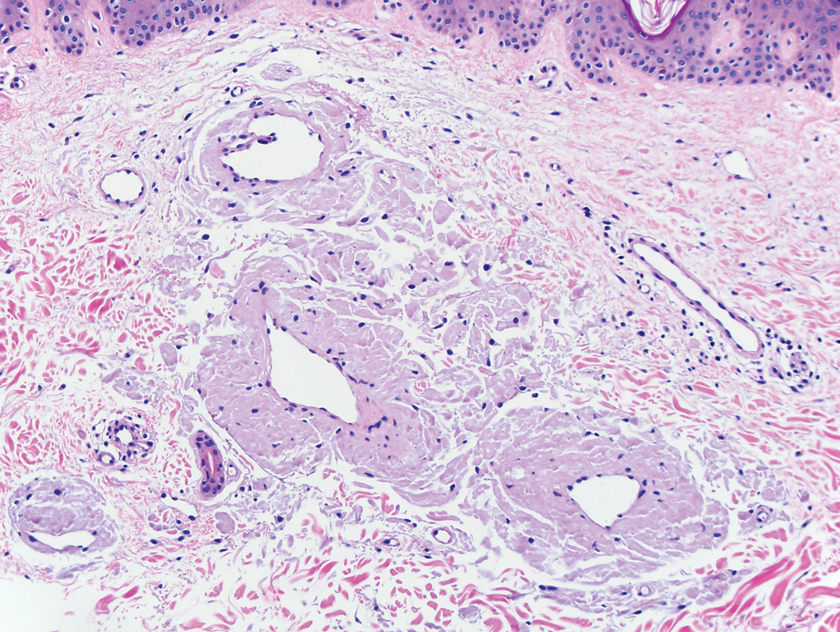

Eighteen cases reported positive immunohistochemistry from cutaneous biopsy (Table 3), given its high specificity in determining the origin of cutaneous metastases, while 8 case reports only performed hematoxylin and eosin staining. One case did not report hematoxylin and eosin or immunohistochemical staining. Table 4 lists the final breast cancer diagnosis for each case.

As per the standard of care, patients were evaluated with mammography or ultrasonography, combined with fine-needle aspiration of a suspected primary tumor, to give a definitive diagnosis of breast cancer. However, 4 cases reported negative mammography and ultrasonography.13,22,28,31 In 3 of these cases, no primary tumor was ever found.13,22,31

Comment

Our systematic review demonstrated that cutaneous lesions may be the first clinical manifestation of an undetected primary malignancy.40 These lesions often occur on the chest but may involve the face, abdomen, or extremities. Although asymptomatic erythematous nodules and plaques are the most common clinical presentations, lesions may be tender or pruritic or may even resemble benign skin conditions, including dermatitis, cellulitis, urticaria, and papulovesicular eruptions, causing them to go unrecognized.

Nevertheless, cutaneous metastasis of a visceral malignancy generally is observed late in the disease course, often following the diagnosis of a primary malignancy.14 Breast cancer is the most common internal malignancy to feature cutaneous spread, with the largest case series revealing a 23.9% rate of cutaneous metastases in females with breast carcinoma.6 Because of its proximity, the chest wall is the most common location for cutaneous lesions of metastatic breast cancer.

Malignant cells from a primary breast tumor may spread to the skin via lymphatic, hematogenous, or contiguous tissue dissemination, as well as iatrogenically through direct implantation during surgical procedures.3 The mechanism of neoplasm spread may likewise influence the clinical appearance of the resulting lesions. The localized lymphedema with a peau d’orange appearance of inflammatory metastatic breast carcinoma or the erythematous plaques of carcinoma erysipeloides are caused by embolized tumor cells obstructing dermal lymphatic vessels.3,11 On the other hand, the indurated erythematous plaques of carcinoma en cuirasse are caused by diffuse cutaneous and subcutaneous infiltration of tumor cells that also may be associated with marked reduction in breast volume.3

A primary breast cancer is classically diagnosed with a combination of clinical breast examination, radiologic imaging (ultrasound, mammogram, breast magnetic resonance imaging, or computed tomography), and fine-needle aspiration or lesional biopsy with histopathology.9 Given that in 20% of metastasized breast cancers the primary tumor may not be identified, a negative breast examination and imaging do not rule out breast cancer, especially if cutaneous biopsy reveals a primary malignancy.43 Histopathology and immunohistochemistry can thereby confirm the presence of metastatic cutaneous lesions and help characterize the breast cancer type involved, with adenocarcinomas being most commonly implicated.28 Although both ductal and lobular adenocarcinomas stain positive for cytokeratin 7, estrogen receptor, progesterone receptor, gross cystic disease fluid protein 15, carcinoembryonic antigen, and mammaglobin, only the former shows positivity for e-cadherin markers.3 Conversely, inflammatory carcinoma stains positive for CD31 and podoplanin, telangiectatic carcinoma stains positive for CD31, and mammary Paget disease stains positive for cytokeratin 7 and mucin 1, cell surface associated.3 Apart from cutaneous biopsy, fine-needle aspiration cytology can likewise provide a simple and rapid method of diagnosis with high sensitivity and specificity.14

Conclusion

Although cutaneous metastasis as the presenting sign of a breast malignancy is rare, a high index of suspicion should be exercised when encountering rapid-onset, out-of-place nodules or plaques in female patients, particularly nodules or plaques presenting on the chest.

- Siegel R, Miller K, Jemal A. Cancer statistics, 2020 [published online January 8, 2020]. CA Cancer J Clin. 2020;70:7-30.

- Damaskos C, Dimitroulis D, Pergialiotis V, et al. An unexpected metastasis of breast cancer mimicking wheal rush. G Chir. 2016;37:136-138.

- Alcaraz I, Cerroni L, Rütten A, et al. Cutaneous metastases from internal malignancies: a clinicopathologic and immunohistochemical review. Am J Dermatopathol. 2012;34:347-393.

- Wong CYB, Helm MA, Kalb RE, et al. The presentation, pathology, and current management strategies of cutaneous metastasis. N Am J Med Sci. 2013;5:499-504.

- Shagalov D, Xu M, Liebman T, et al. Unilateral indurated plaque in the axilla: a case of metastatic breast carcinoma. Dermatol Online J. 2016;22:13030/qt8vw382nx.

- Lookingbill DP, Spangler N, Helm KF. Cutaneous metastases in patients with metastatic carcinoma: a retrospective study of 4020 patients. J Am Acad Dermatol. 1993;29:228-236.

- Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol. 2009;62:e1-e34.

- Bansal R, Patel T, Sarin J, et al. Cutaneous and subcutaneous metastases from internal malignancies: an analysis of cases diagnosed by fine needle aspiration. Diagn Cytopathol. 2011;39:882-887.

- Ahmed M. Cutaneous metastases from breast carcinoma. BMJ Case Rep. 2011;2011:bcr0620114398.

- Alani A, Roberts G, Kerr O. Carcinoma en cuirasse. BMJ Case Rep. 2017;2017:bcr2017222121.

- Al Ameer A, Imran M, Kaliyadan F, et al. Carcinoma erysipeloides as a presenting feature of breast carcinoma: a case report and brief review of literature. Indian Dermatol Online J. 2015;6:396-398.

- Bhattacharyya A, Gangopadhyay M, Ghosh K, et al. Wolf in sheep’s clothing: a case of carcinoma erysipeloides. Oxf Med Case Rep. 2016;2016:97-100.

- Carlos Ortega B, Alfaro Mejia A, Gómez-Campos G, et al. Metástasis de carcinoma de mama que simula prototecosis. Dermatol Rev Mex. 2012;56:55-61.

- Chandanwale SS, Gore CR, Buch AC, et al. Zosteriform cutaneous metastasis: a primary manifestation of carcinoma breast, rare case report. Indian J Pathol Microbiol. 2011;54:863-864.

- Cullen Aravena R, Cullen Aravena D, Velasco MJ, et al. Carcinoma hemorrhagiectoides: case report of an uncommon presentation of cutaneous metastatic breast carcinoma. Dermatol Online J. 2017;23:13030/qt3hm3z850.

- Frances L, Cuesta L, Leiva-Salinas M, et al. Secondary mucinous carcinoma of the skin. Dermatol Online J. 2014;20:22361.

- Gaitan-Gaona F, Said MC, Valdes-Rodriguez R. Cutaneous metastatic pigmented breast carcinoma. Dermatol Online J. 2016;22:13030/qt0sv018ck.

- Goodier MA, Jordan JR. Metastatic breast cancer to the lower eyelid. Laryngoscope. 2010;120(suppl 4):S129.

- Lee H-J, Kim J-M, Kim G-W, et al. A unique cutaneous presentation of breast cancer: a red apple stuck in the breast. Ann Dermatol. 2016;28:499-501.

- Mahore SD, Bothale KA, Patrikar AD, et al. Carcinoma en cuirasse : a rare presentation of breast cancer. Indian J Pathol Microbiol. 2010;53:351-358.

- Martí N, Molina I, Monteagudo C, et al. Cutaneous metastasis of breast carcinoma mimicking malignant melanoma in scalp. Dermatol Online J. 2008;14:12.

- Mehenni NN, Gamaz-Bensaou M, Bouzid K. Metastatic breast carcinoma to the gallbladder and the lower eyelid with no malignant lesion in the breast: an unusual case report with a short review of the literature [abstract]. Ann Oncol. 2013;24(suppl 3):iii49.

- O’Brien OA, AboGhaly E, Heffron C. An unusual presentation of a common malignancy [abstract]. J Pathol. 2013;231:S33.

- O’Brien R, Porto DA, Friedman BJ, et al. Elderly female with swelling of the right breast. Ann Emerg Med. 2016;67:e25-e26.

- Oliveira GM de, Zachetti DBC, Barros HR, et al. Breast carcinoma en Cuirasse—case report. An Bras Dermatol. 2013;88:608-610.

- Salati SA, Rather AA. Carcinoma en cuirasse. J Pak Assoc Derma. 2013;23:452-454.

- Santiago F, Saleiro S, Brites MM, et al. A remarkable case of cutaneous metastatic breast carcinoma. Dermatol Online J. 2009;15:10.

- Siqueira VR, Frota AS, Maia IL, et al. Cutaneous involvement as the initial presentation of metastatic breast adenocarcinoma - case report. An Bras Dermatol. 2014;89:960-963.

- Uria M, Chirino C, Rivas D. Inusual clinical presentation of cutaneous metastasis from breast carcinoma. A case report. Rev Argent Dermatol. 2009;90:230-236.

- Virmani NC, Sharma YK, Panicker NK, et al. Zosteriform skin metastases: clue to an undiagnosed breast cancer. Indian J Dermatol. 2011;56:726-727.

- de Souza Weimann ET, Botero EB, Mendes C, et al. Cutaneous metastasis as the first manifestation of occult malignant breast neoplasia. An Bras Dermatol. 2016;91(5 suppl 1):105-107.

- Wu CY, Gao HW, Huang WH, et al. Infection-like acral cutaneous metastasis as the presenting sign of an occult breast cancer. Clin Exp Dermatol. 2009;34:e409-e410.

- Yaghoobi R, Talaizade A, Lal K, et al. Inflammatory breast carcinoma presenting with two different patterns of cutaneous metastases: carcinoma telangiectaticum and carcinoma erysipeloides. J Clin Aesthet Dermatol. 2015;8:47-51.

- Atis G, Demirci GT, Atunay IK, et al. The clinical characteristics and the frequency of metastatic cutaneous tumors among primary skin tumors. Turkderm. 2013;47:166-169.

- Benmously R, Souissi A, Badri T, et al. Cutaneous metastases from internal cancers. Acta Dermatovenerol Alp Pannonica Adriat. 2008;17:167-170.

- Chopra R, Chhabra S, Samra SG, et al. Cutaneous metastases of internal malignancies: a clinicopathologic study. Indian J Dermatol Venereol Leprol. 2010;76:125-131.

- El Khoury J, Khalifeh I, Kibbi AG, et al. Cutaneous metastasis: clinicopathological study of 72 patients from a tertiary care center in Lebanon. Int J Dermatol. 2014;53:147-158.

- Fernandez-Flores A. Cutaneous metastases: a study of 78 biopsies from 69 patients. Am J Dermatopathol. 2010;32:222-239.

- Gómez Sánchez ME, Martinez Martinez ML, Martín De Hijas MC, et al. Metástasis cutáneas de tumores sólidos. Estudio descriptivo retrospectivo. Piel. 2014;29:207-212

- Handa U, Kundu R, Dimri K. Cutaneous metastasis: a study of 138 cases diagnosed by fine-needle aspiration cytology. Acta Cytol. 2017;61:47-54.

- Itin P, Tomaschett S. Cutaneous metastases from malignancies which do not originate from the skin. An epidemiological study. Article in German. Internist (Berl). 2009;50:179-186.

- Siu AL, U.S. Preventive Services Task Force. Screening for breast cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2016;164:279-296.

- Torres HA, Bodey GP, Tarrand JJ, et al. Protothecosis in patients with cancer: case series and literature review. Clin Microbiol Infect. 2003;9:786-792.

Breast cancer is the second most common malignancy in women (after primary skin cancer) and is the second leading cause of cancer-related death in this population. In 2020, the American Cancer Society reported an estimated 276,480 new breast cancer diagnoses and 42,170 breast cancer–related deaths.1 Despite the fact that routine screening with mammography and sonography is standard, the incidence of advanced breast cancer at the time of diagnosis has remained stable over time, suggesting that life-threatening breast cancers are not being caught at an earlier stage. The number of breast cancers with distant metastases at the time of diagnosis also has not decreased.2 Therefore, although screening tests are valuable, they are imperfect and not without limitations.

Cutaneous metastasis is defined as the spread of malignant cells from an internal neoplasm to the skin, which can occur either by contiguous invasion or by distant metastasis through hematogenous or lymphatic routes.3 The diagnosis of cutaneous metastasis requires a high index of suspicion on the part of the clinician.4 Of the various internal malignancies in women, breast cancer most frequently results in metastasis to the skin,5 with up to 24% of patients with metastatic breast cancer developing cutaneous lesions.6

In recent years, there have been multiple reports of skin lesions prompting the diagnosis of a previously unknown breast cancer. In a study by Lookingbill et al,6 6.3% of patients with breast cancer presented with cutaneous involvement at the time of diagnosis, with 3.5% having skin symptoms as the presenting sign. Although there have been studies analyzing cutaneous metastasis from various internal malignancies, none thus far have focused on cutaneous metastasis as a presenting sign of breast cancer. This systematic review aimed to highlight the diverse clinical presentations of cutaneous metastatic breast cancer and their clinical implications.

Methods

Study Selection

This study utilized the PRISMA guidelines for systematic reviews.7 A review of the literature was conducted using the following databases: MEDLINE/PubMed, EMBASE, Cochrane library, CINAHL, and EBSCO.

Search Strategy and Analysis

We completed our search of each of the databases on December 16, 2017, using the phrases cutaneous metastasis and breast cancer to find relevant case reports and retrospective studies. Three authors (C.J., S.R., and M.A.) manually reviewed the resulting abstracts. If an abstract did not include enough information to determine inclusion, the full-text version was reviewed by 2 of the authors (C.J. and S.R.). Two of the authors (C.J. and M.A.) also assessed each source for relevancy and included the articles deemed eligible (Figure 1).

Inclusion criteria were the following: case reports and retrospective studies published in the prior 10 years (January 1, 2007, to December 16, 2017) with human female patients who developed metastatic cutaneous lesions due to a previously unknown primary breast malignancy. Studies published in other languages were included; these articles were translated into English using a human translator or computer translation program (Google Translate). Exclusion criteria were the following: male patients, patients with a known diagnosis of primary breast malignancy prior to the appearance of a metastatic cutaneous lesion, articles focusing on the treatment of breast cancer, and articles without enough details to draw meaningful conclusions.

For a retrospective review to be included, it must have specified the number of breast cancer cases and the number of cutaneous metastases presenting initially or simultaneously to the breast cancer diagnosis. Bansal et al8 defined a simultaneous diagnosis as a skin lesion presenting with other concerns associated with the primary malignancy.

Results

The initial search of MEDLINE/PubMed, EMBASE, Cochrane library, CINAHL, and EBSCO yielded a total of 722 articles. Seven other articles found separately while undergoing our initial research were added to this total. Abstracts were manually screened, with 657 articles discarded after failing to meet the predetermined inclusion criteria. After removal of 25 duplicate articles, the full text of the remaining 47 articles were reviewed, leading to the elimination of an additional 11 articles that did not meet the necessary criteria. This resulted in 36 articles (Figure 1), including 27 individual case reports (Table 1) and 9 retrospective reviews (Table 2). Approximately 13.7% of patients in the 9 retrospective reviews presented with a skin lesion before or simultaneous to the diagnosis of breast cancer (Figure 2).

Forty-one percent (17/41) of the patients with cutaneous metastasis as a presenting feature of their breast cancer fell outside the age range for breast cancer screening recommended by the US Preventive Services Task Force,42 with 24% of the patients younger than 50 years and 17% older than 74 years (Figure 3).

Lesion Characteristics

The most common cutaneous lesions were erythematous nodules and plaques, with a few reports of black17,21 or flesh-colored5,20,31 lesions, as well as ulceration.8,17,32 The most common location for skin lesions was on the thorax (chest or breast), accounting for 57% of the cutaneous metastases, with the arms and axillae being second most commonly involved (15%)(Figure 4). Some cases presented with skin lesions extending to multiple regions. In these cases, each location of the lesion was recorded separately when analyzing the data. An additional 5 cases, shown as “Other” in Figure 4, included the eyelids, occiput, and finger. Eight case reports described symptoms associated with the cutaneous lesions, with painful or tender lesions reported in 7 cases5,9,14,17,20,30,32 and pruritus in 2 cases.12,20 Moreover, 6 case reports presented patients denying any systemic or associated symptoms with their skin lesions.2,5,9,16,17,28 Multiple cases were initially treated as other conditions due to misdiagnosis, including herpes zoster14,30 and dermatitis.11,12

Diagnostic Data

Eighteen cases reported positive immunohistochemistry from cutaneous biopsy (Table 3), given its high specificity in determining the origin of cutaneous metastases, while 8 case reports only performed hematoxylin and eosin staining. One case did not report hematoxylin and eosin or immunohistochemical staining. Table 4 lists the final breast cancer diagnosis for each case.

As per the standard of care, patients were evaluated with mammography or ultrasonography, combined with fine-needle aspiration of a suspected primary tumor, to give a definitive diagnosis of breast cancer. However, 4 cases reported negative mammography and ultrasonography.13,22,28,31 In 3 of these cases, no primary tumor was ever found.13,22,31

Comment

Our systematic review demonstrated that cutaneous lesions may be the first clinical manifestation of an undetected primary malignancy.40 These lesions often occur on the chest but may involve the face, abdomen, or extremities. Although asymptomatic erythematous nodules and plaques are the most common clinical presentations, lesions may be tender or pruritic or may even resemble benign skin conditions, including dermatitis, cellulitis, urticaria, and papulovesicular eruptions, causing them to go unrecognized.

Nevertheless, cutaneous metastasis of a visceral malignancy generally is observed late in the disease course, often following the diagnosis of a primary malignancy.14 Breast cancer is the most common internal malignancy to feature cutaneous spread, with the largest case series revealing a 23.9% rate of cutaneous metastases in females with breast carcinoma.6 Because of its proximity, the chest wall is the most common location for cutaneous lesions of metastatic breast cancer.

Malignant cells from a primary breast tumor may spread to the skin via lymphatic, hematogenous, or contiguous tissue dissemination, as well as iatrogenically through direct implantation during surgical procedures.3 The mechanism of neoplasm spread may likewise influence the clinical appearance of the resulting lesions. The localized lymphedema with a peau d’orange appearance of inflammatory metastatic breast carcinoma or the erythematous plaques of carcinoma erysipeloides are caused by embolized tumor cells obstructing dermal lymphatic vessels.3,11 On the other hand, the indurated erythematous plaques of carcinoma en cuirasse are caused by diffuse cutaneous and subcutaneous infiltration of tumor cells that also may be associated with marked reduction in breast volume.3

A primary breast cancer is classically diagnosed with a combination of clinical breast examination, radiologic imaging (ultrasound, mammogram, breast magnetic resonance imaging, or computed tomography), and fine-needle aspiration or lesional biopsy with histopathology.9 Given that in 20% of metastasized breast cancers the primary tumor may not be identified, a negative breast examination and imaging do not rule out breast cancer, especially if cutaneous biopsy reveals a primary malignancy.43 Histopathology and immunohistochemistry can thereby confirm the presence of metastatic cutaneous lesions and help characterize the breast cancer type involved, with adenocarcinomas being most commonly implicated.28 Although both ductal and lobular adenocarcinomas stain positive for cytokeratin 7, estrogen receptor, progesterone receptor, gross cystic disease fluid protein 15, carcinoembryonic antigen, and mammaglobin, only the former shows positivity for e-cadherin markers.3 Conversely, inflammatory carcinoma stains positive for CD31 and podoplanin, telangiectatic carcinoma stains positive for CD31, and mammary Paget disease stains positive for cytokeratin 7 and mucin 1, cell surface associated.3 Apart from cutaneous biopsy, fine-needle aspiration cytology can likewise provide a simple and rapid method of diagnosis with high sensitivity and specificity.14

Conclusion

Although cutaneous metastasis as the presenting sign of a breast malignancy is rare, a high index of suspicion should be exercised when encountering rapid-onset, out-of-place nodules or plaques in female patients, particularly nodules or plaques presenting on the chest.

Breast cancer is the second most common malignancy in women (after primary skin cancer) and is the second leading cause of cancer-related death in this population. In 2020, the American Cancer Society reported an estimated 276,480 new breast cancer diagnoses and 42,170 breast cancer–related deaths.1 Despite the fact that routine screening with mammography and sonography is standard, the incidence of advanced breast cancer at the time of diagnosis has remained stable over time, suggesting that life-threatening breast cancers are not being caught at an earlier stage. The number of breast cancers with distant metastases at the time of diagnosis also has not decreased.2 Therefore, although screening tests are valuable, they are imperfect and not without limitations.

Cutaneous metastasis is defined as the spread of malignant cells from an internal neoplasm to the skin, which can occur either by contiguous invasion or by distant metastasis through hematogenous or lymphatic routes.3 The diagnosis of cutaneous metastasis requires a high index of suspicion on the part of the clinician.4 Of the various internal malignancies in women, breast cancer most frequently results in metastasis to the skin,5 with up to 24% of patients with metastatic breast cancer developing cutaneous lesions.6

In recent years, there have been multiple reports of skin lesions prompting the diagnosis of a previously unknown breast cancer. In a study by Lookingbill et al,6 6.3% of patients with breast cancer presented with cutaneous involvement at the time of diagnosis, with 3.5% having skin symptoms as the presenting sign. Although there have been studies analyzing cutaneous metastasis from various internal malignancies, none thus far have focused on cutaneous metastasis as a presenting sign of breast cancer. This systematic review aimed to highlight the diverse clinical presentations of cutaneous metastatic breast cancer and their clinical implications.

Methods

Study Selection

This study utilized the PRISMA guidelines for systematic reviews.7 A review of the literature was conducted using the following databases: MEDLINE/PubMed, EMBASE, Cochrane library, CINAHL, and EBSCO.

Search Strategy and Analysis

We completed our search of each of the databases on December 16, 2017, using the phrases cutaneous metastasis and breast cancer to find relevant case reports and retrospective studies. Three authors (C.J., S.R., and M.A.) manually reviewed the resulting abstracts. If an abstract did not include enough information to determine inclusion, the full-text version was reviewed by 2 of the authors (C.J. and S.R.). Two of the authors (C.J. and M.A.) also assessed each source for relevancy and included the articles deemed eligible (Figure 1).

Inclusion criteria were the following: case reports and retrospective studies published in the prior 10 years (January 1, 2007, to December 16, 2017) with human female patients who developed metastatic cutaneous lesions due to a previously unknown primary breast malignancy. Studies published in other languages were included; these articles were translated into English using a human translator or computer translation program (Google Translate). Exclusion criteria were the following: male patients, patients with a known diagnosis of primary breast malignancy prior to the appearance of a metastatic cutaneous lesion, articles focusing on the treatment of breast cancer, and articles without enough details to draw meaningful conclusions.

For a retrospective review to be included, it must have specified the number of breast cancer cases and the number of cutaneous metastases presenting initially or simultaneously to the breast cancer diagnosis. Bansal et al8 defined a simultaneous diagnosis as a skin lesion presenting with other concerns associated with the primary malignancy.

Results

The initial search of MEDLINE/PubMed, EMBASE, Cochrane library, CINAHL, and EBSCO yielded a total of 722 articles. Seven other articles found separately while undergoing our initial research were added to this total. Abstracts were manually screened, with 657 articles discarded after failing to meet the predetermined inclusion criteria. After removal of 25 duplicate articles, the full text of the remaining 47 articles were reviewed, leading to the elimination of an additional 11 articles that did not meet the necessary criteria. This resulted in 36 articles (Figure 1), including 27 individual case reports (Table 1) and 9 retrospective reviews (Table 2). Approximately 13.7% of patients in the 9 retrospective reviews presented with a skin lesion before or simultaneous to the diagnosis of breast cancer (Figure 2).

Forty-one percent (17/41) of the patients with cutaneous metastasis as a presenting feature of their breast cancer fell outside the age range for breast cancer screening recommended by the US Preventive Services Task Force,42 with 24% of the patients younger than 50 years and 17% older than 74 years (Figure 3).

Lesion Characteristics

The most common cutaneous lesions were erythematous nodules and plaques, with a few reports of black17,21 or flesh-colored5,20,31 lesions, as well as ulceration.8,17,32 The most common location for skin lesions was on the thorax (chest or breast), accounting for 57% of the cutaneous metastases, with the arms and axillae being second most commonly involved (15%)(Figure 4). Some cases presented with skin lesions extending to multiple regions. In these cases, each location of the lesion was recorded separately when analyzing the data. An additional 5 cases, shown as “Other” in Figure 4, included the eyelids, occiput, and finger. Eight case reports described symptoms associated with the cutaneous lesions, with painful or tender lesions reported in 7 cases5,9,14,17,20,30,32 and pruritus in 2 cases.12,20 Moreover, 6 case reports presented patients denying any systemic or associated symptoms with their skin lesions.2,5,9,16,17,28 Multiple cases were initially treated as other conditions due to misdiagnosis, including herpes zoster14,30 and dermatitis.11,12

Diagnostic Data

Eighteen cases reported positive immunohistochemistry from cutaneous biopsy (Table 3), given its high specificity in determining the origin of cutaneous metastases, while 8 case reports only performed hematoxylin and eosin staining. One case did not report hematoxylin and eosin or immunohistochemical staining. Table 4 lists the final breast cancer diagnosis for each case.

As per the standard of care, patients were evaluated with mammography or ultrasonography, combined with fine-needle aspiration of a suspected primary tumor, to give a definitive diagnosis of breast cancer. However, 4 cases reported negative mammography and ultrasonography.13,22,28,31 In 3 of these cases, no primary tumor was ever found.13,22,31

Comment

Our systematic review demonstrated that cutaneous lesions may be the first clinical manifestation of an undetected primary malignancy.40 These lesions often occur on the chest but may involve the face, abdomen, or extremities. Although asymptomatic erythematous nodules and plaques are the most common clinical presentations, lesions may be tender or pruritic or may even resemble benign skin conditions, including dermatitis, cellulitis, urticaria, and papulovesicular eruptions, causing them to go unrecognized.

Nevertheless, cutaneous metastasis of a visceral malignancy generally is observed late in the disease course, often following the diagnosis of a primary malignancy.14 Breast cancer is the most common internal malignancy to feature cutaneous spread, with the largest case series revealing a 23.9% rate of cutaneous metastases in females with breast carcinoma.6 Because of its proximity, the chest wall is the most common location for cutaneous lesions of metastatic breast cancer.

Malignant cells from a primary breast tumor may spread to the skin via lymphatic, hematogenous, or contiguous tissue dissemination, as well as iatrogenically through direct implantation during surgical procedures.3 The mechanism of neoplasm spread may likewise influence the clinical appearance of the resulting lesions. The localized lymphedema with a peau d’orange appearance of inflammatory metastatic breast carcinoma or the erythematous plaques of carcinoma erysipeloides are caused by embolized tumor cells obstructing dermal lymphatic vessels.3,11 On the other hand, the indurated erythematous plaques of carcinoma en cuirasse are caused by diffuse cutaneous and subcutaneous infiltration of tumor cells that also may be associated with marked reduction in breast volume.3

A primary breast cancer is classically diagnosed with a combination of clinical breast examination, radiologic imaging (ultrasound, mammogram, breast magnetic resonance imaging, or computed tomography), and fine-needle aspiration or lesional biopsy with histopathology.9 Given that in 20% of metastasized breast cancers the primary tumor may not be identified, a negative breast examination and imaging do not rule out breast cancer, especially if cutaneous biopsy reveals a primary malignancy.43 Histopathology and immunohistochemistry can thereby confirm the presence of metastatic cutaneous lesions and help characterize the breast cancer type involved, with adenocarcinomas being most commonly implicated.28 Although both ductal and lobular adenocarcinomas stain positive for cytokeratin 7, estrogen receptor, progesterone receptor, gross cystic disease fluid protein 15, carcinoembryonic antigen, and mammaglobin, only the former shows positivity for e-cadherin markers.3 Conversely, inflammatory carcinoma stains positive for CD31 and podoplanin, telangiectatic carcinoma stains positive for CD31, and mammary Paget disease stains positive for cytokeratin 7 and mucin 1, cell surface associated.3 Apart from cutaneous biopsy, fine-needle aspiration cytology can likewise provide a simple and rapid method of diagnosis with high sensitivity and specificity.14

Conclusion

Although cutaneous metastasis as the presenting sign of a breast malignancy is rare, a high index of suspicion should be exercised when encountering rapid-onset, out-of-place nodules or plaques in female patients, particularly nodules or plaques presenting on the chest.

- Siegel R, Miller K, Jemal A. Cancer statistics, 2020 [published online January 8, 2020]. CA Cancer J Clin. 2020;70:7-30.

- Damaskos C, Dimitroulis D, Pergialiotis V, et al. An unexpected metastasis of breast cancer mimicking wheal rush. G Chir. 2016;37:136-138.

- Alcaraz I, Cerroni L, Rütten A, et al. Cutaneous metastases from internal malignancies: a clinicopathologic and immunohistochemical review. Am J Dermatopathol. 2012;34:347-393.

- Wong CYB, Helm MA, Kalb RE, et al. The presentation, pathology, and current management strategies of cutaneous metastasis. N Am J Med Sci. 2013;5:499-504.

- Shagalov D, Xu M, Liebman T, et al. Unilateral indurated plaque in the axilla: a case of metastatic breast carcinoma. Dermatol Online J. 2016;22:13030/qt8vw382nx.

- Lookingbill DP, Spangler N, Helm KF. Cutaneous metastases in patients with metastatic carcinoma: a retrospective study of 4020 patients. J Am Acad Dermatol. 1993;29:228-236.

- Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol. 2009;62:e1-e34.

- Bansal R, Patel T, Sarin J, et al. Cutaneous and subcutaneous metastases from internal malignancies: an analysis of cases diagnosed by fine needle aspiration. Diagn Cytopathol. 2011;39:882-887.

- Ahmed M. Cutaneous metastases from breast carcinoma. BMJ Case Rep. 2011;2011:bcr0620114398.

- Alani A, Roberts G, Kerr O. Carcinoma en cuirasse. BMJ Case Rep. 2017;2017:bcr2017222121.

- Al Ameer A, Imran M, Kaliyadan F, et al. Carcinoma erysipeloides as a presenting feature of breast carcinoma: a case report and brief review of literature. Indian Dermatol Online J. 2015;6:396-398.

- Bhattacharyya A, Gangopadhyay M, Ghosh K, et al. Wolf in sheep’s clothing: a case of carcinoma erysipeloides. Oxf Med Case Rep. 2016;2016:97-100.

- Carlos Ortega B, Alfaro Mejia A, Gómez-Campos G, et al. Metástasis de carcinoma de mama que simula prototecosis. Dermatol Rev Mex. 2012;56:55-61.

- Chandanwale SS, Gore CR, Buch AC, et al. Zosteriform cutaneous metastasis: a primary manifestation of carcinoma breast, rare case report. Indian J Pathol Microbiol. 2011;54:863-864.

- Cullen Aravena R, Cullen Aravena D, Velasco MJ, et al. Carcinoma hemorrhagiectoides: case report of an uncommon presentation of cutaneous metastatic breast carcinoma. Dermatol Online J. 2017;23:13030/qt3hm3z850.

- Frances L, Cuesta L, Leiva-Salinas M, et al. Secondary mucinous carcinoma of the skin. Dermatol Online J. 2014;20:22361.

- Gaitan-Gaona F, Said MC, Valdes-Rodriguez R. Cutaneous metastatic pigmented breast carcinoma. Dermatol Online J. 2016;22:13030/qt0sv018ck.

- Goodier MA, Jordan JR. Metastatic breast cancer to the lower eyelid. Laryngoscope. 2010;120(suppl 4):S129.

- Lee H-J, Kim J-M, Kim G-W, et al. A unique cutaneous presentation of breast cancer: a red apple stuck in the breast. Ann Dermatol. 2016;28:499-501.

- Mahore SD, Bothale KA, Patrikar AD, et al. Carcinoma en cuirasse : a rare presentation of breast cancer. Indian J Pathol Microbiol. 2010;53:351-358.

- Martí N, Molina I, Monteagudo C, et al. Cutaneous metastasis of breast carcinoma mimicking malignant melanoma in scalp. Dermatol Online J. 2008;14:12.

- Mehenni NN, Gamaz-Bensaou M, Bouzid K. Metastatic breast carcinoma to the gallbladder and the lower eyelid with no malignant lesion in the breast: an unusual case report with a short review of the literature [abstract]. Ann Oncol. 2013;24(suppl 3):iii49.

- O’Brien OA, AboGhaly E, Heffron C. An unusual presentation of a common malignancy [abstract]. J Pathol. 2013;231:S33.

- O’Brien R, Porto DA, Friedman BJ, et al. Elderly female with swelling of the right breast. Ann Emerg Med. 2016;67:e25-e26.

- Oliveira GM de, Zachetti DBC, Barros HR, et al. Breast carcinoma en Cuirasse—case report. An Bras Dermatol. 2013;88:608-610.

- Salati SA, Rather AA. Carcinoma en cuirasse. J Pak Assoc Derma. 2013;23:452-454.

- Santiago F, Saleiro S, Brites MM, et al. A remarkable case of cutaneous metastatic breast carcinoma. Dermatol Online J. 2009;15:10.

- Siqueira VR, Frota AS, Maia IL, et al. Cutaneous involvement as the initial presentation of metastatic breast adenocarcinoma - case report. An Bras Dermatol. 2014;89:960-963.

- Uria M, Chirino C, Rivas D. Inusual clinical presentation of cutaneous metastasis from breast carcinoma. A case report. Rev Argent Dermatol. 2009;90:230-236.

- Virmani NC, Sharma YK, Panicker NK, et al. Zosteriform skin metastases: clue to an undiagnosed breast cancer. Indian J Dermatol. 2011;56:726-727.

- de Souza Weimann ET, Botero EB, Mendes C, et al. Cutaneous metastasis as the first manifestation of occult malignant breast neoplasia. An Bras Dermatol. 2016;91(5 suppl 1):105-107.

- Wu CY, Gao HW, Huang WH, et al. Infection-like acral cutaneous metastasis as the presenting sign of an occult breast cancer. Clin Exp Dermatol. 2009;34:e409-e410.

- Yaghoobi R, Talaizade A, Lal K, et al. Inflammatory breast carcinoma presenting with two different patterns of cutaneous metastases: carcinoma telangiectaticum and carcinoma erysipeloides. J Clin Aesthet Dermatol. 2015;8:47-51.

- Atis G, Demirci GT, Atunay IK, et al. The clinical characteristics and the frequency of metastatic cutaneous tumors among primary skin tumors. Turkderm. 2013;47:166-169.

- Benmously R, Souissi A, Badri T, et al. Cutaneous metastases from internal cancers. Acta Dermatovenerol Alp Pannonica Adriat. 2008;17:167-170.

- Chopra R, Chhabra S, Samra SG, et al. Cutaneous metastases of internal malignancies: a clinicopathologic study. Indian J Dermatol Venereol Leprol. 2010;76:125-131.

- El Khoury J, Khalifeh I, Kibbi AG, et al. Cutaneous metastasis: clinicopathological study of 72 patients from a tertiary care center in Lebanon. Int J Dermatol. 2014;53:147-158.

- Fernandez-Flores A. Cutaneous metastases: a study of 78 biopsies from 69 patients. Am J Dermatopathol. 2010;32:222-239.

- Gómez Sánchez ME, Martinez Martinez ML, Martín De Hijas MC, et al. Metástasis cutáneas de tumores sólidos. Estudio descriptivo retrospectivo. Piel. 2014;29:207-212

- Handa U, Kundu R, Dimri K. Cutaneous metastasis: a study of 138 cases diagnosed by fine-needle aspiration cytology. Acta Cytol. 2017;61:47-54.

- Itin P, Tomaschett S. Cutaneous metastases from malignancies which do not originate from the skin. An epidemiological study. Article in German. Internist (Berl). 2009;50:179-186.

- Siu AL, U.S. Preventive Services Task Force. Screening for breast cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2016;164:279-296.

- Torres HA, Bodey GP, Tarrand JJ, et al. Protothecosis in patients with cancer: case series and literature review. Clin Microbiol Infect. 2003;9:786-792.

- Siegel R, Miller K, Jemal A. Cancer statistics, 2020 [published online January 8, 2020]. CA Cancer J Clin. 2020;70:7-30.

- Damaskos C, Dimitroulis D, Pergialiotis V, et al. An unexpected metastasis of breast cancer mimicking wheal rush. G Chir. 2016;37:136-138.

- Alcaraz I, Cerroni L, Rütten A, et al. Cutaneous metastases from internal malignancies: a clinicopathologic and immunohistochemical review. Am J Dermatopathol. 2012;34:347-393.

- Wong CYB, Helm MA, Kalb RE, et al. The presentation, pathology, and current management strategies of cutaneous metastasis. N Am J Med Sci. 2013;5:499-504.

- Shagalov D, Xu M, Liebman T, et al. Unilateral indurated plaque in the axilla: a case of metastatic breast carcinoma. Dermatol Online J. 2016;22:13030/qt8vw382nx.

- Lookingbill DP, Spangler N, Helm KF. Cutaneous metastases in patients with metastatic carcinoma: a retrospective study of 4020 patients. J Am Acad Dermatol. 1993;29:228-236.

- Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol. 2009;62:e1-e34.

- Bansal R, Patel T, Sarin J, et al. Cutaneous and subcutaneous metastases from internal malignancies: an analysis of cases diagnosed by fine needle aspiration. Diagn Cytopathol. 2011;39:882-887.

- Ahmed M. Cutaneous metastases from breast carcinoma. BMJ Case Rep. 2011;2011:bcr0620114398.

- Alani A, Roberts G, Kerr O. Carcinoma en cuirasse. BMJ Case Rep. 2017;2017:bcr2017222121.

- Al Ameer A, Imran M, Kaliyadan F, et al. Carcinoma erysipeloides as a presenting feature of breast carcinoma: a case report and brief review of literature. Indian Dermatol Online J. 2015;6:396-398.

- Bhattacharyya A, Gangopadhyay M, Ghosh K, et al. Wolf in sheep’s clothing: a case of carcinoma erysipeloides. Oxf Med Case Rep. 2016;2016:97-100.

- Carlos Ortega B, Alfaro Mejia A, Gómez-Campos G, et al. Metástasis de carcinoma de mama que simula prototecosis. Dermatol Rev Mex. 2012;56:55-61.

- Chandanwale SS, Gore CR, Buch AC, et al. Zosteriform cutaneous metastasis: a primary manifestation of carcinoma breast, rare case report. Indian J Pathol Microbiol. 2011;54:863-864.

- Cullen Aravena R, Cullen Aravena D, Velasco MJ, et al. Carcinoma hemorrhagiectoides: case report of an uncommon presentation of cutaneous metastatic breast carcinoma. Dermatol Online J. 2017;23:13030/qt3hm3z850.

- Frances L, Cuesta L, Leiva-Salinas M, et al. Secondary mucinous carcinoma of the skin. Dermatol Online J. 2014;20:22361.

- Gaitan-Gaona F, Said MC, Valdes-Rodriguez R. Cutaneous metastatic pigmented breast carcinoma. Dermatol Online J. 2016;22:13030/qt0sv018ck.

- Goodier MA, Jordan JR. Metastatic breast cancer to the lower eyelid. Laryngoscope. 2010;120(suppl 4):S129.

- Lee H-J, Kim J-M, Kim G-W, et al. A unique cutaneous presentation of breast cancer: a red apple stuck in the breast. Ann Dermatol. 2016;28:499-501.

- Mahore SD, Bothale KA, Patrikar AD, et al. Carcinoma en cuirasse : a rare presentation of breast cancer. Indian J Pathol Microbiol. 2010;53:351-358.

- Martí N, Molina I, Monteagudo C, et al. Cutaneous metastasis of breast carcinoma mimicking malignant melanoma in scalp. Dermatol Online J. 2008;14:12.

- Mehenni NN, Gamaz-Bensaou M, Bouzid K. Metastatic breast carcinoma to the gallbladder and the lower eyelid with no malignant lesion in the breast: an unusual case report with a short review of the literature [abstract]. Ann Oncol. 2013;24(suppl 3):iii49.

- O’Brien OA, AboGhaly E, Heffron C. An unusual presentation of a common malignancy [abstract]. J Pathol. 2013;231:S33.

- O’Brien R, Porto DA, Friedman BJ, et al. Elderly female with swelling of the right breast. Ann Emerg Med. 2016;67:e25-e26.

- Oliveira GM de, Zachetti DBC, Barros HR, et al. Breast carcinoma en Cuirasse—case report. An Bras Dermatol. 2013;88:608-610.

- Salati SA, Rather AA. Carcinoma en cuirasse. J Pak Assoc Derma. 2013;23:452-454.

- Santiago F, Saleiro S, Brites MM, et al. A remarkable case of cutaneous metastatic breast carcinoma. Dermatol Online J. 2009;15:10.

- Siqueira VR, Frota AS, Maia IL, et al. Cutaneous involvement as the initial presentation of metastatic breast adenocarcinoma - case report. An Bras Dermatol. 2014;89:960-963.