User login

Body-size awareness linked with BMI decrease in obese children, teens

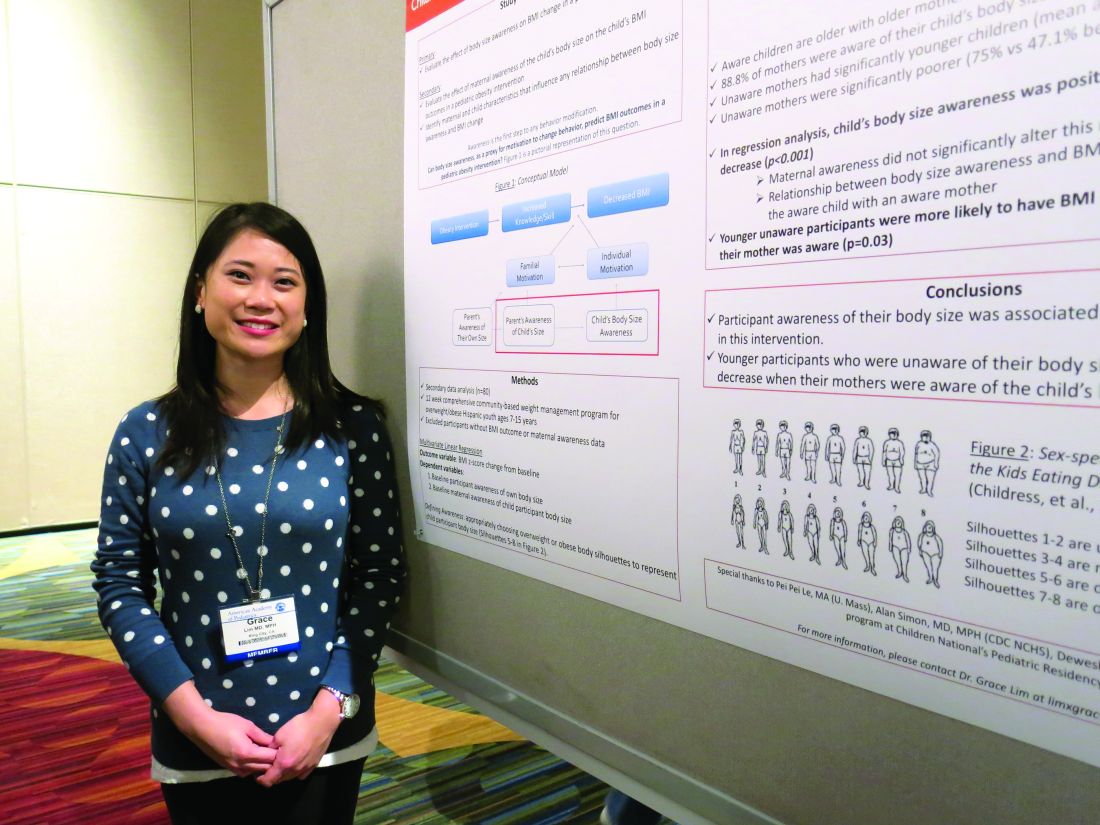

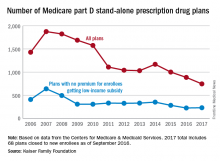

SAN FRANCISCO – Body-size awareness was associated with a decrease in BMI among Latino children and teens, said Grace Lim, MD, a pediatrician in King City, Calif.

Awareness of body size, as a proxy for motivation to change behavior, was based on choices of sex-specific body silhouettes in comparison to actual body mass index (BMI) z-scores. Dr. Lim and her coauthor, Dr. Nazrat Mirza, conducted a study at Children’s National Medical Center in Washington of 80 overweight or obese Latino youths aged 7-15 years who were taking part in a 12-week, community-based weight management program: 68% of study participants demonstrated awareness of overweight or obese body sizes. They were more likely to be older (P less than .001) and with higher maternal age (P = .02). Body-size awareness in the child was positively associated with a decrease in BMI during the intervention period (P less than .001).

Dr. Lim said that she had no relevant financial disclosures.

SAN FRANCISCO – Body-size awareness was associated with a decrease in BMI among Latino children and teens, said Grace Lim, MD, a pediatrician in King City, Calif.

Awareness of body size, as a proxy for motivation to change behavior, was based on choices of sex-specific body silhouettes in comparison to actual body mass index (BMI) z-scores. Dr. Lim and her coauthor, Dr. Nazrat Mirza, conducted a study at Children’s National Medical Center in Washington of 80 overweight or obese Latino youths aged 7-15 years who were taking part in a 12-week, community-based weight management program: 68% of study participants demonstrated awareness of overweight or obese body sizes. They were more likely to be older (P less than .001) and with higher maternal age (P = .02). Body-size awareness in the child was positively associated with a decrease in BMI during the intervention period (P less than .001).

Dr. Lim said that she had no relevant financial disclosures.

SAN FRANCISCO – Body-size awareness was associated with a decrease in BMI among Latino children and teens, said Grace Lim, MD, a pediatrician in King City, Calif.

Awareness of body size, as a proxy for motivation to change behavior, was based on choices of sex-specific body silhouettes in comparison to actual body mass index (BMI) z-scores. Dr. Lim and her coauthor, Dr. Nazrat Mirza, conducted a study at Children’s National Medical Center in Washington of 80 overweight or obese Latino youths aged 7-15 years who were taking part in a 12-week, community-based weight management program: 68% of study participants demonstrated awareness of overweight or obese body sizes. They were more likely to be older (P less than .001) and with higher maternal age (P = .02). Body-size awareness in the child was positively associated with a decrease in BMI during the intervention period (P less than .001).

Dr. Lim said that she had no relevant financial disclosures.

AT AAP 16

Key clinical point:

Major finding: 68% of 80 the children demonstrated awareness of overweight or obese body sizes and were more likely to drop their BMI.

Data source: A study of 80 overweight or obese Latino youths aged 7-15 years taking part in a 12-week, community-based weight management program.

Disclosures: Dr. Lim said she that had no relevant financial disclosures.

He/O2 does not reduce NIV failure in severe COPD exacerbations

Inhaling He/O2 did not result in a lower NIV failure rate than inhaling Air/O2 in COPD patients requiring noninvasive ventilation, in a randomized, controlled study.

In the study, known as the E.C.H.O.ICU trial, patients either received He/02 (a 78%/22% mixture blended with 100% O2) or a conventional Air/O2 mixture for up to 72 hours, during both noninvasive ventilation (NIV) and spontaneous breathing.

Previous research had demonstrated that during hypercapnic COPD exacerbations, the He/O2 mixture reduces airway resistance, partial pressure of carbon dioxide in arterial blood (PaCO2), intrinsic positive end-expiratory pressure, and work of breathing during both spontaneous breathing and NIV, compared with Air/O2, said Philippe Jolliet, MD, and his colleagues.

The two treatment groups in the E.C.H.O.ICU trial had similar NIV failure rates – defined as endotracheal intubation or death without intubation. The rates were 14.7% for the patients who received He/O2 and 14.5% for the patients who received Air/O2. The NIV failures for 31 the patients in the He/O2 group resulted in intubation; the remaining two patients who were classified as having NIV failure died. All 32 of the patients in the Air/O2 group who had NIV failures were intubated.

The length of ICU stay was also comparable between the two groups. In the subgroups of patients with severe acidosis (having a pH of less than 7.30) from both the He/O2 and Air/O2 groups, the NIV failure rates were again nearly identical (AJRCCM. 2016 Oct 13; doi: 10.1164/rccm.201601-0083OC).

The average times to NIV failure were 93 hours in the He/O2 group (N = 33) and 52 hours in the Air/O2 group (N = 32, P = .12). The He/O2 group achieved a significantly quicker improvement in respiratory acidosis, encephalopathy score, and respiratory rate.

Patients intubated following an NIV failure who had received He/O2 had a shorter ventilation duration and a shorter ICU stay than did the intubated patients who had received Air/O2 (7.4 days, vs. 13.6 days, P = .02, and 15.8 vs. 26.7 days, P = .01).

No significant differences appeared in the safety profile of the two groups, nor were significant differences seen in ICU, hospital, or 6-month mortality rates; or in 6-month hospital readmission rates.

“[The] study was stopped prematurely after a futility analysis due the low event rate identified by [an independent adjudication committee],” said Dr. Jolliet of the intensive care and burn unit at Le Centre Hospitalier Universitaire Vaudois (CHUV), in Lausanne, Switzerland, and his fellow researchers.

The study included 445 patients from ICUs or intermediate care units in six countries. The inclusion criteria were presenting with current COPD exacerbation with hypercapnic acute respiratory failure, a PaCO2 of at least 45 mm Hg, an arterial pH of less than or equal to 7.35, and at least one of the following: respiration rate of at least 25 breaths per minute, a PaO2 less than or equal to 50 mm Hg, and an arterial oxygen saturation of less than or equal to 90%.

Half of the patients in each group were already receiving NIV prior to enrollment in the study. Males constituted two thirds of all enrolled patients.

HeO2 administration was limited to 72 hours for each patient and about a third of NIV failures occurred after the end of the HeO2 administration, the researchers said.

“The main reason for the absence of observed benefit on outcome in the He/O2 probably lies in the very low NIV failure rate now observed in both groups. One possible mechanism explaining the low intubation rate could be that some patients had received uncontrolled oxygen therapy prior to ICU admission, thereby worsening initial hypercapnia and acidosis, a problem that can easily be corrected by adequate titration,” the researchers said. “The 14.5% failure rate in the Air/O2 group was much lower than the 25% rate used in designing the study,” which was based on previous research.

The trial’s sponsor, Air Liquide Healthcare, provided input into the design and conduct of the study; oversaw the collection, management, and statistical analysis of data; and contributed to the manuscript’s preparation and review.

Inhaling He/O2 did not result in a lower NIV failure rate than inhaling Air/O2 in COPD patients requiring noninvasive ventilation, in a randomized, controlled study.

In the study, known as the E.C.H.O.ICU trial, patients either received He/02 (a 78%/22% mixture blended with 100% O2) or a conventional Air/O2 mixture for up to 72 hours, during both noninvasive ventilation (NIV) and spontaneous breathing.

Previous research had demonstrated that during hypercapnic COPD exacerbations, the He/O2 mixture reduces airway resistance, partial pressure of carbon dioxide in arterial blood (PaCO2), intrinsic positive end-expiratory pressure, and work of breathing during both spontaneous breathing and NIV, compared with Air/O2, said Philippe Jolliet, MD, and his colleagues.

The two treatment groups in the E.C.H.O.ICU trial had similar NIV failure rates – defined as endotracheal intubation or death without intubation. The rates were 14.7% for the patients who received He/O2 and 14.5% for the patients who received Air/O2. The NIV failures for 31 the patients in the He/O2 group resulted in intubation; the remaining two patients who were classified as having NIV failure died. All 32 of the patients in the Air/O2 group who had NIV failures were intubated.

The length of ICU stay was also comparable between the two groups. In the subgroups of patients with severe acidosis (having a pH of less than 7.30) from both the He/O2 and Air/O2 groups, the NIV failure rates were again nearly identical (AJRCCM. 2016 Oct 13; doi: 10.1164/rccm.201601-0083OC).

The average times to NIV failure were 93 hours in the He/O2 group (N = 33) and 52 hours in the Air/O2 group (N = 32, P = .12). The He/O2 group achieved a significantly quicker improvement in respiratory acidosis, encephalopathy score, and respiratory rate.

Patients intubated following an NIV failure who had received He/O2 had a shorter ventilation duration and a shorter ICU stay than did the intubated patients who had received Air/O2 (7.4 days, vs. 13.6 days, P = .02, and 15.8 vs. 26.7 days, P = .01).

No significant differences appeared in the safety profile of the two groups, nor were significant differences seen in ICU, hospital, or 6-month mortality rates; or in 6-month hospital readmission rates.

“[The] study was stopped prematurely after a futility analysis due the low event rate identified by [an independent adjudication committee],” said Dr. Jolliet of the intensive care and burn unit at Le Centre Hospitalier Universitaire Vaudois (CHUV), in Lausanne, Switzerland, and his fellow researchers.

The study included 445 patients from ICUs or intermediate care units in six countries. The inclusion criteria were presenting with current COPD exacerbation with hypercapnic acute respiratory failure, a PaCO2 of at least 45 mm Hg, an arterial pH of less than or equal to 7.35, and at least one of the following: respiration rate of at least 25 breaths per minute, a PaO2 less than or equal to 50 mm Hg, and an arterial oxygen saturation of less than or equal to 90%.

Half of the patients in each group were already receiving NIV prior to enrollment in the study. Males constituted two thirds of all enrolled patients.

HeO2 administration was limited to 72 hours for each patient and about a third of NIV failures occurred after the end of the HeO2 administration, the researchers said.

“The main reason for the absence of observed benefit on outcome in the He/O2 probably lies in the very low NIV failure rate now observed in both groups. One possible mechanism explaining the low intubation rate could be that some patients had received uncontrolled oxygen therapy prior to ICU admission, thereby worsening initial hypercapnia and acidosis, a problem that can easily be corrected by adequate titration,” the researchers said. “The 14.5% failure rate in the Air/O2 group was much lower than the 25% rate used in designing the study,” which was based on previous research.

The trial’s sponsor, Air Liquide Healthcare, provided input into the design and conduct of the study; oversaw the collection, management, and statistical analysis of data; and contributed to the manuscript’s preparation and review.

Inhaling He/O2 did not result in a lower NIV failure rate than inhaling Air/O2 in COPD patients requiring noninvasive ventilation, in a randomized, controlled study.

In the study, known as the E.C.H.O.ICU trial, patients either received He/02 (a 78%/22% mixture blended with 100% O2) or a conventional Air/O2 mixture for up to 72 hours, during both noninvasive ventilation (NIV) and spontaneous breathing.

Previous research had demonstrated that during hypercapnic COPD exacerbations, the He/O2 mixture reduces airway resistance, partial pressure of carbon dioxide in arterial blood (PaCO2), intrinsic positive end-expiratory pressure, and work of breathing during both spontaneous breathing and NIV, compared with Air/O2, said Philippe Jolliet, MD, and his colleagues.

The two treatment groups in the E.C.H.O.ICU trial had similar NIV failure rates – defined as endotracheal intubation or death without intubation. The rates were 14.7% for the patients who received He/O2 and 14.5% for the patients who received Air/O2. The NIV failures for 31 the patients in the He/O2 group resulted in intubation; the remaining two patients who were classified as having NIV failure died. All 32 of the patients in the Air/O2 group who had NIV failures were intubated.

The length of ICU stay was also comparable between the two groups. In the subgroups of patients with severe acidosis (having a pH of less than 7.30) from both the He/O2 and Air/O2 groups, the NIV failure rates were again nearly identical (AJRCCM. 2016 Oct 13; doi: 10.1164/rccm.201601-0083OC).

The average times to NIV failure were 93 hours in the He/O2 group (N = 33) and 52 hours in the Air/O2 group (N = 32, P = .12). The He/O2 group achieved a significantly quicker improvement in respiratory acidosis, encephalopathy score, and respiratory rate.

Patients intubated following an NIV failure who had received He/O2 had a shorter ventilation duration and a shorter ICU stay than did the intubated patients who had received Air/O2 (7.4 days, vs. 13.6 days, P = .02, and 15.8 vs. 26.7 days, P = .01).

No significant differences appeared in the safety profile of the two groups, nor were significant differences seen in ICU, hospital, or 6-month mortality rates; or in 6-month hospital readmission rates.

“[The] study was stopped prematurely after a futility analysis due the low event rate identified by [an independent adjudication committee],” said Dr. Jolliet of the intensive care and burn unit at Le Centre Hospitalier Universitaire Vaudois (CHUV), in Lausanne, Switzerland, and his fellow researchers.

The study included 445 patients from ICUs or intermediate care units in six countries. The inclusion criteria were presenting with current COPD exacerbation with hypercapnic acute respiratory failure, a PaCO2 of at least 45 mm Hg, an arterial pH of less than or equal to 7.35, and at least one of the following: respiration rate of at least 25 breaths per minute, a PaO2 less than or equal to 50 mm Hg, and an arterial oxygen saturation of less than or equal to 90%.

Half of the patients in each group were already receiving NIV prior to enrollment in the study. Males constituted two thirds of all enrolled patients.

HeO2 administration was limited to 72 hours for each patient and about a third of NIV failures occurred after the end of the HeO2 administration, the researchers said.

“The main reason for the absence of observed benefit on outcome in the He/O2 probably lies in the very low NIV failure rate now observed in both groups. One possible mechanism explaining the low intubation rate could be that some patients had received uncontrolled oxygen therapy prior to ICU admission, thereby worsening initial hypercapnia and acidosis, a problem that can easily be corrected by adequate titration,” the researchers said. “The 14.5% failure rate in the Air/O2 group was much lower than the 25% rate used in designing the study,” which was based on previous research.

The trial’s sponsor, Air Liquide Healthcare, provided input into the design and conduct of the study; oversaw the collection, management, and statistical analysis of data; and contributed to the manuscript’s preparation and review.

FROM AJRCCM

Key clinical point: .

Major finding: The trial was stopped prematurely due to a low event rate. The NIV failure rates for patients who received Air/O2 and He/O2 were 14.5% (N = 32) and 14.7% ( N = 33, P = .97), respectively.

Data source: A prospective, open-label, randomized, controlled trial of 445 patients.

Disclosures: Air Liquide Healthcare sponsored and contributed to the study.

Comorbidities common in COPD patients

LOS ANGELES – Comorbidities are common in patients with chronic obstructive pulmonary disease, especially cardiovascular disease, diabetes, anemia, and osteoporosis, results from a single-center analysis showed.

“These affect the course and outcome of COPD, so identification and treatment of these comorbidities is very important,” Hamdy Mohammadien, MD, said in an interview in advance of the annual meeting of the American College of Chest Physicians.

In an effort to estimate the presence of comorbidities in patients with COPD and to assess the relationship of comorbid diseases with age, sex, C-reactive protein, and COPD severity, Dr. Mohammadien and his associates at Sohag (Egypt) University, retrospectively evaluated 400 COPD patients who were at least 40 years of age. Those who presented with bronchial asthma or other lung diseases were excluded from the analysis. The mean age of patients was 62 years, 69% were male, and 36% were current smokers. Their mean FEV1/FVC ratio (forced expiratory volume in 1 second/forced vital capacity) was 48%, and 57% had two or more exacerbations in the previous year.

Dr. Mohammadien reported that all patients had at least one comorbidity. The most common comorbidities were cardiovascular diseases (85%), diabetes (35%), dyslipidemia (23%), osteopenia (11%), anemia (10%), muscle wasting (9%), pneumonia (7%), osteoporosis (6%), GERD (2%), and lung cancer (2%). He also noted that the association between cardiovascular events, dyslipidemia, diabetes, osteoporosis, muscle wasting, and anemia was highly significant in COPD patients aged 60 years and older, in men, and in patients with stage III and IV COPD. In addition, a significant relationship was observed between a positive CRP level and each comorbidity, with the exception of gastroesophageal reflux disease and lung cancer. The three comorbidities with the greatest significance were ischemic heart disease (P = .0001), dyslipidemia (P = .0001), and pneumonia (P = .0003). Finally, frequent exacerbators were significantly more likely to have two or more comorbidities (odds ratio 2; P = .04) and to have more hospitalizations in the past year (P less than .01).

“Comorbidities are common in patients with COPD, and have a significant impact on health status and prognosis, thus justifying the need for a comprehensive and integrating therapeutic approach,” Dr. Mohammadien said at the meeting. “In the management of COPD all these conditions need to be carefully evaluated and treated.”

He acknowledged certain limitations of the study, including its relatively small sample size and the fact that bone density was measured by sonar and not by dual-energy x-ray absorptiometry. Dr. Mohammadien reported having no financial disclosures.

LOS ANGELES – Comorbidities are common in patients with chronic obstructive pulmonary disease, especially cardiovascular disease, diabetes, anemia, and osteoporosis, results from a single-center analysis showed.

“These affect the course and outcome of COPD, so identification and treatment of these comorbidities is very important,” Hamdy Mohammadien, MD, said in an interview in advance of the annual meeting of the American College of Chest Physicians.

In an effort to estimate the presence of comorbidities in patients with COPD and to assess the relationship of comorbid diseases with age, sex, C-reactive protein, and COPD severity, Dr. Mohammadien and his associates at Sohag (Egypt) University, retrospectively evaluated 400 COPD patients who were at least 40 years of age. Those who presented with bronchial asthma or other lung diseases were excluded from the analysis. The mean age of patients was 62 years, 69% were male, and 36% were current smokers. Their mean FEV1/FVC ratio (forced expiratory volume in 1 second/forced vital capacity) was 48%, and 57% had two or more exacerbations in the previous year.

Dr. Mohammadien reported that all patients had at least one comorbidity. The most common comorbidities were cardiovascular diseases (85%), diabetes (35%), dyslipidemia (23%), osteopenia (11%), anemia (10%), muscle wasting (9%), pneumonia (7%), osteoporosis (6%), GERD (2%), and lung cancer (2%). He also noted that the association between cardiovascular events, dyslipidemia, diabetes, osteoporosis, muscle wasting, and anemia was highly significant in COPD patients aged 60 years and older, in men, and in patients with stage III and IV COPD. In addition, a significant relationship was observed between a positive CRP level and each comorbidity, with the exception of gastroesophageal reflux disease and lung cancer. The three comorbidities with the greatest significance were ischemic heart disease (P = .0001), dyslipidemia (P = .0001), and pneumonia (P = .0003). Finally, frequent exacerbators were significantly more likely to have two or more comorbidities (odds ratio 2; P = .04) and to have more hospitalizations in the past year (P less than .01).

“Comorbidities are common in patients with COPD, and have a significant impact on health status and prognosis, thus justifying the need for a comprehensive and integrating therapeutic approach,” Dr. Mohammadien said at the meeting. “In the management of COPD all these conditions need to be carefully evaluated and treated.”

He acknowledged certain limitations of the study, including its relatively small sample size and the fact that bone density was measured by sonar and not by dual-energy x-ray absorptiometry. Dr. Mohammadien reported having no financial disclosures.

LOS ANGELES – Comorbidities are common in patients with chronic obstructive pulmonary disease, especially cardiovascular disease, diabetes, anemia, and osteoporosis, results from a single-center analysis showed.

“These affect the course and outcome of COPD, so identification and treatment of these comorbidities is very important,” Hamdy Mohammadien, MD, said in an interview in advance of the annual meeting of the American College of Chest Physicians.

In an effort to estimate the presence of comorbidities in patients with COPD and to assess the relationship of comorbid diseases with age, sex, C-reactive protein, and COPD severity, Dr. Mohammadien and his associates at Sohag (Egypt) University, retrospectively evaluated 400 COPD patients who were at least 40 years of age. Those who presented with bronchial asthma or other lung diseases were excluded from the analysis. The mean age of patients was 62 years, 69% were male, and 36% were current smokers. Their mean FEV1/FVC ratio (forced expiratory volume in 1 second/forced vital capacity) was 48%, and 57% had two or more exacerbations in the previous year.

Dr. Mohammadien reported that all patients had at least one comorbidity. The most common comorbidities were cardiovascular diseases (85%), diabetes (35%), dyslipidemia (23%), osteopenia (11%), anemia (10%), muscle wasting (9%), pneumonia (7%), osteoporosis (6%), GERD (2%), and lung cancer (2%). He also noted that the association between cardiovascular events, dyslipidemia, diabetes, osteoporosis, muscle wasting, and anemia was highly significant in COPD patients aged 60 years and older, in men, and in patients with stage III and IV COPD. In addition, a significant relationship was observed between a positive CRP level and each comorbidity, with the exception of gastroesophageal reflux disease and lung cancer. The three comorbidities with the greatest significance were ischemic heart disease (P = .0001), dyslipidemia (P = .0001), and pneumonia (P = .0003). Finally, frequent exacerbators were significantly more likely to have two or more comorbidities (odds ratio 2; P = .04) and to have more hospitalizations in the past year (P less than .01).

“Comorbidities are common in patients with COPD, and have a significant impact on health status and prognosis, thus justifying the need for a comprehensive and integrating therapeutic approach,” Dr. Mohammadien said at the meeting. “In the management of COPD all these conditions need to be carefully evaluated and treated.”

He acknowledged certain limitations of the study, including its relatively small sample size and the fact that bone density was measured by sonar and not by dual-energy x-ray absorptiometry. Dr. Mohammadien reported having no financial disclosures.

AT CHEST 2016

Key clinical point:

Major finding: The three most common comorbidities in COPD patients were cardiovascular diseases (85%), diabetes (35%), and dyslipidemia (23%).

Data source: A retrospective study of 400 patients with COPD.

Disclosures: Dr. Mohammadien reported having no financial disclosures.

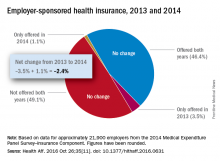

Employer-provided insurance stable after ACA implementation

Concerns that Affordable Care Act (ACA) provisions implemented in 2014 would lead large numbers of employers to drop health insurance coverage appear to have been unfounded, according to a study published in the journal Health Affairs.

More than 95% of employers did not change their insurance coverage policy between 2013 and 2014: 46.4% offered coverage in 2013 and continued it in 2014 and 49.1% did not offer coverage either year. Of the 21,900 private-sector employers included in the analysis, 3.5% provided coverage in 2013 but not in 2014 and 1.1% did not offer it in 2013 but did in 2014, reported Jean Abraham, PhD, of the University of Minnesota, Minneapolis, and her associates (Health Aff. 2016 Oct 26;35[11]. doi: 10.1377/hlthaff.2016.0631).

The analysis of data from the 2014 Medical Expenditure Panel Survey–Insurance Component, however, did show associations between coverage changes and several workforce and employer characteristics. “Small firms were more likely to drop coverage compared to large ones, as were those with more low-wage workers compared to those with fewer such workers, newer establishments compared to older ones, and those in the service sector compared to those in blue- and white-collar industries,” Dr. Abraham and her associates wrote.

The Robert Wood Johnson Foundation’s State Health Access Reform Evaluation program supported the study. No other financial disclosures were provided.

Concerns that Affordable Care Act (ACA) provisions implemented in 2014 would lead large numbers of employers to drop health insurance coverage appear to have been unfounded, according to a study published in the journal Health Affairs.

More than 95% of employers did not change their insurance coverage policy between 2013 and 2014: 46.4% offered coverage in 2013 and continued it in 2014 and 49.1% did not offer coverage either year. Of the 21,900 private-sector employers included in the analysis, 3.5% provided coverage in 2013 but not in 2014 and 1.1% did not offer it in 2013 but did in 2014, reported Jean Abraham, PhD, of the University of Minnesota, Minneapolis, and her associates (Health Aff. 2016 Oct 26;35[11]. doi: 10.1377/hlthaff.2016.0631).

The analysis of data from the 2014 Medical Expenditure Panel Survey–Insurance Component, however, did show associations between coverage changes and several workforce and employer characteristics. “Small firms were more likely to drop coverage compared to large ones, as were those with more low-wage workers compared to those with fewer such workers, newer establishments compared to older ones, and those in the service sector compared to those in blue- and white-collar industries,” Dr. Abraham and her associates wrote.

The Robert Wood Johnson Foundation’s State Health Access Reform Evaluation program supported the study. No other financial disclosures were provided.

Concerns that Affordable Care Act (ACA) provisions implemented in 2014 would lead large numbers of employers to drop health insurance coverage appear to have been unfounded, according to a study published in the journal Health Affairs.

More than 95% of employers did not change their insurance coverage policy between 2013 and 2014: 46.4% offered coverage in 2013 and continued it in 2014 and 49.1% did not offer coverage either year. Of the 21,900 private-sector employers included in the analysis, 3.5% provided coverage in 2013 but not in 2014 and 1.1% did not offer it in 2013 but did in 2014, reported Jean Abraham, PhD, of the University of Minnesota, Minneapolis, and her associates (Health Aff. 2016 Oct 26;35[11]. doi: 10.1377/hlthaff.2016.0631).

The analysis of data from the 2014 Medical Expenditure Panel Survey–Insurance Component, however, did show associations between coverage changes and several workforce and employer characteristics. “Small firms were more likely to drop coverage compared to large ones, as were those with more low-wage workers compared to those with fewer such workers, newer establishments compared to older ones, and those in the service sector compared to those in blue- and white-collar industries,” Dr. Abraham and her associates wrote.

The Robert Wood Johnson Foundation’s State Health Access Reform Evaluation program supported the study. No other financial disclosures were provided.

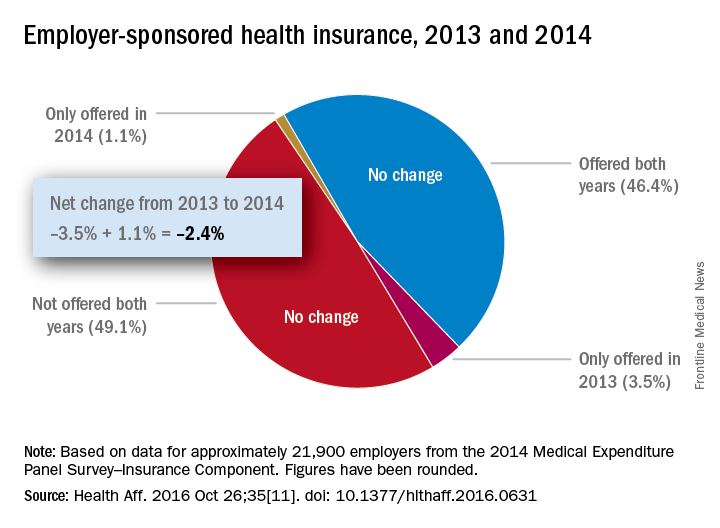

Number of Medicare part D drug plans continues to decline

There will be 16% fewer Medicare part D prescription drug plans available in 2017, compared with 2016, according to an analysis by the Kaiser Family Foundation.

In 2017, there will be 746 stand-alone prescription drug plans available to Medicare beneficiaries in the 50 states and the District of Columbia, which is 140 (16%) less than were available in 2016 and 1,129 (60%) less than the peak in 2007, Kaiser reported.

Of the nearly 41 million people enrolled in Medicare part D plans in 2016, about 12 million receive the low-income subsidy. The Congressional Budget Office estimates that spending on part D benefits will be $94 billion in 2017, which is 15.6% of overall Medicare costs. Medicare actuaries have projected that the cost per enrollee will increase by 5.8% annually from 2015 to 2025, Kaiser reported.

There will be 16% fewer Medicare part D prescription drug plans available in 2017, compared with 2016, according to an analysis by the Kaiser Family Foundation.

In 2017, there will be 746 stand-alone prescription drug plans available to Medicare beneficiaries in the 50 states and the District of Columbia, which is 140 (16%) less than were available in 2016 and 1,129 (60%) less than the peak in 2007, Kaiser reported.

Of the nearly 41 million people enrolled in Medicare part D plans in 2016, about 12 million receive the low-income subsidy. The Congressional Budget Office estimates that spending on part D benefits will be $94 billion in 2017, which is 15.6% of overall Medicare costs. Medicare actuaries have projected that the cost per enrollee will increase by 5.8% annually from 2015 to 2025, Kaiser reported.

There will be 16% fewer Medicare part D prescription drug plans available in 2017, compared with 2016, according to an analysis by the Kaiser Family Foundation.

In 2017, there will be 746 stand-alone prescription drug plans available to Medicare beneficiaries in the 50 states and the District of Columbia, which is 140 (16%) less than were available in 2016 and 1,129 (60%) less than the peak in 2007, Kaiser reported.

Of the nearly 41 million people enrolled in Medicare part D plans in 2016, about 12 million receive the low-income subsidy. The Congressional Budget Office estimates that spending on part D benefits will be $94 billion in 2017, which is 15.6% of overall Medicare costs. Medicare actuaries have projected that the cost per enrollee will increase by 5.8% annually from 2015 to 2025, Kaiser reported.

Woman with rash in groin

The family physician (FP) suspected that the patient had tinea cruris, but hadn’t ever seen it spread so far from the inguinal area. The rash had central clearing, which is typical of tinea corporis, but is not seen as often with tinea cruris. The FP performed a potassium hydroxide (KOH) preparation, which was positive for branching septate hyphae. (See video on how to perform a KOH preparation here.)

The FP discussed the treatment options, which consisted of topical antifungal medicine vs oral antifungal medicine. The patient was willing to try the topical terbinafine and return for a follow-up appointment in a month. The FP told the patient that he would give her oral terbinafine if the topical terbinafine didn’t work. One month later, the skin had cleared and the patient was happy with the results.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Usatine R, Smith M. Tinea cruris. In: Usatine R, Smith M, Mayeaux EJ, et al, eds. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013:795-798.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com

The family physician (FP) suspected that the patient had tinea cruris, but hadn’t ever seen it spread so far from the inguinal area. The rash had central clearing, which is typical of tinea corporis, but is not seen as often with tinea cruris. The FP performed a potassium hydroxide (KOH) preparation, which was positive for branching septate hyphae. (See video on how to perform a KOH preparation here.)

The FP discussed the treatment options, which consisted of topical antifungal medicine vs oral antifungal medicine. The patient was willing to try the topical terbinafine and return for a follow-up appointment in a month. The FP told the patient that he would give her oral terbinafine if the topical terbinafine didn’t work. One month later, the skin had cleared and the patient was happy with the results.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Usatine R, Smith M. Tinea cruris. In: Usatine R, Smith M, Mayeaux EJ, et al, eds. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013:795-798.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com

The family physician (FP) suspected that the patient had tinea cruris, but hadn’t ever seen it spread so far from the inguinal area. The rash had central clearing, which is typical of tinea corporis, but is not seen as often with tinea cruris. The FP performed a potassium hydroxide (KOH) preparation, which was positive for branching septate hyphae. (See video on how to perform a KOH preparation here.)

The FP discussed the treatment options, which consisted of topical antifungal medicine vs oral antifungal medicine. The patient was willing to try the topical terbinafine and return for a follow-up appointment in a month. The FP told the patient that he would give her oral terbinafine if the topical terbinafine didn’t work. One month later, the skin had cleared and the patient was happy with the results.

Photos and text for Photo Rounds Friday courtesy of Richard P. Usatine, MD. This case was adapted from: Usatine R, Smith M. Tinea cruris. In: Usatine R, Smith M, Mayeaux EJ, et al, eds. Color Atlas of Family Medicine. 2nd ed. New York, NY: McGraw-Hill; 2013:795-798.

To learn more about the Color Atlas of Family Medicine, see: www.amazon.com/Color-Family-Medicine-Richard-Usatine/dp/0071769641/

You can now get the second edition of the Color Atlas of Family Medicine as an app by clicking on this link: usatinemedia.com

Improving Hospital Telemetry Usage

Hospitalists often rely on inpatient telemetry monitoring to identify arrhythmias, ischemia, and QT prolongation, but research has shown that its inappropriate usage increases costs to the healthcare system. An abstract presented at the 2016 meeting of the Society of Hospital Medicine looked at one hospital’s telemetry usage and how it might be improved.

The study revolved around a progress note template the authors developed, which incorporated documentation for telemetry use indications and need for telemetry continuation on non-ICU internal medicine services. The authors also provided an educational session describing American College of Cardiology and American Heart Association (ACC/AHA) telemetry use guidelines for internal medicine residents with a pretest and posttest.

Application of ACA/AHA guidelines was assessed with five scenarios before and after instruction on the guidelines. On pretest, only 29% of trainees answered all five questions correctly; on posttest, 63% did. A comparison between charts of admitted patients with telemetry orders from 2015 with charts from 2013 indicated that the appropriate initiation of telemetry improved significantly as did telemetry documentation. Inappropriate continuation rates were cut in half.

The success of the study suggests further work.

“We plan expansion of telemetry utilization education to internal medicine faculty and nursing to encourage daily review of telemetry usage,” the authors write. “We are also working to develop telemetry orders that end during standard work hours to prevent inadvertent continuation by overnight providers.”

Reference

1. Kuehn C, Steyers CM III, Glenn K, Fang M. Resident-based telemetry utilization innovations lead to improved outcomes [abstract]. J Hosp Med. 2016;11(suppl 1). Accessed October 17, 2016.

Hospitalists often rely on inpatient telemetry monitoring to identify arrhythmias, ischemia, and QT prolongation, but research has shown that its inappropriate usage increases costs to the healthcare system. An abstract presented at the 2016 meeting of the Society of Hospital Medicine looked at one hospital’s telemetry usage and how it might be improved.

The study revolved around a progress note template the authors developed, which incorporated documentation for telemetry use indications and need for telemetry continuation on non-ICU internal medicine services. The authors also provided an educational session describing American College of Cardiology and American Heart Association (ACC/AHA) telemetry use guidelines for internal medicine residents with a pretest and posttest.

Application of ACA/AHA guidelines was assessed with five scenarios before and after instruction on the guidelines. On pretest, only 29% of trainees answered all five questions correctly; on posttest, 63% did. A comparison between charts of admitted patients with telemetry orders from 2015 with charts from 2013 indicated that the appropriate initiation of telemetry improved significantly as did telemetry documentation. Inappropriate continuation rates were cut in half.

The success of the study suggests further work.

“We plan expansion of telemetry utilization education to internal medicine faculty and nursing to encourage daily review of telemetry usage,” the authors write. “We are also working to develop telemetry orders that end during standard work hours to prevent inadvertent continuation by overnight providers.”

Reference

1. Kuehn C, Steyers CM III, Glenn K, Fang M. Resident-based telemetry utilization innovations lead to improved outcomes [abstract]. J Hosp Med. 2016;11(suppl 1). Accessed October 17, 2016.

Hospitalists often rely on inpatient telemetry monitoring to identify arrhythmias, ischemia, and QT prolongation, but research has shown that its inappropriate usage increases costs to the healthcare system. An abstract presented at the 2016 meeting of the Society of Hospital Medicine looked at one hospital’s telemetry usage and how it might be improved.

The study revolved around a progress note template the authors developed, which incorporated documentation for telemetry use indications and need for telemetry continuation on non-ICU internal medicine services. The authors also provided an educational session describing American College of Cardiology and American Heart Association (ACC/AHA) telemetry use guidelines for internal medicine residents with a pretest and posttest.

Application of ACA/AHA guidelines was assessed with five scenarios before and after instruction on the guidelines. On pretest, only 29% of trainees answered all five questions correctly; on posttest, 63% did. A comparison between charts of admitted patients with telemetry orders from 2015 with charts from 2013 indicated that the appropriate initiation of telemetry improved significantly as did telemetry documentation. Inappropriate continuation rates were cut in half.

The success of the study suggests further work.

“We plan expansion of telemetry utilization education to internal medicine faculty and nursing to encourage daily review of telemetry usage,” the authors write. “We are also working to develop telemetry orders that end during standard work hours to prevent inadvertent continuation by overnight providers.”

Reference

1. Kuehn C, Steyers CM III, Glenn K, Fang M. Resident-based telemetry utilization innovations lead to improved outcomes [abstract]. J Hosp Med. 2016;11(suppl 1). Accessed October 17, 2016.

Measuring Excellent Comportment among Hospitalists

The hospitalist’s performance is among the major determinants of a patient’s hospital experience. But what are the elements of a successful interaction? The authors of an article published in the Journal of Hospital Medicine set out to establish metrics to answer—and measure the answer—to that question, to assess hospitalists’ behaviors, and to establish norms and expectations.

“This study represents a first step to specifically characterize comportment and communication in hospital medicine,” the authors write.

Patient satisfaction surveys, they state, have some shortcomings in providing useful answers to that question.

“First, the attribution to specific providers is questionable,” the authors write. “Second, recall about the provider by the patients may be poor because surveys are sent to patients days after they return home. Third, the patients’ recovery and health outcomes are likely to influence their assessment of the doctor. Finally, feedback is known to be most valuable and transformative when it is specific and given in real time.”

Researchers asked the chiefs of hospital medicine divisions at five hospitals to identify their “most clinically excellent” hospitalists. Each hospitalist was observed during a routine clinical shift, and behaviors were recorded that were believed to be associated with excellent comportment and communication using the hospital medicine comportment and communication tool (HMCCOT), the final version of which has 23 variables. The physicians’ HMCCOT scores were associated with their patient satisfaction survey scores, suggesting that improved comportment might translate into enhanced patient satisfaction.

The results showed extensive variability in comportment and communication at the bedside. One variable that stood out to the researchers was that teach-back was employed in only 13% of the encounters.

“Previous studies have shown that teach-back corroborates patient comprehension and can be used to engage patients (and caregivers) in realistic goal setting and optimal health service utilization,” the researchers write. “Further, patients who clearly understand their post-discharge plan are 30% less likely to be readmitted or visit the emergency department. The data for our group have helped us to see areas of strengths, such as hand washing, where we are above compliance rates across hospitals in the United States, as well as those matters that represent opportunities for improvement such as connecting more deeply with our patients.”

The researchers call for future studies to determine whether hospitalists can improve feedback from this tool and whether enhancing comportment and communication can improve both patient satisfaction and clinical outcomes.

Reference

- Kotwal S, Khaliq W, Landis R, Wright S. Developing a comportment and communication tool for use in hospital medicine [published online ahead of print August 13, 2016]. J Hosp Med. doi:10.1002/jhm.2647.

The hospitalist’s performance is among the major determinants of a patient’s hospital experience. But what are the elements of a successful interaction? The authors of an article published in the Journal of Hospital Medicine set out to establish metrics to answer—and measure the answer—to that question, to assess hospitalists’ behaviors, and to establish norms and expectations.

“This study represents a first step to specifically characterize comportment and communication in hospital medicine,” the authors write.

Patient satisfaction surveys, they state, have some shortcomings in providing useful answers to that question.

“First, the attribution to specific providers is questionable,” the authors write. “Second, recall about the provider by the patients may be poor because surveys are sent to patients days after they return home. Third, the patients’ recovery and health outcomes are likely to influence their assessment of the doctor. Finally, feedback is known to be most valuable and transformative when it is specific and given in real time.”

Researchers asked the chiefs of hospital medicine divisions at five hospitals to identify their “most clinically excellent” hospitalists. Each hospitalist was observed during a routine clinical shift, and behaviors were recorded that were believed to be associated with excellent comportment and communication using the hospital medicine comportment and communication tool (HMCCOT), the final version of which has 23 variables. The physicians’ HMCCOT scores were associated with their patient satisfaction survey scores, suggesting that improved comportment might translate into enhanced patient satisfaction.

The results showed extensive variability in comportment and communication at the bedside. One variable that stood out to the researchers was that teach-back was employed in only 13% of the encounters.

“Previous studies have shown that teach-back corroborates patient comprehension and can be used to engage patients (and caregivers) in realistic goal setting and optimal health service utilization,” the researchers write. “Further, patients who clearly understand their post-discharge plan are 30% less likely to be readmitted or visit the emergency department. The data for our group have helped us to see areas of strengths, such as hand washing, where we are above compliance rates across hospitals in the United States, as well as those matters that represent opportunities for improvement such as connecting more deeply with our patients.”

The researchers call for future studies to determine whether hospitalists can improve feedback from this tool and whether enhancing comportment and communication can improve both patient satisfaction and clinical outcomes.

Reference

- Kotwal S, Khaliq W, Landis R, Wright S. Developing a comportment and communication tool for use in hospital medicine [published online ahead of print August 13, 2016]. J Hosp Med. doi:10.1002/jhm.2647.

The hospitalist’s performance is among the major determinants of a patient’s hospital experience. But what are the elements of a successful interaction? The authors of an article published in the Journal of Hospital Medicine set out to establish metrics to answer—and measure the answer—to that question, to assess hospitalists’ behaviors, and to establish norms and expectations.

“This study represents a first step to specifically characterize comportment and communication in hospital medicine,” the authors write.

Patient satisfaction surveys, they state, have some shortcomings in providing useful answers to that question.

“First, the attribution to specific providers is questionable,” the authors write. “Second, recall about the provider by the patients may be poor because surveys are sent to patients days after they return home. Third, the patients’ recovery and health outcomes are likely to influence their assessment of the doctor. Finally, feedback is known to be most valuable and transformative when it is specific and given in real time.”

Researchers asked the chiefs of hospital medicine divisions at five hospitals to identify their “most clinically excellent” hospitalists. Each hospitalist was observed during a routine clinical shift, and behaviors were recorded that were believed to be associated with excellent comportment and communication using the hospital medicine comportment and communication tool (HMCCOT), the final version of which has 23 variables. The physicians’ HMCCOT scores were associated with their patient satisfaction survey scores, suggesting that improved comportment might translate into enhanced patient satisfaction.

The results showed extensive variability in comportment and communication at the bedside. One variable that stood out to the researchers was that teach-back was employed in only 13% of the encounters.

“Previous studies have shown that teach-back corroborates patient comprehension and can be used to engage patients (and caregivers) in realistic goal setting and optimal health service utilization,” the researchers write. “Further, patients who clearly understand their post-discharge plan are 30% less likely to be readmitted or visit the emergency department. The data for our group have helped us to see areas of strengths, such as hand washing, where we are above compliance rates across hospitals in the United States, as well as those matters that represent opportunities for improvement such as connecting more deeply with our patients.”

The researchers call for future studies to determine whether hospitalists can improve feedback from this tool and whether enhancing comportment and communication can improve both patient satisfaction and clinical outcomes.

Reference

- Kotwal S, Khaliq W, Landis R, Wright S. Developing a comportment and communication tool for use in hospital medicine [published online ahead of print August 13, 2016]. J Hosp Med. doi:10.1002/jhm.2647.

Which Patients With Cancer Best Survive the ICU?

Because cancer is a complex disease, admitting a patient with cancer to the intensive care unit (ICU) can be challenging triage. Often the reason for the admission is acute complications related to the cancer or its treatment. Understanding how those complications might affect the patient’s outcome is critical to planning care, gauging use of ICU resources, and counseling patients and their families.

Related: Delirium in the Cardiac ICU

Although some studies have identified important determinants of mortality, the existing literature is “scarce,” say researchers who studied outcomes in ICU patients with cancer. They analyzed data from 2 cohort studies of 1,737 patients with solid tumors and 291 with hematologic malignancies. Of those, 456 (23%) had cancer-related complications at ICU admission, most frequently chemotherapy and radiation therapy toxicity, venous thromboembolism (VTE), and respiratory failure by tumor.

Patients with complications tended to have worse performance status scores and active disease. They also were more likely to have more severe organ dysfunctions, greater need for invasive support, and infection at ICU admission.

Complications occurred more often in patients with metastatic solid tumors, particularly patients with lung and breast cancer (although less common in patients with gastrointestinal [GI] tumors), and in patients with more aggressive hematologic malignancies, especially acute leukemia and aggressive non-Hodgkin lymphoma.

Related: Survival After Long-Term Residence in an Intensive Care Unit

Their study had several major findings, the researchers say. First, 1 in 4 patients with cancer admitted to the ICU has acute complications related to the underlying cancer or treatment adverse effects. However, although there were many cancer-related complications with varying degrees of prognostic impact and despite high mortality rates, outcomes in these patients were “better than perceived a priori,” the researchers say.

A high Sequential Organ Failure Assessment score on the first day of ICU stay, worse performance status, and need for mechanical ventilation were independent predictors of mortality, and all in accord with current literature. However, among the individual cancer-related complications studied, only vena cava syndrome, GI involvement, and respiratory failure were independently associated with in-hospital mortality. A “substantial” mortality rate (73%) among patients with GI involvement emphasizes the importance of discussing the appropriateness of ICU admission in these patients, the researchers caution. Although VTE was one of the most common complications, it was not a major determinant of outcome.

Related: Patients Benefit From ICU Telemedicine

Another important point, the researchers note, was the high frequency of chemotherapy/radiation therapy-induced toxicity. Treatment-related neutropenia is not a good predictor of outcome, they say, since research has found it is not a relevant predictor of mortality.

Source:

Torres VBL, Vassalo J, Silva UVA, et al. PLoS One. 2016;11(10): e0164537.

doi: 10.1371/journal.pone.0164537.

Because cancer is a complex disease, admitting a patient with cancer to the intensive care unit (ICU) can be challenging triage. Often the reason for the admission is acute complications related to the cancer or its treatment. Understanding how those complications might affect the patient’s outcome is critical to planning care, gauging use of ICU resources, and counseling patients and their families.

Related: Delirium in the Cardiac ICU

Although some studies have identified important determinants of mortality, the existing literature is “scarce,” say researchers who studied outcomes in ICU patients with cancer. They analyzed data from 2 cohort studies of 1,737 patients with solid tumors and 291 with hematologic malignancies. Of those, 456 (23%) had cancer-related complications at ICU admission, most frequently chemotherapy and radiation therapy toxicity, venous thromboembolism (VTE), and respiratory failure by tumor.

Patients with complications tended to have worse performance status scores and active disease. They also were more likely to have more severe organ dysfunctions, greater need for invasive support, and infection at ICU admission.

Complications occurred more often in patients with metastatic solid tumors, particularly patients with lung and breast cancer (although less common in patients with gastrointestinal [GI] tumors), and in patients with more aggressive hematologic malignancies, especially acute leukemia and aggressive non-Hodgkin lymphoma.

Related: Survival After Long-Term Residence in an Intensive Care Unit

Their study had several major findings, the researchers say. First, 1 in 4 patients with cancer admitted to the ICU has acute complications related to the underlying cancer or treatment adverse effects. However, although there were many cancer-related complications with varying degrees of prognostic impact and despite high mortality rates, outcomes in these patients were “better than perceived a priori,” the researchers say.

A high Sequential Organ Failure Assessment score on the first day of ICU stay, worse performance status, and need for mechanical ventilation were independent predictors of mortality, and all in accord with current literature. However, among the individual cancer-related complications studied, only vena cava syndrome, GI involvement, and respiratory failure were independently associated with in-hospital mortality. A “substantial” mortality rate (73%) among patients with GI involvement emphasizes the importance of discussing the appropriateness of ICU admission in these patients, the researchers caution. Although VTE was one of the most common complications, it was not a major determinant of outcome.

Related: Patients Benefit From ICU Telemedicine

Another important point, the researchers note, was the high frequency of chemotherapy/radiation therapy-induced toxicity. Treatment-related neutropenia is not a good predictor of outcome, they say, since research has found it is not a relevant predictor of mortality.

Source:

Torres VBL, Vassalo J, Silva UVA, et al. PLoS One. 2016;11(10): e0164537.

doi: 10.1371/journal.pone.0164537.

Because cancer is a complex disease, admitting a patient with cancer to the intensive care unit (ICU) can be challenging triage. Often the reason for the admission is acute complications related to the cancer or its treatment. Understanding how those complications might affect the patient’s outcome is critical to planning care, gauging use of ICU resources, and counseling patients and their families.

Related: Delirium in the Cardiac ICU

Although some studies have identified important determinants of mortality, the existing literature is “scarce,” say researchers who studied outcomes in ICU patients with cancer. They analyzed data from 2 cohort studies of 1,737 patients with solid tumors and 291 with hematologic malignancies. Of those, 456 (23%) had cancer-related complications at ICU admission, most frequently chemotherapy and radiation therapy toxicity, venous thromboembolism (VTE), and respiratory failure by tumor.

Patients with complications tended to have worse performance status scores and active disease. They also were more likely to have more severe organ dysfunctions, greater need for invasive support, and infection at ICU admission.

Complications occurred more often in patients with metastatic solid tumors, particularly patients with lung and breast cancer (although less common in patients with gastrointestinal [GI] tumors), and in patients with more aggressive hematologic malignancies, especially acute leukemia and aggressive non-Hodgkin lymphoma.

Related: Survival After Long-Term Residence in an Intensive Care Unit

Their study had several major findings, the researchers say. First, 1 in 4 patients with cancer admitted to the ICU has acute complications related to the underlying cancer or treatment adverse effects. However, although there were many cancer-related complications with varying degrees of prognostic impact and despite high mortality rates, outcomes in these patients were “better than perceived a priori,” the researchers say.

A high Sequential Organ Failure Assessment score on the first day of ICU stay, worse performance status, and need for mechanical ventilation were independent predictors of mortality, and all in accord with current literature. However, among the individual cancer-related complications studied, only vena cava syndrome, GI involvement, and respiratory failure were independently associated with in-hospital mortality. A “substantial” mortality rate (73%) among patients with GI involvement emphasizes the importance of discussing the appropriateness of ICU admission in these patients, the researchers caution. Although VTE was one of the most common complications, it was not a major determinant of outcome.

Related: Patients Benefit From ICU Telemedicine

Another important point, the researchers note, was the high frequency of chemotherapy/radiation therapy-induced toxicity. Treatment-related neutropenia is not a good predictor of outcome, they say, since research has found it is not a relevant predictor of mortality.

Source:

Torres VBL, Vassalo J, Silva UVA, et al. PLoS One. 2016;11(10): e0164537.

doi: 10.1371/journal.pone.0164537.

Targeting CD98 to treat AML

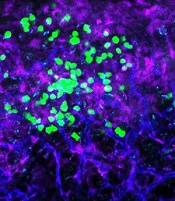

with blood vessels (blue).

Image courtesy of

UC San Diego Health

Preclinical research suggests the cell surface molecule CD98 promotes acute myeloid leukemia (AML), and the anti-CD98 antibody IGN523 can inhibit AML growth.

In AML patient cells and mouse models of the disease, IGN523 disrupted the interactions between leukemia cells and the surrounding blood vessels, thereby inhibiting the growth of AML.

Tannishtha Reya, PhD, of the University of California San Diego School of Medicine, and her colleagues reported these findings in Cancer Cell.

The team believes their results suggest IGN523 or other anti-CD98 antibodies might be useful for treating AML, particularly in children.

However, in a phase 1 study presented at the 2015 ASH Annual Meeting, IGN523 demonstrated only modest anti-leukemic activity in adults with AML.

Still, the researchers involved in the phase 1 study said IGN523 may prove effective in combination with other drugs used to treat AML.

Cancer Cell study

“To improve therapeutic strategies for [AML], we need to look not just at the cancer cells themselves but also at their interactions with surrounding cells, tissues, molecules, and blood vessels in the body,” Dr Reya said.

“In this study, we identified CD98 as a critical molecule driving AML growth. We showed that blocking CD98 can effectively reduce leukemia burden and improve survival by preventing cancer cells from receiving support from the surrounding environment.”

Dr Reya’s team engineered mouse models that lacked CD98 and found that loss of this molecule blocked AML growth and improved survival. Furthermore, CD98 loss largely spared normal blood cells, which the researchers said indicates a potential therapeutic window.

Additional experiments revealed that leukemia cells lacking CD98 had fewer stable interactions with the lining of blood vessels—interactions that were needed to fuel AML growth.

So the researchers decided to test the effects of blocking CD98 with a therapeutic inhibitor—IGN523. The team found that IGN523 blocks CD98’s AML-promoting activity in mouse models and human AML cells.

The researchers also transplanted patient-derived AML cells into mice and treated the recipients with either IGN523 or a control antibody. Anti-CD98 treatment effectively eliminated AML cells, while AML in the control mice expanded more than 100-fold.

“This study suggests that human AML can’t get established without CD98 and that blocking the molecule with anti-CD98 antibodies could be beneficial for the treatment of AML in both adults and children,” Dr Reya said.

Moving forward, Dr Reya and her colleagues are working to further define whether CD98 could be used to treat pediatric AML.

“Many of the models we used in this work were based on mutations found in childhood AML,” Dr Reya said. “While many childhood cancers have become very treatable, childhood AML continues to have a high rate of relapse and death.”

“We plan to work with pediatric oncologists to test if anti-CD98 agents can be effective against pediatric AML and whether it can improve responses to current treatments. I think this is particularly important to pursue since the anti-CD98 antibody has already been through phase 1 trials and could be more easily positioned to test in drug-resistant pediatric AML.”

Igenica Biotherapeutics Inc., the company developing IGN523, provided the drug for this study, and one of the study’s authors is an employee of the company. ![]()

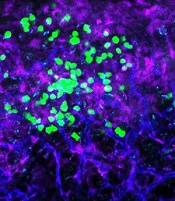

with blood vessels (blue).

Image courtesy of

UC San Diego Health

Preclinical research suggests the cell surface molecule CD98 promotes acute myeloid leukemia (AML), and the anti-CD98 antibody IGN523 can inhibit AML growth.

In AML patient cells and mouse models of the disease, IGN523 disrupted the interactions between leukemia cells and the surrounding blood vessels, thereby inhibiting the growth of AML.

Tannishtha Reya, PhD, of the University of California San Diego School of Medicine, and her colleagues reported these findings in Cancer Cell.

The team believes their results suggest IGN523 or other anti-CD98 antibodies might be useful for treating AML, particularly in children.

However, in a phase 1 study presented at the 2015 ASH Annual Meeting, IGN523 demonstrated only modest anti-leukemic activity in adults with AML.

Still, the researchers involved in the phase 1 study said IGN523 may prove effective in combination with other drugs used to treat AML.

Cancer Cell study

“To improve therapeutic strategies for [AML], we need to look not just at the cancer cells themselves but also at their interactions with surrounding cells, tissues, molecules, and blood vessels in the body,” Dr Reya said.

“In this study, we identified CD98 as a critical molecule driving AML growth. We showed that blocking CD98 can effectively reduce leukemia burden and improve survival by preventing cancer cells from receiving support from the surrounding environment.”

Dr Reya’s team engineered mouse models that lacked CD98 and found that loss of this molecule blocked AML growth and improved survival. Furthermore, CD98 loss largely spared normal blood cells, which the researchers said indicates a potential therapeutic window.

Additional experiments revealed that leukemia cells lacking CD98 had fewer stable interactions with the lining of blood vessels—interactions that were needed to fuel AML growth.

So the researchers decided to test the effects of blocking CD98 with a therapeutic inhibitor—IGN523. The team found that IGN523 blocks CD98’s AML-promoting activity in mouse models and human AML cells.

The researchers also transplanted patient-derived AML cells into mice and treated the recipients with either IGN523 or a control antibody. Anti-CD98 treatment effectively eliminated AML cells, while AML in the control mice expanded more than 100-fold.

“This study suggests that human AML can’t get established without CD98 and that blocking the molecule with anti-CD98 antibodies could be beneficial for the treatment of AML in both adults and children,” Dr Reya said.

Moving forward, Dr Reya and her colleagues are working to further define whether CD98 could be used to treat pediatric AML.

“Many of the models we used in this work were based on mutations found in childhood AML,” Dr Reya said. “While many childhood cancers have become very treatable, childhood AML continues to have a high rate of relapse and death.”

“We plan to work with pediatric oncologists to test if anti-CD98 agents can be effective against pediatric AML and whether it can improve responses to current treatments. I think this is particularly important to pursue since the anti-CD98 antibody has already been through phase 1 trials and could be more easily positioned to test in drug-resistant pediatric AML.”

Igenica Biotherapeutics Inc., the company developing IGN523, provided the drug for this study, and one of the study’s authors is an employee of the company. ![]()

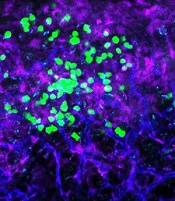

with blood vessels (blue).

Image courtesy of

UC San Diego Health

Preclinical research suggests the cell surface molecule CD98 promotes acute myeloid leukemia (AML), and the anti-CD98 antibody IGN523 can inhibit AML growth.

In AML patient cells and mouse models of the disease, IGN523 disrupted the interactions between leukemia cells and the surrounding blood vessels, thereby inhibiting the growth of AML.

Tannishtha Reya, PhD, of the University of California San Diego School of Medicine, and her colleagues reported these findings in Cancer Cell.

The team believes their results suggest IGN523 or other anti-CD98 antibodies might be useful for treating AML, particularly in children.

However, in a phase 1 study presented at the 2015 ASH Annual Meeting, IGN523 demonstrated only modest anti-leukemic activity in adults with AML.

Still, the researchers involved in the phase 1 study said IGN523 may prove effective in combination with other drugs used to treat AML.

Cancer Cell study

“To improve therapeutic strategies for [AML], we need to look not just at the cancer cells themselves but also at their interactions with surrounding cells, tissues, molecules, and blood vessels in the body,” Dr Reya said.

“In this study, we identified CD98 as a critical molecule driving AML growth. We showed that blocking CD98 can effectively reduce leukemia burden and improve survival by preventing cancer cells from receiving support from the surrounding environment.”

Dr Reya’s team engineered mouse models that lacked CD98 and found that loss of this molecule blocked AML growth and improved survival. Furthermore, CD98 loss largely spared normal blood cells, which the researchers said indicates a potential therapeutic window.

Additional experiments revealed that leukemia cells lacking CD98 had fewer stable interactions with the lining of blood vessels—interactions that were needed to fuel AML growth.

So the researchers decided to test the effects of blocking CD98 with a therapeutic inhibitor—IGN523. The team found that IGN523 blocks CD98’s AML-promoting activity in mouse models and human AML cells.

The researchers also transplanted patient-derived AML cells into mice and treated the recipients with either IGN523 or a control antibody. Anti-CD98 treatment effectively eliminated AML cells, while AML in the control mice expanded more than 100-fold.

“This study suggests that human AML can’t get established without CD98 and that blocking the molecule with anti-CD98 antibodies could be beneficial for the treatment of AML in both adults and children,” Dr Reya said.

Moving forward, Dr Reya and her colleagues are working to further define whether CD98 could be used to treat pediatric AML.

“Many of the models we used in this work were based on mutations found in childhood AML,” Dr Reya said. “While many childhood cancers have become very treatable, childhood AML continues to have a high rate of relapse and death.”

“We plan to work with pediatric oncologists to test if anti-CD98 agents can be effective against pediatric AML and whether it can improve responses to current treatments. I think this is particularly important to pursue since the anti-CD98 antibody has already been through phase 1 trials and could be more easily positioned to test in drug-resistant pediatric AML.”

Igenica Biotherapeutics Inc., the company developing IGN523, provided the drug for this study, and one of the study’s authors is an employee of the company. ![]()